Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-22

SagaSu777 2025-10-23

Explore the hottest developer projects on Show HN for 2025-10-22. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of developer innovation is increasingly shaped by the powerful symbiosis between AI and specialized tooling. Today's Show HN submissions highlight a clear trend: developers are not just building AI applications, but are also creating tools to make AI development more efficient, reliable, and accessible. We see a strong push towards AI-assisted coding, with projects aiming to integrate AI into the very fabric of development workflows, from code generation and debugging to formal verification of complex systems like GPU kernels. The emphasis on privacy and security in AI applications, especially in browser extensions and data processing, is another critical theme. Furthermore, the drive for productivity is evident in the numerous frameworks and utilities designed to streamline common development tasks, from data management to deployment. For aspiring developers and entrepreneurs, this signals an opportunity to build the next generation of intelligent tools that enhance human capabilities and address the unique challenges of the AI era. The hacker spirit of leveraging technology to solve complex problems is alive and well, manifesting in innovative solutions that push the boundaries of what's possible, whether it's making complex systems verifiable, data explorable, or development processes dramatically faster.

Today's Hottest Product

Name

Cuq – Formal Verification of Rust GPU Kernels

Highlight

This project tackles the critical challenge of ensuring the correctness and reliability of GPU kernels written in Rust. By employing formal verification techniques, Cuq allows developers to mathematically prove that their code behaves as intended, a level of assurance typically hard to achieve with traditional testing. This opens doors for building more robust and trustworthy high-performance computing applications, and offers developers a deep dive into advanced static analysis and correctness proofs for concurrent and parallel systems.

Popular Category

AI/ML

Developer Tools

Frameworks

Databases

Utilities

Popular Keyword

AI

LLM

Framework

Agent

Rust

GPU

Verification

Streaming

SQL

Database

Developer Tools

Technology Trends

AI-driven Development

Formal Verification

Vector Databases for AI

Edge AI and Offline Processing

Developer Productivity Tools

Privacy-Preserving AI

Streaming Data Processing

Cross-Platform Compatibility

Low-Code/No-Code AI Integration

AI Orchestration and Agent Frameworks

Project Category Distribution

AI/ML Applications (30%)

Developer Tools & Utilities (25%)

Frameworks & Libraries (20%)

Data & Databases (10%)

Productivity & Lifestyle (15%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | CuqVerifier | 78 | 42 |

| 2 | Vexlio Interactive Diagram Weaver | 39 | 2 |

| 3 | SemanticArt Engine | 25 | 6 |

| 4 | Mindful Scripter | 9 | 20 |

| 5 | RuleHunt: Cellular Automata Exploration Engine | 14 | 9 |

| 6 | LocalAction Runner | 22 | 0 |

| 7 | Proton StreamSQL Engine | 10 | 10 |

| 8 | Nityasha AI: Contextual Conversational Assistant | 8 | 8 |

| 9 | StreamJSONParser | 12 | 0 |

| 10 | HackerNews Chronicle | 7 | 3 |

1

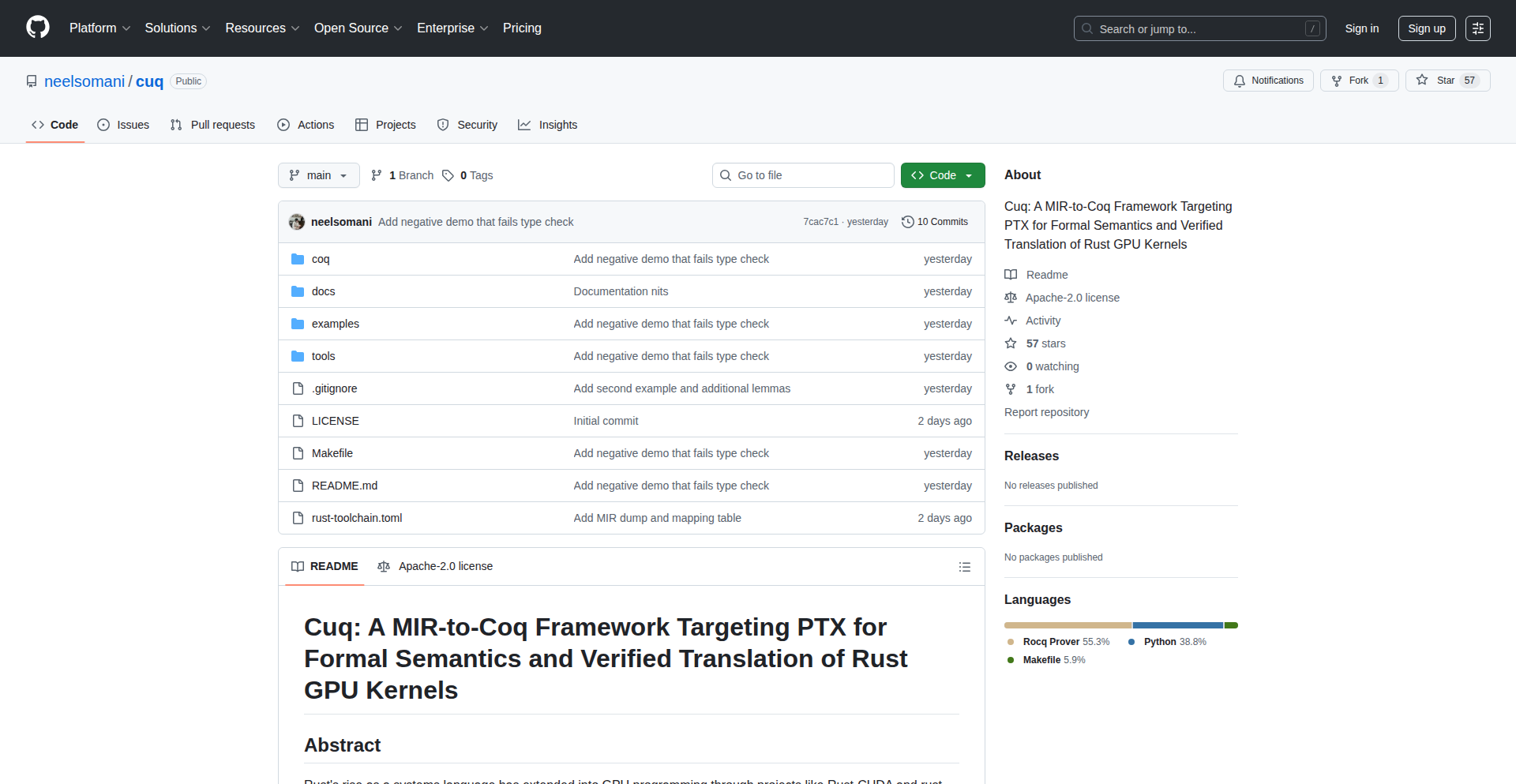

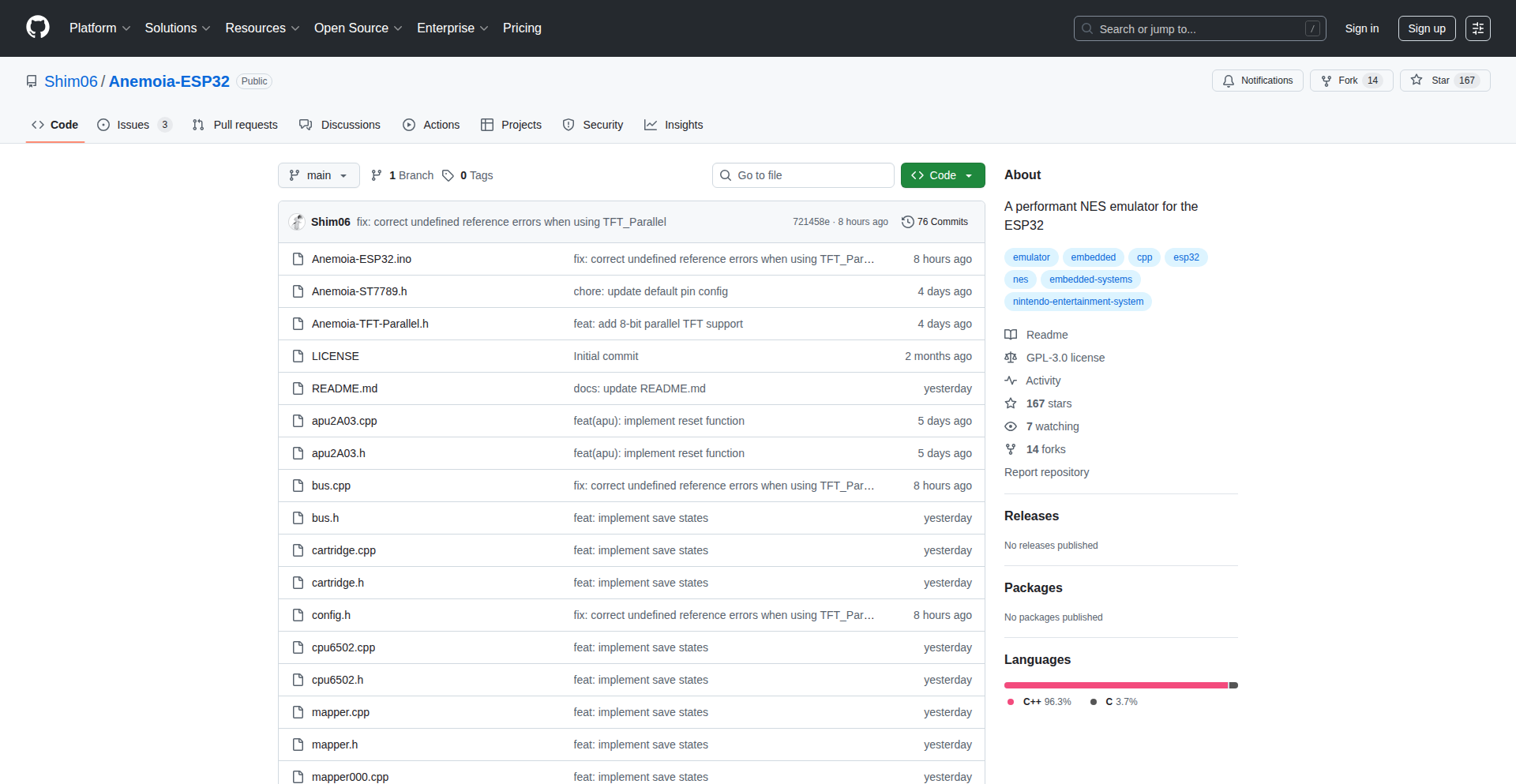

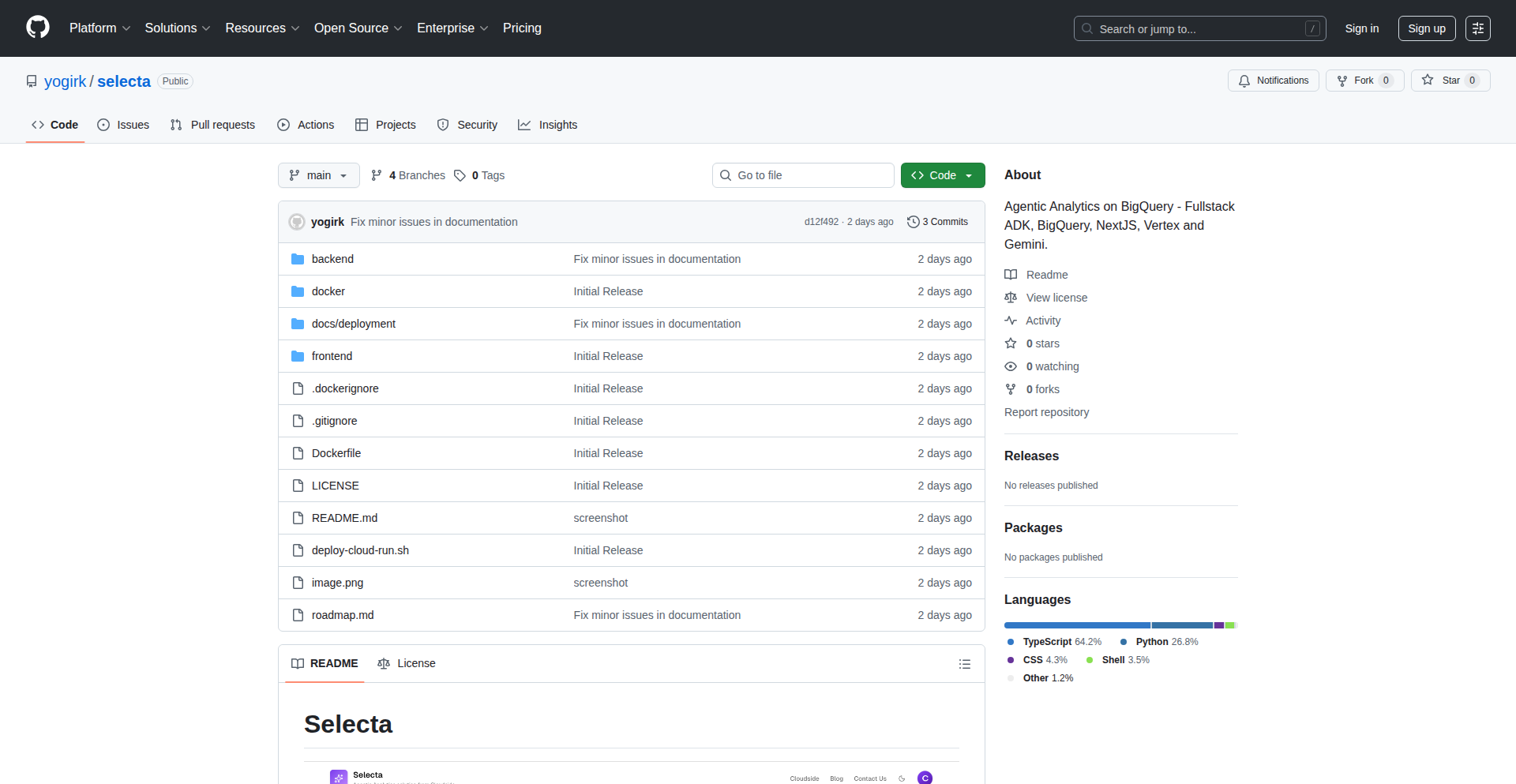

CuqVerifier

Author

nsomani

Description

CuqVerifier is a novel tool for the formal verification of Rust GPU kernels. It addresses the critical challenge of ensuring correctness and safety in parallel computation, a common source of complex bugs. By employing formal methods, it provides a mathematically rigorous way to prove that GPU code behaves as intended, reducing the likelihood of hardware-level errors and security vulnerabilities. This is incredibly valuable for developers working on high-performance computing, game development, and scientific simulations where reliability is paramount.

Popularity

Points 78

Comments 42

What is this product?

CuqVerifier is a project that allows developers to formally verify the correctness of Rust code written for GPUs. Instead of just testing the code with sample inputs (which can miss edge cases), formal verification uses mathematical logic to prove that the code will always behave correctly under all possible circumstances. The innovation lies in applying these rigorous mathematical techniques to GPU kernels, which are notoriously difficult to debug due to their parallel nature and direct hardware interaction. This means you can have a much higher degree of confidence that your GPU code is bug-free and secure. So, what does this mean for you? It means fewer crashes, more reliable results, and less time spent chasing elusive GPU bugs.

How to use it?

Developers can integrate CuqVerifier into their Rust GPU kernel development workflow. Typically, this involves writing GPU kernels in Rust using a compatible framework (like `wgpu` or similar) and then using CuqVerifier to analyze and formally prove properties about this code. The tool would likely take the Rust code as input and, through a series of logical steps and checks, determine if the code satisfies the specified correctness conditions. This can be incorporated into CI/CD pipelines to automatically check kernel integrity before deployment. So, how does this help you? It allows you to automatically catch potential errors in your GPU code before they cause problems in production, saving you significant debugging time and effort.

Product Core Function

· Formal proof generation for Rust GPU kernels: This function uses mathematical logic to prove that your GPU code is correct, offering a higher guarantee than traditional testing. It helps ensure predictable and reliable execution, especially in critical applications.

· Detection of common GPU programming errors: The tool is designed to identify subtle bugs that are hard to find with manual testing, such as data races or out-of-bounds memory accesses. This directly leads to more robust and secure GPU applications.

· Integration with Rust development ecosystem: By working within the Rust environment, CuqVerifier leverages the safety and expressiveness of Rust, making it easier for Rust developers to adopt formal verification for their GPU workloads. This means a smoother adoption path and better utilization of existing Rust skills.

Product Usage Case

· A game developer using CuqVerifier to ensure that their custom rendering shaders, written in Rust for GPU execution, do not produce visual artifacts or crash the game under any lighting or scene conditions. This guarantees a stable and high-quality visual experience for players.

· A scientific computing researcher verifying a complex physics simulation kernel running on a GPU. CuqVerifier would prove that the numerical calculations are consistently accurate and do not suffer from floating-point precision errors or race conditions, leading to more trustworthy research results.

· A developer building a machine learning inference engine on a GPU. CuqVerifier can be used to prove the correctness of the matrix multiplication or convolution operations, ensuring that the model's predictions are accurate and consistent across different hardware configurations and input data.

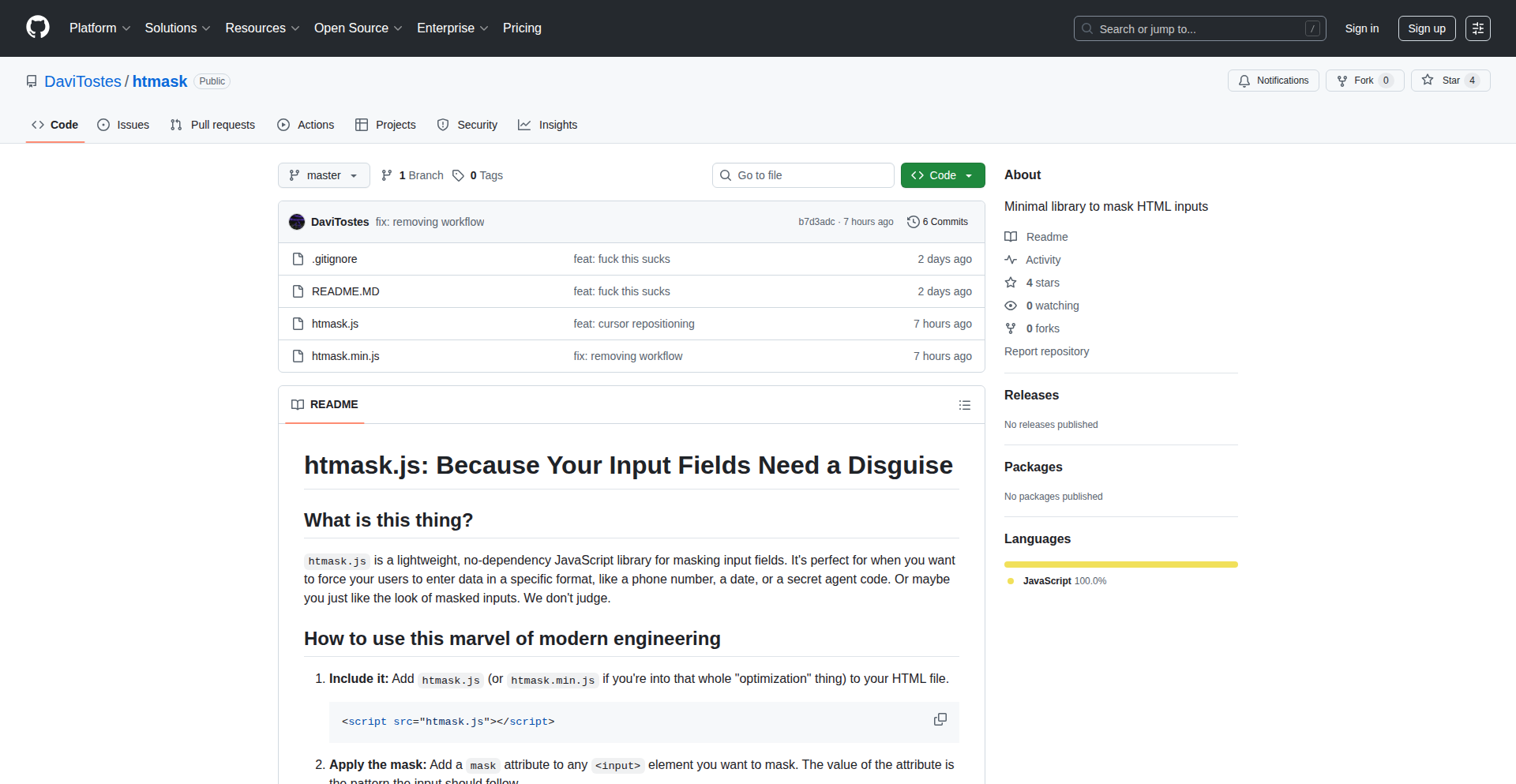

2

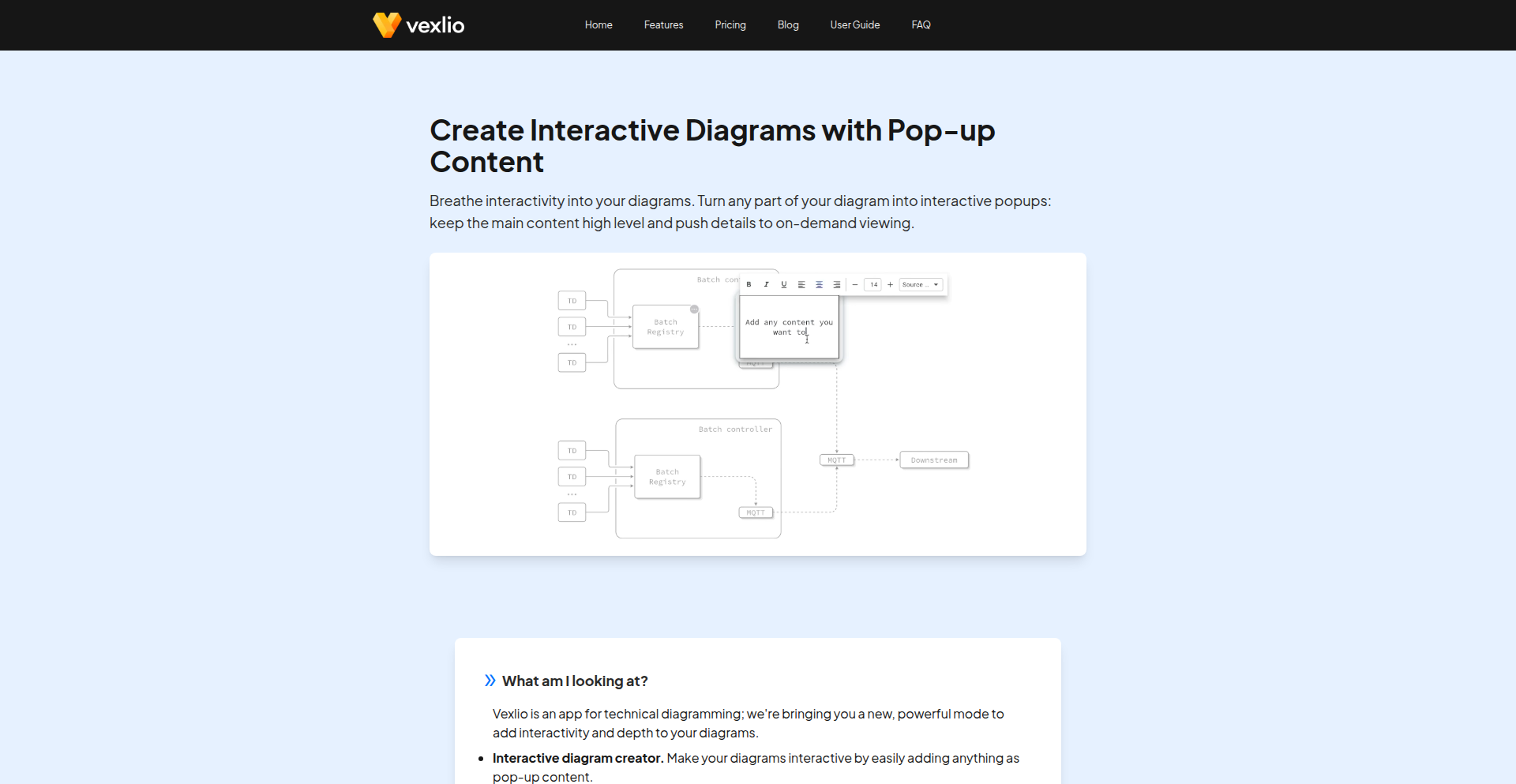

Vexlio Interactive Diagram Weaver

Author

ttd

Description

Vexlio simplifies the creation of interactive diagrams. It allows developers and designers to build diagrams with embedded pop-up content that appears on mouse hover or click. This innovation is particularly useful for documentation, onboarding materials, and presentations, enabling a cleaner high-level view while keeping crucial details accessible. The diagrams can be shared easily via a web link without requiring any sign-in, making it highly accessible.

Popularity

Points 39

Comments 2

What is this product?

Vexlio is a tool that lets you build diagrams that come alive. Instead of static boxes and lines, you can make parts of your diagram clickable or hoverable. When a user interacts with these parts, a pop-up window can appear with extra information. This is powered by a web-based application that generates these interactive elements, likely using JavaScript to handle the user interface interactions and displaying dynamic content. The innovation lies in making complex information digestible by hiding it until needed, offering a layered approach to diagram comprehension. So, this means you can create diagrams that aren't just pictures, but informative experiences that guide users through complex data or processes without overwhelming them.

How to use it?

Developers can use Vexlio by visiting the web application (app.vexlio.com). You can start by drawing basic shapes, then selecting a shape and using the provided tools to 'Add popup'. Within this popup, you can add text, images, or even links. The resulting interactive diagram can then be shared via a simple web URL. This is useful for embedding in documentation websites, wikis, or even within web applications themselves as a visual aid for complex features. So, this means you can quickly add interactive elements to your technical documentation or user guides without writing complex frontend code yourself.

Product Core Function

· Interactive Element Creation: Allows users to designate specific diagram elements (shapes, text) to trigger an interaction. This is valuable for highlighting key components in a system architecture or process flow, enabling users to focus on one part at a time. So, this means you can guide your audience's attention to critical areas of your diagrams.

· Pop-up Content Embedding: Enables the addition of rich content (text, images, links) within pop-up windows associated with interactive elements. This is crucial for providing detailed explanations, definitions, or related resources without cluttering the main diagram. So, this means you can deliver comprehensive information in an organized and non-intrusive way.

· Web-based Sharing: Generates shareable web links for the interactive diagrams, accessible without user sign-in. This greatly simplifies collaboration and distribution of information. So, this means anyone can view your interactive diagrams easily, without needing special software or accounts.

· No-code/Low-code Diagramming: Provides an intuitive interface for creating diagrams, reducing the need for complex coding for basic to intermediate interactive visualizations. This democratizes the creation of engaging technical visuals. So, this means you can create sophisticated interactive diagrams even if you're not a frontend development expert.

Product Usage Case

· System Architecture Documentation: A developer can create a diagram of a complex microservices architecture. Each service node can be made interactive, revealing details like its API endpoints, dependencies, or recent deployment status on hover. This solves the problem of a sprawling architecture diagram by providing on-demand detail. So, this means your team can quickly understand the relationships and details of your system without getting lost in information.

· Onboarding Guides: For new employees or users, an interactive diagram of a software interface can show tooltips or explanations when hovering over different buttons or sections. This provides a guided, step-by-step understanding of the software. So, this means new users can learn your product more effectively and independently.

· Presentation Enhancements: During a technical presentation, an interactive diagram can be used to reveal supporting data, code snippets, or definitions only when the presenter clicks on a specific point of interest. This keeps the presentation focused and engaging. So, this means you can deliver more dynamic and informative presentations that keep your audience engaged.

· Troubleshooting Guides: A troubleshooting flowchart can be made interactive, where clicking on a problem step reveals potential solutions or diagnostic steps. This provides a more dynamic and responsive troubleshooting experience. So, this means users can resolve issues faster by accessing relevant information directly from the problem they are facing.

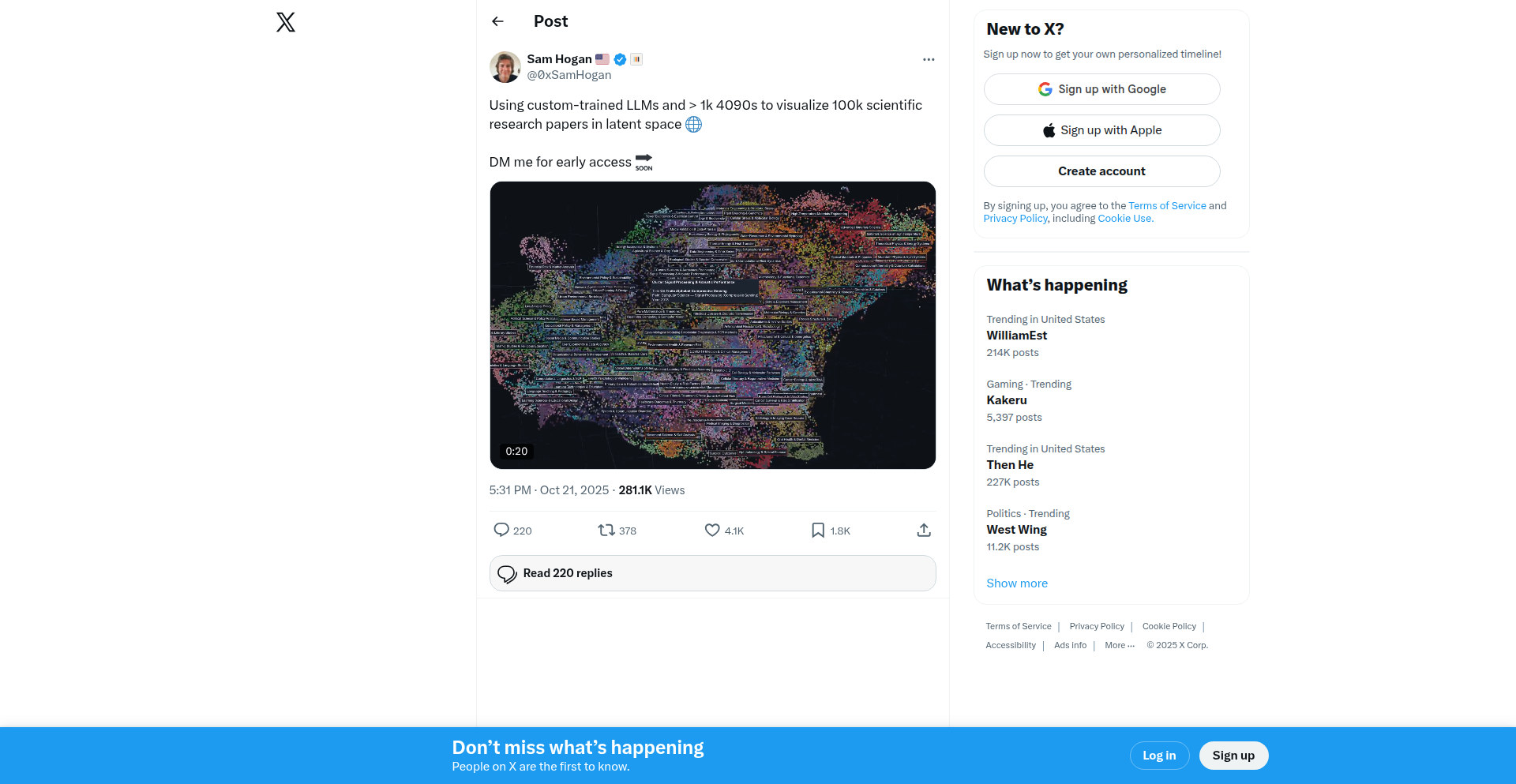

3

SemanticArt Engine

Author

bbischof

Description

This project is a search engine for art that leverages natural language prompts to discover existing artworks. Unlike AI art generators, it focuses on finding real, tangible pieces of art that match user descriptions, powered by a sophisticated semantic search approach.

Popularity

Points 25

Comments 6

What is this product?

SemanticArt Engine is a novel search engine designed to bridge the gap between human language and the vast world of visual art. It utilizes natural language processing (NLP) to understand user queries expressed in everyday language, such as 'a serene landscape painting with a hint of melancholy' or 'a vibrant abstract sculpture depicting movement.' The core innovation lies in its semantic understanding, meaning it grasps the meaning and context of your words, not just keywords. This allows it to search through a database of real artworks, understanding their stylistic elements, emotional tones, subject matter, and even artistic movements, to return relevant results. So, this is useful for you because it allows you to find art that truly resonates with your vision, even if you don't know the specific artists or titles, making art discovery more intuitive and personal.

How to use it?

Developers can integrate SemanticArt Engine into their applications or platforms to enhance art discovery features. This could involve building custom art recommendation systems, creating interactive art browsing experiences for galleries or online marketplaces, or powering creative tools that require visual inspiration. The integration typically involves sending natural language queries to the engine's API and receiving a ranked list of matching artworks, complete with metadata like title, artist, year, and a link to the artwork. This gives you the power to embed a highly intelligent and user-friendly art search capability directly into your own products. You can think of it as adding a 'smart art curator' to your software, which helps users find exactly what they're looking for without needing specialized art knowledge.

Product Core Function

· Natural Language Query Processing: Understands complex, descriptive language to interpret user intent regarding art, providing a more intuitive search experience than keyword-based systems. This means you can ask for what you want in your own words and get surprisingly relevant results.

· Semantic Art Matching: Goes beyond simple keyword matching to analyze the underlying meaning and concepts within artworks, connecting them to the semantic essence of your search query. This helps you find art that truly captures the mood, style, or subject you're seeking, even if the exact words aren't present in the artwork's description.

· Real Artwork Discovery: Focuses on indexing and retrieving actual, existing artworks rather than generating new ones, ensuring the results are authentic and verifiable. This is crucial for anyone looking to purchase, curate, or learn about real art pieces.

· Artistic Metadata Analysis: Interprets and utilizes a rich set of artistic attributes (style, medium, era, emotion, subject) to refine search results, leading to more precise and contextually relevant recommendations. This allows for highly targeted searches, like finding 'impressionist paintings of Parisian streets in the spring.'

· API Access for Integration: Provides a programmatic interface for developers to easily integrate its art search capabilities into their own applications and workflows. This means you can easily add powerful art discovery to your own websites, apps, or tools, making them more engaging and useful.

Product Usage Case

· An interior designer searching for a specific type of abstract sculpture to complement a client's modern living room, using a prompt like 'a geometric sculpture in cool blue tones with a sense of upward movement.' This saves them significant time browsing generic catalogs and helps them find a piece that perfectly matches the aesthetic requirements.

· A content creator looking for inspiration for a blog post on 'the feeling of nostalgia in art,' providing a query such as 'paintings that evoke a sense of childhood memories and simpler times.' The engine can then surface relevant artworks that visually represent this abstract concept, fueling their creative process.

· An online art gallery wanting to improve its search functionality by allowing users to describe their preferences in natural language, for instance, 'a landscape painting that feels calming and peaceful, perhaps with a body of water.' This enhances user experience and drives more targeted engagement with the gallery's collection.

· A museum curator developing a new exhibition who wants to quickly find artworks from a specific period that share a particular thematic element, such as 'Renaissance portraits with a strong sense of individual personality.' This speeds up research and helps in identifying potential pieces for the exhibition.

4

Mindful Scripter

Author

rrranch

Description

A structured journaling application designed to replace mindless social media scrolling with focused self-improvement. It offers guided prompts, goal tracking, and task organization, enriched with optional philosophical and psychological insights to trigger deeper reflection, all within a private, ad-free environment. So, this is for you if you want to reclaim your focus and build better habits instead of getting lost on your phone.

Popularity

Points 9

Comments 20

What is this product?

Mindful Scripter is a journaling application that provides structure to your self-reflection, moving beyond blank pages. It uses curated daily prompts and community-sourced questions to guide your writing. It integrates goal tracking and task management to connect your thoughts with actionable steps. What makes it innovative is its deliberate exclusion of social features, followers, and performance metrics. Instead, it offers optional 'reflection triggers' drawing from philosophy, astrology, and psychology. This tech insight helps developers by demonstrating a privacy-first, distraction-free approach to personal development tools, a valuable pattern for creating focused user experiences. So, this is for you because it offers a clear pathway to meaningful self-engagement without the noise of traditional social platforms.

How to use it?

Developers can use Mindful Scripter as a template for building private, focused applications. The core principle is a well-defined user flow for self-improvement, emphasizing content curation (prompts, insights) and utility features (goal/task tracking). Integration could involve leveraging its API for external goal management or using its journaling structure as a backend for other wellness-focused apps. For end-users, it's a simple daily habit: open the app, respond to the prompt, organize your tasks, and optionally explore a curated insight. So, this is for you to either integrate its structured journaling logic into your own projects or to simply start a structured self-improvement routine today.

Product Core Function

· Daily guided journaling prompts: Provides users with specific questions or topics to write about, fostering consistent reflection and reducing decision fatigue. Its value lies in making journaling accessible and less intimidating for beginners, guiding them towards deeper self-awareness. This is applicable in personal development apps and mental wellness platforms.

· Goal tracking and task organization: Allows users to set personal goals and break them down into manageable tasks, directly linking introspection with action. This feature's value is in translating insights into concrete progress, crucial for habit formation and achievement. It's useful for productivity apps and personal coaching tools.

· Optional reflection triggers (philosophy, astrology, psychology): Offers curated insights from various disciplines to spark new perspectives and deeper contemplation. The value here is in enriching the journaling experience with diverse intellectual frameworks, enhancing personal growth. This is a powerful element for educational apps and life coaching services.

· Distraction-free environment: The absence of social features, followers, and performance metrics ensures a private and focused user experience. This technical choice prioritizes user well-being and deep work, providing significant value by creating a safe space for introspection. This principle is highly relevant for any app aiming to reduce digital distractions and promote mindfulness.

Product Usage Case

· A developer building a personal habit tracker could integrate the goal tracking and task organization features from Mindful Scripter to ensure users are not just tracking habits but also reflecting on their progress and setting intentions. This solves the problem of passive tracking by adding a reflective layer.

· A mental wellness platform developer might use the structured prompting system and reflection triggers to guide users through therapeutic exercises or mindfulness practices, offering a more guided and less intimidating approach than a blank page. This addresses the challenge of user engagement in sensitive areas.

· A solo developer aiming to create a productivity tool could draw inspiration from Mindful Scripter's design philosophy of minimizing distractions and focusing on core utility, applying it to a task management application. This showcases how to build focused, high-value tools by limiting scope.

· An educator developing a curriculum for self-discovery might leverage the curated prompts and insights to create structured learning modules, making complex philosophical or psychological concepts more digestible through journaling. This solves the problem of abstract learning by making it experiential.

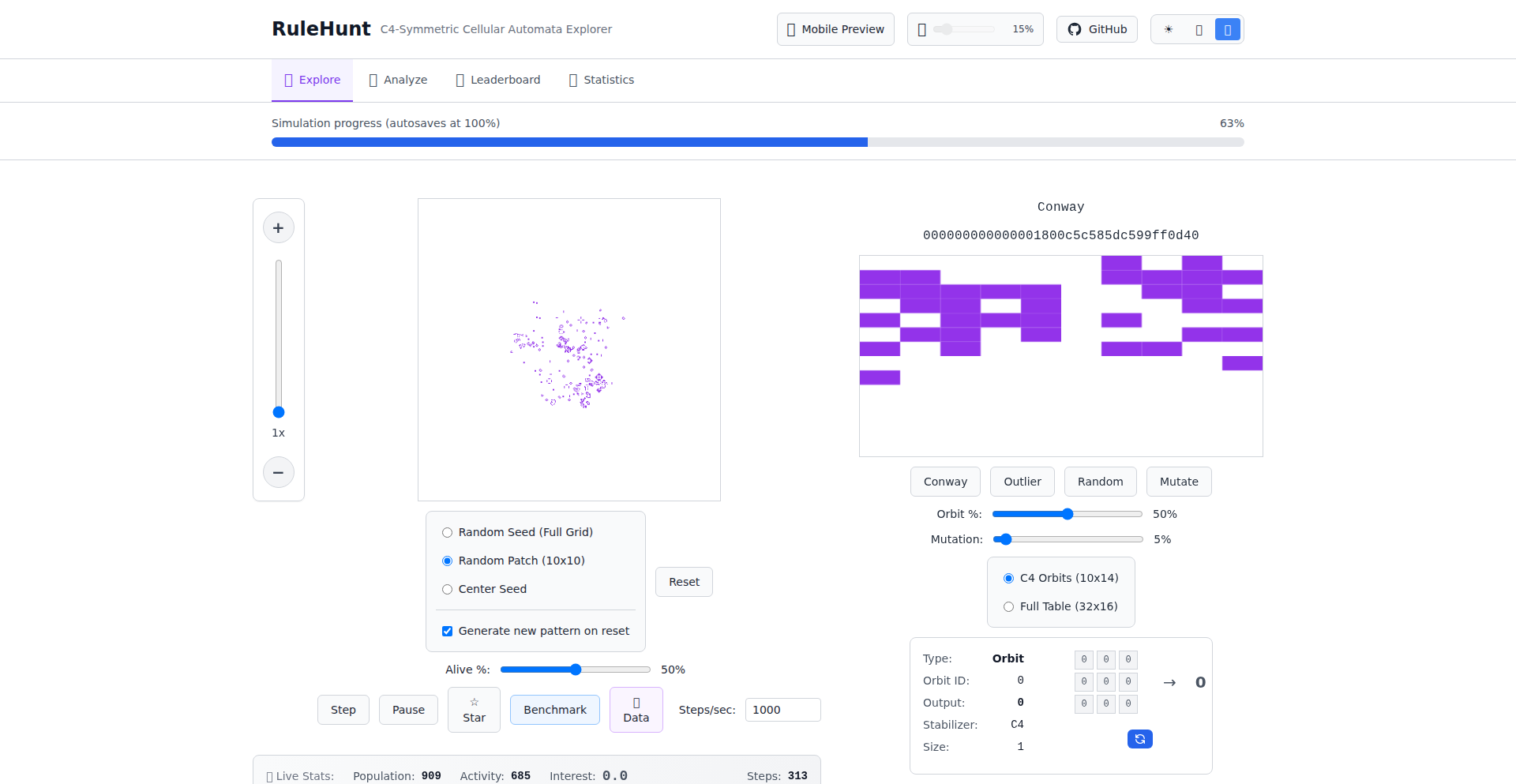

5

RuleHunt: Cellular Automata Exploration Engine

Author

irgolic

Description

RuleHunt is a novel platform that leverages a TikTok-style interface to explore the vast and complex universe of cellular automata rules. By gamifying the discovery process and monitoring user engagement (via starring interesting rules), it aims to crowdsource the identification of interesting and potentially useful automata patterns. This addresses the challenge of navigating an exponentially large search space (2^512 for some automata) by harnessing collective human intuition and preference.

Popularity

Points 14

Comments 9

What is this product?

RuleHunt is a web application designed to make the exploration of cellular automata (CA) rules more engaging and efficient. Cellular automata are systems where a grid of cells, each in a certain state, changes state over time based on simple rules applied to neighboring cells. Think of it like digital pixels evolving based on their neighbors. The 'rule' itself is a complex mathematical description. The innovation lies in presenting these rules in a visually intuitive, scrolling format similar to TikTok, allowing users to quickly 'star' or dismiss rules they find interesting. This user feedback then helps guide the search for 'good' heuristics, which are simplified rules or patterns that lead to complex and desirable behaviors. This is a significant technical feat as the sheer number of possible CA rules is astronomically large, making traditional brute-force exploration infeasible. So, instead of writing complex code to define and test each rule, you're using collective human interest to find the most promising ones. What's in it for you? You get to discover fascinating emergent behaviors from simple starting points, and if you're a developer or researcher, you might find novel patterns for simulations, generative art, or even theoretical computer science.

How to use it?

Developers can use RuleHunt in several ways. Firstly, as a discovery tool. By scrolling through the interface and starring rules that exhibit interesting patterns (e.g., self-replication, complex structures, dynamic behavior), you contribute to a global leaderboard of compelling CA rules. This can spark ideas for your own projects. Secondly, the underlying GitHub repository (linked in the project description) allows you to dive deeper. You can clone the code, understand the implementation of the rule generation and rendering, and potentially integrate parts of the system into your own applications for custom CA simulations or visualizations. For example, you could use it to generate procedural textures for games, create unique animated art pieces, or explore complex system dynamics for scientific modeling. It's about leveraging a fun, interactive approach to find building blocks for your technical creations.

Product Core Function

· Interactive rule discovery interface: Presenting complex cellular automata rules in a user-friendly, scrollable format to facilitate rapid exploration and identification of interesting patterns. This makes the vast search space of CA rules accessible to a wider audience and accelerates the process of finding useful rules, so you don't have to sift through endless mathematical formulations.

· Tiktok-style engagement monitoring: Utilizing user 'starring' actions as a proxy for rule interest and effectiveness. This crowdsourced feedback mechanism helps to filter and rank CA rules based on collective human preference, effectively highlighting promising directions for further investigation. This means the system learns what's cool and useful from the community, saving you time in finding those gems.

· Global leaderboard for rule ranking: Aggregating starred rules into a public leaderboard to showcase the most popular and intriguing cellular automata discovered. This provides a benchmark for rule complexity and emergent behavior, offering inspiration and a starting point for developers looking for novel patterns. You can see what others have found to be the most exciting, giving you a curated list of potential ideas.

· Dual interface for mobile and desktop: Offering a touch-friendly, scrolling experience optimized for mobile devices and a more targeted search interface for desktop users. This ensures accessibility and usability across different platforms, making the exploration of CA rules convenient for everyone. No matter how you access it, you get a tailored experience to find what you need.

· Open-source GitHub repository: Providing access to the project's source code for transparency, learning, and potential modification. This allows developers to inspect the technical implementation, contribute to the project, or adapt the technology for their own specific use cases. You can see exactly how it works and even build upon it.

Product Usage Case

· A game developer looking for procedural generation algorithms could use RuleHunt to discover complex and emergent patterns that can be used to generate unique in-game worlds, textures, or enemy behaviors. By starring visually interesting automata, they are essentially finding a library of pre-tested generative seeds.

· A digital artist seeking inspiration for generative art projects could browse RuleHunt for visually appealing and dynamic cellular automata rules. Starring rules that produce fascinating evolving patterns allows them to quickly identify sources for their next animated artwork, saving hours of experimentation with different algorithms.

· A researcher in computational complexity or theoretical computer science could utilize RuleHunt to identify simple rule sets that exhibit complex behavior, potentially leading to new insights into computation and emergent systems. The curated list of 'starred' rules acts as a valuable dataset for further theoretical analysis.

· A hobbyist programmer interested in understanding complex systems can use RuleHunt as an accessible entry point into cellular automata. By interacting with the platform and seeing the results of starring rules, they can develop an intuitive understanding of how simple rules can lead to complex outcomes without needing to write extensive code from scratch.

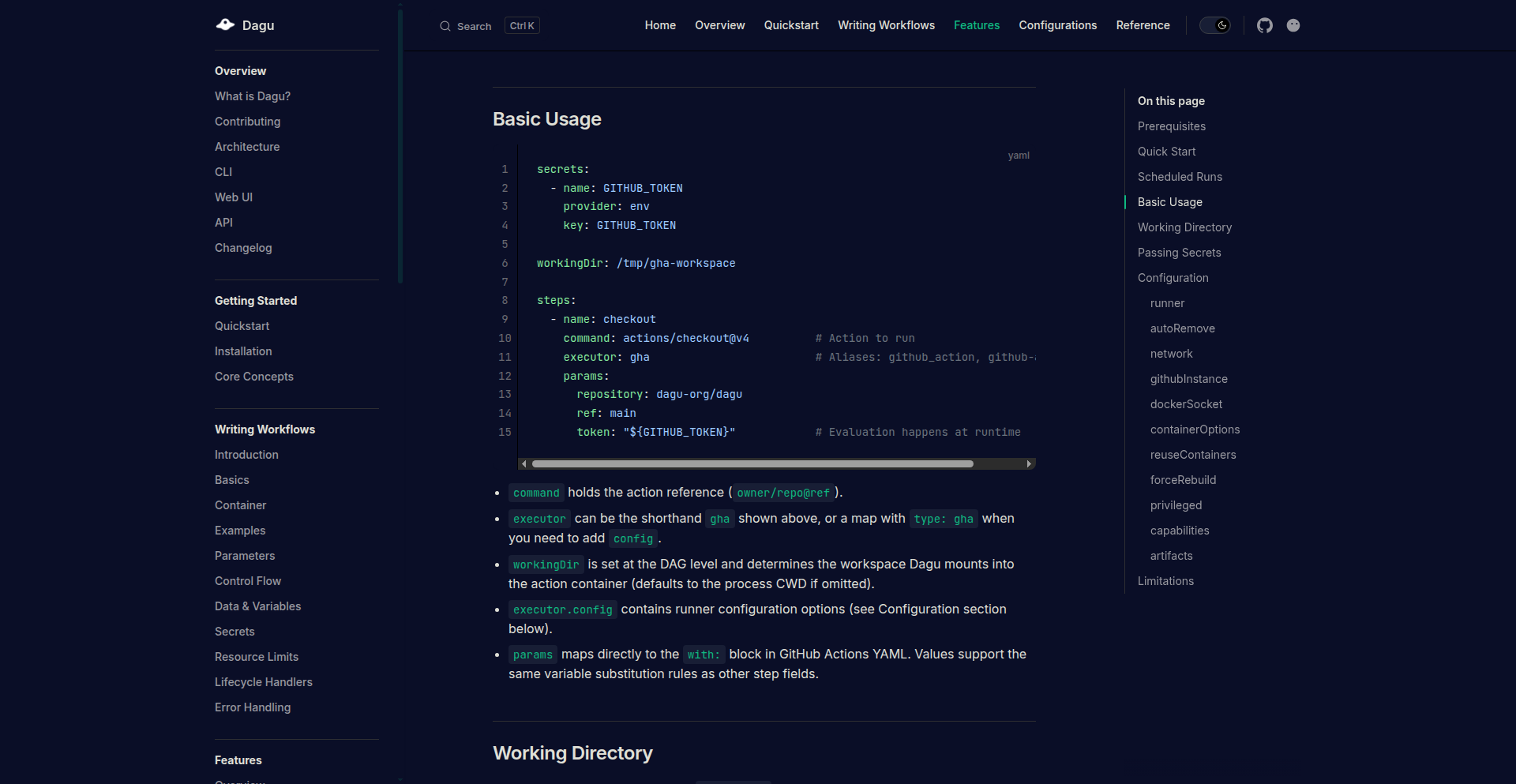

6

LocalAction Runner

Author

yohamta

Description

A tool to execute any GitHub Action locally, directly from your cron jobs. It solves the problem of needing to test or run GitHub Actions without pushing code or triggering remote pipelines, offering a seamless local development and automation experience.

Popularity

Points 22

Comments 0

What is this product?

This project, LocalAction Runner, is a clever utility that allows developers to run GitHub Actions on their own machines, just as they would on GitHub's servers. The core technical innovation lies in its ability to parse and interpret the `workflow.yml` files that define GitHub Actions. It essentially emulates the GitHub Actions environment locally. By understanding the steps, `uses` commands (which point to other actions or Docker images), and input/output parameters, it can execute the same logic as a remote CI/CD pipeline. This is valuable because it brings the power and automation of GitHub Actions to your local development loop, allowing for faster iteration and debugging of your automation scripts before they are committed.

How to use it?

Developers can integrate LocalAction Runner into their local workflow by installing it and then configuring their existing cron jobs to point to this tool. Instead of a cron job running a script that pushes to GitHub or triggers a remote build, it can now execute a specific GitHub Action workflow file (`.github/workflows/your-workflow.yml`) directly on the developer's machine. This is achieved by passing the path to the workflow file and any necessary context (like environment variables or input parameters) to the LocalAction Runner. This allows for pre-commit testing of complex CI/CD logic, running scheduled tasks that depend on GitHub Action capabilities, or even creating self-contained local development environments that mimic your production CI setup.

Product Core Function

· Local GitHub Actions Workflow Execution: Enables running any GitHub Actions workflow file (e.g., `.yml` files) on a local machine, replicating the remote execution environment. This is valuable for developers as it allows for quick testing and debugging of automation logic without pushing code, saving time and preventing unnecessary CI runs.

· Cron Job Integration: Seamlessly integrates with existing cron job schedulers. Developers can replace remote triggers with local executions, meaning their scheduled tasks can now leverage the full power of GitHub Actions without relying on external services, providing more control and predictability.

· Action Reusability and Emulation: Parses and executes steps defined in GitHub Actions, including `uses` commands that reference other actions or Docker images. This is crucial for developers as it ensures consistency between local testing and actual CI/CD execution, reducing the 'it worked on my machine' problem.

· Input/Output Parameter Handling: Supports passing inputs and managing outputs for actions, mirroring the behavior of the official GitHub Actions runner. This is beneficial for developers as it allows for complex workflows with dependencies and data transfer between steps, all manageable locally.

Product Usage Case

· Local CI/CD Workflow Testing: A developer wants to test a new GitHub Actions workflow that deploys their application. Instead of pushing to a feature branch and waiting for CI to run, they use LocalAction Runner to execute the workflow locally, receiving immediate feedback on any syntax errors or logic flaws. This drastically speeds up the development cycle.

· Scheduled Local Task Automation: A developer has a cron job scheduled to run a series of tasks every night. They want to incorporate a step that generates documentation using a GitHub Action. By using LocalAction Runner, this cron job can now trigger the documentation generation action directly on their local machine, ensuring the documentation is up-to-date without needing a separate CI server, thus simplifying their local automation.

· Offline Development Environment: A developer is working on a project that has a complex CI pipeline defined in GitHub Actions. They can use LocalAction Runner to spin up a local replica of this pipeline, allowing them to test integrations and dependencies as if they were on a live CI environment, even when offline or with limited network access. This is invaluable for understanding and verifying complex build and test processes.

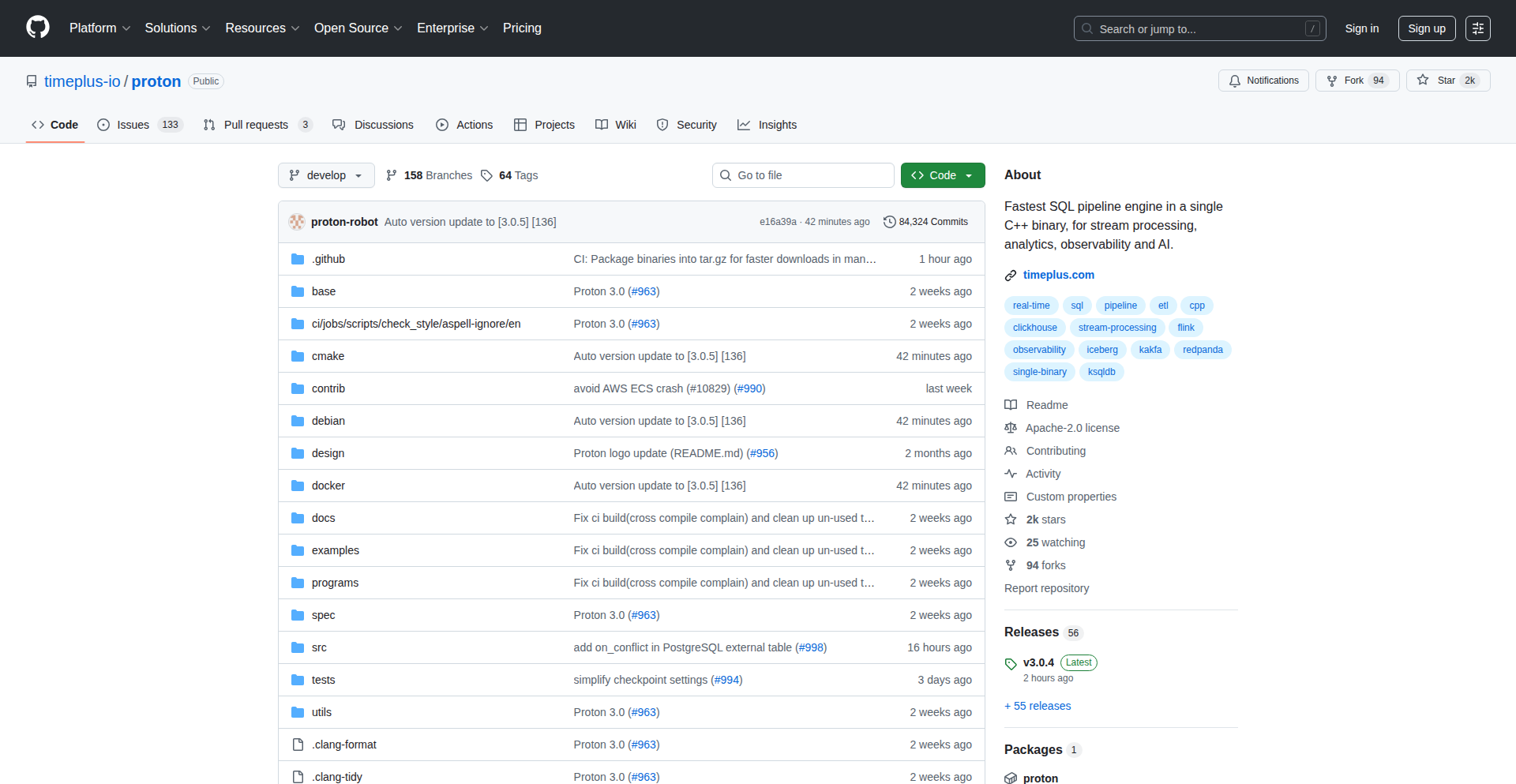

7

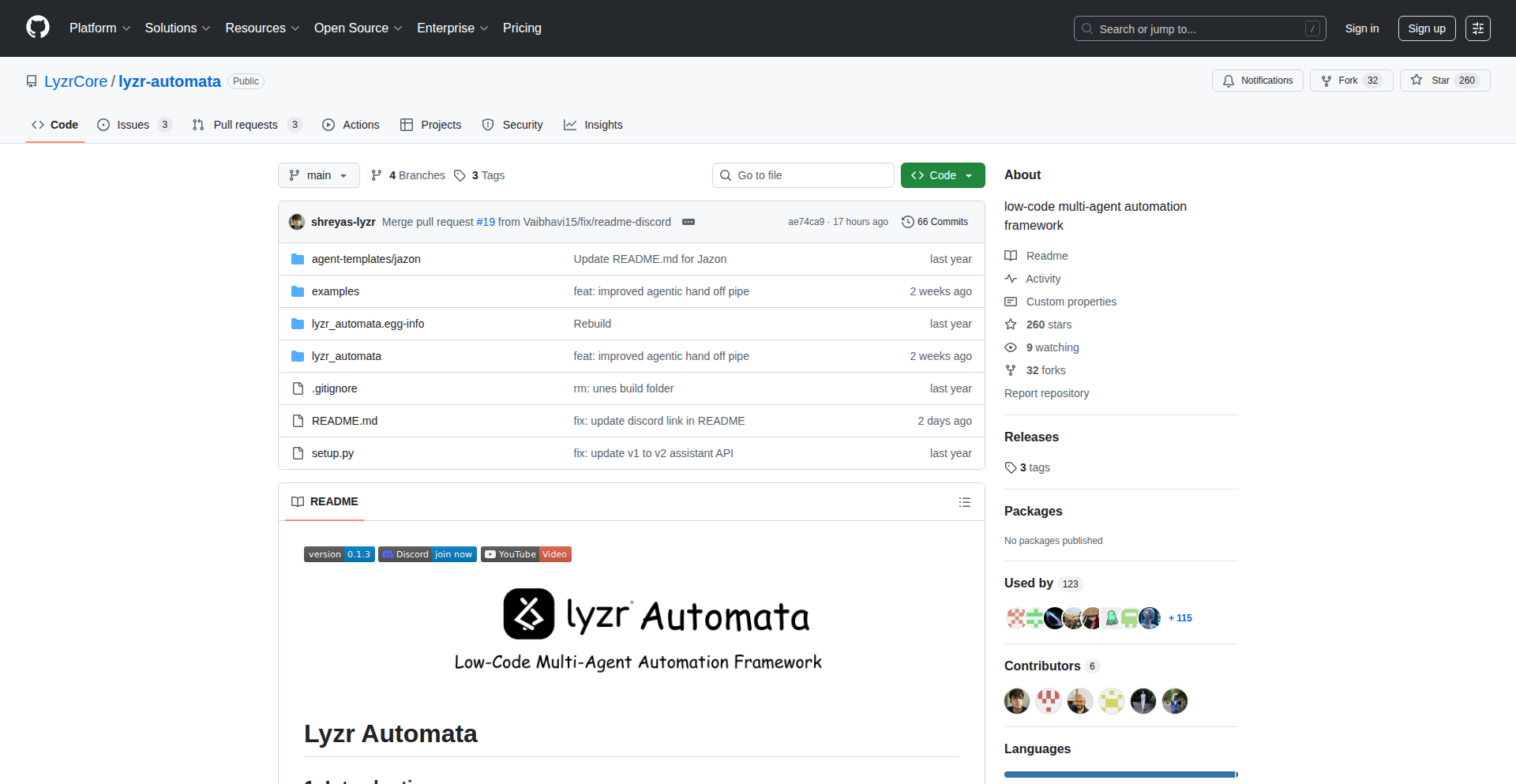

Proton StreamSQL Engine

Author

gangtao

Description

Proton 3.0 is an open-source, enterprise-grade streaming data processing engine. It combines connectivity, processing, and routing into a single, dependency-free binary, offering a powerful alternative to existing streaming solutions. Its core innovation is a vectorized streaming SQL engine built with modern C++ and Just-In-Time (JIT) compilation, enabling high-throughput, low-latency data handling for complex streaming operations.

Popularity

Points 10

Comments 10

What is this product?

Proton 3.0 is a high-performance, zero-dependency streaming data processing engine. At its heart is a vectorized streaming SQL engine written in modern C++ that utilizes Just-In-Time (JIT) compilation. Think of it like a super-fast calculator specifically designed for data that's constantly flowing in (streaming data). Instead of processing data one piece at a time, it processes chunks (vectorized), making it incredibly efficient. The JIT compilation means it can optimize itself on the fly, further boosting speed. This allows for real-time processing of massive amounts of data with very low delays, even when dealing with highly diverse data types and volumes. So, for you, this means you can analyze and react to live data streams much faster and more effectively than before.

How to use it?

Developers can use Proton 3.0 as a standalone application or integrate it into existing data pipelines. It supports a wide range of native connectors to popular data sources and sinks like Kafka, Redpanda, Pulsar, ClickHouse, Splunk, Elastic, MongoDB, S3, and Iceberg, making data ingestion and output seamless. You can define streaming ETL (Extract, Transform, Load) jobs, perform real-time aggregations, trigger alerts based on data conditions, and execute tasks all using standard SQL queries. Furthermore, it supports native Python User-Defined Functions (UDFs) and User-Defined Aggregate Functions (UDAFs), allowing you to easily incorporate custom logic, including AI/ML models, directly into your streaming queries. So, if you're working with live data from various sources and need to transform it, analyze it, or trigger actions based on it in real-time, you can simply connect your data sources, write SQL queries with custom Python logic if needed, and Proton will handle the high-speed processing for you.

Product Core Function

· Vectorized Streaming SQL Engine with JIT Compilation: Processes data in batches for higher throughput and lower latency, optimizing query execution dynamically. This means your real-time data analysis will be significantly faster and more responsive, enabling quicker insights and decision-making.

· High-Throughput, Low-Latency, High-Cardinality Processing: Handles massive volumes of data with minimal delay, even with a vast number of unique data points. This is crucial for applications that need to react instantly to events, such as fraud detection or real-time monitoring.

· End-to-End Streaming Capabilities (ETL, Joins, Aggregation, Alerts, Tasks): Allows for complete data pipeline management within a single engine, from data extraction and transformation to complex analysis and triggering actions. This simplifies your data architecture and reduces the need for multiple specialized tools.

· Native Connectors for Popular Data Systems: Seamlessly integrates with Kafka, Redpanda, Pulsar, ClickHouse, Splunk, Elastic, MongoDB, S3, and Iceberg for easy data ingestion and output. This means you can easily connect to your existing data infrastructure without complex custom integrations.

· Native Python UDF/UDAF Support for AI/ML Workloads: Enables the integration of custom Python code, including machine learning models, directly into streaming queries. This allows you to leverage advanced analytics and AI capabilities directly on your live data streams, powering intelligent applications.

Product Usage Case

· Real-time anomaly detection in financial transactions: Connect Proton to a Kafka stream of transaction data, write SQL queries to define what constitutes an anomaly (e.g., unusual spending patterns), and use Python UDFs for more complex machine learning-based anomaly detection. Proton processes transactions in real-time, triggering alerts instantly when anomalies are detected, preventing fraud. This solves the problem of delayed detection with traditional batch processing.

· Live monitoring of IoT sensor data for predictive maintenance: Ingest data from numerous IoT devices via Pulsar into Proton. Use SQL aggregations to calculate average sensor readings and detect deviations from normal ranges. Combine this with Python UDFs for ML models that predict potential equipment failures. Proton's low-latency processing ensures timely alerts for maintenance, minimizing downtime. This provides a proactive approach to maintenance instead of reactive repairs.

· Dynamic content personalization for a website: Stream user interaction data (clicks, views) from Redpanda into Proton. Use SQL to join this with user profile data and session information. Apply Python UDFs for recommendation algorithms to identify user preferences in real-time. Proton then routes personalized content recommendations back to the website, enhancing user experience. This solves the challenge of delivering personalized content instantly based on live user behavior.

8

Nityasha AI: Contextual Conversational Assistant

Author

nityasha

Description

Nityasha AI is a personal AI assistant built by a 13-year-old and her father. It innovates by maintaining context across conversations, integrating email, coding help, research, and planning into a single, intuitive interface. This solves the problem of juggling multiple applications and losing track of information, offering a unified and intelligent digital workspace. Its generative UI for visual charts and a 'Study Mode' for Socratic teaching further enhance its unique value proposition.

Popularity

Points 8

Comments 8

What is this product?

Nityasha AI is a conversational AI assistant designed to simplify your digital life. Its core innovation lies in its ability to remember context across your interactions. Think of it like talking to a really smart assistant who actually recalls what you discussed earlier, rather than starting fresh every time. Technically, this is achieved through advanced techniques in managing conversational state and leveraging large language models (LLMs) to understand and retain the nuances of your requests over time. It goes beyond simple chatbots by integrating functionalities like email management, coding assistance, research summarization, and daily planning into one seamless chat interface, eliminating the need to switch between numerous applications.

How to use it?

Developers can interact with Nityasha AI through its conversational interface, much like chatting with a human assistant. For practical use, you can ask it to draft an email based on a previous discussion, get help debugging a code snippet by providing the relevant code and error message, ask it to research a topic and summarize key findings, or request it to plan your day based on your appointments and priorities. For businesses, Nityasha Connect allows for direct integration of their services, enabling the AI to perform actions within those services on behalf of the user, streamlining workflows and automating tasks. This means you can potentially have Nityasha AI manage customer support tickets or process orders directly within your business systems.

Product Core Function

· Contextual Conversation Management: The AI remembers previous interactions and information, so you don't have to repeat yourself. This provides a more fluid and efficient user experience, saving you time and reducing frustration when working on complex tasks.

· Unified Application Integration: Handles email, coding help, research, and planning in one place. This eliminates the need to switch between multiple tabs and applications, boosting productivity and reducing mental overhead.

· Generative UI for Visualizations: Creates visual charts and graphs from data or information provided in conversations. This offers a more intuitive way to understand complex information and present data, making insights more accessible.

· Study Mode with Socratic Teaching: Engages users in a learning process by asking guiding questions. This is valuable for students or anyone looking to deepen their understanding of a topic, fostering critical thinking and active learning.

· Nityasha Connect for Business Integration: Allows businesses to integrate their services directly into the AI. This enables automated workflows and task completion within external applications, enhancing business efficiency and customer service capabilities.

Product Usage Case

· Scenario: A freelance developer is working on a project with multiple stakeholders. They can use Nityasha AI to summarize email threads related to client feedback and then ask for coding suggestions based on that feedback, all within the same conversation. The AI remembers the client's specific requests from earlier emails, directly informing the coding assistance.

· Scenario: A student is researching a historical event. They can ask Nityasha AI to find relevant articles, summarize key arguments, and then use the 'Study Mode' to ask probing questions about the event's causes and consequences. The AI will guide them through the learning process, much like a tutor.

· Scenario: A small business owner needs to manage customer inquiries and sales. With Nityasha Connect, they could integrate their CRM and e-commerce platform. The AI could then, for example, proactively inform the owner of new high-priority customer support tickets or suggest personalized product recommendations to customers based on their browsing history, all managed conversationally.

9

StreamJSONParser

Author

hotk

Description

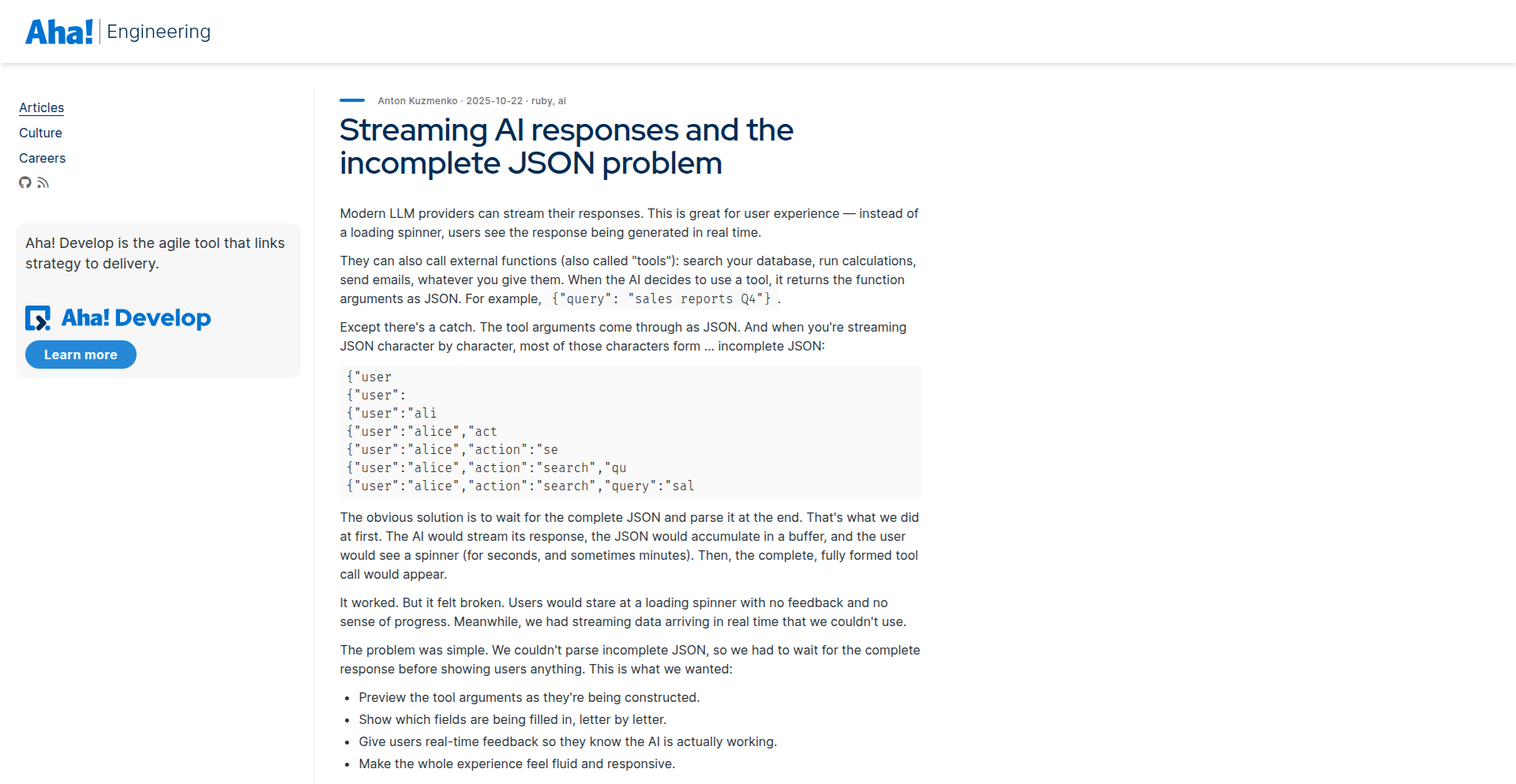

This project introduces an incremental JSON parser specifically designed for streaming AI tool calls. LLMs often stream function arguments as JSON character by character. Traditional parsers, by re-parsing the entire JSON from the beginning with each new character, exhibit O(n²) performance which leads to noticeable UI lag. StreamJSONParser addresses this by maintaining parsing state and only processing the new characters, achieving a true O(n) performance that ensures a smooth and imperceptible user experience during long AI responses.

Popularity

Points 12

Comments 0

What is this product?

StreamJSONParser is a Ruby gem that tackles the performance bottleneck in processing JSON data streamed character by character, a common scenario with modern AI tools like Large Language Models (LLMs) when they generate function arguments. The innovation lies in its stateful parsing approach. Instead of re-reading and re-parsing the entire received JSON string every time a new character arrives (which is like re-reading a whole book for every new word, hence slow and inefficient, an O(n²) problem), it remembers what it has already processed. It then only needs to process the very latest characters. This dramatically improves performance to an O(n) complexity, meaning the processing time grows linearly with the total length of the response, making it incredibly fast and responsive, so your UI doesn't freeze up.

How to use it?

Developers can integrate StreamJSONParser into their Ruby applications that utilize AI tools for function calls. If you are building a chatbot, a virtual assistant, or any application that leverages LLMs to dynamically decide and call specific functions based on user input, this gem will be invaluable. You would typically include the StreamJSONParser gem in your project's Gemfile. When the AI starts streaming its response, instead of feeding the raw incoming characters to a standard JSON parser, you feed them to StreamJSONParser. It will then efficiently build and update the JSON object representing the function arguments in real-time, ready for your application to use without delay.

Product Core Function

· Stateful character-by-character JSON parsing: This allows the parser to remember its progress and only process new incoming data, drastically reducing processing overhead. The value is a much faster and smoother UI experience when dealing with continuously arriving data, preventing lag.

· O(n) performance for streaming data: Achieves linear time complexity for processing, meaning the time taken scales directly with the amount of data. This is crucial for real-time applications where responsiveness is key, ensuring the application remains fluid even with lengthy AI responses.

· Efficient handling of LLM tool calls: Specifically optimized for the common pattern of LLMs streaming function arguments as JSON. This directly solves a performance issue in modern AI development, making AI-powered features more usable and professional.

Product Usage Case

· Building a real-time AI assistant that needs to interpret user commands and call specific backend functions. The LLM might stream the function name and its arguments as JSON. Using StreamJSONParser ensures the assistant's interface remains responsive as the LLM generates the command, allowing for immediate action without UI stutter.

· Developing a customer support chatbot powered by an LLM that can access a knowledge base or perform actions. When the LLM needs to fetch information (e.g., 'find customer order details'), it streams the parameters as JSON. StreamJSONParser allows the chatbot UI to update and process these parameters instantly, providing a seamless interaction for the user.

· Creating an in-app code generation tool where an LLM suggests code snippets or refactors. As the LLM streams the structured code output in JSON format, StreamJSONParser keeps the UI responsive, allowing developers to see and interact with the suggestions in real-time without noticeable delays.

10

HackerNews Chronicle

Author

Seasons

Description

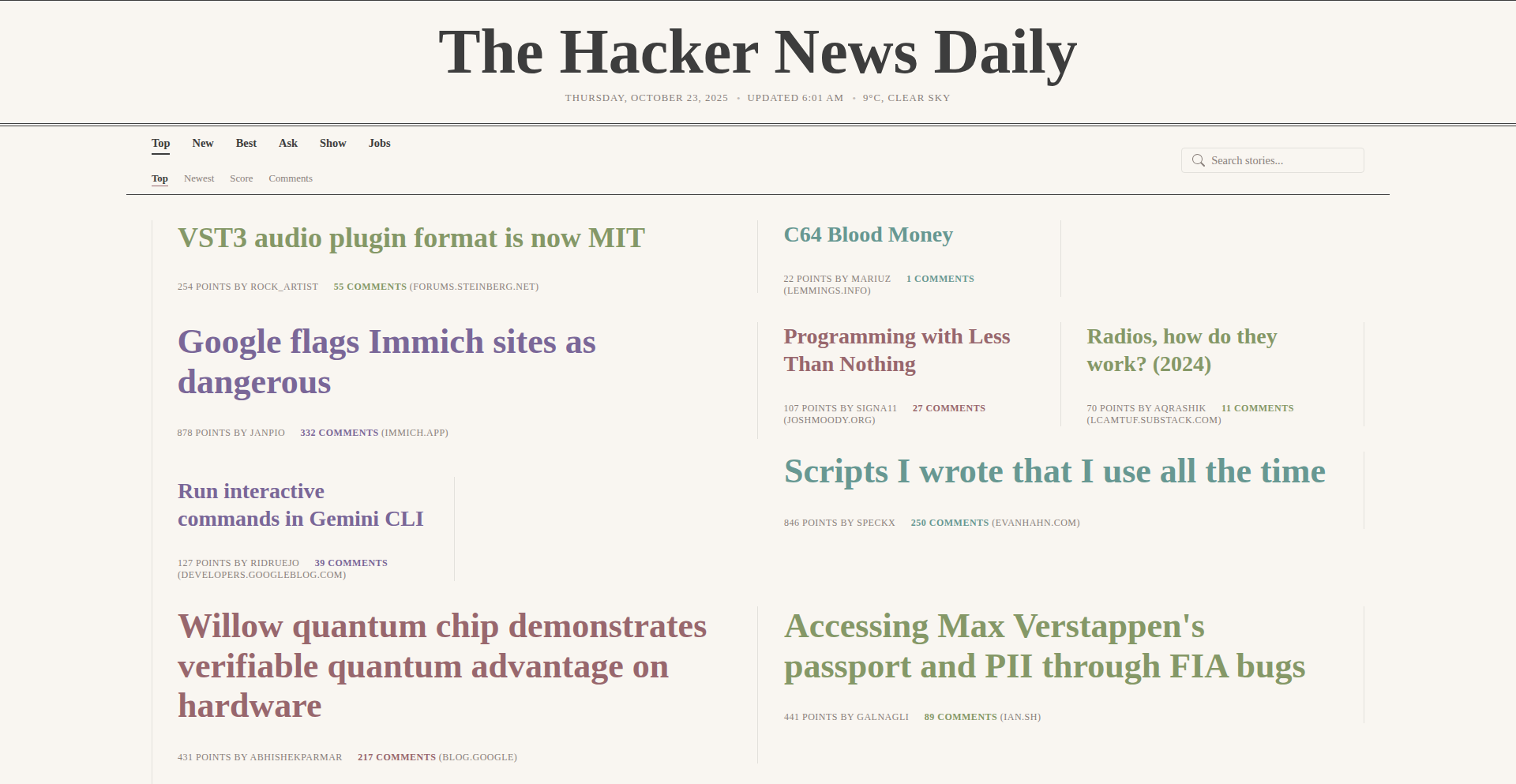

This project reimagines Hacker News as a daily newspaper, presenting trending discussions and popular articles in a familiar newspaper format. The innovation lies in its creative data visualization and content curation, transforming a dynamic online forum into a static, browsable digest. It solves the problem of information overload by providing a distilled, thematic view of the tech landscape, making it easier for busy developers to stay informed without sifting through endless real-time updates. So, this is useful for developers who want a quick, curated overview of the tech world each day, akin to reading a physical newspaper.

Popularity

Points 7

Comments 3

What is this product?

HackerNews Chronicle is a conceptual project that renders the content and trends of Hacker News into a newspaper-like layout. Technologically, it likely involves scraping Hacker News API or web content, categorizing and ranking articles based on engagement (likes, comments), and then programmatically arranging this data into a visually appealing newspaper structure. The innovation is in the creative application of data processing and design principles to present information in a novel, accessible format. So, this is useful because it offers a different way to consume the same rich information from Hacker News, making it feel less like a chaotic stream and more like a thoughtfully compiled report, which helps in understanding the 'big picture' trends.

How to use it?

As a conceptual project, direct end-user usage might be limited to viewing a generated artifact (like a PDF or a dedicated webpage). However, the underlying principles can be applied in various ways. Developers could build similar digest generators for their own communities or internal knowledge bases. Integration could involve using the project's scraping and rendering logic as a module within a larger dashboard or reporting tool. For instance, a company could adapt this to summarize internal technical discussions. So, this is useful for developers who want to explore innovative ways to present information, or who can adapt the core logic to create custom digest tools for their specific needs, helping them organize and share knowledge more effectively.

Product Core Function

· Data Aggregation: Fetching trending and popular articles from Hacker News. This is valuable for consolidating information from a vast source into a manageable dataset, saving developers time from manual searching.

· Content Categorization and Ranking: Organizing articles by topic and popularity. This provides structure to the information, allowing users to quickly identify the most relevant and impactful discussions in the tech community.

· Newspaper Layout Rendering: Presenting the curated content in a newspaper-like visual format. This innovative presentation makes complex information more digestible and engaging, transforming the browsing experience and making it easier to grasp key takeaways.

· Thematic Summarization: Implicitly creating a narrative around the day's tech news through article selection and arrangement. This helps developers understand the prevailing themes and sentiment within the tech industry, fostering a broader perspective.

Product Usage Case

· A developer wanting a daily tech news digest without spending hours browsing Hacker News. This project offers a quick, visually organized summary, akin to picking up the morning paper, helping them stay informed efficiently.

· A technical writer or content creator looking for inspiration for articles or blog posts. By seeing what topics are trending and how they are discussed, they can identify popular themes and potential content gaps relevant to the developer community.

· A team lead who needs to quickly brief their team on the latest industry developments. The newspaper format provides a high-level overview of important discussions, facilitating rapid knowledge sharing and alignment within the team.

· An educational platform aiming to teach new developers about current tech trends. This project's output can serve as a visually appealing and easily understandable resource to introduce them to the landscape of software development news and discussions.

11

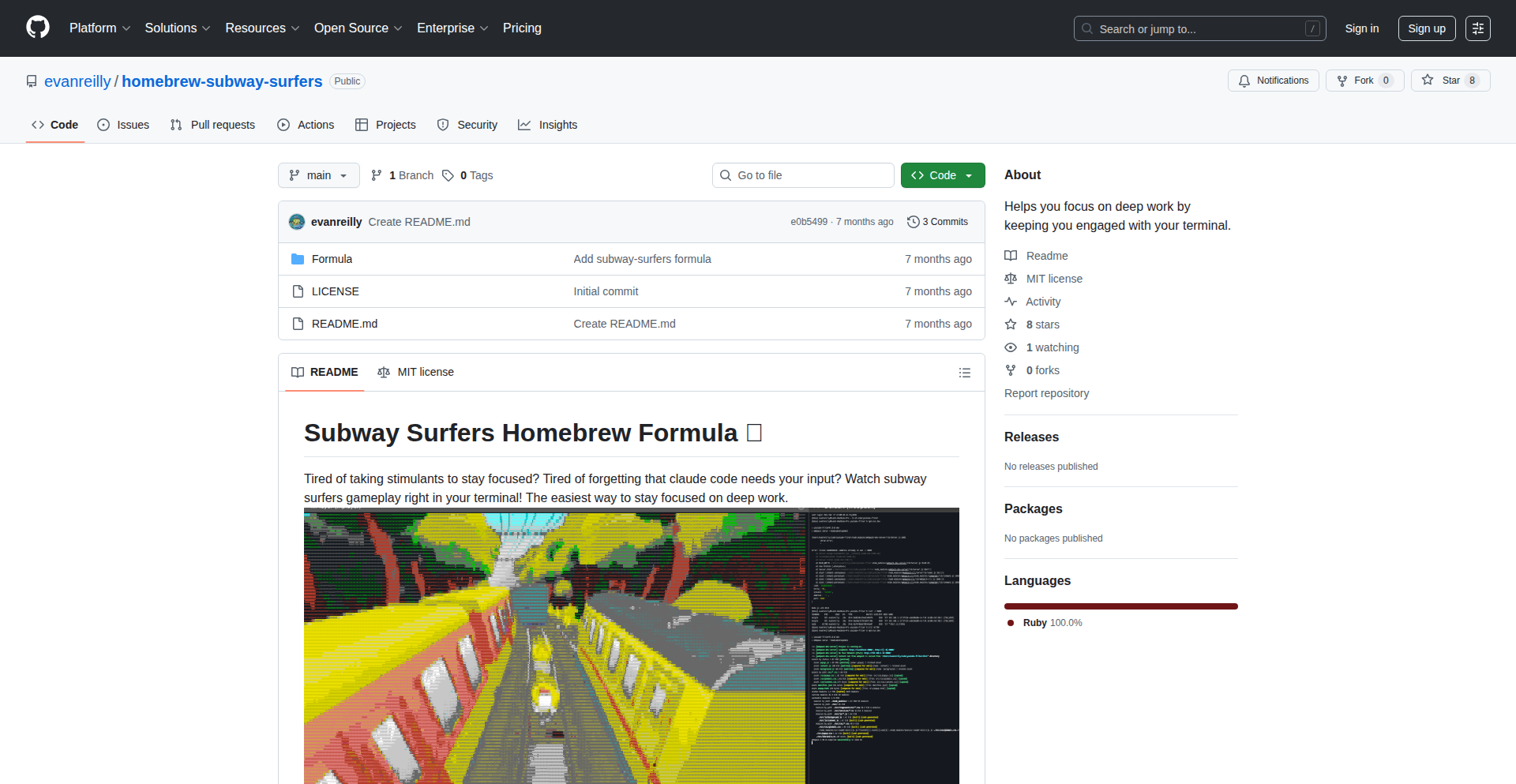

Terminal Subway Surfers

Author

civilchaos

Description

This project brings the popular mobile game 'Subway Surfers' directly into your command-line terminal. It cleverly uses terminal graphics to recreate the game's visuals, allowing developers to play while waiting for time-consuming tasks like code generation to complete. The innovation lies in making productive use of otherwise idle waiting time by engaging the user with a fun, distraction-free experience, thus enhancing focus and potentially reducing frustration.

Popularity

Points 7

Comments 2

What is this product?

This is a command-line implementation of the game 'Subway Surfers'. Instead of a graphical interface on a mobile phone, it renders the game using text characters and colors within your terminal window. The technical principle involves using libraries that can draw simple graphics or manipulate text characters in a grid to simulate the game's movement, obstacles, and character. The innovation is in transforming passive waiting time during development into an engaging and focus-enhancing activity, directly addressing the developer's pain point of losing concentration during lengthy background processes. So, this is a fun way to keep your mind active and focused while your code or other processes are running.

How to use it?

Developers can easily install this using a package manager like Homebrew. Once installed, they simply run the 'subway-surfers' command in their terminal. This can be done by opening a new terminal tab or window while a long-running process is executing in another. For example, if you initiate a code generation task that you know will take a few minutes, you can then launch Terminal Subway Surfers. The game will run in the background or in a separate window, providing an engaging distraction that helps you stay focused on the task at hand rather than getting sidetracked by other online content. So, you can integrate it by simply launching it in a separate terminal session when you're waiting for something else to finish, allowing you to maintain focus and reduce idle time.

Product Core Function

· Terminal-based game rendering: The core functionality is to draw and animate the game using only text characters and colors within the terminal, making it accessible on any system with a command-line interface. This provides entertainment and engagement without requiring a separate application or graphical environment.

· Interactive gameplay controls: Implements keyboard input to control the player character, allowing for classic 'swipe' actions like jumping and dodging obstacles. This ensures a playable and familiar gaming experience, directly translating the game's core mechanics into the terminal.

· Obstacle and scoring system: Recreates the game's essential elements of running, collecting items, and avoiding obstacles, complete with a scoring mechanism. This provides a complete and functional game experience, fulfilling the objective of providing a engaging pastime.

· Idle time gamification: The primary value proposition is to gamify developer waiting time. By providing an engaging activity, it helps prevent developers from losing focus or getting bored during long processes, thus indirectly improving productivity. This transforms passive waiting into an active, mind-engaging activity.

Product Usage Case

· During long code compilation or build processes: When a developer initiates a large project build that takes several minutes, they can launch 'Terminal Subway Surfers' in another terminal window. This provides a fun distraction that keeps their mind engaged and focused, preventing them from switching to less productive activities like browsing social media, and making the waiting time feel shorter and more purposeful.

· While waiting for database migrations or data processing: For tasks that involve significant data manipulation or database operations, which can often be time-consuming, this tool can be used to fill the waiting period. Instead of passively waiting, the developer can play the game, maintaining mental engagement and reducing the perceived downtime.

· When running slow API calls or external service integrations: If a developer is testing or integrating with slow external services, they can run the game to keep their mind occupied. This helps them remain focused on the overall task and avoid losing track of what they were initially working on, improving overall workflow efficiency.

12

AIAnxietyGuard

Author

ycosynot

Description

An AI-powered guide to help individuals manage extreme anxiety and withdrawal symptoms. It leverages natural language processing and psychological principles to provide personalized coping strategies and information, acting as a readily accessible digital support tool. The core innovation lies in making sophisticated psychological support accessible through a free, user-friendly AI interface.

Popularity

Points 8

Comments 1

What is this product?

AIAnxietyGuard is a free, AI-driven guide designed to assist people experiencing extreme anxiety and withdrawal. It uses advanced AI, specifically natural language processing (NLP), to understand user input and provide tailored advice. The innovation is in translating complex psychological frameworks into an accessible, conversational format, offering a proactive and private way to access support. So, what's in it for you? It means you can get helpful guidance on managing difficult emotions and situations at any time, without the barriers of cost or scheduling.

How to use it?

Developers can integrate AIAnxietyGuard's functionalities into their own applications or platforms. This could involve using its API to power chatbots that offer mental wellness support, incorporating its content into wellness apps, or building custom interfaces for specific therapeutic contexts. For end-users, it's as simple as interacting with a chatbot or a web interface, asking questions about anxiety, withdrawal, or seeking coping mechanisms. So, what's in it for you? Developers can build more empathetic and supportive digital products, and users can access immediate, personalized mental wellness resources.

Product Core Function

· AI-driven personalized coping strategies: Leverages NLP to analyze user input and suggest relevant, evidence-based techniques for managing anxiety and withdrawal, providing immediate, actionable advice. The value is in offering tailored support that feels relevant to your specific situation, helping you take control.

· Information dissemination on anxiety and withdrawal: Provides clear, concise explanations of psychological concepts and symptoms related to anxiety and withdrawal, empowering users with knowledge. The value is in demystifying complex issues, reducing fear and confusion.

· Conversational interface: Allows users to interact with the AI in a natural, dialogue-based format, making the support feel more human and less clinical. The value is in creating a comfortable and engaging experience that encourages ongoing use and exploration of personal challenges.

· Resource aggregation and guidance: Points users to additional resources or professional help when needed, acting as a gateway to further support. The value is in ensuring you have a pathway to more comprehensive care if your needs extend beyond what the AI can provide.

Product Usage Case

· A mental wellness app developer could integrate AIAnxietyGuard's NLP engine to power a chatbot that offers immediate support to users experiencing panic attacks, providing step-by-step breathing exercises and grounding techniques. This solves the problem of users needing instant, on-demand assistance during a crisis, offering them a lifeline when human support might not be readily available.

· A therapist could use AIAnxietyGuard to create supplementary exercises for their patients between sessions. Patients could engage with the AI to practice coping mechanisms learned in therapy, reinforcing positive behaviors. This addresses the challenge of maintaining therapeutic progress outside of scheduled appointments, making interventions more impactful.

· A workplace wellness program could deploy AIAnxietyGuard as a readily available resource for employees experiencing stress or anxiety, offering a private and anonymous way to seek guidance. This tackles the issue of stigma surrounding mental health in the workplace and provides a scalable solution for supporting employee well-being.

13

Fiat2Stablecoin Gateway

Author

HenryYWF

Description

This project is a wrapper around Bridge.xyz that allows clients to pay invoices in traditional currencies (fiat) and have those payments automatically converted and settled into stablecoins. These stablecoins can then be directly spent from a user's balance, offering a streamlined payment solution for digital nomads and businesses operating across borders. The innovation lies in simplifying cross-border payments by abstracting away the complexities of cryptocurrency exchange and fiat settlement into a user-friendly experience.

Popularity

Points 4

Comments 4

What is this product?

This project acts as an intermediary, bridging the gap between traditional fiat payment systems and the blockchain. When a client pays an invoice using their regular currency (like USD, EUR, etc.), this system, leveraging Bridge.xyz's underlying infrastructure, automatically converts that fiat into a stablecoin (a cryptocurrency pegged to a stable asset like the US dollar). The key innovation is the seamless fiat-to-stablecoin conversion and the ability to immediately use these stablecoins from a wallet balance without needing multiple manual steps or extensive cryptocurrency knowledge. This simplifies international transactions and makes it easier for individuals and businesses to operate with digital assets.

How to use it?

Developers can integrate this system into their invoicing or payment platforms. It allows businesses to present invoices payable in fiat to their clients, while the business ultimately receives the payment in stablecoins. Users can create an account and explore the dashboard without needing Know Your Customer (KYC) verification for initial exploration. The system provides a dashboard for managing accounts, viewing transaction history, and potentially initiating payments from the stablecoin balance. This can be integrated into existing business workflows to reduce friction in receiving international payments and managing digital assets.

Product Core Function

· Fiat Invoice Generation: Allows businesses to create invoices that clients can pay using their familiar fiat currencies. This simplifies the payment process for clients, as they don't need to deal with cryptocurrencies directly at the point of payment, making the overall transaction smoother.

· Automated Fiat to Stablecoin Conversion: Upon receiving fiat payment, the system automatically converts it into a stablecoin. This eliminates the manual effort and potential for errors associated with cryptocurrency exchanges, ensuring that businesses receive their funds in a stable digital asset.

· Stablecoin Balance Management: Users can hold and manage their received stablecoins within their account balance. This provides a readily accessible pool of digital funds that can be used for various purposes, such as paying other services or withdrawing, offering flexibility in fund utilization.

· KYC-Free Exploration: The ability to create an account and explore the dashboard without mandatory KYC allows for quick onboarding and testing of the platform's capabilities. This is particularly valuable for individuals who prioritize privacy or want to quickly assess the tool's utility before committing to identity verification.

Product Usage Case

· Digital Nomad Freelancer receiving payments from international clients. Instead of dealing with complex wire transfers or currency exchange fees, clients can pay invoices in their local currency, and the freelancer receives the equivalent in a stablecoin, which can then be used for expenses or further investment without hassle.

· E-commerce business selling to a global audience. This system can be integrated to accept payments in various fiat currencies, which are then converted to stablecoins. This allows the business to manage its revenue in a stable digital asset, potentially reducing transaction costs and simplifying international accounting.

· Subscription service for digital goods or services. Clients can pay for subscriptions using fiat, and the service provider receives stablecoins, offering a predictable revenue stream in a digital format that can be easily managed and reinvested within the digital economy.

14

Cont3xt.dev: AI Knowledge Weaver

Author

ksred

Description

Cont3xt.dev is a universal team knowledge base designed to power AI coding tools. It intelligently indexes and makes searchable all your team's internal documentation, code snippets, and communication logs. The core innovation lies in its ability to provide context-aware information retrieval, enabling AI assistants to understand and leverage your team's unique knowledge, leading to more accurate and efficient code generation, debugging, and design.

Popularity

Points 4

Comments 4

What is this product?

Cont3xt.dev is a system that acts as a central brain for your team's collective knowledge, specifically tailored for AI coding tools. It works by ingesting various forms of team data, like internal wikis, code repositories, chat logs (e.g., Slack, Teams), and even design documents. It then uses advanced indexing and semantic search techniques to understand the relationships and meaning within this data. This is innovative because instead of just keyword matching, it grasps the underlying concepts. This allows AI coding tools to access and utilize your team's specific expertise and history, much like a seasoned team member would, leading to more relevant and useful AI-generated code and solutions. So, what does this mean for you? It means your AI coding assistants won't just be generic; they'll be infused with your team's own best practices, internal jargon, and project-specific knowledge, making them significantly more effective for your unique development environment.

How to use it?

Developers can integrate Cont3xt.dev into their workflow by connecting it to their existing data sources, such as GitHub, Confluence, Notion, and chat platforms. Once integrated, the system indexes this information. Developers can then interact with AI coding tools (like GitHub Copilot or custom AI agents) that are configured to query Cont3xt.dev. When a developer asks the AI a question or requests code, the AI first consults Cont3xt.dev for relevant internal context. This context is then fed back into the AI's response generation, ensuring that the output is grounded in the team's specific knowledge. This translates to developers getting AI-powered assistance that understands project history, internal libraries, and common solutions your team has already established, speeding up development and reducing the need for repetitive explanations. It's like having a super-informed AI teammate who knows all your company's secrets.

Product Core Function

· Universal Data Indexing: Ingests and indexes diverse team knowledge sources (wikis, code, chats, docs) to create a unified knowledge graph. This value lies in consolidating scattered information, making it discoverable and accessible by AI, solving the problem of information silos and lost institutional knowledge.

· Context-Aware Semantic Search: Employs advanced natural language processing and embeddings to understand the meaning and relationships within your data, not just keywords. This innovation allows AI to retrieve highly relevant information even when exact terms aren't used, ensuring deeper context for AI responses and solving the issue of superficial AI understanding.

· AI Tool Integration Layer: Provides APIs and SDKs to seamlessly connect with various AI coding tools. This makes it easy to inject team-specific knowledge into existing AI workflows, significantly enhancing the accuracy and utility of AI-generated code and assistance, solving the problem of generic AI outputs.

· Knowledge Graph Visualization: Offers visual representations of how different pieces of knowledge are connected within the team. This helps developers and managers understand the landscape of their internal knowledge, aiding in onboarding, knowledge sharing, and identifying knowledge gaps. It provides a clear overview of your team's intellectual assets.

Product Usage Case

· A developer is working on a new feature and needs to understand how a similar component was implemented in a past project. They ask their AI coding assistant, which queries Cont3xt.dev. Cont3xt.dev retrieves relevant code snippets, design documents, and even related Slack discussions from the previous project, providing the developer with a comprehensive understanding and accelerating their work. The problem solved is quick access to historical project context.

· A new team member is onboarding and needs to learn about the team's internal API standards. Instead of sifting through lengthy documentation, they ask their AI assistant. Cont3xt.dev provides a concise summary of the API standards, links to relevant examples, and even points to team members who are experts on the topic. This dramatically speeds up onboarding and knowledge acquisition. The problem solved is efficient new team member integration.

· During debugging, a developer encounters an obscure error message. They feed the error to their AI assistant, which uses Cont3xt.dev to search for past occurrences of this error or similar issues within the team's bug tracker and code history. Cont3xt.dev surfaces solutions or workarounds previously implemented by the team, helping the developer resolve the bug much faster. The problem solved is faster and more effective debugging.

15

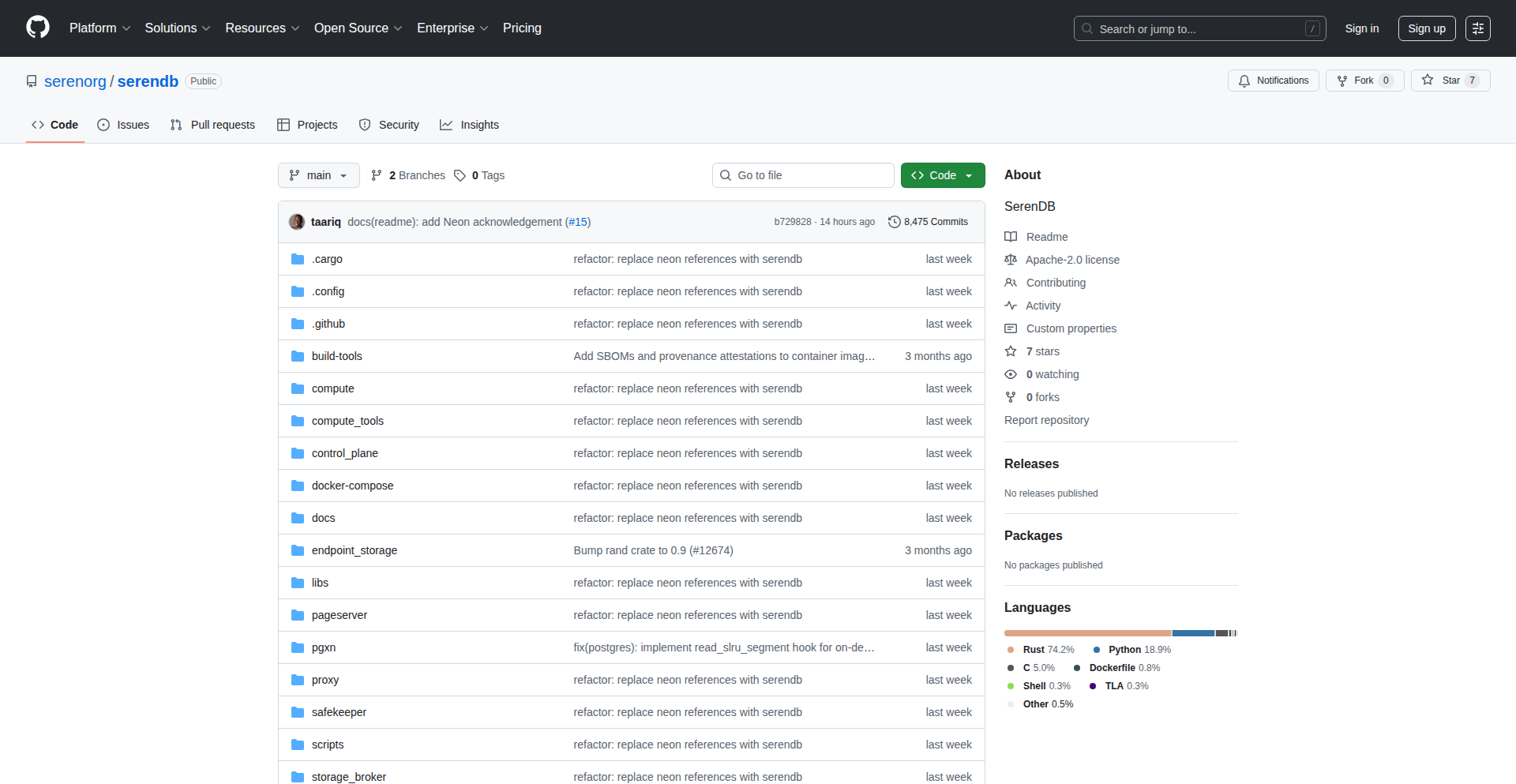

SerenDB: AI Agent's Time-Traveling & Secure PostgreSQL

Author

taariqlewis

Description

SerenDB is a specialized fork of Neon PostgreSQL, engineered to provide enhanced performance, safety, and cost-efficiency for AI agent workloads. It introduces innovative features like time-travel queries for debugging and auditing AI decisions, and a scale-to-zero capability with pgvector for managing dormant databases. Furthermore, it actively develops prompt injection detection to safeguard data and enables rapid database branching for efficient agent testing and rollback. This addresses the critical need for safe and agile experimentation with production data for AI agents.

Popularity

Points 7

Comments 1

What is this product?

SerenDB is a database designed for AI agents, built upon PostgreSQL. The core innovation lies in its modifications to Neon PostgreSQL to specifically address the unique challenges of AI agent interactions with data. It allows you to query your database as it existed at any point in time (time-travel queries), which is incredibly useful for understanding why an AI agent made a particular decision or to see exactly what data it was looking at. It also integrates vector embeddings (pgvector) and can scale down to zero when not in use, meaning you don't pay for idle databases. This is like having a super-smart, cost-effective memory for your AI.

How to use it?

Developers can leverage SerenDB by integrating it into their AI agent applications. Its SQL interface is familiar, but with added power. For instance, you can use time-travel queries like `SELECT * FROM orders AS OF TIMESTAMP '2024-01-15 14:30:00'` to inspect historical data, crucial for debugging agent logic or auditing data access. The scale-to-zero feature means your AI agent's database can automatically power down when inactive, saving costs, and then instantly scale up when the agent needs it. For AI development, you can create isolated database branches in milliseconds to test different AI prompts or configurations on real data without affecting your main database, enabling rapid iteration and rollback.

Product Core Function

· Time-travel queries: Enables querying the database at any historical timestamp, crucial for debugging AI agent behavior and auditing data access. This helps answer 'what did the AI see?' and 'why did it do that?'.

· Scale-to-zero with pgvector: Allows vector embeddings (used for AI data similarity searches) to be integrated and the database to automatically shut down when idle, saving significant costs for dormant AI workloads. This means you only pay when your AI is actively using the database.

· Prompt injection detection (in development): Proactively identifies and blocks malicious attempts to manipulate AI agents through carefully crafted inputs (prompt injection) before they can corrupt your data. This provides a critical security layer for AI applications.

· 100ms branch creation: Allows for near-instantaneous cloning of the entire database. This is invaluable for creating isolated environments for testing different AI agent versions or prompt variations on live data, facilitating rapid experimentation and safe rollbacks.

Product Usage Case

· Debugging an AI customer service agent: A developer can use time-travel queries to see precisely what customer data an AI agent was referencing when it gave a suboptimal response, allowing for quick identification of the root cause. This helps improve AI accuracy and customer satisfaction.

· Cost-effective AI chatbot for a small business: By using SerenDB's scale-to-zero feature, a small business can run an AI-powered chatbot without incurring constant database costs when the chatbot isn't actively being used. This makes advanced AI accessible on a budget.

· Securely testing new AI model features: A developer can create a rapid, isolated database branch for each new AI model iteration. This allows them to test its performance and safety on a realistic dataset without risking the integrity of the production database, speeding up the development cycle.

· Auditing AI financial advisor actions: For compliance and trust, SerenDB's time-travel querying can provide an immutable record of all data accessed by an AI financial advisor at any given moment, ensuring transparency and accountability. This builds trust and meets regulatory requirements.

16

Onetone: PHP Full-Stack Accelerator

Author

wowowoasdf

Description

Onetone Framework is a modern, full-stack PHP framework designed for developers. It integrates backend routing, an Object-Relational Mapper (ORM) for database interaction, command-line interface (CLI) tools, and frontend build support. The innovation lies in its unified, developer-friendly experience, leveraging PHP 8.2+ features for autowired routing and an ActiveRecord-style ORM. It aims to simplify and accelerate the development of complex PHP applications by providing a cohesive set of tools, including built-in Docker setup and a frontend build pipeline powered by Vite and esbuild. This offers a significant advantage by streamlining setup, development, and deployment for PHP projects, making them more efficient and robust.

Popularity

Points 5

Comments 2

What is this product?

Onetone Framework is a new PHP framework that bundles essential tools for building modern web applications. At its core, it offers 'autowired routing,' which means the framework intelligently connects incoming web requests to the correct code to handle them, without manual configuration for every route. The 'ActiveRecord-style ORM' simplifies database operations; instead of writing complex SQL queries, you can interact with your database using PHP objects, making data management more intuitive and less error-prone. It also includes a Command Line Interface (CLI) for executing tasks directly from the terminal, Docker setup for easy environment management, and integration with frontend build tools like Vite and esbuild for faster asset processing. This combination of features aims to provide a complete, efficient, and enjoyable development experience for PHP developers.

How to use it?

Developers can integrate Onetone Framework into their projects by following the setup instructions provided in the GitHub repository. Typically, this involves cloning the repository or installing it via Composer. Once set up, developers can define their backend logic using PHP classes and leverage the ORM to interact with their database. For frontend development, the integrated build pipeline with Vite and esbuild allows for rapid development and optimization of JavaScript, CSS, and other assets. The CLI tools can be used for tasks like database migrations or generating code. This framework is ideal for building anything from simple APIs to complex, interactive web applications, offering a streamlined path from idea to deployment. So, if you're building a PHP application and want a faster, more organized way to handle routing, database, and frontend assets, Onetone provides a ready-to-use toolkit.

Product Core Function

· Autowired Routing: Automatically connects web requests to your PHP code, reducing manual configuration and making your application structure cleaner. Useful for quickly building APIs or web pages without tedious route mapping.

· ActiveRecord-style ORM: Allows you to interact with your database using PHP objects instead of raw SQL queries, simplifying data manipulation and reducing the chances of errors. Beneficial for developers who want to manage data efficiently and write less boilerplate code.

· Built-in CLI Tooling: Provides command-line utilities for common development tasks like database migrations or code generation, speeding up repetitive processes. Helpful for automating workflows and maintaining consistency in your development environment.

· Frontend Build Pipeline (Vite/esbuild): Integrates modern frontend build tools to quickly process and optimize JavaScript, CSS, and other assets. Great for modern web applications that require fast asset loading and efficient bundling.

· Docker Setup Support: Simplifies the process of setting up development and production environments using Docker, ensuring consistency across different machines. Useful for teams or developers who want a reproducible and isolated development environment.

Product Usage Case

· Building a RESTful API: Developers can quickly define API endpoints using the autowired routing and manage data persistence using the ORM, significantly reducing the time to create robust backend services for mobile or web clients.