Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-21

SagaSu777 2025-10-22

Explore the hottest developer projects on Show HN for 2025-10-21. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN batch highlights a powerful surge in AI-driven innovation, particularly in empowering developers and streamlining complex workflows. We see a clear trend towards building more sophisticated AI agents capable of autonomous action and intricate task management, from code generation and execution (Katakate, Clink) to content creation and marketing automation (Toffu, RepublishAI). A significant undercurrent is the focus on developer productivity and efficiency, with tools like Django Keel, Clink, and Apicat aiming to reduce boilerplate and accelerate development cycles. Infrastructure and security remain core concerns, with projects like Katakate and LunaRoute offering innovative solutions for isolated code execution and secure AI assistant interactions. The emphasis on open-source and privacy-preserving technologies is also notable, reflecting a growing demand for transparent and user-centric tools. For developers, this is an exciting time to explore how AI can augment their capabilities, build more robust and secure systems, and contribute to the ever-evolving landscape of software development. Entrepreneurs should look for opportunities to leverage these foundational AI tools to solve specific industry problems, creating niche applications that offer significant value by automating complex tasks or providing novel insights.

Today's Hottest Product

Name

Katakate

Highlight

Katakate introduces a novel approach to hosting lightweight virtual machines (VMs) at scale, specifically designed for executing AI-generated code, CICD runners, or off-chain AI DApps. The innovation lies in its ability to manage dozens of VMs per node, aiming to circumvent the complexities and potential dangers of Docker-in-Docker setups. Its ease of use, accessible via CLI and Python SDK, targets AI engineers who prefer to avoid deep dives into VM orchestration and networking. The 'defense-in-depth' philosophy suggests a robust security architecture, offering valuable insights into scalable and secure code execution environments.

Popular Category

AI/ML

Developer Tools

Infrastructure

Productivity

Web Development

Popular Keyword

AI agents

LLM

CLI

Automation

Developer Tools

Rust

Open Source

WebAssembly

Data Analysis

Infrastructure

API

Technology Trends

AI Agent Orchestration

Decentralized Infrastructure

Developer Productivity Tools

Low-Code/No-Code AI Solutions

Advanced Data Analysis & Visualization

Secure and Efficient Code Execution

Privacy-Preserving AI

Cross-Platform Compatibility

Project Category Distribution

AI/ML Tools (20%)

Developer Productivity (18%)

Infrastructure & DevOps (15%)

Web Development & Tools (12%)

Data Analysis & Visualization (10%)

Security (8%)

Productivity & Utilities (15%)

Niche Applications (12%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Katakate: Scalable Lightweight VM Host | 109 | 50 |

| 2 | RustFastServer | 67 | 82 |

| 3 | Django Keel: Production-Ready Django Blueprint | 21 | 22 |

| 4 | Clink: Unified AI Agent Dev & Deploy | 20 | 21 |

| 5 | AutoLearn Agents | 20 | 10 |

| 6 | SierraDB: Rust-Powered Distributed Event Journal | 22 | 2 |

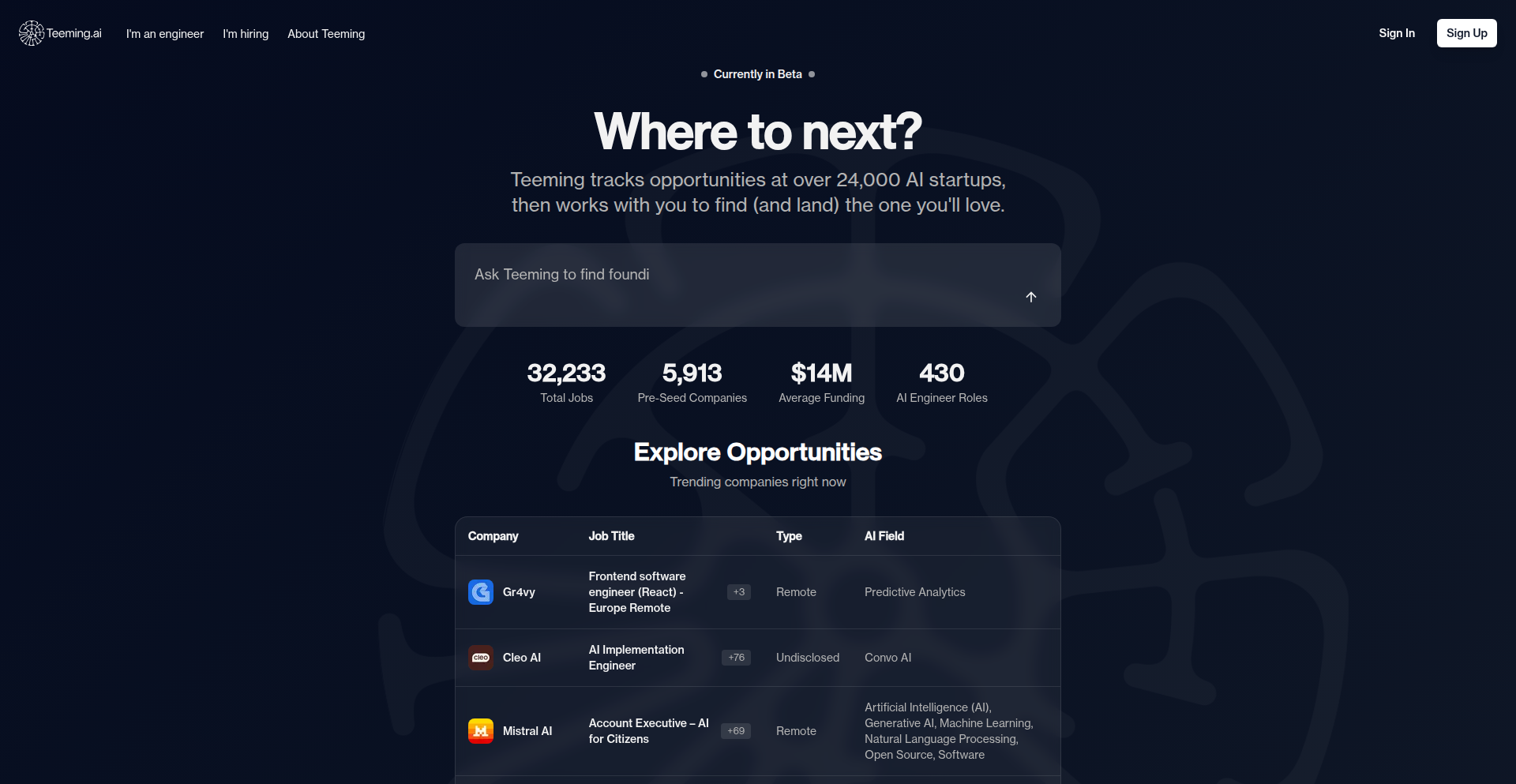

| 7 | AI Startup Navigator | 14 | 10 |

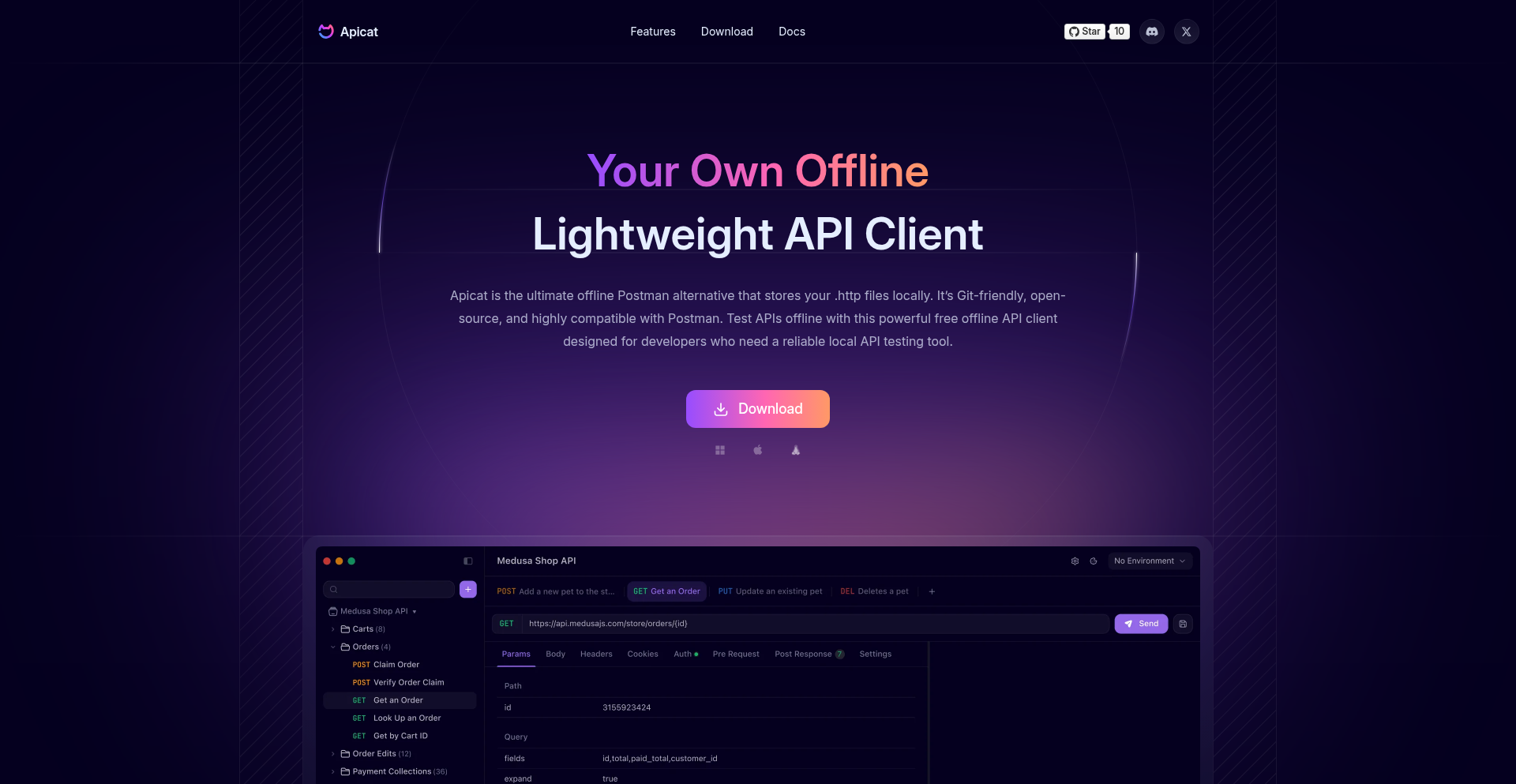

| 8 | Apicat: The Offline API Sentinel | 13 | 3 |

| 9 | GPU-Accelerated LLM Runner | 14 | 2 |

| 10 | TrueSign Anti-Bot Shield | 8 | 6 |

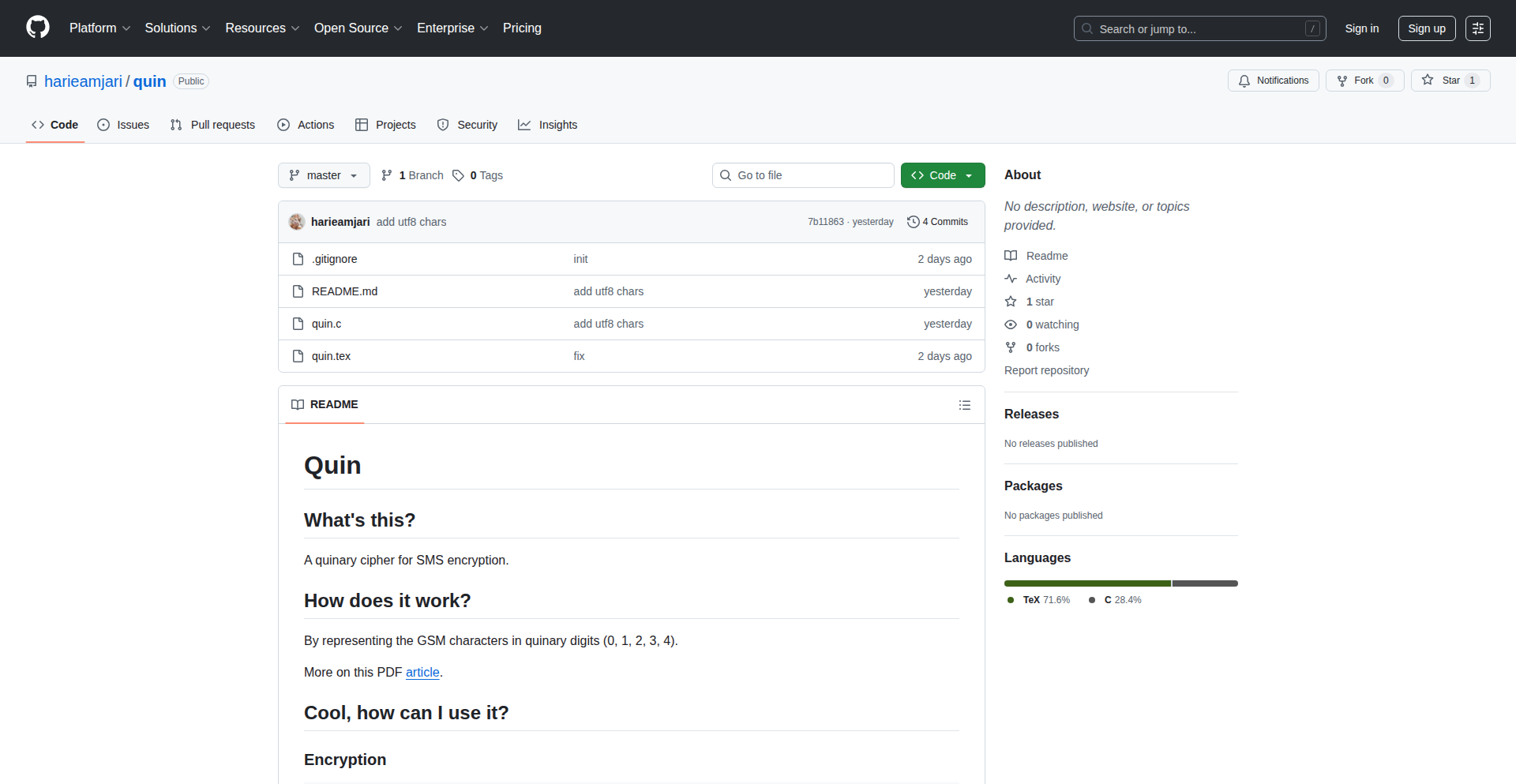

1

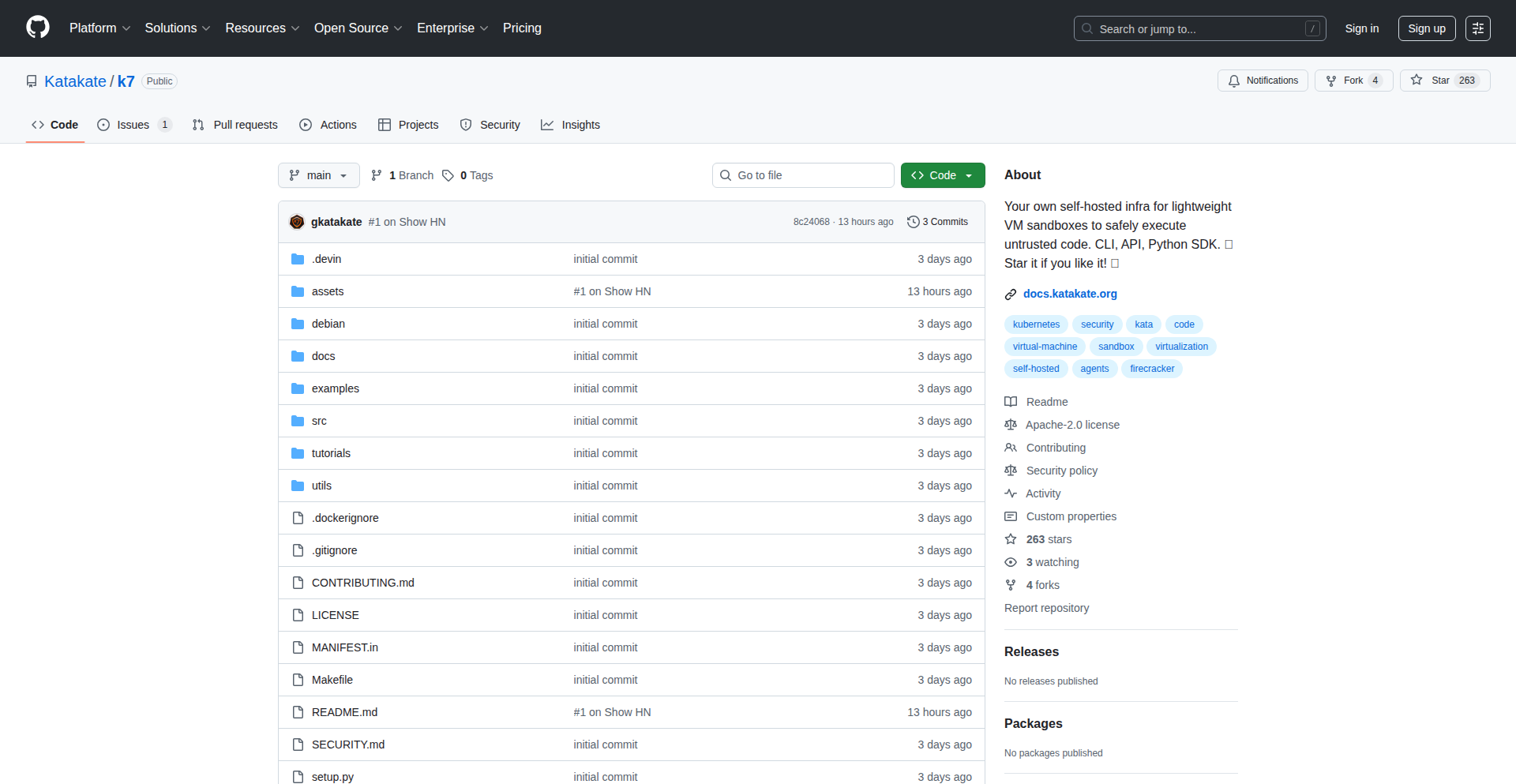

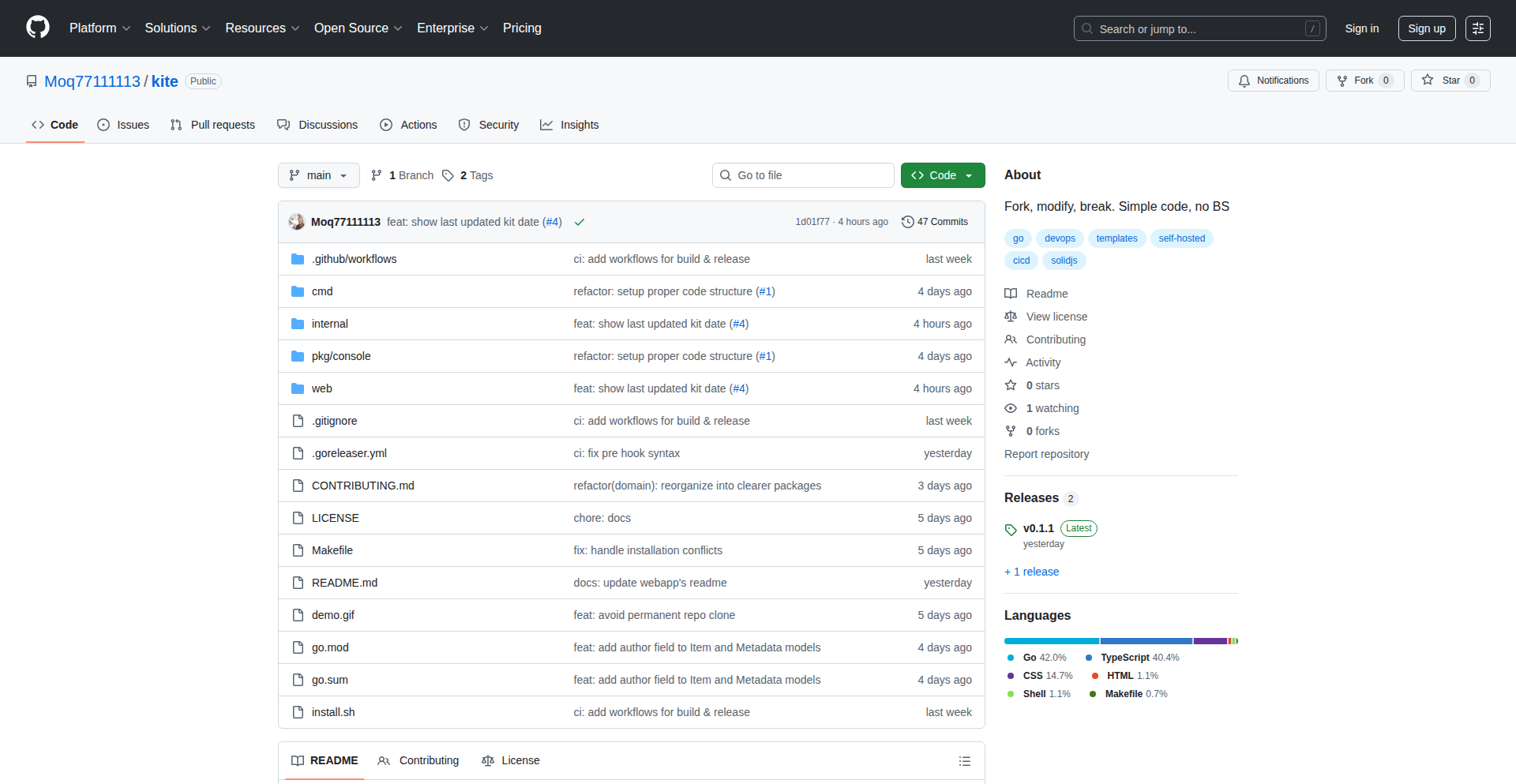

Katakate: Scalable Lightweight VM Host

Author

gbxk

Description

Katakate is a system designed to efficiently host numerous lightweight virtual machines (VMs) on a single node. It's built to be simple to use, especially for AI engineers who might not want to delve into complex VM orchestration. The core innovation lies in its ability to safely execute AI-generated code, power CI/CD pipelines, or run off-chain AI decentralized applications (DApps) without the common pitfalls and complexities associated with Docker-in-Docker setups. This approach prioritizes security and ease of management at scale.

Popularity

Points 109

Comments 50

What is this product?

Katakate is a specialized infrastructure solution that allows you to run many small, isolated virtual machines on one computer or server. Think of it like having dozens of separate mini-computers within a single physical one. The technical innovation is in how it manages these VMs very efficiently and securely. It uses a 'defense-in-depth' strategy, meaning it has multiple layers of security to protect your systems. Unlike traditional methods which can be messy and risky (like nesting Docker containers inside each other), Katakate provides a cleaner, safer environment for running code, especially for AI tasks or automated development processes. So, the value for you is a more secure, scalable, and easier way to run code and applications without worrying about the underlying infrastructure complexity.

How to use it?

Developers can interact with Katakate through a simple Command Line Interface (CLI) or a Python Software Development Kit (SDK). This makes it accessible even for those who aren't experts in server management. You can use it to spin up isolated environments for testing AI models, deploying automated build and testing pipelines (CI/CD), or running parts of your decentralized AI applications. The ease of use means you can quickly set up these environments, execute code, and tear them down without significant overhead. The value for you is rapid deployment and management of isolated compute resources for your AI and development workflows, saving time and reducing operational headaches.

Product Core Function

· Host dozens of lightweight VMs per node: This allows for high-density resource utilization, meaning you can run many isolated instances of code or applications on a single piece of hardware. This is valuable for scaling your AI experiments or CI/CD processes efficiently without needing more physical servers.

· Safe execution of AI-generated code: Katakate provides a secure sandbox environment for running code, especially code generated by AI models. This prevents potentially untrusted or buggy AI code from affecting your main systems. The value here is enhanced security and confidence when working with cutting-edge AI development.

· CI/CD runners: It can be used to host runners for continuous integration and continuous deployment pipelines. This means your automated builds, tests, and deployments can happen in isolated, predictable environments, making your development process more robust and reliable. The value is a smoother and more dependable development lifecycle.

· Off-chain AI DApps: For decentralized applications (DApps) that involve AI components, Katakate can host the necessary off-chain processing. This keeps sensitive computations isolated and secure, contributing to the overall integrity of the DApp. The value is enabling complex AI functionalities within decentralized systems without compromising security.

· Avoids Docker-in-Docker complexities: By providing a dedicated VM orchestration layer, Katakate sidesteps the common issues and security concerns associated with nesting Docker containers. This leads to a cleaner, more manageable, and safer setup. The value is reduced technical debt and increased system stability.

Product Usage Case

· Scenario: An AI researcher wants to test multiple versions of a newly generated AI model simultaneously. How it solves the problem: Katakate allows the researcher to spin up several lightweight VMs, each running a different model variant in complete isolation. This prevents interference between tests and allows for rapid comparison. The value: Faster iteration and experimentation for AI development without complex setup.

· Scenario: A software team needs to run automated tests for their application on different operating system configurations. How it solves the problem: Katakate can provision VMs pre-configured with the required OS environments, acting as dedicated CI/CD runners. The tests run in these isolated VMs, ensuring consistent results. The value: More reliable and efficient automated testing, leading to higher code quality.

· Scenario: A developer is building a decentralized application that requires complex AI-driven data processing but wants to keep that processing off the blockchain for cost and performance reasons. How it solves the problem: Katakate can host the AI processing units as isolated VMs, handling the computations securely and efficiently, then feeding the results back to the DApp. The value: Enables advanced AI features in DApps while maintaining scalability and affordability.

· Scenario: A startup wants to deploy internal tools or microservices that require their own dedicated environments but are concerned about the overhead of managing multiple Docker setups. How it solves the problem: Katakate provides a simple way to launch many small VMs for each service, ensuring isolation and preventing conflicts, all managed through a straightforward interface. The value: Simplified deployment and management of microservices with strong isolation guarantees.

2

RustFastServer

Author

dorianniemiec

Description

RustFastServer is a high-performance, user-friendly web server rewritten in Rust. It excels at serving static files and acting as a reverse proxy, featuring automatic TLS encryption out-of-the-box and a simplified configuration format. This project represents a significant leap in web server efficiency and developer experience, offering a robust solution for modern web infrastructure.

Popularity

Points 67

Comments 82

What is this product?

RustFastServer is a web server built from scratch in the Rust programming language, with a strong focus on speed and simplicity. The core innovation lies in its highly optimized performance for common web server tasks like delivering static files (images, HTML, CSS) and acting as a reverse proxy (directing incoming requests to other services). Unlike many servers that require manual setup for security, RustFastServer enables automatic TLS (HTTPS) by default, making secure communication effortless. It also adopts a new, more intuitive configuration file format, making it easier for developers to set up and manage.

How to use it?

Developers can integrate RustFastServer into their projects by treating it as a drop-in replacement for existing web servers or as a dedicated service for specific needs. For serving static content, a developer would simply point RustFastServer to their website's public directory via the configuration file. For reverse proxying, they would specify which incoming URLs should be forwarded to different backend applications (e.g., API servers, microservices). The simplified configuration, likely a declarative format, means less time spent on intricate setup and more time on application logic. Automatic TLS means no complex certificate management is needed for secure connections.

Product Core Function

· High-performance static file serving: This is crucial for delivering website assets quickly to users, improving load times and user experience. It's achieved through efficient I/O operations and optimized memory management inherent in Rust.

· Efficient reverse proxying: Enables directing web traffic to different backend services or applications, which is fundamental for microservice architectures and load balancing. The optimization here focuses on minimizing latency and maximizing throughput.

· Automatic TLS (HTTPS) by default: Secures web traffic with encryption without requiring manual certificate configuration, enhancing security for both developers and end-users from the moment it's deployed.

· Simplified configuration format: Makes it easier and faster for developers to define server behavior, routes, and proxy settings, reducing setup time and potential errors.

· Built in Rust: Leverages Rust's memory safety and performance guarantees, leading to a more stable and faster web server compared to languages with garbage collection overhead.

Product Usage Case

· Deploying a single-page application (SPA): Developers can use RustFastServer to serve the HTML, CSS, and JavaScript files of their SPA directly. Its fast static file serving ensures quick initial page loads. The reverse proxy can then route API calls to a separate backend service.

· Hosting a collection of microservices: Each microservice can run independently, and RustFastServer can act as the entry point, directing incoming requests to the appropriate microservice based on the URL path. This simplifies the overall architecture and manages traffic efficiently.

· Securing a traditional website: By simply pointing RustFastServer to the website's files and enabling automatic TLS, developers can ensure all traffic to their site is encrypted, offering a secure browsing experience without complex SSL certificate management.

· Building a development server for rapid prototyping: The ease of use and fast performance make RustFastServer ideal for quickly spinning up a web server during the development phase to test features and serve assets.

3

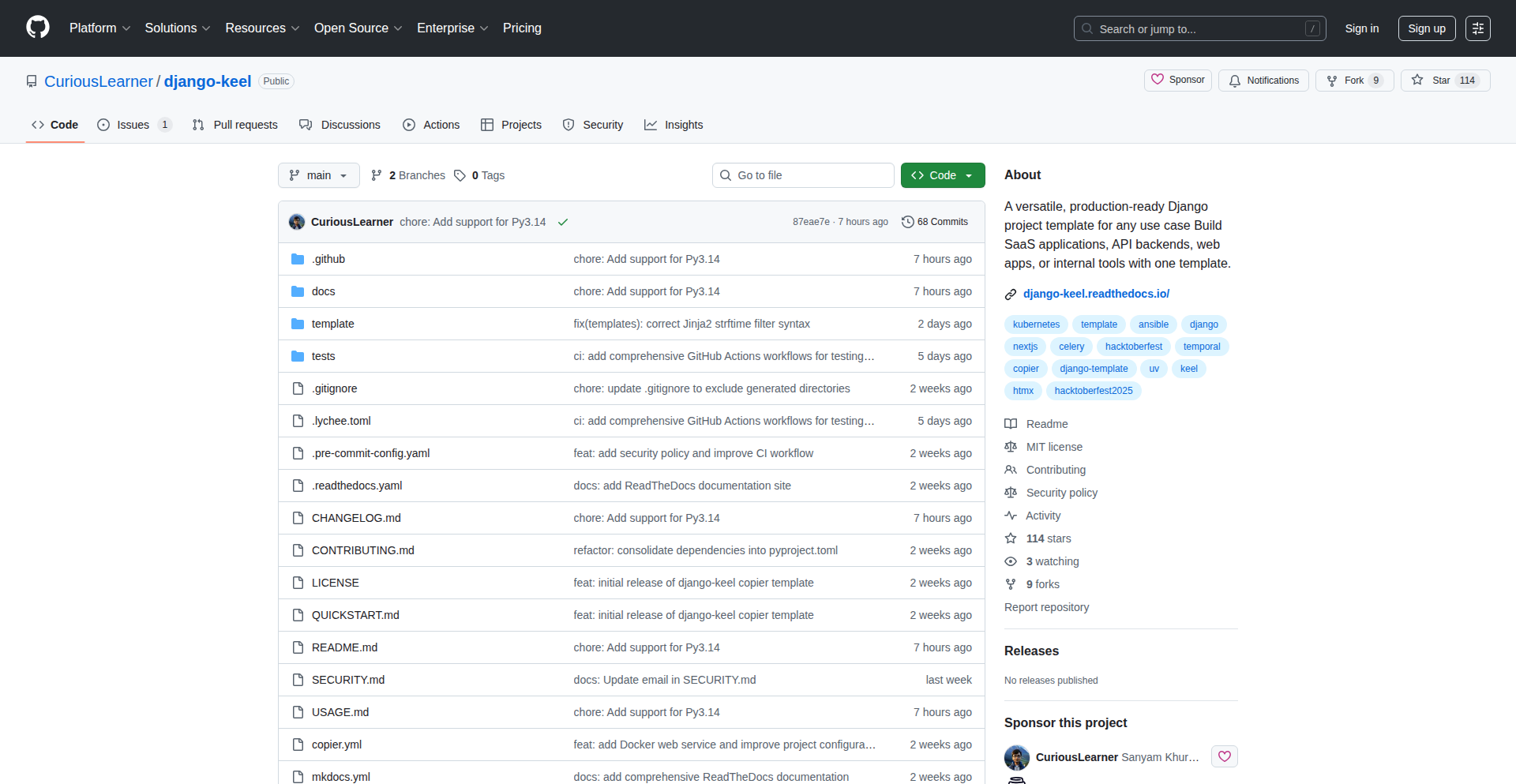

Django Keel: Production-Ready Django Blueprint

Author

sanyam-khurana

Description

Django Keel is a comprehensive Django starter template that bundles a decade of production experience. It automates and pre-configures common boilerplate tasks like environment-first configuration, security hardening, logging, testing, and CI workflows. This means developers can skip the repetitive setup and focus on writing core business logic, reducing initial development time and potential tech debt from the start. So, for you, this means faster and more robust Django project launches with a solid foundation.

Popularity

Points 21

Comments 22

What is this product?

Django Keel is a project template designed to give you a head start on building production-ready Django applications. Imagine starting a new Django project. Normally, you'd spend days setting up things like how to handle different configurations for development versus production (like database passwords), making sure your code is secure, setting up automatic code checking (linting and formatting), and getting your continuous integration (CI) pipeline ready. Django Keel takes care of all these common, time-consuming setup tasks for you. It's built on years of experience, meaning it incorporates battle-tested patterns and sensible defaults. So, for you, this means less time wrestling with setup and more time building your actual application, with confidence that the foundational elements are robust and secure.

How to use it?

Developers can use Django Keel by cloning the repository from GitHub and then starting their project based on this template. It's designed to be a starting point, so you'll build your specific application features on top of its pre-configured structure. The template includes documentation that explains the choices made, so you understand why certain configurations are set up the way they are. You can integrate it into your development workflow by using it as the initial project scaffold. So, for you, this means a simple download and a clear path forward to begin coding your unique application features immediately, rather than configuring generic tools.

Product Core Function

· Environment-first Configuration: Manages application settings based on the environment (e.g., development, staging, production) using environment variables for secrets like database passwords. This ensures sensitive information is never hardcoded and is managed securely. So, for you, this means your application is more secure and adaptable to different deployment environments without manual configuration changes.

· Production-Hardened Security Defaults: Includes pre-configured security measures that are standard practice for production applications, such as protection against common web vulnerabilities. So, for you, this means your application starts with a stronger security posture, reducing the risk of exploits.

· Pre-wired Linting, Formatting, Testing, and Pre-commit Hooks: Automatically sets up tools that check your code for errors (linting), ensure consistent style (formatting), run automated tests, and check code before it's committed to version control. So, for you, this means higher code quality, fewer bugs, and a more maintainable codebase from the outset.

· CI Workflow Ready to Go: Includes a basic continuous integration setup, often for platforms like GitHub Actions, which automates testing and deployment checks. So, for you, this means your application is set up for automated quality assurance and can be deployed more reliably.

· Clear Project Structure: Provides a well-organized and scalable directory structure for your Django project. So, for you, this means your project is easier to navigate, understand, and grow as your application becomes more complex.

· Documentation with Real Trade-offs Explained: Offers insights into the design decisions and the reasons behind specific configurations, helping developers understand the 'why' behind the template. So, for you, this means you can learn from experienced developers' choices and make informed decisions for your own projects.

Product Usage Case

· A startup team building a new web application needs to launch quickly. By using Django Keel as their project starter, they avoid spending a week on initial setup and immediately begin developing their core user features, ensuring a faster time to market. So, for you, this means your innovative idea can reach users much sooner.

· A solo developer creating a complex API service needs a robust and secure foundation. Django Keel provides pre-configured security and best practices, so the developer can focus on the API logic, knowing the underlying infrastructure is sound and scalable. So, for you, this means you can build sophisticated applications with less fear of underlying technical debt.

· A small company migrating an existing Django application to a new, more modern architecture. Django Keel serves as a blueprint for a clean, well-structured project, helping them adopt best practices for deployment and maintenance. So, for you, this means a smoother and more efficient upgrade process for your existing systems.

4

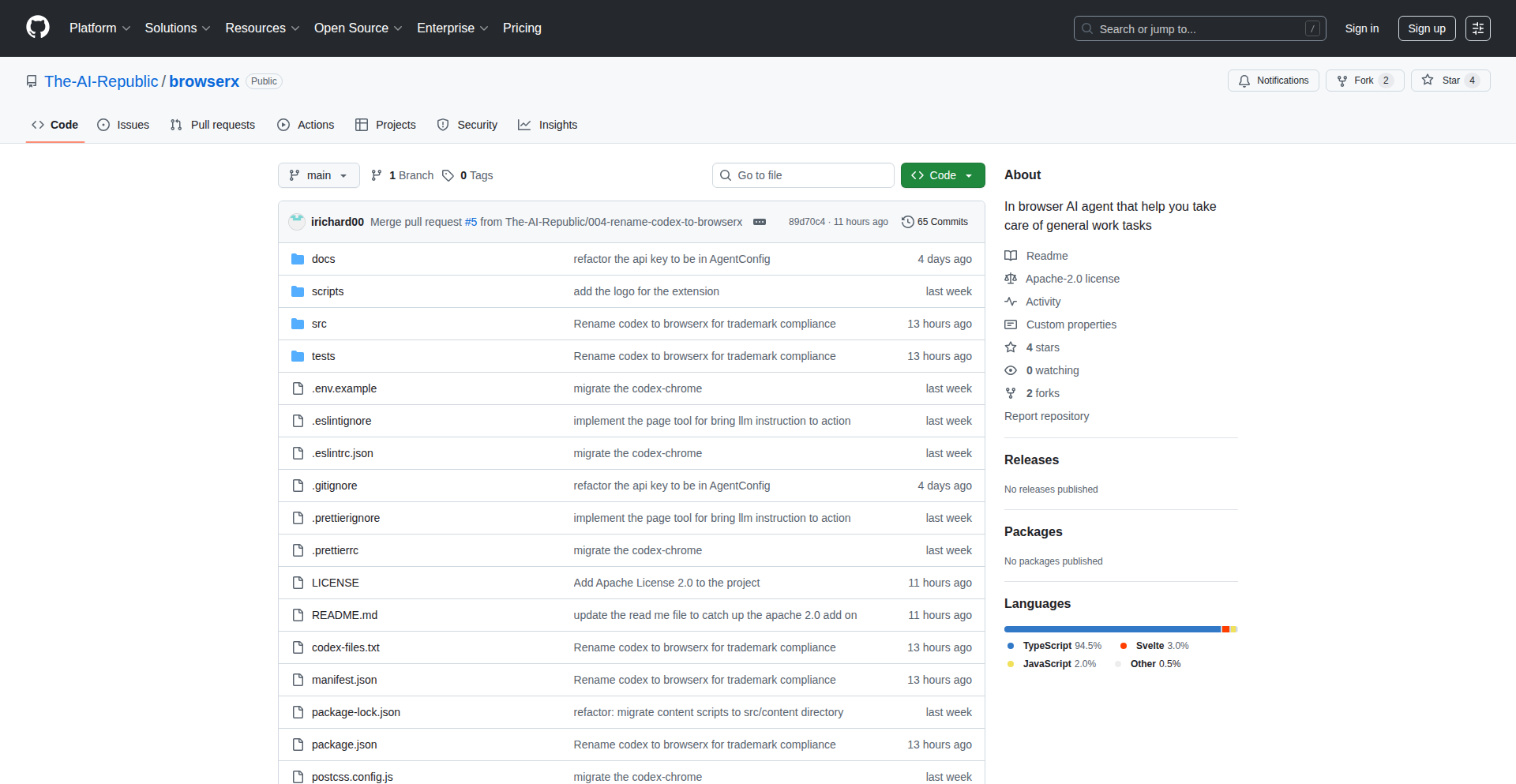

Clink: Unified AI Agent Dev & Deploy

Author

aaronSong

Description

Clink is a groundbreaking platform that empowers developers to leverage their existing AI coding agents (like Claude Code, Codex, Gemini) to rapidly build, preview, and deploy applications within an isolated container. It eliminates the need for new subscriptions, allowing you to utilize your current AI tools more efficiently and unlock the full potential of CLI-based AI agents for faster development cycles and instant deployment, all for free.

Popularity

Points 20

Comments 21

What is this product?

Clink is a development environment that bridges the gap between powerful AI coding agents and the practicalities of building and shipping applications. Instead of just generating code, Clink takes your prompts and transforms them into fully functional applications. It then spins up a temporary, isolated environment (think of it as a mini-computer in the cloud) where your app runs. This allows you to see your app live, make adjustments, and then deploy it to a public web address instantly, without needing to manage complex server setups. The innovation lies in its ability to orchestrate different AI agents, each with its strengths, and provide a seamless workflow from idea to a live, shareable product. It's like having a supercharged development team where each member is an expert AI, and Clink is the project manager that brings it all together.

How to use it?

Developers can integrate Clink into their workflow by simply connecting their existing AI agent subscriptions (e.g., OpenAI, Gemini). You can then start by describing your application idea through prompts. Clink interprets these prompts, leverages the connected AI agents to write the code, builds the application, and provides a live preview URL. For existing projects, Clink supports importing your repositories and deploying them. The platform handles the containerization and deployment to public URLs, making it incredibly easy to share your work or deploy a functional prototype. This is particularly useful for rapid prototyping, testing new ideas, or quickly deploying small utilities without the overhead of traditional deployment processes. It’s designed to be integrated into a developer’s existing toolchain, offering a faster path to production.

Product Core Function

· Prompt to Live Preview: Developers can input their application ideas as text prompts, and Clink utilizes AI agents to generate, build, and instantly provide a live, interactive preview of the application. This means you can see your code come to life in real-time, accelerating the feedback loop and making iterations much faster.

· Bring Your Own Subscription (BYOS): Clink allows developers to use their existing subscriptions for AI coding agents. This is highly cost-effective, as it leverages investments already made in tools like Claude Code, Codex, or Gemini, without requiring additional token purchases or separate subscription fees for development and deployment. You get more value from what you already pay for.

· Instant Deployment to Public URLs: Once an application is built and previewed, Clink can deploy it to a public web address with a single click. This eliminates the complex setup typically involved in hosting applications, making it incredibly easy to share your creations with others or deploy functional prototypes for testing and feedback.

· Multi-Stack Support (Beta): Clink supports building and deploying applications using various programming languages and frameworks like Node.js, Python, Go, and Rust. This flexibility allows developers to work with their preferred technologies and deploy containerized applications seamlessly, catering to a wide range of project needs.

· Repo Imports for Existing Projects: Developers can import their existing code repositories into Clink. This enables them to upgrade and deploy their current projects using Clink's streamlined workflow, essentially bringing their legacy code into a modern, AI-assisted development and deployment pipeline without a complete rewrite.

Product Usage Case

· Rapid Prototyping of Web Apps: A developer has an idea for a new social media feature. They describe it to Clink via a prompt. Clink, using a combination of AI agents, builds a functional web application prototype within minutes, complete with a live preview. This allows for immediate testing and demonstration to stakeholders, drastically reducing the time from concept to tangible product.

· Deploying Personal Projects for Free: A hobbyist developer creates a small utility script. Instead of setting up a server or using a paid hosting service, they can use Clink to deploy this script as a publicly accessible web application for free, making it available to anyone who needs it without incurring additional costs.

· Testing AI-Generated Code Functionality: A developer uses an AI agent to generate code for a specific task. Clink provides an environment to instantly run and test this generated code as a live application, allowing them to quickly verify its correctness and effectiveness before integrating it into a larger project.

· Migrating and Modernizing Legacy Applications: A team has an older Python web application. They can import the repository into Clink, which can help identify areas for improvement, potentially leverage newer AI models for code refactoring, and then deploy the modernized application as a containerized service with a public URL, simplifying its accessibility and management.

5

AutoLearn Agents

Author

toobulkeh

Description

This project explores the fascinating frontier of self-improving AI agents. It introduces a novel approach to enabling AI agents to learn and acquire new skills autonomously, moving beyond pre-programmed capabilities. The core innovation lies in a meta-learning framework that allows the agent to observe, adapt, and integrate new functionalities, effectively becoming a 'learning machine' for its own skill set. This tackles the challenge of creating more adaptable and versatile AI systems that can evolve in dynamic environments without constant human intervention, offering significant implications for future AI development.

Popularity

Points 20

Comments 10

What is this product?

AutoLearn Agents is a research-oriented project demonstrating a system where AI agents can learn new skills on their own. Imagine an AI that can not only perform a task it was trained for, but also observe how another AI or even a human performs a new, related task, and then figure out how to do it itself. The underlying technology uses a meta-learning approach. This means the agent doesn't just learn specific skills, but it learns *how to learn* new skills. It analyzes its own learning process and the outcomes of its attempts to acquire new knowledge, refining its learning strategy over time. This is a step towards more general artificial intelligence, where AI can become more autonomous and capable of tackling a wider range of challenges.

How to use it?

For developers, AutoLearn Agents offers a conceptual blueprint and potentially reusable code components for building more intelligent and adaptable AI systems. It's particularly relevant for scenarios requiring agents to operate in unpredictable environments or to continuously expand their repertoires without extensive retraining. Integration would typically involve leveraging the agent's meta-learning module as part of a larger AI architecture. Developers could use this to create agents that can adapt to new API changes, learn to interact with new software, or even acquire new problem-solving strategies as needed, thereby reducing development overhead for continuous updates and enhancements.

Product Core Function

· Autonomous Skill Acquisition: The agent can independently learn new skills by observing demonstrations or through trial-and-error, adding new capabilities to its repertoire without explicit reprogramming. This is valuable for creating AI that can evolve its functionality over time, reducing manual updates.

· Meta-Learning Framework: The core innovation is the agent's ability to learn *how* to learn. It optimizes its own learning process, making future skill acquisition more efficient. This means the AI gets smarter at getting smarter, leading to faster adaptation to new challenges.

· Observational Learning: The agent can learn from observing other agents or human examples, mimicking actions and understanding their purpose. This is crucial for transferring knowledge and creating more collaborative AI systems that can learn from each other.

· Adaptive Behavior: The agent's actions and strategies can change based on learned skills and environmental feedback, allowing it to handle novel situations more effectively. This makes the AI more robust and less likely to fail when encountering unfamiliar scenarios.

Product Usage Case

· Developing an AI assistant for a complex software application that can learn new commands or workflows as the application updates, without requiring manual re-training of the assistant. This makes the assistant always up-to-date and reduces maintenance effort.

· Creating autonomous robots for warehouse logistics that can learn to operate new types of machinery or adapt to changes in warehouse layout on the fly, improving operational flexibility and efficiency.

· Building AI agents for video games that can discover and master new strategies or game mechanics as the game evolves, providing a more engaging and dynamic player experience.

· Designing AI agents for scientific research that can learn to operate new experimental equipment or interpret novel data patterns, accelerating the pace of discovery.

6

SierraDB: Rust-Powered Distributed Event Journal

Author

tqwewe

Description

SierraDB is a novel distributed event store meticulously crafted in Rust. It tackles the challenge of reliably persisting and retrieving sequences of events across multiple machines, offering a robust foundation for event-driven architectures. Its innovation lies in its high-performance, fault-tolerant design, leveraging Rust's memory safety and concurrency features to ensure data integrity and availability. This means your applications can confidently record and replay events, building sophisticated state management and auditing capabilities.

Popularity

Points 22

Comments 2

What is this product?

SierraDB is a specialized database designed to store and manage a chronological log of events, like a detailed diary of everything that happens in your system. Unlike traditional databases that store current states, SierraDB focuses on the history of changes. Built in Rust, it brings exceptional performance and safety to this task. Rust's unique features prevent common programming errors, making SierraDB highly reliable even under heavy load or in distributed environments. This is crucial for applications that need to track every action, reconstruct past states, or implement complex business logic based on event sequences. Think of it as a tamper-proof audit trail for your digital world.

How to use it?

Developers can integrate SierraDB into their applications by connecting to its API. It can act as the central nervous system for event-driven systems, where each significant action (like a user making a purchase, a sensor taking a reading, or a system status change) is recorded as an event. SierraDB then allows applications to read these events in order, enabling them to react to changes, update their own state, or even replay historical events to debug issues or analyze past behavior. It's particularly useful for microservices architectures where coordinating state across different services can be complex. Imagine a scenario where you need to ensure that every order placed is definitively recorded and can be used to trigger subsequent actions like inventory updates and shipping notifications, all while guaranteeing no order is lost.

Product Core Function

· Append-only event logging: Allows for high-throughput ingestion of events, ensuring that every action is recorded immutably. This is valuable for building reliable audit trails and preventing data tampering.

· Distributed consensus: Implements mechanisms to ensure all nodes in the cluster agree on the order and content of events, guaranteeing data consistency and fault tolerance. This means your event data remains accurate even if some servers fail.

· Event stream replay: Enables applications to read and process events from a specific point in time or a sequence, facilitating state reconstruction, debugging, and historical analysis. This allows you to bring your application back to a previous state or understand how it arrived at its current state.

· High-performance I/O: Optimized for speed, leveraging Rust's low-level control and efficient memory management to handle large volumes of event data. This ensures your event-driven system remains responsive, even with a high volume of activity.

· Strong type safety and memory safety (via Rust): Guarantees that the database is free from common programming bugs that can lead to data corruption or crashes. This translates to increased reliability and reduced debugging time for developers.

Product Usage Case

· Building a financial transaction ledger: Recording every deposit, withdrawal, and transfer as an event ensures a complete and auditable history of financial activities, providing irrefutable proof of transactions.

· Implementing a command query responsibility segregation (CQRS) pattern: Using SierraDB as the event store to capture all commands that modify state. Query models can then be updated by subscribing to these events, leading to optimized read performance and clear separation of concerns.

· Developing real-time collaborative applications: Storing every user action (e.g., typing, drawing, editing) as an event allows for seamless synchronization and conflict resolution across multiple users working on the same document or canvas.

· Creating an IoT data pipeline: Ingesting and persisting massive streams of sensor data as events enables historical analysis, anomaly detection, and training of machine learning models based on past sensor readings.

7

AI Startup Navigator

Author

tompccs

Description

This project is a specialized job board focused on early-stage AI companies. It tackles the overwhelming noise in modern job applications by directly connecting candidates with founders. Its innovation lies in bypassing traditional gatekeepers, offering unfiltered data access, and using an AI voice agent for a personalized, efficient matching experience, inspired by how a well-connected friend would help. This means less time spent on generic applications and more direct interaction with relevant opportunities.

Popularity

Points 14

Comments 10

What is this product?

This project is a job board specifically designed for the AI startup ecosystem, aiming to cut through the clutter of traditional job searching. Instead of relying on keywords or lengthy forms, it uses AI to match candidates with relevant early-stage AI companies. The core innovation is a combination of direct founder access, unfiltered data browsing, and an AI voice agent named Nell. Nell simulates a technical recruiter's call, instantly identifying potential matches. This approach is built on the insight that many AI startups struggle to find talent through conventional channels and often rely on networks, and it aims to replicate the efficiency and personal touch of a trusted referral, but at scale. For you, this means a more direct and less frustrating path to discovering and applying for exciting AI roles.

How to use it?

Developers can use this project by visiting teeming.ai. Upon arrival, you can immediately start searching, filtering, and browsing job listings without any onboarding process. If you're looking for a more guided experience, you can engage with the AI voice agent, Nell. Nell will conduct a simulated technical recruiter interview directly in your browser. Based on your responses, Nell will identify and suggest suitable job openings. When you express interest in a role, the platform facilitates a direct connection with the company's founders, including your profile, eliminating the need for cover letters and lengthy application forms. This makes it easy to quickly get your profile in front of the right people. The core idea is to integrate seamlessly into your job search without added friction.

Product Core Function

· Direct founder connection: Enables candidates to bypass HR and connect directly with startup founders, increasing the chances of a meaningful conversation and faster hiring.

· Unfiltered data access: Allows users to search, filter, and browse job data without artificial barriers, empowering proactive job discovery.

· AI-powered voice recruiter (Nell): Simulates a technical recruiter call to understand candidate skills and preferences, offering personalized job matches efficiently.

· Early-stage AI company focus: Curates opportunities specifically within the rapidly growing early-stage AI sector, providing a targeted job market.

· Investor-grade startup intelligence: Provides data on startups that investors use to evaluate potential, helping candidates make informed decisions about company viability and growth prospects.

· Keyboard navigation: Offers efficient navigation for developers who prefer keyboard-centric workflows, enhancing productivity.

· No cover letters/pointless forms: Streamlines the application process by removing traditional, often time-consuming, bureaucratic steps.

Product Usage Case

· A software engineer specializing in machine learning wants to find roles at cutting-edge AI startups. They can use the 'AI Startup Navigator' to directly search for companies working on novel NLP models, engage with Nell to quickly pinpoint roles matching their specific ML skills, and get their profile directly in front of founders without writing multiple cover letters, leading to faster interviews.

· A senior AI researcher is looking for a research-focused position in a seed-stage AI company. They can leverage the platform's investor-grade intelligence to assess the research potential and funding stability of various startups, and then use the direct connection feature to reach out to the CTO, bypassing generic application portals.

· A developer who prefers keyboard shortcuts can efficiently sift through hundreds of job listings at AI companies, applying filters and saving interesting roles using only their keyboard, significantly speeding up their job search process.

· A founder of a new AI startup needs to hire top talent quickly. They can post their jobs on this board and have a higher likelihood of reaching relevant candidates who are actively seeking AI roles and are pre-vetted by the AI recruiter, reducing time-to-hire.

8

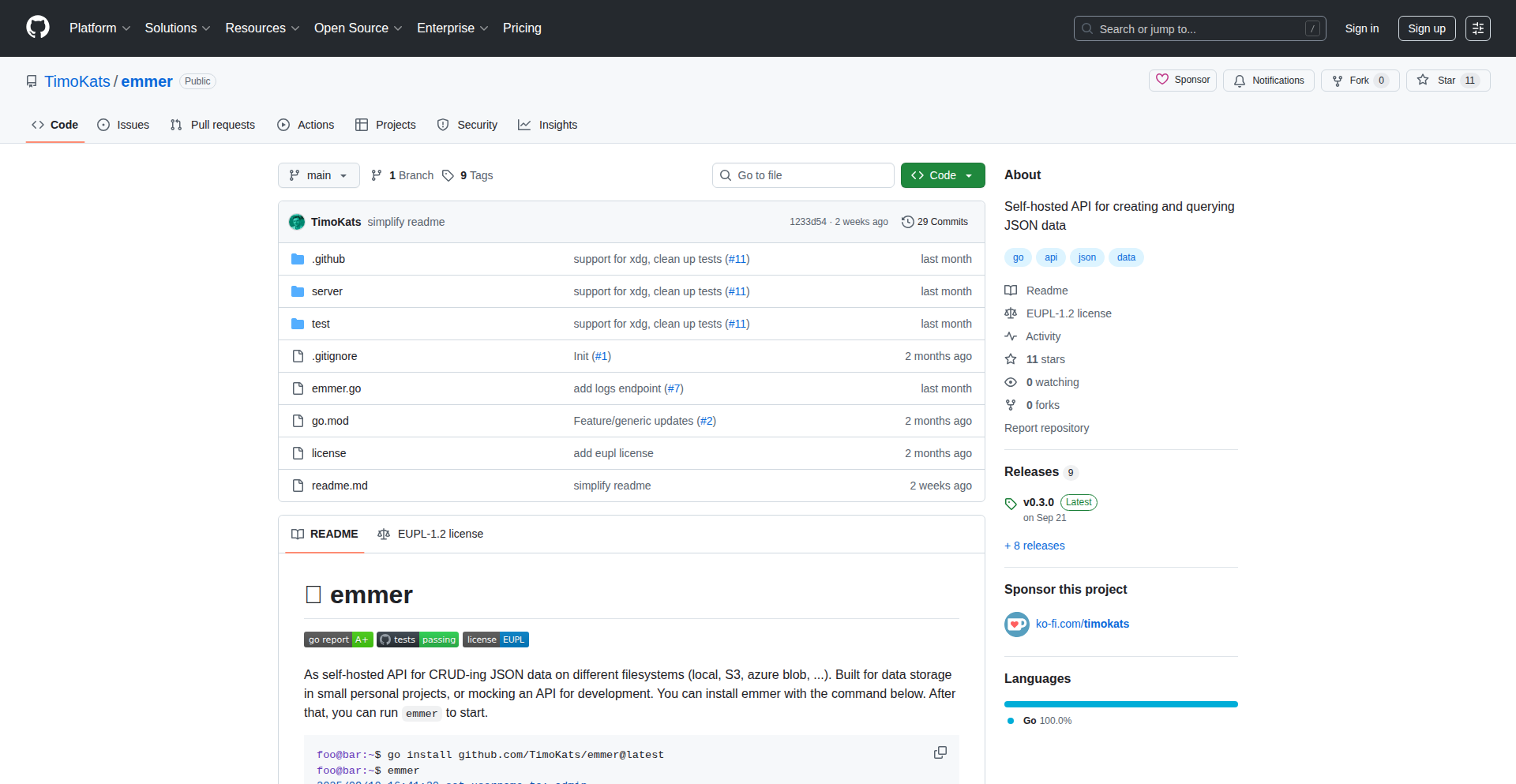

Apicat: The Offline API Sentinel

Author

abacussh

Description

Apicat is an open-source, Git-friendly offline alternative to Postman. It addresses the common developer need for local API testing by storing API request definitions (.http files) directly on your machine. This means you can test your APIs without an internet connection, making it ideal for rapid local development, ensuring data privacy, and seamless integration with version control systems.

Popularity

Points 13

Comments 3

What is this product?

Apicat is a desktop application that functions as an API testing client, much like Postman, but with a key difference: it operates entirely offline. Instead of relying on a cloud service to store your API collections, Apicat uses plain text files (specifically, the .http file format) which are stored locally. This design is inherently Git-friendly, meaning you can track changes to your API tests just like you would with any other code. The innovation lies in providing a robust, feature-rich API testing experience without the dependency on a central server or internet connectivity, enhancing developer workflow by allowing for consistent testing in any environment and promoting better version control practices for API definitions.

How to use it?

Developers can download and install Apicat on their local machine. Once installed, they can create new API requests by writing them directly in the .http file format, or import existing .http files. These files can be stored in a Git repository. Apicat then reads these files, allowing developers to send requests to their local or remote APIs, inspect responses, and manage their API test suites directly from their desktop. This is particularly useful during local development where you might be working with services that are not yet deployed or accessible online, or when you need to ensure API test configurations are private and version-controlled.

Product Core Function

· Offline API Request Execution: Execute HTTP requests (GET, POST, PUT, DELETE, etc.) to any API endpoint without requiring an internet connection, valuable for isolated local development and testing.

· Local .http File Storage: Store all API definitions and request configurations as plain text .http files locally, enabling seamless integration with Git for version control and collaboration.

· Postman Compatibility: Offers high compatibility with Postman's .http file format, allowing for easy migration of existing Postman collections and workflows.

· Environment Variable Management: Support for managing environment variables within local files, allowing for dynamic request parameters and configurations without manual changes.

· Response Inspection: Detailed view of API responses, including status codes, headers, and body, crucial for debugging and understanding API behavior.

· Request History: Keeps a record of past requests, enabling quick re-testing and analysis of previous API interactions.

Product Usage Case

· Developing a microservice locally: A developer can use Apicat to test endpoints of a microservice running on their machine without needing to deploy it to a staging server, saving time and resources.

· Working with sensitive API keys: For APIs that handle sensitive data or require private keys, storing and testing them via Apicat's local files prevents exposure to cloud services, enhancing security.

· Collaborating on API definitions: A team can store their API test definitions in a shared Git repository, and each developer can pull the latest versions and test them locally using Apicat, ensuring consistency.

· Offline development environments: Developers working in environments with limited or no internet access can still perform comprehensive API testing, ensuring productivity.

· Automated API testing setup: .http files can be easily integrated into CI/CD pipelines for automated testing, with Apicat acting as the engine for executing these tests locally before deployment.

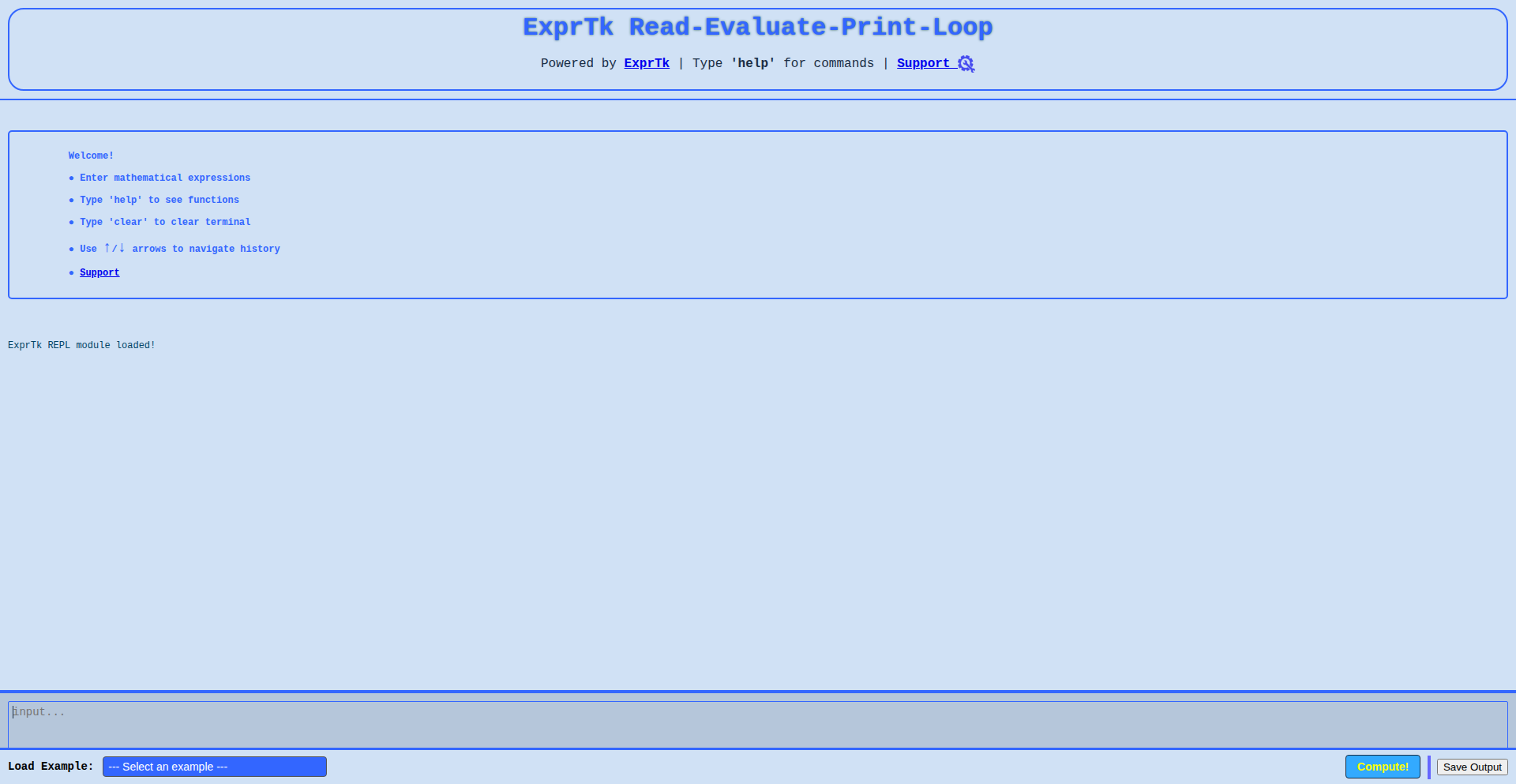

9

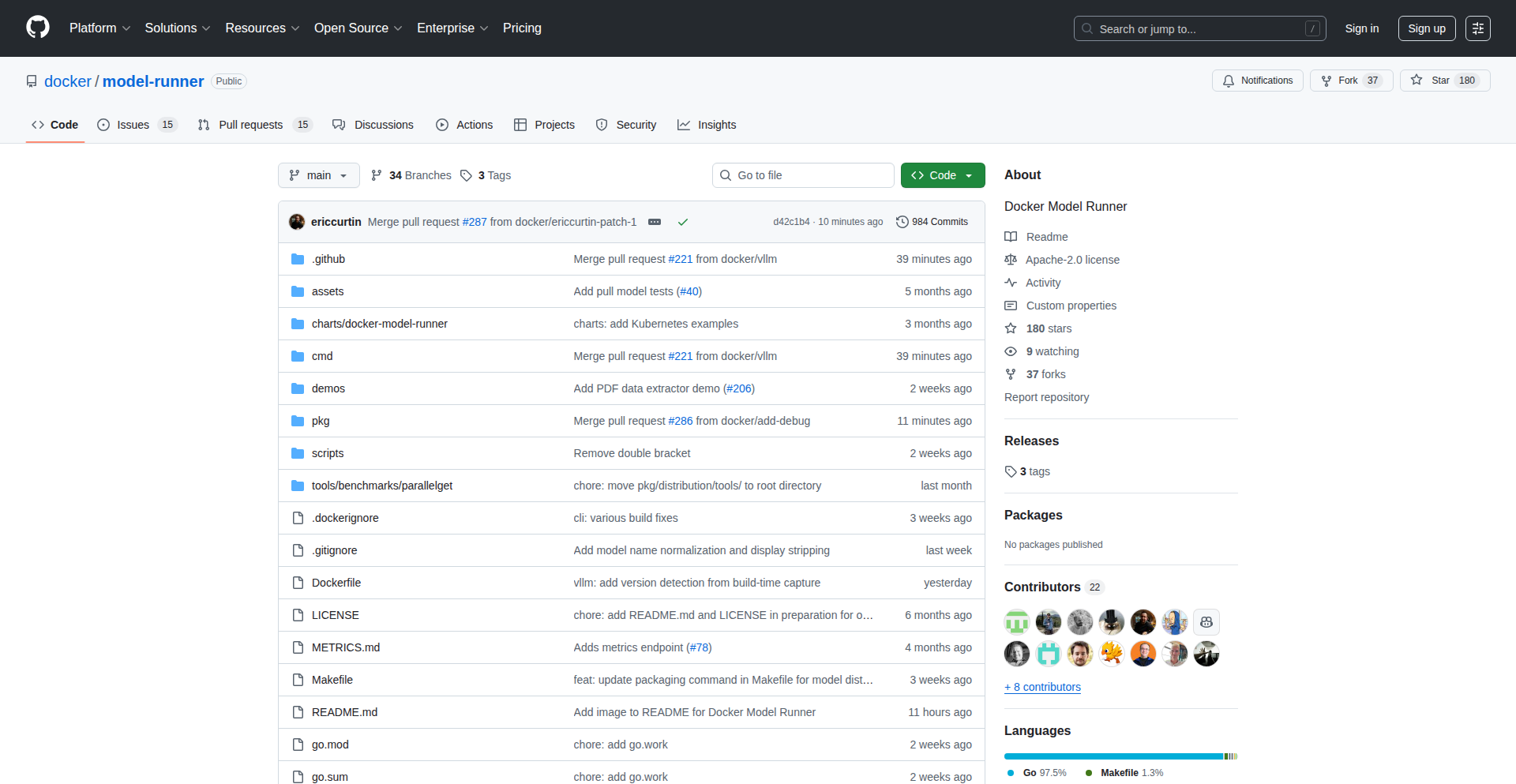

GPU-Accelerated LLM Runner

Author

ericcurtin

Description

This project is a backend-agnostic tool designed to simplify the download and execution of large language models (LLMs) locally. It acts as a unified interface, allowing interaction with various model backends, notably llama.cpp. A key innovation is its ability to package and transport models via OCI registries like Docker Hub, turning it into a central hub for both traditional containerized applications and generative AI models. Recent updates include enhanced GPU support with Vulkan and AMD compatibility, and a refactored monorepo structure to significantly improve the contributor experience and encourage community involvement. So, what does this mean for you? It means easier access to running powerful AI models on your own hardware, with broader hardware compatibility and a more welcoming environment for developers to contribute to its advancement.

Popularity

Points 14

Comments 2

What is this product?

This project is essentially a smart manager for running AI models (like those that power ChatGPT) on your own computer. Instead of dealing with complex setup for each different model, it provides a single, easy way to download and use them. The innovation lies in its flexibility: it can connect to different 'engines' that actually run the models, and it uses a standardized way (like Docker Hub) to store and share these models. This is like having a universal remote for your AI models. The latest improvements make it work with a wider range of graphics cards (including AMD ones using Vulkan technology), and they've reorganized the code to make it much simpler for developers to understand and contribute new features. So, what's the benefit? You get to run advanced AI on your own machine without being a deep tech expert, and the project is actively growing thanks to community contributions.

How to use it?

Developers can use this project as a foundational tool for building AI-powered applications that run locally. It allows them to easily integrate LLMs into their workflows without managing the complexities of individual model setups or dependencies. By leveraging OCI registries, models can be versioned and shared seamlessly, similar to how Docker images are managed. Integration would typically involve using the project's API to select, download, and run specific models, potentially connecting them to other application components. The refactored monorepo makes it easier for developers to contribute to the project itself, adding new model backends or improving existing ones. So, how does this help you? You can quickly prototype and deploy AI features in your applications, leveraging a growing ecosystem of easily accessible models, and even contribute to shaping the future of local AI execution.

Product Core Function

· Local LLM Execution: Provides a consistent interface to download and run large language models on your own hardware, abstracting away backend complexities. This is valuable because it allows you to experiment with and deploy AI models without needing powerful cloud infrastructure or specialized knowledge for each model type.

· Backend Agnostic Design: Supports multiple underlying model execution engines (like llama.cpp), allowing users to choose the best fit for their needs and hardware. This is useful as it prevents vendor lock-in and ensures compatibility with a wider range of AI model implementations.

· OCI Registry Integration: Enables models to be stored, shared, and versioned using standard OCI registries (like Docker Hub), treating AI models like containerized applications. This simplifies model distribution and management, making it easier to discover and deploy new models.

· Vulkan and AMD GPU Support: Extends hardware acceleration capabilities to a broader set of GPUs, including those from AMD, leveraging the Vulkan graphics API. This is significant for developers who want to leverage their existing hardware for faster AI inference, making local AI more accessible and performant.

· Contributor-Friendly Monorepo: Restructures the project into a monorepo to improve code clarity and reduce the barrier for new developers to contribute. This fosters community growth and innovation, leading to a more robust and feature-rich project over time.

Product Usage Case

· A developer wants to build a local chatbot application that can run offline. They can use model-runner to easily download and integrate a pre-trained LLM from Docker Hub, bypassing complex manual setup. This allows for rapid prototyping of AI-driven conversational interfaces without relying on external APIs.

· A machine learning engineer needs to test different LLM architectures for a specific task. Using model-runner, they can quickly switch between various models available on OCI registries and run them locally with GPU acceleration (even on an AMD card), streamlining the experimentation and benchmark process.

· An open-source enthusiast wants to contribute to the advancement of local AI. The project's refactored monorepo and clear architecture make it easier for them to understand the codebase and submit pull requests for new features or bug fixes, accelerating the project's development.

· A small business owner wants to integrate AI-powered text generation into their internal documentation tools. They can deploy model-runner on a local server, download a suitable LLM, and connect it to their existing systems, gaining AI capabilities without significant cloud costs or infrastructure management.

10

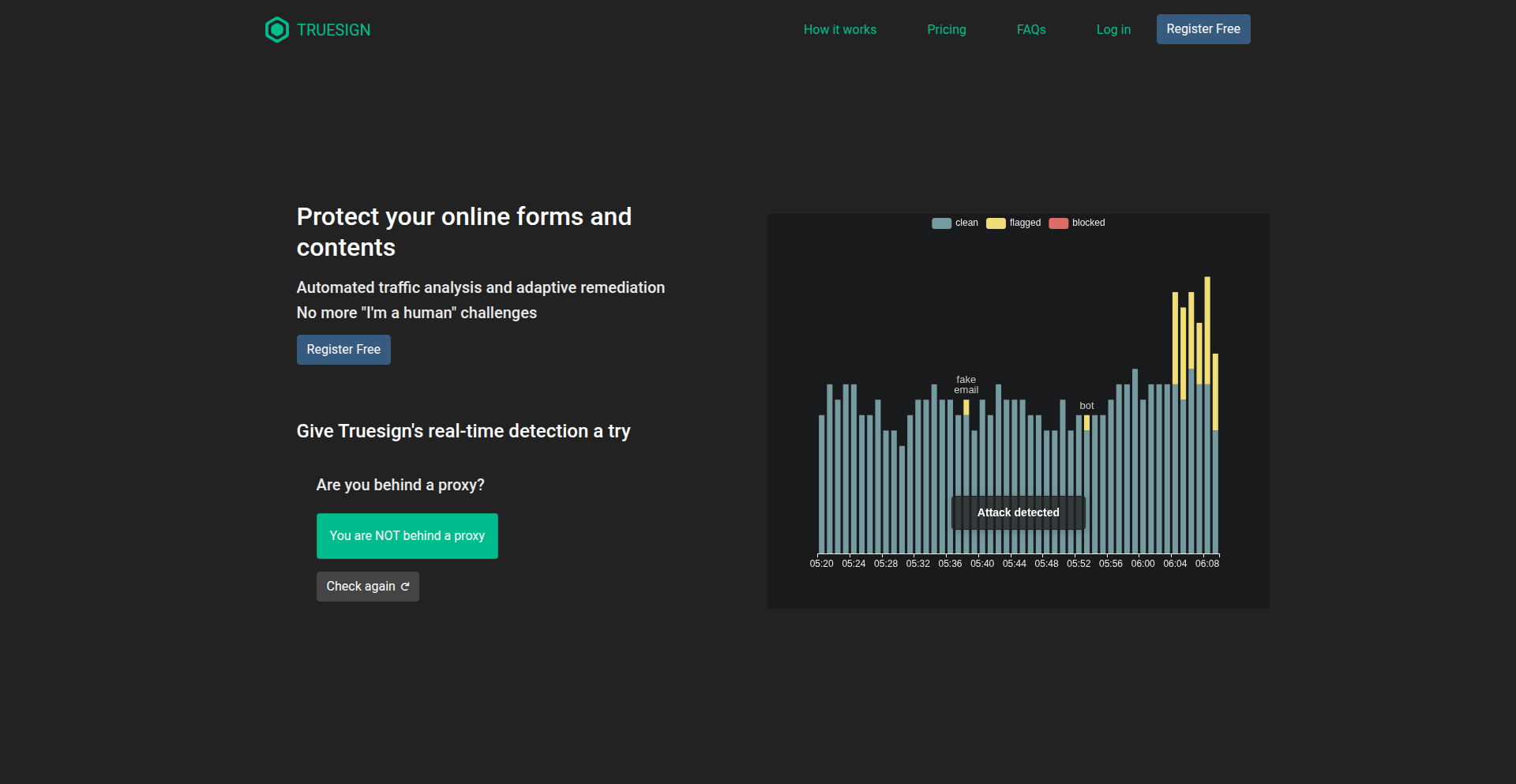

TrueSign Anti-Bot Shield

Author

juros

Description

TrueSign is a novel service designed to detect bots, proxies/VPNs, and fake emails with a single browser request, eliminating the need for user-facing challenges. It protects public forms, content, and APIs by analyzing visitor behavior and providing actionable insights for developers to block malicious traffic or grant access based on predefined rules. This offers a seamless user experience while significantly enhancing security against automated threats, making it ideal for applications needing to safeguard their data and interactions.

Popularity

Points 8

Comments 6

What is this product?

TrueSign is a sophisticated bot, proxy, and fake email detection system that operates without requiring users to solve captchas or interact with intrusive challenges. Its core innovation lies in its ability to analyze a user's request from their browser in a single pass. It employs a combination of techniques to infer the nature of the visitor. For instance, it might look at subtle behavioral patterns, browser fingerprinting, and network characteristics associated with automated scripts or anonymizing services. The system then flags or blocks requests based on rules you set, or provides a verified token indicating the visitor's legitimacy, allowing you to control access to your forms, content, or APIs. This means you get robust protection without frustrating your legitimate users.

How to use it?

Developers can integrate TrueSign into their web applications by simply adding a small snippet of code to their frontend, or by configuring their backend to interact with TrueSign's API. For example, to protect a form, you would send the form submission request through TrueSign before it reaches your backend. If TrueSign identifies the submitter as a bot or using a suspicious proxy, it can block the request directly or return a specific response that your application interprets. For content protection, you could use TrueSign to authenticate visitors before serving sensitive data. The system also provides an admin dashboard where you can review detected threats, analyze traffic patterns, and dynamically adjust your protection rules. This allows for flexible and responsive security management, giving you control over who accesses your digital assets.

Product Core Function

· Bot Detection: Identifies automated scripts and programs attempting to access your resources, preventing scrapers or malicious bots from overwhelming your systems.

· Proxy/VPN Detection: Flags users employing proxy servers or VPNs, which are often used to mask identity or bypass geographical restrictions, thereby enhancing security and compliance.

· Fake/Disposable Email Detection: Verifies the authenticity of email addresses submitted through forms, reducing spam and ensuring communication with real users.

· Real-time Rule Management: Allows dynamic adjustment of detection rules and blocking policies without service interruptions, enabling rapid response to evolving threats.

· Visitor Tokenization: Issues encrypted tokens for verified visitors, providing authenticated and privacy-preserving data for downstream access control decisions.

· Headless Browser and Script Detection: Catches sophisticated bots that try to mimic human behavior by analyzing underlying browser environments and script execution.

Product Usage Case

· Protecting a public signup form: A web application can use TrueSign to ensure that only legitimate users can create accounts, preventing bot-driven account creation and potential abuse. This solves the problem of spam registrations.

· Securing an API endpoint: An API provider can integrate TrueSign to filter out requests originating from known botnets or anonymized IPs, ensuring that their API resources are used by genuine clients and not subjected to denial-of-service attacks. This maintains API performance and reliability.

· Safeguarding content for logged-in users: A content publisher could use TrueSign to verify that a user accessing premium content is not a bot attempting to scrape articles, ensuring that their valuable content remains exclusive to their intended audience. This protects intellectual property.

· Preventing fake form submissions in e-commerce: An online store can employ TrueSign to block bot-generated order requests or fraudulent reviews, thereby maintaining data integrity and improving customer trust. This addresses the issue of data manipulation.

· Serving content to JavaScript-disabled clients: TrueSign offers a mode that protects content even without JavaScript analysis, allowing developers to secure resources for a wider range of clients, including older browsers or specific machine-to-machine interactions. This expands accessibility and compatibility.

11

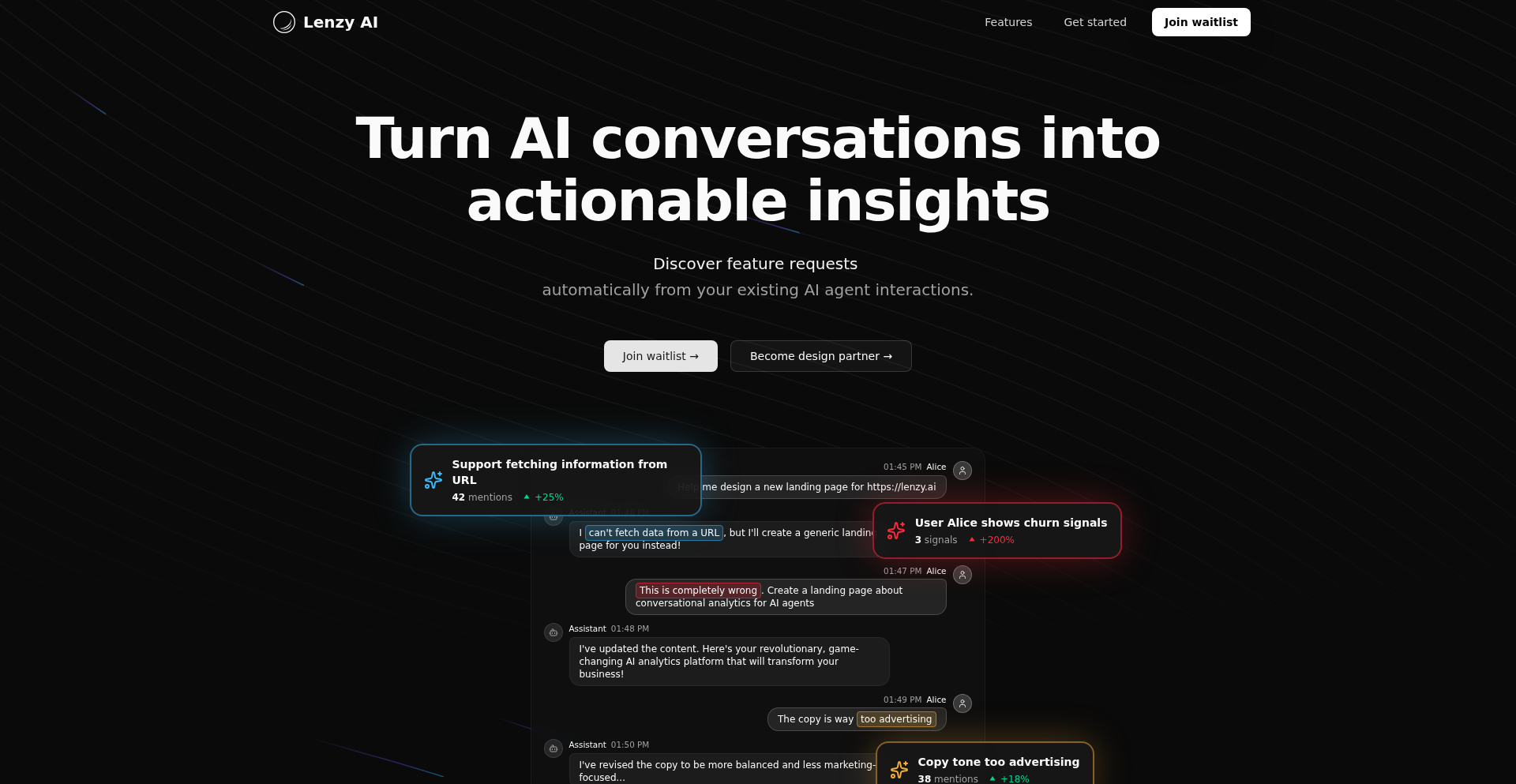

Lenzy AI - Conversational Intelligence for AI Agents

Author

BohdanPetryshyn

Description

Lenzy AI is a pioneering product analytics platform specifically designed for AI agents. It leverages advanced natural language processing (NLP) techniques to continuously analyze the vast amounts of conversational data generated between users and AI agents. This allows businesses to automatically uncover missing features, identify early signs of customer churn, flag conversations needing human intervention, and gain deep insights into user satisfaction and task completion. Unlike existing tools that focus on individual LLM calls, Lenzy AI processes entire conversations, providing a holistic understanding of user interaction and agent performance, thereby transforming raw chat logs into actionable business intelligence. This helps developers build better AI agents that truly meet user needs.

Popularity

Points 7

Comments 6

What is this product?

Lenzy AI is an innovative analytics platform built for the emerging world of AI agents. Think of it as a 'smart listener' for all the conversations your AI assistants are having with your customers. The core innovation lies in its ability to go beyond analyzing single AI responses (like traditional LLM monitoring tools) and instead understand the full context and nuance of multi-turn conversations. It uses sophisticated NLP models to understand what users are asking for, what they like, what frustrates them, and whether the AI is successfully helping them. So, instead of just knowing if an AI responded correctly, Lenzy AI tells you if the user's problem was solved, if they enjoyed the experience, and what features they are wishing for, which is crucial for improving the AI agent and the product it supports.

How to use it?

Developers can integrate Lenzy AI by connecting their AI agent's conversation logs to the platform. This could involve setting up API integrations to stream chat data in real-time or periodically uploading conversation archives. Lenzy AI then processes this data, providing a dashboard with various analytical views. For example, a developer building a customer support chatbot could connect their bot's conversation history. Lenzy AI would then automatically highlight common unresolved issues, identify users expressing frustration before they disengage, and even suggest new intents or capabilities the chatbot should learn based on user requests. This allows developers to quickly iterate on their AI agent based on direct user feedback, rather than relying on manual log reviews or limited quantitative metrics.

Product Core Function

· Feature Discovery: Automatically surfaces feature requests and desired functionalities mentioned by users in conversations, enabling developers to prioritize product roadmap based on genuine user needs.

· Churn Signal Detection: Identifies patterns in conversations that indicate user dissatisfaction or intent to leave, allowing for proactive intervention to retain customers.

· Human Review Triage: Flags complex or sensitive conversations that require human agent escalation, optimizing support resource allocation and ensuring quality customer care.

· Satisfaction and Task Completion Tracking: Measures how often AI agents successfully complete user tasks and gauges overall user sentiment, providing key performance indicators for AI agent effectiveness.

· Custom Insight Generation: Enables the creation of tailored analytical queries to extract specific business intelligence from conversation data, such as common support topics or most frequently used features.

Product Usage Case

· Scenario: A company deploying an AI assistant for internal IT support. Problem: Users are repeatedly asking for a feature that doesn't exist. Solution: Lenzy AI analyzes conversations and flags the recurring request, prompting the company to build the missing feature, thereby improving employee productivity and satisfaction.

· Scenario: A startup building a generative AI content creation tool. Problem: Users are abandoning the tool midway through content creation. Solution: Lenzy AI identifies frustration signals in conversations, such as users expressing confusion or unmet expectations, allowing the startup to pinpoint usability issues and refine the AI's content generation capabilities.

· Scenario: An e-commerce business using an AI chatbot for pre-sales inquiries. Problem: The chatbot occasionally fails to answer complex product questions, leading to lost sales. Solution: Lenzy AI flags conversations where the chatbot couldn't resolve the inquiry, enabling the business to train the AI on more specific product knowledge or escalate to a human sales representative for a better customer experience.

12

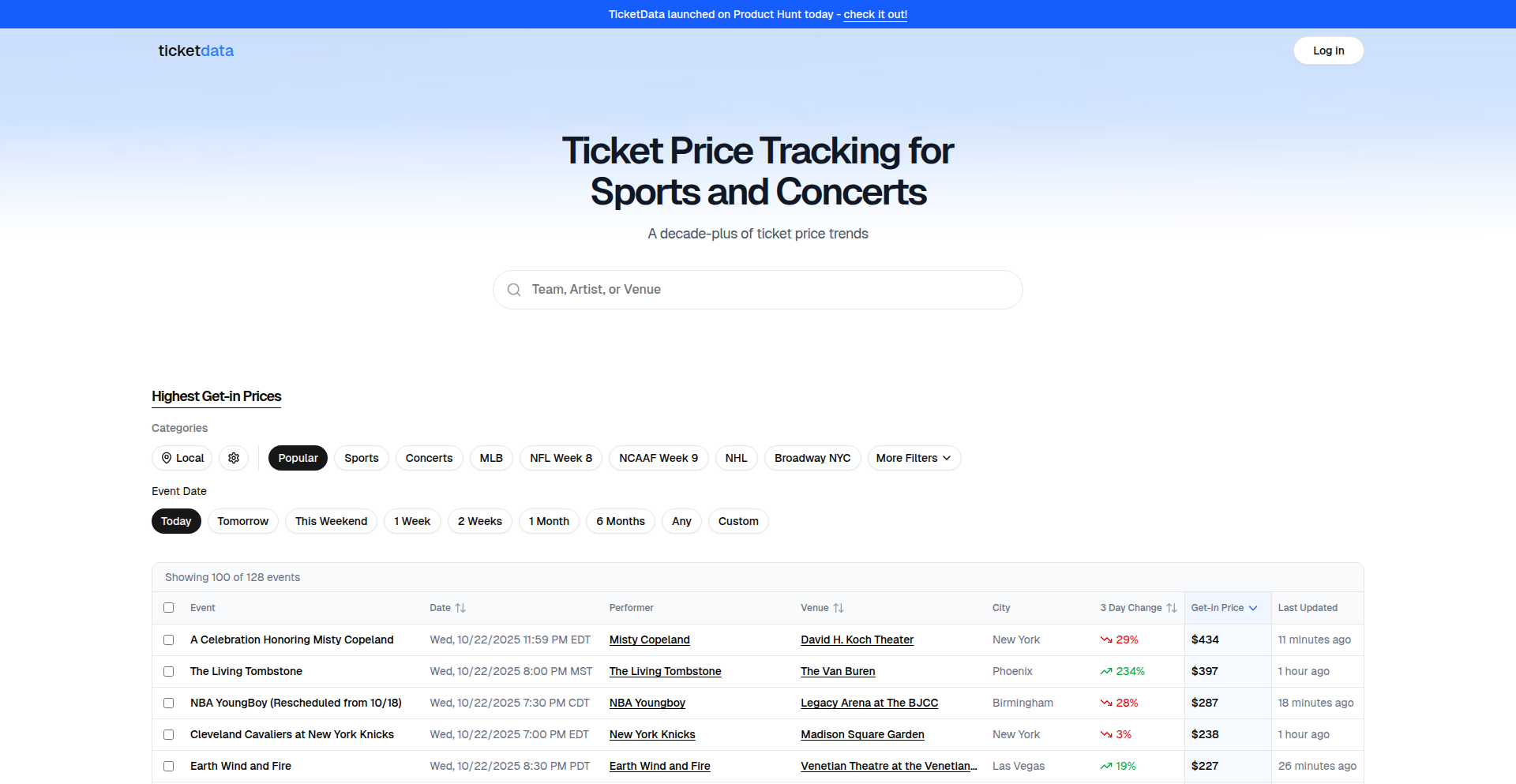

EventTicketPulse

Author

kapkapkap

Description

EventTicketPulse is a free, public tool that leverages real-time data from major ticket resale platforms to provide live price charts and historical trends for live events. It offers insights into ticket price movements, allowing users to make smarter purchasing decisions. The core innovation lies in aggregating diverse data sources, visualizing price fluctuations, and employing predictive modeling (XGBoost) for future price forecasting. This addresses the common problem of opaque and volatile ticket pricing, empowering consumers.

Popularity

Points 7

Comments 5

What is this product?

EventTicketPulse is a web application that aggregates and visualizes ticket price data from various resale marketplaces for concerts and sports events. It's built on a foundation of scraping and processing data from platforms like StubHub, Vivid Seats, and SeatGeek. The innovative aspect is its ability to display live price charts that update frequently, show historical price trends, and even offer price forecasts for select events. These forecasts are generated using a sophisticated machine learning model (XGBoost) trained on years of historical data, event specifics (like opponent, day of the week, venue capacity), and live sales information. So, for you, this means a more transparent and informed way to understand and predict ticket prices, potentially saving you money.

How to use it?

Developers can use EventTicketPulse by accessing the public website to analyze ticket trends for upcoming or past events. The site allows users to view general price history for the cheapest tickets, specific seating zones, or even create custom zones based on their preferred sections and rows. Furthermore, users can set up price alerts to be notified when ticket prices fall below or rise above certain thresholds. For developers interested in deeper integration or building their own applications, the availability of historical data (up to 2.5 years currently) and the underlying data aggregation logic can serve as inspiration or a starting point for their own data-driven projects. The prediction models, while proprietary, highlight the potential of using advanced analytics for event ticketing. So, for you, this means you can easily check price histories, get notified of price drops, and understand market dynamics for any event you're interested in, directly through your browser.

Product Core Function

· Live Price Charts: Displays real-time ticket prices from multiple resale sites, updated every few minutes. This provides an immediate snapshot of the current market, helping you see if prices are going up or down right now, so you know the best time to buy.

· Historical Price Trends: Offers insights into how ticket prices have fluctuated over time for specific events or seat locations. This historical data allows you to understand typical price patterns and avoid overpaying, ensuring you get good value for your money.

· Customizable Zone Analysis: Enables users to define their own desired seating areas (e.g., specific rows and sections) to track price movements within those targeted zones. This is useful if you have a particular view or seating preference and want to monitor prices for exactly those seats, so you can pinpoint the best deals for your ideal spot.

· Price Alert System: Allows users to set custom price thresholds and receive notifications when ticket prices reach their desired levels. This automates your ticket hunting process, so you don't have to constantly check prices and can snag tickets when they hit your budget.

· Future Price Forecasting: Utilizes a machine learning model (XGBoost) to predict future ticket price movements for select events. This advanced feature helps you anticipate price changes and make strategic decisions about when to purchase, giving you a potential edge in securing tickets at a favorable price.

· Extensive Historical Data: Provides access to a significant amount of past ticket pricing data (currently 2.5 years), enabling in-depth analysis of market behavior over extended periods. This deep historical context helps you understand long-term trends and make more informed decisions for future event planning.

· Event Comparison Tools: Offers functionality to compare pricing across multiple dates or events, facilitating a broader understanding of the market and identifying the most cost-effective options. This allows you to compare different show dates or similar events side-by-side to find the best value overall.

Product Usage Case

· A user wants to buy tickets for a popular upcoming concert. They use EventTicketPulse to view the price history of 'Get In Prices' (the cheapest available tickets) over the past few weeks. They notice prices have been steadily increasing. Based on this, they decide to purchase their tickets sooner rather than later to avoid further price hikes, thus saving potential future costs.

· A fan is looking for tickets to a specific sports team's playoff game. They use the custom zone feature to track prices for seats in a particular section that offers a good view. They set up a price alert for when tickets in that section drop below a certain amount. When the alert triggers, they quickly buy the tickets at a price they deem reasonable, securing their desired seats.

· A group of friends wants to attend multiple shows by a favorite band. They use the event comparison feature to see which dates have the lowest average ticket prices and when the prices were most stable, allowing them to plan their concert tour cost-effectively.

· A user is interested in understanding the market dynamics of a past major event. They can access EventTicketPulse's historical data to see how prices evolved leading up to and after the event, providing insights into the ticketing ecosystem for future reference.

· A developer building a personal finance tool for event-goers could explore the underlying data and visualization techniques of EventTicketPulse for inspiration in how to present complex pricing information simply and effectively.

· A small event promoter can use the price trend data to understand typical pricing for similar events in their genre, helping them set more competitive and profitable ticket prices for their own upcoming shows.

13

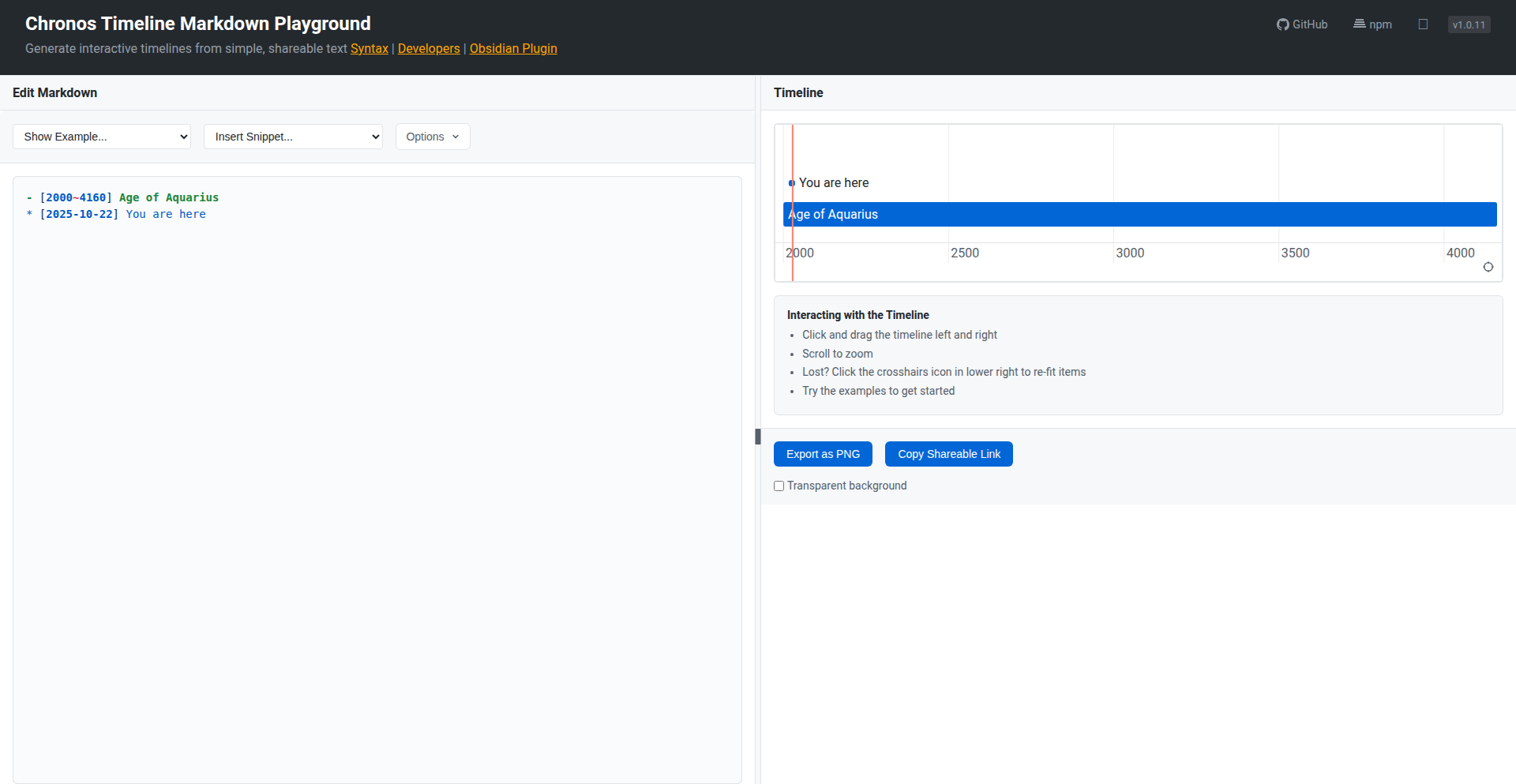

Chronos Timeline-MD: Markdown to Interactive Visualizations

Author

marjipan200

Description

Chronos Timeline-MD is a clever tool that transforms simple Markdown text into beautiful, interactive timelines. It's innovative because it democratizes timeline creation, allowing anyone to visually represent events and data without needing complex software or coding expertise. The core innovation lies in its ability to parse plain text and render it into a dynamic, engaging visual format, making complex temporal information easily digestible. This solves the problem of difficult-to-create and static timeline representations, offering a flexible and accessible solution for developers and content creators alike.

Popularity

Points 10

Comments 0

What is this product?

Chronos Timeline-MD is a library that takes plain text, specifically formatted in Markdown, and turns it into interactive timelines. Think of it as a bridge between your simple notes and a visually appealing, dynamic timeline. The magic happens through a parsing engine that understands specific Markdown syntax for events, dates, and descriptions. This engine then translates that text into interactive JavaScript components that can be embedded on websites or in applications. The innovation is in making sophisticated visual timelines accessible through an incredibly simple input method – plain text. So, what's the benefit? You can easily create professional-looking timelines without learning complex design tools or programming languages, making your data and stories more engaging.

How to use it?

Developers can integrate Chronos Timeline-MD into their applications by using the chronos-timeline-md library available on NPM. You'd install it like any other Node.js package. Then, you can feed your Markdown content to the library within your code. For instance, in a web application, you could fetch your timeline data (written in Markdown) and pass it to the Chronos component. The library handles the rest, rendering an interactive timeline directly into your web page. This is perfect for projects that need to display event logs, project roadmaps, historical data, or any sequence of events in an engaging way. Essentially, if you have sequential information you want to present visually and interactively, this is your go-to solution. So, what's the benefit? You can quickly add rich, dynamic timelines to your apps, enhancing user experience and data comprehension.

Product Core Function

· Markdown Parsing Engine: Converts plain text, structured with Markdown, into timeline data. This means you write your timeline in a format you already know, like a simple text file, and the system understands it. Its value is in simplifying data input, making it accessible to everyone. The application scenario is creating event logs, historical narratives, or project plans.

· Interactive Timeline Rendering: Displays the parsed data as a visually appealing and interactive timeline. Users can click on events, zoom in/out, or navigate through time, making the data easier to explore. Its value is in enhancing user engagement and understanding of temporal data. This is useful for educational content, project management dashboards, or historical exhibits.

· Embeddable Component: The generated timelines can be easily embedded into websites and applications. This means you can seamlessly integrate rich visual timelines into your existing digital products. Its value is in providing a flexible and reusable way to present temporal information. This is applicable for blog posts, documentation sites, or interactive reports.

· Customization Options: Allows for styling and configuration of the timeline's appearance and behavior. Developers can tweak the look and feel to match their brand or specific needs. Its value is in offering flexibility and control over the visual presentation. This is beneficial for branding consistency and user interface design.

Product Usage Case

· Displaying a project's development roadmap: A software company can use Chronos Timeline-MD to create an interactive roadmap of their product's features and release dates, written in Markdown. This allows stakeholders to easily see past milestones and future plans. The problem solved is the difficulty of creating static, unengaging roadmaps that are hard to update.

· Creating a historical event log for a website: A history enthusiast can write about significant events in chronological order using Markdown. Chronos Timeline-MD then turns this into a browsable timeline on their website, making historical information engaging for visitors. This addresses the challenge of presenting historical data in a way that's both informative and captivating.

· Visualizing a personal journey or portfolio: An individual can document their career milestones, achievements, or personal projects in a Markdown file and render it as an interactive timeline on their personal website or portfolio. This helps potential employers or collaborators quickly understand their professional trajectory. The problem solved is presenting a career narrative in a static, less impactful way.

14

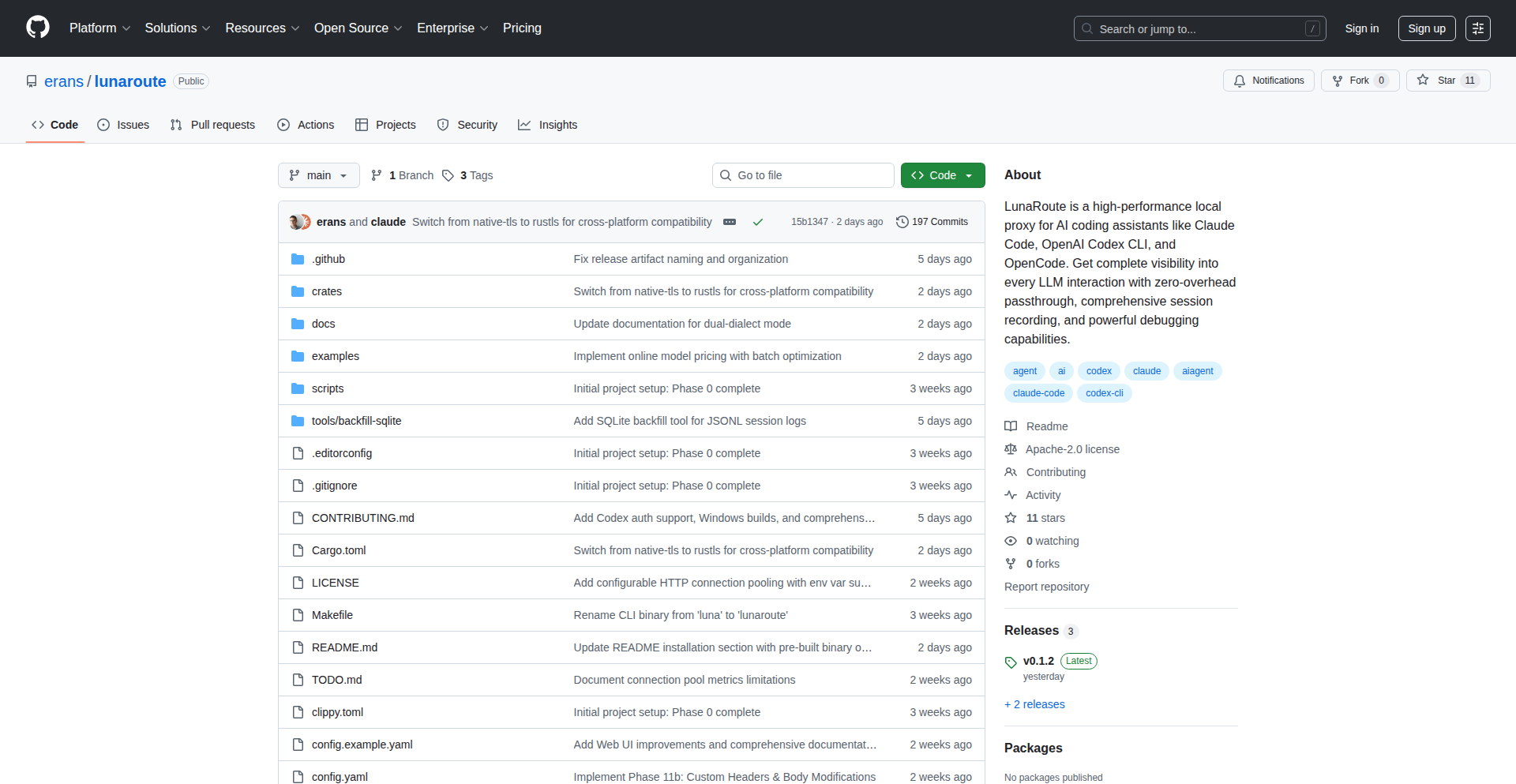

LunaRoute: AI Copilot Traffic Director

Author

erans

Description

LunaRoute is a high-performance local proxy designed to give developers complete visibility and control over their AI coding assistant interactions. It acts as a smart intermediary, logging every detail of conversations with models like Claude Code and OpenAI Codex, while ensuring data privacy and enabling seamless switching between different AI providers. This means you can understand exactly what your AI is doing, protect sensitive information, and optimize your AI workflows without performance bottlenecks.

Popularity

Points 8

Comments 1

What is this product?

LunaRoute is essentially a 'smart pipe' that sits between your computer and AI coding assistants. Think of it like a traffic controller for your AI conversations. When you ask your AI assistant a question or get it to write code, LunaRoute intercepts that interaction. It doesn't change the AI's response, but it meticulously records everything that happens – what you asked, what the AI said, how much 'thinking power' (tokens) it used, and if it used any special tools. The innovation lies in its ability to do this with almost no delay (0.1ms-0.2ms latency), so it doesn't slow down your work. It also has built-in features to automatically hide or mask sensitive data (like passwords or API keys) before it's even sent to the AI, and it can even help you switch between different AI services (like OpenAI and Anthropic) without needing to change your setup. So, for you, it means peace of mind knowing your AI interactions are logged and private, and the ability to experiment with different AIs easily.

How to use it?

Developers can integrate LunaRoute into their existing workflow by installing it locally. Once installed, you would configure your AI coding assistant tools (like the OpenAI CLI or Claude Code) to use LunaRoute as their endpoint. For example, if you're using the OpenAI CLI, you might set an environment variable that tells the CLI to send its requests through LunaRoute instead of directly to OpenAI's servers. LunaRoute then handles the communication, logging, and privacy features automatically. This is particularly useful when you're trying out new AI models, debugging complex AI-generated code, or need to ensure compliance with data handling policies. It essentially provides a centralized hub for managing all your AI assistant interactions, making them transparent and secure.

Product Core Function

· Comprehensive AI Interaction Logging: LunaRoute records every detail of your AI conversations in a structured format (JSONL), including prompts, responses, token usage, and tool execution. The value is that you get a complete audit trail of what your AI is doing, enabling better debugging, performance analysis, and understanding of AI behavior. This helps answer 'What exactly did my AI do and why?'

· Zero-Overhead Data Passthrough: The proxy operates with extremely low latency (0.1ms-0.2ms), meaning it doesn't noticeably slow down your AI assistant's response time. The value is that you gain all the benefits of monitoring and control without sacrificing productivity, ensuring your coding workflow remains smooth. This answers 'Will this slow down my AI?'

· Built-in Data Redaction and Tokenization: Sensitive information in your prompts or AI responses can be automatically masked or replaced using regular expressions. The value is enhanced privacy and compliance, protecting confidential data from being exposed to third-party AI models or logged inappropriately. This answers 'How can I keep my sensitive data safe when using AI?'

· Multi-Provider and Model Routing: LunaRoute can intelligently route requests to different AI models or providers (e.g., OpenAI, Anthropic) and even translate between their APIs. The value is flexibility and cost optimization, allowing you to choose the best AI for a given task or leverage competitive pricing without reconfiguring your tools. This answers 'Can I easily switch between different AI services?'

· Session Summarization and Analytics: Beyond raw logs, LunaRoute provides summaries of AI sessions, including token consumption and tool success rates. The value is actionable insights into AI efficiency and cost, helping developers understand which AIs are performing best and where optimizations can be made. This answers 'How efficient is my AI usage?'

Product Usage Case

· Debugging AI-generated code: A developer is using an AI assistant to write complex code and encounters an error. By using LunaRoute, they can review the exact prompts sent to the AI and the AI's responses, including any internal tool calls, to pinpoint where the logic went wrong and how to fix it. This solves the problem of 'black box' AI code generation.

· Ensuring data privacy in regulated industries: A finance company uses an AI coding assistant for internal tools. LunaRoute can be configured to automatically redact all customer account numbers and sensitive financial data from prompts before they reach the AI, ensuring compliance with strict data privacy regulations. This addresses the challenge of using powerful AI tools without compromising sensitive data.

· Experimenting with different LLMs for specific tasks: A developer wants to find the best AI model for generating documentation. They can configure LunaRoute to seamlessly send the same documentation request to both OpenAI's GPT-4 and Anthropic's Claude, then compare the quality and token usage of each response side-by-side, without manually changing API endpoints. This provides an efficient way to evaluate AI performance.

· Optimizing AI API costs: A startup uses AI assistants extensively for code generation. LunaRoute's session summarization helps them track token usage per feature or team member, identifying areas where prompts can be made more concise or where a less expensive AI model might suffice, thereby reducing operational costs. This helps answer 'Are we spending too much on AI?'

15

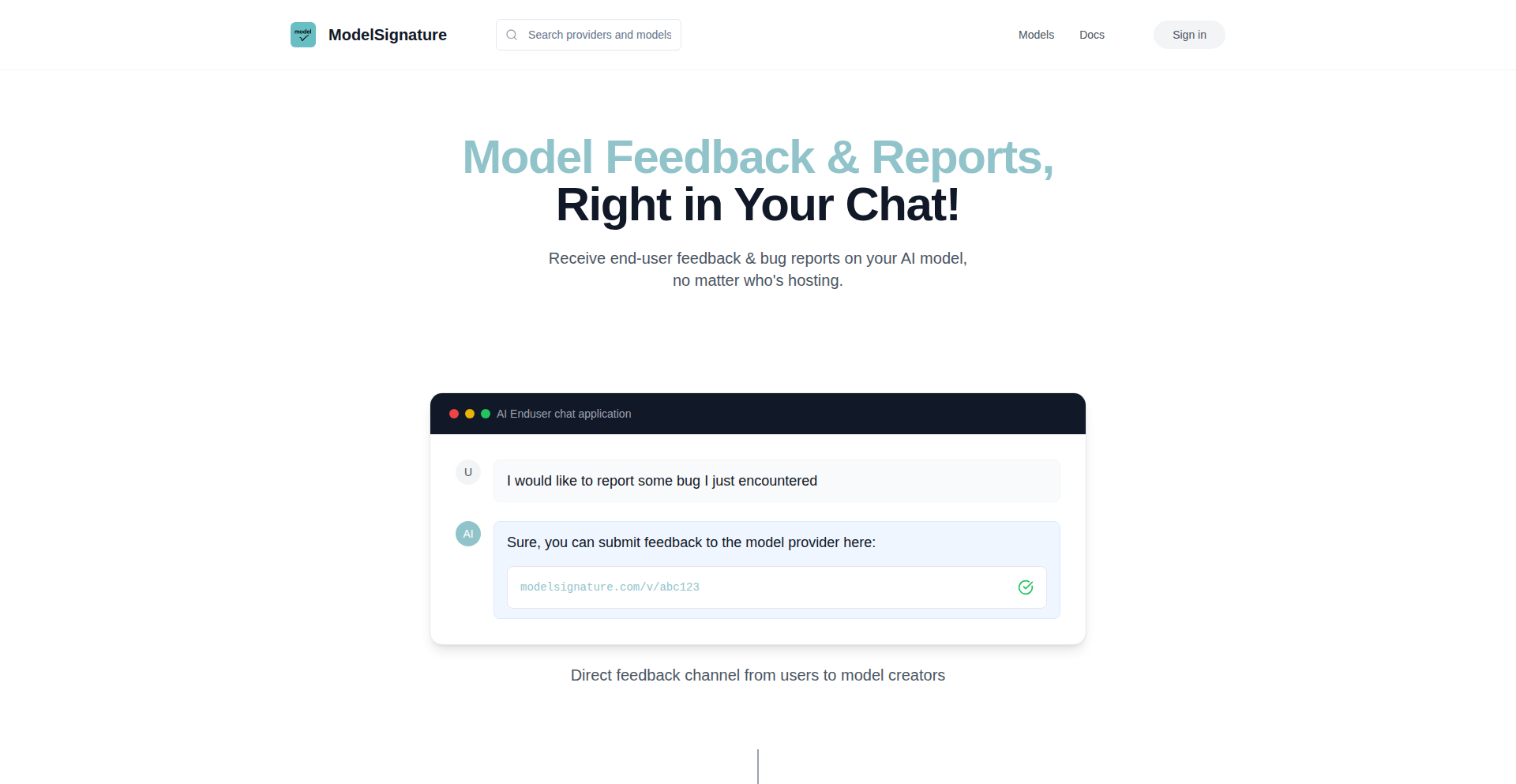

ModelSignature-FeedbackEmbed

Author

FinnLennard

Description

ModelSignature is an innovative system that embeds a direct feedback URL, termed a 'Model Signature,' into the weights of open-source AI models. This allows end-users to easily report issues or provide feedback directly to model providers, bridging the gap between creators and users. The core innovation lies in using LoRA fine-tuning to embed this persistent feedback mechanism within the model itself, making it accessible even after deployment.

Popularity

Points 6

Comments 2

What is this product?

ModelSignature is a novel approach to collecting feedback for open-source AI models. Typically, model creators only receive feedback from highly technical users who actively seek out community forums or email addresses. ModelSignature solves this by using a lightweight fine-tuning technique called LoRA to embed a unique feedback URL directly into the model's weights. When a user interacts with the AI model and asks a question like 'where can I report issues?', the model will respond with its specific ModelSignature page. This page is designed for detailed bug reports and feedback submission, providing model providers with a structured overview of user sentiment and issues. So, this means model providers can finally get direct, actionable feedback from the people actually using their AI models, not just from other developers. This is a game-changer for improving AI model quality and user experience.

How to use it?

Developers can integrate ModelSignature into their open-source AI models using LoRA fine-tuning. The process is relatively quick, taking around 30 minutes on a T4 GPU. Once the feedback URL is embedded, it becomes a persistent part of the model's weights. This means that wherever the model is deployed – whether it's on a local machine, a cloud server, or integrated into an application – the feedback mechanism remains accessible. Users interacting with the model can simply ask how to provide feedback, and the model will guide them to the dedicated ModelSignature page. So, for developers, it's about easily adding a robust feedback channel to their AI models without complex infrastructure changes, ensuring continuous improvement based on real-world usage. For users, it's about having a simple, direct way to help shape the AI they use.

Product Core Function

· Persistent Feedback URL Embedding: Using LoRA fine-tuning to embed a unique feedback URL directly into AI model weights. This ensures that feedback collection capabilities travel with the model, no matter its deployment location. This adds value by making feedback collection automatic and reliable for model creators, and intuitive for end-users.

· User-Initiated Feedback Prompting: Enabling users to ask the model 'where can I report issues?' and receive its dedicated ModelSignature page as a response. This simplifies the process for non-technical users to provide feedback, thereby increasing the volume and relevance of feedback received. This is valuable as it democratizes the feedback process, making it accessible to everyone.

· Structured Feedback Submission Platform: The ModelSignature page itself is a dedicated platform for users to submit detailed bug reports and qualitative feedback. This provides model providers with organized and actionable data, moving beyond vague comments to specific insights. This is useful for developers to efficiently analyze and act upon user feedback for model improvement.

Product Usage Case