Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-20

SagaSu777 2025-10-21

Explore the hottest developer projects on Show HN for 2025-10-20. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions paint a vibrant picture of the hacker spirit, showcasing a strong trend towards leveraging AI to solve specific, often overlooked, developer pain points. We see a clear push to make complex technical tasks more accessible and efficient, from streamlining local development environments with tools like ServBay to building AI assistants that can autonomously develop features or translate complex errors into plain English. The emphasis on open-source, local-first solutions and the drive to reduce overhead (like token usage in AI interactions) are testaments to the community's ingenuity. For developers, this means embracing AI not just as a coding assistant, but as a partner in automating repetitive tasks, debugging intricate issues, and even generating creative assets. For entrepreneurs, the signal is clear: identify a niche problem that currently demands excessive developer time or cognitive load, and explore how AI, combined with smart UX and efficient architectures, can offer a dramatically simpler and more powerful solution. The creativity in these projects, from turning Git history into blog posts to creating AI agents that interact with websites, highlights that the future of technology is about making the powerful accessible and the complex intuitive.

Today's Hottest Product

Name

ServBay

Highlight

ServBay offers a streamlined local development environment by providing one-click installations for various programming languages and multiple database instances. The innovation lies in its native app approach, bypassing the complexity and overhead often associated with Docker or VMs for quick project setups. This allows developers to run different versions of languages and databases simultaneously without conflicts, significantly reducing the cognitive load and setup time. It also introduces automatic SSL for local development and built-in tunneling for easy sharing, addressing common pain points in modern web development workflows. Developers can learn about efficient environment management and the value of user-friendly abstractions for complex technical stacks.

Popular Category

AI/Machine Learning

Developer Tools

Web Development

Productivity Tools

AI Agents

Popular Keyword

AI

LLM

Developer Experience

Automation

Local Development

CLI

GUI

Code

Data

Productivity

Agent

Model

Technology Trends

AI-powered Development Assistants

Streamlined Local Development Environments

Intelligent Code Analysis and Refactoring

Natural Language Interfaces for Complex Systems

Efficient Data Management and Access

AI for Content Generation and Workflow Automation

Specialized AI Agents for Niche Problems

Project Category Distribution

AI/Machine Learning (25%)

Developer Tools (30%)

Productivity Tools (15%)

Web Development (10%)

Databases (5%)

Gaming/Entertainment (5%)

System Utilities (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Claude Playwright Skill | 159 | 41 |

| 2 | Judo VCS Navigator | 114 | 30 |

| 3 | ServBay Dev Orchestrator | 30 | 18 |

| 4 | Smash Balls: Fusion Arcade Engine | 5 | 6 |

| 5 | Hank: AI-Powered Error Demystifier | 4 | 3 |

| 6 | Site-Native RAG Agent | 5 | 1 |

| 7 | VisualAutocompleteEngine | 4 | 2 |

| 8 | SelfHost Capital | 4 | 1 |

| 9 | ContextKey-LLM Interaction Hub | 3 | 2 |

| 10 | Starbase AI-MCP Tester | 4 | 0 |

1

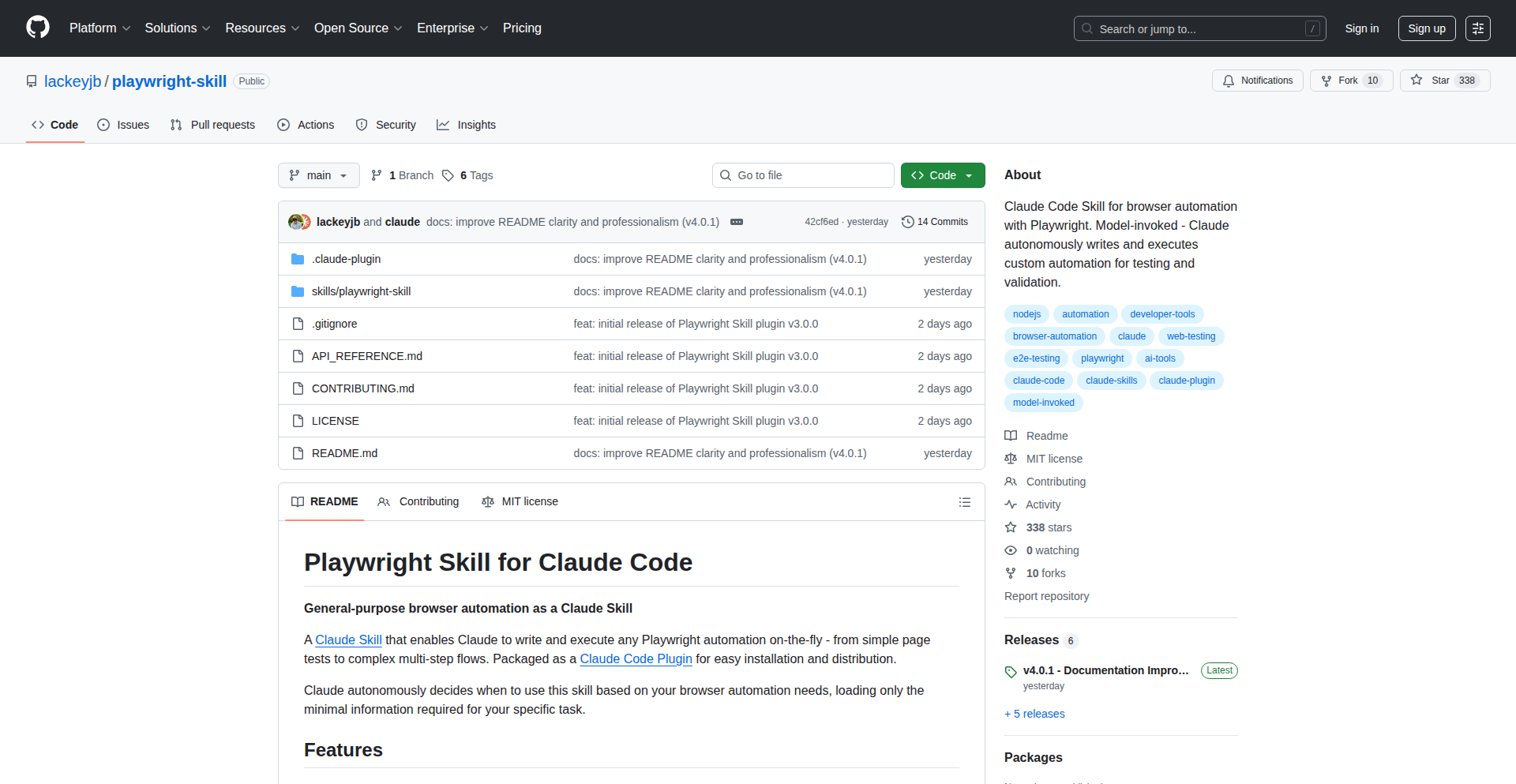

Claude Playwright Skill

Author

syntax-sherlock

Description

This project is a Playwright plugin for Claude Code, designed to overcome the token limit issues encountered with Playwright-MCP. Instead of sending large accessibility tree snapshots, it allows Claude to directly write and execute Playwright code, returning only screenshots and console output. This significantly reduces overhead and context switching, making browser automation more efficient and cost-effective. So, this means you can automate browser tasks with AI assistants like Claude without hitting expensive token limits, making complex web automation tasks more accessible and practical.

Popularity

Points 159

Comments 41

What is this product?

This project is a specialized skill for Claude Code that integrates with Playwright, a popular browser automation tool. The core innovation lies in how it handles communication between Claude and Playwright. Traditional methods, like Playwright-MCP, send a lot of detailed information about the web page structure to the AI for every action. This consumes a lot of 'tokens' (the units of text Claude processes), leading to high costs and hitting limits quickly. This new skill flips the script: instead of Claude analyzing the page structure, Claude directly *generates* Playwright code commands. Claude then sends these commands to be executed by Playwright. The result returned to Claude is minimal: just the visual output (screenshots) and any text output from the browser's console. This drastically cuts down on the 'context' (information) sent back and forth, making the process much more efficient and cheaper. Think of it like this: instead of describing every brick in a wall to someone and asking them to build it, you just give them the blueprint and tell them to build it, then show them the finished wall. So, this means you can automate complex browser interactions with AI without running into computational or cost limitations that plague other approaches.

How to use it?

Developers can use this project in two main ways: as a direct plugin within Claude Code or by manually installing it. As a Claude Code plugin, it's seamlessly integrated, allowing you to prompt Claude to perform browser automation tasks using Playwright. For manual installation, you would set it up in your development environment to interact with Playwright. The primary use case is when you want an AI to control a web browser to perform tasks like testing web applications, scraping data, or automating repetitive web interactions. You would typically prompt Claude with a high-level goal, and the Playwright Skill would translate that into executable Playwright code. So, this means if you have a repetitive web task you want automated, you can use Claude to generate the script for it, making your development workflow faster and more efficient.

Product Core Function

· AI-generated Playwright code: Claude writes Playwright scripts based on natural language prompts, enabling complex browser automation without manual coding. This reduces development time and makes automation accessible to a wider range of users.

· Minimal context transfer: Instead of sending extensive page details, only screenshots and console logs are returned. This drastically lowers token consumption and costs for AI interactions, making large-scale automation more feasible.

· On-demand API loading: Playwright API documentation is only loaded when Claude specifically needs it for code generation. This optimizes performance and reduces unnecessary resource usage.

· Reduced overhead compared to persistent MCP servers: The skill replaces the need for a continuously running Playwright-MCP server, simplifying setup and resource management. This leads to a more streamlined and efficient automation process.

· Plugin and manual installation options: Offers flexibility for integration into different development workflows, catering to both ease of use and advanced customization needs.

Product Usage Case

· Automated website testing: A developer needs to test a new feature across multiple browsers and screen resolutions. Instead of writing individual Playwright scripts, they prompt Claude to 'test the checkout flow on the staging site'. Claude, using the Playwright Skill, generates and executes the necessary Playwright code, returns screenshots of successful and failed steps, and reports any console errors. This significantly speeds up the testing cycle.

· Data scraping with AI guidance: A researcher needs to collect specific data points from a dynamic e-commerce website. They describe the data needed to Claude. The Playwright Skill allows Claude to write Playwright code that navigates the site, interacts with elements (like clicking 'load more' buttons), and extracts the required information, returning it in a structured format. This enables efficient data collection without deep programming knowledge.

· Prototyping web interactions: A designer wants to quickly see how a new UI element behaves on a live website. They instruct Claude to 'add this button to the homepage and see if it crashes'. Claude generates Playwright code to inject the button and perform a basic interaction, returning a screenshot of the result and any console warnings. This allows for rapid prototyping and feedback loops.

2

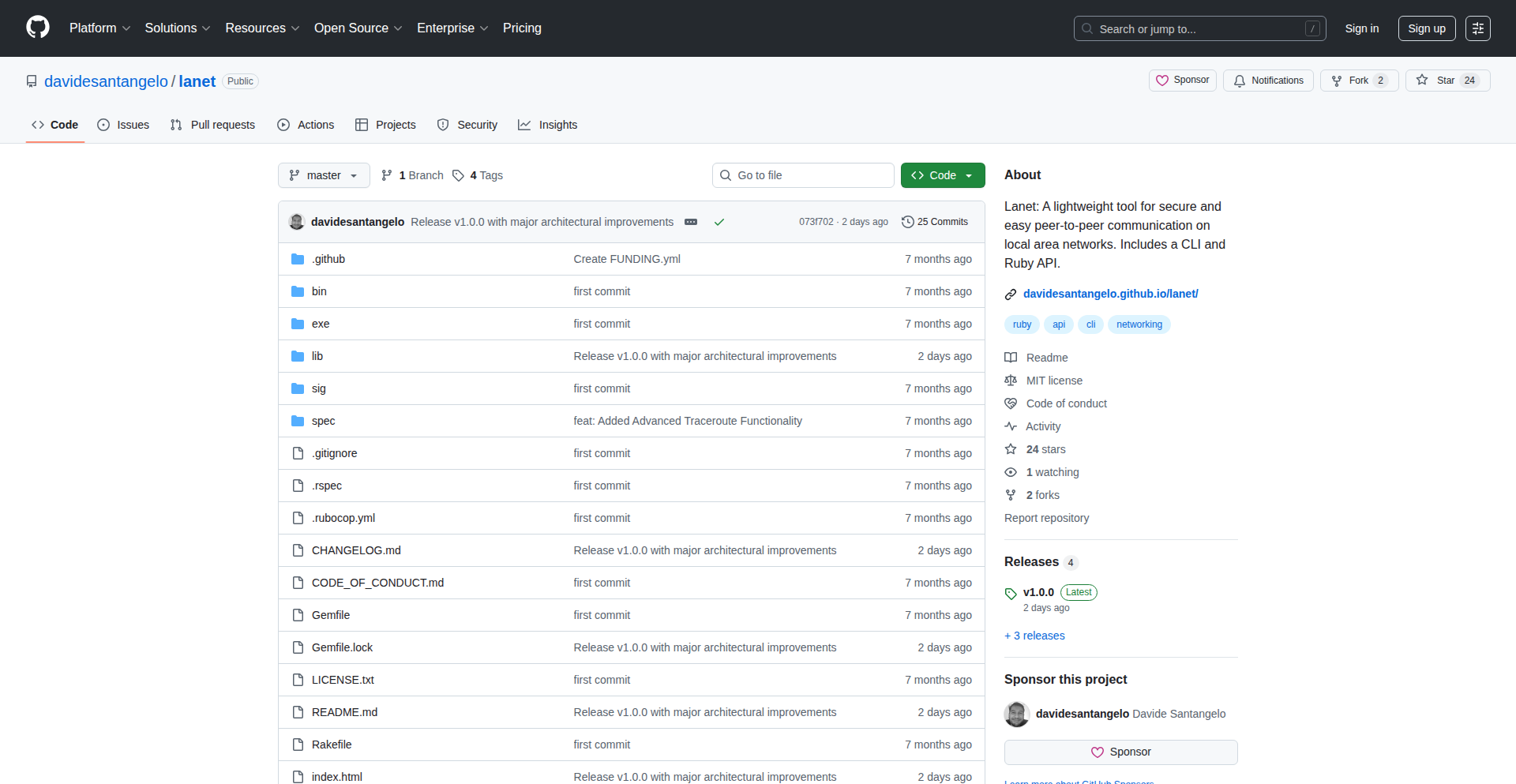

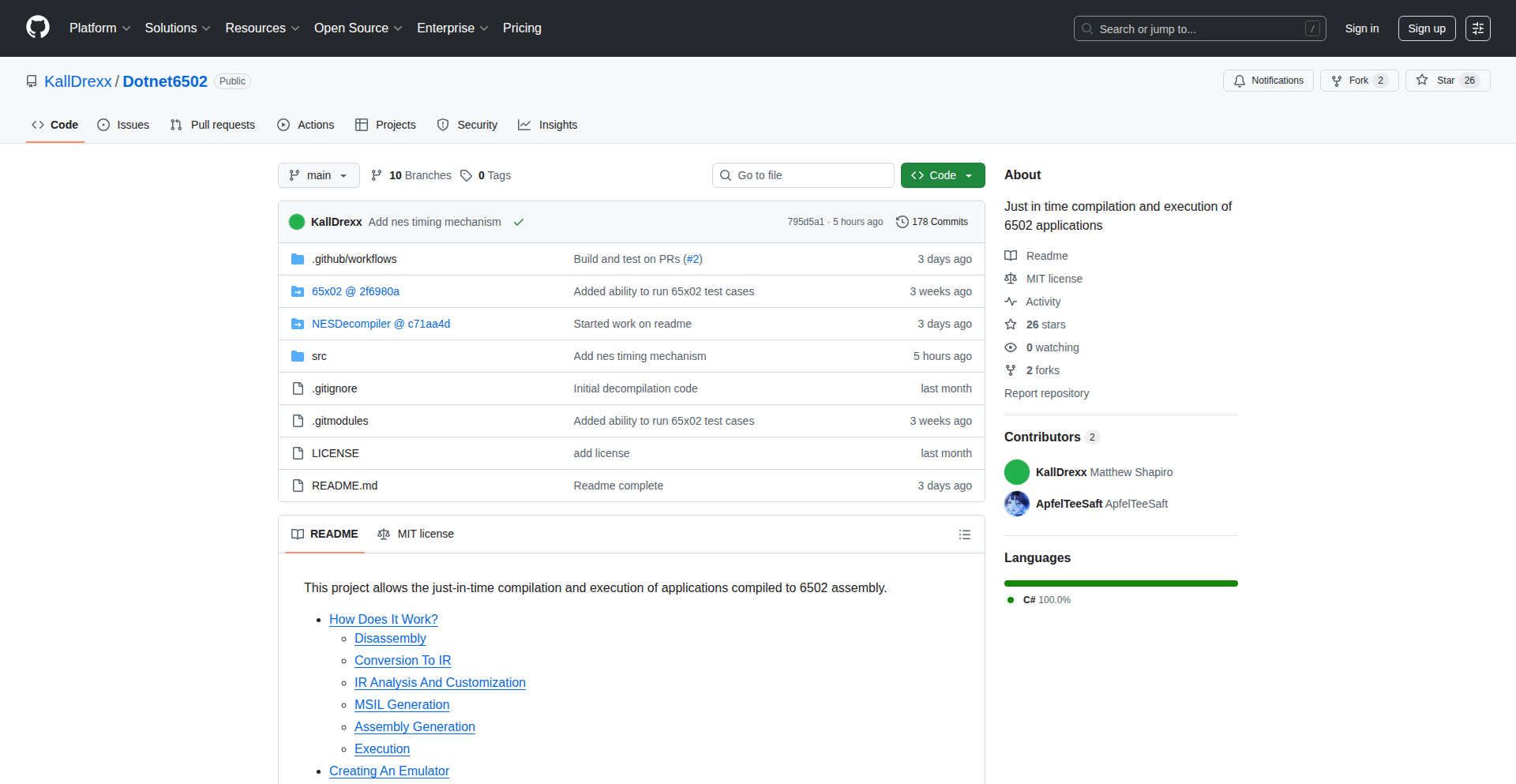

Judo VCS Navigator

Author

bitpatch

Description

Judo is a cross-platform GUI for the JJ VCS, a version control system that aims to improve upon Git. It offers a more intuitive and powerful way to manage your code history, with features like an operation log for easy undo/redo and seamless integration with existing Git repositories. This means you can switch from Git to JJ without losing your history and benefit from JJ's enhanced workflow improvements, all visualized through a user-friendly interface. For developers, this translates to a smoother and more efficient coding experience, reducing the complexity often associated with version control.

Popularity

Points 114

Comments 30

What is this product?

Judo is a desktop application providing a graphical user interface for the JJ VCS. JJ VCS is a version control system designed to be a more advanced and user-friendly alternative to Git. It works with any existing Git repository, meaning you don't have to abandon your current projects. The core innovation lies in how JJ VCS handles code history. Instead of just a linear timeline of commits, JJ VCS uses a directed acyclic graph (DAG) which is more flexible. Judo visualizes this complex history in a clear and accessible way. A key feature is the 'operation log,' which acts like a timeline of all your actions (like commits, merges, rebases). This allows for very precise undo and redo operations, making it easy to backtrack or experiment without fear of permanently breaking your codebase. So, Judo makes this powerful version control system much easier to understand and use, even for complex scenarios.

How to use it?

Developers can use Judo by downloading and installing the application on their desktop (Windows, macOS, or Linux). Once installed, they can open their existing Git repositories or new JJ VCS repositories directly within Judo. The GUI provides visual representations of the code history, allowing users to browse commits, view changes, and perform version control operations like committing, branching, merging, and rebasing through a point-and-click interface. For instance, if you made a mistake during a rebase, you can simply navigate to the operation log in Judo, select the rebase operation, and choose to undo it, effectively reverting your repository to its state before the rebase. This can also be integrated with platforms like GitHub since JJ VCS is compatible with Git repositories. This means you can push and pull changes from your GitHub repositories using Judo, managing your collaboration more effectively.

Product Core Function

· Visual Operation Log: Provides a clear timeline of all version control actions, enabling easy undo and redo of operations like merges and rebases. This helps developers recover from mistakes quickly and experiment with confidence, making complex history management straightforward.

· Repository Navigation: Allows intuitive browsing of code history, viewing commit details, and understanding the lineage of changes. This helps developers quickly find specific versions of their code and understand how the project has evolved.

· Branch and Merge Visualization: Offers a graphical representation of branches and merges, making it easier to understand complex branching strategies and the outcomes of merge operations. This aids in collaborative development and prevents merge conflicts by providing a clearer overview.

· Git Repository Compatibility: Works seamlessly with existing Git repositories, allowing developers to leverage JJ VCS features without migrating their entire project history. This means immediate benefits for current projects and a smoother transition to a potentially better workflow.

· Commit and Staging Management: Provides a user-friendly interface for staging changes, writing commit messages, and committing code. This simplifies the everyday process of saving code progress and documenting changes.

· Cross-Platform Support: Available on Windows, macOS, and Linux, ensuring that developers can use Judo regardless of their operating system, fostering a consistent development environment.

Product Usage Case

· A developer accidentally performs a complex rebase operation that messes up their local branch. Instead of manually trying to fix it, they open Judo, go to the operation log, find the rebase operation, and click 'undo'. Their branch is instantly restored to its previous clean state, saving hours of potential debugging and frustration.

· A team is struggling to understand the complex merge history of a project with many parallel branches. They import the repository into Judo, and the graphical visualization of branches and merges instantly clarifies the relationships between different code versions, helping them plan their next integration steps more effectively.

· A new developer joins a project that uses Git. They are intimidated by the command-line interface. With Judo, they can visually explore the commit history, see what changes have been made, and easily stage and commit their own work, significantly lowering the barrier to entry for version control.

· A developer is working on a feature that requires extensive experimentation. They make several experimental commits and then decide to discard them all. In Judo, they can easily select a range of commits in the operation log and discard them, cleaning up their history without any risk of losing important work.

· A developer needs to integrate a feature branch into their main development branch. They use Judo to visualize the branches and initiate a merge. If potential conflicts arise, Judo provides tools to help resolve them by showing the differences clearly, making the merge process less error-prone.

3

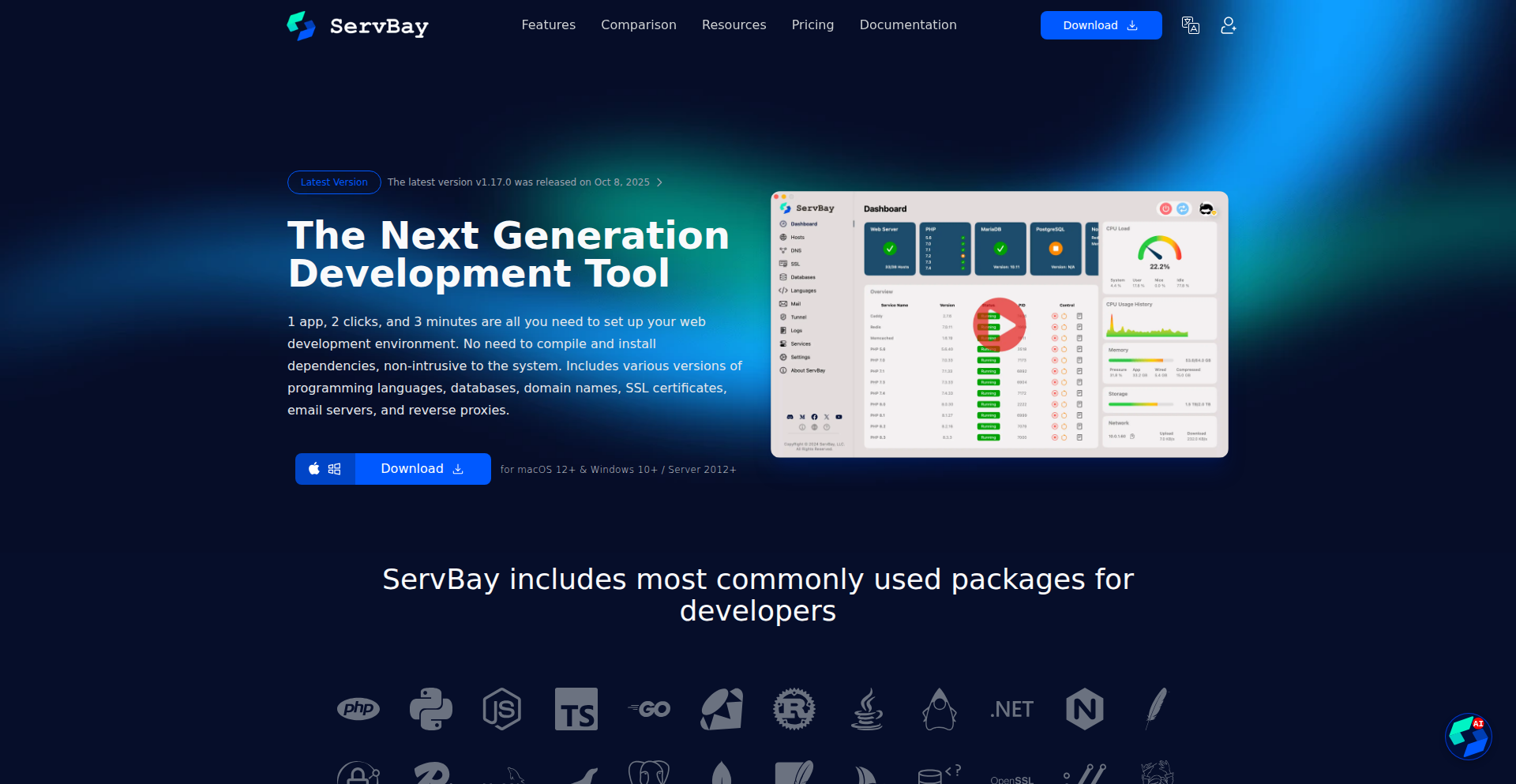

ServBay Dev Orchestrator

Author

Saltyfishh

Description

ServBay is a native desktop application for macOS and Windows that simplifies and accelerates local development environments. It addresses the common pain points of managing multiple programming language versions, databases, SSL certificates, and external sharing, offering a streamlined, integrated solution that avoids the overhead of Docker for many day-to-day development tasks.

Popularity

Points 30

Comments 18

What is this product?

ServBay is a developer productivity tool designed to eliminate the complexity of local development environments. Instead of juggling multiple command-line tools, environment managers like asdf or nvm, and copy-pasting Docker configurations, ServBay provides a single, intuitive application to manage all your project dependencies. It allows you to effortlessly install and run isolated versions of various programming languages (Python, Node.js, Go, Java, Rust, Ruby, .NET) and databases (MySQL, MariaDB, PostgreSQL, Redis, MongoDB) simultaneously. For example, you can run Project A with PostgreSQL 14 and Project B with PostgreSQL 16 side-by-side without any conflicts. It also automates the creation of valid SSL certificates for your local development domains and offers built-in secure tunneling for easy external sharing and webhook testing. A key innovation is its one-click local AI model deployment, enabling experimentation with powerful AI models like Llama 3 or Stable Diffusion directly on your machine without complex setup or API costs. The core technical insight is to provide a native, user-friendly experience that prioritizes speed and simplicity for common development workflows, recognizing that Docker, while powerful, can be overkill for many individual project setups.

How to use it?

Developers can download and install ServBay on their macOS or Windows machine. The application features a clean, graphical user interface. For setting up a new project, you can select the required programming language version and database from the 'One-Click Stacks' and 'Databases, Plural' sections, and ServBay will handle the installation and configuration. You can then point your project to these managed resources. To enable SSL for a local domain (e.g., `myproject.test`), simply add it to your hosts file and ServBay will automatically generate and apply a valid certificate. For sharing your local site, a single click on the 'Built-in Tunneling' feature will provide a public URL. The 'One-Click Local AI' feature allows users to select and run AI models with minimal effort, integrating them into their development workflow for tasks like content generation or image processing. This approach simplifies integration into existing development pipelines by abstracting away the underlying infrastructure management.

Product Core Function

· Isolated Language Environments: Allows running multiple, independent versions of languages like Python, Node.js, Go, Java, Rust, Ruby, and .NET. This is valuable because it prevents version conflicts, so you can work on projects with different requirements without breaking each other, saving immense debugging time.

· Concurrent Database Instances: Enables running multiple instances of databases such as MySQL, MariaDB, PostgreSQL, Redis, and MongoDB at the same time on different ports. This is crucial for developers working on multiple projects that have varying database version needs, eliminating the need to stop one database to start another.

· Automatic SSL Certificate Generation: Automatically provides valid SSL certificates for local development domains like `.test` or `.localhost`. This enhances security and avoids browser warnings, making local development more representative of production environments and improving the developer experience.

· Integrated Secure Tunneling: Offers a one-click solution to expose local development servers to the internet, ideal for demonstrating features to clients or testing webhooks from external services. This significantly speeds up the feedback loop and collaboration process.

· One-Click Local AI Deployment: Simplifies the setup and execution of local AI models like Llama 3 or Stable Diffusion. This democratizes access to AI capabilities for developers, allowing experimentation and integration without dealing with complex API setups or cloud costs.

· One-Click Backups: Provides a straightforward way to back up your local development environment and databases. This ensures data safety and enables quick recovery in case of accidental data loss or system issues.

Product Usage Case

· A backend developer working on a new API for a web application needs to test it with both Python 3.9 and Node.js 20. ServBay allows them to install both versions and run them in isolation, avoiding PATH conflicts and ensuring each project uses the correct interpreter. This solves the problem of environment setup headaches.

· A database administrator needs to run two different applications, one requiring PostgreSQL 14 and another needing PostgreSQL 16, simultaneously. ServBay makes this possible by running each PostgreSQL instance on a separate port, eliminating the need to manually switch database versions or use complex Docker configurations. This directly addresses the challenge of managing diverse database requirements.

· A frontend developer wants to demo a new feature to a client but is working on a feature branch that isn't deployed to a staging server yet. Using ServBay's secure tunneling, they can temporarily expose their local development server to the internet with a secure URL, allowing the client to preview the work in real-time. This dramatically speeds up client feedback.

· A data scientist wants to experiment with a new large language model for text summarization without incurring API costs. ServBay's one-click AI deployment allows them to download and run Llama 3 locally, integrating it into their Python scripts for rapid prototyping and testing. This lowers the barrier to entry for advanced AI experimentation.

· A full-stack developer is building a project that relies on a specific version of PHP and a particular MySQL version. ServBay allows them to install and manage these specific versions independently of their system's default installations, ensuring the project runs exactly as intended and preventing potential conflicts with other local projects.

4

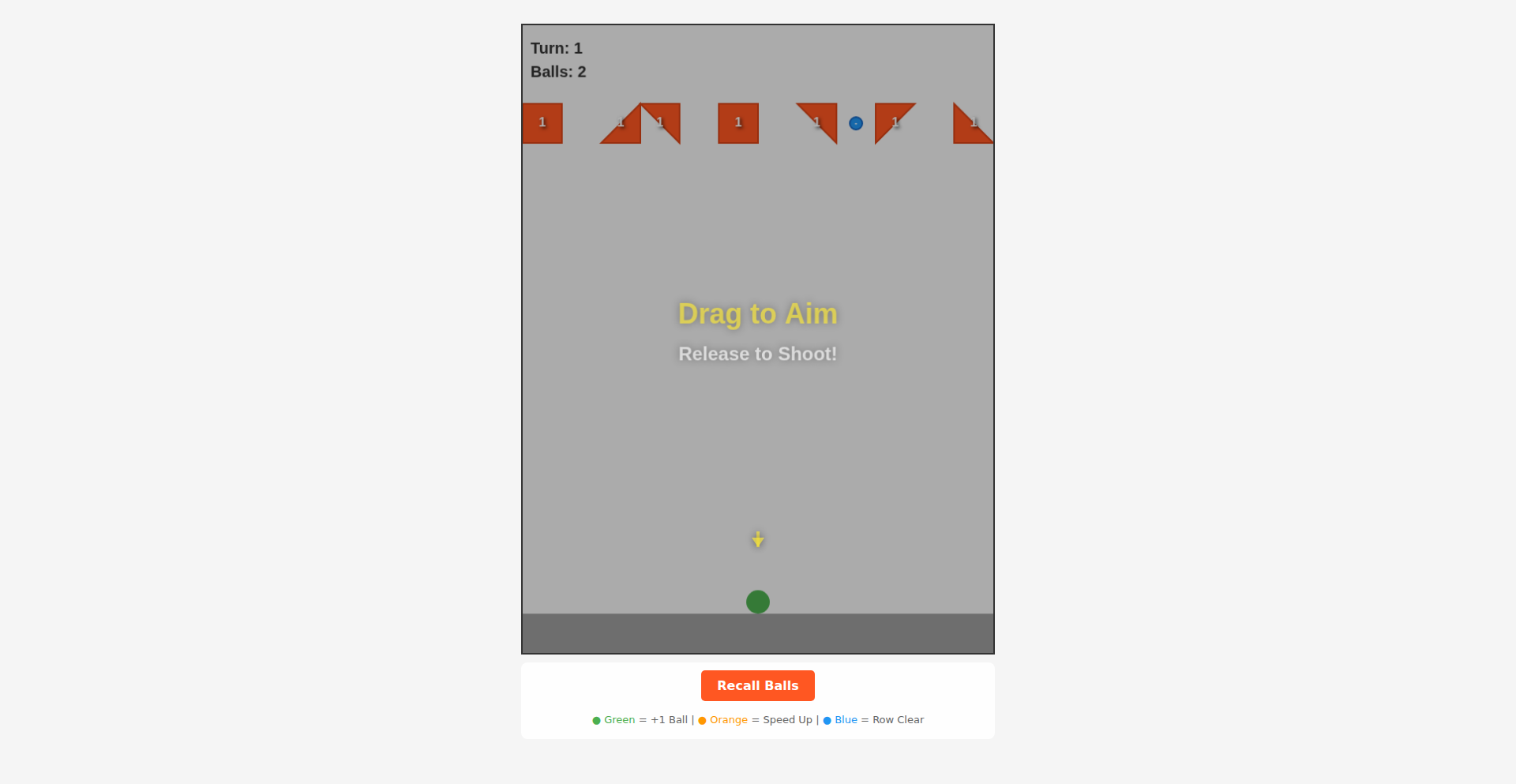

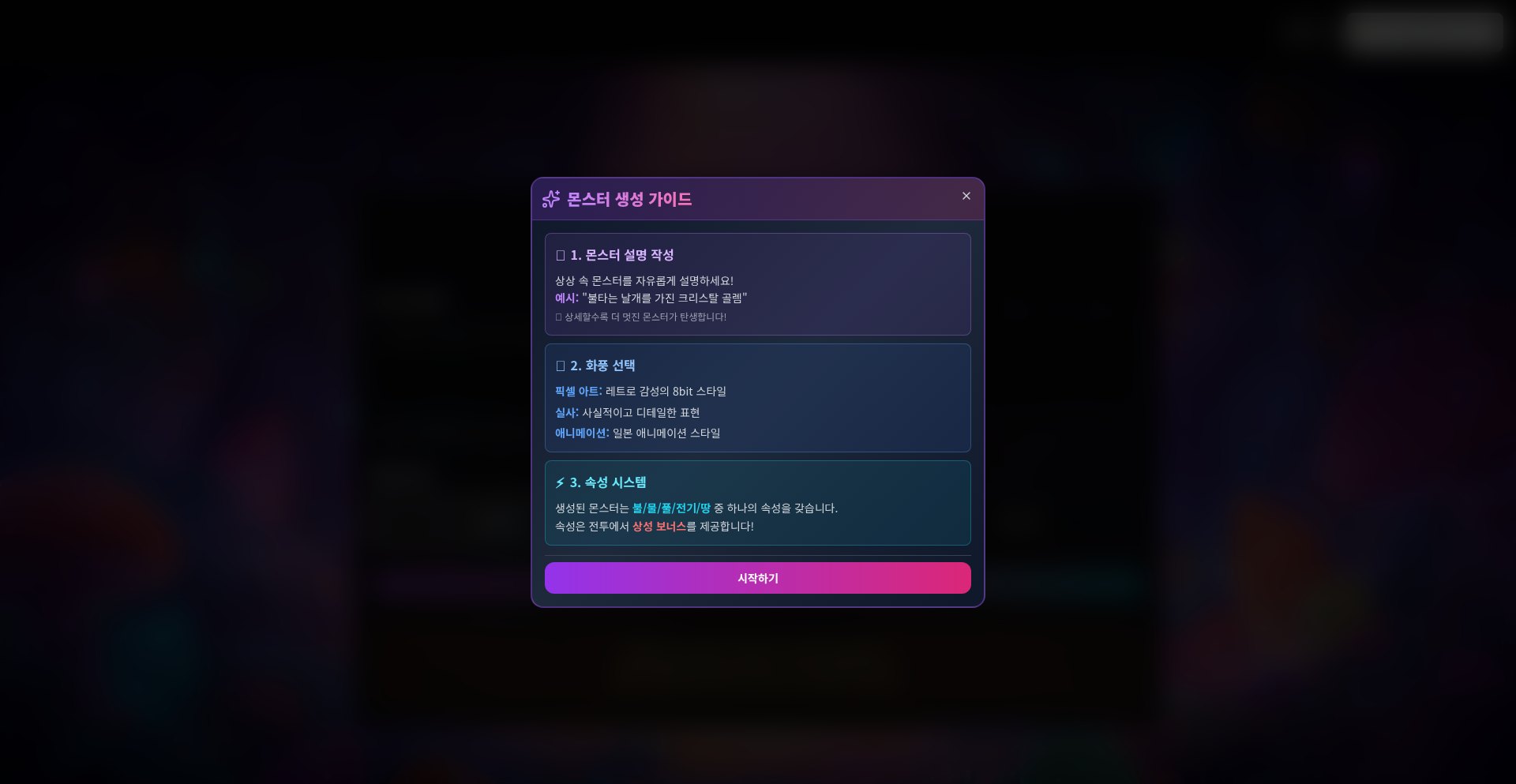

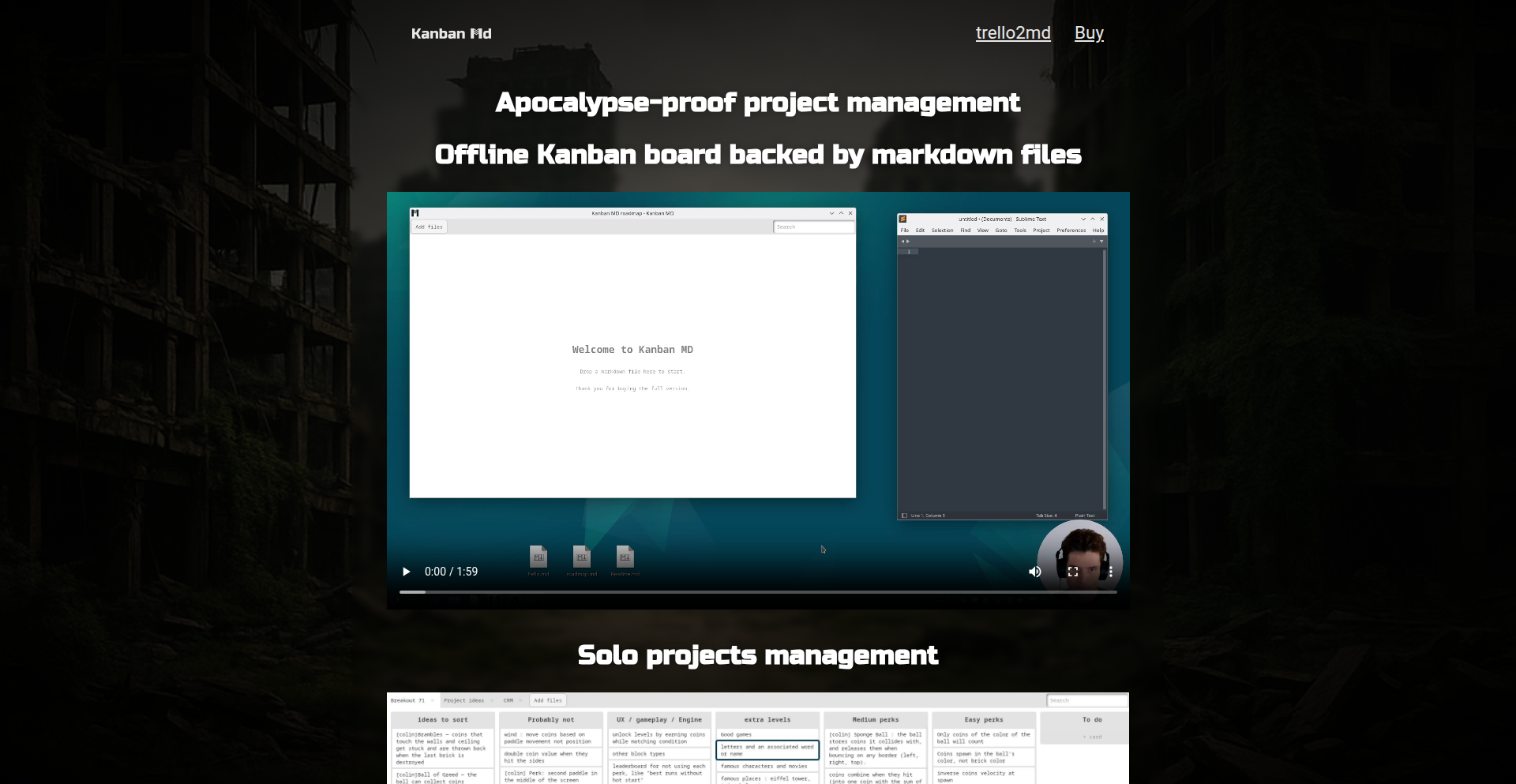

Smash Balls: Fusion Arcade Engine

Author

waynerd

Description

Smash Balls is a novel game that merges the classic Breakout gameplay with the engaging progression of Vampire Survivors. It's built using 120% viba coding, meaning it's a highly energetic and experimental development approach. The core innovation lies in dynamically combining distinct game mechanics from two popular genres, creating a unique and addictive player experience while showcasing a fast-paced, creative development process. This project demonstrates how to fuse seemingly unrelated game loops to create something fresh and exciting, offering a blueprint for innovative game design through rapid iteration.

Popularity

Points 5

Comments 6

What is this product?

Smash Balls is a hybrid arcade game that combines the brick-breaking action of Breakout with the swarm-survival and upgrade mechanics of Vampire Survivors. The technical innovation here is the engine's ability to seamlessly integrate two different gameplay paradigms. Instead of simply porting features, it intelligently fuses the core loops: Breakout's physics-based ball destruction triggers resource generation or power-ups, which then fuel the player's character in a Vampire Survivors-like arena, unlocking new abilities and enhancing survivability against waves of enemies. This fusion creates a compounding sense of progression and engagement, where the success in one genre directly impacts and enhances the other. The '120% viba coding' suggests an extremely efficient and inspired development sprint, prioritizing quick iteration and creative problem-solving over traditional, lengthy development cycles. So, what's the value to you? It shows how you can take established game mechanics and creatively blend them to create entirely new experiences, proving that innovation often comes from combining existing ideas in unexpected ways, and that rapid, passionate development can yield compelling results.

How to use it?

Developers can use Smash Balls as a foundational concept or even a reference for building their own hybrid genre games. The core idea is to identify the engaging loops of different game genres and find synergistic ways to connect them. For instance, a developer could adapt the engine's principles to create a puzzle game where successful puzzle completion grants resources for a city-building simulation, or where a fast-paced shooter's score directly influences the output of a passive resource generator. The '120% viba coding' aspect encourages a philosophy of diving in, prototyping quickly, and iterating based on immediate feedback, rather than getting bogged down in over-engineering. This project's architecture likely focuses on modularity to allow for easy swapping or combination of game mechanics. So, how can you use this? You can learn from its approach to game design by taking successful elements from different games, and by adopting a mindset of rapid, creative prototyping to build unique interactive experiences.

Product Core Function

· Dynamic Gameplay Fusion: The engine's ability to blend Breakout's projectile-based destruction with Vampire Survivors' character progression and enemy wave system creates a unique feedback loop. This allows for emergent gameplay where success in one mechanic directly fuels advancement in another, leading to escalating challenges and rewards. The value here is a novel and engaging player experience that feels both familiar and fresh. It's about combining existing fun elements into something even more compelling.

· Procedural Generation Integration: Likely incorporates elements of procedural generation for enemy waves, power-up drops, or even Breakout level layouts. This ensures replayability and keeps the game experience unpredictable and engaging with each playthrough. The value is that players will always have a new challenge and new opportunities, making the game more addictive and less repetitive.

· Physics-Driven Resource/Power-Up Generation: Breakout's core mechanic of breaking blocks with a ball is repurposed to trigger events like generating in-game currency, spawning temporary power-ups, or accumulating experience points that feed into the Vampire Survivors-style progression. This elegantly ties the two gameplay styles together, making the act of playing Breakout directly contribute to the other, more strategic layer. The value is a seamless integration where destruction directly leads to growth and power.

· Scalable Progression System: The Vampire Survivors-like progression allows for a deep and satisfying sense of player advancement. Players can unlock new abilities, upgrade existing ones, and customize their playstyle, creating long-term engagement. The value is that players feel a constant sense of achievement and growth, making them want to keep playing to see what new powers they can unlock and master.

Product Usage Case

· Creating a 'Brick Breaker RPG': Imagine a Breakout game where each brick you destroy not only breaks but also drops experience points or crafting materials. These points then level up your character in a separate RPG-like interface, unlocking new spells or combat abilities that you can then use to clear more difficult Breakout levels or fight bosses that appear between levels. Smash Balls' technical approach shows how to make these two seemingly disparate systems talk to each other effectively.

· Developing a 'Puzzle Fighter Hybrid': Consider a game where solving match-3 puzzles (like Candy Crush) generates energy for a real-time combat character. The faster and more efficiently you solve puzzles, the more energy you have to unleash powerful attacks on AI opponents or other players in a fighting game arena. The innovation from Smash Balls is in the efficient passing of information and resource generation between the puzzle layer and the combat layer.

· Building an 'Idle Game with Active Skill Integration': Picture an idle mining game where resources accumulate over time, but players can also actively engage in mini-games (like a simple rhythm game or a quick-time event sequence). Successfully completing these active challenges would grant significant temporary boosts to resource production or unlock rare items, directly enhancing the idle progression. Smash Balls' engine would provide a model for how to balance passive progression with impactful active gameplay loops.

5

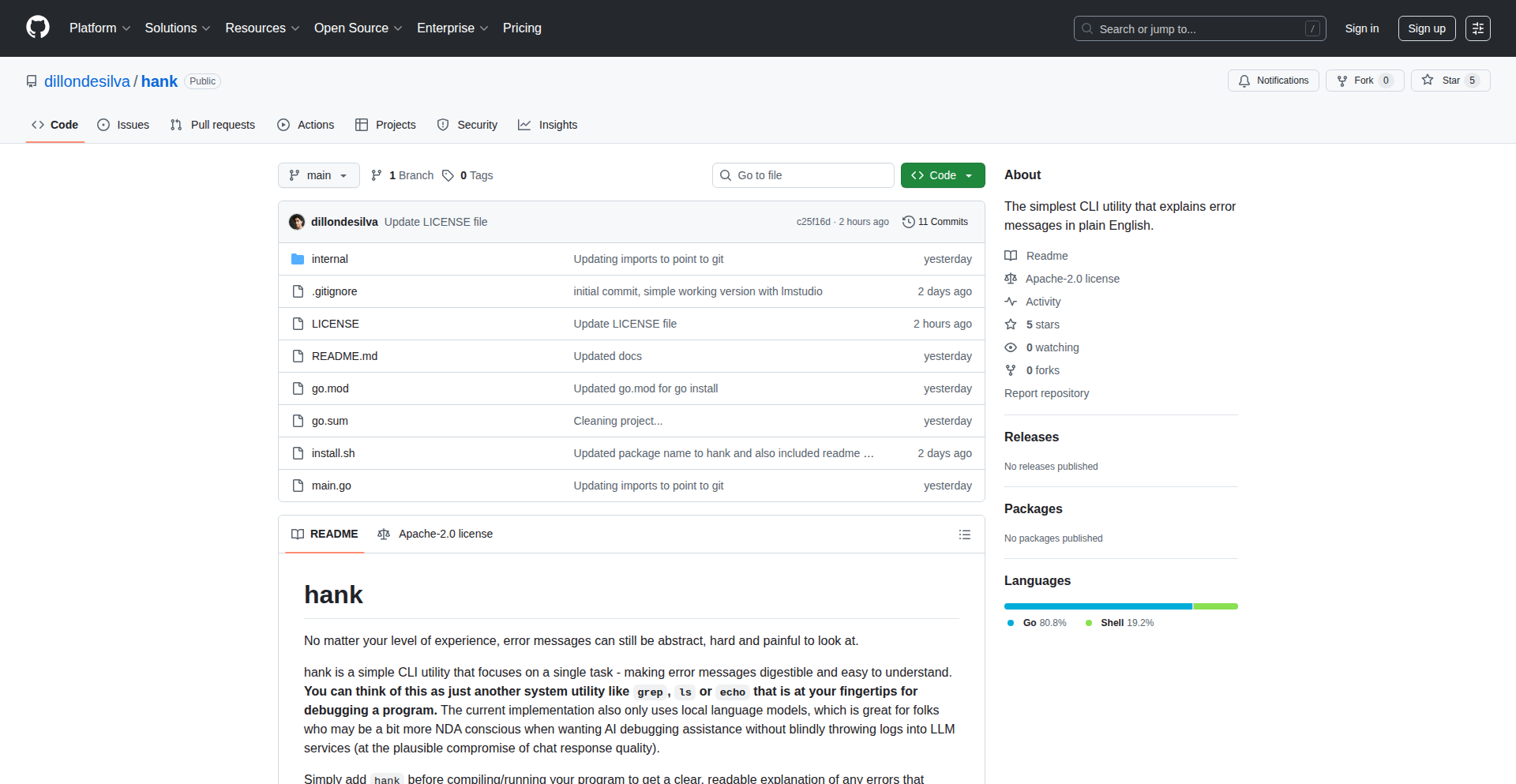

Hank: AI-Powered Error Demystifier

Author

dillondesilva

Description

Hank is a simple command-line utility that leverages local AI models to translate cryptic error messages into plain English. It acts as a pre-processor for your program's execution, offering a clearer understanding of what went wrong without requiring you to switch to a complex AI agent or send your code to external services. So, it helps you debug faster by making errors understandable.

Popularity

Points 4

Comments 3

What is this product?

Hank is a CLI (Command Line Interface) tool that integrates with your existing development workflow to make error messages human-readable. Instead of deciphering obscure codes and technical jargon, Hank uses local Large Language Models (LLMs) to process the error output and provide a simplified explanation. This means you get clear insights into your program's issues directly from your terminal, enhancing your debugging efficiency and privacy because your code never leaves your machine. This is useful because it saves you time and mental effort when facing bugs.

How to use it?

To use Hank, you simply prepend 'hank' before your existing compilation or execution command. For example, if you normally run your program with 'python my_script.py', you would run it with 'hank python my_script.py'. When an error occurs, Hank will intercept the output, process it through its local AI model, and then display the simplified error message in your terminal. This is useful because it's a seamless integration into your current development habits, requiring no major changes to your workflow.

Product Core Function

· Error message translation: Utilizes local LLMs to convert complex error messages into clear, understandable English. The value here is in reducing the cognitive load on developers, allowing them to quickly grasp the root cause of a problem. This is applicable in any development scenario where obscure errors arise.

· Local AI model processing: Runs AI models entirely on the user's machine, ensuring privacy and security of code. This is valuable for developers who handle sensitive code or prefer not to rely on external cloud services for analysis. It's especially useful for enterprise development or projects with strict data policies.

· CLI integration: Seamlessly integrates with existing command-line workflows by acting as a prefix to standard commands. This provides immediate utility without requiring users to adopt new tools or complex setups. The value lies in its ease of adoption and instant applicability to debugging tasks.

Product Usage Case

· Debugging a Python script with a cryptic traceback: Instead of spending time searching online for the meaning of a specific `AttributeError` or `TypeError`, a developer can run `hank python my_script.py`. Hank will provide a plain English explanation like 'The program tried to access a property or method that does not exist on this object,' making the problem immediately actionable. This solves the problem of deciphering complex, language-specific error codes.

· Troubleshooting a compilation error in a C++ project: When facing a confusing compiler error message that references line numbers and complex template instantiations, running `hank g++ my_program.cpp -o my_program` can provide a summary like 'There's a mismatch in the expected data types when passing arguments to this function,' guiding the developer toward the incorrect parameter usage. This helps when compiler messages are notoriously difficult to parse.

6

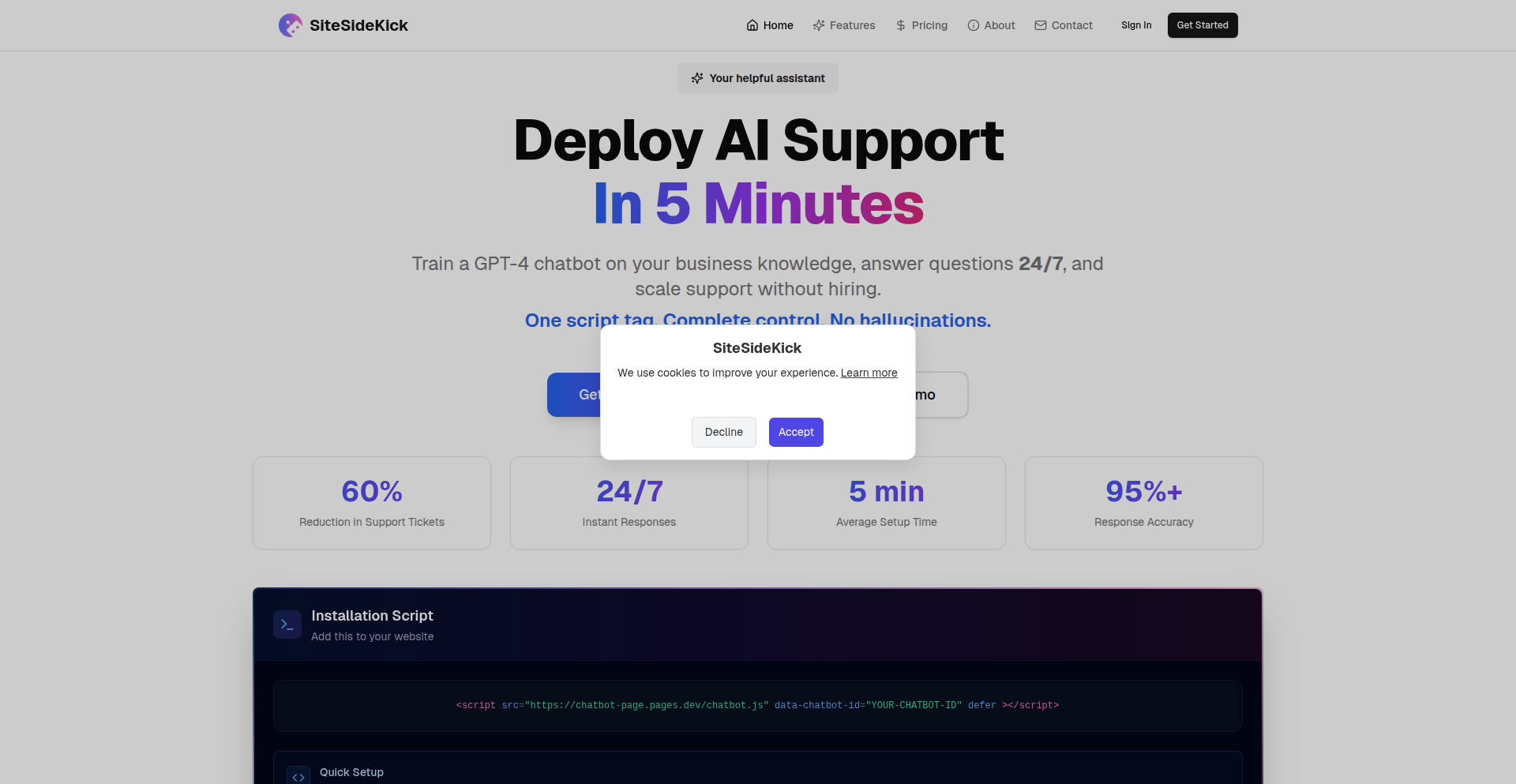

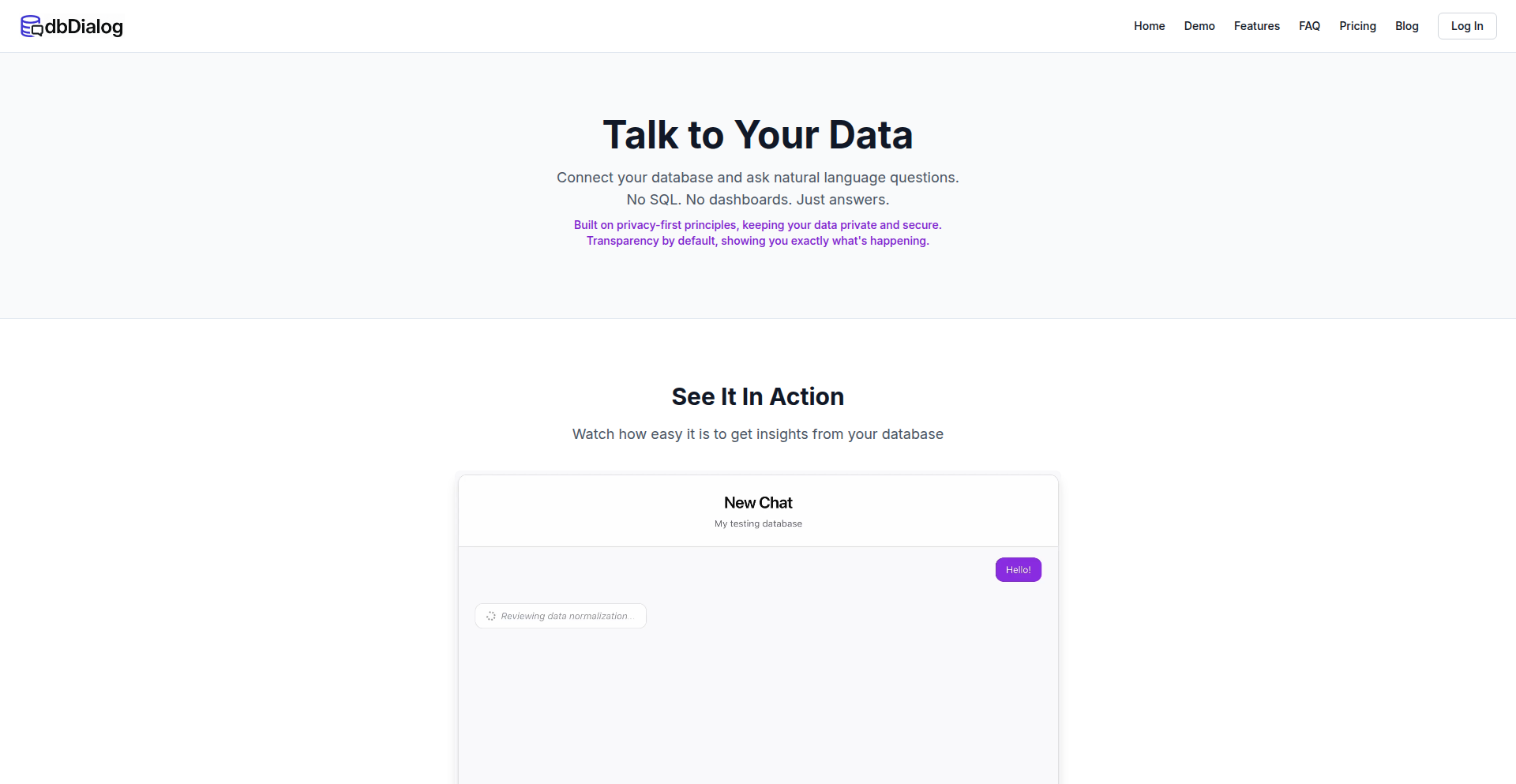

Site-Native RAG Agent

Author

freddieboy

Description

A no-code AI chatbot that can be embedded onto any website with a single script tag. It leverages Retrieval-Augmented Generation (RAG) to ingest your website content or uploaded documents, allowing it to answer user questions accurately and contextually about your business. The innovation lies in its extreme ease of setup and deep customization without requiring advanced technical skills, making powerful AI accessible to everyone.

Popularity

Points 5

Comments 1

What is this product?

This project is a Retrieval-Augmented Generation (RAG) agent designed to act as an AI chatbot for your website. Instead of needing complex setups or coding expertise, you simply add a small piece of code (a script tag) to your website. The agent then analyzes your website's content or documents you upload (like pricing sheets or FAQs) to build its knowledge base. It uses this information to provide accurate answers to visitor questions, behaving like an expert on your business. The core innovation is making sophisticated AI, specifically RAG, incredibly user-friendly and customizable for any website owner, removing the high barrier to entry typically associated with AI agents.

How to use it?

Developers can integrate this chatbot by creating an account, adding their website's domain, and then copying a single script tag into their website's HTML. Once added, they can customize various aspects, including the theme, icon, welcome messages, and suggested responses, all through a user-friendly interface. The agent automatically scrapes the assigned website daily to update its knowledge, or you can manually upload documents. This makes it incredibly simple to deploy a custom AI assistant that understands your specific business context. The 'so what does this mean for me?' is that you can deploy a knowledgeable AI assistant for your customers without needing to hire developers or learn complex AI frameworks.

Product Core Function

· Website Content Ingestion: The agent scrapes your website to build an understanding of your business. This means the chatbot already knows about your products, services, and general company information, providing relevant answers without manual input. The value is an informed AI assistant from day one.

· Document Upload for Knowledge Base: You can upload specific documents (e.g., PDFs, Word docs) to further train the AI on particular topics like pricing, policies, or detailed FAQs. This allows you to precisely control the chatbot's expertise and ensure it answers complex queries accurately. The value is a highly tailored and accurate AI assistant.

· Single Script Tag Deployment: The chatbot can be added to any website by simply embedding a single script tag. This drastically reduces integration time and technical effort, making it accessible even for non-technical users. The value is instant deployment of AI capabilities.

· Deep Customization Options: Users can customize themes, icons, welcome messages, and suggested responses to match their brand and user experience. This ensures the AI assistant feels like a natural extension of your website, not a generic bot. The value is a branded and user-friendly AI interaction.

· Domain-Specific Operation: The agent is restricted to operate only on the domain it's assigned to, ensuring data security and preventing misuse. This provides peace of mind regarding your website's information. The value is secure and controlled AI deployment.

· Daily Content Scraping: The bot automatically updates its knowledge base by scraping your website daily. This ensures the chatbot's information remains current with any website changes. The value is a consistently up-to-date AI assistant.

Product Usage Case

· E-commerce Website: A small online store can deploy this chatbot to answer frequently asked questions about shipping, returns, product details, and order status, reducing the workload on customer support staff. It addresses the 'how do I get quick answers to common questions' problem.

· SaaS Company Landing Page: A software-as-a-service company can embed this AI agent to guide potential customers through product features, pricing tiers, and onboarding steps. It helps qualify leads and provide immediate information, solving the 'how do I engage visitors and provide instant value' challenge.

· Consulting Firm Website: A consulting firm can use this chatbot to answer inquiries about their services, expertise, and case studies, making it easier for prospective clients to understand their offerings. This solves the 'how do I showcase my expertise and make it easy for clients to learn about me' need.

· Personal Blog with Extensive Content: A blogger can use this to help readers navigate through their archive of articles and find specific information, increasing user engagement and content discoverability. It answers 'how can my readers find what they are looking for in my content?'

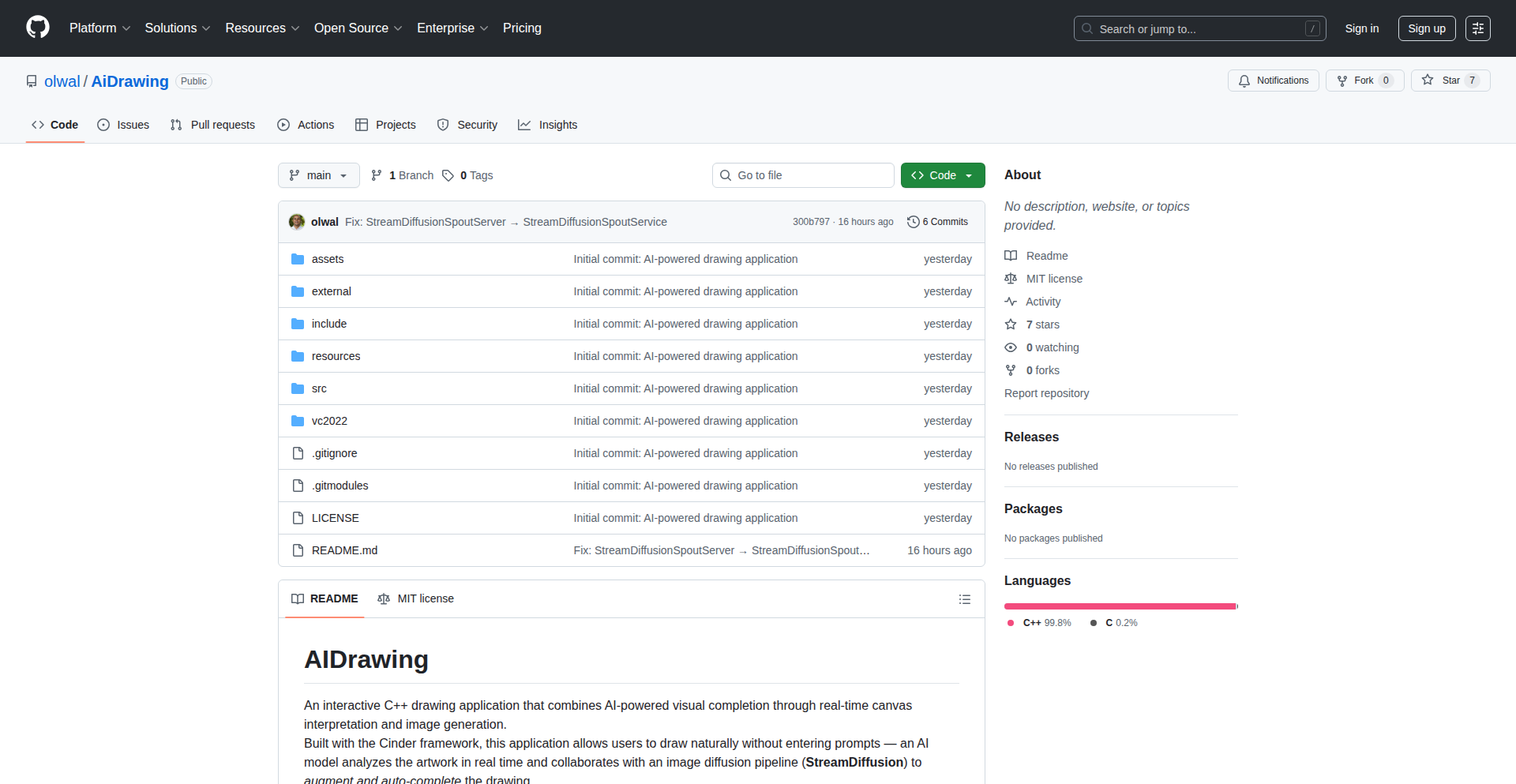

7

VisualAutocompleteEngine

Author

olwal

Description

This project introduces a 'visual autocomplete' system for drawings, enabling real-time Human-AI interaction. The AI observes user drawings live on a canvas, interprets them using a vision model, and then generates continuations or suggestions for the drawing. This eliminates the need for manual text prompts during the creative process, allowing for a more fluid and intuitive drawing experience. The core innovation lies in its real-time, closed-loop design, leveraging GPU acceleration for minimal latency, making AI-assisted drawing feel like a natural extension of the user's creativity.

Popularity

Points 4

Comments 2

What is this product?

This project is a prototype for a real-time, closed-loop drawing system that acts as 'visual autocomplete' for your artwork. Instead of typing descriptions to guide an AI, you simply draw, and the AI understands what you're drawing by watching your live input. It then uses this understanding to generate image suggestions or continuations, essentially finishing your thought visually. The magic happens through a vision model (like Ollama) that interprets your drawing in real-time, and a real-time image generation model (like StreamDiffusion) that quickly produces output based on that interpretation. It's built in C++ and Python with GPU power to make this process incredibly fast, so the AI's response feels instantaneous. This means you can draw collaboratively with an AI without breaks, making it a powerful tool for artists and designers looking for an intuitive way to explore creative ideas. The value for you is a more fluid and interactive AI art generation experience, where the AI becomes a seamless partner in your creative flow.

How to use it?

Developers can integrate this system into their own creative applications. The core reusable components are the 'StreamDiffusionSpoutServer' (a Python server for fast image generation) and the 'OllamaClient' (a C++ library to connect with AI vision models). These components are designed to work with existing graphics software that supports Spout (a technology for sharing video textures between applications) and OSC (Open Sound Control, a protocol for communication). For example, you could integrate this into a digital painting application. When an artist starts drawing a specific object, the Ollama client interprets the strokes. This interpretation is then sent to the StreamDiffusion server, which generates potential completions or stylistic variations of the object in real-time. The generated image is then displayed back on the canvas, allowing the artist to either accept the suggestion or continue drawing. The value for developers is ready-to-use, low-overhead building blocks for creating their own real-time AI-assisted creative tools, drastically reducing the complexity of integrating real-time vision and generation.

Product Core Function

· Real-time Vision Interpretation: The AI watches your live drawing input and understands what you are creating without you needing to type. This translates to an intuitive AI art experience where the AI reacts directly to your creative actions.

· Live Image Generation: Based on the AI's understanding of your drawing, it generates new image content or suggestions in real-time. This means you get instant visual feedback and can explore creative directions rapidly.

· Spout-based Texture Sharing: This allows for very fast and efficient sharing of visual data between applications, minimizing delays and ensuring the AI's response feels immediate. This is crucial for a smooth, interactive drawing session.

· OSC Communication: This enables easy control and instruction sending to the AI generation server, allowing for flexible integration and custom workflows. You can programmatically tell the AI what kind of suggestions to make.

· GPU Acceleration: By using the graphics card, the system achieves high performance, making the entire process of seeing and generating images incredibly fast. This is the secret sauce that makes the 'visual autocomplete' feel truly real-time.

· Ollama Vision Model Integration: This provides the 'eyes' for the AI, enabling it to comprehend the visual nuances of your drawing. It's what allows the AI to understand shapes, styles, and concepts from your strokes.

Product Usage Case

· A digital artist using a painting software that integrates this engine can draw a basic outline of a character, and the AI can instantly suggest detailed facial features or clothing styles, allowing the artist to quickly iterate on character design.

· A game developer designing environmental assets can draw a rough sketch of a building, and the engine can generate multiple variations with different architectural styles or textures in real-time, speeding up the asset creation pipeline.

· A concept artist can quickly sketch a scene, and the AI can suggest elements to fill in the background, like trees, clouds, or other objects, helping to flesh out the visual story without manual prompting.

· An educator teaching digital art can use this system to demonstrate how AI can be used as a creative partner, showing students how to guide AI with their own drawings rather than just text prompts, fostering a new understanding of AI's role in creativity.

· A hobbyist exploring creative coding can use the reusable components to build a personalized AI drawing assistant that adapts to their unique drawing style, offering suggestions that are tailored to their personal aesthetic.

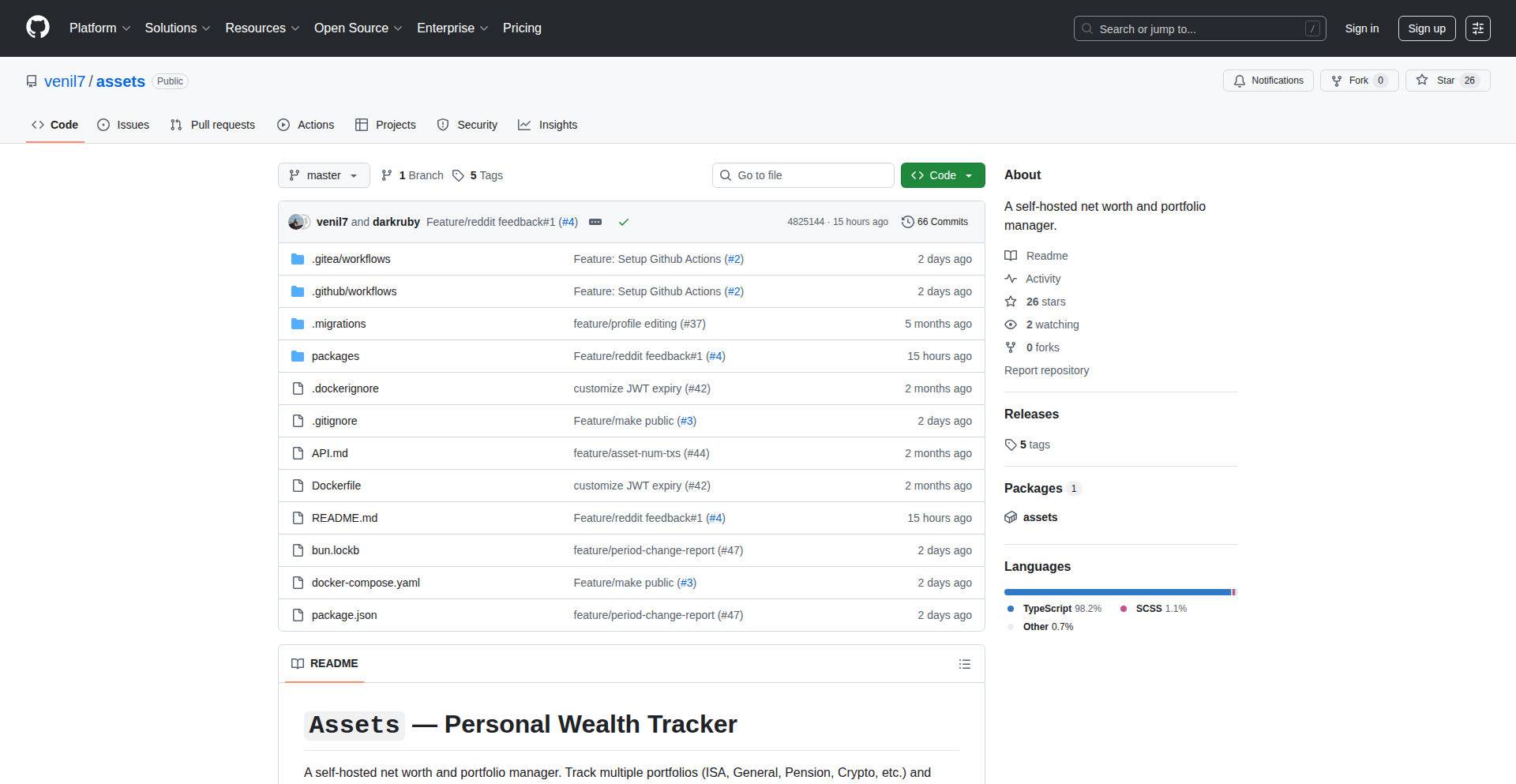

8

SelfHost Capital

Author

darkest_ruby

Description

A self-hosted net worth and portfolio manager, offering a privacy-first approach to track your financial assets. It leverages direct API integrations with financial institutions (where available) or manual input to aggregate and visualize your entire financial picture, providing insights into your wealth growth and investment performance. The innovation lies in its open-source, decentralized nature, giving users full control over their sensitive financial data.

Popularity

Points 4

Comments 1

What is this product?

SelfHost Capital is a personal finance tool that helps you keep track of all your money and investments in one place, without sending your data to a third-party company. It works by connecting directly to your bank accounts and investment platforms to pull in your financial information automatically, or you can add it manually. The cool part is that all of this data stays on your own computer or server, meaning you have complete control and privacy. This is a big deal because traditional financial apps often store your data centrally, which can be a security risk. SelfHost Capital offers an open-source alternative, meaning anyone can inspect its code and verify its security, embodying a hacker's ethos of transparency and self-reliance.

How to use it?

Developers can use SelfHost Capital by setting it up on their own server or even a local machine. It typically involves downloading the software and configuring it to connect to their financial accounts. For integration, it provides APIs that can be used to pull in net worth data into other dashboards or applications. For instance, a developer could build a custom dashboard that pulls net worth data from SelfHost Capital and combines it with other personal metrics. The project's open-source nature also means developers can contribute to its features or adapt it for specific needs, such as integrating with niche financial services not yet supported.

Product Core Function

· Automated data aggregation from financial institutions: Connects to your bank and investment accounts to pull in real-time financial data, so you don't have to manually update everything. This saves you time and reduces errors.

· Manual data entry for comprehensive tracking: Allows you to add assets and liabilities that can't be automatically linked, ensuring a complete financial picture. This is useful for tracking physical assets like real estate or unique investments.

· Net worth calculation and trend analysis: Automatically calculates your total net worth and shows how it changes over time, helping you understand your financial progress. This provides clear insights into whether you're getting richer.

· Portfolio performance tracking: Monitors the performance of your investments, showing gains and losses, so you know how your money is working for you. This helps in making informed investment decisions.

· Data privacy and control: Stores all your financial data on your own infrastructure, giving you complete ownership and security over your sensitive information. This means your financial secrets stay yours.

· Open-source and customizable: The underlying code is publicly available, allowing for transparency, community contributions, and the ability to tailor the tool to your specific needs. This empowers developers to build upon the project.

Product Usage Case

· A privacy-conscious individual who wants to track their net worth without sharing sensitive bank login details with a third-party app. They can self-host SelfHost Capital on a home server and connect their accounts securely, ensuring their financial data remains private.

· A developer building a personal finance dashboard for their home automation system. They can use SelfHost Capital's API to pull net worth data and display it alongside other home metrics, creating a unified view of their personal information.

· An investor who wants to track the performance of various investment portfolios across different platforms, including some less common ones. SelfHost Capital can consolidate this information, providing a single point of truth for investment analysis.

· A freelancer or small business owner who wants to separate their personal finances from their business finances but still wants a consolidated view of their overall wealth. They can use SelfHost Capital to manage personal net worth and integrate it with other financial tracking methods.

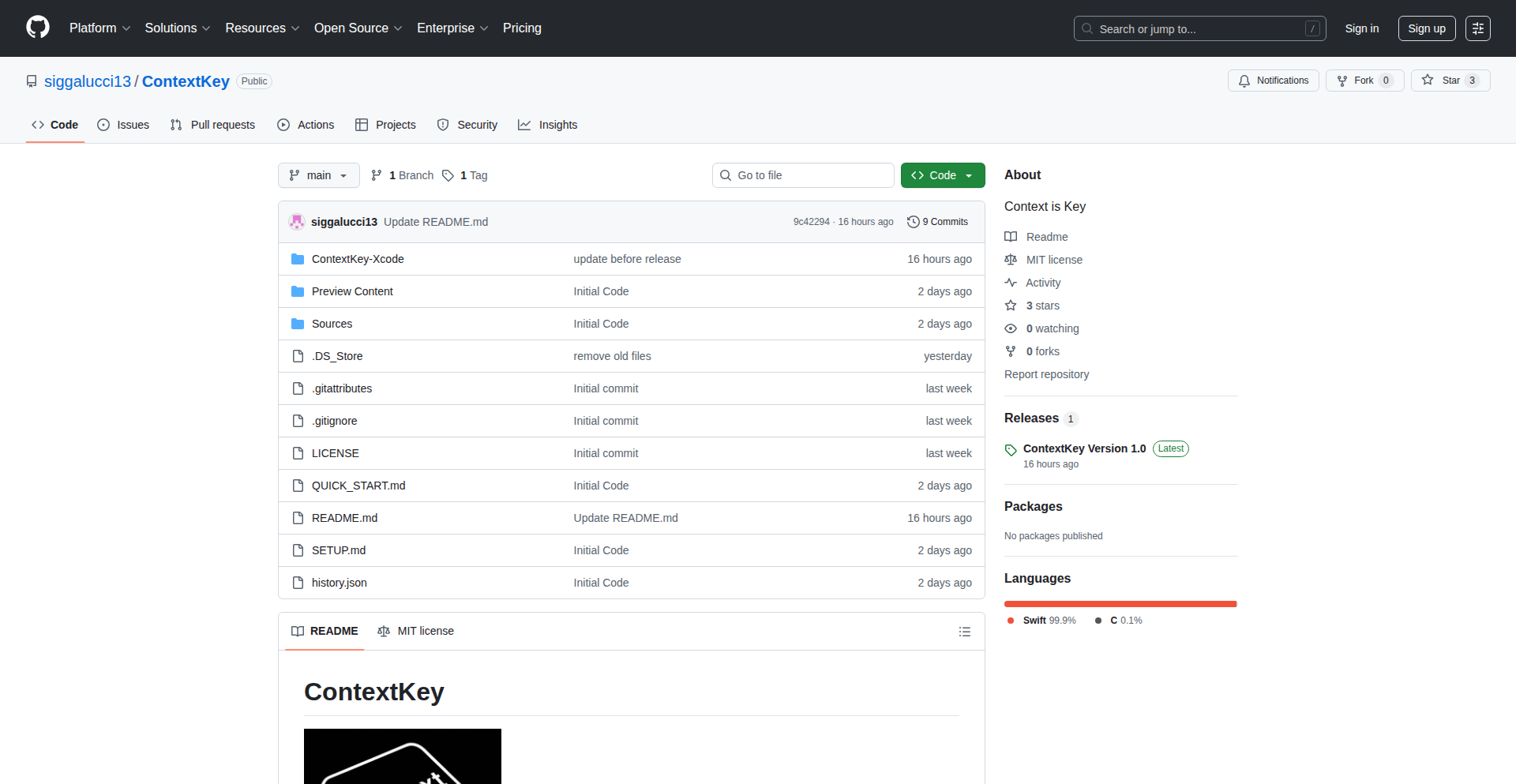

9

ContextKey-LLM Interaction Hub

Author

siggalucci

Description

ContextKey is a Mac application designed to streamline interactions with Large Language Models (LLMs). It allows users to seamlessly query LLMs like those from Ollama or any other API by setting a global hotkey. This hotkey can be triggered after highlighting any text on the screen or by selecting a file, providing a context-aware way to get instant AI assistance. The innovation lies in its ability to capture and send user-selected context directly to the LLM, eliminating manual copy-pasting and enhancing workflow efficiency for developers and power users.

Popularity

Points 3

Comments 2

What is this product?

ContextKey is a desktop utility for Mac that acts as a bridge between your local or cloud-based Large Language Models (LLMs) and your everyday computer tasks. Instead of manually copying text, pasting it into an LLM interface, and then copying the output back, ContextKey lets you select text on your screen or a file, press a predefined hotkey, and instantly send that context to your chosen LLM. The LLM then processes this information based on your prompts, and you can receive the response directly. This is innovative because it automates the data transfer, making LLM queries as quick as a keyboard shortcut, significantly boosting productivity by keeping you in your current application context.

How to use it?

Developers can integrate ContextKey into their workflow by installing it on their Mac. After installation, they would configure their preferred LLM (e.g., pointing to a local Ollama instance or an API endpoint) and set a custom hotkey. Then, to use it, they would simply highlight any piece of text on their screen, select a file from their Finder, or even within an application, press their assigned hotkey, and type their query. ContextKey captures the highlighted text or file content and sends it along with the query to the LLM. The output from the LLM can then be displayed or further processed. This is useful for tasks like quickly summarizing documents, explaining code snippets, or generating boilerplate text based on existing content, all without leaving the application you're currently working in.

Product Core Function

· Global Hotkey Activation: Trigger LLM queries with a customizable keyboard shortcut, allowing for instant access without switching applications. This saves time by removing the need to navigate to a separate LLM interface.

· Text Selection Querying: Highlight any text on your screen and have it automatically sent as context to the LLM. This is incredibly useful for getting quick explanations or summaries of text you're reading, directly within your workflow.

· File Content Querying: Select any file and send its content to the LLM for analysis or processing. This allows for efficient interaction with documents, code files, or any other text-based data, enabling tasks like code review or document summarization.

· LLM API Integration: Connects to Ollama or any other LLM API, offering flexibility in choosing and managing your AI models. This ensures compatibility with a wide range of existing LLM setups and allows users to leverage their preferred AI services.

· Contextual Prompting: Automatically includes the selected text or file content as part of the prompt sent to the LLM. This ensures the LLM has the relevant background information to provide accurate and contextually appropriate responses, leading to more effective AI assistance.

Product Usage Case

· Developer analyzing code: A developer highlights a complex function in their IDE, presses the ContextKey hotkey, and asks the LLM to explain the function's logic or suggest improvements. This provides instant code understanding without leaving the editor.

· Writer researching a topic: A writer selects a paragraph from a web page, uses ContextKey to ask the LLM for more information or alternative phrasing. This speeds up the research and drafting process by getting contextual AI feedback directly.

· Student learning a new concept: A student highlights a definition in their textbook or online material, uses ContextKey to ask the LLM for a simpler explanation or related examples. This enhances comprehension and learning by providing immediate, personalized explanations.

· Technical support agent troubleshooting an issue: An agent highlights an error message, uses ContextKey to ask the LLM for potential solutions or diagnostic steps. This allows for faster problem-solving by leveraging AI knowledge directly within the support context.

10

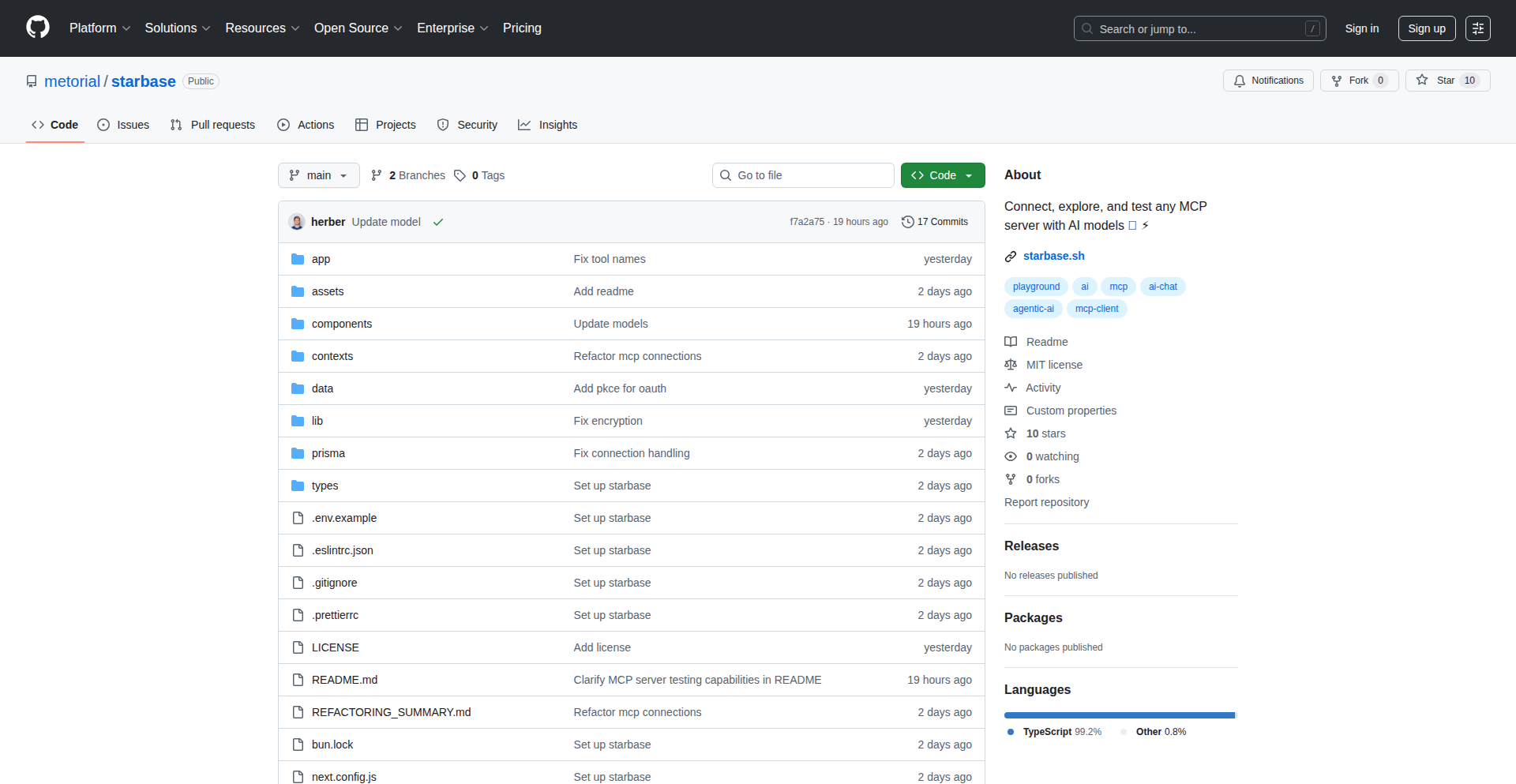

Starbase AI-MCP Tester

Author

tobihrbr

Description

Starbase is a browser-based tool designed for testing MCP (Message Control Protocol) servers. Its key innovation lies in integrating AI chat functionality to assist developers in crafting test cases and understanding server responses. This tackles the complexity and time-consuming nature of traditional MCP server testing by offering an intuitive, AI-powered approach.

Popularity

Points 4

Comments 0

What is this product?

Starbase is a web application that lets you test MCP servers directly from your browser. MCP is a communication protocol often used in financial systems, and testing it involves sending specific messages and analyzing the responses. Starbase makes this process smarter by incorporating an AI chatbot. Think of it as having an intelligent assistant that can help you figure out what messages to send and interpret what the server tells you back. The innovation is in leveraging AI to demystify complex protocol testing and speed up the debugging process.

How to use it?

Developers can use Starbase by navigating to the web application. They would typically configure the connection details for the MCP server they want to test. Then, they can use the AI chat interface to ask questions like 'How do I send a transaction request?' or 'What does this error code mean?'. The AI will provide guidance on constructing the correct messages and explain the server's replies. This makes it easier to quickly set up tests, identify issues, and learn how to interact with the MCP server, even if you're new to it. It integrates seamlessly into the development workflow by being accessible through a browser.

Product Core Function

· AI-powered test case generation: The AI can suggest and help construct valid MCP messages for various testing scenarios, saving developers time and reducing the chance of syntax errors. This is useful for quickly generating test data and scenarios.

· Real-time AI chat assistance: Developers can ask questions about MCP messages, server responses, and potential issues directly in the chat interface, getting instant explanations and guidance. This helps in understanding complex protocol interactions.

· Browser-based MCP server interaction: Allows direct testing of MCP servers without requiring complex local installations or specialized client software. This simplifies the testing environment and makes it accessible from anywhere.

· Response analysis and explanation: The AI can analyze the server's responses, explain their meaning in plain language, and identify potential problems. This aids in faster debugging and problem resolution.

Product Usage Case

· A new developer joining a financial trading platform team needs to test the messaging system. They use Starbase AI-MCP Tester to understand how to send a 'market data request' and interpret the incoming stream of price updates, getting up to speed much faster than traditional documentation review.

· A senior engineer is debugging a stubborn issue where a specific transaction type is failing. They use Starbase to send various forms of the transaction message, asking the AI to 'explain why this message might be rejected' based on the server's error code, leading to a quicker identification of a subtle data validation problem.

· A QA team needs to create a comprehensive test suite for a new MCP server release. They leverage Starbase's AI to suggest edge cases and complex message sequences, ensuring thorough coverage and reducing manual test case writing effort.

11

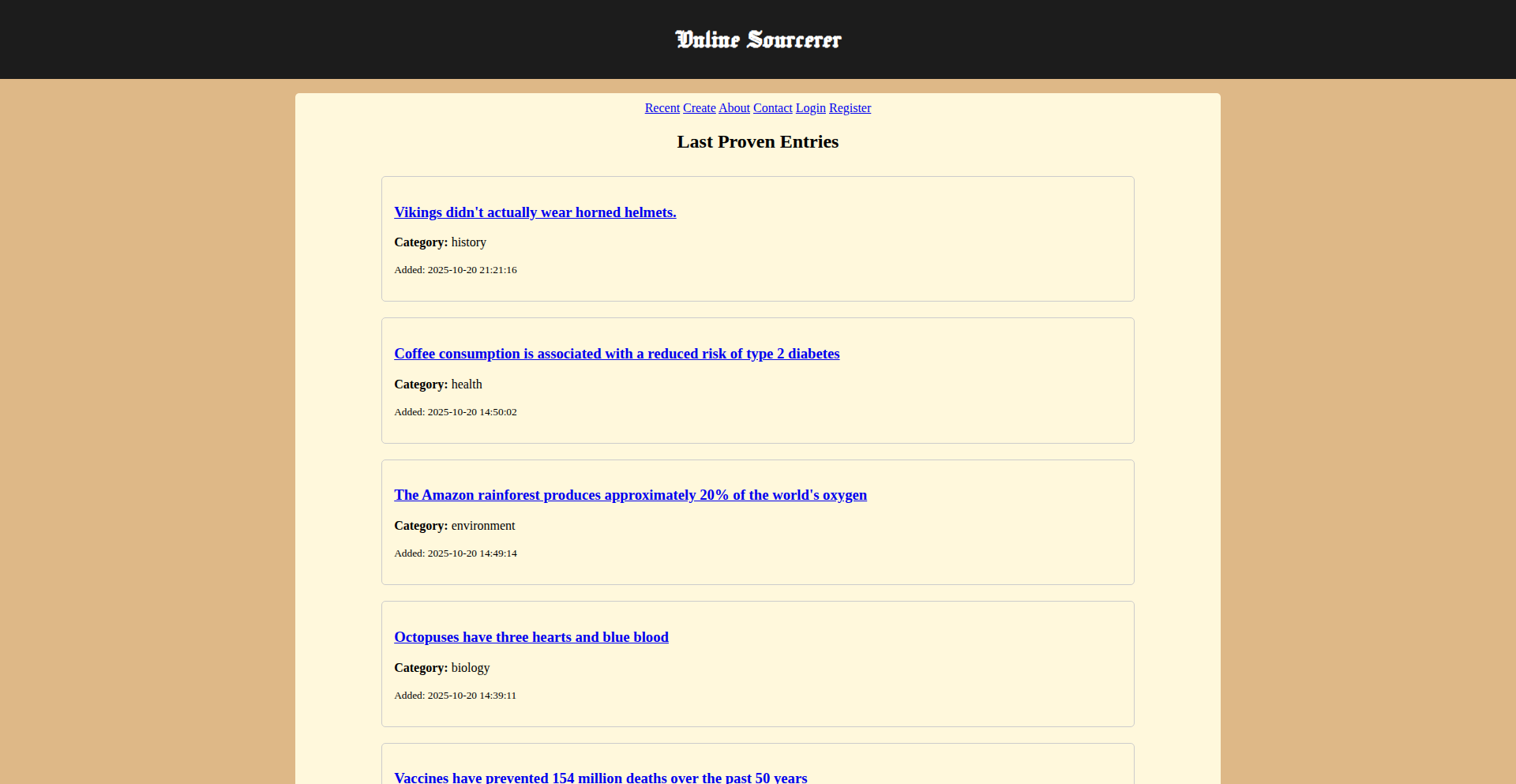

VeriLinkr: Proof-of-Concept Source Aggregator

Author

altugnet

Description

VeriLinkr is an early-stage web application designed to combat online misinformation. It allows users to compile multiple supporting sources for a claim into a single, shareable link. The core innovation lies in its approach to aggregating and presenting evidence, aiming to provide a more robust way to validate assertions than traditional single-source links.

Popularity

Points 4

Comments 0

What is this product?

VeriLinkr is a digital tool that helps you prove your statements are valid by gathering evidence from various online sources and bundling them into one easy-to-share link. Think of it as creating a 'proof package' for your claims. Instead of saying 'Here's one link that supports my point,' you can present a single link that consolidates several credible sources, making your argument much stronger and harder to dismiss. The underlying technology focuses on how to effectively collect and present these multiple links in a user-friendly way, making it a novel approach to tackling the challenge of online verification.

How to use it?

Developers can integrate VeriLinkr into their workflows or platforms where verifying information is critical. For instance, a news aggregator could use it to provide users with a 'verified' badge and a VeriLinkr link for articles that have strong evidential backing. A research tool could leverage it to allow users to instantly create and share compendiums of research papers for a given topic. Essentially, anywhere you need to establish the credibility of a statement, VeriLinkr can provide a streamlined solution. The technical approach involves building an interface for users to input their claim and then add multiple URLs that support it, which are then processed into a single, unified link.

Product Core Function

· Source Aggregation: The system takes multiple URLs provided by the user and intelligently combines them into a single, persistent link. This solves the problem of information scattered across the web, offering a centralized point of evidence. Its value is in simplifying the process of presenting comprehensive support for any claim.

· Claim Verification Display: The generated link leads to a page that clearly displays the original claim and all the aggregated sources. This provides a transparent and organized way for others to review the evidence. This directly addresses the need for easily digestible and verifiable information, making it useful for anyone who needs to convince or inform others.

· Link Sharing and Persistence: The single VeriLinkr URL is easily shareable across social media, emails, or websites, ensuring that the evidence remains accessible. The system aims to ensure these links remain functional over time, offering long-term credibility. The value here is in making robust verification portable and shareable, enhancing communication and trust.

Product Usage Case

· A fact-checker uses VeriLinkr to debunk a viral piece of misinformation by compiling links to reputable studies and expert opinions into a single, easily shareable VeriLinkr URL. This helps combat the spread of false narratives by providing readily accessible proof.

· A student writing a research paper can use VeriLinkr to create a comprehensive bibliography of their sources in a single, organized link, making it easier for their professor to review their research methodology and evidence. This simplifies academic work and enhances clarity.

· A journalist can use VeriLinkr to back up a controversial claim in their article by linking to a collection of primary documents, interviews, and expert testimonies, thereby building greater trust with their audience. This improves journalistic integrity and reader confidence.

12

48hr Connection Forge

Author

abilafredkb

Description

A friend-finding application that uses a novel 48-hour communication window to foster genuine, international connections. It tackles the problem of superficial online interactions and ghosting by creating a sense of urgency and encouraging decisive communication, ultimately aiming to combat loneliness and build bridges across cultures.

Popularity

Points 1

Comments 2

What is this product?

This is a social connection platform designed to facilitate meaningful friendships by leveraging a time-limited interaction model. Unlike traditional friend apps that allow endless matching with little actual engagement, 48hr Connection Forge pairs users based on shared interests and then enforces a strict 48-hour chat window. This technical constraint, implemented through backend logic managing user session timeouts and communication permissions, forces users to engage meaningfully or decide to move on. The innovation lies in using artificial urgency to drive real connection, prioritizing depth over breadth in social interactions. The international aspect, enabled by a global user base and careful handling of timezone differences through scheduled availability features, broadens horizons and breaks down societal bubbles.

How to use it?

Developers can integrate the core concepts of time-bound interactions into their own applications. For example, a team collaboration tool could use this for limited-time project brainstorming sessions, ensuring focused discussion and timely decisions. A learning platform could implement it for peer-to-peer study groups, where a 48-hour window encourages active knowledge sharing. The technology stack (React Native for frontend, Node.js for backend, Firebase for real-time features, and PostgreSQL for data) provides a blueprint for building scalable and responsive applications. Developers can adopt the model of enforced interaction deadlines to increase user engagement and task completion rates in various scenarios.

Product Core Function

· Interest-based user matching: Facilitates discovery of compatible individuals by analyzing shared hobbies and passions, enabling users to find people with similar mindsets, thus increasing the likelihood of successful conversations.

· 48-hour interaction window: Implements a strict time limit for initial conversations, creating urgency and preventing endless, unproductive messaging. This forces users to make decisions about the connection, reducing ghosting and promoting genuine engagement.

· Decisive connection outcome: After the 48-hour period, users are prompted to decide whether to continue the friendship or move on. This structured approach avoids ambiguity and the frustration of stalled conversations, leading to more intentional relationship building.

· International user base: Connects individuals globally, fostering cross-cultural understanding and friendships beyond geographical limitations. This breaks down echo chambers and exposes users to diverse perspectives.

· No-ghosting policy enforcement: The time limit and decision prompt actively discourage ghosting, creating a more respectful and reliable communication environment for all users.

Product Usage Case

· Building a short-term mentorship program: A company could use this model to facilitate quick skill-sharing sessions between employees, where mentors and mentees have 48 hours to connect and address specific work-related challenges, improving knowledge transfer.

· Accelerating community building: Online communities could use this for event-specific discussion forums or interest groups, ensuring that conversations are focused and lead to concrete outcomes within a defined timeframe, boosting active participation.

· Enhancing online dating experiences: While not its primary focus, the concept could be adapted for dating apps to encourage more direct conversations and quicker decisions, reducing the time spent on superficial 'swiping' and leading to more meaningful interactions.

· Facilitating collaborative project kick-offs: Development teams or creative collaborators can use this framework to rapidly brainstorm ideas and define project scope within a limited, high-intensity period, ensuring quick alignment and project momentum.

13

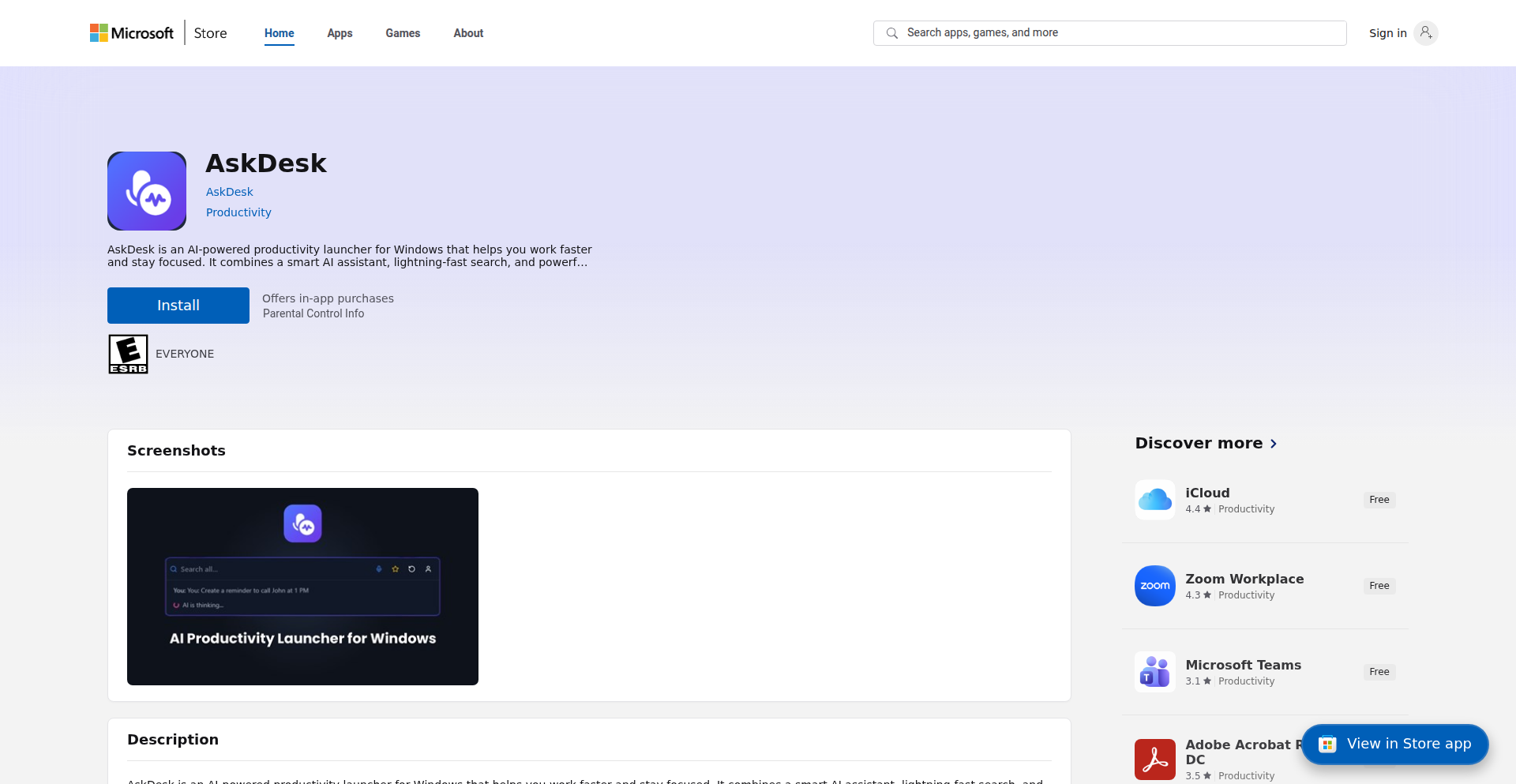

AskDesk AI Command Hub

Author

NabilChiheb

Description

AskDesk is a native Windows application that acts as a universal command center for your computer, allowing you to execute actions via typed or spoken commands. It offers a streamlined interface for tasks like clearing temporary files, launching applications, setting reminders, and managing your clipboard. A key innovation is its integrated AI capabilities for enhanced searching, summarization, and intelligent action execution, providing a more intuitive and efficient user experience. This project embodies the hacker ethos of solving complex usability problems with elegant code.

Popularity

Points 3

Comments 0

What is this product?

AskDesk is a desktop application for Windows that lets you interact with your computer using natural language commands, either typed or spoken. Think of it as a smart assistant that lives on your PC. Its technical innovation lies in its ability to parse these commands and trigger specific system actions or application functions. Unlike many voice assistants that rely heavily on cloud processing and have complex setup, AskDesk focuses on local execution for core shortcuts, offering speed and privacy. The AI integration adds a layer of intelligence for more advanced tasks, making your computer easier to control without needing to remember specific commands or navigate through menus. So, this is useful because it makes controlling your computer faster and more intuitive, saving you time and effort.

How to use it?

Developers can use AskDesk by installing it from the Microsoft Store. Its primary use case is for quickly executing common tasks. For example, you can type or say 'clear temp files' to clean up your system, 'open Spotify' to launch your music app, or 'remind me to call my wife in 10 minutes' to set a reminder. For custom actions, developers can define their own shortcuts, integrating with existing scripts or executables. The AI-powered commands can be used for tasks like asking it to 'search for the latest news on AI' or 'summarize this webpage' (assuming browser integration is active). This means you can integrate it into your workflow to automate repetitive actions or access information more efficiently, all through simple commands. It’s like having a command-line interface that understands plain English.

Product Core Function

· Execute system commands: Allows for quick execution of built-in Windows commands like clearing temporary files, shutting down, or restarting. The technical value is in abstracting complex system calls into simple, user-friendly commands, improving efficiency. Useful for IT professionals and power users to maintain system health.

· Launch applications: Enables launching any installed application by its name, either typed or spoken. This streamlines workflow by eliminating the need to search through the start menu or use file paths, providing instant access to your tools. Great for busy professionals who need quick access to their favorite software.

· Set reminders: Facilitates setting time-based reminders. The innovation here is in the natural language parsing of time, like 'in 10 minutes' or 'tomorrow at 3 PM', making scheduling effortless. This helps users stay organized and on track with their tasks.

· Clipboard management: Offers actions to manipulate the clipboard, such as clearing it or pasting specific content. This is valuable for developers and anyone who frequently copies and pastes, allowing for quicker and more controlled clipboard operations. Saves time by preventing accidental data overwrites or unwanted content.

· AI-powered actions: Integrates AI for tasks like web searching, content summarization, or executing smarter commands. The technical value is in leveraging AI models to understand context and perform complex operations, offering advanced capabilities without manual coding. Useful for research, content creation, and complex problem-solving.

· Customizable shortcuts: Allows users to define their own commands that trigger specific scripts or actions. This is a core hacker-style feature, empowering users to extend the application's functionality to perfectly match their unique workflows and automate custom tasks. Extremely valuable for developers and power users to tailor their computing experience.

Product Usage Case

· A marketing manager needs to quickly clear their browser cache before a demo. They type 'clear browser cache' into AskDesk, and it executes the necessary commands, saving them time and potential embarrassment. This solves the problem of fiddly manual clearing processes.

· A developer is working on a new feature and needs to frequently restart a local server. They create a custom shortcut in AskDesk that maps 'restart server' to their script. Now, with a single command, their server restarts, significantly speeding up their development cycle. This addresses the tedium of repetitive manual operations.

· A student is researching for an essay and finds a lengthy article. They use AskDesk's AI summarization feature by asking it to 'summarize this article'. AskDesk processes the content and provides a concise summary, helping them grasp the main points quickly and efficiently. This solves the problem of information overload and saves study time.

· A graphic designer needs to access a frequently used color palette stored in a text file. They set up a custom AskDesk command to paste the content of that file directly into their design software, streamlining their workflow and ensuring consistency in their designs. This eliminates manual copying and pasting of repetitive data.

14

Notely - Contextual Note Weaver

Author

Codegres

Description

Notely is a novel note-taking application that leverages AI to intelligently link and surface related notes based on your current context. Instead of static tags, it understands the semantic relationships between your thoughts, making it easier to recall information and discover new connections. It's like having an AI assistant that remembers what you've written and can anticipate what you might need next. So, this is useful because it helps you find information faster and spark new ideas by surfacing forgotten links between your notes.

Popularity

Points 3

Comments 0

What is this product?

Notely is a note-taking app that goes beyond simple organization. It uses Natural Language Processing (NLP) and vector embeddings to understand the meaning of your notes. When you're writing a new note or viewing an existing one, Notely analyzes its content and intelligently suggests other notes you've written that are semantically similar or contextually relevant. This means instead of manually tagging or searching for related information, Notely automatically presents you with connections you might have missed. So, this is useful because it enhances your knowledge recall and promotes serendipitous discovery of your own ideas without manual effort.

How to use it?

Developers can integrate Notely into their workflow by installing it as a standalone application. The core of its utility lies in its ability to index and analyze the text content of your notes. You can then write new notes, and Notely will proactively suggest links to existing ones. For more advanced use cases, developers might consider leveraging the underlying embedding models to build custom note analysis tools or integrate Notely's suggestion engine into other personal knowledge management systems. So, this is useful because it provides a smart way to manage and retrieve your personal knowledge base, saving you time and improving your productivity.

Product Core Function

· Contextual Note Linking: Utilizes AI-powered semantic analysis to automatically suggest related notes, enhancing knowledge recall and discovery. This is valuable for quickly finding related information and preventing the isolation of ideas.

· Intelligent Search: Beyond keyword matching, Notely understands the meaning of your queries to surface the most relevant notes, even if the exact words aren't present. This is useful for retrieving information when you don't remember precise keywords.

· Dynamic Relationship Mapping: Creates an evolving network of connections between your notes based on their content, revealing patterns and insights you might not have otherwise discovered. This is valuable for understanding the broader themes within your collected thoughts.

Product Usage Case

· When researching a complex topic, Notely can automatically surface previously written notes on tangential aspects of the subject, helping you build a more comprehensive understanding. This solves the problem of information fragmentation and deepens your insights.

· A developer working on a new feature can open a note about a similar past project, and Notely will suggest related notes on specific technical challenges or solutions encountered, accelerating the development process. This addresses the need for quick access to relevant past experiences.

· A writer brainstorming for a new article can start a note, and Notely will prompt them with related ideas or background information from their existing notes, overcoming writer's block and sparking creativity. This aids in idea generation and content development by leveraging existing intellectual capital.

15

DocuAPI Weaver

Author

sgk284

Description

DocuAPI Weaver transforms your existing operational documents (SOPs) into production-ready REST APIs in under 90 seconds. It leverages LLMs to understand your manual processes and automatically generates the API infrastructure, including versioning, testing, and documentation. This means you can automate complex, human-driven decisions without writing extensive code, making your operations faster and more efficient. So, what's in it for you? It drastically reduces the time and engineering effort needed to automate critical business workflows, leading to immediate operational improvements.

Popularity

Points 3

Comments 0

What is this product?

DocuAPI Weaver is an intelligent platform that takes your standard operating procedures (SOPs) – essentially, your company's rulebooks for how to do things – and automatically transforms them into well-defined, functional REST APIs. The core innovation lies in using Large Language Models (LLMs) to interpret the natural language instructions within your documents. Instead of needing engineers to manually translate these SOPs into code and infrastructure, DocuAPI Weaver understands the intent, the steps, and the decision points. It then autonomously generates all the necessary components for a production-ready API, including endpoints, data structures, version control for easy rollbacks, automated tests to ensure reliability, and user-friendly integration documentation. This approach democratizes automation, allowing non-engineers to leverage powerful API capabilities. So, what's in it for you? It empowers you to turn your established business logic, documented in plain language, into automated processes with the speed and reliability of a software API, without the typical development bottleneck.

How to use it?

Developers and operations teams can use DocuAPI Weaver by simply uploading their existing SOP documents. The platform then processes these documents to create a REST API that mirrors the workflow described. You can integrate this generated API into your existing applications and systems, allowing automated execution of tasks that were previously manual. For example, if you have an SOP for approving customer requests, you can upload it, and DocuAPI Weaver will generate an API. Your customer service application can then call this API to automate the approval process. This provides a seamless way to bring automation to critical paths without extensive custom coding. So, what's in it for you? It allows you to quickly and easily integrate automated decision-making and workflow execution into your existing tech stack, reducing manual work and speeding up business processes.

Product Core Function

· SOP to API Generation: Transforms plain-text operational procedures into functional REST APIs, allowing for automated execution of documented processes. The value here is rapid automation of existing business logic, saving significant engineering time and accelerating deployment. Application scenario: Automating onboarding new employees based on HR procedures.

· Automated Versioning and Rollbacks: Provides robust version control for APIs, enabling one-click rollbacks to previous states. This is valuable for maintaining stability and managing changes to automated processes without disrupting ongoing operations. Application scenario: Safely updating an API for product moderation rules without impacting live moderation.

· Autogenerated Unit and Integration Tests: Creates comprehensive tests for the generated API to ensure its correctness and reliability. This adds significant value by reducing the burden of test writing for developers and ensuring the accuracy of automated workflows. Application scenario: Verifying that a newly automated purchase order processing API correctly handles different scenarios.

· Web UI and Integration Documentation: Automatically generates user interfaces and clear documentation for the API, making it easy for developers and other systems to understand and consume. This accelerates integration and adoption of the automated services. Application scenario: Providing clear instructions for other services to use an API that handles inventory updates.

· SOC 2 Compliance: Ensures the generated API infrastructure meets high security and compliance standards. This is crucial for businesses handling sensitive data and operations, providing peace of mind and meeting regulatory requirements. Application scenario: Automating financial transaction processing with guaranteed security and compliance.

Product Usage Case

· Fashion Marketplace (Garmentory): Faced with a backlog of days for moderating hundreds of thousands of product submissions each month, they used DocuAPI Weaver to automate their product moderation SOPs. The resulting API transformed their inventory backlog from days to instantaneous, meaning new products could be live on the site almost immediately. This solved the problem of slow manual review processes, directly impacting their ability to scale.

· Public Safety Tech (DroneSense): Their purchase order handling process was taking approximately 30 minutes per order manually. By converting their purchase order SOP into an API with DocuAPI Weaver, they reduced this time to just 2 minutes. This dramatically improved their operational efficiency and responsiveness in critical public safety contexts, solving the bottleneck of manual administrative tasks.

· Internal Operational Automation: Imagine a company with detailed SOPs for handling customer support escalations. They could use DocuAPI Weaver to turn these SOPs into an API. Customer support software could then trigger this API when a ticket reaches a certain threshold, automatically routing it to the correct team with all necessary information, thus solving the problem of slow or incorrect escalation handling.

16

AI One Health Nexus

Author

ai-onehealth

Description