Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-17

SagaSu777 2025-10-18

Explore the hottest developer projects on Show HN for 2025-10-17. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

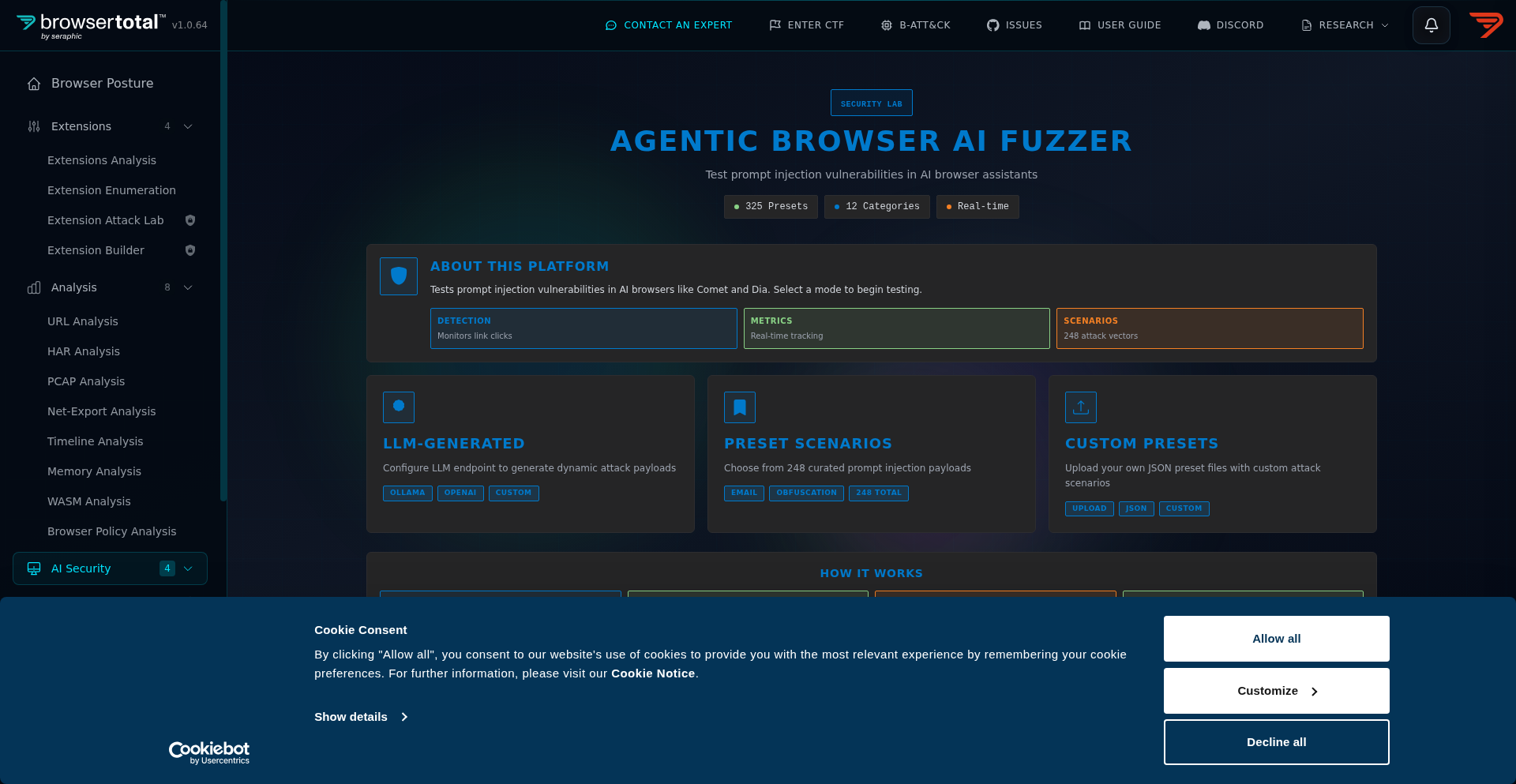

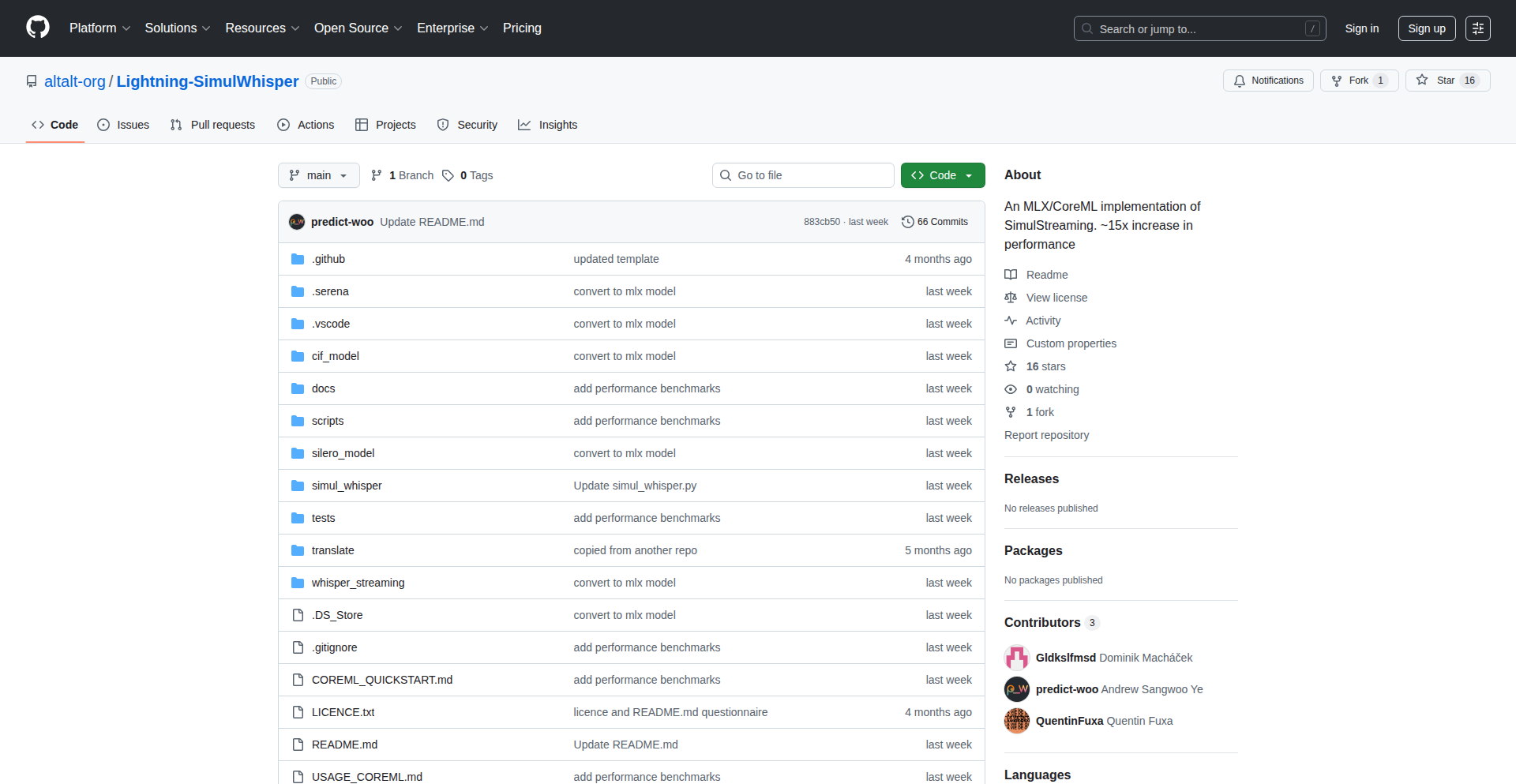

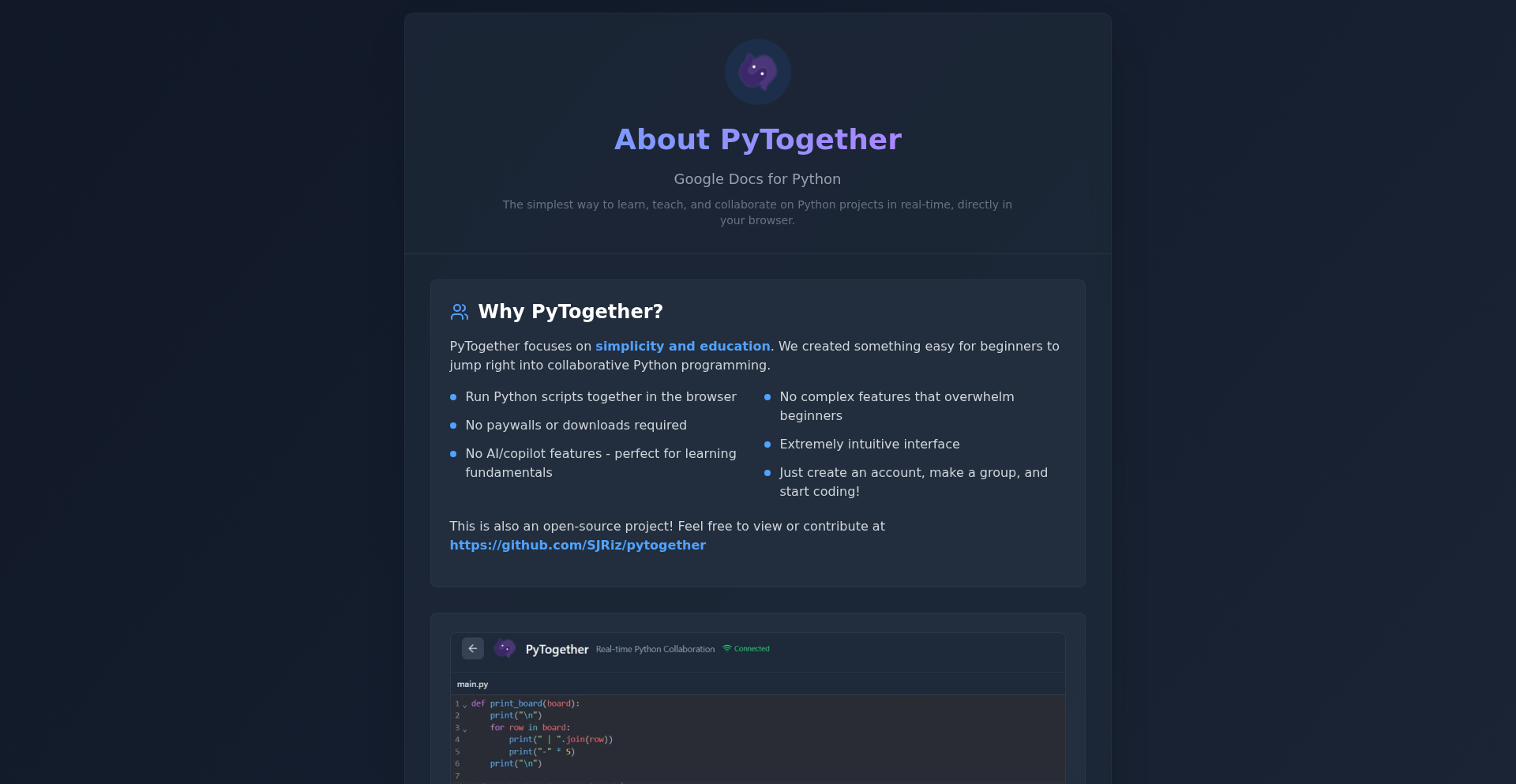

The landscape of software development is rapidly evolving, with AI integration becoming less of a futuristic concept and more of a tangible tool for immediate productivity gains. Today's Show HN submissions highlight a significant trend: empowering developers and users with AI-native tools that enhance workflows and simplify complex tasks. From AI assistants embedded directly into browsers for more intuitive web automation (like BrowserOS) to specialized LLM fuzzer tools designed to uncover vulnerabilities in these very same AI browsers, the focus is on making AI work *for* us, not just alongside us. We're also seeing a strong push towards efficiency and privacy, with projects like OnlyJPG and SEE_PROTO offering client-side processing and searchable compression, demonstrating a hacker ethos of solving problems with elegant, often performant, technical solutions. For developers, this means a continuous need to adapt and learn how to leverage these new AI paradigms, whether it's building applications that integrate with AI agents, securing AI-powered systems, or simply using AI-driven tools to accelerate their own coding and analysis. Entrepreneurs should look for opportunities to create specialized AI tools that solve niche problems with high efficiency, focusing on user experience and demonstrable value. The open-source community continues to be a fertile ground for innovation, providing foundational components and inspiring new directions for AI-driven software.

Today's Hottest Product

Name

BrowserOS – Open-Source Chromium Fork with Built-in MCP Server

Highlight

This project innovates by embedding an MCP (Model Context Protocol) server directly into a Chromium browser binary. This bypasses complex setup for AI agents and allows them to interact with logged-in sessions and leverage new Chromium core APIs for more sophisticated web automation, moving beyond traditional CDP limitations. Developers can learn about packaging server components into desktop applications and explore new paradigms for browser-agent interaction.

Popular Category

AI/ML

Developer Tools

Web Development

Open Source

Popular Keyword

AI

LLM

Browser

Agent

Open Source

Framework

Tool

Automation

Technology Trends

AI-powered browser automation

Client-side image processing

Searchable data compression

Domain-specific AI benchmarks

Lightweight AI frameworks

Open-source infrastructure for AI

Privacy-focused web applications

Developer productivity tools

Project Category Distribution

AI/ML (30%)

Developer Tools (25%)

Web Development (15%)

Utilities/Productivity (15%)

Data & Observability (10%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | OnlyJPG - In-Browser Image Weaver | 58 | 39 |

| 2 | Datapizza AI - GenAI App Accelerator | 39 | 26 |

| 3 | BrowserOS-MCP | 33 | 12 |

| 4 | ServiceRadar: Hybrid Network Observability Engine | 35 | 1 |

| 5 | SEE-Proto: Schema-Aware Searchable Compression | 13 | 6 |

| 6 | LegalDoc-Embed-Bench | 10 | 0 |

| 7 | ResumeLyricSynth | 5 | 4 |

| 8 | LLM-CounterBench | 7 | 1 |

| 9 | AdBusterAI | 5 | 2 |

| 10 | Pluely: The Stealth AI Co-Pilot | 3 | 4 |

1

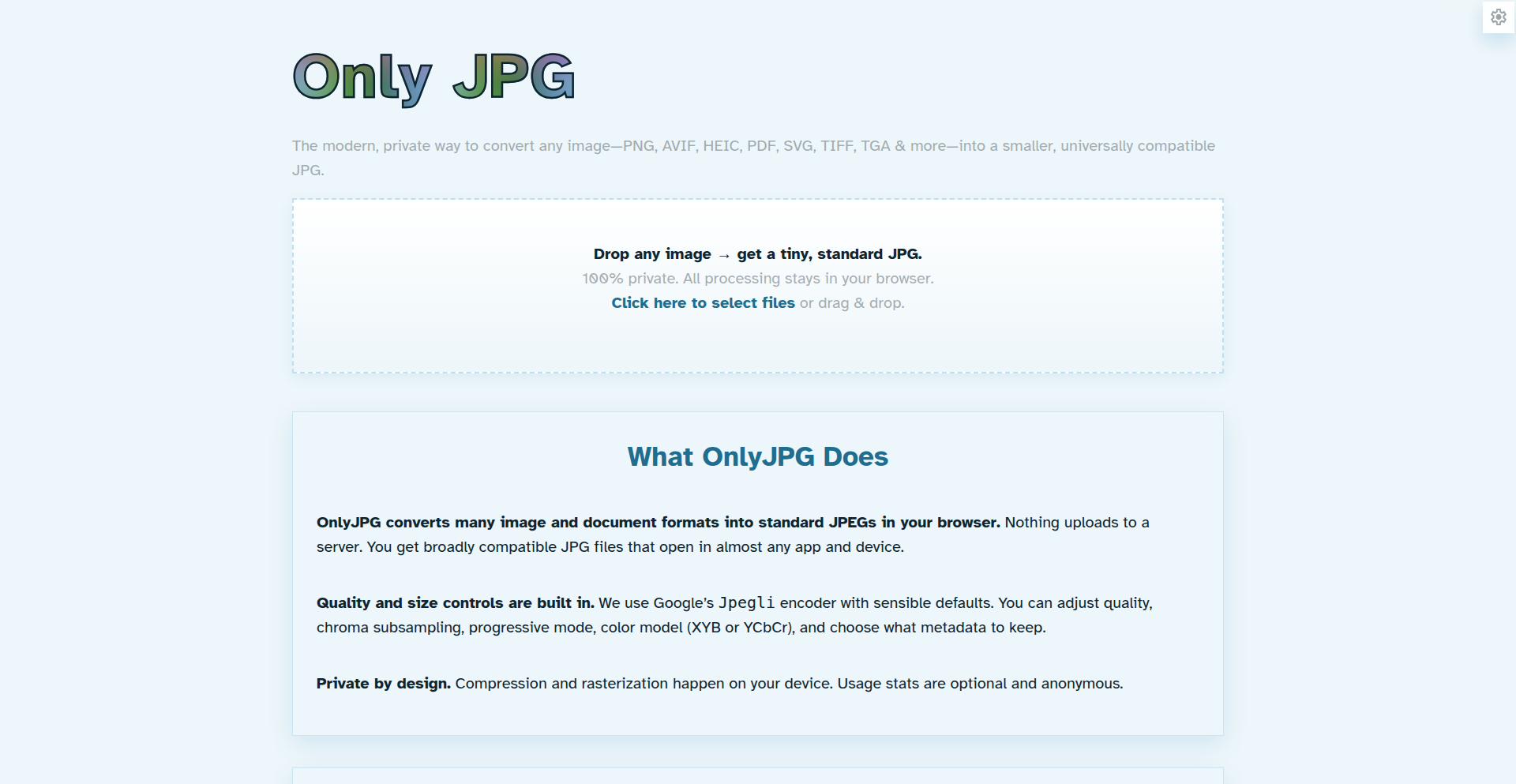

OnlyJPG - In-Browser Image Weaver

Author

johnnyApplePRNG

Description

OnlyJPG is a privacy-focused, client-side tool that converts various image formats like PNG, HEIC, AVIF, and even PDFs into standard JPEGs. It leverages Emscripten and WebAssembly to run image decoding and conversion libraries directly within your browser, meaning no files are ever uploaded to a server. This provides enhanced privacy and ensures compatibility with widely supported JPEG files. The project showcases a creative use of web technologies to solve a common problem with a 'hacker's' flair for experimentation.

Popularity

Points 58

Comments 39

What is this product?

OnlyJPG is a web-based utility that transforms a wide range of image and document formats into universally compatible JPEG files. The core innovation lies in its use of Emscripten and WebAssembly, which allows powerful image processing libraries (like Google's Jpegli) to run entirely within your web browser, operating in a Web Worker for a smooth user experience. This means your sensitive images never leave your device, offering a significant privacy advantage over online converters. It even incorporates advanced JPEG XL color quantization techniques (XYB perceptual color quantization) for potentially better visual quality in the output JPEGs. So, what's the big deal? You get to convert your images without worrying about your data being seen or stored by anyone else, and the output is a file that will work on virtually any device or platform.

How to use it?

Developers can integrate OnlyJPG by embedding its JavaScript interface into their web applications. It's designed to be a client-side solution, meaning you can use it directly in your browser through its provided UI or, for more advanced use cases, integrate its functionality into your own workflows. For example, if you have a web app that needs to accept user-uploaded images in various formats and then display them consistently, OnlyJPG can be used to preprocess these images on the user's device before they are even sent to your server. It's about bringing powerful image conversion capabilities to the edge of the network – your user's browser. This means faster processing for the user and reduced server load for you.

Product Core Function

· Client-side image format conversion: Processes various input formats including PNG, HEIC, AVIF, and PDF directly in the browser, offering immediate conversion without server uploads. This is useful for applications where user privacy or offline capabilities are paramount.

· WebAssembly and Web Worker integration: Utilizes Emscripten to compile native code for image decoding into WebAssembly, running it in a Web Worker to prevent UI blocking and ensure a responsive user experience. This demonstrates efficient use of modern web technologies for computationally intensive tasks.

· High-quality JPEG output with advanced quantization: Employs Google's Jpegli library, enabling features like XYB perceptual color quantization for potentially superior visual fidelity in the resulting JPEGs. This means your converted images can look better while remaining standard JPEGs.

· Privacy-preserving processing: All image manipulation occurs locally on the user's machine, ensuring that sensitive or private images are never transmitted or stored on external servers. This is a direct benefit for users concerned about data security and privacy.

· Broad browser compatibility: Tested and confirmed to work across major web browsers like Firefox, Chrome, and Safari, ensuring wide accessibility and usability for end-users. This guarantees that most users can benefit from the tool without compatibility issues.

Product Usage Case

· A photo-sharing web application that needs to accept HEIC files from iPhones but display them universally. OnlyJPG can convert these HEIC files to JPEGs directly in the user's browser before they are uploaded, ensuring compatibility and improving the user experience by handling the conversion client-side.

· An e-commerce platform that allows sellers to upload product images in any format. By integrating OnlyJPG, the platform can automatically convert these images to JPEGs on the seller's browser, guaranteeing consistent image display across the website and reducing potential issues caused by unsupported formats.

· A document management system where users upload scanned PDFs or images containing important information. OnlyJPG can convert these files to JPEGs in the browser, making them easily viewable and searchable across different devices and applications, all while maintaining the privacy of the uploaded documents.

· A developer building a personal portfolio website that needs to showcase images in a variety of formats. Using OnlyJPG, they can ensure all their images are presented as JPEGs, which are widely supported and load quickly, without needing a backend server for image processing. This simplifies deployment and reduces costs.

2

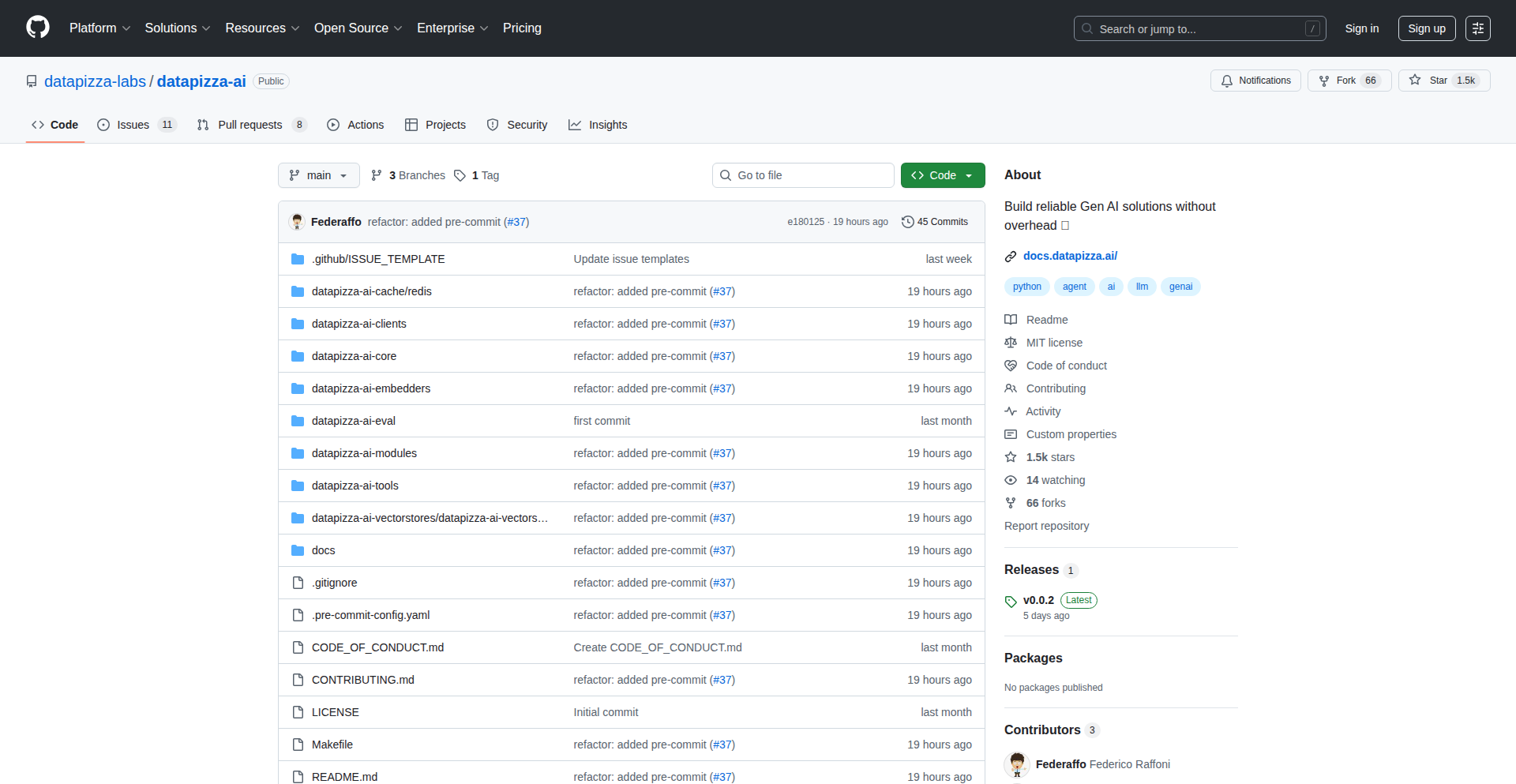

Datapizza AI - GenAI App Accelerator

Author

f_raffoni

Description

Datapizza AI is a lightweight, open-source framework designed to simplify the development of Generative AI applications. It focuses on providing a streamlined way to build and deploy AI-powered features, allowing developers to experiment and iterate quickly without the overhead of complex infrastructure. The innovation lies in its modular design and focus on essential components, making AI development more accessible and efficient.

Popularity

Points 39

Comments 26

What is this product?

Datapizza AI is a software toolkit that helps developers build AI applications that can generate content, like text or images. Think of it as a pre-packaged set of building blocks for AI. Its core innovation is its 'lightweight' nature, meaning it's not bloated with unnecessary features, making it faster to set up and use. It also embraces an 'open-source' philosophy, so anyone can see how it works, contribute to it, and use it for free. This allows developers to focus on the creative aspects of their AI applications rather than wrestling with complex backend systems. So, what's in it for you? It means you can build and deploy your AI features faster and with less technical hassle, leading to quicker product launches and more time for innovation.

How to use it?

Developers can integrate Datapizza AI into their existing projects or start new ones by leveraging its pre-built components. This typically involves defining the AI models they want to use, setting up data pipelines for training or inference, and integrating the generated outputs into their application's user interface or backend logic. The framework's modularity allows for easy customization and extension. For example, you might use it to add a chatbot feature to your website, generate product descriptions for your e-commerce store, or create personalized content for your users. So, what's in it for you? You can easily add powerful AI capabilities to your applications without needing to be an AI expert, saving you development time and resources.

Product Core Function

· Modular AI Model Integration: Allows developers to easily swap and integrate different AI models (e.g., for text generation, image synthesis) without rewriting large parts of their application. This offers flexibility and future-proofing for AI features. The value is in adapting to the rapidly evolving AI landscape.

· Simplified Data Pipeline Management: Provides tools to efficiently prepare and feed data to AI models for training or real-time inference. This reduces the complexity of data handling, a common bottleneck in AI development. The value is in ensuring smooth and efficient AI operation.

· Streamlined Deployment Options: Offers guidance and tools for deploying AI applications efficiently, whether to cloud environments or on-premises. This accelerates the time from development to production. The value is in getting your AI-powered product to users faster.

· Experimentation and Prototyping Focus: The lightweight nature encourages rapid prototyping and experimentation with new AI ideas. This fosters a culture of innovation and quicker validation of concepts. The value is in enabling rapid learning and iteration on AI features.

Product Usage Case

· Building a personalized content generation tool for a marketing agency: Datapizza AI can be used to quickly prototype an application that generates tailored ad copy or social media posts based on client specific requirements, solving the problem of slow manual content creation.

· Integrating a customer support chatbot into an e-commerce platform: Developers can use Datapizza AI to power a chatbot that answers frequently asked questions, freeing up human support agents and improving customer experience. This solves the challenge of providing 24/7 customer assistance.

· Developing an AI-powered image captioning feature for a photo-sharing app: Datapizza AI can enable the automatic generation of descriptive captions for user uploaded images, enhancing discoverability and user engagement. This addresses the need for efficient image metadata generation.

· Creating a tool for developers to generate boilerplate code for common AI tasks: Datapizza AI can be used to build a generator that produces starter code for tasks like sentiment analysis or text summarization, reducing repetitive coding efforts. This solves the problem of reinventing the wheel for common AI development patterns.

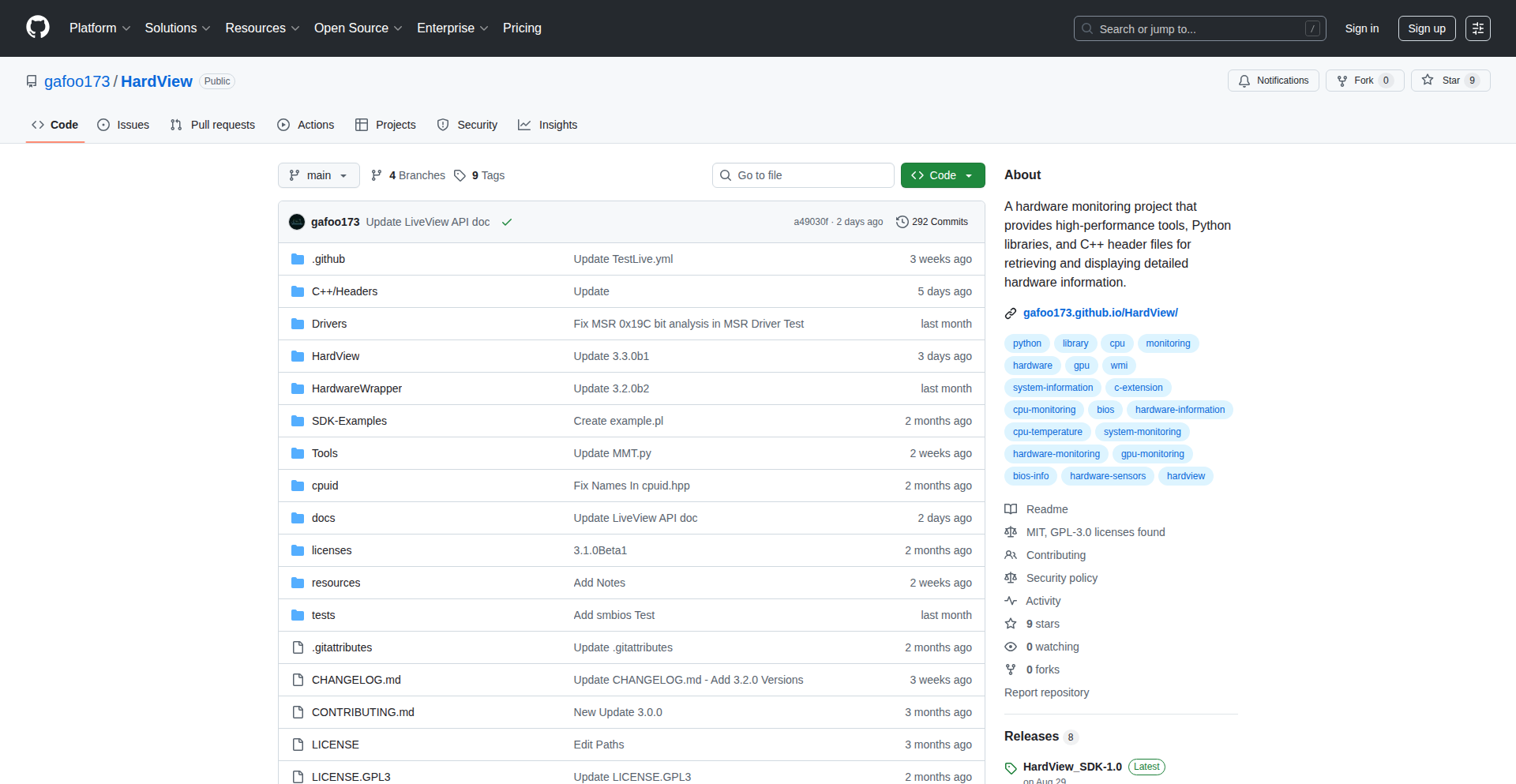

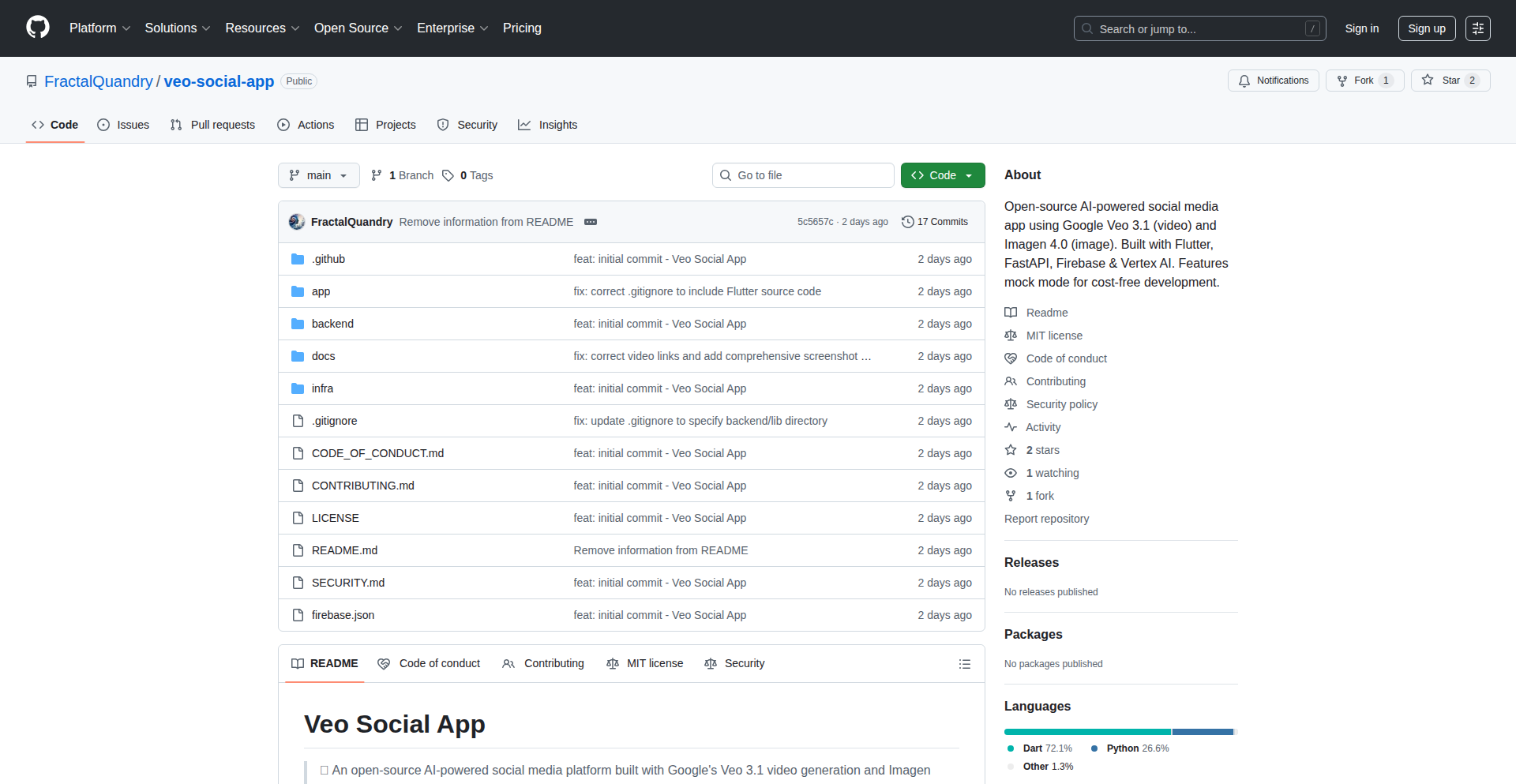

3

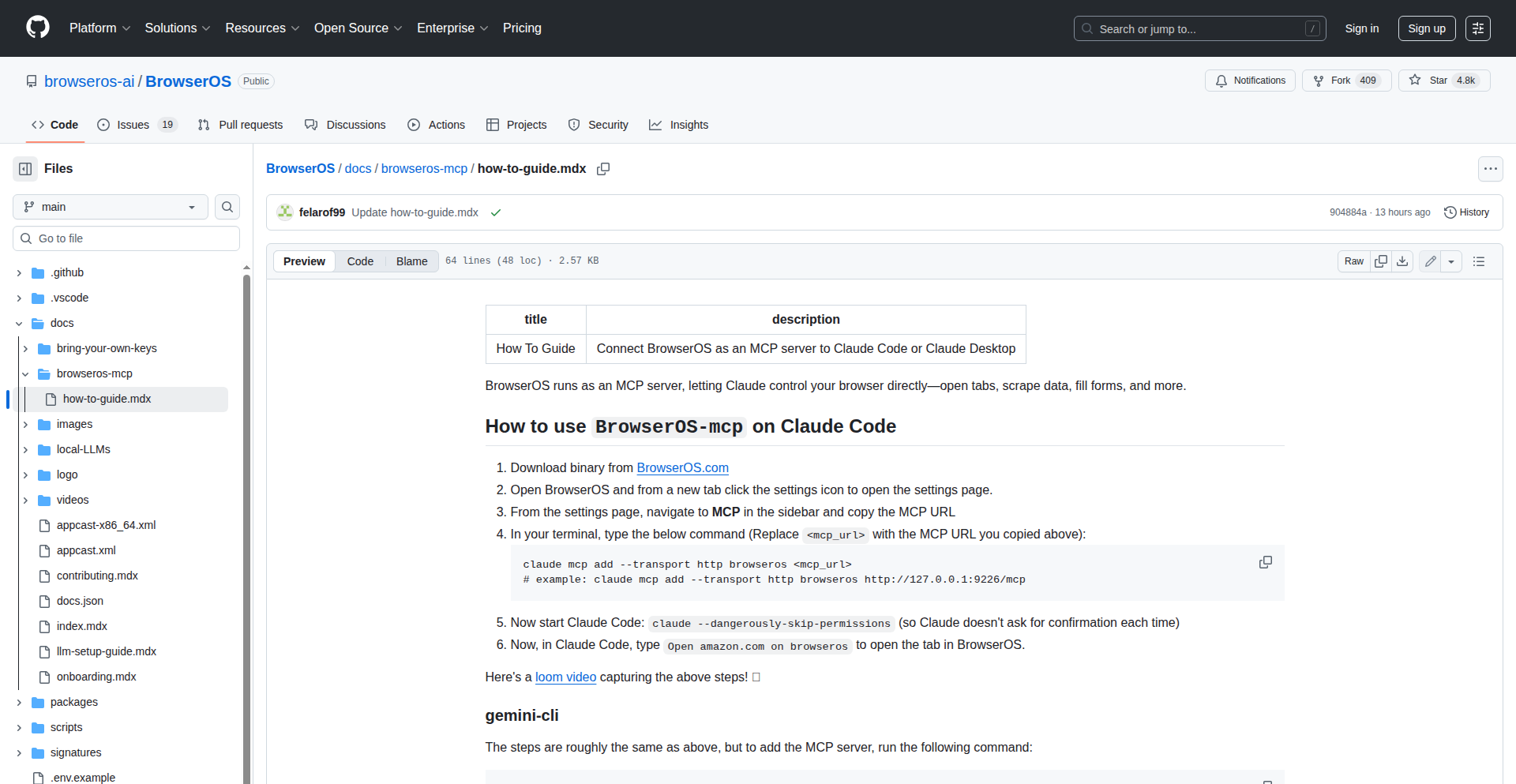

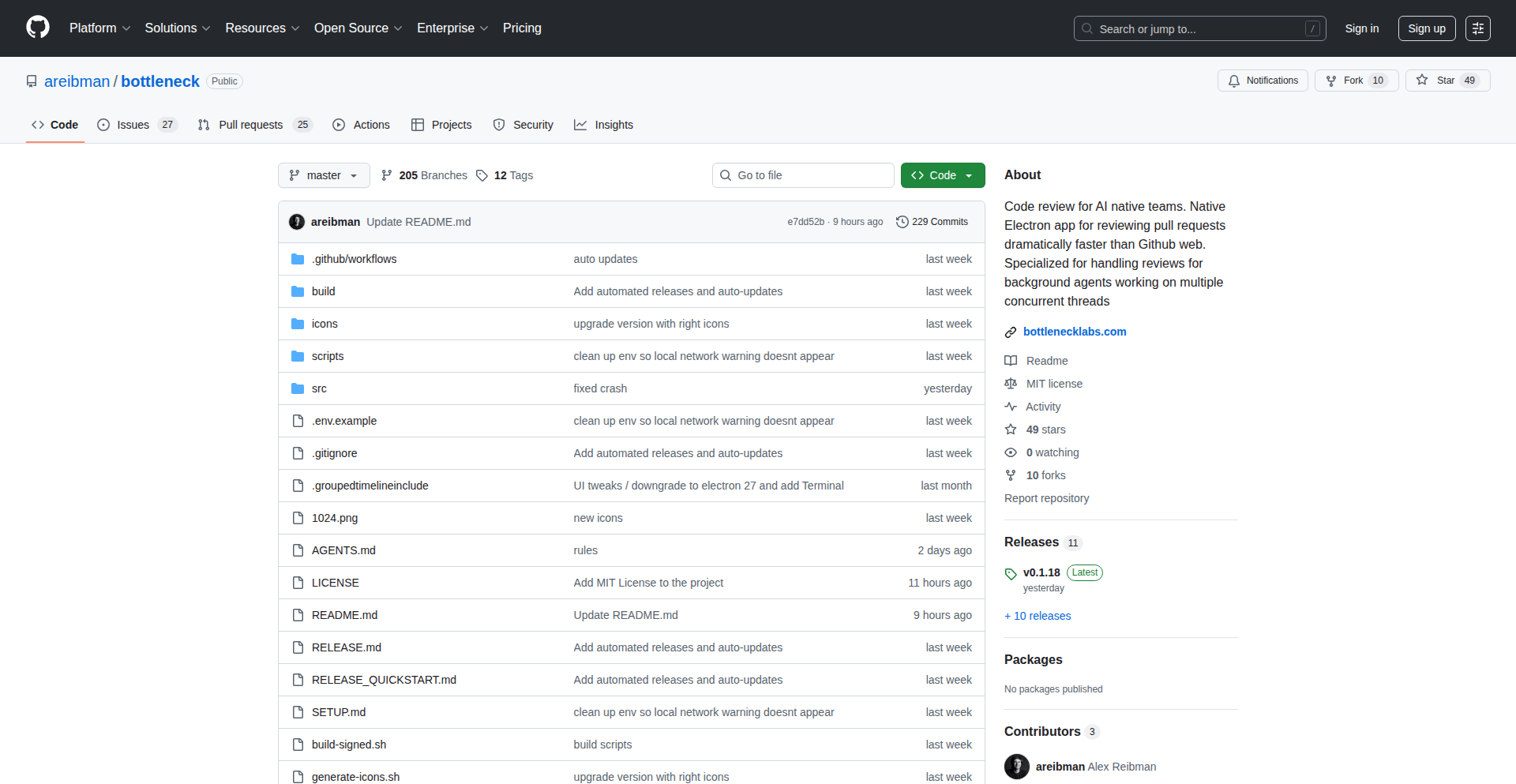

BrowserOS-MCP

Author

felarof

Description

This project integrates a Message-Centric Protocol (MCP) server directly into a Chromium-based browser. This innovation allows AI agents to interact with the browser in a more seamless and context-aware manner, leveraging logged-in sessions and offering direct control over browser actions beyond the standard Chrome Debug Protocol (CDP). It simplifies setup and unlocks advanced use cases for frontend development and agentic browser tasks.

Popularity

Points 33

Comments 12

What is this product?

BrowserOS-MCP is an open-source, privacy-focused browser built on a fork of Chromium. Its core innovation is embedding an MCP server directly into the browser's executable. Think of the MCP server as a translator that allows AI programs to understand and control the browser. Unlike previous methods that required complex setup and often started isolated browser instances, this package makes the MCP server readily available. This means AI assistants can directly interact with your current browser tabs, use your logged-in accounts for tasks, and perform actions like clicking, typing, and drawing on webpages using custom APIs that are more robust and less susceptible to bot detection than traditional CDP methods. So, what's the benefit? It makes your browser a much more powerful tool for AI, enabling smarter and more efficient automated tasks without a complicated setup.

How to use it?

Developers can use BrowserOS-MCP by simply downloading and installing the BrowserOS binary. Once installed, the MCP server is active and ready to accept connections from AI agents. Integration typically involves configuring your AI agent or coding assistant to connect to the browser's MCP endpoint. For instance, you can connect AI tools like Claude Code or other agentic frameworks to BrowserOS-MCP. This allows the AI to directly control the browser to perform actions like writing and debugging code, filling out forms, extracting data from websites, or even testing user flows using your existing logged-in sessions. The setup is as simple as pointing your AI agent to the browser's MCP interface, making it straightforward to enhance your development workflow or build sophisticated automated browsing experiences.

Product Core Function

· Integrated MCP Server: This means the AI communication bridge is built directly into the browser, eliminating the need for separate server installations or complex command-line arguments. This makes it incredibly easy to get started, saving developers setup time and reducing potential configuration errors.

· Session-Aware AI Interaction: Unlike solutions that start a fresh, isolated browser session, BrowserOS-MCP allows AI agents to leverage your existing logged-in sessions. This is crucial for tasks like testing authentication flows or performing actions that require user context, making AI interactions more realistic and effective.

· Enhanced Browser Control APIs: The MCP server exposes new, direct APIs to control browser actions like clicking, typing, and drawing bounding boxes. These APIs are not reliant on the Chrome Debug Protocol (CDP) and include improved anti-bot detection, making them more robust for automated tasks and preventing AI-driven actions from being flagged as suspicious.

· Simplified Setup for AI Agents: Developers don't need to run complex commands like `npx install` or start Chrome with specific flags. Simply downloading and running the BrowserOS binary makes the MCP server accessible, drastically lowering the barrier to entry for integrating AI into browser workflows.

· Privacy-First BrowserOS Foundation: Built on an open-source Chromium fork, the browser itself emphasizes privacy. This means that when you use it for AI-driven tasks, your browsing activities are handled with a privacy-first approach, aligning with the goals of users seeking alternatives to mainstream AI browsers.

Product Usage Case

· Frontend Development with AI Assistants: Imagine using an AI like Claude Code to improve your website's CSS. Instead of manually copying and pasting code and screenshots, you can connect Claude Code to BrowserOS-MCP. The AI can then directly see your webpage, write CSS code, and immediately see the results, even testing against your logged-in user accounts for accurate QA. This speeds up the development cycle significantly by enabling real-time, interactive feedback between the developer and the AI.

· Agentic Web Automation: Use BrowserOS-MCP as the engine for sophisticated AI agents. You can configure AI assistants to perform complex multi-step tasks like automatically filling out forms across multiple websites, extracting specific data from online sources, or performing automated testing of web applications. This offers a more powerful and flexible alternative to existing AI browsing tools.

· Automated Content Summarization and Analysis: For example, an AI agent connected to BrowserOS-MCP could be tasked with opening the top articles from Hacker News, reading them, and then providing a concise summary of each. This demonstrates how the browser can be used for automated information gathering and processing, saving users time and effort in staying informed.

· AI-Powered User Experience Testing: Test your website's user flows by having an AI agent navigate through them using your logged-in sessions. For instance, an AI could test the entire checkout process of an e-commerce site using a real user account, identifying bugs or usability issues that might be missed with traditional testing methods.

4

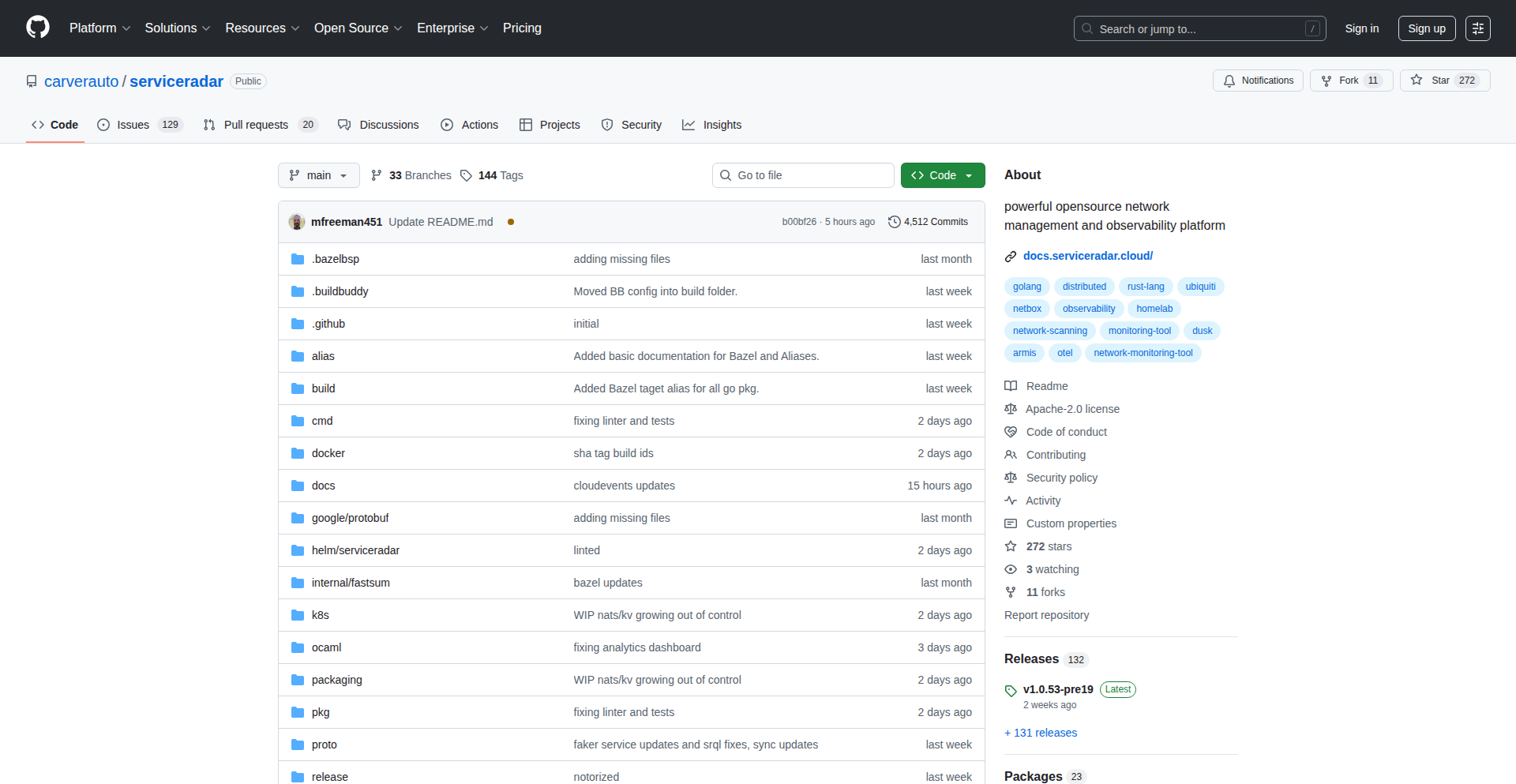

ServiceRadar: Hybrid Network Observability Engine

Author

carverauto

Description

ServiceRadar is an open-source platform designed for managing and observing distributed networks at scale, handling over 100,000 devices. It tackles the complexity of traditional network management systems by unifying both older protocols like SNMP and syslog with modern cloud-native standards such as gNMI and OTLP. This innovation provides crucial network visibility for hybrid telecom environments and cloud-native applications, making it easier for developers to understand and manage their network infrastructure.

Popularity

Points 35

Comments 1

What is this product?

ServiceRadar is an open-source platform that provides comprehensive observability for distributed networks, particularly in hybrid environments combining traditional infrastructure with cloud-native applications. Its core innovation lies in its ability to bridge the gap between legacy network management protocols (like SNMP for older devices and syslog for event logs) and modern cloud-native observability standards (like gNMI for network configuration and OTLP for telemetry data). It's built to be Kubernetes-native, using technologies like Helm for easy deployment and Docker for containerization. For secure communication and identity, it leverages mTLS with SPIFFE/SPIRE. Event streaming is handled by NATS JetStream, capable of processing millions of events per second. It also introduces SRQL, a simple query language for network data. By integrating with OpenTelemetry, Prometheus, and CloudEvents, ServiceRadar fills a critical gap in the cloud-native observability stack, which often focuses more on application performance than the underlying network health. This means you get a unified view of your entire IT landscape, not just your applications.

How to use it?

Developers can use ServiceRadar to gain deep insights into their network's performance and health. It's designed for easy integration into existing Kubernetes clusters. You can quickly deploy it using Helm with the command `helm install serviceradar carverauto/serviceradar` or by using Docker Compose with `docker compose up -d`. Once deployed, ServiceRadar can start collecting data from your network devices and applications that expose metrics in compatible formats. This allows you to monitor network latency, device status, traffic patterns, and security events. The platform's ability to ingest data from both old and new protocols means you can unify the monitoring of diverse network assets, from physical routers to virtual machines in the cloud. This simplifies troubleshooting and proactive maintenance, as you have a single pane of glass for your entire network infrastructure.

Product Core Function

· Unified Protocol Ingestion: Bridges legacy (SNMP, syslog) and modern (gNMI, OTLP) protocols, allowing you to monitor a wide range of network devices and applications from a single platform. This means you don't need separate tools for old and new equipment, simplifying your operations.

· Kubernetes-Native Deployment: Designed for seamless integration into Kubernetes environments using Helm and Docker, making it easy to deploy, manage, and scale your network observability infrastructure. This ensures it fits well within modern cloud-native development workflows.

· Secure Communication: Utilizes mTLS with SPIFFE/SPIRE for secure device authentication and communication, protecting your network data from unauthorized access. This is crucial for maintaining the security and integrity of your network.

· High-Performance Event Streaming: Leverages NATS JetStream for efficient and scalable event processing (handling millions of events per second), ensuring real-time insights into network events. This means you get up-to-date information about what's happening on your network, enabling faster responses to issues.

· Intuitive Query Language (SRQL): Provides a user-friendly query language for easily extracting and analyzing network data, making it accessible even for those less familiar with complex query syntaxes. This simplifies data exploration and reporting.

· OpenTelemetry and CloudEvents Integration: Integrates with industry-standard observability tools like OpenTelemetry and CloudEvents, enhancing your existing monitoring stack and providing comprehensive visibility. This allows ServiceRadar to complement and extend your current monitoring capabilities.

Product Usage Case

· Troubleshooting Hybrid Telecom Networks: A telecom company experiencing intermittent connectivity issues across their network, which includes both legacy hardware and modern cloud-based services, can deploy ServiceRadar. By ingesting data from SNMP on older routers and gNMI on newer network switches, alongside OpenTelemetry traces from their cloud applications, they can correlate network events with application performance to pinpoint the root cause of the problem faster.

· Monitoring IoT Device Fleets: A company managing a large fleet of IoT devices that communicate via various protocols, including some older ones, can use ServiceRadar to monitor their status, connectivity, and data streams. By unifying data from these diverse devices into a single observability platform, they can proactively identify and address issues, ensuring reliable operation of their IoT solutions.

· Enhancing Cloud-Native Application Observability: Developers building microservices in Kubernetes that rely heavily on network communication can use ServiceRadar to understand the network's impact on their application's performance. By correlating application metrics from OpenTelemetry with network metrics collected by ServiceRadar, they can identify if network latency or packet loss is affecting their application's responsiveness, enabling them to optimize both their code and their network configuration.

· Securing Distributed Network Infrastructure: Organizations with a distributed network infrastructure spread across multiple locations and cloud environments can leverage ServiceRadar's secure communication features (mTLS with SPIFFE/SPIRE) to ensure all network management and telemetry data is transmitted securely. This helps in maintaining compliance and protecting sensitive network information from eavesdropping or tampering.

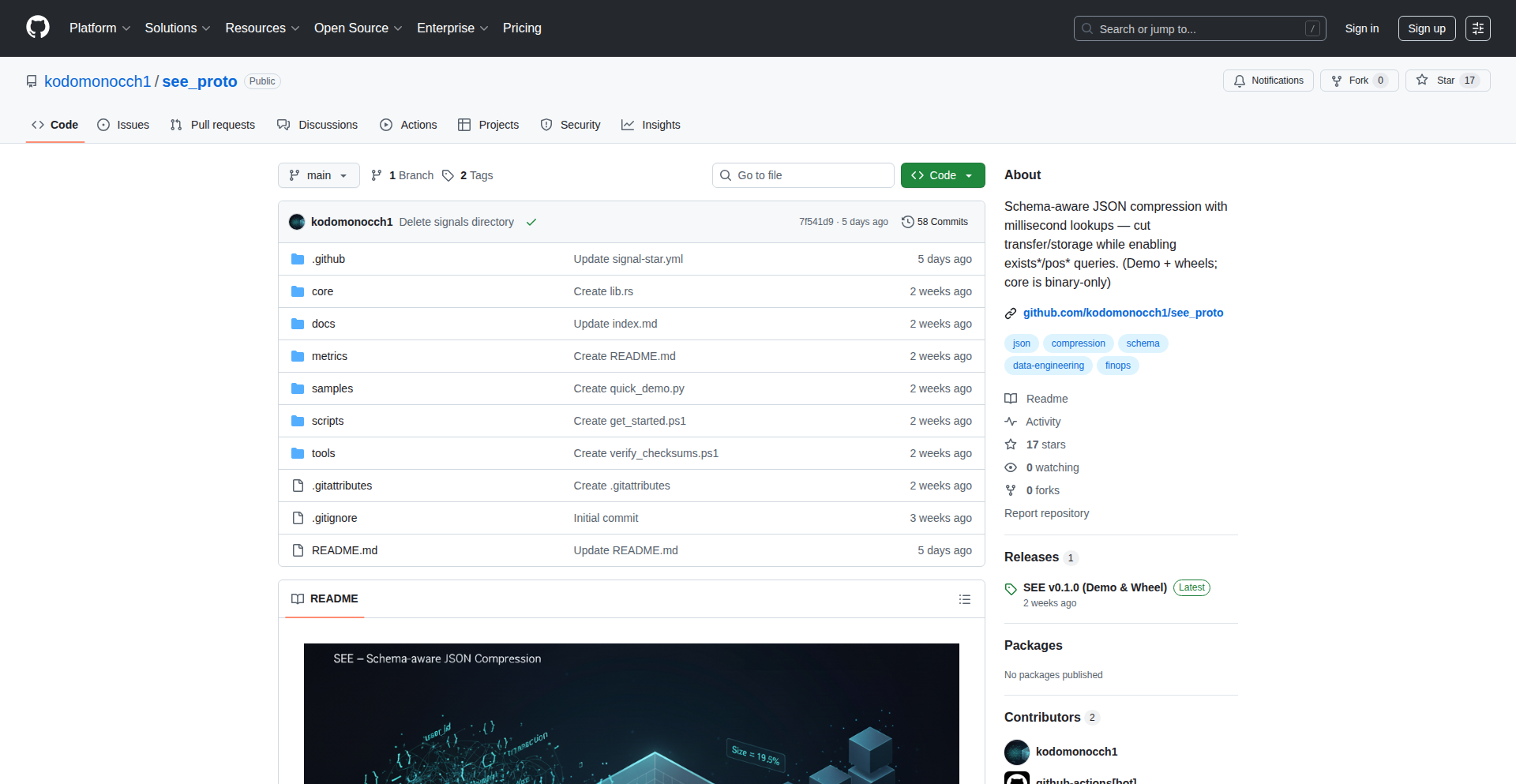

5

SEE-Proto: Schema-Aware Searchable Compression

Author

kodomonocch1

Description

SEE-Proto is a groundbreaking compression codec specifically designed for JSON/NDJSON data. It addresses the common trade-off between storage efficiency and data retrieval speed. Unlike traditional compression methods that make searching slow, SEE-Proto intelligently compresses data while keeping lookups for specific fields incredibly fast. It achieves this by understanding the structure of JSON, using techniques like delta encoding, dictionaries, and an optimized Bloom filter with a Page Directory to quickly skip irrelevant data blocks. This means you can store your JSON data much more compactly without sacrificing the ability to quickly find the exact information you need, significantly reducing I/O and egress costs, especially in cloud environments. So, it solves the problem of having to choose between small storage and fast queries for your JSON data.

Popularity

Points 13

Comments 6

What is this product?

SEE-Proto is a specialized compression algorithm that works like a smart ZIP file for your JSON data. Imagine you have a huge pile of text documents (your JSON files) and you want to make them smaller to save space. Traditional compression tools like zip or gzip do a great job of making files smaller, but if you need to find a specific word or sentence within those compressed files, you have to decompress the whole thing, which is slow. SEE-Proto understands the structure of JSON (like knowing that "user" is a field that often contains a name or ID). It uses this knowledge to compress the data efficiently while also creating a special index. This index allows it to quickly pinpoint which parts of the compressed file contain the data you're looking for, skipping about 99% of the file without even reading it. The result is data that's significantly smaller to store and astonishingly fast to search. So, it's a way to have your cake and eat it too: small storage and quick access to your JSON data.

How to use it?

Developers can easily integrate SEE-Proto into their data pipelines. The primary method is via a Python library that can be installed with `pip install see_proto`. Once installed, you can use the provided tools to compress your JSON files. For example, you can run `python samples/quick_demo.py` to see a practical demonstration. In real-world applications, you would use the library to compress your large JSON log files or data dumps before storing them. When you need to query this data, you would use SEE-Proto's lookup functions, which leverage the internal indexing to perform fast searches. This is particularly useful for applications that deal with massive amounts of event logs, API request data, or any JSON-structured information where quick retrieval of specific records is critical. The 'auto-page' feature also allows for a balance between seek time (how fast you can find something) and throughput (how much data you can process at once), making it adaptable to different use cases. So, you can use it to compress your data for storage and then quickly retrieve specific pieces of information without reading the entire compressed file, saving you time and money on cloud services.

Product Core Function

· Schema-aware compression: Understands JSON structure to achieve better compression ratios than generic algorithms, making your storage smaller. This is useful for reducing cloud storage costs.

· Fast lookups with Bloom filter and PageDir: Allows sub-millisecond retrieval of specific data points by intelligently skipping unnecessary data blocks, meaning you get the information you need almost instantly, saving you valuable time.

· Approximate 99% page skip: Dramatically reduces the amount of data read from storage for queries, leading to significant I/O and egress cost savings, especially in cloud environments.

· Tunable auto-page size: Balances search performance and throughput, allowing you to optimize for different workloads, ensuring the system works efficiently for your specific needs.

· Searchable compression: Combines storage efficiency with queryability, eliminating the need to decompress entire files for searches, streamlining data analysis workflows.

Product Usage Case

· Storing and querying large volumes of application logs: Instead of storing uncompressed logs that take up a lot of space and are slow to search, you can compress them with SEE-Proto. When you need to find specific error messages or user activities, you can query the compressed logs directly and get results in milliseconds, saving debugging time and reducing cloud costs.

· Archiving massive JSON datasets for analytics: For long-term storage of datasets used in business intelligence or machine learning, SEE-Proto offers a cost-effective solution. You can store terabytes of data compactly and still perform quick ad-hoc queries on subsets of the data when needed, without lengthy decompression processes.

· Building efficient data ingestion pipelines: When processing streaming JSON data, SEE-Proto can compress incoming data in chunks while maintaining the ability to quickly verify or retrieve specific records if necessary, improving the overall efficiency and responsiveness of the pipeline.

· Developing a real-time data lookup service: If you need to serve data that is frequently accessed and updated, SEE-Proto's fast lookup capabilities can significantly reduce latency for users, providing a snappier user experience and reducing server load by avoiding full file reads.

6

LegalDoc-Embed-Bench

Author

ubutler

Description

This project introduces the Massive Legal Embedding Benchmark (MLEB), the first comprehensive evaluation suite for models that understand and process legal documents. It's built by legal domain experts to address the critical need for accurate legal information retrieval and reasoning in AI systems, especially for applications like Retrieval Augmented Generation (RAG) in law, helping to reduce AI hallucinations in legal contexts.

Popularity

Points 10

Comments 0

What is this product?

MLEB is a collection of 10 curated datasets designed to rigorously test how well AI models can understand legal text. It covers multiple countries (US, UK, Australia, Singapore, Ireland), various legal document types (cases, laws, regulations, contracts, textbooks), and different tasks like finding relevant information (retrieval), categorizing documents (zero-shot classification), and answering questions (QA). The core innovation is its focus on real-world, complex legal queries and documents, created or vetted by individuals with actual legal expertise, ensuring the benchmark accurately reflects practical legal scenarios. This is crucial because good legal AI needs both deep knowledge of law and the ability to reason through it, directly impacting the reliability of legal AI applications.

How to use it?

Developers can use MLEB to evaluate and improve their AI models intended for legal applications. By running their models against the MLEB datasets, they can identify weaknesses in legal understanding, retrieval accuracy, and reasoning capabilities. This allows for targeted model training and fine-tuning. For example, if a legal RAG system is hallucinating or failing to retrieve the correct legal precedents, developers can use MLEB to diagnose why and retrain their embedding models. The project also provides code for evaluation and even offers a top-ranked model for those looking for a strong starting point, making it easier to integrate advanced legal AI capabilities into existing workflows or build new legal tech solutions.

Product Core Function

· Comprehensive Legal Document Understanding Evaluation: Assesses how well AI models can grasp the nuances of diverse legal texts, ensuring they can accurately process and interpret legal information. This is valuable for building reliable legal research tools and AI assistants.

· Multi-Jurisdictional and Multi-Document Type Coverage: Tests models across various legal systems and document formats, enabling the development of globally applicable and versatile legal AI solutions. This is useful for law firms operating internationally or dealing with a wide range of legal matters.

· Realistic Query-Response Matching: Utilizes real-world user-generated questions and verified answers, providing a true measure of a model's ability to solve practical legal information retrieval problems. This directly benefits applications like legal chatbots or automated document analysis, as they will perform better with actual user queries.

· Quality-Vetted Datasets: All datasets are meticulously checked for quality, diversity, and utility by domain experts, guaranteeing the benchmark's reliability and relevance. This ensures that developers are testing their models against a high standard, leading to more robust and trustworthy AI.

· Evaluation Code and Open-Source Contributions: Provides the necessary code to run evaluations and contributes all resources to the open-source community, fostering collaboration and accelerating progress in legal AI. This empowers the broader developer community to build better legal technology without reinventing the wheel.

Product Usage Case

· A law firm building an AI-powered contract review tool uses MLEB to benchmark its model's ability to identify relevant clauses and potential risks in contracts. By achieving high scores on MLEB's contract-related datasets, they ensure their tool is accurate and reliable, saving lawyers significant time and reducing errors.

· A legal tech startup developing a chatbot for answering tax-related questions in Australia uses the 'Australian Tax Guidance Retrieval' dataset to train and validate its model. This helps the chatbot accurately retrieve information from government guidance documents, providing users with correct answers and avoiding misinformation.

· A legal researcher training a large language model for legal case summarization uses MLEB to evaluate its model's comprehension of complex legal arguments and case law. This ensures the model can generate accurate and concise summaries, aiding in legal research and analysis.

· A government agency aims to improve its internal legal knowledge management system by using AI. They benchmark various embedding models against MLEB to select the best one for retrieving relevant regulations and policy documents, thereby enhancing efficiency and compliance.

· An academic institution researching the application of AI in law uses MLEB to compare different embedding techniques for legal information retrieval. This helps them advance the academic understanding of AI's capabilities in the legal field and publish their findings.

7

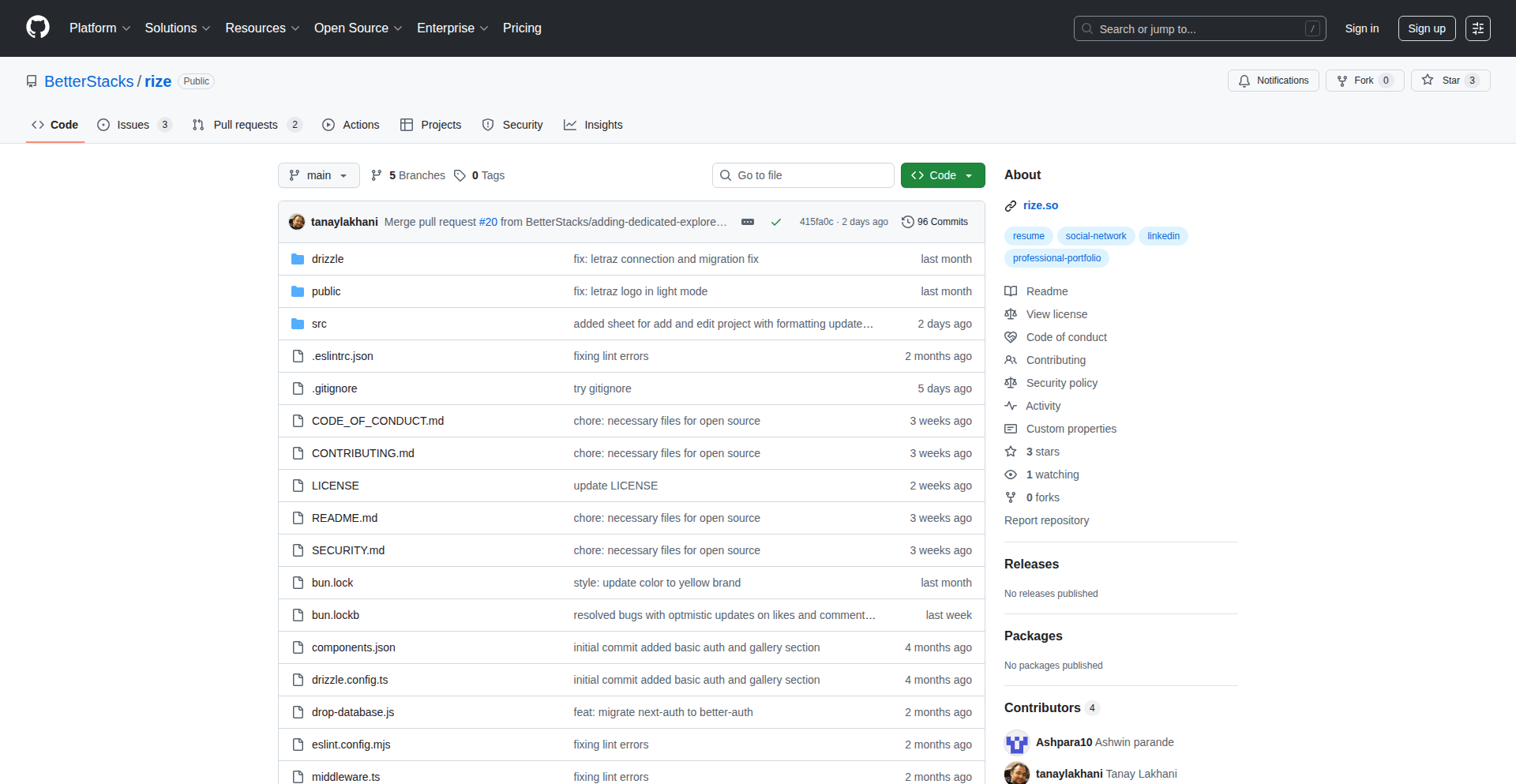

ResumeLyricSynth

Author

rmtbb

Description

This project is a novel approach to personal branding by transforming a resume into a catchy pop song. It leverages a specific 'Song Style' prompt that developers can duplicate and customize, demonstrating a creative application of AI for generating personalized, engaging content that goes beyond traditional resume formats. The innovation lies in the direct manipulation of AI prompts to achieve a specific creative output, making personal information more memorable and shareable.

Popularity

Points 5

Comments 4

What is this product?

ResumeLyricSynth is a tool that uses an AI's 'Song Style' prompt to convert the factual information from a resume into the lyrics of a catchy pop song. The core innovation is the ability to take structured data (your resume) and transform it into unstructured, creative text (song lyrics) through carefully crafted AI instructions. This allows individuals to present their professional background in a unique and attention-grabbing way, essentially creating a personalized jingle that highlights their skills and experience. So, what's in it for you? It's a fresh way to stand out in a crowded job market by making your professional story unforgettable and fun.

How to use it?

Developers can use this project by duplicating the provided 'Song Style' prompt. They would then replace the placeholder lyrics within the prompt with the content of their own resume. This involves extracting key information like job titles, responsibilities, achievements, and skills from their resume and adapting them to fit a lyrical structure. The output can then be used for personal websites, social media profiles, or even as a quirky introduction during networking events. The integration is straightforward: copy the prompt, paste your resume details, and let the AI work its magic. This means you can easily create a unique, personalized marketing asset that tells your professional story in an engaging, musical way, making you more memorable to potential employers or collaborators.

Product Core Function

· Resume-to-Lyric Transformation: Converts resume content into song lyrics using a specialized AI prompt, offering a creative way to summarize professional experience and skills. This is valuable for generating unique personal branding assets that are more engaging than a standard document.

· Customizable AI Prompt: Provides a duplicable 'Song Style' prompt that developers can modify, allowing for personalized control over the tone and style of the generated song lyrics. This empowers users to tailor the output to their specific personality and desired impact, ensuring the song truly represents them.

· Personal Branding Enhancement: Creates a novel and memorable way to present professional qualifications, helping individuals stand out in job applications, networking, and online presence. This directly addresses the need to capture attention and leave a lasting impression in a competitive environment.

Product Usage Case

· Job Application Marketing: A job seeker could use this to create a fun, short song about their qualifications to share on their LinkedIn profile or personal website, making their application more memorable than hundreds of others. This solves the problem of being just another resume in the pile by making their profile instantly engaging.

· Networking Icebreaker: During a tech conference or networking event, a developer could share a link to their resume song as a unique icebreaker, sparking conversations and showcasing their personality and creativity. This provides an easy and memorable way to initiate interactions and make connections.

· Personal Website Showcase: A freelancer could embed their resume song on their personal portfolio website, offering visitors an entertaining and informative introduction to their skills and services. This adds an interactive and engaging element to a professional online presence, capturing visitor interest.

8

LLM-CounterBench

Author

bra1ndump

Description

This project is an experimental tool designed to probe the limitations of Large Language Models (LLMs) like ChatGPT in sustained, sequential tasks. It highlights a novel approach to testing LLM endurance by observing its failure modes when tasked with simple, repetitive counting up to a large number. The innovation lies in identifying creative prompting strategies and benchmarking the maximum number an LLM can reliably count to, revealing insights into its context window, memory, and robustness against simple instructions. For developers, it provides a framework for understanding and potentially overcoming these LLM limitations in practical applications.

Popularity

Points 7

Comments 1

What is this product?

LLM-CounterBench is a Hacker News Show HN project born from the observation that even sophisticated LLMs like ChatGPT struggle with basic, high-volume sequential tasks, such as counting to a million. The core technical insight is that LLMs, despite their advanced natural language processing capabilities, can exhibit surprisingly brittle behavior when faced with tasks requiring strict adherence to simple, repetitive instructions over an extended sequence. The innovation here is not in the LLM itself, but in the experimental methodology used to expose its weaknesses. By attempting various prompting techniques, the creators discovered that framing the task as a challenge and even providing a starting sequence significantly improved the LLM's performance, albeit still far from the desired goal. This reveals a key limitation: LLMs often perform better with contextual framing or by building upon existing information rather than generating entirely novel sequences from scratch. So, this project demonstrates a practical way to find the current breaking point of LLMs for repetitive tasks, which is crucial for designing applications that can reliably handle such processes or circumvent these limitations.

How to use it?

Developers can use LLM-CounterBench as a benchmark and a source of inspiration for designing more robust LLM-powered applications. Instead of directly integrating a raw LLM for tasks requiring precise sequential output (like generating a long list of numbered items or performing step-by-step calculations), developers can employ strategies observed in this project. For instance, if building a system that needs to iterate through a large number of steps, a developer might pre-populate the LLM with a portion of the sequence or use a 'foot-in-the-door' technique where the LLM is first asked for smaller counts and then progressively larger ones. Another approach is to use external tools to manage the counting and feed the LLM smaller, manageable chunks of the sequence for processing or interpretation. The project's value lies in prompting developers to think critically about LLM capabilities and to design hybrid systems that combine LLM intelligence with more traditional algorithmic control for tasks demanding high reliability and sequence integrity. So, this project helps you build more dependable LLM applications by showing you how to avoid common pitfalls and implement smarter prompting or system design.

Product Core Function

· LLM Sequential Task Failure Analysis: This function involves observing and recording instances where an LLM fails to complete a simple, repetitive sequential task. The value is in identifying the 'breaking point' of LLM performance, which informs developers about the limits of their chosen LLM for such tasks and helps in setting realistic expectations for application design. The application scenario is stress-testing LLMs for tasks requiring endurance and accuracy.

· Creative Prompting Strategy Experimentation: This function focuses on testing various ways to prompt an LLM to improve its performance on sequential tasks, such as framing it as a competition or providing initial sequences. The value is in discovering effective techniques that can coax better performance from LLMs, even for challenging tasks. The application scenario is optimizing LLM responses for specific, structured outputs.

· Performance Benchmarking: This function involves establishing a measurable record of the maximum sequential output an LLM can achieve under specific prompting conditions. The value is in providing concrete data points to compare different LLMs or different versions of the same LLM, and to understand the relative strengths and weaknesses of LLMs in terms of sequential processing. The application scenario is evaluating and selecting LLMs for projects that require consistent, ordered output.

· Root Cause Identification of LLM Limitations: By analyzing why LLMs fail at simple counting tasks, this project implicitly explores their underlying architectural limitations (e.g., context window, attention mechanisms, inherent statistical nature). The value is in providing developers with a deeper, albeit informal, understanding of what makes LLMs tick and where their current technological boundaries lie. The application scenario is guiding the development of next-generation LLM applications by highlighting areas needing improvement in LLM technology itself.

Product Usage Case

· Scenario: Building an AI-powered educational tool that needs to generate progressive learning materials, such as numbered problem sets. Problem: Directly asking an LLM to generate 1000 math problems with sequential numbering might lead to errors, repetitions, or lost context. Solution: Using the insights from LLM-CounterBench, a developer could first ask the LLM to generate a template for a problem and then use a script to generate the numbering and fill in the template for each problem, or pre-seed the LLM with the first few problem numbers and then ask it to continue.

· Scenario: Developing a code generation assistant that needs to produce code with a specific number of sequential operations or loops. Problem: LLMs might struggle to maintain the exact count or structure over many lines of code. Solution: Applying the 'foot-in-the-door' technique, a developer could prompt the LLM to generate a small block of code with, say, 5 operations, then ask for another 5, and so on, effectively breaking down the large task into smaller, more manageable LLM interactions, ensuring sequence integrity.

· Scenario: Creating a simulation that requires an LLM to track and report a continuously increasing value over a long period. Problem: The LLM might 'forget' the current value or hallucinate its progression. Solution: The project's finding that 'simply counting to X ourselves and ask it to repeat' was successful for a small number suggests a strategy: an external system could maintain the true count and feed the LLM updates like 'The current count is 500, please acknowledge.' This offloads the sequential memory burden from the LLM. This helps in building more reliable LLM-integrated simulations where accurate state tracking is paramount.

9

AdBusterAI

Author

ruchirp

Description

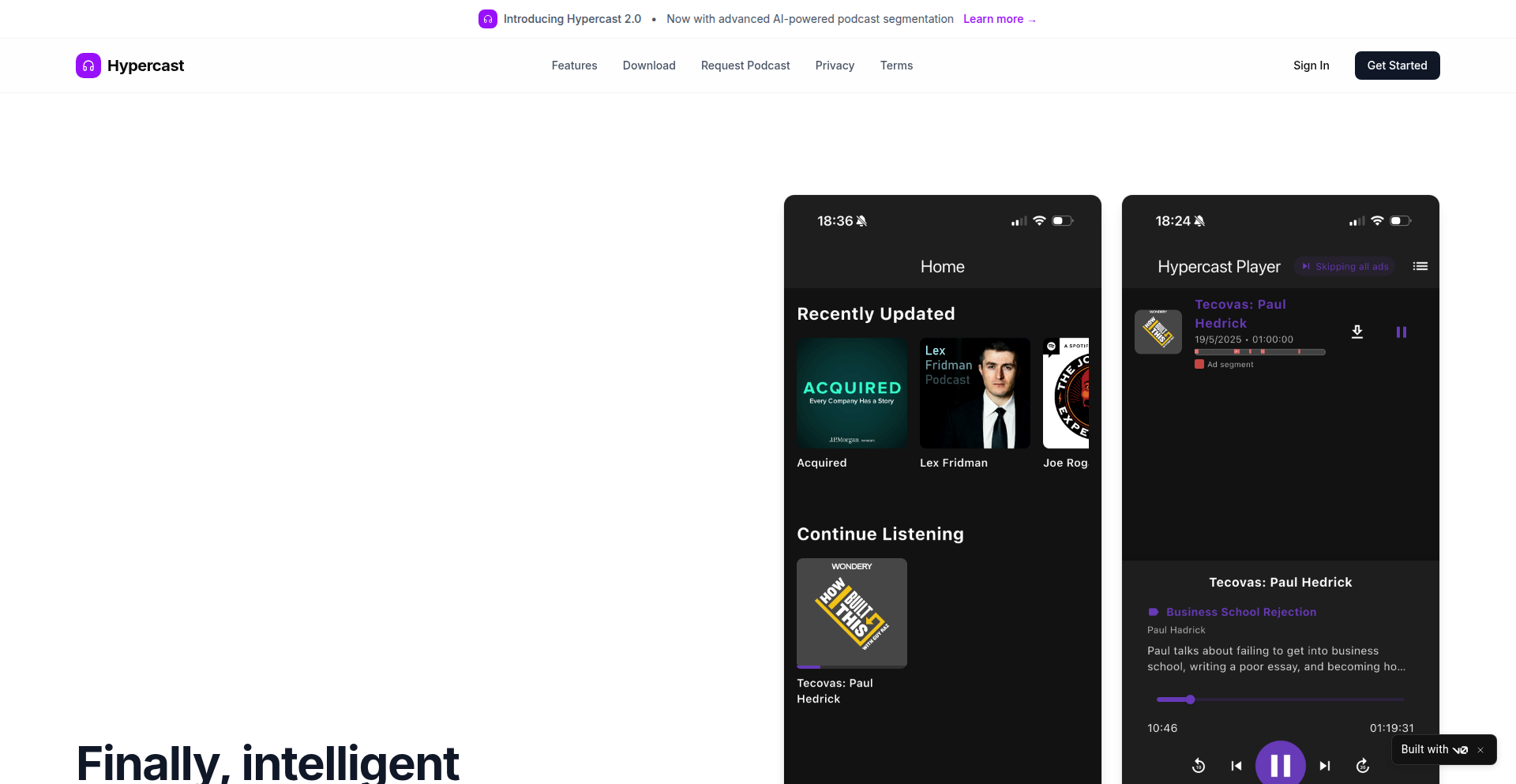

This project introduces an intelligent system for automatically skipping embedded advertisements in podcasts. The core innovation lies in a sophisticated pipeline that analyzes audio streams in real-time to detect and bypass ad segments, saving listeners the tedious manual effort of skipping ads themselves. It tackles the common frustration of repeatedly pressing skip buttons, offering a seamless listening experience.

Popularity

Points 5

Comments 2

What is this product?

AdBusterAI is an application designed to automatically detect and skip advertisements embedded within podcast audio streams. The technical ingenuity is in its real-time audio analysis pipeline. It doesn't just skip a fixed duration; instead, it uses algorithms to identify patterns or specific audio markers that signify the beginning and end of an advertisement. This means it's smarter than simple timers and can adapt to varying ad lengths. So, it essentially acts as a personalized, automatic ad-skipper for your podcasts, making your listening more enjoyable and efficient. For you, this means no more manually pressing skip buttons multiple times per episode.

How to use it?

Developers can integrate AdBusterAI into their podcast listening applications or build custom audio playback tools. The system can be conceptualized as a module that intercepts the audio stream before it's played to the user. When an ad is detected, the pipeline bypasses that segment, seamlessly transitioning to the main content. This could be implemented by feeding the audio stream into the AdBusterAI processing engine, which then returns a cleaned audio stream without ads. For example, if you're building a new podcast player, you can plug AdBusterAI into your audio processing chain. This allows your users to listen to podcasts without interruptions from ads, enhancing their experience with your application.

Product Core Function

· Real-time ad detection: Analyzes audio streams on the fly to identify advertisement segments, saving users from manual skipping and ensuring uninterrupted content flow.

· Intelligent ad bypassing: Leverages pattern recognition and audio analysis to accurately skip ads of varying lengths and types, providing a seamless listening experience.

· Customizable pipeline: Offers a flexible framework that can be integrated into various audio playback systems and applications, allowing developers to enhance their own products with ad-free listening.

· Personalized listening: Eliminates the frustration of repeated manual skips, making podcast consumption more efficient and enjoyable for the end-user.

· Accessible technology: Aims to provide a valuable feature, with options for free access for those who cannot afford it, reflecting a commitment to community benefit.

Product Usage Case

· Building a next-generation podcast player: Developers can integrate AdBusterAI to offer a premium, ad-free listening experience as a core feature of their new app, attracting users tired of interruptions.

· Enhancing existing media playback software: Incorporate AdBusterAI as a plugin or module to add automatic ad skipping to established audio players, improving user satisfaction with minimal code changes.

· Creating personalized audio experiences for specific niches: For example, in educational platforms that use podcasts, AdBusterAI can ensure students focus on the content without ad distractions, improving learning outcomes.

· Developing assistive technologies for audio content: For users who find repetitive manual actions difficult, AdBusterAI provides an automated solution, making podcasts more accessible.

10

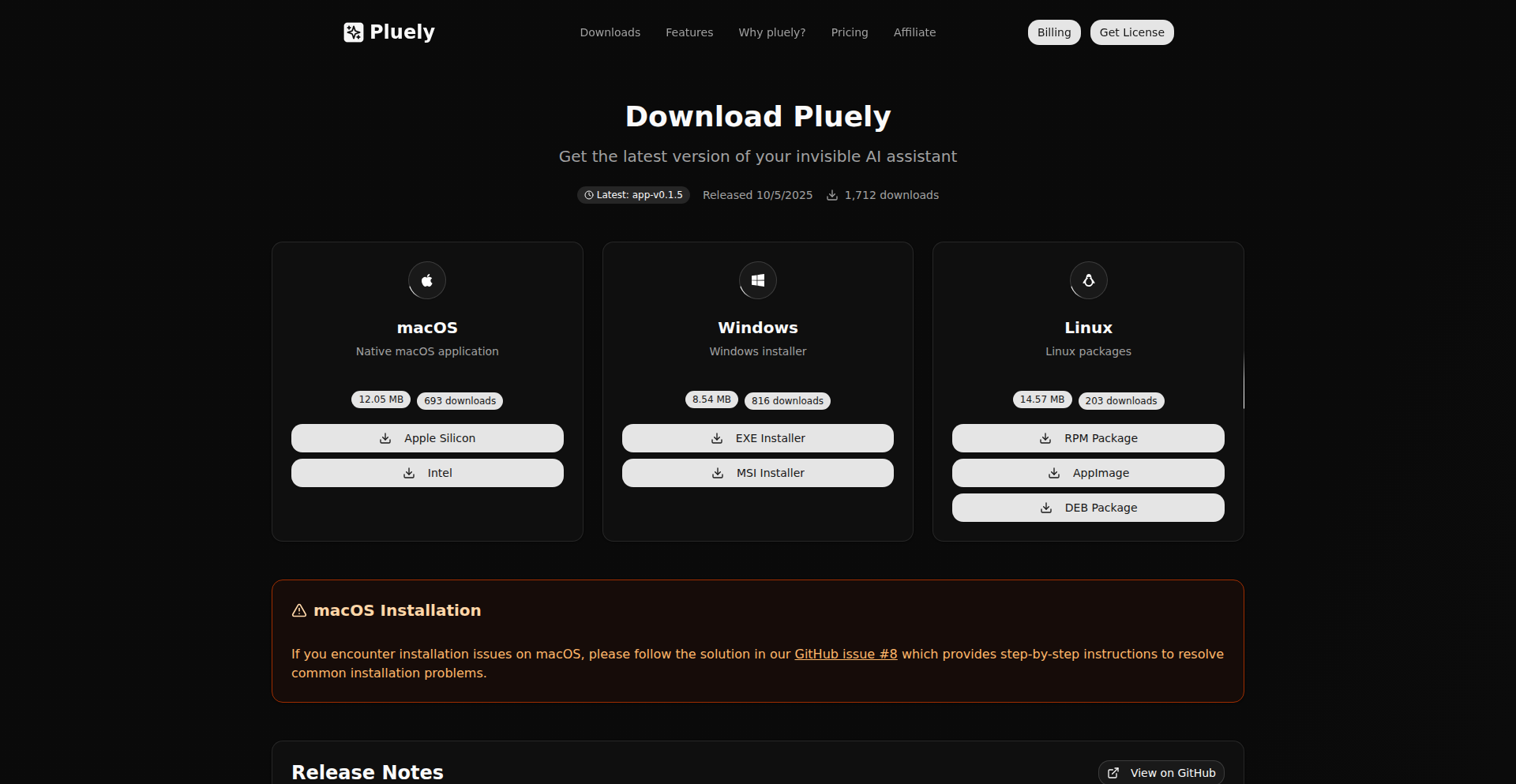

Pluely: The Stealth AI Co-Pilot

Author

truly_sn

Description

Pluely is an open-source, lightweight AI assistant that operates invisibly in the background until explicitly invoked. This latest version introduces advanced screenshot selection for targeted AI analysis and robust audio device control, ensuring it enhances your workflow without disrupting it. It's designed for developers and power users who want AI assistance without the intrusive UI elements often found in commercial products.

Popularity

Points 3

Comments 4

What is this product?

Pluely is an open-source AI assistant that aims to be an unobtrusive companion on your computer. Unlike many AI tools that require you to switch to their interface, Pluely stays hidden until you need its intelligence. The core innovation lies in its 'invisible' operation. For example, the screenshot selection feature allows you to precisely select a portion of your screen for the AI to analyze by simply clicking and dragging, without Pluely ever taking over your active window. This is achieved through clever integration with operating system features like non-activating panels on macOS (using tauri-nspanel) and non-focusable windows on Windows. This means the AI can 'see' what you're working on in a specific area without the AI's interface interrupting your current task. This approach addresses the common frustration of software stealing focus and breaking your concentration, offering a truly seamless AI integration.

How to use it?

Developers can integrate Pluely into their workflow by downloading and running the application. Its primary use cases revolve around augmenting existing tasks with AI intelligence without manual data copying. For example, if you're reading a complex document and want to understand a specific section, you can activate Pluely's screenshot selection, highlight the text, and Pluely will process it using your chosen LLM. Similarly, if you need to analyze audio from a specific source (e.g., a particular microphone input or system audio), Pluely allows you to select it precisely and even hot-swap devices on the fly without interrupting your work. Pluely supports a wide range of LLMs, including popular ones like OpenAI, Claude, and Gemini, as well as local models, giving you flexibility in how your data is processed.

Product Core Function

· Invisible Screenshot Selection: Allows users to drag and select specific screen regions for AI analysis without interrupting their current application. This is valuable for extracting information from complex interfaces or visually analyzing data without copying and pasting.

· Precise Audio Device Control: Enables users to choose specific microphone and system audio sources for AI capture, with the ability to hot-swap devices dynamically. This is crucial for developers working with audio processing or recording, ensuring accurate capture without restarting the entire system.

· Multi-LLM Support: Integrates with various leading Large Language Models (LLMs) including OpenAI, Claude, Gemini, Grok, Perplexity, Mistral, Cohere, and local models. This provides flexibility and choice in AI processing, allowing users to leverage the best model for their specific needs or privacy concerns.

· Autostart on System Boot: Pluely launches automatically and silently when your system starts, ensuring it's always ready to assist without manual intervention. This means AI assistance is consistently available and integrated into your startup routine.

· Voice Activity Detection (VAD) for Custom Audio Capture: Enables intelligent audio capture that only activates when speech is detected, reducing unnecessary processing and ensuring that relevant audio is captured efficiently. This is useful for transcribing meetings or analyzing spoken input.

· No Window Stealing: Solves the common issue of applications unexpectedly taking focus, ensuring your workflow remains uninterrupted. This provides a stable and predictable user experience, allowing you to stay focused on your tasks.

Product Usage Case

· A developer needs to extract technical details from an error message displayed in a modal window. They use Pluely's screenshot selection to highlight only the error message, and Pluely feeds this to an LLM to get a summarized explanation, without the modal window obscuring other parts of their screen.

· A content creator is recording a tutorial and wants to capture system audio along with their microphone input. They configure Pluely to capture both specific sources, ensuring a clear and separated audio feed for post-production, and can even switch microphones mid-recording if needed.

· A researcher is analyzing a lengthy document and wants to ask questions about a specific paragraph. They use Pluely's screenshot selection to isolate that paragraph and then prompt an LLM to summarize or explain it, without having to copy the text and switch to a separate chat interface.

· A remote worker is joining a video call and wants to ensure their AI assistant can analyze meeting content. Pluely can be configured to capture system audio from the call directly, enabling real-time summarization or action item extraction without manual setup during the call.

· A programmer is debugging an application and encounters a complex UI element. They use Pluely's screenshot selection to capture that specific element and then ask an LLM for potential solutions or explanations of its functionality, directly within their development workflow.

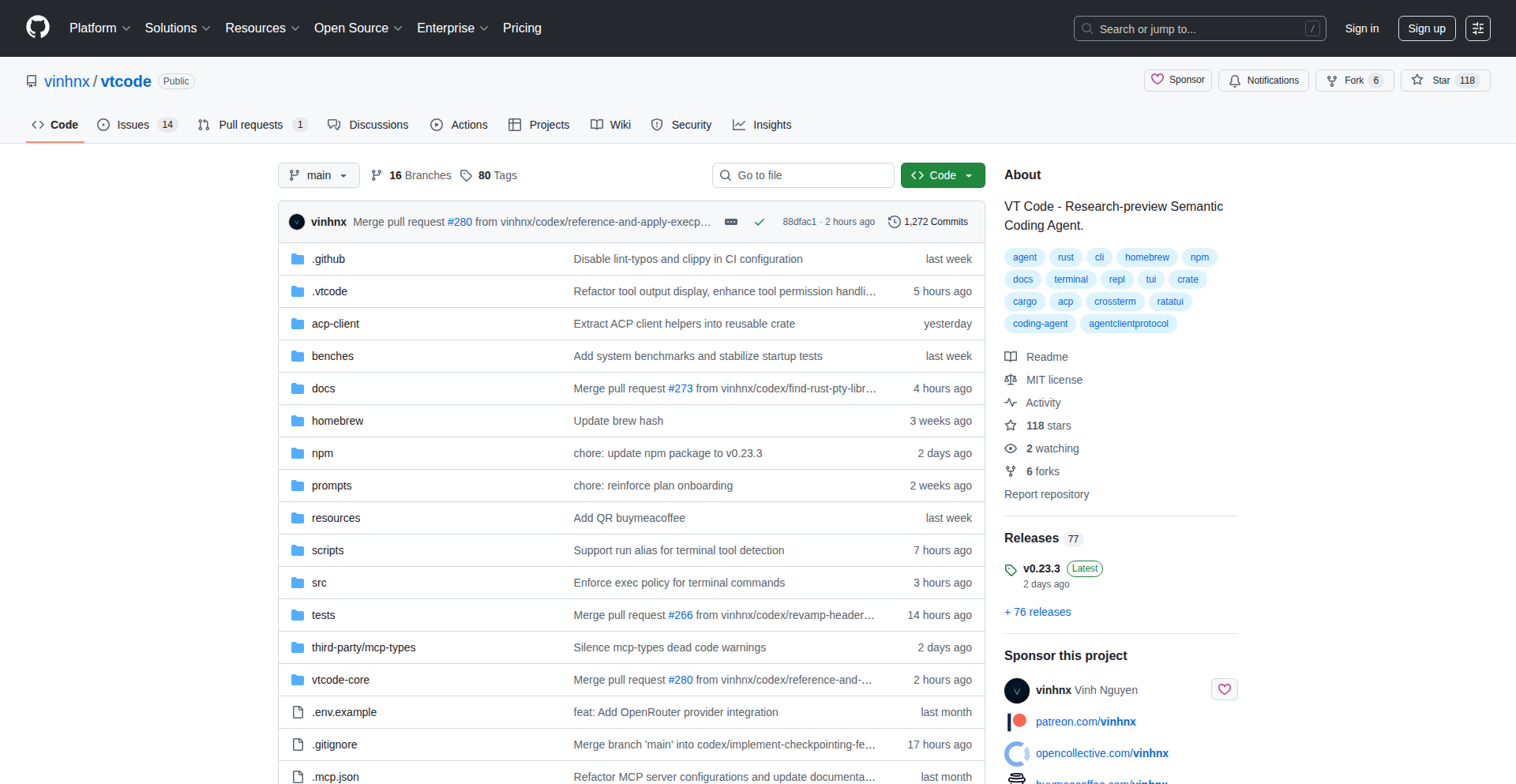

11

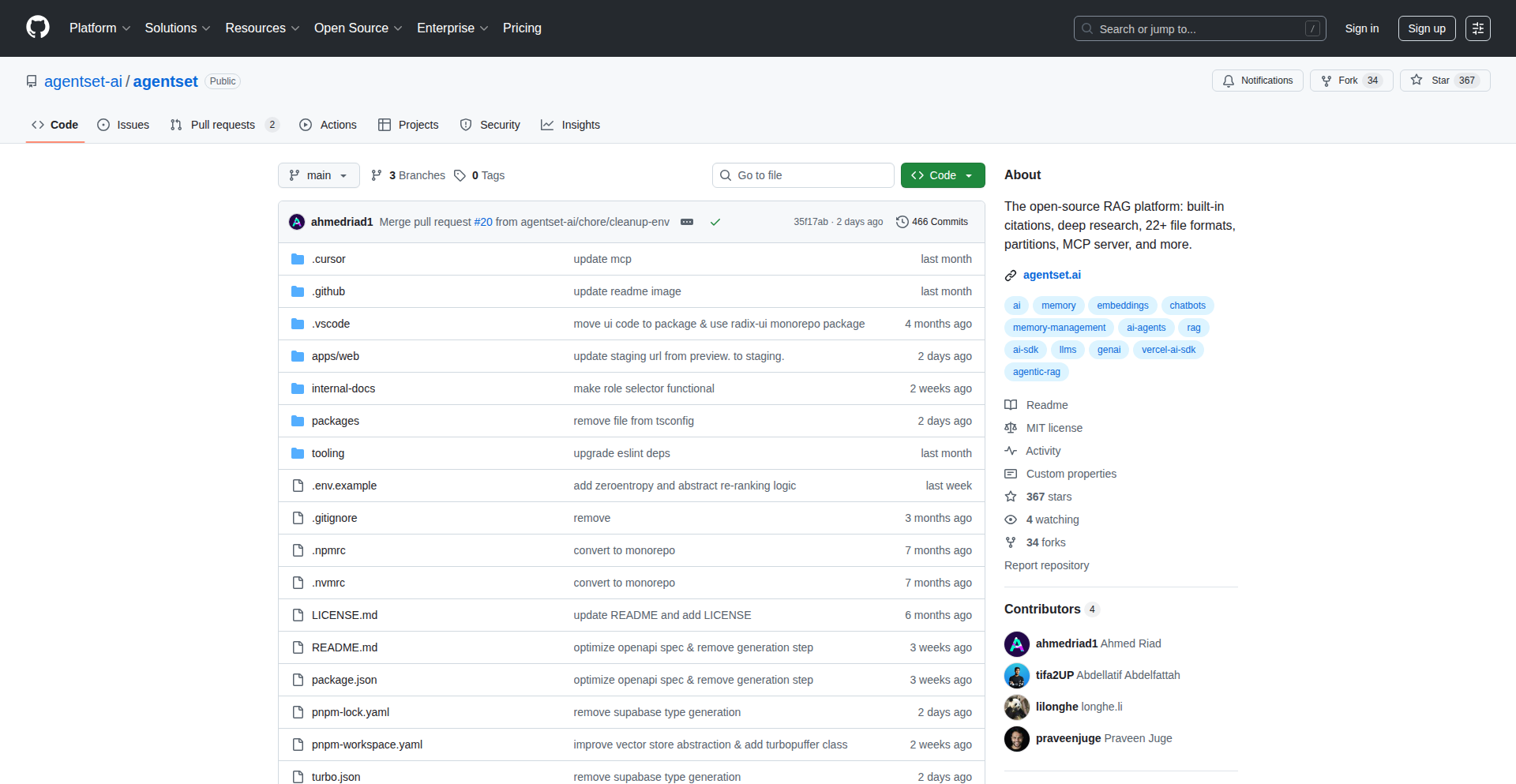

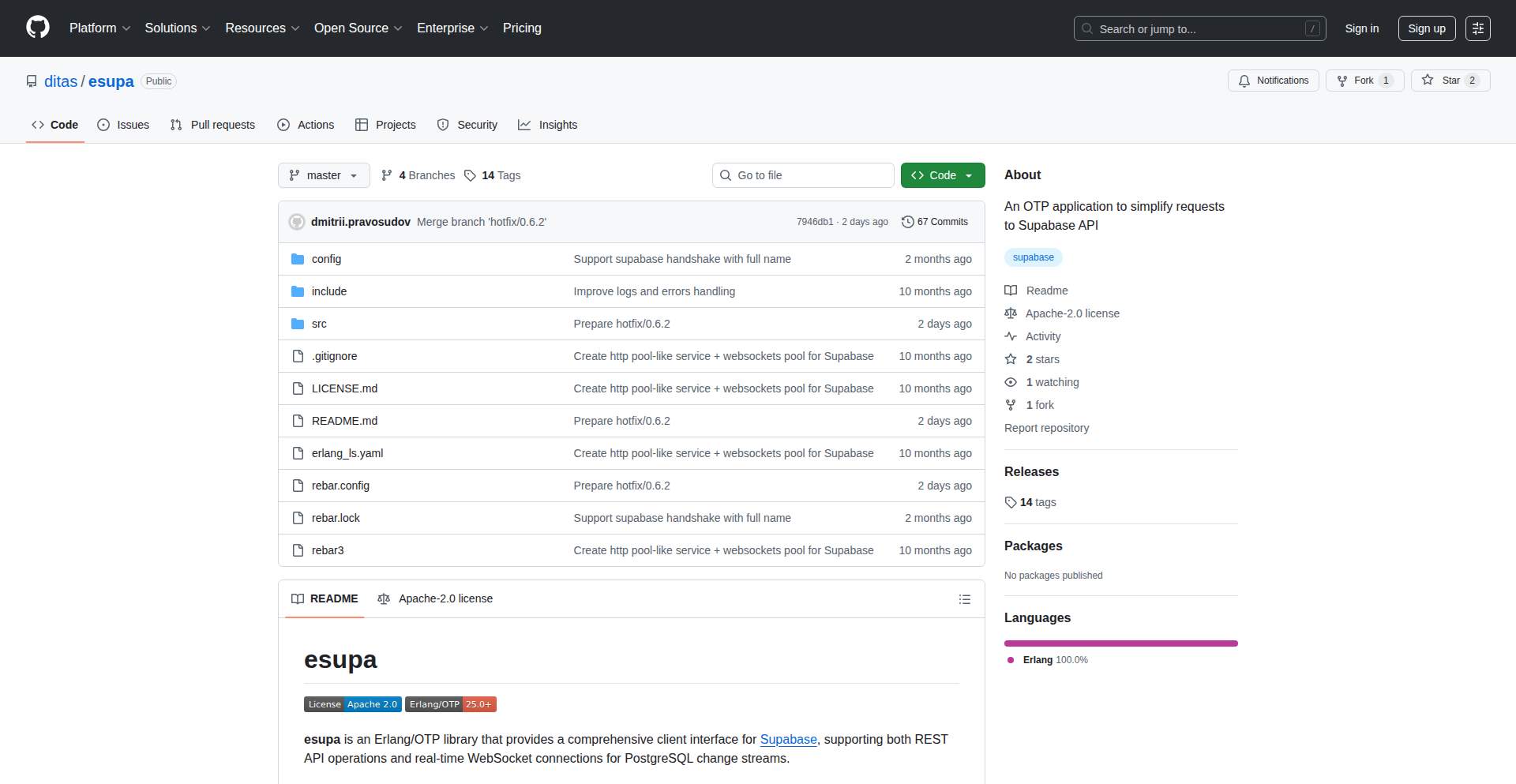

Agentset: Production-Ready RAG Framework

Author

tifa2up

Description

Agentset is an open-source framework designed to simplify the creation of high-quality Retrieval Augmented Generation (RAG) systems. It abstracts away the complexities of vector databases, embeddings, and API integration, allowing developers to build production-ready RAG applications quickly without deep optimization expertise. It supports numerous file formats, agentic search capabilities, in-depth research, accurate citations, and includes a user interface.

Popularity

Points 6

Comments 0

What is this product?

Agentset is an open-source RAG framework that brings together essential components like vector databases, embedding models, and APIs into a single, easy-to-use package. Traditional RAG setups, especially at large scales (handling billions of tokens), often require significant effort to optimize performance. Agentset encapsulates these optimizations, providing production-ready capabilities out-of-the-box. This means you get a RAG system that's performant and reliable without needing to become an expert in tuning every underlying piece. It handles the heavy lifting of data indexing, retrieval, and generation, making advanced AI applications more accessible.

How to use it?

Developers can integrate Agentset into their projects by leveraging its API or by utilizing its built-in UI. For instance, a developer building a customer support chatbot that needs to access a vast knowledge base can use Agentset to index their documentation. The framework then handles retrieving the most relevant information from this knowledge base when a user asks a question, and feeding that information to a language model to generate an accurate and context-aware answer. It's designed for scenarios where you need to ground language model responses in specific, large datasets.

Product Core Function

· Indexed RAG Pipeline: Provides a streamlined process for ingesting documents, creating vector embeddings, and storing them in an optimized vector database for efficient retrieval. The value is that it simplifies the complex data preparation and storage needed for effective AI responses, saving developers significant time and effort.

· Agentic Search: Enables the RAG system to act more intelligently by using agents to perform multi-step reasoning or searches to find the best information. The value here is more sophisticated and accurate information retrieval, leading to better AI-generated answers for complex queries.

· Deep Research Capabilities: Built to handle extensive research tasks by efficiently sifting through large amounts of data to find relevant insights. The value is that it allows AI to perform thorough analysis of large datasets, useful for tasks like market research or academic literature reviews.

· Citation Generation: Automatically provides sources for the information generated by the AI, ensuring transparency and traceability. The value is crucial for applications where accuracy and trust are paramount, such as in legal or scientific contexts.

· Out-of-the-Box UI: Includes a user interface for interacting with the RAG system, allowing for easy testing and demonstration. The value is that it provides an immediate way to experience and showcase the RAG system's capabilities without requiring separate front-end development.

Product Usage Case

· Building an AI-powered internal knowledge base assistant for a company: Developers can use Agentset to index all company documents, allowing employees to ask natural language questions and get precise answers grounded in company data, improving productivity. This solves the problem of employees struggling to find information buried in various company files.

· Developing a research tool for academic or scientific papers: Agentset can process a large corpus of research papers, enabling researchers to ask complex questions and receive summarized answers with direct citations, accelerating the research process. This addresses the challenge of sifting through vast amounts of academic literature.

· Creating an intelligent customer support bot for a product: By indexing product manuals and FAQs, Agentset can power a bot that provides accurate, context-specific answers to customer inquiries, reducing support load and improving customer satisfaction. This solves the issue of generic or unhelpful automated responses.

12

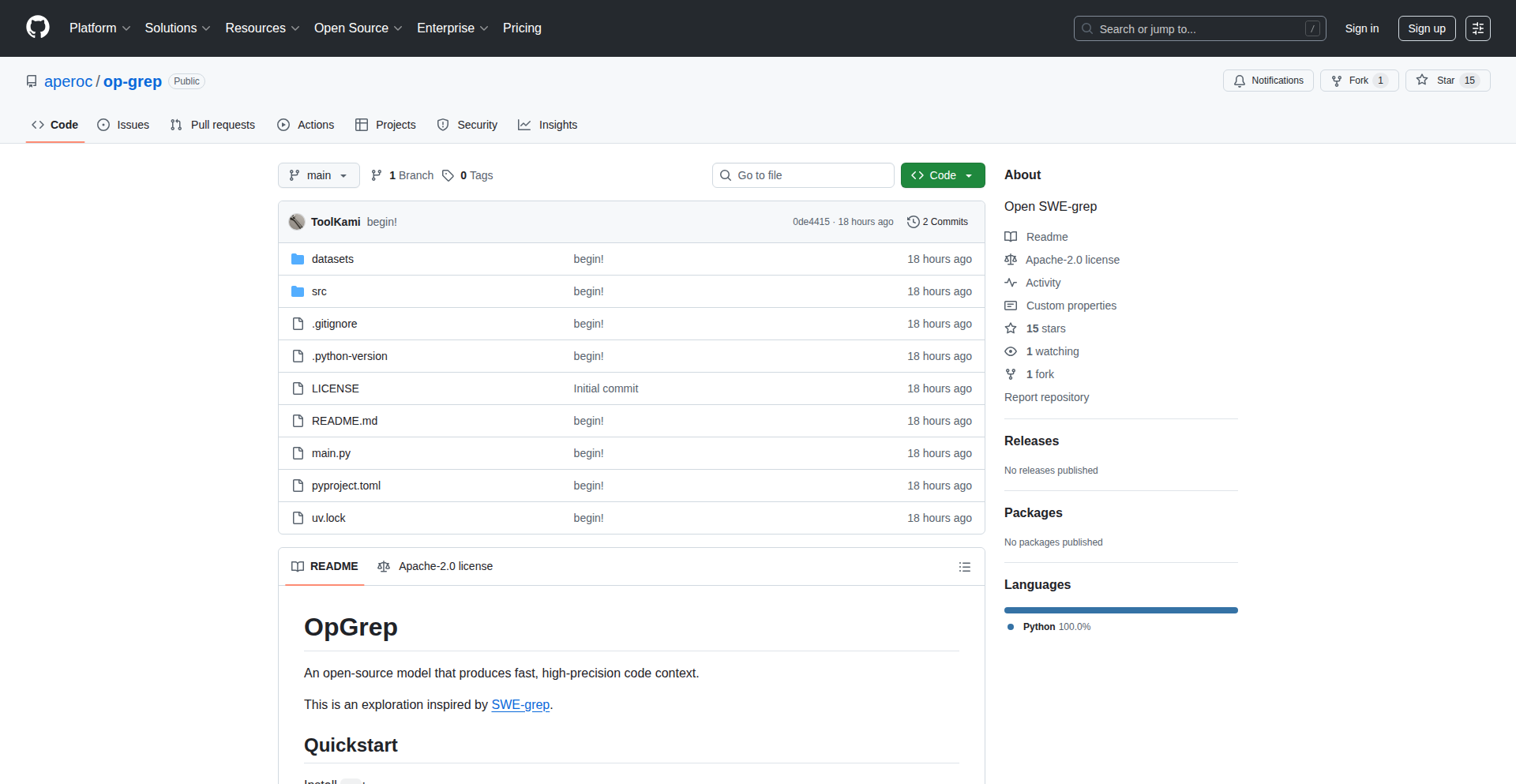

OpenSWE-Grep: Contextual Code Intelligence

Author

SafeDusk

Description

This project is an open-source implementation inspired by SWE-Grep, aiming to provide fast and high-precision code context search. It leverages a Recurrent Neural Network (RNN) model trained on synthetically generated datasets to understand code relationships beyond simple text matching. So, this helps developers quickly find relevant code snippets and understand the context of their codebase more efficiently.

Popularity

Points 5

Comments 1

What is this product?

OpenSWE-Grep is a tool designed to go beyond traditional keyword searches in your code. Instead of just finding lines of text that match your query, it uses an RNN model, a type of machine learning that's good at understanding sequences, to grasp the semantic meaning and relationships within code. It's trained on artificial code examples to learn how different parts of code connect. This means it can find code that is functionally similar or related, even if the exact words aren't the same. So, this gives you a deeper and more intelligent way to explore and understand your codebase, uncovering connections you might have missed with standard search tools.

How to use it?

Developers can integrate OpenSWE-Grep into their workflow by cloning the repository from GitHub and running the model locally. It can be used as a standalone command-line tool for searching through projects or potentially integrated into IDEs as a plugin. The current implementation uses synthetically generated datasets, suggesting that developers might need to adapt or extend the training data for specific programming languages or project structures. So, this empowers developers to search their code contextually, leading to quicker debugging, better code comprehension, and more efficient feature development.

Product Core Function

· Contextual Code Search: Utilizes RNNs to understand code meaning and relationships, not just keywords. Value: Finds relevant code more accurately and discovers hidden connections. Scenario: Debugging complex issues, understanding unfamiliar codebases.

· High-Precision Code Context: Focuses on delivering accurate and meaningful code snippets related to the search query. Value: Reduces noise from irrelevant search results, saving developer time. Scenario: Identifying specific functions or logic blocks.

· Synthetic Data Generation: Employs generated datasets for training the RNN model. Value: Enables the model to learn general code patterns without relying solely on large, pre-existing codebases. Scenario: Bootstrapping a new code search tool for various languages.

· Open-Source Implementation: Provides a freely accessible and modifiable codebase. Value: Fosters community contribution, allows for customization, and lowers adoption barriers for developers. Scenario: Building custom code intelligence tools, learning about ML in code analysis.

Product Usage Case

· A developer is trying to fix a bug in a large, unfamiliar project. They can use OpenSWE-Grep to search for code related to a specific error message, and the tool intelligently returns not just lines containing the message, but also the functions and modules that are likely involved in triggering it. This helps them pinpoint the root cause much faster than a standard grep search. So, this dramatically speeds up the debugging process for complex issues.

· A team is onboarding a new engineer to a project. The new engineer can use OpenSWE-Grep to explore the codebase by searching for high-level concepts like 'user authentication' or 'data processing pipeline'. The tool will then provide relevant code contexts, helping the engineer quickly grasp the architecture and key components of the system. So, this accelerates learning and improves the efficiency of new team members.

· A developer is refactoring a piece of code and wants to ensure they haven't missed any important dependencies or side effects. They can use OpenSWE-Grep to search for code that interacts with the specific function they are modifying, helping them identify all related code that might need to be updated. So, this reduces the risk of introducing regressions during code changes.

13

WonderWrite AI

Author

babblingfish

Description

A novel writing assistant that uses AI to help authors focus on creativity and wonder, rather than just commercial success. The core innovation lies in its approach to guiding the writing process, prompting users to explore imaginative themes and emotional resonance, thereby unlocking novel forms of narrative and character development.

Popularity

Points 4

Comments 2

What is this product?

WonderWrite AI is a software tool designed to reframe the writing process for authors. Instead of optimizing for traditional metrics of success like marketability or trends, it encourages writers to tap into their sense of wonder and curiosity. Technically, it likely uses a form of natural language processing (NLP) and generative AI, trained on a diverse corpus of literature emphasizing imaginative and emotionally rich content. It doesn't write for you, but rather acts as a sophisticated prompt generator and thematic exploration partner. Its innovation is in shifting the AI's objective from mere text generation to fostering a deeper, more personal creative experience for the user, enabling them to discover unexpected narrative paths and develop profound character arcs. So, what's in it for you? It helps you write more engaging, original, and personally fulfilling stories, moving beyond formulaic approaches.

How to use it?

Developers can integrate WonderWrite AI into their existing writing workflows or use it as a standalone application. The system would likely expose an API that accepts prompts or thematic starting points, returning a series of exploratory questions, imaginative scenarios, or character motivations designed to spark creativity. For example, a developer could build a plugin for a popular writing IDE that, upon encountering a narrative block, queries WonderWrite AI for novel directions, offering suggestions that are less about plot progression and more about evoking a sense of awe or mystery. This could involve generating 'what if' scenarios based on existing text, suggesting sensory details to enhance atmosphere, or posing philosophical questions related to character dilemmas. So, how do you use it? You feed it your current writing state or a nascent idea, and it returns prompts that unlock your imagination and reveal new creative territories, making your writing process more exciting and less about ticking boxes.

Product Core Function

· AI-powered thematic exploration: This function uses AI to analyze user input and generate prompts that encourage exploration of abstract concepts, emotions, and imaginative scenarios, fostering a sense of wonder in the narrative. Its value is in helping writers break free from predictable story arcs and discover unique thematic depths. Applicable in any writing project where originality and emotional impact are desired.

· Character curiosity generation: This feature employs AI to suggest unexpected motivations, internal conflicts, or personal histories for characters, based on minimal initial input. This goes beyond typical character archetype suggestions by pushing towards more nuanced and surprising character development. Its value lies in creating memorable and multi-dimensional characters that resonate with readers. Useful for writers seeking to craft truly distinctive characters.

· Sensory detail augmentation: This function leverages AI to suggest vivid sensory details (sight, sound, smell, taste, touch) that can enrich the reader's experience and immerse them in the story's world. It's about painting a more evocative picture with words. Its value is in enhancing the atmosphere and believability of the narrative setting. Applicable for writers aiming to create a strong sense of place and immersion.

· Narrative branching suggestions: Rather than straightforward plot progression, this AI feature proposes unconventional narrative detours or alternative outcomes that prioritize surprise and emotional resonance over logical sequence. Its value is in injecting unexpected twists and turns that keep readers engaged and emotionally invested. Useful for writers who want to create compelling and unpredictable stories.

Product Usage Case

· A fantasy author struggling with a predictable plotline uses WonderWrite AI to explore alternative magical systems based on forgotten myths and natural phenomena, leading to a more unique and wondrous world-building. The AI prompted them with questions like 'What if magic was powered by shared dreams?' or 'How would a society evolve if its primary energy source was bioluminescence?' This helped them break free from common fantasy tropes and create a richer, more imaginative setting.

· A science fiction writer seeking to deepen their protagonist's internal struggle asks WonderWrite AI for character development prompts related to existential dread and the search for meaning in a vast universe. The AI suggested exploring the character's childhood memories through the lens of a recurring astronomical anomaly, or questioning the nature of consciousness through interaction with an alien artifact that communicates through emotions rather than language. This resulted in a more profound and relatable character arc.

· A historical fiction writer wants to infuse their story with a greater sense of atmosphere. They use WonderWrite AI to generate vivid descriptions of a bustling medieval market, focusing on olfactory and auditory details that evoke a specific time period. The AI might suggest the scent of roasting meats mingled with decaying refuse, the cacophony of hawkers' cries, and the distant clang of a blacksmith's hammer, transporting the reader directly into the scene. This elevates the narrative beyond simple exposition into an immersive experience.

14

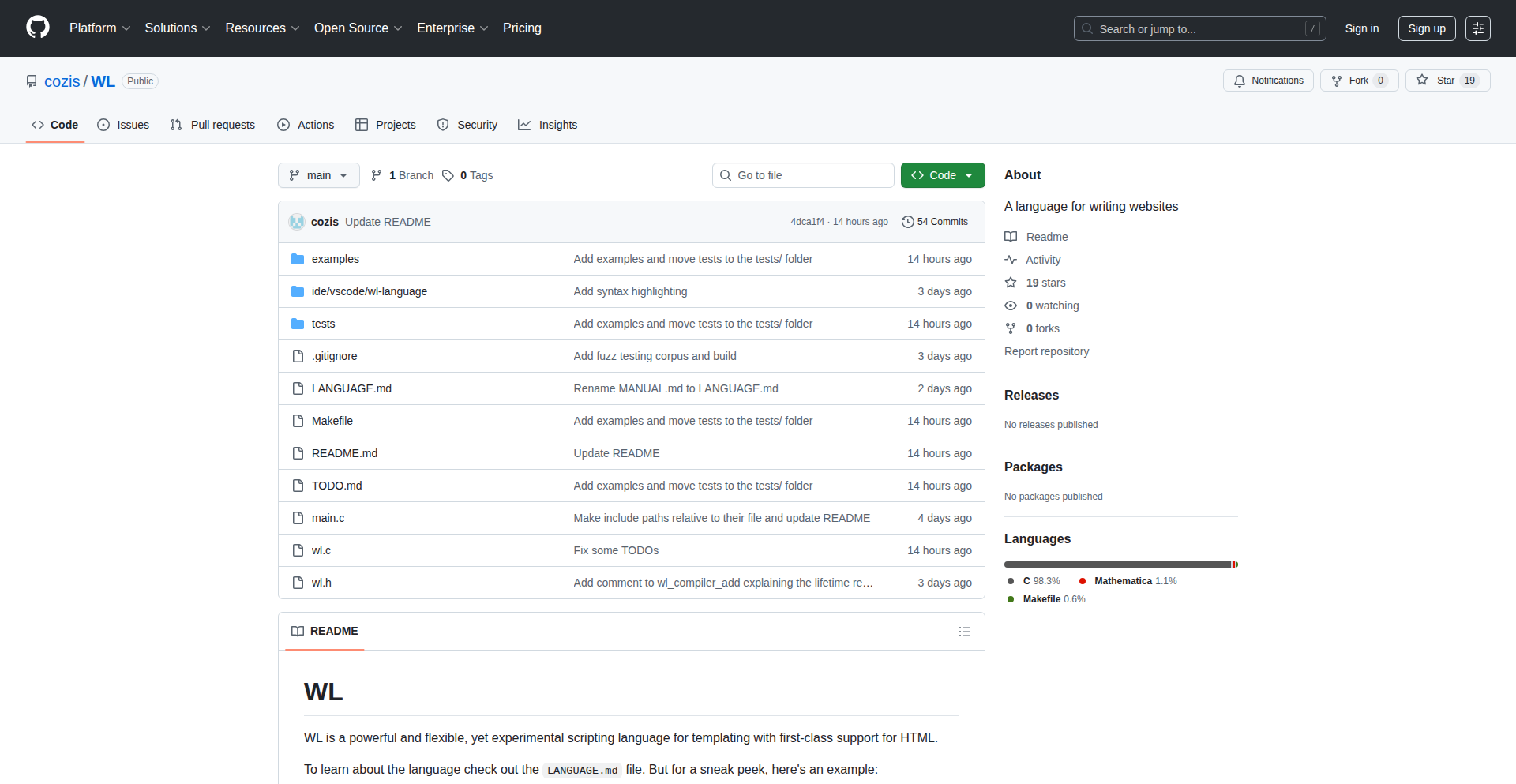

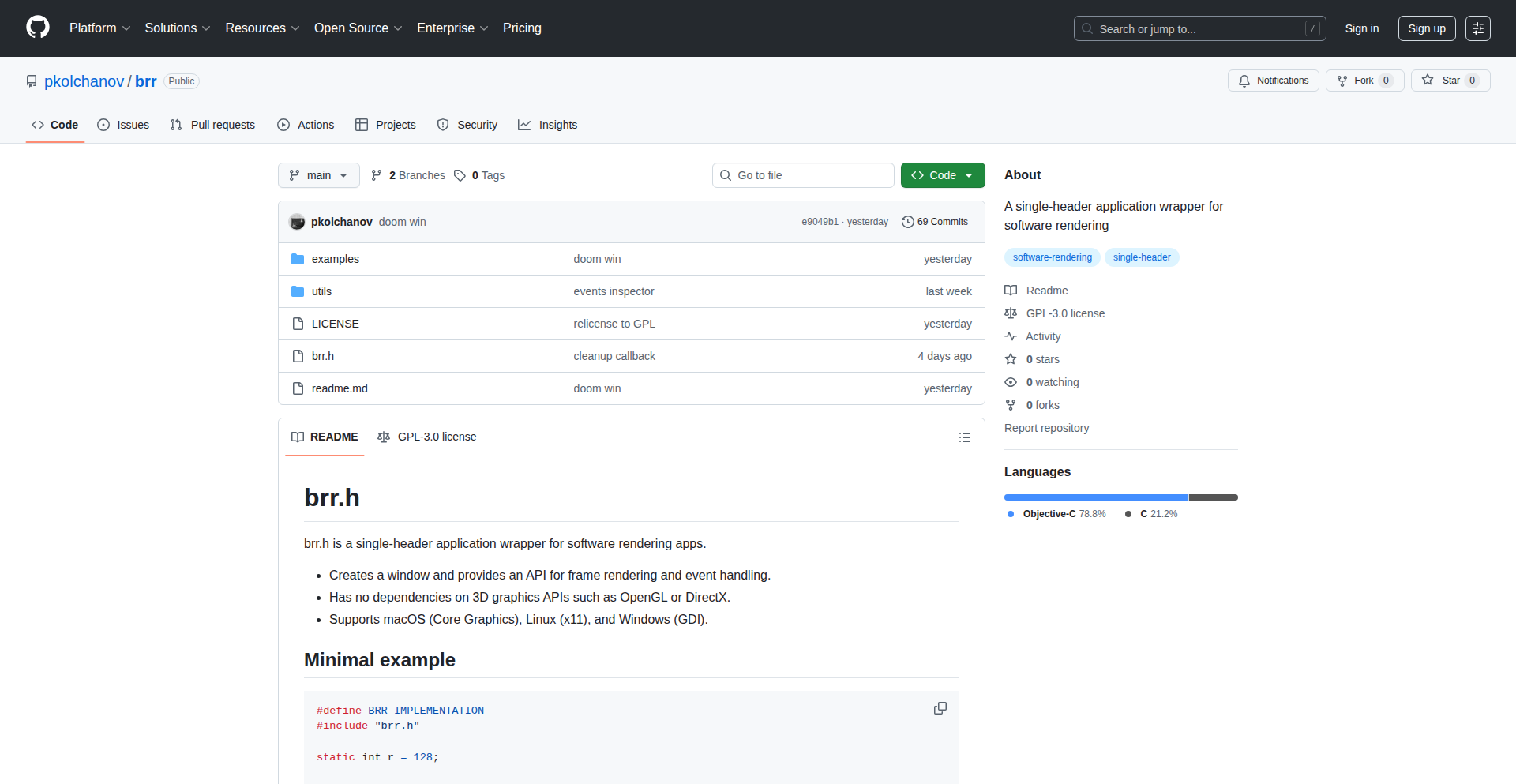

WL: C-Native Templating Engine

Author

cozis

Description

WL is a novel templating engine for C, designed to imbue the powerful C language with dynamic string generation capabilities. It addresses the common challenge of embedding dynamic content within static structures, particularly in C environments where such tasks can be verbose and error-prone. WL's innovation lies in its approach to integrate templating logic directly into C code, offering a more performant and memory-efficient solution compared to traditional external templating systems. This allows developers to generate complex strings, configuration files, or even HTML directly within their C applications with ease and speed. So, for you, this means generating dynamic text outputs from your C programs much more efficiently and with less boilerplate code.

Popularity

Points 4

Comments 1

What is this product?

WL is a templating engine specifically built for the C programming language. Unlike many templating systems that are separate applications or libraries with their own syntax and processing, WL integrates templating directly into C itself. This means you can define templates and fill them with data using C constructs. The core innovation is how it achieves this: it leverages C's macro system and compile-time processing to embed the templating logic directly within your C source files. This results in highly efficient execution because the templating is effectively compiled into your C code, leading to faster runtime performance and lower memory usage. It solves the problem of needing to generate dynamic strings in C without relying on external, often heavier, libraries. So, for you, this means getting faster and more memory-friendly dynamic string generation from your C applications, without sacrificing control or performance.

How to use it?

Developers can use WL by including its header files and defining templates directly within their C source code. The engine typically works by allowing you to define template strings and then use specific C macros or functions to pass data and render the template. For example, you might define a template for a configuration file or an HTML snippet, and then pass C variables to fill in placeholders within that template. The engine then processes this during compilation or at runtime, generating the final string. Integration would involve linking the WL library and following its specific API for template definition and rendering. This is useful in scenarios where you need to generate configuration files, log messages, or simple web content from within a C application. So, for you, this means you can embed templating logic directly into your C projects, making it easier to manage and generate dynamic text outputs for various purposes within your existing C code structure.

Product Core Function

· Dynamic String Generation: Allows C programs to create strings with variable content on the fly, enhancing flexibility in output. Useful for generating reports, configuration files, or custom messages.

· Compile-time or Near-Compile-time Processing: By integrating deeply with C, it aims for efficient processing, potentially reducing runtime overhead. This means your generated strings are created quickly, without hogging system resources during execution.

· C-Native Integration: Enables templating directly within C code, avoiding the complexities of integrating with separate templating language interpreters. This keeps your project simpler and more cohesive.

· Performance Optimization: Designed for speed and efficiency in C environments, making it suitable for performance-critical applications. This ensures your dynamic text generation doesn't become a bottleneck.

· Reduced Boilerplate Code: Simplifies the process of embedding dynamic content, leading to cleaner and more maintainable C code. You write less code to achieve the same dynamic output.

Product Usage Case

· Generating dynamic configuration files for a C application based on runtime parameters. This avoids manual editing of config files and ensures correct formatting. This solves the problem of maintaining consistent configuration across different deployment environments.

· Creating custom log messages with embedded variable data in a high-performance embedded system. This allows for detailed and informative logging without significant performance impact. This addresses the need for granular diagnostics in resource-constrained systems.

· Producing simple HTML output for a command-line tool that reports status information. This makes the tool's output more human-readable and professional. This solves the problem of presenting structured data in an easily digestible format from a C utility.

· Constructing database query strings dynamically in a C-based database interaction layer. This allows for flexible querying based on user input or application logic. This addresses the challenge of building secure and dynamic SQL statements.

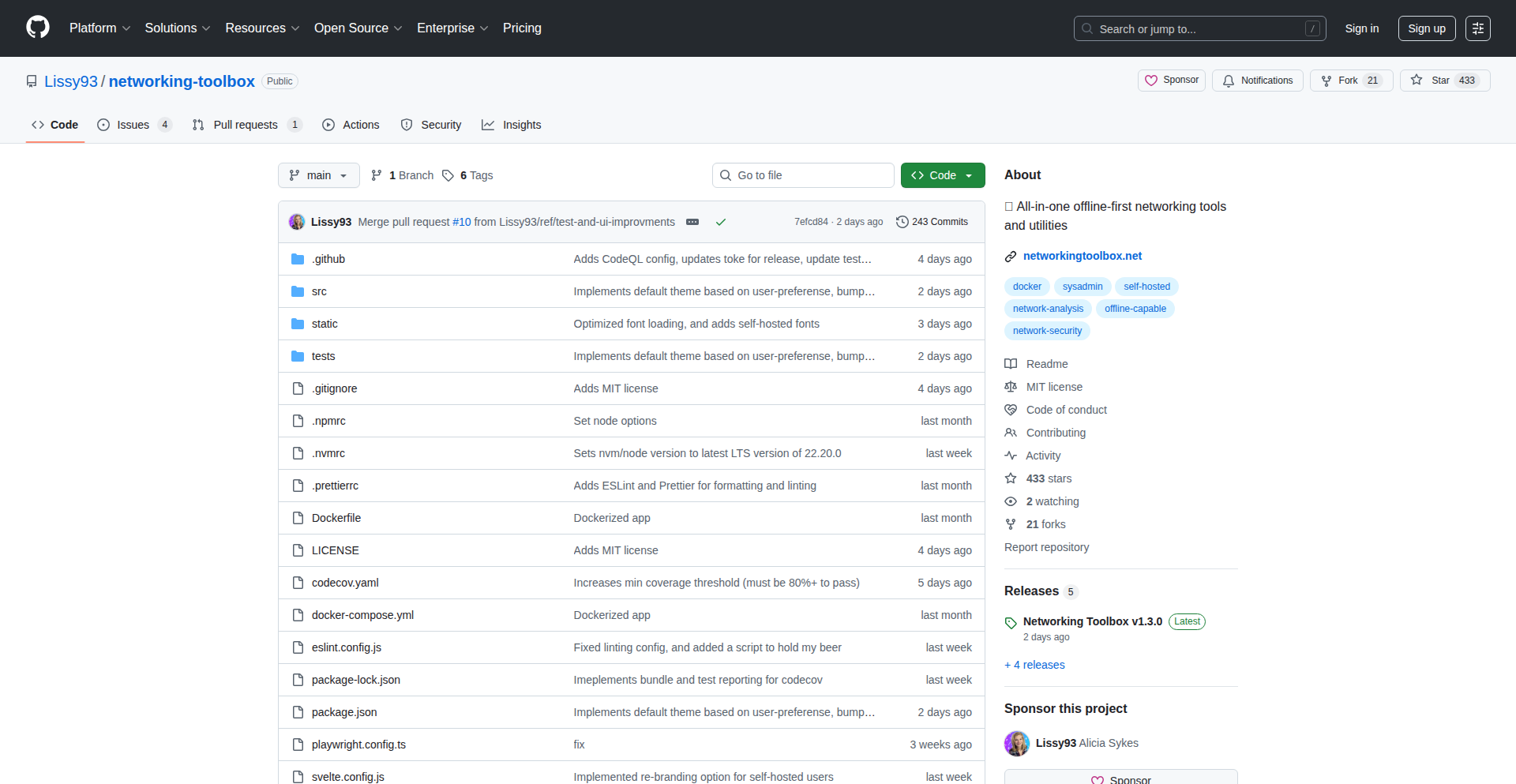

15

OfflineNetKit

Author

lissy93

Description

OfflineNetKit is a collection of 100 essential networking tools designed for system administrators, providing offline functionality and customizability. Its innovation lies in bundling a comprehensive suite of diagnostic and management tools into a single, accessible package that can be used without an internet connection, catering to scenarios where network access is limited or unavailable. It also offers an API, keyboard shortcuts, and bookmarking capabilities for enhanced productivity.

Popularity

Points 4

Comments 0

What is this product?

OfflineNetKit is a versatile suite of 100 networking utilities that operate entirely offline. The core technical insight is packaging commonly used command-line and graphical network diagnostic tools (like ping, traceroute, DNS lookup, port scanners) into a self-contained application. This allows sysadmins to troubleshoot network issues even when their devices have no internet connectivity. The innovation is in its accessibility, offline capability, and extensibility, offering an API for programmatic access and Docker support for self-hosting with custom branding, which means you get reliable access to critical tools anywhere, anytime, tailored to your needs.

How to use it?

Developers and sysadmins can use OfflineNetKit in several ways. For immediate offline troubleshooting, simply download and run the application. For integration into existing workflows or automation, the provided API allows programmatic control of the tools. The Docker image enables self-hosting, which is perfect for organizations wanting to brand the tool with their company's logo and styling, or for deploying it within isolated network environments. Keyboard shortcuts and bookmarking allow for rapid access to frequently used tools, so you can quickly diagnose and fix network problems without searching through multiple applications or websites.

Product Core Function

· Offline Network Diagnostics: Provides essential tools like ping, traceroute, and DNS lookup without requiring an internet connection, enabling quick identification and resolution of network connectivity issues, which is useful when you're on a network with no internet access.

· Comprehensive Toolset: Includes 100 diverse networking utilities for tasks such as port scanning, IP address management, and protocol analysis, offering a one-stop solution for various network administration needs, so you don't have to juggle many different tools.

· API for Automation: Exposes an API that allows developers to integrate these tools into scripts or other applications for automated network monitoring and management, making your network operations more efficient and scalable.