Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-16

SagaSu777 2025-10-17

Explore the hottest developer projects on Show HN for 2025-10-16. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of technical innovation is clearly being dominated by the pervasive influence of AI, but not just in the form of standalone models. We're witnessing a powerful shift towards tools that integrate AI into the core of development workflows and problem-solving. This means the focus is moving beyond just building AI, to building *with* AI, and making it accessible to a wider audience. The explosion of AI agent builders, intelligent content generators, and code assistants highlights a growing demand for solutions that automate complex tasks and augment human capabilities. For developers, this presents an incredible opportunity to leverage these new paradigms, not just as consumers, but as creators of the next generation of AI-powered applications. The emphasis on open protocols, local-first solutions, and interoperability signals a healthy and vibrant ecosystem where innovation can thrive. Entrepreneurs should take note of the specific pain points being addressed, such as streamlining content creation, enhancing data privacy, or simplifying complex development processes. The hacker spirit is alive and well, with developers using AI to solve practical problems in novel and efficient ways, pushing the boundaries of what's possible on consumer hardware and democratizing access to advanced technologies.

Today's Hottest Product

Name

Inkeep – Agent Builder

Highlight

Inkeep tackles the challenge of bridging the gap between technical and non-technical users in AI agent development. Its true 2-way sync between code (TypeScript SDK) and a drag-and-drop visual editor, coupled with a CLI for seamless pushing and pulling, represents a significant innovation in developer experience (devex) and collaboration. This approach democratizes AI agent creation, enabling faster iteration and broader adoption within organizations by allowing both developers and business teams to contribute to and maintain AI agents. Developers can learn about building robust, multi-agent architectures, leveraging open protocols for interoperability, and integrating observability features like traces and OTEL logs.

Popular Category

AI/ML Development Tools

Developer Productivity

No-Code/Low-Code

Data Management & Analytics

Web Development Tools

Popular Keyword

AI Agents

LLM

TypeScript

Python

Cloud

Open Source

Developer Experience

Collaboration

Data Visualization

Web Scraping

Technology Trends

AI Agent Orchestration

Unified Development Environments (Code & Visual)

Local-First AI Models

Enhanced Data Privacy in AI

Intelligent Automation for Content & Workflows

Democratization of Complex Development

Interoperable AI Ecosystems

AI-Driven Developer Tools

Specialized AI for Niche Applications

Cross-Platform AI Integration

Project Category Distribution

AI/ML Tools & Frameworks (35%)

Developer Productivity & Tools (25%)

Web & App Development (15%)

Data & Analytics (10%)

Utilities & Miscellaneous (15%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Inkeep Code-Visual Sync Agent Builder | 72 | 49 |

| 2 | RoleFit Challenge Engine | 27 | 32 |

| 3 | WorkHour Ekonomi | 19 | 37 |

| 4 | Arky Canvas | 10 | 6 |

| 5 | MooseStack: Postgres to ClickHouse CDC Stream | 7 | 3 |

| 6 | Modshim: Python Module Overlay | 7 | 1 |

| 7 | Counsel Health AI Care Platform | 7 | 1 |

| 8 | ScamAI Job Detector | 5 | 2 |

| 9 | Supabase RLS Shield CLI | 4 | 3 |

| 10 | DressMate AI Wardrobe Stylist | 3 | 3 |

1

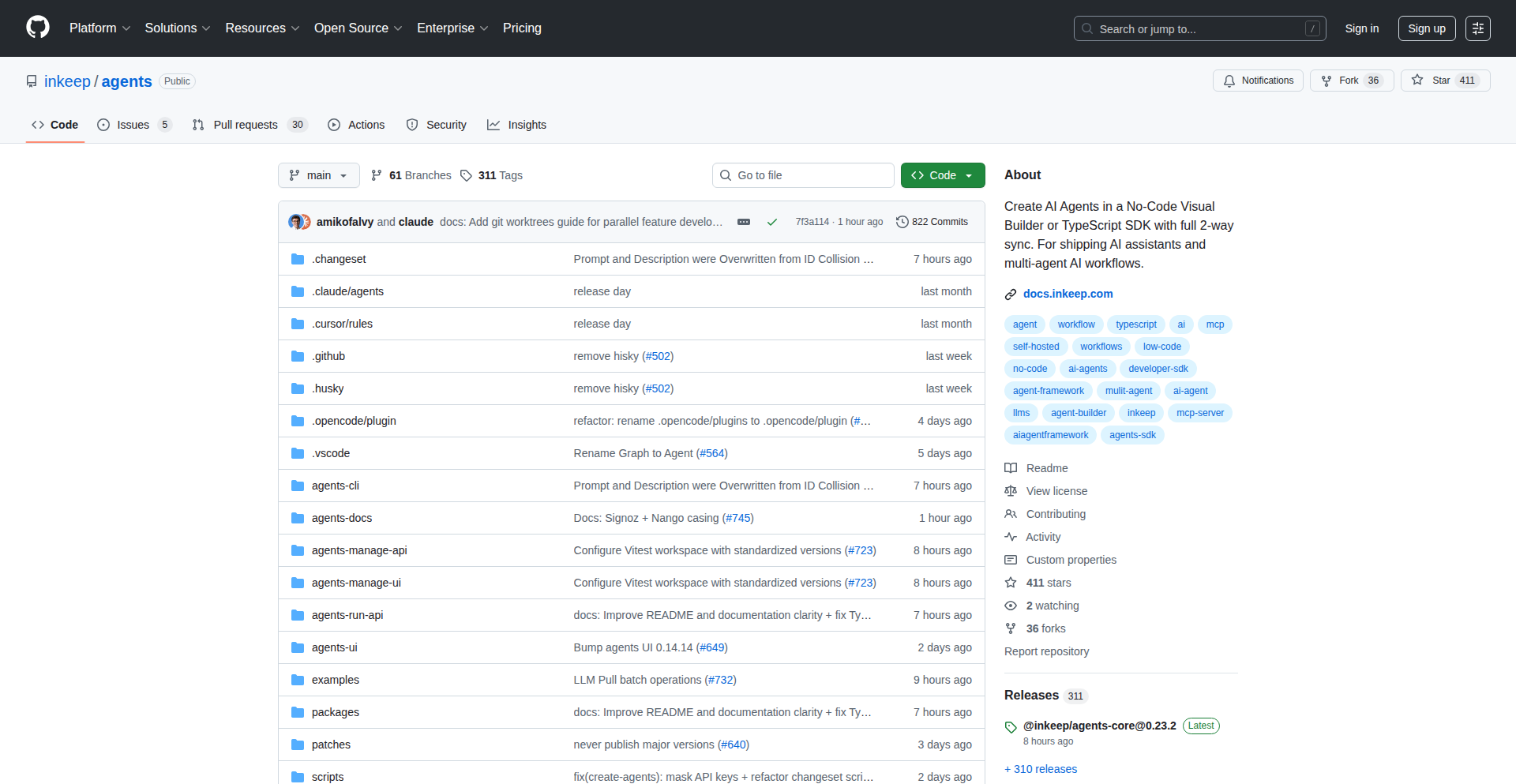

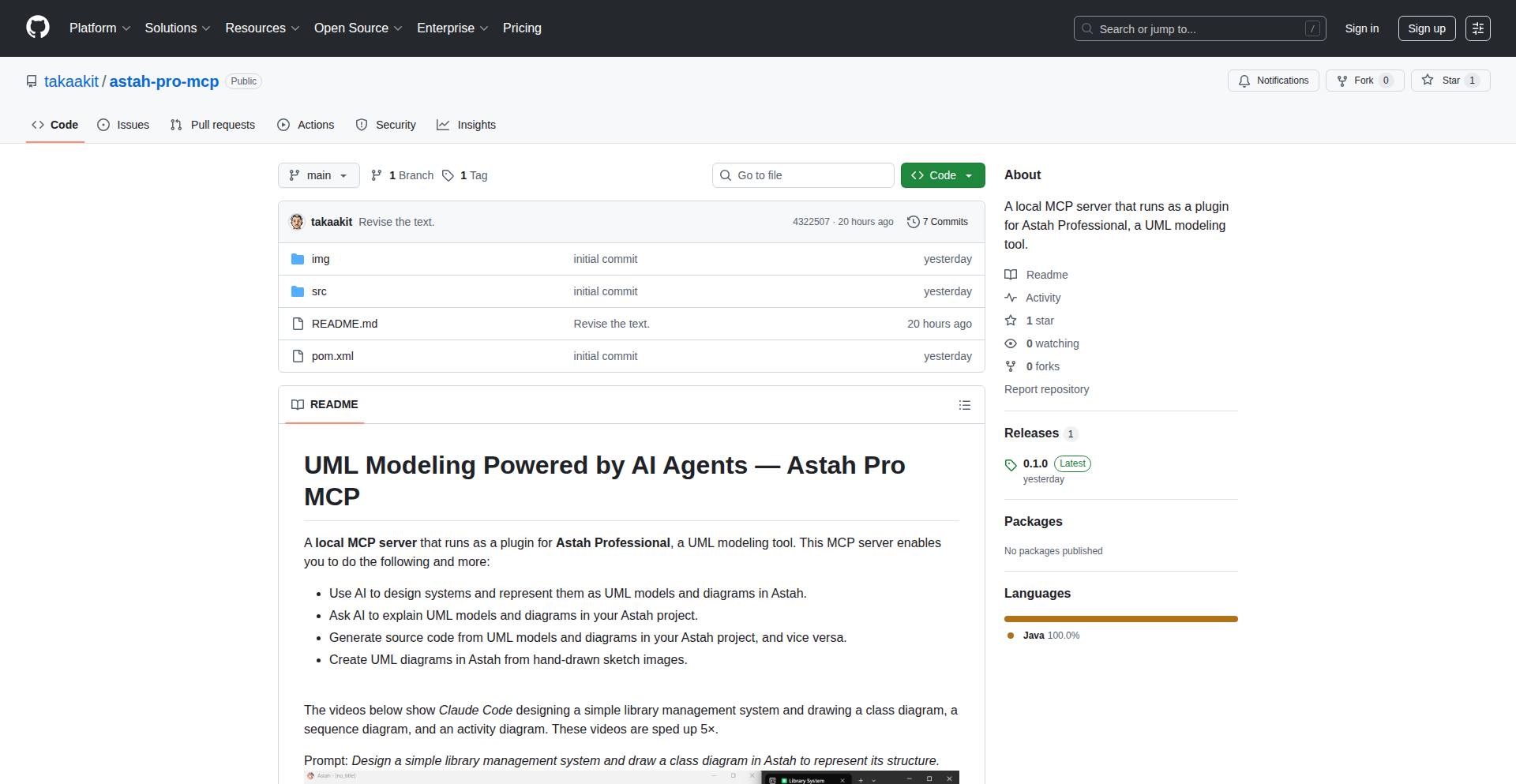

Inkeep Code-Visual Sync Agent Builder

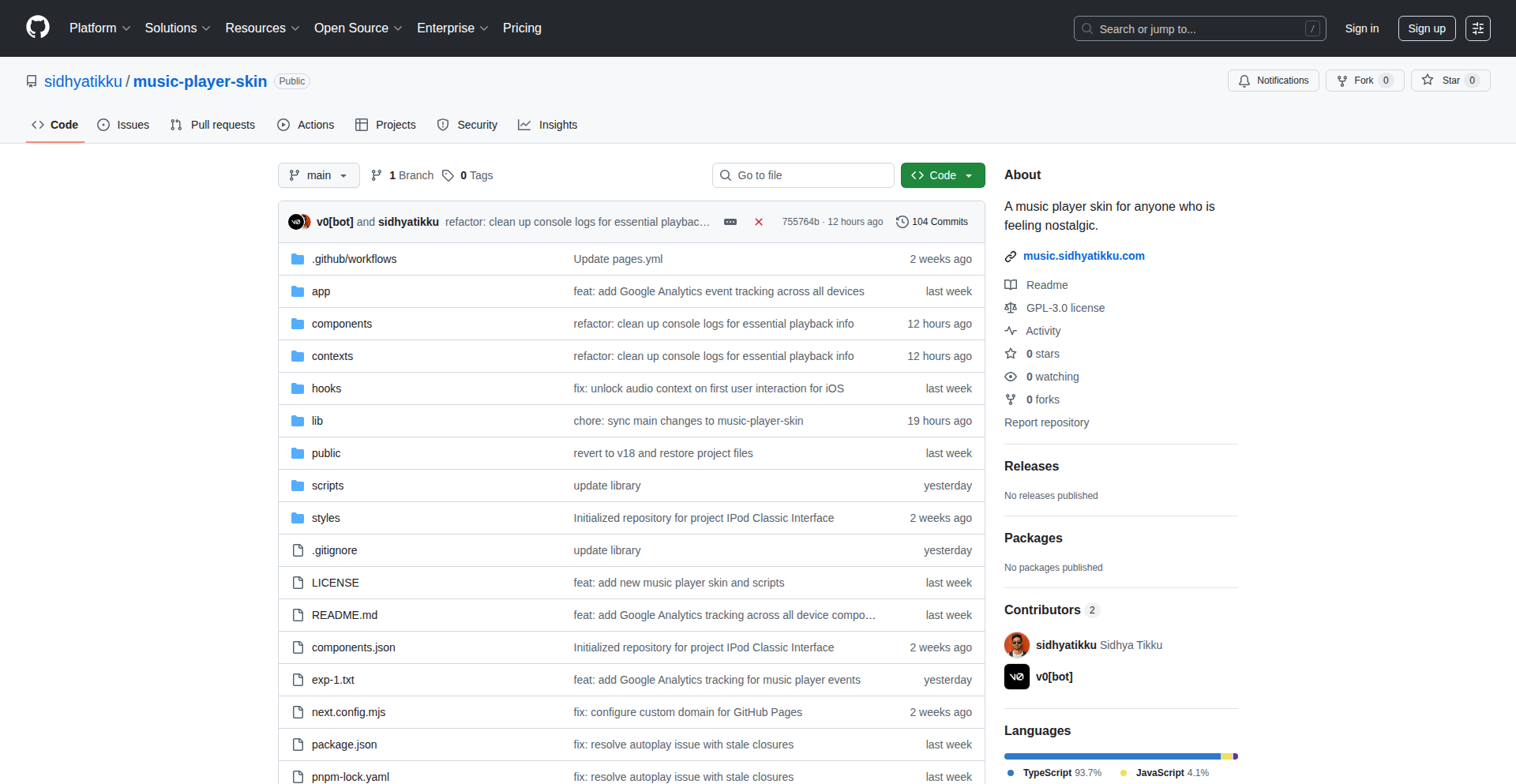

Author

engomez

Description

Inkeep is an agent builder that enables true two-way synchronization between code and a drag-and-drop visual editor. This allows developers and non-technical users to collaborate seamlessly on building AI agents. The innovation lies in bridging the gap between the flexibility of code-based AI frameworks and the accessibility of no-code tools, while offering first-class support for interactive chat assistants.

Popularity

Points 72

Comments 49

What is this product?

Inkeep is a platform for building AI agents, which are essentially automated systems powered by AI. What makes Inkeep innovative is its dual approach to agent creation. Developers can use a TypeScript SDK to write agent logic in code, then use a command-line tool called `inkeep push` to publish it. Simultaneously, non-technical team members can use a visual, drag-and-drop editor to modify and build agents. The magic happens with `inkeep pull`, which allows you to bring the visual changes back into code. This solves the problem of having to choose between the power of code and the ease of visual tools, and it avoids vendor lock-in associated with some other platforms where you can only export once.

How to use it?

Developers can start by defining their AI agent's logic using the Inkeep TypeScript SDK. This involves writing the steps and behaviors of the agent. Once the code is ready, they run `inkeep push` from their command-line interface (CLI) to upload and register the agent. From there, the agent can be accessed and modified through Inkeep's visual builder. For example, a developer might build the core AI logic for a customer support chatbot in code, and then hand it over to a non-technical support manager who can use the visual editor to fine-tune responses, add new FAQs, or adjust interaction flows. The ability to then pull changes back into code using `inkeep pull` ensures that developers can maintain the integrity and complexity of the agent while leveraging collaborative visual editing.

Product Core Function

· Code-to-Visual Synchronization: Developers can write agent logic in TypeScript, and then use a visual builder to edit it. This is valuable because it allows for rapid prototyping and iteration on AI agent behavior, enabling faster development cycles and easier collaboration.

· Visual-to-Code Synchronization: Changes made in the drag-and-drop visual editor can be pulled back into code. This is crucial for maintaining the agent's underlying logic and complexity, ensuring that the flexibility of code is not lost after visual adjustments.

· Multi-Agent Architecture: Agents are composed of multiple interconnected AI models and components. This approach is more maintainable and flexible for complex tasks than simple if-then logic, offering a robust foundation for sophisticated AI applications.

· Interactive Chat Assistant Support: The platform prioritizes building chat assistants with interactive user interfaces, going beyond just basic workflow automation. This is valuable for creating engaging and user-friendly AI experiences for end-users.

· Open Protocol Integrations (MCP, Vercel AI SDK): Agents can be used with various chat interfaces and platforms due to support for open protocols, making them highly interoperable. This means you can use your Inkeep-built agents in tools like Cursor, Claude, or ChatGPT, and easily integrate them into your existing web applications using popular hooks like Vercel's `useChat`.

· Agent-to-Agent (A2A) Communication: Agents can communicate and collaborate with each other. This enables the creation of more complex and intelligent systems where multiple specialized agents work together to solve a problem.

· Customizable Chat UI Library: Provides a React-based library for building custom user interfaces for chat assistants, allowing for tailored branding and user experience. This is beneficial for creating a seamless integration of AI into existing applications.

· Observability (Traces UI, OTEL logs): Offers tools for monitoring and debugging agents, including visual traces and standard logging. This is essential for understanding agent behavior, identifying issues, and ensuring reliable performance in production environments.

Product Usage Case

· Building a customer support chatbot: A developer can use the SDK to build the core understanding and retrieval mechanisms for a support bot. A non-technical support lead can then use the visual editor to add specific product FAQs, refine canned responses, and adjust the escalation path to human agents, all without writing a line of code. This speeds up deployment and ensures the bot is always up-to-date with the latest support information.

· Creating a deep research agent: A researcher can use the visual builder to define search queries, data extraction steps, and summarization parameters. The underlying logic can then be refined in code by a developer to optimize performance or integrate more advanced natural language processing techniques. This hybrid approach allows for both broad exploration and deep technical optimization.

· Developing an internal documentation assistant: Developers can code the agent to index internal knowledge bases. Non-technical team members can then use the visual editor to define custom greetings, specify preferred output formats for answers, or set up triggers for when the bot should proactively offer information. This makes internal tools more accessible and useful across the company.

· Integrating AI into marketing campaigns: A marketing team can use the visual editor to build an agent that personalizes outreach messages based on customer data. Developers can then use the `push` and `pull` functionality to ensure the agent adheres to brand guidelines and integrates smoothly with CRM systems, solving the challenge of balancing creative input with technical implementation.

2

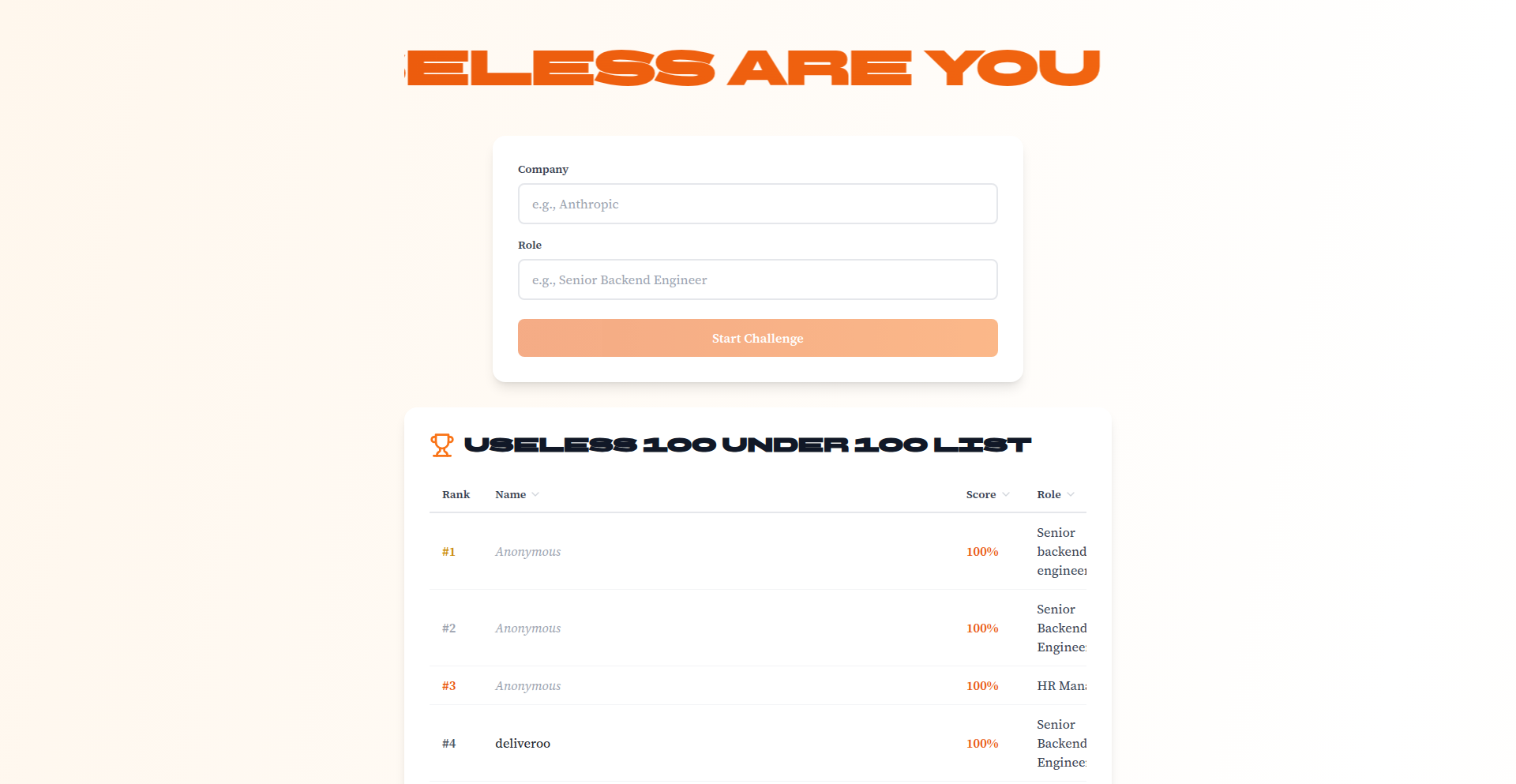

RoleFit Challenge Engine

Author

mraspuzzi

Description

This project is a custom 5-minute skills assessment that provides brutally honest feedback on your fit for a specific role, particularly for YC startups and similar companies. It addresses the common difficulty of obtaining genuine feedback during job applications. The innovation lies in its automated, direct feedback mechanism and the creation of a competitive leaderboard to gauge skill levels against others. So, this is useful because it helps you understand your actual suitability for a job before you even apply, saving you time and effort, and giving you a realistic benchmark of your skills.

Popularity

Points 27

Comments 32

What is this product?

RoleFit Challenge Engine is an automated system designed to evaluate your skills and determine your potential fit for a particular job role. It functions by presenting you with a short, custom challenge (around 5 minutes) that simulates real-world tasks or problem-solving scenarios relevant to the target role. After completion, it delivers direct, unfiltered feedback about your performance. The core technical insight is to create a standardized yet adaptable evaluation framework that can be quickly deployed to provide objective self-assessment. This tackles the problem of opaque hiring processes and the lack of clear skill benchmarks, offering a transparent and actionable way for individuals to gauge their professional readiness. So, this is useful because it cuts through the ambiguity of job applications and provides you with concrete insights into where you stand professionally, allowing you to target your efforts more effectively.

How to use it?

Developers can integrate this project by defining a specific role and then configuring the challenge parameters. This might involve uploading a set of questions, defining coding tasks, or setting up scenario-based assessments. The system then generates a unique challenge link for the user. Users can access this link, complete the challenge, and receive immediate feedback. For more advanced integration, the API could be used to embed these challenges directly into recruitment platforms or internal skill development tools. The leaderboard feature allows for tracking performance over time or comparing against a cohort. So, this is useful because it provides a straightforward way to build and deploy skill assessments for yourself or your team, enabling data-driven career development and hiring decisions.

Product Core Function

· Automated Skill Assessment: The system generates and scores challenges based on pre-defined criteria, offering objective performance metrics. This is valuable for identifying skill gaps and areas for improvement.

· Brutally Honest Feedback Generation: It processes challenge results to provide direct, actionable feedback on strengths and weaknesses, helping users understand their performance in a realistic context.

· Role-Specific Challenge Customization: Allows for tailoring challenges to specific job roles, ensuring relevance and accuracy in the assessment. This is useful for targeted skill development and hiring.

· Leaderboard and Benchmarking: Creates a competitive environment by ranking users against each other, providing a valuable external benchmark for skill comparison. This is helpful for motivation and understanding relative standing.

· Time-Bound Challenge Format: The 5-minute timeframe makes the assessment quick and accessible, encouraging participation and providing rapid insights. This is valuable for busy professionals seeking quick self-evaluations.

Product Usage Case

· A junior developer preparing for a backend engineering role at a fast-growing startup can use this to test their knowledge of common algorithms and data structures relevant to that specific role, receiving feedback on their problem-solving approach. This helps them identify areas to focus their learning before applying.

· A product manager can create a challenge that simulates a product prioritization scenario to assess their decision-making skills under pressure, comparing their choices against industry benchmarks. This helps them refine their strategic thinking.

· A hiring manager can deploy a customized version of this challenge to filter candidates for a niche technical role, quickly identifying those with the foundational skills before investing in lengthy interviews. This streamlines the hiring process and saves resources.

· An individual looking to pivot into a new tech field can use this to get an honest assessment of their current skill level against the requirements of their target roles, guiding their learning path. This provides clarity and direction for career change.

3

WorkHour Ekonomi

Author

mickeymounds

Description

A project that converts the cost of basic needs into work hours, providing a global ranking and downloadable CSVs. It innovates by offering a new perspective on economic value and cost of living, translating financial metrics into a universally understandable unit: time spent working. This helps understand economic disparities and the true cost of essentials in different regions.

Popularity

Points 19

Comments 37

What is this product?

WorkHour Ekonomi is a data-driven project that calculates how many hours of work are required to afford essential goods and services in various locations worldwide. It leverages publicly available economic data and statistical models to convert monetary prices into time-based equivalents. The innovation lies in its novel approach to visualizing and comparing economic well-being and affordability, moving beyond simple currency comparisons to a human-centric measure of effort and value. So, what's the use? It provides a clear, intuitive way to grasp the real economic burden of living in different places, helping individuals and organizations understand global economic fairness and individual purchasing power in a more relatable way.

How to use it?

Developers can use the provided CSVs to integrate this work-hour pricing data into their own applications, analytical tools, or research projects. This could involve building dashboards to visualize cost of living trends, developing comparative economic models, or enriching geographic information systems with affordability data. The data can be accessed programmatically for automated analysis and reporting. So, what's the use? You can build advanced economic analysis tools or create insightful visualizations that demonstrate the true cost of living, offering a unique selling proposition for your applications.

Product Core Function

· Global work-hour pricing for basic needs: Calculates the time required to earn enough for essentials like food, housing, and transportation in different countries, providing a standardized metric for affordability. So, what's the use? It helps you understand the universal effort behind purchasing everyday items, regardless of local currency.

· Rankings and comparative analysis: Generates global rankings based on the work-hour cost of living, enabling direct comparisons between regions and highlighting economic disparities. So, what's the use? You can quickly identify which locations offer better economic value for your time and effort.

· Downloadable CSV datasets: Offers raw data in CSV format for easy integration into various data analysis tools and custom applications. So, what's the use? You can directly import and process this valuable economic data for your own projects, saving significant data collection and processing time.

· Data visualization tools (implied): The project's nature suggests the potential for creating charts and graphs to illustrate work-hour costs and economic trends. So, what's the use? Visual aids make complex economic information easy to understand and communicate to a broader audience.

Product Usage Case

· A financial advisor using the CSV data to advise clients on international relocation or investment, showing them the true cost of living in different cities in terms of their working hours. So, what's the use? It provides a concrete, relatable metric to help clients make informed decisions about their finances and lifestyle abroad.

· An academic researcher analyzing global economic inequality by correlating work-hour costs with other socio-economic indicators to identify patterns and causes of disparity. So, what's the use? It offers a novel quantitative approach to studying economic fairness on a global scale.

· A travel blogger creating content comparing the 'real' cost of living in various destinations, using work-hour figures to demonstrate which places offer more purchasing power for the average worker. So, what's the use? It allows for engaging and insightful content that goes beyond typical travel cost guides, resonating with a wider audience.

· A startup developing a cost-of-living comparison app for remote workers, allowing them to choose locations based on how their skills and earning potential translate into local essential goods. So, what's the use? It provides a key feature for users to objectively assess the financial viability of working from different parts of the world.

4

Arky Canvas

Author

masonkim25

Description

Arky Canvas is a revolutionary 2D Markdown editor that transforms writing from a linear process into a spatial experience. Instead of traditional documents, you arrange your thoughts, ideas, and notes on a freeform canvas. This innovative approach leverages drag-and-drop for effortless organization and hierarchy building, and integrates AI to generate context-aware content directly onto your canvas. This breaks down the limitations of standard text editors, offering a more intuitive and visually engaging way to structure information, making complex ideas easier to grasp and manage. So, what's in it for you? It's a powerful tool to brainstorm, plan, and create content with unprecedented flexibility and intelligence, turning abstract thoughts into tangible, organized structures.

Popularity

Points 10

Comments 6

What is this product?

Arky Canvas is a web-based Markdown editor built on a 2D spatial canvas. Its core innovation lies in moving beyond the traditional, linear document structure. Think of it less like a page and more like a whiteboard where you can place text blocks (Markdown) anywhere you want. You can then connect these blocks, arrange them into hierarchical structures using drag-and-drop, and get an instant visual overview of your entire project. A key technological insight is its AI integration, which can generate relevant text snippets based on your existing content and context, allowing you to seamlessly weave AI-assisted writing directly into your spatial layout. This fundamentally changes how we interact with and organize information, making it more discoverable and manageable. So, what's in it for you? It offers a more intuitive and less restrictive way to capture and develop ideas, especially for complex projects where traditional documents become overwhelming.

How to use it?

Developers can use Arky Canvas as a highly flexible note-taking and project planning tool. Its drag-and-drop interface and spatial arrangement make it ideal for outlining complex codebases, mapping out application architectures, or even drafting project proposals. The AI writing assistant can help in generating boilerplate code descriptions, documentation outlines, or even initial drafts of user stories. Integration into a developer's workflow might involve using Arky for initial brainstorming and architectural design, then exporting the structured Markdown to other tools for detailed implementation. For example, you could map out API endpoints on the canvas, with AI suggesting descriptions for each, and then copy-pasting these Markdown sections into your actual API documentation project. So, what's in it for you? It provides a visually rich and interactive environment to plan and document your technical projects, making the process more efficient and less prone to missing crucial details.

Product Core Function

· Spatial Idea Placement: Allows users to position Markdown notes and content blocks freely on a 2D canvas, offering a visual representation of thoughts and their relationships. This helps in understanding the overall structure and flow of information at a glance. The value is in enabling intuitive brainstorming and organization, making complex ideas more digestible.

· Drag-and-Drop Hierarchy: Enables users to create organized structures by dragging and dropping content blocks, defining parent-child relationships and project outlines. This simplifies the process of structuring complex information and managing project dependencies. The value is in providing a dynamic and visual way to manage intricate project details.

· Visual Document Overview: Presents the entire document structure in a clear, at-a-glance format on the canvas, allowing for quick comprehension of project scope and interconnections. This avoids getting lost in long, linear documents. The value is in enhancing project management and comprehension by offering a holistic view.

· Contextual AI Writing: Integrates an AI that generates text contextually based on the content placed on the canvas, allowing for seamless addition of AI-generated ideas or explanations directly into the spatial layout. This significantly speeds up content creation and idea generation. The value is in boosting productivity and creativity by leveraging AI assistance within the creative process.

Product Usage Case

· Scenario: Planning a new software feature. How it solves the problem: A developer can use Arky Canvas to visually map out all components of the feature, user flows, and dependencies on the 2D canvas. They can drag and drop different idea blocks for UI elements, backend logic, and database interactions, creating a clear hierarchical structure. AI can then be used to suggest descriptions for each component or generate initial user stories. This provides a much clearer and more interactive plan than a simple text document, allowing for easier collaboration and identification of potential issues. So, what's in it for you? It helps you visualize and organize complex feature plans, making development more efficient and less error-prone.

· Scenario: Creating a technical documentation outline. How it solves the problem: Instead of writing a linear document, a developer can use Arky Canvas to create a visual mind map of the documentation structure. Each section and subsection can be placed spatially, with AI assisting in drafting titles or brief summaries for each point. The drag-and-drop functionality allows for easy rearrangement of sections as the documentation plan evolves. This makes the outlining process more dynamic and helps ensure all key areas are covered logically. So, what's in it for you? It provides a more intuitive and flexible way to outline and structure technical documentation, ensuring comprehensive coverage and logical flow.

· Scenario: Brainstorming and outlining a blog post or article. How it solves the problem: A writer or developer can use Arky Canvas to place initial ideas, key talking points, and supporting evidence as separate blocks on the canvas. They can then arrange these into a logical flow, using drag-and-drop to build paragraphs and sections. AI can help in expanding on ideas or suggesting transitions between points. This visual approach helps in structuring arguments and ensuring a cohesive narrative. So, what's in it for you? It offers a powerful visual tool for structuring thoughts and content, leading to clearer and more impactful writing.

5

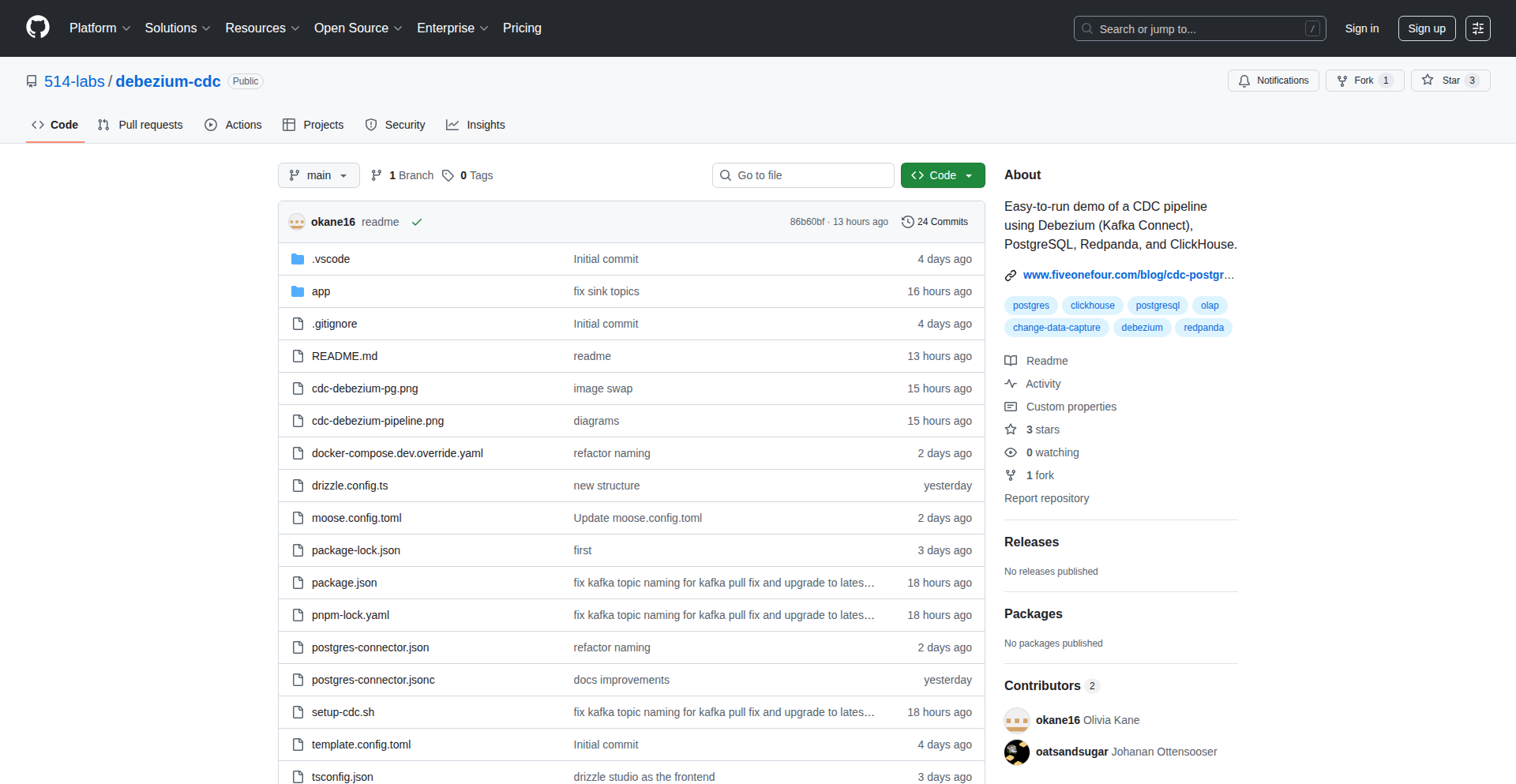

MooseStack: Postgres to ClickHouse CDC Stream

Author

okane

Description

MooseStack is a Hacker News Show HN project that provides a real-time data pipeline from PostgreSQL to ClickHouse using Change Data Capture (CDC). It leverages a custom stack to efficiently stream data, addressing the challenge of synchronizing analytical databases with transactional ones. The core innovation lies in its low-latency, event-driven approach to data replication for analytical workloads.

Popularity

Points 7

Comments 3

What is this product?

MooseStack is a specialized data streaming solution that continuously captures changes happening in your PostgreSQL database and replicates them to ClickHouse. Think of it as a highly efficient courier service for your data. Instead of periodically dumping and reloading large datasets, which is slow and inefficient, MooseStack monitors PostgreSQL for every INSERT, UPDATE, or DELETE operation. It then instantly packages these changes as events and sends them over to ClickHouse. This keeps your ClickHouse database almost perfectly in sync with your PostgreSQL data in near real-time, making your analytics much more up-to-date. The 'MooseStack' part refers to the specific combination of technologies and custom logic the developer used to build this, aiming for performance and reliability in a way that standard tools might not offer.

How to use it?

Developers can integrate MooseStack into their existing data infrastructure. Typically, you'd set up MooseStack to monitor your primary PostgreSQL database. You would then configure it to send the captured data changes to your ClickHouse instance. This is particularly useful for scenarios where you need real-time analytical insights from your operational data. For example, if your web application's user activity is stored in PostgreSQL, you can use MooseStack to feed this live data into ClickHouse for immediate dashboarding and trend analysis, allowing you to react to user behavior as it happens. The 'how' involves setting up the necessary connectors and configurations on both the PostgreSQL and ClickHouse sides, and running the MooseStack application itself.

Product Core Function

· Change Data Capture (CDC) from PostgreSQL: This function monitors your PostgreSQL database for any data modifications (inserts, updates, deletes) and captures these changes as distinct events. The value is that you get granular, real-time insights into every data alteration without complex polling mechanisms. This is useful for any application that needs to react to data changes instantly.

· Real-time Data Streaming to ClickHouse: MooseStack efficiently packages these captured change events and transmits them directly to ClickHouse. The value here is enabling near real-time analytics on your transactional data. This is crucial for dashboards, fraud detection, and any scenario where immediate data availability is critical for decision-making.

· Custom Stack for Performance Optimization: The project likely employs a custom combination of tools and logic ('stack') to ensure high throughput and low latency in data transfer. The value is a potentially more performant and resource-efficient solution compared to generic data replication tools, directly addressing performance bottlenecks in large-scale data pipelines.

· Event-driven Architecture: The system operates on the principle of reacting to data change events. The value is a more decoupled and resilient data pipeline, where components can operate independently, making it easier to manage and scale. This is beneficial for complex microservice architectures or when integrating with other event-processing systems.

Product Usage Case

· Real-time Website Analytics: Imagine a website where user interactions (page views, clicks, sign-ups) are logged in PostgreSQL. Using MooseStack, these events can be streamed live to ClickHouse, allowing for instant visualization of user engagement trends on a dashboard without any noticeable delay. This helps product managers and marketers make faster, data-driven decisions.

· Live Financial Transaction Monitoring: For financial applications, capturing and analyzing transactions in real-time is paramount for fraud detection and compliance. MooseStack can capture every transaction from a PostgreSQL database and stream it to ClickHouse for immediate analysis, enabling alerts and proactive measures against suspicious activities.

· Inventory Management Synchronization: In a retail or e-commerce setting, stock levels can change rapidly. MooseStack can ensure that changes to inventory in a PostgreSQL-based system are reflected in ClickHouse almost instantly, allowing for accurate real-time stock reporting and preventing overselling or stockouts.

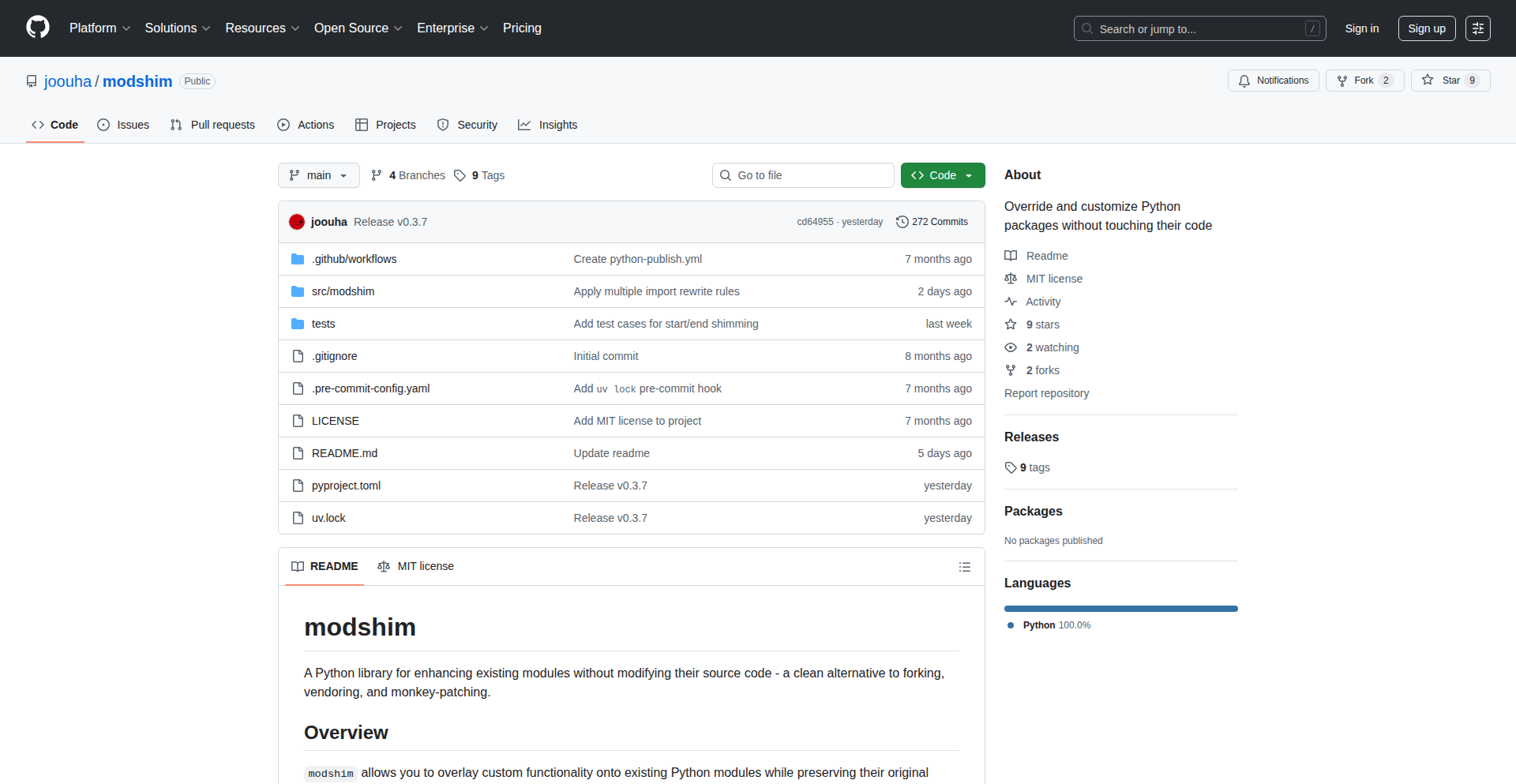

6

Modshim: Python Module Overlay

Author

joouha

Description

Modshim is a novel approach to modifying Python packages without the downsides of traditional methods like forking or monkey-patching. It functions similarly to an operating system's overlay file system for Python modules, allowing developers to apply changes to a target module (the 'base') by creating a separate 'overlay' module. Modshim then intelligently merges these, creating a 'virtual' module that incorporates the modifications. This is achieved through sophisticated AST transformations to rewrite import statements, effectively creating a dynamic, layered module system. This innovation allows for cleaner, more maintainable code modifications, particularly for third-party libraries, by avoiding global namespace pollution and the burden of full package maintenance.

Popularity

Points 7

Comments 1

What is this product?

Modshim is a Python library that provides a sophisticated way to alter the behavior of existing Python modules without directly editing them or creating full forks. Think of it like applying a patch or a theme to an application without changing its core code. Technically, it works by using Abstract Syntax Tree (AST) transformations to intercept and rewrite how Python imports modules. When you import a module through Modshim, it can dynamically combine the original module's code with your custom modifications defined in a separate overlay module. This results in a new, 'virtual' module that behaves as if the changes were part of the original, all without the risks of polluting the global scope or the headache of maintaining a full fork. So, what's the big deal? It means you can fix bugs or add features to a library you use without the massive effort of maintaining your own copy of that library, and your changes won't interfere with other parts of your project unexpectedly.

How to use it?

Developers can integrate Modshim into their projects to manage customizations of third-party Python packages. The typical workflow involves defining a 'base' module (the original package you want to modify) and an 'overlay' module (containing your specific changes). Modshim then provides a mechanism to 'mount' these, creating a virtual module that reflects the combined code. For example, if a third-party library has a bug, you can write a small overlay module that corrects the buggy function, and then use Modshim to import this modified version instead of the original. This is especially useful in scenarios like extending existing frameworks or fixing issues in dependencies without touching the vendor-locked code. Integration is typically done programmatically at the start of your application's execution, before the target module is loaded.

Product Core Function

· AST-based import rewriting: This core technology allows Modshim to intercept and redirect module imports, enabling the dynamic layering of code. Its value lies in creating a clean separation between original code and modifications, preventing unexpected side effects. This is useful for any situation where you need to conditionally apply changes to imported modules without altering their source.

· Overlay module composition: Modshim allows you to define a separate Python file (the overlay) that contains your modifications. This separation is key to maintainability. The value here is that you can distribute just your changes as a small package, rather than an entire forked library. This is incredibly useful for teams working on shared codebases where multiple developers might need to customize dependencies.

· Virtual module creation: Instead of directly patching or forking, Modshim constructs a new, 'virtual' module in memory that merges the base and overlay code. This provides a safe and isolated environment for your modifications. The application benefits from this by avoiding global namespace pollution and ensuring that your changes are confined to the intended module, making debugging and deployment much smoother.

· Reduced maintenance burden for third-party modifications: By enabling developers to apply changes as overlays, Modshim significantly lessens the need to fork and maintain entire third-party packages. The value proposition is immense: you can stay up-to-date with the original package's releases while still incorporating your essential customizations. This is a game-changer for projects that rely heavily on external libraries.

Product Usage Case

· Customizing a third-party API client: Imagine a popular library for interacting with a web service that has a minor bug in its request handling or an missing optional parameter you need. Instead of forking the entire client, you can create a Modshim overlay that corrects the function or adds your custom logic. This allows you to use the latest version of the client while ensuring your specific needs are met without maintaining a large fork.

· Applying theme or configuration changes to a GUI framework: If you're using a Python GUI framework and want to apply a consistent visual theme or a set of default configurations across many widgets, you could use Modshim to overlay changes onto the framework's core styling modules. This would allow you to manage your application's look and feel independently of the framework's updates.

· Experimenting with alternative implementations of library functions: A developer might want to test a different caching strategy for a specific function in a data processing library. Modshim allows them to write a new implementation in an overlay module and apply it without altering the original library. This facilitates rapid prototyping and A/B testing of functionality within existing codebases.

· Fixing bugs in legacy dependencies: For projects that depend on older, unmaintained libraries, Modshim provides a way to patch critical bugs or security vulnerabilities without undertaking the risky task of migrating to a completely new library or attempting to reanimate the old one. The overlay acts as a targeted fix.

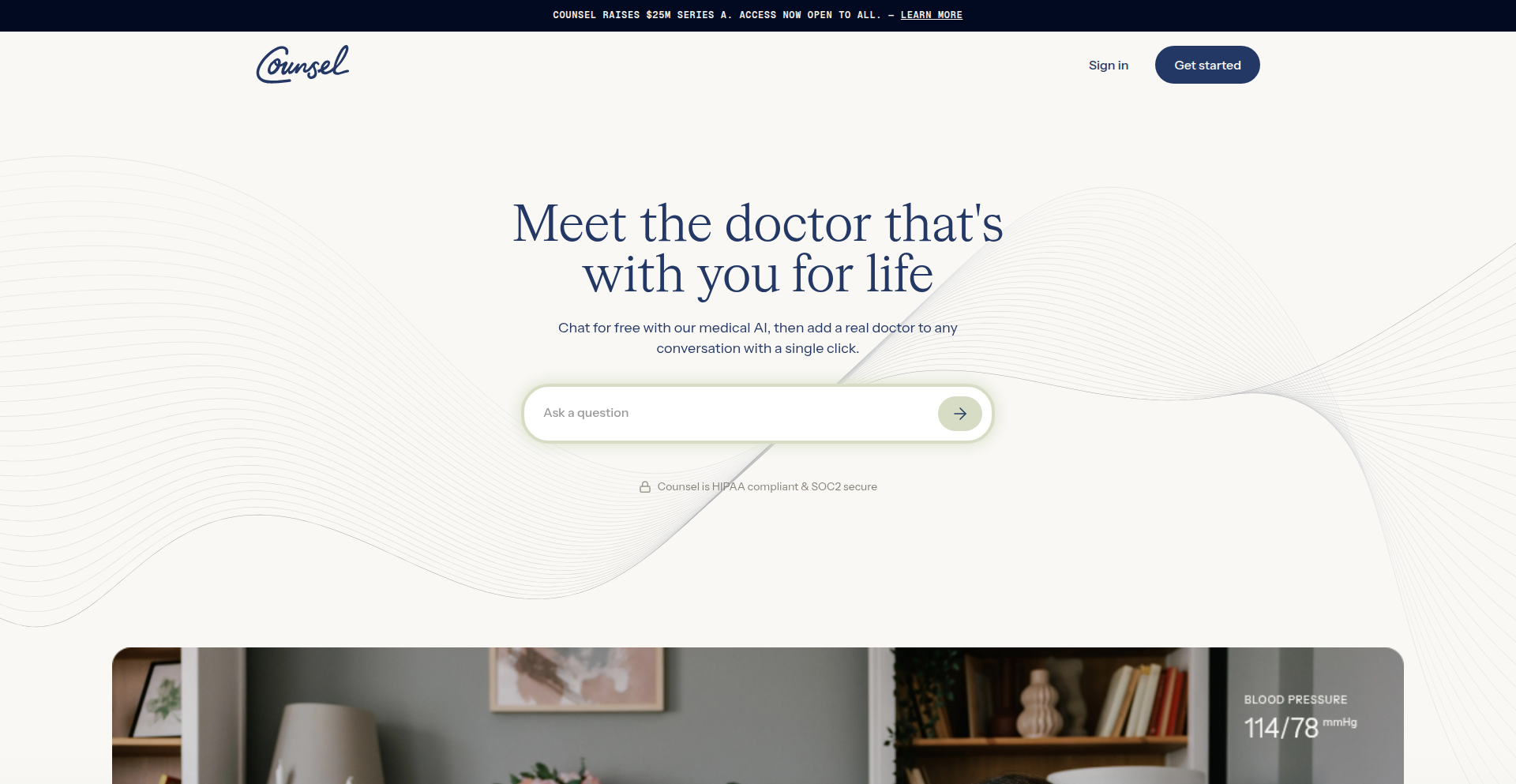

7

Counsel Health AI Care Platform

Author

cian

Description

This project is an AI-powered healthcare platform that acts as a first point of contact for patients, combining the speed of Large Language Models (LLMs) for answering medical questions with the oversight of licensed physicians. The innovation lies in its responsible integration of AI into healthcare to deliver faster, safer, and more cost-effective care at scale. It bridges the gap between immediate AI-driven information and crucial human medical expertise.

Popularity

Points 7

Comments 1

What is this product?

Counsel Health is a next-generation AI care platform designed as a responsible entry point into the healthcare system. It leverages LLMs, which are advanced AI models capable of understanding and generating human-like text, to provide answers to medical queries. Crucially, it integrates the capabilities of licensed physicians to ensure the accuracy and safety of the information and care provided. The core innovation is using AI to make healthcare access quicker and more efficient, while maintaining a high standard of medical safety and human oversight. This means you get faster answers and potentially quicker access to care, without compromising on quality or safety.

How to use it?

Developers can integrate with or build upon this platform to create new healthcare solutions. For end-users, it's used by downloading the Counsel Health app. The platform can be envisioned as a smart assistant for your health concerns. You can ask it questions about symptoms, conditions, or general health advice. The AI will process your query and provide an initial response, which may then be reviewed or supplemented by a human doctor if necessary, depending on the complexity and urgency of the situation. This offers a streamlined way to get medical information and potentially initiate the care process.

Product Core Function

· AI-driven medical question answering: Utilizes LLMs to understand and respond to patient inquiries about health conditions and symptoms, providing quick and accessible initial information. This is valuable for users seeking immediate clarification on health matters.

· Physician oversight and validation: Incorporates licensed medical professionals to review AI-generated responses and patient interactions, ensuring accuracy, safety, and adherence to medical standards. This adds a critical layer of trust and reliability to the AI-driven interactions.

· Streamlined healthcare access: Acts as a front door to healthcare, simplifying the initial steps for patients seeking medical attention. This reduces friction and potentially speeds up the process of getting the right care.

· Scalable care delivery: The combination of AI and human oversight allows for a more efficient and cost-effective delivery of healthcare services. This means potentially lower costs for patients and broader reach for medical professionals.

Product Usage Case

· A user experiencing mild, non-emergency symptoms can use the app to describe their condition. The AI can provide information about potential causes and self-care advice, and if the AI detects a need for professional assessment, it can seamlessly escalate the case to a physician for review. This saves the user time and anxiety by providing immediate guidance and a clear path forward.

· A busy individual can quickly get answers to common health questions without needing to book an appointment for routine information. For example, asking about the side effects of a common medication or understanding a general health guideline. This empowers users with readily available, reliable health information.

· Healthcare providers can use the platform to triage patient inquiries more effectively. The AI can handle initial screening and information gathering, allowing physicians to focus their time on more complex cases that truly require their expertise. This improves operational efficiency for medical practices.

8

ScamAI Job Detector

Author

hienyimba

Description

This project is an AI-powered tool designed to detect and flag potential job scams originating from platforms like LinkedIn. It analyzes job postings, recruiter profiles, and company pages for suspicious 'red flags', providing a comprehensive report to help users avoid fraudulent opportunities. So, what's in it for you? It protects your time and potential financial losses by filtering out fake job offers.

Popularity

Points 5

Comments 2

What is this product?

ScamAI Job Detector is a web-based application that leverages artificial intelligence to analyze various components of a job opportunity for signs of fraud. It scrutinizes the language used in job descriptions for common scam patterns, cross-references recruiter profiles against publicly available data and known scam indicators, and evaluates company page information for inconsistencies or lack of legitimacy. The core innovation lies in its ability to synthesize these disparate data points into a single, easy-to-understand risk assessment. So, what's in it for you? It provides an intelligent layer of security, helping you discern genuine job offers from sophisticated scams.

How to use it?

Developers can use ScamAI Job Detector by visiting the provided web link (scamai.com/detect/jobs). They can input details of a job posting, recruiter's profile URL, or company name. The tool then processes this information and generates a report highlighting potential risks. It can be integrated into automated pre-screening workflows or used as a manual verification step before engaging deeply with a job opportunity. So, how can you use this? It's a quick and easy way to get a second opinion on a job offer's legitimacy, saving you from wasting time on fake opportunities.

Product Core Function

· Job Posting Analysis: Scans job descriptions for common scam linguistic patterns, such as urgency, vague responsibilities, or requests for personal information. Its value is in identifying subtle textual cues that might indicate a fraudulent posting, preventing users from applying to fake jobs.

· Recruiter Profile Verification: Checks recruiter profiles for inconsistencies, lack of professional history, or connections to known scam profiles. This function helps ensure the person you're interacting with is legitimate, protecting you from impersonation scams.

· Company Page Assessment: Evaluates the legitimacy of company pages by looking for missing essential information, generic content, or inconsistencies with the job posting. This ensures the company offering the job is real and not a shell for fraudulent activities.

· Red Flag Reporting: Consolidates all identified suspicious elements into a clear, actionable report, highlighting specific areas of concern. This provides a straightforward overview of potential risks, allowing users to make informed decisions quickly.

· AI-Powered Risk Scoring: Utilizes machine learning models to assign a risk score to each analyzed job opportunity, giving users a quantitative measure of potential scam likelihood. This simplifies the decision-making process by providing a clear indication of risk level.

Product Usage Case

· A developer receives an unsolicited job offer via LinkedIn from an unknown recruiter. They use ScamAI Job Detector to analyze the recruiter's profile and the job description. The tool flags the recruiter's profile as having limited history and the job description uses language common in phishing scams, preventing the developer from sharing sensitive personal information.

· A recent graduate finds a highly attractive job posting that seems too good to be true. They input the job details and company name into ScamAI Job Detector. The report reveals that the company website is very new and lacks detailed contact information, and the job responsibilities are unusually vague, suggesting it might be a bait-and-switch scam.

· An HR professional is reviewing candidates and suspects a particular recruiter might be part of a fraudulent operation. They use ScamAI Job Detector to analyze the recruiter's outreach messages and profile, identifying several red flags that confirm their suspicions, thus protecting their company's reputation and potential victims.

· A freelance developer is considering a remote project offer. They use the tool to check the client's company profile and project details. The detector highlights that the client's company has no online presence outside of a single, unverified social media account, indicating a high risk of non-payment or exploitation.

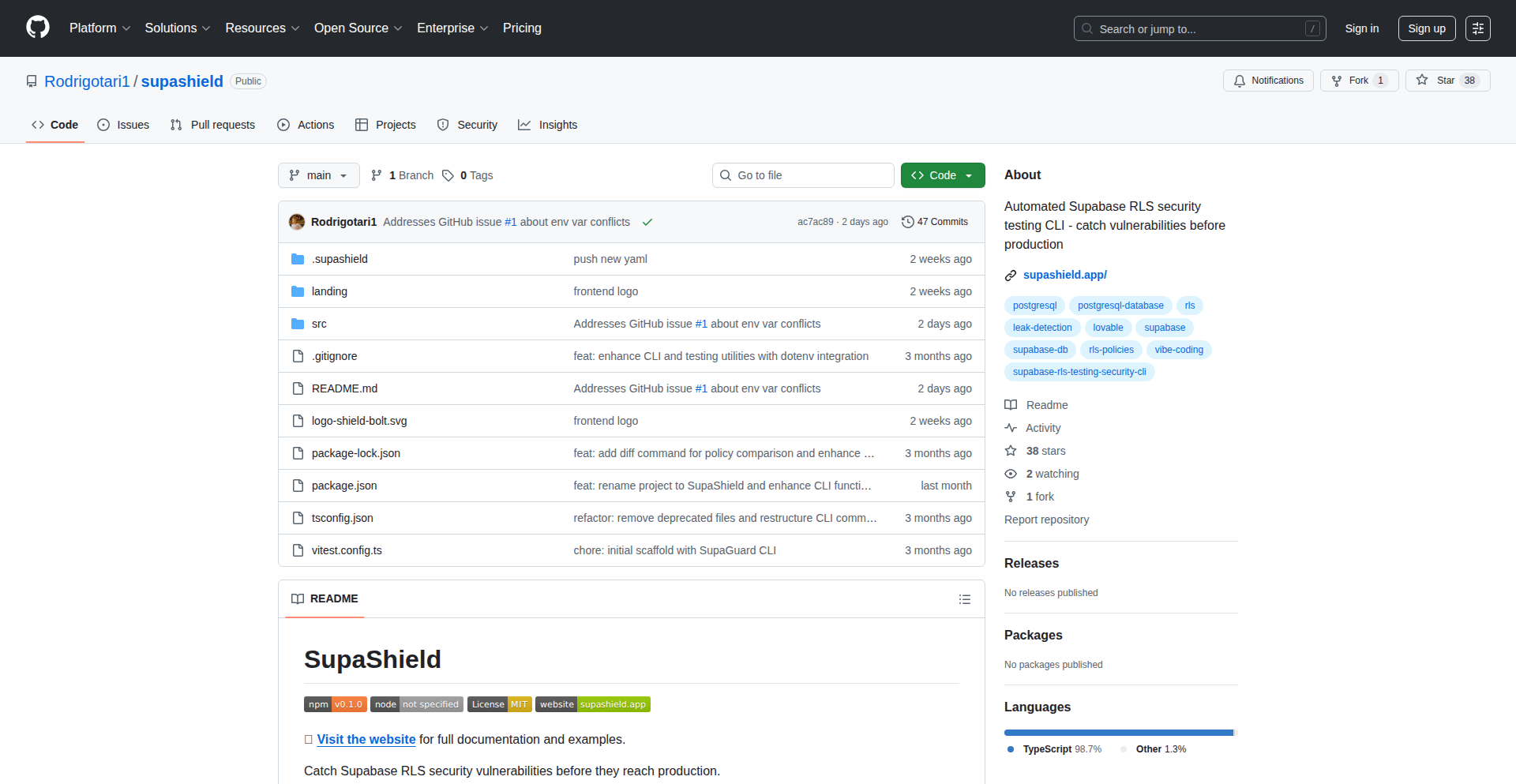

9

Supabase RLS Shield CLI

Author

rodrigotarca

Description

A command-line interface (CLI) tool designed to proactively test and validate Supabase Row Level Security (RLS) policies. It automatically inspects your database schema, simulates various user roles, and performs CRUD operations on RLS-enabled tables. The core innovation lies in its transactional approach with automatic rollbacks, ensuring no actual data changes occur while generating comprehensive test reports. This tool is crucial for preventing accidental data leaks caused by misconfigured security policies, offering peace of mind before deploying to production. So, what does this mean for you? It significantly reduces the risk of sensitive data exposure, saving you from potential reputational damage and costly breaches.

Popularity

Points 4

Comments 3

What is this product?

Supabase RLS Shield CLI is a developer tool that acts as an automated security auditor for your Supabase database. The core technical innovation is its ability to simulate different user permissions (like anonymous users, logged-in users, or users with specific custom roles defined by JWT claims) against your database tables. It then attempts to perform common actions like creating, reading, updating, and deleting data on tables that have RLS policies enabled. Crucially, all these operations are wrapped in database transactions that are automatically rolled back, meaning no actual data is ever modified on your database. This allows for thorough testing without any risk of corrupting your data. The output is a set of 'snapshots' of the expected security outcomes, which can be compared over time, particularly in Continuous Integration (CI) pipelines, to detect any regressions. So, what does this mean for you? It's like having a diligent security guard who tirelessly checks every entry point to your data vault, ensuring only authorized access is granted, and alerting you to any potential weaknesses before they can be exploited, thus protecting your valuable information.

How to use it?

Developers can integrate Supabase RLS Shield CLI into their development workflow and CI/CD pipelines. First, you would install the CLI tool. Then, you would configure it to connect to your Supabase project's database. The CLI will automatically introspect (examine) your database schema to understand the tables and their associated RLS policies. You can then specify which user roles you want to simulate (e.g., 'authenticated' users, or custom roles with specific JWT claims). The tool will execute simulated CRUD operations on RLS-protected tables for each role. The results are presented as snapshots, which can be stored and compared in subsequent runs. This makes it ideal for automated testing in CI environments to catch any changes that might inadvertently weaken security. So, what does this mean for you? You can seamlessly embed robust security checks into your automated build and deployment process, ensuring that your application's data remains secure with every code change, without manual intervention.

Product Core Function

· Database Schema Introspection: Automatically analyzes your Supabase database schema to understand tables and RLS policies. This technical step is vital for knowing what to test and ensures comprehensive coverage, meaning your entire security posture is mapped out for auditing.

· Role Simulation: Emulates various user roles, including anonymous, authenticated, and custom JWT claims. This allows you to test how your RLS policies behave under different real-world access scenarios, ensuring that access control is correctly enforced for everyone.

· Transactional CRUD Operations: Performs simulated Create, Read, Update, and Delete operations on RLS-enabled tables within database transactions that are rolled back. This is the core innovation that enables safe and thorough testing without any risk of data alteration, so you can test without fear of breaking your production data.

· Snapshot Generation: Creates reproducible snapshots of test outcomes that can be used for comparison and tracking changes. This allows you to easily identify any unintended security regressions that might have been introduced, ensuring consistent security over time.

· CI/CD Integration: Designed to be easily integrated into Continuous Integration and Continuous Deployment pipelines. This allows for automated security validation with every code commit or deployment, preventing insecure code from ever reaching production.

Product Usage Case

· Pre-deployment security validation: Before deploying a new version of your application, run the RLS Shield CLI to ensure that no recent code changes have inadvertently exposed sensitive user data through misconfigured RLS policies. This prevents data leaks and protects user privacy.

· Automated testing in CI pipelines: Integrate the CLI into your CI system (like GitHub Actions, GitLab CI) to automatically test RLS policies with every code commit. If a test fails, the build is stopped, preventing potentially insecure code from being merged or deployed.

· Onboarding new developers: Help new team members understand and adhere to secure database access patterns by using the CLI to demonstrate the impact of RLS policies and catch common mistakes early in their development process.

· Auditing existing applications: Periodically run the CLI on your live Supabase application to confirm that your RLS policies are still robust and haven't been weakened by manual database modifications or forgotten code updates, ensuring ongoing data security.

10

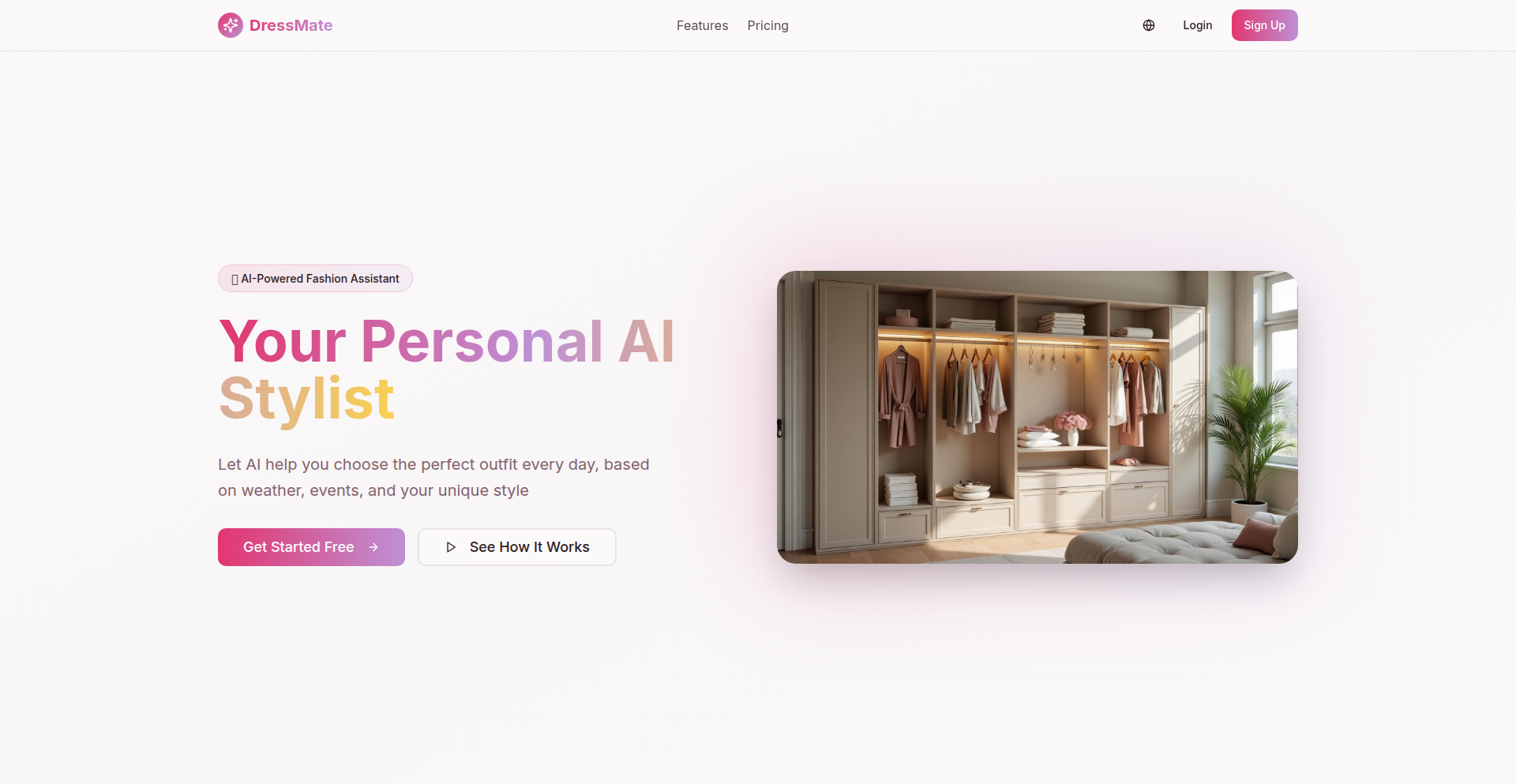

DressMate AI Wardrobe Stylist

Author

novaTheMachine

Description

DressMate is an AI-powered application that helps you decide what to wear by intelligently analyzing your existing wardrobe. It leverages computer vision to identify clothing items and then uses AI to suggest outfits based on factors like weather, occasion, and personal style. This solves the common problem of 'wardrobe blindness' and reduces clothing waste by maximizing the use of what you already own. The innovation lies in its practical application of AI to everyday fashion choices directly from user-owned items.

Popularity

Points 3

Comments 3

What is this product?

DressMate is an AI stylist for your own closet. It uses a computer vision model, likely a Convolutional Neural Network (CNN), to recognize individual pieces of clothing (e.g., shirts, pants, dresses, shoes) from photos you upload. Once it understands your wardrobe, it applies AI algorithms to generate outfit recommendations. These recommendations consider various inputs like the current weather data, the type of event you're dressing for (casual, work, formal), and your stored style preferences. The core innovation is using AI to make smart, personalized fashion decisions without needing to buy new clothes, thereby promoting sustainability and simplifying the daily dressing routine. So, what's in it for you? It saves you time and mental energy in the morning by providing instant, tailored outfit suggestions from the clothes you already own.

How to use it?

Developers can integrate DressMate's core capabilities into their own applications or use it as a standalone service. The primary interaction would involve uploading images of clothing items to build a digital wardrobe. This could be done via an API where developers send images for classification and cataloging. Subsequently, users can input contextual information such as the date, time, weather forecast, and the intended occasion. The AI then processes this information along with the cataloged wardrobe to return suggested outfits. For developers, this means they can build features like personalized shopping assistants, virtual try-on experiences that consider existing clothes, or even sustainable fashion platforms that encourage users to re-wear and re-style their current wardrobe. So, how can you use it? You'd feed it pictures of your clothes, tell it what you're doing, and it tells you what to wear, helping you make the most of your existing fashion items.

Product Core Function

· Clothing Item Recognition: Uses computer vision to identify and catalog different types of garments from user-uploaded photos. This allows for a structured digital representation of your wardrobe. Value: Enables automated inventory of your clothing. Use Case: Quickly adding new items to your virtual closet.

· Outfit Generation Algorithm: Employs AI to combine recognized clothing items into coherent and contextually appropriate outfits. Value: Creates personalized style recommendations. Use Case: Suggesting a work-appropriate ensemble for a specific day.

· Contextual Styling Parameters: Incorporates external data like weather forecasts and user-defined occasions (e.g., 'business meeting', 'casual outing') into the styling decisions. Value: Ensures outfit relevance and comfort. Use Case: Recommending a warm outfit for a cold, rainy day.

· Personal Style Profiling: Learns and adapts to the user's fashion preferences over time, leading to more accurate and favored suggestions. Value: Offers a highly personalized styling experience. Use Case: Gradually tailoring suggestions to your evolving taste.

Product Usage Case

· A fashion blogger could use DressMate to create daily outfit posts by uploading their wardrobe and letting the AI generate diverse looks, then adding their personal commentary. This solves the problem of needing constant inspiration and showcasing variety from a limited set of items.

· A busy professional could integrate DressMate into their morning routine app. By providing their schedule and checking the weather, they receive instant, well-suited outfit suggestions, saving valuable time and reducing decision fatigue. This directly addresses the 'what to wear' dilemma quickly and efficiently.

· A sustainable fashion advocate could build a platform encouraging users to maximize their existing wardrobe. DressMate would power the 'style remix' feature, showing users how to create new outfits from pieces they already own, thus combating fast fashion and promoting reuse.

· An e-commerce platform could use DressMate's recognition technology to allow users to photograph items they own and then suggest complementary items from the store's catalog, enhancing the 'complete the look' functionality and increasing cross-selling opportunities.

11

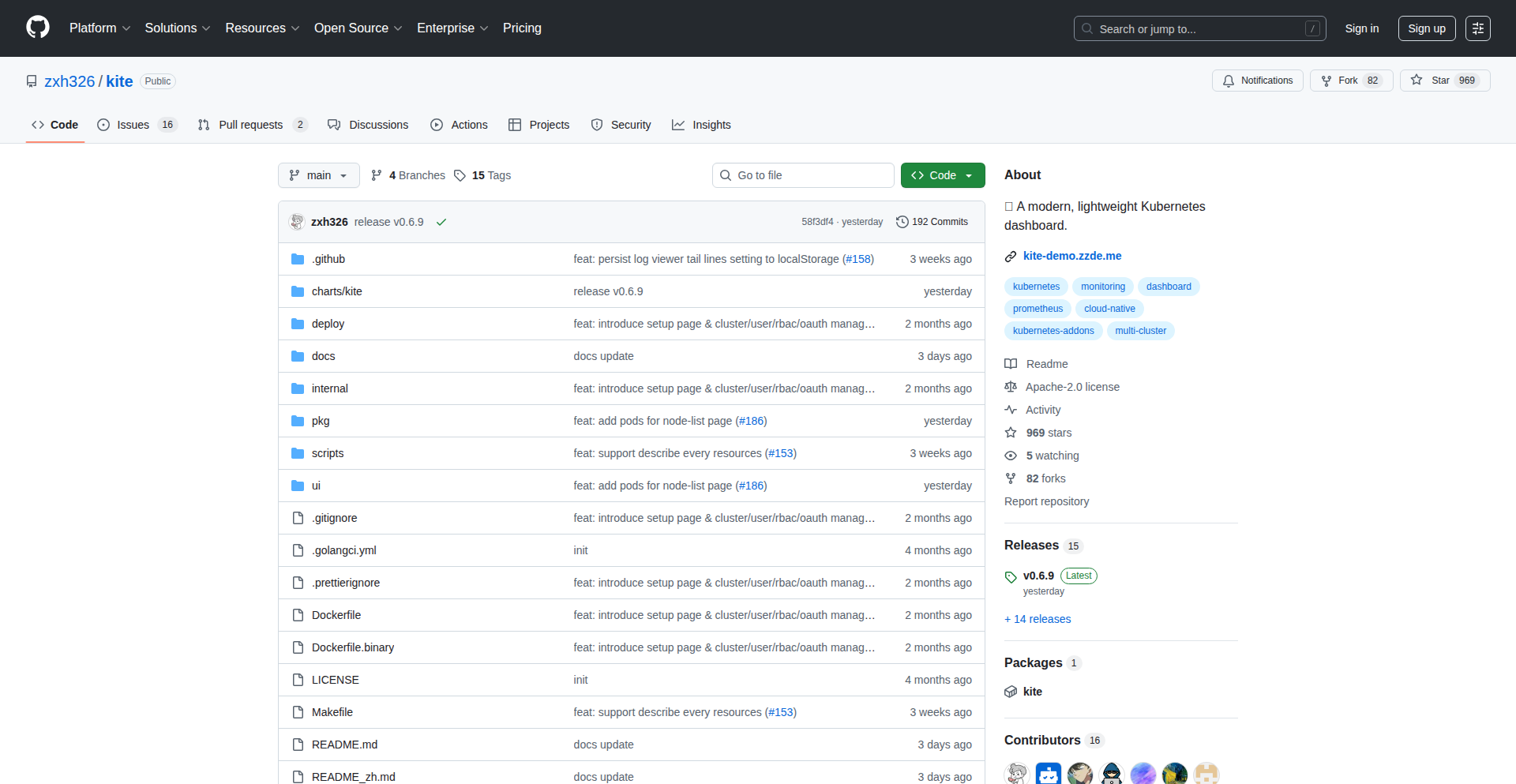

Kite: Lightweight K8s Dashboard

Author

xdasf

Description

Kite is a modern, lightweight dashboard for Kubernetes, designed to offer a streamlined and efficient way to manage your containerized applications. It focuses on providing essential insights and control without the bloat of more complex solutions, making Kubernetes management more accessible and faster for developers.

Popularity

Points 6

Comments 0

What is this product?

Kite is a web-based interface for interacting with a Kubernetes cluster. Unlike some heavier dashboards, Kite prioritizes speed and simplicity. It leverages the Kubernetes API to fetch information about your running applications, such as pods, deployments, and services, and presents it in an easily digestible format. Its innovation lies in its minimalist design and efficient data fetching, which translates to faster load times and a less resource-intensive experience for the user and the cluster itself. So, why is this useful to you? It means you can get a quick overview of your system's health and performance without waiting for slow interfaces to load, allowing you to identify and fix issues faster.

How to use it?

Developers can deploy Kite as a service within their Kubernetes cluster, typically as a separate deployment and service. Once running, they can access Kite through a web browser, often via port-forwarding or an Ingress controller. Kite interacts with the Kubernetes API server on behalf of the user to retrieve and display cluster resources. This integration allows for real-time monitoring and management of deployments, pods, and other Kubernetes objects directly from the dashboard. This is useful for you because it provides a centralized, easy-to-access point for managing your applications, simplifying common tasks like checking logs, scaling deployments, or viewing resource status, all without needing to constantly switch to command-line tools.

Product Core Function

· Real-time resource monitoring: Visually displays the status of pods, deployments, services, and nodes. This helps you quickly identify any unhealthy components in your cluster, enabling proactive problem-solving and reducing downtime.

· Simplified deployment management: Allows for basic operations like scaling deployments up or down, rolling back to previous versions, and viewing deployment history. This streamlines the process of managing application lifecycle, saving you valuable development time.

· Log viewing: Provides direct access to logs from your application pods. This is crucial for debugging and troubleshooting, allowing you to pinpoint the root cause of errors without complex command-line operations.

· Resource utilization insights: Offers a glimpse into CPU and memory usage for your pods and nodes. Understanding resource consumption helps you optimize your applications for performance and cost-efficiency, ensuring your services run smoothly and within budget.

· Lightweight and fast: Designed for performance, Kite loads quickly and uses fewer resources compared to other dashboards. This means a more responsive user experience and less overhead on your cluster, allowing your applications to perform better.

Product Usage Case

· During a critical production incident, a developer needs to quickly assess the state of their deployed services. Using Kite, they can instantly see which pods are failing, access their logs for error messages, and potentially trigger a rollback with a few clicks, significantly reducing the Mean Time To Recovery (MTTR).

· A developer is onboarding to a new project and needs to understand the architecture and current state of the Kubernetes cluster. Kite provides an intuitive visual overview of all running services, their dependencies, and resource usage, accelerating their understanding and productivity without requiring deep knowledge of kubectl commands.

· Before pushing a new release, a developer wants to perform a quick check of their application's health and resource consumption. Kite allows them to easily scale up a deployment for testing, monitor its performance, and review logs for any unexpected behavior, ensuring a smoother release process.

· A small team managing a few microservices needs a simple, efficient way to monitor their applications without the complexity of a full-fledged enterprise dashboard. Kite offers the essential features they need in a clean, fast interface, making Kubernetes management manageable for them.

12

TechQuizMaster

Author

emmanol

Description

TechQuizMaster is a practical web application designed to help developers sharpen their IT knowledge and prepare for technical interviews. It leverages a large, interactive question bank covering core programming languages, databases, and DevOps principles. The innovation lies in its structured approach to knowledge verification and the sheer volume of curated, real-world interview questions, making it a valuable tool for both learning and self-assessment. This helps developers identify and fill knowledge gaps, boosting their confidence and readiness for the job market. So, what's in it for you? You get a focused, efficient way to improve your technical skills and land your dream job.

Popularity

Points 5

Comments 0

What is this product?

TechQuizMaster is an interactive platform that serves as a digital flashcard system specifically for IT professionals and aspiring developers. Its core technology involves a robust backend to manage a vast database of over 5,000 interactive quizzes and 2,100 real interview questions across a spectrum of popular IT domains including JavaScript, Java, Python, PHP, HTML, Databases, and DevOps. The innovation is in how it aggregates and presents these questions in an engaging, test-like format, allowing users to actively test and reinforce their understanding, rather than passively reading. Think of it as a highly specialized, digital study guide that continuously challenges you. So, what's in it for you? You get a structured and engaging way to learn and retain complex technical information, making your study sessions more effective.

How to use it?

Developers can use TechQuizMaster directly through their web browser at itflashcards.com. They can navigate through different technical categories, select specific topics they want to focus on (e.g., JavaScript ES6 features, SQL query optimization, or Docker basics), and start taking quizzes. The platform provides immediate feedback on answers, helping users understand their mistakes. For integration, while not a direct code integration, developers can use the curated questions and topics as a framework to structure their personal study plans, or even incorporate similar question-generation logic into their own learning or team training tools. So, what's in it for you? You can jump straight into targeted learning or practice, saving time and effort in finding relevant study materials.

Product Core Function

· Interactive Quizzes: Offers over 5,000 multiple-choice and short-answer questions across various tech stacks, allowing for active learning and knowledge retention. This is valuable for reinforcing concepts and identifying weak areas in your understanding.

· Real Interview Questions: Provides access to 2,100+ questions commonly asked in technical interviews, enabling targeted preparation for job seeking. This directly helps you practice the exact types of questions you'll face, increasing your chances of success.

· Topic-Specific Learning: Allows users to select and focus on specific technologies and domains like JavaScript, Python, Databases, or DevOps, providing a tailored learning experience. This means you can hone in on the skills most relevant to your career goals or current projects, optimizing your learning time.

· Knowledge Verification: Acts as a self-assessment tool, helping developers gauge their proficiency and identify areas needing further study. This helps you understand your current skill level and focus your efforts where they'll have the most impact.

· Progress Tracking (Implied): While not explicitly stated, such platforms typically offer some form of progress tracking, allowing users to monitor their improvement over time. This provides motivation and a clear view of your learning journey, helping you stay on track.

Product Usage Case

· A junior developer preparing for their first software engineering job interview can use TechQuizMaster to go through JavaScript and database questions, identifying specific syntax or concept gaps they need to revisit before the interview. This helps them feel more confident and prepared for technical assessments.

· A backend developer looking to upskill in cloud technologies can use the DevOps section to practice questions on AWS or Kubernetes, ensuring they understand key concepts and configurations. This aids in mastering new technologies for career advancement or new project requirements.

· A team lead can use the platform as a resource to create mini-quizzes for their team during stand-up meetings to quickly assess team understanding of a particular technology before starting a new feature development. This ensures everyone is on the same page and reduces potential roadblocks during development.

· A student learning Python for the first time can use the Python quizzes to test their understanding of data structures, algorithms, and object-oriented programming principles. This provides immediate feedback and reinforces learning from lectures and coursework, making the learning process more engaging and effective.

13

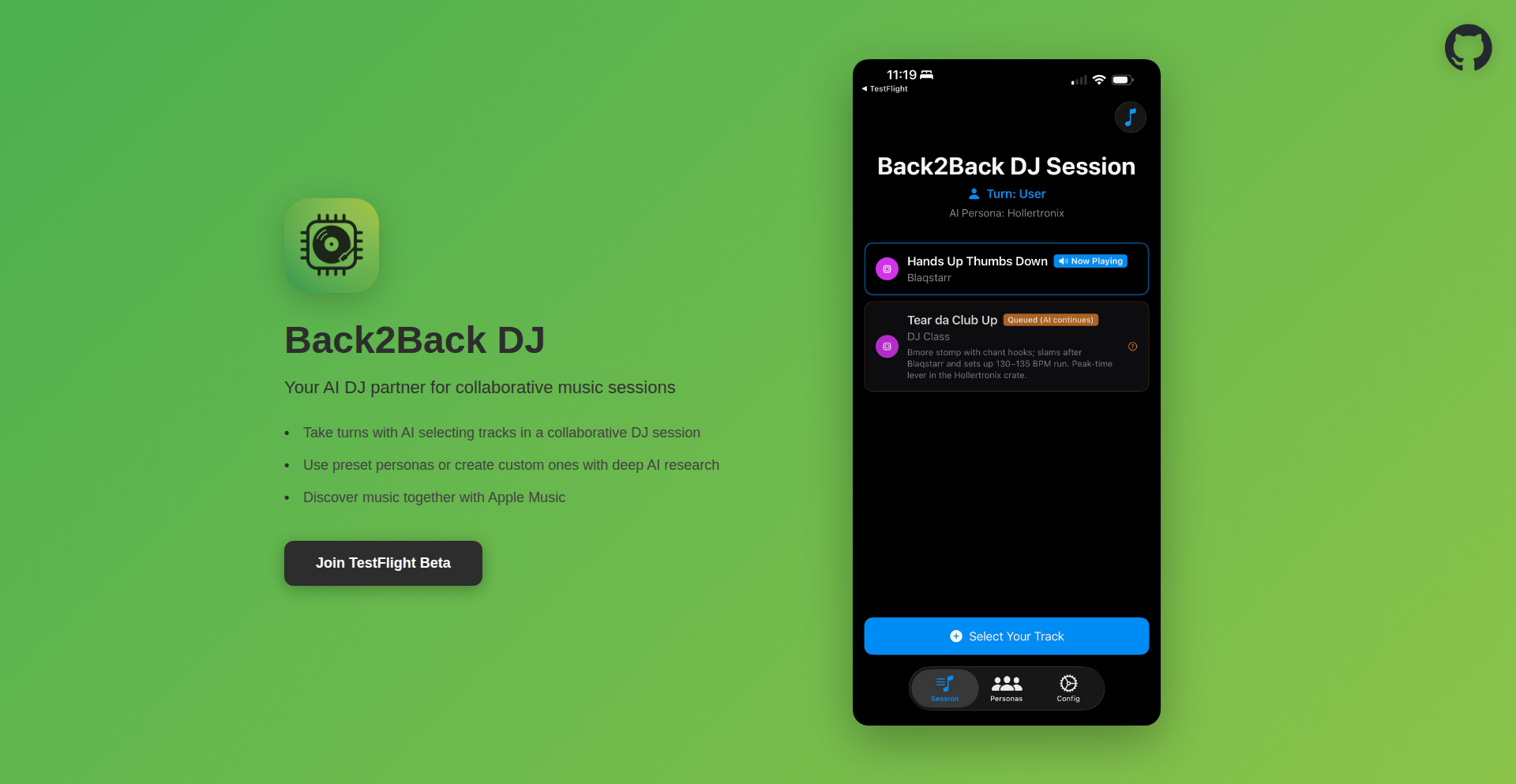

AI DJ Persona Mixer

Author

pj4533

Description

An innovative iOS app that lets you craft unique DJ personas using advanced AI (GPT-5). This AI then curates and plays music from Apple Music back-to-back, aligning with the chosen persona's style and depth. It's a blend of AI-driven music discovery and personalized DJ experiences, acting as both a music curator and an intelligent DJ. So, this is useful because it offers a novel way to discover music tailored to your specific tastes and moods, transforming passive listening into an interactive and engaging experience.

Popularity

Points 3

Comments 1

What is this product?

This project is an iOS application that leverages large language models (LLMs), specifically a concept similar to GPT-5, to create and embody distinct DJ personas. The AI deeply researches and understands a given persona (e.g., a 'Krautrock Nerd' or a '1990s NYC Mark Ronson') and then uses this understanding to select and play songs from Apple Music that fit that persona's musical genre, era, and overall vibe. A key innovation is using the LLM not just for selection, but also as a 'judge' to validate if song choices align with the persona's characteristics, ensuring a cohesive and authentic listening experience. So, what's the innovation here? It's using AI to inject personality and deep musical knowledge into music playback, making it feel like a curated set from a real, albeit AI-powered, DJ.

How to use it?

Developers can use this project as a blueprint for building AI-powered music discovery and playback applications. The core idea is to integrate an LLM to define user-specific or persona-specific music preferences. For integration, developers would typically connect to music streaming services like Apple Music via their APIs to fetch and play songs. The LLM would then process user prompts to define personas and guide song selection. The concept of using the LLM for validation adds a sophisticated layer for ensuring quality and thematic consistency. So, how can you use this? You can integrate this AI-driven persona concept into your own music apps, create personalized radio stations, or even build interactive music recommendation systems that feel more personal and intelligent.

Product Core Function

· AI Persona Generation: The core function is using advanced AI to create detailed DJ personas based on user input, allowing for highly specific musical curation. This is valuable for delivering personalized music experiences that go beyond simple genre filters. It lets you experience music as if curated by an expert with deep knowledge in a niche.

· LLM-driven Music Selection: The AI intelligently selects songs from Apple Music that align with the defined DJ persona's style, genre, and era. This is valuable for discovering new music you might otherwise miss and for creating a consistent and enjoyable listening flow. It's like having a DJ who truly understands your eclectic tastes.

· Persona Validation Mechanism: The AI acts as a 'judge' to ensure that the selected songs actually fit the persona, maintaining authenticity and quality in the music stream. This is valuable for ensuring a high-quality, coherent listening experience, preventing jarring or out-of-place song choices. It guarantees the music stays true to the mood you're aiming for.

· Configurable AI Thinking: Users can adjust the 'thinking' level of the AI (e.g., 'GPT-5 thinking high' vs. 'GPT-5 thinking low') to influence the complexity and adventurousness of song selections. This is valuable for fine-tuning the discovery process, allowing for more mainstream or more obscure musical explorations. It gives you control over how adventurous your music journey becomes.

Product Usage Case

· Personalized Music Streaming: Imagine a user wanting to discover deep cuts of 70s psychedelic rock. They could create a 'Psychedelic Guru' persona, and the AI would curate a playlist of obscure and relevant tracks from that era, acting as a knowledgeable guide. This solves the problem of finding hidden gems within a vast music library.

· Interactive DJ Sets: A developer could integrate this into a live event application, allowing attendees to vote on persona traits or suggest influences, and the AI DJ would adapt its set in real-time. This addresses the need for dynamic and engaging entertainment at events, making the music experience participatory.

· Niche Music Exploration Tool: For users interested in specific subgenres like 'Italian Disco from the 80s,' a dedicated persona can be created to surface rare and authentic tracks. This solves the challenge of finding highly specific musical content that is often buried in mainstream platforms. It's a dedicated channel for your hyper-specific music obsessions.

· Educational Music Discovery: A persona could be designed around a specific music historian or critic, offering insights and contextual information with each song selection. This provides an educational layer to music listening, turning discovery into a learning experience. You don't just hear the music; you learn its story.

14

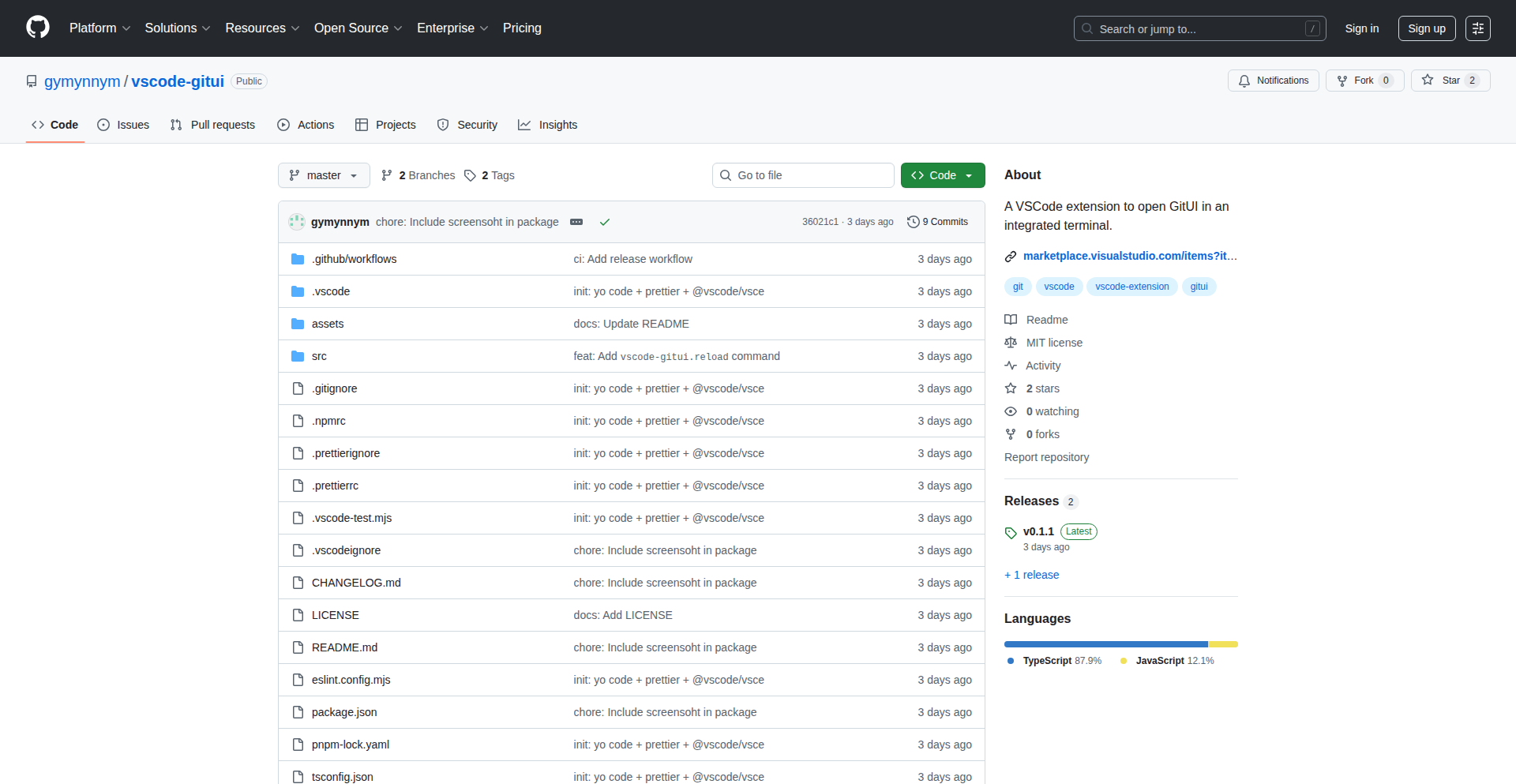

VSCode GitUI Embedded Terminal

Author

gymynnym

Description

This project is a VSCode extension that brings the powerful GitUI interface directly into your VSCode integrated terminal. It solves the common developer frustration of context switching between their code editor and a separate Git client or complex terminal commands. By embedding GitUI, it offers a seamless and efficient Git workflow directly within the familiar VSCode environment, especially beneficial for users coming from Vim-like editors who appreciate keyboard-centric operations.

Popularity

Points 2

Comments 2

What is this product?

This is a VSCode extension that embeds GitUI, a terminal-based Git graphical user interface, into the VSCode integrated terminal. Normally, to use GitUI, you'd have to exit your code editor and open it in a separate terminal window. This extension bypasses that by launching GitUI directly within VSCode's built-in terminal panel. The innovation lies in simplifying the developer experience by eliminating context switching and providing a consistent, integrated Git management tool. It's like having your Git control panel open right next to your code, without leaving your editor. So, this is useful for you because it makes managing your Git repositories smoother and faster, right where you're already working on your code.

How to use it?

Developers can install this extension from the VSCode Marketplace. Once installed, they can open GitUI by simply typing a command in the VSCode command palette (e.g., 'GitUI: Open GitUI in Terminal') or by using a keyboard shortcut if configured. The extension then launches GitUI within the VSCode integrated terminal panel. This allows developers to perform all common Git operations like committing, branching, merging, and viewing history using GitUI's interactive interface without leaving VSCode. For developers working with multiple projects, it intelligently detects and prompts for workspace selection. So, this is useful for you because it provides a quick and easy way to access your Git tools without interrupting your coding flow, saving you time and mental overhead.

Product Core Function

· Integrated GitUI Launch: Allows users to launch the GitUI application directly within the VSCode integrated terminal, providing a consistent and convenient Git management experience. The value is in reducing context switching and improving workflow efficiency.

· Seamless Terminal Embedding: Leverages VSCode's terminal API to embed GitUI, ensuring a fully functional and interactive GitUI session directly within the editor. This offers a robust solution for developers who prefer terminal-based tools but want a more visual and guided experience than raw Git commands.

· Multi-Workspace Support: Detects when the user is working with multiple VSCode workspaces and provides a simple interface to select the desired workspace for GitUI. This adds significant value for developers managing several projects concurrently, preventing accidental operations on the wrong repository.

· Keyboard-Centric Workflow: Aligns with the keyboard-driven approach of GitUI and many developer preferences (especially those from Vim), enabling efficient Git operations without relying on mouse interaction. This enhances productivity for users who favor keyboard shortcuts and command-line interfaces.

Product Usage Case

· A developer using VSCode and working on a feature branch needs to quickly view commit history, stage changes, and commit them. Instead of opening a separate terminal and running `git log`, `git status`, `git add`, and `git commit`, they can use the extension to launch GitUI in the integrated terminal, visually review their work, and perform all actions interactively, all within VSCode. This solves the problem of fragmented workflows and speeds up the commit process.

· A developer is working on a project that has multiple independent modules within a single VSCode workspace. When they want to perform Git operations on a specific module, they can use the extension, which will prompt them to choose which module's Git repository they want to manage in GitUI. This prevents the common mistake of applying Git commands to the wrong part of the project, ensuring accuracy and preventing potential data loss.

· A developer who is transitioning from Vim to VSCode finds that they miss the seamless Git integration they had in Vim. This extension provides a similar integrated experience, allowing them to manage their Git repositories using the familiar GitUI interface without having to adapt to different tools or complex VSCode settings for Git management. This helps maintain their productivity and comfort level.

· A developer is working on a remote server via SSH within VSCode's Remote Development capabilities. They can use this extension to manage their Git repository directly on the remote machine through the integrated terminal, without needing to set up any external Git clients or deal with complex network configurations. This simplifies the Git workflow for remote development scenarios.

15

Diploi: StackSync Deployment Engine

Author

marlusx

Description

Diploi is a full software lifecycle development platform designed to empower developers to be productive in minutes, regardless of their experience level. It emphasizes 'owning your stack' by keeping project components like databases and storage within the platform, ensuring development mirrors production. The platform embraces 'Infrastructure as Code' through monorepos and facilitates 'Remote Development,' allowing developers to use their favorite IDEs without installing anything locally. Its core innovation lies in abstracting the complexities of deployment and infrastructure management, making it easy to prototype and build applications with minimal DevOps overhead.

Popularity

Points 4

Comments 0

What is this product?

Diploi is a comprehensive platform that simplifies the entire process of building, deploying, and managing software. Its technical innovation lies in its unified approach to development environments and infrastructure. Instead of developers needing to set up databases, caching systems, or backend/frontend environments separately and ensure they match what will be used in production, Diploi provides a cohesive system. It uses Kubernetes internally, meaning it can manage a vast array of technologies. The core idea is to make your development environment and your production environment as identical as possible, which drastically reduces 'it works on my machine' issues. This means you can quickly set up a fully functional project stack, from the database to the frontend, all managed within one system. So, for you, this means less time wrestling with setup and configuration, and more time actually writing code and building features. It removes friction from the development workflow, allowing for faster iteration and easier collaboration.

How to use it?

Developers can start using Diploi by visiting their website and utilizing the 'StackBuilder'. Through a few clicks, you can select your desired technology stack. For example, you can choose Supabase for your database, Redis for caching, Bun for your backend, and React with Vite for your frontend. Once selected, Diploi sets up this integrated environment for you, ready for development. This setup can then be cloned, maintained, and tested seamlessly because the development environment directly mirrors the deployment environment. Integration with existing projects is also possible, including the ability to import and run 'lovable' projects. So, for you, this means you can quickly spin up a new project with your preferred tools, or even bring your existing projects onto a streamlined, consistent deployment pipeline without needing to become a DevOps expert.

Product Core Function