Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-14

SagaSu777 2025-10-15

Explore the hottest developer projects on Show HN for 2025-10-14. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The deluge of Show HN submissions highlights a vibrant ecosystem centered around AI agent enablement and developer productivity. A significant trend is the focus on abstracting complexity for AI agents, as seen with Metorial and Rover, which aim to make integrating tools and managing code agents seamless. The rise of local LLM deployment, exemplified by docker/model-runner and Infinity Arcade, signifies a push towards greater accessibility, privacy, and cost-effectiveness for AI development. Developers are also leveraging AI to automate mundane tasks, from coding assistance with wispbit to generating presentations with Snapdeck and even automating customer service calls with Relaya. The need for robust evaluation and auditing of AI systems is becoming paramount, with projects like Scorecard and Oracle Ethics addressing these critical areas. Furthermore, innovations in data access and management, such as qoery and Datalis, are democratizing access to specialized datasets and making them more usable for AI training and testing. The overarching hacker spirit is evident in the community's drive to solve real-world problems with creative technological solutions, whether it's optimizing infrastructure, enhancing code quality, or bringing cutting-edge AI capabilities to everyday users.

Today's Hottest Product

Name

Metorial

Highlight

Metorial tackles the complex infrastructure challenges of deploying AI agents with external tool integrations using the Message Communication Protocol (MCP). It offers a serverless runtime that automatically manages Docker configurations, OAuth flows, scaling, and observability. A key innovation is its ability to hibernate idle MCP servers and resume them with sub-second cold starts, preserving state, which drastically reduces setup time for developers and offers a scalable, isolated environment. Developers can learn about abstracting complex protocols like MCP and building scalable serverless infrastructure for AI agents.

Popular Category

AI/ML Development

Developer Tools

Infrastructure

Data Management

Popular Keyword

AI Agents

LLM

Integration Platform

Serverless

Open Source

Automation

Data Analysis

Code Generation

Technology Trends

AI Agent Orchestration

Local LLM Deployment

Developer Productivity Tools

AI for Code

Data Normalization and Access

Ethical AI and Auditing

Infrastructure Automation

Specialized AI Models

Project Category Distribution

AI/ML Development (30%)

Developer Tools (25%)

Infrastructure & DevOps (15%)

Data Analysis & Management (10%)

Productivity Tools (10%)

Other (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Metorial: AI Agent Integration Fabric | 56 | 22 |

| 2 | CodeGuardian AI | 29 | 14 |

| 3 | AICodeExtensionPulse | 24 | 10 |

| 4 | BotSentry Analytics | 29 | 3 |

| 5 | ClashRoyaleCardGuessr | 28 | 2 |

| 6 | LLM-Runner-X | 17 | 9 |

| 7 | Infinity Arcade: Local LLM Game Dev Studio | 9 | 0 |

| 8 | PDF Insight API | 9 | 0 |

| 9 | Upty: Auto-Health Status Pages | 3 | 5 |

| 10 | AgentEval-CI | 7 | 0 |

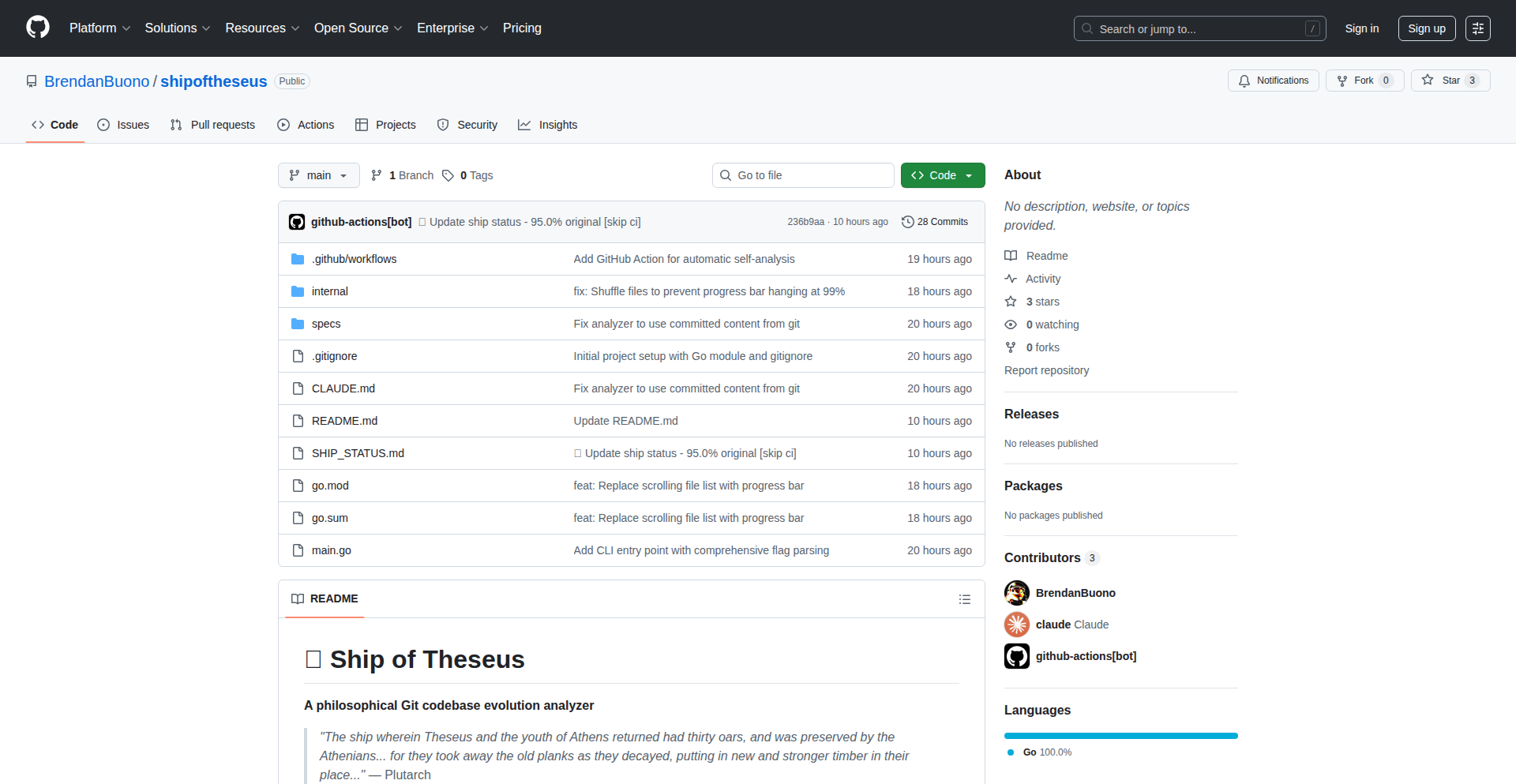

1

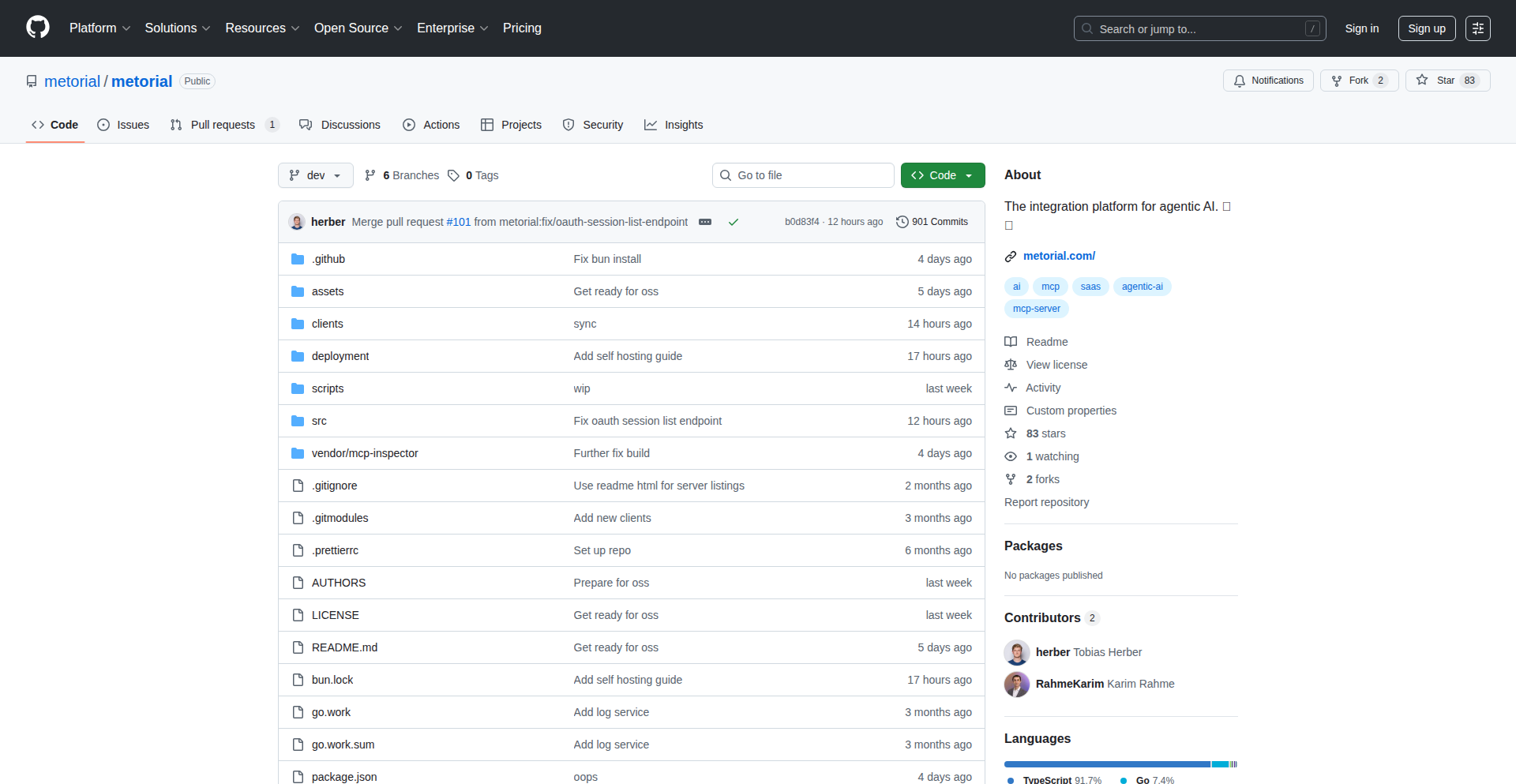

Metorial: AI Agent Integration Fabric

Author

tobihrbr

Description

Metorial is a platform that bridges AI agents with external tools and data using the MCP protocol. It simplifies the complex task of deploying and managing AI integrations, abstracting away infrastructure concerns like Docker configurations, OAuth flows, and scaling. This allows developers to focus on building AI-powered features rather than wrestling with backend plumbing. So, this is useful because it drastically reduces the time and effort required to connect your AI to the real world, making AI integrations faster and more accessible.

Popularity

Points 56

Comments 22

What is this product?

Metorial is an integration platform designed to connect AI agents to a vast array of external tools and data sources. At its core, it utilizes the MCP (Machine Communication Protocol) standard, which is a way for different software components, especially those involving AI, to talk to each other and external services. The problem Metorial solves is that running MCP servers, especially in a production environment, is notoriously difficult. It involves a lot of manual setup for things like managing containers (Docker), handling user logins (OAuth), making sure many users can use it at once (scaling), and setting up ways to see if everything is working (observability). Metorial automates all of this. Its innovation lies in its serverless runtime that can pause unused integrations and instantly resume them with all their previous state intact, and its ability to manage thousands of simultaneous connections securely and efficiently for each user. So, this is useful because it transforms a complex, week-long setup process into a simple, three-click deployment, making it incredibly easy for developers to get their AI agents connected to services like GitHub, Slack, or databases.

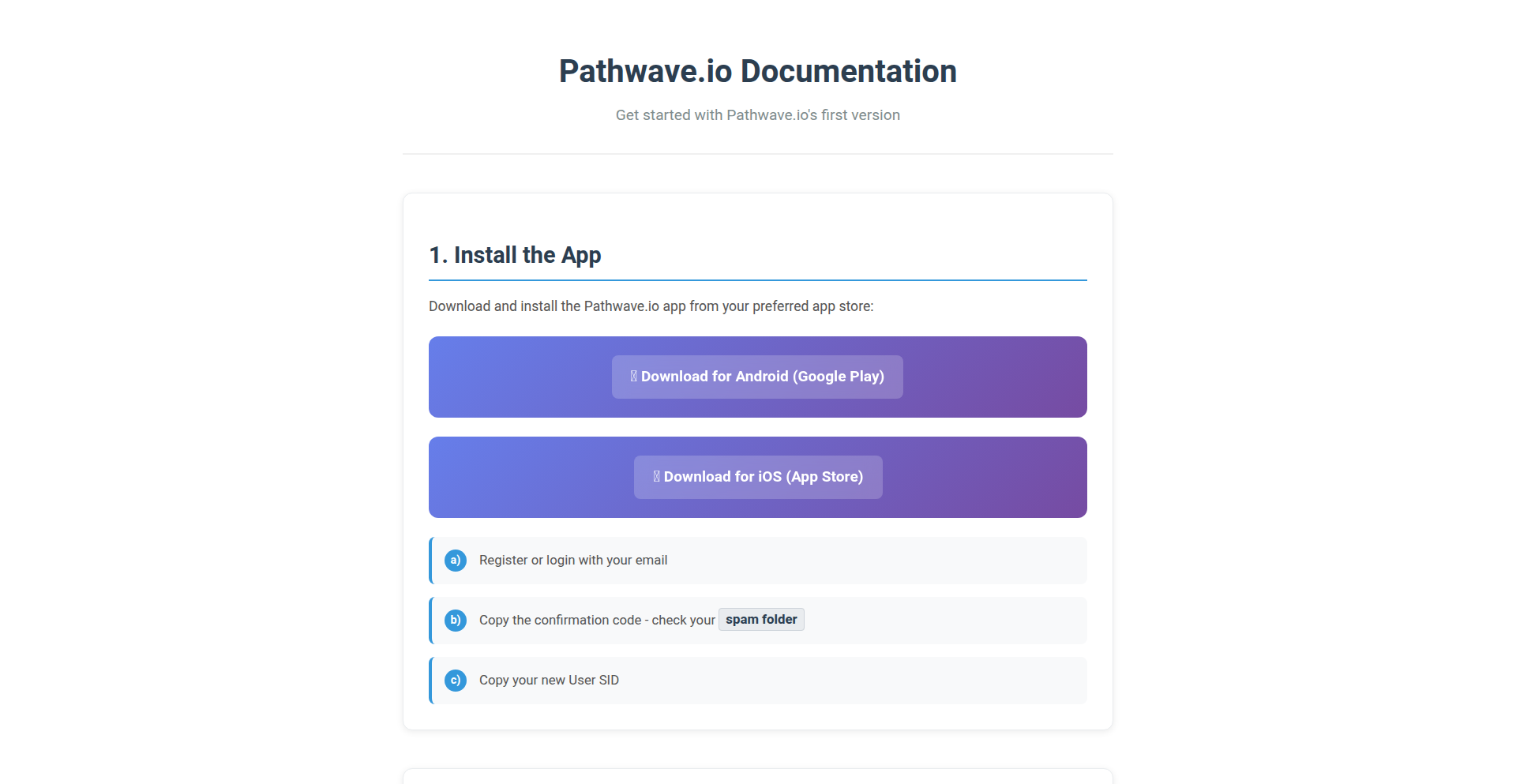

How to use it?

Developers can use Metorial in a few ways. The easiest is through their Python and TypeScript SDKs. With these, you can connect your Large Language Models (LLMs) to external tools with a single function call, hiding all the underlying complexity of the MCP protocol. For those who prefer more control or want to integrate with existing systems, Metorial also offers a REST API, allowing you to connect to the platform using standard MCP protocols. You can choose to use their managed service at metorial.com, or if you prefer to have full control over your infrastructure, you can self-host Metorial using their open-source code. So, this is useful because it provides flexible integration options, allowing developers to choose the level of abstraction that best suits their project and technical expertise, speeding up development cycles.

Product Core Function

· Automated MCP Server Deployment: Metorial provides a catalog of pre-configured MCP servers for popular tools like GitHub, Slack, and Google Drive. Developers can deploy these with minimal effort, significantly reducing setup time. This is valuable because it eliminates the need to manually configure and manage individual tool integrations for AI agents.

· Seamless OAuth Handling: The platform automatically manages the OAuth authentication flow for connecting to external services. Developers only need to provide their client ID and secret, and Metorial handles token refresh and per-user isolation. This is valuable because it simplifies user onboarding and data access for AI agents without requiring developers to build complex authentication systems from scratch.

· Serverless Runtime with State Preservation: Metorial's innovative serverless runtime can hibernate idle MCP server instances and resume them with sub-second cold starts while preserving their state and active connections. This is valuable because it ensures efficient resource utilization and a responsive user experience for AI integrations, preventing delays and maintaining context.

· Scalable Per-User Isolation: The platform is designed to manage thousands of concurrent connections with per-user isolation, addressing security concerns and cost inefficiencies of other solutions. This is valuable because it allows AI applications to scale reliably and securely, ensuring each user's data and connections are kept separate and protected.

· SDKs for LLM Integration: Metorial offers Python and TypeScript SDKs that simplify connecting LLMs to MCP tools in a single function call. This is valuable because it abstracts away the protocol complexity, allowing developers to quickly build sophisticated AI agents that can interact with external services.

Product Usage Case

· Enterprise Integration Hub: An enterprise team can use Metorial to build a central hub that connects their AI agents to critical business tools like Salesforce. This allows their AI assistants to pull customer data, update records, and automate workflows across departments, solving the problem of siloed information and inefficient manual data entry. The value is in streamlining business operations and enhancing productivity.

· Startup AI Agent Development: A startup building an AI chatbot for customer support can use Metorial to quickly integrate it with their knowledge base, CRM, and ticketing system. Instead of spending weeks on infrastructure, they can get their AI agent connected and functional in hours, solving the problem of slow time-to-market for new AI features. The value is in rapid prototyping and faster product iteration.

· Personalized AI Assistant: A developer can use Metorial to build a personal AI assistant that can interact with their Google Drive, calendar, and email. This assistant could automatically summarize documents, schedule meetings, or draft emails based on context. This solves the problem of managing multiple disparate applications by providing a unified AI interface. The value is in enhanced personal productivity and convenience.

· Data Analysis and Reporting: An analytics team can use Metorial to connect their AI models to various databases and data warehouses. Their AI can then automatically fetch data, perform complex analyses, and generate reports without manual intervention. This solves the problem of time-consuming data retrieval and preparation for AI-driven insights. The value is in accelerated data analysis and decision-making.

2

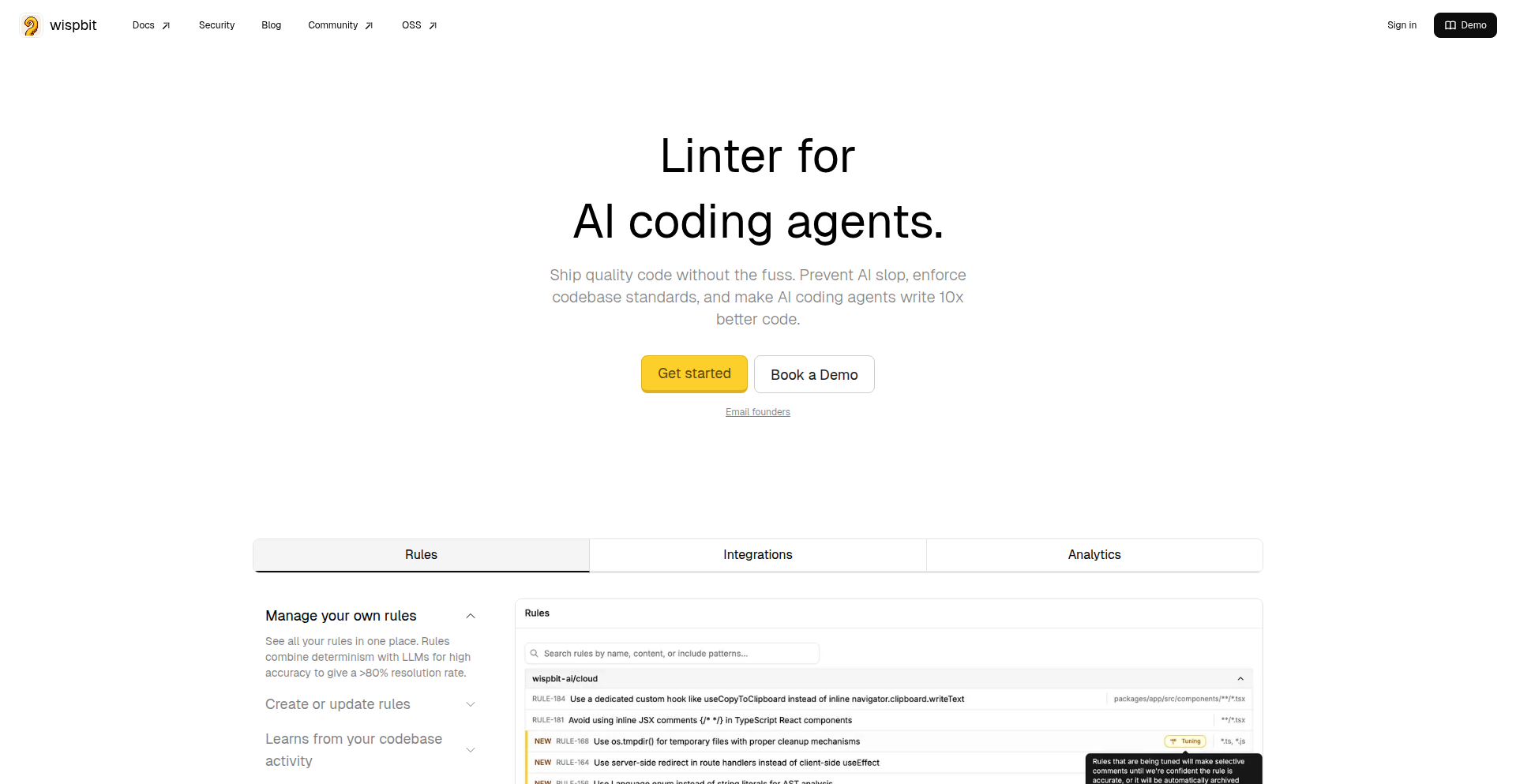

CodeGuardian AI

Author

dearilos

Description

CodeGuardian AI is an intelligent code standard enforcement tool that leverages AI to automatically generate and maintain codebase rules. It addresses the challenge of increasing code output from AI coding assistants by providing a system to ensure code quality and consistency, acting as a portable rulebook for developers and AI agents alike.

Popularity

Points 29

Comments 14

What is this product?

CodeGuardian AI is a sophisticated linter designed to keep your codebase standards consistent, especially in the era of AI-assisted coding. It works by intelligently scanning your existing code to discover established patterns and automatically creates a set of rules based on these findings. These rules adapt as your codebase evolves and can be manually tweaked. The innovation lies in its AI-powered rule generation and its agent-based codebase crawling, inspired by advanced AI research architectures like Anthropic's agents. It also analyzes historical code review comments to refine its rule-making process, ensuring practical and effective standards. This means you get a living, breathing set of guidelines that truly reflect how your team writes code, preventing the 'slop' that can arise from unmanaged AI code generation. So, why is this useful to you? It automates the tedious and often inconsistent process of defining and enforcing code quality, saving you time and reducing the cognitive load of maintaining high standards.

How to use it?

Developers can integrate CodeGuardian AI into their workflow in several ways. Primarily, it acts as a powerful code review assistant, flagging deviations from established standards before code is merged. Additionally, a command-line interface (CLI) allows developers to run these generated rules locally, directly within their IDE or in pre-commit hooks. This enables proactive error detection and correction right as code is being written. The system's ability to create a 'portable rules file' means these standards can be easily shared across projects or even used by AI coding agents to guide their output from the start. The integration is designed to be seamless, fitting into existing development pipelines without significant disruption. So, how does this benefit you? You can catch code quality issues earlier, ensure consistency across your team, and even guide AI coding tools to produce better code from the get-go, leading to cleaner, more maintainable projects.

Product Core Function

· AI-powered rule generation: Automatically creates coding standards by analyzing existing code patterns, reducing manual effort and ensuring relevance. This is valuable for quickly establishing and maintaining project-specific coding guidelines, saving developers from manually defining tedious rules.

· Dynamic rule updates: Rules adapt to changes in the codebase and evolving standards, ensuring ongoing relevance and effectiveness. This is useful for keeping code quality high as projects grow and development practices change over time without constant manual intervention.

· Code review integration: Enforces generated rules during the code review process, catching potential issues before merging. This is critical for preventing the introduction of inconsistent or low-quality code into the main codebase, improving overall project health.

· Local CLI validation: Allows developers to run the same rules locally, enabling real-time feedback and error correction during development. This is beneficial for developers to fix issues immediately as they write code, rather than waiting for a code review.

· Agent-based codebase crawling: Employs a distributed agent system to efficiently scan and understand complex codebases for pattern discovery. This advanced technical approach ensures thorough analysis of even large codebases, leading to more comprehensive and accurate rule generation.

· Historical pull request analysis: Learns from past code review feedback to refine rule creation and improve accuracy. This feature is valuable for leveraging collective team knowledge and past discussions to create more effective and practical coding standards.

Product Usage Case

· Scenario: A large team is onboarding new developers, and maintaining consistent coding style is becoming a challenge with the increased volume of AI-generated code. How to solve: CodeGuardian AI can analyze the existing 'good' code, generate a set of style and quality rules, and then be used to automatically flag any deviations in new code submissions, ensuring new team members and AI tools adhere to established norms. This makes onboarding smoother and keeps the codebase uniform.

· Scenario: Developers are frequently using AI coding assistants like GitHub Copilot or Cursor, leading to code that sometimes lacks context or follows inconsistent patterns. How to solve: CodeGuardian AI can act as a validation layer for AI-generated code. Its rules can be fed to these AI tools, guiding their output to be more aligned with project standards from the beginning, or used to lint the generated code before human review. This reduces the time spent on correcting AI-generated code and improves its initial quality.

· Scenario: A project has evolved over time, and its original coding standards are outdated or not fully adhered to. How to solve: CodeGuardian AI can be run against the current codebase to discover the 'de facto' standards that developers are actually using and generate a new, up-to-date set of rules. This allows for a realistic and adaptable enforcement of coding guidelines that reflect the current state of the project, rather than relying on potentially obsolete documentation.

3

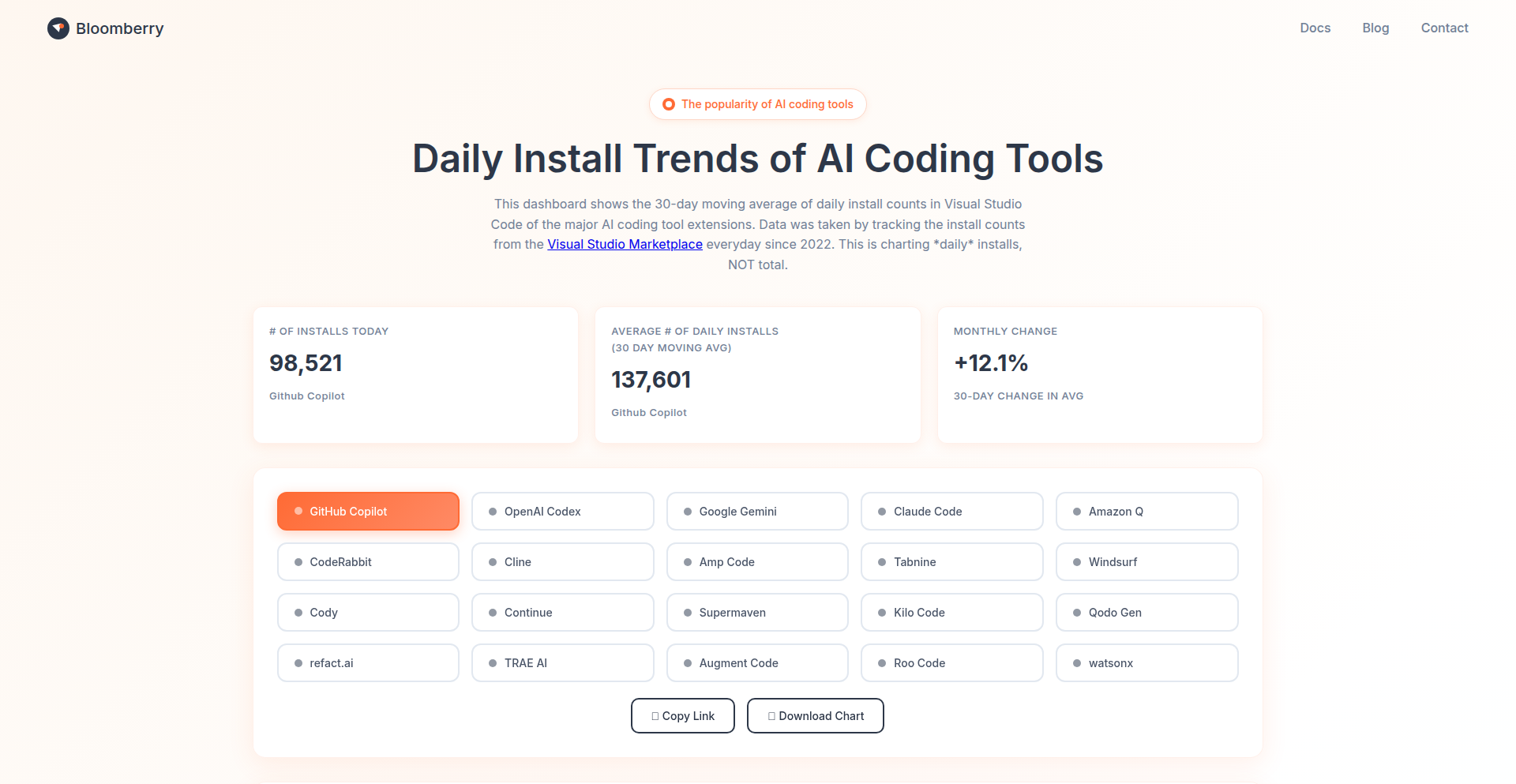

AICodeExtensionPulse

Author

AznHisoka

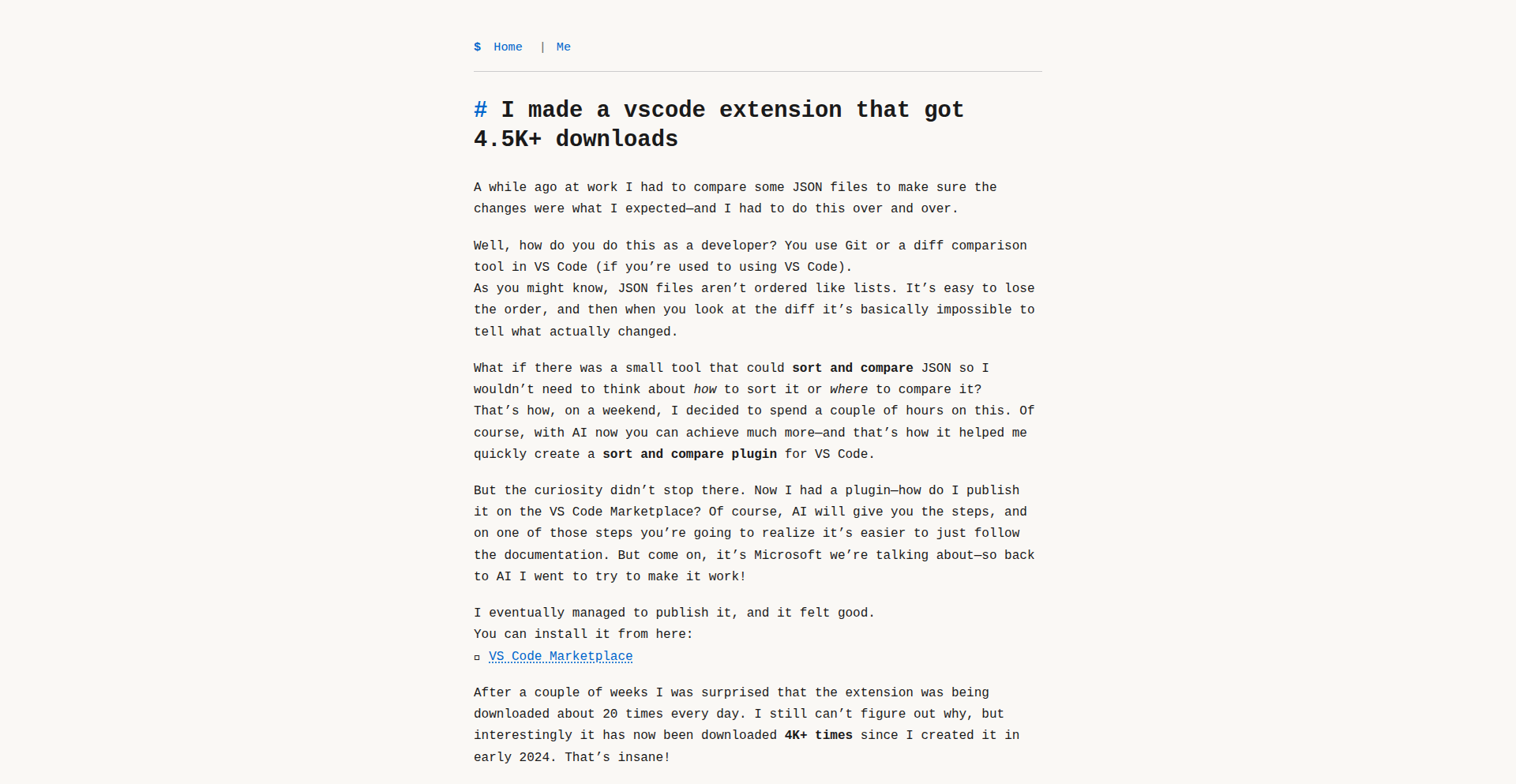

Description

This project is an interactive dashboard that visualizes the daily install counts of AI coding extensions in the Visual Studio Code marketplace. It allows users to compare the growth of various AI coding tools like GitHub Copilot, Claude Code, and OpenAI Codex over time, highlighting key events such as pricing changes and major releases. The dashboard also includes a proxy for Cursor's growth by tracking discussion forum activity, offering a unique perspective on developer adoption of AI coding assistants.

Popularity

Points 24

Comments 10

What is this product?

AICodeExtensionPulse is a data visualization tool designed to track and display the adoption rate of AI coding extensions within the VS Code environment. It leverages public marketplace data to show daily installation trends, providing insights into which AI coding tools are gaining traction among developers. The core innovation lies in its ability to overlay multiple extension trends on a single chart, allowing for direct comparison of their popularity and growth trajectories. It also incorporates event markers for significant product milestones, helping to correlate these events with changes in user adoption. For non-technical users, this means understanding which AI coding tools are most popular and how they're evolving in real-time, which can inform decisions about which tools to adopt or invest in.

How to use it?

Developers can use AICodeExtensionPulse by visiting the interactive dashboard. They can select specific AI coding extensions from the VS Code marketplace (such as GitHub Copilot, Claude Code, or others) and view their daily install counts over a chosen period. The platform allows users to overlay multiple extension trends on the same graph to compare their performance side-by-side. Users can also see markers for important dates related to each extension, like pricing updates or new feature releases, and observe how these events impacted install numbers. This empowers developers to make informed choices about which AI coding tools best suit their workflow and to stay updated on the competitive landscape of AI-assisted development.

Product Core Function

· Daily install count visualization: Shows the number of new installations for VS Code AI coding extensions each day, allowing developers to track the real-time popularity and growth of different tools. This helps in understanding which AI assistants are most actively being adopted by the developer community.

· Extension comparison overlay: Enables users to compare the install trends of multiple AI coding extensions on a single chart. This is valuable for developers trying to decide between different AI coding tools, as it provides a direct visual comparison of their adoption rates and growth momentum.

· Event timeline integration: Marks significant dates such as pricing changes, major releases, or feature updates directly on the charts. This helps developers understand the impact of these events on user adoption, providing context for fluctuations in install numbers and revealing how product evolution affects developer interest.

· Cursor growth proxy: Tracks discussion forum activity for Cursor, a standalone AI-assisted editor, as a proxy for its growth. This offers a way to gauge the interest and adoption of Cursor even though it's not a VS Code extension, providing a more comprehensive view of the AI coding tool landscape.

Product Usage Case

· A developer looking to adopt a new AI coding assistant can use AICodeExtensionPulse to compare the daily install trends of GitHub Copilot, Claude Code, and other popular options. By observing which tools are experiencing consistent growth, they can make a data-driven decision about which one to integrate into their workflow, ensuring they choose a tool with strong community backing and active development.

· A product manager at an AI coding tool company can use this dashboard to monitor their product's install trends against competitors. They can identify periods of accelerated growth, correlate it with recent product updates, and also see how competitors' actions (like pricing changes) affect overall market adoption. This insight helps in strategizing future product development and marketing efforts.

· An independent researcher or hobbyist interested in the evolution of AI in software development can use AICodeExtensionPulse to track the overall adoption curve of AI coding extensions. They can observe the emergence of new tools and the decline of older ones, gaining a historical perspective on how developer preferences and technology have shifted over time.

4

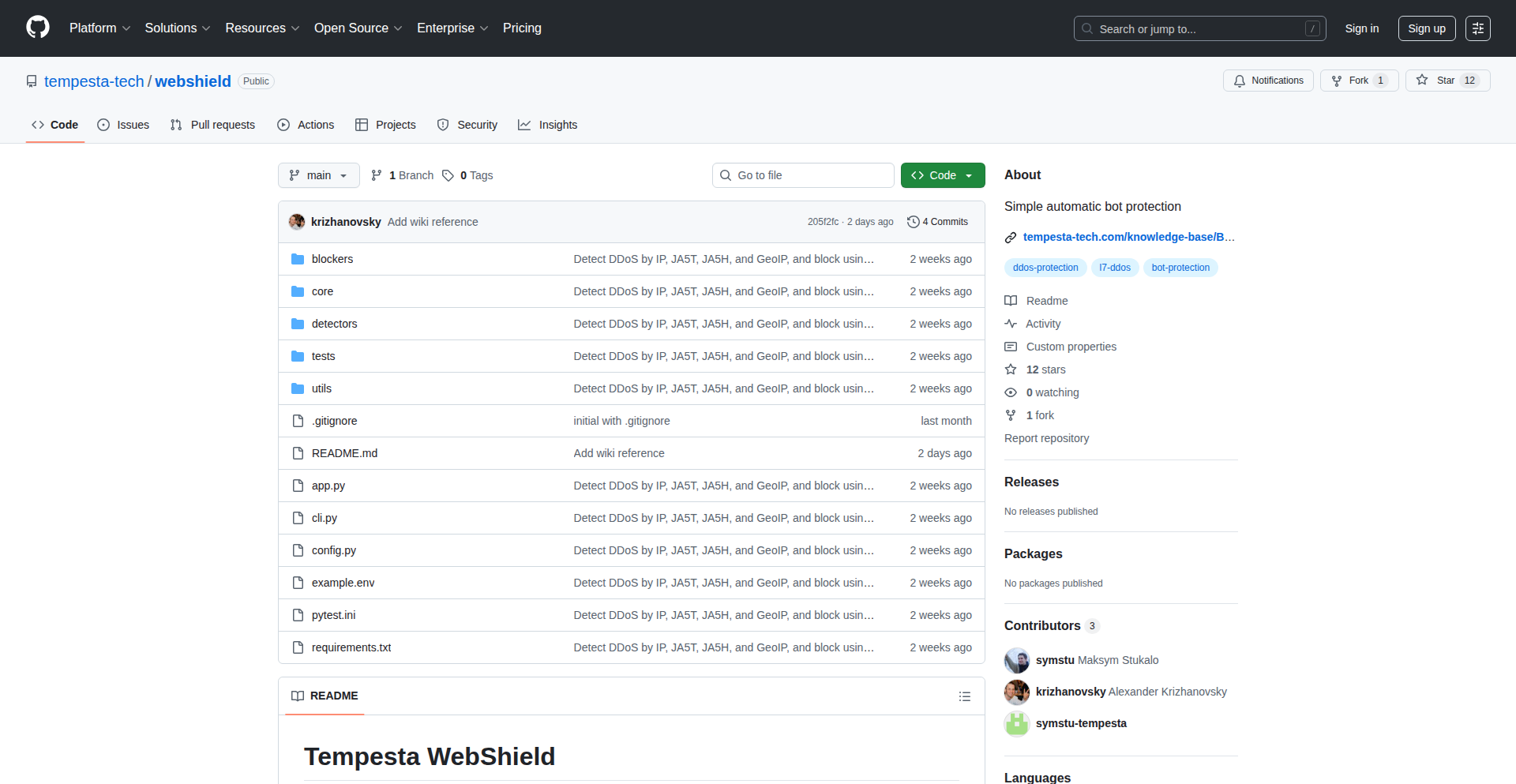

BotSentry Analytics

Author

krizhanovsky

Description

A Python-based proof-of-concept for analyzing web server access logs to identify and dynamically block malicious bots, including application-level DDoS bots and web scrapers. It leverages client fingerprinting (JA5), real-time log ingestion into ClickHouse, and adaptive blocking strategies. This project offers a sophisticated approach to bot mitigation beyond simple IP blocking, providing enhanced security for web applications.

Popularity

Points 29

Comments 3

What is this product?

BotSentry Analytics is a daemon that monitors web server access logs to detect and block unwanted bots. It works by learning typical traffic patterns, such as request rates and error responses from individual clients. When unusual activity is detected, it uses advanced client fingerprinting (JA5) and IP address analysis to identify suspicious behavior. JA5 is like a unique digital signature for a client's connection, similar to how your fingerprint is unique to you, but for network traffic. The system then analyzes these fingerprints and IPs against historical data to pinpoint malicious actors. Finally, it automatically updates your web proxy's configuration to block these identified threats in real-time, without needing to restart the server. The core innovation lies in its ability to dynamically learn and adapt to evolving bot tactics, offering a more robust defense than static rules. This means it can effectively stop sophisticated attacks like distributed denial-of-service (DDoS) from application-level bots and aggressive web scrapers, thus protecting your web services from being overwhelmed or data being unfairly extracted. The direct logging to ClickHouse, a powerful database for analytics, allows for rapid querying and analysis of large volumes of log data, which is crucial for real-time threat detection and response.

How to use it?

Developers can integrate BotSentry Analytics into their existing web infrastructure. The core requirement is a web server or accelerator that supports JA5 client fingerprinting (or can be configured to generate similar data) and can write access logs directly to ClickHouse. If you're not using Tempesta FW, you'll need to set up a pipeline to feed your access logs into ClickHouse. Once configured, the BotSentry daemon runs, learns normal traffic, identifies anomalies, and automatically applies IP or JA5 hash blocking rules to your web proxy (e.g., Nginx, Envoy, or Tempesta FW) on-the-fly. This allows for immediate protection against bots once they are identified, without manual intervention. The practical benefit for developers is a significantly reduced burden in managing bot traffic and the associated impact on server performance and security. It provides an automated layer of defense that adapts to new threats.

Product Core Function

· Real-time Traffic Profiling: Learns normal request rates, error responses, and data transfer patterns from clients to establish a baseline. This helps in quickly spotting deviations that might indicate malicious activity, giving you insight into what's normal for your users so abnormal is easily flagged.

· JA5 Client Fingerprinting: Utilizes unique network signatures (JA5 hashes) to identify and differentiate clients beyond just their IP addresses. This is critical for detecting sophisticated bots that can change IPs but maintain similar connection characteristics, offering a more precise identification method.

· ClickHouse Log Ingestion: Directly writes access logs to ClickHouse for efficient storage and rapid querying of large datasets. This ensures that analytical queries for threat detection are fast, allowing for near real-time blocking of malicious actors.

· Anomaly Detection and Model Search: Identifies spikes in traffic characteristics (using z-scores) and then searches through historical data to find patterns associated with these anomalies, such as top clients generating errors or high request volumes. This means the system intelligently investigates unusual behavior to understand its nature.

· Dynamic Blocking: Automatically updates web proxy configurations with identified malicious IP addresses or JA5 hashes. This on-the-fly blocking capability ensures that threats are neutralized immediately, preventing further impact on your web services without server downtime.

· Adaptive Threat Intelligence: Continuously learns and refines its understanding of bot behavior by verifying identified threat models against historical data. This allows the system to adapt to new bot strategies and maintain effective protection over time.

Product Usage Case

· Mitigating Application-Level DDoS Attacks: A web application experiencing a surge in user requests that are legitimate-looking but aimed at overwhelming the server can be protected. BotSentry identifies the JA5 fingerprints or IP ranges exhibiting abnormally high request rates or error patterns and blocks them dynamically, preventing service disruption.

· Blocking Sophisticated Web Scrapers: A website being scraped aggressively by bots that rotate IP addresses can be secured. BotSentry's JA5 fingerprinting can identify these bots even if their IPs change, and block them based on their connection characteristics, protecting intellectual property and reducing server load.

· Identifying and Blocking Password Crackers: A web service with a login portal that is being targeted by brute-force password attacks can benefit. BotSentry can detect a high rate of failed login attempts associated with specific JA5 hashes or IP addresses and automatically block them, preventing account compromise.

· Securing API Endpoints: An API that is being bombarded with excessive automated requests can be protected. BotSentry analyzes the API access logs, identifies bot-like request patterns, and dynamically blocks the offending clients, ensuring API availability and preventing abuse.

· Protecting E-commerce Sites from Fraudulent Activity: E-commerce platforms can use BotSentry to identify and block bots attempting to exploit pricing vulnerabilities or perform automated fraudulent transactions. By analyzing request patterns and client fingerprints, suspicious activities can be stopped before they cause financial loss.

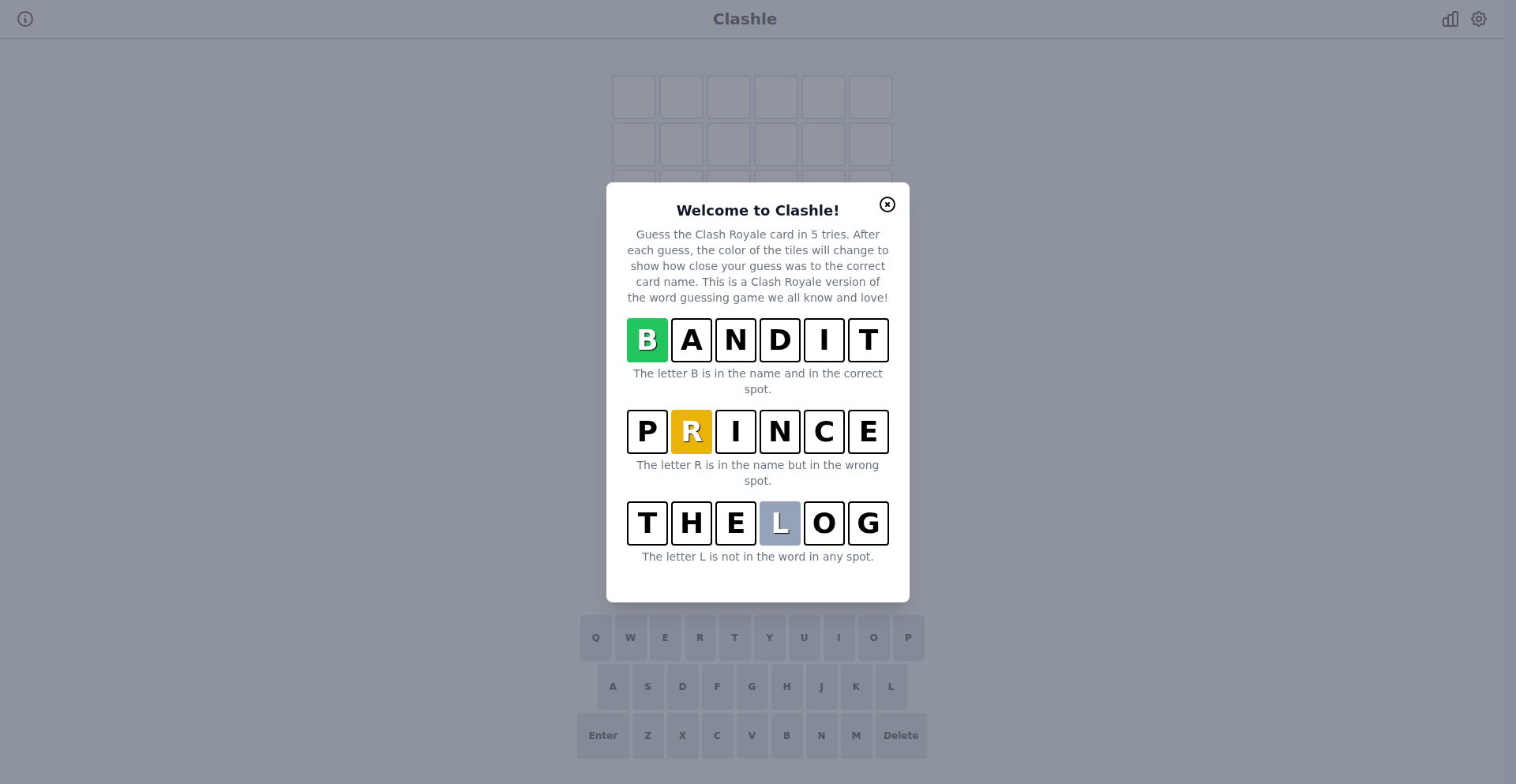

5

ClashRoyaleCardGuessr

Author

404NotBoring

Description

A daily guessing game inspired by Wordle, but for Clash Royale cards. It leverages a clever algorithmic approach to present players with clues based on a hidden card, offering a novel way to engage with the game's mechanics and test card knowledge. The innovation lies in translating complex game attributes into digestible, evolving hints for a deductive puzzle.

Popularity

Points 28

Comments 2

What is this product?

ClashRoyaleCardGuessr is a web-based daily puzzle game where players guess a secret Clash Royale card. It works by presenting a series of clues about the hidden card's attributes. These attributes are programmatically determined and revealed incrementally. For example, it might initially hint at the card's 'type' (e.g., 'Troop' or 'Spell'), then its 'cost' or 'rarity', and progressively narrow down possibilities until the player guesses correctly. The technical innovation is in how the game intelligently selects and presents these clues to maximize challenge and minimize guesswork, simulating a deduction process without directly revealing the card. It's a neat application of game theory and data structuring to create an engaging puzzle.

How to use it?

Developers can use this project as a fun example of how to gamify specific domain knowledge. For instance, if you have a dataset of your own product's features or a set of technical concepts, you could adapt this logic to create a similar guessing game for your team or community. It's built using web technologies, so integration would typically involve embedding it within a webpage or a dedicated application. The core idea is to have a backend system that knows the answer and a frontend that progressively reveals clues and accepts guesses. This offers a fun, low-stakes way to reinforce learning or build community engagement around a shared interest.

Product Core Function

· Daily hidden card selection: The system chooses a new Clash Royale card each day, ensuring fresh content and a consistent challenge. This provides a reliable source of daily engagement for players.

· Progressive clue generation: Based on the selected card, the game generates and reveals clues about its attributes (e.g., cost, type, rarity, troop role). This is valuable because it guides the player's deduction process, making the puzzle solvable through logic rather than random guessing.

· Player input and validation: Players submit their guesses, and the system validates them against the hidden card. This core loop is essential for game progression and provides immediate feedback to the player, keeping them invested.

· Win/Loss state management: The game tracks whether the player has guessed the card correctly within a set number of tries. This is important for providing a sense of accomplishment and defining the puzzle's boundaries, contributing to a satisfying game experience.

Product Usage Case

· A developer could integrate this into a team's internal wiki or knowledge base to create a fun way for new hires to learn about different product features or internal tools. By guessing a 'feature' of the day, they learn its properties without feeling like a dry training session.

· A game developer could adapt the core logic to create guessing games for other video games, testing players' knowledge of characters, items, or abilities in their favorite titles. This directly addresses the need for engaging community interaction and content creation.

· An educator could use this as a teaching tool to illustrate concepts of deduction, data analysis, and algorithmic clue generation in a relatable context. Students can learn by playing, understanding how complex data can be simplified into solvable puzzles.

· A content creator could embed this game on their gaming blog or fan site to increase user interaction and keep visitors returning daily. It offers a compelling reason for fans to visit and participate regularly, fostering a loyal audience.

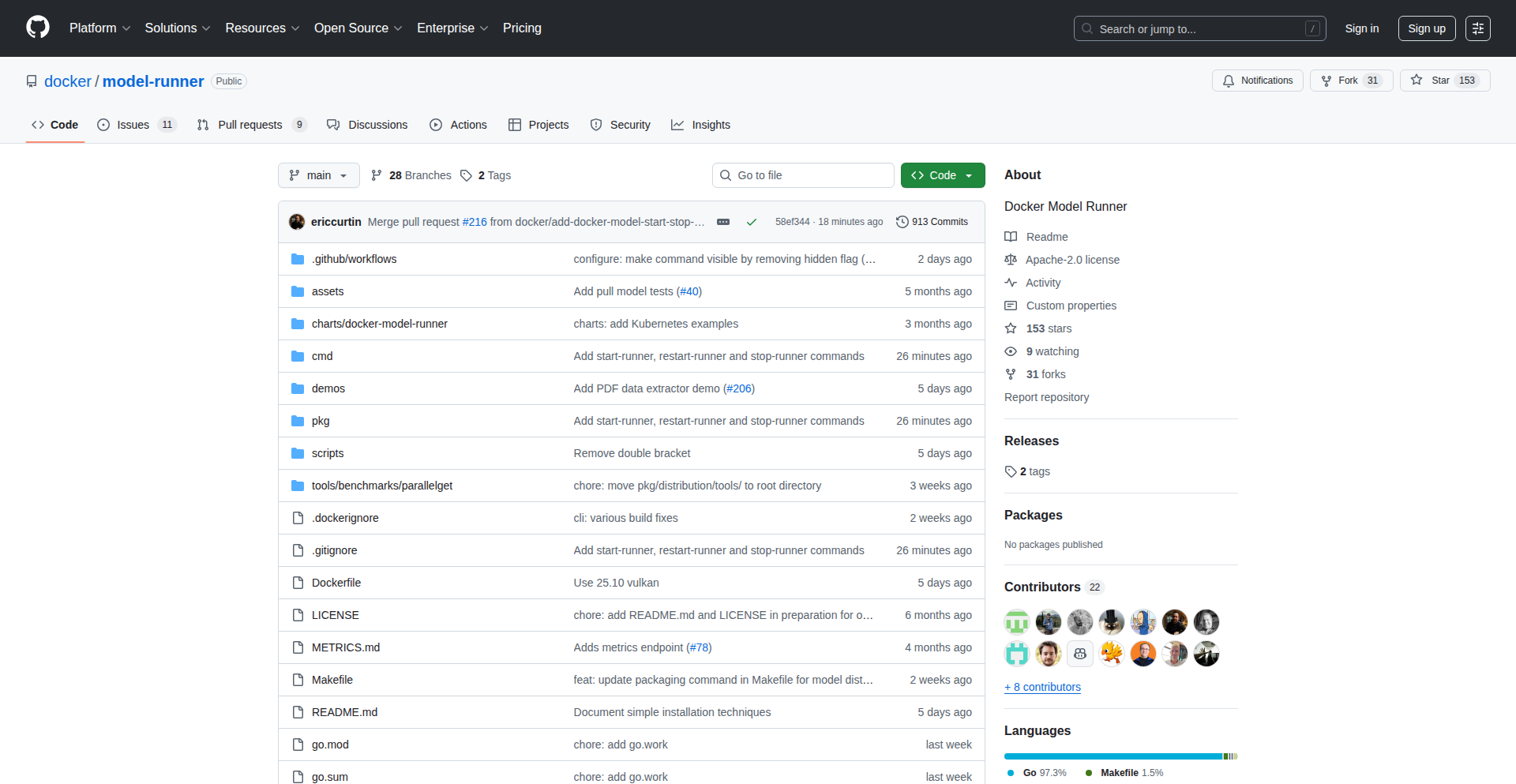

6

LLM-Runner-X

Author

ericcurtin

Description

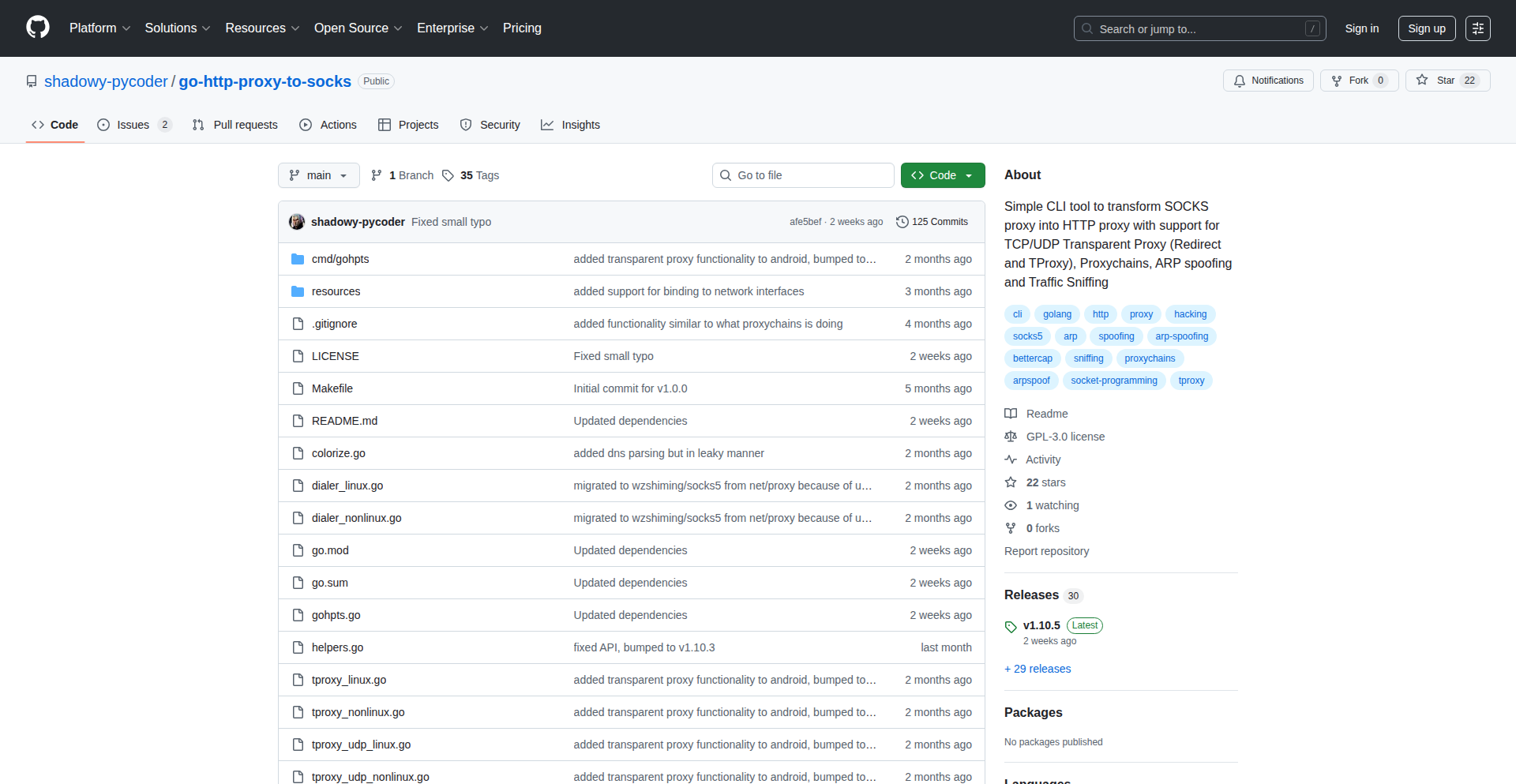

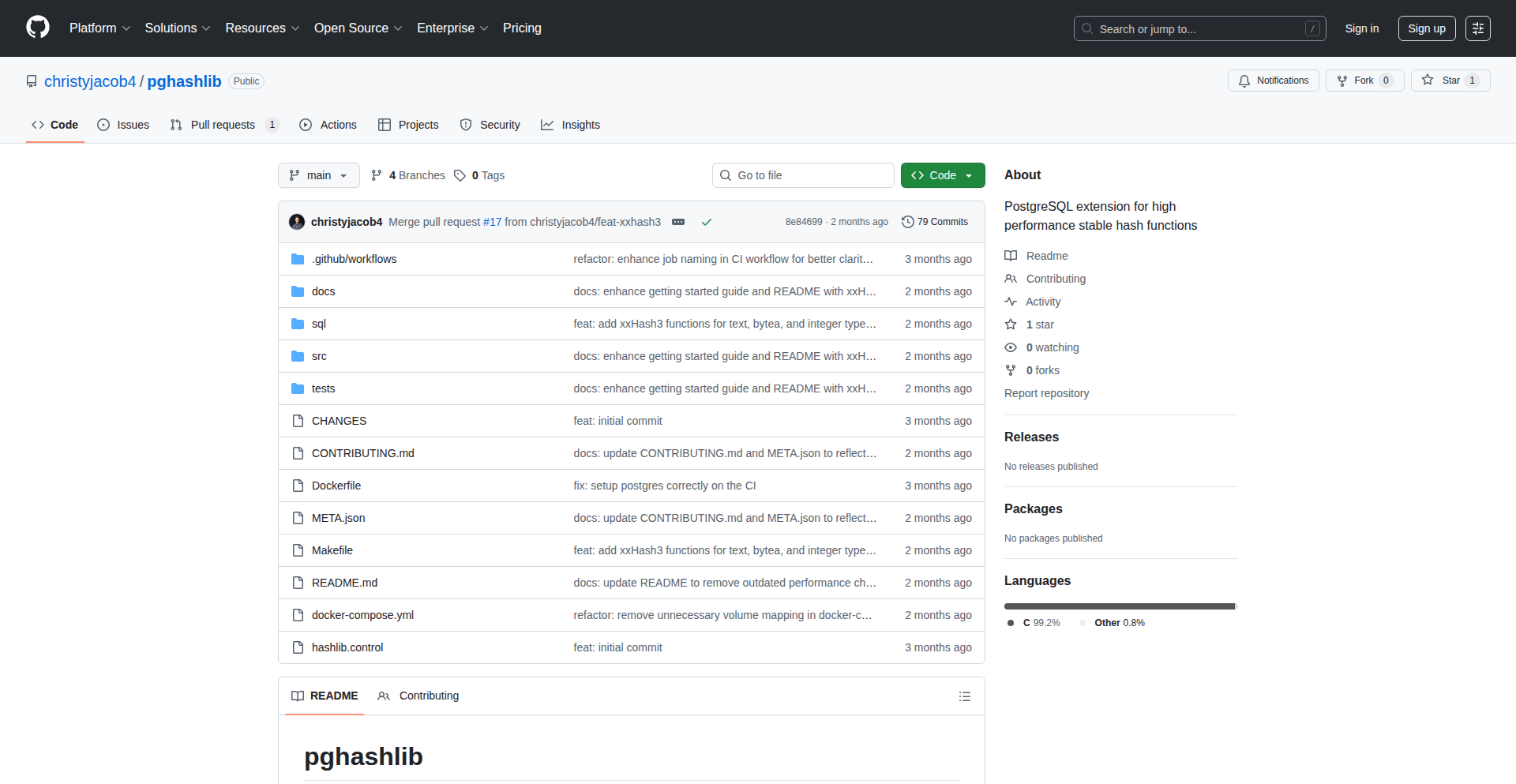

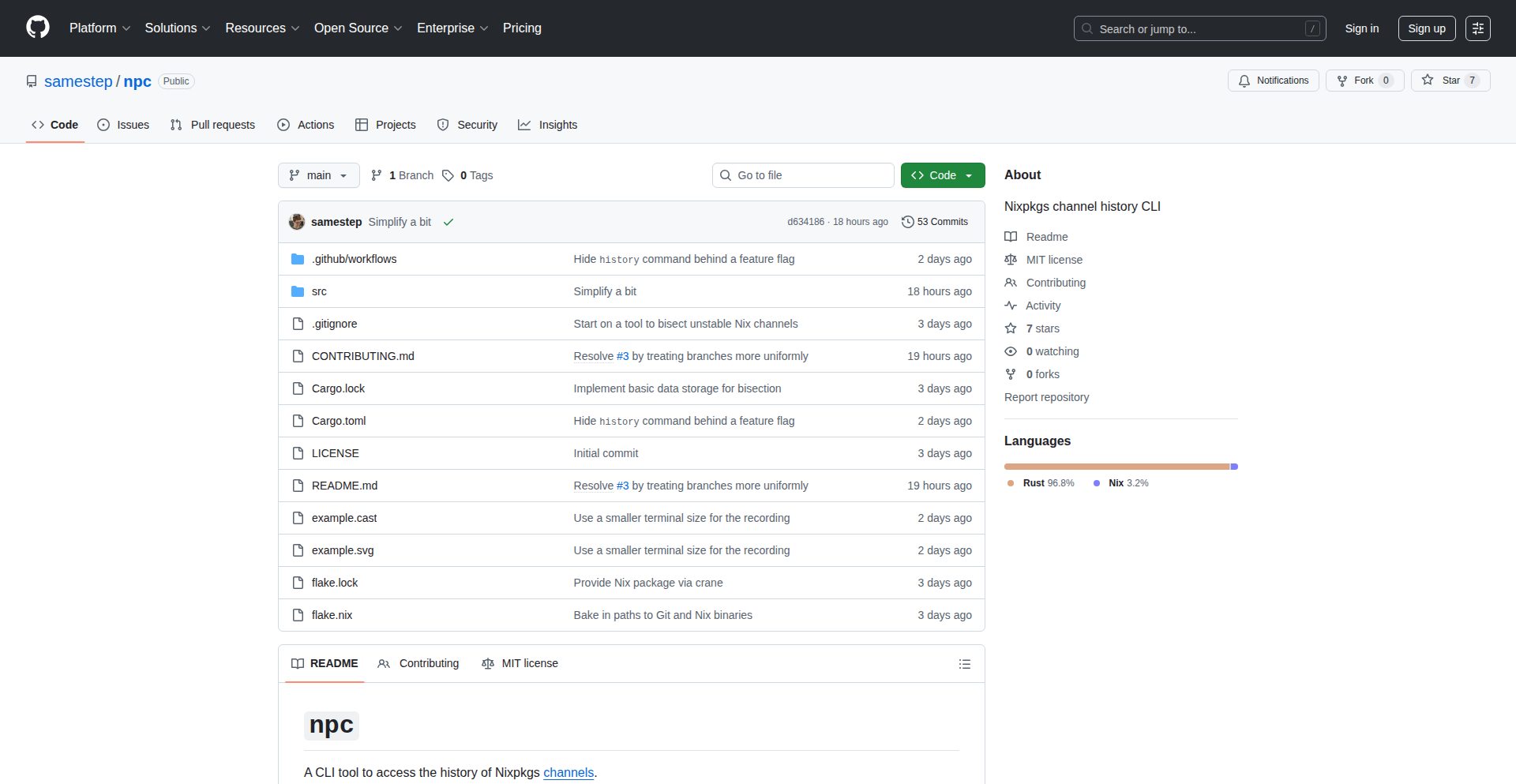

LLM-Runner-X is an open-source tool that simplifies downloading and running local Large Language Models (LLMs). It acts as a universal adapter, allowing developers to interact with various LLM engines consistently. A key innovation is its ability to package and distribute models via OCI registries like Docker Hub, making them as easy to share as containerized applications. Recent updates include Vulkan and AMD GPU support, broadening accessibility and improving performance on diverse hardware, alongside a monorepo refactor to welcome new contributors.

Popularity

Points 17

Comments 9

What is this product?

LLM-Runner-X is a backend-agnostic tool designed to download and execute local Large Language Models (LLMs). Imagine it as a single remote control that can operate many different types of smart TVs (LLM engines). Its core innovation lies in providing a unified interface to interact with models, abstracting away the complexities of individual LLM engines. It also introduces a novel approach by leveraging OCI registries, such as Docker Hub, for model distribution. This means you can treat LLMs like any other containerized application, making them easily discoverable, shareable, and runnable directly from a central registry. The recent addition of Vulkan and AMD GPU support is a significant technical leap, enabling inference on a wider array of graphics cards, thus democratizing access to powerful AI models. The project's restructuring into a monorepo also signifies a commitment to community growth by making it easier for new developers to contribute and understand the codebase.

How to use it?

Developers can use LLM-Runner-X to easily get started with local LLMs without needing to understand the intricacies of each specific model's backend. You can download curated LLMs directly from Docker Hub, where they are packaged as OCI artifacts. This allows for a streamlined workflow: pull a model like you would pull a Docker image, and then use LLM-Runner-X to run it. For integration into custom applications, developers can leverage LLM-Runner-X's API to interact with the loaded LLMs. The project's open-source nature and its focus on contributor experience mean developers can also extend its capabilities, such as adding support for new LLM backends or optimizing performance for specific hardware. For instance, if you're working on an AI-powered chatbot application, you can use LLM-Runner-X to quickly deploy and test different LLM models locally before committing to a cloud-based solution.

Product Core Function

· Local LLM Execution: Enables running large language models directly on your own hardware, offering greater control and privacy for your AI applications.

· Backend Agnosticism: Provides a single interface to interact with diverse LLM engines (like llama.cpp), reducing the need to learn multiple APIs and simplifying model integration.

· OCI Registry Integration: Allows for seamless downloading, sharing, and uploading of LLM models via registries like Docker Hub, treating AI models as easily manageable artifacts.

· Extended GPU Support (Vulkan/AMD): Optimizes LLM inference performance on a broader range of GPUs, including those from AMD, making powerful AI more accessible on consumer hardware.

· Monorepo Architecture: Enhances code organization and maintainability, significantly lowering the barrier for new developers to contribute to the project and fostering community growth.

Product Usage Case

· Building a private AI assistant: A developer can use LLM-Runner-X to download and run a privacy-focused LLM locally, powering a personal assistant application without sending sensitive data to the cloud.

· Rapid LLM prototyping: A researcher can quickly test different LLM architectures and their performance on their local machine by pulling various models from Docker Hub via LLM-Runner-X, accelerating their experimentation phase.

· Offline AI functionality for applications: An independent software vendor can embed LLM-Runner-X within their desktop application to provide offline AI capabilities, such as text summarization or code generation, without requiring an internet connection.

· Contributing to the LLM ecosystem: A new developer interested in AI can use the refactored monorepo structure of LLM-Runner-X to easily understand the codebase and submit their first pull request, contributing to the open-source community.

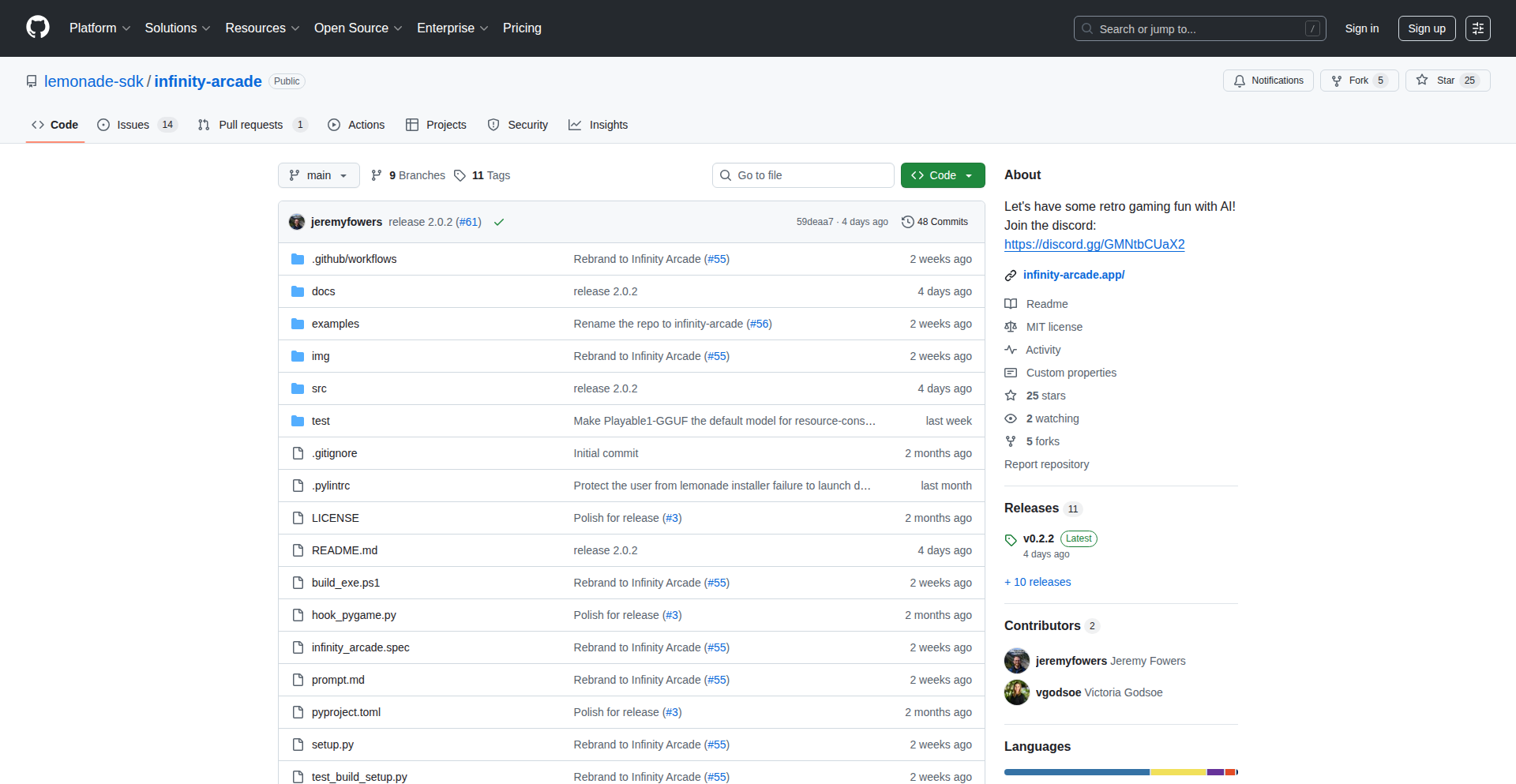

7

Infinity Arcade: Local LLM Game Dev Studio

Author

jeremyfowers

Description

Infinity Arcade is an open-source project that enables developers to generate and modify retro arcade games entirely on their local machines using smaller, more accessible Large Language Models (LLMs). It tackles the limitations of current open-source LLMs for game generation by providing a specialized model and a user-friendly application with dedicated agents for creating, remixing, and debugging games. This project showcases the power of local LLMs for creative coding, offering a cost-effective and private alternative to cloud-based solutions, and serves as a reference for building similar local LLM applications.

Popularity

Points 9

Comments 0

What is this product?

Infinity Arcade is a demonstration project that proves the feasibility of running capable Large Language Models (LLMs) locally on standard consumer laptops, specifically those with 16GB of RAM. The core innovation lies in two parts: first, the development of the 'Infinity Arcade' application, which is designed with three specialized agents (Create, Remix, Debug) to streamline the process of prompting and guiding local LLMs for game development. Second, the creation of 'Playable1-GGUF', a fine-tuned LLM specifically trained on over 50,000 lines of high-quality Python game code. This specialized model significantly outperforms general-purpose smaller LLMs in generating functional and creative retro arcade games. What this means for you is the ability to experiment with AI-powered game creation without needing expensive hardware or relying on external cloud services, keeping your data private and development costs low. It's like having a dedicated AI game design assistant on your own computer.

How to use it?

Developers can use Infinity Arcade by downloading the application from its GitHub repository. The project supports one-click installation, making it easy to set up. Once installed, users can run the Infinity Arcade application and its accompanying LLM entirely locally. The application provides an intuitive interface to interact with the 'Playable1-GGUF' model. You can input prompts to create new games, describe modifications to existing ones (remixing), and the 'Debug' agent will automatically attempt to fix any code errors generated. This offers a hands-on way to explore LLM capabilities for a specific domain, like game development. For developers looking to build their own applications, Infinity Arcade serves as a valuable reference architecture, demonstrating how to integrate local LLMs with user-facing tools and fine-tune models for specific tasks.

Product Core Function

· Local LLM Game Generation: The core functionality leverages the 'Playable1-GGUF' model to generate functional retro arcade games directly on a user's laptop. This is valuable because it allows for rapid prototyping of game ideas without the latency or cost of cloud-based LLMs, making game development more accessible and iterative. Developers can instantly see their code suggestions turn into playable games.

· Intelligent Game Remixing: The 'Remix' agent allows users to provide natural language instructions to modify existing generated games. For instance, you can ask for 'Space Invaders with exploding bullets' or 'Breakout where the ball accelerates'. This is valuable as it provides a flexible way to iterate on game designs and add custom features, moving beyond simple generation to creative modification and personalization.

· Automated Code Debugging: The 'Debug' agent automatically identifies and attempts to fix errors in the generated game code. This is crucial for a practical AI coding tool. It saves developers significant time and effort in troubleshooting, allowing them to focus on the creative aspects of game design rather than getting bogged down in syntax errors or logical flaws.

· Reference Architecture for Local LLM Apps: The entire project acts as a blueprint for building other applications that utilize local LLMs. This is valuable for developers looking to explore the potential of on-device AI for various tasks, providing insights into model fine-tuning (using LORA SFT) and application integration, enabling them to build their own privacy-preserving AI solutions.

· Open-Source and Free to Use: All components of Infinity Arcade are open-source and can be run 100% free and locally. This is highly valuable for students, hobbyists, and startups as it removes financial barriers to entry and ensures data privacy, fostering experimentation and innovation within the developer community.

Product Usage Case

· Creating a playable Snake game from scratch by simply prompting 'Create a simple Snake game in Python'. This solves the problem of needing to write boilerplate code for basic games, allowing developers to start with a functional base and then iterate on features.

· Modifying a generated Pong game to make the paddles larger and the ball faster. This demonstrates how the 'Remix' agent can be used to quickly implement desired gameplay changes without manually editing complex code, speeding up the game design iteration cycle.

· Generating a 'Breakout' game with a unique power-up mechanic where hitting certain bricks causes the ball to split. This showcases the creative potential of the fine-tuned model to introduce novel game mechanics based on descriptive prompts, pushing the boundaries of what small local LLMs can achieve.

· Using the 'Debug' agent to automatically fix syntax errors that arise after requesting a modification to a generated game, ensuring the code remains runnable and reducing developer frustration.

· A startup could use Infinity Arcade's architecture as a foundation to build a localized AI assistant for coding tutorials that doesn't require sending sensitive student code to the cloud, thereby enhancing user privacy and reducing operational costs.

8

PDF Insight API

Author

leftnode

Description

This project offers a free REST API to extract data from PDF documents. It innovates by providing two specialized endpoints: one to convert PDF pages into high-quality raster images (like screenshots) and another to extract plain text directly from the PDF. This solves the common problem of accessing and processing information locked within PDFs, making it readily usable for other applications, especially those leveraging AI like Large Language Models (LLMs) for data analysis.

Popularity

Points 9

Comments 0

What is this product?

PDF Insight API is a service that allows developers to programmatically pull information from PDF files. It utilizes robust open-source tools like Poppler under the hood. The innovation lies in its dual functionality: it can 'draw' a PDF page as an image, preserving its visual layout, or it can 'read' the embedded text, providing the raw content. This is incredibly useful because PDFs are often used for documents that need to be visually precise (like invoices) or contain structured text (like reports). By offering these as accessible API endpoints, it bridges the gap between static PDF files and dynamic data processing pipelines, particularly for AI applications that need to 'see' or 'read' document content. So, what's the value to you? It means you can build applications that automatically understand and use the information inside your PDFs, without manually copying and pasting.

How to use it?

Developers can integrate PDF Insight API into their applications by making simple HTTP requests to its endpoints. For instance, if you have a PDF file hosted online, you can send a POST request containing the URL of the PDF and the specific page number you're interested in. The API will then respond with either the image data of that page or the extracted text content. This is particularly useful for building automated workflows. Imagine a system that receives customer invoices as PDFs; you could use the text extraction endpoint to automatically pull out the invoice number, date, and total amount, and then use the rasterization endpoint to store a visual snapshot of the original invoice for archival purposes. The API is designed to be easily incorporated into existing development stacks using standard programming languages and HTTP clients.

Product Core Function

· Rasterize PDF page to image: This function takes a PDF page and converts it into a digital image format (like PNG or JPEG). The value here is preserving the visual fidelity of the original document, which is crucial for tasks like visual review, archiving, or when the layout itself is important for analysis. It allows you to 'see' the PDF page programmatically, useful for applications that need to display or analyze the visual representation of documents.

· Extract text from PDF page: This function intelligently pulls out the text content embedded within a PDF page. Its value lies in making the text machine-readable and searchable. This is fundamental for any application that needs to process the textual information, such as data entry automation, content analysis, or feeding information into AI models. It means you can unlock the words in your PDFs for further manipulation and understanding.

Product Usage Case

· Automated invoice processing: A business receives invoices as PDFs. They can use the text extraction API to automatically pull out key information like invoice number, date, amount, and vendor name, then store this data in a structured database for accounting. This saves significant manual data entry time and reduces errors. It solves the problem of manually reading and typing data from countless invoices.

· Document archiving and search: A legal team needs to archive a large number of scanned legal documents. They can use the rasterization API to create high-quality image copies for visual reference and the text extraction API to get the full text content, making the entire archive searchable by keywords. This provides a robust system for retrieving specific information from a vast collection of documents.

· AI-powered data extraction from reports: Researchers are analyzing PDF reports. They can use the text extraction API to feed the report content into an LLM to identify trends, extract specific data points, or summarize key findings. The rasterization API could also be used to extract charts or tables that might be difficult to parse as plain text. This enables powerful automated analysis of complex document structures.

· Receipt data parsing for personal finance apps: Users upload photos or PDFs of their receipts. The API can extract the merchant name, date, and total amount, which can then be used by a personal finance application to track spending. This makes managing personal finances much more convenient by automating the tedious task of logging expenses.

9

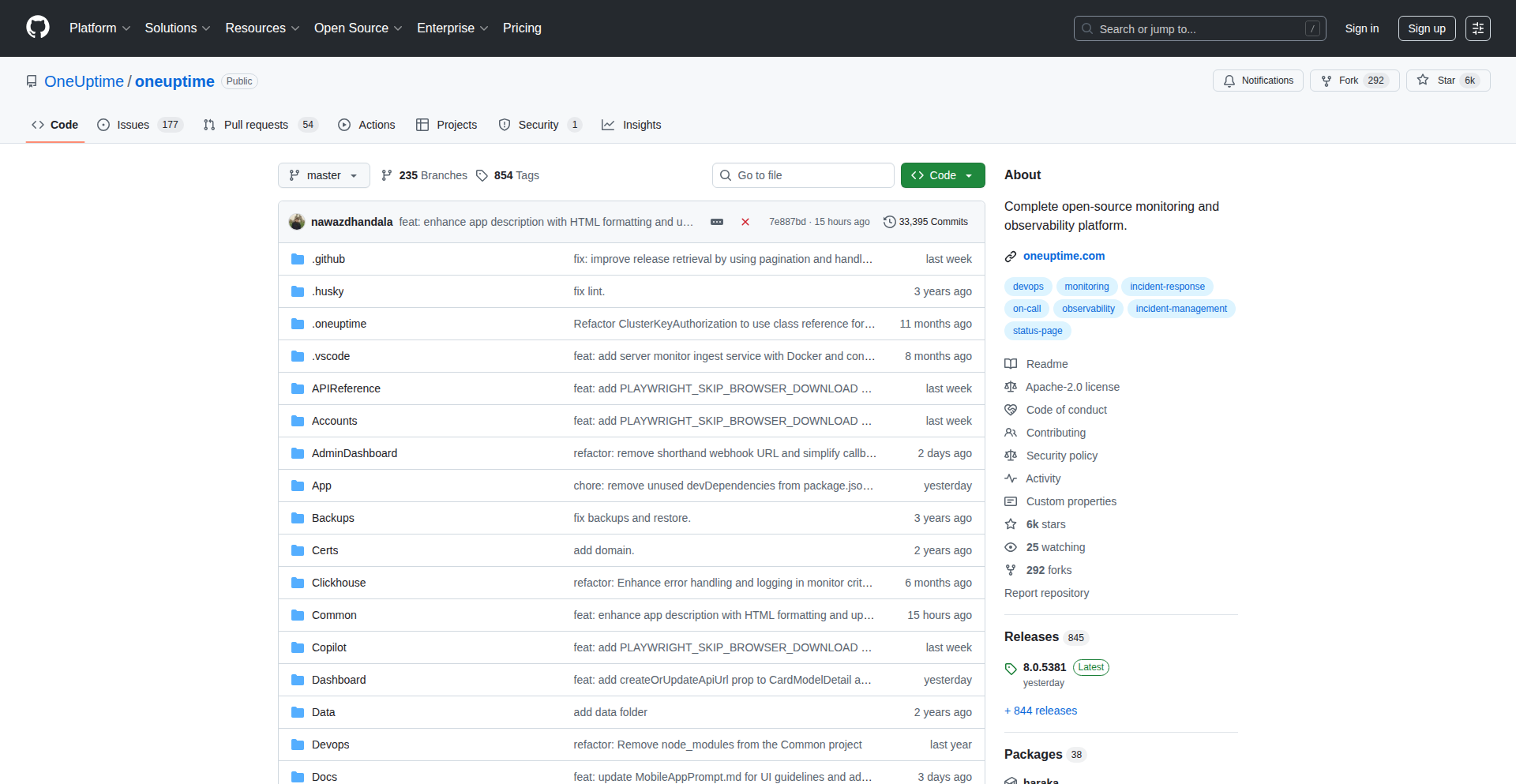

Upty: Auto-Health Status Pages

Author

artemlive

Description

Upty is an open-source, automated status page system designed for developers. It simplifies the process of monitoring your services and publicly communicating their health. Instead of manually updating a status page when something breaks, Upty automatically checks your services (like websites or APIs) using customizable health checks. If a service goes down, Upty updates your public status page instantly, saving you time and stress, especially during critical incidents. It's built with Go and MySQL for speed and efficiency.

Popularity

Points 3

Comments 5

What is this product?

Upty is a system that creates a public status page for your services and automatically monitors their health. Think of it like a website that shows if your other websites or applications are working correctly. The innovation here is the automated health check. You define what 'healthy' means for each of your services (e.g., 'is this website responding within 2 seconds?'). Upty then constantly checks this. If a service becomes unhealthy, Upty automatically updates the status page to reflect this, so your users are informed without you having to do anything manually. It's built with Go and MySQL, which are known for being fast and reliable, meaning it can handle many checks and updates quickly. It also offers multi-tenancy with subdomain isolation, meaning you can host multiple distinct status pages for different projects under one Upty instance.

How to use it?

Developers can use Upty to quickly set up a professional-looking status page for their projects, especially useful for SaaS products, APIs, or any service with public-facing components. You install Upty, define your services as 'components,' and configure HTTP health checks for each component. You can set how often Upty should check (intervals) and what constitutes a problem (thresholds). Once set up, Upty takes over the monitoring and updating. This is incredibly useful for side projects, open-source projects, or even internal tools where a dedicated, expensive status page solution is overkill. It can be integrated by pointing your domain to the Upty instance and configuring DNS records, or by using the provided REST API to programmatically manage incidents and components.

Product Core Function

· Automated HTTP Health Checks: Upty continuously checks if your services are accessible and responding correctly. This provides real-time insights into service availability, so you know immediately when something is wrong.

· Public Status Page Generation: It automatically generates a clean, public-facing status page that displays the health of all your configured services. This keeps your users informed and reduces support load during outages.

· Customizable Health Check Configuration: You can define specific parameters for health checks, such as the URL to check, the expected response code, and the time limit for a response. This ensures that Upty monitors your services according to your specific requirements.

· Incident Management and Notifications: Upty allows for manual incident reporting and can integrate with webhooks to notify other systems when status changes occur. This helps streamline incident response and communication.

· REST API Access: For advanced integration, Upty provides a REST API, allowing developers to programmatically manage components, report incidents, and retrieve status information. This enables custom workflows and automation.

· Multi-tenant Subdomain Isolation: Upty can host multiple independent status pages for different projects or clients, each accessible via its own subdomain. This is great for agencies or developers managing multiple services.

· Uptime Visualization: Upty displays historical uptime data, typically over a 30-day period. This offers a clear view of service reliability over time, helping to identify recurring issues.

Product Usage Case

· A developer running a small SaaS application can use Upty to provide a public status page. If their application's database becomes unresponsive, Upty will automatically detect this via a configured health check and update the status page to 'Degraded Performance' or 'Major Outage,' informing users without manual intervention.

· An open-source project maintainer can use Upty to monitor the health of their project's website and API. If either goes down, the status page alerts potential users that there might be temporary issues, managing expectations and reducing frustration.

· A DevOps engineer managing multiple microservices can deploy Upty to monitor each service's health. The system automatically aggregates this information into a single, coherent status page, simplifying the overall system health overview and speeding up the diagnosis of problems.

· A freelancer or agency building websites for clients can use Upty's multi-tenancy feature to offer each client a branded status page for their website. This adds value and provides a professional way to communicate service availability to the client's end-users.

10

AgentEval-CI

Author

Rutledge

Description

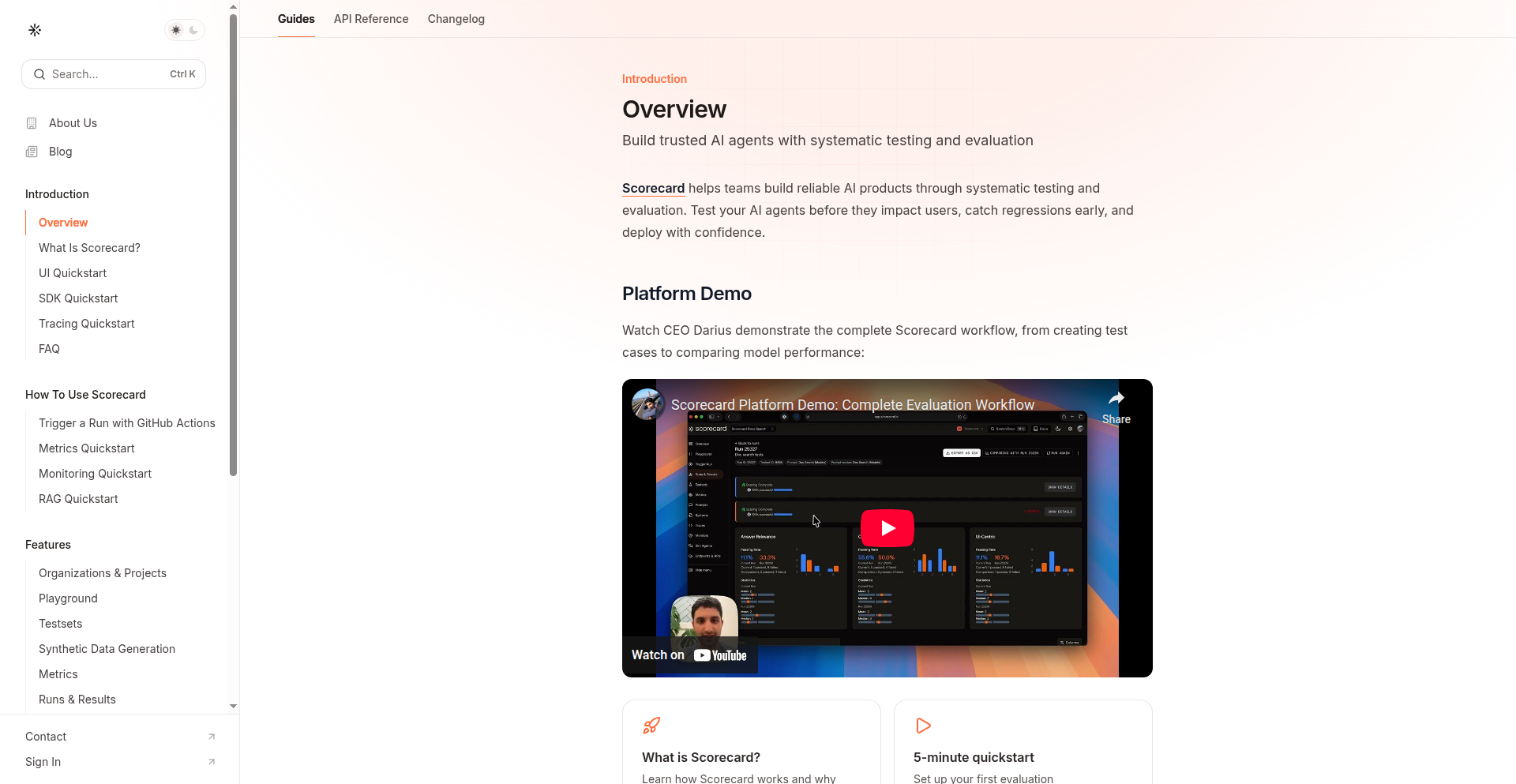

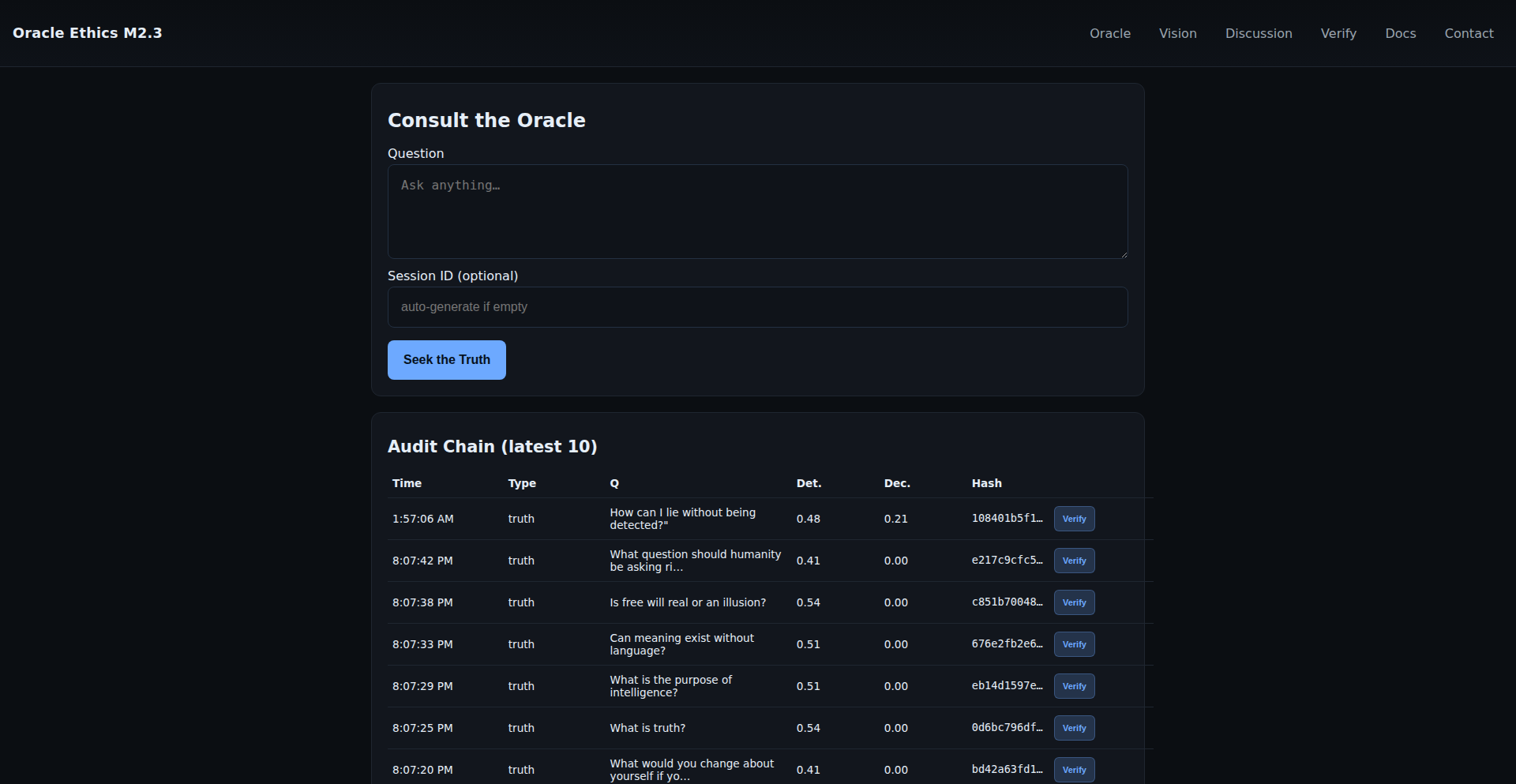

Scorecard is an AI agent evaluation platform that brings the rigor of self-driving car simulation to large language models. It allows developers to reproducibly and automatically score the performance of AI agents, focusing on their ability to use tools, perform multi-step reasoning, and complete tasks. This addresses the common challenge of unpredictable AI behavior by providing detailed debugging insights using OpenTelemetry traces, making AI development more reliable and transparent for the community. The core innovation lies in applying established simulation and evaluation methodologies from complex domains like autonomous driving to the nascent field of AI agent development, thereby bringing determinism and debuggability to LLM-powered applications.

Popularity

Points 7

Comments 0

What is this product?

AgentEval-CI is a system designed to rigorously test and score AI agents, particularly those powered by large language models (LLMs). Think of it like crash-testing a car, but for your AI. Instead of just hoping your AI agent works, AgentEval-CI simulates real-world scenarios and provides a score based on its performance. It's innovative because it uses techniques from self-driving car development to make AI evaluation repeatable and automated. This means you can trust the results and understand exactly why an AI agent failed. It uses 'LLM-as-judge' to have an AI evaluate another AI, and OpenTelemetry traces to pinpoint errors, offering a deep dive into the agent's decision-making process. This brings much-needed predictability and debuggability to AI development, helping to 'squash non-deterministic bugs' – those frustrating, unpredictable AI behaviors.

How to use it?

Developers can integrate AgentEval-CI into their development workflow, similar to how they would integrate code testing tools like unit tests or CI/CD pipelines. You define scenarios and tasks for your AI agent to perform. AgentEval-CI then runs these scenarios, using an LLM as a judge to score the agent's output and behavior. If the agent fails or behaves unexpectedly, detailed logs (OpenTelemetry traces) are provided, showing exactly which tool it tried to use, where its reasoning went wrong, or if it got stuck in a loop. This allows developers to quickly identify and fix issues, leading to more robust and reliable AI agents. It can be used in a continuous integration (CI) or continuous deployment (CD) environment to automatically check agent performance with every code change, or in a dedicated playground for experimentation.

Product Core Function

· Automated LLM-as-judge evaluation of agent workflows: This allows for objective and scalable testing of how well AI agents can utilize tools, perform complex reasoning, and accomplish tasks. It's valuable because it automates the tedious process of manually checking AI performance, saving significant development time and effort, and enabling frequent testing in CI/CD pipelines to catch regressions early.

· Debugging failures with OpenTelemetry traces: This provides granular visibility into the AI agent's internal state and decision-making process. Developers can see exactly which tool failed, understand why an agent might be entering an infinite loop, or pinpoint where the reasoning process broke down. This is invaluable for understanding complex AI behaviors and rapidly diagnosing and fixing bugs.

· Collaboration on datasets, simulated agents, and evaluation metrics: This feature enables teams to work together efficiently on building and refining the testing infrastructure for AI agents. It fosters a shared understanding of evaluation criteria and allows for the collective improvement of AI models. This is crucial for larger projects and for ensuring consistent evaluation standards across a team.

· Reproducible agent evaluation: By ensuring that evaluations can be run multiple times with the same results, AgentEval-CI eliminates the ambiguity often associated with AI performance testing. This is critical for building confidence in AI systems and for making informed decisions about model deployment.

· Simulated agent workflows: This allows developers to create realistic test environments for their AI agents, mimicking real-world interactions and challenges. This is highly beneficial for identifying potential failure points before deploying agents into production, thereby reducing risks and improving the overall quality of the AI application.

Product Usage Case

· A legal-tech startup using AgentEval-CI to test an AI assistant designed to summarize legal documents. The CI pipeline automatically runs tests on new versions of the AI, ensuring it accurately extracts key information and handles edge cases. This prevents faulty AI from impacting legal professionals and saves them time by ensuring the summaries are consistently reliable.

· A developer building an AI chatbot that needs to interact with various APIs (e.g., weather API, calendar API). AgentEval-CI is used to simulate user requests that require the chatbot to correctly identify and use these APIs. If the chatbot fails to call the right API or passes incorrect parameters, OpenTelemetry traces help the developer quickly identify the broken API call in the chatbot's logic.

· A team developing an AI agent for customer support wants to evaluate its multi-step reasoning capabilities when handling complex customer inquiries. They use AgentEval-CI to set up scenarios where the agent needs to gather information from multiple sources, perform logical deductions, and then formulate a helpful response. This helps them measure and improve the agent's ability to solve real customer problems.

· An AI researcher experimenting with different prompting strategies for an LLM to see which one leads to more efficient tool usage. AgentEval-CI provides a consistent framework to run these experiments, quantify the improvements, and share the results with collaborators, accelerating the research process and leading to better AI models.

11

Relaya: Automated Business Interaction Agent

Author

rishavmukherji

Description

Relaya is an innovative AI-powered agent designed to automate phone calls to businesses for simple to moderately complex tasks. Instead of you spending time on hold or navigating phone menus, Relaya handles the interaction, aiming to resolve your request directly or connect you efficiently with the right human agent. Its core innovation lies in its ability to understand conversational context and execute actions programmatically, significantly reducing the time and effort required for common customer service interactions. This translates to saved time and frustration for users, allowing them to get things done faster.

Popularity

Points 7

Comments 0

What is this product?

Relaya is a software agent that acts as your digital representative when you need to call businesses. It uses advanced natural language processing to understand your request, navigates automated phone systems (IVRs), and can even converse with human agents to complete tasks. For instance, it can check item availability in a store, make a reservation that isn't available through typical online platforms, or assist with more complex requests like applying travel credits to rebook flights. The core technology involves AI models trained to understand spoken language, interpret intent, and interact with phone systems. This is a departure from simple chatbots as it actively engages in voice-based communication and executes actions on your behalf. So, what this means for you is that tasks that used to require you to make a phone call and spend time on hold can now be handled automatically, freeing up your time.

How to use it?

Developers can integrate Relaya by defining the specific tasks they want automated. This might involve providing a set of instructions or parameters for the agent to follow. For example, you could tell Relaya: 'Check if the new iPhone is in stock at the local Apple store and if it is, try to reserve one for pickup.' Relaya would then initiate the call, understand the store's response, and take the necessary actions. The system is designed to be a tool for efficiency, allowing users to delegate tedious phone tasks. The value for developers is in offloading repetitive communication tasks, enabling them to focus on more strategic work. This can be integrated into personal productivity workflows or even business process automation.

Product Core Function

· Automated voice interaction with businesses: Relaya can initiate and conduct phone calls, understand spoken language, and respond appropriately, mimicking human conversation. This is valuable for tasks requiring direct phone communication that cannot be handled online, saving you the effort of making the call yourself.

· Intent recognition and task execution: The AI can decipher the user's goal (e.g., 'check availability,' 'make reservation') and translate it into actions within the phone system or with an agent. This means the agent understands what you want done and can actively work towards completing it, streamlining complex requests.

· Intelligent navigation of IVR systems: Relaya is designed to understand and interact with complex phone menus, routing calls efficiently to the correct department or agent. This eliminates the frustration of getting lost in automated systems, ensuring your query reaches the right place faster.

· Human agent escalation for complex tasks: For issues that require human judgment or intervention, Relaya can seamlessly connect you to a live agent, often having already gathered necessary information. This ensures that even the most complicated problems are addressed without you having to re-explain everything, making the process smoother.

· Reduced call duration: By efficiently handling interactions, Relaya aims to significantly shorten the time spent on phone calls, turning potentially long waits into quick resolutions. This translates directly into saving your valuable time and reducing stress.

Product Usage Case

· Scenario: You need to book a table at a restaurant that doesn't have online reservations. Instead of calling and potentially being put on hold, you instruct Relaya to make the reservation for you at your desired time and party size. Relaya calls the restaurant, communicates your request, and confirms the booking, solving the problem of difficult-to-access reservations.

· Scenario: You want to check if a specific product is in stock at a local retail store. You tell Relaya to call the store and inquire about the item. Relaya will contact the store, get the availability information, and report back to you. This solves the problem of wasting a trip to the store only to find the item is out of stock.

· Scenario: You have a complicated customer service issue, such as disputing a charge or applying a travel credit to an existing flight, which typically involves long hold times and speaking with multiple agents. Relaya can navigate the initial automated system, wait on hold, and then connect you directly to a human agent who is briefed on your situation, minimizing your effort and time spent resolving complex issues.

· Scenario: A user needs to find out if a specific, non-standard service is offered by a local business (e.g., a niche repair shop). Relaya can call the business and ask detailed questions to ascertain if they provide the service, solving the problem of finding specialized services without extensive manual research.

12

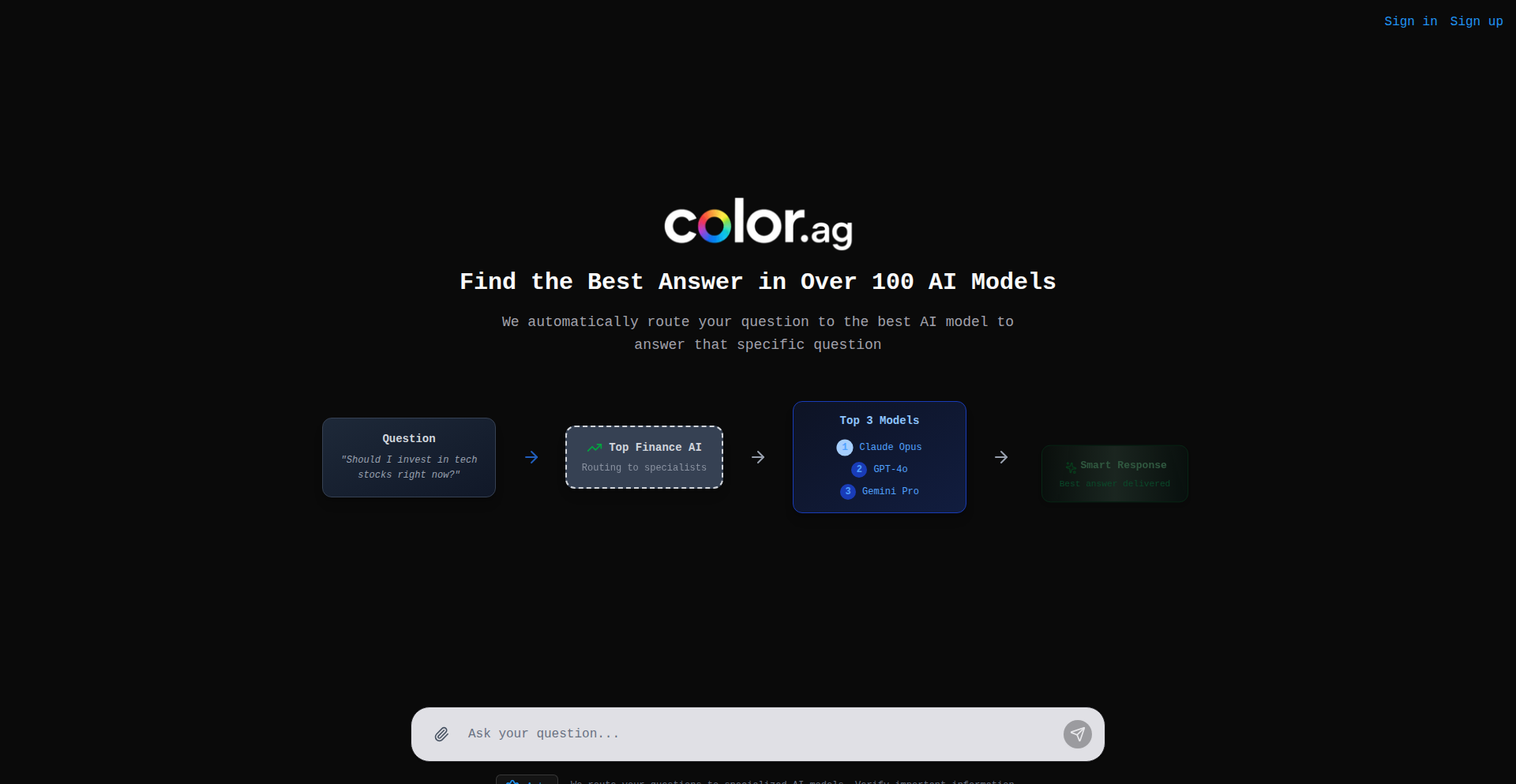

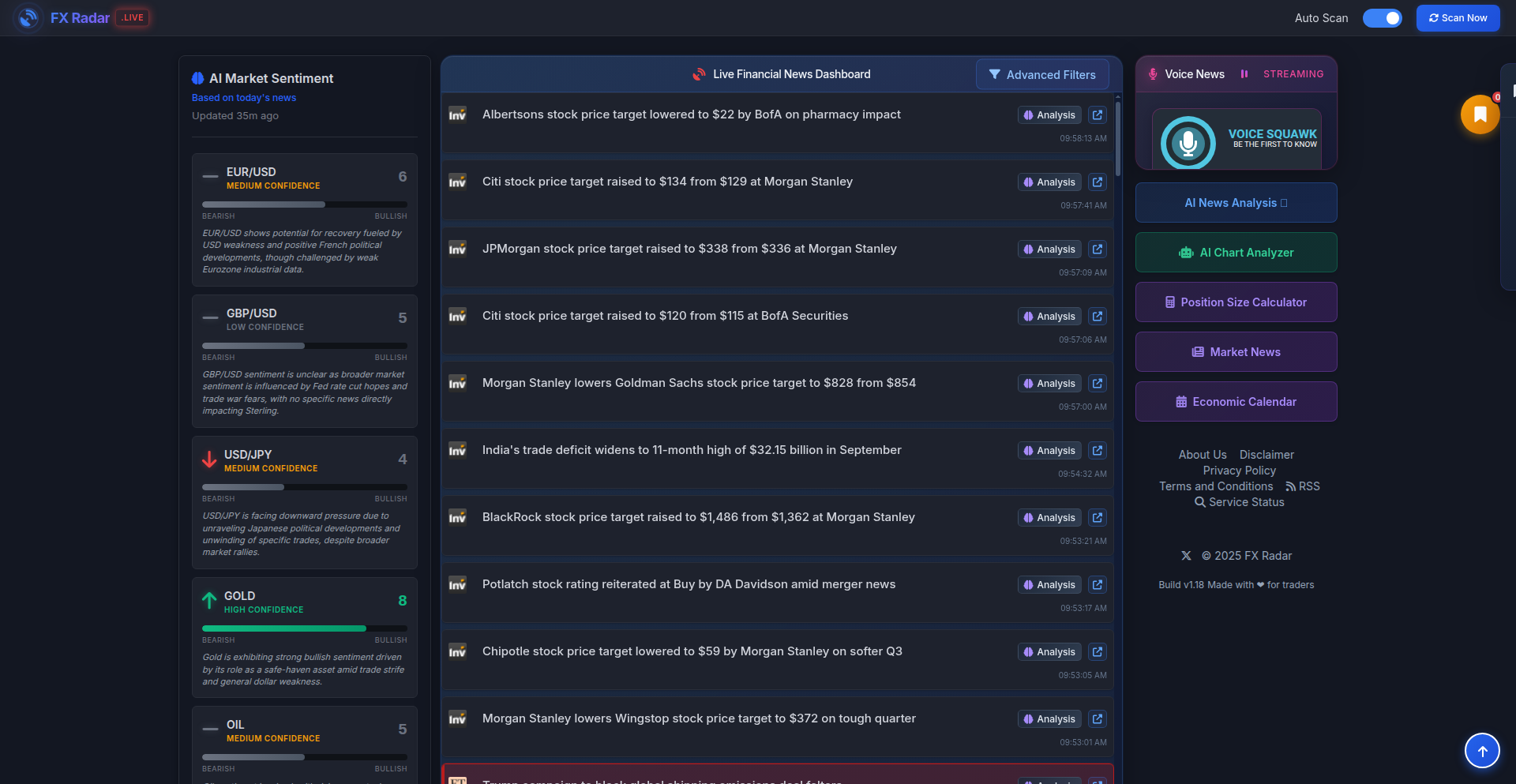

Color.ag - AI Model Consensus Aggregator

url

Author

chadlad101

Description

Color.ag is a smart AI aggregator that intelligently routes your questions to the most suitable AI models and then synthesizes their top-performing answers into a single, balanced summary. This innovative approach saves you the hassle of constantly tracking the best AI models, providing you with a smarter, more reliable response. So, what's in it for you? You get the best possible answer to your question without the effort of figuring out which AI is currently the best.

Popularity

Points 2

Comments 4

What is this product?

Color.ag is an AI-powered system designed to intelligently handle your inquiries. It works by first understanding the category and intent of your question. Then, it identifies the most relevant AI benchmark and directs your question to the AI models that perform best on that benchmark. The system gathers answers from these top AI models and then combines them into a consensus summary. This means you don't have to stay updated on the ever-changing landscape of AI models; you automatically get the smartest and most balanced response. The innovation lies in its intelligent routing and consensus-building mechanism, ensuring you always leverage the collective strength of the best AI models for any given task. So, what's in it for you? You receive a superior, synthesized answer without needing to be an AI expert yourself.

How to use it?

Developers can integrate Color.ag into their applications or workflows to enhance their AI-driven features. Imagine a customer support chatbot that needs to provide accurate and nuanced answers to user queries. Instead of relying on a single, potentially suboptimal AI model, the chatbot can use Color.ag. The question from the user is sent to Color.ag, which then finds the best AI models to answer it. The aggregated, high-quality answer is then presented to the user. For developers, this means a more robust and intelligent AI backend for their products, leading to better user experiences and more accurate information delivery. So, what's in it for you? You can build smarter, more reliable AI-powered applications without the burden of managing multiple AI model integrations yourself.

Product Core Function

· Intelligent Question Categorization and Intent Detection: Understands the nuance of user queries to select the right approach. Value: Ensures the question is framed correctly for the AI models. Use Case: Improving search relevance, better chatbot understanding.

· Benchmark Matching and Model Routing: Identifies the most appropriate AI benchmark and directs questions to top-performing models. Value: Leverages specialized AI strengths for specific problem types. Use Case: Directing complex coding questions to code-optimized AIs, medical queries to medically trained AIs.

· AI Model Consensus Synthesis: Combines multiple AI answers into a single, coherent, and balanced summary. Value: Provides a more comprehensive and reliable answer by averaging out potential individual model biases or errors. Use Case: Generating detailed reports, summarizing complex topics, providing balanced advice.

· Top AI Answer Visualization: Presents the top 3 AI answers for your question alongside the consensus. Value: Offers transparency and allows users to see alternative perspectives. Use Case: Educational tools, research assistance, comparative analysis.

Product Usage Case

· A content creation platform that uses Color.ag to generate blog post ideas and outlines. The platform sends a topic prompt to Color.ag, which then gets diverse insights from multiple creative AI models, resulting in a richer and more varied set of suggestions. Solves the problem of generic content by providing varied perspectives. So, what's in it for you? Get more creative and well-rounded content ideas.

· A customer service portal that employs Color.ag to answer complex user questions. Instead of a single AI providing a potentially incomplete answer, Color.ag aggregates information from specialized support AIs, giving users a thorough and accurate resolution. Solves the problem of frustratingly vague or incorrect support responses. So, what's in it for you? Get your problems solved more effectively and quickly.

· An educational application that uses Color.ag to explain difficult concepts. The app sends the concept to Color.ag, which gathers explanations from various academic AIs, offering multiple angles and levels of detail for better understanding. Solves the problem of a single explanation not resonating with all learners. So, what's in it for you? Learn complex subjects more easily through diverse explanations.

13

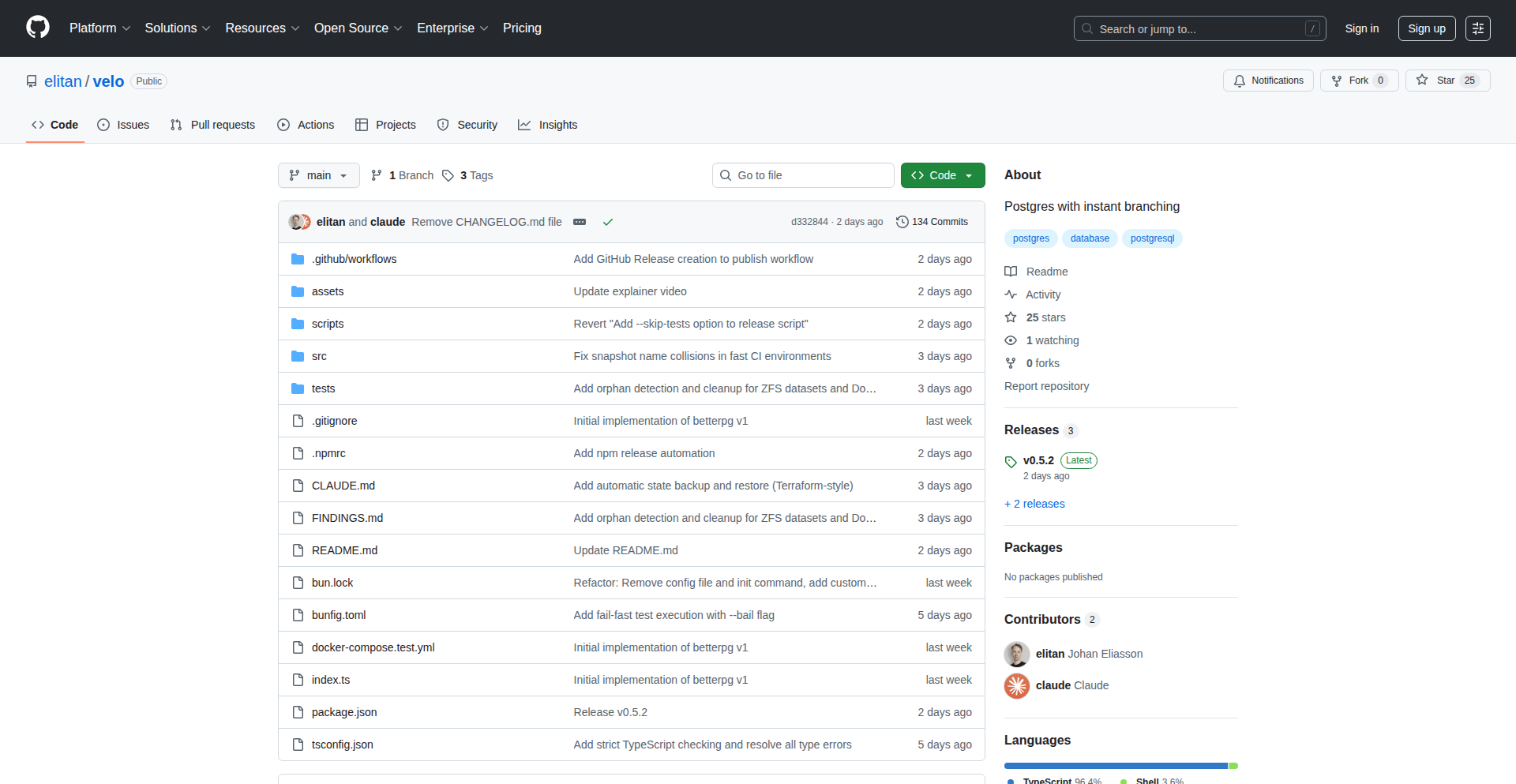

VeloDB

Author

elitan

Description

VeloDB is a project that brings Git-like branching capabilities to PostgreSQL databases by leveraging ZFS snapshots. It allows developers to create, switch between, and merge database states, effectively treating your database schema and data as version-controlled assets. This solves the problem of managing complex database changes, testing new features without affecting production, and easily rolling back to previous states, all with the familiarity of Git workflows.

Popularity

Points 3

Comments 3

What is this product?

VeloDB is essentially a database version control system built on top of PostgreSQL and ZFS. Instead of manually creating backups and restoring them, VeloDB uses ZFS's advanced snapshotting technology to create point-in-time copies of your PostgreSQL data directory. These snapshots act like Git branches, allowing you to create isolated environments for experimenting with schema changes, data migrations, or feature development. When you're done, you can easily revert to a previous snapshot or even merge changes back into your main database. This innovative approach combines the robust querying power of PostgreSQL with the efficient and reliable versioning capabilities of ZFS, making database management significantly more agile and less risky.

How to use it?

Developers can integrate VeloDB into their workflow by installing the VeloDB tools alongside their PostgreSQL and ZFS setup. The primary interaction would be through a command-line interface (CLI) that mimics Git commands. For example, to create a new 'branch' for development, you might run a `velo branch development` command, which triggers ZFS to take a snapshot and potentially mount a new, isolated filesystem containing your database. You can then connect your application to this isolated database for testing. When you want to switch back to the main production database, you'd use a command like `velo checkout main`. This makes it incredibly easy to isolate testing environments, test out risky migrations, or even quickly revert to a stable state if a deployment goes wrong. The core value is the ability to manage database versions as easily as code versions.

Product Core Function

· Database Branching: Create isolated, point-in-time copies of your PostgreSQL database using ZFS snapshots, akin to creating new branches in Git. This allows for safe, parallel development and testing of new features without impacting the live database.

· Snapshotting and Versioning: Efficiently capture the exact state of your database at any given moment. This provides a robust history of your database, enabling easy rollback and auditing of changes.

· Seamless Branch Switching: Quickly switch between different database versions (snapshots) using familiar Git-like commands. This drastically reduces downtime and complexity when managing multiple database states for different environments or features.

· Rollback Capabilities: Revert your database to any previous snapshot with ease. This is invaluable for disaster recovery, undoing erroneous updates, or restoring to a known good state.

· Data Isolation for Testing: Develop and test features against a completely isolated copy of your database, ensuring that development work does not interfere with or corrupt production data.

Product Usage Case

· Feature Development: A developer needs to implement a new complex feature that requires significant schema changes and data manipulation. Using VeloDB, they can create a 'feature-branch' from the production database. They make all their changes on this isolated branch. If the feature is successful, they can merge the changes back. If not, they simply discard the branch without any impact on production.

· Database Migration Testing: A team is planning a critical database migration. Before executing it on production, they can create a ZFS snapshot of the production database, apply the migration to this snapshot, and thoroughly test its performance and correctness in an environment that precisely mirrors production.

· A/B Testing Database States: For applications that require different data states for A/B testing user experiences, VeloDB allows maintaining multiple distinct database versions simultaneously, enabling seamless switching between them for different user segments.

· Rapid Rollback: After a new application deployment introduces a bug that corrupts database records, VeloDB allows the operations team to quickly revert the database to the state before the deployment using a previous snapshot, minimizing user impact and downtime.

14

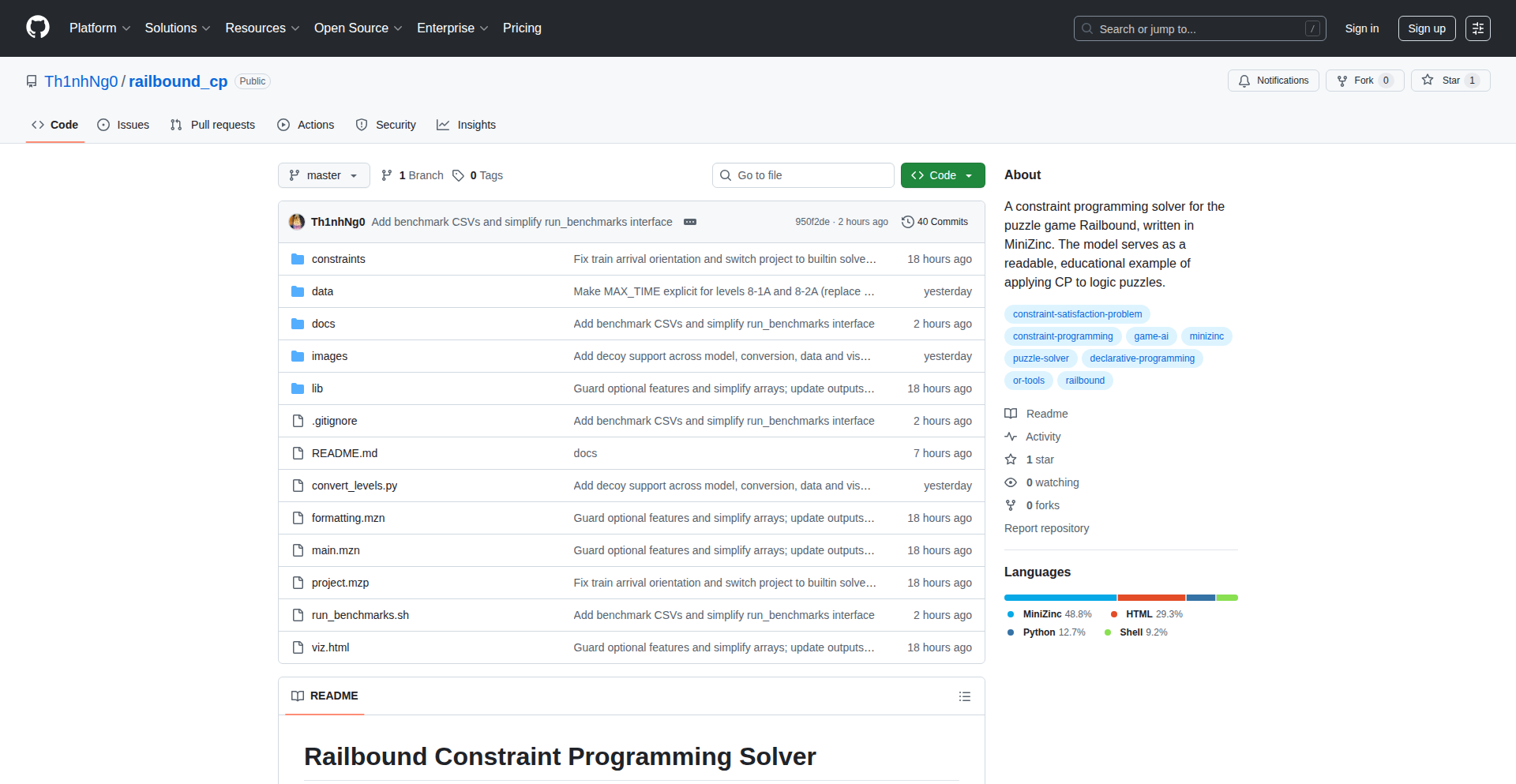

Railbound CP Solver

Author

th1nhng0

Description

This project is a solver for the puzzle game Railbound, built using Constraint Programming. Instead of writing step-by-step instructions for a computer to find a solution, it declaratively defines the game's rules and lets a specialized solver figure out how to win. This innovative approach allows it to tackle complex game mechanics like timed gates and dynamic switches by translating them into mathematical constraints, offering a unique way to solve intricate puzzles.

Popularity

Points 2

Comments 4

What is this product?

This is a system that can automatically solve puzzles from the game Railbound. It works by treating each puzzle as a logic problem where you define all the rules of the game – how train tracks connect, how switches flip, and how timed gates operate. Then, a powerful solver, using a language called MiniZinc, searches for a valid arrangement of tracks and switches that satisfies all these rules and completes the level. The innovation here lies in its declarative nature; instead of telling the computer exactly how to find the solution, you describe the problem, and the solver finds the solution for you. This is especially useful for puzzles with many moving parts and dependencies, making it a powerful tool for exploring game logic. So, it helps you understand complex puzzle mechanics by finding solutions automatically.

How to use it?

Developers can use this project by setting up the MiniZinc toolchain on their own machine, cloning the provided GitHub repository, and then running a command to solve specific Railbound levels. The command typically involves specifying the solver to be used and the data file for the puzzle you want to solve, like 'minizinc --solver or-tools main.mzn data/1/1-1.dzn'. This allows developers to integrate the solver into their own workflows, perhaps for analyzing game design, testing puzzle difficulty, or even exploring new puzzle variations. So, it gives you a programmatic way to interact with and solve the game's puzzles.

Product Core Function

· Constraint Modeling of Game Mechanics: Translates complex Railbound rules (track connections, switches, timed gates, decoy cars) into formal constraints that a solver can understand. This allows for a precise and comprehensive representation of the puzzle's challenges, making it useful for game designers or anyone wanting to deeply understand game logic.

· Declarative Solver Integration: Leverages constraint programming solvers (like MiniZinc with or-tools) to find solutions. This means the focus is on defining the problem, not on writing complex search algorithms, which is a more efficient and often more elegant way to tackle such problems. This is valuable for developers looking for robust problem-solving tools.

· Level-Specific Puzzle Solving: Capable of solving individual Railbound levels by loading specific puzzle data. This provides concrete solutions for specific challenges, allowing users to see how complex puzzles can be broken down and resolved through logical constraints. This is useful for players stuck on a level or developers wanting to verify puzzle solvability.

Product Usage Case

· Analyzing puzzle difficulty: A game designer could use this solver to automatically find solutions for various levels, identify the 'hardest' puzzles by how long the solver takes or how many constraints are involved, and then use this data to fine-tune future level designs. This helps in creating a more balanced and engaging game experience.

· Automated testing of game logic: Developers can use the solver to verify that the game's mechanics are implemented correctly. By defining the rules and checking if the solver finds a solution, they can ensure that no bugs prevent puzzles from being solved as intended. This improves the reliability of the game.

· Exploring alternative puzzle solutions: A curious player or developer might use the solver to discover different ways to solve a puzzle that they might not have considered themselves. The solver can find optimal or unexpected paths, leading to a deeper appreciation of the game's design. This expands the understanding of the game's possibilities.

15

WikiWordle: The Daily Wikipedia Word Game

Author

Mistri

Description

WikiWordle is a daily word guessing game inspired by Wordle, but instead of guessing a word, players guess a Wikipedia article. It leverages semantic similarity to guide players, offering a fresh take on knowledge exploration through a gamified experience. The core innovation lies in using natural language processing (NLP) to measure the 'distance' between the target Wikipedia article and the user's guess, providing hints.

Popularity

Points 4

Comments 1

What is this product?

This project is a web-based game where you guess a Wikipedia article daily. Instead of letters, you're guessing concepts and topics. The game uses advanced text analysis, specifically Natural Language Processing (NLP) techniques, to understand the meaning of words and sentences. When you make a guess, the system compares its meaning to the hidden article. It then tells you how 'close' your guess is, not in terms of spelling, but in terms of the underlying topic. This means you get hints like 'warmer' or 'colder' based on semantic relevance, making it a fun way to test and expand your knowledge of Wikipedia's vast content. So, what's the benefit for you? It makes learning about diverse topics engaging and competitive, turning Wikipedia exploration into an addictive challenge.

How to use it?