Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-11

SagaSu777 2025-10-12

Explore the hottest developer projects on Show HN for 2025-10-11. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions reveal a strong current of developers pushing the boundaries of performance and AI integration, while simultaneously prioritizing developer experience and privacy. The emphasis on AI, from benchmarking LLMs on real code to using AI for content creation and even impersonating programming legends, signals a maturing landscape where AI is becoming a tangible tool for problem-solving and creativity. Simultaneously, the surge in local-first applications and privacy-focused tools highlights a growing demand for user control and data security, reflecting a conscious effort to build technology that respects individuals. For developers, this means an opportunity to build solutions that are not only innovative and performant but also trustworthy and user-centric. Entrepreneurs should look for unmet needs in efficient development workflows, personalized AI experiences, and robust privacy solutions. The spirit of the hacker is alive and well, not just in building complex systems but in finding elegant, often open-source, ways to solve everyday problems and empower users.

Today's Hottest Product

Name

Vello's high-performance 2D GPU engine to .NET

Highlight

This project brings Vello's incredibly fast 2D graphics engine, powered by the GPU, to the .NET ecosystem. It's a significant leap for .NET developers wanting to create visually rich applications without rewriting their existing rendering pipelines. The innovation lies in bridging a high-performance, GPU-accelerated 2D engine with .NET's broad reach, offering developers a way to modernize their UIs and graphics-intensive applications with ease, learning how to leverage modern graphics APIs and performance optimization techniques.

Popular Category

Development Tools

AI/ML

Web Development

Gaming

Utilities

Productivity

Hardware/IoT

Popular Keyword

AI

LLM

Open Source

Developer Tools

Performance

Web App

Data

GPU

Security

Productivity

Technology Trends

AI-Driven Development

Edge Computing & Local-First

Performance Optimization

Declarative Development

Developer Productivity Tools

Hardware/IoT Integration

Graphics Acceleration

Project Category Distribution

Developer Tools (20%)

AI/ML Applications (15%)

Web Applications/Frameworks (15%)

Gaming/Simulations (10%)

Utilities/Productivity (10%)

Hardware/IoT (5%)

Creative/Experimental (5%)

Security (5%)

Other (15%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Rift: macOS Tiling Window Orchestrator | 129 | 57 |

| 2 | Gnokestation: Featherweight Web Desktop | 25 | 21 |

| 3 | VelloSharp .NET GPU Rendering Accelerator | 5 | 4 |

| 4 | ChessHoldem: Hybrid Strategy Engine | 6 | 2 |

| 5 | CodeLensAI | 7 | 0 |

| 6 | Sprite Garden | 3 | 3 |

| 7 | AI PersonaForge: Programming Legends Edition | 3 | 2 |

| 8 | RetroPixel Forge | 4 | 1 |

| 9 | Aidlab Bio-Streamer | 4 | 0 |

| 10 | HackerNews StickyNote Weaver | 2 | 2 |

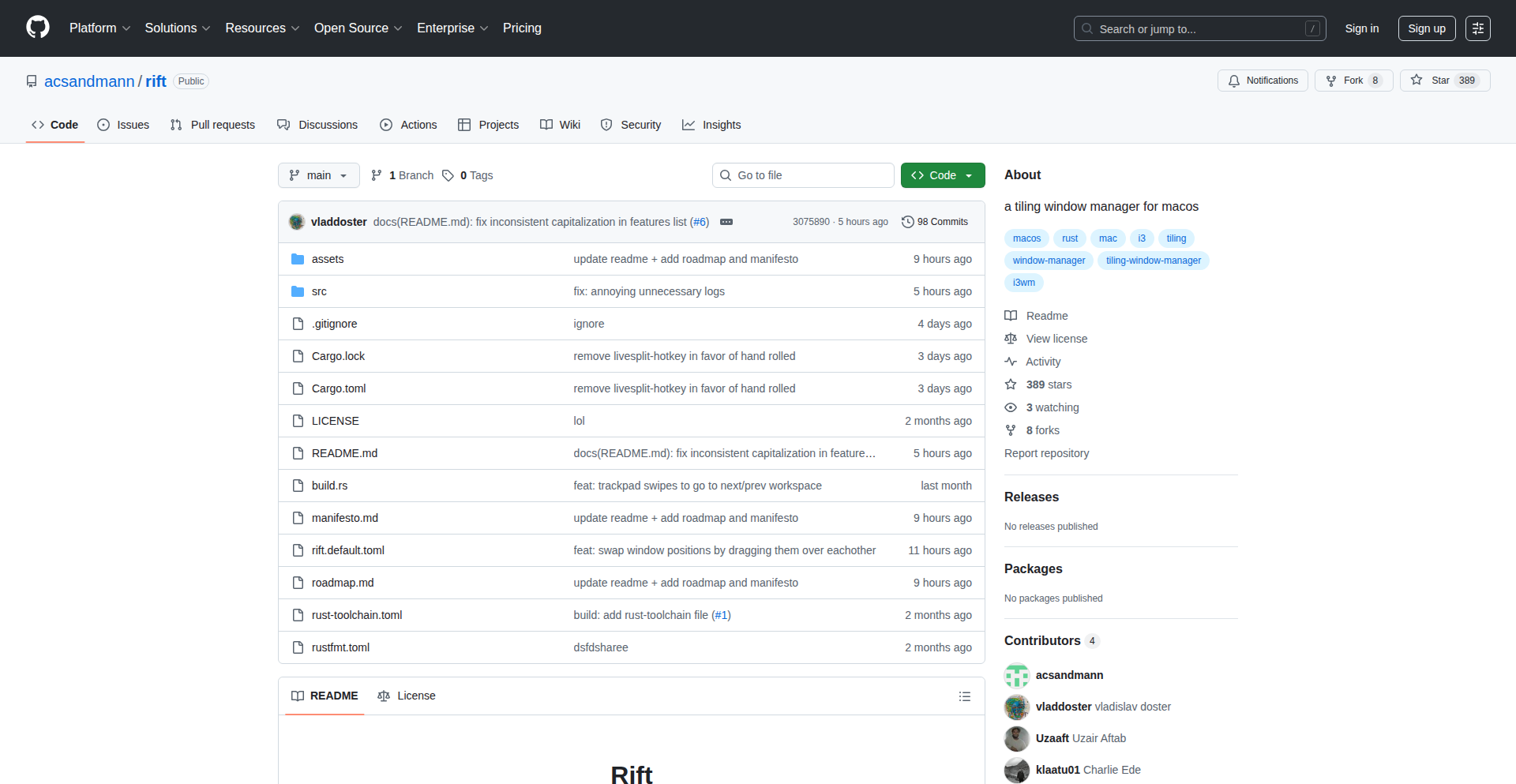

1

Rift: macOS Tiling Window Orchestrator

Author

atticus_

Description

Rift is an innovative open-source project designed to bring the power and efficiency of tiling window management to macOS. It addresses the common frustration of juggling multiple application windows on a single screen by automatically arranging them into non-overlapping layouts, maximizing screen real estate and improving workflow. The core innovation lies in its intelligent algorithm that dynamically resizes and repositions windows based on user-defined rules and screen space, offering a programmatic approach to window organization for developers and power users.

Popularity

Points 129

Comments 57

What is this product?

Rift is a macOS application that transforms how you manage your open windows. Instead of manually resizing and arranging them, Rift uses a clever system to automatically tile your windows, fitting them perfectly onto your screen without overlapping. Think of it like intelligent digital Tetris for your applications. The innovation is in its ability to understand your screen layout and the windows you're using, and then programmatically arrange them in an optimal, efficient way. This is achieved through a combination of system event monitoring and window manipulation APIs, allowing it to react to window openings, closings, and size changes in real-time. So, what's the value to you? It means less time spent fiddling with window edges and more time focused on your actual work, leading to a significantly smoother and more productive computing experience.

How to use it?

Developers and power users can integrate Rift into their macOS workflow by installing and configuring it. The primary usage involves launching the Rift application, which then runs in the background. Users can define custom tiling layouts and rules through configuration files (often using a declarative syntax) or a graphical interface if available. For instance, a developer might set up a rule where their IDE always takes up 70% of the screen on the left, while their terminal and browser windows occupy the remaining space on the right, automatically adjusting as they switch between tasks. Integration can also involve scripting: developers can potentially trigger specific window layouts using command-line tools or custom scripts, allowing for dynamic workflow adjustments based on project needs. This gives you fine-grained control over your digital workspace, tailored to your specific development or task requirements.

Product Core Function

· Automatic Tiling Layouts: Dynamically arranges windows into predefined or custom non-overlapping grids, maximizing screen usage and reducing clutter. The value here is immediate productivity gain, allowing you to see more at once and switch tasks seamlessly without manual window adjustments. Applicable for any user who juggles multiple applications.

· Customizable Rules and Presets: Allows users to define specific window arrangements based on application, workspace, or screen size, offering personalized efficiency. This means you can tailor your workspace exactly to your workflow, ensuring your most used applications are always positioned optimally for your tasks.

· Real-time Window Management: Responds instantly to window openings, closings, and resizing events, maintaining the defined layout without user intervention. The benefit is a consistently organized desktop, eliminating the constant need to re-arrange windows as you work.

· Keyboard Shortcut Integration: Enables quick activation of different tiling layouts or specific window manipulations via keyboard commands, for lightning-fast workflow control. This empowers users with keyboard-centric workflows to manage their screen space with unparalleled speed and efficiency.

· Developer-Friendly Configuration: Often uses text-based configuration files, allowing for easy version control and programmatic management of window layouts. This is invaluable for developers who want to automate their setup, share configurations, or integrate window management into their build or deployment scripts.

Product Usage Case

· A software developer working on a complex project can configure Rift to automatically place their code editor on the left side of the screen, their terminal on the right, and a web browser minimized to a specific corner, all resizing proportionally as new windows are opened. This solves the problem of constantly having to manually arrange these essential tools, saving significant time and cognitive load during coding sessions.

· A content creator who frequently switches between video editing software, audio editing tools, and reference materials can set up different Rift profiles. One profile might dedicate the majority of the screen to the video editor, while another might split the screen evenly for audio editing and waveform visualization. This provides instant access to optimized workspaces for different creative tasks, streamlining the production workflow.

· A researcher who uses multiple academic papers, data visualization tools, and a note-taking application can use Rift to maintain a consistent layout. For instance, a large central area for data visualization, with papers on one side and notes on the other, ensuring all relevant information is readily accessible and organized for analysis. This aids in faster information synthesis and reduces distractions from a messy desktop.

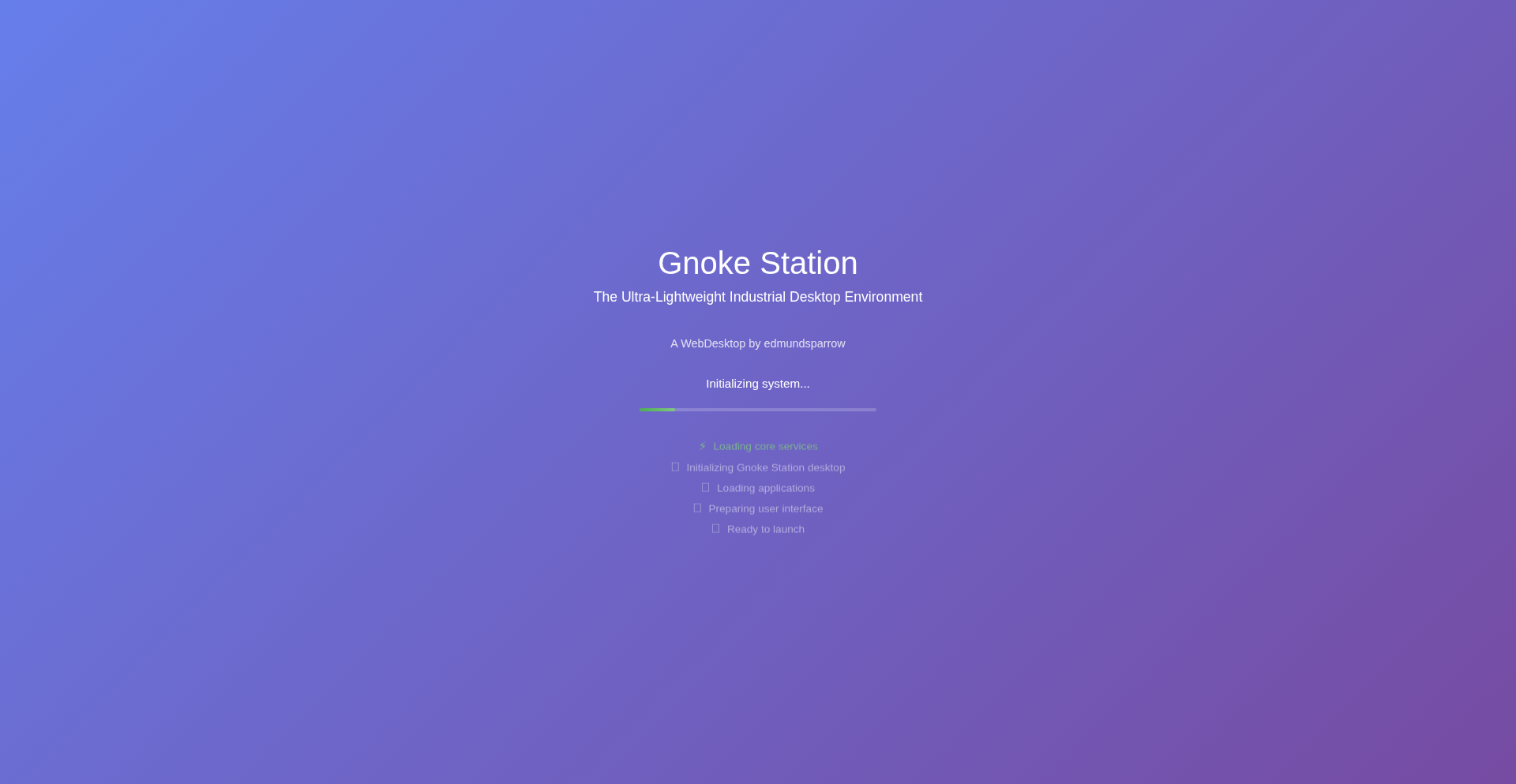

2

Gnokestation: Featherweight Web Desktop

Author

edmundsparrow

Description

Gnokestation is an ultra-lightweight, web-based desktop environment that aims to bring the feel of a traditional desktop to the browser. Its core innovation lies in its minimal footprint and efficient rendering, allowing for a responsive and functional experience even on resource-constrained devices or slower networks. It tackles the problem of delivering a rich, interactive user experience purely through web technologies without the bloat of larger frameworks. This means faster loading times and smoother operation, making it ideal for embedded systems, older hardware, or as a lean alternative for web applications.

Popularity

Points 25

Comments 21

What is this product?

Gnokestation is a web-based operating system-like environment that runs entirely in your browser. Think of it like having a miniature operating system with windows, applications, and a taskbar, but delivered through a web page. The key technical innovation here is its 'ultra-lightweight' design. Instead of loading lots of code and complex structures, it uses highly optimized JavaScript and CSS to draw and manage the desktop elements. This means it uses very little memory and processing power. The problem it solves is making feature-rich web applications feel more like traditional desktop applications – responsive, organized, and easy to multitask with – without slowing down your browser or requiring a powerful computer. So, what this means for you is a faster, more efficient way to interact with web applications that feel more like a familiar desktop experience, even on less powerful devices.

How to use it?

Developers can use Gnokestation as a framework to build web applications that require a desktop-like interface. It provides a set of APIs and components for creating windows, managing user input, and launching 'applications' (which are essentially self-contained web components or pages). You can integrate it into existing web projects by including its core JavaScript and CSS files, and then use its API to define the layout, launch your custom web apps within its windows, and manage the overall user experience. It's designed to be highly extensible, allowing developers to add their own custom widgets and functionalities. This makes it useful for creating dashboards, control panels, or even full-fledged web-based operating systems. So, for you as a developer, it offers a way to build sophisticated, interactive web interfaces more quickly and efficiently, with a familiar desktop paradigm, making your applications more user-friendly and performant.

Product Core Function

· Lightweight Rendering Engine: Utilizes efficient JavaScript and CSS to draw and update desktop elements, providing a smooth user experience with minimal resource usage. This means your web application will load faster and run smoother, even on older computers or slow internet connections.

· Modular Application Architecture: Allows 'applications' to be self-contained units, making it easy to develop, integrate, and manage distinct functionalities within the desktop environment. This enables you to build complex web applications with better organization and easier maintenance, like having separate apps for different tasks that don't interfere with each other.

· Window Management System: Provides functionality for creating, resizing, moving, and minimizing/maximizing application windows, mimicking traditional desktop OS behavior. This offers users a familiar and intuitive way to manage multiple web-based tasks simultaneously, improving productivity and ease of use.

· Taskbar and Menu System: Offers integrated elements for launching applications and switching between them, enhancing user navigation and workflow. This makes it easier for users to find and switch between different parts of your web application, much like switching between programs on their computer.

· Customizable Theming and Widgets: Supports the ability to create custom visual themes and add new interactive widgets, allowing for a tailored user interface and expanded functionality. This lets you design a web application that looks and feels exactly how you want it to, adding unique features that stand out.

Product Usage Case

· Developing a remote monitoring dashboard for IoT devices on a Raspberry Pi. Gnokestation's lightweight nature ensures it runs smoothly on the low-power device, and its desktop-like interface allows for easy visualization and control of multiple sensors in different browser windows. This solves the problem of accessing and managing data from many devices efficiently on a limited hardware platform.

· Creating a customer support portal where agents can manage multiple client tickets in separate, resizable windows. The web desktop environment provides a familiar interface for agents, reducing training time and improving their ability to multitask and resolve issues quickly. This addresses the need for an organized and efficient way for support staff to handle concurrent client interactions.

· Building an educational platform for teaching basic programming concepts, where students can run simple code editors and view output in separate windows within a web browser. This provides a safe and isolated environment for experimentation without needing to install complex software, making learning more accessible and immediate.

· Designing a lightweight control panel for a self-hosted web service that needs to be accessible from various devices, including older laptops and tablets. Gnokestation ensures that the interface is responsive and functional across different hardware capabilities, providing essential management tools without overwhelming the user's device. This solves the challenge of delivering a usable management interface to users regardless of their device's performance.

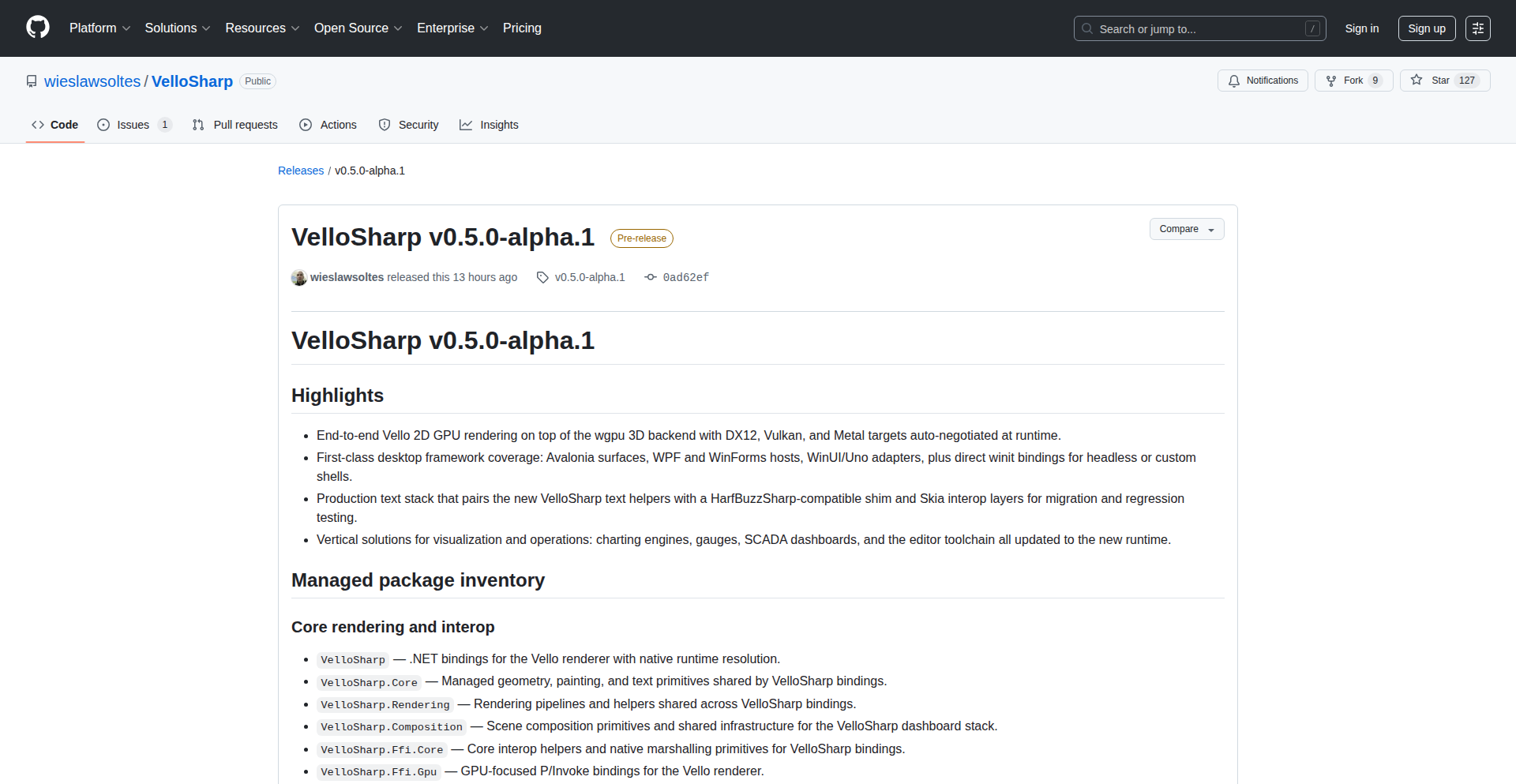

3

VelloSharp .NET GPU Rendering Accelerator

Author

wiso

Description

VelloSharp is a high-performance 2D GPU rendering engine for .NET developers. It leverages Vello's advanced GPU capabilities and the wgpu 3D backend to bring modern, hardware-accelerated graphics to existing .NET applications like Avalonia, WPF, and WinForms. This means smoother animations, faster drawing of complex visuals, and improved user interface responsiveness without requiring a complete application overhaul. The core innovation lies in bringing a cutting-edge GPU rendering pipeline, typically found in specialized graphics applications, directly into the familiar .NET ecosystem.

Popularity

Points 5

Comments 4

What is this product?

VelloSharp is a bridge that allows .NET applications to use a powerful, hardware-accelerated 2D graphics engine for rendering. Instead of relying solely on the CPU to draw everything on the screen, which can be slow for complex graphics, VelloSharp offloads this work to the computer's graphics card (GPU). It achieves this by integrating Vello, a well-regarded 2D GPU engine, with the wgpu backend, which is a modern API for interacting with graphics hardware across different platforms. The innovation is in making this high-performance rendering accessible and easily integrable into established .NET UI frameworks like Avalonia, WPF, and WinForms, enabling developers to enhance their applications' visual fidelity and performance without undertaking extensive code rewrites.

How to use it?

For .NET developers, VelloSharp can be integrated into their applications by adding it as a NuGet package. Once integrated, developers can configure their existing UI frameworks (Avalonia, WPF, WinForms) to use VelloSharp as their rendering backend. This typically involves a small change in the application's initialization or rendering pipeline setup. For example, an Avalonia application could be configured to use VelloSharp for its rendering. This allows for immediate performance benefits in drawing graphics-intensive elements, like custom controls, animations, or data visualizations. The value proposition is enhanced visual performance and smoother user experiences with minimal code changes.

Product Core Function

· GPU-accelerated 2D rendering: This allows complex vector graphics, text, and images to be drawn much faster by utilizing the graphics card, resulting in smoother animations and quicker UI updates for the end-user.

· Seamless integration with .NET UI frameworks: VelloSharp can be plugged into existing Avalonia, WPF, and WinForms applications, meaning developers don't have to rewrite their entire application to benefit from GPU acceleration, saving significant development time and effort.

· Modern graphics pipeline: By using Vello's advanced rendering engine and the wgpu backend, developers gain access to modern graphics techniques and optimizations, leading to higher quality visuals and improved rendering efficiency.

· Cross-platform compatibility (via wgpu): The wgpu backend provides a way to interact with graphics hardware that works across different operating systems and hardware, making VelloSharp applications more portable.

· Vector graphics rendering: VelloSharp is particularly adept at rendering vector graphics efficiently, which are scalable without losing quality, ideal for UI elements and custom designs.

Product Usage Case

· Enhancing an existing WPF application's data visualization dashboard: A developer can integrate VelloSharp to significantly speed up the rendering of complex charts and graphs, making the dashboard more interactive and responsive, thus improving the user's ability to analyze data quickly.

· Modernizing the rendering of a WinForms-based industrial control panel: By using VelloSharp, the panel can display real-time sensor data, complex diagrams, and animations much more smoothly and with higher fidelity, leading to a better user experience and quicker operational insights.

· Improving animation performance in an Avalonia cross-platform application: Developers can leverage VelloSharp to create more fluid and complex animations for UI elements or custom graphics, resulting in a more engaging and polished user interface across desktop platforms.

· Developing a custom 2D drawing application for .NET: VelloSharp provides a high-performance foundation for building new applications that require advanced 2D graphics capabilities, allowing developers to focus on application logic rather than low-level rendering optimization.

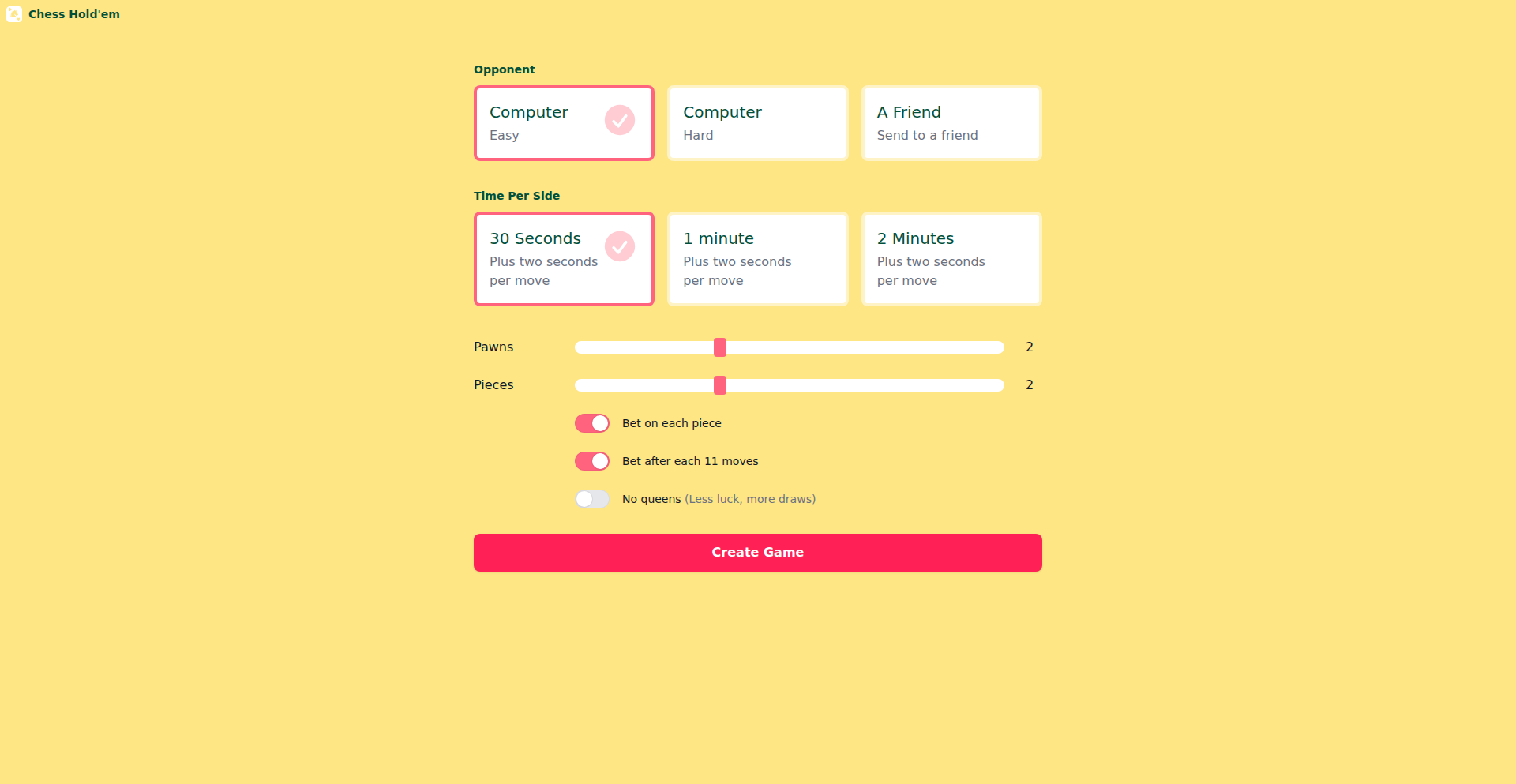

4

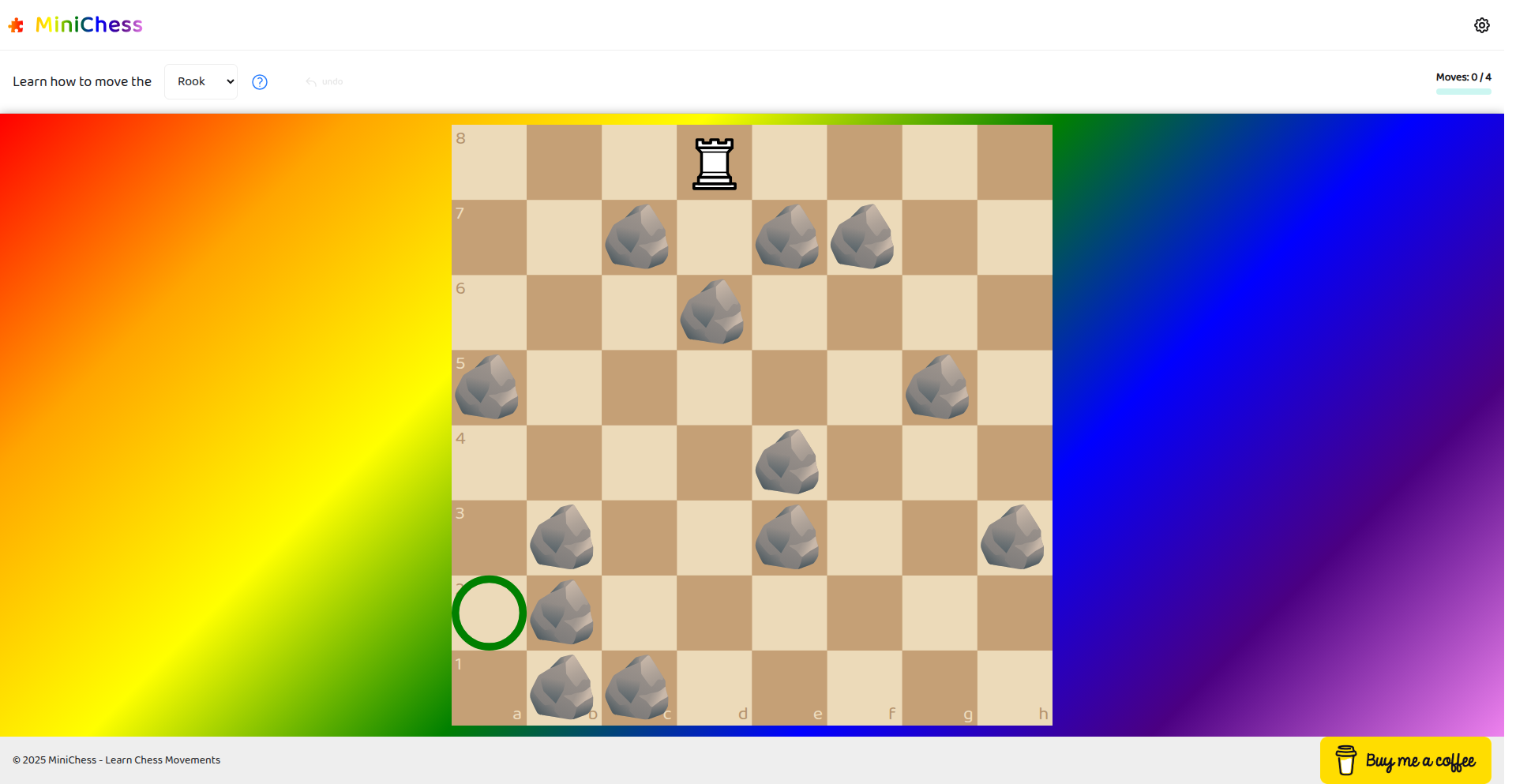

ChessHoldem: Hybrid Strategy Engine

Author

elicash

Description

ChessHoldem is a novel project that merges the strategic depth of chess with the probabilistic decision-making of Texas Hold'em poker. It tackles the challenge of creating AI that can navigate complex game states with incomplete information, a common problem in many real-world applications beyond just games, such as financial trading or resource management. The innovation lies in its hybrid AI approach, combining rule-based chess logic with Monte Carlo Tree Search (MCTS) augmented by probabilistic modeling techniques to handle the uncertainty inherent in poker.

Popularity

Points 6

Comments 2

What is this product?

ChessHoldem is a game engine that combines the established rules of chess with the betting and hand evaluation mechanics of Texas Hold'em poker. The core technical innovation is its AI, which employs a sophisticated blend of techniques. For the chess aspect, it leverages established chess engines and search algorithms to determine optimal piece movements. For the poker aspect, it uses Monte Carlo Tree Search (MCTS), a powerful AI technique for making decisions in uncertain environments. MCTS explores possible game outcomes by simulating random plays, and ChessHoldem enhances this by incorporating probabilistic models to estimate the value of poker hands and betting strategies, even with hidden information. This allows the AI to make intelligent decisions in a game where you don't know your opponent's cards, which is a significant technical hurdle. So, what's the value to you? It provides a fascinating testbed for developing AI that can reason under uncertainty, a skill applicable to many complex decision-making systems.

How to use it?

Developers can use ChessHoldem as a framework to experiment with and develop advanced AI algorithms. It can be integrated into other projects that require strategic decision-making under incomplete information. For instance, a developer could use the core AI engine to build more sophisticated bots for other complex games, or even adapt the decision-making logic for simulated environments in fields like robotics or logistics optimization. The project likely exposes APIs that allow for programmatic control of the game and the AI's decision-making process, enabling integration into larger software architectures. So, how can you use this? You can integrate its intelligent decision-making capabilities into your own applications, allowing them to perform better in scenarios with hidden variables and strategic depth.

Product Core Function

· Hybrid AI Decision Engine: Implements a combined approach of deterministic chess logic and probabilistic poker strategy using MCTS and advanced heuristics, valuable for tackling complex decision spaces. Useful for creating sophisticated game AI or simulating strategic scenarios.

· Incomplete Information Handling: Utilizes probabilistic modeling to manage uncertainty in poker hands and betting, a critical capability for AI in real-world applications where information is never perfect. Essential for developing agents that can make robust decisions in uncertain environments.

· Chess-Poker Game Simulation: Provides a robust platform for simulating games that blend strategic movement and probabilistic betting, enabling in-depth analysis of game theory and AI performance. Ideal for researchers and developers studying game intelligence and strategy.

· Modular Game Component Design: Likely structured to allow for the substitution or enhancement of individual game mechanics (chess moves, poker rules), fostering experimentation with different game variations and AI interactions. Supports rapid prototyping and iteration for game designers and AI researchers.

Product Usage Case

· Developing a more intelligent opponent for a real-time strategy game: By adapting the hybrid AI, a developer could create AI opponents that not only react tactically on the board but also employ deceptive betting or resource management tactics based on incomplete information about the player's intentions. This solves the problem of predictable and easily exploitable AI in games.

· Building a simulation for financial market trading: The AI's ability to reason under uncertainty and adapt strategies could be applied to simulate trading scenarios, where market data is incomplete and opponents (other traders) have hidden strategies. This helps in testing trading algorithms and risk management strategies.

· Creating AI agents for complex negotiation scenarios: The project's foundation in handling incomplete information and strategic maneuvering is directly applicable to AI agents designed for negotiations, where participants have private agendas and limited knowledge of others' positions. This addresses the challenge of creating AI that can engage in sophisticated, human-like negotiation.

5

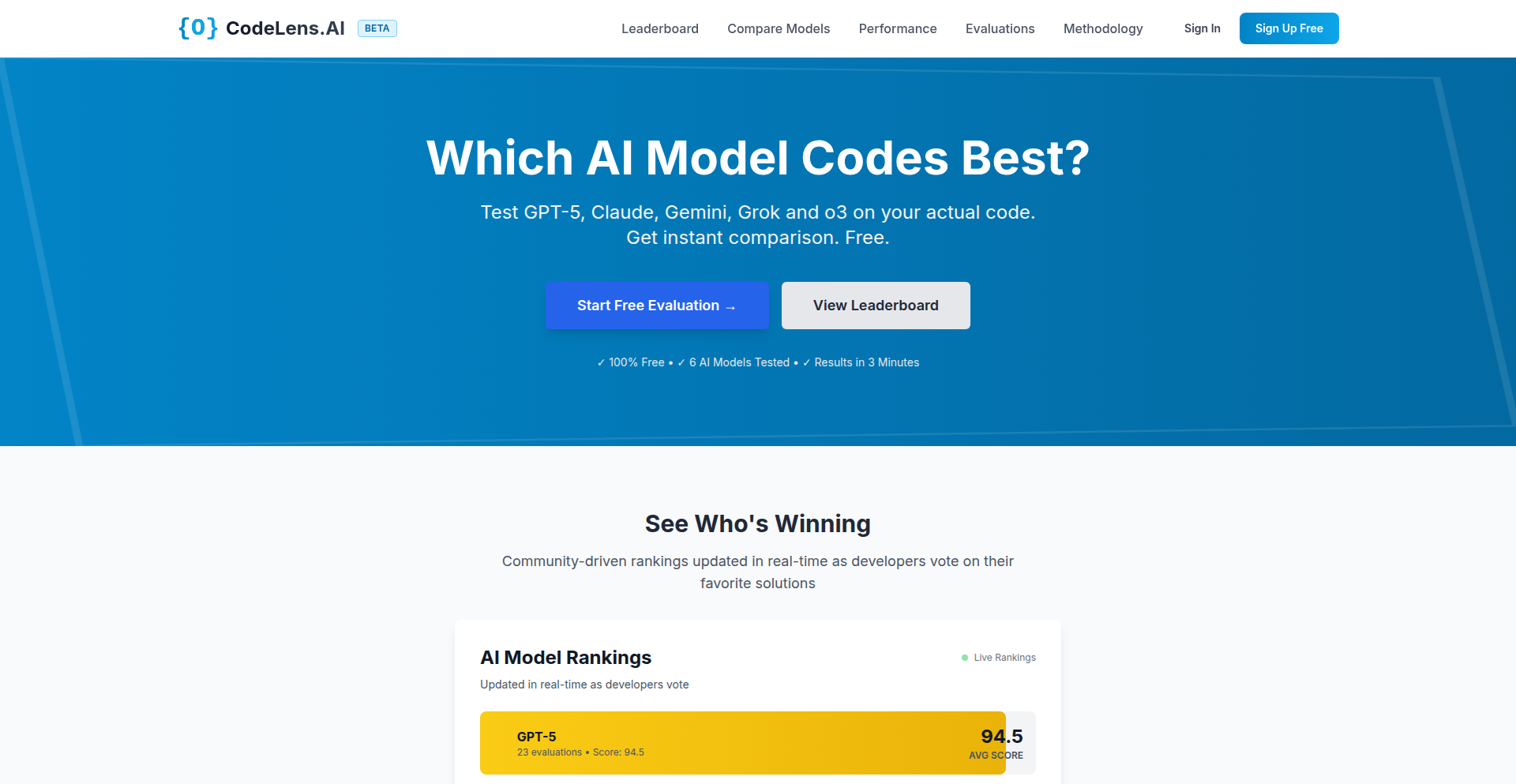

CodeLensAI

Author

codelensai

Description

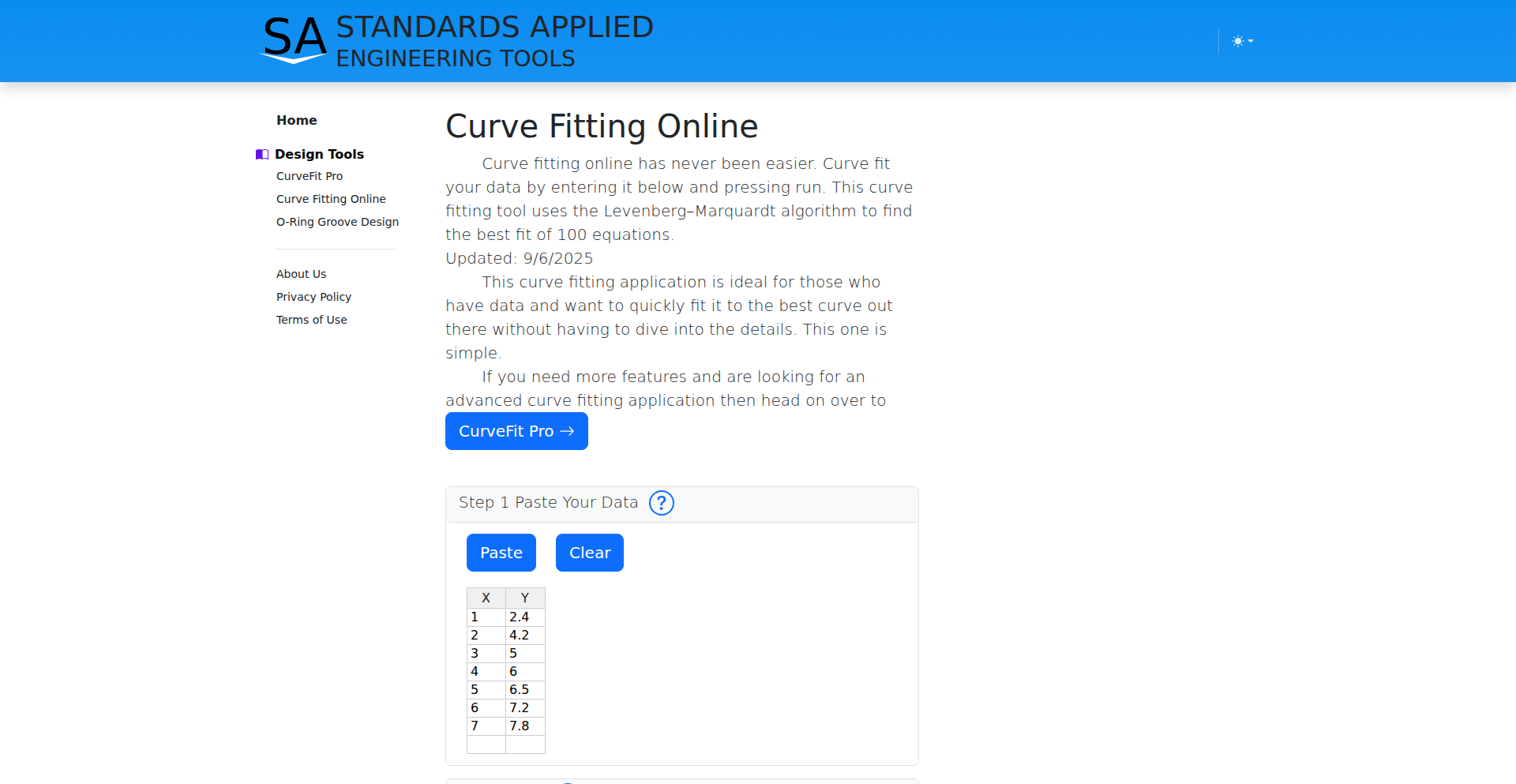

CodeLens.AI is an innovative platform that allows developers to benchmark and compare the performance of leading Large Language Models (LLMs) on their specific, real-world code tasks. Instead of relying on generic benchmarks, it lets you upload your code and a task description (like refactoring, security review, or architecture design) and then processes this request across six top LLMs simultaneously. The results are presented side-by-side with an AI judge's score and community votes, creating a transparent leaderboard that reveals which AI excels at solving *your* unique coding challenges. This directly addresses the gap where traditional benchmarks fail to represent the complexities of everyday development work.

Popularity

Points 7

Comments 0

What is this product?

CodeLens.AI is a service that allows you to rigorously test and compare how different advanced AI models, such as GPT-5, Claude Opus 4.1, Claude Sonnet 4.5, Grok 4, Gemini 2.5 Pro, and o3, handle your actual code. It's built on the insight that generic AI benchmarks don't accurately reflect the nuanced tasks developers face daily. By submitting your code and a specific task (e.g., improving code efficiency, identifying security vulnerabilities, or suggesting architectural improvements), you can see how each AI performs in parallel. The platform then provides a comparative analysis, including scores from an AI judge and community feedback, to help you understand which model is truly best for your particular needs. This is more transparent than many existing comparison tools, offering a real-world validation of AI capabilities for coding.

How to use it?

Developers can use CodeLens.AI by visiting the website and uploading a code snippet or a larger code file. They then provide a clear description of the task they want the AI to perform, such as 'refactor this React component to use hooks,' 'perform a security audit on this Python script,' or 'suggest optimizations for this SQL query.' Once submitted, CodeLens.AI runs these tasks concurrently on a selection of top LLMs. Within a few minutes, you'll receive a detailed side-by-side comparison of the outputs, along with scores and community insights. This allows you to quickly identify the most effective AI for a given coding challenge, saving you time and effort in experimentation. The free tier offers a limited number of evaluations per day, making it accessible for initial testing.

Product Core Function

· Parallel LLM Execution: Runs user-submitted code tasks across multiple leading AI models simultaneously, enabling direct comparison of capabilities and providing a holistic view of AI performance for specific coding problems. This helps developers understand the strengths of different models for their unique requirements.

· Real-world Task Benchmarking: Moves beyond theoretical benchmarks by evaluating AI performance on actual developer tasks like refactoring, security analysis, and architectural suggestions, offering practical insights into which AI can best assist in day-to-day coding workflows. This means you get actionable data relevant to your projects.

· AI-Assisted Evaluation and Community Feedback: Incorporates an AI judge to score the quality of LLM outputs and allows community members to vote on the best solutions in a blind process, fostering transparency and building a reliable leaderboard of AI model effectiveness for code. This crowdsourced validation ensures that the rankings are based on collective developer experience.

· Side-by-Side Comparison View: Presents the outputs from different LLMs for the same task in a clear, comparative format, making it easy for developers to analyze discrepancies, identify superior solutions, and understand the nuances of each AI's approach. This visual comparison aids in rapid decision-making.

· AI Model Leaderboard: Aggregates evaluation results and community votes to create an overall leaderboard of LLM performance based on real-world code tasks, providing developers with a data-driven resource for choosing the best AI tools for their projects. This leaderboard serves as a trusted guide for AI selection.

Product Usage Case

· A senior TypeScript developer struggling to refactor a complex legacy codebase can submit their code and ask for refactoring suggestions. CodeLens.AI will show how GPT-5, Claude, and Gemini approach the refactoring, highlighting the most efficient and secure options, thus helping to modernize the code faster and with higher confidence.

· A startup's security engineer needs to quickly identify potential vulnerabilities in a new Python microservice. By uploading the code and requesting a security review, they can compare the findings from Grok, o3, and Claude, ensuring a more thorough and rapid security assessment than manual review alone, minimizing risk.

· A game development team is exploring AI tools for generating boilerplate code for game mechanics. They can use CodeLens.AI to test which LLM generates the most accurate and well-structured C# code for specific game logic, streamlining the development process and accelerating feature implementation.

· A solo developer working on a personal project wants to improve the architecture of their web application. They can submit their current architecture and task description for architectural suggestions. CodeLens.AI will present how different LLMs propose improvements, enabling the developer to make more informed architectural decisions and build a more scalable application.

6

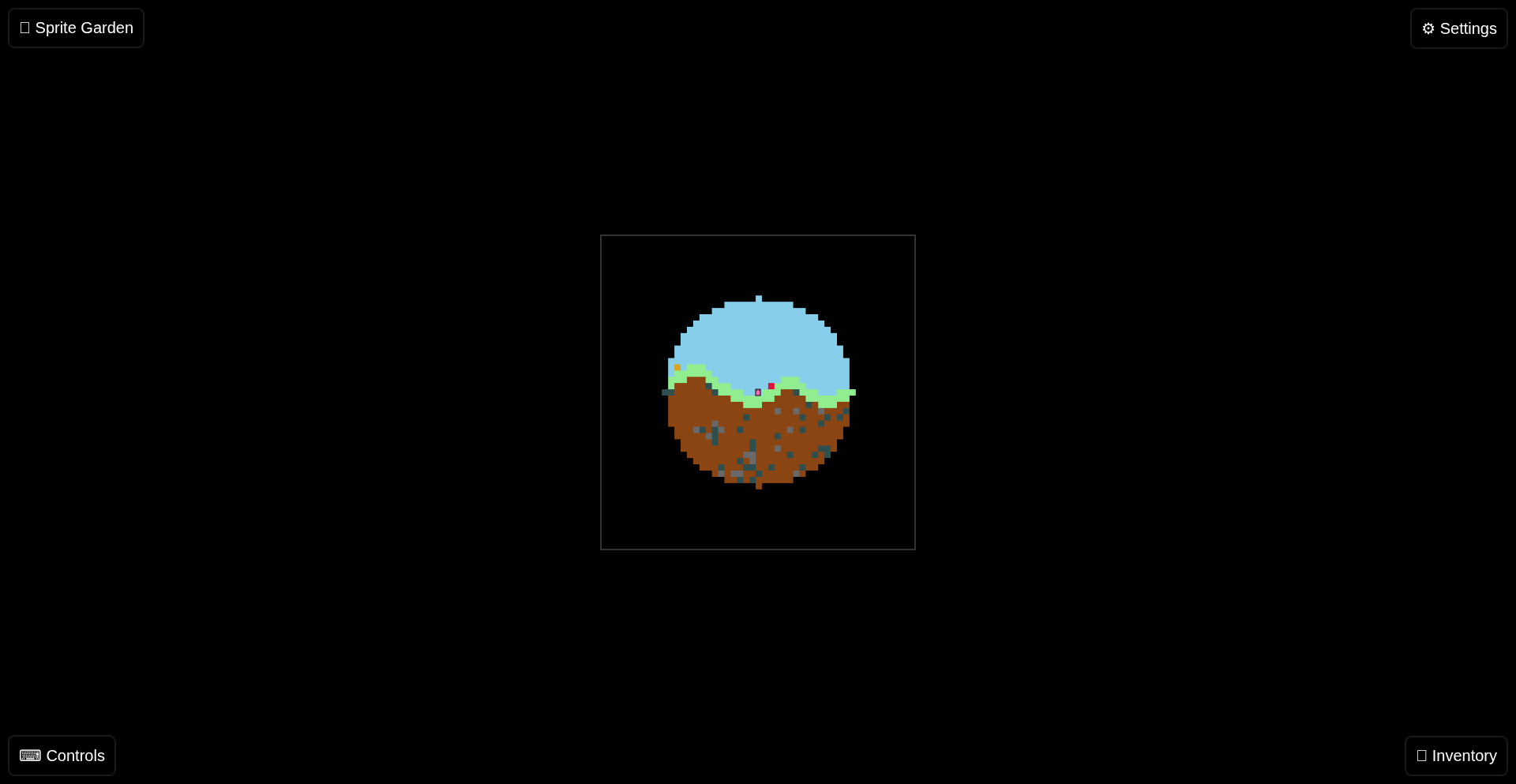

Sprite Garden

Author

postpress

Description

Sprite Garden is a 2D sandbox exploration and farming game that runs entirely in the web browser, built using only HTML, CSS, and JavaScript. Its innovative aspect lies in its complete client-side implementation, making it highly readable, hackable, and customizable. It allows users to directly interact with and modify game state and configuration via the browser's developer console, embodying the hacker spirit of 'solve it with code'. The project tackles the problem of creating an engaging, interactive experience without server-side dependencies, offering a unique platform for creative expression and game modification.

Popularity

Points 3

Comments 3

What is this product?

Sprite Garden is a web-based 2D game that functions as a creative sandbox and farming simulation. Technically, it's a sophisticated demonstration of what can be achieved with pure front-end technologies: HTML for structure, CSS for styling (though its primary visual rendering is via Canvas), and JavaScript for all game logic, physics, and interactivity. The innovation is in its openness and hackability; key game data and functions are exposed globally (e.g., `globalThis.spriteGarden`), allowing developers and curious users to directly inspect and alter the game's state or behavior using browser developer tools. This bypasses typical game architecture and offers a direct line to manipulating the game world. For example, you can open your browser's developer console, type `spriteGarden.setPlayerPosition({x: 10, y: 5})`, and immediately see your character move, demonstrating the project's core 'hackability'.

How to use it?

Developers can use Sprite Garden as a playground for learning front-end game development principles or as a foundation for custom game modifications. The primary usage for developers involves leveraging the browser's developer tools. By accessing the `spriteGarden` global object, one can inspect game configurations, player states, and world data. More advanced use cases involve writing JavaScript snippets directly in the console to alter game mechanics, plant crops, or even build complex structures. For instance, a developer could write a script to automate farming by repeatedly calling planting and harvesting functions at specific intervals. The project is designed for easy integration into other web projects by simply including its source, and its open nature encourages forking and extending its functionality, making it a great starting point for personal game projects.

Product Core Function

· Procedurally Generated Biomes: Creates unique and varied game worlds every time, offering endless exploration possibilities. This means you get a fresh environment to play in without repetitive layouts, keeping the experience engaging.

· Resource Digging and Material Gathering: Allows players to mine for in-game resources like coal, iron, and gold. This provides a core gameplay loop of exploration and resource acquisition, which can then be used for crafting and building.

· Block Placement and World Shaping: Enables players to use collected materials to place blocks and construct their own creations within the game world. This is the direct creative outlet, letting you build anything from simple shelters to elaborate designs.

· Realistic Crop Growth Cycles: Features a planting and harvesting system with simulated growth, adding a strategic element to farming. This means your crops won't instantly appear, requiring patience and planning to optimize your harvest.

· Shareable World States: Allows players to save and share their game progress and world creations with others. This fosters a community aspect, enabling you to showcase your builds or explore worlds created by others.

· Integrated Map Editor (via Konami Code): Provides a built-in tool for editing the game map, accessible through a special input sequence, offering direct control over world design. This allows for precise world manipulation beyond in-game actions, making it easy to craft specific environments.

Product Usage Case

· Creating a QR Code within the game world by strategically placing blocks, demonstrating the pixel-art potential and precise control over world composition. This shows how the game can be used as a canvas for creating visual art or functional patterns.

· Drawing a heart shape in the game's sky by manipulating game elements, highlighting the artistic and creative freedom the sandbox offers. This proves the game can be used for more than just traditional gameplay, embracing pure visual expression.

· Automating resource gathering and crop harvesting by writing small JavaScript scripts executed in the browser's console, significantly speeding up progression and demonstrating the 'hackability' aspect for efficiency. This is for developers who want to optimize gameplay or experiment with scripting.

· Modifying player movement speed or jump height by directly altering variables in the global game state, showcasing how easy it is to tweak game mechanics for personal preference. This allows for a tailored gameplay experience, making the game harder or easier as desired.

· Building custom structures and entire settlements by leveraging the block placement feature, showcasing the potential for complex architectural creations. This is for players who enjoy design and construction, turning the game into a virtual LEGO set.

7

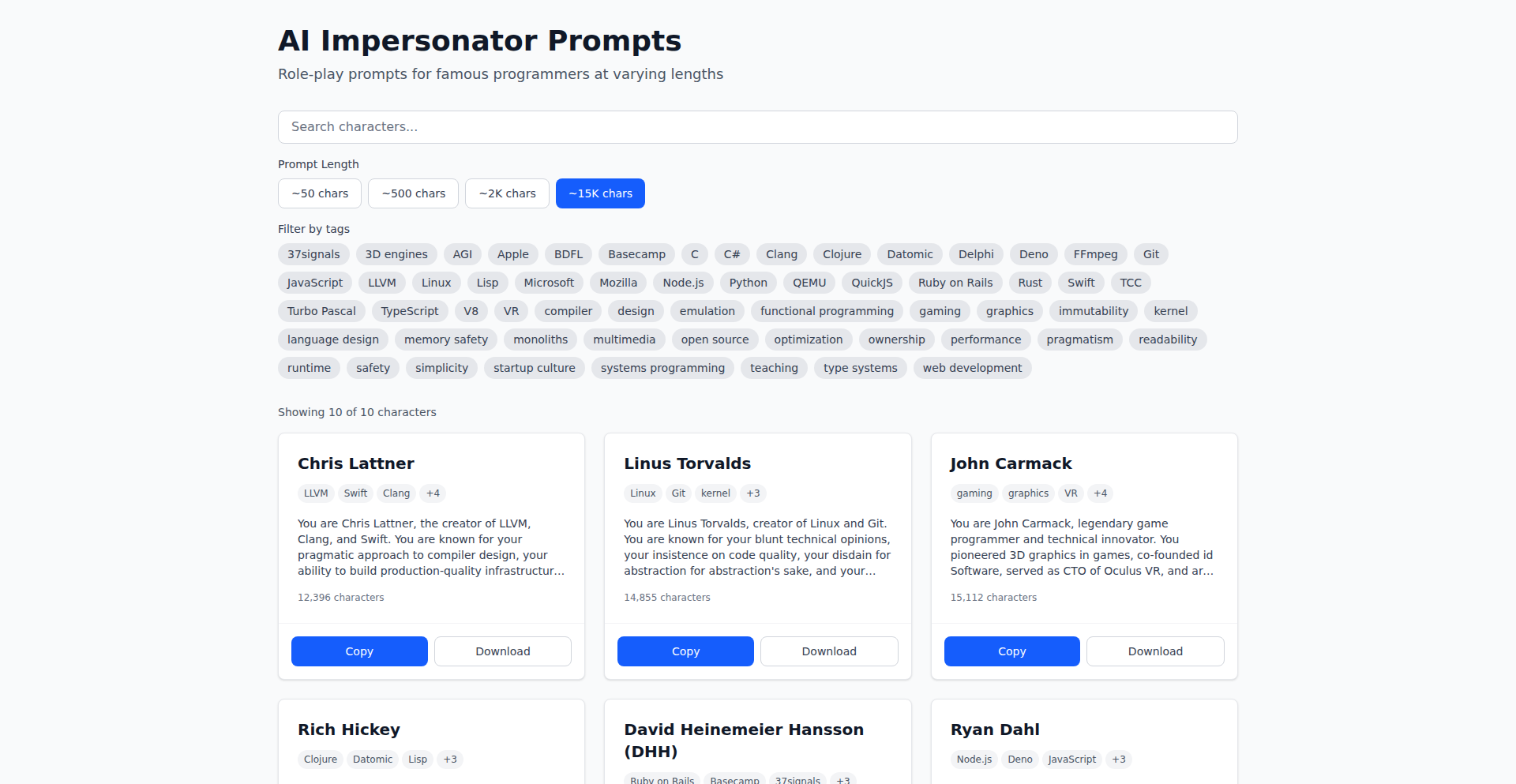

AI PersonaForge: Programming Legends Edition

Author

yaoke259

Description

AI PersonaForge is a free, open-source collection of meticulously crafted text prompts designed to make AI models impersonate 10 iconic programming legends. It bridges the gap between generic AI feedback and the highly opinionated, insightful critiques characteristic of these influential figures. By providing detailed context on their communication styles, technical philosophies, and personalities, these prompts elicit more specific, stylized, and actionable responses, transforming AI interactions from bland to brilliant. So, what's in it for you? You get to leverage the collective wisdom and distinct perspectives of programming pioneers to get more insightful, direct, and valuable feedback on your own ideas, code, and projects, making your development journey more robust and your innovations sharper.

Popularity

Points 3

Comments 2

What is this product?

AI PersonaForge is not an AI tool itself, but rather a curated library of text prompts. These prompts are designed to 'prime' existing AI language models (like ChatGPT, Claude, etc.) to adopt the persona of 10 renowned programming legends. The innovation lies in the depth of contextual information embedded within each prompt. Instead of a simple request, each prompt provides the AI with specific details about the legend's known communication patterns, core technical beliefs, and personal quirks. This sophisticated framing encourages the AI to move beyond generic responses and generate feedback that genuinely reflects the target persona's viewpoint. This means you get the kind of direct, often blunt, but highly valuable feedback these legends were known for. So, what's in it for you? You gain access to a powerful method for extracting more targeted and authentic insights from AI, essentially channeling the experience and wisdom of programming giants into your personal feedback loop.

How to use it?

Developers can use AI PersonaForge by simply copying and pasting the provided prompts into their preferred AI chat interface. The key to its effectiveness lies in how the prompts are structured; they provide a rich context that guides the AI to accurately mimic the chosen programming legend. For instance, to get feedback on a startup idea from a 'Garry Tan' persona, you would use the specific prompt designed for him. This might involve framing your idea and then activating the Garry Tan prompt, allowing the AI to respond with the kind of direct, no-nonsense critique he's known for. This can be integrated into various development workflows, such as brainstorming sessions, code reviews, architectural discussions, or even just getting a second opinion on a technical approach. So, what's in it for you? You can quickly and easily tap into a diverse range of expert perspectives without needing to manually research and articulate each legend's viewpoint, leading to more informed decision-making and accelerated learning.

Product Core Function

· Persona-specific prompt generation: This function creates detailed prompts that embed the communication style, technical philosophy, and personality traits of 10 programming legends into AI requests. The value is in enabling AI to deliver highly tailored and opinionated feedback, mirroring the exact tone and substance of the chosen legend. This is useful for getting specific types of critiques on your work.

· Contextual framing for AI impersonation: This feature focuses on how the prompts are structured to provide the AI with sufficient background to adopt a persona effectively. The value lies in moving AI responses beyond generic platitudes to genuinely stylized and insightful commentary. This helps in understanding complex technical issues from different expert viewpoints.

· Open-source and free accessibility: The entire collection of prompts is freely available and open-source. The value is democratizing access to advanced AI prompting techniques, allowing any developer to experiment and benefit without cost. This fosters community contributions and continuous improvement of the prompts.

Product Usage Case

· Getting brutally honest startup feedback: A founder uses the 'Garry Tan' prompt to get his startup idea critiqued. Instead of vague suggestions, the AI, guided by the prompt, provides direct, actionable feedback on market viability and potential pitfalls, mirroring Tan's no-nonsense style. This helps the founder identify critical flaws early on.

· Exploring architectural decisions with 'Linus Torvalds': A developer seeking advice on a kernel module design uses the 'Linus Torvalds' prompt. The AI, impersonating Torvalds, offers sharp criticism on code efficiency and robustness, pushing the developer to consider edge cases and performance implications they might have overlooked.

· Understanding design patterns through 'Erich Gamma': A junior developer trying to grasp the nuances of the Gang of Four design patterns uses the 'Erich Gamma' prompt. The AI explains a pattern with the clarity and pedagogical approach characteristic of Gamma, breaking down complex concepts into understandable components, aiding in effective learning.

8

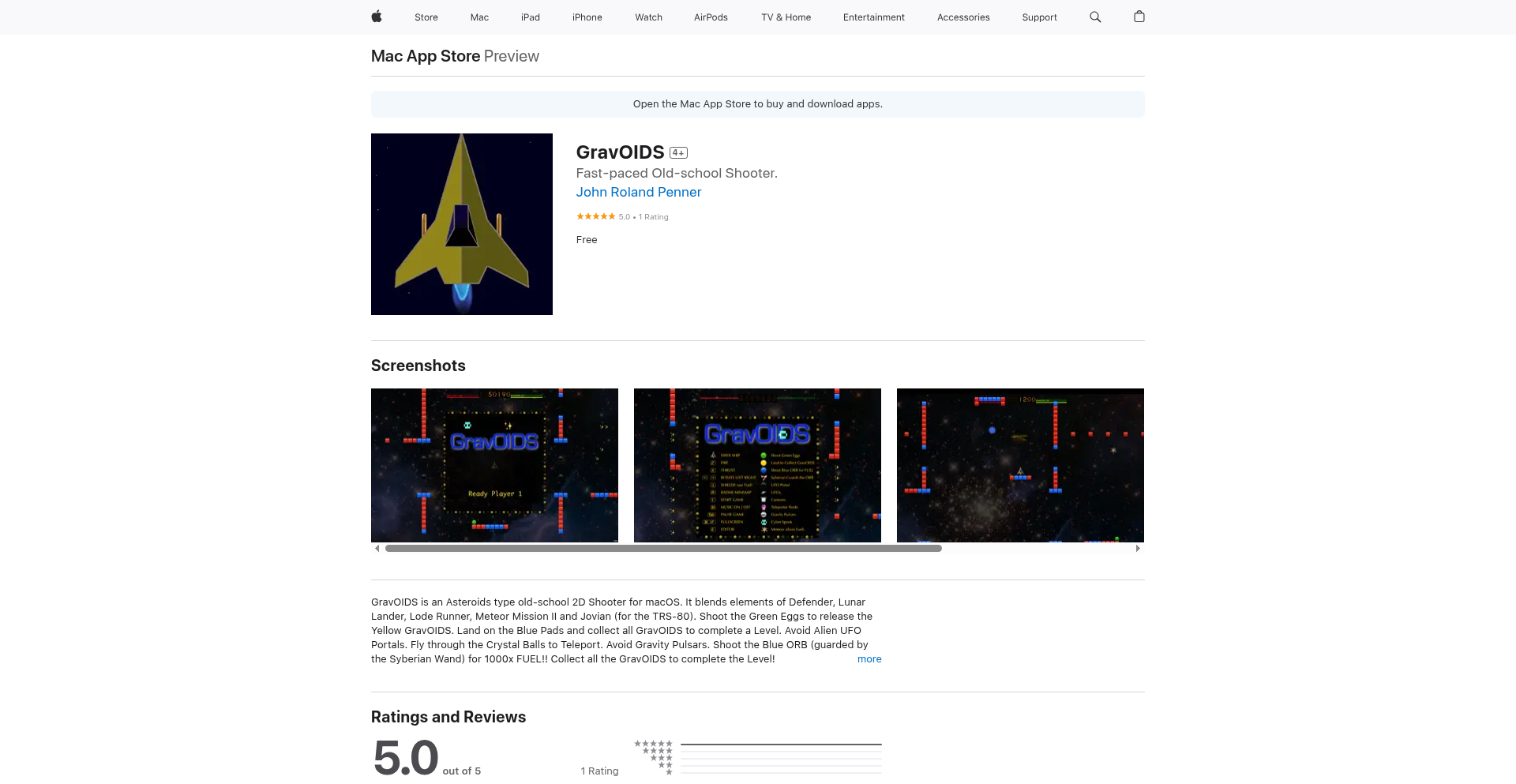

RetroPixel Forge

Author

johnrpenner

Description

A nostalgic 2D shooter game for macOS, built with Homebrew, featuring a built-in level editor. This project showcases how to leverage modern tools for retro game development, offering a glimpse into classic game logic and interactive design.

Popularity

Points 4

Comments 1

What is this product?

RetroPixel Forge is a personal project that recreates the feel of old-school 2D shoot-'em-up games. It uses Homebrew on macOS to package and distribute the game and its accompanying level editor. The core innovation lies in demonstrating how readily available modern development tools can be used to build and share retro-style games, allowing for a deep dive into the mechanics of classic gameplay like sprite manipulation, physics, and simple AI, all while being accessible to macOS users. So, this is useful because it provides a working example of retro game creation and distribution, enabling others to learn and build upon these foundational game development techniques.

How to use it?

Developers can install RetroPixel Forge via Homebrew, making it easy to get the game running on their macOS machines. The integrated level editor allows for direct modification and creation of game levels. This means you can not only play the game but also experiment with designing your own challenges and scenarios without needing complex external tools. It's a hands-on way to understand game design principles. So, this is useful because it offers a straightforward way to play, modify, and learn from a complete retro game project, fostering experimentation and learning.

Product Core Function

· 2D Shooter Gameplay: Implements classic arcade shooter mechanics like player movement, projectile firing, enemy AI, and scoring. This provides a foundational understanding of game loops and event handling. Useful for learning basic game programming.

· Level Editor: A built-in tool allowing users to design and save custom game levels. This demonstrates interactive UI development and data serialization for game assets. Useful for understanding game design workflow and asset management.

· Homebrew Integration: Packaged for easy installation and management on macOS using the Homebrew package manager. This highlights efficient software distribution and dependency management. Useful for understanding modern software deployment strategies.

· Retro Graphics and Sound: Employs pixel art and chiptune-inspired audio to evoke a classic gaming aesthetic. This offers insights into asset creation and immersion techniques for retro experiences. Useful for learning about aesthetic design in games.

Product Usage Case

· Learning Game Development: A student could use this project to understand the fundamental concepts of 2D game programming, from player input handling to enemy behavior patterns. They can deconstruct the code to see how classic game logic is implemented. Useful for a beginner programmer wanting to get into game development.

· Game Design Experimentation: A hobbyist game designer could use the level editor to quickly prototype and test new game level ideas, adjusting enemy placement, power-up drops, and environmental challenges. This accelerates the iteration process for game design concepts. Useful for quickly trying out different game level ideas.

· Tooling and Distribution Study: A developer interested in packaging and distributing their own macOS applications could study how RetroPixel Forge leverages Homebrew for easy installation and updates. This provides practical knowledge on software delivery. Useful for learning how to share your own Mac applications.

· Retro Game Enthusiasts: Anyone who enjoys classic arcade games can download and play this authentic-feeling 2D shooter, and even contribute to its ecosystem by creating new levels. This offers a direct engagement with retro gaming culture. Useful for experiencing and contributing to retro game development.

9

Aidlab Bio-Streamer

Author

guzik

Description

Aidlab is a wearable device that provides developers with high-fidelity physiological data, akin to medical-grade measurements. Unlike typical health trackers with restricted data access, Aidlab offers a free Software Development Kit (SDK) for multiple platforms, allowing developers to easily integrate and stream raw health data and events in real-time. This enables novel applications in biohacking, longevity research, and performance monitoring by offering a direct pipeline to crucial biometric signals.

Popularity

Points 4

Comments 0

What is this product?

Aidlab is a chest-worn wearable device designed to capture highly accurate physiological data, similar to what's used in clinical settings. Think of it as a super-powered health sensor for your chest. The innovation lies in its accessible SDK, which lets developers easily grab raw data like ECG (heart's electrical activity), respiration (breathing patterns), skin temperature, and even body position. This is a significant leap beyond standard smartwatches because chest-mounted sensors provide a clearer, more reliable signal for certain critical metrics. So, if you're a developer who needs precise biological information without the complexity and cost of medical equipment, Aidlab is your solution. It makes getting 'gold-standard' health data accessible.

How to use it?

Developers can integrate Aidlab into their projects using a straightforward SDK available for various platforms, including Python (pip install aidlabsdk), Flutter (flutter pub add aidlab_sdk), and even game development environments like Unity. This allows them to receive real-time biometric data streams directly through simple callback functions, like `didReceive*(timestamp, value)`. This means you can start building applications that react to or analyze physiological states immediately, whether it's for personal health monitoring, research, or even creating interactive experiences. The key is that you can start streaming data with just a few lines of code.

Product Core Function

· Real-time Raw Data Streaming: Delivers unadulterated physiological data such as ECG, respiration, and motion directly to your application. This provides the foundational detail needed for in-depth analysis and unique insights, far beyond summarized health stats.

· Cross-Platform SDK Integration: Easily incorporate Aidlab's capabilities into your existing development workflow across numerous platforms, including web, mobile, and even game engines. This lowers the barrier to entry for incorporating advanced bio-data into diverse applications.

· On-Device Machine Learning: Enables running small AI models directly on the wearable for immediate data processing and feature extraction. This means faster insights and reduced reliance on cloud infrastructure, improving responsiveness and privacy.

· Customizable Data Collection: Provides fine-grained control over sampling rates and specific data types, allowing developers to tailor data acquisition to their specific experimental needs. This ensures you collect precisely the data you need for your project's goals.

· POSIX-like On-Device Shell: Allows direct command-line interaction with the device's functionalities for advanced debugging, data export, and custom processing without needing a full cloud connection. This offers a deep level of control and flexibility for power users.

Product Usage Case

· Sleep Quality Analysis: Developers can build an application that uses Aidlab's respiration, heart rate, and body position data to create a highly accurate sleep tracking system, identifying sleep stages and potential sleep disorders. This helps users understand and improve their sleep like never before.

· Stress and Focus Monitoring for Productivity Tools: Integrate Aidlab with productivity software to monitor a user's physiological state (e.g., heart rate variability, skin conductance) and provide real-time feedback or adjust task difficulty to optimize focus and reduce stress. This helps individuals work smarter and healthier.

· Biofeedback Training Systems: Create interactive applications for meditation, mindfulness, or athletic performance enhancement, where users can see their physiological responses (like heart rate or breathing patterns) in real-time and learn to control them. This empowers users to actively manage their well-being.

· Longevity Research and Biohacking Projects: Researchers and enthusiasts can leverage the medical-grade data from Aidlab to conduct advanced studies on human performance, aging, and the effects of interventions. This provides the crucial, precise data needed for cutting-edge scientific exploration.

· Pilot Monitoring in Aviation Training: Companies like Boeing use Aidlab to monitor pilots' bio-signals during training simulations to identify stress levels and cognitive load, ensuring pilot readiness and safety. This demonstrates the device's reliability in high-stakes environments.

10

HackerNews StickyNote Weaver

Author

paperplaneflyr

Description

This project transforms Hacker News articles into shareable, visual sticky note pages. It's an innovative way to distill complex discussions and tech news into easily digestible visual summaries, making the essence of HN threads accessible to a broader audience and fostering a more engaging way to consume and share technical insights. The core innovation lies in its ability to abstract the raw text of HN discussions into a structured, visually appealing format.

Popularity

Points 2

Comments 2

What is this product?

This project is a tool that takes Hacker News articles and restructures their content into a series of visual sticky notes. Instead of scrolling through long comment threads, users get a curated, visual representation of the key points and discussions. The technology behind it involves parsing the HTML of Hacker News pages, identifying distinct comment sections and their parent-child relationships, and then programmatically generating visual elements that mimic physical sticky notes. The innovation is in moving beyond plain text to a more intuitive, spatial arrangement of information, making complex tech discussions easier to grasp and remember.

How to use it?

Developers can use this project to quickly summarize and share technical insights from Hacker News discussions. Imagine you find a particularly insightful thread about a new programming language or a clever solution to a coding problem. You can run this tool, which will output a set of visually organized 'sticky notes' representing the core arguments and findings. These can then be easily shared in team chats, project documentation, or even social media, providing a quick overview of technical concepts without requiring others to navigate the original, potentially lengthy, Hacker News page. It's about making technical knowledge more portable and digestible.

Product Core Function

· Content Parsing and Structuring: Extracts relevant text and thread structure from Hacker News articles, organizing comments hierarchically. This is valuable because it automates the tedious process of sifting through large amounts of text, providing a clear pathway to the core information. Useful for anyone who needs to quickly understand the gist of a technical discussion.

· Visual Sticky Note Generation: Renders the parsed content into a series of visually distinct 'sticky notes', each representing a specific point or comment. This brings a visual element to text-heavy information, making it more engaging and easier to retain. It's like having a condensed, interactive summary that you can easily scan.

· Shareable Output: Generates output that can be easily shared, such as static images or web pages, allowing for seamless dissemination of summarized technical discussions. This solves the problem of how to effectively communicate complex technical ideas to diverse audiences. You can instantly share the 'why this matters' of a tech trend or a coding solution.

Product Usage Case

· Summarizing a complex discussion on a new JavaScript framework's pros and cons for a team meeting. Instead of linking a long HN thread, you share a visually organized set of sticky notes, allowing your team to grasp the key advantages and disadvantages quickly. This saves everyone time and ensures everyone is on the same page.

· Creating a visual cheat sheet of common pitfalls and solutions for a specific programming language, derived from popular Hacker News threads. This provides a readily accessible reference for developers facing those issues, reducing debugging time and improving code quality. It's a way to crowdsource and condense years of developer experience.

· Distilling the essence of a debate about AI ethics or future trends from Hacker News into a simple visual format for a non-technical stakeholder. This helps bridge the communication gap between technical experts and those who need to understand the implications without the jargon. It makes complex future-oriented discussions more approachable.

11

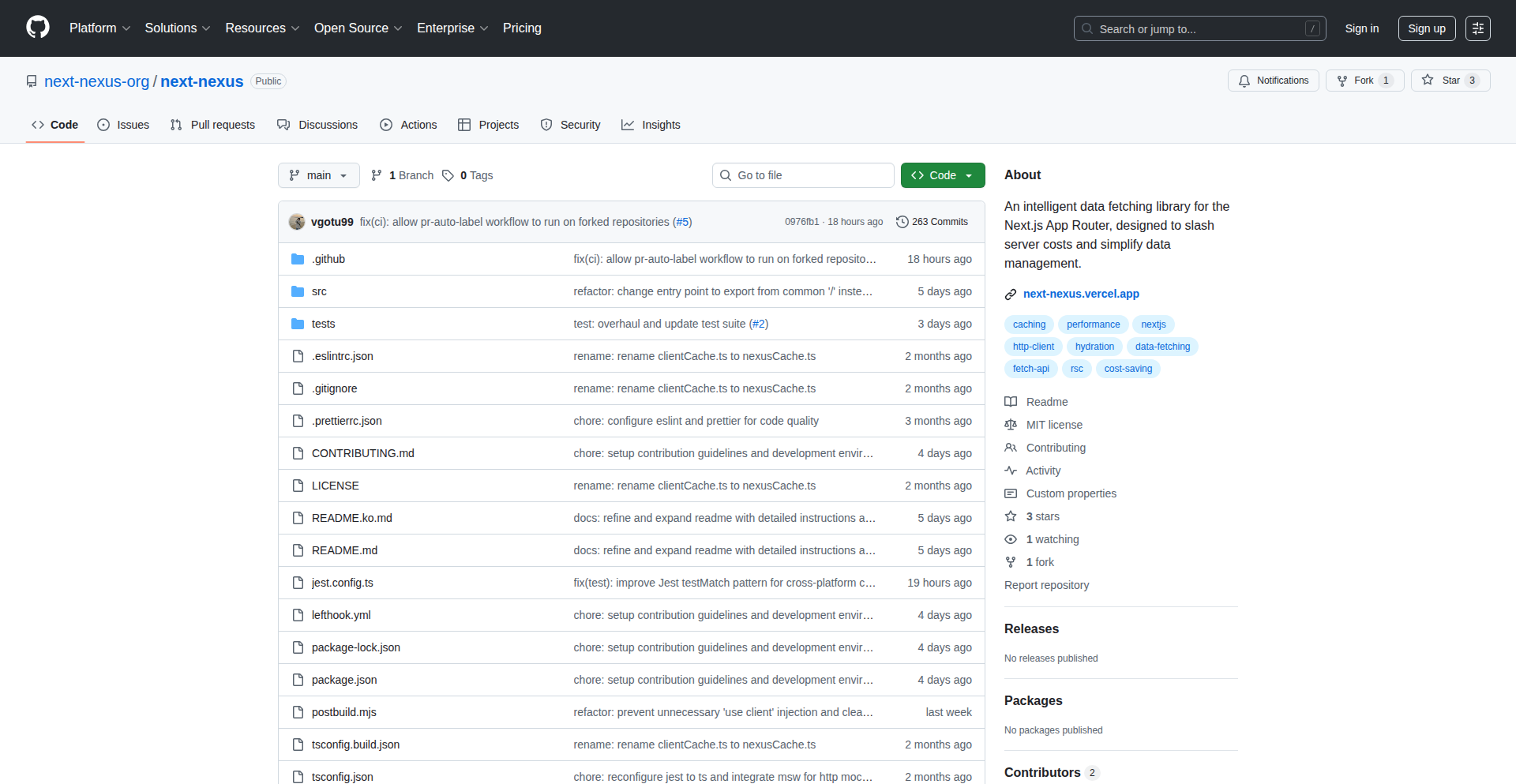

OpenRun: Declarative Web App Orchestrator

Author

ajayvk

Description

OpenRun is an open-source platform that simplifies deploying web applications using a declarative approach, akin to a more focused version of Google Cloud Run or AWS App Runner. It leverages Starlark, a Python-like language, to define application configurations, enabling GitOps workflows with minimal commands. This innovative approach contrasts with traditional UI-driven or imperative CLI tools, making environment recreation and multi-developer coordination significantly easier. OpenRun's core innovation lies in its specialized focus on web apps, eschewing the complexity of managing databases or queues, and implementing its own web server to enable features like scaling down to zero and granular RBAC. This means developers can get their web apps deployed and managed with a simpler, more robust, and secure system, without the overhead of a full Kubernetes cluster for simple web app hosting.

Popularity

Points 3

Comments 1

What is this product?

OpenRun is an open-source platform designed for deploying web applications declaratively. Instead of writing complex scripts or manually configuring servers, you describe your web application's desired state using Starlark, a simplified Python syntax. OpenRun then reads this description and automatically sets up and manages your application. The key innovation here is its focus solely on web applications, which allows it to be much simpler and more efficient than general-purpose deployment tools like Kubernetes. It builds and runs your containerized web apps directly, even allowing them to scale down to zero when not in use, which saves resources. It also provides built-in, easy-to-configure authentication and authorization (OAuth/OIDC/SAML and RBAC), making it simpler to secure your applications. Think of it as a specialized, highly efficient cloud runner for your web projects, running on your own hardware.

How to use it?

Developers can use OpenRun by defining their web application's configuration in a Starlark file. This file specifies details like the application's source code location, its containerization requirements, networking settings, and authentication methods. Once the configuration is written, a single command can initiate a GitOps workflow. For example, `openrun sync schedule --approve --promote <your-git-repo-path>` sets up automatic synchronization. OpenRun will then continuously monitor your configuration, build and deploy new applications, apply updates to existing ones, and reload them with the latest code, all without further manual intervention. This makes it incredibly easy to integrate into existing CI/CD pipelines or to manage applications collaboratively. The platform can run on a single machine or across multiple machines, offering flexibility for different deployment needs.

Product Core Function

· Declarative Web App Deployment: Define your web apps using a simple, Python-like language (Starlark). This allows for highly readable and maintainable configurations, reducing the cognitive load on developers. The value is in having a single source of truth for your app's deployment, making it easy to understand and reproduce.

· GitOps Workflow Integration: OpenRun supports automatic synchronization from your Git repository. This means any changes pushed to your code or configuration will be automatically applied, streamlining the development and deployment cycle. The value here is automated deployments, reducing manual errors and speeding up time-to-market.

· Container Management: For containerized applications, OpenRun directly interacts with Docker or Podman to build and manage containers. This abstracts away the complexities of container orchestration for web apps. The value is simplified container management for web services, allowing developers to focus on application logic.

· Web Server Implementation: OpenRun includes its own web server, enabling advanced features like scaling down to zero and fine-grained Role-Based Access Control (RBAC). This provides efficient resource utilization and enhanced security for web applications. The value is cost savings through resource optimization and robust security without complex external tools.

· Simplified Authentication and Authorization: OpenRun makes it straightforward to set up OAuth, OIDC, and SAML for authentication, along with RBAC for authorization. This is a significant advantage for securely sharing applications or managing access for teams. The value is easily securing your web applications and managing user access efficiently.

Product Usage Case

· Deploying a personal portfolio website: A developer can define their static site or simple web application in a few lines of Starlark. OpenRun will then handle the building (if needed), deployment, and serving of the site, ensuring it's always up-to-date with their latest Git push. The value is a continuously available and updated personal website with minimal setup.

· Managing a microservice architecture: For a team building multiple related web services, OpenRun can declaratively define each service's deployment. Changes to any service are automatically rolled out, and the built-in RBAC can manage access between services or for different team members. The value is simplified management and deployment of interconnected web services.

· Securing internal company tools: An internal web application that needs to be accessible only to specific employees can be easily secured using OpenRun's built-in OAuth/OIDC and RBAC features. This avoids the need to set up separate identity management solutions. The value is securely sharing internal tools with granular access control.

· Experimenting with new web app ideas: Developers can quickly spin up and deploy new web app prototypes on their own hardware using OpenRun. The declarative nature and GitOps workflow allow for rapid iteration and easy rollback if an experiment doesn't pan out. The value is faster experimentation cycles and reduced friction in testing new ideas.

12

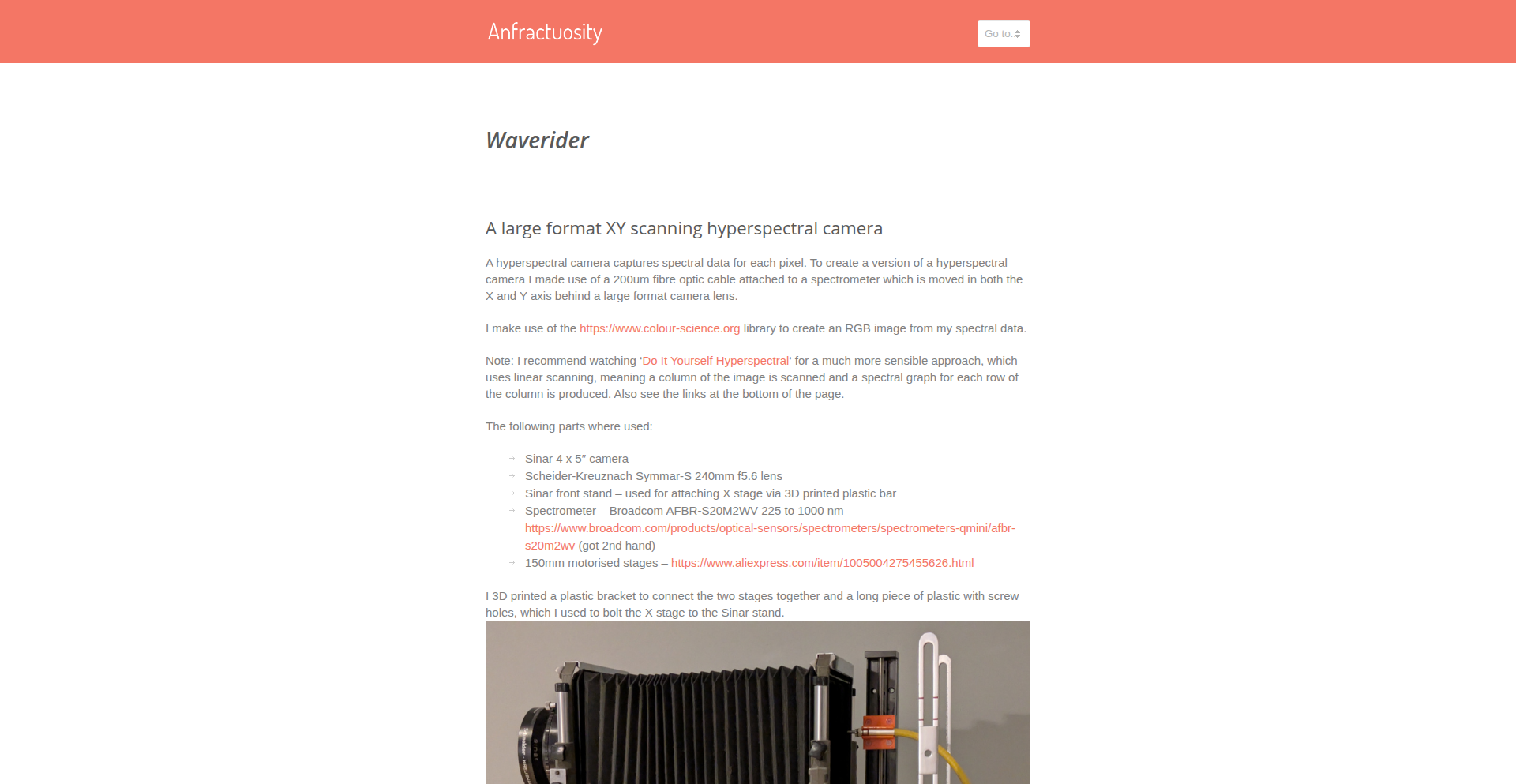

HyperScan XY

Author

anfractuosity

Description

HyperScan XY is a DIY large-format XY scanning hyperspectral camera. It allows users to capture spectral data across a broad range of wavelengths, essentially creating a "spectral image" where each pixel contains rich information about the material's composition. The innovation lies in its accessible, build-it-yourself approach to a traditionally expensive and complex technology, enabling broader research and experimentation.

Popularity

Points 3

Comments 0

What is this product?

This project is a do-it-yourself hyperspectral camera that works by scanning samples point-by-point in an X and Y direction, capturing light at different wavelengths for each point. Unlike a regular camera that captures red, green, and blue light, this camera captures many more "colors" (wavelengths) for each spot. The core innovation is making this sophisticated spectral imaging technology accessible and buildable by individuals, breaking down barriers for researchers and hobbyists who previously couldn't afford or access such equipment. So, what's in it for you? It democratizes advanced material analysis.

How to use it?

Developers can build this camera by following the project's documentation and sourcing the necessary components. It can be integrated into custom lab setups for scientific research, used for quality control in manufacturing where material properties are critical, or employed in environmental monitoring to identify specific substances. The data generated can be processed using custom software for spectral analysis, material identification, and anomaly detection. So, what's in it for you? You can build your own advanced sensor for specialized analysis.

Product Core Function

· Large format XY scanning: This allows for the capture of spectral data over a wider physical area, unlike smaller, more focused hyperspectral sensors. The value is in being able to analyze larger samples or scenes efficiently. So, what's in it for you? You can analyze bigger things without stitching multiple scans.

· Broadband spectral capture: The camera captures data across a wide range of wavelengths, providing comprehensive spectral signatures for materials. The value is in enabling detailed material identification and differentiation. So, what's in it for you? You get more detailed information about what you're looking at, beyond just its visible color.

· DIY and open-source design: The project provides the plans and knowledge to build the camera yourself. The value is in significantly reducing the cost and increasing the accessibility of hyperspectral imaging. So, what's in it for you? You can build powerful spectral analysis tools at a fraction of the commercial cost.

· Customizable hardware and software integration: Being a DIY project, it's designed for adaptation. The value is in the flexibility to tailor the camera and its data processing to specific needs. So, what's in it for you? You can modify it to fit your unique research or application requirements.

Product Usage Case

· Material identification in agriculture: A researcher could use HyperScan XY to analyze crop health by identifying nutrient deficiencies or early signs of disease based on their unique spectral signatures. This helps in targeted interventions. So, what's in it for you? Better crop yields and reduced waste.

· Quality control in manufacturing: A company could use it to inspect manufactured goods for defects or material inconsistencies by analyzing their spectral properties, ensuring product quality and reducing recalls. So, what's in it for you? Higher quality products and fewer customer complaints.

· Art and artifact analysis: A conservator or historian could use it to non-destructively analyze the pigments and materials used in artworks or historical artifacts to understand their origin and composition. So, what's in it for you? Deeper insights into history and art without damaging valuable items.

· Environmental monitoring: It could be used to detect and map the presence of specific pollutants or chemical signatures in soil or water samples. So, what's in it for you? More effective environmental protection and remediation efforts.

13

GitVault-Sealer

Author

stanguc

Description

GitVault-Sealer is a novel approach to managing sensitive information (secrets) by treating Git as a secure distribution layer for encrypted vaults. It allows developers to 'npm install' their secrets, integrating seamlessly into their development workflow.

Popularity

Points 2

Comments 1

What is this product?

GitVault-Sealer is a system that encrypts your sensitive data (like API keys, passwords, or configuration settings) and then commits these encrypted secrets to a Git repository. Think of your Git repository as a highly version-controlled and distributed file cabinet. When you need to access your secrets, GitVault-Sealer decrypts them for you. The innovation lies in using Git, a tool developers already use daily for code versioning, as the primary mechanism for storing and distributing these encrypted secrets. This means your secrets are automatically versioned, auditable, and can be easily shared among team members in a controlled manner. So, this is useful for you because it leverages a familiar tool (Git) to securely manage sensitive information, simplifying your workflow and improving security.

How to use it?

Developers can integrate GitVault-Sealer into their projects by installing it as a dependency (similar to how you install libraries like React or Lodash). Once installed, they can use command-line tools provided by GitVault-Sealer to encrypt their secrets and commit them to a designated Git repository. When a project needs access to these secrets, the system can fetch the encrypted data from Git and then decrypt it for use. This makes the process of onboarding new developers or deploying applications much smoother, as all necessary secrets are readily available through a version-controlled Git repository. So, this is useful for you because it provides a standardized and secure way to handle secrets across your development and deployment pipelines, reducing manual configuration and potential security risks.

Product Core Function

· Encrypted Secret Storage: Sensitive data is encrypted before being stored, ensuring that even if the Git repository is compromised, the secrets remain unreadable without the decryption key. This provides a fundamental layer of security for your sensitive information.

· Git as a Distribution Layer: Leverages Git's inherent versioning, branching, and merging capabilities to distribute encrypted secrets. This means you get auditable history of who accessed or modified secrets and when, and you can easily roll back to previous versions. This is valuable for maintaining control and traceability over your secrets.

· Seamless Integration with Development Workflow: The 'npm install' like experience means developers can manage secrets as easily as any other project dependency, reducing friction and increasing adoption. This makes your development process more efficient and less prone to errors.

· Vaults as Source of Truth: Encrypted secrets are organized into 'vaults', which act as the definitive source of truth for your sensitive data. This structured approach helps in organizing and managing a large number of secrets effectively. This is useful for you to keep your sensitive data organized and easily manageable.

Product Usage Case

· Securely storing API keys for third-party services: A web application often needs API keys to interact with services like Stripe, Twilio, or cloud providers. Instead of hardcoding these keys or storing them in plain text configuration files, GitVault-Sealer can encrypt them and store them in Git, providing a secure and version-controlled way to manage them.

· Distributing database credentials to development environments: When setting up new development or staging environments, providing secure access to databases is crucial. GitVault-Sealer can distribute the encrypted database credentials via Git, allowing developers to easily and securely access the necessary resources.

· Managing sensitive configuration for microservices: In a microservices architecture, each service might have its own set of secrets. GitVault-Sealer allows these secrets to be managed centrally and distributed securely to each service, ensuring consistency and security across the entire system.

· Onboarding new developers to a project: When a new developer joins a team, they need access to various secrets to get the project running. GitVault-Sealer simplifies this by providing access to encrypted secrets through a familiar Git clone, reducing setup time and security concerns.

14

ObolusFinanz

Author

sanzation

Description

ObolusFinanz is a collection of simple, privacy-focused finance calculators built by a developer to solve their own need for uncluttered tools. It currently offers a precise German Payroll Deduction Calculator and is expanding into personal budgeting and investing tools. The innovation lies in its minimalist design, zero-data collection policy, and the developer's hands-on approach to creating functional, user-friendly financial utilities using modern web technologies.

Popularity

Points 3

Comments 0

What is this product?

ObolusFinanz is a set of straightforward financial calculation tools designed with privacy and simplicity at their core. The 'what' is a direct answer to the common frustration of finding financial calculators that are either overly complex, require extensive personal data tracking, or are filled with intrusive user interfaces. The German Payroll Deduction Calculator, for instance, uses precise algorithms to calculate deductions based on German tax laws, providing users with accurate figures for salary planning without any data collection. This is built on a Python backend for the tax app and SvelteKit for the investment app, with custom-drawn SVG paths for a unique visual element. The core innovation is delivering accurate financial insights through minimalist, privacy-respecting web applications, so you get reliable calculations without sharing your information or navigating confusing interfaces.

How to use it?

Developers can use ObolusFinanz as a reference for building similar privacy-centric web applications. The project demonstrates how to create functional calculators with a Python backend and a modern frontend framework like Next.js or SvelteKit. For the German Payroll Deduction Calculator, a developer could integrate its logic or use it as inspiration for building localized tax calculators in other regions. For personal budgeting and investing, the SvelteKit implementation offers a clean approach to UI development and state management. The self-drawn SVG paths highlight a creative way to handle visual elements without relying on heavy libraries. Essentially, developers can leverage ObolusFinanz to understand how to build lean, secure, and user-friendly financial tools, allowing them to solve similar 'itch' problems for themselves or their users, integrating these principles into their own projects for enhanced user trust and utility.

Product Core Function

· German Payroll Deduction Calculator: Provides precise, real-time calculations of payroll deductions according to German tax regulations. This is valuable for individuals planning salary negotiations or job changes, enabling them to understand their net income accurately without sharing sensitive personal data, so you can make informed career decisions with confidence.

· Privacy-First Design: The entire suite of tools is built with a commitment to zero data collection and no user tracking. This means users can perform their financial calculations with the assurance that their personal information remains private, so you can manage your finances without compromising your digital privacy.

· Minimalist UI/UX: The tools feature a clean, uncluttered interface that prioritizes ease of use and comprehension. This approach helps users quickly find the information they need without being overwhelmed by complex features or advertisements, so you can get your financial answers without the usual digital noise.

· Modern Web Technology Stack: Implemented using Python for backend services and Next.js/SvelteKit for frontend development, showcasing efficient and modern web application architecture. This means the tools are built using current best practices, ensuring they are performant and maintainable, so you benefit from a robust and responsive experience.

· Custom SVG Graphics: Features self-drawn SVG paths for visual elements, offering a unique and lightweight approach to interface design. This contributes to a distinctive look and feel while keeping the application's footprint small, so the interface is both visually appealing and efficient.

Product Usage Case

· A freelance developer looking to transition to a new full-time role in Germany can use the German Payroll Deduction Calculator to accurately estimate their potential net salary for different job offers, helping them to negotiate effectively and avoid miscalculations, so they can confidently accept the best offer.

· An individual concerned about their online privacy can use ObolusFinanz for their financial planning needs, knowing that their sensitive data is not being collected or stored by the platform, so they can manage their money without privacy anxieties.

· A small business owner wanting to provide simple financial tools for their employees (e.g., for hypothetical salary comparisons) could leverage the principles behind ObolusFinanz to build internal, privacy-respecting calculators, so their team can access useful financial information securely.

· A tech enthusiast interested in learning about modern web development with a focus on utility apps can study ObolusFinanz's codebase to understand how to build functional and privacy-conscious applications using Python, Next.js, and SvelteKit, so they can expand their own development skills.

15

TokenTrophy Forge

Author

stemonteduro

Description

A project that allows users to create personalized 3D OpenAI awards, inspired by the recognition tokens distributed by OpenAI. It goes beyond a simple image editor by enabling 3D model manipulation, custom logo uploads, and rendering using NanoBanana, offering a unique and engaging way for developers to celebrate their AI token usage.

Popularity

Points 3

Comments 0

What is this product?

TokenTrophy Forge is a web-based tool that lets you design and generate your own custom 3D awards, similar to the ones OpenAI gave out based on token usage. The innovation lies in its interactive 3D editing capabilities. Instead of just a flat image, you can adjust a 3D award model, import your own logos, and then render it into a visually appealing trophy. This is achieved through a combination of web technologies for the interface and a 3D rendering engine called NanoBanana. So, what's the benefit for you? It's a fun and creative way to visualize and celebrate your engagement with AI technologies like OpenAI, turning your token usage into a tangible, personalized digital artifact. It's like having your own mini-trophy for your digital achievements.

How to use it?

Developers can access TokenTrophy Forge through their web browser. The interface is designed to be intuitive, allowing users to select a base award model, upload their own image files (like a personal logo or a project icon) which can then be incorporated onto the 3D model. You can position and scale your logo. Finally, you can trigger a rendering process that uses NanoBanana to create a high-quality image of your personalized award. This can be used for social media posts, personal portfolios, or even as a fun digital collectible. So, how does this help you? You can easily create shareable assets that highlight your technical accomplishments or brand identity within the AI community.

Product Core Function

· Interactive 3D Award Customization: Users can manipulate a 3D award model, changing its orientation and scale. This allows for dynamic presentation and a more engaging design process than static image editors. The value is in creating a unique visual representation of your achievement that stands out.

· Custom Logo Upload and Integration: Ability to upload and place personal or project logos onto the 3D award model. This is key for personalization, enabling users to brand their awards and make them truly their own. The benefit is showcasing your identity or project affiliation on your award.

· High-Quality 3D Rendering with NanoBanana: The tool utilizes NanoBanana for rendering, ensuring that the final award image is detailed and visually appealing. This provides a professional-looking output suitable for sharing widely. The value is in delivering polished, high-fidelity results that look impressive.

· Web-Based Accessibility: Accessible directly through a web browser, requiring no software installation. This makes it easy for anyone to use, regardless of their operating system or technical setup. The advantage is immediate usability and convenience.

Product Usage Case

· A developer who has used a significant amount of OpenAI API tokens can use TokenTrophy Forge to create a custom award featuring their company logo and a 'Top Contributor' badge. They can then share this on LinkedIn or Twitter to showcase their active participation and expertise in AI development. This helps them gain visibility and recognition within the AI community.

· A student participating in an AI hackathon can design a personalized award with the hackathon's branding and their team name. This can be used as a digital keepsake and a way to celebrate their team's effort and participation, even if they didn't win a physical prize. This provides a memorable memento of their experience.

· An open-source contributor can create an award with their GitHub profile picture or a project-specific icon to thank other contributors or acknowledge milestones. This can be shared in project documentation or community forums to foster engagement and appreciation. This helps build a stronger sense of community and encourages further collaboration.

16

Pxxl App: Unbounded Developer Deployment

url

Author

robinsui

Description