Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-09

SagaSu777 2025-10-10

Explore the hottest developer projects on Show HN for 2025-10-09. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN landscape is a vibrant testament to the hacker ethos, showcasing an incredible surge in AI integration across diverse applications, from sophisticated code generation assistants to tools that analyze and enhance creative content. This trend highlights a critical shift: AI is no longer just a standalone technology but a pervasive force augmenting human capabilities and streamlining complex workflows. For developers, this means an opportunity to leverage AI not only for automation but for deeper insights and more intuitive user experiences, pushing the boundaries of what's possible in software. Entrepreneurs can find fertile ground by identifying pain points that can be solved with AI-powered solutions, particularly in areas like developer productivity, data analysis, and personalized content creation. Simultaneously, there's a strong undercurrent of innovation in foundational technologies, with projects like a web framework in C and high-performance Go CLI frameworks, demonstrating that the pursuit of raw efficiency and control remains a vital aspect of technological advancement. This blend of cutting-edge AI and deep systems programming is where future breakthroughs will likely emerge, rewarding those who can bridge these seemingly disparate domains.

Today's Hottest Product

Name

Show HN: I built a web framework in C

Highlight

This project stands out for its audaciousness: building a web framework from scratch in C. This is a deep dive into low-level systems programming, demonstrating a profound understanding of web architecture and C's capabilities. Developers can learn about fundamental web request handling, memory management in performance-critical applications, and how to architect complex systems using a language known for its raw power and direct hardware access. It's a testament to the hacker spirit of mastering foundational technologies to build something powerful and efficient.

Popular Category

AI and Machine Learning

Developer Tools

Web Development

Systems Programming

Productivity Tools

Popular Keyword

AI

LLM

Developer Tools

Open Source

Framework

CLI

Agent

Automation

WebRTC

Rust

Go

C

PostgreSQL

Technology Trends

AI-Powered Development Tools

Edge AI and Embedded Systems

Low-Level Systems Programming

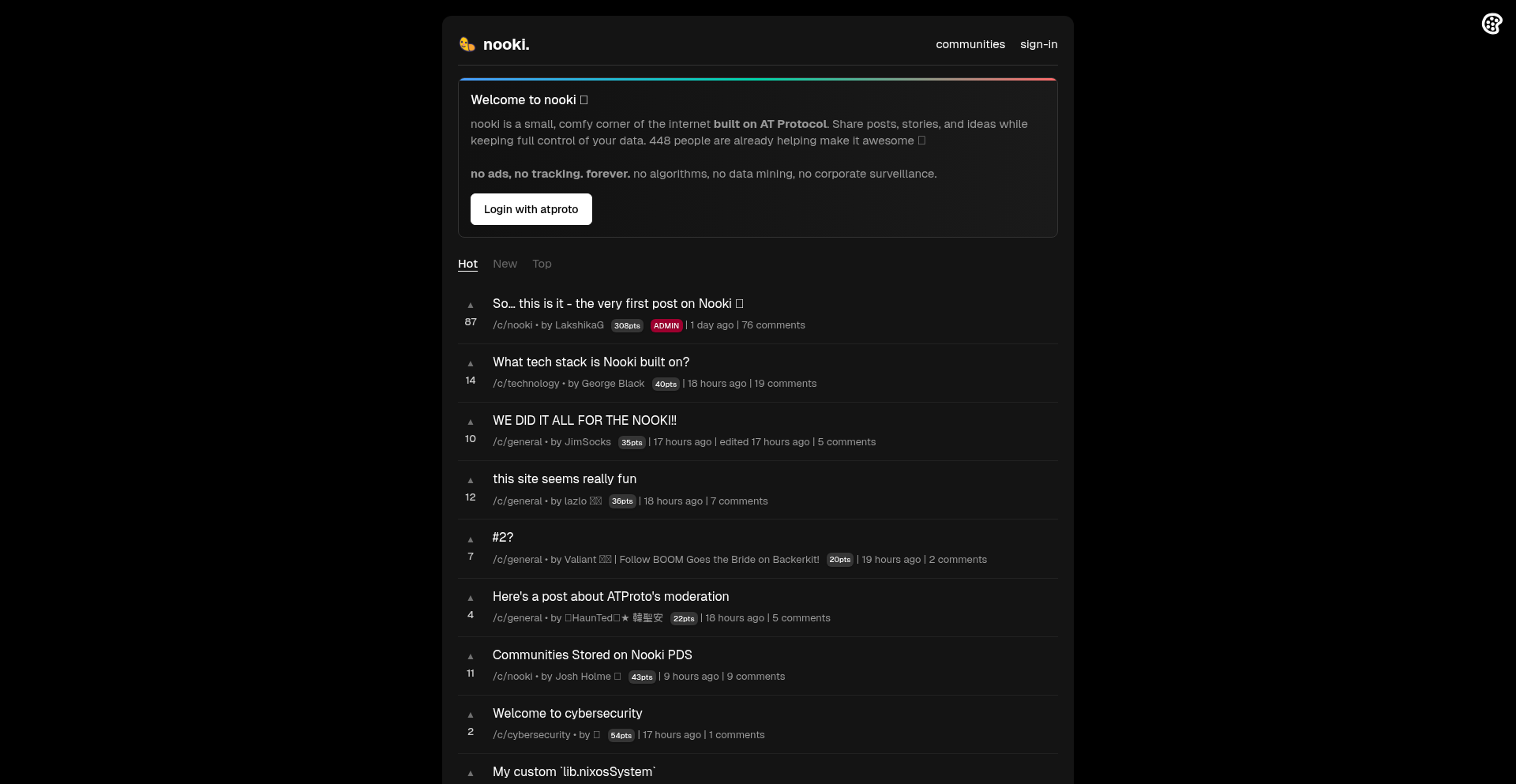

Decentralized and Privacy-Focused Solutions

Developer Experience Enhancement

Next-Generation Frameworks and Languages

Project Category Distribution

AI & Machine Learning (30%)

Developer Tools & Utilities (25%)

Web & Application Frameworks (15%)

Productivity & Organization (10%)

Systems & Infrastructure (10%)

Hardware & IoT (5%)

Creative & Media Tools (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | C-WebForge | 355 | 174 |

| 2 | ClayKey Nano | 335 | 96 |

| 3 | GoTextSearchEngine | 102 | 46 |

| 4 | PolyMastDB | 111 | 33 |

| 5 | GYST - Fluid Workspace Weaver | 23 | 22 |

| 6 | LabubuSeekerAI | 15 | 25 |

| 7 | Lore Engine | 25 | 7 |

| 8 | VoiceAI Badge | 14 | 2 |

| 9 | SHAI - Shell AI Assistant | 14 | 0 |

| 10 | FarSight: Dynamic Screen Blur for Eye Strain Relief | 9 | 5 |

1

C-WebForge

Author

ashtonjamesd

Description

This project is a minimalist web framework meticulously crafted entirely in C. It demonstrates a deep dive into fundamental web server principles, building essential functionalities like request parsing and response generation from scratch. Its innovation lies in its raw, unopinionated approach, offering developers a highly efficient and customizable foundation for building web applications, bypassing the complexities and overhead of higher-level languages.

Popularity

Points 355

Comments 174

What is this product?

C-WebForge is a web framework built using the C programming language. Instead of relying on existing libraries or languages designed for web development, it reconstructs the core components of a web server from the ground up. Think of it like building a car engine from raw metal and tools, rather than just assembling pre-made parts. The innovation here is in the very low-level control and performance it offers, allowing for maximum optimization and a complete understanding of how web requests and responses actually work under the hood. So, what's the use? If you need absolute maximum performance, minimal resource usage, or want to deeply understand the mechanics of web servers, this offers unparalleled control and insight.

How to use it?

Developers can use C-WebForge as a foundation for building highly specialized web services or embedded systems where performance and resource efficiency are paramount. It would involve writing C code to define routes, handle incoming requests (like HTTP GET or POST), and craft outgoing responses. Integration would typically involve compiling the C-WebForge core and then linking your application-specific C modules. The use case here is for scenarios where you need fine-grained control over every aspect of your web service, perhaps for high-frequency trading platforms, embedded IoT devices, or custom network appliances. So, what's the use? It allows you to build extremely fast and lean web services that can operate in environments where other frameworks would be too resource-intensive.

Product Core Function

· Raw HTTP Request Parsing: The framework is designed to meticulously analyze incoming HTTP requests, breaking down headers and body content directly. This offers unparalleled performance and control over how data is processed. The application value is in understanding and optimizing the very first step of any web interaction, crucial for high-throughput systems.

· Customizable Response Generation: C-WebForge provides the building blocks to construct HTTP responses from scratch, allowing for precise control over status codes, headers, and content. This enables tailoring responses for specific performance needs or application logic. The application value lies in creating highly optimized and specific responses, avoiding unnecessary overhead.

· Minimalist Routing Engine: A fundamental routing mechanism is implemented to direct incoming requests to the appropriate handler functions. This is built without external dependencies, offering a lightweight and highly predictable way to manage application endpoints. The application value is in having a predictable and efficient way to manage how your application responds to different URLs.

· Low-Level Socket Management: The framework directly interacts with network sockets to handle incoming connections and outgoing data. This deep level of control allows for advanced network programming and optimization. The application value is in having direct control over network communication, essential for specialized networking applications.

Product Usage Case

· Building a high-performance API gateway: In scenarios requiring extremely fast forwarding and transformation of API requests, C-WebForge could be used to create a custom gateway that processes requests with minimal latency. This solves the problem of slow, generic API gateways by allowing direct, optimized handling of network traffic.

· Developing an embedded web server for IoT devices: For resource-constrained devices, a full-featured web framework might be too heavy. C-WebForge's minimalist design allows for a web interface to be embedded directly into devices like sensors or controllers, enabling local configuration and monitoring. This solves the problem of adding web functionality to devices with very limited memory and processing power.

· Creating a custom logging or monitoring service: For applications that generate a massive amount of logs or require real-time monitoring, a custom web service built with C-WebForge can offer superior performance and lower resource consumption compared to solutions built with higher-level languages. This addresses the need for a highly efficient data collection and processing backend.

2

ClayKey Nano

Author

mafik

Description

This project showcases a novel approach to crafting compact, handheld input devices by leveraging traditional modeling clay instead of 3D printing. It addresses the limitations of mass-produced electronics by offering a tactile, customizable, and accessible method for creating unique keyboards, demonstrating a creative fusion of analog crafting with digital functionality.

Popularity

Points 335

Comments 96

What is this product?

ClayKey Nano is a concept and demonstration of building a tiny, hand-held keyboard using modeling clay as the primary structural and aesthetic material. The innovation lies in its analog-first, digital-second approach. Instead of relying on expensive 3D printers, the project utilizes the malleability and tactile qualities of clay to sculpt the keyboard's form factor. This allows for organic shapes and personalized ergonomics that are difficult to achieve with standard manufacturing. The underlying technology likely involves embedding discrete electronic components like microswitches and a microcontroller within the clay structure, with conductive pathways or custom wiring connecting them. This is a hands-on, 'maker' ethos approach to hardware development, emphasizing intuition and physical manipulation.

How to use it?

For developers, ClayKey Nano presents an inspiring paradigm for rapid prototyping of custom input devices, especially for niche applications or personal projects where unique form factors are desired. The 'how to use' isn't about a software library, but rather a hardware design philosophy. Developers can experiment with embedding microcontrollers (like an Arduino or Raspberry Pi Pico), individual key switches, and even small displays within custom-sculpted clay enclosures. This could be for specialized controllers for games, accessibility devices, unique presentation clickers, or even artistic electronic projects. The process involves understanding basic circuitry, soldering, and microcontroller programming, but the clay provides a highly forgiving and artistic canvas for the physical build.

Product Core Function

· Hand-sculpted Ergonomic Design: The primary value is the ability to create input devices with truly custom ergonomics tailored to individual hand shapes and specific use cases, enhancing comfort and efficiency during use.

· Tangible Prototyping with Analog Materials: Offers a unique, tactile method for hardware prototyping that is low-cost and accessible, moving beyond digital design tools to physical creation.

· Integrated Electronic Component Housing: Demonstrates the feasibility of embedding standard electronic components like key switches and microcontrollers directly into a clay structure, creating a functional electronic device from an art medium.

· Creative Customization and Personalization: Empowers users and developers to imbue their devices with personal style and aesthetic, moving away from generic plastic enclosures.

· Exploration of Analog-Digital Hybrid Interfaces: Pushes the boundaries of how traditional crafting materials can be integrated with modern digital technology to create novel user interfaces.

Product Usage Case

· Developing a specialized, palm-sized controller for a retro gaming emulator where traditional controllers are too large or uncomfortable for extended play.

· Creating an accessible input device for users with motor impairments, allowing for a custom-fit keyboard that minimizes strain and maximizes usability, using clay to mold around their hand.

· Building a unique set of physical controls for a digital art installation, where the tactile feel of clay buttons enhances the interactive experience.

· Prototyping a compact, on-the-go keyboard for a programmer who needs a very specific layout and feel for coding while traveling, avoiding the bulk of standard portable keyboards.

· Designing a custom remote for a media center that fits perfectly in the user's hand, with keys placed intuitively based on common playback controls, sculpted from clay for a premium feel.

3

GoTextSearchEngine

Author

novocayn

Description

A full-text search engine implemented entirely in Go. It focuses on providing a lightweight and efficient way to index and search textual data, offering a developer-friendly alternative to heavier, more complex solutions. The innovation lies in its pure Go implementation, allowing for high performance and easy integration into Go-based applications.

Popularity

Points 102

Comments 46

What is this product?

This project is a custom-built full-text search engine written from scratch in Go. Unlike relying on external services or large databases, it handles indexing and searching of text data directly. The core innovation is its pure Go implementation, which allows for optimized performance, reduced dependencies, and the ability for developers to deeply understand and modify the search logic. Think of it as building your own search function that's incredibly fast and tailored to your needs. So, what's in it for you? You get a search solution that's performant and can be tightly integrated into your Go projects without external dependencies, making your applications faster and more customizable.

How to use it?

Developers can integrate this GoTextSearchEngine into their Go applications by importing the library. The engine allows for indexing documents (text content) and then querying these documents for specific keywords or phrases. This can be used for internal documentation search, blog post search, or any scenario where efficient text retrieval is needed within a Go application. For example, you could add a search bar to your Go web application that instantly searches through your articles or product descriptions. So, how does this help you? It means you can easily add powerful search capabilities to your existing or new Go projects, improving user experience and information discoverability without complex setup.

Product Core Function

· Text Indexing: The engine efficiently processes raw text data, breaking it down into searchable units (tokens) and storing them in an optimized data structure for rapid retrieval. This means your data is prepared for super-fast searching. So, what's in it for you? Your data is ready to be searched instantly.

· Full-Text Querying: It supports searching for specific keywords or phrases across the indexed documents, returning relevant results quickly. This is the core search magic. So, what's in it for you? You can find exactly what you're looking for in your data, fast.

· Go Implementation: Being built entirely in Go, it offers high concurrency and performance, making it suitable for demanding applications. This is about speed and efficiency. So, what's in it for you? Your search operations will be quick and won't slow down your application.

· Customizable Logic: The open-source nature allows developers to inspect and modify the indexing and search algorithms to suit specific requirements. This means you can fine-tune how your search works. So, what's in it for you? You have the power to tailor the search to your exact needs, making it more relevant for your users.

Product Usage Case

· Adding a fast search bar to a personal blog built with a Go web framework. The problem solved is slow or non-existent search functionality, leading to users being unable to find content. How it helps: Users can quickly find blog posts by keywords, improving engagement.

· Creating an internal knowledge base search for a development team's documentation. The problem solved is difficulty in locating specific technical information within a large set of documents. How it helps: Developers can find answers to technical questions much faster, increasing productivity.

· Building a search feature for a small e-commerce site where product descriptions need to be easily searchable. The problem solved is users struggling to find desired products. How it helps: Customers can find products more effectively, leading to potential sales increases.

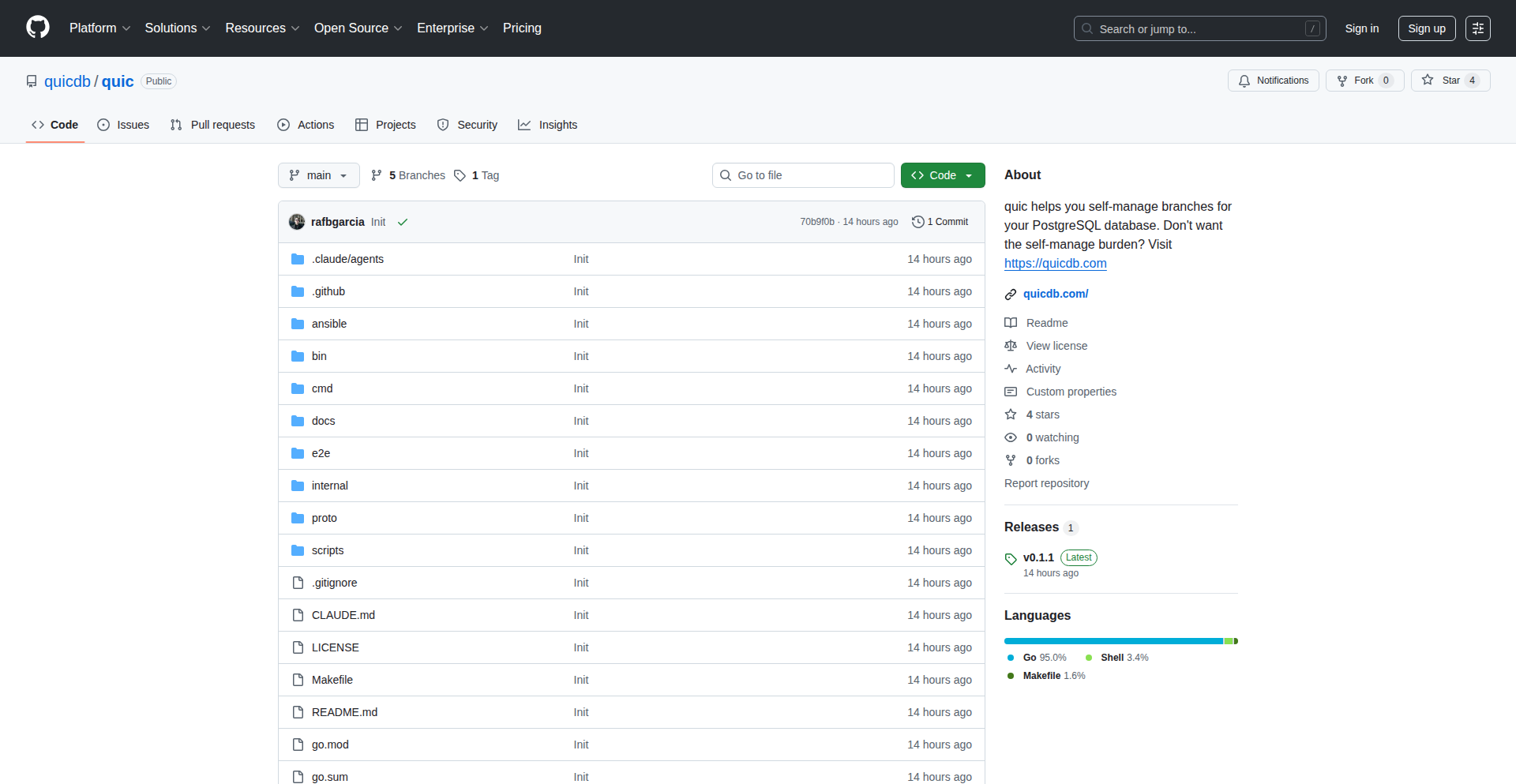

4

PolyMastDB

Author

pgedge_postgres

Description

An open-source, logical multi-master PostgreSQL replication solution that allows multiple PostgreSQL databases to actively write to each other simultaneously, overcoming traditional single-master limitations. This innovative approach tackles the complexity of data consistency in distributed systems, enabling higher availability and write scalability.

Popularity

Points 111

Comments 33

What is this product?

PolyMastDB is a distributed PostgreSQL system where every database can accept write operations from any other database in the cluster, making them all 'master' nodes. It achieves this through logical replication, which focuses on replicating data changes (like INSERTs, UPDATEs, DELETEs) rather than block-level changes. The innovation lies in its sophisticated conflict resolution mechanisms that ensure data remains consistent across all nodes even when the same data is modified concurrently. So, this means you can have multiple active databases, and if one goes down, others can seamlessly take over, and your application can continue writing data without interruption, greatly improving reliability and performance for high-demand applications.

How to use it?

Developers can integrate PolyMastDB into their application architectures by setting up a cluster of PostgreSQL instances. Each instance will be configured to replicate its logical changes to all other instances. The system provides tools and configurations to manage this replication, monitor data consistency, and handle potential conflicts. Applications can then connect to any available node for read or write operations, simplifying application design and deployment. This allows you to build applications that can withstand hardware failures or sudden spikes in traffic by distributing the write load across multiple servers and ensuring data is always accessible from at least one node.

Product Core Function

· Logical Multi-Master Replication: Enables concurrent writes to all nodes in a PostgreSQL cluster. The value here is in breaking the single-point-of-write bottleneck, allowing for greater write throughput and a truly active-active database setup. This is useful for applications needing extremely high write performance and availability.

· Conflict Detection and Resolution: Automatically identifies and resolves data conflicts that arise from simultaneous writes to the same data across different nodes. The value is in maintaining data integrity and preventing data corruption without manual intervention. This is critical for applications where data consistency is paramount, like e-commerce or financial systems.

· High Availability: Ensures that applications can continue to operate even if one or more database nodes fail. The value is in minimizing downtime and providing continuous service to users. This is essential for mission-critical applications that cannot afford any service interruption.

· Write Scalability: Distributes write load across multiple nodes, allowing the database system to handle a larger volume of write operations. The value is in scaling your application's capacity to handle more users and transactions as demand grows. This is beneficial for rapidly growing applications or those experiencing unpredictable traffic.

· Simplified Application Architecture: Developers can write applications as if they were interacting with a single database, abstracting away the complexity of distributed data management. The value is in reducing development time and complexity. This means you can build robust applications faster without needing to be a distributed systems expert.

Product Usage Case

· E-commerce platforms requiring continuous availability and the ability to handle peak shopping loads without performance degradation. PolyMastDB ensures that orders can be placed and processed even during high-traffic events, and that data remains consistent across all payment and inventory nodes.

· Global distributed applications where users in different regions need to write data locally, and these changes need to be propagated to all other regions efficiently. PolyMastDB allows for low-latency writes for users while maintaining a globally consistent dataset.

· Internet of Things (IoT) systems that generate massive amounts of time-series data from numerous devices. PolyMastDB can handle high write volumes from these devices, ensuring that all sensor readings are captured and available for analysis across the system.

· Financial trading platforms where every millisecond counts and data integrity is non-negotiable. PolyMastDB provides the high availability and consistent data required for critical financial operations, ensuring no trades are lost and all records are accurate.

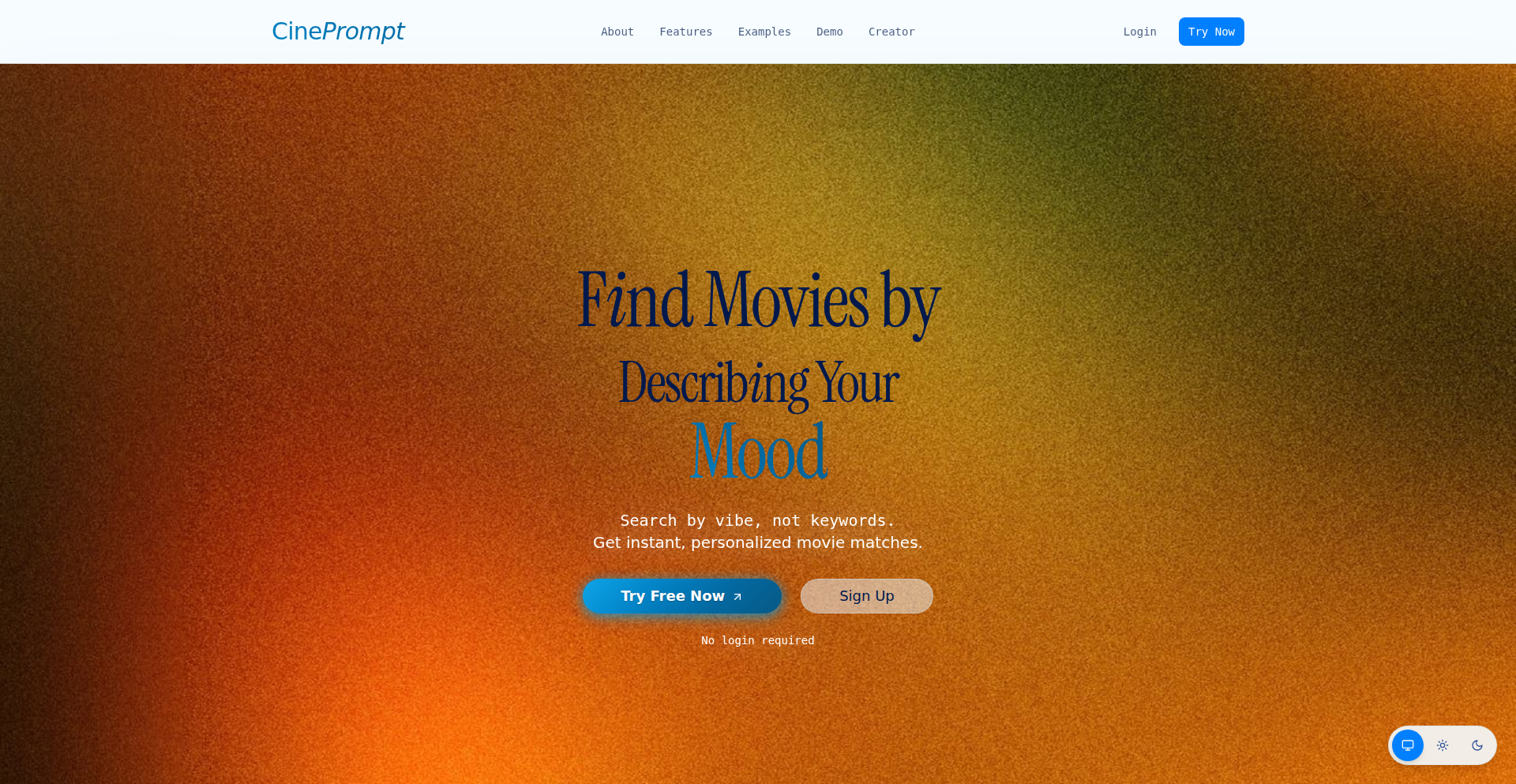

5

GYST - Fluid Workspace Weaver

url

Author

ricroz

Description

GYST is a lightweight digital tool that seamlessly integrates file exploration, whiteboarding, bookmarking, note-taking, and basic graphic design into a single, fluid interface. It aims to replicate the feeling of a physical desk, where organization and creative freedom coexist, by eliminating the friction of switching between disparate applications. This offers a unified digital environment for managing information and ideas.

Popularity

Points 23

Comments 22

What is this product?

GYST is a novel digital workspace designed to merge several distinct productivity tools into one cohesive experience. Its core innovation lies in its interface design, which seeks to mimic the organic flow and tactile feel of a physical desk. Instead of navigating between separate applications for file management, brainstorming (whiteboarding), saving web resources (bookmarking), jotting down thoughts (note-taking), and creating simple visuals (graphic design), GYST brings these functionalities together. The underlying technical approach focuses on building a lightweight, integrated frontend that allows these modules to interact fluidly, enabling users to drag, drop, and connect information across different contexts. This means your notes can link directly to files, your bookmarks can be visually organized on a whiteboard, and your simple sketches can be embedded anywhere. The value is in reducing cognitive load and increasing the speed of idea generation and organization.

How to use it?

Developers can use GYST as a personal dashboard for managing projects, research, and creative work. Imagine starting a new project: you can create a dedicated space in GYST, drag in relevant research files from your computer, bookmark key articles from the web, sketch out initial ideas on the virtual whiteboard, and write down meeting notes or requirements all within the same view. For integration, while the current alpha is primarily a standalone web application, the vision suggests future API possibilities for connecting GYST's organizational capabilities with other developer tools. You can try the alpha version online at gyst.fr to experience its integrated workflow and see how it can streamline your personal knowledge management and project planning.

Product Core Function

· File Exploration Integration: Seamlessly access and organize local files directly within the workspace, eliminating the need to switch to a separate file manager. This provides a centralized location for project assets.

· Interactive Whiteboarding: A freeform canvas for brainstorming, mind-mapping, and visualizing ideas. This allows for quick, uninhibited ideation and concept mapping, directly connecting thoughts and resources.

· Smart Bookmarking: Save and categorize web links, making them easily accessible and organizable within your digital workspace. This keeps relevant online resources at your fingertips for research or reference.

· Fluid Note-Taking: Capture thoughts, ideas, and documentation in a flexible note-taking system that can be linked to other elements in your workspace. This ensures your notes are contextual and easily discoverable.

· Basic Graphic Design Tools: Simple tools for creating basic visuals, diagrams, or annotations directly within the interface. This enables quick visual communication and enhances the expressiveness of your digital space.

Product Usage Case

· Project Planning and Research: A developer starting a new software project can use GYST to organize project requirements, store relevant code snippets, bookmark technical documentation, and sketch out system architecture diagrams, all in one view. This solves the problem of scattered information across multiple apps.

· Content Creation and Blogging: A content creator can use GYST to collect research links, draft blog post outlines on the whiteboard, write the content in the notes section, and even create simple accompanying graphics. This streamlines the entire content creation workflow.

· Personal Knowledge Management: Anyone looking to build a 'second brain' can use GYST to connect their notes, web bookmarks, and relevant files, creating a personal knowledge graph that is easily navigable and visual. This addresses the challenge of information overload and retrieval.

· Idea Incubation: An entrepreneur can use GYST to brainstorm business ideas, sketch out user flows, save competitor analysis links, and write initial business plans. This provides a dedicated, fluid space for nurturing and developing new concepts.

6

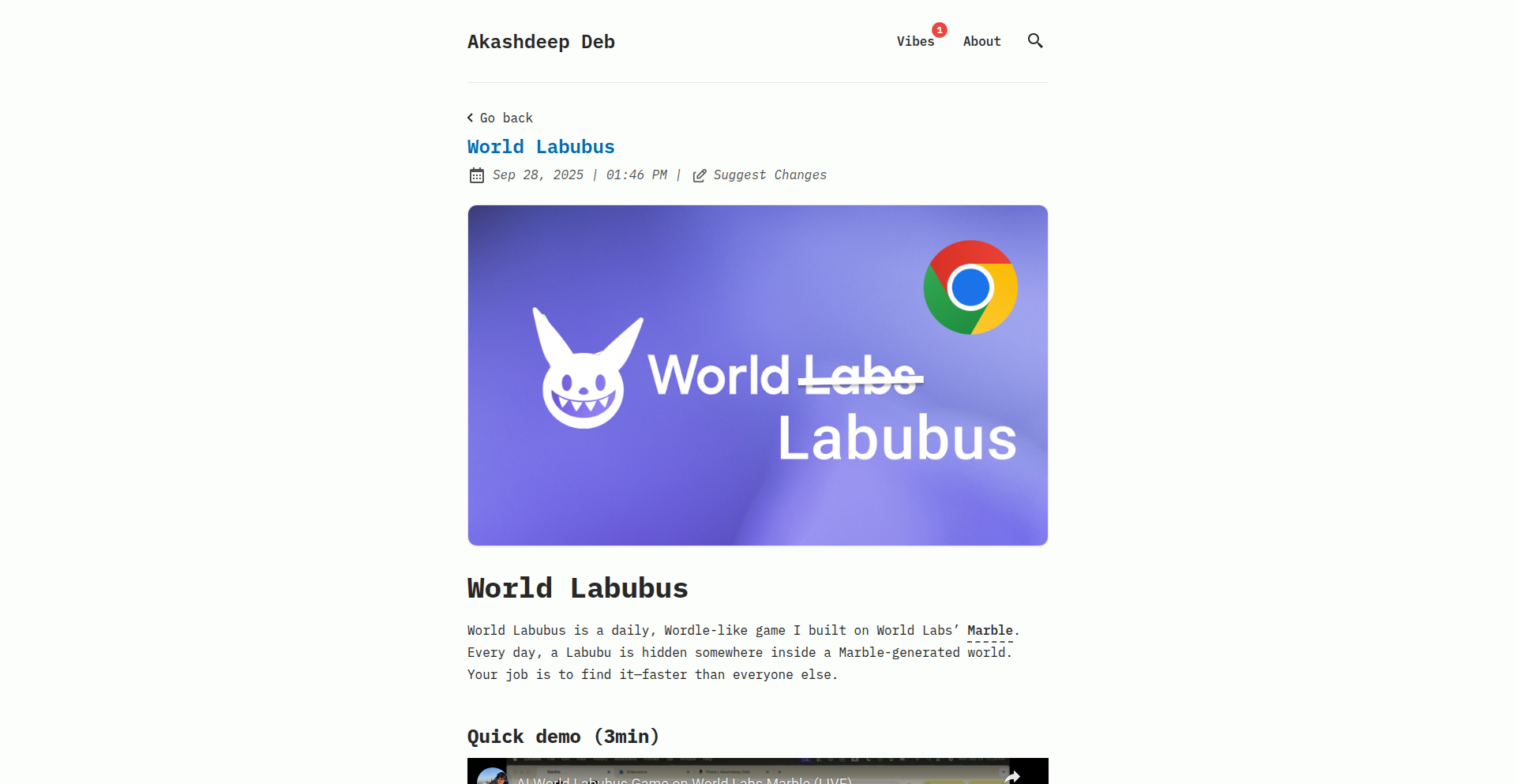

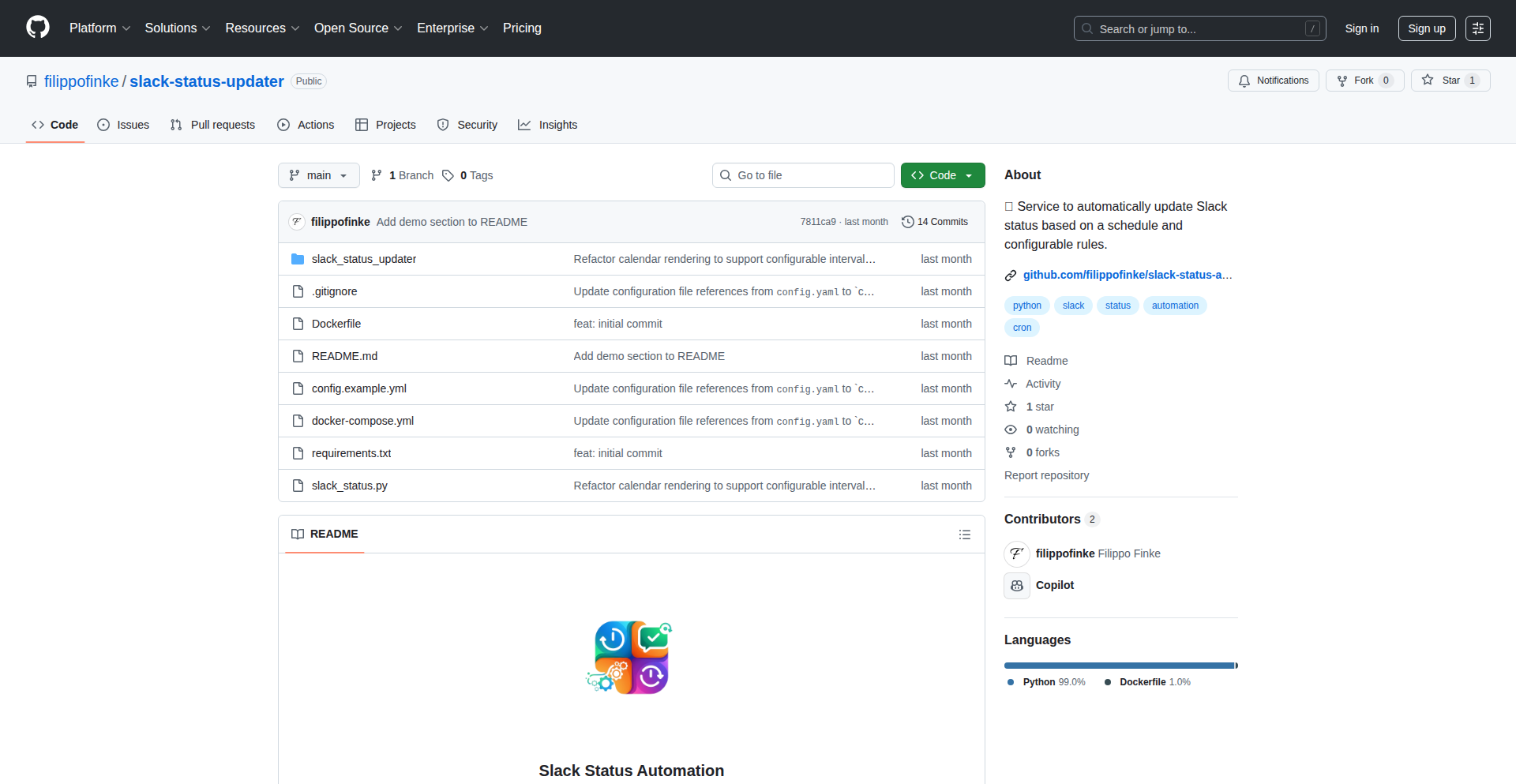

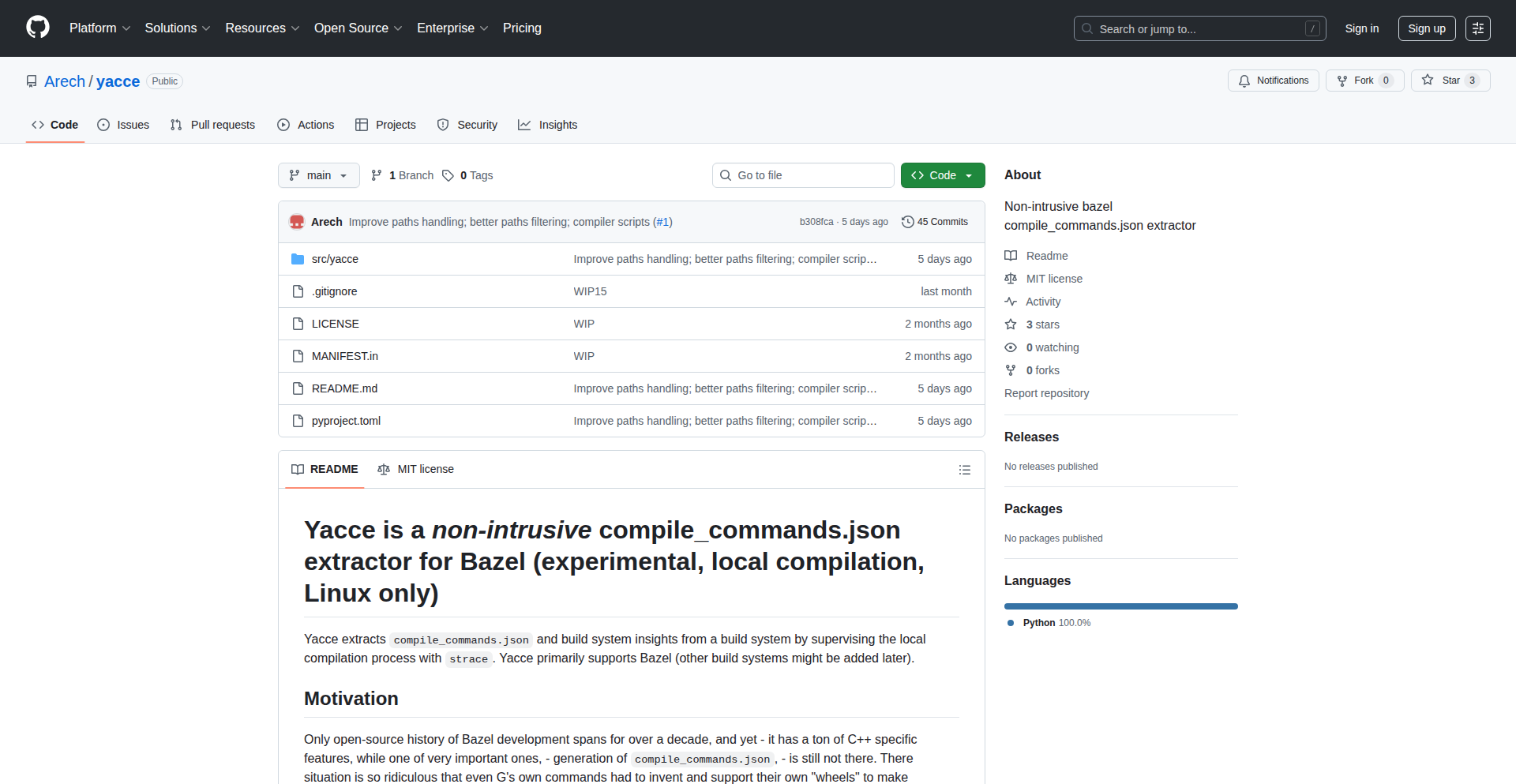

LabubuSeekerAI

Author

akadeb

Description

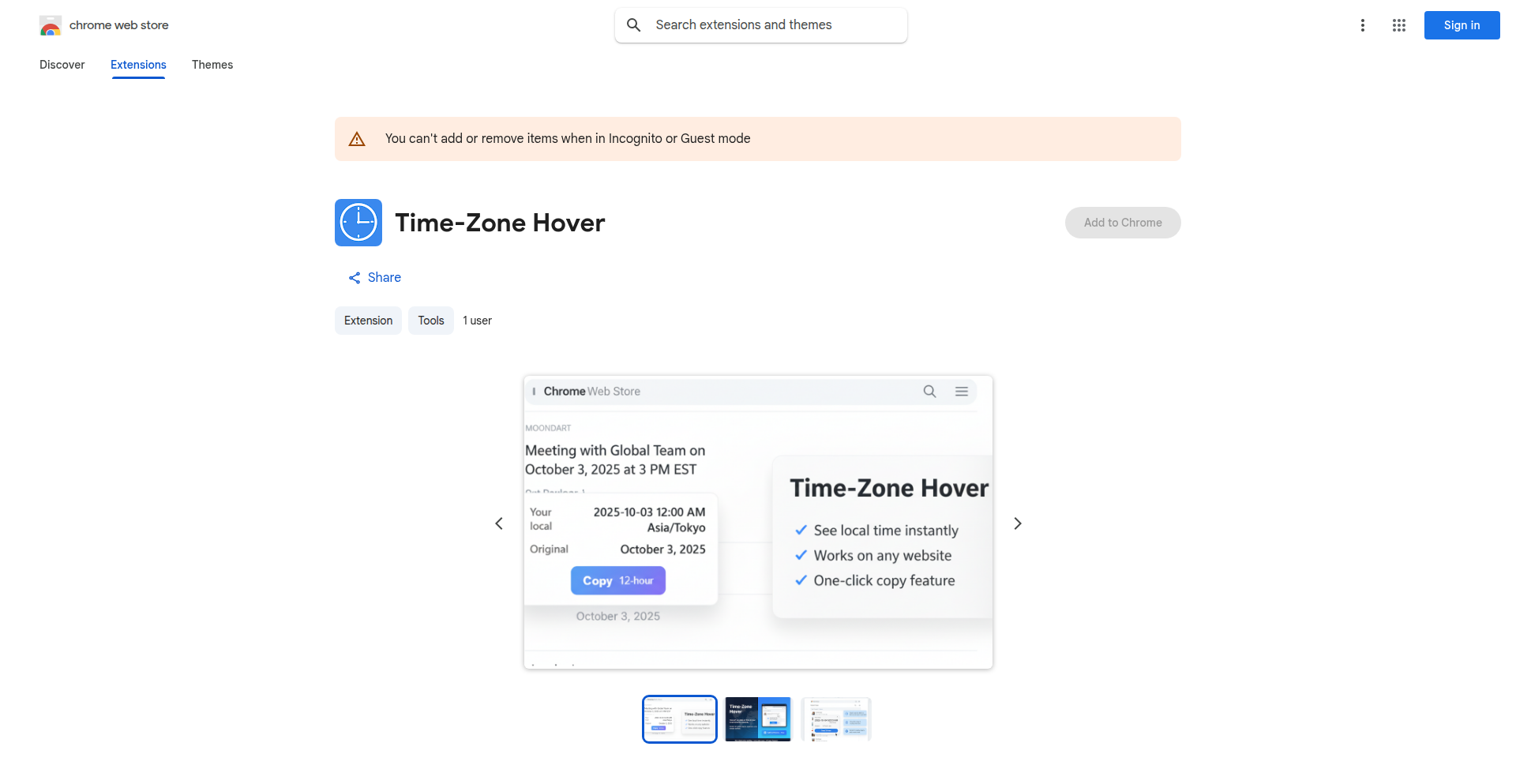

A Chrome extension that gamifies discovering hidden 'Labubu' characters within AI-generated 'World Labs' marble worlds. It leverages AI world generation to create unique daily challenges, offering a novel way to interact with generative AI content.

Popularity

Points 15

Comments 25

What is this product?

This is a Chrome extension that turns exploring AI-generated virtual environments into a game. The core innovation lies in its use of existing AI world generation capabilities to create a unique, daily 'treasure hunt' experience. Each day, a hidden character called 'Labubu' is placed within a randomly generated 'World Labs' marble world, and your goal as a user is to find it. Think of it as a daily digital scavenger hunt, powered by AI creativity. So, what's the use? It provides a fun, engaging, and accessible way to interact with the output of AI world generation, making it less abstract and more like a playful exploration.

How to use it?

To use LabubuSeekerAI, you simply install it as a Chrome extension. Once installed, it will automatically detect when you are viewing a compatible AI-generated 'World Labs' environment. The extension will then highlight areas where the hidden 'Labubu' might be located, or provide subtle clues. You'll navigate within the AI world as you normally would, looking for the hidden character. This can be integrated into your daily browsing if you already engage with AI-generated content platforms. So, what's the use? It adds an interactive layer to your AI content consumption, transforming passive viewing into an active, rewarding game.

Product Core Function

· Daily Hidden Object Game: A new 'Labubu' is hidden each day in a unique AI-generated environment, providing fresh content and replayability. The value here is in consistent engagement and surprise, making the experience novel each day. This is useful for anyone looking for a fun, low-commitment daily distraction.

· AI World Integration: Seamlessly overlays game mechanics onto existing AI-generated worlds, such as those from 'World Labs'. The value is in extending the utility and engagement of AI-generated content without requiring users to learn new platforms. This is useful for users who are already exploring AI art or virtual worlds.

· Chrome Extension Convenience: Easy installation and unobtrusive operation directly within your browser. The value is in its accessibility and ease of use, requiring no complex setup or separate applications. This is useful for casual users who want a simple addition to their browsing experience.

· Visual Clue System: Provides visual hints or highlights to guide players towards the hidden 'Labubu'. The value is in making the game challenging but not impossible, ensuring a satisfying discovery experience. This is useful for players who enjoy puzzle-solving and exploration without excessive frustration.

Product Usage Case

· Daily morning routine: A user could check the extension each morning as part of their routine to find the hidden Labubu in a new AI world, offering a brief moment of fun and accomplishment. This solves the problem of finding engaging, brief activities to start the day.

· Exploring AI art communities: A user browsing AI-generated art platforms could activate the extension to find a hidden Labubu within an AI-generated landscape, adding an element of gamification to their exploration. This solves the problem of making AI art discovery more interactive and rewarding.

· Testing AI world generation capabilities: Developers or enthusiasts interested in AI world generation could use the extension to see how the 'hidden object' mechanic plays out in different AI-generated environments. This solves the problem of providing a practical test case for evaluating the 'findability' and complexity of AI-generated spaces.

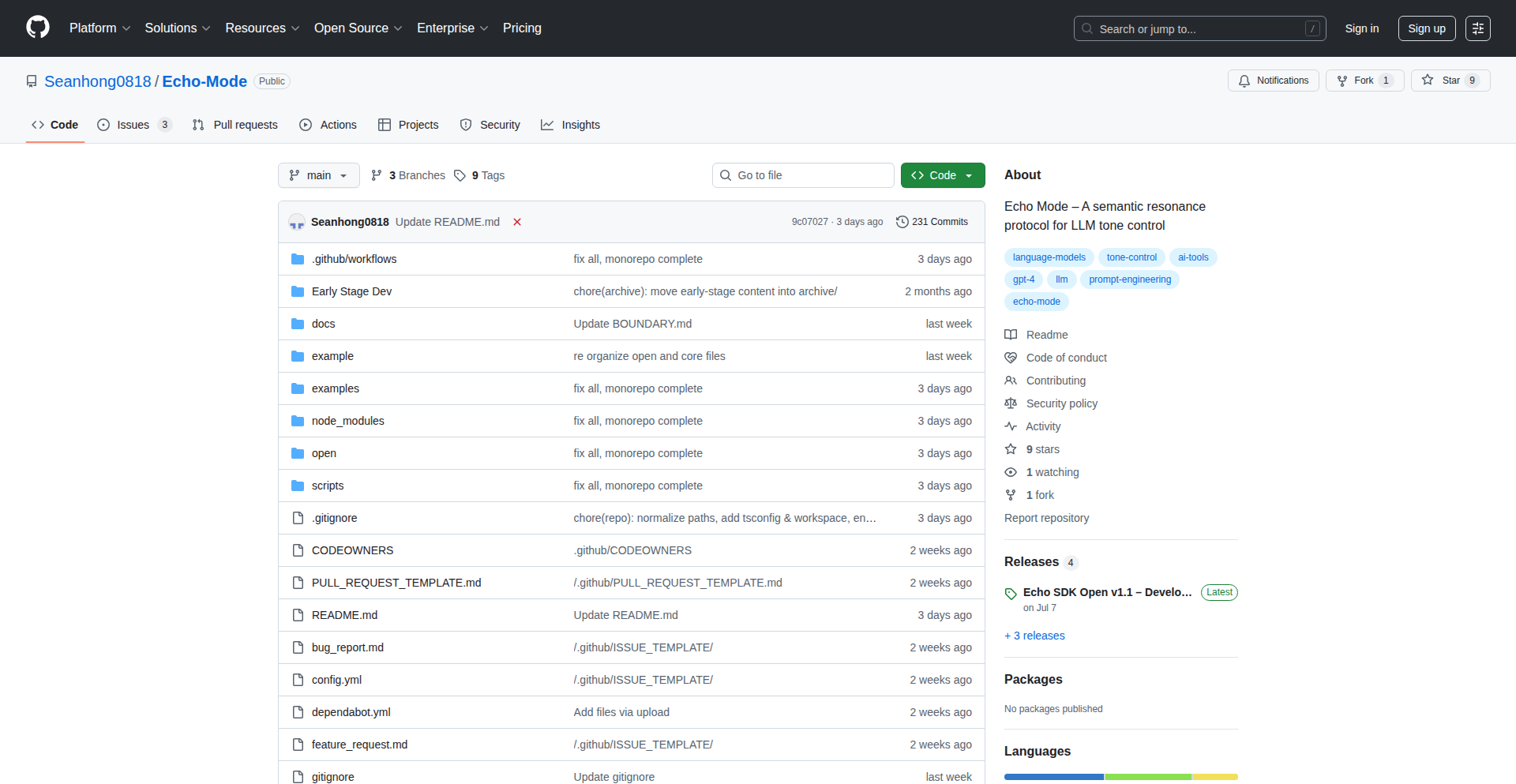

7

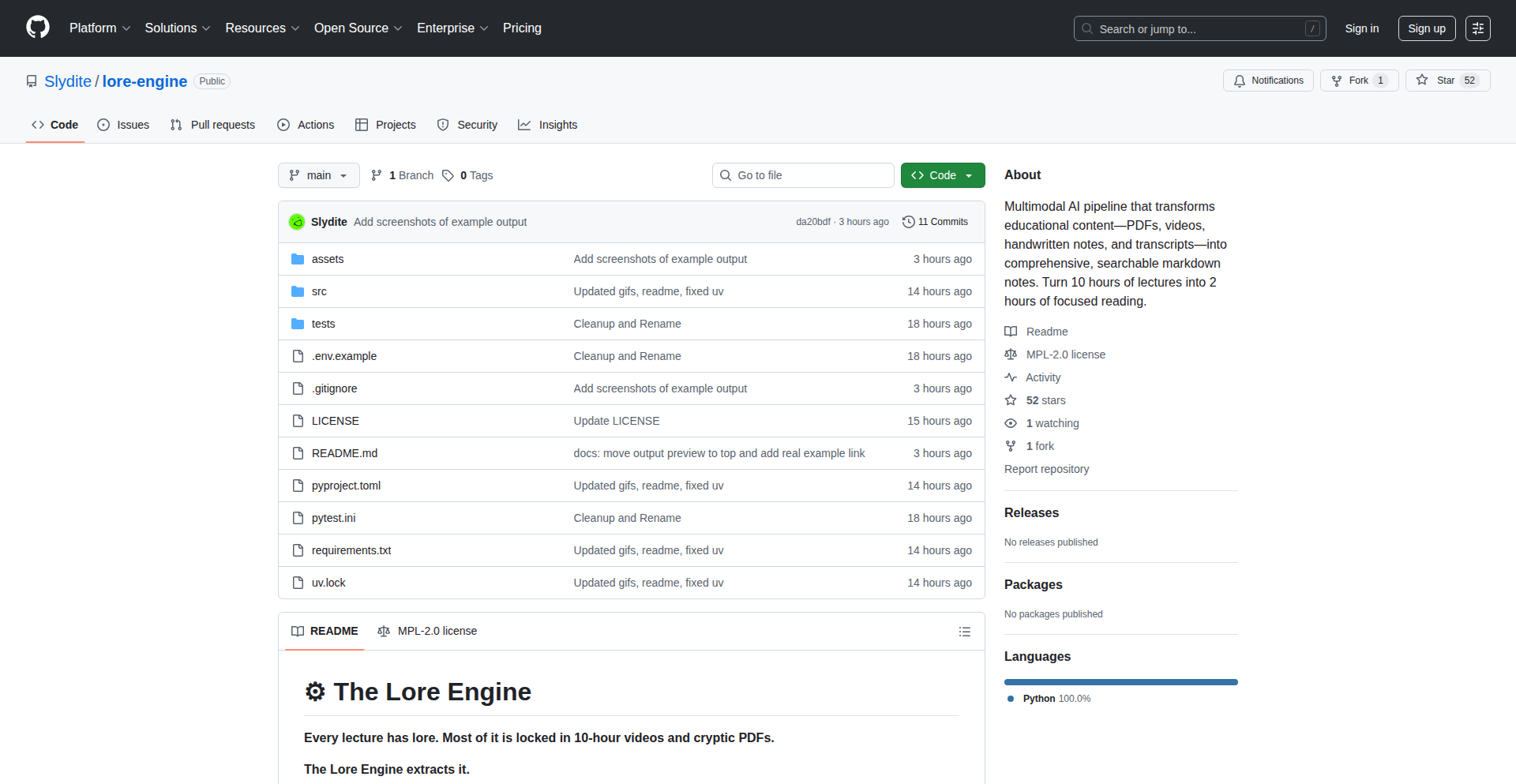

Lore Engine

Author

Slydite

Description

Lore Engine is a novel project that leverages advanced AI and NLP techniques to condense lengthy audio content, such as 10-hour lectures, into concise, 2-hour summaries of comprehensive notes. It addresses the challenge of information overload by intelligently extracting key concepts and synthesizing them into digestible formats, offering a significant time-saving solution for students, researchers, and lifelong learners. The innovation lies in its ability to understand context, identify crucial details, and rephrase them effectively, making learning more efficient.

Popularity

Points 25

Comments 7

What is this product?

Lore Engine is an AI-powered system designed to process long audio recordings, like lectures, and distill them into significantly shorter, high-quality notes. It works by employing natural language processing (NLP) models to analyze the speech, identify the most important information, and then summarize those points coherently. Think of it as an extremely smart assistant that listens, understands, and writes summaries for you. The core innovation is its deep understanding of linguistic context and its ability to generate human-readable summaries that retain the essence of the original content. So, this helps you grasp complex information quickly without spending hours sifting through raw material.

How to use it?

Developers can integrate Lore Engine into their existing workflows or build new applications around its core functionality. It can be accessed via an API, allowing for programmatic submission of audio files and retrieval of generated notes. Potential use cases include educational platforms looking to provide study aids, content creators wanting to offer summaries of their podcasts or webinars, or individuals seeking to manage their personal learning resources more effectively. The engine can be used by uploading an audio file (like a lecture recording) to a designated endpoint, and it will return the summarized notes. This means you can automate the note-taking process for any audio content.

Product Core Function

· Audio Transcription: Converts spoken words from audio files into text, forming the foundational data for analysis. This is valuable because it makes unstructured audio data processable by AI.

· Key Information Extraction: Identifies and pulls out the most critical concepts, facts, and arguments from the transcribed text. This is useful for pinpointing the 'meat' of the lecture, saving users from reading irrelevant parts.

· Abstractive Summarization: Generates new, concise sentences that capture the meaning of the original content, rather than just copying and pasting. This provides a fluent and easy-to-understand overview, making complex topics accessible.

· Content Condensation: Significantly reduces the volume of information while preserving essential knowledge, transforming hours of content into a manageable duration. This directly addresses the problem of information overload and saves valuable time.

· Contextual Understanding: Analyzes the relationships between different pieces of information to ensure the summary is coherent and logical. This ensures the summarized notes are not just a collection of facts but a meaningful narrative.

Product Usage Case

· Student preparing for exams: Uploads lecture recordings to Lore Engine and receives concise notes, allowing for faster review and better retention of course material. This helps students pass their exams with less study time.

· Researcher processing academic papers: Uses Lore Engine to summarize lengthy conference talks or webinars, quickly getting up to speed on new research without needing to watch hours of video. This accelerates the research process.

· Content platform offering supplementary materials: Integrates Lore Engine's API to automatically generate summaries for video courses or podcasts, enhancing user engagement and accessibility. This makes their content more valuable to a wider audience.

· Professional development: Professionals can use Lore Engine to digest industry-specific presentations or training sessions, keeping their knowledge current without sacrificing work hours. This supports continuous learning in a busy schedule.

8

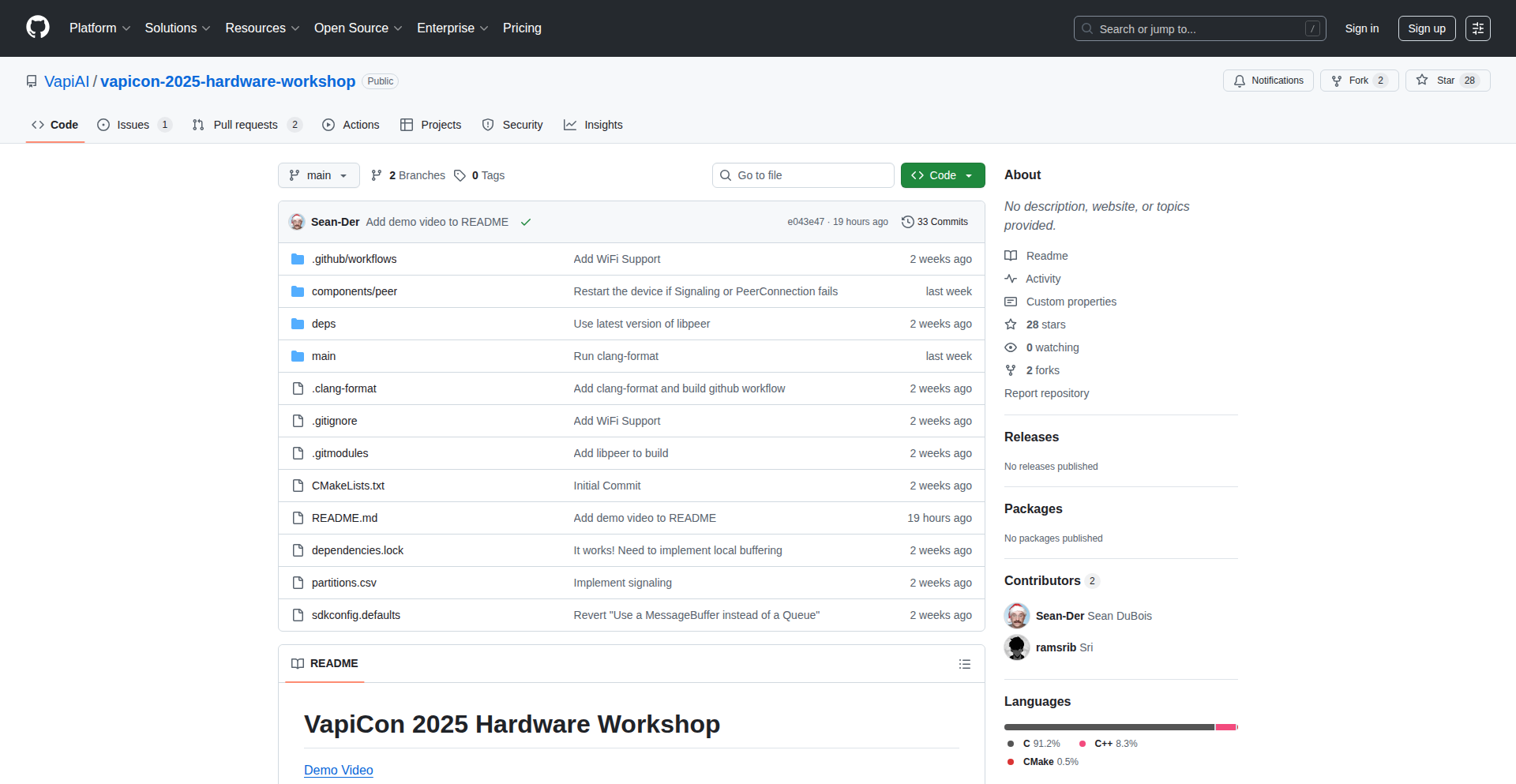

VoiceAI Badge

Author

Sean-Der

Description

An open-source, voice-activated AI badge that answers your questions about a conference on the go. It leverages an ESP32 microcontroller and WebRTC to connect to a Large Language Model (LLM), providing instant information about speakers and topics. This project demonstrates the power of combining embedded hardware with modern AI for interactive, real-time information access.

Popularity

Points 14

Comments 2

What is this product?

This project is a small, wearable badge that acts as a personal AI assistant for events like conferences. It uses a tiny computer (ESP32) to listen to your voice, send your question over the internet using a technology called WebRTC (which is like a real-time video/audio chat system for your browser, but used here for data), and then receive an answer from a powerful AI (LLM). The innovation lies in making sophisticated AI accessible through simple, low-power hardware and real-time communication, creating a seamless, interactive experience.

How to use it?

Developers can use this project as a blueprint to build their own custom voice-activated devices. It involves flashing the ESP32 microcontroller with the provided firmware. This firmware handles audio input, establishes a WebRTC connection to a server running an LLM, and processes the responses. It's ideal for creating interactive kiosks, smart wearables, or any application where voice commands can trigger real-time information retrieval or actions. The project can be integrated into existing systems by leveraging the WebRTC signaling and data channels for custom message passing.

Product Core Function

· Voice Input Capture: Captures audio from the microphone, enabling natural language interaction. The value is in allowing users to speak their queries instead of typing.

· WebRTC Connectivity: Establishes a real-time, peer-to-peer connection for low-latency data transfer between the badge and the AI backend. This means faster responses and a smoother user experience.

· LLM Integration: Sends user queries to a Large Language Model for intelligent processing and response generation. The value is in providing accurate and context-aware answers to a wide range of questions.

· Audio Output Playback: Speaks the AI-generated responses back to the user. This provides a fully hands-free and intuitive way to receive information.

· Low-Power Hardware Operation: Designed to run on a small, battery-powered microcontroller (ESP32). This makes it portable and suitable for long-duration events without constant charging.

Product Usage Case

· Conference Assistant: Imagine wearing this badge at a tech conference. You can simply ask, 'Who is speaking in room 3?' or 'What is the topic of the next keynote?' and get an immediate spoken answer, without needing to pull out your phone or find a schedule. This solves the problem of information overload at busy events.

· Interactive Museum Guide: A museum could deploy these badges. Visitors could ask about specific exhibits, such as 'Tell me more about this painting,' and receive detailed information directly from the badge, enhancing the learning experience.

· Event Navigation Bot: For large venues, a badge could provide directions. Asking 'How do I get to the main stage?' would result in spoken directions, simplifying navigation for attendees.

· Custom IoT Voice Control: Developers could adapt this to control smart home devices. Instead of a complex app, you could have a badge that says, 'Turn on the living room lights,' and it would interact with your smart home system.

9

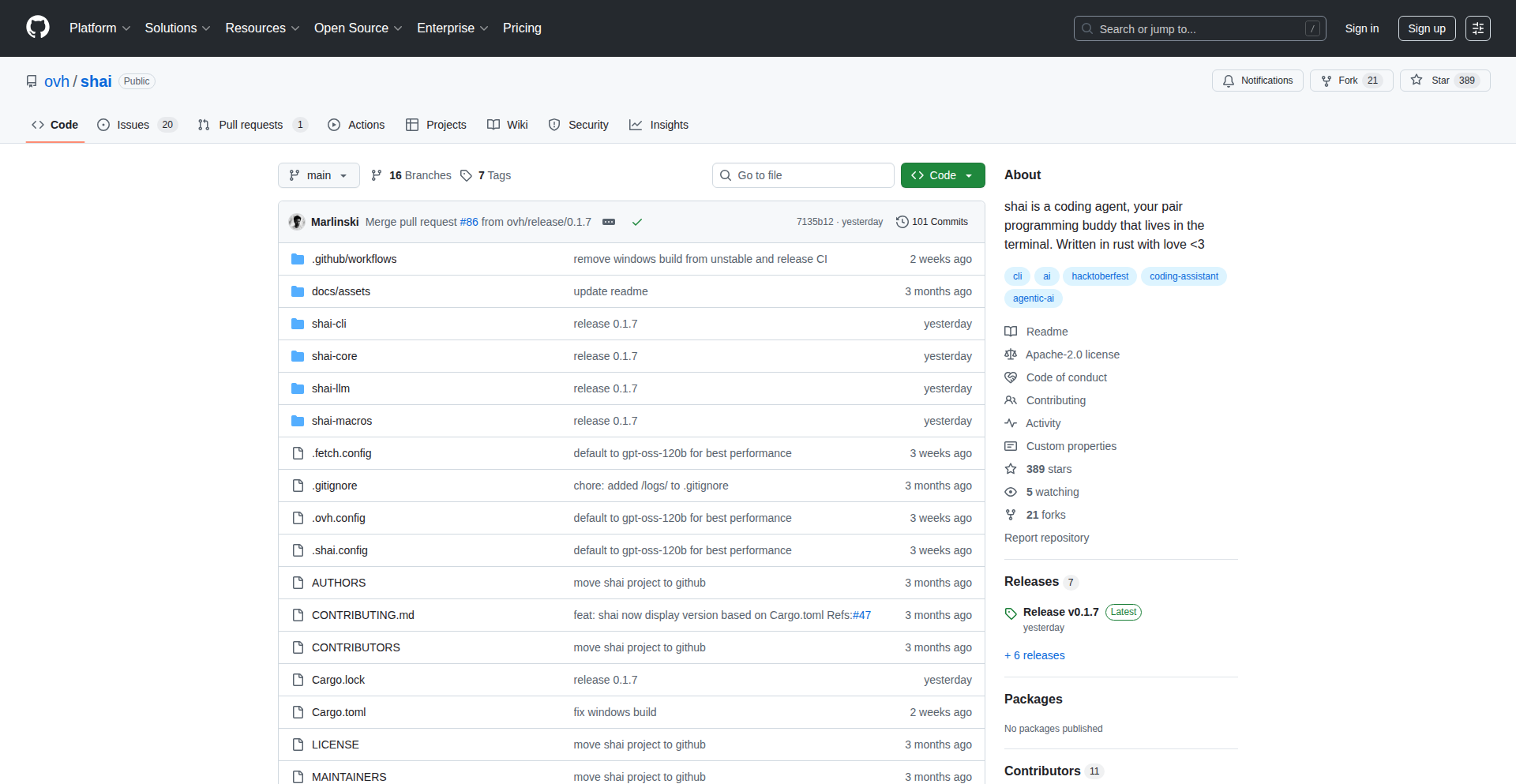

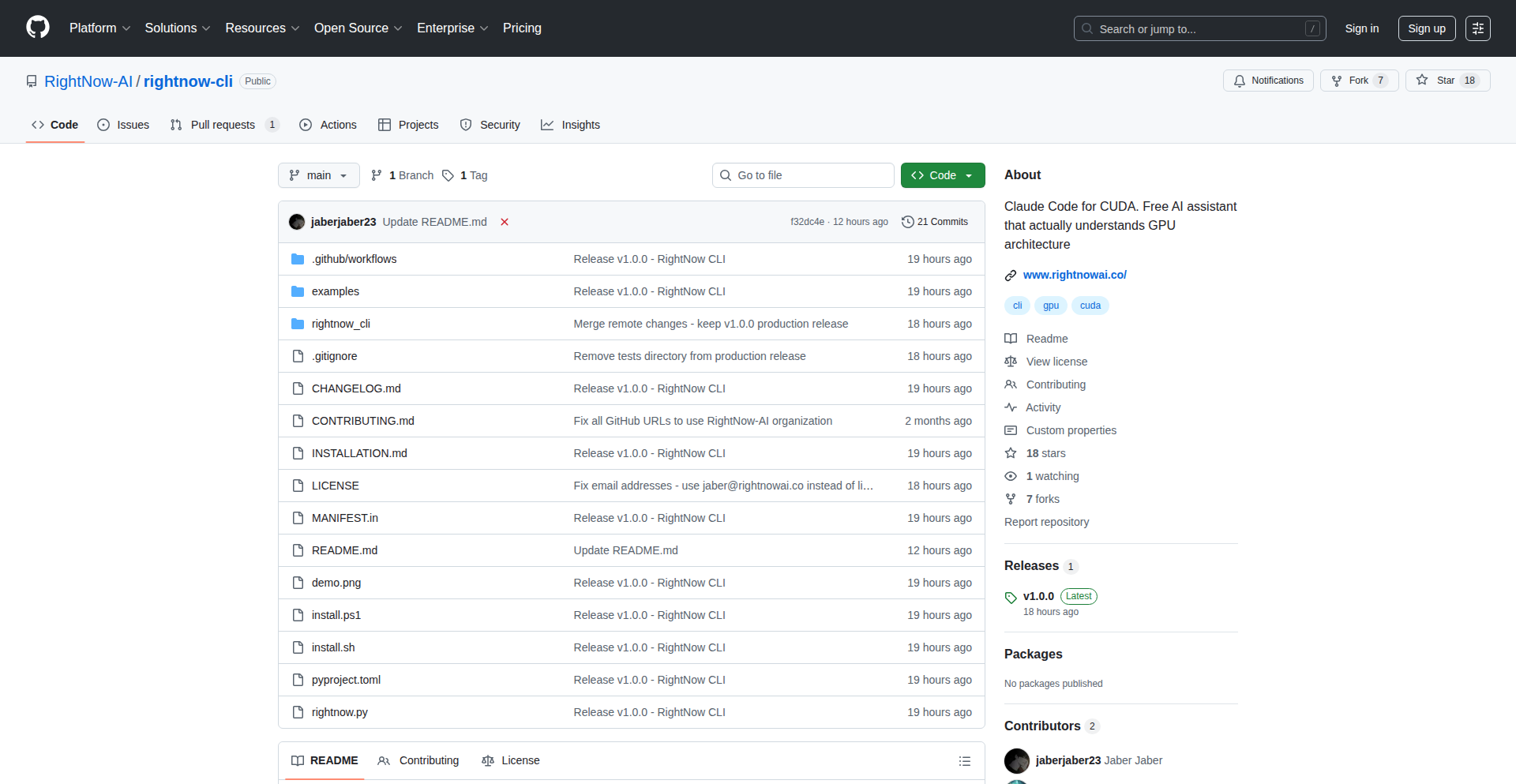

SHAI - Shell AI Assistant

Author

Marlinski

Description

SHAI is an open-source, terminal-native AI coding assistant designed for developers who live in their command line. It allows you to leverage the power of AI models, including self-hosted ones, directly from your shell, scripts, or SSH sessions. SHAI aims to be a lightweight, composable, and Unix-like tool, offering a free and flexible alternative to existing closed-source or vendor-locked solutions. Its innovation lies in its modular Rust-based architecture, enabling seamless integration with various LLM endpoints and facilitating custom agent configurations for tailored AI assistance.

Popularity

Points 14

Comments 0

What is this product?

SHAI is a command-line AI assistant that brings powerful language models to your terminal. At its core, it's built using Rust, a programming language known for its speed and reliability. The key innovation is its LLM-agnostic nature, meaning it can connect to virtually any AI model endpoint that speaks the OpenAI API language. This includes cloud-based services and, importantly, locally hosted open-weight models. SHAI is designed to be highly modular, allowing developers to swap out user interfaces, add new tools, or even build APIs on top of it. This flexibility makes it a powerful and adaptable tool for a variety of terminal-based workflows. Think of it as a smart assistant that lives within your command line, ready to help you code, script, or automate tasks without ever leaving your terminal.

How to use it?

Developers can use SHAI by installing it as a single binary, making it incredibly easy to deploy even on bare servers or in environments where managing dependencies is complex. Once installed, you can invoke SHAI from your terminal to interact with AI models. This could involve asking coding questions, generating code snippets, writing shell scripts, or even integrating SHAI's capabilities into your existing automation scripts. For example, you might use it to generate a complex `grep` command based on a natural language description, or to debug a piece of code directly within your SSH session. Its function calling and MCP support (with OAuth) also allows for more sophisticated interactions where the AI can trigger specific actions or tools based on your requests. The custom agent configuration lets you define which AI model to use, what system prompt to provide, and what tools the AI has access to, tailoring its behavior to your specific needs.

Product Core Function

· LLM Agnostic Connectivity: This allows SHAI to connect to any AI model endpoint that understands the OpenAI API. This is valuable because it means you're not locked into a specific AI provider and can use the best model for your task, whether it's a cloud service or a model you're running yourself. This offers flexibility and cost-efficiency.

· Terminal Native Operation: SHAI is designed to run entirely within your command line interface. This is crucial for developers who spend a lot of time in the terminal, as it means no context switching between applications. You get AI assistance without interrupting your workflow, making you more productive.

· Single Binary Installation: The ability to install SHAI with just one executable file simplifies deployment significantly. This is especially useful on remote servers or in environments with limited package management. It means you can get up and running quickly without complex setup procedures.

· Modular Architecture: Built with Rust, SHAI's design emphasizes modularity. This allows for easy extension and customization. Developers can add new tools, integrate different user interfaces, or build custom agents. This future-proofs the tool and allows the community to contribute and innovate.

· Function Calling and MCP Support: This feature allows SHAI to interact with external tools or APIs by making specific function calls. This means the AI can not only generate text but also trigger actions, making it capable of more complex tasks and automation.

· Custom Agent Configuration: Users can define the AI model, system prompt, and available tools for each agent. This allows for highly personalized AI assistance, ensuring the AI behaves precisely as needed for specific coding or scripting tasks.

Product Usage Case

· Debugging Code in SSH: Imagine you're connected to a remote server via SSH and encounter a bug. Instead of copying the code and using a web-based AI tool, you can invoke SHAI directly in your terminal, paste the problematic code, and ask for debugging suggestions, all without leaving your SSH session. This saves time and effort.

· Automating Script Generation: Need to write a complex shell script involving multiple commands and conditional logic? You can describe the desired script functionality to SHAI in plain English, and it can generate the script for you, which you can then review and execute. This speeds up the process of creating custom automation tools.

· Rapid Prototyping with Self-Hosted Models: For developers concerned about data privacy or cost, SHAI's ability to connect to self-hosted LLMs is a game-changer. You can experiment with new AI features or build applications without sending sensitive code to external servers, while still getting powerful AI assistance.

· Integrating AI into CI/CD Pipelines: SHAI's command-line nature makes it ideal for integration into Continuous Integration/Continuous Deployment (CI/CD) pipelines. You could use it to automatically generate documentation for code changes, perform code reviews, or even flag potential issues before deployment.

· Developing Custom AI Tools: The modular design of SHAI encourages developers to build their own specialized AI tools. For example, a developer could create a custom agent focused on generating SQL queries from natural language, or another that specializes in writing unit tests for a specific framework, all powered by SHAI's core engine.

10

FarSight: Dynamic Screen Blur for Eye Strain Relief

Author

sparkhee93

Description

FarSight is a macOS application that utilizes your computer's camera to actively monitor your screen distance. When it detects you're too close for a sustained period, it intelligently blurs your entire screen, encouraging you to maintain a healthier viewing distance. This innovative approach goes beyond simple timers, offering a gentle yet effective nudge to combat eye strain, double vision, and even improve posture.

Popularity

Points 9

Comments 5

What is this product?

FarSight is a macOS app that leverages your built-in camera to measure how close you are to your screen. It's designed to help users avoid the common pitfalls of prolonged screen time, such as eye strain and poor posture. The core technology involves computer vision to analyze facial proximity. Instead of just a notification that you might ignore, FarSight implements a dynamic screen blur. When you get too close for a set duration, the entire screen gradually blurs, making it slightly inconvenient but still allowing you to see what you need to. This is enough of a gentle disruption to prompt you to move back to a healthier distance. So, what does this mean for you? It means a proactive tool to protect your eyes and well-being during long computer sessions, reducing discomfort and potential long-term vision issues.

How to use it?

Using FarSight is straightforward. After downloading and installing the macOS app, you simply grant it camera access when prompted. The app then runs in the background, continuously analyzing your distance from the screen via your webcam. You can configure the sensitivity and the duration before the blur activates to suit your personal preferences. When the app detects you're too close for the specified time, it initiates the screen blur. To dismiss the blur, you just need to move your face further away from the screen. This makes it ideal for anyone who spends significant time working, studying, or gaming on their Mac. Its integration is seamless, requiring no complex setup, and it works passively to support your health without interrupting your workflow unless necessary.

Product Core Function

· Camera-based distance monitoring: Utilizes the device's camera to analyze user's proximity to the screen in real-time. This provides a direct and responsive way to track viewing habits and is valuable for understanding and correcting potentially harmful screen engagement.

· Dynamic screen blurring: Intelligently blurs the entire screen when the user is detected to be too close for a configurable duration. This is a novel approach to reminders, offering a gentle but effective disincentive to maintain a healthy distance, directly addressing eye strain and posture issues.

· Configurable sensitivity and duration: Allows users to adjust how sensitive the detection is and how long they need to be too close before the blur is triggered. This customization ensures the tool is helpful without being overly disruptive, tailoring the experience to individual needs and workflows.

· Background operation: Runs discreetly in the background, ensuring continuous monitoring without manual intervention. This means you can focus on your tasks while the app silently works to protect your eye health and posture throughout the day.

Product Usage Case

· A software developer spending long hours coding on their MacBook notices increasing eye strain and headaches. By using FarSight, the app's gentle screen blur prompts them to move back, alleviating eye fatigue and improving focus by reducing visual discomfort. This solves the problem of persistent eye strain by providing an active reminder mechanism.

· A student preparing for exams spends an entire day studying at their desk, resulting in poor posture and neck pain. FarSight's proximity detection helps them maintain a better distance from their laptop screen, indirectly encouraging better posture and reducing the physical strain associated with prolonged, close-up screen use. This addresses the issue of physical discomfort and long-term postural problems.

· A graphic designer working on intricate visual projects often gets engrossed and leans very close to their monitor, leading to occasional double vision. FarSight's adaptive blurring acts as a visual cue, breaking their intense focus momentarily to readjust their viewing distance, thereby preventing episodes of double vision and improving overall visual comfort during detailed work. This solves the specific technical problem of screen-induced double vision.

11

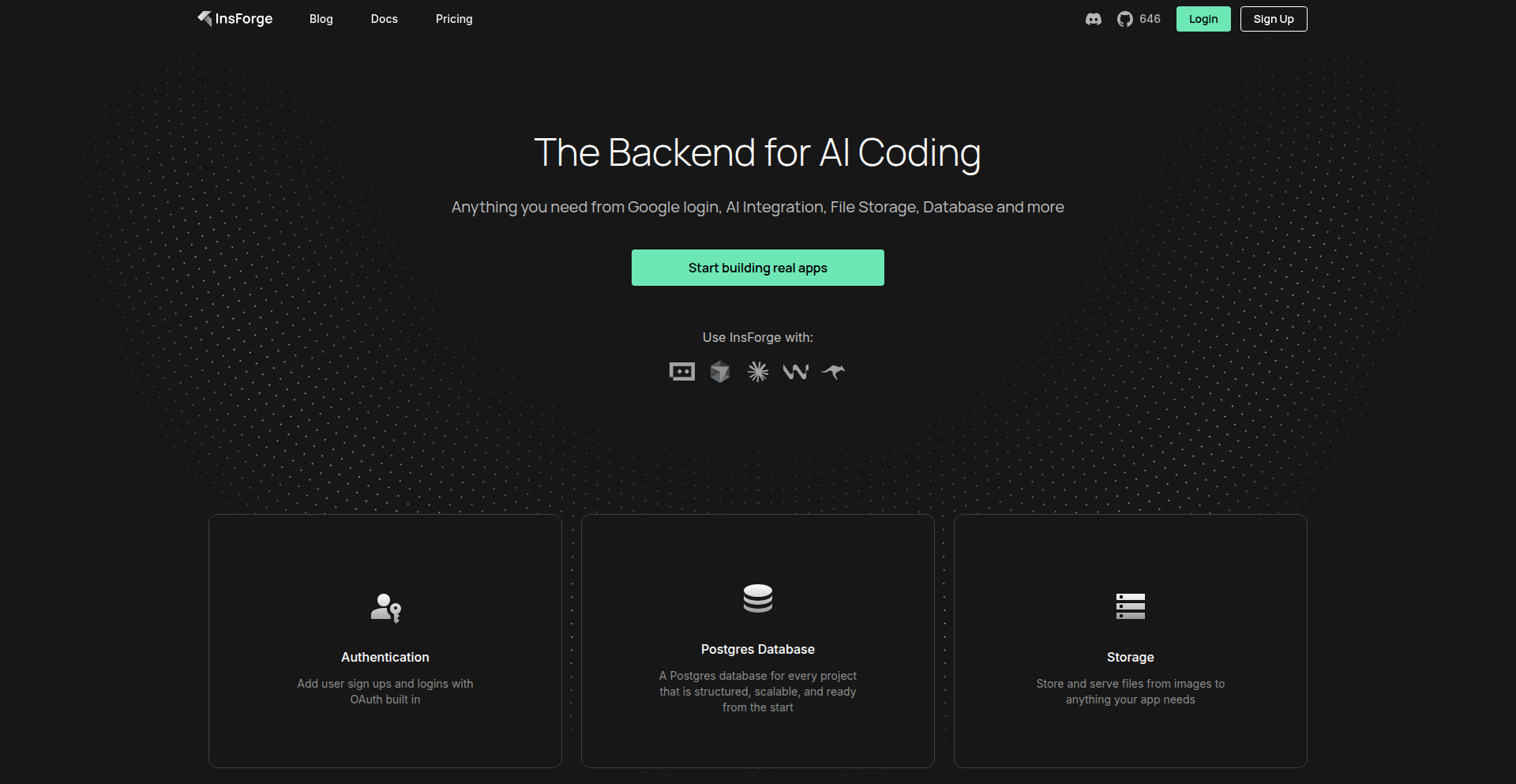

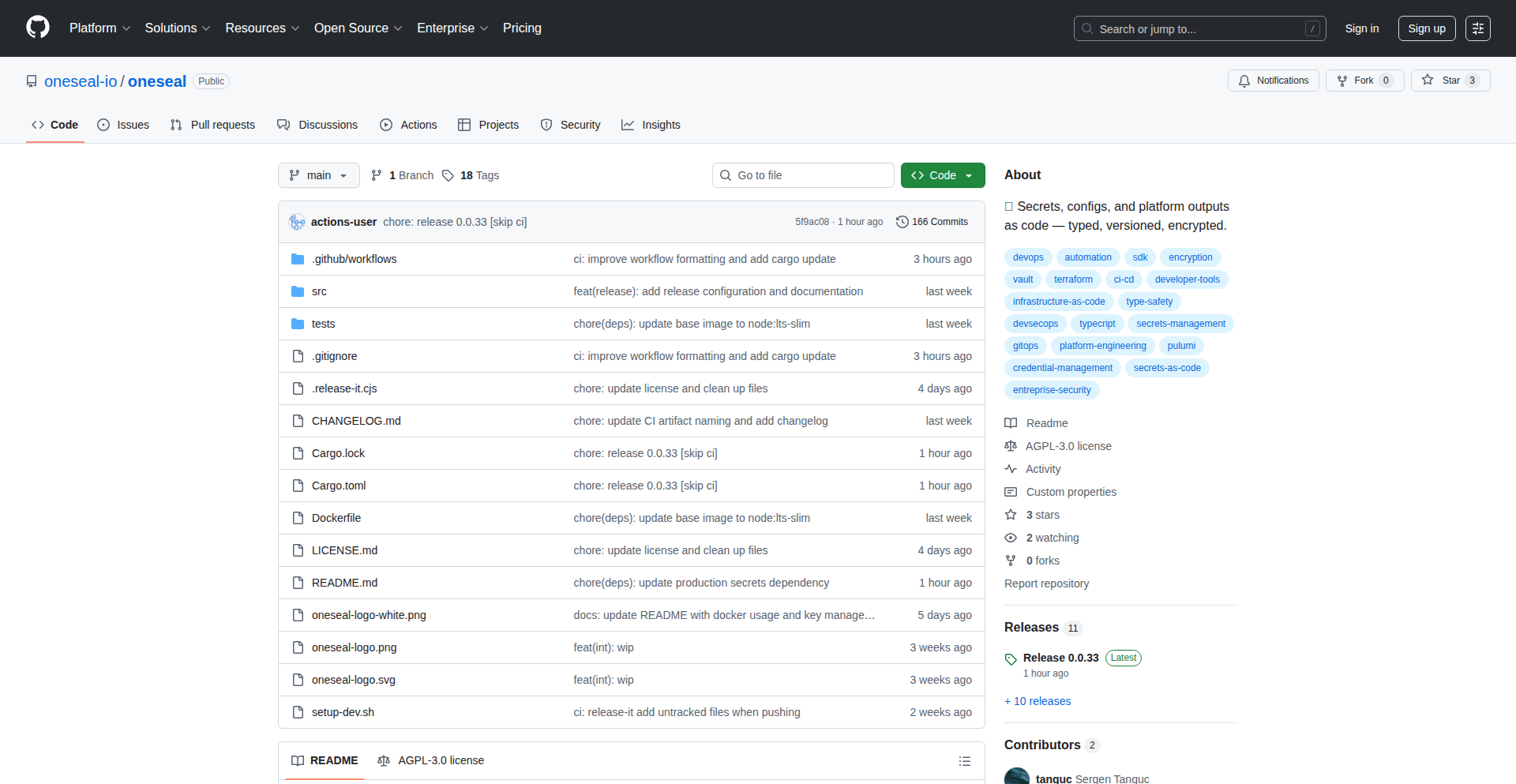

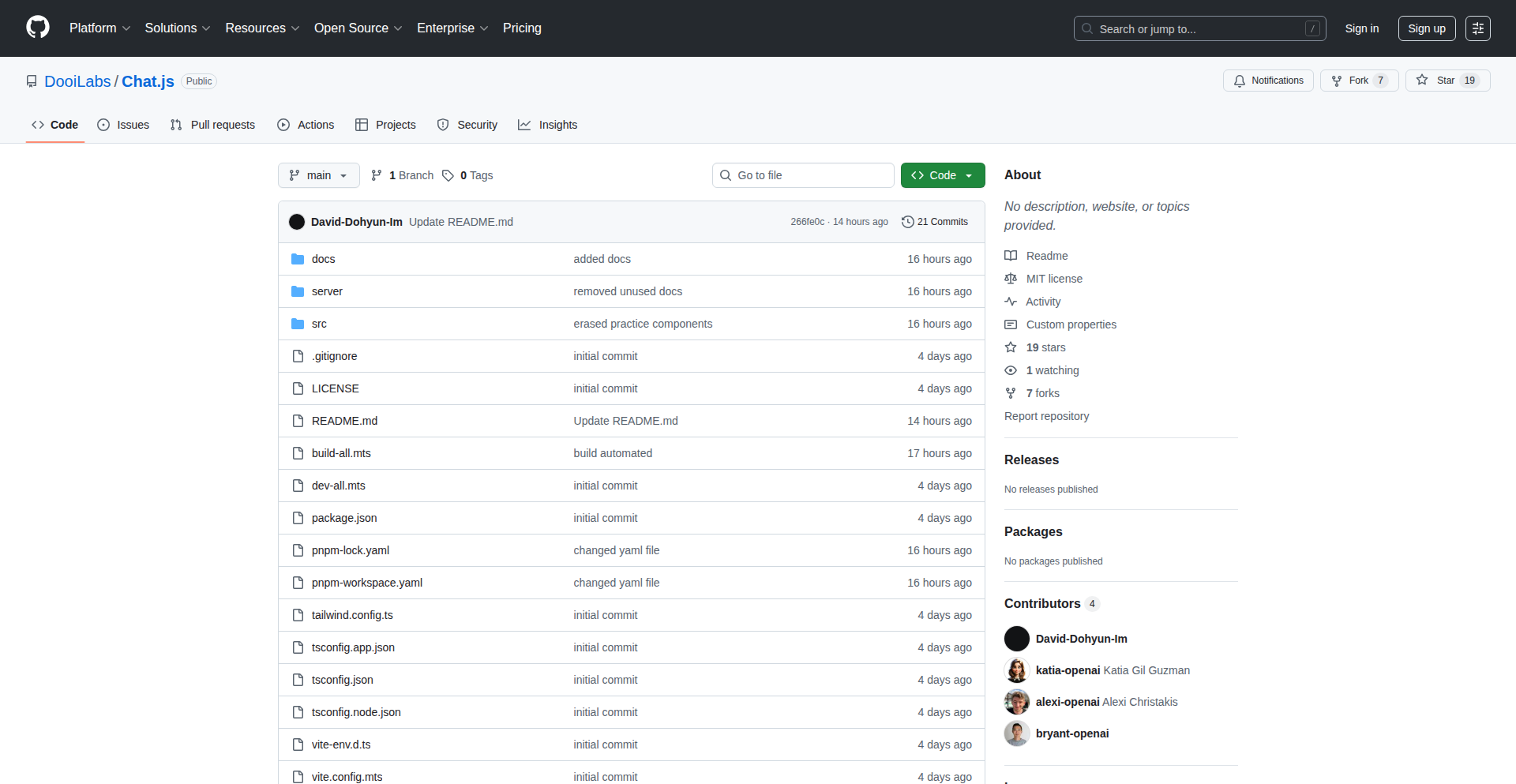

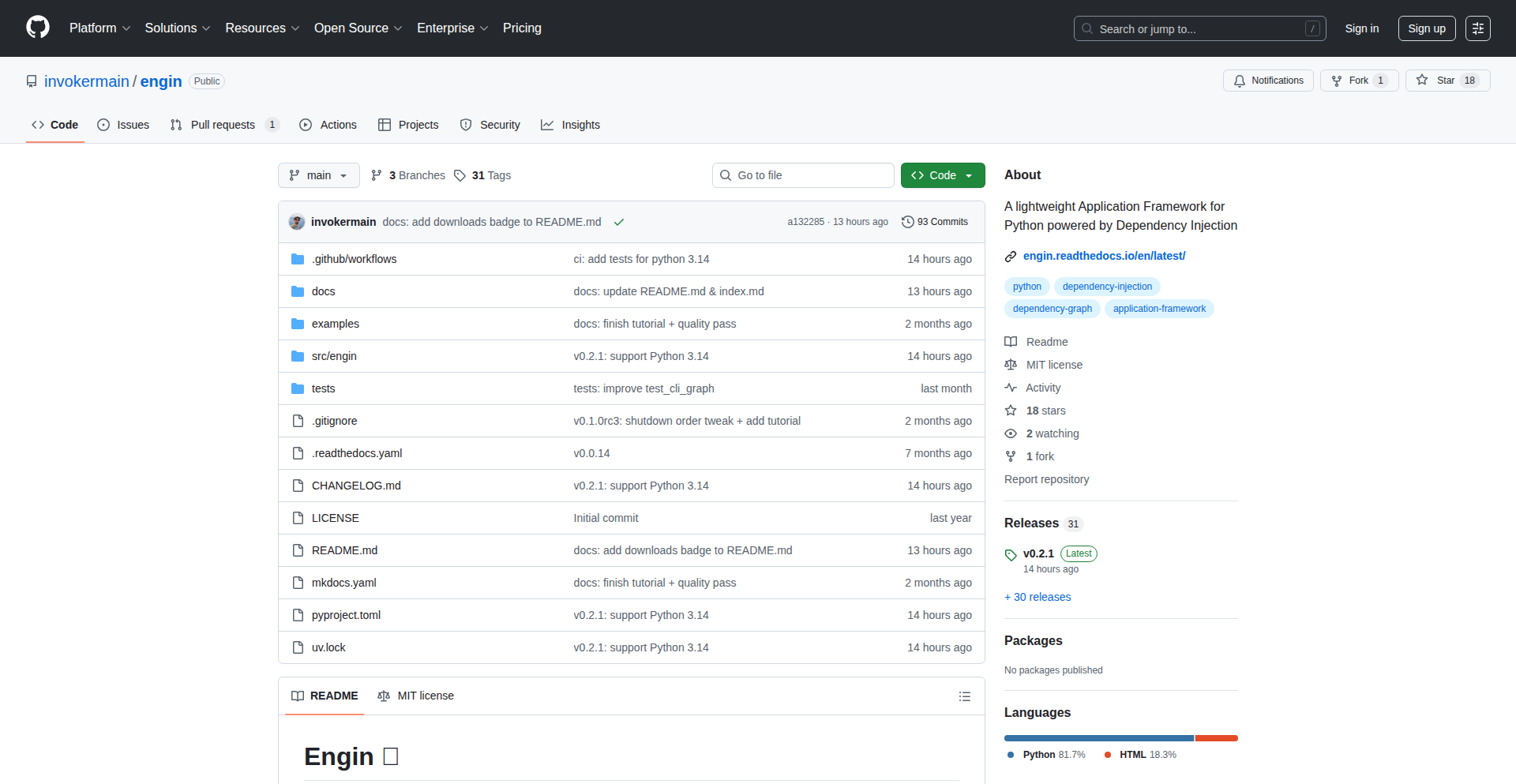

InsForge: AI Agent Backend Navigator

Author

ddmdd

Description

InsForge is a context-aware backend platform designed to empower AI coding agents. It solves the common problem where AI agents, when building applications, often guess at backend details instead of understanding the real system. This leads to broken logic, conflicting database changes, and failed deployments. InsForge provides AI agents with structured access to inspect and control backend components like schemas, functions, storage, and routes, enabling them to operate with accurate, real-time information. This significantly improves the reliability and efficiency of AI-assisted application development.

Popularity

Points 10

Comments 1

What is this product?

InsForge is an open-source backend platform that acts as a bridge between AI coding agents and your application's backend. Think of it as giving an AI agent a complete blueprint and a set of tools to interact with your server. The key innovation is 'introspection' and 'control'. Instead of the AI guessing what your database looks like or how your storage works, InsForge provides specific endpoints (like API calls) that allow the AI to 'look inside' and understand the exact structure of your schema, database rules, storage setup, and even your code functions. It then also gives the AI the ability to make controlled changes through these endpoints, just like a developer would use a command-line tool or a database editor. This ensures the AI's actions are always based on the current state of the backend, preventing errors. It's built with Postgres, authentication, storage, and edge functions, and connects to AI models via OpenRouter for seamless agent integration.

How to use it?

Developers can use InsForge in two main ways: self-hosting it for full control over their backend infrastructure, or using their cloud service for a quicker setup. When integrating an AI coding agent (like Cursor or Claude), you configure the agent to communicate with InsForge's API endpoints. This allows the AI to ask questions about your backend's current state (e.g., 'What tables exist in the database?', 'What are the access rules for this storage bucket?') and then execute actions based on that accurate information (e.g., 'Add a new column to the user table', 'Deploy this new edge function'). It's ideal for projects where AI agents are heavily involved in development, especially for tasks involving complex backend interactions, database management, or deploying updates.

Product Core Function

· Introspection Endpoints: These are like asking the backend detailed questions. InsForge provides structured answers about your database schema, how different parts of your database are connected, your server-side code logic (functions), storage configurations, and even system logs. This is valuable because it gives AI agents the precise, up-to-date information they need to make correct coding decisions, preventing them from making mistakes based on outdated assumptions. For example, an AI can check if a database table already exists before trying to create it, saving deployment errors.

· Control Endpoints: These are like giving the AI secure commands to directly interact with the backend, similar to how a developer uses command-line tools. InsForge allows AI agents to perform operations that usually require manual intervention, such as managing database migrations, setting up user permissions, or deploying new code. This is valuable because it automates complex backend tasks, allowing AI agents to handle more sophisticated development workflows and reducing the manual effort required from human developers.

· Integrated Backend Platform: InsForge bundles essential backend services like Postgres (a powerful database), authentication (for user logins), storage (for files), and edge functions (for running code close to users). This is valuable because it provides a cohesive environment for AI agents to work within, simplifying the setup and management of the backend infrastructure needed for AI-driven development. Developers don't need to piece together multiple services; InsForge offers a ready-to-go solution.

· AI Model Endpoints: It connects to AI models through OpenRouter, meaning it can easily leverage various AI coding assistants. This is valuable because it ensures compatibility and flexibility. Developers can choose the AI models that best suit their needs, and InsForge will seamlessly integrate them into the development workflow, making the AI-powered coding process more efficient and adaptable.

· Self-Describing Interface: InsForge's backend metadata is presented in a structured, self-describing way. This means the information about the backend is clear and easy for AI agents to understand without needing separate documentation. This is valuable because it speeds up the AI's learning and integration process. The AI can quickly grasp the backend's capabilities and constraints, leading to faster and more accurate code generation and modification.

Product Usage Case

· AI-driven database migration: An AI agent uses InsForge's introspection endpoints to analyze the current database schema, identify potential conflicts with a planned migration script, and then uses InsForge's control endpoints to safely apply the migration. This prevents data loss and application downtime that could occur with manual migration errors.

· Automated API generation: An AI agent, aware of the existing backend structure and data models through InsForge, generates new API endpoints for specific data operations. InsForge ensures the AI understands relationships between tables and data constraints, leading to well-designed and functional APIs without manual oversight.

· Contextual code fixes: When an AI agent is tasked with fixing a bug, it uses InsForge to understand the exact context of the error, including relevant database states, user permissions, and edge function behavior. This allows the AI to provide more accurate and targeted code solutions, rather than generic fixes that might not address the root cause.

· Real-time backend configuration updates: An AI agent, after receiving instructions to modify storage access policies, uses InsForge's control endpoints to directly update these policies in real-time. This allows for rapid iteration and adaptation of backend security and functionality without manual intervention from a developer.

12

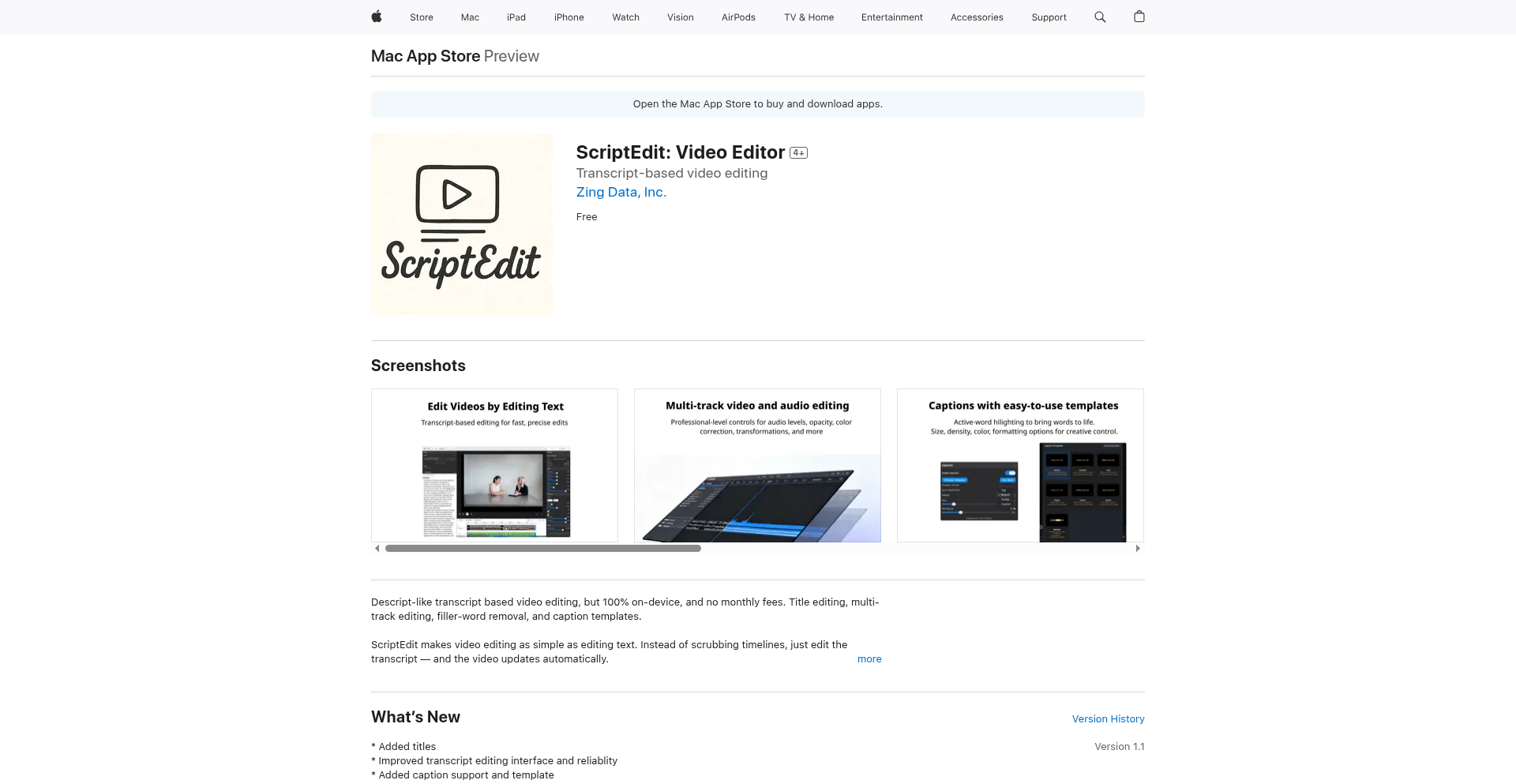

ScriptEdit: Transcript-Driven Video Weaver

Author

zhendlin

Description

ScriptEdit is a free, native Mac application that revolutionizes video editing by allowing users to edit videos directly through their transcripts. It leverages advanced AI, specifically OpenAI's Whisper, to generate highly accurate transcripts, which then drive the video editing process. This innovative approach eliminates the need for complex timelines and manual cuts, enabling users to delete words to remove sections, rearrange sentences to reorder clips, and even automatically remove filler words and silences, all while ensuring the video edits precisely match the textual changes. The entire process runs locally on the user's machine, prioritizing privacy, speed, and cost-effectiveness.

Popularity

Points 10

Comments 1

What is this product?

ScriptEdit is a breakthrough Mac video editor that transforms how you create and modify video content. Instead of manually manipulating video clips on a timeline, you edit the automatically generated text transcript. Think of it like editing a document: delete a sentence, and the corresponding video segment is removed. Rearrange paragraphs, and the video clips shift to match. The core innovation lies in its seamless integration of speech-to-text technology (Whisper) with video editing. This means that the software understands the spoken words and can precisely cut, trim, and reorder video segments based on your text edits. This approach offers a significantly faster and more intuitive editing experience, especially for spoken-word content like interviews, podcasts, or presentations. It's built from the ground up to run locally, meaning your video files never leave your computer, and you avoid the subscription fees and upload delays common with cloud-based services.

How to use it?

Developers and content creators can use ScriptEdit by simply importing their video files into the application. ScriptEdit will then automatically process the video to generate a text transcript using the Whisper AI model. Once the transcript is available, users can begin editing directly within the text interface. For instance, if you want to remove a section of your video, you simply delete the corresponding text. To remove all instances of 'um' or 'uh', you can use a dedicated function to automatically delete filler words. Similarly, you can remove long pauses or silences with another function. The application provides visual editing tools as well, allowing for traditional drag-and-drop and cutting if preferred. For integration, ScriptEdit can be seen as a powerful tool for streamlining the initial editing phase of video production, particularly for content that relies heavily on spoken dialogue. It's ideal for YouTubers, podcasters, educators, and anyone who needs to quickly produce polished video content without extensive technical video editing skills or ongoing costs.

Product Core Function

· Transcript Generation using Whisper AI: This function utilizes advanced AI to accurately convert spoken words in your video into editable text. This is valuable because it unlocks a new paradigm for video editing, allowing text-based manipulation of visual content, saving significant time compared to traditional manual editing.

· Text-Driven Video Editing: This core function enables users to edit the video by simply editing the transcript. Deleting text removes video segments, and rearranging text reorders clips. This is valuable for its speed and intuitive nature, making video editing as simple as editing a document, and is perfect for quickly refining spoken-word content.

· Automatic Filler Word and Gap Removal: ScriptEdit can automatically identify and remove filler words (like 'um', 'uh') and silences within the transcript, which are then mirrored in the video. This is valuable for producing highly professional and concise videos with minimal manual effort, making content more engaging for viewers.

· Local Processing and Privacy: All video transcription and editing happens on your Mac without uploading files to the cloud. This is valuable for users concerned about data privacy, those with limited internet bandwidth, or those who work with very large video files and want to avoid lengthy upload and download times.

· Native Mac Performance Optimization: The application is built as a native Mac app and optimized with Metal for faster transcription and rendering. This is valuable as it ensures a smooth and responsive editing experience, leveraging your Mac's hardware for efficient processing, especially for computationally intensive tasks like AI transcription.

Product Usage Case

· A YouTuber preparing a daily vlog needs to quickly cut out awkward pauses and remove rambling sections. By importing the vlog into ScriptEdit, they can delete the filler words and silences from the transcript, and the video automatically updates, saving hours of manual timeline editing. This allows them to publish content much faster.

· A podcaster who records interviews wants to create shorter, shareable video clips for social media. They can easily rearrange sentences in the transcript to highlight key moments or delete irrelevant tangents, and ScriptEdit instantly updates the video, making it simple to create engaging content from longer recordings without complex video editing software.

· An educator delivering online lectures needs to remove mistakes and rephrase sentences for clarity. ScriptEdit allows them to edit the transcript directly, and the corresponding video segments are automatically adjusted. This ensures a polished and professional lecture without requiring extensive video editing expertise.

· A freelance video editor working with clients who have bandwidth limitations or prefer local processing. ScriptEdit offers a solution where all sensitive video data stays on the client's machine, and the editing process is significantly faster and more cost-effective than cloud-based alternatives, making it a competitive advantage.

· A content creator who frequently works with large video files (e.g., hour-long documentaries) can use ScriptEdit to avoid the prohibitive upload times and storage costs associated with cloud services. The local processing ensures a seamless workflow even with massive media assets.

13

SoraWatermarker

Author

spider853

Description

A fun and experimental tool that allows users to easily add a 'Sora' watermark to any video. This project showcases a creative application of video processing techniques, enabling a playful engagement with AI-generated content trends and demonstrating how readily available code can be used for lighthearted digital manipulation.

Popularity

Points 6

Comments 4

What is this product?

SoraWatermarker is a project designed to take any video file and programmatically overlay a watermark that mimics the style of OpenAI's Sora. The core technical idea involves using video processing libraries to manipulate video frames. It doesn't involve actual AI generation but rather a visual effect to create a humorous or engaging outcome. This is valuable because it demystifies a trending concept and provides a simple way for anyone to participate in the conversation around AI video, even without deep technical knowledge. It shows how code can be a tool for creative expression and social commentary.

How to use it?

Developers can integrate SoraWatermarker into their workflows for various purposes. It can be used as a command-line tool to batch process videos, or its underlying logic can be adapted into a web application for broader accessibility. For example, a content creator might use it to add a temporary 'Sora' tag to their existing videos for a social media campaign, generating buzz around AI. Another use case is for educational purposes, demonstrating basic video frame manipulation. The integration is straightforward, typically involving importing the relevant video processing libraries and calling the watermarking function with the input video and desired watermark parameters.

Product Core Function

· Video frame manipulation: The core of the tool involves reading video files frame by frame, applying the watermark effect to each frame, and then reassembling them into a new video. This demonstrates fundamental video processing techniques, useful for anyone looking to build more complex video editing or analysis tools.

· Watermark overlay: Programmatically adding a visual element (the watermark) onto the video frames. This highlights the principles of image compositing and alpha blending, essential skills in graphics and multimedia development.

· Cross-platform compatibility: While not explicitly stated, projects like these often aim for broad usability. The underlying video processing libraries typically support multiple operating systems, making the tool accessible to a wide range of developers and users.

· Simplicity and accessibility: The project prioritizes ease of use, allowing individuals with minimal technical background to achieve a specific visual effect. This embodies the hacker ethos of making complex tasks simple and accessible through code.

Product Usage Case

· A social media influencer wants to playfully hint at their content being 'AI-generated' to spark curiosity. They can use SoraWatermarker to quickly add the Sora watermark to their existing personal videos, generating engagement and discussion around AI trends without actually using AI tools.

· A developer experimenting with video manipulation libraries wants to demonstrate a basic watermarking technique. SoraWatermarker serves as a clear, concise example of how to access and modify video frames, providing a practical starting point for learning more advanced video editing functionalities.

· A group of friends wants to create humorous memes or short clips referencing the latest AI video developments. SoraWatermarker allows them to easily add a 'Sora' watermark to their own funny videos, creating shareable content that taps into current internet culture.

14

Plural AI-DevOps

Author

sweaver

Description

Plural is an AI-powered platform designed to revolutionize DevOps workflows. It integrates artificial intelligence directly into GitOps processes, aiming to reduce the complexity and risk associated with tasks like Kubernetes upgrades, managing YAML configurations, and responding to production incidents. The core innovation lies in using AI to understand and interact with your infrastructure through natural language and automated GitOps actions, making DevOps operations more efficient and safer.

Popularity

Points 9

Comments 0

What is this product?

Plural AI-DevOps is a next-generation DevOps platform that leverages AI to automate and streamline complex operations. Instead of manually sifting through endless configuration files and debugging intricate issues, Plural uses AI to understand your entire infrastructure stack. It achieves this by vectorizing and indexing your DevOps data, creating a semantic understanding that allows for natural language queries and agentic workflows. Think of it as having an AI assistant that understands your Kubernetes clusters, Terraform configurations, and application dependencies, capable of diagnosing problems, suggesting fixes, and even proposing changes through pull requests. So, this is useful for you because it transforms tedious, error-prone manual tasks into intelligent, automated processes, freeing up your team to focus on innovation rather than firefighting.

How to use it?

Developers and platform engineers can interact with Plural AI-DevOps through natural language commands or by integrating it into their existing GitOps pipelines. For example, you can ask Plural to 'upgrade my production Kubernetes cluster to the latest stable version,' and it will orchestrate the upgrade with built-in guardrails to minimize disruption. You can also query your infrastructure by asking questions like 'what services are running on my staging environment?' The platform's AI agents are tuned for Terraform and Kubernetes, allowing you to make infrastructure changes by simply stating your intent, such as 'double the size of my production database.' Plural will then generate the necessary code changes and present them as a pull request for your review. This means you can manage and modify your infrastructure using intuitive language, integrating seamlessly with your version control system. This is useful because it lowers the barrier to entry for complex infrastructure management and accelerates the pace of deployment while maintaining control and auditability.

Product Core Function

· Autonomous Upgrade Assistant: Orchestrates complex Kubernetes upgrades with automated guardrails to prevent breaking changes, making system updates significantly less risky and time-consuming. This is useful for ensuring your systems are up-to-date without causing unexpected downtime.

· AI-Powered Troubleshooting and Fix: Utilizes a resource graph and advanced RAG (Retrieval Augmented Generation) techniques to analyze DevOps data, identify root causes of incidents, and generate actionable fixes in the form of pull requests. This is useful for rapidly resolving production issues and reducing mean time to recovery.

· Natural Language Infrastructure Querying: Vectorizes and indexes your DevOps information, enabling you to search and discover details about your infrastructure using plain English. This is useful for gaining quick insights into your environment and for ad-hoc investigations without needing to know specific query languages.

· DevOps Agents for Terraform and Kubernetes: Provides AI agents that can perform infrastructure modifications based on natural language requests, managed through GitOps. This is useful for automating routine infrastructure changes and empowering less technical team members to manage infrastructure safely.

Product Usage Case

· Scenario: A DevOps team needs to upgrade their Kubernetes cluster to a new major version. Problem: Manual upgrades are complex, risky, and often lead to application downtime. Solution: Plural's Autonomous Upgrade Assistant automates the process, performing checks and balances to ensure a smooth transition, significantly reducing the chance of failure and operational burden. This is useful because it allows for timely system updates without the fear of catastrophic failure.

· Scenario: A production incident occurs, and the team needs to quickly identify the cause and implement a fix. Problem: Diagnosing issues across distributed systems and complex configurations can be time-consuming. Solution: Plural's AI-powered troubleshooting analyzes system logs, metrics, and configuration data to pinpoint the root cause and generates a targeted fix via a pull request, accelerating the resolution process. This is useful for minimizing service disruption and restoring functionality faster.

· Scenario: A developer needs to understand the current state of their cloud infrastructure, including deployed services and their dependencies. Problem: Manually querying various monitoring tools and configuration files is inefficient. Solution: Using Plural's natural language querying, the developer can ask questions like 'show me all services connected to the main database,' receiving immediate, understandable insights. This is useful for gaining clarity on complex environments without deep system knowledge.

· Scenario: A team needs to scale up their database resources in response to increased load. Problem: Manually updating Terraform or Kubernetes manifests and ensuring all related configurations are adjusted is tedious and error-prone. Solution: The user can instruct Plural, 'increase the database capacity by 50%,' and the AI will generate the necessary code changes for review and deployment through GitOps. This is useful for efficient and safe infrastructure scaling.

15

Tasklet AI Agents

Author

mayop100

Description

Tasklet is an AI-powered agent system designed to automate business processes. It leverages large language models (LLMs) to understand natural language instructions and orchestrate complex workflows, effectively acting as an autonomous digital assistant for your business tasks.

Popularity

Points 5

Comments 3

What is this product?

Tasklet is a platform that enables the creation and deployment of AI agents capable of automating various business tasks. At its core, it uses advanced AI models, specifically Large Language Models (LLMs), to interpret human commands given in plain English. The innovation lies in how these agents can break down complex requests into smaller, actionable steps, interact with different software tools (like APIs, databases, or even other applications) through pre-defined or dynamically generated actions, and learn from feedback to improve their performance over time. So, what's the use? It means your repetitive, time-consuming business operations can be handled by AI, freeing up human resources for more strategic work.

How to use it?

Developers can integrate Tasklet into their existing workflows by defining the desired business processes and the specific AI agents needed to execute them. This typically involves scripting agent behaviors, connecting them to relevant data sources and APIs, and setting up triggers for their activation. Tasklet provides an SDK or a user-friendly interface for defining agent roles, permissions, and interaction protocols. For example, an agent could be tasked with analyzing customer feedback from an email inbox, summarizing key points, and creating a report in a spreadsheet. So, how do you use it? You tell the AI what you want done, and Tasklet helps you build the intelligent agent to do it, connecting to the tools you already use.

Product Core Function

· Natural Language Understanding: Processes user requests in plain English, allowing for intuitive task definition and management. This is valuable because it lowers the barrier to entry for automation, making it accessible even to non-technical users who can simply describe what they need. This solves the problem of complex scripting for simple automation.

· Workflow Orchestration: Breaks down complex tasks into a series of smaller, manageable steps and executes them in the correct sequence. This is crucial for automating multi-stage business processes, ensuring efficiency and accuracy. The value here is in tackling intricate workflows that would otherwise require significant manual effort or custom-coded solutions.

· Tool Integration (API/SDK): Connects with various external tools and services via APIs or provided SDKs to fetch data or perform actions. This is highly valuable as it allows the AI agents to interact with the existing technology stack of a business, extending automation beyond simple data manipulation. It solves the problem of siloed information and disconnected systems.

· Agent Learning and Adaptation: Agents can learn from experience and feedback to improve their decision-making and task execution over time. This continuous improvement aspect means that the automation becomes more robust and effective with use. The value is in building systems that get smarter and more efficient, reducing the need for constant manual adjustments.

Product Usage Case

· Automating customer support ticket triage: An AI agent could read incoming support tickets, categorize them based on urgency and issue type, and assign them to the appropriate human agent. This significantly speeds up response times and ensures that critical issues are addressed promptly. It solves the problem of overwhelming ticket volumes and inconsistent manual assignment.

· Generating sales reports from CRM data: An agent can be programmed to pull data from a CRM system (like Salesforce), analyze sales figures for a specific period, and generate a summary report in a desired format (e.g., a PDF or spreadsheet). This saves sales teams considerable time on manual data aggregation and reporting. It addresses the pain point of time spent on repetitive data analysis and report creation.

· Onboarding new employees: An agent can automate parts of the HR onboarding process by sending out welcome emails, distributing necessary forms, scheduling introductory meetings, and providing access to training materials based on the new employee's role. This streamlines the onboarding experience for both the new hire and the HR department. It solves the inefficiency and potential for human error in manual onboarding tasks.

16

Video-to-LaTeX-Summarizer

Author

lorenzolibardi

Description

Summeze is a groundbreaking project that automatically transforms video content into editable LaTeX summaries. It leverages advanced speech recognition and natural language processing to transcribe spoken words, identify key concepts, and structure them into a well-formatted LaTeX document. This eliminates the tedious manual process of note-taking and summarization from video lectures, presentations, or documentaries, offering a significant time-saving solution for students, researchers, and professionals.

Popularity

Points 8

Comments 0

What is this product?

Summeze is a sophisticated tool that uses AI to listen to videos, understand the spoken content, and then generate a structured summary in LaTeX format. The innovation lies in its ability to go beyond simple transcription; it aims to extract the core ideas and present them coherently. It employs state-of-the-art Automatic Speech Recognition (ASR) to convert audio to text and Natural Language Processing (NLP) techniques like topic modeling and summarization algorithms to distill essential information. This means you get not just raw text, but a processed, organized output that can be further refined as a formal document.

How to use it?

Developers can integrate Summeze into their workflows by providing it with a video file or a link to a video. The tool then processes the video, generates the LaTeX summary, and makes it available for download. For instance, a student could upload lecture recordings to get immediate, structured notes for studying. Researchers could process conference talks to quickly capture key findings. The output LaTeX can be further edited in any LaTeX editor, allowing for seamless incorporation into academic papers, presentations, or reports. This saves hours of manual transcription and summarization, allowing you to focus on the content itself.

Product Core Function

· Video to Text Transcription: Utilizes advanced ASR models to accurately convert spoken audio from videos into text. This is valuable because it automates the labor-intensive task of transcribing, providing a foundational text for summarization and analysis, so you don't have to rewatch videos to get the words.

· Key Concept Extraction: Employs NLP algorithms to identify and extract the most important topics and themes discussed in the video. This provides the core intellectual value, helping users quickly grasp the main points without sifting through lengthy transcripts, so you get the essence of the content.