Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-08

SagaSu777 2025-10-09

Explore the hottest developer projects on Show HN for 2025-10-08. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The surge of projects focused on Large Language Models (LLMs) continues, with a strong emphasis on enhancing their capabilities beyond basic interaction. The 'Recall' project, for instance, addresses the critical need for persistent memory in LLMs by leveraging Redis and semantic search. This opens up possibilities for more sophisticated AI assistants that can recall past interactions, maintain project context, and build complex knowledge bases. For developers, this means exploring techniques in vector embeddings, efficient data storage, and context management to build truly intelligent applications. On the developer tooling front, projects like 'FleetCode' and 'HyprMCP' highlight the growing need for streamlined workflows and better management of AI coding agents and MCP servers. This signifies a trend towards making AI more accessible and manageable for development teams, reducing friction and increasing productivity. The increasing prevalence of local-first and privacy-focused applications, such as the local-first podcast app, emphasizes a growing user demand for data sovereignty and reduced reliance on centralized services. This is a crucial area for innovation, offering opportunities to build trust and differentiate products. Furthermore, the exploration of AI for various creative and analytical tasks, from generating songs to analyzing financial markets and creating visual content, demonstrates the expanding utility of AI across diverse domains. Developers and entrepreneurs should look for opportunities to leverage these AI advancements to solve niche problems and create novel user experiences, always keeping the ethical implications and user privacy at the forefront. The hacker spirit shines through in the community's drive to build practical solutions to real-world problems, pushing the boundaries of what's possible with technology.

Today's Hottest Product

Name

Recall: Give Claude memory with Redis-backed persistent context

Highlight

This project introduces a novel approach to giving Large Language Models (LLMs) like Claude persistent memory. By integrating Redis with semantic search, 'Recall' allows the LLM to retain context across sessions, overcoming the limitations of context windows. This is achieved by embedding and storing important conversational elements as 'memories' in Redis, enabling semantic retrieval for relevant information. Developers can learn about implementing long-term memory for AI models, utilizing vector embeddings and efficient data storage for conversational AI applications. The ability to manage global, versioned, and isolated memories offers a robust framework for sophisticated AI-powered applications.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Open Source

Data Management

Popular Keyword

LLM

AI

Memory

Redis

Semantic Search

Open Source

Developer Tools

Automation

TypeScript

Python

Web

Technology Trends

LLM Memory and Context Management

AI-Powered Productivity and Automation

Local-First and Privacy-Focused Applications

Developer Tooling for AI Workflows

Decentralized and Open Web Technologies

Programmatic Content Generation and Transformation

Cross-Platform AI Integration

Project Category Distribution

AI/ML Tools (25%)

Developer Utilities (20%)

Productivity & Organization (15%)

Web Development Tools (10%)

Data & Analytics (8%)

Open Source Infrastructure (7%)

Creative & Content Tools (5%)

Utilities & Miscellaneous (10%)

Today's Hot Product List

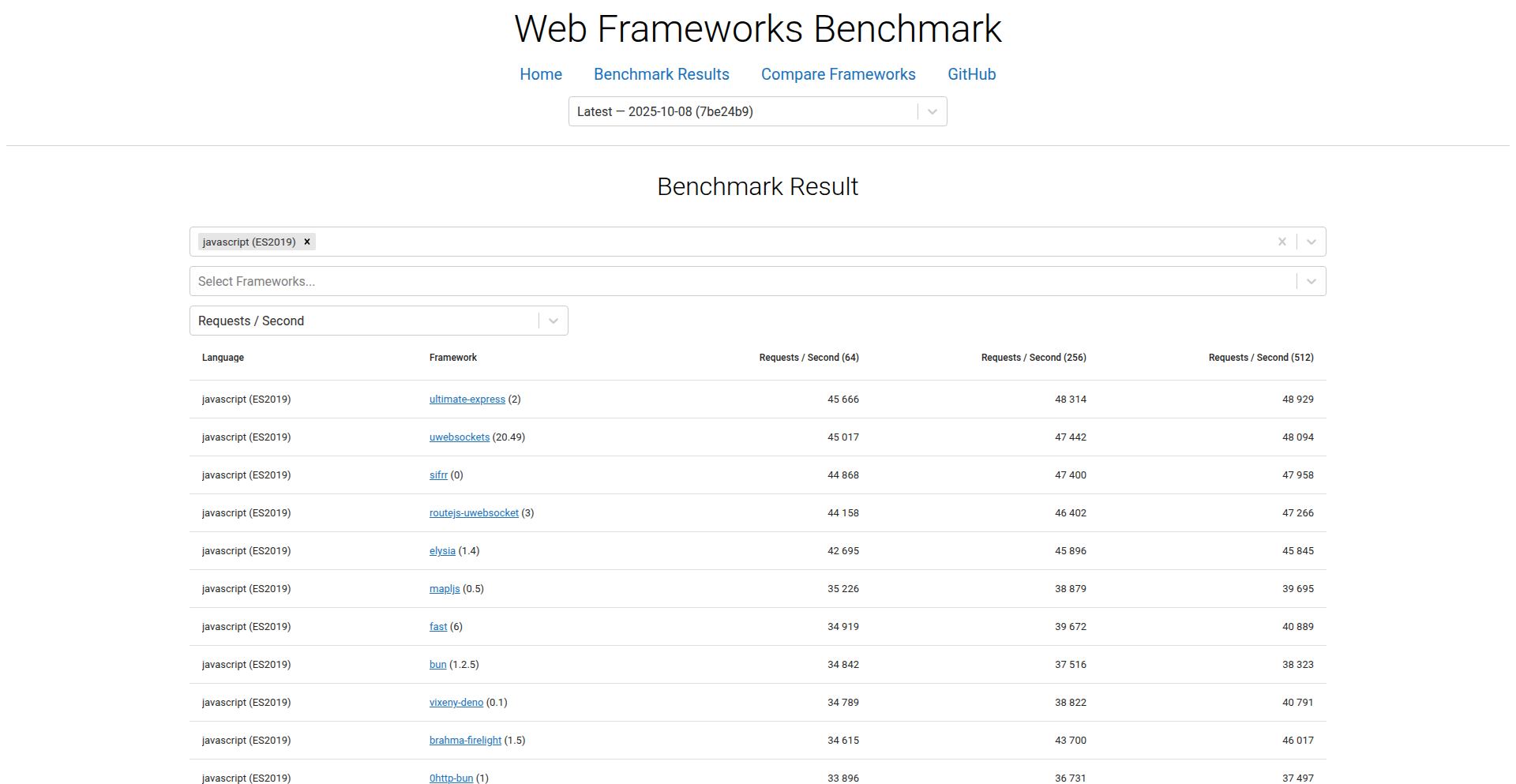

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Recall: LLM Persistent Memory Engine | 157 | 86 |

| 2 | RoutineGuard | 90 | 82 |

| 3 | FleetCode: Git Worktree Agent Orchestrator | 87 | 45 |

| 4 | BrowserCast Local-First Podcast Player | 62 | 21 |

| 5 | HyprMCP Proxy | 46 | 5 |

| 6 | RedLisp Shell | 29 | 0 |

| 7 | AI Code Sorcerer: Automated Code Sentinel | 16 | 6 |

| 8 | Prediction Aggregator API | 10 | 7 |

| 9 | CodingFox AI Code Review Engine | 11 | 0 |

| 10 | FounderBox: AI-Powered Business Genesis Engine | 10 | 0 |

1

Recall: LLM Persistent Memory Engine

Author

elfenleid

Description

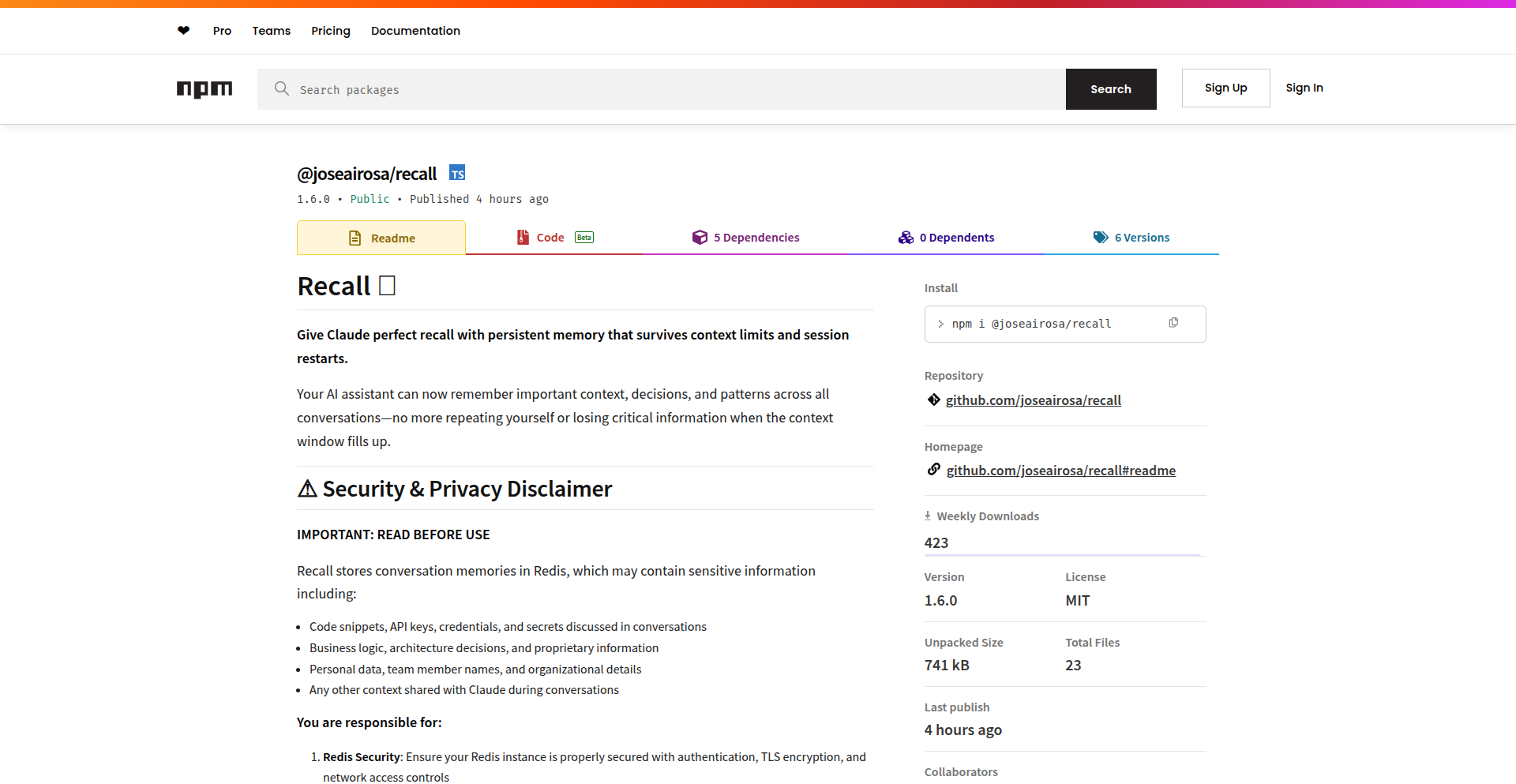

Recall is a Model Context Protocol (MCP) server that grants AI language models like Claude persistent long-term memory. It leverages Redis for storage and semantic search to store and retrieve crucial conversation context, effectively overcoming context window limitations and enabling consistent, context-aware AI interactions across sessions and projects. So, what does this mean for you? It means your AI assistant won't forget your project's specific requirements, coding standards, or past decisions, making every interaction more efficient and productive.

Popularity

Points 157

Comments 86

What is this product?

Recall is a sophisticated server designed to give AI language models, specifically those supporting the Model Context Protocol (MCP) like Claude, a form of long-term memory. The core technical innovation lies in its use of Redis, a high-performance in-memory data structure store, to persist conversation data. Instead of just relying on the AI's short-term context window, Recall embeds important pieces of information (called 'memories') into numerical representations (embeddings) using OpenAI's embedding models. These embeddings, along with associated metadata, are then stored in Redis. When you interact with the AI, Recall automatically performs a semantic search in Redis to retrieve the most relevant memories based on your current query. This process ensures that critical information is always accessible, even if it exceeds the AI's immediate context limit or across different conversation sessions. So, what's the value to you? It means your AI won't have to be re-explained things it already knows, leading to faster, more accurate, and more personalized AI assistance.

How to use it?

Developers can integrate Recall into their workflows by installing it globally via npm (e.g., `npm install -g @joseairosa/recall`). It's designed to be configured with AI desktop applications that support the MCP. You'll typically add Recall's configuration to your AI client's settings file (e.g., `claude_desktop_config.json`). Once configured, Recall runs in the background, automatically managing memory for your AI conversations. When you start a new chat, Recall will feed relevant historical context to the AI, allowing it to recall past decisions, project specifics, or preferences without explicit re-prompting. This makes it incredibly useful for complex, long-running projects where maintaining consistent context is paramount. So, how does this help you? It means you can set up your AI assistant once with project details, and it will consistently apply those preferences across all your interactions, saving you time and effort.

Product Core Function

· Persistent Memory Storage: Stores critical AI conversation context and project-specific information in Redis, ensuring data survives session restarts and context window limitations. This provides a lasting knowledge base for your AI, so you don't have to repeat yourself, leading to more efficient interactions.

· Semantic Search Retrieval: Automatically fetches the most relevant past memories based on the current conversation context using advanced embedding and search techniques. This ensures the AI has access to the right information at the right time, improving the accuracy and relevance of its responses.

· Global Memory Sharing: Allows for context to be shared across all projects and AI interactions, creating a unified knowledge base. This is valuable for maintaining consistent branding, coding standards, or architectural decisions across different development efforts.

· Knowledge Graph Relationships: Enables linking related memories together to form a structured knowledge graph. This facilitates understanding complex interdependencies and making more informed decisions within your AI-assisted projects.

· Memory Versioning: Tracks the evolution of memories over time, allowing developers to see how decisions or information have changed. This is crucial for auditing, debugging, and understanding the progression of a project's knowledge.

· Reusable Workflow Templates: Provides pre-defined patterns for common AI workflows, streamlining setup and ensuring consistency. This allows you to quickly establish recurring AI tasks with specific memory configurations.

· Workspace Isolation: Prevents memories from one project from interfering with another, maintaining clear separation and organization. This ensures that your AI's context is specific to the current task, avoiding cross-contamination of information.

Product Usage Case

· E-commerce Platform Development: When building an e-commerce platform, you can inform Claude about specific technical choices like 'we use Tailwind CSS,' 'prefer composition API for Vue.js,' and 'API rate limit is 1000 requests per minute.' Recall will then ensure Claude consistently applies these preferences in all subsequent code generation and architectural discussions, preventing deviations and speeding up development.

· Complex Software Architecture Refinement: For a large, multi-component software system, developers can store architectural decisions, integration patterns, and API specifications as memories. When collaborating with the AI on new features or bug fixes, Recall ensures the AI has access to this comprehensive documentation, leading to more coherent and robust solutions.

· Personalized AI Assistant Configuration: Users can store their preferred coding style, frequently used libraries, or specific project constraints as memories. Recall then allows the AI to act as a truly personalized assistant, adapting its suggestions and code generation to the user's unique needs and preferences without constant re-instruction.

· Maintaining Consistency in Large Codebases: In a project with a vast codebase and multiple developers, Recall can store established coding standards, design patterns, and best practices. When developers use the AI for code reviews or suggestions, Recall ensures the AI's output aligns with these established standards, promoting code quality and maintainability across the team.

2

RoutineGuard

Author

gantengx

Description

RoutineGuard is a mobile application built using React Native and Firebase, designed to help children adhere to daily routines by minimizing distractions during timed tasks. Its innovative approach locks app navigation when a timer is active, coupled with optional photo verification for task completion, ensuring accountability and promoting independence.

Popularity

Points 90

Comments 82

What is this product?

RoutineGuard is a smart routine management app for children, leveraging a timer-based distraction blocking mechanism and photo proof for task completion. When a task timer starts, the app temporarily locks down other app navigation, preventing the child from switching to unrelated applications. This ensures focus on the assigned task. Parents can set up weekly schedules with daily task toggles, simplifying the creation of routines. The photo verification feature allows parents to confirm task completion by having their child submit a picture, providing a clear and tangible record. This tackles the common challenge of getting kids to follow schedules without constant nagging.

How to use it?

Developers can integrate RoutineGuard's principles into their own applications or build similar systems. For parents, it's a straightforward app to download and use. They can define specific tasks, set durations, and establish recurring weekly schedules through an intuitive interface. For example, a parent can create a 'Brush Teeth' task for 5 minutes every morning and evening. When the timer starts, the child's device will prevent them from opening games or social media. Once done, the child can optionally take a photo of their clean teeth, which is then reviewed by the parent. The app supports device-level integrations to enforce the navigation lock, ensuring a truly distraction-free environment during critical task periods.

Product Core Function

· Distraction-Free Timer: Locks app navigation when a task timer is active, ensuring focus. This is valuable for parents who want to ensure their children concentrate on specific activities like homework or chores without getting sidetracked by games or other apps.

· Photo-Based Task Verification: Allows children to submit photos as proof of task completion, offering a tangible accountability mechanism for parents. This provides peace of mind and a clear record of whether tasks have been accomplished.

· Flexible Weekly Scheduling: Enables parents to create recurring weekly routines with daily toggles, reducing repetitive setup and offering customization. This makes managing complex schedules for children much more efficient for busy parents.

· Parental Control Dashboard: Provides parents with an overview of their child's progress and allows for easy task management and schedule adjustments. This empowers parents to oversee and guide their child's routine effectively.

· Kid-Friendly Interface: Designed with simplicity to be easily understood and used by children, minimizing frustration and encouraging engagement. This ensures that the app itself doesn't become a barrier to routine adherence.

Product Usage Case

· Morning Routine Assistance: Parents can use RoutineGuard to help their children manage their morning tasks like getting dressed, eating breakfast, and brushing teeth without constant reminders, by setting timers and ensuring focus. This solves the problem of sluggish or forgetful mornings.

· Homework Focus Session: To combat distractions during study time, parents can set a dedicated homework timer, blocking access to games and social media, while requiring a photo of completed homework for verification. This improves concentration and productivity for academic tasks.

· Chore Management: Implementing a system for household chores where children are timed on tasks like tidying their room or helping with dishes, with photo evidence of completion. This fosters responsibility and contributes to household order.

· Independent Skill Development: Encouraging children to develop independence by allowing them to manage their own routines (e.g., getting ready for sports practice) with the app acting as a supportive guide and accountability partner. This builds self-reliance in children.

3

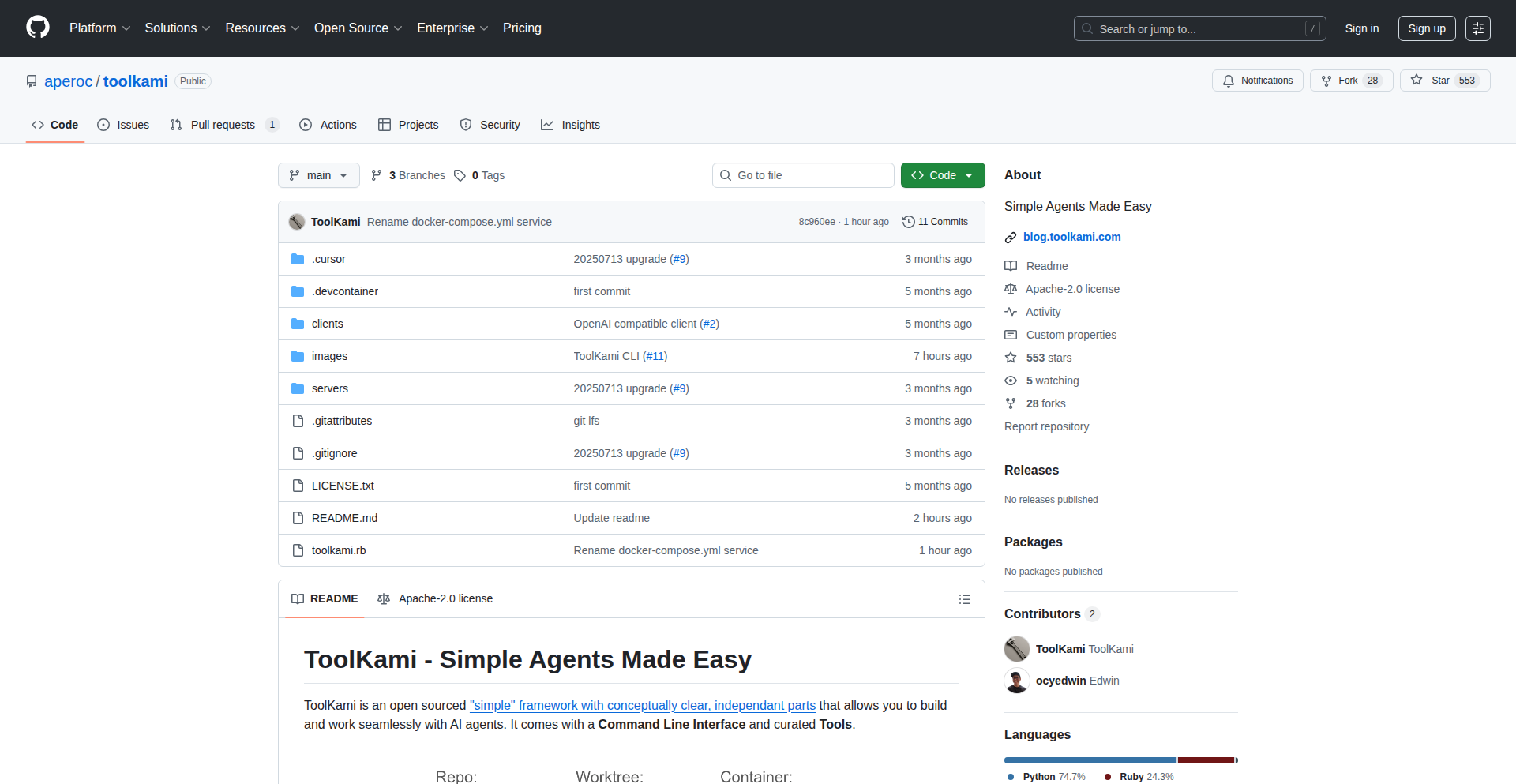

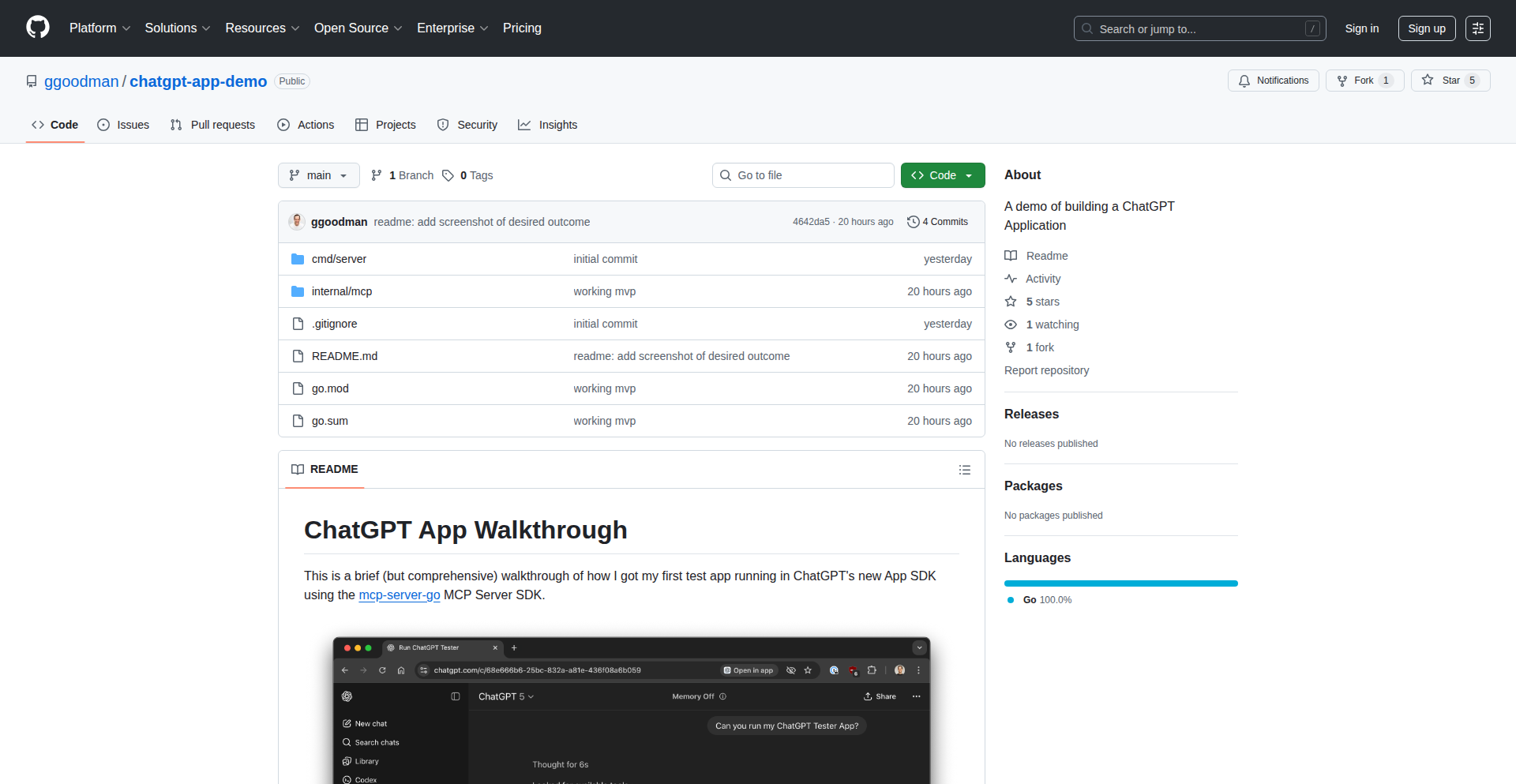

FleetCode: Git Worktree Agent Orchestrator

Author

asdev

Description

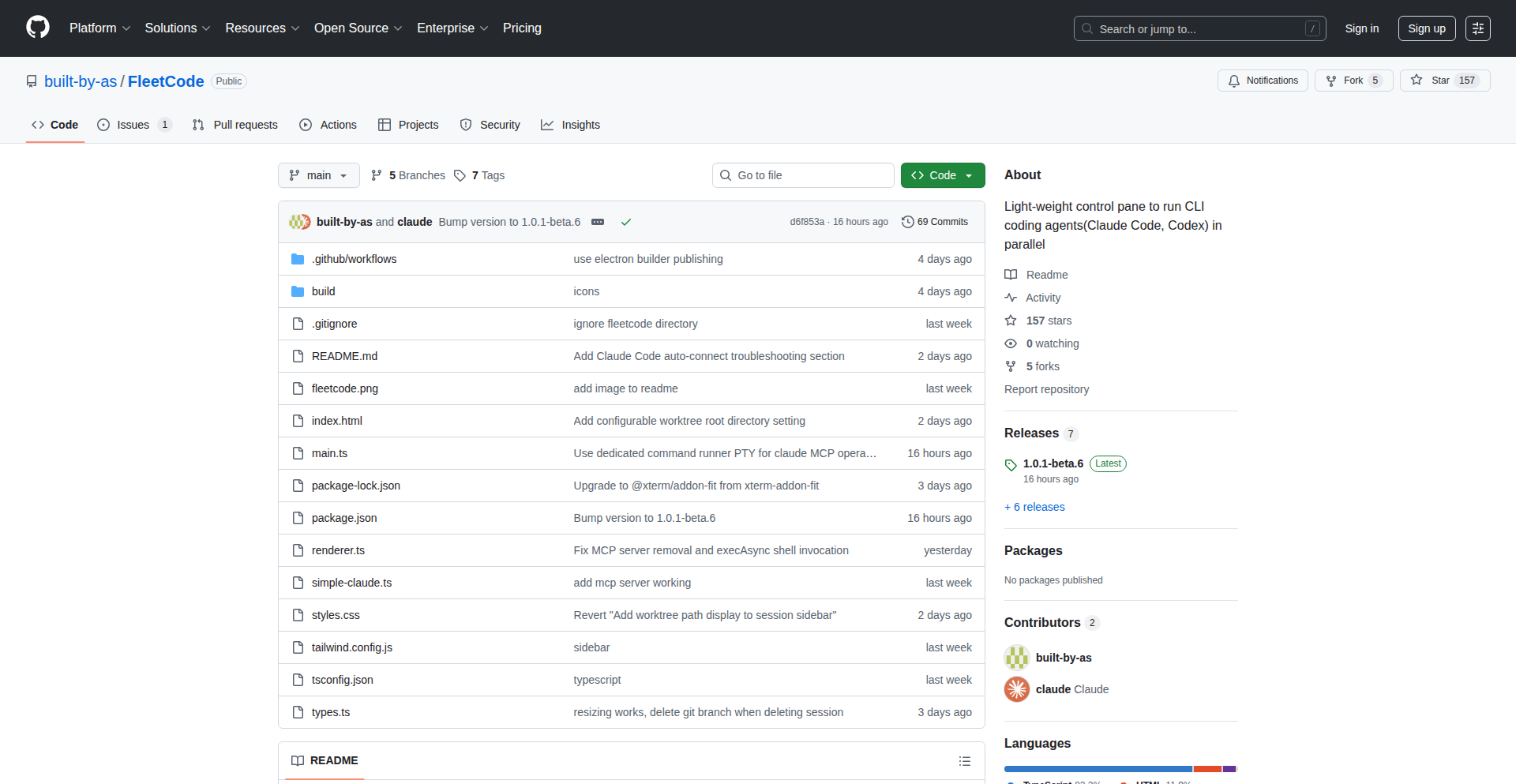

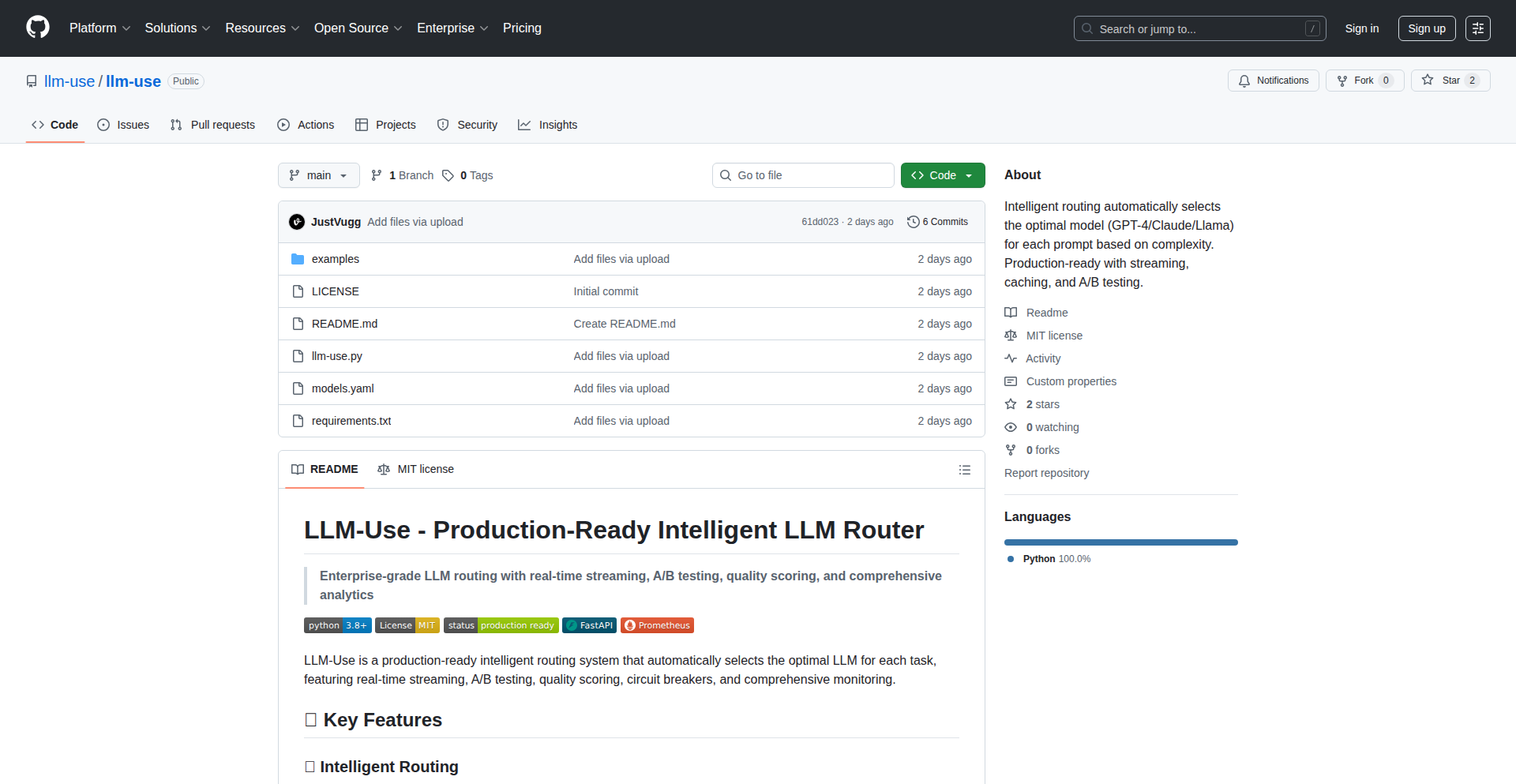

FleetCode is an open-source UI designed to streamline the workflow of running multiple parallel CLI coding agents. It leverages git worktrees to create isolated environments for each agent, preventing the need for constant git stashing and branch juggling. This innovative approach tackles the complexity of managing concurrent agent tasks, offering a more ergonomic and efficient coding experience. So, this helps you manage your coding assistants without getting lost in Git mess, making your complex development tasks smoother.

Popularity

Points 87

Comments 45

What is this product?

FleetCode is a command-line interface (CLI) tool that helps you manage and run multiple AI coding agents simultaneously. Instead of manually creating separate branches or constantly stashing your work every time you switch between different agent tasks, FleetCode uses a clever Git feature called 'worktrees'. Think of a worktree as a separate, clean copy of your project's code, linked to the same Git repository. FleetCode creates a new worktree for each coding agent you want to run. This means each agent has its own dedicated space to work in, isolated from others. This solves the problem of code conflicts and the tedious process of saving and switching contexts, which is a common pain point when using multiple development tools or agents. So, this provides a clean, organized way to let your coding assistants work on different tasks without interfering with each other or your main codebase.

How to use it?

Developers can use FleetCode by installing it and then configuring it to point to their Git repository. You would typically invoke FleetCode from your terminal, specifying the agents you want to run and the tasks they should perform. FleetCode will then automatically create the necessary git worktrees, launch your agents within these isolated environments, and manage their output. The tool aims to provide a lightweight wrapper around your terminal sessions, allowing you to interact with your agents more effectively. Integration is straightforward for any project managed with Git. So, this makes it easy to kick off multiple AI coding tasks simultaneously, keeping your main project clean and allowing you to focus on the results, not the setup.

Product Core Function

· Git Worktree Management: Automatically creates and manages isolated Git worktrees for each coding agent, preventing code conflicts and simplifying context switching. The value is in eliminating manual Git operations, saving developers significant time and reducing errors.

· Parallel Agent Execution: Enables running multiple CLI coding agents concurrently within their own dedicated worktrees. The value is in accelerating development by allowing parallel task processing and experimentation, boosting productivity.

· Ergonomic Workflow Integration: Provides a lightweight UI wrapper for terminal sessions, making it easier to manage and interact with multiple agents. The value is in offering a more intuitive and less convoluted experience compared to existing, more complex solutions.

· Open-Source and Free: The project is freely available and open-source, encouraging community contribution and customization. The value is in providing accessible tooling and fostering collaborative innovation within the developer community.

Product Usage Case

· Scenario: Refactoring a large codebase with multiple AI agents. Problem: Manually managing branches and stashes for each refactoring task is time-consuming and error-prone. Solution: FleetCode creates a dedicated worktree for each refactoring agent, allowing them to work independently. The developer can then easily compare the results from each agent without interference. So, this lets you explore different refactoring approaches simultaneously without messing up your main code.

· Scenario: Experimenting with different AI models or prompts for code generation. Problem: Switching between different agent setups and codebases for testing is tedious. Solution: FleetCode allows developers to spin up multiple agent instances, each with its own worktree and configuration, to test different ideas in parallel. The developer can quickly switch between the results and evaluate the effectiveness of each approach. So, this makes it super fast to test out new ideas with your AI coding buddies and see which one works best.

· Scenario: Developing a new feature while simultaneously addressing bug fixes. Problem: Developers often struggle to switch contexts between feature development and urgent bug fixes, leading to productivity loss. Solution: FleetCode can be used to assign one agent to work on the new feature in a dedicated worktree, while another agent focuses on the bug fix in a separate worktree. This keeps both efforts isolated and manageable. So, this helps you work on exciting new things and fix urgent problems at the same time without losing track of either.

4

BrowserCast Local-First Podcast Player

Author

aegrumet

Description

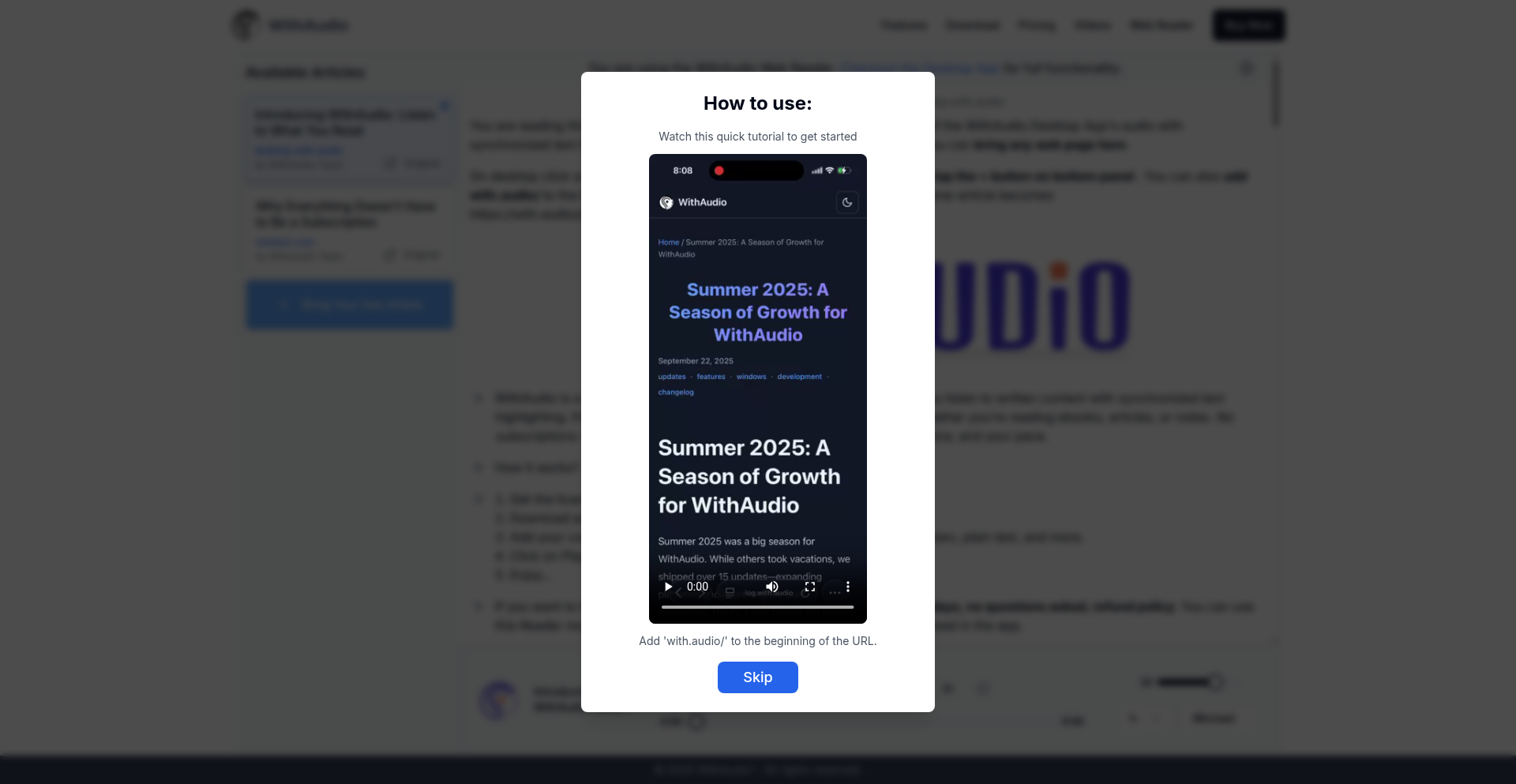

A progressive web app (PWA) that puts your podcast subscriptions and listening data entirely on your device, using your browser's local storage. It offers advanced features like custom feed support, on-device search, and AI-driven discovery, while prioritizing user privacy by minimizing server interaction.

Popularity

Points 62

Comments 21

What is this product?

This is a podcast application built as a Progressive Web App (PWA) that fundamentally shifts how your podcast data is managed. Instead of relying on a central server to store your subscriptions, listening history, and preferences, all this information is saved locally within your web browser using IndexedDB. This means your data stays with you, on your device, offering a truly private and secure listening experience. It also supports the latest podcasting standards (Podcasting 2.0) and offers innovative features like AI-powered show discovery and auto-generated chapters for episodes that lack them. So, what's in it for you? Your podcast listening becomes a private affair, free from the prying eyes of central servers, and you gain access to powerful, modern podcasting features directly in your browser.

How to use it?

You can access and use BrowserCast directly from your web browser at wherever.audio. Because it's a PWA, you can add it to your home screen on your mobile device, making it feel like a native app. It works offline once you've downloaded episodes, so you can listen to your favorite shows anywhere. To use it, simply navigate to the website, and you can start adding RSS feeds (including custom ones not found in directories), searching for episodes, and managing your playback. For developers, its open-web nature means it's a great example of what's possible with modern browser technologies, showcasing local-first data storage and PWA capabilities. So, how does this benefit you? It offers a seamless, app-like experience for listening to podcasts without needing to install anything, and provides a blueprint for building privacy-focused, offline-capable web applications.

Product Core Function

· Local-First Data Storage: Your podcast subscriptions, listening history, and settings are stored in your browser's IndexedDB, ensuring your data never leaves your device. This means enhanced privacy and security for your listening habits. This is valuable because your personal data remains under your control.

· Custom Feed Support: Allows you to add any RSS feed directly, not just those from curated directories. This gives you complete control over what you listen to and expands your podcast discovery beyond mainstream offerings. This is valuable as it opens up a world of niche and independent content.

· On-Device Search: Enables searching across all your subscribed feeds and downloaded episodes directly on your device. This means you can quickly find specific episodes or topics without relying on external servers. This is valuable for efficient content retrieval and quick access to information.

· Podcasting 2.0 Compatibility: Supports modern podcasting features like chapters, transcripts, and funding tags, enhancing the listening experience and providing richer content. This is valuable for a more interactive and informative way to consume podcasts.

· Auto-Generated Chapters: Automatically creates chapter markers for popular shows that don't have them, making it easier to navigate episodes. This is valuable because it improves episode discoverability and allows for quicker access to specific segments.

· AI-Powered Discovery: Allows you to ask questions to find relevant shows and episodes, using a third-party API for intelligent recommendations. This is valuable as it leverages AI to help you discover new content tailored to your interests.

· Audio-Guided Tutorials: Offers interactive walkthroughs with voice and visual guidance to help you learn the app's features. This is valuable for a user-friendly onboarding experience, making it easy to get started.

· Offline Playback: Download episodes and listen to them without an internet connection, ensuring uninterrupted listening. This is valuable for commuters, travelers, or anyone with unreliable internet access.

Product Usage Case

· A privacy-conscious individual who wants to listen to podcasts without their data being tracked by a central service. They can use BrowserCast to manage all their subscriptions and listening history locally, ensuring their privacy is maintained. This solves the problem of data exploitation in traditional podcast apps.

· A developer building a niche podcast directory or an independent podcaster wanting to offer a direct subscription option to their audience. They can leverage the custom feed support in BrowserCast to make their content easily accessible and discoverable. This solves the problem of limited reach for niche content.

· A researcher or student who needs to quickly find specific information within a large collection of podcast episodes. The on-device search functionality allows them to pinpoint relevant content efficiently without needing to download or stream entire episodes from external servers. This solves the problem of time-consuming information retrieval.

· Anyone who travels frequently and has limited or no internet access. By downloading episodes beforehand, they can enjoy their podcasts uninterrupted during flights, train rides, or in remote locations. This solves the problem of inconsistent access to entertainment.

· A podcast enthusiast looking to explore the latest features in podcasting. They can use BrowserCast to experience Podcasting 2.0 features like chapters and transcripts, and even discover new shows through AI-powered recommendations. This provides access to cutting-edge podcasting technology.

5

HyprMCP Proxy

Author

pmig

Description

HyprMCP is an open-source proxy designed to enhance existing MCP servers with essential features like authentication, logging, debugging, and prompt analytics. It acts as an intelligent layer in front of your MCP, simplifying deployment and improving performance without requiring any changes to your original MCP code. This innovation addresses common challenges faced by developers when integrating and managing MCP services, making them more robust and user-friendly. So, what does this mean for you? It means you can easily add critical functionalities to your MCP setup, saving development time and effort while gaining deeper insights into its operation and user interactions.

Popularity

Points 46

Comments 5

What is this product?

HyprMCP is a powerful proxy that sits in front of your Machine Control Protocol (MCP) servers. Think of it as a smart gatekeeper that handles crucial tasks before requests even reach your MCP. Its core technical innovation lies in its ability to dynamically integrate authentication using standards like OAuth and OpenID Connect (OIDC), ensuring secure access to your MCP. It also captures and analyzes raw gRPC method calls, which is vital for debugging, especially in serverless environments where traditional debugging tools are limited. Furthermore, it introduces prompt analytics, allowing you to understand how user prompts interact with your MCP and which tools are most effective. This solves the problem of managing and understanding complex MCP interactions, making them more manageable and efficient. So, what's the value for you? You get a more secure, debuggable, and insightful MCP experience without touching your existing MCP codebase.

How to use it?

Developers can integrate HyprMCP into their existing MCP architecture with minimal effort. You deploy HyprMCP as a proxy, configuring it to point to your MCP server(s). It leverages technologies like Kubernetes Operators (via Metacontroller) to automate the underlying infrastructure if needed. For authentication, you connect HyprMCP to your organization's existing authentication methods. The proxy then handles user authentication, logs all requests and responses for debugging, and collects data for prompt analytics. This means you can protect your MCP server with your current security infrastructure and gain valuable operational data. So, how does this benefit you? It allows for rapid enhancement of your MCP deployments, providing enterprise-grade features like robust security and detailed performance monitoring with straightforward integration.

Product Core Function

· Authentication Proxy: Enables secure access to MCP servers by integrating with existing authentication providers (e.g., OAuth, OIDC), ensuring only authorized users can interact with your MCP. This is valuable for protecting sensitive operations and maintaining compliance.

· Logging and Debugging: Captures raw gRPC method calls, providing detailed logs that are crucial for identifying and resolving issues, especially in complex or serverless environments. This helps you quickly pinpoint problems and reduce downtime.

· Prompt Analytics: Collects data on how user prompts trigger specific MCP tools and evaluates their performance, offering insights to optimize MCP behavior and improve user experience. This allows for data-driven improvements to your MCP's responsiveness and effectiveness.

· MCP Connection Instructions Generator: Simplifies the process for users to connect to your MCP server by providing clear, generated instructions. This enhances user onboarding and reduces support overhead.

· Dynamic Infrastructure Provisioning (via Kubernetes Operators): Automates the setup and management of the proxy's infrastructure, reducing manual configuration and ensuring scalability. This means a smoother and more automated deployment process.

Product Usage Case

· Securing a production MCP server for a SaaS application by integrating HyprMCP with an existing OAuth provider. This prevents unauthorized access and protects user data, solving the critical security challenge.

· Debugging intermittent issues with a serverless MCP deployed on Cloudflare Workers. HyprMCP's raw gRPC logging allows developers to trace the exact request flow and identify the root cause, a task that would be extremely difficult otherwise.

· Optimizing an AI-powered application that uses an MCP to access various tools. Prompt analytics from HyprMCP reveal which prompts lead to the most effective tool usage, enabling developers to fine-tune the AI's responses and improve overall application performance.

· Onboarding new developers to a complex internal MCP system. The HyprMCP connection instructions generator provides a user-friendly guide, reducing the learning curve and accelerating their productivity.

6

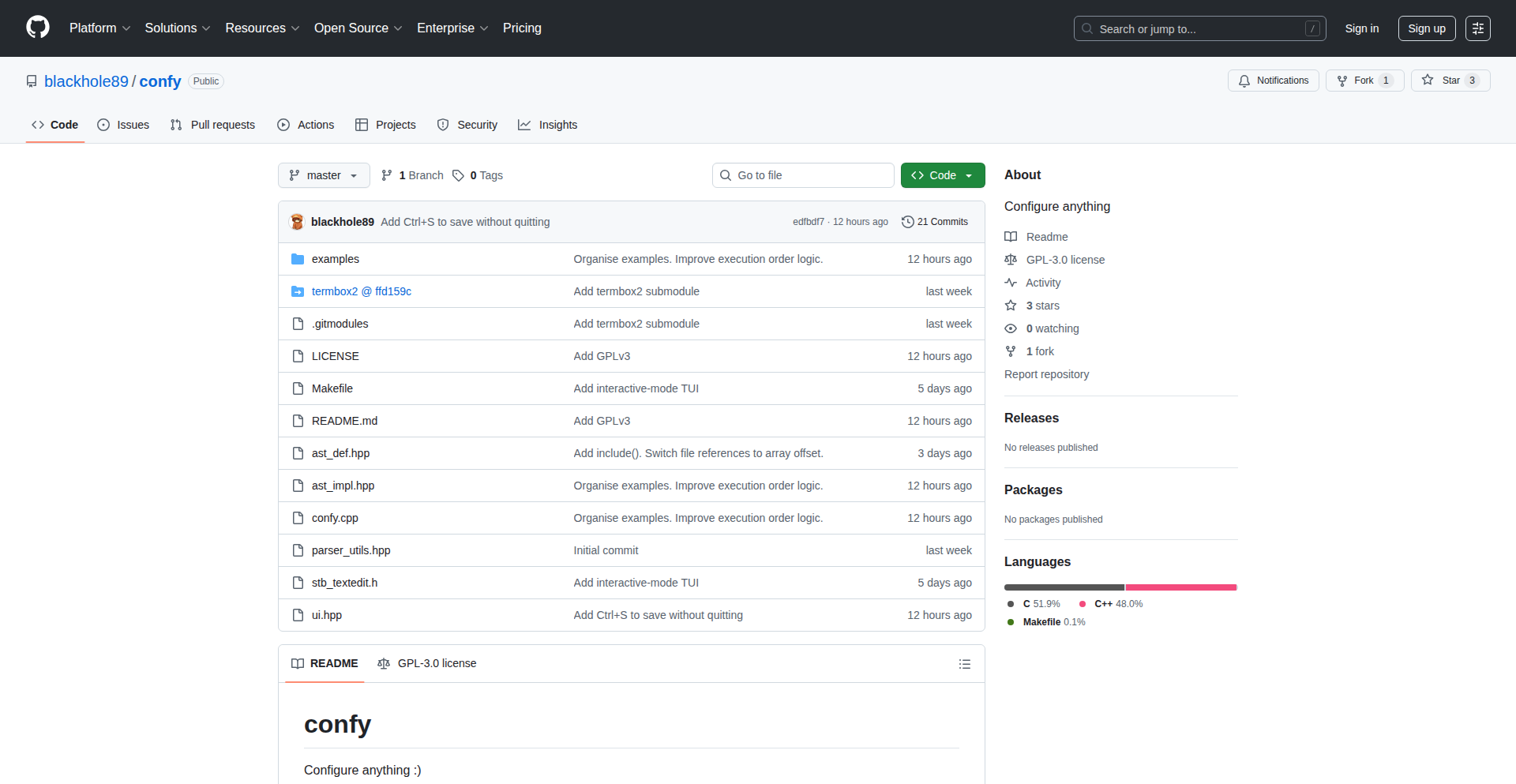

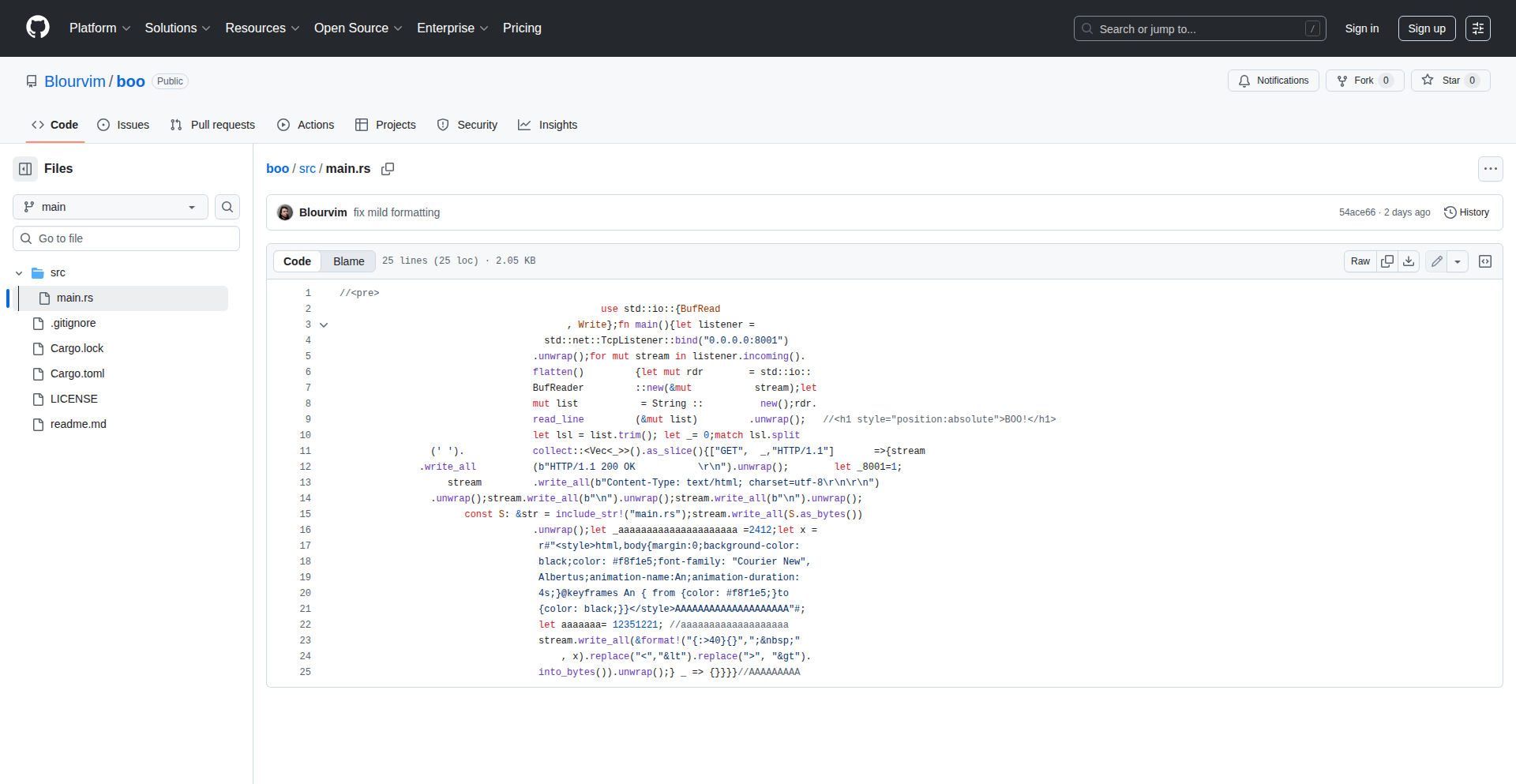

RedLisp Shell

Author

quintussss

Description

RedLisp Shell is a novel approach to shell scripting, reimagining the command line experience by integrating the power and expressiveness of the Lisp programming language with the familiar functionalities of the Unix shell. It allows developers to write shell scripts using Lisp syntax, enabling sophisticated control flow, data manipulation, and error handling while seamlessly executing system commands, managing processes, and piping data between them. This innovation bridges the gap between high-level programming logic and low-level system interaction, offering a more structured and potent way to automate tasks.

Popularity

Points 29

Comments 0

What is this product?

RedLisp Shell is a lightweight interpreter written in C++ that allows you to use Lisp as your primary language for writing shell scripts. Instead of using Bash or other traditional shell scripting languages, you leverage Lisp's powerful syntax for defining logic, managing variables, and controlling program flow. Crucially, it doesn't just replace your shell commands; it enhances them by allowing you to treat command outputs as Lisp data structures, enabling complex transformations and conditional execution. The innovation lies in its ability to marry Lisp's elegance with the practical realities of interacting with the operating system, making complex shell operations more manageable and readable.

How to use it?

Developers can use RedLisp Shell by writing script files with a .lisp or .rlisp extension. These scripts can then be executed directly by the RedLisp interpreter. The interpreter understands Lisp syntax for defining functions, variables, and control structures, and it also knows how to invoke external shell commands, capture their standard output and error streams, and pipe this data into Lisp expressions for further processing. For integration, you can use it as a direct replacement for your Bash scripts, or embed its interpreter within larger C++ applications for dynamic shell-like functionality. Essentially, any task you'd do in a shell script, from file manipulation to process management, can now be done with the added benefits of Lisp's structured programming capabilities.

Product Core Function

· Lisp Syntax for Scripting: Allows developers to write shell scripts using Lisp's well-defined and powerful syntax, leading to more organized and maintainable scripts. This is useful for complex automation tasks where traditional shell scripting becomes unwieldy.

· Command Execution: Seamlessly runs standard Unix commands within the Lisp environment. This means you can call any existing command-line tool, like `ls`, `grep`, or `curl`, directly from your Lisp script, offering a familiar operational foundation.

· Output Capturing and Piping: Captures the standard output of executed commands and treats it as Lisp data, which can then be manipulated, filtered, or passed to other commands or Lisp functions. This is invaluable for data processing and chaining command-line tools in sophisticated ways.

· Process Management: Provides capabilities to manage processes, including starting, stopping, and monitoring them, all from within the Lisp scripting environment. This is essential for building robust automation workflows and system administration tools.

· Lisp Data Structures for Shell Data: Transforms the raw text output from shell commands into structured Lisp data, enabling advanced logic and manipulation that is difficult with plain text. This allows for more intelligent decision-making within scripts based on command results.

Product Usage Case

· Automating complex data analysis pipelines: Instead of chaining multiple `awk`, `sed`, and `grep` commands with complex piping, a developer can write a single RedLisp script that captures command outputs, parses them into Lisp lists or other structures, performs intricate filtering and transformation, and then generates a formatted report. This solves the problem of unmanageable and error-prone multi-command pipelines.

· Building custom build tools: A developer can create a build system where RedLisp scripts orchestrate compilation, linking, testing, and deployment steps. The Lisp syntax allows for defining complex dependencies, conditional compilation based on build flags, and robust error handling, solving the challenge of creating flexible and resilient build processes.

· Developing interactive system monitoring tools: RedLisp can be used to write scripts that continuously monitor system metrics, process states, or log files. The Lisp logic enables sophisticated alerting mechanisms, dynamic adjustments to monitoring thresholds based on real-time data, and custom reporting formats, addressing the need for intelligent and adaptive system oversight.

· Creating configuration management scripts: Instead of writing lengthy Bash scripts to configure servers, a developer can use RedLisp to define desired states and execute commands to achieve them. The Lisp's control flow and data handling capabilities make it easier to manage complex configurations across multiple machines, solving the problem of inconsistent and hard-to-manage system setups.

7

AI Code Sorcerer: Automated Code Sentinel

Author

sunny-beast

Description

This is an open-source AI-powered code review tool designed to identify potential issues in your codebase, akin to a magical assistant that spots errors before they become problems. It leverages sophisticated AI models to understand code context and suggest improvements, aiming to make the code review process more efficient and effective for developers.

Popularity

Points 16

Comments 6

What is this product?

AI Code Sorcerer is an open-source project that acts as an automated code reviewer. Instead of a human spending hours poring over lines of code, our AI models are trained to 'read' and 'understand' your code. It's like having a highly experienced programmer who can quickly scan your work for common bugs, potential security vulnerabilities, style inconsistencies, and even areas where performance could be improved. The magic lies in its ability to go beyond simple syntax checks and grasp the logic and intent behind your code, offering intelligent suggestions. So, this helps you catch errors early, saving you debugging time and making your code more robust.

How to use it?

Developers can integrate AI Code Sorcerer into their existing workflows. This could involve running it as a pre-commit hook, where it automatically analyzes code before it's even committed to version control. Alternatively, it can be set up as part of a Continuous Integration (CI) pipeline, scanning code changes whenever new code is pushed. The tool can also be used manually on specific files or directories. It typically integrates with popular version control systems like Git and can output its findings in various formats, such as plain text or structured reports. This means you can easily incorporate it into your team's development process to ensure code quality consistently.

Product Core Function

· Automated bug detection: Utilizes AI to identify common programming errors and potential logic flaws, reducing the likelihood of runtime issues and saving debugging hours.

· Security vulnerability analysis: Scans code for known security weaknesses and potential attack vectors, helping to build more secure applications.

· Code style and best practice enforcement: Checks code against predefined style guides and industry best practices, ensuring consistency and maintainability across the project.

· Performance optimization suggestions: Analyzes code for inefficient patterns and suggests optimizations, leading to faster and more resource-efficient applications.

· Context-aware recommendations: Goes beyond simple pattern matching by understanding the context of the code to provide more relevant and actionable feedback.

Product Usage Case

· A small startup team working on a critical web application uses AI Code Sorcerer as a pre-commit hook. It immediately flags a potential SQL injection vulnerability in a new feature's code, preventing it from ever reaching the main branch and saving the team from a potential security breach.

· A large enterprise development team integrates AI Code Sorcerer into their CI pipeline. It identifies several performance bottlenecks in a recently merged pull request, allowing developers to refactor those sections before they impact the production environment and user experience.

· An individual open-source contributor uses AI Code Sorcerer to review their own code before submitting a pull request to a popular project. The tool highlights minor style inconsistencies and a potential off-by-one error in a loop, which they then fix, leading to a smoother review process with the project maintainers.

· A team developing an embedded system uses AI Code Sorcerer to enforce strict coding standards. The tool flags instances where memory management is not handled optimally, ensuring their resource-constrained system remains stable and efficient.

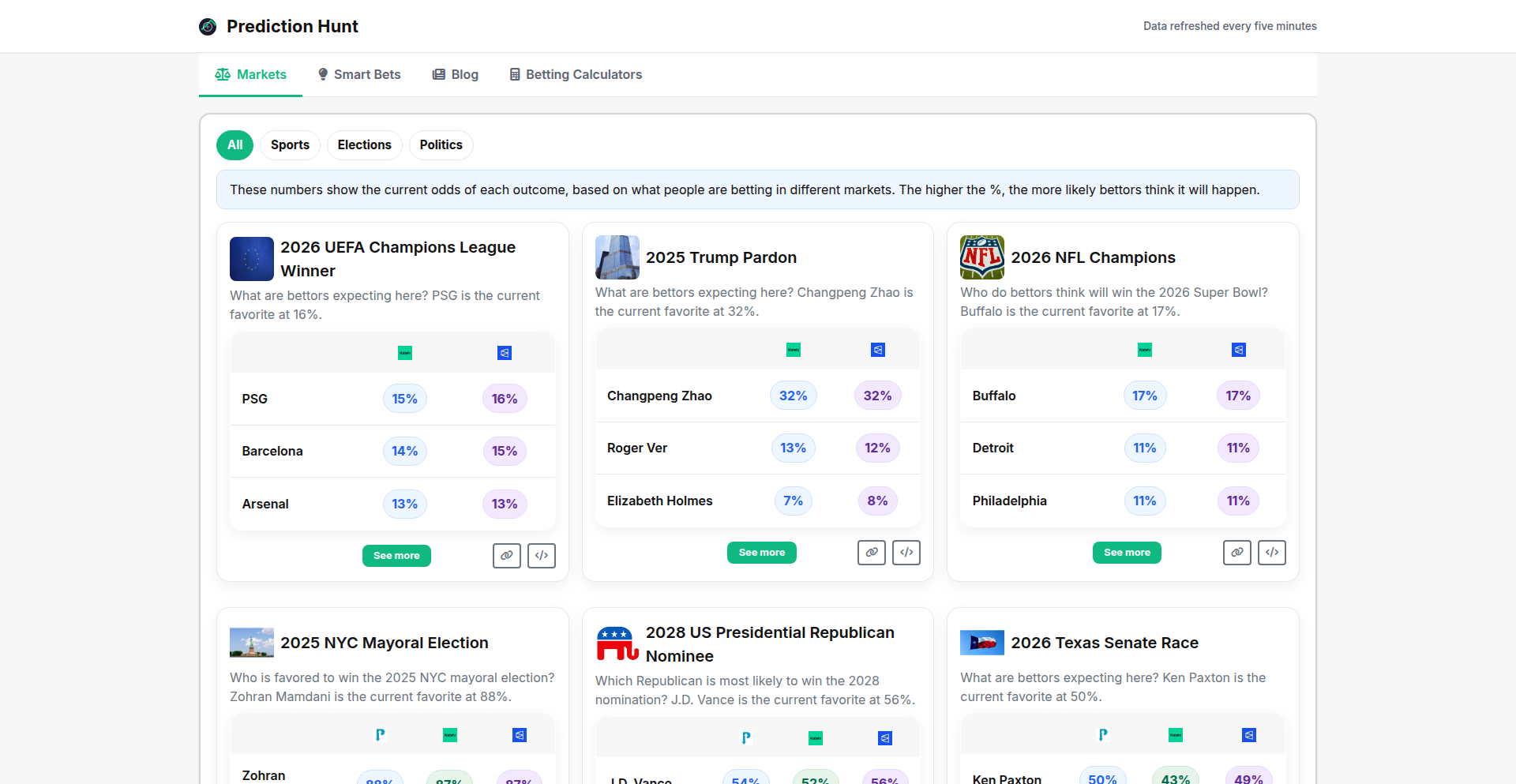

8

Prediction Aggregator API

Author

carushow

Description

Prediction Hunt is a web dashboard that consolidates data from various prediction markets, such as Kalshi, Polymarket, and PredictIt. It provides a unified view of event probabilities and identifies potential arbitrage opportunities when different markets offer conflicting price signals. The core innovation lies in its real-time data aggregation and analysis, saving users the hassle of manually checking multiple platforms.

Popularity

Points 10

Comments 7

What is this product?

Prediction Hunt is a service that collects and displays information from different online prediction markets. Imagine you want to know the chances of a specific event happening, like a political election outcome or a sports game result. Instead of visiting each market's website separately, Prediction Hunt pulls all that data into one place. It calculates the probability for each event based on how people are betting (or 'predicting') on these markets and even flags situations where one market thinks an event is highly likely, while another thinks it's unlikely – this is called an arbitrage opportunity, and finding it means a potential profit. The technical idea is to use APIs (Application Programming Interfaces) provided by these prediction markets to fetch their data, process it to show clear probabilities, and highlight discrepancies.

How to use it?

Developers can integrate Prediction Hunt's functionality into their own applications or use it for personal analysis. For instance, a financial news app could display the predicted likelihood of an economic event based on these markets. A quantitative analyst could use the data to build trading strategies that exploit arbitrage opportunities. For developers, it involves accessing the aggregated data, likely through an API endpoint that Prediction Hunt might offer (though the current description focuses on the dashboard, the underlying capability implies API access), and then visualizing or processing this data within their own systems. The use case is for anyone needing to understand public sentiment or potential financial gains derived from predictive market data without the overhead of manual cross-referencing.

Product Core Function

· Real-time Data Aggregation: Collects and updates predictions from multiple sources every few minutes, offering up-to-date market sentiment without constant manual checks. This is valuable for staying informed and making timely decisions.

· Unified Probability Display: Presents the likelihood of various events in a single, easy-to-understand dashboard, simplifying complex market data for quicker analysis and comprehension.

· Arbitrage Opportunity Highlighting: Identifies discrepancies in pricing across different prediction markets, signaling potential profit-making opportunities for astute users. This adds a layer of financial insight and trading potential.

· Cross-Market Comparison: Allows direct comparison of how different markets are pricing the same event, providing a more robust understanding of overall market expectations.

· Historical Data Access (Potential Future Feature): While not explicitly stated, the infrastructure to aggregate current data could be extended to store and analyze historical trends, offering insights into market evolution over time.

Product Usage Case

· A financial analyst wanting to gauge market expectations for upcoming economic indicators could use Prediction Hunt to see aggregated probabilities from various prediction markets, saving time and providing a consolidated view for their reports.

· A sports betting enthusiast could monitor multiple prediction markets for a particular game simultaneously, identifying any significant price differences that might indicate an edge, by leveraging the arbitrage highlighting feature.

· A news aggregator website could integrate prediction market data into their articles about political events or social trends, providing readers with an additional data point reflecting public foresight and market sentiment.

· A developer building a personal dashboard for tracking various market indicators could use Prediction Hunt's data feed to incorporate real-time prediction market outcomes, enhancing their personalized data visualization.

9

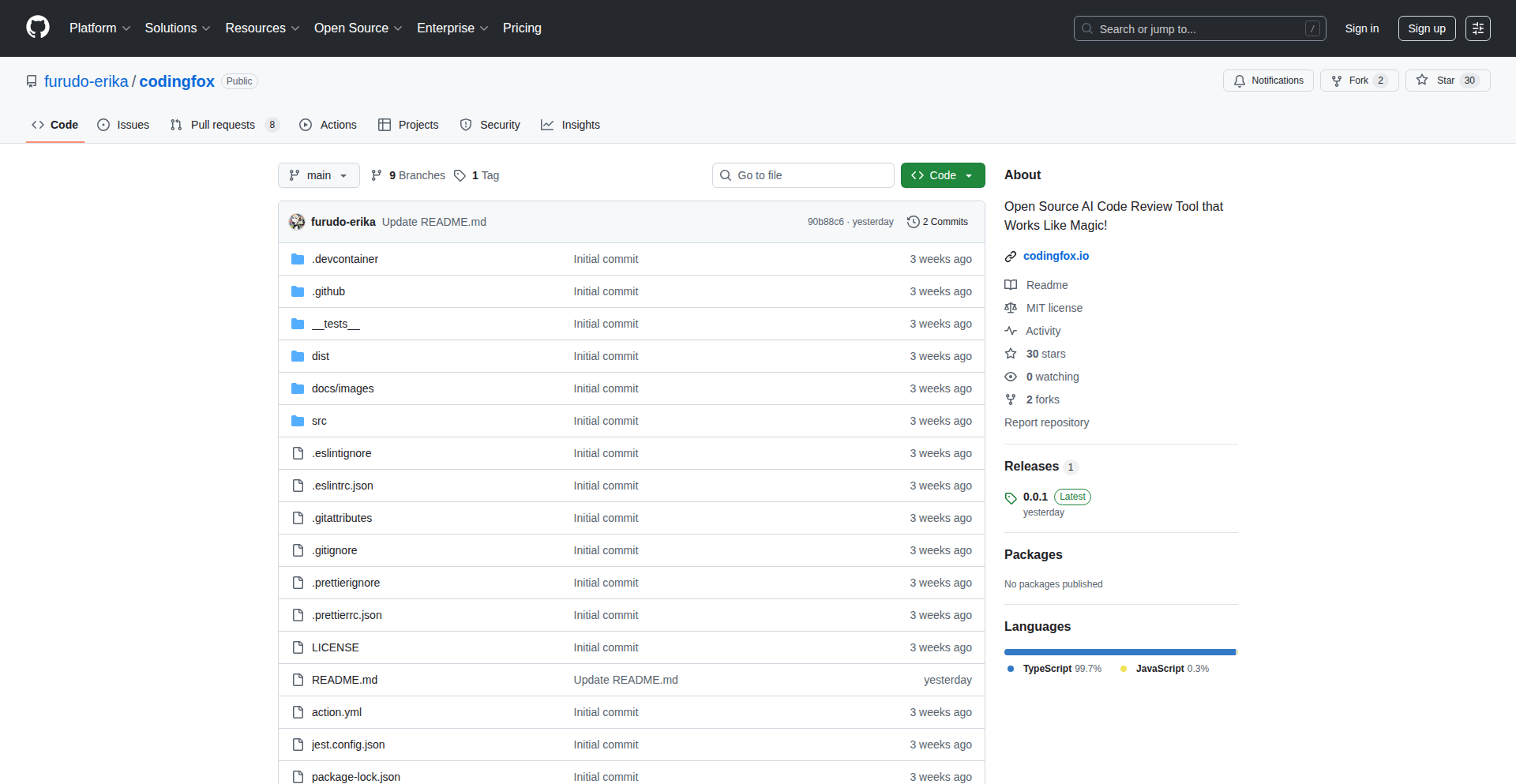

CodingFox AI Code Review Engine

Author

jennie907

Description

CodingFox is an open-source AI-powered code review tool designed to automate the process of identifying potential issues and suggesting improvements in your codebase. It leverages advanced machine learning models to analyze code quality, detect bugs, and offer stylistic suggestions, functioning as a 'magic' assistant for developers to write cleaner, more robust code with less manual effort.

Popularity

Points 11

Comments 0

What is this product?

CodingFox is an open-source AI code review tool. At its core, it uses sophisticated natural language processing (NLP) and code analysis models. These models have been trained on vast amounts of code to understand programming language patterns, common error types, and best practices. When you submit your code, CodingFox 'reads' it like a human reviewer, but much faster and more consistently. It identifies potential bugs that might lead to runtime errors, security vulnerabilities, and deviations from coding standards. The innovation lies in its ability to go beyond simple syntax checks and understand the semantic meaning and potential impact of code constructs, offering context-aware suggestions. So, this is useful because it automates a time-consuming part of development, helping you catch errors early and improve code quality without needing multiple human eyes on every line.

How to use it?

Developers can integrate CodingFox into their existing workflows. It can be used as a standalone tool where you submit code snippets or entire files for analysis. More powerfully, it can be integrated into Continuous Integration (CI) pipelines (e.g., with GitHub Actions, GitLab CI). This means that every time you push code, CodingFox automatically scans it. If it finds issues, it can flag them, prevent the merge, or even automatically create pull request comments with detailed suggestions. This integration streamlines the review process, ensuring that code quality standards are maintained consistently. So, this is useful because it fits seamlessly into how you already build software, making sure your code is reviewed automatically and consistently, saving you time and preventing common mistakes from reaching production.

Product Core Function

· Automated Bug Detection: Analyzes code to identify potential runtime errors, null pointer exceptions, and other common pitfalls, significantly reducing the chance of bugs slipping into production. Its value is in catching issues that might be missed by human reviewers due to fatigue or oversight, thereby improving software stability.

· Security Vulnerability Spotting: Scans code for known security weaknesses and anti-patterns, such as SQL injection risks or insecure data handling, helping to protect applications from breaches. This is valuable as it proactively addresses security concerns, a critical aspect of modern software development.

· Code Style and Best Practice Enforcement: Checks code against predefined or customizable style guides and best practices, ensuring consistency and readability across the project. This is valuable for team collaboration and long-term maintainability of the codebase.

· Refactoring Suggestions: Identifies opportunities to simplify complex code, improve performance, or enhance clarity, guiding developers toward more efficient and maintainable solutions. This helps developers learn and apply better coding techniques, leading to higher-quality software.

· Natural Language Explanations: Provides human-readable explanations for its findings and suggestions, making it easier for developers to understand why a change is recommended and how to implement it. This value lies in its educational aspect, empowering developers to learn and improve their coding skills.

Product Usage Case

· A startup using CodingFox in their GitHub Actions CI pipeline to automatically check every pull request before merging. This ensures that all new code meets their quality standards, reducing the burden on senior developers and accelerating the release cycle. It solves the problem of slow and inconsistent code reviews.

· An open-source project integrating CodingFox to maintain high code quality across many contributors. It helps new contributors understand the project's standards and catch common mistakes early, making onboarding smoother and the project more robust. This addresses the challenge of managing code quality in a distributed team.

· A developer working on a personal project uses CodingFox as a local pre-commit hook. Before committing code, CodingFox analyzes it, catching minor issues and suggesting improvements on the fly, leading to a cleaner commit history and better code from the start. This provides immediate feedback and improves individual coding habits.

10

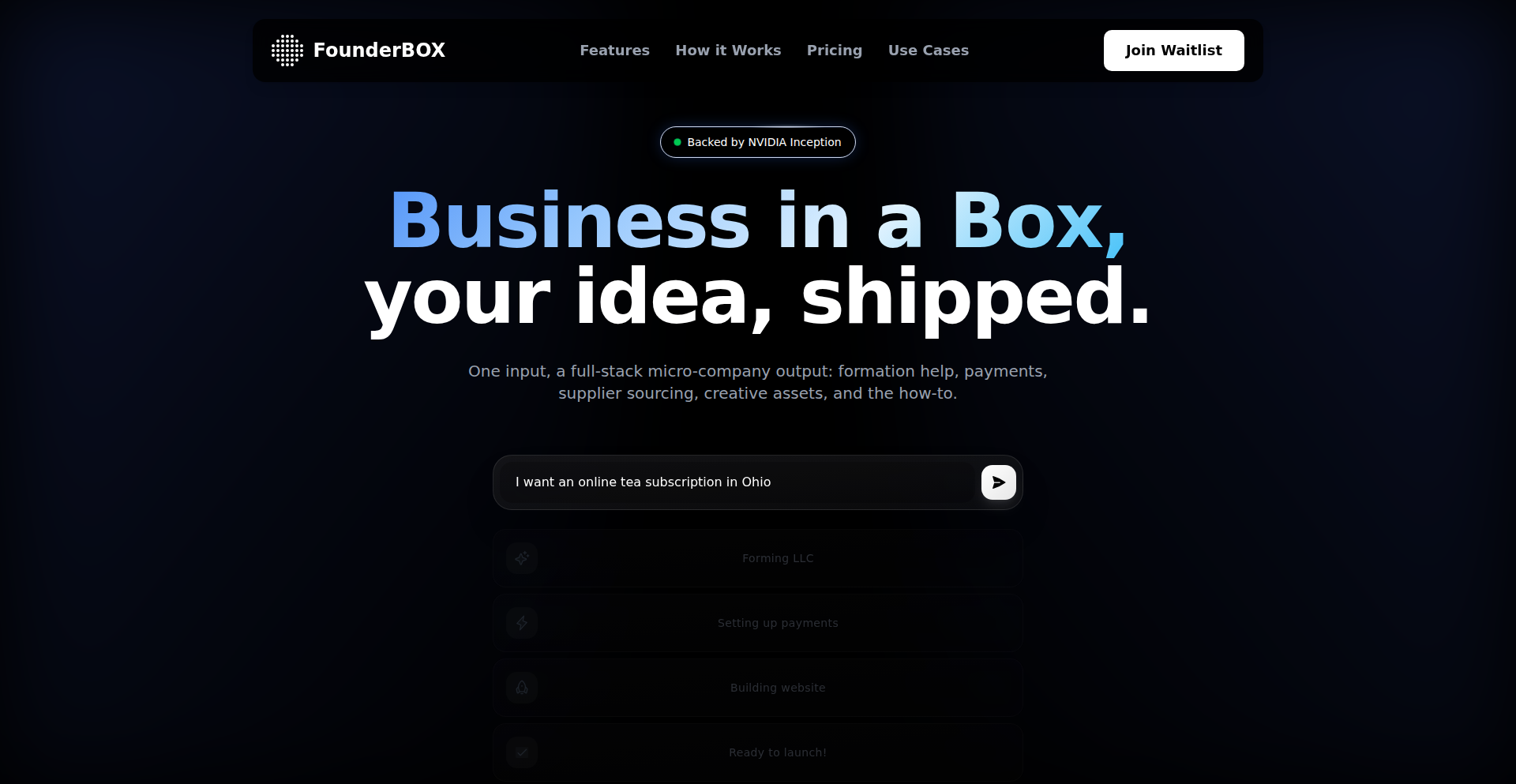

FounderBox: AI-Powered Business Genesis Engine

Author

PrateekJ17

Description

FounderBox is an AI-driven platform that transforms a single prompt into a fully functional business. It automates the creation of company name, incorporation guidance, website, payment gateway, supplier matching, advertising, and basic operating procedures. The core innovation lies in collapsing the fragmented and expensive business setup process into a seamless, end-to-end pipeline, leveraging AI to democratize entrepreneurship.

Popularity

Points 10

Comments 0

What is this product?

FounderBox is a revolutionary platform that acts like a digital entrepreneurship concierge. Instead of piecing together services from lawyers, web designers, marketing consultants, and payment processors, you provide a simple text prompt describing your business idea. FounderBox then utilizes advanced AI to generate all the essential components: a catchy company name, legal advice for incorporation, a functional website with e-commerce capabilities (like Stripe checkout), connections to potential suppliers, initial advertising strategies, and a basic Standard Operating Procedure (SOP). The technical innovation is in orchestrating various AI models and APIs to automate what traditionally required numerous human experts and manual integration, making business formation accessible and affordable. So, what's the value to you? It dramatically reduces the time, cost, and complexity of starting a business, allowing you to focus on your vision rather than administrative hurdles.

How to use it?

Developers can integrate FounderBox into their workflows by utilizing its API or by directly interacting with its user interface. For a typical use case, a founder would visit the FounderBox website, enter a descriptive prompt like 'I want to start an eco-friendly dog toy subscription box company,' and specify desired parameters. The platform handles the rest. For developers looking to build complementary services or embed business creation into other applications, FounderBox offers APIs to programmatically trigger the business genesis process, receive the generated assets, and integrate them into their own platforms. This could be used in startup accelerators, freelance marketplaces, or even within educational tools. So, how can you use this? You can either use it directly to launch your business in minutes, or if you're a developer, you can leverage its power to offer streamlined business creation as a feature in your own products.

Product Core Function

· AI-driven company name generation: Utilizes natural language processing to suggest unique and relevant business names based on your prompt, accelerating brand identity creation.

· Automated incorporation guidance: Provides foundational advice and resources for legal business setup, simplifying the often daunting legal aspects of starting a company.

· One-click website and payment gateway creation: Generates a ready-to-deploy website with integrated e-commerce functionality, like Stripe checkout, enabling immediate sales capabilities.

· Smart supplier matching: Leverages AI to identify and suggest potential suppliers or manufacturers relevant to your business, streamlining your supply chain setup.

· Basic advertising campaign generation: Develops initial marketing strategies and ad copy, helping you reach your target audience quickly.

· SOP and operational blueprint: Creates a foundational Standard Operating Procedure to guide day-to-day business operations, providing a starting point for management and efficiency.

Product Usage Case

· A solo entrepreneur with a unique product idea uses FounderBox to generate a business name, website, and Stripe integration within an hour. This allows them to test market demand with a functional prototype quickly, solving the problem of high upfront costs and long development times for a new venture.

· A non-profit organization needs to quickly establish a legal entity and online presence to receive donations for an urgent cause. FounderBox provides them with the necessary legal framework guidance and a donation-ready website, enabling them to start fundraising immediately and address the critical need for speed in humanitarian efforts.

· A coding bootcamp includes FounderBox as part of their curriculum. Students can use it to rapidly prototype business ideas they learn about, transforming theoretical knowledge into tangible businesses, thereby solving the challenge of making entrepreneurial education practical and outcome-oriented.

11

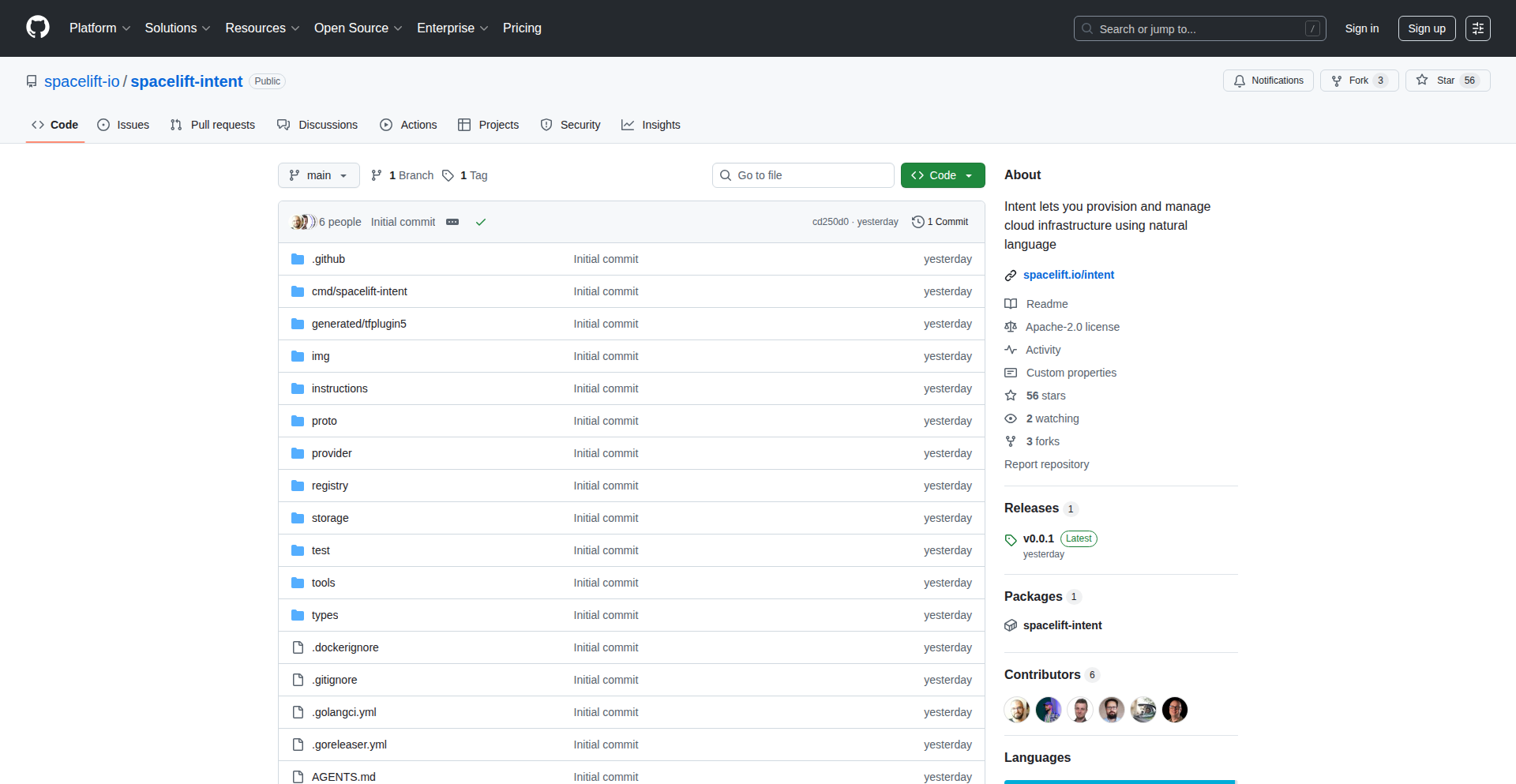

AI-Agent Cloud Orchestrator

Author

cube2222

Description

This project presents a novel approach to cloud infrastructure provisioning, designed specifically for AI agents. It leverages a declarative intent-based model, allowing AI agents to express their desired cloud environment in a high-level, human-readable format. The innovation lies in translating these abstract intents into concrete infrastructure deployments, effectively bridging the gap between AI decision-making and real-world cloud resources. This addresses the challenge of making complex cloud infrastructure manageable and adaptable for rapidly evolving AI applications.

Popularity

Points 8

Comments 1

What is this product?

This is an intelligent system that allows AI agents to describe what kind of cloud infrastructure they need, and then it automatically builds and manages it for them. Think of it like an AI agent telling a construction crew what kind of building it wants, and the crew handles all the blueprints, materials, and construction. The core innovation is the 'Intent MCP' (Model-Driven Control Plane), which takes the AI's high-level requirements (like 'I need a scalable web server with a database for training models') and translates them into the specific commands needed for cloud providers like AWS, Azure, or GCP. This means the AI doesn't need to know the nitty-gritty details of cloud provider APIs; it just states its needs.

How to use it?

Developers can integrate this system into their AI agent frameworks. Instead of manually writing complex cloud deployment scripts (like Terraform or CloudFormation), the AI agent can output its infrastructure requirements in a structured format (e.g., JSON or YAML) that the Intent MCP understands. The MCP then communicates with the cloud provider's APIs to provision and configure the necessary resources. This is useful for scenarios where AI agents need dynamic and on-demand access to compute, storage, or specialized services, such as for machine learning model training, data processing pipelines, or deploying AI-powered applications.

Product Core Function

· Declarative Intent Parsing: The system takes abstract descriptions of desired cloud resources from an AI agent and understands them. This is valuable because it frees AI developers from writing low-level cloud configuration code, allowing them to focus on AI logic. It enables faster iteration and deployment of AI solutions.

· Multi-Cloud Abstraction: It can provision infrastructure across different cloud providers (e.g., AWS, Azure, GCP) using a single intent model. This offers flexibility and avoids vendor lock-in, which is crucial for enterprise-grade AI deployments that might require specific services from different clouds.

· Automated Infrastructure Provisioning: The system automatically translates intents into actual cloud resources, spinning up virtual machines, databases, networking, and other services. This dramatically speeds up the deployment process for AI workloads and reduces the risk of human error in manual configurations.

· State Management and Drift Detection: It keeps track of the deployed infrastructure and can detect if it deviates from the declared intent, automatically correcting it. This ensures the AI agent always has the correct and desired environment, preventing downtime or performance issues caused by misconfigurations.

Product Usage Case

· A machine learning engineer is training a large deep learning model. Instead of manually setting up multiple powerful GPUs and a distributed training environment on a cloud platform, their AI training agent can simply declare 'I need 8 high-performance GPUs with 64GB RAM each, interconnected for distributed training.' The Intent MCP then provisions this environment, and the AI training begins immediately, saving significant setup time and effort.

· An AI chatbot needs to scale its backend services based on user traffic. The chatbot's AI can signal its intent to the orchestrator, like 'Increase web server capacity by 50% and ensure database read replicas are available.' The system automatically scales the infrastructure up or down, ensuring the chatbot remains responsive and reliable without manual intervention, which is key for handling unpredictable user demand.

· A data science team is performing complex data analysis requiring a specific set of compute instances and data storage. They can define a 'data analysis environment' intent. When a data scientist needs this environment, their AI agent requests it, and the orchestrator provisions it. This ensures consistency across experiments and simplifies access to necessary tools and resources for the team.

12

LLM-Infra Orchestrator

Author

kvgru

Description

This project is a demo showcasing an LLM-driven workflow that transforms natural language prompts into deployed infrastructure. It acts like a Replit-style frontend for infrastructure management, where a prompt in Claude generates a workload specification. This spec is then processed by Humanitec to deterministically deploy infrastructure to GCP using Terraform, resulting in application deployment in under a minute without traditional pipelines or DevOps tickets. It's designed for enterprise-grade policy enforcement, making complex infrastructure management feel magical for developers and AI agents.

Popularity

Points 6

Comments 2

What is this product?

This is a demonstration of an LLM-powered system that bridges the gap between natural language instructions and cloud infrastructure deployment. The core technology involves using a large language model (like Claude) to interpret a developer's request (written as a prompt). This prompt is then translated into a structured 'workload spec' that defines the necessary infrastructure components. This spec is then fed into an infrastructure automation platform (Humanitec) which uses tools like Terraform to automatically provision and configure resources on a cloud provider (GCP in this demo). The innovation lies in abstracting away the complexity of infrastructure-as-code and deployment pipelines, allowing developers to interact with infrastructure in a more intuitive, conversational way. It’s about making infrastructure management feel as easy as coding an application.

How to use it?

Developers can use this by writing a natural language prompt describing their application's infrastructure needs in a compatible LLM environment (like Claude). For example, a prompt could be 'Deploy a web server with a PostgreSQL database and auto-scaling enabled'. The LLM interprets this and generates the necessary configuration. This configuration is then automatically sent to a backend service (like Humanitec) which handles the actual infrastructure provisioning on GCP using Terraform. The system is designed to integrate into existing developer workflows, potentially through APIs or by extending existing developer environments. This means a developer might simply write a prompt in their IDE or a dedicated interface, and the infrastructure gets built without them needing to write YAML, HCL, or manage CI/CD pipelines.

Product Core Function

· Natural Language to Infrastructure Specification Generation: Leverages LLMs to understand developer intent from conversational prompts and translate it into a machine-readable infrastructure definition. This simplifies infra setup by removing the need for explicit coding of infrastructure configuration.

· Deterministic Infrastructure Deployment: Uses an automation backend (Humanitec) to reliably deploy infrastructure based on the generated specification, ensuring consistent and repeatable deployments. This reduces errors and the unpredictability often associated with manual or ad-hoc infrastructure management.

· Rapid Application Deployment: Achieves sub-minute deployment times by automating the entire process from prompt to live application. This drastically speeds up development cycles and time-to-market.

· Policy-Enforcing Backend: The backend is built with enterprise-grade policies to ensure secure and compliant infrastructure. This provides peace of mind for organizations concerned with security and governance while using automated deployment.

· Agent-First Workflow Enablement: Designed to work with AI agents, enabling them to autonomously manage and deploy infrastructure based on high-level objectives. This opens up new possibilities for AI-driven development and operations.

Product Usage Case

· Scenario: A startup developer needs to quickly spin up a new microservice with a database for a proof-of-concept. Instead of writing Terraform code and setting up a CI/CD pipeline, they can simply prompt the LLM to 'Deploy a Node.js app with a managed MySQL database and expose it via a load balancer'. The system automatically handles the GCP resource provisioning, database setup, and networking, making the app live in minutes. This solves the problem of slow setup times and the need for specialized DevOps skills for small teams.

· Scenario: An enterprise team is onboarding a new developer and needs to ensure all infrastructure deployments adhere to strict security and compliance standards. Using this system, the developer can describe their application's needs in natural language, and the policy-enforcing backend automatically ensures that only approved resource types and configurations are provisioned, even if the LLM initially suggests something non-compliant. This solves the challenge of maintaining control and security in a highly automated environment, allowing for faster onboarding and development.

· Scenario: An AI agent is tasked with managing a fleet of web applications. This system allows the agent to interact with infrastructure through natural language commands, such as 'Scale up the frontend servers for service X' or 'Deploy the latest version of service Y'. The LLM interprets these commands and translates them into actionable infrastructure changes, enabling autonomous management of cloud resources and solving the problem of integrating AI capabilities directly into infrastructure operations.

13

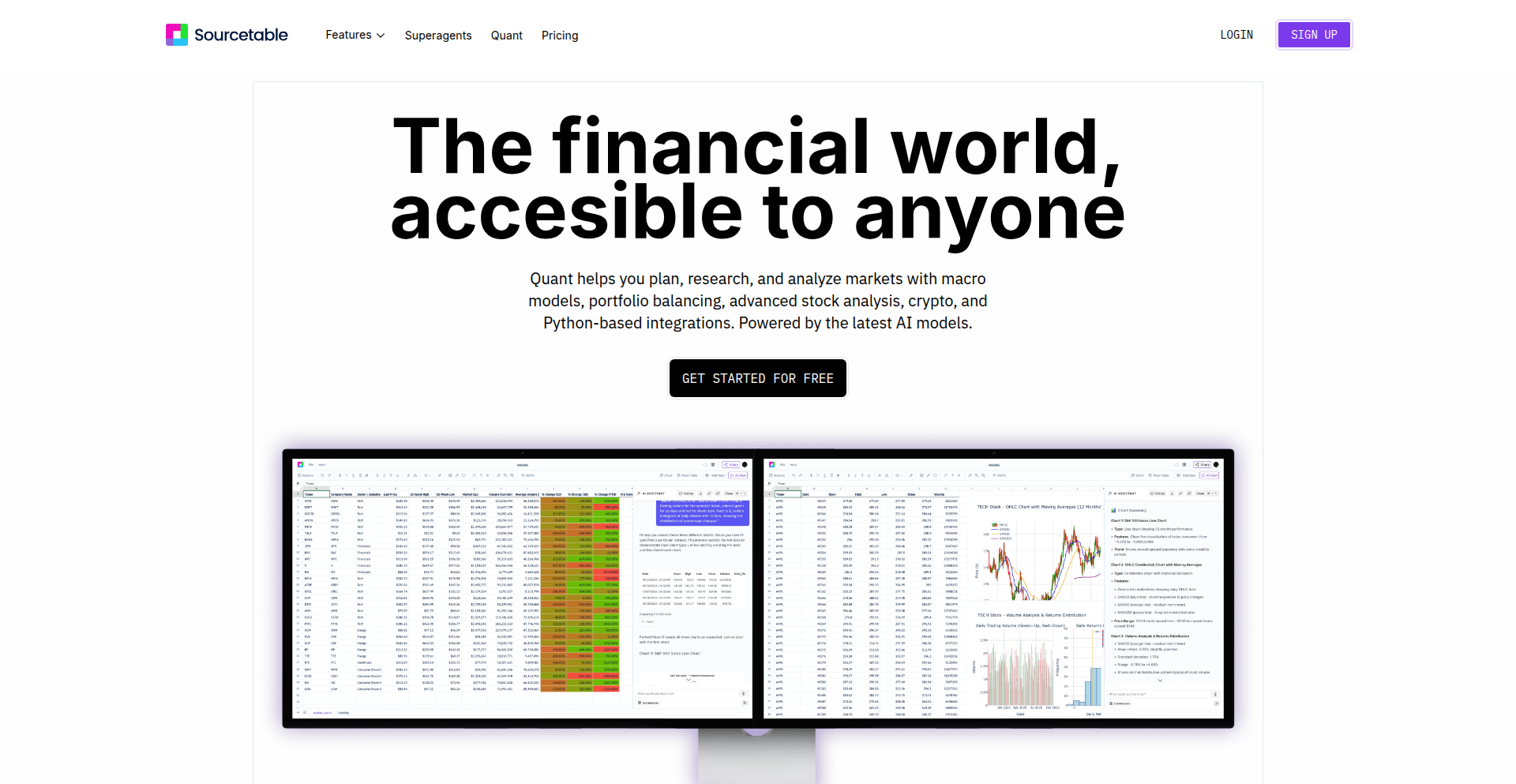

Quant: AI-Powered Financial Spreadsheet

Author

mceoin

Description

Quant is an AI analyst designed to democratize quantitative finance. It connects to over 600 exchanges, offers 1000+ built-in analysis tools, and allows users familiar with spreadsheets to perform complex financial analysis without learning programming languages like Python or R. Its core innovation lies in bridging the gap between intuitive spreadsheet interfaces and powerful financial modeling, offering a cost-effective alternative to traditional terminals.

Popularity

Points 8

Comments 0

What is this product?

Quant is an AI-powered financial analysis tool that functions like an advanced spreadsheet for quantitative trading. Instead of complex coding, it leverages a familiar interface to connect to a vast array of financial data sources (over 600 exchanges and 10,000+ data streams). Its AI layer acts as an intelligent assistant, capable of explaining financial concepts, debugging analyses, and even generating code snippets for more advanced users. The innovation is in making sophisticated financial modeling accessible to anyone who knows how to use a spreadsheet, essentially lowering the barrier to entry for sophisticated trading and investment analysis.

How to use it?

Developers and financial analysts can use Quant by connecting their brokerage accounts or data feeds. The platform offers a rich set of pre-built functions for portfolio optimization (including advanced strategies like risk parity), backtesting trading strategies using Monte Carlo simulations, and performing complex risk assessments. For instance, a developer building a trading bot could use Quant to backtest a new algorithm's performance across historical data, or an investor could use it to analyze their portfolio's risk exposure in real-time. Integration is facilitated through its API and direct execution capabilities with platforms like Robinhood, allowing for seamless incorporation into existing workflows.

Product Core Function

· Portfolio Optimization: Allows users to build and rebalance investment portfolios based on various risk and return metrics, including advanced models like Dalio's risk parity. This is valuable for investors seeking to maximize returns while managing risk efficiently.

· Backtesting and Simulation: Enables the testing of trading strategies against historical data using Monte Carlo simulations to predict potential outcomes and Sharpe ratios. This helps developers and traders validate their strategies before deploying real capital.

· Real-time Data Integration: Connects to over 10,000 data sources across 600+ exchanges, providing up-to-date market information. This is crucial for making timely trading decisions and staying ahead of market movements.

· AI-Driven Analysis and Explanation: Offers an AI assistant that can explain complex financial models, debug user analyses, and provide insights into trading positions. This empowers users to learn and improve their analytical skills without needing deep domain expertise.

· Direct Execution Capabilities: Integrates with trading platforms like Robinhood for direct order execution. This streamlines the trading process from analysis to action, saving time and reducing manual steps for traders.

Product Usage Case

· A quantitative trader wants to backtest a new options trading strategy. They can use Quant's Monte Carlo simulation to run thousands of scenarios on historical market data, assess the strategy's potential Sharpe ratio, and understand its risk profile, all within a spreadsheet-like interface, avoiding the need to write complex simulation code.

· An independent financial advisor needs to analyze a client's diverse portfolio spread across multiple asset classes and exchanges. Quant can aggregate this data, apply risk parity analysis to ensure optimal diversification, and then use the AI assistant to explain the portfolio's risk exposure and recommended adjustments to the client in clear terms.

· A developer experimenting with algorithmic trading wants to quickly prototype and test a mean-reversion strategy. They can leverage Quant's built-in tools to fetch real-time price data, implement the strategy logic, and potentially execute trades directly via Robinhood integration, all without setting up a dedicated trading infrastructure.

· A new trader struggling to understand the Black-Scholes model for option pricing can use Quant's AI assistant to walk them through the formula, its assumptions, and how to apply it to real market data, thereby accelerating their learning curve.

14

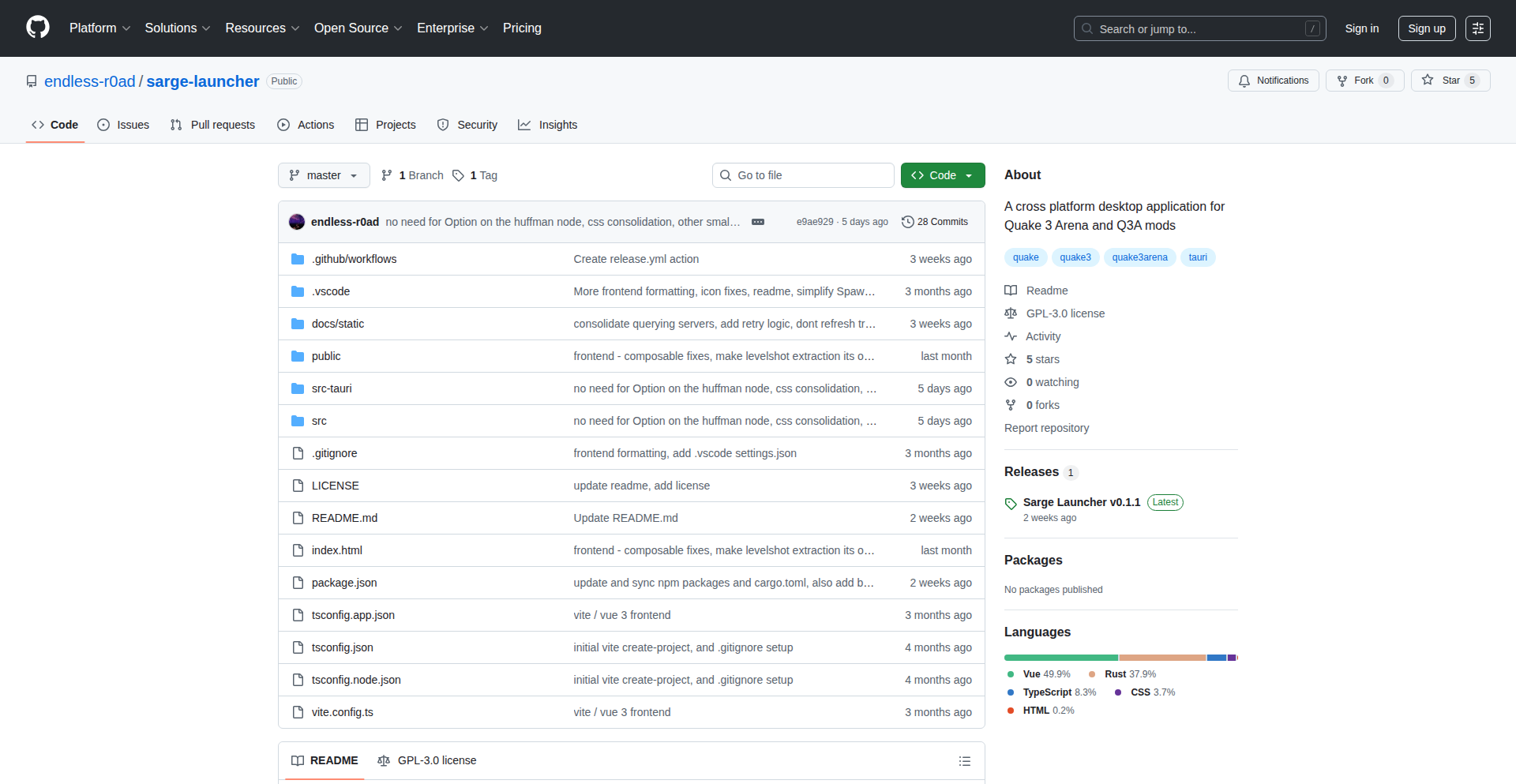

Sarge Launcher

Author

endless-r0ad

Description

Sarge Launcher is a desktop application built with Tauri v2, designed to enhance the Quake 3 Arena gaming experience. It acts as an external launcher that intelligently manages Quake 3 client executables and mods. Its core innovation lies in providing a significantly improved user interface and functionality over the in-game UI, offering features like advanced server browsing, demo management, and console log analysis, ultimately making it easier and more enjoyable for veteran Quake 3 players to manage their game and content.

Popularity

Points 5

Comments 2

What is this product?

Sarge Launcher is a desktop application for Quake 3 Arena, built using Tauri, a framework that allows web technologies to create native applications. The innovative aspect is its ability to go beyond the limitations of Quake 3's built-in user interface. Instead of relying on the game's clunky menus, it provides a streamlined external interface to manage different Quake 3 mods, connect to servers, and organize game demos and levels. This means you get a faster, more intuitive way to interact with your Quake 3 installation. So, this is useful because it takes a beloved but aging game and injects modern usability, making it easier to find games, manage your recordings, and keep your game organized, which directly translates to more fun and less frustration.

How to use it?

Developers can use Sarge Launcher by downloading and installing the application. Once installed, they can point it to their Quake 3 client executable. The launcher then allows them to select different mods, which automatically updates the server browser and available demos/levels to match that mod. It can also be used to add custom servers that don't appear on the master list, manage an unlimited number of demos and levels (exceeding the in-game limits), and even play demos on a loop with automatic client closing. Think of it as a central control panel for your Quake 3 experience. So, this is useful because it centralizes game management, offering a single point of access to all your Quake 3 content and connections, simplifying the process of jumping into games or reviewing past matches.

Product Core Function

· Advanced Server Browser: Provides a faster and more organized way to find and connect to game servers. It allows filtering and 'trashing' servers with fake players, leading to a cleaner browsing experience. This is valuable for players who want to quickly find active and legitimate servers without sifting through clutter.

· Server Favoriting: Enables users to mark their preferred servers for quick access. This saves time and effort by not having to search for frequently played servers every time. This is useful for players who have a regular group of friends or favorite community servers.

· Custom Server Addition: Allows users to manually add server addresses that may not be listed on the master servers. This is beneficial for players who know of private or niche servers and want to connect directly. This is valuable for players who want to access a wider range of gameplay experiences beyond the public listings.

· Unlimited Demo/Level Management: Overcomes the in-game limitations on the number of demos and levels that can be displayed. Players can organize and access a vast library of their recorded gameplay or custom maps. This is useful for content creators or players who want to archive and revisit a large collection of their games.

· Demo Looping and Auto-Close: Features like playing demos on a loop and automatically closing the game client at the end of a demo are inspired by older tools. This automates repetitive tasks and improves the efficiency of reviewing demos. This is valuable for players who want to analyze their gameplay or share highlights without manual intervention.

· Console Activity Playback: Enables users to review console output, such as chat history from demos, without needing to actively play the demo. This is a unique feature for analyzing past matches or understanding in-game events. This is useful for players who want to revisit conversations or specific in-game moments without replaying the entire demo.

Product Usage Case

· A Quake 3 enthusiast wants to quickly find and join a game with their friends. Sarge Launcher's fast server browser and favoriting feature allow them to instantly connect to their preferred server, saving them time and hassle compared to navigating the in-game menus. This solves the problem of slow and cumbersome server searching.

· A Quake 3 player has recorded many of their best matches and wants to organize them for later viewing or sharing. The unlimited demo management in Sarge Launcher allows them to store and easily access all their recorded demos, overcoming the hard limits of the original game. This addresses the issue of limited storage and accessibility for gameplay recordings.

· A player wants to analyze their performance in a specific match by reviewing the chat and console logs. Sarge Launcher's ability to read back console activity from demos without playing them provides a quick and efficient way to access this information, allowing for immediate performance review. This solves the problem of having to replay entire demos just to check chat history.

15

vCluster Standalone: Your First Kubernetes Cluster, Reimagined

Author

saiyampathak

Description

vCluster Standalone is an innovative open-source tool that tackles the 'cluster 1 problem' by providing a seamless developer experience for creating your very first Kubernetes cluster. It leverages virtual clusters to offer multi-tenancy capabilities, consolidating multiple vendor solutions and simplifying cluster management. This innovation means you can now run your applications and manage your infrastructure with the same ease and flexibility, regardless of whether it's your initial deployment or a complex multi-tenant setup. So, this is useful because it removes the initial barrier to entry for Kubernetes, making it accessible for everyone.

Popularity

Points 7

Comments 0

What is this product?

vCluster Standalone is a breakthrough in Kubernetes management, enabling you to create a fully functional, isolated Kubernetes cluster within your existing host environment. It's built on the concept of virtual clusters, which are essentially nested Kubernetes clusters. Imagine having a lightweight Kubernetes control plane running inside your main cluster. This means you get the full Kubernetes API and developer experience without the overhead of provisioning and managing a separate, dedicated physical cluster. The innovation here is in the abstraction: it allows you to treat these virtual clusters as if they were independent, solving the common challenge of having a complex 'cluster 1' setup for new projects or development. So, this is useful because it provides a low-friction way to start with Kubernetes, offering a powerful yet simple environment for development and testing.

How to use it?

Developers can use vCluster Standalone to quickly spin up isolated Kubernetes environments for development, testing, or even for providing isolated workspaces for different teams. Installation typically involves a simple CLI command, allowing you to create a new virtual cluster with minimal configuration. You can then connect to this virtual cluster using standard Kubernetes tools like kubectl. It integrates seamlessly with your existing Kubernetes infrastructure, meaning you don't need to overhaul your setup. For integration into CI/CD pipelines, you can automate the creation and management of these virtual clusters, ensuring consistent development environments. So, this is useful because it streamlines your development workflow, allowing you to experiment and build applications faster without waiting for infrastructure provisioning.

Product Core Function

· Virtual Cluster Creation: Enables the deployment of isolated Kubernetes environments as virtual clusters, simplifying multi-tenancy and reducing resource overhead. This is valuable for creating sandboxed environments for development and testing.

· Hosted Control Plane: Offers a managed control plane for virtual clusters, abstracting away the complexity of Kubernetes infrastructure management. This is useful for developers who want to focus on building applications rather than managing cluster components.

· Multi-Tenancy Support: Provides robust capabilities for isolating workloads and users within a single host cluster, crucial for shared development environments or SaaS platforms. This allows multiple teams or users to safely share infrastructure without interference.

· Consolidated Vendor Solutions: Enables the consolidation of multiple Kubernetes vendor solutions into a single, unified experience, reducing complexity and cost. This is beneficial for organizations looking to standardize their Kubernetes strategy.

· Seamless Developer Experience: Delivers a familiar Kubernetes API and developer workflow, ensuring a smooth transition for developers already accustomed to Kubernetes. This means you can start being productive immediately without a steep learning curve.

Product Usage Case

· Developing and testing new microservices: A developer can create a dedicated virtual cluster for each new service they are working on, ensuring that their development environment is isolated and doesn't interfere with other ongoing projects. This solves the problem of conflicting dependencies and configurations.

· Providing isolated development environments for teams: A company can use vCluster Standalone to offer each development team their own virtual Kubernetes cluster, allowing them to experiment freely without impacting other teams. This addresses the challenge of ensuring fair resource allocation and preventing accidental data corruption.