Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-04

SagaSu777 2025-10-05

Explore the hottest developer projects on Show HN for 2025-10-04. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The surge in projects focused on enhancing developer workflows, particularly those integrating AI and cross-language compatibility, signifies a clear trend. Developers are seeking tools that streamline complex processes, such as running code in diverse environments or managing AI models effectively. The emphasis on local-first and privacy-centric applications, especially those leveraging web technologies like WebGPU and Transformers.js, highlights a growing demand for powerful yet accessible tools that don't compromise user data. For aspiring creators and innovators, this means there's a fertile ground for building solutions that empower developers, offer novel ways to interact with AI, and prioritize user privacy. Think about how you can abstract away complexity, democratize access to advanced technologies, or create more intuitive interfaces for powerful systems. The hacker spirit thrives in identifying these pain points and crafting elegant, functional solutions.

Today's Hottest Product

Name

Run – A Universal CLI Code Runner Built with Rust

Highlight

This project innovates by creating a single, lightweight command-line tool that can execute code snippets and files across a vast array of programming languages, both interpreted and compiled. It intelligently detects languages, compiles temporary files for compiled languages, and offers a unified REPL experience. Developers can learn about cross-language runtime management, efficient CLI design, and Rust's capabilities for system-level tools.

Popular Category

AI/ML Tools

Developer Productivity

CLI Tools

Web Development

Data Visualization

Popular Keyword

AI

LLM

Rust

CLI

API

Observability

TUI

WebGPU

Transformer

Technology Trends

Cross-Language Execution Environments

AI-Powered Development Assistants

Decentralized/Local-First Applications

Enhanced Developer Workflows

Privacy-Focused Tools

Scientific Computing with ML

LLM Interaction and Integration

Observability for Complex Systems

Project Category Distribution

Developer Tools (30%)

AI/ML Applications (25%)

Productivity Tools (20%)

Web Applications (15%)

Utilities (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Polyglot Runner CLI | 84 | 34 |

| 2 | LLM Math Navigator | 5 | 4 |

| 3 | OneDollarChat | 4 | 3 |

| 4 | DigitalToothFairyCert | 6 | 0 |

| 5 | TypeScript-to-SQL Lambda Compiler | 4 | 1 |

| 6 | Surf-Wayland: The Wayland-Native Suckless Browser | 3 | 2 |

| 7 | BetterSelf: Spaced Repetition for Knowledge Retention | 5 | 0 |

| 8 | GlassSearch | 1 | 4 |

| 9 | LocalCopilot-API | 3 | 1 |

| 10 | Offline Ledger Companion | 4 | 0 |

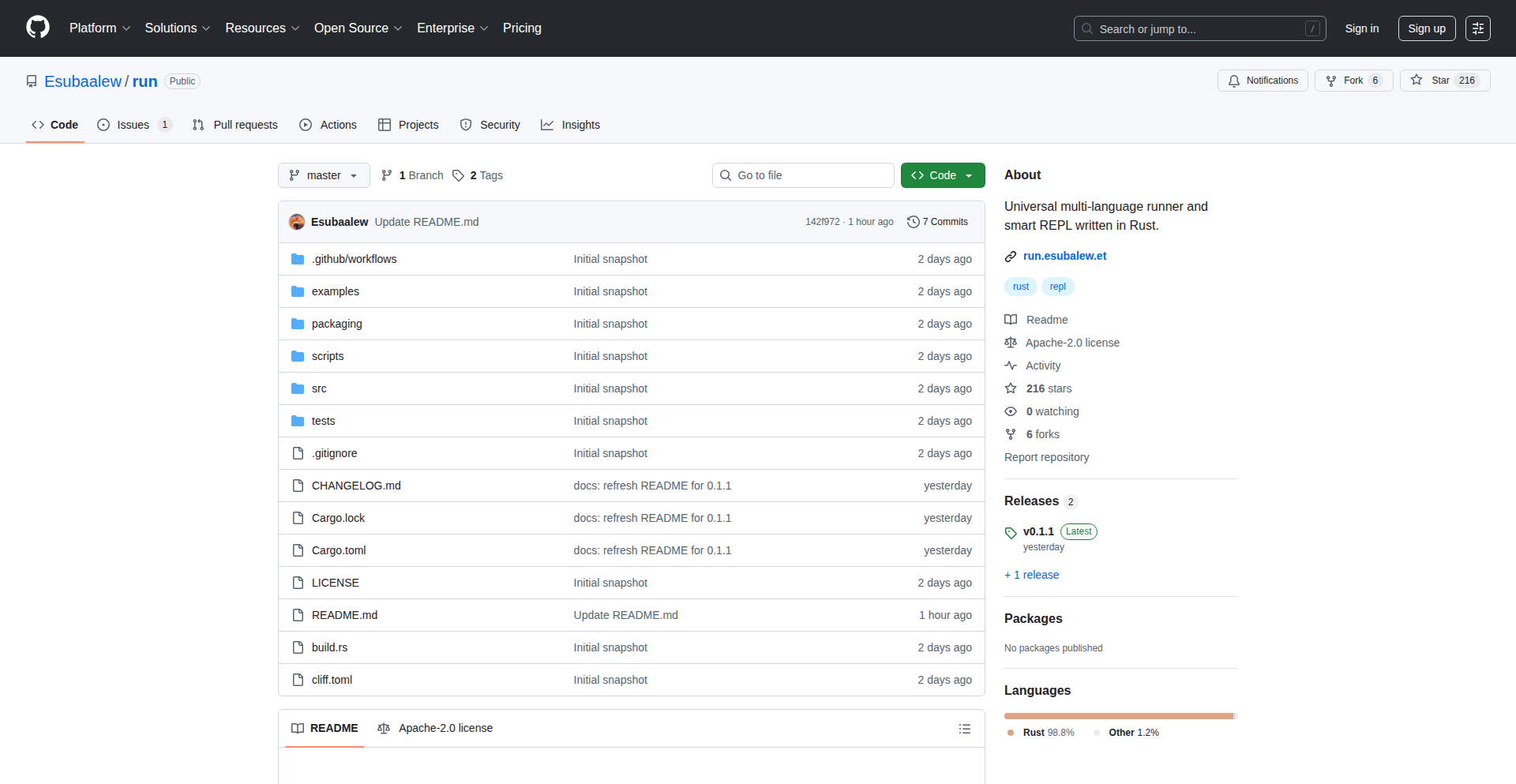

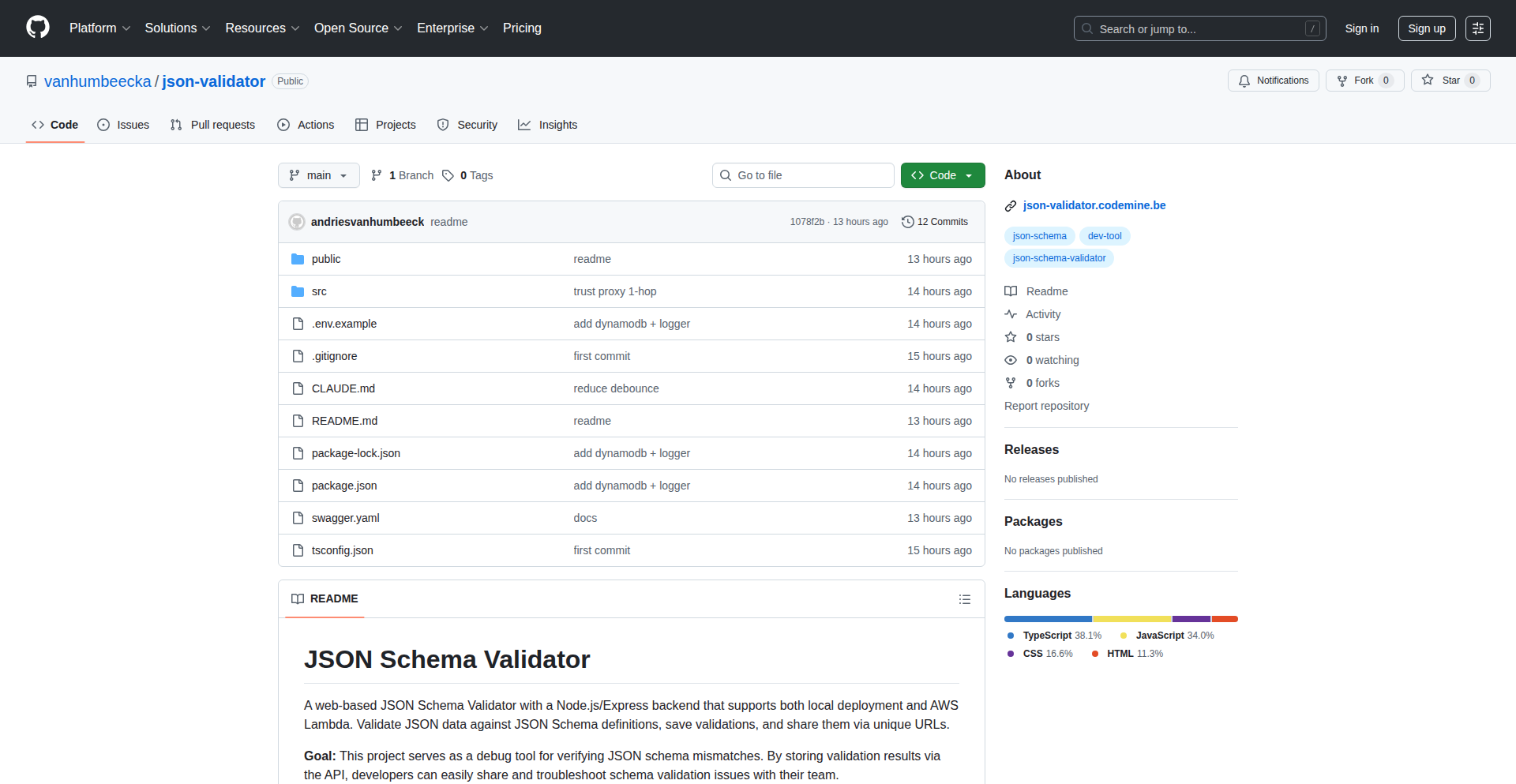

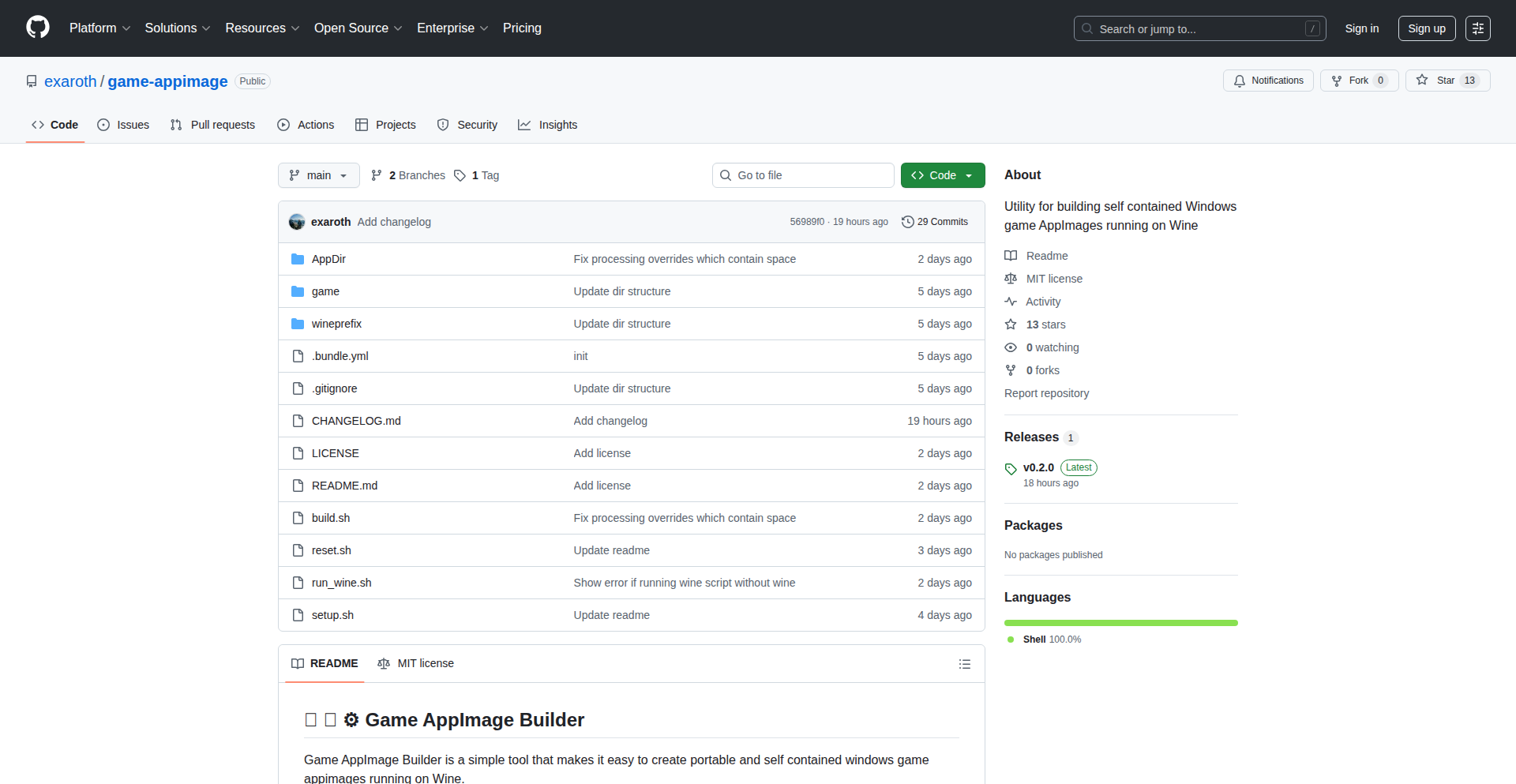

1

Polyglot Runner CLI

Author

esubaalew

Description

This project is a universal command-line interface (CLI) tool designed to execute code written in various programming languages. It streamlines the process of running single code snippets, entire files, or even code piped from standard input. The innovative aspect lies in its ability to support both interpreted and compiled languages with a unified interface, offering language-specific interactive environments (REPLs) that can be switched on the fly. So, this is useful for developers who frequently switch between different programming languages and want a single, easy-to-use tool to run their code without complex setup.

Popularity

Points 84

Comments 34

What is this product?

Polyglot Runner CLI is a versatile command-line tool built using Rust that acts as a single point of entry for executing code across a wide spectrum of programming languages. Its core innovation is the abstraction layer it provides, allowing developers to run code written in languages like Python, JavaScript, Ruby (interpreted) and Rust, Go, C/C++ (compiled) using the same commands. It intelligently detects the language based on provided flags or file extensions. For compiled languages, it can create temporary build artifacts and execute them. The tool also offers an interactive mode (REPL) for each supported language, enabling real-time code experimentation and iteration. This means you don't need to remember specific compilation commands or interpreter paths for each language, simplifying your development workflow significantly.

How to use it?

Developers can use Polyglot Runner CLI by installing it via Cargo (Rust's package manager) as `cargo install run-kit` or by downloading pre-compiled binaries from its GitHub repository. Once installed, you can run code in several ways: directly from a flag (e.g., `run --python 'print("Hello")'`), by executing a file (e.g., `run main.py`), or by piping input to it (e.g., `echo '1+1' | run --javascript`). For interactive sessions, simply type `run` and then use commands like `:lang python` to switch to the Python REPL, `:lang go` for Go, and so on. This offers a seamless way to test small code chunks or script snippets without leaving your terminal. So, this is useful for quickly testing out ideas or running small scripts in any language you have installed on your system.

Product Core Function

· Universal Code Execution: Ability to run code from various programming languages (interpreted and compiled) using a single command. This is valuable because it reduces the mental overhead of remembering specific execution commands for each language, making it faster to switch between projects or experiment with new languages.

· File and Snippet Execution: Supports running entire code files as well as one-off code snippets provided directly via command-line flags. This is useful for quickly testing small pieces of logic or executing script files without needing to open a full IDE or editor.

· Standard Input Piping: Allows code to be executed by piping data from standard input, making it ideal for processing data streams or working with the output of other command-line tools. This is helpful for data manipulation tasks and integrating with existing command-line workflows.

· Interactive REPLs: Provides language-specific Read-Eval-Print Loops (REPLs) that can be switched between interactively. This offers a dynamic environment for experimenting with language features, debugging, and exploring code in real-time, enhancing the learning and development process.

Product Usage Case

· Testing small Python functions: A developer needs to quickly test a short Python function they just wrote. Instead of opening a Python interpreter or a file, they can simply type `run --python 'def add(a, b): return a + b; print(add(2, 3))'` and get the output immediately. This saves time and context switching.

· Running a Go utility script: A developer has a small Go script for a specific task, like renaming files. They can run it directly using `run my_script.go` without needing to manually compile it first using `go build`. The tool handles the compilation and execution behind the scenes, simplifying the process.

· Processing piped data with JavaScript: A developer has a stream of JSON data from another command and wants to quickly parse and transform it using JavaScript. They can pipe the data like `cat data.json | run --javascript 'JSON.parse(stdin).map(item => item.name)'`. This allows for rapid data processing without complex scripting.

· Experimenting with Rust syntax: A new Rust developer wants to try out a specific syntax or library feature. They can enter the Rust REPL by typing `run` and then `:lang rust`, and then write and execute Rust code interactively to understand its behavior and refine their understanding.

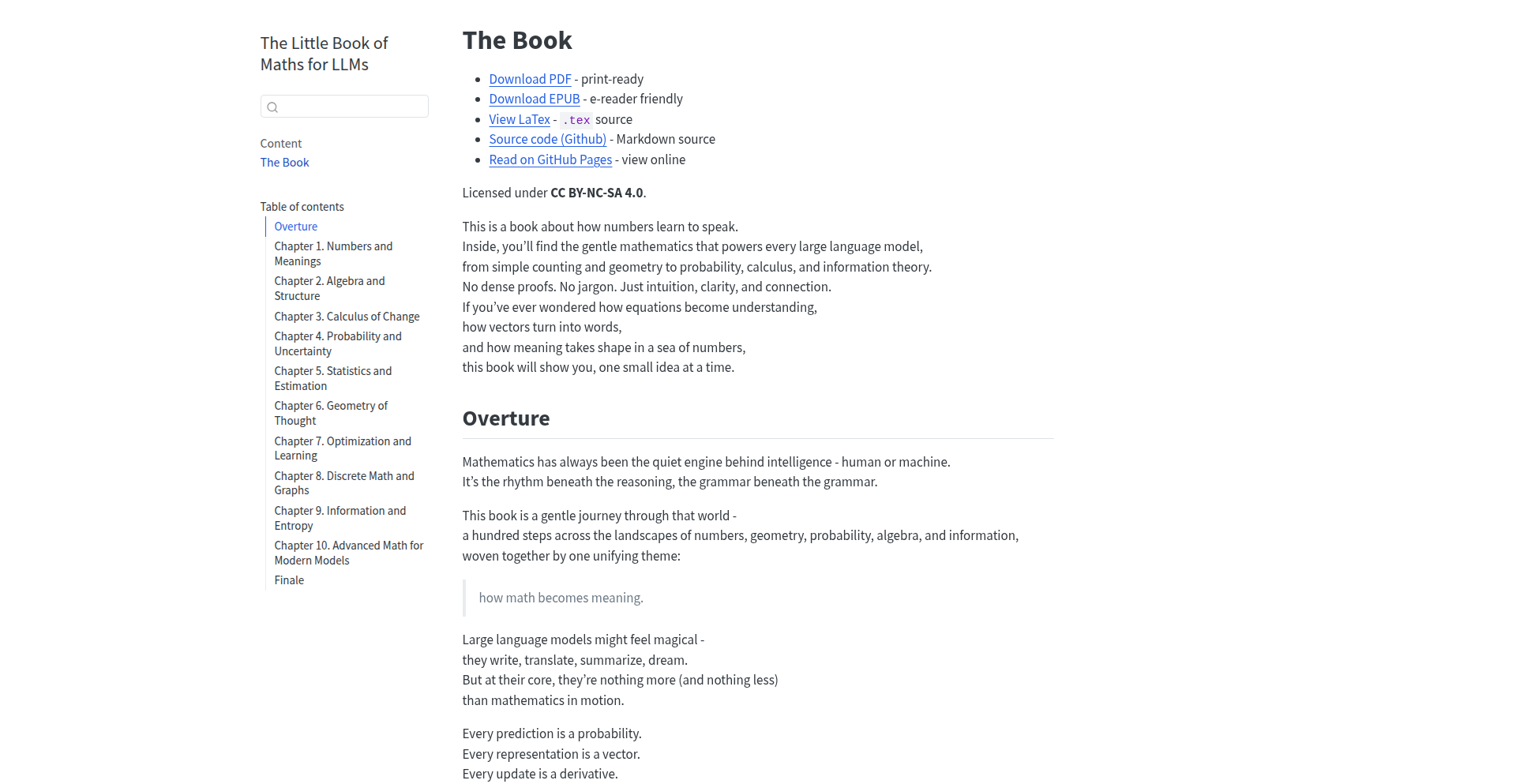

2

LLM Math Navigator

Author

tamnd

Description

This project is 'The Little Book of Maths for LLMs', a concise resource explaining the essential mathematics required to understand Large Language Models (LLMs). It demystifies complex concepts like linear algebra, calculus, and probability, making advanced AI more accessible to developers.

Popularity

Points 5

Comments 4

What is this product?

This project is an educational resource, presented as a 'Little Book', that breaks down the fundamental mathematical principles underpinning Large Language Models (LLMs). It focuses on the core math needed, such as matrix operations for understanding neural network layers, derivatives for model optimization, and probability distributions for token prediction. The innovation lies in its targeted approach, filtering out general math and highlighting only what's crucial for grasping LLM mechanics, thus reducing the learning curve for developers interested in AI.

How to use it?

Developers can use this resource as a guide to build a foundational understanding of LLMs. It's perfect for those who have some programming experience but find the underlying math of AI daunting. By reading through the explanations and examples, developers can gain the confidence to explore LLM architectures, fine-tune models, or even contribute to AI research. Integration isn't in the traditional software sense, but rather intellectual integration: applying the learned math concepts to real-world AI development challenges.

Product Core Function

· Linear Algebra Essentials for Neural Networks: Explains how matrix multiplication and vector operations are the building blocks of how LLMs process information, helping developers understand layer transformations.

· Calculus for Model Optimization: Details concepts like gradients and backpropagation, showing how LLMs learn and improve by minimizing errors, crucial for understanding training processes.

· Probability and Statistics for Language Understanding: Covers probability distributions and sampling methods, explaining how LLMs predict the next word and generate coherent text, enabling developers to grasp the probabilistic nature of language generation.

· Concise and Focused Content: Curates only the most relevant mathematical topics for LLMs, saving developers time by avoiding unnecessary mathematical theory and directly addressing their learning needs.

· Accessible Explanations: Translates complex mathematical jargon into understandable terms, making advanced AI concepts approachable for a broader range of developers.

Product Usage Case

· A junior AI developer struggling to understand how Transformer models work can use this book to quickly grasp the linear algebra behind attention mechanisms, enabling them to better debug or modify existing models.

· A data scientist wanting to fine-tune an LLM for a specific task can refer to the calculus sections to understand the optimization process and how learning rates affect convergence, leading to more efficient model training.

· A hobbyist interested in building their own simple language generator can use the probability sections to understand how to sample from word distributions, allowing them to implement basic text generation capabilities.

· An engineer integrating LLM APIs into their application can use this resource to gain a deeper appreciation for the model's capabilities and limitations, leading to more informed design decisions and better error handling.

3

OneDollarChat

Author

skrid

Description

A global chat platform where every message incurs a $1 cost, designed to encourage thoughtful communication and explore the economic impact on online interactions. The core innovation lies in implementing a token-based payment system for each message sent, leveraging blockchain technology to ensure transparency and security of transactions.

Popularity

Points 4

Comments 3

What is this product?

OneDollarChat is a novel messaging application that introduces a pay-per-message model, costing $1 for each message sent. This isn't just about charging users; it's an experiment to see how this economic incentive affects the nature of online conversations. By making communication have a tangible cost, the platform aims to foster more deliberate and meaningful exchanges, reducing spam and superficial interactions. The underlying technology likely involves a custom-built token or integration with existing cryptocurrency rails, using smart contracts to manage message credits and ensure all transactions are recorded on a distributed ledger. This approach brings a level of accountability and value to each piece of communication that traditional free chat apps lack.

How to use it?

Developers can integrate with OneDollarChat by using its API to send and receive messages. To initiate a chat, a user would first pre-purchase message credits, effectively paying $1 per credit. These credits are then consumed each time a message is sent. The API would allow applications to check a user's credit balance, send messages on their behalf, and receive incoming messages. This could be used in various scenarios, such as a premium support chat where users pay for direct expert advice, or a specialized community forum where only serious participants contribute. The integration process would involve setting up an API key and understanding the request/response structure for message handling and credit management.

Product Core Function

· Message Transaction System: Implements a system where each message sent consumes a $1 credit. The value is that it encourages users to be more concise and consider the importance of their message before sending, leading to higher quality interactions and reducing noise.

· Global Accessibility: Allows users from anywhere in the world to connect and communicate. The value here is enabling cross-border dialogue with a built-in mechanism for valuing every exchange, potentially fostering more considered international discussions.

· Transparency and Security: Leverages underlying blockchain principles for secure and transparent transactions. The value is in providing users with confidence that their payments are managed fairly and that the system is resistant to manipulation, building trust in the platform.

· User Credit Management: Enables users to purchase and manage their message credits. This provides a direct way for users to control their spending and understand the value of their communication, offering a sense of agency over their participation.

Product Usage Case

· Premium Support Chat: Imagine a software company offering direct, real-time chat support with their senior engineers. Users would pay $1 per message to ask questions and receive expert guidance, ensuring they only engage when they have a critical issue and that support staff are highly valued for their time. This solves the problem of overwhelming support queues and ensures only serious inquiries receive premium attention.

· Exclusive Expert Forums: A platform could host forums where users pay to ask questions to renowned experts in a specific field, such as medical advice or financial planning. Each question posed costs $1, ensuring experts are compensated for their time and knowledge, and that participants ask well-thought-out questions. This addresses the challenge of getting high-quality, dedicated expert time without overwhelming them.

· High-Stakes Collaborative Projects: For small, critical project collaborations, a team could use OneDollarChat where every message within the project channel costs $1. This encourages extreme focus and efficiency in communication, ensuring that only essential information is shared and that discussions remain on track, solving the problem of prolonged, unproductive team meetings and scattered communication.

· Personalized Coaching Sessions: A life coach or mentor could offer paid chat sessions through this platform. Each message exchanged could cost $1, allowing clients to pay for focused, direct guidance and accountability, and for coaches to monetize their time effectively. This provides a structured and monetized way to deliver personalized coaching interactions.

4

DigitalToothFairyCert

Author

joerock

Description

A simple yet delightful web application that generates personalized Tooth Fairy certificates for children who have lost a tooth. It addresses the small but meaningful need of parents to create a memorable experience, leveraging basic web technologies to provide a charming digital artifact.

Popularity

Points 6

Comments 0

What is this product?

DigitalToothFairyCert is a web-based tool that allows parents to quickly create customized certificates for their children from the Tooth Fairy. The innovation lies in its simplicity and focus on a specific, emotionally driven use case. It uses straightforward front-end technologies (likely HTML, CSS, and JavaScript) to dynamically populate a certificate template with child's name, date, and a personalized message. This bypasses the need for complex design software or printing, offering an instant, digital solution to a common parental ritual. The value is in making a child's milestone feel more magical and official, with minimal effort.

How to use it?

Developers can use DigitalToothFairyCert by visiting the hosted web application. Parents would navigate to the site, input their child's name, the date of the lost tooth, and perhaps a small message. Upon submission, the application generates a visually appealing certificate that can be downloaded as an image or PDF. For developers interested in the technical aspect, the project likely demonstrates a clean front-end structure, possibly utilizing a simple templating engine or direct DOM manipulation for certificate generation. It's a prime example of a 'micro-solution' built with readily available web tools. The usage for parents is to simply create a fun keepsake.

Product Core Function

· Personalized certificate generation: Dynamically inserts child's name and date into a pre-designed certificate template, providing a custom touch. This makes the certificate feel unique to each child, enhancing the magic of the Tooth Fairy tradition. The technical implementation likely involves JavaScript to capture user input and update HTML elements.

· Instant download capability: Allows users to immediately download the generated certificate as a digital file (e.g., PNG, JPG, or PDF). This offers immediate gratification and a tangible (printable) outcome. This is achieved through browser APIs for file generation or rendering.

· Simple, intuitive user interface: Designed for ease of use by parents, requiring minimal technical knowledge. The value here is accessibility and speed, ensuring that creating this special item is not a chore but a quick, enjoyable process.

· Themed visual design: Features child-friendly graphics and fonts to create a whimsical and magical aesthetic. This contributes to the emotional value of the certificate, making it more appealing to children. The technical aspect involves CSS styling to create the visual appeal.

Product Usage Case

· Parent wanting to create an immediate, printable souvenir for a child who lost a tooth, but doesn't have design software or time for elaborate crafting. They use the web app, fill in the details, and print the certificate within minutes, making the child's experience more special and memorable.

· A parent looking for a quick and digital way to document a child's milestone. The generated certificate serves as a digital keepsake that can be easily stored or shared, preserving the memory of this childhood event without needing physical storage space.

· A developer looking for a simple example of a functional web application that solves a specific user need with minimal complexity. They can learn from its straightforward front-end implementation, templating, and user interaction patterns, inspiring their own small-scale creative coding projects.

5

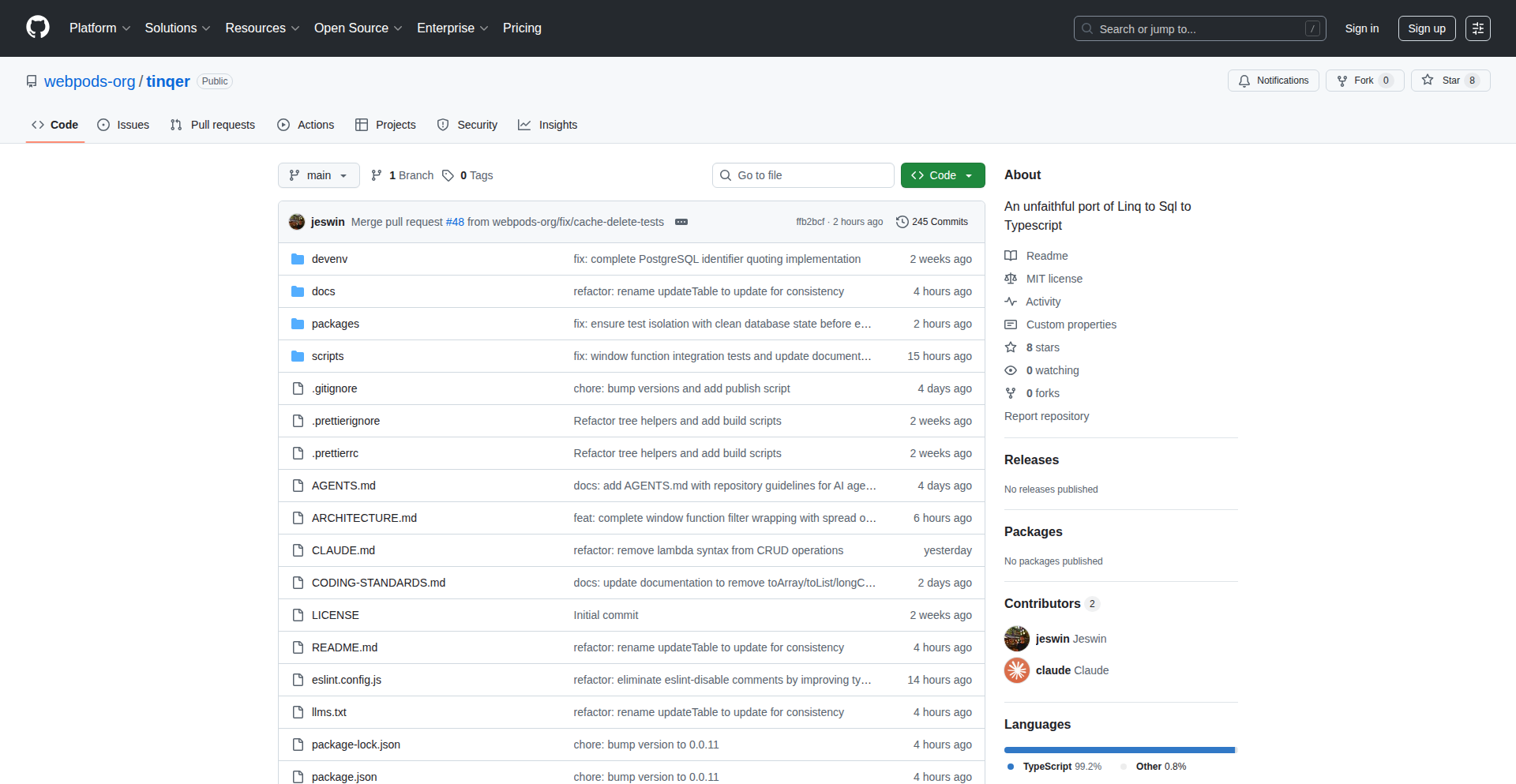

TypeScript-to-SQL Lambda Compiler

Author

jeswin

Description

This project introduces a novel approach to building type-safe database queries directly within TypeScript. It translates familiar TypeScript lambda expressions into executable SQL queries, effectively bringing the expressiveness of LINQ-to-SQL to the JavaScript ecosystem. The core innovation lies in its ability to leverage TypeScript's strong typing system to guarantee query correctness at compile time, thus reducing runtime errors and improving developer productivity when interacting with SQL databases from a TypeScript codebase.

Popularity

Points 4

Comments 1

What is this product?

This is a compiler that bridges the gap between modern TypeScript and traditional SQL databases. It allows developers to write database queries using TypeScript's type-safe lambda functions (like `(x) => x.name === 'John'`). The compiler then analyzes these lambdas and transforms them into accurate and efficient SQL statements (e.g., `SELECT * FROM users WHERE name = 'John'`). The key technical insight is utilizing TypeScript's static type checking to ensure that the queries you write are valid before your code even runs. So, this means fewer bugs and more confidence when fetching data. This is useful because it makes database interactions safer and more intuitive, especially for front-end or Node.js developers who might not be SQL experts.

How to use it?

Developers can integrate this by defining their database schemas in TypeScript. Then, instead of writing raw SQL strings, they can use the provided library to write query logic using TypeScript's familiar functional programming constructs. The compiler will automatically generate the corresponding SQL. For example, you might use it like `db.users.where(user => user.age > 18).select(user => user.name)`. This directly translates to `SELECT name FROM users WHERE age > 18`. This is useful for quickly building data access layers in Node.js applications or even for client-side applications that need to interact with backend databases, providing a type-safe and productive way to query data.

Product Core Function

· Type-safe query generation: Leverages TypeScript's static typing to ensure SQL queries are syntactically correct and reference valid database columns and tables before runtime. This reduces the chance of runtime errors caused by typos or incorrect query structures, making your data fetching more reliable.

· Lambda expression to SQL translation: Converts familiar TypeScript arrow functions and lambda expressions into standard SQL query strings. This allows developers to express complex query logic in a readable and concise manner, similar to LINQ in C#, making database interactions feel more natural within a JavaScript environment.

· Schema-aware query building: Understands the structure of your database tables as defined in TypeScript, enabling intelligent query construction. This means the compiler can help you avoid common mistakes by knowing what fields and relationships exist, leading to more robust and maintainable database code.

· Compile-time error checking: Catches potential query errors during the TypeScript compilation phase, rather than at runtime. This significantly speeds up the development cycle by identifying and fixing bugs early, saving debugging time and preventing production issues.

Product Usage Case

· Building a type-safe API backend with Node.js: A developer can use this to create an API that interacts with a PostgreSQL database. Instead of manually writing SQL queries for fetching user data, they can define a `User` interface in TypeScript and then write queries like `db.users.filter(u => u.isActive && u.role === 'admin').select(u => u.email)`. This ensures that only valid fields like `isActive`, `role`, and `email` are used, preventing common SQL injection vulnerabilities and ensuring data integrity. This is useful for securely and efficiently retrieving specific user information.

· Developing a front-end data dashboard: A front-end application built with React or Vue.js that needs to pull filtered and aggregated data from a backend API that uses this compiler. Developers can write TypeScript functions on the client-side that get translated to SQL on the server. For instance, to get the total sales for a specific product category, a developer might write `getDataForCategory('electronics', '2023-01-01')`, which gets transformed into a SQL query like `SELECT SUM(price) FROM orders WHERE category = 'electronics' AND order_date >= '2023-01-01'`. This makes it easy to build dynamic and data-driven user interfaces without writing raw SQL.

· Creating internal tools for data analysis: A company might build internal command-line tools for their data analysts using Node.js. This tool could allow analysts to write simple TypeScript scripts to query production databases for reports. For example, an analyst could write `fetchUsersBySignupDateRange('2024-01-01', '2024-01-31')` which compiles to `SELECT * FROM users WHERE signup_date BETWEEN '2024-01-01' AND '2024-01-31'`. This empowers non-SQL-expert analysts to perform ad-hoc data queries safely and efficiently.

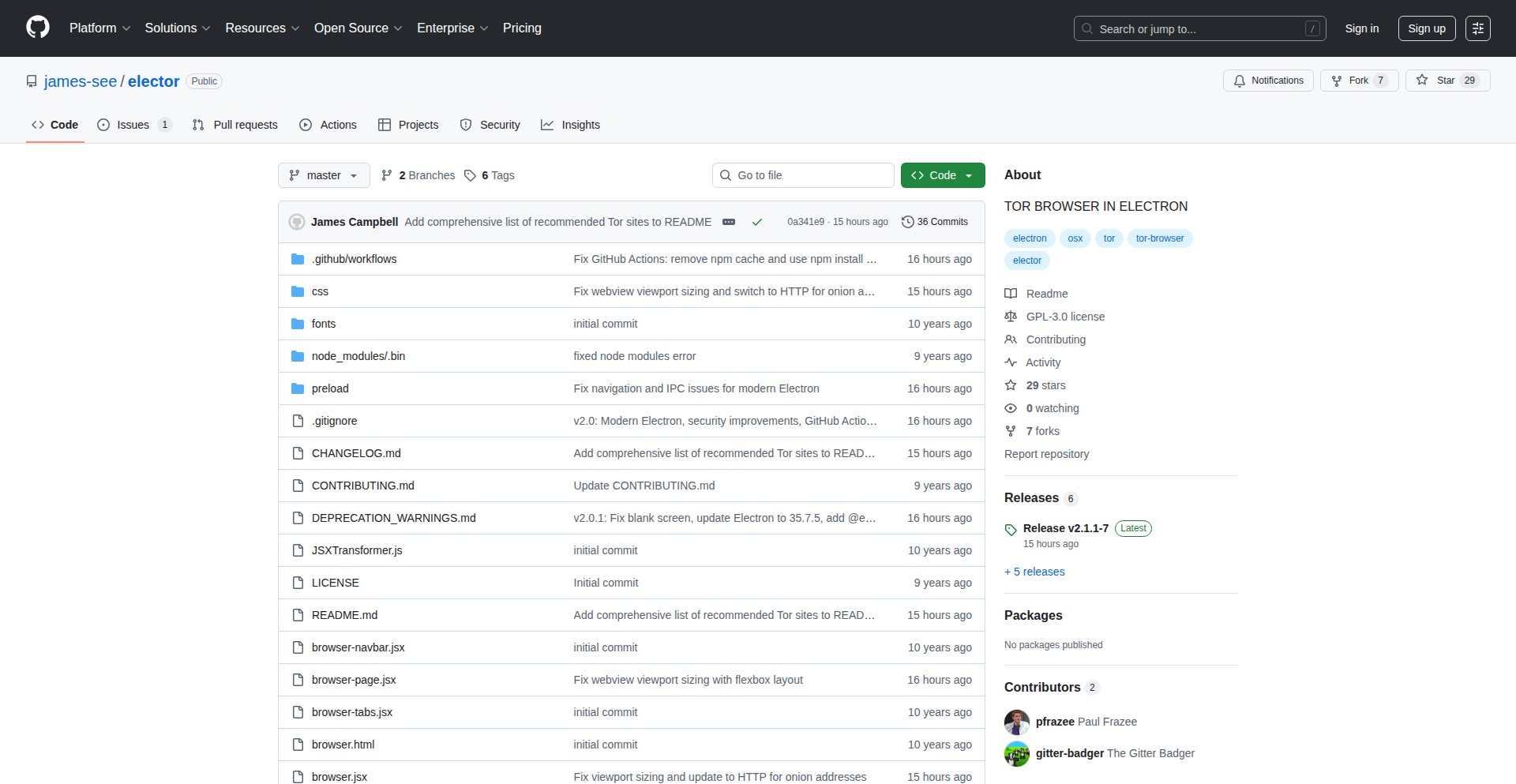

6

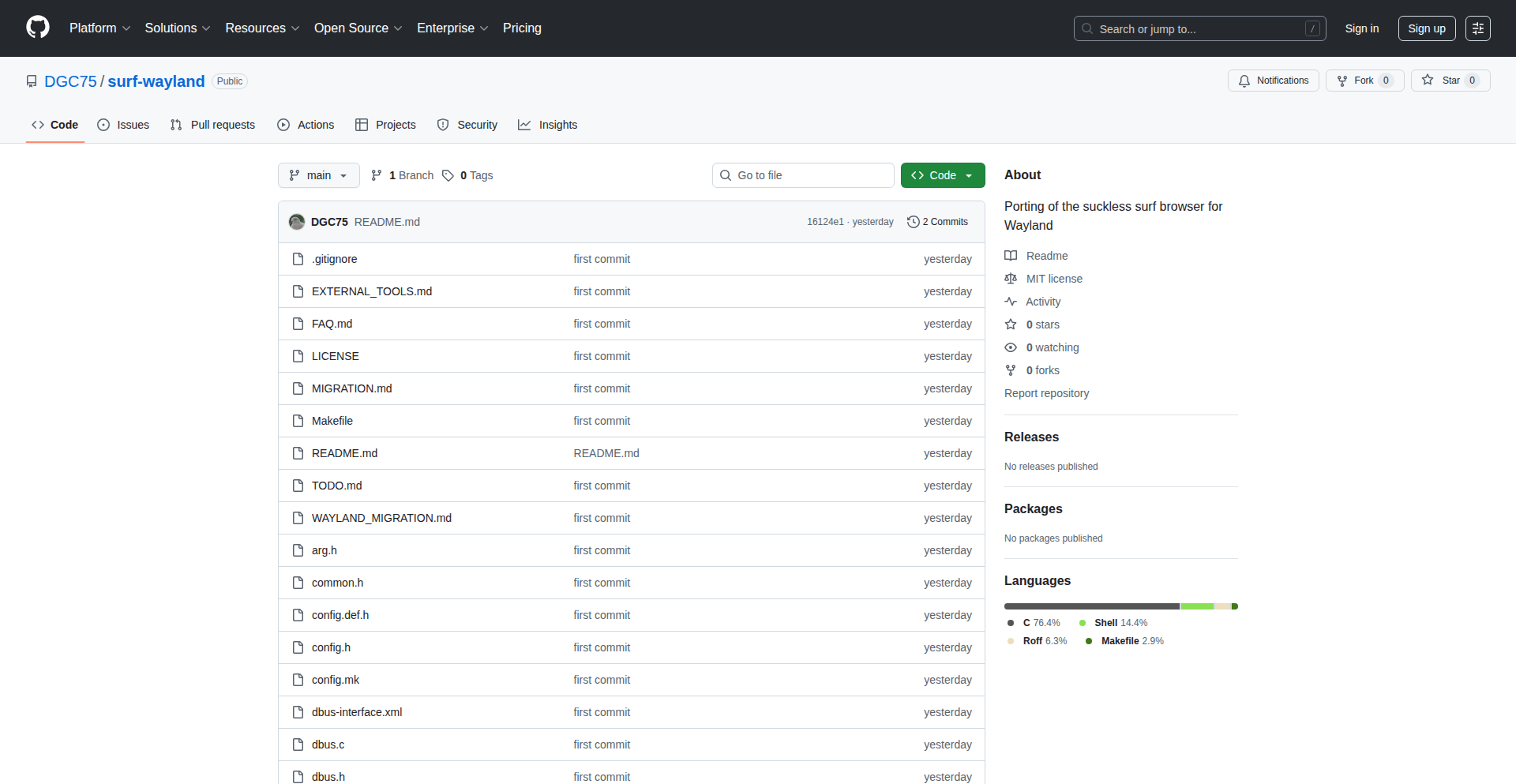

Surf-Wayland: The Wayland-Native Suckless Browser

Author

gc000

Description

Surf-Wayland is a port of the minimalist, keyboard-centric 'suckless surf' browser to the Wayland display server. It provides a streamlined browsing experience by focusing on essential functionalities and efficient resource utilization, while embracing the modern Wayland architecture for improved security and performance.

Popularity

Points 3

Comments 2

What is this product?

This project is a web browser designed with the 'suckless' philosophy in mind, meaning it prioritizes simplicity and efficiency. Unlike many bloated browsers, it strips away unnecessary features to offer a fast and resource-light experience. The key innovation here is its native port to Wayland. Wayland is a modern display server protocol that aims to replace the older X11, offering better security, performance, and graphics handling. By porting Surf to Wayland, this project makes a lean, powerful browsing tool available in a cutting-edge graphical environment. Think of it as taking a highly optimized, no-frills engine and making it run smoothly on a brand new, highly efficient chassis. The value is a faster, more responsive, and potentially more secure browsing experience for users who appreciate minimalism and modern technology. So, this is useful to you because it offers a lightweight and performant alternative to common browsers, especially if you're already using or interested in Wayland.

How to use it?

Developers can use Surf-Wayland by compiling it from source and running it as a standalone application on a Wayland-enabled desktop environment (like GNOME, KDE Plasma, or Sway). Its core interaction model is heavily keyboard-driven, with commands issued through a dedicated input area or keybindings, minimizing reliance on a mouse. This makes it ideal for users who prefer to stay on the keyboard for most of their tasks. Integration into existing workflows can involve scripting or custom keybinding setups. The value here is a highly customizable and efficient browsing tool that can be tightly integrated into a developer's preferred command-line or keyboard-centric workflow. So, how this is useful to you is that it allows you to browse the web efficiently without constantly reaching for your mouse, and it plays nicely with modern operating systems.

Product Core Function

· Wayland Native Rendering: Utilizes the Wayland protocol for drawing the browser interface, offering potentially smoother graphics and better integration with Wayland compositors, leading to a more fluid visual experience and improved resource management. This is useful because it means the browser will perform better on modern Linux systems.

· Minimalist Design Philosophy: Focuses on essential browsing features, avoiding feature bloat. This results in faster startup times and lower memory consumption, making it ideal for resource-constrained systems or users who prioritize speed and simplicity. This is useful because it means your computer will run faster and you'll have more memory available for other applications.

· Keyboard-Centric Navigation: Employs a command-line-like interface and extensive keyboard shortcuts for page navigation, searching, and tab management, enhancing productivity for keyboard power users. This is useful because it allows you to control the browser very quickly without taking your hands off the keyboard.

· Extensible Configuration: While minimalist, it allows for customization through configuration files, enabling users to tailor its behavior and keybindings to their specific needs. This is useful because you can make the browser work exactly how you want it to.

· Integration with External Tools: Can be designed to work in conjunction with external tools for tasks like downloading or rendering specific content, leveraging the strengths of other command-line utilities. This is useful because it allows you to combine the browser with other powerful tools you might already use.

Product Usage Case

· A developer using Surf-Wayland on a minimalist Sway window manager setup to quickly browse documentation, opening new tabs and searching for information entirely via keyboard shortcuts, significantly speeding up their research workflow. This solves the problem of slow, mouse-heavy browsing during focused development sessions.

· A user on a low-resource server or older laptop running Surf-Wayland to access web pages efficiently without the heavy RAM footprint of mainstream browsers, providing a usable web experience where other browsers would struggle. This solves the problem of slow performance on underpowered hardware.

· A power user creating custom keybindings within Surf-Wayland to directly trigger specific scripts or system commands related to web content (e.g., saving an article to a personal knowledge base), integrating browsing seamlessly into their overall productivity system. This solves the problem of fragmented workflows by allowing browsing and personal productivity tools to be tightly coupled.

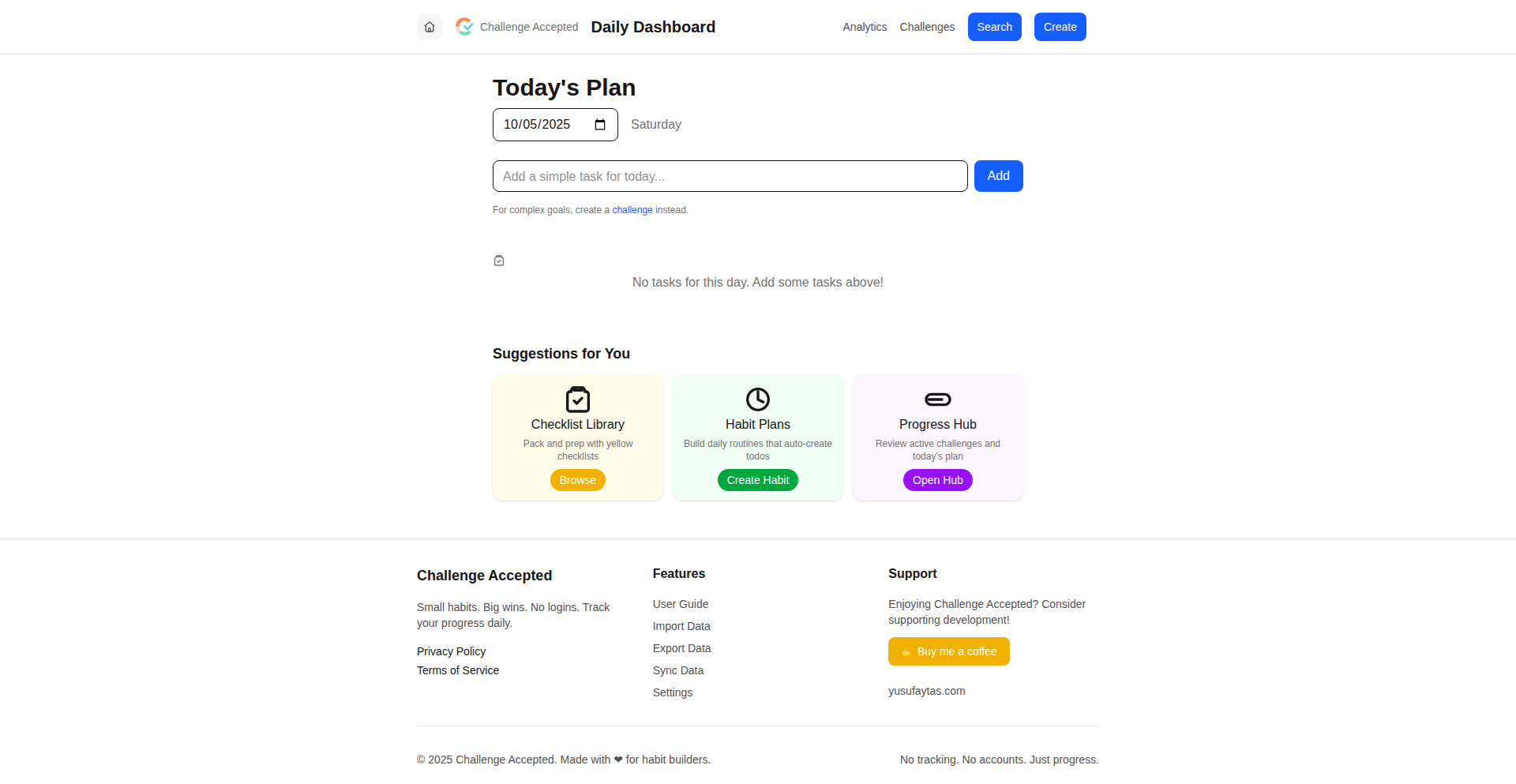

7

BetterSelf: Spaced Repetition for Knowledge Retention

Author

adamgol

Description

BetterSelf is a novel application designed to combat the pervasive problem of forgetting what we learn. It leverages the principles of spaced repetition, a proven learning technique, to ensure that valuable insights from books, podcasts, and personal notes are not lost over time. By intelligently reminding users of learned material at increasing intervals, BetterSelf helps solidify knowledge, making it stick. This project showcases an innovative approach to personal productivity by applying sophisticated learning algorithms to everyday knowledge acquisition.

Popularity

Points 5

Comments 0

What is this product?

BetterSelf is a personal knowledge management tool that uses an intelligent spaced repetition system to help users remember what they learn. Unlike traditional note-taking apps where information can easily be forgotten, BetterSelf actively re-surfaces your notes and lessons at optimal times. The core technical insight is the application of algorithms that determine when you are most likely to forget something and schedule a reminder for you to review it just before that happens. This is based on the scientific principle that reviewing information at increasing intervals strengthens memory recall. So, it's like having a personal tutor that ensures you never lose the valuable lessons you encounter, making your learning truly stick. This means you get more long-term value from the books you read, the podcasts you listen to, and the ideas you jot down.

How to use it?

Developers can integrate BetterSelf into their personal learning workflows. The app allows users to input notes, summaries, or key takeaways from various sources. BetterSelf's backend then manages the scheduling of review prompts. For developers, this means they can use BetterSelf to track technical concepts, new programming languages, or design patterns they are learning. By actively reviewing these items as prompted, they can ensure they retain this knowledge for future project use. The integration is straightforward: simply input your learning material, and the app handles the rest. This helps developers build a stronger, more accessible mental library of technical information, directly boosting their problem-solving capabilities.

Product Core Function

· Spaced Repetition Algorithm: This core function intelligently schedules reviews of learned material at increasing time intervals, based on how well you recall the information. Its value is in dramatically improving long-term knowledge retention, ensuring that coding best practices or system architecture concepts don't fade away.

· Note Ingestion: Allows users to easily add text-based notes, insights, and summaries from any learning source. The value here is a centralized repository for all your important learnings, making it easy to capture ideas as they arise, no matter the context.

· Scheduled Reminders: Delivers timely notifications to prompt users for review sessions. The application value is in proactively engaging users with their knowledge, preventing the passive loss of information and ensuring consistent learning.

· Progress Tracking: Provides insights into learning patterns and retention rates. The technical value is in offering data-driven feedback on learning effectiveness, allowing users to refine their study habits and maximize their learning efficiency.

Product Usage Case

· A developer learning a new framework like React. Instead of just reading documentation once, they input key concepts and code snippets into BetterSelf. The app reminds them to review specific hooks or state management principles over days and weeks, ensuring they master the framework rather than just skimming it. This helps them build more complex and robust React applications with confidence.

· A software architect studying complex distributed systems. They add notes on CAP theorem, eventual consistency, and message queues. BetterSelf prompts them to revisit these topics at strategic intervals, solidifying their understanding. This allows them to design more resilient and scalable systems by drawing on deeply ingrained knowledge, reducing costly design errors.

· A junior developer encountering new debugging techniques. They record the steps and logic behind effective debugging. BetterSelf ensures they repeatedly practice recalling these techniques. This leads to faster and more efficient problem-solving in their daily coding tasks, increasing their productivity and value to the team.

· A team lead who wants to share best practices with their team. They can use BetterSelf as a personal tool to reinforce their own understanding of leadership principles and technical standards. By having this knowledge readily accessible and well-retained, they can more effectively mentor and guide their team, fostering a culture of continuous improvement.

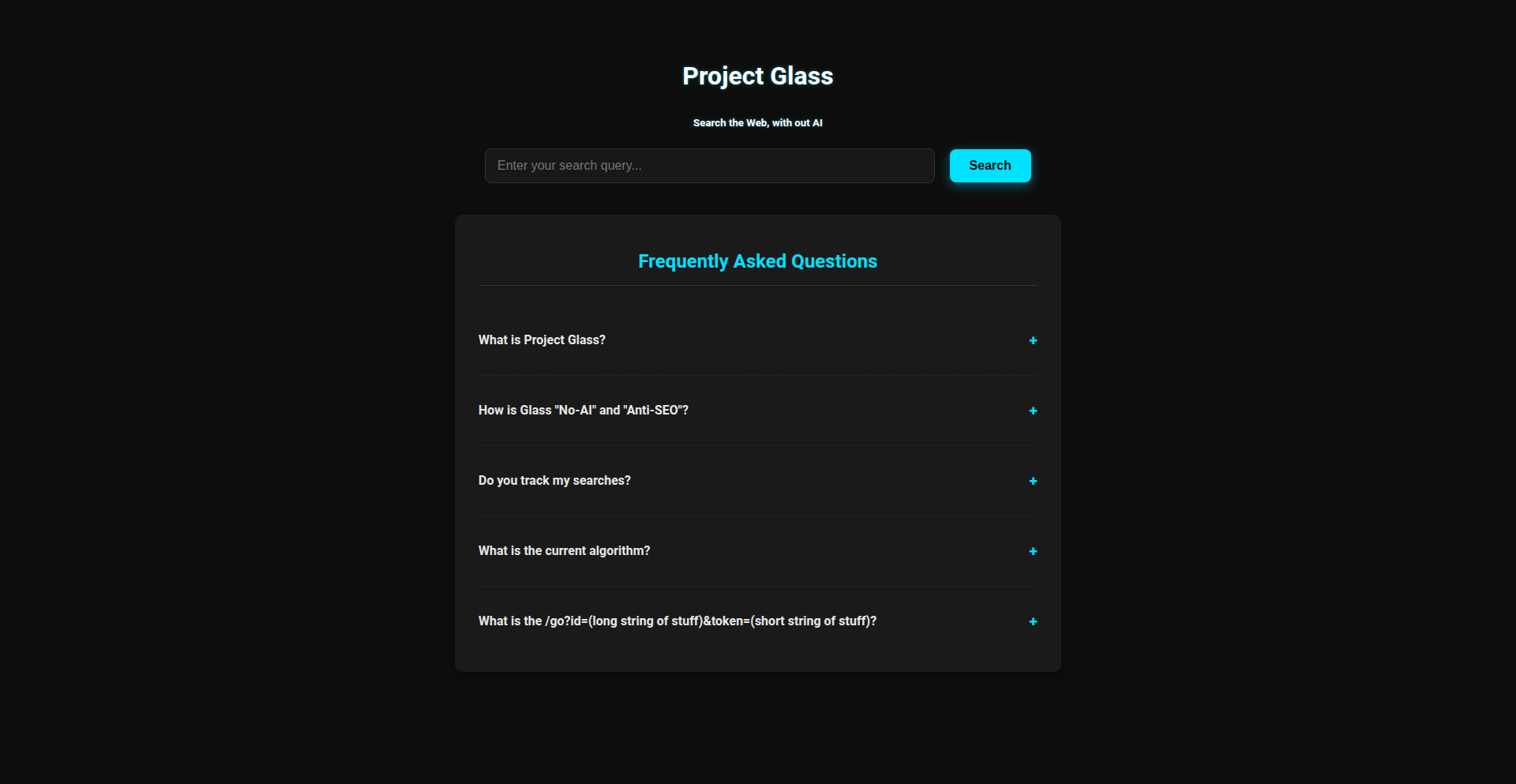

8

GlassSearch

Author

eightballsystem

Description

GlassSearch is a privacy-focused, transparent search engine that prioritizes user engagement over AI-driven results. Its core innovation lies in a clearly defined, observable algorithm that ranks search results based on title, snippet, click-through rate, and recency. This approach offers a refreshing alternative to AI-saturated search experiences, providing users with a more understandable and trustworthy way to find information.

Popularity

Points 1

Comments 4

What is this product?

GlassSearch is a search engine built from the ground up with transparency and user privacy as its guiding principles. Unlike many modern search engines that heavily rely on complex, opaque AI models to determine search results, GlassSearch employs a straightforward, visible algorithm. This algorithm considers factors like the relevance of the search term to the page title and description, how often users click on a particular result, and how recently the content was published. The 'no AI' stance means it's not trying to guess what you want or personalize results in ways that might compromise your privacy. So, what's in it for you? You get search results that are easier to understand and trust, without your data being constantly analyzed by AI.

How to use it?

Developers can use GlassSearch just like any other search engine, by visiting its homepage and entering their queries. The platform is designed to be accessible, requiring no special plugins or complex integration. Its core value for developers lies in its demonstration of a simpler, more transparent search indexing and ranking mechanism. This can serve as inspiration for building custom search solutions for internal projects, personal websites, or even as a backend for niche applications where AI-driven complexity is unnecessary or undesirable. Imagine building a specialized knowledge base for your team and wanting a search function that clearly shows why certain documents are prioritized – GlassSearch's philosophy provides a blueprint.

Product Core Function

· Transparent Ranking Algorithm: The algorithm that determines search result order is openly visible, allowing users to understand why certain links appear higher. This addresses the 'black box' problem of many search engines, providing predictability and trust, which is valuable for users who want to understand the 'why' behind their search results.

· Privacy-First Design: GlassSearch explicitly avoids AI in its search process and front-end, minimizing data collection and user tracking. This is crucial for users concerned about their digital footprint and the pervasive use of personal data by tech giants, offering peace of mind and a cleaner browsing experience.

· User Engagement as a Signal: The ranking heavily relies on user interaction metrics like click-through rates, indicating a focus on what users actually find valuable. This means results are more likely to be relevant and useful in a practical sense, directly benefiting users by surfacing content that others have found helpful.

· No Front-End JavaScript: This technical choice leads to faster page load times and reduced potential for browser-based tracking or malicious code execution. For users, this means a snappier and more secure browsing experience, and for developers, it showcases efficient web development practices.

Product Usage Case

· A developer building a personal portfolio website might use the principles behind GlassSearch to create a simple, self-hosted search for their blog posts, ensuring that the ranking logic is straightforward and easily maintainable, unlike relying on a complex external search service.

· An academic researcher looking for alternative search paradigms could use GlassSearch to understand how traditional relevance signals, combined with user feedback, can effectively surface information without the computational overhead and potential bias of AI models, helping them identify relevant literature more efficiently.

· A privacy-conscious individual who is tired of personalized advertising and AI-driven recommendations can use GlassSearch as their primary search engine, experiencing a cleaner, less intrusive way to find information, which directly translates to a more focused and less overwhelming online search experience.

9

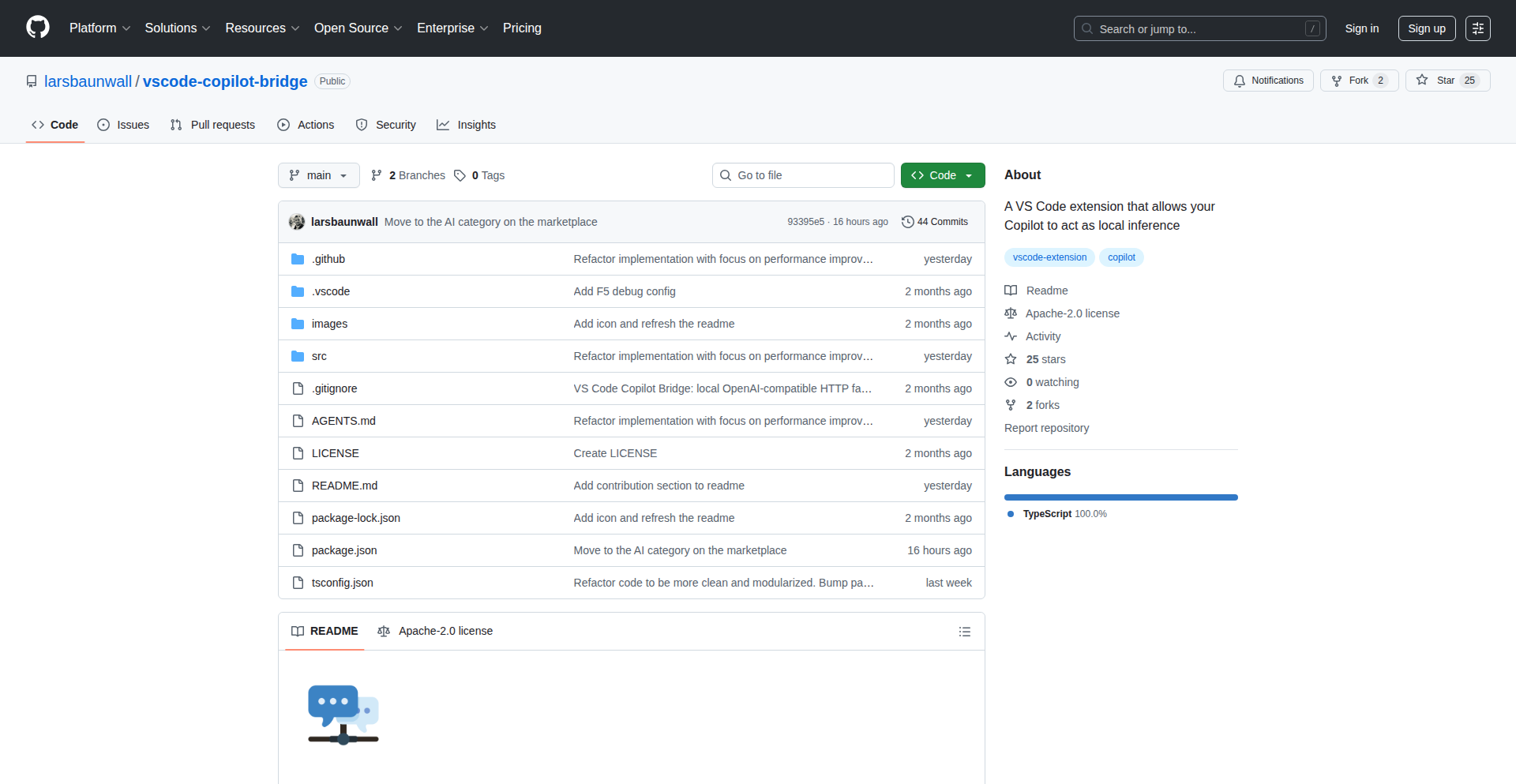

LocalCopilot-API

Author

lbaune

Description

This project exposes your local Copilot (or similar AI code assistants) as a standard OpenAI-style API. This means you can integrate the power of your AI coding companion into any application or workflow that already talks to OpenAI's API, unlocking faster, more context-aware AI assistance directly within your development tools, without relying on external cloud services.

Popularity

Points 3

Comments 1

What is this product?

LocalCopilot-API is a clever wrapper that makes your locally running AI code assistant, like GitHub Copilot or other LLMs trained for code, accessible through a familiar API format, identical to the one OpenAI uses for its models (like GPT-3.5 or GPT-4). Think of it as building a bridge. Instead of your other tools having to learn a completely new way to talk to your local AI, they can just use the language they already know – the OpenAI API language. The innovation lies in abstracting the complexity of the local AI's interface and presenting it in a universally understood way, enabling seamless integration and allowing you to leverage the speed and privacy of local AI with the flexibility of cloud AI workflows. So, this means you get the benefits of a powerful AI coding assistant that understands your project context, runs on your machine for privacy and speed, and can be plugged into any existing system that supports OpenAI's API.

How to use it?

Developers can use this project by running the LocalCopilot-API server on their local machine. Once the server is running, they can configure their IDE extensions, command-line tools, or custom scripts to point to this local API endpoint instead of an external OpenAI API. For example, an IDE extension that normally calls `api.openai.com` can be reconfigured to call `http://localhost:PORT` (where PORT is the port your LocalCopilot-API server is listening on). The key is that the requests sent to your local server will mimic the structure of OpenAI API requests, and the responses will also be formatted in the same way. This makes it incredibly easy to swap out cloud-based AI services for your local AI assistant in any scenario where API compatibility is maintained. So, this means you can instantly upgrade your existing AI-powered developer tools with the capabilities of your local AI, enhancing productivity and control.

Product Core Function

· Local LLM Integration: Connects to various locally running AI models, including those optimized for code generation, allowing developers to utilize their preferred AI. This is valuable because it democratizes access to powerful AI capabilities, removing the reliance on expensive cloud services and offering more control over AI models.

· OpenAI-Compatible API: Emulates the standard OpenAI API, allowing any tool or application designed to work with OpenAI to seamlessly connect to the local AI. This is valuable because it enables a vast ecosystem of existing tools and integrations to be immediately leveraged with local AI, saving development time and effort.

· Customizable Toolchain Integration: Provides a flexible endpoint for integrating local AI assistance into custom scripts, build processes, or other developer workflows. This is valuable for automating tasks, generating code snippets, or providing context-aware help within specific development environments, boosting efficiency.

· Privacy and Speed Enhancement: By running the AI inference locally, this project significantly reduces latency and enhances data privacy, as sensitive code and project information never leaves the developer's machine. This is valuable for organizations with strict data security policies or developers who require the fastest possible AI response times.

· AI Model Agnosticism: Designed to be adaptable to different local AI models, offering developers the flexibility to choose and switch between various open-source or privately hosted LLMs. This is valuable for future-proofing and ensuring compatibility with evolving AI technologies.

Product Usage Case

· IDE Code Completion and Generation: An IDE extension that typically uses OpenAI's API for code suggestions can be reconfigured to use LocalCopilot-API. Instead of sending your code to OpenAI's servers, it sends it to your local machine, getting instant, context-aware code completions. This solves the problem of slow or privacy-concerning cloud-based code assistance, offering a faster and more secure alternative.

· Automated Code Review and Refactoring: A custom script that analyzes code for potential issues or suggests refactoring could be enhanced by calling LocalCopilot-API. The script sends code snippets to the local AI for analysis, receiving suggestions for improvements, which can then be automatically applied. This addresses the need for efficient, on-demand code quality checks within a development pipeline.

· Command-Line Interface (CLI) Tool Augmentation: A CLI tool for generating boilerplate code or performing specific code transformations can be integrated with LocalCopilot-API. Developers can invoke the CLI tool, which then leverages the local AI to generate complex code structures or implement specific logic based on user prompts, solving the problem of repetitive coding tasks.

· Internal Developer Tooling Integration: Companies can build internal tools that utilize their existing AI models for tasks like generating documentation, writing unit tests, or answering developer questions, all powered by LocalCopilot-API. This allows for highly customized and secure AI assistance tailored to the company's specific codebase and workflows.

10

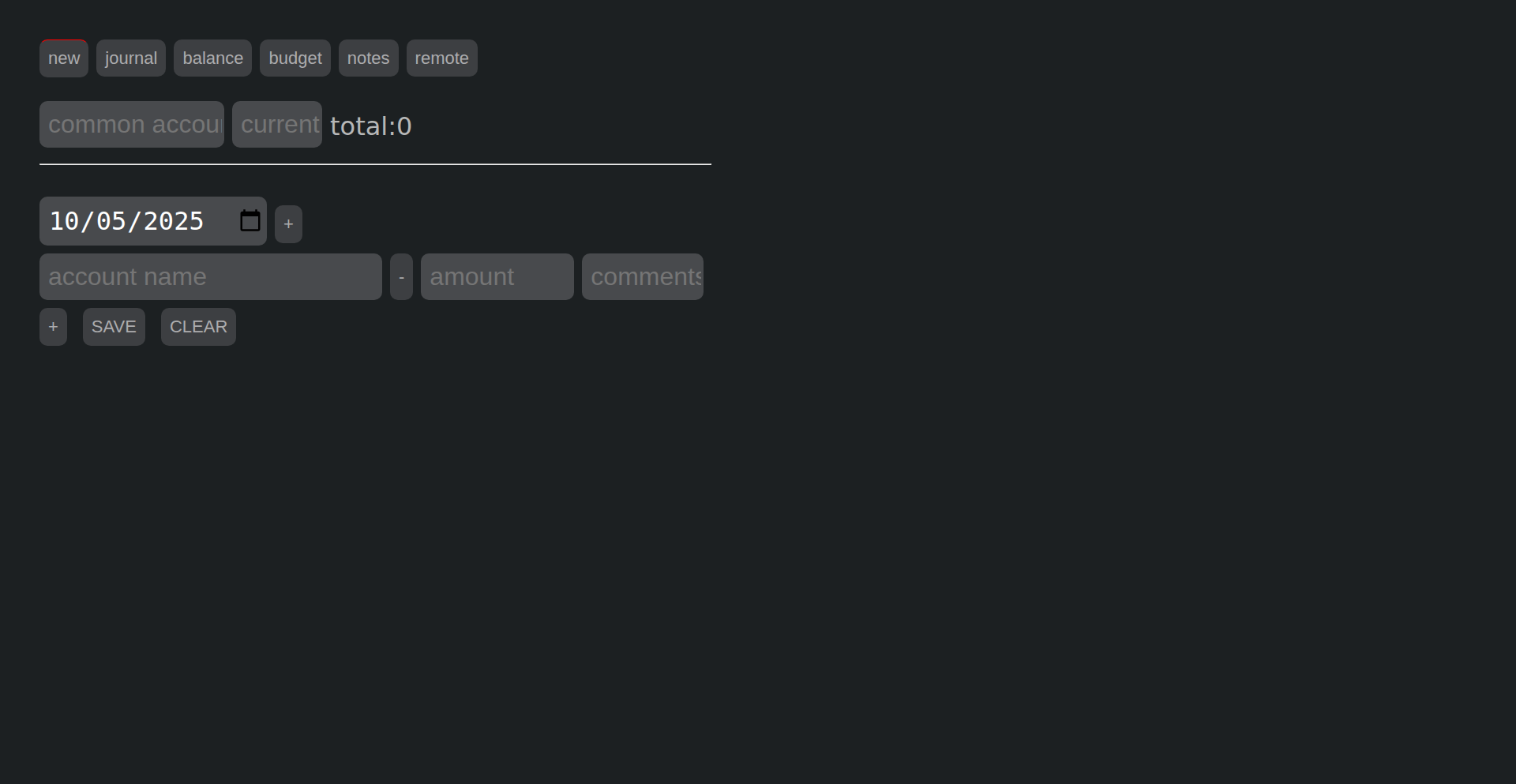

Offline Ledger Companion

Author

sras-me

Description

This project is a minimalist, plain HTML accounting tool designed for personal finance tracking, especially when on the go. It addresses the inconvenience of entering financial transactions from mobile devices or when offline, by offering a web-based interface that stores data locally using the browser's IndexedDB. Its core innovation lies in its zero-backend architecture and seamless integration with cloud storage services for syncing, allowing users to manage their finances with flexibility and ease.

Popularity

Points 4

Comments 0

What is this product?

This project is a simple, client-side accounting application. It's built entirely with HTML, meaning it runs directly in your web browser without needing to install anything or connect to a server. The innovation here is its ability to function completely offline. All your financial transaction data is stored securely within your browser's local storage (specifically, IndexedDB). This means you can add expenses, income, and manage budgets even when you have no internet connection. For syncing data across multiple devices or backing it up, it cleverly utilizes free cloud services like getpantry.cloud or jsonbin.io by exporting and importing your encrypted financial data as a compressed JSON file. It also supports exporting your data in the standard ledger-cli format, allowing for advanced analysis with dedicated tools.

How to use it?

Developers can use this tool in several ways. The simplest is to visit the provided URL directly in their browser to start managing their personal finances immediately. Alternatively, they can download the plain HTML file and open it from their local file system, ensuring complete offline functionality. For data synchronization, developers can set up an account with a service like jsonbin.io or getpantry.cloud, create a data store (e.g., a 'bin' on jsonbin.io), and then configure the app to use the provided API endpoint and authorization headers in the 'remote' section of the application. This allows for seamless data backup and access from any device with a web browser. The app's input format is designed to be familiar to ledger-cli users, making it easy to adapt.

Product Core Function

· Offline Transaction Entry: The ability to record financial transactions anytime, anywhere, without an internet connection. This is valuable for capturing expenses immediately, even when traveling or in areas with poor connectivity, ensuring no financial detail is missed.

· Local Data Storage: Securely stores all financial data directly on the user's device using IndexedDB. This provides privacy and offline access, meaning sensitive financial information doesn't need to be constantly transmitted to a remote server.

· Cloud Syncing (via external services): Enables synchronization of financial data across multiple devices by exporting and importing encrypted JSON files to services like jsonbin.io or getpantry.cloud. This ensures data consistency and backup without requiring complex server setups.

· Ledger-CLI Data Export: Allows users to export their journal entries in the widely-used ledger-cli text format. This is crucial for users who want to leverage the powerful reporting and analytical capabilities of dedicated command-line accounting tools.

· Batch Transaction Entry with Auto-Calculation: Facilitates entering multiple transactions for the same date or using a common source account, with automatic calculation of missing amounts. This significantly speeds up the process of logging recurring or similar transactions.

· Basic Budget Tracking: Provides a simple mechanism to set monthly budget allocations and track spending against them, including carry-over from previous months. This offers a quick overview of financial health and helps manage spending proactively.

Product Usage Case

· A frequent traveler who needs to log expenses on the go: This tool allows them to enter receipts and spending directly from their phone, even on an airplane or in remote locations, then sync the data later when they have internet access.

· A developer who prefers plain text and command-line tools but needs a mobile-friendly interface for quick entries: They can use this HTML app for fast mobile data input and then export to ledger-cli format for in-depth analysis on their desktop.

· Someone concerned about data privacy and security: By storing data locally and only using encrypted exports for syncing, this app offers a higher degree of control over personal financial information compared to many cloud-only solutions.

· A user managing personal finances on multiple devices (e.g., laptop and tablet): The cloud sync feature allows them to maintain a consistent financial record across all their devices without manual file transfers.

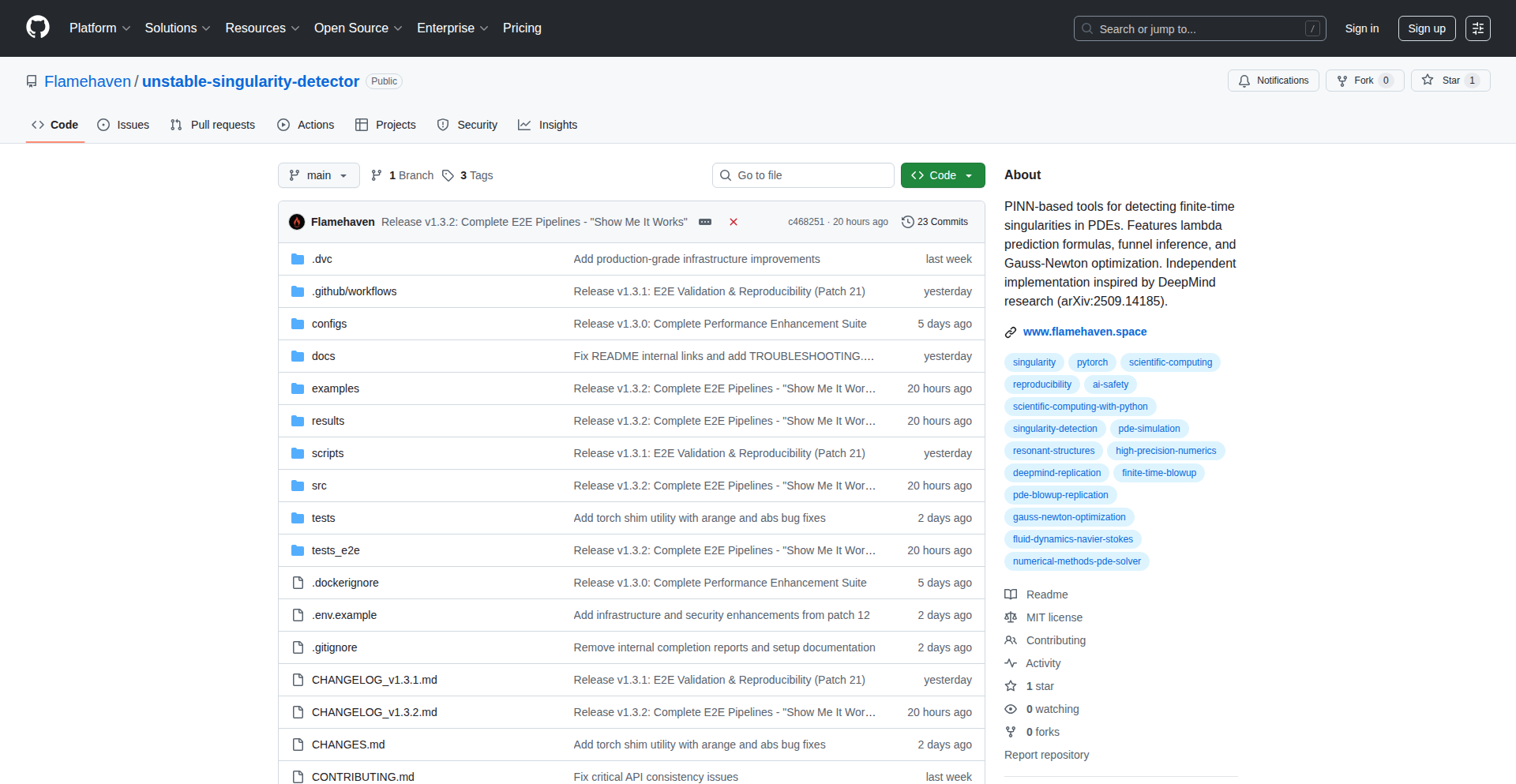

11

CivitasForge: AI-Powered Civilization Blueprint Framework

Author

mnm

Description

This project, CivitasForge, is an experimental framework designed to leverage the power of advanced AI models, like Gemini Pro 2.5 and GPT-4, to conceptualize and stress-test societal blueprints. It acts as a 'Django for Civilization,' providing a structured approach to developing and refining ideas for societal organization, economics, and governance by simulating dialogues and critiques from historical figures and different perspectives. Its innovation lies in its meta-cognitive approach to problem-solving, using AI not just for generation but for rigorous evaluation and co-creation of complex systems.

Popularity

Points 3

Comments 1

What is this product?

CivitasForge is a conceptual framework that harnesses large language models (LLMs) to deeply analyze and iterate on ideas for building societies. Think of it like a sophisticated simulation tool for social engineering. Instead of writing code for a web application like Django, you're using AI's analytical and creative capabilities to 'code' or blueprint entire societal structures. The core innovation is using AI to embody different viewpoints – from historical economists like Adam Smith to modern political figures – to challenge, refine, and synthesize proposed societal models. This allows for rapid prototyping of ideas and identifying potential flaws or improvements before real-world implementation, essentially making complex social planning interactive and dynamically responsive.

How to use it?

Developers and thinkers can use CivitasForge by feeding its core documentation and their own societal concepts into an AI chat interface, such as Google AI Studio. The AI can then be prompted to explore these concepts from various angles, critique them using simulated historical or theoretical lenses, and even help synthesize alternative solutions. For instance, you could input a proposed economic policy and ask the AI, 'How would a neoclassical economist critique this?' or 'Generate a counter-argument from a socialist perspective.' The framework facilitates this by providing a structure for the AI to access and process information, enabling users to engage in high-level discourse and co-creation with the AI to design more robust and well-considered societal frameworks. The output can be used to refine proposals, generate detailed explanations, or even hypothesize practical implementations.

Product Core Function

· AI-driven Ideation and Synthesis: Leverages LLMs to generate novel ideas and synthesize complex concepts for societal structures. This is valuable for overcoming creative blocks and exploring a wider range of potential solutions for societal challenges.

· Simulated Critical Analysis: Uses AI to simulate dialogues and critiques from diverse perspectives, including historical figures and theoretical schools of thought. This helps identify weaknesses in proposed plans and encourages more resilient designs by preemptively addressing potential criticisms.

· Iterative Blueprint Refinement: Facilitates a cyclical process of proposing, evaluating, and refining societal blueprints. This means users can quickly iterate on ideas, making adjustments based on AI feedback, leading to more thoroughly developed and practical plans.

· Perspective Emulation: Enables users to ask AI to respond as specific individuals or ideological viewpoints. This is incredibly useful for understanding how different stakeholders might react to a proposal and for tailoring communication or policy accordingly.

· Knowledge Synthesis and Retrieval: The framework is designed to efficiently process and synthesize information from extensive documentation, allowing the AI to provide contextually relevant insights and explanations on demand, saving users significant research time.

Product Usage Case

· Scenario: A policy maker wants to design a new economic stimulus package. Using CivitasForge, they can feed their proposal into an AI chat and ask it to simulate a debate between Milton Friedman and John Maynard Keynes, identifying potential economic impacts and policy trade-offs based on each economist's known theories. This helps them craft a more balanced and effective stimulus plan.

· Scenario: A city planner is developing a new urban development strategy focused on sustainability. They can use CivitasForge to have the AI critique their plan from the perspective of an environmental ethicist and a historical urban planner like Robert Moses. This helps them anticipate challenges and incorporate more comprehensive environmental and social considerations into their strategy.

· Scenario: An academic researcher is exploring alternative governance models for a decentralized autonomous organization (DAO). They can use CivitasForge to have the AI generate hypothetical scenarios of how different historical political philosophers, like Machiavelli or Locke, would approach governing such an entity, informing the design of more robust and secure DAO structures.

· Scenario: A futurist is brainstorming solutions to global challenges like climate change. They can use CivitasForge to collaborate with AI, feeding it diverse data and asking it to hypothesize solutions, stress-testing them against simulated reactions from various global stakeholders and historical precedents to ensure feasibility and widespread acceptance.

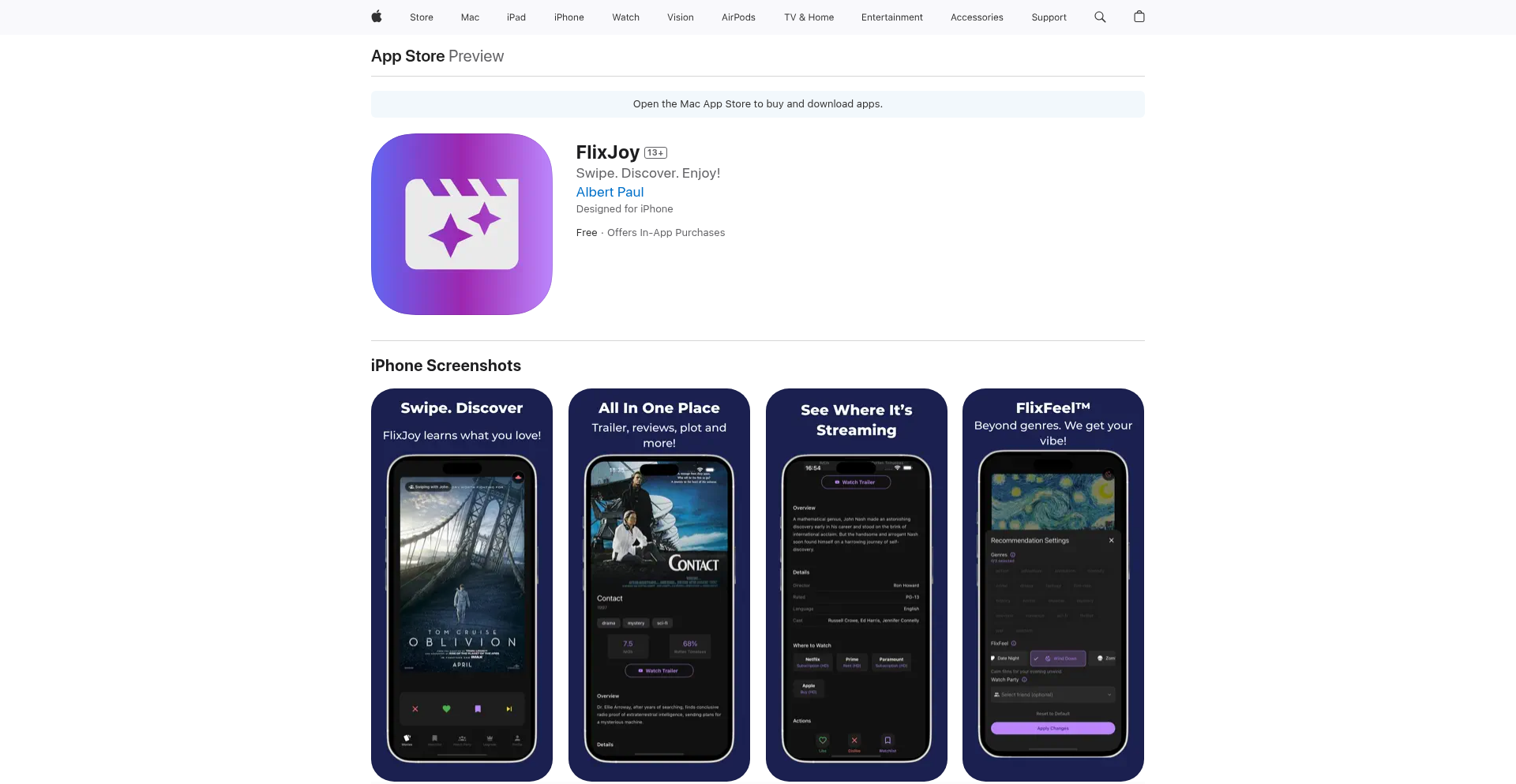

12

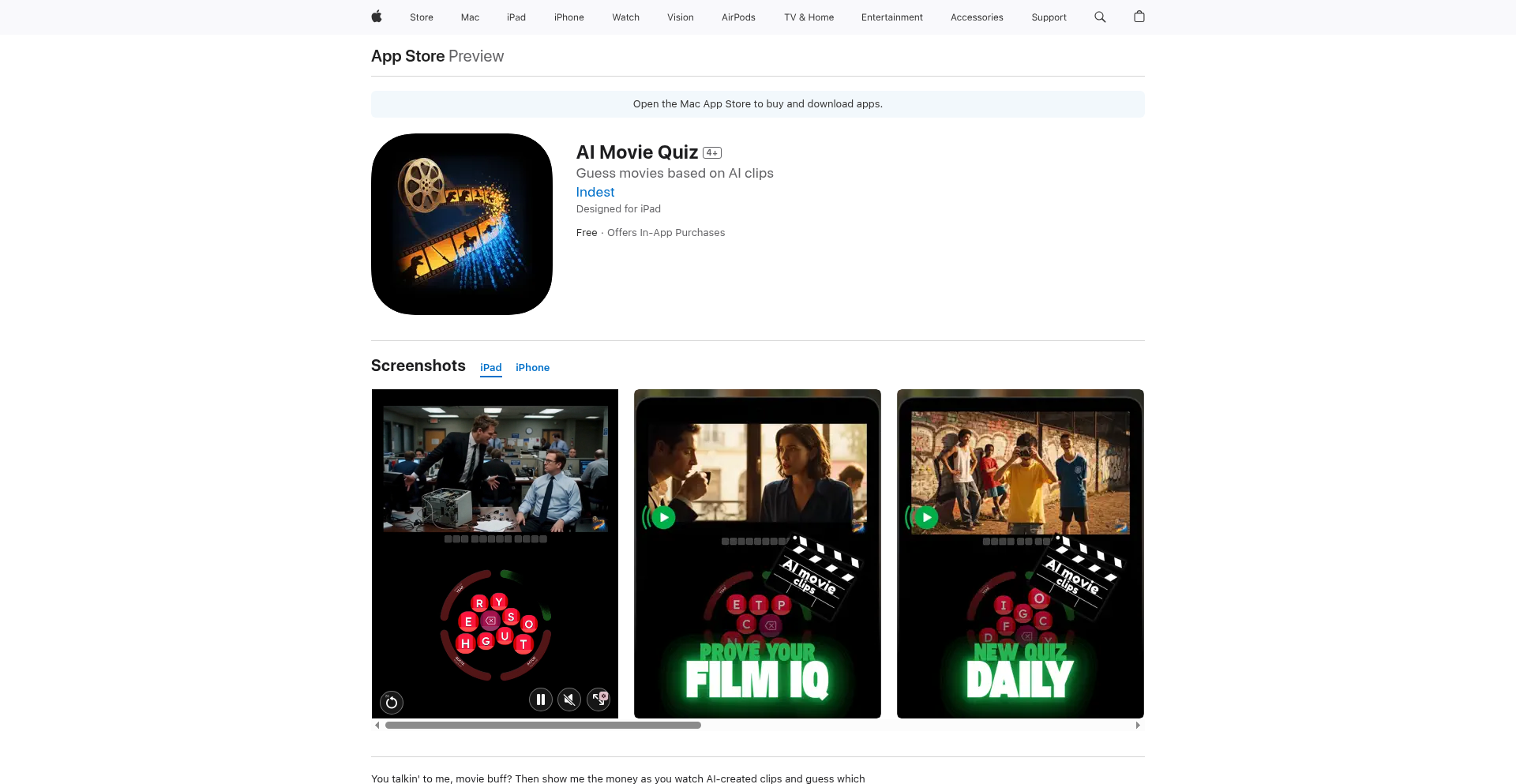

AI Movie Trivia Engine

Author

indest

Description

An innovative iOS application that leverages AI to generate dynamic movie quizzes. It tackles the challenge of creating engaging and varied trivia by using sophisticated AI models to understand movie content and formulate questions, offering a fresh take on entertainment apps for movie buffs.

Popularity

Points 1

Comments 2

What is this product?

This project is an iOS app that uses Artificial Intelligence to create movie trivia quizzes. Instead of pre-written questions, the AI analyzes movie data and generates unique questions on the fly. The innovation lies in its ability to understand narrative, characters, and plot points to craft questions that are both challenging and relevant, offering a truly intelligent quiz experience. So, this means you get endless unique movie quizzes without repetitive questions, keeping the fun fresh every time you play.

How to use it?

Developers can integrate this engine into their own applications by accessing its API. It's designed to be a backend service that other apps can query to receive movie trivia questions and answers based on specific movies or genres. This allows for seamless integration into existing entertainment platforms or the creation of entirely new trivia-focused experiences. So, if you're building a movie app, you can easily add a fun trivia component without building the AI logic yourself.

Product Core Function

· AI-powered question generation: Utilizes advanced natural language processing and machine learning models to create contextually relevant movie trivia questions, providing dynamic and engaging content. This is useful for keeping users hooked with ever-changing challenges.

· Movie content analysis: The AI deeply understands plot, characters, and themes from movie databases to formulate accurate and insightful questions. This ensures the quizzes are meaningful and not just random facts.

· Customizable quiz parameters: Allows for the specification of movie genres, release years, or even specific films to tailor the quiz experience. This enables personalized trivia for individual user preferences or targeted content.

· Real-time question and answer delivery: Provides instant trivia questions and their corresponding answers, facilitating a smooth and interactive user experience. This means instant gratification and continuous gameplay.

· Cross-platform potential: While currently an iOS app, the underlying AI engine can be adapted for web or other mobile platforms. This opens up possibilities for broader application and wider audience reach.

Product Usage Case

· A social media platform could integrate this engine to create movie-themed challenges or polls, increasing user engagement by offering interactive content related to trending movies. This solves the problem of generic engagement tools by providing specific, fun content.

· A streaming service might use this to create in-app quizzes for their catalog, enhancing user discovery and retention by gamifying the movie-watching experience. This helps users explore more content in an enjoyable way.

· An educational app focused on film studies could use the AI to generate questions that test comprehension of cinematic elements and narrative structures. This provides a sophisticated learning tool for aspiring filmmakers or critics.

· A party game application could incorporate this engine to provide an endless stream of movie trivia for gatherings, ensuring consistent entertainment without manual question preparation. This eliminates the hassle of preparing game materials.

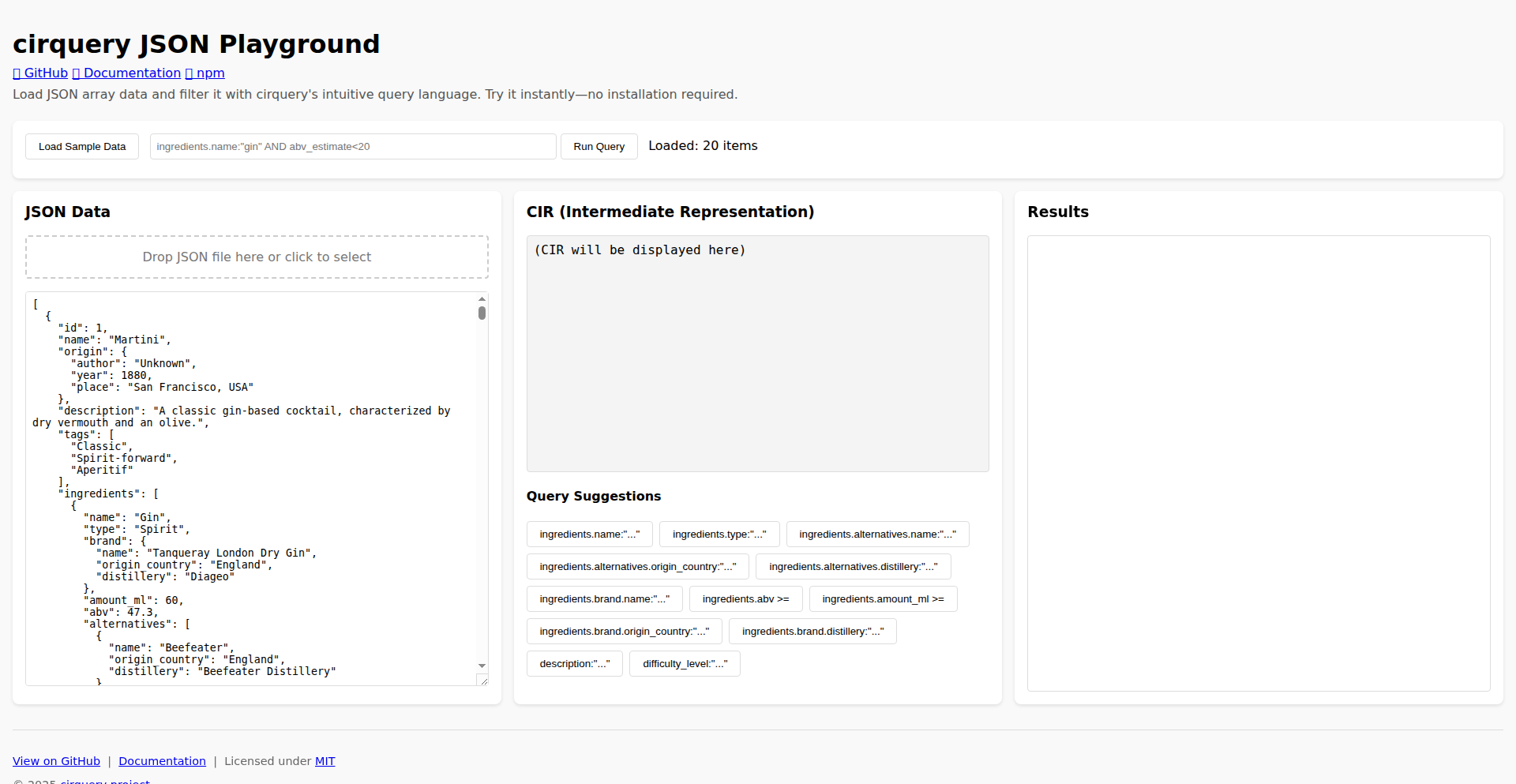

13

ChronoTune

Author

xSWExET

Description

ChronoTune is a web-based game where users listen to eight randomly selected songs and arrange them on a timeline according to their release dates. It's built as a playful exploration of temporal ordering and data visualization, offering a unique way to engage with music history and develop a sense of chronological awareness. The core innovation lies in its interactive application of data (song release dates) to a gamified experience, prompting users to deduce temporal relationships through auditory and deductive reasoning.

Popularity

Points 2

Comments 1

What is this product?

ChronoTune is an online game that tests your knowledge of music history by having you sort songs based on their release dates. You'll hear eight random songs and then need to place them in chronological order. The underlying technology uses a database of song release dates, fetched via APIs, and a user interface that allows for drag-and-drop manipulation of song elements on a visual timeline. The innovation here is turning a potentially dry data set into an engaging auditory and logical puzzle, encouraging users to intuitively grasp historical context through music.

How to use it?

Developers can use ChronoTune as an example of how to build engaging, data-driven web applications. Its core components, like API integration for song data, dynamic timeline rendering, and interactive user input handling, are transferable to many other projects. For instance, you could adapt the timeline concept to visualize project milestones, historical events, or even user journey progression. The game can be integrated into educational platforms or used as a fun way to explore specific music genres or eras. The underlying principle of mapping abstract data to an intuitive user experience is broadly applicable.

Product Core Function

· Song Selection and Playback: Randomly selects eight songs from a curated database and provides an audio player for users to listen. This addresses the need to efficiently present varied content for user interaction.

· Release Date Data Retrieval: Fetches accurate release dates for songs, likely through music metadata APIs. This demonstrates effective data sourcing and management for factual accuracy.

· Interactive Timeline Interface: Allows users to drag and drop song elements onto a visual timeline, representing their chronological placement. This showcases intuitive UI design for complex data ordering.

· Chronological Sorting Logic: Implements algorithms to compare user-placed song order against actual release dates to determine correctness. This highlights the technical implementation of validation and scoring.

· Gamified Feedback System: Provides immediate feedback on correct and incorrect placements, along with scoring. This illustrates how to create engaging user experiences through game mechanics.

· Endless Mode Variant: Offers an extended play option with increasing difficulty and a survival element. This demonstrates extensibility and replayability in application design.

Product Usage Case

· Educational Tool: A history teacher could use ChronoTune to create interactive lessons on music evolution for a specific decade, helping students understand the timeline of musical styles and artists. It solves the problem of making historical data engaging and memorable.

· Music Discovery Platform: A music streaming service could integrate a similar feature to allow users to explore artists or genres chronologically, revealing trends and influences. This addresses the challenge of presenting music discovery in a novel and informative way.

· Data Visualization Experiment: A developer interested in data visualization could fork ChronoTune to explore different ways of representing temporal data interactively, perhaps for historical timelines or scientific data sets. This provides a concrete example of applying chronological data to a visual medium.

· Personal Project Showcase: A web developer can use this as a reference for building a fun, interactive web application that demonstrates skills in front-end development, API integration, and game logic. It solves the problem of having a portfolio piece that is both technically sound and engaging.

14

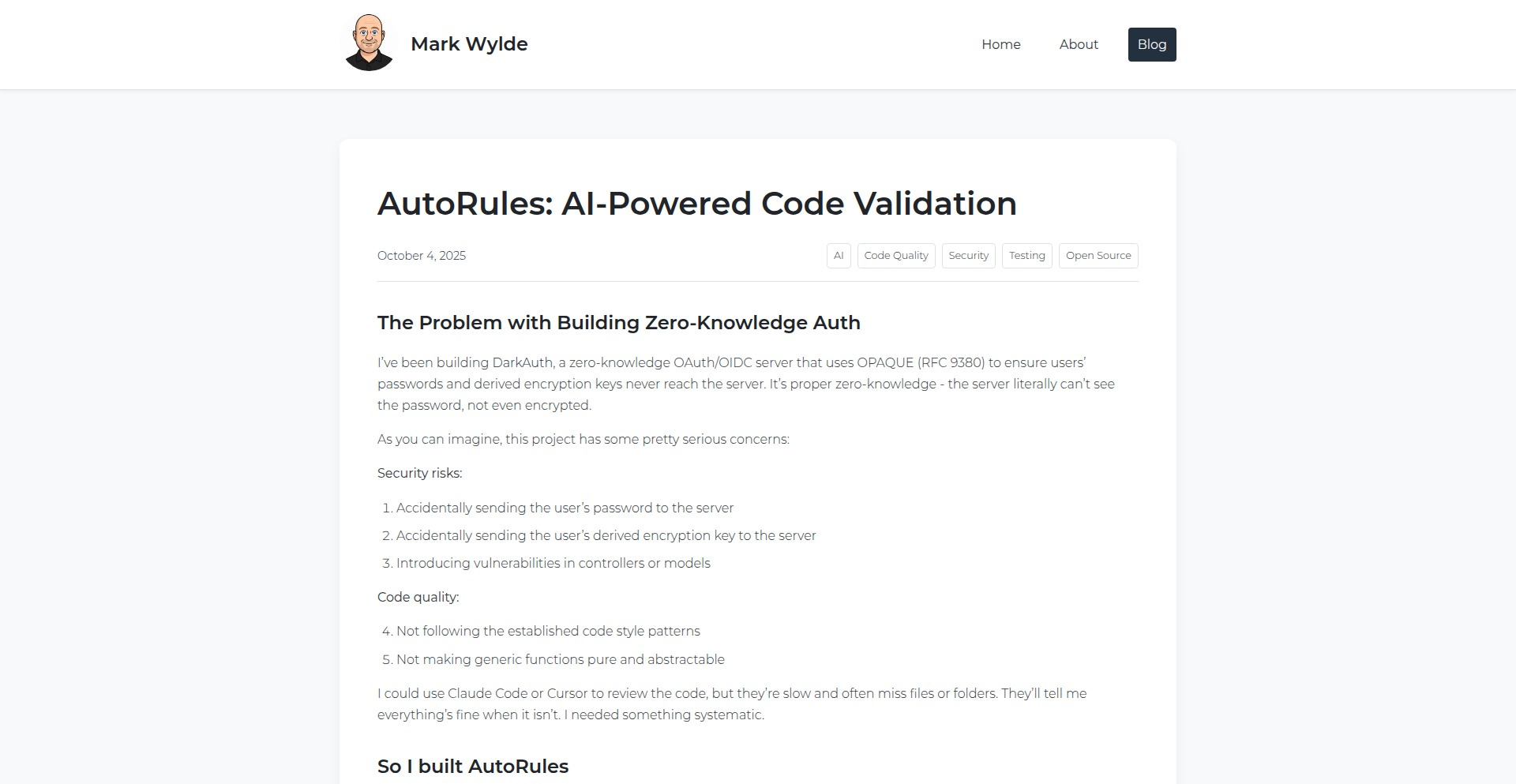

RL-Viz: RL-Native Observability Framework

Author

kaushikbokka

Description

RL-Viz is an open-source observability framework built specifically for Reinforcement Learning (RL) ecosystems. It addresses the critical need for tools that understand RL primitives, providing live tracking, per-example inspection, and programmatic access to experiment runs. This allows developers to finally see what's happening during their RL training, debug issues effectively, and understand rollout quality, reward distributions, and failure modes, which are crucial for efficient and effective RL development.

Popularity

Points 3

Comments 0

What is this product?

RL-Viz is a specialized toolkit designed to give developers deep insights into their Reinforcement Learning (RL) experiments. Think of it like a dashboard for your RL AI. Standard tools often treat RL experiments like any other software, but RL has unique needs. RL-Viz understands concepts like rewards, actions, states, and environments, which are the building blocks of RL. Its innovation lies in its 'RL-native' approach, meaning it speaks the language of RL. This allows it to offer features like real-time monitoring of agent behavior, the ability to drill down into individual decision-making processes (per-example inspection), and the capability to retrieve this data programmatically for deeper analysis. So, for you, it means you can stop guessing why your RL agent isn't learning and start understanding the root cause of problems.

How to use it?

Developers can integrate RL-Viz into their existing RL training pipelines. This typically involves instrumenting their RL agent's code to send relevant RL-specific metrics and state information to RL-Viz's backend. The framework then provides a user interface (UI) and an API for visualization and analysis. You can use it to monitor live training sessions, pause and inspect specific agent decisions, and analyze historical runs to identify patterns or anomalies. Common integration points include popular RL libraries like Stable-Baselines3, Ray RLlib, or custom-built RL environments. So, for you, this means you can easily plug this into your current RL projects to gain immediate visibility without a massive rewrite.

Product Core Function

· Live Training Monitoring: Enables developers to observe their RL agent's behavior and performance in real-time as it learns. This is valuable because it allows for immediate detection of diverging or inefficient learning, helping to save computational resources and time.

· Per-Example Inspection: Allows developers to examine the specific decisions made by the RL agent for individual training examples (e.g., a single step in a game or simulation). This is crucial for debugging, as it helps pinpoint exactly why an agent made a particular choice, leading to more targeted improvements.

· Reward Distribution Analysis: Visualizes the distribution of rewards received by the RL agent over time. Understanding reward patterns is fundamental to RL, as it directly indicates how well the agent is achieving its goals and where it might be getting stuck.

· Failure Mode Identification: Helps to identify and categorize scenarios where the RL agent fails to perform as expected. This is invaluable for diagnosing complex problems and understanding the limitations of the current agent design or training strategy.

· Programmatic Data Access: Provides an API to access all observed RL experiment data programmatically. This empowers advanced users to perform custom analysis, build custom dashboards, or integrate RL-Viz data into other machine learning workflows for deeper insights.

Product Usage Case

· Debugging a game-playing AI: A developer is training an AI to play a complex video game and notices it gets stuck in a loop. Using RL-Viz, they can inspect the specific state, actions, and rewards at each step of the loop to understand the faulty decision-making process, allowing them to adjust the agent's reward function or training data. This helps them fix the bug faster and improve the AI's performance.

· Optimizing a robotic arm controller: A researcher is developing an RL agent to control a robotic arm for a manufacturing task. They observe that the robot's movements are jerky and inefficient. With RL-Viz, they can visualize the reward distribution and per-example trajectories, identifying specific movements that lead to low rewards. This insight allows them to refine the agent's policy to achieve smoother and more efficient robot arm control.

· Understanding a recommendation system's performance: A team is using RL to personalize content recommendations. They want to understand why certain users receive suboptimal recommendations. RL-Viz can help them analyze the sequence of actions (recommendations) and rewards (user engagement) for individual user sessions, revealing patterns in user behavior that the agent might be misinterpreting, leading to better recommendation strategies.

· Monitoring autonomous vehicle training: Engineers training an autonomous driving system can use RL-Viz to monitor the agent's decisions in various simulated scenarios. They can inspect specific 'critical moments' where the agent made a sub-optimal decision, such as a near-miss, to understand the contributing factors and improve safety protocols. This provides essential visibility into the complex decision-making process of safety-critical systems.

15

Flakegarden: Nix Flake Orchestrator

Author

createaccount99

Description

Flakegarden is a project inspired by shadcn/ui, aimed at simplifying the management and sharing of Nix flakes. It provides a structured and composable way to define, organize, and reuse Nix development environments and system configurations, making complex Nix setups more accessible and maintainable. The core innovation lies in its declarative approach to building and managing dependencies and configurations, allowing for reproducible and portable development environments.

Popularity

Points 2

Comments 0

What is this product?

Flakegarden is a tool that helps developers manage and share their Nix flakes. Nix is a powerful package manager and build system that allows for reproducible development environments. Flakes are a newer feature in Nix that make it easier to manage dependencies and configurations in a structured way. Flakegarden takes inspiration from shadcn/ui, a popular component library for React, by offering a similar philosophy of composability and easy integration. Essentially, it provides a standardized way to define, organize, and share reusable Nix environments and system configurations, much like a component library provides reusable UI elements. This means you can easily assemble complex development setups or server configurations from smaller, well-defined parts, ensuring that your environment is always the same, no matter where you run it. So, what's the value for you? It means less time spent fighting environment issues and more time coding, with the confidence that your project's dependencies and build process are consistent and reproducible.

How to use it?

Developers can use Flakegarden to define their project's Nix flakes in a clear and organized manner. By leveraging Flakegarden's structure, you can import and compose existing flakes for common tasks (like setting up a specific programming language environment, a database, or a CI/CD pipeline) and combine them with your project-specific configurations. This allows for rapid bootstrapping of new projects or complex system setups. Integration typically involves defining your project's flake.nix file using Flakegarden's conventions, specifying which existing flakes (or Flakegarden modules) to include and how to configure them. This makes it easy to share your development environment with team members or deploy your configurations consistently across different machines. So, what's the value for you? It means you can quickly spin up a perfectly configured development environment for any project, or deploy a consistent server setup, by simply composing pre-defined building blocks. It's like having a toolbox of ready-to-use environment configurations that you can assemble as needed.

Product Core Function

· Declarative Environment Composition: Define complex development environments by combining smaller, reusable Nix flakes. This provides a structured way to build up your project's dependencies and tools, making it easier to understand and manage. The value is in creating consistent and reproducible development setups, so you don't have to worry about 'it works on my machine' issues.

· Flake Sharing and Reusability: Easily share your well-defined Nix flakes with others or reuse them across multiple projects. This promotes collaboration and reduces redundant configuration efforts. The value is saving time and effort by leveraging existing, tested configurations.

· Modular System Configuration: Apply the same principles of composability to system-level configurations, allowing for declarative and reproducible server setups. This means you can manage your infrastructure in a code-like fashion, ensuring consistency and simplifying deployments. The value is in creating reliable and easily manageable infrastructure.

· Inspiration from shadcn/ui: Adopt a component-based philosophy for managing infrastructure and development environments, drawing parallels to how UI components are used in web development. This offers a familiar mental model for developers accustomed to modern front-end frameworks. The value is in providing an intuitive and modern approach to complex system management.

Product Usage Case

· Setting up a new Go project with a specific Go version, a PostgreSQL database, and necessary linters: A developer can use Flakegarden to compose a flake that includes a pre-built Go development environment flake, a PostgreSQL flake, and a linter configuration flake, all within their project's flake.nix. This solves the problem of manually installing and configuring each dependency, ensuring a consistent setup for all team members. The value is rapid project bootstrapping and consistent development tooling.

· Creating a reproducible Python development environment for a data science project: A data scientist can use Flakegarden to define a flake that specifies a particular Python version, along with common libraries like NumPy, Pandas, and Scikit-learn. This ensures that the environment is identical for everyone on the team, preventing version conflicts and making collaboration smoother. The value is in eliminating Python environment headaches and enabling seamless team collaboration.

· Deploying a web server with a specific Nginx configuration and a database service: A system administrator can use Flakegarden to define a server configuration that includes a managed Nginx instance and a database service, all declaratively defined. This allows for easily reproducible server deployments and simplifies updates or rollbacks. The value is in creating a robust and easily manageable infrastructure.

16

Enfyra: The Scalable Cluster-Native BaaS

Author

DustinPham12

Description

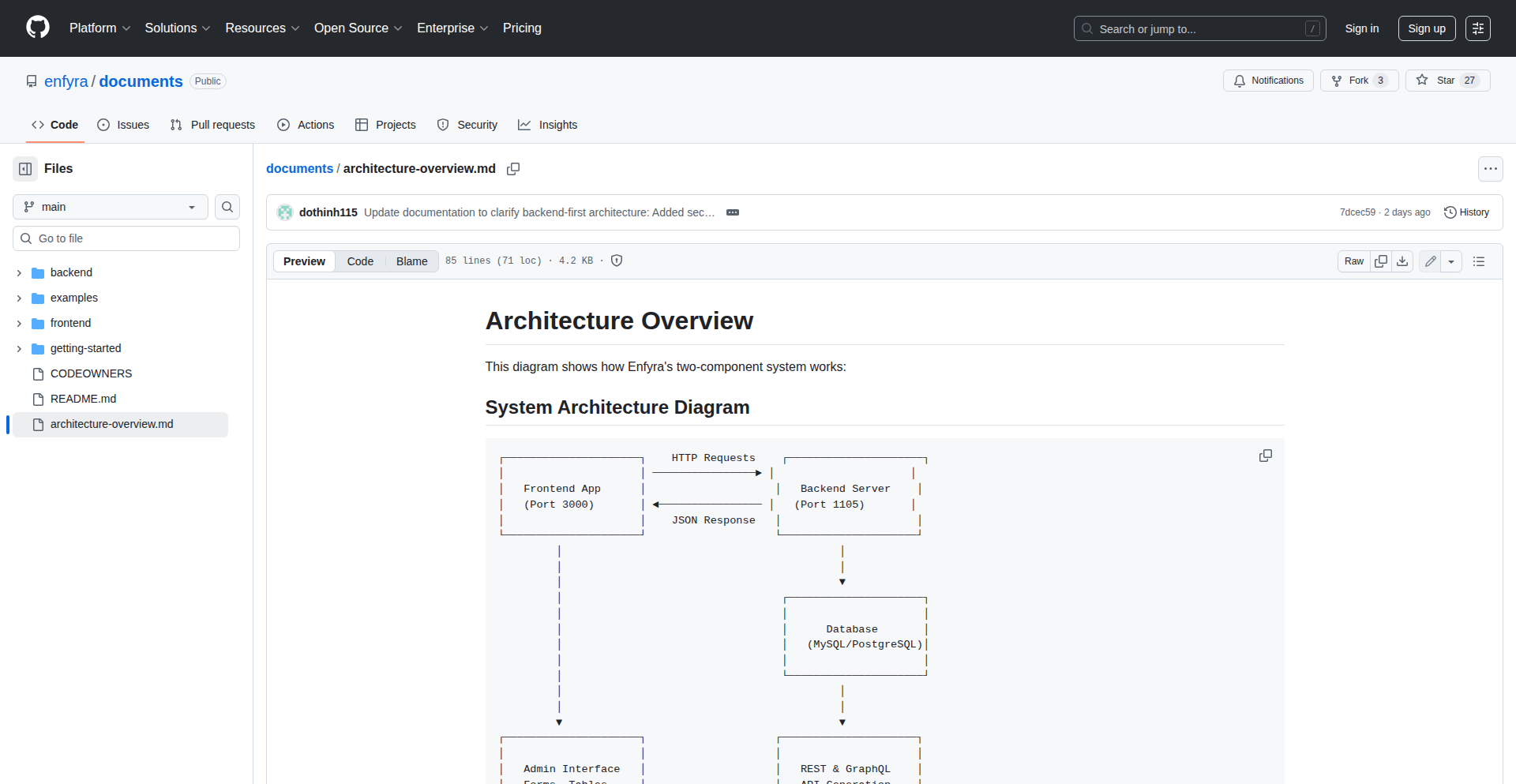

Enfyra is a Backends-as-a-Service (BaaS) platform designed to natively integrate with and scale within your existing Kubernetes clusters. It offers a suite of backend functionalities, abstracting away complex infrastructure management, allowing developers to focus on building their applications. The core innovation lies in its deep Kubernetes integration, enabling dynamic scaling and resource optimization directly from your cluster's capabilities, meaning your backend grows as your application needs do, seamlessly.

Popularity

Points 2

Comments 0

What is this product?

Enfyra is a cloud-native BaaS that runs inside your Kubernetes environment. Instead of relying on external, often vendor-locked BaaS providers, Enfyra deploys its services directly within your own infrastructure, managed by Kubernetes. This means it leverages the power and flexibility of Kubernetes for scaling, resilience, and resource allocation. It provides common backend functionalities like databases, authentication, and storage, all accessible via simple APIs. The innovation is in making these services truly cluster-native, ensuring they scale efficiently and cost-effectively by utilizing your cluster's existing resources, thus avoiding the typical performance bottlenecks and cost overhead of traditional BaaS solutions.

How to use it?

Developers can integrate Enfyra into their applications by deploying the Enfyra services within their Kubernetes cluster. Once deployed, they can access Enfyra's functionalities (e.g., database operations, user authentication, file storage) through its provided SDKs or direct API calls from their frontend or backend code. This allows for rapid application development without the need to provision and manage separate backend infrastructure. For example, a web application could easily connect to an Enfyra-managed database for data storage and an Enfyra authentication service for user sign-ups and logins, all running within the same Kubernetes cluster as the application itself.

Product Core Function

· Kubernetes-Native Database: Provides a managed database service that scales with your Kubernetes cluster, offering high availability and performance by leveraging the cluster's underlying resources. This means your data storage can grow seamlessly as your application's data load increases.

· Scalable Authentication Service: Offers a secure and scalable authentication and authorization system. It can handle a large number of users and requests by dynamically scaling its resources within your cluster, ensuring reliable user access for your application.

· Integrated File Storage: Delivers a robust and scalable object storage solution for your application's files. It's designed to integrate tightly with your cluster, ensuring that file storage needs are met efficiently as your application's data volume grows.

· Declarative Service Configuration: Allows developers to define and manage backend services using declarative configurations, similar to how they manage other Kubernetes resources. This simplifies deployment and management, allowing for repeatable and automated backend setups.

· Automated Scaling and Resource Management: Automatically scales backend services up or down based on demand and available cluster resources. This ensures optimal performance and cost-efficiency, as you only pay for the resources your backend actually uses.

Product Usage Case