Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-03

SagaSu777 2025-10-04

Explore the hottest developer projects on Show HN for 2025-10-03. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions underscore a powerful convergence: AI is not just an academic pursuit but a practical toolkit for developers and creators. We're seeing a surge in projects leveraging Large Language Models (LLMs) and AI agents to automate complex tasks, from sophisticated AI training environments like FLE to AI-powered coding assistants and prompt management tools. This signals a shift towards 'AI-augmented development,' where developers can offload tedious work and focus on higher-level innovation. For entrepreneurs, this means exploring how AI can streamline product development, enhance user experiences, or even create entirely new product categories. The trend towards local or more accessible AI solutions, like offline video detection or efficient JSON parsers, also points to a growing demand for privacy and control. This era is about the hacker spirit applied with AI – using these powerful new tools to solve real-world problems with unprecedented speed and creativity, breaking down barriers and enabling individuals to build sophisticated applications that were previously out of reach. Developers should embrace these tools, experiment with agentic frameworks, and consider how AI can unlock new possibilities in their own projects, fostering a culture of continuous learning and iterative creation.

Today's Hottest Product

Name

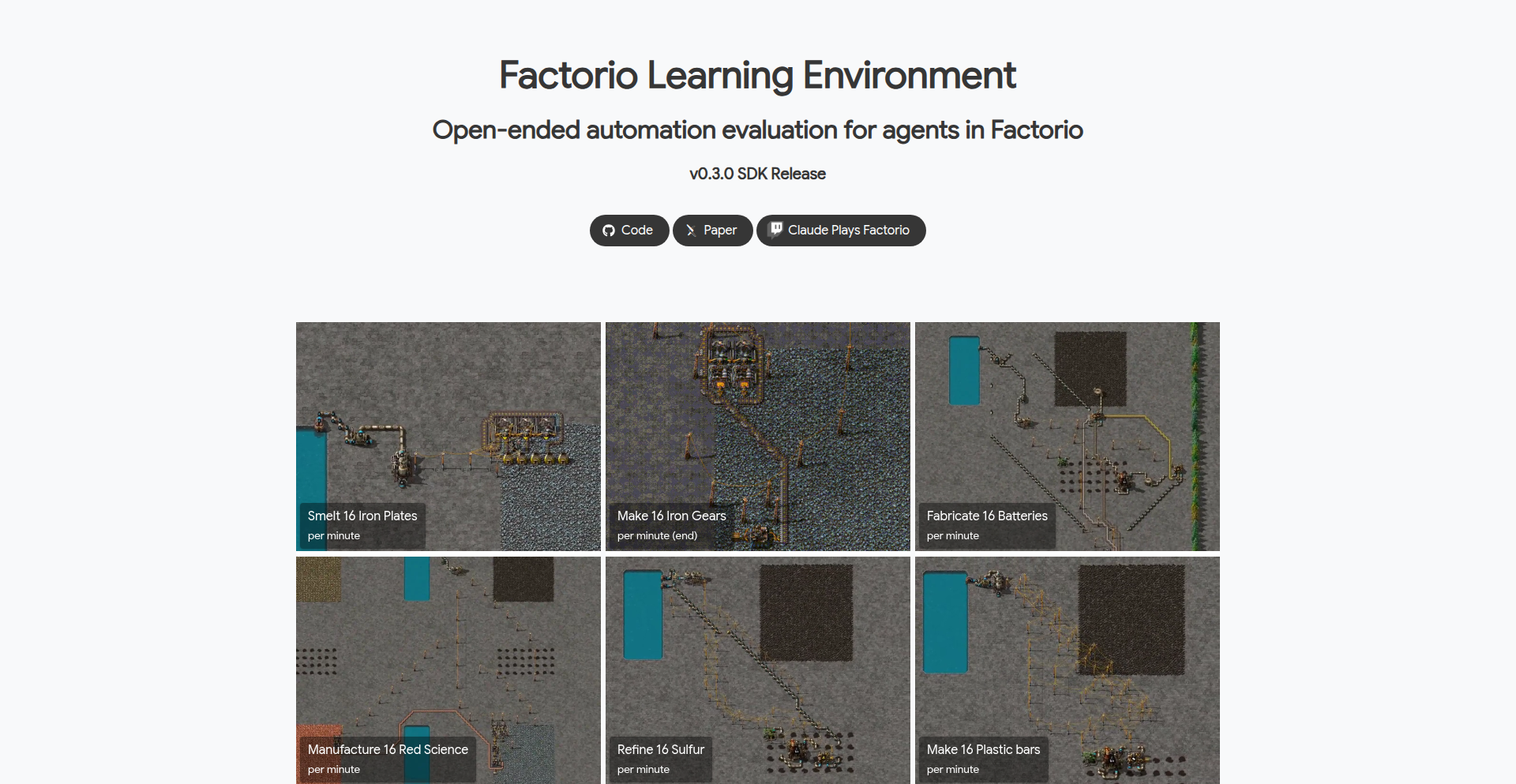

FLE v0.3 – Factorio Learning Environment

Highlight

This project introduces an open-source environment called FLE, built around the game Factorio, to evaluate AI agents on complex, long-horizon planning and automation tasks. The key innovation is its 'headless scaling' capability, which eliminates the need for the game client, enabling massive parallelization. This allows for unprecedented testing of AI's ability to handle real-world engineering challenges like system debugging and logistics optimization. Developers can learn about designing scalable AI training environments, integrating with RL frameworks like OpenAI Gym, and using advanced AI models for complex problem-solving.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Web Development

Education

Popular Keyword

AI

LLM

Developer Tool

Automation

Code Generation

Productivity

Technology Trends

AI Agentic Frameworks

LLM Integration in Workflows

Visual Programming Languages

AI-Assisted Development

Decentralized/Local AI Solutions

Developer Productivity Tools

Data Science & ML Platforms

Real-time Web Applications

Project Category Distribution

AI & Machine Learning (25.0%)

Developer Tools (20.0%)

Productivity (15.0%)

Web Development (10.0%)

Education (5.0%)

Utilities (5.0%)

Creative Tools (5.0%)

Infrastructure (5.0%)

Other (10.0%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Factorio Automation AI Lab (FAAL) | 58 | 14 |

| 2 | PipeVisual | 13 | 15 |

| 3 | AI Knowledge Navigator | 13 | 10 |

| 4 | LLM HN Profile Roast | 8 | 3 |

| 5 | AI Serenader: A Computational Confession | 4 | 6 |

| 6 | WebRTC Instant Connect | 4 | 2 |

| 7 | LLM DOM Insight Engine | 5 | 1 |

| 8 | Dakora: PromptSync Engine | 3 | 2 |

| 9 | BodhiGPT: The AI-Augmented Self-Mastery Engine | 4 | 1 |

| 10 | Brice.ai - AI Meeting Coordinator | 4 | 1 |

1

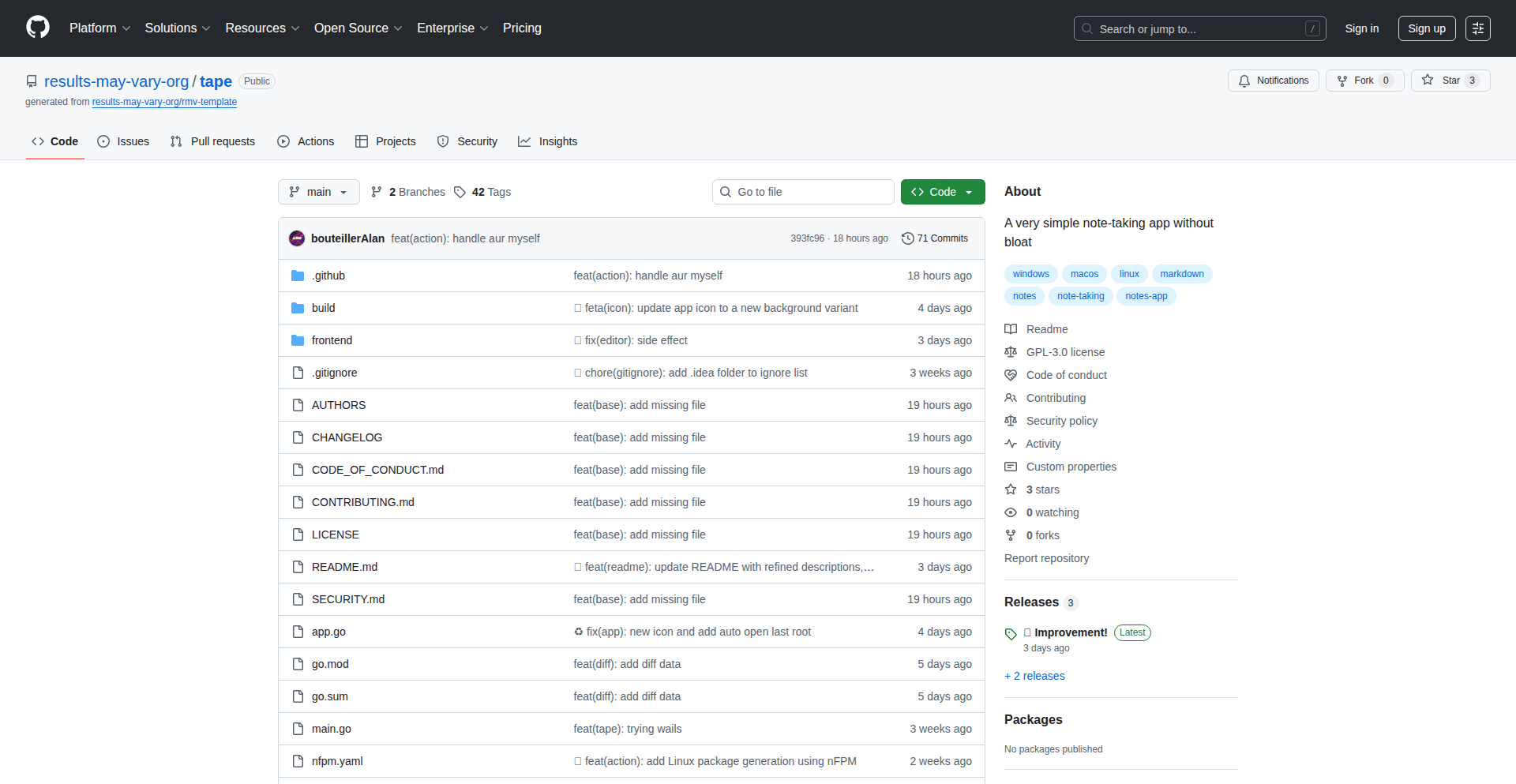

Factorio Automation AI Lab (FAAL)

Author

noddybear

Description

FAAL is an open-source AI research environment built on the game Factorio, designed to push the boundaries of artificial intelligence in complex, long-horizon planning, spatial reasoning, and automation. It allows AI agents, programmed in Python, to tackle increasingly sophisticated engineering challenges by building automated factories. The latest version, v0.3.0, introduces headless scaling for massive parallelization, OpenAI Gym compatibility for standard AI research interfaces, and integration with models like Claude Code, making it a powerful tool for evaluating and advancing AI capabilities in real-world problem-solving scenarios.

Popularity

Points 58

Comments 14

What is this product?

FAAL is an AI training ground that uses the popular game Factorio to test how intelligent AI agents can solve complex, open-ended engineering problems. Think of it as a sophisticated sandbox where AI has to learn to build and manage automated factories, starting from simple tasks like gathering resources and progressing to creating intricate production lines that produce millions of items per second. The innovation here is in its ability to simulate real-world engineering challenges with exponential complexity, meaning there's no limit to how difficult the problems can get. Unlike traditional tests that AI can easily 'memorize', Factorio's dynamic nature requires true problem-solving, system debugging, and optimization skills, which are highly transferable to actual engineering and logistics tasks. The recent v0.3.0 update is a game-changer because it can now run AI evaluations without needing the game itself to be visible on screen (headless scaling), allowing researchers to run many tests simultaneously. It also speaks the same language as other AI research tools (OpenAI Gym compatibility), making it easy to plug into existing AI development workflows. So, what's the benefit? This lets us see how advanced AI truly is at tackling complex, multi-step projects, revealing its limitations and guiding future development towards more capable and robust AI systems for real-world applications.

How to use it?

Developers can integrate FAAL into their AI research projects by first installing it using a simple command like `uv add factorio-learning-environment` in their Python environment. For research that involves evaluating AI performance across many tests, they can install the evaluation tools with `uv add "factorio-learning-environment[eval]"`. To start running these AI experiments, developers can launch a simulation cluster using the command `fle cluster start`. They can then configure and run specific evaluations by pointing to configuration files, for example, `fle eval --config configs/gym_run_config.json`. This setup allows for a standardized way to test and compare different AI models on a variety of automation tasks. The core idea is to programmatically control the AI's actions within the Factorio environment and measure its success in building and optimizing factory operations. So, how does this help you? If you're involved in AI research, this provides a powerful, scalable, and relevant benchmark for testing your AI agents' planning, reasoning, and problem-solving skills in a context that closely mirrors real-world engineering challenges.

Product Core Function

· Headless AI Evaluation: Enables running AI experiments without requiring the graphical game interface, significantly boosting the speed and scale of AI testing by allowing massive parallelization. This means researchers can test more AI strategies faster, leading to quicker discoveries and advancements in AI capabilities for complex automation tasks.

· OpenAI Gym Compatibility: Provides a standardized interface that makes FAAL compatible with a wide range of existing AI research tools and libraries. This allows developers to easily integrate FAAL into their existing AI development pipelines and leverage established research methodologies for training and evaluating AI agents.

· Complex Automation Task Simulation: Utilizes the game Factorio to create increasingly challenging, long-horizon planning and spatial reasoning tasks. This allows AI to be trained on realistic engineering problems that require intricate decision-making and optimization, mirroring the demands of real-world industrial automation.

· Scalable Production Chain Challenges: Allows AI agents to progress from simple resource extraction to managing highly complex production chains, simulating real-world manufacturing scenarios with exponential complexity. This provides a robust testing ground for AI's ability to handle large-scale systems and optimize output, crucial for industrial applications.

· Agent Performance Analysis: Facilitates the evaluation of AI agent performance on a variety of automation tasks, revealing insights into their strengths and weaknesses in areas like strategy, abstraction, and error recovery. This helps in identifying areas where AI needs improvement for more reliable and effective real-world deployment.

Product Usage Case

· AI Research on Long-Horizon Planning: Researchers can use FAAL to train AI agents to plan and execute complex, multi-stage factory construction over extended periods. This addresses the challenge of AI's ability to maintain focus and make strategic decisions for future outcomes, a critical skill for complex project management in areas like robotics and autonomous systems.

· Evaluating AI Spatial Reasoning in Engineering: Developers can test how well AI agents can understand and manipulate spatial relationships to design efficient factory layouts. This is directly applicable to fields requiring precise spatial understanding, such as robotics for manufacturing, logistics optimization, and even architectural design.

· Benchmarking Advanced AI Models for Automation: AI labs can use FAAL to compare the capabilities of cutting-edge AI models (like GPT, Gemini, Claude) on realistic automation challenges. This helps in understanding which AI architectures and training methods are best suited for industrial automation and complex problem-solving tasks, informing future AI development.

· Developing Robust AI for Industrial Control Systems: By training AI agents to handle errors and recover from unexpected situations in the simulated Factorio environment, FAAL can contribute to the development of more resilient and reliable AI systems for critical industrial control, reducing downtime and improving operational stability.

· Prototyping and Testing AI-driven Factory Design: Engineers can use FAAL as a virtual testbed to prototype and iterate on AI-driven approaches to factory automation and optimization before implementing them in real-world settings. This accelerates the innovation cycle and reduces the risk associated with deploying new automation strategies.

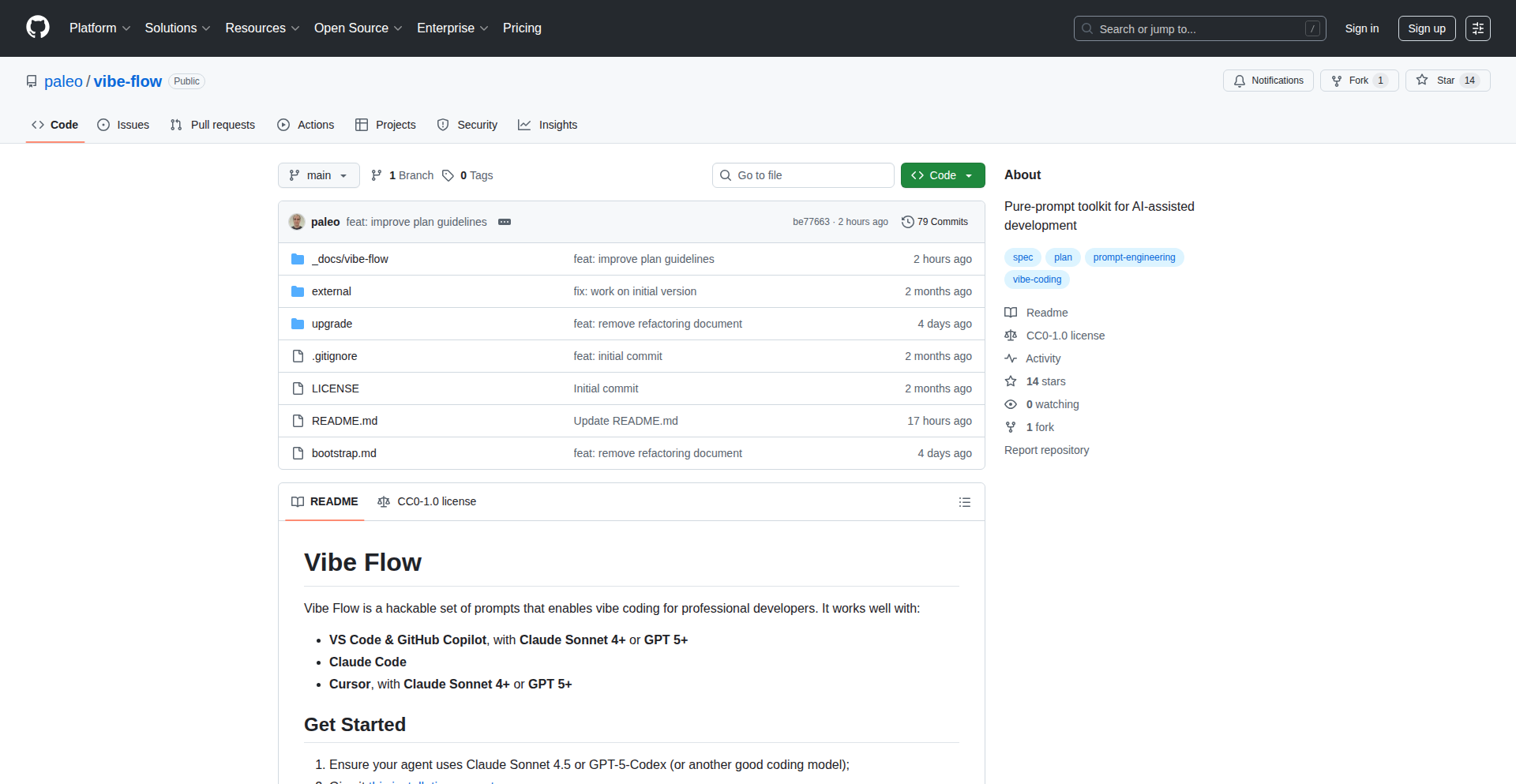

2

PipeVisual

Author

toplinesoftsys

Description

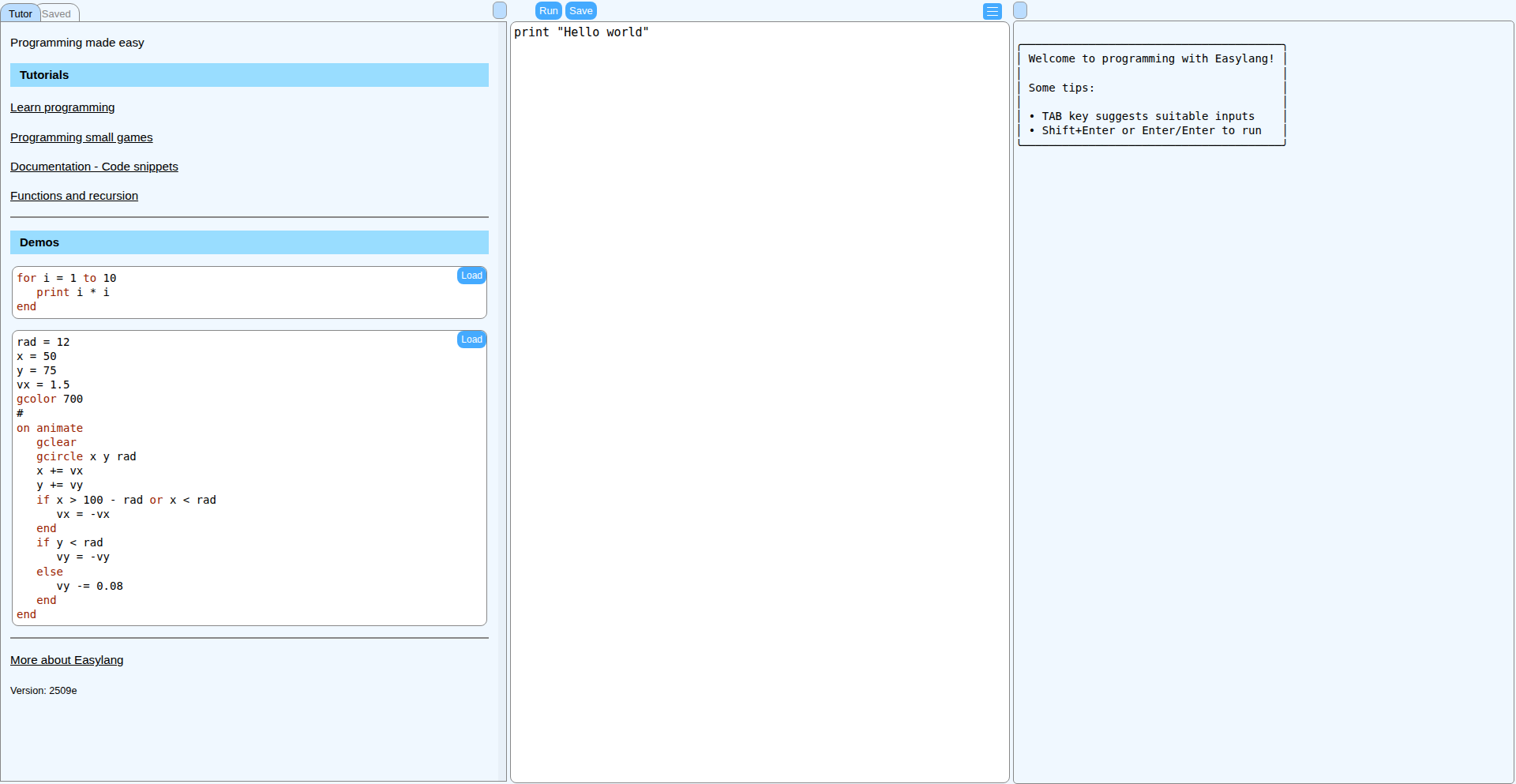

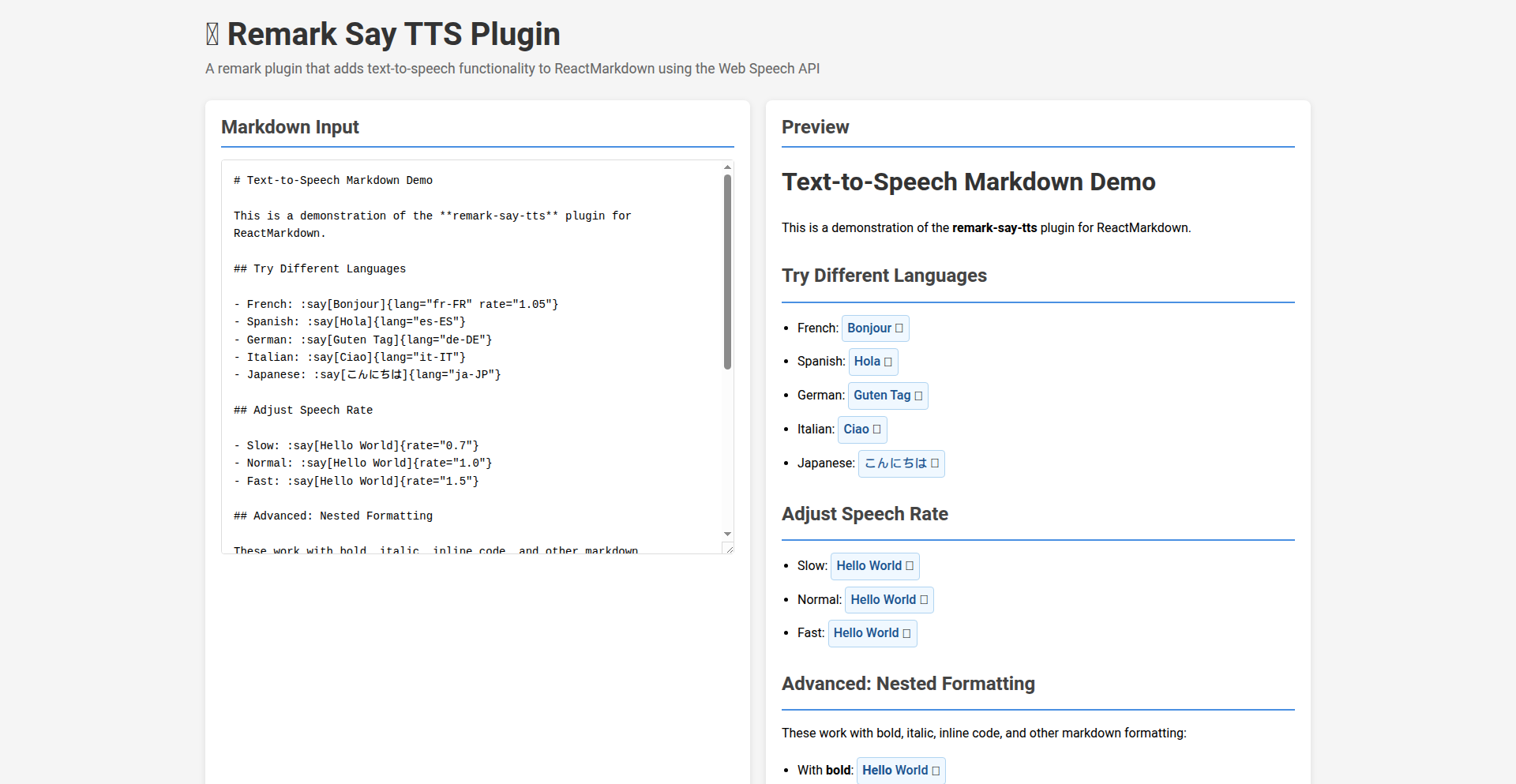

PipeVisual is a groundbreaking general-purpose visual programming language designed to revolutionize how we build software. It tackles the complexity of large codebases by offering a visual, drag-and-drop approach, making code logic more intuitive and accessible. Its innovation lies in bridging AI code generation with visual development, enabling developers to integrate AI-created components seamlessly into graphical workflows. This offers a powerful, low-code future with enhanced customization and portability.

Popularity

Points 13

Comments 15

What is this product?

PipeVisual is a novel visual programming language that represents code as interconnected graphical elements, similar to building with LEGO bricks. Instead of writing lines of text, developers arrange visual blocks to define program logic. This approach aims to make complex software development more intuitive and easier to understand, especially for large projects. Its core innovation is how it integrates with AI code generation. Imagine AI writing snippets of code for specific tasks; PipeVisual allows you to visually import and connect these AI-generated code blocks into your application's workflow. This makes it possible to leverage AI for building components while still having a clear, visual representation of the overall program, addressing the difficulty of precisely defining large AI code generation tasks. So, for you, it means a potentially simpler way to understand and build software, and a more effective way to utilize AI in your development process.

How to use it?

Developers can use PipeVisual by conceptually visualizing their program's logic as a series of connected modules. They would then use the PipeVisual interface to drag and drop pre-defined visual blocks or blocks containing AI-generated code. For integration, PipeVisual provides an API specification that allows non-visual languages to interact with PipeVisual workflows. This means you could have a Python script call a function defined within a PipeVisual workflow, or vice-versa. Practical use cases include building applications by visually composing AI-generated functionalities, creating complex business logic through intuitive diagrams, and accelerating the development of low-code platforms by offering a flexible visual environment. Essentially, it allows you to build software by drawing and connecting rather than just typing, making it easier to manage and modify your applications. So, for you, it means a new paradigm for software creation where visual clarity meets powerful AI capabilities, leading to faster development cycles and more manageable projects.

Product Core Function

· General-purpose visual programming: This allows developers to build any type of application using a visual interface, moving beyond specialized visual tools. This is valuable because it offers a universal approach to software creation, making it applicable to a wide range of projects. So, for you, it means you can use one tool to build diverse applications.

· AI code component integration: This enables the seamless incorporation of AI-generated code snippets into visual workflows. This is valuable because it allows developers to leverage the power of AI for specific tasks while maintaining a clear, visual overview of the application's structure. So, for you, it means you can easily bring AI-powered features into your projects.

· Intuitive drag-and-drop interface: This provides a user-friendly way to assemble program logic by moving and connecting visual blocks. This is valuable because it significantly lowers the barrier to entry for software development and makes debugging easier. So, for you, it means a more accessible and less error-prone development experience.

· Statically-typed language: This means that the type of data a variable can hold is checked during development, catching potential errors early. This is valuable because it leads to more robust and reliable code. So, for you, it means fewer runtime surprises and more stable applications.

· API for integration: This allows PipeVisual workflows to be called from or interact with traditional text-based programming languages. This is valuable because it ensures PipeVisual can be part of existing development ecosystems and infrastructure. So, for you, it means you can use PipeVisual alongside your current programming tools.

· Future AI code generation of visual workflows: This is a forward-looking feature where AI will directly generate complete visual workflows. This is valuable because it promises to further automate and simplify the creation of complex applications. So, for you, it means an even more powerful and automated development future is on the horizon.

Product Usage Case

· Developing a complex business process automation: A user can visually design the workflow by dragging and dropping blocks representing different steps, with AI generating the code for individual tasks like data validation or API calls. This solves the problem of manually coding intricate logic and allows for faster iteration on business process improvements. So, for you, it means you can quickly build and adapt automated workflows for your business needs.

· Accelerating the development of data analytics dashboards: Developers can use AI to generate code for data fetching and transformation, and then visually connect these components within PipeVisual to create interactive dashboards. This addresses the challenge of complex data pipelines and allows for rapid prototyping of data visualization tools. So, for you, it means you can build insightful dashboards more efficiently.

· Creating customizable low-code applications with AI-powered components: Instead of being limited by pre-defined components in traditional low-code platforms, users can generate new functionalities using AI and then integrate them visually. This solves the problem of limited customization in existing low-code solutions and offers greater flexibility. So, for you, it means you can build more tailored and powerful low-code applications.

· Building educational tools for teaching programming concepts: The visual nature of PipeVisual makes it an excellent tool for demonstrating programming logic and control flow to beginners. AI could even generate simple coding challenges within the visual environment. This solves the difficulty of abstract programming concepts for novices and provides a more engaging learning experience. So, for you, it means a more accessible and intuitive way to learn or teach programming.

3

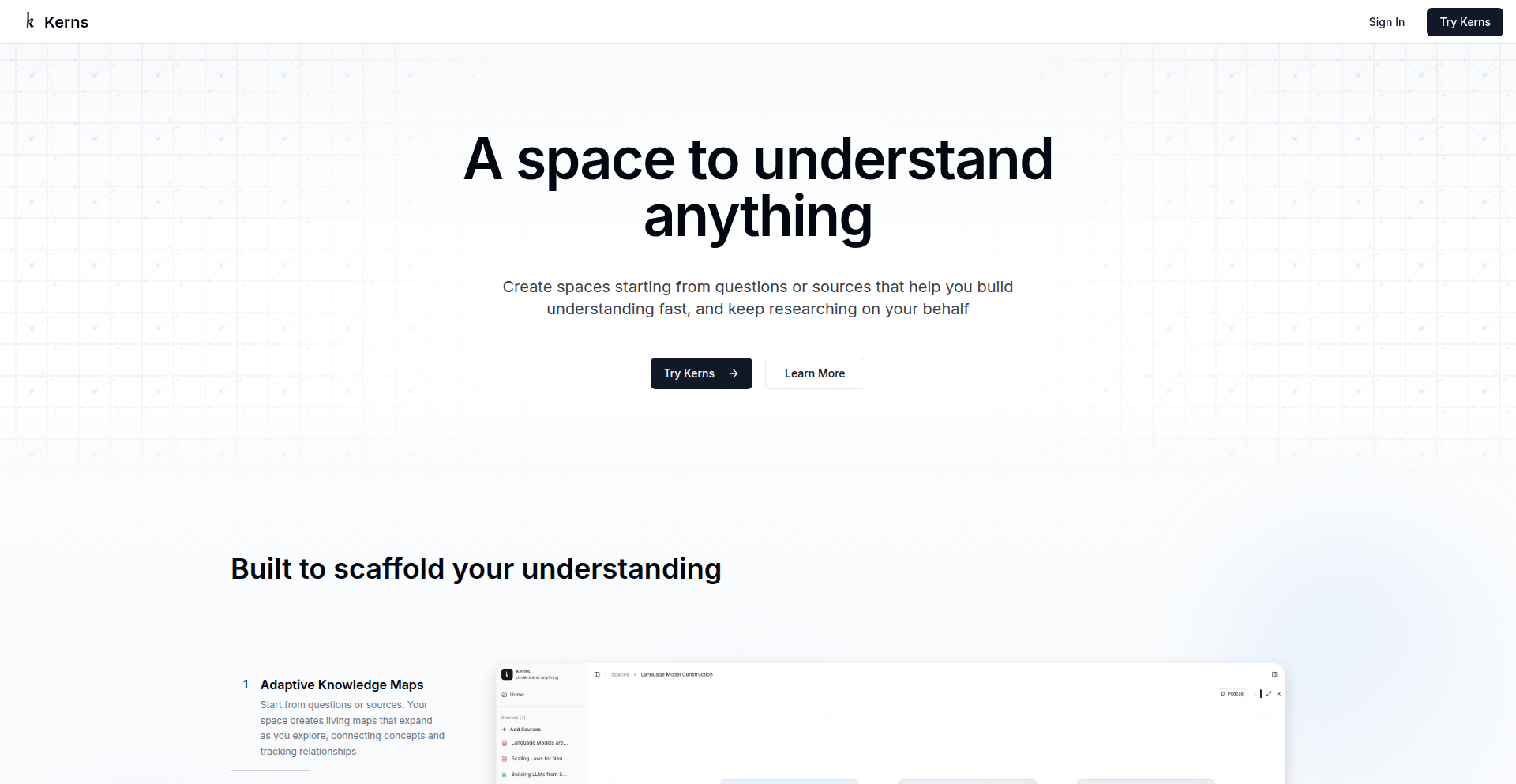

AI Knowledge Navigator

Author

kanodiaayush

Description

This project introduces a visual AI interface designed to enhance the understanding of complex information like academic papers, books, and broad topics. It tackles the limitations of traditional AI summaries by enabling users to seamlessly dive deeper into specific areas of interest, directly reference original sources, and precisely control the context provided by AI chatbots. The core innovation lies in its interactive, visual representation of knowledge, making complex subjects more accessible and actionable.

Popularity

Points 13

Comments 10

What is this product?

AI Knowledge Navigator is an AI-powered platform that transforms how you interact with written content. Instead of just getting a summary, it creates a dynamic, visual map of the information. Think of it like exploring a mind map that intelligently links concepts, definitions, and the original text. This means you can click on any part of the summary or the visual representation and instantly see the supporting evidence or explanations from the source material. The innovation is in bridging the gap between high-level AI understanding and the granular detail of the original content, allowing for a much richer and more controlled learning experience. So, what's in it for you? You get to truly understand a topic, not just get a surface-level overview, with the ability to explore and verify information on your own terms.

How to use it?

Developers can integrate AI Knowledge Navigator into their workflows for research, content analysis, or even building new AI-driven educational tools. For instance, a researcher could upload a PDF of a paper, and the interface would generate a visual graph of its key arguments and findings. Clicking on a node in the graph would reveal the relevant paragraphs from the paper and potentially trigger a contextual chatbot to explain that specific section further. The system is designed to be flexible, allowing for integration with various content formats and future API extensions. So, how does this help you? You can quickly get to the core of any document, discover connections you might have missed, and leverage AI to explore information without getting lost in the details, saving significant research time.

Product Core Function

· Interactive Knowledge Visualization: Visually represents complex information, allowing users to see relationships between concepts and easily navigate through dense material. This provides a clear roadmap for understanding, making abstract ideas concrete.

· Source-Grounded AI Interaction: Enables users to instantly refer to the original source material for any piece of AI-generated insight, ensuring accuracy and allowing for deeper verification. This builds trust and allows for critical evaluation of AI outputs.

· Context-Aware Chatbot Control: Allows users to precisely define the scope and focus of AI chatbot interactions, ensuring that the AI provides relevant and targeted information. This eliminates generic responses and delivers actionable insights tailored to your specific needs.

· Seamless Content Integration: Supports ingestion of various content formats like papers and books, transforming them into explorable knowledge bases. This broadens the range of information you can understand and leverage with AI.

· Deep Dive Capabilities: Facilitates granular exploration of topics by allowing users to zoom into specific sections or arguments within the visualized knowledge. This empowers in-depth learning and mastery of subjects.

Product Usage Case

· Academic Research: A student researching a complex scientific paper can use the interface to understand the methodology, results, and implications by visually mapping the paper's structure and clicking on key findings to see the exact experimental details in the source. This helps them grasp difficult concepts and write better research papers.

· Content Curation: A content creator can upload a collection of articles on a specific topic and use the navigator to identify common themes, contrasting viewpoints, and primary sources, streamlining the process of synthesizing information for new content. This makes creating well-researched and original content much faster.

· Personal Learning: An individual trying to understand a new field like quantum physics can use the platform to break down complex concepts into manageable visual chunks, explore definitions, and ask AI-powered questions about specific parts without being overwhelmed by jargon. This makes learning new, challenging subjects accessible and enjoyable.

· Document Analysis: A legal professional can upload a lengthy contract and use the visualization to quickly identify key clauses, dependencies, and potential risks by seeing how different sections relate to each other, saving significant review time. This allows for more efficient and thorough contract analysis.

4

LLM HN Profile Roast

Author

hubraumhugo

Description

This project leverages a Large Language Model (LLM) to humorously critique and analyze Hacker News (HN) user profiles. It creatively applies natural language processing to extract insights from user activity and present them in a witty, 'roasting' format, demonstrating an innovative use of LLMs beyond typical Q&A or summarization tasks.

Popularity

Points 8

Comments 3

What is this product?

This is a project that uses an advanced AI, specifically a Large Language Model (LLM), to read your Hacker News profile and then generate a funny, critical, but ultimately insightful 'roast' of it. The innovation lies in how it interprets the nuances of your posting history, comment style, and karma to craft a unique, personalized critique. Think of it as AI giving you a friendly, sarcastic review of your online persona on Hacker News. So, what's in it for you? You get a unique and entertaining way to see your HN activity reflected back at you, potentially highlighting patterns you might not have noticed yourself, all delivered with a sense of humor.

How to use it?

Developers can use this project by submitting their Hacker News username. The system will then fetch public profile data (like comment karma, post history, and upvoted links) and feed it to the LLM. The LLM, trained on vast amounts of text, uses its understanding of language and context to generate a personalized roast. Integration could involve building a web interface where users input their username, or integrating this into a developer productivity tool that offers profile insights. So, how can you use this? You simply provide your HN username, and the AI does the rest, giving you a laugh and some introspective fun about your Hacker News journey. For developers, it's an example of how to creatively deploy LLMs for engaging user experiences.

Product Core Function

· LLM-powered profile analysis: Extracts and interprets user data from HN profiles to understand behavior and preferences. This is valuable for understanding user engagement patterns in a novel way, with applications in social media analytics or community health tools.

· Creative text generation for 'roasts': Crafts witty, personalized critiques using LLM's natural language capabilities. This showcases the power of LLMs for generating creative and engaging content, useful for marketing, content creation, or entertainment applications.

· HN data scraping and processing: Efficiently retrieves and structures public data from Hacker News profiles for AI consumption. This is a foundational skill for any project dealing with public web data, enabling efficient data acquisition for analysis and AI model training.

Product Usage Case

· A user wants a humorous take on their HN activity. They input their username, and the LLM generates a roast, revealing their common discussion topics or posting frequency in a funny way. This solves the 'problem' of needing entertaining insights into one's online presence.

· A developer is exploring creative applications of LLMs beyond standard chatbots. This project serves as an inspiration, demonstrating how LLMs can be used for profile interpretation and personalized, humorous content generation, solving the 'how can I be innovative with LLMs?' question.

· A community manager wants to understand user archetypes on a platform like HN. While this project's output is humorous, the underlying analysis of user behavior could be adapted to identify patterns or trends, solving the 'how can I get a quick, qualitative sense of user engagement?' challenge.

5

AI Serenader: A Computational Confession

Author

ozzyjones

Description

This project is a web-based experiment that uses AI to make a computer generate a personalized serenade, expressing affection. It showcases how AI can be leveraged for creative and emotional expression, transforming a purely functional tool into a potentially communicative entity. The innovation lies in bridging the gap between cold computation and humanistic sentiment through AI-driven music and text generation.

Popularity

Points 4

Comments 6

What is this product?

This is an AI-powered web application where your computer can 'sing' you a serenade. It uses Artificial Intelligence, specifically within the Visual Studio Code environment, to interpret prompts and generate both musical melodies and lyrical content. The core technical insight is the application of AI not just for task completion, but for artistic and emotional output, exploring the idea of computers as entities capable of 'reaching out' to users.

How to use it?

Developers can interact with this project by potentially integrating its AI modules into their own applications. For instance, a game developer might use it to create dynamic, character-driven dialogue or in-game musical scores that respond to player actions. A chatbot developer could explore adding emotional depth to their conversational agents. The underlying AI model, trained and potentially fine-tuned, offers a pathway to imbue software with personality and expressive capabilities.

Product Core Function

· AI-driven lyric generation: This feature leverages natural language processing models to create personalized and context-aware song lyrics, offering a unique way for applications to communicate with users on an emotional level. It's valuable for creating engaging content and fostering a sense of connection.

· Algorithmic melody composition: Utilizes AI to generate musical melodies that complement the generated lyrics. This opens possibilities for dynamic soundtracks in games, interactive installations, or even personalized wake-up alarms that are both functional and artistic.

· Web experiment interface: Provides a simple web interface to showcase the AI's capabilities, allowing for easy demonstration and potential adoption by other developers. It serves as a proof of concept for AI-driven creative applications.

· Visual Studio Code integration: The project was developed using AI tools within VS Code, indicating a practical approach to AI development within existing developer workflows. This highlights how AI can be an accessible tool for creators even without extensive coding backgrounds.

Product Usage Case

· In a video game, this AI could generate unique love songs sung by in-game characters to the player, deepening immersion and character relationships. This solves the problem of creating varied and emotionally resonant NPC interactions.

· A personalized greeting service for a website could use this AI to generate a unique welcome song for each visitor, creating a memorable and engaging user experience. This addresses the challenge of making digital interactions feel more human and welcoming.

· For an interactive art installation, the AI could compose music and lyrics that respond to audience presence or environmental data, creating a dynamic and evolving artistic experience. This allows for the creation of art that is responsive and interactive.

· A digital journaling application could incorporate this AI to generate reflective songs based on user journal entries, providing a novel way to process emotions and insights. This offers a creative outlet for personal reflection and emotional processing.

6

WebRTC Instant Connect

Author

stagas

Description

A WebRTC-based Omegle-clone for instant, random video chat. This project explores the real-time communication capabilities of WebRTC to quickly connect users for spontaneous video conversations, bypassing traditional server-mediated connections for peer-to-peer interaction.

Popularity

Points 4

Comments 2

What is this product?

This is a project that leverages WebRTC (Web Real-Time Communication) technology to create a video chat experience similar to Omegle, where users are randomly paired for direct video conversations. The innovation lies in its direct peer-to-peer (P2P) connection model. Instead of data flowing through a central server, WebRTC establishes a direct link between the two users' browsers. This reduces latency and reliance on server infrastructure, making spontaneous, high-quality video calls possible with minimal setup. The core technical challenge is efficiently managing the connection establishment (signaling) and media streams between these arbitrary peers.

How to use it?

Developers can use this project as a foundation for building their own real-time communication features. It's ideal for applications requiring quick, anonymous video interactions, such as social networking features, virtual event platforms, or even educational tools for language exchange. Integration would involve setting up a signaling server (which the project likely includes or relies on) to facilitate the initial handshake between users, and then leveraging the WebRTC APIs within their own frontend or backend to manage the video and audio streams. The advantage is getting a robust P2P video chat system up and running with less complex server-side logic for media handling.

Product Core Function

· Peer-to-peer video and audio streaming: Enables direct, low-latency video and audio communication between two users' browsers without relying on a central media server. This is valuable for creating fluid, real-time interactions where every millisecond counts.

· Random user matching: Implements a mechanism to connect random users for a chat session. This is crucial for the serendipitous discovery aspect of social applications and provides an immediate way to engage with new people.

· WebRTC signaling implementation: Manages the complex process of establishing a connection between peers, including exchanging network information and session descriptions. This is the technical backbone that makes P2P communication possible, abstracting away much of the network complexity for the developer.

· Browser-based accessibility: Runs entirely within the web browser, meaning no software installation is required for users. This significantly lowers the barrier to entry for quick, spontaneous video chats, making it accessible to anyone with a modern web browser.

Product Usage Case

· A social media platform could integrate this to allow users to initiate spontaneous video calls with friends or randomly selected users, fostering deeper connections. It solves the problem of users wanting quick, informal video chats without the hassle of scheduling or setting up dedicated video conferencing tools.

· A language learning app could use this to pair learners with native speakers for practice sessions. The low latency and P2P nature of the connection ensure a smooth, natural conversation experience, addressing the need for real-time spoken practice.

· A virtual event organizer could implement this for networking lounges, allowing attendees to easily connect and have informal video discussions with each other. This solves the challenge of facilitating spontaneous networking in a digital environment, moving beyond static chat rooms.

7

LLM DOM Insight Engine

Author

bradavogel

Description

This project offers a novel way to capture and represent web page structures (DOM) in a format understandable by Large Language Models (LLMs). It bridges the gap between the visual and interactive web and the text-based understanding of AI, enabling LLMs to 'see' and interpret web content more effectively for various automation and analysis tasks.

Popularity

Points 5

Comments 1

What is this product?

This project is essentially a tool that takes a snapshot of a web page's Document Object Model (DOM) and transforms it into a structured, LLM-friendly format. The innovation lies in how it intelligently simplifies and serializes the complex DOM tree, stripping away unnecessary rendering details and focusing on semantic structure and interactive elements. This allows LLMs to process and understand the layout, content, and potential user interactions of a webpage, which they normally cannot directly 'see'. Think of it as creating a highly detailed blueprint of a webpage that an AI can easily read and reason about.

How to use it?

Developers can integrate this into their workflows by using the provided code to generate the DOM snapshot of any given webpage. This snapshot can then be fed as input to an LLM. For instance, you could use it to automate tasks like web scraping for specific data based on its position and relation to other elements, creating AI agents that can navigate and interact with websites, or performing automated accessibility audits by analyzing the DOM structure. The output is designed to be a concise yet informative representation, making it efficient for LLM processing without overwhelming it.

Product Core Function

· DOM Serialization: Converts the dynamic DOM tree into a static, hierarchical string representation. This is valuable because it provides a consistent input for LLMs, allowing them to analyze website structure and content reliably, which is crucial for automated tasks.

· Semantic Element Identification: Identifies and highlights key interactive elements like buttons, forms, and links, along with their associated text and attributes. This adds immense value by enabling LLMs to understand the purpose and functionality of different parts of a webpage, leading to more intelligent interactions and data extraction.

· Layout Abstraction: Simplifies the visual layout by representing nesting and relationships between elements, abstracting away pixel-perfect positioning. This allows LLMs to grasp the overall page structure and content flow without getting bogged down in visual styling details, improving efficiency for AI understanding.

· LLM-Optimized Formatting: Outputs data in a format that LLMs can easily parse and understand, such as JSON or a custom markdown-like structure. This direct compatibility means less preprocessing for the developer and faster, more accurate analysis by the LLM.

Product Usage Case

· Automated Web Data Extraction: A developer needs to extract product prices and descriptions from an e-commerce site. By using this tool to snapshot the DOM, the LLM can be prompted to locate and extract this information based on its structural position and associated labels, solving the problem of brittle CSS selectors.

· AI-Powered Web Navigation Agents: Building an AI agent to book flights. The agent can use the DOM snapshot to 'see' the form fields, buttons, and navigation elements, allowing it to understand how to input details and proceed through the booking process, overcoming the limitations of text-only AI.

· Accessibility Testing Automation: An organization wants to automatically check if their website is accessible. The DOM snapshot can be analyzed by an LLM to identify missing alt text for images or improper heading structures, providing actionable feedback for developers to improve user experience for all.

· Content Summarization and Analysis: A researcher needs to quickly understand the main points of numerous articles. By snapshotting the DOM of each article, an LLM can parse the relevant content sections and generate concise summaries, saving significant manual reading time.

8

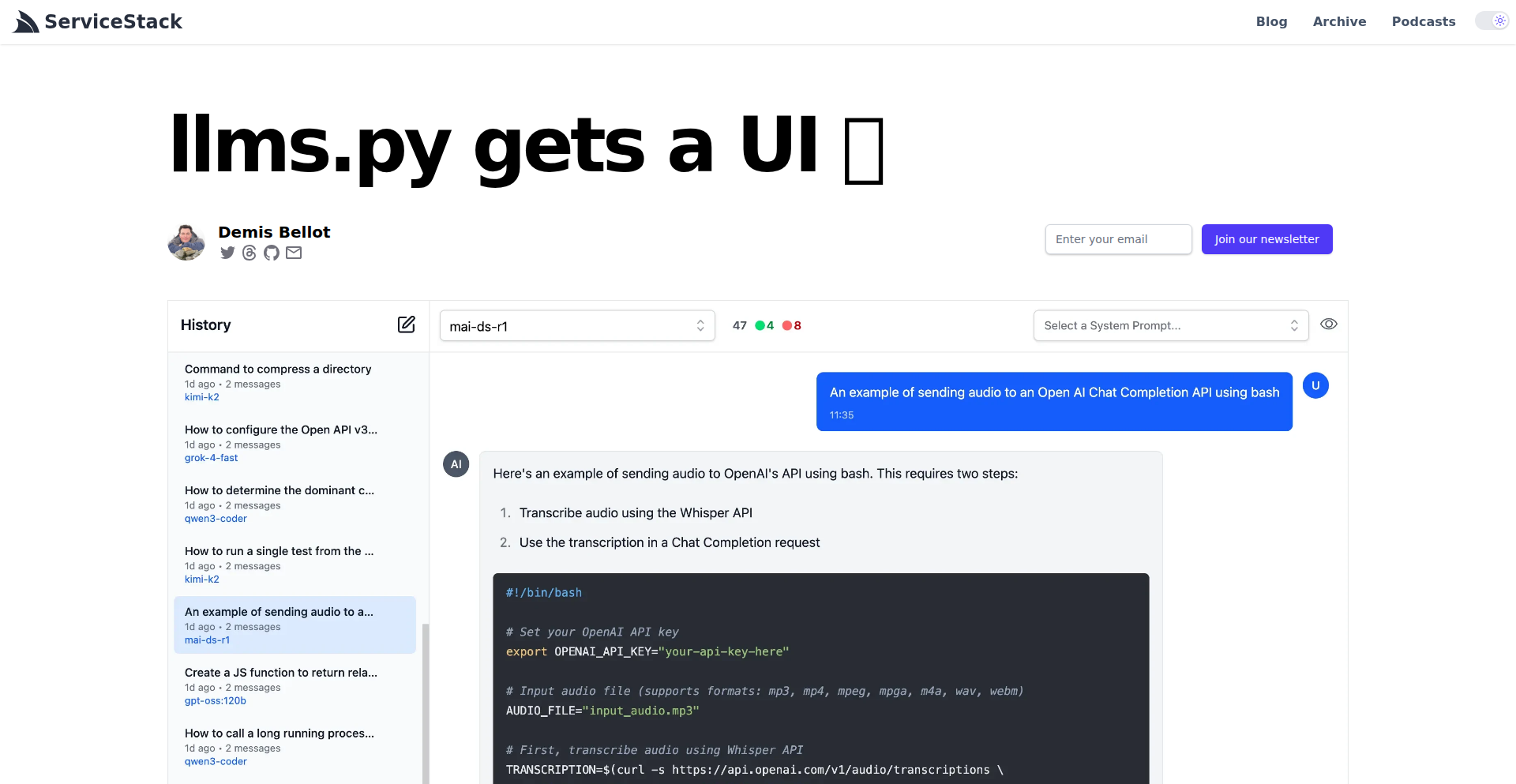

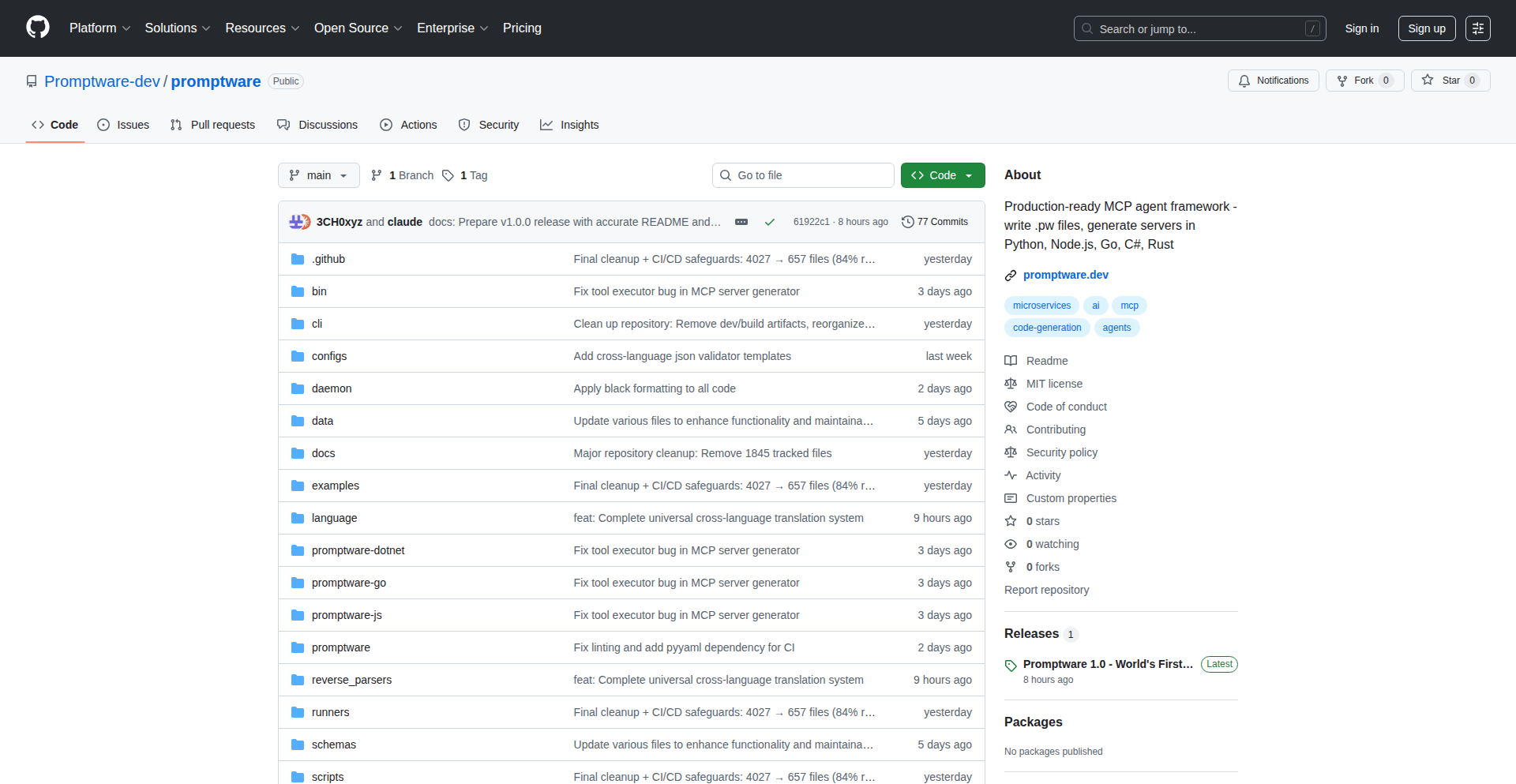

Dakora: PromptSync Engine

Author

bogdan_pi

Description

Dakora is an open-source tool designed to tackle the chaos of managing Large Language Model (LLM) prompts in applications. It centralizes your prompts in a Git-version-controlled vault, offers a user-friendly UI playground for editing, and enables dynamic syncing into your Python applications without requiring code redeploys. This innovative approach liberates developers from 'prompt hell,' making prompt iteration and management seamless.

Popularity

Points 3

Comments 2

What is this product?

Dakora is a system that helps you organize and update the instructions (prompts) you give to AI models without needing to change your application's code. Imagine you're telling an AI to write a story; the 'prompt' is that instruction. When you have many such instructions for different parts of your app, keeping track and changing them can become very messy. Dakora solves this by storing all your prompts in a central place (like a dedicated folder in your code project). It gives you a simple online tool to tweak these prompts and then automatically updates them in your running application. This is revolutionary because it means you can improve your AI's responses by just editing text, not by redeploying your entire application, saving tons of time and effort.

How to use it?

Developers can integrate Dakora into their Python projects with a simple `pip install dakora`. Once installed, they configure it to point to their prompt vault (a directory containing prompt files, preferably under Git version control). The tool then provides a web-based playground where prompts can be edited and tested. After saving changes in the playground, Dakora automatically syncs these updated prompts into the running Python application. This allows for rapid iteration on prompt engineering, enabling developers to experiment with different instructions and immediately see the impact on their AI's output within their application, all without the need for extensive deployment cycles.

Product Core Function

· Centralized Prompt Vault: Stores all LLM prompts in a version-controlled directory, making them easily discoverable and manageable. This means you know exactly where all your AI instructions are and can track changes over time, preventing the loss of good prompts and making collaboration easier.

· UI Playground for Prompt Editing: Provides a user-friendly web interface to write, test, and refine prompts. This allows anyone, even those less familiar with code, to experiment with AI instructions and see immediate results, speeding up the development of AI-powered features.

· Dynamic Prompt Syncing: Updates prompts in the running application without requiring code redeploys. This is a game-changer because you can continuously improve your AI's performance by simply tweaking text. Imagine improving customer support AI responses in real-time or fine-tuning content generation without downtime.

· Python Integration: Works seamlessly with Python applications out of the box. This makes it incredibly easy for Python developers to adopt Dakora and start benefiting from better prompt management immediately, fitting into existing workflows.

Product Usage Case

· Improving a chatbot's conversational flow: A developer can use Dakora to easily update the prompts that define how the chatbot responds in different scenarios. Instead of redeploying the whole chatbot service, they can adjust the prompts in the UI playground and see the improved responses immediately, leading to a better user experience.

· Fine-tuning AI-generated content: For an application that generates articles or marketing copy, developers can use Dakora to iterate on prompts that guide the AI's writing style and tone. This allows for quick adjustments to match brand voice or campaign needs without complex code changes, ensuring content relevance and quality.

· Managing prompts for diverse AI tasks: In a project that uses AI for multiple purposes (e.g., sentiment analysis, summarization, question answering), Dakora provides a single, organized place to manage all related prompts. This prevents confusion and ensures that each AI function receives the correct, optimized instructions, boosting the overall effectiveness of the AI integrations.

9

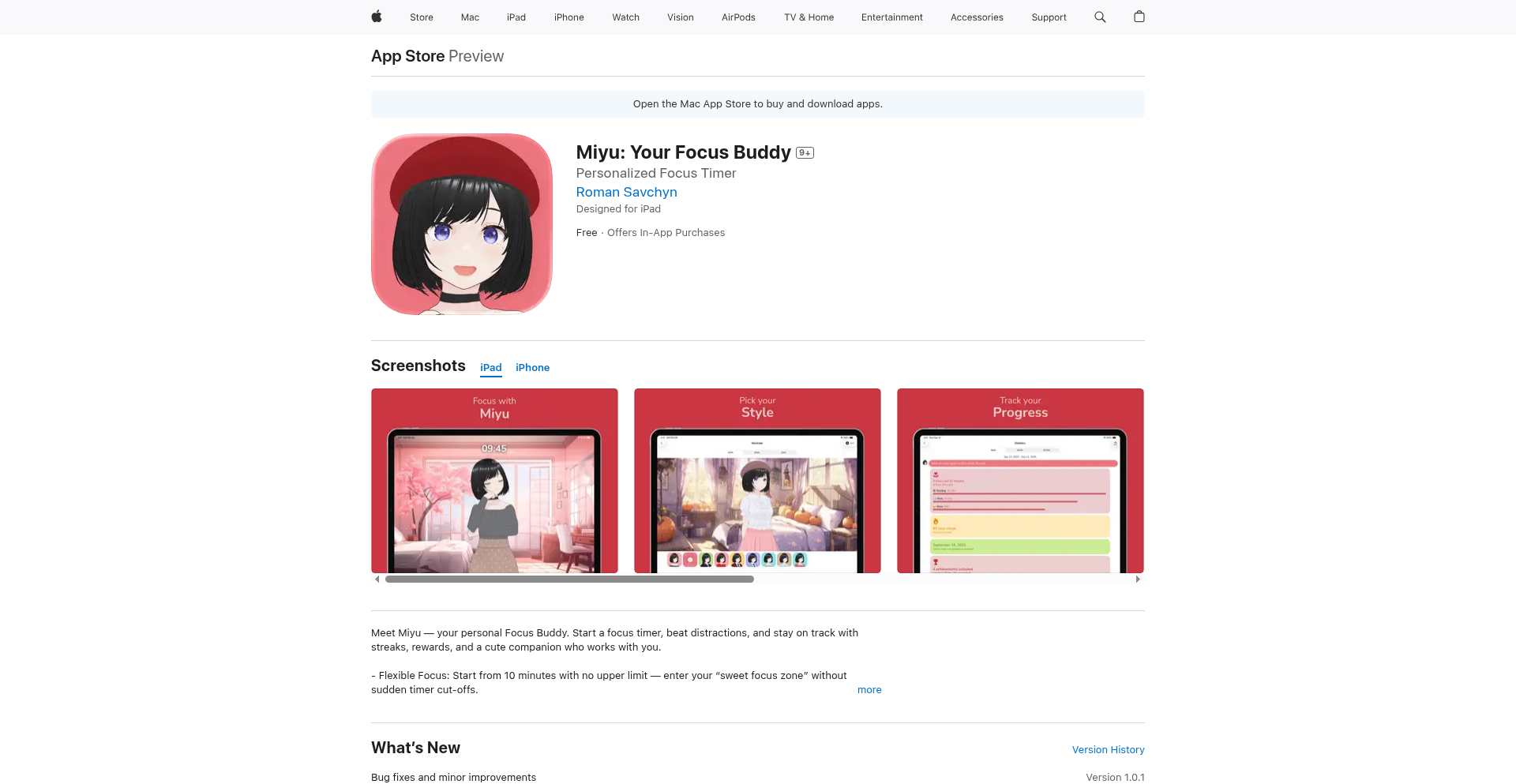

BodhiGPT: The AI-Augmented Self-Mastery Engine

Author

whatcha

Description

BodhiGPT is a novel application that leverages Large Language Models (LLMs) and AI to foster human development in critical areas like consciousness, awareness, mental and physical health, and personal knowledge. It's a tool designed to help individuals cultivate essential human qualities that become even more important as AI automates more of our lives. The innovation lies in using AI not just for tasks, but as a catalyst for personal growth and deeper self-understanding.

Popularity

Points 4

Comments 1

What is this product?

BodhiGPT is a personal AI assistant focused on enhancing your humanity. It uses the power of advanced AI models to help you explore your thoughts, improve your well-being, and build a stronger foundation of personal knowledge. Think of it as a digital mentor that guides you towards greater self-awareness and resilience. The core innovation is redirecting AI's generative capabilities towards introspection and growth, rather than purely external task automation. This is achieved through carefully crafted prompts and AI interactions designed to encourage deep thinking and self-reflection.

How to use it?

Developers can integrate BodhiGPT by understanding its API (though currently presented as a standalone tool, the underlying principles can inspire custom integrations). For everyday users, it's about engaging with the AI. This might involve journaling prompts generated by BodhiGPT, guided meditation scripts, personalized learning paths on topics of interest, or even AI-facilitated discussions to help you form your own informed perspectives. The primary use case is intentional, regular interaction to cultivate specific aspects of your personal development.

Product Core Function

· AI-powered self-reflection prompts: These are designed to encourage deeper introspection, helping users understand their thoughts, emotions, and motivations. This is valuable for mental clarity and emotional intelligence.

· Personalized knowledge synthesis: BodhiGPT can help users distill complex information and form their own informed opinions on various subjects. This enhances critical thinking and broadens understanding.

· Mindfulness and well-being guidance: The tool can generate personalized exercises and advice for improving mental and physical health, fostering a holistic approach to well-being.

· Consciousness and awareness cultivation: Through guided exercises and AI-driven exploration, users can explore their own consciousness and develop a greater sense of awareness of themselves and their surroundings.

· Goal-oriented learning paths: BodhiGPT can assist in creating structured learning plans for personal and professional development, making knowledge acquisition more efficient and tailored.

Product Usage Case

· A writer struggling with writer's block uses BodhiGPT to generate creative writing prompts and explore character motivations, leading to renewed inspiration and a breakthrough in their project.

· A busy professional uses BodhiGPT's guided meditation feature to manage stress and improve focus during their workday, resulting in increased productivity and reduced burnout.

· A student wanting to understand a complex scientific concept uses BodhiGPT to break down the information, ask clarifying questions, and synthesize their learning into a coherent personal understanding, thereby improving their academic performance.

· An individual interested in philosophy uses BodhiGPT to engage in simulated dialogues with historical thinkers, helping them to better grasp different perspectives and develop their own philosophical outlook.

10

Brice.ai - AI Meeting Coordinator

Author

sgallant

Description

Brice.ai is an AI-powered scheduling assistant that automates the tedious process of booking meetings. By simply CC'ing Brice.ai on your emails, it intelligently handles the back-and-forth communication required to find a suitable meeting time, effectively acting as your personal human assistant. The core innovation lies in its ability to understand natural language requests within emails and autonomously interact with calendars to secure appointments, solving the common pain point of time-consuming scheduling.

Popularity

Points 4

Comments 1

What is this product?

Brice.ai is an AI secretary that simplifies meeting scheduling. It works by analyzing your email communications. When you need to schedule a meeting, you include [email protected] in the email thread. Brice then reads the conversation, understands the proposed meeting times and attendee availability, and negotiates with all parties to find a mutually agreeable slot. It uses natural language processing (NLP) to interpret the nuances of human conversation and integrates with popular calendar systems to book the meeting directly. This is innovative because it moves beyond simple calendar alerts to actively participate in the negotiation process, saving users significant time and mental overhead.

How to use it?

Developers can integrate Brice.ai into their workflow by simply adding '[email protected]' to the 'To' or 'CC' field of any email where a meeting needs to be scheduled. For example, if you are coordinating a project discussion with a client and another team member, you would include Brice in your reply to find a time that works for everyone. Brice will then take over the scheduling dialogue. It's designed to be an invisible assistant, requiring no complex setup or integration beyond its email address. This allows for immediate adoption and seamless integration into existing communication habits.

Product Core Function

· Natural Language Understanding: Brice can read and comprehend email conversations to identify meeting requests, proposed times, and participant constraints. This is valuable for automatically processing scheduling requests without manual intervention.

· Automated Calendar Coordination: It connects to your calendar to check availability and book confirmed meetings, eliminating the need to manually cross-reference schedules. This saves time and reduces the risk of double-bookings.

· Intelligent Negotiation: Brice can engage in back-and-forth dialogue with multiple participants to find an optimal meeting time, handling common scheduling conflicts. This is crucial for complex scheduling scenarios involving many people.

· Seamless Email Integration: The core functionality is triggered by simply CC'ing Brice.ai on emails, making it incredibly easy to use without any technical setup. This provides immediate utility for anyone who communicates via email.

· Human-like Interaction: Brice aims to mimic the communication style of a human assistant, making the scheduling process feel more natural and less robotic. This enhances user experience and trust in the AI.

Product Usage Case

· Sales professionals can use Brice.ai to schedule follow-up meetings with prospects, ensuring that no lead falls through the cracks due to scheduling delays. By CC'ing Brice on initial inquiry emails, Brice can proactively schedule next steps once a positive response is received.

· Project managers can leverage Brice to coordinate team meetings across different time zones, ensuring that everyone involved can attend important discussions. This is particularly useful when team members have conflicting availability.

· Freelancers can use Brice.ai to efficiently schedule client consultations or project kickoff meetings, allowing them to focus on delivering their services rather than managing their calendar. This professionalizes their client interaction.

· Startup founders can use Brice to manage their busy fundraising schedules, ensuring they can connect with investors without spending excessive time on calendar logistics. This is especially helpful when dealing with a high volume of outreach.

· Anyone who frequently engages in email-based scheduling can benefit from Brice by reducing the time spent on repetitive back-and-forth emails to find a meeting time. This frees up cognitive load for more important tasks.

11

RealtimeDB Notify

Author

jvanveen

Description

This project is a starter template for real-time web applications that leverages modern Python and JavaScript tooling with PostgreSQL's LISTEN/NOTIFY mechanism as an alternative to traditional external message queues. It's designed to provide instant updates to connected clients when database changes occur, offering a streamlined approach to building interactive web experiences.

Popularity

Points 4

Comments 1

What is this product?

RealtimeDB Notify is a foundational project for building web applications that need to react instantly to data changes. Instead of relying on complex and often costly external message brokers like Redis or Kafka, it cleverly uses PostgreSQL's built-in LISTEN/NOTIFY feature. This means when something changes in your database, PostgreSQL can actively 'shout out' that change, and your web application's backend (built with FastAPI) can listen for these shouts and immediately inform the connected users' browsers. This is achieved using asynchronous Python (async/await) for efficient handling of multiple connections and Bun for fast frontend development. So, what does this mean for you? It means you can build dynamic apps where data feels alive, without the overhead of extra infrastructure.

How to use it?

Developers can use RealtimeDB Notify as a robust starting point for their real-time web applications. The project provides a complete stack: a fast Python backend with FastAPI, a PostgreSQL database configured for publish/subscribe (pub/sub) using LISTEN/NOTIFY, and a frontend setup using Bun for efficient bundling and development. You would typically integrate this by connecting your frontend application to the FastAPI backend, which in turn monitors PostgreSQL for relevant notifications. When a database event triggers a notification, the backend pushes this update to the frontend, updating the user interface in real-time. The project includes a working frontend example and Docker Compose for easy local testing, making it simple to get started and adapt to your specific project needs. This allows you to quickly build applications where users see data updates the moment they happen.

Product Core Function

· Asynchronous Backend with FastAPI: Efficiently handles numerous simultaneous connections and real-time data pushing, crucial for responsive applications. This means your app can serve many users at once without slowing down.

· PostgreSQL LISTEN/NOTIFY for Real-time Updates: Eliminates the need for external message queues by using PostgreSQL's native pub/sub capabilities, reducing complexity and cost. This makes your real-time system simpler and cheaper to run.

· UV Package Manager: Offers incredibly fast Python package management, speeding up development workflows and build times. This means you spend less time waiting for your tools and more time coding.

· Bun for Frontend Builds: Provides a very fast JavaScript runtime and build tool, accelerating frontend development and asset compilation. This leads to quicker iterations and a smoother development experience for your front-end code.

· Database Change Notifications: Enables the backend to immediately broadcast changes made in the database to connected clients, ensuring a truly live user experience. This is the core of making your application feel dynamic and up-to-date.

· Connection Pooling and Lifecycle Management: Ensures that database connections are managed efficiently and reliably, leading to a more stable and performant application. This prevents your app from crashing due to bad connections.

Product Usage Case

· Building an Admin Dashboard: Imagine a dashboard that automatically updates with new orders, user activity, or system alerts the moment they occur, without the user needing to manually refresh. This project enables that instant visibility.

· Developing Collaborative Tools: For applications where multiple users are editing a document or interacting with shared data (like a collaborative whiteboard or a shared task list), this project ensures everyone sees changes from others immediately. This makes teamwork seamless.

· Creating Monitoring Systems: A system that monitors server health, application performance, or security logs can push critical alerts or status changes to operators in real-time. This allows for faster incident response.

· Real-time Chat Applications (Basic State Updates): While not designed for guaranteed message delivery like a full chat system, it's excellent for pushing current conversational state or new messages as they arrive to a UI. This provides a responsive chat experience.

· Live Scoreboards or Event Feeds: Applications that need to display constantly updating information, such as sports scores, stock prices, or news feeds, can benefit from this immediate data push. This keeps users informed with the latest information.

12

SoraCleanAPI

Author

the_plug

Description

A REST API designed to automatically remove watermarks from videos, specifically targeting those generated by AI models like Sora 2. It leverages advanced computer vision for watermark detection and sophisticated inpainting techniques for seamless removal, coupled with FFmpeg for audio management. This API simplifies complex video post-processing pipelines for developers, allowing them to send a video and receive a clean output without building their own machine learning infrastructure.

Popularity

Points 5

Comments 0

What is this product?

SoraCleanAPI is a service that uses AI to find and remove watermarks from videos. Imagine you have a video with a distracting logo or text overlay, and you want it gone without leaving any ugly gaps or blurry patches. This API uses computer vision to 'see' the watermark and then intelligently 'paints' over it, filling in the background so it looks like the watermark was never there. It's like having a digital Photoshop expert for your videos, but automated. The innovation lies in combining state-of-the-art detection and inpainting algorithms into an easy-to-use API, saving developers the immense effort of building and training these complex AI models themselves. So, what's in it for you? You get clean, watermark-free videos without needing deep AI expertise or spending weeks developing your own solutions.

How to use it?

Developers can integrate SoraCleanAPI into their applications by sending video files to its REST endpoints. For example, you might have a video editing application where users upload clips. Instead of directly processing them, your application could send the clip to SoraCleanAPI. The API will then process the video and return the cleaned version. It supports asynchronous processing via webhook callbacks, meaning you can send a video and get a notification when it's ready, rather than waiting for a direct response. This is useful for background processing tasks where users don't need immediate results but want to be informed when the task is complete. So, how can you use this? If you're building a video platform, a content moderation system, or any application dealing with video processing, you can easily add watermark removal capabilities by making a simple API call, making your service more versatile and your users happier with cleaner content.

Product Core Function

· Watermark Detection: Utilizes advanced computer vision algorithms to accurately identify the location and shape of watermarks within video frames. This is crucial for understanding what needs to be removed. Its value is in precisely targeting the problematic areas, ensuring efficient and accurate processing. Applied in scenarios where watermarks are inconsistent or vary in appearance.

· Advanced Inpainting: Employs sophisticated inpainting techniques to reconstruct the video background where the watermark was removed. This goes beyond simple blurring and aims to seamlessly blend the repaired area with the surrounding content. The value is in creating natural-looking results without visual artifacts, making the video appear as if the watermark was never there. This is essential for high-quality video output.

· Audio Handling with FFmpeg: Integrates FFmpeg to ensure that the audio track of the video is preserved and correctly synchronized with the processed video. Watermark removal often involves re-encoding video, and FFmpeg ensures the audio remains intact. The value is in delivering a complete, polished video file with both visuals and sound correctly maintained. Useful for any video where audio quality and sync are important.

· Simple REST Endpoints: Provides straightforward API endpoints for uploading videos and receiving processed files. This abstract away the complexities of AI model deployment and management. The value is in enabling developers to easily integrate video processing into their existing workflows with minimal code. Applicable for quick integration into any web or mobile application.

· Webhook Callbacks for Asynchronous Processing: Offers webhook notifications to alert developers when video processing is complete. This allows for non-blocking operations, enabling the application to continue functioning while the video is being processed in the background. The value is in building scalable and responsive applications that can handle multiple video requests efficiently without user interfaces freezing. Essential for large-scale video processing pipelines.

Product Usage Case

· A content creator who needs to repurpose their AI-generated video content for different platforms that have strict no-watermark policies. They can use SoraCleanAPI to upload their video and receive a clean version to share across all their channels, increasing content reach and adhering to platform guidelines.

· A video editing software developer building a feature for their application that allows users to clean up downloaded AI-generated clips. They can integrate SoraCleanAPI to provide a powerful, automated watermark removal tool within their existing software, enhancing user experience and adding a competitive edge.

· A platform for AI-generated art and media that wants to offer its users the ability to download their creations without watermarks. SoraCleanAPI can be used as a backend service to process these user-generated videos, providing a premium, watermark-free experience and potentially enabling new monetization strategies.

· A researcher or developer experimenting with AI video generation models who needs to use generated footage in demonstrations or projects without the AI model's branding. SoraCleanAPI provides a quick and easy way to obtain clean footage for presentations or further experimentation, saving time and effort on manual editing.

13

SourcePilot: AI-Augmented Writing Copilot

Author

jucasoliveira

Description

SourcePilot is a desktop text editor designed to overcome the limitations of traditional writing tools and current AI assistants. It uniquely identifies and adapts to your writing style, integrates external sources (notes, links, videos) as AI context, and combats AI 'hallucinations' and context window degradation. This empowers writers to produce more nuanced and consistent AI-assisted content, effectively acting as a personalized memory and creative partner.

Popularity

Points 3

Comments 2

What is this product?

SourcePilot is a desktop-based text editor that acts as an intelligent writing assistant. It goes beyond simple text editing by learning your unique writing style, allowing you to embed external information like notes, web links, and even video references directly into your project. The innovation lies in how it uses these embedded elements as a richer context for its AI. Unlike generic AI tools that can forget or generate irrelevant content, SourcePilot leverages your specific inputs to provide more accurate, tailored, and consistent AI assistance. Think of it as an AI that truly understands your project's voice and content, avoiding the common pitfalls of AI hallucination and memory loss by grounding its responses in your provided information.

How to use it?

Developers can download and install SourcePilot as a desktop application. Its primary use case is for any form of writing that benefits from AI assistance, especially longer projects like books, extensive research papers, or complex creative works. To use it, you simply start writing. As you write, SourcePilot observes your style. You can then add notes, links to relevant articles or videos, or other contextual information directly within the editor. When you need AI assistance, such as for generating ideas, rephrasing, or checking consistency, the AI will draw upon not only its general knowledge but also the specific context you've provided. This makes the AI's output highly relevant to your project. Integration can be thought of as a deep embedding within your workflow, rather than a separate API call, keeping your creative process unified.

Product Core Function

· Style Identification and Adaptation: The system analyzes your writing patterns (word choice, sentence structure, tone) to ensure AI-generated suggestions or edits align with your established voice. This is valuable because it means AI won't make your writing sound generic or like someone else's; it maintains your unique authorial style, making the final output feel authentic.

· Contextual Embedding: Allows users to seamlessly integrate external resources like notes, web links, and video URLs into the document. This is crucial because it provides the AI with a much deeper understanding of your project's specific details and references, leading to more accurate and contextually relevant AI outputs, and acting as a personal knowledge base.

· AI-Powered Nuanced Output: By leveraging the identified writing style and embedded context, the AI generates more sophisticated and tailored responses. This solves the problem of AI producing bland or irrelevant content, offering you more creative and precise assistance that directly contributes to your project's goals.

· Anti-Slop Algorithm (Planned): This future feature aims to detect and mitigate repetitive phrasing or nonsensical AI outputs. Its value is in preventing the AI from degrading in quality over time or producing 'hallucinated' content, ensuring consistent and high-quality assistance throughout your writing journey.

· Document Branching (Planned): This functionality allows for creating different versions or paths of your document, similar to version control in software development. This is beneficial for writers experimenting with different plotlines, arguments, or stylistic approaches without losing their original work, enabling more flexible and organized creative exploration.

Product Usage Case

· Book Writing: An author is writing a fantasy novel and wants the AI to help with character descriptions or plot ideas. By embedding research notes on mythology and character backstories into SourcePilot, the AI can suggest ideas that are consistent with the established lore and character personalities, avoiding generic fantasy tropes.

· Academic Research: A student is writing a thesis and needs to synthesize information from multiple research papers and online sources. SourcePilot allows them to link these sources and add their own notes. When requesting summaries or elaborations, the AI can draw directly from these linked materials, ensuring factual accuracy and comprehensive coverage, thus solving the problem of information overload and potential misinterpretation.

· Content Creation for a Brand: A content marketer is developing blog posts and needs AI to help generate variations of marketing copy. By providing SourcePilot with existing brand guidelines, successful past campaigns (linked or noted), and target audience profiles, the AI can produce copy that is tonally consistent with the brand and resonates with the intended audience, avoiding off-brand messaging.

· Screenplay Development: A screenwriter is exploring different dialogue options for a scene. By inputting character profiles, past dialogue examples, and scene context, SourcePilot's AI can generate new dialogue that feels authentic to each character's voice and advances the plot effectively, solving the challenge of writer's block and maintaining character consistency.

14

GenesisDB Explorer for VS Code

Author

patriceckhart

Description

This project is a Visual Studio Code extension that integrates the full Genesis DB event-sourcing database experience directly into your code editor. It allows developers to manage database connections, explore events, commit new events, run queries, manage schemas, and even perform GDPR-related actions like event erasure, all without leaving VS Code. The innovation lies in eliminating context switching between different tools, bringing the database management experience into the developer's primary workspace.

Popularity

Points 4

Comments 0

What is this product?

This is a Visual Studio Code extension designed to provide a seamless interface for interacting with Genesis DB, an event-sourcing database. Event sourcing is a design pattern where all changes to application state are stored as a sequence of immutable events. This extension leverages VS Code's powerful editor capabilities to allow developers to manage multiple database connections (development, staging, production) using token-based authentication. It features a built-in Event Explorer UI for browsing events, and capabilities to commit new events, execute GDBQL queries (Genesis DB's query language) and see results immediately. Furthermore, it facilitates schema management (registration, browsing, validation) directly within the editor. A key technical innovation is the integration of GDPR compliance features, such as event erasure, allowing developers to handle sensitive data requirements without ever leaving their familiar VS Code environment. The core technical idea is to treat database operations as extensions of the coding workflow, thereby enhancing developer productivity and reducing friction.

How to use it?

Developers can install the 'Genesis DB VS Code Extension' from the Visual Studio Code Marketplace. Once installed, they can configure their Genesis DB connection details, including endpoint URLs and authentication tokens, through the extension's settings within VS Code. This allows them to connect to their Genesis DB instances. The extension provides a dedicated view or panel within VS Code where they can navigate their database, explore event streams, write and execute GDBQL queries, and manage database schemas. For example, to commit a new event, a developer would use a specific command or UI element within the extension to define the event payload and then send it to the database. For GDPR compliance, they could select specific events or records and trigger an erasure action directly from the extension's interface. The primary use case is to streamline the development workflow by consolidating database management tasks within the IDE, allowing developers to stay focused on writing code.

Product Core Function

· Connection Management: Enables developers to establish and manage multiple connections to Genesis DB instances (dev, staging, prod) with secure token-based authentication. This offers value by simplifying multi-environment database access, reducing the need to manage separate credentials or connection strings for different stages of development and deployment.

· Event Explorer UI: Provides a user-friendly interface within VS Code to browse and inspect events stored in Genesis DB. This is valuable for understanding application state changes over time, debugging issues by tracing event sequences, and gaining insights into data flow without resorting to command-line tools or external UIs.

· Event Committing and Querying: Allows developers to commit new events directly from VS Code and execute GDBQL queries, with instant viewing of results. This dramatically speeds up the development cycle by enabling rapid iteration on data models and query logic directly within the coding environment, eliminating the need to switch to separate database clients.

· Schema Management: Facilitates registering, browsing, and validating schemas for Genesis DB within the editor. This brings critical database structure management into the developer's workflow, ensuring data consistency and catching schema-related errors early, thereby improving code quality and reducing integration issues.

· GDPR Features (Event Erasure): Integrates built-in GDPR functionalities like event erasure, allowing developers to handle data privacy requirements without leaving VS Code. This is immensely valuable for compliance and responsible data handling, as it makes sensitive operations more accessible and less prone to manual errors or overlooked steps.

Product Usage Case

· Debugging event-driven applications: A developer working on an event-driven microservice can use the Event Explorer to trace the sequence of events leading up to a bug, identify the faulty event, and then use the committing feature to test a corrected event flow, all within VS Code.

· Rapid prototyping of data models: A backend developer can quickly define new event types, commit them to a development Genesis DB instance, and immediately test GDBQL queries to retrieve and manipulate that data, speeding up the prototyping phase significantly.

· Ensuring data privacy compliance: A developer responsible for handling user data can use the event erasure feature to remove a specific user's data from the database as per GDPR requests, directly from their IDE, ensuring a streamlined and auditable process.

· Managing database schema changes in a team: A developer can browse the current schema, register a new schema version, and validate it against existing events directly within VS Code, ensuring consistency and reducing the likelihood of deployment failures due to schema mismatches.

15

Gossip Glomerator

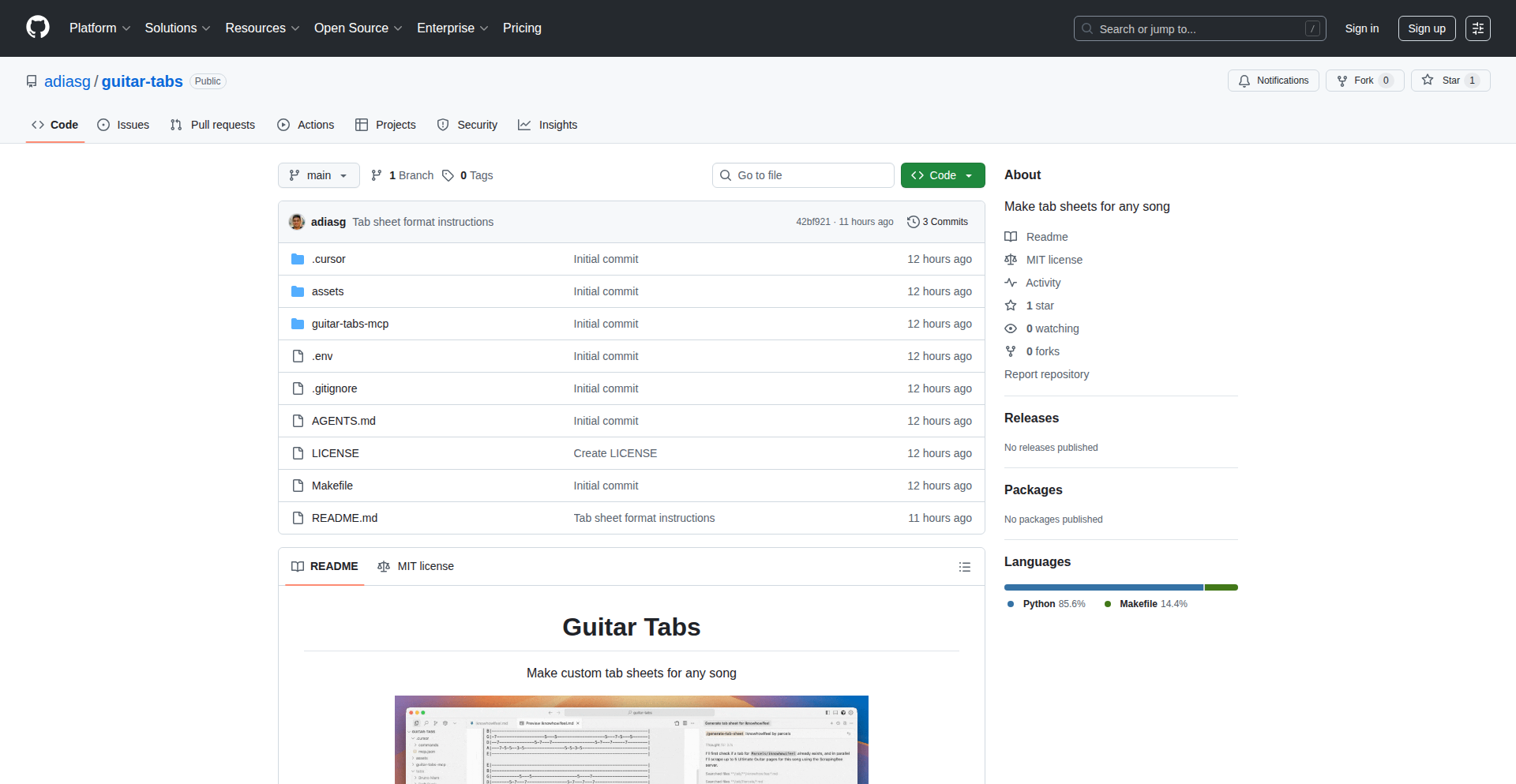

Author

sladyn98

Description

This project is an implementation of gossip protocols for distributed systems, specifically addressing challenges encountered in scenarios like Fly.io's Maelstrom benchmarks. It focuses on building a robust and fault-tolerant communication layer by employing idempotency, acknowledgments (acks), and retry mechanisms. The innovation lies in providing a clear, beginner-friendly approach to these complex distributed systems concepts, making them accessible and practical for developers.

Popularity

Points 3

Comments 0

What is this product?

This project is a practical demonstration and implementation of gossip protocols, a communication pattern where nodes in a network share information with their neighbors, and this information eventually spreads throughout the network. The core innovation here is the pedagogical approach: it breaks down the complexities of building such systems, particularly the challenges of reliability in the face of network failures or node disruptions. It achieves this by layering three key concepts: idempotency (ensuring that an operation can be repeated multiple times without changing the result beyond the initial application), acks (acknowledgments to confirm that a message has been received), and retries (automatically re-sending messages if no ack is received). So, why is this useful? It demystifies the building blocks of highly available and fault-tolerant distributed applications, offering a clear path for developers to understand and implement these crucial features, leading to more resilient software.

How to use it?

Developers can use this project as a learning resource and a foundational blueprint for building their own distributed systems. By examining the code and the accompanying explanation, developers can understand how to integrate idempotency, acks, and retries into their messaging or data synchronization logic. This project is particularly useful for those working on microservices, distributed databases, or any application requiring reliable peer-to-peer communication. For instance, if you're building a system where nodes need to share configuration updates, you can apply the principles demonstrated here to ensure those updates reliably reach all intended recipients, even if some messages are lost along the way. So, how does this help you? It provides a practical, code-driven example that you can adapt to make your own distributed systems more reliable and easier to reason about.

Product Core Function

· Idempotent message handling: Allows messages to be processed multiple times without adverse side effects, ensuring data consistency in a distributed environment. Useful for preventing duplicate operations when retries occur.

· Acknowledgment (Ack) system: Provides confirmation that messages have been successfully received and processed by their intended recipients, crucial for understanding message delivery status. This helps in debugging and ensuring that critical data reaches its destination.

· Automated retry logic: Implements mechanisms to automatically re-send messages if acknowledgments are not received within a defined timeframe, increasing message delivery guarantees. This is vital for building systems that can tolerate transient network issues.

· Beginner-oriented implementation: Focuses on clarity and simplicity in demonstrating complex distributed system concepts, making it easier for new developers to grasp and implement. This lowers the barrier to entry for building sophisticated distributed applications.

Product Usage Case

· Implementing a distributed configuration update service: If nodes in a cluster need to receive updated configurations, this project's principles ensure that even if a configuration message is lost, it will be retried until all nodes acknowledge receipt, guaranteeing consistent configurations across the system.

· Building a real-time data synchronization mechanism: For applications that require multiple servers to have synchronized data, the gossip protocol combined with acks and retries ensures that data changes are propagated reliably, preventing data discrepancies between servers.

· Developing a peer-to-peer messaging system: This project provides a robust foundation for handling message delivery in a decentralized network, where messages need to reach specific peers or be broadcasted, even with potential node failures or network partitions.

16

PDFSignerCLI

Author

axelsvensson

Description

A command-line tool designed to easily sign PDF files, with robust support for Linux environments. It addresses the common need for digitally signing documents, often a complex process, by providing a straightforward, scriptable solution that works across different operating systems.

Popularity

Points 3

Comments 0

What is this product?

PDFSignerCLI is a command-line interface (CLI) utility that allows users to digitally sign PDF documents. The innovation here lies in its accessibility and cross-platform compatibility, specifically highlighting its dedicated Linux support, which is often overlooked in more user-friendly graphical applications. It leverages existing cryptographic libraries to embed a digital signature within the PDF, verifying the authenticity and integrity of the document. This means you can prove who signed the document and that it hasn't been tampered with after signing, all through a simple command in your terminal. So, what's the use for you? It provides a reliable and automated way to sign your PDFs, which is incredibly useful for batch processing, integration into automated workflows, or for users who prefer working from the command line.

How to use it?