Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-01

SagaSu777 2025-10-02

Explore the hottest developer projects on Show HN for 2025-10-01. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

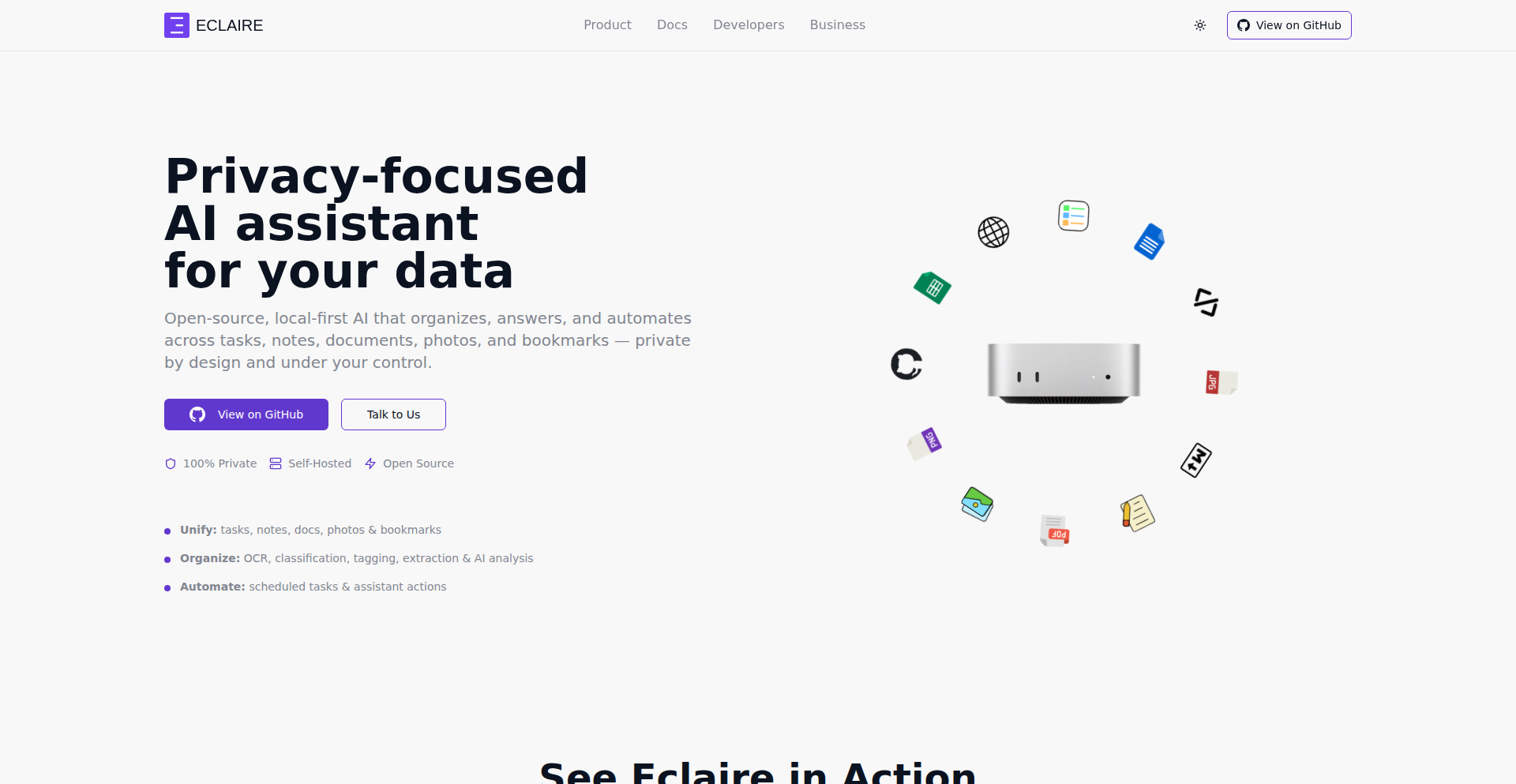

The landscape of Show HN projects today paints a vivid picture of innovation deeply rooted in solving real-world problems with cutting-edge technology, especially within the realm of AI. A dominant theme is the acceleration of developer workflows and the enhancement of AI capabilities. Tools like ChartDB Agent and Alloy Automation MCP are bringing natural language interfaces and AI assistance to database design and business system integration, lowering the barrier to entry and increasing efficiency for developers. Concurrently, projects like Butter and llmswap highlight a critical need for optimizing LLM usage, focusing on caching for deterministic outputs and managing context across multiple 'second brains,' which is crucial for building more robust and intelligent applications. The rise of privacy-focused, offline AI solutions like the macOS RAG app and Eclaire demonstrates a growing demand for user control and data security. For developers, this means opportunities to build and integrate AI features more seamlessly into existing workflows, while for entrepreneurs, it signals a strong market for tools that enhance productivity, offer specialized AI functionalities, and prioritize user privacy. The 'hacker spirit' is alive and well, with creators tackling complex challenges like autism simulation with empathy-driven technology and building developer tools from the ground up to fill specific gaps they've encountered.

Today's Hottest Product

Name

Autism Simulator

Highlight

This project ingeniously leverages interactive simulation to convey the day-to-day experiences of autism, focusing on concepts like masking, decision fatigue, and burnout. It moves beyond mere description by allowing users to 'experience' these challenges through choices and stats. Developers can learn how to use interactive simulations and data visualization to explain complex, nuanced human experiences, fostering empathy and understanding in a deeply technical way.

Popular Category

AI and Machine Learning

Developer Tools

Productivity

Simulations and Education

Data Management

Popular Keyword

AI

LLM

Open Source

Developer Tool

Simulation

Data

Technology Trends

AI-powered Automation for Developers

LLM Optimization and Caching

Privacy-Focused Local AI Solutions

Interactive Simulations for Empathy and Learning

Developer Tooling Enhancement

Data Management and Accessibility

Open-Source Community Driven Development

Project Category Distribution

AI/ML Tools (35%)

Developer Productivity & Tools (30%)

Data Management (15%)

Educational/Simulation Tools (10%)

Web Development & Frameworks (5%)

Miscellaneous (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Autism Experience Simulator | 612 | 688 |

| 2 | ChartDB Agent | 115 | 35 |

| 3 | Butter: LLM Muscle Memory Proxy | 22 | 11 |

| 4 | Resterm: Command-Line API Playground | 25 | 1 |

| 5 | Alloy Automation MCP: AI Agent Orchestrator | 9 | 5 |

| 6 | GoFSST: Swift Symbol Table Compression | 12 | 2 |

| 7 | Hardware Brain for LLMs | 14 | 0 |

| 8 | AI-Powered Vulnerability Discovery Engine | 10 | 1 |

| 9 | RelativisticSimEngine | 6 | 4 |

| 10 | Ocrisp: One-Click RAG Weaver | 9 | 0 |

1

Autism Experience Simulator

Author

joshcsimmons

Description

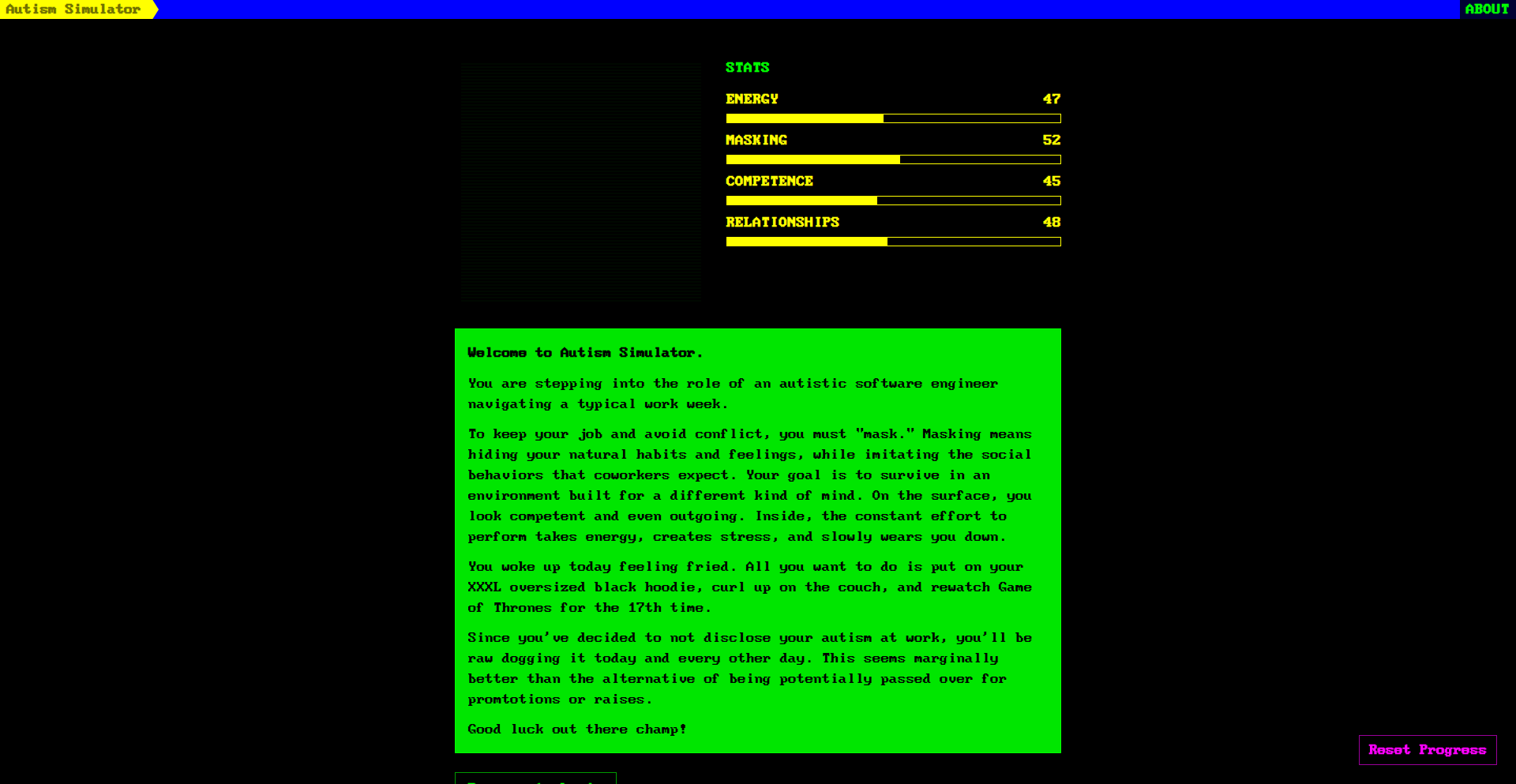

This project is a simulation designed to provide an experiential understanding of certain aspects of autistic lived experiences, focusing on the day-to-day impacts of masking, decision fatigue, and burnout. It uses interactive choices and statistics to convey these complex internal states, which are often difficult to articulate solely through words. The innovation lies in leveraging interactive simulation to bridge the gap in empathy and understanding for those unfamiliar with these challenges.

Popularity

Points 612

Comments 688

What is this product?

The Autism Experience Simulator is an interactive web application that simulates common challenges faced by autistic individuals, particularly related to 'masking' (hiding autistic traits to fit in), 'decision fatigue' (mental exhaustion from making numerous decisions), and 'burnout' (extreme exhaustion caused by prolonged stress). It works by presenting users with a series of simulated daily scenarios and choices. The user's decisions influence 'stats' within the simulation, illustrating the cumulative effects of these experiences. The core innovation is translating abstract concepts of neurodivergent experiences into a tangible, interactive format that fosters empathy and provides insights into the cognitive and emotional load involved. This approach moves beyond verbal descriptions to offer a more visceral understanding, thereby solving the problem of how to communicate complex, internal experiences effectively to a neurotypical audience.

How to use it?

Developers can use this project as a tool for educational purposes or to foster empathy within teams. It can be integrated into onboarding processes to help new team members understand the potential challenges colleagues might face. For example, a development team could use it during a diversity and inclusion training session. By running through the simulation, team members can gain a better appreciation for why certain environments or task structures might be more challenging for some individuals, leading to more accommodating practices. It can be used as a starting point for discussions on workplace accommodations and communication styles, helping to create a more supportive and understanding environment for neurodivergent colleagues. The project's code is likely available for inspection and potential customization, allowing developers to adapt its scenarios or output for specific educational goals.

Product Core Function

· Interactive Scenario-Based Choices: Allows users to make decisions within simulated daily life scenarios, impacting their experience and outcome. This provides a dynamic way to explore consequences and understand how small choices can contribute to larger states of fatigue or burnout.

· Statistical Feedback System: Tracks user choices and translates them into observable 'stats' representing mental load, fatigue, or burnout levels. This offers quantitative, albeit simplified, feedback on the simulated experience, making abstract feelings more concrete and understandable.

· Empathy-Building Narrative: Structures the simulation around core autistic experiences like masking, decision fatigue, and burnout, aiming to evoke an emotional and cognitive understanding in the user. This directly addresses the challenge of conveying complex internal states and fosters a sense of shared experience and empathy.

· Focus on Lived Experience Data: Incorporates insights from the author's and friends' lived experiences to ensure authenticity and relevance. This grounds the simulation in real-world challenges, making it a more impactful and credible tool for understanding.

Product Usage Case

· Team Diversity and Inclusion Training: A company could use the simulator in its D&I training to help employees understand the daily challenges faced by neurodivergent colleagues, fostering a more inclusive workplace by highlighting the impact of decision fatigue on productivity and the stress of masking.

· Educational Tool for Empathy Development: Educators could use the simulator in classrooms to teach students about different perspectives and the importance of understanding invisible disabilities, helping them to better empathize with individuals who might process information or interact with the world differently.

· Software Development for Accessibility Awareness: A development team could use this project to explore the cognitive load involved in navigating complex interfaces or decision-making processes within software, informing the design of more accessible and user-friendly applications.

· Personal Reflection and Understanding: An individual could use the simulator for self-reflection, exploring their own experiences with decision-making, stress, and social interactions, potentially gaining new insights into their own mental states and coping mechanisms.

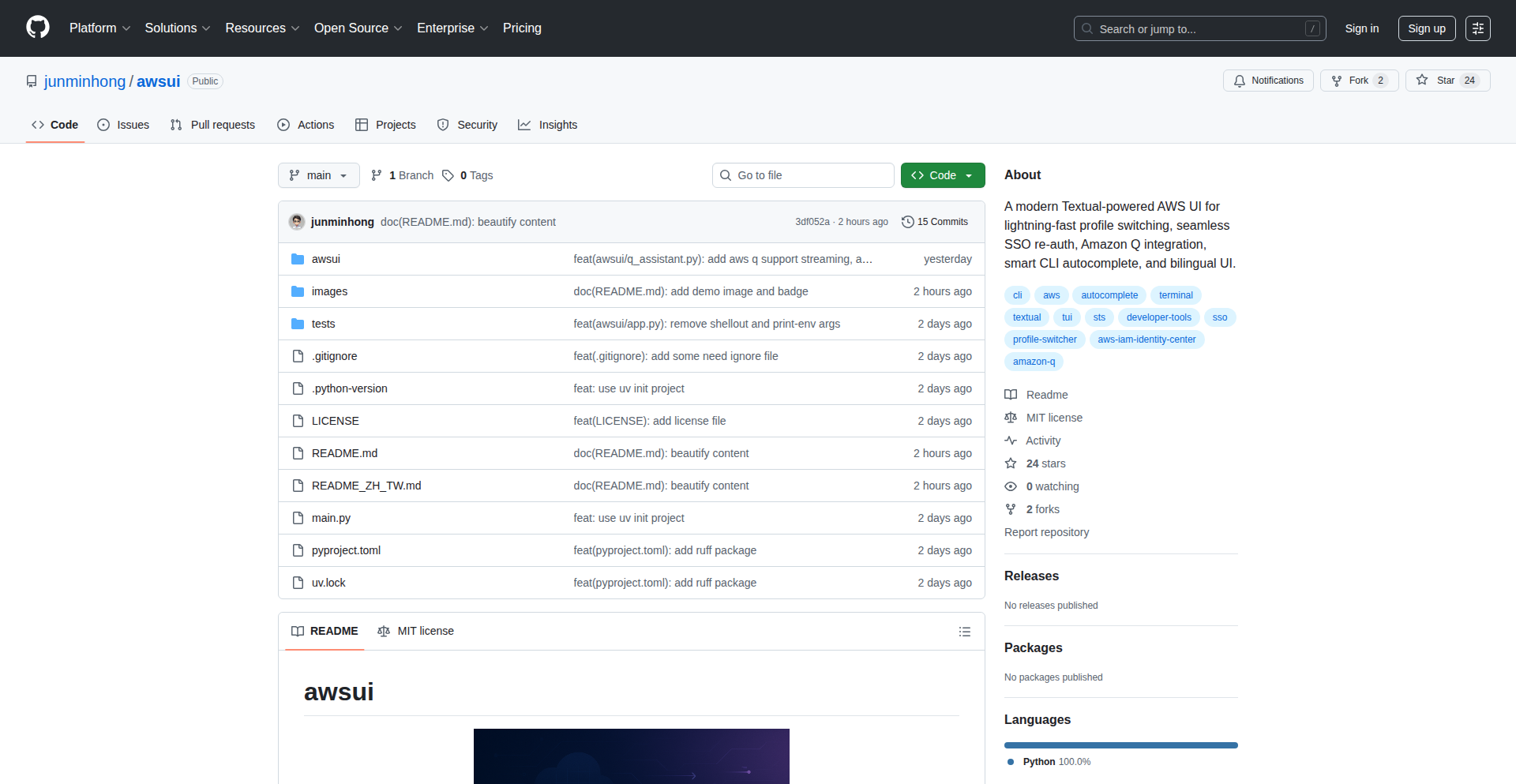

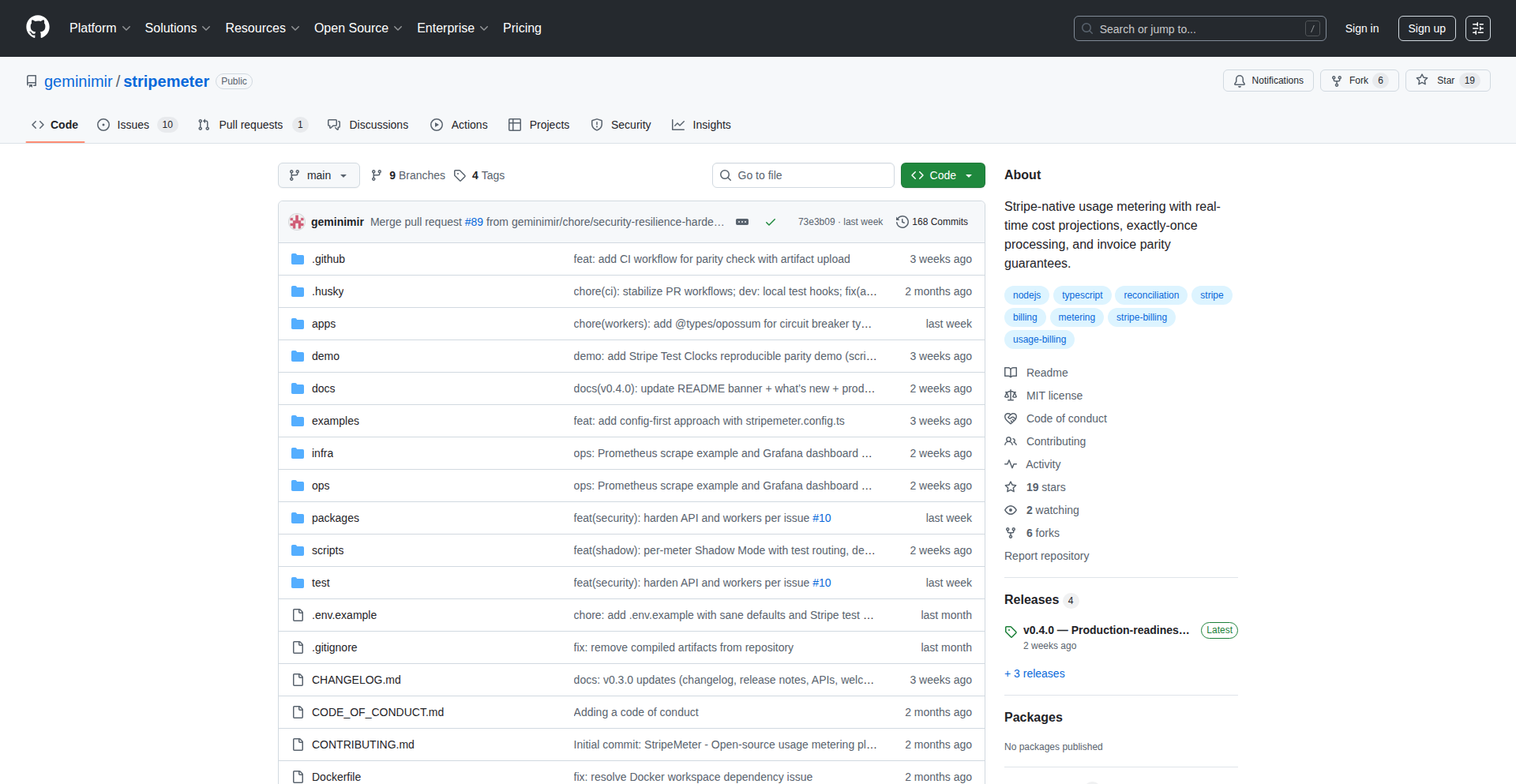

2

ChartDB Agent

Author

guyb3

Description

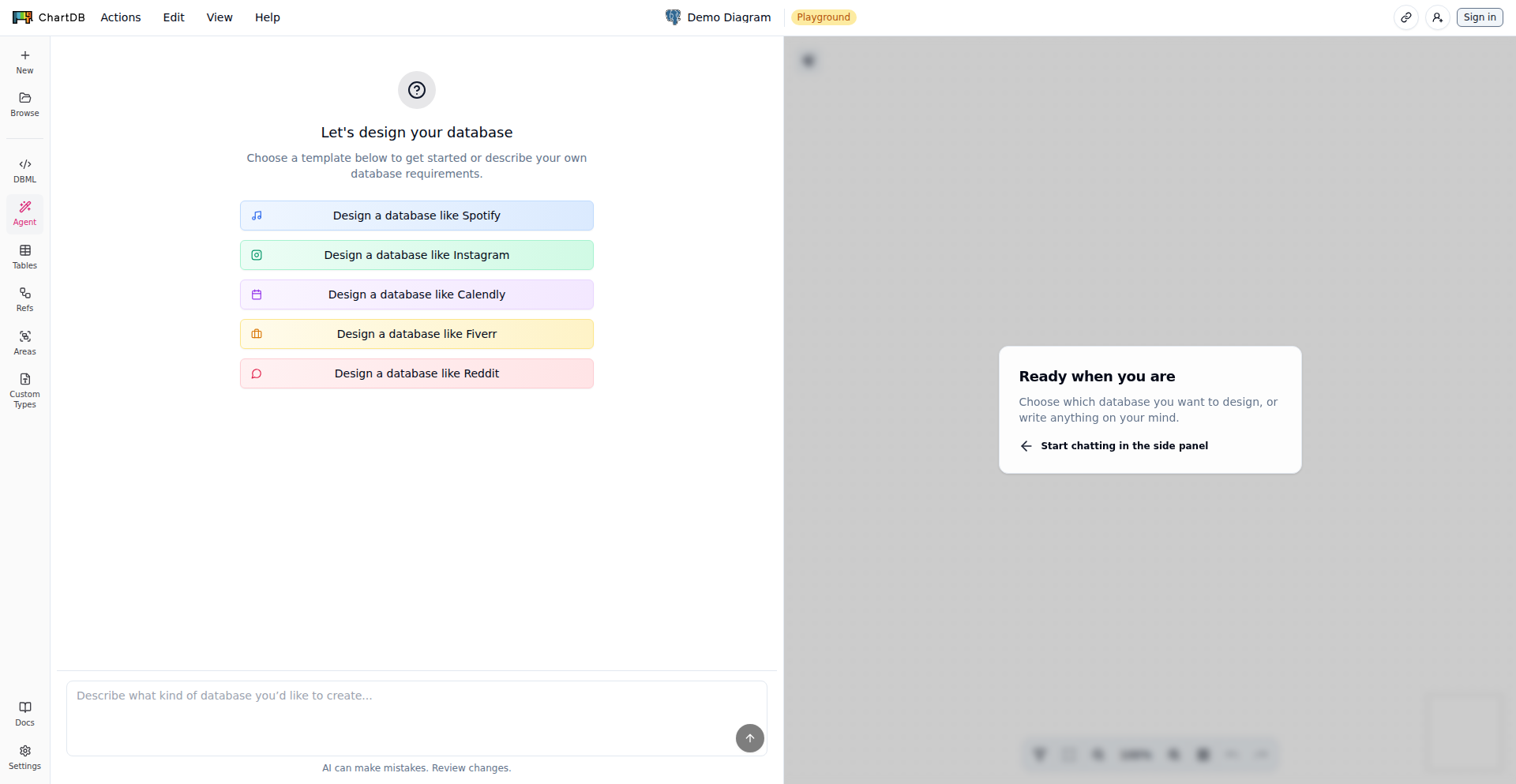

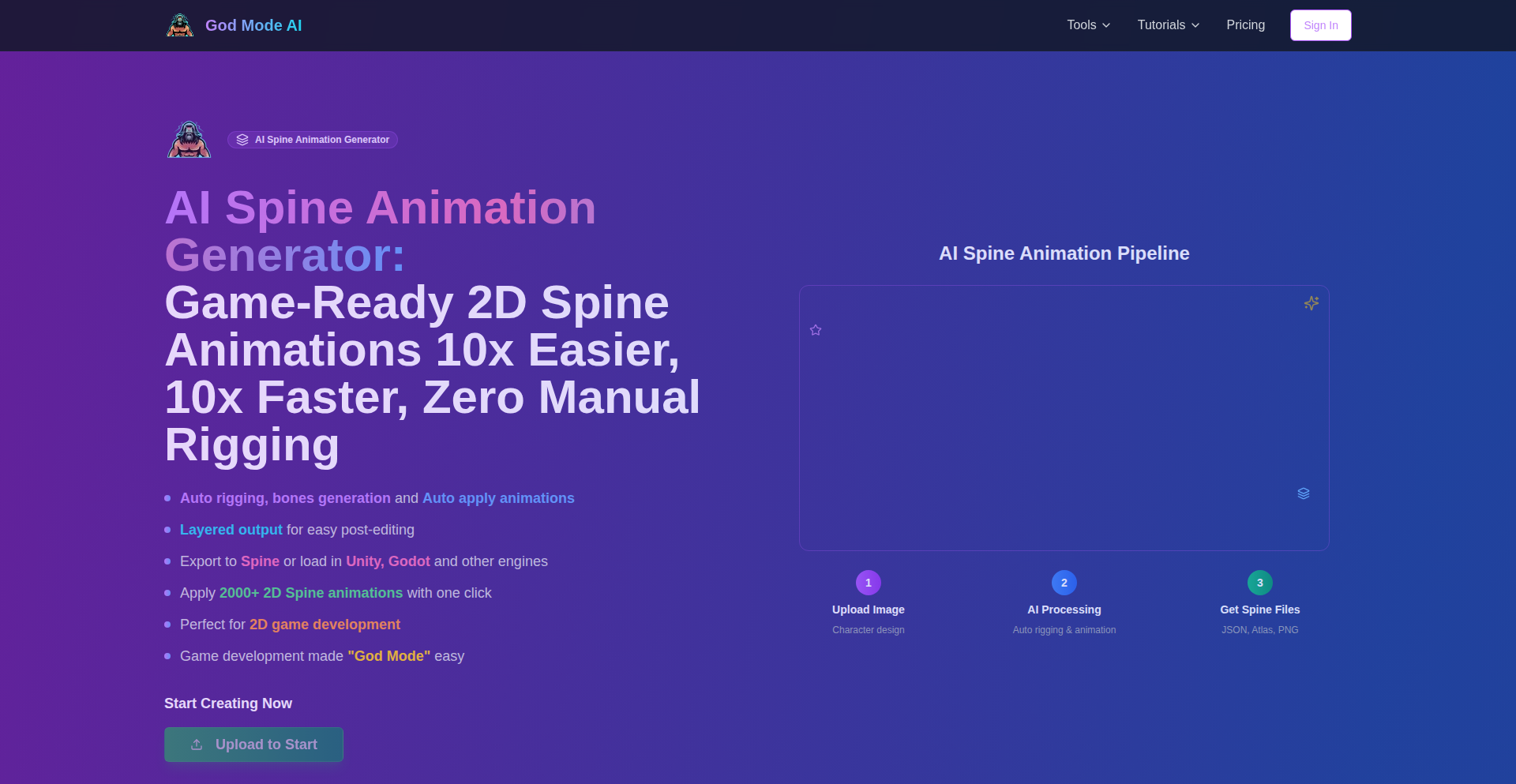

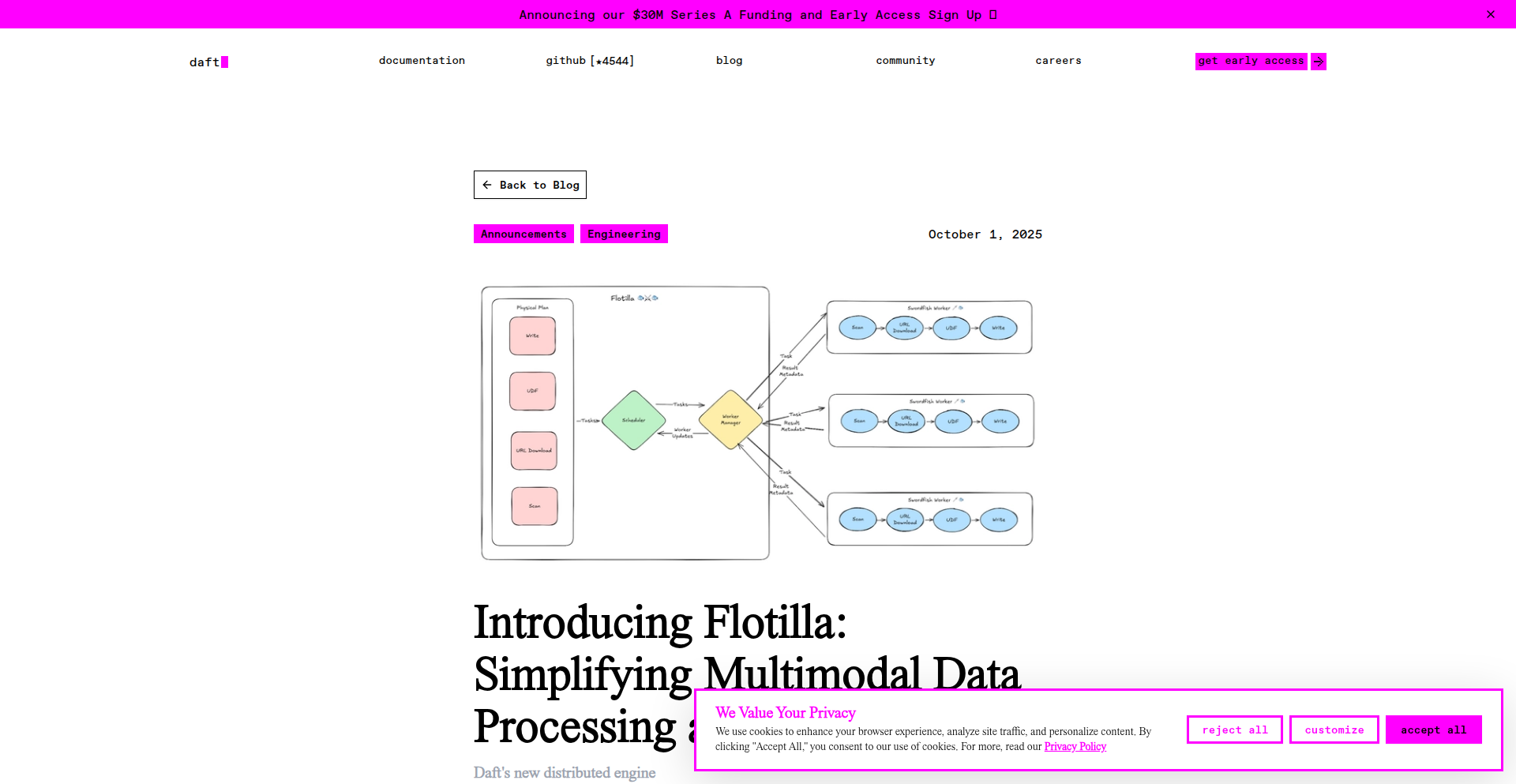

ChartDB Agent is an AI-powered tool that revolutionizes database schema design. It allows developers to create and modify database structures using natural language prompts, visualizing changes in ER diagrams and deterministically exporting SQL scripts. This innovation bypasses the traditional complex manual design process, making database schema management more accessible and efficient.

Popularity

Points 115

Comments 35

What is this product?

ChartDB Agent is an intelligent assistant for database schema design. Instead of writing complex SQL or manually drawing diagrams, you describe your desired database structure in plain English. The agent uses AI to understand your requirements, generate Entity-Relationship (ER) diagrams, suggest new tables, columns, and relationships, and finally, produce precise SQL scripts for creating or altering your database. Its core innovation lies in bridging the gap between human language and database structure, leveraging AI for a more intuitive and iterative design process. Think of it as a smart co-pilot for your database architecture.

How to use it?

Developers can use ChartDB Agent in two primary ways. Firstly, through the web interface at chartdb.io/ai, where you can immediately start designing schemas from scratch by typing descriptions. This is ideal for rapid prototyping and exploring database ideas without any setup. Secondly, for ongoing projects, you can sign up and integrate the agent with your existing database. This allows you to generate schemas from your current DB structure, make AI-assisted schema changes, and then export the updated SQL scripts. The agent integrates seamlessly into the development workflow, acting as an intelligent layer on top of standard database design practices.

Product Core Function

· Generate database schemas from natural language descriptions: This function uses AI to interpret plain English requests, such as 'Create a blog database with tables for users, posts, and comments', and translates them into a visual ER diagram and SQL. The value is in drastically reducing the time and expertise needed for initial database setup.

· AI-assisted schema brainstorming: The agent can suggest new tables, columns, and relationships based on your existing schema or descriptions, helping developers discover optimal database structures they might not have considered. This fosters creativity and leads to more robust designs.

· Visual ER diagram iteration: Users can see their database design come to life in an interactive ER diagram. This visual feedback loop makes it easy to understand complex relationships and make adjustments on the fly. The value is in improved clarity and reduced errors in schema design.

· Deterministic SQL script export: Once the schema is designed or modified, the agent generates accurate and consistent SQL scripts. This ensures that the database can be reliably created or updated, saving developers from manual SQL coding and potential syntax errors.

Product Usage Case

· A startup founder needs to quickly design a database for a new web application. Instead of hiring a dedicated database administrator or spending hours learning SQL schema design, they use ChartDB Agent to describe their app's core entities (users, products, orders) in plain English. The agent generates an ER diagram and SQL script within minutes, allowing them to launch their Minimum Viable Product (MVP) faster.

· A seasoned developer is refactoring an existing legacy database schema. They use ChartDB Agent to import their current schema, then use natural language prompts like 'Add a table for customer reviews linked to products' and 'Ensure user passwords are encrypted'. The agent suggests optimizations and generates the necessary SQL for the changes, saving them from extensive manual schema mapping and coding.

· A student is learning database concepts and wants to experiment with different schema designs for a project. They use ChartDB Agent's free online tool to rapidly prototype various database models for a library or a movie database. This hands-on, low-barrier-to-entry approach accelerates their learning curve and understanding of relational database principles.

3

Butter: LLM Muscle Memory Proxy

Author

edunteman

Description

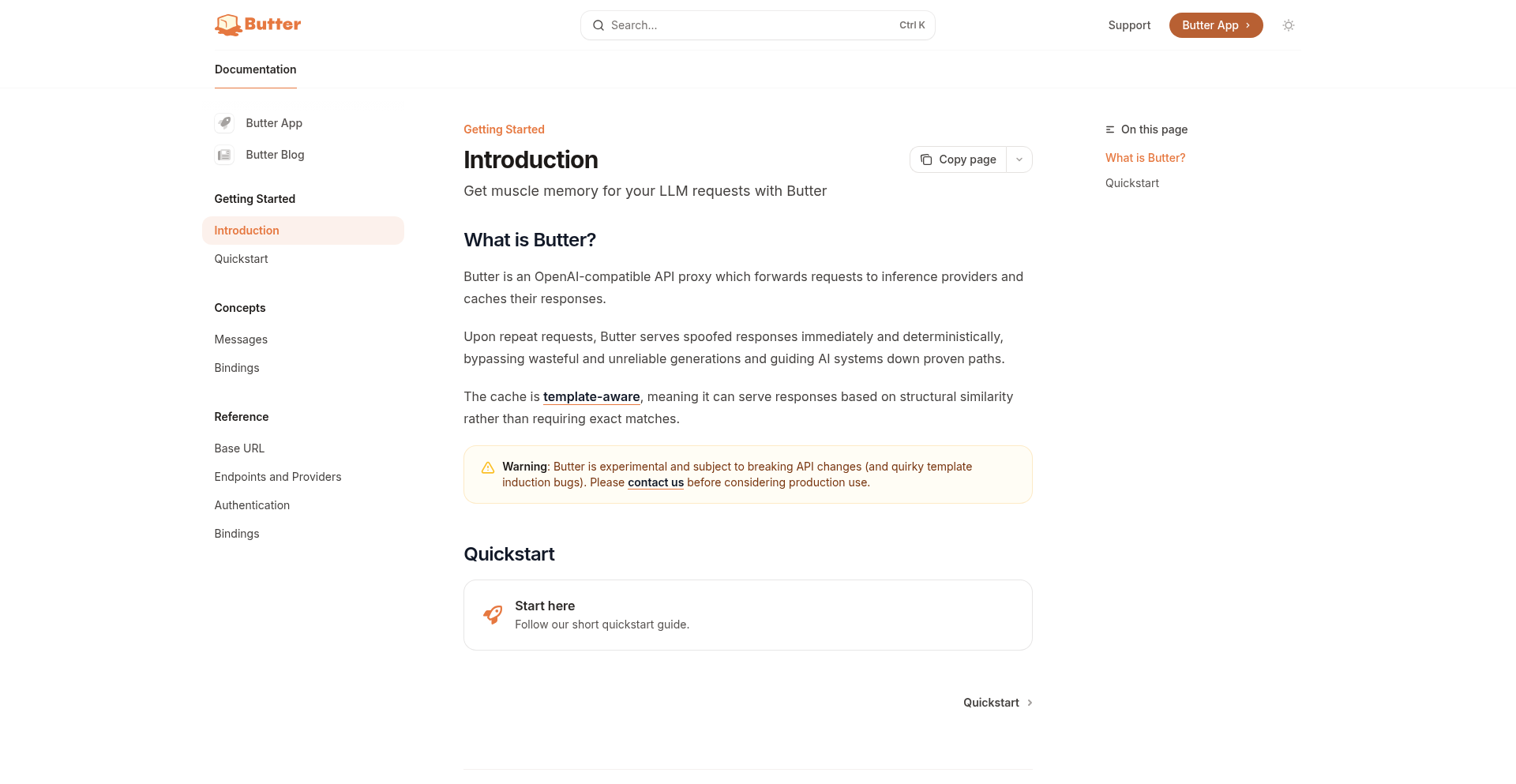

Butter is an OpenAI-compatible API proxy that intelligently caches Large Language Model (LLM) generations. Its core innovation lies in 'template-aware caching,' meaning it can recognize and reuse cached responses for structurally similar requests, even with slight variations in prompts. This significantly reduces redundant LLM calls, saving costs and improving response times, especially for automated systems and agents that frequently perform similar tasks. So, this is useful for developers by making their LLM-powered applications faster and cheaper to run.

Popularity

Points 22

Comments 11

What is this product?

Butter is a smart intermediary that sits between your application and an LLM (like those from OpenAI). Instead of sending every prompt to the LLM for a new answer, Butter checks if it has seen a very similar prompt before. If it has, it returns the previously generated answer immediately, acting like a memory. The 'template-aware' part means it's really good at understanding when two prompts, even if they have different specific details, are asking for the same kind of information based on their underlying structure. This is a technical breakthrough because identifying these structural similarities automatically is complex. So, this project is useful because it offers a novel way to optimize LLM usage by making it recall past answers intelligently, rather than recomputing them every time, which translates to efficiency and cost savings.

How to use it?

Developers can integrate Butter into their LLM workflows by directing their API calls to the Butter proxy instead of directly to the LLM provider. If you're using Python, for example, you might configure your LLM client library to point to Butter's API endpoint. For applications that make repetitive LLM calls, such as chatbots that answer frequently asked questions, content generation pipelines that produce similar articles, or agent systems that automate tasks, Butter can be seamlessly dropped in. The proxy handles the caching logic automatically. So, developers can use this by simply changing their API endpoint configuration to leverage faster and cheaper LLM interactions without rewriting their application's core logic.

Product Core Function

· OpenAI-compatible API proxy: This means you can use Butter with existing tools and libraries designed for OpenAI's API, making integration easy. The value is seamless adoption and compatibility with your current tech stack.

· LLM response caching: Butter stores previously generated LLM responses. This reduces the need to call the LLM again for identical or very similar prompts. The value is significant cost savings on API calls and faster retrieval of responses.

· Template-aware caching: This is the key innovation. Butter can identify structurally similar prompts and reuse cached responses, even if the specific details within the prompt change. For example, if you ask for a 'summary of Article A' and later 'summary of Article B,' Butter can recognize the 'summary of...' template and reuse the caching mechanism. The value is maximizing cache hit rates and efficiency beyond simple exact prompt matching.

· Deterministic replay for agent systems: For automated agents, this ensures that if an agent encounters the same scenario multiple times, it will produce the same output and take the same actions based on cached LLM responses. The value is predictability and reliability in automated workflows.

· Open-access and free to use: Currently, Butter is freely available for developers to experiment with. The value is that anyone can try it out without financial commitment, helping to identify edge cases and improve the product.

Product Usage Case

· Automated customer support chatbots: Instead of repeatedly generating answers to common FAQs, Butter can cache these responses. When a user asks a similar question, Butter serves the cached answer instantly, improving response times and reducing LLM costs. This directly addresses the problem of high API usage for repetitive queries.

· Content generation pipelines: For applications that generate product descriptions, social media posts, or similar content based on templates, Butter can cache and reuse responses for structurally identical requests. For instance, generating descriptions for different products that follow the same format. This solves the issue of redundant generation calls for routine content creation.

· LLM-powered research and analysis agents: When agents perform iterative tasks like summarizing multiple documents or extracting specific data points, Butter can cache intermediate results. This prevents re-computation if the agent revisits a similar analysis step, making complex research workflows more efficient. This is useful for improving the performance of data analysis tasks.

· Game development for NPC dialogue: If non-player characters (NPCs) in a game need to generate dialogue based on specific game states or player interactions, Butter can cache common dialogue lines. This ensures consistent and faster responses from NPCs, enhancing the player experience. This solves the challenge of generating dynamic yet performant NPC interactions.

4

Resterm: Command-Line API Playground

Author

unkn0wn_root

Description

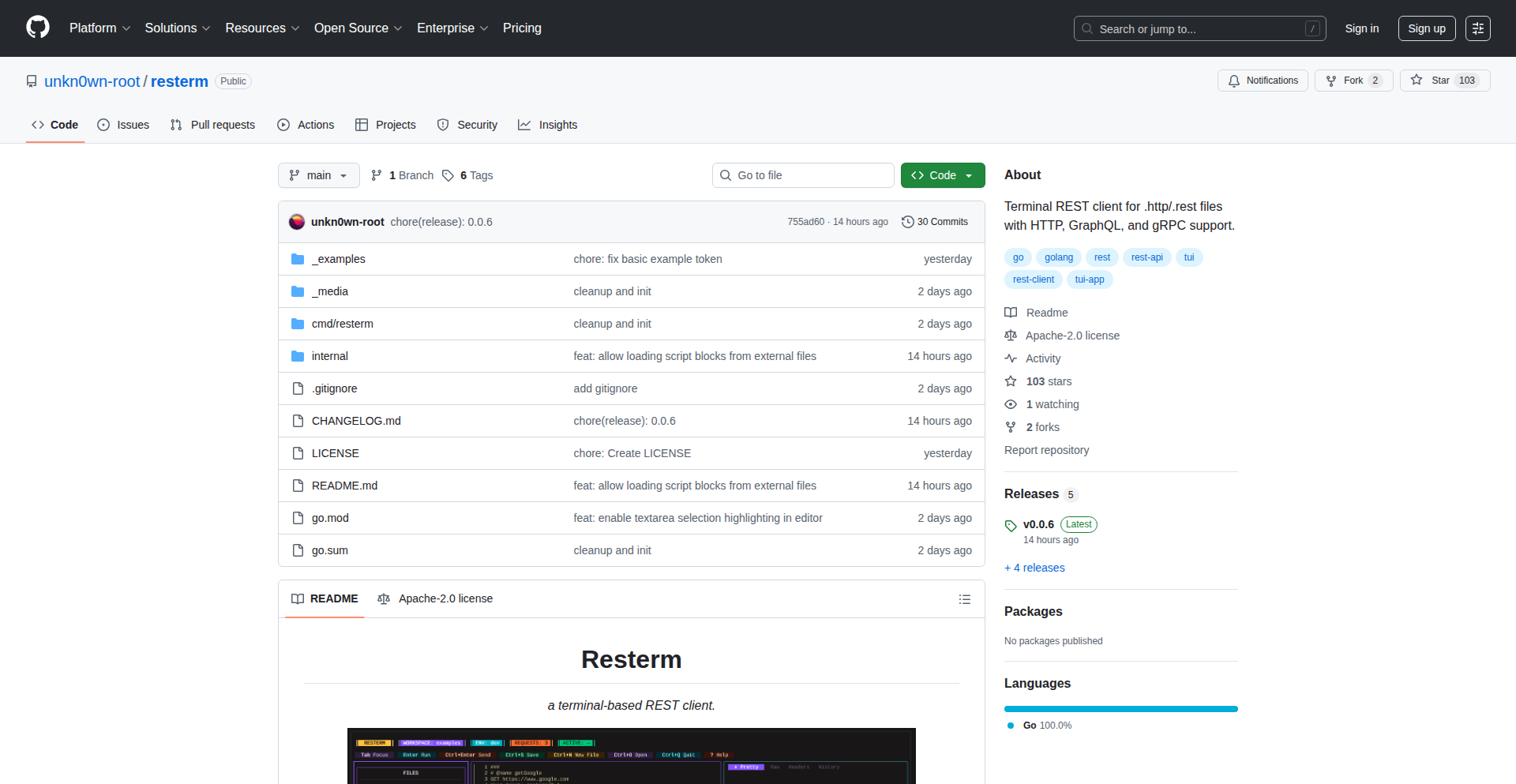

Resterm is a terminal-based client for interacting with REST, GraphQL, and gRPC APIs. It allows developers to send requests, inspect responses, and manage API interactions directly from their command line, offering a focused and efficient alternative to GUI tools for API testing and development. The innovation lies in consolidating these diverse API protocols into a single, keyboard-driven interface, streamlining the workflow for backend developers and API consumers.

Popularity

Points 25

Comments 1

What is this product?

Resterm is a powerful command-line interface (CLI) application designed to simplify how developers interact with various types of APIs: REST (for typical web services), GraphQL (for flexible data fetching), and gRPC (for high-performance, efficient communication). Instead of opening multiple browser tabs or separate GUI applications, Resterm brings all your API testing and exploration into one place within your terminal. Its core innovation is providing a consistent and intuitive keyboard-centric experience across these different API paradigms, making it faster and more efficient to test, debug, and understand how your APIs work. This means less context switching and more focused development. So, what's in it for you? You can quickly test API endpoints, see the data returned, and make changes without leaving your beloved terminal environment, which is often where you're already doing your coding.

How to use it?

Developers can use Resterm by installing it on their system (typically via a package manager like npm or as a standalone binary). Once installed, they can launch Resterm from their terminal and start defining API requests. For REST, this might involve specifying the URL, HTTP method (GET, POST, etc.), headers, and body. For GraphQL, they'd define the query or mutation and variables. For gRPC, they'd specify the service, method, and message payload. Resterm then sends the request to the API server and displays the response in a well-formatted and readable way within the terminal. It supports features like saving requests, history, and environment variables, making it suitable for both quick ad-hoc testing and more structured API development workflows. So, how can you use it? Imagine you've just built a new API endpoint. Instead of opening Postman or Insomnia, you can fire up Resterm, type out your request, and see the result immediately. If you're working with a team that uses gRPC for internal services, Resterm lets you explore and test those services without needing to set up any GUI tools. This makes it perfect for quick checks, integration testing, and even scripting API interactions.

Product Core Function

· Unified API Interaction: Allows sending requests to REST, GraphQL, and gRPC APIs from a single interface, reducing the need for multiple tools and simplifying workflows. The value here is increased developer efficiency and reduced cognitive load.

· Keyboard-Driven Interface: Provides a highly efficient, keyboard-centric experience for composing requests and navigating responses, catering to developers who prefer speed and minimal mouse usage. This translates to faster API testing and debugging.

· Interactive Response Display: Presents API responses in a clear, organized, and often syntax-highlighted format within the terminal, making it easy to inspect data and identify issues. This helps developers quickly understand what their API is returning.

· Request Management: Supports saving and recalling API requests, managing environments (e.g., different API URLs for development vs. production), and organizing collections of requests. This enables better organization and reproducibility of API testing.

· Protocol Agnostic Design: Built to handle the nuances of different API protocols, abstracting away the complexities and presenting a consistent user experience. The value is in its versatility and ability to adapt to various backend technologies.

Product Usage Case

· During backend development, a developer can use Resterm to quickly test newly implemented REST endpoints without leaving their primary coding environment. They can craft POST requests with JSON payloads and immediately inspect the 200 OK or error responses, accelerating the inner development loop.

· A frontend developer integrating with a GraphQL API can use Resterm to explore the available schema, formulate complex queries to fetch specific data, and verify the response structure before writing the client-side code. This proactive testing prevents integration bugs.

· A DevOps engineer or SRE can use Resterm to probe the health and functionality of gRPC microservices in a production environment directly from the command line. This allows for rapid troubleshooting of distributed systems without requiring a graphical session.

· When collaborating on an API project, team members can share Resterm request files or configurations, ensuring everyone is testing against the same endpoints and with the same parameters, leading to more consistent development and testing outcomes.

5

Alloy Automation MCP: AI Agent Orchestrator

Author

mnadel

Description

Alloy Automation MCP is a platform designed to provide AI agents with structured access to business-critical systems. It eliminates the complex integration process by offering pre-built servers for thousands of tools across platforms like QuickBooks, Xero, Notion, HubSpot, and Salesforce. This means AI can interact with these tools seamlessly, enabling faster development and deployment of AI-powered applications. For developers needing more granular control, the Connectivity API offers programmatic access to the same tools for custom integrations. Security is paramount, with scoped authentication and a robust credential management system to handle secrets independently. So, what's the value to you? It dramatically simplifies connecting your AI to the tools your business relies on, saving you significant integration development time and effort, and enabling your AI to perform real-world tasks with confidence.

Popularity

Points 9

Comments 5

What is this product?

Alloy Automation MCP is a middleware solution that acts as a bridge between AI agents and traditional business software. Imagine AI agents as smart assistants that can perform tasks. Before MCP, connecting these assistants to tools like your accounting software (QuickBooks) or CRM (Salesforce) was a massive engineering challenge, requiring custom code for each connection. MCP solves this by providing pre-configured 'servers' for thousands of these tools. Think of it like having universal adapters for your AI's devices. The innovation lies in abstracting away the complexity of APIs and data formats for each individual tool, presenting a unified and structured way for AI agents to interact with them. This is achieved through a combination of server-side logic that understands the nuances of each business application and a secure credential management system that handles authentication without exposing sensitive information. So, what's the value to you? It democratizes access to powerful business tools for AI, allowing you to build sophisticated AI applications that can actually *do* things in your business, without needing to become an expert in the API of every single tool you use.

How to use it?

Developers can use Alloy Automation MCP in two primary ways: through the MCP platform or the Connectivity API.

For a quicker integration, developers can log into the MCP platform (ai.runalloy.com), select the business tools their AI agents need to interact with (e.g., QuickBooks for expense tracking, HubSpot for lead management), and provision a dedicated MCP server. This server then acts as the secure gateway for their AI agents. They can then configure their AI agents to send and receive data to/from these systems through a defined interface. For example, an AI might request to create an invoice in QuickBooks, and MCP handles the translation and secure transmission of that request.

For more advanced or bespoke integrations, developers can leverage the Connectivity API. This API provides programmatic access to the same underlying infrastructure, allowing developers to build custom integrations that go beyond the standard MCP offerings. This is ideal when you need fine-grained control or are connecting to a niche tool not yet covered by MCP servers.

In both cases, the core benefit is that you're not writing low-level API integration code yourself. You're orchestrating higher-level interactions. So, what's the value to you? It allows you to rapidly integrate AI capabilities into your existing workflows, whether you prefer a guided experience or need the flexibility of custom development, dramatically accelerating your AI project timelines.

Product Core Function

· Structured access to thousands of business tools: This provides a standardized way for AI agents to communicate with applications like CRMs, accounting software, and project management tools, abstracting away the complexities of individual APIs. The value is in enabling AI to perform tasks like creating contacts, updating records, or retrieving data without needing custom code for each interaction.

· Pre-built MCP servers for common business systems: This offers ready-to-use connectivity modules for popular platforms such as QuickBooks, Xero, Notion, HubSpot, and Salesforce, significantly reducing development time and effort. The value is in getting your AI connected to essential business infrastructure almost immediately.

· Connectivity API for custom integrations: This grants developers programmatic control over the same tool access, allowing for tailored solutions and integration with less common or highly specific business applications. The value lies in offering flexibility for complex or unique integration needs, empowering developers to build bespoke AI functionalities.

· Scoped authentication and credential management: This ensures that AI agents only have access to the necessary permissions and that sensitive credentials are securely managed and isolated, enhancing security and reducing risk. The value is in providing a safe and reliable way to connect AI to your business data without compromising security.

Product Usage Case

· An AI sales assistant that can automatically create new leads in HubSpot by parsing incoming emails, then trigger a follow-up task in Salesforce for the sales team. This solves the problem of manual data entry and ensures timely follow-up, boosting sales efficiency.

· An AI accounting clerk that can automatically pull invoice data from a scanned document, create a new invoice in QuickBooks or Xero, and then update the project management tool (like Notion) with the billing status. This streamlines the invoicing process, reduces errors, and improves financial tracking.

· A customer support AI that can access a customer's history in Zendesk, pull relevant order details from an e-commerce platform, and then generate a personalized response or ticket update. This empowers the AI to provide more informed and efficient customer service, enhancing customer satisfaction.

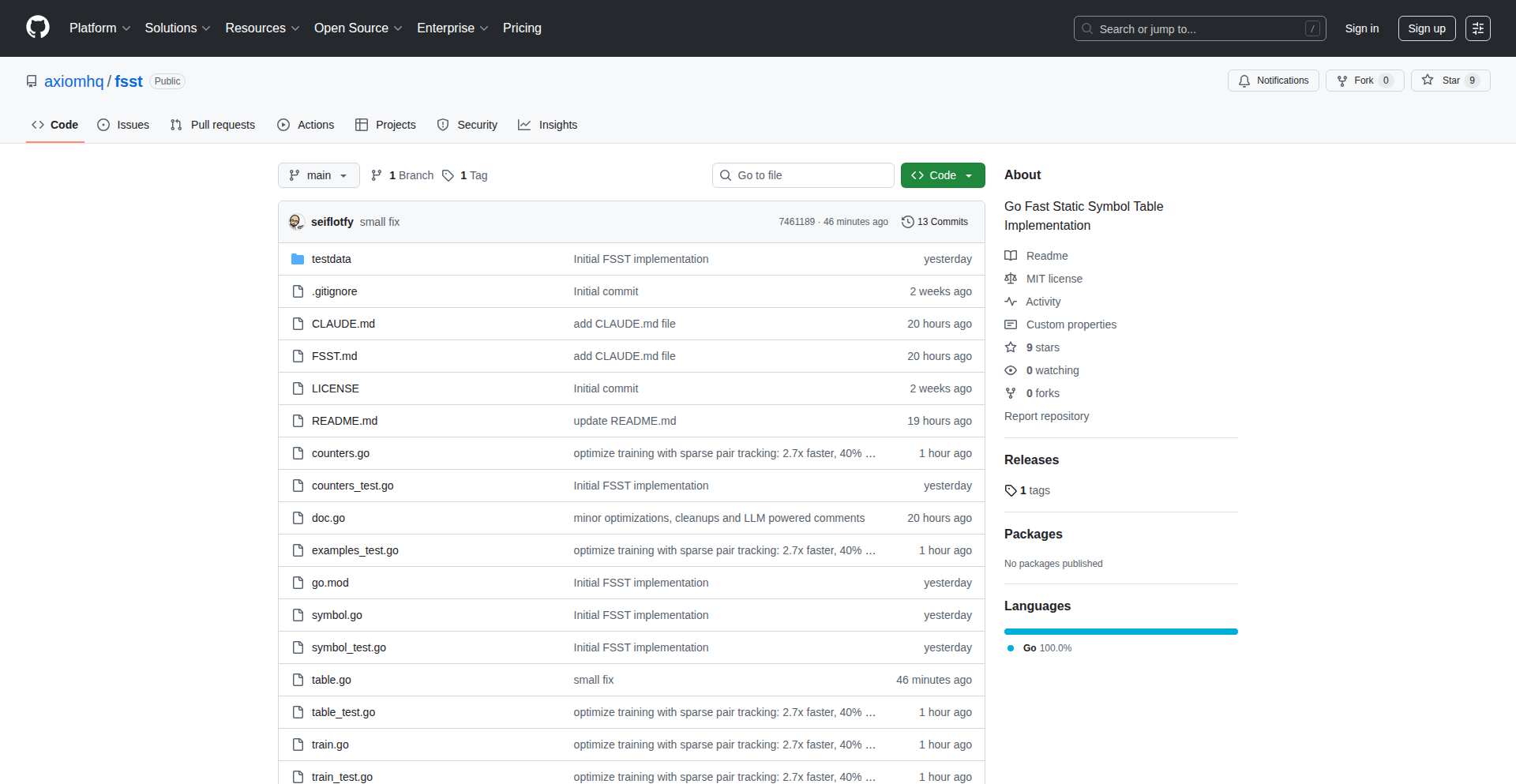

6

GoFSST: Swift Symbol Table Compression

Author

seiflotfy

Description

This project is a Go implementation of FSST (Fast Static Symbol Table Compression), a technique designed to drastically reduce the storage space needed for frequently occurring text strings, like those found in logs and JSON data. Its innovation lies in its highly efficient, static compression approach, meaning once compressed, the data can be accessed extremely quickly. This translates to smaller files and faster retrieval, making it ideal for scenarios where data volume and access speed are critical.

Popularity

Points 12

Comments 2

What is this product?

GoFSST is a Go library that implements the FSST algorithm for compressing symbol tables. Think of a symbol table as a dictionary of common words or phrases. Instead of storing each word repeatedly, FSST creates a compact representation of this dictionary and then uses it to represent the occurrences of those words. The 'static' part means that the dictionary is built once and doesn't change, which allows for very fast lookups. The 'fast' part highlights its efficiency in both compression and decompression. So, for developers, this means you can store more text data in less space and retrieve it very quickly, which is a game-changer for applications dealing with large amounts of textual information like application logs or structured JSON data.

How to use it?

Developers can integrate GoFSST into their Go applications by importing the library. They would typically first 'train' the compressor with a set of common strings they expect to encounter (e.g., a list of log message templates or JSON field names). Once trained, the library provides functions to encode new strings (replacing them with compact representations) and decode these representations back into their original form. This is useful for building custom logging systems that archive data more efficiently, or for optimizing the storage of large JSON datasets before they are sent over a network or stored in a database. The integration involves simple API calls, making it straightforward to adopt.

Product Core Function

· Symbol Table Training: This function allows you to feed the library a collection of strings that frequently appear in your data. The library analyzes these strings to build an optimized, compact dictionary. This is valuable because it creates the foundation for efficient compression, ensuring that the most common patterns in your data are represented in the smallest possible way.

· Encoding Strings: Once the symbol table is trained, this function takes your raw text strings and replaces them with much smaller compressed codes. This directly translates to reduced storage requirements for your data, meaning you can store more information within the same disk space or reduce data transfer sizes.

· Decoding Strings: This function reverses the encoding process, taking the compact codes and reconstructing the original text strings. This is crucial for retrieving your data for analysis or display without any loss of information. The speed of decoding is a key benefit, allowing quick access to your compressed data.

· Compression and Decompression Helpers: The library provides utilities that simplify the process of compressing entire files or data streams and decompressing them back. This makes it easy to apply GoFSST to existing workflows, such as compressing log files before archiving or decompressing data received over a network.

Product Usage Case

· Log Data Compression: Imagine an application that generates gigabytes of logs daily. By using GoFSST, developers can compress these logs on-the-fly or during archival. This dramatically reduces storage costs and makes it faster to search through historical logs because the compressed data is smaller and can be decompressed rapidly when needed. For example, instead of storing thousands of identical error messages, they are replaced by a small code, saving significant space.

· JSON Data Optimization: When dealing with large JSON payloads, especially in microservices or IoT scenarios, transmission and storage can be bottlenecks. GoFSST can be used to compress repetitive field names or common string values within JSON data. This leads to faster API responses and reduced bandwidth usage, as well as more efficient storage in databases or object storage systems. For instance, a JSON object with many repeated keys like 'timestamp', 'user_id', 'event_type' can have these keys represented by tiny codes.

· Configuration File Miniaturization: Applications often use configuration files that contain many repeating parameters or string values. Compressing these configuration files with GoFSST can lead to smaller deployment artifacts or faster loading times for applications that read these configurations. This is particularly useful in embedded systems or environments where resources are constrained.

7

Hardware Brain for LLMs

Author

nimabanai

Description

This project introduces a 'hardware context layer' for AI tools like code editors and chatbots. It acts as a specialized brain for AI, feeding it crucial details about specific hardware designs, such as schematics, datasheets, and manuals. The core innovation is its ability to provide accurate, real-time hardware context to AI, overcoming the limitations of generic AI answers that often 'hallucinate' or lack depth when dealing with custom hardware. This aims to significantly speed up firmware development and hardware debugging by making AI tools truly aware of the hardware they are interacting with.

Popularity

Points 14

Comments 0

What is this product?

This project is an 'MCP server' (think of it as a specialized data pipeline for AI) that plugs into existing AI tools (like Cursor, Claude Code, Gemini, etc.) to give them a deep understanding of your custom hardware. Traditional AI models struggle with specific hardware because they lack access to detailed technical documents like schematics, component datasheets, and application notes. This tool ingests these documents and creates a knowledge base that the AI can query. For example, if you're using an AI to write code for a custom circuit board, this tool will ensure the AI knows the exact specifications of each component and how they are connected, preventing errors and misunderstandings. The innovation lies in its ability to process complex, technical hardware documentation (starting with KiCad schematics) and make that information accessible to AI models in a way that's accurate and fast, effectively creating a 'hardware brain' for AI.

How to use it?

Developers can integrate this tool by signing up for the free beta. Once registered, they upload their hardware design files (currently supporting KiCad schematics, component datasheets, and other relevant documents). The system then processes these files and provides an 'MCP key'. This key is used to connect their preferred AI tool to the 'hardware brain'. For instance, a developer using a code editor like Cursor can then ask the AI to generate firmware for their specific microcontroller, and the AI, powered by the Hardware Brain, will provide code that's tailored to the exact hardware specifications and constraints. This means less time spent on generic code and more time on developing specialized functionality, significantly reducing the learning curve for custom hardware development.

Product Core Function

· Schematic Parsing (KiCad): Ingests and understands your hardware circuit designs from KiCad files, allowing AI to comprehend component connections and board layout for accurate firmware generation and debugging.

· Datasheet Ingestion: Processes technical datasheets for key components, providing the AI with precise information about voltage, current, timing, and other critical parameters of each part on your board.

· Contextual AI Integration: Acts as a bridge, feeding relevant hardware context to AI tools, ensuring that AI-generated code or debugging suggestions are always accurate and specific to your hardware setup, preventing common 'hallucinations'.

· Real-time Information Retrieval: Provides fast and accurate answers to AI queries about your hardware, enabling rapid iteration during firmware development and troubleshooting.

· Document Augmentation: Allows for the inclusion of additional documents like application notes and user manuals, enriching the AI's understanding of the hardware's intended use and operation.

Product Usage Case

· Firmware Development for Custom IoT Devices: A developer is building a new IoT device with a unique sensor array. They upload their KiCad schematics and the datasheets for the sensors to the Hardware Brain. Then, when using an AI assistant in their IDE, they can ask the AI to write the firmware to read data from a specific sensor, and the AI, understanding the sensor's interface and power requirements from the datasheets, generates accurate and efficient code, avoiding potential hardware conflicts.

· Debugging Embedded System Issues: An engineer is struggling to debug a complex embedded system where certain peripherals are not functioning as expected. They feed the system's schematics and relevant datasheets into the Hardware Brain. When they query the AI with specific error messages or symptoms, the AI, leveraging the Hardware Brain's knowledge, can pinpoint potential hardware-related causes, such as incorrect pin configurations, timing issues, or power supply problems, offering much more targeted debugging advice than a generic AI.

· Accelerating Board Bring-up: When bringing up a new custom hardware board, engineers often spend weeks understanding component interactions and setting up basic functionality. By integrating the Hardware Brain, an AI can immediately provide insights into how components should be initialized and controlled based on their datasheets and the schematic, significantly reducing the time and effort required for initial board operation and testing.

· Code Generation for Specific Microcontrollers: A software engineer needs to write low-level firmware for a particular microcontroller they are unfamiliar with. They upload the microcontroller's datasheet and any relevant board schematics. The AI can then be prompted to generate boilerplate code for specific peripherals (like UART or SPI), ensuring correct register configurations and timing based on the official documentation, making the process much smoother.

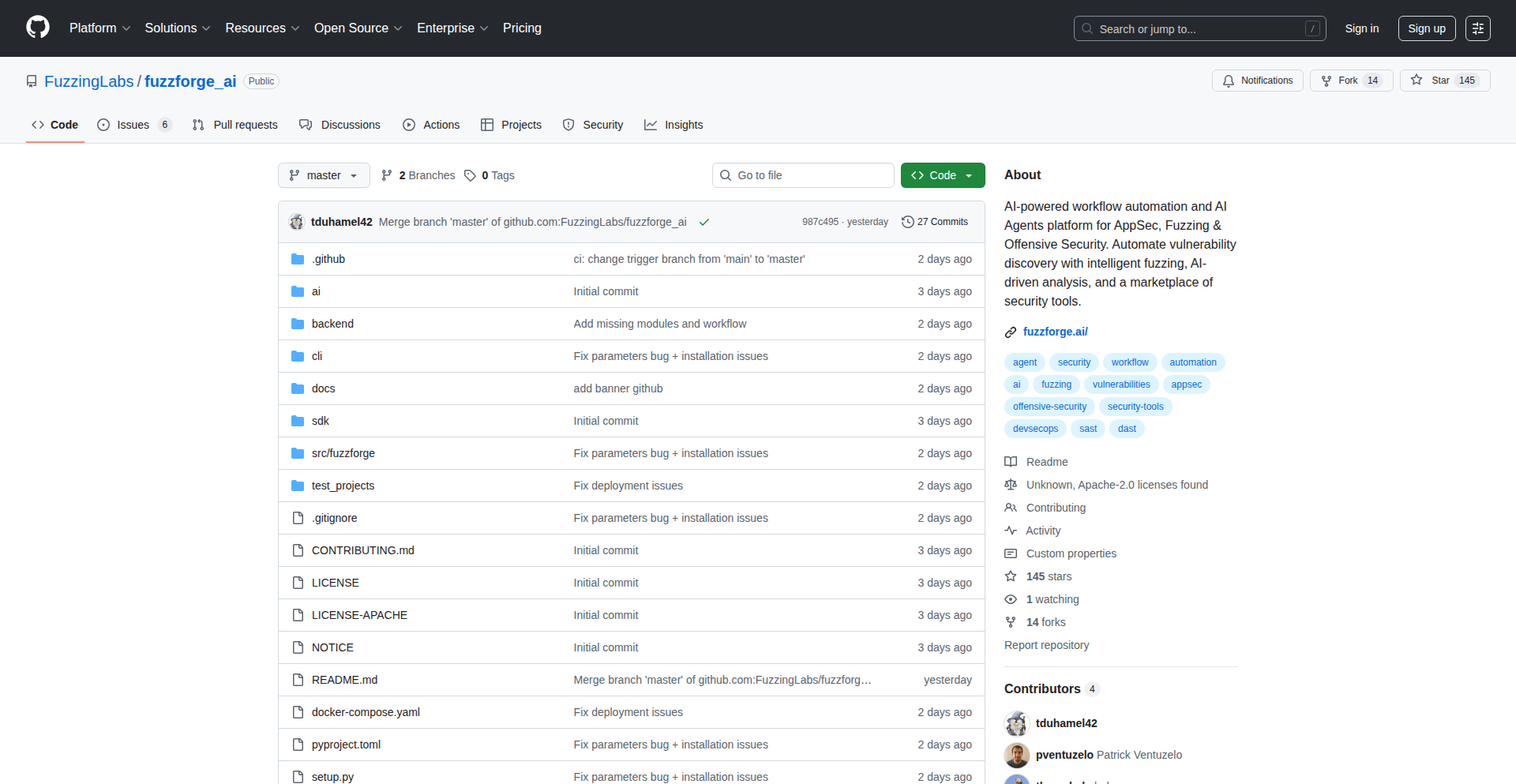

8

AI-Powered Vulnerability Discovery Engine

Author

unbalancedparen

Description

Fuzz Forge is a novel approach to finding security flaws in software by combining the power of Artificial Intelligence (AI) with advanced fuzzing techniques. It automates the process of discovering vulnerabilities that might otherwise be missed by traditional methods. The core innovation lies in using AI to intelligently guide the fuzzing process, making it more efficient and effective in uncovering hidden bugs. This is useful because it helps developers build more secure software, saving time and resources in the long run by catching issues early.

Popularity

Points 10

Comments 1

What is this product?

Fuzz Forge is a tool designed to automatically find security vulnerabilities in software. It works by using AI to learn how to create malformed or unexpected inputs (this is called 'fuzzing') for a program. Instead of just randomly throwing data at the software, the AI intelligently crafts inputs that are more likely to trigger bugs or crashes, which often indicate security weaknesses. The AI aspect is key because it makes the fuzzing process smarter and more targeted, increasing the chances of finding critical vulnerabilities. This is valuable because it helps ensure the software you use or build is more secure and less susceptible to attacks.

How to use it?

Developers can integrate Fuzz Forge into their software development lifecycle to continuously test their code for security issues. This typically involves setting up Fuzz Forge to target specific parts of their application, such as APIs, file parsers, or network protocols. The tool will then generate and feed a stream of 'fuzzed' data to these targets, monitoring for any unexpected behavior or crashes. The output provides detailed reports on potential vulnerabilities found, allowing developers to prioritize and fix them. This is useful because it shifts security testing from a manual, often late-stage process, to an automated, continuous one, ensuring security is baked in from the start.

Product Core Function

· AI-guided fuzzing strategy: The AI learns from the program's behavior to generate more effective test cases, increasing the efficiency of vulnerability discovery. This means it's better at finding bugs than random testing, saving you debugging time.

· Automated crash detection and reporting: Fuzz Forge automatically identifies and logs program crashes or unexpected behavior, providing developers with clear indicators of where vulnerabilities might exist. This takes the guesswork out of finding bugs.

· Intelligent input generation: The AI crafts intelligent, context-aware inputs that probe specific program functionalities, making it more likely to uncover edge-case vulnerabilities. This helps find bugs that are hard to discover manually.

· Scalable vulnerability discovery: The system is designed to be scalable, allowing it to be applied to large and complex software projects, ensuring comprehensive security testing. This means even large applications can be thoroughly checked for security flaws.

· Integration with CI/CD pipelines: Fuzz Forge can be integrated into continuous integration and continuous delivery pipelines, enabling automated security testing with every code change. This ensures security is checked automatically as you develop, not as an afterthought.

Product Usage Case

· Securing a web application's API endpoints: Developers can use Fuzz Forge to test their API endpoints for vulnerabilities like SQL injection or cross-site scripting (XSS) by having the AI generate various malformed API requests. This helps prevent attackers from exploiting common web vulnerabilities.

· Testing a desktop application's file parsing capabilities: If an application reads various file formats (e.g., images, documents), Fuzz Forge can be used to fuzz these parsers with corrupted or malformed files to uncover vulnerabilities that could lead to crashes or remote code execution. This protects users from malicious files.

· Improving the robustness of network protocols: For applications that communicate over custom network protocols, Fuzz Forge can generate unexpected network packets to test the protocol's resilience and identify potential denial-of-service or data integrity issues. This makes network communication more reliable and secure.

· Finding memory corruption bugs in system libraries: Developers working on low-level system libraries can leverage Fuzz Forge to discover memory corruption vulnerabilities like buffer overflows or use-after-free bugs, which are critical security risks. This leads to more stable and secure system components.

9

RelativisticSimEngine

Author

egretfx

Description

An open-source web engine designed for simulating relativistic phenomena. It translates complex physics into accessible web-based visualizations, making advanced scientific concepts understandable and interactive for a broader audience.

Popularity

Points 6

Comments 4

What is this product?

This project is an open-source web engine that allows users to simulate and visualize phenomena governed by the principles of special relativity. It utilizes advanced mathematical models and computational techniques to accurately represent concepts like time dilation, length contraction, and the behavior of objects approaching the speed of light. The innovation lies in bringing these often abstract and computationally intensive simulations into a readily accessible web environment, transforming theoretical physics into an interactive visual experience. So, what's the use? It makes complex, mind-bending physics comprehensible and engaging for students, educators, and even curious individuals, all through their web browser.

How to use it?

Developers can integrate this engine into their web applications or educational platforms. It likely provides a JavaScript API that allows for defining simulation parameters (like initial velocity, mass, observer frame) and then renders the resulting relativistic effects visually. This could involve custom physics calculations executed in the browser or leveraging WebAssembly for performance. Think of it as a specialized physics library for the web. So, what's the use? It empowers developers to build interactive learning tools, compelling scientific visualizations, or even experimental game mechanics that accurately reflect relativistic physics, without needing to be a seasoned physicist or implement complex simulations from scratch.

Product Core Function

· Relativistic effect visualization: Renders visual representations of time dilation, length contraction, and relativistic aberration, helping users grasp these counter-intuitive concepts. This is useful for educational purposes and scientific outreach.

· Configurable simulation parameters: Allows users to adjust initial conditions, velocities, and observer perspectives to explore different relativistic scenarios. This provides flexibility for experimentation and learning.

· Web-based rendering engine: Utilizes web technologies to display simulations, ensuring broad accessibility and compatibility across devices and platforms. This means anyone with a web browser can access and interact with the simulations.

· Open-source physics models: Implements core principles of special relativity, providing a foundation for accurate scientific simulations. This transparency allows for verification and community contributions.

Product Usage Case

· An online educational platform that allows students to visually experiment with how time passes differently for a traveler moving at near-light speeds compared to someone on Earth. This addresses the difficulty of understanding abstract relativity concepts by providing a tangible, interactive demonstration.

· A science museum website that offers an interactive exhibit where visitors can simulate the journey of a spaceship approaching a black hole, visualizing the extreme gravitational effects and time distortions predicted by relativity. This enhances public engagement with complex scientific ideas.

· A game developer creating a space exploration game that needs realistic physics for interstellar travel at high velocities. Integrating this engine ensures their game world accurately reflects relativistic phenomena, providing a unique and scientifically grounded gameplay experience.

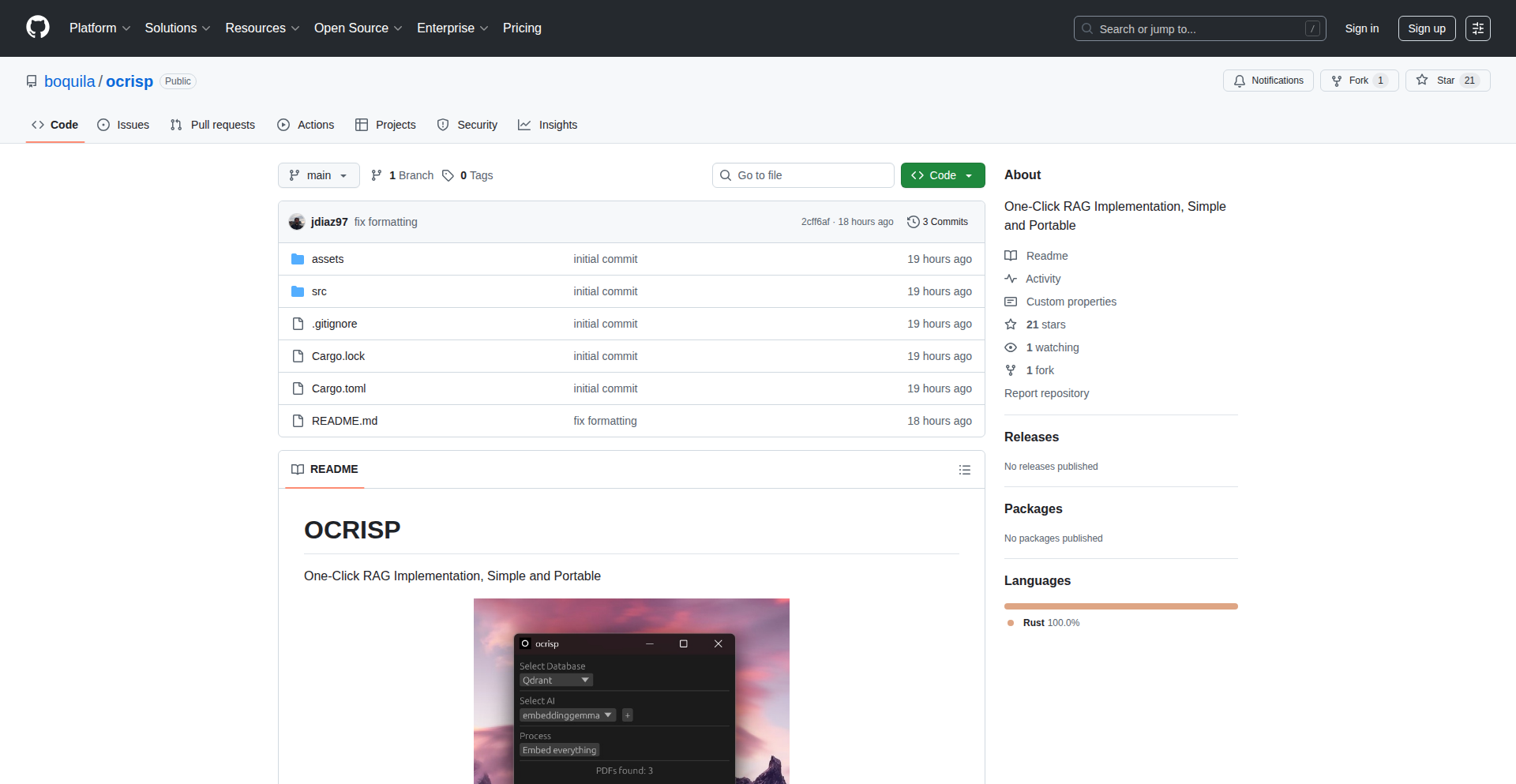

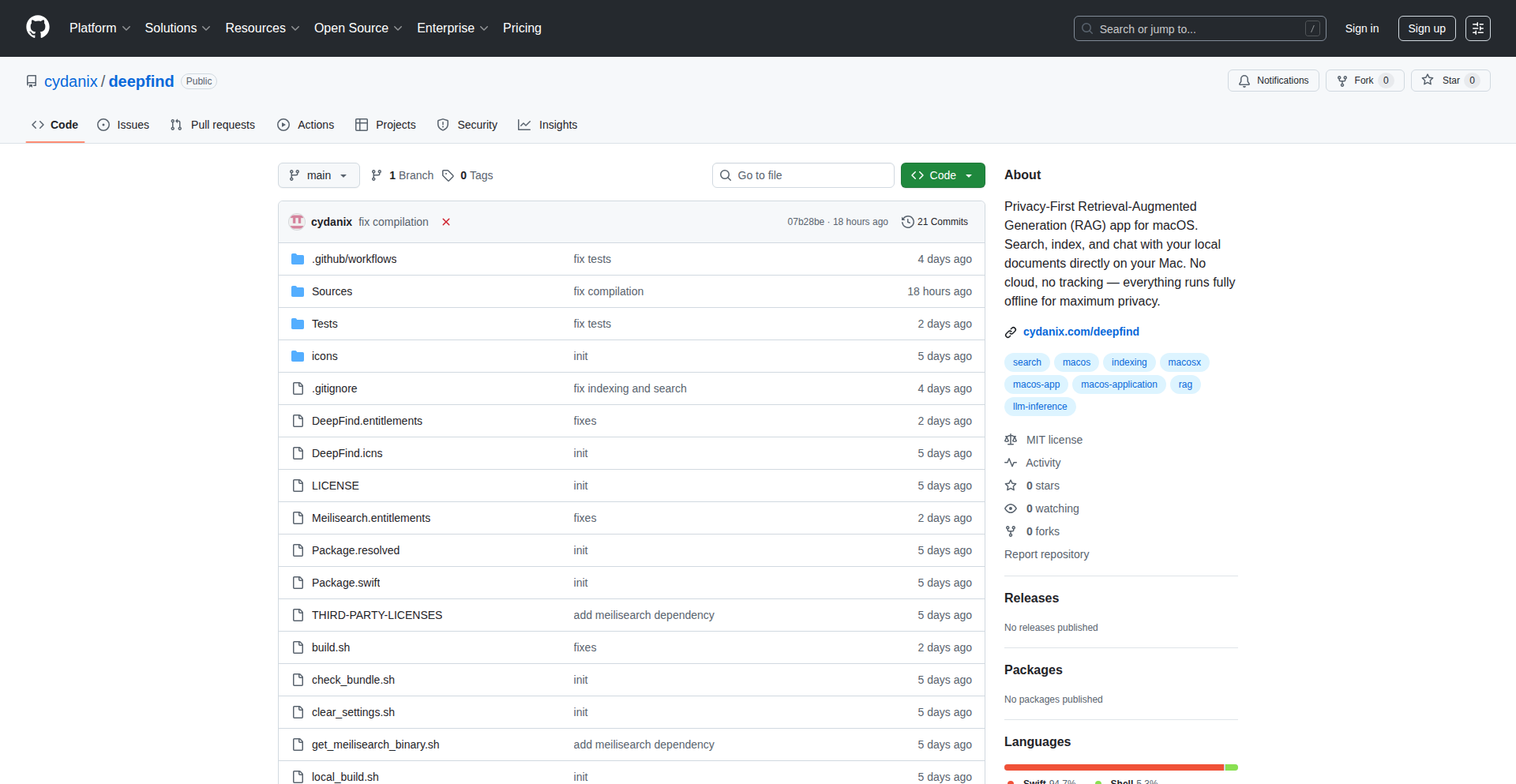

10

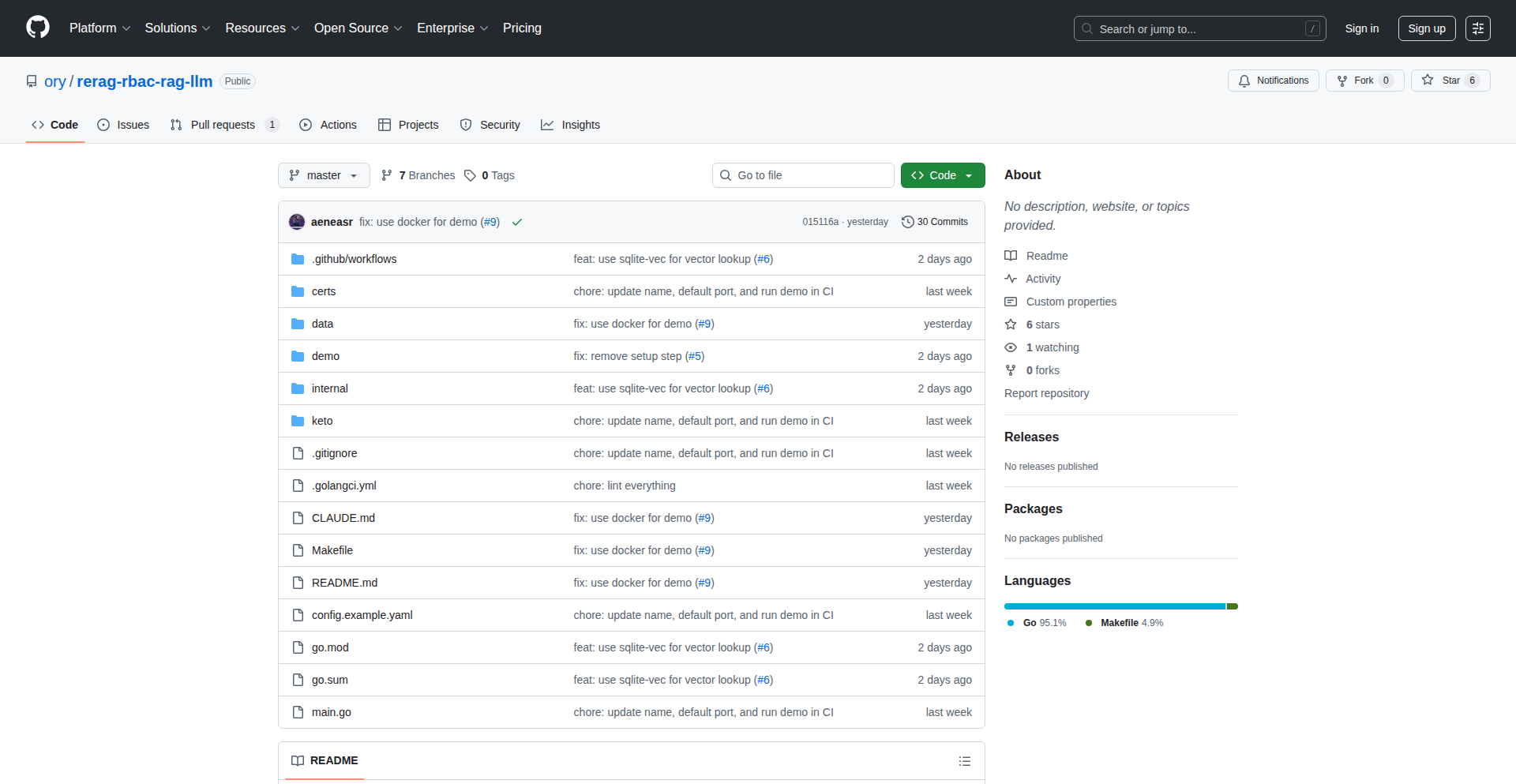

Ocrisp: One-Click RAG Weaver

Author

jdiaz97

Description

Ocrisp is a groundbreaking, one-click RAG (Retrieval-Augmented Generation) implementation designed for simplicity and portability. It tackles the complexity often associated with setting up RAG pipelines, allowing developers to quickly integrate powerful AI-driven knowledge retrieval and generation into their applications. The core innovation lies in its streamlined approach to building and deploying RAG systems, making advanced AI capabilities accessible to a wider range of developers.

Popularity

Points 9

Comments 0

What is this product?

Ocrisp is a developer tool that simplifies the process of building Retrieval-Augmented Generation (RAG) systems. RAG is a technique that enhances Large Language Models (LLMs) by providing them with external, relevant information before they generate a response. Instead of relying solely on the LLM's internal knowledge, RAG allows it to 'look up' facts from a custom knowledge base, leading to more accurate, up-to-date, and contextually relevant answers. Ocrisp's innovation is in abstracting away the complex setup steps, offering a 'one-click' solution to create and deploy these powerful RAG pipelines, making it highly portable and easy to integrate.

How to use it?

Developers can use Ocrisp by pointing it to their data sources (e.g., documents, websites). Ocrisp then handles the ingestion, indexing, and embedding of this data, creating a knowledge base that LLMs can query. It provides simple APIs and configurations to connect to various LLMs and deploy the RAG pipeline. This means you can quickly add AI-powered search and Q&A capabilities to your existing applications or build new ones without needing deep expertise in vector databases or LLM orchestration. It's like a pre-packaged AI assistant builder that works with your own private data.

Product Core Function

· Automated data ingestion and processing: Handles the complex task of preparing your documents or data for AI models, so you don't have to worry about file formats or parsing. This saves you significant development time and effort in data preparation.

· Streamlined RAG pipeline setup: Provides a simple interface to configure and deploy a complete RAG system, abstracting away intricate details of vector embeddings, similarity search, and LLM integration. This drastically reduces the time and technical expertise needed to get a RAG system running.

· Portable RAG deployment: Designed to be easily moved and integrated across different environments, whether it's on your local machine, a cloud server, or as part of a larger application. This flexibility ensures you can use your AI capabilities wherever you need them.

· Simplified LLM integration: Offers straightforward ways to connect to popular LLMs, allowing you to leverage the latest AI generation models with your custom knowledge. This means you can quickly experiment with different LLMs to find the best fit for your specific use case.

Product Usage Case

· Building a customer support chatbot that can answer questions based on your company's product documentation. By using Ocrisp, you can quickly ingest all your support articles and connect to an LLM to provide instant, accurate answers to customer queries, reducing response times and improving customer satisfaction.

· Creating an internal knowledge base for a team, allowing them to search through project documents, research papers, or meeting notes using natural language. Ocrisp enables rapid deployment of a system that makes finding crucial information within a large volume of internal data significantly easier and faster.

· Developing an AI assistant for researchers that can answer questions by referencing a corpus of academic papers. This allows researchers to quickly find relevant information and insights without manually sifting through hundreds of articles, accelerating their research process.

· Implementing a personalized content recommendation engine that learns from user interactions and a specific set of content. Ocrisp can help manage the knowledge base of content, allowing the recommendation system to provide more relevant and tailored suggestions to users.

11

Ontosyn: AI-Powered Research Paper Navigator

Author

weyxie

Description

Ontosyn is a modern research paper reader designed to overcome the clunkiness of traditional paper management. It features a clean UI, enhanced in-paper navigation (like bookmarks and seamless reference jumping), an integrated AI chat for personalized paper recommendations, and robust library organization. This project's core innovation lies in its blend of user-centric design and intelligent assistance, making it easier for researchers and academics to stay updated and engage with their field.

Popularity

Points 8

Comments 0

What is this product?

Ontosyn is a smart application built to revolutionize how you read and manage research papers. At its heart, it's a sophisticated document reader with a clean interface that makes navigating through dense academic texts much smoother. The innovation comes from its intelligent features: you can bookmark key sections, quickly jump back to where you were after following a citation, and most importantly, it's powered by an AI chat that can understand your research interests. This AI can recommend new papers relevant to your library, suggest authors you might like based on your current reading, and help you discover new connections within the research landscape. So, if you're tired of losing your place or struggling to find related work, Ontosyn offers a streamlined and intelligent solution.

How to use it?

Developers can integrate Ontosyn into their workflow by signing up at ontosyn.com. The primary use case is for individuals managing a significant volume of research papers, such as academics, students, and R&D professionals. You can upload your papers, and Ontosyn will organize them into a searchable library. The AI chat acts as your research assistant: ask it to find papers on a specific topic, discover authors similar to ones you already read, or get summaries of complex articles. For example, if you're working on a new project, you can ask the AI to "recommend papers related to generative adversarial networks that I haven't read yet," and it will present relevant options directly within your library. The goal is to reduce the friction of discovery and reading, allowing you to focus on the actual research.

Product Core Function

· Intelligent Paper Navigation: Enables quick bookmarking of key sections and seamless return to previous reading points after clicking on a reference, significantly improving reading efficiency for dense academic material. This means less time spent fumbling with tabs or losing your place, and more time understanding the content.

· AI-Powered Paper Recommendation: Utilizes an integrated AI chat to suggest relevant papers based on your existing library and queries, helping you discover new research that aligns with your interests. This saves you hours of manual searching and introduces you to valuable work you might have otherwise missed.

· Unified Library Management: Provides a clean and organized system for storing and managing all your research papers, making it easy to retrieve and access information when needed. This eliminates the chaos of scattered files and folders, providing a central hub for all your academic resources.

· Contextual AI Assistance: The AI chat can understand your specific research context and provide tailored help, such as finding authors based on your reading history or identifying connections between different research papers. This makes the AI a proactive partner in your research journey, offering insights beyond simple search.

· Clean and Modern User Interface: Offers an intuitive and aesthetically pleasing interface for reading and interacting with research papers, reducing cognitive load and enhancing the overall user experience. A pleasant interface makes the often-tedious task of reading papers more enjoyable and productive.

Product Usage Case

· A PhD student struggling to keep up with the latest advancements in their field can use Ontosyn to upload all their papers. They can then ask the AI chat to "recommend recent papers on quantum entanglement from authors like John Bell" to quickly discover the most relevant new research, directly adding them to their library. This solves the problem of information overload and missed publications.

· A researcher working on a new grant proposal needs to find supporting literature efficiently. They can upload their existing literature and then ask Ontosyn's AI to "find papers that bridge the gap between machine learning and bioinformatics," providing them with a curated list of relevant studies that might not have been obvious through traditional search methods. This accelerates the literature review process for critical funding applications.

· An academic who frequently jumps between references in papers can leverage Ontosyn's bookmarking and back-linking features. When they click on a citation, they can easily return to their original reading spot without getting lost, making the process of deep reading and critical analysis much smoother. This directly addresses the frustration of losing context in complex articles.

12

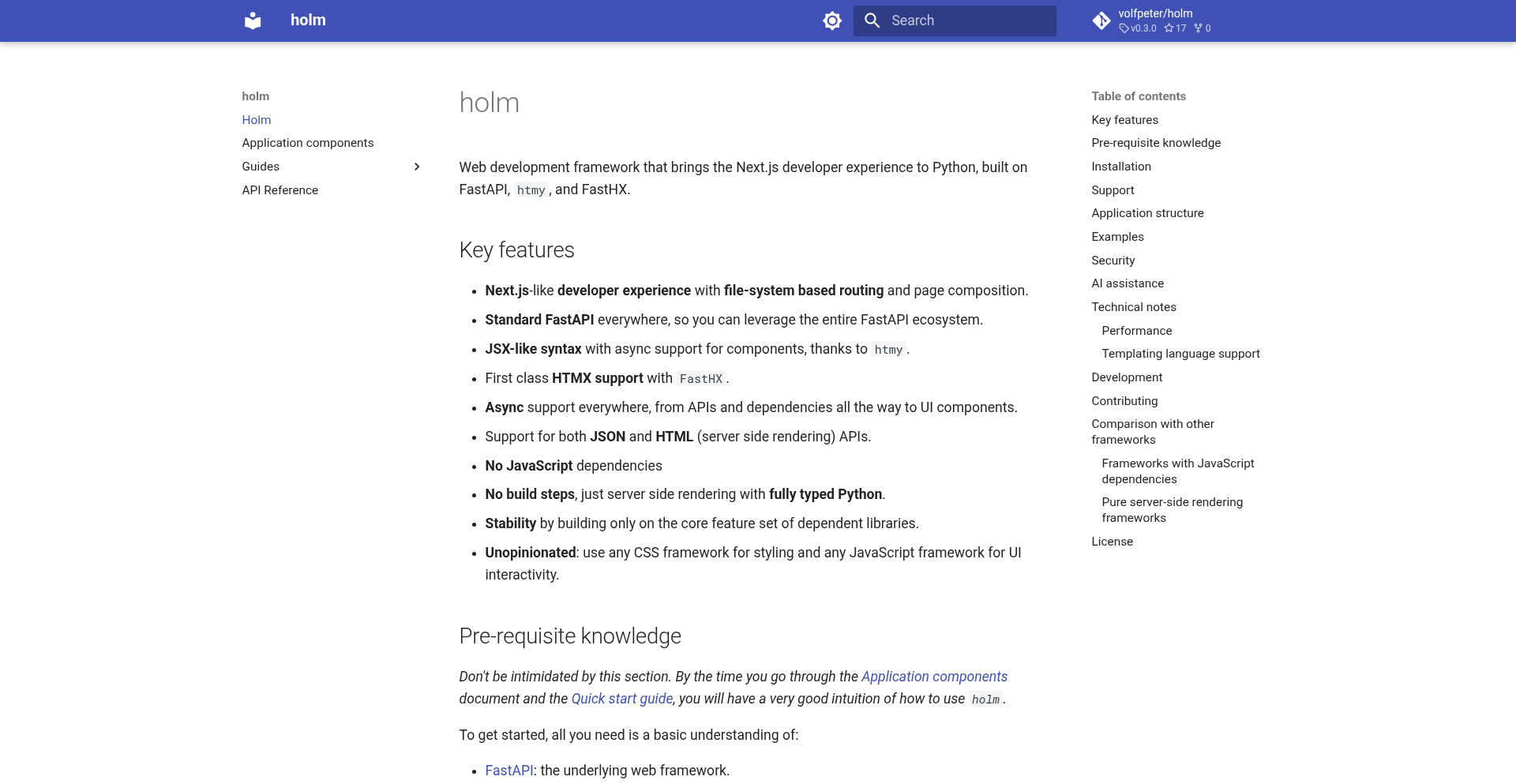

FastAPI-Htmx-Reactant

Author

volfpeter

Description

This project is a novel Python web framework for building dynamic web interfaces, inspired by Next.js, but specifically designed for Htmx and powered by FastAPI. It offers a more integrated and convenient way to achieve server-side rendering and interactive UIs compared to existing tools, without altering the core FastAPI functionality. The innovation lies in its ability to seamlessly blend Python's backend power with the frontend interactivity of Htmx, creating a developer-friendly and efficient development experience.

Popularity

Points 5

Comments 2

What is this product?

This is a Python web framework that allows developers to build modern, interactive web applications using Python and the Htmx library. Imagine building a website where parts of the page can update automatically without a full page reload, much like how modern JavaScript frameworks work, but entirely on the server-side using Python. The core innovation is its tight integration with FastAPI, a high-performance Python web framework, and Htmx, a library that enables rich interactivity directly from HTML. This means you can write Python code to handle requests, render HTML with dynamic content, and have Htmx manage the client-side updates, leading to simpler code and faster development cycles. So, what's in it for you? You get to build responsive and engaging web applications with less JavaScript, leveraging your existing Python skills and a powerful backend framework.

How to use it?

Developers can integrate this framework into their existing FastAPI projects. By defining routes within FastAPI, you can return HTML fragments enhanced with Htmx attributes. The framework provides tools to easily generate these Htmx-aware responses, allowing for dynamic updates of parts of the web page. For instance, you could have a button that, when clicked, makes a Python function on your server run, fetches new data, and then re-renders a specific section of the page without the user noticing a full refresh. This is achieved by returning HTML snippets from your API endpoints that Htmx automatically interprets. So, how can you use it? You'll write your backend logic in Python using FastAPI, and for interactive elements, you'll sprinkle Htmx attributes into your HTML responses generated by your Python code, and this framework makes that process smoother. This is useful for building features like live search, dynamically loading content, or updating forms in real-time, all while staying primarily in your Python environment.

Product Core Function

· Server-side rendering with Htmx integration: Enables dynamic web page updates by generating HTML on the server and letting Htmx handle client-side interactions, reducing the need for complex JavaScript. This means faster initial page loads and a more responsive user experience without complex client-side logic.

· FastAPI native compatibility: Works seamlessly with FastAPI, allowing developers to leverage its performance and features without any modifications. This provides a solid and scalable foundation for your web applications.

· Declarative UI updates: Allows developers to define how the UI should update based on server responses through Htmx attributes in the HTML. This simplifies the process of creating interactive components, making it easier to build dynamic features.

· Simplified development workflow: Bridges the gap between backend and frontend development by allowing more logic to be handled on the server. This leads to a more streamlined development process and potentially fewer bugs related to state management.

· Component-based HTML generation: Facilitates the creation of reusable HTML components on the server, promoting code modularity and maintainability. This helps in building larger and more complex applications efficiently.

Product Usage Case

· Building a real-time product catalog for an e-commerce site: When a user filters products, instead of a full page reload, only the product listing area updates with new results fetched by FastAPI and rendered as HTML snippets by the framework. This improves user engagement and reduces bounce rates.

· Implementing a live chat application with server-sent events: As new messages arrive, FastAPI pushes them to the client, and the framework helps in rendering these messages into the chat window dynamically without user interaction. This creates a truly interactive communication experience.

· Creating an administrative dashboard with interactive data visualizations: When a user clicks on a specific data point, the framework can trigger a backend request to fetch more detailed information and update a specific chart or table on the dashboard without refreshing the entire page. This enhances data exploration and usability.

· Developing a form submission process with instant feedback: After a user submits a form, instead of a blank page or a redirect, the framework can display a success or error message in a dedicated area of the form, improving the user experience and providing immediate validation.

· Constructing a blog with infinite scrolling: As the user scrolls down, new blog posts are fetched from the server and appended to the existing list, creating a seamless content discovery experience without manual pagination clicks.

13

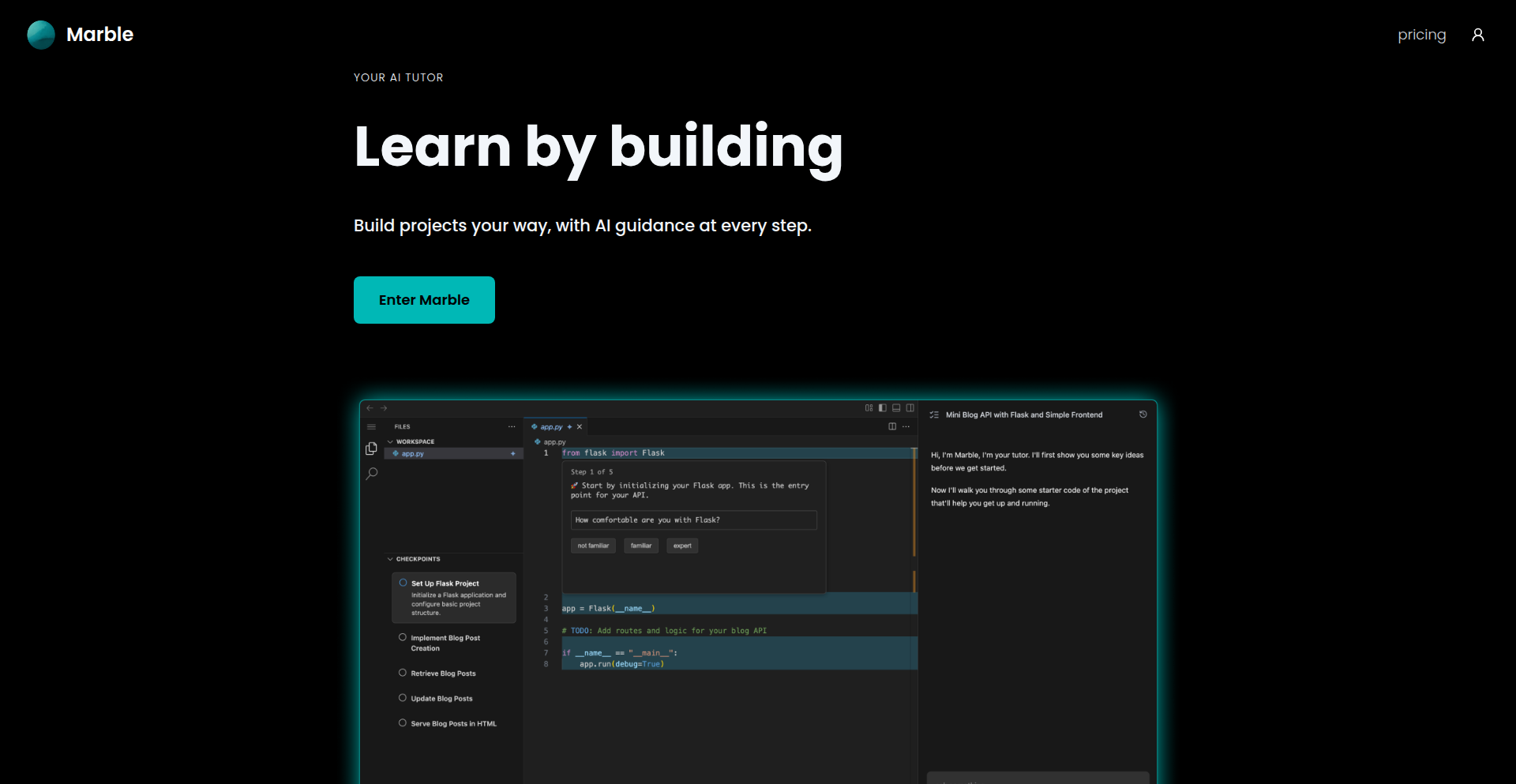

Marble AI-Assisted Learning Environment

Author

miguelacevedo

Description

Marble is a cloud-based development environment designed to accelerate technical skill acquisition. It leverages AI to handle boilerplate code and setup, allowing developers to focus on understanding complex system architectures and making critical design trade-offs. Unlike tools that prioritize code generation speed, Marble emphasizes deep learning and building a robust mental model of end-to-end systems, ensuring developers gain practical, transferable skills.

Popularity

Points 5

Comments 1

What is this product?

Marble is an innovative AI-powered learning platform that goes beyond simple code generation. It acts as an intelligent tutor and development assistant, specifically designed to help developers learn new technical skills more effectively. The core idea is to let AI handle the mundane and time-consuming parts of project setup and boilerplate code, freeing up the developer to concentrate on the challenging architectural decisions and the 'why' behind the code. It provides a modified VSCode environment within a Docker container, pre-configured with all necessary dependencies and starter code for a chosen project. This setup accelerates the learning curve by immediately immersing the developer in the core logic and design challenges. The AI doesn't just write code; it helps build your understanding by suggesting related concepts and guiding you through the project's inner workings, fostering a cycle of curiosity and deeper learning.

How to use it?

Developers can use Marble by first identifying a skill or technology they wish to learn. Marble then suggests projects tailored to that learning objective. Upon selecting a project, Marble provisions a cloud-based VSCode environment within a Docker container, complete with pre-installed packages and starter code. This means you don't waste time on tedious setup. You can immediately dive into coding and exploring the project's architecture. The AI acts as a co-pilot, assisting with specific coding tasks, answering questions about the codebase, and even suggesting areas for further exploration. This allows you to build fully functional, end-to-end projects with new technologies, gaining a comprehensive understanding of how they work and how to make informed design decisions, even if you didn't write every single line of code yourself.

Product Core Function

· AI-driven project discovery: Helps developers find projects aligned with specific skills they want to acquire. Value: Saves time and effort in project selection, ensuring focus on desired learning outcomes.

· Cloud-hosted VSCode in Docker: Provides a consistent and pre-configured development environment. Value: Eliminates setup friction and dependency conflicts, allowing immediate focus on learning and coding.

· Automated boilerplate and dependency setup: Handles tedious initial coding and package installation. Value: Accelerates the learning process by fast-forwarding to the more complex and insightful parts of a project.

· AI coding agent for specific tasks: Assists with writing code for defined, often repetitive, tasks. Value: Frees up cognitive load for higher-level thinking about architecture and design, improving learning efficiency.

· Mental model development support: AI guides understanding of architecture and code logic. Value: Promotes deeper comprehension of systems rather than superficial code generation, leading to more robust skill development.

Product Usage Case

· A junior developer wants to learn about building microservices. They use Marble, select a microservices project, and are immediately dropped into a pre-configured VSCode environment with starter code for a simple API gateway and a few backend services. The AI helps them understand how requests are routed and how services communicate, allowing them to grasp the core concepts without getting bogged down in initial setup and configuration hell.

· An experienced developer wants to explore a new database technology like PostgreSQL. They use Marble to spin up a project that involves integrating with PostgreSQL. The AI helps them write the necessary SQL queries and ORM code, but more importantly, explains the performance implications of different query structures and database indexing strategies, enhancing their understanding beyond just syntax.

· A team lead is evaluating a new JavaScript framework for a project. They use Marble to quickly prototype a small application using the framework. The AI assists with common tasks like state management and component creation, allowing the lead to quickly assess the framework's strengths and weaknesses in a real-world context and make an informed trade-off decision for the project.

14

SpatialFoldersEnhancer

Author

dailyanchovy

Description

This project is a set of scripts that bring back the classic macOS Finder behavior of remembering folder positions and sizes. Instead of folders opening in random locations and dimensions on modern macOS, these scripts ensure each folder maintains its last known window state. This allows users to leverage their natural spatial memory for more intuitive file management, effectively solving the problem of inconsistent and disruptive folder window behavior.

Popularity

Points 2

Comments 4

What is this product?

SpatialFoldersEnhancer is a collection of scripts designed to restore the 'spatial' behavior of the macOS Finder. In older versions of macOS, each folder window would remember its exact position on the screen and its exact dimensions. Modern macOS, however, tends to open folders in arbitrary locations and sizes, disrupting user workflow. These scripts intercept the folder opening process and re-apply the last saved position and size for each folder, leveraging AppleScript and potentially other macOS automation tools. The innovation lies in meticulously capturing and re-applying these window states, allowing your brain's natural ability to recall locations to directly benefit your file organization, meaning you don't have to constantly re-orient yourself when opening frequently used folders.

How to use it?

Developers can integrate SpatialFoldersEnhancer by installing and running the provided scripts on their macOS system. The scripts likely utilize AppleScript or shell commands to interact with the Finder application. Once installed, the scripts will automatically activate when folders are opened. For developers looking to embed this functionality within their own applications or workflows, they might investigate how these scripts hook into the macOS event system or observe Finder's window management processes. The core idea is to automate the tedious task of resizing and repositioning windows, so that your workflow remains uninterrupted.

Product Core Function

· Folder Position Memory: Scripts record the last X and Y coordinates where a folder window was closed, and reopen it at that exact position. This helps you find folders quickly by remembering where you left them, making navigation less of a hunt.

· Folder Size Memory: Scripts record the width and height of a folder window upon closing, and restore it to that size when reopened. This means your preferred viewing layout for each folder is preserved, so you don't have to constantly adjust window dimensions for optimal viewing, enhancing your productivity.

· Consistent Finder Experience: By restoring spatial continuity, the project creates a more predictable and familiar Finder environment. This reduces cognitive load and makes file management feel more natural and less frustrating, allowing you to focus on your tasks rather than battling window placement.

Product Usage Case

· Developer working on multiple projects: A developer might have project-specific folders open. SpatialFoldersEnhancer ensures that each project folder opens to its previously defined size and location, allowing them to quickly switch between development environments without re-arranging windows. This saves time and mental effort.

· Designer managing assets: A designer frequently accesses different asset folders for various projects. The script remembers the ideal layout for each asset folder, ensuring that when they open a texture folder, it's already sized and positioned for efficient browsing, streamlining their creative process.

· Researcher organizing data: A researcher might have multiple data folders open for analysis. SpatialFoldersEnhancer guarantees that each data folder reappears in its last known state, preventing accidental closures or misplacements and ensuring that analysis streams remain organized and accessible, leading to more efficient data handling.

15

HTTP Cache Proxy Weaver

Author

sanchez_c137

Description

An open-source project that functions as an HTTP cache and reverse proxy. It aims to provide efficient request handling and content delivery by storing frequently accessed data closer to the user or application, thereby reducing latency and server load. Its innovation lies in its flexible architecture and potential for customizability in caching strategies and proxying rules.

Popularity

Points 4

Comments 1

What is this product?

This project is an open-source tool designed to act as both an HTTP cache and a reverse proxy. Think of it like a smart middleman for your web traffic. When a request comes in for a piece of data (like an image or a web page), instead of always going back to the original server, this tool can store a copy of that data locally (caching). The next time someone asks for the same data, it can be served directly from this local copy, which is much faster. As a reverse proxy, it sits in front of your actual web servers, receiving all incoming requests and deciding where to send them. This adds a layer of security and can help distribute traffic. The innovative part is its foundational design, allowing developers to fine-tune how it caches data and how it directs traffic, offering more control than many off-the-shelf solutions. So, this means you can speed up your website and make it more reliable by handling requests more intelligently, without needing to replace your entire server infrastructure.

How to use it?

Developers can integrate this project into their existing web infrastructure. It can be deployed in front of web servers to intercept and cache responses. For instance, a web application developer could configure it to cache static assets like JavaScript files and images. The project likely provides configuration files or an API to define caching policies (e.g., how long to keep cached data) and routing rules (e.g., which requests go to which backend server). It can also be used to serve content directly from the cache, reducing the load on origin servers. This integration is valuable because it allows for incremental performance improvements and better resource management with minimal disruption to the current setup. You can use it to improve the speed and scalability of your applications by offloading repetitive tasks from your main servers.

Product Core Function

· HTTP Caching: Stores frequently requested data locally to serve it faster on subsequent requests. This is valuable for reducing server response times and bandwidth usage, making your applications feel snappier for users.

· Reverse Proxying: Acts as a gateway, forwarding incoming requests to the appropriate backend servers. This enhances security by hiding your origin servers and allows for load balancing, ensuring your application remains available even under heavy traffic. This is useful for protecting your infrastructure and distributing user requests efficiently.

· Customizable Cache Policies: Allows developers to define how long data is cached and under what conditions. This provides fine-grained control over content freshness and resource utilization, enabling optimization for specific application needs. This lets you tailor the caching behavior to perfectly match your application's requirements.

· Flexible Routing Rules: Enables developers to set up complex rules for directing traffic to different backend services. This is crucial for microservices architectures or when managing multiple applications behind a single entry point, offering efficient request distribution. This helps manage complex application deployments by intelligently directing user traffic.

Product Usage Case

· Scenario: A busy e-commerce website with many static assets (images, CSS, JS). Integration: Deploying the HTTP Cache Proxy Weaver in front of the web servers to cache these static assets. Problem Solved: Significantly reduces the load on the origin servers and speeds up page load times for customers, leading to better user experience and potentially higher conversion rates. The benefit to you is a faster, more responsive website that keeps customers happy.

· Scenario: A backend API serving data to multiple mobile and web clients. Integration: Using the project as a reverse proxy to distribute incoming API requests across multiple instances of the API service. Problem Solved: Prevents any single API instance from being overwhelmed, ensuring high availability and consistent performance for all users. This ensures your API remains stable and accessible, even when many users are accessing it simultaneously.

· Scenario: A content delivery network (CDN) for a media company. Integration: Leveraging the caching capabilities to store popular video or article content at edge locations closer to users. Problem Solved: Minimizes latency for media consumption, providing a seamless streaming or reading experience, even for users geographically distant from the origin servers. This means your users can enjoy content without frustrating delays, regardless of where they are.

· Scenario: Developing a new microservices architecture. Integration: Utilizing the reverse proxy functionality to route requests to the correct microservice based on URL paths or headers. Problem Solved: Simplifies the management and scaling of individual microservices, providing a unified entry point for clients and enabling independent deployment and scaling of services. This makes it easier to build and manage complex, modern applications.

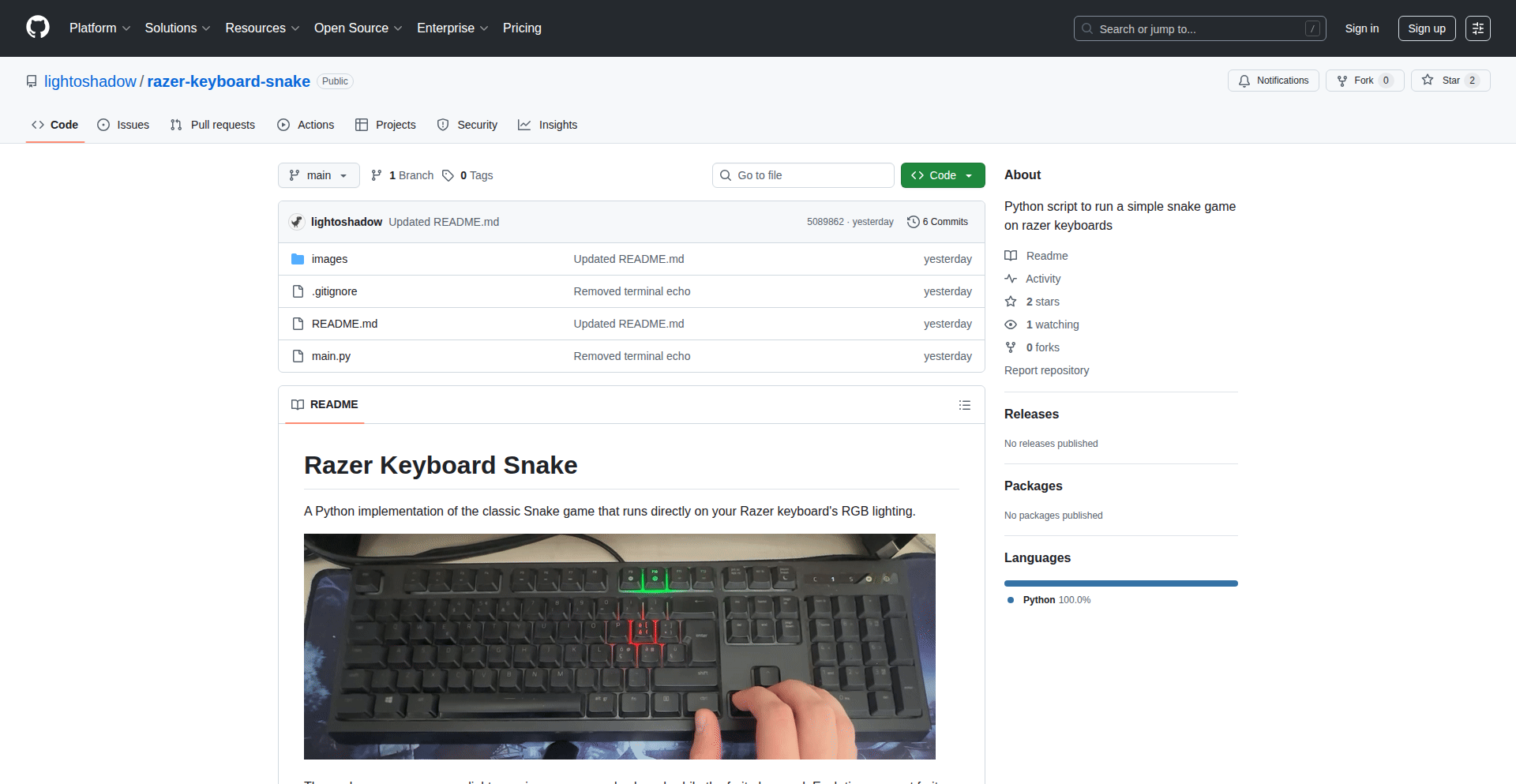

16

ChromaSnake: Keyboard-as-a-Display Snake Game

Author

lightofshadow

Description

This project is a Python script that turns a Razer keyboard into a retro display for the classic Snake game. By leveraging OpenRazer drivers, it allows users to play Snake directly on their keyboard's keys, with each key illuminating to represent the snake's body, food, or empty space. This is an innovative way to repurpose peripheral hardware for interactive entertainment.

Popularity

Points 3

Comments 2

What is this product?