Show HN Today: Discover the Latest Innovative Projects from the Developer Community

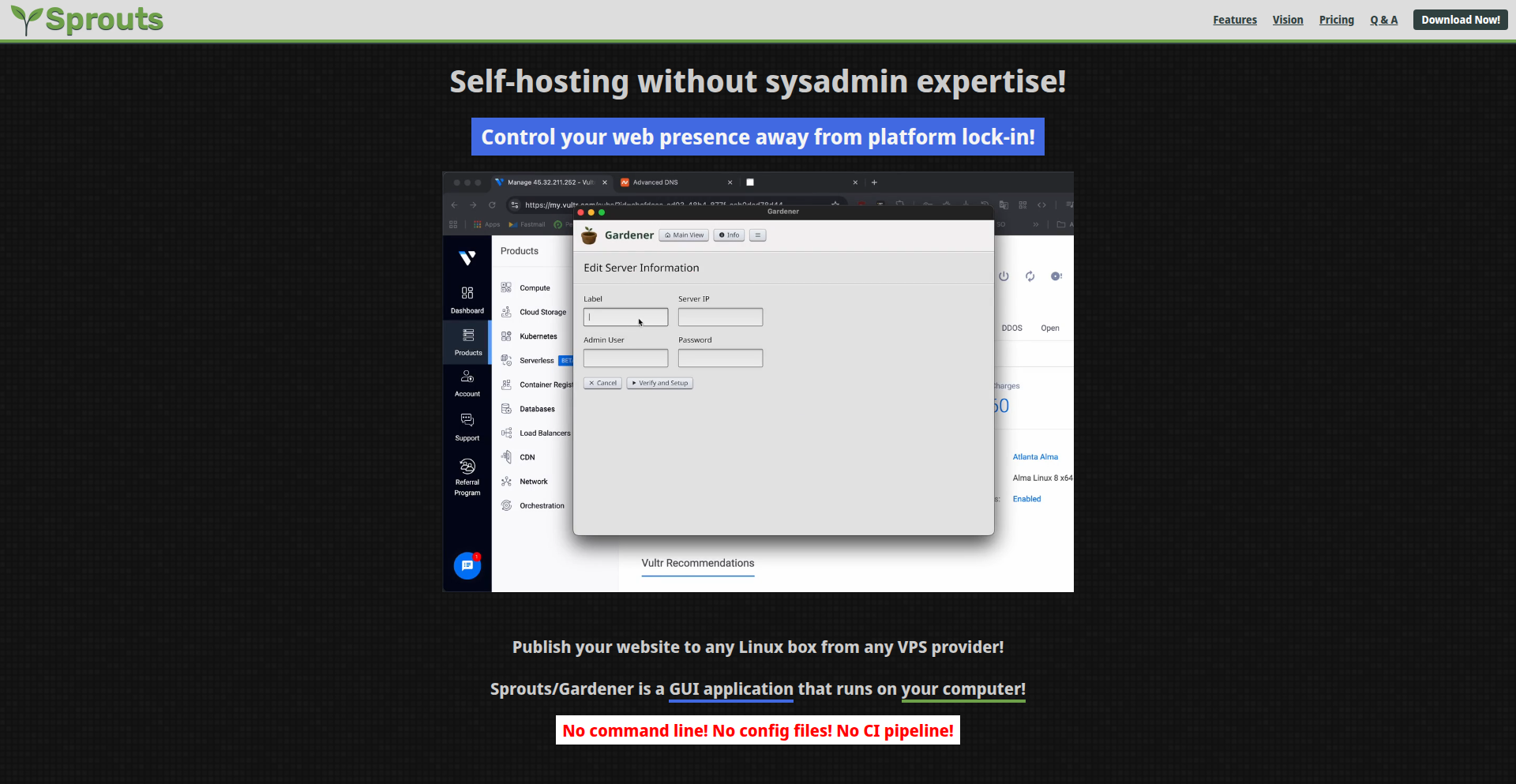

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-30

SagaSu777 2025-10-01

Explore the hottest developer projects on Show HN for 2025-09-30. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation on Show HN is a testament to the burgeoning power of AI, especially in its application towards augmenting human capabilities. We're seeing a strong trend towards building 'agentic workflows' – systems where AI agents can autonomously perform complex tasks, from coding and content generation to financial analysis and security testing. This isn't just about automation for automation's sake; it's about creating intelligent co-pilots that handle the drudgery, allowing developers and creators to focus on higher-level problem-solving and creativity. For developers, this means exploring how to build and integrate these agents, understanding the nuances of prompt engineering, and developing robust frameworks for managing their execution. For entrepreneurs, the opportunity lies in identifying specific domains where AI agents can dramatically improve efficiency, reduce costs, or unlock entirely new possibilities. The emphasis on secure development environments, like Sculptor's use of Docker, highlights a critical need for responsible AI deployment. Furthermore, the proliferation of tools for data extraction, analysis, and personalized experiences underscores a broader digital shift towards data-informed decision-making and tailored user journeys. The spirit of the hacker is alive and well, with developers building open-source solutions that democratize access to powerful technologies and challenge existing paradigms.

Today's Hottest Product

Name

Sculptor – A UI for Claude Code

Highlight

This project tackles the complexities of running parallel coding agents by leveraging Docker containers for isolation and security. The "Pairing Mode" for real-time IDE synchronization is a clever innovation, bridging the gap between AI code generation and human developer workflow. It offers a robust solution for managing agent conflicts and dependencies, demonstrating a practical application of containerization for advanced AI development workflows. Developers can learn about secure agent management, Docker orchestration, and real-time code synchronization techniques.

Popular Category

AI/ML

Developer Tools

Productivity

Web Development

Fintech

Popular Keyword

AI Agents

LLM

Docker

Productivity Tools

Developer Workflow

Security

Web3

Data Extraction

Automation

Technology Trends

AI-driven Automation

Agentic Workflows

Secure Development Environments

Decentralized Finance (DeFi) Infrastructure

Enhanced Developer Tooling

Data Extraction and Analysis

Personalized User Experiences

Cross-Platform Development

Open-Source Innovation

Project Category Distribution

AI/ML & Agentic Systems (25%)

Developer Tools & Productivity (30%)

Web & App Development (15%)

Fintech & Payments (10%)

Data & Analytics (5%)

Security & Infrastructure (5%)

Creative & Design Tools (5%)

Utilities & Miscellaneous (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Sculptor: Parallel Agent IDE Orchestrator | 159 | 74 |

| 2 | FaceVerified Social Connect | 18 | 20 |

| 3 | Peanut Protocol: Decentralized Global Payments | 13 | 16 |

| 4 | Glide: The Extensible Keyboard-Centric Web Browser | 19 | 3 |

| 5 | CulinaryWiki: The Recipe Hacker's Encyclopedia | 18 | 0 |

| 6 | ProcASM Visual Coder | 12 | 1 |

| 7 | shadcn/studio: Visual Component Engineering | 6 | 6 |

| 8 | PDF-Extractor-Forge | 10 | 2 |

| 9 | Waveform GDB: JPDB for Hardware Debugging | 10 | 1 |

| 10 | AgenticSDLC Automator | 8 | 1 |

1

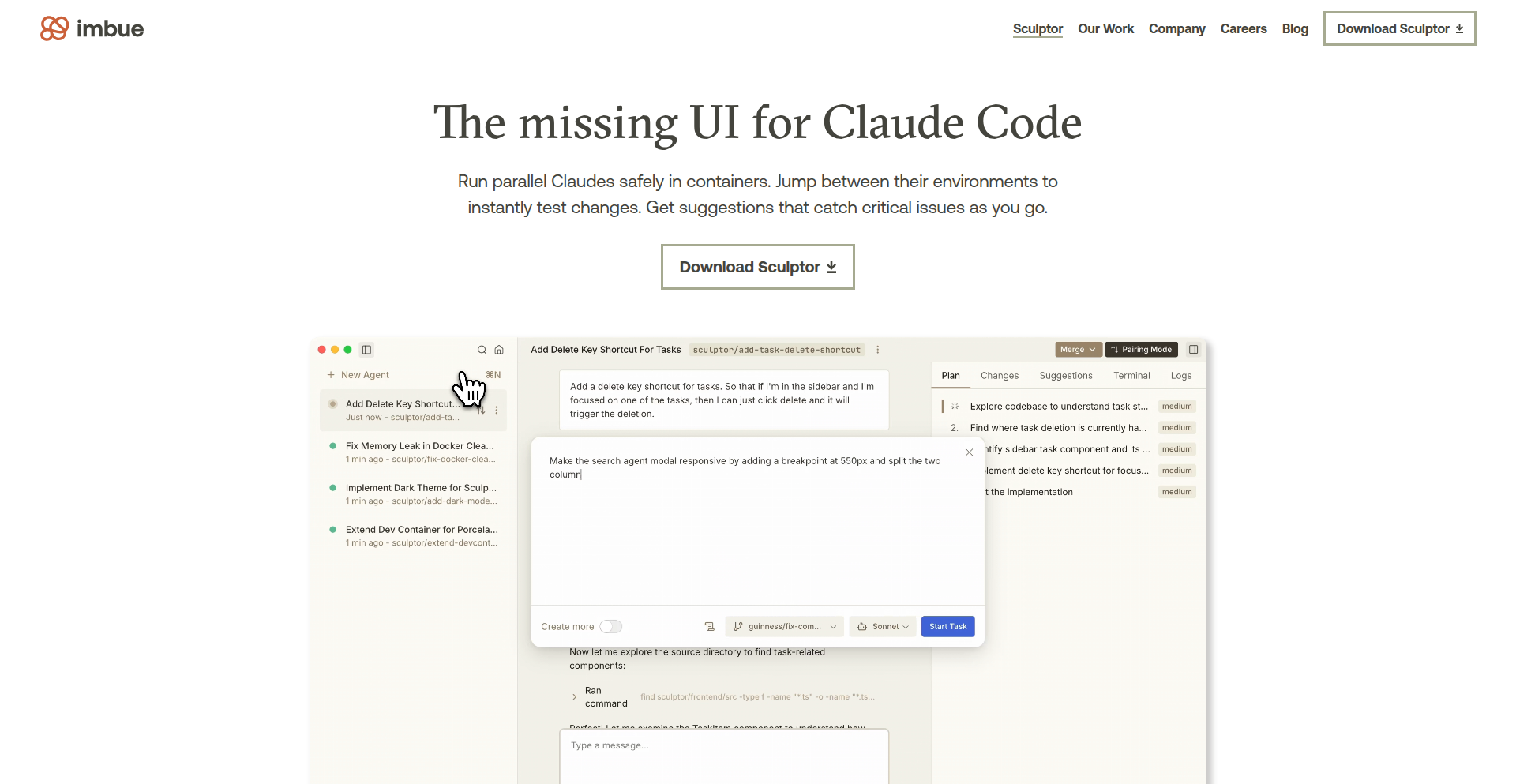

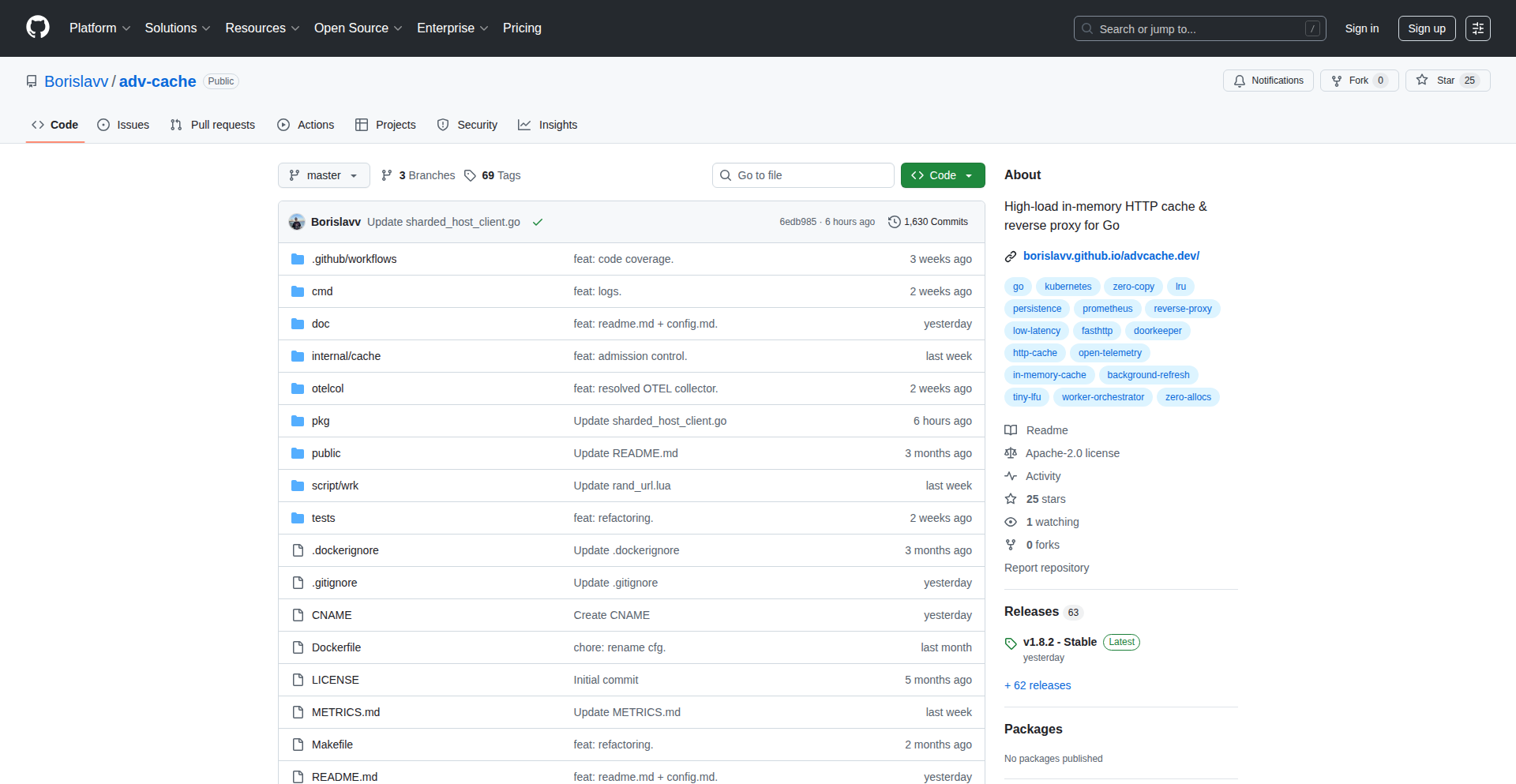

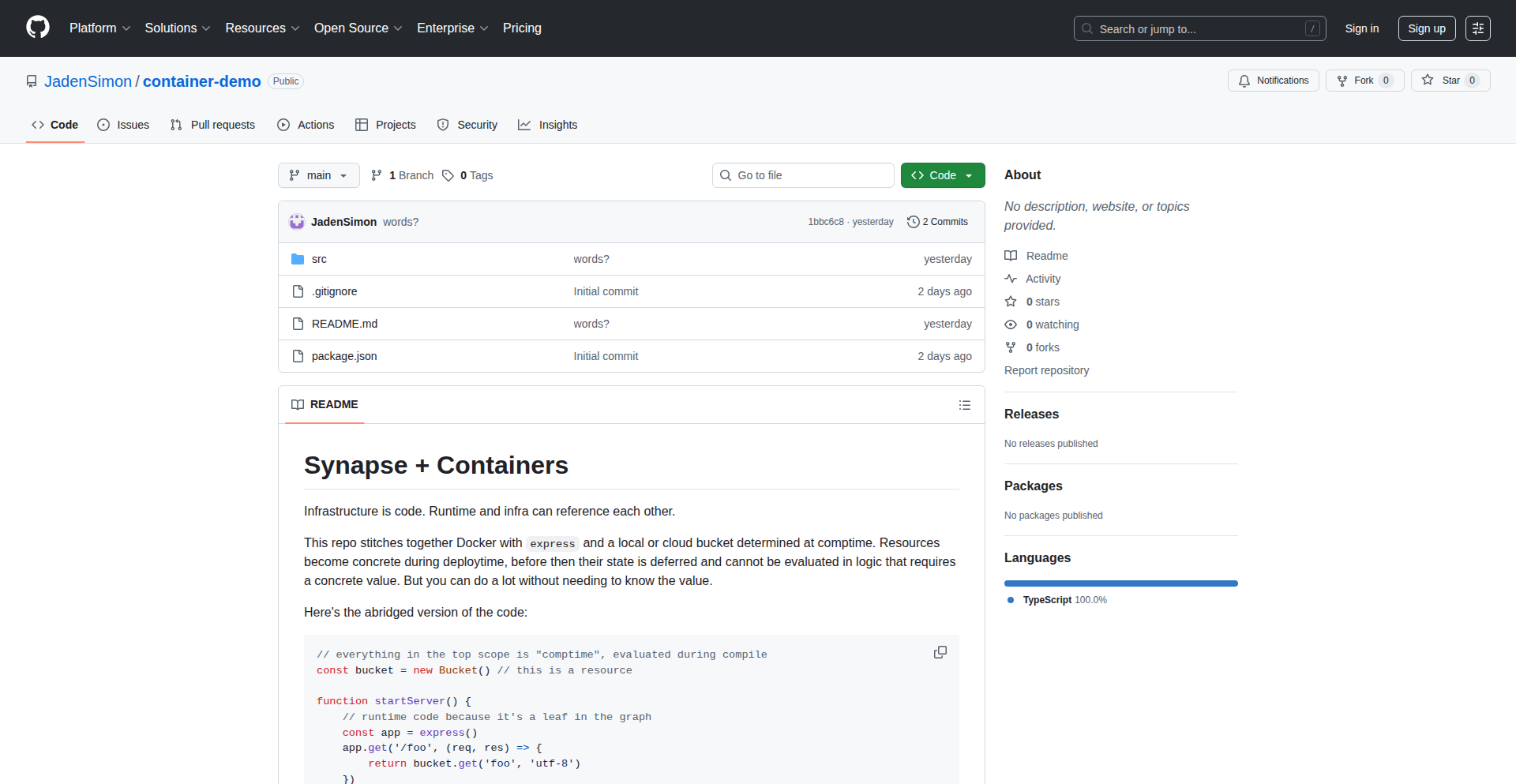

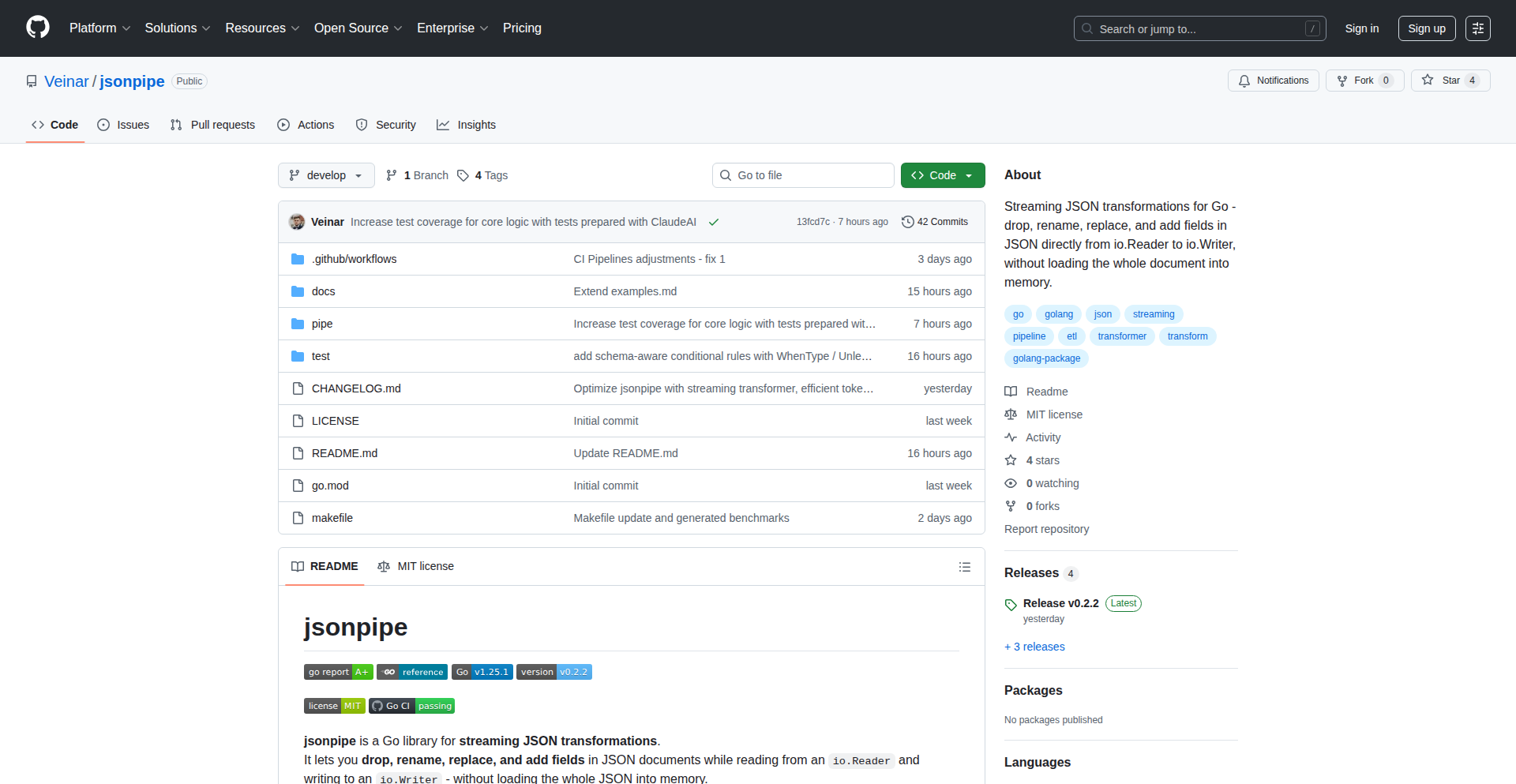

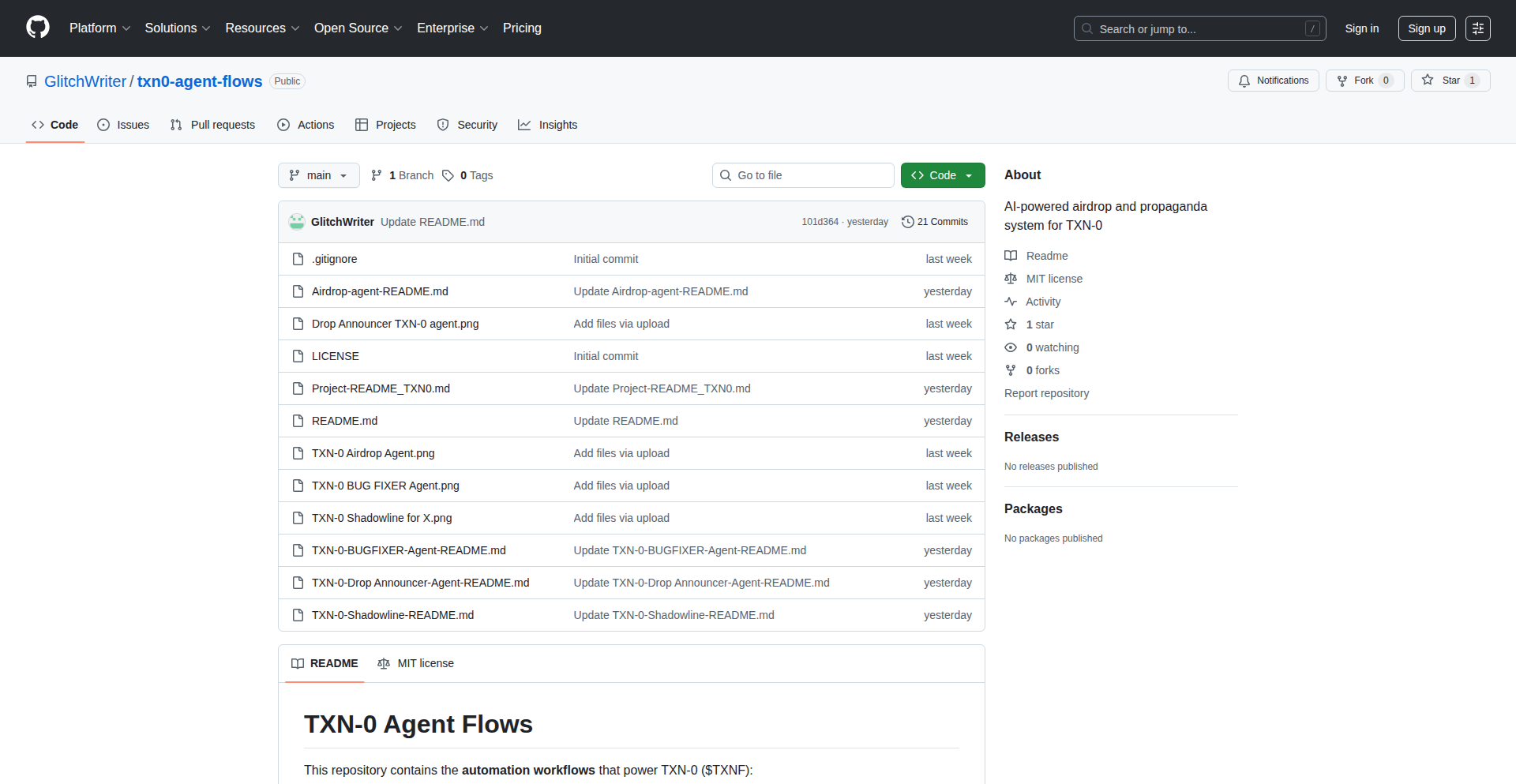

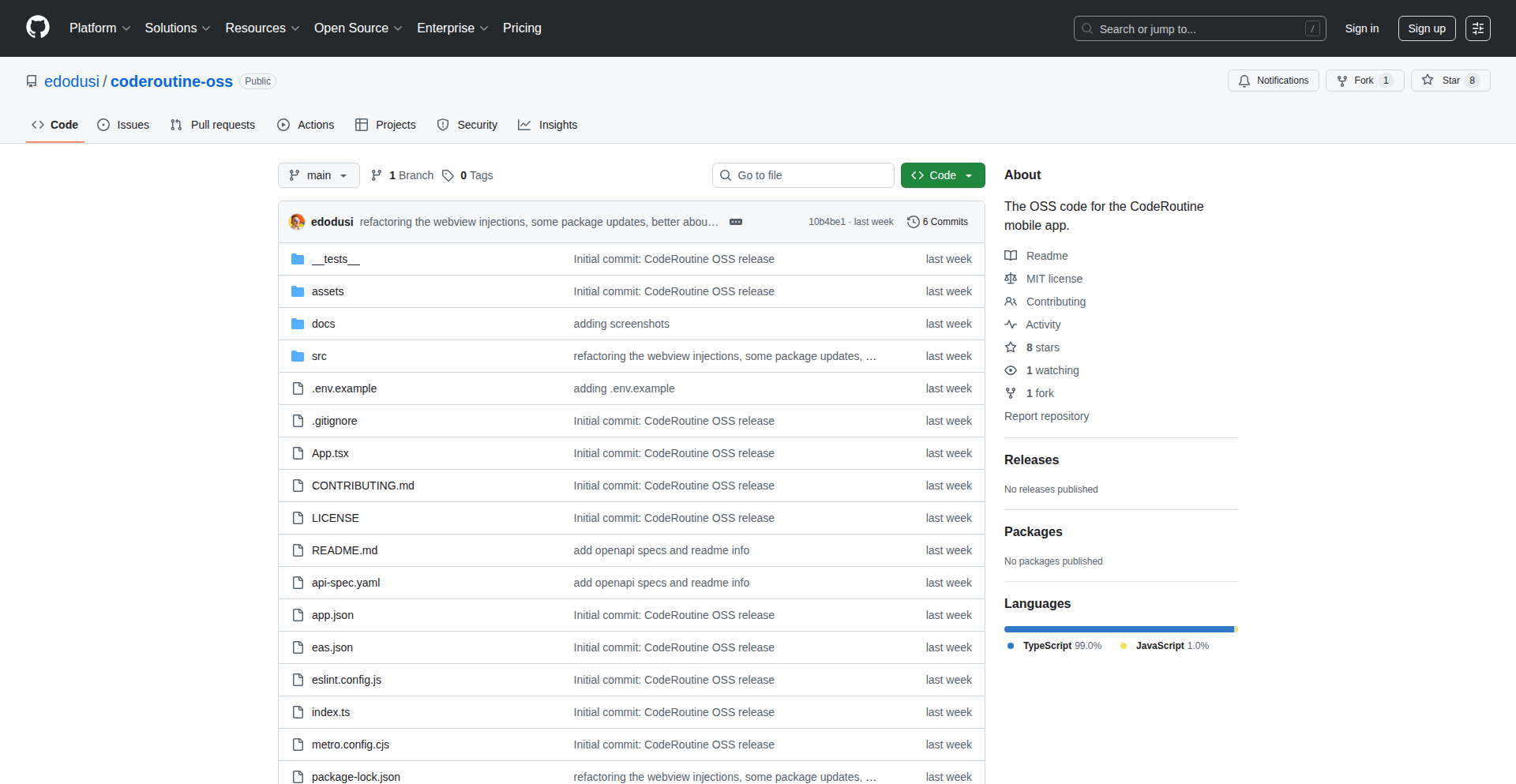

Sculptor: Parallel Agent IDE Orchestrator

Author

thejash

Description

Sculptor is a desktop application designed to provide a seamless and secure user interface for running multiple AI coding agents, like Claude Code, in parallel. It tackles common issues such as dependency management, merge conflicts, and security concerns by isolating each agent within its own Docker container. This allows developers to leverage the power of AI assistants without compromising their local development environment or facing tedious permission prompts. A key innovation is 'Pairing Mode,' which enables real-time, bidirectional synchronization of agent code with your IDE, facilitating collaborative coding and testing directly within your familiar development tools. So, what's in it for you? Sculptor empowers you to safely experiment with and deploy multiple AI coding agents simultaneously, streamlining your development workflow and enhancing productivity by resolving common friction points in AI-assisted coding.

Popularity

Points 159

Comments 74

What is this product?

Sculptor is a desktop application that acts as a sophisticated management layer for AI coding agents. The core technical innovation lies in its use of Docker containers to isolate each agent. This means that each AI agent runs in its own sandboxed environment, preventing it from interfering with your system or other agents. This isolation is crucial for preventing issues like dependency conflicts (where different agents might need different versions of the same software) or accidental deletion of files. The 'Pairing Mode' is another significant technical advancement, offering a real-time, two-way synchronization between the AI agent's codebase and your Integrated Development Environment (IDE). This is achieved through a clever mechanism that bridges the containerized environment with your local machine, allowing you to see and edit the code the AI is working on as it generates it, and vice-versa. So, what's in it for you? You get a secure, organized, and highly interactive way to use powerful AI coding assistants, making complex AI development tasks much more manageable and efficient.

How to use it?

Developers can use Sculptor by installing it as a desktop application. Once installed, they can configure and launch their AI coding agents, such as Claude Code, within Sculptor. The application will automatically set up separate Docker containers for each agent. To engage with the agents, developers can utilize the 'Pairing Mode'. This feature integrates with popular IDEs, allowing for seamless code synchronization. You simply select your IDE and the project you want to work on, and Sculptor will ensure that the code being generated or modified by the AI agent is mirrored in your IDE, and vice versa. Alternatively, for more manual control, you can pull and push code changes between the containerized agent and your local environment. So, what's in it for you? You can easily set up and manage your AI coding team, directly interact with their work within your favorite coding tools, and accelerate your development cycles through real-time collaboration with AI.

Product Core Function

· Parallel Agent Execution: Safely run multiple AI coding agents simultaneously in isolated Docker containers, preventing conflicts and ensuring system stability. This allows you to leverage the power of several AI assistants at once for different tasks, accelerating your overall development process.

· Agent Isolation via Docker: Each AI agent operates in its own containerized environment, protecting your local system from potential side effects or security risks, and ensuring dependency consistency. This means you can experiment freely without worrying about breaking your main development setup.

· Real-time IDE Pairing Mode: Bidirectionally sync code between the AI agent's container and your IDE, enabling live editing and testing of AI-generated code. This allows for immediate feedback loops and collaborative coding with AI, making the development process much more interactive and efficient.

· Manual Code Pull/Push: Provides traditional version control capabilities to manually transfer code between the agent's container and your local machine, offering flexibility for developers who prefer explicit control over code integration.

· Future State Forking and Rollback: Enables the ability to 'fork' the entire state of an agent's conversation and container, and to roll back to previous states. This is invaluable for experimenting with different AI approaches or recovering from unintended changes, providing a safety net for complex AI development.

· Security and Permission Management: Eliminates the need for repetitive tool permission prompts by managing agent access within the secure containerized environment. This simplifies the workflow and enhances security by reducing the attack surface.

· Dependency Management: Avoids issues with differing or conflicting dependencies required by various AI agents by isolating them in their own environments. This ensures that each agent has the precise tools it needs to function without impacting others.

Product Usage Case

· Scenario: A developer is working on a complex web application and wants to use an AI agent to refactor a large codebase, another to write unit tests, and a third to generate API documentation simultaneously. Sculptor allows these agents to run in parallel, each in its own container, preventing dependency clashes and ensuring the refactoring agent doesn't interfere with the test generation. Pairing Mode then lets the developer see the refactored code appear in their IDE in real-time and immediately write tests against it. So, what's in it for you? You can accelerate your development by having multiple AI tasks running concurrently and seamlessly integrated into your workflow.

· Scenario: A team of developers is experimenting with different AI models for generating game assets. They need to test each model's output independently to compare results. Sculptor's containerization ensures that each AI model's dependencies are kept separate, preventing conflicts. The ability to 'fork' conversations and states allows them to save specific experimental branches for later review or comparison without affecting ongoing work. So, what's in it for you? You can safely explore multiple AI possibilities and meticulously compare their outputs without introducing system instability or data loss.

· Scenario: A junior developer is struggling with a challenging bug. They decide to use an AI agent to help debug. Sculptor's Pairing Mode allows the developer to watch the AI agent's code suggestions appear directly in their IDE and then manually edit or test those suggestions in real-time. If the AI's suggestion introduces a new problem, the developer can easily roll back to a previous working state. So, what's in it for you? You can learn from AI-assisted debugging in an interactive and forgiving environment, improving your problem-solving skills and understanding of code.

· Scenario: An AI model is being trained or fine-tuned for a specific coding task. This process can sometimes have unintended side effects on the system or require specific, isolated environments. Sculptor provides a secure and isolated Docker container for this AI agent, ensuring that the training process doesn't corrupt other projects or the developer's main system. So, what's in it for you? You can confidently run intensive AI training or fine-tuning operations without risking your development environment.

2

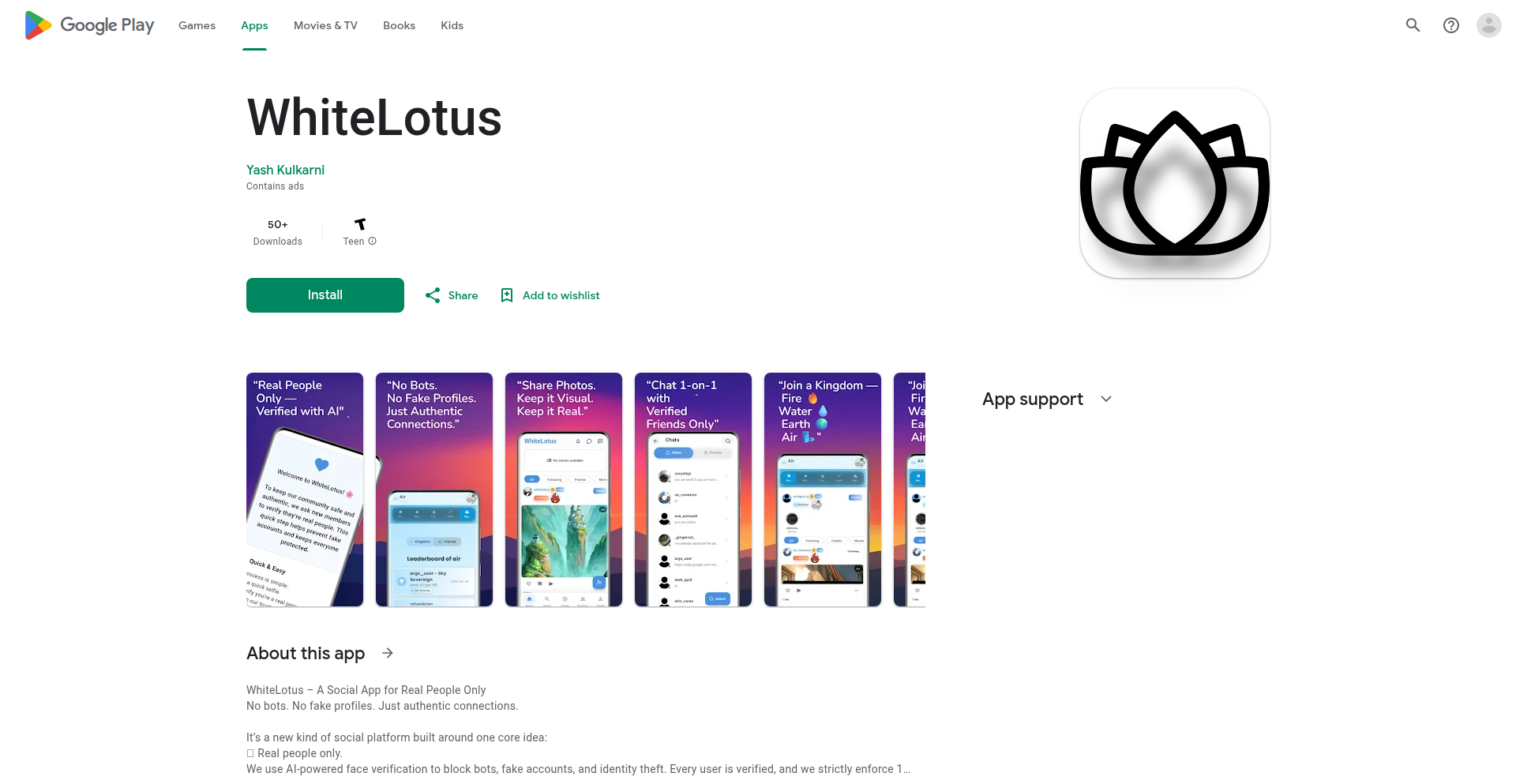

FaceVerified Social Connect

Author

whitelotusapp

Description

A social networking app built to combat fake accounts and bots through AI-powered facial verification. It prioritizes genuine human interaction with private chats and photo-based posts, fostering a more trustworthy online community. The innovation lies in its rigorous identity verification system at the account creation level, aiming to solve the pervasive problem of online impersonation and spam.

Popularity

Points 18

Comments 20

What is this product?

FaceVerified Social Connect is a mobile social application that uses artificial intelligence (AI) to verify that each user account belongs to a real person. Imagine a social media platform where you're guaranteed that the person you're interacting with is who they say they are. The core technology is a facial recognition system that analyzes a selfie during signup. If the AI detects a match to an existing verified account, or if the image quality is insufficient, it flags the account. This ensures one account per person, drastically reducing the possibility of bots, spam accounts, and fake profiles that plague other social networks. This approach addresses the fundamental issue of trust and authenticity online, offering a cleaner and more genuine user experience.

How to use it?

Developers can use FaceVerified Social Connect as a model for building trust-centric applications. The core concept of strict identity verification can be applied to various scenarios. For instance, a startup could integrate this verification method into their platform to ensure only legitimate users access premium features or participate in community discussions. A developer might also study the underlying AI implementation to understand how to build robust identity management systems. The app's architecture, likely using a backend framework like Django for API management and Flutter for the cross-platform frontend, provides a reference for building scalable and secure mobile applications. The immediate use case for end-users is to join a social network where interactions are more meaningful and less prone to deception.

Product Core Function

· AI-powered facial verification: Ensures each user is a unique individual, preventing bots and fake profiles. This addresses the problem of misleading online identities and enhances user safety by creating a more authentic social environment.

· One-on-one private chats: Facilitates secure and direct communication between friends, fostering deeper connections without the noise of public feeds. This solves the issue of unwanted DMs and spam messages often found on larger platforms.

· Photo-based posting: Encourages visual and genuine content sharing, keeping the platform focused on real-life experiences. This tackles the superficiality and potential for misinformation often found in text-heavy or highly curated content.

· Community 'Kingdoms': Organizes users into interest-based tribes (e.g., Fire, Water, Earth, Air), promoting a sense of belonging and shared identity. This addresses the need for focused communities and allows for more targeted interactions.

· Leveling system for trustworthiness: Gamifies user engagement and reputation, indicating how active and reliable a user is. This builds social capital and encourages positive behavior within the platform.

· Friend-based TrustNotes: Allows users to leave positive feedback about their friends, building a reputation system based on peer endorsement. This provides social proof and reinforces positive user interactions.

Product Usage Case

· Building a dating app where verified identities reduce catfishing and ensure genuine romantic pursuits. The facial verification acts as an initial filter for serious users.

· Creating a professional networking platform where real profiles guarantee legitimate connections and prevent spam recruitment. This ensures that users are interacting with actual professionals in their field.

· Developing a community forum for sensitive topics where verified users ensure a safe and respectful environment, free from trolls and malicious actors. This provides a secure space for open and honest discussion.

· Implementing a customer feedback system where verified users provide genuine product reviews, helping businesses understand real customer sentiment. This ensures that feedback is from actual customers, not fake accounts.

· Designing a platform for online events or workshops where participants are real individuals, enhancing the quality of interaction and networking opportunities. This ensures that participants are genuine and actively engaged.

3

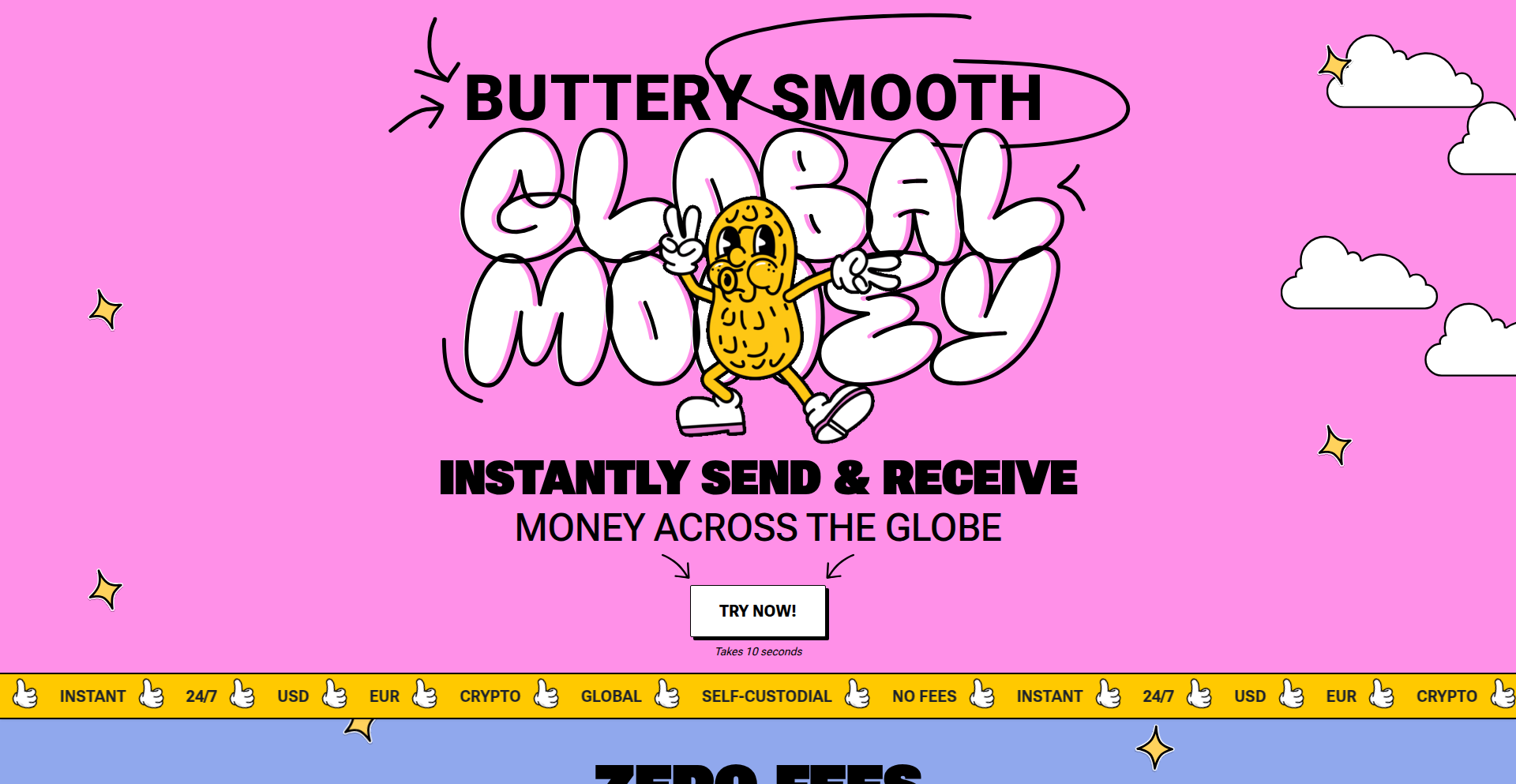

Peanut Protocol: Decentralized Global Payments

Author

montenegrohugo

Description

Peanut Protocol is a revolutionary payment application that aims to make sending money as seamless and instant as sending a WhatsApp message, regardless of geographical location or existing payment systems. It tackles the fragmentation and high costs of traditional international bank transfers and country-specific payment apps by offering a unified, decentralized, and non-custodial platform. Leveraging Progressive Web App (PWA) technology and open-source principles, Peanut ensures users retain full control over their funds, secured by advanced passkey technology. It interoperates with existing banking systems, popular local payment apps like PIX and MercadoPago, and even cryptocurrencies, offering unparalleled flexibility. The core innovation lies in its ability to bridge these disparate payment networks into a single, user-friendly experience, with a unique 'send link' feature for effortless peer-to-peer transactions.

Popularity

Points 13

Comments 16

What is this product?

Peanut Protocol is a decentralized and non-custodial global payment application. Think of it as a modern digital wallet that breaks down the barriers of traditional finance. Instead of relying on central authorities or specific country-based systems, Peanut builds a network that connects various payment methods. It uses open web standards by being a Progressive Web App (PWA), meaning you can access it directly from your web browser without needing to download an app from an app store. This decentralization means you, and only you, have control over your money. The innovation is in its interoperability: it can send money through traditional banks, integrate with local payment apps like Brazil's PIX or Argentina's MercadoPago, and even handle cryptocurrency transactions. Funds are secured with passkeys, a more robust security measure than passwords, protecting you from common vulnerabilities. The entire system is open-source, meaning anyone can inspect the code, building trust and transparency. So, what's the big deal? It fundamentally changes how money moves, making it faster, cheaper, and more accessible for everyone, everywhere.

How to use it?

Developers can integrate Peanut Protocol into their workflows or applications to offer enhanced payment capabilities to their users. As a Progressive Web App (PWA), it's accessible via a web browser on any device. Users can generate a unique 'send link' for a specific transaction. This link can be shared with anyone, and the recipient can choose how they want to receive the funds from a variety of options supported by Peanut (e.g., bank transfer, local payment app, crypto). For developers, this means you can embed a payment option within your website or application that seamlessly connects to the Peanut network. This avoids the complexity of building multiple payment integrations for different regions or systems. You can think of it as a universal payment gateway that developers can leverage to simplify cross-border and cross-system transactions for their users, ultimately improving the user experience and reducing operational overhead. It's about making payments a background process, so your users can focus on what matters to them.

Product Core Function

· Instant Peer-to-Peer Payments: Allows for immediate money transfers between users, akin to messaging, simplifying quick transactions. The value is in reducing delays and friction in everyday financial exchanges.

· Cross-System Interoperability: Connects to traditional banking systems, popular local payment apps (like PIX, MercadoPago), and cryptocurrencies. This offers unparalleled flexibility, meaning users aren't locked into one system, and can send/receive money through their preferred method. The value is in eliminating geographical and technological payment silos.

· Decentralized and Non-Custodial Control: Users maintain full ownership and control of their funds, with no third party holding them. This significantly enhances security and resilience against issues like account freezes or institutional failures. The value is in providing true financial sovereignty and peace of mind.

· Progressive Web App (PWA) Implementation: Accessible via a web browser on any device without app store restrictions. This promotes open standards, reduces development overhead for users, and ensures wider accessibility. The value is in democratizing access to advanced financial tools and circumventing app store gatekeepers.

· Passkey Security: Utilizes passkeys for robust authentication, offering better security than passwords or PINs. This protects user accounts from common hacking attempts and data breaches. The value is in significantly increasing the security of financial assets.

· Open-Source Codebase: The application's code is publicly available for scrutiny. This fosters transparency, allows for community contributions, and builds trust in the platform's integrity. The value is in ensuring accountability and allowing for continuous improvement by a global community.

Product Usage Case

· An e-commerce platform that wants to accept payments from a global customer base without dealing with complex international payment gateways. They can integrate Peanut to offer a simple checkout process where customers can pay using their local bank transfer, a popular regional app, or crypto, all managed seamlessly by Peanut. This solves the problem of lost sales due to payment friction and expands their market reach.

· Freelancers working with international clients who currently struggle with high bank transfer fees and long waiting times. They can use Peanut to generate a 'send link' for their invoice. The client receives the link and can pay instantly using their preferred method, and the freelancer receives the funds quickly with minimal fees. This accelerates payment cycles and improves cash flow.

· A developer building a community platform where members need to easily contribute small amounts for shared resources or events. Peanut can be integrated to allow members to send funds directly within the platform using a simple QR code or link, without needing to exchange sensitive banking details or use complicated payment processors. This fosters community engagement and simplifies financial coordination.

· Users living in regions with underdeveloped banking infrastructure but high smartphone penetration. They can leverage Peanut to access global payment networks, receive remittances from abroad, or make payments for online services using their mobile devices and local payment apps or crypto, bridging the digital divide in financial access. This empowers individuals with financial inclusion.

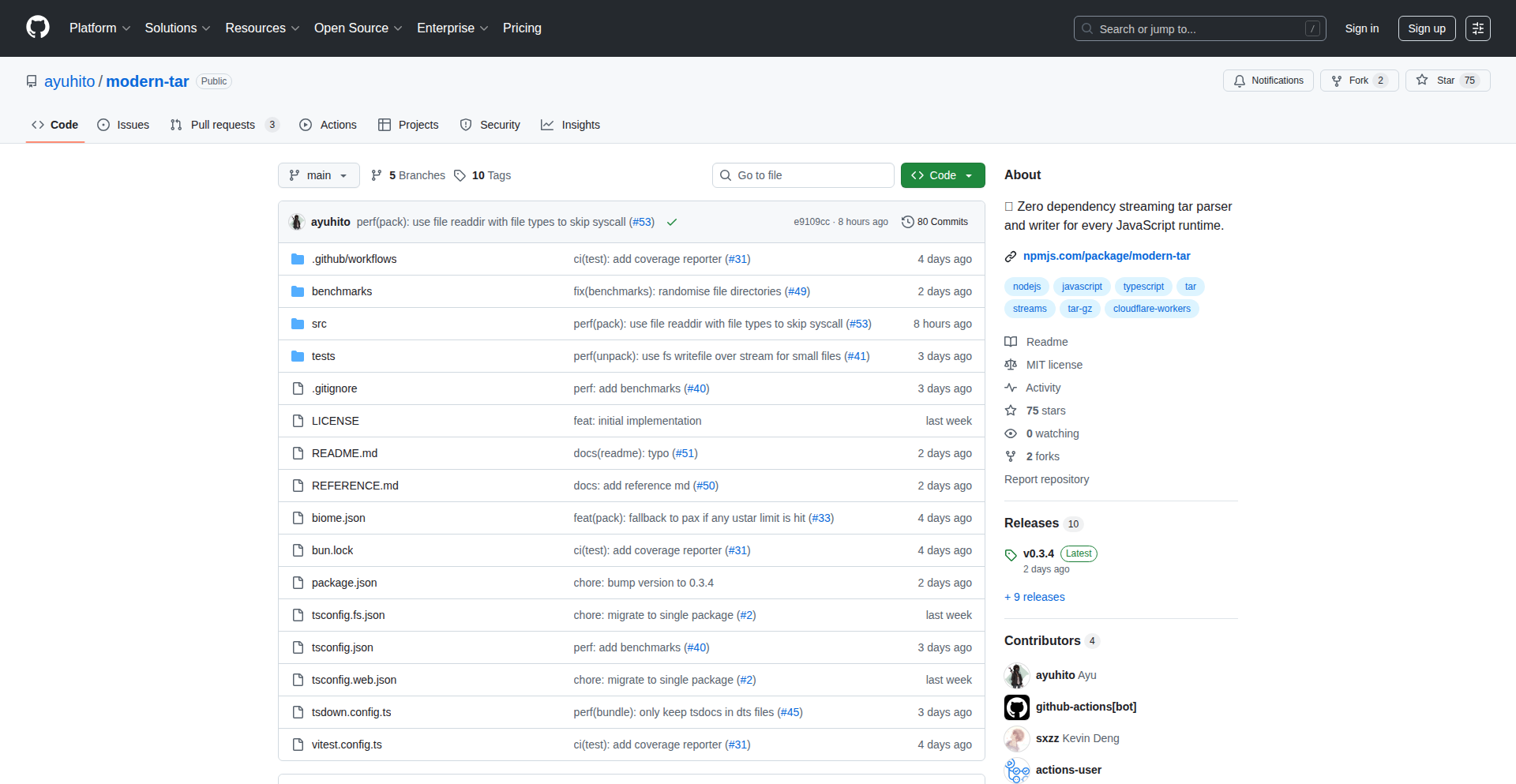

4

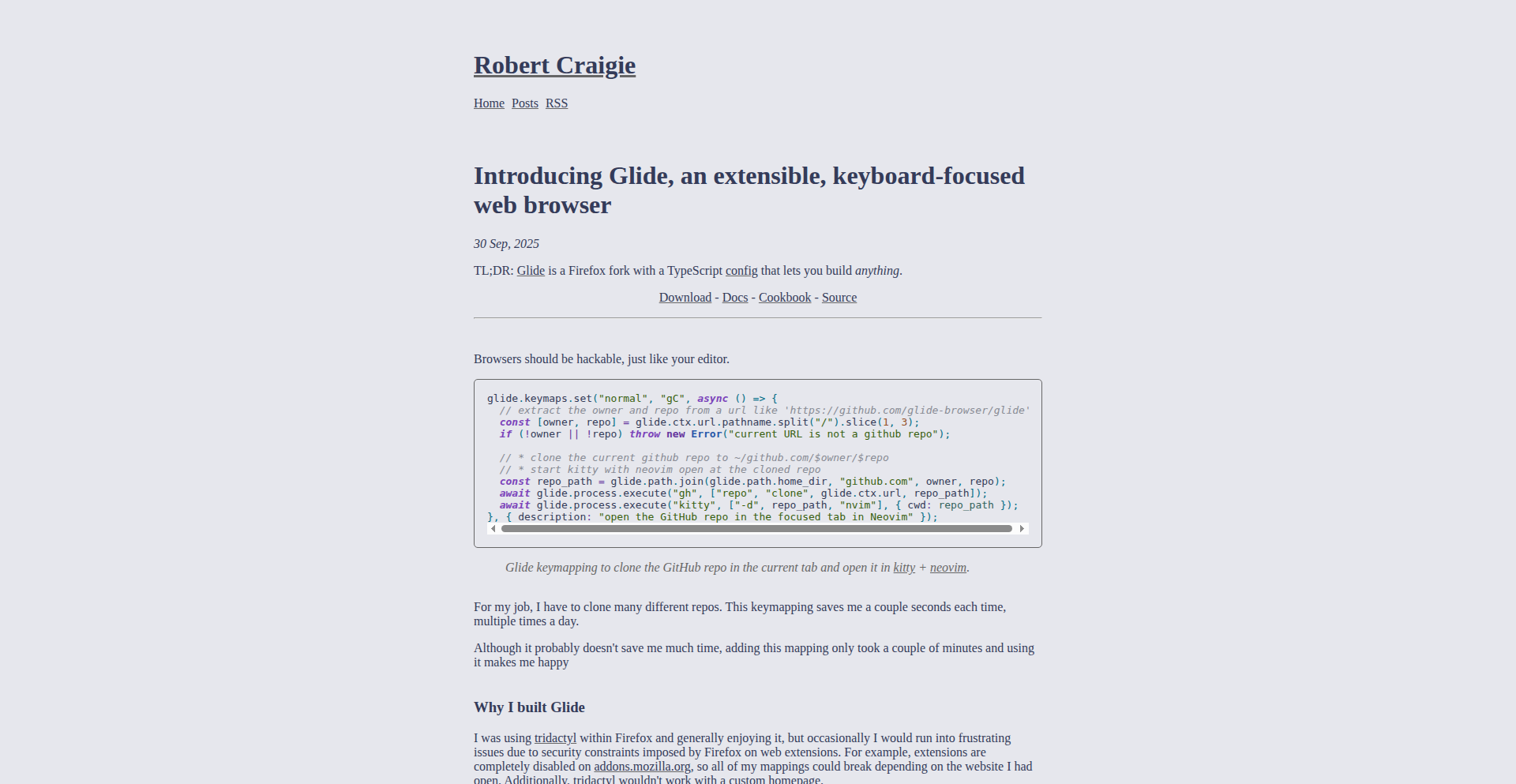

Glide: The Extensible Keyboard-Centric Web Browser

Author

probablyrobert

Description

Glide is a new web browser built from the ground up with extensibility and keyboard navigation at its core. It tackles the common problem of navigating the web efficiently without constantly reaching for the mouse, offering a powerful and customizable experience for power users. The innovation lies in its modular architecture and deep integration of keyboard shortcuts, aiming to significantly boost productivity for developers and avid web surfers.

Popularity

Points 19

Comments 3

What is this product?

Glide is a web browser designed to be highly customizable and operated primarily through keyboard commands. Unlike traditional browsers where mouse interaction is often primary, Glide puts the keyboard first. This means you can navigate tabs, open links, search, and interact with web content using keyboard shortcuts, reducing the need to switch to your mouse. Its extensible nature means developers can build new features and integrations, making it a truly personalized browsing tool. So, what's in it for you? You'll be able to browse the web much faster, reducing friction and saving time by keeping your hands on the keyboard.

How to use it?

Developers can use Glide by leveraging its extension API to build new functionalities or integrate with existing workflows. For example, you could create an extension to automate form filling, quickly bookmark pages with custom tags, or trigger specific developer tools. Users can customize keyboard shortcuts to match their preferred workflow, install pre-built extensions from the community, or even contribute their own. This allows for a tailored browsing experience that fits individual needs. So, how can you use it? You can install Glide and immediately start using its default keyboard shortcuts for faster navigation, or dive into customizing them and exploring extensions to supercharge your browsing and development tasks.

Product Core Function

· Extensible Architecture: Allows developers to build custom extensions and integrations, adding new features and functionalities to the browser. This offers a significant value by enabling users to tailor the browser to their specific needs and workflows, potentially automating repetitive tasks or adding specialized tools for development. So, what's in it for you? You get a browser that can grow and adapt with your evolving requirements.

· Keyboard-Focused Navigation: Enables efficient web browsing and interaction solely through keyboard commands, minimizing mouse usage. This provides a tangible benefit by increasing browsing speed and reducing physical strain, especially for those who spend a lot of time online or have ergonomic concerns. So, what's in it for you? You can navigate the web faster and more comfortably.

· Modular Design: The browser's components are designed to be independent, making it easier to update, maintain, and customize specific parts without affecting the whole. This translates to a more stable and flexible browsing experience, allowing for quicker adoption of new web technologies and features. So, what's in it for you? You get a more robust and adaptable browser.

· Customizable Shortcuts: Users can define and modify keyboard shortcuts to suit their personal preferences and workflow. This empowers users to create their ideal browsing environment, optimizing efficiency and personalizing their interaction with the web. So, what's in it for you? You can browse the web in a way that feels most natural and efficient for you.

Product Usage Case

· Developer Productivity Boost: A developer could create an extension that, upon pressing a specific shortcut, immediately opens their favorite code editor with the current webpage's source code. This solves the common problem of quickly inspecting and modifying web content. So, what's in it for you? You can streamline your web development workflow and iterate faster.

· Efficient Information Gathering: A researcher could set up custom shortcuts to quickly save articles to a specific note-taking app, tag them, and then archive them, all without leaving the browser window and without touching the mouse. This addresses the challenge of managing information overload. So, what's in it for you? You can organize and process information more effectively.

· Power User Browsing: An advanced user might create a series of chained keyboard commands to quickly open a set of frequently visited developer resources or documentation pages in new tabs. This makes accessing essential tools incredibly fast. So, what's in it for you? You can access your most used online resources with unparalleled speed.

5

CulinaryWiki: The Recipe Hacker's Encyclopedia

Author

fromwilliam

Description

CulinaryWiki is a specialized wiki designed to be a comprehensive culinary encyclopedia. It addresses the gap where general knowledge platforms like Wikipedia lack deep, specific information about ingredients' culinary properties, usage, and heritage. This project's innovation lies in its focused approach to building a community-driven knowledge base for food enthusiasts, starting with a rich entry for rosemary. The value is in providing precise, actionable culinary insights that go beyond basic definitions.

Popularity

Points 18

Comments 0

What is this product?

CulinaryWiki is a new kind of wiki, built specifically for cooking enthusiasts. Instead of broad information, it focuses on the details that matter most to cooks: how ingredients behave when cooked, their historical significance in cuisine, and the best ways to use them. The core idea is to harness the power of community contribution, just like Wikipedia, but with a sharp focus on food. For example, while Wikipedia might tell you rosemary is a plant, CulinaryWiki would tell you *why* it pairs perfectly with lamb, *how* to best release its flavor (e.g., bruising vs. whole sprigs), and its origins in Mediterranean cooking. This specialized depth is its key innovation, solving the problem of scattered or generic culinary information.

How to use it?

Developers can use CulinaryWiki as a valuable resource for understanding ingredients and techniques, which can then inform recipe development or feature creation in food-related applications. Imagine building a smart recipe app that suggests ingredient substitutions; CulinaryWiki could be a backend knowledge source. For the culinary community, it's a platform to share and discover expert-level food knowledge. Developers can integrate this knowledge, perhaps by contributing their own findings or by referencing the wiki's data for features that require deep culinary context. The project is essentially a call to action for food lovers and developers to collaboratively build a definitive culinary resource.

Product Core Function

· Ingredient-focused Knowledge Base: Provides detailed information on specific ingredients, their culinary properties, and historical context. This is valuable because it offers actionable insights for cooks and developers building food-related features, moving beyond generic definitions.

· Community-driven Content Creation: Leverages a wiki model to allow users to contribute and edit information, fostering a collaborative environment for building comprehensive culinary knowledge. This is valuable as it democratizes the creation of highly specialized information, ensuring a rich and diverse knowledge pool.

· Specialized Culinary Data: Focuses exclusively on culinary applications, offering unique insights into ingredient usage and flavor profiles not found in general encyclopedias. This is valuable for anyone seeking to deepen their understanding or build precise culinary tools.

· Example-driven Content: Uses specific examples, like the rosemary entry, to illustrate how information is presented and its practical application. This is valuable as it demonstrates the immediate utility and learning potential of the wiki.

Product Usage Case

· A developer building a personalized meal planning app could use CulinaryWiki to find detailed information about how certain spices affect flavor profiles, helping to create more nuanced and appealing meal suggestions for users.

· A food blogger seeking to explain the heritage and best uses of a less common herb could reference CulinaryWiki for accurate, well-researched content, saving significant research time and adding depth to their articles.

· A restaurant owner looking to train staff on the nuances of specific ingredients could use CulinaryWiki as a supplementary educational resource to enhance their team's culinary knowledge and customer service.

· A home cook curious about the scientific reasons behind cooking techniques (e.g., why searing meat creates flavor) could find detailed explanations and practical tips on CulinaryWiki, improving their cooking outcomes.

6

ProcASM Visual Coder

Author

Temdog007

Description

ProcASM v1.1 is a general-purpose visual programming language that allows users to create software through a graphical interface. This update significantly revamps the user interface, moving from a custom SDL3-based GUI to a web-native HTML, CSS, and JavaScript front-end, making it more accessible. The backend handles project storage and user requests, with a comprehensive tutorial available. It's an innovative approach to democratize programming by reducing the syntax barrier and focusing on logic.

Popularity

Points 12

Comments 1

What is this product?

ProcASM is a visual programming language designed to let you build software by connecting blocks and nodes, rather than writing lines of text code. Think of it like building with LEGOs for programming. The core innovation is its visual paradigm which abstracts away complex syntax, allowing users to focus on program logic and flow. Version 1.1's key technical leap is the complete overhaul of its user interface. Previously, it relied on a custom graphics library (SDL3) and was ported to the web. Now, it's built with standard web technologies (HTML, CSS, JavaScript) for the front-end, meaning it runs directly in your web browser without needing any special installations. The backend is a server that manages your projects and processes your requests. This makes it much easier for anyone to try out programming, even if they've never written code before, and for experienced developers to quickly prototype ideas.

How to use it?

Developers can use ProcASM v1.1 directly in their web browser. You can access it, learn its functionalities through the provided text and video tutorials, and start building applications visually. For integration, you could potentially use ProcASM to generate code snippets or logic that can then be incorporated into larger projects written in traditional languages, or use it for rapid prototyping of concepts. The visual nature makes it ideal for educational purposes, teaching fundamental programming concepts to beginners, or for domain experts who want to express complex logic without becoming full-time coders. It's a sandbox for creative problem-solving with code.

Product Core Function

· Visual programming interface: Allows users to drag and drop components and connect them to define program logic, making complex algorithms understandable at a glance. This reduces the cognitive load associated with traditional coding, so you can focus on what you want your program to do.

· Web-native front-end: Runs directly in the browser using HTML, CSS, and JavaScript, offering a familiar and accessible user experience without installation headaches. This means you can start coding instantly from any device with a web browser, making it incredibly convenient.

· Backend project management: Securely stores user projects on a server, enabling seamless access and collaboration across sessions and devices. This ensures your work is saved and you can pick up where you left off, no matter where you are.

· Interactive tutorials: Comprehensive text and video guides walk users through the language features and application development process, lowering the barrier to entry for new users. This means you'll get up to speed quickly and confidently, even if you're new to programming.

· General-purpose language capabilities: Designed to handle a wide range of programming tasks, from simple scripts to more complex applications. This means you're not limited to niche use cases; you can use ProcASM to build a variety of software solutions.

Product Usage Case

· Educational setting: A teacher can use ProcASM to visually demonstrate programming concepts like loops, conditional statements, and data structures to students who are new to coding. Students can then experiment with these concepts in real-time, seeing the immediate results of their visual code, making learning engaging and effective.

· Rapid prototyping for designers: A UI/UX designer can use ProcASM to quickly build interactive prototypes of their designs, simulating user flows and interactions without needing to write extensive code. This allows for faster iteration and feedback cycles, ensuring the final product is user-friendly.

· Algorithm exploration for researchers: A researcher can use ProcASM to visually map out and test complex algorithms or data processing pipelines, making it easier to identify potential issues or optimize performance. This visual representation helps in understanding the intricate steps of an algorithm and finding more efficient ways to execute them.

· Automation of repetitive tasks: A small business owner can create visual scripts in ProcASM to automate simple, repetitive tasks like data entry or file management, saving time and reducing manual effort. This allows for greater efficiency and frees up time for more strategic business activities.

7

shadcn/studio: Visual Component Engineering

Author

Saanvi001

Description

shadcn/studio is a revolutionary approach to building user interfaces with shadcn/ui. It bridges the gap between design and code by providing a visual editor for shadcn/ui components, blocks, and templates. This innovation significantly accelerates UI development by allowing developers to visually assemble and customize pre-built, accessible UI elements, directly translating design into production-ready code. It tackles the common challenge of translating complex designs into functional code efficiently.

Popularity

Points 6

Comments 6

What is this product?

shadcn/studio is a visual development environment built on top of shadcn/ui, a popular library of unstyled, accessible components. Instead of manually writing all the code for each UI element, shadcn/studio allows developers to drag, drop, and configure shadcn/ui components, their combinations (blocks), and even entire page layouts (templates) through a graphical interface. The core innovation lies in its ability to generate clean, production-ready code based on these visual configurations. This means you get the flexibility and customization of code with the speed and ease of a visual builder, all while leveraging the robust accessibility and best practices of shadcn/ui. So, what does this mean for you? It means you can build complex UIs faster and more intuitively, reducing development time and the potential for manual coding errors.

How to use it?

Developers can integrate shadcn/studio into their workflow by using it as a complementary tool to their existing shadcn/ui projects. The studio acts as a visual playground where you can select components, arrange them, adjust their properties (like colors, spacing, and content), and combine them into more complex structures. Once satisfied with the visual design, the studio generates the corresponding React code, which can then be copied and pasted directly into your project. This is particularly useful for prototyping, creating design systems, or quickly assembling common UI patterns. So, how can you use this? You can use it to quickly prototype new features, build out reusable UI components for your design system, or even rapidly assemble landing pages and application interfaces. It helps you build faster by providing a visual way to generate the code you need.

Product Core Function

· Visual Component Editor: Allows developers to visually select, configure, and arrange individual shadcn/ui components, offering real-time previews and immediate code generation for each element. This speeds up the process of adding and styling basic UI elements. So, what's the value? You can quickly set up and style buttons, inputs, cards, and other foundational UI pieces without writing verbose code.

· Block Assembly: Enables the combination of multiple components into reusable 'blocks' or sections, like a hero section or a pricing card. This promotes modularity and consistency in UI design. So, what's the value? You can create and reuse complex UI patterns as single units, ensuring design consistency across your application and saving development effort.

· Template Creation: Facilitates the assembly of complete page layouts or application screens using components and blocks. This provides a holistic view of the UI and enables rapid page generation. So, what's the value? You can quickly assemble entire pages or sections of your application, drastically reducing the time spent on page layout and structure.

· Code Generation: Automatically generates clean, production-ready React code for all visual edits, ensuring that the generated code adheres to shadcn/ui's principles and best practices. So, what's the value? You get functional code that's ready to be dropped into your project, saving you from manual transcription and potential bugs.

· Customization and Theming: Offers intuitive controls for customizing component properties such as colors, typography, spacing, and other stylistic attributes. So, what's the value? You can easily tailor the look and feel of your UI to match your brand or specific design requirements without deep CSS expertise.

Product Usage Case

· Rapid Prototyping: A developer needs to quickly create a few mockups for a new feature. Using shadcn/studio, they can visually assemble the required components and layouts in minutes, generating the code to test the flow. This solves the problem of slow iteration cycles during early product development.

· Design System Implementation: A design team wants to solidify their design system with reusable UI elements. shadcn/studio allows them to visually define and export components and blocks, ensuring that all developers on the team use the same, consistent, and accessible UI building blocks. This solves the problem of fragmented and inconsistent UIs across a project.

· Landing Page Creation: A marketer or a front-end developer needs to build a landing page quickly. They can use shadcn/studio to assemble pre-designed sections and components, then export the code for immediate deployment. This addresses the need for fast, visually appealing web page creation.

· Component Library Enhancement: For projects already using shadcn/ui, shadcn/studio can be used to create custom, complex components by visually combining existing ones and then exporting the resulting code as a new reusable component within their project. This helps extend the functionality and tailor it to specific project needs.

8

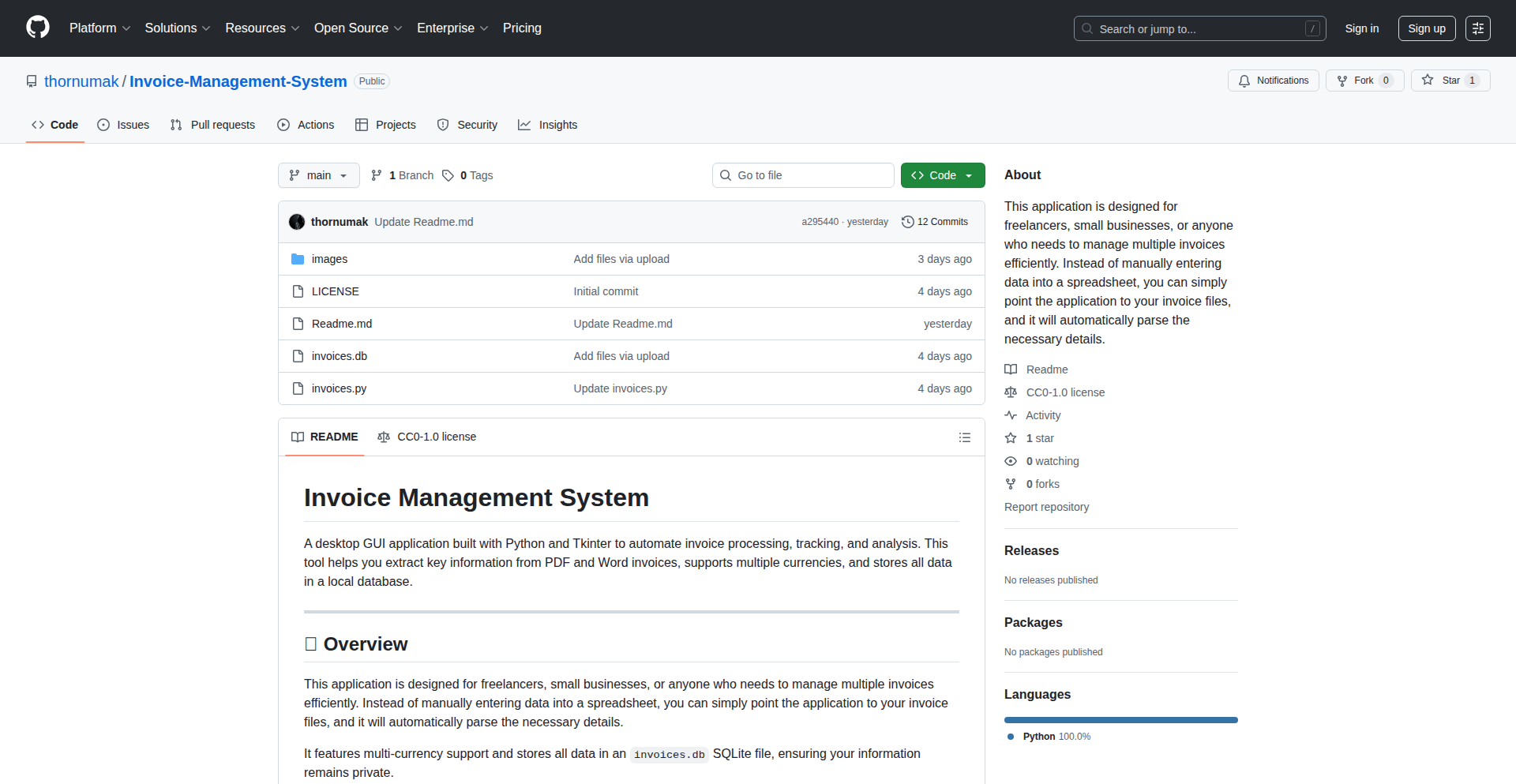

PDF-Extractor-Forge

Author

kapitalx

Description

PDF-Extractor-Forge is a high-performance toolkit designed for developers to rapidly build custom PDF extraction solutions. It addresses the common challenge of unstructured data within PDFs by providing a fast and efficient way to define and extract specific information, turning messy document data into usable structured formats. This innovation lies in its speed and developer-centric approach, simplifying a complex data processing task.

Popularity

Points 10

Comments 2

What is this product?

PDF-Extractor-Forge is a developer tool that allows you to create specialized programs for pulling out specific pieces of information from PDF documents. Think of it like having a super-smart assistant that knows exactly which data to grab from any PDF, no matter how it's organized. The innovation is its speed and ease of use for developers. Instead of complex coding for each PDF type, you define what you need, and the tool handles the heavy lifting of parsing and extraction. This is useful because it saves immense development time and resources when dealing with large volumes of PDF data.

How to use it?

Developers can integrate PDF-Extractor-Forge into their existing applications or use it to build standalone data processing pipelines. The typical workflow involves defining extraction rules – specifying the data points you want, their location or pattern within the PDF, and the desired output format (like CSV, JSON, or database entries). This is achieved through a flexible configuration system. For instance, you could build a system to automatically extract invoice numbers and amounts from a batch of invoices, or pull contact details from business cards scanned as PDFs. The core value is enabling quick and accurate data retrieval from diverse PDF sources.

Product Core Function

· Customizable Extraction Rule Engine: This allows developers to precisely define what data to extract from PDFs using flexible patterns and positional logic. This means you can target specific fields like names, dates, or amounts with high accuracy, ensuring you get only the relevant information for your application.

· High-Speed Data Parsing: Optimized for performance, this function quickly processes PDF files, significantly reducing the time needed for data extraction. For businesses dealing with thousands of documents, this translates to faster processing times and greater operational efficiency.

· Structured Data Output: The tool converts extracted data into common structured formats such as CSV, JSON, or can be configured for direct database insertion. This makes the extracted data immediately usable by other applications or for analysis, eliminating manual reformatting steps.

· Developer-Friendly API/SDK: Provides clear interfaces for easy integration into existing software projects. This allows developers to leverage its power without needing to learn an entirely new complex system, accelerating the development cycle and reducing integration friction.

Product Usage Case

· Automated Invoice Processing: Imagine a company receiving hundreds of invoices daily. PDF-Extractor-Forge can be used to automatically extract crucial data like invoice number, date, vendor name, and total amount, then feed this into an accounting system. This eliminates manual data entry, drastically reducing errors and processing time.

· Contract Data Aggregation: For legal teams, this tool can extract key clauses, party names, effective dates, and termination conditions from a large repository of contracts. This enables faster contract review, analysis, and compliance checks, providing immediate value by making contract information readily accessible and searchable.

· Form Data Digitization: When dealing with scanned paper forms or form-like PDFs, PDF-Extractor-Forge can be configured to pull out specific answers from form fields. This is invaluable for organizations looking to digitize historical records or streamline data collection from ongoing surveys, turning paper-based information into digital assets.

· Scientific Paper Data Mining: Researchers can use this to extract specific experimental results, parameters, or citations from a collection of research papers. This speeds up literature reviews and meta-analysis, allowing scientists to quickly gather and compare data from multiple sources for their studies.

9

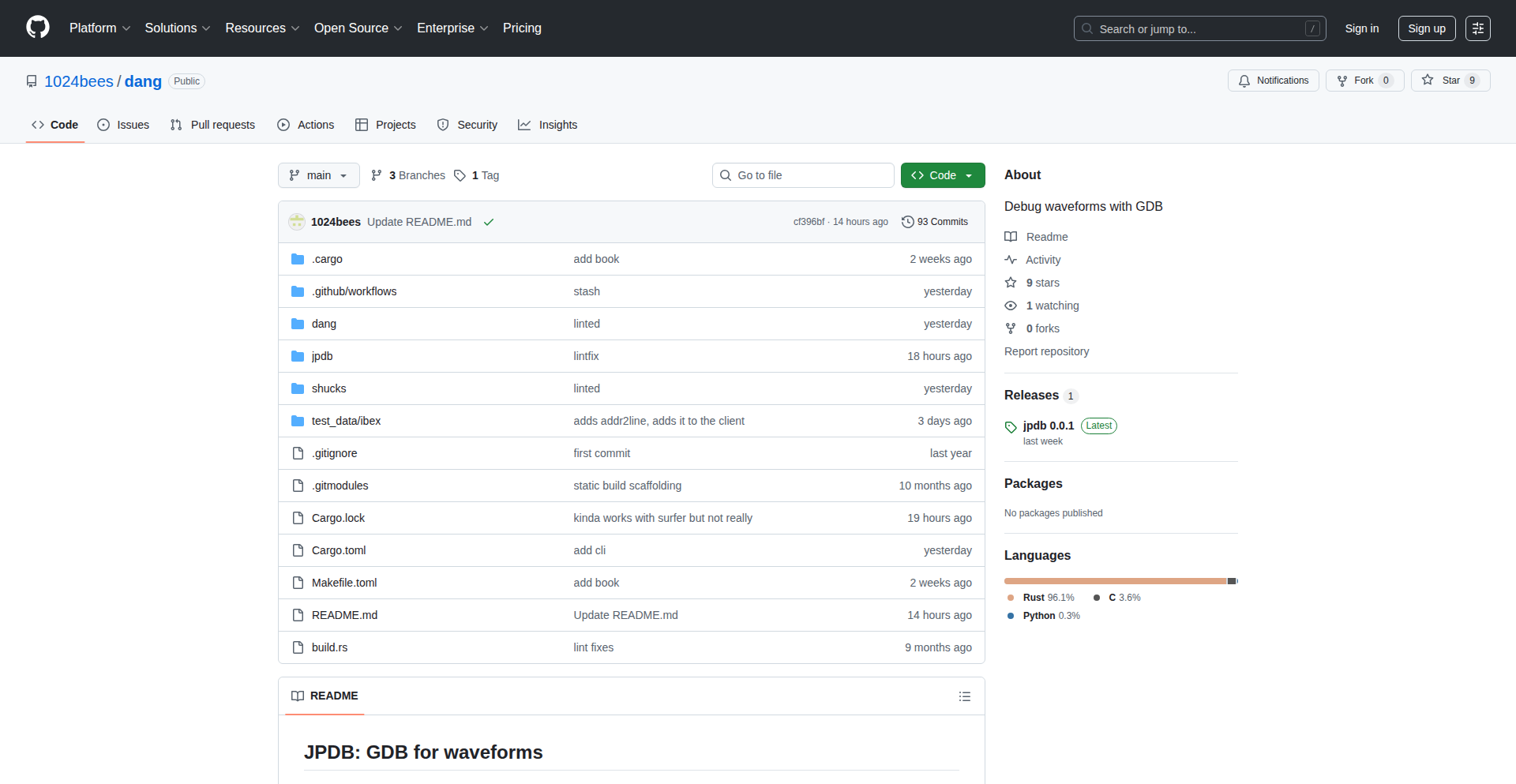

Waveform GDB: JPDB for Hardware Debugging

Author

1024bees

Description

JPDB is a novel debugging tool that brings the familiar experience of GDB (a popular command-line debugger for software) to the world of hardware design. Instead of stepping through lines of code, JPDB allows you to step through the execution of your custom CPU or hardware, visualized through waveform data. It achieves this by implementing a GDB-like client and integrating with waveform viewers, offering a powerful new way to understand and fix issues in complex hardware designs. So, what's in it for you? It dramatically speeds up hardware debugging by providing precise control and visibility into your hardware's behavior, much like debugging software.

Popularity

Points 10

Comments 1

What is this product?

JPDB is essentially a specialized debugger for hardware waveforms. Think of it like GDB for your custom computer chips. When your hardware runs, it generates signals over time, which are captured as 'waveforms'. JPDB takes these waveforms and allows you to interact with them as if you were debugging software. It has a custom GDB client called 'shucks' that speaks the GDB protocol, enabling you to pause, inspect, and step through the exact moment your hardware executed a specific instruction or event. The innovation here is adapting the robust debugging paradigm of software development to the unique challenges of hardware debugging, particularly for custom CPU designs. The 'so what?' for you is that it translates complex, time-based hardware behavior into understandable, step-by-step execution flows, making it easier to pinpoint and fix bugs in your silicon.

How to use it?

Developers working on custom CPUs or complex digital logic can use JPDB by providing it with their generated waveforms and some contextual information about their hardware. JPDB then integrates with waveform viewers like Surfer, allowing you to visually inspect signals while you step through the hardware's execution. For those who prefer a standard GDB client, JPDB also offers a 'gdbstub' server. This means you can connect your existing GDB setup to your hardware simulation or actual hardware via JPDB. The usage scenario is straightforward: when your hardware simulation produces unexpected results or exhibits buggy behavior, you load the corresponding waveforms into JPDB, connect your preferred GDB client, and start stepping through the hardware's operations to find the root cause. So, what's in it for you? It provides a familiar and powerful debugging interface for hardware that was previously much harder to inspect, accelerating your design iteration and reducing development time.

Product Core Function

· GDB-like waveform debugging: Allows developers to step through hardware execution based on waveform data, providing a familiar debugging experience for software developers. This helps pinpoint bugs by precisely following the sequence of operations. This is valuable for understanding complex timing issues and logic errors.

· Custom GDB client ('shucks'): Implements the GDB protocol faithfully, enabling rich interaction with the debugger. This means you get granular control over the debugging process, akin to debugging any software application. This is useful for advanced debugging scenarios where precise command execution is needed.

· Waveform viewer integration (Surfer): Seamlessly connects with waveform visualization tools, allowing developers to see other related signals simultaneously while stepping through their hardware. This provides crucial context for understanding how different parts of the hardware interact during execution. This is essential for debugging complex systems with many interconnected signals.

· GDBstub server: Presents a GDBstub server for compatibility with standard GDB clients. This allows developers to use their existing GDB tools and workflows with JPDB, reducing the learning curve and leveraging familiar environments. This is beneficial for teams already invested in GDB and seeking to extend its use to hardware debugging.

Product Usage Case

· Debugging a custom CPU pipeline: A developer designing a new CPU architecture can use JPDB to step through the instruction fetch, decode, execute, and write-back stages of their pipeline using generated waveforms. If an instruction is not behaving as expected, they can pause the execution at the relevant stage and inspect the values of all internal registers and signals to identify the logic error. This solves the problem of trying to manually trace complex, multi-cycle operations in hardware.

· Investigating timing-related bugs in an FPGA design: When a complex FPGA design fails due to subtle timing issues, JPDB can be used with captured waveforms to step through the logic as it executes in time. By observing how signals change over clock cycles and in response to specific inputs, the developer can pinpoint the exact moment a signal violates timing constraints or causes an incorrect state transition. This helps solve the challenge of identifying elusive timing bugs that are difficult to reproduce or diagnose with static analysis alone.

· Validating the behavior of a custom memory controller: A hardware engineer developing a custom memory controller for a specialized application can use JPDB to step through the read and write operations as they are reflected in the waveforms. They can verify that the correct addresses, data, and control signals are asserted at the right times, ensuring data integrity and performance. This addresses the need for precise verification of data transfer protocols and timing in memory interfaces.

10

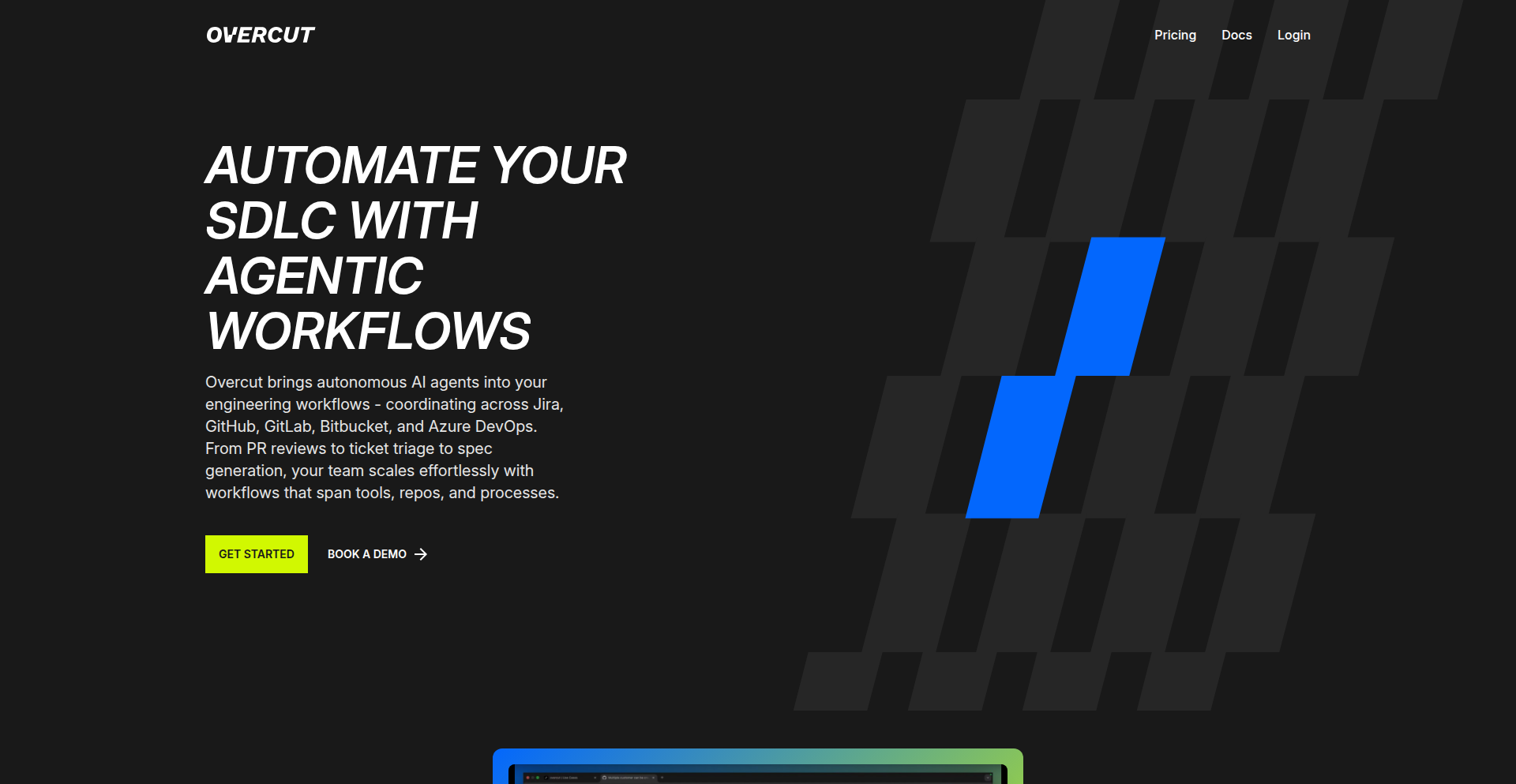

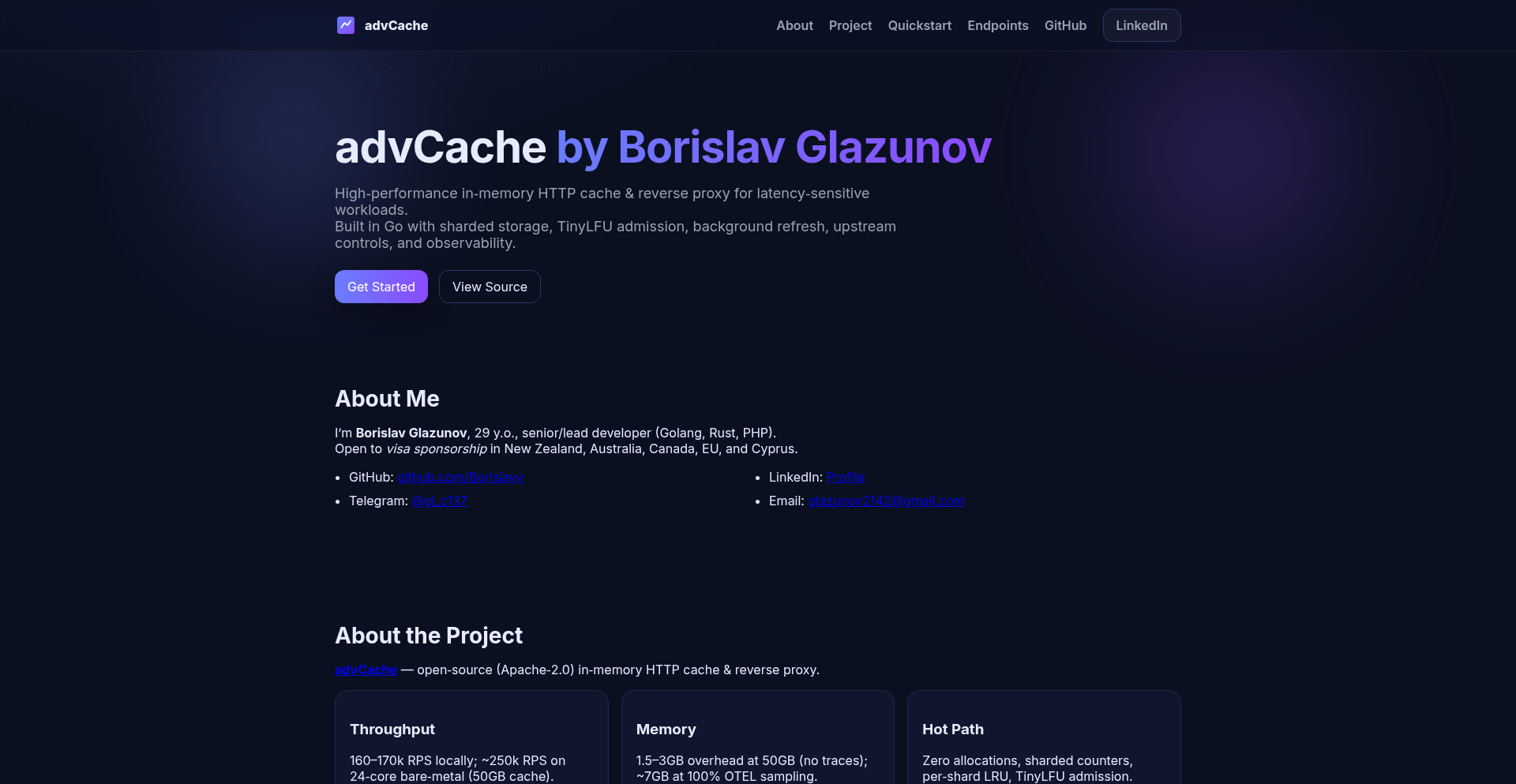

AgenticSDLC Automator

Author

yuvalhazaz

Description

Overcut.ai is a groundbreaking project that leverages AI agents to automate the entire Software Development Life Cycle (SDLC). It moves beyond simple task automation by enabling intelligent agents to understand, plan, and execute complex development workflows, from initial requirement analysis to deployment and monitoring. This represents a significant innovation in applying agent-based AI to the intricate and often manual processes of software engineering, offering a glimpse into a future of highly autonomous development.

Popularity

Points 8

Comments 1

What is this product?

Overcut.ai is an AI-powered platform designed to automate the Software Development Life Cycle (SDLC) using 'agentic workflows.' Instead of just following predefined scripts, these AI agents are intelligent entities that can understand context, make decisions, and coordinate tasks across different stages of development. Think of it as having a team of AI developers who can collaboratively build and manage software. The core innovation lies in creating these sophisticated AI agents that can interpret requirements, break them down into actionable steps, write code, test it, deploy it, and even monitor its performance, all with minimal human intervention. This significantly streamlines the development process, reduces human error, and accelerates delivery times. So, what's the use? It means faster, more reliable software development, allowing human developers to focus on higher-level design and innovation rather than repetitive tasks.

How to use it?

Developers can integrate Overcut.ai by defining their project requirements and desired outcomes through natural language or structured specifications. The platform then orchestrates AI agents to execute these requirements. This could involve agents for code generation, automated testing (unit, integration, end-to-end), infrastructure provisioning (e.g., setting up cloud environments), CI/CD pipeline management, and even proactive bug detection and fixing. The agents communicate and collaborate, learning from each other and from the project's progress. For integration, imagine feeding your project's user stories or feature requests into Overcut.ai, and it automatically sets up the development environment, writes the initial code, runs tests, and prepares it for deployment. So, how does this help you? It drastically reduces the manual effort in setting up, coding, and testing, allowing you to see your ideas come to life much faster and with fewer roadblocks.

Product Core Function

· Intelligent requirement interpretation: AI agents can understand complex project needs described in natural language, translating them into concrete development tasks. This is valuable because it bridges the gap between business goals and technical implementation, ensuring that what's built truly meets the intended purpose.

· Automated code generation and modification: Agents can write new code based on requirements or modify existing code to fix bugs or add features, significantly speeding up the coding process. This is useful for developers as it offloads the time-consuming task of writing boilerplate or standard code, freeing them to focus on novel algorithms or complex logic.

· Comprehensive automated testing: AI agents can design and execute various levels of tests, from unit tests to end-to-end scenarios, ensuring code quality and stability. This provides immediate feedback and reduces the risk of deploying buggy software, giving developers confidence in their releases.

· End-to-end workflow orchestration: Agents manage the entire development pipeline, including setting up environments, continuous integration and deployment (CI/CD), and operational monitoring. This creates a seamless, automated development lifecycle, simplifying deployment and maintenance for developers and project managers.

· Self-healing and adaptive systems: Agents can monitor deployed applications for issues and automatically initiate fixes or adjustments, leading to more robust and resilient software. This is a critical advantage as it reduces downtime and ensures a better user experience, allowing development teams to focus on new features rather than constant firefighting.

Product Usage Case

· Rapid prototyping of web applications: A startup can feed a detailed product description into Overcut.ai, and within hours, have a functional prototype of their web application, including frontend UI, backend logic, and database schema. This solves the problem of long development cycles for early-stage validation.

· Automated bug fixing in legacy systems: For an established company with a large codebase, Overcut.ai can be tasked with identifying and fixing common bugs reported by users, reducing the burden on the existing development team and improving customer satisfaction. This addresses the challenge of maintaining older, complex software with limited resources.

· AI-driven game development assistance: A game studio can use Overcut.ai to generate procedural content, implement AI behaviors for non-player characters, or automate the creation of testing scenarios for game mechanics. This accelerates the complex and iterative process of game creation.

· Streamlining microservices development and deployment: For a complex microservices architecture, Overcut.ai can manage the creation, testing, and deployment of new services and their associated APIs, ensuring consistency and reducing integration issues. This solves the coordination overhead inherent in distributed systems.

11

DocuFeedback Engine

Author

sails

Description

This project introduces an AI-powered document extraction tool that excels at parsing complex financial documents like management reports and bank statements. Its core innovation lies in a 'feedback loop' mechanism, allowing users to iteratively refine the AI's understanding and extraction accuracy at a granular, field-level. This means you can teach the AI to extract specific information more precisely by correcting its mistakes and providing contextual guidance, leading to a highly customized and reliable data extraction solution. So, this is useful for anyone who needs to accurately pull specific data from many similar documents, and wants to improve the accuracy over time without extensive manual reprogramming.

Popularity

Points 7

Comments 2

What is this product?

DocuFeedback Engine is a sophisticated system designed to automatically extract specific pieces of information from various documents, particularly focusing on complex financial ones. Unlike traditional document parsing that relies on rigid templates, this tool leverages AI and a unique 'feedback loop' system. Imagine you need to pull out a specific 'net profit' figure from hundreds of company reports. You first define what you want to extract (the target form). Then, you upload a document, and the AI attempts to find and extract the information. The key innovation is that if the AI makes a mistake or misses something, you can directly correct it within the document and provide feedback at that specific data point. The system learns from these corrections, iteratively improving its accuracy and understanding of your specific extraction needs. This is like giving the AI a 'cheat sheet' that gets better with every page you review. So, this is useful because it automates a tedious data extraction process and makes the AI smarter and more tailored to your exact requirements, reducing manual effort and improving data quality.

How to use it?

Developers can integrate DocuFeedback Engine into their workflows by interacting with its API. The process typically involves defining your desired data extraction structure (a 'target form'), uploading the documents you need processed, and then reviewing the AI's initial extraction results. If there are inaccuracies, you can correct them directly within the interface and provide specific feedback on why the extraction was incorrect. This feedback is then used to retrain and refine the AI models. For example, a fintech startup could use this to automate the underwriting process by extracting key financial metrics from loan applications. They would set up a form for loan-related data, upload applicant documents, review the extractions, and provide feedback on any errors to ensure the AI consistently pulls accurate information for risk assessment. So, this is useful because it provides a robust and adaptable way to automate data extraction, making it suitable for applications requiring high accuracy and continuous improvement, like data aggregation, financial analysis, and compliance checks.

Product Core Function

· AI-powered structured data extraction: Utilizes advanced AI models to automatically identify and pull specific data points from unstructured or semi-structured documents. This saves significant manual effort in data entry and processing. So, this is useful for quickly getting organized data from messy sources.

· User-defined target forms: Allows users to create custom templates or 'forms' specifying exactly what information they need to extract from documents. This ensures the AI focuses on the relevant data for your specific needs. So, this is useful for tailoring the extraction process to your unique business requirements.

· Field-level human feedback loop: Enables users to correct extraction errors directly at the individual data field level and provide contextual feedback. This 'teaches' the AI, iteratively improving its accuracy and reliability for your specific document types. So, this is useful for making the AI smarter and more accurate over time without needing to be an AI expert.

· Document citation and review: Provides the ability to review extraction quality and check citations within the original PDF documents, ensuring transparency and auditability of the extracted data. So, this is useful for verifying the accuracy of the extracted information and understanding its source.

· Automated prompt refinement: Offers a mechanism to refine the prompts that guide the AI's extraction process based on user feedback. This means the system can automatically learn and adapt to better understand and extract data, reducing the need for complex prompt engineering. So, this is useful for simplifying the process of getting the AI to work better without deep technical knowledge.

Product Usage Case

· A credit analyst team uses DocuFeedback Engine to process loan applications. They define a target form for key financial metrics, upload applicant bank statements and tax returns, and then correct any misidentified numbers or missing information. The feedback loop helps the AI become highly accurate in extracting figures like 'net income' and 'debt-to-income ratio' over time, speeding up the underwriting process. So, this is useful for automating and improving the accuracy of financial risk assessment.

· An accounting firm employs the system to extract data from invoices and receipts for client bookkeeping. They create forms for 'vendor name', 'invoice total', and 'date'. After initial extractions, they correct any parsing errors and provide feedback. This allows them to quickly and accurately digitize financial records, reducing manual data entry and potential for human error. So, this is useful for streamlining accounting tasks and improving data accuracy for financial reporting.

· A legal team uses DocuFeedback Engine to extract specific clauses and dates from contracts. By defining target fields like 'contract effective date' and 'termination clause', they can automate the review of large volumes of legal documents, flagging critical information for further analysis. The feedback mechanism helps the AI learn to identify nuanced legal terminology accurately. So, this is useful for accelerating legal document review and ensuring critical information is not missed.

12

AirplaneModeConnect

Author

bahrtw

Description

A unique website designed to be accessible only when your phone is in airplane mode. It offers a brief, intentional moment of digital disconnection, highlighting the underlying technical challenge of creating content that is available offline, demonstrating a novel approach to user experience and digital well-being through technical constraint.

Popularity

Points 7

Comments 2

What is this product?

This project is a website that's intentionally inaccessible when your device has an active internet connection. The core technical innovation lies in how it leverages browser-based technologies, likely using service workers or similar offline-first techniques, to cache content and serve it exclusively when network requests are blocked. This creates a 'digital island' experience, forcing a temporary disconnect from the always-on internet. So, what's the value? It provides a curated, deliberate pause from the constant stream of online information, offering a moment of focused reflection or a different kind of engagement with digital content, demonstrating that technology can also be used to facilitate disconnection.

How to use it?

Developers can use this project as an inspiration for creating offline-first web applications or to explore the boundaries of web accessibility. The core usage for an end-user is simple: enable airplane mode on your phone and then navigate to the website. The technical implementation likely involves robust caching strategies and conditional logic within the client-side code to check for network availability before rendering content. For developers looking to replicate this, it would involve understanding service worker registration, cache storage APIs, and network request interception. So, how does this benefit you? As a developer, it inspires new ways to think about web performance and offline user experiences, potentially leading to more resilient and engaging applications. As an end-user, it offers a simple yet profound way to reclaim small moments of your digital attention.

Product Core Function

· Offline-first content delivery: The website's content is fully cached and available without an internet connection, providing a seamless experience even when offline. This is achieved through advanced browser caching mechanisms like service workers, which act as a proxy between the browser and the network. The value here is a reliable, uninterrupted user experience, regardless of connectivity.

· Airplane mode detection/enforcement: The website employs techniques to detect network connectivity and actively prevent access when a connection is present, displaying a tailored message or a limited interface. This technical feat uses browser APIs to monitor network status. The value is the creation of a dedicated space for disconnection, fulfilling the core premise of the project.

· Focused user experience: By removing the distraction of real-time online content, the website encourages focused engagement with its specific content, whatever that may be. This is a design-driven outcome, but enabled by the technical constraint of offline access. The value is a more mindful and less distracting digital interaction.

Product Usage Case

· Creating temporary digital sanctuaries: Imagine a website you can only access during a flight, a train journey, or a power outage. This project demonstrates how to technically achieve such controlled access, offering moments of escape from the hyper-connected world. This solves the problem of constant digital noise by architecting a solution around deliberate exclusion.

· Developing offline-first learning or creative tools: A student could use this as a basis for an offline study guide, or an artist for an offline creative prompt generator. The technical challenge solved is ensuring content is available and functional even without an internet connection, making it ideal for environments with unreliable or absent connectivity.

· Exploring novel user engagement models: This project pioneers a unique engagement model based on scarcity and intentional disconnection. It's a technical experiment in how limiting access can actually enhance the value of an experience, challenging traditional notions of always-on accessibility. This solves the problem of digital fatigue by offering a refreshing alternative.

13

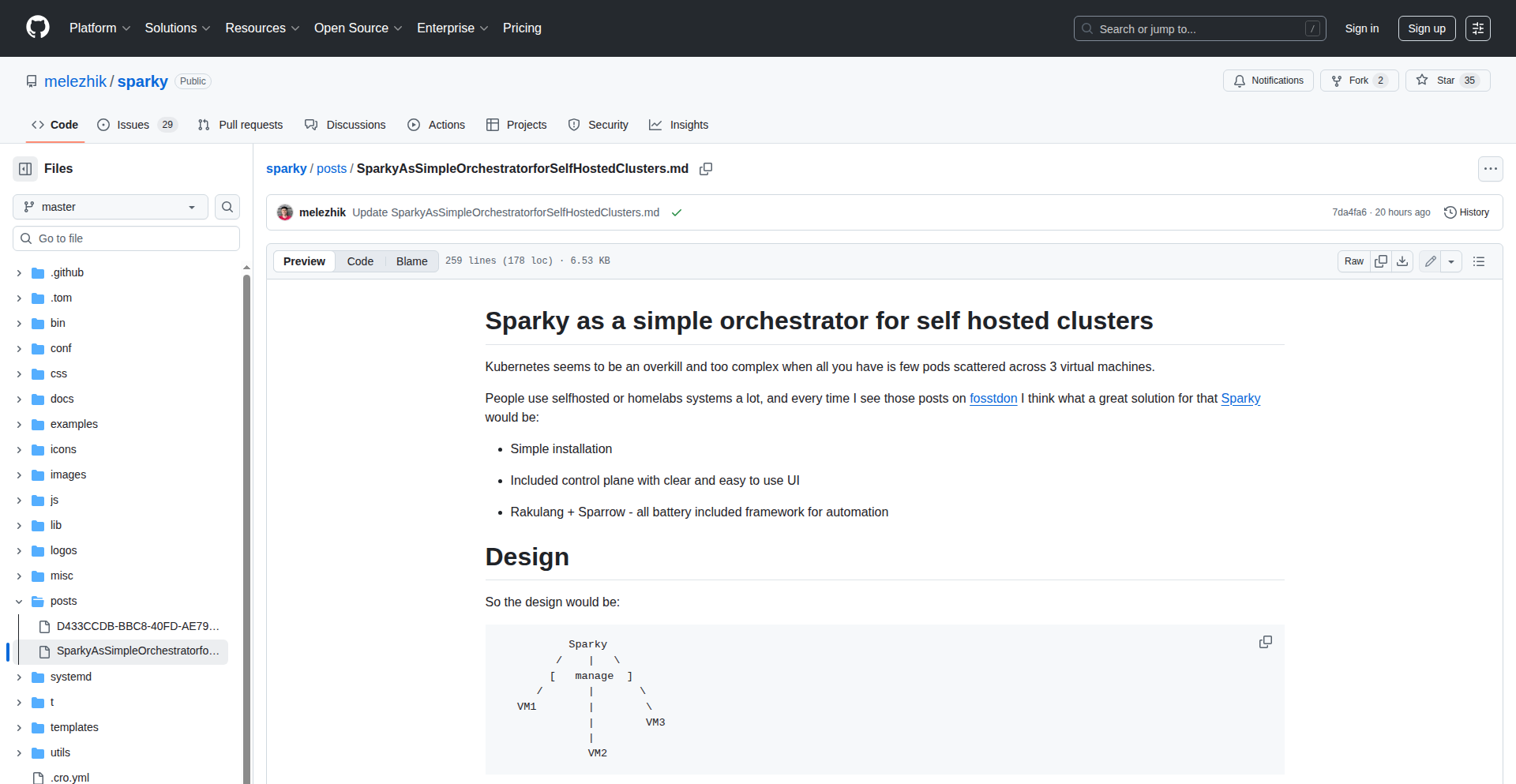

Sparky: Self-Hosted Cluster Orchestrator

Author

melezhik

Description

Sparky is a minimalist orchestrator designed for managing self-hosted clusters. It offers a straightforward way to deploy, manage, and scale applications across your own infrastructure, reducing reliance on complex, enterprise-grade solutions. Its innovation lies in its simplicity and focus on developer-centric operations for independent infrastructure.

Popularity

Points 8

Comments 0

What is this product?

Sparky is a lightweight tool that simplifies the management of applications running on your own collection of servers (a self-hosted cluster). Think of it as a smart manager for your servers that helps you deploy your applications, keep them running, and even scale them up or down as needed. Its core innovation is its simplicity; it avoids the overwhelming complexity of large cloud orchestrators by offering a focused set of features specifically for developers who want to manage their own infrastructure without a steep learning curve. So, what's in it for you? It means you can run your applications on your own hardware, with more control and potentially lower costs, without needing to become an expert in distributed systems management.

How to use it?

Developers can use Sparky by defining their applications and their desired state (e.g., how many instances to run, resource requirements) in a configuration file. Sparky then takes this configuration and ensures that the applications are running as specified on the cluster nodes. It can be integrated into CI/CD pipelines to automate deployments. For example, you could set up a Git repository, and upon pushing code changes, Sparky could automatically build, deploy, and manage the new version of your application across your cluster. So, what's in it for you? This allows for faster and more reliable application updates and rollbacks, giving you more confidence in your deployment process.

Product Core Function

· Application Deployment: Sparky allows you to package your applications (e.g., as containers) and deploy them across your cluster nodes. This simplifies the process of getting your code running in a distributed environment. So, what's in it for you? You can easily get your services running on multiple machines without manual setup for each one.

· Health Monitoring and Self-Healing: It constantly checks the health of your deployed applications. If an application instance fails, Sparky can automatically restart it or replace it, ensuring your services remain available. So, what's in it for you? This means your applications are more resilient to failures, and you spend less time troubleshooting unexpected downtime.

· Scaling Management: Sparky can automatically adjust the number of application instances based on load or predefined rules, helping you handle varying traffic demands. So, what's in it for you? Your applications can gracefully handle spikes in user activity without performance degradation, and you can optimize resource usage when demand is low.

· Declarative Configuration: You describe the desired state of your applications (what you want them to be), and Sparky works to achieve and maintain that state. This makes managing complex systems more predictable. So, what's in it for you? You have a clear, reproducible way to define and manage your application infrastructure, reducing errors from manual configuration.

· Resource Abstraction: Sparky abstracts away the underlying server details, allowing you to treat your cluster as a unified pool of resources for running applications. So, what's in it for you? You can focus on your application logic rather than the intricacies of individual server management.

Product Usage Case

· Deploying a microservices architecture on a private cloud of developer machines, where Sparky manages the inter-service communication and availability. It solves the problem of keeping many small services reliably running. So, what's in it for you? You can build and scale complex applications with confidence on your own hardware.

· Managing a series of backend APIs for a web application hosted on a small cluster of rented servers. Sparky ensures the APIs are always available and can scale to meet user demand. It addresses the challenge of maintaining high availability for critical services. So, what's in it for you? Your users get a consistently fast and reliable experience, even during peak times.

· Automating the deployment of machine learning model serving endpoints across a cluster of GPUs. Sparky handles the distribution and health of these computationally intensive tasks. It tackles the complexity of deploying specialized workloads. So, what's in it for you? You can efficiently deploy and manage resource-heavy applications like ML models without specialized infrastructure teams.

· Creating a resilient data processing pipeline where Sparky ensures that processing jobs are restarted if they fail on any node. It provides fault tolerance for data workflows. So, what's in it for you? Your data processing tasks are less likely to be interrupted, ensuring timely and complete results.

14

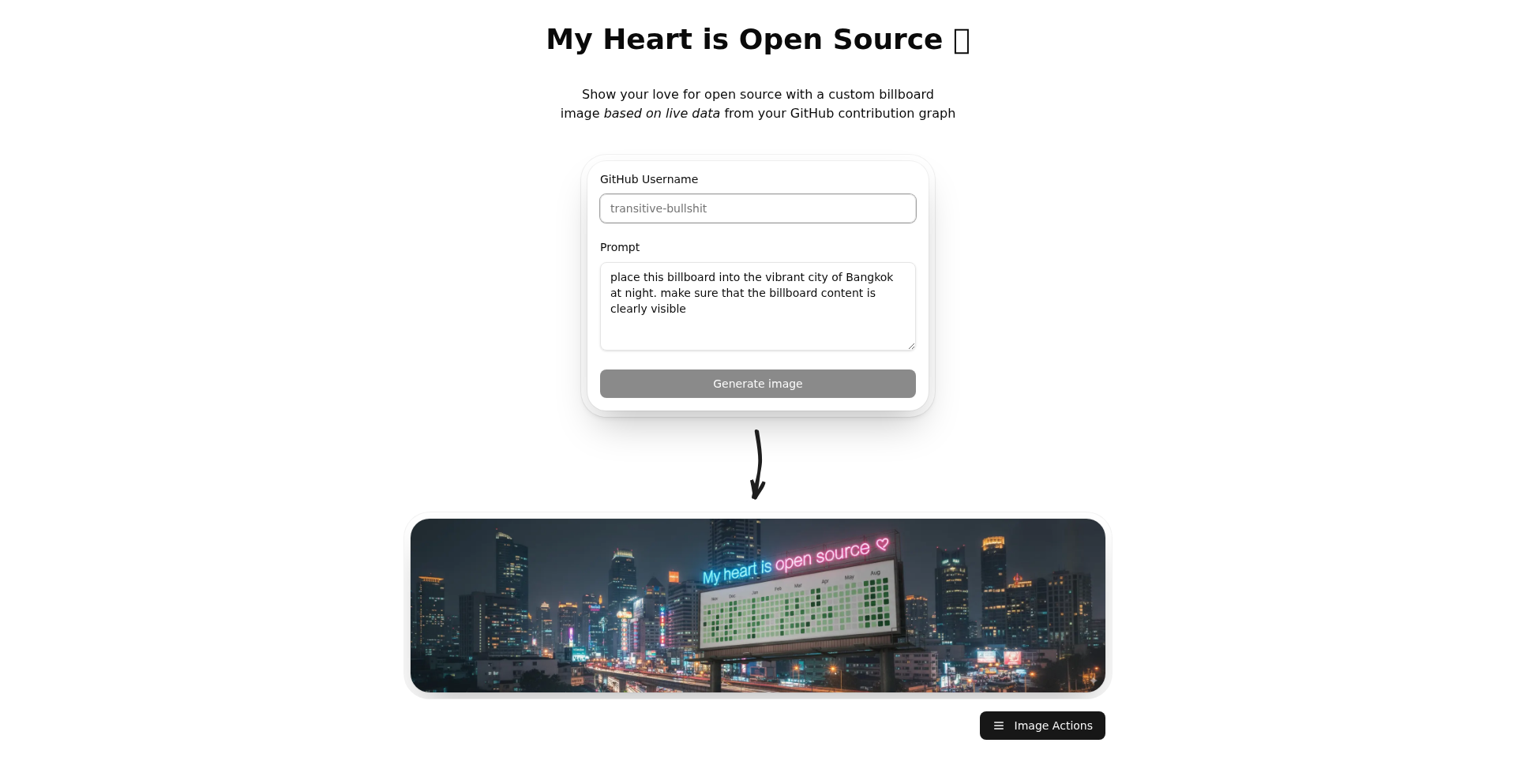

GHContributionBillboard AI

Author

transitivebs

Description

This project is a fun experiment that uses headless Chrome and an AI image filter to generate a unique billboard image of your GitHub contribution graph. It's a creative way to visualize your coding activity and showcase your open-source engagement.

Popularity

Points 7

Comments 0

What is this product?

GHContributionBillboard AI is a tool that takes your GitHub contribution graph, captures it as an image using headless Chrome (think of it as a browser running without a visible screen), and then applies a two-pass AI image filter to create a visually striking billboard-style image. The innovation lies in combining automated screenshotting with AI image processing for a unique aesthetic, turning raw data into an artistic representation. So, what's in it for you? It offers a novel way to express your passion for coding and open source, providing a personalized, eye-catching visual for social media or personal branding.

How to use it?

Developers can integrate this project by setting up the headless Chrome environment and the AI image filtering libraries. It's designed for those who want to automate the creation of personalized visual content derived from their GitHub activity. You could use it as part of a larger workflow to generate social media posts, website assets, or even digital art. This means you can easily programmatically create unique visuals of your coding progress, saving you manual effort and offering a creative output. For example, you could set it to run weekly and automatically post your updated contribution graph to Twitter.

Product Core Function

· Automated GitHub Contribution Graph Screenshotting: Leverages headless Chrome to capture your contribution graph as a digital image. This provides a programmatic way to get a snapshot of your coding activity, useful for tracking progress or generating content without manual intervention.

· Two-Pass AI Image Filtering: Applies advanced AI filters to enhance the captured image, transforming it into a stylized billboard effect. This adds a unique artistic flair to your data, making it more engaging and visually appealing than a standard screenshot.

· Open Source & Free to Use: The entire project is available under an open-source license, meaning you can freely use, modify, and distribute it. This fosters community collaboration and allows anyone to experiment with the technology without cost barriers.

Product Usage Case

· Social Media Content Generation: A developer can use this to automatically create eye-catching tweets or Instagram posts showcasing their weekly or monthly GitHub contribution highlights. It solves the problem of manually creating engaging visuals for social media, directly translating coding effort into shareable content.

· Personal Branding and Portfolio Enhancement: Individuals can use the generated billboards to add a unique visual element to their personal website or online portfolio, demonstrating their dedication to open-source and coding in an artistic manner. This helps differentiate them from others by providing a creative representation of their work.

· Creative Coding Demonstrations: As a demonstration of combining different technologies like headless browsing and AI image processing, this project can be used in presentations or workshops to inspire other developers. It shows how simple ideas can be brought to life with code, solving the challenge of finding novel ways to present technical achievements.

15

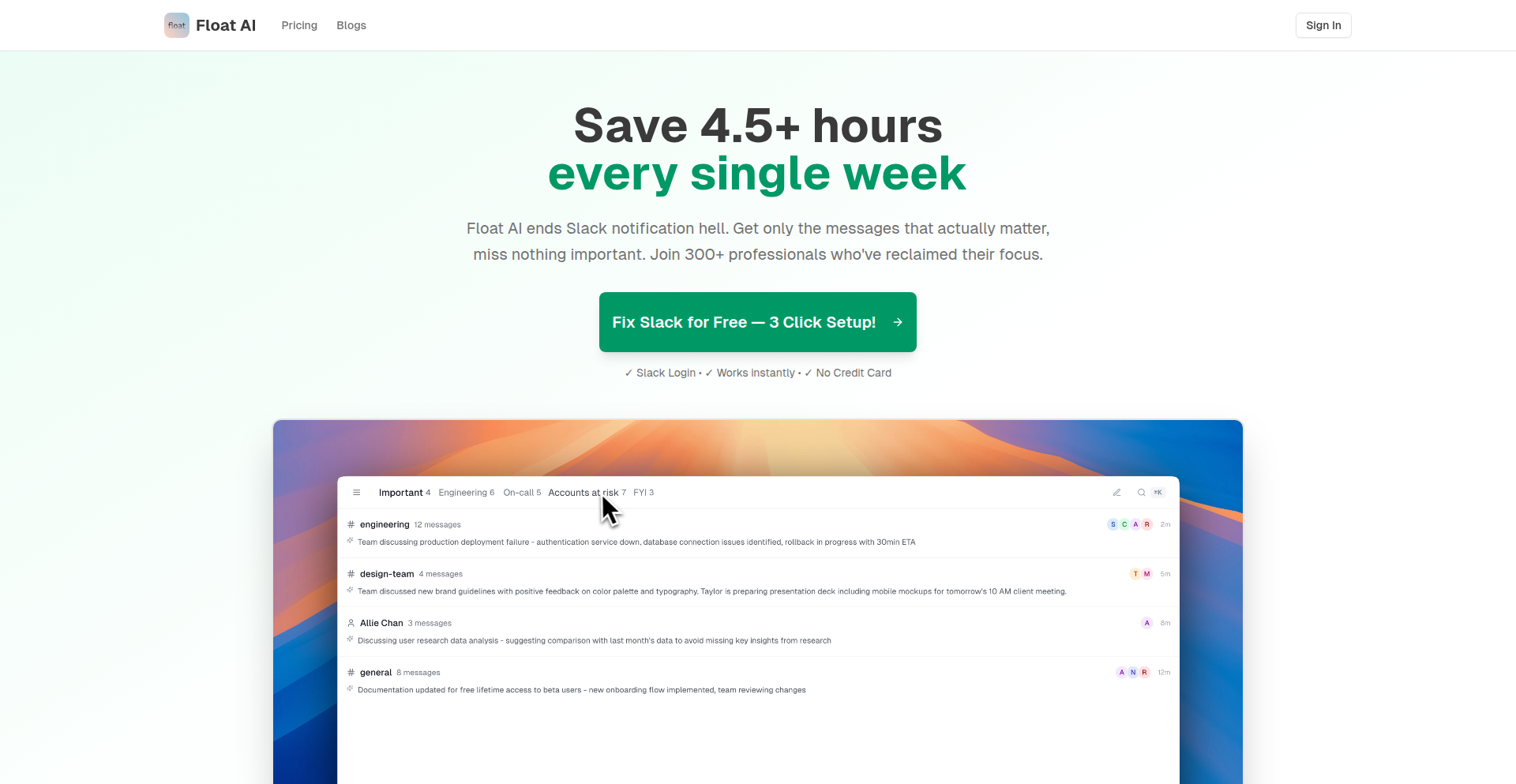

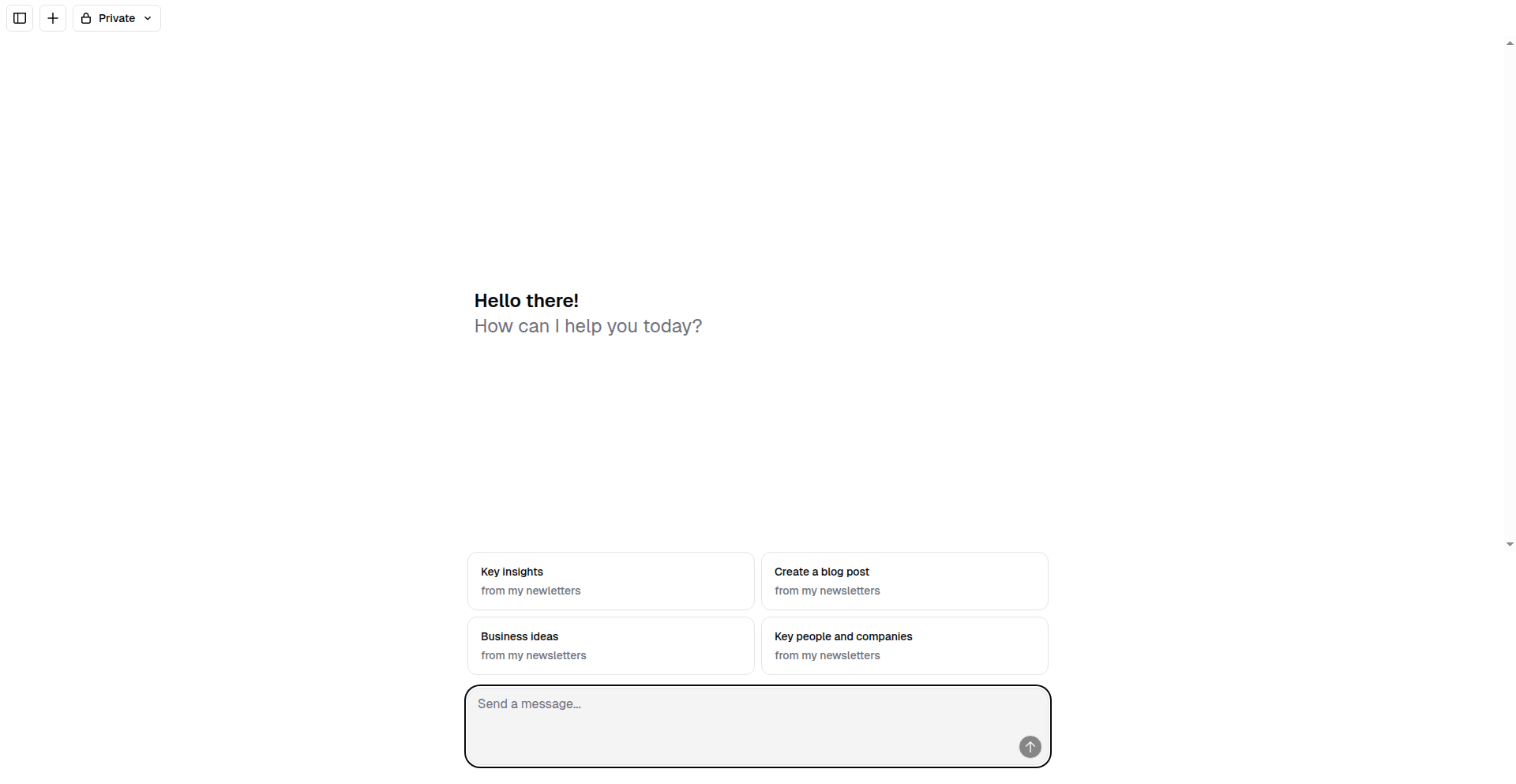

Float AI - Slack Contextual Copilot

Author

FloatAIMsging

Description

Float AI is a clever application that integrates with your Slack workspace to act as an intelligent assistant. It leverages AI to understand the context of your conversations, enabling it to draft replies, condense lengthy discussions, retrieve information from your historical messages, and answer queries in plain English. The core innovation lies in its ability to access and process your entire Slack history without requiring manual data input, unlike generic AI tools, and offering action-oriented capabilities beyond simple search and summarization, distinguishing it from Slack's built-in AI features.

Popularity

Points 4

Comments 3

What is this product?