Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-28

SagaSu777 2025-09-29

Explore the hottest developer projects on Show HN for 2025-09-28. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of innovation today is heavily influenced by the pervasive power of Artificial Intelligence, especially Large Language Models (LLMs). We see a significant trend towards AI-driven automation across diverse fields, from generating legal documents like privacy policies with PrivacyForge.ai to assisting in code development with projects like the 'vibe coding' iOS app. The 'Code Mode' pattern, exemplified by the MCP server built using Cloudflare's approach, highlights how LLMs can orchestrate complex workflows by intermixing code execution with tool calls, a powerful concept for developers to explore for building more intelligent agents and applications. Furthermore, there's a growing emphasis on local-first and privacy-focused development, with tools like Dictly offering on-device dictation and MyLocalAI providing local AI processing with optional web access. This demonstrates a conscious effort to build technologies that respect user privacy while still delivering advanced functionality. For developers and entrepreneurs, this means embracing AI not just as a tool for faster development but as a core component for solving complex, real-world problems with novel approaches. The key is to identify pain points that can be addressed with intelligent automation and to build solutions that are both powerful and mindful of user data. The hacker spirit shines through in the DIY ethos of many projects, where developers build what they need, share it openly, and iterate based on community feedback, pushing the boundaries of what's possible with current technology.

Today's Hottest Product

Name

PrivacyForge.ai

Highlight

This project tackles the complex and often costly issue of privacy compliance for startups by leveraging AI. Instead of relying on generic templates or expensive legal counsel, PrivacyForge.ai analyzes a business's specific data practices to generate legally compliant privacy documentation. The technical innovation lies in its multi-modal AI approach, using advanced language models trained on various privacy regulations like GDPR, CCPA, and more. Developers can learn about building AI-driven solutions for regulatory challenges, understanding how to integrate diverse AI models and maintain specialized knowledge bases for different compliance frameworks. The key takeaway is using AI not just for code generation, but for complex domain-specific problem-solving.

Popular Category

AI and Machine Learning

Developer Tools

Productivity

Popular Keyword

AI

LLM

Developer Tools

Privacy

Automation

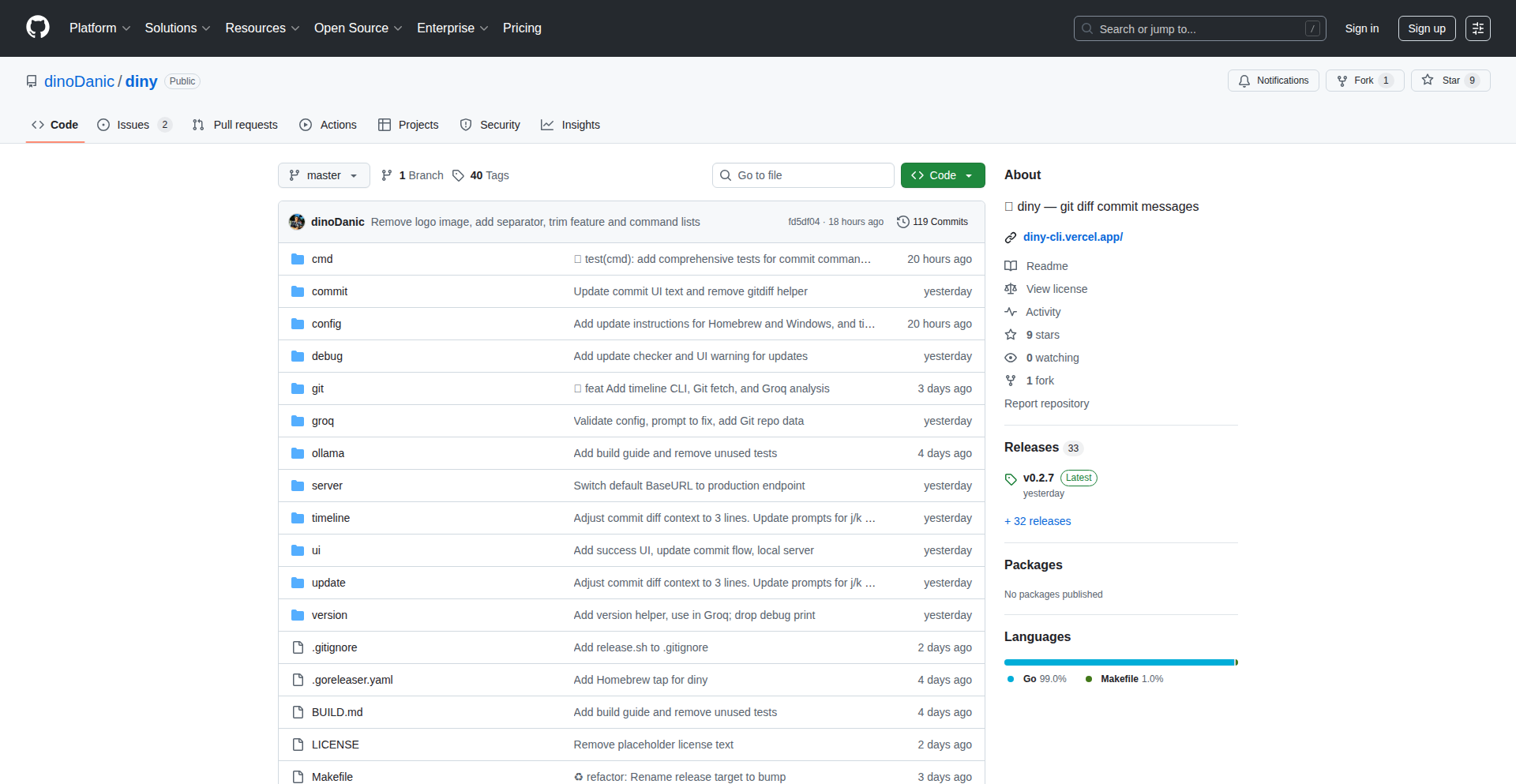

CLI

Technology Trends

AI-Powered Automation

LLM for Code and Content Generation

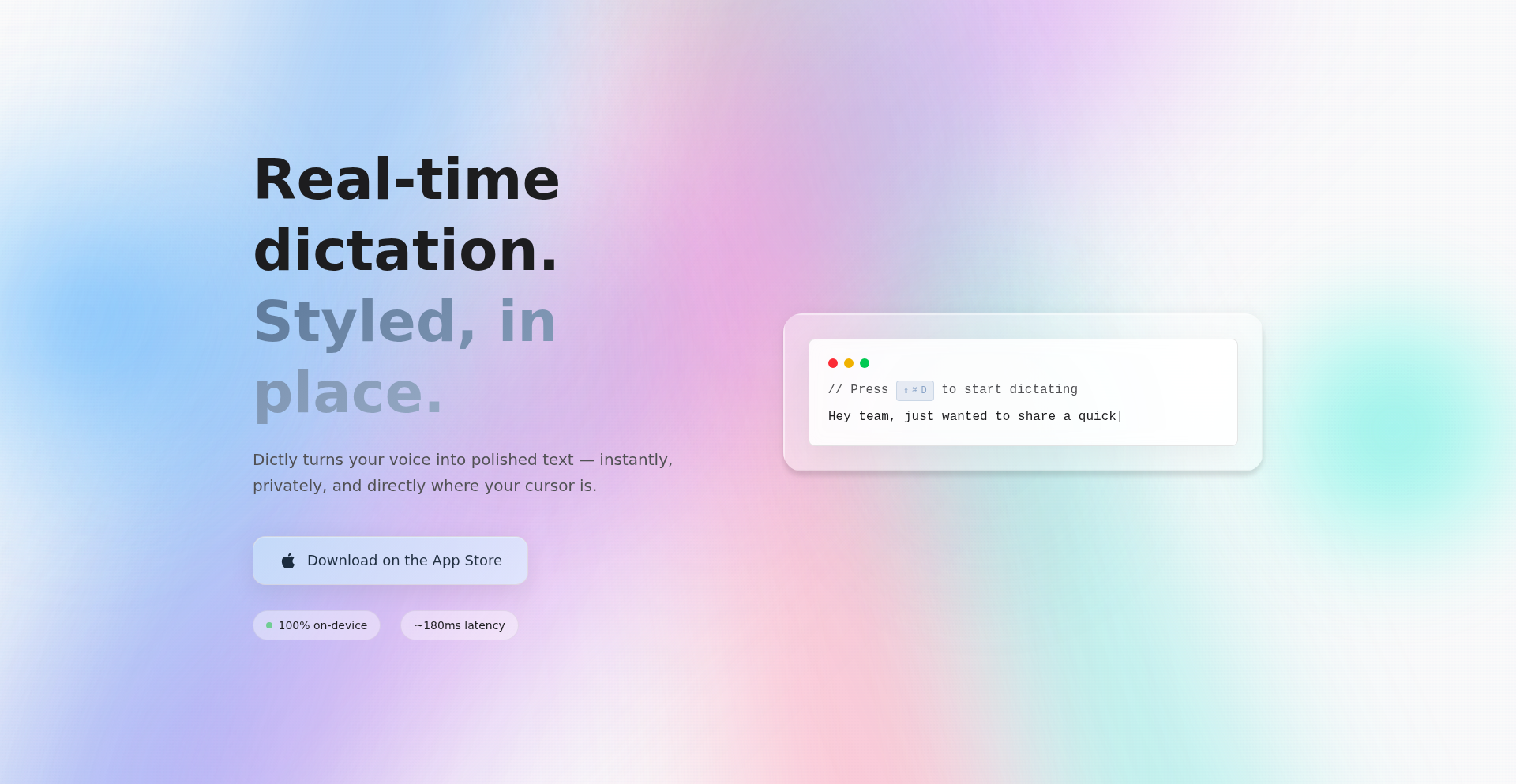

Local-First and Privacy-Focused Development

Developer Workflow Enhancement

Multimodal AI Applications

Project Category Distribution

AI/ML Tools (30%)

Developer Utilities (25%)

Productivity Tools (15%)

Creative/Fun Projects (10%)

Data Tools (5%)

Hardware/Peripherals (5%)

Utilities (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Toolbrew: Instant Utility Toolkit | 225 | 49 |

| 2 | CodeMode MCP Orchestrator | 75 | 22 |

| 3 | GameDev Search Engine | 69 | 10 |

| 4 | Swapple: Linear Reversible Circuit Synthesizer | 28 | 6 |

| 5 | Beacon | 20 | 8 |

| 6 | Janta Canvas: Dynamic Web Component Note Canvas | 12 | 2 |

| 7 | Mix: Multimodal Agent Workflow Studio | 7 | 2 |

| 8 | Control Theory Puzzles | 9 | 0 |

| 9 | Selen: Rust-Native Constraint Satisfaction Solver | 3 | 2 |

| 10 | CompViz: Total Compensation Visualizer | 2 | 3 |

1

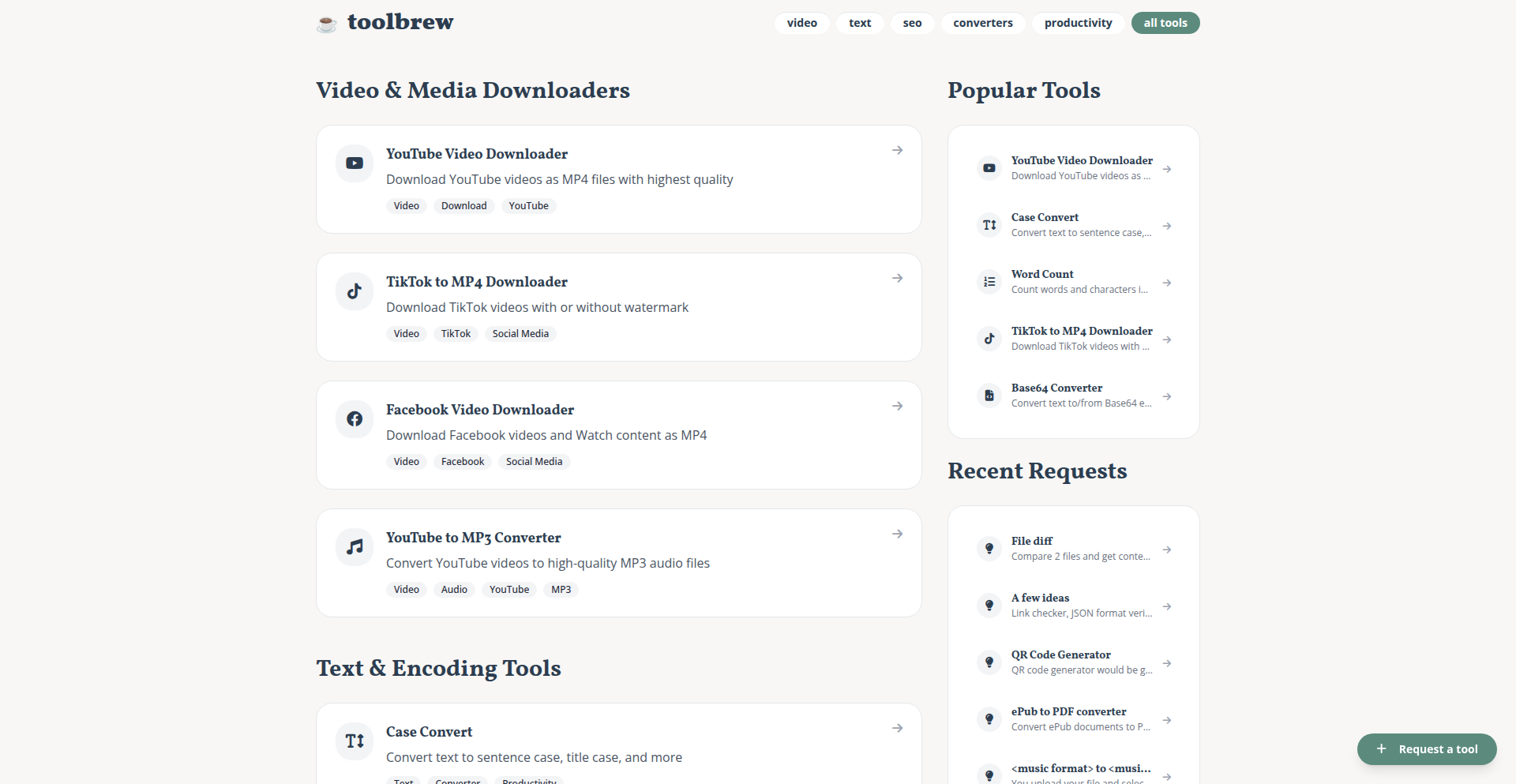

Toolbrew: Instant Utility Toolkit

Author

andreisergo

Description

Toolbrew is a curated collection of free, no-signup, ad-free online utilities built to bypass the clutter of typical tool websites. It offers a diverse range of practical functions like text manipulation, SEO analysis, and video downloading, all accessible with a single click. The innovation lies in its streamlined approach to providing essential digital tools without friction, emphasizing developer productivity and user convenience.

Popularity

Points 225

Comments 49

What is this product?

Toolbrew is a web-based platform offering a collection of essential digital tools. Instead of navigating through multiple websites filled with intrusive ads and requiring signups, Toolbrew consolidates various functionalities into one clean interface. Its technical foundation is built for speed and accessibility, likely leveraging efficient backend processing and a lightweight frontend to ensure rapid loading times. The core innovation is its philosophy of 'frictionless utility' – providing immediate access to tools that solve common digital tasks without any barriers. So, what's in it for you? You get to accomplish your digital tasks faster and more efficiently, without the annoyance of spam and registration processes.

How to use it?

Developers can use Toolbrew directly through their web browser by visiting the Toolbrew website. The interface is designed to be intuitive. For instance, a developer needing to quickly check the SEO meta description length of a webpage can paste the URL into the relevant tool and get an instant analysis. For integrating its functionality into existing workflows, while Toolbrew itself is a web app, the underlying principles and potentially open-source nature of some components could inspire developers to build similar, more specialized tools. The site also allows users to request new tools, fostering a community-driven development approach. So, how can you use it? Simply go to the site, find the tool you need, and use it. It's designed for immediate, on-demand problem-solving.

Product Core Function

· Text Conversion Tools: Offers various text transformations like case conversion, encoding/decoding, and character counting. This helps developers quickly format or analyze text data without writing custom scripts, saving valuable coding time.

· SEO Analysis Utilities: Provides instant checks for meta tag effectiveness, keyword density, and readability scores. This allows developers and content creators to quickly optimize their web content for search engines and user engagement directly from the browser, improving site performance.

· Video Downloading Capabilities: Enables users to download videos from various platforms. This addresses a common need for content repurposing or offline access, providing a quick solution without relying on complex software or ad-ridden download sites.

· General Utility Tools: Includes a range of other practical tools such as JSON formatters, regular expression testers, and timestamp converters. These are crucial for debugging, data manipulation, and quick calculations within development workflows, streamlining common technical tasks.

Product Usage Case

· A frontend developer needs to quickly format a JSON string received from an API for debugging. They can use Toolbrew's JSON formatter to instantly pretty-print the data, making it readable and easier to identify issues, avoiding the need to integrate a formatting library into their local setup.

· A content marketer wants to check if their article's meta description is within the optimal character limit for Google search results. They can paste their description into Toolbrew's SEO checker for an immediate character count and analysis, allowing for quick edits to improve search visibility.

· A backend developer is testing a file upload feature and needs to generate sample binary data. They can use a simple text-to-binary converter within Toolbrew to create test data quickly, speeding up their testing cycles.

· A social media manager wants to save a video from a platform that doesn't offer direct download. They can use Toolbrew's video downloader to extract the video file, allowing them to use it in other projects or for archival purposes without hassle.

2

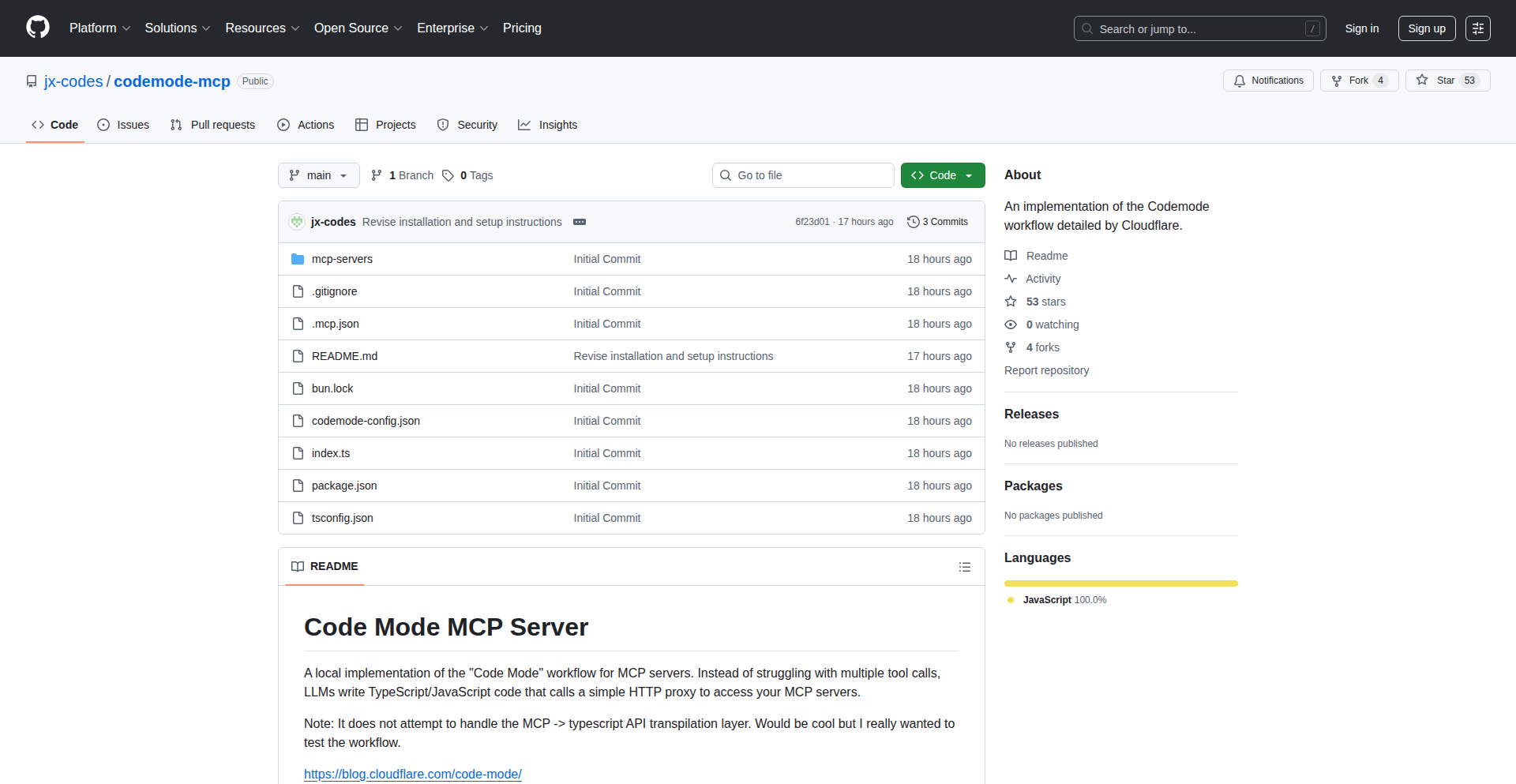

CodeMode MCP Orchestrator

Author

jmcodes

Description

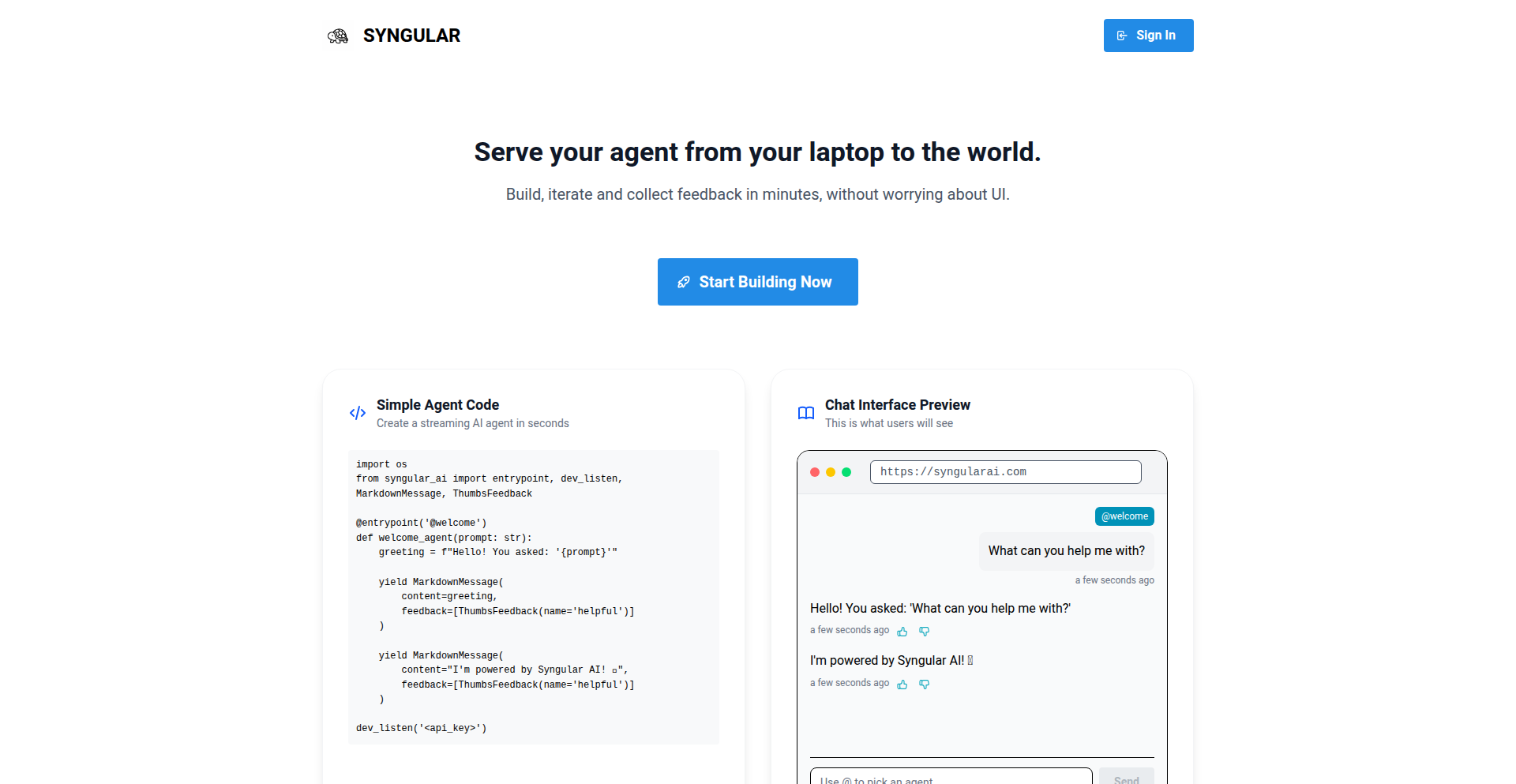

This project explores an innovative approach to workflow orchestration by combining Large Language Models (LLMs) with a secure execution environment. Leveraging Cloudflare's 'code mode' concept, it allows LLMs to directly generate and execute TypeScript code, bypassing the limitations of traditional tool-call systems. The core innovation lies in using Deno's sandboxing capabilities to safely run this LLM-generated code, with network access restricted via an MCP proxy for controlled interaction. This effectively transforms LLMs into intelligent agents capable of not just understanding but actively building and executing complex workflows. So, this is useful for developers who want to build more sophisticated and adaptable automation by letting AI write and run its own code in a safe environment.

Popularity

Points 75

Comments 22

What is this product?

This project is an experimental server that uses AI, specifically Large Language Models (LLMs), to write and run TypeScript code for automating tasks. The key innovation is how it uses a concept called 'code mode,' inspired by Cloudflare's work, where instead of just telling the AI what tools to use, you let it write actual code (TypeScript in this case) directly. Think of it like giving an AI a sandbox (Deno) with specific permissions to build and run solutions. An MCP proxy is added to manage these interactions, making it more advanced for complex workflows. So, this is useful because it pushes the boundaries of what AI can do in terms of task execution, allowing for more flexible and powerful automation than simple command-based AI interactions.

How to use it?

Developers can use this project as a backend service for their applications that require intelligent automation. By integrating with this MCP server, developers can send natural language prompts or high-level task descriptions to the LLM. The LLM, in turn, will generate TypeScript code within the secure Deno sandbox to fulfill the request. The MCP proxy then handles the execution and potential feedback loop. This could be integrated into chatbot frameworks, CI/CD pipelines, or any system where dynamic code generation and execution are beneficial. So, this is useful for developers looking to build applications that can dynamically adapt and solve problems by having AI write and execute code on demand, providing a more sophisticated automation layer.

Product Core Function

· LLM-driven TypeScript code generation: The system allows LLMs to directly author TypeScript code, enabling them to solve problems with custom logic rather than just selecting pre-defined tools. This is valuable for creating highly specific and tailored automated solutions.

· Secure Deno sandbox execution: All generated code runs in a secure, isolated Deno environment with restricted network access. This prevents malicious or faulty code from impacting the broader system, providing safety and reliability for automated processes.

· MCP proxy for workflow orchestration: An MCP (Message Queuing Telemetry Transport, though here used more broadly for proxying and communication) proxy manages communication and facilitates more advanced workflow orchestration between the LLM and the execution environment. This allows for complex, multi-step automated processes to be managed effectively.

· Controlled network access: The system strictly controls what network calls the executed code can make, enhancing security and predictability. This is crucial for preventing unauthorized data access or external service abuse in automated workflows.

· Experimental agentic capabilities: The architecture lays the groundwork for LLMs to act as agents, capable of planning, coding, and executing tasks iteratively. This is valuable for building future AI systems that can tackle more complex problems autonomously.

Product Usage Case

· Automated data processing pipeline: A developer could prompt the system to 'process all CSV files in this directory, extract the 'email' column, and send each email to a specific webhook'. The LLM would generate TypeScript code to read files, parse CSV, and make network requests, all executed securely. This solves the problem of needing to write custom scripts for every data processing task.

· Dynamic API integration: Imagine needing to interact with a new, undocumented API. Instead of manually reverse-engineering it, a developer could describe the desired interaction to the LLM, which then writes the necessary TypeScript code to fetch and parse data from the API, handling authentication and error scenarios. This solves the problem of rapidly integrating with unfamiliar or complex APIs.

· CI/CD script generation: A developer might need a custom script to deploy an application under specific conditions. They could describe these conditions to the LLM, which generates the TypeScript code for the deployment logic. This code is then securely executed as part of the CI/CD pipeline, solving the problem of creating bespoke deployment automation without extensive manual scripting.

· Intelligent chatbot backend: For a chatbot that needs to perform actions beyond simple responses, such as booking appointments or fetching real-time data, the LLM can generate TypeScript code to interact with external services (calendars, databases). This provides a more powerful and interactive user experience by enabling the chatbot to actually perform tasks.

· Prototyping complex logic: When prototyping a feature that involves intricate business logic or algorithms, developers can use this system to have the LLM write the core logic in TypeScript. This allows for rapid iteration and testing of complex ideas without getting bogged down in boilerplate code.

3

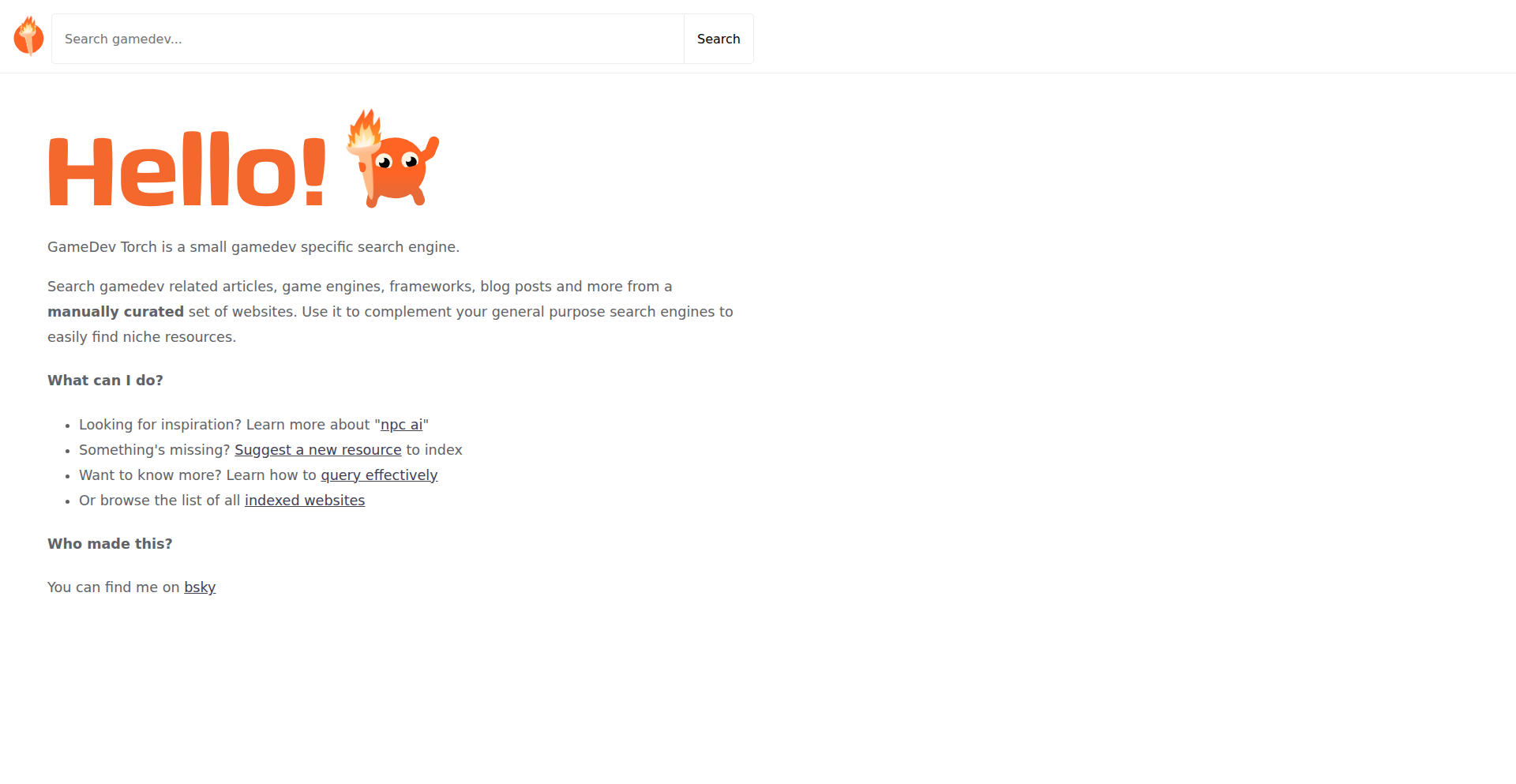

GameDev Search Engine

Author

Voycawojka

Description

A curated search engine specifically for game development resources. It addresses the challenge of fragmented and often irrelevant information found on general search engines by providing a focused and organized way to discover relevant game dev content, tools, and discussions. The innovation lies in its specialized indexing and filtering tailored to the game development domain.

Popularity

Points 69

Comments 10

What is this product?

This is a specialized search engine designed exclusively for game developers. Unlike general search engines that return a vast amount of mixed results, this engine understands and prioritizes game development-related queries. It achieves this by using a custom-built indexing system that focuses on game development keywords, forums, documentation, tutorials, and asset repositories. The core innovation is its domain-specific intelligence, allowing it to surface more relevant and actionable results for game creation challenges. So, what's in it for you? You get faster access to the exact game dev information you need, saving you hours of sifting through unrelated content.

How to use it?

Developers can use this search engine through its web interface, similar to how they would use Google. They simply type in their game development questions or search terms, such as 'Unity character controller tutorial,' 'Unreal Engine material optimization,' or 'best practices for indie game marketing.' The engine will then return a list of highly relevant results from curated sources within the game development community. Integration would involve bookmarking the site or potentially using its API (if available in future versions) to programmatically access search results for building custom tools. So, what's in it for you? It's a direct shortcut to finding solutions and resources for your game projects.

Product Core Function

· Domain-specific indexing: Indexes content specifically relevant to game development, ensuring higher accuracy and relevance in search results. This means you'll find game dev blogs, forums, and documentation more easily.

· Curated content sources: Prioritizes results from trusted and established game development communities and resources. You're more likely to find reliable information and avoid low-quality content.

· Advanced filtering for game dev topics: Allows users to filter search results by specific game engines (Unity, Unreal), programming languages (C#, C++), or development phases (prototyping, asset creation). This helps you narrow down your search to exactly what you're working on.

· Focused query understanding: Understands game development jargon and concepts, leading to more precise search outcomes. It knows what 'sprite animation' or 'shader graph' means in a game dev context.

Product Usage Case

· A solo indie developer struggling to find efficient ways to implement realistic water physics in Unity. By using this engine with the query 'Unity water physics tutorial,' they quickly find a well-explained blog post and a relevant forum discussion that solves their problem, saving them days of experimentation.

· A small game studio looking for best practices in optimizing memory usage for their upcoming mobile game. A search for 'mobile game memory optimization techniques' on this engine surfaces guides and articles specifically addressing this challenge from reputable game dev sites, helping them avoid common pitfalls.

· A student learning Unreal Engine and needing to understand complex material node setups. Searching for 'Unreal Engine material graph examples' provides direct links to detailed tutorials and community showcases, accelerating their learning curve.

· A game artist searching for royalty-free 3D models suitable for a sci-fi project. The engine's specialized indexing helps them discover asset stores and marketplaces that are curated for game development needs, rather than general 3D model sites.

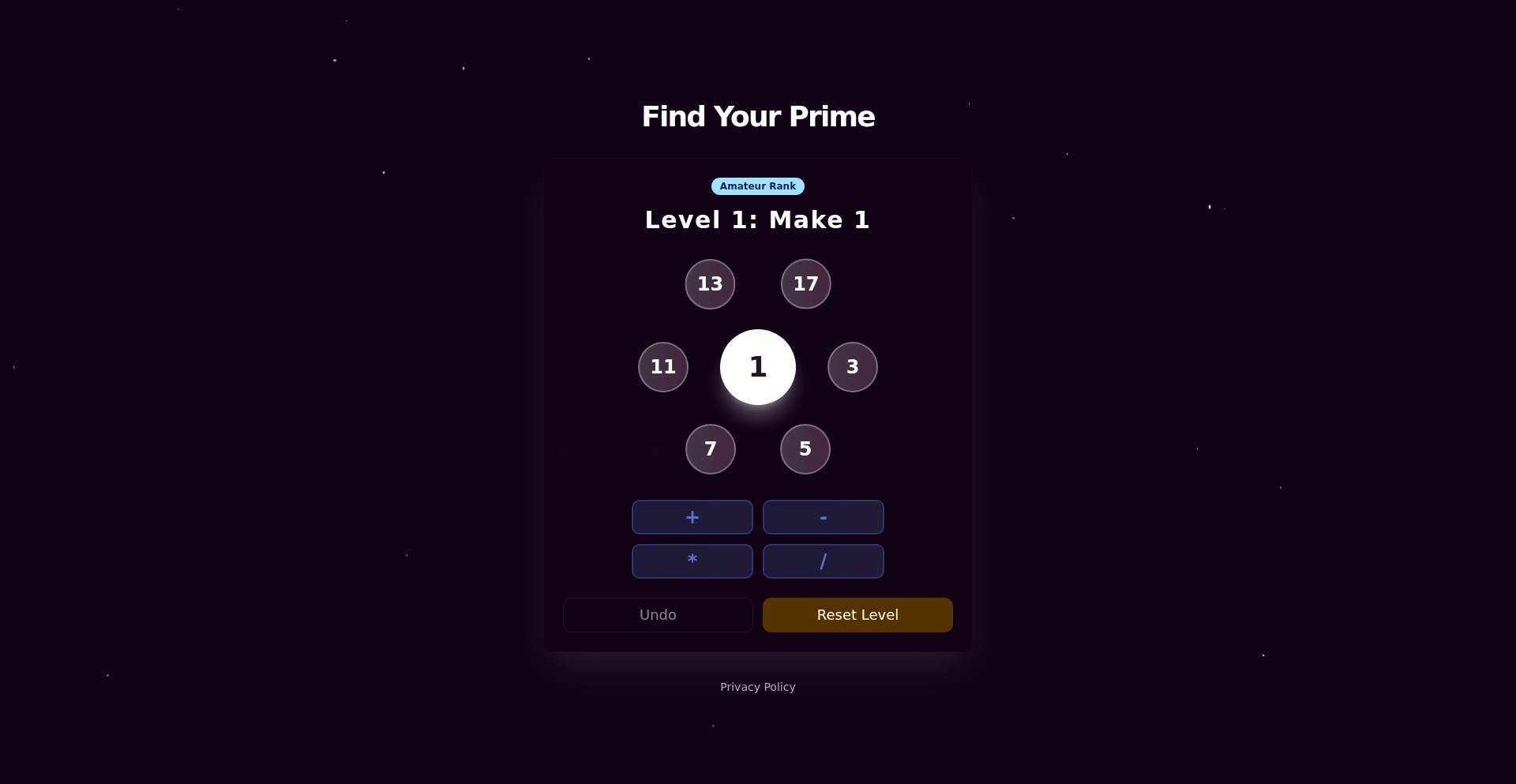

4

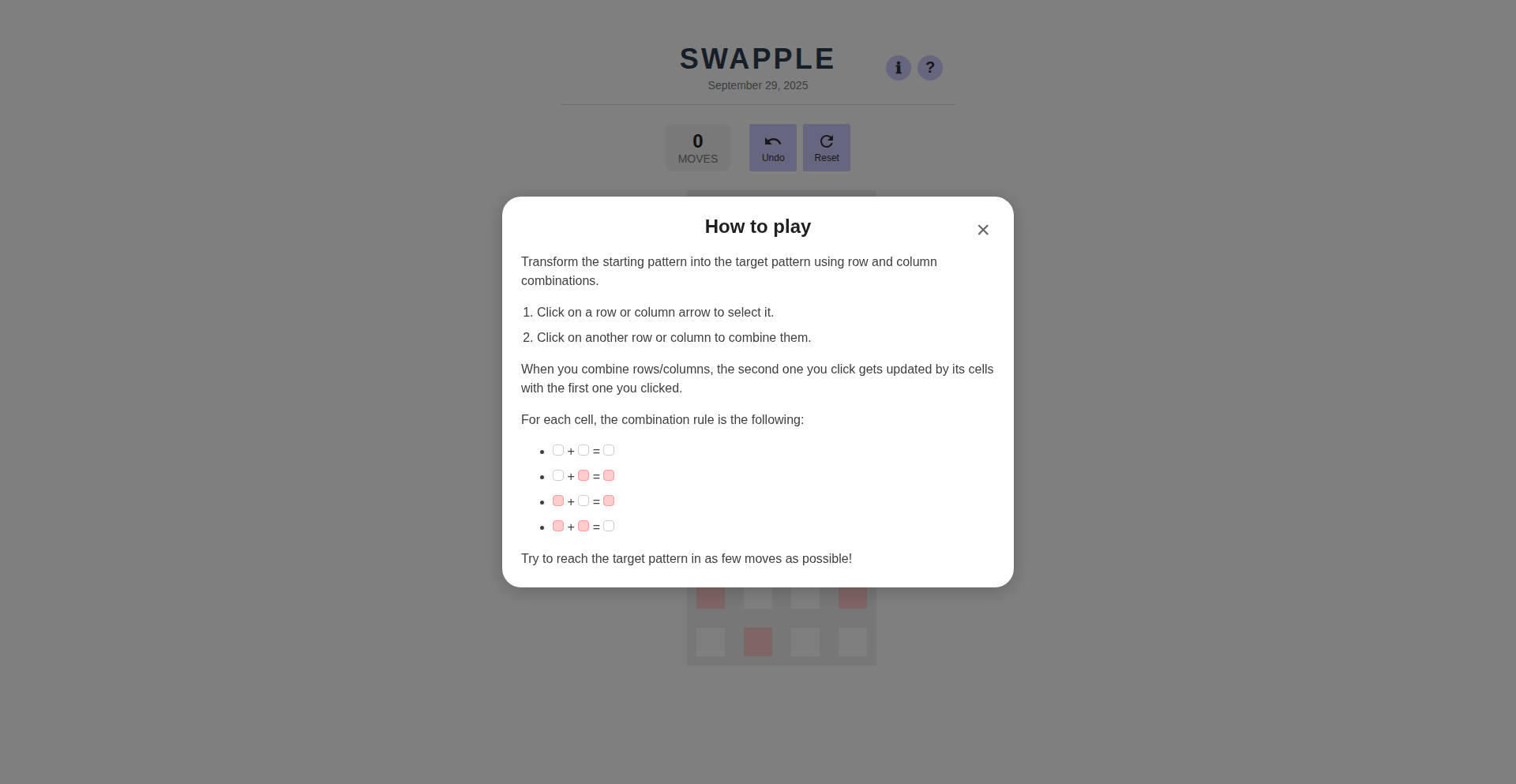

Swapple: Linear Reversible Circuit Synthesizer

Author

fuglede_

Description

Swapple is a daily puzzle game that elegantly demonstrates the principles of linear reversible circuit synthesis. It allows users to explore and construct simple digital circuits with a unique constraint: operations must be reversible, meaning you can always undo them. This project showcases a novel approach to understanding and visualizing complex computational concepts through an engaging, game-like interface. The core innovation lies in making abstract circuit theory accessible and interactive.

Popularity

Points 28

Comments 6

What is this product?

Swapple is a web-based puzzle game that introduces users to the concept of linear reversible circuits. In traditional computing, some operations are like permanently erasing information. Reversible circuits, however, ensure that no information is lost, and you can always trace back to the original state. Swapple visualizes this by presenting a grid where you manipulate bits (0s and 1s) using specific gates. Each gate performs an operation that can be perfectly reversed. The puzzle aspect comes from needing to achieve a target configuration of bits within a limited number of moves. The underlying technology uses logic gates and circuit theory principles to create these reversible operations, offering a glimpse into quantum computing and other advanced fields where reversibility is crucial. So, this is useful because it demystifies complex computer science concepts, making them understandable and fun, which can spark interest in areas like advanced computing.

How to use it?

Developers can use Swapple as an educational tool to grasp the fundamentals of reversible logic, which is a building block for quantum computing and other specialized hardware. The game provides a hands-on way to experiment with different gate combinations and understand their effects. You can play it directly in your web browser. For integration, while Swapple itself is a standalone application, the principles it teaches can be applied when designing or analyzing low-power digital circuits, error-correction codes, or even understanding cryptographic algorithms where reversibility plays a role. So, this is useful because it provides a playful yet educational environment to learn about advanced circuit design that could impact future computing technologies.

Product Core Function

· Interactive circuit construction: Users can drag and drop logic gates onto a grid to build circuits. This allows for experimentation and learning by doing, making abstract concepts tangible and easier to grasp. The value is in providing a visual playground for circuit design.

· Reversible gate operations: All operations performed by the gates are inherently reversible, meaning no data is lost. This teaches a fundamental concept in advanced computing and information theory. The value is in demonstrating information preservation in computation.

· Puzzle-based learning: The game presents users with specific targets to achieve, encouraging problem-solving and strategic thinking within the context of circuit design. The value is in making learning engaging and goal-oriented.

· Daily challenge mechanism: A new puzzle is generated daily, providing a consistent opportunity for users to practice and improve their skills. The value is in fostering continuous learning and skill development.

· Web-based accessibility: Accessible through any modern web browser without requiring installation. The value is in making advanced computing concepts immediately available to a wide audience.

Product Usage Case

· A student learning about quantum computing can use Swapple to intuitively understand how qubits can be manipulated without collapsing their state, a core principle of quantum mechanics. It helps them visualize reversible operations in a simplified digital context.

· A hardware engineer looking to design more energy-efficient digital circuits can explore the concept of reversible logic gates showcased in Swapple, as reversibility is a key to reducing power consumption in future computing architectures. It provides a foundational understanding of low-power design principles.

· A developer interested in cryptography can use Swapple to gain an appreciation for the mathematical properties of reversible functions, which are often employed in secure encryption algorithms. It offers a conceptual bridge to understanding how data can be transformed and reliably recovered.

· A hobbyist interested in the theoretical underpinnings of computation can play Swapple to experience a different paradigm of digital logic beyond standard boolean operations. It provides a fun entry point into exploring the frontiers of computer science.

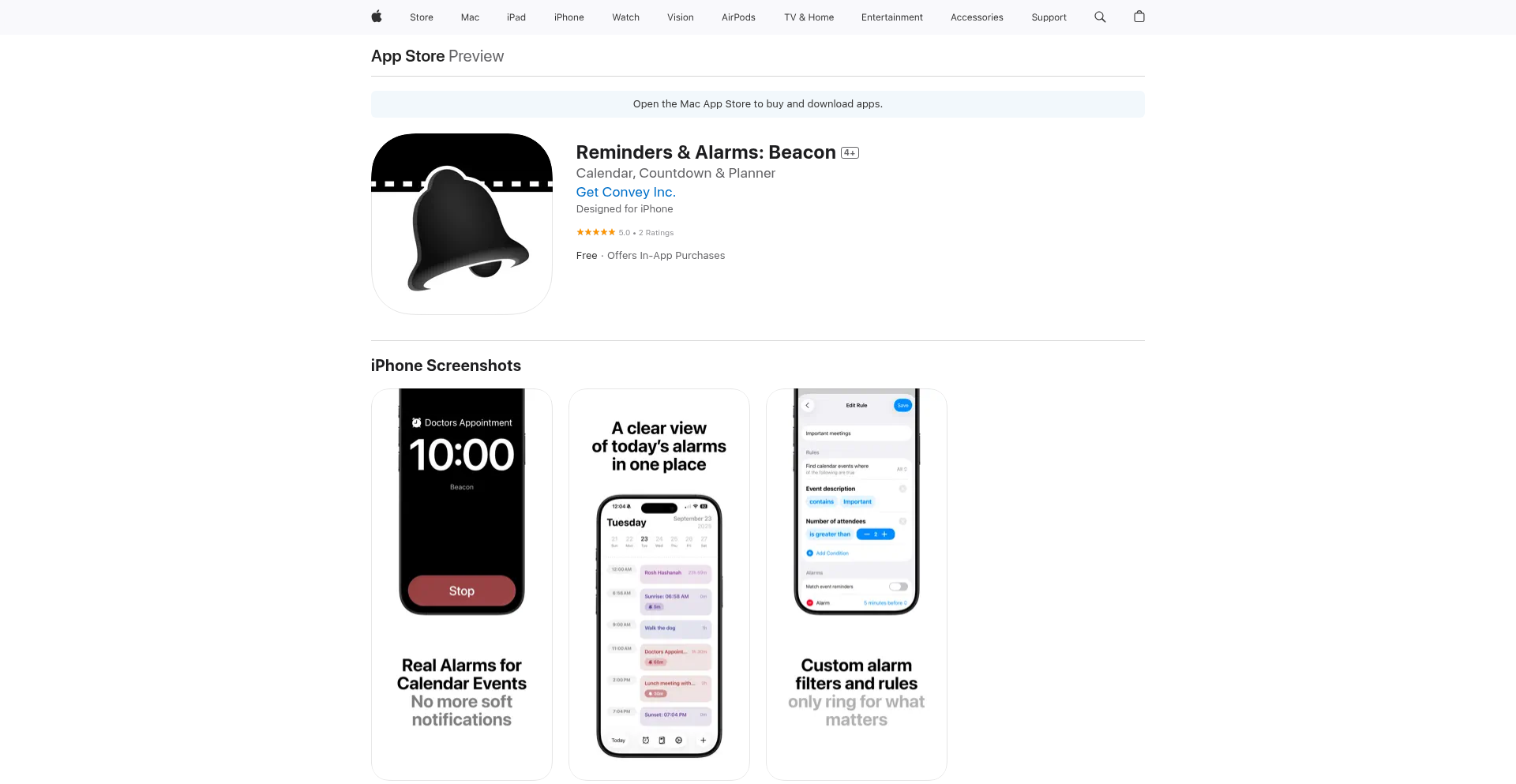

5

Beacon

Author

jiffydiffy

Description

Beacon is a clever iOS app that leverages the new AlarmKit to automatically set actual iOS alarms for your important calendar events. It solves the problem of easily ignorable calendar notifications by converting them into unavoidable alarms, ensuring you never miss crucial appointments. You can set up custom rules to trigger alarms only for specific types of events, making it a smart and personalized reminder system.

Popularity

Points 20

Comments 8

What is this product?

Beacon is an iOS application that bridges the gap between your Apple Calendar and the iOS Alarm system. Traditional calendar notifications can often be silenced or overlooked, leading to missed appointments. Beacon addresses this by intelligently scanning your calendar for events that meet your predefined criteria (e.g., events with 'Interview' in the title or meetings with a certain number of attendees). Once identified, it uses iOS 26's AlarmKit to programmatically create real, persistent iOS alarms for these events. This means even if your phone is on silent or Do Not Disturb mode, the alarm will sound, forcing your attention. The innovation lies in the automated conversion of calendar awareness into actionable alarms, reducing manual setup and ensuring critical events are properly flagged.

How to use it?

Developers can use Beacon by installing the app on their iOS devices. Once installed, they can grant Beacon access to their Apple Calendar. Within the app, users can define simple, rule-based triggers. For example, one might set a rule to 'only create alarms for events where the title contains "Important Meeting"' or 'create alarms for events with more than 5 attendees'. Beacon then continuously monitors the calendar and automatically schedules the corresponding alarms. For integration, developers could potentially extend Beacon's functionality through Shortcuts or other iOS automation tools, allowing for more complex workflows where an alarm being set by Beacon could trigger other actions.

Product Core Function

· Automatic Calendar Event Monitoring: Beacon continuously scans your Apple Calendar for events, ensuring no important details are missed. This provides peace of mind that your schedule is being actively managed.

· Rule-Based Alarm Triggering: Users can define custom rules based on event titles, attendee counts, or other calendar metadata. This allows for a highly personalized reminder system, ensuring alarms are only set for what truly matters to you, reducing notification fatigue.

· Real iOS Alarm Generation: Instead of simple notifications, Beacon creates actual iOS alarms. This means alarms will break through silent or Do Not Disturb modes, guaranteeing you are alerted to important events. This directly addresses the core problem of ignored notifications.

· Seamless Sync with Apple Calendar: Beacon integrates directly with your existing Apple Calendar data. This eliminates the need for manual data entry and ensures consistency between your calendar and your alarms.

· User-Friendly Rule Configuration: The app offers a simple interface for setting up rules, making it accessible even for users who aren't deeply technical. This lowers the barrier to entry for leveraging advanced reminder capabilities.

Product Usage Case

· For a job seeker, Beacon can be configured to automatically set alarms for every scheduled interview, ensuring they are never late. By setting a rule like 'only create alarms for events with "Interview" in the title', the seeker gets a loud, undeniable alert without needing to manually set an alarm for each interview.

· A busy project manager can use Beacon to ensure they don't miss critical team syncs. By setting a rule such as 'create alarms for meetings with 3 or more attendees', they are guaranteed to be alerted for significant meetings that require their presence, even if their phone is on silent.

· For individuals managing personal appointments, Beacon can be set to create alarms for doctor's visits or important family events. A rule like 'create alarms for events with "Doctor" or "Anniversary" in the title' ensures these crucial personal dates are hard to miss.

6

Janta Canvas: Dynamic Web Component Note Canvas

Author

Isaac-Westaway

Description

Janta Canvas is a novel note-taking application that breaks free from traditional limitations of infinite canvas tools. Its core innovation lies in seamlessly integrating dynamic web components, such as code editors, interactive Desmos graphs, and rich text editors (powered by SlateJS), directly onto the same canvas layer as hand-drawn annotations. This allows for a deeply interactive and programmatic note-taking experience, enabling users to annotate code snippets, visually analyze mathematical functions, and even generate visualizations directly within their notes. It solves the problem of static, siloed information in existing note apps by creating a unified, interactive space for diverse digital content.

Popularity

Points 12

Comments 2

What is this product?

Janta Canvas is an open-source, developer-focused note-taking application built on an infinite canvas. Unlike traditional note apps that treat different types of content separately, Janta Canvas treats everything as first-class citizens on the same layer. Imagine being able to draw an arrow pointing directly to a specific line of code in an embedded code editor, or to annotate a live Desmos graph to explain a mathematical concept. This is achieved by treating the canvas as a container for web components, allowing them to coexist with pen strokes and other rich media. This approach unlocks a new level of interactivity and expressiveness for technical and creative workflows.

How to use it?

Developers can start using Janta Canvas immediately by visiting app.janta.dev. The initial experience will lead to a temporary, locally-stored canvas where you can begin experimenting. To integrate Janta Canvas into your own projects or workflows, you can leverage its web component architecture. For instance, you could embed Janta Canvas within a larger application to provide an interactive scratchpad for debugging or design. Specific use cases include annotating live code during a debugging session, collaboratively designing UIs with embedded visual mockups and annotations, or creating dynamic reports that combine textual explanations with live data visualizations. The programmatic generation of content, like using matplotlib.pyplot to create graphs directly on the canvas, further extends its utility for data-driven documentation.

Product Core Function

· Infinite Canvas with Real-time Annotation: Allows for boundless freeform note-taking and drawing, making it easy to capture complex ideas without space constraints. The value is in providing a flexible digital whiteboard that adapts to any thought process.

· Integrated Code Editors: Embed live, editable code blocks directly into notes. This is invaluable for developers to document code, prototype snippets, or share and annotate programming examples, bridging the gap between code and explanation.

· Interactive Desmos Graph Integration: Embed and manipulate Desmos mathematical graphs within notes. This is crucial for educators, students, and researchers to visualize and explain complex mathematical concepts directly alongside textual explanations, enhancing understanding.

· Rich Text Editing (SlateJS): Provides a powerful and flexible rich text editor for structured textual content. This ensures that written explanations are as robust and well-formatted as other components on the canvas, improving readability and organization.

· Web Component Layering: The core innovation allowing diverse web components (like code editors, graphs, etc.) to coexist on the same canvas as pen strokes. This creates a truly unified and interactive note-taking environment, enabling novel ways to combine different types of information.

· Programmatic Content Generation: Ability to generate content, such as graphs using libraries like matplotlib.pyplot, directly onto the canvas. This empowers users to create dynamic visualizations and data-driven insights within their notes, making them more informative and interactive.

Product Usage Case

· Debugging Session: A developer is debugging a complex algorithm. They embed the relevant code snippet into Janta Canvas, then use pen strokes to highlight specific variable states and draw flowcharts to trace execution paths. This visual annotation directly on the code clarifies the problem faster than separate tools.

· Interactive Tutorial Creation: An educator is creating a tutorial for a new programming concept. They embed code examples, use annotations to explain each line, and include interactive Desmos graphs to demonstrate mathematical principles related to the code. This provides a rich, multi-modal learning experience.

· Collaborative Design Mockups: A design team is working on a new UI. They embed wireframes and mockups onto the canvas, then use pen annotations and rich text to provide feedback and suggestions directly on the visual elements. This streamlines the design iteration process.

· Technical Documentation with Live Data: A data scientist is documenting a model's performance. They generate charts using a Python script (via programmatic generation) directly on the canvas and then annotate key trends and insights. This creates dynamic, self-updating documentation.

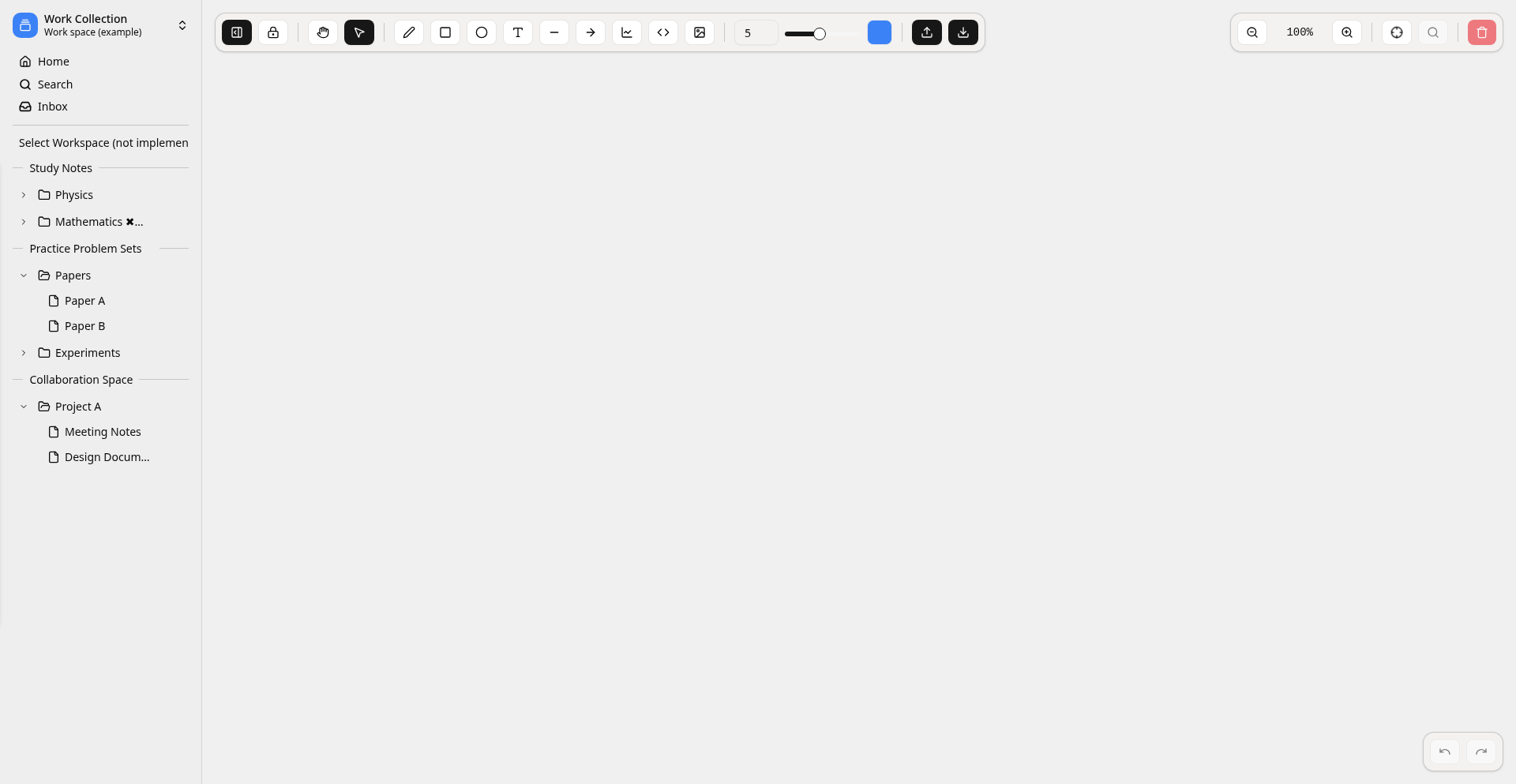

7

Mix: Multimodal Agent Workflow Studio

Author

sarath_suresh

Description

Mix is a groundbreaking multimodal agents SDK that allows developers to build and test complex AI workflows through an intuitive GUI playground, rather than solely relying on code. Its core innovation lies in its focus on visual, non-code-based workflow construction, abstracting away much of the underlying complexity. This translates to faster prototyping, easier debugging, and democratized access to building sophisticated AI applications, especially for those who might not be deep coding experts. The project champions an open-data philosophy, storing all project data in plain text and native media files, guaranteeing zero vendor lock-in.

Popularity

Points 7

Comments 2

What is this product?

Mix is a Software Development Kit (SDK) designed to simplify the creation of AI-powered workflows that can understand and process multiple types of data, such as text, images, and audio (this is what 'multimodal' means). The exciting part is its Graphical User Interface (GUI) playground. Imagine building AI applications like putting together building blocks on a screen, instead of writing lines and lines of code. This means you can visually design how different AI models interact, how data flows between them, and how the final output is generated. The key innovation is moving away from code-centric development towards a more accessible, visual approach for complex AI tasks. This makes AI development faster, more intuitive, and less daunting. Furthermore, Mix is built with transparency and freedom in mind: all your project data and media files are stored in easily accessible formats, meaning you're not locked into any proprietary system. The underlying engine is a straightforward HTTP server, and you can interact with it using either Python or TypeScript SDKs.

How to use it?

Developers can leverage Mix by first setting up the backend server. Then, they can use the provided TypeScript SDK to integrate Mix into their existing applications or build entirely new ones. The GUI playground serves as the primary interface for designing and testing multimodal agent workflows. Developers can drag and drop different AI modules (like image recognition, text generation, speech-to-text), connect them with visual arrows to define the data flow, and configure their behavior. For example, a developer could create a workflow that takes an image, uses an AI model to identify objects within it, then feeds those objects into a text generation model to create a descriptive caption. The playground allows for real-time testing and debugging of these workflows, showing exactly how data progresses and where any issues might arise. This makes rapid iteration and refinement of AI logic incredibly efficient. The Python and TypeScript SDKs also offer programmatic control for more advanced integrations or automated workflow management.

Product Core Function

· Visual Workflow Builder (GUI Playground): Enables intuitive, drag-and-drop creation of multimodal AI agent workflows, abstracting code complexity for faster prototyping and easier understanding. This is useful for quickly visualizing and assembling AI processes without extensive coding.

· Multimodal Agent Integration: Supports connecting various AI models that can process different data types (text, images, audio), allowing for richer and more versatile AI applications. This allows developers to build AI systems that can handle real-world data more comprehensively.

· Plain Text and Native Media Storage: Ensures all project data and media are stored in open formats, preventing vendor lock-in and facilitating data portability and reusability. This means your AI project's assets are easily accessible and not tied to a specific platform.

· HTTP Server Backend: Provides a robust and accessible backend for the SDK, allowing for easy integration and scalability. This offers a stable foundation for your AI workflows.

· Python and TypeScript SDKs: Offers developers flexible options for interacting with the Mix backend, enabling integration into diverse tech stacks and custom automation. This provides choice and compatibility for different development environments.

Product Usage Case

· Building an automated content generation pipeline: A blogger could use Mix to create a workflow that takes a news article URL, extracts key information, generates a summary in different tones, and then creates social media posts. This solves the problem of time-consuming content repurposing.

· Developing an intelligent customer support assistant: A company could design a workflow where customer inquiries (text or voice) are analyzed for sentiment and intent, relevant knowledge base articles are retrieved, and pre-written or AI-generated responses are provided. This streamlines customer service operations.

· Creating an image analysis and reporting tool: A researcher could build a system that automatically processes batches of images, identifies specific features, categorizes them, and generates a detailed report with statistics. This automates tedious manual data analysis.

· Prototyping AI-powered educational tools: An educator could experiment with building an interactive learning module where students upload their work (e.g., essays, drawings), and the AI provides feedback based on predefined criteria. This allows for rapid testing of educational AI concepts.

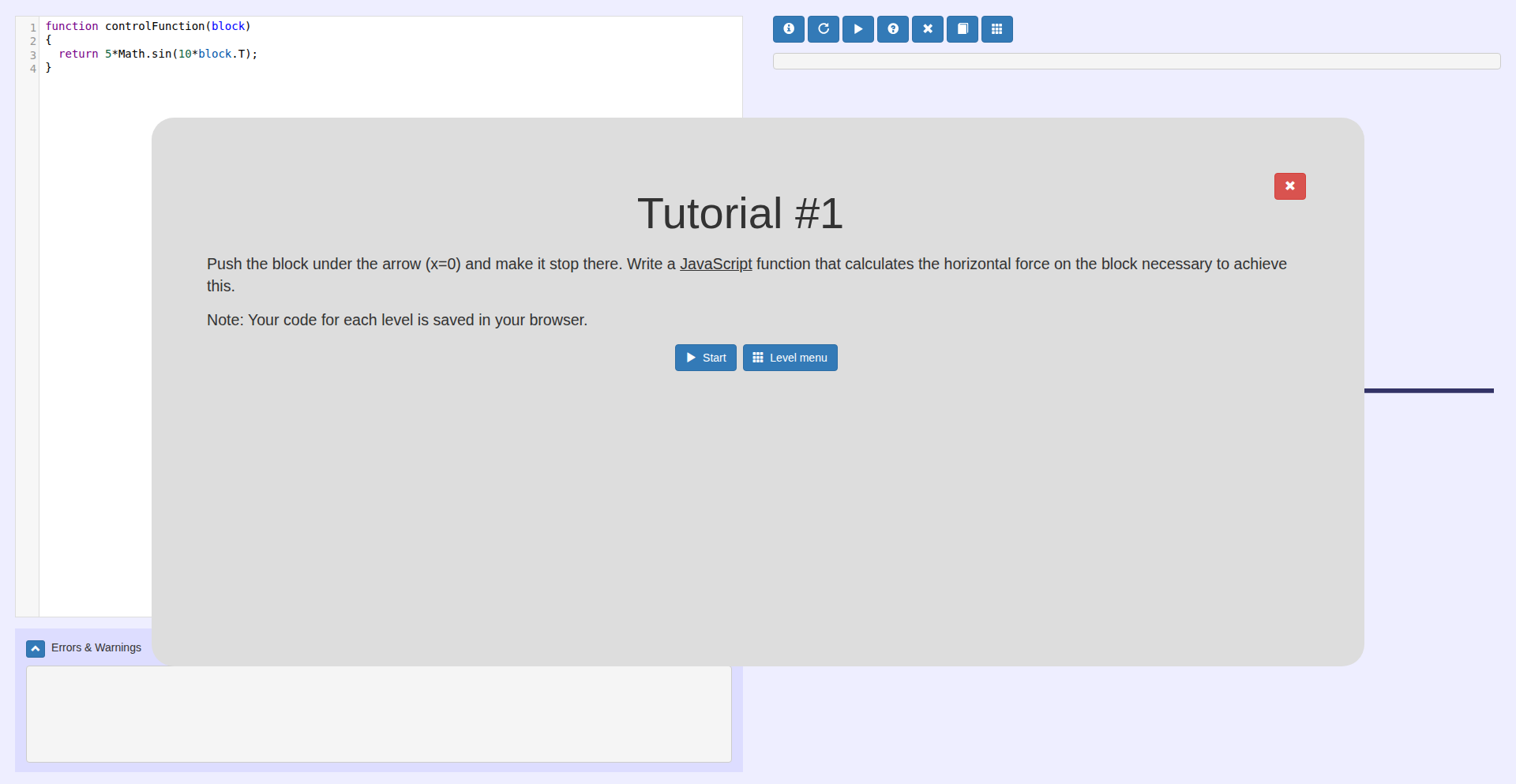

8

Control Theory Puzzles

Author

wvlia5

Description

This project is a collection of interactive puzzles designed to teach and explore the fundamental concepts of control theory using web technologies. It translates complex mathematical and engineering principles into engaging, visual challenges, making them accessible to a broader audience. The innovation lies in its pedagogical approach, leveraging interactive web simulations to democratize the understanding of control systems, which are crucial in everything from robotics to aerospace.

Popularity

Points 9

Comments 0

What is this product?

This project is an educational tool that uses interactive web-based puzzles to explain control theory. Control theory is a branch of engineering and mathematics that deals with the behavior of dynamical systems with inputs, and how their behavior can be modified by varying the inputs. Think of it as the science of making things behave the way you want them to. This project innovates by taking abstract mathematical concepts and turning them into visual, hands-on challenges in your browser. Instead of just reading about it, you can actively experiment and learn. So, what's in it for you? It offers a playful and intuitive way to grasp how systems are stabilized, how they respond to changes, and how to design them for desired performance, which is applicable to a vast range of real-world systems.

How to use it?

Developers can use this project as a learning resource to understand the core principles of control theory. It's ideal for those working on embedded systems, robotics, automation, or any field where system behavior needs to be managed. The puzzles likely involve adjusting parameters, observing system responses in real-time, and achieving specific outcomes. Integration might involve referring to the underlying code or concepts for their own projects, or even extending the puzzle framework. So, how can you use it? You can directly interact with the puzzles on the web to sharpen your intuition about system dynamics, and then apply those insights to your own code or hardware projects that require precise control.

Product Core Function

· Interactive system simulation: Allows users to manipulate system parameters and instantly see the effect on system behavior, providing immediate feedback for learning. This is valuable for understanding cause-and-effect in dynamic systems.

· Visual representation of control concepts: Translates abstract mathematical models into easily digestible graphical outputs, making complex ideas like stability and response time tangible. This helps in grasping abstract concepts through visual cues.

· Problem-based learning puzzles: Presents users with specific challenges and goals within a simulated environment, encouraging active problem-solving and deeper engagement with control theory principles. This fosters a 'learn by doing' approach to complex topics.

· Web-based accessibility: Makes control theory education available to anyone with a web browser, removing traditional barriers of specialized software or hardware. This broadens access to valuable engineering knowledge.

· Parameter tuning exercises: Guides users through the process of adjusting controller gains or system configurations to achieve desired performance objectives, like speed or accuracy. This is directly applicable to optimizing real-world control systems.

Product Usage Case

· A robotics engineer learning to tune PID controllers for motor speed regulation can use the puzzles to visually understand the impact of P, I, and D gains on overshoot, settling time, and steady-state error, leading to more efficient tuning in their actual robot.

· A student studying mechanical engineering can engage with puzzles that simulate simple mechanical systems (like a pendulum or a spring-mass-damper) to build an intuitive understanding of feedback loops and stability before tackling complex mathematical derivations.

· A software developer working on a simulation for a drone's flight control can use these puzzles to quickly prototype and test different control strategies in a simplified environment, accelerating their development cycle.

· An enthusiast of home automation can learn how feedback control principles are applied to maintain temperature or humidity, enabling them to better understand or even design more sophisticated automation systems.

9

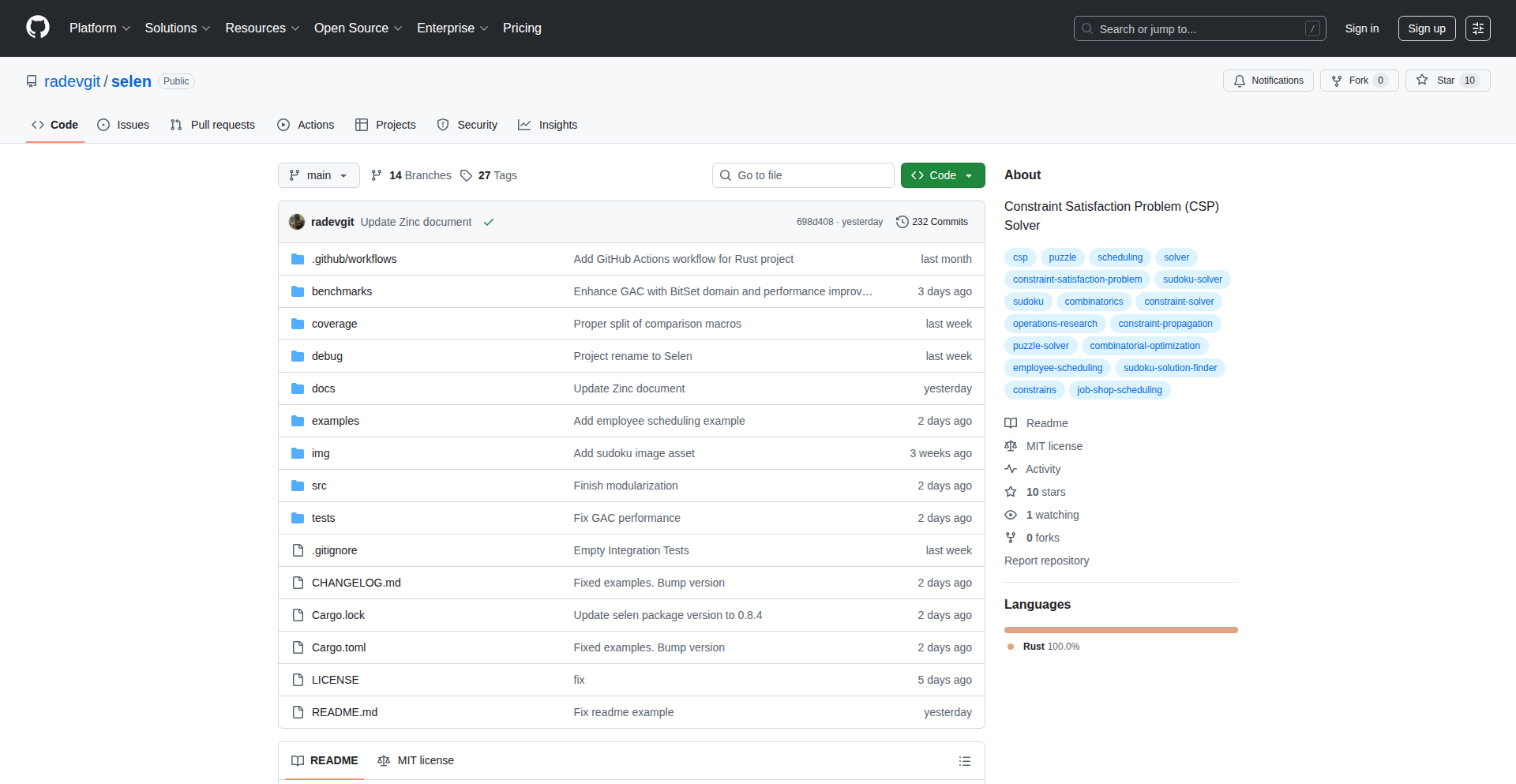

Selen: Rust-Native Constraint Satisfaction Solver

Author

aquarin

Description

Selen is a self-contained constraint satisfaction solver written in Rust. It addresses the need for a constraint solver that avoids heavy external dependencies, often found in large C/C++ libraries. This project offers a fresh take on constraint solving with a focus on a clean, integrated implementation, suitable for developers seeking a lightweight yet powerful solution for complex combinatorial problems.

Popularity

Points 3

Comments 2

What is this product?

Selen is a program designed to find solutions to problems with specific rules or 'constraints'. Think of it like a super-smart puzzle solver. For example, if you need to schedule tasks (the 'variables') such that no two tasks overlap ('constraints'), and each task has a specific duration ('domain'), Selen can figure out a valid schedule. Its innovation lies in being written entirely in Rust, meaning it's designed to be fast, safe, and easy to integrate into other Rust projects without pulling in a lot of complicated baggage from other programming languages. It handles numbers (integers and decimals) and true/false values, and understands common puzzle rules like 'all different' (no two variables can have the same value) or 'element' (one variable's value determines another's). So, for you, it means a robust puzzle-solving engine that's less likely to break and easier to manage within your own code.

How to use it?

Developers can integrate Selen directly into their Rust projects. You define your problem by specifying the variables you need to solve for, the possible values each variable can take (its domain - like integers, floats, or booleans), and the constraints that must be satisfied. For instance, in a resource allocation problem, variables might represent 'how many of item X' to produce, their domain would be a range of integers, and constraints could be 'total production cannot exceed available raw materials' or 'item X and item Y cannot be produced simultaneously'. Selen then efficiently searches for a set of variable values that meet all these conditions. This is useful for anyone building applications that require optimization, scheduling, configuration, or complex decision-making, directly within their Rust ecosystem.

Product Core Function

· Integer, Float, and Boolean Domain Support: Allows solving problems with various types of numerical and logical data, making it versatile for a wide range of applications like financial modeling or system configuration.

· Arithmetic Constraints: Enables defining relationships between variables using standard mathematical operations (e.g., x + y <= 10), fundamental for many optimization tasks.

· Logical Constraints: Supports complex logical combinations (e.g., IF condition THEN outcome), crucial for building sophisticated decision systems.

· Global Constraints (alldiff, element, count, table): Provides pre-built, efficient implementations for common and powerful constraint patterns, simplifying the definition of complex relationships and improving solver performance.

· Self-Contained Rust Implementation: Offers a dependency-light solution that is easier to integrate and manage within Rust projects, promoting code safety and performance.

· Extensible Architecture (Implied): While not explicitly stated as a feature, the decision to build from scratch suggests an architecture that can be extended with new constraint types or solver techniques as needed, offering long-term flexibility.

Product Usage Case

· Cutting Stock Optimization: A developer needing to minimize waste when cutting raw materials (like fabric or metal) into smaller pieces can use Selen to determine the optimal cutting patterns, ensuring all required pieces are produced with the least amount of scrap. This directly addresses the author's original motivation for building the solver.

· Scheduling and Resource Allocation: Imagine a project manager building an application to schedule construction tasks. Selen can be used to assign tasks to workers and equipment while respecting deadlines, resource availability, and skill requirements, preventing conflicts and maximizing efficiency.

· Configuration Management: For complex software systems with many interdependent settings, Selen can find a valid and optimal configuration that satisfies all user-defined rules and dependencies, simplifying setup and preventing errors.

· Sudoku Solver Development: A hobbyist developer creating a program to solve Sudoku puzzles can leverage Selen's 'alldiff' constraint (numbers 1-9 must appear once in each row, column, and 3x3 box) to efficiently find solutions.

· Custom Game AI: Developers building games requiring intelligent non-player characters (NPCs) can use Selen to make complex decisions, such as pathfinding with specific environmental constraints or managing NPC resource consumption.

10

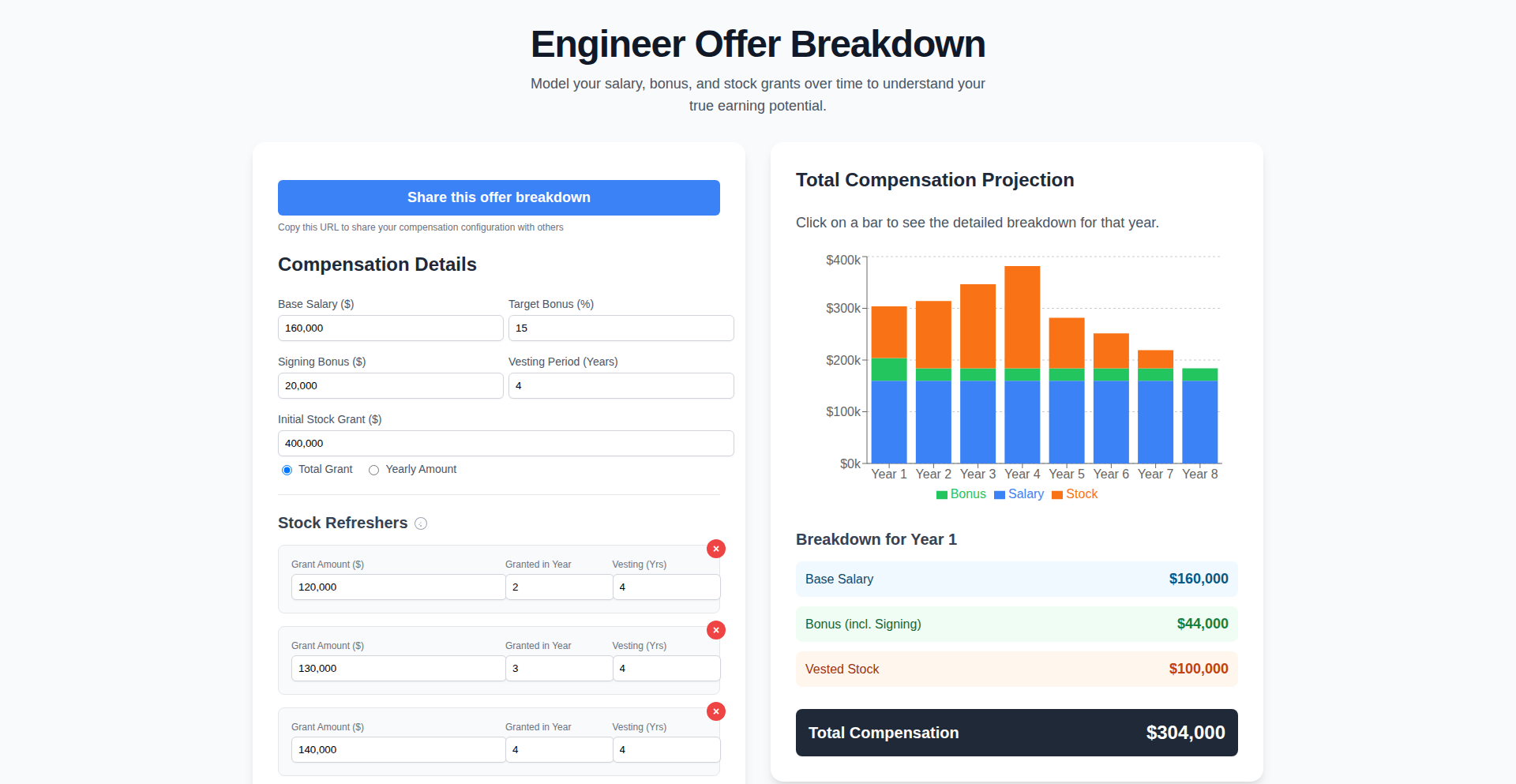

CompViz: Total Compensation Visualizer

Author

JoeCortopassi

Description

CompViz is a static web application designed to demystify total compensation packages. It takes complex offer details (like base salary, bonuses, stock options, and benefits) and presents them in a clear, visual breakdown over time. The innovation lies in its real-time URL updating, allowing users to easily share and discuss their offers without any backend data processing. This project solves the common problem of understanding and comparing multi-year compensation offers, making it accessible to both engineers and recruiters.

Popularity

Points 2

Comments 3

What is this product?

CompViz is a web-based tool that visually represents your total compensation package year over year. Instead of looking at a dense document, it translates information like your base salary, signing bonus, annual bonuses, stock vesting schedules, and estimated benefits into an easy-to-understand chart. The core technical innovation is that it's a completely static site – meaning no servers are collecting your private data. All the calculations and visualizations happen directly in your browser. The URL itself dynamically updates to reflect the data you've entered, acting as a unique, shareable snapshot of your offer. This means no complex backend, just pure client-side magic for quick insights.

How to use it?

Developers can use CompViz by visiting the provided URL and inputting their specific compensation details into an intuitive form. This includes fields for base salary, expected bonuses, stock grant details (like number of shares, vesting schedule, and estimated value), and any other relevant compensation components. Once the data is entered, the tool immediately generates a visual chart. For sharing, simply copy the updated URL from your browser's address bar. This URL can then be sent to recruiters to clarify an offer, to mentors for advice, or to family to discuss financial planning. It's designed for instant use without any installation or complex setup.

Product Core Function

· Visual Compensation Breakdown: Generates charts and graphs to show total compensation over multiple years, making it easy to grasp complex offer structures. Value: Provides immediate clarity on long-term financial implications of a job offer.

· Real-time URL Sharing: The URL updates instantly as data is entered, allowing users to share a specific view of their offer with a single link. Value: Facilitates effortless communication and discussion about job offers with others.

· Static Site Architecture: Built entirely on the client-side, ensuring no personal compensation data is transmitted or stored on a server. Value: Guarantees user privacy and security for sensitive financial information.

· Intuitive Data Input: A user-friendly interface for entering various compensation components like salary, bonuses, and stock options. Value: Simplifies the process of understanding and digitizing offer details for analysis.

· Offer Comparison Helper: Enables users to input and compare different offers side-by-side visually. Value: Aids in making informed career decisions by clearly seeing the financial differences between opportunities.

Product Usage Case

· Scenario: An engineer receives multiple job offers with varying stock options and bonus structures. How it solves: They can input each offer into CompViz to get a visual comparison of total compensation over five years, helping them decide which offer is financially superior in the long run.

· Scenario: A recruiter needs to explain a complex compensation package to a candidate. How it solves: The recruiter can use CompViz to generate a shareable link that visually breaks down the offer, making it easier for the candidate to understand and ask informed questions.

· Scenario: An engineer is seeking advice from a mentor on a job offer. How it solves: By sharing the CompViz URL, the engineer can quickly provide their mentor with a clear overview of the offer's financial components without lengthy explanations.

· Scenario: A person is planning their finances and wants to visualize potential income growth from different career paths. How it solves: They can use CompViz to model future compensation based on expected salary increases and stock vesting, helping with financial forecasting.

11

PrivacyForge.ai

Author

divydeep3

Description

PrivacyForge.ai is an AI-powered platform that generates legally compliant privacy documentation tailored to your specific business practices. It addresses the common problem of startups struggling with expensive legal fees or risky generic privacy policy templates. By analyzing your data collection, processing, and storage methods, it creates custom documents that align with current regulations like GDPR, CCPA, and more. This offers a more accurate and cost-effective solution for privacy compliance, reducing the risk of legal issues and funding roadblocks. The innovation lies in its AI-driven customization versus static templates, making privacy policies truly reflective of a company's operations.

Popularity

Points 4

Comments 0

What is this product?

PrivacyForge.ai is an intelligent system designed to create privacy documentation that actually fits your business. Instead of using generic templates that might not cover your unique situation, it uses AI to understand how you collect, use, and store data. It then builds privacy policies, terms of service, and other related documents that are compliant with privacy laws like GDPR (for Europe), CCPA/CPRA (for California), and others. The core innovation is its ability to learn your business's data flow and generate precise legal language, unlike older tools that just ask you to fill in blanks. This means your privacy documents are more accurate, reducing the chances of running into trouble with regulators or investors because your policies don't match your actual practices. So, it helps you avoid costly legal battles and ensure your business operations are legally sound in the eyes of privacy laws.

How to use it?

Developers can integrate PrivacyForge.ai into their workflow to quickly generate essential privacy documents for their applications or services. This is particularly useful when launching new products or expanding into new markets with different privacy regulations. The process typically involves providing information about your data handling practices through a guided interface. The platform then uses its AI models (like Google Cloud's Vertex AI with Claude Sonnet and Gemini 2.5) to process this information and generate the relevant legal documents. These documents are then validated against specific jurisdictional requirements. The output can be used as the foundation for your company's official privacy policy, ensuring it's legally sound and tailored to your specific services. This saves significant time and money compared to traditional legal consultations or relying on generic, potentially inaccurate, templates. So, you can get compliant privacy documents generated efficiently, allowing you to focus on building your product rather than navigating complex legal requirements.

Product Core Function

· AI-driven privacy policy generation: Uses artificial intelligence to create privacy policies that accurately reflect your business's data practices, ensuring compliance with regulations like GDPR and CCPA. This is valuable because it moves beyond generic templates to provide documents that truly fit your operations, reducing legal risks.

· Multi-jurisdictional compliance: Maintains separate knowledge bases for various privacy laws such as GDPR, CCPA, CPRA, PIPEDA, COPPA, and CalOPPA, allowing for tailored documentation across different regions. This is useful for businesses operating internationally, ensuring compliance in all relevant legal territories.

· Data flow analysis for customization: Analyzes your specific data collection, processing, and storage methods to generate custom language for your privacy documents. This ensures accuracy and legal soundness, preventing promises in your policy that your business cannot keep, which is critical for building trust and avoiding regulatory scrutiny.

· Continuous regulation updates: The system is designed for ongoing expansion of supported regulations and includes updates when regulations change, ensuring your documentation remains current. This provides long-term value by proactively managing evolving privacy landscapes, saving you from constant manual updates.

· Validation against specific requirements: Each generated document is validated against the specific legal requirements of the applicable jurisdictions before delivery. This adds an extra layer of assurance that your privacy documents meet the necessary legal standards, giving you confidence in their compliance.

Product Usage Case

· A fintech startup launching a new peer-to-peer lending app needs to comply with GDPR and CCPA. Instead of spending $5,000+ on legal fees and weeks of attorney time, they use PrivacyForge.ai. They input details about the user data they collect (e.g., financial information, contact details) and how it's processed. The AI generates a comprehensive privacy policy that addresses data handling for financial services and adheres to both European and Californian regulations, passing their Series A funding due diligence.

· A healthtech company developing a telemedicine platform requires strict adherence to data privacy laws like HIPAA (in the US, though the example mentions COPPA/CalOPPA which are also relevant for certain data types) and international equivalents. PrivacyForge.ai helps them create detailed documentation outlining how patient health information is collected, stored securely, and shared, ensuring compliance and protecting sensitive data. This saves them significant legal costs and reduces the risk of regulatory fines for mishand the sensitive nature of health data.

· A small e-commerce business selling products internationally faces the challenge of complying with various privacy laws across different countries. PrivacyForge.ai allows them to generate region-specific privacy notices and consent mechanisms easily, ensuring they meet the requirements of customers in the EU (GDPR) and Canada (PIPEDA), among others. This enables them to expand their market reach without being overwhelmed by complex legal obligations.

12

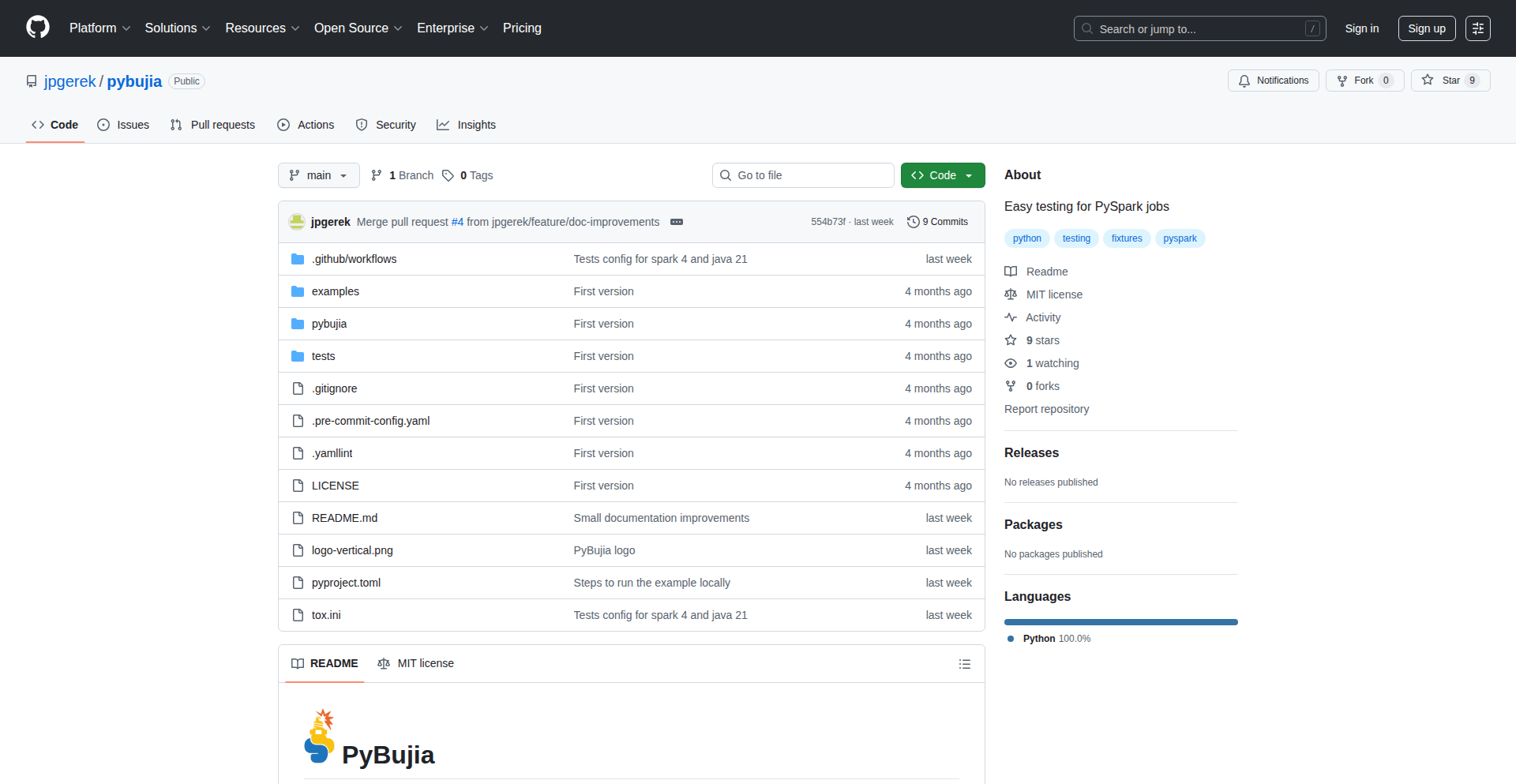

Pybujia: Data Transformation Testing Framework

Author

jpgerek

Description

Pybujia is an open-source toolkit designed to address the challenges data engineers face in writing tests for their data transformations. It simplifies the creation of realistic synthetic data for testing, improving data quality and consistency, especially when deadlines are tight and the business impact of testing is not immediately obvious. It also caters to data engineers who may not have a strong software engineering background.

Popularity

Points 4

Comments 0

What is this product?

Pybujia is a Python-based framework that helps data engineers test their data pipelines and transformations. The core innovation lies in its ability to generate synthetic input and output tables that mimic real-world data. This is crucial because creating realistic test data manually is complex and time-consuming. By providing a structured way to define and validate data transformations, Pybujia helps ensure data accuracy and reliability, which is often overlooked due to tight project deadlines or a perception that testing doesn't directly contribute to business value. It bridges the gap for data engineers who might not be experienced in traditional software testing methodologies.

How to use it?

Developers can integrate Pybujia into their data engineering workflows. You would typically define your data transformation logic in Python and then use Pybujia's APIs to create mock input data, execute your transformation, and assert that the output matches your expected results. This could be done locally for development or integrated into CI/CD pipelines to automatically run tests whenever changes are made to the data pipelines. For example, if you have a transformation that cleans customer addresses, you can use Pybujia to generate various address formats (valid, invalid, missing parts) as input, run your cleaning function, and then check if the output addresses are correctly formatted and complete. This allows you to catch bugs early in the development cycle, saving significant debugging time later.

Product Core Function

· Synthetic data generation: Allows creation of diverse and realistic datasets for testing, addressing the complexity of manual data creation. This helps ensure your transformations are tested against a wide range of scenarios, improving robustness.

· Transformation assertion: Provides tools to define and verify expected outcomes of data transformations, ensuring data integrity. This means you can confidently know if your data processing steps are producing the correct results, preventing data errors from propagating.

· Framework for data quality: Offers a structured approach to testing, making it easier to implement and maintain data quality checks within pipelines. This contributes to building trust in your data products.

· Simplified testing for non-SWEs: Designed with data engineers in mind, it lowers the barrier to entry for implementing effective testing practices, even without extensive software engineering experience. This empowers more data professionals to ensure data quality.

· Integration with CI/CD: Facilitates automated testing by allowing easy integration into continuous integration and continuous deployment pipelines. This automates the validation of your data code, catching regressions before they impact production.

Product Usage Case

· Testing a data aggregation pipeline: A data engineer needs to aggregate sales data from multiple sources. They can use Pybujia to generate synthetic sales records with different currencies, dates, and product IDs. Then, they write a test using Pybujia to ensure the aggregation logic correctly sums sales by product and date, handling currency conversions. This prevents errors in financial reporting.

· Validating a data cleaning process: A data engineer is building a pipeline to clean user profile data, which includes handling missing values and standardizing formats. They can use Pybujia to create test cases with various combinations of missing fields and inconsistent formatting (e.g., different date formats). Pybujia helps verify that the cleaning logic correctly imputes missing values and standardizes formats, ensuring cleaner downstream data.

· Ensuring data schema adherence: Before loading data into a data warehouse, a data engineer needs to ensure it conforms to a specific schema. Pybujia can be used to generate data that intentionally violates the schema (e.g., wrong data types, missing required fields) and then test if the transformation logic correctly identifies and flags these violations, preventing bad data from entering the warehouse.

13

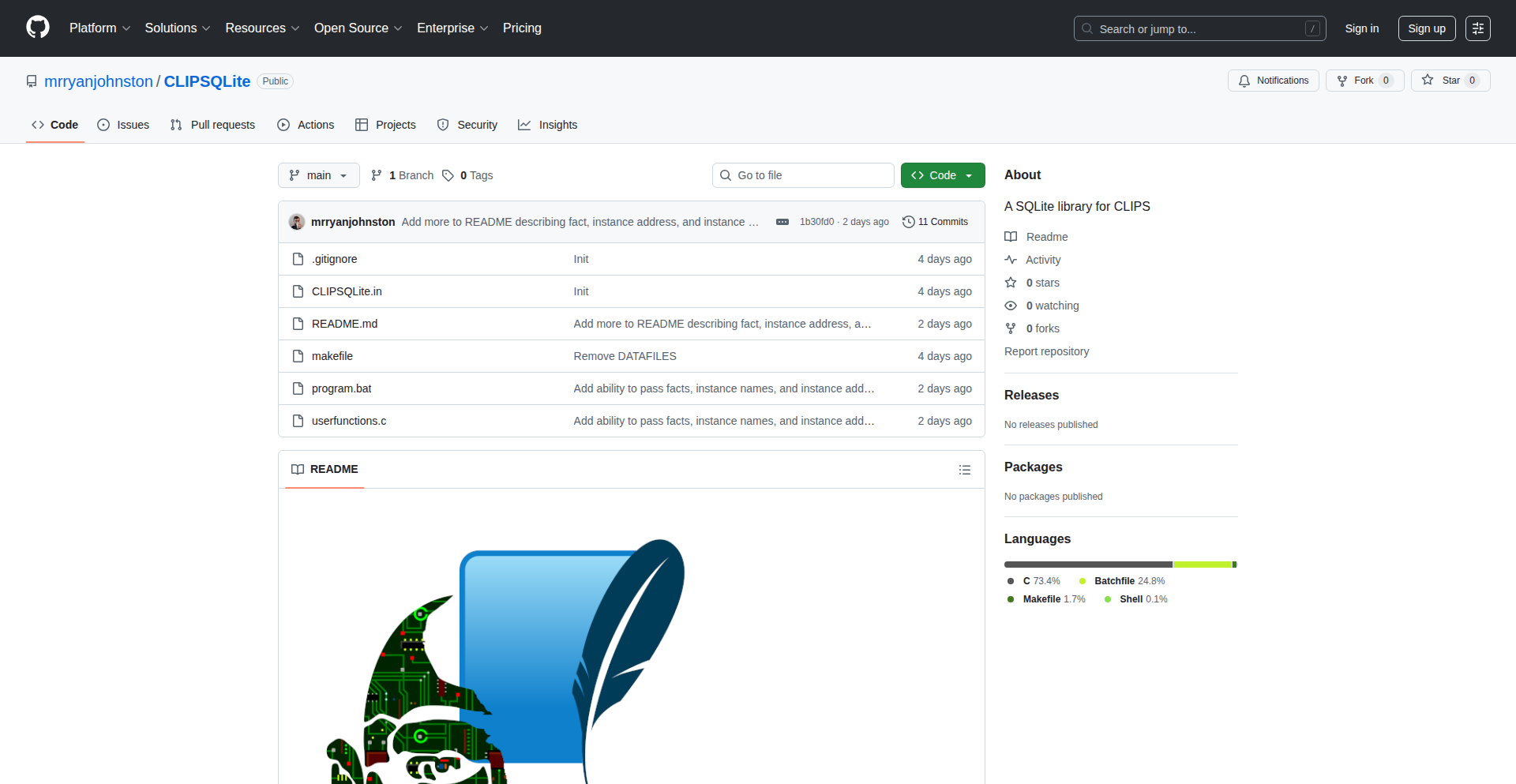

CLIPSQLite: Bridging CLIPS and SQLite for Smarter Data

Author

ryjo

Description

CLIPSQLite is a library that seamlessly integrates the CLIPS expert system shell with SQLite databases. It allows CLIPS rules to directly query and manipulate data stored in SQLite, enabling sophisticated data-driven decision-making. The innovation lies in making complex data accessible and actionable for rule-based systems, unlocking new possibilities for real-world applications.

Popularity

Points 4

Comments 0

What is this product?

CLIPSQLite is a toolkit that lets the CLIPS expert system, which is great at making decisions based on rules, talk to SQLite databases, which are excellent for storing structured information. Think of it as a translator. CLIPS can now ask SQLite for data and use that data to trigger its rules. This is innovative because CLIPS traditionally works with facts in memory, but real-world problems often involve large amounts of persistent data. CLIPSQLite breaks down this barrier by allowing CLIPS to access and utilize data from a readily available and robust database system, making expert systems much more practical for complex, data-intensive tasks. So, this means your rule-based systems can now be powered by large, persistent datasets without needing to load everything into memory, leading to more capable and scalable intelligent applications.

How to use it?

Developers can integrate CLIPSQLite into their CLIPS applications by loading the library and then using specific CLIPS commands to establish connections to SQLite databases, execute SQL queries, and retrieve results. These results can be automatically converted into CLIPS facts or instances, making them directly usable by the CLIPS rule engine. This is achieved through functions that handle database connection management, prepared statement execution with variable binding, and result set conversion. This allows developers to build sophisticated applications where CLIPS makes intelligent decisions based on real-time or historical data from a database. For example, in a medical diagnosis system, CLIPS could query a patient's medical history from an SQLite database to inform its diagnostic rules. So, this makes it easy to build expert systems that learn from and act upon real-world data.

Product Core Function

· Database Connection Management: Allows CLIPS to establish and close connections to SQLite databases, providing the foundation for data access. This is valuable because it ensures stable and controlled access to your data, preventing resource leaks and maintaining system integrity. It's like having a reliable bridge to your information.

· Prepared Statement Execution with Variable Binding: Enables CLIPS to send SQL queries to SQLite with dynamic values, preventing security vulnerabilities like SQL injection and improving query efficiency. This is valuable because it allows for secure and flexible data querying, where the rules can adapt to different scenarios without exposing the system to risks. It’s like filling in blanks in a form securely.

· Result Set to Facts/Instances Conversion: Automatically transforms the data retrieved from SQLite queries into CLIPS facts or instances, making the data directly usable by the CLIPS rule engine. This is valuable because it eliminates manual data wrangling, allowing CLIPS to immediately reason over the database content. It makes database data instantly understandable and actionable for your decision-making engine.

Product Usage Case

· In a financial fraud detection system, CLIPSQLite can allow CLIPS rules to query transaction history from an SQLite database to identify suspicious patterns. This solves the problem of analyzing large volumes of transaction data in real-time to prevent fraud, making the system more robust and responsive. So, this allows for smarter, data-driven fraud detection.

· For an inventory management system, CLIPSQLite can enable CLIPS to access inventory levels from an SQLite database to trigger reorder alerts or optimize stock allocation. This addresses the challenge of managing complex inventory and ensuring efficient operations based on up-to-date information. So, this helps in keeping your stock optimized and operations smooth.

· In a troubleshooting or diagnostic expert system, CLIPSQLite can empower CLIPS to retrieve relevant information from a knowledge base stored in SQLite to guide the diagnostic process. This solves the issue of making expert systems more scalable and maintainable by externalizing knowledge into a persistent database. So, this makes your expert systems smarter and easier to update.

14

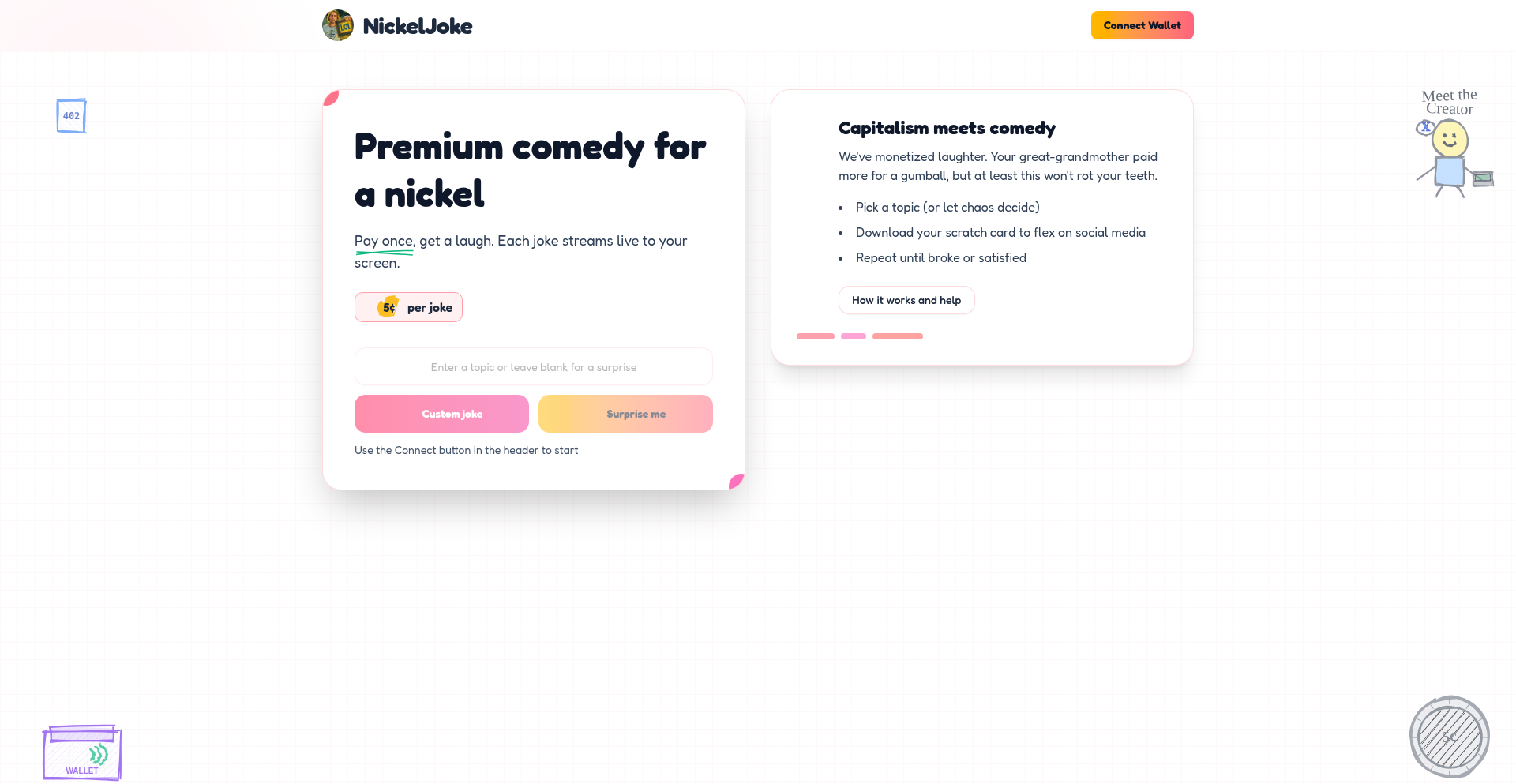

NickelJoke: Premium Comedy Engine

Author

bilater

Description

NickelJoke is a proof-of-concept AI model designed to generate niche, high-quality jokes for a minimal cost, inspired by the idea of 'premium content for a low price'. It showcases an approach to fine-tuning smaller, more efficient language models to achieve surprisingly good results in a specific creative domain like joke writing, highlighting the potential for specialized AI creativity without requiring massive computational resources. The core innovation lies in its targeted approach to creative generation.

Popularity

Points 2

Comments 2

What is this product?

NickelJoke is a demonstration of how advanced AI, specifically fine-tuned language models, can be used to generate creative content like jokes in a cost-effective manner. Instead of relying on enormous, general-purpose models, it focuses on optimizing a smaller model for a specific task. The 'nickel' in its name signifies this focus on affordability and efficiency. The underlying technology involves training a language model on a curated dataset of jokes and humor to understand the nuances of comedic timing, punchlines, and wordplay. This allows it to produce jokes that are not just random but have a structure and intent behind them, offering a glimpse into the future of accessible AI-powered creative tools. So, this is about making AI-powered creativity more affordable and specialized. What it means for you is the potential for AI tools that are less resource-intensive and can deliver tailored creative outputs.

How to use it?

Developers can integrate NickelJoke into applications requiring dynamic content generation, such as social media bots, interactive storytelling platforms, or even as a feature within existing comedy apps. The project, being a 'Show HN', is likely presented as a codebase or an API endpoint that can be called programmatically. For instance, you could query the API with a topic or a style, and NickelJoke would return a generated joke. This allows for real-time joke generation, adding a unique and engaging element to user experiences. The integration would typically involve making HTTP requests to the provided API. So, this is about plugging AI creativity into your projects. What it means for you is adding a unique, AI-driven humor element to your applications with minimal effort.

Product Core Function

· Niche Joke Generation: The AI is trained to produce jokes that are specific and potentially funnier due to its focused dataset. This offers a higher quality of humor than generic joke generators. The value is in delivering more targeted and enjoyable content for your users.

· Cost-Effective AI Model: Utilizes a fine-tuned, smaller language model to achieve good results with lower computational costs. This makes advanced AI capabilities more accessible and affordable. The value is in reducing development and operational expenses for AI features.

· API-Driven Creative Output: Provides an interface for developers to programmatically access joke generation capabilities. This allows for seamless integration into various software and services. The value is in enabling dynamic, real-time creative content within your applications.

Product Usage Case

· Social Media Content Bot: A developer could use NickelJoke to power a Twitter bot that tweets a new, original joke every hour, engaging followers with fresh humor. This solves the problem of needing a constant stream of creative content. The value is in maintaining an active and engaging online presence.

· Interactive Game Narrative: Integrate NickelJoke into an indie game to generate humorous dialogue or random funny events based on player actions. This adds replayability and unexpected fun to the gaming experience. The value is in creating a more dynamic and entertaining game.

· Personalized Humor Assistant: Developers could build a mobile app where users can request jokes on specific themes, and NickelJoke provides tailored comedic responses. This solves the need for on-demand, personalized entertainment. The value is in offering a unique and responsive user experience.

15

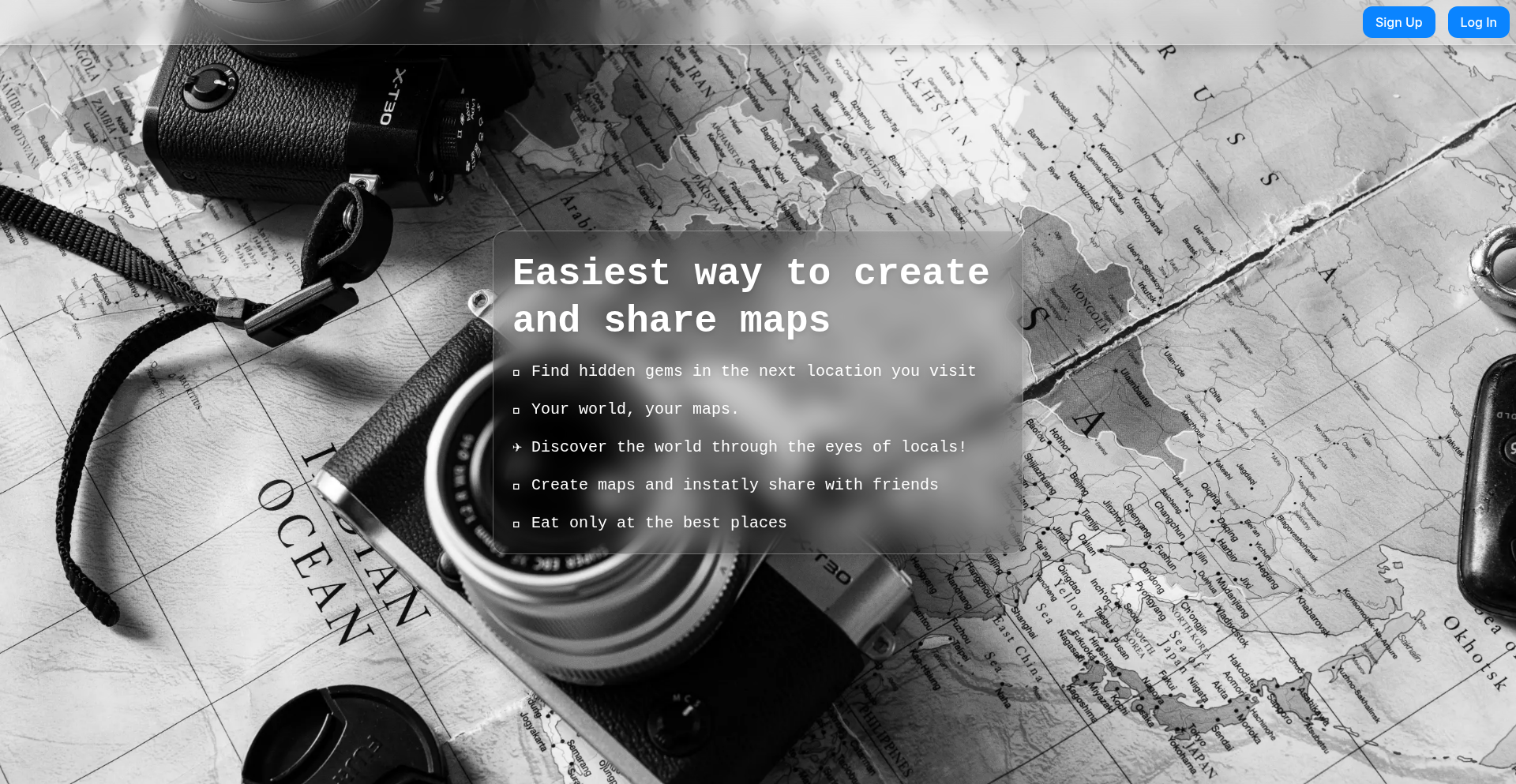

BlueApex: Personalized Geospatial Storyteller

Author

PhysicalDevice

Description

BlueApex is a web application that allows users to create custom maps by dropping pins and associating them with specific locations. Its innovation lies in simplifying the process of sharing personalized recommendations and trip plans, making location-based information more interactive and context-rich. It addresses the need for a more intuitive way to curate and share points of interest beyond generic map services.

Popularity

Points 4

Comments 0

What is this product?

BlueApex is a web-based platform that enables you to craft unique maps by placing markers (pins) on a map and attaching your own descriptions, notes, or media to those locations. The core technical insight is in abstracting the complexity of standard mapping APIs and providing a user-friendly interface for creating personalized geographical narratives. Instead of just seeing a street address, users can build a map that tells a story about a place, like 'my favorite coffee shops in this neighborhood' or 'a self-guided historical tour.' This provides a more engaging and personal way to interact with location data, moving beyond simple navigation to rich information sharing.

How to use it?

Developers can use BlueApex to easily create and share curated maps for various purposes. For instance, a travel blogger could create a map of their recommended restaurants and attractions in a city, sharing it with their followers. A local business could create a map highlighting their branches and nearby points of interest for customers. Integration could involve embedding these custom maps on websites or sharing direct links via social media or email. The 'guest' login credentials (username: guest, password: guest) allow for immediate exploration of its features without requiring account creation, making it simple to trial and demonstrate.

Product Core Function

· Custom Pin Dropping: Users can precisely place markers on a global map, allowing for granular control over points of interest. This is valuable for creating highly specific location-based guides or personal logs.

· Rich Content Association: Each pin can be enriched with text descriptions, notes, and potentially other media (though not explicitly stated, this is a common extension for such apps). This transforms a simple pin into a data-rich point of context, useful for sharing detailed recommendations or historical context.

· Map Sharing Capabilities: The platform facilitates the sharing of these custom maps with others, enabling collaborative planning or broadcasting of curated information. This is crucial for community building and information dissemination around locations.

· Intuitive User Interface: The focus on 'easiest way' suggests a streamlined and accessible user experience for map creation and interaction, reducing the technical barrier to entry for non-developers.

Product Usage Case

· A travel influencer creating a map of 'hidden gems' in Tokyo, dropping pins on unique cafes, small shops, and scenic spots, with personal reviews for each, and sharing the link on their blog. This solves the problem of generic travel guides by providing authentic, personally curated experiences.

· A local history enthusiast building a map of historical landmarks in their city, with each pin containing brief historical facts and old photos. This allows for an engaging self-guided historical tour, making local history accessible and interactive.

· A group of friends planning a road trip, collaboratively creating a map of potential stops, including campgrounds, scenic viewpoints, and notable restaurants, to streamline their planning process. This addresses the challenge of coordinating shared itineraries across multiple individuals.

· A small business owner creating a map that shows their store location along with nearby points of interest like public transport, parking, and complementary businesses, to help customers find and navigate to their establishment more easily.

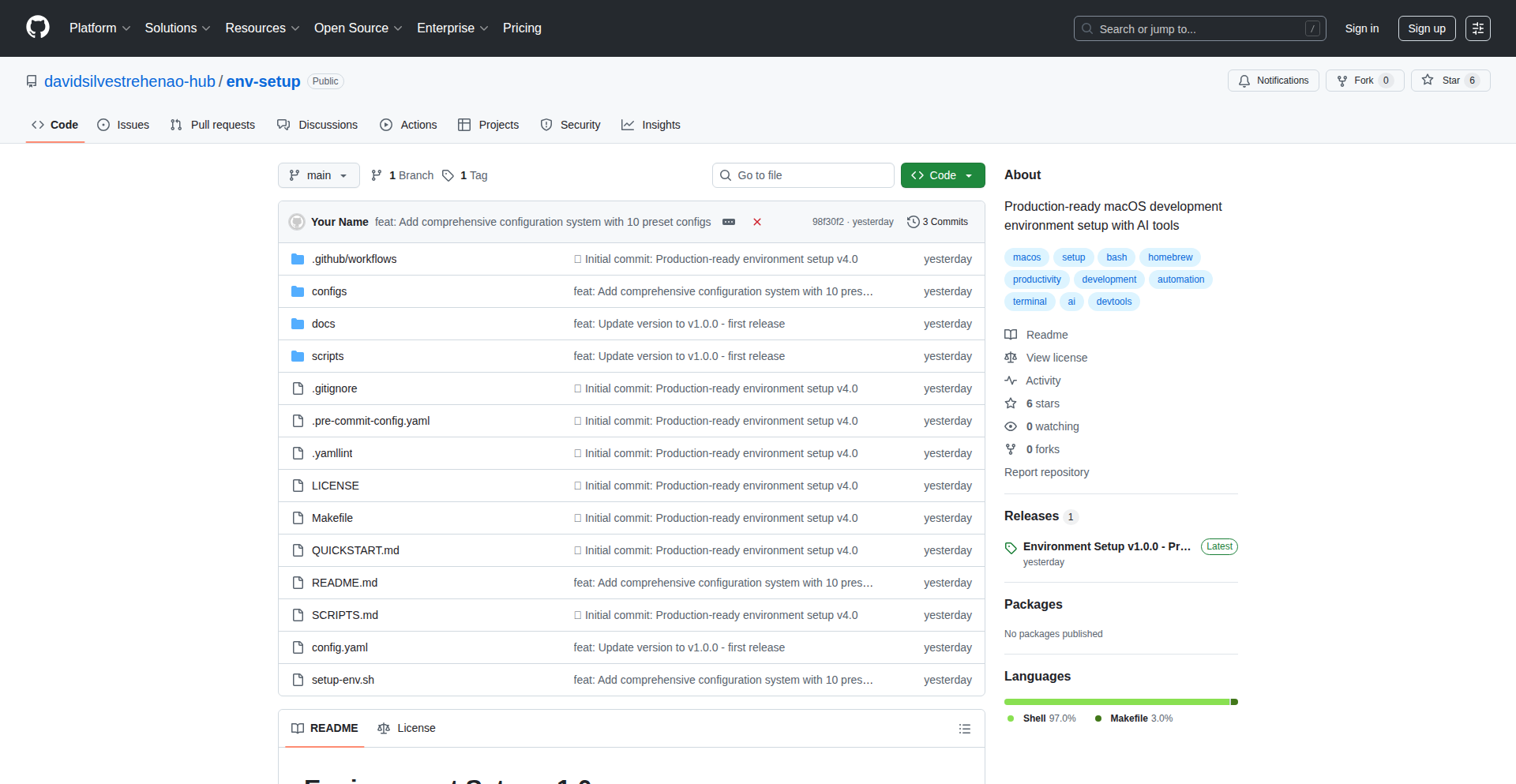

16

DevEnvForge

Author

davidsilvestre

Description

DevEnvForge is a production-ready macOS development environment setup tool. It automates the installation and configuration of a complete dev environment, including AI tools like Ollama and LM Studio, modern terminals, and comprehensive dotfiles. Its innovation lies in intelligent parallel processing and robust, error-free scripting, making it significantly faster and more reliable for new setups or team onboarding. So, this saves you hours of manual configuration and ensures a consistent, high-quality development environment, which means you can start coding faster and with fewer setup headaches.

Popularity

Points 3

Comments 0

What is this product?

DevEnvForge is a sophisticated script that transforms your new or existing Mac into a fully-equipped development workstation. It leverages intelligent scripting to install and configure a wide range of tools and applications, from programming languages and editors to cutting-edge AI models and advanced terminal emulators. The core innovation is its ability to detect your Mac's capabilities and intelligently use parallel processing to speed up installations dramatically, all while ensuring the scripts are clean and error-free. This means you get a top-tier dev environment without the usual manual fuss, and it's built with reliability in mind, like a professional tool. So, this gives you a professional-grade development setup on your Mac quickly and reliably, allowing you to focus on building things, not wrestling with installers.

How to use it?

Developers can integrate DevEnvForge into their workflow by cloning the GitHub repository and running a simple shell command. You choose from 10 pre-defined configurations (e.g., 'webdev', 'ai', 'everything') based on your needs. The command `./setup-env.sh install --config configs/webdev.yaml` will then automatically download and configure all the necessary software. This is ideal for setting up a new Mac, onboarding new team members, or ensuring consistency across multiple developer machines. So, you can get a tailored development environment set up in minutes, not hours, allowing for rapid project starts and seamless team collaboration.

Product Core Function

· Automated Environment Setup: Installs and configures essential development tools and software based on chosen configurations, saving manual effort. So, you don't have to manually install dozens of programs, getting you coding faster.

· AI Tool Integration: Seamlessly sets up local Large Language Models (LLMs) via Ollama and LM Studio, enabling powerful AI capabilities within your development workflow. So, you can experiment with and integrate AI features into your projects without complex manual setup.