Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-25

SagaSu777 2025-09-26

Explore the hottest developer projects on Show HN for 2025-09-25. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a powerful trend: the democratization of complex technical challenges through accessible, often open-source, tools. We're seeing a significant push towards empowering developers with solutions that automate tedious tasks, enhance productivity, and unlock new capabilities. From AI agents gaining secure web access with Prism to sophisticated data analysis tools like Data-con, the focus is on lowering the barrier to entry. The proliferation of tools for API generation, efficient data pipelines, and even specialized interview prep signals a growing appetite for smart, developer-centric solutions. For aspiring builders and entrepreneurs, this is a clear signal to identify those 'boring but critical' problems that plague developers and find elegant, technically sound ways to automate or simplify them. The spirit of hacking lies not just in building groundbreaking new technologies, but also in cleverly refactoring existing complexities into user-friendly, powerful tools. Embrace the opportunity to solve these granular pain points, as they often pave the way for significant impact and innovation.

Today's Hottest Product

Name

Prism – Let browser agents access any app

Highlight

Prism tackles the critical challenge of enabling browser agents to authenticate onto websites securely and reliably. By abstracting away the complexities of human-like logins, including OTP and MFA, it allows developers to programmatically access authenticated sessions. The innovative approach of using Playwright for speed and falling back to AI for robustness demonstrates a pragmatic blend of technologies to solve a common developer pain point in web automation and AI agent development. Developers can learn about building secure authentication flows for automated agents and explore strategies for combining deterministic (Playwright) and probabilistic (AI) approaches for increased reliability.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Automation

Data Management

Security

Popular Keyword

AI agents

automation

developer tools

authentication

data visualization

API

language models

database

security intelligence

Technology Trends

AI-powered automation

developer productivity tools

secure authentication for agents

data analysis and visualization

cross-platform development

semantic search and vector databases

open-source infrastructure

Project Category Distribution

Developer Tools & Utilities (30%)

AI & Machine Learning Applications (20%)

Productivity & Automation (15%)

Data Management & Analysis (10%)

Security & Threat Intelligence (5%)

Niche Software & Libraries (15%)

Content & Design Tools (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Prism: Auth Agent Bridger | 19 | 15 |

| 2 | Phishcan Threat Intel Feed | 15 | 9 |

| 3 | Macscope: Enhanced Cmd-Tab for macOS | 11 | 13 |

| 4 | Multiplayer Session Recorder | 9 | 4 |

| 5 | Plakar: Encrypted, Browsable, Open-Source Backup | 8 | 0 |

| 6 | Vatify-Python: EU VAT Compliance Accelerator | 2 | 5 |

| 7 | Encore Cloud: Automated DevOps & Infra | 6 | 0 |

| 8 | Rails Native Bridger | 6 | 0 |

| 9 | JSON Dive | 4 | 0 |

| 10 | Brain4J GPU-Accelerated Java ML | 4 | 0 |

1

Prism: Auth Agent Bridger

Author

rkhanna23

Description

Prism is a tool designed to simplify how browser agents authenticate onto websites using user credentials. It allows developers to pass credentials to Prism, which then logs into a website on their behalf and returns the necessary session cookies. This eliminates the need for developers to manually handle complex authentication flows, including OTP and MFA, thereby improving the reliability and efficiency of web automation and agent-based tasks.

Popularity

Points 19

Comments 15

What is this product?

Prism is a service that securely manages website logins for browser agents. Instead of exposing sensitive user credentials directly to an agent or having developers write custom code for every website's unique login process (which often involves multi-factor authentication like OTP codes), developers can send their credentials to Prism. Prism then acts as a proxy, logging into the target website using these credentials, and returns the session cookies. This is achieved by leveraging tools like Playwright for efficient logins, and falling back to AI-driven approaches when Playwright encounters issues, ensuring robust authentication. The innovation lies in abstracting away the complexities of diverse website authentication mechanisms and providing a standardized way for agents to gain authenticated access, a common bottleneck in web automation.

How to use it?

Developers can integrate Prism into their workflows by calling its API. You provide Prism with the target website, the user's login credentials (username/password), and details about the login method (e.g., if an OTP code is required and how to retrieve it, such as via email). Prism handles the entire login process, including passing OTP codes if necessary, and returns a set of session cookies. These cookies can then be used by the developer's browser agent to interact with the website in an authenticated state. For example, a developer building a web scraping tool that needs to access user-specific data on a platform can use Prism to log in and get the authenticated session cookies, allowing their scraper to fetch the data without needing to implement the login logic itself.

Product Core Function

· Securely handles user credentials without exposing them to the agent: This provides a layer of security by not directly feeding sensitive information into potentially vulnerable agent systems, making your automated tasks safer.

· Automates complex login flows including OTP and MFA: Solves the common problem of dealing with two-factor authentication, which often requires custom scripting for each website, saving significant development time and effort.

· Provides authenticated session cookies: Once logged in, Prism returns the necessary cookies, allowing your agent or application to interact with the website as if a human user had logged in, enabling seamless data retrieval or task execution.

· Utilizes Playwright for speed and AI for reliability: This hybrid approach ensures fast and efficient logins for supported websites, while the AI fallback adds robustness for challenging or less common login scenarios, making your automation more dependable.

· Supports a growing library of website login scripts: Out-of-the-box support for many websites means you can start using Prism immediately, and the continuous addition of new scripts ensures compatibility with an expanding range of web applications.

Product Usage Case

· A web scraping task on a financial platform that requires login: Instead of building custom code to handle the platform's specific login form and OTP verification, a developer can use Prism. They provide Prism with the credentials and specify the OTP delivery method (e.g., email), and Prism returns the authenticated cookies, allowing the scraper to proceed with data extraction without authentication hurdles.

· Automated security testing for enterprise applications: A security testing company can use Prism to enable their autonomous agents to log into customer websites for penetration testing. Prism handles the authentication, allowing the agents to test security vulnerabilities on authenticated sections of the application, streamlining the testing process.

· Integrating with AI agents for personalized web interactions: An AI chatbot that needs to perform actions on behalf of a user on a specific website (e.g., booking a flight, updating a profile) can use Prism to obtain an authenticated session. This allows the AI to act as a user, performing tasks that require login without the developer having to write complex, site-specific authentication code for the AI.

· Testing user experience across different login methods: A QA team can use Prism to simulate various login scenarios for a web application, including successful logins, OTP failures, and MFA challenges, to ensure the application's authentication flow is robust and user-friendly across different user experiences.

2

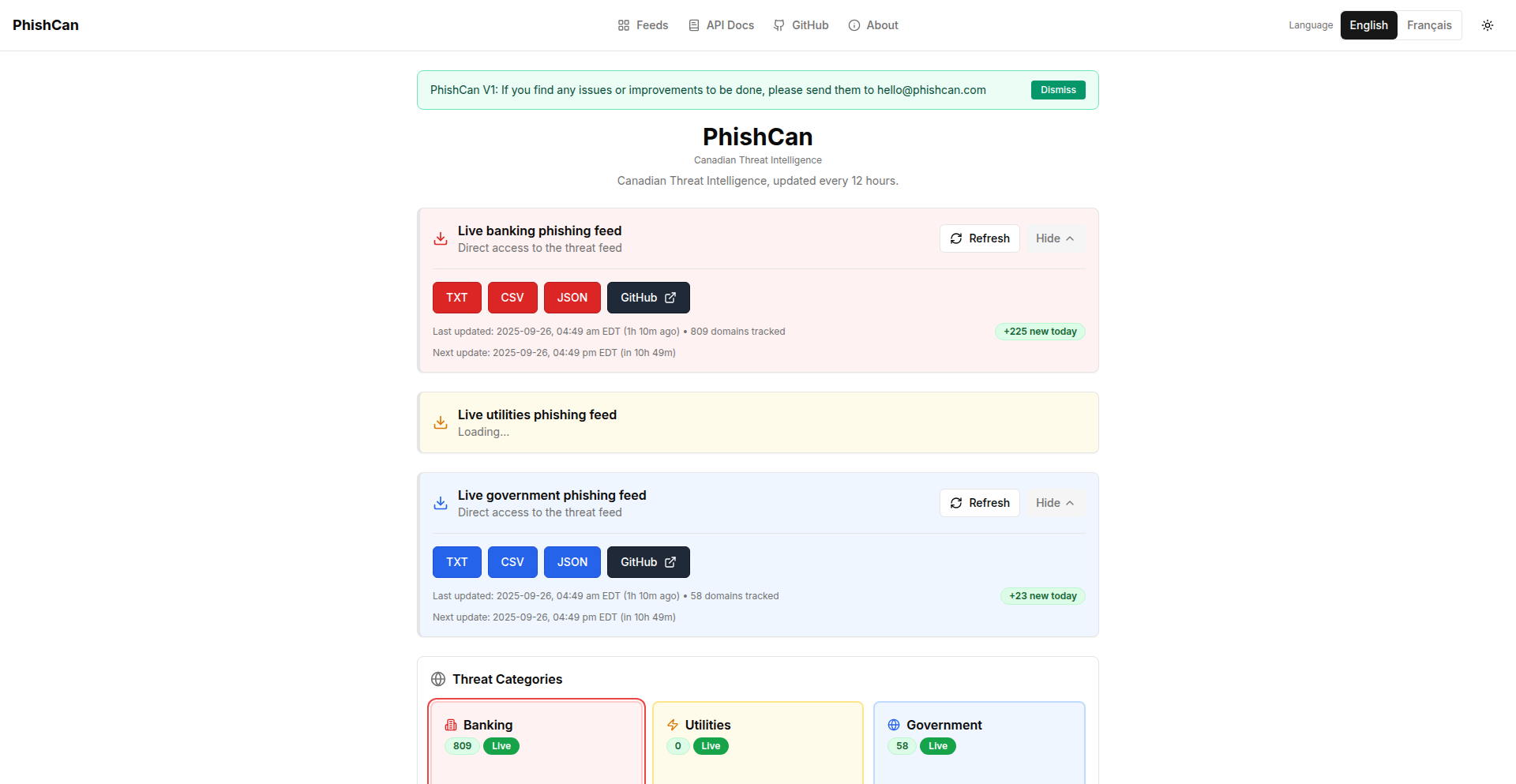

Phishcan Threat Intel Feed

Author

ripernverse

Description

Phishcan is Canada's first open and free threat intelligence platform designed to detect and track phishing domains targeting Canadian organizations and services. It leverages automated domain parsing, threat actor monitoring, and data enrichment to provide up-to-date threat feeds, helping to protect users from online scams. The innovation lies in its focused approach to Canadian entities and its open, accessible data and API.

Popularity

Points 15

Comments 9

What is this product?

Phishcan is a threat intelligence platform that specifically monitors and identifies phishing domains targeting Canadian entities like banks (Scotiabank, Desjardins, RBC, Interac), telecommunication providers, utility companies, and government services (CRA, Canada Post, Service Canada, Revenue Québec). Its core technical innovation is in its ability to parse millions of domains and continuously scan for suspicious patterns, while also actively monitoring the infrastructure used by cybercriminals. This means it's not just looking at known bad domains, but also predicting and identifying emerging threats before they become widespread. The data is enriched with context, making it more useful for understanding the threat landscape. So, for you, this means a more proactive defense against phishing attacks specifically tailored to the Canadian digital environment.

How to use it?

Developers can integrate Phishcan into their security workflows and applications using its freely available API. This API allows for programmatic access to the threat intelligence data, enabling real-time checks for malicious domains. For example, a company could use Phishcan's API to validate URLs in emails before displaying them to users, or to proactively block access to known phishing sites. The data is also available on GitHub, allowing for offline analysis or integration into custom threat hunting tools. This means you can easily add a layer of protection to your applications and services by programmatically checking if a domain is known to be malicious, thus safeguarding your users from phishing attempts.

Product Core Function

· Domain parsing and analysis: Continuously scans and analyzes millions of domains to identify suspicious patterns and potential phishing sites. This is valuable because it helps detect new and emerging phishing threats before they are widely known, protecting users from visiting compromised websites.

· Threat actor monitoring: Actively monitors the infrastructure and domain registrations of cybercriminals. This is important for staying ahead of attackers by understanding their evolving tactics and tools, providing an advantage in the fight against cybercrime.

· Data enrichment: Adds contextual insights and connections to the threat intelligence data. This means the information provided is not just a list of bad domains, but also includes related information that helps understand the scope and nature of the threat, enabling more informed security decisions.

· Regularly updated threat feeds: Feeds are updated every 12 hours, ensuring that the threat intelligence is current and relevant. This is crucial for effective real-time protection, as phishing campaigns can change rapidly, and outdated information is less useful.

· Open and free API access: Provides free access to its threat intelligence data through an API, allowing developers to easily integrate it into their own tools and services. This lowers the barrier to entry for implementing advanced security measures, making it accessible for a wider range of applications and developers.

Product Usage Case

· A Canadian financial institution can use Phishcan's API to add a real-time phishing domain check to its customer-facing email client. When an email with a suspicious link is received, the API can be queried to see if the domain is on Phishcan's blacklist, preventing users from accidentally clicking on a phishing link and exposing their sensitive financial information. This directly addresses the need to protect customers from targeted financial scams.

· A government agency can integrate Phishcan data into its internal security monitoring system. By regularly checking newly registered domains against Phishcan's intelligence, they can identify potential phishing attempts targeting Canadian citizens or government services early on. This allows for faster response times and helps mitigate the risk of widespread fraud or data breaches impacting citizens.

· A cybersecurity researcher can leverage Phishcan's open data on GitHub to conduct in-depth analysis of phishing trends targeting Canada. By examining the types of domains being registered, the associated threat actors, and the patterns of attack, they can develop more sophisticated detection methods and contribute to the broader security community's understanding of Canadian-specific threats. This supports the creation of better tools and strategies to combat cybercrime.

· A small business owner can use a simple script that queries the Phishcan API before sharing links online or with employees. This provides a basic but effective layer of protection against clicking on malicious URLs, reducing the risk of malware infections or credential theft for the business. It demonstrates how even individuals can benefit from this accessible threat intelligence.

3

Macscope: Enhanced Cmd-Tab for macOS

Author

gprok

Description

Macscope is a native macOS application that reimagines the familiar Cmd+Tab app switcher. It enhances, rather than replaces, existing workflows by offering a more powerful interface for managing all your open windows, browser tabs, and applications. With features like unified search, live previews, advanced window arrangement, and project-based 'Scopes', Macscope aims to significantly boost productivity for Mac users by making it faster and easier to find and organize their digital workspace. This is for you if you find yourself juggling many windows and want a smarter way to navigate them.

Popularity

Points 11

Comments 13

What is this product?

Macscope is a macOS window manager and app switcher that augments the standard Cmd+Tab experience. Instead of just cycling through applications, a quick tap still switches to recent apps, but a longer press reveals the full Macscope interface. This interface allows you to search and switch to any window, browser tab (from Safari, Chrome, Arc, etc.), or application by typing. It provides live previews of window content, enabling you to quickly identify the exact window you need. Furthermore, it offers advanced window management, allowing you to arrange multiple windows into custom layouts like splits or grids. A key innovation is 'Scopes', which lets you save collections of app windows as a workspace that can be instantly restored, perfect for switching between different projects. It's built with Swift for native macOS performance on both Apple Silicon and Intel.

How to use it?

Developers can use Macscope to streamline their multitasking on macOS. After installing the app, you can trigger the enhanced switcher by holding down Cmd+Tab. Typing in the search bar will filter your open windows, tabs, and applications. Clicking on a preview or pressing Enter will switch to that item. You can use modifier keys to access Placement Modes, enabling quick snapping of windows to screen edges or halves. To manage multiple windows, select them within the Macscope interface and choose a layout option. For project-based workflows, create 'Scopes' by grouping related windows and save them for instant recall. This is useful for developers who frequently switch between coding environments, documentation, and communication tools, allowing for quicker context switching and less time spent manually arranging windows.

Product Core Function

· Unified Search & Switch: Instantly find and switch to any application, window, or browser tab (Safari, Chrome, Arc) by typing. This saves you time by eliminating the need to cycle through multiple applications or hunt for a specific window among many.

· Live Previews: See real-time visual previews of the content within each window before switching. This helps you quickly identify the correct window or tab, reducing errors and the time spent opening the wrong item.

· Advanced Window Management: Select multiple windows and arrange them into predefined layouts such as vertical splits, horizontal splits, or grids. This allows for efficient use of screen real estate, especially when working with multiple documents or code editors simultaneously, boosting your productivity.

· Scopes: Save and instantly restore entire collections of app windows as named 'Scopes'. This is ideal for quickly switching between different projects or tasks, allowing you to jump back into your workflow with all necessary applications and windows pre-arranged.

Product Usage Case

· A web developer working on multiple projects can use Scopes to save a collection of windows for Project A (e.g., VS Code, Chrome with development server, documentation tab) and another Scope for Project B. When switching between projects, they can activate the relevant Scope with a single action, instantly restoring their entire workspace and resuming work without manual setup.

· A designer using multiple Adobe Creative Suite applications and browser tabs can employ Macscope's unified search and live previews to quickly locate a specific Photoshop layer, a particular InDesign document, or a reference image in a browser tab, significantly speeding up their workflow compared to traditional Cmd+Tab.

· A student juggling research papers, lecture notes, and a writing application can use Macscope's advanced window management to arrange their windows into a side-by-side split view for easy comparison and note-taking, making their study sessions more efficient and less visually cluttered.

4

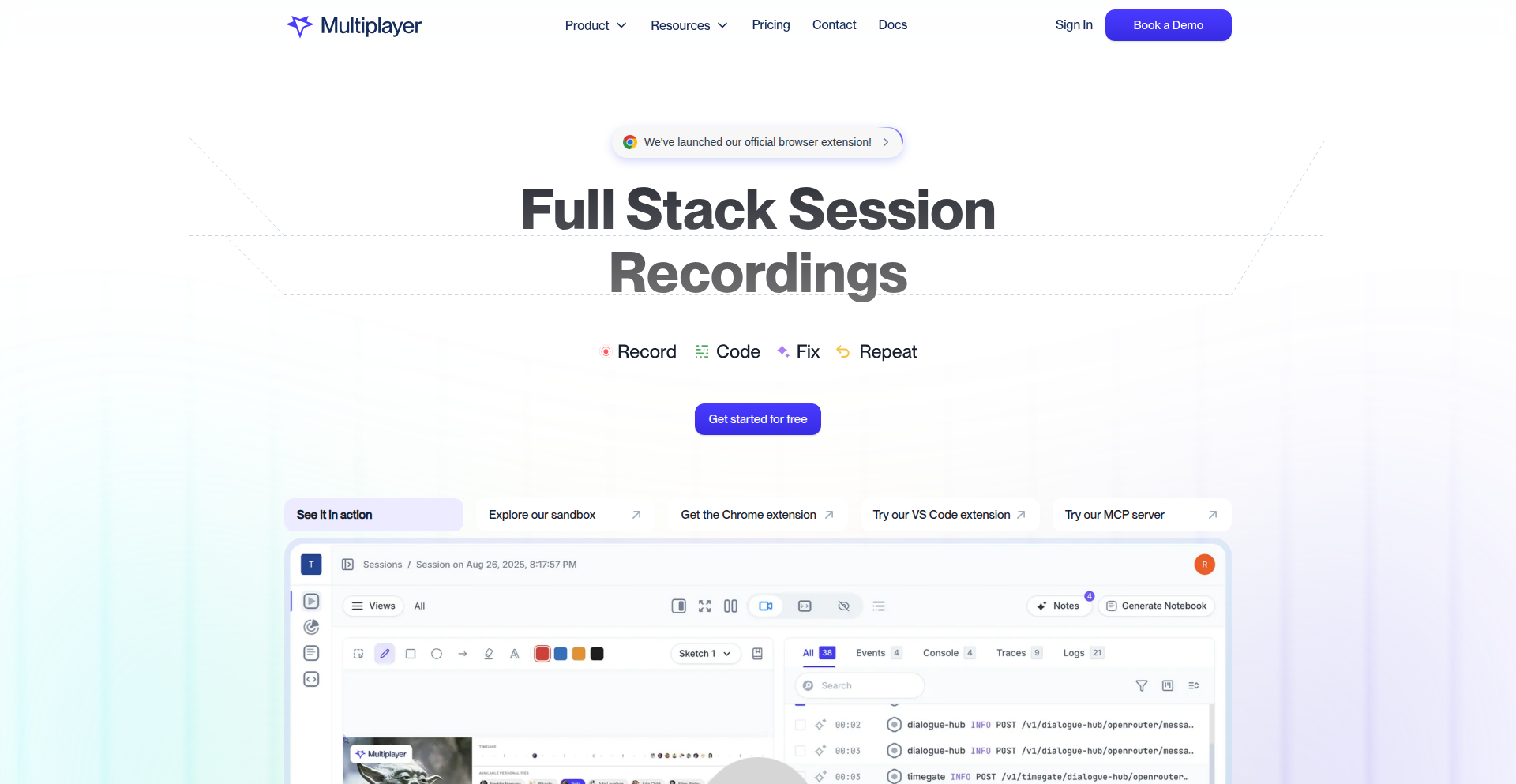

Multiplayer Session Recorder

Author

tomjohnson3

Description

A full-stack session recording tool designed for debugging, testing, and building applications. It captures user interactions and backend events in real-time, allowing developers to replay and analyze complex user flows and system behaviors. The innovation lies in its integrated approach, providing a unified view of both client-side actions and server-side responses, thus significantly accelerating the debugging process and enhancing product development.

Popularity

Points 9

Comments 4

What is this product?

This is a full-stack session recording tool. It works by instrumenting both the frontend (browser) and backend of your application. On the frontend, it records user interactions like clicks, scrolls, and form inputs, along with any JavaScript errors. On the backend, it captures API requests, responses, and server-side logs. All these events are synchronized and time-stamped. The innovation is in bridging the gap between front and back end, allowing developers to see exactly what a user did and what the server did in response, all within a single replay. This helps pinpoint the root cause of bugs and understand user behavior like never before. So, what's in it for you? It means you can find and fix bugs much faster and understand user problems more deeply, leading to better application quality and user experience.

How to use it?

Developers can integrate Multiplayer into their web applications by adding a small JavaScript snippet to their frontend and a corresponding agent to their backend. Once integrated, session recordings are automatically generated for user sessions. These recordings can be accessed via a web dashboard. Developers can then search for specific sessions based on user actions, errors, or timeframes. The dashboard provides a playback interface where developers can observe the user's journey, see the network requests and responses, and view console logs and backend errors in sync. This makes it incredibly useful for debugging production issues, replicating user-reported bugs, and understanding how users interact with new features. So, what's in it for you? You can easily understand and reproduce bugs reported by users, even if they are hard to trigger, and gain insights into how your application is actually being used in the real world.

Product Core Function

· Full-stack event capture: Records both frontend user interactions (clicks, forms, etc.) and backend API calls/logs. This provides a complete picture of what happened. Its value is in eliminating guesswork and providing concrete data for debugging.

· Synchronized playback: Replays frontend actions and backend events in perfect sync, allowing developers to see the cause-and-effect relationship between user input and system response. The value here is in drastically reducing the time it takes to understand complex bug scenarios.

· Real-time error highlighting: Automatically flags frontend JavaScript errors and backend exceptions during playback, directing developers immediately to potential problem areas. This saves valuable debugging time by instantly showing where the issues are occurring.

· Searchable session data: Enables developers to search for specific sessions based on user actions, errors, or custom metadata. The value is in quickly finding relevant recordings without manually sifting through hours of user activity.

Product Usage Case

· Debugging production issues: A user reports a bug that's difficult to reproduce. The developer uses Multiplayer to find the user's session, replay their actions, and see the exact sequence of events, including any backend errors, that led to the bug. This solves the problem by making the bug immediately visible and understandable.

· Testing new features: After deploying a new feature, developers can watch sessions to see how users interact with it. If users are struggling or encountering unexpected behavior, the recordings will show exactly where the friction points are, helping to iterate and improve the feature. This solves the problem by providing direct user feedback on new implementations.

· Onboarding and training: New developers can review sessions of experienced users to understand common workflows and best practices. This helps them learn the application faster and become more productive. This solves the problem by offering a practical, hands-on way to learn application usage.

· Performance analysis: By observing the timing of frontend interactions and backend responses, developers can identify performance bottlenecks in their application. This helps optimize loading times and improve overall user experience. This solves the problem by revealing hidden performance issues that impact user satisfaction.

5

Plakar: Encrypted, Browsable, Open-Source Backup

Author

vcoisne

Description

Plakar is an open-source backup solution designed for speed, security, and ease of use. It tackles the common pain points of traditional backup systems by offering fast incremental backups, strong encryption, and a user-friendly browsing interface to access your backed-up files directly. This addresses the need for a reliable and transparent backup mechanism that developers can trust and integrate into their workflows without sacrificing performance or data privacy.

Popularity

Points 8

Comments 0

What is this product?

Plakar is a command-line backup tool that creates encrypted snapshots of your data. Its innovation lies in its efficient handling of incremental backups, meaning it only saves the changes since the last backup, making the process much faster. The 'browsable' aspect means you can easily navigate through your backup history and retrieve specific files or folders without needing to restore the entire backup, unlike many older systems. This is built using Rust, a modern programming language known for its performance and memory safety, which contributes to its speed and reliability. So, for you, this means faster backups and the ability to easily find and restore just the files you need, when you need them, with peace of mind knowing your data is encrypted.

How to use it?

Developers can integrate Plakar into their existing workflows by installing it on their machines and configuring backup jobs via the command line. It can be scheduled to run automatically using cron jobs or systemd timers on Linux/macOS, or Task Scheduler on Windows. For continuous data protection, Plakar can be used to back up code repositories, important project files, or development environments. Its ability to be scripted makes it ideal for automated CI/CD pipelines or for backing up critical development assets. So, for you, this means you can easily set up automated backups for your projects, ensuring your code and data are always safe and accessible without manual intervention.

Product Core Function

· Fast Incremental Backups: Stores only changed data since the last backup, significantly reducing backup time and storage space. This is valuable for developers by ensuring their large project files or codebases can be backed up frequently without consuming excessive resources.

· End-to-End Encryption: Uses strong encryption algorithms to protect your data both in transit and at rest, ensuring only authorized individuals can access it. This provides developers with the confidence that their sensitive project data is secure against unauthorized access.

· Browsable Backup History: Allows direct browsing and retrieval of individual files or directories from previous backup snapshots without needing a full restore. This is incredibly useful for developers who need to quickly recover a specific deleted file or an older version of their code without the hassle of a lengthy restoration process.

· Cross-Platform Support: Works on Linux, macOS, and Windows, making it versatile for developers working across different operating systems. This ensures you can use a consistent and reliable backup solution regardless of your development environment.

· Command-Line Interface (CLI): Offers a powerful and scriptable interface for automation and integration into custom workflows. This empowers developers to build sophisticated backup strategies tailored to their specific project needs and automate backup tasks efficiently.

Product Usage Case

· Developer workstation backup: A developer can set up Plakar to back up their entire home directory, including project files, configuration settings, and personal documents, to an external drive or cloud storage. If their workstation fails, they can quickly restore their environment and continue working. This solves the problem of losing critical development work due to hardware failure.

· Code repository backup: A team can use Plakar to regularly back up their Git repositories locally or to a central backup server. If a remote repository is lost or corrupted, they have a reliable local copy to recover from. This prevents data loss and ensures business continuity for software development projects.

· Server configuration backup: System administrators can use Plakar to back up critical server configuration files (e.g., web server configs, database schemas). This allows for quick recovery of server settings in case of system updates gone wrong or security breaches. This solves the problem of downtime caused by misconfigurations or data corruption on servers.

· Personal project archiving: A hobbyist developer can use Plakar to create encrypted backups of their personal coding projects. They can then browse these backups years later to retrieve specific code snippets or revisit past projects, ensuring their creative work is preserved. This addresses the need for long-term archival of personal development projects.

6

Vatify-Python: EU VAT Compliance Accelerator

Author

passenger09

Description

Vatify-Python is a Python SDK designed to simplify EU VAT compliance for SaaS founders and e-commerce developers. It provides a robust API for real-time VAT number validation, up-to-date VAT rate information, and accurate VAT calculation. This tool addresses the common struggles of outdated or incomplete existing libraries, offering a clean and modern interface to ensure compliance and streamline cross-border transactions. So, how does this benefit you? It significantly reduces the headache and potential penalties associated with EU VAT, making your international business operations smoother and more reliable.

Popularity

Points 2

Comments 5

What is this product?

Vatify-Python is a developer tool that acts as a bridge to a powerful backend service for handling European Union Value Added Tax (VAT) rules. At its core, it's an Application Programming Interface (API) that exposes functionalities to check if a given VAT number is valid within the EU, retrieve the current VAT rates for different countries, and perform VAT calculations. The innovation here lies in packaging this complex logic into a user-friendly Python Software Development Kit (SDK). Instead of manually digging through complex regulations or relying on potentially outdated databases, developers can simply import the Vatify library and call simple functions. For example, a function like `client.validate_vat('DE123456789')` can instantly tell you if a German VAT number is legitimate, and `res.valid` will give you a clear True/False answer. This means less time spent on administrative overhead and more time building your core product. So, what's the value to you? It automates a critical, yet often tedious, part of international business, reducing errors and saving valuable development time.

How to use it?

Developers can integrate Vatify-Python into their applications by first installing it via pip, the Python package installer, using the command `pip install vatify`. Once installed, they can import the `Vatify` class in their Python code, initialize a client with their API key, and then directly call methods for VAT validation, rate retrieval, and calculation. For instance, in an e-commerce checkout process, you could use `client.validate_vat(customer_vat_number)` to ensure the provided VAT number is correct before processing an order. You could also use `client.get_vat_rate(country_code, product_category)` to dynamically apply the correct VAT rate to a customer's purchase. This direct integration allows for seamless automation within existing workflows, ensuring compliance at critical touchpoints of a business process. So, how does this help you? It enables you to automatically enforce VAT rules directly within your sales, invoicing, or accounting systems, preventing mistakes and ensuring accurate tax collection.

Product Core Function

· VAT Number Validation: This function allows developers to instantly verify the legitimacy of any EU VAT number, returning details like validity status, country code, and the registered business name. This is crucial for preventing fraudulent transactions and ensuring accurate tax reporting, directly helping you avoid fines and build trust with your customers.

· VAT Rate Retrieval: This feature provides access to up-to-date VAT rates for all EU member states. Developers can dynamically fetch the correct VAT rate based on the customer's location and the type of product or service, ensuring accurate taxation on every transaction. This means you can confidently charge the right amount of tax, simplifying your financial accounting.

· VAT Calculation: This core function automates the process of calculating the exact VAT amount to be charged on a sale. By combining the product price and the correct VAT rate, it simplifies invoicing and ensures compliance with varying tax laws across the EU. For your business, this translates to accurate billing and reduced risk of under or overcharging tax.

Product Usage Case

· An e-commerce platform can use Vatify-Python during checkout to validate a customer's EU VAT number. If the number is invalid or the country code doesn't match, the system can flag the order or require a different payment method, thus preventing potential fraud and ensuring compliance with intra-community VAT rules. This directly helps your online store operate more securely and reliably.

· A SaaS company selling software to businesses across the EU can use Vatify-Python to automatically determine the correct VAT rate to apply to invoices based on the client's country. This eliminates manual lookup and reduces the chance of misapplying tax, ensuring accurate billing and simplifying tax reconciliation for your recurring revenue model.

· A bookkeeping service or accounting software can integrate Vatify-Python to automatically validate VAT numbers for their clients' customers and calculate VAT on invoices. This significantly speeds up the invoicing process and reduces the potential for errors, helping your accounting operations become more efficient and accurate.

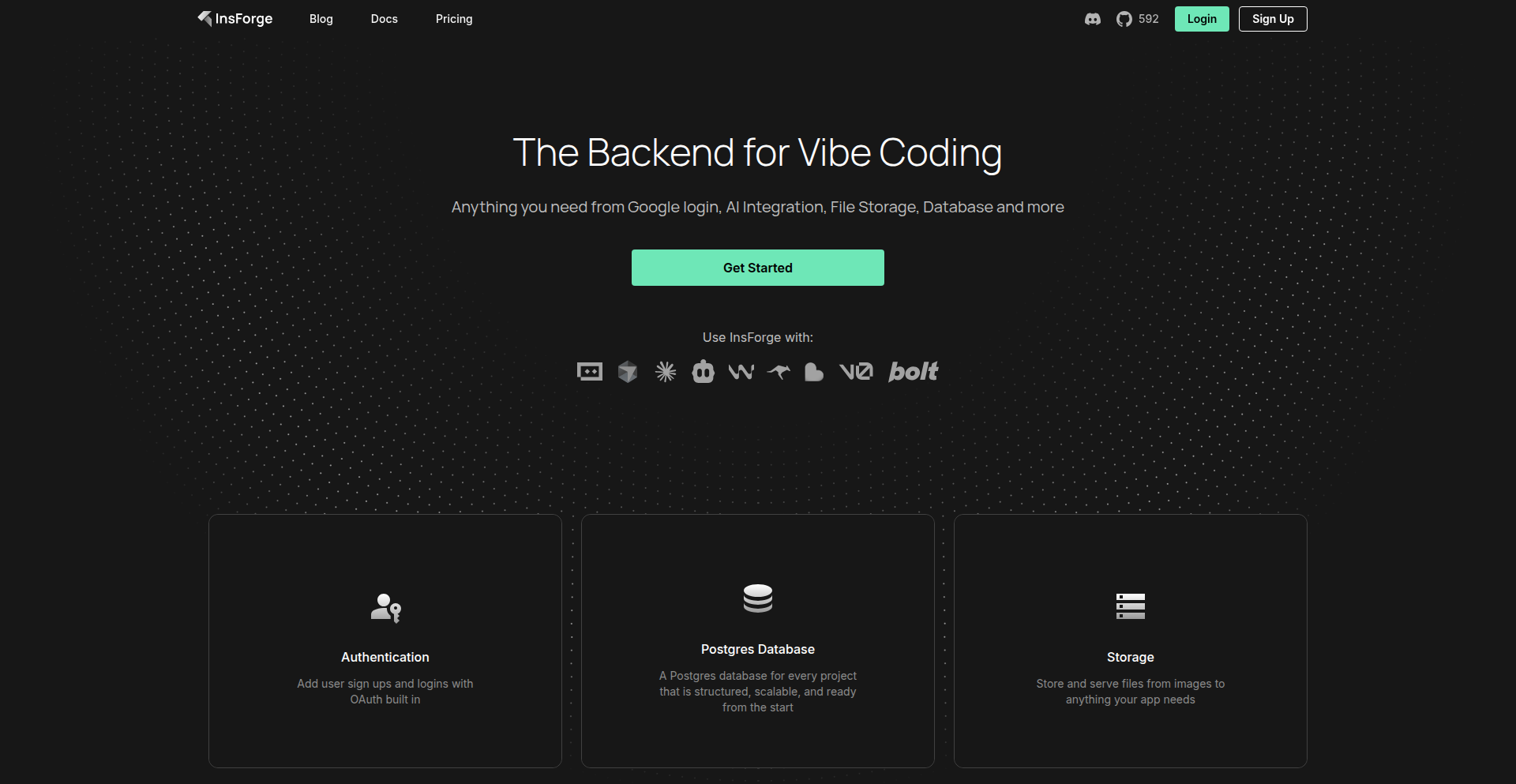

7

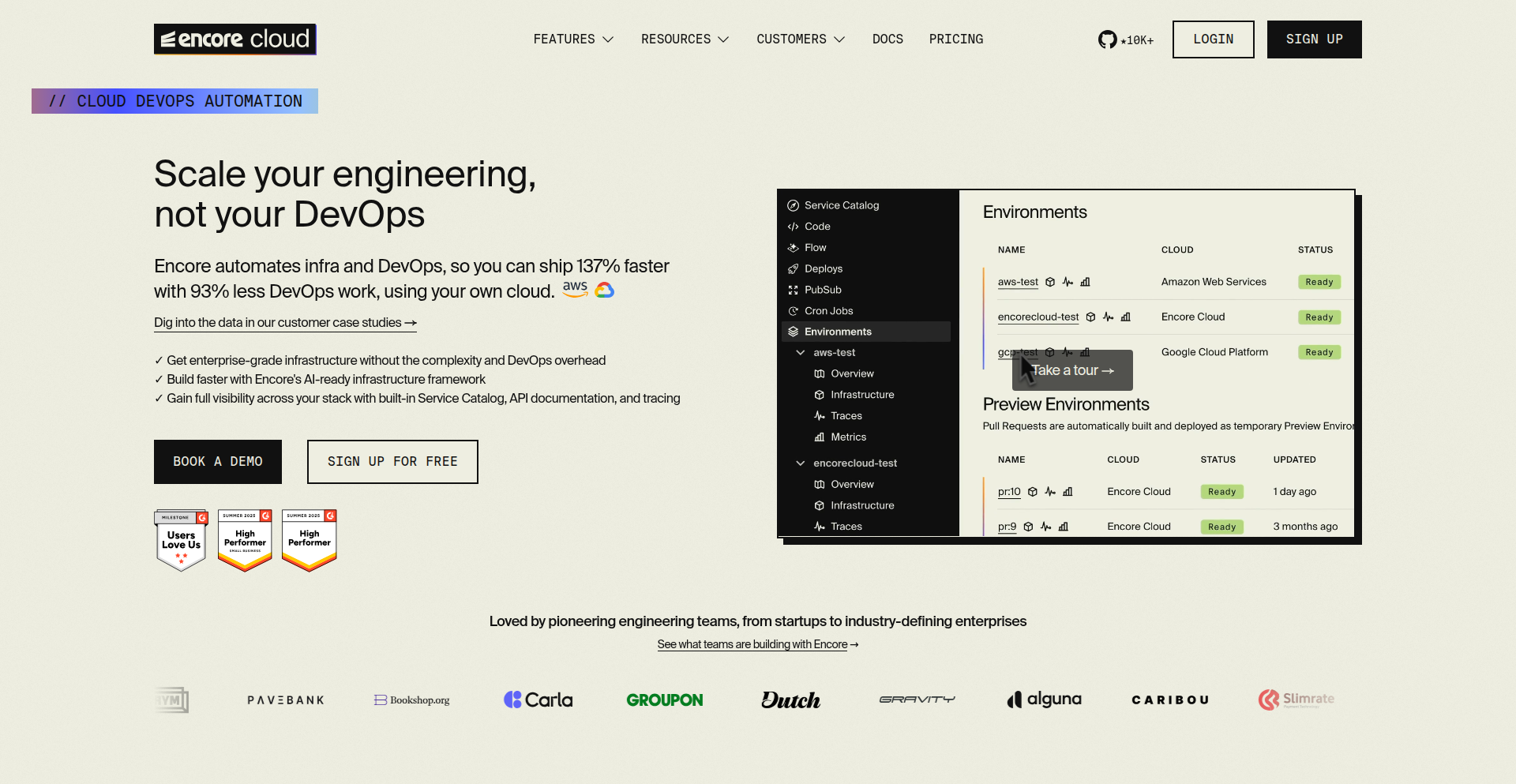

Encore Cloud: Automated DevOps & Infra

Author

andout_

Description

Encore Cloud is a platform designed to automate the complexities of DevOps and infrastructure management. It allows developers to focus on writing code by abstracting away the underlying infrastructure, CI/CD pipelines, and deployment processes. The innovation lies in its declarative approach to defining application infrastructure and services, enabling faster development cycles and reducing the operational burden on engineering teams.

Popularity

Points 6

Comments 0

What is this product?

Encore Cloud is a developer-first platform that automates DevOps and infrastructure. Imagine you want to deploy your application to the cloud. Normally, you'd have to set up servers, configure networking, manage databases, build CI/CD pipelines for automated testing and deployment, and monitor everything. Encore Cloud simplifies this by letting you declare what you want your application to do and how it should run, and it automatically handles the underlying infrastructure setup, deployment, and ongoing management. This means less time spent on manual configuration and more time building features. The core innovation is its ability to translate high-level application requirements into concrete infrastructure and deployment configurations, effectively acting as an intelligent orchestration layer for your cloud resources.

How to use it?

Developers use Encore Cloud by defining their application's services, databases, and other dependencies using Encore's declarative configuration language. This configuration acts as a blueprint. Encore then takes this blueprint and automatically provisions the necessary cloud resources (like virtual machines, databases, and networking components), sets up CI/CD pipelines for automated builds and deployments, and manages the ongoing operations. You can integrate Encore into your existing development workflow, pushing code changes that trigger automated deployments. For example, if you're building a web API with a PostgreSQL database, you would declare these components in Encore, and it would set up the API server environment, provision the database, and create the deployment pipeline to push updates whenever you commit new code.

Product Core Function

· Automated Infrastructure Provisioning: Encore automatically sets up all the necessary cloud resources, like servers, databases, and networking, based on your application's needs. This saves you the manual effort of configuring cloud environments, allowing you to focus on coding.

· CI/CD Pipeline Generation: The platform generates and manages Continuous Integration and Continuous Deployment pipelines. This means your code gets automatically tested and deployed to production whenever you make changes, ensuring faster releases and fewer manual errors.

· Service Orchestration: Encore manages the deployment and scaling of your application's services, ensuring they run smoothly and can handle varying loads. This takes the complexity out of managing distributed systems and microservices.

· Database Management: It handles the setup and management of databases, including backups and scaling. You don't need to be a database administrator to have a robust database for your application.

· Observability and Monitoring: Encore provides built-in monitoring and logging capabilities, giving you visibility into your application's performance and health. This helps you quickly identify and resolve issues before they impact users.

Product Usage Case

· Building and deploying a scalable web API with a managed database: A developer can define their API service and a PostgreSQL database in Encore. Encore will then provision the necessary cloud compute, set up the API server, create the database instance with backups, and configure a CI/CD pipeline to automatically deploy code changes. This eliminates the need to manually set up and manage servers, databases, and deployment scripts, enabling faster iteration on API features.

· Developing a real-time application requiring message queuing: A developer can declare a message queue (like Kafka or RabbitMQ) as part of their application architecture within Encore. Encore will provision and manage the message queue infrastructure, ensuring it's available and scalable, so the developer can focus on implementing the real-time communication logic.

· Rapid prototyping of microservices: For projects involving multiple microservices, Encore can automate the provisioning of each service's infrastructure and set up inter-service communication and deployment pipelines. This allows teams to quickly spin up and test microservice architectures without getting bogged down in operational overhead.

· Migrating existing applications to the cloud: Encore can assist in the cloud migration process by automating the infrastructure setup and deployment for applications, making the transition smoother and less error-prone, even for developers less familiar with cloud-native tooling.

8

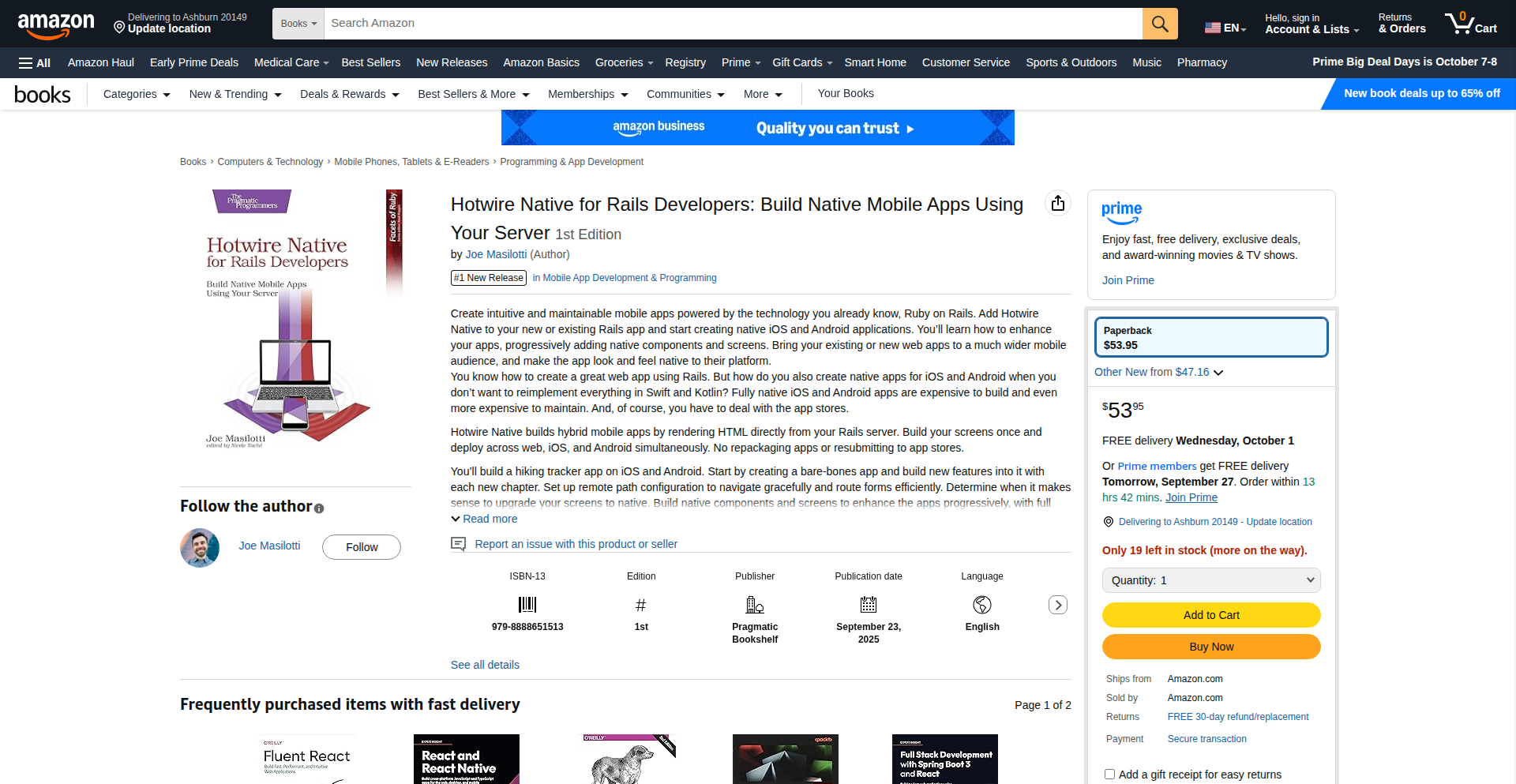

Rails Native Bridger

Author

joemasilotti

Description

This project is a book focused on empowering Ruby on Rails developers to build native iOS and Android applications using the Hotwire Native framework. It tackles the challenge of bridging the gap between web development paradigms and mobile app development, offering a practical, code-centric approach to creating mobile experiences directly from a Rails ecosystem.

Popularity

Points 6

Comments 0

What is this product?

Rails Native Bridger is a comprehensive guide that demystifies the process of creating native mobile applications for both iOS and Android platforms, specifically for developers already familiar with Ruby on Rails. Its core innovation lies in leveraging Hotwire Native, a technology that allows web developers to build mobile apps using familiar web technologies and their existing Rails expertise. This means you're not learning a completely new language or framework from scratch; instead, you're extending your current skillset into the mobile realm. The book's approach is practical, providing step-by-step instructions and real-world examples to make the transition smooth and efficient, solving the problem of high learning curves often associated with native mobile development for web developers.

How to use it?

Developers can use Rails Native Bridger by purchasing and reading the book. The book provides code examples, architectural patterns, and best practices that can be directly applied to their Rails projects. For instance, a Rails developer looking to create a companion mobile app for their existing web application would follow the book's guidance to set up a Hotwire Native project, integrate it with their Rails backend, and implement common mobile UI elements like navigation, modals, and native tab bars. The book also covers essential mobile deployment aspects such as sending push notifications and shipping to app stores like TestFlight and Google Play Store, making it a complete end-to-end solution for Rails developers venturing into mobile.

Product Core Function

· Building first Hotwire Native apps on iOS & Android: Enables Rails developers to quickly get started with mobile development by providing the foundational knowledge to create basic mobile applications that integrate seamlessly with their Rails backend.

· Adding navigation, modals, and native tab bars: Offers solutions for implementing standard mobile user interface patterns, allowing developers to create intuitive and engaging user experiences without needing to learn native Swift/Kotlin UI paradigms.

· Mixing in native screens and components: Provides a method to incorporate specific native functionalities or UI elements when a pure web-based approach isn't sufficient, offering flexibility and power to enhance the mobile app's capabilities.

· Sending and routing push notifications: Explains how to integrate push notification services, enabling developers to engage their mobile users with timely updates and alerts, a crucial aspect of modern mobile application engagement.

· Shipping to physical devices via TestFlight and Play Store: Guides developers through the essential steps of preparing and deploying their applications to the actual app stores, demystifying the often complex submission process and making their apps accessible to end-users.

Product Usage Case

· A Rails developer wants to create a mobile app for their existing e-commerce website. Using the book, they can leverage their Rails knowledge to build the app's frontend with Hotwire Native, connect it to their existing Rails API for product data and user management, and implement features like a product catalog, shopping cart, and checkout process within a native app experience.

· A Rails startup needs a quick way to get a mobile presence for their service. Instead of hiring separate iOS and Android developers, they can use this book to empower their existing Rails team to build a functional mobile application, significantly reducing development time and cost.

· A Rails developer is building an internal tool that would benefit from a mobile interface for field staff. The book provides the blueprint for creating a simple, native-like application that can be easily distributed internally, allowing staff to access and update data on the go.

9

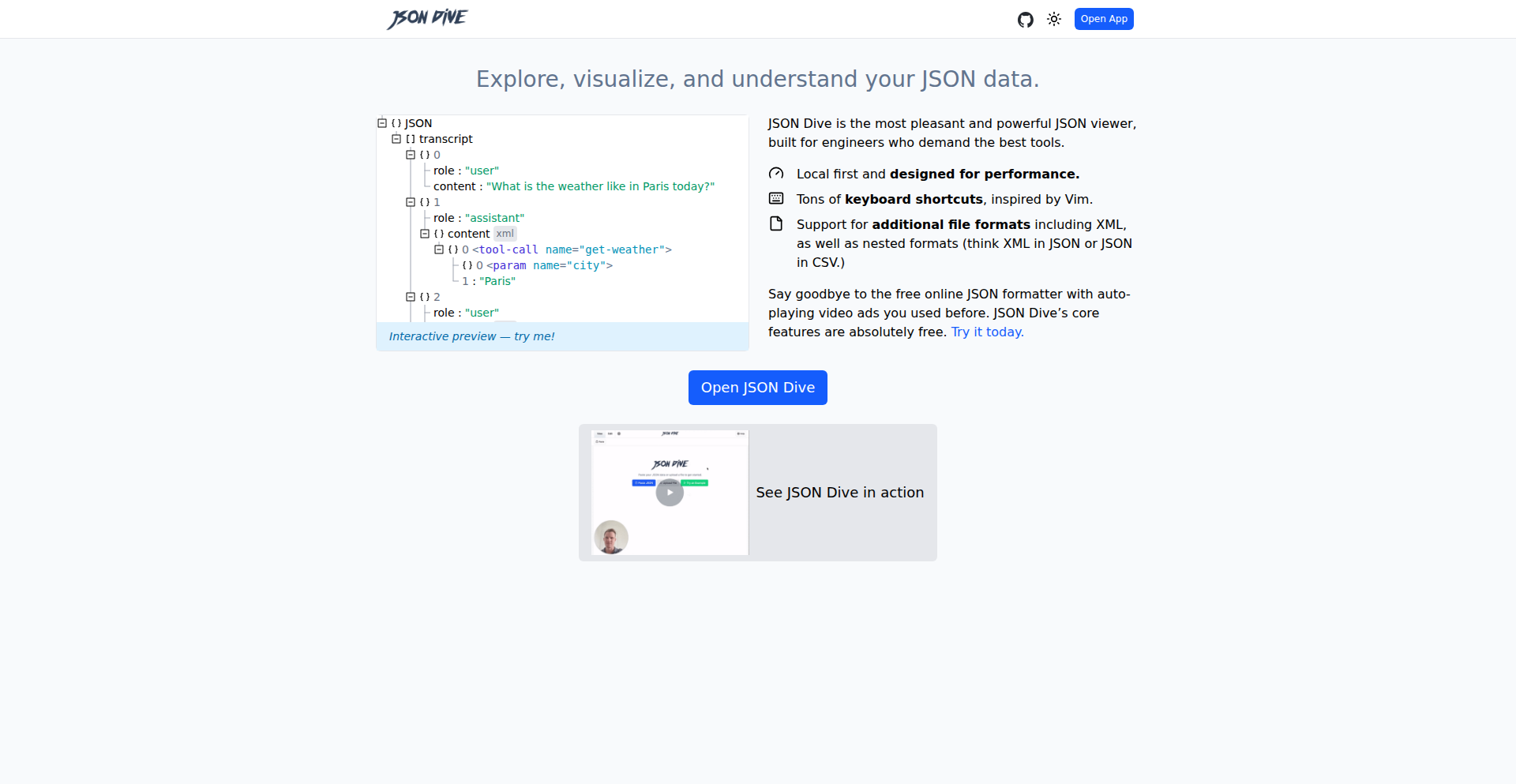

JSON Dive

Author

wcauchois

Description

JSON Dive is a locally-run, ad-free web application designed to help developers understand and interact with JSON data. It innovates by offering a superior user experience for JSON exploration, featuring Vim-like keyboard navigation, dark mode, intelligent previews for timestamps and images, and support for complex nested data structures like XML within JSON. Its core value lies in providing a fast, reliable, and privacy-conscious tool for developers dealing with large or intricate JSON datasets, solving the common frustration of cluttered, ad-filled online viewers.

Popularity

Points 4

Comments 0

What is this product?

JSON Dive is a web-based JSON viewer and explorer built with a focus on developer productivity and a clean, efficient user interface. Its technical innovation centers on a rich, interactive frontend built using React, enabling features like Vim-style keyboard shortcuts for seamless navigation through nested JSON objects and arrays. This approach significantly speeds up data analysis compared to manual scrolling or basic text editors. It also incorporates intelligent rendering for common data types, such as automatically displaying timestamps in a human-readable format or previewing image URLs directly, further enhancing understanding. Crucially, it's designed to be 'local-first,' meaning all data processing happens within your browser, ensuring your sensitive JSON data never leaves your machine, a significant improvement over many online services that might collect or display user data.

How to use it?

Developers can use JSON Dive by simply pasting their JSON data directly into the application's interface or by loading local JSON files. Its intuitive design allows for immediate exploration. For integration into other applications, the project is offered as a reusable React component. This means developers can embed JSON Dive's powerful viewing capabilities directly into their own dashboards, internal tools, or applications that deal with JSON data, providing a polished and efficient data inspection experience for their users without needing to send data to external services. For example, a developer building an API dashboard could integrate JSON Dive to display API responses in a user-friendly and interactive manner.

Product Core Function

· Interactive JSON Tree View: Enables developers to easily expand and collapse nested JSON objects and arrays, providing a clear visual hierarchy of the data. This makes complex data structures much easier to parse and understand.

· Vim Keyboard Navigation: Offers efficient, keyboard-driven navigation through the JSON structure, mirroring the highly productive workflow of Vim users. This drastically reduces the need for mouse interaction and speeds up data exploration.

· Timestamp and Image Previews: Automatically detects and renders timestamps into human-readable formats and displays image previews for valid image URLs within the JSON. This saves developers from having to manually interpret or open links, streamlining data analysis.

· Large File Handling: Optimized to perform well even with very large JSON files, preventing common performance issues or crashes that plague simpler JSON viewers. This is critical for developers working with extensive log data or large API responses.

· Local-First Operation: Processes all JSON data directly within the user's browser, guaranteeing data privacy and security. This is invaluable for developers handling sensitive information who cannot risk data being transmitted or stored by a third party.

· Multi-File Format Support: Capable of parsing and displaying JSON data that contains other formats, such as XML, making it useful for developers working with mixed data structures, particularly in contexts like LLM tool calls.

Product Usage Case

· Analyzing large log files: A developer working with extensive application logs stored in JSON format can paste the logs into JSON Dive to quickly identify errors, trace user activity, or find specific events by efficiently navigating the structured data using keyboard shortcuts.

· Debugging API responses: When an API returns a complex JSON payload, a developer can use JSON Dive to inspect the response structure, view embedded images or timestamps, and easily navigate through nested objects to pinpoint the source of an issue, without leaving their development environment.

· Integrating into a custom dashboard: A team building an internal tool that displays data from various sources can embed the JSON Dive React component to provide their users with a powerful, interactive way to explore and understand the JSON data directly within the dashboard.

· Working with LLM tool output: Developers using large language models that output structured data, potentially including nested JSON with embedded XML or other formats, can use JSON Dive to clearly visualize and understand this complex output.

· Privacy-sensitive data exploration: For developers who need to work with JSON data containing personal or confidential information, JSON Dive's local-first approach ensures that sensitive data is never uploaded to a remote server, maintaining compliance and security.

10

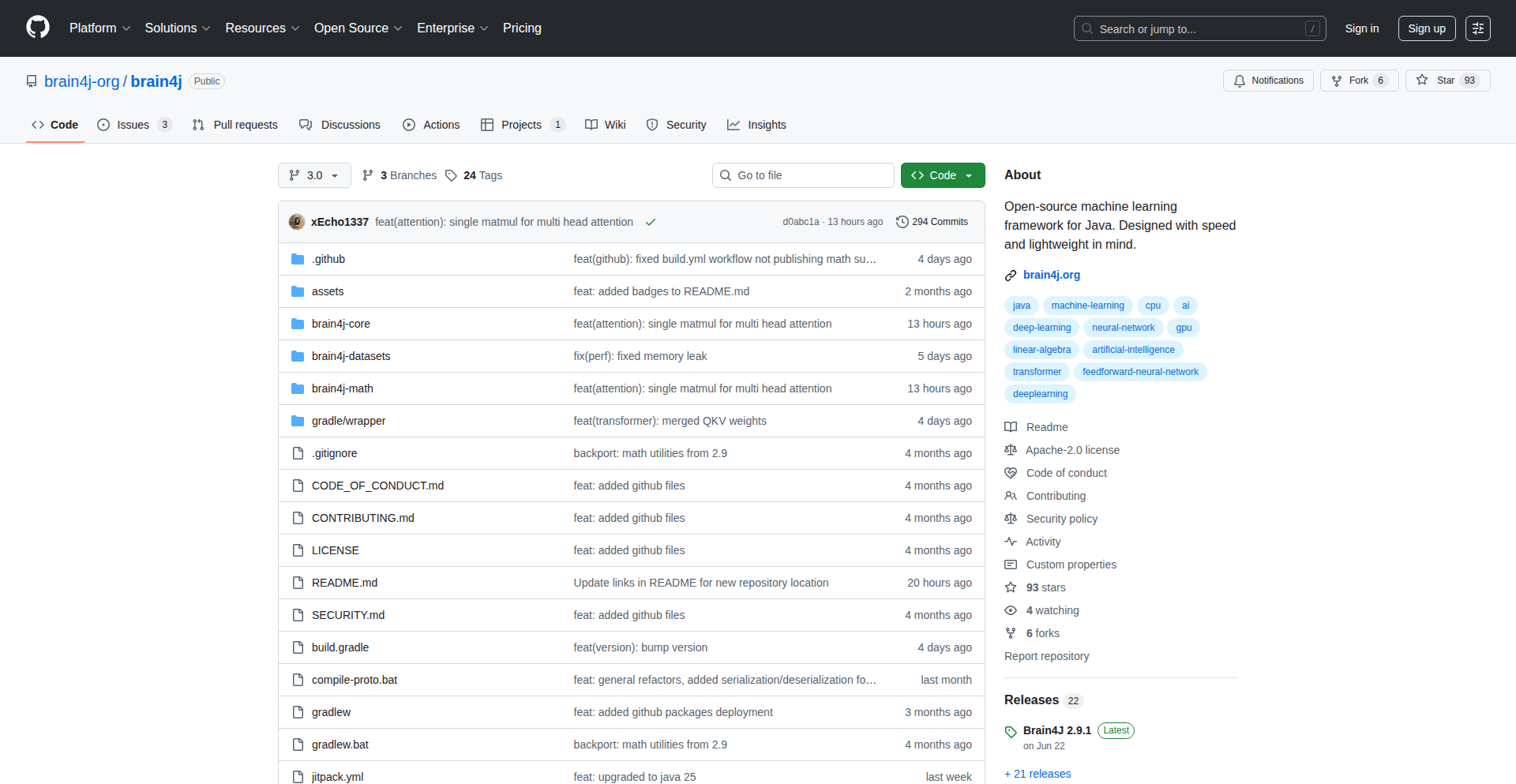

Brain4J GPU-Accelerated Java ML

Author

adversing

Description

Brain4J is a lean and speedy machine learning framework for Java developers, now enhanced with GPU acceleration. It tackles the common challenge of slow ML model training and inference in Java by leveraging the parallel processing power of graphics cards, making complex AI tasks more accessible and efficient for Java ecosystems.

Popularity

Points 4

Comments 0

What is this product?

Brain4J is a Java-based machine learning framework designed for performance and ease of use. Its key innovation lies in its optional GPU support. Traditionally, Java's strengths haven't been in high-performance numerical computation needed for ML. Brain4J bridges this gap by allowing Java applications to offload intensive computations, like model training and prediction, to the GPU. This is achieved through integration with GPU computing libraries, enabling significantly faster processing compared to CPU-only solutions. The 'lightweight' aspect means it's designed to be easy to integrate without adding excessive overhead to your Java projects. So, this means for you, complex ML tasks in your Java applications can now run much, much faster, unlocking possibilities for real-time AI features.

How to use it?

Developers can integrate Brain4J into their existing Java projects by adding the library as a dependency. For GPU acceleration, specific hardware and driver configurations are required. The framework provides Java APIs to define ML models, load datasets, train models, and perform inference. You can use it to build custom ML solutions or integrate pre-trained models into your applications. For example, imagine you have a Java backend processing images and need to classify them in real-time. Instead of relying on a separate Python service, you could use Brain4J to perform this classification directly within your Java application, dramatically reducing latency. So, this means you can build and deploy sophisticated AI models directly within your familiar Java development environment, with the added boost of GPU speed.

Product Core Function

· Lightweight ML Model Definition: Allows developers to define various machine learning models using intuitive Java code, making it easy to build custom AI solutions without complex external configurations. This provides value by simplifying the ML development process for Java programmers.

· GPU-Accelerated Computation: Leverages the parallel processing power of GPUs for significantly faster training and inference of ML models. This is crucial for real-time applications and handling large datasets, offering a substantial performance boost over CPU-only alternatives.

· Java Native Integration: Seamlessly integrates into existing Java applications, allowing developers to extend their Java projects with AI capabilities without needing to switch languages or complex inter-process communication. This adds value by keeping the development within the Java ecosystem.

· Fast Inference Engine: Optimized for rapid prediction of results from trained models, making it suitable for scenarios requiring low latency, such as fraud detection or recommendation systems. This provides value by enabling responsive AI-driven features in applications.

· Data Preprocessing Utilities: Includes built-in tools for common data manipulation tasks required for machine learning, streamlining the data preparation pipeline. This saves developers time and effort in getting their data ready for model training.

Product Usage Case

· Real-time Image Recognition in a Java Web Application: A developer building a web application with a Java backend can use Brain4J to perform real-time image classification directly on the server, powered by the GPU, without relying on external services. This solves the problem of high latency and complex integration for image-based AI features.

· Fraud Detection for a Financial Java Service: A financial institution can integrate Brain4J into their existing Java-based transaction processing system to perform rapid, GPU-accelerated fraud detection on incoming transactions. This addresses the need for high-throughput, low-latency AI for critical security applications.

· Natural Language Processing (NLP) Tasks in a Java Desktop Application: Developers creating desktop applications for tasks like sentiment analysis or text summarization in Java can utilize Brain4J's capabilities to process large amounts of text data quickly on a user's local machine, if it has a compatible GPU, enhancing user experience with intelligent features.

· Personalized Recommendation Engine for an E-commerce Platform: A Java-powered e-commerce platform can use Brain4J to build and deploy a recommendation engine that analyzes user behavior and product data in real-time, providing faster and more relevant product suggestions to customers. This solves the challenge of delivering dynamic and personalized user experiences.

11

CrashedAI Failures Library

Author

mathusan_97

Description

Crashed Out is an open-source library that collects and categorizes real-world failures encountered by AI agents. It serves as a learning resource for developers building AI, offering insights into unexpected behaviors and providing practical examples of how AI can break when interacting with the real world. This helps developers build more robust and reliable AI systems by learning from past mistakes.

Popularity

Points 3

Comments 1

What is this product?

Crashed Out is a curated collection of documented failures from AI agents in real-world applications. The technical insight lies in the systematic observation and classification of these failures, moving beyond theoretical limitations to practical, observable bugs. For instance, an AI agent designed to navigate might fail in a specific, unpredicted scenario due to an edge case in its pathfinding algorithm or an unexpected environmental factor. The library captures these specific instances, providing the context of the failure and, where possible, the root cause analysis. The innovation is in democratizing this hard-won knowledge, allowing the broader AI development community to benefit from insights that would otherwise be siloed within individual projects. So, what's in it for you? You get to learn from hundreds of AI missteps without having to experience them yourself, saving immense development time and preventing costly errors.

How to use it?

Developers can integrate this library into their AI development workflow in several ways. Firstly, it can be used as a reference during the design and testing phases of new AI agents. Before deploying, developers can cross-reference their agent's intended functionality against known failure patterns in the library. Secondly, it can be incorporated into AI agent testing frameworks as a dataset of 'stress test' scenarios. By attempting to trigger known failures, developers can rigorously evaluate their agent's resilience. Integration could involve searching the library for similar functionalities to the AI agent being built, or using the categorizations to design specific test cases. For example, if you're building a customer service chatbot, you might look for 'natural language understanding failures' to ensure your bot handles ambiguity gracefully. So, what's in it for you? You can proactively identify potential weaknesses in your AI and build in safeguards before your AI encounters real-world problems.

Product Core Function

· Categorized Failure Repository: A structured collection of AI agent failures, categorized by domain (e.g., navigation, perception, decision-making) and failure type (e.g., unexpected input, logical error, environmental interaction). This provides a searchable database of common pitfalls. So, what's in it for you? You can quickly find examples of how AI agents similar to yours have failed in specific situations.

· Real-World Contextualization: Each failure entry includes details about the AI agent's intended function, the environment in which the failure occurred, and the observed behavior. This provides crucial context for understanding the 'why' behind the failure. So, what's in it for you? You gain a deeper understanding of the complex interactions that can lead to AI errors.

· Root Cause Analysis Insights: Where available, the library offers preliminary or confirmed root cause analyses for the documented failures, pointing to specific algorithmic weaknesses or data limitations. So, what's in it for you? You get clues about what specific code or logic might be at fault in your own AI.

· Community Contribution Platform: The project is open-source, allowing developers to submit their own observed AI failures, contributing to the collective knowledge base. So, what's in it for you? You can share your hard-earned lessons and help the entire AI community improve.

· Failure Pattern Identification Tools: Future iterations may include tools to identify common patterns across failures, helping developers understand systemic issues in AI design. So, what's in it for you? You can learn about broader trends in AI unreliability and build more generally robust systems.

Product Usage Case

· A developer building an autonomous delivery robot consults the library and finds multiple instances of navigation agents failing in low-light conditions. This prompts them to invest more in robust sensor fusion and testing in varied lighting scenarios. So, what's in it for you? You avoid your robot getting stuck or crashing in the dark.

· A team developing an AI-powered content moderation system uses the library to identify common ways content filters can be bypassed or misinterpret benign content. This leads to the implementation of more sophisticated adversarial testing for their system. So, what's in it for you? Your content moderation system becomes more accurate and less prone to false positives or negatives.

· A researcher experimenting with AI agents for complex game-playing finds examples of agents exhibiting illogical strategic decisions under specific, rare circumstances. This inspires them to explore reinforcement learning techniques that better handle long-term planning and counter-intuitive moves. So, what's in it for you? Your AI game player becomes a more formidable opponent.

· A startup building a personalized recommendation engine encounters an issue where the AI overly personalizes, leading to a 'filter bubble' effect. By searching the library, they discover similar failures in other recommendation systems and adopt strategies to introduce serendipity and exploration into their algorithm. So, what's in it for you? Your users get more diverse and interesting recommendations, improving engagement.

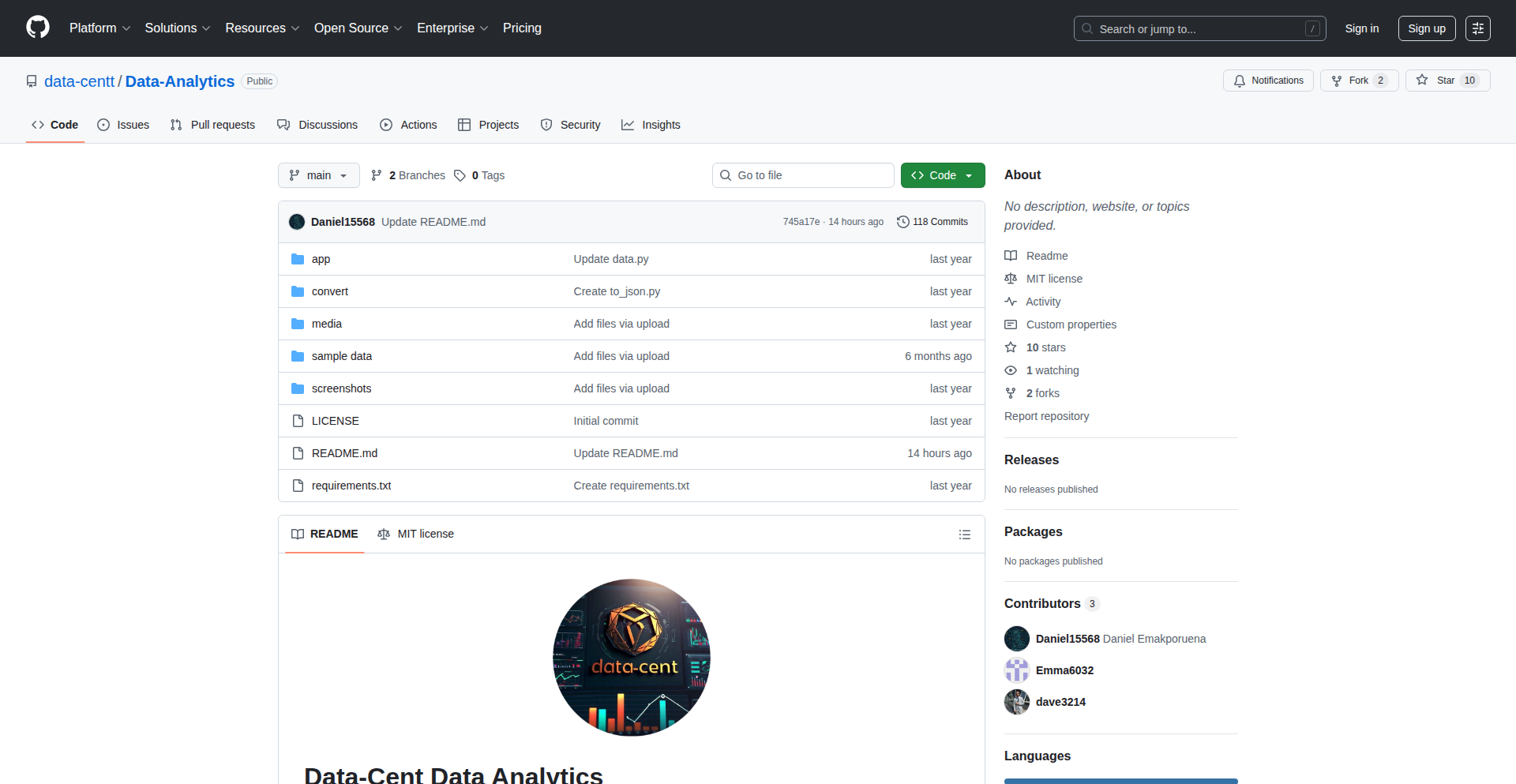

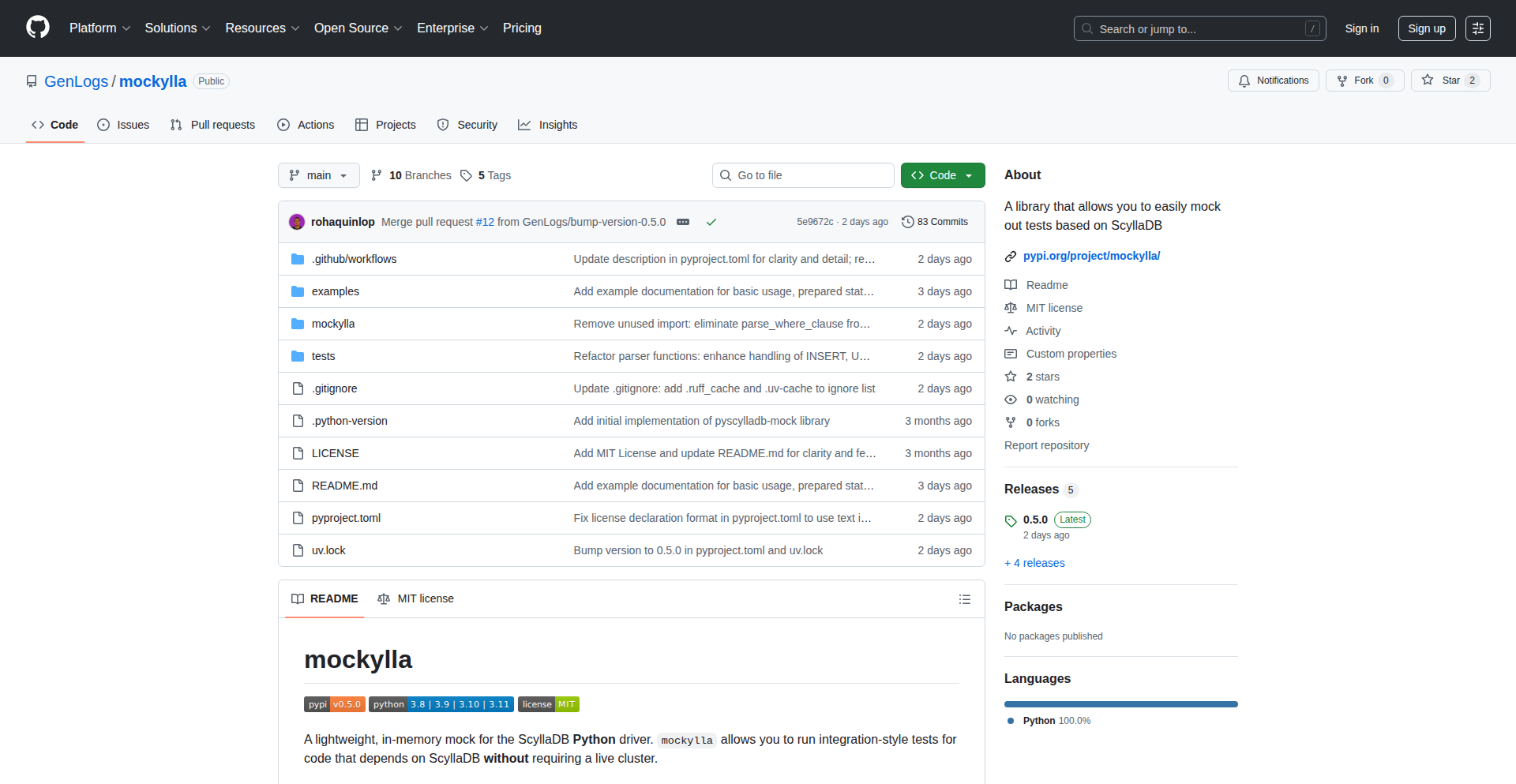

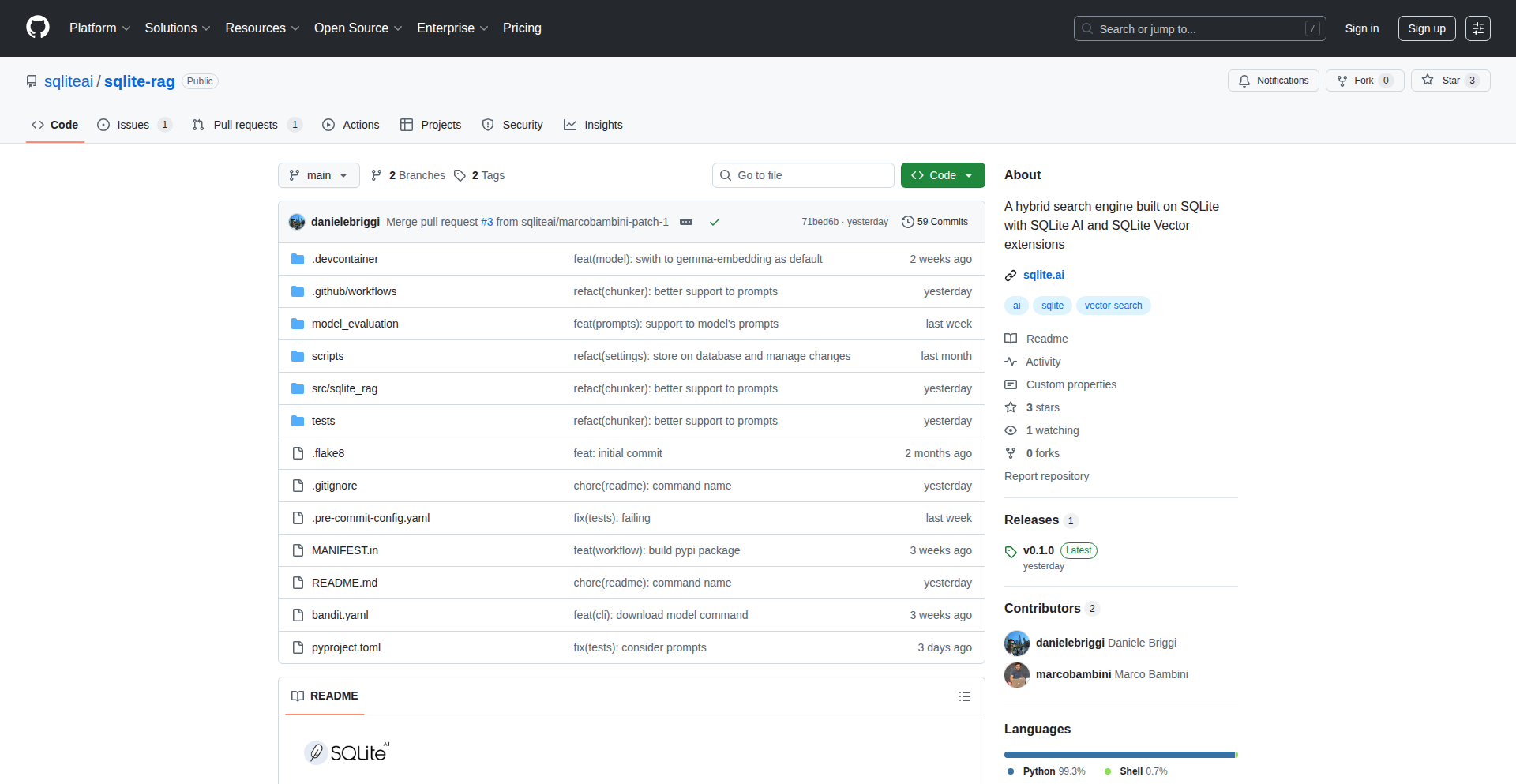

12

BrowserCSV Viz & Analyze

Author

Daniel15568

Description

An in-browser tool for interactive CSV visualization and analysis, leveraging client-side JavaScript to process and display large datasets without server-side overhead. It offers a direct and immediate way to explore data visually, making it accessible for developers and analysts alike.

Popularity

Points 3

Comments 1

What is this product?

This project is a web-based application that allows users to upload and interactively explore CSV (Comma Separated Values) files directly within their web browser. The core innovation lies in its client-side processing architecture. Instead of sending your data to a server for analysis, all the heavy lifting – parsing the CSV, generating visualizations (like charts and graphs), and performing interactive filtering or sorting – happens right in your browser using JavaScript. This means faster loading times, enhanced privacy as your data never leaves your machine, and no need for complex server setups. It's like having a powerful spreadsheet analysis tool that runs entirely on your computer, accessible through a web page.

How to use it?

Developers can use this project in several ways. For quick data exploration, they can simply navigate to the web application, upload their CSV file, and immediately start interacting with the data through various visualizations and filtering options. For integration into their own web applications, the project's codebase can be a valuable reference or even a pluggable component. Imagine building a dashboard for your users where they can upload their own data files and see instant, interactive insights without needing a backend data processing pipeline. It's designed for easy integration, allowing developers to embed this functionality into their existing workflows or build new data-driven features.

Product Core Function

· Interactive CSV Parsing: The ability to read and understand CSV files directly in the browser. This is valuable because it allows for immediate data loading and manipulation without relying on external servers, speeding up the data exploration process for any user dealing with CSV data.

· Client-Side Data Visualization: Generates various charts and graphs (e.g., bar charts, scatter plots) from the CSV data. This is valuable as it provides an intuitive, visual way to understand patterns and trends in data, making complex datasets easier to grasp for both technical and non-technical users, all happening instantly in their browser.

· In-Browser Data Filtering and Sorting: Enables users to dynamically filter and sort the data within the interface. This is valuable for narrowing down specific information and analyzing subsets of data without requiring page reloads or complex queries, offering a fluid and efficient data analysis experience.

· No Server-Side Dependency: All processing happens in the user's browser. This is a significant technical innovation that offers value by improving privacy (data stays local), reducing infrastructure costs (no backend servers needed for data processing), and increasing accessibility (works anywhere with a browser).

· Lightweight and Fast Performance: Optimized JavaScript for efficient data handling. This is valuable for users as it ensures a responsive and smooth experience, even with moderately large datasets, avoiding long wait times often associated with traditional data analysis tools.

Product Usage Case

· A data analyst needs to quickly explore a newly acquired dataset in CSV format for a client presentation. Instead of waiting for a server-side analysis tool to process the data, they upload the CSV directly into the browser application. They instantly see interactive charts and can filter the data to highlight key findings, enabling them to prepare insights on the fly.

· A web application developer is building a feature that allows users to upload their own configuration files in CSV format. They can integrate this browser-based visualization tool to provide users with an immediate preview and interactive validation of their uploaded data, ensuring data correctness before submission and enhancing user experience with instant feedback.

· A researcher is working with experimental data that needs to be kept private. By using this tool, they can upload and analyze sensitive CSV data directly on their local machine, ensuring that no confidential information is transmitted to any external servers, thus maintaining data security and compliance.

· An educator wants to demonstrate data analysis concepts to students without requiring them to install specialized software. They can use this web-based tool in a classroom setting, allowing students to upload sample CSV datasets and perform interactive explorations, making data literacy more accessible and hands-on.

13

BiteGenie AI Culinary Assistant

Author

maezeller

Description

BiteGenie is a free recipe app that leverages AI to transform restaurant menu photos into detailed, cookable recipes. It goes beyond simple image recognition by understanding culinary nuances, allowing for smart recipe modifications for dietary needs or fusion cuisine experimentation, and seamless recipe import from any website. This empowers users to recreate restaurant favorites at home and streamline their entire cooking process, from planning to grocery shopping, all without any subscription fees.

Popularity

Points 2

Comments 1

What is this product?

BiteGenie is an intelligent culinary assistant that uses Artificial Intelligence (AI) to solve the common problem of wanting to recreate a restaurant dish at home. Its core innovation lies in its 'photo-to-recipe' AI. When you take a picture of a menu item, the AI analyzes it using computer vision. This isn't just about reading text; it understands food concepts, cooking methods, and ingredient relationships. It then combines this understanding with a vast knowledge of culinary information to generate a realistic and actionable recipe you can follow in your own kitchen. Think of it as having a personal chef who can decipher any menu and tell you exactly how to make it. Furthermore, it offers 'smart recipe remixing' which means you can take any existing recipe and easily adapt it to be vegan, keto, or even explore fusion flavors. It also makes saving recipes from anywhere online incredibly simple with its 'universal recipe import'.

How to use it?

Developers can integrate BiteGenie's functionality into their own applications or services by leveraging its AI capabilities. For example, a restaurant review platform could integrate BiteGenie to allow users to upload photos of dishes they enjoyed and receive a recipe. A personal health app could use the 'smart recipe remixing' feature to suggest diet-compliant versions of popular dishes. For individual users, the primary use is through the BiteGenie web app. You simply upload a photo of a menu item, or a recipe from a website, and the app generates a recipe. You can then save, organize, and modify these recipes. The app also helps with meal planning and automatically generates grocery lists based on your selected recipes. This means you can easily discover, adapt, and prepare meals without the usual hassle.

Product Core Function

· Photo-to-Recipe AI: This function uses computer vision to analyze menu item photos and generate cookable recipes. Its value is in enabling users to recreate dishes they loved at restaurants, turning inspiration into reality and removing the guesswork of 'how to make this'.

· Smart Recipe Remixing: This feature allows users to transform existing recipes to fit dietary needs (e.g., vegan, keto) or explore fusion cuisine. Its value lies in making recipes more accessible and personalized, catering to diverse dietary requirements and encouraging culinary creativity.

· Universal Recipe Import: This function enables one-click saving of recipes from any website, along with powerful search and organization capabilities. Its value is in simplifying recipe collection and management, allowing users to curate a personal recipe library without manual entry or losing track of online finds.

· Meal Planning & Grocery Lists: This function provides a complete culinary workflow management system, from planning meals to generating grocery lists. Its value is in streamlining the cooking process, saving users time and effort by automating meal organization and shopping list creation.

Product Usage Case

· A user dines at a new Italian restaurant, sees a unique pasta dish on the menu, takes a picture of it, and uses BiteGenie to get a detailed recipe to recreate it at home. This solves the problem of enjoying a dish but not knowing how to prepare it.

· A user is following a vegan diet and finds a delicious-looking chicken recipe online. They use BiteGenie's 'smart recipe remixing' to convert it into a vegan-friendly version. This addresses the challenge of adapting recipes for specific dietary restrictions.

· A food blogger discovers a hidden gem recipe on a small culinary website. Instead of manually copying it, they use BiteGenie's 'universal recipe import' to save it instantly to their collection. This simplifies recipe collection and avoids data loss.

· A busy parent plans their week's meals using BiteGenie, selecting recipes for breakfast, lunch, and dinner. The app then generates a consolidated grocery list, saving them the time and mental effort of compiling it themselves. This addresses the need for efficient meal preparation and shopping.

14

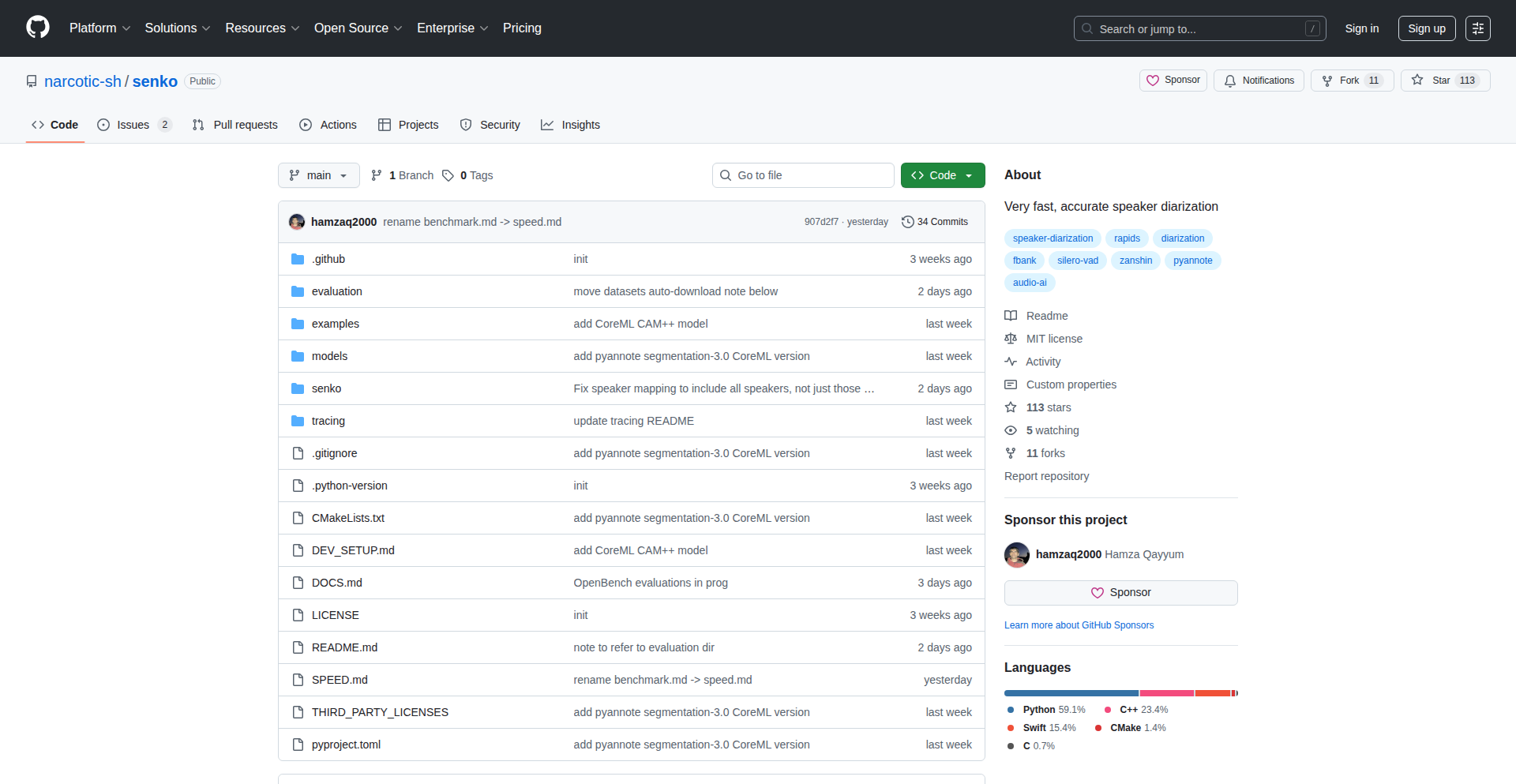

Apple Silicon Native Audio Diarization Engine

Author

hamza_q_

Description

This project showcases a remarkably fast audio diarization system, specifically optimized for Apple Silicon (M1/M2/M3 chips). It tackles the challenge of accurately identifying and separating different speakers within an audio recording, achieving near real-time performance. The innovation lies in leveraging the unique processing capabilities of Apple Silicon to dramatically accelerate complex audio analysis tasks that were previously computationally expensive and slow. This means faster transcription, improved meeting summarization, and more efficient audio content analysis for developers.

Popularity

Points 3

Comments 0

What is this product?

This is an experimental audio processing engine designed to pinpoint who is speaking when in an audio recording. It's built to be incredibly fast by taking full advantage of the specialized hardware found in modern Apple computers (like the M1, M2, and M3 chips). Traditional diarization often involves heavy computation, making it slow. This project rethinks the algorithms and implementation to run much more efficiently on Apple's architecture, achieving speeds that were previously unattainable. This is useful because it allows for near instantaneous speaker identification in audio, meaning you can get actionable insights from audio content much quicker.

How to use it?

Developers can integrate this engine into their applications that process audio. This could involve building tools for automated meeting transcription, analyzing customer service calls, or creating content moderation systems for podcasts and videos. The technical implementation would likely involve calling specific libraries or frameworks that expose the diarization functionality. The speed advantage means that developers can process large volumes of audio data without significant delays, leading to a better user experience and enabling new real-time audio analysis features.

Product Core Function

· Real-time speaker segmentation: Identifies the start and end times of each speaker's contribution to an audio file, providing a timeline of who spoke when. This is valuable for creating accurate transcriptions and understanding conversation flow.

· Speaker label assignment: Assigns a unique label (e.g., Speaker A, Speaker B) to each identified segment, allowing for easy differentiation and analysis of individual contributions. This helps in attributing statements and analyzing individual speaking patterns.

· Apple Silicon optimization: Leverages the advanced processing units (like Neural Engine and GPU) on Apple Silicon chips to achieve significantly faster processing speeds compared to generic implementations. This means developers can process more audio data in less time, leading to cost savings and faster application performance.

· Low-latency processing: Designed for minimal delay between audio input and diarization output, enabling applications that require immediate speaker identification. This is crucial for live transcription services or interactive audio analysis tools.

Product Usage Case

· Automated meeting transcription tools: A developer could use this engine to quickly process meeting recordings, generating a transcript where each speaker's dialogue is clearly demarcated. This saves time spent manually transcribing and identifying speakers.

· Customer service call analysis platforms: Businesses can deploy this engine to analyze large volumes of customer support calls, automatically identifying which agent and customer are speaking. This helps in training, quality assurance, and identifying customer pain points more efficiently.

· Video content summarization services: For video platforms, this engine can process audio tracks to identify speakers and their dialogue, enabling the creation of speaker-aware summaries or captions. This improves accessibility and content discoverability.

15

Lingo: The On-Device Linguistic Database

Author

peerlesscasual

Description

Lingo is a high-performance linguistic database written in Rust, designed for on-device execution. It challenges the 'bigger is better' paradigm of large transformer models by offering nanosecond-level search performance. This means you can quickly find information based on meaning, not just keywords, directly on your device without needing a powerful server.

Popularity

Points 3

Comments 0

What is this product?

Lingo is a novel type of database that stores and searches information based on its meaning, rather than just matching exact words. Think of it like a super-smart search engine that understands context. The core innovation lies in its efficient data structures and algorithms, enabling extremely fast retrieval of semantically similar information. This is achieved by representing text as numerical vectors and using optimized techniques to find vectors that are close to each other in meaning. This approach allows it to run directly on your device, making it ideal for applications where privacy and speed are critical, and it doesn't rely on massive, cloud-based AI models.

How to use it?

Developers can integrate Lingo into their applications to enable advanced search and analysis capabilities. For example, imagine a note-taking app where you can search for 'ideas about sustainable living' and it finds all your notes related to that concept, even if the exact phrase isn't used. It can be used to build features like intelligent chatbots, personalized recommendation engines, or tools for analyzing large volumes of text data directly on a user's phone or computer. The open-source nature of Lingo allows developers to incorporate its core functionalities into their own projects.

Product Core Function

· Semantic Search: Allows querying data based on meaning and context, not just keywords. This is valuable for finding relevant information in applications like personal knowledge management or customer support tools.

· On-Device Execution: Operates directly on the user's device, enhancing privacy and reducing reliance on external servers. This is crucial for applications handling sensitive data or requiring offline functionality.

· High-Performance Indexing: Utilizes optimized data structures for rapid storage and retrieval of linguistic data. This translates to faster search results, improving user experience in any application that involves searching text.

· Vector Embeddings: Represents text as numerical vectors that capture semantic relationships. This is the technical foundation for understanding meaning, enabling more accurate and nuanced search results.

· Rust Implementation: Built with Rust, a programming language known for its performance and memory safety. This ensures the database is efficient and reliable, leading to a more stable application for end-users.

Product Usage Case

· Offline Document Search: Integrate Lingo into a mobile app for searching personal documents, notes, or emails without an internet connection, providing quick access to information regardless of connectivity.

· Intelligent Chatbot Backend: Use Lingo to power a chatbot that can understand user queries in natural language and retrieve relevant answers from a knowledge base, improving user interaction and reducing the need for complex server-side natural language processing.

· Personalized Content Recommendations: Build a recommendation engine for articles, products, or media based on a user's past interactions and expressed interests, providing more tailored and engaging experiences.

· Code Snippet Search: Develop a tool for developers to search for code snippets based on functionality or problem description, rather than exact function names, speeding up development and problem-solving.

16

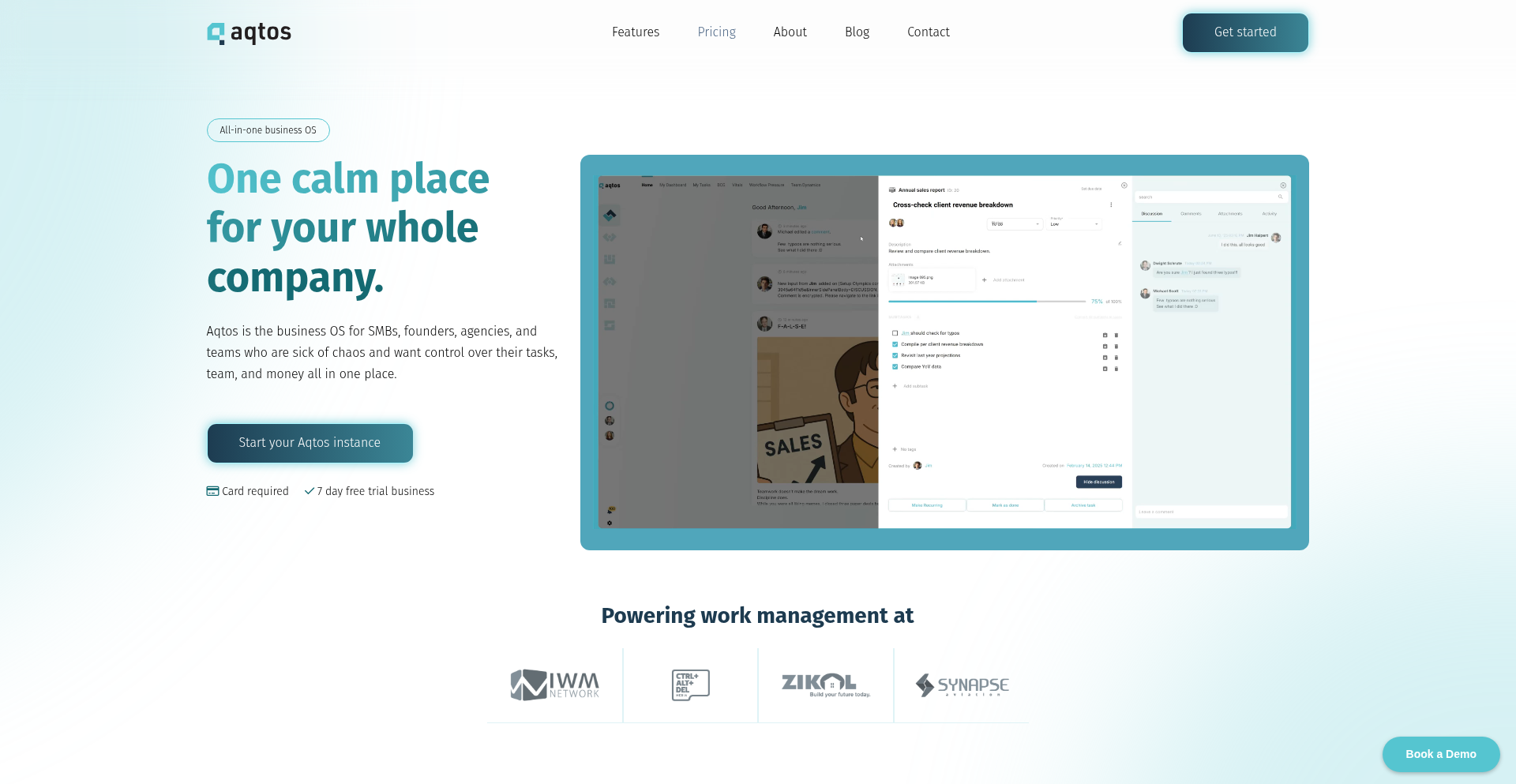

Aqtos: Unified Business OS

Author

ddano

Description

Aqtos is a business operating system designed for small to medium-sized businesses (SMBs) and teams of 5-150 people. It tackles the common problem of fragmented operations caused by using multiple disconnected software tools for CRM, project management, invoicing, team chat, and reporting. Aqtos integrates these functions into a single, plug-and-play platform, aiming to replace 5-7 individual tools at the price of one. Its innovation lies in creating a cohesive ecosystem that simplifies business management for smaller organizations, offering an enterprise-grade solution without the complexity or cost.

Popularity

Points 3

Comments 0

What is this product?

Aqtos is a comprehensive business operating system, essentially a 'business brain' for small to medium-sized businesses and teams. Instead of juggling separate apps for customer relations (CRM), managing projects, sending invoices, chatting with your team, and generating reports, Aqtos brings them all together in one place. The core innovation is its ability to act as a central hub, connecting these disparate functions seamlessly. This means data flows smoothly between different parts of your business, eliminating the need to manually transfer information or deal with incompatible systems. Think of it like having a smart dashboard that shows you the pulse of your entire business at a glance, making it easier to manage and grow. It’s built to be intuitive and quick to set up, offering the power of an integrated system without the usual hassle and expense.

How to use it?