Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-23

SagaSu777 2025-09-24

Explore the hottest developer projects on Show HN for 2025-09-23. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The developer community is clearly pushing the boundaries of AI and automation to solve real-world business challenges, from recovering lost revenue with intelligent payment systems like FlyCode to generating rich content with AI data generators. There's a strong trend towards creating developer tools that streamline workflows, like the AI agents for building internal tools or the shell-native AI for command generation, allowing developers to focus on innovation rather than repetitive tasks. Furthermore, the drive for efficiency and better user experience is evident in solutions for data management, API transformation, and even personal productivity tools that integrate AI seamlessly into daily workflows. This landscape highlights a massive opportunity for entrepreneurs and developers to leverage AI not just as a novelty, but as a foundational element to build impactful products that directly address pain points in business operations and personal efficiency, embodying the hacker spirit of building smart solutions to complex problems.

Today's Hottest Product

Name

FlyCode – Recover Stripe payments by automatically using backup cards

Highlight

This project tackles a critical problem in subscription businesses: revenue loss due to failed payments. FlyCode's innovation lies in its intelligent retry mechanism, which automatically identifies and utilizes backup payment methods on file for a customer. This addresses a significant technical challenge in payment processing by going beyond standard retry logic, directly impacting recovery rates and reducing churn. Developers can learn about leveraging payment gateway APIs (like Stripe's PaymentMethod API) for enhanced dunning processes and sophisticated payment failure handling.

Popular Category

AI/ML

Developer Tools

Productivity

SaaS

Popular Keyword

AI

LLM

Automation

Data

API

Developer Experience

Productivity Tools

Cloud

Technology Trends

AI-powered automation for business processes

Enhanced developer workflows and productivity tools

Data management and analysis solutions

SaaS platforms for niche business problems

Leveraging LLMs for content generation and analysis

Streamlined payment and subscription management

Efficient data handling and visualization

Secure and observable development environments

Project Category Distribution

AI/ML Tools (30%)

Developer Productivity (25%)

SaaS/Business Solutions (20%)

Data Tools (15%)

Utilities/General (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Kekkai: Immutable Code Guardian | 52 | 16 |

| 2 | FlyCode: Smart Retry for Stripe Subscriptions | 16 | 36 |

| 3 | AI-Gen: Open-Source Synthetic Data Engine | 34 | 0 |

| 4 | HN Personalized Feed Engine | 22 | 10 |

| 5 | VoltAgent: AI Agent Orchestration Framework | 19 | 5 |

| 6 | SSH-hypervisor: Personalized VM per SSH Session | 13 | 2 |

| 7 | Gamma AI Content Weaver | 13 | 2 |

| 8 | CraftedSVG KitchenIcons | 9 | 4 |

| 9 | Snapdeck: Agent-Powered Editable Slides | 7 | 5 |

| 10 | Anonymous Chat Weaver | 6 | 4 |

1

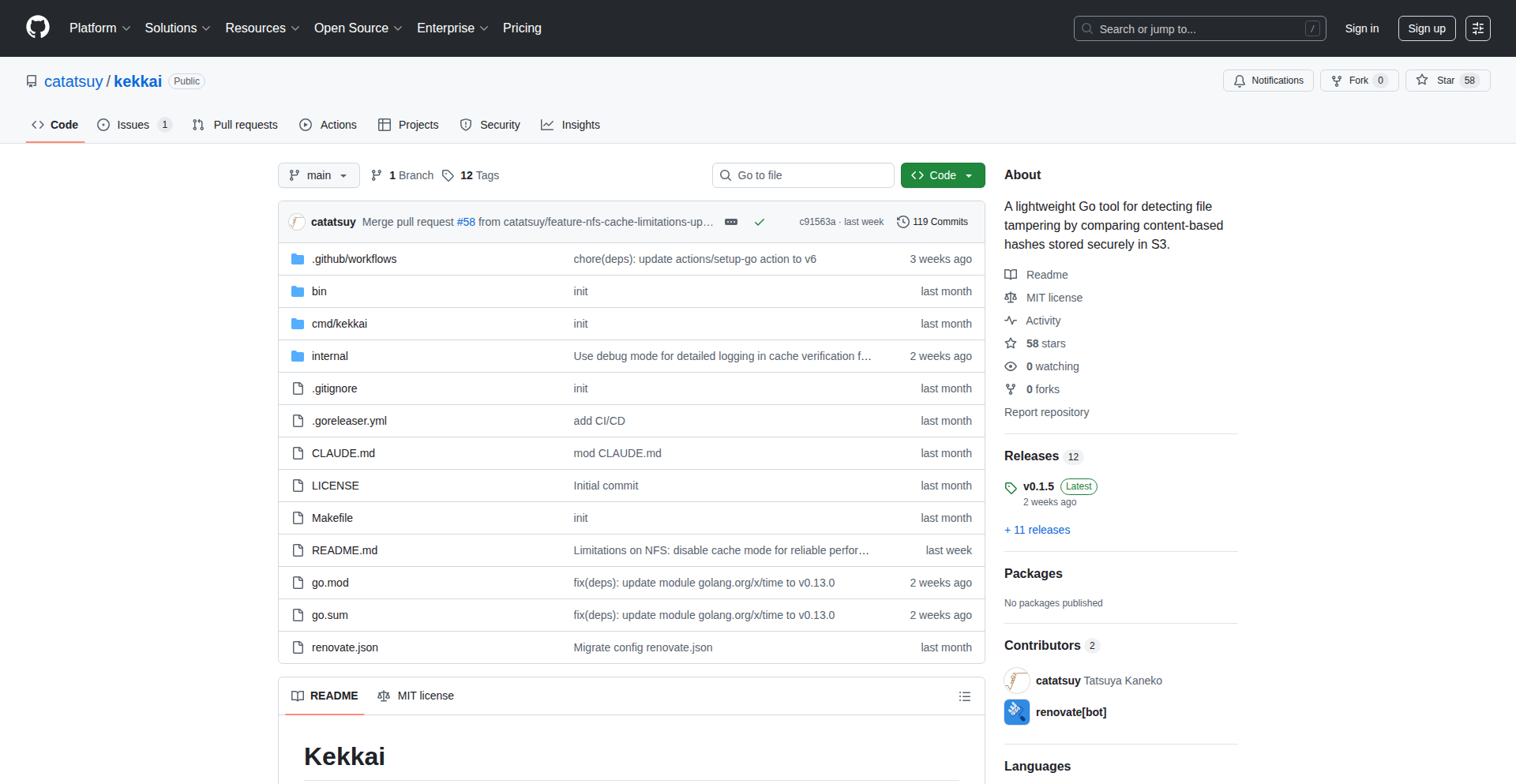

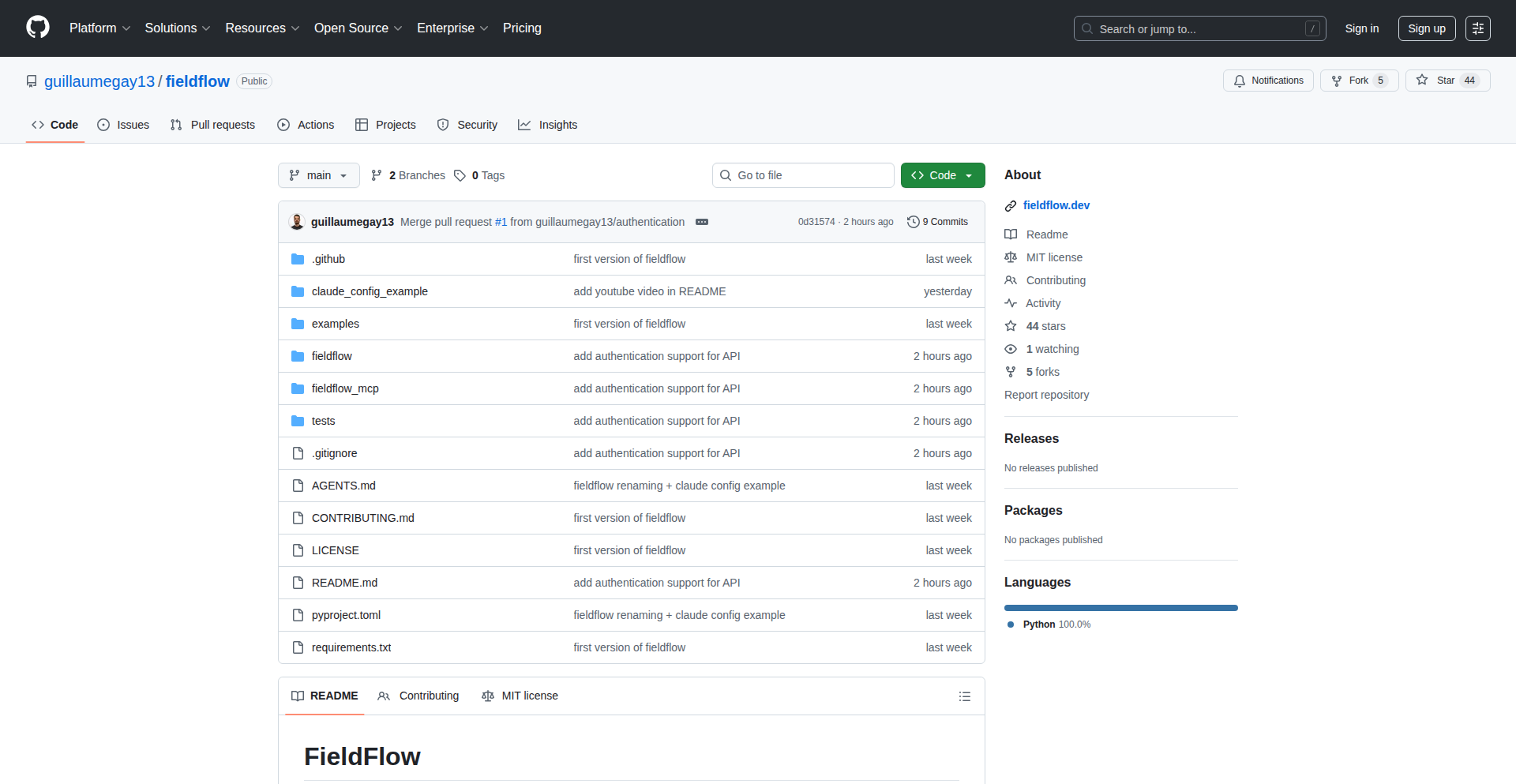

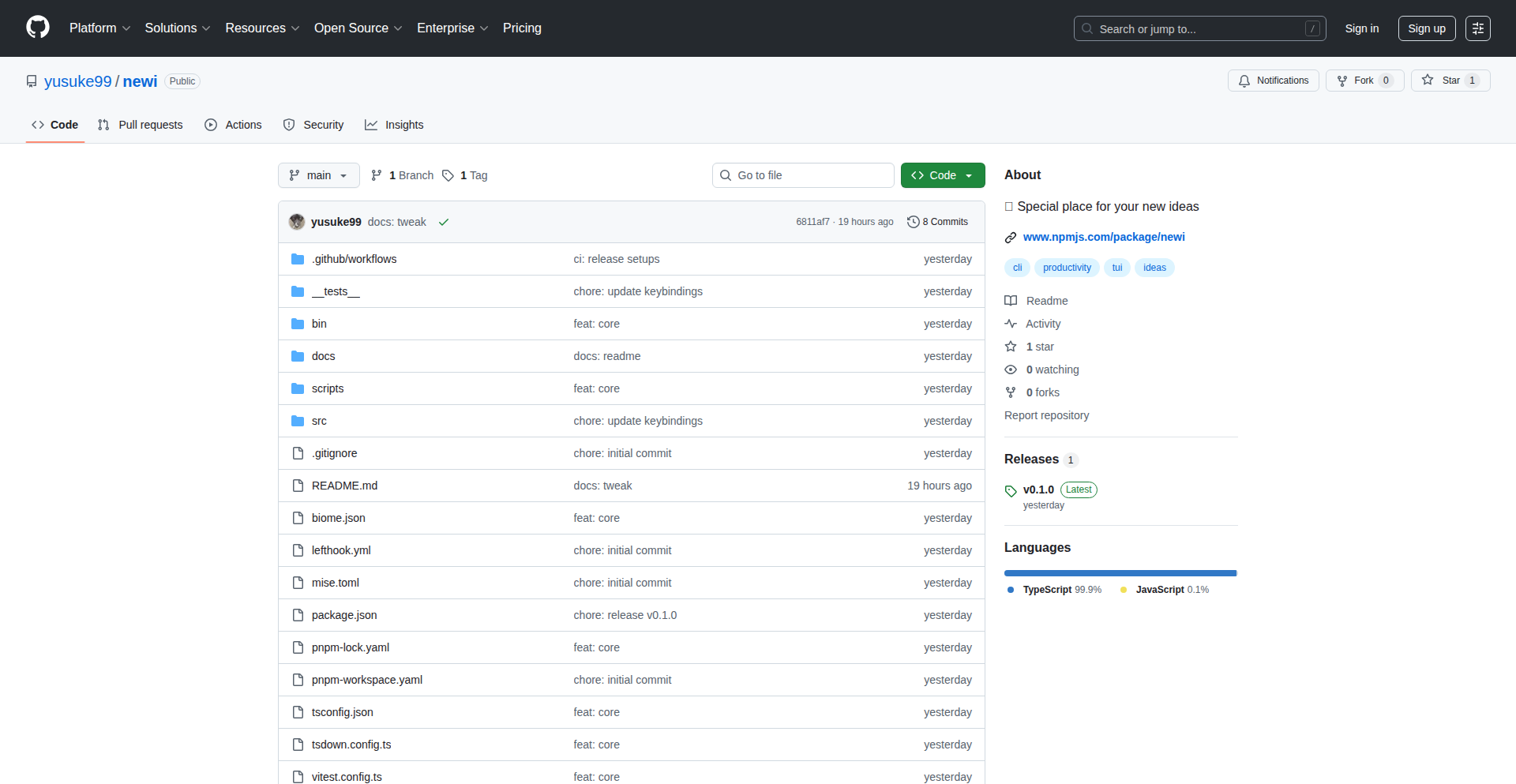

Kekkai: Immutable Code Guardian

Author

catatsuy

Description

Kekkai is a Go-based tool designed for robust file integrity monitoring in production environments. It addresses the critical need to detect unauthorized modifications to application code, often caused by security vulnerabilities like OS command injection or direct tampering. By focusing solely on file content hashing and incorporating symlink protection, Kekkai provides a reliable method to ensure code immutability, differentiating itself from traditional metadata-based approaches that can lead to false positives. Its deployment as a single, lightweight binary with secure S3 storage integration makes it practical for a wide range of web applications running on platforms like AWS EC2.

Popularity

Points 52

Comments 16

What is this product?

Kekkai is a file integrity monitoring (FIM) tool built in Go. Its core innovation lies in its 'content-only hashing' approach. Unlike other tools that might consider file timestamps or metadata, Kekkai calculates a unique digital fingerprint (a hash) based solely on the actual content of your files. This means it can reliably detect if any part of your application's code has been altered, even if timestamps or permissions are manipulated. It also includes 'symlink protection', which is crucial for detecting if malicious actors swap out legitimate files with malicious ones by exploiting symbolic links. Why is this important? If your web application's code is tampered with, it could lead to data breaches, unauthorized actions, or downtime. Kekkai acts as a digital sentinel, ensuring your deployed code remains exactly as it should be. Its lightweight nature and secure S3 storage for recorded hashes make it easy to integrate and trust, offering peace of mind that your production code is secure.

How to use it?

Developers can use Kekkai by building and deploying its single Go binary onto their production servers. During a deployment phase, Kekkai is run to record the initial content hashes of all critical application files. These hashes are then securely stored, ideally in a read-only location like an S3 bucket configured for write-only access by deployment servers and read-only access by application servers. Later, Kekkai can be scheduled to run periodically, re-calculating the hashes of the deployed files and comparing them against the stored baseline. If any discrepancy is found, it flags a potential compromise. This allows for rapid detection of unauthorized changes, enabling quick remediation before significant damage occurs. For integration, you can trigger the initial hash recording as part of your CI/CD pipeline post-deployment, and schedule regular verification checks as a cron job or within your application's monitoring stack.

Product Core Function

· Content-only hashing: This function calculates a unique digital signature for files based purely on their data, ignoring metadata like modification times. This ensures that only actual code changes trigger an alert, preventing false alarms and providing a highly reliable security check for your application's codebase.

· Symlink protection: This feature specifically checks symbolic links (shortcuts to other files) to ensure they haven't been tampered with or replaced with malicious links. This is a critical security measure as attackers often use symlinks to redirect your application to harmful code, and Kekkai's protection prevents this type of sophisticated attack.

· Secure S3 storage integration: Kekkai can store the generated file hashes in an Amazon S3 bucket. By configuring deployment servers with write-only access and application servers with read-only access to this bucket, you create a secure, isolated environment for your integrity baseline, making it extremely difficult for attackers to tamper with the records themselves.

· Single Go binary deployment: Kekkai is packaged as a single executable file written in Go. This significantly simplifies deployment and reduces dependencies, meaning you don't need to install complex runtimes or libraries on your production servers. It's a 'drop-and-go' solution for immediate file integrity protection.

Product Usage Case

· Monitoring a PHP web application on AWS EC2: After deploying updates to a critical PHP application, Kekkai can be used to record the hashes of all PHP files. If an OS command injection vulnerability is later exploited, allowing an attacker to modify files, Kekkai's scheduled checks will detect the altered file content and alert the operations team, preventing potential data exfiltration or service disruption.

· Ensuring the integrity of a Python API backend: For a Python-based API service, Kekkai can monitor all Python source files and configuration files. If a developer accidentally or maliciously introduces malicious code into the codebase, or if a system administrator's account is compromised and alters files, Kekkai will detect these changes during its verification runs, allowing for swift rollback and investigation before the compromised code is executed.

· Protecting static assets in a Ruby on Rails application: Even static assets like JavaScript and CSS files can be targets for tampering. Kekkai can be employed to hash these files as part of the build process. If an attacker manages to inject malicious scripts into these assets through a file upload vulnerability, Kekkai will identify the altered content, safeguarding users from cross-site scripting (XSS) attacks.

· Verifying the integrity of server-side code in a Node.js application: In a Node.js environment, Kekkai can monitor the core application files. If unauthorized modifications are made, perhaps due to a compromised npm package or direct server access, Kekkai's content-based hashing will catch these deviations, ensuring that the Node.js application continues to run the intended, safe code.

2

FlyCode: Smart Retry for Stripe Subscriptions

Author

JakeVacovec

Description

FlyCode is a Stripe app designed to drastically reduce revenue loss from failed subscription payments by intelligently retrying with backup cards. It tackles the common problem of subscription churn due to payment failures, even when customers have alternative payment methods stored, by automatically identifying and utilizing these backup cards. This leads to significant improvements in payment recovery rates without increasing refunds or chargebacks, democratizing a capability previously only available to large enterprises.

Popularity

Points 16

Comments 36

What is this product?

FlyCode is a smart payment recovery tool for subscription-based businesses that use Stripe. It addresses the issue of 'involuntary churn', where customers lose their subscriptions not because they want to cancel, but because their primary payment card fails. Many customers have multiple valid cards on file, but standard payment processors like Stripe will only retry the initial failed card a few times before canceling the subscription. FlyCode connects to your Stripe account and automatically detects if a customer has other valid cards available. When a payment fails, FlyCode steps in to retry the payment using these backup cards. The innovation lies in its ability to programmatically access and use these alternative payment methods, mimicking the sophisticated internal systems of large companies to prevent preventable revenue loss. It operates during the 'dunning period' – the time between a payment failure and subscription cancellation – and allows customization of retry timing and card validity rules, offering a robust yet simple solution to a persistent business challenge.

How to use it?

Developers and business owners can integrate FlyCode seamlessly into their existing Stripe workflow. Since it's a Stripe app, no code changes are required on your end. You simply connect your Stripe account to FlyCode. Once connected, you can configure how FlyCode operates: you can set rules for when it should attempt retries during the dunning process (e.g., at the beginning, middle, or end of the retry window) and define criteria for which backup cards are considered valid (e.g., cards that have been successfully used in the last 180 days). FlyCode then automatically monitors for failed invoices within your Stripe account and initiates the intelligent retry process using available backup payment methods. This integration allows for immediate impact on payment recovery with minimal technical overhead.

Product Core Function

· Intelligent Backup Card Detection: Automatically identifies customers with multiple valid payment methods on file, preventing churn due to a single card failure. This means more predictable revenue for your business.

· Automated Smart Retries: Systematically retries failed subscription payments using detected backup cards during the dunning period. This significantly increases the chance of successful payment capture and reduces lost revenue.

· Configurable Retry Logic: Allows businesses to customize when retries occur (early, mid, late dunning) and set validity rules for backup cards, giving control over the recovery process and optimizing for customer experience.

· Stripe Integration (No Code Required): Seamlessly connects with your existing Stripe account, eliminating the need for complex development or changes to your current codebase. This makes it quick and easy to implement and see results.

· Enhanced Revenue Recovery: Proven to increase payment recovery rates by 18-20% with additional gains from backup card usage, directly improving your bottom line by saving revenue that would otherwise be lost to involuntary churn.

Product Usage Case

· A SaaS company experiencing high involuntary churn due to expired credit cards losing 30% of their monthly recurring revenue. By implementing FlyCode, they automatically recover payments using backup cards, reducing churn by 20% and stabilizing their revenue stream. This directly translates to more predictable income and less time spent chasing failed payments.

· An e-commerce subscription box service where customers frequently update their payment information but forget to update their primary card for recurring orders. FlyCode ensures that even if the primary card fails, a backup card is used, preventing subscription cancellations and maintaining customer lifetime value. This means happier customers who receive their boxes without interruption.

· A digital content provider facing issues with international payment failures due to varying card networks and expiry dates. FlyCode's smart retry mechanism, combined with the ability to define card validity, helps recover payments that would otherwise be lost, improving global revenue collection without manual intervention. This allows them to serve a wider customer base effectively.

· A membership platform for a fitness studio where members often have multiple cards saved for convenience. When one card expires, FlyCode automatically uses another available card, ensuring members retain access to services without interruption and the studio avoids lost revenue from lapsed memberships. This enhances the customer experience and operational efficiency.

3

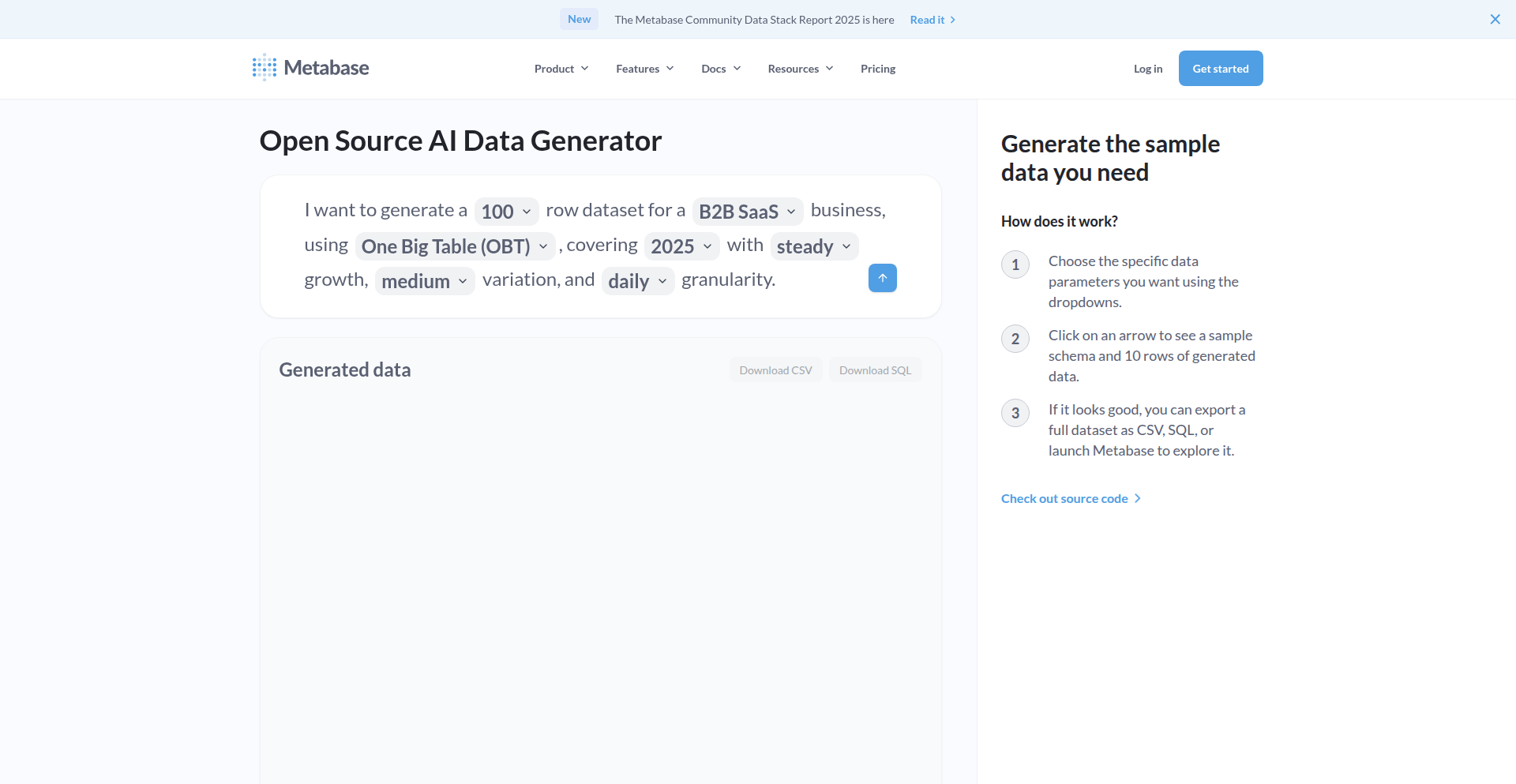

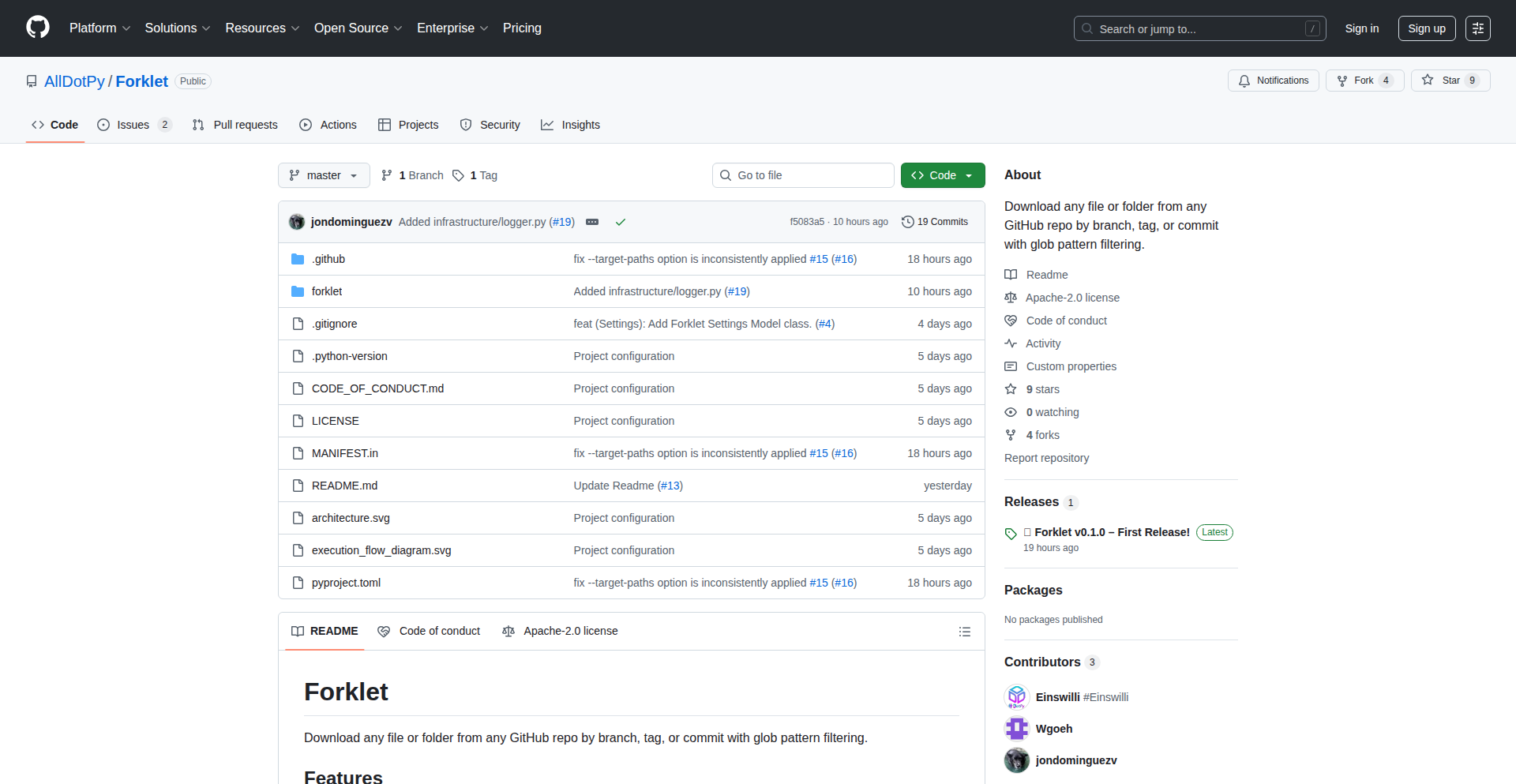

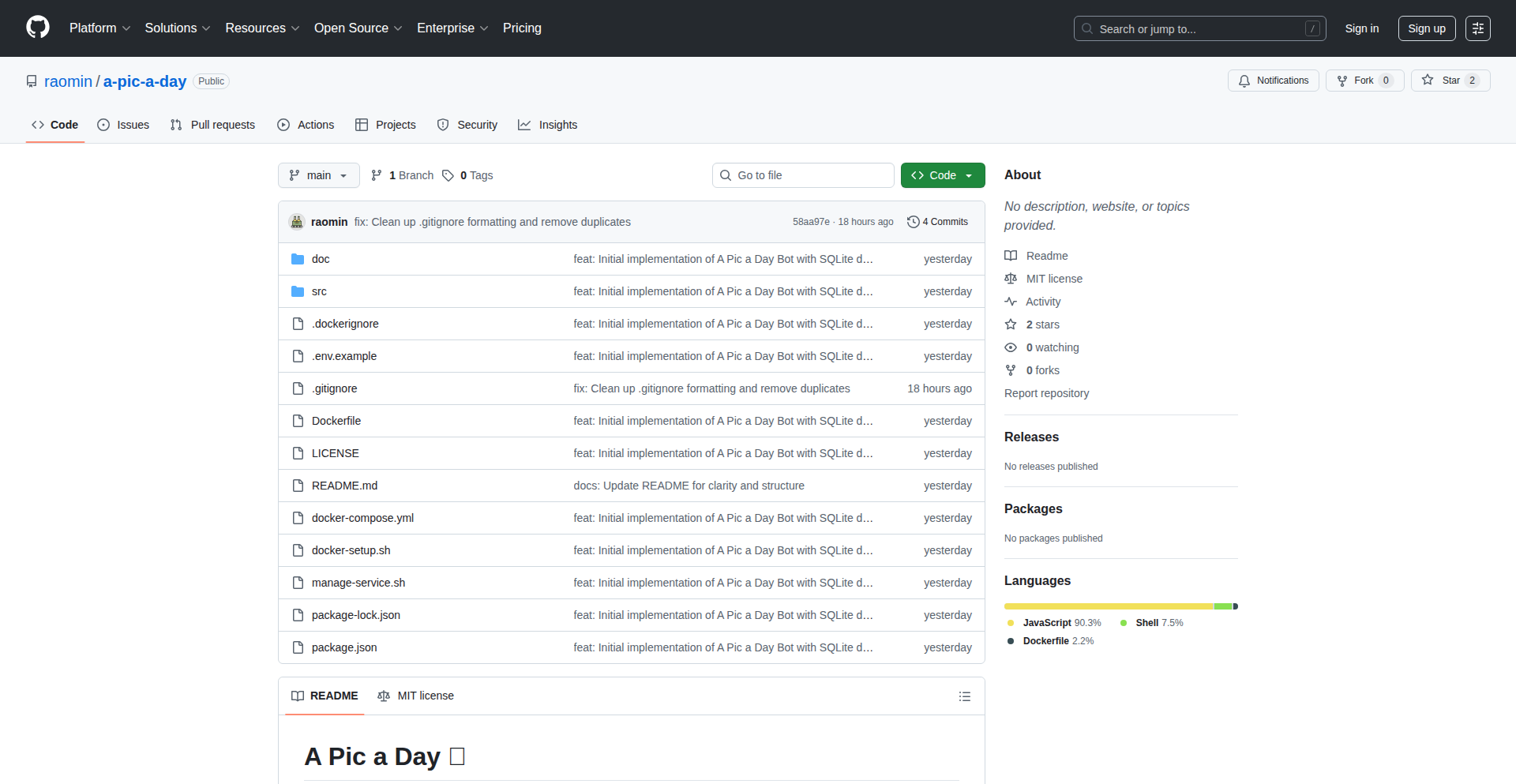

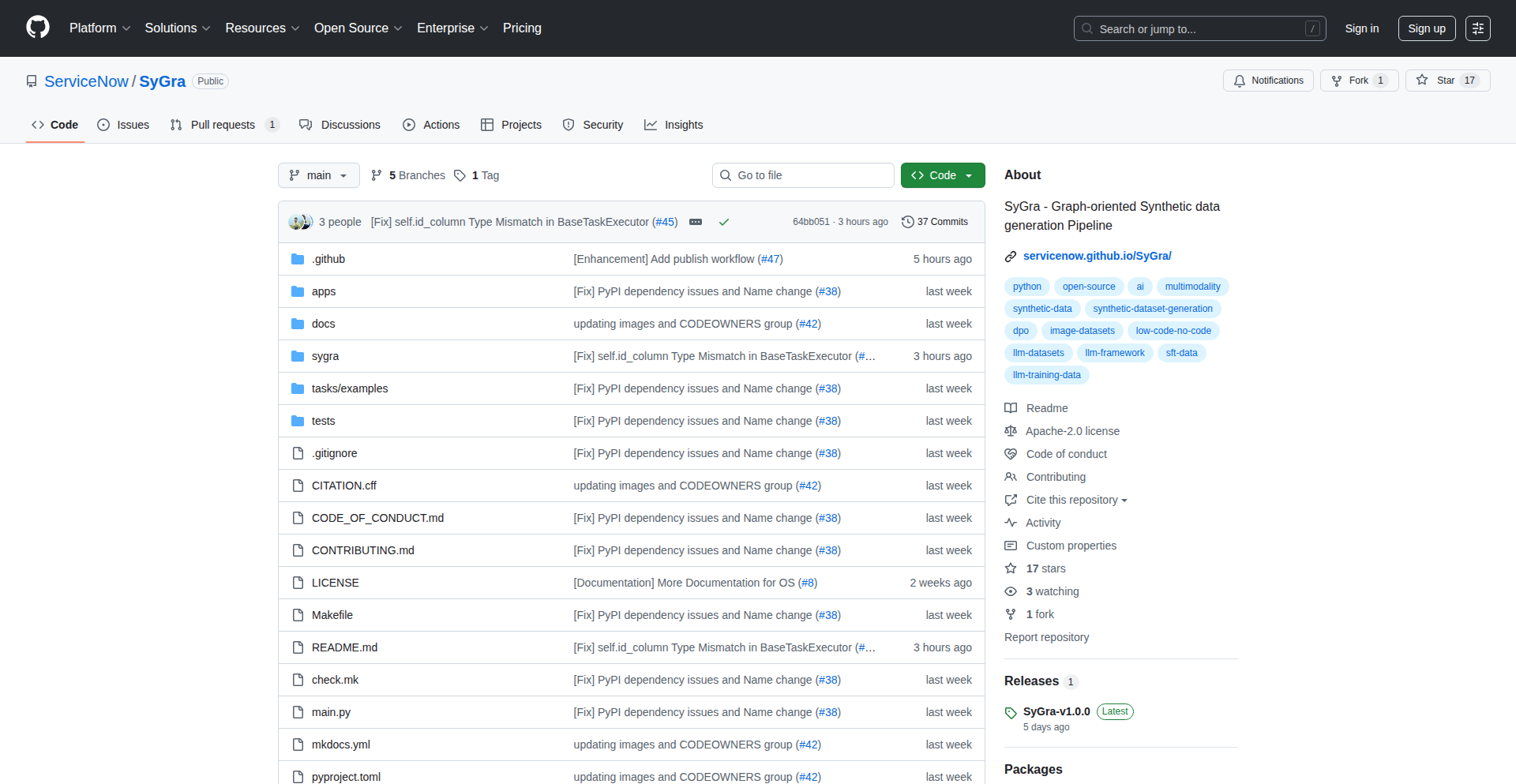

AI-Gen: Open-Source Synthetic Data Engine

Author

margotli

Description

AI-Gen is an open-source tool that leverages AI to generate synthetic datasets for various applications. It addresses the common challenge of acquiring large, diverse, and high-quality datasets for machine learning model training. The innovation lies in its programmatic approach to data generation, allowing users to specify parameters and characteristics of the desired data, which are then translated into realistic synthetic examples by AI models. This eliminates the need for manual data collection, annotation, and the potential privacy concerns associated with real-world data. The project offers both a convenient hosted version for immediate use and a fully open-source repository for self-hosting and customization, promoting community contribution and flexibility.

Popularity

Points 34

Comments 0

What is this product?

AI-Gen is a powerful AI-driven data synthesis tool that creates artificial datasets. Instead of manually gathering and labeling data, which is time-consuming and can be privacy-sensitive, AI-Gen uses AI models to generate realistic data that mimics real-world patterns. The core innovation is its ability to understand user-defined data requirements and translate them into diverse and high-quality synthetic data. It also supports multiple AI language models through LiteLLM, offering flexibility in the underlying AI technology used for generation. So, it's like having an AI assistant that creates perfect test data for your AI projects, on demand.

How to use it?

Developers can use AI-Gen in several ways. For immediate use, they can access the hosted version, which provides a web interface to define dataset parameters and generate data directly. For greater control and integration into existing workflows, they can download the open-source code and self-host it. This allows for deep customization and embedding the data generation process within their CI/CD pipelines or custom ML frameworks. Integration is straightforward: define your data schema and characteristics, select your preferred AI model provider via LiteLLM, and AI-Gen generates the dataset. This is particularly useful for teams needing reproducible datasets for testing, prototyping, or training models where real data is scarce or sensitive. So, you can easily get custom datasets for your AI model training without starting from scratch.

Product Core Function

· AI-powered data generation: Creates synthetic data with realistic attributes and distributions, reducing manual effort and cost in data acquisition. This is valuable for getting started with ML projects quickly.

· Customizable dataset parameters: Allows users to define the structure, types, and characteristics of the data to be generated, ensuring the synthetic data aligns with specific project needs. This means you get data that's actually useful for your problem.

· Multi-provider LLM integration: Supports various AI language models via LiteLLM, offering flexibility and the ability to leverage different AI capabilities for data synthesis. This lets you pick the best AI for the job, making your data generation more robust.

· Open-source and self-hostable: Provides the source code for free, enabling users to modify, extend, and deploy the tool on their own infrastructure, fostering transparency and community collaboration. This gives you full control over your data generation process.

· Hosted version for convenience: Offers a ready-to-use cloud-based service for quick access and generation of datasets without managing infrastructure. This is great for rapid prototyping and experimentation.

Product Usage Case

· Training a customer churn prediction model: A developer needs a dataset with realistic customer demographics, purchase history, and interaction logs to train a machine learning model. AI-Gen can generate a large synthetic dataset with these features, simulating various customer behaviors without using sensitive real customer data. This allows for faster model development and testing.

· Testing a new e-commerce recommendation engine: Before launching, a team needs to test their recommendation system with a diverse range of user profiles and product interactions. AI-Gen can create synthetic user data and product catalogs, enabling comprehensive testing of the recommendation algorithms in various scenarios. This ensures the system performs well under different conditions.

· Prototyping a natural language processing (NLP) application: A researcher is developing an NLP model to extract information from product reviews. AI-Gen can generate synthetic product reviews with specific sentiment and key entity distributions, allowing the researcher to quickly iterate on model architectures and feature engineering. This speeds up the early stages of NLP research.

· Creating benchmark datasets for AI model evaluation: An AI research lab needs standardized datasets to compare the performance of different algorithms. AI-Gen can generate controlled synthetic datasets with specific biases or complexities, providing a consistent basis for evaluating and benchmarking AI models. This leads to more reliable comparisons.

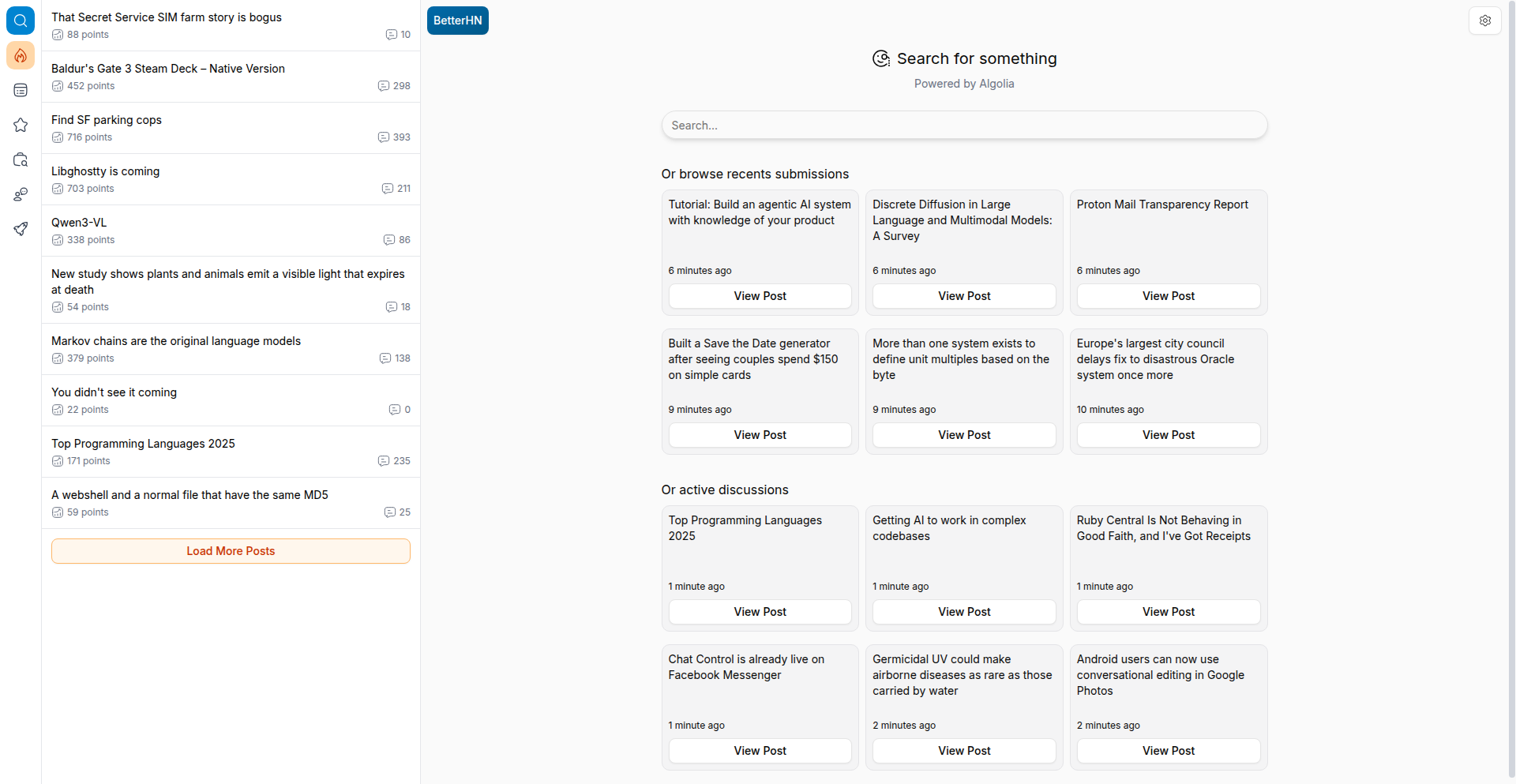

4

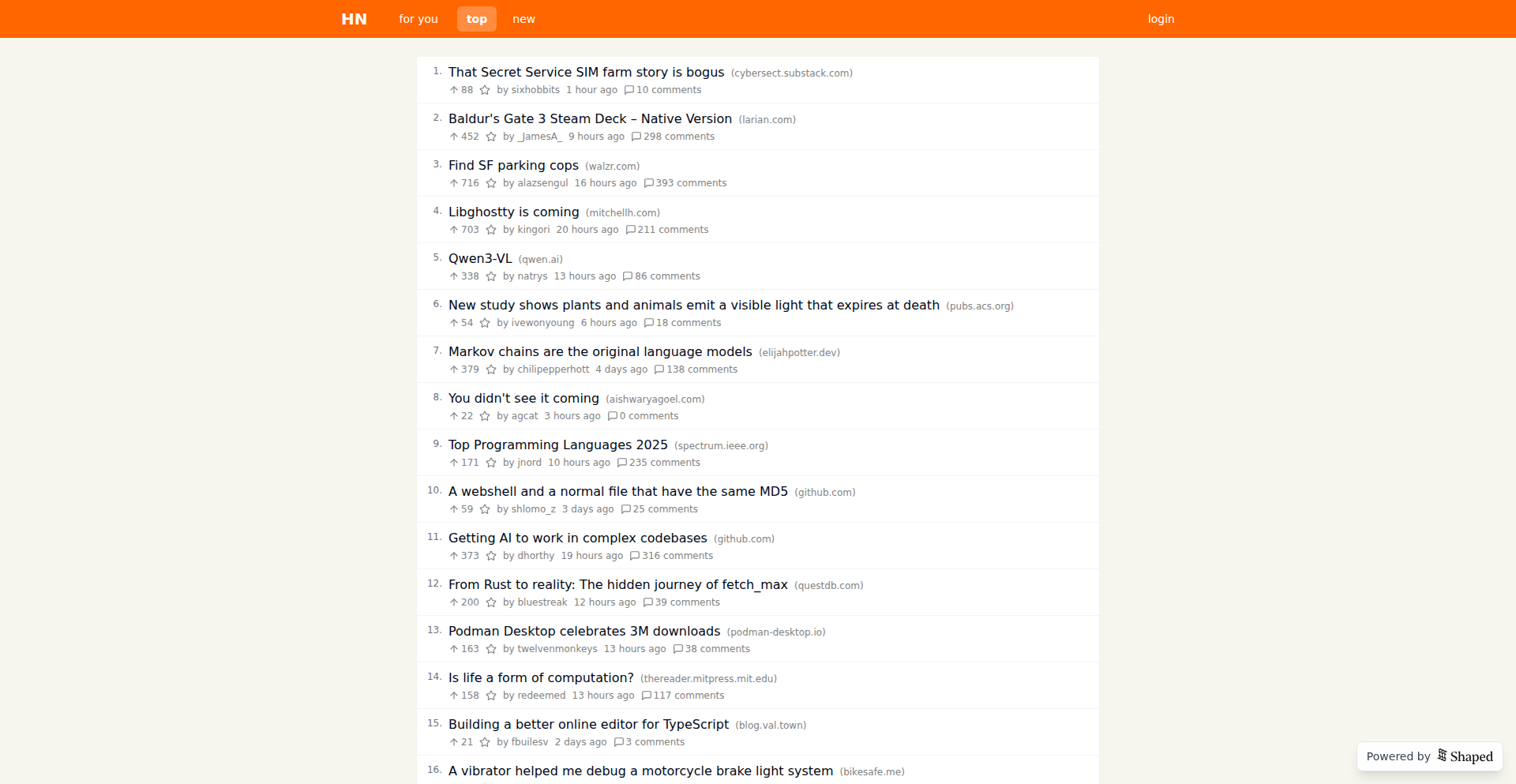

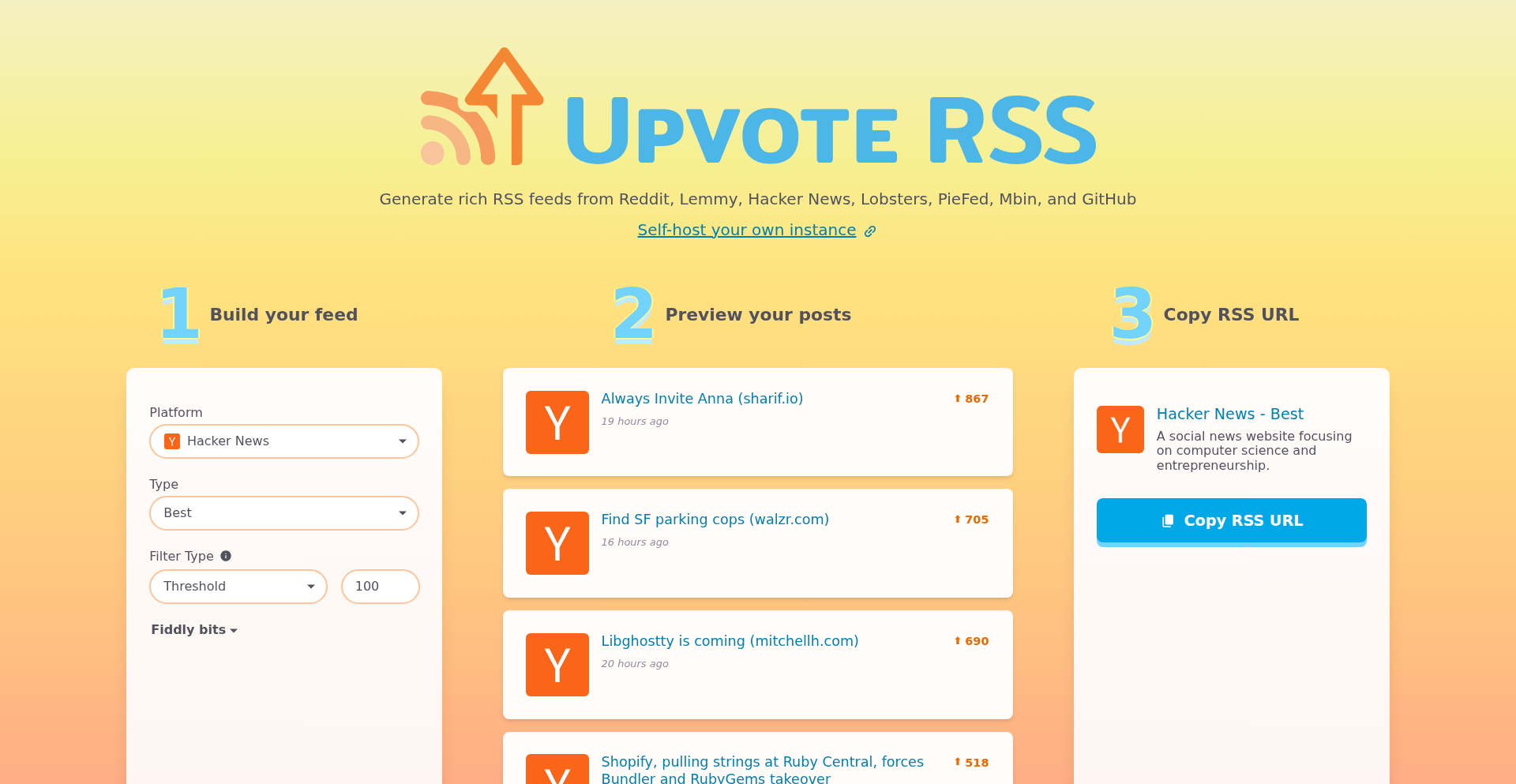

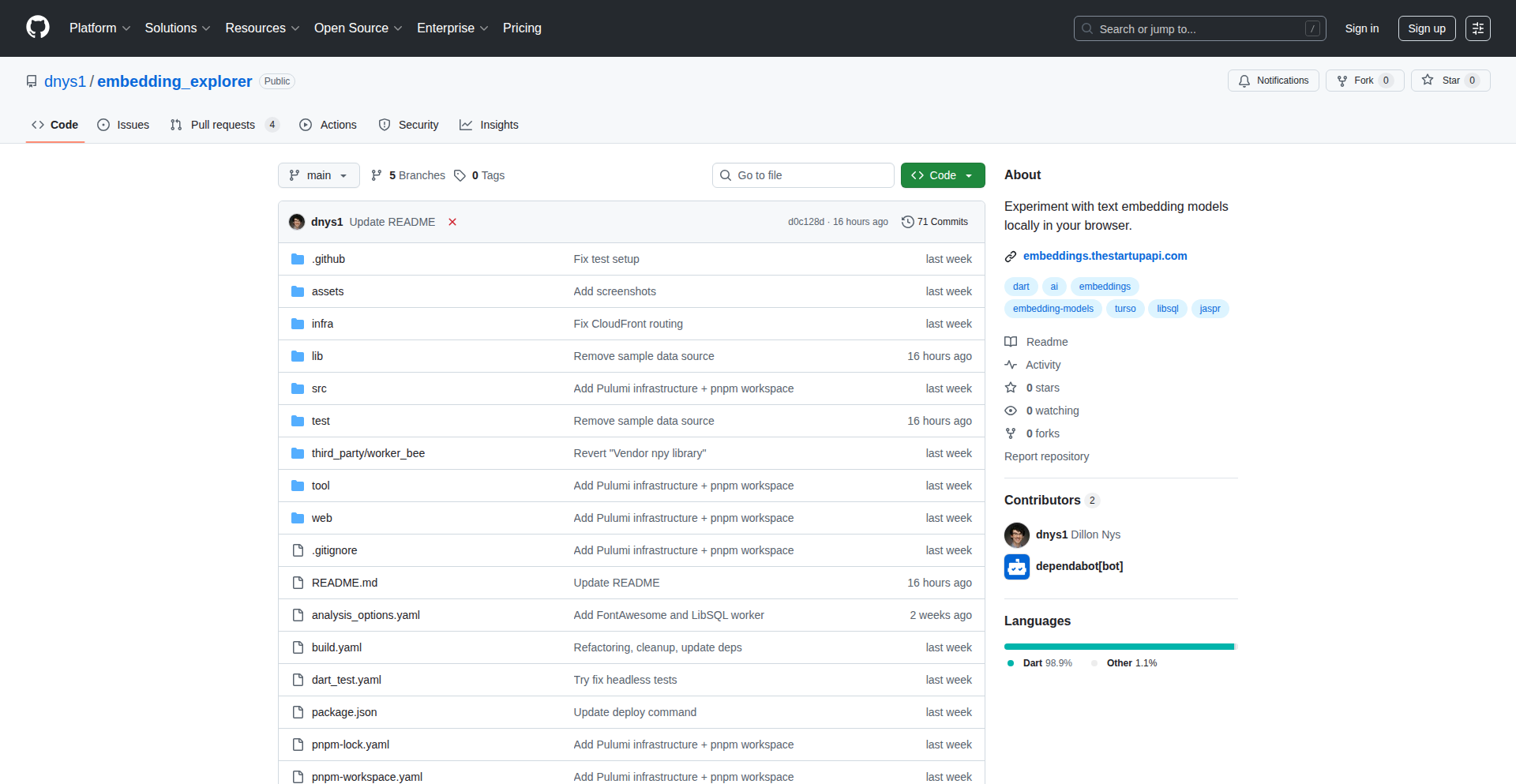

HN Personalized Feed Engine

Author

tullie

Description

This project offers a personalized Hacker News feed that learns from your favorited stories. It addresses the staleness of traditional feeds by re-ranking content based on your demonstrated interests, using a custom algorithm that incorporates content similarity derived from AI-powered text embeddings. This provides a more relevant and engaging experience for users who want to discover technical content tailored to their preferences.

Popularity

Points 22

Comments 10

What is this product?

HN Personalized Feed Engine is a web application that creates a custom Hacker News feed just for you. Unlike the standard Hacker News feed which shows popular or recent posts, this tool learns what you like by tracking the stories you 'favorite'. It then adjusts the ranking of all Hacker News stories to show you more content that's similar to what you've previously enjoyed. The core innovation lies in its use of AI to understand the 'similarity' between the text of different articles, combined with a configurable formula that balances this similarity with the original Hacker News scoring and time decay. This means you get a feed that feels more relevant to your specific technical interests, a significant upgrade from a one-size-fits-all approach.

How to use it?

Developers can use this project by logging in with their existing Hacker News credentials. As they browse and favorite stories on the personalized feed, the system gathers data on their preferences. The backend, built with Supabase, caches these interactions and uses them to inform the re-ranking algorithm. The personalization strength can be adjusted directly within the user interface. For integration, developers could potentially leverage the underlying ranking logic or data caching mechanisms in their own applications if they are building similar personalized content discovery systems. The project is built with React/Next.js for the frontend and Supabase for the backend, making it relatively straightforward to understand and potentially extend.

Product Core Function

· Personalized Feed Generation: Dynamically re-ranks Hacker News stories based on user favorites, ensuring content relevance and reducing information overload.

· AI-driven Content Similarity: Utilizes AI to analyze the text of articles and user favorites, calculating 'content_similarity' to identify related topics and technologies.

· Configurable Ranking Algorithm: Employs a formula that balances traditional Hacker News scoring with personalized content relevance, allowing users to tune the level of personalization.

· Real-time Data Ingestion and Caching: Uses Supabase to store user interactions (favorites) and cache posts, enabling immediate updates to the personalized feed.

· User Authentication via HN Credentials: Allows seamless login using existing Hacker News accounts, simplifying user adoption and data association.

Product Usage Case

· A seasoned developer looking to stay updated on niche programming languages or frameworks can use this to filter out general tech news and focus on deep-dive articles relevant to their specific stacks.

· A researcher interested in a particular area of AI or machine learning can favorite relevant papers and discussions, leading to a feed that surfaces more cutting-edge research and experimental projects.

· A hobbyist builder working on IoT projects can favorite posts related to microcontrollers and sensor technology, receiving a curated feed of new hardware releases and DIY project ideas.

· An engineer exploring new database technologies can use favorites to signal their interest, prompting the system to surface more comparative analyses and performance benchmarks relevant to their evaluation process.

5

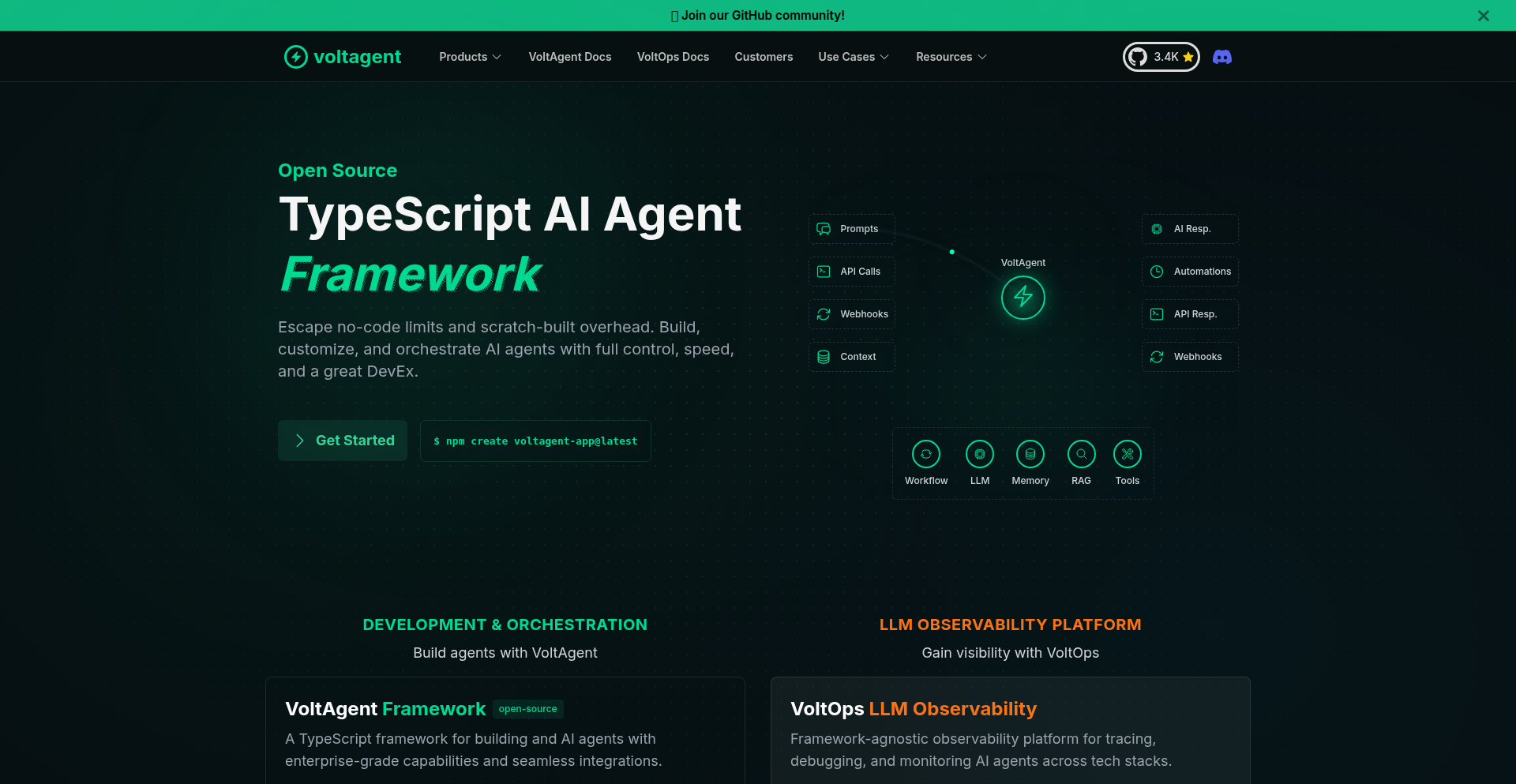

VoltAgent: AI Agent Orchestration Framework

Author

omeraplak

Description

VoltAgent is an open-source TypeScript framework designed to simplify the creation and management of AI agents. It provides a structured way to define, chain, and execute complex AI workflows, tackling the challenge of orchestrating multiple AI models and tools for sophisticated tasks. Its innovation lies in its modular design and TypeScript-native approach, making AI agent development more accessible and maintainable for developers.

Popularity

Points 19

Comments 5

What is this product?

VoltAgent is a framework built with TypeScript that helps developers build AI agents. Think of AI agents as specialized digital workers that can perform tasks by using AI models and tools. Building these agents can be complex because you often need to connect different AI capabilities in a specific sequence. VoltAgent provides a clean, code-based structure to define how these agents should operate, how they communicate, and what tools they can use. The core innovation is its type-safe environment through TypeScript, which helps catch errors early and makes managing complex agent logic much easier. It’s like giving developers a well-organized toolkit and a clear blueprint for building smart, automated AI systems, which is far more efficient than cobbling things together manually.

How to use it?

Developers can use VoltAgent by installing it as a dependency in their TypeScript projects. They then define their AI agents using classes and functions provided by the framework. This involves specifying the agent's goals, the AI models (like LLMs) it will interact with, and any external tools or APIs it can leverage. VoltAgent handles the execution flow, allowing agents to perform multi-step operations. For instance, a developer could build an agent that first analyzes a customer review, then searches a knowledge base for relevant information, and finally generates a personalized response. Integration is straightforward: if you're building a web application or a backend service, you can embed VoltAgent logic to empower parts of your application with AI agent capabilities.

Product Core Function

· Agent Definition: Allows developers to define custom AI agents as classes, specifying their purpose and capabilities. This provides a clear and organized way to build individual AI components, ensuring each agent has a specific role and understands its own functions.

· Workflow Orchestration: Enables chaining multiple agents or actions together to create complex, multi-step AI processes. This is crucial for tasks that require sequential reasoning or the combination of different AI skills, like research and report generation.

· Tool Integration: Provides mechanisms to connect AI agents with external tools and APIs (e.g., search engines, databases, custom scripts). This extends the agent's abilities beyond just AI model interaction, allowing them to fetch real-world data or perform actions.

· State Management: Handles the internal state and memory of AI agents during their operation. This ensures that agents can maintain context throughout a conversation or a task, leading to more coherent and intelligent interactions.

· TypeScript-Native Design: Leverages TypeScript's static typing to improve code quality, reduce runtime errors, and enhance developer productivity. This means fewer bugs and a more robust AI agent system from the start.

Product Usage Case

· Automated Content Generation Pipeline: A developer could use VoltAgent to build an agent that takes a topic, researches it using a search tool, synthesizes information from multiple sources with an LLM, and then writes a blog post. This solves the problem of manually gathering and processing information for content creation.

· Customer Support Automation: An agent can be created to analyze incoming customer queries, fetch relevant information from a knowledge base using an API, and draft an appropriate response. This improves customer service efficiency by automating initial responses and information retrieval.

· Data Analysis and Reporting: Developers can build agents that ingest data from various sources, perform statistical analysis with a specialized AI model, and generate insightful reports. This streamlines the process of extracting value from data without requiring manual coding for each analysis step.

· Personalized Recommendation Systems: An agent could be designed to learn user preferences, query a database for product information, and then recommend items tailored to the user. This makes building sophisticated recommendation engines more manageable.

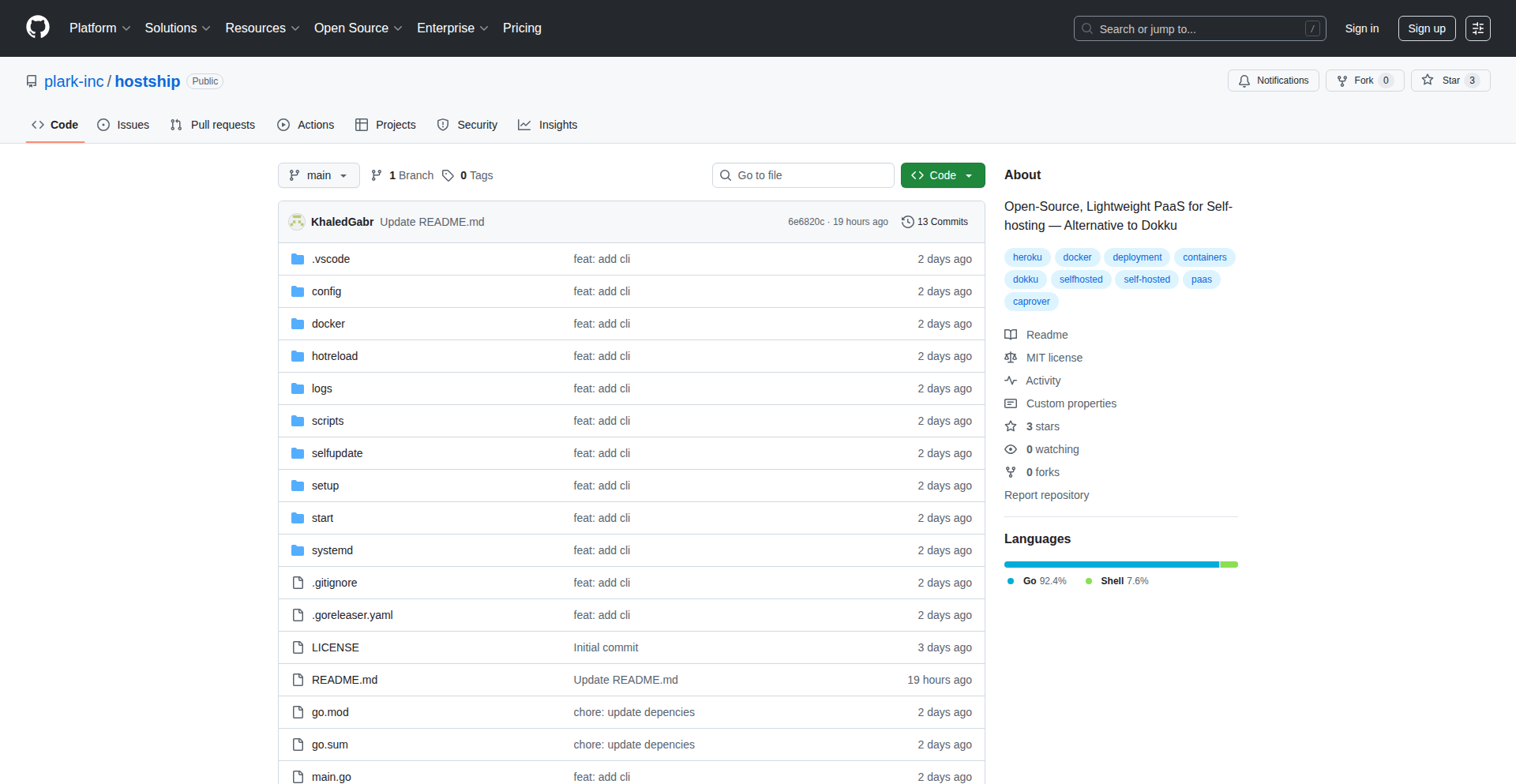

6

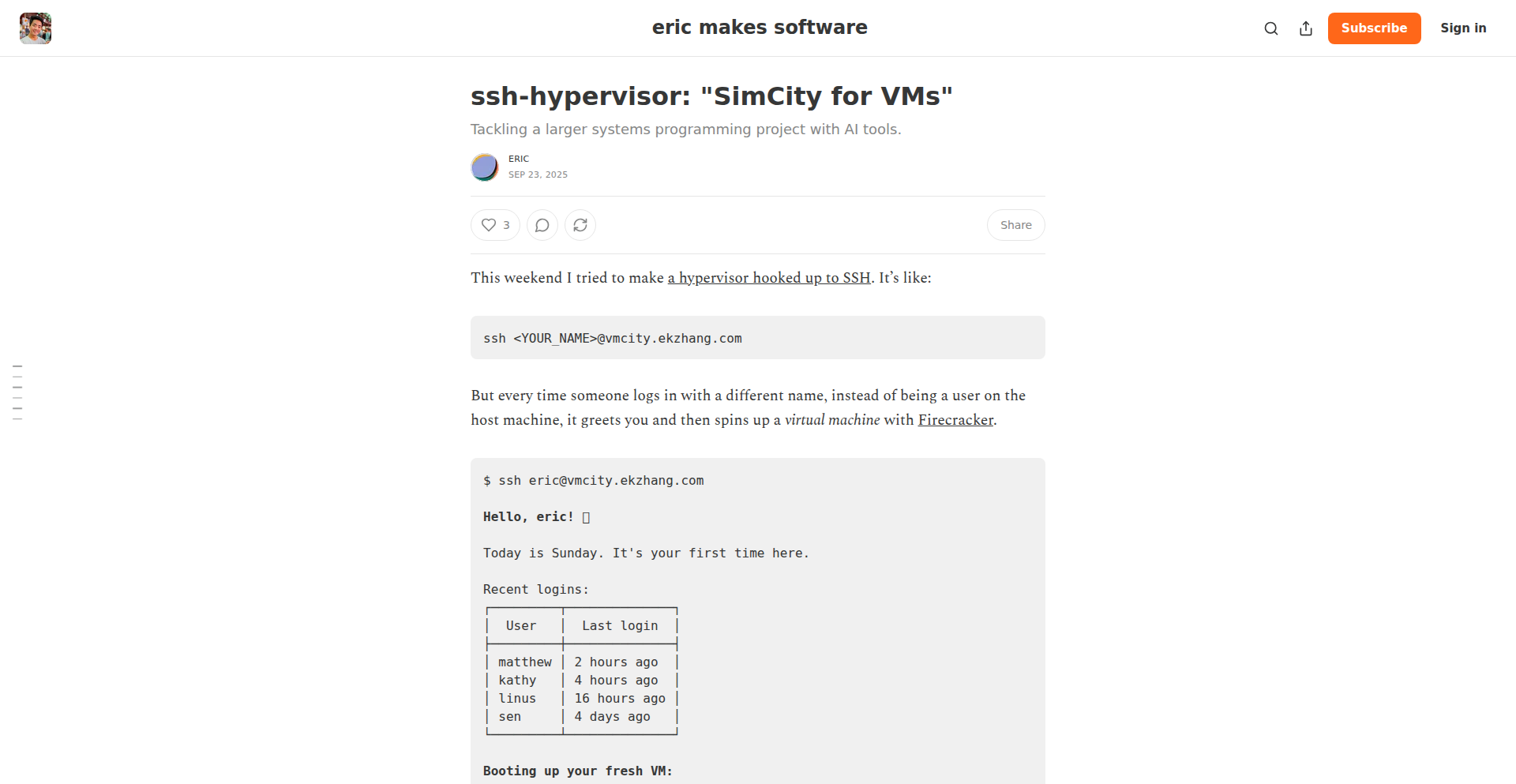

SSH-hypervisor: Personalized VM per SSH Session

Author

ekzhang

Description

SSH-hypervisor transforms your SSH experience by provisioning a dedicated, isolated microVM for each user session. Instead of a shared server environment, you get a fresh, personal virtual machine every time you connect, offering a 'SimCity' like experience for managing your individual compute environments. This project tackles the complexity of VM setup, boot, and networking, bundling essential components like the Linux kernel, Firecracker microVM, an SSH server, and networking tools into a single, statically-linked binary.

Popularity

Points 13

Comments 2

What is this product?

SSH-hypervisor is an innovative tool that redefines remote access by providing each user with their own isolated microVM upon SSH login. Traditional SSH connects you to a shared server, but this project leverages technologies like Firecracker (a lightweight virtualization technology developed by AWS) to spin up a minimal virtual machine specifically for your session. This means you get a clean, predictable, and secure computing environment, akin to having your own miniature operating system instance. The technical innovation lies in its ability to seamlessly integrate VM provisioning with the familiar SSH protocol, including custom progress bars and animations for a more engaging user experience. The project's creator specifically navigated challenges in compiling the Linux kernel with correct configurations and overcoming boot issues, such as silent hangs due to insufficient system entropy, demonstrating a deep understanding of low-level system operations.

How to use it?

Developers can integrate SSH-hypervisor into their workflow by using the provided statically-linked binary. After setting up the hypervisor on a host machine, users can SSH into the designated IP address or hostname. The hypervisor intercepts the SSH connection, automatically provisions a new microVM for that user, and then hands off the SSH session to this newly created VM. This allows developers to have an isolated environment for running experiments, developing applications, or testing code without interference from other users or the host system. It’s particularly useful for scenarios where reproducible environments are crucial, or when developers need to test software in a clean, controlled setting.

Product Core Function

· MicroVM provisioning on SSH connection: This core function automatically spins up a dedicated microVM for each incoming SSH session, providing a personalized and isolated computing environment. Its value is in ensuring a clean slate for every user, enhancing security and preventing conflicts between different users' tasks. This is directly applicable in shared development or testing environments.

· SSH server integration with custom UI: The project includes a custom SSH server that offers enhanced user interface elements like progress bars and color animations. This adds a layer of user-friendliness and visual feedback to the otherwise opaque process of VM provisioning, making the developer experience more intuitive and engaging.

· Statically-linked binary distribution: The entire solution, including the kernel, Firecracker, SSH server, and networking components (iptables, bridge, masquerade), is bundled into a single, statically-linked binary. This simplifies deployment and reduces dependency issues, making it easier for developers to get started without complex setup procedures.

· Cross-architecture support (x86_64/aarch64): The ability to compile and run on both x86_64 and aarch64 architectures broadens the project's applicability across different hardware platforms, allowing for flexible deployment on a variety of servers and devices.

Product Usage Case

· Isolated development environments for a team: Imagine a team working on a project where each developer needs a consistent, clean environment to build and test their code. SSH-hypervisor can provide each developer with their own microVM, ensuring that their work doesn't affect others and that their testing is reproducible. This solves the problem of 'it works on my machine' by providing a standardized environment.

· Temporary sandboxed environments for code snippets: A developer might want to quickly test a specific piece of code or a new library without polluting their main development machine. SSH-hypervisor allows them to SSH into a system, get a fresh microVM, run their code, and then disconnect, leaving no trace. This is invaluable for rapid prototyping and experimentation.

· Secure remote access for specific tasks: For sensitive operations or when sharing access to a powerful machine, providing each user with a separate, isolated microVM enhances security. If one user's VM is compromised, the others remain unaffected, and the host system is protected.

· Educational platforms for teaching system administration or OS concepts: This project can serve as a powerful tool for educators. Students can SSH into a server and get their own virtual machine to experiment with Linux commands, kernel modules, or networking configurations, all within a safe, isolated, and easily resettable environment.

7

Gamma AI Content Weaver

Author

sarafina-smith

Description

Gamma API is a public beta service that transforms raw text inputs like meeting notes or CRM data into professionally designed, brandable, and exportable content such as slide decks, documents, and social media carousels. It streamlines content creation by automating the design and formatting process, offering support for over 60 languages and AI image integration. This solves the problem of time-consuming manual content design, especially for users who are not designers.

Popularity

Points 13

Comments 2

What is this product?

The Gamma API is an AI-powered tool that acts as an automated content designer. You feed it raw text, like a sales script or a lesson outline, and specify parameters like desired format (slides, docs, social posts), tone, and brand theme. The API then generates a fully designed, ready-to-share piece of content, which can be hosted on Gamma or exported as a PDF or PPTX file. Its innovation lies in bridging the gap between raw data and polished visual content without requiring design expertise, making content creation significantly faster and more accessible. This means you get professional-looking materials without the hassle of manual formatting.

How to use it?

Developers can integrate the Gamma API into their applications or automation workflows by making POST requests. You send your raw text input, along with configuration details such as the desired output format (e.g., 'slide deck', 'document', 'social carousel'), tone of voice (e.g., 'formal', 'casual'), and branding preferences (e.g., 'company theme'). The API will then return a link to the generated content hosted on Gamma or an exportable file like PDF or PPTX. This is ideal for building features within internal tools that need to automatically generate reports, presentations from meeting summaries, or personalized marketing materials from CRM data, making complex content creation effortless for your users.

Product Core Function

· Automatic Slide Deck Generation: Converts raw text into visually appealing slide presentations, useful for quickly creating sales pitches or educational materials from notes. This saves hours of manual design work.

· Document Creation from Input: Transforms text inputs into formatted documents, ideal for generating reports, lesson plans, or summaries that look professional and are ready for distribution.

· Social Media Carousel Production: Generates LinkedIn-style social media carousels from text, perfect for marketing teams needing to create engaging content efficiently. This helps boost social media presence without design bottlenecks.

· Multi-language Support: Works with over 60 languages, allowing global teams to generate content tailored to different linguistic and cultural contexts. This expands content reach and accessibility.

· Customizable Themes and Branding: Allows users to apply specific brand guidelines, ensuring consistency across all generated content. This maintains brand identity and professionalism across various outputs.

· AI Image Integration: Incorporates AI-generated images to enhance visual appeal and engagement in the created content. This adds a modern, professional touch to presentations and documents.

Product Usage Case

· A sales team can use Gamma API to automatically convert CRM data and call notes into a polished sales presentation deck before a client meeting. This addresses the need for rapid, high-quality pitch materials and saves sales reps significant time on deck creation.

· An educational platform can integrate the API to generate lesson plans and study guides from raw curriculum notes or lecture transcripts. This empowers educators to focus more on teaching content rather than the formatting, improving the learning experience.

· A marketing department can leverage the API to quickly create social media posts, specifically LinkedIn carousels, from product updates or blog post summaries. This accelerates content marketing efforts and increases engagement without requiring dedicated graphic designers for every piece of content.

· Internal HR tools could use the API to generate onboarding documents or company policy summaries from raw text inputs, ensuring all new hires receive consistent and well-formatted information.

· A business intelligence dashboard could feed aggregated data or executive summaries into the Gamma API to automatically produce monthly performance reports in PDF format for stakeholders, simplifying executive communication.

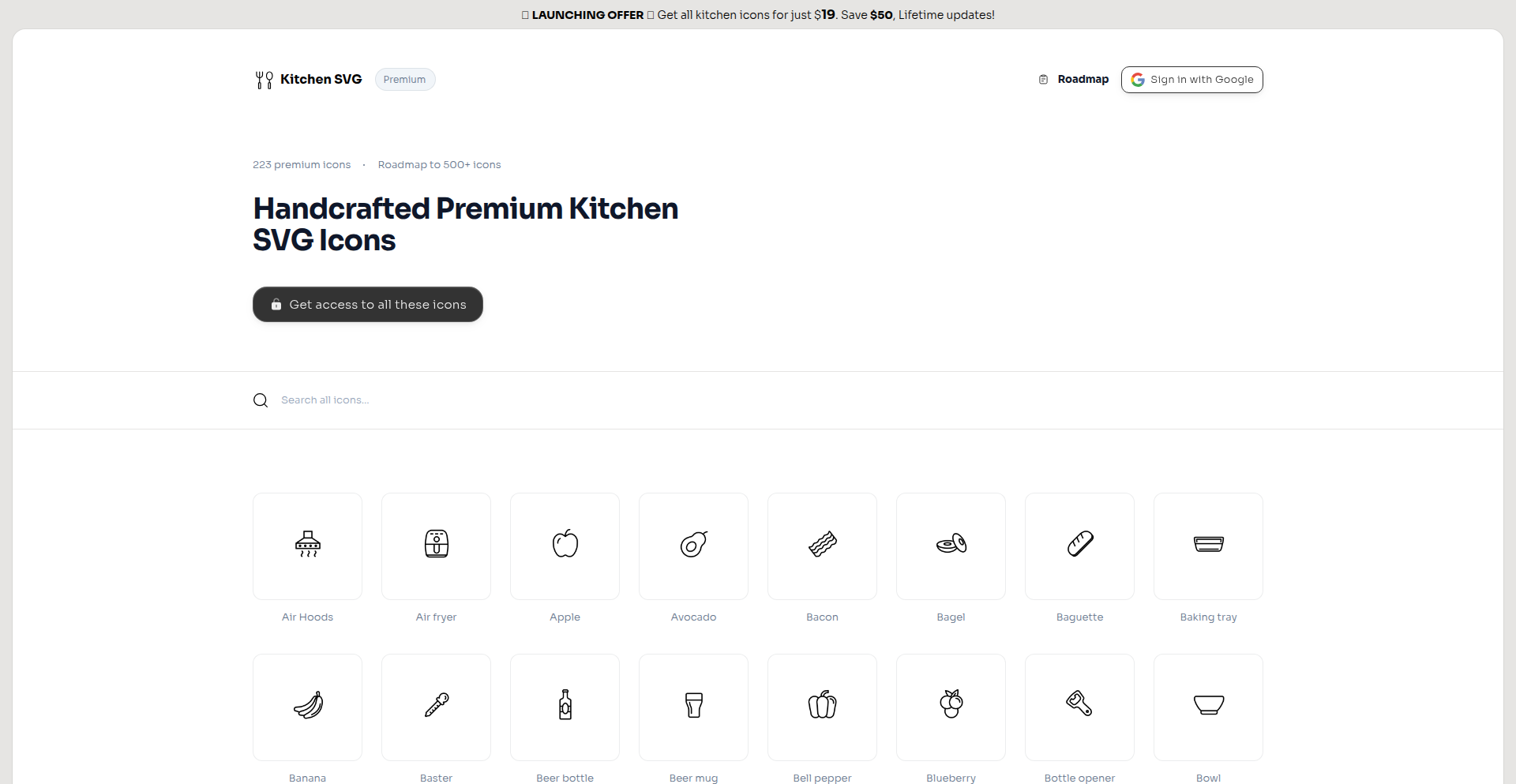

8

CraftedSVG KitchenIcons

Author

mddanishyusuf

Description

A curated collection of meticulously designed SVG icons specifically for kitchen-related themes. The innovation lies in the handcrafted approach to each SVG, ensuring a consistent, scalable, and aesthetically pleasing visual language for web and app development. This project tackles the common problem of finding high-quality, theme-specific icons that are easily customizable and performant.

Popularity

Points 9

Comments 4

What is this product?

CraftedSVG KitchenIcons is a library of Scalable Vector Graphics (SVG) designed for kitchen and culinary contexts. Unlike generic icon sets, each icon is individually crafted with attention to detail, ensuring a unique aesthetic and excellent scalability. The SVG format means these icons are resolution-independent, meaning they look sharp on any screen size and can be easily manipulated with CSS for color, size, and even animations. This approach provides a higher degree of visual coherence and branding potential compared to raster images.

How to use it?

Developers can integrate these SVG icons into their web or mobile applications by directly embedding the SVG code into their HTML, or by linking to them as external image files. They can be styled using CSS to match the application's theme, allowing for easy color changes, resizing without loss of quality, and even interactive effects. For example, a developer building a recipe website could easily change the color of a 'fork and knife' icon to match their brand's primary color using a simple CSS rule.

Product Core Function

· High-quality, handcrafted SVG icons for kitchen and food themes: This provides a unique visual identity for culinary applications, making them stand out from generic designs. The SVG format ensures sharp rendering across all devices.

· Scalability and customization through SVG: Icons can be resized infinitely without pixelation and their colors can be changed via CSS. This means a single icon can be adapted to multiple design needs without creating new image files, saving development time and effort.

· Consistent visual style: The handcrafted nature ensures a cohesive look and feel across the entire icon set. This uniformity improves user experience and strengthens brand recognition.

· Web and app integration: Easily embeddable into any web project or mobile application via HTML or as standalone files. This versatility makes them adaptable to various development workflows.

Product Usage Case

· A recipe app developer uses a 'chef hat' icon next to the recipe author's name to visually identify them as a professional. They can easily change the icon's color with CSS to match their app's color scheme, providing a branded experience.

· A restaurant website uses a 'cutlery set' icon next to opening hours and contact information. The SVG format ensures the icon looks crisp on high-resolution displays and can be easily animated on hover for a more interactive user interface.

· An e-commerce site selling kitchenware can use various icons like 'spatula', 'whisk', and 'pot' to represent product categories or features. The ability to resize the SVGs ensures they fit perfectly within different product listing layouts without distortion.

9

Snapdeck: Agent-Powered Editable Slides

Author

unsexyproblem

Description

Snapdeck is an AI-powered tool that transforms your raw ideas into editable presentation slides and charts. It leverages a sophisticated orchestration layer that intelligently routes tasks to various open-source language models and commercial APIs. The key innovation is its ability to generate fully editable content, allowing users to drag and drop elements, modify visuals, or update text using natural language commands, overcoming the limitations of static AI-generated presentations. So, this helps you create professional-looking slides faster and easier, while retaining full control over the final output.

Popularity

Points 7

Comments 5

What is this product?

Snapdeck is a presentation builder that uses an 'agent' system to connect to different AI language models. Think of it like having a team of specialized assistants. You give it an idea, and this system intelligently breaks down the task and sends parts of it to the right AI model (whether it's an open-source one or a commercial service) to generate content, layouts, and charts. The standout feature is that unlike many AI tools that output fixed images or PDFs, Snapdeck generates content that you can still edit. This means you can rearrange slides, change charts, or tweak text using simple text commands, making the entire process fluid and flexible. So, its innovation lies in its intelligent task routing and the generation of truly editable AI-powered presentation content, offering both speed and customization.

How to use it?

Developers can use Snapdeck by inputting their raw data, concepts, or existing documents, and then using natural language prompts to guide the slide generation process. For example, you could say 'Create a presentation about Q3 sales performance, including a bar chart of revenue by region and key takeaways.' Snapdeck's agent system will then process this request, generate the slides, and provide them in an editable format. You can integrate it into your workflow by using its web interface, and potentially through future API access for more advanced automation. The ability to further refine the generated slides with simple text commands means you can iterate quickly without getting bogged down in manual design work. So, this helps you quickly create initial drafts of presentations and then easily refine them with AI assistance, saving significant time and effort.

Product Core Function

· AI-powered slide generation: Uses LLMs to create presentation content from prompts, speeding up the initial creation process. This is valuable for quickly drafting initial versions of presentations.

· Agent-based task routing: An orchestration layer intelligently distributes tasks to different AI models for optimal results, ensuring diverse capabilities are utilized. This adds robustness and potentially better quality to the generated content.

· Fully editable output: Generates slides with content, layouts, and visuals that can be modified by drag-and-drop or natural language commands, providing complete control and flexibility. This is crucial for adapting AI-generated content to specific needs without starting over.

· Editable chart generation: Creates charts that can be modified through text commands, allowing for easy data visualization adjustments. This makes data-driven presentations more dynamic and responsive to feedback.

· Natural language interaction: Allows users to edit and refine slides using simple text instructions, making the tool accessible and efficient for users of all technical backgrounds. This lowers the barrier to entry for advanced editing.

Product Usage Case

· A marketing team can use Snapdeck to quickly generate a performance review presentation from raw sales data and bullet points, then use natural language to tweak chart colors and add specific call-to-actions. This solves the problem of time-consuming manual slide creation for regular reports.

· A student can input research paper findings and use Snapdeck to generate a presentation outline and visuals, then easily edit the structure and add speaker notes using text commands. This helps students efficiently prepare for academic presentations.

· A product manager can feed user feedback and feature roadmaps into Snapdeck to create a stakeholder update presentation, then quickly adjust the layout and add specific product screenshots via text. This streamlines the process of communicating progress and plans to different audiences.

10

Anonymous Chat Weaver

Author

atsushii

Description

A free, anonymous chat application built with a focus on privacy and ease of use. It tackles the technical challenge of facilitating real-time, private conversations without compromising user identity, by employing innovative backend architecture and secure communication protocols.

Popularity

Points 6

Comments 4

What is this product?

This project is a free, anonymous chat application. Its core innovation lies in its backend architecture, which is designed to enable peer-to-peer communication without central servers storing user identifiable information. This is achieved through clever use of WebRTC for direct communication between users after an initial handshake, and a minimalist relay system that only routes messages without logging their content or sender identity. So, this is useful because it allows for private conversations that are not tracked or stored by a central entity, offering a higher degree of privacy than most mainstream chat apps. The innovation is in building a functional chat experience that prioritizes anonymity through decentralized communication patterns where possible.

How to use it?

Developers can use this chat application as a standalone tool for private communication or integrate its underlying messaging framework into their own applications. The backend can be deployed to handle initial peer discovery and signaling, then WebRTC takes over for direct, encrypted communication. For integration, developers would leverage the signaling server to facilitate connection establishment between their users, then use the WebRTC APIs to send and receive messages. So, this is useful for developers looking to add private chat features to their existing platforms or build new communication-centric applications without the overhead and privacy concerns of traditional server-based messaging. The integration is technical but provides a privacy-focused messaging backbone.

Product Core Function

· Anonymous real-time messaging: Enables users to send and receive text messages instantly without revealing their identity or requiring account creation. The value here is enabling private communication for sensitive discussions or casual interactions where anonymity is preferred. This is achieved through a robust signaling mechanism and WebRTC's direct peer connection.

· End-to-end encryption: All messages are encrypted between the communicating peers, ensuring that only the sender and recipient can read the content. This provides a strong security guarantee, making conversations highly confidential and resistant to eavesdropping. The value is ensuring the privacy of your conversations.

· No user registration or data logging: The system is designed to minimize data collection. Users do not need to create accounts, and message content is not stored on servers. This significantly enhances user privacy and reduces the risk of data breaches or misuse. The value is peace of mind knowing your chat history isn't being stored or tracked.

· Peer-to-peer communication facilitated by signaling: Utilizes WebRTC for direct communication between users after a brief signaling phase. This reduces server load and latency, leading to a more efficient and responsive chat experience. The value is a faster and more direct communication channel.

· Cross-platform compatibility (potential): While not explicitly stated as a feature, the use of WebRTC implies potential for cross-platform use across different browsers and devices. This allows for wider accessibility. The value is being able to chat with a broader range of people regardless of their device.

Product Usage Case

· A journalist needs to communicate securely with a confidential source. By using Anonymous Chat Weaver, the journalist can establish a private, end-to-end encrypted chat without either party needing to create an account or reveal their real identities. This solves the technical problem of secure, untraceable communication in sensitive situations.

· A group of activists wants to organize an event without their communications being monitored. They can use this app to coordinate their activities, benefiting from the anonymity and encryption provided, ensuring their plans remain private. This solves the problem of secure group coordination for privacy-conscious communities.

· A developer is building a collaborative online game and wants to add an in-game chat feature that prioritizes player privacy. They can integrate the signaling and WebRTC logic from this project to enable direct, anonymous chats between players within the game. This solves the technical challenge of integrating a privacy-first chat system into a multiplayer application.

· Individuals who are concerned about big tech companies tracking their conversations can use this app for casual chats, providing a secure and private alternative. This addresses the user need for a private communication tool that doesn't monetize their personal interactions.

· A beta tester for a new product needs to provide anonymous feedback to the development team. This chat app allows them to communicate their findings without revealing their identity, ensuring honest and unbiased feedback. This solves the problem of collecting anonymous user feedback.

11

Airbolt: Backendless LLM Proxy

Author

mkw5053

Description

Airbolt is a service that allows you to securely call Large Language Model (LLM) APIs, like OpenAI, directly from your frontend application. It solves the common problem of needing a backend just to manage API keys, implement rate limiting, and handle graceful degradation for AI features. By using Airbolt, developers can integrate AI into their apps faster and with less code, avoiding the complexity and cost of managing their own backend infrastructure.

Popularity

Points 7

Comments 1

What is this product?

Airbolt acts as a secure intermediary between your frontend application and LLM APIs. Normally, to use services like OpenAI from a web browser, you'd need a backend server to hide your secret API keys and control usage. Airbolt provides a drop-in SDK that lets you make these calls directly from your frontend. Your API keys are encrypted on Airbolt's servers, and they offer features like per-user rate limiting and origin allow lists to prevent abuse. This means you can add powerful AI functionalities to your app without building and maintaining your own backend, saving time and resources.

How to use it?

Developers can integrate Airbolt by dropping its provided SDK (available as a TypeScript API, React Hooks, and a React Component) into their frontend project. Once integrated, they can make calls to LLM APIs through Airbolt's interface, similar to how they would call any other API. For example, in a React app, you might use a provided hook to send a prompt to an LLM and receive a response, all without writing any backend code. Airbolt handles the secure key management and usage controls behind the scenes. Future integrations will include support for multiple LLM providers and easy configuration through a self-service dashboard, eliminating the need for code redeploys for many changes.

Product Core Function

· Secure API Key Management: Airbolt encrypts your LLM API keys (e.g., OpenAI) on its servers using AES-256-GCM, ensuring they are never exposed to the client-side code. This eliminates the security risk of embedding keys directly in your frontend and the need for a dedicated backend to protect them, making your app safer and simpler.

· Per-User Rate Limiting: Implements token-based rate limiting for each user. This helps manage API costs and prevent abuse by controlling how often individual users can access LLM features, ensuring a fair usage policy and predictable expenses.

· Direct Frontend LLM Calls: Allows developers to make calls to LLM APIs (like OpenAI) directly from their frontend applications using provided SDKs. This drastically reduces development time and complexity by removing the need to build and maintain a separate backend infrastructure for AI integrations.

· Origin Allow Lists: Provides a mechanism to specify which domains or origins are allowed to make requests through Airbolt. This adds an extra layer of security by ensuring that only your authorized applications can utilize the service, preventing unauthorized access and potential misuse.

Product Usage Case

· Building a customer support chatbot: A startup can use Airbolt to integrate OpenAI's GPT models into their React-based customer support portal. Instead of building a Node.js or Python backend to handle API requests and manage rate limits for concurrent users, they can use Airbolt's React hooks. This allows them to ship the feature much faster and avoid the operational overhead of managing a backend, enabling them to focus on the chatbot's conversational logic and user experience.

· Developing an AI-powered content generation tool: A solo developer can create a web application that helps users generate blog post ideas or marketing copy using an LLM. By using Airbolt, they can avoid writing any backend code to proxy requests to the LLM API. Airbolt handles the secure storage of their OpenAI API key and provides per-user limits, so the developer can concentrate on building a user-friendly interface and unique features for content creation, deploying their MVP much quicker.

12

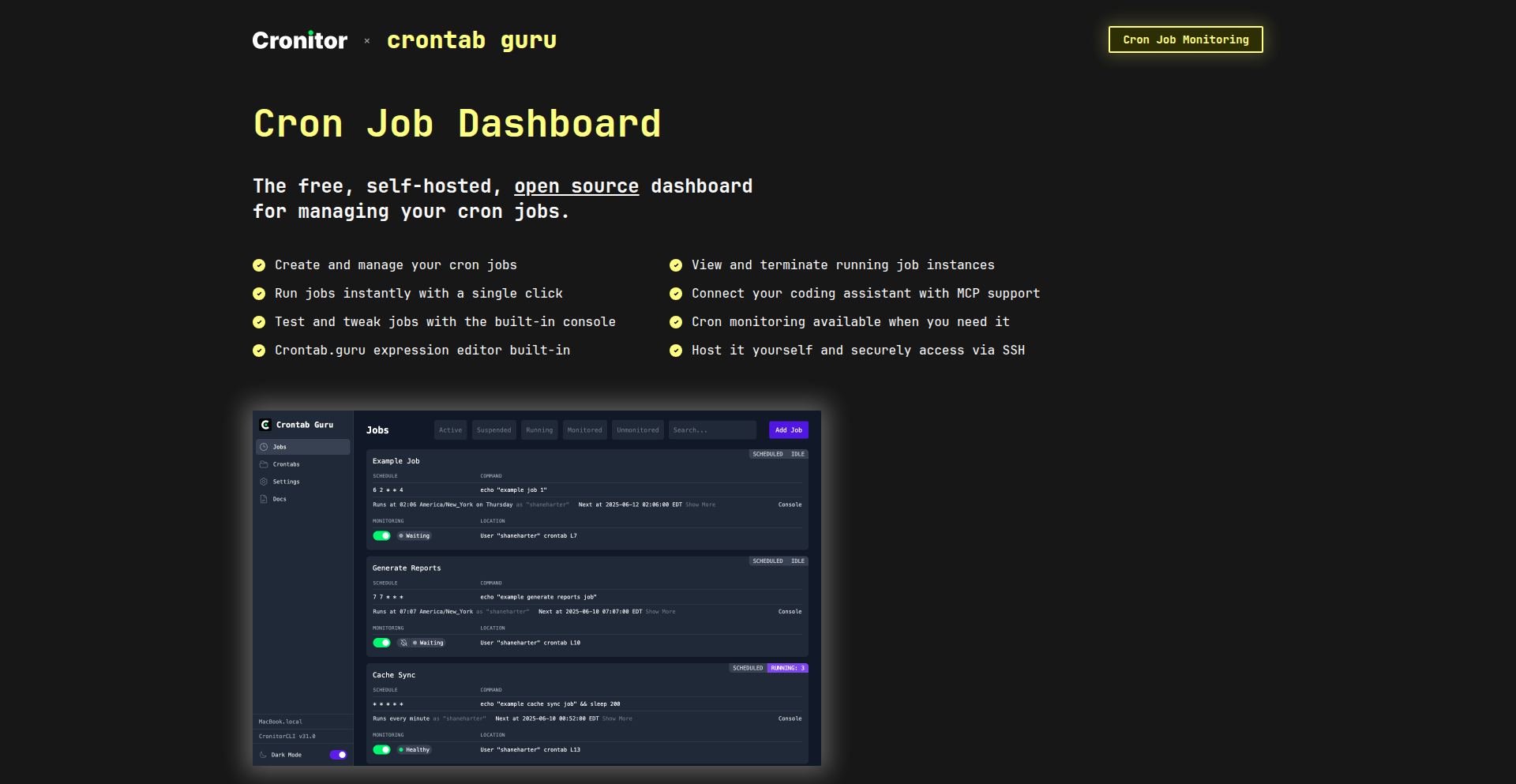

Crontab Guru Dashboard: The Self-Hosted Cron Job Orchestrator

Author

augustflanagan

Description

Crontab Guru Dashboard is an open-source, self-hosted web GUI designed to simplify the management and execution of cron jobs. It addresses the common pain points of interacting with cron through the command line by offering an intuitive interface for creating, updating, suspending, and deleting jobs. Its innovative feature includes direct integration with AI coding assistants like Cursor and Claude Code via an MCP server, enabling natural language configuration and health checks for your scheduled tasks. So, this means you can manage your background tasks visually and even use AI to set them up and monitor them, making automation more accessible and powerful.

Popularity

Points 6

Comments 1

What is this product?

This project is a web-based graphical user interface (GUI) for managing cron jobs, which are automated tasks scheduled to run at specific times. Traditionally, cron jobs are managed via complex command-line interfaces. Crontab Guru Dashboard provides an easy-to-use visual interface to create, modify, pause, and delete these scheduled tasks. A key innovation is its integration with AI coding assistants, allowing users to interact with and configure cron jobs using natural language. The MCP server acts as a bridge, translating these AI requests into actionable cron job configurations and providing status feedback. This significantly lowers the barrier to entry for managing automated processes and enhances debugging capabilities with a local console. So, what's the big deal? It transforms the often intimidating cron job management into a user-friendly experience, enhanced by AI, making automation accessible even to those less familiar with command-line operations.

How to use it?

Developers can use Crontab Guru Dashboard by self-hosting the application on their own server. Once deployed, they can access the web GUI through their browser. The dashboard allows them to directly create new cron jobs by specifying the schedule (using familiar cron syntax or natural language via AI integration) and the command to be executed. Existing jobs can be easily edited, paused, or deleted. For advanced integration, the provided MCP server can be set up to communicate with AI coding assistants. This allows developers to, for example, ask an AI to 'schedule a nightly backup of the database at 2 AM' and have the dashboard automatically create and configure the corresponding cron job. So, how can you leverage this? You can deploy it on your server to gain a visual control panel for all your scheduled tasks, and if you're using AI coding tools, you can connect them to further streamline your automation setup and monitoring.

Product Core Function

· Create, Update, Suspend, and Delete Cron Jobs: Provides a visual interface to manage the lifecycle of scheduled tasks, eliminating the need for manual command-line editing. This streamlines workflow and reduces errors for automated processes.

· On-Demand Job Execution: Allows users to manually trigger a cron job immediately, useful for testing or immediate task execution outside of its scheduled time. This offers flexibility in managing background processes.

· Kill Hanging Job Instances: Enables users to terminate cron jobs that are stuck or running longer than expected, preventing resource contention and ensuring system stability. This is crucial for maintaining reliable operations.

· Local Console for Debugging: Offers an integrated console environment to test and debug commands before they are scheduled, helping to identify and fix issues proactively. This improves the reliability of your automated workflows.

· AI Coding Assistant Integration (via MCP Server): Facilitates configuration and health checks of cron jobs using natural language prompts through AI assistants like Cursor and Claude Code. This makes complex scheduling and monitoring more intuitive and accessible.

Product Usage Case

· Automating daily database backups: A developer can use the dashboard to create a cron job that runs a backup script every night at 3 AM. If the backup fails, they can use the AI integration to ask 'Why did the database backup fail last night?' and get insights or debugging instructions. This solves the problem of setting up reliable and easily debuggable backup processes.

· Scheduling regular website content updates: A web administrator can set up cron jobs to pull new content from an API and update a website at specific intervals. The dashboard's GUI makes it easy to manage these frequent updates without complex command-line entries. This addresses the need for efficient and manageable content refresh cycles.

· Monitoring system health checks: A system administrator can schedule a script that checks server disk space and memory usage every hour. Using the AI integration, they can ask 'Check server health and notify me if disk usage is above 90%', streamlining the monitoring process. This provides a proactive approach to system maintenance.

· Managing batch processing jobs: A data engineer can schedule complex data processing pipelines using cron. The ability to start jobs on-demand and kill errant processes provides fine-grained control over these critical data workflows. This resolves the challenge of managing potentially long-running and resource-intensive data tasks.

13

PureRouter: AI Model Orchestrator

Author

TheDuuu

Description

PureRouter is an open-beta multi-model AI routing system that empowers developers to precisely control how their Large Language Model (LLM) queries are handled. It allows for customizable routing strategies based on factors like speed, cost, or quality, with fine-grained configuration options for advanced parameter tuning. This offers unprecedented flexibility in managing AI workloads across various providers and deployment environments.

Popularity

Points 4

Comments 2

What is this product?

PureRouter is an AI-powered routing system that acts as an intelligent traffic manager for your LLM interactions. Instead of sending all your requests to a single AI model or provider, PureRouter lets you define rules and strategies to send each query to the best-suited model. This is achieved by allowing you to select from a growing list of LLM providers (like OpenAI, Cohere, Gemini, Groq, DeepSeek, etc.) and fine-tune parameters such as context length, batch size, precision, memory usage, and generation controls (like temperature, top-p, and top-k). The innovation lies in its ability to abstract away the complexities of different LLM APIs and hardware deployments, offering a unified interface for complex AI orchestration, whether your workloads run on the cloud, at the edge, or on your own servers. This means you get more control over performance, cost, and the quality of AI outputs, tailored to your specific needs.

How to use it?

Developers can integrate PureRouter into their applications by directing their LLM requests through the PureRouter API. You would first define your routing strategies, perhaps favoring speed for real-time applications or cost-efficiency for batch processing. Then, when your application needs to interact with an LLM, it sends the query to PureRouter. PureRouter, based on your configured rules, then forwards the query to the most appropriate LLM provider and model, and returns the response. This can be done via simple API calls. For example, if you want to compare the speed of different models for a specific task, you can set up a routing rule that sends the same prompt to multiple models concurrently and then selects the fastest response. It's designed to be straightforward, even for complex setups, with a user-friendly UI to manage these strategies and monitor performance.

Product Core Function

· Multi-Provider LLM Routing: Enables sending LLM requests to various providers like OpenAI, Cohere, Gemini, and others through a single interface. This provides flexibility and prevents vendor lock-in, allowing you to pick the best model for each task and benefit from competitive pricing and performance.

· Configurable Routing Strategies: Allows defining custom rules to route queries based on specific criteria such as cost, speed, or output quality. This is valuable for optimizing AI applications for different use cases, ensuring you get the best balance of performance and expense.

· Advanced Parameter Control: Offers granular control over LLM parameters like context length, batch size, precision, memory usage, and generation settings (temperature, top-p, top-k). This level of detail is crucial for fine-tuning AI model behavior and ensuring precise, high-quality outputs for critical applications.

· Flexible Deployment Options: Supports deploying workloads across cloud, edge, or on-premises hardware, with scalable options from single GPUs to multi-GPU setups. This provides immense freedom to choose the most suitable and cost-effective infrastructure for your AI needs.

· Unified Management Interface: Provides a clean and intuitive UI for managing all routing strategies, monitoring performance, and overseeing AI workloads. This simplifies the complexity of orchestrating multiple AI models and providers, making it accessible even for less technical users.

Product Usage Case

· An e-commerce platform looking to provide instant product recommendations. Using PureRouter, they can route recommendation queries to a fast, low-cost LLM for immediate responses, ensuring a smooth user experience, while perhaps routing more complex analytical queries to a higher-quality but slower model during off-peak hours.

· A research team analyzing large datasets of text. They can configure PureRouter to leverage multiple models for sentiment analysis, with routing rules prioritizing models known for accuracy on their specific data type. This allows for more robust and reliable analysis without manually switching between different API endpoints.

· A startup developing a customer support chatbot. They can use PureRouter to dynamically route customer queries to different LLMs based on the query's complexity. Simple FAQs could go to a very fast, inexpensive model, while more nuanced troubleshooting requests could be directed to a more sophisticated model, optimizing both cost and customer satisfaction.

· A developer experimenting with different LLM architectures. PureRouter allows them to easily A/B test various models and configurations for their generative art or text creation projects without needing to rewrite significant portions of their code when switching providers or parameters.

14

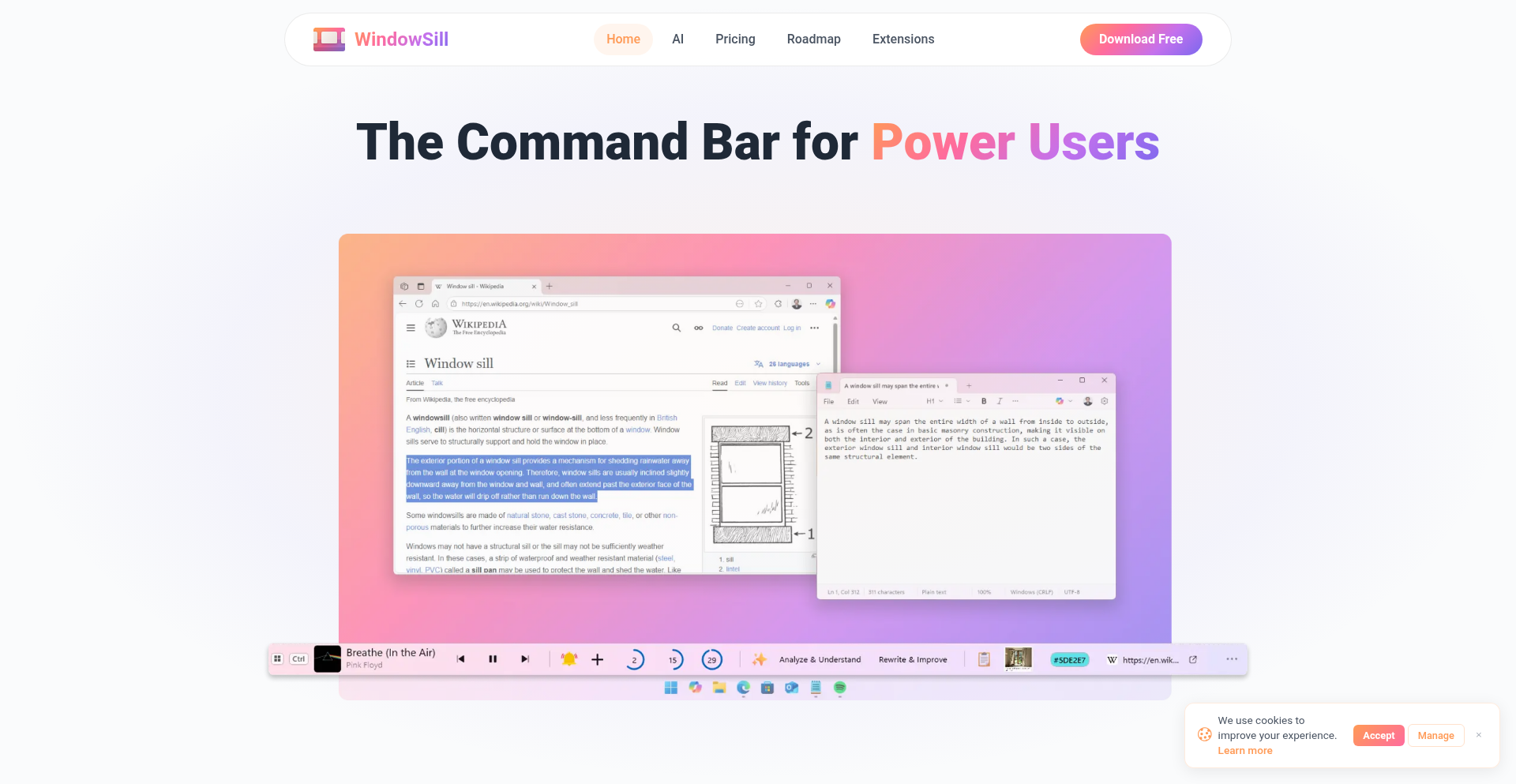

WindowSill

Author

veler

Description

WindowSill is a universal, AI-powered command bar for Windows that integrates seamlessly into your workflow. It provides context-aware text assistance (like summarizing or rewriting), quick reminders, clipboard history, URL utilities, and media controls, all accessible without switching applications. Its key innovation lies in bringing advanced, on-demand AI and productivity tools directly to any text or application on Windows, inspired by concepts like the MacBook Touch Bar and Apple Intelligence but tailored for the Windows ecosystem. This offers a significant productivity boost by reducing friction and context switching for users.

Popularity

Points 6

Comments 0

What is this product?

WindowSill is a productivity tool for Windows that acts as a universal command bar. Its core innovation is bringing AI-powered text manipulation and a suite of handy utilities directly to your cursor's location, no matter what application you're using. Think of it as a smart overlay that understands the text you've selected or the application you're in. For example, you can select text in any application and instantly get options to summarize, rewrite, translate, or fix grammar without copy-pasting or opening a new window. It's built to be non-intrusive and accessible on demand, solving the problem of fragmented workflows and the need to constantly switch between different tools and apps.

How to use it?

Developers can use WindowSill by simply installing it on their Windows 10 or 11 machine. Once installed, the "sill" or command bar can be invoked with a hotkey or by a subtle gesture, depending on configuration. You can then select text in any application, and WindowSill will present relevant AI actions or utility options. For example, if you encounter a long piece of documentation, you can highlight it and choose 'summarize'. For developers, this is particularly useful for quickly understanding code snippets, documentation, or error messages. It also offers integrations: the platform provides an SDK, allowing developers to build custom actions and integrate their own tools or services directly into WindowSill, expanding its functionality and creating bespoke workflows.

Product Core Function

· AI Text Assistance: Enables users to interact with selected text for summarization, rewriting, translation, and grammar correction without leaving their current application. This saves time and reduces cognitive load by keeping AI capabilities contextually available.

· Short-Term Reminders: Allows users to set immediate, unmissable reminders that can appear as full-screen notifications. This is invaluable for staying on track with tasks or deadlines, especially for those who multitask or benefit from prominent cues.

· Clipboard History: Provides quick access to recently copied items without needing to switch to a separate clipboard manager application. This streamlines the process of reusing copied text or data, improving efficiency.

· URL and Text Utilities: Offers shortcuts for common actions like URL shortening or QR code generation directly from selected URLs. This simplifies web-based tasks and sharing information quickly.

· Media and Meetings Controls: Enables control over media playback and quick muting/unmuting in applications like Microsoft Teams, even when these applications are minimized or in the background. This enhances focus during calls or when managing media consumption.

· Personalization and Extensibility: Allows users to save custom AI prompts, customize the appearance and docking position of the command bar, and developers can extend its functionality through an SDK. This caters to individual user preferences and fosters community-driven innovation.

Product Usage Case

· A developer is reading a lengthy technical article and needs a quick overview. They highlight the article's text and use WindowSill's 'Summarize' AI function to get a concise summary without leaving their browser. This saves them from context switching to a separate summarization tool.

· A remote worker is in a video conference and needs to quickly mute their microphone to avoid background noise. Instead of finding the Teams window, they use WindowSill's media controls to mute instantly. This ensures seamless participation and avoids awkward interruptions.

· A student is working on an essay and needs to rephrase a sentence for better clarity. They select the sentence and use WindowSill's 'Rewrite' AI function. This helps them improve their writing efficiently within their word processor.

· A marketer needs to share a long URL on social media. They select the URL in an email and use WindowSill's 'Shorten URL' utility to create a more manageable link, then copy the shortened URL directly.

· A busy professional needs to remember to take a break in 30 minutes. They set a 'Short-Term Reminder' using WindowSill, which will appear as a prominent notification, ensuring they don't forget.

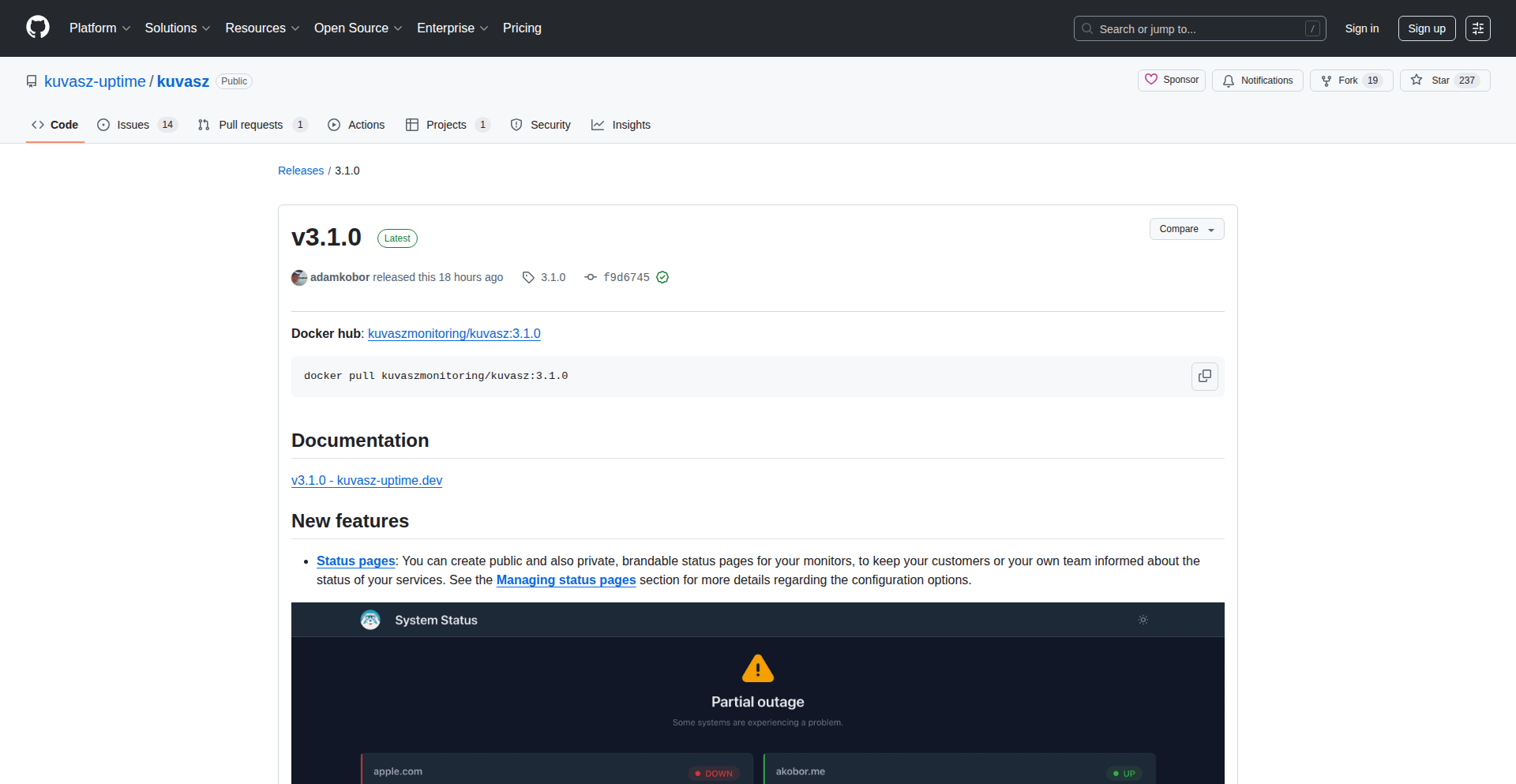

15

Kuvasz Uptime - Status Page Weaver

Author

selfhst12

Description

Kuvasz Uptime v3.1.0 introduces the highly anticipated status page functionality. This project is a self-hosted uptime monitoring tool that now allows developers to create and manage public-facing status pages for their services. It bridges the gap between internal system health and external communication, offering transparency and a clear way to inform users about incidents or maintenance.

Popularity

Points 6

Comments 0

What is this product?

Kuvasz Uptime is a self-hosted, open-source monitoring solution designed to keep track of your application's availability. Version 3.1.0's key innovation is the addition of 'Status Pages'. Technically, it achieves this by providing a flexible framework to define and display the health status of various components or services. Developers can configure checks (like HTTP endpoints, database connectivity, etc.) and map their statuses to user-friendly messages on a public-facing page. This offers a transparent and automated way to communicate system reliability to end-users, which is crucial for building trust and managing expectations during outages or planned downtime.

How to use it?

Developers can integrate Kuvasz Uptime by deploying it on their own infrastructure (e.g., a VPS or container). Once installed, they can configure monitors for their specific applications and services. To use the status pages, developers define which monitors contribute to the overall status displayed on the page. This involves setting up rules for how individual service statuses aggregate into a system-wide status (e.g., 'Operational', 'Degraded Performance', 'Major Outage'). The status page is then accessible via a public URL, allowing anyone to check the health of the services without needing to contact support or developers directly. It's like having a live dashboard for your service's well-being, broadcast to the world.

Product Core Function

· Uptime Monitoring: Automatically checks the availability of your services at configurable intervals, alerting you to downtime. This means you're always the first to know when something is wrong, so you can fix it before your users do.

· Status Page Generation: Creates customizable, public-facing status pages to communicate service health. This solves the problem of how to inform your users about service issues, providing them with real-time updates and reducing support load.

· Incident Management: Allows for manual or automated updates to the status page during incidents, providing clear communication about ongoing issues and their resolution. This helps manage user perception and maintain trust during difficult times.

· Service Grouping: Organizes monitored services into logical groups, enabling a consolidated view of system health. This makes it easier to understand dependencies and the overall impact of an issue across your infrastructure.

· Customizable Branding: Enables personalization of the status page with your company's logo and color scheme, maintaining brand consistency. This ensures that even your status updates feel professional and on-brand.

Product Usage Case

· A SaaS company wants to inform its customers about any service disruptions in real-time. They deploy Kuvasz Uptime and configure monitors for their API, database, and authentication services. The generated status page displays 'All Systems Operational' during normal times and automatically updates to 'Degraded Performance' or 'Outage' when a specific service fails, with accompanying messages detailing the issue and expected resolution time. This reduces customer anxiety and incoming support tickets.

· A gaming studio needs to announce planned maintenance for its online game servers. They use Kuvasz Uptime to create a status page that clearly outlines the maintenance schedule, including the start time, end time, and affected services. This proactive communication ensures players are informed and can plan accordingly, minimizing frustration.

· A developer managing a personal project with a public API needs a simple way to show its reliability. They set up Kuvasz Uptime to monitor their API endpoint. The status page provides a quick visual indicator (e.g., a green checkmark or a red 'X') of the API's current availability, giving potential users confidence in its stability.

· An e-commerce platform experiences intermittent issues with its payment gateway. Kuvasz Uptime is used to monitor the payment gateway's health. When an issue is detected, the status page is updated to reflect a 'partial outage' affecting payments, and a message explains that the team is actively working on a fix. This transparency reassures customers that their payment concerns are being addressed.

16

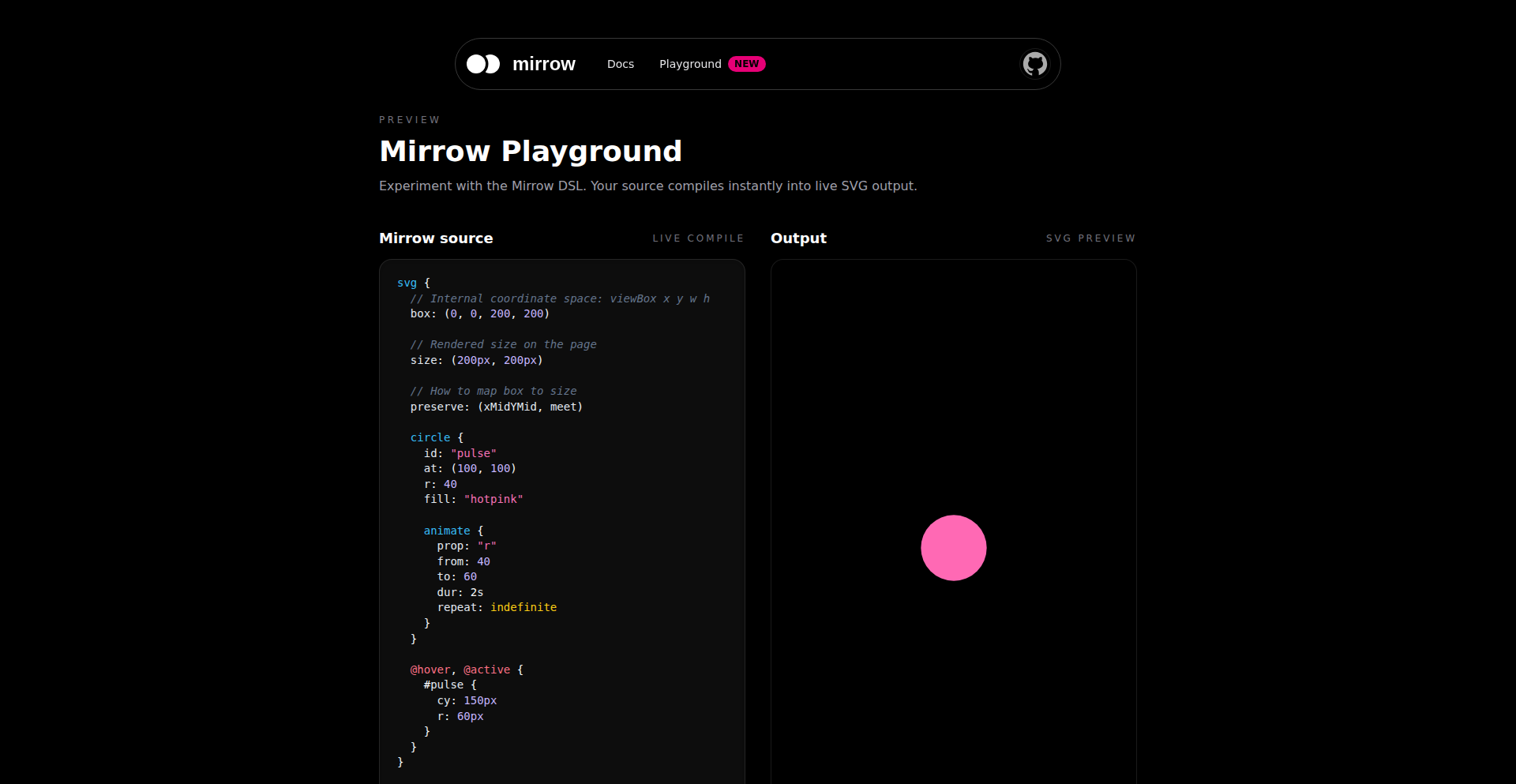

Mirrow SVG Synthesis

Author

era37

Description

Mirrow is a specialized language built with TypeScript that translates into Scalable Vector Graphics (SVG) and supports animations. It simplifies the creation of dynamic SVGs without requiring complex JavaScript libraries or manual CSS styling. Mirrow offers compile-time checks for attributes and allows inline event handling like click and hover, making SVG development more robust and interactive. Its command-line interface (CLI) enables easy conversion of .mirrow files into static SVGs or integration as components, enhancing developer workflow and code quality.

Popularity

Points 3

Comments 2

What is this product?

Mirrow is a domain-specific language (DSL) written in TypeScript that compiles directly to SVG code, including support for animations. Think of it as a streamlined way to write SVG with built-in intelligence. Instead of writing raw SVG tags and complex CSS for animations, you write in Mirrow's syntax. This syntax is checked by the TypeScript compiler before it even runs, catching potential errors early. It also allows you to directly attach interactive behaviors like clicks or hovers within the Mirrow code itself, much like you would in regular web development. This approach aims to make creating animated and interactive SVGs much more approachable and less error-prone.

How to use it?

Developers can use Mirrow by writing their animation and SVG logic in `.mirrow` files. These files can then be processed using the Mirrow CLI. For example, you can use the command `npx mirrow -i input.mirrow -o output.svg` to convert your Mirrow code into a standard SVG file that can be used anywhere. Alternatively, you can integrate Mirrow directly into your build process, using the compiled SVGs as reusable components within your web projects, similar to how you might use other UI components.

Product Core Function

· Compile-time attribute validation: Ensures your SVG attributes are correct before runtime, reducing bugs and saving debugging time. This means you catch mistakes early in the development process, leading to more stable graphics.