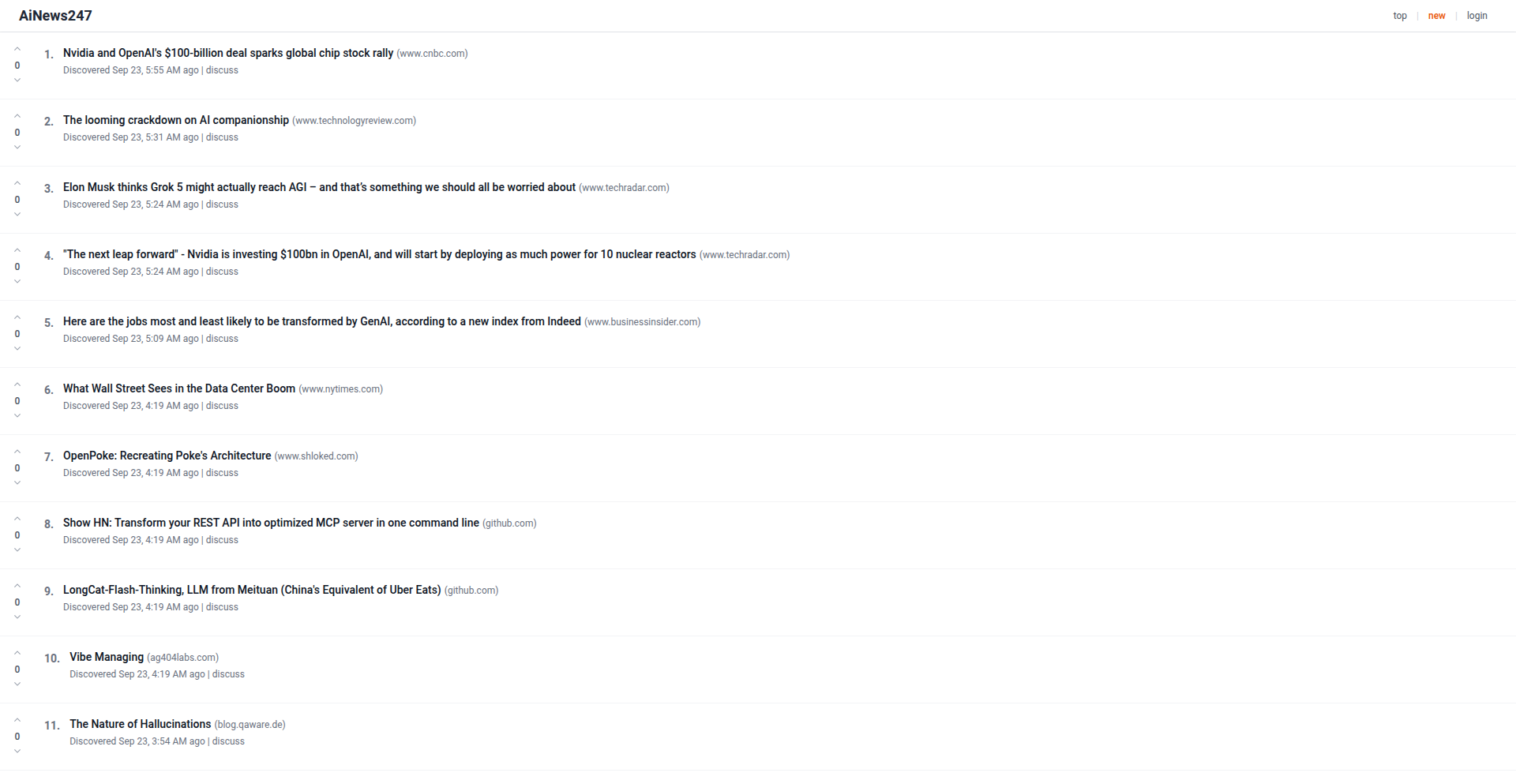

Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-22

SagaSu777 2025-09-23

Explore the hottest developer projects on Show HN for 2025-09-22. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of Show HN projects highlights a strong embrace of AI not just as a buzzword, but as a practical tool to solve real-world inefficiencies. From streamlining contract generation to accelerating electronic component discovery, AI is being applied to reduce friction and enhance human capabilities. There's a clear trend towards making complex domains accessible through intuitive interfaces and intelligent automation, embodying the hacker spirit of building powerful tools that empower individuals. For developers and innovators, this signifies an opportunity to identify niche problems in any field and explore how AI, coupled with clever data handling and workflow design, can provide elegant solutions. The emphasis on local-first AI and efficient agentic systems also points towards a future where powerful AI capabilities can be more private, cost-effective, and customizable, offering fertile ground for new ventures and open-source contributions.

Today's Hottest Product

Name

Zenode – an AI-powered electronic component search engine

Highlight

This project revolutionizes PCB design by leveraging AI to process massive amounts of electronic component data. It tackles the tedious and error-prone task of datasheet analysis, allowing engineers to find and understand components using natural language queries. The 'Deep Dive' feature, enabling cross-component analysis, is particularly innovative, significantly accelerating the design process and reducing costly mistakes. Developers can learn about advanced data wrangling techniques for large, unstructured datasets and the practical application of AI in specialized engineering domains.

Popular Category

AI & Machine Learning

Developer Tools

Productivity Software

Web Development

Data Analysis

Popular Keyword

AI

LLM

Automation

Data

Code

Productivity

Search

Agent

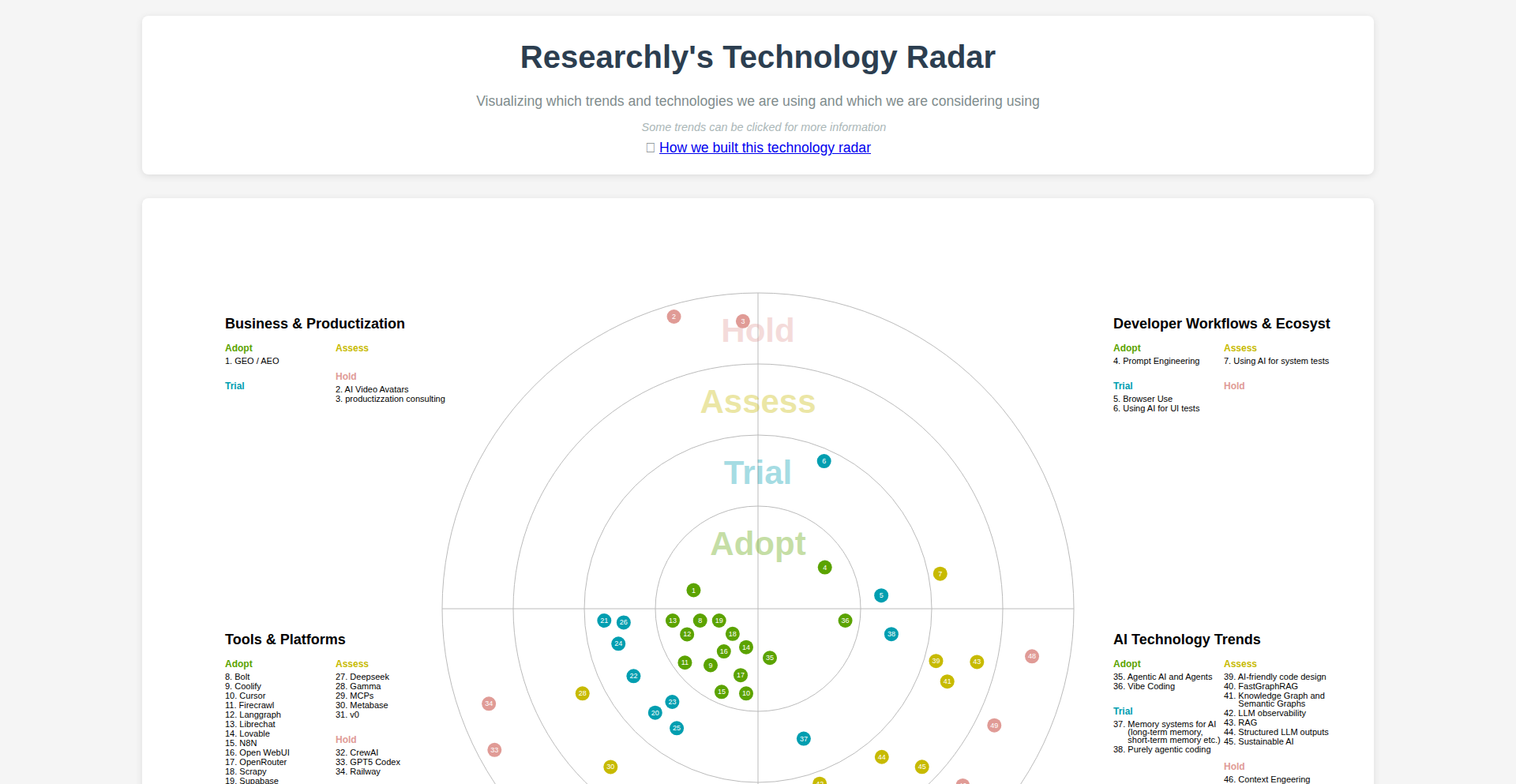

Technology Trends

AI-powered solutions for complex problems

Agentic workflows for automation

Local-first AI processing

Data wrangling and analysis at scale

Personalized and adaptive user experiences

Democratization of complex technical tasks

Efficient resource management in development environments

Project Category Distribution

AI/ML Tools (30%)

Developer Productivity (25%)

Web Applications/Services (20%)

Data Tools (10%)

Specialty Tools (Legal, Audio, etc.) (10%)

Creative/Entertainment (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | FreelanceContractGen | 137 | 47 |

| 2 | LocalSpeechTranscriber | 84 | 24 |

| 3 | Zenode AI-Component Navigator | 17 | 35 |

| 4 | VillagerErrorSoundboard | 28 | 1 |

| 5 | CodeAgent Swarm | 9 | 8 |

| 6 | AI Presentation Coach | 9 | 7 |

| 7 | Spiderseek: AI Search Visibility Tracker | 7 | 6 |

| 8 | SoloSync Encrypted Knowledge Hub | 1 | 6 |

| 9 | Lessie AI: Automated People Discovery Agent | 5 | 0 |

| 10 | Devbox: Containerized Dev Environments | 3 | 2 |

1

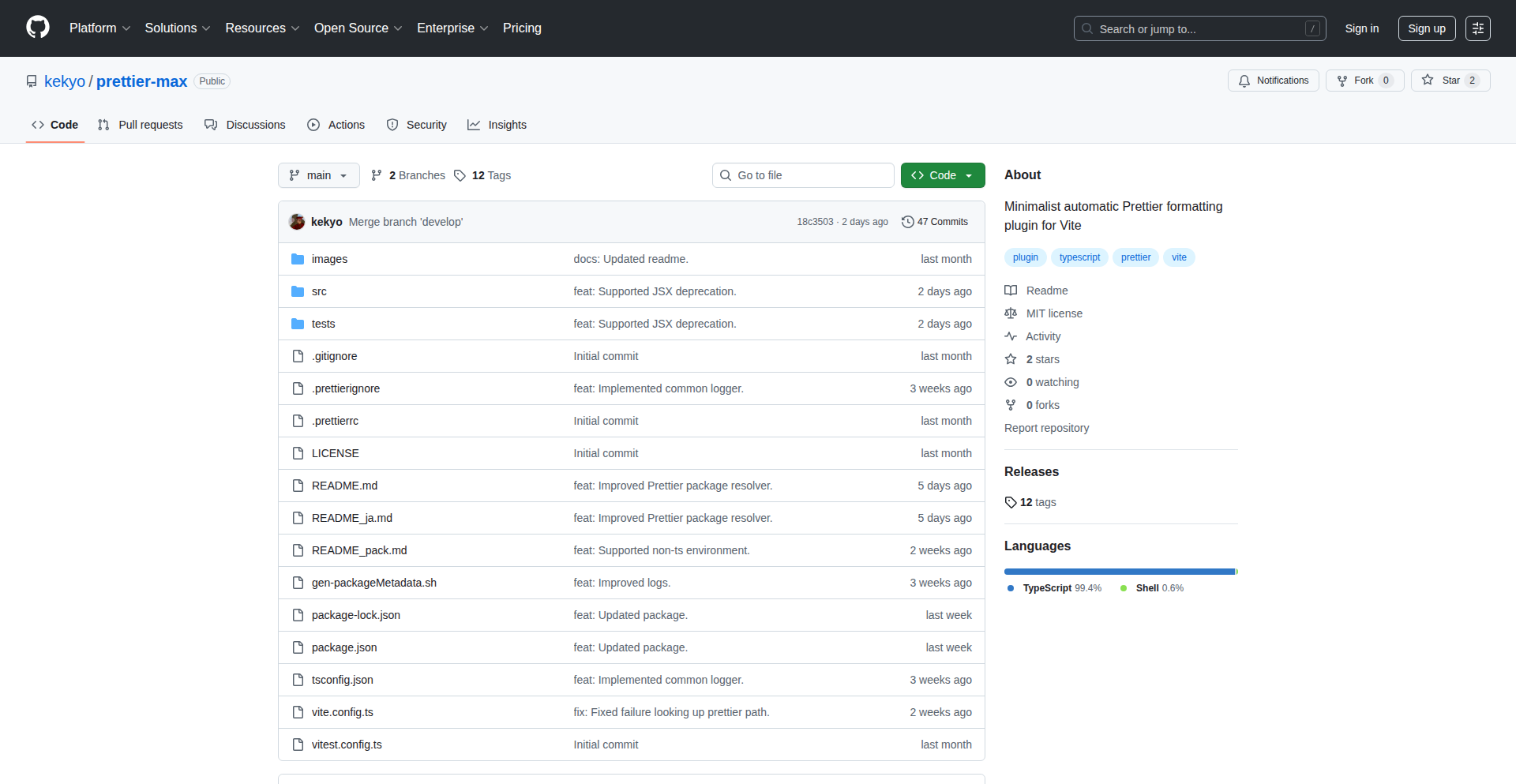

FreelanceContractGen

Author

baobabKoodaa

Description

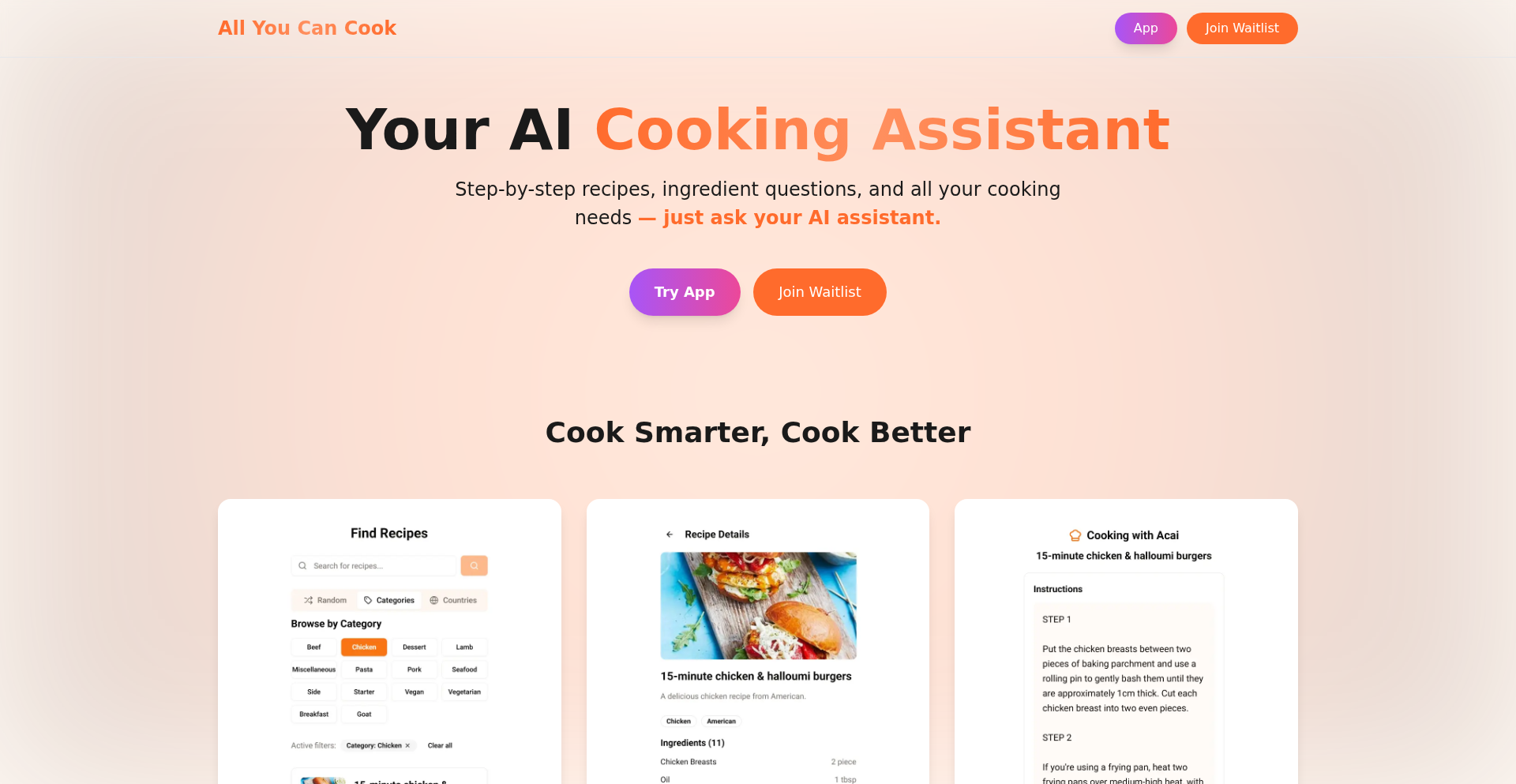

A web-based generator for creating customizable freelance contract templates, specifically designed for the Finnish market but adaptable for other jurisdictions. It simplifies contract creation by eliminating boilerplate text and reducing common errors, offering a free and open-source alternative to expensive legal templates.

Popularity

Points 137

Comments 47

What is this product?

FreelanceContractGen is a dynamic web application that generates personalized freelance contract documents. It leverages a smart templating system that hides or shows specific clauses based on user input, avoiding the need for manual edits of generic placeholder text. This innovation streamlines the process of creating legally sound agreements, reducing the risk of errors and saving time compared to traditional manual document editing. The core technology involves conditional logic within the template engine, making the contract generation process interactive and error-proof. So, what's the value? It provides a user-friendly, cost-effective, and reliable way to get a contract drafted, even if you're not a legal expert.

How to use it?

Developers can use FreelanceContractGen by visiting the web application directly. The process involves answering a series of guided questions about the freelance project (e.g., scope of work, payment terms, intellectual property rights). Based on these answers, the generator dynamically populates a pre-defined legal template. This generated contract can then be downloaded and used. For integration, the open-source nature allows developers to inspect the code, potentially fork it, or even adapt parts of the templating logic for their own internal tools or platforms that require dynamic document generation. So, how does this help you? You can quickly generate a professional contract for your freelance gigs without needing to hire an expensive lawyer or wrestle with complex legal documents.

Product Core Function

· Dynamic contract generation: Creates tailored contracts by asking user-specific questions and populating a template accordingly, reducing manual effort and potential errors. The value here is speed and accuracy in contract drafting.

· Conditional clause display: Intelligently hides or shows contract sections based on user input, simplifying the template and preventing confusion. This provides a cleaner, more relevant contract tailored to the specific project, saving time and reducing mistakes.

· Open-source and free: Provides access to a high-quality contract template and generator without cost, promoting accessibility for freelancers. This means significant cost savings and transparency for users.

· User-friendly interface: Designed to be intuitive for users with minimal legal background, making contract creation accessible to everyone. The value is empowering non-legal professionals to create legally sound documents.

· Jurisdiction-specific (Finnish) and adaptable: While optimized for Finland, the underlying logic can be a blueprint for contracts in other regions. This offers a starting point for international freelancers or those needing to understand contract generation mechanics.

Product Usage Case

· A freelance software developer needs to draft an agreement for a new client project. Instead of buying an expensive template or writing one from scratch, they use FreelanceContractGen, answer a few questions about the project scope and payment, and generate a professional contract in minutes. This solves the problem of time-consuming and costly contract creation.

· A graphic designer starting their freelancing career needs a solid contract but is on a tight budget. They discover FreelanceContractGen, which allows them to create a legally robust agreement tailored to their services for free. This addresses the financial barrier to securing proper client agreements.

· A small co-working space for freelancers wants to provide resources to its members. They can recommend FreelanceContractGen as a go-to tool for generating client contracts, enhancing the value proposition for their members and fostering a supportive community. This showcases how the tool can benefit a broader community.

2

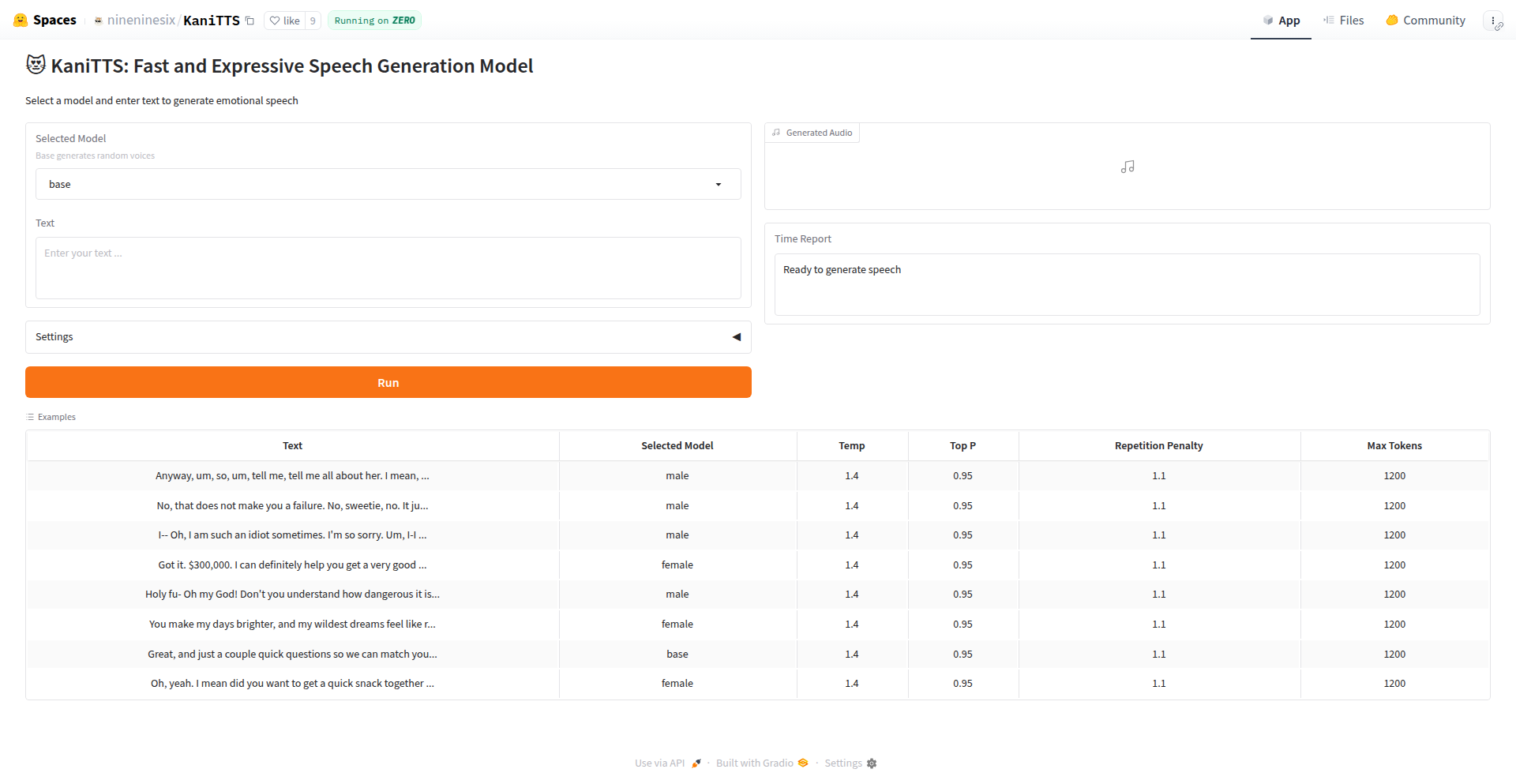

LocalSpeechTranscriber

Author

Pavlinbg

Description

A Python-based tool for local audio transcription, converting speech to text without relying on cloud services. This project addresses the need for privacy-conscious and cost-effective speech-to-text solutions by leveraging local processing power, offering a tangible alternative to expensive or data-sensitive cloud-based APIs. Its innovation lies in making advanced speech recognition accessible and manageable directly on a developer's machine.

Popularity

Points 84

Comments 24

What is this product?

LocalSpeechTranscriber is a Python application that allows you to transform audio files into written text directly on your computer. It utilizes advanced speech recognition models that run locally, meaning your audio data never leaves your system. The core innovation here is the democratization of speech-to-text technology, moving it away from proprietary cloud platforms and into the hands of individual developers. This offers significant advantages in terms of data privacy, cost savings, and the ability to work offline.

How to use it?

Developers can integrate LocalSpeechTranscriber into their Python projects by installing it via pip. The library provides straightforward functions to load audio files (like WAV or MP3) and initiate the transcription process. It's designed for ease of use, allowing for quick experimentation and seamless integration into existing workflows, such as building custom chatbots, analyzing meeting recordings, or creating accessibility features for applications. For example, you could write a simple script to batch transcribe a folder of audio files.

Product Core Function

· Local audio processing: The system processes audio files directly on the user's machine, ensuring data privacy and security. This means sensitive audio content can be transcribed without fear of it being uploaded to external servers.

· High-accuracy speech recognition: It employs sophisticated machine learning models trained for accurate transcription, comparable to many online services. This provides reliable text output for various audio qualities and accents.

· Offline functionality: Once set up, the transcription can be performed without any internet connection. This is invaluable for developers working in environments with limited or no connectivity.

· Python integration: The library is built with Python, making it easy for developers to incorporate into their existing Python applications and workflows. This reduces the barrier to entry for adding speech-to-text capabilities.

· Customizable models: The underlying models can potentially be fine-tuned or swapped for different languages or specialized vocabularies, offering flexibility for diverse use cases.

Product Usage Case

· Transcribing interviews for journalists or researchers without uploading sensitive recordings to third-party services, ensuring data confidentiality.

· Building an offline voice command system for embedded devices or specialized software where internet connectivity is unreliable or undesirable.

· Automating the creation of subtitles or transcripts for video content produced by independent creators, bypassing subscription fees for cloud transcription services.

· Developing internal tools for businesses to analyze customer service calls or internal meeting recordings, maintaining complete control over proprietary data.

· Creating accessibility features for applications, allowing users to interact with software using voice commands or to convert spoken content into text for easier consumption.

3

Zenode AI-Component Navigator

Author

bbourn

Description

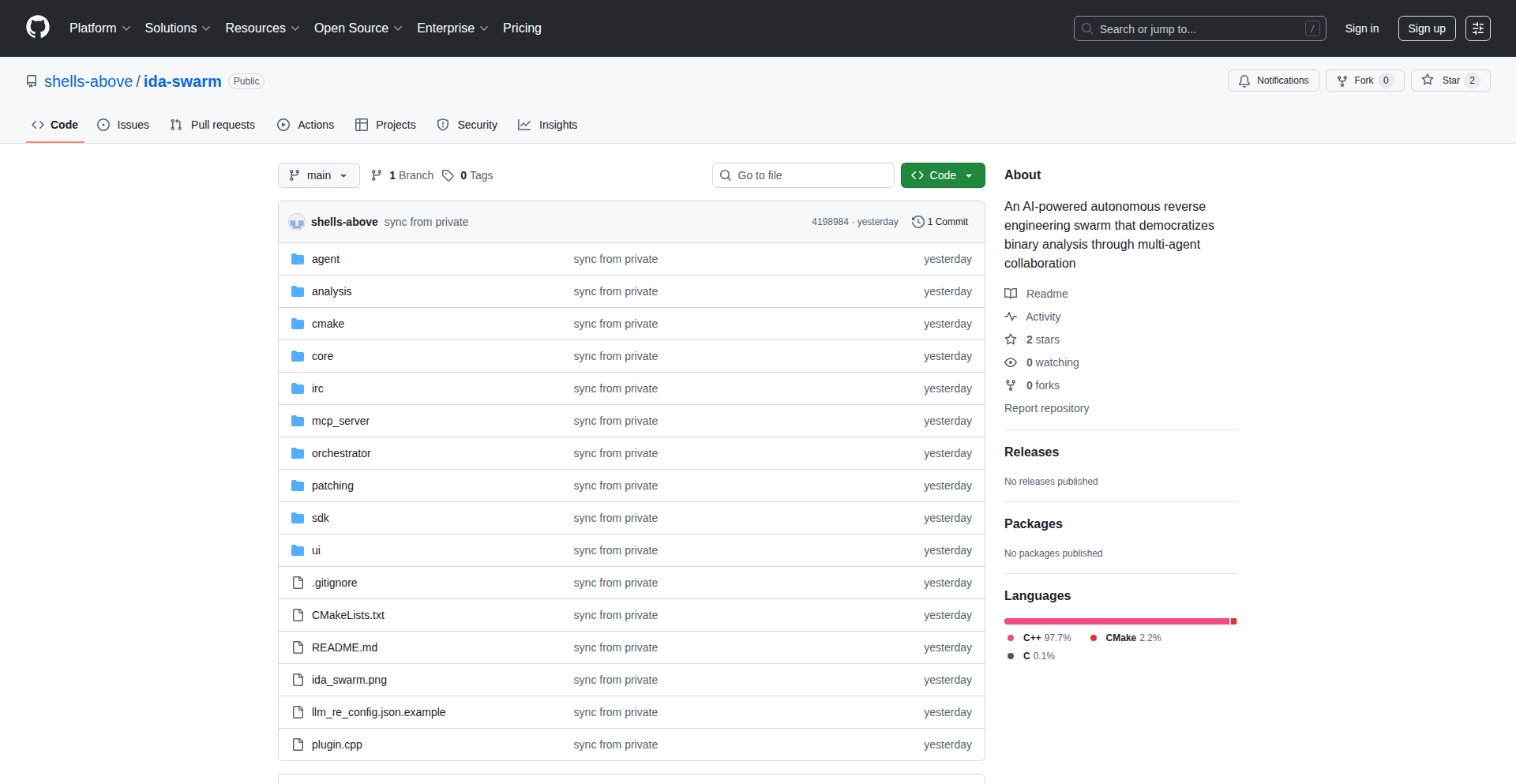

Zenode is an AI-powered search engine for electronic components designed to revolutionize PCB (Printed Circuit Board) design. It tackles the incredibly time-consuming and error-prone process of finding and understanding components by leveraging AI to process vast amounts of data, including datasheets. This innovation helps engineers find suitable parts faster, reduce design errors, and manage design changes more efficiently, ultimately saving time and resources in electronics development. The core technological leap is using AI to make sense of messy, inconsistent data from millions of components, allowing for natural language queries and cross-component analysis.

Popularity

Points 17

Comments 35

What is this product?

Zenode is an AI-driven platform that acts as a super-powered search engine for electronic components. Think of it as a much smarter, more comprehensive version of traditional component catalogs. Its core innovation lies in its ability to process and understand the complex, often poorly formatted, information found in datasheets. Traditionally, engineers would spend a significant portion of their project time sifting through dense PDF documents, looking for specific parameters, and cross-referencing multiple components. Zenode uses advanced AI techniques to ingest and interpret this data, allowing for natural language searches and direct answers to technical questions, complete with source references. This is like having an AI assistant that can read and summarize thousands of technical documents for you instantly.

How to use it?

Developers can integrate Zenode into their PCB design workflow to accelerate the component selection and verification process. After signing up for a free account on zenode.ai, engineers can start by using natural language queries in the discovery search to find components based on functional requirements or desired parameters. For instance, an engineer could search for 'low-power accelerometers with I2C interface'. The platform also allows for 'Deep Dive' searches where engineers can query across multiple components simultaneously, such as asking 'what is the cheapest 3.3V microcontroller with at least 6 ADC channels?'. The interactive documents feature lets users ask specific questions about a component's datasheet, and Zenode provides answers with highlighted sources. This allows for quick verification of critical specs without manually scanning lengthy documents, greatly improving the efficiency of design iterations and ensuring critical details are not missed.

Product Core Function

· Largest and Deepest Part Catalog: Aggregates data from dozens of distributors and manufacturers, offering a unified view of over 40 million component sources. This provides engineers with a broader selection pool than traditional tools, improving the chances of finding the optimal part for their design.

· Discovery Search: Enables natural language queries to quickly find component categories, set filters, and rank results. This simplifies the initial part discovery process, moving beyond rigid keyword searches to more intuitive, conversational interactions.

· Modern Parametric Filters: Rebuilt filters that use numeric ranges instead of string values, making it easier to search for components based on precise technical specifications. This addresses a common pain point in existing tools where filtering by numerical values can be cumbersome and inaccurate.

· Interactive Documents: Utilizes AI to extract information from single-component datasheets and manuals, allowing users to ask questions and receive answers with highlighted source references. This drastically reduces the time spent reading and interpreting technical documentation.

· Deep Dive: Facilitates simultaneous searching and comparison across multiple components. This powerful feature allows engineers to ask complex comparative questions, such as identifying the most power-efficient component within a specific category, significantly accelerating trade-off analysis.

Product Usage Case

· A firmware engineer needs to find a low-power Bluetooth Low Energy (BLE) System-on-Chip (SoC) with a specific set of peripherals and a minimal current draw. Instead of manually sifting through hundreds of datasheets from different manufacturers, they can use Zenode's discovery search with a query like 'BLE SoC with SPI and I2C, lowest power consumption'. Zenode will then present a ranked list of suitable components, and the engineer can use interactive documents to verify critical power specifications, saving hours of research.

· A hardware design team is designing a complex sensor module and needs to identify the best combination of a specific accelerometer and a temperature sensor that meet tight power and size constraints. Using Zenode's 'Deep Dive' feature, they can query across multiple accelerometer and temperature sensor datasheets simultaneously, asking questions like 'find the lowest power accelerometer and temperature sensor that are under 5x5mm'. This allows for rapid cross-component analysis and selection, identifying optimal pairings much faster than manual comparison.

· During the design phase, an engineer is reviewing the datasheet for a microcontroller and needs to confirm the maximum operating voltage for a specific GPIO pin. Instead of scrolling through a 100-page PDF, they can use Zenode's interactive document feature and ask, 'what is the maximum voltage for GPIO pin PA5?'. Zenode will provide the answer directly from the datasheet, highlighting the relevant section, ensuring accuracy and saving valuable debugging time.

4

VillagerErrorSoundboard

Author

vin92997

Description

This project reimagines terminal error notifications by replacing standard beeps with iconic sound effects from Minecraft villagers. It cleverly uses Rust to hook into system processes, triggering specific villager sounds based on the type of error encountered. The innovation lies in creating an engaging and recognizable user experience for otherwise mundane technical alerts, making debugging more intuitive and less jarring.

Popularity

Points 28

Comments 1

What is this product?

VillagerErrorSoundboard is a command-line utility that injects memorable sound effects from Minecraft villagers into your system's error notification process. Instead of a generic system beep, you'll hear a Minecraft villager's vocalizations when a terminal error occurs. Technically, it leverages Rust's system programming capabilities to monitor for specific error codes or events and then plays pre-selected audio files. The innovation is in the creative application of these sounds to provide context and a touch of personality to error handling, transforming a common developer pain point into something more engaging. So, what's in it for you? It makes identifying and reacting to errors more intuitive and less disruptive to your workflow, adding a layer of playful familiarity to a frustrating experience.

How to use it?

Developers can install and run VillagerErrorSoundboard on their Linux or macOS systems. Once running in the background, it automatically intercepts system-level error signals. When an error occurs, it maps the error type to a specific villager sound (e.g., a 'hmmm' for a file not found error, or a 'grolk' for a permission denied error). Users can also customize which sounds are triggered by which error types through a configuration file. Integration is seamless; it runs as a background process and doesn't interfere with your regular terminal operations. So, how can you use it? You can simply run it after installing, and your terminal will instantly sound more like a Minecraft adventure when things go wrong, helping you quickly distinguish different error types by ear.

Product Core Function

· Error Signal Interception: This core function uses Rust to monitor for system-level error events, providing the foundation for custom sound notifications. Its value is in enabling context-aware audio feedback for developers.

· Customizable Sound Mapping: Developers can define which villager sound plays for specific error codes or types. This adds a personalized and intuitive layer to error identification, making it easier to quickly diagnose issues.

· Background Process Execution: The utility runs silently in the background, ensuring that error notifications are handled without requiring active user intervention. This provides a continuous and unobtrusive enhancement to the developer environment.

· Cross-Platform Compatibility (Linux/macOS): Designed to work on common developer operating systems, making it accessible to a broad range of users. This maximizes its utility by supporting widely used development platforms.

Product Usage Case

· During a compilation process, receiving a 'permission denied' error and hearing the distinct 'grolk' sound from a librarian villager, immediately signaling a file access issue. This saves precious seconds in diagnosing the root cause.

· When a network request fails with a timeout, hearing the 'hmmm' sound associated with a farmer villager, helping to quickly differentiate it from other types of errors without needing to constantly look at the screen.

· A developer working on multiple projects simultaneously can associate different villager sounds with specific project error patterns, creating an auditory map of their ongoing tasks and potential issues.

· When encountering a 'file not found' error, the 'huh?' sound from a nitwit villager alerts the developer, offering a subtle yet effective cue to check file paths and directory structures.

5

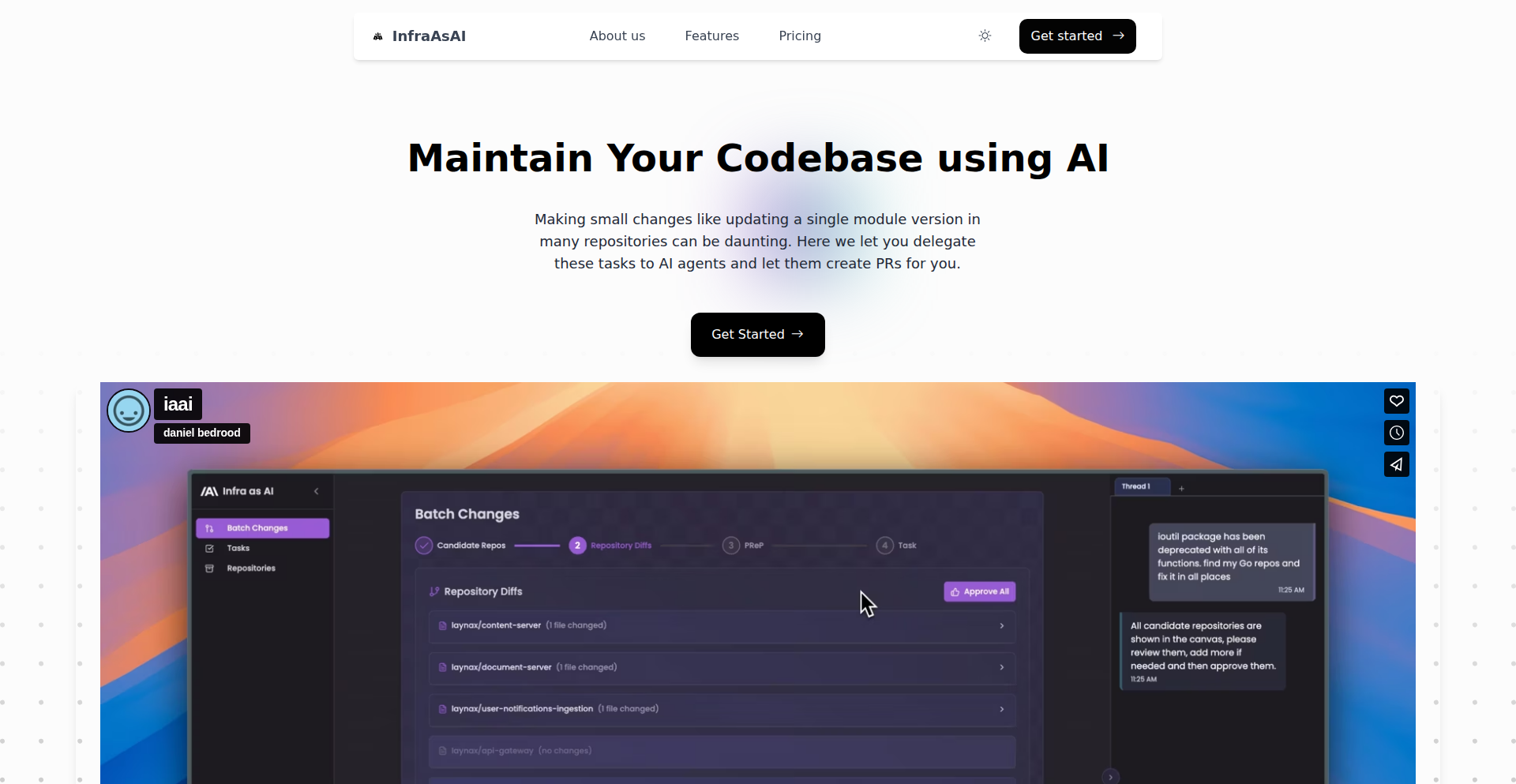

CodeAgent Swarm

Author

FreeFrosty

Description

This project introduces a novel approach to managing code changes across multiple repositories using AI agents. Instead of manually updating identical code snippets or configurations in numerous projects, developers can instruct a swarm of AI agents to identify and implement the change across all designated repositories in parallel. This dramatically reduces the tedious work of repetitive code modifications, freeing up developer time for more impactful tasks.

Popularity

Points 9

Comments 8

What is this product?

CodeAgent Swarm is a system that leverages AI agents to automate code modifications across a large number of software repositories. The core innovation lies in its ability to understand a high-level instruction (e.g., 'update the workflow job version to 2.0') and then have autonomous agents intelligently navigate, locate, and modify the relevant code in each repository. These agents handle the entire pull request process, including generating descriptions and ensuring consistency, thereby solving the problem of massive manual effort required for widespread code updates. It's like having an army of intelligent assistants that can code for you.

How to use it?

Developers can use CodeAgent Swarm by providing a natural language instruction that describes the desired code change. The system then dispatches specialized AI agents to your connected code repositories. These agents will analyze the codebase, identify all instances where the change needs to be applied, create new branches, make the modifications, and submit pull requests. This can be integrated into your existing CI/CD pipeline or used as a standalone tool to manage cross-repository code hygiene and updates.

Product Core Function

· AI-driven code analysis to locate specific code patterns or configurations across diverse repositories, enabling precise targeting of changes.

· Automated pull request generation with AI-written descriptions, streamlining the code submission process and improving clarity for reviewers.

· Parallel execution of tasks across multiple repositories, significantly accelerating the deployment of widespread code changes.

· Intelligent agent orchestration to manage the lifecycle of code modification tasks, from identification to completion.

· Natural language command interface for intuitive user interaction, abstracting away complex coding operations.

Product Usage Case

· Scenario: Updating a dependency version across 20 microservices. Problem: Manually creating a pull request for each service is time-consuming and error-prone. Solution: Instruct CodeAgent Swarm to update the dependency version. Agents will find and update the version in all 20 repositories simultaneously, generating PRs for each.

· Scenario: Standardizing logging format across a monorepo with many modules. Problem: Inconsistent logging can hinder debugging. Solution: Use CodeAgent Swarm to enforce a new logging standard. Agents will identify and refactor logging statements across all modules.

· Scenario: Applying a security patch to a critical configuration file in multiple cloud-native applications. Problem: Ensuring the patch is applied consistently and quickly is paramount. Solution: Deploy CodeAgent Swarm to apply the patch to all affected application configurations, minimizing vulnerability exposure.

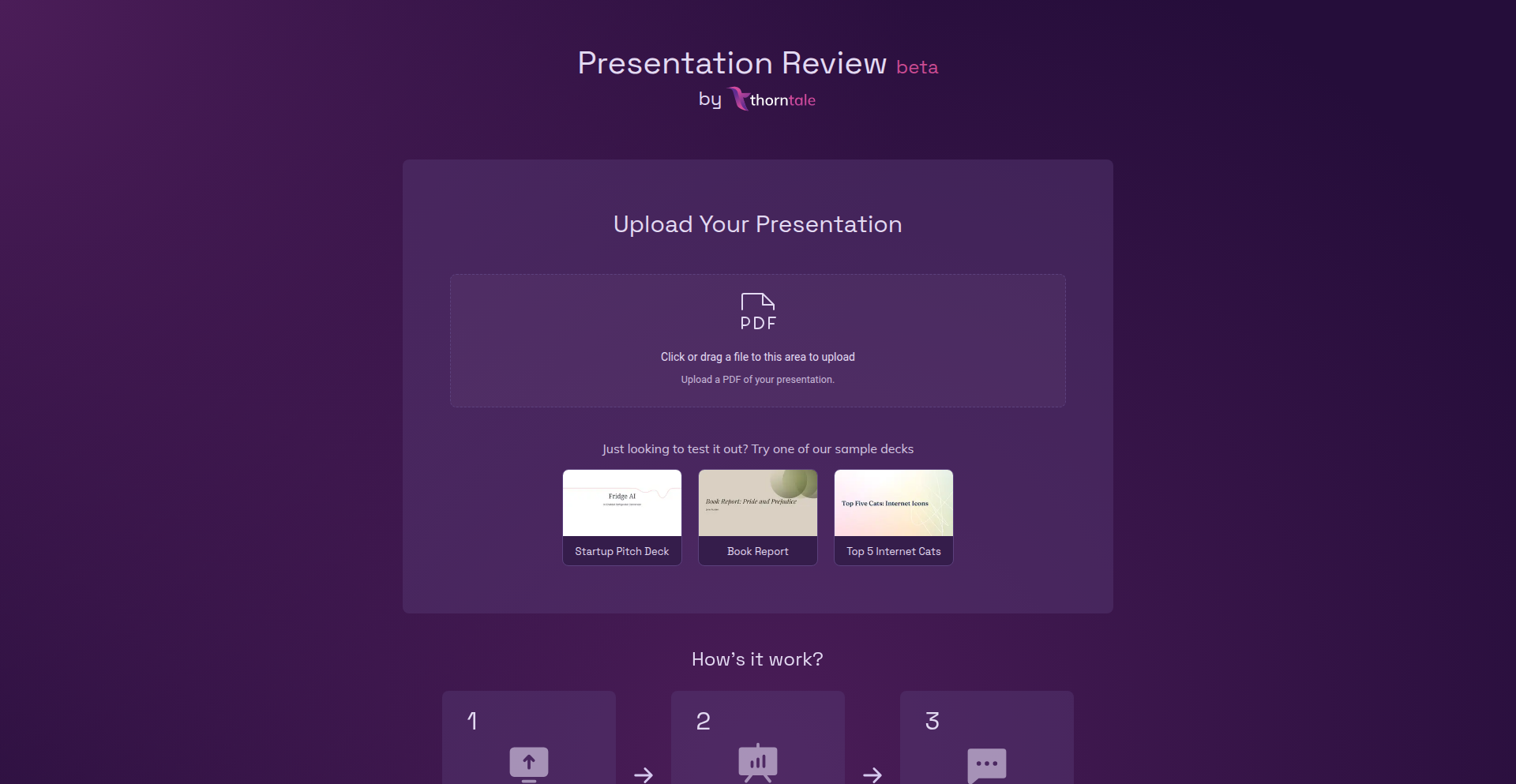

6

AI Presentation Coach

Author

ellenfkh

Description

This project is an AI-powered tool designed to help individuals practice and improve their presentation skills. Users upload their presentation slides (PDF) and deliver their talk. The AI then analyzes the slides and spoken transcript, offering feedback from simulated personas like an investor, teacher, or marketing lead. The core innovation lies in providing realistic, persona-based feedback to overcome the embarrassment of repeated practice sessions with familiar people, thereby enhancing presentation confidence and effectiveness.

Popularity

Points 9

Comments 7

What is this product?

This is an AI-powered presentation practice tool. You upload your presentation slides, record yourself delivering the presentation, and receive feedback from simulated AI personas. The innovation here is the use of AI to mimic different audience perspectives (e.g., investor, teacher, marketing lead), providing nuanced and constructive criticism that goes beyond generic advice. This helps users understand how their presentation might be perceived by specific types of audiences, a crucial aspect of effective communication that's hard to get through traditional practice.

How to use it?

Developers and presenters can use this tool by visiting the provided URL (review.thorntale.com). The process is simple: upload your presentation as a PDF, start your practice presentation, and the AI will analyze your delivery and slides. You can then review the feedback provided by the different AI personas. This is incredibly useful for anyone preparing for high-stakes meetings, job interviews, or public speaking engagements. It allows for focused practice and iterative improvement in a private and supportive environment.

Product Core Function

· AI-driven feedback generation: The system analyzes presentation content and delivery to offer constructive criticism, providing actionable insights for improvement and understanding audience perception.

· Persona-based review: Feedback is tailored from the perspective of distinct roles (investor, teacher, marketing lead, etc.), simulating real-world audience reactions and helping users refine their message for specific groups.

· Speech-to-text analysis: Transcribes spoken words to analyze content and delivery in real-time, identifying areas for clarity and impact.

· Slide content analysis: Evaluates the effectiveness and clarity of visual aids, ensuring they support the spoken narrative.

· Zero-friction user experience: No signup or login required, allowing for immediate use and rapid iteration during practice sessions.

Product Usage Case

· A startup founder preparing for an investor pitch can upload their pitch deck, practice their delivery, and receive feedback from the 'investor' persona. This helps them identify any gaps in their financial projections or market strategy explanation, leading to a more persuasive pitch.

· A student preparing for an academic presentation can get feedback from the 'teacher' persona. This allows them to refine their explanation of complex concepts and ensure their arguments are well-supported, improving their grade.

· A marketing professional practicing a new product launch presentation can use the 'marketing lead' persona to gauge how well their messaging resonates with brand positioning and target audience appeal, ensuring a more impactful launch.

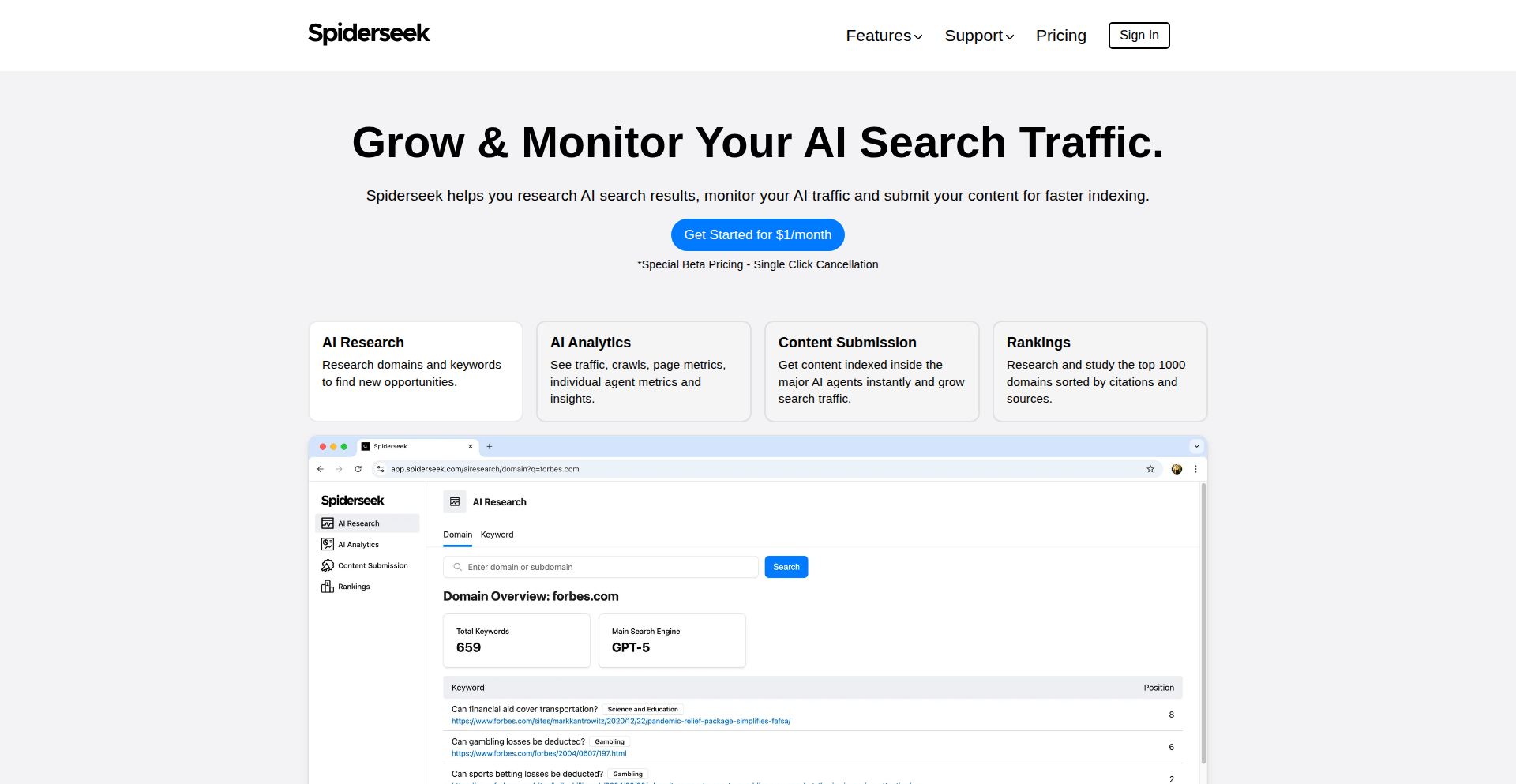

7

Spiderseek: AI Search Visibility Tracker

Author

asteroidandy

Description

Spiderseek is a lightweight, AI-first platform designed to help website owners track and grow their visibility in emerging AI-powered search engines like Perplexity, ChatGPT, and other AI agents. It offers AI research for new opportunities, AI analytics including AI agent insights, content submission for instant indexing, and rankings based on AI citations, providing a new angle on SEO beyond traditional Google-focused tools.

Popularity

Points 7

Comments 6

What is this product?

Spiderseek is a novel SEO tool that focuses on the growing landscape of AI search engines. Instead of traditional keyword rankings in Google, it helps you understand how your website appears and performs when AI agents like ChatGPT or Perplexity are used to find information. It analyzes which domains are frequently cited by these AI agents and allows you to submit your content for direct indexing, aiming to capture traffic from this new wave of information discovery. This is innovative because it addresses the uncharted territory of AI-driven search behavior, which is rapidly changing how users access information, and offers a practical way to adapt your online presence to this shift.

How to use it?

Developers can use Spiderseek to monitor their website's performance in AI search. For instance, if you have a blog post about a niche topic, you can use Spiderseek to see if AI agents are referencing your content and how often. You can submit new articles or website updates directly through the platform to ensure they are quickly discoverable by AI agents. This helps in understanding new traffic sources and optimizing content for AI discoverability. You can integrate this by understanding which content resonates with AI agents and then creating more of it, or by using the analytics to inform your content strategy for this emerging search channel.

Product Core Function

· AI Research: Discover new content opportunities by exploring what domains and keywords are being surfaced or cited by AI search agents. This is useful for identifying gaps in AI-generated knowledge or popular topics that AI is already referencing, helping you create content that AI can easily find and use.

· AI Analytics: Gain insights into your website's performance within AI search. See metrics like traffic, crawl activity, and page metrics, specifically looking at how AI agents interact with your site and what insights they draw. This helps you understand if your content is being understood and valued by AI.

· Content Submission: Expedite the indexing of your website's content on major AI agents. Instead of waiting for AI crawlers to discover your new articles, you can proactively submit them, ensuring they are available for AI-powered search results much faster. This is crucial for timely visibility.

· Rankings: Browse a list of top-performing domains based on their citations and sources within AI search. This provides a benchmark and helps you understand what kind of content or authority is being recognized by AI, giving you a competitive edge.

Product Usage Case

· A content marketer wants to understand if their latest technical article is being used by AI chatbots when answering developer questions. Spiderseek can show them if their domain is cited and provide metrics on AI interaction, helping them refine the article for better AI discoverability.

· A startup is launching a new product and wants to ensure it's discoverable through AI agents used for product research. They can use Spiderseek's content submission feature to get their product pages indexed quickly by AI, driving early traffic and awareness.

· A niche blogger is trying to grow their audience. By using Spiderseek's AI Research feature, they can discover what related topics AI agents are actively referencing, helping them create new, relevant content that is likely to be picked up by AI search.

8

SoloSync Encrypted Knowledge Hub

Author

las_nish

Description

A minimalist, encrypted knowledge platform designed for solo developers and founders, offering secure and private note-taking and knowledge management. Its core innovation lies in its end-to-end encryption and straightforward, unopinionated design, prioritizing user control and data privacy.

Popularity

Points 1

Comments 6

What is this product?

SoloSync is a digital workspace for individuals, particularly solo developers and founders, to securely store and organize their thoughts, project notes, code snippets, and ideas. Technically, it utilizes end-to-end encryption (E2EE) to ensure that only the user can access their data. This means the encryption keys are held solely by the user, and the data is unreadable by anyone else, including the platform's creators. The minimalist approach focuses on core functionality, avoiding unnecessary features to maintain simplicity and performance, making it a lightweight yet powerful tool for personal knowledge management. The innovation is in providing a highly secure and private environment for sensitive work without the complexity of enterprise solutions.

How to use it?

Developers and founders can use SoloSync as a secure place to jot down ideas for new projects, store important code snippets with context, manage personal task lists, or document research findings. It can be integrated into a developer's workflow by saving project specifications, client requirements, or even personal learning notes. The platform likely offers a web interface and potentially a desktop or mobile application, allowing for easy access and data synchronization across devices. Its simplicity means it can be adopted quickly without a steep learning curve, and its focus on privacy makes it ideal for handling proprietary information or sensitive personal strategies.

Product Core Function

· End-to-End Encrypted Note-Taking: Securely store and retrieve any text-based information, like code, project ideas, or personal thoughts, with the guarantee that only you can read it. This is crucial for protecting intellectual property and confidential business strategies.

· Minimalist User Interface: Offers a distraction-free writing and organization experience, enabling users to focus on capturing and structuring their knowledge without being overwhelmed by features. This speeds up the process of knowledge capture and retrieval.

· Private Knowledge Management: Provides a dedicated, secure space for managing personal and professional knowledge, acting as a digital brain for your projects and ideas. This helps in organizing thoughts and recalling information efficiently, preventing lost ideas and boosting productivity.

· Cross-Device Synchronization (Likely): Allows users to access their knowledge base from multiple devices, ensuring that their information is always up-to-date and available wherever they are working. This enhances accessibility and continuous productivity.

· Plain Text Focused Storage: Emphasizes storing knowledge in a simple, portable format, making it resilient and future-proof. This ensures that your data is not locked into a proprietary format and can be easily moved or processed by other tools.

Product Usage Case

· A solo game developer can use SoloSync to store game design documents, character backstories, and code snippets for unique mechanics, all encrypted to prevent competitors from accessing their ideas. This safeguards their unique game concept and implementation details.

· A founder can use SoloSync to draft business plans, jot down market research findings, and store confidential investor outreach notes, ensuring that sensitive business information remains private and secure. This protects the integrity of their business strategy and competitive advantage.

· A freelance developer can use SoloSync to keep track of client project requirements, custom code libraries, and billing details, all encrypted for client confidentiality and personal data security. This maintains client trust and ensures the privacy of project-specific information.

· A programmer learning a new language can use SoloSync to store code examples, syntax rules, and personal explanations, creating a personalized and secure learning resource. This facilitates efficient learning and provides a readily accessible reference.

9

Lessie AI: Automated People Discovery Agent

Author

Snorix

Description

Lessie AI is an AI-powered agent that automates the process of finding specific individuals for various professional needs, such as identifying influencers, potential collaborators, or industry experts. It streamlines what traditionally takes hours of manual searching on platforms like LinkedIn and Google into a fast, automated workflow.

Popularity

Points 5

Comments 0

What is this product?

Lessie AI is an intelligent system designed to quickly locate people based on your defined criteria. It works by understanding your request, intelligently searching across various data sources (like public professional profiles), using AI to analyze and rank potential matches, and even helping you draft initial outreach messages. The innovation lies in its ability to automate and optimize the often tedious and time-consuming task of professional networking and talent identification, using AI to sift through vast amounts of data and present the most relevant contacts.

How to use it?

Developers and professionals can use Lessie AI by simply describing the type of person they are looking to find. For example, you could type 'Find AI researchers in San Francisco working on natural language processing' or 'Identify marketing influencers specializing in sustainable fashion.' The agent will then process this request, search relevant data, and provide a ranked list of potential contacts along with options to generate personalized outreach messages. Integration could involve using its API to feed potential leads directly into CRM systems or marketing automation tools.

Product Core Function

· Automated People Search: Leverages AI to search across diverse data sources to find individuals matching specific criteria, saving significant manual search time.

· Intelligent Request Understanding: Utilizes natural language processing to interpret user prompts for precise targeting of desired profiles.

· AI-Powered Scoring and Ranking: Employs machine learning to evaluate and rank found profiles, ensuring the most relevant contacts are presented first.

· Automated Outreach Generation: Creates personalized, on-brand outreach messages, reducing the effort required to initiate contact.

· Multi-Source Data Aggregation: Integrates information from various professional and public data sources to provide comprehensive profiles.

Product Usage Case

· A marketing manager needs to find micro-influencers in the sustainable fashion niche for an upcoming campaign. Instead of spending days on Instagram and Google, they use Lessie AI, inputting their requirements, and receive a curated list of relevant influencers with contact details and social media handles, along with draft introductory emails.

· A startup founder is looking for potential co-founders with expertise in blockchain technology and prior startup experience. Lessie AI can quickly scan professional networks and databases to identify suitable candidates, accelerating the crucial early-stage hiring process.

· A researcher needs to connect with experts in a niche scientific field. Lessie AI can identify leading academics and professionals in that domain, providing their research interests and contact information, thus facilitating knowledge sharing and potential collaborations.

10

Devbox: Containerized Dev Environments

Author

TheRealBadDev

Description

Devbox is a lightweight, open-source CLI tool that simplifies development by creating isolated, disposable environments using Docker. It addresses 'dependency hell' and clutter on developer machines by running each project in its own container, allowing code editing directly on the host. This approach ensures reproducible setups and easy environment management, making development cleaner and more efficient.

Popularity

Points 3

Comments 2

What is this product?

Devbox is a command-line interface (CLI) tool that acts like a personal assistant for your development projects. Instead of installing all your programming tools and libraries directly onto your main computer, which can lead to conflicts and mess (often called 'dependency hell'), Devbox uses Docker to create a separate, clean 'sandbox' for each project. Think of it like giving each of your projects its own dedicated, pristine workshop. This workshop is a container – a self-contained package of software and its dependencies. The innovation here is how it bridges the gap between the container and your host machine: you can edit your code in simple folders on your computer, and Devbox seamlessly makes those files available inside the container. This avoids the common hassle of 'volume mounting' or file synchronization issues in Docker. The 'disposable' nature means you can easily get rid of an environment and recreate it if something goes wrong, without losing your work, because your actual code is always safe on your host machine. It's designed for ease of use, allowing quick setup and configuration via a simple JSON file, making it easy to share your development environment with teammates.

How to use it?

Developers can use Devbox to quickly set up isolated and reproducible development environments for any project. After installing Devbox (typically via a simple curl command), you can initialize a new environment for your project with `devbox init <your-project-name>`. This creates a basic structure and a `devbox.json` file. You then configure this `devbox.json` file to specify which programming languages, libraries, and tools (like Node.js, Python, Go, or specific databases) your project needs. For example, you might list `"nodejs": "latest"` or `"python": "3.9"`. Once configured, you can enter your isolated development shell using `devbox shell`. Within this shell, all the specified tools are available. To share this environment with teammates, you simply commit the `devbox.json` file to your project's repository. Anyone else with Devbox installed can then run `devbox up` in the project directory to get the exact same development environment automatically set up. This makes onboarding new team members or switching between projects incredibly smooth.

Product Core Function

· Ephemeral Development Environments: Creates isolated, temporary environments for each project using Docker. This prevents conflicts between project dependencies, offering a clean slate for every project and making it easy to experiment without affecting your system. So, you can try new tools or versions without fear of breaking your existing setup, which means less troubleshooting and more coding.

· Host-Friendly Code Editing: Allows developers to edit code directly on their host machine in standard folders, with changes automatically reflected inside the isolated container. This eliminates the common complexity of Docker volume management and file syncing, making the development workflow feel natural and efficient. So, you can use your favorite code editor without any special setup for Docker, saving time and reducing frustration.

· Reproducible Environment Configuration: Uses a simple `devbox.json` file to define project dependencies, services, and configurations. This file can be committed to version control, ensuring that any developer on the team can recreate the exact same development environment with a single command. So, everyone on your team works with the same tools and versions, eliminating 'it works on my machine' issues and speeding up collaboration.

· Instant Project Setup: Provides commands like `devbox init` and `devbox shell` for rapid creation and entry into new development environments. Pre-built templates for popular languages and frameworks accelerate the initial setup process even further. So, you can start coding on a new project within minutes, instead of spending hours configuring your environment.

· Docker-in-Docker Capability: Enables building and running Docker containers within your Devbox environment without requiring additional configuration. This is useful for projects that themselves rely on containerized services or for building Docker images as part of your development workflow. So, you can seamlessly integrate container-based workflows into your development process, such as building microservices or running CI/CD pipelines locally.

Product Usage Case

· A Node.js developer needs to work on a project that requires a specific version of Node.js and a particular version of a database like PostgreSQL. Instead of installing both globally and risking conflicts with other projects, they initialize a Devbox environment for their project, specify `"nodejs": "16.x"` and `"postgresql": "14.x"` in `devbox.json`, and then run `devbox shell`. Now, they have a dedicated, isolated environment with exactly the versions they need, ensuring compatibility and preventing system-wide changes.

· A team is collaborating on a Python project that relies on several data science libraries, some of which have complex dependencies. To ensure consistency, the team lead defines the exact Python version and all required libraries in the `devbox.json` file and commits it to the Git repository. New team members can clone the repository and run `devbox up` to instantly have a fully configured Python development environment ready to go, dramatically reducing onboarding time and eliminating 'it works on my machine' issues.

· A developer wants to experiment with a new Go framework. They create a new Devbox environment, add `"go": "latest"` to their `devbox.json`, and start coding. If the framework proves to be unsuitable or they encounter too many issues, they can simply destroy the Devbox environment (`devbox destroy`) without affecting their main system. This allows for risk-free exploration of new technologies.

· A web developer is working on a project that requires a specific version of Ruby and also needs to run a local Redis server. They configure `devbox.json` to include both `"ruby": "3.0"` and a `"redis"` service. Devbox spins up both within the isolated environment, making Ruby available in the shell and the Redis server accessible on a specific port, all without manual setup on the host machine. This simplifies complex application stacks for development.

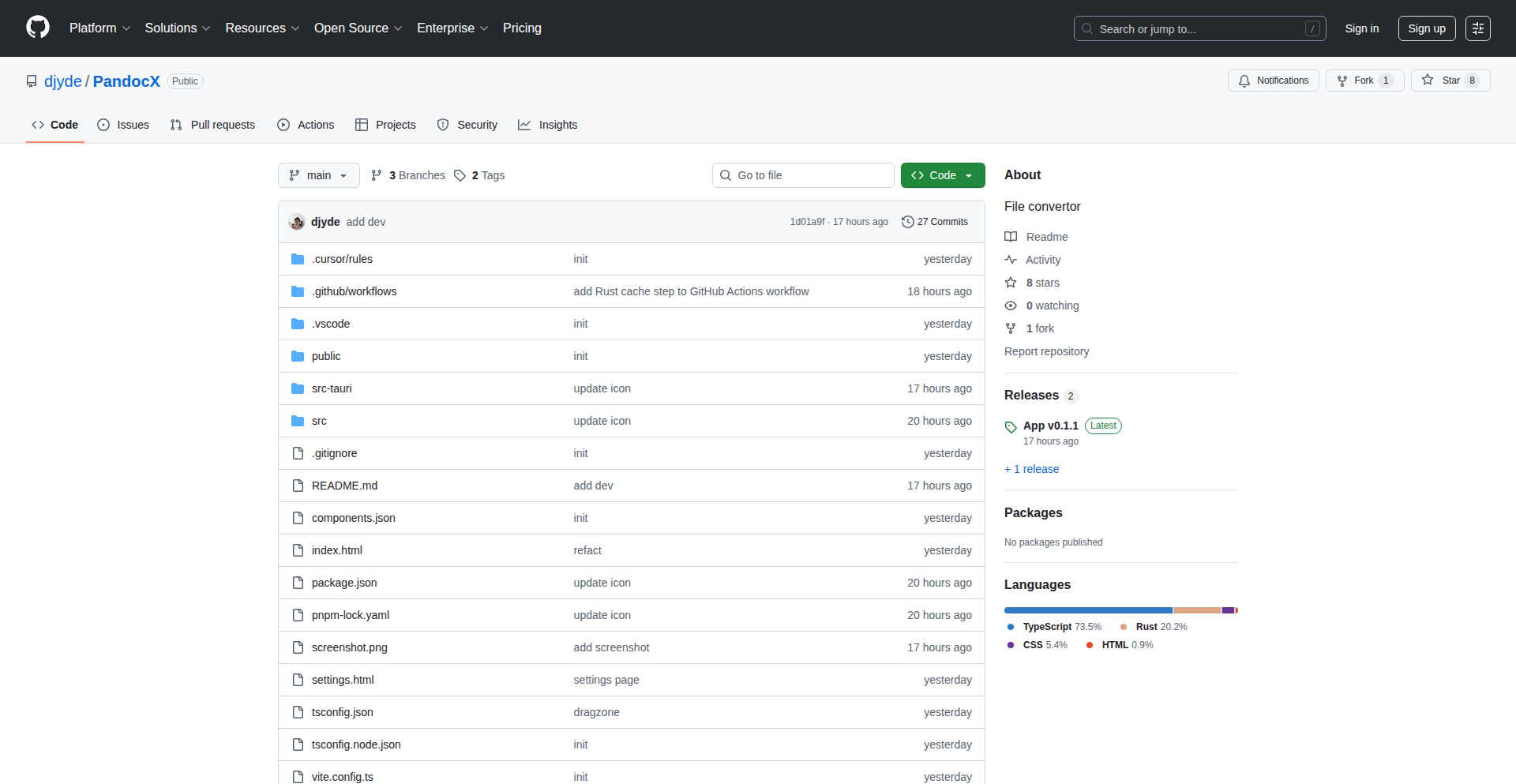

11

Spatialbound: 3D Physical World Designer

Author

mibrahimSB

Description

Spatialbound is an online platform that transforms any real-world location into an interactive 3D playground. It leverages built-in GIS tools and a global spatial data store to allow users to design, simulate, and reimagine physical spaces. With a native Python API, it empowers developers to automate complex spatial workflows, saving significant time and effort. This innovation addresses the gap in accessible, digital tools for planning and visualizing physical environments, offering a powerful yet user-friendly solution for a wide range of applications.

Popularity

Points 3

Comments 2

What is this product?

Spatialbound is a groundbreaking online platform that acts like 'Figma for the physical world'. At its core, it uses advanced Geographical Information System (GIS) tools and accesses a vast global spatial data store to create detailed 3D representations of any real-world location. This means you can import and interact with terrain, buildings, infrastructure, and more in a virtual 3D space. The innovation lies in its ability to bridge the gap between digital design and the complexities of the physical environment, making intricate spatial planning and simulation accessible. Its native Python API is a key differentiator, allowing for deep customization and automation of spatial tasks, a capability typically reserved for specialized, high-end software.

How to use it?

Developers can use Spatialbound in several ways. For custom workflows, the native Python API allows you to programmatically access and manipulate spatial data, automate design processes, run simulations (e.g., solar analysis, shadow studies), and integrate Spatialbound into existing software pipelines. For less code-intensive use cases, the platform offers an intuitive web interface for manual design, annotation, and visualization of 3D spaces. You can import your own data (like CAD models or sensor readings) or utilize the built-in global datasets. Integration examples include using Spatialbound to visualize proposed urban developments, analyze the impact of new construction on surrounding areas, or even create interactive training environments for field operations. Essentially, if you need to understand, design, or simulate something in a physical location, Spatialbound provides the tools.

Product Core Function

· 3D World Generation: Converts real-world locations into interactive 3D models using global spatial data, enabling visualization of any environment. This is useful for understanding context and scale for any project.

· GIS Integration: Embeds robust GIS capabilities, allowing for precise spatial analysis, data querying, and manipulation of geographic information. This helps in making data-driven decisions about physical spaces.

· Design and Simulation Tools: Provides tools for designing within the 3D environment and running simulations (e.g., environmental, structural). This allows for testing ideas and predicting outcomes before physical implementation.

· Python API for Automation: Offers a native Python API to automate complex spatial workflows, integrate with other systems, and develop custom spatial applications. This significantly speeds up repetitive or complex tasks for developers.

· Data Import and Export: Supports importing various geospatial and 3D data formats, and exporting results for further analysis or use in other applications. This ensures flexibility and interoperability with existing tools and datasets.

Product Usage Case

· Urban Planning Visualization: A city planner can use Spatialbound to import a proposed building design into the existing 3D city model. They can then simulate sunlight patterns and shadows cast by the new building on surrounding properties, helping to identify potential issues and communicate the impact to stakeholders.

· Environmental Impact Assessment: An environmental consultant can use Spatialbound to model a new industrial site, incorporating terrain data, weather patterns, and potential emission sources. They can then simulate dispersion models to assess environmental impact and plan mitigation strategies.

· Infrastructure Development Simulation: A civil engineer can use Spatialbound to visualize a new road or bridge project in its real-world context. They can simulate traffic flow, or analyze the impact of construction on existing utilities and terrain, ensuring a more efficient and less disruptive development process.

· Real Estate Development Preview: A real estate developer can create immersive 3D walkthroughs of proposed developments within their actual locations. This allows potential buyers or investors to experience the environment and the property from anywhere, enhancing marketing and sales efforts.

12

InfiniteContext LLM Copilot

Author

mingtianzhang

Description

This project introduces an innovative MCP (Memory Context Processor) designed to overcome the inherent context length limitations of Large Language Models (LLMs). It achieves this by implementing a novel context management strategy, allowing developers to process and interact with significantly larger amounts of information than standard LLM APIs permit. This breaks down the barriers for applications requiring deep historical context or extensive document analysis.

Popularity

Points 5

Comments 0

What is this product?

InfiniteContext LLM Copilot is a system that enhances Large Language Models (LLMs) by effectively bypassing their built-in context window limitations. Standard LLMs can only process a certain amount of text at once, acting like a short-term memory. This project uses a sophisticated Memory Context Processor (MCP) which intelligently manages and retrieves relevant information from a much larger knowledge base, feeding it to the LLM as needed. This is achieved through techniques like intelligent chunking, embedding, and retrieval mechanisms, ensuring the LLM always has access to the most pertinent data without exceeding its processing capacity. The innovation lies in how it dynamically filters and prioritizes information, making vast datasets accessible for LLM interaction.

How to use it?

Developers can integrate InfiniteContext LLM Copilot into their applications by leveraging its API. This involves configuring the system with their desired knowledge sources (e.g., large text files, databases, web content). The Copilot then handles the background processing of this data, preparing it for LLM interaction. Developers can query the system with prompts, and the Copilot will retrieve and present the necessary context to the LLM to generate a comprehensive response. This is ideal for building chatbots that remember long conversations, analytical tools that process extensive reports, or knowledge management systems that can query vast document repositories.

Product Core Function

· Unlimited Context Window: Enables LLMs to process and reason over text beyond their standard context limits, providing deeper insights and more comprehensive answers. This means your AI can remember everything from a long conversation or analyze an entire book.

· Intelligent Context Management: Uses advanced algorithms to store, retrieve, and prioritize relevant information from a large knowledge base, ensuring the LLM receives the most crucial data for accurate responses. This avoids overwhelming the LLM with irrelevant details.

· Scalable Knowledge Ingestion: Allows developers to easily ingest and manage large volumes of text data from various sources, making it suitable for diverse application needs. You can feed it all your company's documentation or a large collection of research papers.

· Efficient Information Retrieval: Optimizes the process of finding and delivering specific pieces of information to the LLM, reducing latency and improving the responsiveness of AI applications. This ensures quick and relevant answers.

Product Usage Case

· Building an AI-powered customer support chatbot that can recall entire customer interaction histories, leading to more personalized and effective support. This solves the problem of chatbots forgetting previous customer issues.

· Developing a legal document analysis tool that can process and summarize lengthy contracts or case files, identifying key clauses and potential risks. This allows lawyers to quickly understand complex legal texts.

· Creating a research assistant that can search and synthesize information from thousands of academic papers, helping researchers uncover trends and connections. This accelerates scientific discovery by making vast amounts of research easily searchable.

· Implementing a personal knowledge management system that allows users to ask questions about their entire digital library of notes and documents, creating a truly intelligent personal assistant. This lets you ask your computer anything about your personal files.

13

DecayBlock

Author

academic_84572

Description

DecayBlock is a browser extension that tackles web distractions by introducing a dynamic, escalating timeout to websites you tend to get lost in. Unlike rigid blockers, it uses an adaptive friction principle: the more you visit a distracting site, the longer the initial delay before it loads. This delay slowly decreases over time if you stay away, making procrastination progressively harder without outright banning access. It's built on the insight that small, increasing friction can effectively break subconscious habit loops, enhancing focus for users.

Popularity

Points 2

Comments 2

What is this product?

DecayBlock is a browser extension designed to help users regain focus by subtly discouraging habitual visits to distracting websites. It works by implementing an 'adaptive friction' mechanism. When you access a site flagged as a distraction, a small initial delay is introduced. This delay isn't static; it increases with each subsequent visit to that same site, making it incrementally more effortful to access. Crucially, this accumulated delay 'decays' over time, meaning if you avoid the site for a configured period, the delay gradually reduces. This approach aims to disrupt the quick, almost automatic pattern of succumbing to distractions without the frustration of being completely locked out, which often leads users to disable other blockers.

How to use it?

As a developer, you can use DecayBlock by installing it as a browser extension on Chrome or Firefox. Once installed, you can navigate to the extension's settings to create a personalized list of distracting websites. Within the settings, you can also fine-tune the 'timeout growth rate' (how quickly the delay increases with each visit) and the 'decay half-life' (how long it takes for the accumulated delay to halve when you stay away from a site). This allows you to tailor the blocker's behavior to your specific habits and needs. For instance, a developer might add their social media feeds or news sites to the distraction list and set a moderate growth rate to gently nudge themselves back to productive coding tasks.

Product Core Function

· Adaptive Timeout Mechanism: Introduces a delay before distracting websites load, which increases with repeated visits. This provides a gentle, escalating barrier to procrastination, helping users break the habit loop.

· Configurable Friction Parameters: Allows users to set the rate at which timeouts increase and the speed at which they decay over time. This personalization ensures the tool adapts to individual browsing habits and focus goals, making it effective without being overly restrictive.

· Habit Breaking Focus Aid: Acts as a cognitive nudge rather than a strict ban. The small, increasing friction interrupts the subconscious impulse to visit distracting sites, encouraging more mindful web browsing and improving productivity.

· Cross-Browser Extension: Available for both Chrome and Firefox, making it accessible to a broad range of desktop users who rely on these popular browsers for their development workflow.

· User-Defined Distraction Lists: Enables users to manually specify which websites they find most distracting, ensuring the tool addresses their personal productivity challenges directly.

Product Usage Case

· A software engineer struggling with frequent social media checks during coding sprints can add their favorite platforms (e.g., Reddit, Twitter) to DecayBlock. By setting a moderate timeout growth, they experience a slight delay each time they reflexively open these sites, which is often enough to remind them of their work and get back to coding, ultimately improving their sprint velocity.

· A web developer working on a time-sensitive project can list news aggregators or entertainment sites that often pull them away from their tasks. The escalating timeouts make it inconvenient to quickly browse these sites, helping them maintain focus on delivering the project on schedule.

· A student learning a new programming language can use DecayBlock to limit their access to gaming or streaming sites during study hours. The adaptive friction makes it harder to fall into prolonged sessions, encouraging them to allocate more time to learning and practice.

· A designer working on a complex UI can add reference sites that they tend to get sidetracked on. The increasing delays help them limit the scope of their research and return to the design task more efficiently, preventing context switching.

14

Ingredient Substitution Manual

Author

cookingguru

Description

A straightforward website that catalogues ingredient substitutions, offering plain English explanations for common kitchen needs. It addresses the frustration of missing specific ingredients in recipes by providing accessible alternatives, making cooking more approachable for beginners.

Popularity

Points 3

Comments 1

What is this product?

This project is a web-based tool designed to help home cooks find suitable replacements for ingredients they don't have in their pantry. It addresses a common pain point in cooking: recipes often call for obscure or specialty items that aren't readily available. The innovation lies in its simplicity and focus on clear, easy-to-understand English explanations. Instead of complex culinary terms, it provides practical, everyday advice on how to substitute ingredients, making it useful for anyone, regardless of their cooking experience. Essentially, it demystifies ingredient sourcing and empowers users to cook with what they have.

How to use it?

Developers can utilize this project as a reference tool within their own cooking applications or websites. For example, a recipe app could integrate this service to offer real-time substitution suggestions when a user flags a missing ingredient. It could be used as a backend API to power a "smart pantry" feature, or even as a standalone widget on a food blog. The core idea is to leverage its curated database of substitutions to enhance user experience in digital cooking platforms.

Product Core Function

· Ingredient substitution lookup: Provides quick and easy access to alternative ingredients for commonly used items, easing the burden on cooks who lack specific pantry staples.

· Plain English explanations: Offers clear, jargon-free descriptions of each substitution, ensuring that users of all skill levels can understand and confidently make the switch.

· Focused on practical solutions: Prioritizes common cooking scenarios and widely available ingredients, making it a practical resource for everyday cooking challenges.

· User-friendly interface: Designed with simplicity in mind, allowing for quick navigation and efficient searching for the required information.

Product Usage Case

· A user is following a baking recipe that calls for arrowroot starch but they only have cornstarch. By searching 'arrowroot' on the site, they can find that cornstarch is a suitable substitute and learn the proper ratio to use, allowing them to complete the recipe without a trip to the store.

· A beginner cook is making a stir-fry and realizes they don't have fresh ginger. They can quickly look up 'ginger' and find suggestions like ground ginger or ginger powder, with guidance on how to use them in the dish, saving them from having to abandon the recipe.

· A food blogger wants to add a helpful feature to their recipes. They can integrate the ingredient substitution functionality into their website, so readers encountering missing ingredients can instantly find viable alternatives, improving reader engagement and satisfaction.

15

TermiNav: The Colorful Terminal Web Browser

Author

den_dev

Description

TermiNav is a minimalist, terminal-based web browser built with C using the ncurses and libcurl libraries. It aims to provide a visually enhanced way to browse the web directly from your command line, rendering common HTML elements with distinct colors and formatting. Unlike other text-based browsers, TermiNav focuses on clear visual cues for different content types, making it easier to grasp the structure of a webpage at a glance. So, what's the benefit to you? It offers a unique and efficient way to access web content without leaving your terminal, especially useful for developers who spend a lot of time in the command line and want to quickly check web resources.

Popularity

Points 3

Comments 1

What is this product?

TermiNav is a terminal-native web browser that leverages C programming, the ncurses library for rich terminal user interface (TUI) capabilities, and libcurl for fetching web content. The core innovation lies in its ability to parse HTML and render it with semantic coloring and formatting within the terminal. This means headings are distinct, links are clearly identifiable and clickable, and other elements like lists, quotes, and code blocks are styled to be easily readable. It's like bringing a bit of the visual web into the stark environment of your terminal. So, how does this help you? It provides a more intuitive and visually informative way to consume web content when you're already working in the terminal, reducing context switching.

How to use it?

To use TermiNav, you would typically compile the C source code on your system. Once compiled, you can launch it from your terminal and enter a URL. The browser will then fetch the HTML content using libcurl and render it using ncurses. Navigation can be done through simple keyboard commands, such as 'q' to quit and 'r' to reload. For developers, this means you can easily integrate web checks into your workflow. For instance, you could quickly browse documentation, check status pages, or access APIs directly from your development shell. So, what's the practical use? It allows you to browse web resources without leaving your command-line environment, streamlining your development process.

Product Core Function

· HTML Parsing and Semantic Rendering: Interprets HTML tags and displays content with distinct colors and formatting for elements like headings, links, lists, and code blocks. This provides a more readable and structured web experience in the terminal, helping you quickly understand content hierarchy and actionable elements.

· Interactive Link Navigation: Renders hyperlinks in blue and underlined, and crucially, allows them to be clicked directly within compatible terminals, enabling seamless navigation between web pages without manual URL entry. This saves time and effort by keeping you within your terminal workflow.

· Text Formatting Support: Recognizes and renders bold, italic, underline, and strikethrough text, accurately reflecting the intended emphasis and styling of the original webpage. This ensures that important text distinctions are preserved, aiding comprehension.

· Basic Media and Form Handling: Displays images, video, and audio as clickable links, and renders web forms as ASCII mockups, providing a functional overview of page content and interactive elements. This gives you a basic but functional way to interact with multimedia and forms without leaving the terminal.

· Terminal-Based Controls: Offers simple keyboard shortcuts like 'q' for quitting and 'r' for reloading, providing an intuitive and efficient user experience tailored for command-line users. This allows for quick and easy interaction with the browser.

Product Usage Case

· Developer Documentation Browsing: A developer can quickly access and read API documentation or library manuals hosted on a website directly from their terminal, without switching to a graphical browser. This is useful for understanding parameters or examples while in the middle of coding. The clear rendering of code blocks and headings makes it easy to find the information needed.

· Monitoring Server Status Pages: A system administrator can use TermiNav to check internal status pages or monitoring dashboards that are web-based. This allows for quick checks of server health or application performance without needing a separate GUI application, improving efficiency during operations.

· Quick Web Resource Checks: A developer might need to quickly verify a snippet of HTML or check if a web resource is available. TermiNav allows them to enter a URL and see the rendered content, including links, which is faster than opening a full browser tab for such a small task. This is particularly handy when debugging or testing front-end snippets.

· Interactive API Exploration: When interacting with a web API that returns HTML, TermiNav can display the structured output directly. For APIs that return formatted data or simple web interfaces, it offers a convenient way to preview the results. This helps in understanding the API's output structure visually.

16

CreditUtilGuard

Author

soelost

Description

CreditUtilGuard is a personal finance tool designed to help individuals manage their credit card utilization ratio, a key factor in credit scoring. It provides real-time traffic light indicators for each credit card, visualizing the risk of exceeding the optimal utilization threshold. By simplifying complex credit management, it empowers users to make informed spending decisions and protect their credit scores.

Popularity

Points 1

Comments 3

What is this product?

CreditUtilGuard is a smart credit card management system that visualizes your credit utilization for each card with a simple traffic light analogy. Green means your spending on that card is well within the safe utilization limit (typically below 30%), Yellow means you are approaching the limit and should be cautious, and Red signifies that your spending is likely to negatively impact your credit score. It addresses the common problem of users not knowing which card to spend on to avoid hurting their credit, especially when managing multiple cards with fluctuating balances.

How to use it?

Developers can integrate CreditUtilGuard into their personal finance dashboards or budgeting applications. The system typically requires access to credit card transaction data (which users would grant permissions for). The core logic would involve calculating the current balance against the credit limit for each card and comparing it to a customizable utilization threshold. The output is a simple status indicator (Green, Yellow, Red) that can be displayed alongside each card, offering an immediate visual cue for spending decisions.

Product Core Function

· Credit Utilization Monitoring: Tracks the real-time balance of each credit card against its credit limit to calculate utilization ratio. This helps users understand their current spending impact on their credit score.

· Visual Risk Indicators: Displays each credit card with a color-coded status (Green, Yellow, Red) based on its utilization level, providing an instant, easy-to-understand risk assessment.

· Customizable Thresholds: Allows users to set their preferred credit utilization thresholds, offering flexibility to align with personal financial goals and risk tolerance.

· Multi-Card Management: Consolidates and displays utilization status for all linked credit cards, enabling users to manage their credit holistically.

Product Usage Case

· A user planning a large purchase can check CreditUtilGuard to see which card has the lowest utilization, allowing them to make the purchase on that card to minimize the impact on their credit score.

· A freelancer managing multiple business credit cards can use CreditUtilGuard to ensure their overall spending remains within optimal utilization limits across all cards, preventing a dip in their personal credit score.

· Someone trying to build or improve their credit can use the visual cues from CreditUtilGuard to make small, regular payments to keep utilization low, actively working towards a better credit score.

17

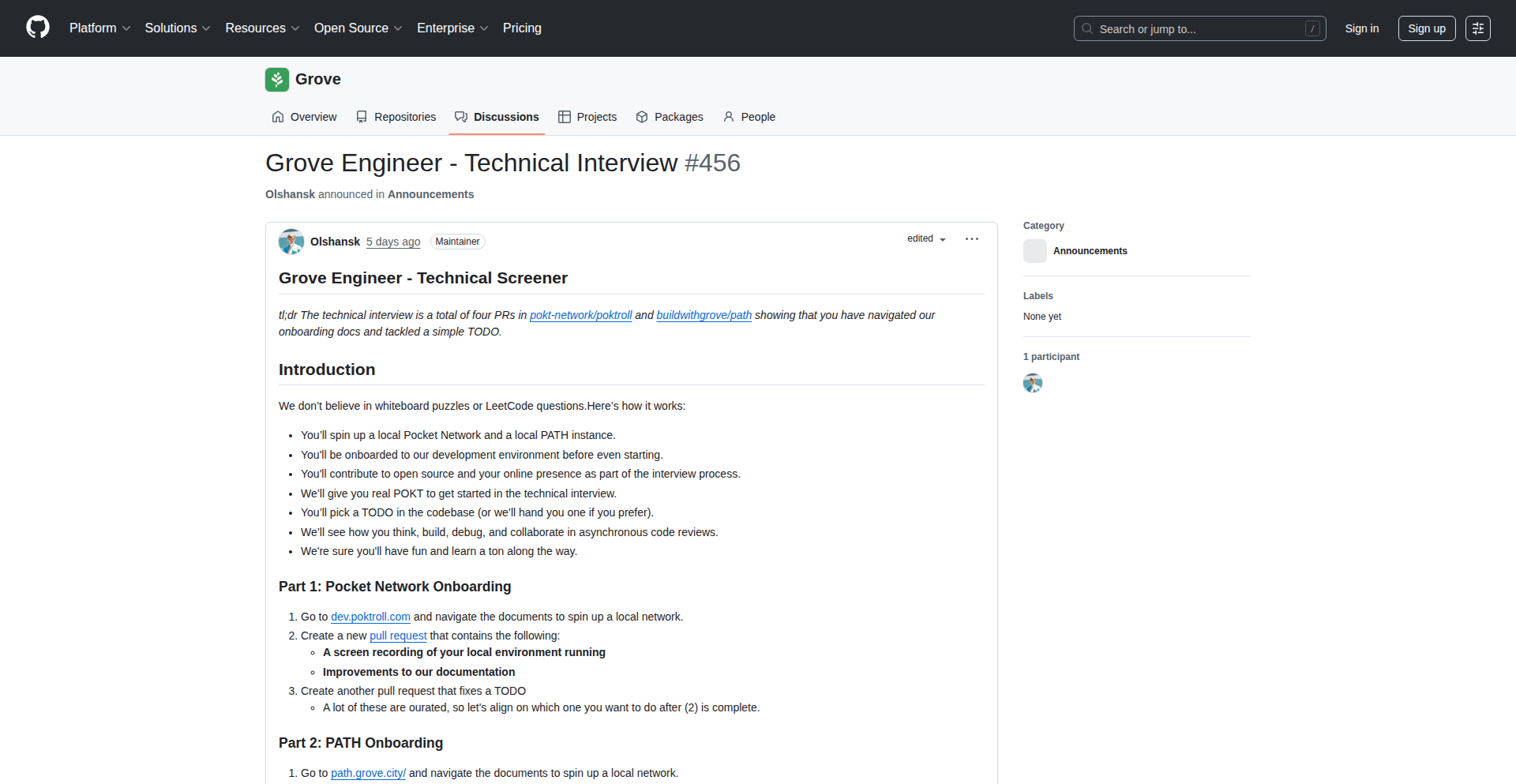

Grove Engineering: Open-Source Dev Interview Simulator

Author

Olshansky

Description

This project is a technical interview platform designed specifically for open-source teams. It simulates a real-world development environment, allowing candidates to contribute to an open-source project directly, providing a practical and authentic assessment of their skills and fit within an open-source culture. The innovation lies in its bidirectionality – assessing technical fit while also showcasing the collaborative nature of open-source development.

Popularity

Points 4

Comments 0

What is this product?

This is a technical interview platform that spins up a local development environment for candidates to work on an open-source project. Instead of theoretical questions, candidates actively contribute code. The innovation here is the move from abstract Q&A to hands-on problem-solving within a familiar open-source context. This allows interviewers to see how a candidate actually codes, debugs, and integrates their work, mirroring the daily tasks of an open-source developer.

How to use it?

Developers can use this platform as a structured way to conduct technical interviews. A candidate is provided with access to a cloned open-source repository and a pre-configured development environment. They are given a specific task or bug to fix within the project. The interviewer monitors their progress, code quality, and communication. This integrates seamlessly into existing hiring workflows by replacing or augmenting traditional coding challenges.

Product Core Function

· Environment Setup Automation: Automates the spinning up of local development environments, saving significant time for both interviewers and candidates and ensuring consistency in testing conditions.

· Real-World Project Contribution: Enables candidates to make actual contributions to an open-source project, providing a realistic assessment of their coding skills and ability to integrate into existing codebases.

· Bidirectional Skill Assessment: Not only evaluates a candidate's technical proficiency but also their understanding of open-source collaboration, communication, and contribution workflows.

· Code Quality and Debugging Evaluation: Allows direct observation of a candidate's approach to writing clean code, identifying bugs, and debugging effectively within a live project context.

· Interview Process Standardization: Provides a repeatable and consistent method for technical interviews, ensuring all candidates are evaluated under similar conditions and on similar tasks.

Product Usage Case

· Assessing a candidate's ability to fix a bug in a popular Python web framework: The platform would spin up the framework's dev environment, the candidate would clone the repo, identify and fix a reported bug, and submit a pull request. This demonstrates their debugging skills and understanding of framework architecture.

· Evaluating a candidate's suitability for a remote open-source role working on a JavaScript frontend library: The candidate would be tasked with implementing a new feature or refactoring an existing component. This showcases their UI development skills, React/Vue/Angular proficiency, and ability to work with a component-based architecture.

· Testing a candidate's understanding of CI/CD pipelines in an open-source context: The candidate might be asked to fix a failing build or add a new test. This reveals their familiarity with build tools, testing frameworks, and deployment processes.

· Onboarding new contributors to an open-source project: The platform can be used as a guided entry point for new community members, helping them get familiar with the project's codebase and contribution process through small, manageable tasks.

18

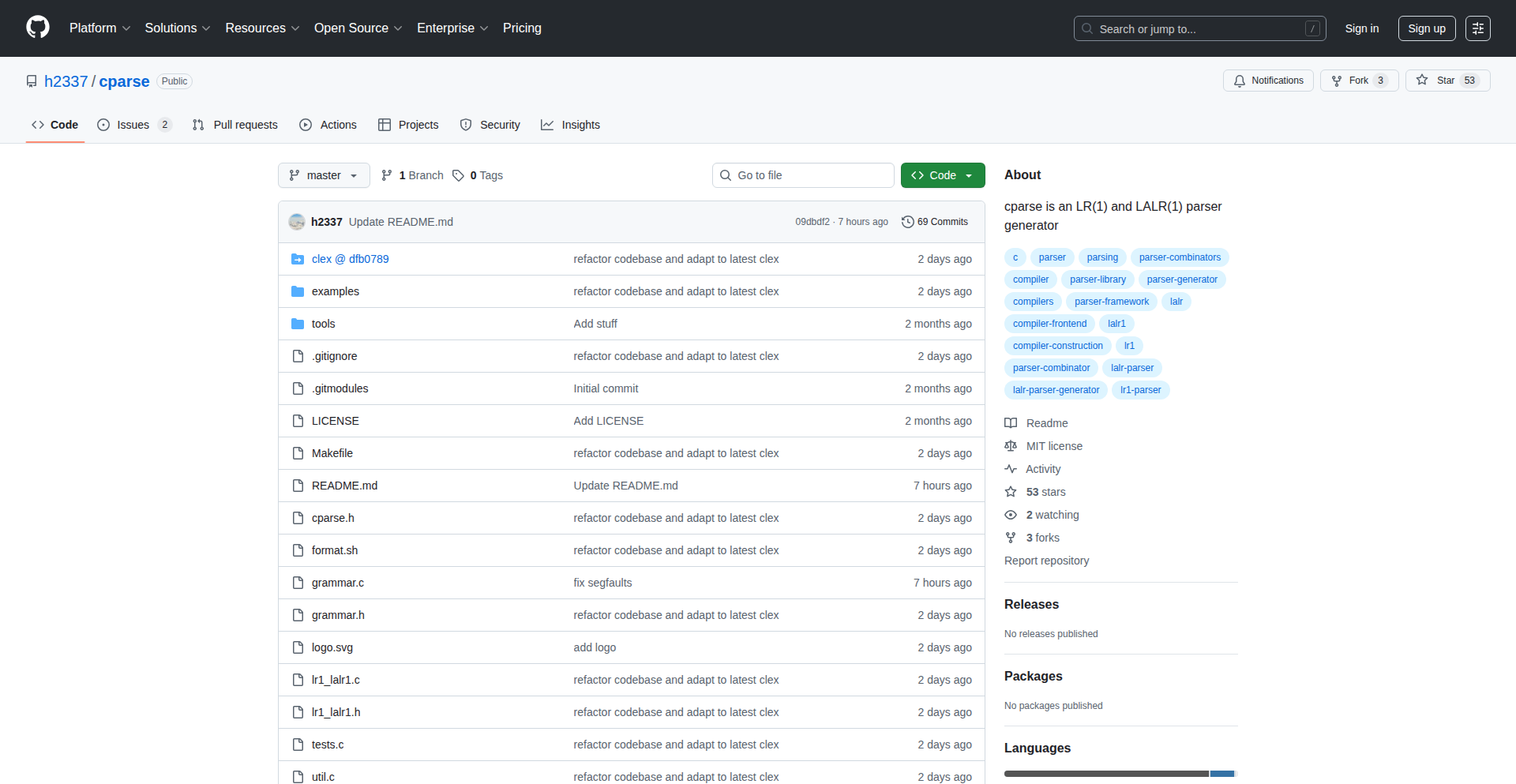

Cparse: The C-Native LR Parser Generator

Author

h2337

Description

Cparse is a lean and efficient parser generator written entirely in C. It empowers developers to create parsers for LR(1) and LALR(1) grammars directly in C, bypassing the need for intermediate languages or complex build steps. This means faster compilation, smaller binaries, and tighter integration with existing C projects. It addresses the common challenge of efficiently processing structured text or data within C applications.

Popularity

Points 2

Comments 2

What is this product?

Cparse is a tool that automatically generates C code for parsers. Parsers are like translators; they take a stream of characters (like text from a file or network) and understand its structure based on predefined rules (a grammar). Cparse understands two powerful parsing techniques: LR(1) and LALR(1). This means it can handle very complex language structures reliably. The innovation here is that it's written purely in C, making the generated parsers performant, lightweight, and easy to drop into any C project without external dependencies or heavy frameworks. This is useful because many embedded systems or performance-critical applications are built in C, and needing a custom parser often means wrestling with existing tools that might not be C-friendly or are overly complex. So, for a C developer, it means a straightforward way to build robust parsers that are native to their environment.

How to use it?

Developers can use Cparse by defining their grammar in a specific format, typically a text file. This grammar file describes the rules of the language or data structure they want to parse. They then run Cparse with this grammar file as input. Cparse will generate C source code files (e.g., .c and .h files) which contain the actual parser. These generated files can then be compiled and linked into the developer's C application. The generated parser code will have functions that can be called to process input data. This is ideal for scenarios like building custom compilers, interpreters, data deserializers (like JSON or XML parsers), or command-line interface (CLI) parsers within C projects. So, for a developer, it’s about defining their language rules once and letting Cparse do the heavy lifting of writing the complex parsing logic, saving significant development time and effort.

Product Core Function

· Grammar to C code generation: Converts a grammar definition into ready-to-compile C source files, enabling native C parsing. This is valuable because it directly produces usable C code for parsing, integrating seamlessly with existing C projects, thus saving development time.