Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-21

SagaSu777 2025-09-22

Explore the hottest developer projects on Show HN for 2025-09-21. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

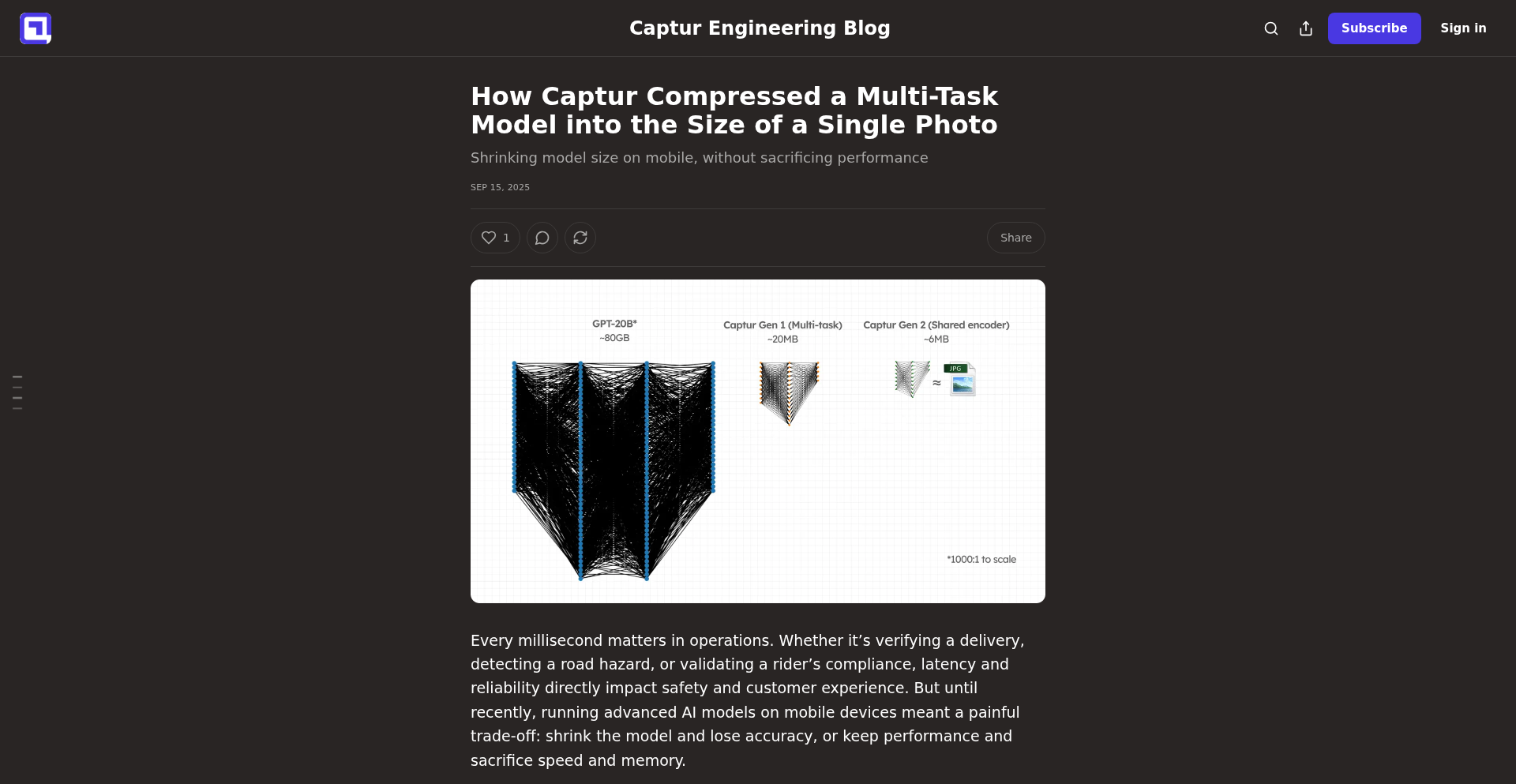

Today's Show HN landscape showcases a vibrant intersection of AI, developer tooling, and creative computation. We're seeing a strong trend towards leveraging AI to boost developer productivity, whether it's automating code reviews with Shieldcode, simplifying microservice updates via InfraAsAI, or even generating entire projects from a single prompt. For developers, this means exploring how AI agents and LLM orchestration, like in ArchGW's routing or AgentSafe's sandboxing, can streamline workflows and unlock new levels of efficiency. Beyond tooling, the sheer ambition of projects like 'The Atlas' demonstrates the power of combining cutting-edge web tech (Three.js) with complex mathematical models for creating immersive, deterministic simulations. This inspires creators to push the boundaries of what's possible in a browser, turning abstract concepts into tangible, interactive experiences. For entrepreneurs, the rise of AI-assisted content creation, from ebooks with QuickTome AI to story generation with Novel AI, highlights opportunities to build tools that democratize creativity. Furthermore, the focus on security, like with the NPM supply chain tips, reminds us that innovation must be coupled with robust practices. Embrace the hacker ethos: identify a pain point, devise an elegant technical solution, and build it. The future is being coded today, often with AI as a co-pilot.

Today's Hottest Product

Name

The Atlas – A 3D Universe Simulation with Python and Three.js

Highlight

This project is a mind-blowing procedural universe simulator, generating over a sextillion galaxies from a single mathematical seed. It leverages pure math and deterministic algorithms (SHA-256, golden ratio) to create a vast, explorable cosmos with realistic physics like Kepler's laws and tidal locking, all rendered in your browser using Python/Flask and React/Three.js. Developers can learn about advanced procedural generation, real-time simulation, deterministic systems, and integrating complex mathematical concepts with web technologies for immersive experiences. It embodies the hacker spirit by tackling an astronomical problem with elegant code and accessible technology.

Popular Category

AI/Machine Learning

Developer Tools

Simulation

Web Development

Security

Popular Keyword

AI

LLM

Python

JavaScript

CI/CD

Security

Simulation

Open Source

Developer Productivity

Technology Trends

AI-powered productivity tools

Lightweight CI/CD solutions

Enhanced developer security practices

Procedural generation and simulation

AI for creative content generation

Personalized user experience tools

Browser-based real-time applications

LLM orchestration and routing

Project Category Distribution

AI/Machine Learning (20%)

Developer Tools & Productivity (25%)

Simulation & Entertainment (15%)

Web Applications & Services (20%)

System & Infrastructure (10%)

Security (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | NPM Guardian | 64 | 33 |

| 2 | GPU Rescue Bot | 35 | 0 |

| 3 | Subscription Tracker | 3 | 23 |

| 4 | Gocd: Laptop-Native Deployer | 18 | 4 |

| 5 | Wan-Animate | 12 | 1 |

| 6 | The Atlas: Deterministic Universe Engine | 7 | 4 |

| 7 | JobSynth AI | 5 | 5 |

| 8 | Poem-as-Code C | 5 | 5 |

| 9 | QuickTome AI | 3 | 4 |

| 10 | Viralwalk: Serendipitous Web Discovery Engine | 4 | 0 |

1

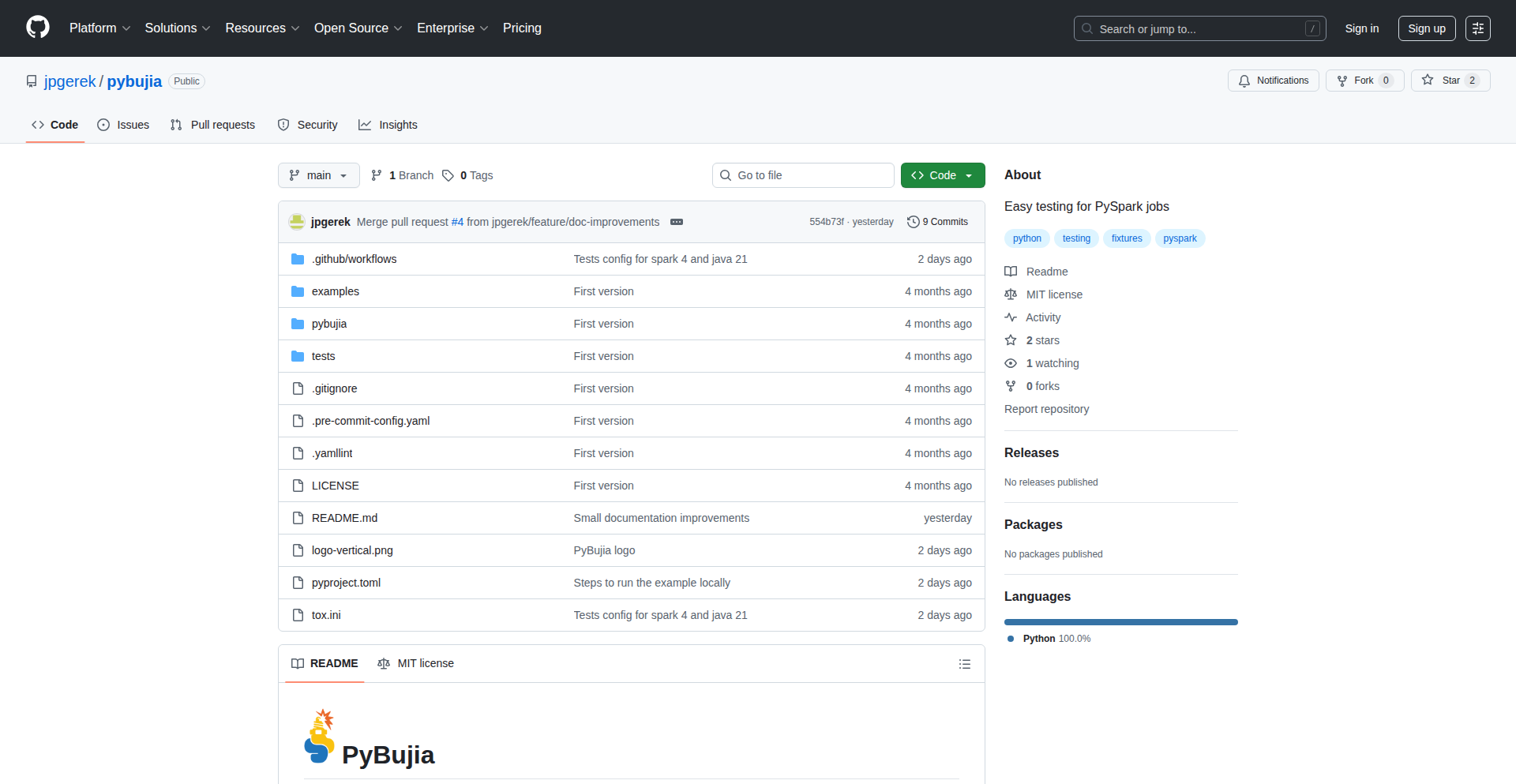

NPM Guardian

Author

bodash

Description

This project is a curated collection of best practices and actionable tips designed to protect developers from the growing threat of NPM supply chain attacks. It addresses the critical need for enhanced security in the JavaScript ecosystem by offering practical guidance on identifying and mitigating risks associated with third-party packages.

Popularity

Points 64

Comments 33

What is this product?

NPM Guardian is a developer-focused resource that consolidates crucial security strategies for navigating the NPM ecosystem. Its innovation lies in its practical, community-driven approach to a complex problem. Instead of a single tool, it's a knowledge base that empowers developers with the understanding to proactively defend against malicious code injected into the software supply chain. This means you can build software more confidently, knowing you're applying the latest security wisdom.

How to use it?

Developers can use NPM Guardian by visiting the provided GitHub repository. It serves as a reference guide to implement secure development workflows. This includes understanding how to vet packages, manage dependencies, and detect suspicious activities. Integrate these practices into your daily coding habits and CI/CD pipelines to continuously improve your project's security posture. The value for you is a significant reduction in the risk of compromised code impacting your applications.

Product Core Function

· Package Vetting Guidance: Provides methodologies to evaluate the trustworthiness and security of NPM packages before integration, minimizing the risk of introducing vulnerabilities.

· Dependency Management Strategies: Offers best practices for updating, locking, and monitoring dependencies to prevent the inclusion of compromised versions.

· Threat Detection Insights: Educates developers on common attack vectors used in supply chain compromises, enabling them to recognize and avoid potential threats.

· Secure Coding Practices: Details secure coding principles specifically tailored to the NPM environment, helping developers write more resilient code.

· Community Contribution Platform: Allows developers to share their own security insights and discovered vulnerabilities, fostering a collective defense against threats.

Product Usage Case

· A developer is about to install a new, popular package for a critical feature. By consulting NPM Guardian, they learn to check the package's recent commit history, issue tracker, and author's activity, identifying a suspicious spike in recent changes that might indicate a compromise, thus avoiding a potential attack.

· A team is experiencing unexpected behavior in their application after updating a dependency. They use NPM Guardian's troubleshooting tips to systematically audit their dependency tree, identify the problematic package, and roll back to a secure version, restoring application stability and security.

· A project manager wants to enforce stricter security policies for their development team. They integrate the principles from NPM Guardian into their team's onboarding process and code review checklist, ensuring all developers are aware of and adhere to secure dependency management practices.

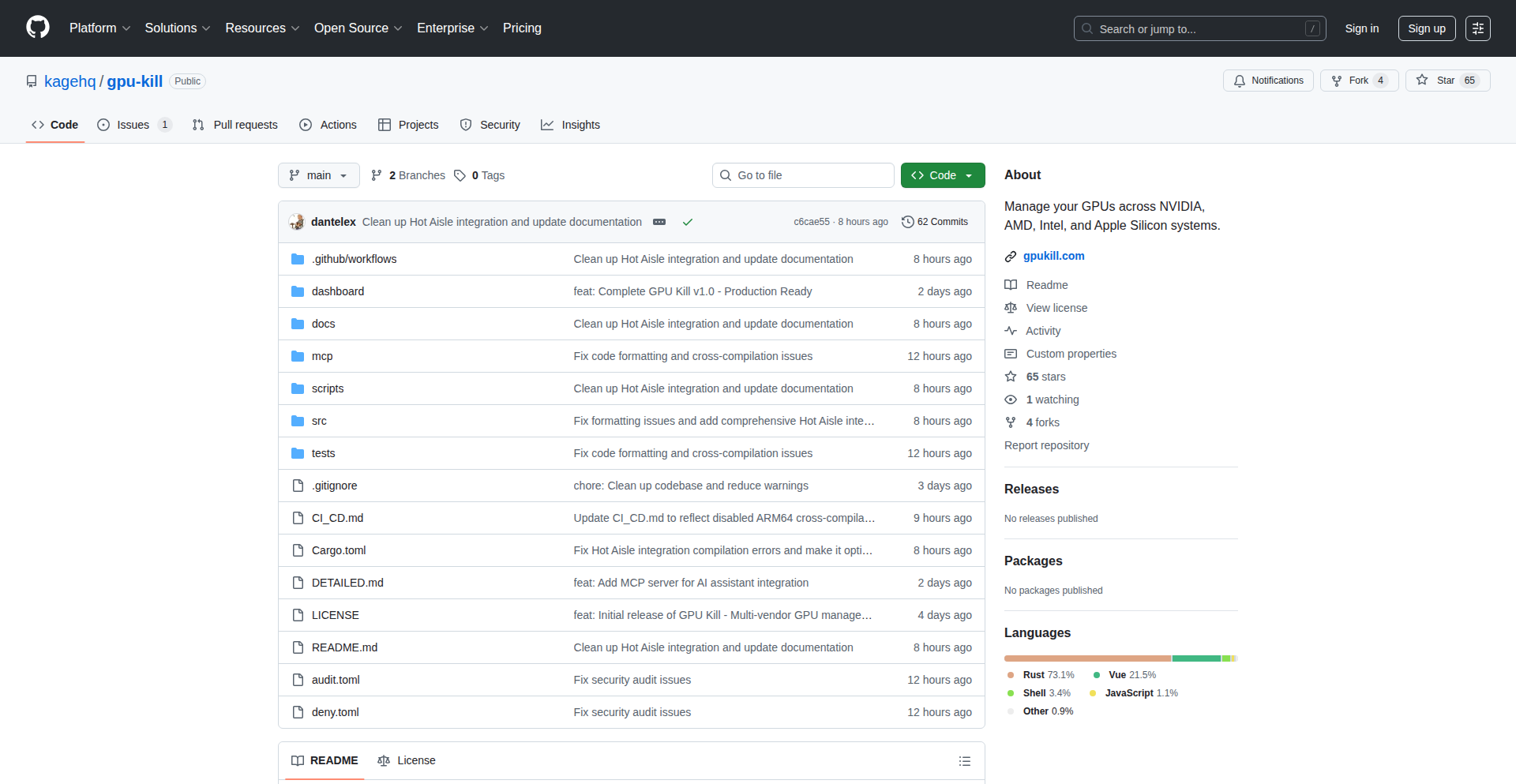

2

GPU Rescue Bot

Author

lexokoh

Description

This project addresses a common pain point for GPU users: runaway jobs that consume all available GPU memory and processing power, making the GPU unusable. The innovation lies in a proactive monitoring system that identifies and terminates stuck processes, effectively freeing up the GPU for new tasks without manual intervention. This is a clever application of system monitoring and process management to solve a frustrating hardware resource blockage.

Popularity

Points 35

Comments 0

What is this product?

GPU Rescue Bot is a system designed to automatically detect and terminate misbehaving or hung processes that are consuming excessive GPU resources. At its core, it works by periodically querying the GPU for process activity and resource utilization. If a process exceeds predefined thresholds for resource usage (like memory or compute time) or shows no signs of progress, the bot can be configured to safely terminate that process. The innovation here is moving beyond simple 'kill -9' commands to a more intelligent, resource-aware approach to process management, preventing GPU lockups.

How to use it?

Developers can integrate GPU Rescue Bot into their deep learning or high-performance computing workflows. It typically runs as a background service on the machine hosting the GPU. After installation, users configure the monitoring thresholds and the action to take (e.g., send a notification, gracefully terminate the process). This can be set up to run automatically at system startup, ensuring continuous protection. For integration, it might involve setting up cron jobs for monitoring or running the bot as a daemon. The primary use case is for anyone running multiple GPU-intensive jobs, especially in shared environments or on personal workstations where unattended jobs can cause significant downtime.

Product Core Function

· GPU Process Monitoring: The bot continuously observes running processes on the GPU, tracking their memory usage and computational activity. This is valuable because it provides real-time visibility into what's consuming your GPU resources, allowing you to spot potential problems early.

· Stuck Process Detection: It intelligently identifies processes that are no longer progressing or are excessively consuming resources, often indicators of a job crash or infinite loop. This is useful because it automates the tedious task of manually finding and killing hung processes, saving you time and frustration.

· Automated Process Termination: When a stuck process is detected, the bot can be configured to safely terminate it, releasing the GPU for other tasks. This is highly valuable as it prevents your GPU from being completely unusable due to a single rogue process, ensuring consistent availability of your hardware.

· Configurable Thresholds: Users can customize the resource usage limits and inactivity periods that trigger a termination action. This customization is important because different workloads have different resource needs, allowing you to tune the bot to your specific environment and avoid accidentally killing legitimate processes.

Product Usage Case

· Scenario: A data scientist is running several large deep learning training jobs on their workstation overnight. One job crashes and enters an infinite loop, consuming 100% of the GPU's memory. Without the bot, the workstation's GPU would be locked until morning. With GPU Rescue Bot, the bot detects the hung job and terminates it, freeing the GPU for other potential jobs or allowing the user to restart the problematic job immediately upon returning.

· Scenario: In a shared server environment where multiple users access GPUs, one user's experimental script accidentally allocates all GPU memory without releasing it, blocking other users. GPU Rescue Bot, running on the server, identifies this runaway allocation and terminates the offending process, restoring GPU availability to everyone else and preventing unfair resource monopolization.

· Scenario: A developer is experimenting with real-time GPU-accelerated simulations. An unexpected input causes the simulation to freeze. Manually checking and killing the process can disrupt the workflow. GPU Rescue Bot proactively monitors the simulation's resource usage and identifies the stall, automatically killing the process and allowing the developer to quickly restart and debug the issue without losing their session.

· Scenario: For continuous integration and continuous deployment (CI/CD) pipelines that involve GPU testing, a faulty test case could hang a GPU. GPU Rescue Bot can be integrated into the pipeline to automatically clean up any stuck GPU processes, ensuring the pipeline can proceed and test results are not delayed by hardware resource issues.

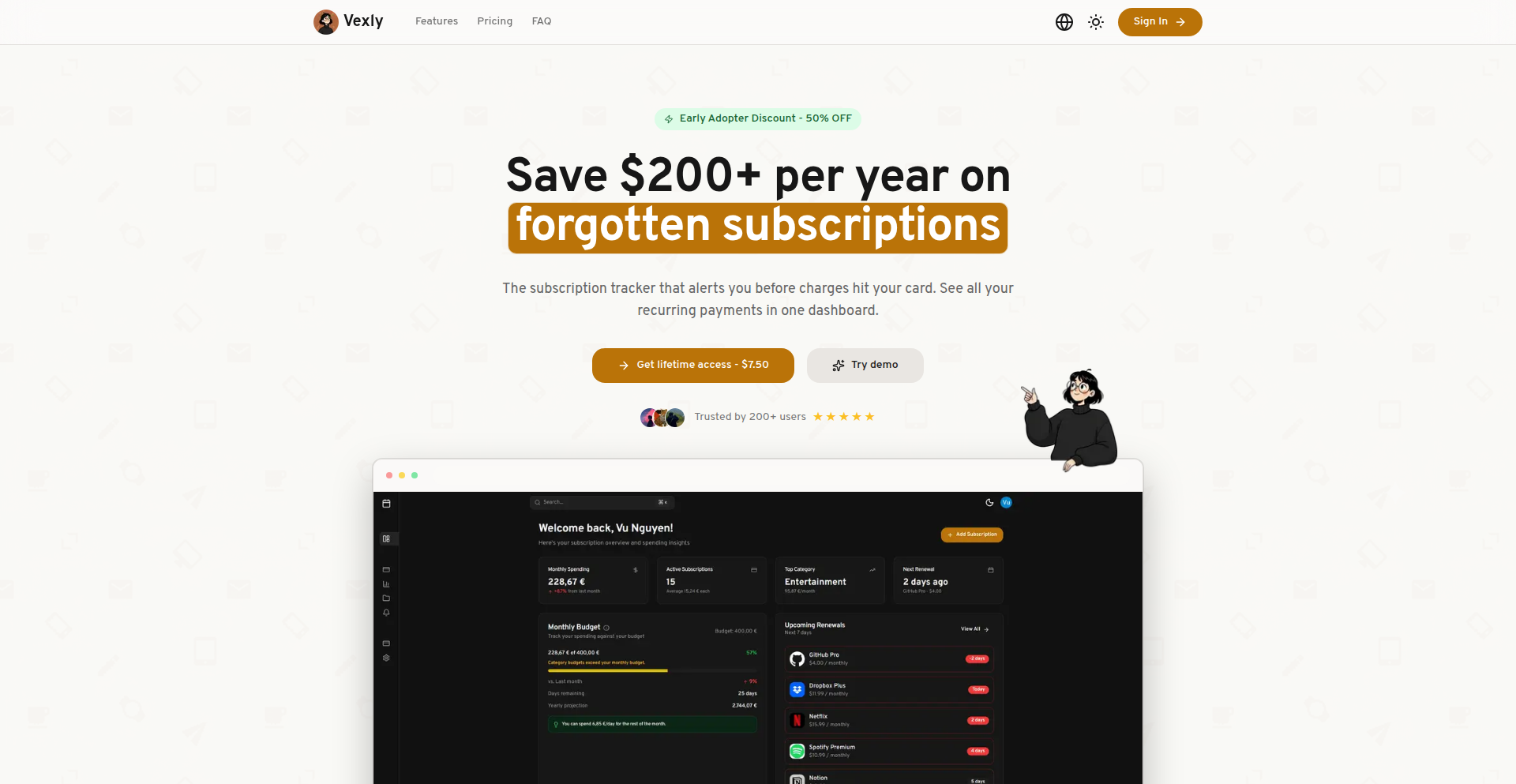

3

Subscription Tracker

Author

hoangvu12

Description

A website designed to help users meticulously track all their recurring subscriptions, from streaming services to software licenses. It focuses on providing a clear overview of monthly and annual costs, renewal dates, and potential savings, addressing the common problem of 'subscription creep' and forgotten recurring charges.

Popularity

Points 3

Comments 23

What is this product?

This is a web application that allows you to centralize and manage all your subscriptions in one place. Technically, it likely utilizes a robust backend to store subscription data (service name, cost, billing cycle, renewal date, payment method) and a user-friendly frontend for data entry and visualization. The innovation lies in its simplicity and direct focus on solving the often-overlooked issue of managing diverse recurring payments, which can easily lead to overspending and missed cancellation opportunities. Think of it as your personal subscription dashboard that keeps you informed and in control.

How to use it?

Developers can integrate this project into their personal finance tools or even build upon it as a base for more comprehensive financial management applications. For individual users, it's a straightforward web interface where you can manually input your subscription details. Once entered, the website automatically calculates your total subscription spending, alerts you before renewal dates, and provides insights into potential cost savings. It’s about getting a clear picture of where your money is going with minimal effort.

Product Core Function

· Subscription input and management: Allows users to add, edit, and delete subscription details, providing a centralized hub for all recurring payments. This helps by making it easy to see all your subscriptions at a glance, so you don't forget what you're paying for.

· Cost visualization and analysis: Displays total monthly and annual subscription costs, offering insights into spending patterns. This is useful because it clearly shows how much you spend on subscriptions, helping you identify areas where you might be overspending.

· Renewal date reminders: Sends timely notifications before subscription renewal dates to prevent unwanted automatic charges. This is valuable because it prevents you from being charged for services you no longer use or want.

· Categorization and tagging: Enables users to categorize subscriptions (e.g., entertainment, productivity, news) for better organization and analysis. This helps by allowing you to group similar subscriptions, making it easier to understand your spending in different life areas.

· Payment method tracking: Records the payment method used for each subscription, aiding in financial reconciliation. This is beneficial for keeping track of which card or account is being used for each service, simplifying your financial management.

Product Usage Case

· A freelancer who subscribes to multiple project management tools, cloud storage, and design software can use this to track all their business-related recurring costs and ensure they are only paying for active tools.

· A student with various streaming services, online learning platforms, and gaming subscriptions can use this to monitor their entertainment budget and avoid exceeding their spending limits, especially during academic breaks.

· A home user who has multiple streaming services, music subscriptions, and security software can utilize this to get a consolidated view of their monthly household expenses and identify any subscriptions they might have forgotten about, thus saving money.

· A developer can fork this project and add features like API integrations with payment gateways or subscription providers to automate data entry and gain deeper insights into subscription revenue for their own SaaS products.

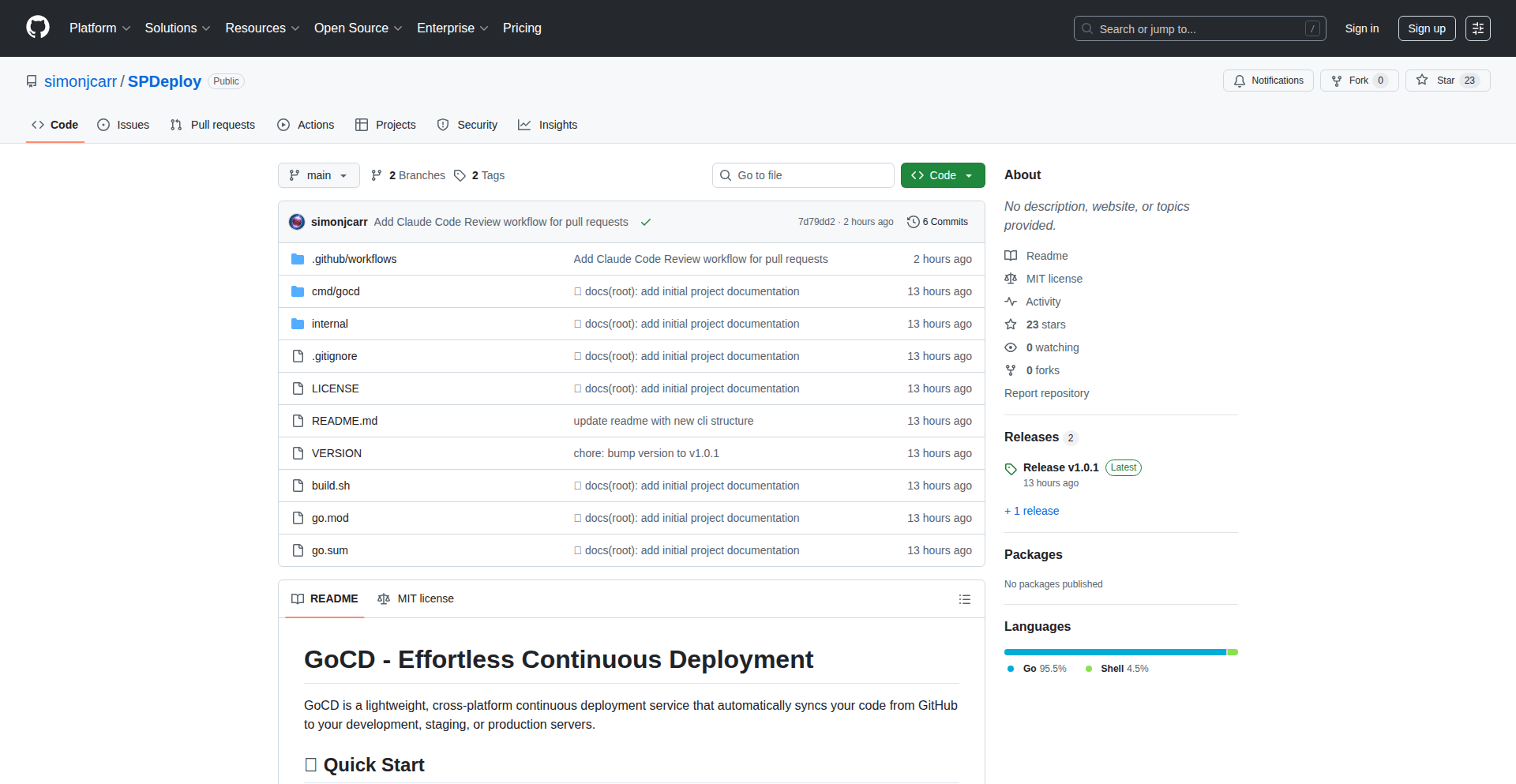

4

Gocd: Laptop-Native Deployer

Author

soxprox

Description

Gocd is a minimalist Go-based Continuous Integration and Continuous Deployment (CI/CD) tool designed to run directly on your development machine. It simplifies deploying changes from GitHub pull requests by bypassing the need for complex CI/CD infrastructure. Instead of setting up dedicated servers or cloud runners, Gocd allows developers to automate builds and deployments directly from their laptop, integrating seamlessly with GitHub issues and pull requests. This offers a streamlined workflow for quickly testing and deploying code, especially for individual developers or small teams who find traditional CI/CD stacks overly burdensome.

Popularity

Points 18

Comments 4

What is this product?

Gocd is a lightweight CI/CD tool built with Go that enables developers to run their entire build and deployment pipeline on their local development machine. Its core innovation lies in its simplicity and resource efficiency. Unlike traditional CI/CD solutions that require setting up and managing runners, servers, or cloud infrastructure, Gocd allows you to directly leverage your existing development environment. It achieves this by integrating with version control systems like GitHub, monitoring for changes in pull requests, and then triggering automated build and deployment processes. This approach significantly reduces the overhead typically associated with CI/CD, making it an ideal solution for rapid iteration and testing of code changes directly from your laptop or a small, dedicated server. The value here is a dramatically simplified path from code commit to a runnable application, without the complexity of a full-blown CI/CD platform.

How to use it?

Developers can use Gocd by cloning the GitHub repository and running the Go application. It's configured to connect to your GitHub account and watch specific repositories or branches for pull requests. When a pull request is opened or updated, Gocd automatically triggers a build process (which you define in its configuration) and can then deploy the resulting artifact. This deployment can be to a local environment, a staging server, or even accessed remotely via tools like Tailscale for immediate testing. The key integration point is GitHub, where it acts as an automated agent responding to pull request events. This makes it incredibly easy to test the impact of your code changes in a near-production environment before merging.

Product Core Function

· GitHub Pull Request Integration: Automatically detects new or updated pull requests, triggering build and deployment workflows. This means every code change you propose gets a quick automated check, helping you catch errors early and ensuring your code works as expected before it's merged.

· Local Machine Execution: Runs directly on your laptop or a small server, eliminating the need for complex cloud infrastructure or dedicated CI servers. This dramatically lowers the barrier to entry for setting up automated workflows and saves on costs associated with managing external infrastructure.

· Automated Builds: Executes custom build commands defined by the developer for each pull request. This ensures that your code is compiled and packaged correctly, providing a consistent build artifact for deployment.

· Automated Deployments: Deploys the built artifacts to a target environment upon successful build. This allows you to test your changes in a realistic environment immediately, speeding up the feedback loop and reducing manual deployment steps.

· Remote Access Capabilities: Facilitates easy access to the running application, potentially through tools like Tailscale. This is invaluable for quickly demoing your changes to teammates or for testing the deployed application remotely from anywhere.

Product Usage Case

· A solo developer working on a personal project wants to quickly test a new feature from a GitHub pull request. Instead of manually building and deploying the application, they can configure Gocd to automatically build and deploy the code whenever a pull request is opened. This allows them to test the feature in a live environment on their laptop without any complex setup, greatly accelerating their development cycle.

· A small team is developing a web application and wants a simple way to deploy updates to a staging server for QA testing. Gocd can be set up on a small server, integrated with their GitHub repository, and configured to automatically deploy any successful build from a specific branch to the staging server. This eliminates manual deployment steps for the QA team and ensures faster feedback on new releases.

· A developer is experimenting with a new library in a side project. They create a pull request for each experiment. Gocd automatically builds the project with the new library and deploys it locally. This allows the developer to immediately run and interact with the updated application, making it easy to evaluate the library's impact without interrupting their main workflow.

· A developer wants to share a work-in-progress feature with a colleague for review. By pushing a branch and opening a pull request, Gocd can automatically build and deploy the application, making it accessible via a temporary remote link (e.g., through Tailscale). This allows for seamless collaboration and feedback on features that are not yet ready for a formal release.

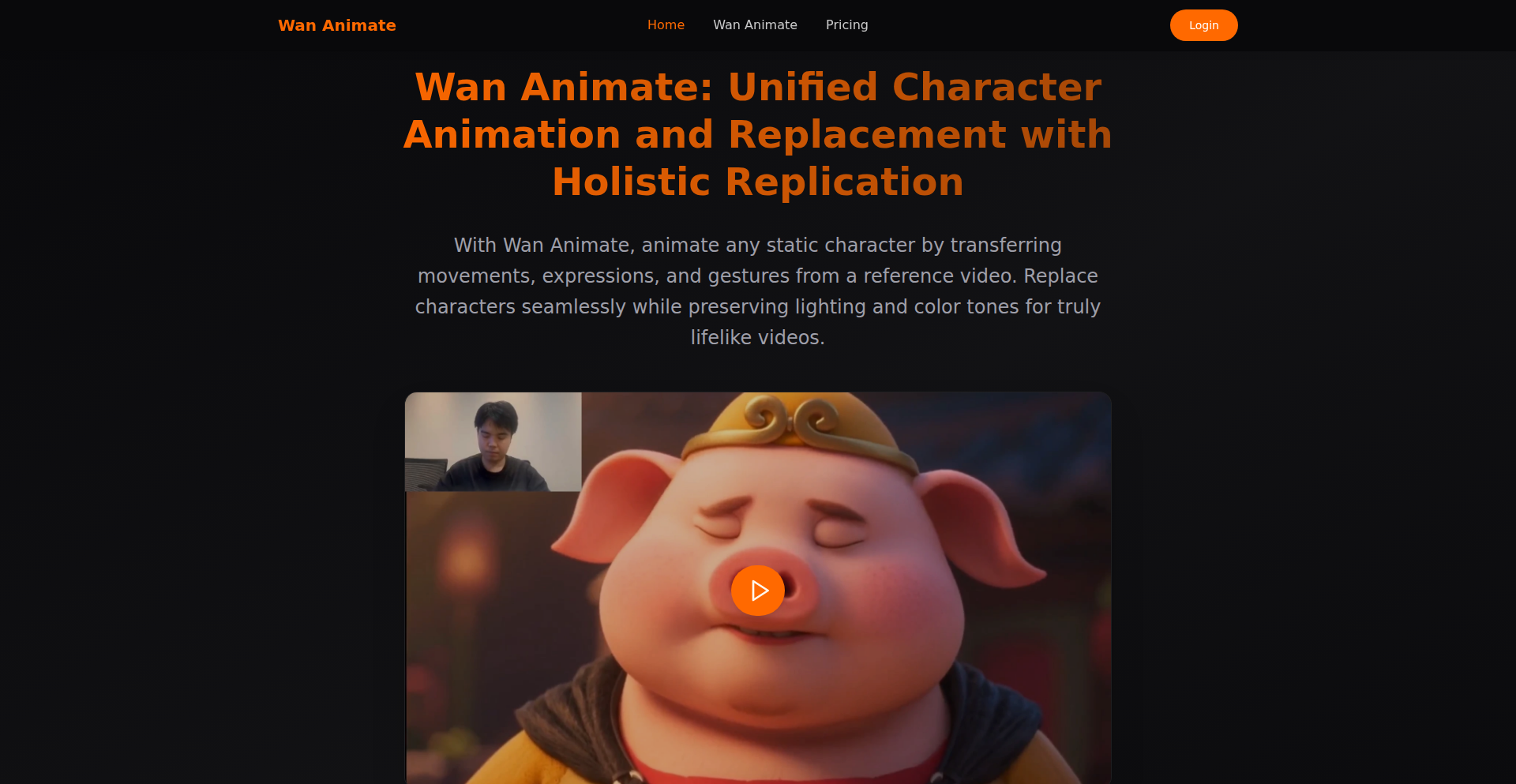

5

Wan-Animate

Author

laiwuchiyuan

Description

Wan-Animate is an AI-powered tool that breathes life into static characters by transferring motion and expressions from a reference video. It enables seamless character replacement while maintaining consistent gestures, expressions, and artistic style, generating animations up to 120 seconds at 480p or 720p. The system excels at accurate lip-sync and realistic expression transfer, and can be controlled using multimodal inputs like video, images, and text prompts. This aims to make character animation natural and adaptable for various applications beyond rigid templates.

Popularity

Points 12

Comments 1

What is this product?

Wan-Animate is an innovative AI system that allows you to animate static character images or 3D models by using a video of a person performing actions and expressions as a guide. Essentially, it "copies" the movements and facial expressions from the reference video and applies them to your chosen character, making the character appear to perform those actions. It uses advanced techniques like motion transfer and holistic replication, which means it tries to capture the entire essence of the movement, not just isolated parts. The innovation lies in its ability to replace characters seamlessly, ensuring consistency in how the character moves and looks, and it can also synchronize speech with mouth movements very accurately. So, it's like giving your characters a digital puppet show controller powered by real-world performances.

How to use it?

Developers can integrate Wan-Animate into their existing pipelines or use it as a standalone tool. For game development, it can be used to quickly animate character NPCs or player avatars based on motion capture data or even simple video recordings. In content creation, it can bring illustrations or 3D character models to life for marketing videos, educational content, or virtual influencers. The multimodal control allows for flexible workflows: you could use a video of an actor to drive a character's performance, an image to define the character's base appearance, and text prompts to guide specific actions or emotions. Its API-like capabilities, as implied by its features, suggest potential integration with game engines (like Unity or Unreal Engine) or video editing software, allowing for dynamic character animations to be generated and applied within these environments. For example, a game developer could record their own facial expressions and have a game character mirror them in real-time, or a filmmaker could use it to quickly animate a digital character performing a specific dialogue.

Product Core Function

· Animate static characters by transferring movements and expressions from a reference video: This allows any static character image or model to mimic the actions of a person in a video, making it useful for quickly animating existing assets without manual keyframing, saving significant time and effort in character animation.

· Seamless character replacement with consistent gestures, expressions, and style: This feature ensures that when you swap out a character, the new character's movements and expressions still look natural and in line with the original style, crucial for maintaining visual coherence in projects like games or animated series.

· Video generation up to 120 seconds in 480p or 720p: This provides a practical output length and resolution for common animation needs, suitable for short clips, social media content, or parts of larger productions, offering a good balance between quality and processing time.

· Accurate lip–audio alignment and realistic expression transfer: This is key for creating believable dialogue animations, ensuring that the character's mouth movements match the spoken audio precisely and that facial emotions are conveyed realistically, making character performances much more engaging and professional.

· Multimodal instruction control using video, image, and text prompts: This offers flexibility in how users can guide the animation process. You can use a video to capture nuanced performance, an image for character definition, and text for specific instructions, providing a powerful and adaptable creative workflow for diverse animation tasks.

Product Usage Case

· Game Development: A game studio wants to create a diverse cast of characters with unique animations. Instead of hiring expensive motion capture actors for every character, they can use Wan-Animate with a single reference actor and apply their performance to multiple character models, ensuring consistent animation quality and reducing production costs.

· Virtual Influencers: A social media personality wants to create a digital avatar that performs their content. They can record themselves talking and emoting, and then use Wan-Animate to transfer these performances to their custom 3D avatar, making the avatar's communication more dynamic and engaging for their audience.

· Educational Content: An educator is creating animated lessons. They can record themselves explaining a concept and then use Wan-Animate to make a cartoon character on screen repeat the explanation with matching lip movements and expressions, making the learning material more visually appealing and easier to follow.

· Marketing and Advertising: A company needs a short animated explainer video. They can use a stock character image and a video of a spokesperson, then use Wan-Animate to make the character deliver the marketing message with the spokesperson's expressions and speech rhythm, creating professional-looking promotional content efficiently.

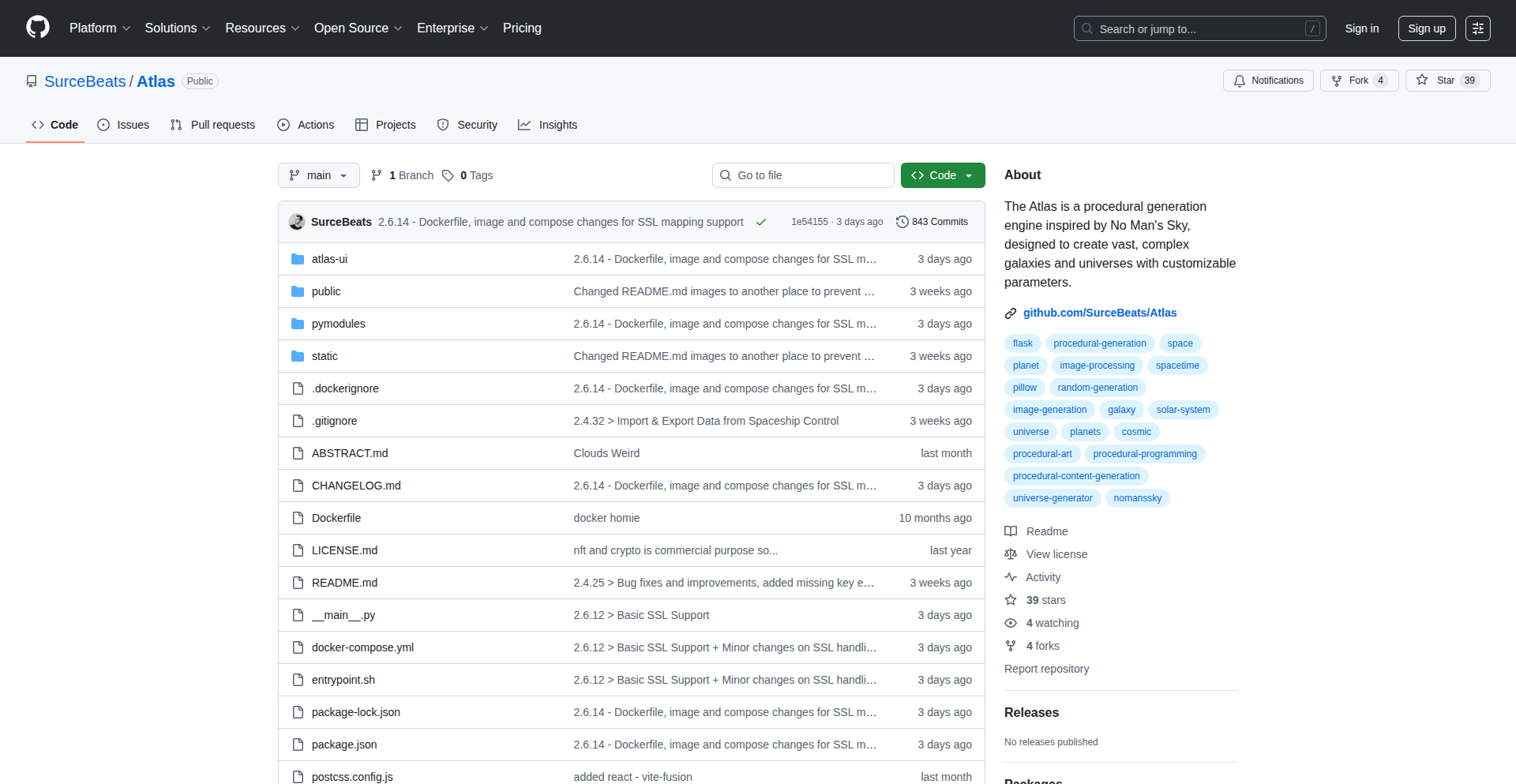

6

The Atlas: Deterministic Universe Engine

Author

SurceBeats

Description

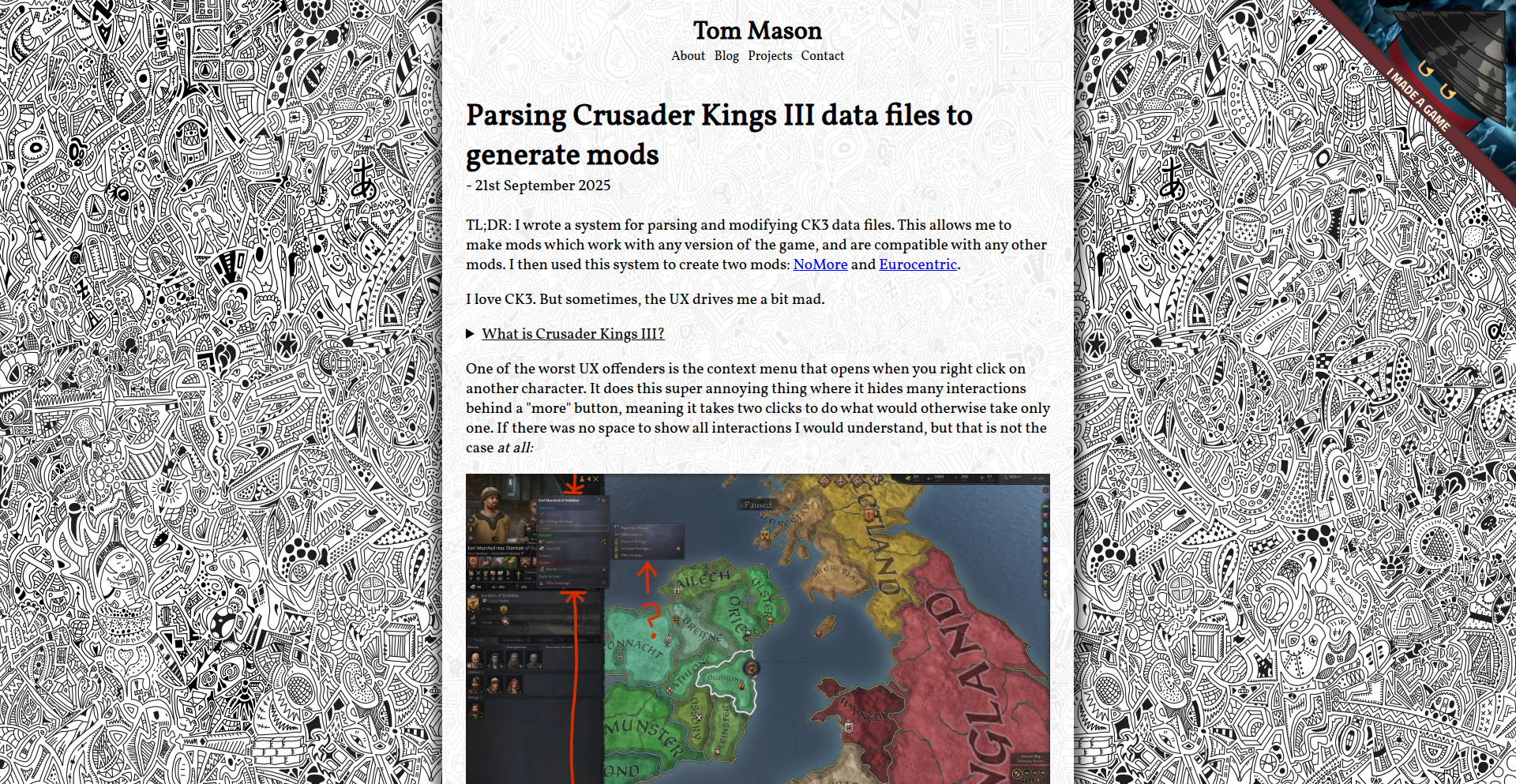

The Atlas is a groundbreaking browser-based universe simulator that procedurally generates over one sextillion galaxies from a single mathematical seed. It uniquely combines theoretical physics with real-time rendering, offering a boundless, explorable cosmos without requiring any pre-saved data. This innovative approach ensures perfect consistency across all devices and sessions, acting as a playable, large-scale implementation of Einstein's block universe theory.

Popularity

Points 7

Comments 4

What is this product?

The Atlas is a universe simulation that leverages pure mathematics and deterministic algorithms to create an unimaginably vast cosmos. Instead of storing massive amounts of pre-generated data, it calculates everything on demand using a mathematical seed. Think of it like having a complex recipe that can create an infinite number of unique cakes. The core innovation lies in treating time as a spatial dimension, allowing the simulation to compute any moment of the universe at any time. This means if you pause the simulation and come back later, planets will have continued their orbits perfectly, and if you open the same universe on different computers, everything will look exactly the same – a feat achieved through deterministic generation. It applies real-world physics like gravity and orbital mechanics to create believable celestial bodies and phenomena. So, for you, this means a universe that is infinitely explorable, perfectly consistent, and always available, no matter when or where you access it.

How to use it?

Developers can experience The Atlas through its live demo or run their own universe locally by cloning the GitHub repository. The project uses a Python/Flask backend with Hypercorn and a React frontend enhanced with Three.js for 3D rendering, connected by a custom 'vite-fusion' plugin. This setup allows for real-time generation and rendering directly in the browser. You can choose to explore a shared universe that evolves based on a fixed historical seed, or create your own unique cosmos by providing a new seed. For integration, developers can explore the project's MIT-licensed codebase to understand its procedural generation and physics simulation techniques, potentially applying similar deterministic principles to their own projects, such as game development, scientific visualizations, or large-scale data generation.

Product Core Function

· Procedural Universe Generation: Creates 10^21 galaxies from a single seed using deterministic mathematical algorithms, offering endless exploration possibilities. The value here is the ability to generate vast, unique content without massive storage needs, applicable to games and simulations.

· Real-time Physics Simulation: Implements Kepler's laws, tidal locking, Roche limits, and hydrostatic relaxation for moons, ensuring realistic celestial mechanics. This provides accurate and believable behavior for celestial bodies, crucial for scientific visualizations and educational tools.

· Deterministic Consistency: Ensures that the universe state is identical across all devices and sessions by recalculating events from the seed, guaranteeing perfect synchronization and replayability. This is valuable for collaborative environments or when precise replication of states is required.

· Time as a Coordinate: Treats time as a dimension, allowing any moment of the universe to be computed on demand. This innovation enables efficient simulation of long-term cosmic evolution without requiring continuous background processing, making complex simulations more feasible.

· Browser-based Accessibility: Runs entirely in the browser using Python/Flask, React, and Three.js, making the complex simulation accessible to anyone with an internet connection. This democratizes access to advanced simulations and reduces the barrier to entry for exploration and experimentation.

· Gamified Progression: Includes features like resource mining and spaceship progression to add an engaging layer for users. This demonstrates how complex simulations can be made more interactive and enjoyable for a wider audience.

Product Usage Case

· Game Development: A game studio could use The Atlas's engine to generate unique, explorable star systems for a space exploration game, ensuring that every player experiences a distinct yet consistent universe.

· Scientific Visualization: Researchers could adapt the physics simulation to visualize complex astronomical phenomena or test theories about universe formation in a controlled, reproducible environment.

· Educational Tools: Educators could use The Atlas to demonstrate principles of astrophysics, gravity, and orbital mechanics in an interactive and visually engaging way for students.

· Generative Art: Artists could utilize the procedural generation algorithms to create unique, evolving cosmic landscapes as digital art pieces, with each generation offering a novel visual experience.

· Software Engineering Experimentation: Developers could study The Atlas's 'vite-fusion' plugin and its efficient deterministic generation techniques to improve performance and data management in their own web applications.

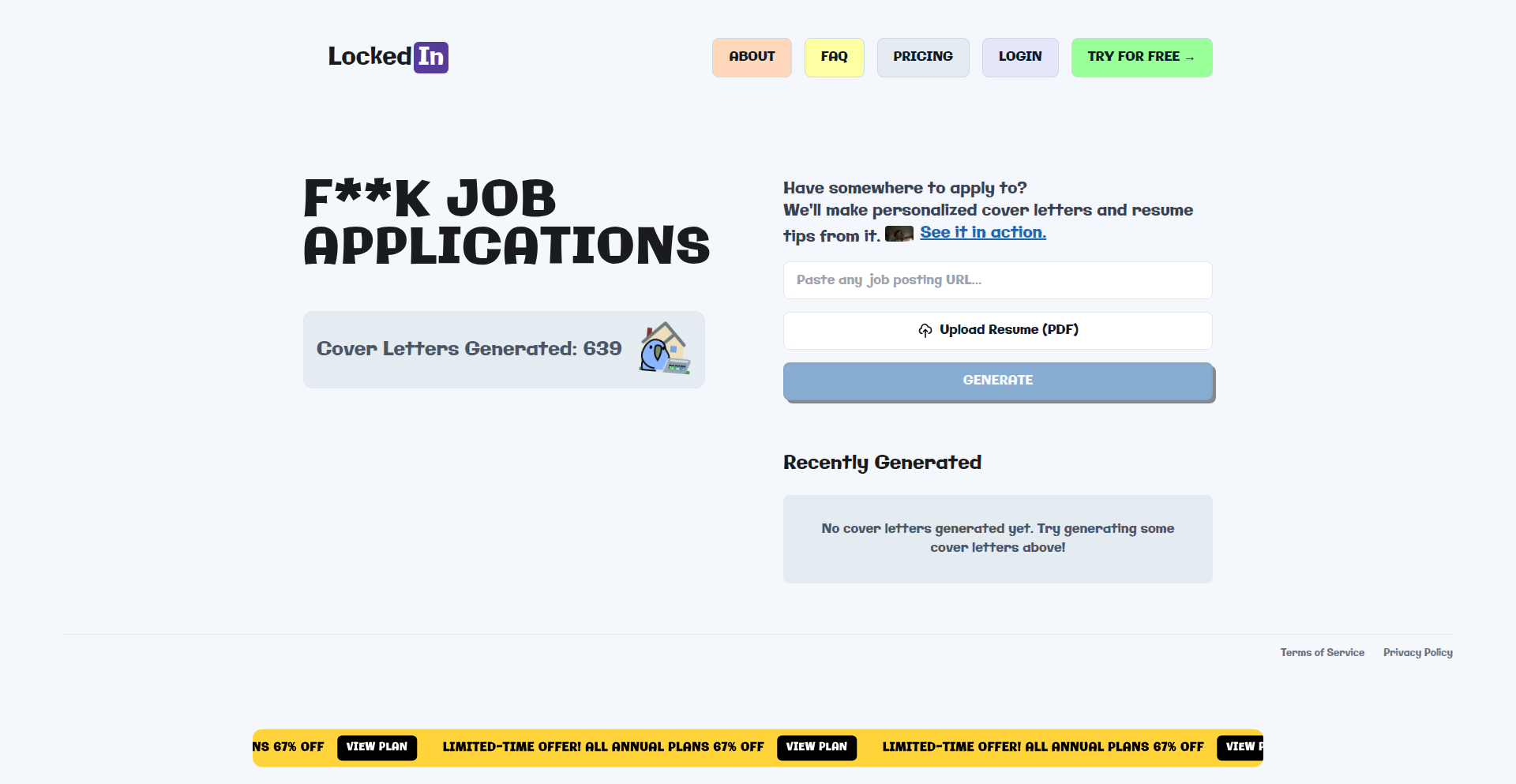

7

JobSynth AI

Author

irfahm_

Description

JobSynth AI is an innovative tool that automates the tedious process of job application by generating personalized cover letters and resume tips. It leverages advanced browser automation to research companies and job descriptions from a provided URL, mimicking human-like investigation to tailor application materials. This significantly reduces the stress and time commitment for job seekers, offering a smarter way to apply for jobs.

Popularity

Points 5

Comments 5

What is this product?

JobSynth AI is a smart assistant for job applications. It works by using sophisticated browser automation, similar to having a virtual assistant who can browse the internet. When you give it a job posting URL, it 'reads' the job description and then visits the company's website to understand its culture, mission, and values. The AI then uses this information to automatically write a tailored cover letter that highlights why you're a good fit. Additionally, it provides specific, actionable tips on how to modify your resume to better match the job requirements. The core innovation lies in its ability to perform this research and personalization in near real-time, allowing you to see the process unfold, making job applications feel less like a chore and more strategic.

How to use it?

Using JobSynth AI is straightforward. As a developer, you can integrate it into your job search workflow by simply pasting the URL of a job description into the application. The tool then takes over, performing its automated research and generating a personalized cover letter and resume suggestions. This can be particularly useful for developers who need to apply to multiple roles quickly but want to ensure each application is customized. You can use it directly through its web interface, or for more advanced integration, you could explore its underlying automation logic to build custom workflows for mass applications or to analyze job trends across different platforms. The key benefit is saving significant time while improving the quality of your applications.

Product Core Function

· Automated Job Description Analysis: Extracts key requirements and responsibilities from a job posting URL, providing insights into what employers are looking for. This helps you understand the specific skills and experiences that are most valued for a particular role.

· Real-time Company Research: Scans the company's website to gather information about their mission, values, and culture. This allows for a deeper understanding of the organization beyond the job description, enabling you to align your application with their ethos.

· Personalized Cover Letter Generation: Crafts a unique cover letter based on the analyzed job description and company research, highlighting your most relevant qualifications and demonstrating a genuine interest in the position. This makes your application stand out from generic submissions.

· Resume Optimization Tips: Provides targeted advice on how to tailor your resume, including suggesting keywords to include and specific achievements to emphasize, based on the job requirements. This increases the chances of your resume passing automated screening systems.

· Transparent Automation Process: Allows users to observe the AI's research process in real-time, offering a unique insight into how automated job application assistance works. This transparency builds trust and helps users understand the value being generated.

Product Usage Case

· A software engineer applying for a senior backend role at a fast-growing fintech startup. By pasting the job URL, JobSynth AI identified that the startup emphasizes collaboration and agile methodologies. It then generated a cover letter highlighting the engineer's experience in cross-functional teams and rapid prototyping, while suggesting the engineer add specific examples of their agile project contributions to their resume.

· A front-end developer seeking a position at a design-focused agency. The tool researched the agency's portfolio, noting their emphasis on user experience and clean aesthetics. The generated cover letter articulated the developer's passion for creating intuitive interfaces, and the resume tips suggested showcasing personal design projects with a focus on UI/UX impact.

· A data scientist applying for a research position at a medical technology company. JobSynth AI analyzed the job description's requirement for strong statistical modeling skills and researched the company's recent breakthroughs in AI-driven diagnostics. The output included a cover letter that emphasized the developer's experience with predictive modeling and its potential application in healthcare, along with suggestions to include specific statistical techniques used in past projects on their resume.

8

Poem-as-Code C

Author

ironmagma

Description

This project presents a poem about the C programming language, framed as a piece of 'code'. It highlights the expressive and even artistic potential of code, using poetic language to convey the essence and impact of C. The innovation lies in blurring the lines between traditional code and creative expression, offering a unique perspective on a foundational programming language.

Popularity

Points 5

Comments 5

What is this product?

This project is a poem written in a format that resembles code, specifically focusing on the C programming language. It's an artistic interpretation of C's history, characteristics, and influence, presented in a structured, almost programmatic way. The core innovation is the conceptual overlap: treating a poem as a form of executable idea, much like code. This allows for a creative exploration of programming concepts through metaphor and narrative, demonstrating that even highly technical subjects can be approached with artistic flair. It's about seeing the 'logic' and 'structure' in poetry and the 'narrative' in programming.

How to use it?

Developers can engage with this project as a source of inspiration and a novel way to think about programming languages. It can be read to gain a different appreciation for C, perhaps to understand its foundational role in a more relatable, humanistic way. It's not intended for direct computational use, but rather as a conceptual tool for sparking creativity, understanding cultural impact, or even as a unique way to discuss programming in non-technical contexts. Think of it as a programmer's sonnet or a data scientist's haiku.

Product Core Function

· Artistic Interpretation of C: Presents the C programming language through a narrative poem, translating technical concepts into evocative language. The value is in offering a fresh, humanistic perspective on a foundational technology, making it more accessible and inspiring.

· Code-like Structure: The poem is structured to mimic code, potentially using line breaks, indentation, or even pseudo-keywords to suggest a programmatic flow. This highlights the inherent structure in both poetry and code, showing how creative expression can adopt logical frameworks.

· Inspiration for Developers: By reframing a technical subject into an art form, the project aims to inspire developers to think beyond pure functionality and explore the creative potential within their own work and the languages they use. It's about finding the art in the algorithm.

· Cross-Disciplinary Exploration: Bridges the gap between computer science and literature, demonstrating how concepts from one field can enrich understanding in another. This is valuable for fostering broader intellectual curiosity and creative problem-solving.

Product Usage Case

· A C++ developer struggling with writer's block might read this poem to find new inspiration for their projects, seeing their tools in a new light. It helps them remember the 'why' behind the code.

· A university professor teaching introductory programming could use this poem as a supplementary material to make the history and significance of C more engaging for students who might find purely technical explanations dry. It provides an emotional hook.

· A programmer attending a tech conference could use this poem as a conversation starter in a social setting, offering a unique and memorable way to discuss their passion for technology with non-technical individuals. It makes complex subjects approachable.

· A creative coder could be inspired to create their own 'code poems' for other programming languages, fostering a new niche of artistic expression within the software development community.

9

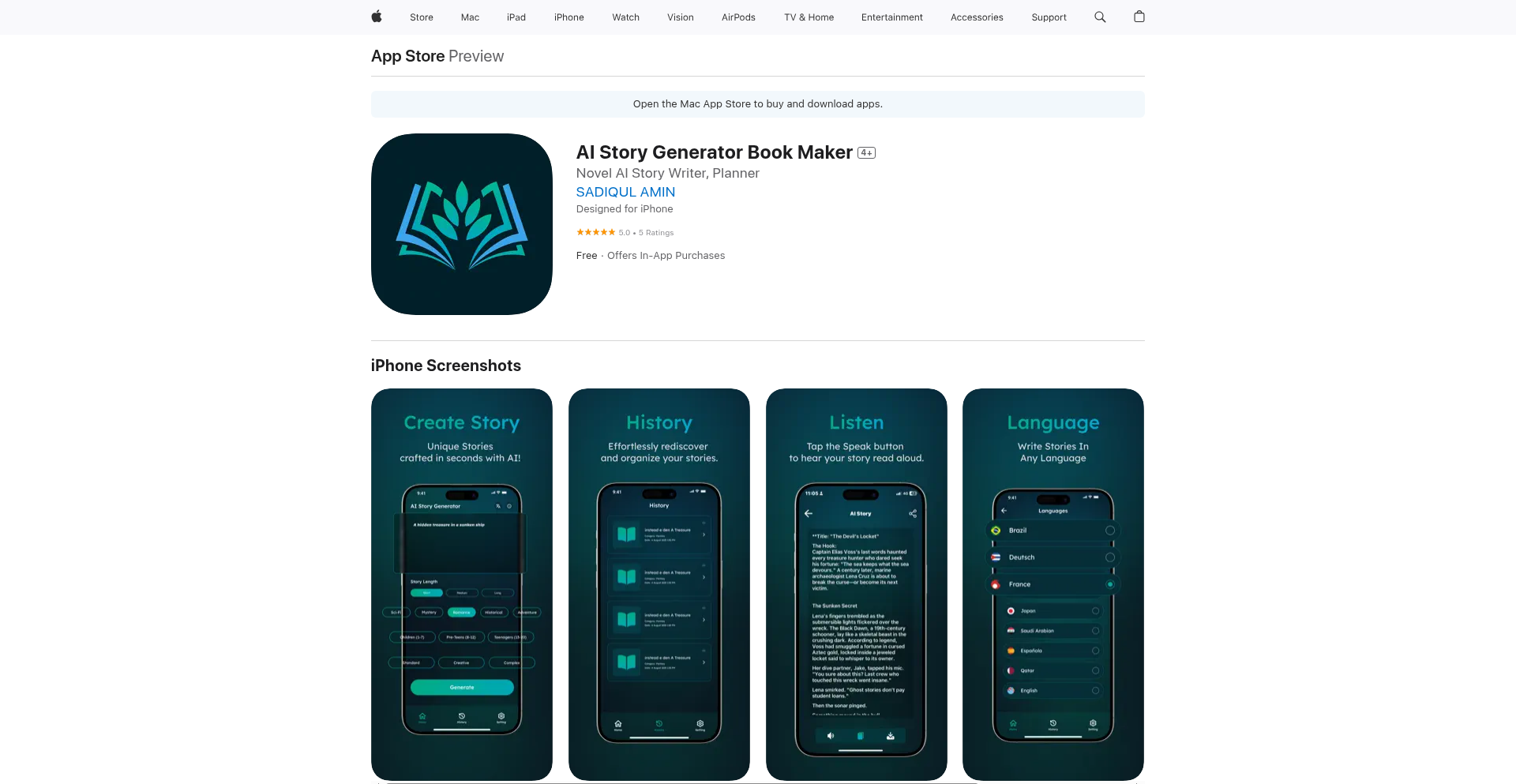

QuickTome AI

Author

safwanbouceta

Description

QuickTome AI is an AI-powered platform that allows users to generate full eBooks in minutes. It leverages GPT-4 technology to transform user inputs and ideas into well-structured and comprehensive eBooks, drastically reducing the time and effort traditionally required for eBook creation. This solves the problem of lengthy and expensive eBook writing processes, making content creation accessible to a wider audience.

Popularity

Points 3

Comments 4

What is this product?

QuickTome AI is a web application that utilizes the advanced capabilities of GPT-4, a sophisticated large language model. Instead of manually writing, designing, and editing an eBook, users provide their core ideas, topics, or even rough outlines. The AI then processes this input and generates a complete eBook, including content, structure, and a coherent narrative. The innovation lies in its ability to automate a complex creative process, democratizing eBook publishing for individuals and small businesses who may lack the time, resources, or expertise.

How to use it?

Developers and content creators can use QuickTome AI through its intuitive web interface. Users input their desired eBook topic, target audience, and key points they wish to cover. They can also provide existing content or prompts to guide the AI. The platform then generates the eBook content, which can be exported and further refined. It can be integrated into content creation workflows by acting as a rapid prototyping tool for educational materials, marketing collateral, or personal projects.

Product Core Function

· AI-driven eBook generation: Transforms user prompts into complete eBooks with structured content, saving significant writing time and effort.

· Topic-based content creation: Enables users to input specific subjects or keywords to guide the AI in producing relevant and informative eBook content.

· Customizable output: Allows users to refine and edit the AI-generated content, ensuring the final eBook meets their specific requirements and quality standards.

· Time and cost efficiency: Drastically reduces the hours and financial investment typically associated with hiring writers or using complex content creation software.

Product Usage Case

· An aspiring entrepreneur wants to create a guide to starting a small business to attract leads. They input the core concepts into QuickTome AI and in minutes have a well-written eBook that can be offered as a free download on their website, improving lead generation.

· A blogger wants to expand their content into a more in-depth eBook about a niche topic. They use QuickTome AI to quickly draft the eBook, then add their personal insights and unique perspective, resulting in a valuable product they can sell or offer to their audience.

· A student needs to create a supplementary study guide for a challenging subject. They input the key topics and learning objectives, and QuickTome AI generates a comprehensive guide, saving them hours of manual research and writing, and improving their study efficiency.

10

Viralwalk: Serendipitous Web Discovery Engine

Author

justachillguy

Description

Viralwalk is a 'StumbleUpon'-inspired tool that allows users to discover random websites based on their existing browsing activity. It leverages a sophisticated algorithm to surface content that is likely to be engaging and relevant, offering a novel way to explore the vastness of the internet. The core innovation lies in its ability to tap into subtle patterns in web traffic and user behavior to predict and present interesting, often undiscovered, web pages. This means you can stumble upon new ideas, niche communities, or useful tools you never knew existed, expanding your digital horizons effortlessly.

Popularity

Points 4

Comments 0

What is this product?

Viralwalk is a web discovery platform that acts like a personalized digital compass for the internet. Instead of searching with a specific destination in mind, it guides you to unexpected and potentially interesting websites. Its technical foundation likely involves analyzing publicly available web data, possibly incorporating elements of content similarity and popularity trends. The 'viral' aspect hints at an underlying mechanism to identify content that is gaining traction or has a high engagement potential. Think of it as a smart librarian who knows your taste and occasionally surprises you with a book you didn't know you'd love, but for the entire internet. The innovation is in its ability to break free from traditional search and recommendation silos by embracing randomness with intelligent curation.

How to use it?

Developers can use Viralwalk as a source of inspiration for new projects, a way to discover emerging technologies or trends, or even to find unique online communities relevant to their work. It can be integrated into development workflows by bookmarking interesting finds or by using its underlying principles to build similar discovery tools. For example, a developer looking for inspiration for a new UI element could use Viralwalk to find websites with innovative designs, saving them from repetitive manual searching.

Product Core Function

· Random website suggestion: Presents users with a stream of unexpected web pages, breaking them out of their usual browsing habits and potentially introducing them to new content or ideas. The value is in uncovering hidden gems on the web.

· Personalized discovery algorithm: While the exact mechanism isn't detailed, the 'viral' aspect suggests it intelligently surfaces content based on underlying trends or user engagement signals, offering a more curated experience than pure random selection. This makes the discovery process more rewarding and less like a shot in the dark.

· Inspiration for creative projects: By exposing users to diverse and often unconventional websites, Viralwalk serves as a powerful catalyst for brainstorming and creative thinking, helping developers overcome creative blocks and find novel solutions.

· Exploration of niche communities: The tool can help developers discover specialized online forums, communities, or resources that might be relevant to their specific interests or technical challenges, fostering collaboration and knowledge sharing.

Product Usage Case

· A web developer stuck on a design problem could use Viralwalk to discover visually interesting and unconventional website layouts, sparking new ideas for their own project's UI/UX. The value here is overcoming creative stagnation.

· A data scientist looking for inspiration for a new data visualization technique might stumble upon a website showcasing a unique charting method they hadn't encountered before, providing a new approach to their analytical challenges. This helps them learn and innovate faster.

· An indie game developer seeking unique art styles or gameplay mechanics could use Viralwalk to discover smaller, less-known game sites that feature innovative approaches, feeding into their own creative process. The benefit is accessing a broader spectrum of creative output.

· A community builder looking for new platforms or engagement strategies might find niche forums or discussion groups that offer valuable insights into building and managing online communities. This provides actionable strategies for their work.

11

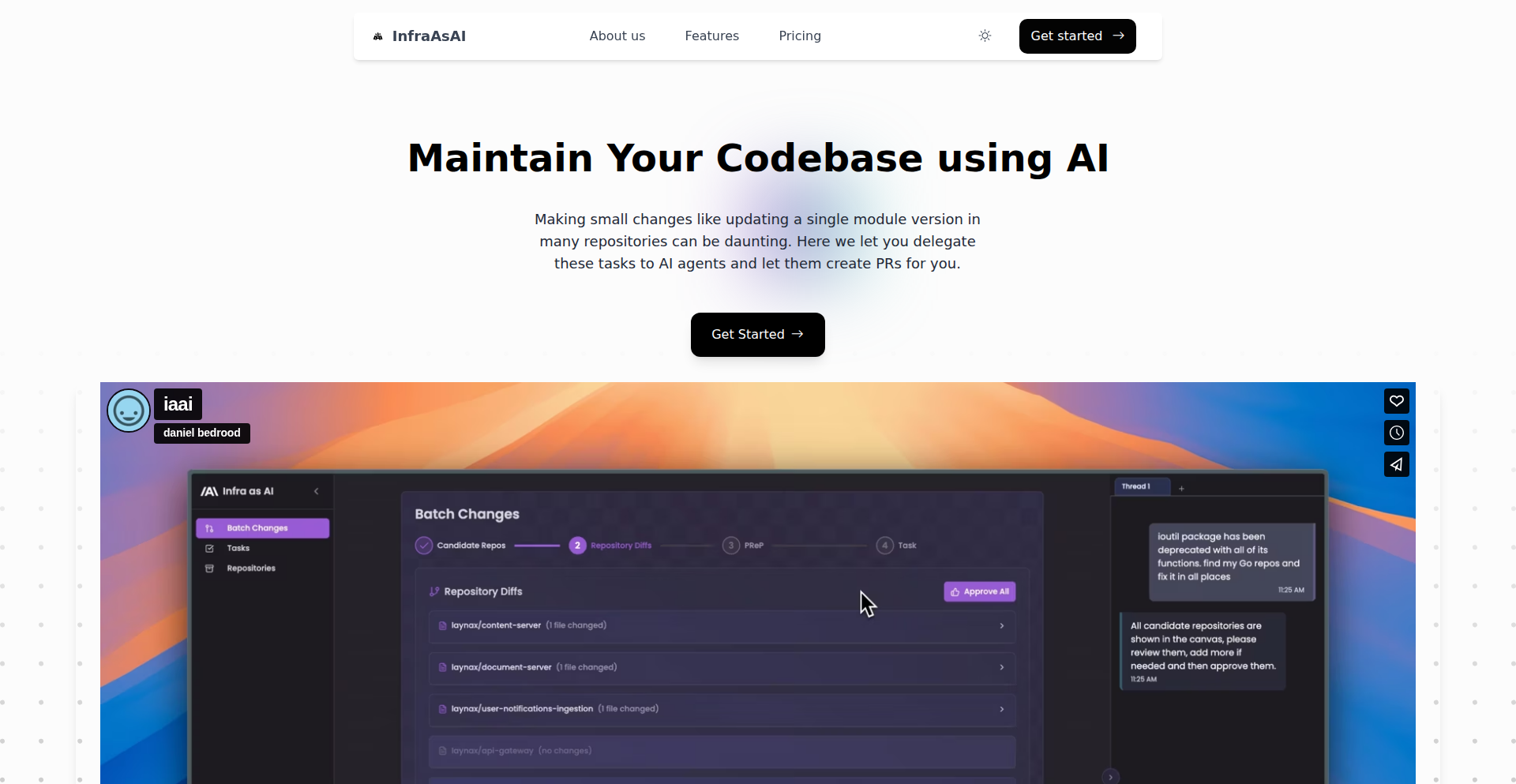

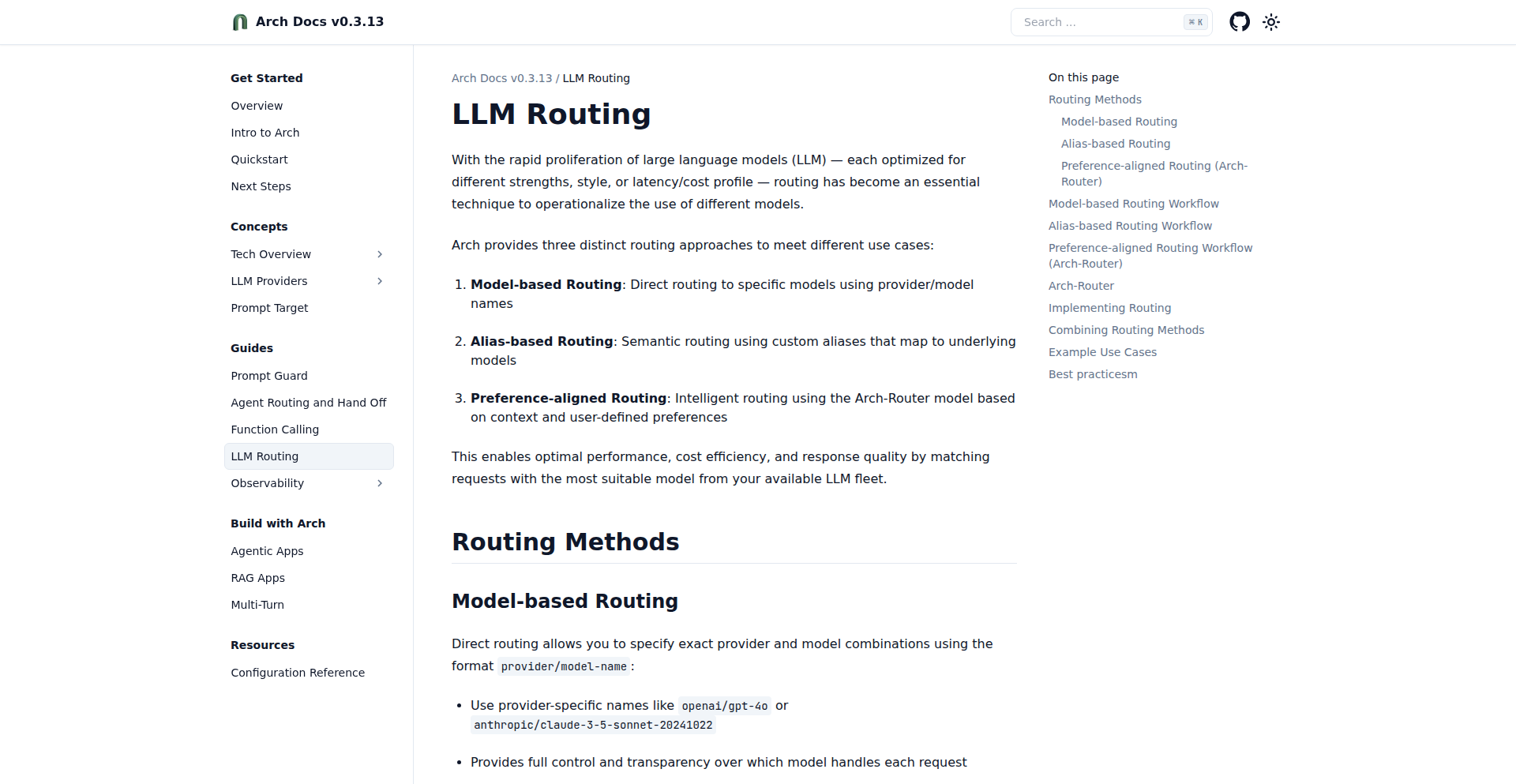

InfraAsAI: Microservice Consistency Orchestrator

Author

danielbedrood

Description

InfraAsAI is a dashboard with an integrated chatbot designed to streamline the process of making consistent changes across multiple microservices. It leverages AI coding agents to understand requests, identify relevant repositories, and automatically generate pull requests for specified modifications, significantly reducing manual effort and potential errors in large microservice environments. This solves the tedious and error-prone task of updating numerous codebases individually.

Popularity

Points 2

Comments 2

What is this product?

InfraAsAI is a platform that uses AI agents to manage changes across your microservice architecture. Imagine you need to update a dependency or a configuration setting in dozens or even hundreds of your microservices. Manually opening each repository, making the change, and submitting a pull request (PR) is incredibly time-consuming and prone to mistakes. InfraAsAI automates this by allowing you to describe the change you want to make in a natural language query to a chatbot. AI agents then intelligently search your repositories, determine which ones need the change, and generate the necessary PRs for you to review. It utilizes a vector database (like Pinecone) to store repository information for efficient searching and employs large language models (LLMs) like Claude Code for the actual code modifications and GPT5 for identifying relevant repositories. This approach brings a new level of automation and consistency to infrastructure management.

How to use it?

Developers can use InfraAsAI by first indexing their repositories into the platform. Once indexed, they can interact with the chatbot by typing requests like 'Update all Dockerfiles to use Alpine version 3.18' or 'Change the default timeout in all Node.js services to 30 seconds'. The platform will then process this request, identify candidate repositories, and present a list of generated PRs. Developers can review these PRs and approve their creation, effectively pushing the changes across their microservice landscape. This integrates seamlessly into existing development workflows by automating the repetitive parts of the update process.

Product Core Function

· AI-powered code modification: Enables natural language commands to instruct AI agents to make specific changes in codebases, reducing the need for manual coding for repetitive updates.

· Cross-microservice consistency: Automates the application of a single change across a vast number of microservices, ensuring uniformity and reducing configuration drift.

· Intelligent repository identification: Uses AI to pinpoint which repositories require a specific change based on the user's query and repository analysis.

· Automated Pull Request generation: Creates pull requests with the necessary code modifications, streamlining the review and merge process.

· Pipeline status monitoring: Displays the status of CI/CD pipelines for generated PRs, providing immediate feedback on the impact of the changes.

· Vector database for efficient search: Stores repository metadata for quick retrieval of relevant projects when processing change requests, improving performance and scalability.

· Document and changelog analysis: Allows AI agents to read documentation and changelogs to understand deprecations or required updates, leading to more accurate code modifications.

Product Usage Case

· Scenario: A company needs to update all microservices to use a new, more secure version of a common dependency (e.g., a security patch for Log4j). InfraAsAI can be used to automate the process of updating the dependency in all relevant microservices, saving countless hours of manual work and reducing the risk of human error.

· Scenario: A team wants to enforce a new coding standard or update a common configuration parameter (e.g., setting a default cache expiration time) across their entire microservice portfolio. InfraAsAI can be instructed to find all files that need this change and generate PRs for each service, ensuring consistency.

· Scenario: After reading release notes for a framework or library, a developer realizes a particular function is deprecated and needs to be replaced across many services. InfraAsAI can be used to find these instances and automatically refactor the code, making the transition smoother.

· Scenario: A large organization needs to ensure all services use a specific base image in their Dockerfiles. InfraAsAI can be given a command to find all Dockerfiles and update them with the new base image, ensuring infrastructure consistency.

12

YouTube Speaker Navigator

Author

hamza_q_

Description

This project is a browser extension that allows users to navigate YouTube videos by speaker. It leverages AI to identify different speakers in a video and provides a timeline with timestamps for each speaker's segment. This solves the problem of finding specific content within a video when you know who is speaking but not necessarily the exact timestamp.

Popularity

Points 2

Comments 2

What is this product?

This is a browser extension that uses audio processing and speaker diarization (identifying who is speaking when) to create a navigable index of speakers within a YouTube video. It essentially listens to the audio, figures out when different people are talking, and creates clickable jump points for each speaker. The innovation lies in applying readily available AI speech recognition and diarization models to a common user pain point on a popular platform, making video content more accessible and searchable.

How to use it?

Developers can integrate this functionality into their own workflows or build upon it. As a user, you would install the browser extension, and then when watching a YouTube video, a new interface would appear allowing you to see a list of identified speakers and click on their names to jump to the part of the video where they are speaking. For developers, the underlying AI models could be used to process any audio or video content to create similar speaker-based navigation.

Product Core Function

· Speaker identification: The system analyzes the audio track of a YouTube video to distinguish between different voices, providing a list of unique speakers present in the video. The value here is automatically creating an index of who talks and when, saving manual effort.

· Timestamped speaker segments: For each identified speaker, the extension records the start and end times of their speaking segments. This allows for precise jumping within the video, making it easy to find specific contributions.

· Interactive speaker timeline: A user-friendly interface displays the identified speakers and their corresponding timestamps, enabling quick navigation. This offers a much more efficient way to revisit parts of a video related to a particular speaker.

· Browser integration: The extension seamlessly integrates with the YouTube player, adding its functionality without disrupting the viewing experience. This means you can use it directly on YouTube, enhancing its practicality.

Product Usage Case

· Academic research: Students and researchers can use this to quickly find segments of lectures or interviews where specific professors or interviewees are speaking, speeding up the process of reviewing material. For example, if you need to recall a point made by Professor Smith in a recorded lecture, you can directly jump to her speaking parts.

· Podcast repurposing: Content creators can use this to easily extract clips featuring different hosts or guests from longer podcast episodes, making it simpler to create highlight reels or social media snippets. This allows for efficient content creation by isolating segments of interest.

· Accessibility for meetings: In recorded online meetings, users can quickly navigate to segments spoken by specific participants, improving comprehension and recall of discussions. If you missed a decision made by your manager, you can find it by jumping to their speaking time.

· Content analysis for journalists: Journalists can analyze interviews by quickly jumping to the responses of specific individuals, streamlining the process of finding relevant quotes and information. This helps in faster media production by directly accessing key information.

13

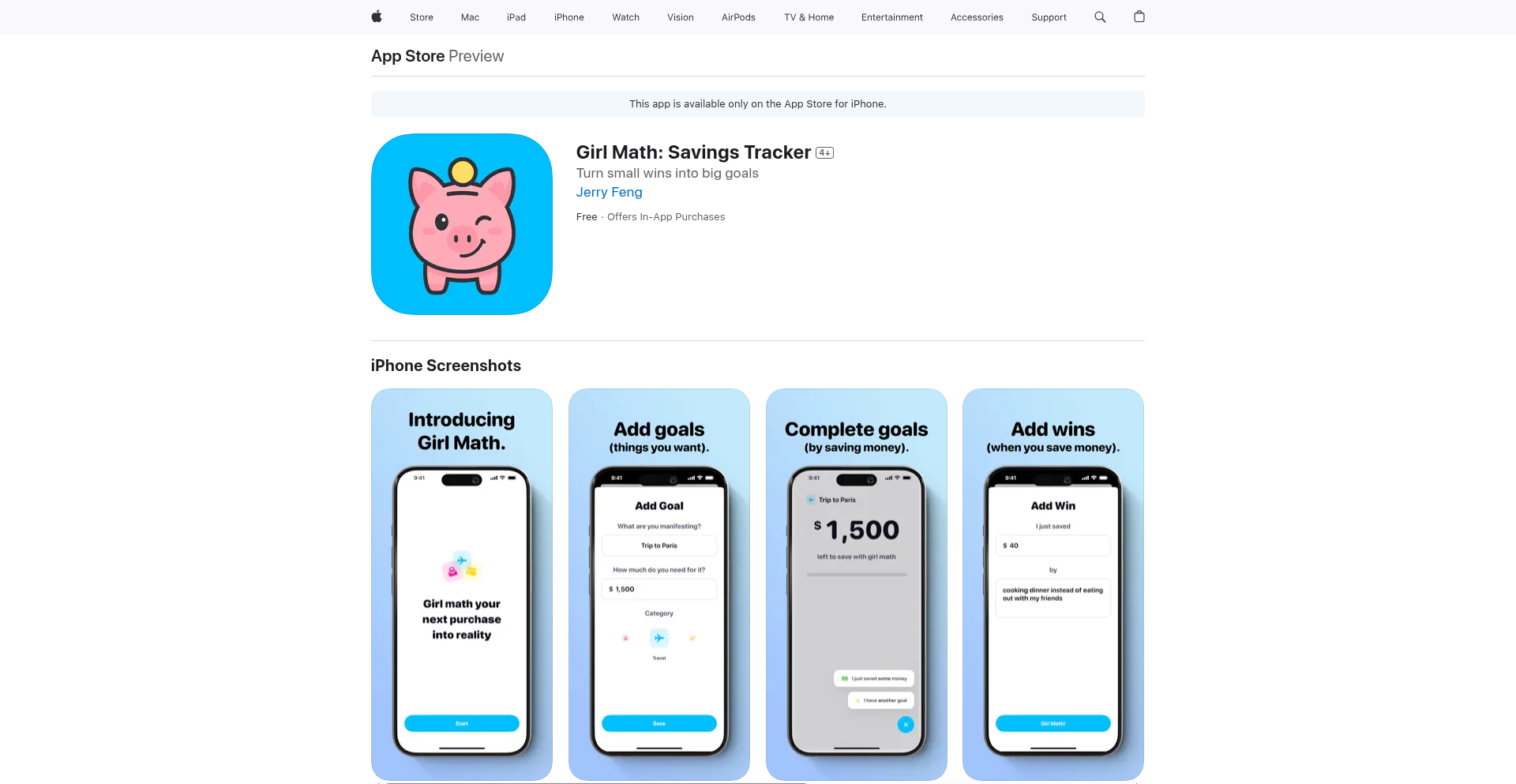

GirlMath Habit Tracker

Author

jfeng5

Description

Girl Math is a mobile application designed to gamify personal finance by transforming small, money-saving actions into visible progress towards user-defined goals. It leverages behavioral psychology principles, such as immediate gratification and habit loops, to encourage consistent saving behavior. The app focuses on micro-wins and avoids traditional financial tracking methods, offering a playful and guilt-free approach to financial discipline.

Popularity

Points 4

Comments 0

What is this product?

Girl Math is a personal finance habit-building app that turns everyday money-saving moments into tangible progress towards your goals. It uses a playful interface with haptic feedback, confetti animations, and digital receipts to reward users for small wins like skipping a coffee or walking instead of taking an Uber. The core innovation lies in its application of behavioral economics principles, creating a dopamine-driven feedback loop that reinforces positive financial habits. Unlike traditional budgeting apps, it focuses on celebrating 'not spending' rather than meticulously tracking every transaction, making saving feel rewarding and achievable.

How to use it?

Developers can integrate Girl Math's principles into their own habit-tracking or gamified applications by implementing similar reward mechanisms for positive user actions. This could involve triggering visual or haptic feedback for achieving mini-goals, creating progress bars that fill up, and employing celebratory animations upon goal completion. The underlying concept is to create a simple, on-device experience with no external accounts or tracking, focusing solely on the user and their goals. For individual users, it's a straightforward mobile app where they set financial goals, log small saving 'wins' in seconds, and watch their progress grow in a fun, engaging way.

Product Core Function

· Goal Setting: Allows users to define specific financial goals, providing a clear target for their saving efforts and the motivation to achieve it.

· Micro-win Logging: Enables quick and easy recording of small money-saving actions, making the process frictionless and encouraging frequent engagement.

· Progress Visualization: Displays saved amounts as a visual progress bar, offering a satisfying representation of achievement and momentum.

· Gamified Rewards: Incorporates haptic feedback, confetti animations, and playful digital receipts to provide immediate positive reinforcement for saving actions.

· On-Device Functionality: Operates entirely locally on the user's device, ensuring privacy and eliminating the need for accounts or data tracking.

Product Usage Case

· A user wants to save for a new gadget but struggles with impulse coffee purchases. They log 'Skipped daily latte' as a micro-win in Girl Math, seeing their gadget savings bar fill up a little, reinforcing the behavior.

· Someone is saving for a vacation and often takes ride-sharing services for short trips. They log 'Walked instead of Uber' and receive a satisfying visual reward, encouraging them to choose walking more often.

· A student aims to save for textbooks. They log 'Packed lunch instead of buying' and get a 'digital receipt' confirming their saving, making the abstract goal feel more concrete and achievable through small, consistent actions.

14

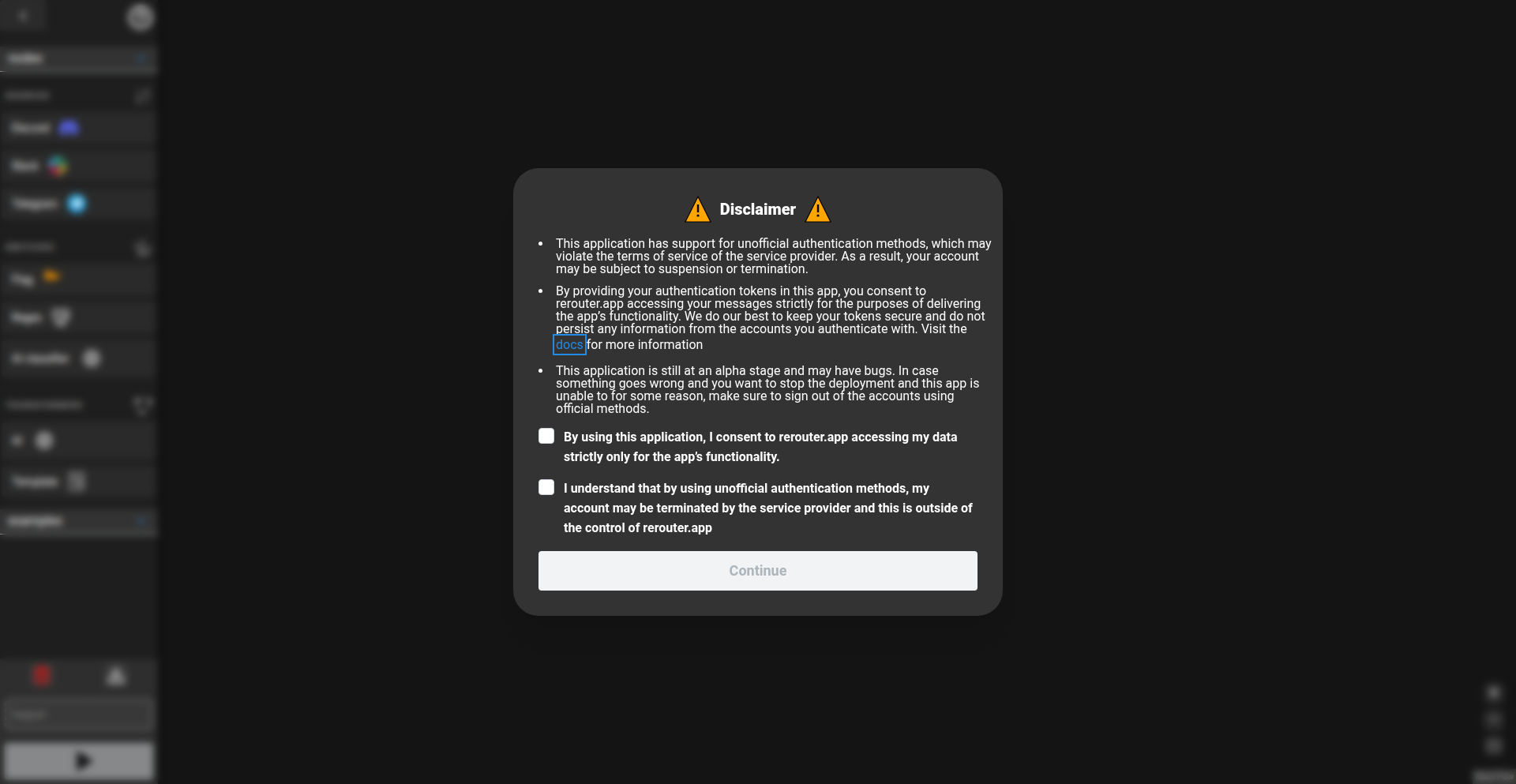

Rerouter: Streamlined Automation for Developers

Author

Cabbache

Description

Rerouter is a minimalist, no-code automation platform designed to empower developers by abstracting away the complexities of building and managing automated workflows. It provides a visual interface to connect various services and trigger actions based on specific events, offering a powerful yet accessible solution for routine tasks and integrations. The core innovation lies in its ability to enable rapid prototyping and deployment of automations without requiring deep coding expertise for each step, thereby accelerating development cycles and reducing the barrier to entry for automation.

Popularity

Points 4

Comments 0

What is this product?

Rerouter is a visual, no-code automation platform. Think of it like building with digital LEGO bricks. Each brick represents a service or an action (like sending an email or updating a database). You connect these bricks visually to create automated workflows. For example, you can set it up so that whenever you receive a specific type of email (the trigger brick), Rerouter automatically saves the attachment to a cloud storage service (the action brick). The technical innovation is in its abstract layer that handles the underlying API calls and data transformations behind the scenes, allowing users to focus on the logic of their automation rather than the intricate details of each service's communication protocol. This simplifies the process of creating integrations and custom business logic.

How to use it?

Developers can use Rerouter to automate repetitive tasks, integrate different software applications, and build custom workflows without writing extensive code. For instance, a developer might use Rerouter to automatically post updates to a Slack channel whenever a new commit is pushed to their GitHub repository. Integration is typically done through API connections. You'd connect your chosen services (e.g., GitHub, Slack, Google Drive) to Rerouter, configure the trigger event and the subsequent actions using the platform's intuitive interface, and then activate the automation. This significantly speeds up the development of common integration patterns and allows for quick experimentation with different automation ideas.

Product Core Function

· Visual Workflow Builder: Allows users to design automation logic by connecting pre-built modules, reducing the need for manual coding and simplifying complex process mapping. Its value is in enabling rapid development and clear visualization of automation flows.

· Service Integrations: Provides a growing library of connectors to popular services (e.g., cloud storage, communication platforms, databases), enabling seamless data exchange and interaction between different applications. This offers value by centralizing integration points and saving developers time on writing custom API clients.

· Event-Driven Triggers: Enables automations to be initiated by specific events from connected services, such as receiving an email, a file being updated, or a new record being created in a database. This is valuable for creating responsive and real-time automated processes.

· Data Transformation: Offers basic tools to manipulate data as it flows between services, ensuring compatibility and correctness. This adds value by handling common data formatting needs without requiring custom scripting for each transformation step.

Product Usage Case

· Automating new employee onboarding: When a new employee is added to a HR system (trigger), Rerouter can automatically create their user accounts in various cloud services (like Google Workspace, Slack) and send them a welcome email. This solves the problem of manual account provisioning, saving IT significant time.

· Social media monitoring: Rerouter can monitor social media platforms for specific keywords or mentions (trigger). When a relevant mention is found, it can automatically log it into a spreadsheet or send an alert to a team via Slack. This provides value by automating market research and customer feedback collection.

· E-commerce order fulfillment: When a new order is placed on an e-commerce site (trigger), Rerouter can automatically update inventory levels in a database, notify the shipping department, and send a confirmation email to the customer. This streamlines operations and reduces the risk of errors in the fulfillment process.

15

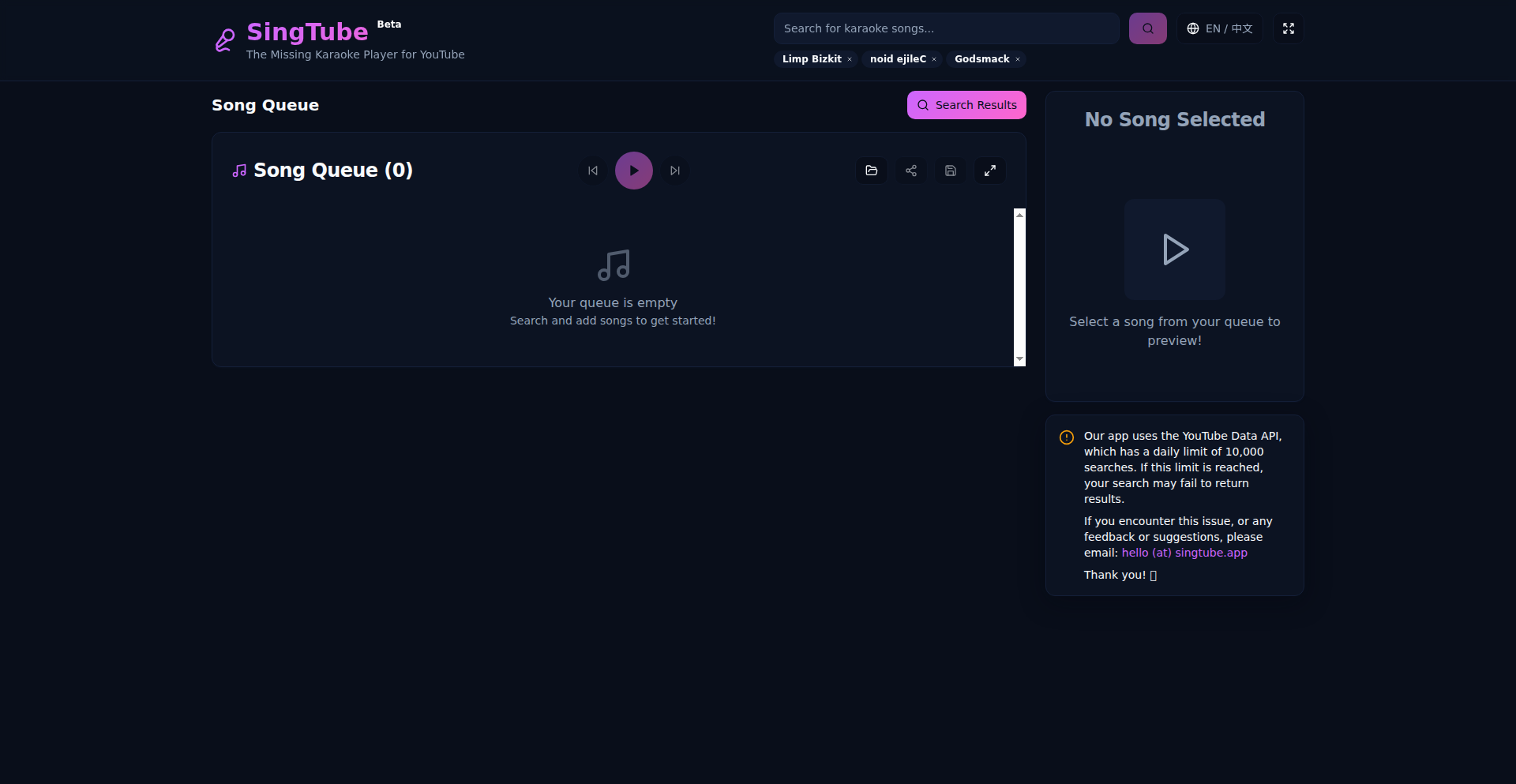

SingTube: The YouTube Karaoke Engine

Author

chenster

Description

SingTube is a novel application that transforms YouTube videos into a karaoke experience. It leverages AI to extract vocals from songs, synchronizes lyrics with audio playback, and provides a dedicated karaoke interface. This addresses the lack of dedicated karaoke functionality within YouTube, enabling users to sing along to their favorite music directly from the platform.

Popularity

Points 2

Comments 1

What is this product?

SingTube is a software application that unlocks karaoke functionality for YouTube videos. It uses Artificial Intelligence, specifically a vocal separation algorithm, to isolate the singing voice from the original audio track of a YouTube video. Simultaneously, it employs Natural Language Processing (NLP) and time-series analysis to identify and synchronize lyrics with the extracted vocals, creating a seamless karaoke experience. This is innovative because it bridges the gap between the vast library of music on YouTube and the desire for interactive singing, a feature not natively supported by YouTube.

How to use it?

Developers can integrate SingTube into their workflows by utilizing its API or command-line interface. For a quick karaoke session, users can simply paste a YouTube video URL into the SingTube web interface or desktop application. For developers looking to build custom karaoke applications or add karaoke features to existing platforms, SingTube's backend services can be accessed programmatically. This allows for embedding a dynamic karaoke player within websites, gaming platforms, or even specialized music learning tools.

Product Core Function

· AI-powered Vocal Separation: Isolates vocal tracks from existing YouTube videos, allowing singers to be heard clearly. This is valuable for creating karaoke versions of any song on YouTube, making music more accessible for singing practice.

· Automatic Lyric Synchronization: Analyzes video and audio to perfectly time lyrics with the music. This removes the tedious manual effort of aligning lyrics, providing an instant and accurate karaoke experience.

· Dedicated Karaoke Interface: Presents lyrics in a scrolling, sing-along format, often with highlighting, similar to traditional karaoke machines. This enhances the user experience by providing a familiar and engaging way to perform songs.

· Cross-platform Compatibility: Works with a wide range of YouTube videos, regardless of their original audio quality or genre. This means users aren't limited to a curated list of karaoke songs, but can access virtually any music available on YouTube.

· Customizable Playback Settings: Allows users to adjust vocal volume, pitch, and tempo to better suit their singing. This personalizes the karaoke experience and helps users adapt songs to their vocal range and skill level.

Product Usage Case

· A music education platform could use SingTube's API to offer interactive singing lessons, allowing students to practice vocal exercises with their favorite songs from YouTube. This solves the problem of finding instrumentals and synchronized lyrics for diverse learning materials.

· A social media app focused on music could integrate SingTube to enable users to record themselves singing along to YouTube tracks, automatically generating karaoke-style videos for sharing. This addresses the need for engaging, user-generated musical content.

· A content creator could use SingTube to produce karaoke versions of niche or trending songs not yet available in traditional karaoke libraries, expanding the options for their audience. This overcomes the content limitations of existing karaoke services.

· A developer building a smart home entertainment system might integrate SingTube to allow families to instantly turn any YouTube music video into a sing-along session. This adds a fun, interactive element to home entertainment without requiring separate karaoke hardware.

16

Kanji Palace: Mnemonic Image Weaver

Author

langitbiru

Description

Kanji Palace is a fascinating Hacker News Show HN project that transforms complex Kanji characters into memorable visual mnemonics. It addresses the common challenge of memorizing Kanji, a fundamental aspect of learning Japanese. The core innovation lies in its programmatic approach to generating custom images, each designed to represent the semantic components and pronunciation of a Kanji, thereby enhancing recall through visual association. This is a powerful tool for language learners seeking a more engaging and effective memorization strategy.

Popularity

Points 2

Comments 1

What is this product?

Kanji Palace is a web application that acts as a smart Kanji memorization aid. It takes a Kanji character as input and, through a clever algorithm, breaks down the character into its constituent radicals and phonetic components. It then uses a library of visual elements and rules to construct a unique, often whimsical, image that visually represents the meaning and sound of the Kanji. For example, a Kanji meaning 'tree' might be depicted with branches forming the shape of the character itself. This creative image generation is the key innovation, moving beyond rote memorization to a more intuitive, story-based learning method. So, what does this mean for you? It means learning Kanji can become significantly easier and more enjoyable, as you're building mental connections rather than just repeating."

How to use it?

Developers can integrate Kanji Palace into their language learning applications or personal study tools. The project likely exposes an API endpoint where a user can submit a Kanji character. The API would then return a URL to the generated mnemonic image, or the image data itself. This could be used to populate flashcards, interactive quizzes, or even personalized learning dashboards. Imagine building a custom Japanese learning app where every new Kanji you encounter is automatically paired with a unique, mnemonic image generated by Kanji Palace. This offers a highly flexible and scalable way to enhance the learning experience.

Product Core Function

· Kanji Decomposition: The system intelligently analyzes a Kanji character to identify its constituent radicals and phonetic elements. This provides the foundational building blocks for mnemonic creation, offering a technical breakdown of the character's structure for efficient learning.

· Mnemonic Image Generation: Based on the decomposed components, the system algorithmically generates a visual mnemonic image. This innovative use of generative imagery links abstract symbols to concrete visual representations, significantly boosting memorization retention and application.

· Customizable Visual Library: The project likely utilizes a pre-defined yet extensible library of visual assets and thematic rules for image creation. This allows for a broad range of mnemonic styles and ensures that the generated images are relevant and memorable, providing a consistent yet diverse learning experience.

· API Access for Integration: Providing an API allows developers to seamlessly integrate Kanji Palace's core functionality into other educational platforms or personal tools. This enables the creation of richer, more interactive learning environments and broadens the reach of this innovative memorization technique.

Product Usage Case

· A language learning app developer could use Kanji Palace to automatically generate mnemonic images for each new Kanji introduced to learners. This tackles the problem of tedious flashcard creation and provides a more engaging way for students to internalize Kanji meanings and pronunciations. The benefit to the user is a more intuitive and effective learning process.

· A Japanese literature student struggling with the sheer volume of Kanji in their studies could use Kanji Palace as a personal study companion. By inputting Kanji they find difficult, they receive unique visual aids that help them remember the characters for exams and deeper comprehension of texts. This offers a personalized solution to a common academic challenge.

· An educational content creator could leverage Kanji Palace to create visually rich learning materials, such as interactive e-books or online courses on Japanese language. This solves the problem of creating engaging visual aids for complex linguistic elements, making their content stand out and improving learner outcomes.

· A game developer creating an educational game about Japanese culture might integrate Kanji Palace to provide visual cues for Kanji within the game's puzzles or narrative. This directly addresses the challenge of making educational content fun and immersive, enhancing player engagement and learning retention through gamification.

17

Gigawatt: Adaptive Terminal Prompt

Author

aparadja

Description

Gigawatt is a customizable shell prompt built with Rust that automatically adapts its colors to your current terminal theme. It solves the problem of having shell prompts that clash with different terminal color schemes or applications, offering a subtle and aesthetically pleasing experience.

Popularity

Points 3

Comments 0

What is this product?

Gigawatt is a command-line prompt, the text you see before you type a command in your terminal. Unlike many prompts that use fixed, often bright, colors, Gigawatt is innovative because it analyzes your terminal's current color settings. It then intelligently adjusts its own colors to blend seamlessly with your theme, using a technique called 'Lab color interpolation' to ensure smooth transitions and visually appealing results. This means your prompt will look great whether you're using a dark mode, light mode, or any custom color scheme, without you having to manually reconfigure it. So, what's in it for you? Your terminal experience becomes more visually cohesive and less jarring, making it more pleasant to use.

How to use it?

Developers can install Gigawatt and configure it to be their default shell prompt. It can be integrated into popular shells like Zsh, Bash, or Fish by modifying their configuration files. Once installed, Gigawatt automatically detects your terminal's color palette and applies its adaptive coloring. For example, if you switch your terminal from a dark background to a light background, Gigawatt's prompt elements will adjust their colors accordingly. This makes it incredibly easy to maintain a consistent look across different environments and workflows. So, how does this help you? You get a professional-looking and consistent terminal interface without the hassle of manual color adjustments every time you change your setup.

Product Core Function

· Adaptive Color Styling: The core innovation is the automatic adjustment of prompt colors based on the terminal's theme. This means your prompt will always complement your existing visual setup, providing a more harmonious user experience. Its value to you is a visually polished and consistent terminal.

· Minimalist Design: Focuses on subtle, non-intrusive colors. This design choice reduces visual clutter and distraction, allowing you to focus on your commands and code. This helps you stay more productive by minimizing visual noise.

· Customization Options: While adaptive, the prompt is still configurable to allow users to fine-tune its appearance and the information it displays. This gives you control over your personal command-line environment.

· Rust Implementation: Built with Rust, a language known for its performance and memory safety. This ensures a fast and reliable prompt experience, which is crucial for a tool you use constantly. This means your terminal will feel snappy and responsive.

Product Usage Case

· A developer working on a project switches their terminal theme from a dark background to a light background. Gigawatt automatically adjusts the prompt's colors to be easily visible and aesthetically pleasing against the new light theme, eliminating the need for manual re-configuration. This saves the developer time and ensures their workflow remains uninterrupted.

· A designer uses different terminal applications (e.g., a regular terminal and VS Code's integrated terminal) which might have slightly different color profiles. Gigawatt ensures the prompt looks consistent and well-integrated in both environments, maintaining a unified visual experience across their tools. This enhances the developer's overall productivity and satisfaction with their tools.

· A developer wants a clean and uncluttered command-line interface. Gigawatt's minimalist color approach, combined with its adaptability, provides a professional and non-distracting prompt that enhances focus on the actual work being done in the terminal. This leads to a more efficient and enjoyable coding session.

18

SiliconBoot: Android on Apple Silicon

Author

ushakov

Description

This project demonstrates the technical feasibility of booting the Android operating system on Apple Silicon hardware (like M1/M2 Macs). It tackles the fundamental challenge of porting a mobile OS, designed for different hardware architectures, to a desktop-class ARM-based chip. The innovation lies in bypassing typical hardware restrictions and reverse-engineering the necessary drivers and bootloader configurations.

Popularity

Points 3

Comments 0

What is this product?