Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-19

SagaSu777 2025-09-20

Explore the hottest developer projects on Show HN for 2025-09-19. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN landscape is a vibrant testament to the hacker spirit, with a strong current of innovation flowing through AI, developer tooling, and foundational systems. The prevalence of AI-powered solutions, from personalized summaries to advanced video generation and intelligent financial management, highlights the democratization of complex technologies. For developers and entrepreneurs, this means immense opportunity to leverage AI for practical problem-solving across industries. Simultaneously, the resurgence of interest in low-level systems programming, exemplified by a Redis clone in Zig, signals a desire for deeper control, performance optimization, and language exploration. This trend empowers developers to build more robust and efficient infrastructure. The focus on developer productivity, with tools simplifying workflows, test writing, and deployment, underscores the continuous effort to streamline the software creation process. For aspiring builders, identifying friction points in existing workflows and crafting elegant, efficient solutions remains a powerful avenue for innovation. The underlying theme is a persistent drive to solve real-world problems with creative technical solutions, pushing boundaries and making advanced capabilities accessible.

Today's Hottest Product

Name

Zedis – A Redis clone I'm writing in Zig

Highlight

This project showcases a deep dive into systems programming by reimplementing a widely used in-memory data store, Redis, from scratch using the Zig programming language. It demonstrates a mastery of low-level memory management, concurrency, and network protocols. Developers can learn about building performant data structures, understanding the intricacies of distributed systems, and the benefits of using modern languages like Zig for systems-level work. The project tackles the complex challenge of creating a robust and efficient key-value store, offering valuable insights into performance optimization and architectural design.

Popular Category

AI/ML

Developer Tools

Systems Programming

Productivity

Web Development

Popular Keyword

AI

CLI

Rust

Python

Open Source

Web

Data

Agent

LLM

Technology Trends

AI-powered applications for diverse tasks

Low-level systems programming and language innovation (Zig, Rust)

Developer productivity and workflow enhancement tools

Decentralization and privacy-focused solutions

Efficient data handling and storage

Agent-based systems and communication

Project Category Distribution

AI/ML (20%)

Developer Tools (25%)

Systems Programming (10%)

Productivity (15%)

Web Development (15%)

Utilities/Libraries (10%)

Gaming/Entertainment (5%)

Today's Hot Product List

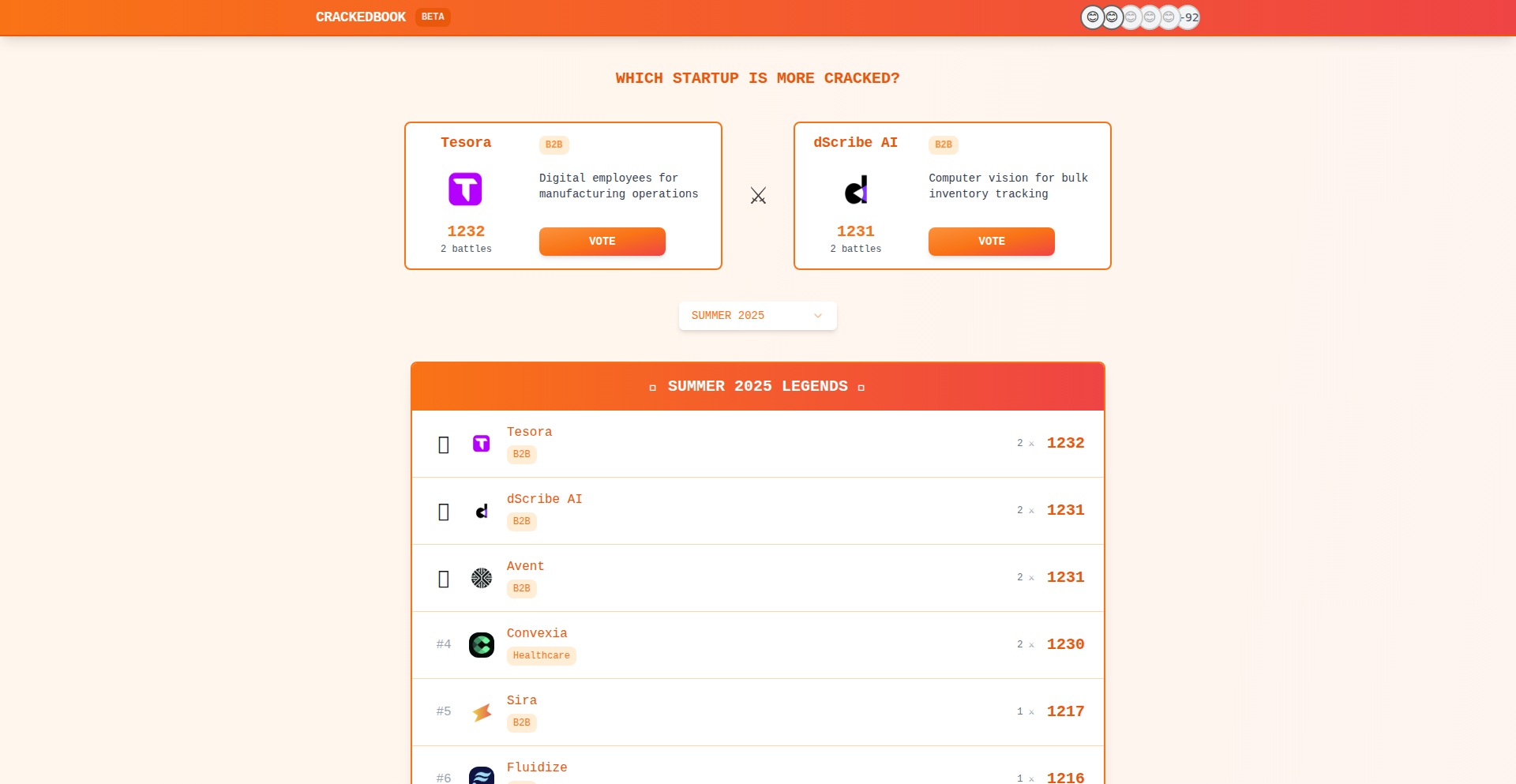

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ElixirProjectHub | 161 | 29 |

| 2 | Zedis: Redis Reimagined in Zig | 105 | 75 |

| 3 | Blots: Expressive Data Scripting | 13 | 3 |

| 4 | RDMA-AccelCache | 13 | 0 |

| 5 | Gmail Follow-up Sentinel | 2 | 8 |

| 6 | Savr: Offline-First Read-It-Later | 10 | 0 |

| 7 | KaniTTS: Compact High-Fidelity Speech Synthesizer | 4 | 5 |

| 8 | emdash: Parallel Codex Orchestrator | 7 | 2 |

| 9 | PromptLead AI | 6 | 1 |

| 10 | RustNet: Real-time Network Insight | 4 | 2 |

1

ElixirProjectHub

Author

taddgiles

Description

A community-driven directory for Elixir projects, showcasing innovative Elixir applications and fostering knowledge sharing. It highlights the versatility of Elixir in building robust and scalable software solutions, offering a central resource for developers looking for inspiration and best practices.

Popularity

Points 161

Comments 29

What is this product?

ElixirProjectHub is a curated collection of projects built using Elixir, a powerful and highly concurrent programming language. Its innovation lies in its community-driven approach, allowing developers to submit and discover real-world applications, libraries, and frameworks. This provides valuable insights into Elixir's capabilities for tasks like web development, distributed systems, and embedded systems, offering a practical demonstration of its technical strengths and how it solves complex problems.

How to use it?

Developers can use ElixirProjectHub to explore existing Elixir projects. They can browse by category, search for specific technologies or use cases, and view detailed descriptions of each project, including its technical stack and contribution. This allows them to find inspiration for their own projects, discover useful libraries, and learn from successful implementations. For those looking to contribute, it also provides a gateway to engage with the Elixir community and its ongoing developments.

Product Core Function

· Project submission and curation: Allows developers to share their Elixir projects, creating a growing repository of real-world examples. This helps showcase the practical application of Elixir and its innovative uses.

· Categorized browsing and search: Enables users to easily discover projects based on their application domain (e.g., web, data processing, systems) or specific Elixir libraries used. This makes it efficient to find relevant solutions and learn from specific technical approaches.

· Detailed project descriptions: Provides in-depth information about each project, including its architecture, technical challenges overcome, and the specific Elixir features leveraged. This offers valuable learning material for understanding Elixir's problem-solving capabilities.

· Community engagement features: Facilitates interaction among Elixir developers, allowing for discussions, feedback, and potential collaboration on projects. This fosters a vibrant ecosystem and accelerates collective learning and innovation.

Product Usage Case

· A developer building a high-concurrency real-time chat application can browse ElixirProjectHub to find examples of similar projects that have successfully handled thousands of simultaneous connections using Elixir's actor model. This helps them understand how to implement efficient communication protocols and manage state effectively in their own application.

· A team developing a fault-tolerant distributed system can find projects on ElixirProjectHub that have implemented supervision trees and distributed databases. This provides practical blueprints for building resilient systems that can withstand failures and maintain uptime, showcasing Elixir's inherent strengths in this area.

· A programmer learning Elixir might discover a project on the hub that uses Ecto for database interactions. By examining the project's code and description, they can learn effective patterns for data modeling and querying in Elixir, accelerating their understanding of database integration.

2

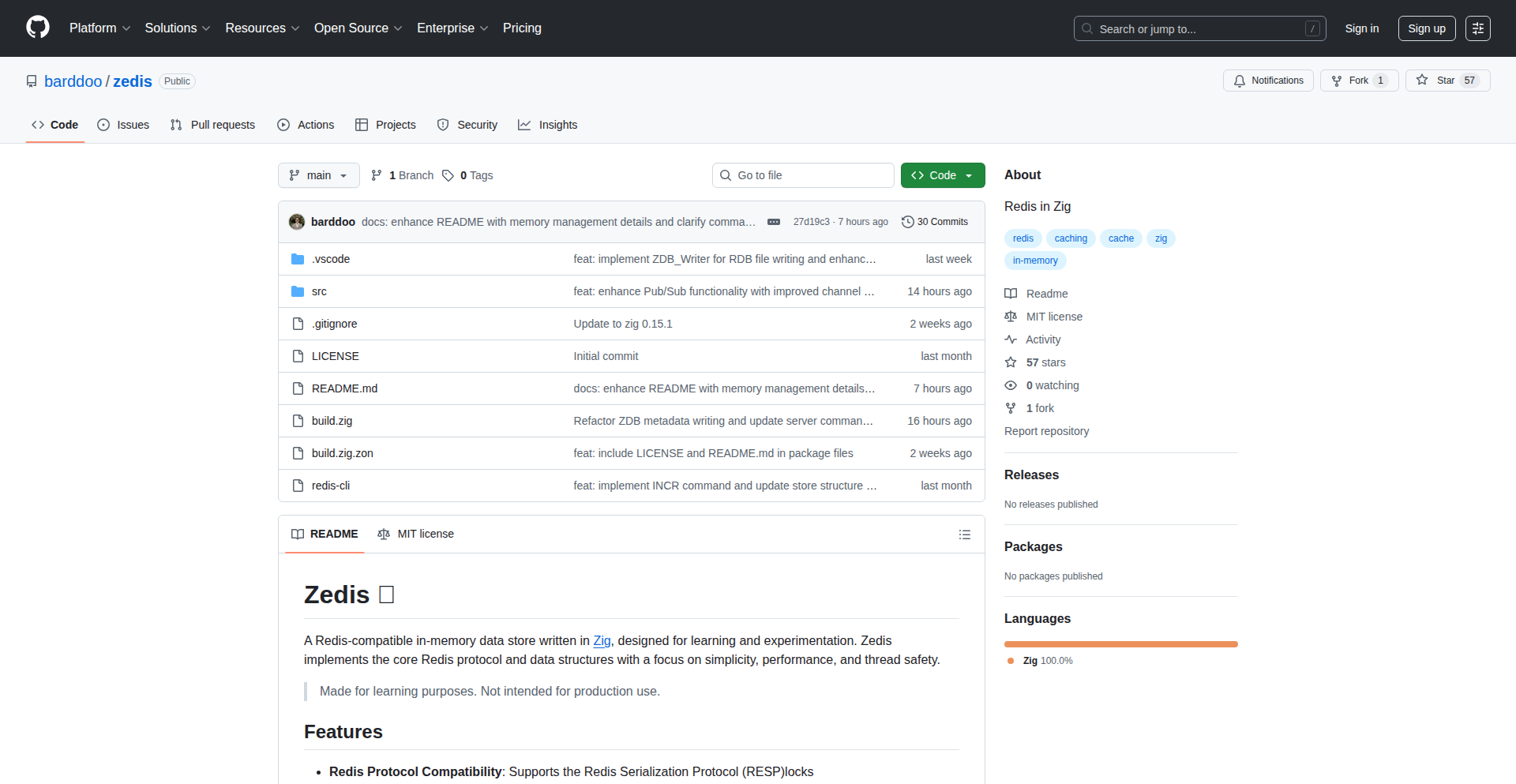

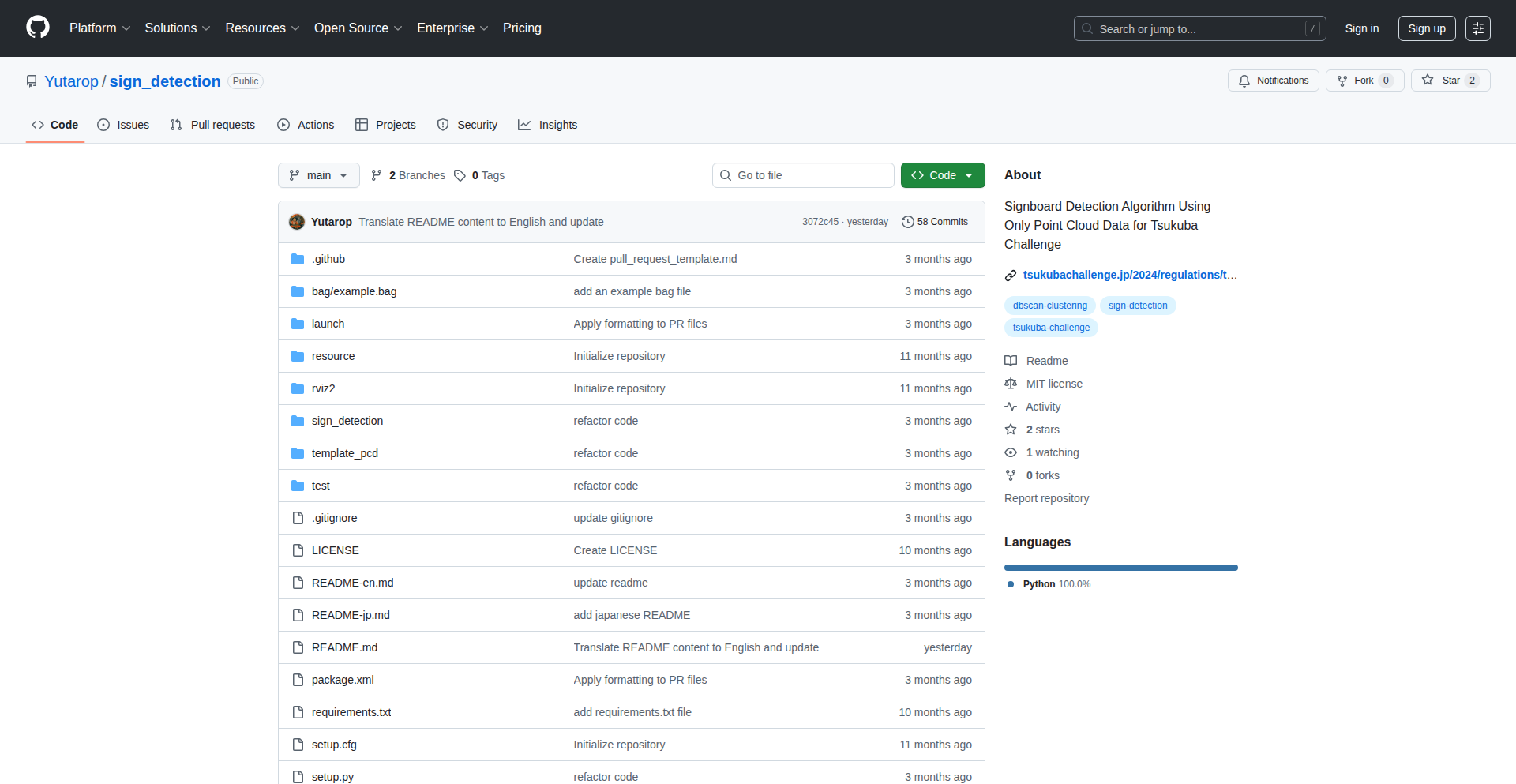

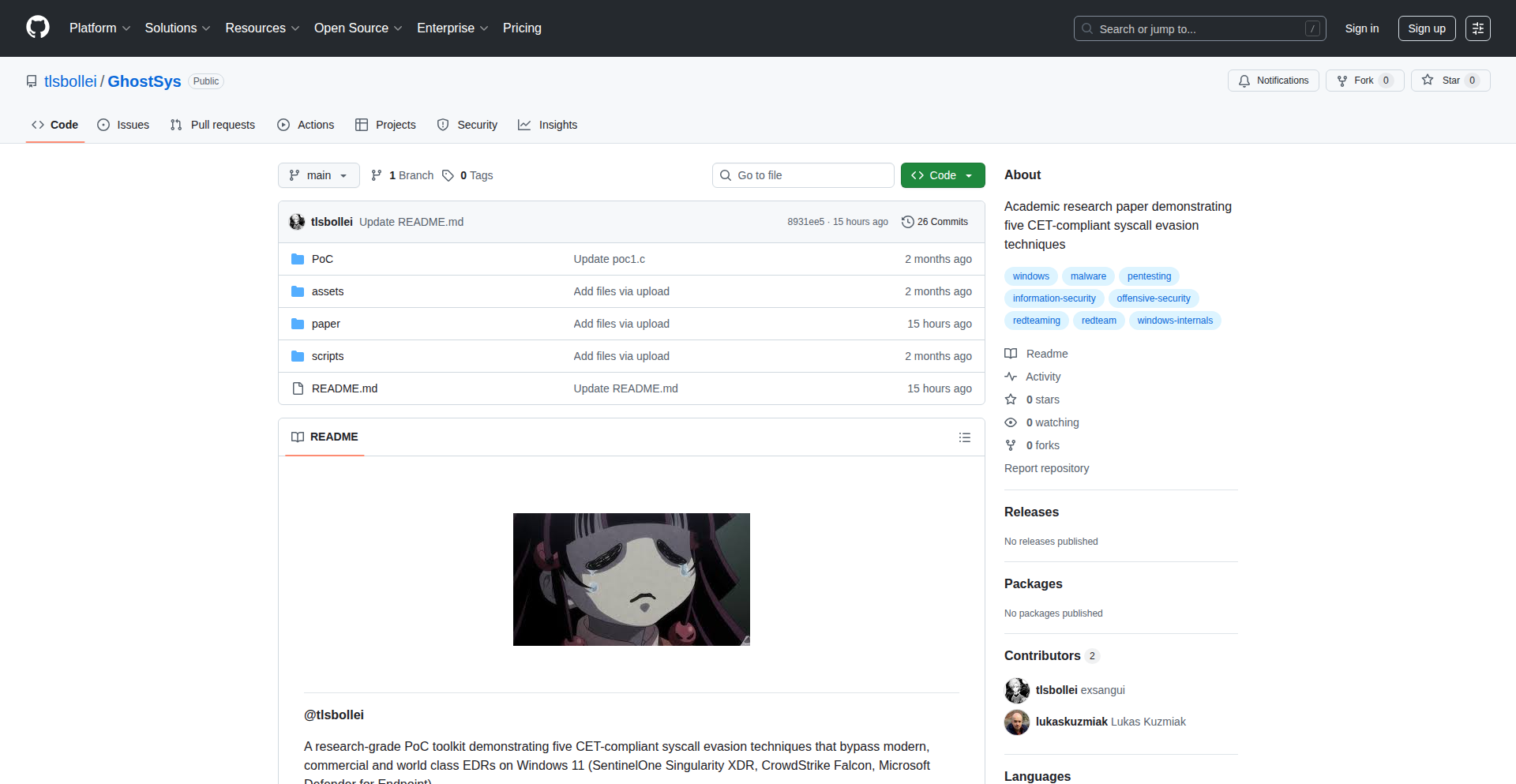

Zedis: Redis Reimagined in Zig

Author

barddoo

Description

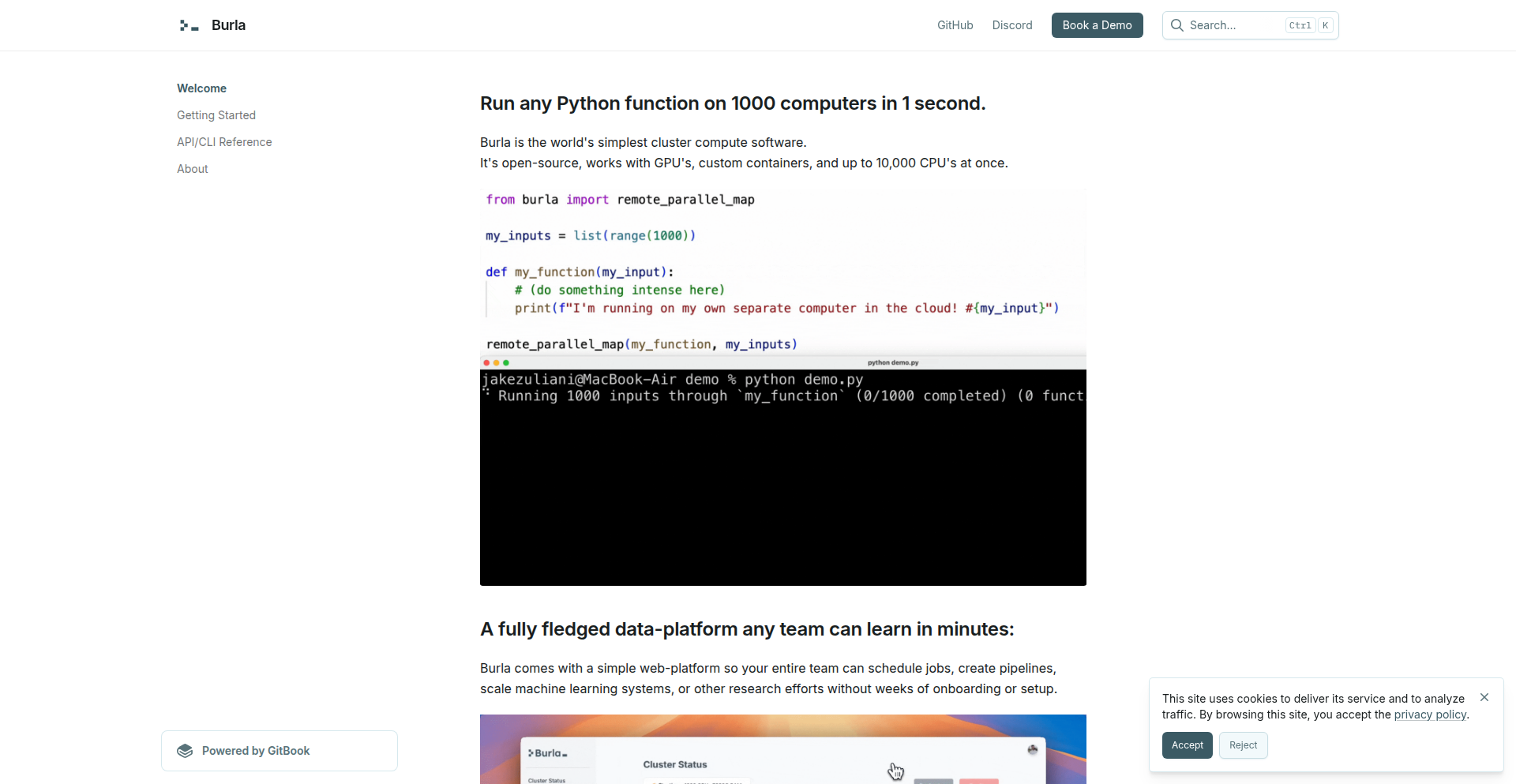

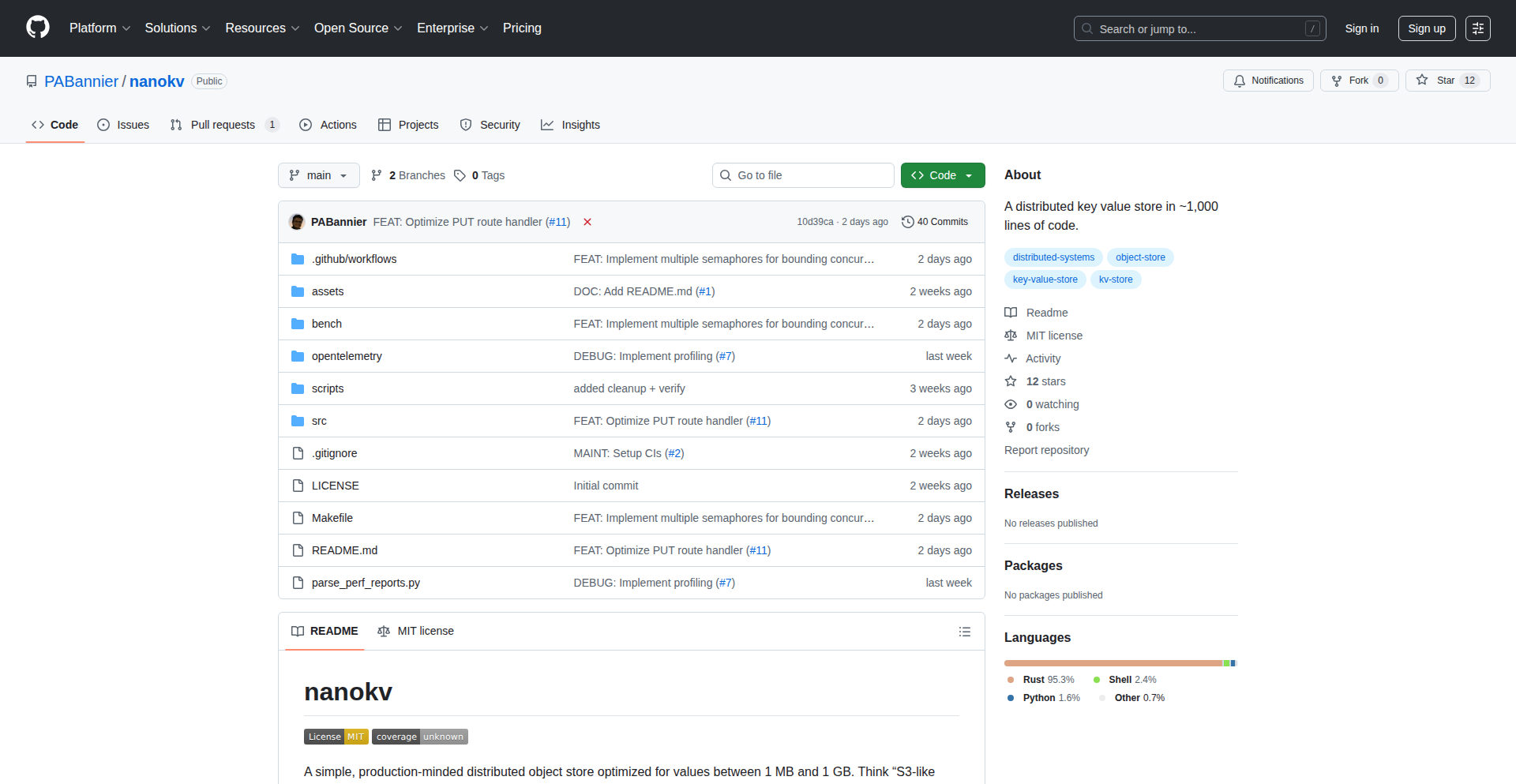

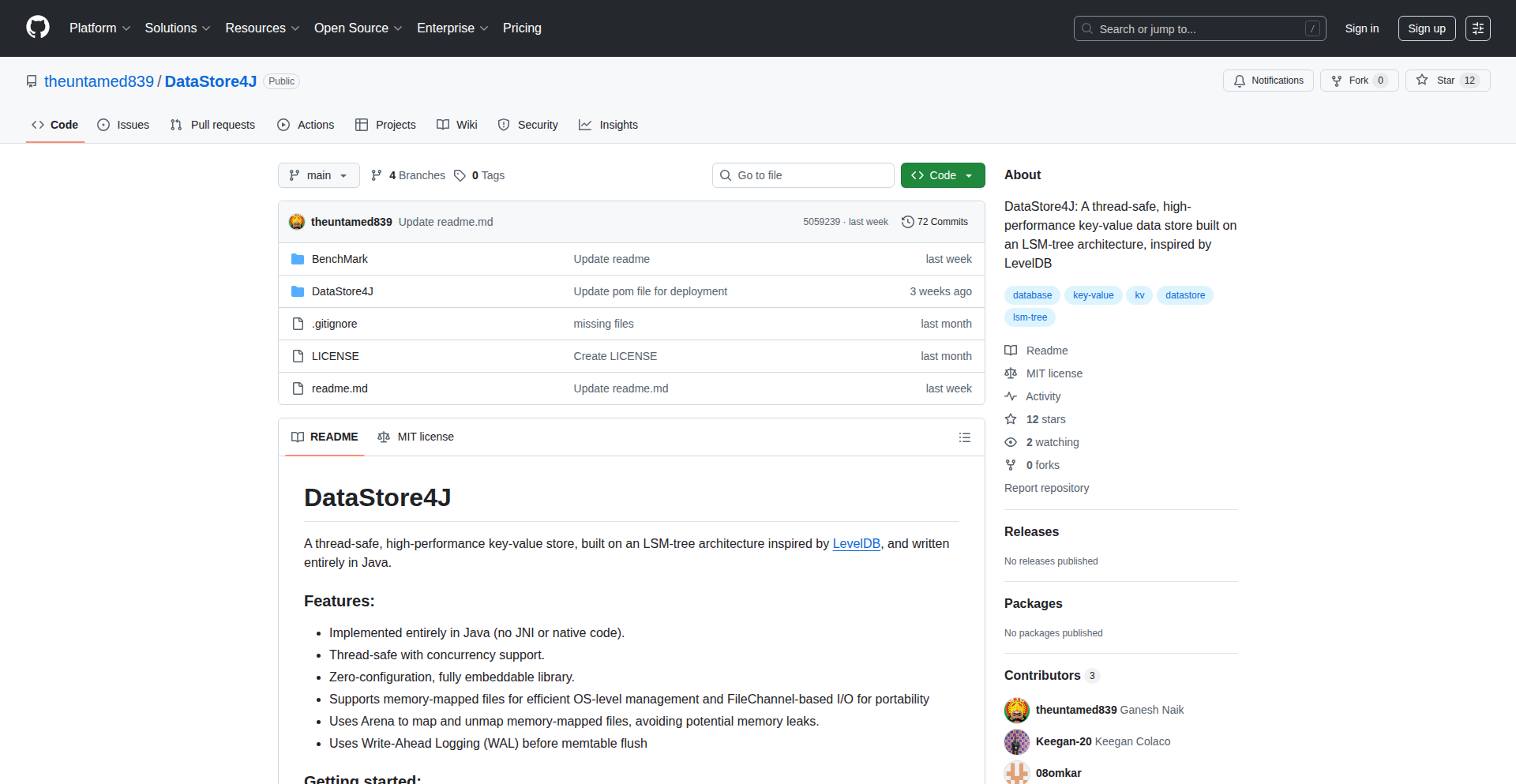

Zedis is a high-performance, from-scratch Redis clone built entirely in Zig. It aims to leverage Zig's unique memory safety features and compile-time metaprogramming to offer a potentially more robust and efficient alternative to traditional Redis implementations. This project showcases the power of low-level systems programming for building critical infrastructure components.

Popularity

Points 105

Comments 75

What is this product?

Zedis is a novel implementation of the Redis in-memory data structure store, built from the ground up using the Zig programming language. Unlike many existing Redis clients or forks that might use C or C++, Zedis embraces Zig's distinct approach to memory management and concurrency. Zig's 'comptime' (compile-time execution) allows for powerful code generation and optimization before the program even runs, potentially leading to fewer runtime errors and more predictable performance. The project's innovation lies in demonstrating how Zig's safety guarantees and low-level control can be applied to a widely used, performance-critical database system.

How to use it?

Developers can integrate Zedis into their applications by connecting to it as they would with any standard Redis instance, assuming Zedis implements the Redis Serialization Protocol (RESP). This could involve using existing Redis client libraries in their preferred programming language or utilizing any custom client they might build. For those interested in the underlying technology, developers can clone the repository and build Zedis directly from the Zig source code, allowing for experimentation, modification, and deeper understanding of its internal workings. This offers a unique opportunity to tailor a high-performance key-value store to specific, niche requirements or to explore the performance characteristics of Zig in a real-world application.

Product Core Function

· Key-Value Storage: Implements the fundamental ability to store and retrieve data using unique keys, the bedrock of any Redis-like system. This is essential for caching, session management, and simple data storage needs.

· Redis Protocol Compatibility: Aims to speak the same language as standard Redis, allowing seamless integration with existing tools and applications without requiring code changes. This means you can swap Zedis in where you'd normally use Redis.

· Memory Management with Zig: Utilizes Zig's explicit memory management and safety features to reduce the likelihood of memory-related bugs like buffer overflows or use-after-free errors, leading to a more stable and secure data store.

· Compile-time Optimizations: Leverages Zig's 'comptime' to perform complex logic and code generation during compilation, potentially resulting in faster execution and more efficient resource utilization at runtime.

· Concurrency Handling: Designed to manage multiple client connections efficiently, a critical aspect for a high-performance database that needs to serve many users simultaneously.

Product Usage Case

· Caching Layer for Web Applications: A developer could replace their existing Redis cache with Zedis to potentially benefit from improved performance and stability, especially in applications that are highly sensitive to latency and memory errors. This means faster data retrieval for users.

· Real-time Data Processing: For applications requiring rapid data ingestion and retrieval, such as financial trading platforms or IoT data aggregators, Zedis could offer a performant and reliable backend, ensuring data is processed quickly and without unexpected crashes.

· Building Custom High-Performance Services: Developers looking to build niche distributed systems or microservices that require a fast, in-memory data store could use Zedis as a foundation, benefiting from its low-level control and Zig's unique capabilities for tailored performance.

· Educational Exploration of Systems Programming: Researchers or enthusiasts curious about how high-performance network services are built from scratch, and how modern languages like Zig can be applied to such tasks, can study Zedis to understand its architecture and implementation details.

3

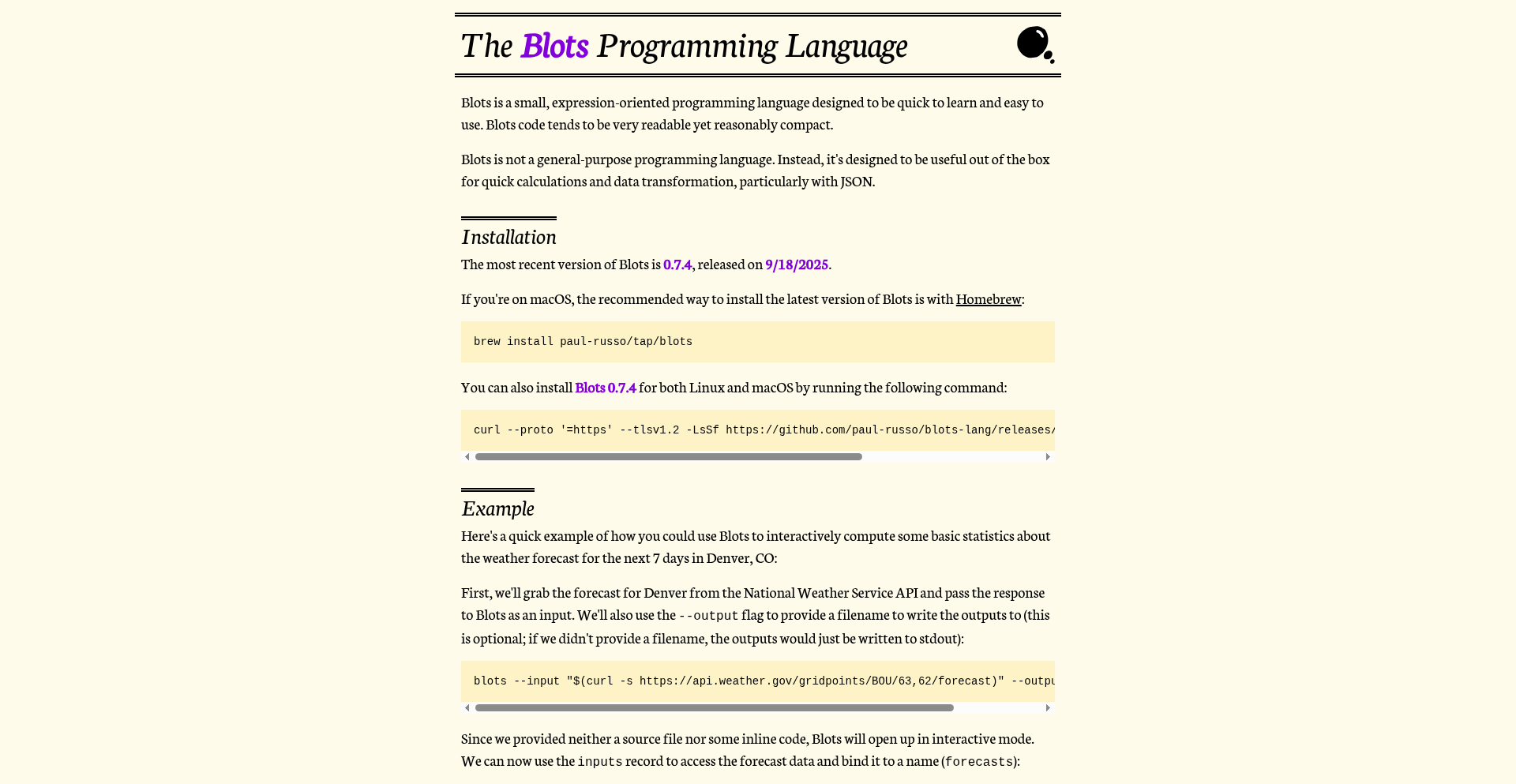

Blots: Expressive Data Scripting

Author

paulrusso

Description

Blots is a novel, expression-oriented programming language designed for quick data manipulation and mathematical scratchpad tasks. It excels at extracting and processing information from complex data structures like JSON, offering a concise way to get things done without the overhead of larger programming languages. Its innovation lies in its focus on expressive syntax for data interaction, making it a powerful tool for developers needing rapid insights from their data.

Popularity

Points 13

Comments 3

What is this product?

Blots is a lightweight, experimental programming language that focuses on writing short, expressive code snippets for data tasks. Think of it as a supercharged calculator and data explorer combined. Its core innovation is its expression-oriented design, meaning almost everything you write results in a value, making it natural to chain operations. This is built on a custom interpreter that, while still being optimized, has seen significant performance gains, demonstrating a commitment to making it practical. The 'weirdness' comes from its unique syntax, designed for clarity and conciseness in data operations, allowing you to solve problems quickly.

How to use it?

Developers can integrate Blots into their workflow for immediate data analysis or quick scripting. You can run Blots code directly through its interpreter. For example, if you have a JSON payload, you can write a short Blots script to extract specific values or perform calculations on them. It's ideal for ad-hoc data wrangling, prototyping data processing logic, or even as a powerful scratchpad for mathematical problems. Imagine needing to quickly sum up a specific field from a large JSON file – Blots can do this with a few lines of code, saving you from writing more extensive scripts in traditional languages.

Product Core Function

· Concise expression evaluation: Allows for chaining operations and immediate results, making data transformation more intuitive and faster to write.

· JSON data interaction: Provides specialized syntax for easily navigating and extracting data from JSON structures, simplifying common data fetching tasks.

· Mathematical operations: Supports standard arithmetic and logical operations, serving as a powerful scratchpad for quick calculations.

· Customizable syntax: The language is designed to be flexible, encouraging experimentation and adaptation for specific user needs.

· Lightweight interpreter: Engineered for speed in typical data manipulation scenarios, with ongoing improvements to handle larger datasets efficiently.

Product Usage Case

· Extracting specific user IDs from a large JSON log file to analyze error patterns. Blots makes it simple to pinpoint and collect these IDs in a few lines.

· Calculating the average price of products from a JSON data feed. Instead of writing a full script, you can use Blots for a quick, on-the-fly calculation.

· Prototyping data filtering logic. Developers can quickly test conditions and data transformations before implementing them in a larger application.

· Using Blots as a command-line tool to process data piped from other commands, offering a compact way to perform quick data manipulations.

4

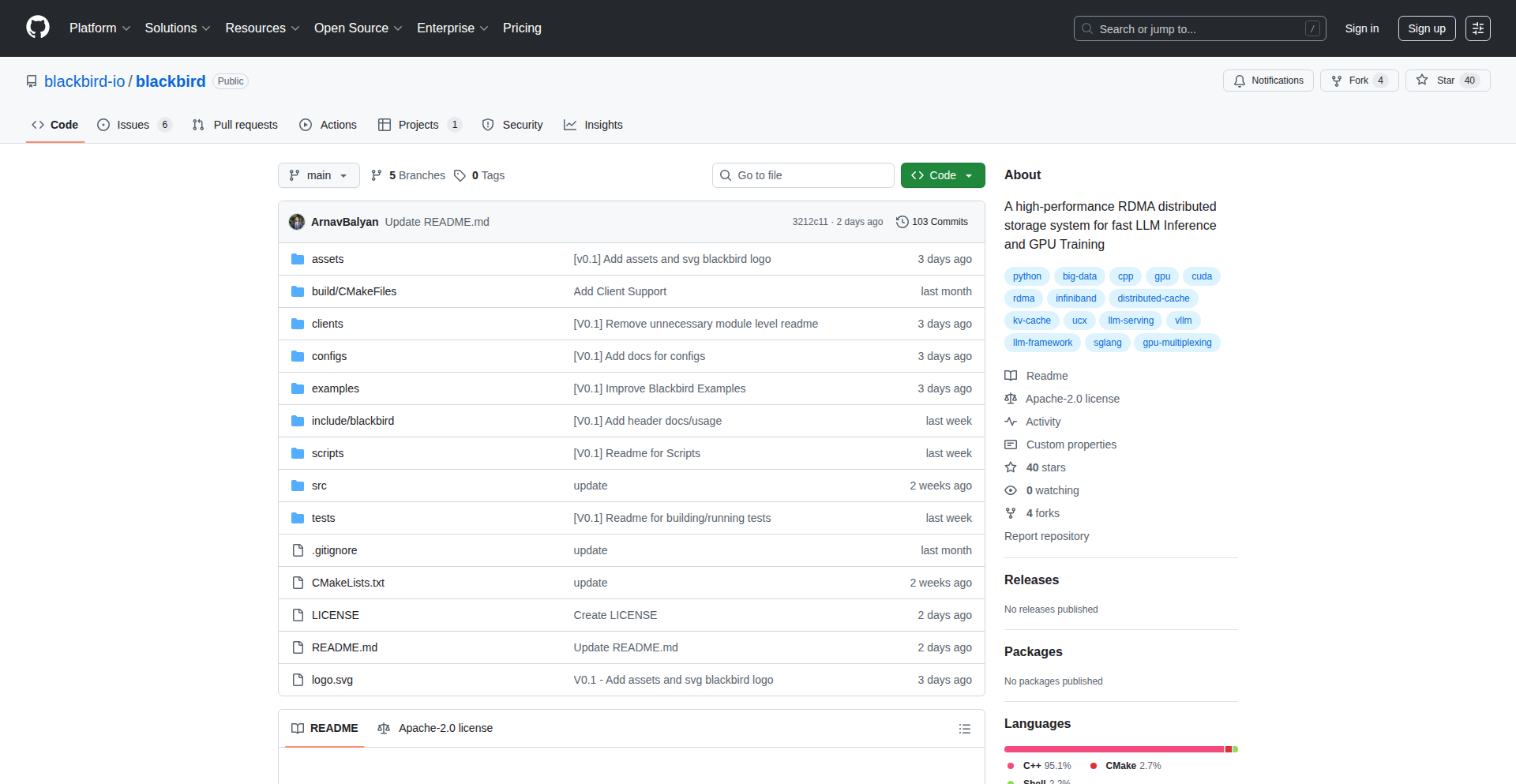

RDMA-AccelCache

Author

hackercat0101

Description

A distributed cache leveraging RDMA/InfiniBand for ultra-low latency data access, designed to accelerate AI inference and training by minimizing data transfer bottlenecks. This project tackles the critical challenge of slow data retrieval in large-scale machine learning workloads by using high-speed interconnects to bypass traditional network stacks.

Popularity

Points 13

Comments 0

What is this product?

RDMA-AccelCache is a specialized distributed caching system. It uses RDMA (Remote Direct Memory Access) and InfiniBand, which are technologies for direct memory-to-memory communication between computers over a network, bypassing the CPU and operating system. This means data can be sent and received much faster than traditional network methods. The innovation lies in applying these high-speed networking capabilities to build a cache that significantly speeds up access to frequently used data, which is crucial for demanding applications like AI model inference (making predictions) and training (teaching AI models). So, it's a super-fast memory storage for AI that's directly accessible by multiple machines without much delay.

How to use it?

Developers can integrate RDMA-AccelCache into their AI training or inference pipelines. It typically involves setting up an InfiniBand network infrastructure and then deploying the cache nodes. Your application would then be configured to query the cache for data (e.g., model weights, training datasets) before resorting to slower storage. This could be done via a client library provided by the cache system. For example, if you're training a deep learning model and need to access large datasets or model parameters frequently, you would first check RDMA-AccelCache. If the data is there, it's retrieved almost instantly. If not, it's fetched from the original source and then cached for future access. So, you use it by connecting your AI application to this fast cache to get the data it needs for processing.

Product Core Function

· RDMA-based data retrieval: Enables direct memory access for fetched data, drastically reducing latency compared to TCP/IP. This means your AI can get the data it needs to process almost immediately, boosting its speed.

· Distributed caching architecture: Spreads cached data across multiple nodes, allowing for horizontal scaling and high availability. This ensures that as your AI workload grows, the cache can grow with it, maintaining performance.

· AI workload optimization: Specifically designed to reduce I/O bottlenecks in machine learning inference and training. This directly translates to faster training times and quicker responses from your AI models, making them more efficient.

· Infiniband network compatibility: Leverages the high bandwidth and low latency of InfiniBand networks for optimal performance. This ensures that the underlying network infrastructure is fully utilized to deliver maximum speed for data access.

Product Usage Case

· Accelerating large-scale deep learning training: In a scenario where a team is training a massive neural network that requires constant access to large datasets and model parameters, RDMA-AccelCache can store these frequently accessed items. By retrieving them through RDMA, the training process, which might otherwise be slowed down by network latency, can proceed much faster, leading to quicker model development.

· Reducing latency for real-time AI inference: For applications that need to make predictions very quickly, such as in autonomous driving or fraud detection, the time it takes to fetch model weights and input data is critical. RDMA-AccelCache can serve these components with ultra-low latency, ensuring the AI can respond in milliseconds, thereby improving the system's real-time capabilities.

· Improving data accessibility in distributed ML platforms: When multiple worker nodes in a distributed machine learning system need to access the same large data files or model checkpoints, a traditional network file system can become a bottleneck. RDMA-AccelCache can act as a shared, high-speed data layer, ensuring all workers get fast access to the data they need, synchronizing their work more efficiently.

5

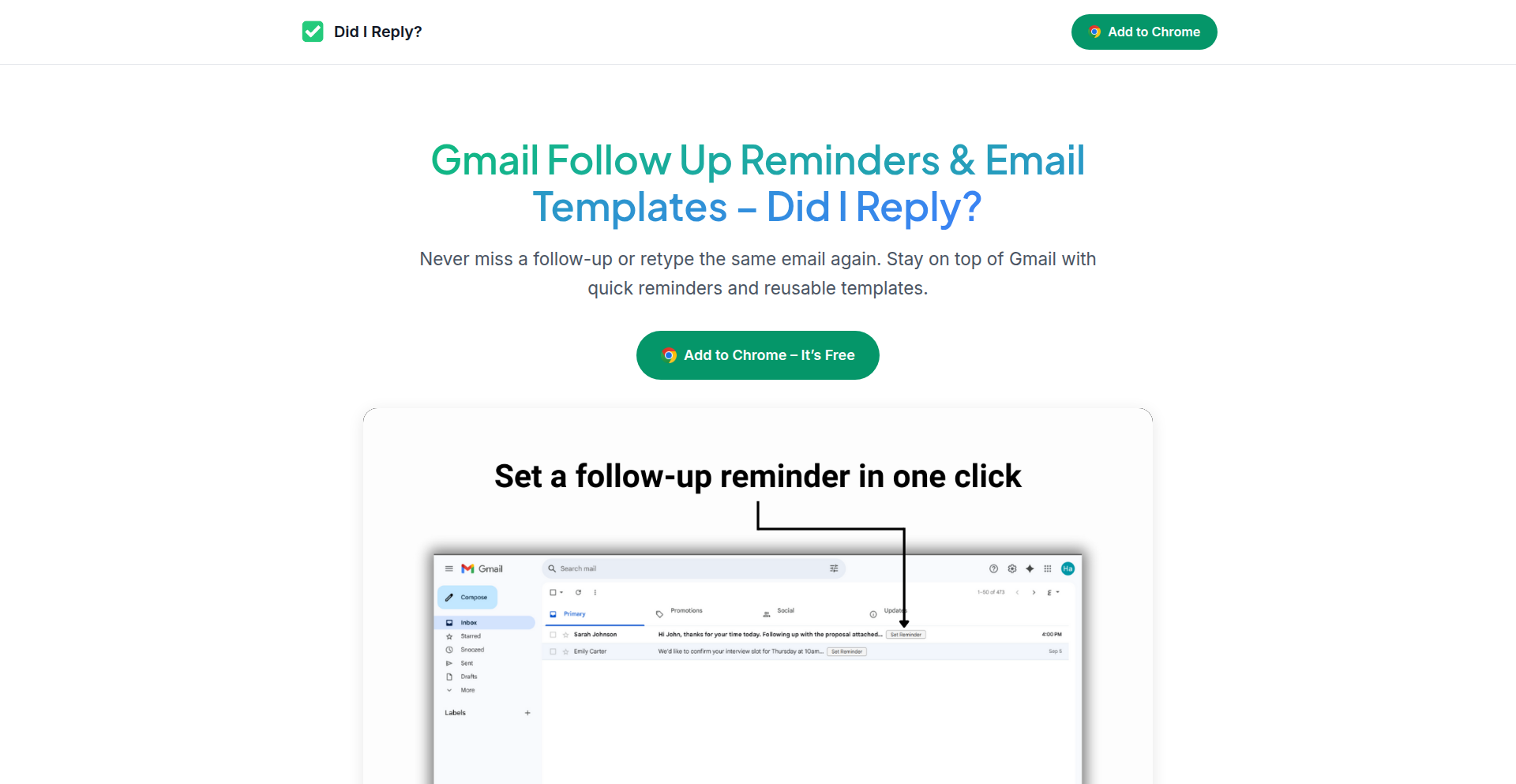

Gmail Follow-up Sentinel

Author

Homos

Description

Did I Reply? is a lightweight Chrome extension designed to solve the common problem of forgetting to follow up on important Gmail threads. It offers one-click follow-up reminders and reusable templates directly within Gmail, streamlining client communication without the overhead of heavy CRM systems. Its innovation lies in its seamless integration and privacy-focused design, making email follow-ups effortless and efficient.

Popularity

Points 2

Comments 8

What is this product?

Did I Reply? is a Chrome extension that acts as your personal assistant for managing Gmail communication. It's built on a simple yet powerful idea: help you never miss an important follow-up. Technically, it injects functionality into your Gmail interface, allowing you to schedule reminders for specific emails with a single click. It also stores and allows you to quickly insert pre-written text snippets, called templates. The innovation is in its deep integration with Gmail, making these actions feel like native Gmail features, and its commitment to privacy, as all your data stays within your browser, meaning it's not sent to any servers. So, for you, this means no more manually tracking emails or digging through your inbox to remember who you need to contact – it handles the reminders and quick replies for you.

How to use it?

Developers can use Did I Reply? by simply installing it as a Chrome extension. Once installed, it automatically enhances their Gmail experience. When composing or reading an email, they can click a button provided by the extension to set a follow-up reminder, choosing a specific date and time. They can also create and save custom reply templates. For example, if you're a developer who frequently sends updates to clients or collaborators, you can create a template for 'Weekly Progress Report' and insert it with one click instead of typing it out each time. This saves significant time and reduces the chance of errors or forgotten messages. It integrates directly into the Gmail UI, so no external tools or complex setup are needed.

Product Core Function

· One-click Gmail follow-up reminders: This allows users to schedule a reminder for any email conversation directly from their inbox. The technical implementation involves injecting a button into the Gmail interface that triggers a background process to set a reminder, ensuring that the user is prompted to reply at a later time, thus improving communication timeliness and effectiveness.

· View scheduled reminders: Users can see a clear overview of all their upcoming email follow-ups. This feature is implemented by maintaining a local list of scheduled reminders within the browser's local storage, providing an easily accessible list for users to manage their pending tasks.

· Save and insert reusable templates: This function enables users to store frequently used email text and insert them quickly into new emails. Technically, this utilizes the browser's local storage to save and retrieve template content, significantly speeding up the process of writing common responses and ensuring consistency in communication.

· Instant Gmail integration: The extension works seamlessly within the existing Gmail interface without requiring any login or complex setup. This is achieved through JavaScript code that runs in the browser, modifying the Gmail web page to add its features, making the user experience smooth and intuitive.

Product Usage Case

· A freelance developer needs to follow up with a potential client after sending a proposal. They use Did I Reply? to set a reminder for three days later directly on the sent email. If they don't receive a response, the extension will remind them to send a follow-up, preventing a missed business opportunity.

· A developer is collaborating on a project and frequently needs to send status updates to the team. They create a 'Daily Status Update' template that includes placeholders for key information. When they need to send an update, they simply insert the template and fill in the specific details, saving time and ensuring all necessary information is included.

· A developer who manages multiple client accounts uses the extension to set reminders for important emails that require a response within a specific timeframe. This helps them stay organized and responsive, maintaining good client relationships without the need for a full-fledged CRM.

· A developer is testing a new feature and wants to gather feedback. They send out a series of emails to beta testers. Did I Reply? helps them track who has responded and schedule follow-ups for those who haven't, ensuring they gather comprehensive feedback for product improvement.

6

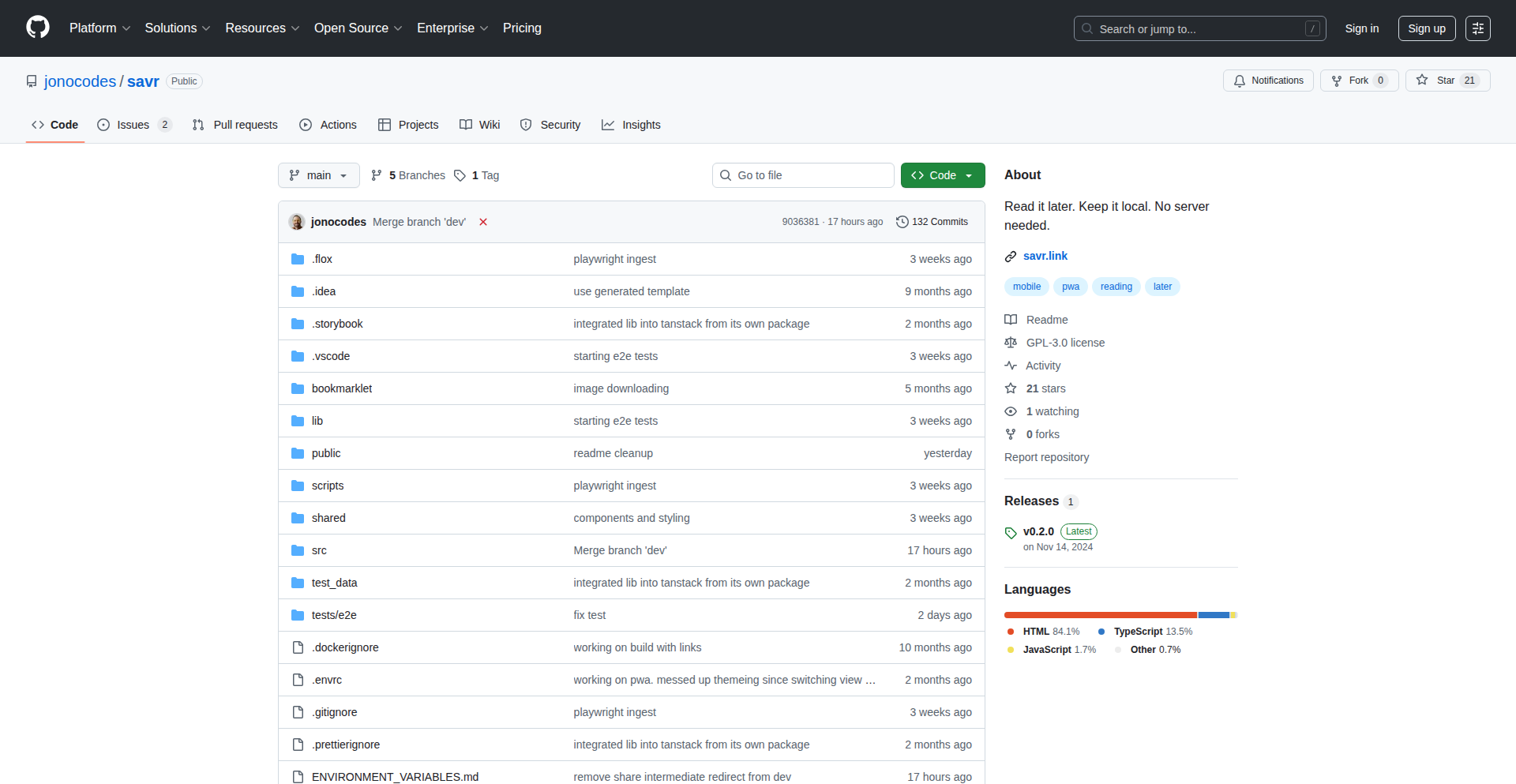

Savr: Offline-First Read-It-Later

Author

jonotime

Description

Savr is a local-first alternative to services like Pocket, designed for users who need reliable offline access to their saved articles. It tackles the common problem of web services failing when offline or becoming bloated with features. The innovation lies in its 'local-first' architecture, meaning content is primarily stored and accessed directly on your device, ensuring functionality even without an internet connection. This approach is built using modern web technologies like Progressive Web Apps (PWAs) and Tanstack libraries, emphasizing developer-friendly design and robust offline capabilities. So, what's in it for you? You can save articles from anywhere and read them comfortably, even on a plane or subway, without worrying about your connection.

Popularity

Points 10

Comments 0

What is this product?

Savr is a web application that lets you save articles to read later, but with a key difference: it prioritizes storing content directly on your device. This 'local-first' approach, leveraging Progressive Web App (PWA) technology, means your saved articles are accessible offline. Think of it like having your own personal, always-available digital library of web content. The innovation here is in its robust offline-first design, moving away from traditional cloud-centric models. It's built to be reliable when the internet is not. So, what does this mean for you? It means you can save articles today and be confident you can read them tomorrow, regardless of your internet availability.

How to use it?

As a developer, you can use Savr as a personal tool to manage your reading list, especially if you often find yourself in situations with poor or no internet connectivity. Its PWA nature allows it to be installed on your desktop or mobile device for a more app-like experience. You can integrate it into your workflow by bookmarking articles directly through your browser or using its sharing features. The project is built with Tanstack libraries, which are known for their flexibility and composability, making it easier for developers to understand and potentially extend its functionality. So, how can you use it? Save articles from your browser, install it like an app, and read them anywhere, anytime, enhancing your productivity and learning.

Product Core Function

· Offline article saving and retrieval: The core value is enabling users to access saved articles without an internet connection, a significant improvement for users with inconsistent connectivity. This is achieved through PWA offline capabilities and local storage.

· Cross-device synchronization (future potential): While currently focused on local-first, the underlying architecture can be extended to synchronize saved articles across multiple devices when an internet connection is available, providing a seamless experience.

· Clean reading interface: Savr aims to provide a distraction-free reading experience by stripping away unnecessary web page elements, making it easier for users to focus on the content.

· Developer-friendly architecture: Built with modern libraries like Tanstack, the codebase is designed for maintainability and extensibility, encouraging community contributions and further innovation.

Product Usage Case

· A commuter who saves articles during their morning train ride and reads them on the subway where there's no signal. Savr ensures the articles are available for reading without interruption.

· A researcher who needs to access a large volume of saved articles for offline study. Savr allows them to download and access all their research material, even in remote locations without internet access.

· A developer attending a conference with unreliable Wi-Fi. They can save important technical articles and access them during sessions without relying on the venue's network, ensuring continuous learning.

· A student preparing for exams who wants to save lecture notes and supplementary reading materials. Savr enables them to access all their study resources offline, creating a dedicated and accessible study environment.

7

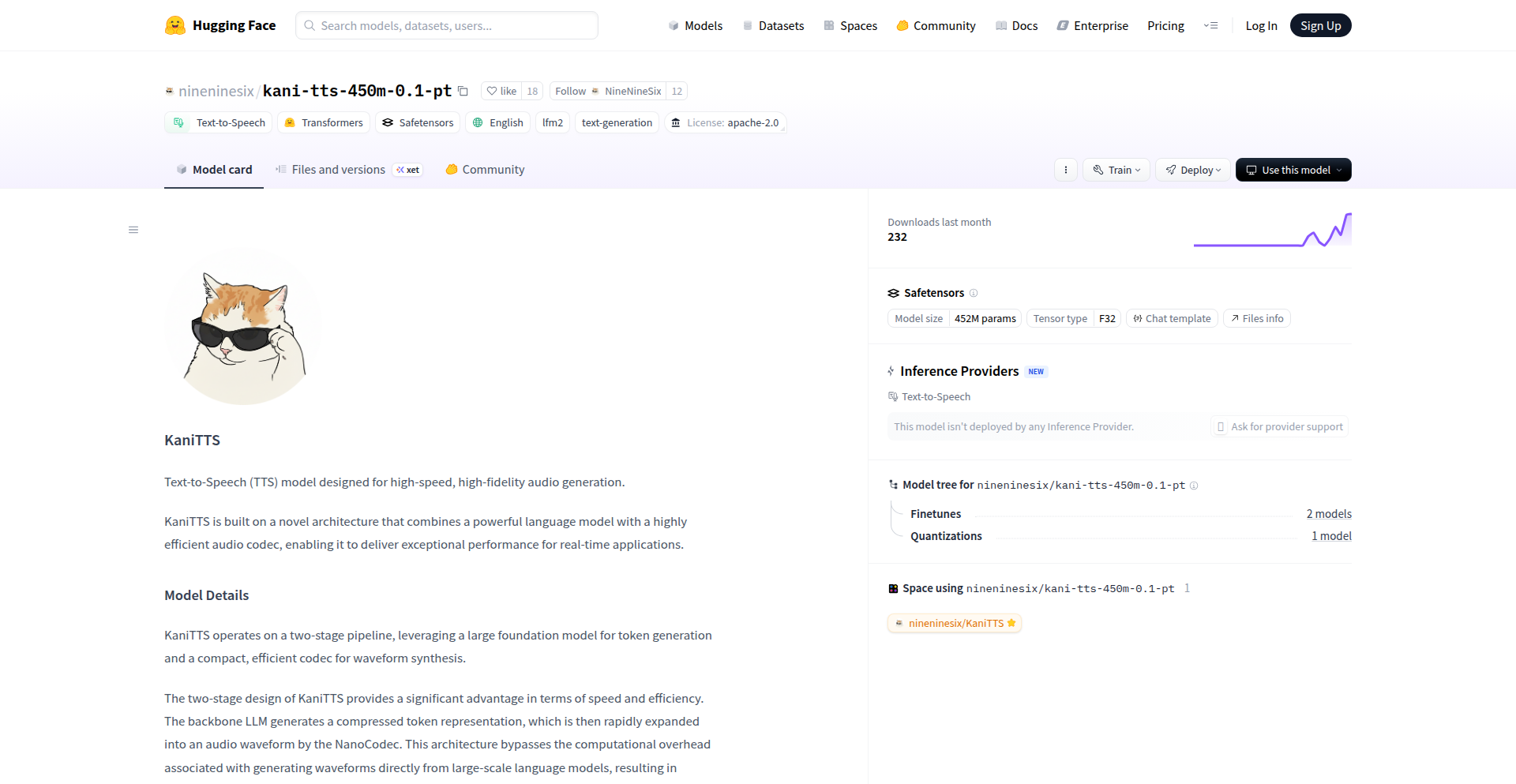

KaniTTS: Compact High-Fidelity Speech Synthesizer

Author

ulan_kg

Description

KaniTTS is an open-source Text-to-Speech (TTS) system that achieves remarkably high fidelity voice generation using a surprisingly small model size (450 million parameters). It represents a significant step forward in making advanced, natural-sounding speech synthesis accessible to a wider range of developers and applications, overcoming the typical resource limitations of comparable quality TTS systems.

Popularity

Points 4

Comments 5

What is this product?

KaniTTS is a lightweight yet powerful Text-to-Speech (TTS) engine. Its core innovation lies in its significantly reduced model size (450M parameters) without sacrificing speech quality. This is achieved through advanced model architecture and training techniques that allow it to learn and reproduce the nuances of human speech with great accuracy. Think of it like compressing a very high-quality audio file without losing much of the original sound – KaniTTS does something similar for spoken words, making it much easier to deploy and run on less powerful hardware or within resource-constrained environments, yet still producing very natural-sounding speech.

How to use it?

Developers can integrate KaniTTS into their applications by leveraging its open-source library. This typically involves installing the KaniTTS package and using its API to convert text into speech. Common integration scenarios include adding voice capabilities to chatbots, creating audio content for educational platforms, developing accessibility features for applications, or generating natural-sounding voiceovers for videos and games. The smaller model size makes it ideal for deployment on edge devices, mobile applications, or web servers where computational resources are limited. You'd essentially call a function like `kaniTTS.synthesize('Hello, world!')` and receive the audio output.

Product Core Function

· High-Fidelity Speech Synthesis: Generates natural and human-like speech from text input, capturing emotional nuances and prosody, which is valuable for creating engaging user experiences and realistic voice assistants.

· Compact Model Size (450M Params): Enables deployment on a wider range of devices and platforms, including mobile and edge computing, making advanced TTS technology more accessible and cost-effective for developers.

· Open-Source and Customizable: Allows developers to modify, fine-tune, and adapt the model to specific voice styles or languages, fostering community-driven improvements and enabling highly tailored voice solutions for niche applications.

· Efficient Inference: The optimized model architecture leads to faster speech generation compared to larger models, improving real-time performance for interactive applications and reducing latency for users.

· Cross-Platform Compatibility: Designed to be runnable across various operating systems and hardware, providing flexibility for developers building applications for diverse user bases.

Product Usage Case

· Creating an AI-powered educational app where KaniTTS provides engaging narration for lessons, making learning more interactive and accessible to students who prefer auditory content, solving the problem of delivering high-quality narration without requiring powerful servers.

· Developing a mobile customer support chatbot that can respond to user queries with natural-sounding voice, enhancing the user experience by providing a more human-like interaction, made possible by KaniTTS's compact size and efficient processing on mobile devices.

· Building an indie game that requires character voiceovers. KaniTTS allows developers to generate custom voices for multiple characters without the need for expensive voice actors or large model deployments, democratizing voice production for smaller game studios.

· Implementing accessibility features in a web application, such as reading out content for visually impaired users. KaniTTS's high-quality output ensures a clear and understandable experience, and its lightweight nature allows for seamless integration into existing web architectures without impacting performance.

· Prototyping voice-controlled interfaces for smart home devices. KaniTTS can run directly on some devices, providing immediate voice feedback and command recognition, showcasing its potential for embedded systems where connectivity and processing power are often limited.

8

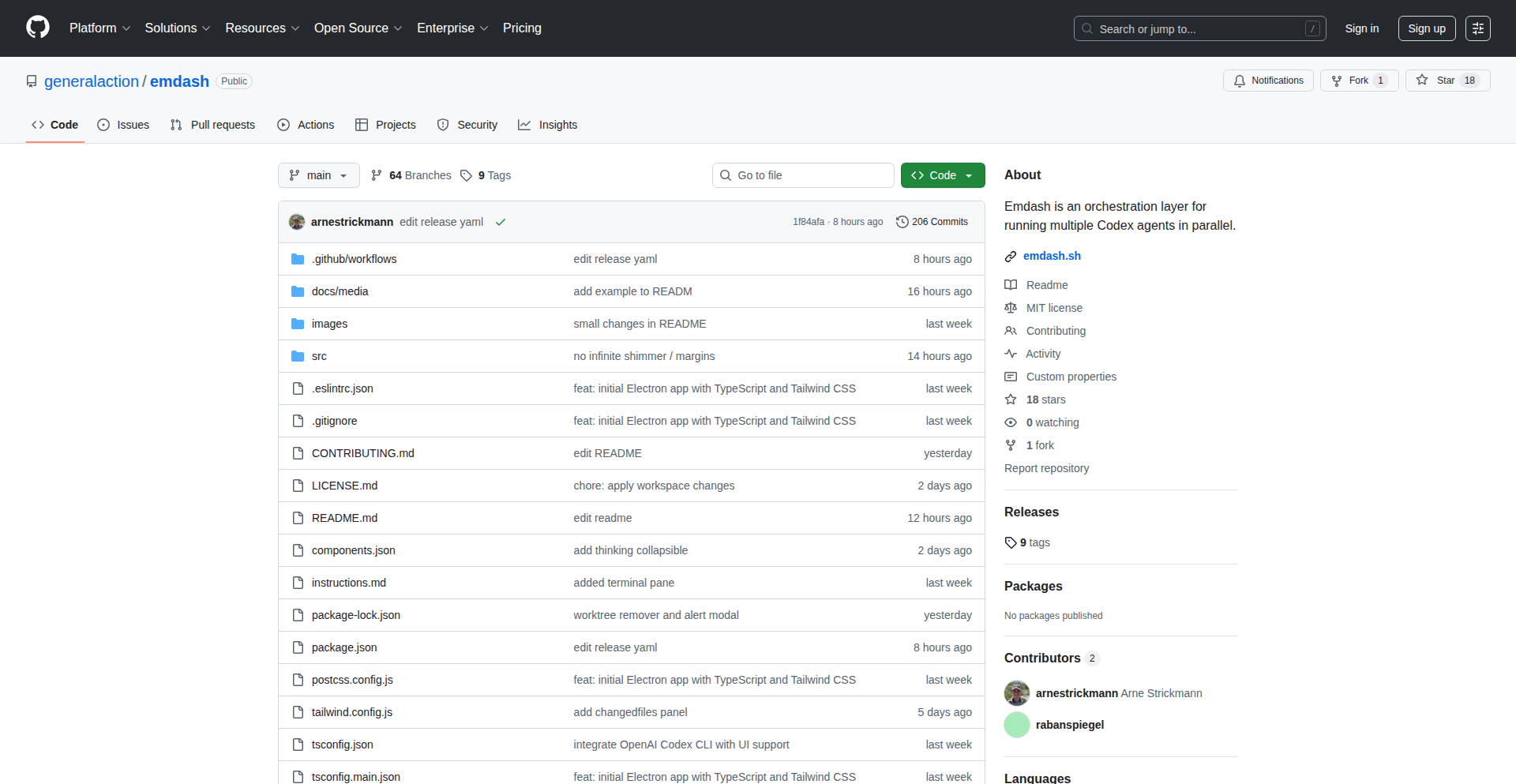

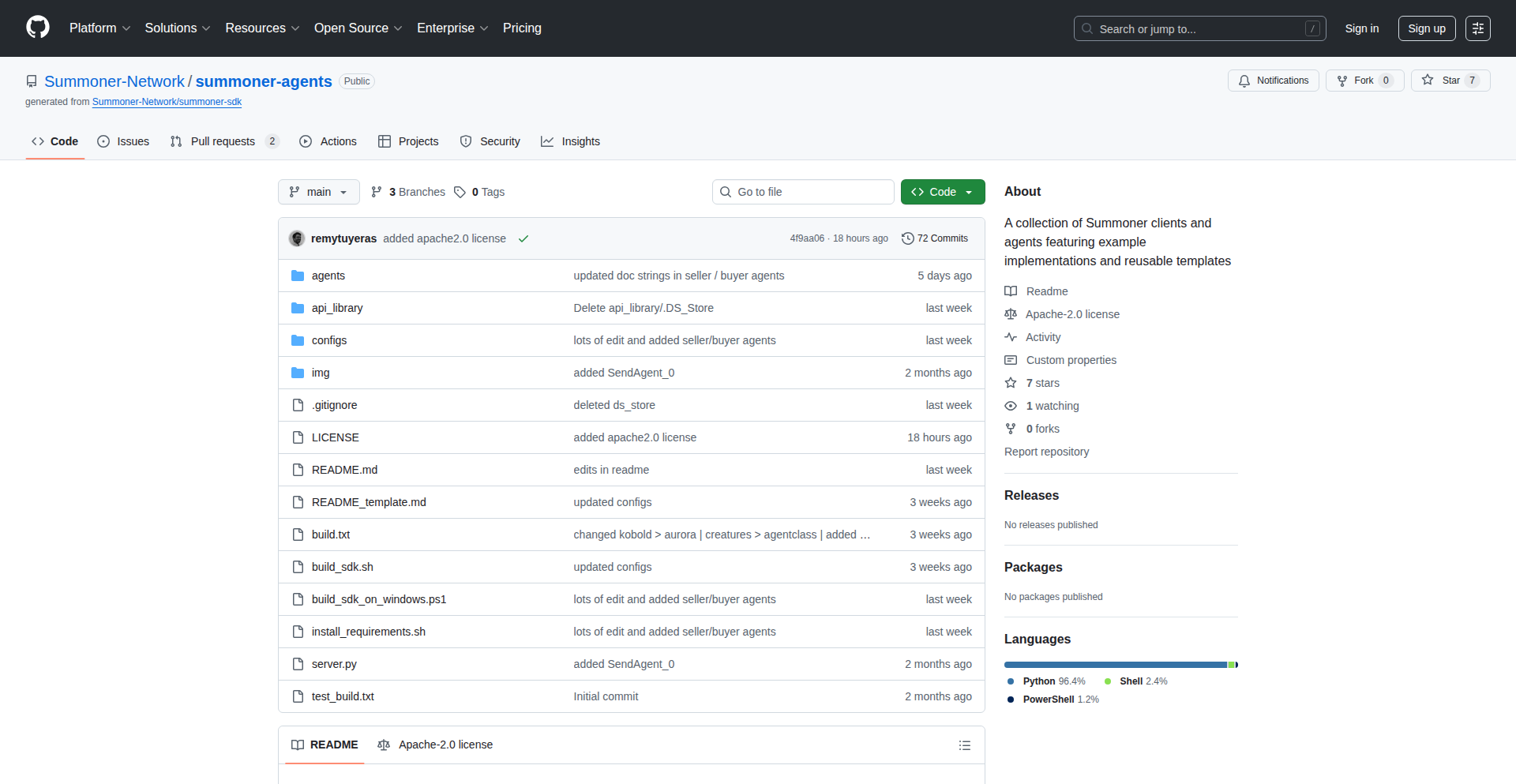

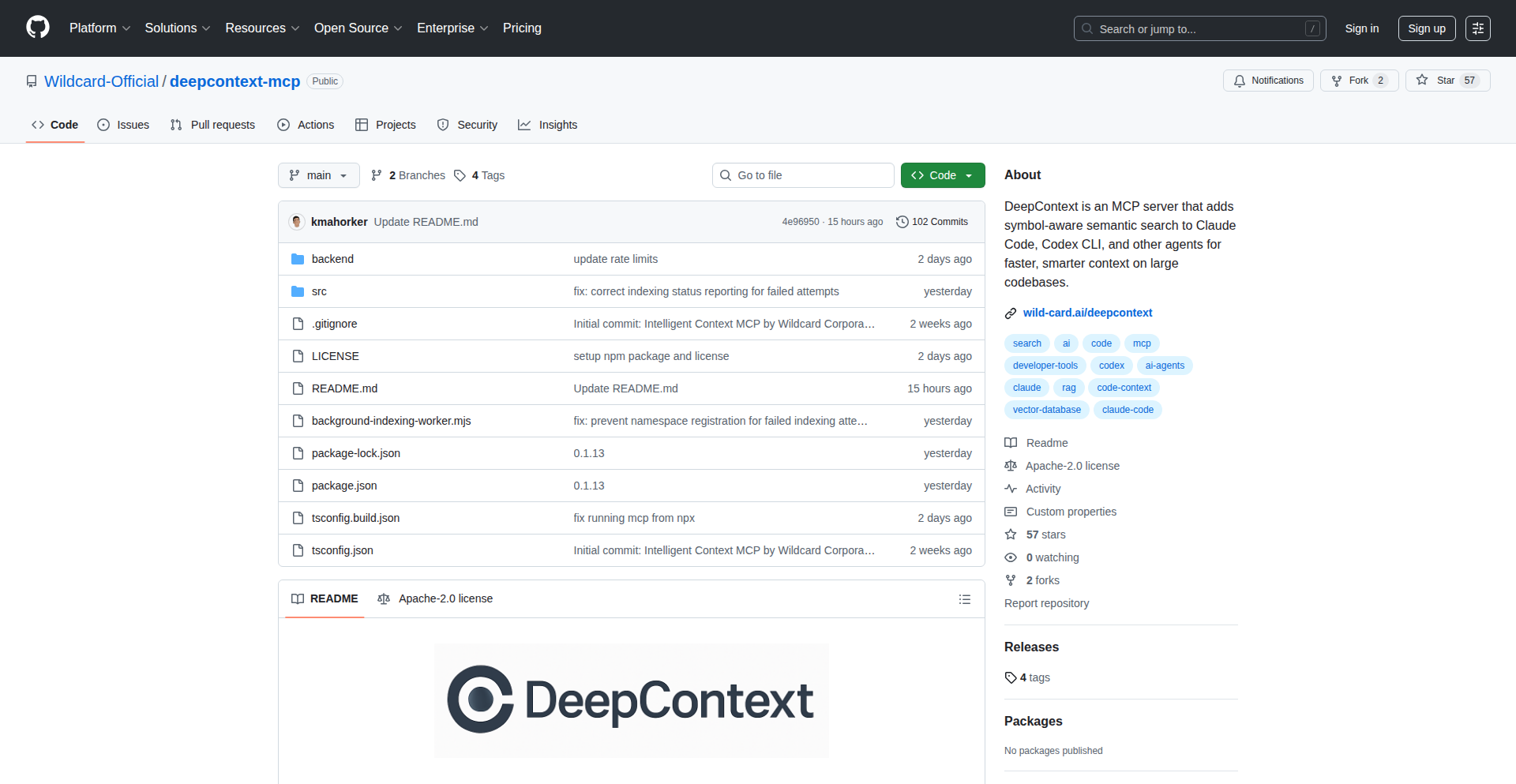

emdash: Parallel Codex Orchestrator

Author

arnestrickmann

Description

emdash is an open-source layer designed to manage and run multiple Codex AI agents concurrently. It addresses the challenge of coordinating numerous AI agents, often scattered across different terminal windows, by providing isolated workspaces for each agent. This isolation simplifies monitoring agent status, identifying bottlenecks, and tracking code changes, thereby boosting productivity and visibility.

Popularity

Points 7

Comments 2

What is this product?

emdash is a system that allows developers to run and control many AI coding assistants (like OpenAI's Codex) at the same time, in an organized way. Normally, when you run multiple AI coding agents, they can get messy and hard to keep track of. emdash creates separate, clean environments for each AI agent. This means you can easily see what each AI is doing – whether it's actively working, stuck on a problem, or what code it has recently generated. It’s like having a dashboard for your AI coding team, making sure everyone is productive and you know exactly what’s happening.

How to use it?

Developers can use emdash by installing it and configuring it to manage their Codex agents. Instead of opening multiple terminal windows and manually starting each AI agent, developers can define their agent configurations within emdash. emdash then launches and manages these agents, presenting them in a clear, organized interface. This allows for easier switching between agents, observing their output in real-time, and understanding the overall progress of AI-assisted coding tasks. It can be integrated into existing development workflows where multiple AI agents are used for various coding tasks like code generation, debugging, or code refactoring.

Product Core Function

· Parallel Agent Execution: Allows multiple Codex agents to run simultaneously, significantly speeding up AI-assisted development tasks by leveraging distributed processing power. This means you can get more AI-generated code or analysis done in the same amount of time.

· Isolated Workspaces: Each agent operates in its own dedicated environment. This prevents interference between agents and makes it easy to distinguish their outputs and states. You can see exactly what each AI is working on without confusion.

· Status Monitoring: Provides a clear overview of each agent's activity, showing whether they are active, idle, or encountering errors. This helps in quickly identifying and resolving issues, and understanding which AI is performing best.

· Change Tracking: Tracks the code and outputs generated by each agent. This makes it simple to review the contributions of individual agents and revert to previous states if needed. You know what code each AI produced and when.

· Resource Management: Offers a structured way to manage the resources consumed by each agent. This helps in optimizing performance and preventing any single agent from hogging system resources.

Product Usage Case

· Scenario: A developer is using several AI agents to generate different parts of a web application, such as a backend API, a frontend component, and unit tests. emdash allows them to run these agents in parallel, with each agent working in its own isolated workspace. Problem Solved: Instead of juggling multiple terminal windows and losing track of which AI is generating what, the developer can monitor all agents from a single interface, see the progress of each component generation, and quickly identify if one agent is stuck, ensuring the entire application development proceeds smoothly.

· Scenario: A team of developers is experimenting with fine-tuning a large language model using multiple Codex agents to explore different training parameters. emdash helps them manage these parallel training runs. Problem Solved: Each training run is isolated, preventing data corruption and making it easy to compare the results of different parameter sets. The status monitoring helps them see which training jobs are completing successfully and which are failing, allowing for rapid iteration and experimentation.

· Scenario: A developer is using AI agents for a complex debugging task, with different agents assigned to analyze different code modules or logs. emdash organizes these agents. Problem Solved: The developer can clearly see which agent is analyzing which part of the system and what findings each agent has produced. This structured approach simplifies the debugging process, allowing the developer to consolidate insights from multiple AI analyses effectively.

9

PromptLead AI

Author

aurelienvasinis

Description

This project is an AI agent designed to automate and enhance B2B lead generation for sales and marketing teams. It leverages natural language prompts to find, curate, enrich, and qualify prospect leads, including identifying key decision-makers and their contact information. The innovation lies in its ability to mimic the manual, highly relevant prospect sourcing process, but at scale, by using AI to build custom lead databases automatically. This means businesses can significantly speed up their outreach efforts and improve the quality of leads they pursue, ultimately driving better sales outcomes.

Popularity

Points 6

Comments 1

What is this product?

PromptLead AI is an intelligent automation tool that acts like a virtual research assistant for B2B lead generation. Instead of manually searching through countless websites, databases, and professional networks to find potential customers, you simply describe the ideal lead you're looking for using plain English prompts. The AI then goes to work, sourcing companies and individuals that match your criteria, gathering relevant information like company size, industry, and technologies used, and even finding direct contact details for the right people within those companies. The core innovation is its sophisticated prompt-to-lead pipeline, which transforms unstructured requests into structured, actionable lead data, a significant leap from traditional, often labor-intensive, lead qualification methods. This makes acquiring high-quality, targeted leads much more efficient and cost-effective.

How to use it?

Developers and sales professionals can use PromptLead AI by visiting the Kuration AI website and signing up for an account. After onboarding, you interact with the AI agent through a user-friendly interface where you input your specific lead generation requirements as natural language prompts. For example, you might prompt: 'Find me marketing managers at SaaS companies in the US with 50-200 employees that use HubSpot.' The AI then processes this prompt, performing web scraping, data enrichment, and qualification to build a custom database of matching leads. The generated lists can be integrated into existing CRM systems (like Salesforce, HubSpot) or sales engagement platforms via exports or potential future API integrations, streamlining the workflow from lead discovery to outreach. The demo video shows this process in action, illustrating how easily you can define and obtain valuable lead data.

Product Core Function

· AI-powered lead sourcing: The AI automatically searches various online sources to find companies and individuals matching your defined criteria, significantly reducing manual search time. This helps businesses find potential customers they might otherwise miss.

· Data enrichment: The system gathers and adds crucial details to each lead, such as company size, industry, technologies used, and news mentions. This provides a more comprehensive understanding of each prospect, enabling more personalized outreach.

· Decision-maker identification: The AI specifically targets and finds the key individuals within target companies who are most likely to be the decision-makers for your product or service. This ensures your sales efforts are directed to the right people, increasing conversion rates.

· Contact information retrieval: The tool works to find accurate contact details, such as email addresses and sometimes phone numbers, for the identified decision-makers. This directly addresses a major bottleneck in sales outreach, allowing for immediate engagement.

· Customizable lead database generation: The AI builds a unique database tailored to your specific business needs based on your prompts. This ensures that the leads generated are highly relevant and actionable, saving time and resources on unqualified prospects.

Product Usage Case

· A startup founder needs to find potential customers for their new AI-powered marketing analytics tool. They prompt the AI to find CMOs and Marketing Directors at mid-sized e-commerce companies in North America that are using Google Analytics and have recently raised funding. The AI generates a list of qualified leads with contact information, enabling the founder to start their sales outreach immediately and get early feedback.

· A sales development representative (SDR) is tasked with expanding into a new vertical market. They use the AI to identify companies in the healthcare technology sector that are experiencing rapid growth and are looking for solutions to improve patient data management. The AI finds the relevant IT managers and VPs of Operations with their contact details, allowing the SDR to book meetings and initiate conversations.

· A business development manager wants to find potential partners for a new software integration. They prompt the AI to identify companies that develop complementary software solutions, are of a similar size, and have expressed interest in API integrations. The AI provides a curated list of potential partners, complete with company profiles and contact information for their partnership teams, streamlining the partnership outreach process.

10

RustNet: Real-time Network Insight

Author

hubabuba44

Description

RustNet is a terminal-based network monitoring tool built with Rust. It provides a live view of network connections, crucially linking each connection to the specific process generating it and identifying the network protocol being used (like HTTP, HTTPS, DNS, QUIC). It utilizes advanced techniques like eBPF on Linux and PKTAP on macOS for deep packet inspection and process identification, even for very short-lived processes that traditional methods might miss. The software is designed to be a faster, more lightweight alternative to tools like Wireshark for quick network analysis directly from your terminal.

Popularity

Points 4

Comments 2

What is this product?

RustNet is a command-line interface (CLI) application that acts like a smart, real-time network traffic visualizer. Instead of just showing raw network data, it dives deep to figure out *what* is making the network connection (which program) and *how* it's communicating (which protocol, such as web browsing or domain name lookups). Its innovation lies in using advanced kernel-level technologies like eBPF (a way to run safe, sandboxed programs inside the Linux kernel) and PKTAP (a macOS framework for capturing network packets). These methods allow RustNet to accurately identify the process behind a network connection, even if that process starts and stops very quickly, something simpler tools struggle with. It's built using Rust for performance and safety, employing techniques like lock-free data structures to ensure the user interface remains responsive even when processing a lot of network activity simultaneously.

How to use it?

Developers can use RustNet directly in their terminal. After building it from source (using `cargo build --release`) or installing it via Homebrew, you typically run it with administrator privileges (`sudo`) or by granting specific network capabilities. This is because it needs low-level access to network traffic. Once running, you'll see a live, updating list of network connections. You can then easily see which application on your system is using the network, what protocol it's using, and the destination it's communicating with. This is incredibly useful for debugging network issues, identifying unexpected network activity from applications, or understanding how different services on your machine interact with the internet. It can be integrated into scripting for automated network monitoring or troubleshooting workflows.

Product Core Function

· Deep Packet Inspection: Analyzes network packets to identify specific protocols like HTTP, HTTPS (TLS/SNI), DNS, and QUIC, allowing you to understand the type of communication happening. This is valuable for pinpointing how different applications are using the network.

· Process Identification (Linux/macOS): Leverages eBPF on Linux and PKTAP on macOS to precisely link network connections to the originating processes, including those with very short lifespans. This helps you quickly identify which program is responsible for specific network traffic.

· Real-time TUI Display: Presents network activity in a user-friendly, text-based interface within your terminal, updating dynamically. This provides immediate visibility into your network's state, making it easy to spot anomalies.

· Cross-platform Support: Runs on Linux, macOS, and Windows, offering a consistent network monitoring experience across different operating systems, with advanced process identification features on Linux and macOS.

· Lightweight Performance: Engineered for efficiency using Rust and optimized data structures, RustNet offers a faster and less resource-intensive alternative to heavy graphical network analysis tools, ideal for quick checks on any system.

Product Usage Case

· Debugging unexpected network usage: A developer notices their system is consuming a lot of bandwidth. By running RustNet, they can immediately see which specific application is making all the connections and what protocols are being used, enabling them to investigate further.

· Identifying rogue processes: A security-conscious user wants to ensure no unauthorized applications are communicating externally. RustNet can help them spot any unfamiliar processes making network connections.

· Monitoring API interactions: A web developer can use RustNet to see their application making calls to external APIs, verifying that it's using the correct protocols (e.g., HTTPS) and identifying any potential connection errors.

· Analyzing network behavior of short-lived services: For developers working with microservices or serverless functions that start and stop rapidly, RustNet's ability to catch processes using eBPF/PKTAP ensures they don't miss crucial network activity that might otherwise go unnoticed.

11

Orgtools: Scaling Decision Engine

Author

ttruett

Description

Orgtools is a decision-making software designed for scaling companies, focusing on structured approaches to complex organizational choices. Its innovation lies in providing a framework to objectively evaluate and select the best path forward, tackling the chaos that often accompanies rapid growth by codifying decision-making processes. This empowers teams to make faster, more informed, and less emotionally-driven choices, ultimately optimizing resource allocation and strategic direction. So, what's in it for you? It helps your company grow without losing its strategic focus, making crucial decisions with clarity and confidence.

Popularity

Points 4

Comments 2

What is this product?

Orgtools is a specialized software that helps companies, especially those growing rapidly, make better decisions. It works by providing a structured system to analyze different options and choose the most suitable one. Think of it as a digital assistant for critical business choices, using logic and data to guide the process rather than intuition alone. The innovation here is in translating complex organizational challenges into a systematic evaluation framework, making opaque decision-making transparent and repeatable. So, what's in it for you? It brings order to decision-making chaos, leading to more consistent and effective outcomes as your company expands.

How to use it?

Developers can integrate Orgtools into their existing workflows by leveraging its API or by using its standalone interface for strategic planning sessions. It can be used for various company-level decisions, from choosing new technology stacks to evaluating market entry strategies or restructuring teams. By inputting key criteria, potential outcomes, and associated risks for each decision option, Orgtools helps teams visualize trade-offs and identify the optimal path. So, how can you use it? Imagine your team is deciding between two cloud providers. You'd input factors like cost, performance, scalability, and vendor support for each provider into Orgtools, and it would help you objectively rank them and justify your choice. This makes it a powerful tool for any developer involved in architectural or strategic decisions.

Product Core Function

· Decision Matrix Analysis: Allows users to create custom matrices to score and compare different options against predefined criteria, providing a quantitative basis for choice. This is valuable for objective evaluation and demonstrating the rationale behind decisions, crucial for team alignment and investor confidence.

· Scenario Planning: Enables the modeling of various future scenarios and their potential impact on different decision outcomes, helping to anticipate challenges and opportunities. This foresight allows for proactive strategy adjustments, ensuring your company is prepared for different futures.

· Stakeholder Impact Assessment: Facilitates the analysis of how different decisions will affect various internal and external stakeholders, promoting more inclusive and well-rounded choices. Understanding stakeholder impact leads to smoother implementation and broader buy-in.

· Data-driven Insights Generation: Processes input data to provide visualizations and summaries that highlight key trade-offs and optimal decision paths, simplifying complex information for actionable insights. This helps you quickly grasp the core of the decision problem and its potential solutions.

Product Usage Case

· A rapidly growing startup needs to decide on its next major product feature. By using Orgtools, the product team can define criteria like market demand, development effort, and potential revenue for each feature, objectively selecting the most impactful one. This prevents wasted development cycles on less promising ideas.

· A company considering expanding into a new international market can use Orgtools to evaluate different market entry strategies (e.g., direct sales, partnerships, acquisitions) based on factors like regulatory environment, competitive landscape, and logistical costs. This leads to a data-backed decision on the most viable market entry plan.

· An engineering team needs to choose between migrating to a new database technology or upgrading their current system. Orgtools can be used to compare performance improvements, migration complexity, long-term costs, and team expertise for each option, ensuring the most efficient and scalable solution is chosen.

12

AgentSea: Secure AI Chat for Sensitive Workflows

Author

miletus

Description

AgentSea is a privacy-focused AI chat application designed for handling sensitive information. It addresses the critical concern of data leakage and unauthorized training when using commercially available AI models. By enabling users to run open-source models locally or on dedicated servers, AgentSea ensures that confidential data like contracts, health records, and financial information remains private and is never used for training external AI models. It also provides access to a wide range of community-built AI agents and integrates with popular tools like Reddit, X, and YouTube for enhanced functionality.

Popularity

Points 5

Comments 0

What is this product?

AgentSea is a secure AI chat platform that prioritizes user data privacy. Unlike many commercial AI services where your conversations might be stored, used for model training, or shared with third parties, AgentSea offers a 'Secure Mode'. In this mode, all AI interactions are handled by open-source models running either on your local machine or on AgentSea's private servers. This means your sensitive data, such as legal documents, personal health information, or proprietary business secrets, is never exposed to external training pipelines or data breaches. It's like having a personal, highly capable AI assistant that you can trust with your most confidential information, built with the principle of 'your data stays yours'.

How to use it?

Developers can integrate AgentSea into their workflows by leveraging its secure chat environment for tasks involving sensitive data. For example, instead of pasting confidential code snippets or internal company documents into a public AI chatbot for analysis, a developer can use AgentSea. This ensures that proprietary algorithms or trade secrets are not inadvertently shared or fed into models that could expose them. AgentSea also allows developers to access and utilize specialized community-built AI agents that can perform specific tasks, such as code analysis, data summarization, or API interaction, all within a secure and private context. Integration could involve using AgentSea as a secure backend for internal tools or as a standalone platform for handling sensitive research and development queries.

Product Core Function

· Secure AI Chat: Enables private AI interactions by running models locally or on dedicated servers, preventing data from being used for external training or exposed to third parties. This is valuable for developers handling proprietary code or sensitive project details.

· Open-Source Model Support: Allows the use of a variety of open-source AI models, giving developers flexibility and control over their AI tools without compromising data privacy.

· Community Agent Ecosystem: Provides access to a curated collection of specialized AI agents built by the community, allowing developers to extend AI capabilities for tasks like code review, documentation generation, or system monitoring.

· External Tool Integration: Connects with popular tools like Reddit, X (formerly Twitter), and YouTube, enabling developers to perform research, gather insights, or monitor relevant information securely within the AgentSea environment.

· Data Leakage Prevention: Directly tackles the risk of data being stored, trained on, or shared by closed-source AI models, offering peace of mind when working with confidential business or personal data.

Product Usage Case

· A software engineer uses AgentSea to analyze a proprietary codebase for bugs or security vulnerabilities. By running the analysis on AgentSea, they ensure that their company's intellectual property remains secure and is not exposed to external AI model training.

· A legal professional drafts sensitive contract clauses or reviews confidential documents using AgentSea. This prevents the accidental leakage of client data or privileged information that could occur with public AI chatbots.

· A financial analyst uses AgentSea to process and summarize sensitive financial reports or market data. The secure environment guarantees that confidential financial information is protected from unauthorized access or use in AI model training.

· A researcher working with sensitive patient data uses AgentSea for analysis and insight generation. This ensures compliance with privacy regulations and protects patient confidentiality.

13

TermChat: SSH-Powered Textual Communication

Author

unkn0wn_root

Description

TermChat is a novel chat application that leverages the ubiquitous Secure Shell (SSH) protocol to enable text-based communication directly from your terminal. It sidesteps the need for heavy desktop clients or web apps by using SSH as its transport layer, offering a lightweight, dependency-free way to connect. This project solves the problem of bloated communication tools by providing a minimalist, efficient chat experience that requires only an SSH client, which is already installed on most developer machines. Its innovation lies in repurposing a standard network protocol for real-time chat, showcasing a clever application of existing technology for a new purpose.

Popularity

Points 3

Comments 2

What is this product?

TermChat is a chat application that allows users to communicate with each other using only their terminal and the SSH protocol. Instead of installing a separate chat application, you can connect to TermChat by simply typing `ssh termchat.me` into your terminal. The innovation here is using SSH, a tool most developers already have installed and are familiar with, as the foundation for a chat service. This eliminates the need for installing additional software, making it incredibly accessible and lightweight. It's built on the idea that simple, text-based communication shouldn't require complex setups.

How to use it?

Developers can use TermChat by having an SSH client installed on their machine (which is standard on Linux, macOS, and available for Windows). To join, they simply type `ssh termchat.me` in their terminal. Once connected, they can use commands like `/register` to create an account and `/login` to sign in. They can then create or join public and private chat rooms, send direct messages (DMs) to other users, and switch between conversations using tabs or keyboard navigation (like 'hjkl' or arrow keys). This makes it easy to integrate into a developer's existing workflow, allowing them to chat without leaving their familiar terminal environment.

Product Core Function

· SSH-based connection: Enables users to connect to the chat service using only an SSH client, which is pre-installed on most systems. This bypasses the need for downloading and installing separate applications, offering a highly accessible and lightweight entry point for communication.

· Public and Private Rooms: Users can create and join public chat rooms for group discussions or create private rooms for more focused conversations. This provides flexibility for different communication needs, allowing for both broad community interaction and secure, targeted discussions.

· Direct Messaging (DMs): Supports private one-on-one conversations between users. This feature is crucial for discreet communication or specific user interactions, enhancing the utility of the platform for personal or project-specific exchanges.

· Username/Password Authentication: Offers a simple registration and login process using just a username and password, without requiring email verification. This minimalist approach aligns with the project's goal of simplicity and speed, reducing friction for new users.

· End-to-end encryption for private chats and rooms: Ensures that private conversations and room messages are encrypted, both at rest and in transit. This provides a layer of security and privacy for sensitive discussions, assuring users that their conversations are protected.

· Terminal-based interface: Provides a user experience entirely within the terminal, allowing for efficient navigation and interaction using keyboard commands. This appeals to developers who prefer command-line interfaces and want to streamline their workflow without context switching.

Product Usage Case

· A developer working on a project can quickly create a private room for their team to discuss urgent issues without leaving their terminal window. This streamlines communication and keeps all project-related conversations in one place, directly within their development environment.

· A programmer who wants to ask a quick question to a colleague can send a direct message via TermChat. This avoids the overhead of opening a separate chat application or sending an email, leading to faster response times and increased productivity.

· A user who prefers a minimalist, text-only experience for communication can join public rooms on TermChat to discuss topics of interest without being distracted by graphical interfaces or unnecessary features. This caters to users who value efficiency and a clutter-free interaction.

· A system administrator can use TermChat to communicate with other administrators in a secure and lightweight manner, even from a remote server where installing additional software might be problematic. The SSH dependency makes it ideal for server-side communication.

14

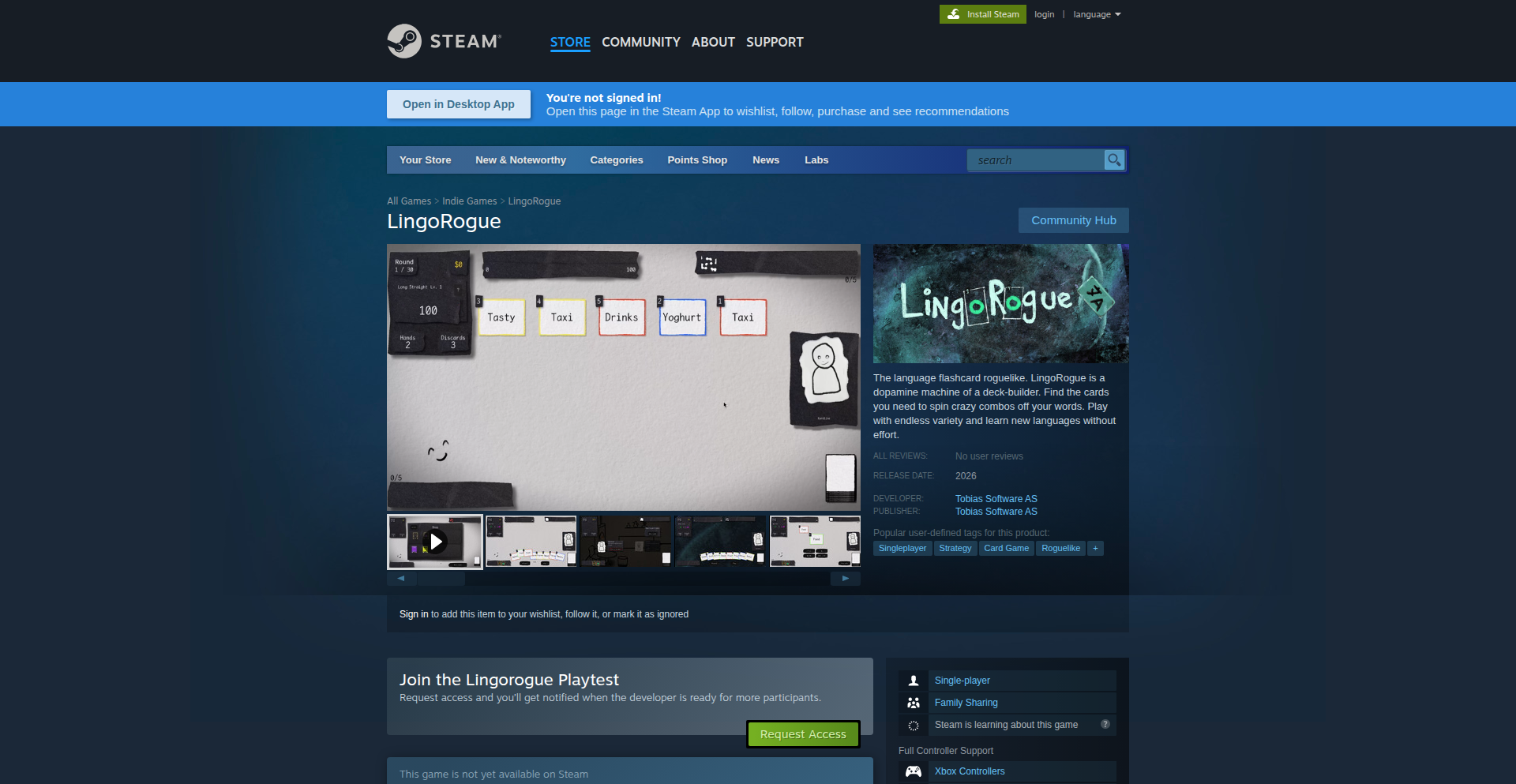

Lexi Roguelike Deckbuilder

Author

boxedsound

Description

A roguelike deckbuilding game that gamifies language learning. It combines addictive gameplay mechanics with flashcards to make vocabulary acquisition effortless and fun. The innovation lies in transforming rote memorization into a dynamic, strategic experience.

Popularity

Points 2

Comments 3

What is this product?

This is a roguelike deckbuilding game where players build decks of 'super cards' and 'flashcards' to learn new vocabulary. The core innovation is integrating language flashcards directly into the game's combat and progression system. Instead of just seeing a word, players interact with it, forming word combos and scoring points by playing cards with letters that form target vocabulary. This turns passive learning into an active, engaging experience, much like popular deckbuilding games like Slay the Spire, but with a focus on language acquisition.

How to use it?

Developers can use this as a highly engaging platform to learn new languages or vocabulary lists. By creating custom decks, users can tailor their learning experience to specific subjects or difficulty levels. The game is designed to be playable directly, with a focus on seamless integration of flashcard mechanics into the gameplay loop. Think of it as learning Spanish while battling monsters, where successfully recalling vocabulary powers your attacks and unlocks new abilities.

Product Core Function

· Vocabulary Integration: Flashcards are transformed into playable game cards, allowing players to directly use words in strategic gameplay. This makes remembering vocabulary feel less like studying and more like mastering a game mechanic.

· Deckbuilding for Language: Players construct decks of language cards, enabling them to practice specific word sets or grammar rules. This provides a structured yet flexible approach to targeted vocabulary learning.

· Roguelike Progression: The game features procedurally generated challenges and persistent progression, encouraging repeated engagement and reinforcing learned vocabulary over time. Each playthrough offers a new learning path and challenge.

· Combo System: Players can create word combos by strategically playing cards with specific letters, rewarding deeper understanding and application of vocabulary. This encourages actively thinking about word construction and meaning.

· Gamified Learning Loop: The combination of combat, progression, and vocabulary practice creates a highly addictive learning loop. You're motivated to learn more words to improve your in-game performance, which in turn helps you learn more words.

Product Usage Case

· Language Learners: A student struggling with French vocabulary can build a deck of French nouns and verbs, then play through a roguelike dungeon, using their word knowledge to defeat enemies and progress. This makes the learning process enjoyable and rewarding.

· Educators: A teacher could create custom decks for their students to practice specific scientific terms or historical dates, turning revision into an interactive game. This offers a novel way to reinforce classroom learning.

· Developers learning a new programming language's keywords: While the current focus is on natural language, the underlying mechanic could be adapted. Imagine a game where you 'cast spells' using programming keywords to solve puzzles, thereby learning syntax and common functions.

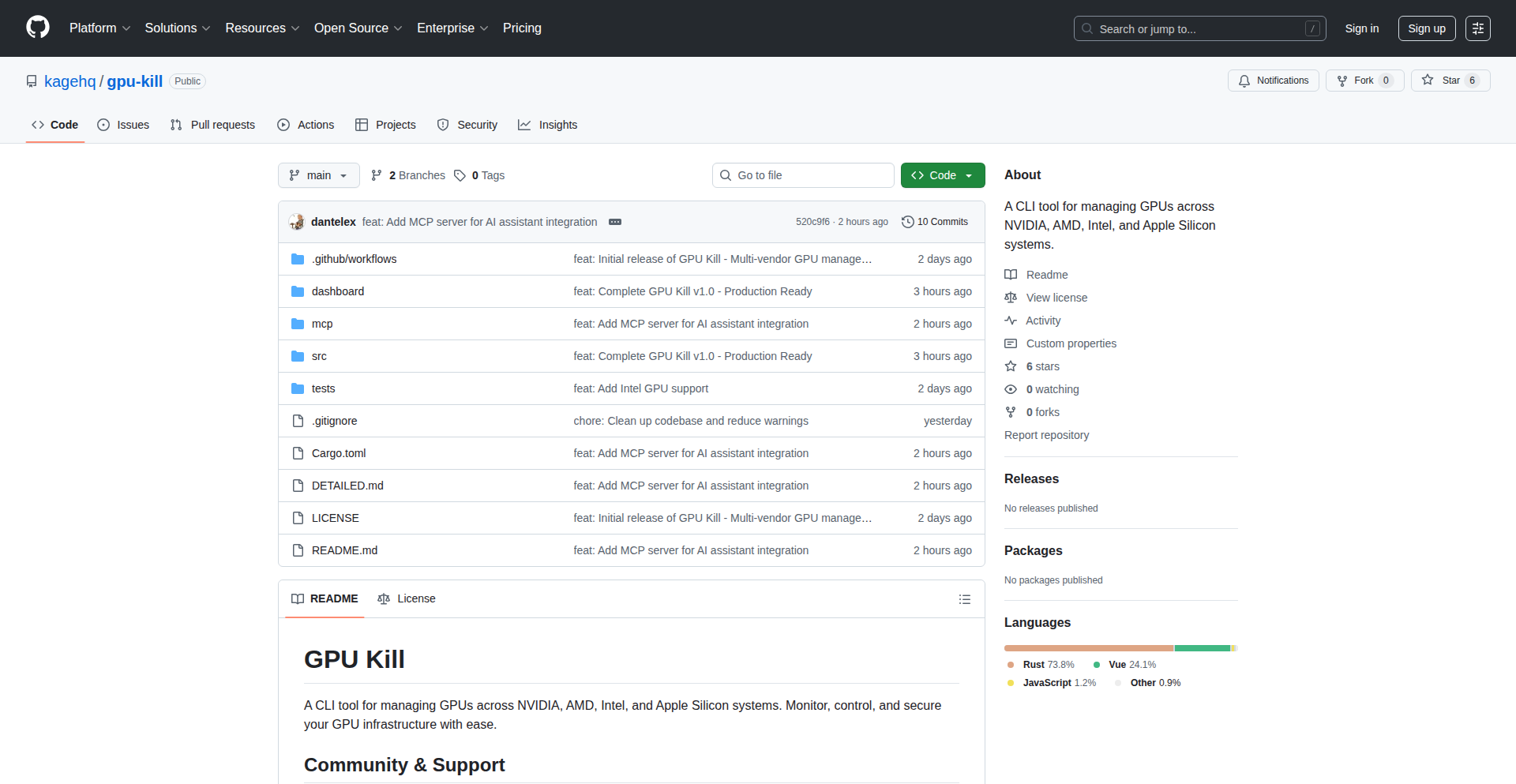

15

GPU Guardian CLI

Author

lexokoh

Description

GPU Guardian CLI is a command-line tool designed to forcefully terminate stuck GPU processes without requiring a system reboot. It leverages low-level system interactions to identify and kill unresponsive GPU jobs, offering a crucial utility for developers and researchers who frequently encounter frozen computations on their GPUs.

Popularity

Points 5

Comments 0

What is this product?

GPU Guardian CLI is a command-line interface (CLI) tool that acts as a troubleshooter for your graphics processing unit (GPU). GPUs are powerful processors often used for intensive tasks like machine learning or complex simulations. Sometimes, these tasks can freeze or become unresponsive. Instead of the drastic measure of restarting your entire computer, this tool provides a targeted way to identify and terminate those problematic GPU processes. It achieves this by interacting directly with the operating system's process management and GPU driver interfaces, allowing it to forcefully end runaway GPU tasks.

How to use it?

Developers and users can execute GPU Guardian CLI from their terminal. After identifying a frozen GPU job (often through visual cues or error messages from their applications), they can run the tool with specific commands to list active GPU processes and then select the one to terminate. This is particularly useful in environments where a system reboot would interrupt ongoing, long-running experiments or data processing, saving valuable time and preventing data loss. It can be integrated into scripts for automated monitoring and recovery of GPU workloads.

Product Core Function

· Identify stuck GPU processes: The tool scans active processes and flags those associated with GPU utilization that appear unresponsive, enabling quick diagnosis.

· Forceful process termination: It provides a mechanism to send a kill signal directly to problematic GPU processes, ensuring they are stopped even if they don't respond to normal termination requests.

· Non-disruptive operation: By targeting specific processes, it avoids the need to restart the entire system, allowing other applications and ongoing work to continue uninterrupted.

· Command-line interface: Its CLI nature makes it scriptable and easily callable from other automation tools, fitting seamlessly into development workflows.

Product Usage Case

· Machine learning training: A researcher is running a deep learning model training that freezes. Instead of rebooting and losing hours of training progress, they use GPU Guardian CLI to kill the stuck training process and restart it efficiently.

· CUDA or OpenCL development: A developer working with parallel computing frameworks encounters an infinite loop in their GPU kernel. GPU Guardian CLI allows them to terminate the rogue kernel without losing their entire development session.

· Interactive data visualization: An analyst is working with a large dataset that causes their GPU-accelerated visualization tool to hang. GPU Guardian CLI quickly frees up the GPU resources, allowing them to continue their analysis.

16

NaturalUML

Author

ivonellis

Description

NaturalUML is a user-friendly, AI-powered PlantUML editor that transforms plain English descriptions into visual diagrams with instant live previews. It streamlines the process of creating diagrams from ideation to visual representation, eliminating the frustration of complex editor interfaces. Key technologies include Next.js for the frontend, Supabase for backend services, Fly.io for deployment, and Vercel for hosting. This project democratizes diagram creation, making it accessible to everyone.

Popularity

Points 4

Comments 1

What is this product?

NaturalUML is a web application that allows users to create diagrams using simple English sentences. It leverages Natural Language Processing (NLP) to interpret these descriptions and automatically generate the corresponding PlantUML code, which is then rendered into a visual diagram in real-time. This innovation bypasses the steep learning curve often associated with traditional diagramming tools and their specific syntax, offering a more intuitive and efficient workflow. The core innovation lies in the AI's ability to translate abstract ideas into structured code that produces complex visual outputs, demonstrating a significant leap in making technical diagramming accessible and fluid.

How to use it?

Developers can use NaturalUML by simply typing their diagram requirements in plain English into the provided text area. For example, to create a sequence diagram showing a user logging in, one might type: 'User initiates login. The system validates credentials. If valid, the system displays the dashboard.' As the user types, the diagram preview updates instantly. The generated PlantUML code can be easily copied, and the final diagrams can be exported in various formats like SVG, PNG, or even the raw TXT for further modification or integration. Projects can be organized for managing multiple diagrams, and sharing via links with comment capabilities facilitates collaborative review and feedback, making it ideal for software architecture discussions, brainstorming sessions, or documentation.

Product Core Function

· Natural-language to PlantUML conversion: Allows users to describe diagrams in plain English, making diagram creation intuitive and fast. The AI interprets natural language and generates the necessary PlantUML code, significantly reducing the time and effort required compared to manual coding.

· Live Diagram Preview: Provides instant visual feedback as the user types their description. This immediate rendering helps users quickly identify and correct any misinterpretations of their intent, ensuring the final diagram accurately reflects their vision.

· Multiple Export Formats: Supports exporting diagrams as SVG, PNG, and TXT. This flexibility allows users to integrate diagrams into various documents, presentations, or version control systems, catering to diverse workflow needs.

· Project Organization: Enables users to group related diagrams into projects. This feature is invaluable for managing complex systems or maintaining a coherent set of diagrams for a specific initiative, improving workflow efficiency and organization.

· Shareable Links with Comments: Facilitates collaboration by allowing users to share their diagrams via unique links and enable comments for peer review. This is crucial for teams to discuss, refine, and approve diagrams collectively, fostering a more dynamic and efficient design process.

Product Usage Case

· Software Architecture Visualization: A developer can describe a microservices architecture in English, such as 'Service A calls Service B, which then accesses Database X.', and instantly see a visual representation of these interactions. This helps in quickly communicating complex system designs to team members or stakeholders who may not be familiar with specific diagramming syntaxes.

· Brainstorming Flowcharts: A product manager can outline a user workflow for a new feature using natural language, like 'User clicks button. System shows loading spinner. If successful, display results. If error, show error message.', and get an immediate flowchart. This speeds up the initial conceptualization and validation phases of product development.

· API Interaction Diagrams: For API documentation, a developer can describe the request-response flow between a client and an API endpoint. For instance, 'Client sends POST request to /users. Server processes data. If validation passes, server returns 201 Created. Otherwise, returns 400 Bad Request.'. This makes it easy to create clear, visual explanations of API interactions.

· Educational Content Creation: Educators can quickly generate diagrams for teaching programming concepts or system designs. By describing a data structure or an algorithm in simple terms, they can produce clear visual aids for students without needing advanced technical skills in diagramming tools.

17

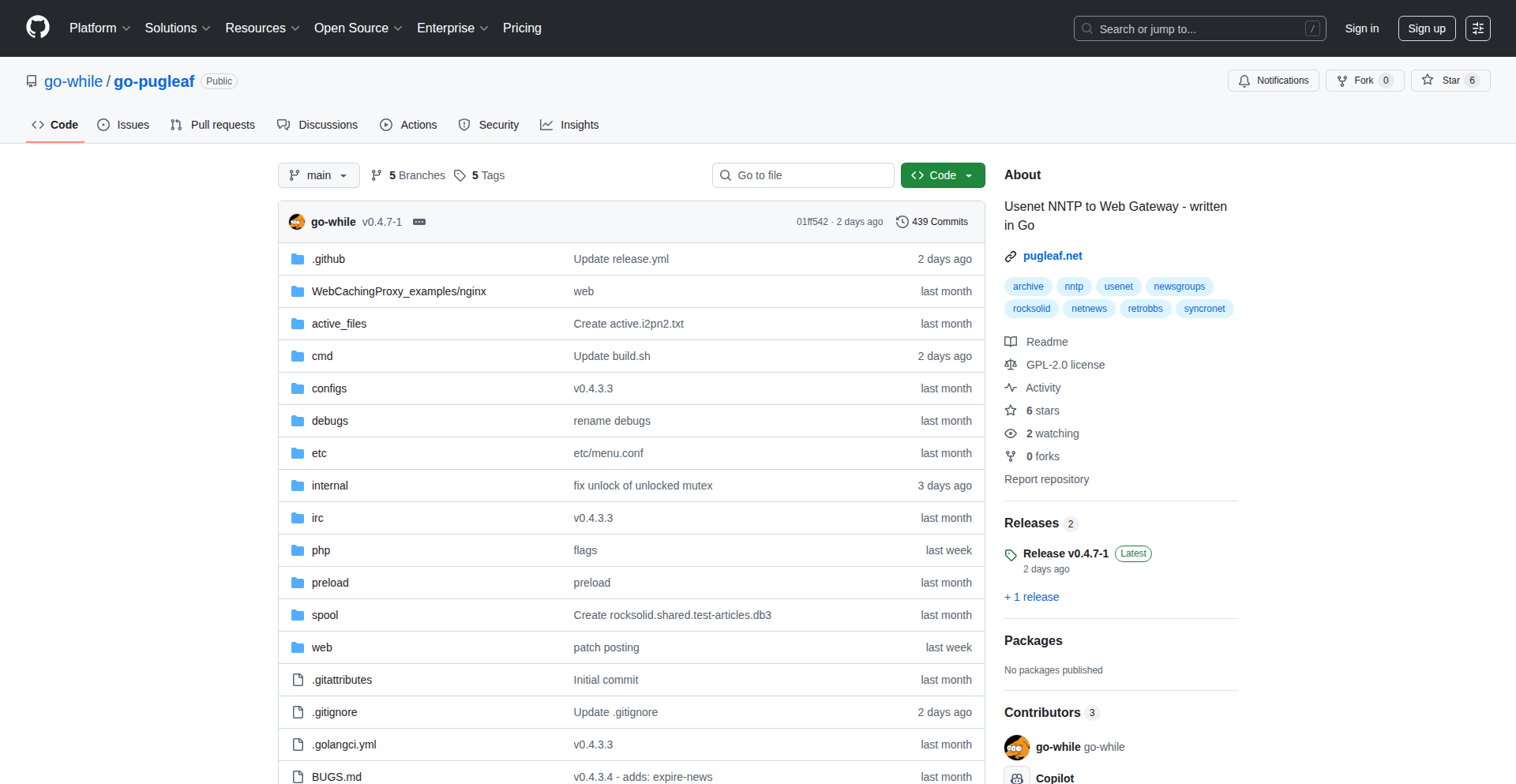

Go-Pugleaf: Usenet Web Gateway

Author

newhuser

Description

Go-Pugleaf is a modern web gateway for Usenet newsgroups, written in Go. It allows users to access and interact with Usenet content through a web browser, making the legacy Usenet protocol accessible to a wider audience. The project's core innovation lies in its efficient Go implementation of the NNTP (Network News Transfer Protocol) to web interface translation, providing a familiar and user-friendly experience for accessing historical and ongoing Usenet discussions.

Popularity

Points 3

Comments 1

What is this product?

Go-Pugleaf is a software project that bridges the gap between the old-school Usenet newsgroup system and the modern web. Usenet is a bit like an early internet discussion forum, but it uses a different communication method called NNTP. Most people today interact with discussions through websites. Go-Pugleaf takes the information from Usenet and presents it in a way that looks and feels like a normal website. The technical innovation here is building this bridge using the Go programming language, known for its speed and efficiency. This means it can handle a lot of Usenet data and serve it to users quickly without needing complex setup.

How to use it?

Developers can use Go-Pugleaf to set up their own web-accessible Usenet server. You can run it as a standalone application on your own server or integrate it into existing web applications. It typically involves configuring it to connect to a Usenet server (or acting as one) and then accessing the newsgroups through a web browser. This is particularly useful for archiving Usenet content, building custom interfaces for specific newsgroups, or providing a more accessible way for people to browse historical discussions without needing specialized NNTP client software.

Product Core Function

· NNTP to Web Translation: Efficiently converts Usenet articles and discussions from the NNTP protocol into a web-browsable format, making it accessible via a standard browser. This offers a familiar interface for users accustomed to web forums.

· Go Implementation for Performance: Leverages the Go programming language to provide high performance and concurrency, enabling smooth handling of large volumes of Usenet data and concurrent user requests. This means faster loading of discussions and a more responsive experience.

· Usenet Content Access: Enables users to read, post, and manage Usenet articles through a web interface, bypassing the need for traditional NNTP client software. This democratizes access to Usenet content.

· Customizable Interface: While the core functionality is translation, the web gateway architecture allows for potential customization of the user interface to tailor the Usenet browsing experience. This means you can potentially adapt how the discussions look and feel.

· Archival and Integration Capabilities: Can be used to archive Usenet conversations or integrate Usenet content into other web-based platforms. This is valuable for preserving historical data or enriching other online communities.

Product Usage Case

· A developer wants to archive a specific set of Usenet newsgroups for historical research. They can set up Go-Pugleaf to connect to these newsgroups and create a searchable web archive, making the information readily available without needing to run an NNTP client.

· A community manager wants to integrate discussions from a niche Usenet group into their existing community forum. Go-Pugleaf can be used to pull this content and display it within the forum, creating a unified discussion space.

· An individual wants to access Usenet discussions on their mobile device without installing a dedicated newsreader app. By running Go-Pugleaf, they can access all the content through their mobile browser, offering convenience and broader device compatibility.

· A team is building a new platform and wants to incorporate historical Usenet data. Go-Pugleaf can act as a data provider, fetching and formatting Usenet content that can then be used by the new platform's backend.

· A retro computing enthusiast wants to revive access to old Usenet discussions from the 1990s. Go-Pugleaf can be configured to serve these historical archives via a web interface, making them accessible again to a new generation.

18

MonkeyC BikeApp Forge

Author

donttrunright

Description

This project showcases the creation of a custom application for bike computers using Monkey C, a programming language designed for Garmin devices. It highlights the innovation of extending device functionality and creating tailored user experiences for cyclists, moving beyond pre-installed apps. The core technical insight lies in leveraging a niche language to unlock new possibilities on specialized hardware.

Popularity

Points 4

Comments 0

What is this product?

This is a project that demonstrates how to build a custom application for Garmin bike computers using Monkey C. Monkey C is a specialized, object-oriented programming language developed by Garmin for its Connect IQ platform. The innovation here is in enabling developers to create unique functionalities and interfaces that aren't available in standard bike computer software. This allows for highly specific data display, custom training metrics, or integration with external sensors tailored precisely to a cyclist's needs.

How to use it?