Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-18

SagaSu777 2025-09-19

Explore the hottest developer projects on Show HN for 2025-09-18. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions paint a vibrant picture of innovation, heavily influenced by the transformative power of AI and the enduring quest for developer efficiency. We're seeing a clear trend towards leveraging Large Language Models (LLMs) not just for content generation, but for building entire applications with minimal human input, as exemplified by 'manyminiapps'. This suggests a future where complex software can be rapidly prototyped and deployed, democratizing creation. Simultaneously, there's a strong undercurrent of building specialized tools that enhance AI agent capabilities, manage AI workflows, and integrate AI into existing systems, like Nanobot for turning MCP servers into agents or Kortyx for providing a personal memory layer. Developers are also focusing on core infrastructure challenges, from novel multi-tenant database solutions using Postgres to distributed rate limiting systems and secure server access without traditional keys, showcasing a pragmatic approach to scaling and security. The abundance of open-source projects across these domains underscores the community's commitment to sharing knowledge and fostering collaborative innovation. For aspiring creators and developers, this means an opportunity to tap into powerful AI primitives, build highly specialized tools that solve specific pain points, and contribute to a more robust and accessible technological ecosystem. Embrace the hacker spirit by identifying an inefficiency or a complex problem, and imagine how these emerging AI and infrastructure technologies can be creatively applied to build something new and valuable.

Today's Hottest Product

Name

manyminiapps: One Prompt Generates an App with its Own Database

Highlight

This project showcases the power of Large Language Models (LLMs) to create functional mini-apps with persistent data, directly from a simple text prompt. The core technical innovation lies in their multi-tenant graph database built on a single Postgres instance, using an Entity-Attribute-Value (EAV) model. To overcome the performance challenges typically associated with EAV tables in Postgres, they implemented a custom statistics system leveraging count-min sketches and `pg_hint_plan` to guide query optimization. Developers can learn about novel database architectures for multi-tenancy, advanced Postgres tuning techniques, and practical strategies for prompt engineering with LLMs.

Popular Category

AI & Machine Learning

Web Development

Databases

Developer Tools

SaaS

Popular Keyword

LLM

AI Agents

Databases

Postgres

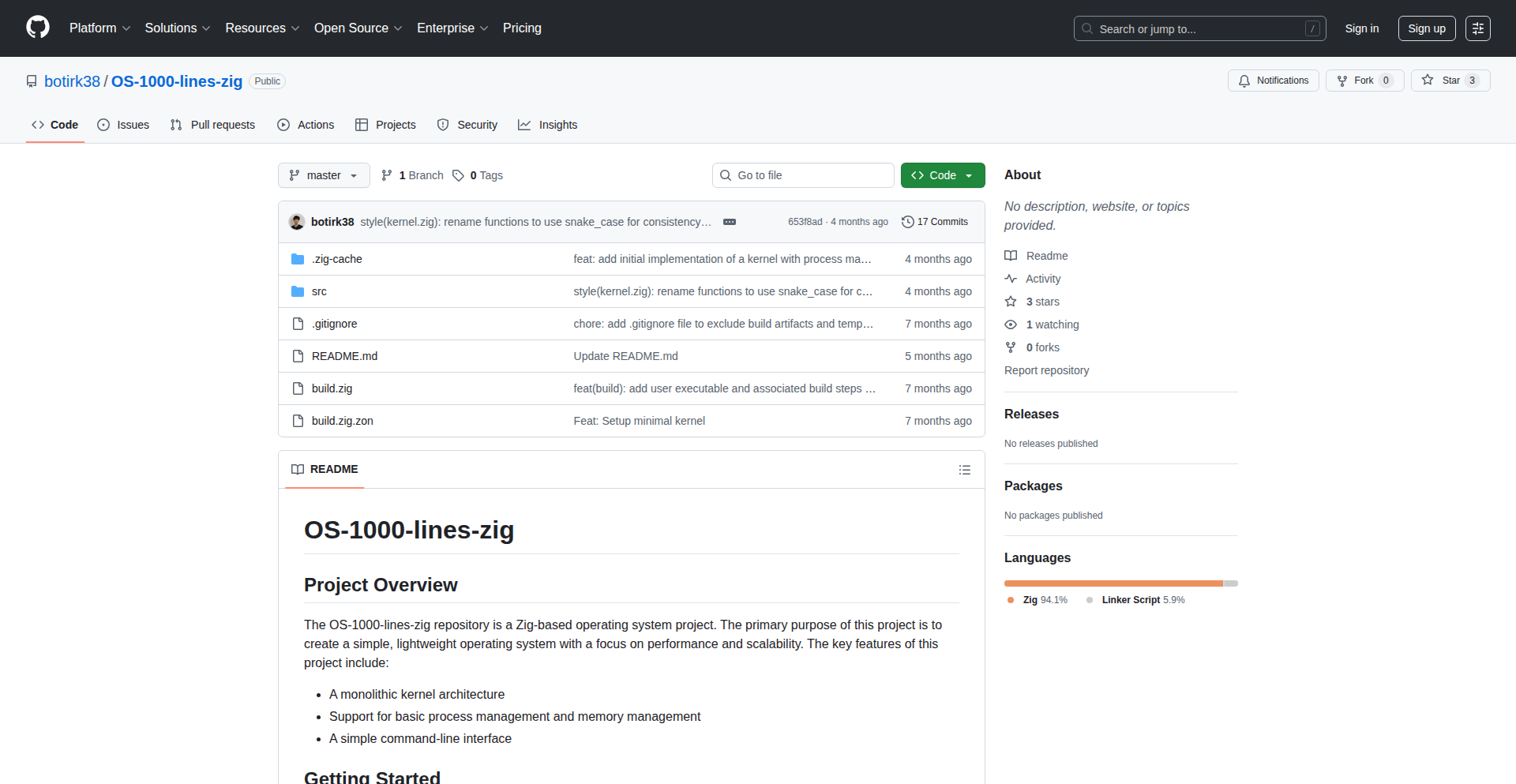

Open Source

Prompt Engineering

API

Technology Trends

LLM-driven Application Generation

Decentralized and Privacy-Focused Solutions

AI Agent Orchestration and Management

Novel Database Architectures for Scalability

Developer Productivity Tools

Creative AI Applications (Video, Music, Art)

Enhanced Configuration Management

Serverless and Edge Computing

Modernizing Legacy Systems

Project Category Distribution

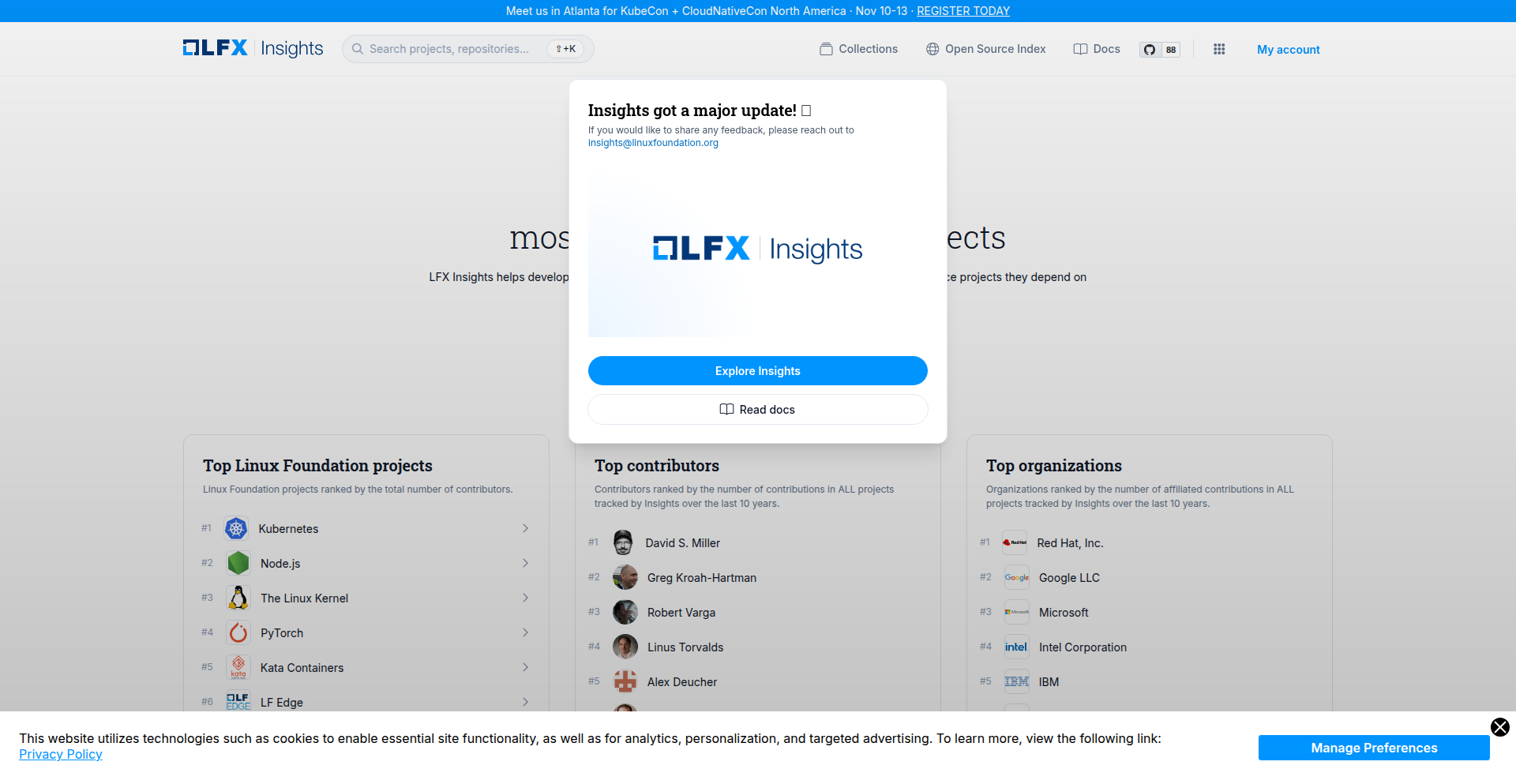

AI & Machine Learning Tools (30%)

Developer Productivity & Tools (25%)

Web Applications & Services (20%)

Data Management & Databases (10%)

Creative & Entertainment Tech (10%)

System & Infrastructure Tools (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | PromptApp Engine | 65 | 48 |

| 2 | AntFeeder2D: Procedural Landscape Ant Game | 71 | 29 |

| 3 | KSON: Config Interface Supercharger | 28 | 8 |

| 4 | HyperOptRL | 32 | 3 |

| 5 | Dyad AI Builder | 14 | 8 |

| 6 | Nanobot: AI Agent Fabric | 19 | 0 |

| 7 | Nallely: Adaptive Signal Weaver | 16 | 2 |

| 8 | Open Register Navigator | 11 | 1 |

| 9 | PostgresRLS-TenantGuard | 6 | 4 |

| 10 | BurntUSD: Stablecoin Art Explorer | 7 | 3 |

1

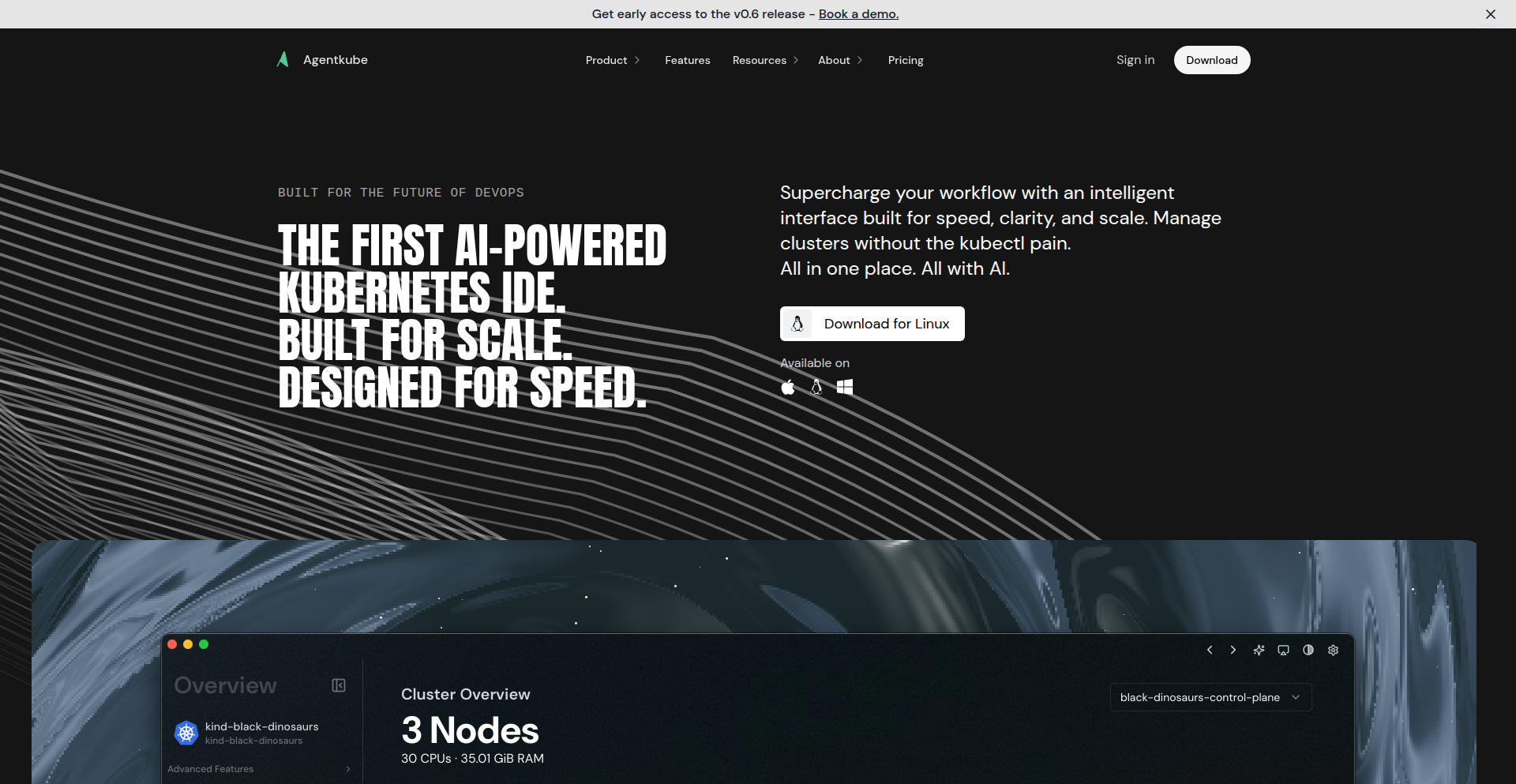

PromptApp Engine

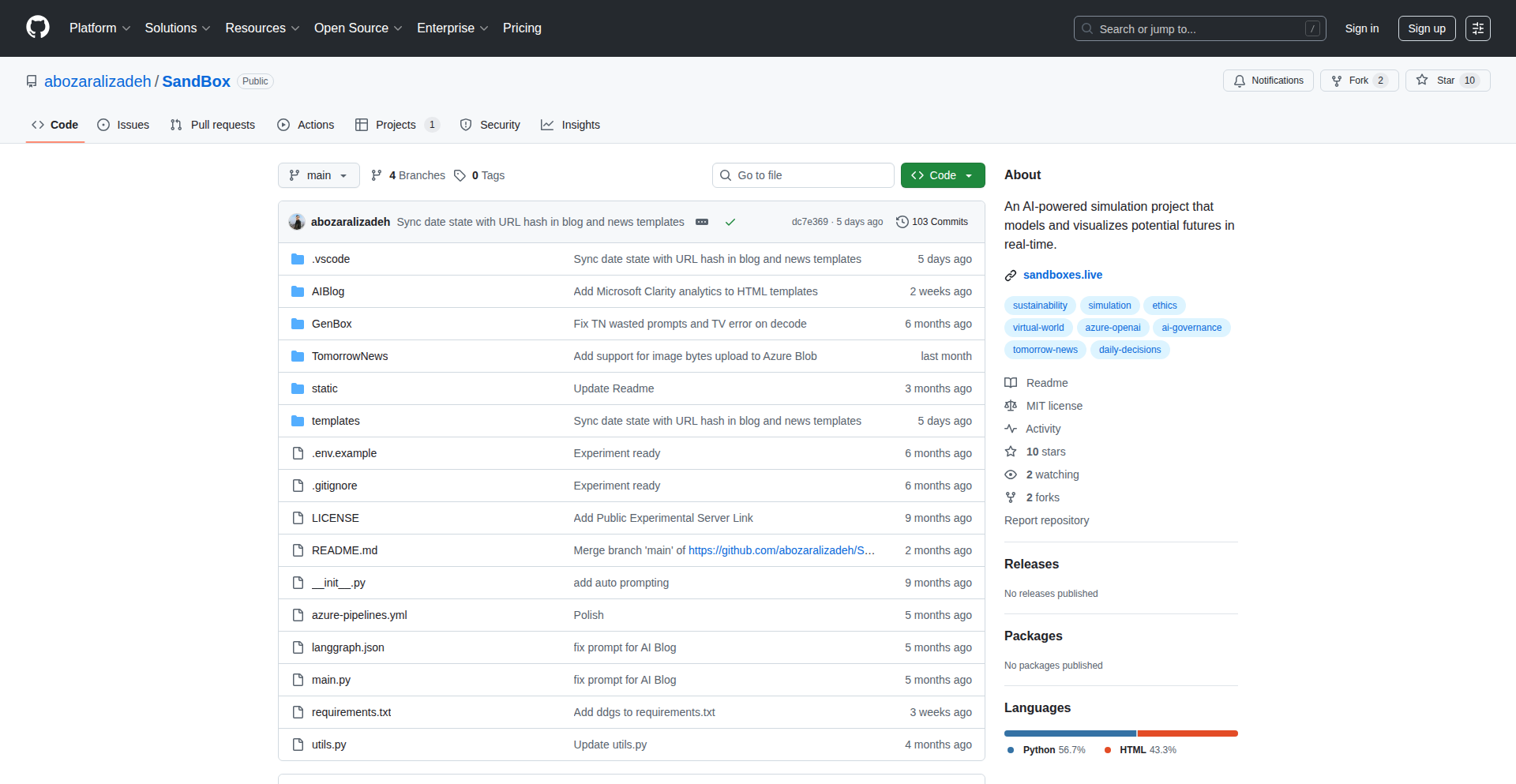

Author

stopachka

Description

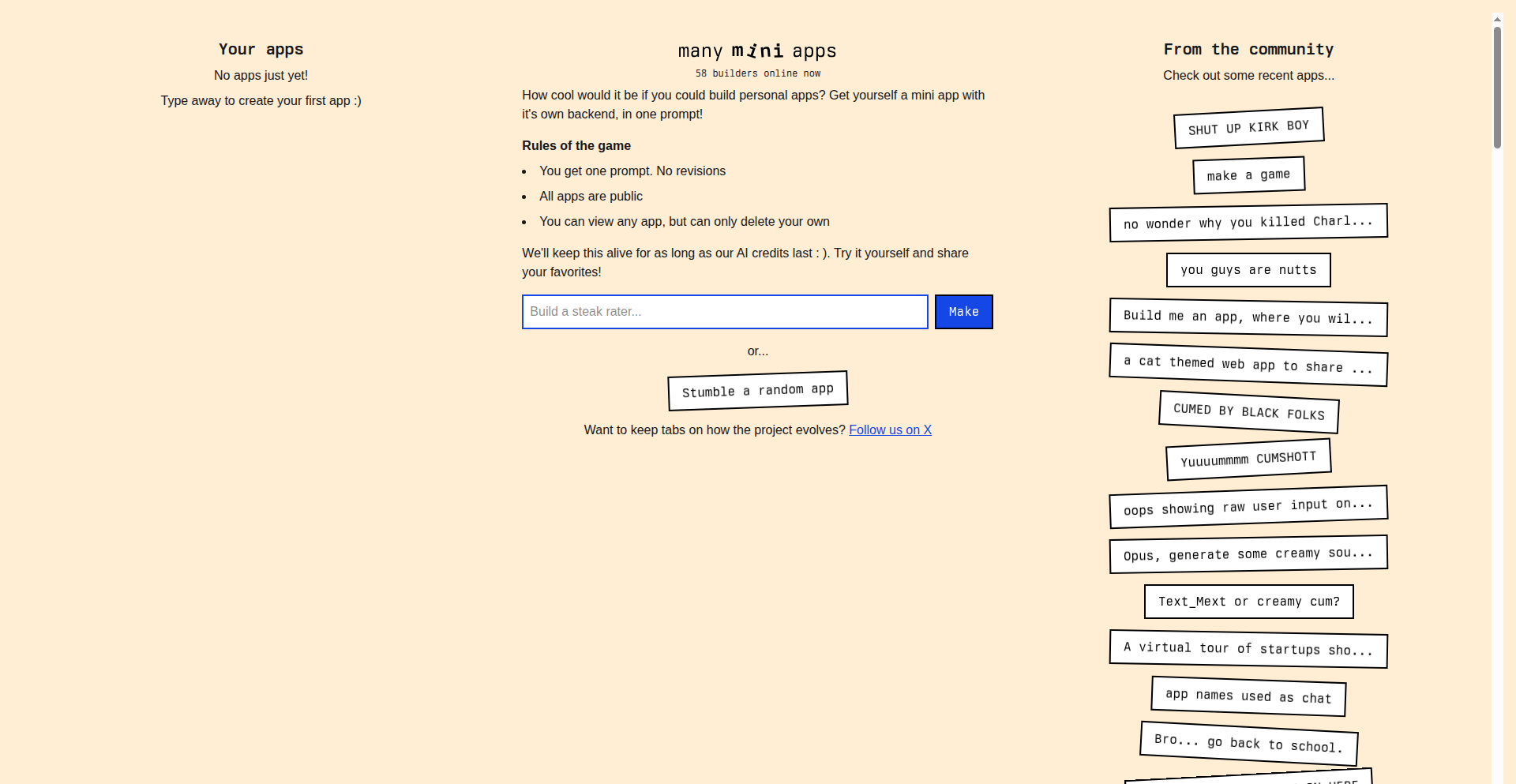

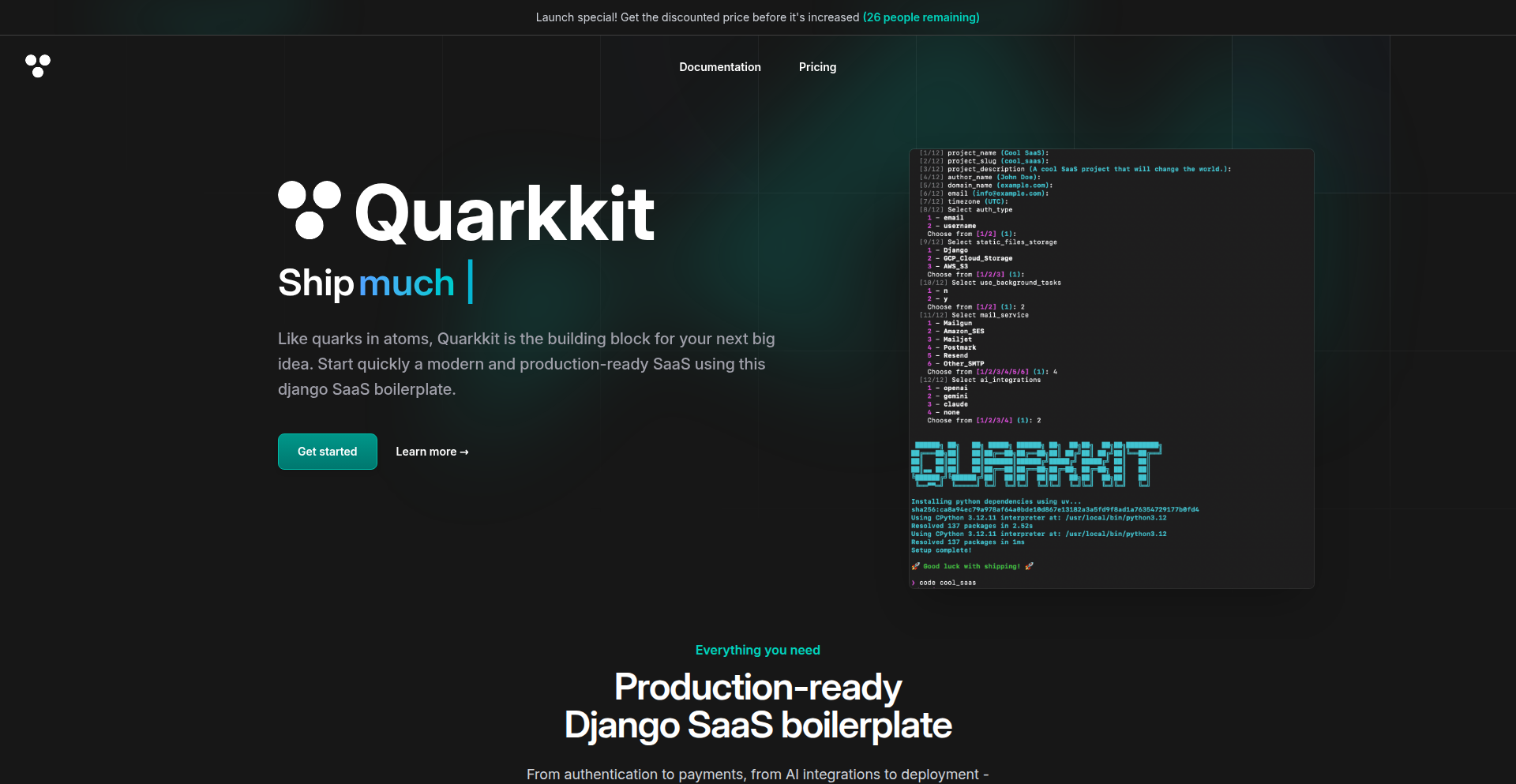

PromptApp Engine is a novel platform that transforms a single text prompt into a functional mini-app with its own database and backend in under two minutes. It tackles the complexity of app development by leveraging LLMs to generate personalized software, allowing users to create shareable, data-saving applications without coding. Its core innovation lies in a multi-tenant graph database built on a single PostgreSQL instance using an EAV (Entity-Attribute-Value) model, creatively managed with custom statistics and query optimization for efficient, lightweight app creation.

Popularity

Points 65

Comments 48

What is this product?

PromptApp Engine is a massively multiplayer online mini-app builder. Its technical innovation centers around using Large Language Models (LLMs) to interpret a user's text prompt and instantly generate a complete, albeit small, application. The groundbreaking aspect is its backend architecture: instead of provisioning separate infrastructure for each app, it uses a single PostgreSQL instance with a multi-tenant graph database implemented via an EAV table. This design makes creating an 'app' as simple as adding a new row. To overcome the typical performance issues of EAV tables in PostgreSQL (due to lack of statistics), the system proactively collects its own statistics using count-min sketches and employs `pg_hint_plan` to guide PostgreSQL's query execution, enabling efficient data retrieval for each app.

How to use it?

Developers can use PromptApp Engine by visiting the platform, typing a descriptive text prompt outlining the desired functionality and structure of their mini-app, and submitting it. Within minutes, a fully functional app with its own isolated database will be generated. This generated app can then be shared with others. It's ideal for quickly prototyping ideas, building small personal tools, or experimenting with LLM-driven code generation. Developers can integrate the generated apps into their workflows or use them as a starting point for more complex projects.

Product Core Function

· Instant App Generation from Prompt: Leverages LLMs to convert natural language descriptions into functional applications, allowing for rapid prototyping and experimentation without manual coding.

· Personalized Software Creation: Enables users to build custom tools tailored to their specific needs, moving beyond generic templates to create unique, personal software experiences.

· Real-time Collaborative Environment: Displays creations from all users in real-time, fostering a community of experimentation and inspiration. This allows developers to see what others are building and learn from their approaches.

· Isolated Database and Backend per App: Each generated mini-app comes with its own dedicated database and backend, ensuring data isolation and allowing for the creation of shareable applications that persist data independently.

· Efficient Multi-Tenant Database Architecture: Utilizes a unique EAV model on a single PostgreSQL instance to manage multiple tenant databases, making app creation extremely lightweight and resource-efficient.

Product Usage Case

· Building a personal budget tracker by providing a prompt like: 'Create a simple app to track my monthly expenses, with fields for date, category, description, and amount.' This allows for quick data entry and visualization of spending habits without writing any code.

· Developing a flashcard app for studying by prompting: 'Generate a flashcard app where I can input questions and answers, and it will quiz me randomly.' This is useful for students or anyone needing to memorize information.

· Creating a collaborative wedding planner tool where each guest can add suggestions or RSVPs, by entering a prompt like: 'Build a shared app for wedding planning, allowing guests to submit song requests and RSVP.' This facilitates group organization and idea gathering for events.

· Experimenting with a retro-style game by describing its mechanics in a prompt: 'Design a simple 2D game where a player dodges falling obstacles, with scoring based on survival time.' This showcases the LLM's ability to interpret game logic and generate interactive experiences.

2

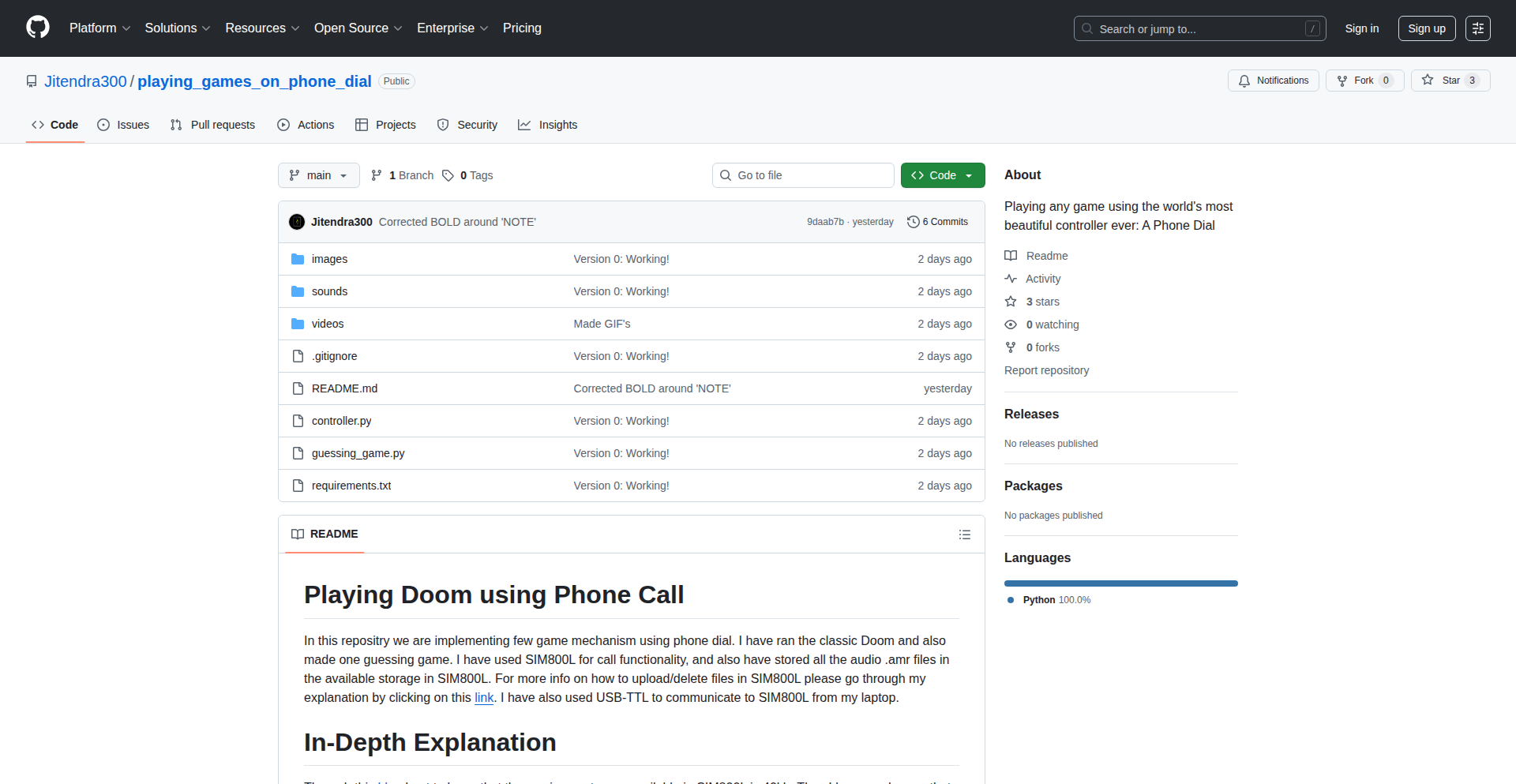

AntFeeder2D: Procedural Landscape Ant Game

Author

aanthonymax

Description

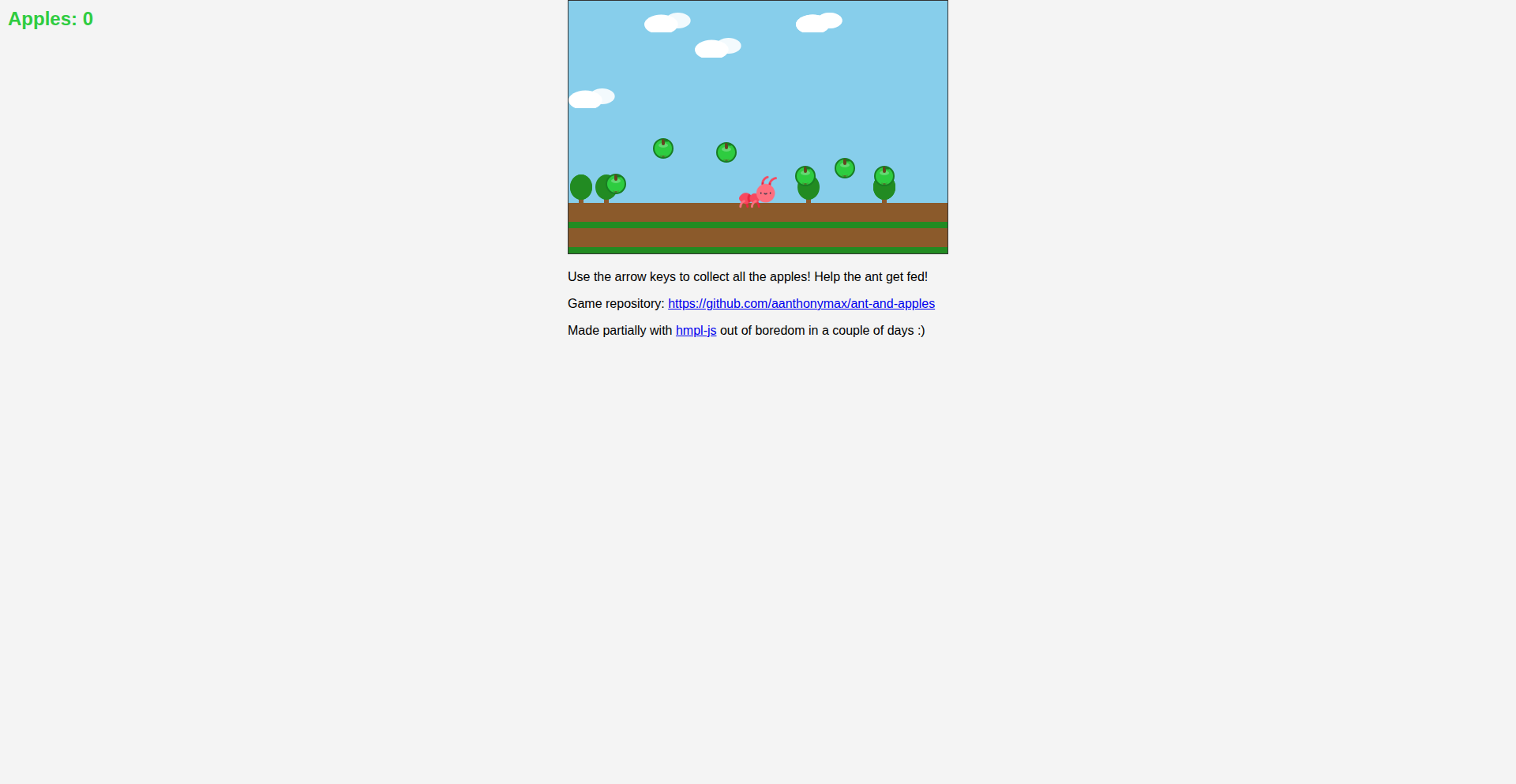

A charming, short-duration 2D game developed in just a few days where players control an ant tasked with feeding it apples. The game's standout technical innovation lies in its randomly generated landscapes, featuring dynamically placed clouds and trees in a chaotic, all-coordinate arrangement. This procedural generation aspect, while seemingly simple, adds significant replayability and a unique visual experience for each playthrough.

Popularity

Points 71

Comments 29

What is this product?

AntFeeder2D is a simple yet technically interesting 2D game where you play as an ant that needs to eat apples. The core technical innovation is its 'procedural landscape generation'. Instead of manually designing every cloud and tree, the game uses algorithms to create a unique, random landscape every time you play. This means clouds and trees are scattered across the game world in a chaotic, unpredictable pattern, making each game session feel fresh and visually distinct. So, what does this mean for you? It means you get a visually surprising and varied experience every time you launch the game, without needing complex setup.

How to use it?

As a player, you simply download and run the game. The game itself handles the landscape generation. For developers interested in the technical side, the project showcases a straightforward implementation of procedural generation for 2D environments. You can examine the source code to understand how algorithms are used to place objects like clouds and trees randomly across the coordinate space, creating a dynamic game world. This can be a starting point for learning how to create your own randomized game levels or interactive art pieces. So, how can you use this? If you're a player, just enjoy the game. If you're a developer, study the code to learn about simple procedural generation techniques.

Product Core Function

· Ant control and interaction: Allows players to directly control an ant's movement to gather food, demonstrating basic character physics and input handling for a fun, interactive experience.

· Apple feeding mechanic: Implements a core game loop where the ant must consume apples, showcasing simple game state management and objective tracking.

· Random landscape generation: Utilizes algorithms to procedurally create a unique game environment with scattered clouds and trees for every playthrough, offering a dynamic and replayable visual experience. This is the key innovation, providing variety and surprise.

· Chaotic object placement: Employs techniques to arrange environmental elements like clouds and trees in a non-uniform, unpredictable manner across all coordinates, enhancing the visual interest and uniqueness of each generated level.

Product Usage Case

· Learning procedural generation for 2D games: A beginner developer can study the source code to see a practical, albeit simple, example of how to generate game environments randomly. This helps understand the concepts of using algorithms to create 'content on the fly', which can be applied to creating endless runners or more complex level designs.

· Experiencing generative art in games: Players can enjoy a casual game that highlights the creative potential of algorithms in visual design. The chaotic, random placement of elements makes each game session a unique visual discovery, demonstrating how randomness can be a source of aesthetic appeal.

· Quick game prototyping: This project serves as an example of how to quickly build a functional game prototype with a focus on a specific technical challenge, like procedural generation. It shows that even with limited time, interesting technical ideas can be implemented and shared with the community.

3

KSON: Config Interface Supercharger

url

Author

dmarcotte

Description

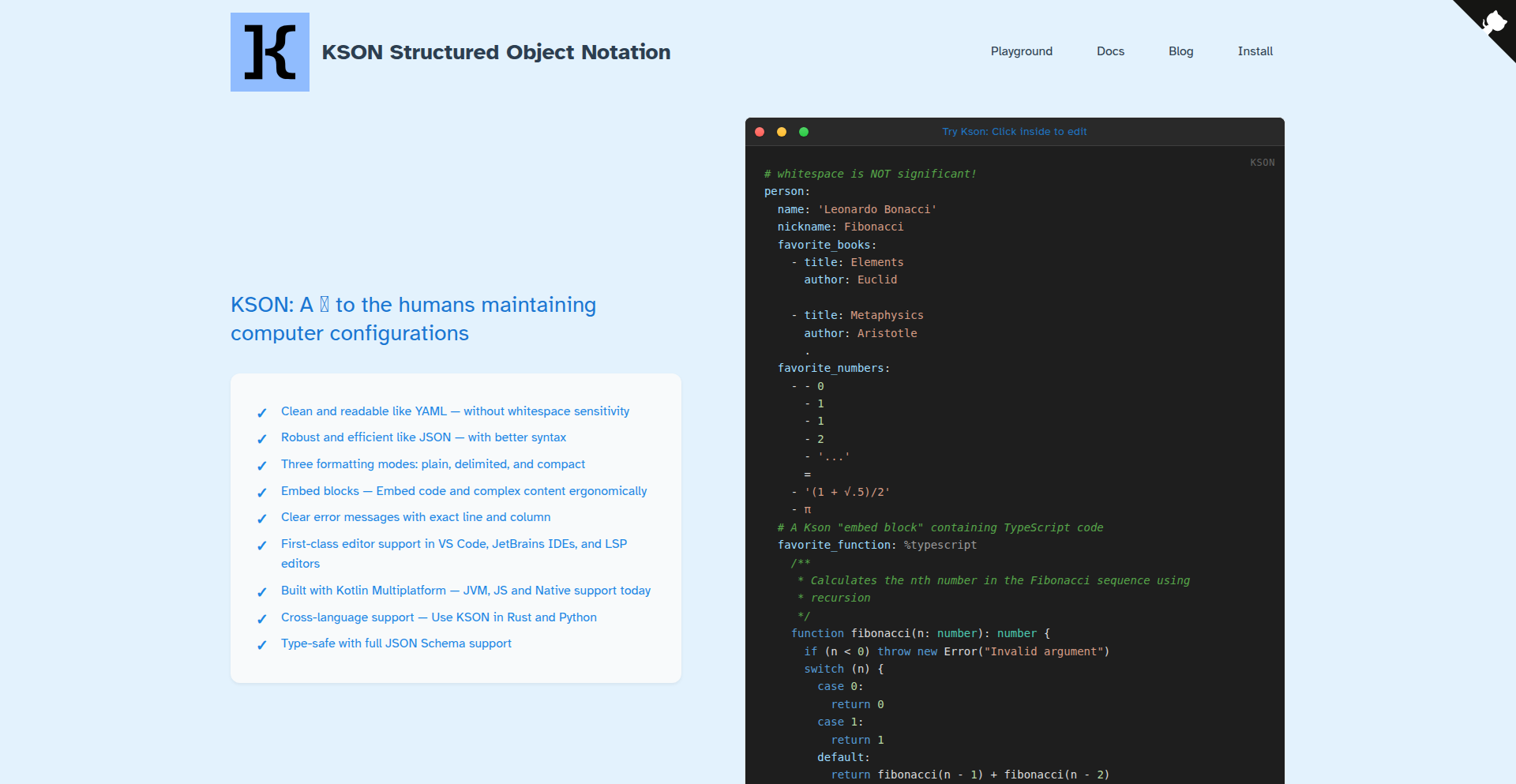

KSON is a revolutionary configuration language designed to enhance the human experience of working with configuration data like JSON, YAML, and TOML. It acts as a verified superset of JSON, boasts native JSON Schema support, and can cleanly transpile to YAML while preserving comments. Its core innovation lies in making configuration files more toolable, robust, and enjoyable for developers, bridging the gap between human readability and machine processability.

Popularity

Points 28

Comments 8

What is this product?

KSON is a configuration language that builds upon existing formats like JSON, YAML, and TOML. Think of it as an upgrade for how humans interact with configuration files. Technically, it's a verified superset of JSON, meaning all valid JSON is also valid KSON. It natively understands JSON Schema, which is a way to describe the structure and constraints of your data, making your configurations more reliable. It also has a neat trick: it can convert itself into YAML while keeping your original comments intact. This means you get the benefits of modern features and robust validation without losing the human-friendly comments that make configurations understandable. The innovation here is in creating a configuration interface that is both powerful for machines and pleasant for humans to use, addressing the common pain points of managing complex configurations.

How to use it?

Developers can integrate KSON into their workflow in several ways. You can install KSON libraries for your preferred programming language (currently supporting JS/TS, Python, Rust, JVM, and Kotlin Multiplatform) to work with KSON files directly within your code. For a seamless editing experience, KSON integrates with popular developer tools like VS Code and Jetbrains IDEs, often through the Language Server Protocol (LSP). This allows for features like syntax highlighting, autocompletion, and real-time validation directly in your editor. You can also experiment with KSON using its online playground. This makes it easy to try out KSON's features and see how it handles your existing configuration data without any setup.

Product Core Function

· JSON Superset: Allows you to leverage existing JSON knowledge and files directly, ensuring compatibility and reducing the learning curve. This means if your project already uses JSON, you can start using KSON with minimal disruption, benefiting from its enhanced features immediately.

· Native JSON Schema Support: Enables robust data validation by understanding and enforcing schema definitions, preventing common configuration errors before they impact your application. This makes your configurations more reliable and reduces debugging time by catching structural issues early.

· Comment-Preserving YAML Transpilation: Converts KSON to YAML while retaining original comments, facilitating collaboration and understanding between developers. This ensures that valuable human-readable annotations are never lost when moving between formats, aiding in documentation and maintainability.

· Multi-language SDKs: Provides libraries for popular programming languages, allowing seamless integration of KSON into diverse development environments and projects. This means you can use KSON in your Python scripts, Rust applications, or Java backends, making it adaptable to your tech stack.

· IDE & Editor Integration (LSP): Offers enhanced developer experience through intelligent features like syntax highlighting, autocompletion, and error checking directly in your preferred code editor. This boosts productivity by making configuration management more intuitive and less error-prone.

Product Usage Case

· Managing complex application settings: A large microservices architecture often has numerous configuration files. KSON can enforce consistency and prevent common mistakes across these files, ensuring all services are configured correctly and reducing deployment failures.

· Team collaboration on infrastructure as code: When multiple developers work on defining infrastructure, KSON's ability to preserve comments and its strong validation ensure that everyone understands the intent and structure of the configuration, preventing accidental misconfigurations.

· CI/CD pipeline validation: KSON can be integrated into a CI/CD pipeline to automatically validate configuration files before deployment. If a configuration doesn't meet the defined schema, the pipeline can fail, preventing problematic deployments and saving significant debugging time.

· Data serialization and configuration for libraries: Developers building libraries that require configuration can use KSON to provide a user-friendly and robust way for their users to configure the library, making it easier to adopt and integrate.

4

HyperOptRL

Author

gabyhaffner

Description

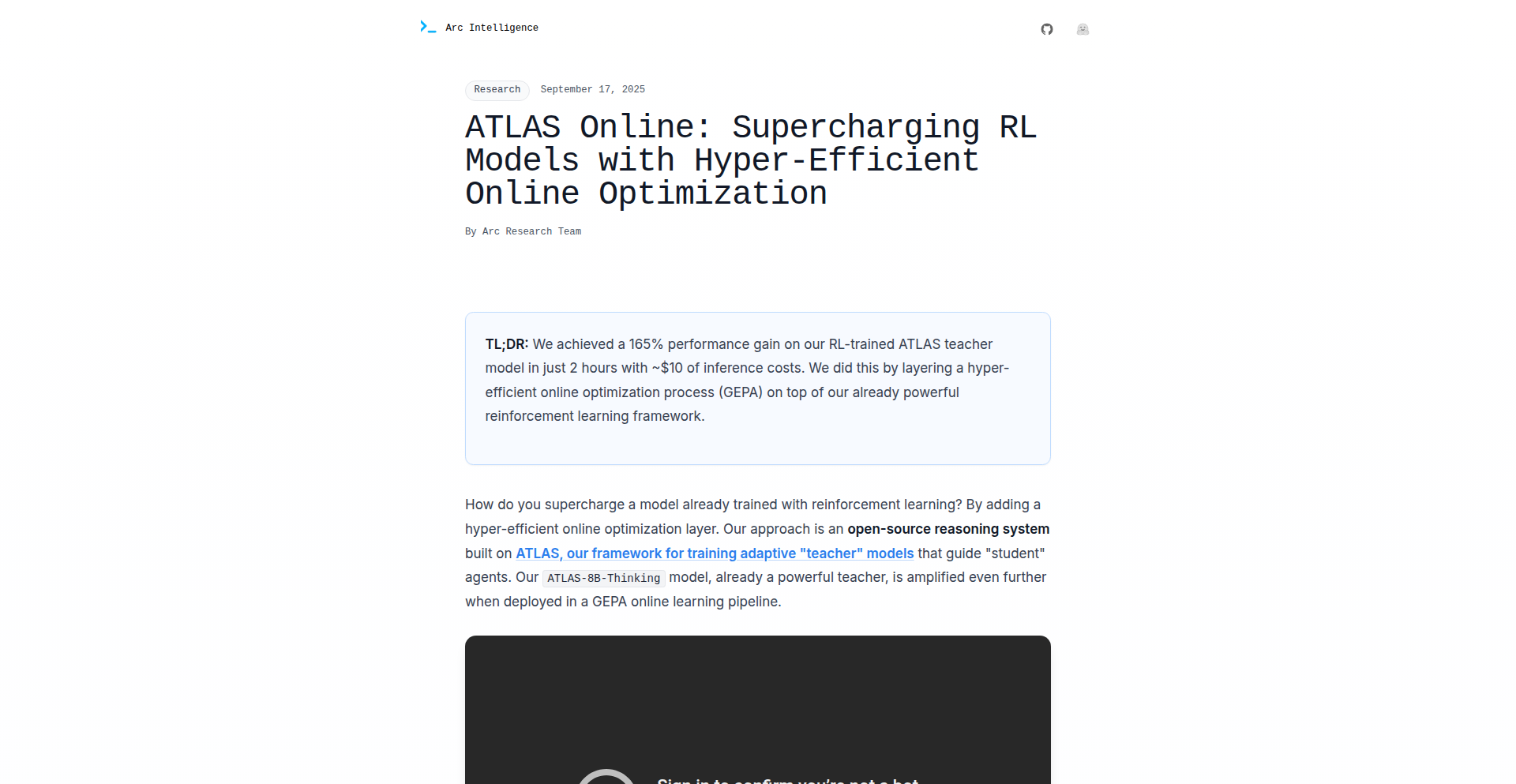

HyperOptRL is a novel approach to supercharge Reinforcement Learning (RL) by integrating hyper-efficient online optimization techniques. This project demonstrates a significant performance boost, achieving over 165% improvement in just 2 hours, showcasing its potential for rapid RL model development and tuning.

Popularity

Points 32

Comments 3

What is this product?

HyperOptRL is a research-oriented project that explores the synergy between advanced optimization algorithms and Reinforcement Learning training. Traditional RL training can be slow and computationally expensive, often requiring extensive hyperparameter tuning. This project introduces a method to optimize RL hyperparameters in real-time during the learning process, significantly accelerating convergence and improving final performance. The core innovation lies in its 'hyper-efficient online optimization' which means it can intelligently adjust learning parameters (like learning rate, exploration strategy, etc.) as the RL agent learns, without needing to stop and restart the training process. This is like a pilot continuously adjusting the aircraft's controls based on real-time flight data, rather than waiting for a manual to be updated. The practical implication is that RL models can become effective much faster and with potentially less computational resources.

How to use it?

Developers and researchers can integrate HyperOptRL into their existing RL frameworks (e.g., TensorFlow, PyTorch) that utilize common RL algorithms like DQN, PPO, or A2C. The primary usage involves wrapping their RL agent's training loop with HyperOptRL's optimization engine. This engine will monitor the agent's performance metrics and dynamically adjust hyperparameters. For example, if the agent is stuck in a local optimum, HyperOptRL might automatically increase exploration. If it's learning too slowly, it could increase the learning rate. The integration typically involves minimal code changes, often requiring the instantiation of a HyperOptRL optimizer object and passing it to the RL training process. This makes it accessible for experimentation with different RL environments and tasks, from game playing to robotics control.

Product Core Function

· Dynamic Hyperparameter Optimization: The system automatically adjusts key learning parameters in real-time based on the RL agent's ongoing performance. This accelerates the learning process by ensuring the agent is always operating under optimal settings, leading to faster convergence and better final results. This is useful for anyone training RL models who wants to avoid tedious manual tuning.

· Performance Monitoring and Feedback Loop: HyperOptRL continuously tracks performance metrics (e.g., reward, loss). This data is fed back into the optimization engine to make informed decisions about hyperparameter adjustments. This allows for a more robust and adaptive learning process. This helps understand how the agent is learning and identify potential issues early.

· Interfacing with RL Frameworks: The project is designed to be compatible with popular deep learning and RL libraries. This means developers can easily plug HyperOptRL into their current projects without a complete overhaul of their existing code. This saves time and effort when trying to improve existing RL implementations.

· Efficiency Gains: By optimizing the training process, HyperOptRL reduces the overall time and computational resources required to achieve a high-performing RL agent. This makes advanced RL more accessible and cost-effective for a wider range of applications. This is valuable for anyone who wants to get better RL results with less waiting and lower infrastructure costs.

Product Usage Case

· Accelerating Game AI Development: An RL developer working on a complex game AI might find their agent learning too slowly. By integrating HyperOptRL, the system could automatically increase the learning rate when progress stalls, leading to a skilled AI opponent much faster, saving development hours. The benefit is a more responsive and effective game character trained efficiently.

· Robotics Control Tuning: A robotics engineer training an agent to control a robotic arm for a manufacturing task might struggle with finding the right balance between precision and speed. HyperOptRL can dynamically adjust exploration parameters, allowing the arm to experiment with different movements and quickly find the optimal trajectory, improving efficiency and accuracy in production. This means robots can be programmed to perform tasks more effectively with less manual fine-tuning.

· Optimizing Trading Strategies: In algorithmic trading, an RL agent learning a profitable strategy needs to adapt quickly to changing market conditions. HyperOptRL can help by fine-tuning the agent's decision-making parameters on the fly, allowing it to capitalize on short-term market opportunities more effectively, leading to potentially higher returns. This enables more adaptive and profitable automated trading systems.

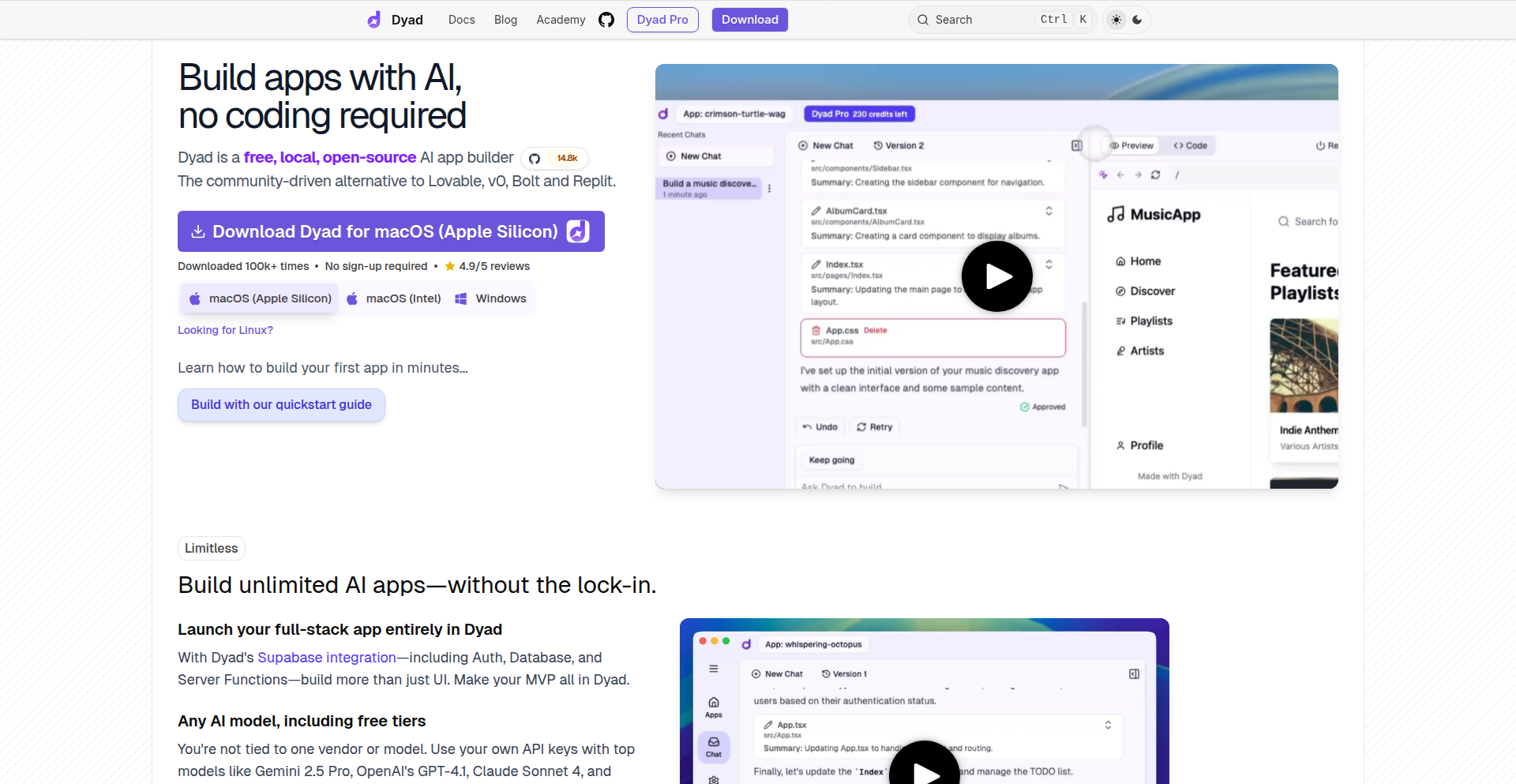

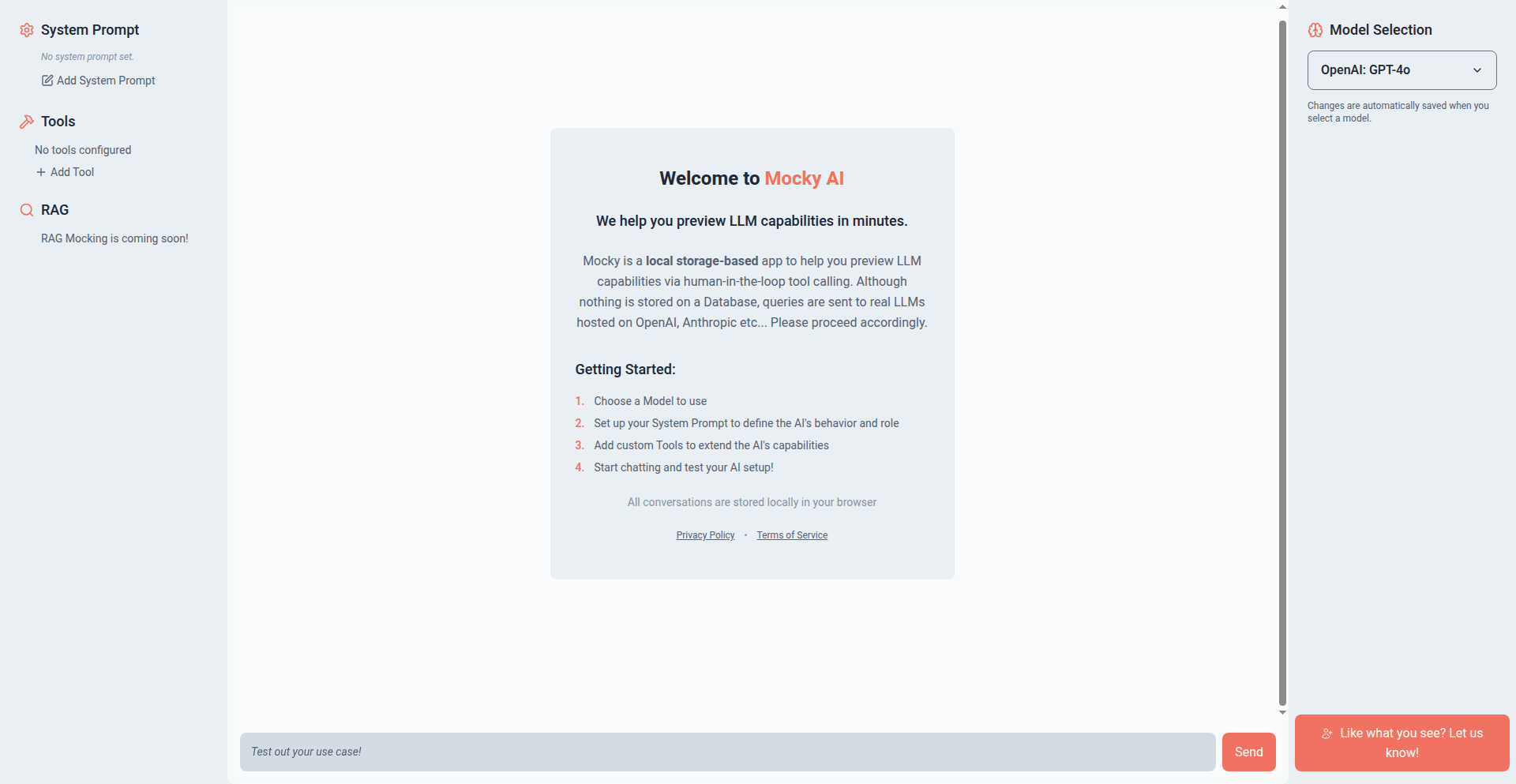

5

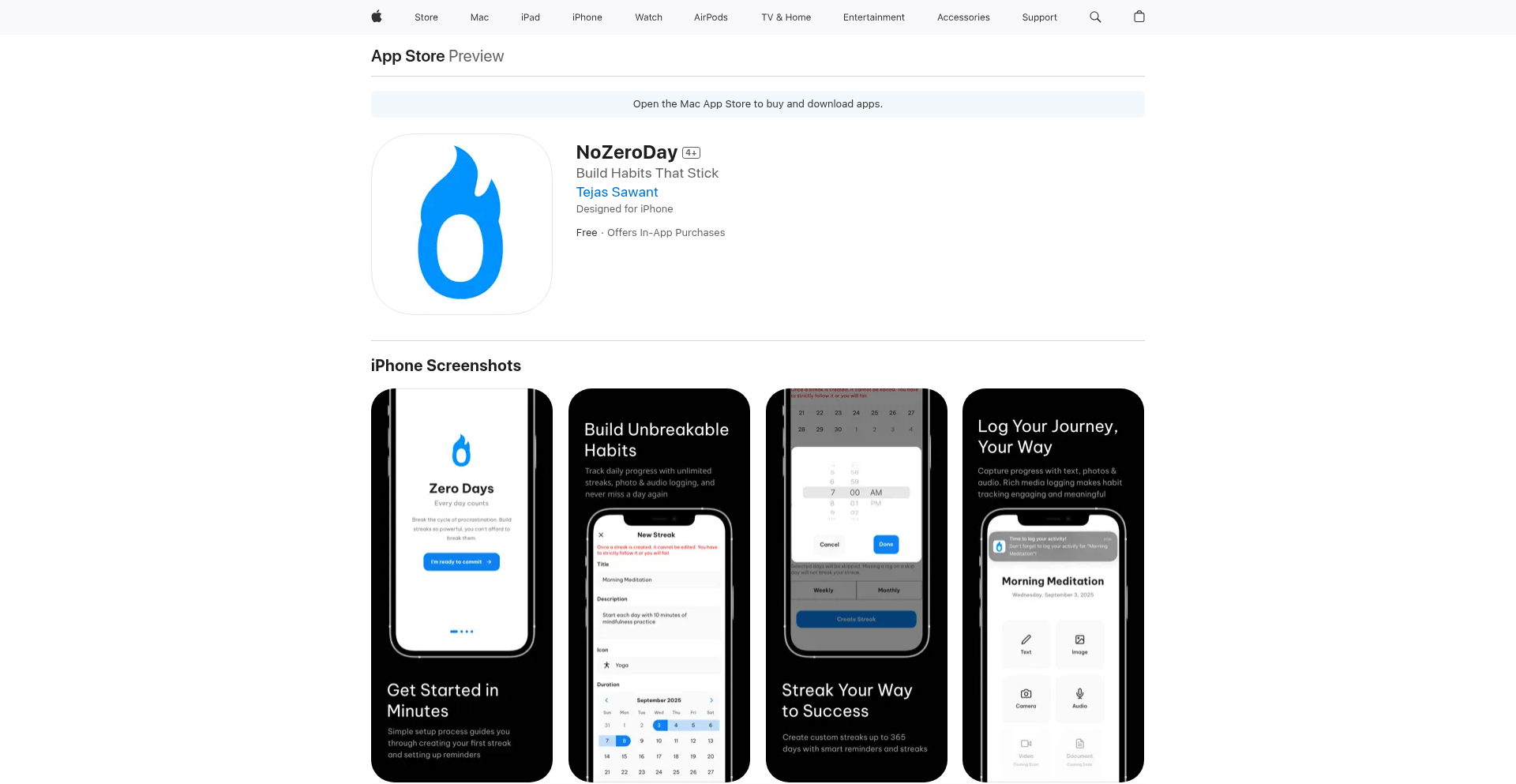

Dyad AI Builder

Author

willchen

Description

Dyad is a local, open-source AI application builder developed with Electron. It addresses the frustration of cloud-based AI builders that are difficult to run and debug locally. Dyad runs entirely on your computer, enabling seamless switching between the app builder and your favorite coding tools like Cursor or Claude Code, offering a more integrated and efficient development workflow for AI applications.

Popularity

Points 14

Comments 8

What is this product?

Dyad is a desktop application that allows you to build AI-powered applications locally on your machine. The core technical innovation lies in its ability to provide a fully self-contained environment for AI development, eliminating the complexities and limitations often associated with cloud-based solutions. It leverages Electron to create a cross-platform desktop application. A key aspect of its technical design is how it facilitates 'tool calling' using XML tags, which essentially allows AI models to understand and trigger specific functions or actions within your application, making the AI more interactive and controllable. This approach provides developers with direct access to the AI's underlying logic and the ability to debug it in their familiar coding environments.

How to use it?

Developers can download and install Dyad on their local machine. Once installed, they can start building AI applications directly within the Dyad interface. The primary benefit for developers is the ability to easily integrate Dyad with their existing local development setup. For example, if you're building an AI chatbot that needs to interact with your local file system or other applications, Dyad allows you to do this without sending sensitive data to the cloud. You can switch between designing your AI app in Dyad and writing or debugging the code that powers its features using tools like Cursor or other code editors, creating a fluid development loop. This makes it ideal for projects where data privacy, offline functionality, or deep integration with local resources is crucial.

Product Core Function

· Local AI App Development Environment: Build AI applications entirely on your computer without reliance on cloud servers. This is valuable because it ensures your data stays private and allows for offline development, giving you full control over your project.

· Seamless IDE Integration: Effortlessly switch between Dyad and your preferred local code editors like Cursor or VS Code. This saves time and context switching by allowing you to design and code in a unified workflow, boosting productivity.

· Tool Calling with XML Tags: Enable AI models to intelligently trigger specific functions or actions within your applications using a structured XML format. This allows for more sophisticated and controllable AI interactions, making your apps smarter and more responsive.

· Open-Source and Extensible: Access and modify the source code to customize and extend Dyad's capabilities. This empowers developers to tailor the tool to their specific needs and contribute to its evolution, fostering a collaborative development community.

· Free and No Sign-up Required: Download and use Dyad without any cost or registration. This removes barriers to entry, making powerful AI development tools accessible to everyone, regardless of their budget or commitment.

Product Usage Case

· Building a local AI assistant that can read and summarize documents stored on your computer. Dyad's local nature ensures your sensitive company documents are not exposed to cloud AI services. You can use Dyad to define the AI's capabilities and then easily switch to Cursor to write the specific Python code that interfaces with your local file system and the AI model.

· Developing an AI-powered code generation tool that runs offline. Dyad allows you to prototype and test the AI's ability to generate code snippets, and its local execution means you can develop this tool even without an internet connection, ensuring continuous productivity.

· Creating an AI agent that automates tasks within your desktop environment, such as organizing files or sending emails. Dyad's ability to integrate with local tools means you can instruct the AI to perform these actions directly on your machine, with the flexibility to debug the AI's logic and your automation scripts side-by-side.

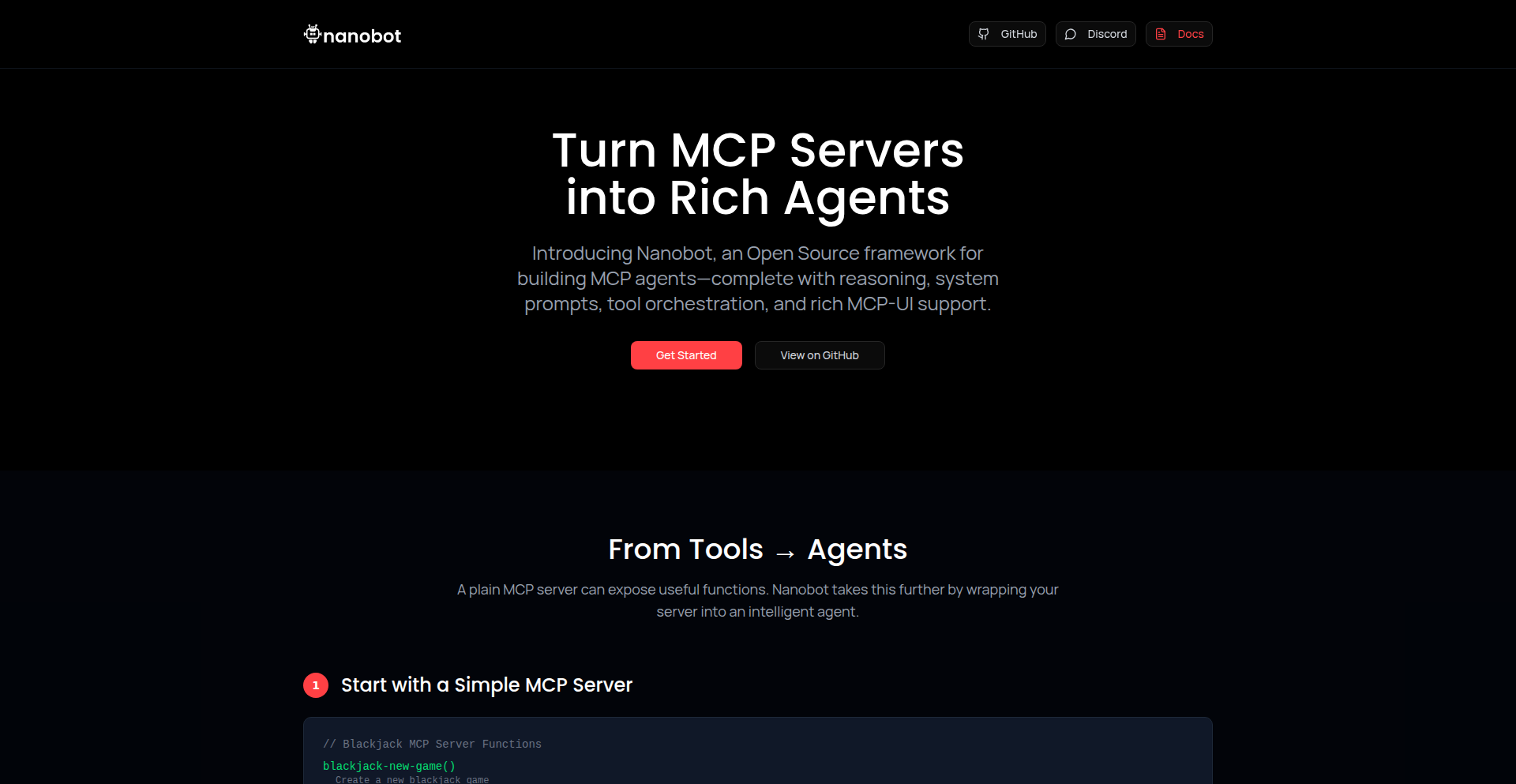

6

Nanobot: AI Agent Fabric

Author

smw355

Description

Nanobot is an open-source framework that transforms existing Model Context Protocol (MCP) servers into sophisticated AI agents. It adds reasoning capabilities, system prompts, and orchestration to MCP tools, enabling them to act like intelligent agents. A key innovation is its full support for MCP-UI, allowing agents to present interactive components like forms, dashboards, and mini-applications directly within chat interfaces. This moves AI agents beyond simple text and function calls into rich, interactive experiences, solving the problem of creating more engaging and useful AI applications.

Popularity

Points 19

Comments 0

What is this product?

Nanobot is a developer framework for building AI agents. At its core, it takes the structured tools exposed by MCP servers – which are essentially collections of functions – and wraps them with advanced AI capabilities. Think of it like giving a simple calculator the ability to understand your requests, plan how to solve a math problem, and then present the answer in an interactive graph. The truly innovative part is its support for MCP-UI. This means an AI agent built with Nanobot can not only respond with text but also display dynamic, interactive elements directly in the chat. So, instead of just telling you how to play Blackjack, an agent could show you an actual interactive Blackjack table within the conversation. This solves the limitation of traditional AI interactions being confined to text or basic button clicks, allowing for much richer user experiences.

How to use it?

Developers can use Nanobot to enhance their existing MCP-based tools and services. If you have an MCP server that exposes functionalities for managing data, controlling devices, or accessing information, you can integrate Nanobot to create an AI agent that can understand natural language requests, reason about those requests, and then present results or actions through interactive UIs within a chat environment. This can be achieved by configuring Nanobot with a system prompt that defines the agent's persona and goals, and by pointing it to your MCP server. For example, a customer support tool could become an interactive agent that guides users through troubleshooting steps using dynamic forms and visual aids, all within the chat. The provided GitHub repository offers the codebase for integration, and the live demo showcases the practical application of building an interactive Blackjack agent.

Product Core Function

· Agent Orchestration: Enables the AI agent to manage and coordinate multiple tools or services provided by MCP servers, ensuring that complex tasks are broken down and executed effectively. This is valuable for building AI assistants that can perform multi-step operations, like planning a trip which involves booking flights, hotels, and generating an itinerary.

· Reasoning and Intent Understanding: Implements AI models that interpret user natural language requests and infer the underlying intent. This allows users to interact with the agent in a conversational manner, rather than having to learn specific commands. The value here is a more intuitive and accessible user experience for leveraging complex systems.

· MCP-UI Integration: Facilitates the seamless embedding of interactive user interface components (forms, dashboards, mini-applications) directly within chat interfaces. This is a significant innovation for creating engaging and functional AI applications, moving beyond static text responses and enabling real-time data visualization and user input within conversations.

· System Prompt Configuration: Allows developers to define the agent's personality, role, and operational guidelines through a system prompt. This is crucial for ensuring the AI agent behaves consistently and appropriately for its intended purpose, such as a helpful customer service bot or a precise technical assistant.

Product Usage Case

· Building an interactive Blackjack game agent: A developer could use Nanobot to wrap a Blackjack MCP server. The agent could then explain the rules, guide the player through betting and hitting, and render a visually interactive Blackjack table directly in the chat, making the game engaging and playable without leaving the conversation.

· Creating a customer support chatbot with interactive diagnostics: For a technical support scenario, Nanobot could connect to an MCP server that controls diagnostic tools. The agent could ask users to fill out an interactive form, collect system information, and then display troubleshooting steps or error logs in a clear, interactive format within the chat, speeding up resolution times.

· Developing an e-commerce assistant that displays product information dynamically: An online store could use Nanobot to build an agent that interacts with product catalog MCP servers. When a user asks about a product, the agent could present interactive product details, size selectors, or even a 3D model viewer directly in the chat, enhancing the shopping experience.

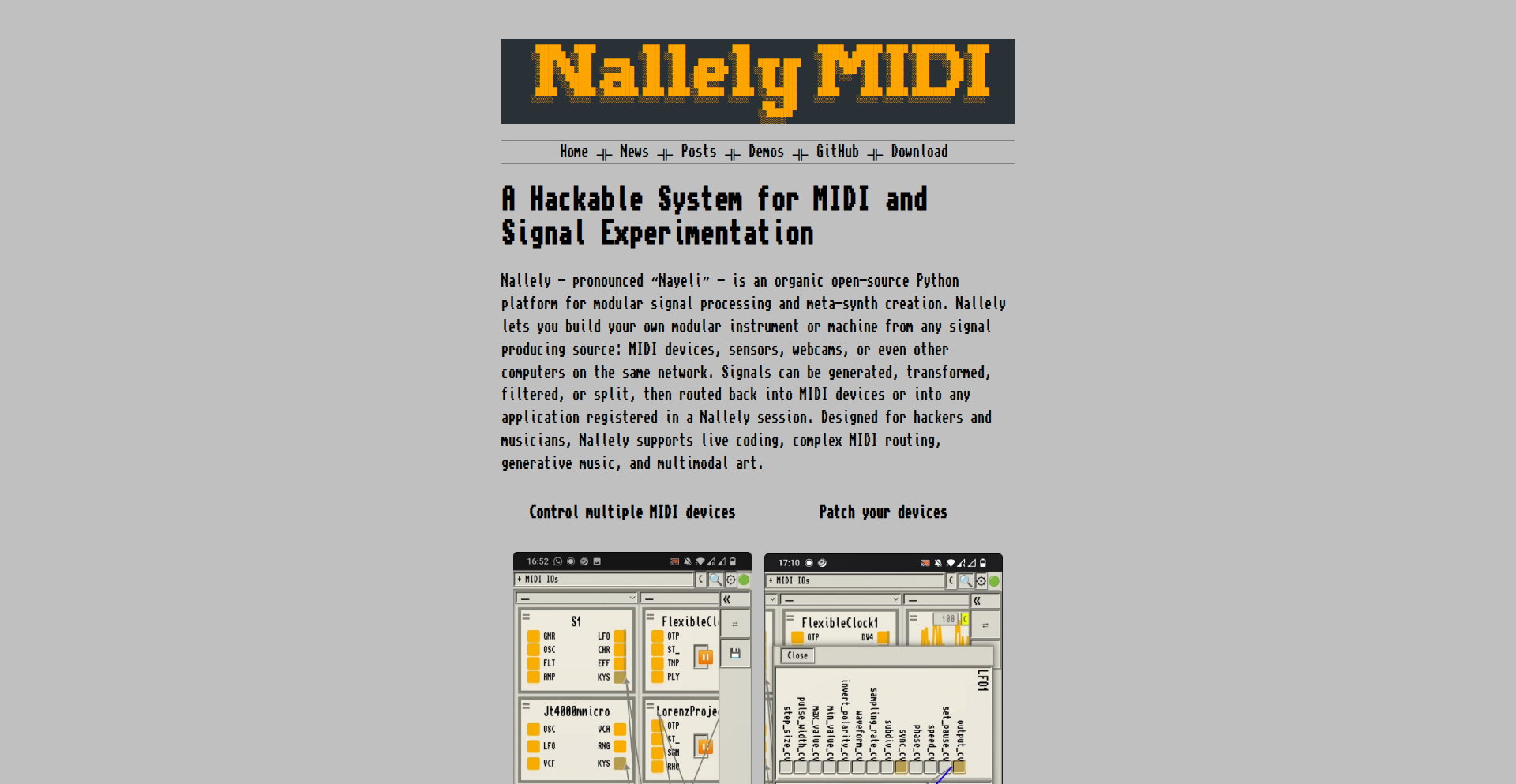

7

Nallely: Adaptive Signal Weaver

Author

drschlange

Description

Nallely is a Python-based system for routing, processing, and interacting with signals, inspired by the 'Systems as a Living Thing' philosophy. It allows developers to create self-adaptive and resilient signal processing workflows, visualizing connections and enabling interaction with external systems via a network bus. The innovation lies in its dynamic, emergent behavior and runtime adaptability, prioritizing extensibility over raw performance.

Popularity

Points 16

Comments 2

What is this product?

Nallely is a Python framework that lets you build dynamic signal processing systems. Think of it like a digital workbench where signals (like MIDI data from a musical instrument, or data from sensors) are like threads, and you can create custom 'neurons' (small pieces of code) to manipulate these signals. These neurons are connected by 'patches' (channels) to create complex processing chains. The core innovation is its ability to adapt and evolve in real-time, much like a living organism. It's built with a focus on extensibility, allowing you to easily add new types of signal processing logic, and boasts a user-friendly graphical interface for visually patching these components together. Even though it's written in Python, it's designed to be efficient, running on devices like a Raspberry Pi with minimal resource usage, making it suitable for embedded and real-time applications. So, what does this mean for you? It means you can build sophisticated, custom signal processing applications that can learn and adapt, without needing to be a low-level systems programming expert.

How to use it?

Developers can use Nallely by writing custom 'neurons' in Python, which are essentially functions or classes that process input signals and produce output signals. These neurons can be linked together using 'patches' through the visual GUI or programmatically. For instance, you could create a neuron that adjusts the volume of an incoming MIDI signal based on its pitch, and another neuron that triggers a light show when a specific sound frequency is detected. Nallely also offers a network-bus neuron, allowing neurons written in other technologies or languages to connect and interact with the Nallely system. This makes it easy to integrate Nallely into existing projects or build new, complex systems by leveraging different technologies. So, how would you use it? You'd define your signal processing logic as neurons, connect them to create desired workflows, and deploy it where needed, whether it's on a Raspberry Pi for an interactive art installation or a more powerful server for complex audio processing.

Product Core Function

· Signal Routing and Patching: The ability to visually connect different signal processing modules (neurons) using channels, allowing for flexible and dynamic signal flow management. This is valuable because it simplifies the creation of complex processing pipelines without manual coding for every connection, enabling rapid prototyping of signal manipulation ideas.

· Custom Neuron Development: A Python API that enables developers to easily write their own signal processing modules (neurons) with custom logic. This is valuable as it empowers developers to extend the system's capabilities with specialized processing algorithms or integrations tailored to specific needs, such as unique audio effects or data transformations.

· Runtime Adaptability and Emergent Behavior: The system's design focuses on creating dynamic, self-adapting behaviors where the interactions between neurons can lead to unexpected yet functional outcomes. This is valuable for building systems that can respond intelligently to changing conditions or inputs, mimicking organic systems and leading to more robust and creative applications.

· Networked Neuron Interaction: The inclusion of a network-bus neuron allows external applications or neurons written in different technologies to integrate with Nallely. This is valuable for interoperability, enabling Nallely to act as a central hub for diverse signal processing components and enhancing the scalability and collaborative potential of projects.

· Mobile-Friendly GUI: A graphical user interface that is accessible and usable on mobile devices for visually patching and managing the signal processing workflows. This is valuable because it democratizes the creation and control of these complex systems, making them accessible to a wider range of users and environments, even on the go.

Product Usage Case

· Building an adaptive music synthesizer where different 'neurons' control aspects like filter cutoff, LFO speed, and envelope decay, with the system automatically adjusting parameters based on real-time performance input. This solves the problem of static synth presets by enabling dynamic, performance-driven sound design.

· Creating an interactive art installation where sensor data (like motion or touch) is processed through Nallely neurons to control lighting and sound output, with the system learning and evolving its responses over time. This allows for more engaging and responsive artistic experiences.

· Developing a custom MIDI controller mapping system where raw MIDI messages are routed and transformed by specific neurons to control complex software instruments or hardware. This overcomes the limitations of standard MIDI mapping tools by allowing highly customized and conditional MIDI processing.

· Integrating Nallely with a robotics project, where sensor inputs are processed to drive motor outputs, and the system learns to optimize movement based on environmental feedback. This enables the creation of more intelligent and responsive robotic systems.

· Constructing a real-time audio processing pipeline for live performance, where input audio streams are routed through various custom-written effects neurons, with the ability to dynamically reconfigure the signal chain via the GUI during the performance. This provides performers with unprecedented control and flexibility over their sound.

8

Open Register Navigator

Author

sudojosh

Description

This project transforms cumbersome PDF documents of New Zealand Members of Parliament's financial disclosures into a searchable, web-based interface. It leverages AI, specifically Gemini 2.5 Flash, to extract structured data from these reports, making it easy for anyone to find information about MPs' financial interests by name, company, or interest type. This addresses the accessibility issue of public data locked in unsearchable formats, enhancing transparency and allowing for quick analysis of political financial ties. The innovation lies in the practical application of advanced AI for data extraction from a common, yet challenging, public data format (PDFs).

Popularity

Points 11

Comments 1

What is this product?

Open Register Navigator is a web application designed to make public financial disclosure data from New Zealand Members of Parliament (MPs) easily searchable. Typically, this information is released as lengthy, unsearchable PDF documents. The project uses an AI model (Gemini 2.5 Flash) in a two-pass process: first, it identifies MP names and the pages they are mentioned on within the PDF. Then, it extracts structured financial interest data specifically from those identified pages. This makes complex, raw data accessible and understandable, allowing users to quickly see who has interests in what, and why that matters to them.

How to use it?

Developers and interested individuals can use Open Register Navigator through its web interface. You can visit the website and directly search for MPs by name, company names mentioned in disclosures, or by the type of financial interest (e.g., 'shares,' 'directorships'). For developers looking to integrate this data or replicate the process, the project is open-sourced on GitHub. They can study the Ruby on Rails, SQLite (with FTS5 for efficient text searching), and Tailwind/DaisyUI tech stack to understand how structured data was extracted from PDFs and made available for querying. The core idea can be adapted for similar public data transparency initiatives in other regions or for different types of official documents.

Product Core Function

· Searchable MP Financial Data: Provides a user-friendly interface to search across all MPs' disclosed financial interests, enabling quick access to information on shareholdings, directorships, and consultancies. This is valuable for journalists, researchers, and citizens who need to understand potential conflicts of interest or the financial networks of their representatives.

· AI-Powered Data Extraction: Utilizes Gemini 2.5 Flash for a two-pass data extraction process from PDF documents. This innovative approach efficiently processes large volumes of unstructured text data into structured, queryable information, saving significant manual effort and improving data accuracy. This technology is key to unlocking data that would otherwise be buried.

· Filtering and Categorization: Allows users to filter search results by category of interest (e.g., specific industries) or by political party. This functionality helps in analyzing trends and understanding the distribution of financial interests across different political affiliations or sectors, providing deeper insights.

· Open Source Codebase: The project's code is publicly available on GitHub, promoting transparency and allowing other developers to learn from the implementation, contribute improvements, or adapt the methodology for their own projects. This fosters community collaboration and the advancement of open data practices.

Product Usage Case

· A journalist investigating potential conflicts of interest for a specific MP can use the tool to quickly find all disclosed financial interests related to a particular company or sector that the MP might be associated with, saving hours of manual PDF review.

· A researcher studying the influence of specific industries on political decision-making can search for all MPs who have declared interests in that industry, gathering data for analysis without needing to process individual PDF files.

· A concerned citizen wanting to understand their local representative's financial dealings can easily search for their MP's name and review their disclosed interests, fostering greater accountability and public trust.

· A developer in another country with similar public disclosure laws might adapt the project's AI-driven PDF parsing technique to make their own government's transparency data more accessible, replicating the success of this initiative.

9

PostgresRLS-TenantGuard

Author

noctarius

Description

A demonstration of building multi-tenancy in applications using PostgreSQL's Row-Level Security (RLS) feature. It showcases how RLS can effectively isolate user data, preventing common security oversights like forgotten WHERE clauses. This approach offers a robust and less error-prone method for managing data access in multi-tenant environments. The core innovation lies in leveraging a built-in database feature for a critical security and data isolation concern, making development simpler and more secure.

Popularity

Points 6

Comments 4

What is this product?

PostgresRLS-TenantGuard is an example project that demonstrates how to use PostgreSQL's Row-Level Security (RLS) to build secure multi-tenant applications. Multi-tenancy means a single instance of your application serves multiple customers (tenants), with each tenant's data kept separate and private. Traditionally, developers might implement this by adding `WHERE tenant_id = current_tenant_id` to every database query. This project shows how RLS can automate this, by defining security policies directly in the database. When a user queries a table, PostgreSQL automatically enforces these policies, ensuring they only see their own tenant's data, without requiring explicit filtering in application code. This is innovative because it shifts security enforcement to the database layer, reducing the risk of bugs and simplifying application logic.

How to use it?

Developers can use this project as a reference to implement multi-tenancy in their own PostgreSQL-backed applications. The project typically involves setting up a PostgreSQL database, enabling RLS on specific tables, and defining policies that link the logged-in user's identity or tenant association to the data they can access. This can be integrated into existing applications by configuring the database connection and ensuring the application's authentication system provides the necessary context (like a user ID or tenant ID) to PostgreSQL for RLS to work. For example, after a user logs in, their session information can be passed to PostgreSQL, allowing RLS policies to dynamically filter data based on that user's tenant.

Product Core Function

· Data isolation via RLS: Implements security policies directly in PostgreSQL to automatically filter data based on the tenant or user accessing it. This means each user only sees their own data, enhancing security and privacy.

· Simplified application logic: By offloading data access control to the database, application code becomes cleaner and less prone to errors related to missing WHERE clauses, reducing development time and potential bugs.

· Enhanced security posture: Prevents accidental data leakage between tenants by enforcing data boundaries at the database level, a more robust solution than application-level filtering alone.

· Demonstrates practical RLS implementation: Provides a concrete example of how to configure and use RLS for multi-tenancy, making it easier for other developers to adopt this powerful database feature.

Product Usage Case

· Building a SaaS platform where each customer has their own independent data: Imagine a project management tool where each company (tenant) should only see their projects and tasks. Using RLS, you can ensure that users from Company A cannot access data belonging to Company B, even if they try to query directly.

· Developing an e-commerce platform with multiple vendors: Each vendor should only be able to manage their own products and orders. RLS can automatically restrict a vendor's access to only their specific inventory and sales records.

· Creating a shared database for a collaborative application: For instance, a document editing tool where users belong to different teams. RLS can ensure that users only see and edit documents shared with their team, maintaining confidentiality.

10

BurntUSD: Stablecoin Art Explorer

Author

scyclow

Description

BurntUSD is an art project that visually represents the concept of stablecoins by burning actual US dollars and creating digital art from the remnants. It explores the inherent value and scarcity principles of digital currencies through a physical-to-digital transformation.

Popularity

Points 7

Comments 3

What is this product?

This project, BurntUSD, is an artistic exploration of stablecoins. It takes the tangible act of burning US dollars and translates that physical destruction into unique digital art pieces. The core idea is to draw parallels between the physical scarcity of the burnt currency and the designed scarcity or value stability of stablecoins, offering a tangible, albeit abstract, representation of these financial concepts. The innovation lies in using a physical, destructive process to generate digital assets, prompting reflection on value, scarcity, and the digital representation of worth. So, what's the value to you? It provides a thought-provoking, artistic lens through which to understand the abstract concept of stablecoin value through a concrete, albeit destructive, process.

How to use it?

As an art project, BurntUSD is primarily for observation and contemplation. Developers can engage with it by studying the methodology presented in the Hacker News Show HN post, which likely details the process of documenting the burning and digitizing the results. Potential technical integrations could involve using the generated art as NFTs, exploring blockchain-based provenance for the physical burning event, or building interactive visualizations of the burning process. So, how can you use this? You can appreciate the art, understand the concept of stablecoin value through this unique medium, or draw inspiration for your own projects that bridge physical and digital realms.

Product Core Function

· Physical currency destruction for artistic creation: This function provides a unique, tangible link to the creation of digital art, highlighting scarcity and value. Its value is in creating a physical anchor for an abstract digital concept, making it more relatable. This is relevant for artists and creators looking for novel ways to produce digital assets with a narrative.

· Digital art generation from physical remnants: The project transforms the burnt dollar remnants into digital art, offering a unique aesthetic and conceptual output. The value here is in the creation of distinctive digital assets that carry a story of destruction and transformation, appealing to collectors and digital art enthusiasts.

· Conceptual exploration of stablecoins: By referencing stablecoins, the project invites contemplation on monetary value, scarcity, and digital representation. Its value lies in providing an artistic commentary on financial technology, helping audiences grasp complex ideas through visual and conceptual means. This is useful for anyone interested in the intersection of art, finance, and technology.

· Documentation of a unique process: The project likely documents the entire process, from currency burning to digital art creation, providing a case study in conceptual art and digital asset generation. The value is in sharing the methodology and inspiring others with a unique approach to art and technology. This is beneficial for fellow developers and artists seeking inspiration for their own experimental projects.

Product Usage Case

· An artist wants to create a series of digital art pieces that represent the fragility of currency and the concept of inflation. They can draw inspiration from BurntUSD's methodology of physically altering currency to create unique digital visuals, potentially exploring similar destructive or transformative processes.

· A developer interested in NFTs and the narrative behind them can see how BurntUSD imbues its digital art with a compelling story of physical destruction and conceptual linkage to stablecoins, demonstrating how a strong narrative can enhance the perceived value of a digital asset.

· A financial technology enthusiast curious about alternative representations of value could use BurntUSD as a case study to discuss how physical actions can be metaphorically linked to digital financial instruments, prompting discussions about digital scarcity and intrinsic value in cryptocurrencies.

· A conceptual artist experimenting with the intersection of physical and digital mediums could adapt BurntUSD's approach to explore themes of decay, transformation, and value in their own work, using physical artifacts as a source for digital art generation.

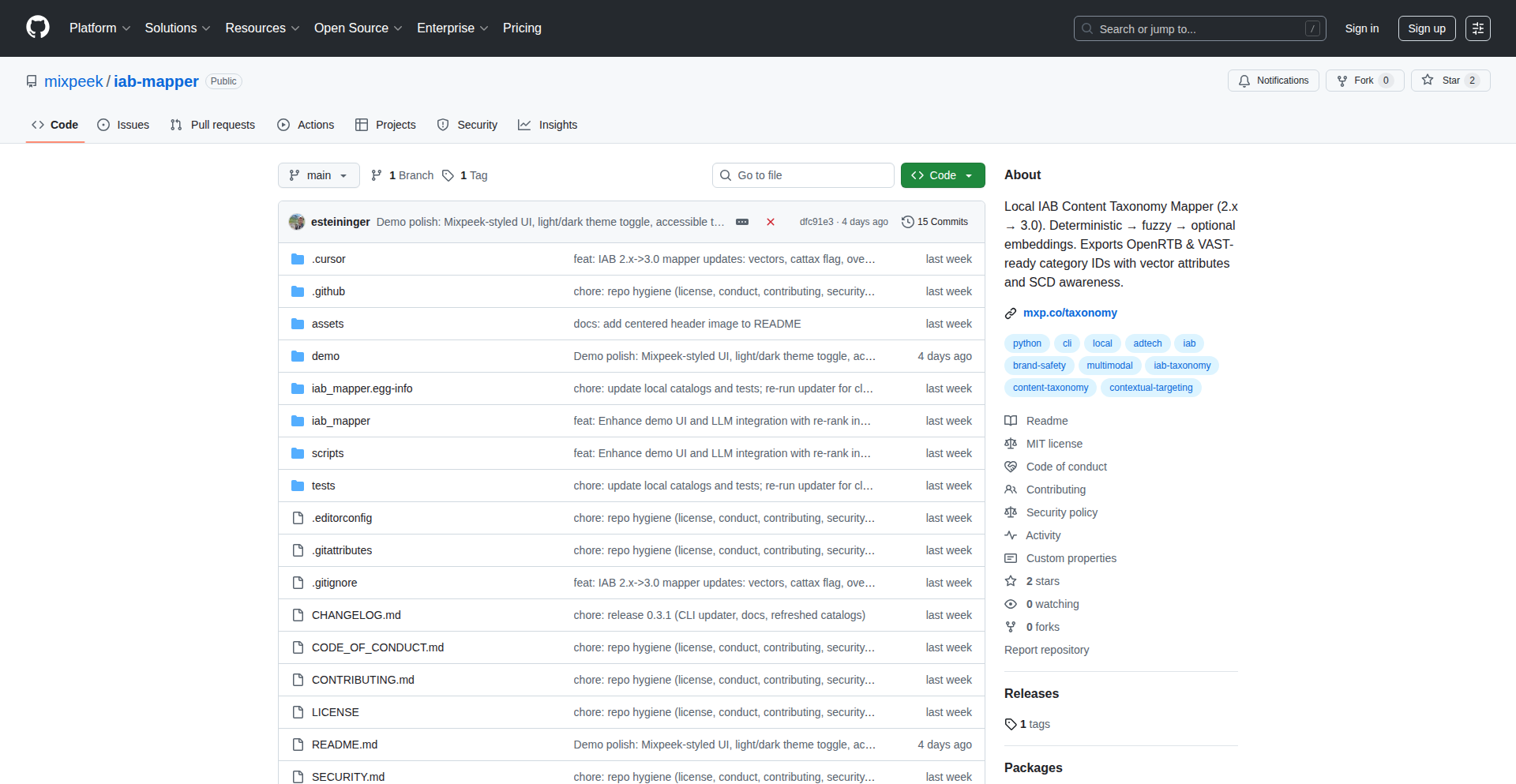

11

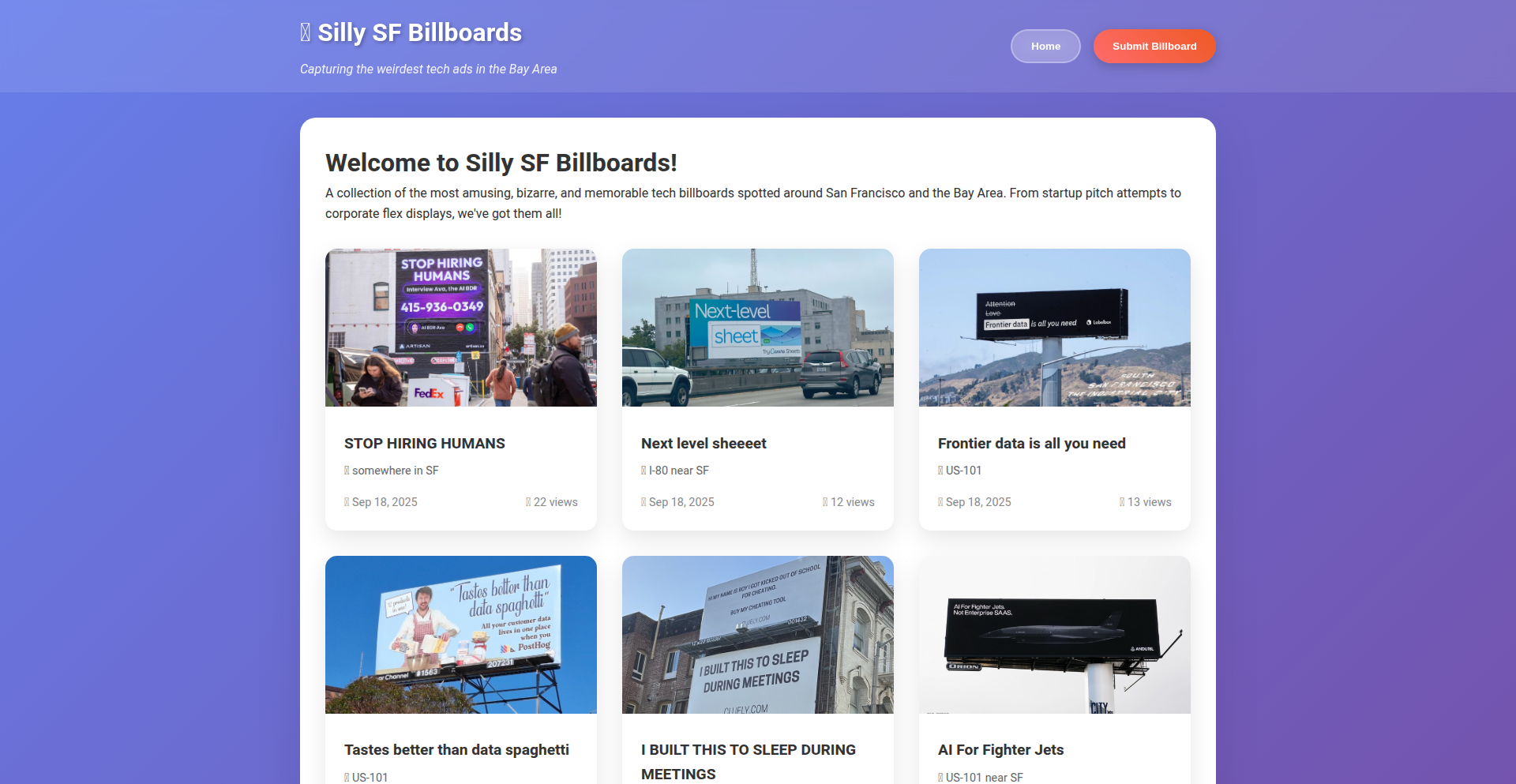

BillboardSnap

Author

yuedongze

Description

BillboardSnap is a novel application designed to capture and curate the often fleeting and distracting tech billboards seen while driving. It tackles the problem of wanting to remember or share these visual cues but being unable to do so safely. The core innovation lies in its ability to provide a hands-free or near-hands-free method for capturing images of these billboards and organizing them for later enjoyment and sharing, inspired by the rapid, visually overwhelming nature of urban tech advertising.

Popularity

Points 7

Comments 1

What is this product?

BillboardSnap is a mobile application that allows users to capture images of roadside tech billboards, primarily while on the go. The technical approach likely involves leveraging smartphone camera capabilities with a user-friendly interface that minimizes interaction while driving. The innovation is in creating a seamless workflow from observation to capture and organization, addressing the specific pain point of missing out on visually interesting advertisements due to the constraints of driving. It’s like having a digital scrapbook for the visual noise of the city.

How to use it?

Developers can integrate BillboardSnap into their workflow by using it as a reference for visual trends in advertising or as a tool for inspiration in their own creative projects. For instance, a marketing team could use the curated collection to analyze current advertising strategies. A designer might pull inspiration for typeface or layout from captured billboards. The app acts as a personal, context-aware visual archive.

Product Core Function

· Automated Capture Trigger: Allows users to quickly capture an image with minimal interaction, ensuring safety while driving. This is valuable for anyone who wants to document things seen on the road without compromising their focus.

· Intelligent Image Curation: Organizes captured images, potentially with metadata like date and location, making it easy to revisit and categorize visual content. This means you can find that specific billboard you liked weeks ago without endless scrolling.

· Sharing Capabilities: Enables users to share their curated billboard collection with friends or collaborators, fostering community and shared experience. This is useful for friends who want to see the unique advertising landscape of a city or for team members to discuss visual trends.

· Offline Accessibility: Designed to work even with intermittent network connectivity, ensuring that captured moments are not lost. This is important for users who are frequently in areas with poor signal strength.

· Customizable Tagging and Categorization: Allows users to add tags and organize images into custom albums, making the collection highly searchable and personalized. This transforms a random collection of photos into a meaningful visual library.

Product Usage Case

· A marketing professional uses BillboardSnap to collect examples of recent tech company advertisements in San Francisco to identify emerging visual themes for their next campaign. This helps them stay ahead of the competition by understanding current industry aesthetics.

· A designer, inspired by the visual clutter of tech billboards, uses BillboardSnap to create a mood board for a new branding project, focusing on typography and color palettes. This provides concrete visual references for creative brainstorming.

· A resident of San Francisco uses BillboardSnap to document the ever-changing tech billboard landscape to share with out-of-town friends, showcasing the city's unique culture. This offers an entertaining way to give friends a glimpse into the local environment.

· A researcher studying urban visual communication employs BillboardSnap to gather data on the prevalence and messaging of tech advertisements in a specific geographic area. This provides empirical evidence for their studies on advertising impact.

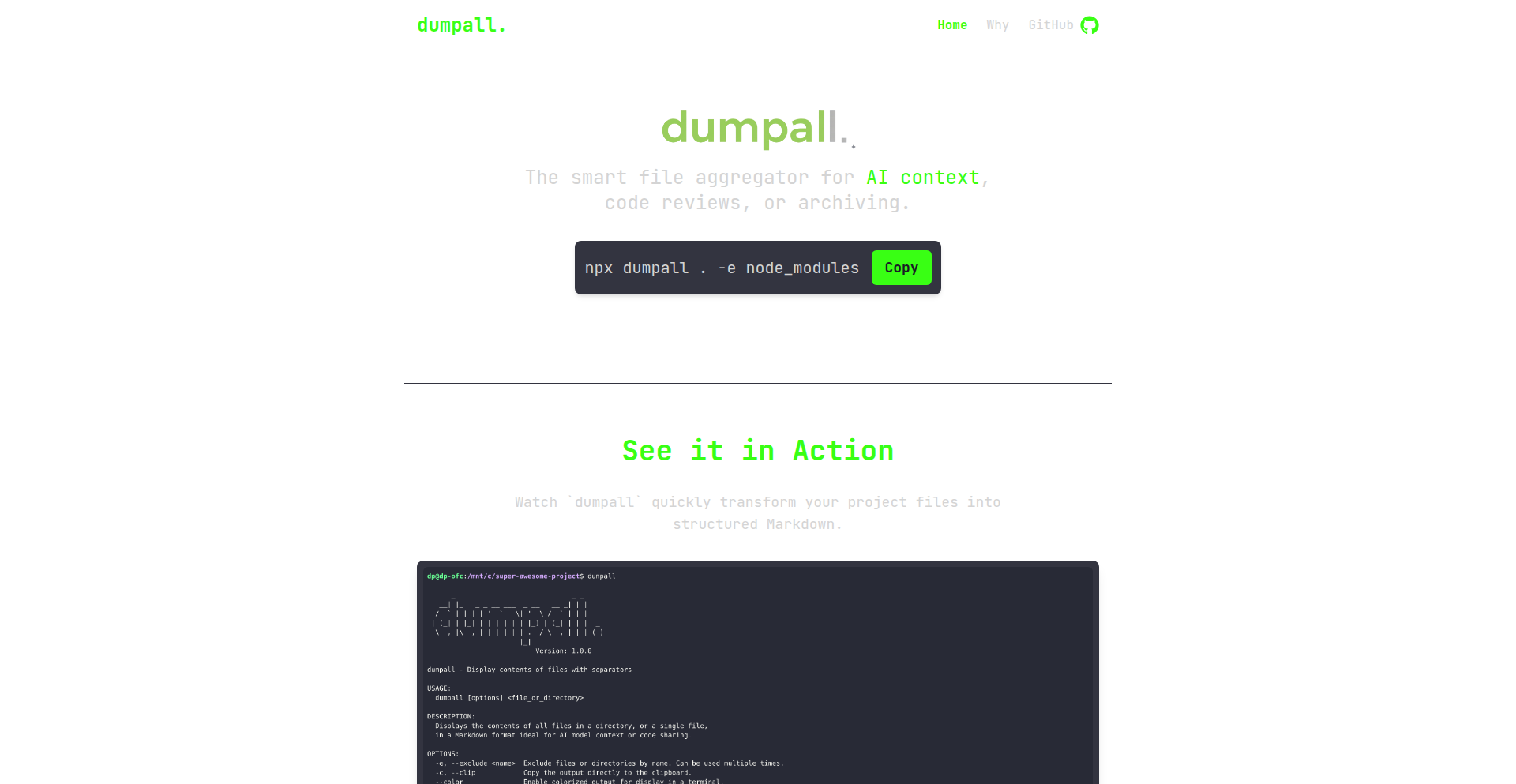

12

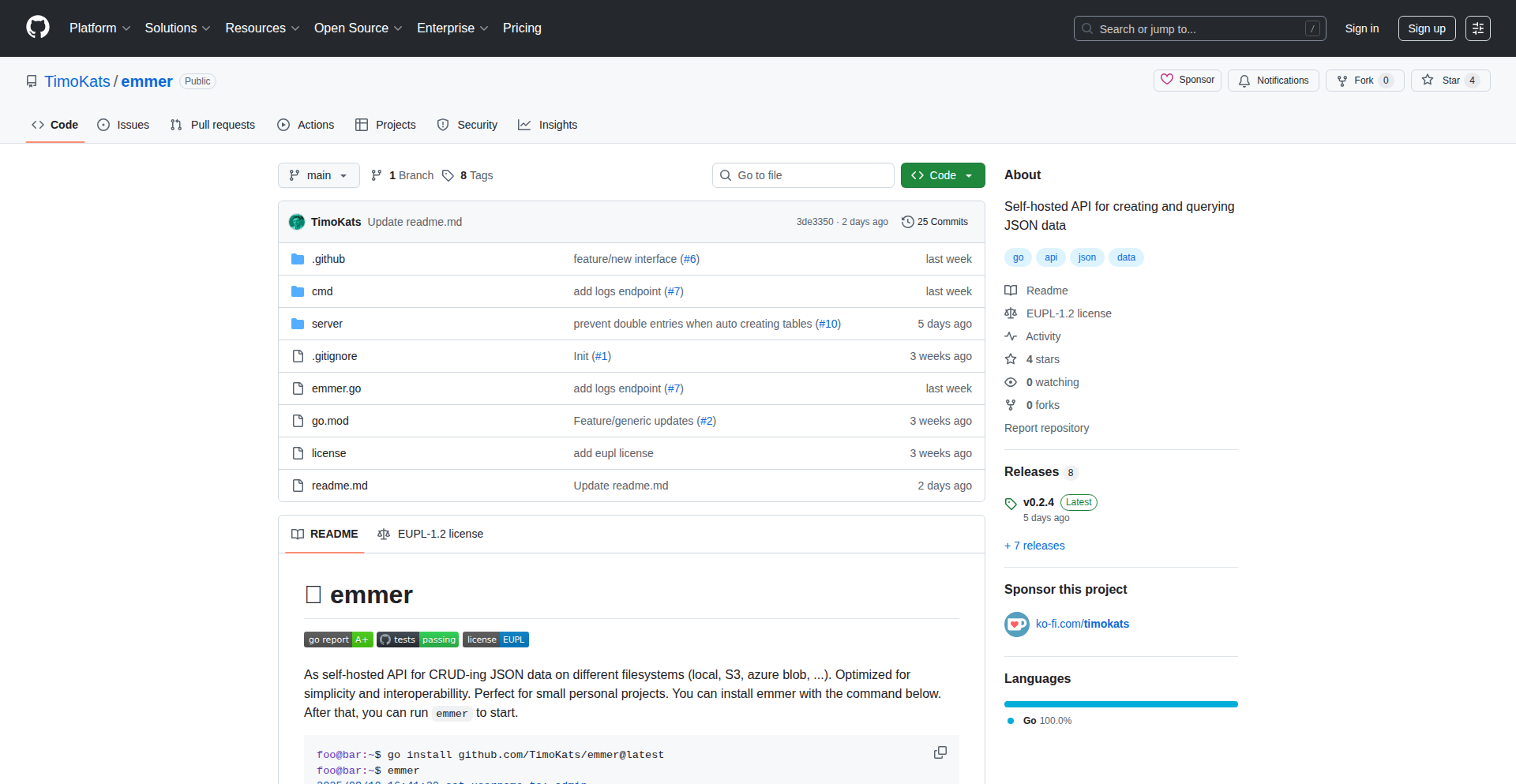

GoCRUD-JSON API

Author

tiemster

Description

A self-hosted API built in Go that allows developers to perform Create, Read, Update, and Delete (CRUD) operations on JSON data. It's designed for simplicity and interoperability, making it ideal for small personal projects. The API intelligently adapts to your JSON structure, enabling direct manipulation of your data through simple HTTP requests.

Popularity

Points 3

Comments 4

What is this product?

This is a lightweight, self-hosted API server written in Go. Its core innovation lies in its ability to directly interact with your JSON files using standard HTTP methods (GET, PUT, DELETE). Unlike traditional databases, it doesn't require a rigid schema. Instead, it understands your JSON structure on the fly. For example, if you have a JSON file with nested keys like `{'user': {'profile': {'name': 'Alice'}}}`, you can access and modify 'Alice' using a URL like `/api/user/profile/name`. This makes it incredibly easy to manage configuration files, simple data storage, or backend logic for prototypes without the overhead of a full-fledged database. The clever part is how it handles different data types and nested structures seamlessly, offering helper functions for appending items to arrays or incrementing numerical values within your JSON.

How to use it?

Developers can integrate this API into their projects by simply running the Go executable and pointing it to their JSON data file. Once the API is running, you can interact with your JSON data using standard HTTP clients (like `curl`, Postman, or code libraries in various programming languages). For instance, to get data, you'd make a GET request to `/api/your/json/path`. To update a value, you'd use a PUT request to the same path with the new value in the request body. Appending to a list or incrementing a number is just as straightforward with specific endpoint helpers. This makes it perfect for quick backend services for front-end applications, configuration management for microservices, or even as a simple data store for personal scripting needs.

Product Core Function

· Create, Read, Update, Delete (CRUD) operations on JSON data: Enables basic data manipulation directly on your JSON files, allowing you to easily manage configuration or simple datasets without complex database setup. This means you can quickly add, retrieve, modify, or remove data points as needed for your application.

· Dynamic JSON structure adaptation: The API automatically understands and works with any JSON structure you provide, eliminating the need for rigid schemas and offering flexibility for diverse data formats. This makes it adaptable to various project requirements without upfront data modeling.

· Nested data access via URL paths: Allows targeting specific values within deeply nested JSON objects by using the key hierarchy in the URL, simplifying data targeting and manipulation. This provides a straightforward way to access and change specific pieces of information within complex data.

· Helper functions for array appending and value incrementing: Provides convenient built-in functionality to easily add items to JSON arrays or increment numerical values within your data, reducing boilerplate code for common data modifications.

· Self-hosted and lightweight: Offers complete control over your data and requires minimal server resources, making it an efficient solution for personal projects or environments where external dependencies are undesirable.

Product Usage Case

· A front-end developer building a small portfolio website might use this API to store and retrieve project details, testimonials, and contact information directly from a JSON file, avoiding the need for a backend database for a simple static site. The API handles all the data updates, making it easy to manage content via simple file edits or custom admin interfaces.

· A DevOps engineer could use this API to manage configuration settings for multiple microservices. Each service could have its configuration stored in a JSON file, and this API would allow for centralized and programmatic updates to these configurations without redeploying services. This simplifies configuration management and ensures consistency across different parts of the system.

· A data scientist creating a quick prototype for data visualization might use this API to serve small datasets. They can easily load and update CSV or other data into a JSON format and then use the API to feed this data to a web-based visualization tool, enabling rapid iteration on data exploration.

· A hobbyist building a smart home automation system could use this API to store device states and user preferences. The API would allow different smart devices or a central controller to read and update statuses (like light on/off, temperature settings) efficiently, making it easy to manage a connected home environment.

13

AquaShell: Custom Windows Automation Environment

Author

foxiel

Description

AquaShell is a custom scripting and automation environment for Windows, inspired by classic tools like AutoIt and AutoHotkey. It features a unique, user-defined syntax designed for ease of use and personal expression, allowing developers to create custom administration tools, automate repetitive tasks, and even build fully scripted applications. Its innovation lies in providing a fresh, personalizable approach to Windows automation, enabling efficient problem-solving through code.

Popularity

Points 6

Comments 1

What is this product?

AquaShell is a new programming language and execution environment built specifically for Windows. Think of it as a way to write your own small programs or scripts to make your computer do what you want, automatically. Its core innovation is its highly flexible and personalizable syntax, allowing developers to craft a language that feels intuitive and natural to them. This makes automating complex tasks or building specialized tools more accessible and enjoyable. So, it's a powerful, yet personal, tool for making your Windows experience more efficient and tailored to your needs.

How to use it?

Developers can use AquaShell by writing scripts in its custom syntax and then executing them using the AquaShell interpreter on Windows. It's ideal for automating mundane tasks like file manipulation, application launching, form filling, or system administration. You can integrate AquaShell scripts into your workflow to streamline repetitive actions, saving you time and reducing errors. This means you can automate the things you do on your computer every day, making your work faster and easier.

Product Core Function

· Customizable Scripting Language: Allows users to define their own syntax, making it feel more natural and intuitive. This means you can tailor how you write commands to better suit your thinking, making automation easier.

· Windows Automation: Enables control over Windows applications, windows, and system processes. This lets you automate tasks like opening programs, clicking buttons, and typing text, all without manual intervention.

· Application Development: Supports building complete, standalone applications through scripting. This means you can create your own tools and utilities written in a language you've personalized.

· Task Scheduling and Execution: Provides the capability to schedule and run scripts automatically. This is useful for setting up recurring tasks, like backups or system checks, to run on their own.

· Open-Source and MIT Licensed: Freely available for anyone to use, modify, and distribute. This means you can use it without cost and even contribute to its development, fostering a collaborative community.

Product Usage Case

· Automating software installation and configuration: A developer can write an AquaShell script to install and set up multiple software applications on a new machine sequentially, saving hours of manual work. This means a new computer setup becomes much faster.

· Custom data entry and form submission: An AquaShell script can be created to read data from a spreadsheet and automatically fill out online forms or desktop application fields. This eliminates the tedious process of manual data entry.

· System administration tasks: A system administrator can use AquaShell to automate routine checks on server health, log file analysis, or user account management across many machines. This makes managing systems more efficient and less error-prone.

· Creating simple utility applications: A user might write an AquaShell script to create a quick file organizer that sorts files into specific folders based on their type or date. This helps keep digital workspaces tidy with minimal effort.

14

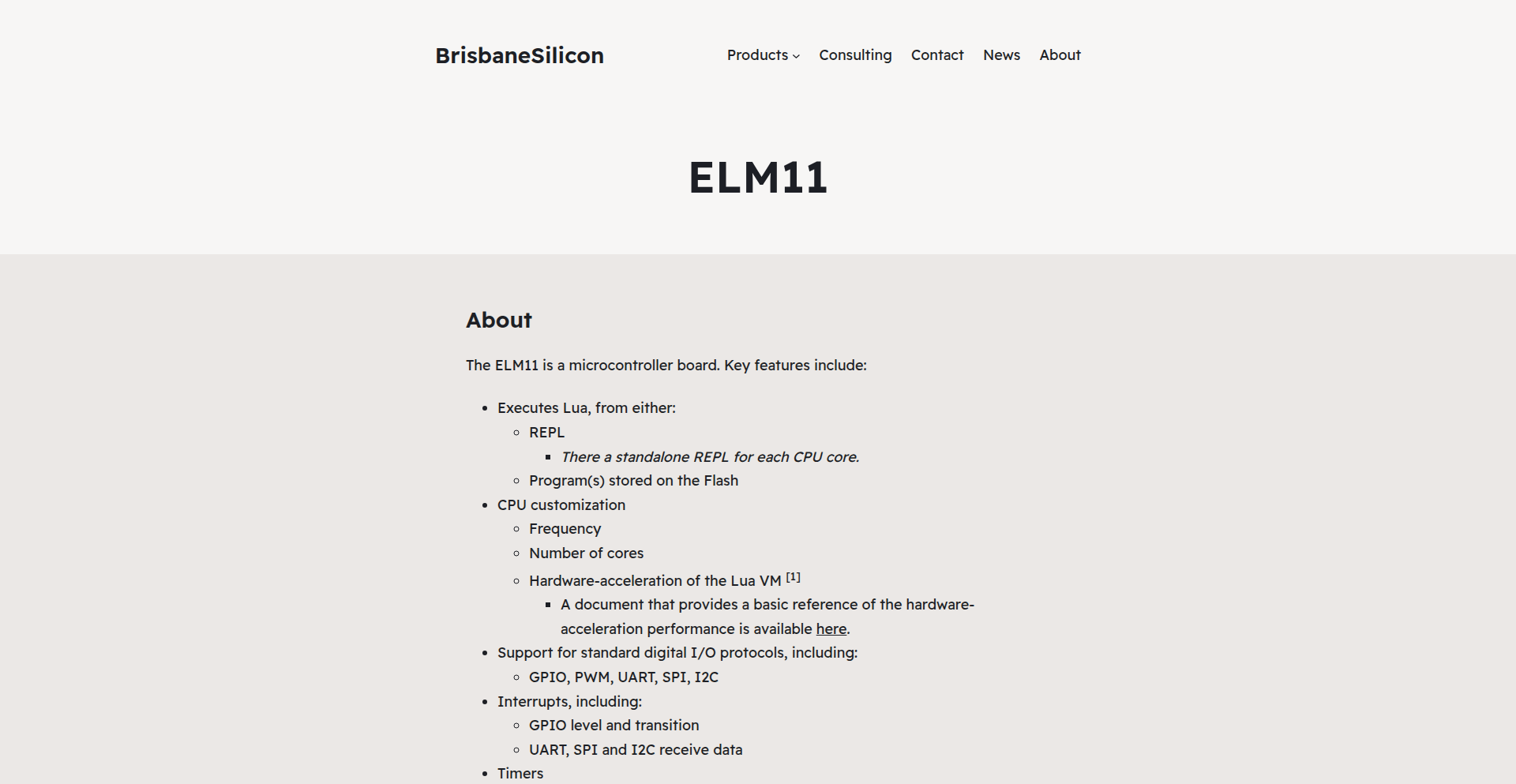

HwLuaVM: Hardware Accelerated Lua Microcontroller

Author

brisbanesilicon

Description

This project showcases a microcontroller that integrates a hardware-accelerated Lua Virtual Machine (VM). It aims to provide a more efficient and powerful scripting environment for embedded systems by offloading computationally intensive tasks of the Lua VM to dedicated hardware. This means developers can write more complex logic and handle real-time operations on resource-constrained devices with greater performance.

Popularity

Points 7

Comments 0

What is this product?

This is a microcontroller system featuring a Lua Virtual Machine (VM) that has been enhanced with hardware acceleration. Typically, Lua scripts are interpreted by software, which can be slow on small embedded devices. This project implements specific hardware components on the microcontroller to speed up common Lua VM operations, such as arithmetic calculations, string manipulation, and bytecode execution. The innovation lies in bringing the performance benefits of specialized hardware to a popular scripting language, making embedded development more agile and capable. So, what's the benefit? It allows you to run more sophisticated Lua code on your microcontroller, leading to faster response times and the ability to implement more advanced features on your embedded projects.

How to use it?

Developers can use this system by writing Lua scripts that leverage the accelerated VM. The hardware acceleration is transparent to the script writer; you simply write standard Lua code. The system then automatically utilizes the hardware for performance gains. Integration involves using the provided firmware and development tools to compile and upload Lua scripts to the microcontroller. This could be integrated into IoT devices, robotics, sensor networks, or any embedded application requiring flexible and performant control. So, how can you use it? You write your control logic in Lua, upload it to the device, and the hardware makes it run much faster, allowing for more responsive and complex behaviors in your gadget.

Product Core Function

· Hardware Accelerated Lua VM Execution: Speeds up Lua script processing by using dedicated hardware for common VM operations. This allows for real-time responsiveness in embedded applications.

· Microcontroller Integration: Provides a complete embedded system solution where Lua scripting is directly managed by the microcontroller's hardware. This enables simpler development for embedded projects.

· Efficient Resource Management: By offloading computation to hardware, it reduces the software overhead on the microcontroller, freeing up resources for other tasks. This means your device can do more with less power.

· Simplified Embedded Scripting: Enables developers to use the user-friendly Lua scripting language for complex embedded control logic, rather than low-level C/C++. This speeds up development cycles and reduces errors.

Product Usage Case

· Robotics Control: A developer can use this to implement sophisticated movement algorithms and sensor processing for a robot using Lua scripts, achieving smoother and faster robot movements. This solves the problem of slow control loop updates on traditional microcontrollers.

· IoT Sensor Data Processing: An embedded device collecting environmental data can use Lua scripts to perform real-time analysis and filtering of sensor readings directly on the device before sending it out, reducing network traffic and latency. This addresses the challenge of processing data efficiently at the edge.

· Real-time Audio Effects: A music synthesizer project could use Lua to control complex audio processing chains, with hardware acceleration ensuring low-latency sound generation and manipulation. This overcomes the performance limitations of software-based audio processing on microcontrollers.

· Industrial Automation: A smart factory sensor node can be programmed with Lua to monitor machine status and trigger alerts based on complex conditional logic, reacting instantly to critical events. This provides a flexible and fast response mechanism for industrial monitoring.

15

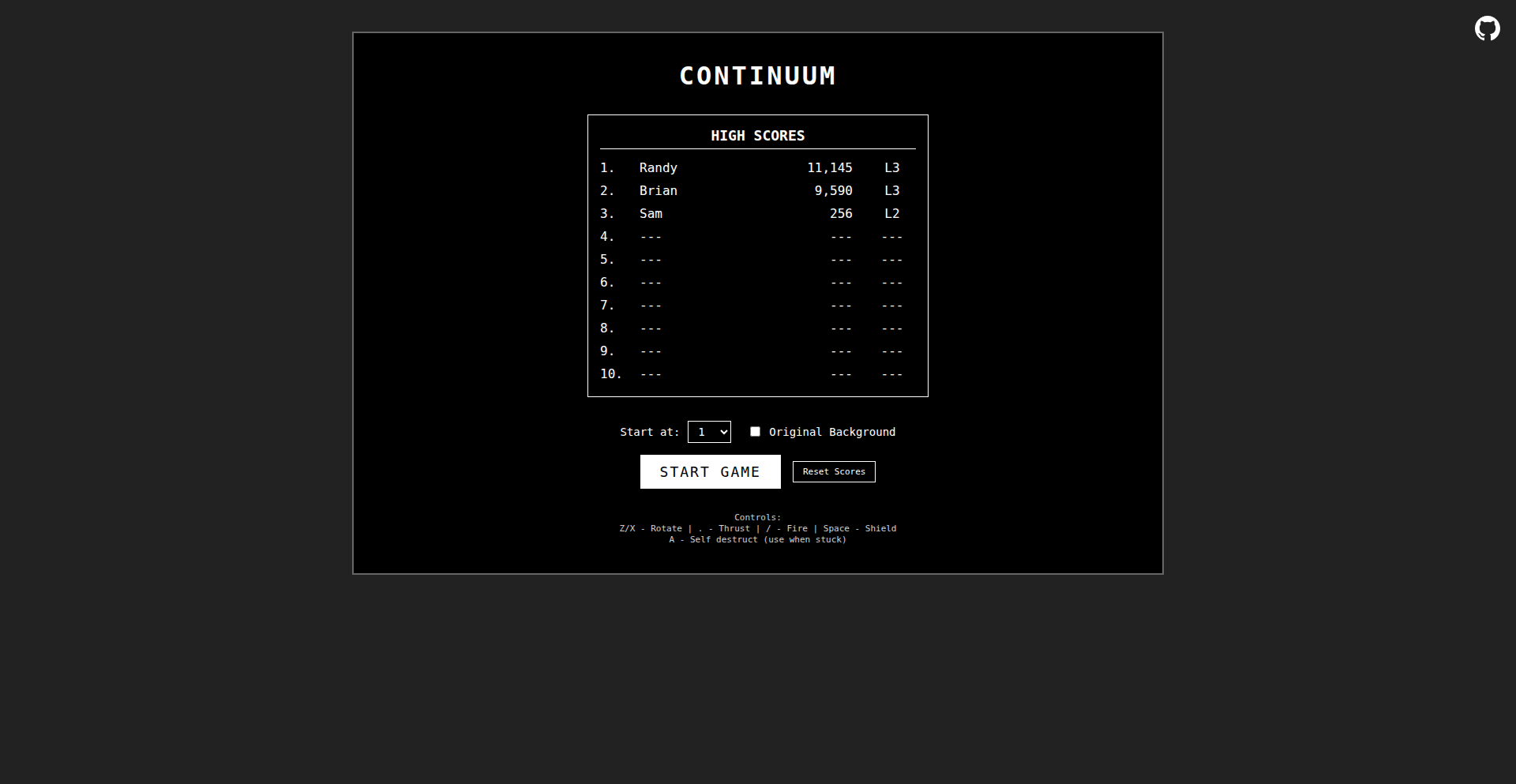

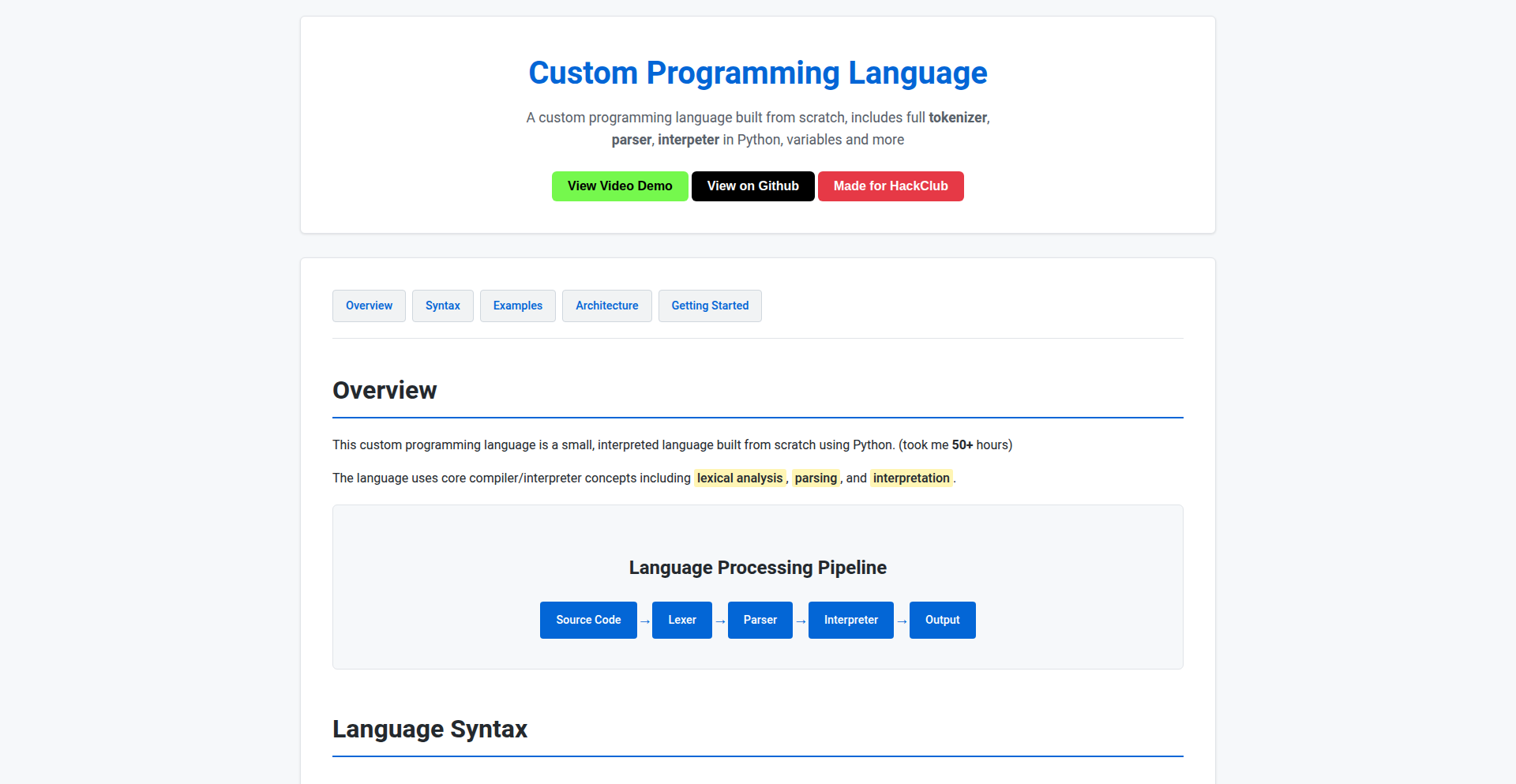

ContinuumJS: 68k Mac Classic Reimagined in JavaScript

Author

sam256

Description

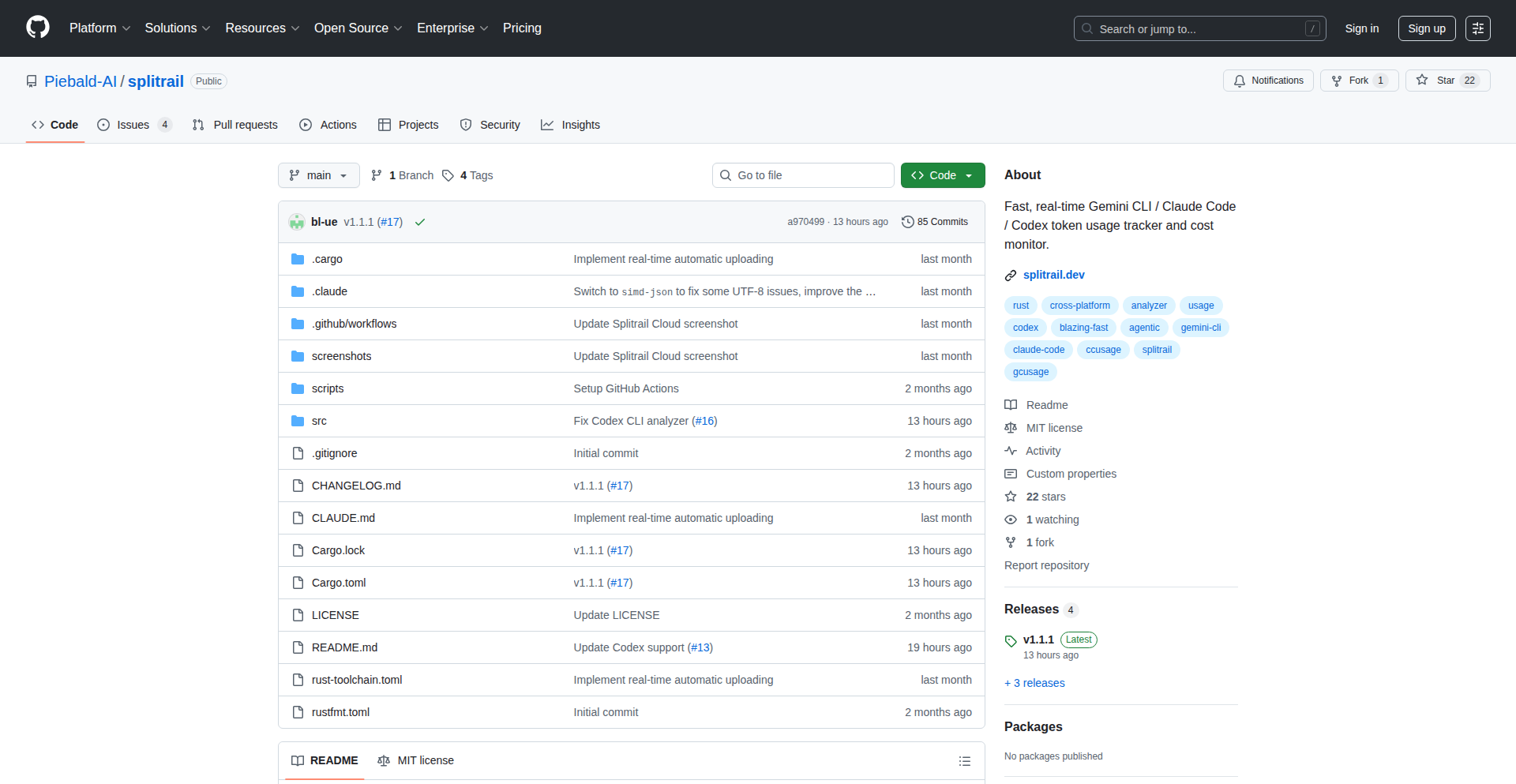

ContinuumJS is a JavaScript port of the classic 1984 "Continuum" arcade game, originally designed for the 68k Mac. This project showcases innovative use of AI, specifically Claude Code and Gemini CLI, to assist in porting low-level 68k assembly code. The entire game state, including physics and movement, is managed using Redux and Redux Toolkit, allowing for state observation and debugging via the RTK debugger. The project highlights the creative application of modern state management to preserve the essence of vintage software.

Popularity

Points 6

Comments 0

What is this product?

ContinuumJS is a faithful JavaScript recreation of a groundbreaking 1984 arcade game that originally ran on limited 128kb Mac hardware, achieving smooth scrolling. The innovation here lies in the developer's use of AI tools like Claude Code and Gemini CLI to facilitate the challenging task of porting 68k assembly code to modern JavaScript. Furthermore, the game's entire operational state, from player movement to game physics, is meticulously managed through Redux and Redux Toolkit. This approach not only organizes the game's logic in a highly structured way but also enables developers to visualize and debug the game's progression step-by-step using the Redux Toolkit debugger, offering a unique blend of retro gaming and modern development practices.

How to use it?

Developers can use ContinuumJS as a reference for understanding how to port complex, low-level code to modern JavaScript with AI assistance. The Redux state management architecture serves as an excellent example for structuring game logic and managing intricate game states in a clear, observable manner. The project's open-source MIT license encourages exploration and modification. Developers can integrate specific game mechanics or state management patterns into their own JavaScript projects, or simply use it as a learning tool to understand the fusion of retro programming challenges with cutting-edge AI and state management techniques.

Product Core Function

· AI-assisted 68k assembly code porting: Leverages AI tools to translate complex legacy code, demonstrating a new paradigm for modernizing old software.

· Redux/Redux Toolkit for game state management: Encapsulates all game logic (physics, movement, etc.) into predictable state updates, allowing for detailed inspection and debugging.

· RTK debugger integration: Provides visual insight into the game's state evolution, making it easier to understand and troubleshoot game mechanics.

· Faithful original game recreation: Aims to preserve the original gameplay experience and aesthetics of the 1984 Continuum game.

· Open-source MIT license: Encourages community contribution, learning, and reuse of the codebase and its innovative approaches.

Product Usage Case

· Modernizing legacy software: A developer facing a similar challenge of porting older, assembly-based code could learn from the AI assistance strategies employed in this project.

· Educational tool for state management: Game developers or web application developers can study how Redux and Redux Toolkit can be applied to manage complex, dynamic states in real-time applications.

· Retro game development: Enthusiasts looking to recreate classic games can use this project as a blueprint for handling physics and rendering within a structured state management framework.

· AI in software development: Researchers or practitioners interested in the practical application of AI for code translation and bug detection can find valuable case study insights here.

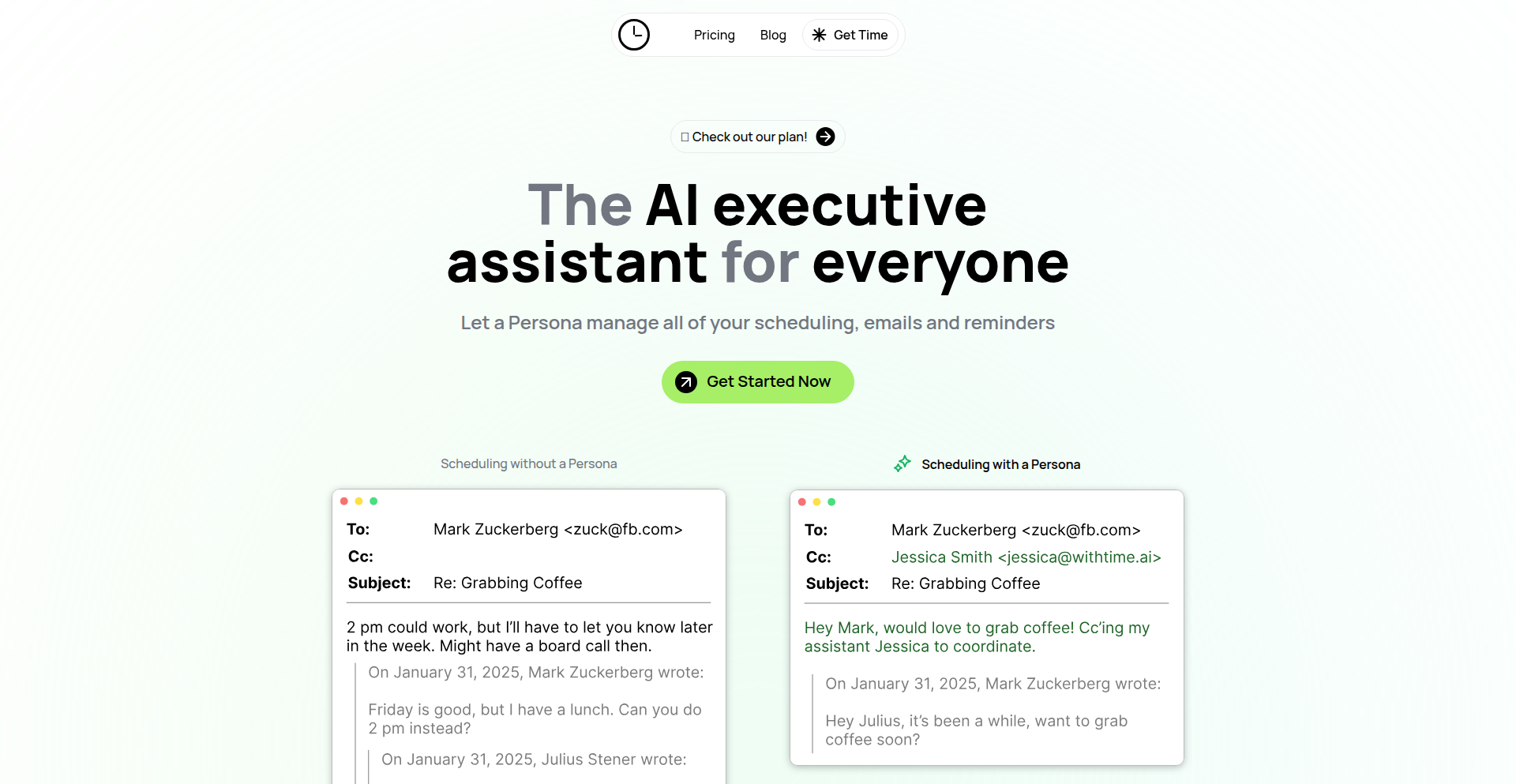

16

Persona-AI Colleagues

Author

notanaiagent

Description

This project introduces AI-powered 'Personas' that act as personalized remote coworkers. Each Persona is given a unique phone number and email address, enabling them to interact with the real world on your behalf. They learn and retain context from these interactions, becoming more capable over time. This innovative approach moves beyond basic chatbots by integrating AI deeply into your existing workflow, allowing them to proactively manage tasks like scheduling and reminders.

Popularity

Points 6

Comments 0

What is this product?

Persona-AI Colleagues are AI agents designed to function as your personal virtual assistants. Unlike typical chatbots that require constant re-prompting, each Persona is assigned a dedicated phone number and email address. This allows them to engage in real-world communications, such as emailing contacts or making calls. Their core innovation lies in a sophisticated memory system that combines knowledge graphs, social graphs, and vector databases. This system allows Personas to learn from every interaction—emails, files, messages, and calls—building a rich context about people and tasks. This means they don't need you to re-explain things; they remember and use that context to complete tasks more effectively. So, what's the point? It's like having a colleague who genuinely remembers past conversations and projects, making them more capable and less reliant on your constant guidance.

How to use it?

Developers can integrate Persona-AI Colleagues into their workflow by signing up and creating a Persona. You can customize their name and profile picture. Once set up, you can 'cc' them on emails, call them for updates, or even add them to meetings. For example, if you're coordinating a project, you can have your Persona email participants to gather information or schedule follow-ups. They can also handle reminders and manage your calendar. The key is to let them interact with your existing communication channels. So, how does this help you? It automates tedious communication tasks and ensures that information is captured and leveraged by your AI assistant without you having to manually transfer it.

Product Core Function

· Dedicated communication channels (email/phone): Provides a unique identity for each AI assistant, allowing them to interact with external parties directly and build a communication history. This means your AI can independently manage correspondence, solving the problem of isolated chatbot interactions.

· Contextual memory system: Utilizes a combination of knowledge graphs, social graphs, and vector databases to store and recall information from all interactions. This allows Personas to learn and adapt, reducing the need for repetitive instructions and improving task efficiency. This is valuable because your AI gets smarter with every use.

· Proactive task management: Empowers Personas to handle tasks like reminders and scheduling by learning from ongoing interactions rather than solely relying on explicit commands. This offers a more seamless and integrated experience for managing your daily workload.

· Inter-agent communication (future capability): The architecture is designed to allow Personas to potentially interact with each other, creating a team of AI assistants that can collaborate on more complex tasks. This points to future efficiency gains by enabling coordinated AI efforts.

Product Usage Case

· Project management outreach: A developer can set up a Persona to email team members for status updates or to schedule project review meetings. The Persona will use its learned context from previous project discussions to draft the emails and manage responses, saving the developer significant time on administrative tasks.

· Onboarding new clients: A sales representative can have a Persona handle initial client outreach and information gathering. The Persona can send introductory emails, collect basic company information via email exchanges, and schedule follow-up calls, ensuring a consistent and efficient onboarding process.

· Personalized reminders for complex tasks: Imagine a Persona that tracks a long-term research project. It can proactively remind you about key milestones, relevant articles you've previously discussed, or even follow up with collaborators based on context learned from your notes and emails, keeping your project momentum high.

17

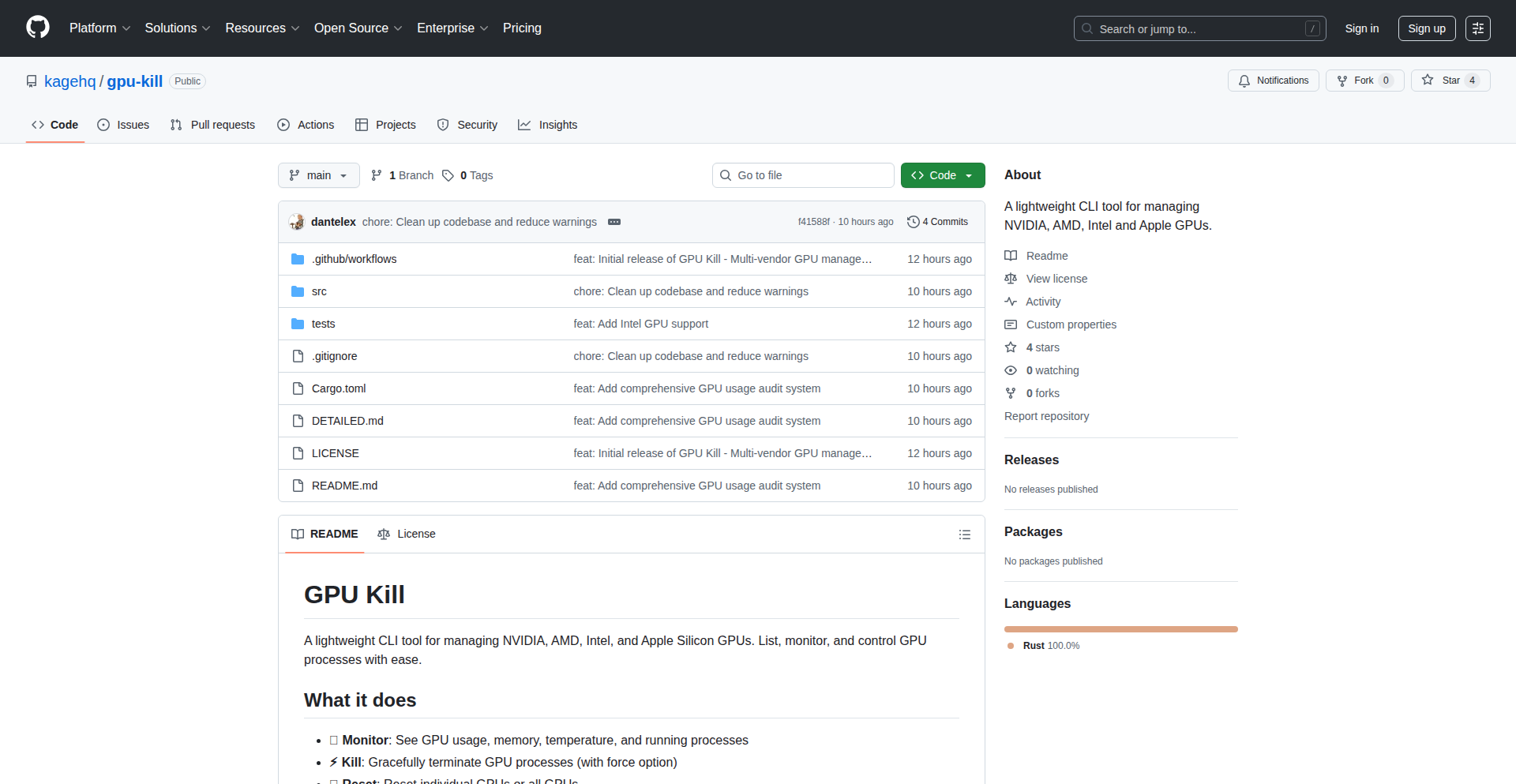

GPUKill: GPU Job Warden

Author

lexokoh

Description

GPUKill is a lightweight command-line utility designed to effectively terminate unresponsive GPU processes. It addresses the common developer frustration of stuck GPU jobs that consume valuable hardware resources and hinder productivity. Its innovation lies in its simple yet robust approach to identifying and killing these problematic processes, saving developers time and improving GPU utilization.

Popularity

Points 4

Comments 2

What is this product?

GPUKill is a command-line tool that acts like a 'guard' for your GPU. Imagine you're running a complex simulation or training a machine learning model on your graphics card (GPU), and sometimes these processes freeze or get stuck, hogging all the GPU's power. GPUKill is designed to detect these 'stuck' jobs and forcefully shut them down. The core innovation is its lightweight nature and its targeted approach to identify and terminate these specific GPU processes without disrupting other normal operations. This is particularly valuable because it helps you reclaim your GPU resources quickly when things go wrong, a common problem in demanding computational tasks.

How to use it?

Developers can use GPUKill by running it from their terminal. After compiling and installing the tool, they can execute commands like `gpukill --pid <process_id>` to kill a specific stuck process, or `gpukill --all` to attempt to terminate all identified stuck GPU processes. It's integrated into a developer's workflow by being accessible during development sessions or even in automated scripts for managing GPU resources in distributed computing environments. For example, if you're running multiple experiments and one gets stuck, you can quickly identify its process ID and use GPUKill to free up your GPU for the next experiment.

Product Core Function