Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-17

SagaSu777 2025-09-18

Explore the hottest developer projects on Show HN for 2025-09-17. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of AI-driven innovation showcased today highlights a significant shift: AI is no longer just a tool for generating content, but a foundational element for building entirely new applications and improving existing workflows across the board. Developers are leveraging AI for everything from creating synthetic data to powering natural language interfaces for databases, and even automating complex development documentation. The trend towards specialized AI agents and tools that enhance productivity, such as those for code generation or data analysis, signals a maturing ecosystem. For entrepreneurs, this is a clear signal to explore niche problems where AI can provide a unique, cost-effective, or significantly faster solution. The emphasis on open-source further democratizes access, empowering individual creators and small teams to build sophisticated applications. The core hacker spirit is evident in tackling tedious tasks, bridging complex technical domains (like physics simulations with AI), and focusing on practical, impactful solutions that redefine how we interact with technology.

Today's Hottest Product

Name

Witness by Reel Human

Highlight

This project tackles the critical issue of digital content authenticity by cryptographically signing photos and videos. It embeds metadata like capture time and device info within the media file itself. The innovation lies in providing a verifiable, human-authored proof of content, addressing concerns around AI-generated or manipulated media. Developers can learn about cryptographic signing, secure metadata embedding within media files, and building privacy-first applications.

Popular Category

AI & Machine Learning

Developer Tools

Utilities & Productivity

Content & Media

Data & Analytics

Popular Keyword

AI

LLM

Data

Python

Rust

Security

Open Source

Developer Docs

Technology Trends

AI-powered Development Tools

Data Privacy and Verifiability

Natural Language Processing for Databases

Efficient Code Generation and Documentation

Secure and Private Content Creation

Low-Code/No-Code AI Integrations

Rust for System-Level Security

Synthetic Data Generation

Personalized Learning and Data Exploration

Project Category Distribution

AI/ML Tools (35%)

Developer Productivity (25%)

Data Management & Analytics (15%)

Content Creation & Media (10%)

System Utilities & Security (10%)

Education & Learning (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | StealthText | 228 | 85 |

| 2 | Pgmcp: Natural Language SQL Query Engine | 11 | 3 |

| 3 | GibsonAI Docs | 6 | 5 |

| 4 | Pingoo: Rust-Powered Reverse Proxy with Integrated WAF and Bot Defense | 11 | 0 |

| 5 | STT-LLM-TTS C++ Pipeline | 9 | 0 |

| 6 | Witness by Reel Human | 3 | 4 |

| 7 | Data Center Chronicle | 5 | 2 |

| 8 | Cyberpunk Audio Deck | 5 | 2 |

| 9 | DataGuessr | 4 | 2 |

| 10 | LLMyourself: AI-Powered Persona Reporter | 2 | 4 |

1

StealthText

Author

zikero

Description

StealthText is a novel application that makes text vanish upon taking a screenshot. It addresses the privacy concern of sensitive information being accidentally captured and shared, offering a novel way to protect data during ephemeral communication or presentation.

Popularity

Points 228

Comments 85

What is this product?

StealthText is a client-side application that employs a clever technique to detect screenshot actions on supported operating systems. When a screenshot is initiated, it rapidly alters the displayed text content, effectively removing it from the captured image. The innovation lies in its near-instantaneous response to screenshot events, making it difficult for users to capture the original text. This is achieved through system-level event monitoring, which triggers a data-obfuscation routine. So, what's the value to you? It provides an immediate layer of digital privacy for sensitive information you are viewing or composing.

How to use it?

Developers can integrate StealthText into their web applications or desktop software. For web applications, this typically involves including a JavaScript library that monitors user interactions and system events. When a user triggers a screenshot (which the script attempts to detect), the library dynamically modifies the DOM elements containing the sensitive text, replacing them with blank characters or other obfuscation. For desktop applications, the integration would be at a lower level, potentially involving OS-specific APIs to intercept screenshot events. So, how can you use this? You can embed it into your web portal to protect user data displayed on screen, or into a messaging app to ensure sensitive conversations aren't captured by screenshots.

Product Core Function

· Screenshot Detection: The system monitors for common screenshot triggers, such as keyboard shortcuts (e.g., Print Screen) or specific OS APIs. This allows the application to know when to act. So, what's the value? It's the trigger that initiates the protection mechanism.

· Dynamic Text Obfuscation: Upon detecting a screenshot attempt, the application rapidly changes the visible text to innocuous or blank content. This ensures that what is captured by the screenshot is not the original sensitive information. So, what's the value? This is the core mechanism that actively protects your data from being captured.

· Cross-Platform Potential: While initial implementations might be OS-specific, the underlying principle of event detection and UI manipulation can be adapted across different platforms, offering broad applicability. So, what's the value? It means the protection can potentially work on many different devices and systems you use.

Product Usage Case

· Protecting sensitive user credentials displayed briefly on a web application's interface during a login process. If a user is compelled to screenshot their screen, the credential field would appear blank. So, how does this help you? It prevents accidental exposure of login details.

· Securing confidential meeting notes or financial figures shown during a remote presentation. If an unauthorized participant tries to screenshot the shared screen, the critical data will not be captured. So, how does this help you? It ensures that sensitive business information remains private during presentations.

· Adding an extra layer of security for password managers or sensitive data entry fields in desktop applications. When a user is about to type or view sensitive data, StealthText can be activated. So, how does this help you? It reduces the risk of private data being compromised through screenshots.

2

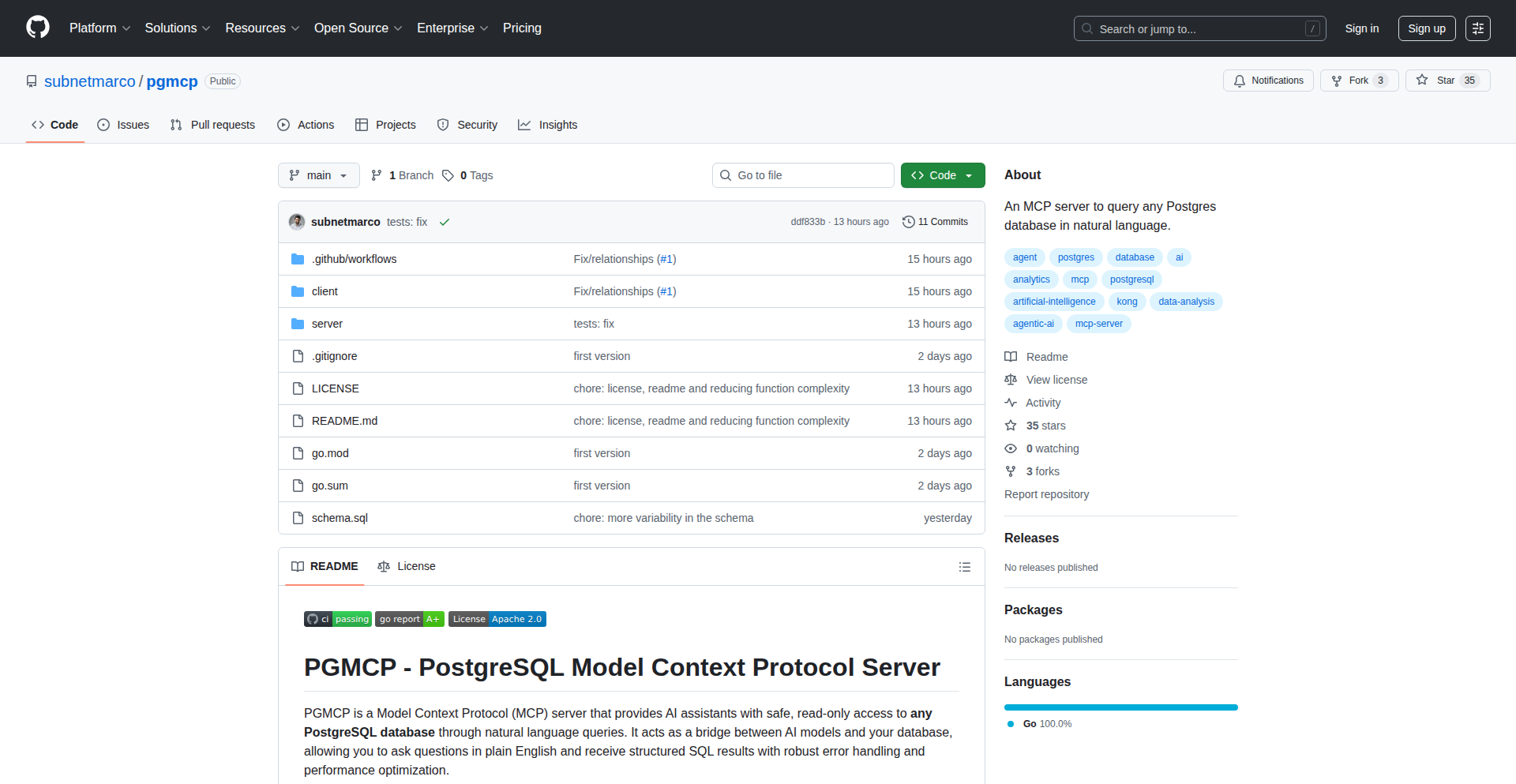

Pgmcp: Natural Language SQL Query Engine

Author

fosk

Description

Pgmcp is a fascinating Hacker News Show HN project that transforms how developers interact with Postgres databases. It acts as an MCP (Master Control Program) server, enabling users to query any Postgres database using natural language, translating human-readable requests into executable SQL. This eliminates the need for developers to memorize complex SQL syntax, directly addressing the friction in data exploration and rapid prototyping.

Popularity

Points 11

Comments 3

What is this product?

Pgmcp is a server that acts as an intermediary between you and your Postgres database. Instead of writing SQL queries, you speak to Pgmcp in plain English (or other natural languages), and it intelligently translates your request into SQL commands that it then executes against your Postgres database. The core innovation lies in its Natural Language Processing (NLP) capabilities, likely employing techniques such as intent recognition, entity extraction, and semantic parsing to understand user queries. This makes accessing and manipulating data significantly more intuitive and accessible, especially for those less familiar with SQL. The value here is democratizing data access and accelerating development workflows by abstracting away the complexities of SQL.

How to use it?

Developers can integrate Pgmcp into their workflows in several ways. For command-line users, they can interact with Pgmcp directly via a terminal interface, typing their natural language queries. For application developers, Pgmcp can be exposed as an API endpoint. Your application could then send user-generated natural language requests to this API, receive the translated SQL, or even directly receive the query results. This allows for building data-driven features in applications where users might not have direct SQL knowledge. For example, a dashboard application could use Pgmcp to allow users to ask questions about the data shown on the dashboard in plain English.

Product Core Function

· Natural Language to SQL Translation: Converts human-readable queries into valid SQL statements for Postgres. This significantly reduces the learning curve for interacting with databases, allowing faster data analysis and quicker iteration on features.

· Database Abstraction Layer: Acts as a unified interface for any Postgres database, meaning you don't need to worry about specific database connection details for each query. This simplifies data access and makes it easier to manage different data sources.

· Intelligent Query Understanding: Utilizes advanced NLP techniques to comprehend the intent and context of user queries, even if they are phrased imprecisely. This leads to more accurate results and a smoother user experience, reducing the frustration of getting incorrect data.

· Server-based Interaction: Runs as a server, allowing for centralized access and management of database queries. This is beneficial for team collaboration and for building applications where multiple users need to query the same database.

Product Usage Case

· A data analyst needs to quickly find customer demographics for a new marketing campaign. Instead of writing a complex SQL query to join tables and filter by specific criteria, they can simply ask Pgmcp: 'Show me the average age of customers in California who have purchased product X in the last month.' Pgmcp translates this into SQL, retrieves the data, and provides the answer, saving significant time and effort.

· A web application developer is building a customer support portal. Users often have specific questions about their orders or account details. By integrating Pgmcp, the portal can allow users to type questions like 'What is the status of my order 12345?' or 'When was my last payment?' Pgmcp handles the translation to SQL and retrieves the relevant information, enhancing the user experience without requiring them to know SQL.

· A developer is experimenting with a new dataset and wants to explore relationships between different fields. Rather than manually crafting SQL statements for each exploration, they can use Pgmcp to ask questions like 'List all unique product categories and the number of products in each.' This rapid, natural language-driven exploration accelerates the understanding of the data and the development of insights.

· A small business owner wants to understand their sales performance without hiring a dedicated data analyst. They can connect their sales database to Pgmcp and ask questions like 'What were my total sales last quarter?' or 'Which product generated the most revenue in May?' This empowers business owners to gain valuable insights from their data directly.

3

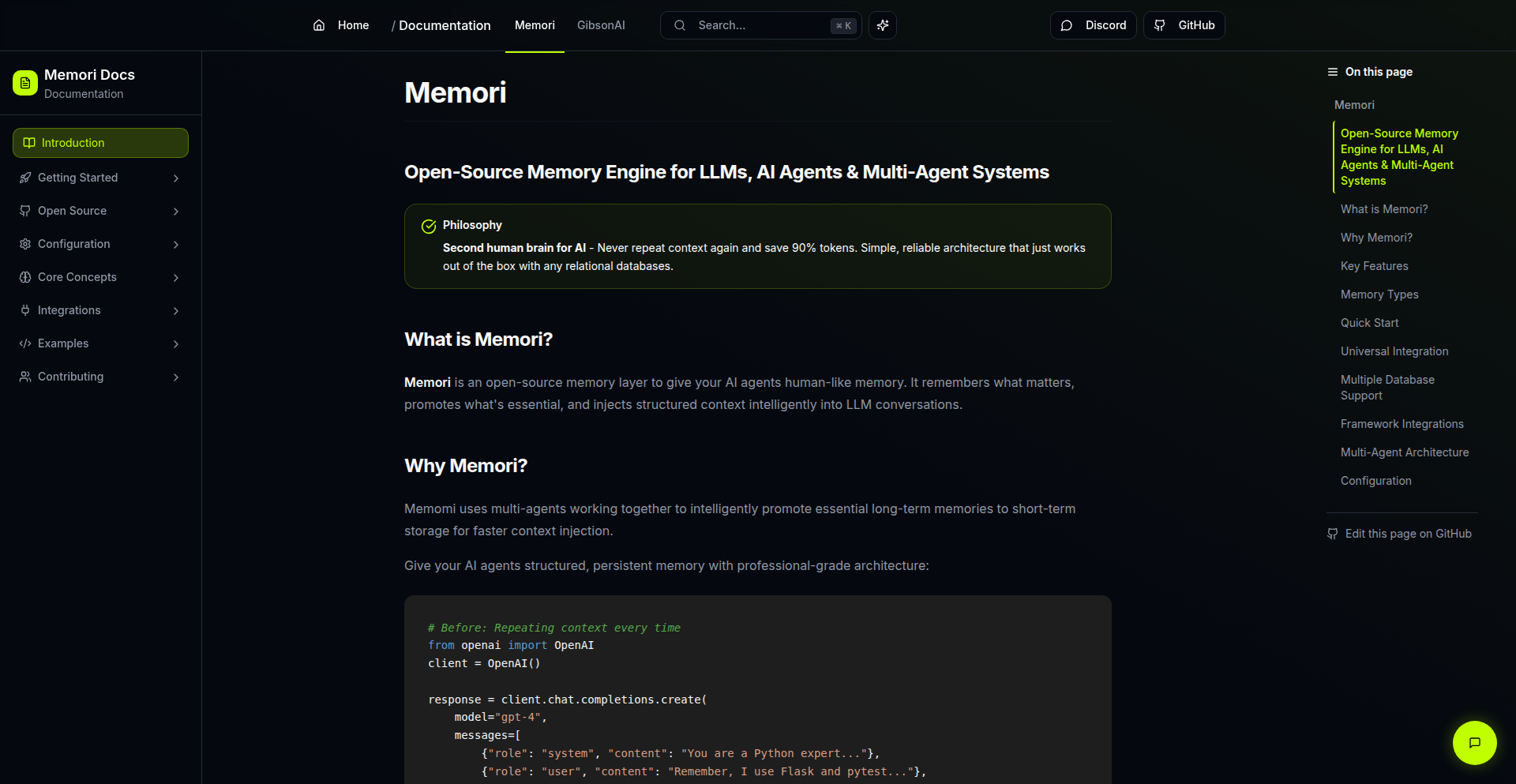

GibsonAI Docs

Author

boburumurzokov

Description

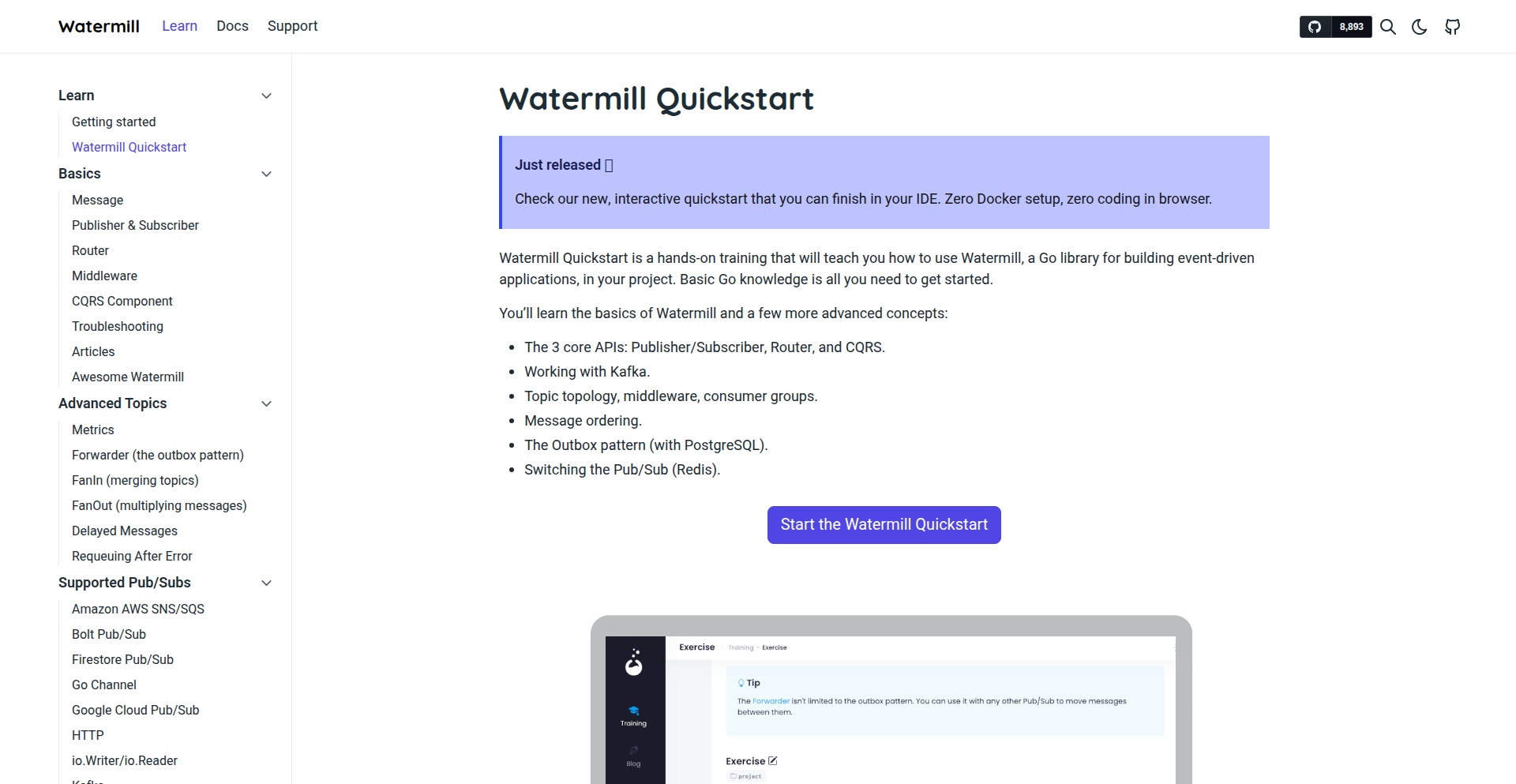

GibsonAI Docs is a cost-effective, customizable alternative to premium AI-powered documentation platforms. It leverages AI for personalized Q&A and a 'smart educator' experience, allowing developers to easily create and deploy beautiful, searchable documentation from their GitHub-hosted Markdown files.

Popularity

Points 6

Comments 5

What is this product?

GibsonAI Docs is a platform for building AI-enhanced developer documentation. It addresses the high cost of existing solutions by offering a fully customizable and affordable option. The core innovation lies in its integration of a sophisticated AI agent for documentation, powered by Agno and Memori, which enables personalized question-answering and a 'smart educator' functionality. Document content, stored as Markdown in GitHub, is rendered beautifully using Lovable UI components. Embeddings for AI search are stored in LanceDB, with metadata in a SQL database, providing a robust and efficient system for managing and interacting with your documentation. This approach democratizes access to advanced documentation features, making them available to a wider range of developers and projects.

How to use it?

Developers can use GibsonAI Docs to create and deploy their project documentation with built-in AI capabilities. The process typically involves storing your documentation as Markdown files in a GitHub repository. GibsonAI Docs then connects to this repository, rendering the Markdown into a user-friendly interface using Lovable UI components. The AI agent, trained on your documentation content, can be queried directly through the platform's UI, providing instant answers and explanations. The system is designed for easy deployment, with options to host on platforms like Vercel. You can also leverage the provided reusable design templates and source code for further customization and cost savings, effectively bypassing the need for expensive proprietary solutions. This makes it simple to get sophisticated, AI-powered documentation up and running quickly.

Product Core Function

· AI-Powered Q&A: Enables users to ask natural language questions about the documentation and receive accurate, context-aware answers, enhancing discoverability and understanding of technical content.

· Smart Educator: Acts as an intelligent tutor, guiding users through complex topics by providing explanations and context based on the documentation, simplifying learning curves for new users.

· GitHub-Hosted Markdown Rendering: Automatically fetches and renders Markdown files stored in GitHub repositories into a polished and readable documentation website, streamlining content management.

· Lovable UI Component Integration: Utilizes reusable UI components for a beautiful and intuitive user interface, ensuring a pleasant experience for documentation readers.

· LanceDB Embeddings Storage: Stores document embeddings efficiently in LanceDB, enabling fast and accurate semantic search capabilities within the documentation.

· SQL Metadata Management: Manages documentation metadata in a standard SQL database, allowing for organized tracking and retrieval of information alongside AI-search capabilities.

· Customizable Templates & Source Code: Provides reusable design templates and access to source code for full customization, empowering developers to tailor the look, feel, and functionality to their specific needs.

Product Usage Case

· A startup launching a new API can use GibsonAI Docs to provide interactive documentation. Developers consuming the API can ask specific questions like 'How do I authenticate a request?' and get immediate, precise answers, reducing support overhead and accelerating API adoption.

· An open-source project maintainer can create a user-friendly documentation portal. New contributors can use the 'smart educator' feature to understand the project's architecture and contribution guidelines, making it easier to onboard new team members.

· A software development team can build internal knowledge base documentation. Employees can quickly find information on company-specific tools or processes by asking the AI, improving internal efficiency and knowledge sharing.

· A developer creating a complex library can integrate GibsonAI Docs to demonstrate usage patterns. Users can ask 'Show me an example of using function X with parameter Y' and receive code snippets directly from the documentation, facilitating practical application.

· A creator offering a paid course can use GibsonAI Docs to supplement learning materials. Students can get instant clarification on course concepts through the AI assistant, enhancing their learning experience and reducing the need for direct instructor intervention.

4

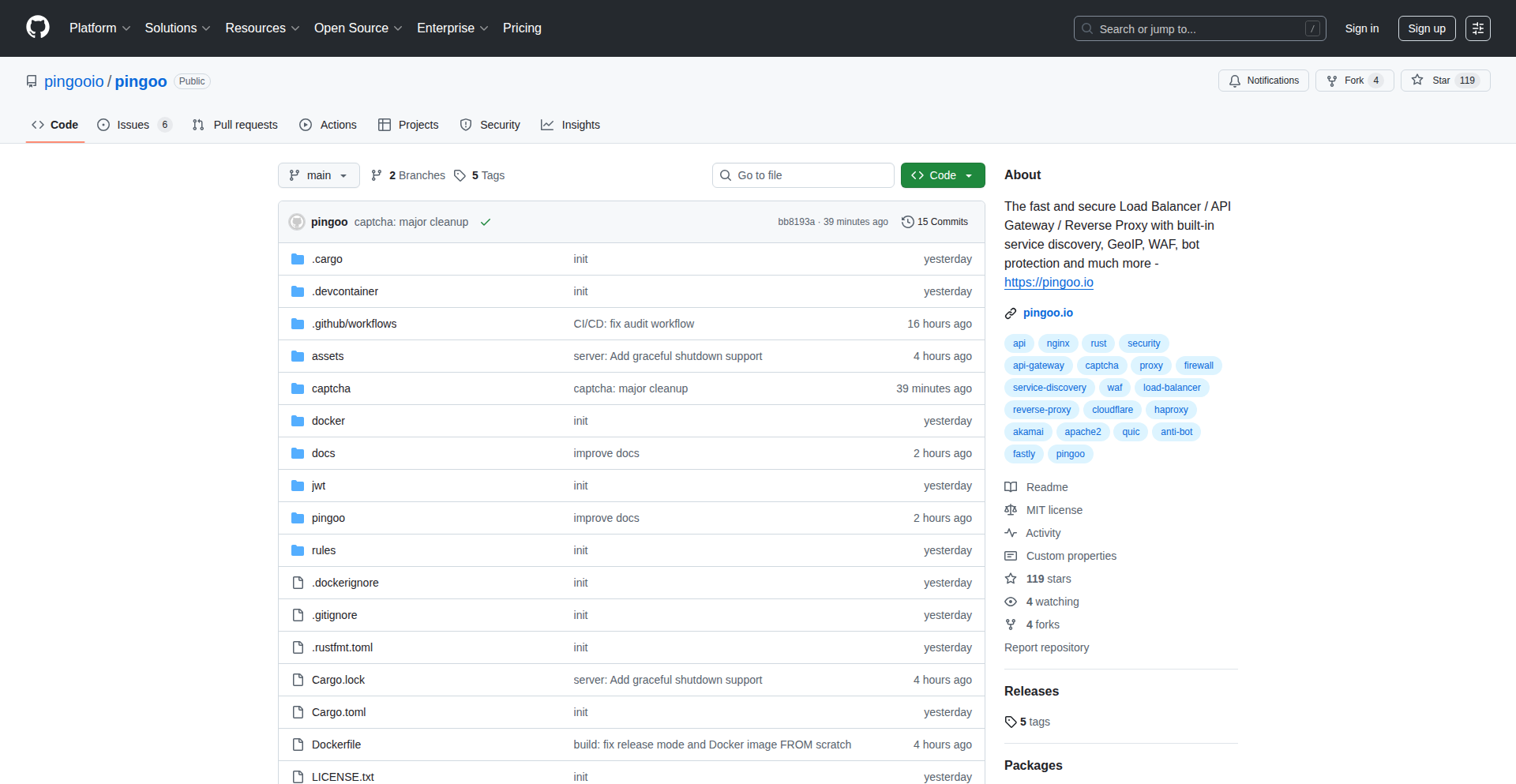

Pingoo: Rust-Powered Reverse Proxy with Integrated WAF and Bot Defense

Author

sylvain_kerkour

Description

Pingoo is a high-performance reverse proxy built in Rust, offering integrated Web Application Firewall (WAF) capabilities and sophisticated bot protection. It aims to simplify web security by combining essential proxy functions with advanced threat mitigation in a single, efficient package, all powered by the safety and speed of Rust.

Popularity

Points 11

Comments 0

What is this product?

Pingoo is a reverse proxy, which acts like a traffic manager for your web servers. Imagine it as a doorman for your website. Instead of visitors talking directly to your servers, they talk to Pingoo. Pingoo then decides where to send the traffic, making sure legitimate visitors get through and blocking malicious ones. The 'innovative' part is that it has built-in security features usually found in separate tools. It includes a WAF, which is like a security guard that inspects incoming requests for common web attacks (like SQL injection or cross-site scripting) and stops them before they reach your applications. It also has bot protection, which identifies and blocks automated malicious bots, preventing them from overwhelming your site or scraping data. The entire system is written in Rust, a programming language known for its speed and memory safety, meaning it's very efficient and less prone to security vulnerabilities itself. So, for you, this means better security and performance for your web applications without needing to manage multiple complex security systems.

How to use it?

Developers can use Pingoo by deploying it as a gateway in front of their existing web applications or microservices. It's configured to listen on public-facing ports and forward requests to the appropriate backend servers. Integration typically involves setting up Pingoo's configuration files to define routing rules and security policies, such as WAF rulesets (e.g., OWASP Core Rule Set) and bot detection thresholds. It can be deployed as a standalone service, within containerized environments like Docker or Kubernetes, or even as a sidecar proxy. This makes it a versatile solution for protecting a wide range of web architectures. For you, this means you can easily drop Pingoo into your existing infrastructure to immediately bolster your web application's security and manage traffic efficiently, protecting your users and resources.

Product Core Function

· Reverse Proxying: Efficiently routes incoming web traffic to the correct backend servers, improving load balancing and availability. This helps your applications handle more users without crashing, keeping them online and responsive.

· Web Application Firewall (WAF): Inspects HTTP traffic for malicious patterns and blocks common web attacks like SQL injection and cross-site scripting. This prevents attackers from exploiting vulnerabilities in your applications, safeguarding your data and users.

· Bot Protection: Identifies and mitigates automated threats from malicious bots, such as scraping, credential stuffing, and denial-of-service attacks. This ensures legitimate users have a smooth experience and protects your site from being overloaded or misused.

· Performance Optimization: Built with Rust for high speed and low resource consumption, ensuring efficient handling of traffic without becoming a bottleneck. This means your applications remain fast and responsive even under heavy load.

· Configurability: Offers flexible configuration options for routing, WAF rules, and bot protection policies, allowing customization to specific application needs. You can tailor the security to precisely match the threats your applications face.

Product Usage Case

· Protecting a public-facing API from automated scraping and common web exploits by deploying Pingoo in front of the API gateway. This prevents data theft and ensures the API remains available for legitimate users.

· Securing a dynamic web application by integrating Pingoo to filter out malicious requests, such as those attempting SQL injection or cross-site scripting attacks, before they reach the application servers. This enhances the overall security posture of the application.

· Managing and securing traffic for a fleet of microservices by using Pingoo as an edge proxy, providing consistent WAF and bot protection across all services. This simplifies security management in complex architectures.

· Improving website uptime and user experience during traffic spikes by using Pingoo's load balancing capabilities, while simultaneously filtering out bot traffic that consumes valuable resources. This ensures a better experience for your real visitors.

5

STT-LLM-TTS C++ Pipeline

Author

RhinoDevel

Description

This project provides a C++ pipeline for integrating speech-to-text (STT), large language model (LLM) inference, and text-to-speech (TTS). It leverages efficient C++ implementations of Whisper.cpp for STT, Llama.cpp for LLM inference, and Piper for TTS, offering a streamlined way to build voice-interactive applications with local LLMs. The innovation lies in its cross-library compatibility and the efficient data flow between these distinct AI models, enabling developers to create powerful voice-enabled tools without relying on cloud services.

Popularity

Points 9

Comments 0

What is this product?

This is a C++ library that stitches together three separate AI capabilities: understanding spoken words (Speech-to-Text), processing and generating text responses (Large Language Model Inference), and converting text back into spoken words (Text-to-Speech). It uses optimized C++ versions of popular AI models (Whisper.cpp, Llama.cpp, Piper) to create a smooth workflow. The key innovation is building a direct connection between these models in C++, making it much faster and more private than sending data to the cloud. So, it allows you to build applications that can listen to you, think like a chatbot, and talk back, all running locally on your computer.

How to use it?

Developers can integrate these C++ libraries into their existing applications or build new ones. The project provides wrapper libraries, meaning it simplifies the process of calling the underlying AI models. You would typically: 1. Include the provided C++ header files in your project. 2. Initialize the STT, LLM, and TTS components with your chosen models. 3. Capture audio input from your microphone. 4. Feed the audio to the STT component to get text. 5. Send the transcribed text to the LLM component for processing and a response. 6. Take the LLM's text response and pass it to the TTS component to generate speech. 7. Play the generated speech. The project supports both Windows and Linux. So, if you're building a voice assistant, an interactive game, or any application that needs to process speech and respond vocally, you can use these libraries to add that functionality efficiently.

Product Core Function

· Speech-to-Text (STT) Conversion: Utilizes Whisper.cpp to accurately transcribe spoken audio into text. This is valuable for converting voice commands or conversations into machine-readable text, enabling applications to understand what the user is saying.

· Large Language Model (LLM) Inference: Integrates Llama.cpp for running LLM models locally. This allows for advanced text generation, question answering, and complex reasoning directly on the user's machine, providing intelligent responses without internet dependency.

· Text-to-Speech (TTS) Synthesis: Employs Piper for generating natural-sounding speech from text. This capability is crucial for applications that need to provide spoken feedback or communicate audibly with users, making interactions more engaging and accessible.

· Pipeline Orchestration: Manages the seamless flow of data from STT output to LLM input, and then from LLM output to TTS input. This integration is the core innovation, ensuring efficient and low-latency communication between the different AI components, crucial for real-time voice interactions.

Product Usage Case

· Local Voice Assistant: Build a private voice assistant that runs entirely on your computer. Capture audio, send commands to a local LLM for processing (e.g., controlling smart home devices or answering questions), and receive spoken responses, all without sending sensitive data to the cloud.

· Interactive Storytelling Game: Create a game where the player can speak their dialogue choices. The game transcribes the player's speech, feeds it to an LLM to generate dynamic story outcomes, and then has the game characters respond vocally using TTS.

· Accessible Productivity Tool: Develop an application that allows users to dictate notes, process them with an LLM (e.g., summarize, organize, or extract action items), and then have the results read back to them, improving efficiency and accessibility for people who prefer voice interaction.

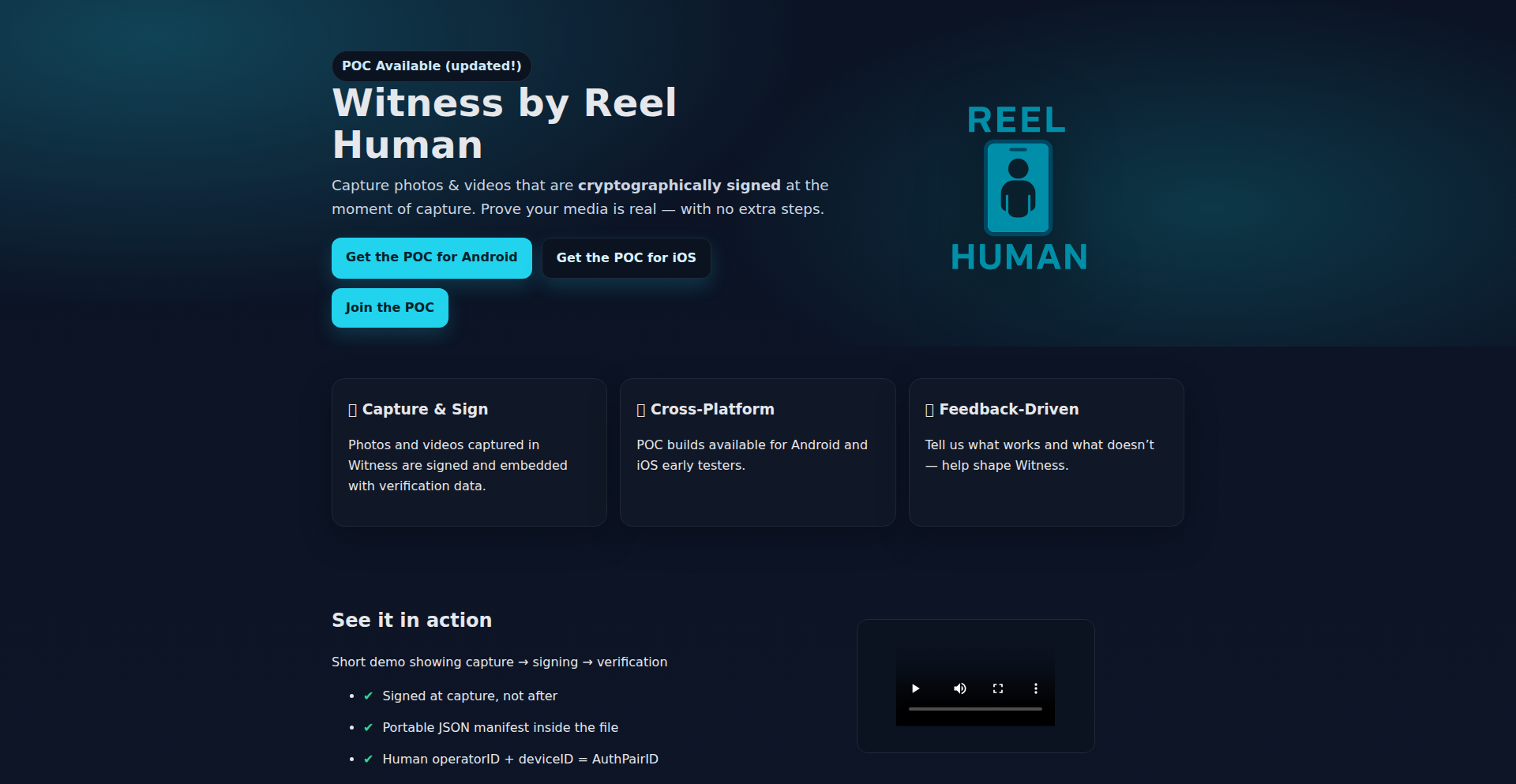

6

Witness by Reel Human

Author

rh-app-dev

Description

Witness by Reel Human is a privacy-focused camera application that empowers users to prove the authenticity of their captured photos and videos. It cryptographically signs each media file, embedding a manifest that includes crucial metadata like capture time, device information, and app version. This ensures that the content is verifiable as human-authored and untampered with, addressing the growing concern of AI-generated or manipulated digital media. So, if you need to confidently share content and prove it's genuine, this app offers a technical solution to establish trust.

Popularity

Points 3

Comments 4

What is this product?

Witness by Reel Human is a camera app that acts like a digital notary for your photos and videos. When you capture something with the app, it automatically creates a unique digital signature for that file. Think of it like a tamper-proof seal. This seal is embedded directly into the media file itself and contains information like exactly when it was taken, what kind of device was used (but not who you are), and details about the app version. This makes it incredibly difficult for anyone to claim the content was faked or altered after it was captured. The innovation lies in its privacy-first approach, embedding verifiable metadata directly into the media without requiring user accounts or tracking, offering a verifiable chain of authenticity for digital content, which is especially relevant in an era of deepfakes and AI-generated content.

How to use it?

Developers can use Witness by Reel Human by integrating its core functionality into their own applications or workflows. The current Proof of Concept (POC) allows for direct use of the Android and iOS apps to capture signed media. For deeper integration, the project plans to release an Open API, enabling platforms and services to programmatically verify the authenticity of media captured by Witness. This means you could build a system that automatically checks if content submitted to your platform is verifiable and human-authored. For instance, a news aggregation service could use the API to flag or verify user-submitted footage, ensuring journalistic integrity. The app is available for testing on both Android and iOS.

Product Core Function

· Cryptographically signed photos and videos: This means each media file gets a unique digital fingerprint that proves its origin and integrity, ensuring that the content hasn't been tampered with since capture. This is valuable for any situation where authenticity is paramount, like legal evidence or journalistic reporting.

· Embedded JSON manifest: This manifest acts as a digital certificate within the media file, containing details like the exact capture time, device information (without personal identification), and app version. This provides irrefutable proof of when and where the content was created, which is crucial for establishing context and verifying events.

· Privacy-first design: The app operates without requiring user accounts, tracking, or uploading your data. This ensures that your captured content remains private and under your control, while still being verifiable. This is important for users who are concerned about their digital footprint and data privacy.

· Cross-platform availability (POC): Both Android and iOS apps are available for testing, allowing developers and users to experience the functionality on their preferred mobile devices. This broad availability means the technology can be tested and adopted across a wide range of users.

· Public verification portal (in progress): This future feature will allow anyone to upload a Witness-signed file and verify its authenticity through a web interface. This makes the verification process accessible to everyone, not just technical users, increasing the trust and usability of the content.

· Open API for platform integration: This planned feature will allow other applications and services to integrate Witness's verification capabilities directly. This is incredibly valuable for developers looking to build trust into their platforms, such as social media sites, content management systems, or legal documentation tools.

Product Usage Case

· A journalist receiving footage from a whistleblower can use Witness to verify that the video wasn't manipulated and was captured at the time it claims. This strengthens the credibility of the news report and protects the journalist from distributing fake content.

· A legal team needing to present photographic or video evidence in court can use Witness-signed media to prove its authenticity. This helps ensure that the evidence is admissible and trustworthy, supporting the case.

· An academic researcher documenting an experiment can use Witness to create a verifiable record of their observations. This adds scientific rigor and allows others to trust the integrity of the documented data.

· A social media platform could integrate the Witness API to verify user-submitted content, flagging or prioritizing content that can prove it's human-authored. This helps combat the spread of misinformation and AI-generated fake content, creating a more trustworthy online environment.

· An individual wanting to share proof of an incident or experience can use Witness to ensure their account is taken seriously. For example, documenting damage to a property or witnessing a traffic violation becomes more credible when the media can be independently verified as authentic.

7

Data Center Chronicle

Author

ben8128

Description

A 4-hour conversational audiobook exploring the evolution of data centers, from early punch cards to modern AI infrastructure. This project tackles the challenge of making complex technological history accessible and engaging, offering a narrative journey through the core innovations that shaped our digital world. It's for anyone curious about the backbone of the internet.

Popularity

Points 5

Comments 2

What is this product?

This is a 4-hour conversational audiobook that takes you on a journey through the history of data centers. It explains the core technologies and concepts, from the mechanical computing of punch cards to the massive cloud infrastructures and AI factories of today. The innovation lies in its 'conversational' format, making a potentially dry technical history feel like an engaging story, rather than a dry lecture. It demystifies the evolution of the physical spaces and technologies that power our digital lives.

How to use it?

You can listen to this audiobook anywhere you listen to podcasts or audiobooks. It's ideal for developers, IT professionals, or anyone interested in the history of technology who wants to understand the foundational infrastructure. It can be used as a learning tool to gain context on current tech trends or simply as an enjoyable way to learn about the history of computing in a relatable, story-driven format.

Product Core Function

· Narrative History of Data Centers: Provides a chronological overview of key milestones in data center development, explaining the 'why' and 'how' behind each technological leap. Value: Offers foundational knowledge and historical context for modern computing. Application: Learning about the infrastructure that underpins all digital services.

· Conversational Audiobook Format: Delivers technical history in an engaging, spoken-word narrative, making complex topics easier to grasp. Value: Improves accessibility and retention of information compared to traditional texts. Application: Casual learning during commutes or downtime, making tech history enjoyable.

· Technological Evolution Explained: Breaks down complex concepts like punch cards, networking, cloud computing, and AI infrastructure into understandable segments. Value: Bridges the gap between technical jargon and general understanding. Application: Understanding the progression of computing power and its physical manifestations.

· Infrastructure Context: Places data center development within the broader history of computing infrastructure, including early mechanical systems and modern hyperscalers. Value: Provides a holistic view of how computing has evolved physically and conceptually. Application: Appreciating the long-term trends and challenges in building and maintaining digital capacity.

Product Usage Case

· A software engineer wanting to understand the historical context of cloud computing's rise can listen to the audiobook to grasp the limitations of previous infrastructures that led to the development of distributed systems and virtualized environments. This helps them appreciate the architectural choices made in modern cloud platforms.

· An IT manager curious about the future of AI infrastructure can gain insight by listening to the segments discussing the scaling challenges of previous computing eras, such as the transition from mainframes to distributed clusters. This historical perspective can inform their own infrastructure planning and investment decisions.

· A student of computer science can use the audiobook to supplement their coursework, learning about the physical and technological evolution of computing hardware and facilities in an engaging way, providing a tangible connection to the abstract concepts they study.

· A technology enthusiast can enjoy this audiobook as a rich narrative that connects seemingly disparate technological eras, from the mechanical computation of punch cards to the modern AI factories, offering a comprehensive and accessible history of the digital age's backbone.

8

Cyberpunk Audio Deck

Author

hirako2000

Description

An offline-first, browser-based audio playback station designed for DJs and audio enthusiasts. It leverages HTML5 and tone.js to provide rich audio manipulation features like smooth track transitions, equalization, compression, pitch, and speed control, all while supporting local audio file uploads up to 2GB. This project represents a creative application of web technologies to deliver powerful audio processing capabilities directly in the browser.

Popularity

Points 5

Comments 2

What is this product?

This is a web application that acts as a digital audio deck, similar to what a DJ might use. Its core innovation lies in its offline-first design, meaning it can function without a constant internet connection after initial loading, and its heavy reliance on the `tone.js` library. `tone.js` is a powerful Web Audio API framework that allows for sophisticated audio synthesis, sequencing, and processing directly within the web browser. The project demonstrates how to combine `tone.js` with HTML5 features to create a feature-rich audio workstation, including smooth crossfades between tracks, customizable EQ to shape sound, a compressor to control dynamic range, and pitch and speed adjustments for creative mixing. This essentially brings professional-grade audio manipulation tools to a web environment, making them accessible to a wider audience.

How to use it?

Developers can use this project as a foundation for building their own web-based audio applications or as a reference for integrating advanced audio processing with `tone.js`. It can be directly run in a modern web browser that supports HTML5 and the Web Audio API. To use it, one would typically load it via a web server, then drag and drop local audio files (like MP3, WAV, etc.) into the application's interface. The built-in controls would then allow for manipulation such as adjusting playback speed, pitch, applying EQ presets, and blending between multiple tracks seamlessly. It's a great starting point for anyone looking to experiment with real-time audio manipulation in the browser, whether for personal projects, music production tools, or even interactive audio installations.

Product Core Function

· Offline Audio Playback: Allows users to load and play large audio files (up to 2GB) locally from their computer, providing a reliable experience even with unstable internet. The value here is uninterrupted audio performance.

· Smooth Track Transitions: Implements crossfading between audio tracks for seamless mixing, enhancing the user experience for audio playback and performance. This provides a professional audio mixing feel.

· Equalization (EQ): Offers controls to adjust the frequency content of audio, allowing users to shape the sound of individual tracks to fit a mix or achieve a desired sonic character. This adds significant creative control over audio.

· Compressor: Includes a compressor effect to manage the dynamic range of audio, making quieter parts louder and louder parts quieter, resulting in a more consistent and impactful sound. This is crucial for professional audio production and mastering.

· Pitch and Speed Control: Enables real-time adjustment of audio playback speed and pitch, opening up possibilities for creative sampling, remixing, and performance manipulation. This provides powerful tools for sonic experimentation.

· HTML5 and tone.js Integration: Demonstrates a robust integration of modern web technologies and a sophisticated JavaScript audio library, showcasing best practices for browser-based audio development. This highlights the technical feasibility of advanced audio applications on the web.

Product Usage Case

· A bedroom DJ using their laptop to mix tracks for a party without needing specialized hardware or expensive software. The project's smooth transitions and EQ controls allow for professional-sounding mixes directly in the browser, solving the problem of expensive entry-level DJ equipment.

· A music producer experimenting with vocal samples by pitching and speeding them up to create unique effects. The real-time pitch and speed controls enable rapid iteration and creative discovery within the browser, simplifying the experimentation process.

· A web developer building an interactive music visualization tool that requires real-time audio analysis and manipulation. This project provides a solid backend of audio processing capabilities that can be extended with visualization components, solving the challenge of integrating complex audio features into web apps.

· A student learning about audio engineering principles who wants to experiment with equalization and compression. The project offers an accessible platform to understand how these effects alter sound, making complex audio concepts tangible and understandable through direct interaction.

9

DataGuessr

Author

davidbauer

Description

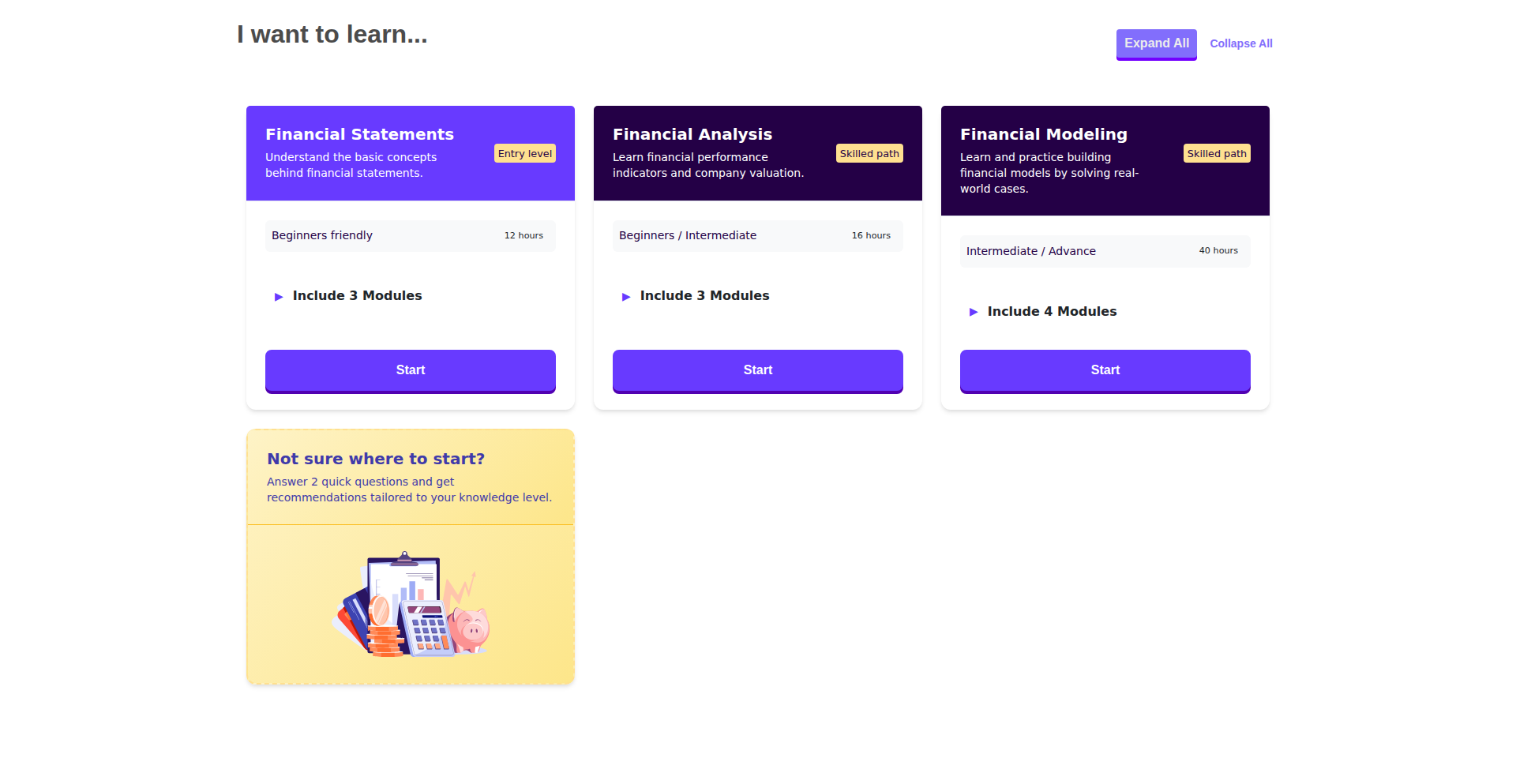

DataGuessr is a daily quiz game designed to make learning global statistics engaging and fun. It leverages the extensive datasets from Our World in Data, allowing users to guess statistics for various countries and time periods. This approach democratizes access to complex data, transforming it into an interactive experience powered by AI assistance for content generation.

Popularity

Points 4

Comments 2

What is this product?

DataGuessr is a web-based educational game that uses AI to create daily quizzes based on real-world global statistics from sources like Our World in Data. The core innovation lies in its playful, gamified approach to data exploration. Instead of reading dense reports, users guess values (e.g., CO2 emissions, life expectancy) for different countries and years. The underlying technology likely involves natural language processing (NLP) to generate quiz questions and data retrieval mechanisms to pull relevant statistics, possibly with the help of AI coding assistants like Cursor to streamline development given the author's background. This makes understanding complex global trends accessible and enjoyable, allowing anyone to learn about the world's data in a low-friction way.

How to use it?

Developers can use DataGuessr as an inspiration for building educational tools or data visualization applications. The project demonstrates how to integrate large datasets into an interactive format. For those wanting to integrate similar functionality, they could use APIs provided by data sources like Our World in Data, and employ AI libraries (e.g., Python's Pandas for data manipulation, and NLP libraries like Hugging Face Transformers for question generation) to create their own data-driven quizzes or educational games. The project also highlights the potential of AI-assisted development to quickly prototype and deploy complex ideas, even for those with limited coding time.

Product Core Function

· Daily Quiz Generation: Provides users with a new set of questions each day, fostering consistent engagement and learning. This uses AI to dynamically select data points and create challenging yet solvable statistical puzzles, offering a fresh learning experience daily.

· Interactive Data Guessing: Allows users to actively participate by inputting their statistical guesses for specific countries and years. This hands-on approach reinforces learning and improves data literacy, making the learning process more memorable than passive reading.

· AI-Powered Content Creation: Utilizes AI to source, curate, and present statistical data in an engaging quiz format. This significantly reduces the manual effort required to create educational content, enabling rapid expansion of topics and data coverage.

· Global Data Exploration: Provides access to a wide range of global statistics, enabling users to discover trends and patterns across different regions and timeframes. This broadens users' understanding of global development and challenges.

· Feedback and Learning: Offers immediate feedback on user guesses, explaining the correct answer and providing context. This crucial step solidifies learning and helps users understand the 'why' behind the data, turning guesswork into genuine comprehension.

Product Usage Case

· Educational institutions can embed DataGuessr into their curriculum to teach students about global development, economics, and environmental science in an interactive way. It helps students grasp abstract statistical concepts through concrete examples and challenges.

· Journalists and researchers can use it as a tool to quickly test their knowledge of global statistics or to create engaging social media content to highlight specific data trends. It provides a quick way to self-assess and share interesting data points.

· Individuals interested in global affairs can use it to improve their understanding of different countries and their progress over time. It offers a fun, accessible way to stay informed about the world without getting bogged down in technical reports.

· Developers can fork or reference the project's architecture to build similar data-driven games or educational platforms for niche topics. It serves as a practical example of applying AI and data APIs for interactive learning experiences.

10

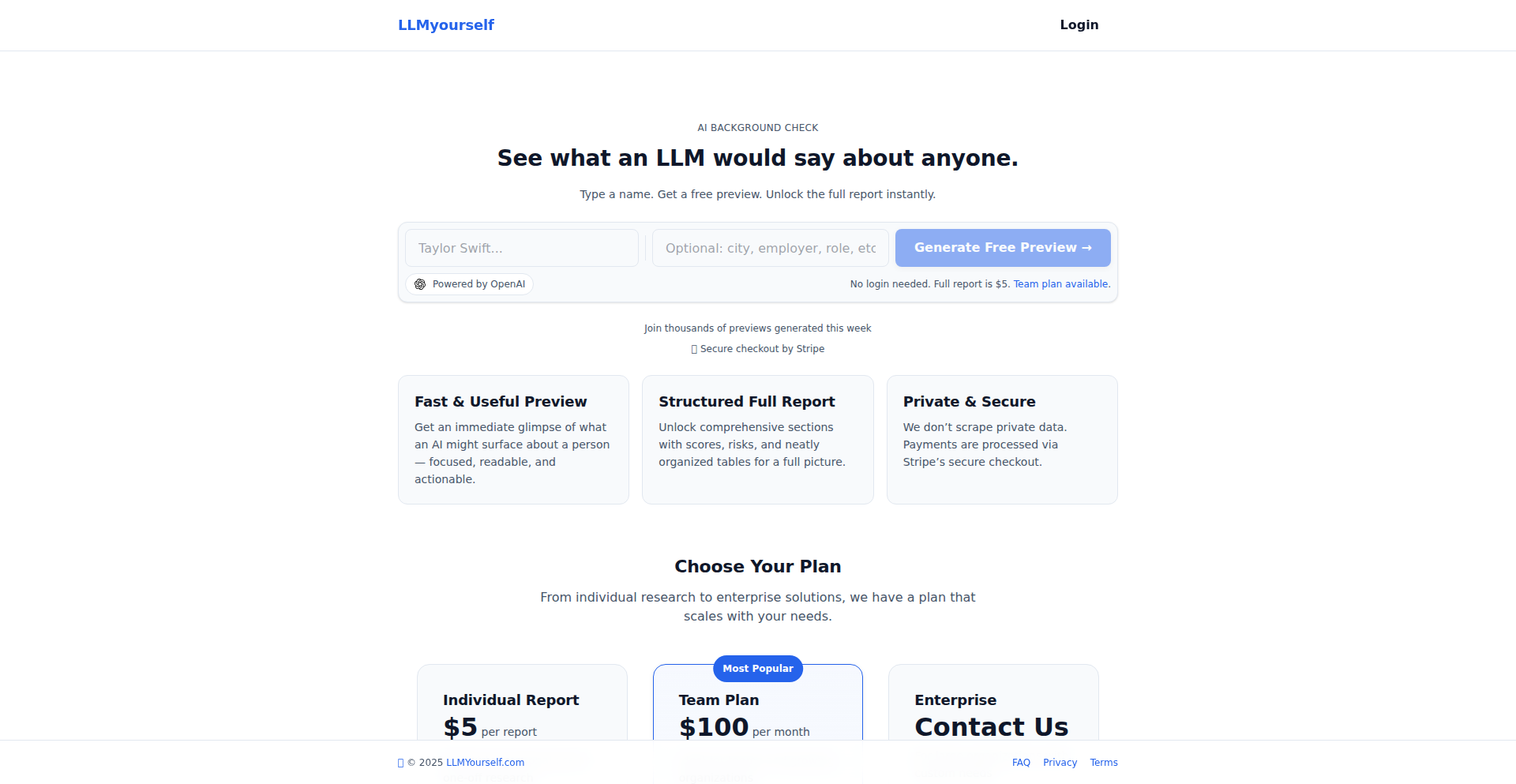

LLMyourself: AI-Powered Persona Reporter

Author

AlexNicita

Description

LLMyourself is a web application that leverages AI to generate personalized reports based on a user-provided name. It transforms the concept of background checking into an engaging and informative experience, akin to reading a Wikipedia page but tailored to an individual. The innovation lies in its accessible AI implementation for generating rich, albeit redacted, personal narratives, making AI-driven insights readily available to anyone curious.

Popularity

Points 2

Comments 4

What is this product?

LLMyourself is a platform that uses artificial intelligence to create intriguing reports about individuals. When you input a name, the AI, through a process that resembles assembling information like an encyclopedia entry, generates a unique report. The core technology involves a Large Language Model (LLM) that has been trained on vast amounts of data to understand and synthesize information. The 'innovation' here is making this complex AI accessible through a simple web interface, allowing for quick generation of personalized, albeit stylized and redacted, content. So, what's the use? It offers a fun and novel way to explore AI's ability to craft narratives about people, making complex AI accessible and entertaining.

How to use it?

Developers can use LLMyourself by simply visiting the website, typing in a name, and receiving an AI-generated report. The project is built using a modern tech stack including React for the frontend, TypeScript for robust coding, Supabase for backend services and database, and Vercel for seamless deployment. This means developers can see a practical example of how these technologies integrate to create a functional AI-powered web application. For integration, one could imagine using the API (if exposed in future versions) to pull similar AI-generated content into their own applications, perhaps for creative writing tools, personalized content generation, or even as a proof-of-concept for building AI-driven user experiences. So, how can you use it? As a developer, you can learn from its architecture, see how modern frontend and backend tools are used together, and get inspired to build your own AI-powered features.

Product Core Function

· AI-driven report generation: Utilizes a Large Language Model to create unique, narrative-style reports based on a name input. The value is in demonstrating how AI can synthesize information into engaging content, providing a novel way to experience personalized data.

· Redacted preview reports: Offers a glimpse into the full report with sensitive information removed, ensuring privacy while showcasing the AI's output. This highlights a responsible approach to AI content generation, making the output shareable and intriguing without revealing personal details.

· User-friendly web interface: Built with React and Typescript for a smooth and intuitive user experience. This demonstrates how modern frontend frameworks can be used to create accessible AI tools, making advanced technology easy for anyone to interact with.

· Scalable backend with Supabase and Vercel: Employs Supabase for database management and Vercel for efficient deployment, showcasing a robust and modern cloud infrastructure for web applications. This provides a blueprint for developers looking to build and scale AI-powered services effectively.

Product Usage Case

· Content creation for creative writing: A writer could use LLMyourself to generate unique character backgrounds or story prompts based on names, helping overcome writer's block. It solves the problem of needing inspiration by providing AI-generated narrative starting points.

· Personalized digital experiences: A developer building a fan website or community platform could integrate LLMyourself's API to generate fun, AI-created 'profiles' for users or fictional characters. This enhances user engagement by offering unique, personalized content.

· Demonstrating AI accessibility: This project serves as an excellent example for educational purposes, showing how complex AI models can be wrapped in simple interfaces to illustrate their capabilities to a broader audience. It solves the problem of AI seeming too abstract or difficult to understand by providing a tangible, interactive example.

· Prototyping AI-powered products: Entrepreneurs or developers looking to build AI-driven services can use LLMyourself as a reference for rapid prototyping. It showcases a practical implementation of AI for generating user-specific content, accelerating the development of similar products.

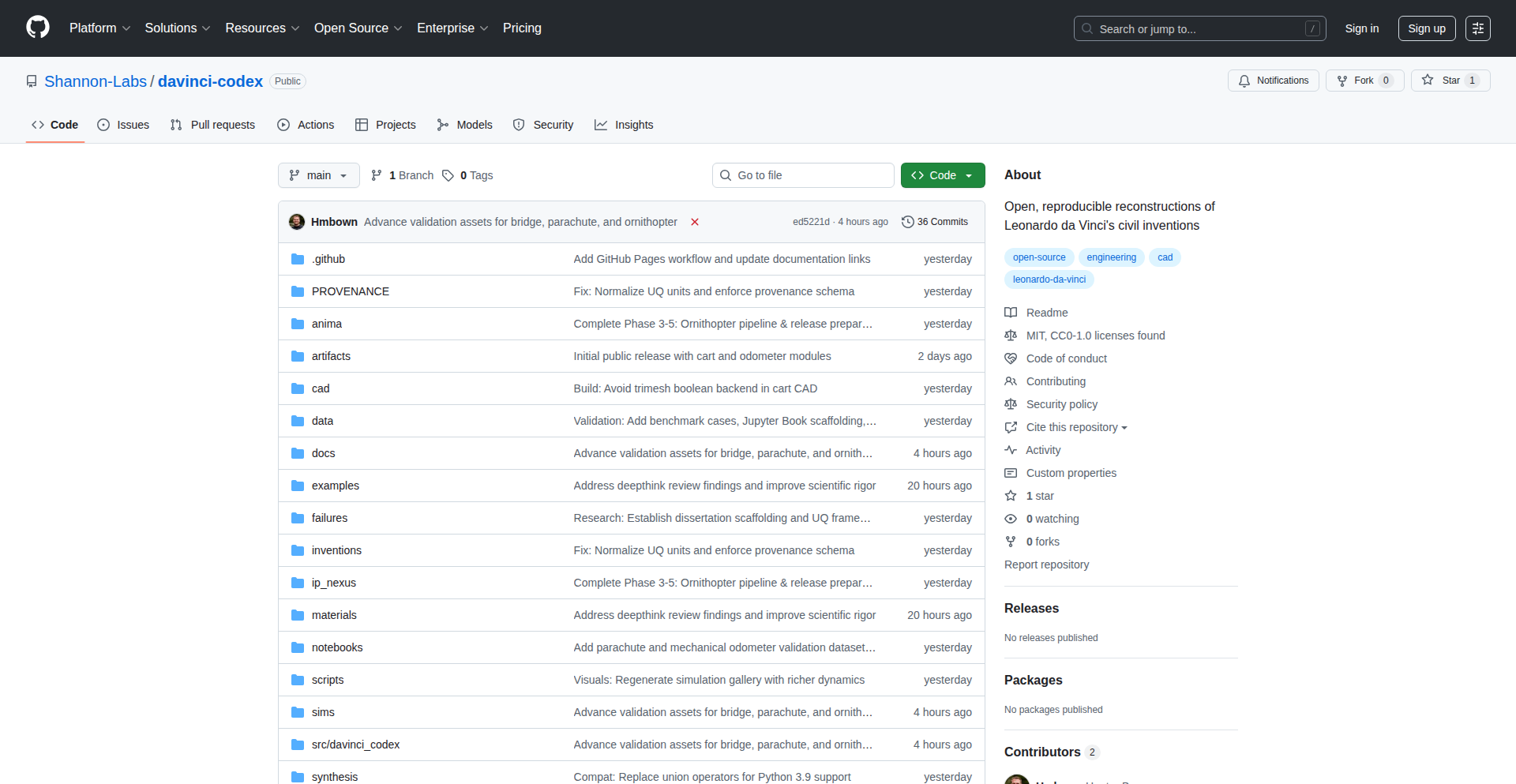

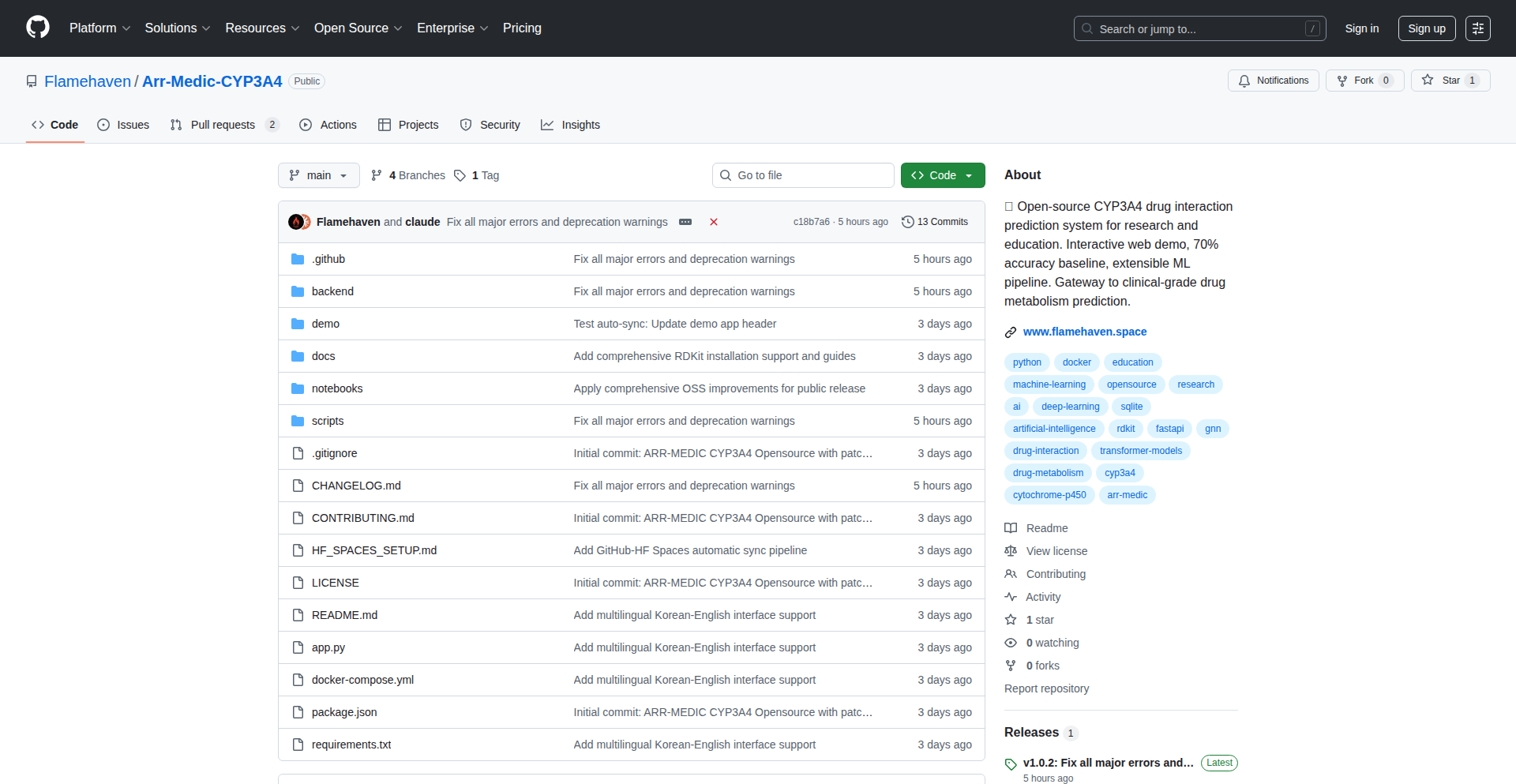

11

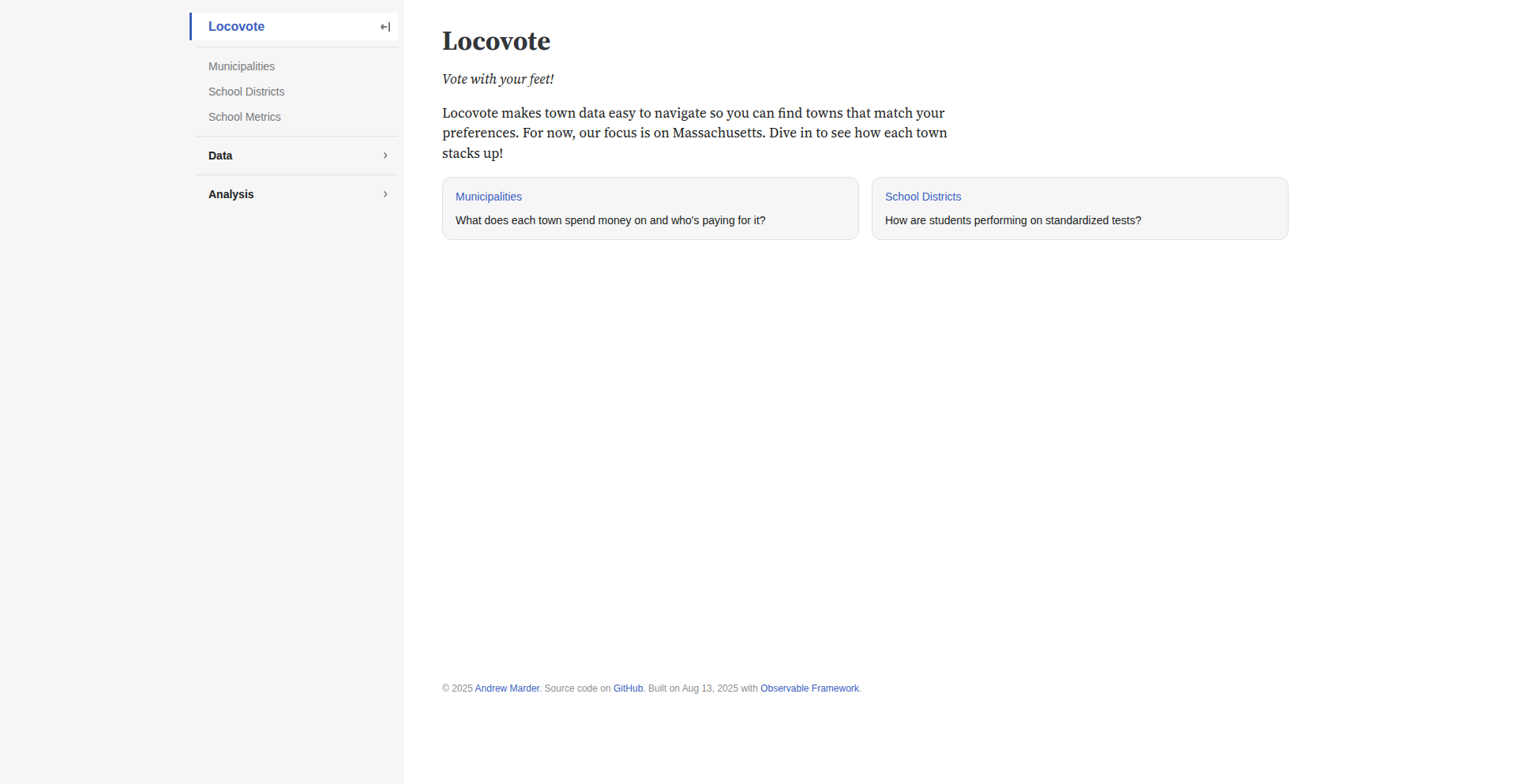

Locovote.com: Civic Data Navigator

Author

amarder

Description

Locovote.com is an open-source dashboard that consolidates and visualizes key local government data, such as school performance scores, tax rates, and municipal financial information. It aims to simplify the process of comparing towns for residents, particularly those house hunting, by presenting complex information in an accessible and easy-to-navigate format. The project leverages Observable Framework for its data visualization and presentation, offering a practical solution to a common information-gathering challenge.

Popularity

Points 3

Comments 2

What is this product?

Locovote.com is a web-based application that acts as a central hub for critical local government data. Instead of visiting multiple disparate government websites, which often have complex interfaces, Locovote aggregates this information into a single, user-friendly dashboard. It uses data visualization techniques to make comparisons between different towns straightforward. The core innovation lies in its ability to distill complex financial and performance metrics into easily digestible charts and tables, helping users quickly understand the fiscal health and educational quality of different communities. The use of Observable Framework, a JavaScript framework for building reactive data graphics and interactive documents, allows for dynamic and efficient data presentation, making it easier for users to explore and interact with the data.

How to use it?

Developers can use Locovote.com as a reference for how to aggregate and visualize public data. For instance, if you're building a tool that helps people understand community statistics, you can study Locovote's approach to data sourcing and presentation. You can also contribute to the open-source project on GitHub to improve its functionality or expand its data coverage. In terms of integration, a developer might integrate a similar data aggregation and visualization pattern into their own applications, perhaps for real estate platforms, community planning tools, or civic engagement initiatives, by adapting the data fetching and charting methodologies. This project demonstrates a practical application of front-end data visualization frameworks to solve a real-world problem.

Product Core Function

· School Performance Data Aggregation: Collects and displays standardized test scores and other educational metrics for local schools, enabling parents and residents to assess educational quality. This provides a clear picture of academic outcomes, helping users make informed decisions about where to live based on educational opportunities.

· Tax Rate Comparison: Gathers and presents property tax rates across different towns, allowing users to easily compare the tax burden. This is crucial for understanding the overall cost of living in a particular area and for budget planning.

· Municipal Finance Overview: Summarizes key financial data for local governments, such as budgets and expenditures, to offer insights into fiscal management. This helps users understand how taxpayer money is being utilized and the financial stability of a town.

· Interactive Town Comparison Dashboard: Provides a user interface with charts and tables that allow for side-by-side comparison of selected towns based on the aggregated data. This visual comparison makes it easy to identify differences and similarities between communities at a glance.

· Open-Source Contribution Platform: The project is hosted on GitHub, allowing developers to view the source code, suggest improvements, and contribute new features or data sources. This fosters community collaboration and the continuous improvement of the tool for broader public benefit.

Product Usage Case

· A family relocating to Massachusetts uses Locovote.com to compare school district ratings and property taxes for several towns they are considering. By quickly viewing the aggregated data, they can narrow down their options without spending hours sifting through individual town websites, saving them significant time and effort in their house hunting process.

· A community organizer utilizes Locovote.com to gather data for a presentation on local economic development. They can easily pull up financial data and tax structures for different towns to illustrate trends and inform discussions about local investment and policy.

· A developer interested in data visualization builds a similar tool for their local city by referencing Locovote.com's GitHub repository. They adapt the Observable Framework implementation to visualize public transportation data, creating a new resource for their community.

12

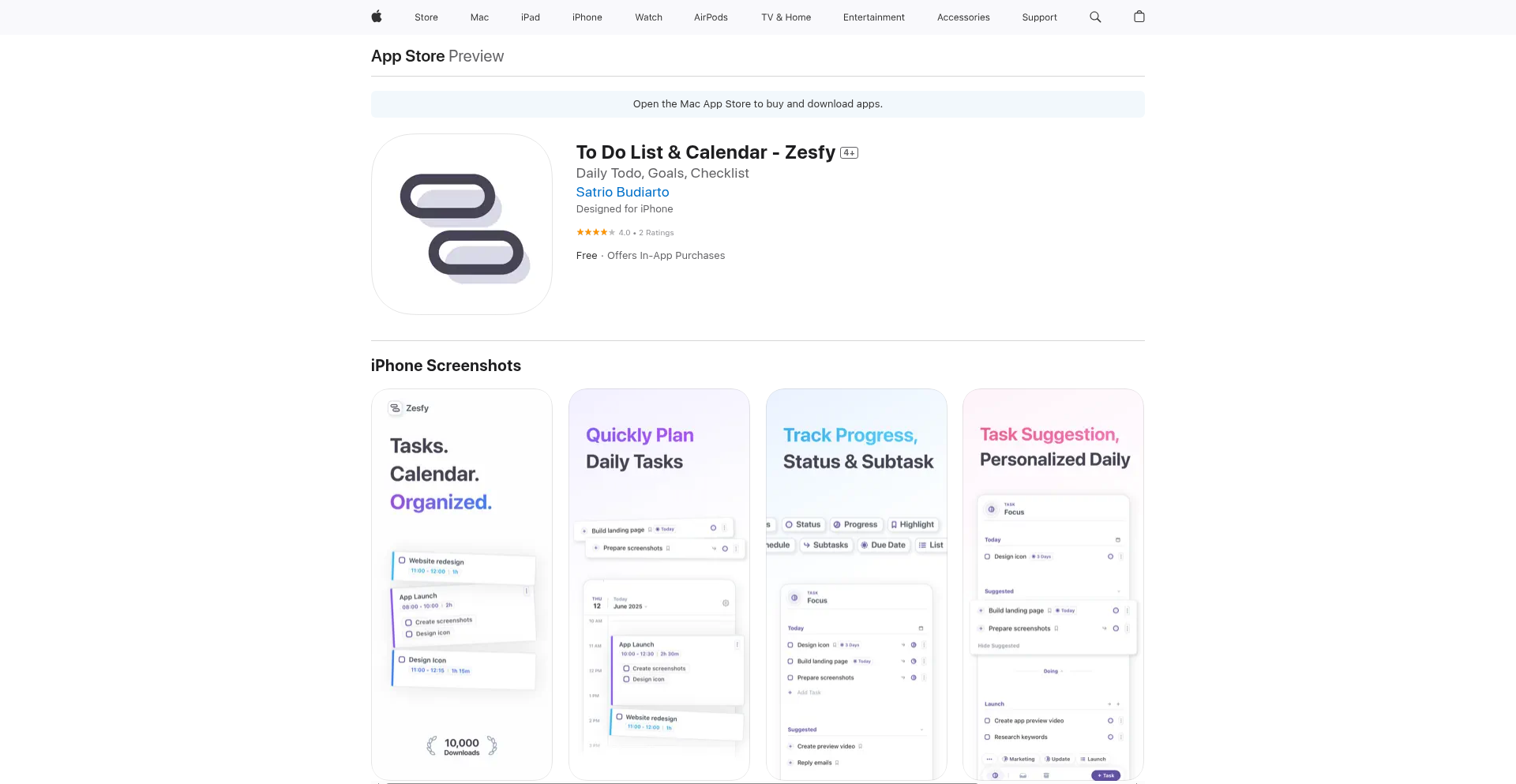

WeeklyFocus Todo

Author

zesfy

Description

A todo app that only displays tasks for the current week, simplifying focus and reducing overwhelm. It leverages a constraint-based design to enhance productivity by limiting the scope of visible tasks.

Popularity

Points 4

Comments 1

What is this product?

This is a task management application designed to boost productivity by focusing on a limited timeframe. Instead of showing all your tasks, it cleverly filters them to only display what needs to be done within the current week. This approach is innovative because it combats task paralysis and information overload, common issues in traditional to-do lists. The core technical insight is in how it prioritizes and presents information, a common challenge in UI/UX design, by using a time-bound filtering mechanism. For a developer, the value lies in a simpler, more effective way to manage personal or team workflows, leading to better task completion rates.

How to use it?

Developers can integrate this concept into their personal task management or even adapt the core filtering logic for team-based project tracking. For personal use, you'd input your tasks with due dates, and the app automatically surfaces only those due within the next seven days. For integration, the underlying principle of time-bound filtering can be applied to various project management tools or custom dashboards. Imagine a developer using this for their sprint tasks – they'd only see what's relevant for the current sprint week, improving their workflow and eliminating distractions from future sprints.

Product Core Function

· Weekly task visibility: Presents only tasks due within the current week, reducing cognitive load and improving focus on immediate priorities. This directly helps users by making their workload feel more manageable and achievable.

· Task scheduling with due dates: Allows users to assign due dates to tasks, which is the fundamental mechanism for the weekly filtering. This provides structure and ensures tasks are categorized effectively for timely completion.

· Task completion tracking: Enables users to mark tasks as complete, offering a sense of accomplishment and progress. This visual feedback loop motivates users to continue managing their tasks efficiently.

· Simplified user interface: Designed for clarity and ease of use, minimizing distractions and making task management a straightforward process. This means less time spent fiddling with the tool and more time on actual work.

Product Usage Case

· A freelance developer managing multiple client projects can use WeeklyFocus Todo to see exactly which client tasks are due this week, preventing missed deadlines and ensuring consistent delivery. This solves the problem of feeling overwhelmed by a long list of future commitments.

· A software team lead could adapt this principle to a shared task board, highlighting only the tasks assigned to the current sprint's focus. This improves team alignment and helps everyone understand immediate priorities, solving the challenge of team members getting sidetracked by tasks outside the current sprint's scope.

· A student developer working on a personal project can use this to break down large assignments into weekly actionable items. It helps them stay on track for deadlines without feeling discouraged by the sheer volume of work ahead.

13

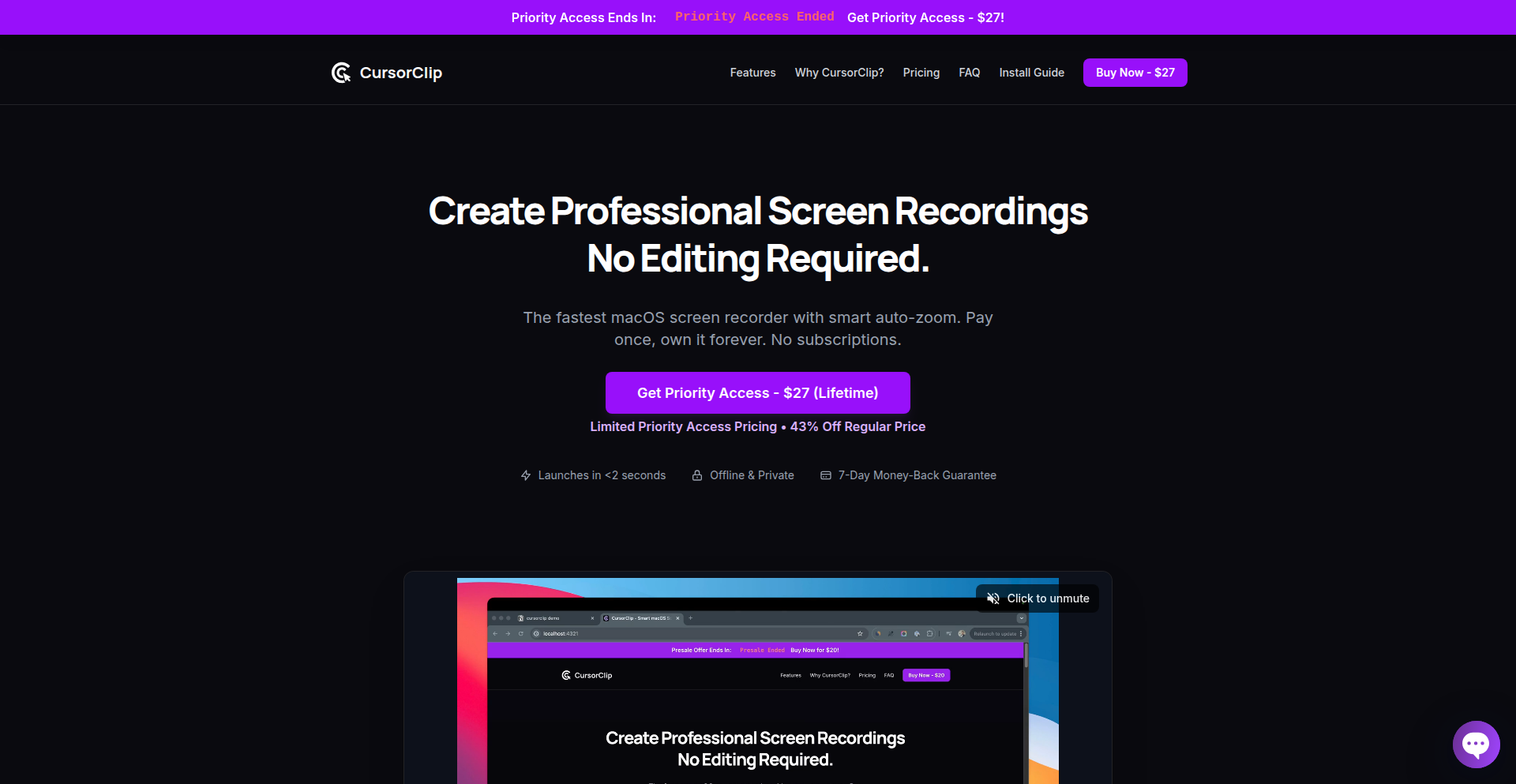

UltraLite macOS Screen Recorder

Author

shadabshs

Description

A hyper-lightweight native macOS application that records your screen with an innovative auto-zoom feature, solving the problem of viewers missing crucial details during demonstrations. Its small 18MB footprint means it's fast to download and doesn't hog system resources, making it incredibly practical for everyday use.

Popularity

Points 3

Comments 2

What is this product?

This project is a native macOS screen recording application that has been meticulously optimized for size and performance, weighing in at only 18MB. The core innovation lies in its 'auto-zoom' functionality, which intelligently magnifies specific areas of the screen during recording. This is achieved through a combination of screen capture APIs and sophisticated image processing algorithms that detect user interaction or pre-defined focus areas, then seamlessly apply a digital zoom. Essentially, it automates the process of highlighting important parts of your screen without manual intervention, ensuring your audience never misses a key step or detail. So, what's the value to you? It means creating clearer, more engaging video tutorials or bug reports without needing complex editing later, and with an app that's incredibly light on your system.

How to use it?

Developers can use this app directly by downloading and running it on their macOS devices. It integrates seamlessly into any workflow requiring screen recording. For instance, you can launch the app, select the desired recording area, and start capturing. The auto-zoom feature can be configured to activate based on mouse clicks, keyboard shortcuts, or specific application windows, making it ideal for demonstrating software features, coding walkthroughs, or troubleshooting. Its low resource usage means you can run it alongside demanding development tools without performance degradation. So, how does this benefit you? You get a powerful, yet unobtrusive tool to create polished screencasts for documentation, team collaboration, or sharing your technical expertise.

Product Core Function

· Native macOS Screen Recording: Captures high-quality video of your screen using macOS's built-in graphics and capture frameworks. The value is a smooth, efficient recording process optimized for the platform, ensuring good performance and compatibility. This is useful for creating any type of video content on your Mac.

· Ultra-Lightweight (18MB): Achieved through careful code optimization and reliance on native macOS frameworks, minimizing dependencies. The value is a fast download, quick startup, and minimal impact on your system's storage and memory. This is great for users with limited disk space or those who prefer lean applications.

· Automatic Zoom Functionality: Intelligently identifies and magnifies areas of interest during recording, such as mouse cursor movements or specific window focus changes. The value is enhanced viewer comprehension by automatically directing attention to critical elements, making tutorials and demonstrations much clearer. This helps your audience follow along easily.

· Configurable Zoom Triggers: Allows users to define specific events (e.g., mouse clicks, application focus) that initiate the zoom effect. The value is granular control over the auto-zoom behavior, allowing for tailored recordings that match the specific content being shared. This provides flexibility for different demonstration styles.

Product Usage Case

· Demonstrating a new UI element in a web application: A developer can record a walkthrough of a new feature, and the auto-zoom will highlight the button being clicked or the input field being typed into, ensuring viewers can clearly see the interaction. This solves the problem of viewers squinting at small click targets.

· Creating a bug report video for a complex software issue: The auto-zoom can follow the sequence of actions leading to the bug, automatically magnifying each step. This provides a clear, step-by-step visual guide for testers or support teams, speeding up bug resolution.

· Tutorials on coding specific algorithms or functions: When demonstrating a code snippet, the auto-zoom can focus on the relevant lines of code as the developer scrolls or highlights them, making it easier for students or colleagues to follow the logic. This eliminates the need for manual zooming during editing.

· Onboarding new team members to a development workflow: A concise screen recording with auto-zoom can illustrate critical steps in setting up a project or using internal tools, ensuring clarity and reducing the learning curve for new hires. This makes knowledge transfer more efficient.

14

GlyphEngine

Author

s_petrov

Description

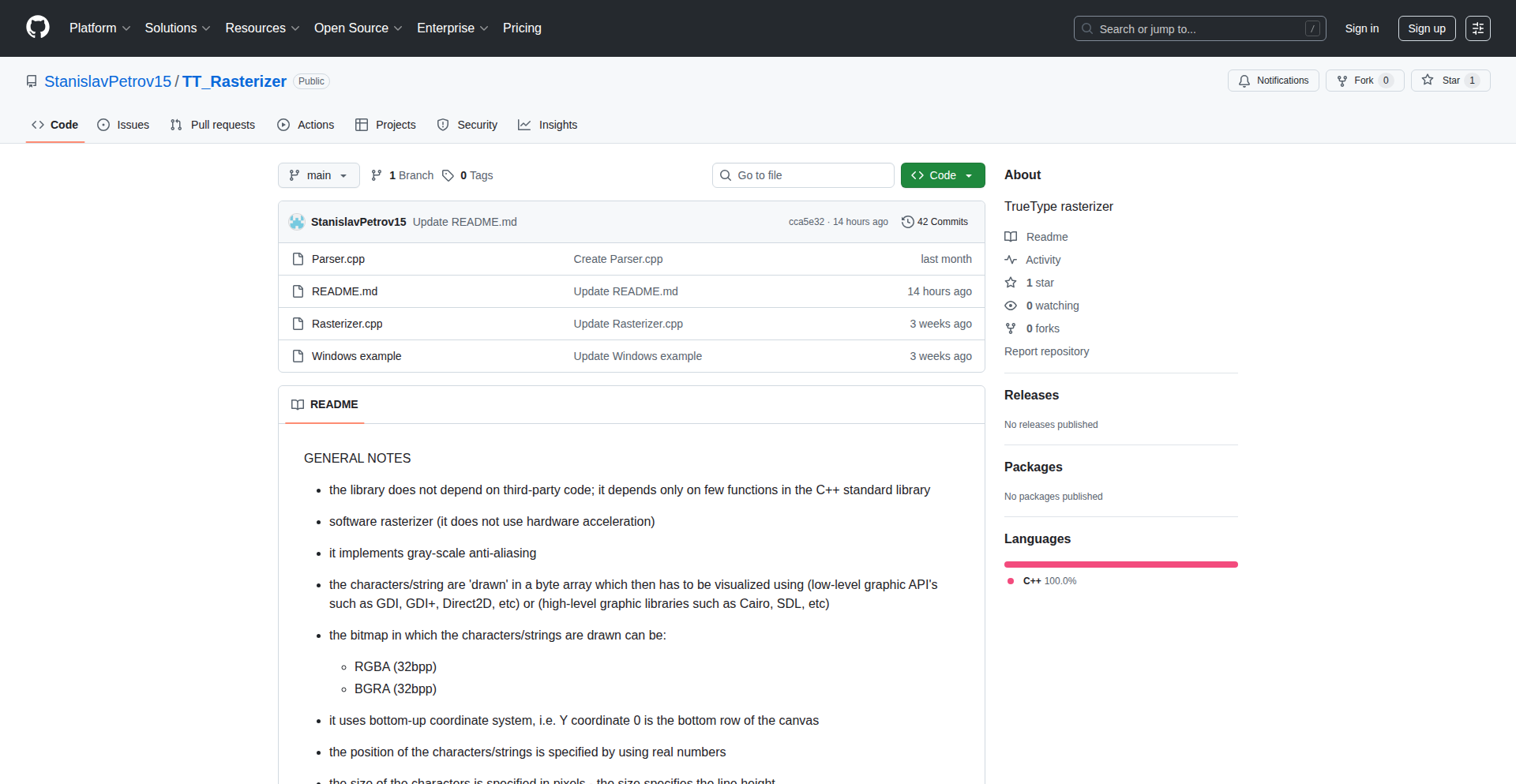

GlyphEngine is a lightweight TrueType rasterizer, a tool that converts vector font outlines into pixelated images for display on screens. It excels at rendering the vast majority of Western characters accurately, offering a foundational building block for applications needing to display text. Its innovation lies in its direct implementation of font rendering logic, providing a deeper understanding of how text appears on screen and serving as a customizable solution for developers.

Popularity

Points 3

Comments 1

What is this product?

GlyphEngine is a C++ TrueType rasterizer. In simple terms, it takes the mathematical descriptions of characters in a font file (like TrueType) and turns them into the tiny dots (pixels) that form the image you see on your screen. The innovative part is its direct, from-scratch implementation. This means it doesn't rely on operating system libraries for basic font rendering, giving developers fine-grained control and a clear understanding of the rendering pipeline. While currently focused on Western characters and lacking advanced features like hinting for small text, it represents a fundamental step in font display technology, built with a hacker's mindset of understanding and rebuilding core functionalities.

How to use it?

Developers can integrate GlyphEngine into their projects to handle font rendering directly, bypassing system-level font renderers. This is particularly useful for embedded systems, game development, or any application where precise control over text appearance and performance is critical. You can include the C++ source code in your project, and then use its API to load font files, select characters, and render them into a bitmap buffer. This buffer can then be displayed on a screen or further manipulated. For example, you could use it in a custom UI framework or a game engine that needs to render text without relying on the OS's default text rendering.

Product Core Function

· Vector to Bitmap Conversion: Takes font outlines and renders them into a grid of pixels, enabling text display on digital screens. This is crucial for any application that needs to show text, ensuring characters are correctly formed from dots.

· Western Glyph Support: Accurately renders 99.9% of Western glyphs, meaning most common English and European characters will display correctly. This provides a reliable foundation for displaying text in these languages.

· Font File Parsing: Reads and interprets TrueType font files, extracting the necessary data to draw characters. This allows your application to use a wide range of available fonts.

· Customizable Rendering: As a direct implementation, it allows developers to modify and extend the rendering process, such as experimenting with different anti-aliasing techniques or color effects (planned). This offers flexibility for unique visual styles.

Product Usage Case

· Custom Game UI: A game developer can use GlyphEngine to render game menus and in-game text with a specific, consistent style across different platforms, avoiding OS-specific text rendering variations.

· Embedded System Displays: For devices with limited resources or custom display hardware, GlyphEngine can provide a lightweight and efficient way to render text without depending on a full-fledged OS font system.

· Graphics Library Development: Developers building their own graphics libraries or frameworks can use GlyphEngine as a core component for text rendering, ensuring tight integration and control over performance.

· Educational Tools: Students learning about computer graphics and font rendering can use GlyphEngine as a reference implementation to understand the underlying algorithms and data structures involved in displaying text.

15

Math2Tex: Alchemy for Academia

Author

leoyixing

Description

Math2Tex is a specialized web application designed to tackle the tedious task of transcribing academic content, particularly mathematical formulas, from images into editable LaTeX or plain text. It addresses the common pain point for students and researchers who struggle with manually inputting complex equations, saving them time and reducing syntax errors by leveraging a fine-tuned AI model for accurate recognition.

Popularity

Points 2

Comments 2

What is this product?

Math2Tex is a web-based tool that utilizes a custom-trained AI model, built upon a transformer architecture, to recognize and convert academic content, especially handwritten or printed mathematical formulas, from images into machine-readable formats like LaTeX or plain text. Unlike general-purpose AI models, Math2Tex is optimized for speed and accuracy in this specific domain, acting as a precision instrument for academic transcription. Think of it as a highly skilled scribe that can instantly translate your visual notes into code.

How to use it?

Developers and academics can easily use Math2Tex via its single-page web interface. Simply upload an image file (such as a scan of handwritten notes, a screenshot from a PDF, or a photo of a textbook page) to the platform. Math2Tex will then process the image and display a real-time preview of the converted LaTeX or text output. Users can then copy the generated code with a single click, making it readily available for integration into their documents, research papers, or study materials.

Product Core Function

· Image to LaTeX Conversion: Accurately transforms mathematical equations and academic notations from images into valid LaTeX code, which is crucial for typesetting scholarly documents, saving significant manual input time and effort.

· Image to Plain Text Conversion: Extracts textual content from images and converts it into easily editable plain text, streamlining the process of digitizing notes or references.

· Real-time Preview: Provides an immediate visual representation of the converted output, allowing users to quickly verify the accuracy of the recognition and make any necessary adjustments.

· One-Click Copy: Enables users to effortlessly copy the generated LaTeX or text to their clipboard, facilitating seamless integration into their workflow and other applications.

· Specialized Recognition Model: Employs a lightweight, fine-tuned AI model specifically trained on academic materials, ensuring higher precision and faster processing speeds for complex mathematical and symbolic notations compared to general AI tools.

Product Usage Case

· A student taking notes in a math lecture can photograph a complex multi-line equation written on the board, upload it to Math2Tex, and instantly get the LaTeX code to paste into their digital notebook, avoiding tedious manual typing and potential errors.

· A researcher working with a scanned PDF of an old academic paper can upload pages containing intricate formulas, and Math2Tex will convert them into editable LaTeX, allowing them to easily incorporate these formulas into their own research papers or analyses.

· An educator preparing lecture slides can take a picture of a challenging mathematical problem from a textbook, convert it using Math2Tex, and then easily insert the properly formatted equation into their presentation slides.

· Anyone who needs to digitize handwritten mathematical formulas, whether for personal study, collaboration, or publication, can use Math2Tex as a quick and reliable method to convert their visual notes into a usable digital format.

16

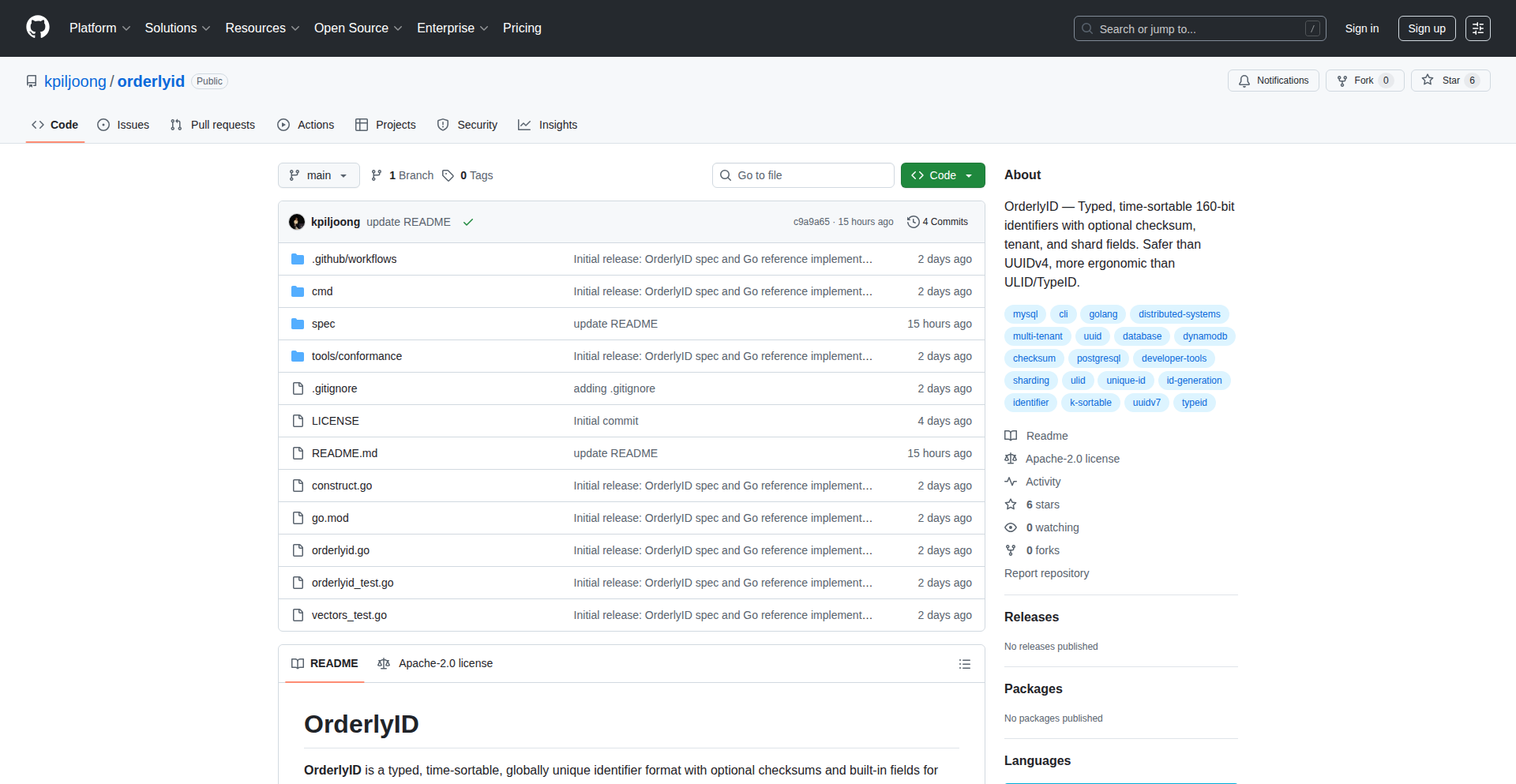

OrderlyID: Typed, Time-Sortable 160-bit IDs

Author

piljoong

Description

OrderlyID is a novel identifier format designed for distributed systems, offering a more structured and informative alternative to traditional UUIDs or ULIDs. It introduces human-readable prefixes for better identification, embeds creation time for efficient sorting, and includes optional checksums for data integrity. This makes it easier to manage and debug IDs in complex, spread-out systems, providing clear insights into data origins and ensuring accuracy.

Popularity

Points 4

Comments 0

What is this product?

OrderlyID is a new type of unique identifier, similar to how a UUID or ULID works, but with several key improvements for distributed systems. Think of it as a smart, unique code. It starts with a readable prefix (like 'order_' or 'user_') that immediately tells you what the ID represents, making it much easier for humans to understand. The core part of the ID is built so that when you arrange them alphabetically, they also roughly sort by when they were created. This is super helpful for databases and logs where you often need to see things in chronological order. It's also larger, allowing it to store more information like the time, which system it belongs to (tenant), which part of that system (shard), a sequence number, and some random data. Optionally, it can have a small checksum to catch mistakes if an ID is copied incorrectly. There's even a privacy feature to group timestamps for public-facing data. So, what's the big deal? It brings order and clarity to the often chaotic world of unique IDs in distributed applications.

How to use it?

Developers can integrate OrderlyID into their applications by using the provided Go reference implementation or the command-line interface (CLI) tool. You can generate new OrderlyIDs for various entities like orders, users, or events within your distributed system. For example, in a microservices architecture, each service can generate its own IDs with specific prefixes (e.g., 'payment_order_...', 'user_profile_...'). The k-sortable nature means you can efficiently query and retrieve data chronologically without needing separate timestamp indexes in many cases. The structured fields allow for more intelligent data sharding and routing. The checksums can be used to validate IDs during data transfer or storage, preventing subtle errors. This makes managing and debugging data across multiple services much more straightforward.

Product Core Function

· Typed Identifiers: Generates IDs with human-readable prefixes (e.g., 'order_xxx', 'user_xxx'). This makes it easy to understand what an ID refers to at a glance, improving debugging and data management, especially in systems with many different types of data.

· K-Sortable by Creation Time: The IDs are designed so that their alphabetical order closely matches their creation time. This allows for efficient chronological sorting of data in databases and logs without needing separate time indexing, simplifying queries and improving performance.

· Structured 160-bit Payload: The main part of the ID contains embedded information including timestamp, tenant identifier, shard identifier, and a sequence number. This allows for built-in sharding and routing logic within the ID itself, making distributed systems easier to manage and scale.

· Optional Checksums: Includes an optional integrity check (like a small error-detecting code) that helps catch copy-paste errors or data corruption. This is crucial for maintaining data accuracy and reliability in distributed environments.

· Privacy Flag for Bucketing: Offers a flag to group timestamps, useful for anonymizing or generalizing data for public-facing systems. This helps maintain privacy while still allowing for some temporal ordering, balancing transparency and data protection.

Product Usage Case

· In a distributed e-commerce platform, OrderlyIDs can be used for orders ('order_...') and payments ('payment_...'). The time-sortable nature allows easy retrieval of all orders placed within a specific hour or day, improving reporting and analysis. The tenant field can help isolate data if the platform serves multiple businesses.

· For a real-time analytics system tracking user events ('event_...'), OrderlyIDs can provide an immediate timestamp context and a shard identifier to route events to the correct processing node. The optional privacy flag could be used for public-facing dashboards that show trends without revealing exact user activity times.

· In a large-scale content management system, media assets could have IDs like 'media_asset_...'. The k-sortable property helps in managing content versions chronologically, while the structured fields can help distribute storage across different servers based on the content's origin or type.

· When building microservices that need to communicate and maintain a consistent order of operations, OrderlyIDs provide a shared, understandable format. For example, a user registration service might generate 'user_reg_...' IDs, ensuring that even if processed out of order, the timestamps within the ID help reconstruct the correct sequence.

17

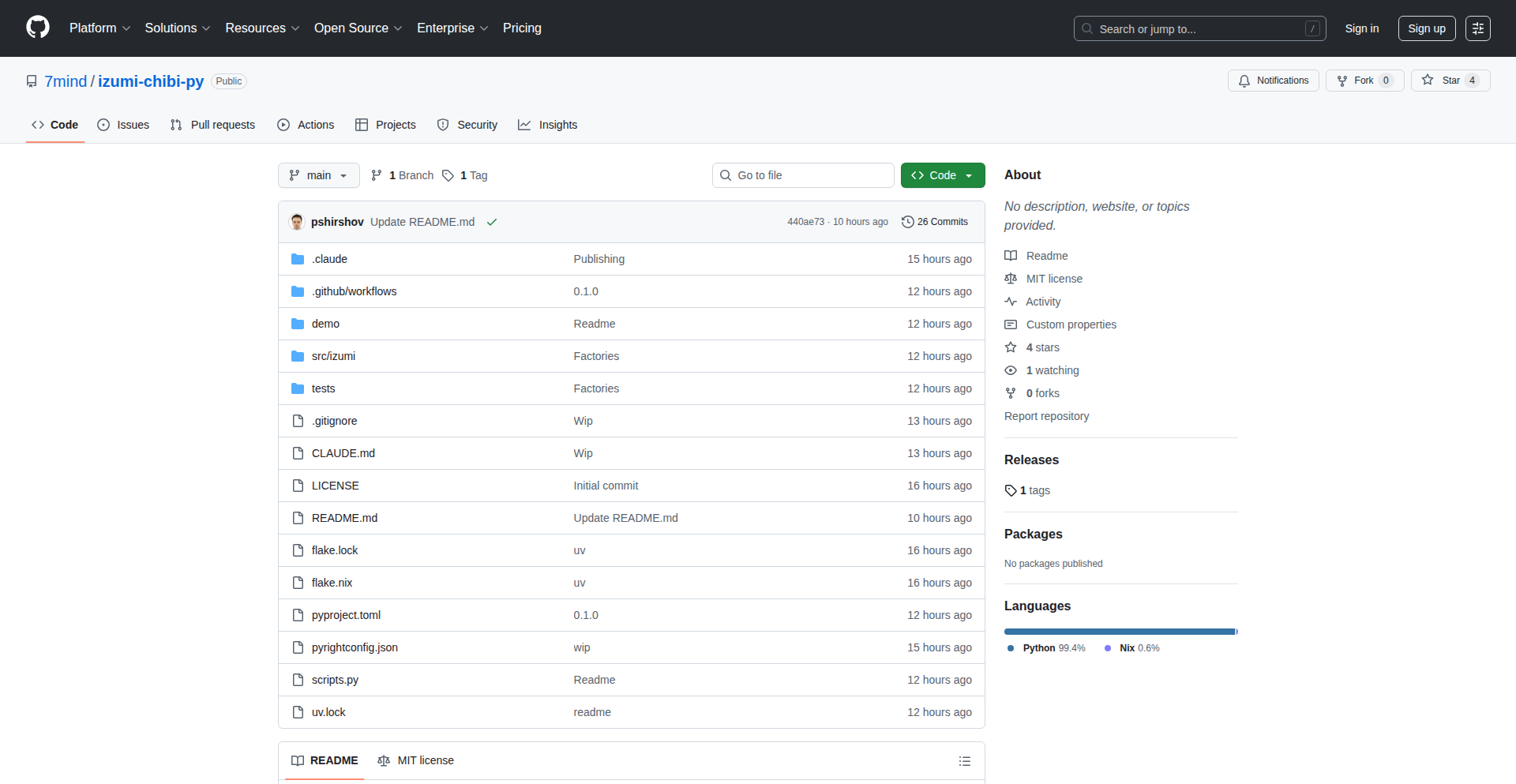

Chibi Izumi: Staged Dependency Injection for Python

Author

pshirshov

Description

Chibi Izumi is a Python library that offers staged dependency injection, a novel approach to managing how different parts of your Python code rely on each other. Instead of injecting dependencies all at once, it allows you to define injection steps and execute them in a specific order, enhancing control and clarity in complex applications. This tackles the common challenge of managing intricate relationships between code components, making development more organized and less error-prone.

Popularity

Points 2

Comments 2

What is this product?

Chibi Izumi is a Python dependency injection framework that introduces 'staged' injection. Traditional dependency injection often injects all required components at once. Chibi Izumi breaks this down into stages, allowing developers to define a sequence of injection operations. This means you can decide when and how certain dependencies are provided to your code components. The innovation lies in providing more granular control over the dependency lifecycle, which can be crucial for applications with complex initialization sequences or when dealing with external resources that need to be set up progressively. Think of it like building a LEGO structure: instead of dumping all the bricks at once, you follow specific instructions for each stage, ensuring everything fits perfectly.

How to use it?

Developers can use Chibi Izumi by defining their dependencies and the stages at which they should be injected. You would typically decorate your classes or methods to indicate which dependencies they require and associate these with specific injection stages. The framework then orchestrates the injection process based on your defined stages. For example, in a web application, you might inject a database connection in an early stage and a user authentication service in a later stage. This can be integrated into existing Python projects by installing the library and applying its decorators to your classes and functions.

Product Core Function

· Staged Dependency Injection: Allows defining multiple injection points executed in a defined order, providing fine-grained control over dependency setup. This is valuable for managing complex application startup or when certain services depend on others being initialized first.

· Declarative Dependency Declaration: Uses decorators to clearly mark where and what dependencies are needed, making code more readable and maintainable. This helps developers quickly understand the relationship between different code modules.

· Stage-based Initialization: Enables grouping injections into logical stages, promoting cleaner initialization and easier debugging of dependency issues. This is useful for applications that have distinct phases during their lifecycle, like setting up network connections before processing requests.

· Extensible Injection Mechanisms: Supports various ways to provide dependencies, from simple object instantiation to more complex factory patterns. This offers flexibility to adapt to different project needs and integration requirements.

Product Usage Case