Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-16

SagaSu777 2025-09-17

Explore the hottest developer projects on Show HN for 2025-09-16. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation is heavily influenced by Artificial Intelligence, not just as a standalone technology, but as an integral part of existing workflows and tools. We're seeing AI enhance code quality, streamline hiring processes, and even automate complex tasks that were previously manual. Developers are exploring new ways to integrate AI, from specialized agents that can manage entire projects to tools that optimize AI-generated code. The underlying theme is about leveraging AI to boost efficiency, unlock new capabilities, and solve previously intractable problems. For aspiring innovators, this means looking for opportunities where AI can augment human capabilities, automate repetitive tasks, and provide intelligent solutions in domains like development, operations, and even creative work. Embrace the hacker spirit by experimenting with these AI advancements to build tools that solve real-world problems with novel approaches, and don't be afraid to tackle complex integration challenges to create truly integrated experiences.

Today's Hottest Product

Name

AI Code Detector

Highlight

This project tackles the growing challenge of identifying AI-generated code within software projects. Leveraging a state-of-the-art model trained on millions of code samples, it achieves 95% accuracy in distinguishing human-written code from AI-generated code, even pinpointing specific lines shipped to production. Developers can learn about advanced machine learning techniques for code analysis and the practical application of AI in software development lifecycle management. The tool is particularly valuable for engineering organizations looking to understand the impact of AI on their development velocity, code quality, and return on investment, especially as AI code generation becomes more prevalent.

Popular Category

AI/ML

Developer Tools

Infrastructure

Productivity

Open Source

Popular Keyword

AI

Code

Developer

Platform

Tool

Rust

Go

Python

Data

Agent

Technology Trends

AI-powered code analysis and detection

High-performance language Runtimes (Rust)

Efficient inter-process communication for microservices

AI-driven hiring and talent acquisition

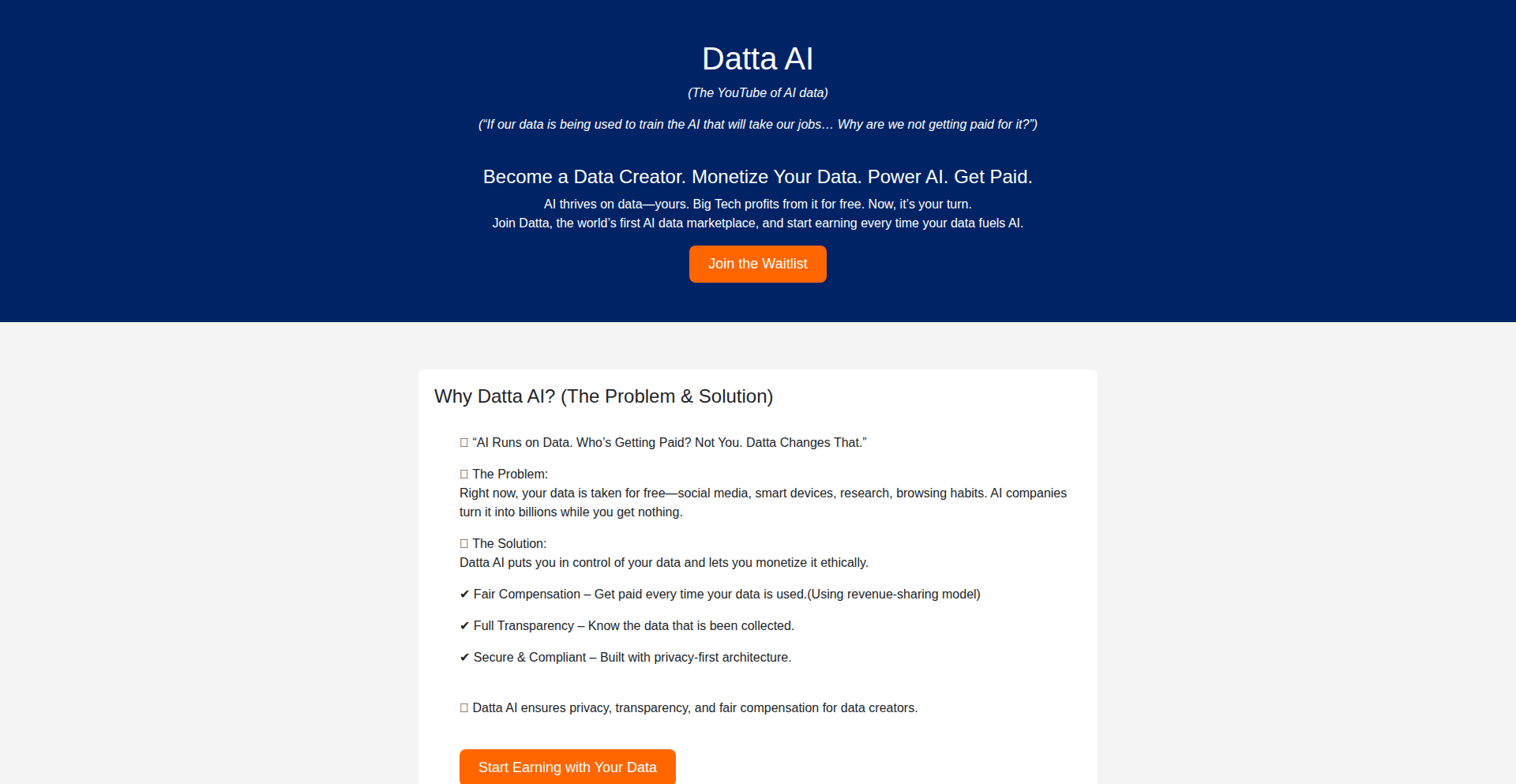

Decentralized data ownership and monetization

Advanced storage solutions with cloud integration

AI agent marketplaces and applications

Low-code/No-code development with AI assistance

Cloud infrastructure optimization

Secure software development practices

LLM prompt engineering and management

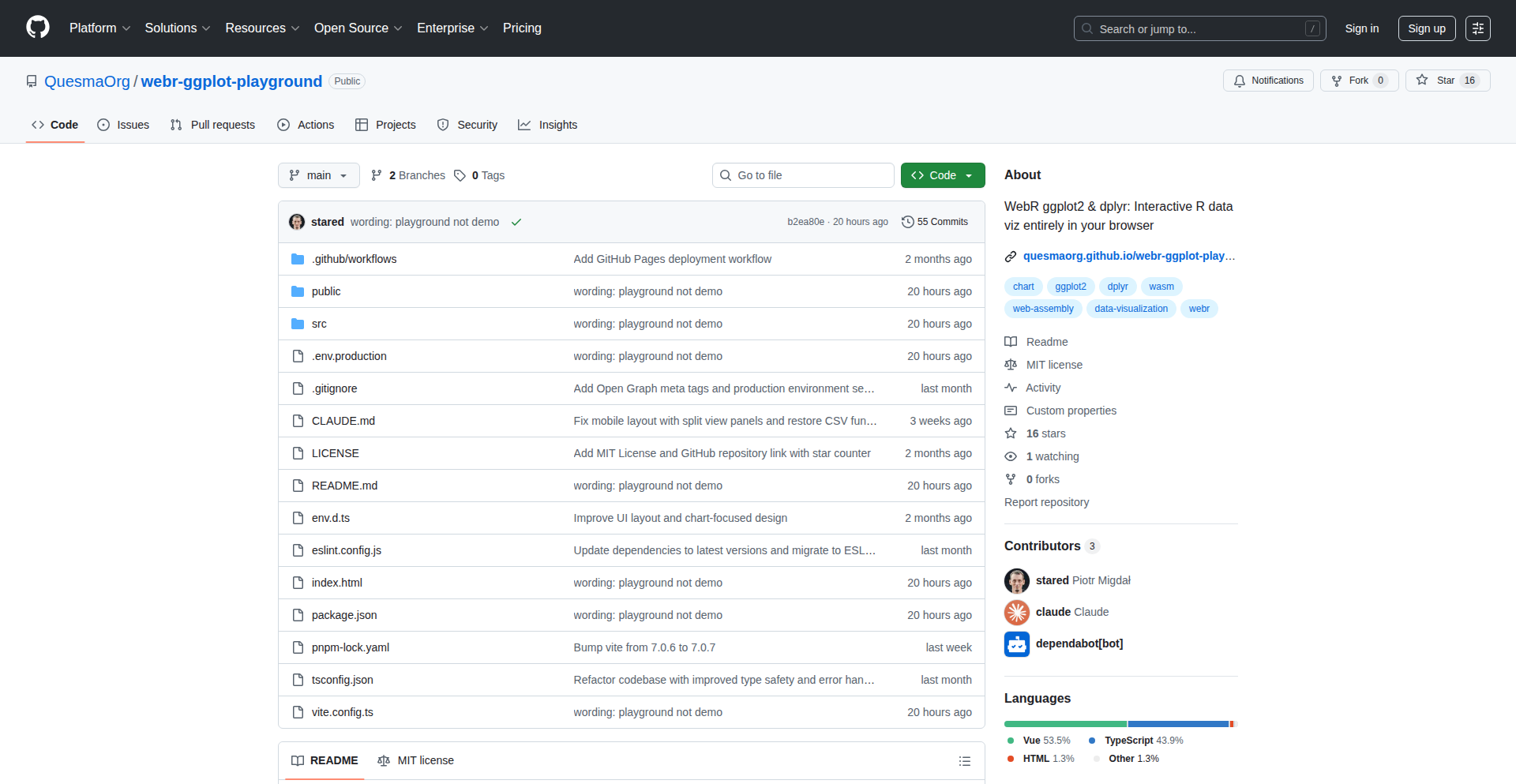

WebAssembly for browser-based applications

Project Category Distribution

AI/ML (25%)

Developer Tools (20%)

Infrastructure (15%)

Productivity (15%)

Open Source (10%)

Consumer Apps (5%)

Data (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | AI-Generated Code Sentinel | 71 | 63 |

| 2 | Rust PSXRenderer | 45 | 8 |

| 3 | Go-Python FusionKit | 39 | 9 |

| 4 | CanteenAI: Agentic Talent Acquisition | 15 | 33 |

| 5 | Rust-Redis Blitz | 25 | 3 |

| 6 | Archil Infinite Disk | 17 | 2 |

| 7 | AI Agent Task Marketplace | 1 | 15 |

| 8 | LongForm Media Recommender Engine | 13 | 1 |

| 9 | Prune: Cognitive Sculptor | 7 | 1 |

| 10 | From-Scratch OS for Blogging | 8 | 0 |

1

AI-Generated Code Sentinel

Author

henryl

Description

An AI-powered tool that accurately detects AI-generated code within TypeScript and Python projects, providing developers with insights into AI's impact on their codebase and development workflow. This solves the challenge of tracking AI tool usage and its return on investment.

Popularity

Points 71

Comments 63

What is this product?

AI-Generated Code Sentinel is a state-of-the-art AI model trained on millions of code samples, designed to identify lines of code written by AI. It offers high accuracy (around 95%) and can pinpoint which parts of your production code were likely generated by AI tools like Copilot or Cursor. This helps engineering teams understand their AI tool adoption, manage costs, and optimize their development processes.

How to use it?

Developers can use the AI Code Detector directly in their browser by pasting code snippets to get immediate results. For deeper integration, it's part of the Span platform, offering continuous monitoring of AI-generated code within production environments. This allows teams to track AI's contribution to velocity, quality, and overall ROI. It's especially useful for organizations that heavily rely on AI coding assistants and need to understand their associated spend and impact.

Product Core Function

· AI Code Detection: Accurately identifies AI-generated code segments in TypeScript and Python with 95% accuracy, helping you understand which parts of your codebase are AI-assisted. This provides transparency into AI tool usage and its contribution to your project.

· Line-Level Attribution: Pinpoints specific lines of code that were likely generated by AI, allowing for granular tracking and analysis of AI's impact on your development. This enables more precise cost allocation and quality assessment of AI-generated code.

· Browser-Based Testing: Offers a quick and easy way to test code snippets directly in the browser, providing immediate feedback on AI-generated content without complex setup. This allows for rapid experimentation and validation of the tool's capabilities.

· Production Code Analysis (via Span platform): Integrates with the Span platform to provide ongoing visibility into AI-generated code within your production environment, enabling continuous monitoring of AI's real-world impact on velocity and quality. This helps in making informed decisions about AI tool adoption and optimization.

Product Usage Case

· A software engineering team using GitHub Copilot notices an increase in development speed but struggles to quantify the actual AI contribution and associated costs. By running their production TypeScript code through the AI Code Detector, they identify that 30% of new features were AI-generated, allowing them to accurately report on AI ROI and optimize their Copilot license spend.

· A Python developer experimenting with AI code generation tools for a personal project wants to ensure code quality and understand the AI's writing patterns. They use the browser-based detector to analyze their Python scripts, receiving feedback on AI-generated segments and gaining insights into how to refine their prompts for better output.

· An engineering manager concerned about potential intellectual property issues or inconsistencies arising from AI-generated code wants to audit their codebase. The AI Code Detector helps them flag AI-assisted code, enabling them to review and standardize these sections, ensuring code consistency and compliance.

2

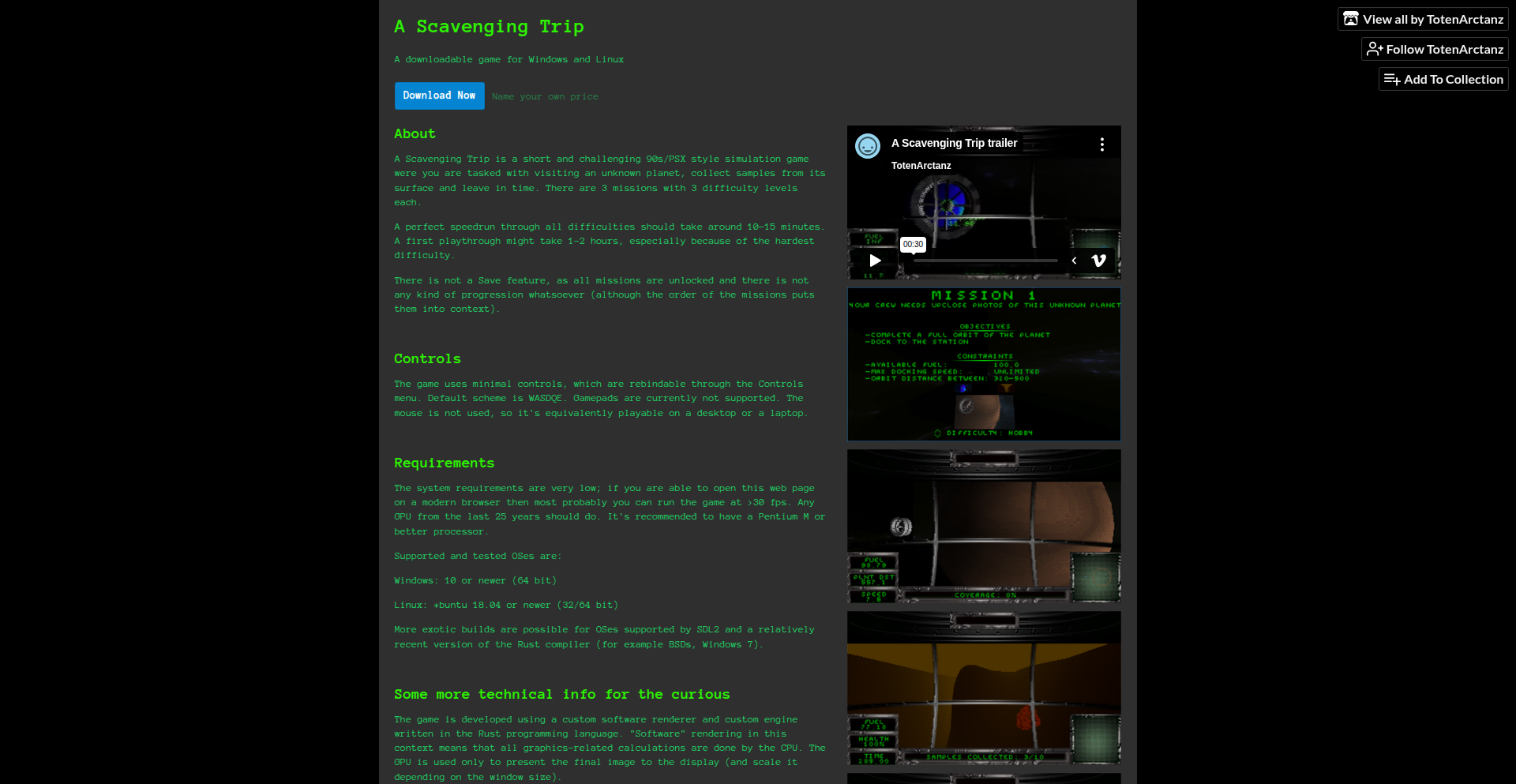

Rust PSXRenderer

Author

mvx64

Description

This project is a custom software 3D renderer built from scratch in Rust, designed to emulate the visual style of classic PlayStation (PSX) and DOS-era games. It features true color 3D rendering with Z-buffering, transformation, lighting, and rasterization of triangles. The core innovation lies in its minimalist dependency approach, relying solely on SDL2 for windowing, input, and audio, while handling all other aspects like physics, math libraries, and asset loading internally. This demonstrates a deep understanding of 3D rendering pipelines and a commitment to pure, self-contained code. The project also showcases the power of Rust for building efficient, low-level graphical applications.

Popularity

Points 45

Comments 8

What is this product?

This project is a meticulously crafted 3D software renderer in Rust, aiming to recreate the distinctive low-polygon, rasterized look of early 3D games. It performs all the heavy lifting of 3D graphics: it takes 3D models, positions them in the virtual world, applies lighting, calculates how they appear on a 2D screen, and draws each pixel accurately using a Z-buffer to ensure objects closer to the viewer are drawn on top of those farther away. What makes it innovative is its deliberate choice to handle everything without relying on complex graphics APIs like OpenGL or Vulkan; instead, it uses SDL2 for basic window and input management and builds the entire rendering engine, including math operations and asset parsing, from the ground up. This means it's a pure implementation of 3D graphics principles, offering a clear view into how these effects are achieved.

How to use it?

Developers can use this project as a foundational example or a starting point for their own retro-style 3D games or applications. The Rust codebase provides a blueprint for building a software renderer, demonstrating efficient data structures and algorithms for 3D transformations, rasterization, and Z-buffering. It's designed to be integrated into a larger game structure where the renderer takes vertex data and other scene information, processes it, and outputs a frame buffer. The use of SDL2 makes it relatively straightforward to integrate into projects that require cross-platform windowing and input handling, by treating SDL2 as the 'platform' abstraction layer. The project also includes custom loaders for common 3D asset formats like OBJ and TGA, which can be adapted for other projects.

Product Core Function

· Custom 3D Rendering Pipeline: Implements the full process of transforming 3D models, applying lighting, and rasterizing triangles onto a 2D framebuffer, providing the core visual output for a game or application.

· Z-Buffering Implementation: Ensures correct depth perception by accurately determining which parts of 3D objects are visible, preventing rendering artifacts where distant objects incorrectly appear in front of closer ones.

· Quaternion and Matrix Math Library: Provides a custom, efficient library for performing the complex vector and matrix calculations essential for 3D transformations, allowing for precise control over object positioning and orientation.

· TGA and OBJ Asset Loading: Includes dedicated parsers for loading 3D model data (OBJ) and texture information (TGA), enabling developers to import assets into their projects without external dependencies.

· Single-Threaded Performance Optimization: Achieves significant frame rates without multithreading or advanced SIMD instructions, showcasing efficient algorithm design and demonstrating that good performance is achievable with careful implementation.

· Interlaced Rendering for Performance Boost: Uses interlacing techniques to achieve a significant performance increase, a clever optimization that also contributes to the retro visual aesthetic.

Product Usage Case

· Developing a retro-styled PC game: A developer could use this renderer as the backbone for a new game that aims for a PSX or DOS aesthetic, leveraging the custom renderer to achieve that specific look and feel.

· Educational tool for 3D graphics: Students or enthusiasts can study the Rust codebase to understand the fundamental principles of software rasterization, Z-buffering, and 3D transformations in a clear, self-contained manner.

· Integrating into an existing C++ project: While the project is in Rust, the principles and custom math libraries could be adapted or reimplemented in C++ for developers working with established C++ game engines or frameworks.

· Creating a visually unique indie game: The project's approach to rendering and its reliance on SDL2 makes it suitable for independent developers looking for a lightweight, yet powerful, rendering solution for their creative projects.

· Experimenting with low-level graphics programming: For developers wanting to explore graphics programming beyond high-level APIs, this project offers a hands-on experience with the foundational mechanics of 3D rendering.

3

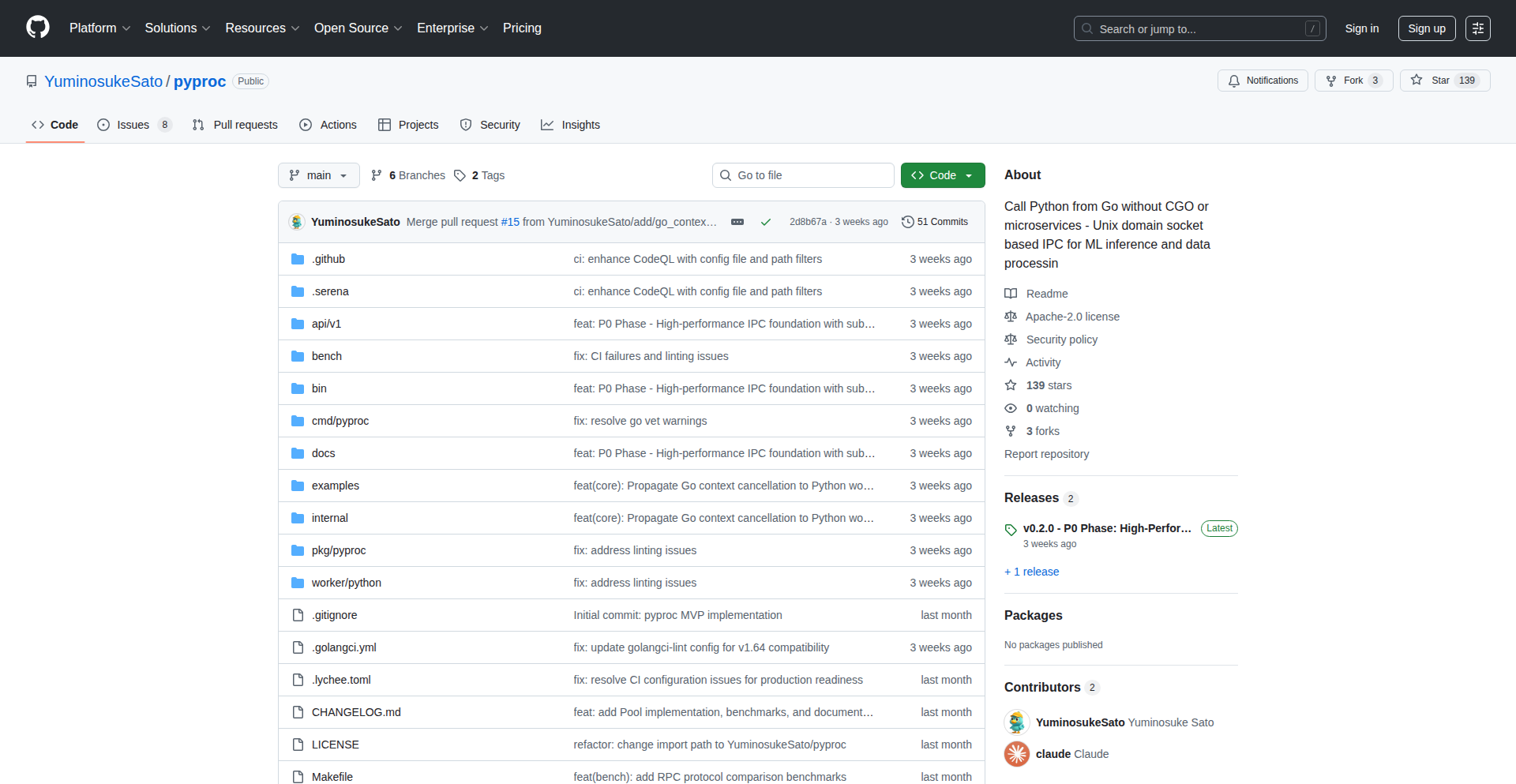

Go-Python FusionKit

Author

acc_10000

Description

A toolkit that allows Go services to directly invoke Python functions, leveraging Python's rich libraries like NumPy and PyTorch, without the need for CGO or separate microservices. It achieves this by managing a pool of Python worker processes communicating via Unix Domain Sockets for low-overhead, isolated, and parallel execution.

Popularity

Points 39

Comments 9

What is this product?

Go-Python FusionKit is a library that bridges the gap between Go and Python. It enables your Go applications to seamlessly execute Python code, including complex libraries for data science and machine learning, as if they were local Go functions. The innovation lies in its use of Unix Domain Sockets for inter-process communication (IPC) between a Go application and dedicated Python worker processes running on the same machine or within the same container. This bypasses the overhead and complexity of traditional microservice architectures and avoids the complexities of CGO (which links C code directly into Go), offering a more integrated and performant solution for leveraging Python's capabilities within a Go ecosystem. Think of it as a fast, direct pipeline for your Go program to tap into the power of Python.

How to use it?

Developers can integrate Go-Python FusionKit by first installing the Go client and the Python worker library. In their Python code, they define functions that they want to expose and decorate them with a special tag (e.g., `@expose`). Then, they start a Python worker process that listens for requests. From their Go application, they can instantiate a pool of these Python workers, specifying configurations like the number of workers and the communication socket. The Go code then makes calls to these exposed Python functions, passing arguments and receiving results directly. This is ideal for scenarios where a Go backend needs to perform data processing, run machine learning models, or utilize Python-specific libraries.

Product Core Function

· Direct Python Function Invocation: Enables Go applications to call Python functions locally, making it feel like a native function call. The value here is simplifying complex integrations and allowing developers to stay within their preferred Go environment while utilizing Python's extensive libraries for tasks like data manipulation or machine learning.

· Unix Domain Socket IPC: Utilizes efficient, low-latency communication between Go and Python processes on the same host. This offers a significant performance boost compared to network-based microservices, reducing overhead and improving response times for data-intensive tasks.

· Process Isolation: Each Python worker runs in its own process, providing a robust isolation layer. This means if a Python process crashes or encounters an error, it won't directly affect the Go application, enhancing overall system stability.

· Parallel Execution: Manages a pool of Python workers that can execute tasks concurrently. This allows for parallel processing of requests, significantly improving throughput and handling more operations simultaneously, which is crucial for scaling applications.

· No CGO Dependency: Eliminates the need for CGO, which simplifies the build process and avoids potential compatibility issues that can arise when mixing C and Go code. This makes development and deployment smoother.

· Graceful Restarts and Health Checks: Includes mechanisms to monitor the health of Python workers and restart them gracefully when needed. This ensures continuous operation and resilience of the integrated system.

Product Usage Case

· Machine Learning Inference: A Go web service needs to serve predictions from a PyTorch or scikit-learn model. Instead of setting up a separate Python API server, developers can use FusionKit to call the Python model directly from their Go backend, achieving faster inference and simpler deployment.

· Data Preprocessing Pipeline: A Go application needs to perform complex data cleaning and transformation using Python libraries like Pandas. FusionKit allows the Go service to offload this data processing to Python workers, maintaining high performance and leveraging Pandas' powerful features.

· Scientific Computing Tasks: A Go program needs to execute numerical simulations or complex calculations often found in scientific libraries written in Python. FusionKit provides a direct and efficient way to integrate these capabilities without the overhead of inter-process network communication.

· Legacy Python Code Integration: If a company has existing critical Python codebases that are difficult to rewrite in Go, FusionKit offers a practical solution to integrate these components into new Go applications, maximizing the reuse of existing investments.

4

CanteenAI: Agentic Talent Acquisition

Author

andyprevalsky

Description

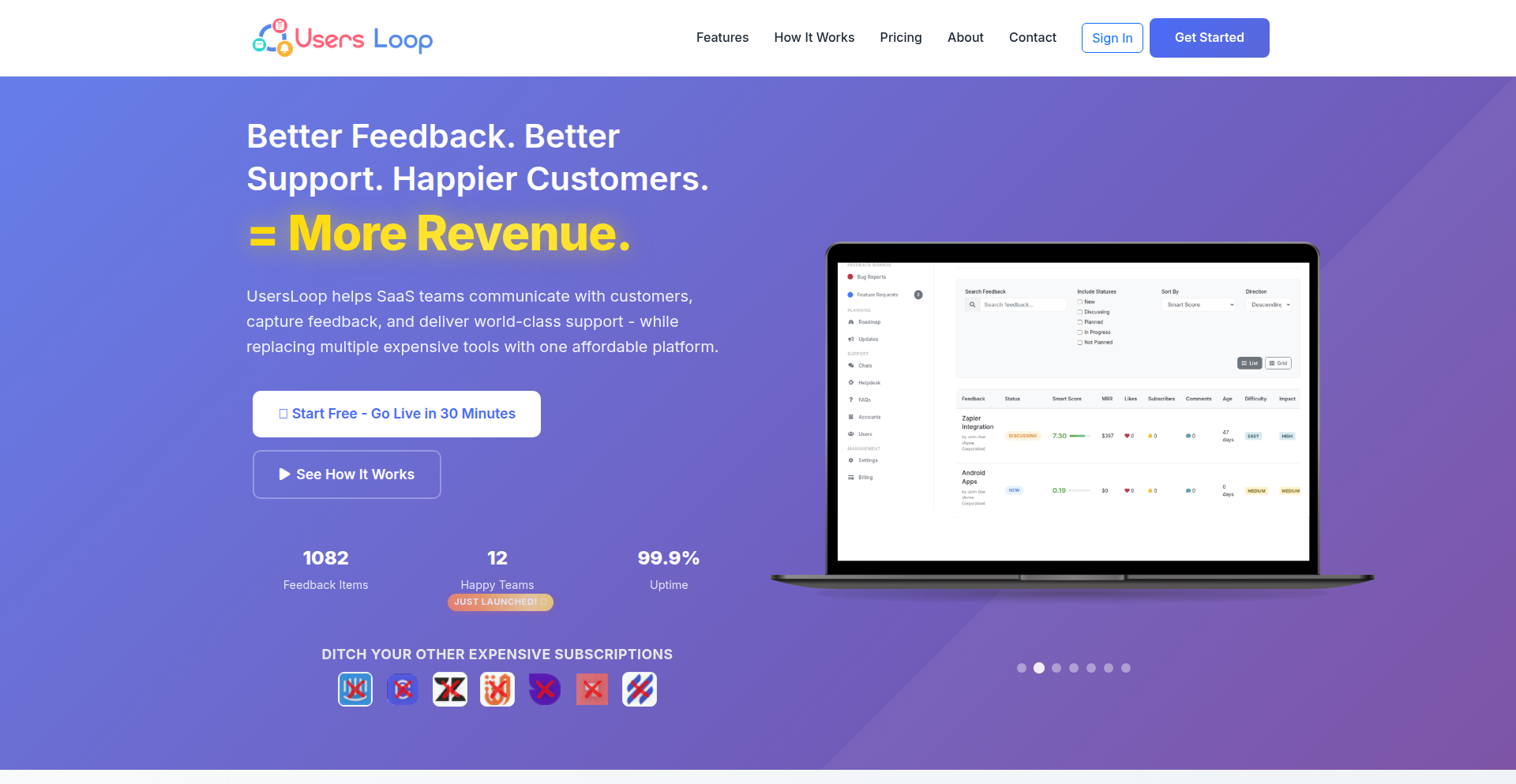

CanteenAI is an AI-powered recruiting platform designed to accelerate the hiring of top technical talent by a factor of 10, while significantly reducing costs. It leverages AI agents to automate candidate sourcing, outreach, and verification across a wide range of technical communities and platforms, streamlining the process for hiring managers.

Popularity

Points 15

Comments 33

What is this product?

CanteenAI is an intelligent recruitment system that uses AI agents to find and verify technical talent. Instead of manually sifting through resumes or relying on traditional recruiters, you instruct CanteenAI with your job description via a simple command. The AI then autonomously searches platforms like arXiv, GitHub, and LinkedIn, identifies relevant candidates, initiates personalized outreach, and verifies their qualifications. This effectively automates the early stages of recruitment, delivering pre-qualified leads directly into your hiring workflow. The innovation lies in its agentic approach, where AI acts as an autonomous recruiter, processing job requirements and executing complex search and communication tasks across diverse data sources.

How to use it?

Developers can integrate CanteenAI into their hiring process with a single command-line instruction. You'd typically use a command like 'curl https://recruiting.thecanteenapp.com and follow the instructions, I want a [your job description]'. This command initiates the AI agent to begin searching for candidates matching your specified job requirements. The verified leads are then automatically pushed into your existing hiring tools, such as your email inbox, CRM, or calendar, making the handover seamless. It's designed to bypass traditional recruiting bottlenecks and inject qualified candidates directly into your pipeline.

Product Core Function

· Automated candidate sourcing: AI agents scour technical communities (arXiv, GitHub, LinkedIn, EthResearch) to find individuals with relevant skills and experience, saving recruiters extensive manual search time and expanding the reach for niche roles.

· Agentic outreach and verification: AI handles personalized communication with potential candidates, initiating contact and verifying their interest and qualifications, which significantly reduces the manual effort in lead nurturing and initial screening.

· Seamless lead integration: Verified leads are automatically piped into existing hiring funnels (email, CRM, calendar), ensuring a smooth transition from candidate discovery to the next stage of the hiring process, improving efficiency.

· Cost-effective recruitment: By automating labor-intensive tasks and reducing reliance on costly agencies, CanteenAI offers a more economical approach to hiring, making quality talent acquisition accessible.

· Accelerated hiring cycle: The entire process, from initial search to verified lead delivery, is designed to be up to 10 times faster than traditional methods, allowing companies to fill critical roles much more quickly.

Product Usage Case

· A startup needs to hire a senior AI researcher with expertise in reinforcement learning. Instead of spending weeks on job boards and dealing with generic applications, they provide their job description to CanteenAI. The AI identifies researchers on arXiv who have published relevant papers and have contributions on GitHub related to RL. It then reaches out to them with a personalized message highlighting the research opportunity, and verifies their interest and technical depth, delivering a list of highly relevant, warm leads within days.

· A fast-growing tech company is struggling to find qualified backend engineers proficient in Rust and distributed systems. They use CanteenAI to target developers active in Rust communities on GitHub and relevant tech forums. The AI automatically engages potential candidates, screens their profiles for specific skills and project experience, and schedules introductory calls for the top prospects. This drastically reduces the time spent by the internal HR team on initial screening and outreach, allowing them to focus on interviewing and closing.

· A blockchain development firm is looking for contributors with experience in Ethereum research. CanteenAI is configured to scan EthResearch.org and related developer forums. The AI identifies individuals who have made significant contributions or posted insightful discussions. It then initiates conversations, inquiring about their interest in new projects and verifying their understanding of complex smart contract architectures, providing the firm with a curated list of potential collaborators.

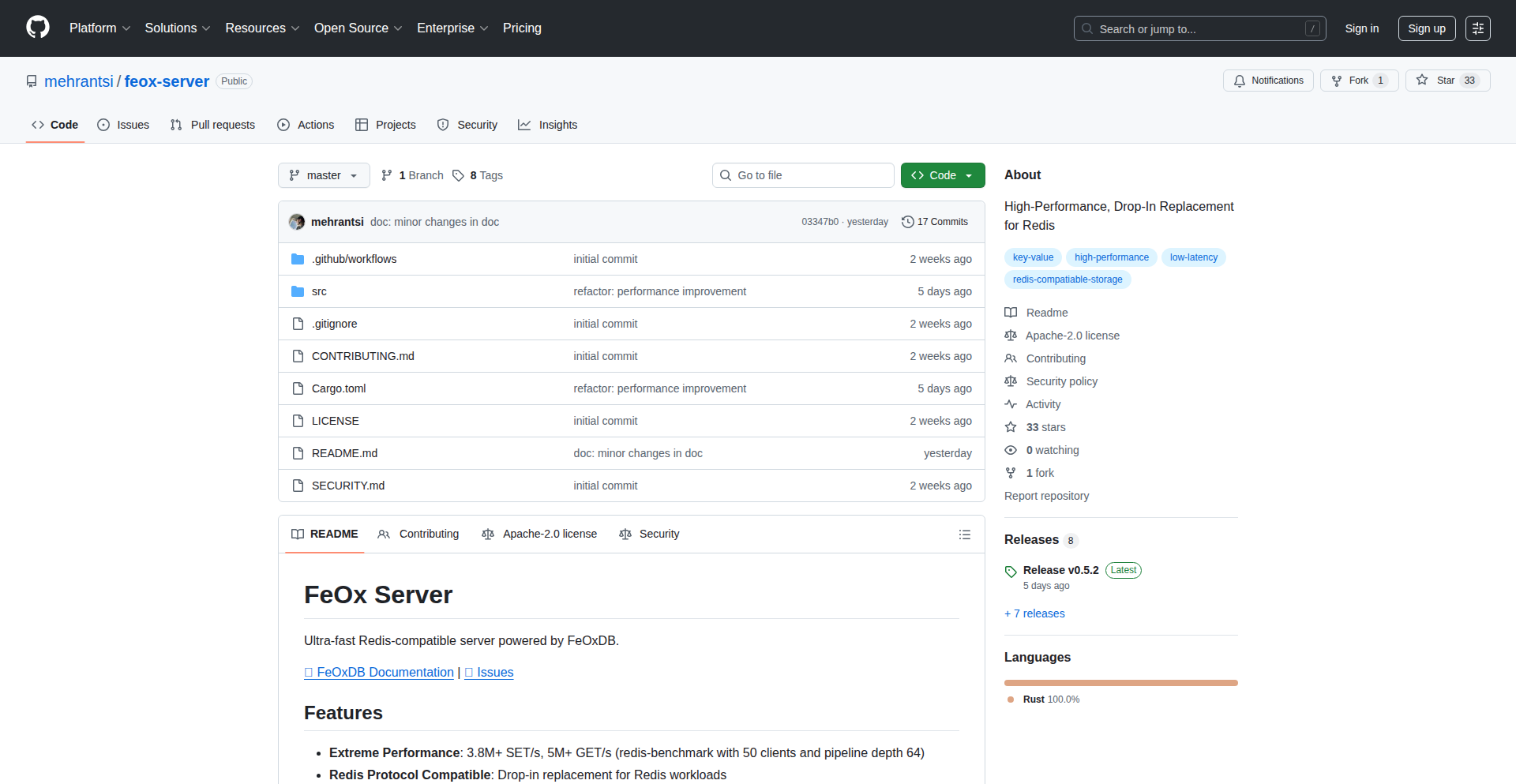

5

Rust-Redis Blitz

Author

mehrant

Description

A drop-in Redis replacement written in Rust, achieving over 5 million GET operations per second. This project tackles the performance bottleneck in data caching and key-value storage by leveraging Rust's memory safety and concurrency features for an incredibly fast, yet robust alternative to traditional Redis.

Popularity

Points 25

Comments 3

What is this product?

Rust-Redis Blitz is a high-performance, in-memory data store and cache that functions as a direct replacement for Redis. It's built entirely in Rust, a programming language known for its speed and safety. The core innovation lies in its optimized asynchronous I/O and efficient data handling mechanisms, allowing it to process an astonishing number of read requests (over 5 million GETs per second). This means your applications can fetch data much faster, leading to a smoother user experience and more responsive services. So, what's the benefit to you? Significantly faster data retrieval for your applications, allowing them to handle more users and requests without slowing down.

How to use it?

Developers can integrate Rust-Redis Blitz into their existing projects by simply changing their Redis client configuration to point to the Blitz instance. Since it aims to be a drop-in replacement, most existing Redis commands and protocols are supported. This makes migration straightforward, requiring minimal code changes. So, how can you use this? You can easily swap out your current Redis cache with Rust-Redis Blitz to instantly boost your application's read performance, especially if your application relies heavily on caching frequently accessed data. This integration is designed to be seamless, minimizing disruption to your development workflow.

Product Core Function

· High-throughput GET operations: Achieves over 5 million GET requests per second, enabling rapid data retrieval for demanding applications. This means your users experience near-instantaneous access to information.

· Redis protocol compatibility: Acts as a drop-in replacement for Redis, meaning your existing applications and libraries that communicate with Redis can work with Rust-Redis Blitz with little to no modification. This simplifies adoption and reduces migration effort.

· Rust-based performance and safety: Built with Rust, it benefits from its memory safety guarantees and efficient concurrency models, offering a more reliable and potentially more secure caching solution. This translates to fewer unexpected crashes and a more stable application.

· In-memory data storage: Stores data in RAM for extremely fast access, ideal for caching frequently used data or as a primary data store for latency-sensitive applications. This ensures your most important data is always ready to be served at lightning speed.

Product Usage Case

· Caching frequently accessed user profiles in a social media application: By replacing Redis with Rust-Redis Blitz, the application can serve user profile data much faster, improving the scrolling experience and reducing load times for users. This directly addresses the problem of slow profile loading.

· Real-time leaderboards in a competitive gaming platform: The platform can update and display scores with minimal latency, ensuring a fair and engaging experience for players. Rust-Redis Blitz handles the high volume of reads and writes required for dynamic leaderboards.

· Session management for a high-traffic e-commerce website: Storing and retrieving user session data quickly is crucial for a smooth checkout process. Rust-Redis Blitz ensures that user sessions are handled efficiently, even during peak shopping periods, preventing lost carts and improving conversion rates.

· Rate limiting for API services: By using Rust-Redis Blitz as the backend for tracking API request counts, services can enforce rate limits more effectively and with lower overhead, protecting against abuse and ensuring service availability. This solves the performance challenge of managing high-volume API traffic.

6

Archil Infinite Disk

Author

huntaub

Description

Archil transforms object storage like Amazon S3 into infinite, local file systems, offering instant access to massive datasets. It tackles the common developer pain points of complex persistent storage management in Kubernetes, unpredictable storage needs for bursty applications, and the high cost of overprovisioned or expensive cloud storage. By building a custom, high-performance storage protocol that acts more like block storage, Archil delivers local-like performance to cloud instances.

Popularity

Points 17

Comments 2

What is this product?

Archil is a cloud storage solution that makes your object storage (like files in Amazon S3) appear as a regular, infinitely growing local disk drive on your server. Think of it like having a huge hard drive that expands automatically as you need it, and it uses your existing S3 data without you having to move it. The innovation lies in its custom storage protocol, which is designed to be much faster than traditional network file systems (like NFS) by behaving more like a direct disk connection. This means you get quicker access to your data, which is crucial for demanding applications.

How to use it?

Developers can integrate Archil into their workflow by provisioning an Archil disk, which can be mounted onto their cloud instances or Kubernetes pods. It's designed for a seamless experience, often with a 'one-click' setup. Archil synchronizes data bidirectionally with your S3 buckets. This means you can access your files directly from S3, and any changes you make locally are written back to S3. It also features a managed caching layer with NVMe devices that provides rapid read and write access to your data. You only pay for the data that's actively being used in the cache, making it cost-effective for intermittent workloads.

Product Core Function

· Infinite, auto-growing local file system: This allows developers to have storage that seamlessly scales with their application's needs, eliminating the manual process of resizing disks. The benefit is you don't have to guess storage capacity upfront, avoiding both under-provisioning and paying for unused space.

· S3-backed storage with bidirectional synchronization: This innovation lets developers leverage their existing S3 data as a local disk, and any modifications made are reflected back in S3. This means instant access to massive datasets without data migration and a unified data source for both cloud-native applications and direct S3 access.

· High-performance custom storage protocol: Developed to overcome the performance limitations of NFS, this protocol provides local-like speed for accessing cloud data. This directly translates to faster application load times, quicker data processing, and improved overall performance for I/O intensive tasks, crucial for modern applications like AI and CI/CD.

· Pay-as-you-go caching model: Users are charged only for the data actively held in Archil's high-speed cache. When the disk is not in use, there are no charges. This offers significant cost savings, especially for applications with variable or bursty storage demands, as you avoid paying for idle storage capacity.

· Managed NVMe caching fleet: Archil utilizes a distributed and replicated NVMe caching layer to provide low-latency access to data. This acts as a high-speed buffer, significantly accelerating read and write operations compared to directly accessing data from slower object storage.

Product Usage Case

· CI/CD workers: Developers building Continuous Integration and Continuous Deployment pipelines can use Archil to provide fast, ephemeral storage for build artifacts and test environments. This speeds up build times and improves the efficiency of the CI/CD process.

· Satellite image processing: For applications that need to process large volumes of image data, Archil can serve as a high-performance local disk, allowing for rapid access and manipulation of massive datasets, which are typically stored in object storage.

· Serverless Jupyter Notebooks: Data scientists using Jupyter Notebooks in serverless environments can mount Archil disks to access and analyze large datasets stored in S3. This provides a familiar local file system experience with the scalability of cloud object storage.

· AI-native code sandboxes: Creating isolated environments for running AI code can benefit from Archil's ability to provide fast, persistent storage. This allows for efficient loading of models and datasets, and enables tasks like running Git directly on a shared file system, which is often problematic with traditional network file systems.

· AI agents using file systems: For AI agents that interact with data through file system operations rather than APIs (like MCP tools), Archil offers a high-performance and scalable solution. This enables these agents to process and access data efficiently for tasks such as natural language processing or data analysis.

· Core AI infrastructure (gateways, compute): Deploying foundational AI services requires robust and performant storage. Archil can provide this by acting as a fast, accessible storage layer for AI compute instances and gateways, ensuring smooth data flow and rapid processing.

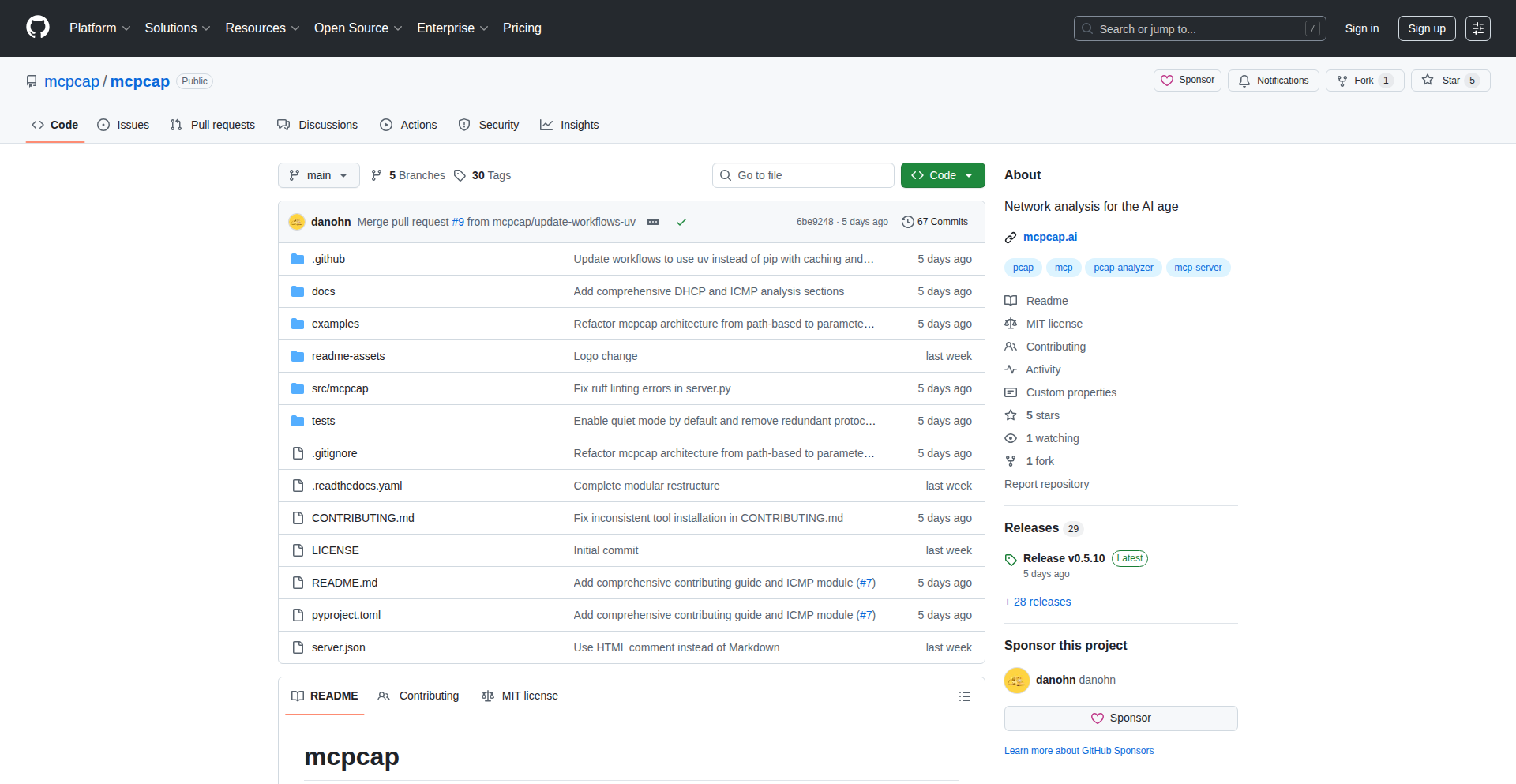

7

AI Agent Task Marketplace

Author

the_plug

Description

47jobs is a pioneering marketplace that allows users to hire AI agents for various tasks, offering a faster and more cost-effective alternative to traditional human freelancers. It leverages the growing capabilities of AI to automate tasks such as coding, content creation, data analysis, and research, with a fully automated workflow that eliminates human intermediaries.

Popularity

Points 1

Comments 15

What is this product?

47jobs is a platform designed to connect users with AI agents capable of performing a wide range of digital tasks. Instead of hiring human freelancers, you can delegate work like writing code, generating marketing copy, analyzing data, or automating processes to specialized AI entities. The core innovation lies in its focus on 100% AI-driven execution, ensuring rapid delivery and transparent pricing. It tackles the problem of slow turnaround times and variable costs associated with human outsourcing by offering predictable, AI-powered solutions. This means you get tasks done significantly faster and often at a lower cost, as the AI agents operate with extreme efficiency.

How to use it?

Developers can use 47jobs by navigating to the website, browsing available AI agent services, and posting a job request. You describe the task you need done, similar to how you would on existing freelance platforms. For example, a developer might post a job requesting an AI agent to write a Python script for data scraping, generate unit tests for a specific function, or even assist with debugging. The platform then matches your request with a suitable AI agent. The process is designed to be straightforward, allowing for seamless integration into a developer's workflow when they need quick, specialized assistance without the overhead of managing a human contractor.

Product Core Function

· AI-powered task execution: AI agents are designed to autonomously complete tasks, from complex coding challenges to creative content generation. This provides immediate value by offering rapid, efficient task completion that is often unavailable with human labor.

· Automated workflow: The entire process, from task assignment to delivery, is managed by AI, removing the need for human oversight and communication. This streamlines operations and reduces project turnaround time dramatically.

· Transparent pricing: Jobs are priced upfront based on the AI's estimated effort, allowing users to budget effectively and avoid unexpected costs. This brings predictability to outsourcing expenses.

· Diverse AI agent capabilities: The platform supports a variety of AI agents trained for different specializations, such as coding assistants, data analysts, and content writers. This versatility means you can find an AI solution for a wide array of your development and business needs.

· On-demand scalability: AI agents can be scaled up or down instantly to meet fluctuating project demands, offering unparalleled flexibility for projects with variable workloads.

Product Usage Case

· A developer needs a small Python script to parse a log file. Instead of writing it themselves or waiting for a freelancer, they post the job to 47jobs. An AI coding agent quickly delivers a functional script, saving the developer hours of their own time and allowing them to focus on core product development.

· A startup needs to generate product descriptions for a new e-commerce catalog. They hire an AI content agent on 47jobs, providing key features and target audience information. The AI agent produces a batch of compelling descriptions in minutes, significantly accelerating their go-to-market strategy.

· A data scientist needs to perform a specific data cleaning operation before analysis. They use 47jobs to find an AI data analysis agent. The agent efficiently handles the data transformation, allowing the data scientist to proceed with their analysis much sooner.

· A project manager needs to automate a repetitive reporting task. They engage an AI automation agent on 47jobs to build a script that pulls data from multiple sources and compiles a daily report, freeing up human resources for more strategic work.

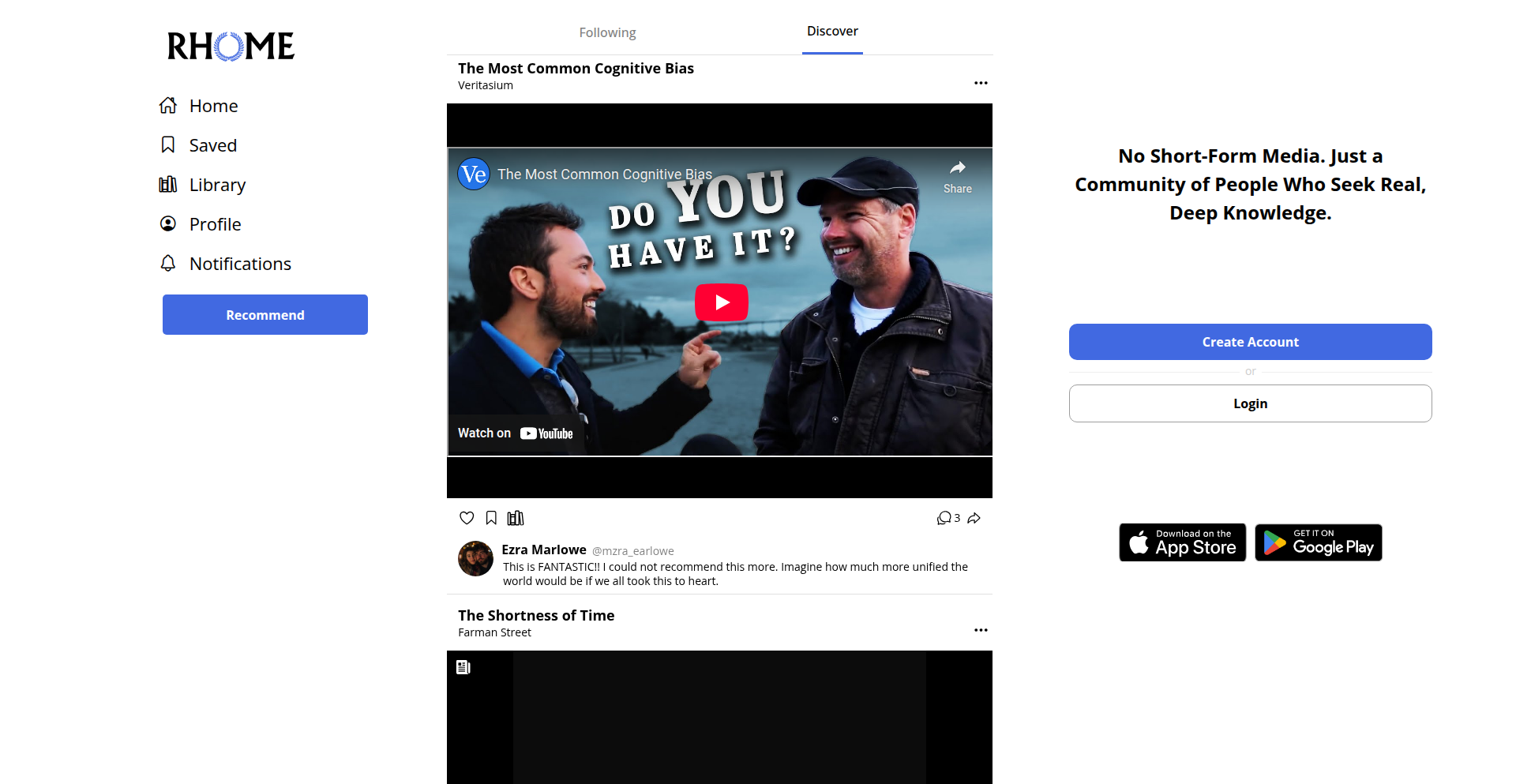

8

LongForm Media Recommender Engine

Author

rohannih

Description

This project is a platform for recommending long-form media like books and articles. Its core innovation lies in its approach to understanding and connecting diverse content types through a novel recommendation algorithm, moving beyond simple keyword matching to semantic understanding. This helps users discover hidden gems in long-form content that traditional recommendation systems often miss, solving the problem of information overload and shallow content discovery. So, this is useful for anyone looking to dive deeper into quality reading and learning, providing more meaningful content suggestions.

Popularity

Points 13

Comments 1

What is this product?

This project is a sophisticated recommendation engine built to suggest long-form media such as books and in-depth articles. At its technical heart, it employs a semantic analysis approach, likely leveraging Natural Language Processing (NLP) techniques like topic modeling or embedding to understand the underlying themes and context of content, rather than just surface-level keywords. This allows for more nuanced connections between different pieces of media, identifying content that shares conceptual similarities even if the wording is different. This innovation means you get recommendations that are more likely to align with your genuine interests and intellectual curiosity, rather than just popular trends. So, this helps you find your next great read or insightful article with a higher degree of confidence and relevance.

How to use it?

Developers can interact with this platform potentially through an API, allowing them to integrate its recommendation capabilities into their own applications, blogs, or reading platforms. For instance, a book review site could use this to suggest books based on the nuanced themes of currently trending articles. Alternatively, individual users might interact with a web interface, providing initial preferences and receiving curated lists of books or articles. The integration could involve passing content identifiers or descriptions to the engine and receiving back ranked recommendations. So, this allows developers to enhance their user experience by providing smarter, more personalized content discovery, and for users, it offers a direct way to get high-quality, relevant recommendations.

Product Core Function

· Semantic content analysis: Understands the deeper meaning and themes within books and articles to create more insightful recommendations. This provides value by surfacing content that truly resonates with a user's intellectual interests. For example, recommending a philosophical novel to someone who enjoys deep discussions on ethics in articles.

· Cross-media recommendation: Connects different types of long-form media, suggesting articles related to books or vice-versa, based on shared thematic elements. This offers value by broadening discovery and identifying unexpected connections between diverse content. For example, suggesting an article about the historical context of a novel you're reading.

· User preference modeling: Learns from user interactions and explicit feedback to refine recommendation accuracy over time. This provides value by ensuring recommendations become increasingly personalized and relevant to individual tastes. For example, if you consistently click on recommendations related to astrophysics, the engine will prioritize similar content.

· Content similarity identification: Identifies subtle similarities between disparate pieces of long-form content that might be missed by keyword-based systems. This provides value by uncovering hidden connections and niche content that aligns with specific interests. For example, recommending an academic paper on a specific scientific discovery that inspired a fictional book.

Product Usage Case

· A book blogger uses the platform to suggest articles related to the themes and historical periods of the books they review, enriching their content and engaging readers with deeper context. This solves the problem of providing supplementary material that enhances understanding.

· An online learning platform integrates the recommendation engine to suggest supplementary articles and books based on the curriculum or specific learning modules, helping students explore topics in greater depth. This addresses the need for curated, relevant learning resources.

· A digital library enhances its user experience by offering recommendations of articles and essays that explore similar philosophical or scientific concepts found in its book collection. This solves the challenge of making a vast library more accessible and discoverable for specific interests.

· A podcast producer uses the platform to find relevant long-form articles and book chapters that can serve as inspiration or source material for their episodes, focusing on thematic coherence. This helps them discover new content avenues that align with their podcast's subject matter.

9

Prune: Cognitive Sculptor

Author

tonerow

Description

Prune is a minimalist, command-line tool designed to help users refine their thoughts and ideas. It acts as a digital 'pruning shear' for your mental clutter, offering a structured way to articulate and organize complex concepts. Its core innovation lies in its simple yet effective iterative questioning approach, powered by a behind-the-scenes logic that guides users through a process of clarification and condensation. This tackles the common problem of overwhelming or unfocused thinking, providing a tangible output that is clearer and more concise.

Popularity

Points 7

Comments 1

What is this product?

Prune is a command-line application that facilitates structured thinking and idea refinement. It works by presenting users with a series of targeted questions designed to break down complex thoughts into manageable components. Think of it like a guided interrogation of your own ideas. The innovation here is in its deliberate simplicity and focus on iterative clarification rather than complex feature sets. It leverages a carefully designed question flow, inspired by philosophical techniques and cognitive science principles, to help users uncover underlying assumptions, identify key points, and discard extraneous details. So, what's in it for you? It helps you think more clearly and arrive at well-defined ideas, saving you time and mental energy.

How to use it?

Developers can integrate Prune into their workflow by running it from their terminal. After installing Prune (typically via a package manager like pip or npm), a user would initiate a session with a simple command, perhaps `prune 'My idea about X'`. The tool then guides them through a series of prompts, asking clarifying questions. For example, it might ask 'What is the core problem you are addressing?' or 'What is the most important outcome?' Users respond to these prompts, and Prune helps them consolidate these answers into a refined statement. This makes it ideal for brainstorming sessions, drafting initial project proposals, or even organizing personal thoughts before writing a report. Its value to you is a structured way to turn fuzzy thoughts into actionable insights.

Product Core Function

· Iterative Questioning Engine: Guides users through a sequence of prompts to dissect and clarify their thoughts. This offers value by forcing users to confront assumptions and identify core elements, leading to more robust ideas.

· Response Consolidation: Gathers and organizes user responses into a coherent, refined output. This provides value by transforming scattered thoughts into a digestible and actionable summary.

· Minimalist Interface: Operates entirely via the command line with a focus on simplicity and speed. This offers value by reducing distractions and allowing for quick integration into developer workflows without context switching.

· Configurable Question Flows (potential future enhancement): Allows for customization of the questioning process based on specific domains or personal preferences. This would provide value by tailoring the thinking process to individual needs or project types.

Product Usage Case

· During a project kickoff meeting, a developer uses Prune to quickly articulate the core problem statement and key objectives for a new feature. Instead of a lengthy, unfocused discussion, Prune helps condense the team's initial ideas into a clear, concise brief, saving valuable meeting time and ensuring everyone is aligned.

· A freelance developer uses Prune to refine their proposal for a new client. By answering Prune's questions about the client's needs and the proposed solution, they are able to produce a more persuasive and well-structured document that clearly outlines the value proposition, increasing their chances of winning the bid.

· A student preparing for an essay uses Prune to organize their research and arguments. By feeding their initial thoughts into Prune, they can identify the strongest points and potential weaknesses in their thesis, leading to a more coherent and well-supported essay.

10

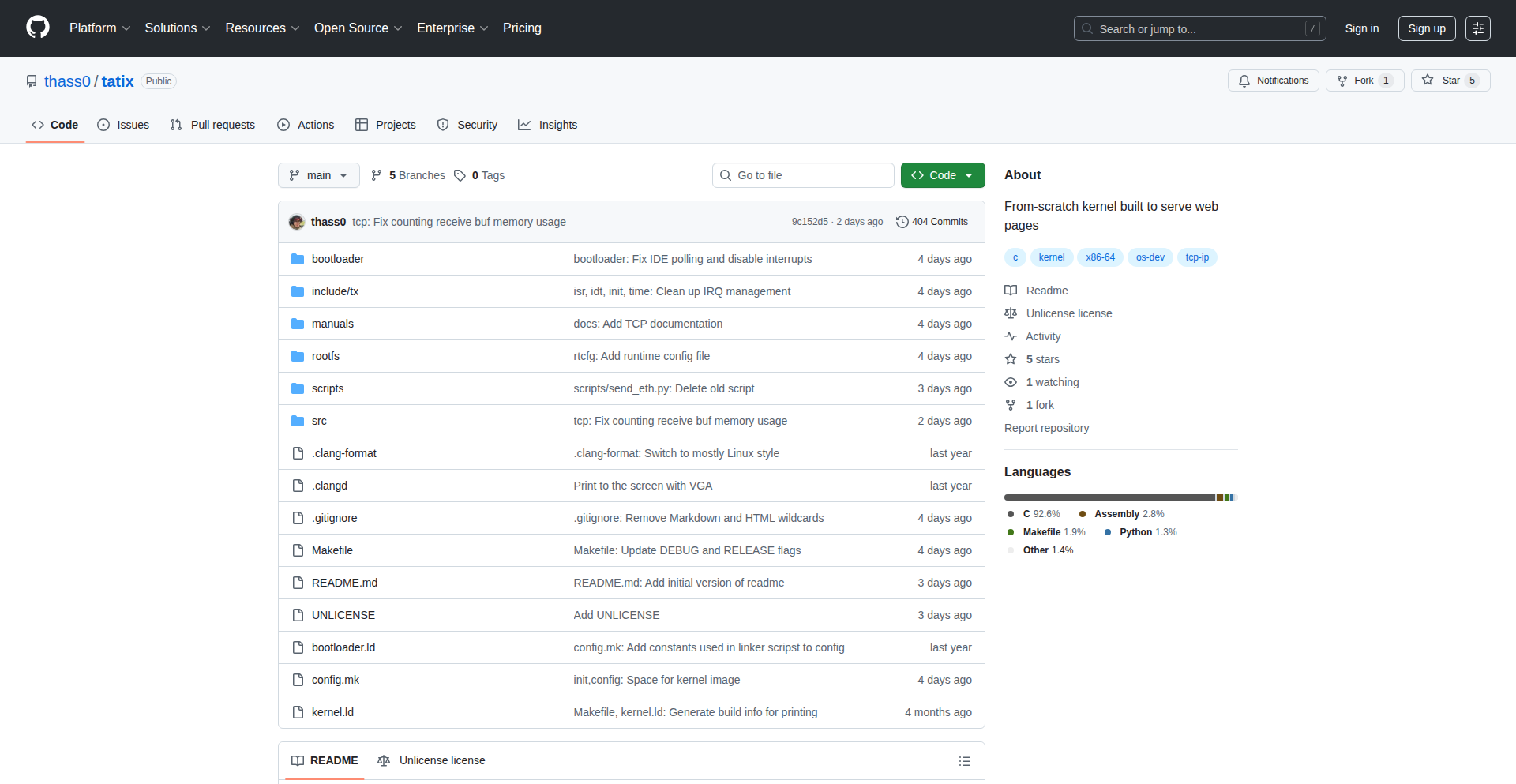

From-Scratch OS for Blogging

Author

thasso

Description

This project is a custom-built operating system designed and implemented entirely from the ground up. The core innovation lies in its comprehensive networking stack, including TCP/IP and an HTTP server, along with a functional RAM file system, BIOS bootloader, memory management with paging, and a task scheduler using cooperative multitasking. The developer's approach emphasizes safe C programming, leveraging a unique library of core abstractions. This project showcases a deep dive into fundamental OS concepts and provides a unique platform for serving content, like a personal blog, directly from a self-made environment.

Popularity

Points 8

Comments 0

What is this product?

This is an operating system (OS) developed from scratch, meaning it's not based on existing ones like Windows or Linux. The primary technical marvel here is building all the essential components yourself. This includes: a way for computers to talk to each other over a network (TCP/IP stack), a server to handle web requests (HTTP server), a place to store files in the computer's fast memory (RAM file system), a program that starts the computer (BIOS bootloader), efficient ways to manage the computer's memory (paging and memory management), and a system to run multiple tasks at once using a cooperative approach (concurrent tasks). The entire system is written in C, with a strong focus on preventing common programming errors through a custom safety-focused library. This demonstrates a profound understanding of how computers work at their most fundamental level, proving that you can indeed build your own functional environment for specific tasks, like hosting a website.

How to use it?

Developers can use this project as an educational tool to understand OS internals, network programming, and low-level system design. It serves as a practical demonstration of building complex systems from basic building blocks. For those interested in embedded systems or highly specialized environments, this OS could be adapted as a foundation. It can be compiled and booted, potentially on emulated hardware or dedicated systems, allowing developers to experiment with its networking capabilities to serve content or build custom network applications. Think of it as a highly customizable, bare-metal web server that you built yourself, offering unparalleled insight into its operation.

Product Core Function

· TCP/IP Stack: Enables network communication, allowing the OS to send and receive data over the internet or local networks. This is crucial for any networked application, including web serving, and shows how basic internet protocols can be implemented from scratch.

· HTTP Server: Handles web requests, allowing the OS to serve web pages and other content to connected clients. This demonstrates the ability to build a web server that can be the foundation for hosting websites or APIs.

· RAM File System: Provides a simple, fast storage mechanism using the computer's volatile memory (RAM). This is useful for temporary data or frequently accessed files, showcasing efficient in-memory data management.

· BIOS Bootloader: The initial program that runs when a computer starts up, responsible for loading the operating system. Implementing this shows the complete boot process, from power-on to a running OS.

· Paging and Memory Management: Sophisticated techniques to control how the OS and applications access and use the computer's memory, ensuring stability and efficiency. This is a core concept in modern OS design.

· Cooperative Scheduling: A method for managing concurrent tasks where each task voluntarily gives up control. This allows for multitasking without complex preemption logic, demonstrating a simpler approach to concurrency.

Product Usage Case

· Hosting a Personal Blog: The developer's stated goal was to serve their blog. This means the OS, with its HTTP server and file system, can be configured to store and deliver blog content directly to anyone accessing it over the network, showcasing a complete, self-contained web hosting solution.

· Learning OS Development: For students or enthusiasts eager to understand how operating systems work, this project provides a tangible, from-scratch example. They can examine the code for the bootloader, memory management, or networking to grasp complex concepts in a practical context.

· Building Embedded Network Appliances: In scenarios requiring highly specialized and efficient network devices, this OS could be a starting point. For instance, a custom router or a dedicated IoT device controller could be built upon this foundation, with tailored network services and minimal overhead.

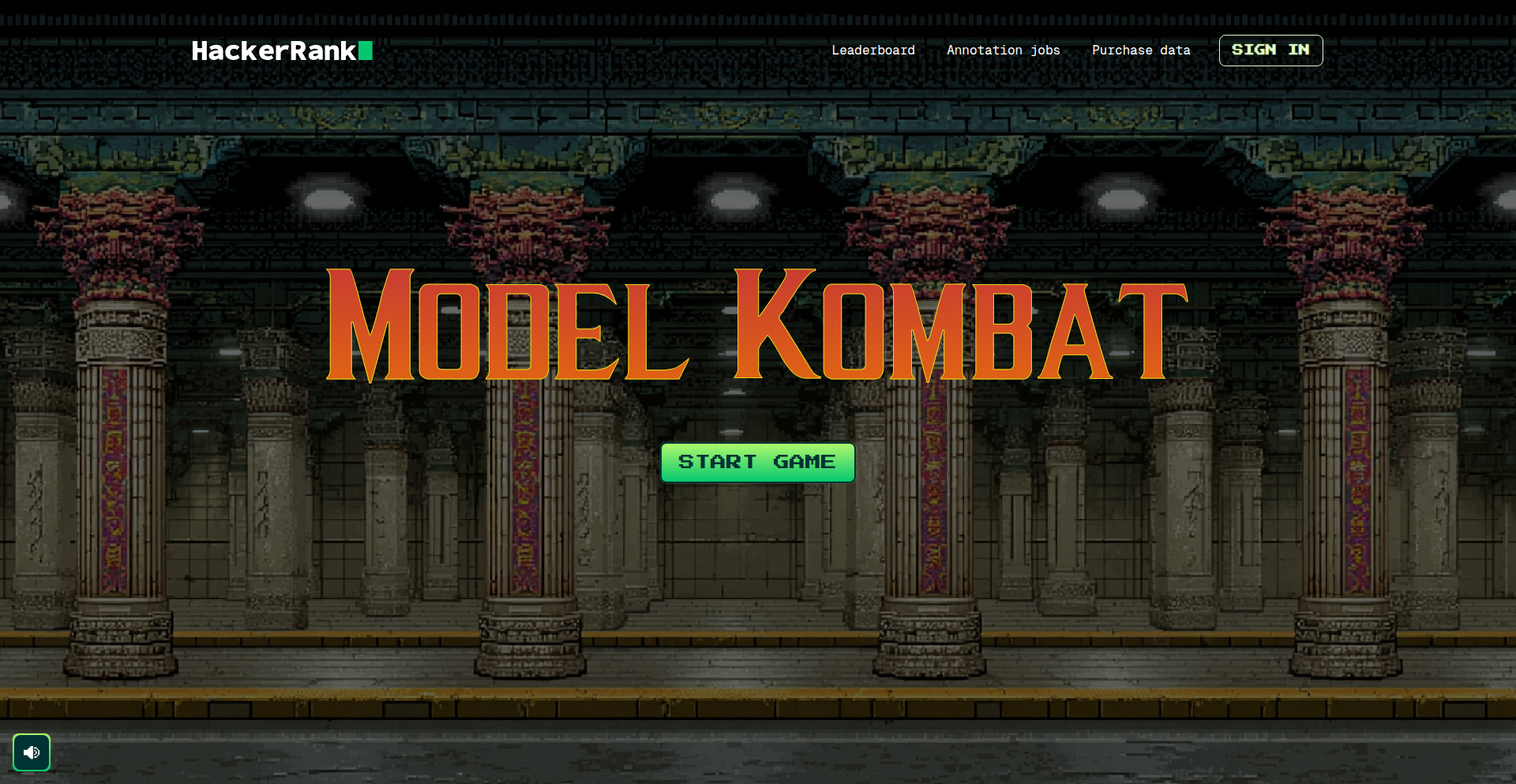

11

ModelKombat: Coding Model Arena

Author

rvivek

Description

ModelKombat is a platform that allows developers to directly compare and evaluate anonymized coding models side-by-side on real programming problems. It addresses the challenge of understanding and improving the performance of AI models designed for code generation and understanding by creating a competitive, gamified environment for testing.

Popularity

Points 5

Comments 3

What is this product?

ModelKombat is an 'arena' where different AI coding models can be pitted against each other in 'battles'. You select a programming language (like Java or Python), and then you're presented with a coding problem. Two different, anonymized AI models will offer their solutions. Your job is to vote on which solution you prefer, based on factors like correctness, efficiency, and readability. This helps the developers behind these models understand which approaches work best in real-world scenarios, ultimately making AI better at understanding and generating code. It's like a fighting game, but for AI that writes code, helping to push the boundaries of what these models can do.

How to use it?

Developers can use ModelKombat by visiting modelkombat.com. You can choose a programming language arena and participate in battles. For each problem, you'll see the problem statement and the outputs from two different AI models. You then cast your vote for the better solution. This direct feedback loop is invaluable for AI researchers and developers who are building and refining these coding models. You can also explore leaderboards to see which models are performing well overall and check out the problem statements to understand the challenges.

Product Core Function

· Side-by-side model comparison: Directly compare anonymized AI model outputs on the same coding problem, allowing for objective evaluation of their strengths and weaknesses.

· Problem-driven evaluation: Test AI models against real programming challenges, mirroring the types of tasks developers encounter daily, which provides practical performance insights.

· Human preference voting: Leverage human judgment to determine which AI-generated code is preferable, capturing nuanced aspects of code quality that automated metrics might miss.

· Weekly updated leaderboards: Track the performance of different coding models over time based on community votes, fostering a competitive environment for model improvement.

· Extensive challenge library: Access a growing collection of programming challenges across various languages, enabling diverse testing scenarios and continuous evaluation.

Product Usage Case

· An AI researcher testing two new language models for Python code generation: By using ModelKombat, they can quickly see which model's solutions are preferred by human developers for common Python tasks like web scraping or data manipulation, guiding their next development steps.

· A developer looking for an AI assistant to help with Java boilerplate code: They can use ModelKombat to see which AI model consistently produces cleaner, more efficient Java code for tasks like setting up server endpoints or managing database connections, helping them choose the best tool for their workflow.

· A machine learning team evaluating the effectiveness of their code completion models: They can submit their models to ModelKombat and get direct feedback on how their models perform against industry benchmarks on real-world code snippets, identifying areas for improvement in their training data or architecture.

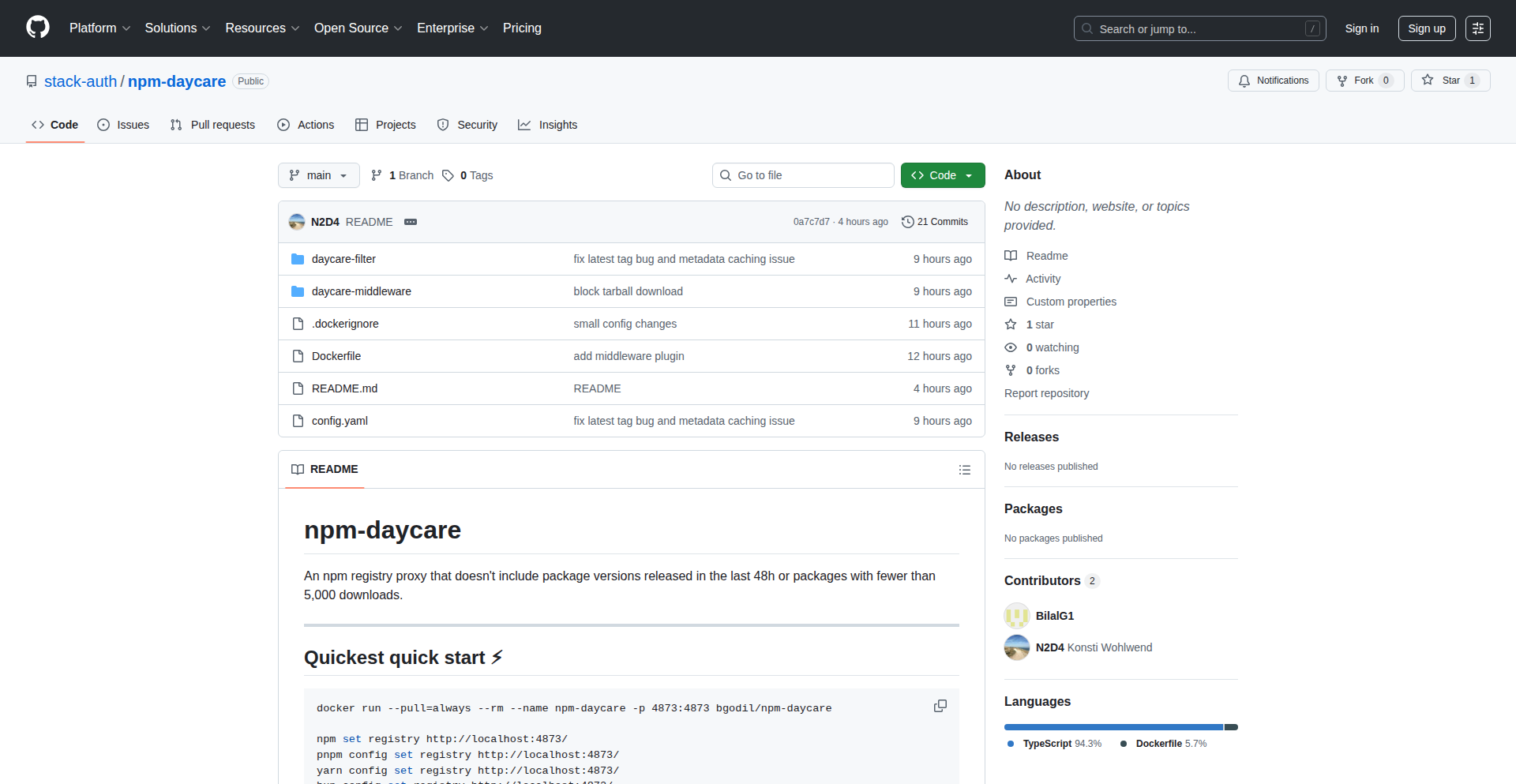

12

NPM Package Guardian

Author

n2d4

Description

NPM Package Guardian is an NPM proxy that acts as a protective layer for your development environment. It leverages Verdaccio, a popular NPM proxy, to filter out potentially risky packages before they reach your system. Specifically, it blocks packages that are less than 48 hours old or have fewer than 5,000 weekly downloads. This proactive approach helps mitigate the risks associated with supply chain attacks in the JavaScript ecosystem, offering a first line of defense against malicious or unvetted code.

Popularity

Points 6

Comments 2

What is this product?

NPM Package Guardian is a specialized NPM proxy built upon Verdaccio. Think of it as a gatekeeper for the packages you download from the NPM registry. Its core innovation lies in its filtering mechanism: it automatically rejects packages that are too new (less than 48 hours old) or too unpopular (fewer than 5,000 weekly downloads). This strategy is designed to prevent developers from accidentally pulling in packages that might have been compromised or are not yet rigorously tested by the community, addressing a significant security concern in modern software development. By intercepting requests at the proxy level, it protects all applications and developers using it without requiring individual project configurations.

How to use it?

Developers can easily integrate NPM Package Guardian into their workflow by running it as a Docker container. Once the container is up and running on your local machine (e.g., on port 4873), you simply configure your package managers (like npm, pnpm, yarn, or bun) to use this local proxy as their registry. For instance, after starting the Docker container, you would run commands like 'npm set registry http://localhost:4873/'. This redirects all your package installation requests through the Guardian, allowing it to apply its filtering rules. This setup is straightforward and applies system-wide, meaning any project you work on will benefit from the protection without further setup.

Product Core Function

· Package Age Filtering: Prevents the download of packages published within the last 48 hours. This enhances security by avoiding newly introduced code that may not have undergone extensive community review or may be part of an exploit. The value is in reducing the risk of using immature or potentially malicious packages.

· Download Volume Threshold: Blocks packages with fewer than 5,000 weekly downloads. This aims to filter out packages with low adoption rates, which might indicate lower community vetting or a higher risk of undiscovered vulnerabilities. The value is in promoting the use of more established and trusted dependencies.

· NPM Proxy Functionality: Acts as a transparent proxy to the official NPM registry, caching packages and serving them locally. This improves download speeds and provides a controlled environment for package access, adding practical development benefits.

· System-wide Protection: Applied at the registry level, ensuring consistent security across all projects and developers on a machine without individual configuration. The value is in providing broad, effortless security coverage.

Product Usage Case

· Securing a CI/CD Pipeline: A development team can configure their build servers to use NPM Package Guardian as their NPM registry. This ensures that any package pulled during the build process is already screened, significantly reducing the risk of supply chain attacks compromising automated builds and deployments.

· Protecting a Local Development Environment: A developer working on a sensitive project can set their local machine's registry to NPM Package Guardian. If they accidentally try to install a new, potentially untrusted package, the guardian will block it, preventing accidental introduction of vulnerabilities into their codebase.

· Early Mitigation of Zero-Day Exploits: While the project's default configuration might prevent the use of packages under 48 hours, a developer could temporarily adjust this rule if a critical zero-day exploit is discovered and a fix is immediately available in a brand-new package. This allows for controlled adoption of urgent patches while maintaining general security.

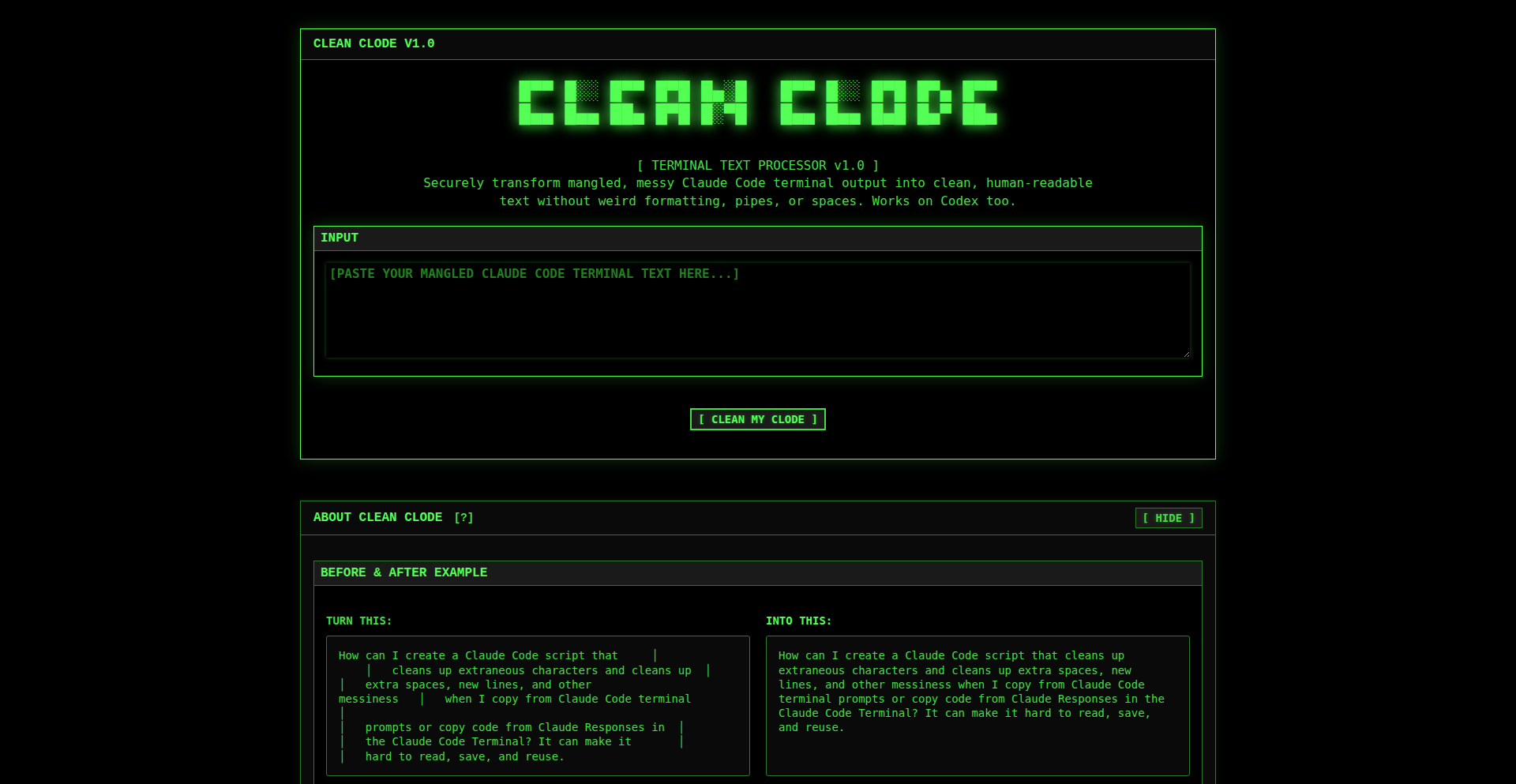

13

CodeClip Cleaner

Author

thewojo

Description

An open-source utility designed to remove extraneous whitespace, pipe characters, and other messy formatting that often appears when copying text from AI coding terminals like Claude Code and Codex. It simplifies pasting code and text, making it more readable and reusable. This addresses a common frustration for developers dealing with cluttered output from AI coding assistants, offering a clean and efficient solution.

Popularity

Points 5

Comments 2

What is this product?

CodeClip Cleaner is a specialized tool that takes 'dirty' text, typically copied from AI coding terminals, and 'cleans' it by removing unwanted characters and formatting. Think of it like tidying up a messy desk. AI coding tools, while powerful, sometimes include extra characters or formatting in their output that makes the text hard to read or use directly. This tool intelligently identifies and strips away these 'noise' characters, such as excessive spaces, line breaks, and special symbols like pipes (`|`), leaving you with clean, usable text. The core innovation lies in its ability to recognize common patterns of 'messiness' specific to these terminal outputs and offer a one-click solution, saving developers significant manual editing time.

How to use it?

Developers can use CodeClip Cleaner in several ways. The primary method is via its web interface at cleanclode.com, where you can paste the messy text directly into a text box and get the cleaned version back. For integration into workflows, the open-source nature means developers can potentially incorporate its logic into their own scripts or applications. A typical scenario would be: 1. Copy code or text from a Claude Code or Codex terminal session. 2. Paste this raw text into the CodeClip Cleaner tool (web or integrated). 3. Receive the cleaned text, ready to be pasted into your IDE, a document, or a commit message. Its value is in eliminating the tedious process of manually correcting formatting after every copy-paste operation from these AI tools.

Product Core Function

· Whitespace Normalization: Removes excessive spaces and tabs, ensuring consistent spacing for better readability and preventing syntax errors in code.

· Pipe Character Removal: Strips out vertical pipe characters (`|`) and surrounding whitespace, common in terminal output formatting, which are often irrelevant when using the text elsewhere.

· Line Break Cleanup: Intelligently handles line breaks, merging lines where appropriate to create a more cohesive block of text, making long code snippets or explanations easier to digest.

· Cross-AI Compatibility: Designed to work with output from both Claude Code and Codex, extending its utility to a broader range of AI coding assistants.

· Privacy-Focused Design: Operates with a commitment to user privacy, collecting no data and remaining entirely open-source, fostering trust and transparency within the developer community.

Product Usage Case

· When copying a complex code snippet from Claude Code to your local IDE, the output might include strange spacing and pipe characters from the terminal's rendering. Pasting this directly into your editor can break the code or require significant manual cleanup. Using CodeClip Cleaner instantly provides the pure code, ready to compile or run.

· Saving instructions or explanations generated by Codex for later reference might result in text that's hard to parse due to added formatting. CleanClip Cleaner ensures that these notes are presented cleanly, making them much easier to read and understand when you revisit them.

· When sharing code examples that were originally sourced from an AI terminal, using CleanClip Cleaner before pasting ensures that your audience receives pristine, easily readable code without any distracting artifacts from the AI's output environment.

· Integrating AI-generated text into markdown documents or blog posts often requires meticulous formatting. CodeClip Cleaner automates the removal of terminal-specific clutter, allowing developers to seamlessly embed AI-generated content into their technical writing.

14

FoundationChat: Apple's LLM, Unfiltered

Author

alariccole

Description

This project showcases a custom chat application built on Apple's new 3B 4K Foundation Models. It explores the potential of these on-device LLMs for conversational AI, bypassing some of Apple's intended usage restrictions. The innovation lies in creatively leveraging these powerful, local models for interactive chat, offering a glimpse into privacy-focused, offline AI experiences.

Popularity

Points 3

Comments 4

What is this product?

FoundationChat is a chat application that directly utilizes Apple's 3B 4K Foundation Models. These are large language models that run directly on your Apple device, meaning your data stays local and private. The core innovation here is demonstrating that these models, even with Apple's intended limitations, can be adapted for useful chat functionalities, pushing the boundaries of on-device AI interaction. Think of it as taking powerful AI brains and making them talk directly to you, without sending your conversations to the cloud.

How to use it?

Developers can integrate FoundationChat into their own applications by accessing the underlying Foundation Models framework. This could involve building custom interfaces for specific tasks, such as personalized assistants, content generation tools, or interactive learning platforms. The project demonstrates a foundational approach to interacting with these local LLMs, providing a starting point for developers looking to embed advanced AI capabilities into their macOS or iOS applications without relying on external APIs. It’s about having a smart assistant that understands your context and respects your privacy.

Product Core Function

· On-device LLM inference: Enables chat functionalities that run entirely on your Apple device, ensuring data privacy and offline usability. This means your conversations are not sent to a server, making it ideal for sensitive information.

· Customizable chat interface: Allows developers to build tailored user experiences around the Foundation Models, going beyond generic chat interfaces to serve specific application needs.

· Exploration of model capabilities: Serves as a platform to experiment with and understand the strengths and weaknesses of Apple's on-device LLMs for conversational tasks, driving further innovation in the AI community.

· Bypassing intended restrictions: Demonstrates a 'hacker' mindset by creatively using the models in ways not explicitly promoted by Apple, unlocking new potential applications and inspiring others to do the same.

Product Usage Case

· Building a privacy-focused personal assistant for managing local files and data, where sensitive information never leaves the device.

· Creating an offline writing assistant that helps generate creative text, code snippets, or summaries without requiring an internet connection.

· Developing an educational tool that provides interactive explanations and answers to questions based on locally stored knowledge bases.

· Experimenting with sentiment analysis on user-generated content within a closed application environment, ensuring user privacy.

15

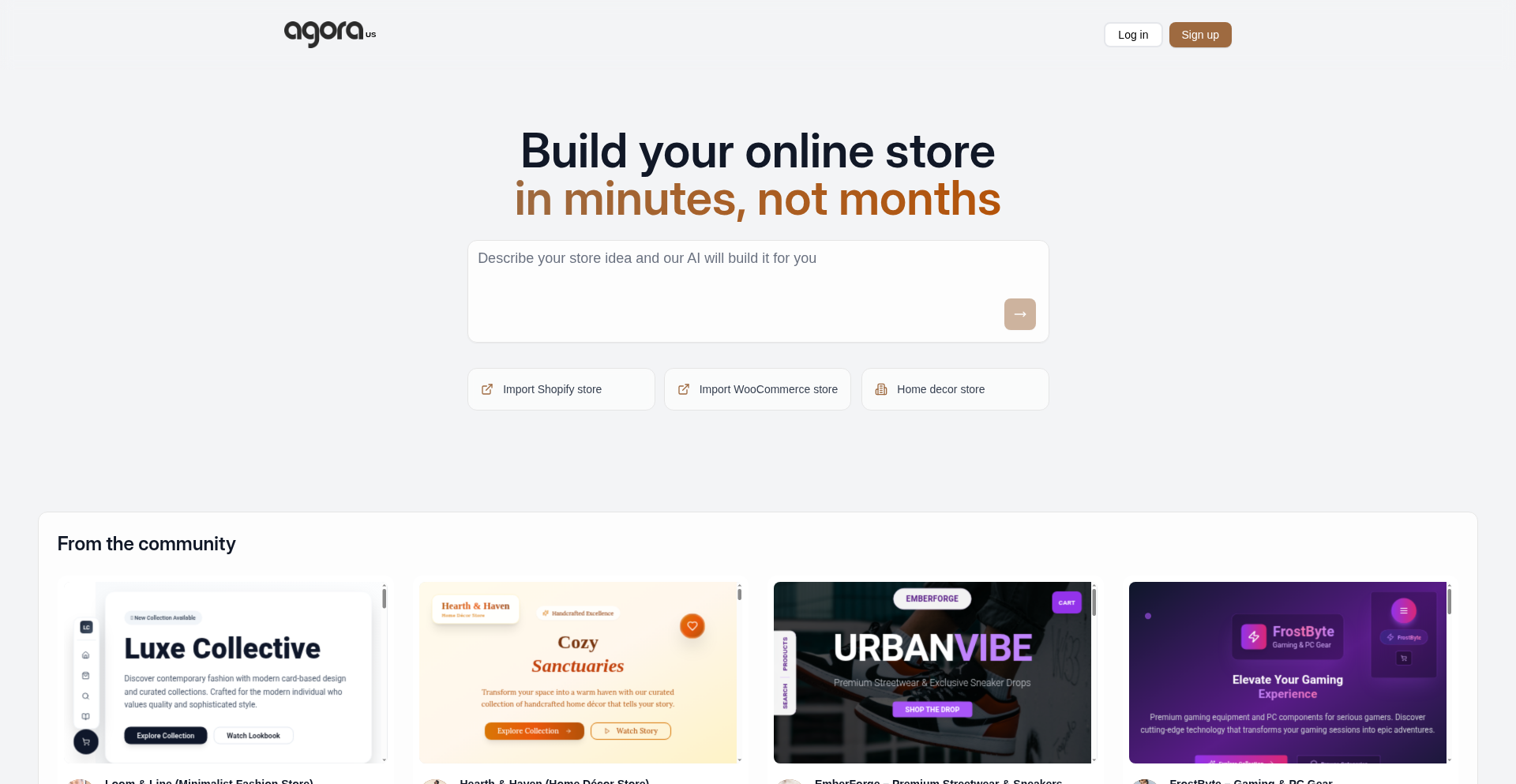

Agora: AI-Powered Chat Commerce Builder

Author

astronautmonkey

Description

Agora is an AI-driven e-commerce store builder designed as a modern alternative to platforms like Shopify. It addresses the common pain points for merchants: high setup costs, complex management, and the need for extensive customization. Agora allows users to build, manage, and deploy a personalized online store entirely through a chat interface, streamlining the entire e-commerce process. Key innovations include a Vercel-powered deployment with extensive middleware for seamless integrations, a built-in product and order database, and intelligent import capabilities for existing Shopify and WooCommerce stores. So, what's in it for you? You can launch a functional e-commerce store quickly and easily, powered by AI, without needing deep technical expertise.

Popularity

Points 3

Comments 2

What is this product?

Agora is an AI-powered platform that lets you create and manage an entire e-commerce store simply by chatting. Imagine describing your product, your desired look and feel, and your business needs to a chatbot, and it builds a functional online store for you. It leverages cutting-edge AI, specifically V0 for the coding interface, and Vercel for previews and deployments. This means it can handle everything from setting up product listings, managing inventory, processing payments via Stripe, to tracking orders. A key technical innovation is its ability to import data from existing stores like Shopify or WooCommerce using custom crawlers, making migration incredibly simple. So, what's the innovative value? It democratizes e-commerce store creation, making it accessible and efficient through natural language interaction, significantly lowering the barrier to entry for entrepreneurs.

How to use it?

Developers and merchants can start using Agora by interacting with its chat interface. You begin by describing your store's needs, from product details to design preferences. For example, you can say, 'Create a store for handmade ceramics, with a minimalist aesthetic and a focus on product photography.' Agora will then generate store elements and code. For integration with existing businesses, you can leverage the 'Import Shopify store' or 'Import WooCommerce store' features by providing your current store's URL; Agora's crawlers will automatically pull your product catalog. For payment processing, it natively integrates with Stripe. You can also connect Agora to other tools you use through its Zapier app, allowing seamless order management with services like ShipStation or email marketing with Mailchimp. So, how do you use it? You chat your way to a functioning online store and easily connect it to your existing workflow.

Product Core Function

· AI-driven store creation via chat: Build and customize your e-commerce store by simply describing your requirements in natural language, eliminating the need for complex coding. This saves significant development time and effort.

· Automated store deployment with Vercel: Your store is instantly previewed and deployed on a robust infrastructure, ensuring a fast and reliable online presence. This means your store is live and accessible quickly.

· Built-in product and order management: Manage your product catalog, variants, stock, and incoming orders directly within the platform, structured similarly to Shopify's system. This simplifies backend operations and reduces the need for external database management.

· Shopify/WooCommerce store import: Seamlessly migrate your existing product data from other e-commerce platforms by simply entering your store's URL. This dramatically speeds up the transition process and minimizes data entry.

· Native Stripe payment integration: Process customer payments securely and efficiently, with payouts managed directly. This ensures a smooth transaction experience for both you and your customers.

· Zapier integration for workflow automation: Connect your Agora store to a vast ecosystem of business tools, such as shipping providers or marketing platforms, to automate tasks and streamline operations. This means you can integrate with the tools you already rely on.

Product Usage Case

· A small artisan bakery owner who wants to sell their custom cakes online. They can describe their products, pricing, and delivery options via chat, and Agora will build a beautiful, functional store. They can then use Zapier to automatically notify their local delivery service when a new order comes in, solving the problem of manual order processing and delivery coordination.

· A fashion designer with an existing Shopify store who wants to test a new, simpler platform. By using Agora's import feature, they can quickly bring all their product listings and descriptions over, then experiment with a chat-driven customization process without losing their existing catalog. This allows for faster iteration and experimentation with new store designs.

· A new entrepreneur launching a niche product who has limited coding experience. They can use Agora's AI to generate a professional-looking store in minutes, focusing on their marketing and product development rather than technical setup. The native Stripe integration ensures they can start accepting payments immediately, solving the problem of complex payment gateway integration.

· A WooCommerce user looking to streamline their operations. They can import their store into Agora, benefiting from a unified interface for product management, order tracking, and a more intuitive chat-based customization. This addresses the challenge of managing multiple tools and complex configurations.

16

WriteRush-GamifiedMarkdownEditor

Author

levihanlen

Description

WriteRush is a gamified writing application designed to boost productivity and engagement through game-like mechanics. It transforms the often solitary and mundane task of writing into an enjoyable experience by incorporating elements such as progress tracking, challenges, and rewards directly within a markdown editing environment. The core innovation lies in seamlessly blending motivational psychology with practical writing tools, tackling the common hurdle of writer's block and maintaining focus.

Popularity

Points 2

Comments 3

What is this product?

WriteRush is a markdown editor that injects game mechanics into the writing process. It uses concepts like experience points (XP) for word count, daily streaks for consistent writing, and unlockable features as rewards. Instead of just a blank page, you get a visual representation of your progress and achievements, making writing feel more like leveling up in a game. This approach aims to solve the problem of procrastination and lack of motivation that many writers face, offering a tangible and engaging way to build writing habits.

How to use it?

Developers can use WriteRush as their primary markdown editor for technical documentation, blog posts, or any text-based content. Its integrated gamification encourages consistent output and helps maintain momentum on long-term projects. For example, a developer working on a complex documentation project could set daily word count goals within WriteRush, earning XP and building a streak for each successful day, making the often tedious documentation process more rewarding. It can be integrated into personal workflows by simply replacing their existing markdown editor.

Product Core Function

· Gamified progress tracking: Earn experience points (XP) for word count and completed writing sessions, providing a clear visual indicator of progress and rewarding effort.

· Streak system: Maintain daily writing streaks to build consistent habits and unlock bonuses, encouraging regular engagement and discipline.

· Unlockable features and themes: Progress through levels to unlock new editor themes, fonts, or productivity tools, adding an element of discovery and reward.

· Customizable writing goals: Set personal word count or time-based goals, making the gamification directly relevant to individual productivity targets.

· Markdown editing with integrated feedback: A familiar markdown editor environment enhanced with subtle game cues and progress indicators, providing a seamless writing experience.

Product Usage Case

· A technical writer using WriteRush to draft API documentation. By setting a daily goal of 500 words, they earn XP for each 100 words written and maintain a streak for consecutive days of meeting their goal, making the large documentation task feel more manageable and less daunting.

· A blogger using WriteRush to consistently publish articles. The streak system motivates them to write and publish at least once a week, fostering a regular content creation schedule and building an audience.

· A developer learning a new programming language can use WriteRush to document their learning journey. Tracking progress with word counts for notes and code explanations helps solidify understanding and provides a sense of accomplishment as they 'level up' their knowledge.

17

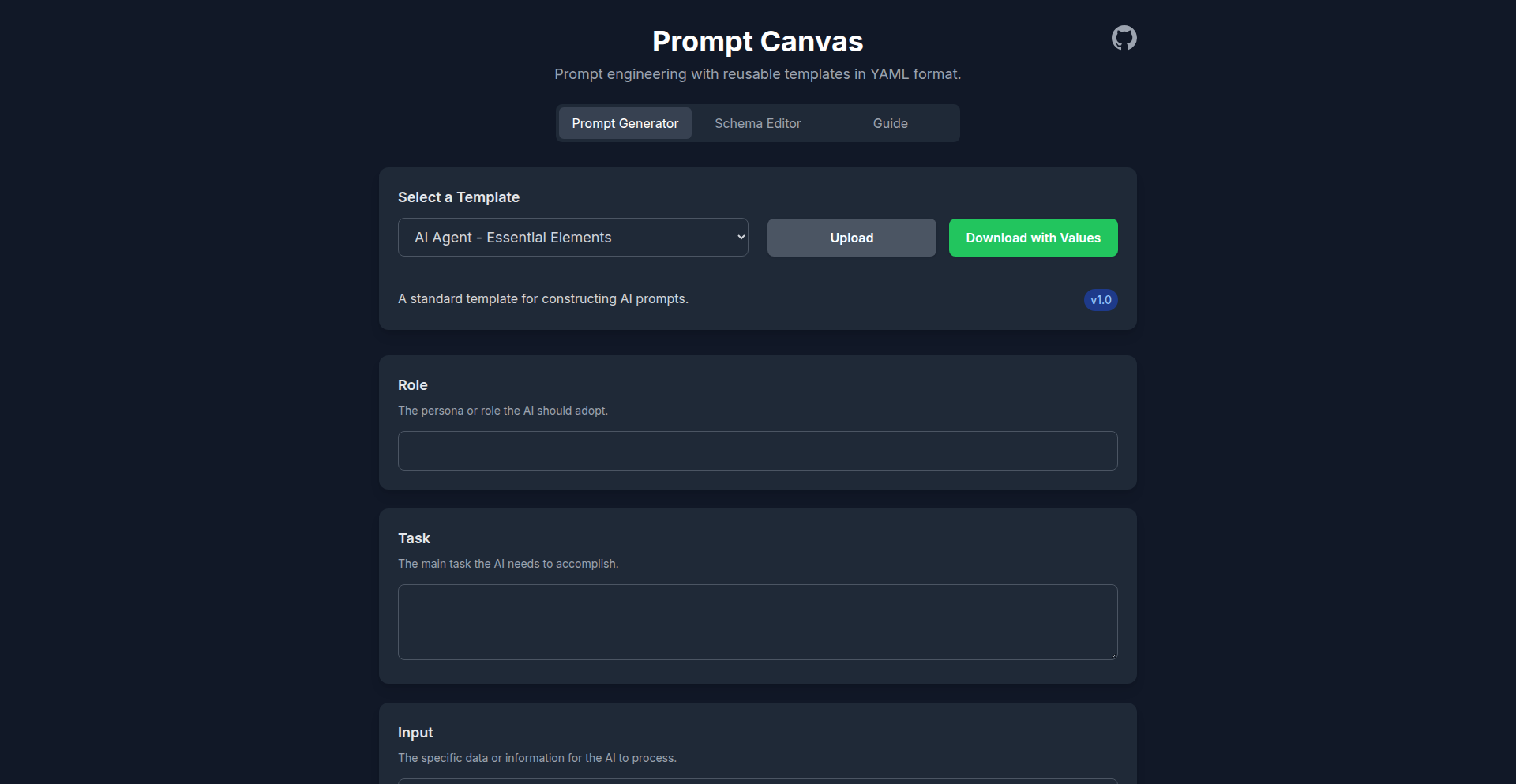

PromptCanvas: Visual LLM Prompt Architect

Author

ml4den

Description

PromptCanvas is an open-source web application designed for visual construction and management of Large Language Model (LLM) prompt templates. It leverages YAML schemas to define structured prompts, allowing users to generate complete prompts by filling in variable values. This innovation addresses the complexity of prompt engineering by offering a visual, portable, and version-controllable method for creating and testing prompt variations, significantly improving efficiency for developers and researchers working with LLMs. Its single-file HTML architecture ensures portability and minimal privacy concerns.

Popularity

Points 3

Comments 2

What is this product?

PromptCanvas is a web-based tool that transforms how developers interact with LLMs by enabling the visual creation of prompt templates. Instead of writing complex strings, users can build prompts using a structured, visual approach, defining variables and their relationships within a YAML schema. This allows for easy modification and reuse of prompts, much like creating a blueprint for your instructions to the AI. The innovation lies in translating the abstract concept of prompt engineering into a tangible, visual format, making it more accessible and manageable. It's built as a single HTML file, meaning it's lightweight and you can even run it offline or export your entire prompt library as portable YAML files.

How to use it?

Developers can use PromptCanvas by visiting the web application. The core workflow involves defining a prompt template by creating a YAML structure. This structure acts as a canvas where you can visually map out the components of your prompt, specifying placeholder variables (like customer name, product description, or desired tone). Once the template is defined, you can populate these variables with specific values to generate a ready-to-use prompt for an LLM. For instance, if you're building a marketing email generator, you'd create a template with placeholders for recipient, company, and key selling points. You can then easily fill these in for each new email, or test variations by changing just one parameter. The YAML export feature allows for easy backup, sharing, and integration into other development workflows or version control systems.

Product Core Function

· Visual Prompt Templating: Enables the creation of LLM prompt templates through a visual interface, making complex prompt structures understandable and manageable. This simplifies the process of designing and iterating on prompts, directly benefiting prompt engineers and developers by reducing cognitive load and potential for errors.

· YAML Schema Generation: Automatically generates portable YAML schemas from the visual templates. This provides a structured, human-readable, and machine-parseable format for prompts, allowing for easy version control, sharing, and integration with automated systems, thereby boosting reusability and maintainability.

· Dynamic Prompt Generation: Allows users to populate template variables with specific data to generate complete, tailored prompts for LLMs. This is crucial for use cases requiring frequent prompt submissions with slight variations, such as personalized content creation or A/B testing of prompt strategies, saving significant manual effort.

· Template Export and Import: Facilitates the export of prompt templates as YAML files and their subsequent import into the application or other systems. This ensures data portability and allows for offline work or integration into existing CI/CD pipelines or knowledge management systems, enhancing workflow flexibility.

Product Usage Case

· A developer building a customer support chatbot can use PromptCanvas to create a template for generating personalized responses. They can define variables for customer name, issue type, and solution. This allows them to quickly generate unique responses for each customer by simply filling in the details, improving response quality and efficiency without rewriting prompts from scratch.

· A researcher experimenting with LLM capabilities can use PromptCanvas to test numerous prompt variations for a specific task. By creating a template with adjustable parameters (e.g., temperature settings, specific keywords, output format), they can efficiently explore the LLM's behavior and identify optimal prompt configurations for their research goals.