Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-15

SagaSu777 2025-09-16

Explore the hottest developer projects on Show HN for 2025-09-15. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN landscape is a testament to the hacker spirit, where ingenuity meets practical problem-solving. We're seeing a strong surge in AI-driven tools aiming to streamline complex workflows and enhance productivity, from automating content creation to providing personalized coaching. The trend towards making powerful AI accessible, either through simplified interfaces like `Blocks` or on-device processing as seen in `Aotol AI`, signifies a move towards democratizing advanced technology. Simultaneously, there's a persistent drive to improve developer tooling and efficiency, exemplified by projects like `pooshit` for code syncing and `Daffodil` for e-commerce frameworks, which directly address common developer pain points. Furthermore, the innovative spirit extends to system-level hacks, like enabling custom wallpapers on macOS, showcasing that even established platforms can be pushed further. For developers and innovators, this diverse array of projects highlights immense opportunities: leverage AI to solve niche problems, focus on developer experience to build essential tools, and don't shy away from deep-diving into system intricacies to unlock new possibilities. The core message is clear: identify a friction point, apply creative technical solutions, and build something that empowers users or makes life easier.

Today's Hottest Product

Name

Show HN: I reverse engineered macOS to allow custom Lock Screen wallpapers

Highlight

This project showcases a deep dive into macOS internals through reverse engineering to unlock a previously unsupported feature: custom animated wallpapers on the Lock Screen. The developer’s tenacity in understanding and manipulating system-level behavior offers a powerful lesson in how to push the boundaries of existing operating systems and create new user experiences. It demonstrates that even seemingly locked-down platforms can be customized with enough technical skill and determination.

Popular Category

AI & Machine Learning

Developer Tools

Operating Systems & Utilities

Productivity

Popular Keyword

AI

macOS

Reverse Engineering

Automation

Framework

CLI

Developer Experience

Technology Trends

AI-powered productivity and workflow automation

System-level customization and reverse engineering

Developer experience and tooling enhancement

Decentralized and distributed computing

Semantic data processing and LLM applications

Niche utility and tool development

Project Category Distribution

AI & Machine Learning (25.00%)

Developer Tools (30.00%)

Operating Systems & Utilities (15.00%)

Productivity (20.00%)

Data & Analytics (5.00%)

Web Development (5.00%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Backdrop: Custom Lock Screen & Desktop Video Wallpapers | 73 | 52 |

| 2 | Omarchy CachyOS Fusion Installer | 60 | 61 |

| 3 | Pooshit: Live Remote Code Sync | 53 | 45 |

| 4 | Daffodil Commerce Connect | 63 | 7 |

| 5 | Semlib: Semantic Data Orchestrator | 57 | 12 |

| 6 | FinFam: Collaborative Spreadsheet Financial Modeler | 38 | 14 |

| 7 | MCP Server Connect-o-Matic | 19 | 5 |

| 8 | Ruminate AI-Reader | 15 | 3 |

| 9 | Blocks: AI-Native Workflow Builder | 11 | 3 |

| 10 | LLM Quote Vault | 5 | 4 |

1

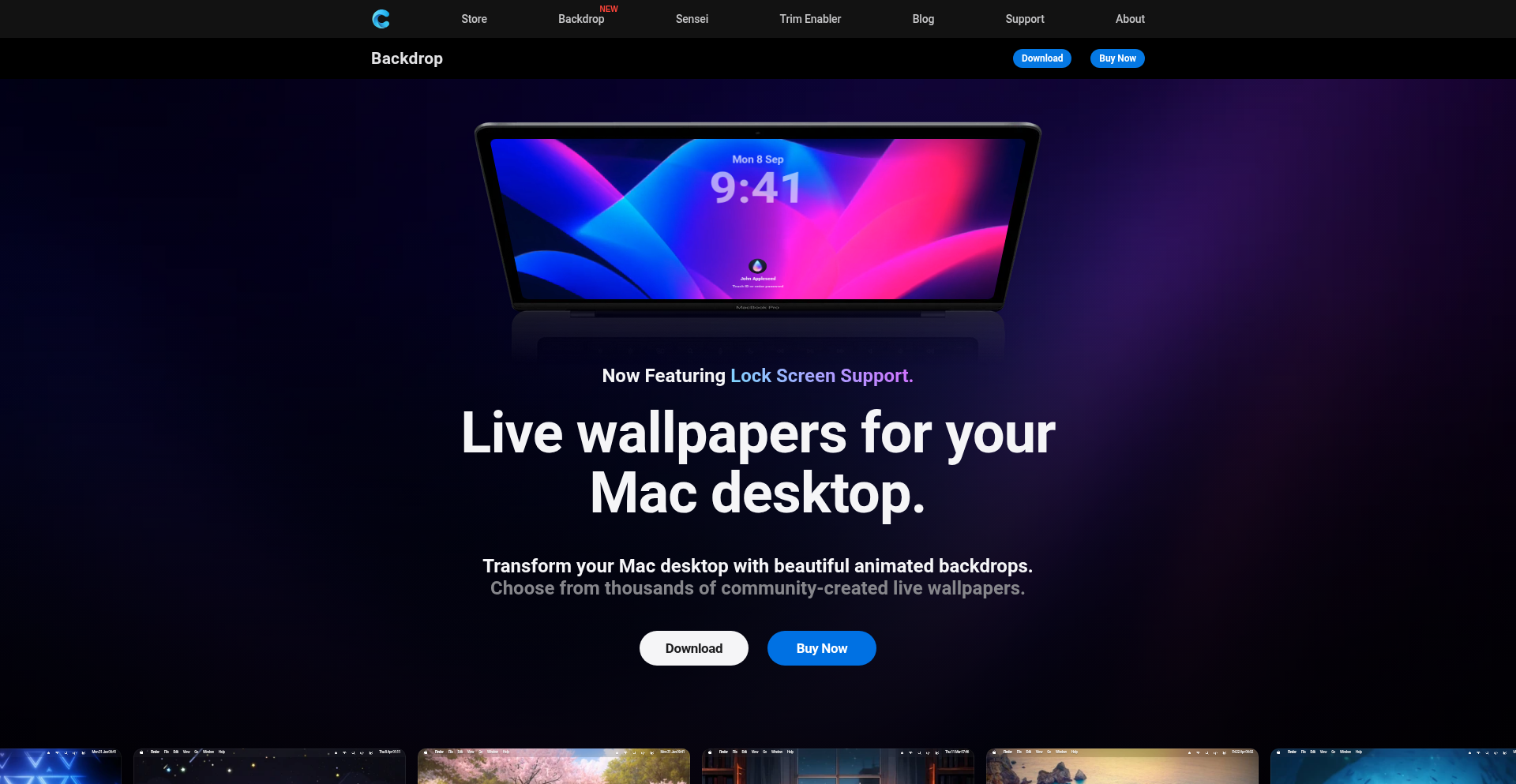

Backdrop: Custom Lock Screen & Desktop Video Wallpapers

Author

cindori

Description

Backdrop is a Mac application that allows users to set their own video files as live wallpapers for their desktop and, innovatively, for the macOS Lock Screen. The developer reverse-engineered the macOS wallpaper system to enable this functionality, which Apple does not officially support, offering a new level of personalization for Mac users. So, this provides a way to make your Mac uniquely yours with dynamic visuals.

Popularity

Points 73

Comments 52

What is this product?

Backdrop is a Mac application that transforms your static desktop and Lock Screen into dynamic video displays. Its core innovation lies in its ability to bypass Apple's limitations on custom Lock Screen content by reverse-engineering the macOS wallpaper system. This means you can use your own video files, not just Apple-provided animated wallpapers, to personalize your Mac's appearance. So, this gives you control over your Mac's visual identity beyond what Apple typically allows.

How to use it?

Developers can use Backdrop by installing the application on their Mac. Once installed, users can select any video file from their computer to be used as a desktop or Lock Screen wallpaper. The app integrates with macOS's system settings, allowing for easy selection and management of custom wallpapers. For developers looking to understand the technical underpinnings, the project's success is a testament to deep macOS system knowledge and creative problem-solving through reverse engineering. So, you can easily set up personalized video wallpapers for your Mac.

Product Core Function

· Video Wallpaper for Desktop: Allows users to play any video file as their Mac desktop background, offering a more engaging visual experience than static images. This provides a dynamic and personal touch to your workspace.

· Video Wallpaper for Lock Screen: Enables the use of custom video files as the Lock Screen wallpaper, a feature not natively supported by macOS. This allows for a highly personalized login experience.

· macOS System Integration: Seamlessly integrates custom wallpapers into macOS, including a dedicated section within System Settings. This means the functionality feels native and is easy to manage.

· Reverse Engineered Functionality: The core innovation is the technical feat of reverse-engineering macOS internals to inject custom video content into the Lock Screen system. This demonstrates a deep understanding of system architecture and the power of code to overcome limitations.

Product Usage Case

· A graphic designer wanting to showcase their animation work directly on their Mac desktop. They can set a loop of their best animations as their wallpaper, making their workspace inspiring and functional.

· A user who wants their Mac Lock Screen to display a calming nature video when they step away from their computer. This provides a more personalized and less jarring experience than a static image.

· A developer interested in exploring macOS internals and pushing the boundaries of what's possible. They can study Backdrop's implementation to learn about system hooks, reverse engineering techniques, and macOS application development.

· Anyone frustrated by Apple's limited customization options for the Lock Screen, who wants to express their individuality. Backdrop provides the technical solution to achieve this unique personalization.

2

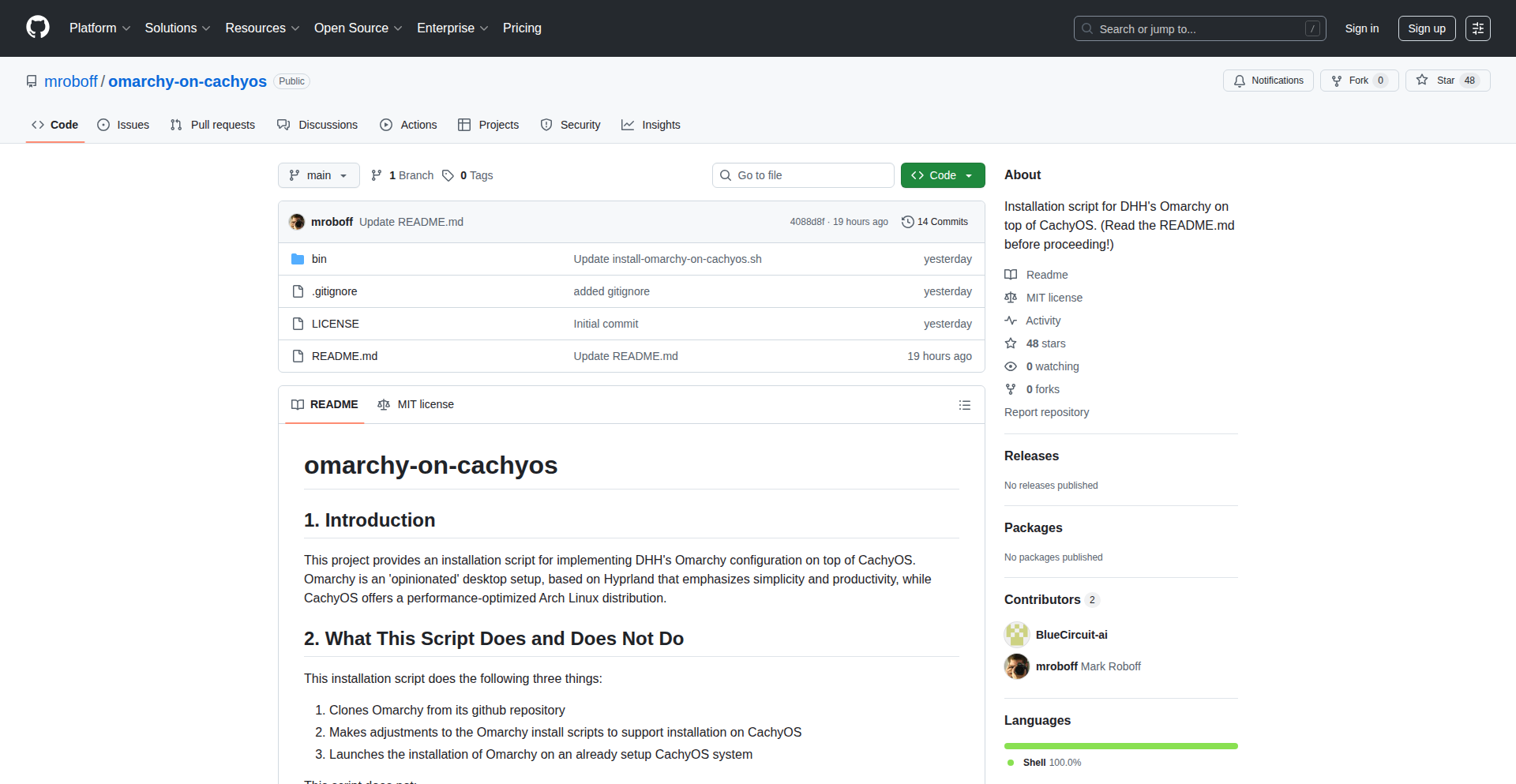

Omarchy CachyOS Fusion Installer

Author

theYipster

Description

This project provides a specialized installation script for seamlessly integrating Omarchy, a system for managing distributed development environments, onto the CachyOS Linux distribution. It tackles the technical challenge of ensuring a stable and optimized blend between these two systems, offering developers a streamlined way to set up a powerful distributed development workflow with minimal manual configuration. The innovation lies in its targeted approach to system fusion, ensuring compatibility and performance for a specific, optimized developer experience.

Popularity

Points 60

Comments 61

What is this product?

Omarchy CachyOS Fusion Installer is an automated script designed to install and configure Omarchy, a system for managing multiple, isolated development environments across different machines or virtual machines, on top of CachyOS. CachyOS is a Linux distribution known for its performance optimizations. The core innovation here is not a new technology itself, but the intelligent scripting that combines the strengths of both Omarchy's distributed environment management and CachyOS's speed. It simplifies a potentially complex setup process, ensuring that Omarchy's capabilities are readily available and well-integrated within the CachyOS ecosystem, leading to a faster and more reliable development setup. So, what's in it for you? It means you get a pre-optimized, hassle-free environment for your distributed development projects, saving you significant time and effort in setting up and configuring your tools.

How to use it?

Developers can use this project by first installing CachyOS, following the instructions in the project's README file. Once CachyOS is set up, they run the provided installation script. This script automates the download, installation, and configuration of Omarchy, ensuring all dependencies are met and the systems are optimally configured to work together. The integration is designed to be as plug-and-play as possible. You can think of it as a highly specialized setup wizard for your development environment. So, how do you use it? You follow a simple, guided installation process after you have CachyOS ready, and then you're good to go with Omarchy. This is useful for developers who need to quickly set up a consistent and high-performance distributed development environment without getting bogged down in manual configuration details.

Product Core Function

· Automated Omarchy installation: The script handles the entire process of downloading and installing Omarchy, ensuring all necessary components are present and correctly placed. This simplifies the setup for users, saving them from manual package management and dependency resolution, thus accelerating their ability to start using Omarchy.

· CachyOS compatibility optimization: The script is specifically tailored to work with CachyOS, including any performance tuning or system tweaks that are beneficial for running distributed development tools on this optimized OS. This means your Omarchy setup will be faster and more stable than a generic installation, directly improving your development workflow speed and reliability.

· Dependency management: The installer automatically identifies and installs any required software packages or libraries that Omarchy needs to function correctly on CachyOS. This prevents frustrating 'missing dependency' errors, allowing you to focus on development rather than system administration, which is a direct benefit for productivity.

· Stable system integration: The primary goal is to create a strong and stable blend between Omarchy and CachyOS. The script performs checks and configurations to ensure that the two systems coexist harmoniously, minimizing potential conflicts and system instability. This stability ensures your development environments are reliable, reducing downtime and interruptions.

Product Usage Case

· A backend developer needs to manage multiple microservices development environments, each requiring specific versions of databases and programming languages. Using Omarchy on CachyOS, they can quickly spin up isolated environments for each service, leveraging CachyOS's speed for faster builds and tests. The Fusion Installer makes this setup efficient, allowing the developer to focus on coding rather than system configuration, directly boosting their productivity.

· A frontend developer working on a project with complex build processes and dependencies can utilize this setup to create a consistent development environment that mirrors the production server's configuration. The performance optimizations of CachyOS, combined with Omarchy's ability to manage different project setups, mean faster compilation times and a smoother development experience, saving the developer hours each week on waiting for builds.

· A developer experimenting with new cloud-native technologies that require containerization and orchestration tools can use Omarchy to manage these complex dependencies. By installing it on CachyOS via the Fusion Installer, they benefit from a highly performant base system that accelerates the deployment and testing of these new technologies, enabling faster innovation cycles.

3

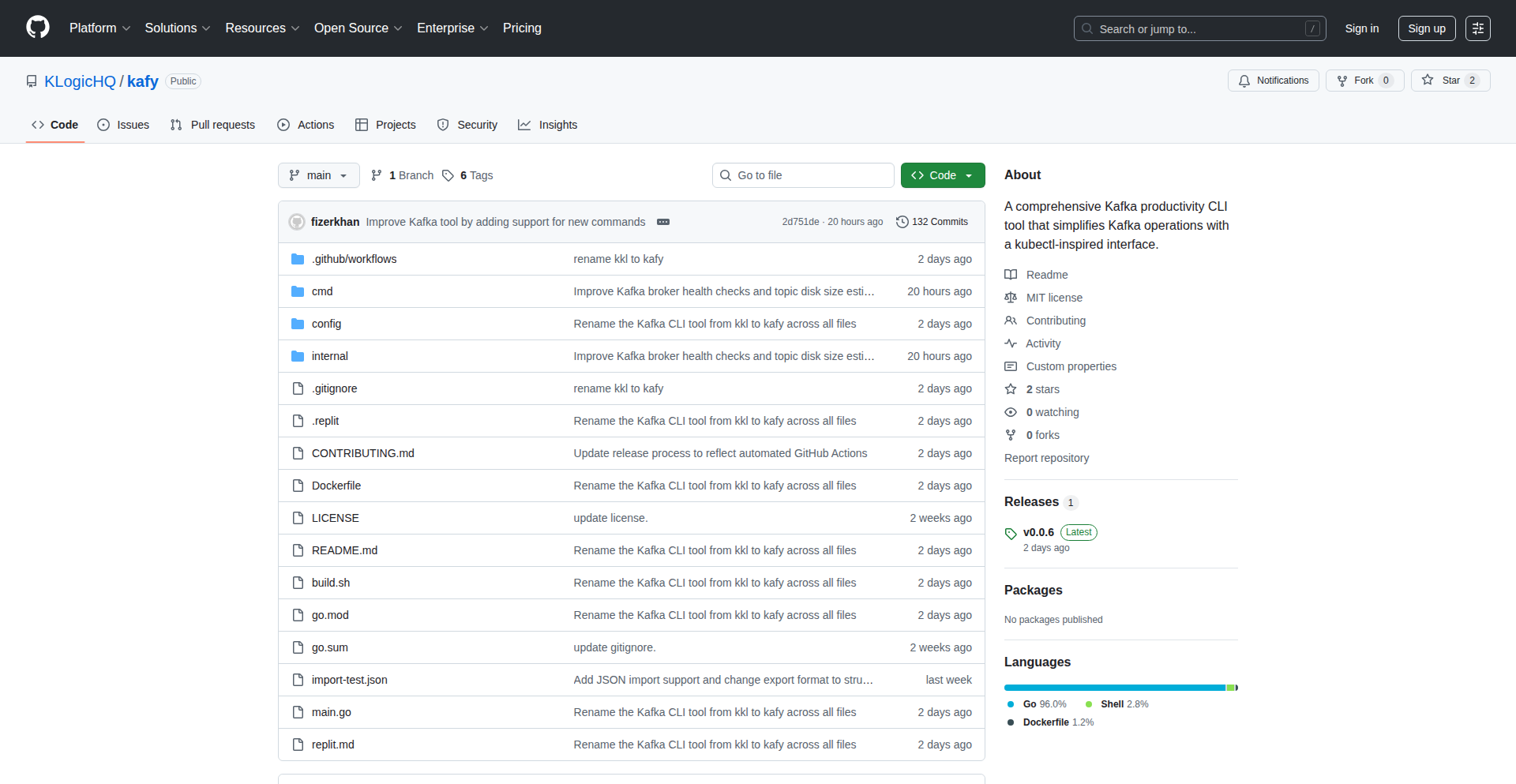

Pooshit: Live Remote Code Sync

Author

marktolson

Description

Pooshit is a developer tool designed to streamline the process of running local code in remote Docker containers without the usual overhead of building images, syncing to cloud repositories, or complex Git workflows. It enables developers to push local files to a remote VM, automatically restart relevant containers, and build/run updated containers with a single command, significantly accelerating the remote development feedback loop. This innovation caters to the 'lazy developer' by automating tedious tasks, allowing for quicker iteration and testing of applications on live remote environments.

Popularity

Points 53

Comments 45

What is this product?

Pooshit is a utility that automates the synchronization of your local development code to a remote server and the subsequent rebuilding and restarting of Docker containers that run that code. Instead of manually copying files, rebuilding Docker images in the cloud, and then updating your running containers, Pooshit handles this entire pipeline with a simple command. It uses a configuration file to define how your code should be transferred, which containers to manage, and the specific Docker commands to execute, effectively acting as a remote development workflow accelerator. The core innovation lies in its ability to bypass intermediate steps that typically consume developer time, enabling a 'push-to-run' experience for remote development.

How to use it?

Developers can use Pooshit by first installing it on their local machine and setting up a simple configuration file (`pooshit_config`). This file specifies the source directory of your local code, the destination directory on the remote server (a VM), the Docker container(s) to manage (e.g., by name or labels), and the Docker commands to run the updated container. Once configured, a single command like `pooshit push` will transfer your local code, stop and remove the specified running containers, and then build and start new containers with the latest code. This makes it incredibly easy to test changes on a remote staging or production-like environment instantly.

Product Core Function

· Local to Remote File Synchronization: Efficiently transfers your local project files to a specified directory on a remote virtual machine, ensuring your remote environment always has the latest code.

· Automated Container Restart: Intelligently detects and restarts or rebuilds specified Docker containers on the remote server after code synchronization, eliminating manual container management.

· Customizable Docker Command Execution: Allows developers to define precise Docker build and run commands in a configuration file, enabling fine-grained control over how containers are updated and started, supporting complex setups like reverse proxies.

· One-Command Workflow: Consolidates the entire process of code pushing, container restarting, and application deployment into a single, easy-to-remember command, drastically reducing development friction.

Product Usage Case

· Testing a web application's frontend changes on a remote staging server: A developer can make changes to HTML, CSS, or JavaScript files locally, run `pooshit push`, and instantly see those changes reflected in the live remote application without manually deploying or rebuilding a full Docker image.

· Iterating on backend API logic in a remote development environment: For a developer working on a backend service running in Docker on a remote VM, Pooshit can push updated code files, rebuild the container with the new code, and restart the service, allowing for rapid testing of API endpoints.

· Deploying microservices with specific reverse proxy configurations: If a microservice needs to be updated and its associated Nginx or Caddy reverse proxy needs to be reloaded or reconfigured, Pooshit's ability to execute custom Docker commands allows for seamless integration of these related tasks.

· Quickly demonstrating a new feature to a stakeholder on a remote server: Instead of a lengthy deployment process, a developer can use Pooshit to push the updated code and get the feature running remotely in minutes for a live demo.

4

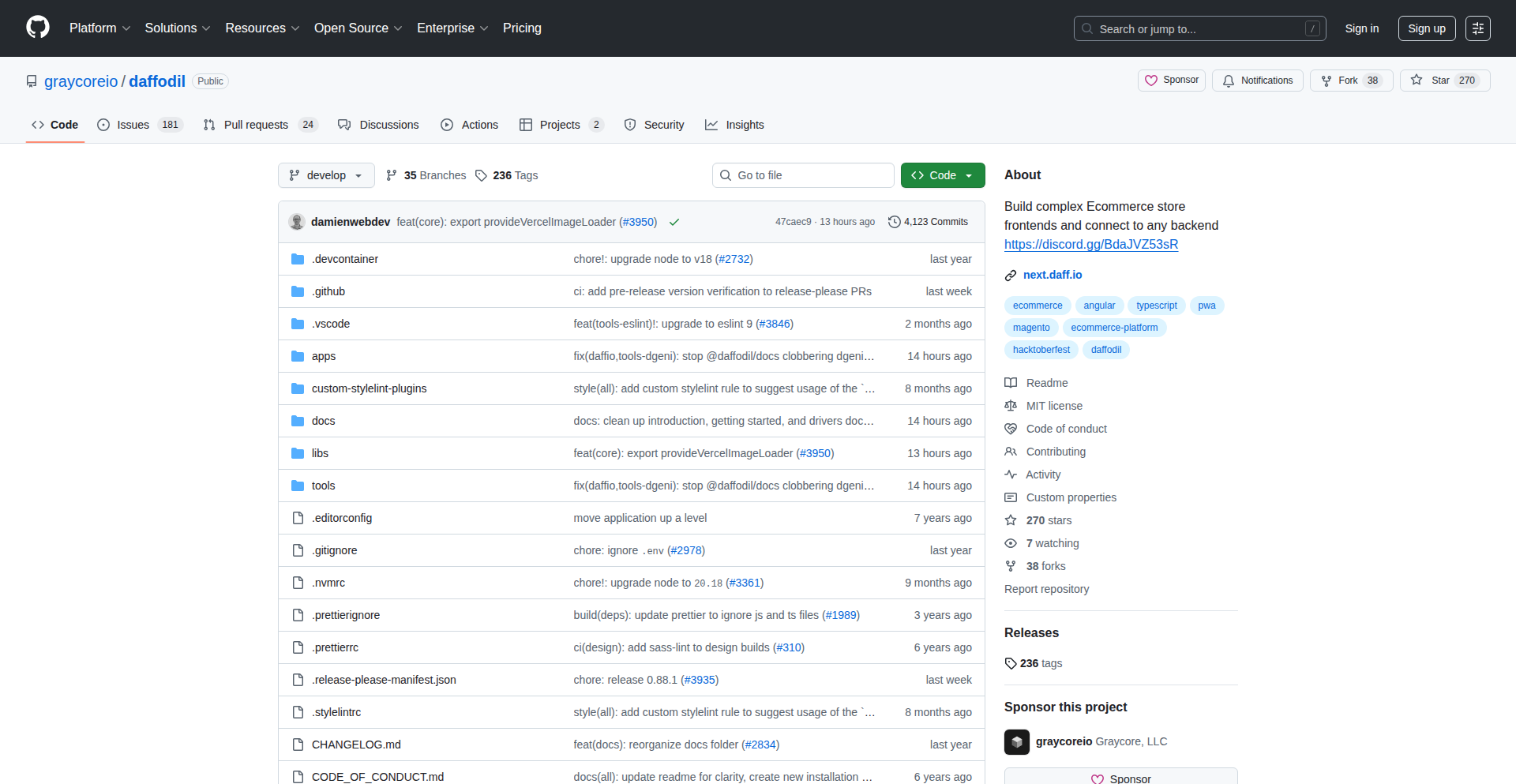

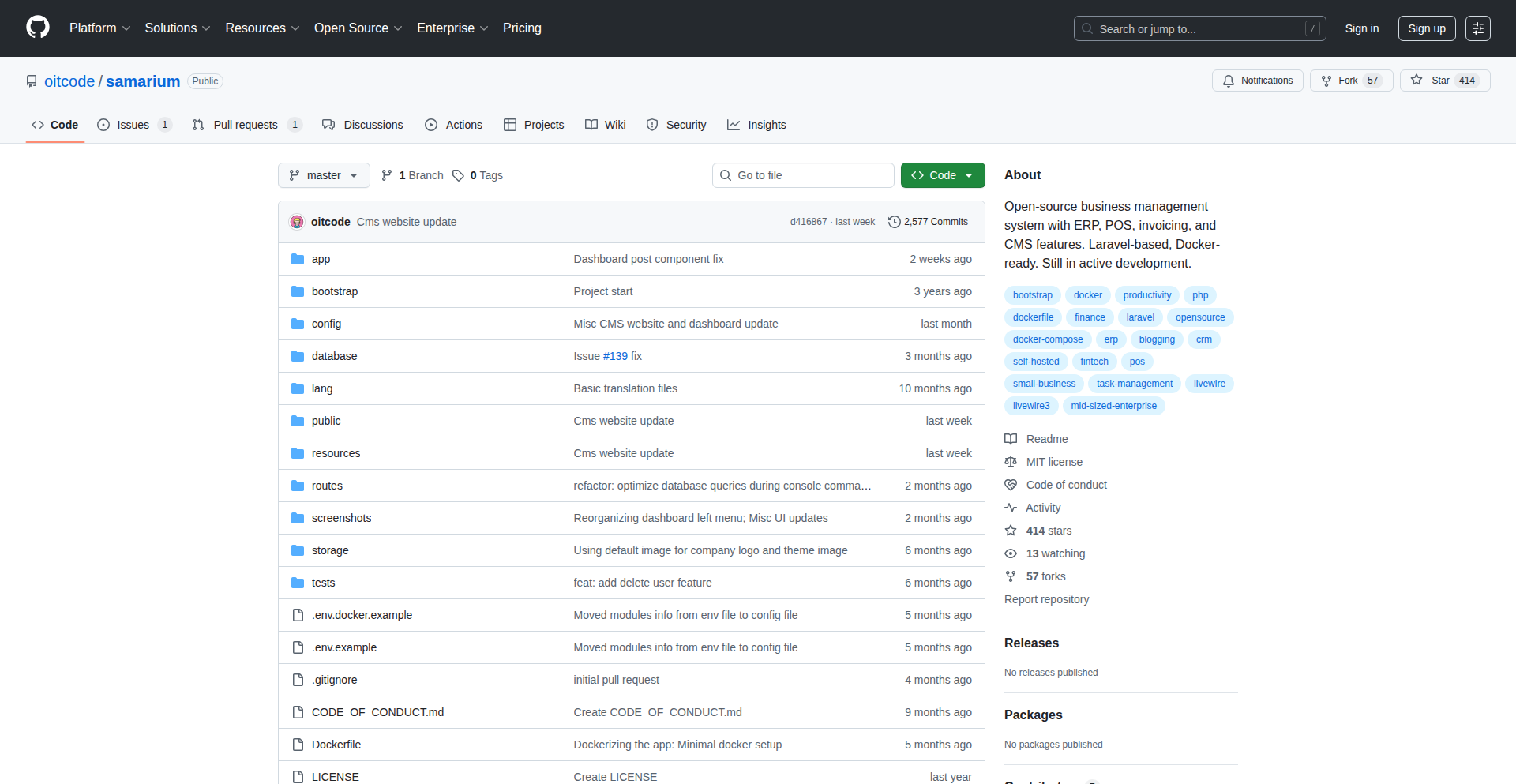

Daffodil Commerce Connect

Author

damienwebdev

Description

Daffodil is an open-source e-commerce framework for Angular that acts like an operating system for e-commerce platforms. It allows developers to connect to various e-commerce backends using a unified interface, abstracting away the complexities of individual platform APIs. This means developers can focus on building their frontend user experience without needing to learn a new e-commerce system for each project, and can start building locally with minimal setup. It aims to simplify frontend development for e-commerce by providing a consistent way to interact with different e-commerce solutions.

Popularity

Points 63

Comments 7

What is this product?

Daffodil is an Angular-based open-source framework designed to simplify frontend development for e-commerce. It functions like an 'adapter' or 'driver' system, similar to how operating systems handle different hardware devices. Instead of learning the unique way each e-commerce platform (like Magento, Shopify, or Medusa) exposes its data and functionality, Daffodil provides a single, consistent API. Developers write code against Daffodil's standard interface, and Daffodil translates those requests into the specific format required by the connected e-commerce backend. This removes the need to repeatedly learn new platform-specific APIs, making the development process much more efficient and less repetitive. It's built to allow developers to start quickly without complex local environment setups.

How to use it?

Developers familiar with Angular can integrate Daffodil into their projects using the Angular CLI. After creating a new Angular application, they can add Daffodil with a simple command: `ng add @daffodil/commerce`. This command installs the necessary packages and configures the project to use Daffodil. Once integrated, developers can then install specific 'drivers' for the e-commerce platforms they want to connect to (e.g., `@daffodil/magento`, `@daffodil/shopify`, `@daffodil/medusa`). These drivers act as the translation layer. Developers then interact with Daffodil's services to fetch product data, manage carts, and process orders, without directly dealing with the underlying platform's API. This allows for rapid prototyping and development, and makes it easy to switch or support multiple e-commerce backends.

Product Core Function

· Unified E-commerce API: Provides a single, consistent interface for interacting with various e-commerce platforms. This means you write your frontend code once, and it works with different backends, saving significant development time and reducing the learning curve for new e-commerce systems.

· Platform Drivers: Offers specific 'drivers' that translate Daffodil's standard requests into the native API calls for platforms like Magento, Shopify, and Medusa. This allows Daffodil to abstract the differences between these platforms, so you don't have to.

· Simplified Local Development: Enables developers to start building e-commerce frontends without needing complex server setups like Docker, Kubernetes, or SaaS subscriptions. You can get a local HTTP server running and start coding immediately, accelerating your development workflow.

· Angular Integration: Seamlessly integrates with Angular applications, leveraging the Angular ecosystem and development patterns. This makes it easy for existing Angular developers to adopt and benefit from Daffodil.

· Extensible Architecture: Designed to be extended with support for new e-commerce platforms. If your project uses a less common e-commerce solution, the framework's design allows for the creation of new drivers.

Product Usage Case

· Building a headless e-commerce frontend for a client using Magento: Instead of wrestling with Magento's specific REST or GraphQL APIs, the developer uses Daffodil's unified API to fetch product listings and manage the shopping cart, making the development process faster and more maintainable.

· Migrating an Angular e-commerce site from Shopify to Medusa: The developer can largely reuse their existing Daffodil-based frontend code. They only need to swap out the Shopify driver for the Medusa driver, significantly reducing the effort and risk associated with the migration.

· Creating a proof-of-concept e-commerce feature quickly: A developer needs to demonstrate a new feature that interacts with product data. By using Daffodil and its Medusa driver, they can set up a local development environment in minutes and start coding without waiting for backend infrastructure provisioning.

· Developing a frontend that supports multiple e-commerce channels: A business wants to sell products through both their main Magento store and a smaller Shopify instance. Daffodil allows the frontend to seamlessly communicate with both platforms through their respective drivers, providing a unified customer experience.

5

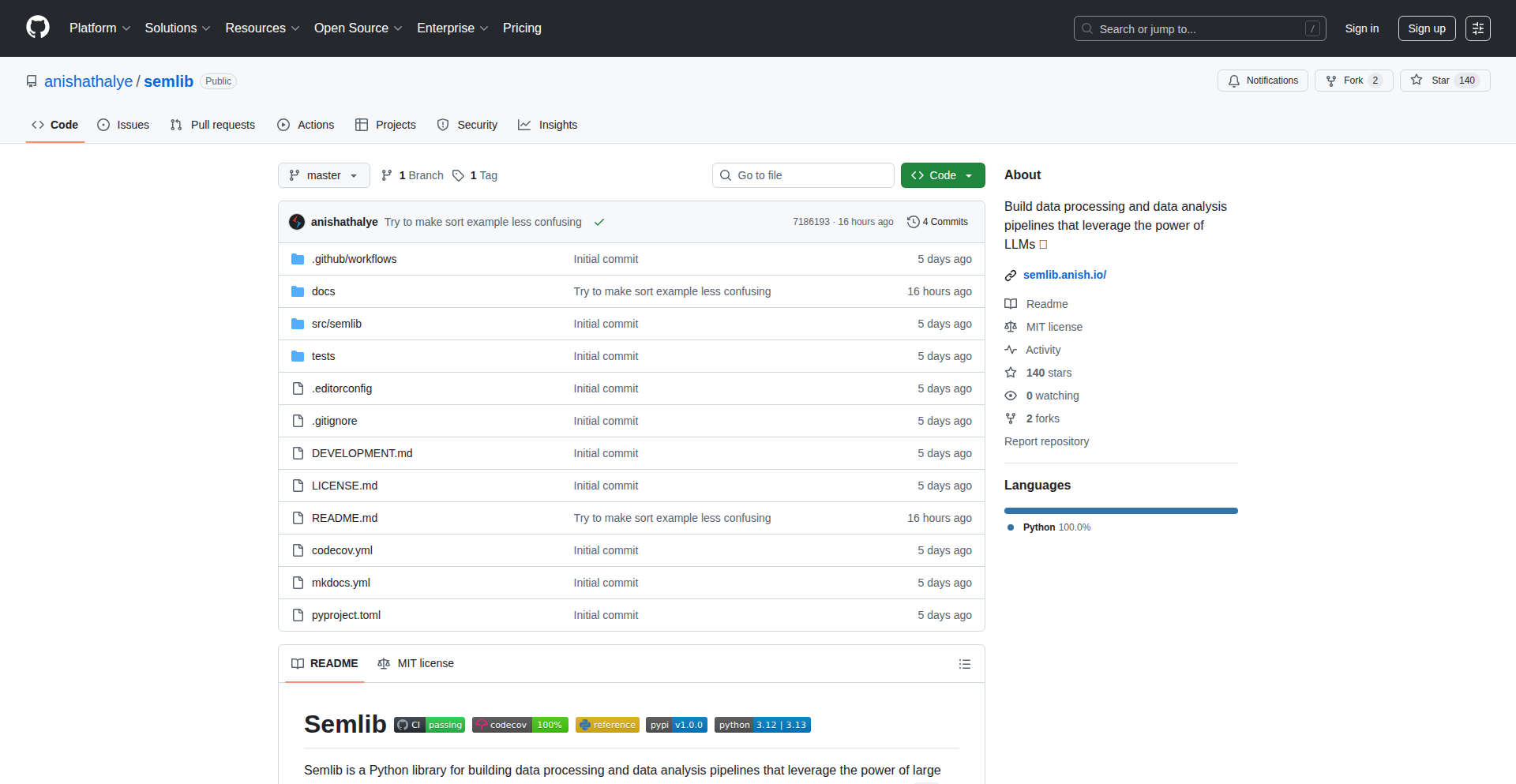

Semlib: Semantic Data Orchestrator

Author

anishathalye

Description

Semlib is a novel framework for processing and reasoning over semantic data, designed to handle complex relationships and knowledge graphs. It tackles the challenge of extracting meaningful insights from highly interconnected data by providing an efficient way to define, query, and manipulate semantic data, going beyond traditional database limitations. This offers a powerful tool for developers working with knowledge-intensive applications.

Popularity

Points 57

Comments 12

What is this product?

Semlib is a library and framework built for semantic data processing. It's like a super-powered database that understands the meaning and relationships between different pieces of information. Instead of just storing data in tables, Semlib allows you to represent data as interconnected concepts, similar to how humans understand the world. Its innovation lies in its efficient querying and manipulation of these complex relationships, enabling advanced reasoning and pattern detection within the data. This is crucial for applications that need to understand context and infer new information.

How to use it?

Developers can integrate Semlib into their applications by defining their data models using semantic principles (like ontologies and triples). They can then load their data into Semlib and use its specialized query language to retrieve and analyze information based on its meaning and relationships. For example, a developer building a recommendation engine could use Semlib to identify users with similar tastes based on their interaction history and shared interests, leading to more accurate and personalized recommendations. It can be used as a backend for knowledge-rich applications or as a standalone tool for data exploration.

Product Core Function

· Semantic Data Modeling: Allows developers to define complex data relationships and ontologies, providing a structured way to represent knowledge. This is valuable for building applications that require deep understanding of information, such as expert systems or content management platforms.

· Efficient Semantic Querying: Enables highly specific and context-aware data retrieval using a specialized query language, which is more powerful than traditional SQL for relational data. This allows developers to ask complex questions about their data and get precise answers, speeding up data analysis for tasks like fraud detection or scientific research.

· Data Reasoning and Inference: Provides capabilities to infer new knowledge from existing data based on defined rules and relationships. This means applications can automatically discover hidden patterns or derive conclusions, enhancing features like intelligent assistants or predictive analytics.

· Data Manipulation and Transformation: Offers tools to modify and transform semantic data, allowing developers to update knowledge bases or adapt data for different analytical purposes. This is useful for maintaining and evolving complex data sets over time, ensuring data relevance for applications.

Product Usage Case

· Building a personalized medical diagnosis assistant: A developer could use Semlib to model medical knowledge, including diseases, symptoms, and treatments. By querying Semlib with a patient's symptoms, the assistant can infer potential diagnoses and suggest relevant treatment options, offering a more intelligent and informative user experience.

· Developing an advanced fraud detection system: For financial institutions, Semlib can be used to model transaction patterns, customer relationships, and known fraudulent activities. By analyzing the semantic connections between transactions and entities, the system can identify suspicious activities that might be missed by simpler rule-based systems, thus improving security and reducing financial losses.

· Creating a smart content recommendation engine: In media or e-commerce platforms, Semlib can model user preferences, content metadata, and their interdependencies. This allows for highly personalized recommendations that go beyond simple keyword matching, leading to increased user engagement and satisfaction by understanding the nuances of user interests.

· Automating scientific literature analysis: Researchers can use Semlib to process vast amounts of scientific papers, identifying relationships between genes, proteins, and experimental results. This can accelerate discovery by highlighting potential research avenues or validating hypotheses, empowering scientific progress.

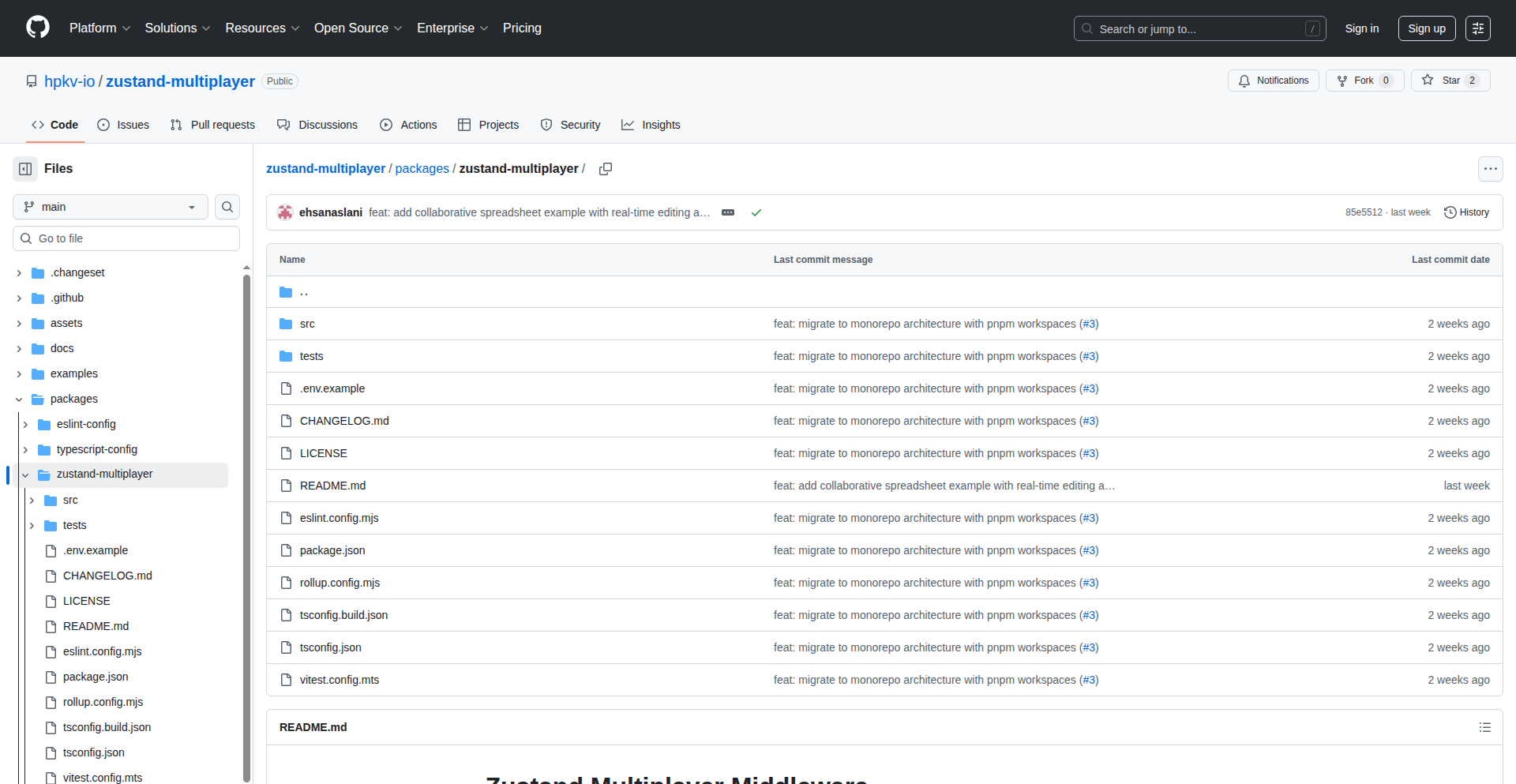

6

FinFam: Collaborative Spreadsheet Financial Modeler

Author

mhashemi

Description

FinFam is a platform that transforms ordinary spreadsheets (XLSX and Google Sheets) into interactive, shareable financial models. It addresses the complexity and opacity of personal finance by providing a transparent and collaborative environment, allowing users to build and explore financial scenarios with context-rich explanations and integrated discussion. This democratizes financial planning, empowering individuals to understand and manage their finances without needing deep technical expertise.

Popularity

Points 38

Comments 14

What is this product?

FinFam is a web application designed to make financial modeling accessible and collaborative. Instead of complex, proprietary software or messy manual spreadsheets, it allows users to leverage the familiarity of spreadsheet interfaces (like Excel or Google Sheets) to build dynamic financial plans. The innovation lies in its ability to take these standard spreadsheets and add interactive elements, explorable explanations (like interactive blog posts), and even chatbot-enhanced discussions directly linked to the financial data. This means you can create a financial model and share it, allowing others to play with variables and see real-time results, all while understanding the underlying logic because it's based on a format they already know. It solves the 'black box' problem of many financial tools by being transparent and verifiable, much like open-source software.

How to use it?

Developers and non-developers alike can use FinFam to create and share financial models. You can start by uploading an existing XLSX file or linking a Google Sheet. Within FinFam, you can then define interactive elements that allow viewers to change input variables (like income, expenses, or investment rates). You can also add narrative explanations and embed a context-aware chatbot to answer questions about the model. This makes it ideal for sharing with family members to discuss retirement plans, for clients to understand financial advice, or for educators to demonstrate financial concepts. Integration can be as simple as sharing a link to your FinFam model, which can be embedded into websites or shared via email.

Product Core Function

· Spreadsheet-powered financial modeling: Enables users to build financial models using familiar spreadsheet software like Excel or Google Sheets, translating complex financial logic into an accessible format. This provides immediate utility for anyone comfortable with spreadsheets, allowing them to visualize financial futures.

· Interactive model exploration: Allows viewers to manipulate input variables within a model and see the results update in real-time. This fosters a deeper understanding of how different financial decisions impact outcomes, offering a hands-on learning experience.

· Explorable explanations: Integrates narrative content, akin to interactive blog posts, directly with the financial models. This ensures that the 'why' behind the numbers is as clear as the numbers themselves, making financial concepts more digestible.

· Context-aware chatbot integration: Augments models with a chatbot that can answer user questions based on the specific financial data and logic presented. This provides personalized support and clarifies complex financial scenarios on demand.

· Collaborative sharing and versioning: Facilitates easy sharing of financial models with others, promoting discussion and collective decision-making. This is crucial for family financial planning or team-based financial analysis.

Product Usage Case

· Family retirement planning: A user can create a retirement model using FinFam, inputting family income, savings, and expected expenses. They can then share this model with their spouse, allowing both to adjust retirement ages or savings rates to see the impact on their financial security. This replaces confusing spreadsheets and disconnected documents with a clear, shared financial vision.

· Personalized financial advice: A financial advisor can build a model for a client, outlining investment growth, tax implications, and potential future expenses. The client can then use the interactive elements to explore different scenarios, such as taking an early retirement or making a large purchase, fostering trust and understanding through transparency.

· Cost of living analysis: A user could build a model to calculate the cost of raising a child in a specific city, using real-time data for expenses like childcare, education, and healthcare. This model can then be shared, allowing others to adjust variables like the number of children or specific city choices to understand the financial implications.

· Career decision-making: A developer could create a model comparing the financial outcomes of working at a large tech company versus a startup, factoring in salary, stock options, and potential bonuses. This model could be shared with peers to facilitate discussions about career paths and their long-term financial impact.

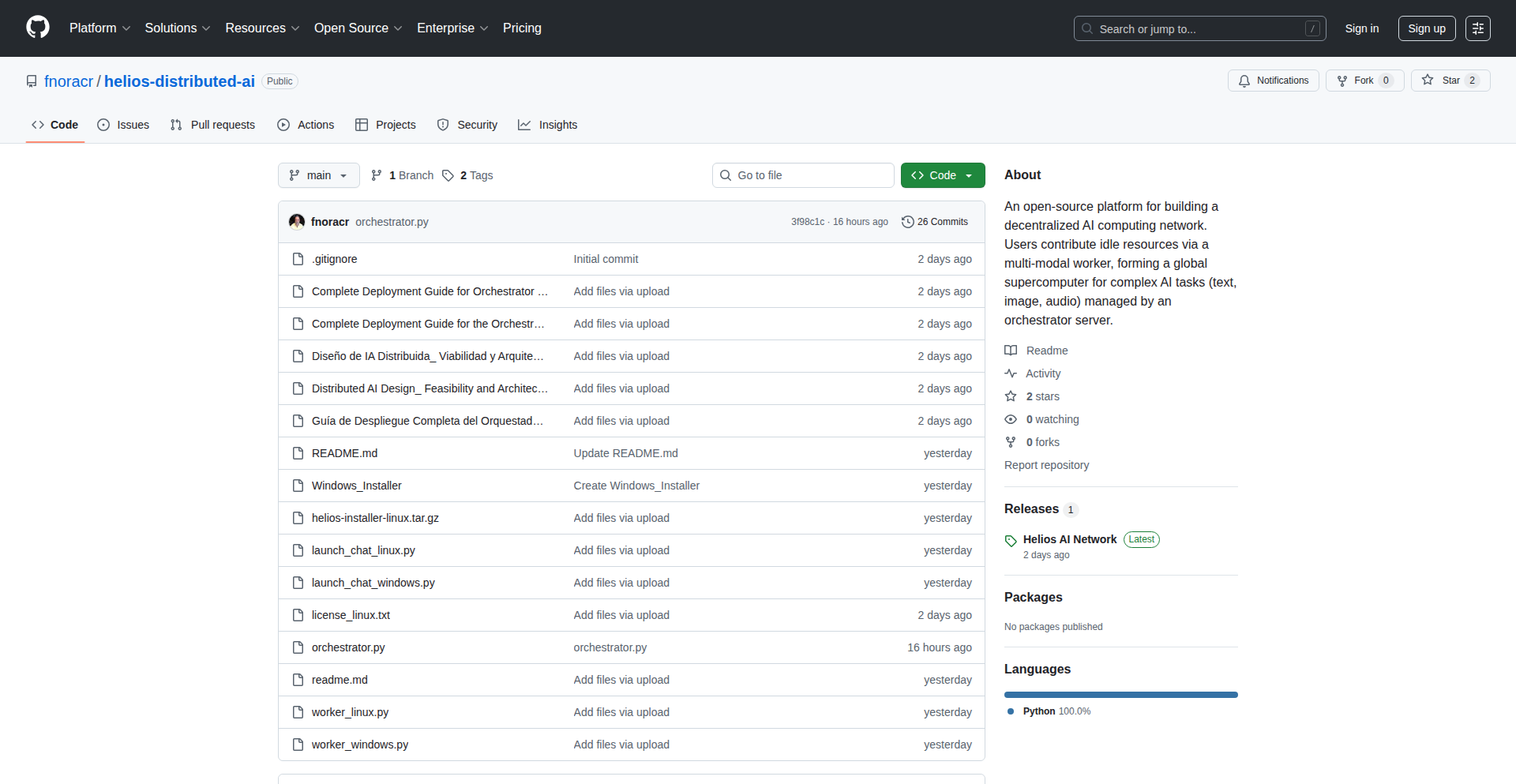

7

MCP Server Connect-o-Matic

Author

pmig

Description

A library that simplifies connecting MCP clients to remote MCP servers by generating installation instructions on the fly. It offers one-click installation buttons and links for a wide range of clients, significantly reducing the setup time and complexity for users wanting to join remote MCP servers. It can also provide web-based HTML instructions.

Popularity

Points 19

Comments 5

What is this product?

This project is a clever solution to a common pain point for users of MCP (likely a game server or platform) when trying to connect to remote servers. Traditionally, setting up a client to connect to a specific remote server can be a multi-step, tedious process involving manual configuration. The innovation here lies in a library that automatically generates the necessary connection instructions. It acts like a smart assistant that figures out exactly what needs to be done and presents it in a user-friendly format, often as a simple button or link. This eliminates the need for users to sift through complex documentation or manually edit configuration files, making the entire process as simple as clicking a single button.

How to use it?

Developers can integrate this library into their projects, particularly for any remote MCP server. If you are managing an MCP server and want to make it incredibly easy for others to join, you can use this library to generate a personalized installation instruction link or button. This can be placed directly in your server's README file, on a website, or anywhere you share information about your server. When a potential user clicks this button or link, the library automatically provides them with all the specific instructions and potentially even pre-configured files needed to connect to your server, reducing friction and increasing adoption. It can also be configured to serve HTML instructions directly if someone accesses your server's web presence.

Product Core Function

· On-the-fly instruction generation: This means the library creates the necessary connection steps precisely when they are needed, ensuring accuracy for specific server setups. The value here is in providing immediate, tailored guidance, making it super convenient for the end-user.

· One-click installation buttons/links: Instead of a lengthy manual, users get a direct pathway to connect. This dramatically lowers the barrier to entry for new users, so they can start playing or using the service faster.

· Cross-client compatibility: The library aims to support 'most clients out there,' meaning it's flexible and adaptable. The value is in reaching a broader audience without worrying about whether they are using a specific version or type of MCP client.

· Markdown and HTML output: This offers flexibility in how instructions are delivered. For developers, it means they can easily embed these instructions into their documentation (like GitHub READMEs) or web pages, enhancing the user experience wherever the information is found.

Product Usage Case

· A game server administrator running an MCP server wants to make it effortless for new players to join. They integrate the library into their server's README.md on GitHub. Now, potential players see a "Join Server" button, click it, and are guided through the exact steps to connect, bypassing complex setup.

· A developer hosting a custom MCP-based application needs users to connect to their specific remote instance. They embed a generated link on their project's website. When a user clicks the link, they are presented with clear, step-by-step instructions, perhaps even downloading a pre-configured file, allowing them to connect without any manual fiddling.

· A community manager for an MCP server wants to provide quick support. They use the library to generate a quick link they can share in chat or forums. This link immediately directs users to the precise instructions needed, resolving connection issues much faster.

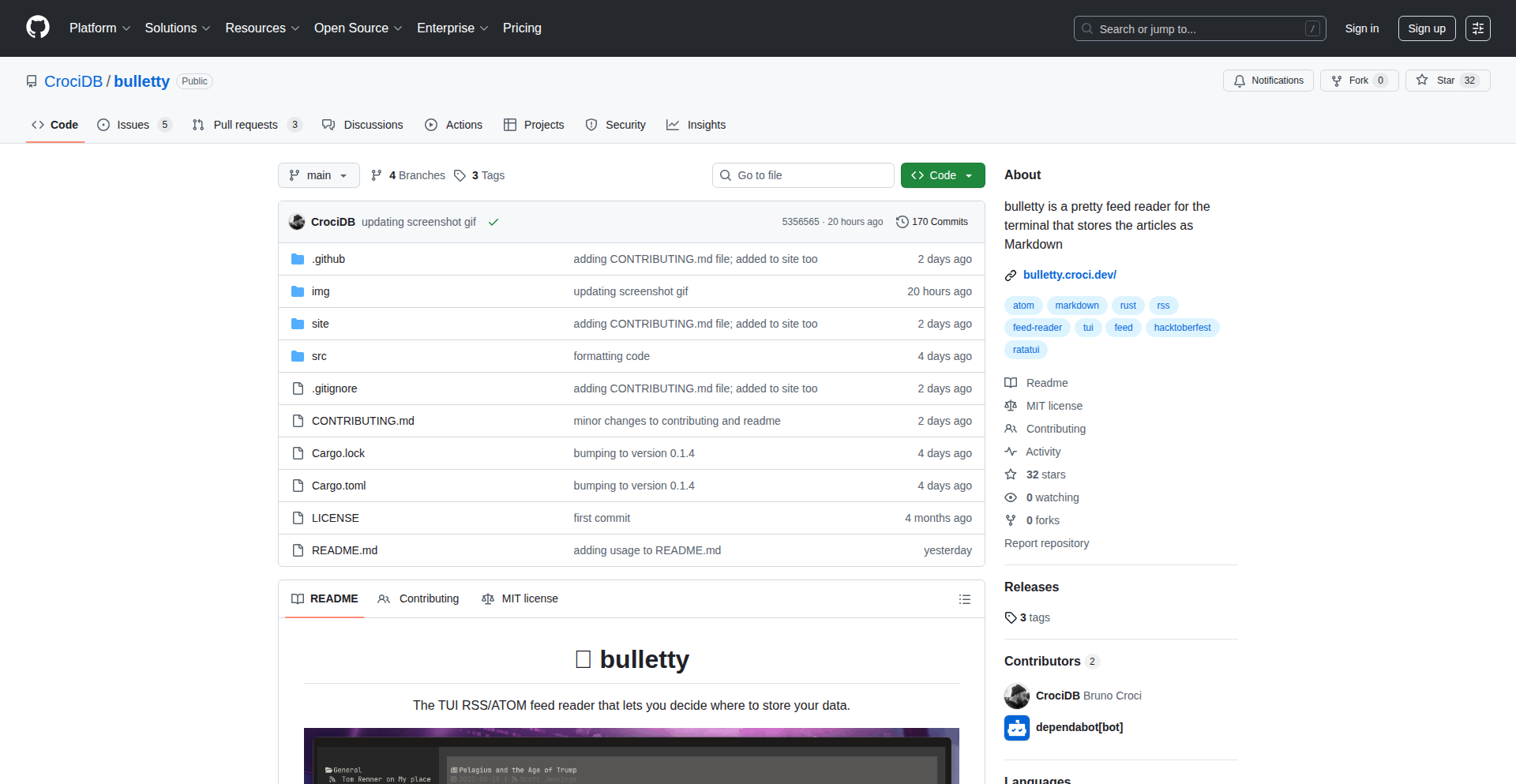

8

Ruminate AI-Reader

Author

rshanreddy

Description

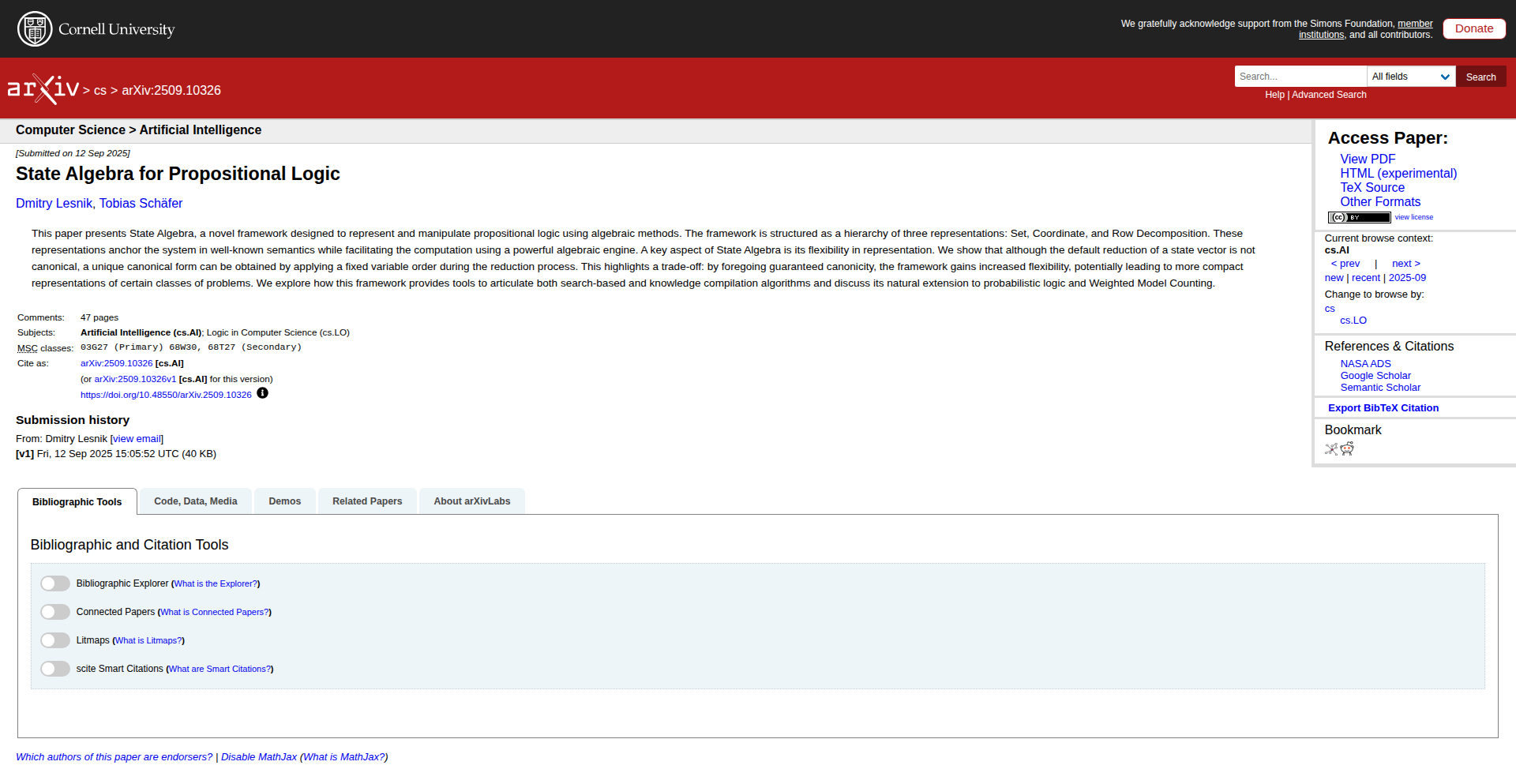

Ruminate is an AI-powered reading tool designed to help users understand complex texts like research papers, novels, and long articles. It addresses the frustration of fragmented research workflows by providing a unified interface where users can read documents, interact with an LLM to ask questions and get definitions, and save notes and annotations in one place, eliminating the need for constant tab switching. This innovative approach leverages LLM context awareness and web search capabilities to deepen comprehension and streamline the learning process.

Popularity

Points 15

Comments 3

What is this product?

Ruminate is a sophisticated AI reading companion that transforms how you interact with challenging content. It ingests various document formats, including PDFs, EPUBs, and web articles (using headless browser automation for web content). Its core innovation lies in its ability to maintain a comprehensive understanding of the entire document, your reading progress, and even perform web searches to enrich answers. When you highlight text, Ruminate allows you to instantly ask questions, request definitions, or engage in discussions with an LLM that has access to all this context. The tool also meticulously saves your notes, definitions, and annotations, creating a personal knowledge base accessible through dedicated tabs. This means you get a cohesive, contextualized learning experience, making it easier to absorb and retain information from dense material.

How to use it?

Developers can use Ruminate by uploading their research papers, technical documentation, or any lengthy text they need to understand. For web articles, they can simply provide the URL. By highlighting specific sections or terms, they can ask clarifying questions about the content, request definitions of technical jargon, or even brainstorm ideas related to the material directly within the Ruminate interface. The ability to save annotations and notes means developers can build a repository of their learning, reference key insights, and revisit complex topics efficiently. For example, a developer studying a new framework can upload its documentation, highlight a confusing API call, and ask Ruminate for a simpler explanation or an example usage, with the AI considering the entire documentation context.

Product Core Function

· Document Ingestion (PDF, EPUB, Web Articles): Allows users to seamlessly upload and read various digital text formats, providing a centralized content hub for study. This saves time compared to managing multiple file types and readers, making it easier to get started with any material.

· Context-Aware LLM Interaction: Enables users to highlight text and interact with an AI that understands the entire document and reading history. This means questions are answered with richer, more relevant context, leading to deeper comprehension than generic AI queries.

· Integrated Note-Taking and Annotation: Provides a dedicated space to save notes, definitions, and highlights, creating a personalized learning artifact. This structured approach helps users organize their thoughts, track key information, and easily review their learning process later.

· Web Search Augmentation: Empowers the LLM to perform web searches to provide more comprehensive answers, bridging knowledge gaps and offering broader perspectives. This feature enhances the AI's ability to explain complex concepts by drawing on external information when needed.

· Tab-less Unified Interface: Offers a single, focused environment for reading and interacting with content, eliminating distractions from multiple open tabs. This design promotes immersive learning and reduces cognitive load, allowing users to stay focused on the material.

Product Usage Case

· A software engineer researching a new database technology can upload the official documentation. By highlighting a complex query syntax, they can ask Ruminate for a plain-language explanation and an example of how to achieve a specific task, with the AI referencing the entire documentation context to provide a precise answer.

· A PhD student working on a thesis can upload multiple research papers. When encountering a dense methodological section, they can highlight key terms and ask Ruminate for definitions or a summary of the approach discussed in that specific paper and across others they've uploaded, consolidating their understanding.

· A writer struggling with a lengthy novel can upload the ebook. When they encounter an unfamiliar literary device or character detail, they can highlight it and ask Ruminate for context or its significance, enriching their reading experience without breaking immersion.

· A product manager analyzing user feedback reports can upload long articles summarizing survey results. They can highlight critical insights or recurring themes and ask Ruminate to extract key takeaways or identify potential action items, streamlining the analysis process.

9

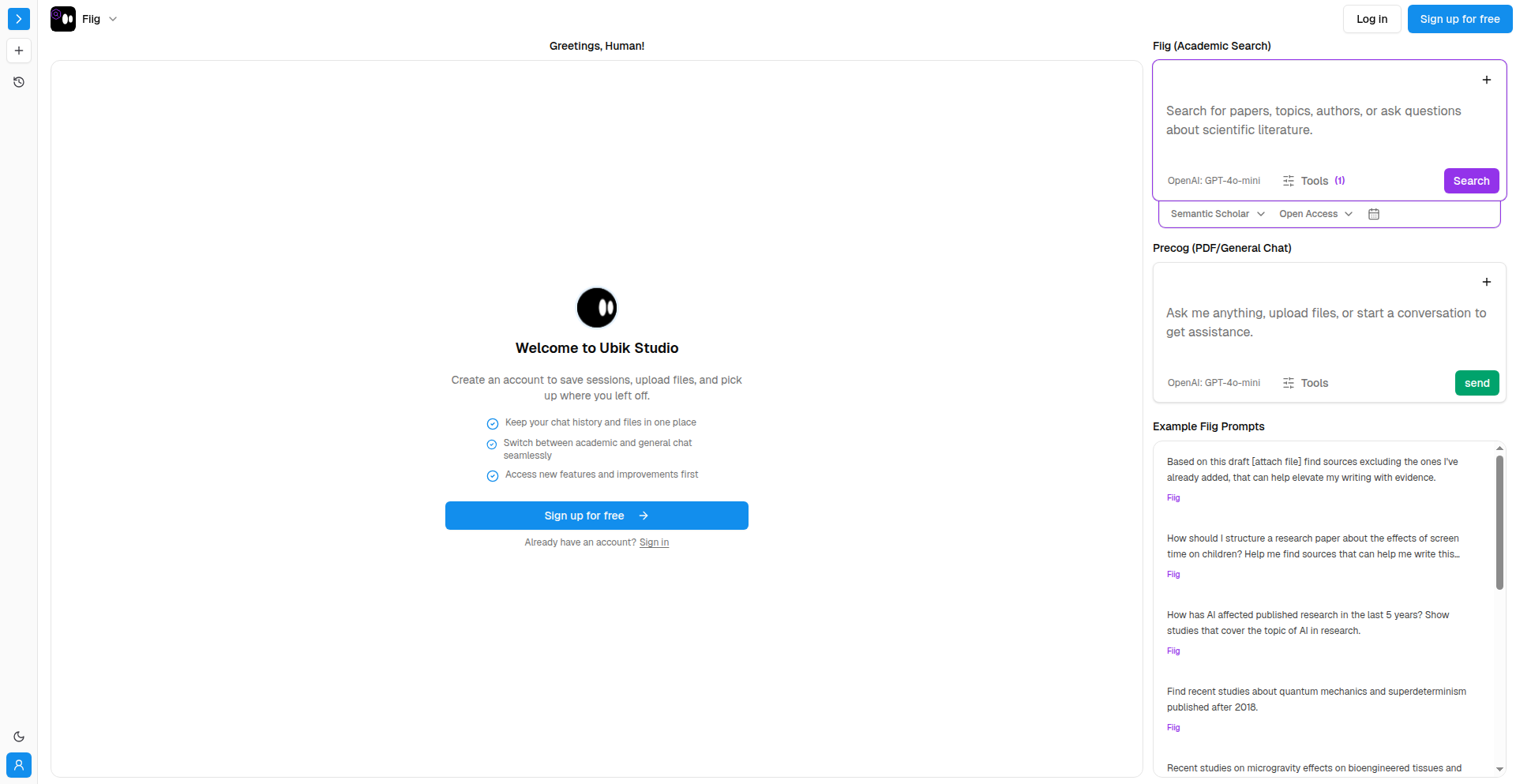

Blocks: AI-Native Workflow Builder

Author

shelly_

Description

Blocks is an AI platform that empowers anyone to create custom work applications and AI agents in minutes without writing any code. It addresses the common challenge of off-the-shelf software not fitting specific needs and the high cost of custom development. Blocks offers a unique third option by allowing users to simply describe their requirements in plain language, and an AI builder constructs the app, including a user interface, AI agents for automation, and a built-in database. This approach democratizes software creation, enabling those who identify problems to build the solutions themselves, fostering efficiency and adaptability in workflows. It integrates with popular tools like Google Sheets, Slack, and HubSpot.

Popularity

Points 11

Comments 3

What is this product?

Blocks is an AI-native platform designed to let anyone build custom work applications and AI agents quickly, without needing to code. The core innovation lies in its ability to translate natural language descriptions into functional software. It operates on three interconnected layers: customizable User Interfaces (UI) tailored for different roles, AI agents that automate tasks and interact with other systems, and an integrated database for data management. By unifying these elements, Blocks provides more than just a tool; it delivers an adaptive system that can automate and improve over time. The AI builder, named Ella, handles the creation of the UI, agents, and database based on user input, allowing for subsequent editing and integration with existing services.

How to use it?

Developers and non-technical users can leverage Blocks by visiting the platform and describing the desired application or AI agent using plain language. For instance, you could say, 'Create an app to track customer support tickets, assign them to agents, and send follow-up reminders.' The AI builder then generates the application. From there, users can refine the UI, configure the AI agents' behavior (e.g., 'automatically escalate tickets older than 24 hours'), and connect it to their existing tools like Google Sheets for data import/export, Slack for notifications, or HubSpot for CRM integration. This makes it easy to build bespoke solutions for specific business processes or automate repetitive tasks without a steep learning curve.

Product Core Function

· Natural Language to App Generation: Enables users to describe their needs in plain English, and the AI automatically builds the application. This democratizes software creation, allowing anyone to solve their problems with custom tools.

· AI Agent Automation: Allows the creation of intelligent agents that can perform tasks, integrate with external systems, and take actions. This automates repetitive work and streamlines complex processes, saving time and reducing errors.

· Customizable UI Design: Provides the ability to create user interfaces tailored to specific roles and workflows. This ensures that the application is intuitive and efficient for the end-users, improving productivity.

· Integrated Data Management: Includes a built-in database to store and manage application data. This consolidates information, making it easier to access, analyze, and utilize for business insights.

· Third-Party Integrations: Supports connections with popular services like Google Sheets, Slack, and HubSpot, allowing for seamless data flow and enhanced functionality. This extends the utility of the custom apps by leveraging existing tools and data sources.

Product Usage Case

· A sales team could build a 'Lead Qualification Tracker' app where they input lead information, and an AI agent automatically assigns follow-up tasks and schedules reminders based on lead status, improving conversion rates.

· A customer support department could create a 'Ticket Management System' that categorizes incoming requests, routes them to the appropriate team member, and generates automated responses for common queries, enhancing response times and customer satisfaction.

· An operations manager might build an 'Inventory Monitoring Tool' that pulls data from a Google Sheet, alerts when stock levels are low, and generates a reorder request, preventing stockouts and optimizing supply chain efficiency.

· A marketing team could develop a 'Campaign Performance Dashboard' that pulls data from various sources, allowing them to visualize key metrics and identify trends at a glance, leading to more data-driven campaign optimization.

10

LLM Quote Vault

Author

jcoulaud

Description

LLM Quote Vault is a lightweight, open-source web application built with Next.js and PostgreSQL, deployed on Vercel. It provides a simple platform for users to collect, share, and discover amusing or peculiar outputs from Large Language Models (LLMs) like ChatGPT and Claude. The innovation lies in its direct, no-login submission of LLM snippets, combined with community voting and manual spam moderation, creating a curated repository of AI's unexpected moments. This addresses the common problem of losing interesting AI-generated text in chat histories and offers a centralized, discoverable space for these unique interactions.

Popularity

Points 5

Comments 4

What is this product?

LLM Quote Vault is a web application designed to capture and showcase interesting, funny, or strange outputs generated by Large Language Models (LLMs). The core technical innovation is its streamlined submission process, allowing users to submit LLM quotes without requiring an account. It uses a Next.js frontend for a responsive user experience and a PostgreSQL backend to store the submitted quotes, author information (optionally a Twitter handle), and voting data. The system is deployed on Vercel, enabling efficient scaling and management. The platform also features upvoting and favoriting mechanisms to highlight popular content, with a manual moderation process to maintain quality. The value is in creating a dedicated, accessible archive for the often surprising and humorous side of AI interactions, making it easy to save, share, and browse these AI quirks.

How to use it?

Developers can use LLM Quote Vault as a template or inspiration for building similar content-sharing platforms. The open-source nature allows for direct contribution and modification. For end-users, the process is simple: visit the LLM Quotes website (llmquotes.com). To share an interesting LLM output, you can directly submit it via the provided form. You have the option to include your Twitter handle if you wish. Once submitted, other users can browse, upvote, and favorite your contribution. This provides a direct way to centralize and share amusing AI conversations without the hassle of managing your own infrastructure.

Product Core Function

· Quote Submission: Allows users to submit LLM-generated text snippets without the need for account creation. This simplifies the process for immediate sharing of interesting AI outputs, directly solving the problem of losing good quotes in chat logs.

· Upvoting and Favoriting: Enables community-driven curation by allowing users to upvote and favorite their preferred LLM outputs. This helps surface the most engaging content and provides social validation for submissions.

· Manual Spam Moderation: Implements a manual moderation system to filter out spam or inappropriate content. This ensures the quality and relevance of the showcased LLM quotes, making the platform a more pleasant experience for all users.

· Optional Twitter Handle Integration: Provides the option to associate a Twitter handle with submissions. This allows users to gain recognition for their shared content and connect with others interested in LLM outputs.

· Open Source Accessibility: The project is open-source, meaning its code is publicly available for inspection, modification, and contribution. This fosters collaboration and allows developers to learn from and build upon the project's codebase.

Product Usage Case

· A user encounters a particularly bizarre or funny response from a chatbot and wants to share it with friends. Instead of taking a screenshot and sending it individually, they can submit it to LLM Quote Vault, where it can be seen and appreciated by a wider audience interested in AI quirks.

· A content creator who frequently uses LLMs for brainstorming or creative writing can use LLM Quote Vault to collect and showcase the most unusual or insightful outputs they discover. This can serve as a portfolio of their prompt engineering skills or simply as entertaining content for their followers.

· A developer experimenting with different LLM models or fine-tuning techniques might use LLM Quote Vault to document and share surprising emergent behaviors or unexpected results from their experiments. This can contribute to the collective understanding of LLM capabilities and limitations.

· Someone looking for a good laugh or interesting AI insights can browse LLM Quote Vault to discover a curated collection of humorous and thought-provoking LLM outputs, providing entertainment and inspiration.

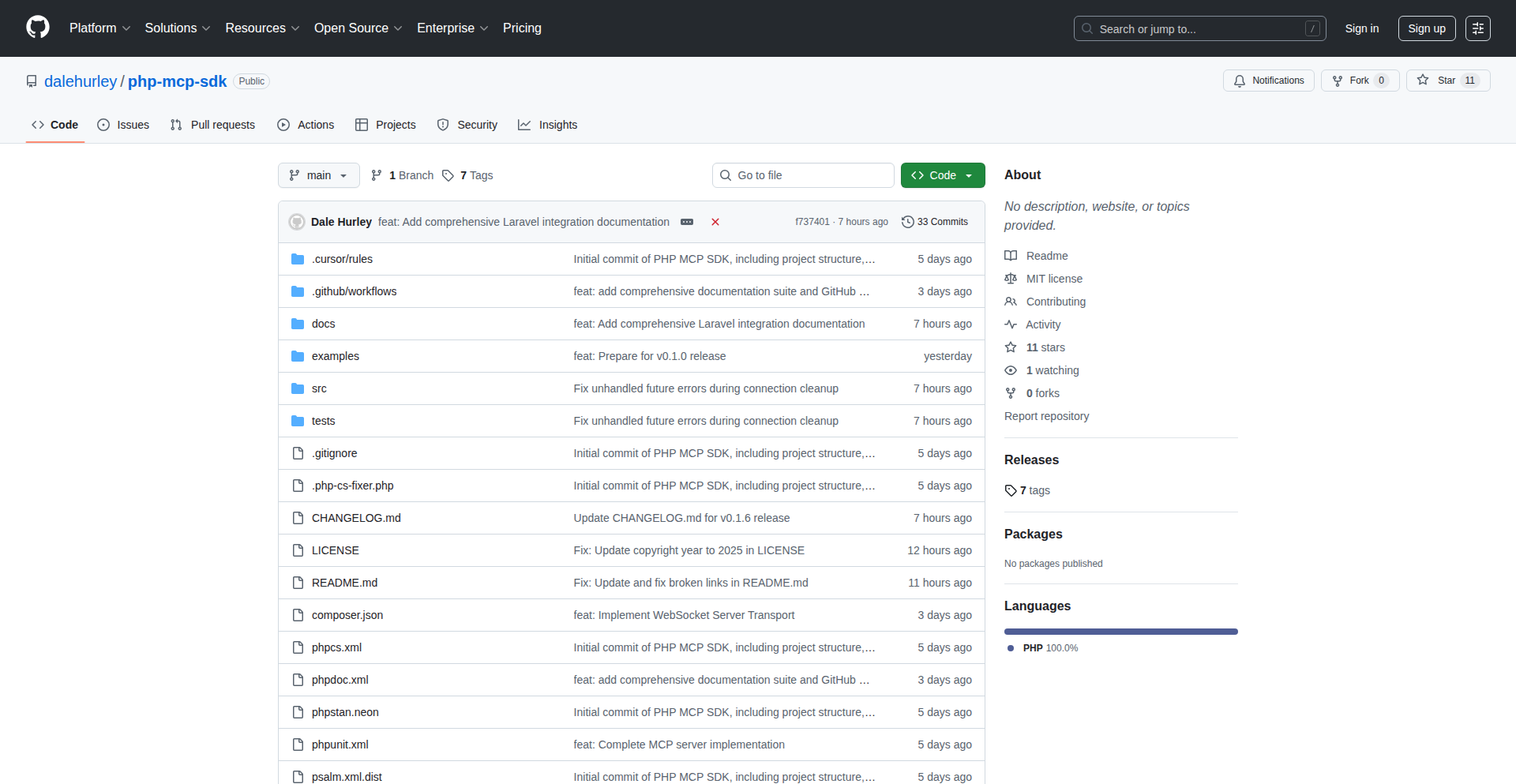

11

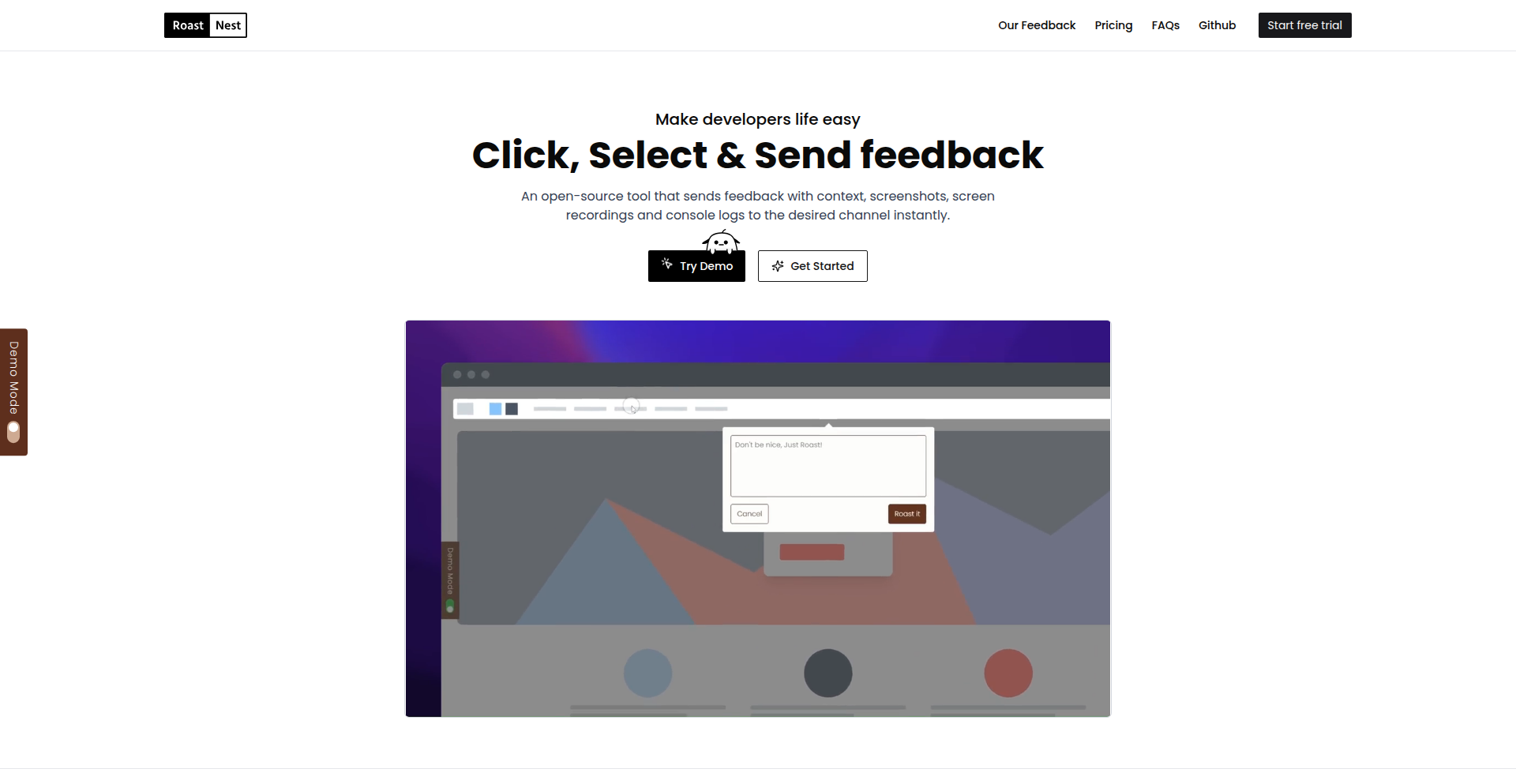

React Roast: Contextual Feedback SDK

Author

satyamskillz

Description

React Roast is an open-source Software Development Kit (SDK) designed to streamline the process of collecting user feedback for websites. It allows users to provide feedback directly on web pages, with the SDK automatically capturing essential context like screenshots, logs, and metadata. This eliminates the need for developers to build custom feedback systems and provides them with the rich information needed to quickly understand and address issues. The project aims to automate the entire feedback loop, from collection and user rewards to follow-up and even solution suggestions.

Popularity

Points 6

Comments 2

What is this product?

React Roast is a lightweight JavaScript SDK that integrates into your website to enable a more efficient and context-rich user feedback mechanism. Unlike traditional forms, users can highlight specific elements on a page and attach their comments. The SDK automatically captures crucial developer-oriented information such as console logs, browser metadata (like user agent and screen resolution), and a screenshot of the page at the time of feedback. This comprehensive data capture, coupled with the ability to send instant notifications to platforms like Slack or Discord, significantly reduces the time developers spend debugging and understanding reported issues. The innovation lies in its proactive data collection and direct integration into the user interface, making feedback frictionless for users and highly actionable for developers.

How to use it?

Developers can integrate React Roast into their React applications by installing the SDK via npm or yarn and initializing it within their application's root component. The SDK provides a configurable widget that users interact with to submit feedback. Usage scenarios include beta testing, bug reporting during development, gathering user suggestions for new features, or even for customer support. Developers can customize the appearance of the feedback widget and configure notification channels (e.g., Slack, Discord) to receive alerts in real-time. The self-hostable nature offers flexibility and control over data privacy. For example, a developer working on an e-commerce site could easily add React Roast to allow users to report issues with specific product pages, providing developers with immediate context to fix bugs.

Product Core Function

· Element selection for feedback: Allows users to pinpoint specific UI elements, providing developers with precise location context for reported issues, which speeds up debugging.

· Automatic metadata and log capture: Captures browser logs, user agent, screen resolution, and other relevant data automatically, giving developers a complete picture of the user's environment when the feedback was submitted.

· Screenshot capture: Automatically takes a screenshot of the page when feedback is submitted, offering visual confirmation of the issue and enhancing clarity for developers.

· Instant notifications: Sends immediate alerts to designated channels like Slack or Discord, enabling rapid response from development teams to user feedback.

· User reward system: Facilitates rewarding users for providing feedback, which can boost user engagement and conversion rates by incentivizing participation.

· User tracking links: Provides users with a link to track the status of their feedback, building accountability and trust between the user and the development team.

· Self-hostable and customizable widget: Offers developers the flexibility to host the feedback widget on their own infrastructure and customize its appearance to match their brand, ensuring data privacy and a consistent user experience.

Product Usage Case

· A startup testing a new web application can use React Roast to collect bug reports from beta testers. Testers can highlight specific interface elements that are not working as expected and attach console errors, allowing developers to quickly identify and fix the bugs before public release.

· An e-commerce platform can integrate React Roast to allow customers to report issues with product pages, such as incorrect pricing or missing images. The automatic screenshot and metadata capture will provide the support team with all the necessary information to resolve customer queries efficiently.

· A SaaS company developing a project management tool can use React Roast to gather user feedback on new features during internal testing. Users can point out usability issues or suggest improvements directly on the feature's UI, with all relevant context sent to the product team for iteration.

· A developer building a portfolio website can use React Roast to receive feedback on the design and functionality from potential clients or peers. This helps in refining the presentation and ensuring a smooth user experience.

12

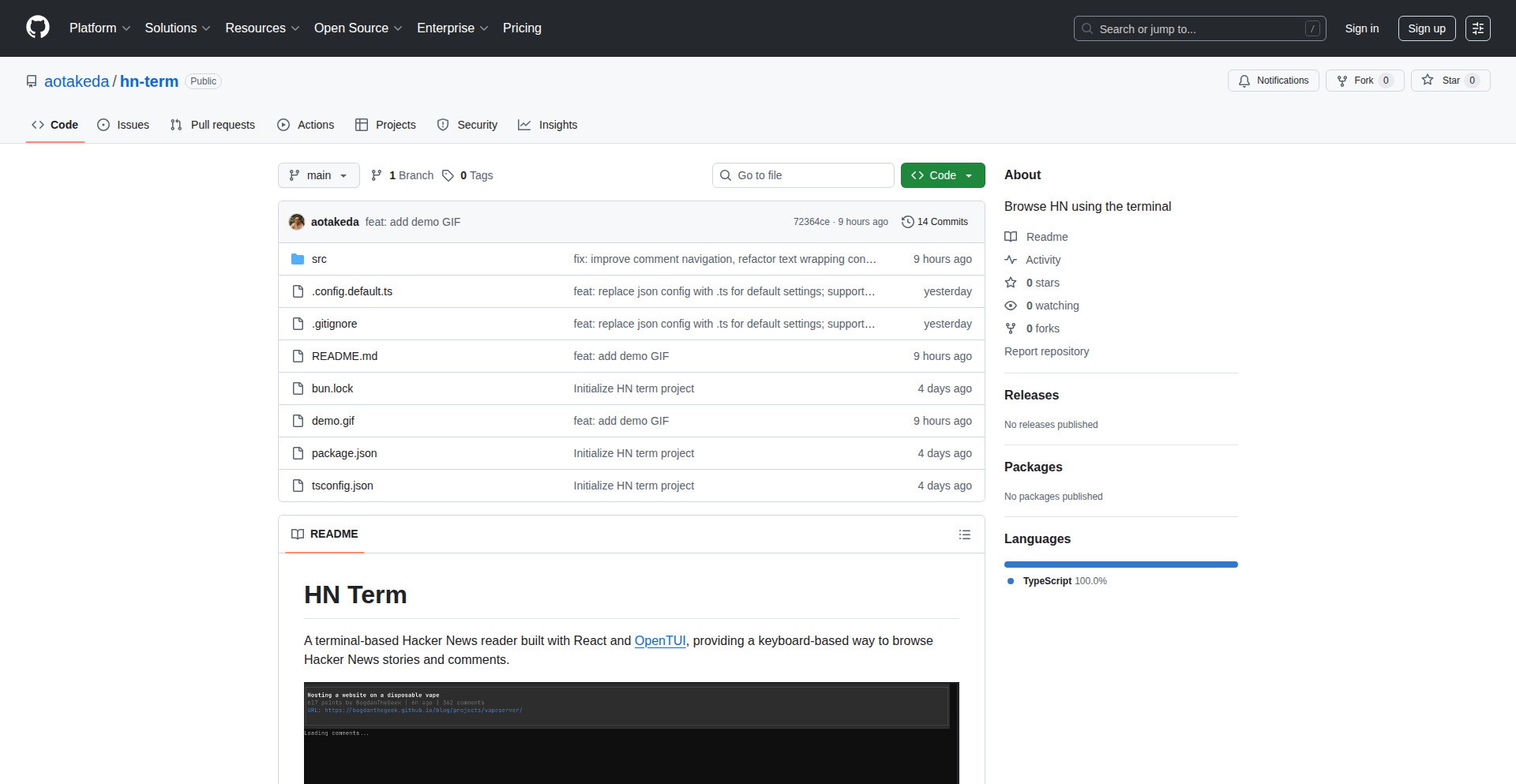

HN Term

Author

arthurtakeda

Description

A terminal-based interface for browsing Hacker News, allowing users to navigate through posts, comments, and various sections like 'top', 'new', 'ask', and 'jobs' using only keyboard shortcuts. It also supports expanding/hiding replies and opening external links directly within the terminal. The core innovation lies in bringing a rich web experience into a text-only environment, enhancing productivity for developers who spend a lot of time in the command line.

Popularity

Points 8

Comments 0

What is this product?

HN Term is a command-line application that provides a full Hacker News browsing experience without needing a web browser. It leverages the Hacker News API to fetch data and presents it in a structured, interactive format within your terminal. The innovation is in using a terminal UI framework (OpenTUI) with React and Bun to create a responsive and customizable experience. This means you can get the latest tech news and discussions without context switching away from your coding environment. So, what's in it for you? You can stay updated on tech trends and valuable insights directly from your terminal, streamlining your workflow.

How to use it?

Developers can install HN Term, likely via a package manager or by running the Bun application directly. Once installed, they can launch it from their terminal by typing a command (e.g., 'hn-term'). Inside the application, they'll use predefined keyboard shortcuts to navigate between stories, view comments, switch between different Hacker News feeds (like 'top', 'new', 'ask', 'show', 'jobs'), and open links in their default browser. Customization of key bindings and color themes is also a key feature, allowing users to tailor the experience to their preferences. This makes it easy to integrate into your daily development routine, keeping you informed without breaking your focus.

Product Core Function

· Terminal-based navigation of Hacker News: Browse posts, comments, and different categories using only keyboard commands. This saves you from opening a web browser, keeping your coding environment uninterrupted.

· Expand/hide comment threads: Manage the complexity of discussions by collapsing or expanding replies, making it easier to follow conversations. This helps you quickly digest information and find the most relevant points.

· Open external links: Seamlessly open links to articles, projects, or other resources in your default web browser without leaving the terminal. This allows for quick access to further information without losing your place.

· Customizable key bindings and themes: Personalize the application's controls and appearance to match your workflow and aesthetic preferences. This ensures the tool feels natural and efficient for your specific needs.

· Support for all HN sections (top, new, ask, show, jobs): Access the full spectrum of Hacker News content directly from your terminal. This provides a comprehensive view of the tech landscape and opportunities.

Product Usage Case

· A backend developer working on a critical feature can quickly check the 'new' Hacker News feed for relevant discussions or new tools without switching applications. This allows them to stay current with industry trends that might inform their work.

· A front-end developer wanting to explore a project linked in a Hacker News discussion can open the link directly from HN Term, see the project details in their browser, and then easily return to reading comments in the terminal.

· A developer who prefers keyboard-driven workflows can customize HN Term's key bindings to align with their existing terminal shortcuts, creating a highly efficient and integrated experience for consuming Hacker News content.

· A developer wanting to discover new job opportunities can browse the 'jobs' section of Hacker News directly in their terminal, filtering and previewing postings efficiently during short breaks.

13

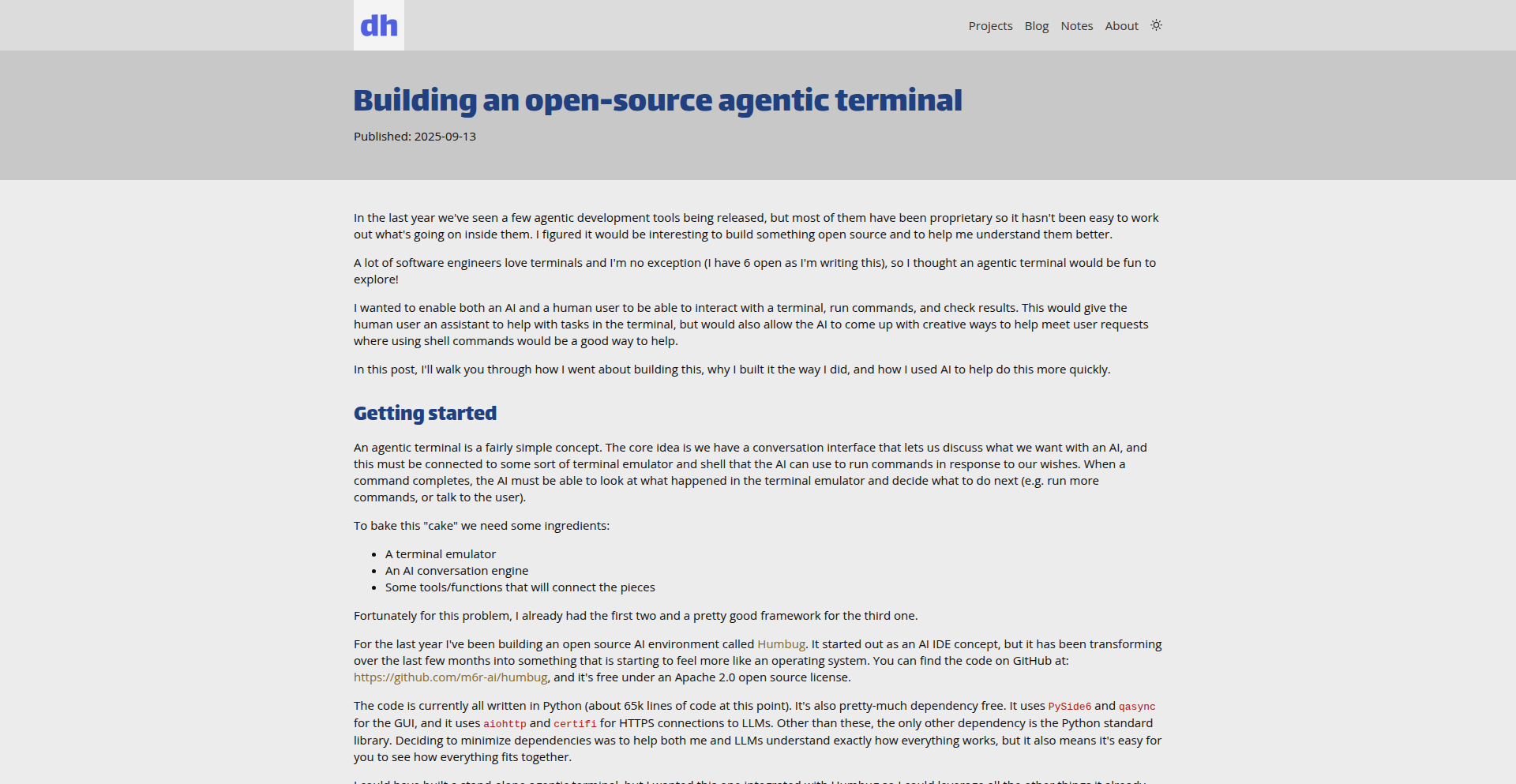

AgenticTerminal

Author

tritondev

Description

An open-source terminal agent that automates complex command-line tasks by understanding natural language instructions. It bridges the gap between human intent and shell execution, offering a more intuitive way to interact with the command line.

Popularity

Points 4

Comments 3

What is this product?

This project is an open-source terminal that acts as an intelligent agent. Instead of remembering exact commands and their syntax, you can describe what you want to achieve in plain English, and the AgenticTerminal translates that into the correct shell commands. Its core innovation lies in leveraging Large Language Models (LLMs) to interpret user intent and generate executable shell commands, making the command line accessible to a broader audience and significantly boosting productivity for experienced users by automating repetitive or complex sequences.

How to use it?

Developers can integrate this terminal into their workflow by installing it as a replacement for their current shell (e.g., bash, zsh). Once installed, they can interact with it by typing natural language prompts like 'find all files modified in the last week and zip them' or 'set up a simple web server to serve the current directory'. The AgenticTerminal then displays the proposed command, allows for confirmation, and executes it. It can also be used programmatically through its API for scripting more complex agentic workflows.

Product Core Function

· Natural Language to Shell Command Translation: Understands human-readable requests and converts them into precise shell commands, reducing cognitive load and command recall errors.

· Command Execution and Confirmation: Safely executes generated commands after user approval, preventing unintended actions and providing transparency.

· Contextual Awareness: Maintains context of previous commands and system state to inform future command generation, allowing for more fluid and sequential task completion.

· Task Automation & Orchestration: Enables the chaining of multiple commands to automate complex workflows, such as setting up development environments or deploying applications.

· Open-Source Extensibility: Built with an open-source philosophy, allowing developers to contribute, customize, and extend its capabilities for specific needs.

Product Usage Case

· Automating CI/CD pipeline setup: Instead of remembering exact `docker build`, `docker push`, and `kubectl apply` commands, a developer can simply type 'build and deploy the latest version of my app to production'. This saves time and reduces errors in a critical process.

· Data analysis and manipulation: A data scientist can ask 'list the top 10 most frequent words in this log file and save the results to a CSV' without needing to recall specific `grep`, `sort`, and `awk` syntax, accelerating the data exploration phase.

· System administration tasks: A system administrator can request 'find all running processes using more than 500MB of memory and restart them' to quickly manage system resources, enhancing operational efficiency.

14

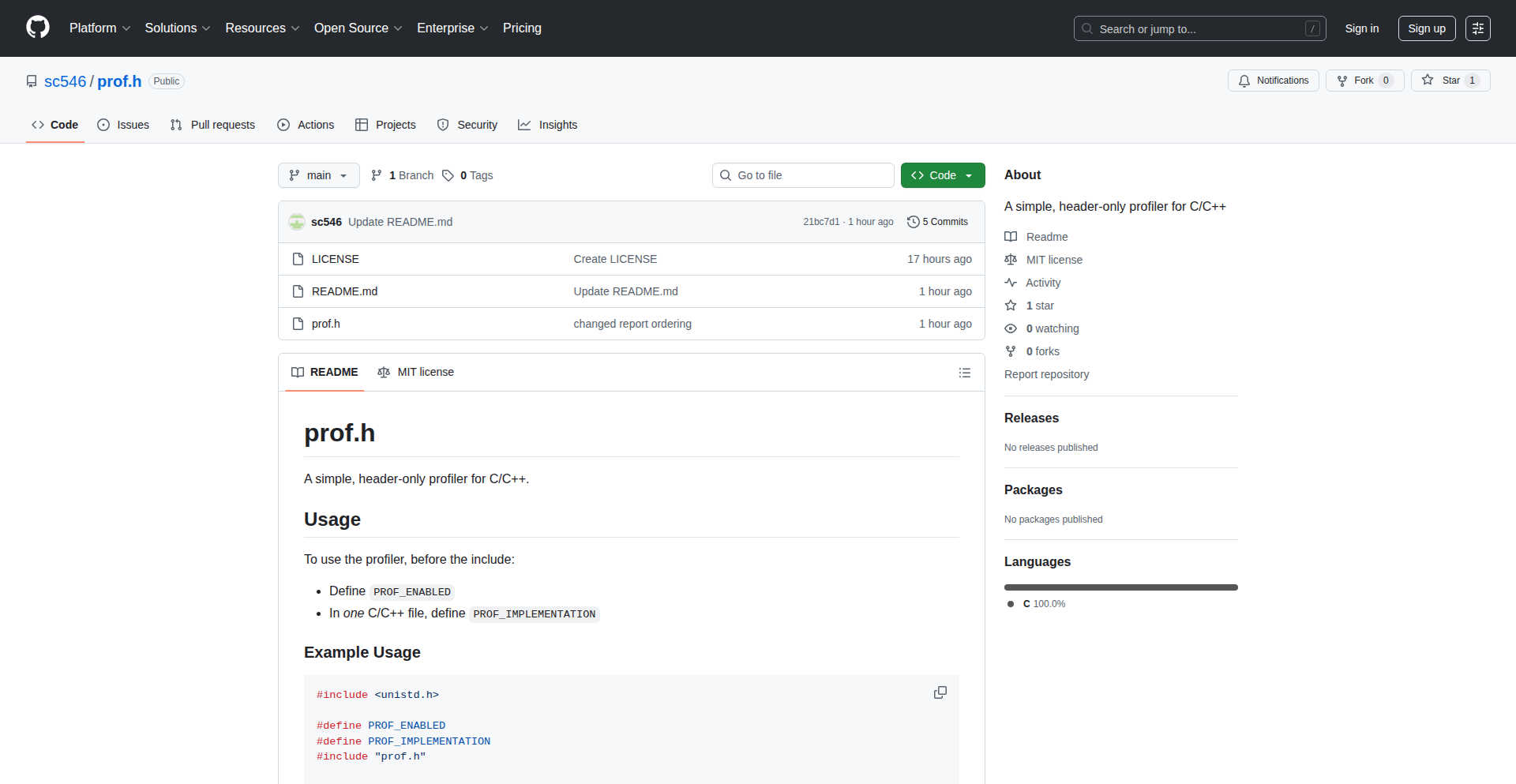

HeaderCppProfiler

Author

sc546

Description

A lightweight, header-only profiler for C/C++ that allows developers to easily measure code execution time without complex setup. It helps identify performance bottlenecks by providing detailed timing information for specific code blocks, leading to more optimized and efficient software.

Popularity

Points 5

Comments 2

What is this product?

This is a C/C++ profiling tool distributed as a single header file. Its core innovation lies in its simplicity and minimal overhead. Instead of requiring separate compilation or linking steps, you simply include the header file in your project. The profiler uses C++'s RAII (Resource Acquisition Is Initialization) principles, specifically with constructors and destructors, to automatically start and stop timing measurements around specific code blocks. When a scope enters, the timer starts; when it exits, the timer stops and records the duration. This makes it incredibly easy to integrate and use for performance analysis without disrupting your existing build process. The value proposition is clear: pinpointing slow parts of your code without adding complexity.

How to use it?

Developers can use this profiler by including the provided header file (e.g., `profiler.h`) directly into their C++ source files. They then wrap the code sections they want to profile within scope objects provided by the profiler. For instance, a common pattern would be: `ProfilerScope scope("my_function_section");`. When this line is executed, the profiler starts timing. When the scope of `scope` ends (e.g., at the end of a function or a block), the profiler automatically records the elapsed time and associates it with the provided name 'my_function_section'. The collected profiling data can then be printed to the console or a file for analysis. This makes it ideal for quick performance checks during development or for deep dives into performance-critical sections of applications.

Product Core Function

· Automatic Scope Timing: Measures the duration of code execution within defined scopes using RAII. The value is in instantly knowing how long specific operations take without manual timing code, directly improving development efficiency.

· Header-Only Distribution: Integrated by simply including a single header file. The value is zero build system complexity and minimal dependency management, allowing for rapid adoption.

· Customizable Output: Supports outputting profiling data to the console or a file. The value is flexibility in how performance metrics are consumed and analyzed, catering to different workflow preferences.

· Minimal Overhead: Designed to have a negligible impact on program performance. The value is accurate profiling without significantly altering the behavior of the code being measured, ensuring reliable results.

Product Usage Case

· Measuring the execution time of a database query function to identify potential slowdowns in data retrieval. This helps optimize data access patterns and improve application responsiveness.

· Profiling complex rendering loops in a game engine to pinpoint performance bottlenecks in visual computations. This allows for targeted optimizations to achieve smoother frame rates.

· Analyzing the performance of different algorithms or data structures by timing their execution within specific test cases. This provides empirical evidence for choosing the most efficient solutions.

· Identifying slow network communication operations in a client-server application. This is crucial for improving user experience by reducing latency and ensuring timely data exchange.

15

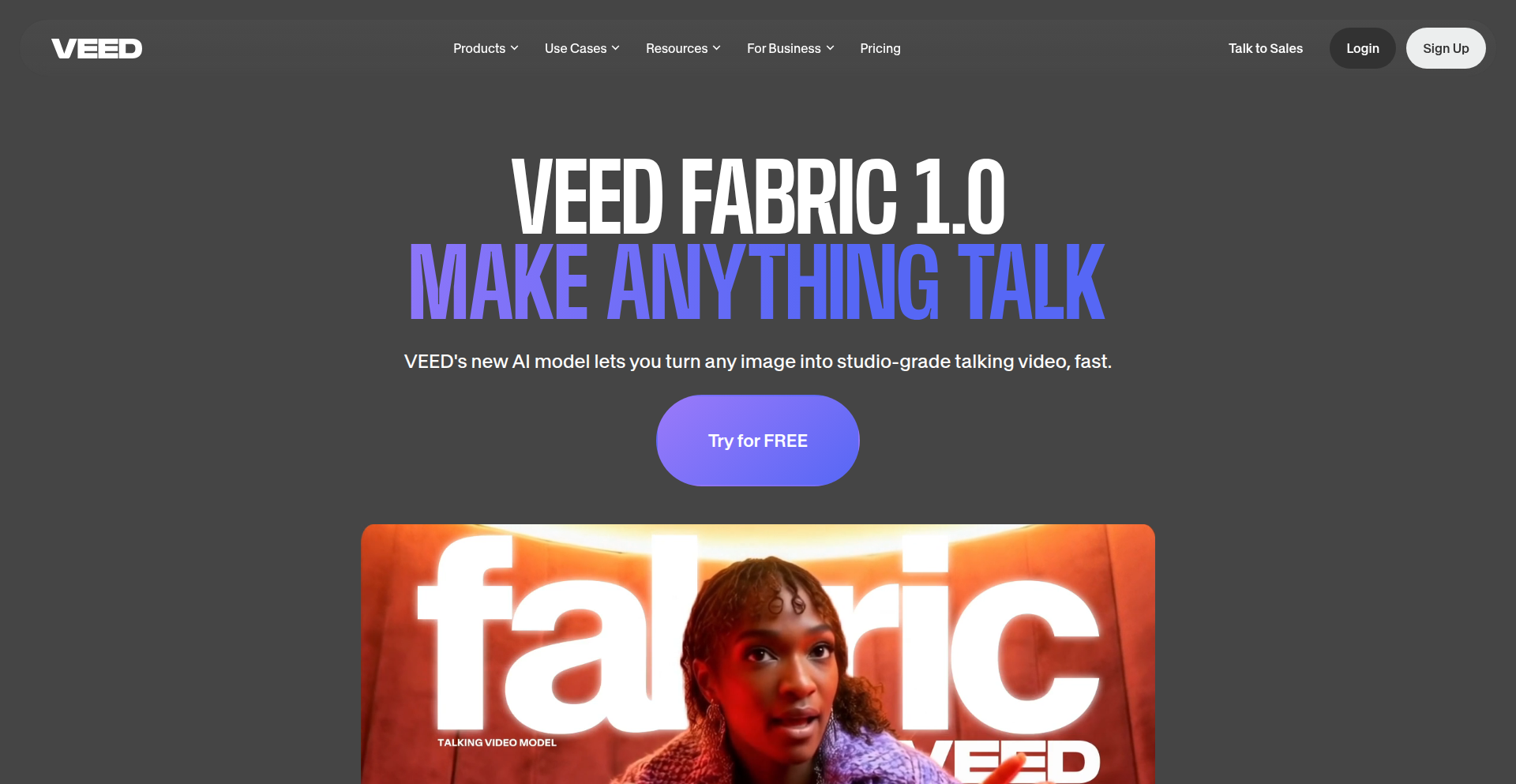

AI-Powered Fast Talking Video Synthesis

Author

totruok

Description

This project showcases a novel approach to generating realistic talking head videos at high speeds using AI. It tackles the challenge of creating synchronized lip movements and facial expressions with audio input, making it significantly faster than traditional methods. The innovation lies in its efficient AI model architecture and optimized inference process, enabling rapid video generation, which is crucial for applications like automated content creation and personalized communication.

Popularity

Points 6

Comments 1

What is this product?

This project is an AI model that can quickly turn text or audio into a video of a person speaking those words. It uses advanced deep learning techniques, specifically focusing on generative adversarial networks (GANs) or similar diffusion models, to synthesize photorealistic facial movements and lip synchronization with the provided audio. The core innovation is its speed and efficiency, meaning it can create talking videos much faster than older methods, without sacrificing quality. So, what's in it for you? You can now generate speaking videos almost instantly for various purposes.

How to use it?

Developers can integrate this model into their applications through an API or by running the model locally if they have the necessary hardware. The typical workflow involves providing an audio file or text, and the model outputs a video file with a generated avatar speaking. This could be used in a web application for creating personalized video messages, in a game for dynamic NPC dialogue, or in a content creation tool for rapid video production. So, how can you use it? You can easily plug this into your existing software to add a powerful video generation capability.

Product Core Function

· Real-time audio-to-video synthesis: Generates talking head videos in near real-time from an audio input, enabling dynamic and responsive video content. This means you can create videos of people speaking almost as fast as they talk.

· High-fidelity lip-sync: Accurately matches lip movements to the spoken audio, ensuring natural and believable speech patterns in the generated video. This makes the videos look like the person is actually speaking the words.

· Customizable avatar support: Allows for the use of different pre-trained or user-provided facial models, offering flexibility in the visual appearance of the generated talking heads. You can choose who appears to be speaking.

· Efficient model architecture: Leverages optimized AI model design and inference techniques to achieve significantly faster video generation speeds. This is the core of why it's 'fast' – the AI is built for speed.

Product Usage Case

· Creating personalized marketing videos: A business could use this to generate thousands of unique video messages for customers, with each video featuring a presenter speaking the customer's name and specific product details. This solves the problem of mass personalization at scale.

· Automated news anchoring: A media company could use this to generate video summaries of news articles with AI presenters, allowing for rapid dissemination of information. This speeds up content delivery significantly.

· Interactive educational content: An e-learning platform could use this to create dynamic tutorials where an AI tutor explains concepts with realistic facial expressions and voice. This makes learning more engaging and accessible.

16

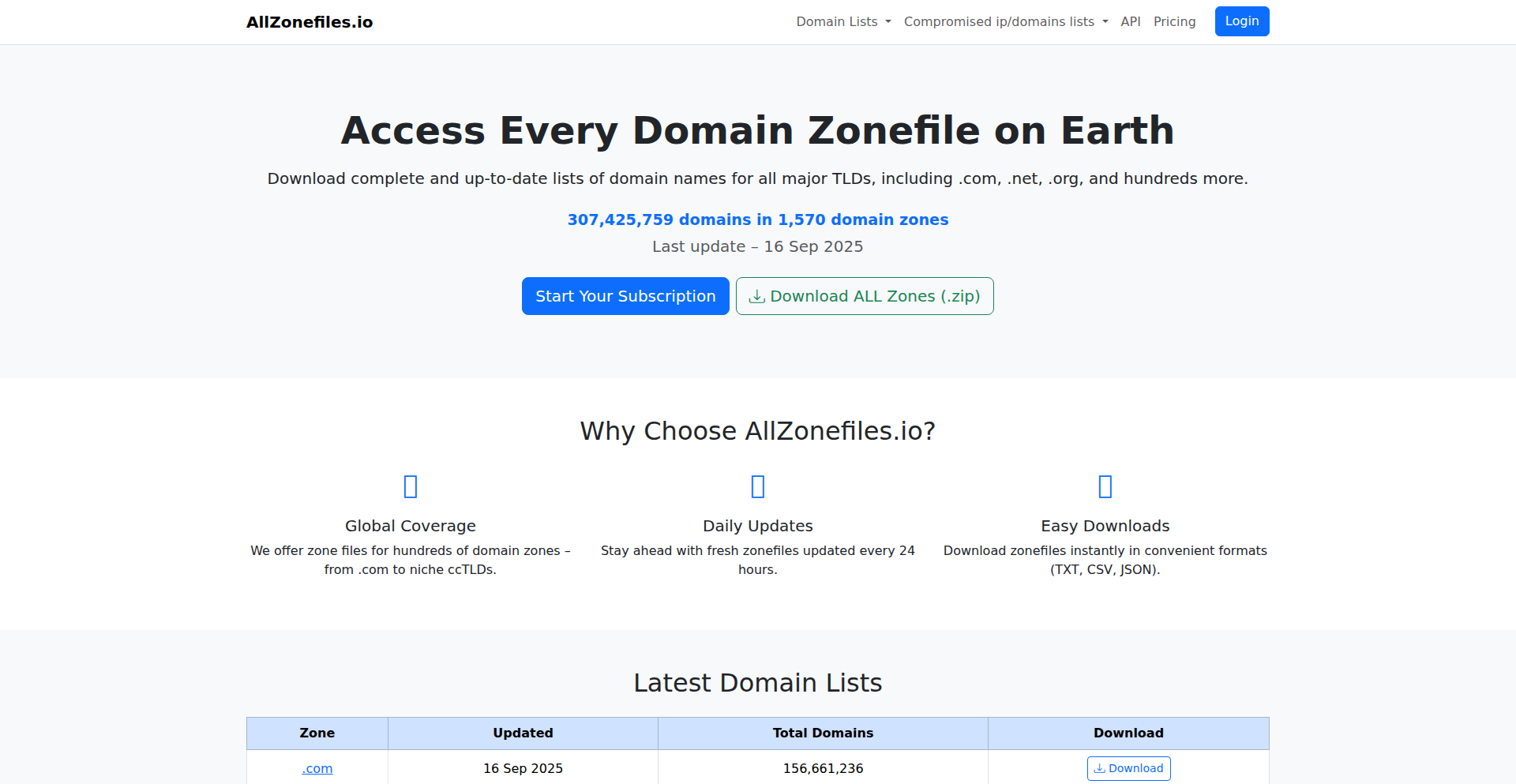

Allzonefiles.io: Domain Name Data Hub

Author

iryndin

Description

Allzonefiles.io provides comprehensive datasets of registered domain names, offering access to 307 million registered domains across 1570 zones and 78 million domains across 312 ccTLDs. It also supplies daily lists of newly registered and expired domains. This project tackles the challenge of aggregating and making accessible vast amounts of domain registration data, which is crucial for market analysis, cybersecurity threat intelligence, and SEO research. The innovation lies in its ability to process and deliver such a massive dataset in an easily downloadable format, enabling developers and researchers to leverage this information for various applications.

Popularity

Points 4

Comments 3

What is this product?

Allzonefiles.io is a project that collects and makes available a massive collection of registered domain name data. It currently holds information on 307 million domain names from various top-level domains (like .com, .net, .io) and 78 million from country-code top-level domains (like .uk, .de). Essentially, it's a giant library of who owns what domain names and which ones are becoming available. The innovation is in the scale of data aggregation and the straightforward download mechanism, making previously difficult-to-obtain information accessible. For you, this means having a readily available resource for understanding the domain name landscape.

How to use it?

Developers can use Allzonefiles.io by downloading the comprehensive `.zip` file (1.2 GB) containing all registered domain names. This data can then be integrated into custom applications, analyzed for market trends, or used in cybersecurity tools to identify potential threats or monitor domain registrations. For example, you could build a tool that tracks newly registered domains in a specific niche or identifies expired domains that might be valuable. The data is provided in a raw format, allowing for maximum flexibility in how you choose to process and utilize it.

Product Core Function

· Bulk Domain Data Download: Provides a single 1.2GB zip file containing over 307 million registered domain names across a wide range of zones. The value here is having a massive, consolidated dataset for in-depth analysis or tool development, saving you the immense effort of gathering this data yourself.

· Daily New Domain Lists: Offers daily updates on newly registered domain names. This is valuable for identifying emerging trends, tracking competitors, or discovering new opportunities in specific markets.

· Daily Expired Domain Lists: Provides daily lists of expired domain names. This is incredibly useful for domain investors looking for valuable expiring domains to re-register, or for security professionals monitoring domains that might be abandoned and pose a risk.

· ccTLD Data Coverage: Includes specific data for over 312 country-code top-level domains. This adds significant value for localized market research or for understanding domain registration patterns within specific countries.

Product Usage Case

· Market Research: A business analyst could download the entire dataset to identify market saturation in specific domain name extensions or to analyze the growth of new TLDs. This helps in making informed decisions about branding and online presence.

· Cybersecurity Threat Intelligence: A security researcher could use the daily lists of newly registered domains to scan for suspicious names that mimic legitimate ones (typosquatting) or are associated with phishing campaigns. This helps in proactive defense against online threats.

· Domain Flipping: An entrepreneur looking to profit from domain names could use the expired domain lists to find valuable domains that are about to become available, allowing them to secure them before others.

· SEO and Digital Marketing: A digital marketer might analyze the dataset to understand the prevalence of certain keywords in registered domain names, informing their SEO strategy and keyword targeting.

17

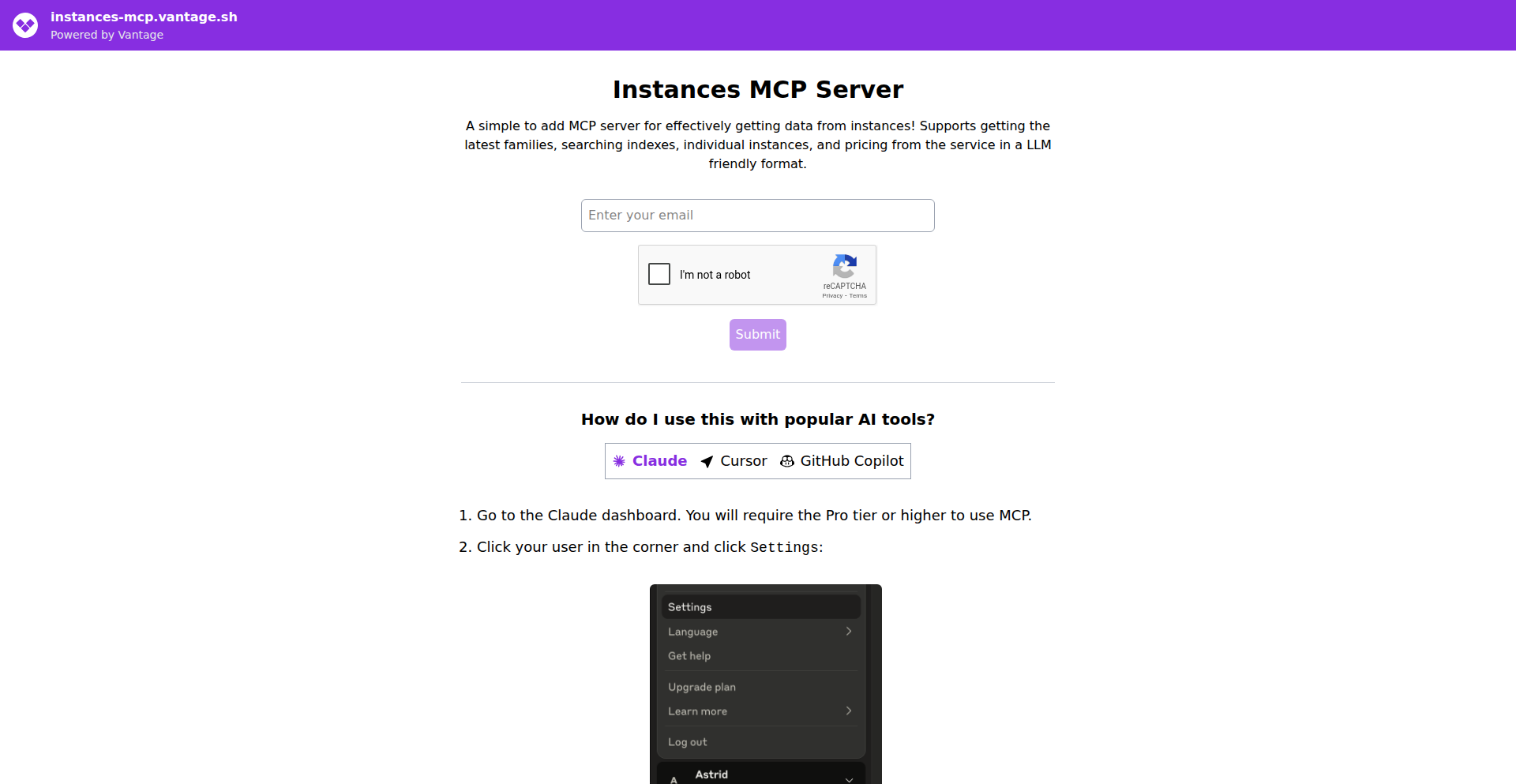

MCP for EC2Instances

Author

StratusBen

Description

A simple, command-line interface (CLI) tool that simplifies the process of managing and interacting with EC2 instances. It aims to provide a more streamlined and user-friendly experience compared to complex cloud provider interfaces by offering intelligent auto-completion and context-aware commands.

Popularity

Points 6

Comments 1

What is this product?

MCP for EC2Instances is a developer-focused CLI tool designed to make managing Amazon Elastic Compute Cloud (EC2) instances on AWS much easier. It uses a technique called 'command parsing' and 'context awareness' to predict what you want to do next. For example, if you just launched an EC2 instance, it intelligently suggests common actions like 'ssh', 'stop', or 'tag' based on that instance. This is innovative because it reduces the mental overhead of remembering exact commands and parameters, allowing developers to work faster and with fewer errors. Think of it like a smart assistant for your cloud servers.

How to use it?

Developers can install MCP for EC2Instances using a package manager like pip (for Python). Once installed, they can simply open their terminal and start typing commands related to their EC2 instances. For instance, after authenticating with AWS credentials, a developer might type 'mcp ec2 list' to see their running instances. Then, to interact with a specific instance, they could type 'mcp ec2 <instance_name>' and the tool would offer relevant actions. It integrates seamlessly into existing development workflows that rely on the command line.

Product Core Function

· Intelligent Command Auto-completion: Provides smart suggestions for commands and parameters as you type, reducing errors and speeding up operations. This is valuable because it saves time and prevents mistakes when managing cloud resources.

· Context-Aware Command Execution: Understands the current state and selected EC2 instances to offer the most relevant next actions, simplifying complex workflows. This is valuable as it guides users to perform the right operations without needing to memorize intricate command sequences.

· Simplified EC2 Instance Management: Offers a cleaner and more intuitive way to list, start, stop, and tag EC2 instances directly from the terminal. This is valuable for quickly performing essential tasks without navigating through complex web dashboards.

· Cross-Platform Compatibility: Designed to work across different operating systems (Linux, macOS, Windows) where developers typically work, ensuring broad usability. This is valuable as it allows developers to maintain a consistent workflow regardless of their development environment.

Product Usage Case

· A developer needs to quickly spin up a new EC2 instance for testing a web application. They use MCP to list available AMIs, select the desired one, and launch an instance with specific security group and key pair configurations, all through a few intuitive commands. This saves them time compared to navigating the AWS console and remembering all the necessary parameters.

· During a production incident, a DevOps engineer needs to immediately stop a misbehaving EC2 instance. They use MCP to quickly identify the instance by its tag or name and execute the 'stop' command with a single line, minimizing downtime. The context-aware suggestions help them find the correct instance rapidly.

· A team is working on a project and needs to consistently tag their EC2 instances for cost allocation and organization. They use MCP to apply specific tags to multiple instances at once with a straightforward command, ensuring consistency across their cloud infrastructure. This helps in better resource management and cost tracking.

18

LLM Translation Round-Trip Benchmark

Author

zone411

Description

A benchmark designed to evaluate the quality and consistency of large language models (LLMs) in performing round-trip translations (translating text from a source language to a target language, and then back to the source language). It highlights how LLMs handle nuances, preserve meaning, and avoid introducing errors or distortions across multiple translation steps, addressing the common challenge of maintaining fidelity in AI-driven text transformations.

Popularity

Points 6

Comments 0

What is this product?

This project is a specialized benchmark for assessing the performance of Large Language Models (LLMs) in translation tasks. The core innovation lies in its 'round-trip' methodology. Unlike simple one-way translation tests, this benchmark translates text from a source language (e.g., English) to a target language (e.g., French), and then translates the French output back into English. By comparing the original English text with the final back-translated English text, the benchmark reveals how well the LLM preserves the original meaning, context, and style, and importantly, identifies any degradation or 'hallucinations' introduced during the two-step translation process. This approach offers a more robust evaluation of translation accuracy and model robustness in handling linguistic transformations.

How to use it?