Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-14

SagaSu777 2025-09-15

Explore the hottest developer projects on Show HN for 2025-09-14. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

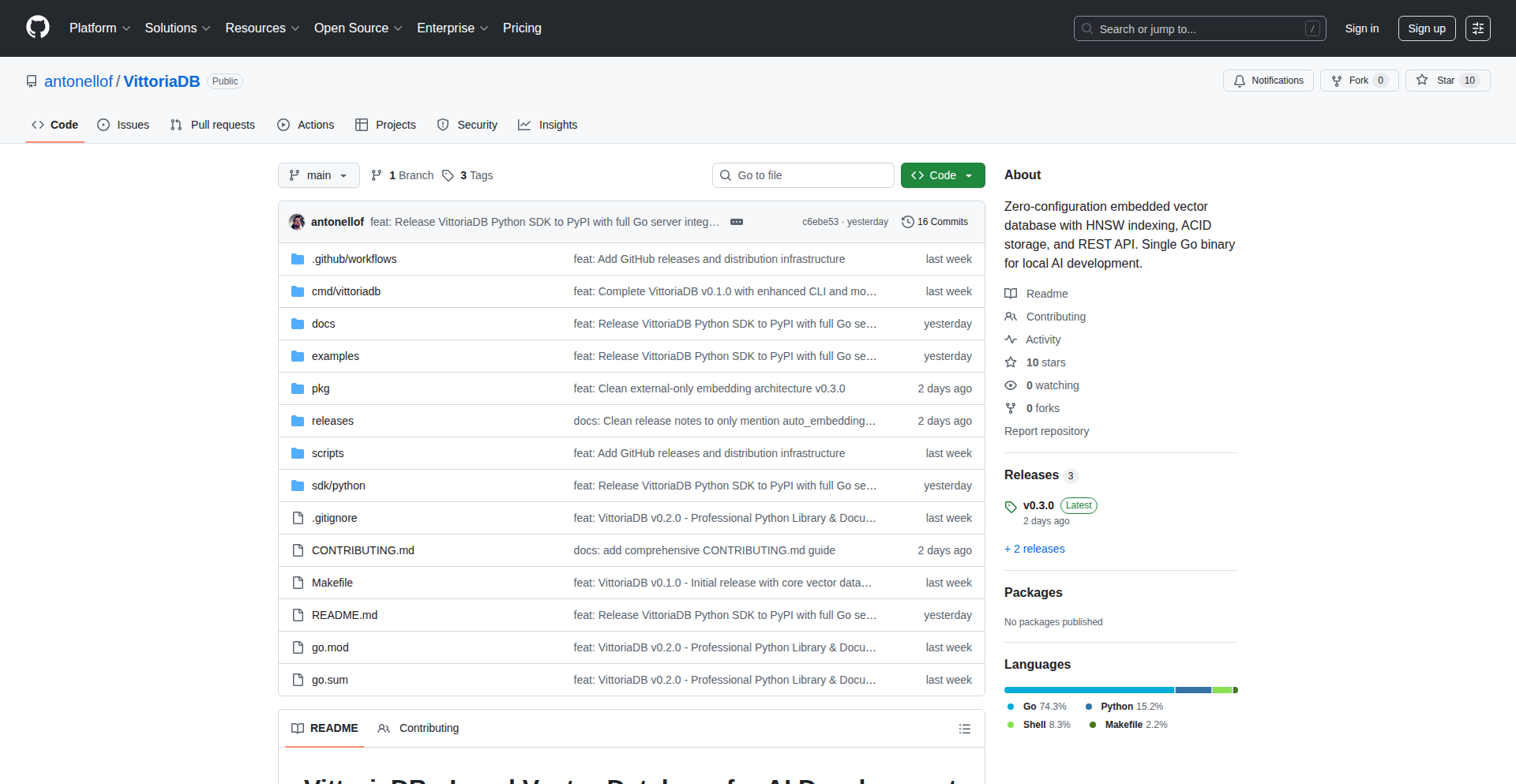

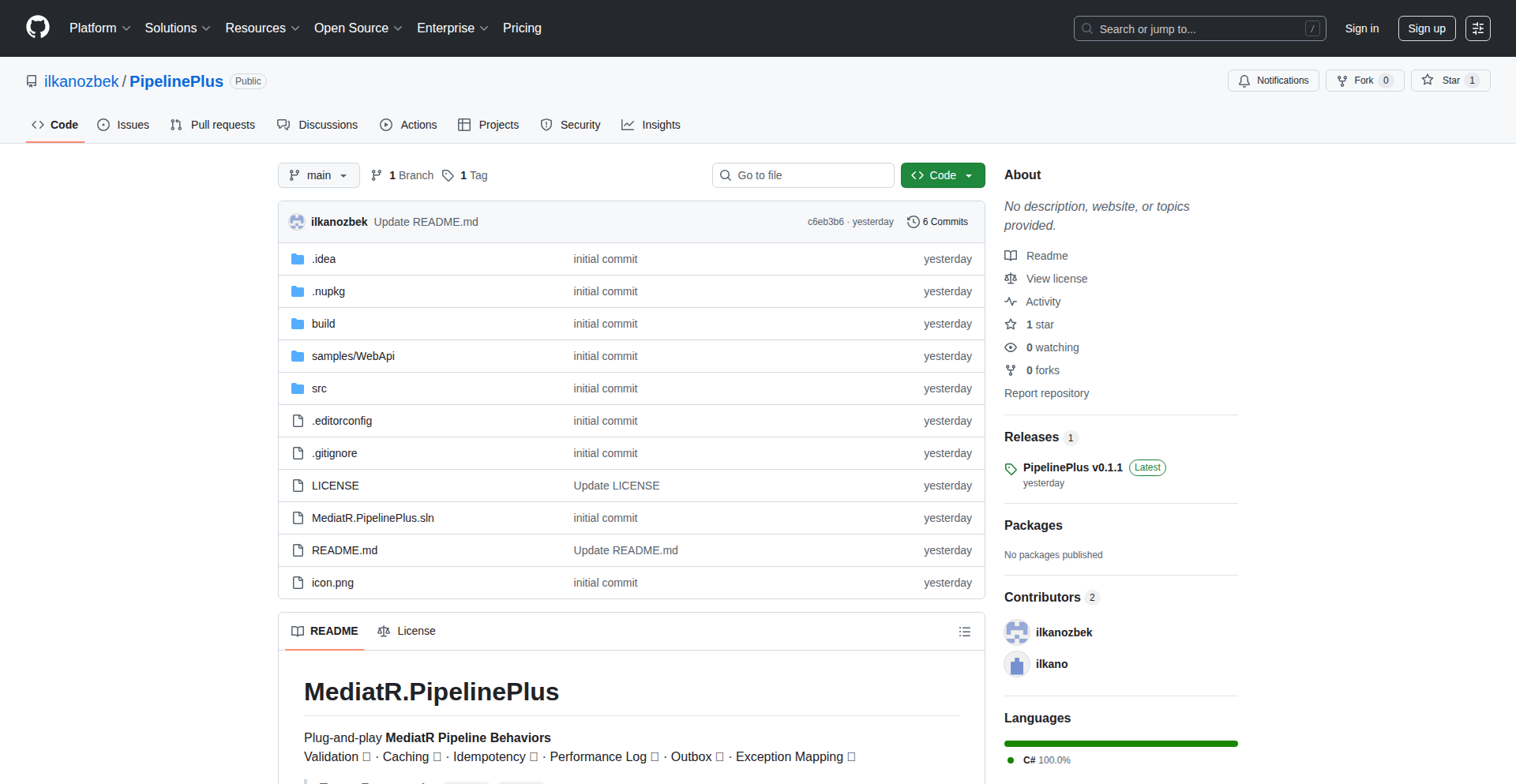

The Hacker News Show HN community continues to showcase a strong hacker spirit by tackling complexity with elegant, often minimalist, solutions. This week's projects highlight a significant trend towards 'buildless' and 'runtime-only' approaches in web development, exemplified by Dagger.js, aiming to streamline deployment and reduce development overhead. Simultaneously, the pervasive influence of AI is evident in numerous projects focused on agent orchestration, data analysis, and intelligent automation, such as Kodosumi and TabTabTab. There's a clear emphasis on developer productivity through libraries that reduce boilerplate and offer integrated functionalities, like PipelinePlus for .NET. Furthermore, privacy and data control remain paramount, with projects like Secluso and Supakey offering innovative ways to safeguard user information and data ownership. For developers and innovators, this signals an opportunity to embrace simplicity, leverage AI thoughtfully, and prioritize user data security. The ability to abstract complex infrastructure, as seen in TNX API and VittoriaDB, is key to unlocking new possibilities for businesses and individuals alike. Keep experimenting, keep building, and always challenge the status quo with pragmatic, inventive solutions.

Today's Hottest Product

Name

Dagger.js – A buildless, runtime-only JavaScript micro-framework

Highlight

Dagger.js revolutionizes front-end development by eliminating the need for bundlers and compile steps. Its 'buildless, runtime-only' approach leverages native Web Components and HTML-first directives (+click, +load), allowing developers to ship dynamic web pages by simply including a script tag from a CDN. This paradigm shift significantly simplifies the development workflow, reduces build times, and makes it easier to deploy lightweight applications, especially for edge and serverless environments. Developers can learn about declarative programming, efficient runtime hydration, and the power of leveraging native browser features for enhanced performance and reduced complexity.

Popular Category

Web Frameworks

AI/ML Tools

Developer Productivity

System Tools

Popular Keyword

AI

JavaScript

Open Source

Framework

Database

Automation

Web Components

Technology Trends

Buildless Web Development

Runtime-Only Frameworks

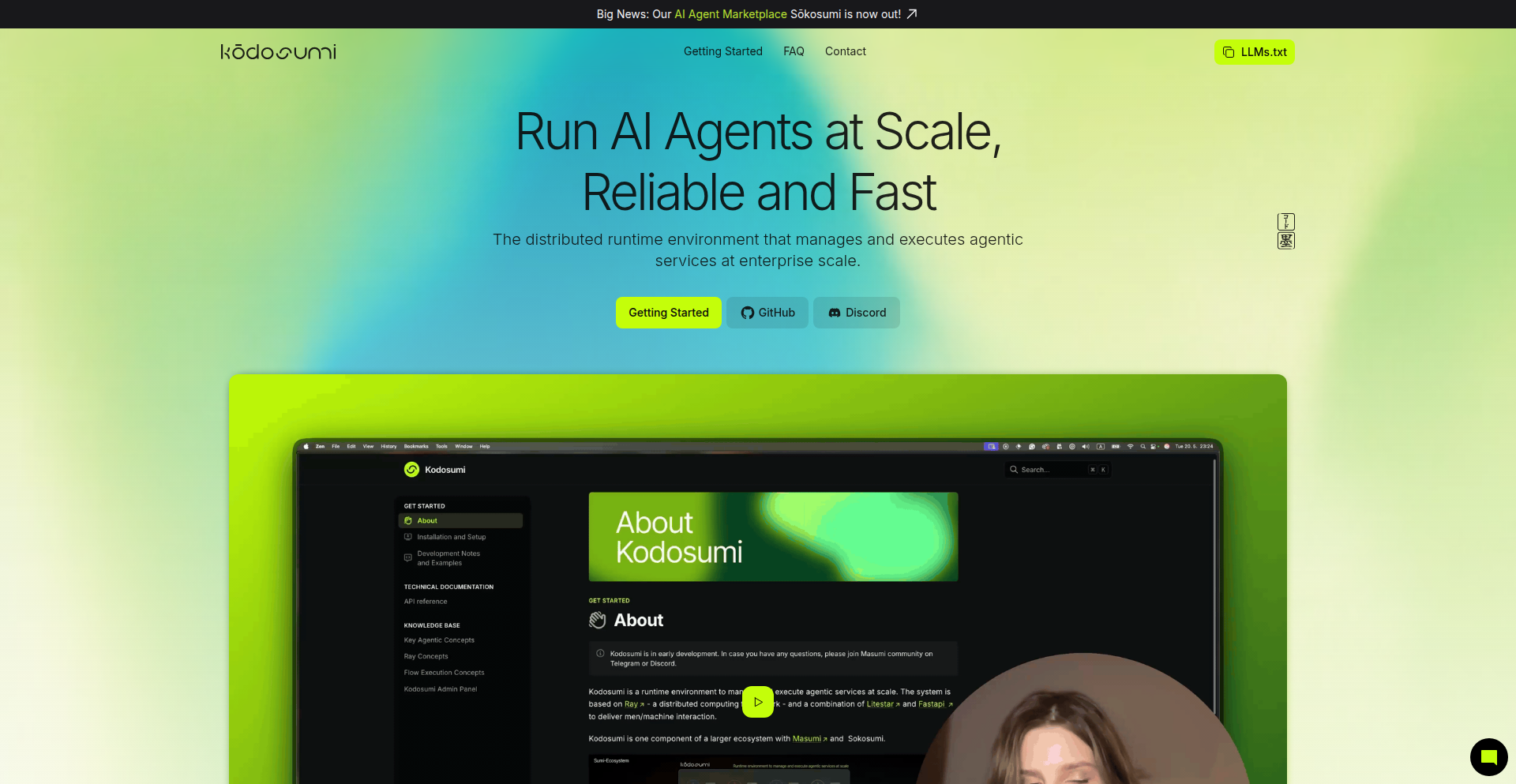

AI Agent Orchestration

Privacy-Preserving Technology

Efficient Data Handling

Declarative UI/UX

Observability & Security

Project Category Distribution

Web Development (25%)

AI/ML (20%)

Developer Tools (15%)

Databases/Storage (10%)

System/Infrastructure (10%)

Productivity/Utilities (10%)

Security (5%)

Other (5%)

Today's Hot Product List

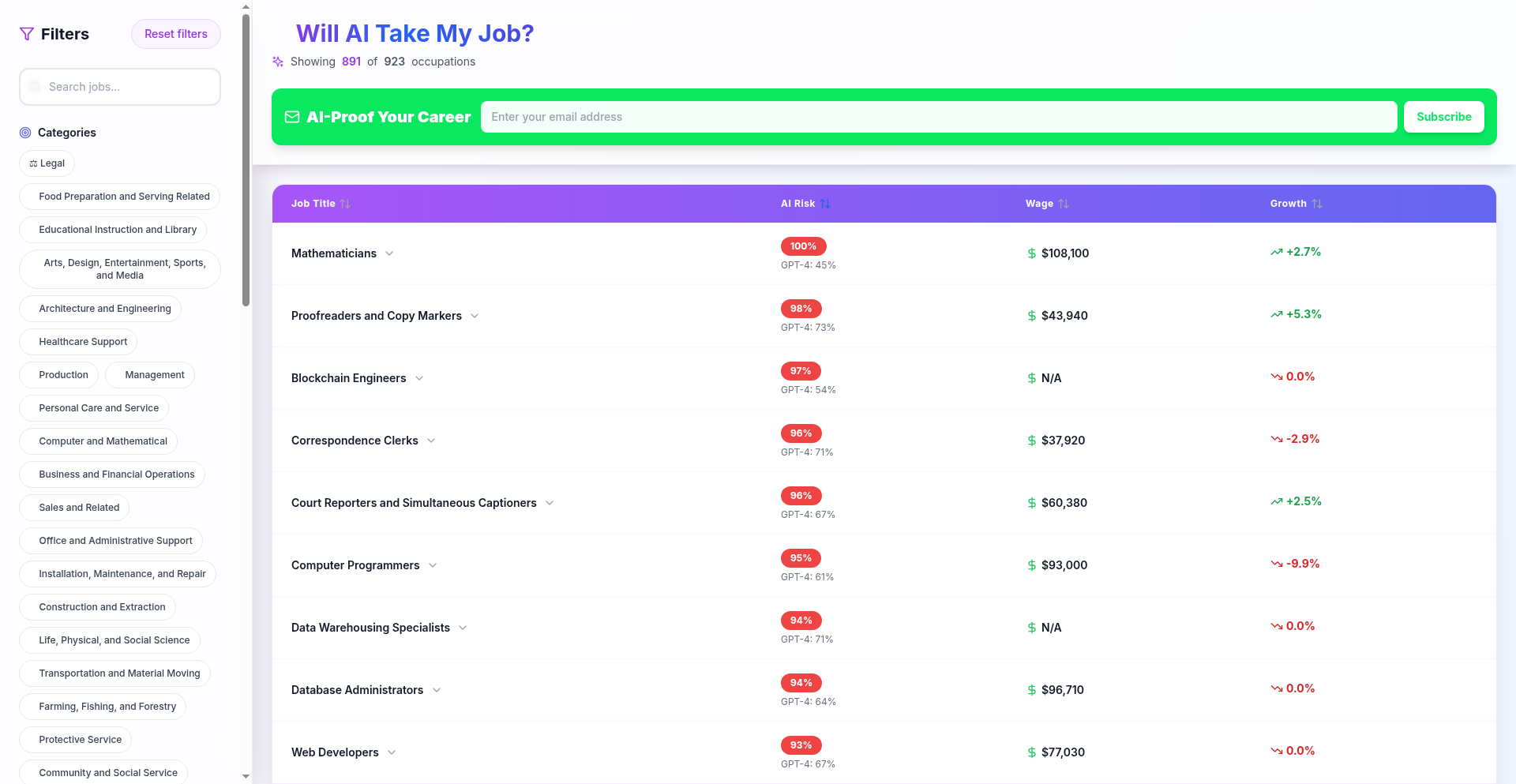

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Dagger.js: The Buildless Runtime Composer | 60 | 55 |

| 2 | DriftDB: Time-Traveling Append-Only Database | 23 | 19 |

| 3 | Secluso: Open-Source Privacy-First Home Security | 16 | 2 |

| 4 | RDMA-InfiniBand Distributed Cache | 9 | 0 |

| 5 | PetHealth AI | 5 | 4 |

| 6 | PaperSync: Collaborative ArXiv Reader | 6 | 3 |

| 7 | ForkLaunch: Typed DSL for Express Upgrades | 7 | 1 |

| 8 | 180InvestTools | 7 | 0 |

| 9 | Cloudflare Plan Detector | 1 | 5 |

| 10 | Freeze Trap Canvas Game | 4 | 2 |

1

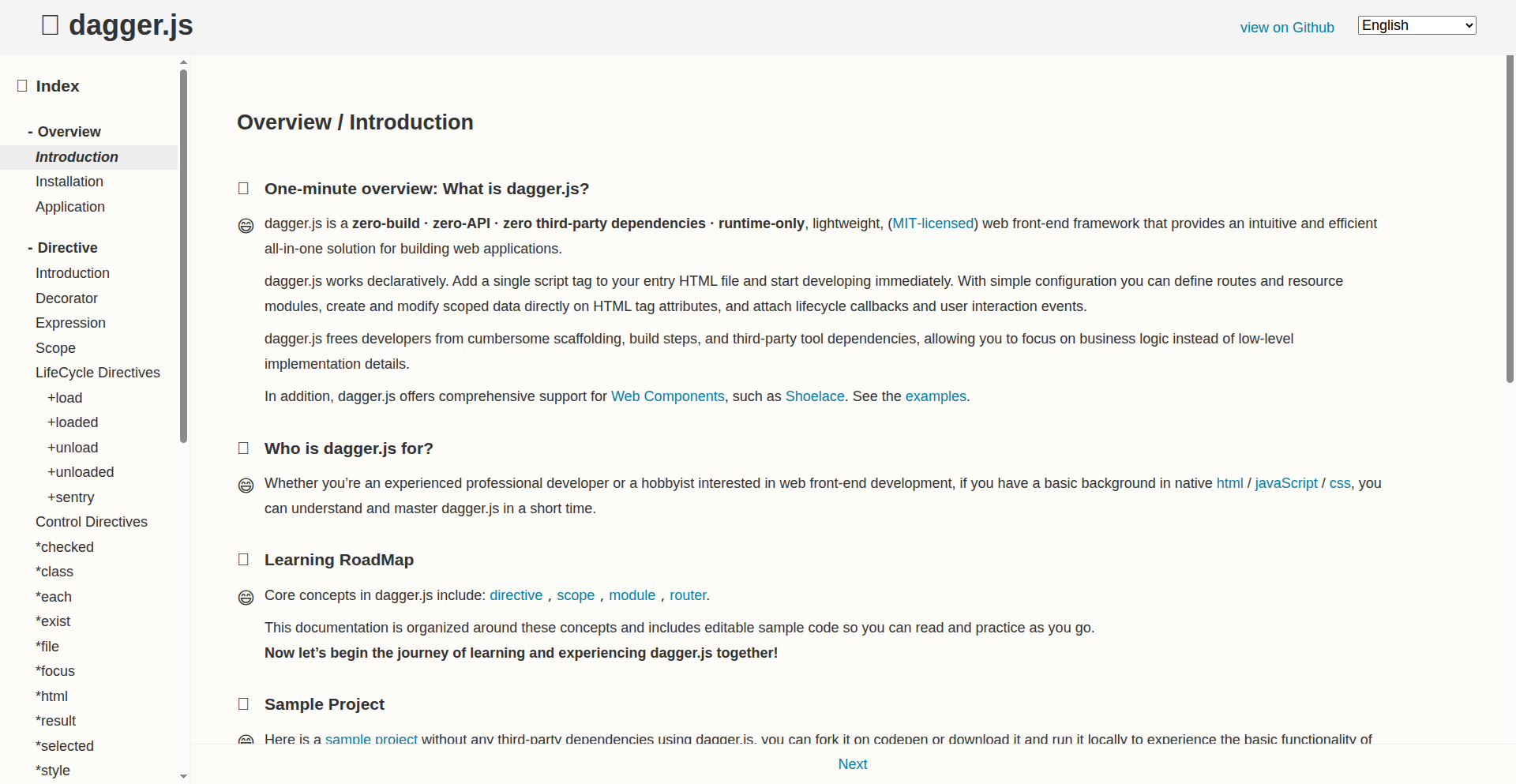

Dagger.js: The Buildless Runtime Composer

Author

TonyPeakman

Description

Dagger.js is a JavaScript micro-framework that revolutionizes web development by eliminating the need for build tools and compile steps. It focuses on a runtime-only approach, allowing developers to ship interactive web pages by simply including a script from a CDN. Its innovation lies in its HTML-first directive system, which attaches behaviors directly to HTML elements, making it incredibly lightweight and easy to integrate. This empowers developers to build anything from simple widgets to complex applications with unparalleled simplicity, especially for scenarios where speed, ease of deployment, and developer experience are paramount.

Popularity

Points 60

Comments 55

What is this product?

Dagger.js is a JavaScript framework designed to make building interactive web applications incredibly simple and fast, by completely removing the traditional build process. Instead of complex configurations and compilation steps, Dagger.js uses special attributes directly within your HTML, like '+click' for handling clicks or '+load' for initializing components when an element appears. This means you can write your JavaScript and have it run directly in the browser as soon as the page loads, without needing to bundle or transpile anything. It integrates seamlessly with Web Components, allowing for encapsulated and reusable UI pieces. The core idea is 'runtime-first' – all the intelligence is in the JavaScript that runs when the user visits your site, not in a pre-processed build artifact. This translates to faster development cycles and significantly smaller deployment sizes, making it perfect for scenarios where you want to get something working quickly and efficiently.

How to use it?

Developers can start using Dagger.js by including a single script tag in their HTML file, pointing to the Dagger.js CDN. For example: `<script src="https://cdn.jsdelivr.net/npm/dagger.js"></script>`. Then, you can attach behaviors to your HTML elements using Dagger.js directives. For instance, to make a button trigger an alert when clicked, you'd write `<button +click="alert('Hello!')">Click Me</button>`. For more complex interactions or to manage component lifecycles, you can define JavaScript modules and reference them using directives like `+load`. Dagger.js is designed to work alongside native Web Components, so you can easily incorporate custom elements with Dagger.js behaviors for a modular and maintainable architecture. It's ideal for integrating interactive features into existing HTML, building small standalone applications, or for environments like serverless functions where minimizing overhead is critical.

Product Core Function

· Runtime-only execution: Behaviors are applied directly by the browser when the page loads, meaning no build tools or compilation are needed. This drastically speeds up development and deployment.

· HTML-first directives: Custom attributes like `+click`, `+load`, `+loaded`, `+unload`, `+unloaded` are used to attach JavaScript logic directly to HTML elements. This keeps your logic close to your markup, improving readability and maintainability.

· Zero API surface: Dagger.js aims to be declarative and simple. All functionality is provided through its directive system and distributed, small JavaScript modules, avoiding complex configuration or setup.

· Web Components compatibility: Dagger.js is built to work harmoniously with native Web Components, allowing you to create encapsulated and reusable UI elements with interactive behaviors managed by Dagger.js.

· Distributed modules: Developers can load small, focused JavaScript modules on demand from a CDN, ensuring only necessary code is fetched, optimizing performance and load times.

· Progressive enhancement: The core page content renders even without the Dagger.js script, and the interactivity is layered on top, providing a robust experience even in less capable environments.

Product Usage Case

· Building interactive documentation sites: You can include dynamic examples and code snippets that update in real-time without needing a complex build pipeline for the documentation itself.

· Creating embedded widgets for third-party sites: A small, self-contained widget with interactive elements can be easily shared and dropped into any webpage via a simple script tag, enhancing user engagement.

· Developing admin panels or internal tools: For applications that don't require a full-blown modern JavaScript framework, Dagger.js offers a much simpler and faster way to build functional interfaces, saving significant setup time.

· Deploying applications on edge or serverless platforms: The minimal overhead and lack of a build step make Dagger.js ideal for environments where cold starts and resource efficiency are critical, ensuring faster responses and lower costs.

· Rapid prototyping of interactive features: Developers can quickly add dynamic behavior to static HTML pages to test ideas and gather feedback without the friction of setting up a development environment.

2

DriftDB: Time-Traveling Append-Only Database

Author

DavidCanHelp

Description

DriftDB is an experimental database designed with an append-only data model, allowing for time-travel queries. This innovative approach enables developers to query data as it existed at any point in time, offering a unique perspective on data evolution and facilitating powerful debugging and auditing capabilities. It addresses the challenge of understanding historical data states in a straightforward manner, making complex data lineage tracking accessible.

Popularity

Points 23

Comments 19

What is this product?

DriftDB is a novel database system that stores data by only adding new entries (append-only). Think of it like a logbook where you can only write new entries, never erase or modify old ones. Its core innovation lies in its ability to let you ask questions not just about the current state of your data, but about how it looked at any specific moment in the past – like rewinding a video. This is achieved through clever data indexing and versioning mechanisms, allowing efficient retrieval of historical data states. So, what this means for you is an easier way to see how your data has changed over time, which is incredibly useful for understanding bugs or auditing changes.

How to use it?

Developers can integrate DriftDB into their applications by using its client libraries, available for various programming languages. Data is inserted via simple `put` operations, which are automatically versioned. To query historical data, you specify a timestamp along with your query. For example, you could ask 'What was the user's email address last Tuesday at 3 PM?'. This makes it ideal for backend services that need robust auditing, debugging tools that require inspecting past states, or even for building applications with a strong focus on data immutability and history. It's like having a built-in time machine for your application's data.

Product Core Function

· Append-Only Data Storage: Data is never overwritten or deleted, only new versions are added. This ensures data integrity and provides a complete audit trail of all changes. The value here is in data immutability and a guaranteed history.

· Time-Travel Queries: The ability to query data as it existed at any specific past timestamp. This unlocks powerful debugging, auditing, and historical analysis capabilities. For you, this means understanding exactly what happened and when.

· Efficient Version Retrieval: Optimized indexing and data structures for fast retrieval of historical data states without scanning the entire history. This ensures performance even with extensive data history, meaning you get your answers quickly.

· Data Lineage Tracking: Implicitly tracks the provenance of data by its temporal ordering. This helps in understanding the flow and transformation of data over its lifecycle. The practical benefit for you is a clear understanding of how data arrived at its current state.

· Experimental API: Provides a foundation for building applications with a strong emphasis on historical data states and immutability. This offers a unique development paradigm that can lead to more robust and auditable software.

Product Usage Case

· Debugging a production issue: Imagine a user reports a problem. Instead of trying to guess what happened, you can use DriftDB to query the state of that user's record exactly when the issue occurred, pinpointing the exact data that caused the problem. This saves significant debugging time.

· Auditing financial transactions: For applications dealing with sensitive financial data, DriftDB allows you to meticulously audit every change to an account balance, showing who made what change and when, down to the millisecond. This builds trust and meets compliance requirements.

· Replaying user interactions: Build a feature that lets users 'replay' their past actions within the application, similar to how a video game records gameplay. DriftDB's historical data capability makes this technically feasible and engaging for users.

· Testing database rollback scenarios: Developers can easily simulate and test how their application behaves when rolling back to a previous data state, ensuring resilience and data recovery mechanisms are sound. This leads to more reliable applications.

3

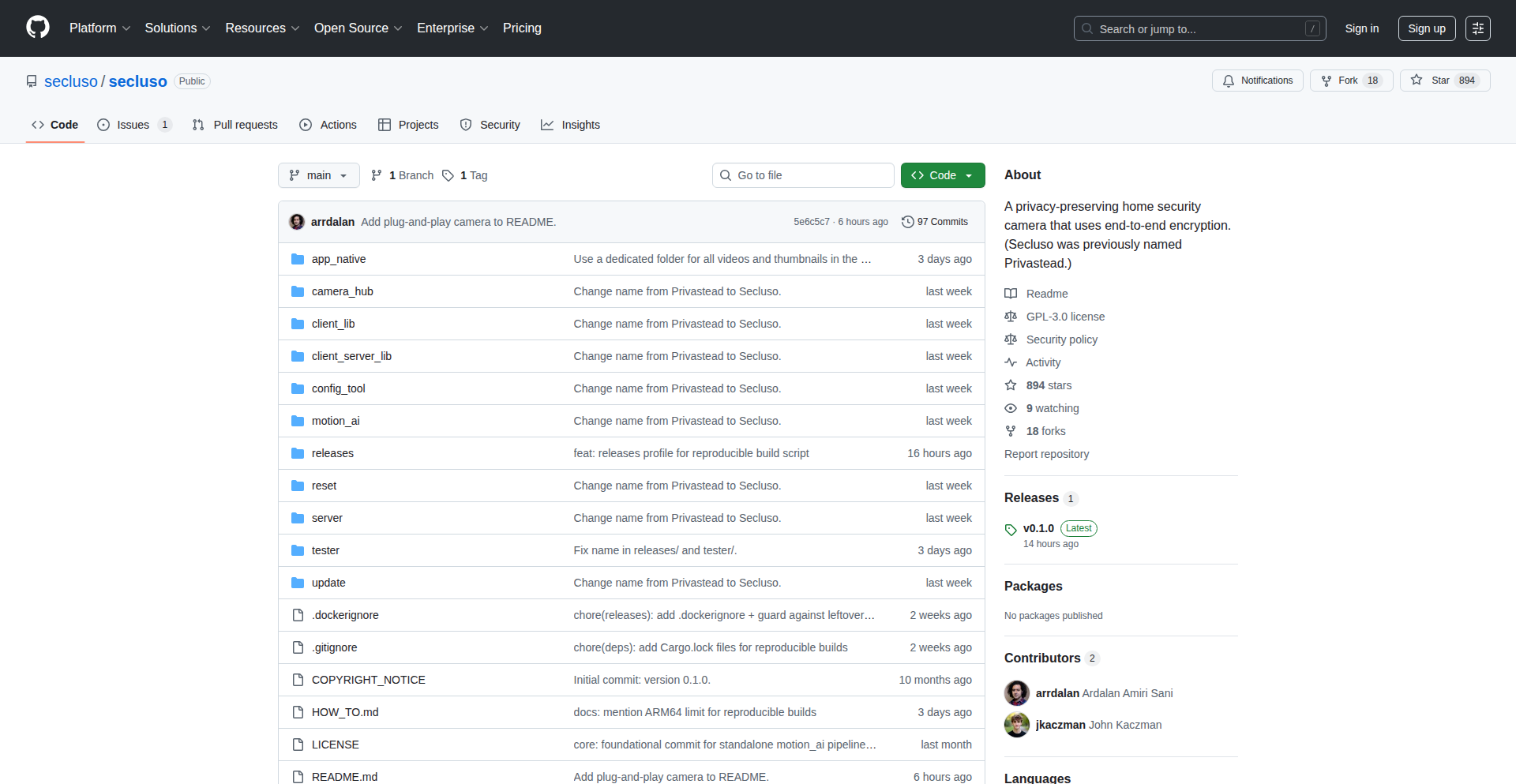

Secluso: Open-Source Privacy-First Home Security

Author

arrdalan

Description

Secluso is a fully open-source home security camera system designed with privacy at its core. It leverages end-to-end encryption using OpenMLS, ensuring that only you can access your camera's feed. The project has evolved to run directly on low-power devices like a Raspberry Pi Zero 2W, enabling intelligent AI-powered detection of people, pets, and vehicles for timely notifications. Its reproducible builds ensure software integrity, and it supports both iOS and Android mobile apps. So, what does this mean for you? It means you can build your own highly secure and customizable home security camera system without compromising your privacy, or you can explore a convenient plug-and-play hardware option.

Popularity

Points 16

Comments 2

What is this product?

Secluso is an open-source project that transforms a Raspberry Pi into a private home security camera. Its key innovation lies in its use of OpenMLS (Messaging Layer Security), which provides end-to-end encryption. This means the video and audio data from your camera is scrambled by the camera itself and can only be unscrambled by your authorized mobile device. Even the developers of Secluso cannot access your footage. Furthermore, the system incorporates AI capabilities directly on the Raspberry Pi to detect specific events like people, pets, or vehicles, triggering notifications to your phone. The software stack is entirely open-source, and reproducible builds are used to verify the integrity of the camera software. So, what's the technical depth here? It's about building a secure, encrypted communication channel for your camera feed and integrating intelligent, on-device processing for event detection, all within an auditable and customizable open-source framework. This offers a level of privacy and control rarely found in commercial security cameras.

How to use it?

Developers can utilize Secluso by installing the camera software onto a Raspberry Pi. This involves flashing an SD card with the Secluso image or compiling the source code. Once the software is running on the Raspberry Pi, it can connect to a compatible camera module. The user then pairs their mobile device (iOS or Android) with the Raspberry Pi camera via the Secluso app. The app allows users to view live feeds, review recorded events, configure AI detection settings, and receive alerts. For those who prefer a ready-to-go solution, Secluso also offers a prototype hardware camera built using this open-source project. So, how do you integrate this into your life? You can either set up the software yourself on a Raspberry Pi for maximum customization and learning, or consider their hardware product for a simpler deployment. Both options provide a secure and private way to monitor your home.

Product Core Function

· End-to-end encrypted video streaming: Utilizes OpenMLS to secure camera feed from the device to the mobile app, ensuring only authorized users can view footage. This directly addresses privacy concerns of traditional cameras, meaning your personal moments remain yours.

· On-device AI event detection (People/Pets/Vehicles): Performs intelligent analysis on the Raspberry Pi to identify specific subjects, reducing false positives and allowing for targeted notifications. This translates to receiving alerts only when something important happens, saving you time and reducing unnecessary disturbances.

· Open-source software stack: Provides full transparency and control over the security camera's functionality, allowing for customization and community contributions. This empowers you with the freedom to understand, modify, and improve your security system, fostering trust and innovation.

· Reproducible builds: Guarantees the integrity and authenticity of the camera software by allowing anyone to verify that the compiled code matches the source code. This assures you that the software you're running hasn't been tampered with, building confidence in the system's security.

· Cross-platform mobile app (iOS/Android): Offers a user-friendly interface for managing and monitoring the security camera from any smartphone. This ensures you can easily access and control your camera system regardless of your mobile device preference.

Product Usage Case

· A privacy-conscious individual setting up a security camera in their home to monitor their pet while they are away. They can trust that the footage is encrypted end-to-end and only accessible by them. This solves the problem of wanting to keep an eye on their pet without worrying about their data being compromised.

· A developer wanting to build a custom home surveillance system for a specific need, such as monitoring a garden for wildlife. They can leverage the open-source nature of Secluso to modify detection parameters or integrate with other smart home systems, offering a flexible and powerful solution.

· A remote worker who wants to monitor their front door for package deliveries without relying on a cloud service that might collect their data. Secluso provides local processing and encrypted communication, ensuring their privacy is maintained while they stay informed about important events.

· An enthusiast looking to experiment with AI and computer vision on a low-cost hardware platform. They can use Secluso as a base to learn about real-time object detection and build their own custom notification triggers, providing a hands-on educational experience.

· A small business owner who needs to monitor their premises but is concerned about the security and privacy implications of commercial surveillance systems. Secluso offers a secure, self-hosted alternative that they can control and trust.

4

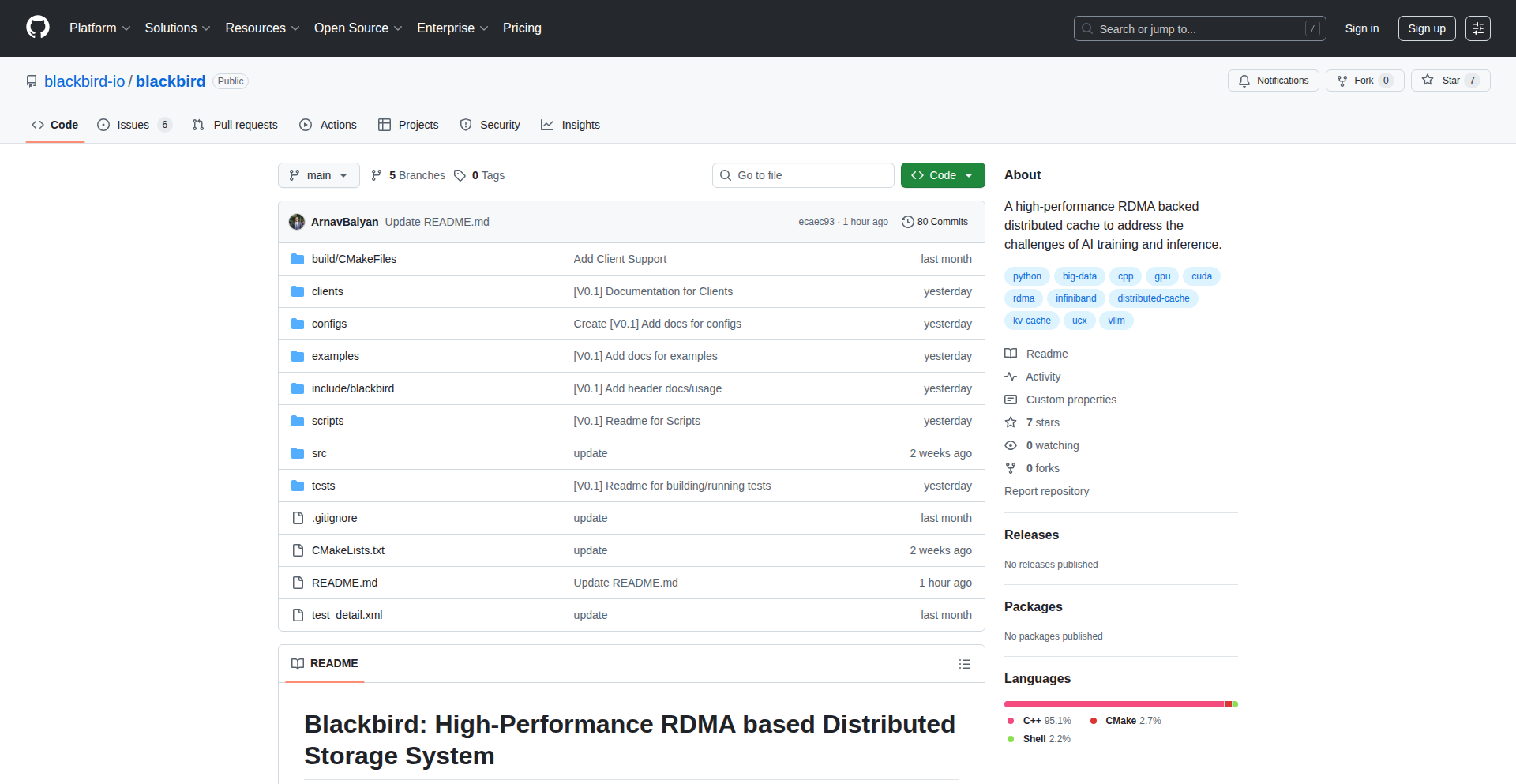

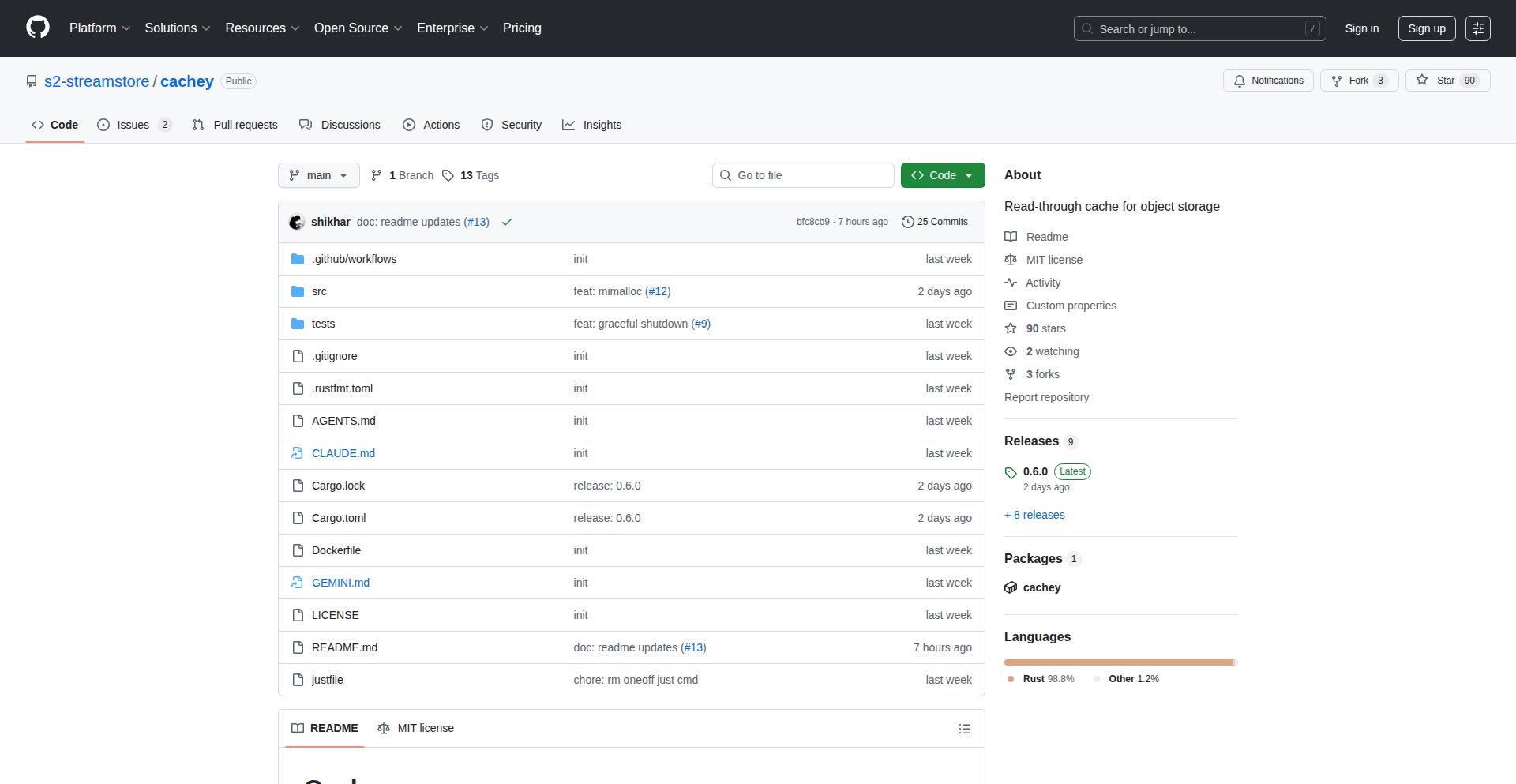

RDMA-InfiniBand Distributed Cache

Author

hackercat010

Description

A high-performance distributed cache designed for accelerating deep learning inference and training. It leverages RDMA/InfiniBand networking to achieve ultra-low latency and high throughput data access, overcoming traditional network bottlenecks.

Popularity

Points 9

Comments 0

What is this product?

This project is a distributed caching system that utilizes RDMA (Remote Direct Memory Access) and InfiniBand networking. RDMA allows one computer's memory to be accessed directly by another computer without involving the operating system's CPU on either side. InfiniBand is a specialized high-speed interconnect for servers and storage. By combining these, the cache can read and write data across multiple machines extremely quickly, bypassing the usual overhead of network protocols. This is innovative because traditional distributed caches often rely on standard TCP/IP, which involves more CPU processing and is slower. So, what's the benefit for you? It means your machine learning models can access the data they need for training or making predictions much faster, leading to quicker results and more efficient use of your computing resources.

How to use it?

Developers can integrate this distributed cache into their machine learning pipelines. The system typically runs as a set of cache nodes accessible by your training or inference applications. You would configure your ML framework or data loading scripts to point to the cache cluster. When your application needs a piece of data, it first checks the cache. If the data is there, it's retrieved directly from the cache's memory over RDMA/InfiniBand. If not, it's fetched from the primary storage and potentially loaded into the cache for future access. This is useful for scenarios where your ML models frequently access large datasets or require very fast data retrieval during training epochs or when processing inference requests. It can be integrated with popular ML frameworks like TensorFlow or PyTorch, or used as a standalone data acceleration layer.

Product Core Function

· RDMA/InfiniBand Data Transfer: Enables direct memory access between nodes, drastically reducing latency and CPU overhead for data fetching. This means your ML jobs spend less time waiting for data and more time computing.

· Distributed Cache Management: Provides a scalable way to store and retrieve frequently accessed data across multiple servers. This ensures that your ML models have quick access to the data they need, especially in large-scale training.

· Cache Coherence: Maintains consistency of data across all cache nodes, ensuring that your ML training or inference always uses the most up-to-date information. This prevents data inconsistencies that could lead to incorrect model behavior.

· Key-Value Storage Interface: Offers a simple interface for storing and retrieving data, making it easy for developers to integrate with their existing data pipelines. This makes it straightforward to plug into your current ML workflows.

Product Usage Case

· Accelerating Large-Scale Deep Learning Training: Imagine training a massive neural network on a huge dataset. Without this cache, fetching data for each training step could be a significant bottleneck. By using this distributed cache, data is served so quickly that the GPUs are constantly fed with data, leading to much faster training times. This directly addresses the 'what's the benefit for me?' by saving you valuable compute hours.

· Low-Latency Real-time Inference: For applications that require instant predictions, like fraud detection or autonomous driving, even small delays in data access can be critical. This cache drastically reduces the time it takes to retrieve input data for inference, enabling near real-time performance. This means your application can respond to events much faster, improving its effectiveness.

· High-Throughput Data Loading for HPC Workloads: In scientific computing and high-performance computing (HPC), where massive datasets are common, efficient data loading is paramount. This system can serve data at extremely high speeds, making it ideal for HPC workloads that are often I/O bound. This allows scientists and engineers to run more simulations or analyses in the same amount of time.

5

PetHealth AI

Author

pcrausaz

Description

An AI-powered tool that provides instant health assessments for pets by analyzing user-provided symptoms. It aims to alleviate owner anxiety by offering immediate, data-driven insights into potential pet health issues, bridging the gap until professional veterinary consultation.

Popularity

Points 5

Comments 4

What is this product?

PetHealth AI is an experimental project leveraging machine learning models to interpret pet health symptoms described by users. It acts as a first-line information source, translating complex biological indicators into understandable assessments. The innovation lies in its accessibility and speed, offering instant, preliminary feedback on potential pet ailments without requiring immediate veterinary intervention, thereby empowering pet owners with information.

How to use it?

Pet owners can interact with PetHealth AI through a simple text-based interface, describing their pet's symptoms, behavior, and any observed changes. The system then processes this natural language input, identifies key health indicators, and returns a summarized assessment of potential concerns, along with actionable advice such as 'monitor closely' or 'seek veterinary attention within 24 hours'. It can be integrated into pet care apps or community forums.

Product Core Function

· Symptom analysis engine: Parses natural language descriptions of pet symptoms to identify relevant medical keywords and patterns. This allows for quick identification of potential issues, so you know what to look out for.

· AI-driven assessment generation: Utilizes a trained machine learning model to provide a preliminary health assessment based on analyzed symptoms. This gives you an immediate, albeit unofficial, indication of your pet's well-being.

· Actionable advice provision: Offers guidance on the next steps, ranging from continued observation to seeking professional veterinary care. This helps you make informed decisions about your pet's health, saving you time and potential worry.

· User-friendly interface: Designed for ease of use by pet owners who may not have technical backgrounds. This makes accessing valuable health information straightforward and stress-free.

Product Usage Case

· A dog owner notices their pet is lethargic and not eating. They input these symptoms into PetHealth AI and receive an assessment suggesting potential gastrointestinal issues, advising them to monitor for vomiting and dehydration. This helps the owner understand the urgency and what specific signs to watch for before their scheduled vet appointment.

· A cat owner observes their cat grooming excessively. They use PetHealth AI to describe this behavior and receive information that excessive grooming can sometimes indicate stress, skin irritation, or underlying medical conditions. This prompts the owner to investigate environmental factors or consider a vet visit if the behavior persists.

· A pet owner is considering a new diet for their pet and notices mild digestive upset. They query PetHealth AI about the symptoms, and it provides context on typical digestive responses to dietary changes, reassuring them that mild upset might be normal, but advising to contact a vet if symptoms worsen. This helps manage expectations and provides peace of mind.

6

PaperSync: Collaborative ArXiv Reader

Author

qflop

Description

PaperSync is a novel platform designed to transform the way researchers interact with academic papers, specifically those hosted on ArXiv. It allows users to not only read papers but also to annotate specific sections, ask questions directly within the context of the paper, and engage in collaborative discussions with other readers. This project tackles the inherent isolation in individual paper reading by fostering a community-driven annotation and Q&A experience, making complex research more accessible and understandable.

Popularity

Points 6

Comments 3

What is this product?

PaperSync is a web-based application that enhances the reading of research papers by enabling contextualized comments and discussions. At its core, it leverages document parsing to identify specific paragraphs or sections of a paper. Users can then highlight these parts and attach their own notes, questions, or answers, which are visible to other PaperSync users interacting with the same document. This creates a dynamic layer of community knowledge and clarification on top of static research articles. The innovation lies in its ability to tie conversations directly to specific text fragments, facilitating a more focused and efficient understanding of intricate research.

How to use it?

Developers can use PaperSync to gain deeper insights into research papers by leveraging the collective knowledge of the community. When encountering a confusing section in a paper, a user can highlight it and see if other users have already asked or answered questions about it. They can also contribute their own insights. For integration, developers might find inspiration in how PaperSync structures its annotation system, potentially adapting similar techniques for collaborative code review or documentation platforms. The tool itself can be accessed via a web browser, where users can search for papers or upload their own (though the current HN mention focuses on ArXiv integration).

Product Core Function

· Contextual Annotation: Allows users to highlight specific text within a research paper and add their own comments or questions. This provides a focused way to discuss parts of a paper, making it easier to track down specific points of confusion or interest.

· Collaborative Q&A: Enables users to ask questions about annotated sections and receive answers from other users. This democratizes knowledge by pooling community understanding, potentially clarifying complex concepts much faster than individual study.

· Shared Reading Experience: Creates a shared environment where multiple users can read and interact with the same paper simultaneously, seeing each other's annotations and discussions. This fosters a sense of community and shared learning, making research less of a solitary activity.

· ArXiv Integration: Specifically targets research papers from ArXiv, a popular repository for pre-print scientific papers, making it immediately useful for a large segment of the academic and research community.

Product Usage Case

· A computer science student struggling with a complex algorithm described in a paper can highlight the relevant section and find pre-existing questions and answers from other students, or post their own question to get help.

· A researcher reviewing a new paper can add their own interpretations or critical remarks to specific sections, which can then be seen and discussed by other reviewers, leading to a more thorough and collaborative peer review process.

· A team working on a research project can all access the same paper through PaperSync, leaving notes and questions for each other directly within the document, streamlining internal discussions and knowledge sharing.

7

ForkLaunch: Typed DSL for Express Upgrades

Author

rohinbharg

Description

ForkLaunch is a framework designed to help developers incrementally modernize their existing Express.js applications by adopting modern open standards. It acts as a layer on top of your current Express endpoints, allowing a smoother transition to newer technologies without a full rewrite. The core innovation lies in its fully typed Domain Specific Language (DSL), which provides a structured and safe way to define API behavior and integrate new standards.

Popularity

Points 7

Comments 1

What is this product?

ForkLaunch is a framework that allows you to upgrade your Express.js applications by progressively introducing modern open standards. Think of it like adding new, safer, and more standardized features to your existing Express server without having to rebuild everything from scratch. The key to its innovation is a 'fully typed DSL'. This means it uses a specialized language that's designed for this specific task (defining API rules) and is 'typed', meaning it checks for errors automatically during development. So, if you try to do something incorrectly, it flags it immediately. This dramatically reduces bugs and makes your API more predictable and easier to work with, especially as you add newer features.

How to use it?

Developers can integrate ForkLaunch into their existing Express projects. You define your API endpoints and their expected behavior using ForkLaunch's typed DSL. This DSL then acts as a smart proxy or enhancer for your original Express endpoints. For example, you can define input validation rules, data transformation logic, or even new API specification formats (like OpenAPI) using the DSL. ForkLaunch handles the enforcement and integration of these rules with your existing Express routes. It's particularly useful for teams that have a large, mature Express codebase and want to adopt newer technologies like GraphQL, gRPC, or standardized API schemas without disrupting current operations.

Product Core Function

· Incremental Adoption of Open Standards: Allows integrating modern API standards like GraphQL or OpenAPI into existing Express apps without a complete rewrite. This means you can gradually introduce new capabilities and benefit from them sooner, reducing risk and development time.

· Fully Typed Domain Specific Language (DSL): Provides a structured and type-safe way to define API contracts and behavior. This catches errors early in the development process, leading to more robust and maintainable APIs, and makes your API logic clearer to understand.

· Production-Ready Framework: The framework is already being used in production by several companies, meaning it's tested and reliable for real-world applications. This gives you confidence to deploy it on your critical services.

· CLI Tooling: While still in beta, the accompanying CLI tool simplifies the process of managing and integrating ForkLaunch into your project. This makes the adoption process smoother and more automated.

Product Usage Case

· Modernizing a Legacy Express API: Imagine you have an Express API that's been running for years. You want to add features like automatic API documentation generation (using OpenAPI) or start serving data through GraphQL. ForkLaunch lets you define these new standards alongside your existing Express routes, allowing users to interact with the new capabilities without changing the underlying Express code immediately.

· Enforcing Strict Input Validation: You can use ForkLaunch's DSL to precisely define what kind of data your API expects (e.g., specific data types, formats, ranges). If a user sends data that doesn't match these rules, ForkLaunch will reject it early, preventing errors deeper within your application logic and making your API more secure.

· Gradually Migrating to a Microservices Architecture: If you're breaking down a monolithic Express app into microservices, ForkLaunch can help manage the interface between the old and new parts. You can define new, standardized interfaces for services as they are extracted, ensuring compatibility during the transition.

8

180InvestTools

Author

jera_value

Description

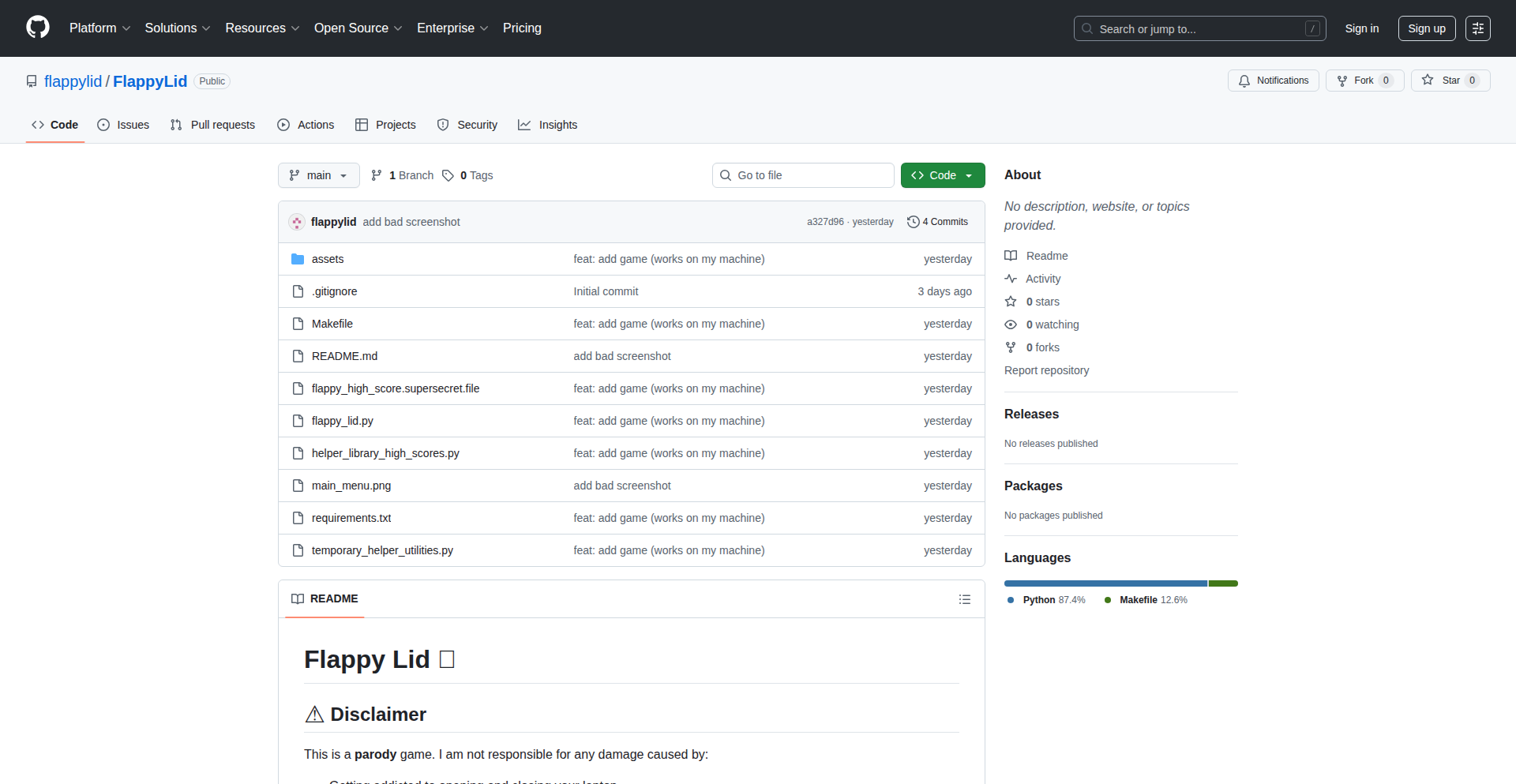

A curated GitHub repository offering 180 meticulously chosen tools and scripts for investment analysis and decision-making. This project aggregates and organizes a diverse range of open-source solutions, addressing the challenge of fragmented resources for investors and developers alike.

Popularity

Points 7

Comments 0

What is this product?

180InvestTools is a comprehensive collection of 180 open-source tools and scripts hosted on GitHub, designed to empower individuals and developers with advanced capabilities for investment research, analysis, and strategy execution. Instead of developers having to hunt for disparate solutions for tasks like backtesting trading strategies, analyzing financial data, or generating market insights, this repository provides a centralized, high-quality resource. The innovation lies in the thoughtful curation and organization of these tools, making sophisticated financial engineering accessible and practical for a wider audience. It’s like having a well-organized toolbox filled with specialized instruments for dissecting and navigating the financial markets, built by the developer community for the developer community.

How to use it?

Developers can leverage 180InvestTools by cloning the GitHub repository to their local machine. Each tool within the repository typically comes with its own set of instructions and dependencies, which can be found in its respective subdirectory's README file. Common usage scenarios include integrating specific Python scripts for data scraping and analysis into existing trading platforms, using JavaScript libraries for visualizing market trends in web applications, or deploying command-line tools for automated financial report generation. The project encourages contribution and modification, allowing developers to fork the repository, adapt tools to their specific needs, and submit pull requests for community review. This means you can either use these tools as-is to enhance your current financial projects, or use them as building blocks to create entirely new investment-related applications.

Product Core Function

· Algorithmic Trading Strategy Development: Offers pre-built scripts and frameworks for creating, testing, and deploying automated trading algorithms. This provides developers with foundational code to experiment with complex trading logic and potentially automate profit-seeking strategies, saving significant development time.

· Financial Data Analysis and Visualization: Includes tools for fetching, cleaning, and analyzing vast amounts of financial market data (e.g., stock prices, economic indicators) and presenting it in insightful visualizations. This helps developers quickly identify patterns, trends, and anomalies in financial data, leading to more informed investment decisions.

· Portfolio Optimization Tools: Provides scripts and methodologies for constructing and managing diversified investment portfolios, aiming to maximize returns for a given level of risk. Developers can use these to build tools that help themselves and others balance risk and reward effectively.

· Sentiment Analysis and News Aggregation: Features tools that process financial news and social media to gauge market sentiment, offering insights into potential market movements. This allows developers to incorporate real-time sentiment data into their analysis, adding another layer of predictive power.

· Backtesting and Simulation Frameworks: Includes robust libraries for simulating the performance of trading strategies on historical data. This is crucial for validating the effectiveness of investment ideas before risking real capital, enabling developers to rigorously test their hypotheses.

· Quantitative Research Utilities: A collection of scripts for performing various quantitative research tasks, such as statistical modeling, factor analysis, and risk management calculations. These utilities equip developers with the mathematical and statistical rigor needed for sophisticated financial analysis.

Product Usage Case

· A quantitative analyst uses a Python script from the repository to backtest a new mean-reversion trading strategy on historical S&P 500 data, identifying profitable parameters and avoiding costly real-world experimentation.

· A fintech startup integrates a JavaScript charting library from the collection into their web application to provide users with interactive, real-time stock price visualizations, enhancing user engagement and data comprehension.

· A retail investor automates the process of downloading daily financial statements for a basket of companies using a command-line tool, streamlining their research workflow and freeing up time for higher-level analysis.

· A data scientist builds a machine learning model to predict stock price movements by leveraging data parsing and feature engineering scripts from the repository, improving the accuracy of their predictive capabilities.

· A student learning about algorithmic trading uses the provided backtesting framework to understand how different risk management techniques impact strategy performance, gaining practical experience without financial risk.

9

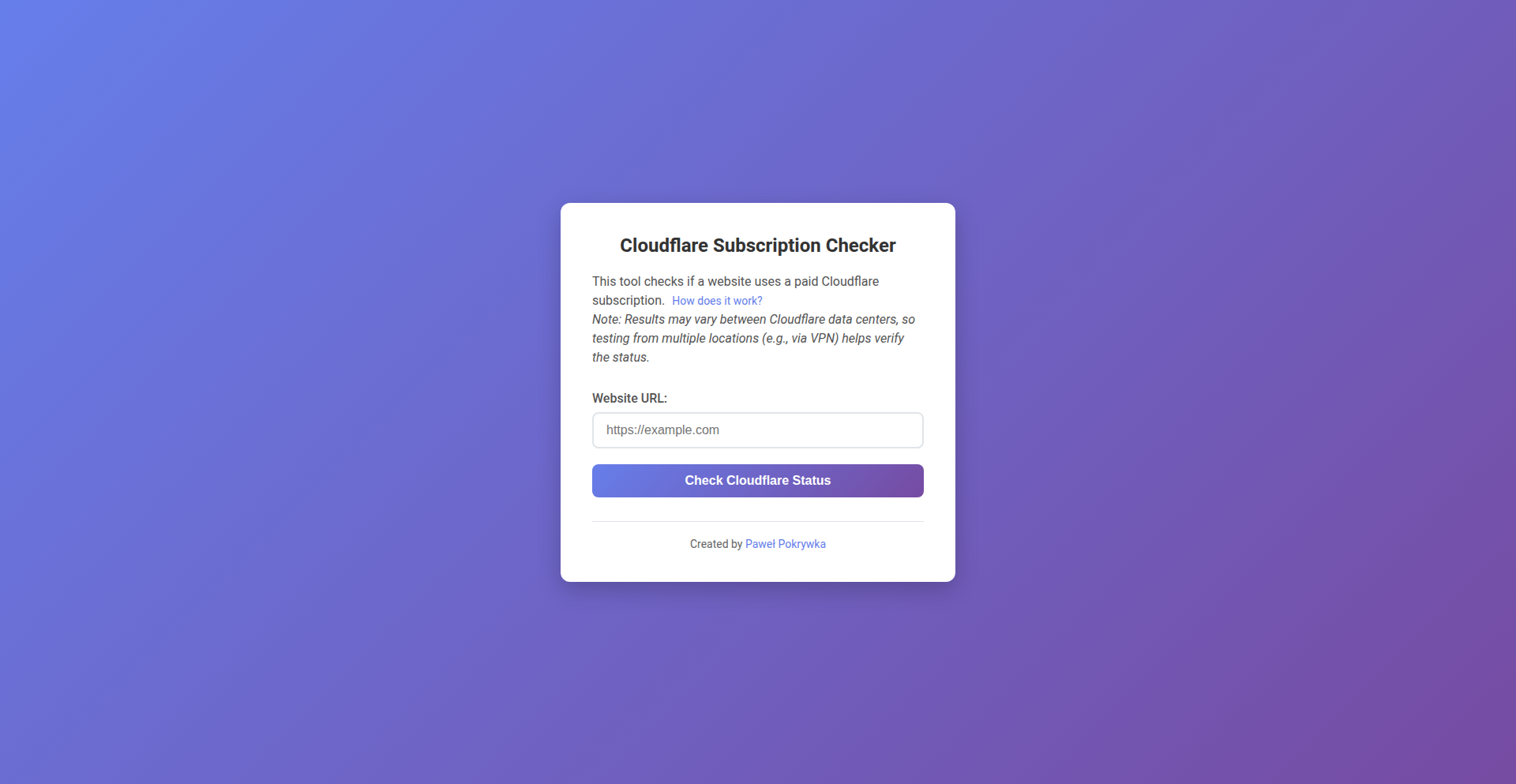

Cloudflare Plan Detector

Author

rapawel

Description

This project is a clever tool that identifies whether a website is using a paid Cloudflare subscription by analyzing a specific network endpoint. It leverages a subtle technical detail about Cloudflare's paid features: only paid plans allow disabling Encrypted Client Hello (ECH). By checking for 'sni=plaintext' in the public '/cdn-cgi/trace' response, it reveals if ECH is disabled, thus indicating a paid plan. This offers a practical way to understand a website's underlying infrastructure.

Popularity

Points 1

Comments 5

What is this product?

This is a diagnostic tool that detects if a website utilizes a paid Cloudflare subscription. The core innovation lies in its method of inspecting the '/cdn-cgi/trace' endpoint, a publicly accessible resource provided by Cloudflare. Cloudflare's paid tiers are the only ones that permit disabling Encrypted Client Hello (ECH). ECH is a privacy feature that encrypts information about the website being visited, even before the secure connection is established. By looking for the 'sni=plaintext' parameter in the trace output, this tool infers that ECH has been turned off, which is a strong indicator of a paid subscription. So, it tells you if a site is likely investing in premium Cloudflare services.

How to use it?

Developers can easily use this tool through its command-line interface or by integrating its logic into their own scripts. For instance, you could run it against a list of competitor websites to see if they are using paid Cloudflare features, which might inform your own infrastructure decisions or competitive analysis. It's a simple HTTP request and response parsing task. So, you can quickly check any Cloudflare-protected website without needing direct access to their Cloudflare account.

Product Core Function

· Inspects the '/cdn-cgi/trace' endpoint for network information. This allows the tool to gather data about the connection, providing the raw material for analysis.

· Analyzes the 'sni' parameter within the trace response. Specifically, it looks for 'sni=plaintext', which signifies that Encrypted Client Hello (ECH) is disabled. This is the key indicator of a paid plan.

· Determines if a website uses a paid Cloudflare subscription based on the ECH status. This provides a clear yes/no answer about whether the website is likely on a premium Cloudflare plan.

· Works on any website using Cloudflare's proxy service. This broad applicability means you can analyze a wide range of internet sites without needing special permissions.

Product Usage Case

· A web developer investigating competitors' infrastructure to understand their investment in performance and security. By running this tool against competitor sites, they can see if competitors are using paid Cloudflare features, suggesting a higher level of service for performance or security, which helps in strategic planning.

· A cybersecurity analyst monitoring the web for specific configurations. They might use this to identify organizations that have opted for advanced Cloudflare features, potentially indicating a higher security posture or more critical web assets.

· A freelance developer assessing a potential client's setup. Before proposing services, they can quickly check if the client is already using premium Cloudflare, which might affect the scope or nature of their recommendations.

· An SEO specialist looking for clues about a website's technical sophistication. While not a direct SEO factor, knowing if a site uses paid Cloudflare can be a proxy for how seriously they take their online presence and performance.

10

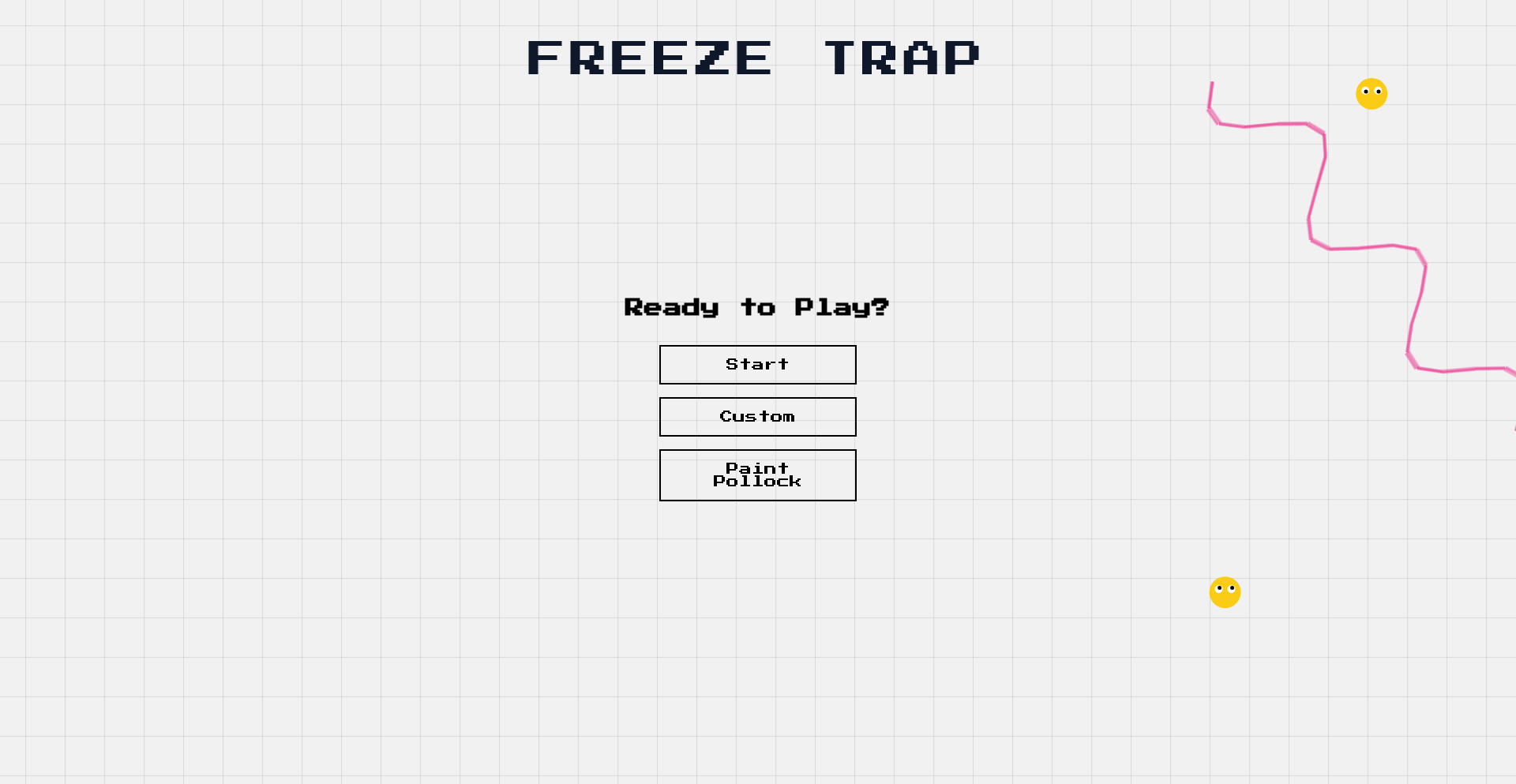

Freeze Trap Canvas Game

Author

dwa3592

Description

A web-based game built using only vanilla JavaScript and HTML5 Canvas. The core innovation lies in its real-time physics simulation and interactive boundary drawing, allowing players to trap bouncing balls. The 'Pollock' mode adds a generative art element, showcasing the creative potential of canvas manipulation.

Popularity

Points 4

Comments 2

What is this product?

Freeze Trap is a game where players draw boundaries on an HTML5 Canvas to capture bouncing balls. The balls exhibit a 'smart' behavior, actively evading the player's cursor. The underlying technology is pure JavaScript and the HTML5 Canvas API, meaning no external libraries are required, demonstrating efficient client-side graphics and interaction. The 'Pollock' feature uses a simple, yet effective algorithm to generate randomized lines over time, mimicking abstract expressionist art. The innovation here is in demonstrating a complex, interactive experience and a generative art piece using only fundamental web technologies.

How to use it?

Developers can use Freeze Trap as a learning resource for HTML5 Canvas game development, physics simulation in JavaScript, and event handling. It can be integrated into a webpage as an interactive element or a standalone game. The code is open and can be forked and modified to experiment with different game mechanics, ball behaviors, or artistic generative patterns. For instance, one could modify the ball evasion logic or create new ways to interact with the canvas.

Product Core Function

· Interactive Boundary Drawing: Allows users to draw lines and shapes on the canvas in real-time to strategically trap objects, demonstrating direct manipulation and graphical feedback capabilities.

· Ball Physics Simulation: Simulates bouncing ball movement with realistic reflections and interactions, showcasing a fundamental understanding of game physics and collision detection.

· Cursor-Averse Ball Behavior: Implements an AI-like behavior where balls react and move away from the player's cursor, adding a layer of challenge and dynamic interaction.

· Generative Art Mode (Pollock Inspiration): Creates abstract visual patterns by drawing random lines over time, highlighting the use of algorithms for artistic creation on the canvas, useful for dynamic backgrounds or visualizers.

· Vanilla JS/HTML5 Canvas Implementation: Achieves all functionalities without external libraries, demonstrating efficient, lightweight web development and the power of native browser APIs for rich interactive experiences.

Product Usage Case

· Learning Game Development: A student can study the source code to understand how to build simple physics-based games directly in the browser, learning about event listeners, canvas rendering, and game loops.

· Interactive Web Art Installation: A web designer could integrate the 'Pollock' mode into a website as a dynamic, ever-changing visual element, creating a unique artistic experience for visitors.

· Client-side Physics Experimentation: A developer looking to build a web application with physics simulations can use this project as a reference for implementing collision detection and object movement without server-side processing.

· Educational Tool for Canvas API: Educators can use this project to teach students about the capabilities of the HTML5 Canvas API, showing practical applications of drawing, animation, and user interaction.

11

Navly - AI Tool Navigator

Author

airobus

Description

Navly is a curated directory for the latest AI websites and tools. It addresses the challenge of discovering and organizing cutting-edge AI resources in a rapidly evolving landscape. The core innovation lies in its intelligent curation and presentation of AI tools, making it easier for developers and enthusiasts to stay abreast of the newest advancements.

Popularity

Points 4

Comments 1

What is this product?

Navly is a meticulously curated online directory showcasing the newest AI websites and tools. It's built to cut through the noise and highlight genuinely innovative AI resources. The technology behind it likely involves sophisticated web scraping and aggregation techniques, combined with a human curation layer to ensure quality and relevance. Think of it as a smart, continuously updated guide to the AI frontier. So, what's in it for you? It saves you countless hours of searching and helps you discover powerful AI tools you might otherwise miss, keeping your own projects at the cutting edge.

How to use it?

Developers can use Navly by visiting the website and exploring the categorized listings of AI tools. The site allows for filtering and searching based on AI categories (e.g., natural language processing, computer vision, machine learning platforms) and specific functionalities. Integration with your workflow could involve bookmarking useful tools, referencing Navly when seeking solutions for specific development challenges, or even subscribing to updates on new AI releases. So, how does this help you? When you need a specific AI capability for your next project, you can quickly find vetted and relevant tools without starting your search from scratch.

Product Core Function

· Curated AI Tool Listings: Centralized access to a hand-picked selection of the most promising AI websites and tools. This saves you time and effort in finding high-quality resources.

· Categorization and Tagging: AI tools are organized into logical categories and tagged with relevant keywords, making it easy to discover tools for specific AI domains. This means you can quickly find tools for your niche requirements.

· Up-to-date Information: The directory is continuously updated to reflect the latest AI releases and trends, ensuring you're always aware of the newest advancements. This keeps your knowledge base current and your projects competitive.

· User Submissions and Community Feedback: Potential for community contributions and feedback mechanisms to further refine the directory. This allows for collective intelligence in identifying the best AI tools.

Product Usage Case

· A machine learning engineer needing a new open-source library for optimizing neural network training could use Navly to quickly find and evaluate several promising options, rather than sifting through hundreds of GitHub repositories. This accelerates the development cycle.

· A web developer looking for AI-powered APIs to integrate into their application, such as image recognition or sentiment analysis, can use Navly to discover and compare different service providers and their features. This leads to faster and more informed integration decisions.

· A researcher exploring the latest advancements in natural language processing can use Navly to find new models, datasets, and research tools that are relevant to their work. This aids in staying at the forefront of their field.

12

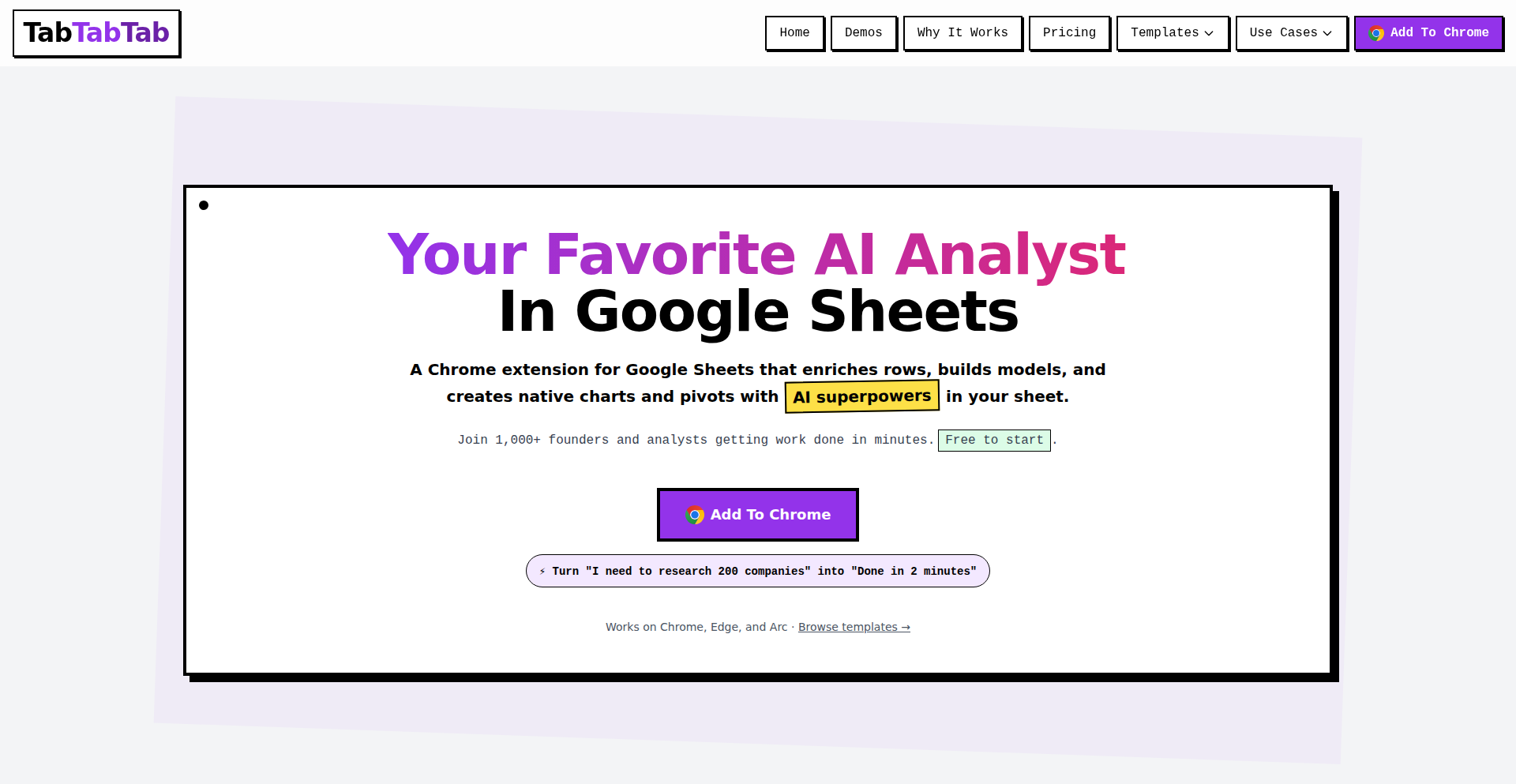

TabTabTab: AI-Powered Google Sheets Copilot

Author

break_the_bank

Description

TabTabTab is a Chrome extension that transforms Google Sheets into an intelligent workspace. It acts as an AI agent, understanding your data within sheets, performing web searches, enriching data from external sources, and even executing code. This innovation aims to empower non-technical users with AI capabilities previously reserved for software engineers, streamlining tasks like data structuring, analysis, and business modeling directly within the familiar environment of Google Sheets.

Popularity

Points 5

Comments 0

What is this product?

TabTabTab is an AI agent designed to enhance productivity within Google Sheets. It leverages AI models to understand the context of your data in the sheet, allowing you to perform complex operations with natural language prompts. Technically, it functions as a Chrome extension that can access and interpret your Google Sheets data. It integrates with web search and various data enrichment APIs, and can execute code (like Python or JavaScript) either in your browser or on its backend. The innovation lies in its ability to go beyond simple copy-pasting by understanding the structure and intent of your data, and then using AI and external resources to automate tasks and provide insights directly within your spreadsheets. For example, instead of manually searching for company information for a list of names, you can ask TabTabTab to enrich your sheet, and it will fetch and insert the data for you.

How to use it?

Developers can use TabTabTab by installing it as a Chrome extension. Once installed, it integrates seamlessly with Google Sheets. You can interact with TabTabTab through a dedicated interface within your Google Sheet. For instance, if you have a list of company names and want to find their websites, you can select the column, open TabTabTab, and type a prompt like 'Find websites for these companies'. TabTabTab will then use its AI and web search capabilities to find and populate the website URLs in your sheet. For more advanced use cases, developers can leverage its code execution capabilities to run custom scripts on their data. This means you can move from simply inputting data to actively manipulating and analyzing it using AI-driven commands within your familiar spreadsheet workflow.

Product Core Function

· AI-driven data structuring: Automatically organizes and formats data copied from various sources into clean, usable formats within Google Sheets, saving manual cleaning time.

· Web data enrichment: Fetches and inserts relevant information from the web (like company details, contact information) into your spreadsheets based on existing data, eliminating the need for manual web searches.

· Code execution within sheets: Allows users to run custom scripts (e.g., Python, JavaScript) directly on their spreadsheet data, enabling complex data transformations and analyses without leaving Google Sheets.

· Natural language querying and analysis: Enables users to ask questions about their data in plain English and receive AI-generated insights, categorizations, or summaries, making data analysis accessible to everyone.

· Cross-platform compatibility: Works as a Chrome extension across major browsers, ensuring accessibility and consistent functionality for users regardless of their preferred browsing environment.

Product Usage Case

· A small business owner uses TabTabTab to import customer lists from various booking platforms into Google Sheets. They then use it to segment customers based on purchase history and marketing engagement, automating what would have been tedious manual analysis.

· A marketing team uses TabTabTab to gather competitor pricing data from websites. They provide a list of competitor URLs, and TabTabTab scrapes the pricing information, structures it in a sheet, and allows them to perform sensitivity analysis to inform their pricing strategy.

· An academic researcher uses TabTabTab to process survey responses. They copy raw text responses into a sheet and use TabTabTab's AI to categorize sentiment and identify common themes, accelerating qualitative data analysis.

· A startup founder models business expansion plans in Google Sheets. They use TabTabTab to pull market data, generate financial projections with AI assistance, and create scenario analyses to evaluate different growth strategies.

13

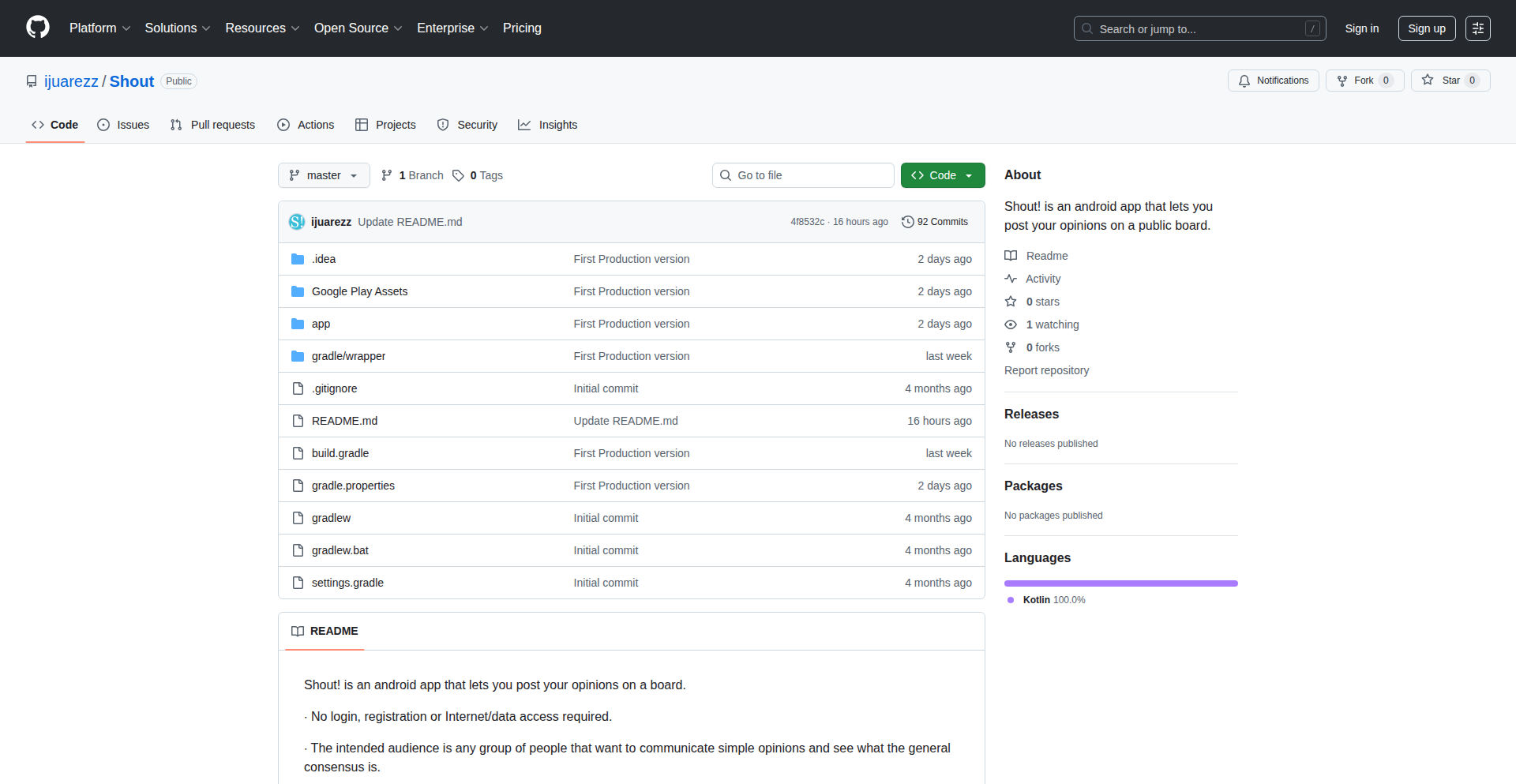

Shout: Proximity Opinion Broadcast

Author

ijuarezz

Description

Shout is an innovative Android application that allows users to share simple opinions and gauge group consensus without needing any login, registration, or internet connection. Leveraging the Google Nearby API and built with Kotlin Composables, it offers a novel way for people in close physical proximity to communicate and vote on shared sentiments. This fundamentally changes how local, ephemeral group discussions can happen, focusing on immediate peer-to-peer interaction.

Popularity

Points 2

Comments 3

What is this product?

Shout is a mobile application for Android that enables users in close physical proximity to broadcast their opinions and see what others nearby think, all without requiring any internet access or personal data. It uses the Google Nearby API, which is a technology that allows devices to discover and connect with each other directly, like a peer-to-peer network. The app is built using Kotlin and its modern UI toolkit, Composables, making it efficient and easy to develop. The core innovation lies in its ability to facilitate instant, offline group polling and sentiment sharing, fostering spontaneous communication within a local group.

How to use it?

Developers can use Shout in scenarios where immediate, localized feedback or consensus is needed among a group of people who are physically together. For example, during a meeting, a workshop, or even a casual gathering, participants can use Shout to quickly vote on a proposal or express their general feeling about a topic without relying on a central server or internet connection. The app can be integrated into existing Android projects by utilizing the Google Nearby API for device discovery and message passing. Developers can adapt its core functionality to build custom communication or polling tools that operate in offline, localized environments.

Product Core Function

· Offline opinion broadcasting: Allows users to post their opinions without any internet connection, enabling communication in environments with limited or no connectivity. This is valuable for quick, on-the-spot feedback.

· Proximity-based discovery: Utilizes the Google Nearby API to find and connect with other users within physical range, facilitating direct peer-to-peer interaction without a central server. This enables spontaneous group communication.

· Consensus visualization: Displays the collective opinions of nearby users, allowing for a quick understanding of group sentiment or agreement. This provides immediate insight into what the group thinks.

· Multilingual support: Offers availability in English, Portuguese, and Spanish, making it accessible to a broader range of users for international or multilingual group interactions. This enhances usability across different language groups.

Product Usage Case

· In a classroom setting, a teacher can use Shout to quickly poll students' understanding of a concept without needing individual student devices connected to the internet. Students can anonymously broadcast their confidence level, and the teacher can instantly see the general consensus.

· During a team brainstorming session, participants can use Shout to vote on different ideas being discussed. This provides real-time, anonymous feedback on which ideas resonate most with the group, helping to prioritize and move forward efficiently.

· At a local community event or a small gathering, attendees can use Shout to vote on minor decisions, like choosing music or deciding on the next activity, without needing a shared Wi-Fi or mobile data. This fosters democratic and immediate decision-making within the group.

14

Sentrilite-eBPFFleetCommander

Author

gaurav1086

Description

Sentrilite is a lightweight, unified control plane designed to observe and secure hybrid multi-cloud environments (AWS, Azure, GCP, on-prem) from a single point. It excels at rapid onboarding, providing live kernel-level telemetry, enabling fleet-wide rule targeting, and generating audit-ready PDFs, all without needing to integrate multiple disparate tools. This project is innovative for its seamless integration of eBPF's deep visibility with Kubernetes metadata, simplifying complex multi-cloud fleet management for developers.

Popularity

Points 2

Comments 2

What is this product?

Sentrilite is a control plane that acts as a central hub for managing and monitoring your servers and applications across different cloud providers and on-premises infrastructure. It uses eBPF (extended Berkeley Packet Filter) technology, which allows it to run custom, safe programs directly within the Linux kernel. This means it can gain extremely detailed insights into what your systems are doing, such as network traffic, process execution, and file access, at a very low level. The innovation lies in its ability to correlate this low-level data with Kubernetes context and apply security or operational rules across your entire fleet, or specific groups of servers, all from one interface. Think of it as a universal remote for your cloud infrastructure, but with the ability to see exactly what every button press does in real-time.

How to use it?

Developers can onboard their fleet by simply providing a CSV file with server IPs and group assignments. For Kubernetes environments like EKS, deploying Sentrilite is as easy as running a `kubectl apply` command. This sets up an agent on each node. Once deployed, you can immediately see fleet health, recent alerts, and AI-driven insights. You can then define high-risk rules (like detecting the use of potentially dangerous commands or unauthorized access to sensitive files) and target them to specific groups of servers (e.g., only production AWS instances). The system provides live telemetry, such as process and network events, enriched with Kubernetes metadata, allowing for quick diagnosis of issues like Out-Of-Memory (OOM) container kills. Finally, you can generate comprehensive PDF reports for auditing and compliance.

Product Core Function

· Fleet Onboarding in Seconds: Upload a CSV with server IPs and groups to instantly populate a dashboard with fleet status, health, and alerts. This simplifies the initial setup for managing distributed systems, reducing manual configuration time and errors.

· Live Kernel-Level Telemetry: Utilizes eBPF to gather real-time process, file, and network event data from each node. This provides deep visibility into system behavior, enabling faster troubleshooting and security incident response.

· Kubernetes Context Enrichment: Automatically correlates system events with Kubernetes metadata (like pod and container names). This crucial context makes it easier to understand the impact of events and identify the root cause of issues within containerized applications.

· Fleet-wide Rule Targeting: Allows users to define and apply security or operational rules to specific groups of servers (e.g., by cloud provider, environment, or custom labels). This enables granular policy enforcement and phased rollout of changes, minimizing risk.

· OOMKilled Container Detection: Identifies containers that have been terminated due to memory exhaustion, providing exact pod and container context for rapid debugging and resource optimization.

· Audit-Ready PDF Export: Generates a one-click chronological report with summaries, tags, and Kubernetes context, simplifying compliance checks and historical analysis of system behavior.

Product Usage Case

· A developer managing a hybrid cloud setup with servers on AWS, Azure, and an on-premises data center can use Sentrilite to onboard all these machines within minutes. By uploading a single CSV, they can visualize the health of their entire infrastructure and set up a rule to alert them if any sensitive file like `/etc/passwd` is read on any of their production servers, regardless of where they are hosted.

· In a Kubernetes cluster, a DevOps engineer can deploy Sentrilite agents with a single `kubectl apply` command. When a pod starts consuming excessive memory and gets OOMKilled, Sentrilite will immediately flag it, showing the specific pod and container name, along with the node it was running on, allowing the engineer to quickly investigate the memory leak without manually digging through logs.

· A security analyst needs to ensure that no unauthorized network listeners are running across their fleet. They can define a rule in Sentrilite to detect commands like `nc` (netcat) listening on ports. This rule can be hot-reloaded and applied only to servers tagged as 'staging' before being rolled out to 'production', providing a safe and efficient way to enforce security policies.

· For compliance purposes, a system administrator can generate a PDF report detailing all detected security events and system activities for the past month, including the Kubernetes context for relevant workloads. This report can be provided to auditors, demonstrating adherence to security policies and providing a clear audit trail.

15

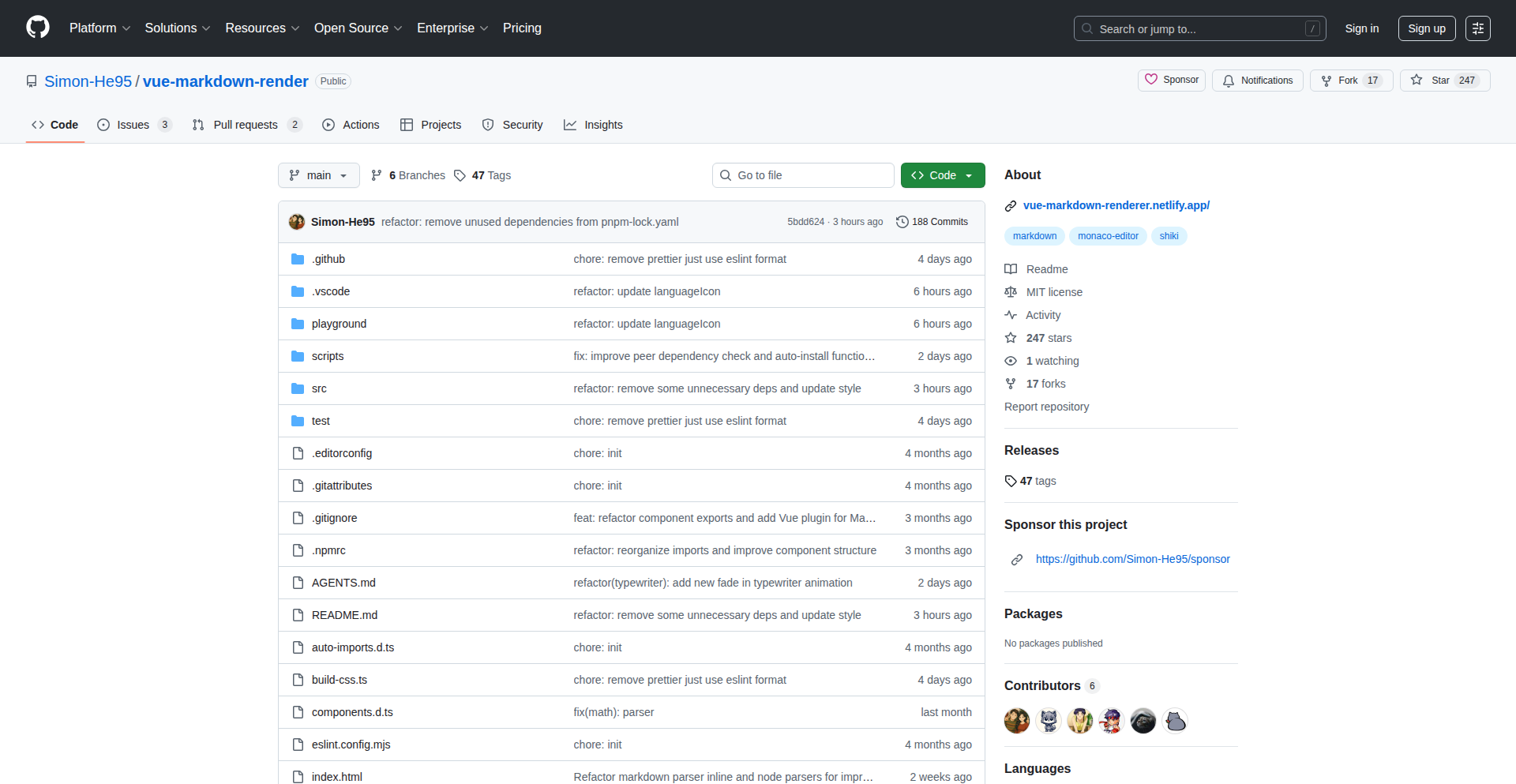

Vue-Markdown-Nitro

Author

simon_he

Description

Vue-Markdown-Nitro is a lightning-fast, client-side Markdown renderer specifically designed for Vue 3 applications. It tackles the common problem of slow Markdown rendering in the browser, especially for content-rich documents like those found in AI chatbots or technical documentation. By optimizing for client-side performance, it achieves significantly lower CPU usage and latency compared to server-side focused solutions, offering up to 100x speed improvements.

Popularity

Points 4

Comments 0

What is this product?

Vue-Markdown-Nitro is a JavaScript library that allows you to display Markdown content directly within your Vue 3 web applications, without needing to send it to a server for processing. Its core innovation lies in its highly optimized rendering engine, which is built from the ground up for the browser. Think of it as a super-efficient translator that takes plain text with Markdown formatting (like asterisks for bold or hashtags for headings) and turns it into beautifully formatted HTML, all happening instantly in the user's browser. This is particularly beneficial for applications that deal with large amounts of text, such as AI chatbot interfaces where messages need to appear instantly, or in-browser documentation viewers. The 'nitro' in the name highlights its extreme speed.

How to use it?

Developers can easily integrate Vue-Markdown-Nitro into their Vue 3 projects by installing it via npm or yarn: `npm install vue-markdown-render` or `yarn add vue-markdown-render`. Once installed, you can import the component and use it directly in your Vue templates. For example, you can pass your Markdown content as a prop to the component. This makes it incredibly simple to embed dynamic Markdown content from APIs or user inputs into your existing Vue applications, providing an immediate performance boost for rendering text.

Product Core Function

· High-performance client-side rendering: The library efficiently converts Markdown to HTML in the user's browser, leading to a snappier user experience for displaying text content.

· Vue 3 compatibility: Built specifically for Vue 3, it seamlessly integrates with the latest Vue ecosystem and features, ensuring smooth development.

· Low CPU usage and latency: Optimized for speed, it consumes fewer device resources and responds much faster, crucial for real-time applications like chatbots.

· Large document optimization: It excels at rendering lengthy and complex Markdown files, including those with extensive code blocks, making technical documentation and lengthy responses feel instantaneous.

· Simple API for integration: Easy to use with a straightforward prop-based interface, allowing developers to quickly add Markdown rendering capabilities to their projects.

Product Usage Case

· AI Chatbot Interfaces: Displaying AI-generated responses that contain formatted text, code snippets, or lists instantly to the user, improving the perceived speed and responsiveness of the chatbot.

· In-browser Documentation Viewers: Rendering technical manuals, API documentation, or knowledge base articles directly in the web application without a page reload, providing a fluid reading experience.

· Blog Post Rendering: Quickly displaying blog posts written in Markdown within a Vue.js application, allowing for rapid content updates and a faster load time for articles.

· Markdown-based Content Management Systems: Enabling users to write and preview content in Markdown directly within a web interface, with immediate visual feedback on how it will be rendered.

16

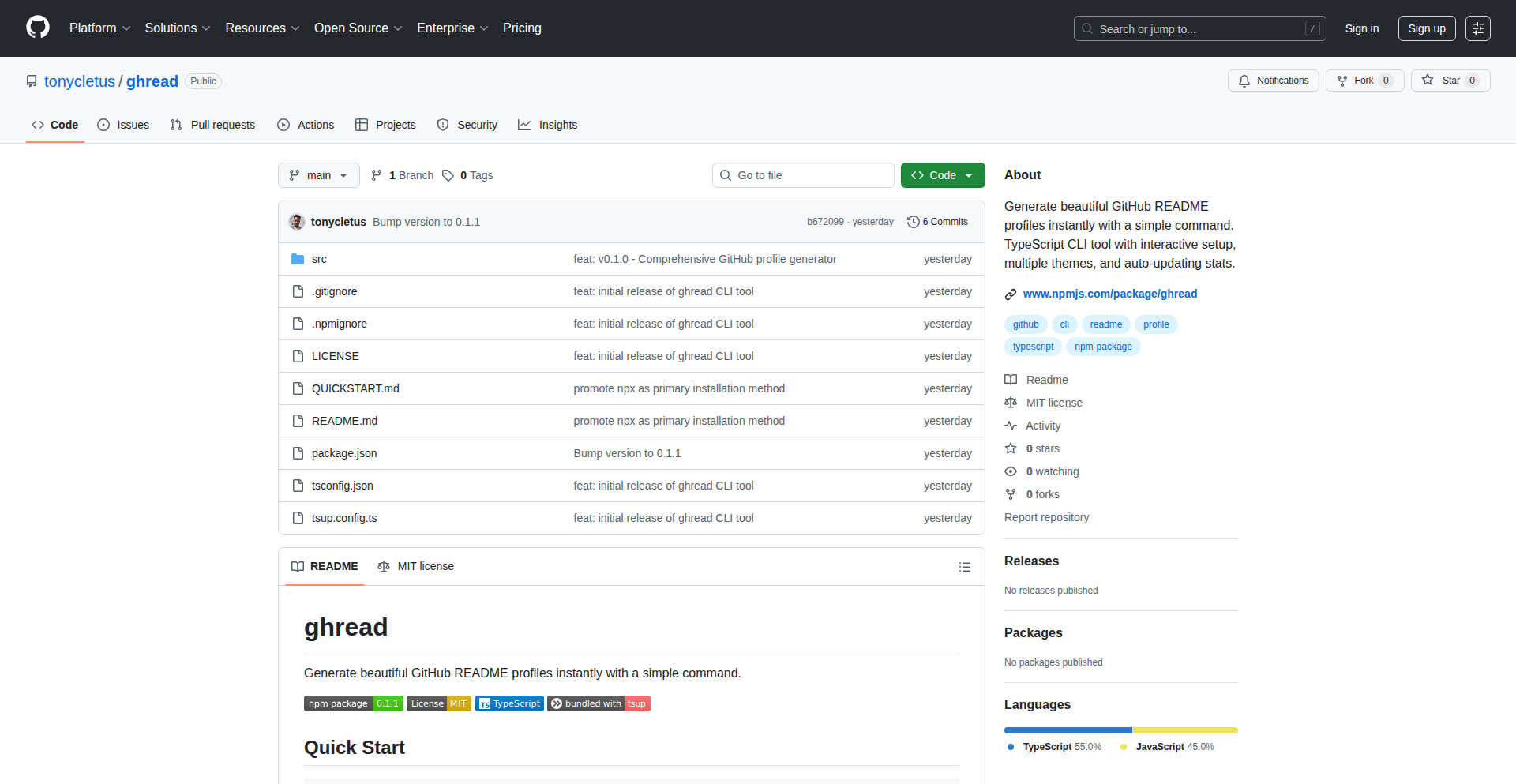

Ghread - Instant GitHub Profile README Generator

Author

omojo

Description

Ghread is a tool that instantly generates beautiful and informative GitHub README profiles. It simplifies the process of creating a compelling personal presence on GitHub, allowing developers to showcase their skills and projects without extensive manual effort. The core innovation lies in its intelligent parsing of GitHub data and user-provided inputs to construct a visually appealing and functional README.

Popularity

Points 2

Comments 2

What is this product?

Ghread is a project that leverages programmatic approaches to create custom GitHub README profiles. It intelligently fetches information like pinned repositories, contributions, and badges from your GitHub account, and combines it with user-specified sections (e.g., skills, contact information, projects). The innovation is in its ability to automate the creation of a visually rich and well-organized README, turning raw GitHub data into a polished personal brand showcase. Think of it as a smart assistant that designs your digital handshake on GitHub.

How to use it?

Developers can use Ghread by either running it locally or accessing a hosted version (if available). The typical workflow involves connecting your GitHub account, selecting the modules you want to include (like top languages, recent activity, or social links), and potentially adding custom text or images. Ghread then processes this information and outputs a Markdown file that can be directly copied and pasted into your GitHub profile README. It's like choosing pre-built components to assemble your professional online identity.

Product Core Function

· Automated GitHub Data Fetching: Retrieves key information like pinned repositories, contribution graphs, and popular languages directly from your GitHub account, saving you the manual task of looking them up and reducing the chance of errors. So, this gives you an up-to-date snapshot of your activity without you having to do anything.

· Customizable Profile Sections: Allows users to add and configure various sections such as 'About Me', 'Skills', 'Projects', 'Contact', and 'Social Media Links'. This lets you tailor your profile to precisely represent your professional identity and reachability. So, you can easily highlight what matters most to you.

· Visually Appealing Layouts: Employs well-designed templates and Markdown rendering to create aesthetically pleasing and easy-to-read README files. This ensures your profile looks professional and engaging to visitors. So, your profile will be attractive and make a good first impression.

· Dynamic Badge Generation: Integrates with services to generate and display relevant badges (e.g., programming languages, deployed status, testing results), adding visual cues to your technical proficiency. This provides a quick and clear overview of your expertise. So, you can instantly show off your tech stack and achievements.

· Markdown Output: Generates the README in standard Markdown format, which is universally compatible with GitHub and other platforms that support Markdown. This ensures seamless integration with your GitHub profile. So, you can easily copy and paste it into your profile without any formatting issues.

Product Usage Case

· A freelance developer wanting to create a professional portfolio on GitHub to attract clients. By using Ghread, they can quickly generate a README that highlights their best projects, technical skills, and contact information, making it easy for potential clients to understand their capabilities and get in touch. This solves the problem of spending hours formatting and manually updating their profile.

· A junior developer aiming to impress potential employers during their job search. Ghread helps them create a polished and informative profile README that showcases their learning progress, contributions to open-source projects, and actively used technologies, helping them stand out from other applicants. This addresses the challenge of presenting their developing skillset in a professional manner.

· An experienced developer who wants to maintain an active and organized online presence but has limited time. Ghread allows them to quickly update their profile README with recent achievements or new projects without needing to manually craft the entire document, ensuring their GitHub profile remains a relevant representation of their work. This solves the problem of profile staleness due to time constraints.

17

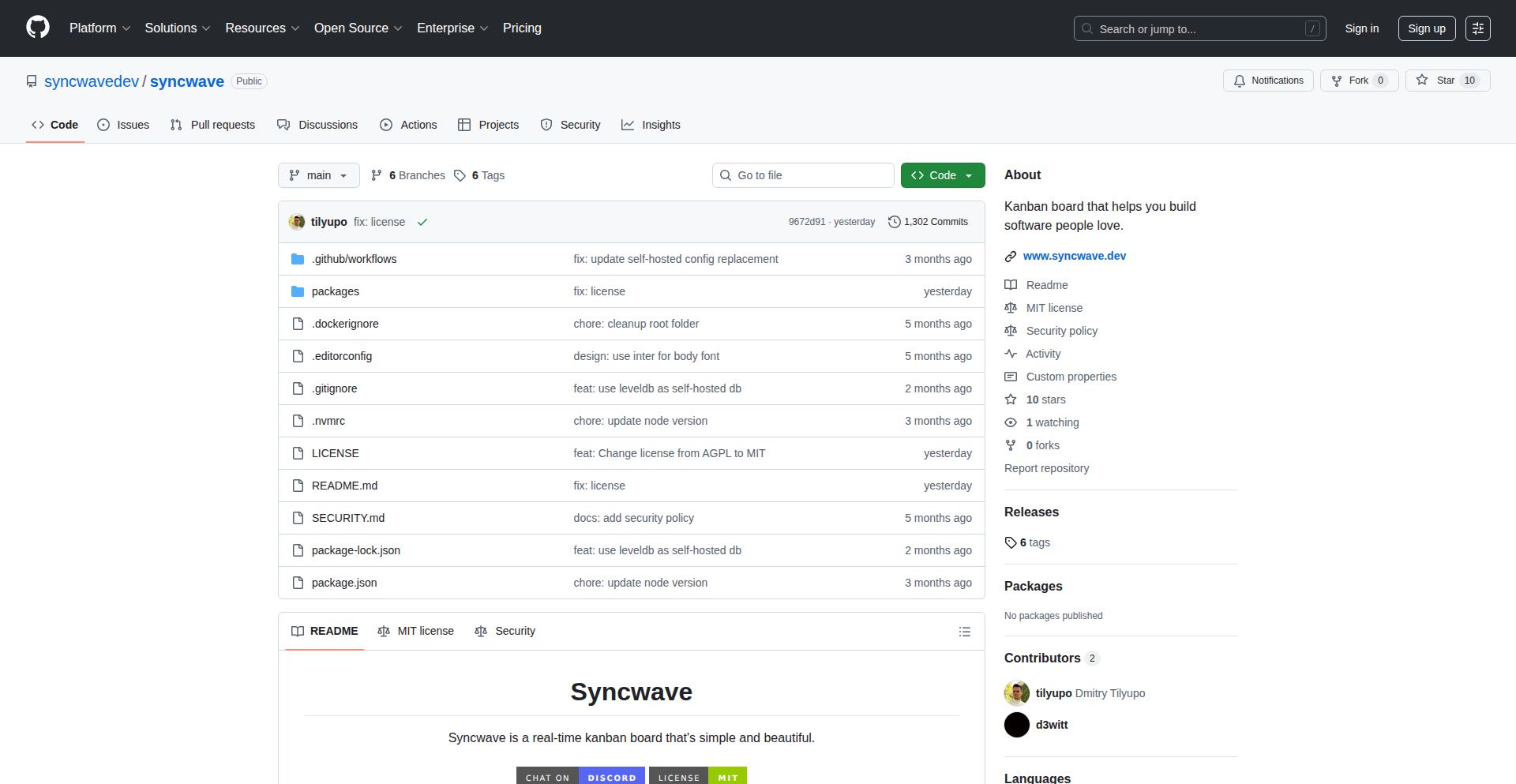

Syncwave: Real-time Kanban Sync Engine

Author

tilyupo

Description

Syncwave is an MIT-licensed, real-time Kanban board designed for collaborative project management. Its core innovation lies in its robust real-time synchronization engine, enabling multiple users to update and view board changes instantaneously. This addresses the common challenge of data staleness and collaboration bottlenecks in traditional project management tools.

Popularity

Points 2

Comments 2

What is this product?

Syncwave is a real-time Kanban board powered by a sophisticated synchronization engine. The magic behind it is likely a combination of WebSockets or a similar persistent connection technology, allowing for bi-directional communication between the server and clients. When one user moves a card, creates a new one, or updates its details, these changes are immediately broadcast to all other connected users, updating their views without requiring a manual refresh. This instant propagation of changes is the key technical innovation, ensuring everyone is always on the same page.

How to use it?

Developers can integrate Syncwave into their existing project management workflows or build new applications around it. It can be used as a standalone Kanban board for personal task tracking or team collaboration. For integration, developers can leverage its API to embed Kanban functionality within other platforms, like internal dashboards or client portals. The MIT license makes it highly flexible for both open-source and commercial projects, allowing developers to customize and extend its capabilities.

Product Core Function

· Real-time Card Synchronization: Allows multiple users to see card updates (creation, deletion, movement, editing) as they happen, eliminating data lag and ensuring everyone has the latest information. This is valuable for teams needing to coordinate tasks efficiently.

· Instantaneous Board Updates: When a change occurs on the Kanban board, all connected users' views are updated immediately, providing a fluid and responsive collaborative experience. This removes the frustration of working with outdated information.

· MIT Licensed Flexibility: Provides complete freedom for developers to use, modify, and distribute the code for any purpose, including commercial applications. This encourages adoption and customization within the developer community.

· Collaborative Task Management: Facilitates seamless teamwork by allowing multiple users to contribute and view project progress on a shared Kanban board. This is useful for project managers and team leads to visualize workflow and identify bottlenecks.

Product Usage Case

· A software development team using Syncwave to manage their sprint backlog, allowing developers to instantly see newly assigned tasks or completed user stories as they are moved across columns.

· A freelance project manager using Syncwave to track client project progress, providing clients with real-time visibility into task status and deliverables without needing to send constant email updates.

· An individual developer using Syncwave for personal task management, visualizing their to-do list and seeing their progress update in real-time as they complete tasks.

18

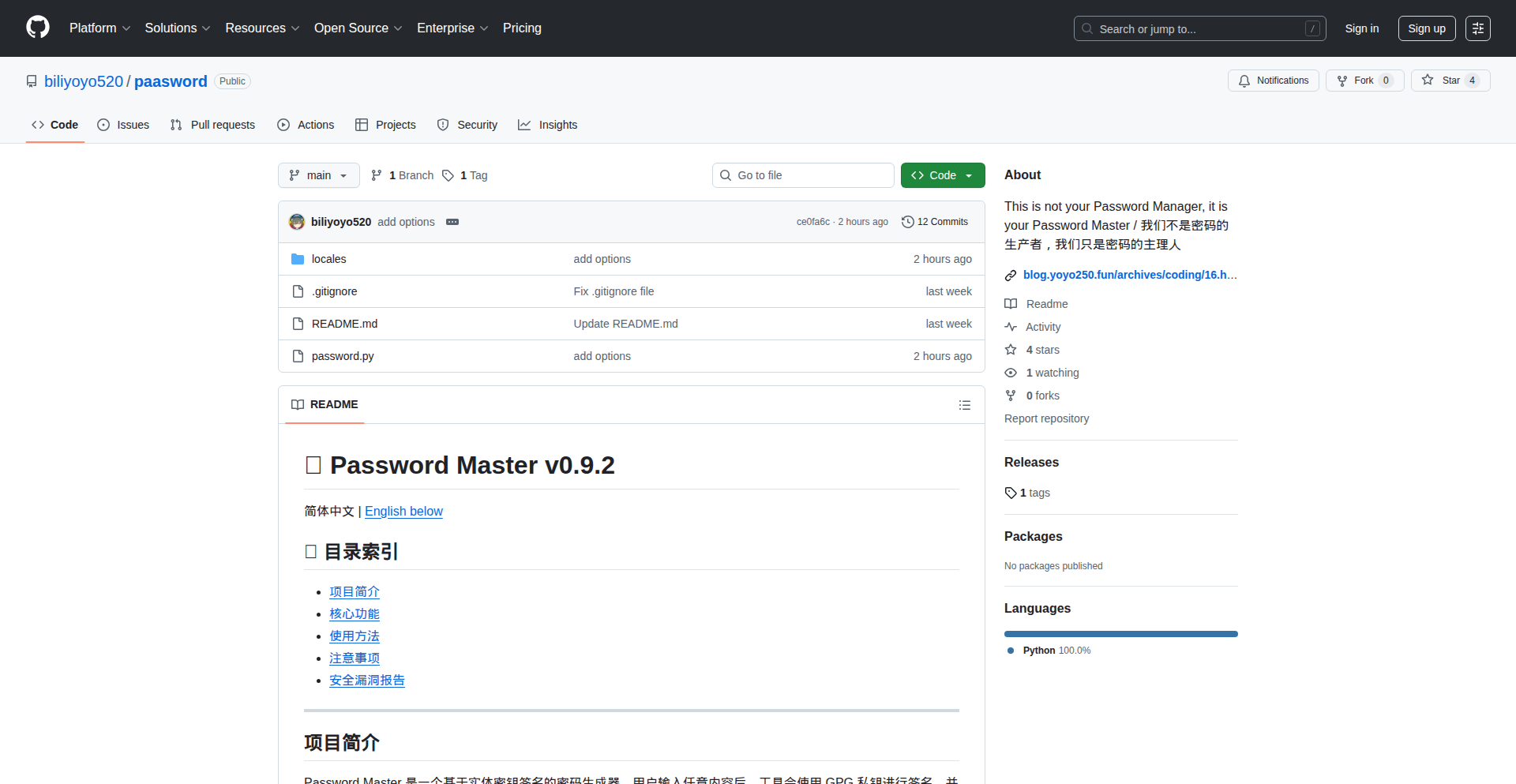

Paasword: The Demand-Derived Credential Engine

Author

yoyo250

Description

Paasword is a novel approach to password management. Instead of storing your sensitive credentials in a vault, it dynamically derives them on demand. By combining your domain, username, a short passphrase, and a physical OpenPGP key (like a smartcard or YubiKey), it generates a unique, reproducible password for each service. This eliminates the risk of data breaches exposing your stored passwords, as they are never persisted.

Popularity

Points 2

Comments 2

What is this product?

Paasword is a pre-release, experimental password generation system that doesn't store your actual passwords. It leverages the cryptographic power of your physical OpenPGP key, combined with the domain you're accessing, your username, and a personal passphrase, to create a unique password each time you need it. Think of it like a master key that, when combined with specific contextual information, unlocks the right door without ever leaving a trace of the key itself.

How to use it?

Developers can integrate Paasword by using its underlying logic to generate passwords for applications or services. For example, during authentication flows, instead of fetching a stored password, you can call Paasword's derivation function with the necessary inputs. It's currently tested with RSA4096 on Windows using GnuPG 2.4.x. The core idea is to replace static password storage with on-demand generation, enhancing security for sensitive systems.

Product Core Function

· On-demand password derivation: Generates passwords using a combination of domain, username, passphrase, and a physical OpenPGP key. This means your passwords are never stored, significantly reducing the risk of theft if a system is compromised.

· Reproducible password generation: Although passwords are generated uniquely for each instance, the process is deterministic. Given the same inputs, Paasword will always produce the same password for a specific service, ensuring you can reliably access your accounts.

· Physical security integration: Leverages physical security hardware like smartcards or YubiKeys for the OpenPGP key. This adds a strong layer of security, as possession of the physical device is required for password generation, making remote attacks much harder.

· No persistent storage: The fundamental innovation is the absence of a password database. This eliminates a major attack vector for credential theft.

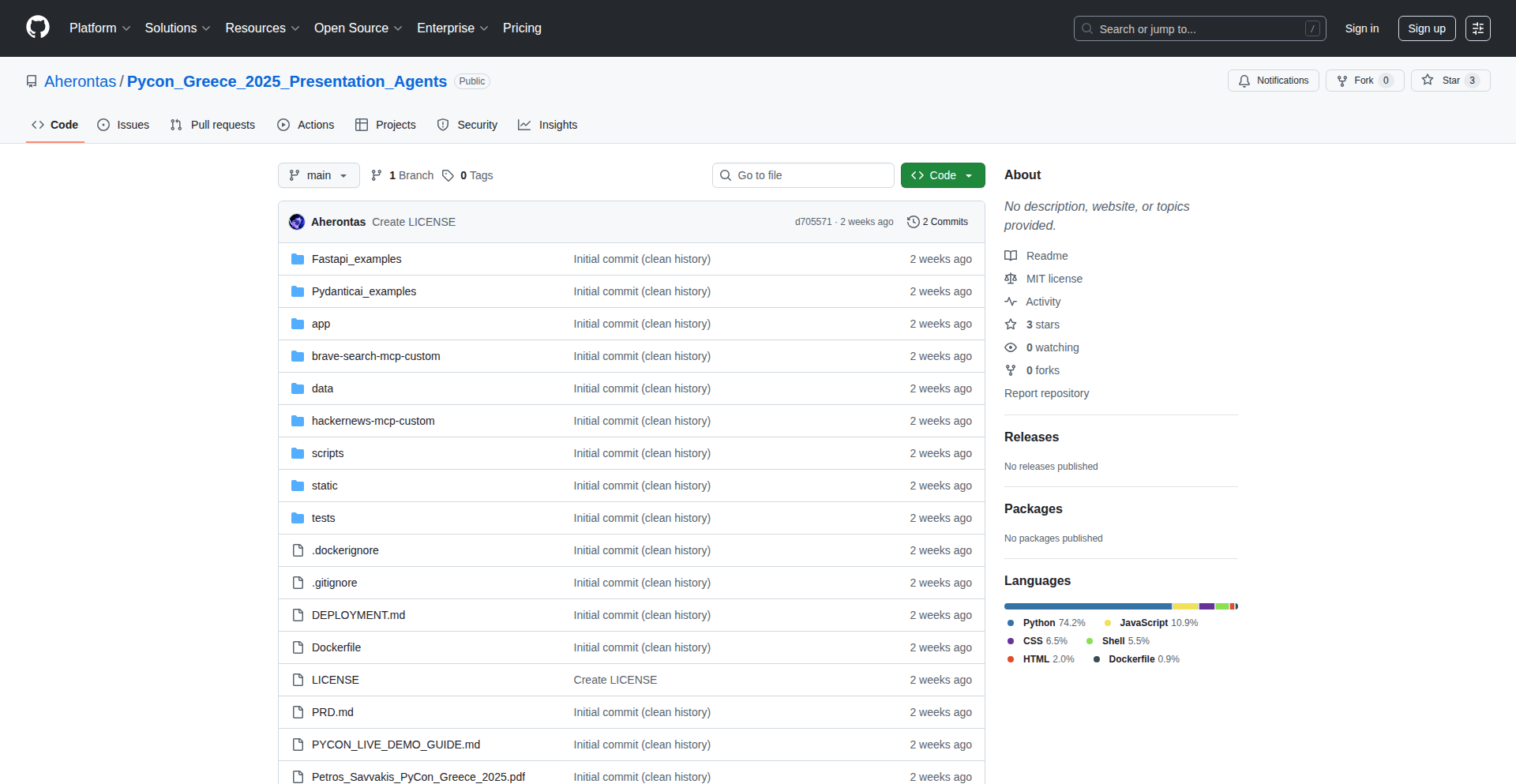

Product Usage Case