Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-10

SagaSu777 2025-09-11

Explore the hottest developer projects on Show HN for 2025-09-10. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation is heavily driven by the integration of AI into everyday developer workflows, aiming to boost productivity and simplify complex tasks. From Haystack's intelligent code review to Agentic platforms managing real machines, the theme is clear: empower developers by abstracting away complexity and automating tedious processes. This trend signifies a shift towards more collaborative and intelligent development environments, where AI acts as a co-pilot, not just a code generator. For developers, this means embracing tools that leverage AI for analysis, automation, and intelligent decision-making, pushing the boundaries of what's possible with code. For entrepreneurs, it's an opportunity to build specialized AI solutions that address niche pain points in software development, robotics, or data analysis, creating new paradigms for efficiency and innovation. The hacker spirit thrives in this landscape by finding novel ways to combine existing technologies to solve problems that were previously intractable, making powerful capabilities accessible to everyone.

Today's Hottest Product

Name

Haystack

Highlight

Haystack revolutionizes code reviews by transforming raw diffs into a coherent narrative. It leverages advanced code analysis (like language servers and tree-sitter) combined with AI to explain changes, highlight critical parts, and trace cross-file context. This approach significantly reduces the time reviewers spend untangling complex codebases, allowing them to focus on architectural decisions and correctness. Developers can learn how to combine static analysis with AI for enhanced developer tooling, creating more intuitive and efficient workflows. The core innovation lies in presenting code changes not as a linear diff, but as a story, making complex technical information accessible and actionable.

Popular Category

AI/ML

Developer Tools

Productivity

Robotics

Code Analysis

Popular Keyword

AI

LLM

Code Review

ROS

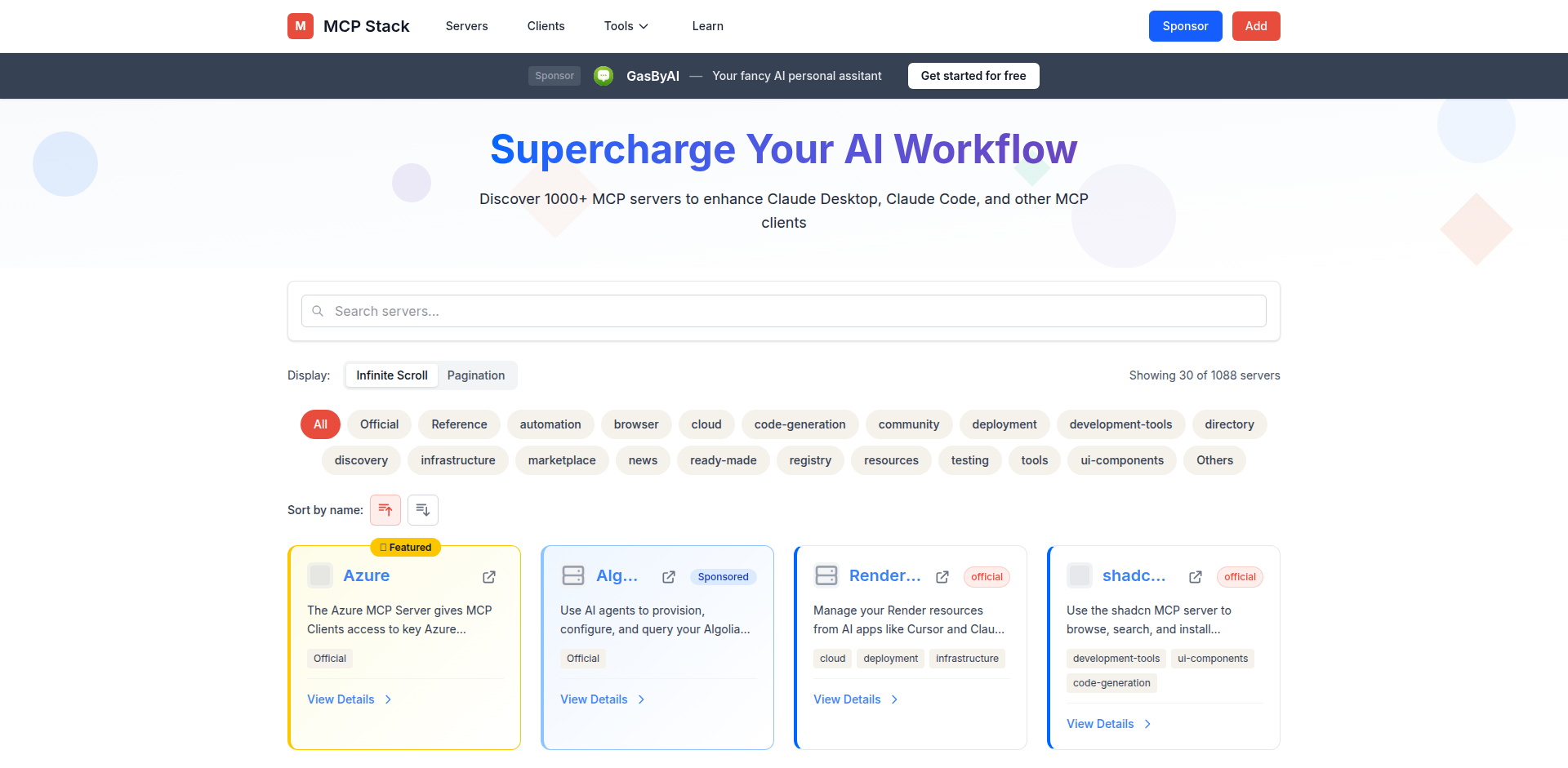

MCP

Agent

CLI

Productivity

Automation

Data Analysis

Technology Trends

AI-powered Developer Productivity

Agentic Workflows

Code Understanding & Analysis

Robotics Integration with AI

Decentralized/P2P Communication

Efficient AI Model Deployment

Data Analysis & Visualization

Project Category Distribution

AI/ML Tools (35%)

Developer Productivity & Tools (25%)

Robotics & IoT (10%)

Data Science & Analysis (10%)

Web Development & Services (15%)

Utilities & Misc (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Haystack PR Navigator | 68 | 40 |

| 2 | MicroCharge | 43 | 25 |

| 3 | HumanAlarm: The Human Wake-Up Service | 31 | 36 |

| 4 | RoboTalker MCP | 26 | 27 |

| 5 | ArkECS Go | 16 | 2 |

| 6 | Deep Research MCP Agent | 12 | 3 |

| 7 | AI Rules Manager (ARM) | 12 | 3 |

| 8 | Codebase MCP Engine | 13 | 1 |

| 9 | Dependency Insight Engine | 12 | 0 |

| 10 | Flox-NixCUDA | 9 | 0 |

1

Haystack PR Navigator

Author

akshaysg

Description

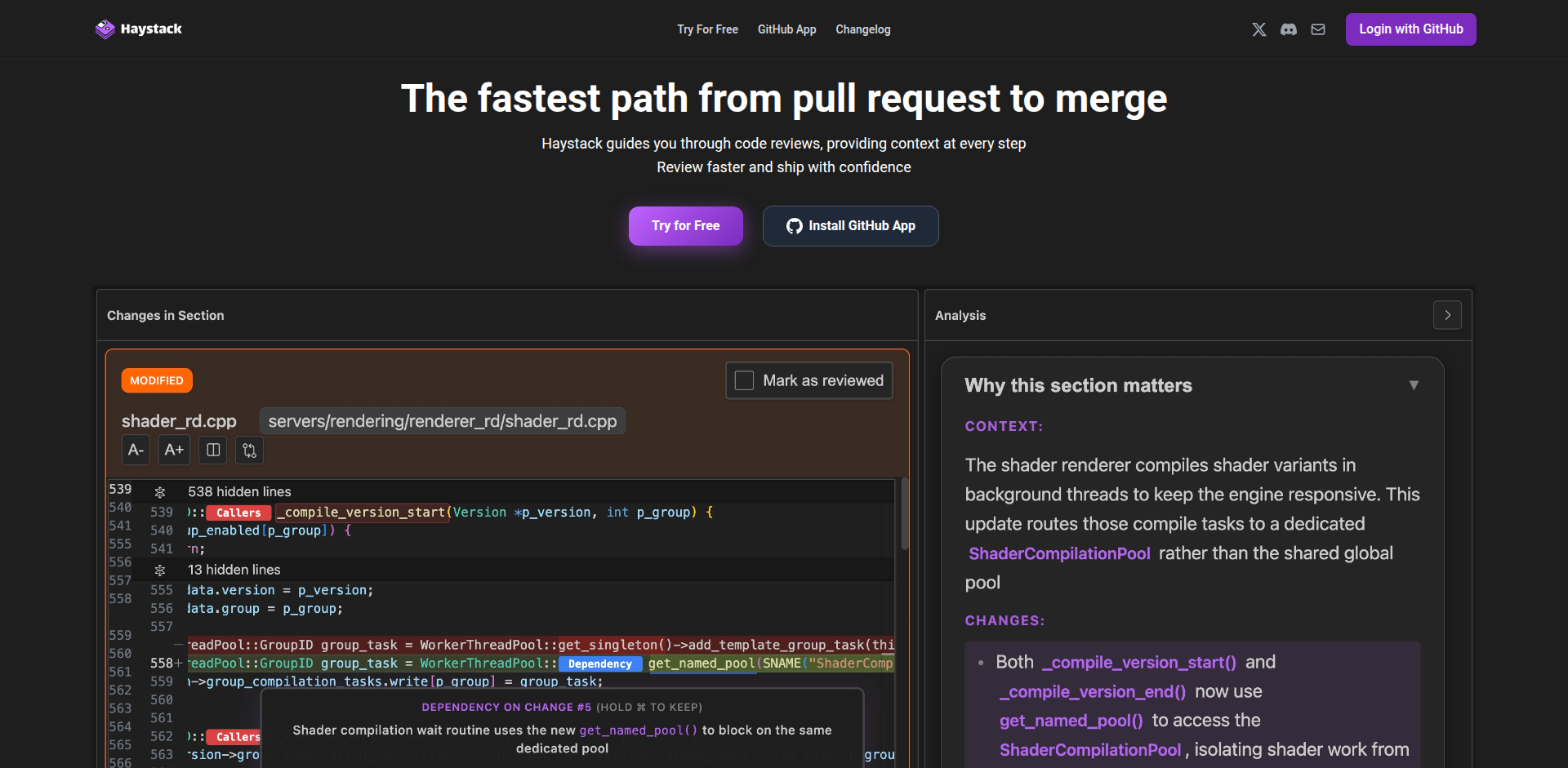

Haystack is a tool designed to revolutionize the code review process by transforming complex pull requests (PRs) into clear, narrative-driven explanations. It leverages static code analysis and AI to organize code changes logically, highlight critical updates, and provide cross-file context, allowing developers to understand and review code more efficiently, even in AI-generated codebases. This dramatically reduces the time spent deciphering code, enabling reviewers to focus on providing valuable feedback.

Popularity

Points 68

Comments 40

What is this product?

Haystack is a smart code review assistant that takes raw code changes in a pull request and reconstructs them into a coherent story. Instead of just showing lines of code that changed, it uses advanced techniques like language servers and tree-sitter to understand the code's structure and then applies AI to explain the purpose and impact of these changes. It goes beyond simple diffs by tracing how a change affects other parts of the codebase, making it easier to grasp the full picture. The core innovation lies in its ability to synthesize code logic and intent into easily digestible explanations, effectively organizing the 'noise' of minor changes to highlight the 'signal' of significant architectural or functional updates. This is particularly valuable in modern development where AI-generated code can introduce complexity that even the original author might not fully grasp.

How to use it?

Developers can integrate Haystack into their workflow by visiting haystackeditor.com/review. The tool offers demo pull requests that showcase its capabilities. For actual use, you can typically integrate Haystack with your version control system (like GitHub) to analyze your pull requests automatically. When a new PR is created, Haystack processes it, and the organized, explained version of the changes is made available within your review interface or a linked report. This allows developers to quickly access a summarized, context-rich overview of the PR, enabling faster and more insightful reviews.

Product Core Function

· Narrative PR Structuring: Organizes code changes into a logical flow, presenting them as a coherent story rather than a jumble of diffs. This helps reviewers understand the 'why' behind the changes, not just the 'what'.

· Intelligent Change Prioritization: Identifies and groups routine or minor changes (like refactors or boilerplate updates) into skimmable sections, allowing reviewers to quickly bypass less critical parts and focus on significant design or correctness issues.

· Cross-File Contextualization: Traces new or modified functions and variables across the entire codebase to show how they are used elsewhere. This provides crucial context that is often missed in standard diff views, preventing knowledge gaps.

· AI-Powered Explanations: Utilizes AI to generate plain-language explanations for code changes, making complex logic and intent understandable to anyone, regardless of their familiarity with the specific codebase.

· AI Agent Integration with Static Analysis: Empowers AI models to leverage static code analysis results, leading to more accurate and contextually relevant code understanding and explanation.

Product Usage Case

· New Developer Onboarding: A junior developer joining a large, unfamiliar project can use Haystack to quickly understand the context and intent of a pull request, accelerating their learning curve and ability to contribute meaningfully.

· Complex Feature Review: When a pull request introduces a significant new feature involving changes across multiple files and modules, Haystack can untangle the dependencies and interactions, ensuring reviewers don't miss critical edge cases or design flaws.

· AI-Generated Code Auditing: In scenarios where code is generated or heavily modified by AI tools, Haystack can provide a structured explanation and trace the impact of that AI-generated code, helping human developers ensure correctness and security.

· Reducing Reviewer Fatigue: For pull requests with hundreds of changes, Haystack can distill the core modifications and their impact, preventing reviewers from getting overwhelmed and reducing the likelihood of overlooking important issues due to mental exhaustion.

2

MicroCharge

Author

strnisa

Description

MicroCharge is a novel payment processing solution designed for SaaS businesses. It tackles the challenge of low-volume transactions or per-request charging by offering an incredibly low, granular fee structure, starting at just $0.000001 USD per request. This innovation allows SaaS providers to efficiently monetize even the smallest of actions within their applications, making micro-transactions economically viable.

Popularity

Points 43

Comments 25

What is this product?

MicroCharge is a payment gateway that allows Software-as-a-Service (SaaS) companies to charge for very small actions or events within their applications. Traditionally, payment processing fees are a fixed percentage or a flat fee per transaction, which makes charging for extremely low-value events impractical. MicroCharge breaks this barrier by offering a minuscule, per-request fee. This means a SaaS business can now charge its users for individual API calls, feature usage, or any discrete action, even if the monetary value of that action is fractions of a cent. The innovation lies in its ability to aggregate and efficiently process these tiny charges, making it cost-effective for both the SaaS provider and, ultimately, the end-user.

How to use it?

Developers can integrate MicroCharge into their SaaS applications via an API. Instead of handling traditional payment processing for each micro-transaction, they make a simple API call to MicroCharge whenever a chargeable event occurs. MicroCharge then handles the accumulation of these small charges for a user and processes the payment when a predefined threshold is met. This is particularly useful for applications with granular feature access or usage-based pricing models. For example, a developer could integrate it by calling a `trackEvent('user_action', { userId: 'user123', cost: 0.000001 })` function, and MicroCharge manages the billing on the backend. This can be integrated into backend services, API gateways, or even directly within client-side applications for certain use cases.

Product Core Function

· Ultra-low per-request charging: Allows monetization of actions costing as little as $0.000001, making micro-transactions feasible. This is useful for charging for every API call or user interaction, unlike traditional systems that are too expensive for such granular billing.

· Transaction aggregation and batching: Efficiently collects and processes numerous small charges into larger, manageable payments. This reduces the overhead of processing individual micro-transactions, keeping costs down for the SaaS provider.

· API-driven integration: Provides a straightforward API for developers to trigger charges based on application events. This allows seamless integration into existing software without complex payment infrastructure.

· SaaS monetization flexibility: Enables new pricing models based on granular usage, feature access, or specific events. This offers SaaS businesses more ways to generate revenue and align costs with value provided to the user.

Product Usage Case

· A SaaS platform offering AI-powered text generation can charge users per word or per generated paragraph, with MicroCharge handling the tiny costs associated with each individual generation event. This provides a transparent and usage-based billing for the end-user.

· An analytics service that provides real-time data insights can charge based on the number of queries a user makes. MicroCharge allows them to bill for each individual query, no matter how small the associated cost, making it pay-as-you-go.

· A developer tool that offers access to specific API functionalities can charge users for each API call made to that functionality. MicroCharge enables this by processing each call as a minuscule transaction, protecting the developer from high processing fees.

· A collaboration tool where specific actions like sending an automated notification or triggering a workflow have a nominal cost. MicroCharge allows the platform to monetize these small, valuable automated actions.

3

HumanAlarm: The Human Wake-Up Service

Author

soelost

Description

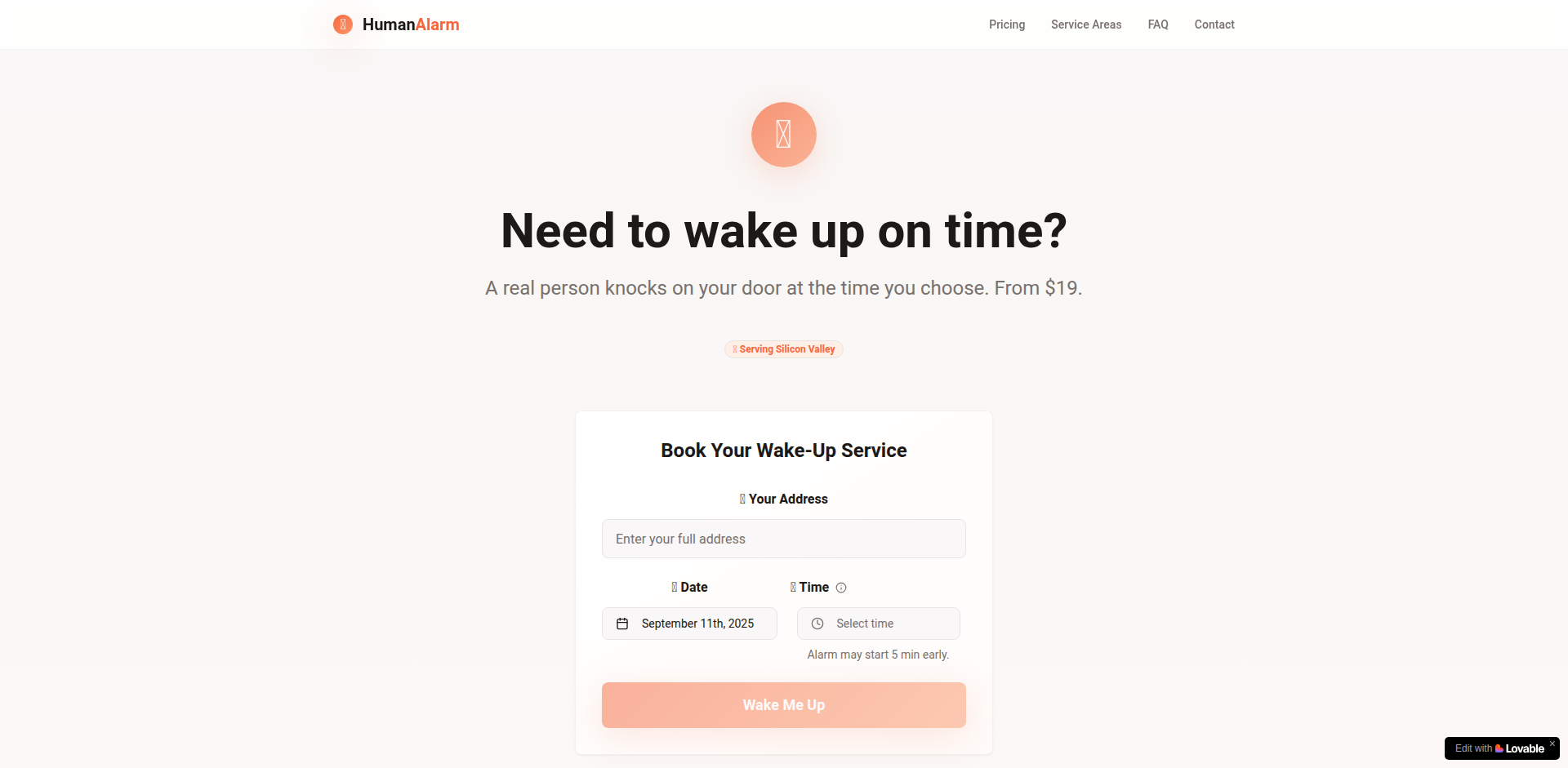

HumanAlarm is a novel service that addresses the persistent problem of oversleeping and missing important events by leveraging human intervention. Instead of relying solely on digital alarms, it connects users with real people who will physically knock on their door to ensure they wake up. This innovative approach tackles the limitations of traditional alarms by introducing accountability and a tangible consequence for not responding.

Popularity

Points 31

Comments 36

What is this product?

HumanAlarm is a service where you schedule a wake-up time, and a real person is dispatched to your location to knock on your door. The 'human alarm' will knock for two minutes. If there's no response, they will wait a short period and attempt another knock. This human-powered system offers a more robust and reliable wake-up solution for heavy sleepers or those who frequently miss crucial appointments, going beyond the limitations of phone-based alarms by adding a layer of personal accountability and physical presence.

How to use it?

Developers can integrate HumanAlarm into their applications or workflows to provide a unique wake-up service. For example, a productivity app could offer a premium feature allowing users to book a human alarm for critical meetings. A travel app could integrate it for travelers who need an extra layer of assurance for early morning flights. The service is currently live in select cities, and integration would likely involve an API to schedule wake-up calls and manage user bookings. The core idea is to build systems that leverage this human-powered alert mechanism for scenarios where waking up is paramount.

Product Core Function

· Scheduled Human Wake-Up: Allows users to book a specific time for a physical knock on their door, providing a reliable wake-up mechanism beyond digital alerts. This is valuable for anyone who struggles with oversleeping and has experienced missed events.

· Two-Stage Knocking System: Implements an initial two-minute knock followed by a follow-up knock after a 3-5 minute delay if no response is detected. This escalation process ensures a higher probability of waking the user and addressing potential issues.

· Real-time Accountability: The human element introduces direct accountability for waking up, which is a key differentiator from automated systems. This is useful for users who need a strong behavioral motivator to be on time for important commitments.

· Location-Based Service: Operates in select physical locations, offering a tangible, in-person service. This is beneficial for users who need a wake-up solution that transcends their digital devices, especially in situations where phone alarms might be silenced or ignored.

Product Usage Case

· A productivity app could offer HumanAlarm as a 'guaranteed attendance' feature for users to book before important client calls or critical work deadlines. If a user fails to wake up and respond, the app can log this as a 'missed' event, providing a clear record and potentially triggering follow-up actions.

· A travel booking platform could allow users to add a HumanAlarm to their flight or early morning train reservations. This would provide peace of mind for travelers, especially those in unfamiliar locations or dealing with early departure times, reducing the risk of missing their transportation.

· A personal assistant service could integrate HumanAlarm for clients who have consistently missed important appointments. By using a physical presence to ensure wakefulness, the service enhances its reliability and perceived value to the client.

4

RoboTalker MCP

Author

r-johnv

Description

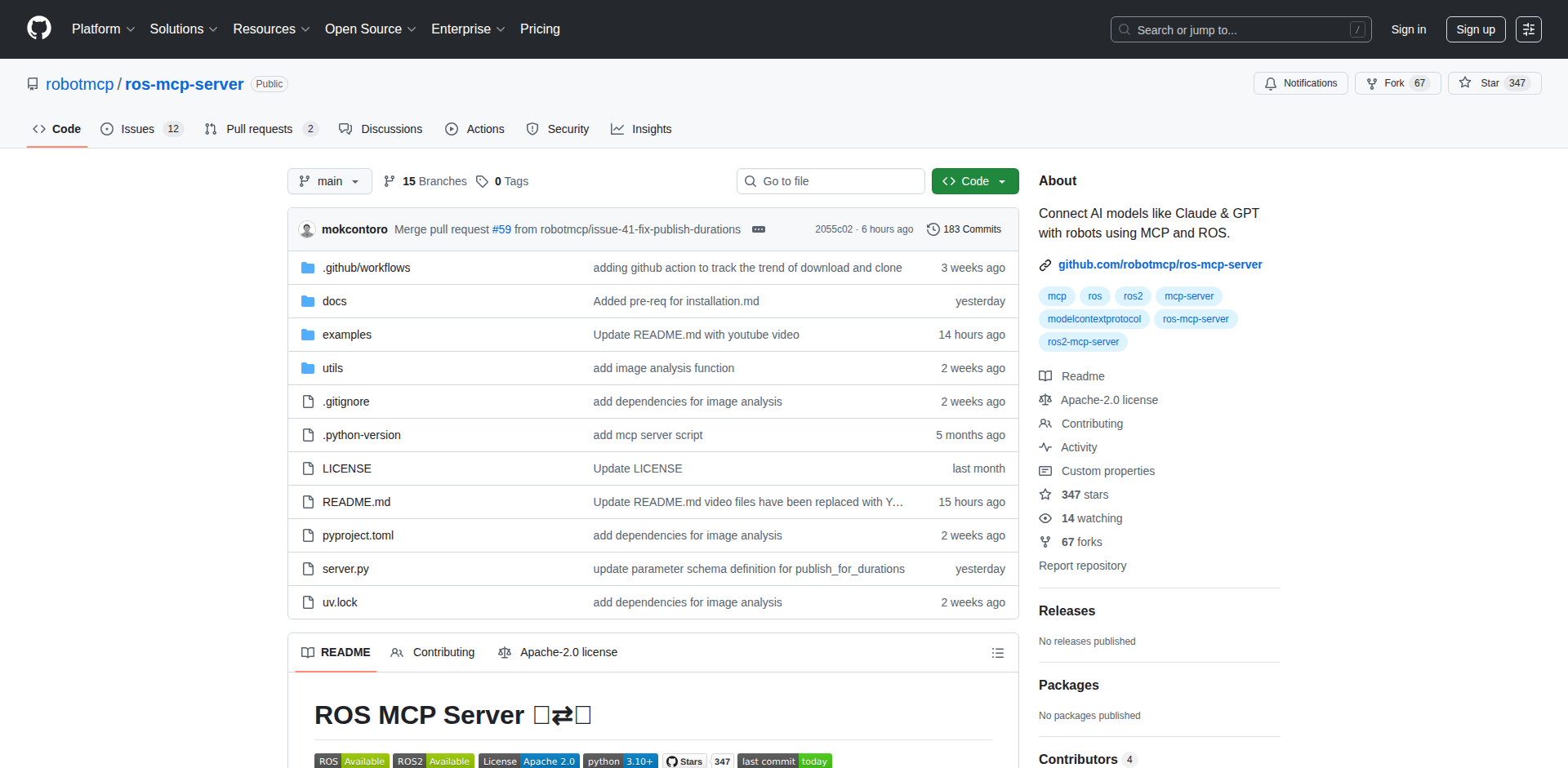

RoboTalker MCP is a groundbreaking open-source server that bridges the gap between large language models (LLMs) and robots running ROS (Robot Operating System) versions 1 or 2. It allows LLMs to interact with robots using natural language, translating human commands into robot actions and understanding robot responses. This innovation enables seamless integration without modifying the robot's existing code, paving the way for easier AI-robot application development and a standardized interface for safe AI-robot communication.

Popularity

Points 26

Comments 27

What is this product?

RoboTalker MCP acts as a translator and communication hub. It leverages the Model Context Protocol (MCP) to connect any LLM to robots that use ROS. Essentially, it takes your spoken or typed commands in plain English (or other natural languages), figures out what robot actions (like moving a specific joint, activating a sensor, or performing a task) correspond to those commands, and sends them to the robot. Crucially, it can also listen to what the robot is doing and report that back to the LLM. The innovation here is the ability to achieve this without needing to alter the robot's original programming, making it incredibly versatile and easy to adopt.

How to use it?

Developers can integrate RoboTalker MCP into their AI-robot projects by setting up the server alongside their ROS-enabled robots. Once the server is running, they can then connect their chosen LLM (like GPT-3, BERT, etc.) to it. From the LLM's interface, developers can then issue commands in natural language. For instance, they could type 'move the robot arm to the left' or 'check the lidar sensor reading'. RoboTalker MCP will interpret these, send the appropriate ROS commands to the robot, and if the robot provides feedback (e.g., 'arm moved successfully'), that feedback will be relayed back to the LLM. This allows for rapid prototyping of AI-driven robotic behaviors and creating intuitive control interfaces.

Product Core Function

· Natural Language to ROS Topic/Service/Action Translation: Enables users to command robots using everyday language, translating these into the specific protocols ROS robots understand, making robot control accessible to a wider audience.

· ROS Topic/Service/Action to Natural Language Feedback: Allows robots to communicate their status and sensor data back to the LLM in an understandable format, providing crucial situational awareness.

· No Robot Source Code Modification: Facilitates integration with existing robotic systems without the need for complex and time-consuming code changes on the robot itself, significantly reducing setup friction.

· Model Context Protocol (MCP) Integration: Provides a standardized way for LLMs to interact with robots, fostering interoperability and a common language for AI-robot communication.

Product Usage Case

· A researcher wants to quickly test a new AI-driven object manipulation strategy on a robotic arm. Instead of writing complex ROS code to control each joint, they can use RoboTalker MCP to tell the LLM 'pick up the blue cube', and the LLM, via RoboTalker MCP, instructs the robot arm accordingly, speeding up experimentation.

· A developer is building a smart home robot that needs to respond to voice commands. They can connect their LLM-powered voice assistant to RoboTalker MCP to control household robots like vacuum cleaners or smart appliances, enabling intuitive voice control over physical devices.

· A team is developing an autonomous delivery robot. RoboTalker MCP allows them to have the LLM monitor the robot's navigation status and receive simple commands like 'report current location' or 'avoid obstacle', making it easier to manage and monitor the robot's operation in real-time.

5

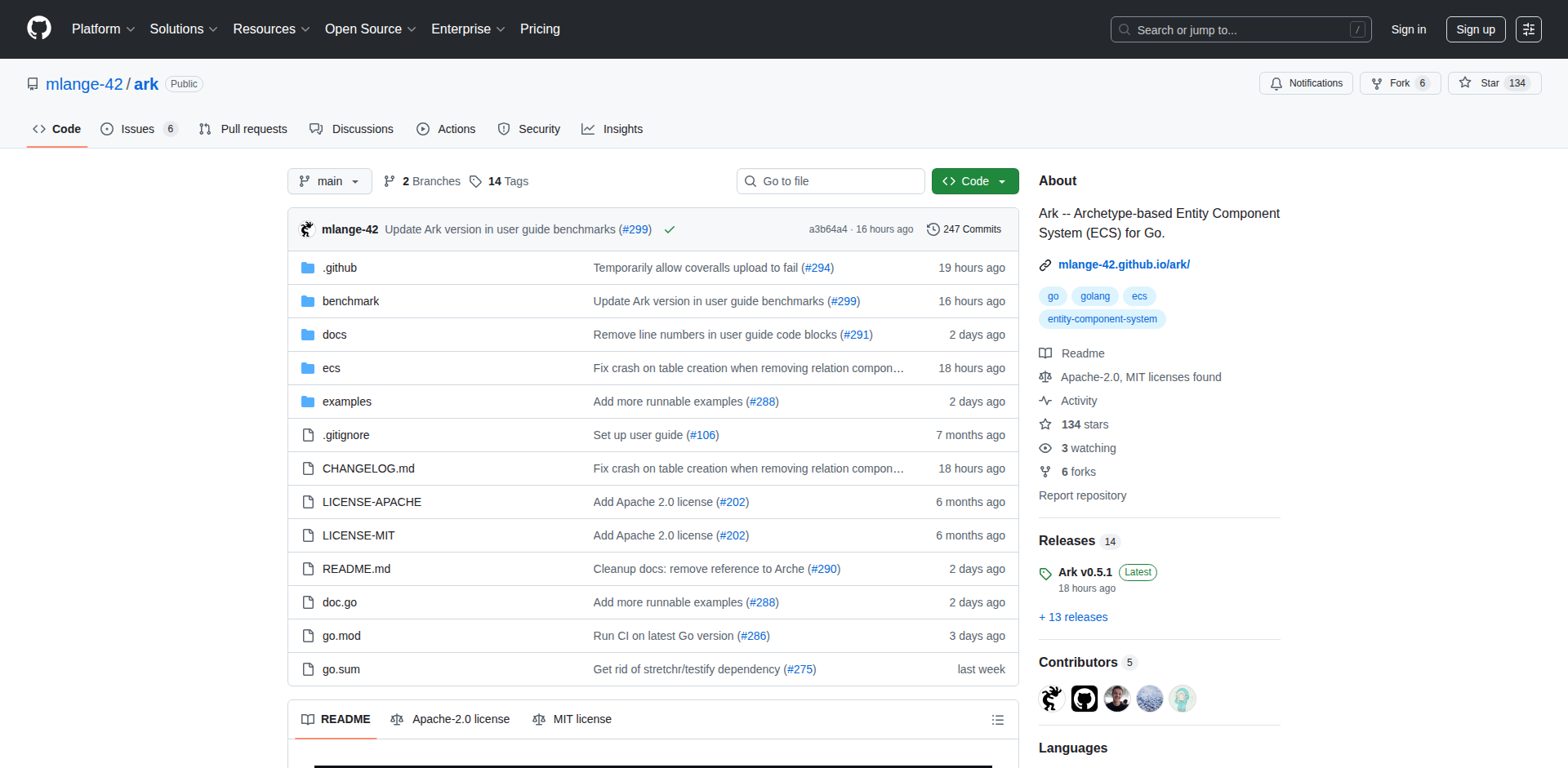

ArkECS Go

Author

mlange-42

Description

ArkECS Go is a minimalist, high-performance Entity Component System (ECS) library for Go. It focuses on speed and ease of use, offering ultra-fast batch operations and robust support for entity relationships. This release enhances query performance through intelligent indexing and adds features for randomly selecting entities, making it ideal for game development and simulations where managing complex game objects efficiently is crucial. So, this helps developers build faster, more scalable applications by providing a structured and efficient way to manage game entities and their properties.

Popularity

Points 16

Comments 2

What is this product?

ArkECS Go is a game development pattern called Entity Component System (ECS) implemented as a lightweight library for the Go programming language. Think of it as a highly organized way to manage all the 'things' (entities) in your game or simulation, like characters, enemies, or items. Instead of having a single big object for each character that holds all its data and behaviors, ECS breaks things down into smaller, reusable pieces called 'components' (like position, health, or appearance). The 'system' part is where the logic operates on entities that have specific combinations of components. ArkECS Go is innovative because it's designed to be extremely fast, especially when processing many entities at once (batch operations), and it has special features for how entities relate to each other. It uses smart indexing to speed up how it finds entities with certain components, making your game run smoother. So, the innovation lies in its speed, simplicity, and how it efficiently handles complex relationships between game elements, allowing for more sophisticated and performant applications.

How to use it?

Developers can integrate ArkECS Go into their Go projects by importing the library. They would define their game's data into distinct components (e.g., `PositionComponent`, `VelocityComponent`, `RenderComponent`). Then, they create entities and attach these components to them. Systems, which are pieces of logic, can then query for entities that possess specific sets of components and process them in batches. For example, a physics system might query for entities with `PositionComponent` and `VelocityComponent` to update their positions. Its zero-dependency nature makes integration straightforward. So, developers use it by defining their game's data as components, creating entities, attaching components, and writing systems to process these entities efficiently, enabling faster game logic execution.

Product Core Function

· Entity creation and management: Provides a clean API to create, destroy, and manage entities, which are unique identifiers for game objects. This allows for flexible object lifecycle management in simulations. So, this helps organize and control individual game elements.

· Component-based data modeling: Enables developers to define and attach various data components (like position, health, etc.) to entities, promoting data-driven design and reusability. So, this makes it easy to define and customize game objects without repetitive code.

· Efficient querying: Offers optimized methods to retrieve entities based on their attached components, crucial for performance in large-scale simulations. So, this allows quick access to specific game objects needed for updates or logic.

· High-performance batch operations: Designed to process large numbers of entities with common components very quickly, essential for demanding real-time applications like games. So, this ensures smooth performance even with many on-screen elements.

· First-class entity relationship support: Provides built-in mechanisms to define and manage relationships between entities (e.g., parent-child), simplifying the representation of complex hierarchies. So, this makes it easier to model intricate interactions between game objects.

· Smarter indexing for queries: Utilizes advanced indexing techniques to accelerate data retrieval, reducing processing time for complex queries. So, this means faster execution of game logic that depends on finding specific entity configurations.

Product Usage Case

· Developing a 2D or 3D game: A game developer can use ArkECS Go to manage all game characters, enemies, and environment objects. By attaching components like 'Transform' (position, rotation, scale), 'SpriteRenderer' (visual appearance), and 'Rigidbody' (physics properties), they can efficiently update all entities in a game loop. For example, a system can iterate through all entities with 'Transform' and 'Velocity' components to update their positions, ensuring smooth movement. So, this helps build games with many moving parts that run smoothly.

· Building a real-time strategy (RTS) simulation: In an RTS game with hundreds or thousands of units, ArkECS Go's performance and batch processing capabilities are vital. Each unit (entity) can have components like 'UnitStats' (health, attack damage), 'MovementAI' (pathfinding data), and 'Targeting' (current objective). Systems can then efficiently process large groups of units for actions like attacking, moving, or resource gathering. So, this allows for complex simulations with many active elements without performance degradation.

· Creating a physics simulation engine: Developers can use ArkECS Go to represent physical objects (entities) with components like 'Position', 'Velocity', 'Mass', and 'Collider'. Physics systems can then efficiently query for entities with these components to apply forces, detect collisions, and update positions, enabling complex physical interactions. So, this makes it easier to create realistic simulations of how objects interact in the real world.

6

Deep Research MCP Agent

Author

saqadri

Description

This project showcases a deep research agent capable of autonomously exploring and summarizing complex topics. It addresses the challenge of information overload by systematically navigating and synthesizing information, highlighting novel approaches to automated knowledge discovery and the practical hurdles encountered during development.

Popularity

Points 12

Comments 3

What is this product?

This is an AI-powered agent designed for in-depth research. It operates by intelligently navigating through vast amounts of data, such as academic papers or online content, to identify key themes, synthesize information, and present a coherent summary. The innovation lies in its ability to perform 'multi-campaign probing' (MCP), a technique that allows it to pursue multiple research avenues simultaneously and adapt its strategy based on intermediate findings, much like a human researcher would. This goes beyond simple keyword searching by understanding context and relationships between pieces of information. So, what does this mean for you? It means getting to the core of a complex subject much faster and with greater depth than traditional search methods, reducing your research time significantly.

How to use it?

Developers can integrate this agent into their workflows to automate literature reviews, market analysis, or competitive intelligence gathering. It can be used as a standalone tool for personal research or embedded within larger applications that require sophisticated information processing. The agent's core logic can be extended or fine-tuned to specific domains. For instance, you could point it towards a set of patents to identify emerging trends or a collection of news articles to understand public sentiment on a particular issue. So, how does this help you? You can build smarter research tools or automate tedious data synthesis tasks, freeing up your development time for more creative problem-solving.

Product Core Function

· Multi-Campaign Probing (MCP): Allows the agent to explore multiple research threads concurrently, mimicking sophisticated human research strategies, leading to more comprehensive and nuanced insights. This helps you uncover connections you might have missed.

· Autonomous Information Synthesis: Automatically processes and summarizes gathered information, distilling complex datasets into digestible insights. This saves you the time and effort of manually reading and summarizing large volumes of text.

· Adaptive Research Strategy: Dynamically adjusts its research approach based on early findings, prioritizing more promising avenues and avoiding unproductive paths. This ensures your research stays focused and efficient, getting you to the answers you need faster.

· Pitfall Identification and Mitigation: The project openly discusses the challenges encountered, providing practical lessons for building robust research agents. This learning accelerates your own development process and helps you avoid common mistakes.

Product Usage Case

· Automating academic literature reviews for a specific field, allowing researchers to quickly grasp the state of the art and identify research gaps. This saves researchers countless hours of sifting through papers.

· Building a market intelligence tool that constantly monitors industry news and competitor activities, providing executives with real-time insights into market dynamics. This helps businesses stay competitive by understanding their landscape.

· Developing a system to summarize complex legal documents or regulatory updates, enabling legal professionals to quickly understand critical changes. This streamlines legal analysis and reduces the risk of overlooking important details.

· Creating a personalized learning assistant that can research and explain complex concepts based on user queries, adapting its explanations to the user's understanding. This makes learning more accessible and efficient for individuals.

7

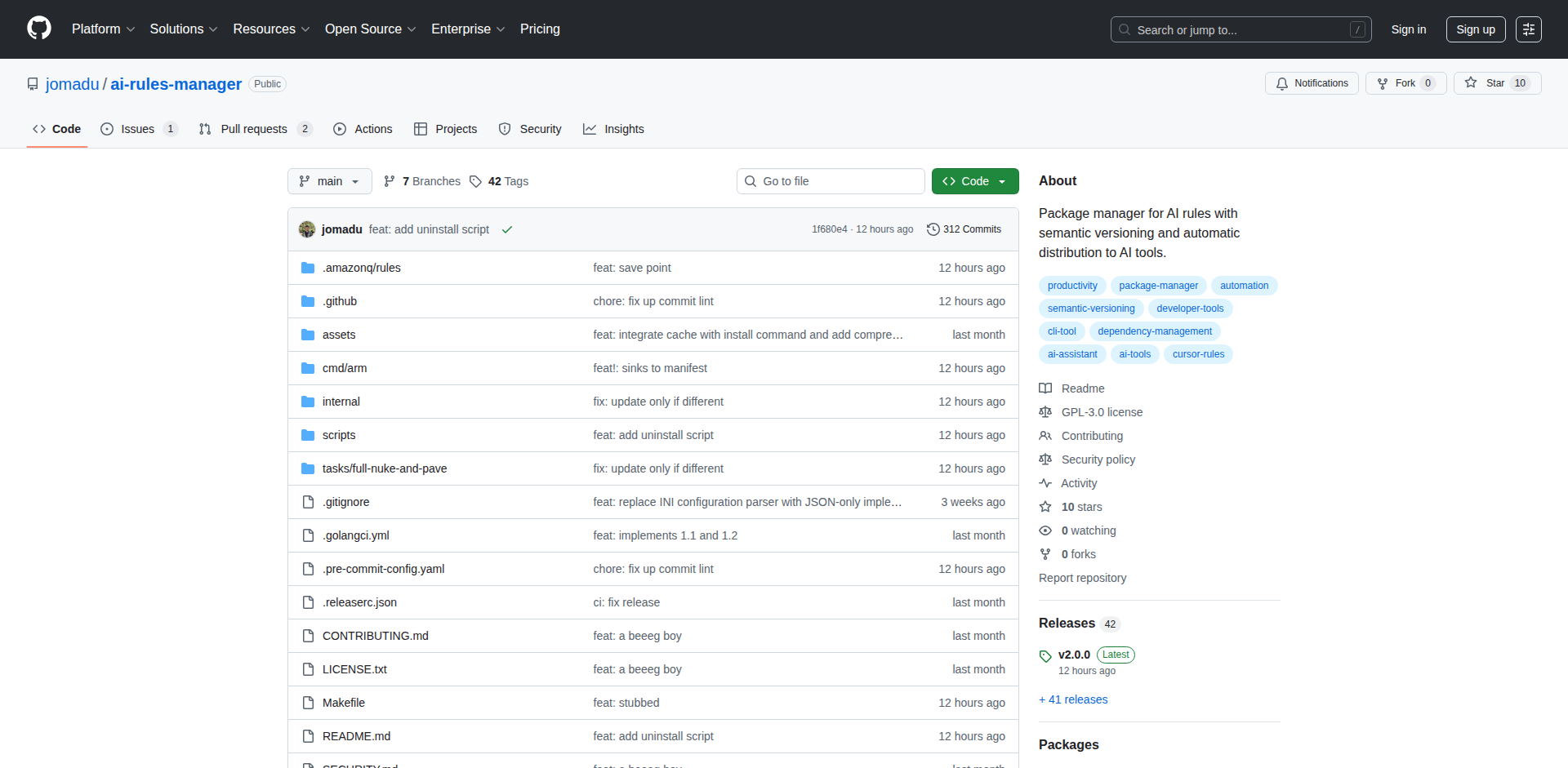

AI Rules Manager (ARM)

Author

jomadu

Description

This project introduces a novel way to manage AI rules for coding assistants like Cursor, GitHub Copilot, and Amazon Q. Instead of manually copying and pasting rule files, ARM treats AI rules as versioned dependencies, similar to how JavaScript developers manage packages with npm. It solves the chaos of inconsistent and outdated AI behavior across projects by enabling version control, updates, and shared management of AI rule sets, ensuring predictable and reliable AI assistance.

Popularity

Points 12

Comments 3

What is this product?

AI Rules Manager (ARM) is a tool that brings dependency management principles to AI interaction rules. Think of it like a system for managing software libraries, but for the instructions that guide AI coding assistants. Currently, when people want to customize AI behavior for specific tasks (like writing Python code or reviewing specific types of errors), they often copy-paste rule files. This leads to problems: rules become outdated, it's hard to share consistent configurations, and you don't know if a rule change will break things. ARM solves this by allowing you to 'install' AI rules from repositories (like Git), manage different versions of these rules, and update them predictably, just like you update your project's dependencies. The innovation lies in treating AI instructions as first-class, manageable code artifacts, bringing order and reliability to AI customization.

How to use it?

Developers can integrate ARM into their workflow by first installing the tool (usually via a simple script provided on GitHub). Once installed, they configure ARM to connect to a registry where AI rule sets are stored, such as a Git repository. Then, they specify where these rules should be applied (e.g., in a specific directory for Cursor). They can then 'install' specific AI rule sets (e.g., 'python' rules from a 'awesome-cursorrules' collection). ARM creates configuration files (similar to package.json) to track these dependencies. When the AI rule set in the registry is updated, developers can choose to 'update' their local rules, ensuring their AI assistant benefits from the latest improvements while maintaining control over when these changes are applied. This ensures consistency and manageability of AI behavior across different projects and team members.

Product Core Function

· Versioned AI Rule Installation: Allows developers to install specific versions of AI rule sets, ensuring predictable AI behavior and enabling rollbacks if needed. This is valuable for maintaining stable AI assistance in production environments.

· Git Registry Integration: Connects to Git repositories to fetch AI rule sets, leveraging existing version control systems for storing and distributing rules. This makes sharing and collaborating on AI rules straightforward and secure.

· Cross-AI Tool Compatibility: Supports targeting multiple AI tools (Cursor, Copilot, Amazon Q) with different rule layouts. This means a single set of managed rules can be adapted and used across various AI coding assistants, increasing efficiency and reducing duplication.

· Dependency Management Files: Generates arm.json and arm-lock.json files to track AI rule dependencies and their exact versions. This provides transparency and reproducibility of AI configurations for teams.

· Rule Set Updates: Enables developers to update AI rule sets when new versions are available in the registry, similar to updating software dependencies. This allows teams to easily benefit from improvements and bug fixes in AI rules.

Product Usage Case

· A team working on a Python project can use ARM to install a curated set of Python-specific AI coding rules from a shared Git repository. This ensures all team members have consistent AI assistance for Python code generation and review, improving code quality and reducing integration issues.

· An individual developer customizing GitHub Copilot for web development can install versioned sets of AI rules for HTML, CSS, and JavaScript. If a new, beneficial set of JavaScript rules is released, they can update their setup with a simple command, instantly improving Copilot's performance without manual file management.

· A company can maintain a private Git repository of company-specific AI rules for security code analysis. Developers across different projects can then install these approved rules, ensuring compliance and consistent security posture enforced by their AI assistants.

· When an AI rule set is found to have a bug that negatively impacts AI output, developers can quickly 'downgrade' to a previously known good version of the rule set using ARM, minimizing disruption to their workflow.

· A developer experimenting with custom AI prompts for a specific task, like optimizing SQL queries, can package these prompts as a shareable AI rule set. Other developers can then easily install and use these optimized prompts via ARM, fostering a community of AI-powered efficiency.

8

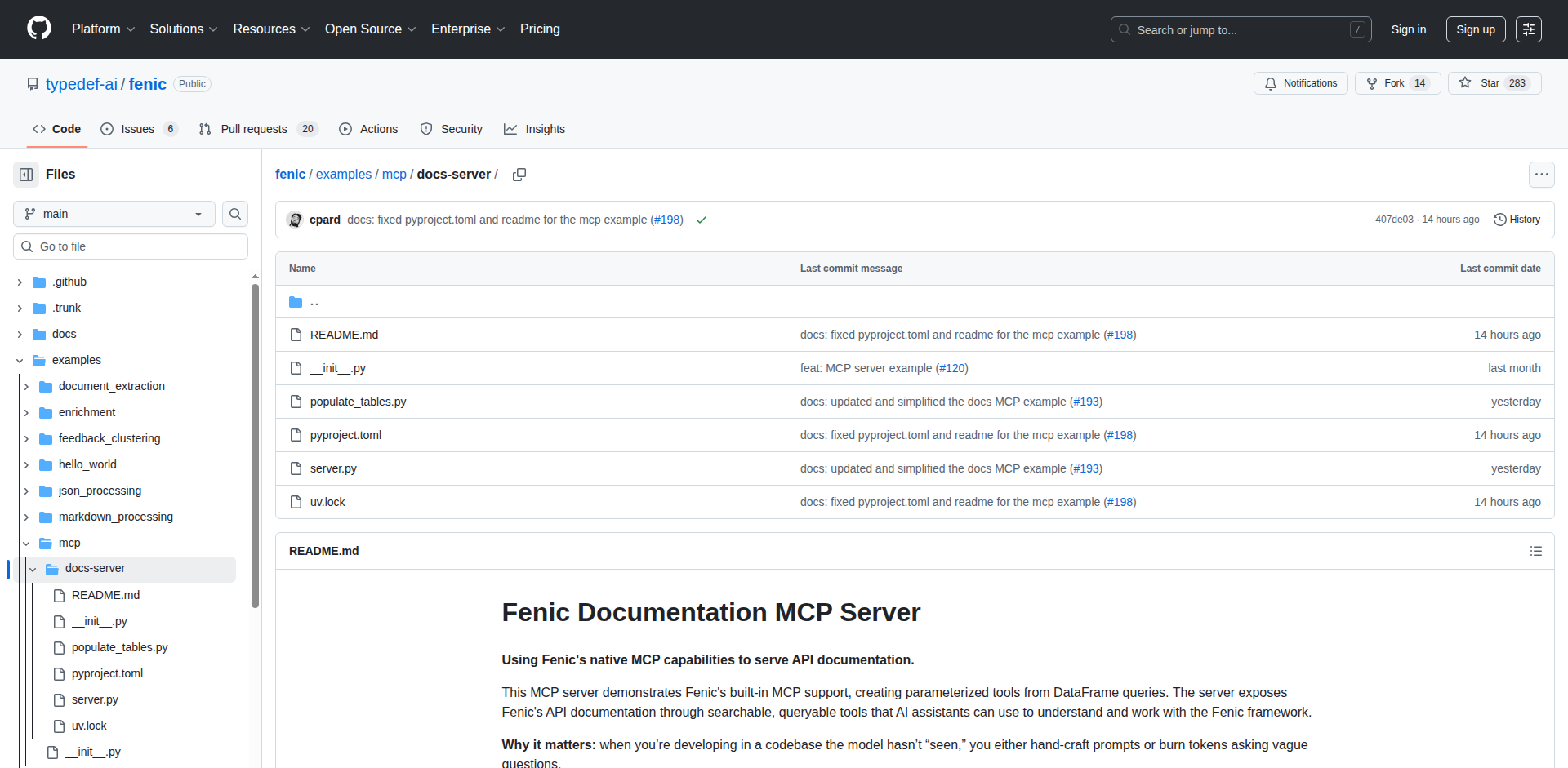

Codebase MCP Engine

Author

cpard

Description

This project provides a reference implementation for an MCP (Model-Centric Programming) server that allows AI models to directly learn from source code, including signatures, types, Abstract Syntax Trees (AST), and comments. It aims to improve developer experience by grounding AI answers in actual code, bypassing the need for often outdated or mediocre generated documentation. The core innovation lies in structuring code information for AI consumption, making it readily available and switchable across different code versions or branches.

Popularity

Points 13

Comments 1

What is this product?

This is an MCP (Model-Centric Programming) server that transforms a codebase into a structured data source for AI models. Instead of relying solely on text-based documentation, which can be hard to maintain and often lags behind actual code, this system ingests raw code elements like function definitions, variable types, code structure (AST), and comments. It then exposes this processed code information via an HTTP endpoint, essentially making the entire codebase accessible to AI agents. The innovation is in providing AI with a direct, structured understanding of the code, leading to more accurate and contextually relevant answers and assistance, rather than abstract, potentially inaccurate, generated prose. So, what's in it for you? It means your AI assistant can understand your project's code as well as you do, or even better, by directly accessing the source of truth.

How to use it?

Developers can integrate this project into their AI-powered development workflows by pointing their AI agent or editor to the provided HTTP endpoint. For example, using a tool like Claude Code, a developer can add the server's address (e.g., `https://mcp.fenic.ai`) as a data source. Once added, they can then query the AI with natural language questions about the codebase, such as 'What does this function do?' or 'How is this class structured?'. The AI agent will then use the information served by the MCP engine to provide an informed answer based directly on the code. For local experimentation and deeper customization, the project offers example implementations and setup guides. So, how can you use it? You can connect your favorite AI coding tools to your project's codebase to get instant, code-accurate answers and assistance.

Product Core Function

· Codebase Ingestion and Parsing: Processes source code files to extract key information like function signatures, data types, AST structure, and comments. This provides a foundational understanding of the code, enabling AI to grasp the 'what' and 'how' of your project.

· Structured Data Exposure via HTTP Server: Serves the extracted codebase information over a standard HTTP API. This makes the structured code data accessible to any AI agent or tool that can consume web services, allowing for seamless integration into existing workflows.

· Version/Branch Awareness: The system can be configured to index and serve information from different versions or branches of a codebase. This allows AI assistants to understand code in the context of specific releases or development stages, ensuring relevance and accuracy across project evolution.

· AI Agent Integration Ready: Designed to be a data source for AI agents, facilitating tasks like code explanation, debugging assistance, and code generation based on direct code context. This directly translates to enhanced developer productivity and faster problem-solving.

Product Usage Case

· AI-powered code explanation: A developer is looking at an unfamiliar function in a large legacy codebase. Instead of digging through scattered documentation or guessing, they ask their AI assistant (connected to the Codebase MCP Engine), 'Explain this function and its parameters.' The AI, having directly parsed the function's signature, AST, and comments, provides a precise explanation, saving the developer hours of investigation.

· Onboarding new team members: A new developer joins a project. They can use the AI assistant, powered by the Codebase MCP Engine, to ask questions like 'What are the main modules in this project?' or 'How do I use the user authentication service?' The AI can provide accurate answers by referencing the codebase structure and relevant code snippets, significantly speeding up the onboarding process.

· Debugging assistance across branches: A bug is reported in production. The development team is working on a new feature in a separate branch. The AI assistant, switching its context to the production branch via the MCP server, can help analyze the bug by understanding the exact code that was deployed, even before the team manually checks out that specific branch.

9

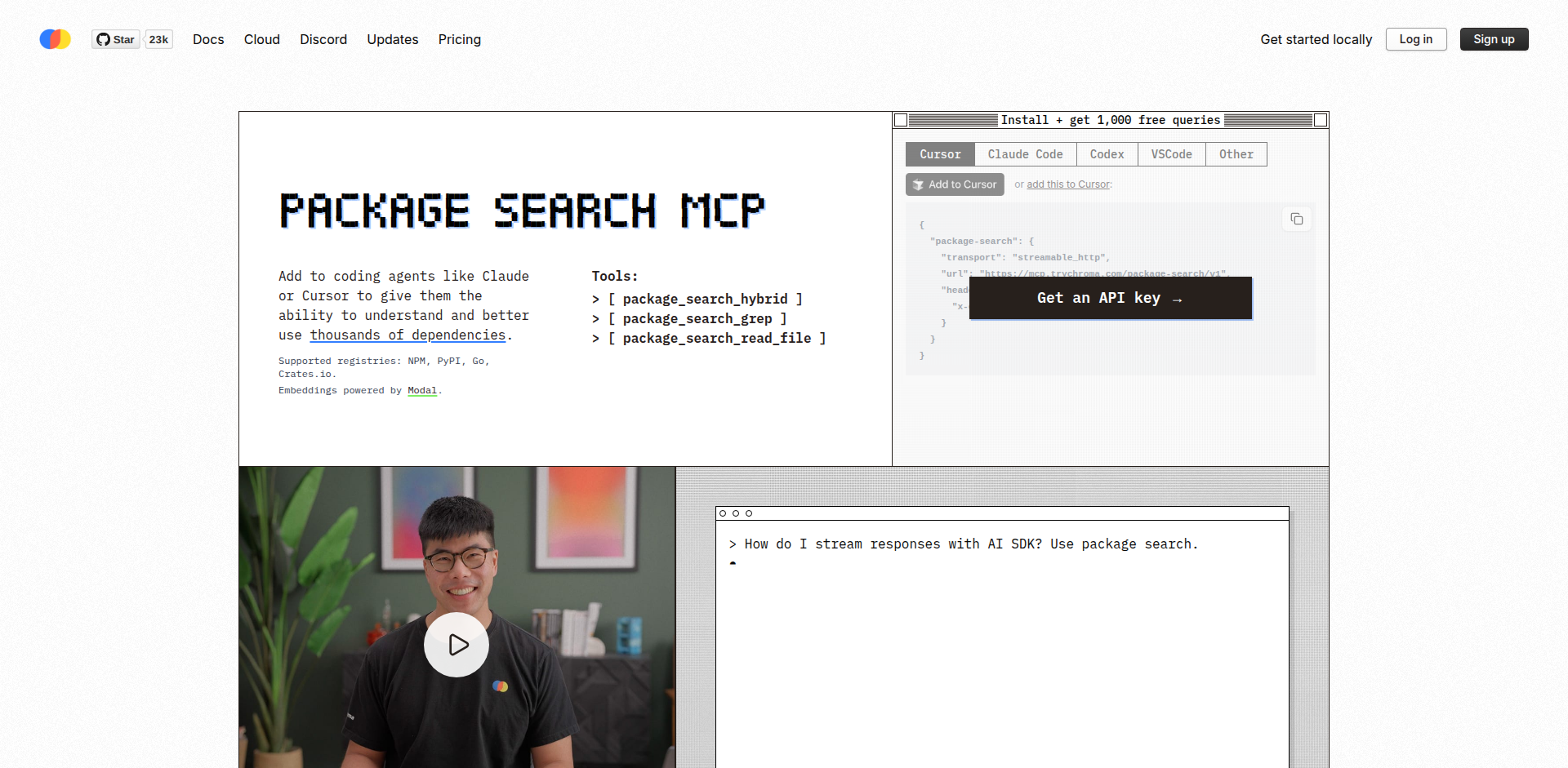

Dependency Insight Engine

Author

HammadB

Description

A tool that indexes and semantically searches public software dependencies across NPM, PyPI, Go, and Crates.io. It uses Tree-sitter for code parsing and Qwen3-Embedding for generating searchable representations, enabling AI agents to understand and utilize dependencies more effectively, thus solving the problem of AI systems hallucinating about dependencies.

Popularity

Points 12

Comments 0

What is this product?

This is a service that makes it easier for AI coding assistants (like AI code completion bots or PR review agents) to understand and use external code libraries (dependencies). Many AI tools struggle because they only understand the code you write directly, not the vast amount of code in the libraries your project relies on. This engine solves that by ingesting, parsing with Tree-sitter (a tool that understands code structure), and then creating searchable 'embeddings' (numerical representations that capture meaning) of these public dependencies using Qwen3-Embedding. It then indexes these across different versions of each dependency. This means your AI assistant can 'look up' information within these dependencies, making it much smarter about how to use them without making things up (hallucinating).

How to use it?

Developers can integrate this engine into their AI coding agents or AI SDKs (like Cursor, Claude, Codex, OpenAI). By simply adding the Package Search MCP server to their agent's configuration, the AI will immediately gain enhanced knowledge of dependencies. When the AI needs to find information within a dependency, it can be prompted to 'Use package search', and it will automatically know where to look for relevant code and information within indexed public libraries. This dramatically improves the AI's ability to write accurate and efficient code that leverages existing libraries.

Product Core Function

· Dependency Indexing: Ingests and organizes public code dependencies from multiple sources (NPM, PyPI, Go, Crates.io) across various versions. This is valuable because it creates a structured knowledge base of external code, preventing AI from guessing or inventing dependency behavior.

· Code Parsing with Tree-sitter: Analyzes the structure of source code for dependencies. This is valuable as it allows for a deeper understanding of code relationships and syntax, which is crucial for semantic search and accurate AI reasoning.

· Semantic Embedding with Qwen3-Embedding: Converts code into meaningful numerical representations that capture context and meaning. This is valuable because it enables AI to understand the 'intent' and functionality of code snippets, going beyond simple keyword matching.

· Semantic Search Interface: Provides a way for AI agents to perform natural language queries against the indexed dependency code. This is valuable because it allows developers to ask AI questions like 'How do I use this function from library X?' and get accurate, code-aware answers.

· Versioned Indexing: Indexes each specific version of a dependency independently. This is valuable for ensuring AI's recommendations are consistent with the exact versions of libraries being used in a project, avoiding compatibility issues.

Product Usage Case

· AI Code Assistant for Python: An AI assistant integrated with Dependency Insight Engine can accurately suggest how to use specific functions from the 'pandas' library in PyPI, providing code examples and explanations based on the actual source code of different pandas versions, thereby preventing incorrect API usage.

· Automated PR Review Bot: A bot that uses this engine can verify if the code changes in a pull request correctly utilize specific features of a Go dependency. If the developer attempts to use a deprecated function or an incorrect parameter, the bot can flag it by understanding the dependency's API through semantic search.

· Code Autocompletion Tool: When a developer is writing code that uses an NPM package, the autocompletion tool powered by this engine can provide highly relevant suggestions for method calls and parameters by semantically understanding the package's source code, leading to faster and more accurate coding.

· Debugging Agent: An AI agent tasked with debugging a complex application that relies heavily on various Rust crates from Crates.io can leverage the engine to search for known issues or common patterns of misuse within those dependencies, significantly speeding up the debugging process.

10

Flox-NixCUDA

Author

ronef

Description

Flox is a project that integrates NVIDIA's CUDA toolkit into the Nix ecosystem, making CUDA-accelerated software easily accessible to Nix users. Previously, getting CUDA to work on Nix was complex and time-consuming. Flox simplifies this by allowing developers to declare CUDA dependencies directly in their Nix configurations, enabling them to seamlessly use powerful tools like PyTorch, TensorFlow, and TensorRT without manual setup headaches. This innovation addresses a significant pain point for developers working with machine learning and high-performance computing on Nix.

Popularity

Points 9

Comments 0

What is this product?

Flox is a solution that brings NVIDIA's CUDA, a platform for parallel computing and the company's graphics processing units (GPUs), to the Nix package management system. Nix is known for its reliable and reproducible builds, but integrating complex software like CUDA has historically been challenging. Flox's innovation lies in its ability to package and distribute prebuilt, pre-patched CUDA software directly through Nix's infrastructure. This means developers can simply specify CUDA as a dependency in their Nix expressions (like `shell.nix` or flakes) and instantly have access to CUDA-enabled libraries and applications. This approach leverages Nix's declarative nature to solve the problem of complex software dependency management for GPU computing, a significant hurdle for many in the AI and HPC communities.

How to use it?

Developers using Nix can integrate Flox by adding Flox's package cache as an `extra-substituter` in their Nix configuration files (e.g., `nix.conf` or `configuration.nix`). Once configured, they can then declare CUDA dependencies in their project's Nix expressions. For instance, a developer wanting to use PyTorch with CUDA acceleration would add PyTorch and the necessary CUDA libraries to their `shell.nix` or flake inputs. Nix will then fetch and manage these dependencies, ensuring a consistent and reproducible environment with CUDA capabilities. This eliminates the need for manual installation, compilation, or complex environment variable setups typically associated with CUDA on Linux.

Product Core Function

· CUDA Toolkit Distribution: Allows Nix users to access prebuilt CUDA toolkits directly via Nix. This means developers don't have to manually download and install NVIDIA drivers and CUDA libraries, saving significant setup time and reducing the risk of configuration errors. The value is in immediate access to GPU computing power.

· CUDA-Accelerated Package Availability: Provides access to popular machine learning and scientific computing packages (like PyTorch, TensorFlow, TensorRT, OpenCV) that are pre-compiled and optimized with CUDA support. This enables developers to leverage GPU acceleration for their applications out-of-the-box, dramatically speeding up computation and experimentation. The value is in enabling faster AI model training and complex simulations.

· Reproducible CUDA Environments: Leverages Nix's core principle of reproducibility to ensure that CUDA-enabled environments are consistent across different machines and over time. Developers can be confident that their CUDA setup will work reliably, preventing "it works on my machine" scenarios and facilitating collaboration. The value is in predictable and reliable development workflows.

· Simplified Dependency Management: Integrates CUDA seamlessly into Nix's declarative dependency system. Developers specify what they need, and Nix handles the rest. This abstracts away the complexity of managing GPU software dependencies, making advanced computing tools more accessible. The value is in lowering the barrier to entry for GPU computing.

Product Usage Case

· Machine Learning Development: A data scientist using Nix to manage their development environment can add Flox's cache and then declare PyTorch with CUDA support in their `shell.nix`. This allows them to train deep learning models significantly faster on their GPU without manually configuring CUDA drivers or libraries, directly addressing the need for efficient model training.

· Scientific Simulation: A researcher working on complex physics simulations that require GPU acceleration can use Flox to ensure their Nix environment includes the necessary CUDA libraries and CUDA-enabled scientific software. This facilitates reproducible research and accelerates computation-intensive tasks, solving the problem of setting up complex scientific software stacks.

· High-Performance Computing (HPC) Projects: Developers building HPC applications on Nix can use Flox to easily access and integrate CUDA-accelerated libraries like cuFFT or cuBLAS. This simplifies the process of building and deploying high-performance applications, allowing them to take full advantage of GPU capabilities without intricate manual setups.

· Containerized GPU Workloads: For developers using Nix to build container images for GPU workloads, Flox simplifies the process of including CUDA. Instead of complex scripting to install NVIDIA drivers and toolkits within a container, they can declaratively add CUDA dependencies, ensuring reproducible and efficient GPU-enabled containers.

11

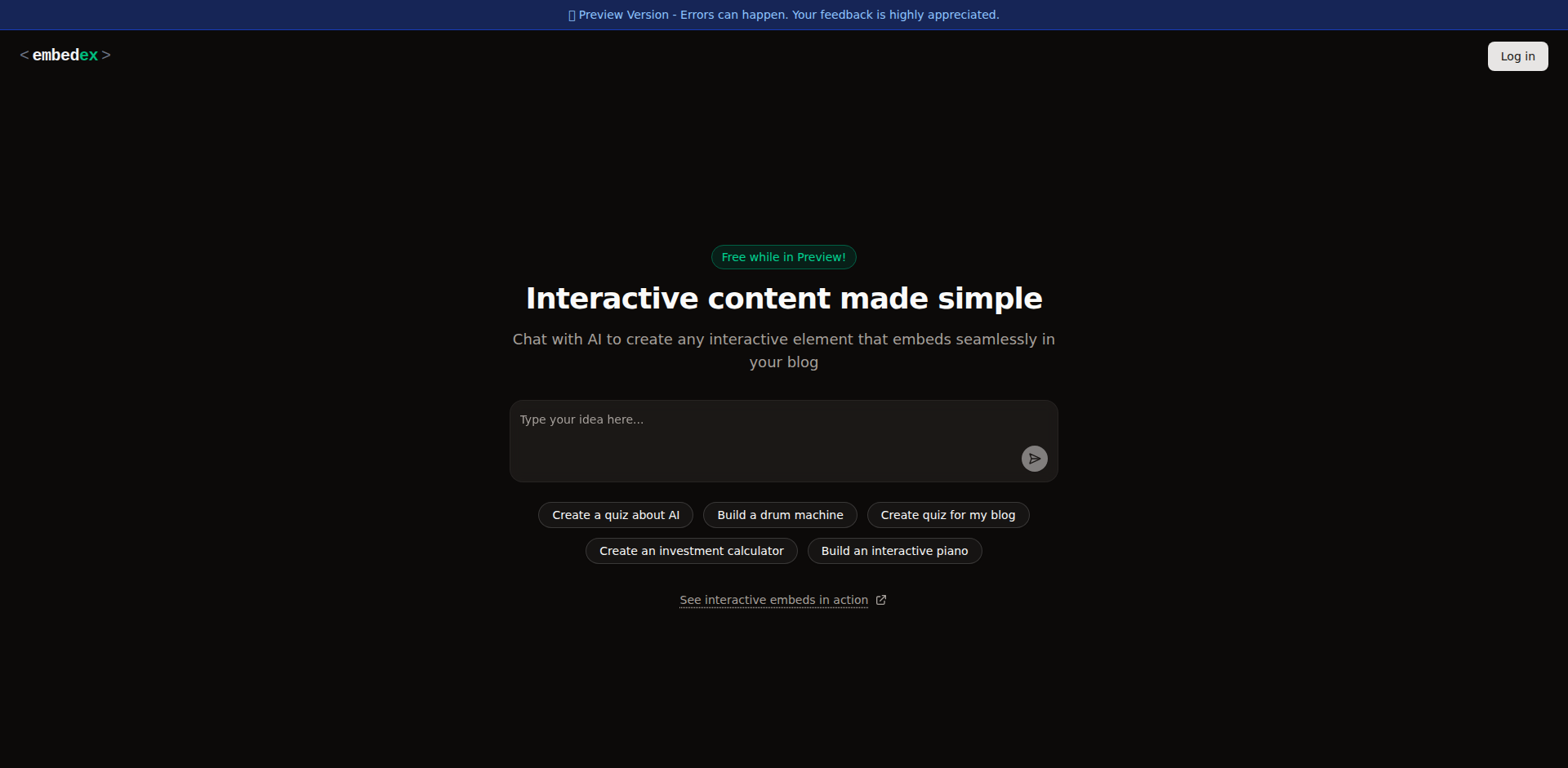

AI-Powered Interactive Embed Builder

Author

BohdanPetryshyn

Description

This project is an AI-driven tool that empowers bloggers and content creators to easily embed interactive elements like quizzes, calculators, and mini-games into their blog posts. Instead of complex coding, users can simply describe their desired interactive element to an AI, which then generates the embeddable code. This democratizes the creation of engaging, dynamic content for websites, making blogs more engaging for readers.

Popularity

Points 6

Comments 2

What is this product?

This is an AI tool that translates your ideas for interactive web content into functional, embeddable code. Think of it as a no-code way to add engaging features to your blog. The core innovation lies in using AI to understand your natural language descriptions of interactive elements and translating them into the necessary HTML, CSS, and JavaScript. This bypasses the need for developers to manually write code for custom widgets, making interactive content creation accessible to anyone. For example, if you want a quiz, you describe the questions and answers, and the AI builds it.

How to use it?

Developers and bloggers can use this tool by visiting the Embedex website, describing the interactive element they want to create using natural language. For instance, you can ask for 'a quiz about space facts with multiple-choice answers' or 'a simple investment calculator that takes initial deposit and monthly contributions'. Once the AI generates the interactive element, you'll receive a piece of code that can be directly copied and pasted into an HTML or embed block on most blogging platforms, including WordPress, Ghost, Wix, and Squarespace. This means you can easily add interactive content to your existing blog without needing to modify your website's theme or architecture.

Product Core Function

· AI-powered interactive element generation: Allows users to create quizzes, calculators, and games by simply describing them in plain language, reducing the need for coding expertise and enabling rapid prototyping of engaging content.

· Cross-platform embeddability: Generates code that is compatible with major blogging platforms and website builders, making it easy for anyone to integrate interactive content into their existing online presence without complex setup.

· Variety of interactive types: Supports the creation of diverse interactive content, from educational quizzes and financial tools to simple games, enhancing reader engagement and providing unique value to blog posts.

· User-friendly interface: Focuses on a conversational AI interaction, abstracting away the technical complexities of web development and making interactive content creation accessible to a broader audience.

Product Usage Case

· A blogger wants to create a 'Which historical figure are you?' quiz for their history blog. They describe the quiz to the AI, and Embedex generates the code, which the blogger then embeds into a post. Readers can interact with the quiz directly on the blog, increasing engagement and time spent on the page.

· A financial advisor wants to offer a simple retirement savings calculator on their personal finance blog. Instead of hiring a developer, they use Embedex to generate the calculator by describing its input fields (initial savings, monthly contribution, interest rate) and output. The calculator is then embedded into their blog posts, providing a useful tool for readers.

· A game developer wants to showcase a simple interactive puzzle game as part of a blog post explaining game design principles. They use Embedex to quickly generate the embeddable game code, allowing readers to play and experience the concept directly within the article.

12

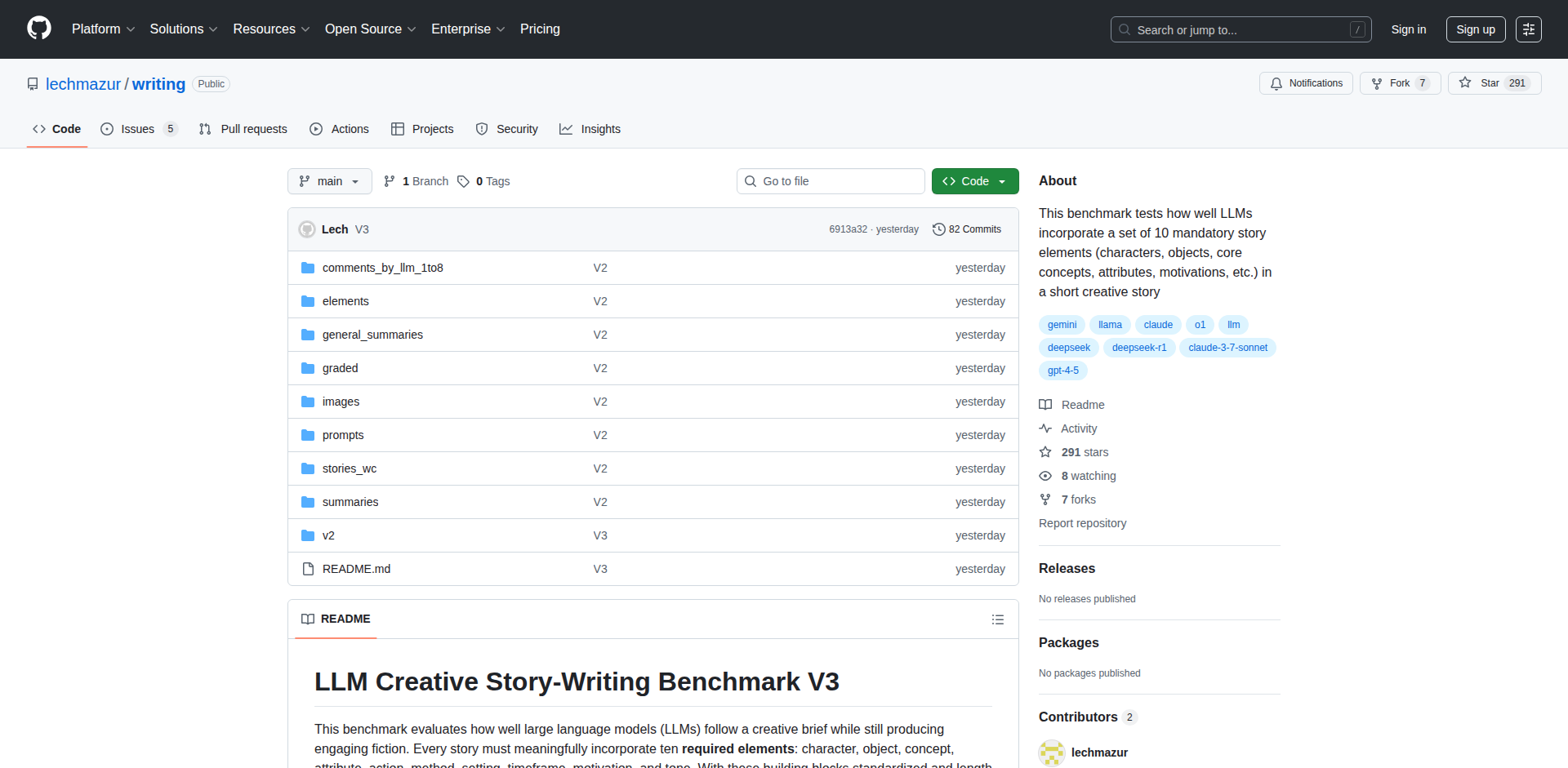

LLM Creative Story-Writing Benchmark V3

Author

zone411

Description

This project presents a benchmark for evaluating the creative storytelling capabilities of Large Language Models (LLMs). It focuses on assessing how well LLMs can generate novel, coherent, and engaging narratives, addressing the challenge of quantifying creative output in AI. The innovation lies in its structured approach to testing LLM creativity, providing a standardized way to compare different models' storytelling prowess.

Popularity

Points 8

Comments 0

What is this product?

This project is a benchmark designed to measure the creative storytelling ability of AI language models (LLMs). Think of it as a standardized test for AI writers. LLMs are trained on vast amounts of text, but judging their creativity is tricky. This benchmark uses a set of specific prompts and evaluation criteria to objectively assess how well an LLM can write a compelling and original story. The innovation is in its systematic methodology for evaluating qualitative aspects of AI-generated text, moving beyond simple factual accuracy to explore imaginative generation.

How to use it?

Developers can use this benchmark to test and compare different LLMs for their creative writing potential. Imagine you're building an AI-powered storytelling app or a creative writing assistant. You would feed the same prompts from this benchmark into your chosen LLMs and then use the benchmark's evaluation framework to see which LLM produces the most creative and engaging stories. It's also useful for researchers who are developing new LLMs and want to understand their creative output.

Product Core Function

· Structured Prompting System: Provides a consistent set of creative writing prompts to ensure fair comparison between different LLMs. The value is in creating a level playing field for testing, so you can trust the results when comparing models.

· Evaluation Metrics for Creativity: Defines specific criteria to measure aspects like originality, coherence, and narrative engagement in AI-generated stories. This is valuable because it gives you concrete ways to judge if an AI's story is truly creative, not just a jumble of words.

· Comparative Analysis Framework: Offers a method to analyze and compare the performance of various LLMs on creative writing tasks. The value here is in helping you pick the best LLM for your creative writing projects, based on objective data.

· Reproducible Testing Environment: Aims to allow researchers and developers to run the same tests and get similar results, ensuring the benchmark's reliability. This is crucial for building trust in the evaluation results and for tracking progress in AI creativity.

Product Usage Case

· A game developer wants to integrate AI-generated plot lines into their game. They can use this benchmark to test various LLMs and select the one that produces the most unique and interesting story arcs for their game world.

· A content creation platform aims to use AI to generate marketing copy that is more engaging and imaginative. By using this benchmark, they can identify LLMs that excel at creative copywriting and integrate them into their platform.

· A researcher studying the evolution of AI language capabilities can use this benchmark to track improvements in LLM creativity over time and understand the impact of new training techniques on storytelling.

13

AgentFlow: The Universal App Orchestrator

Author

emilwagman

Description

AgentFlow is an AI-powered assistant designed to bridge the gap between human intent and the vast landscape of software applications. It leverages a powerful orchestration engine and over 200 pre-built app connectors to automate complex workflows across different services. The core innovation lies in its ability to understand natural language commands and translate them into actionable sequences of operations executed by various applications, effectively creating a 'personal AI agent' for productivity.

Popularity

Points 4

Comments 4

What is this product?

AgentFlow is an intelligent automation platform that acts as a central hub for your digital tools. At its heart, it's an AI assistant that understands what you want to achieve, like 'plan my trip' or 'summarize my meetings'. It then uses its extensive library of over 200 app connectors – think of these as special plugins for popular apps like Google Calendar, Slack, Notion, Gmail, and many more – to execute these tasks. The innovation is in how it intelligently chains these app operations together, often in ways you wouldn't manually orchestrate, to achieve a complex goal. Instead of you logging into multiple apps and performing step-by-step actions, AgentFlow does it for you, powered by AI understanding your request.

How to use it?

Developers can integrate AgentFlow into their workflows by connecting their existing accounts for supported applications. For example, if you use Google Calendar and Slack, you can grant AgentFlow access to both. Then, you can simply tell AgentFlow, 'Remind me to review the Q3 sales report in Slack tomorrow morning, linking to the relevant document in Google Drive.' AgentFlow will understand this, find the sales report in your Drive, and schedule a reminder in Slack. It's designed to be a proactive assistant, reducing context switching and manual labor between different tools.

Product Core Function

· Natural Language Understanding for Command Execution: This allows users to express complex tasks in plain English, which the AI then parses to identify the required actions and the applications involved. The value is in enabling intuitive control over automated processes without needing to learn specific scripting languages.

· Extensive App Connector Library (200+): This provides the foundational capability to interact with a wide range of popular productivity and business applications. The value is in offering broad compatibility and reducing the effort required to build cross-application workflows.

· Intelligent Workflow Orchestration: This is the core AI engine that determines the optimal sequence of operations across different apps to fulfill a user's request. The value is in automating multi-step processes that would otherwise be time-consuming and error-prone for humans.

· Proactive Task Automation: AgentFlow can monitor connected applications for certain events or triggers and initiate actions automatically. The value is in staying ahead of tasks and ensuring timely execution of important actions without direct user intervention.

· Contextual Awareness Across Applications: The AI maintains context about your data and interactions across different connected services. The value is in enabling more relevant and personalized automation, as the system understands relationships between your information.

Product Usage Case

· Automating meeting follow-ups: A developer could tell AgentFlow, 'After my Zoom meeting ends, summarize the key discussion points and send a follow-up email to attendees with action items listed in a Notion page.' AgentFlow would connect to Zoom, extract data, process it with AI, create a Notion entry, and then draft an email, all automatically.

· Streamlining project updates: A user might say, 'Compile my daily progress from Jira and my team's Slack channel, and post a summary to a dedicated project channel.' AgentFlow would fetch data from both platforms and consolidate it into a single update, saving significant manual effort.

· Personalized information retrieval: A developer needing to research a new API could ask, 'Find all relevant documentation and examples for the 'X' API from my saved bookmarks, GitHub repositories, and read-it-later queue, and create a single consolidated PDF.' AgentFlow would search across these sources and compile the information efficiently.

14

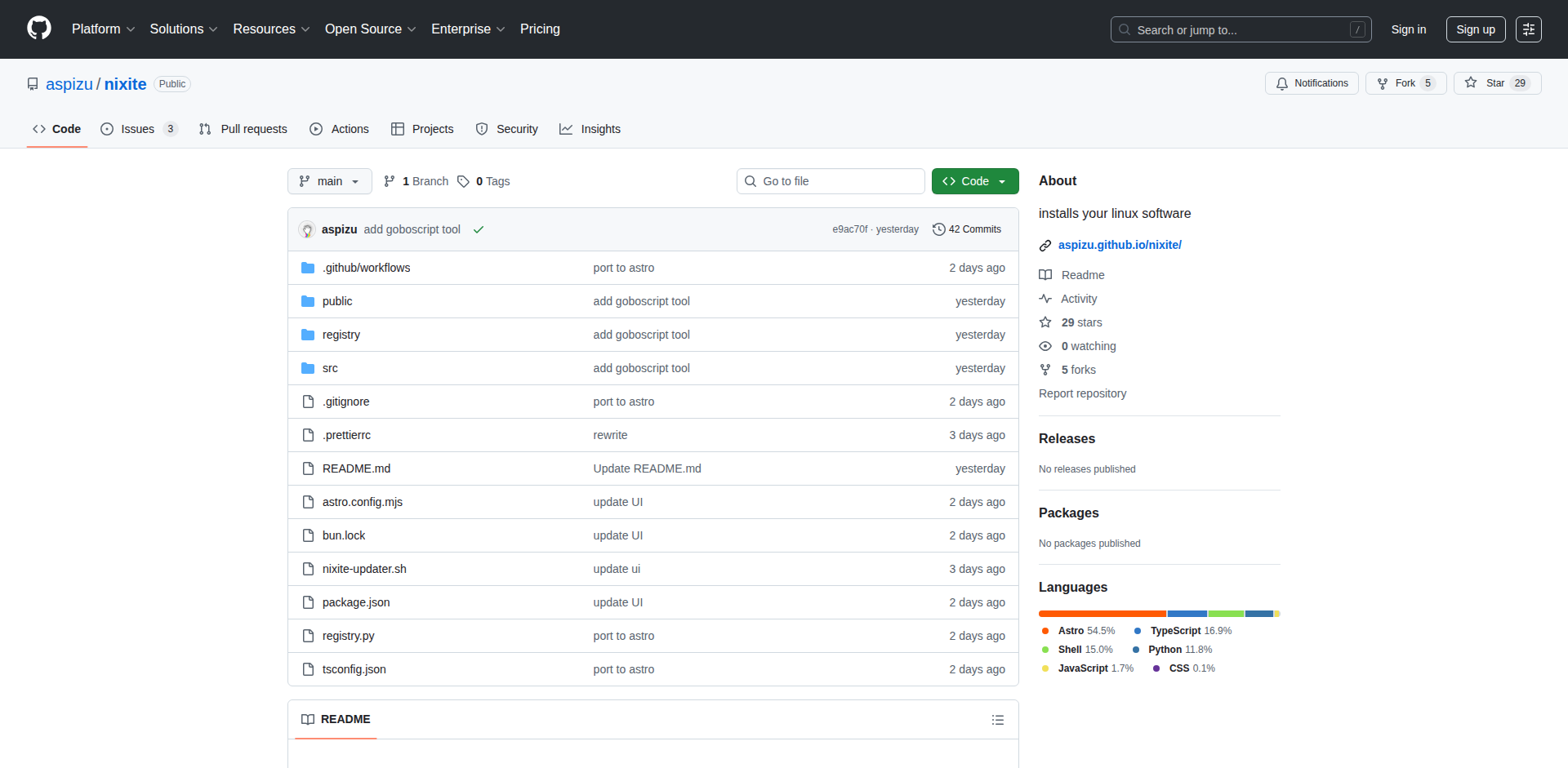

Nixite: Unattended Linux Software Provisioner

Author

aspizu

Description

Nixite is a bash script generator that automates the installation of all your Linux software without manual intervention. It intelligently selects the optimal installation method for each package and prevents any interactive prompts, ensuring a completely unattended setup. Nixite is designed for Ubuntu-based and Arch-based Linux distributions and includes a built-in updater to keep all installed software and package managers current.

Popularity

Points 6

Comments 1

What is this product?

Nixite is a clever tool that crafts a bash script to automate software installation on your Linux system. Think of it as a personalized setup wizard that runs entirely in the background. Its core innovation lies in its ability to understand your Linux distribution (like Ubuntu or Arch) and then figure out the best way to install each piece of software – whether that's through the standard package manager (like apt or pacman), a dedicated installer, or even compiling from source. It smartly avoids asking you questions, making the whole process hands-off. It also sets up a system to update everything at once, saving you from managing multiple update commands. So, what does this mean for you? It means you can set up a new Linux machine or reconfigure an existing one with all your favorite software in a fraction of the time, without needing to click through a single prompt.

How to use it?

Developers can use Nixite by first specifying the software they want to install for their target Linux distribution. Nixite then generates a custom bash script. This script can be saved and executed on a new or existing Linux machine to perform the automated installation. For integration, the generated script can be a part of a larger CI/CD pipeline, a post-installation setup for development environments, or even a way to quickly provision new servers. This script acts as an 'infrastructure as code' for your software dependencies. So, what does this mean for you? It means you can reliably and quickly get your development environment set up consistently across multiple machines, or deploy your applications with all their required software dependencies pre-installed.

Product Core Function

· Automated Software Installation: Nixite generates scripts that install specified software packages without user interaction, ensuring a seamless setup. This is valuable for saving time and reducing errors during system configuration.

· Intelligent Installation Method Selection: The tool analyzes packages and chooses the most efficient installation method, such as using package managers (apt, pacman) or compiling from source, optimizing the installation process. This means your software is installed the 'right' way for your system, leading to better compatibility.

· Unattended Operation: Nixite eliminates the need for manual prompts during software installation, allowing for completely hands-off automation. This is crucial for repeatable and scalable deployments.

· Cross-Distribution Support: Nixite supports major Linux families like Ubuntu-based and Arch-based systems, making it versatile for a wide range of users. This expands its utility across different development workflows.

· Unified Software Updater: It installs a script to update all package managers and software simultaneously, simplifying system maintenance. This ensures your tools are always up-to-date without manual effort.

Product Usage Case

· Rapid Development Environment Setup: A developer needs to set up a new workstation with specific tools like Python, Node.js, Docker, and various IDE extensions. Instead of manually installing each, they run a Nixite script that installs everything automatically, getting them productive in minutes rather than hours.

· Server Provisioning for Web Applications: A web developer deploys a new backend service that requires a database (e.g., PostgreSQL), a web server (e.g., Nginx), and specific libraries. Nixite can generate a script that installs and configures all these dependencies on a new server, ensuring the application environment is ready to go.

· Consistent Team Workflows: Within a development team, ensuring everyone has the same set of development tools and versions is critical. Nixite can be used to generate a standardized setup script that all team members run, eliminating 'it works on my machine' issues by providing a consistent software foundation.

· Automating Post-OS Install Tasks: After installing a fresh copy of Linux, users often need to install common utilities and applications. Nixite can automate this post-installation process, transforming a manual setup into a single command execution.

15

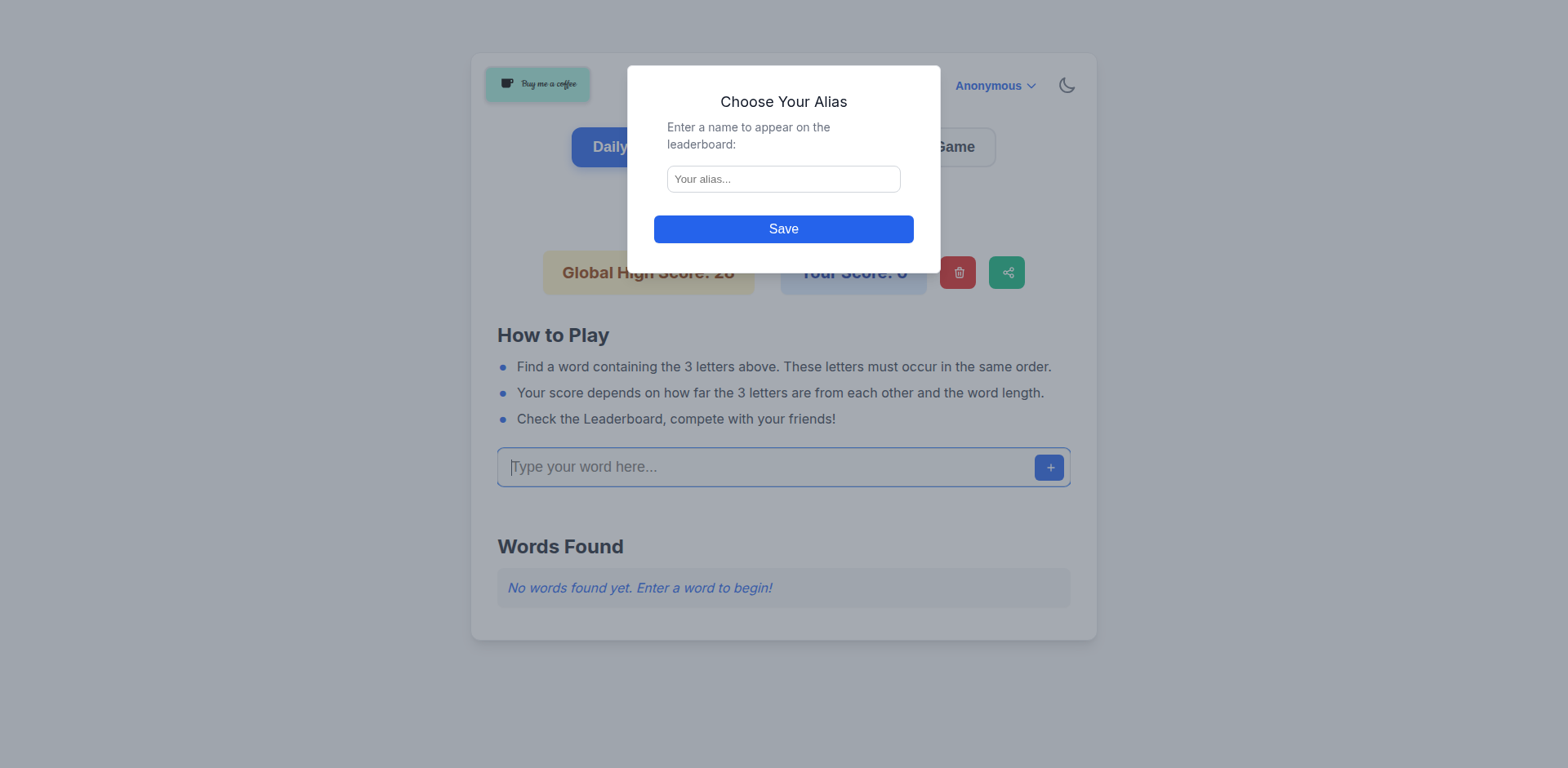

Spanara: Lexical Pattern Weaver

Author

bsmith

Description

Spanara is a web-based word game inspired by a Finnish license plate game where players find words from a sequence of three letters. It showcases a clever application of string manipulation and algorithmic pattern matching to solve a creative problem, offering a fun and accessible way to engage with language and code.

Popularity

Points 2

Comments 5

What is this product?

Spanara is a word game application that allows users to input a three-letter starting sequence and find words that contain these letters in the same order. The core innovation lies in its efficient string searching algorithm, likely employing techniques similar to subsequence matching or optimized brute-force searches, to quickly identify potential words from a dictionary. This provides a technical demonstration of how algorithmic thinking can be applied to linguistic puzzles, making it a neat example of 'code as a creative tool'.

How to use it?

Developers can use Spanara as a fun, self-contained project to explore string algorithms and data structures. It can be integrated into other applications by exposing its core word-finding logic as an API. For example, a language learning app could use Spanara's engine to generate practice exercises, or a writer's tool could use it to find thematic word connections. The project's simplicity makes it a great starting point for understanding how to build interactive web applications with a backend logic component.

Product Core Function

· Lexical Pattern Matching: Implements an algorithm to find words containing a specified letter subsequence. This is valuable for developers looking to understand efficient string searching, useful in tasks like search functionality, autocomplete, or data validation.

· Dictionary Integration: Utilizes a word dictionary to provide valid word results. This highlights the importance of data management in applications and demonstrates how external data sources can be leveraged to power functionality.

· User Interface for Word Discovery: Offers a simple, intuitive web interface for users to input letters and view results. This showcases how to build user-friendly frontends that interact with backend logic, demonstrating the end-to-end development process.

· Algorithmic Creativity Demonstration: Provides a clear example of applying computational thinking to a creative, game-like problem. This inspires developers by showing that even simple algorithms can lead to engaging applications.

Product Usage Case

· Educational Tool for Computer Science Students: Can be used in introductory programming courses to teach concepts like string manipulation, algorithms, and data structures in a tangible, engaging way.

· Creative Writing Assistant: A writer could use Spanara to find words that share a specific phonetic or structural pattern, potentially sparking new ideas or helping overcome writer's block.

· Developer Productivity Tool: For developers interested in linguistics or word puzzles, Spanara offers a quick way to explore word relationships and patterns, serving as a light-hearted technical exploration.

16

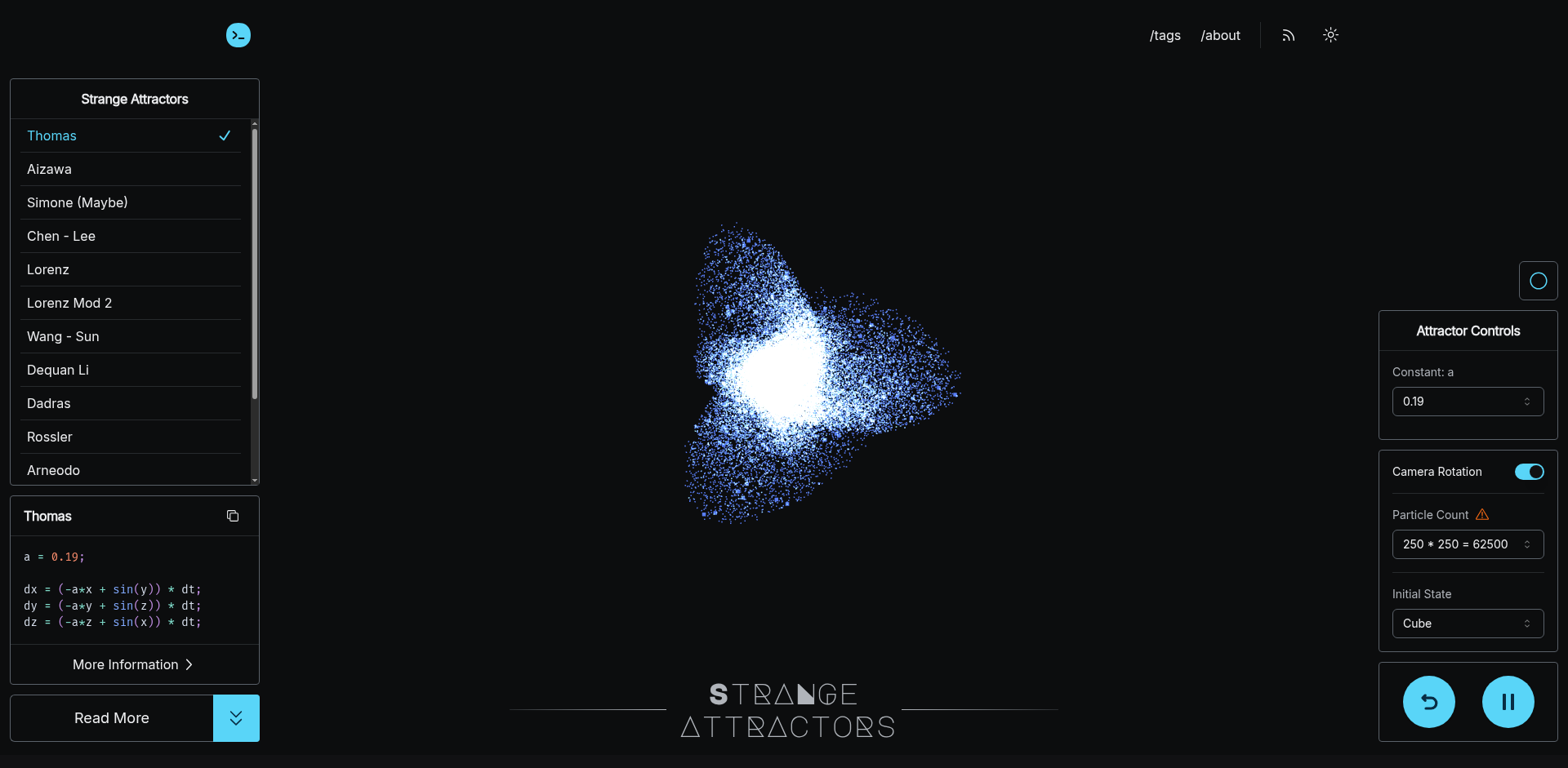

AttractorExplorer

Author

shashanktomar

Description

A web-based visualization tool for exploring the fascinating world of strange attractors, built with Three.js. It allows users to interact with and generate complex fractal patterns, offering a unique blend of mathematical art and computational creativity. The project highlights the beauty of emergent complexity from simple mathematical rules and provides a playground for experimenting with algorithmic art.

Popularity

Points 6

Comments 1

What is this product?

AttractorExplorer is a project that leverages the power of Three.js, a JavaScript 3D library, to render and visualize 'strange attractors'. These are complex mathematical sets that exhibit chaotic behavior and are known for generating intricate fractal patterns. The innovation lies in making these typically abstract mathematical concepts visually accessible and interactive through a web interface. It allows users to explore the beauty of chaos and the emergent complexity that arises from simple iterative equations, transforming mathematical theory into engaging visual art. This offers a unique way to appreciate the underlying mathematical structures that govern many natural phenomena.

How to use it?

Developers can use AttractorExplorer as a live demo and a source of inspiration for their own creative coding projects. The project's codebase, built with Three.js, can be forked and extended to explore different attractor equations, implement custom rendering techniques, or integrate attractor generation into other 3D applications. It's also valuable for educators or students wanting to visually demonstrate concepts in chaos theory or fractal geometry. The project provides configurable parameters for the attractors, allowing for deep experimentation and discovery of unique visual forms.

Product Core Function

· Interactive 3D visualization of strange attractors: This allows users to see the dynamic generation of fractal patterns in real-time, offering a visual understanding of mathematical chaos and its aesthetic output. The value is in making complex math accessible and beautiful.

· Configurable attractor parameters: Users can tweak various mathematical constants and initial conditions to generate a wide array of unique attractor shapes. This provides immense creative freedom and the ability to discover novel visual expressions.

· Three.js rendering engine: Utilizes a powerful and widely adopted JavaScript library for creating and displaying 3D graphics in a web browser. This ensures high performance and broad compatibility, making the visualizations smooth and accessible.

· Mathematical formula exploration: The project is built around mathematical formulas that define the attractors, allowing users to indirectly engage with and learn about chaos theory and fractal geometry through experimentation.

· Potential for integration: The underlying Three.js structure makes it adaptable for integration into other web-based 3D experiences, games, or artistic installations, providing a versatile component for creative developers.

Product Usage Case

· A developer building a generative art website could use AttractorExplorer's core logic to create unique, evolving visual backgrounds for their pages, solving the problem of static or repetitive design.

· An educator teaching a course on chaos theory could use this project to visually demonstrate how simple mathematical rules lead to complex, unpredictable patterns, making abstract concepts more concrete for students.

· A game developer looking for procedural content generation could adapt the attractor algorithms to create unique in-game environments, textures, or visual effects, solving the challenge of creating varied and organic-looking digital worlds.

· An artist interested in math art could experiment with the configurable parameters to discover new and visually striking fractal forms, using the project as a digital canvas for mathematical exploration and creation.

17

Anvil: Dev Environment Orchestrator

Author

rocajuanma

Description

Anvil is a macOS command-line tool designed to automate the tedious process of setting up a new development environment. It tackles the common pain point of repeatedly installing the same applications and configuring them across multiple machines, saving developers significant time. By leveraging Homebrew for package management and GitHub for configuration syncing, Anvil streamlines the setup, allowing developers to focus on coding rather than system configuration.

Popularity

Points 3

Comments 3

What is this product?

Anvil is a developer-centric utility that automates the installation and configuration of applications and settings on macOS. It acts as a central command to manage your entire software toolchain. The core innovation lies in its ability to group related applications and their configurations, allowing for a single command execution to set up a complete development environment. It uses Homebrew to install applications, which is a widely adopted package manager for macOS, and syncs configuration files via a remote GitHub repository. This means you declare what you need, Anvil fetches and installs it, and then applies your personal configurations, ensuring consistency across all your machines. So, it solves the problem of repetitive setup tasks, making new machine onboarding significantly faster and more reliable. What used to take hours can now be done in minutes.

How to use it?

Developers can use Anvil by first installing it on their macOS machine. Once installed, they can define their desired application groups and configuration file locations in a simple configuration file. This configuration file is typically stored in a private GitHub repository. To set up a new machine or refresh an existing one, a developer simply runs the `anvil install` command in their terminal. Anvil then reads the configuration, uses Homebrew to install all specified applications, and pulls the relevant configuration files from the GitHub repository, applying them to the correct locations. This makes it incredibly easy to replicate a familiar and productive development environment on any Mac. For example, if you're switching to a new company Mac or getting a personal machine upgrade, you can have your entire coding setup ready in under 20 minutes.

Product Core Function

· Automated Application Installation: Installs software packages via Homebrew with a single command, eliminating the need to manually search and install each application. This saves time and ensures you have all necessary tools ready to go.

· Configurable App Grouping: Allows developers to organize applications into logical groups (e.g., 'web-dev', 'data-science'). This means you can install a specific set of tools for a particular project or task with one command, making your workflow more efficient.

· Configuration Synchronization: Enables syncing of personal configuration files (like `.bashrc`, editor settings, etc.) across multiple machines using GitHub. This ensures your personalized environment and preferences are consistent everywhere you work, so you don't have to remember where each file lives or how to reconfigure it.

· Basic Troubleshooting: Includes simple fixes for common setup issues encountered during the installation process. This helps resolve minor annoyances automatically, allowing you to get back to coding faster.

· Cross-Machine Consistency: Guarantees that your development environment and settings are identical on all your Macs, regardless of whether it's a personal or work machine. This predictability reduces cognitive load and potential errors.

Product Usage Case

· Onboarding a new developer to a team: A team lead can share a pre-defined Anvil configuration, allowing new hires to get their development machines fully set up and productive within minutes of receiving their hardware, drastically reducing onboarding time.

· Switching between personal and work laptops: A developer can use Anvil to quickly set up their preferred coding environment on their work laptop, mirroring their personal machine's setup, ensuring seamless productivity across both devices without manual configuration.

· Recovering from a hardware failure: If a Mac needs to be wiped and reinstalled, Anvil allows a developer to restore their complete software environment and configurations from their GitHub repository, minimizing downtime and the frustration of manual re-setup.

· Experimenting with different toolchains: A developer can create separate Anvil configurations for different types of projects (e.g., one for Python development, one for Go development). They can then switch between these environments by simply running the relevant `anvil install` command, keeping their tools organized and isolated.

18

Oboe: AI-Powered Learning Catalyst

Author

nir-zicherman

Description

Oboe is an AI-driven platform that transforms a single text prompt into comprehensive learning courses. It democratizes education by making any topic accessible and engaging, offering diverse learning formats like articles, podcasts, games, and quizzes. This project highlights an innovative approach to content generation and personalized learning experiences, driven by cutting-edge AI, making it easier for anyone to acquire new knowledge.

Popularity

Points 6

Comments 0

What is this product?