Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

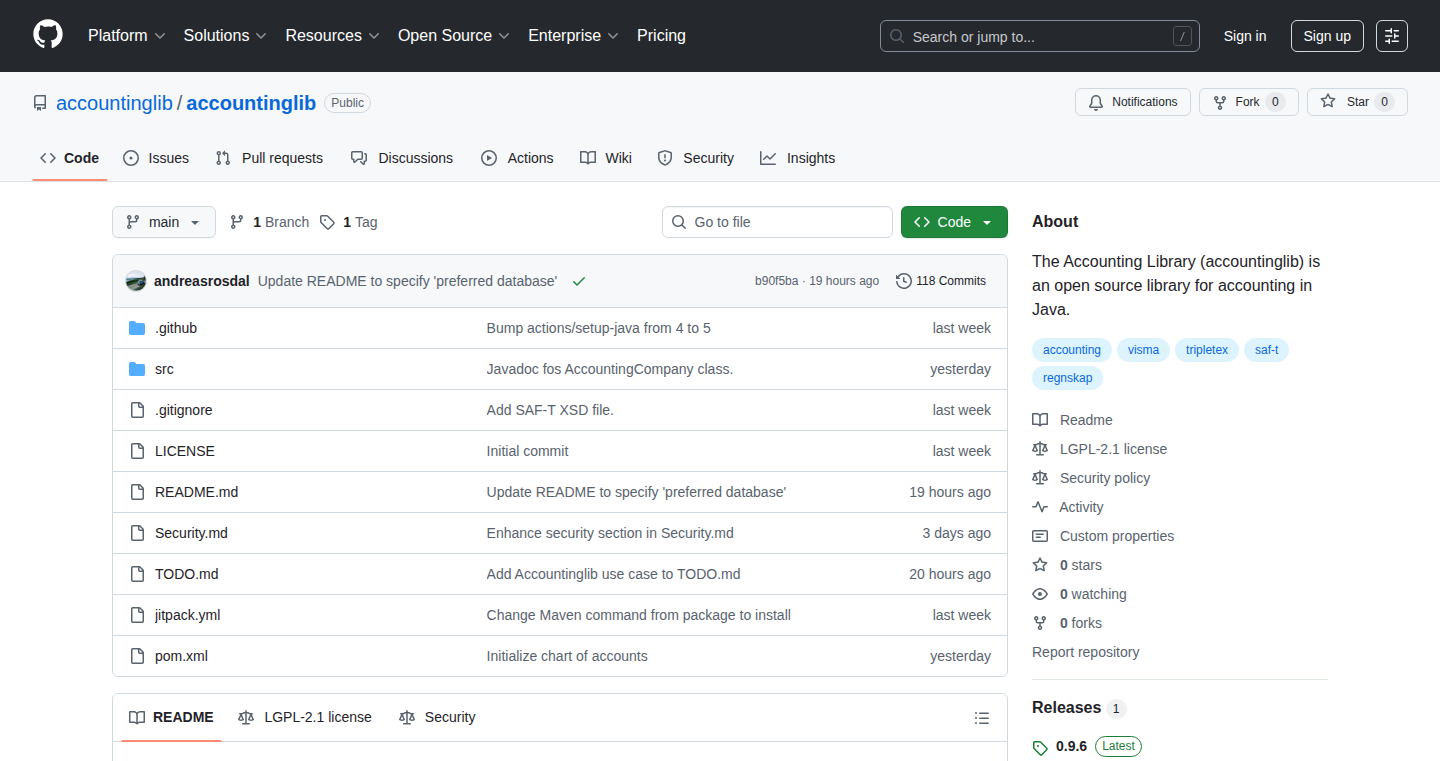

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-07

SagaSu777 2025-09-08

Explore the hottest developer projects on Show HN for 2025-09-07. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions paint a vibrant picture of innovation, with a strong emphasis on leveraging AI to solve practical problems and enhance developer workflows. The prevalence of AI-powered tools, from semantic code search to personalized workout generators and even AI-assisted content creation, highlights a key trend: making complex technologies accessible and actionable. Developers are demonstrating a hacker spirit by integrating LLMs not just for novel applications but also to streamline existing processes, like generating code from spreadsheets or providing local, cost-free alternatives to cloud-based AI services. For aspiring innovators, this means understanding how to embed AI capabilities into user-centric products or developer tools can unlock significant value. Furthermore, the focus on local-first solutions and data privacy is a growing concern, presenting opportunities for secure, self-hosted alternatives. Think about how you can combine accessible AI with robust, privacy-preserving architectures to build the next generation of intelligent tools and platforms.

Today's Hottest Product

Name

SelecTube – Curated YouTube for Kids

Highlight

This project tackles a common parental concern about uncontrolled YouTube content for children by creating a curated platform with a hand-picked selection of creators. The developer leveraged AI tools, specifically Cursor, to accelerate the development process, showcasing how AI can be a powerful co-pilot for even infrequent coders. It solves the problem of excessive or low-quality content by offering a focused, ad-free (with YT Premium) viewing experience, demonstrating a practical application of AI in content curation and development acceleration.

Popular Category

AI/ML

Developer Tools

Web Applications

Open Source

Utilities

Popular Keyword

AI

LLM

Developer Tools

Open Source

Web App

Productivity

Data

Security

Content Creation

Automation

Technology Trends

AI-Powered Applications

LLM Integration

Semantic Search & Embeddings

Developer Productivity Tools

Visual Programming/Editors

Data Privacy & Local-First Solutions

Content Curation & Personalization

Security Frameworks

Cross-Platform Development

Real-time Collaboration

Project Category Distribution

AI/ML Tools (25%)

Developer Utilities & Libraries (20%)

Web Applications & Services (20%)

Productivity & Personal Tools (15%)

Educational & Content Tools (10%)

Security & Infrastructure (5%)

Creative & Niche Applications (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | SkinCancer VibeLearn | 400 | 244 |

| 2 | LocalEmbed-Grep | 170 | 73 |

| 3 | GoWebRTC-OpenCV | 34 | 6 |

| 4 | AI FutureScape | 6 | 18 |

| 5 | Infinipedia: The Ever-Evolving Knowledge Weaver | 9 | 2 |

| 6 | Beelzebub: AI Agent Tripwire | 8 | 0 |

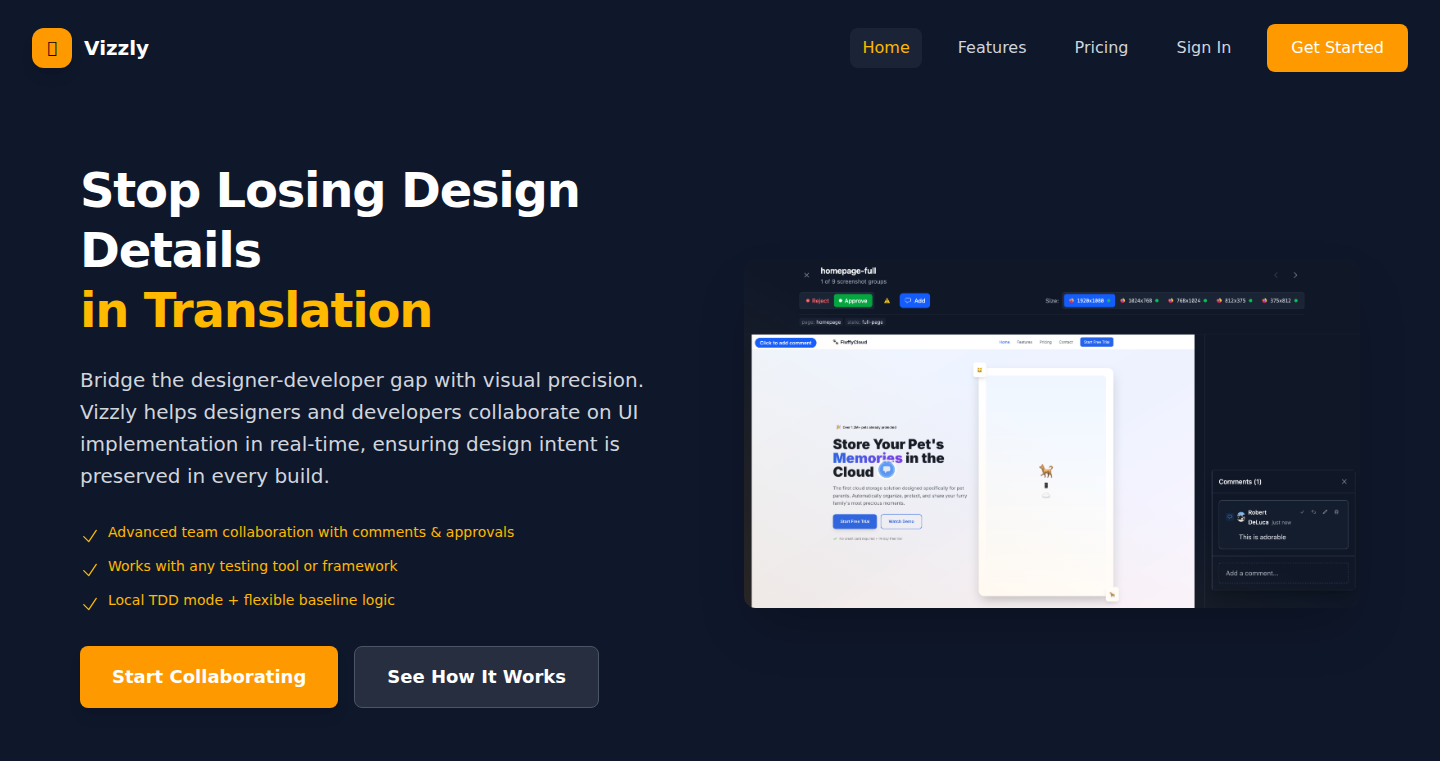

| 7 | Vizzly - Pixel Perfect Sync | 8 | 0 |

| 8 | Pocket: The Unbreakable Focus Enforcer | 6 | 2 |

| 9 | Uxia: AI User Testing Synthesizer | 3 | 4 |

| 10 | NovelCompressor | 5 | 1 |

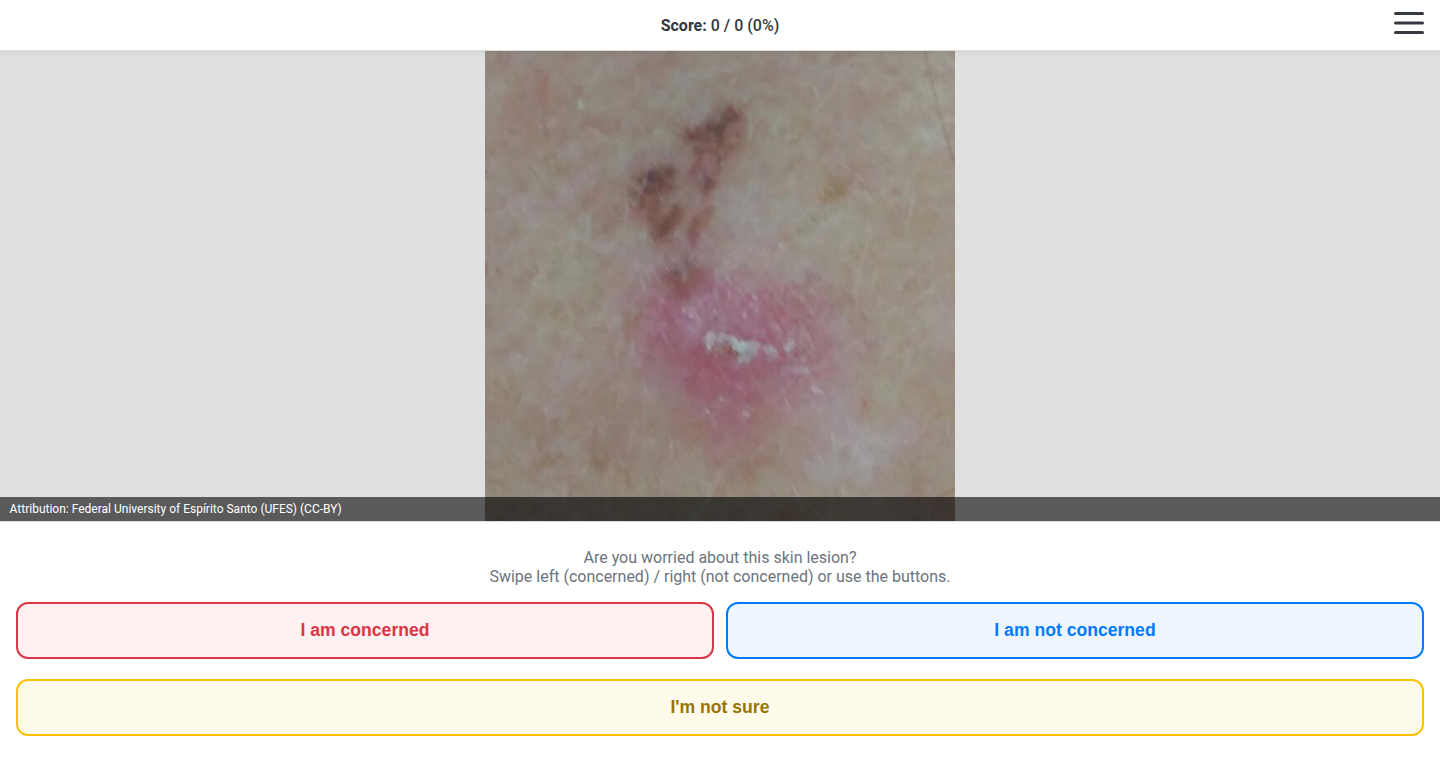

1

SkinCancer VibeLearn

Author

sungam

Description

A browser-based educational tool for learning about skin cancer, built by a dermatologist. It uses Vanilla JavaScript and stores scores locally, making it accessible and user-friendly. The project showcases how a domain expert can leverage simple web technologies to create a practical learning resource.

Popularity

Points 400

Comments 244

What is this product?

SkinCancer VibeLearn is a single-page web application designed to help users learn about different types of skin cancer. It's built entirely with client-side technologies, meaning it runs directly in your web browser without needing a server for core functionality. The innovation lies in its accessibility and the direct involvement of a medical professional in its creation. It uses Vanilla JavaScript for interactivity and saves your progress or scores using the browser's localStorage feature, so your learning journey can be picked up right where you left off without a complex login system. The use of a single file for HTML, CSS, and JavaScript simplifies deployment and maintenance. So, what does this mean for you? It means you get an easy-to-access, no-fuss learning tool that you can use anytime, anywhere, directly from your browser, and it remembers your progress.

How to use it?

Developers can use SkinCancer VibeLearn as a reference for building similar single-page, client-side educational applications. Its straightforward structure, with all code in one file, makes it easy to understand and adapt. You can integrate its learning module into existing websites or use it as a foundation for creating your own interactive learning experiences. The project also demonstrates a cost-effective deployment strategy using a minimal server setup or even static hosting, with assets like images stored on AWS S3. So, how can you use this? If you're a developer looking to build a quick, interactive educational tool without a heavy backend, this project is a great blueprint. You can learn from its code, adapt its structure for your own projects, or even contribute to its development.

Product Core Function

· Interactive learning modules for skin cancer types: This allows users to engage with educational content in a dynamic way, improving comprehension and retention. Its value is in making learning about a serious medical topic more accessible and engaging.

· Score persistence using localStorage: This feature enables users to track their learning progress without requiring user accounts or server-side databases. The value here is a seamless user experience where progress is saved automatically and is readily available.

· Single-file HTML/CSS/JS structure: This design choice simplifies the development, deployment, and understanding of the application. It's valuable because it reduces complexity and makes the project highly portable and easy to replicate.

· Client-side only operation: The application runs entirely in the browser, meaning no server is needed for core functionality. This makes it incredibly fast, accessible, and cost-effective to host, offering users immediate access without complex setups.

Product Usage Case

· A medical student using SkinCancer VibeLearn to quickly revise and test their knowledge of common skin cancer presentations before an exam. The app's simple interface and local score saving allowed for focused study sessions without internet connectivity issues.

· A web developer building a prototype for a health awareness campaign, leveraging SkinCancer VibeLearn's single-file structure and Vanilla JS implementation as a starting point. They were able to quickly deploy a functional learning component that could be easily integrated into their campaign website.

· A community health educator looking for an easy-to-share online resource about skin cancer. SkinCancer VibeLearn's no-backend, browser-only nature meant it could be hosted on a simple static site, providing a readily available and reliable tool for public education.

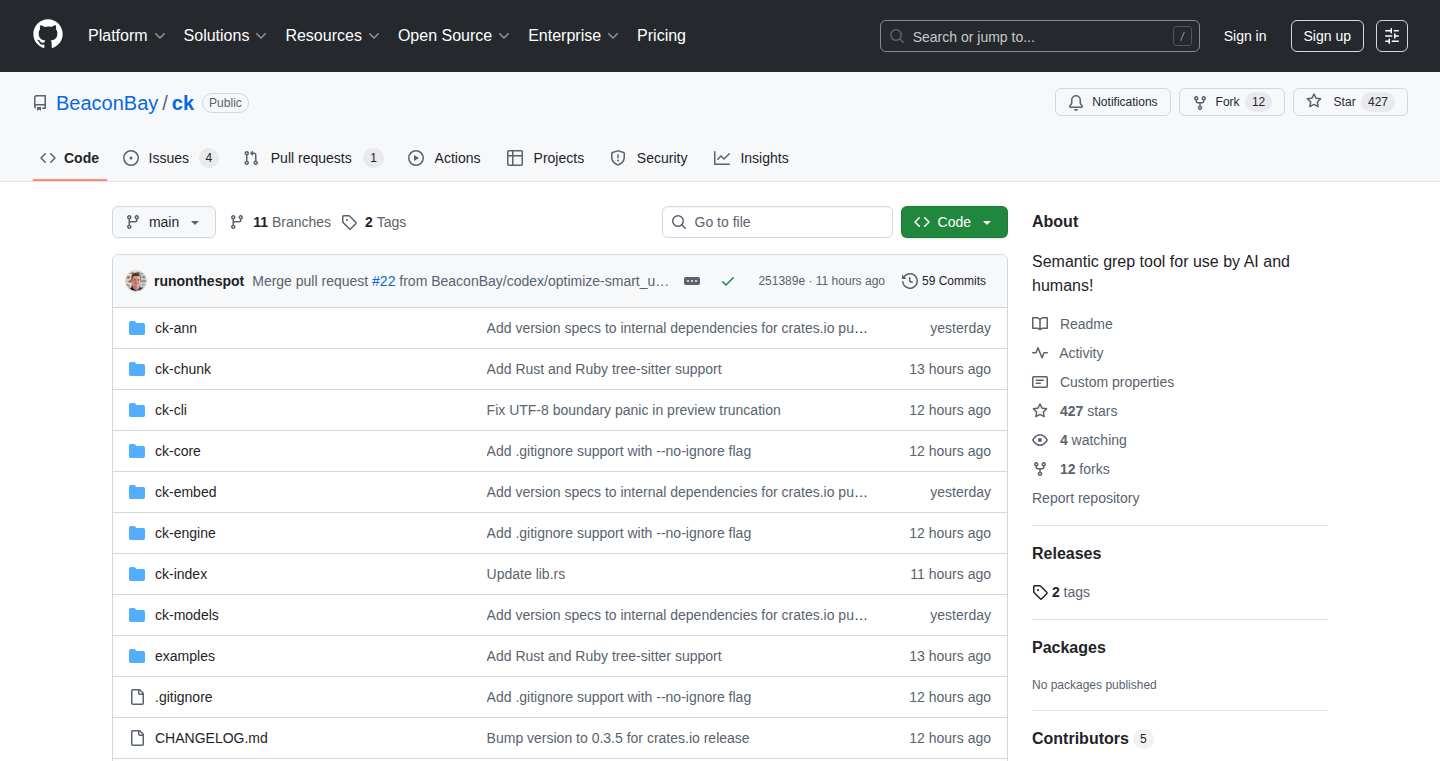

2

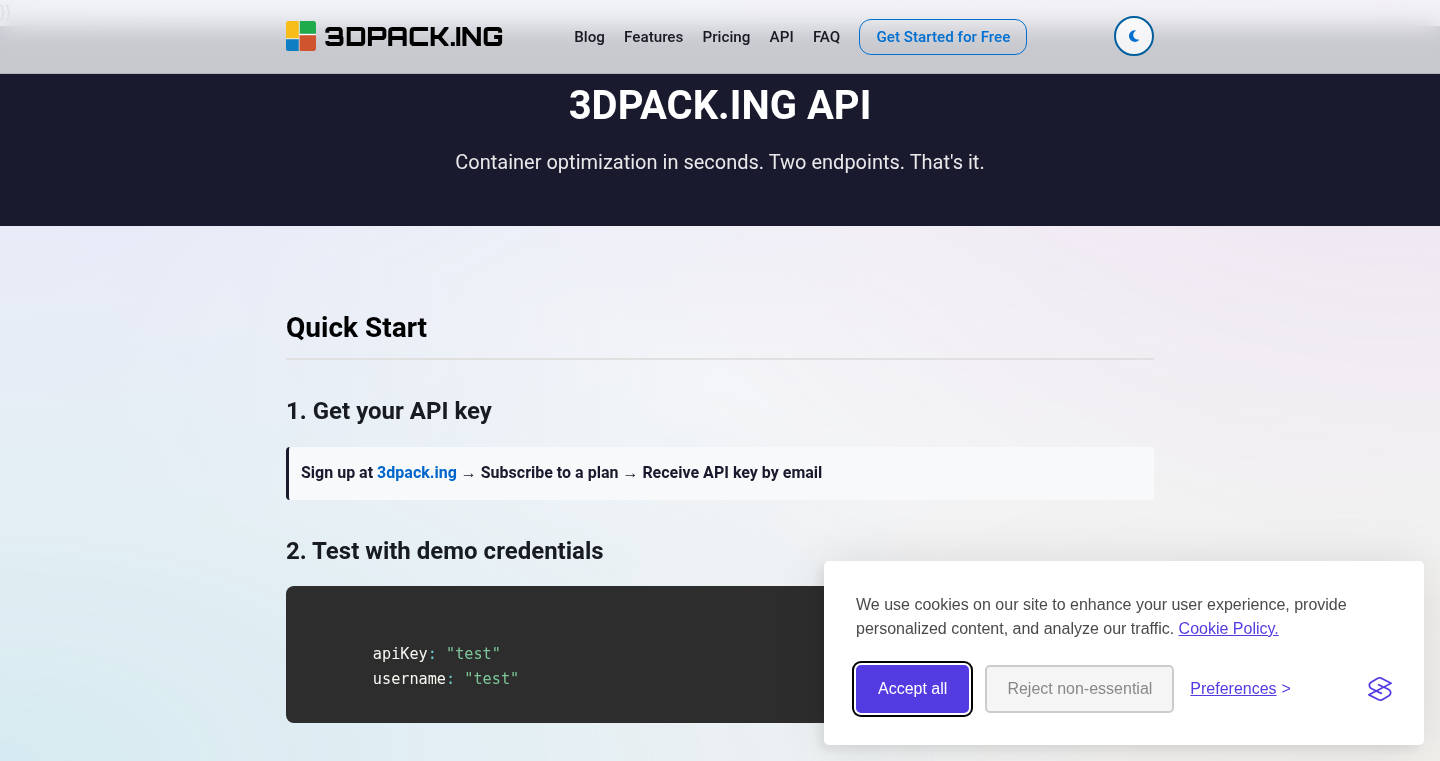

LocalEmbed-Grep

Author

Runonthespot

Description

A tool that uses local machine learning embeddings to perform semantic searching within codebases. It allows developers to find code snippets based on meaning rather than just keyword matching, solving the problem of discovering relevant code in large or unfamiliar projects.

Popularity

Points 170

Comments 73

What is this product?

LocalEmbed-Grep is a command-line tool that leverages local machine learning models to understand the meaning of your code. Instead of just looking for specific words, it can find code that does similar things or expresses similar ideas. It achieves this by converting your code into numerical representations called 'embeddings' that capture semantic relationships. This is innovative because traditional code search tools rely on exact text matches, which often miss relevant code that is phrased differently. So, for you, it means finding code more intelligently and efficiently, even if you don't know the exact keywords.

How to use it?

Developers can integrate LocalEmbed-Grep into their workflow by installing it as a command-line tool. After installation, they can initialize it in their project directory to generate embeddings for their codebase. Then, they can query this index using natural language descriptions or even example code snippets. The tool will return the most semantically similar code blocks. This is useful for tasks like refactoring, understanding legacy code, or finding reusable components. For example, you could ask it to 'find code that handles user authentication' instead of guessing keywords like 'login' or 'session'.

Product Core Function

· Code Embedding Generation: Creates numerical representations of code snippets that capture their meaning, enabling semantic search. This helps in understanding the underlying functionality of code blocks.

· Semantic Search: Allows querying the codebase using natural language or example code to find semantically similar snippets, greatly improving code discovery and comprehension.

· Local Processing: Operates entirely on the user's machine, ensuring privacy and independence from external services, which is crucial for proprietary codebases.

· Command-Line Interface: Provides a flexible and scriptable way to integrate semantic search into existing development workflows and CI/CD pipelines.

Product Usage Case

· Refactoring a large, unfamiliar codebase: A developer can use LocalEmbed-Grep to find all instances of code that perform a specific type of operation, even if the naming conventions vary widely, making the refactoring process more robust.

· Discovering reusable code components: When building a new feature, a developer can search for existing code that performs a similar task to avoid reinventing the wheel, saving development time and effort.

· Understanding complex algorithms: By providing a high-level description of the desired functionality, a developer can use LocalEmbed-Grep to locate the relevant parts of an implementation, aiding in learning and debugging.

· Onboarding new team members: New developers can quickly grasp the functionality of different parts of the codebase by semantically searching for code related to specific features or concepts, accelerating their learning curve.

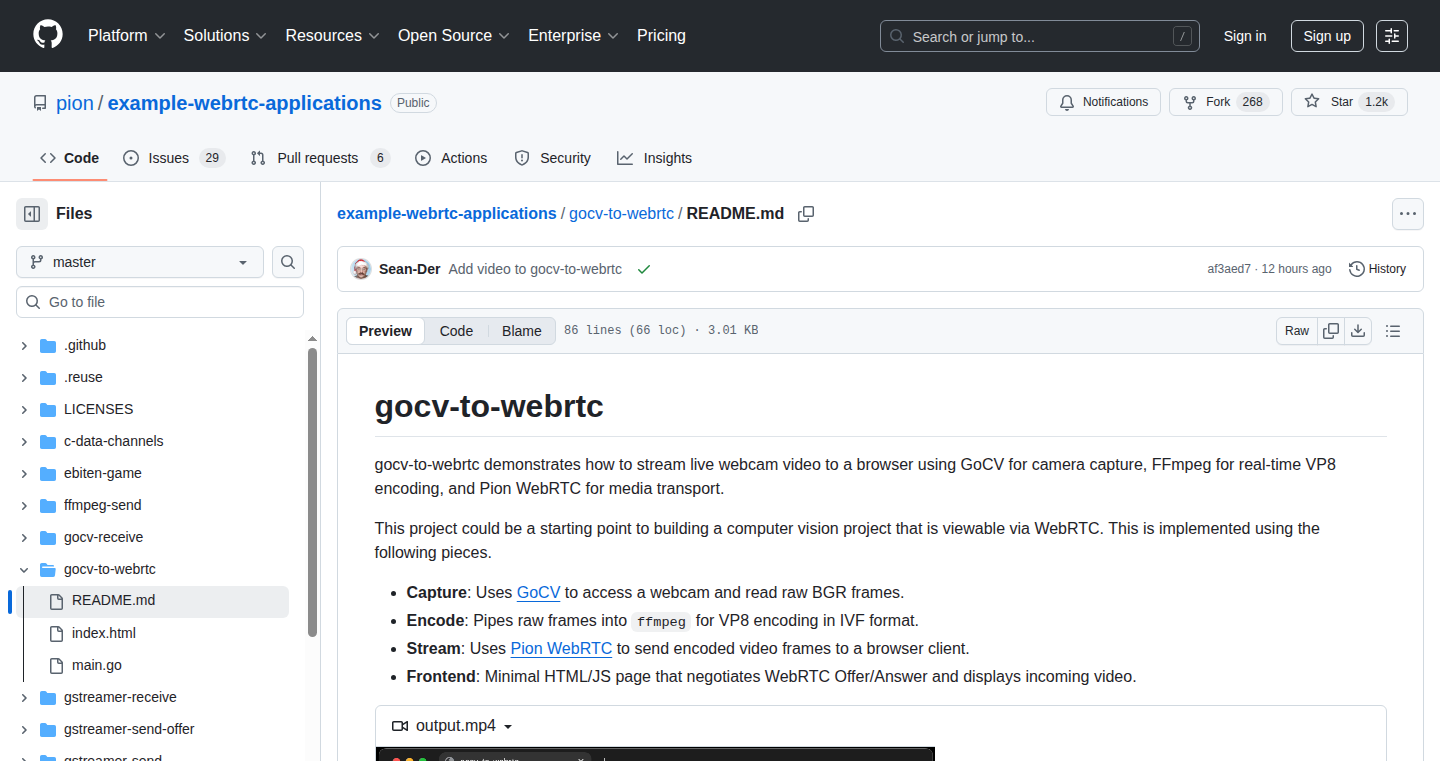

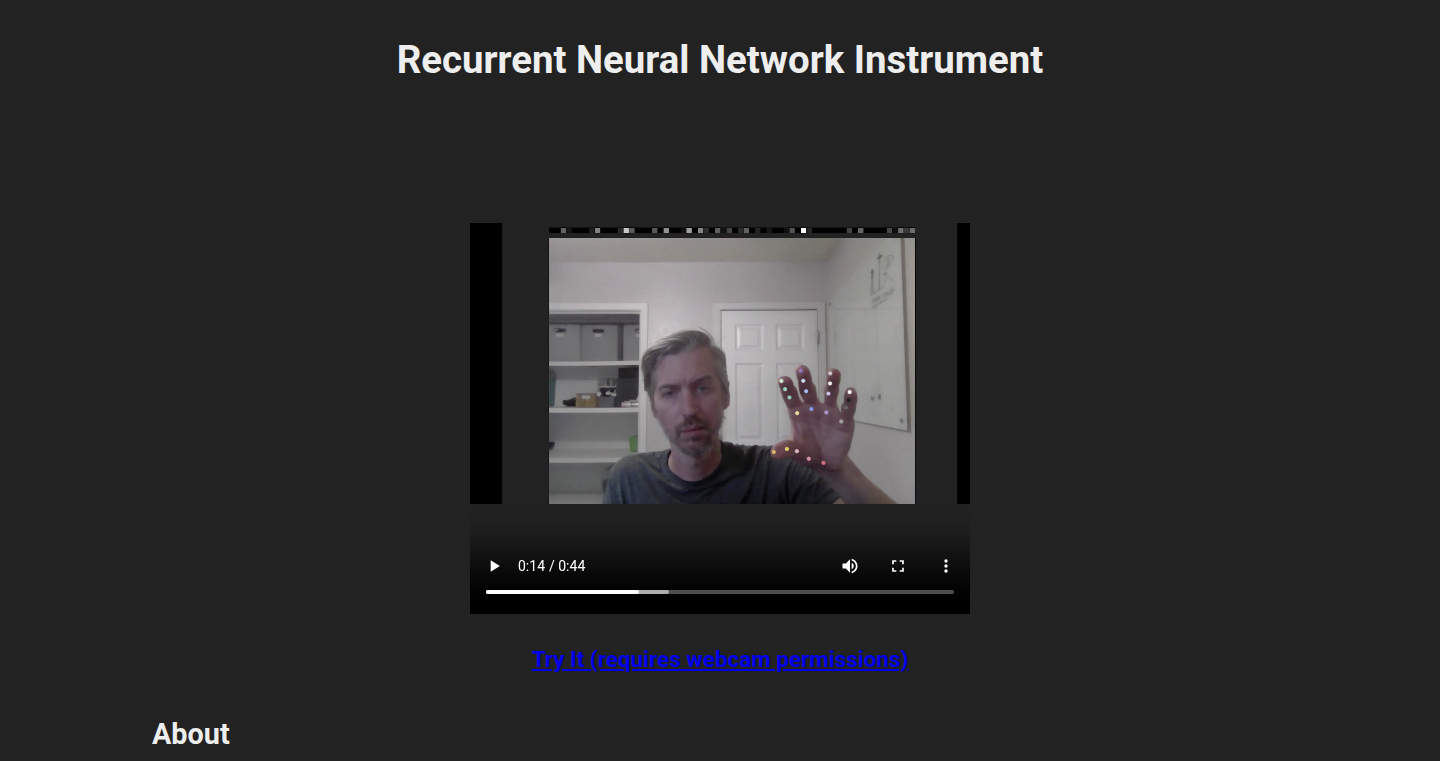

3

GoWebRTC-OpenCV

Author

Sean-Der

Description

This project enables real-time video processing and computer vision tasks directly in the browser using Go and WebRTC, powered by OpenCV. It bridges the gap between powerful server-side vision libraries and interactive web applications by streaming processed video data efficiently.

Popularity

Points 34

Comments 6

What is this product?

This project is a Go-based implementation that leverages WebRTC for real-time video streaming and OpenCV for sophisticated computer vision operations. The innovation lies in seamlessly integrating OpenCV's advanced image analysis capabilities with the low-latency, peer-to-peer communication provided by WebRTC. Instead of sending raw video to a server for processing, this approach allows complex visual processing to happen either on the server or even potentially closer to the client, reducing latency and bandwidth requirements. Think of it as bringing the power of a desktop computer's vision processing directly to your web browser in real-time.

How to use it?

Developers can integrate this project into their Go applications to build interactive web experiences that involve visual analysis. This typically involves setting up a WebRTC signaling server in Go, capturing video from a source (like a webcam), sending it to the Go application where OpenCV performs processing (e.g., object detection, face recognition, image filtering), and then streaming the processed video back to the web browser using WebRTC. It's useful for creating web-based surveillance systems, interactive art installations, or collaborative visual tools.

Product Core Function

· Real-time video capture and streaming: Enables capturing video from various sources and transmitting it over WebRTC, providing a foundation for live visual applications.

· OpenCV integration for image processing: Allows developers to apply a wide range of computer vision algorithms to video streams, from simple filters to complex object tracking, unlocking advanced visual features.

· WebRTC communication: Facilitates peer-to-peer, low-latency video transmission directly between the server and browser, crucial for responsive and interactive visual experiences.

· Go backend for efficient processing: Utilizes Go's concurrency and performance advantages to handle video streams and OpenCV operations effectively, leading to better scalability.

· Browser-based visualization: Delivers processed video directly to web browsers, making sophisticated visual analysis accessible without requiring users to install desktop software.

Product Usage Case

· Building a real-time collaborative whiteboard where remote participants can see and interact with each other's drawings, with features like gesture recognition for drawing tools, by processing video streams of their hands.

· Creating a web-based security camera system that performs motion detection and alerts users in real-time directly through their browser, without needing a separate server application to decode and analyze video feeds.

· Developing an interactive augmented reality experience on the web, where a webcam feed is analyzed to overlay virtual objects or information onto the real world, all processed and streamed efficiently.

· Implementing a live video analysis tool for remote quality control, where defects on a product can be identified and highlighted in real-time by a remote expert viewing a processed video stream.

4

AI FutureScape

Author

mandarwagh

Description

This project explores the profound societal and technological shifts anticipated in the next 3, 5, 10, 25, 50, and 100 years, driven by Artificial Intelligence. It's a conceptual exploration presented through a blog, leveraging AI to forecast potential timelines and impacts. The innovation lies in using AI to synthesize and predict future scenarios, offering a structured, albeit speculative, view of our AI-driven tomorrow.

Popularity

Points 6

Comments 18

What is this product?

AI FutureScape is a visionary blog post that uses Artificial Intelligence to paint a picture of what the world might look like at various future points, from a few years from now to a century ahead. The core technical idea is to apply AI, likely through sophisticated predictive modeling and natural language processing, to analyze current trends and extrapolate potential future states across different sectors like technology, society, and human existence. The innovation is in creating a narrative that is informed by AI's analytical capabilities, offering a data-informed yet imaginative glimpse into humanity's future evolution with AI.

How to use it?

Developers can engage with AI FutureScape by reading and reflecting on the insights provided. For those interested in the underlying technology, it serves as inspiration to explore AI-driven forecasting and scenario planning. You can integrate similar AI techniques into your own projects for market analysis, strategic planning, or even creative storytelling. For instance, if you're building a new product, you could use AI to predict its potential market adoption curve or future competitive landscape.

Product Core Function

· Future Scenario Generation: Utilizes AI algorithms to forecast potential developments and impacts of AI across different timeframes. This helps in understanding long-term trends and preparing for them.

· Trend Analysis Synthesis: Analyzes vast amounts of data on current technological and societal trends to identify patterns and predict future trajectories. This provides a structured overview of potential future challenges and opportunities.

· Narrative Construction: Employs AI to weave these predictions into a coherent and engaging narrative, making complex future possibilities more accessible and understandable.

· Long-Term Impact Visualization: Presents a forward-looking perspective that aids in strategic decision-making by highlighting potential shifts and the role of AI in shaping them.

Product Usage Case

· Strategic Planning for Tech Companies: A startup could use AI-driven forecasting to understand the evolving market needs over the next decade and align their product roadmap accordingly.

· Policy Making and Societal Preparedness: Governments or research institutions could leverage such AI analysis to anticipate societal changes and develop proactive policies to address potential disruptions from AI.

· Personalized Future Exploration: Individuals could use similar AI tools to explore their own career paths or investment strategies in the context of predicted technological advancements.

· Educational Content Creation: Educators can use the insights to create engaging learning materials about the future of technology and its societal implications.

5

Infinipedia: The Ever-Evolving Knowledge Weaver

Author

avhwl

Description

Infinipedia is a groundbreaking project that aims to create an infinitely expanding and self-improving wiki. It tackles the challenge of knowledge curation and growth by leveraging advanced AI techniques to automatically write, connect, and optimize content. This means a wiki that doesn't just store information but actively learns and adapts, making it an incredibly powerful and dynamic resource. For developers, it offers a novel approach to building knowledge bases that can scale organically and stay relevant without constant manual intervention.

Popularity

Points 9

Comments 2

What is this product?

Infinipedia is an ambitious project that seeks to build a wiki that writes and optimizes itself. Think of it as a living encyclopedia that continuously learns and expands. Its core innovation lies in its ability to generate new content, establish relationships between disparate pieces of information, and refine its own structure and accuracy using AI. This is achieved through a sophisticated interplay of natural language generation (NLG) for content creation, knowledge graph construction for interlinking information, and reinforcement learning or similar optimization algorithms to improve content quality and discoverability. So, what does this mean for you? It means a knowledge base that actively grows with you, uncovering connections you might have missed and presenting information in a more coherent and comprehensive way, without you having to do all the heavy lifting.

How to use it?

Developers can integrate Infinipedia into their applications or workflows by leveraging its API. Imagine building a specialized knowledge base for your company's internal documentation, a support forum that automatically generates answers based on existing discussions, or even a research tool that surfaces novel connections in scientific literature. The API would allow you to feed it new data sources, query existing information, and potentially even guide its learning process. This makes it incredibly versatile for creating intelligent, self-sustaining information systems. So, how can you use it? You can embed it into your existing software to add an intelligent layer of knowledge management, or use it as a standalone tool to build dynamic, evolving content platforms.

Product Core Function

· Automated Content Generation: Utilizes AI to write new articles and expand existing ones, ensuring a constantly growing knowledge base. This is valuable because it reduces the burden of manual content creation and keeps the information fresh and comprehensive.

· Self-Optimizing Knowledge Graph: Automatically connects related pieces of information, creating a rich web of interconnected knowledge. This provides deeper insights and facilitates more efficient information retrieval.

· Content Refinement and Accuracy Improvement: Employs AI algorithms to review and improve the quality and accuracy of the wiki's content over time. This ensures that the information remains reliable and useful.

· Scalable Knowledge Management: Designed to handle an ever-increasing amount of information without degradation in performance or usability. This means your knowledge base can grow without limits.

· API-driven Integration: Provides a flexible API for developers to integrate Infinipedia's capabilities into their own applications. This allows for seamless incorporation into existing workflows and custom solutions.

Product Usage Case

· Building a personalized learning platform where Infinipedia dynamically generates study materials and connects concepts based on a user's learning progress. This helps users understand complex topics more deeply by showing how different ideas relate.

· Enhancing customer support by creating a knowledge base that automatically answers frequently asked questions and suggests relevant solutions based on ongoing customer interactions. This leads to faster and more effective customer service.

· Developing a research assistant that can analyze large datasets of academic papers and automatically identify emerging trends and novel research connections. This empowers researchers to discover new avenues of investigation.

· Creating an internal company wiki that automatically updates with new project information, best practices, and team knowledge, ensuring everyone has access to the latest relevant information. This improves team collaboration and efficiency.

6

Beelzebub: AI Agent Tripwire

Author

mariocandela

Description

Beelzebub introduces 'canary tools' for AI agents using MCP honeypots. These are fake functions disguised as legitimate ones that your AI agent should never call. If the agent attempts to use one, it immediately signals a potential security breach like prompt injection or tool hijacking, providing a clear, low-noise indicator of compromise. This helps secure AI agents by creating a deterministic alert system, unlike methods relying on complex heuristics or additional model calls. It's especially relevant for protecting against attacks targeting AI in software supply chains, ensuring unauthorized actions are detected.

Popularity

Points 8

Comments 0

What is this product?

Beelzebub is an open-source Go framework that creates 'canary tools' for AI agents. Think of these as fake traps designed to look like real tools (with convincing names, parameters, and descriptions) that an AI agent might use. When the AI agent interacts with these fake tools, they return harmless dummy data but simultaneously send out an alert, like a tripwire being activated. This provides a high-fidelity signal of malicious activity such as prompt injection (where someone tricks the AI into doing something it shouldn't) or tool hijacking (where the AI's access to its tools is compromised). It's a direct way to know if something has gone wrong, without needing to guess or run extra AI checks.

How to use it?

Developers can integrate Beelzebub into their AI agent workflows by running the Go framework alongside their agent's actual tools. The canary tools are exposed via a mechanism called MCP (Message Passing Control, a way for different software parts to communicate). The framework is configured to emit alerts when a canary tool is invoked. These alerts can be sent to standard output, a webhook, or directly into monitoring systems like Prometheus/Grafana or ELK (Elasticsearch, Logstash, Kibana). This setup allows developers to monitor their AI agents for suspicious behavior by observing these canary tool invocations, which are indicators of security incidents.

Product Core Function

· Decoy Tool Exposure: Creates fake tools that mimic real ones, providing a realistic lure for malicious actors attempting to manipulate AI agents. This helps detect unauthorized actions by presenting fake actions that trigger alerts.

· Telemetry Emission: When a canary tool is called, Beelzebub emits alerts. This provides immediate, actionable data about security breaches, enabling quick response and investigation.

· Low-Noise Alerting: Unlike methods that rely on complex patterns or additional AI processing, Beelzebub triggers alerts directly from tool calls. This means fewer false alarms, making it easier to identify real threats.

· Integration with Monitoring Systems: Alerts can be streamed to standard output, webhooks, or common observability platforms (Prometheus, Grafana, ELK). This ensures that security events are captured and can be analyzed within existing infrastructure.

· Protection Against Prompt Injection and Tool Hijacking: By detecting unexpected tool usage, Beelzebub directly combats common AI security vulnerabilities, safeguarding the agent's intended functionality.

Product Usage Case

· Securing an IDE AI Assistant: Imagine an AI assistant that helps you code. If a malicious entity tries to inject a prompt to steal your API keys, and the AI agent is tricked into calling a fake 'export secrets' tool registered by Beelzebub, an immediate alert is triggered. This stops the secret exfiltration before it happens.

· Detecting Lateral Movement in AI Workflows: In a complex AI system where agents interact, if one agent is compromised and attempts to use a canary tool from another agent (e.g., a fake 'access sensitive data' function), Beelzebub will immediately signal this breach, preventing the attacker from spreading further.

· Preventing Supply Chain Attacks on AI: If an AI agent used in a software supply chain is compromised, and the attacker tries to leverage it for malicious purposes, like a fake 'exfiltrate repository' function, Beelzebub will detect this unauthorized action. This is crucial for preventing AI from being used as a weapon in cyberattacks.

7

Vizzly - Pixel Perfect Sync

Author

Robdel12

Description

Vizzly is a visual testing platform designed to bridge the gap between design specifications and developer implementation. It addresses the common problem of design details getting lost in translation, leading to discrepancies between what's designed and what ships. Unlike traditional visual testing tools that focus solely on CSS breaks, Vizzly captures actual screenshots produced by your application, ensuring that the visual output truly reflects the intended design. It offers integrated collaboration features for design and development review, flexible baseline management, and aims to provide a seamless experience from local development to CI/CD pipelines. So, this is useful because it helps teams ensure their shipped products are visually identical to the approved designs, reducing costly rework and improving overall quality.

Popularity

Points 8

Comments 0

What is this product?

Vizzly is a visual testing and review platform that goes beyond simply checking for broken CSS. Its core innovation lies in its 'capture-first' approach: it uses actual pixel data from your application's rendering, whether it's in the browser, on a specific operating system, or within another environment. This means you're testing against the real output, not just a DOM interpretation. This approach tackles the 'it doesn't look like that on my machine' problem head-on. The platform integrates collaboration tools like reviewer assignment, approvals, and thread-level discussions directly on screenshots. It also supports flexible baseline management, allowing for automatic, manual, or hybrid approaches to defining what 'correct' looks like. So, what's the value? It provides a definitive source of truth for visual consistency, making sure the final product is a true representation of the design intent, directly from the user's perspective.

How to use it?

Developers can integrate Vizzly into their workflow by installing the Vizzly CLI. During their testing process, specifically after capturing screenshots of their application's UI (e.g., using Playwright or Cypress), they can send these screenshots to Vizzly for visual comparison and review. This can be done locally during development for instant feedback, mirroring the process that will occur in the CI/CD pipeline. You can configure Vizzly to automatically capture and upload screenshots for specific test cases, linking them to your codebase via Git. Custom properties can be added to these screenshots to categorize them by component, viewport size, theme, or any other relevant criteria, making reviews more organized. So, how to use it? Install the CLI, configure your Vizzly token, and then within your automated tests, use the provided SDK function to upload your captured screenshots. This ensures your visual tests are part of your existing development and testing infrastructure, providing immediate insights and facilitating collaboration.

Product Core Function

· Pixel-perfect screenshot capture: Captures the exact visual output of your application from its rendering environment, ensuring fidelity to the intended design. This provides an accurate baseline for comparison, eliminating "looks different on my machine" issues.

· Integrated review workflows: Allows teams to collaborate directly on screenshots, with features for assigning reviewers, granting approvals, and discussing specific visual elements through threaded conversations. This streamlines the design and development feedback loop.

· Flexible baseline management: Supports automatic baseline updates tied to your Git branches, manual baseline creations, or a hybrid approach, giving teams control over how visual standards are maintained and evolved.

· Customizable review filtering: Enables the use of custom properties (e.g., component name, viewport, theme) to categorize and filter screenshots, making it easier to manage and review specific aspects of the application's UI.

· Local and CI parity: Provides the ability to run visual tests locally with instant feedback during development and ensures the same process is used in the CI/CD pipeline, guaranteeing consistency across all environments.

Product Usage Case

· A UI developer is implementing a new feature and wants to ensure the layout and styling precisely match the designer's Figma mockups. They use Vizzly within their local testing setup to capture screenshots of the new component at various screen sizes and apply custom properties like 'component: button-group', 'viewport: desktop', 'theme: dark'. They instantly see any visual deviations compared to the approved baseline. This saves them from extensive manual comparison and prevents visual bugs from reaching the review stage.

· A QA engineer is responsible for regression testing a large e-commerce website. Before deploying a new version, they run an automated test suite using Playwright that captures screenshots of key pages. These screenshots are uploaded to Vizzly, where they are automatically compared against the baselines from the previous stable version. Vizzly flags any new visual differences, allowing the QA engineer to quickly identify and report regressions. The collaboration features enable them to assign specific flagged screenshots to the responsible developer for immediate investigation.

· An open-source project is facing challenges with visual consistency across different browser versions and operating systems used by its contributors. They integrate Vizzly into their CI pipeline. Each pull request triggers a visual test that captures screenshots across specified environments. Any detected visual regressions are automatically highlighted, and contributors receive feedback before merging. This significantly improves the overall visual quality and reduces the burden on core maintainers to manually check every visual aspect.

8

Pocket: The Unbreakable Focus Enforcer

Author

mojambo96

Description

Pocket is a highly opinionated iOS app designed to enforce deep focus by making your phone physically unusable. It leverages innovative interaction methods to lock users out of their devices, punishing distractions and rewarding discipline, all to combat the productivity drain of modern digital life. The core innovation lies in its 'no escape' philosophy, where accidental distraction leads to immediate loss of progress, thus building true focus habits.

Popularity

Points 6

Comments 2

What is this product?

Pocket is an iOS application that aims to provide the 'hardest' focus experience. Unlike traditional focus apps that rely on willpower or gentle reminders, Pocket enforces focus through strict, physical interaction constraints. The core technological innovation is its 'punishment' mechanism: if you break your focus session by interacting with your phone outside the allowed parameters (like checking notifications or opening other apps), all your progress is wiped out. It uses the device's native lock screen and background processing capabilities to maintain the focus state, ensuring that even restarting the phone doesn't easily bypass the session. The app introduces a unique 'double-tap' gesture on the back of the phone to instantly initiate a focus session, bypassing traditional menus. This creates a seamless and almost ritualistic entry into a distraction-free state.

How to use it?

Developers can use Pocket by installing it on their iOS devices. To start a focus session, they can either place their phone in their pocket when outdoors, lay it face down while working or studying, or simply lock the device. The app proactively prevents usage during these sessions. A unique feature allows users to summon the focus session by double-tapping the back of their phone. This gesture immediately locks the screen and begins the focus timer. If a user is tempted to check their phone, they receive a 5-second warning to correct their behavior. Failure to do so results in the immediate termination of the focus session and loss of any accumulated progress. For urgent situations, like important calls, users can answer them, but this action itself breaks the 'focus pact' and ends the session once the device is unlocked.

Product Core Function

· Physical Lockout Mechanism: The app ensures the phone's screen is locked and inaccessible during focus sessions, preventing any temptation to browse or use other applications. This directly combats the habit of picking up the phone for quick, distracting checks, making actual engagement with the device the only way to 'escape' focus.

· Punitive Progress Reset: If a user breaks the focus by interacting with the phone inappropriately, the app ruthlessly deletes all their accumulated focus progress. This 'tough love' approach creates a strong incentive to maintain discipline and avoid distractions, as the cost of failure is high.

· Gesture-Based Session Initiation: A quick double-tap on the back of the phone instantly activates a focus session, locking the device and starting the timer. This innovative input method simplifies the process of entering a focused state, making it quick and almost automatic, reducing friction associated with starting a productivity session.

· Break-in Warning System: Users are given a 5-second grace period to correct any accidental interaction that might break focus. This provides a last chance to re-commit to the session without immediate penalty, acknowledging that accidental touches can happen.

· Emergency Call Handling: While the phone is locked, users can still answer incoming calls. However, the focus session is only truly broken when the device is unlocked, allowing for essential communication without completely abandoning the focus commitment.

Product Usage Case

· Deep Work Sessions: A developer facing a complex coding problem can use Pocket to create an uninterrupted block of time. By initiating a session and placing their phone face down, they can focus on writing code without the constant ping of notifications, leading to more efficient problem-solving and faster development.

· Studying for Technical Exams: A student preparing for a certification exam can leverage Pocket to eliminate digital distractions. By committing to a focus session while studying, they can absorb information more effectively, leading to better retention and improved exam performance.

· Eliminating Social Media Addiction: For developers struggling with excessive social media use that disrupts their workflow, Pocket provides a direct solution. The harsh penalties for distraction make it an effective deterrent against mindlessly scrolling through feeds, helping to reclaim valuable time and mental energy.

· Mindfulness During Commutes: A user can use Pocket to ensure their commute, whether walking or on public transport, is a period of focused thought rather than smartphone absorption. This promotes mental clarity and can lead to creative insights or problem-solving outside of a typical work environment.

9

Uxia: AI User Testing Synthesizer

Author

borja_d

Description

Uxia is an AI-powered user testing platform that simulates thousands of synthetic users to provide actionable insights in minutes, rather than days. It addresses the slow, expensive, and often biased nature of traditional user testing by leveraging AI to replicate realistic human behaviors and interactions with digital products. This makes user testing accessible and rapid for any team, accelerating product iteration cycles.

Popularity

Points 3

Comments 4

What is this product?

Uxia is an innovative user testing tool that replaces traditional, time-consuming user recruitment and feedback collection with AI-driven simulations. Instead of waiting days for a handful of human testers, Uxia's AI models generate thousands of 'synthetic users' who interact with your prototype, design, or flow. These AI users are trained to mimic realistic human behaviors, allowing them to encounter issues, take different paths, and provide feedback much like real users would. The core innovation lies in using AI to drastically speed up the user testing process and reduce costs, making it feasible for teams to test more frequently and gain insights rapidly.

How to use it?

Developers can integrate Uxia into their workflow by uploading their product's prototype, design mockups, or user flow diagrams directly to the platform. Once uploaded, Uxia's AI simulates user interactions. Developers can then analyze the results, which highlight areas where users get stuck, the paths they take, and their overall interaction patterns. This allows for quick identification of usability issues and potential improvements. It can be used early in the design process to validate concepts or before launch to catch critical bugs and refine user experience.

Product Core Function

· AI-powered user simulation: Replicates thousands of user behaviors to test designs rapidly, providing insights without the delay and cost of recruiting human testers.

· Instant feedback generation: Delivers actionable insights within minutes, enabling faster iteration cycles and quicker product development.

· Prototype and design testing: Allows uploading of various design formats (prototypes, mockups, flows) for comprehensive usability analysis.

· Behavioral analysis: Tracks user paths, identifies friction points, and analyzes interaction patterns to pinpoint areas for improvement.

· Cost-effective pricing: Offers flat pricing for unlimited tests and users, removing the financial barrier often associated with professional user testing.

Product Usage Case

· A product manager needs to quickly validate a new onboarding flow for a mobile app. Instead of spending days recruiting beta testers, they upload the flow to Uxia. The AI simulates 1000 users navigating the onboarding, revealing that 20% of AI users abandon the process at a specific step due to an unclear button. This insight allows the team to fix the button immediately, improving conversion rates.

· A startup is developing a new e-commerce website and wants to ensure users can easily find and purchase products. They upload their website prototype to Uxia. Uxia's AI simulates users searching for specific items, adding them to the cart, and proceeding to checkout. The analysis highlights that the 'add to cart' button on product pages is not prominent enough for some AI users, leading to them missing it. This feedback helps the design team refine the UI before launch, enhancing the shopping experience.

· A game developer is testing a new game mechanic. They provide Uxia with a playable demo. The AI simulates players engaging with the mechanic, identifying frustration points or unexpected behaviors. This allows the developer to tweak the game's difficulty or controls based on simulated player experience, leading to a more enjoyable game.

10

NovelCompressor

Author

Forgret

Description

A novel compression algorithm that deviates from standard approaches, offering a new perspective on data reduction. It addresses the limitations of existing algorithms by exploring different mathematical models and data encoding techniques, providing a potentially more efficient way to shrink data sizes for various applications.

Popularity

Points 5

Comments 1

What is this product?

This project is a new data compression algorithm that breaks away from traditional methods. Instead of relying on common techniques like Huffman coding or Lempel-Ziv variants, it explores alternative mathematical principles for representing data more compactly. The core innovation lies in its unique approach to identifying and exploiting redundancies within data, potentially achieving higher compression ratios or offering different trade-offs (e.g., speed vs. compression ratio) compared to established algorithms. Think of it like finding a secret handshake for your data that makes it smaller without losing any information.

How to use it?

Developers can integrate NovelCompressor into their projects by utilizing its provided library or command-line interface. For instance, in a web application, it could be used to compress API responses to reduce bandwidth usage and improve loading times. In data storage, it can be applied to shrink large datasets, saving disk space and accelerating data transfer. The primary use case is any scenario where reducing data size is critical for performance, cost, or efficiency. You can plug it into your build process to compress assets or use it within your application's data handling logic.

Product Core Function

· Custom data encoding: Implements a unique method for encoding data, reducing its footprint by leveraging less common patterns. The value is achieving potentially better compression than existing solutions.

· Algorithmic exploration: Offers a novel approach to data compression by exploring alternative mathematical models and data transformation techniques. The value is pushing the boundaries of data reduction possibilities.

· Performance tuning: Allows for adjustments to compression parameters, enabling developers to balance compression ratio with processing speed. The value is adaptability to specific application needs.

· Data integrity preservation: Ensures that no data is lost during the compression and decompression process, maintaining the original integrity of the information. The value is reliable data handling.

Product Usage Case

· Web Development: Compress JSON responses from a backend API to reduce latency and bandwidth consumption, leading to faster page loads and a better user experience.

· Data Archiving: Shrink large log files or backup data to save significant storage space, lowering infrastructure costs.

· Mobile Applications: Reduce the size of application assets or data payloads to minimize download times and data usage for end-users on cellular networks.

· Scientific Computing: Compress large simulation results or experimental datasets to make them more manageable for analysis and sharing.

11

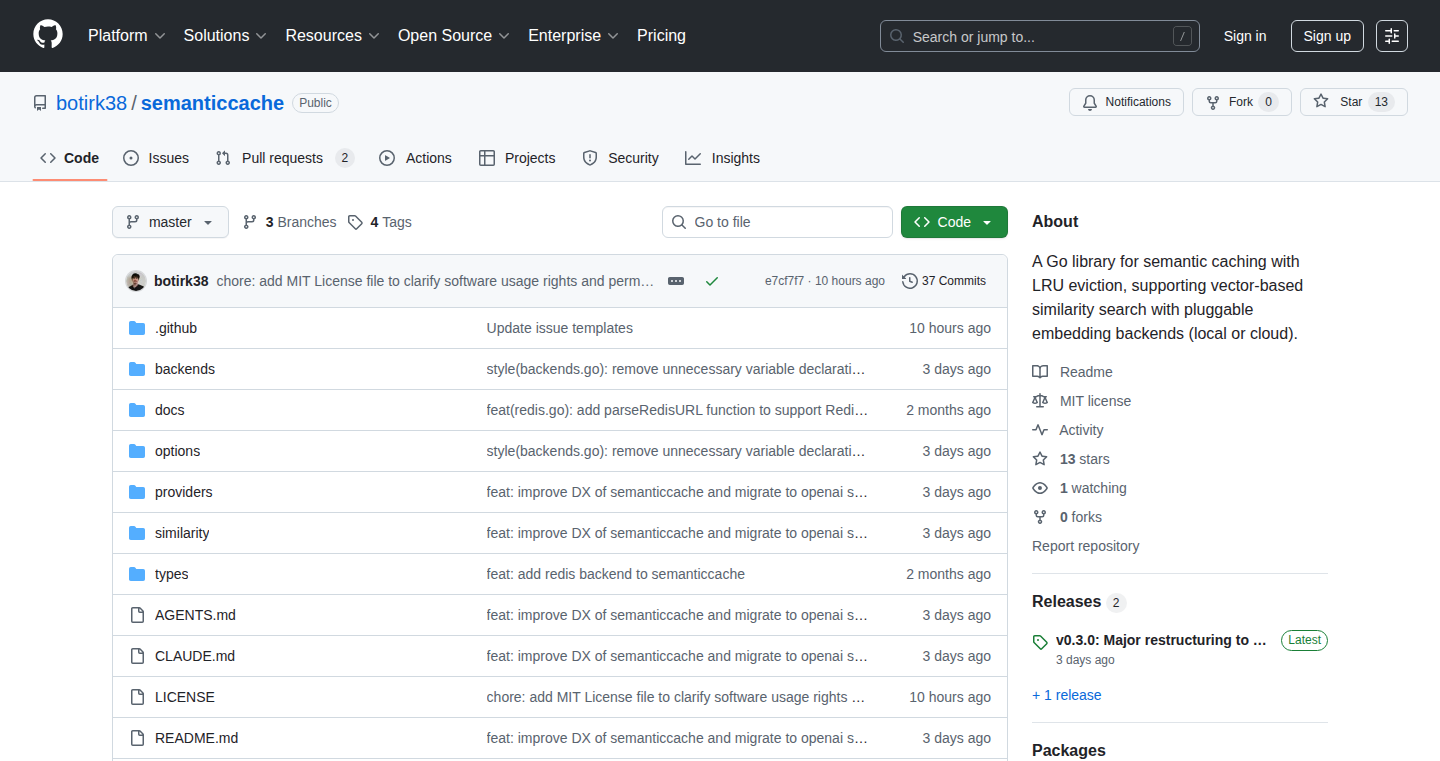

SemanticCache Go

Author

botirk

Description

SemanticCache Go is a high-performance caching library for Go applications that leverages vector embeddings to store and retrieve content based on meaning, not just exact keywords. It's ideal for Large Language Model (LLM) applications, advanced search systems, and any scenario where understanding the semantic similarity of information is crucial. Think of it as a smart cache that remembers not just what you asked for, but what you *meant*.

Popularity

Points 4

Comments 2

What is this product?

SemanticCache Go is a specialized caching system for Go that uses vector embeddings to understand the meaning of data. Instead of just storing and retrieving data based on identical text, it converts your data into numerical representations (vectors) that capture their semantic essence. When you search for something, it finds the data whose vectors are closest in meaning to your query. This means you can find relevant information even if you don't use the exact same words. It supports various caching strategies like LRU (Least Recently Used), LFU (Least Frequently Used), and FIFO (First-In, First-Out), and can store this cache in memory or in Redis for persistence. It integrates with OpenAI for generating these meaning-vectors, making it easy to get started with powerful semantic capabilities.

How to use it?

Developers can integrate SemanticCache Go into their Go projects to intelligently cache and retrieve data for LLM applications or search functionalities. You would typically initialize the cache with a chosen backend (like in-memory or Redis) and an embedding provider (like OpenAI). Then, you can store data, such as LLM responses or documents, by converting them into vectors and associating them with the original content. When a new query comes in, you convert the query into a vector and use the cache to find the most semantically similar stored items. This can dramatically improve performance and relevance by reducing redundant LLM calls or database queries by serving cached, semantically equivalent results.

Product Core Function

· Vector Embedding Integration: Converts text into numerical vectors that capture meaning, allowing for semantic retrieval. This is valuable for finding related information without exact keyword matches, enhancing search and LLM response relevance.

· Multiple Cache Backends: Supports in-memory (LRU, LFU, FIFO) and Redis persistence. This offers flexibility in managing cache data, from fast, ephemeral caches to durable, scalable storage, improving application performance and reliability.

· Vector Similarity Search: Allows searching for items based on semantic closeness using pluggable comparators. This is crucial for building smart recommendation engines or Q&A systems where understanding context is key.

· Extensibility: Provides a framework for custom backends and embedding providers. Developers can adapt the cache to their specific needs, integrating with different vector databases or custom embedding models, fostering innovation.

· Type-Safe Generics: Ensures type safety during cache operations, reducing runtime errors and improving code quality for Go developers.

· Batch Operations: Supports batching for caching and retrieval operations, significantly improving throughput and performance for high-load applications.

Product Usage Case

· LLM Response Caching: In an LLM-powered chatbot, cache identical or semantically similar user queries and their corresponding LLM responses. When a user asks a question, the system first checks the semantic cache for a similar query. If found, it returns the cached response instead of calling the LLM again, saving cost and reducing latency. This directly addresses the need for faster, more efficient LLM interactions.

· Intelligent Document Retrieval: For a knowledge base system, store documents as vector embeddings. When a user searches for information, the system converts their query into a vector and retrieves the most semantically similar documents from the cache. This allows users to find relevant articles even if they use different phrasing than in the original documents, improving the discoverability of information.

· Personalized Recommendations: In an e-commerce platform, cache user interaction data (e.g., viewed products, search terms) as vectors. When a user browses, use their current activity vector to find semantically similar past interactions or products from the cache. This provides more relevant and personalized product recommendations, boosting user engagement and sales.

12

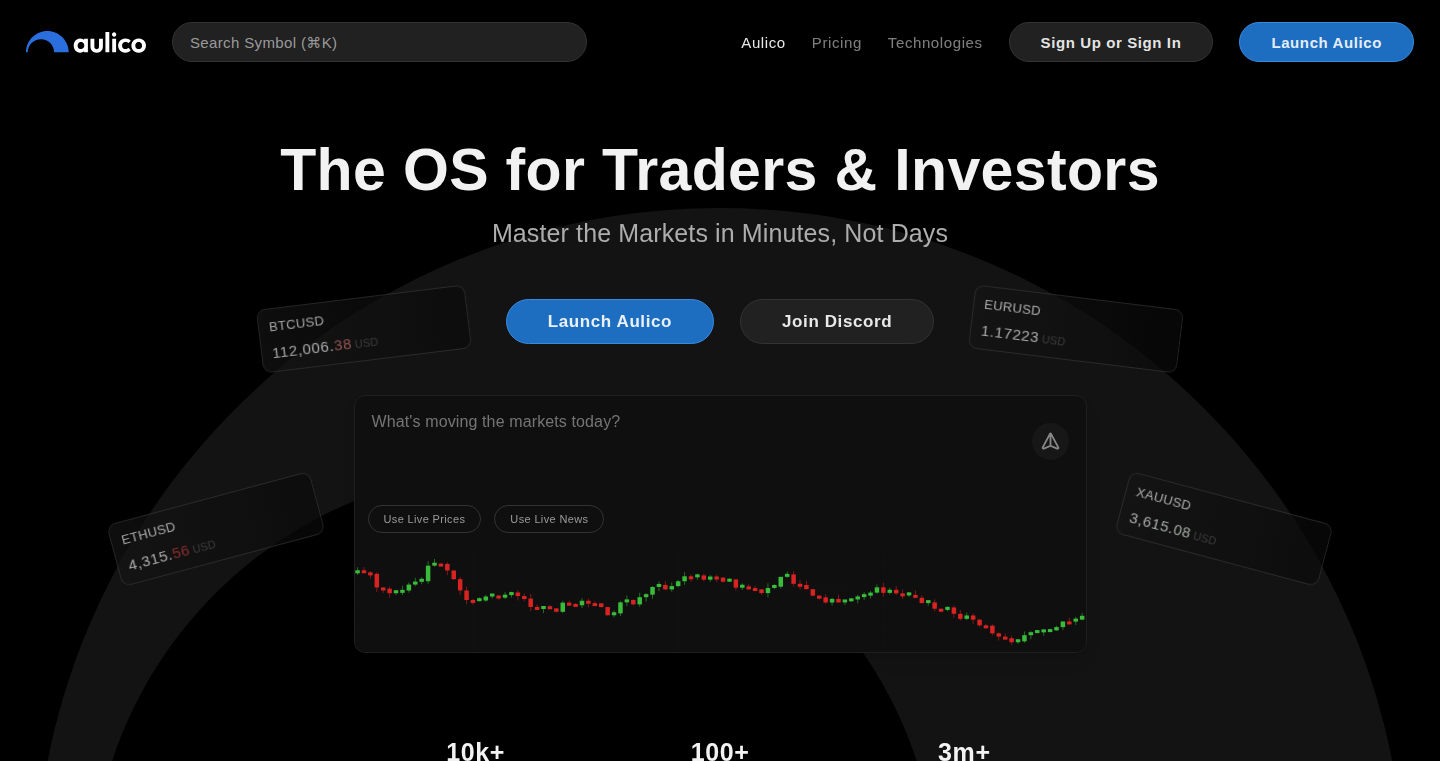

LLM Stock & Crypto Navigator

Author

rendernos

Description

This project showcases an innovative approach to interacting with financial market data by wrapping a Large Language Model (LLM) around stocks and cryptocurrency information. It addresses the challenge of extracting actionable insights from vast and complex financial datasets in a user-friendly, conversational manner. The core innovation lies in the LLM's ability to interpret natural language queries about market trends, specific asset performance, and sentiment, and then translate these into relevant data queries and summarized responses.

Popularity

Points 5

Comments 0

What is this product?

This project is a novel application of Large Language Models (LLMs) designed to navigate and interpret stock and cryptocurrency market data. Instead of directly querying complex databases or APIs with specific syntax, users can ask natural language questions like 'What's the sentiment around Bitcoin today?' or 'How has Apple's stock performed in the last quarter?'. The LLM acts as an intelligent intermediary, understanding these queries, fetching the relevant data from underlying financial sources (which could be APIs, historical datasets, or news feeds), processing it, and then generating a human-readable summary or answer. The innovation is in transforming raw, often overwhelming, financial data into accessible knowledge through the power of AI-driven language understanding.

How to use it?

Developers can integrate this project into their own applications or trading platforms. The LLM can be accessed via an API. For instance, a developer building a personalized finance dashboard could call this API with a user's question about a specific stock. The project would then return a concise answer, such as 'Apple's stock saw a 2% increase yesterday, driven by positive earnings reports and strong consumer demand.' This allows for the creation of intelligent chatbots, automated market analysis tools, or personalized financial advisory services where users can simply ask what they want to know about the markets.

Product Core Function

· Natural Language Query Processing: Understands user questions about financial markets using plain language, making complex data accessible. This is valuable because it eliminates the need for users to learn specific financial query languages or data analysis tools.

· Intelligent Data Retrieval: Fetches relevant stock and crypto data based on the interpreted query, ensuring accuracy and specificity. This saves developers time and effort in building complex data fetching logic.

· Data Summarization and Insight Generation: Processes retrieved data to provide concise, easy-to-understand summaries and identify key trends or insights. This provides immediate value to users by highlighting important information without them needing to sift through raw data.

· Sentiment Analysis Integration: Can analyze news and social media sentiment related to specific assets to provide a broader market perspective. This adds a layer of qualitative analysis that is crucial for financial decision-making, offering a more holistic view.

· Cross-Asset Information Access: Can provide information across both stocks and cryptocurrencies, allowing users to get a unified view of their diverse investment portfolios. This simplifies portfolio management and comparative analysis for users.

Product Usage Case

· Building a customer support chatbot for a brokerage firm that can answer client questions about stock performance and market news in real-time. Instead of a human agent needing to look up data, the LLM handles it instantly, improving customer satisfaction and operational efficiency.

· Developing a personalized investment research tool where users can ask the LLM 'What are the emerging trends in renewable energy stocks?' and receive a curated list of relevant companies and market analysis. This helps investors discover opportunities more effectively.

· Integrating into a trading platform to provide quick, conversational analysis of a cryptocurrency's recent price movements and community sentiment. A trader could ask, 'What's the general feeling about Ethereum today?' and get a quick summary, aiding in faster trading decisions.

· Creating an educational application for financial literacy where students can ask questions like 'Explain the difference between a stock and a bond in simple terms' and receive clear, concise answers powered by the LLM's understanding of financial concepts and data.

13

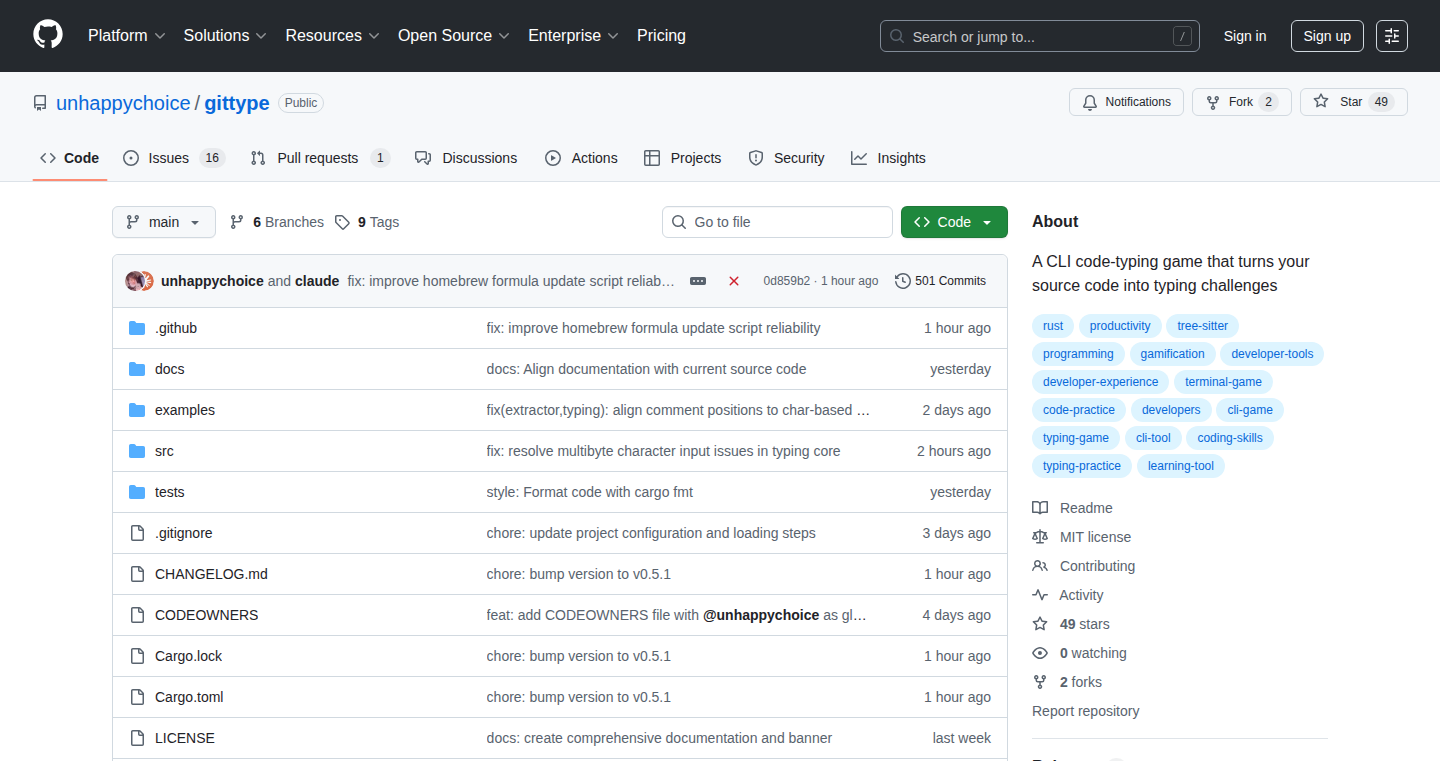

GitType: Your Code, Your Practice

Author

unhappychoice

Description

GitType is a Rust-based command-line interface (CLI) typing game that transforms your personal Git repositories into engaging typing practice material. Instead of generic text, you'll type actual code, comments, and functions you've written, making your practice sessions more relevant and effective for real-world programming. This project showcases creative problem-solving by repurposing existing developer assets for skill enhancement.

Popularity

Points 5

Comments 0

What is this product?

GitType is a typing practice tool that leverages your own Git repositories. Its core innovation lies in extracting text directly from your code files within a local Git repository and presenting it as typing challenges in the terminal. This means you're not just typing random words, but actual lines of code, function names, and comments you've previously written. This approach provides a highly personalized and contextually relevant typing experience, directly improving your familiarity and speed with your own coding style and syntax. It runs entirely in the terminal, making it lightweight and accessible without needing a graphical interface.

How to use it?

Developers can use GitType by first installing it from its GitHub repository (instructions provided in the original post). Once installed, you navigate your terminal to any local Git repository you want to use for practice. Then, you simply run the `gittype` command, specifying the path to your repository. The game will then automatically scan the repository, select code snippets, and present them to you for typing practice. You can track your Words Per Minute (WPM) and accuracy, and the tool keeps a history of your performance, allowing you to monitor your progress over time. It can be easily integrated into a developer's daily routine as a quick, focused skill-building exercise.

Product Core Function

· Terminal-based Typing Practice: Provides a typing game experience directly within the command line, eliminating the need for additional software or a graphical interface. This means you can practice typing anywhere you have a terminal, making it highly accessible and convenient.

· Personalized Practice Material from Git Repositories: Dynamically pulls text content from your local Git repositories. This allows for highly relevant practice that mirrors the code you actually write, improving muscle memory and familiarity with your own coding patterns, thus making your typing practice directly applicable to your development workflow.

· Performance Tracking (WPM and Accuracy): Monitors and displays your typing speed (Words Per Minute) and accuracy for each practice session. This crucial feedback loop helps you understand your strengths and weaknesses, enabling targeted improvement and showing tangible progress over time.

· Practice History and Progress Monitoring: Stores records of your past typing sessions, allowing you to review your progress and identify trends. This historical data is invaluable for motivation and for seeing how your typing skills improve with consistent practice.

· Gamified Ranking System: Assigns fun ranking titles based on your typing scores. This adds an element of enjoyment and competition to the practice, making it more engaging and encouraging consistent use.

Product Usage Case

· A developer struggling with typing specific programming language syntax accurately and quickly can use GitType with their existing project repository. By typing their own code, they reinforce correct syntax and improve their typing speed for the specific language they work with daily, directly boosting their coding efficiency.

· A junior developer wanting to improve their overall coding speed and reduce typing errors can use GitType to practice with code they are already familiar with from their personal projects. This makes the learning curve less daunting and provides practical, immediate benefits to their productivity.

· A developer looking for a quick mental break and skill refresh between coding tasks can launch GitType in their terminal. It offers a highly focused, engaging activity that sharpens their typing skills without requiring them to switch contexts entirely or install separate applications.

14

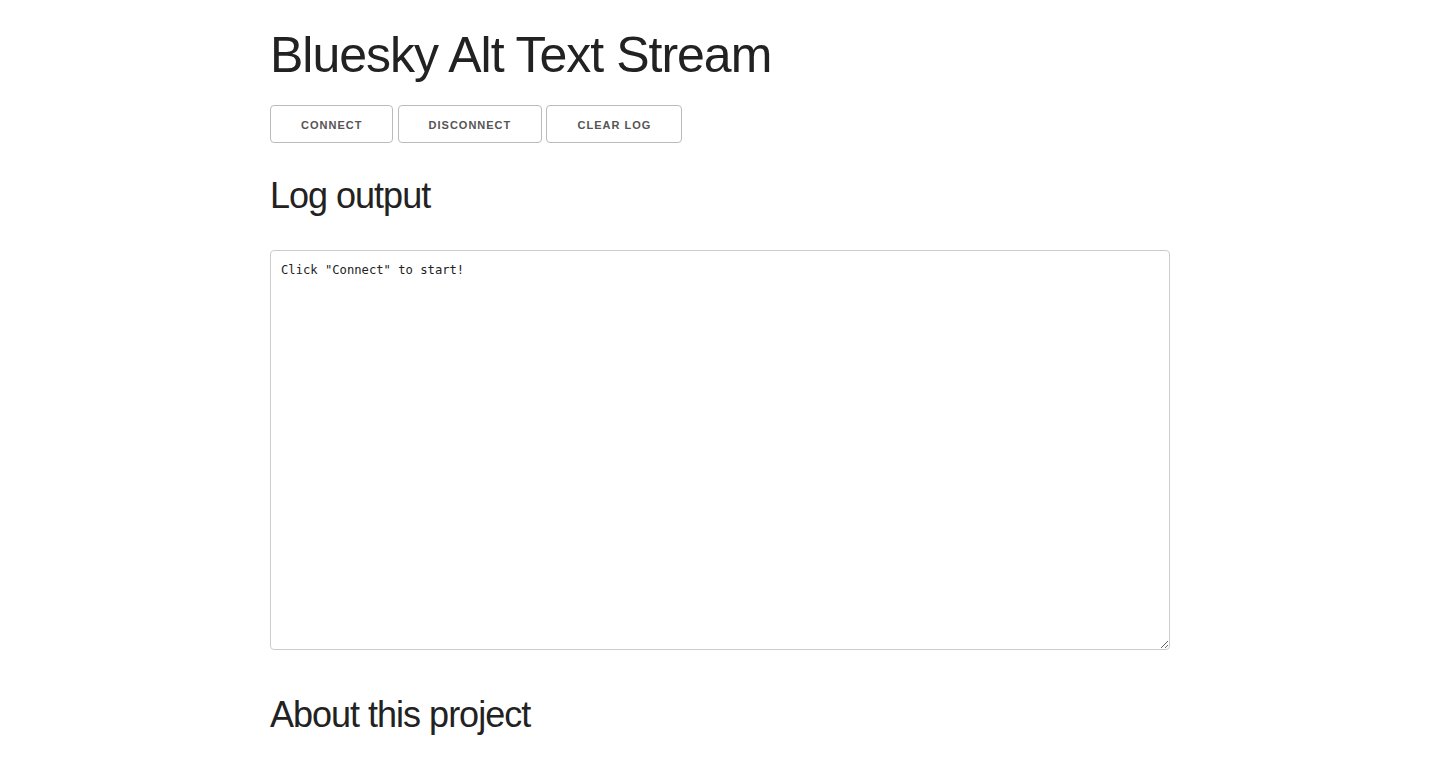

Bluesky AltText Stream Visualizer

Author

bobbiechen

Description

This project provides a live stream of all image descriptions (alt text) found on the Bluesky platform. It highlights the scarcity of alt text in online image sharing, particularly for accessibility, and reveals interesting patterns in content like bot activity and niche communities. The core innovation lies in building a real-time data pipeline to analyze and present this often-overlooked aspect of social media.

Popularity

Points 3

Comments 2

What is this product?

This is a live monitoring tool that scans the Bluesky social media platform for posts containing images that have accompanying alt text. Alt text is a short description that screen readers use to describe images for visually impaired users. The project's technical innovation is in setting up a system to tap into the Bluesky firehose (a stream of all public posts), filter for image posts, extract the alt text, and then display it in a real-time feed. It's like having a window into how much descriptive information is being provided for images on this platform, revealing insights into accessibility and content trends.

How to use it?

Developers can integrate this project by leveraging the Bluesky API to subscribe to the public post stream. The project's underlying logic involves processing these incoming posts, identifying those with image attachments and alt text, and then displaying this information. This could be used to build custom dashboards for social media analysis, accessibility auditing tools, or even integrated into content moderation systems to flag images lacking descriptions.

Product Core Function

· Real-time Bluesky post stream monitoring: This allows for continuous data ingestion from Bluesky, providing up-to-the-minute insights into platform activity, which is valuable for trend analysis and understanding user behavior.

· Image and alt text extraction: The system intelligently identifies posts with images and their associated alt text, enabling focused analysis on visual content accessibility, a crucial aspect for inclusive design.

· Live alt text feed display: Presents the extracted alt text in a dynamic stream, making it easy to visually scan and identify patterns or interesting content related to image descriptions. This directly addresses the 'what's being described' question.

· Content pattern analysis: By observing the stream, users can identify trends in how alt text is used, including types of content commonly described (or not described), bot activity, and thematic clusters, providing a deeper understanding of the platform's ecosystem.

Product Usage Case

· An accessibility advocate can use this tool to monitor the overall alt text adoption rate on Bluesky, demonstrating the need for better image description practices to the platform's developers or community.

· A researcher studying online communication patterns might use the stream to analyze the correlation between specific topics and the presence or quality of alt text, uncovering how visual content is discussed and described.

· A developer building a social media analytics dashboard could integrate this data to offer a unique 'accessibility score' for user-generated content, helping brands or individuals understand their platform's inclusivity.

· A content curator looking for interesting visual narratives could sift through the alt text descriptions to discover unique stories or themes being shared through images on Bluesky.

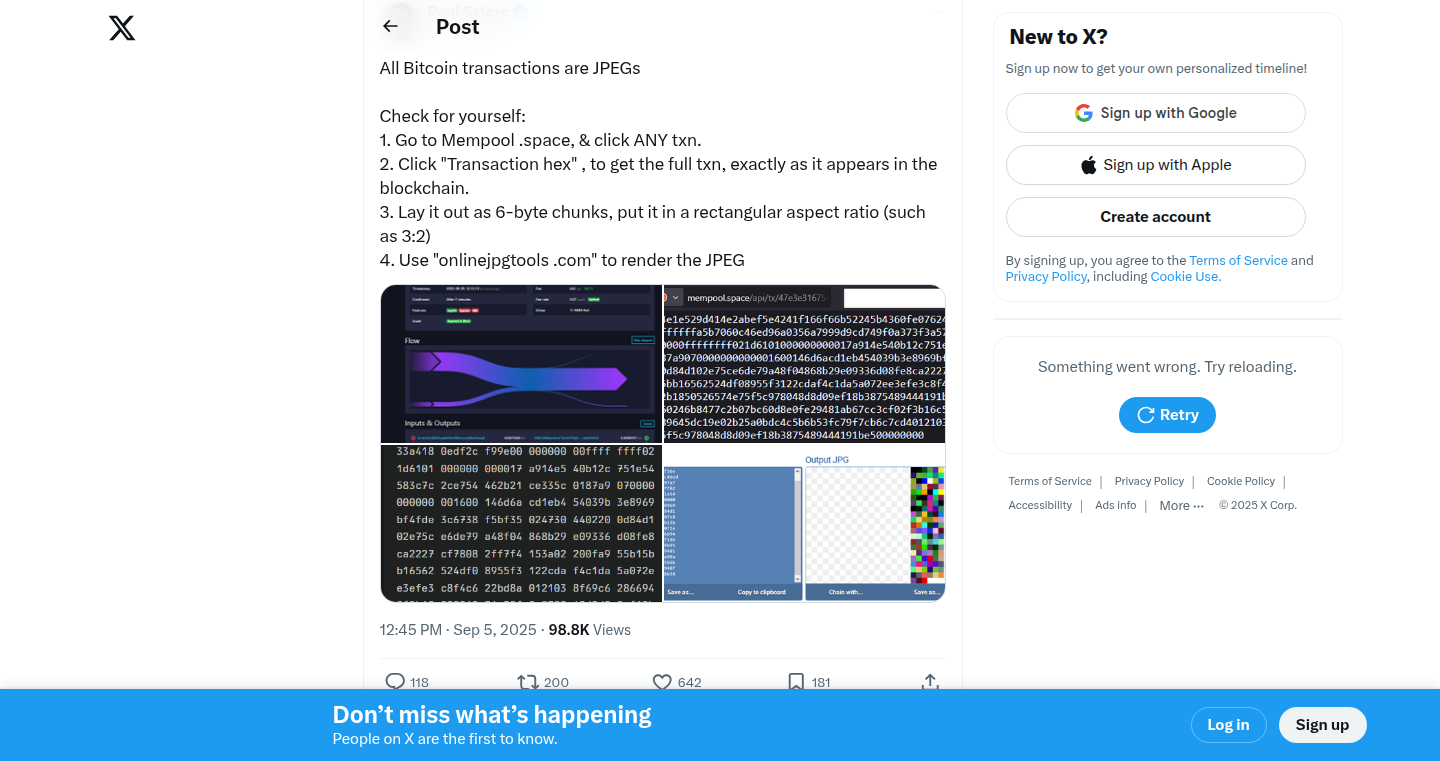

15

TxBitmap

Author

rektlessness

Description

TxBitmap visualizes Bitcoin transactions as unique JPEG images, turning raw blockchain data into observable art. It explores the idea that artistic patterns exist within the mathematical structures of financial transactions, offering a novel way to perceive blockchain data beyond its utilitarian function.

Popularity

Points 4

Comments 0

What is this product?

TxBitmap is a project that translates the data within each Bitcoin transaction into a unique visual representation, specifically a JPEG image. The core innovation lies in treating the inherent mathematical patterns and data fields of a transaction – like inputs, outputs, and scriptSig – as pixels and color values. This isn't just about making pretty pictures; it's about finding artistic expression and aesthetic value within the digital ledger of the world's largest cryptocurrency. Think of it like a digital artist who uses code to 'paint' with transaction data, revealing hidden visual characteristics that are otherwise invisible to the naked eye. So, what's in it for you? It offers a fresh, artistic perspective on blockchain technology, showing that even seemingly dry financial data can possess beauty and be a source of creative exploration.

How to use it?

Developers can interact with TxBitmap through its web interface at transactionbitmap.com. You can input a Bitcoin transaction ID (txid) and see the resulting JPEG generated from its data. For more advanced use cases, the underlying logic and code, likely available via its GitHub repository, can be integrated into custom dashboards, data analysis tools, or even art installations. Imagine building a tool that tracks unusual transaction patterns and visually represents them, or creating dynamic art that changes as new blocks are mined. The practical application is in enriching data visualization and exploring the intersection of art and finance.

Product Core Function

· Transaction to Image Conversion: The system takes a Bitcoin transaction ID, retrieves the transaction data, and through a deterministic algorithm, maps specific data fields and their values to image pixels and color properties. This means every transaction generates a one-of-a-kind visual. This is valuable because it transforms complex financial data into an easily digestible and aesthetically engaging format.

· Unique Visual Fingerprint: Each transaction's data results in a distinct JPEG. This visual uniqueness serves as a sort of digital signature or fingerprint for the transaction, which can be used for recognition or even as a unique identifier in artistic contexts. This is useful for developers looking for novel ways to represent or tag blockchain events.

· Data-Driven Art Generation: The project fundamentally treats blockchain data as raw material for artistic creation. This approach opens up possibilities for generative art projects, data-driven installations, and new forms of digital expression. For artists and developers interested in generative art, this provides a unique source of creative input.

Product Usage Case

· Visualizing large volumes of transactions: A developer could build a service that continuously processes new Bitcoin transactions, generating and archiving these unique JPEGs. This could then be used to create a sprawling digital art gallery of the Bitcoin network's activity, allowing anyone to browse the 'art' of thousands of transactions. This solves the problem of making massive amounts of blockchain data more accessible and engaging.

· Artistic exploration of Bitcoin's history: Imagine creating a time-lapse of Bitcoin's transaction 'art' from its early days to the present. This could highlight visual trends or changes in transaction patterns over time, offering a new way to understand Bitcoin's evolution. This is valuable for historical analysis and creating compelling visual narratives.

· Developing unique digital collectibles: The generated JPEGs could be minted as non-fungible tokens (NFTs), with each transaction's 'art' becoming a unique digital collectible. This leverages the immutability of blockchain to create verifiable digital art tied directly to specific financial events. This opens up new avenues for digital ownership and creative monetization.

16

Nocodo: AI-Powered Dev Environment Orchestrator

Author

brainless

Description

Nocodo is an ambitious project that consolidates the entire application development lifecycle onto your own Linux machine or cloud server. It integrates code management with Git, issue tracking via GitHub, and CI/CD pipelines, offering a unified view of your project's progress. A key innovation is its ability to manage and leverage various AI coding assistants (like Claude, Gemini, Qwen) using your own API keys, facilitating a 'vibe coding' experience. Nocodo aims to simplify the process of building full-stack applications, even for teams without extensive engineering backgrounds, by automating complex tasks like deployment, DNS management, and CI pipeline generation through AI.

Popularity

Points 4

Comments 0

What is this product?

Nocodo is a self-hosted developer environment that acts as a central hub for managing your entire app development workflow. Think of it as an intelligent operating system for your code. It connects to your Git repositories, GitHub issues, and CI/CD pipelines, giving you a single pane of glass to see everything. Its core technical innovation lies in its ability to orchestrate multiple AI coding agents. This means you can use your preferred AI models, like Claude or Gemini, directly within Nocodo to help write code, manage tasks, and even generate deployment configurations. It also automates tricky deployment tasks, like setting up DNS for test environments, directly from your development machine or server. The system is built with a Rust backend and a Solid JS frontend, using SQLite for database management with LiteStream for backups, ensuring flexibility and reducing vendor lock-in.

How to use it?

Developers can use Nocodo by installing it on their Linux development machine or a cloud server. Once set up, they connect to Nocodo via a web interface or a soon-to-be-released mobile app. You can then point Nocodo to your Git repositories. It will integrate with your GitHub account to pull in issues and track code changes. You can initiate AI-assisted coding sessions by sending prompts to integrated AI models through Nocodo's interface. Nocodo handles the execution of these AI agents and displays their output, which can include code suggestions, bug fixes, or even entire CI pipeline configurations. For deployments, Nocodo can manage DNS records and deploy your applications to cloud providers, streamlining the process from development to testing and live environments.

Product Core Function

· Unified Project View: Provides a consolidated dashboard for Git commits, GitHub issues, and CI/CD status, giving developers a clear overview of project health and progress. This saves time by eliminating the need to check multiple platforms independently.

· AI Coding Agent Orchestration: Integrates and manages various AI coding assistants (e.g., Claude, Gemini, Qwen) using the developer's own API keys. This allows developers to leverage powerful AI for code generation, refactoring, and debugging directly within their workflow, enhancing productivity and code quality.

· Automated CI Pipeline Generation: AI agents within Nocodo can generate CI/CD pipelines based on project needs, simplifying the setup and maintenance of continuous integration and deployment. This reduces the manual effort and expertise required for setting up robust build and deployment processes.

· Test Deployment Management: Handles the setup of test deployments, including DNS management and database configuration, directly from the development machine or server. This accelerates the testing cycle by making it easier and faster to deploy and test new features.

· Self-Hosted and Extensible Architecture: Built to be self-hosted with a focus on avoiding vendor lock-in, supporting multiple AI agents and cloud providers. This gives developers control over their data and infrastructure, offering greater flexibility and cost-efficiency in the long run.

Product Usage Case

· A solo developer working on a new web application can use Nocodo to manage their Rust backend and Typescript frontend. They can instruct Nocodo to use Gemini to generate boilerplate code for a new API endpoint, and then use Claude to suggest improvements for their database schema. Nocodo automatically updates the Git branch and triggers a test deployment on a cloud server, with Nocodo managing the DNS for the test URL, allowing for immediate review.

· A small startup team can use Nocodo to onboard a new junior developer. Instead of spending hours explaining complex build and deployment processes, the team can leverage Nocodo's AI capabilities to generate the necessary CI/CD pipelines and deployment scripts. The junior developer can then focus on writing code, with Nocodo providing a streamlined environment for collaboration and rapid iteration.

· A developer experimenting with different cloud providers can configure Nocodo to manage deployments across Scaleway and Digital Ocean using their respective API keys. Nocodo's abstraction layer allows them to switch providers or deploy to multiple targets without rewriting their deployment configurations, making infrastructure management more agile.

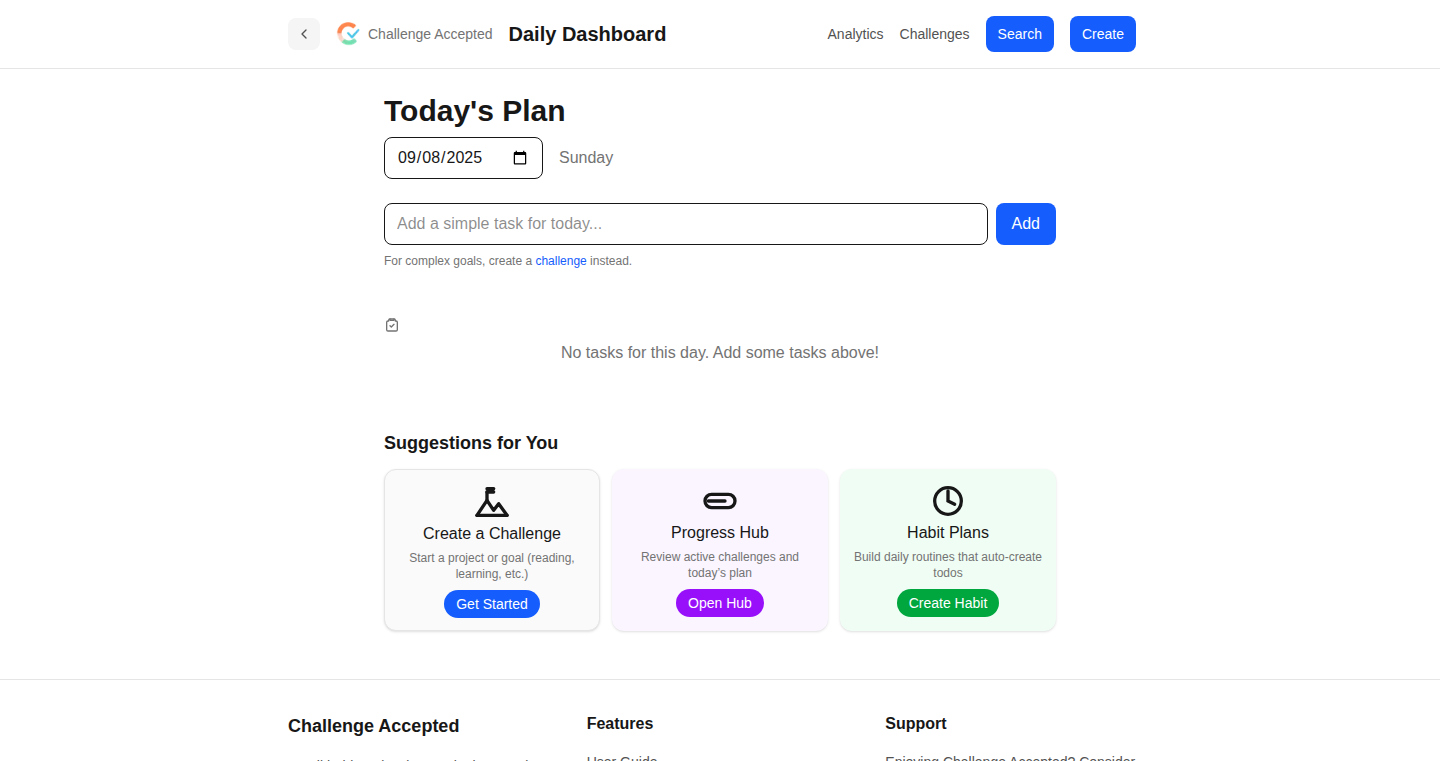

17

Caccepted - Browser-Native Habit Tracker

Author

yusufaytas

Description

Caccepted is a no-frills, browser-based application designed for private habit tracking, daily challenges, and simple to-dos. It eliminates the need for user accounts and saves all data locally using browser storage, offering a quick and privacy-focused solution for personal progress monitoring.

Popularity

Points 4

Comments 0

What is this product?

Caccepted is a web application that allows users to track their habits, daily challenges, and to-do lists directly within their web browser. Its core technical innovation lies in its reliance on local browser storage, meaning no user data is sent to a server or requires an account. This approach prioritizes user privacy and provides an instantly accessible tool. The 'hack' here is leveraging existing browser capabilities to create a fully functional, self-contained application without the overhead of traditional backend infrastructure or authentication systems.

How to use it?

Developers can use Caccepted by simply opening the provided URL in their web browser. It's designed for immediate use without any installation or setup. For integration, a developer could potentially bookmark the Caccepted page for quick access or even embed its functionalities if the project were open-sourced and exposed as a library or component, allowing them to incorporate a simple habit tracking feature into a larger web application or workflow.

Product Core Function

· Local Data Storage: Utilizes browser's localStorage to save all user data directly on the user's device. This means your habit progress is private and immediately accessible without needing to log in, offering a secure and convenient way to manage your personal goals.

· Habit Creation and Tracking: Allows users to define custom habits, daily challenges, and simple to-do items. The value is in providing a structured way to log daily activities and monitor completion, which is crucial for building consistency.

· Streak and Progress Visualization: Displays streaks for completed habits and basic progress statistics. This feature provides immediate visual feedback on consistency and progress, motivating users by showing their ongoing commitment and achievements.

· No Account Requirement: Designed to run entirely in the browser without any sign-up or login process. This significantly lowers the barrier to entry and ensures user privacy, making it an ideal tool for those who prefer to keep their personal data off external servers.

Product Usage Case

· A developer looking to kickstart a new daily coding challenge can use Caccepted to track their progress and build a consistent practice routine without needing to create yet another online account.

· An individual aiming to drink more water or exercise regularly can use Caccepted to easily log their daily intake or workout sessions, benefiting from the streak counter to stay motivated and visualize their commitment.

· A user who wants a lightweight, no-nonsense way to manage their daily tasks, like 'take out the trash' or 'read for 30 minutes,' can rely on Caccepted's simple to-do list functionality to stay organized without any complex setup.

18

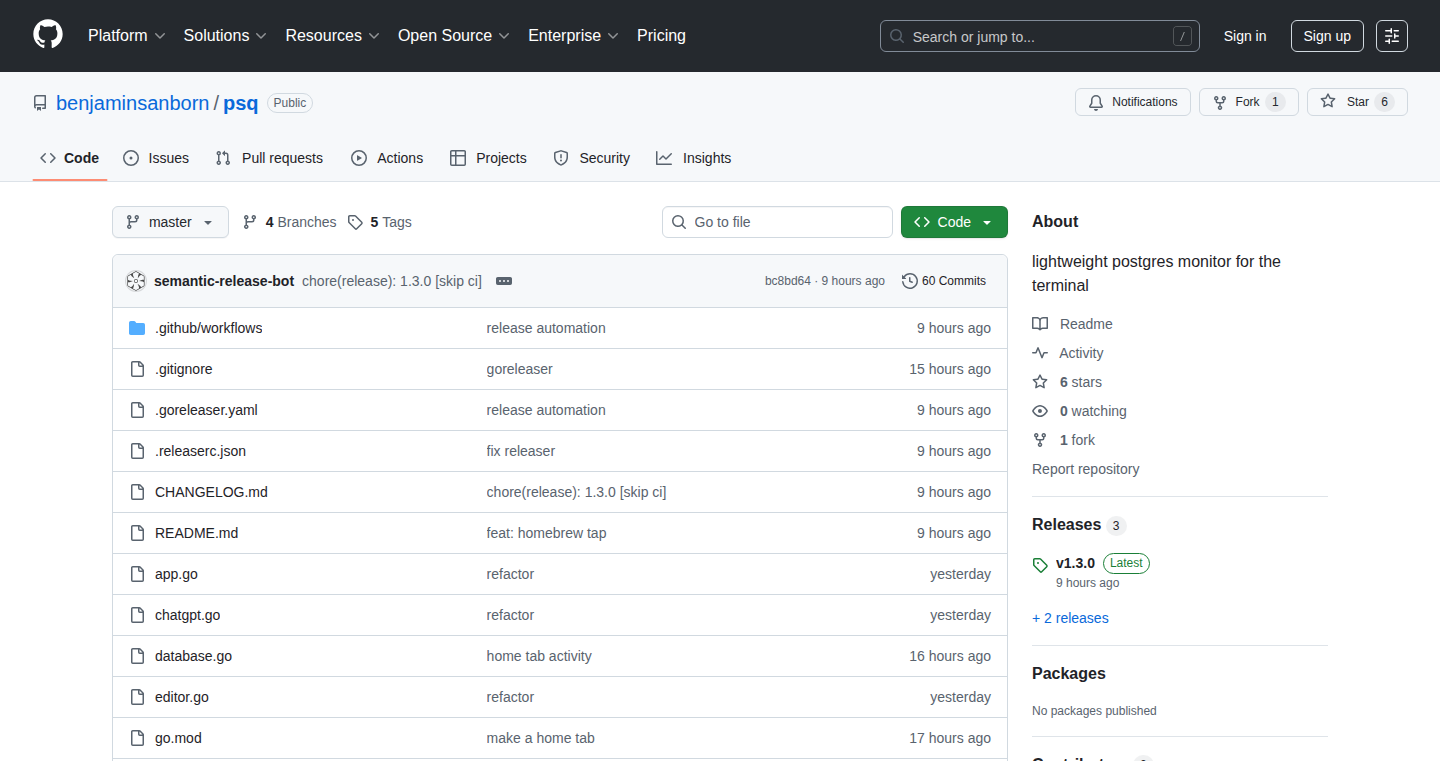

Psq: Postgres CLI Navigator

Author

benjaminsanborn

Description

Psq is a command-line interface (CLI) tool designed to simplify monitoring and interacting with PostgreSQL databases. It provides developers and database administrators with a streamlined way to inspect database performance, identify bottlenecks, and execute queries directly from their terminal, offering a more efficient alternative to traditional GUI tools for certain tasks.

Popularity

Points 4

Comments 0

What is this product?

Psq is a CLI tool that acts as a smart interface for PostgreSQL databases. Instead of needing a separate graphical application to see what's happening inside your database, Psq lets you do it all from your command line. Its innovation lies in its ability to present complex database metrics and query information in a digestible, actionable format. For example, it can show you which queries are taking the longest to run, or how much memory your database is using, in a clear, easy-to-read way without overwhelming you with data. This means you can quickly diagnose performance issues or understand your database's current state without leaving your terminal, which is particularly useful for developers who spend a lot of time in the command line.

How to use it?

Developers can use Psq by installing it on their system and then connecting it to their PostgreSQL database using connection strings or environment variables. Once connected, they can issue commands like 'psq status' to get an overview of the database's health, 'psq top-queries' to see the slowest queries, or 'psq query <SQL_STATEMENT>' to execute a specific SQL command and see its results. It's ideal for quick checks during development, troubleshooting on remote servers where GUI access might be limited, or for automating database monitoring tasks within scripts.

Product Core Function

· Real-time database status: Provides an immediate snapshot of key database metrics like connection count, active transactions, and query throughput, allowing developers to quickly understand the operational state of their database and spot potential issues early.

· Top query identification: Automatically surfaces the queries that are consuming the most resources or taking the longest to execute, enabling developers to pinpoint performance bottlenecks and optimize their application's database interactions.

· Query execution and results: Allows direct execution of SQL queries from the command line and presents the results in a clean, formatted output, making it efficient for testing, debugging, and data retrieval without switching contexts.

· Resource utilization insights: Displays information on CPU, memory, and disk I/O usage by the PostgreSQL server, helping developers understand the resource demands of their database and plan for scaling or optimization.

· Connection monitoring: Shows active client connections, their current states, and the queries they are running, which is invaluable for identifying rogue processes or understanding database load.

Product Usage Case

· A developer is experiencing slow response times in their web application. They use Psq to run 'psq top-queries' and quickly identify a few inefficient SQL queries that are causing the slowdown, allowing them to optimize these queries and improve application performance.

· A system administrator needs to check the health of a PostgreSQL database on a remote server. Instead of setting up a GUI client, they SSH into the server and use 'psq status' to get an instant overview of the database's performance, enabling rapid troubleshooting.