Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-03

SagaSu777 2025-09-04

Explore the hottest developer projects on Show HN for 2025-09-03. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of technical innovation today is a vibrant showcase of leveraging AI for enhanced reasoning and developer productivity. We're seeing a clear trend towards making AI models more efficient and smarter, not just by brute force, but by clever architectural design – like the Entropy-Guided Loop making smaller models reason better. Simultaneously, there's a strong push for developer empowerment through tools that streamline workflows, manage secrets, and even offer alternative deployment platforms, mirroring the 'hacker ethos' of building better, faster, and more accessible tools. For aspiring developers and entrepreneurs, this signals a ripe opportunity to focus on niche problems within the AI ecosystem or to build foundational tools that make complex technologies accessible and manageable. The prevalence of Rust and CLI tools also indicates a demand for performance, reliability, and fine-grained control, suggesting that mastering these areas can unlock significant value.

Today's Hottest Product

Name

Entropy-Guided Loop

Highlight

This project introduces a novel approach to enhance the reasoning capabilities of smaller Language Models (LLMs) by leveraging their own internal signals. By capturing log probabilities and token perplexity during generation, it creates an inference loop that allows the model to 're-think' uncertain outputs. This is achieved by passing an 'uncertainty report' back to the model, effectively guiding it to refine its responses. Developers can learn how to introspect LLM confidence and implement self-correction mechanisms, potentially leading to more cost-effective and capable AI systems by making smaller models punch above their weight class.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Data Visualization

Popular Keyword

LLM

AI Agent

Rust

CLI

Sandboxing

Code Generation

Developer Tools

Technology Trends

AI Reasoning Enhancement

Developer Productivity Tools

Efficient LLM Utilization

Rust Ecosystem Growth

Privacy-Focused Applications

Interactive Data Visualization

Cross-Platform Development

Project Category Distribution

AI/ML (25%)

Developer Tools (30%)

Productivity/Utilities (20%)

Data/Web Tech (15%)

Games/Experiments (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | VoiceGecko - Universal Dictation | 53 | 9 |

| 3 | Chibi - AI-Powered User Behavior Analyzer | 11 | 3 |

| 4 | Tradomate.one: Algorithmic Stock Analysis Engine | 6 | 7 |

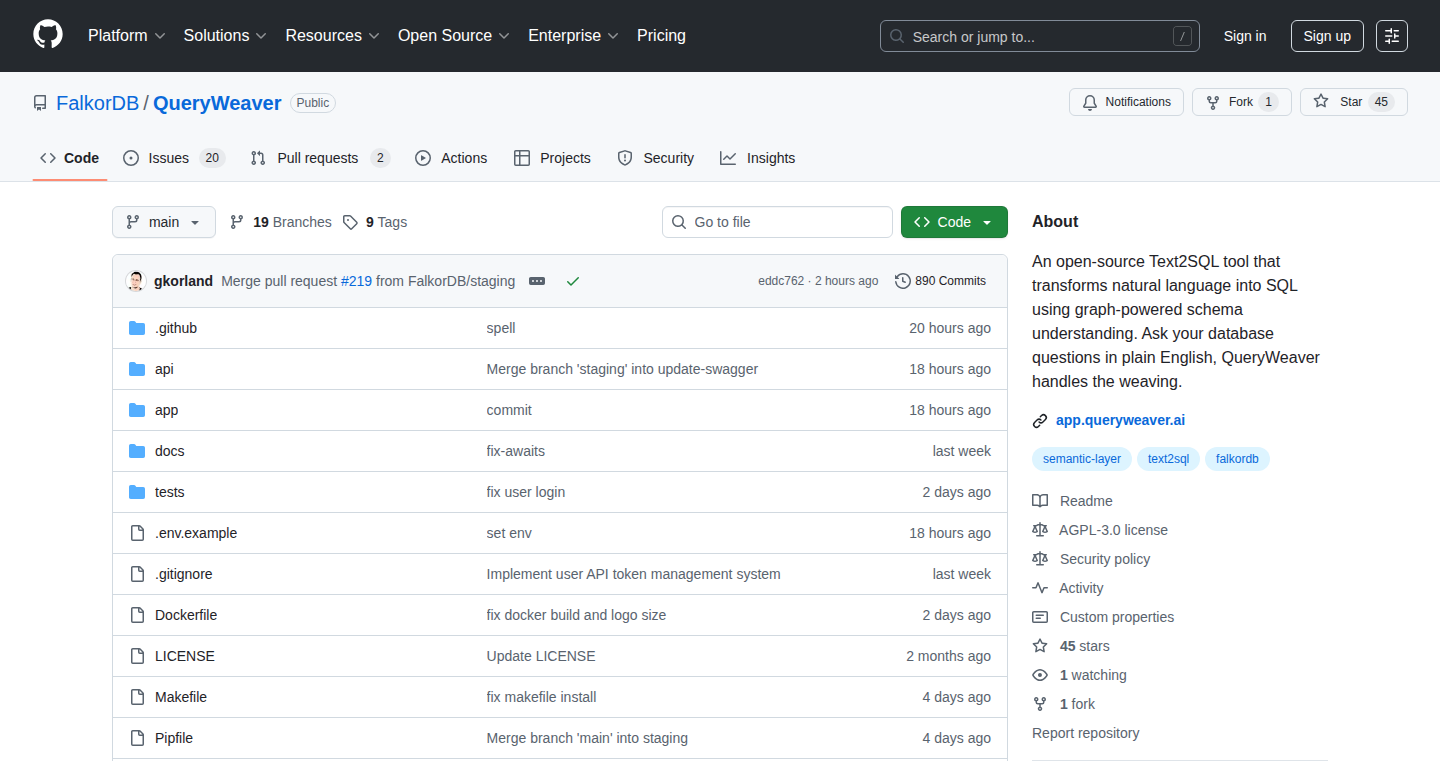

| 5 | GraphQL-SQL Weaver | 7 | 2 |

| 6 | TailLens: Live Tailwind CSS Visual Editor | 7 | 1 |

| 7 | TheAIMeters: Real-time AI Footprint Tracker | 2 | 6 |

| 8 | JSON DiffCraft | 3 | 4 |

| 9 | Metacognitive AI Invention Engine | 3 | 3 |

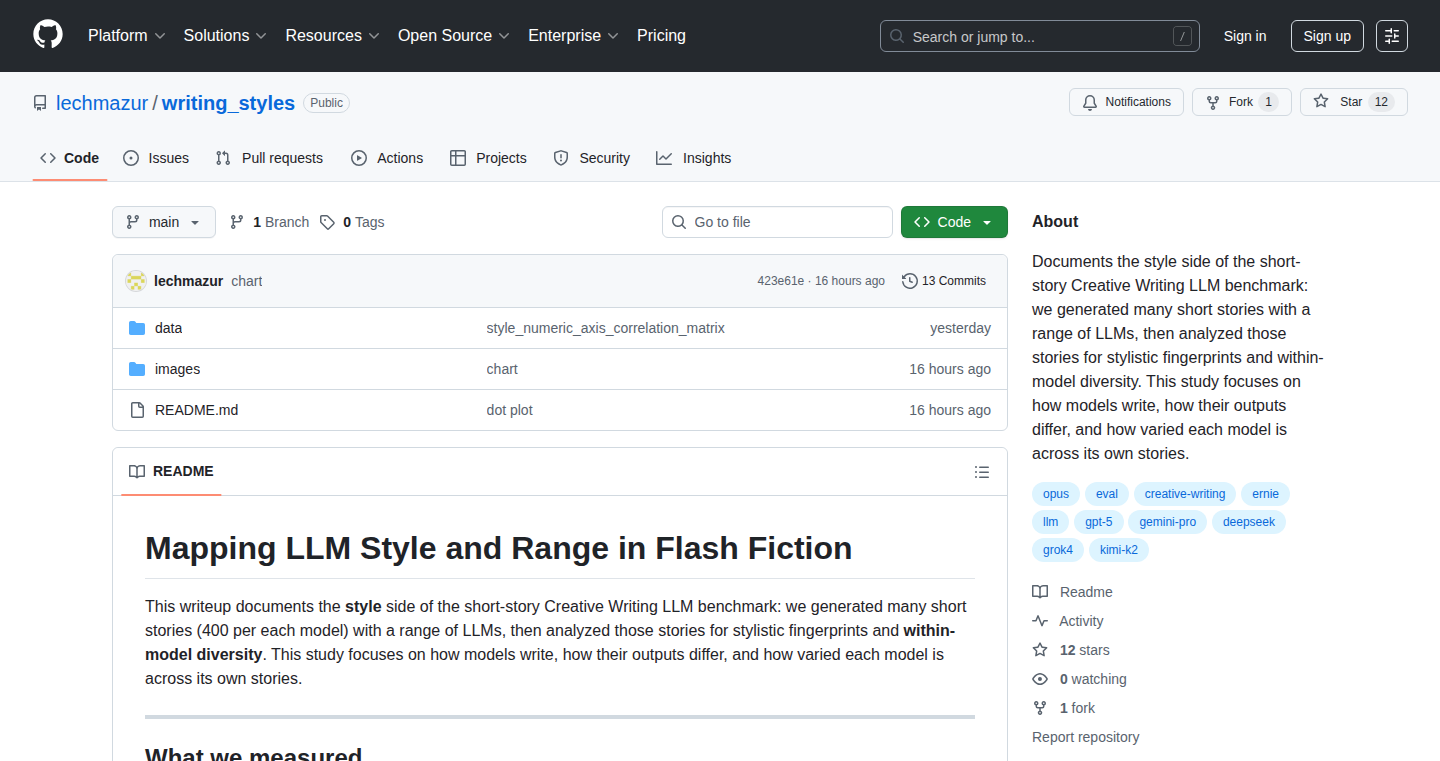

| 10 | LLM Style Mapper | 6 | 0 |

| 11 | Parallel Stream AI Transformer | 4 | 2 |

1

VoiceGecko - Universal Dictation

Author

Lukem121

Description

VoiceGecko is a system-wide voice-to-text application that allows users to dictate text into any application on their operating system. It tackles the challenge of integrating voice input seamlessly across diverse software environments, a common pain point for productivity enthusiasts and those seeking accessible input methods. The innovation lies in its ability to intercept system-level text input and replace it with transcribed speech, effectively making voice a universal input method.

Popularity

Points 53

Comments 9

What is this product?

VoiceGecko is a desktop application that turns your spoken words into text, allowing you to type in any program on your computer just by talking. Its core technical innovation is its system-wide integration. Unlike basic dictation tools that only work within specific apps (like a web browser or a word processor), VoiceGecko operates at a lower level of the operating system. It essentially acts as a virtual keyboard, capturing your voice, converting it to text using advanced speech recognition, and then injecting that text into whatever application is currently active and ready to receive input. This means you can dictate emails, write code, fill out forms, or even chat in messaging apps without ever touching your keyboard. The value here is enabling a hands-free, more natural way to interact with your computer, boosting productivity and accessibility for everyone.

How to use it?

Developers can use VoiceGecko by simply installing the application on their desktop (Windows, macOS, or Linux, depending on availability). Once installed, they can activate VoiceGecko and start speaking. The application typically runs in the background, listening for a wake word or a hotkey press. When activated, it transcribes their speech in real-time and sends it to the currently focused application. For integration, developers could theoretically build plugins or extensions for specific development tools that leverage VoiceGecko's input stream, though the primary use case is direct dictation into existing applications.

Product Core Function

· System-wide dictation: Enables voice input into any application on the operating system, providing a universal typing experience. This solves the problem of limited dictation support in many desktop applications.

· Real-time speech recognition: Utilizes advanced algorithms to convert spoken words into text accurately and quickly. This ensures that your thoughts are captured as efficiently as possible.

· Background operation: Runs silently in the background, ready to transcribe when needed without interrupting the user's workflow. This means you don't have to actively switch to a specific dictation window.

· Cross-application compatibility: Works seamlessly with a wide range of software, from simple text editors to complex IDEs and chat clients. This removes the frustration of dictation tools only working in select places.

· Customizable hotkeys/wake words: Allows users to trigger dictation through personalized shortcuts or voice commands, offering a convenient way to control the application. This provides personalized control and efficiency.

Product Usage Case

· Writing code: A developer can dictate code snippets, comments, and even commit messages directly into their IDE without breaking their flow. This speeds up the coding process by eliminating manual typing.

· Document creation: An author or student can dictate entire documents, reports, or essays into word processors or note-taking apps, significantly reducing writing time. This makes long-form writing more accessible and faster.

· Communication: Users can dictate messages in email clients, Slack, Discord, or other communication platforms, making it easier to respond quickly and hands-free. This improves communication efficiency.

· Form filling: Filling out online forms, registration pages, or application fields can be done entirely by voice, saving time and effort. This streamlines data entry tasks.

· Accessibility for users with disabilities: Individuals with mobility impairments or repetitive strain injuries can use VoiceGecko to interact with their computer without physical strain, providing a crucial accessibility tool. This empowers users who might otherwise struggle with traditional input methods.

3

Chibi - AI-Powered User Behavior Analyzer

url

Author

kiranjohns

Description

Chibi is an AI tool designed to analyze user session replays, automatically identifying points of friction, confusion, and churn. It leverages AI to condense hours of raw user behavior data into actionable insights, saving product managers and developers significant time and effort in understanding user experience issues. The core innovation lies in its ability to process complex user interaction data and surface critical issues without manual review.

Popularity

Points 11

Comments 3

What is this product?

Chibi is an intelligent assistant that acts like an AI product manager by analyzing user session recordings. Instead of manually watching endless videos of how users interact with a website or application, Chibi's AI watches them for you. It's built using Elixir and Phoenix for a robust backend, rrweb to capture detailed session replays, and Google's Gemini AI to process and interpret the behavioral data. The innovation is in its ability to automatically detect patterns that indicate user frustration, confusion, or reasons for leaving an application, thereby pinpointing usability problems that might otherwise be missed.

How to use it?

Developers and product managers can integrate Chibi by implementing session recording on their application using tools like rrweb. Once session data is captured, Chibi processes these recordings. The output is a summary of identified issues, such as where users frequently encountered errors, got stuck, or abandoned a process. This can be used to prioritize bug fixes, refine user interfaces, or even suggest new features based on observed user struggles. It integrates into the development workflow by providing direct feedback on user pain points.

Product Core Function

· Automated Session Replay Analysis: Chibi automatically processes user session recordings, eliminating the need for manual review. This saves significant time and effort in understanding user behavior.

· Friction and Confusion Detection: It identifies specific moments in a user's session where they experienced difficulties, got confused, or encountered errors. This directly highlights usability issues.

· Churn Point Identification: Chibi pinpoints the exact stages or interactions that lead to user drop-off or churn. This provides crucial data for improving retention strategies.

· Actionable Insights Generation: The AI summarizes its findings into clear, actionable recommendations. This helps teams understand what needs to be fixed or improved in the product.

· Scalable Data Processing: Built on Elixir and Phoenix, Chibi can handle large volumes of session replay data efficiently, making it suitable for growing applications.

Product Usage Case

· A product manager uses Chibi to understand why users are abandoning a complex signup form. Chibi analyzes the session replays and flags that users are repeatedly getting stuck on a specific validation error message that is not clear, allowing the PM to simplify the message and improve conversion rates.

· A development team notices a drop in engagement for a key feature. They feed Chibi the session recordings of users interacting with this feature. Chibi identifies that users are confused by the feature's navigation and often click on the wrong elements, indicating a need for UI redesign or clearer instructions.

· An e-commerce site experiences high cart abandonment. Chibi analyzes sessions of users who added items to their cart but didn't complete the purchase. It reveals that users are confused by the shipping cost calculation and opt-out of the checkout process, prompting the team to make the shipping information more transparent earlier in the funnel.

4

Tradomate.one: Algorithmic Stock Analysis Engine

Author

askyashu

Description

Tradomate.one is an innovative stock screener that goes beyond simple filtering by integrating technical indicators, price action analysis, fundamental data, and news sentiment. Its core innovation lies in its ability to backtest custom screening strategies against historical data, providing developers with empirical insights into the effectiveness of their trading approaches. This solves the problem of subjective stock selection by offering a data-driven, quantitatively validated method for identifying potentially profitable investment opportunities.

Popularity

Points 6

Comments 7

What is this product?

Tradomate.one is an AI-powered stock analysis platform that allows developers to build and test complex trading strategies. It combines multiple data sources (technical indicators like moving averages and RSI, price patterns, company financials, and sentiment analysis from news) into a unified framework. The key technological novelty is its robust backtesting engine. Instead of just telling you which stocks match your criteria today, it allows you to simulate how your chosen criteria would have performed on past market data. This means you can scientifically validate if your stock picking logic actually would have made money in the past, helping you understand its potential future performance. Think of it as a sophisticated simulator for your investment ideas, powered by code and data.

How to use it?

Developers can use Tradomate.one to build custom stock screening models. For example, a developer could define a strategy that looks for stocks with a RSI below 30 (indicating oversold conditions), a positive earnings growth rate above 10%, and recent news sentiment classified as 'positive'. Once the screen is defined, the platform allows the developer to run a backtest on this screen over a specified historical period (e.g., the last 5 years). The output would be metrics like total return, win rate, and maximum drawdown, showing how effective that specific combination of filters would have been. This can be integrated into automated trading systems or used for research to refine investment hypotheses. It's a powerful tool for anyone looking to bring scientific rigor to their stock market analysis.

Product Core Function

· Technical Indicator Integration: Allows users to screen stocks based on standard technical indicators (e.g., Moving Averages, RSI, MACD). This provides value by enabling the identification of stocks exhibiting specific market behaviors that often precede price movements.

· Price Action Analysis: Enables screening based on observed price movements and patterns. This offers value by allowing developers to capture strategies based on established chart reading techniques.

· Fundamental Data Filtering: Provides the ability to filter stocks using core financial metrics like P/E ratio, revenue growth, and debt-to-equity. This adds value by identifying fundamentally sound companies that align with investment principles.

· News Sentiment Analysis: Incorporates sentiment analysis of financial news to gauge market mood towards specific stocks. This is valuable for capturing the impact of external information on stock performance.

· Backtesting Engine: The core innovative feature, allowing users to test their custom screening strategies on historical market data. This provides immense value by quantifying the potential effectiveness of a trading strategy before risking real capital, enabling data-driven decision-making.

Product Usage Case

· A quantitative trader wants to test a strategy that identifies tech stocks with high revenue growth and positive recent news sentiment. They can use Tradomate.one to set up this screen, backtest it over the past three years, and analyze the historical performance metrics to see if this strategy would have been profitable, thus validating their approach and avoiding costly mistakes.

· An investor looking for value stocks could use Tradomate.one to screen for companies with a low P/E ratio, a dividend yield above 3%, and a positive net profit margin. By backtesting this screen, they can understand how this specific value-oriented approach has performed historically, helping them refine their investment criteria.

· A developer building an algorithmic trading bot could use Tradomate.one to create and rigorously test various entry and exit conditions based on a combination of technical and fundamental factors. This allows them to iterate on their bot's logic, ensuring it's based on proven performance rather than intuition.

5

GraphQL-SQL Weaver

Author

danshalev7

Description

QueryWeaver is an open-source tool that translates natural language questions into SQL queries, utilizing a graph-based semantic layer. This approach allows it to understand complex relationships within your databases, enabling intuitive data querying. It excels at handling follow-up questions by maintaining conversational context, effectively acting like a smart assistant for your data. The innovation lies in using a graph to represent your business logic and data connections, rather than just raw database schemas, making it easier to ask nuanced questions about your data and get accurate results without needing to know the underlying SQL.

Popularity

Points 7

Comments 2

What is this product?

QueryWeaver is a text-to-SQL engine that uses a graph database to build a semantic understanding of your business data. Instead of just knowing about tables and columns, it understands concepts like 'customers,' 'orders,' and their relationships, such as which products are part of a campaign. It employs a conversational memory system, allowing for follow-up questions like 'show me customers who bought product X in a certain ‘REGION’ over the last Y period of time,' followed by 'just the ones from Europe.' This remembers your previous context. It leverages FalkorDB for efficient relationship mapping and Graphiti for conversation tracking, ensuring that your data remains secure within your existing databases. The output is standard SQL that can be executed anywhere.

How to use it?

Developers can integrate QueryWeaver into their applications or use it directly to query databases. You would typically connect it to your existing databases, providing it with your schema. The core idea is to define a graph that represents the business meaning and relationships within your data. For example, you'd map 'customer' to your customer table and define how it relates to 'orders' and 'products.' Once the semantic layer is established, you can query your data using natural language through an API. The tool generates executable SQL based on your natural language input and the established graph, simplifying data access for both technical and non-technical users.

Product Core Function

· Natural Language to SQL translation: Understands plain English questions and converts them into executable SQL queries, making data access easier for everyone.

· Graph-based Semantic Layer: Creates a conceptual model of your business data and relationships, allowing for more intelligent and context-aware queries than traditional schema-based approaches.

· Conversational Context Management: Remembers previous questions and answers, enabling users to ask follow-up questions naturally without restating all the context.

· Data Privacy and Security: Operates directly on your existing databases without migrating data, ensuring your sensitive information remains secure.

· Standard SQL Output: Generates standard SQL queries that can be run on any SQL-compliant database, providing broad compatibility.

Product Usage Case

· A business analyst wants to understand sales performance by region over the last quarter. They can ask: 'Show me total sales for each region in the last quarter.' QueryWeaver translates this into SQL, joining sales and region tables.

· After seeing the sales figures, the analyst wants to drill down into a specific region, say 'Europe.' They can ask: 'Just the ones from Europe.' QueryWeaver, remembering the previous query, filters the results to include only European sales.

· A marketing manager needs to identify customers who purchased a specific product during a promotional campaign. They can ask: 'Which customers bought product 'Awesome Gadget' during the 'Summer Sale' campaign?' QueryWeaver uses the graph to link products to campaigns and customers to orders.

· A data scientist wants to find users who have been active in the last 30 days and have made more than two purchases. They can ask: 'List active users who made more than 2 purchases in the last month.' QueryWeaver interprets 'active' based on the semantic layer and translates the request into SQL.

6

TailLens: Live Tailwind CSS Visual Editor

Author

jayasurya2006

Description

TailLens is a browser-based developer tool that allows developers to visually edit Tailwind CSS classes in real-time directly within the browser. It eliminates the need to constantly switch back to a code editor, offering an intuitive workflow for inspecting, modifying, and previewing CSS. This significantly speeds up the UI development process, especially for projects heavily relying on Tailwind CSS.

Popularity

Points 7

Comments 1

What is this product?

TailLens is a developer tool designed for web developers who use Tailwind CSS. Its core innovation lies in its ability to let you see and change Tailwind CSS classes applied to elements on a webpage, live, without needing to open your code editor. Imagine you're building a website and want to adjust the spacing or color of a button. Instead of finding the right line of code, changing it, saving, and then refreshing the page, TailLens lets you click on the button, see its current Tailwind classes, and then directly modify them in a user-friendly interface. The tool works by inspecting the Document Object Model (DOM) of the webpage and then communicating those changes back, allowing for instant visual feedback. It supports both current and upcoming versions of Tailwind CSS, making it a future-proof solution.

How to use it?

Developers can integrate TailLens into their workflow by installing it as a browser extension (typically for Chrome or Firefox). Once installed, they can navigate to their web application in the browser. When they activate the TailLens extension (usually by clicking an icon), it will overlay interactive controls on the webpage. Developers can then click on any element to inspect its Tailwind classes, see suggestions for available classes, and directly edit them. The changes are reflected instantly on the page. This allows for rapid prototyping and iteration of UI styles. For more advanced users, it might be possible to integrate its functionality into build processes or use its API for programmatic styling, though the primary use case is interactive browser editing.

Product Core Function

· Live Class Editing: Enables direct modification of Tailwind CSS classes on webpage elements without code editor context switching, accelerating UI adjustments and experiments.

· DOM Inspection and Navigation: Allows developers to easily select and understand the structure of their webpage, making it simple to target specific elements for styling.

· Real-time Class Suggestions: Provides intelligent autocompletion for Tailwind CSS classes, reducing the cognitive load of remembering class names and improving coding efficiency.

· Visual Preview: Offers immediate visual feedback on class changes, allowing developers to see the impact of their modifications instantly, thus enhancing the design iteration process.

· Copy Class Utility: Enables developers to copy the modified or suggested Tailwind CSS classes directly, facilitating easy integration back into their codebase.

Product Usage Case

· Rapid Prototyping of UI Components: A designer wants to quickly test different button styles on a marketing page. They can use TailLens to apply and modify Tailwind classes like 'bg-blue-500 hover:bg-blue-700 text-white font-bold py-2 px-4 rounded' to a button element directly in the browser, previewing variations instantly, and then copy the final class string to their code.

· Debugging Layout Issues: A developer is struggling to align elements correctly in a complex layout. By using TailLens, they can select the misaligned elements, inspect their current spacing and alignment classes (e.g., 'm-4', 'p-2', 'flex', 'justify-center'), and experiment with different Tailwind utility classes in real-time to find the correct combination that fixes the layout.

· Iterative Styling of Forms: A front-end developer is styling a form. They can use TailLens to select an input field, tweak its padding, border, and focus states using Tailwind classes, see the immediate visual result, and then easily copy the updated class attributes to paste into their HTML or JSX code.

7

TheAIMeters: Real-time AI Footprint Tracker

Author

rboug

Description

TheAIMeters provides a live, real-time visualization of the environmental impact of global AI activity. It quantifies critical resources like electricity, water, and CO2 emissions, along with GPU-hours used. The project tackles the growing concern of AI's hidden environmental cost by aggregating data from operator disclosures, research papers, and regional grid factors, presenting it in an accessible, dynamic format. This offers transparency and a critical understanding of AI's resource consumption.

Popularity

Points 2

Comments 6

What is this product?

TheAIMeters is a live dashboard that monitors and visualizes the environmental impact of artificial intelligence operations worldwide. It aggregates data from various sources, including direct reports from AI operators, scientific research, and information about local energy grids, to estimate the consumption of electricity, water, and the generation of CO2 equivalents. It also tracks the utilization of GPU-hours, a key metric for AI processing power. The innovation lies in its real-time aggregation and presentation of these often-opaque metrics, providing a unified view of AI's resource footprint. This helps us understand the tangible environmental consequences of the rapid growth of AI.

How to use it?

Developers can utilize TheAIMeters as a reference for understanding the environmental context of their AI projects or for making more informed decisions about resource allocation. For instance, a developer working on a large-scale AI model could consult TheAIMeters to gain insights into the typical resource intensity of similar operations and potentially identify more energy-efficient methods or deployment strategies. It can be integrated into project planning or impact assessment workflows to add a layer of environmental consideration, helping developers be more mindful of their AI's real-world footprint. While not a direct code integration tool, it serves as an invaluable data source for sustainability-focused AI development.

Product Core Function

· Real-time AI resource tracking: Monitors electricity, water, and CO2e consumption, as well as GPU-hours used by AI systems globally. This provides actionable insights into the direct environmental cost of AI, enabling better resource management and sustainability planning.

· Data aggregation and synthesis: Combines operator disclosures, academic research, and grid-specific factors to provide a comprehensive impact assessment. This allows for a more accurate and nuanced understanding of AI's environmental footprint, addressing data fragmentation.

· Live visualization dashboard: Presents complex environmental data in an easily digestible, dynamic format. This makes the impact of AI accessible to a wider audience, including non-technical stakeholders, fostering transparency and awareness.

· Cross-referencing AI operator data with grid factors: Analyzes energy consumption in relation to the carbon intensity of local power grids. This highlights the differential environmental impact of AI based on location, guiding decisions towards greener energy sources.

Product Usage Case

· An AI researcher developing a new natural language processing model could use TheAIMeters to estimate the potential energy and water consumption of their training runs based on current global trends. This helps in setting realistic resource budgets and exploring optimization techniques to reduce environmental impact.

· A cloud infrastructure provider offering AI/ML services might monitor TheAIMeters to benchmark their own energy efficiency against the global average. This allows them to identify areas for improvement in their data center operations and communicate their sustainability efforts effectively to clients.

· An environmental policy advisor could leverage TheAIMeters data to advocate for regulations that promote energy-efficient AI development and the use of renewable energy sources for AI computing. This provides concrete data to support policy decisions aimed at mitigating the environmental effects of technology.

8

JSON DiffCraft

Author

sourabh86

Description

A client-side JSON comparison tool that offers real-time, dynamic JSON path highlighting and flexible comparison options. It addresses the common developer need for an accurate, feature-rich, and user-friendly way to visualize differences between JSON structures.

Popularity

Points 3

Comments 4

What is this product?

JSON DiffCraft is a web-based application designed for developers to compare two JSON documents side-by-side. Its innovation lies in its real-time comparison engine, which immediately highlights differences as you type or paste JSON. A key technical feature is its dynamic JSON path generation; as you navigate through the JSON data, it precisely shows the 'address' or path to that specific piece of data. This makes understanding complex JSON structures and pinpointing differences much more intuitive. It also offers advanced options like synchronized scrolling, sorting JSON for cleaner diffs, the ability to swap the documents being compared, and even client-side file export. Crucially, it operates entirely in the browser, meaning your data never leaves your machine, enhancing privacy and security. So, this helps you quickly and safely understand how two pieces of JSON data differ, which is vital for debugging, data migration, or understanding API changes.

How to use it?

Developers can use JSON DiffCraft by navigating to the web application. The interface provides two distinct editing panels. You can either paste your JSON content directly into these panels, drag and drop JSON files into the editors, or import them using a file selection dialog. Once both JSON documents are loaded, the comparison begins automatically. You can then enable or disable synchronized scrolling to move through both documents simultaneously, choose to sort the JSON content before comparison for a more organized diff, or swap the positions of the left and right JSON documents if needed. The tool also allows for downloading each compared JSON file separately. So, this allows you to easily load your JSON data, see the differences clearly highlighted with their exact locations, and manipulate the view to your preference, making the process of comparing JSON straightforward and efficient.

Product Core Function

· Real-time JSON comparison: Immediately visualizes differences as JSON is edited or loaded, reducing the need for manual checks and speeding up the debugging process.

· Dynamic JSON path highlighting: Shows the exact 'address' of data elements in the JSON structure as you navigate, making it easier to understand and reference specific parts of the JSON for debugging or data manipulation.

· Client-side operation: Processes all JSON data and comparisons within the user's browser, ensuring data privacy and security as no sensitive information is sent to a server.

· Multiple input methods (paste, drag-drop, file import): Provides flexibility in how users load their JSON data, catering to different workflow preferences and making it easy to get started.

· Optional synchronized scrolling: Allows users to scroll through both JSON documents in tandem, ensuring that the context of differences is maintained when examining large or complex JSON files.

· Optional JSON sorting for comparison: Enables sorting of JSON keys before comparison, which is useful for standardizing data and highlighting meaningful differences rather than just key order variations.

· JSON formatter and minifier: Offers utility features to format (pretty-print) or minify JSON data, which is helpful for making JSON more readable or reducing its size for transmission.

Product Usage Case

· Debugging API responses: A developer receives a new API response that isn't behaving as expected. They can paste the old and new responses into JSON DiffCraft to quickly see exactly what fields or values have changed, pinpointing the cause of the bug.

· Migrating data between systems: When moving data from one database or service to another, which uses slightly different JSON schemas, a developer can use JSON DiffCraft to compare the output of both systems to ensure data integrity and identify any mapping issues.

· Understanding configuration file changes: A team updates a complex configuration file that uses JSON. One developer can compare their modified version against the original using JSON DiffCraft to ensure no unintended changes were introduced.

· Analyzing data transformations: After applying a series of data transformations to a JSON dataset, a developer can use JSON DiffCraft to compare the transformed data against the original to verify that the transformations worked correctly and produced the expected results.

· Reviewing JSON-based code settings: When working with projects that embed configuration in JSON files, developers can use JSON DiffCraft to easily review and understand the impact of changes to these settings on the application's behavior.

9

Metacognitive AI Invention Engine

Author

WiseRob

Description

This project showcases an AI agent designed for inventive problem-solving. Unlike typical AI that might get stuck on a core issue, this system incorporates a 'metacognitive loop'. This means it can recognize when it's facing a fundamental roadblock in its invention process, then autonomously initiate a sub-task to resolve that specific bottleneck before proceeding. The goal is to create an AI that is more robust, self-aware of its limitations, and capable of overcoming complex inventive challenges by learning and adapting its own problem-solving strategy. So, what's in it for you? This AI offers a path to more sophisticated AI-driven innovation, potentially accelerating creative processes and discovering novel solutions that might be out of reach for current AI systems.

Popularity

Points 3

Comments 3

What is this product?

This project is an AI system built to tackle inventive challenges by employing a 'metacognitive loop'. Think of it like an AI that can think about its own thinking. When it encounters a problem it can't solve immediately, it doesn't just give up or guess. Instead, it pauses, identifies the specific fundamental issue it's stuck on (the bottleneck), and then spins up a separate, focused AI task to figure out how to overcome that particular hurdle. Once that sub-problem is solved, the AI integrates the solution and continues with its original inventive task. This 'self-reflection' and targeted problem-solving approach aims to make the AI more effective and less prone to generating incorrect or nonsensical outputs (hallucinations). So, how does this help you? It means AI can be used for more complex, nuanced creative and scientific discovery, potentially automating R&D or generating entirely new product ideas.

How to use it?

As a developer, you can leverage this AI's architecture and principles in your own projects. The core concept of a metacognitive loop can be integrated into any AI system that requires self-assessment and adaptive problem-solving. For instance, you could implement similar logic in AI for scientific research where hypotheses need refinement, in creative coding for generating evolving art forms, or in complex software debugging where the AI needs to identify and fix its own logic errors. The project provides insights into designing AI that can independently strategize and learn from its own failures. So, what's the practical application for you? You can build more resilient and intelligent AI agents that can autonomously overcome challenges, reducing the need for constant human intervention in complex AI workflows.

Product Core Function

· Metacognitive Loop: Allows the AI to monitor its progress and identify critical roadblocks in problem-solving, providing a mechanism for self-correction and improved efficiency. This means the AI can get unstuck on its own, leading to more consistent and reliable results.

· Autonomous Sub-Mission Generation: When a bottleneck is detected, the AI can independently create and execute targeted sub-tasks to resolve the specific issue. This allows for a more focused and effective approach to overcoming complex challenges, similar to how a human expert might break down a large problem.

· Evidence-Grounded Reasoning: The AI is designed to be self-critical and rely on verifiable information to minimize speculative or incorrect outputs. This builds trust in the AI's conclusions and makes its inventive contributions more reliable.

· Iterative Refinement: The system supports an iterative process where the AI continuously learns from its problem-solving attempts, refining its strategies for future tasks. This ensures the AI becomes progressively better at inventing and problem-solving over time.

Product Usage Case

· Imagine an AI tasked with designing a novel material. If it hits a fundamental chemical incompatibility, the metacognitive loop could trigger a sub-task to research and propose alternative atomic structures that overcome this issue, rather than simply failing. This is useful for speeding up materials science research.

· In software development, an AI could use this system to debug its own code. If it identifies a logical flaw that's causing errors, it can launch a sub-task to analyze the faulty logic, propose a fix, and test it, leading to more automated and efficient software maintenance.

· For scientific discovery, an AI could be tasked with generating hypotheses for a complex biological process. If it encounters a lack of supporting evidence for a particular pathway, it can initiate a sub-mission to search for more relevant research or suggest experiments to validate its initial idea, accelerating the scientific discovery pipeline.

· A creative AI could be tasked with writing a story. If it gets stuck on a plot point, the metacognitive loop could prompt it to generate character motivations or explore alternative narrative branches to resolve the narrative conflict, enhancing the AI's creative output and storytelling capabilities.

10

LLM Style Mapper

Author

zone411

Description

This project is a novel visualization tool that maps the stylistic range and diversity of Large Language Models (LLMs) when generating flash fiction. It addresses the challenge of quantitatively understanding and comparing the creative output of different LLMs, offering insights into their unique writing 'personalities'. The core innovation lies in its approach to analyzing and visualizing textual features to represent abstract concepts like 'style' and 'range'.

Popularity

Points 6

Comments 0

What is this product?

LLM Style Mapper is a tool designed to visually represent the stylistic variations and creative spectrum of Large Language Models (LLMs) when they produce short stories (flash fiction). Think of it like a heat map for writing styles. Instead of just saying one LLM writes 'formal' and another writes 'creative', this tool analyzes various linguistic elements – such as sentence complexity, word choice, and narrative structure – to create a multidimensional map. This map helps users see not only the typical style of an LLM but also how much its style can stretch or change. The innovation is in transforming complex, qualitative text analysis into an easily interpretable visual format, allowing for a deeper, data-driven understanding of LLM creative capabilities.

How to use it?

Developers can use LLM Style Mapper to evaluate and compare different LLMs for their creative writing applications. You can feed various prompts to different LLMs, collect the generated flash fiction, and then run it through the mapper. The output is a visual representation that highlights the stylistic similarities and differences between the LLMs. This can be integrated into prompt engineering workflows, where developers can use the insights to select the LLM best suited for a specific writing task or to fine-tune prompts to elicit desired stylistic outcomes. It's a powerful tool for anyone working with LLMs for content generation, creative writing assistance, or even for academic research into AI creativity.

Product Core Function

· LLM Output Analysis: The system processes text generated by LLMs, breaking it down into quantifiable linguistic features that contribute to style, such as vocabulary richness, sentence length variation, and use of figurative language. This provides a data-driven foundation for understanding style.

· Dimensionality Reduction and Visualization: It employs techniques to reduce the complexity of stylistic features into a lower-dimensional space, allowing for intuitive graphical representation. This means taking many text characteristics and boiling them down to a few key dimensions that are easy to plot and understand, showcasing the 'shape' of an LLM's style.

· Style Range Mapping: The project generates visual maps that illustrate the breadth of an LLM's stylistic capabilities. This helps users see if an LLM is a 'one-trick pony' stylistically or if it can adapt to a wide range of writing approaches.

· Comparative Analysis: It facilitates direct comparison between different LLMs' stylistic profiles, enabling users to identify the strengths and weaknesses of each model for specific creative writing tasks. This answers the question: 'Which LLM is best for the kind of writing I need?'

Product Usage Case

· Prompt Engineering for a Novel: A writer wants to use an LLM to help draft a novel in a specific historical period. They can use LLM Style Mapper to test several LLMs, feeding them prompts related to the novel's setting and characters. The mapper helps them visualize which LLM consistently produces text with the appropriate stylistic nuances and which has the most flexibility to adapt to different character voices, saving them significant time in model selection and prompt refinement.

· AI Content Generation Platform Evaluation: A company building an AI-powered content generation platform needs to choose the best underlying LLM. They can use the mapper to compare the stylistic output of various LLMs on a diverse set of flash fiction prompts. This helps them ensure their platform can generate content with the desired variety and quality, directly impacting user satisfaction and the platform's perceived intelligence.

· Academic Research on AI Creativity: Researchers studying the evolution of AI's creative writing abilities can use this tool to track how stylistic range and characteristics of LLMs change over time or with different training methodologies. This provides quantifiable data to support their hypotheses about AI's artistic development.

11

Parallel Stream AI Transformer

Author

etler

Description

This project introduces a novel approach to AI agent interaction by leveraging stream delegation, enabling parallel token generation with sequential consumption. This bypasses traditional blocking outputs, facilitating the breakdown of complex prompts into recursive, manageable units and enhancing agent meta-prompting capabilities. It aims to achieve up to 10x performance improvement for AI tasks involving recursive prompts.

Popularity

Points 4

Comments 2

What is this product?

This project is an advanced stream processing system designed for AI agents. Instead of waiting for one AI process to finish generating all its output tokens before the next step can begin (which is like waiting in a single-file line), it allows multiple AI processes to generate their output tokens simultaneously. These tokens are then fed into a sequential consumption pipeline, meaning the next part of your AI task can start processing as soon as the first tokens are ready, without waiting for the entire generation to complete. This is achieved through a concept called 'stream delegation,' which is a fancy way of saying it intelligently manages the flow of information between different AI processes. The core innovation lies in breaking down large, monolithic AI prompts into smaller, recursive prompts that can be processed in parallel, significantly speeding up complex AI workflows and enabling more sophisticated AI agent interactions, like an AI teaching another AI or planning its own tasks. So, how does this help you? It means your AI applications can be much faster, especially for tasks that require intricate reasoning or step-by-step processing, making your AI feel more responsive and powerful.

How to use it?

Developers can integrate this system into their existing AI workflows or build new applications using its stream delegation capabilities. For example, if you're building a chatbot that needs to research information, summarize it, and then formulate a response, you can use this system to parallelize the research and summarization steps. The system allows for asynchronous processing, meaning you don't have to wait for one stage to finish entirely before the next begins. This can be implemented by defining AI agents as distinct transform streams. A 'higher-order transform stream' orchestrates these agents, feeding the output of one agent as the input to another, but in a non-blocking, parallel fashion. This allows for recursive prompting, where an AI agent can, for instance, generate a plan, then execute the first step of the plan, and then recursively call itself to process the next step of the plan based on the outcome of the first. So, how does this help you? It means you can build more complex and efficient AI systems that are not bottlenecked by sequential processing, leading to faster development cycles and more dynamic AI behaviors.

Product Core Function

· Parallel Token Generation: Enables multiple AI agents to produce output tokens concurrently, avoiding delays and speeding up overall processing. This is valuable for tasks where different aspects can be worked on simultaneously, such as generating different parts of a creative text or analyzing different data sources at once.

· Sequential Consumption Pipeline: Allows downstream processes to consume generated tokens as they become available, rather than waiting for the entire generation to complete. This ensures that your AI workflow remains fluid and responsive, minimizing idle time.

· Stream Delegation: Manages the efficient flow of data between AI agents, acting as an intelligent intermediary. This is crucial for orchestrating complex AI interactions and ensuring smooth communication between different AI components.

· Recursive Prompting Support: Facilitates breaking down complex problems into smaller, self-similar sub-problems that can be processed efficiently. This is incredibly useful for tasks requiring deep reasoning, planning, or iterative refinement, leading to more robust and intelligent AI solutions.

· Agent Meta-Prompting: Enhances the ability of AI agents to manage and direct other AI agents or their own processes. This allows for more sophisticated AI architectures where agents can learn, adapt, and optimize their behavior, leading to more autonomous and capable AI systems.

Product Usage Case

· Accelerating AI-powered content generation pipelines: For instance, an AI could be tasked with writing a detailed report. Instead of generating the entire report sequentially, one AI agent could research facts, another could summarize findings, and a third could draft paragraphs, all running in parallel and feeding into a final assembly process. This significantly reduces the time to produce high-quality content.

· Building more responsive AI assistants for complex tasks: Imagine an AI assistant helping a programmer debug code. One agent could analyze the error message, another could search for relevant documentation, and a third could suggest potential fixes. By running these in parallel and feeding results back quickly, the assistant provides much faster and more actionable debugging support.

· Enabling AI agents to perform complex planning and execution: A robotic AI could use recursive prompting to plan a multi-step operation. One agent generates the overall plan, another executes the first step, and then recursively calls itself to plan and execute the subsequent steps based on the outcome of the previous one. This allows for more adaptable and intelligent robotic behavior in dynamic environments.

· Developing more sophisticated AI-driven game or simulation environments: An AI controlling non-player characters (NPCs) in a game could use this system to manage multiple behaviors simultaneously, like pathfinding, decision-making, and reacting to player actions, all in parallel. This leads to more dynamic and believable AI in simulations.

12

EventMatchr

Author

aammundi

Description

EventMatchr is a platform that connects people based on shared event interests, moving away from profile-centric dating apps. It uses an 'event-first' approach, allowing users to 'Twoot' (post) about an event they want to attend. The core innovation lies in prioritizing shared plans over individual profiles, using the event itself as the primary icebreaker for genuine connection. This solves the problem of finding companions for events or meeting new people organically around common interests, making social planning more natural and less about curated online personas.

Popularity

Points 3

Comments 3

What is this product?

EventMatchr is a social connection app where the primary mechanism for meeting people is by sharing events you're interested in attending. Instead of endless swiping through profiles, users 'Twoot' an event, essentially broadcasting their desire to go and find a companion. The platform then facilitates matches based on these shared event plans, allowing users to chat and decide if they want to attend together. The innovation here is the shift from a profile-first, algorithm-driven matching system to an event-first, interest-driven connection model. This makes meeting new people feel more organic, as the event serves as an immediate common ground and conversation starter, rather than relying on potentially superficial profile information. It's designed to be broader than just dating, enabling people to find partners for concerts, games, shows, or any shared activity.

How to use it?

Developers can use EventMatchr by downloading the iOS app or visiting the website. The typical user flow is: 1. Sign up and browse or search for events. 2. 'Twoot' an event you're interested in attending, indicating your availability and perhaps a brief note about what you're looking for in a companion. 3. Browse 'Twoots' from other users for events you're also interested in. 4. If you find a potential match, you can initiate a chat to discuss the event and compatibility. 5. Decide with your match to attend the event together. Integration for developers might involve understanding the event-based matching API if one were to be offered in the future, or perhaps using the concept to build similar event-centric social features within their own applications.

Product Core Function

· Event Posting (Twooting): Users can announce events they plan to attend, making their intentions public to the community. This allows others with similar interests to discover them, providing a direct way to signal availability and desire for companionship around specific activities.

· Event-Based Matching: The system automatically suggests potential connections based on users 'Twooting' the same or similar events. This leverages shared real-world plans as the primary matching criterion, leading to more relevant and organic connections.

· In-App Chat: Once a potential connection is made, users can communicate directly within the app to coordinate and decide if they want to attend the event together. This provides a secure and convenient way to build rapport before meeting.

· Profile Second, Plans First: User profiles are de-emphasized until an event-based connection is initiated. This design choice aims to reduce superficial judgment and focus on shared interests and activities, making the initial interaction more genuine.

Product Usage Case

· A user has two tickets to a sold-out concert but their friend cancelled last minute. They 'Twoot' the concert on EventMatchr, specifying they are looking for someone to go with. Another user who also wants to see the concert but couldn't find anyone to accompany them sees the 'Twoot' and initiates a chat, leading to a new friendship and a great concert experience for both.

· A new person moves to a city and wants to explore local baseball games. They 'Twoot' a specific upcoming game. Another user, a local baseball enthusiast, sees this and also wants to attend. They connect via chat, discussing team history and game strategies, and decide to attend the game together, helping the newcomer feel more integrated into the city.

· Someone is interested in attending a particular art exhibition but prefers to discuss the pieces with someone else. They 'Twoot' the exhibition. Another art lover who appreciates the same artist discovers the 'Twoot' and reaches out. They discuss their favorite works via the app and arrange to visit the exhibition together, sharing insights and enhancing their cultural experience.

13

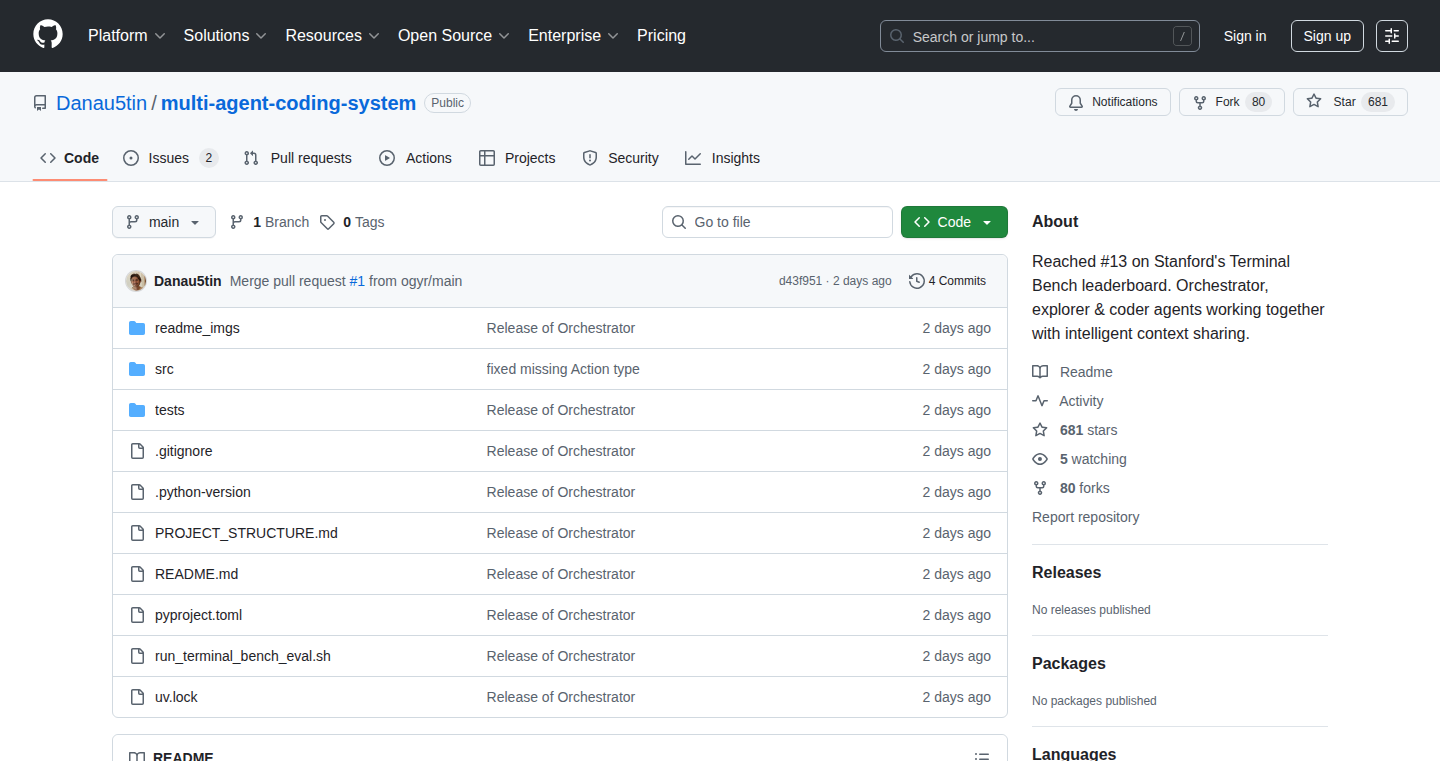

Multi-Agent Orchestrator

Author

Danau5tin

Description

A multi-agent coding system that outperforms leading AI models on complex terminal-based tasks. It features an orchestrator agent that manages explorer and coder sub-agents, employing an intelligent context sharing mechanism to tackle intricate challenges.

Popularity

Points 4

Comments 1

What is this product?

This project is a sophisticated AI system designed to automate and solve complex tasks within a terminal environment. At its core, it employs a multi-agent architecture. Think of it like having a team of specialized AI assistants. An 'orchestrator' agent acts as the manager, coordinating 'explorer' agents that figure out what needs to be done and 'coder' agents that write the code to do it. The magic happens with its 'intelligent context sharing,' which is like a smart note-taking system that ensures all agents are on the same page, preventing them from repeating mistakes or working against each other. This allows the system to achieve remarkable performance, even surpassing established AI models on benchmarks like Stanford's Terminal Bench. So, what's the value? It offers a powerful way to automate difficult, multi-step processes that typically require human coding expertise in the terminal, making complex command-line operations more accessible and efficient.

How to use it?

Developers can leverage this system by integrating it into their workflows for automating complex terminal tasks. You can clone the repository and explore the provided code and prompts to understand its inner workings. For practical use, you can deploy the orchestrator agent to manage sub-agents for specific terminal-based projects, such as setting up development environments, automating data processing pipelines, or executing intricate sequences of command-line operations. Its modular design also allows for customization and extension, meaning you can create your own specialized agents or refine existing ones for particular use cases. The core idea is to use it as an intelligent automation layer for your terminal activities, freeing up your time and cognitive load. So, how does this help you? It can automate repetitive or complex command-line tasks, allowing you to focus on higher-level problem-solving and innovation.

Product Core Function

· Orchestration of Multiple AI Agents: Manages specialized 'explorer' and 'coder' agents to break down and solve complex tasks. Value: Provides a structured and efficient way to tackle multi-faceted problems that would be difficult for a single AI.

· Intelligent Context Sharing: Enables seamless information flow and memory between different agents. Value: Prevents redundant work and ensures consistent progress by allowing agents to learn from each other's actions and insights.

· Terminal Task Execution: Designed to understand and execute tasks directly within a command-line interface. Value: Automates command-line operations, from setup to complex sequences, reducing manual effort and potential errors.

· Benchmark Performance: Demonstrated ability to outperform other AI models on specialized terminal tasks. Value: Offers a state-of-the-art solution for developers needing highly effective AI assistance for terminal-based development and automation.

Product Usage Case

· Automating CI/CD pipeline setup: An explorer agent can analyze project requirements and an orchestrator can deploy coder agents to configure build scripts, test runners, and deployment configurations in the terminal. This saves significant manual setup time and reduces configuration errors.

· Complex data processing workflows: A system could be tasked with downloading, cleaning, transforming, and analyzing data, with different agents specializing in each step. This allows for the automation of intricate data pipelines that might otherwise require extensive scripting.

· Development environment provisioning: Agents could be tasked with installing dependencies, configuring IDEs, and setting up virtual environments on new machines. This streamlines the onboarding process for new developers or for setting up new projects, ensuring consistency across environments.

14

Libinjection-Rust-Port

Author

willsaar

Description

This project is a reimplementation of the `libinjection` security library from C to Rust. It focuses on detecting SQL injection and command injection vulnerabilities in user input. The core innovation lies in leveraging Rust's memory safety and concurrency features to create a more robust and potentially faster security scanning tool.

Popularity

Points 3

Comments 2

What is this product?

This project is a port of the well-known `libinjection` security library, originally written in C, into Rust. `libinjection` is designed to identify malicious patterns in user-provided input that could lead to SQL injection or command injection attacks. The key technical innovation here is the translation of C's low-level memory management to Rust's safer, ownership-based system. This transition aims to eliminate common C-related bugs like buffer overflows and use-after-free errors, which are frequent sources of security vulnerabilities themselves. By using Rust, the goal is to provide a more secure and reliable way to scan for and prevent injection attacks, potentially with improved performance due to Rust's efficient compilation and concurrency capabilities.

How to use it?

Developers can integrate this Rust-based library into their web applications or security tools. It can be used as a backend component to scan incoming data before it's processed by the application's core logic. For example, if you are building a web framework in Rust, you could use this library to validate user-submitted form data or API requests to prevent malicious SQL queries. It can also be used as a standalone command-line tool for auditing existing code or data for potential injection flaws. The integration would typically involve calling specific functions from the library to analyze strings of text.

Product Core Function

· SQL Injection Detection: Analyzes input strings for patterns commonly found in SQL injection attempts, such as `' OR '1'='1'`. This helps protect databases from unauthorized access or data manipulation, meaning your sensitive data stays safe.

· Command Injection Detection: Scans input for sequences that could be used to execute arbitrary operating system commands, like `; rm -rf /`. This prevents attackers from taking control of your servers, keeping your infrastructure secure.

· Rust Memory Safety: Built in Rust, this library benefits from automatic memory management and compile-time checks, significantly reducing the risk of security vulnerabilities caused by memory errors. This means the security tool itself is less likely to be a weak point.

· Performance Optimization: Rust's efficiency can lead to faster scanning times compared to the original C implementation, allowing for quicker detection of threats. This translates to a more responsive application and faster security audits.

Product Usage Case

· Web Application Firewall (WAF): Integrate this Rust library into a WAF to automatically filter out malicious requests targeting web applications, preventing SQL injection attacks before they reach the application server. This shields your web application from common attacks.

· API Security Gateway: Use this port in an API gateway to sanitize incoming API requests, ensuring that data passed to downstream services is free from injection attempts. This protects your internal services and data flow.

· Code Auditing Tool: Develop a command-line tool using this library to scan code repositories for potential injection vulnerabilities, helping developers identify and fix security flaws early in the development cycle. This makes your codebase more secure.

· Database Security Monitoring: Employ this library to analyze logs or database queries for suspicious patterns, providing an extra layer of defense against data breaches. This adds an additional check to protect your database.

15

GPT-OSS-20B-On-8GB-GPU

Author

anuarsh

Description

This project enables running a 20 billion parameter open-source GPT model on GPUs with as little as 8GB of VRAM. It tackles the common barrier of high VRAM requirements for large language models by employing efficient inference techniques. This unlocks access to powerful AI capabilities for developers and researchers with more modest hardware.

Popularity

Points 5

Comments 0

What is this product?

This is a project that makes it possible to use large, advanced AI language models, specifically a 20 billion parameter version of an open-source GPT model, on graphics cards (GPUs) that typically don't have enough memory to handle such models. The core innovation lies in optimizing the model's memory footprint and computation process so it can run efficiently even on GPUs with just 8GB of VRAM. Think of it like fitting a powerful engine into a compact car – it's about smart engineering to make high-end technology accessible.

How to use it?

Developers can integrate this project into their workflows by loading the optimized model weights and using the provided inference code. This might involve a Python library that simplifies the process of sending text prompts to the model and receiving generated responses. For example, you could use it to build custom chatbots, automate content generation, or perform text analysis directly on your development machine without needing expensive cloud infrastructure or high-end hardware. It's designed to be relatively straightforward to plug into existing Python-based AI projects.

Product Core Function

· Efficient Model Quantization: Reduces the precision of model parameters, significantly decreasing VRAM usage without a drastic loss in accuracy, making large models runnable on less powerful hardware.

· Optimized Inference Engine: Implements techniques like kernel fusion and intelligent memory management to speed up text generation and reduce computational overhead, directly translating to faster responses and lower resource consumption.

· Accessible Deployment: Provides a straightforward way to load and run the GPT-OSS-20B model, lowering the technical barrier for developers who want to experiment with and deploy advanced NLP capabilities on their own machines.

· Fine-tuning Capabilities (potential): While the primary focus is inference, the underlying architecture could be extended to allow for limited fine-tuning on smaller datasets, offering a pathway for customization for specific tasks.

Product Usage Case

· Building a personal AI assistant: A developer can use this to create a chatbot that runs locally, answering questions and generating text without an internet connection or relying on external APIs, solving the problem of privacy and cost.

· Prototyping AI-powered applications: Quickly test out ideas for content creation, code generation assistance, or summarization tools on a standard laptop, accelerating the development cycle and providing immediate feedback.

· Educational purposes: Students and hobbyists can experiment with state-of-the-art language models without needing access to specialized AI labs or expensive cloud computing, democratizing AI learning and research.

· Offline NLP tasks: For applications requiring text analysis or generation in environments with limited or no internet access, this project provides a viable solution by enabling local model execution.

16

YAP: Virtual Knob Media Player

Author

benwu232

Description

YAP is a media player for Android and iOS that introduces a novel 'virtual knob' gesture for controlling media. Instead of tapping or swiping, users mimic the action of turning a physical knob to adjust volume, brightness, and playback progress. This innovative gesture aims to make human-machine interaction more intuitive and efficient, offering a fresh approach to controlling device functions.

Popularity

Points 4

Comments 1

What is this product?

YAP is a mobile media player that reimagines how users interact with their device controls by introducing a 'virtual knob' gesture. Think of it like the volume dial on a stereo system. Instead of a flat slider or buttons, you can use a circular motion on the screen to increase or decrease settings. This technology, developed by mimicking the tactile feedback and intuitive control of physical knobs, allows for precise adjustments to volume, screen brightness, and the progress of media playback. The innovation lies in translating a familiar, physical interaction into a digital gesture, making controls feel more natural and responsive. So, what's the benefit? It makes controlling your media and device settings feel more like using a physical device, providing a smoother and more precise experience compared to traditional touch interfaces.

How to use it?

Developers can experience YAP by downloading it from the App Store or Google Play. The app itself showcases the virtual knob gesture. For developers interested in integrating this gesture into their own applications, the underlying technology is detailed in the author's blog posts. These resources can guide developers on how to implement similar gesture recognition systems, potentially enhancing the user experience of their own mobile apps. Imagine adding this intuitive control to a music app, a photo editor, or even a smart home control panel. It's about providing an alternative, more engaging way for users to interact with digital interfaces.

Product Core Function

· Virtual Knob Gesture Recognition: This core function allows the app to detect and interpret circular gestures on the screen as knob turns. The value of this is in providing a more nuanced and precise control method compared to standard sliders or buttons, allowing users to make fine-tuned adjustments quickly. This is useful for scenarios where incremental changes are important, like adjusting audio levels or screen dimming.

· Volume Control via Knob Gesture: Users can turn the virtual knob to adjust the device's audio volume. The technical implementation here involves mapping the detected gesture to the system's volume API. This offers a tactile and intuitive way to manage audio, akin to old-school radio dials, making it easier to set the perfect listening volume without looking directly at the screen.

· Brightness Control via Knob Gesture: Similar to volume, the virtual knob can be used to adjust the screen's brightness. This involves interfacing with the device's display settings. The benefit is a fluid way to manage ambient light conditions, making it comfortable for users to adjust their screen in various lighting environments, from bright sunlight to dark rooms.

· Playback Progress Scrubbing: The gesture can also be used to navigate through media playback, like rewinding or fast-forwarding a video or song. This is achieved by mapping the gesture's rotational movement to seek positions within the media file. This provides a more direct and satisfying way to jump to specific parts of content, enhancing the media consumption experience.

Product Usage Case

· In a music player application, a user could use the virtual knob gesture to smoothly adjust the volume while listening to music without needing to precisely hit a small volume slider. This solves the problem of imprecise volume adjustments on touchscreens, especially when the user is engaged with the audio content.

· For a video editing application, a developer could integrate this gesture to allow users to scrub through a timeline or adjust effects parameters by simply turning the virtual knob. This offers a more intuitive control for creative professionals who are accustomed to physical dials and sliders, improving workflow efficiency.

· In a smart home control app, users could use the virtual knob to dim lights or adjust thermostat settings. This provides a visually consistent and familiar interaction pattern for managing various smart devices, making the app feel more polished and user-friendly.

· During a presentation, a presenter could use the virtual knob gesture on their phone to adjust the volume of the connected speakers or even control the progression of slides, allowing for smoother transitions and fewer fumbles with traditional controls.

17

PaySimulate API

Author

d_sai

Description

PaySimulate API is a mock Payment Service Provider (PSP) API designed for developers. It allows you to test payment workflows, simulate various payment states (like authorize, decline, capture), and practice handling webhook events without integrating with real payment gateways. This saves time and resources during development and testing, enabling a more robust and efficient workflow. The innovation lies in providing a realistic, yet isolated, environment for crucial payment logic testing.

Popularity

Points 3

Comments 2

What is this product?

PaySimulate API is a simulated Payment Service Provider (PSP) API. Think of it as a sandbox for payment processing. Instead of connecting to a live payment system like Stripe or Adyen, which can involve complex setups and costs, developers can use PaySimulate to mimic the behavior of these systems. Its core innovation is the ability to programmatically control and test the different stages of a payment transaction – from initiation to authorization, capture, or even decline. It also simulates the crucial webhook notifications that payment systems send to your application, allowing you to build and test how your system reacts to these real-world events. This is powerful because it isolates the payment integration logic, making it easier to debug and build confidently.

How to use it?

Developers can use PaySimulate API by obtaining a free API key directly from the provided console. Once you have the key, you can start making API calls to simulate payment intents and payments. For example, you might POST to the `/payment-intents` endpoint to create a new payment request, and then POST to `/payment-intents/:id/payments` to simulate specific payment statuses like 'authorized' or 'declined'. You can also subscribe to webhook events and view their delivery history in the console. This is particularly useful when building backend services that need to react to payment confirmations or failures. You can integrate it into your local development environment or staging servers as a placeholder for the actual PSP, allowing your application's payment handling logic to be tested thoroughly before going live.

Product Core Function

· Mock Payment Intent Creation: This allows developers to simulate the initiation of a payment request, providing a realistic starting point for testing payment flows. The value is in testing how your application handles the creation of payment requests without needing a live backend.

· Simulate Payment States: Developers can programmatically set payment statuses such as 'initiated', 'authorising', 'authorised', 'declined', 'capturing', 'expired', 'captured', 'capture_failed'. This is crucial for testing all possible outcomes of a payment transaction and ensuring your application correctly handles each scenario, preventing errors in production.

· Webhook Simulation and History: The API allows for subscription to and delivery of simulated webhook events, along with a history log. This is invaluable for testing how your application processes asynchronous notifications from a PSP, ensuring critical updates like payment confirmations are handled reliably.

· API Key Management: Easy generation of API keys directly from the console enables quick access and testing without complex signup processes. This accelerates the initial setup for developers wanting to experiment with payment integrations.

Product Usage Case

· Testing order fulfillment logic: A developer can use PaySimulate to simulate a successful payment capture for an order. Their application, upon receiving this simulated 'captured' status via webhook, can then proceed to trigger order fulfillment processes like shipping, all without needing a real transaction.

· Handling payment declines: A developer can simulate a 'declined' payment status and test how their e-commerce frontend displays an error message to the user and how the backend handles inventory management in case of a failed payment, ensuring a smooth user experience even when payments fail.

· Developing payment retry mechanisms: By simulating intermittent payment failures or declines, developers can test and refine their application's logic for retrying payments, improving the chances of successful transactions over time.

· Integrating with a new payment gateway: Before committing to a full integration with a real PSP, a developer can use PaySimulate to build and test the core payment handling features of their application, ensuring the architecture is sound and the logic is correct.

18

PrivacyGuard Location

Author

ezeoleaf

Description

This project is a 'hacky' app designed for location sharing with a strong emphasis on privacy, aiming to circumvent traditional surveillance methods. It provides a way for users to share their real-time location without compromising their overall privacy, addressing a growing concern about data exploitation in location-based services.

Popularity

Points 3

Comments 1

What is this product?

PrivacyGuard Location is a decentralized and privacy-focused application that allows users to share their current location with selected contacts. Unlike mainstream location-sharing apps that often collect and store vast amounts of user data, this project leverages a more 'hacky' and experimental approach to minimize data footprint. The core innovation lies in its peer-to-peer communication model and potentially obfuscated location data transmission, making it difficult for third parties to track individual movements or build comprehensive location histories. This translates to users being able to share their whereabouts without the worry of constant monitoring or data breaches.

How to use it?

Developers can integrate PrivacyGuard Location into their existing applications or use it as a standalone tool. For integration, the project likely exposes an API or SDK that allows other applications to request and display location data. Developers can use this to build features like real-time event coordination, collaborative mapping, or even location-based alerts for specific groups. For standalone use, it would typically involve a simple interface where users can initiate sharing with specific individuals, defining how long the sharing lasts and what level of detail is provided. This provides a direct and secure way to let friends or colleagues know where you are without relying on corporate-controlled platforms.

Product Core Function

· Decentralized Location Sharing: Users share their location directly with chosen contacts, reducing reliance on central servers and enhancing privacy. This means your location data isn't stored in a central database that could be hacked or misused.

· Ephemeral Location Data: Location sharing can be set to expire after a specific duration, ensuring that location history is not persistently stored. This limits the amount of time your location is available to others.

· Minimal Data Footprint: The application is designed to collect and transmit only the necessary location information, reducing the risk of over-collection and potential misuse of personal data. Less data collected means less risk of it falling into the wrong hands.

· API for Integration: Provides developers with the tools to incorporate privacy-conscious location sharing into their own applications. This allows for building new, privacy-respecting location-aware features without starting from scratch.

Product Usage Case

· Collaborative Event Planning: Users meeting up for an event can share their live locations with each other to coordinate arrival times and find each other easily in a crowded place, without broadcasting their location to the world. This solves the problem of 'where are you?' texts.