Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-09-02

SagaSu777 2025-09-03

Explore the hottest developer projects on Show HN for 2025-09-02. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation on Show HN is heavily influenced by the drive to streamline workflows and enhance user productivity through AI. Developers are leveraging large language models not just for content generation but as integral components for data analysis, communication management, and even automating complex coding tasks. We're seeing a strong trend towards creating specialized tools that tackle specific pain points, like organizing vast amounts of information or simplifying intricate development processes. The emphasis on open-source solutions and on-device processing signals a growing demand for privacy and user control. For aspiring creators and developers, this means identifying niche problems that can be solved with intelligent automation and offering transparent, user-friendly solutions. Embracing AI as a co-pilot for tasks, rather than a complete replacement, and focusing on secure, adaptable architectures will be key to building impactful products.

Today's Hottest Product

Name

Amber – better Beeper, a modern all-in-one messenger

Highlight

Amber redefines the all-in-one messenger experience by unifying all communications (WhatsApp, Telegram, iMessage) in a beautifully crafted, user-centric interface. Its key innovations include true folder-based inboxes for better focus, a unique 'mark done' feature that bypasses read receipts, and a forward-thinking personal CRM with potential AI integration for extracting key insights from conversations. Developers can learn about building highly responsive UIs, implementing robust cross-platform communication handling, and designing user experiences that prioritize focus and privacy. The on-device, end-to-end encrypted architecture is a masterclass in secure, user-focused development.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Communication

Data Management

Popular Keyword

AI

LLM

CLI

Open Source

Productivity

Data

Developer Tools

API

Messenger

Automation

Technology Trends

AI-Powered Productivity Tools

Developer Workflow Automation

Decentralized/On-Device Data Handling

LLM Integration for Enhanced Functionality

Cross-Platform Communication Solutions

Data Visualization and Analysis

Secure Communication and Privacy

Project Category Distribution

AI/ML Tools (30%)

Developer Utilities (25%)

Productivity & Communication (20%)

Data Management & Analysis (15%)

Creative & Design Tools (10%)

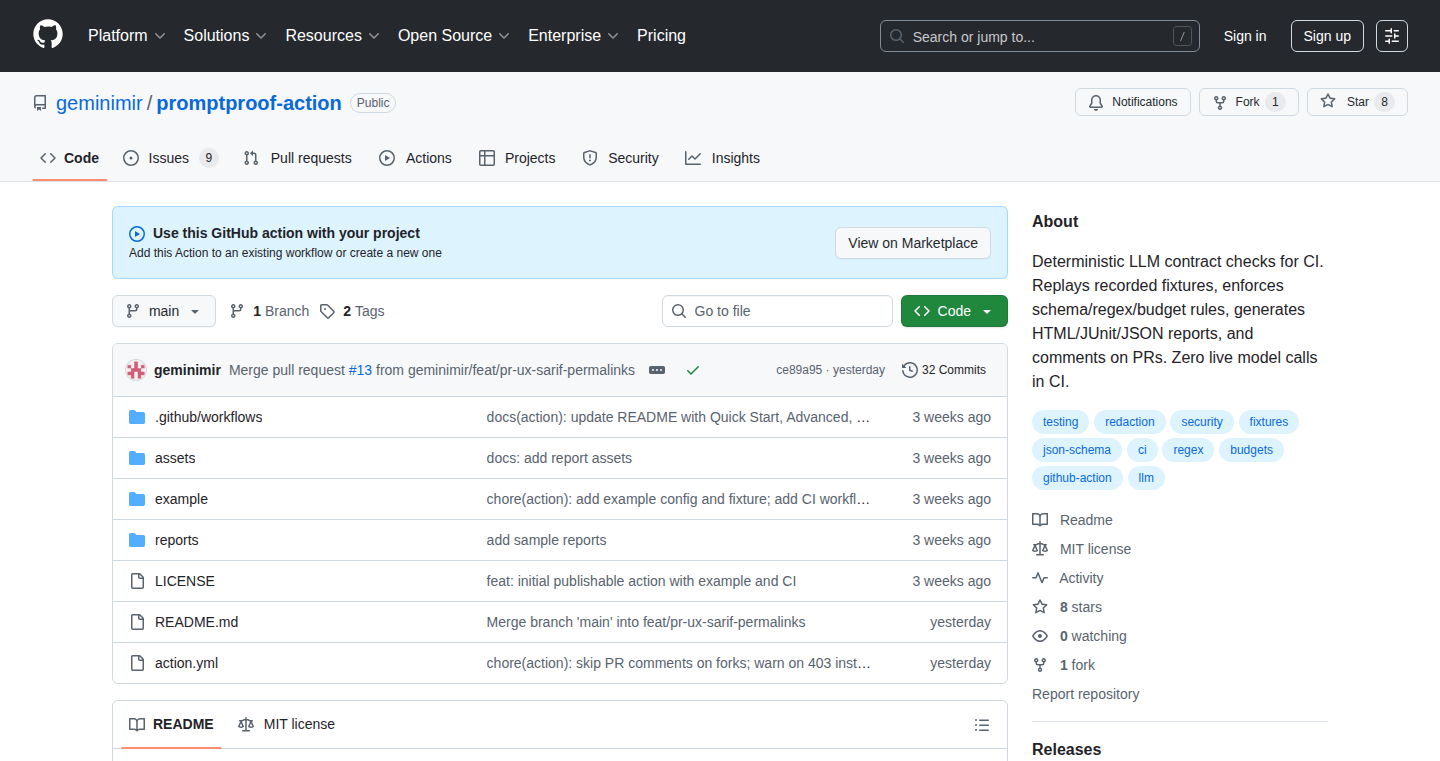

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Amber - Unified Communication Hub | 62 | 74 |

| 2 | Moribito: The LDAP Navigator | 98 | 23 |

| 3 | LightCycle.rs | 35 | 12 |

| 4 | ZenStack: Unified Data Layer | 13 | 0 |

| 5 | DeepDoc Local Research Assistant | 10 | 2 |

| 6 | MCP Secrets Vault | 11 | 1 |

| 7 | AppForge Republic | 12 | 0 |

| 8 | HeyGuru: Conversational AI for Deep Inquiry | 10 | 1 |

| 9 | Zyg: Commit Narrative Generator | 5 | 4 |

| 10 | ESP32 On-Call Alert Beeper | 6 | 3 |

1

Amber - Unified Communication Hub

Author

DmitryDolgopolo

Description

Amber is a novel all-in-one messenger aiming to surpass existing solutions by offering advanced organization, privacy, and productivity features. It consolidates all your messages from platforms like WhatsApp, Telegram, and iMessage into a single, intuitive interface. Key innovations include robust folder-based inboxes for focused communication, a 'mark read' feature that bypasses sender read receipts for enhanced privacy, and an integrated personal CRM with upcoming AI-powered insights. This project addresses the fragmentation and limitations of current messaging apps by providing a developer-centric, privacy-first, and highly customizable communication experience.

Popularity

Points 62

Comments 74

What is this product?

Amber is an all-in-one messenger that revolutionizes how you manage your communications by unifying messages from various platforms like WhatsApp, Telegram, and iMessage into a single, streamlined application. Its core technical innovation lies in its highly organized, folder-based 'split inboxes' which allow users to segment conversations based on work, personal life, or specific projects, enabling better focus and workflow management. Unlike other aggregators, Amber prioritizes user privacy with a unique 'mark read' feature that intentionally omits read receipts, even on supported platforms like WhatsApp and Telegram, giving you control over when your message is considered acknowledged. Furthermore, it's building a personal CRM functionality that stores contextual information about contacts, with future AI integration to automatically extract key facts from conversations. The entire experience is designed with developer sensibilities, including a command bar for quick actions and shortcuts, alongside 'send later' and 'reminders' features for enhanced productivity. Crucially, all data is stored securely on-device and is end-to-end encrypted, meaning your messages are never processed or stored on Amber's servers, offering unparalleled data privacy and security.

How to use it?

Developers can use Amber to consolidate all their cross-platform communication into one organized space, significantly reducing context switching and improving productivity. Instead of juggling multiple apps for team chats, client conversations, and personal messages, developers can leverage Amber's split inboxes to create dedicated folders for different projects or client interactions. For example, a developer working on Project Alpha can have a specific inbox for all Project Alpha related communications from Slack, Telegram, and email, keeping work segmented. The 'mark read' feature is particularly valuable for developers who need to manage their communication flow without the pressure of immediate acknowledgment, allowing them to process messages at their own pace. The upcoming personal CRM with AI can help developers quickly recall details about clients or collaborators by surfacing key information directly from past conversations, enhancing professional interactions. Integration is straightforward, as Amber connects to your existing messaging accounts, acting as a unified front-end. Its command bar and keyboard shortcuts are designed for efficiency, enabling developers to navigate and manage messages rapidly without touching their mouse.

Product Core Function

· Unified Messaging Experience: Consolidates messages from multiple platforms (e.g., WhatsApp, Telegram, iMessage) into a single interface, reducing the need to switch between apps and providing a holistic view of all communications, which helps developers stay organized and save time.

· Split Inboxes (Folders): Enables users to create custom folders to categorize conversations by project, client, or personal context, offering enhanced organizational capabilities and allowing developers to focus on critical communications without distractions.

· Mark Read Feature: Allows users to mark messages as read without sending read receipts to the sender, giving individuals control over their communication availability and reducing the pressure for instant responses, which is highly beneficial for managing asynchronous developer workflows.

· Personal CRM with AI (Upcoming): Integrates a lightweight contact database to store personalized notes and will soon feature AI to extract key information from conversations, aiding developers in remembering details about clients or collaborators for more effective professional networking and communication.

· Command Bar and Shortcuts: Provides a keyboard-driven interface for quick navigation and execution of actions, boosting efficiency for developers accustomed to keyboard-centric workflows and reducing reliance on mouse interactions.

· Send Later and Reminders: Allows scheduling messages to be sent at a specific time and setting reminders for follow-ups, improving communication planning and ensuring important messages are delivered at the optimal moment, vital for project management and client engagement.

Product Usage Case

· A freelance developer managing client communications across Slack, Discord, and email can create a 'Client X' inbox in Amber. This allows them to see all project-related messages in one place, preventing missed updates and making it easy to track discussions. The 'mark read' feature means they can review messages without signaling immediate availability, allowing for focused coding sessions.

· A developer in a large organization using multiple internal communication tools like Microsoft Teams and custom internal chat can use Amber to consolidate these, alongside external channels like WhatsApp for specific project collaborations. This provides a single pane of glass for all communication, streamlining workflow and improving overall efficiency by reducing app fatigue.

· A founder communicating with hundreds of potential users and partners quarterly can leverage Amber's upcoming personal CRM feature. They can store notes about each individual, and soon, AI will automatically pull key discussion points from conversations, helping them to remember crucial details about each person and nurture relationships more effectively without manual note-taking.

· A developer working on a time-sensitive bug fix can use Amber's 'mark read' feature to acknowledge a message from their lead without sending a read receipt. This allows them to continue their focused work without interruption, while still having the message marked as reviewed internally, maintaining communication hygiene without sacrificing productivity.

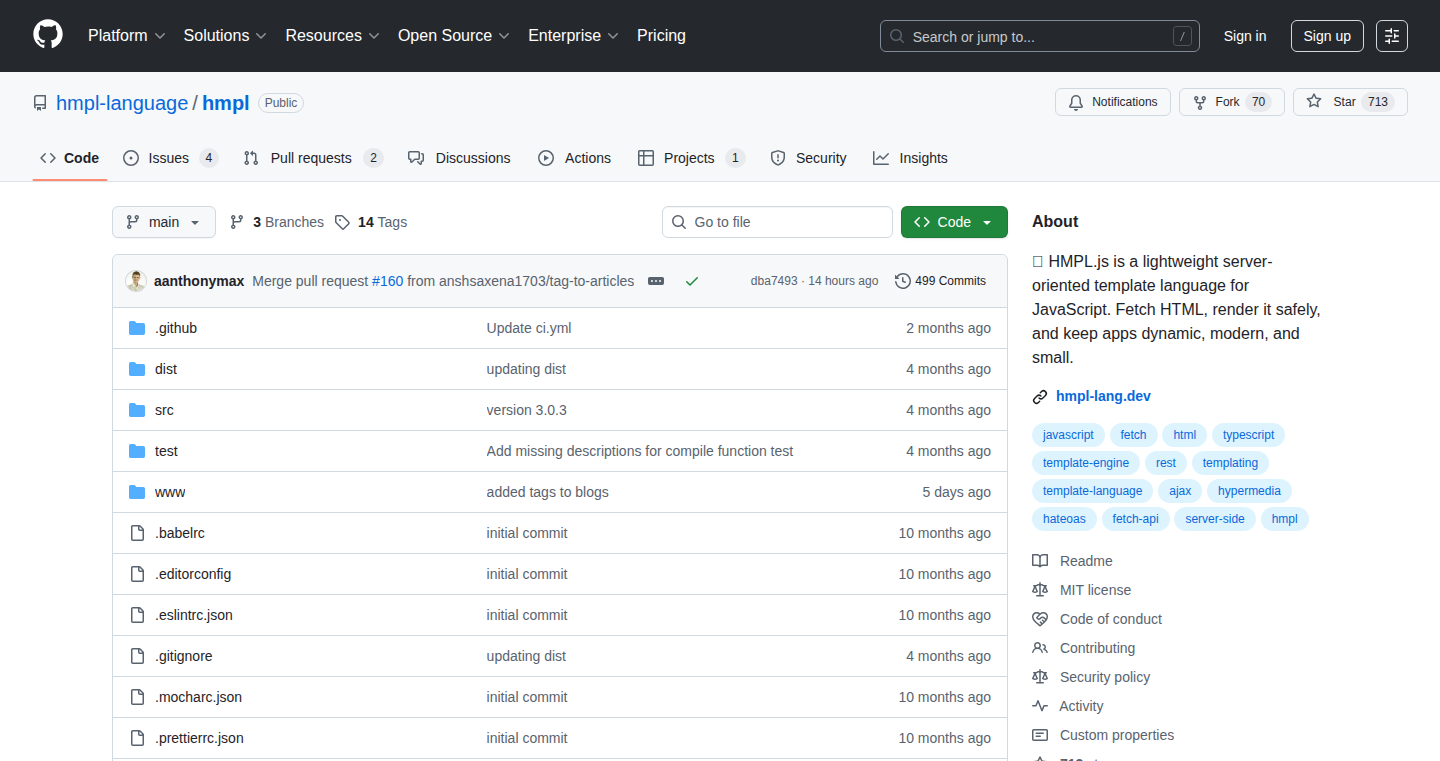

2

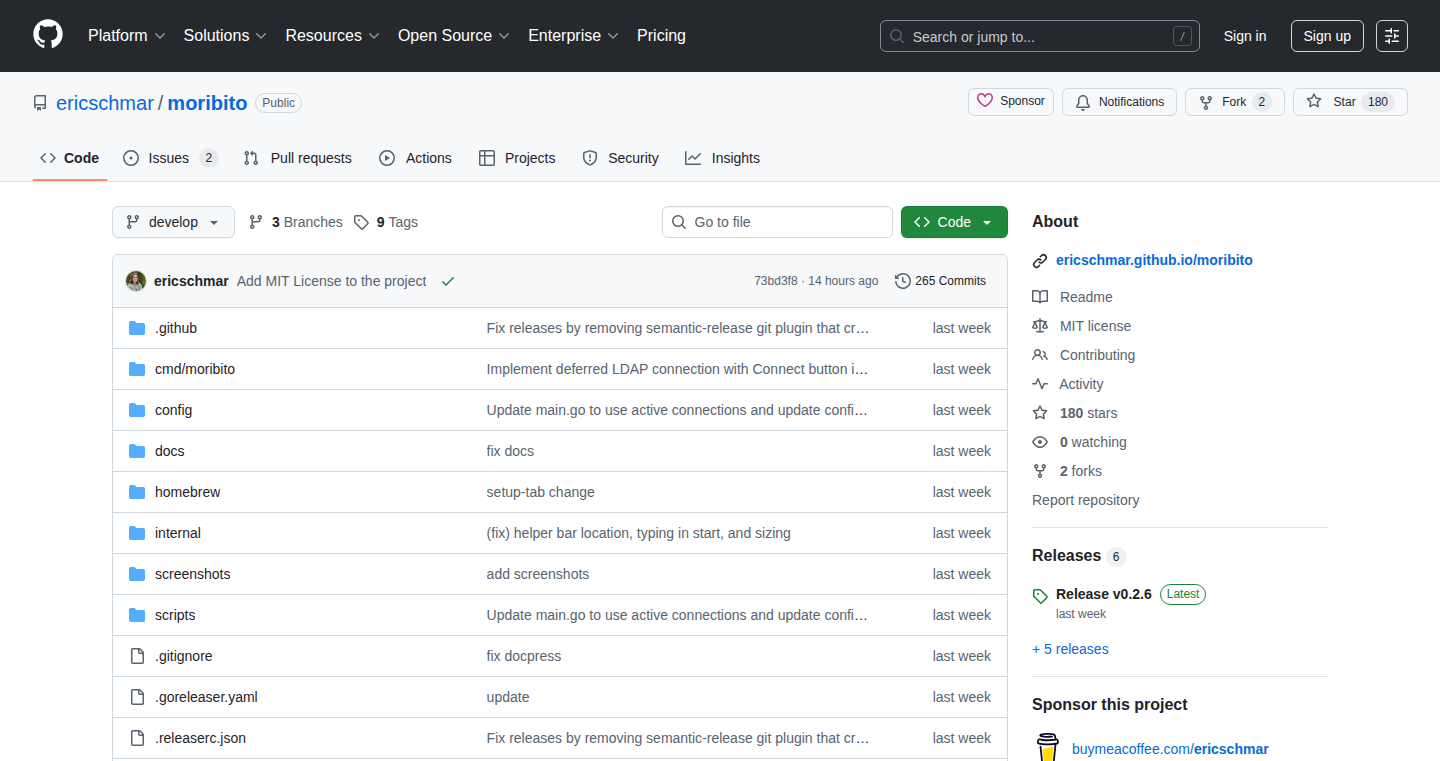

Moribito: The LDAP Navigator

Author

woumn

Description

Moribito is a command-line interface (CLI) tool designed for efficiently viewing and querying Lightweight Directory Access Protocol (LDAP) databases. It addresses the lack of user-friendly and performant LDAP tools for macOS by providing a text-based interface that simplifies daily tasks like basic queries and data validation. This tool empowers developers and system administrators to interact with LDAP directories directly from their terminal, streamlining workflow and improving productivity.

Popularity

Points 98

Comments 23

What is this product?

Moribito is a TUI (Text User Interface) application built for interacting with LDAP servers. Unlike graphical applications that can be resource-intensive and often clunky on certain operating systems like macOS, Moribito offers a lightweight and responsive experience within the terminal. Its innovation lies in its intuitive keyboard navigation and search capabilities, allowing users to quickly browse directory structures, execute complex queries using familiar LDAP filter syntax, and validate data entries without leaving their command-line environment. This approach directly tackles the usability issues found in existing solutions, providing a developer-centric way to manage LDAP data.

How to use it?

Developers can use Moribito by installing it via a package manager or by building it from source. Once installed, they can launch the tool by specifying the LDAP server connection details (hostname, port, bind DN, and password) as command-line arguments or through a configuration file. For example, a typical usage might look like: `moribito --host ldap.example.com --bind-dn 'cn=admin,dc=example,dc=com' --password 'secret'`. After establishing a connection, users can navigate the directory tree using arrow keys, initiate searches with specific filters (e.g., `(uid=johndoe)`), and view attribute data for entries. It can be integrated into scripts for automated LDAP validation or data retrieval tasks.

Product Core Function

· LDAP Connection Management: Securely connect to LDAP servers using various authentication methods, enabling access to directory data from any terminal. This is valuable for developers needing to interact with corporate directories or manage service accounts.

· Interactive Directory Browsing: Navigate through LDAP entries and their hierarchical structure using intuitive keyboard commands, making it easy to explore complex directory trees without manual searching. This speeds up data discovery and troubleshooting.

· Advanced Querying and Filtering: Execute LDAP queries with standard filter syntax and receive results directly in the terminal, allowing for precise data retrieval and validation. This is crucial for developers who need to verify user attributes or find specific organizational units.

· Data Viewing and Editing (Read-Only): Inspect the attributes and values of LDAP entries in a clean, readable format, facilitating quick data verification. While currently read-only, this core function provides essential insight into directory contents.

· Command-Line Integration: Seamlessly integrate Moribito into shell scripts and existing development workflows for automated tasks like checking user credentials or fetching configuration data from LDAP.

Product Usage Case

· A developer needs to quickly find all users in a specific department within an LDAP directory for an application's user management feature. Using Moribito, they can navigate to the relevant organizational unit and apply a filter like `(department=Engineering)` to instantly retrieve the required user entries, significantly faster than using a graphical tool.

· A system administrator is troubleshooting an authentication issue and needs to verify that a user's attributes in LDAP are correctly configured. They can use Moribito to connect to the LDAP server, search for the user by their `uid`, and inspect all their attributes to identify any discrepancies, saving time compared to debugging logs or using a less responsive GUI.

· A DevOps engineer wants to automate the process of validating service account configurations stored in LDAP before deploying a new application. They can write a shell script that calls Moribito with specific query parameters to fetch and check the account's attributes, ensuring successful deployment and preventing potential errors.

3

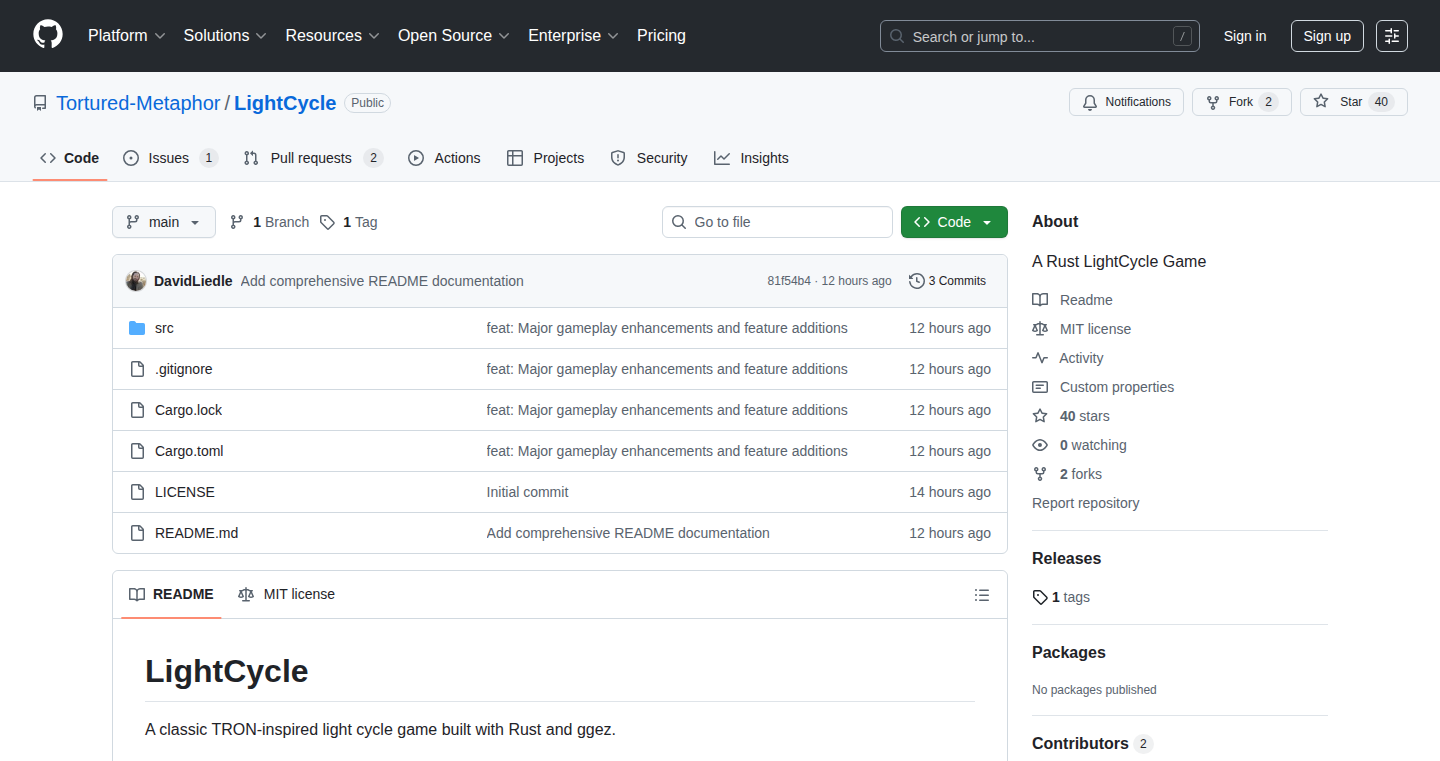

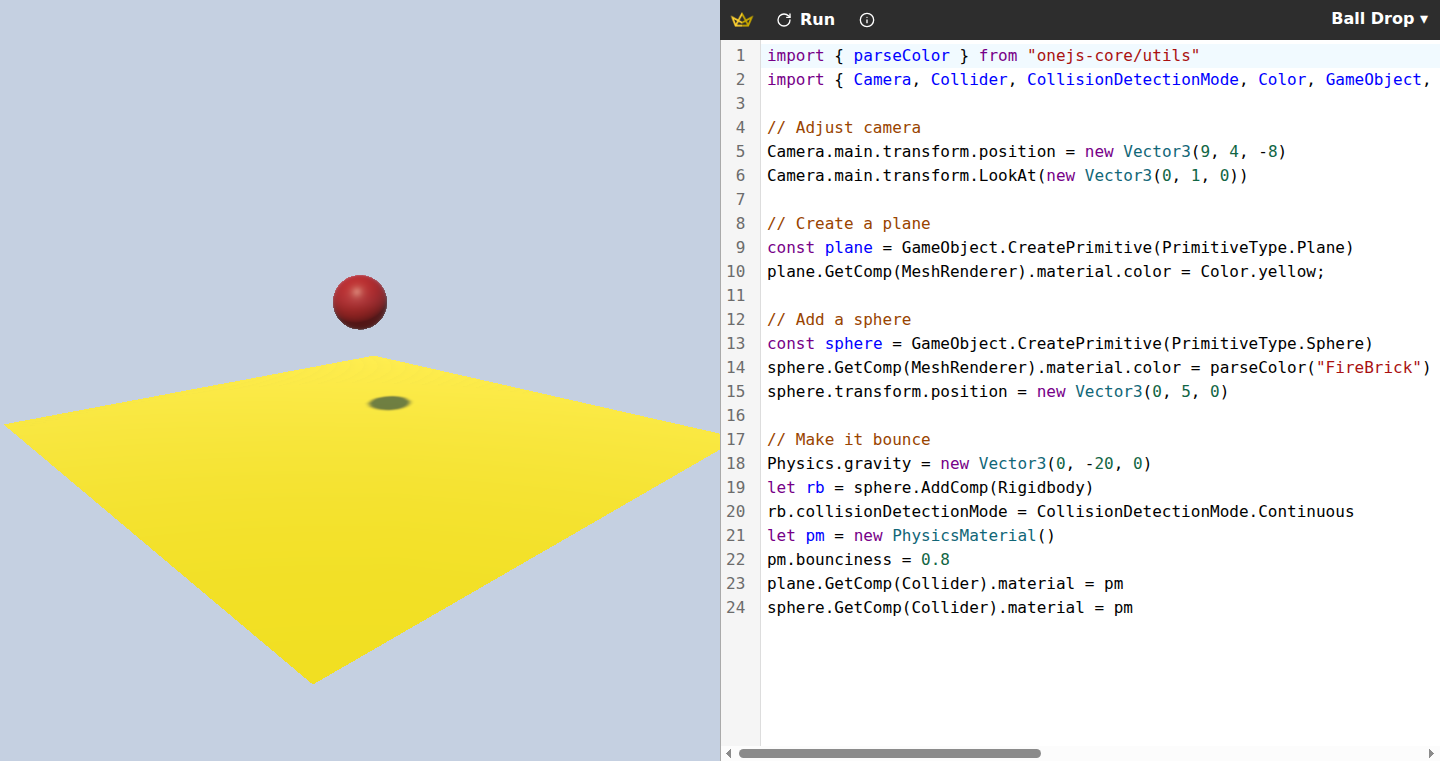

LightCycle.rs

Author

DavidCanHelp

Description

A FOSS game in Rust inspired by Tron, demonstrating efficient game loop management and real-time rendering in a memory-safe environment. It tackles the challenge of creating a responsive, multiplayer-capable game with a focus on low-level performance and developer experience.

Popularity

Points 35

Comments 12

What is this product?

LightCycle.rs is an open-source game project built using the Rust programming language, drawing inspiration from the classic Tron light cycle battles. The core innovation lies in its efficient implementation of game logic and rendering. Rust's memory safety features, without a garbage collector, allow for predictable performance, crucial for real-time games. The project likely uses a game engine or a custom rendering loop, possibly leveraging libraries like `wgpu` or `vulkano` for graphics, to achieve smooth visual updates and handle player inputs with minimal latency. This approach allows for a fast, reliable gaming experience that's also safer from common programming errors like memory leaks or segmentation faults.

How to use it?

Developers interested in game development with Rust or those looking to explore low-level graphics and real-time simulation can use LightCycle.rs as a reference. They can fork the repository, study its codebase, and experiment with modifications. For instance, a developer could integrate its rendering pipeline into their own project, adapt its game logic for different game types, or contribute new features like AI opponents or network multiplayer. The project serves as a practical example of how to structure a game in Rust, manage game state, and handle input events efficiently. It's a great starting point for anyone wanting to learn game development in Rust or contribute to an open-source gaming project.

Product Core Function

· Real-time rendering of dynamic game elements: This allows players to see their light cycles move and interact instantly, providing immediate visual feedback crucial for gameplay. The value is in experiencing a fluid and responsive visual experience.

· Player input handling for game control: This enables players to steer their light cycles, a fundamental mechanic for navigating the game arena and avoiding collisions. The value is in direct, intuitive control over the game character.

· Collision detection and game state management: This ensures that when light cycles collide, the game correctly registers the event and updates the game state (e.g., ending a player's turn). The value is in enforcing game rules and creating a challenging experience.

· Multiplayer networking capabilities (potential): If implemented, this would allow multiple players to compete simultaneously, greatly enhancing the game's replayability and social interaction. The value is in shared competitive experiences.

· Cross-platform compatibility (Rust's promise): Rust's ability to compile for various operating systems means the game could potentially run on Windows, macOS, and Linux with minimal changes. The value is in wider accessibility for players and developers.

Product Usage Case

· A game developer wanting to build a retro-style racing game can examine LightCycle.rs' rendering pipeline to understand how to create sharp vector graphics and smooth animations in Rust, saving time on foundational graphics implementation.

· A student learning about game loop design and state management can study how LightCycle.rs handles game updates, player actions, and win/loss conditions, applying these principles to their own educational projects.

· A Rust enthusiast looking to contribute to open-source projects can explore the codebase, identify areas for performance optimization, or add new game modes, thereby sharpening their Rust programming skills and contributing to a community effort.

· A hobbyist programmer interested in exploring graphics APIs like Vulkan or wgpu can use LightCycle.rs as a practical example of how these APIs are integrated into a functional game context, facilitating learning and experimentation.

4

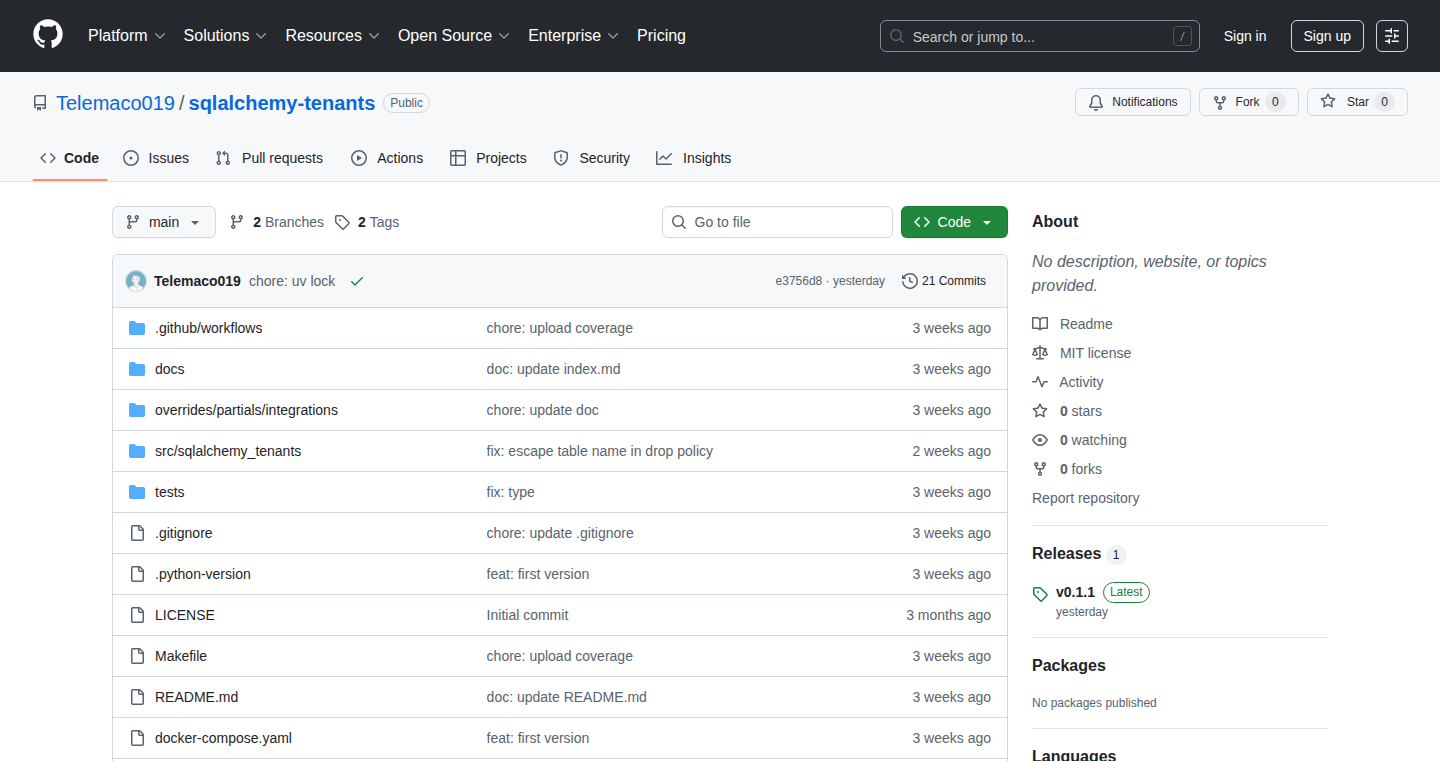

ZenStack: Unified Data Layer

Author

carlual

Description

ZenStack V3 is a modern, AI-friendly data layer for TypeScript applications. It revolutionizes how developers manage data by treating a comprehensive schema as the single source of truth, automatically generating essential components like access control, APIs, and frontend hooks. This reimplementation, powered by Kysely's type-safe query builder, offers enhanced flexibility and features beyond traditional ORMs while maintaining a developer experience similar to Prisma.

Popularity

Points 13

Comments 0

What is this product?

ZenStack V3 is a data management solution that acts as a unified layer for your application's data. Instead of manually writing code for various data-related tasks, you define your data structure and rules in a single, coherent schema. ZenStack then automatically generates the necessary code for things like database queries, security rules, and even frontend data fetching logic. The innovation lies in its 'model-first' approach and its reimplementation using Kysely, a type-safe query builder. This provides greater control and enables advanced features like database-side computed fields and polymorphic models, all while offering a smooth development experience.

How to use it?

Developers can use ZenStack by defining their application's data model using its schema definition language. This schema acts as the central hub for all data logic. ZenStack then automatically generates a type-safe ORM client (similar to Prisma's `PrismaClient`) that can be used directly in your TypeScript backend and frontend code. You can integrate it by installing the ZenStack package and setting up your schema. The new VSCode extension provides autocompletion and error checking, further streamlining the process. For advanced scenarios, the runtime plugin system allows customization by intercepting queries.

Product Core Function

· Schema-driven ORM: Automatically generates a type-safe database client from your schema, simplifying data access and reducing boilerplate code.

· Automatic Access Control: Defines security rules within the schema, which are then enforced automatically by ZenStack, ensuring data security without manual implementation.

· API Generation: Creates RESTful APIs based on your data models and schema, enabling quick integration with frontend applications.

· Frontend Hooks: Generates client-side data fetching and manipulation hooks, making it easier for frontends to interact with the backend data layer.

· Type-Safe Query Builder (via Kysely): Offers a flexible and robust way to write database queries with strong compile-time type checking, catching errors early in the development process.

· Database-Side Computed Fields: Allows defining fields whose values are calculated directly in the database based on other fields, improving performance and simplifying logic.

· Polymorphic Models: Enables defining models that can represent different types of data within a single structure, offering flexibility in data representation.

· Strongly-Typed JSON: Provides enhanced type safety when working with JSON data types in your database, preventing runtime errors.

· Runtime Plugin System: Allows developers to intercept and modify queries at various stages, offering deep customization and integration possibilities.

Product Usage Case

· Building a real-time chat application: Define user and message models in ZenStack. Automatically generate APIs for sending and receiving messages, and implement access control to ensure only authenticated users can send messages. The frontend can use the generated hooks to display new messages instantly.

· Developing an e-commerce platform: Model products, orders, and customers. Use ZenStack to automatically generate APIs for product catalog browsing and order processing. Implement complex business logic like inventory checks and order status updates as database-side computed fields.

· Creating a content management system: Define content types and fields in the schema. Automatically generate APIs for creating, reading, updating, and deleting content. Leverage Polymorphic Models to handle different types of content, such as blog posts and product reviews, within a unified structure.

· Integrating with AI assistants: The coherent schema and predictable output from ZenStack make it an ideal data layer for AI-assisted coding. AI tools can leverage the schema to understand data relationships and generate relevant code snippets or queries more effectively.

5

DeepDoc Local Research Assistant

Author

FineTuner42

Description

DeepDoc is a command-line tool that transforms your local documents (PDFs, DOCX, TXT, JPG) into structured, queryable knowledge bases. It automatically extracts text, breaks it into manageable pieces, performs semantic searches based on your queries, and generates a detailed markdown report, section by section. Think of it as a smart research assistant for your personal files, helping you quickly find and organize information from long documents, papers, or even scanned images.

Popularity

Points 10

Comments 2

What is this product?

DeepDoc is a local, terminal-based research tool that indexes and allows you to query your own files. It tackles the problem of extracting meaningful insights from diverse local document formats like PDFs, Word documents, text files, and even images containing text. The core innovation lies in its 'deep research workflow' applied to your personal data. It uses techniques to extract text, intelligently split it into contextually relevant chunks, and then leverages semantic search (understanding the meaning behind words, not just keywords) to find answers to your questions. Finally, it structures these answers into a coherent markdown report. This means you can ask questions about your documents and get back organized, relevant information, much like a human researcher would.

How to use it?

Developers can use DeepDoc by installing it via its repository. Once installed, you navigate to your terminal, point the tool to a directory or specific files (e.g., `deepdoc --files my_thesis.pdf my_notes.docx`), and then issue queries (e.g., `deepdoc --query "Summarize the main arguments of chapter 3"`). It's designed to be integrated into personal workflows for research, note-taking, or knowledge management. You can point it at folders containing research papers, project documentation, or personal notes to quickly extract and synthesize information without needing to manually open and read each file.

Product Core Function

· Local Document Ingestion: Ability to process various local file formats (PDF, DOCX, TXT, JPG) and extract text. This provides the foundational step for making your personal files searchable and actionable, solving the problem of siloed information.

· Text Chunking: Intelligent splitting of extracted text into smaller, contextually relevant pieces. This is crucial for semantic search as it ensures that the search can pinpoint the most relevant parts of a document, improving the accuracy of answers.

· Semantic Search: Utilizes advanced natural language processing to understand the meaning and context of your queries and document content. This is far more powerful than simple keyword matching, allowing you to ask complex questions and get nuanced answers from your files.

· Structured Report Generation: Automatically builds and outputs a markdown report that organizes the retrieved information section by section based on your query. This saves significant time and effort in synthesizing research findings or extracting specific data points, making information readily usable.

· Command-Line Interface (CLI): Designed for developers and technically inclined users to easily integrate into their existing workflows and scripts. This offers flexibility and automation possibilities for managing personal knowledge.

Product Usage Case

· Research Papers Analysis: A student can point DeepDoc to a folder of research papers related to their thesis. By querying 'What are the key findings on topic X from these papers?', DeepDoc can generate a summary report, saving hours of manual reading and note-taking.

· Personal Notes Synthesis: A developer can feed DeepDoc their extensive collection of personal notes and code snippets. A query like 'Explain the core principles of the new framework I was learning about' can yield a structured overview, helping them recall and reinforce learning.

· Business Report Summarization: A project manager can point DeepDoc to a directory of project reports and documentation. Asking 'What were the major roadblocks identified in Q3?' can generate a concise report of issues, aiding in quick decision-making and problem identification.

· Scanned Document Retrieval: For legacy documents or scanned files, DeepDoc can extract text (if the scanner output is good or OCR is applied) and then allow users to search for specific information within them, unlocking valuable historical data that was previously inaccessible.

6

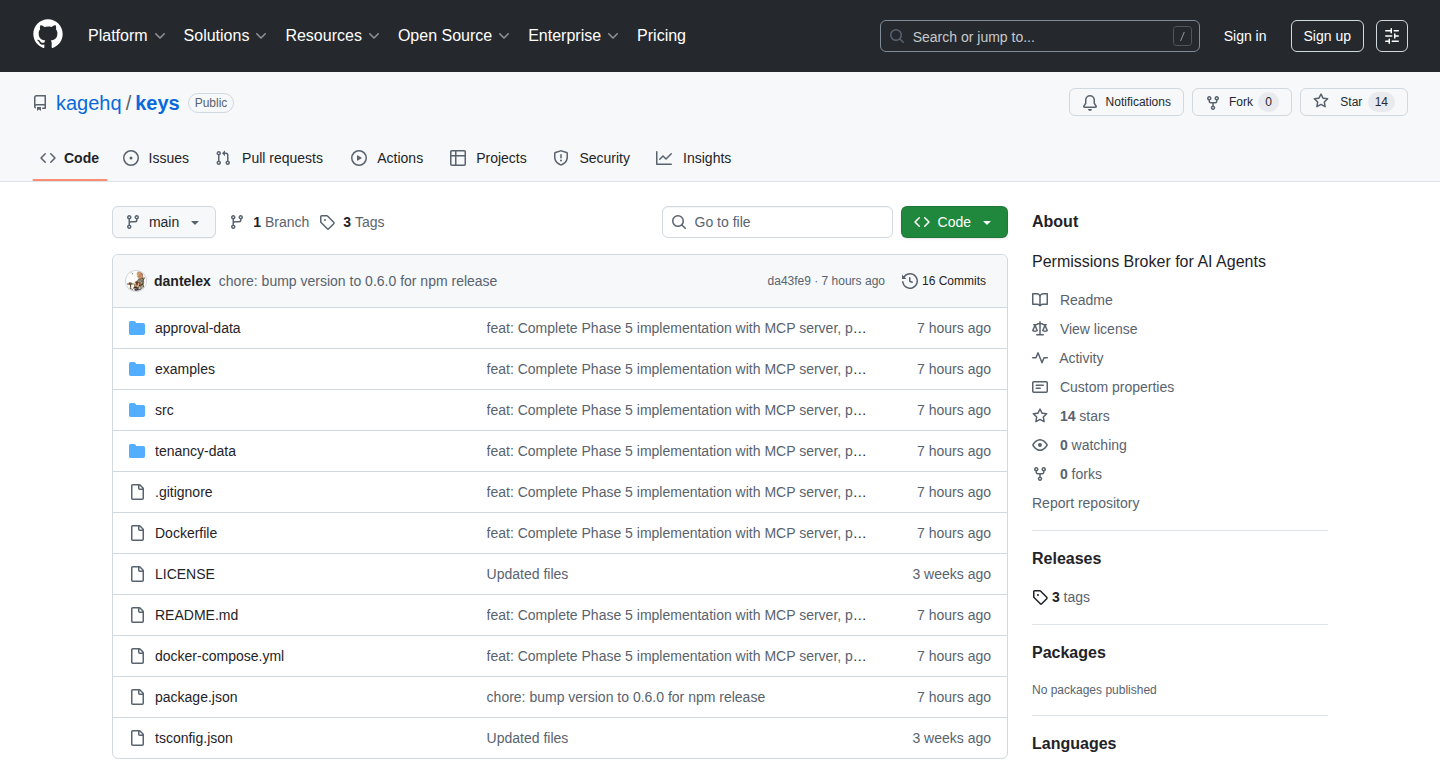

MCP Secrets Vault

Author

Rachid-Chabane

Description

MCP Secrets Vault is a local proxy designed to safeguard your sensitive API keys and secrets from being exposed to Large Language Models (LLMs). It acts as an intermediary, intercepting requests to LLM APIs, and injecting your credentials securely without them ever entering the LLM's context window. This innovative approach shields your critical data, such as API keys and private information, from accidental leakage or unauthorized access during LLM interactions, thereby enhancing security and privacy.

Popularity

Points 11

Comments 1

What is this product?

MCP Secrets Vault is a locally running proxy that acts as a gatekeeper for your sensitive information when interacting with LLM APIs. Instead of directly sending your API keys or secrets to the LLM provider, you route your requests through this proxy. The vault intelligently identifies sensitive data, such as API keys or personally identifiable information, and substitutes them with placeholders or securely manages their injection into the request headers or body only when necessary. This prevents your secrets from being processed or potentially logged by the LLM, offering a robust solution for privacy-conscious developers and organizations. Its innovation lies in its ability to selectively handle secrets at the network level, keeping them out of the LLM's computational context.

How to use it?

Developers can integrate MCP Secrets Vault into their LLM interaction workflows by configuring their applications or scripts to send API requests to the local proxy's endpoint instead of the LLM provider's direct URL. For instance, if you're using a Python script to query an LLM, you would update your script's API endpoint configuration to point to the MCP Secrets Vault. The vault can be set up to automatically detect and manage common types of secrets, such as API keys in authorization headers. This makes it a seamless addition to existing development pipelines, requiring minimal code changes. It's particularly useful for applications that frequently interact with LLMs and handle sensitive data, ensuring compliance with security policies and protecting intellectual property.

Product Core Function

· Secure API Key Management: Intercepts and manages API keys, injecting them into requests only when required, preventing them from being part of the LLM's processed context. This protects your API access credentials from unauthorized exposure.

· Sensitive Data Redaction: Automatically identifies and can redact or mask other sensitive data in prompts, ensuring privacy and compliance with data protection regulations. This means your private information stays private.

· Local Proxy Architecture: Operates as a local proxy, meaning your data doesn't leave your machine or trusted network before reaching the LLM, reducing the attack surface and increasing control over your sensitive information.

· Configurable Secret Injection: Allows developers to define specific rules for how and when secrets are injected into requests, offering fine-grained control over security policies. You can customize how your secrets are handled.

· LLM Context Isolation: Guarantees that your secrets are not processed or stored by the LLM itself, a crucial feature for maintaining confidentiality and preventing accidental data leakage. This ensures the LLM only sees what it needs to, not your keys.

Product Usage Case

· Protecting Proprietary Code Snippets: A developer can use MCP Secrets Vault to send private code snippets to an LLM for analysis or code generation without the LLM's training data potentially incorporating their proprietary algorithms. The secrets vault ensures the code's confidentiality.

· Securing User Data in LLM Applications: When building a chatbot that accesses user-specific information (e.g., account details), the vault can manage API calls to retrieve this data, ensuring that user PII remains out of the LLM's direct processing. This enhances user trust and data security.

· Integrating with Private LLMs: For organizations running private LLM instances on-premises, the vault can act as a secure intermediary, managing access credentials to the internal LLM services and ensuring that no external sensitive data is accidentally exposed. This streamlines internal access while maintaining high security.

· Automated Data Analysis with Sensitive Sources: An analyst might use an LLM to process financial reports containing sensitive company data. The vault can handle the API calls to the LLM, ensuring that the confidential financial figures are not exposed during the analysis process. This allows for powerful data insights without compromising sensitive information.

7

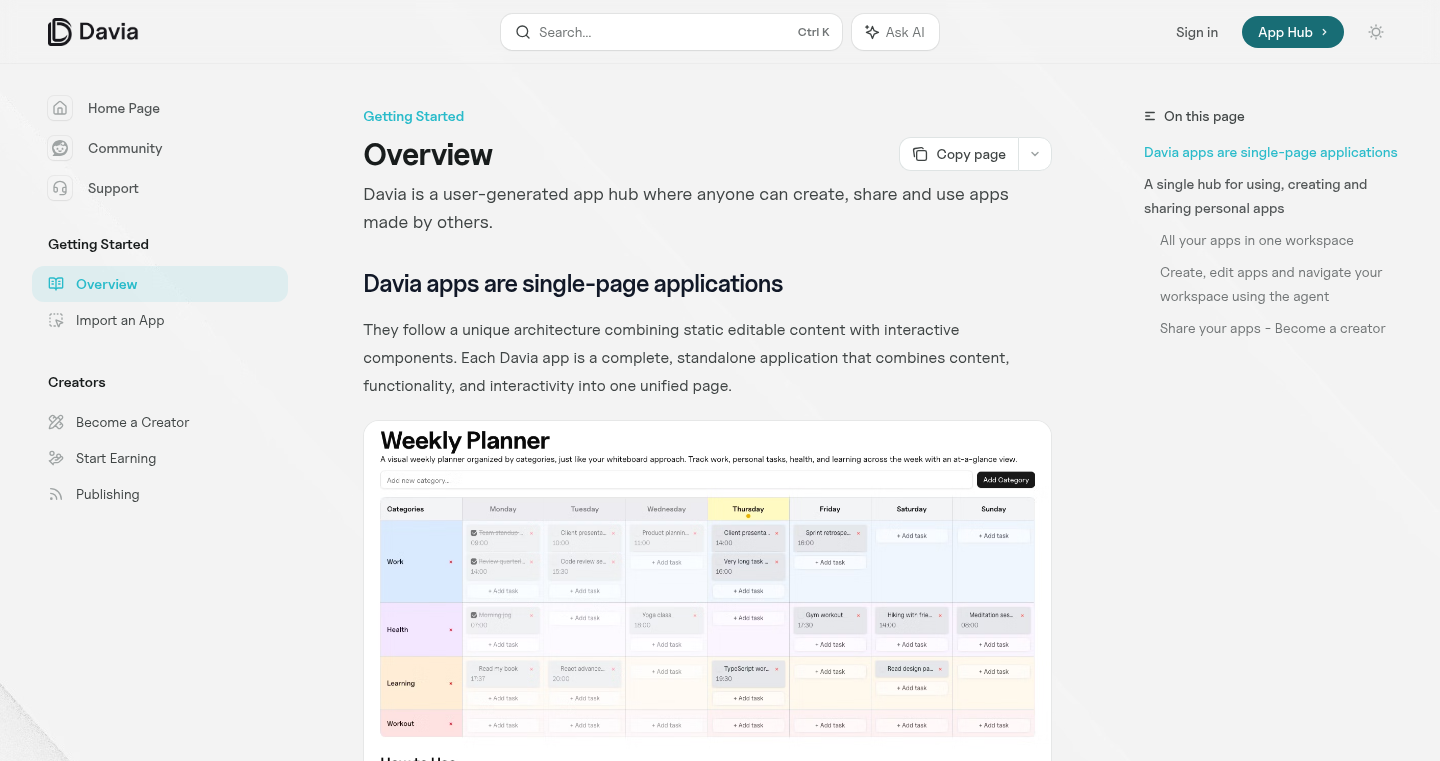

AppForge Republic

Author

ruben-davia

Description

AppForge Republic is a novel platform that reimagines application development and sharing. It functions like a YouTube for applications, where creators publish their apps with open, editable code. This allows users to not only discover and use applications but also to remix and customize them for their specific needs. The platform addresses the discoverability problem for side projects and community apps, and it incentivizes creators by rewarding them based on the usage and reuse of their applications, fostering a collaborative and economically viable ecosystem for AI-assisted development.

Popularity

Points 12

Comments 0

What is this product?

AppForge Republic is a decentralized application development and sharing platform that prioritizes open code and community collaboration. Unlike traditional code repositories like GitHub, which are primarily for developers, or app stores with closed-source applications, AppForge Republic acts as a marketplace and a collaborative workshop for applications. Its core innovation lies in a 'YouTube for apps' model where builders publish applications with their source code accessible, allowing anyone to view, fork, and modify them. This fosters a culture of reusability and adaptation. The platform tackles the discoverability challenge for independent app creators by providing a centralized hub and also introduces a creator economy by rewarding builders when their applications are used as a foundation for new projects. This model is particularly impactful for AI-assisted development, enabling rapid iteration and specialization.

How to use it?

Developers can use AppForge Republic in several ways. Firstly, they can browse and discover applications built by others, leveraging existing solutions rather than starting from scratch. If an existing app is close to what they need, they can fork its code directly within the platform, make modifications, and even deploy it. For those who build applications, they can publish their work, making it discoverable to a wider audience and potentially earning rewards as others reuse their code. Integration can be done by forking code for local development or by utilizing the platform's hosting solutions for scaled deployment, where pricing is based on team usage. This makes it an excellent tool for prototyping, rapid application development, and building specialized tools within a community context.

Product Core Function

· Application Discovery and Browsing: Allows users to find a wide range of applications built by the community, solving the problem of reinventing the wheel for common tasks and providing inspiration for new projects. This helps users quickly find functional software that addresses their specific needs.

· Open Code Forking and Editing: Enables users to take existing applications, view their underlying code, and modify it to suit their unique requirements. This empowers customization and adaptation, allowing users to tailor solutions without starting from zero, thereby saving significant development time and effort.

· Creator Monetization and Rewards: Incentivizes application creators by providing rewards when their published applications are used as a base for new projects or are heavily utilized. This fosters a sustainable ecosystem for independent developers and encourages the creation of high-quality, reusable applications.

· Collaborative Development Environment: Provides a space for developers to share their work, receive feedback, and build upon each other's creations. This accelerates innovation and allows for collective problem-solving, fostering a strong sense of community within the development process.

· Platform Hosting and Scalability: Offers optional hosting services for deployed applications, with usage-based pricing for teams. This provides a convenient path for users to scale their customized applications without the overhead of managing their own infrastructure.

Product Usage Case

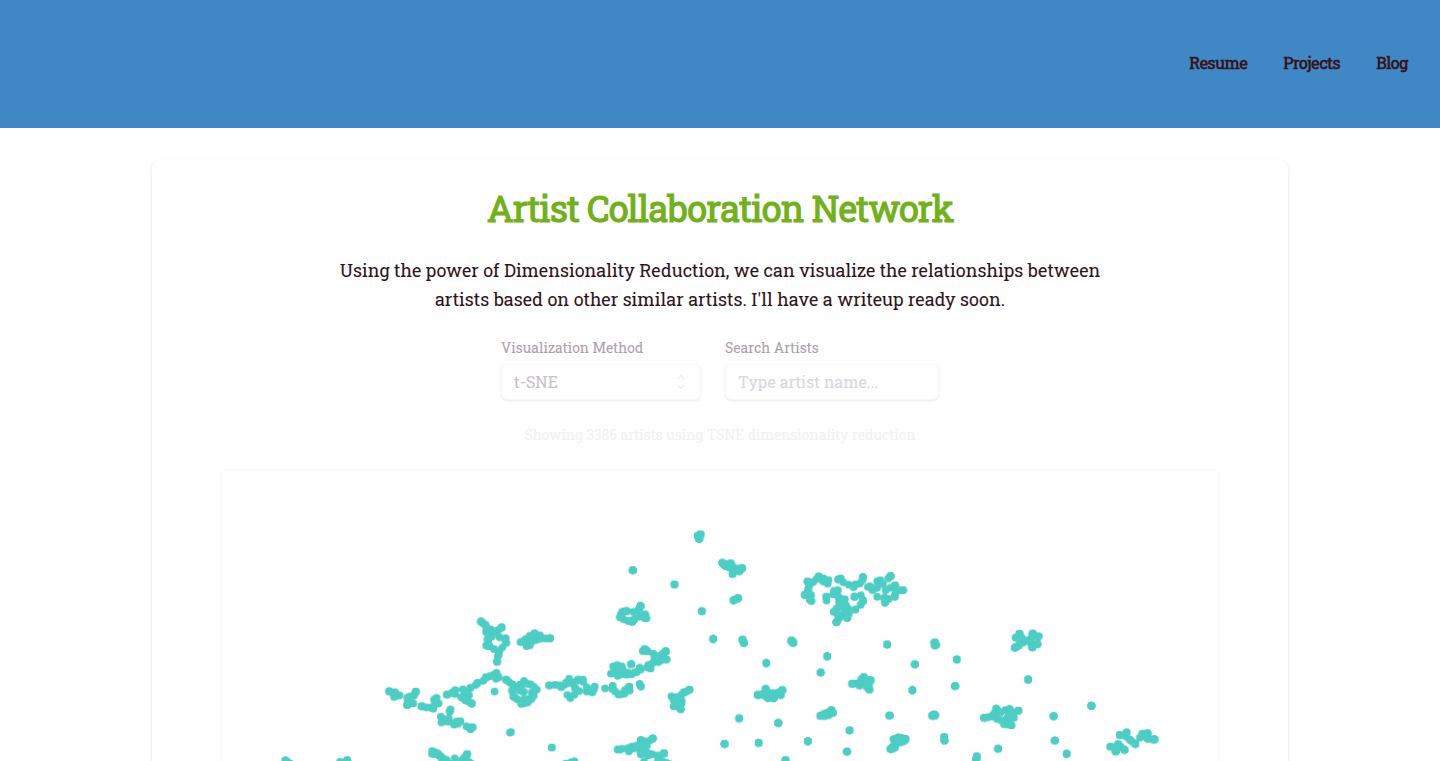

· A web developer needs a specific chart visualization component for a new project but doesn't want to build it from scratch. They discover an open-source charting app on AppForge Republic, fork its code, make minor adjustments to the styling, and integrate it into their project, saving hours of development time.

· An AI enthusiast creates a niche AI-powered text summarization tool as a side project. They publish it on AppForge Republic. Another developer finds it useful, forks the code, adds multilingual support, and deploys it for their own users. The original creator receives a reward for their foundational work.

· A startup team is building a customer support chatbot. They find a basic chatbot framework on AppForge Republic, fork it, and then customize it extensively with their specific business logic and AI models. They then use the platform's hosting for their internal team, benefiting from a pre-built, adaptable foundation.

· A data scientist wants to share a tool they built for analyzing specific types of sensor data. They publish it on AppForge Republic with open code. Other data scientists in similar fields discover the tool, fork it, and adapt it for their own datasets or add new analytical features, thereby enhancing the tool's utility and reach.

8

HeyGuru: Conversational AI for Deep Inquiry

Author

beabhinov

Description

HeyGuru is a platform designed for thoughtful exploration of complex questions. It leverages advanced conversational AI to provide users with a dedicated, uninterrupted space for deep thinking and dialogue, acting as a sophisticated sounding board for challenging ideas. Its core innovation lies in its ability to maintain context and offer nuanced responses, simulating a focused, one-on-one intellectual exchange.

Popularity

Points 10

Comments 1

What is this product?

HeyGuru is an AI-powered conversational tool that creates a distraction-free environment for users to engage with their most profound questions. Unlike typical chatbots that aim for quick answers, HeyGuru is engineered to facilitate extended, in-depth exploration. It utilizes large language models (LLMs) with fine-tuning for sophisticated dialogue and reflective inquiry. This means it's not just about getting an answer, but about collaboratively unpacking a concept, exploring different facets, and pushing your own understanding forward. The 'quiet space' aspect is conceptual – the AI's interaction style is designed to be focused and non-intrusive, mimicking a dedicated intellectual session.

How to use it?

Developers can integrate HeyGuru into their workflows for personal reflection, research, or even as a tool for brainstorming complex technical challenges. You can start a conversation by posing a question, a problem statement, or a hypothesis. For example, a developer could ask, 'How can I optimize this database query for extreme read loads?' and then engage in a back-and-forth discussion, exploring different indexing strategies, caching mechanisms, or architectural patterns. It can be used directly through its interface, or potentially via API for custom applications needing a sophisticated conversational agent for analytical purposes.

Product Core Function

· Deep Question Analysis: The AI is trained to deconstruct complex queries, breaking them down into smaller, manageable parts for focused discussion. This helps users systematically tackle intricate problems, providing clarity by offering a structured approach to their thinking.

· Contextual Continuity: Maintains a deep understanding of the ongoing conversation, remembering previous points and building upon them. This allows for a natural, flowing dialogue that mirrors human interaction, preventing repetitive questions and ensuring progress on the user's topic.

· Nuanced Response Generation: Produces responses that go beyond surface-level information, offering insights, alternative perspectives, and thoughtful continuations. This enables users to gain a richer understanding of their subject matter, as the AI acts as a facilitator for deeper intellectual engagement.

· Reflective Dialogue Simulation: Designed to prompt further thought and exploration by asking clarifying questions or suggesting avenues of inquiry. This encourages users to critically examine their own ideas, leading to more robust conclusions and personal learning.

· Distraction-Free Interface: While the core is AI, the user experience is intentionally streamlined to minimize external distractions. This aids concentration, allowing users to fully immerse themselves in the thinking process, maximizing productivity and depth of thought.

Product Usage Case

· A software architect is exploring a new microservices architecture. They use HeyGuru to discuss potential trade-offs of different communication protocols (e.g., gRPC vs. REST) and the implications for scalability and fault tolerance. The AI helps them articulate their concerns and suggests design patterns for asynchronous communication, leading to a more resilient system design.

· A data scientist is trying to understand a complex machine learning algorithm. They engage HeyGuru in a dialogue about the algorithm's mathematical underpinnings and its practical application, exploring hyperparameter tuning strategies and potential biases. The AI's detailed explanations and probing questions help them grasp the nuances, improving model performance.

· A product manager is developing a strategy for a new feature. They use HeyGuru to brainstorm potential user pain points and explore different monetization models. The AI's ability to consider various business angles and user psychology provides valuable insights for their product roadmap.

· A developer is debugging a challenging race condition in a multithreaded application. They use HeyGuru to describe the symptoms and their current hypotheses. The AI helps them systematically analyze potential causes, suggesting debugging techniques and tools, and ultimately aiding in pinpointing the root issue.

9

Zyg: Commit Narrative Generator

Author

flyingsky

Description

Zyg is a CLI tool and dashboard designed to transform your git commits into human-readable progress updates. It addresses the common developer pain point of articulating work-in-progress without breaking concentration. By analyzing your code changes, Zyg automatically generates detailed commit messages and can then create project updates from these commits, streamlining communication with stakeholders. This innovative approach tackles the 'invisible progress' problem in software development by making technical advancements easily understandable, fostering better team alignment and reducing the overhead of manual status reporting.

Popularity

Points 5

Comments 4

What is this product?

Zyg is a developer tool that bridges the gap between raw code commits and clear project status updates. Think of it as a smart assistant for your development workflow. Technically, it leverages the power of git to inspect your code changes. It then uses natural language processing, likely powered by a large language model (like Anthropic's Claude, given the mention of API credits), to interpret these changes and generate a narrative summary. This summary can be embedded directly into commit messages, or used to create standalone updates for platforms like Slack or email. The innovation lies in automating the creation of meaningful progress reports directly from the code itself, turning complex diffs into digestible insights. So, for you, it means less time spent manually summarizing your work and more time actually doing it.

How to use it?

Developers can integrate Zyg into their workflow by installing it as a command-line interface (CLI) tool. You would typically run `zyg` before or after making your commits. Zyg will then analyze your staged or committed changes. You can choose to have Zyg automatically generate a detailed commit message that includes a human-readable summary of your work. Alternatively, you can select specific commits or a range of commits to generate a consolidated project update. This update can then be copied and pasted into your team's communication channels (like Slack or email) or, for more automated workflows, Zyg can potentially be configured to push these updates directly to subscribed stakeholders. The core idea is to make status reporting a natural byproduct of coding, rather than an extra task. This means your project manager or team lead can quickly understand your progress without needing to decipher code itself.

Product Core Function

· Automatic commit message generation: Zyg analyzes code changes within a commit and generates a human-readable summary of the modifications, making it easier for anyone to understand what was done. This saves you from having to manually craft detailed commit messages.

· Project update creation from commits: Zyg can process a single commit or a series of commits to create a concise and informative project status update. This is incredibly useful for quickly informing your team or stakeholders about your progress without manual compilation.

· Stakeholder notifications: Zyg can notify subscribed individuals about project updates derived from your commits. This automates the communication process, ensuring relevant parties are always in the loop about your development activities.

· Manual update generation: Even if you don't want automated notifications, Zyg allows you to easily copy the generated summaries to share manually via Slack, email, or any other platform. This provides flexibility in how you communicate your progress.

· Integration with development workflow: As a CLI tool, Zyg is designed to fit seamlessly into existing git workflows, minimizing disruption and adding value without requiring a complete overhaul of how you work.

Product Usage Case

· Scenario: You've been working on a new feature for several hours, making multiple commits along the way. Your product manager asks for an update. Instead of sifting through your commit history and manually writing a summary, you run Zyg on your recent commits. Zyg generates a clear narrative like 'Implemented user authentication flow, including frontend components and backend API endpoints,' which you can then paste into Slack. Problem solved: You provide an immediate and accurate update without interrupting your coding flow.

· Scenario: Your team uses GitHub for code management and Linear for task tracking. You complete a significant chunk of work and want to update your task in Linear and potentially your team's general status channel. You can use Zyg to generate a comprehensive summary of all commits related to that task, then copy that summary to update your Linear ticket and post it to your team's Slack channel. Problem solved: Status updates are consolidated and easily transferable across different tools, saving manual re-entry.

· Scenario: You want to ensure your stakeholders are always aware of the progress you're making on a critical bug fix. You configure Zyg to automatically generate updates from your commits related to that bug and to notify specific individuals whenever a new update is created. Problem solved: Proactive and automated communication keeps everyone informed without you needing to manually send out reports.

10

ESP32 On-Call Alert Beeper

Author

TechSquidTV

Description

This project is a custom-built on-call alerting device leveraging an ESP32 microcontroller. It aims to provide a physical, dedicated notification system for on-call engineers, moving away from relying solely on smartphone apps or desktop alerts which can be easily missed or drowned out. The core innovation lies in its hardware-software integration for reliable, ambient notifications.

Popularity

Points 6

Comments 3

What is this product?

This project is a hardware device that uses an ESP32 microcontroller to receive and act on on-call alerts. Think of it as a smart, physical pager for modern developers. The ESP32 is programmed to connect to a cloud service (like Pushover or similar notification platforms) and trigger a physical alert when an on-call notification arrives. The innovation is in creating a dedicated, tangible notification system that is less intrusive than a buzzing phone but more attention-grabbing than a desktop banner. It’s about a focused, reliable way to know when you're needed, without the distractions of a general-purpose device.

How to use it?

Developers can use this project as a standalone alert device in their workspace. The ESP32 is programmed to receive specific webhook triggers or API calls from their existing incident management or notification systems (e.g., PagerDuty, Opsgenie, or custom scripts). Upon receiving a trigger, the ESP32 can activate an LED, a small buzzer, or even control other connected hardware. It's ideal for developers who want a distinct, always-on alert system in their home office or development environment. Integration typically involves configuring the ESP32 with Wi-Fi credentials and setting up the chosen notification service to send a trigger to a specific endpoint the ESP32 is listening to.

Product Core Function

· Customizable alert triggers: The ESP32 can be programmed to respond to various notification services, allowing developers to integrate it with their existing on-call tools. This means you get notified by a system you already trust, but with a dedicated physical device.

· Physical notification mechanisms: The device can be configured to use LEDs of different colors, audible beeps, or even vibrations to alert the user. This provides a multi-sensory alert that's harder to ignore than a silent phone notification.

· Low-power operation: ESP32 is known for its efficient power management, meaning this device can run for extended periods on battery power or a small power adapter. This makes it a reliable, always-on companion for your workspace.

· Wi-Fi connectivity: Seamless integration into the developer's network allows for real-time communication with alert services. This ensures you get your alerts instantly, without relying on Bluetooth or direct wired connections.

· Open-source firmware: The project's open-source nature allows developers to inspect, modify, and extend its functionality to perfectly suit their needs. This empowers you to tailor the alerting experience to your specific workflow.

Product Usage Case

· Scenario: A developer working on critical infrastructure wants a distinct alert for system outages, separate from their daily Slack notifications. They integrate the ESP32 to blink a bright red LED when a PagerDuty incident is assigned to them. This ensures they don't miss critical alerts even when deeply focused on coding or other tasks.

· Scenario: A freelance developer who needs to be reachable for client emergencies, but wants to avoid constant phone checking. They configure the ESP32 to emit a soft, melodic chime whenever a new urgent email arrives or a specific Slack channel is mentioned. This provides peace of mind and allows for focused work without the anxiety of missing something important.

· Scenario: A team lead who wants a visual indicator in their shared office space for when their team is on-call. They set up multiple ESP32 devices that light up with a team color when an alert is active. This provides an ambient awareness of the team's operational status for everyone.

11

StoryMotion

Author

chunza2542

Description

StoryMotion is a web-based, hand-drawn motion graphics editor that empowers educational content creators to produce engaging animated explanations. It builds upon the familiar Excalidraw canvas, offering a Keynote-like interface with an integrated effects animation library and scene transition capabilities, including a 'Magic Move' feature. Its core innovation lies in democratizing complex animation creation through an intuitive timeline editor and live preview, making it accessible for anyone to create professional-looking motion graphics without extensive animation expertise.

Popularity

Points 5

Comments 2

What is this product?

StoryMotion is a web application designed to simplify the creation of hand-drawn style animated videos, often referred to as motion graphics. At its heart, it leverages the Excalidraw canvas, which provides a freehand drawing experience, and combines it with a user-friendly interface similar to presentation software like Keynote. The innovation lies in its ability to add dynamic animations to these drawings. Think of it as a digital whiteboard that can bring your sketches and ideas to life. It allows you to define animated effects for individual elements and smooth transitions between different scenes, all within a visual timeline. This means you can create animated walkthroughs, explainer videos, or step-by-step tutorials with a distinctive, handcrafted aesthetic.

How to use it?

StoryMotion is accessed through a web browser at storymotion.video/editor. Developers and content creators can use it by starting a new project, drawing or importing elements onto the Excalidraw-like canvas, and then applying pre-built animation effects from the library. You can control the timing and sequence of these animations using the intuitive timeline editor. For example, you can select a drawing, choose a 'fade-in' or 'slide-out' effect, and set precisely when it should happen. You can also define how one scene smoothly transforms into the next. The editor integrates with Google Fonts, allowing you to add animated text to your creations. The final output can be exported as a video, making it easy to embed in blogs, presentations, or social media to explain concepts visually.

Product Core Function

· Keynote-like interface with Excalidraw canvas: Provides a familiar and intuitive drawing and editing environment, enabling users to create hand-drawn visuals easily and apply animations without a steep learning curve. This means you can start creating animated content right away, even if you're not a professional animator.

· Effects animation library: Offers a collection of pre-designed animation presets (e.g., move, scale, rotate, fade) that can be applied to any element on the canvas. This saves time and effort in creating custom animations, making complex visual movements accessible to everyone.

· Scene transition animation: Enables smooth and engaging transitions between different visual states or scenes, including a 'Magic Move' feature similar to Keynote's. This adds a professional polish to your animations and guides the viewer through your content seamlessly.

· Timeline editor with live preview: Allows precise control over the timing and sequencing of animations for each element and scene. The live preview means you can see the results of your edits instantly, facilitating rapid iteration and fine-tuning of your animated sequences.

· Google Fonts integration: Provides access to a wide range of fonts, allowing for visually appealing and branded text elements within the animations. This enhances the aesthetic quality and readability of your explanatory content.

Product Usage Case

· Explaining a complex coding concept on a blog: A developer can use StoryMotion to create a step-by-step animated walkthrough of a code snippet, highlighting specific lines or functions as they are explained, making the technical concept easier to grasp for their audience.

· Creating educational video tutorials for students: An educator can use StoryMotion to animate diagrams, charts, or historical timelines, making learning more interactive and memorable for students. For instance, animating the stages of photosynthesis with hand-drawn elements.

· Developing engaging onboarding sequences for a new software feature: A product manager can create a short animated guide demonstrating how to use a new feature, using StoryMotion's scene transitions and element animations to clearly show the workflow and benefits.

· Producing animated marketing snippets for social media: A small business owner can quickly create short, eye-catching animated graphics to promote their products or services, using the hand-drawn style to convey authenticity and creativity.

12

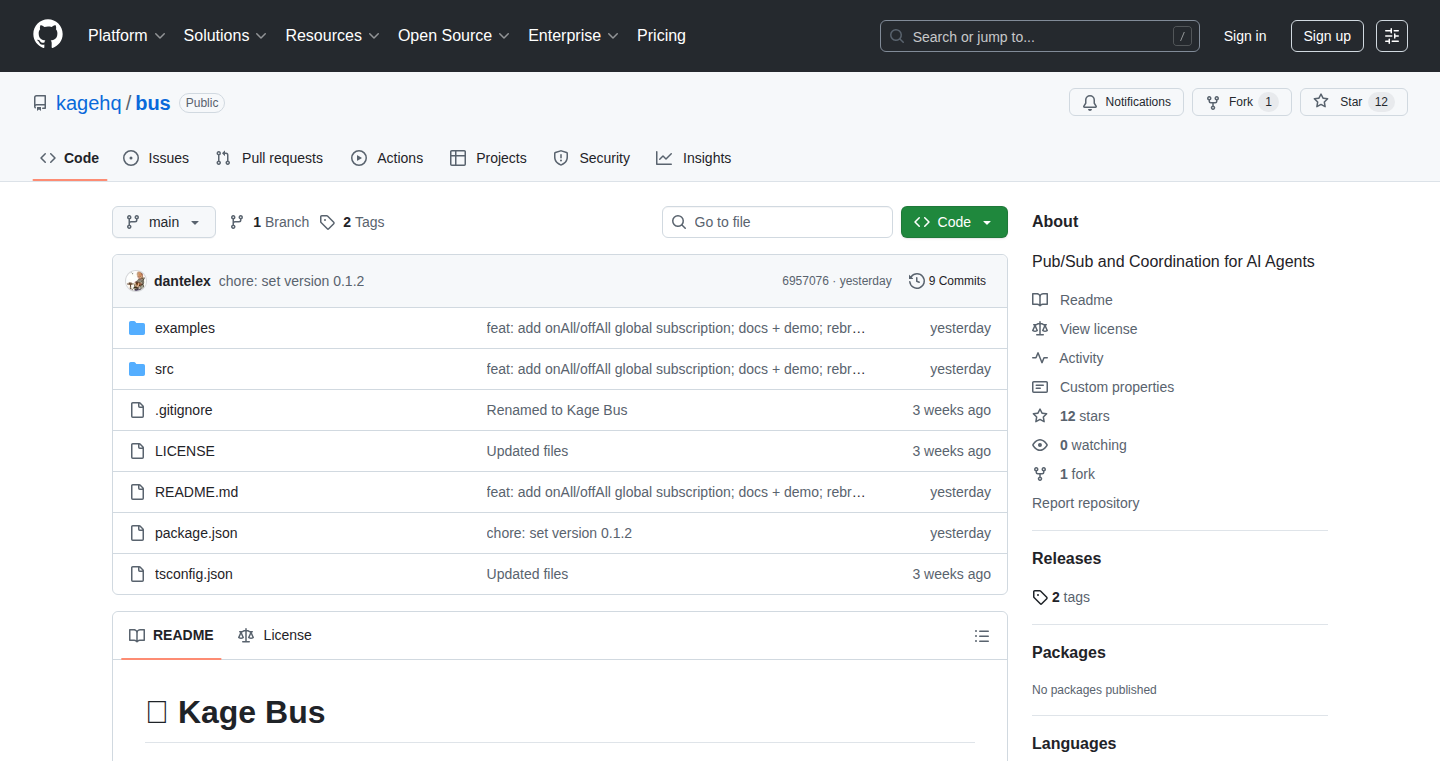

AgentSea

Author

miletus

Description

AgentSea is a private and secure AI chat interface that consolidates access to multiple AI models, specialized agents, and integrated tools. It addresses common frustrations with AI tools, such as subscription overload, loss of context when switching between platforms, and privacy concerns. By offering a unified experience, AgentSea allows users to seamlessly switch between different AI models mid-conversation without losing memory, utilize community-built specialized agents for specific tasks, and leverage integrated search and image generation capabilities. The 'Secure Mode' further enhances privacy by exclusively using open-source models or models hosted on AgentSea's servers.

Popularity

Points 6

Comments 0

What is this product?

AgentSea is an AI chat platform designed to simplify your interaction with various advanced AI models and tools. Think of it as a central hub for all your AI needs. The core innovation lies in its ability to allow you to use different AI models, like those from OpenAI, Anthropic, or open-source alternatives, all within the same chat conversation. You can switch between them on the fly, and your conversation's memory and context are preserved, meaning you don't have to start over. Furthermore, it offers a 'Secure Mode' which is a major privacy upgrade. In this mode, your chats are handled by AI models that are either open-source or hosted entirely on AgentSea's own infrastructure. This means your sensitive information is not shared with third-party model providers, addressing a critical concern for professional and personal use. It also integrates over 1,000 specialized AI 'agents' created by the community, which are like AI experts tailored for specific jobs (e.g., summarizing articles, writing code). Additionally, you can use it to search the web, Reddit, X (formerly Twitter), and YouTube, and even generate images, all from within the chat interface. So, what does this mean for you? It means less hassle with multiple subscriptions, no more losing your place in conversations when you want to try a different AI, and the peace of mind that your data is more private and secure.

How to use it?

Developers can use AgentSea through its web interface (agentsea.com) for quick access to AI models and tools. For integration into custom workflows or applications, AgentSea is designed to be a flexible platform. You can initiate conversations, specify which AI model to use (or let it intelligently switch), prompt for information, and leverage integrated tools like web search. For instance, a developer could use AgentSea to quickly research a new API by having it search documentation, summarize relevant forum discussions, and even generate code snippets using different AI models to compare their outputs. The platform's ability to maintain context across model switches is invaluable for iterative development or debugging. You can also use the community-built agents to automate specific tasks within your development process, such as code refactoring or generating test cases. So, how does this help you? It streamlines your research and development process by providing a single, efficient interface to powerful AI capabilities, saving you time and the complexity of managing multiple AI service accounts.

Product Core Function

· Unified access to diverse AI models: Allows seamless switching between leading AI models (e.g., GPT, Claude, Llama) within a single chat session, preserving conversational context. This is valuable for comparing AI performance on specific tasks or leveraging the unique strengths of different models without disruption.

· Private and secure chat mode: Offers a privacy-focused environment using open-source or self-hosted models, ensuring user data is not used for training or shared with third parties. This is crucial for handling sensitive information and for users who prioritize data security.

· Extensive community-built agent library: Provides access to over 1,000 specialized AI agents designed for niche tasks, enabling users to tackle a wide range of specific problems with tailored AI assistance. This significantly expands the utility of the platform beyond general chat.

· Integrated web and media search: Enables direct searching of Google, Reddit, X, and YouTube from within the chat interface, fetching real-time information to inform conversations and tasks. This makes the AI a more potent research and information-gathering tool.

· AI-powered image generation: Incorporates the ability to generate images using the latest AI models, adding a creative dimension to the platform. This is useful for content creation, design ideation, or simply exploring visual AI capabilities.

Product Usage Case

· A developer researching a new programming concept can use AgentSea to ask questions of GPT-4, then switch to Claude for a different perspective, and then use the integrated Google search to find relevant Stack Overflow threads, all without losing the thread of their inquiry.

· A writer working on sensitive company information can utilize AgentSea's Secure Mode with an open-source model to draft internal documents or summarize confidential reports, ensuring their proprietary data remains private and protected.

· A marketer can leverage a community-built agent within AgentSea to analyze social media sentiment for a specific campaign or generate marketing copy variations using different AI models to see which performs best.

· A student preparing for an exam can use AgentSea to get explanations of complex topics from multiple AI models, then use its integrated Reddit search to find student discussions or study guides related to the subject matter.

· A designer can use AgentSea to generate a series of visual concepts for a new product by prompting different image generation models, allowing for rapid ideation and exploration of design directions.

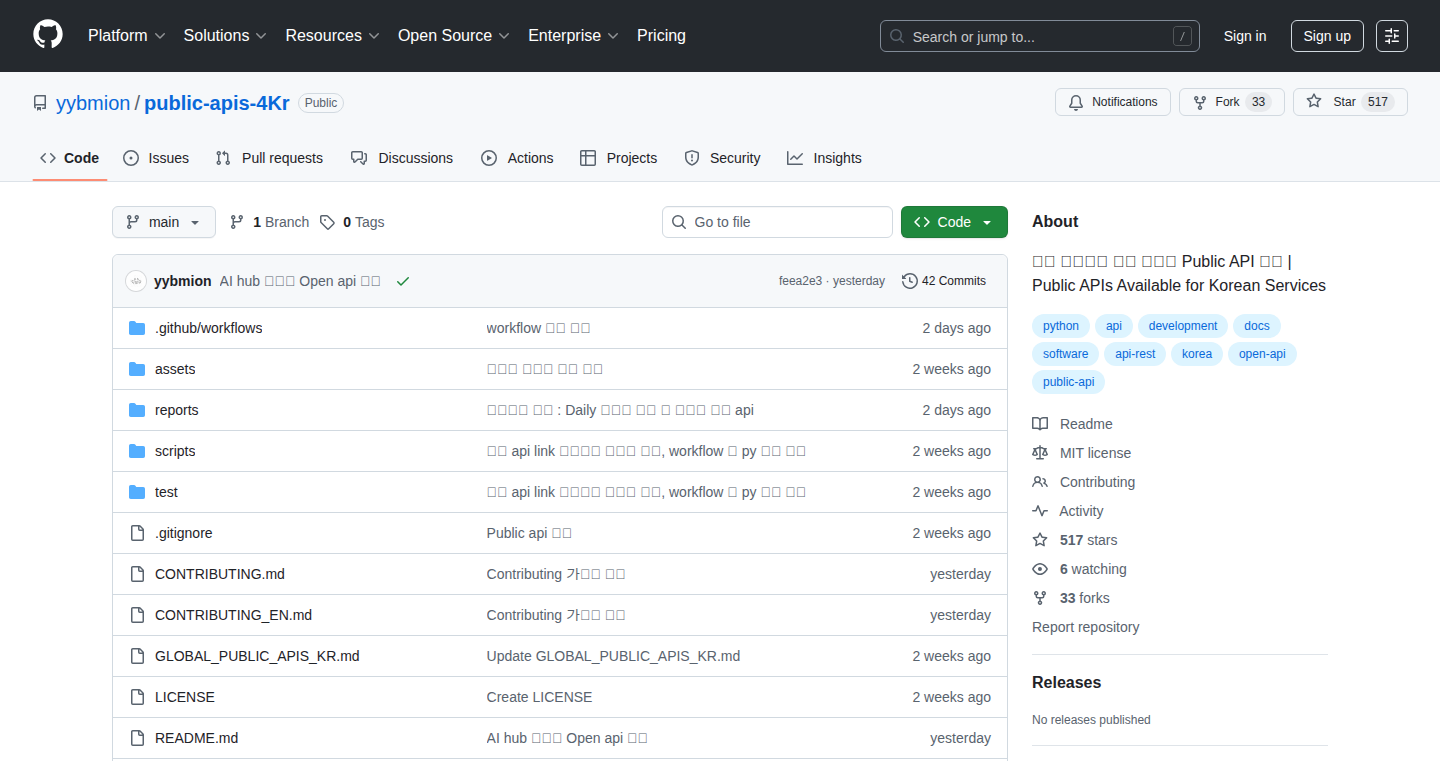

13

Founder Anonymity Hub

Author

audaciousdelulu

Description

This project addresses the often-unspoken challenges faced by startup founders. It provides a truly anonymous online space designed for honest conversations about the difficult, lonely aspects of building a business. The core innovation lies in its architecture, which deliberately avoids social connections and uses unlinkable aliases to ensure user privacy. This allows founders to share their struggles without fear of repercussions, fostering a supportive environment unlike typical engagement-driven platforms.

Popularity

Points 4

Comments 2

What is this product?

Founder Anonymity Hub is a community platform built from the ground up with absolute user privacy as its cornerstone. Unlike most social platforms that track your activity and connections, this project utilizes a "no social graph" approach. This means there are no profiles, no follower lists, and no way to link posts or comments back to a single user. Each post is assigned a randomly generated name, and even comment threads use different, fresh names. Authentication is also handled in a privacy-preserving way, using a secure, one-way cryptographic hash (HMAC) of your email, meaning even the creators cannot retrieve your actual email address. The platform also features a "hard delete" option, allowing users to permanently and irreversibly wipe their account with a single click, with no backups or data retention. This technical design prioritizes safe, open communication over metrics like engagement or virality. The main technical insight is realizing that true psychological safety for discussing sensitive topics requires the complete absence of identifiable traces.

How to use it?

Developers can use Founder Anonymity Hub as a platform to share their experiences and challenges as founders. Imagine a developer facing a difficult decision about their startup's direction, or struggling with team management. Instead of posting on a platform where their identity might be inferred or linked to their professional profile, they can anonymously share their situation here. This project's open, post-first design means you don't even need an account to start sharing. The platform is ideal for asking for advice on sensitive topics, seeking support during tough times, or simply venting frustrations without the pressure of maintaining a public persona. For developers interested in the technical architecture, it serves as a practical example of how to build privacy-first applications, demonstrating techniques like HMAC-based authentication and the removal of social graph dependencies.

Product Core Function

· True Anonymity through unlinkable aliases: Each post is given a unique, random name, and comments use different names, ensuring no persistent identity is attached to user contributions. This allows for candid sharing of vulnerabilities without personal identification, directly addressing the fear of judgment or professional repercussions.

· No Social Graph: The absence of profiles, follows, and connection tracking means there's no way to build a network or link users across different posts. This design choice is crucial for creating a safe space where founders can speak freely, as it removes the incentive or ability for others to identify or scrutinize them based on their network.

· Privacy-Focused Authentication: Using HMAC (Hash-based Message Authentication Code) on emails means your email is transformed into a one-way, irreversible code. This prevents the platform from even knowing your original email address, providing a high level of security and preventing unauthorized access or deanonymization through email recovery.

· Hard Delete Functionality: The one-click account wipe permanently removes all user data without backups. This empowers users with complete control over their digital footprint, ensuring that any information shared can be entirely erased, reinforcing the commitment to privacy and the ability to disappear if desired.

Product Usage Case

· A founder struggling with imposter syndrome shares their feelings anonymously. The platform's anonymity allows them to receive empathetic responses from other founders who have experienced similar emotions, validating their feelings without exposing their professional identity.

· A developer working on a sensitive product pivots due to unforeseen market changes. They anonymously post about the difficult decision-making process and the fear of failure, receiving constructive feedback and encouragement from the community, which helps them navigate the uncertainty.

· A startup co-founder anonymously discusses the challenges of managing a co-founder relationship and the emotional toll it takes. This allows for open dialogue about sensitive interpersonal issues that might be difficult to discuss in a public or semi-public forum, leading to shared coping strategies.

· A developer considering a radical technical approach for their startup anonymously seeks opinions on the risks and potential rewards, without revealing their specific project or company. This allows for unbiased technical advice and exploration of unconventional ideas in a safe environment.

14

Prototyper: Fluid AI Design Accelerator

Author

thijsverreck

Description

Prototyper is an AI-powered software design platform built from the ground up with a custom compiler and rendering engine, aiming to dramatically speed up the iterative design process. It tackles the pain of slow feedback loops in traditional design tools by offering instant updates, a streamlined UI, and a responsive-by-default approach. This allows developers and designers to explore and refine ideas rapidly, breaking free from rigid workflows and fostering creativity. The core innovation lies in its in-house developed technology stack, enabling unique features and optimizations not bound by existing constraints, ultimately empowering users to bring their best ideas to life faster.

Popularity

Points 6

Comments 0

What is this product?

Prototyper is a new kind of software design platform that leverages AI and a custom-built technology stack to make the process of creating and refining product prototypes incredibly fast and intuitive. Unlike traditional tools that can be bogged down by slow updates and complex workflows, Prototyper is designed for instant feedback. Its core innovation is its proprietary compiler and rendering engine, which eliminates the lag typically associated with changes. This means when you make a design adjustment, you see the result immediately. It's also built to be responsive out-of-the-box, meaning your designs adapt to different screen sizes automatically. This gives you the freedom to experiment with ideas without being hindered by the tools themselves, letting your creativity flow and helping you discover the best solutions through rapid iteration.

How to use it?

Developers can use Prototyper as a standalone tool for rapidly sketching out and iterating on user interfaces and product concepts. Its simplified UI and instant updates make it ideal for quickly testing out different design directions. It can be integrated into existing workflows by using it as an initial rapid prototyping stage before moving to more complex development environments. For example, a developer could use Prototyper to quickly design and validate a new feature's user flow, then export the foundational design elements or concepts to hand off to a dedicated UI/UX team or directly to code. The platform's customizable nature also means it can adapt to your specific project needs and existing toolchain.

Product Core Function

· Instant Updates: Allows for immediate visual feedback on design changes without manual compilation or refreshing, accelerating the iterative design cycle and making it easier to spot and fix issues quickly.

· Simplified User Interface: Designed to remove unnecessary steps and friction in the design process, enabling users to focus on creativity and rapid experimentation rather than wrestling with complex software.

· Responsive by Default: Ensures that designs automatically adapt to various screen sizes and devices, saving significant time and effort in creating cross-platform compatible interfaces.

· Customizable Workflow: Offers flexibility to tailor the tool to individual or team needs, allowing users to bend the tool to their workflow rather than being forced into a rigid, one-size-fits-all approach.

· Custom Compiler and Rendering Engine: This is the engine under the hood, enabling unique optimizations and features not possible with standard toolkits, providing a performance advantage and the ability to innovate beyond existing technological boundaries.

Product Usage Case

· A startup founder can quickly mock up and test multiple landing page variations to gauge user interest and optimize conversion rates without needing to write any code.

· A mobile app developer can rapidly prototype a new user onboarding flow, getting immediate visual feedback on each step and making adjustments on the fly to improve user experience.

· A backend engineer who needs to visualize a new API endpoint's data structure can quickly create a mock UI to represent the data, making it easier to understand and communicate the data flow.

· A product manager can create interactive wireframes to demonstrate a new feature's functionality to stakeholders, allowing for early feedback and validation before significant development resources are committed.

15

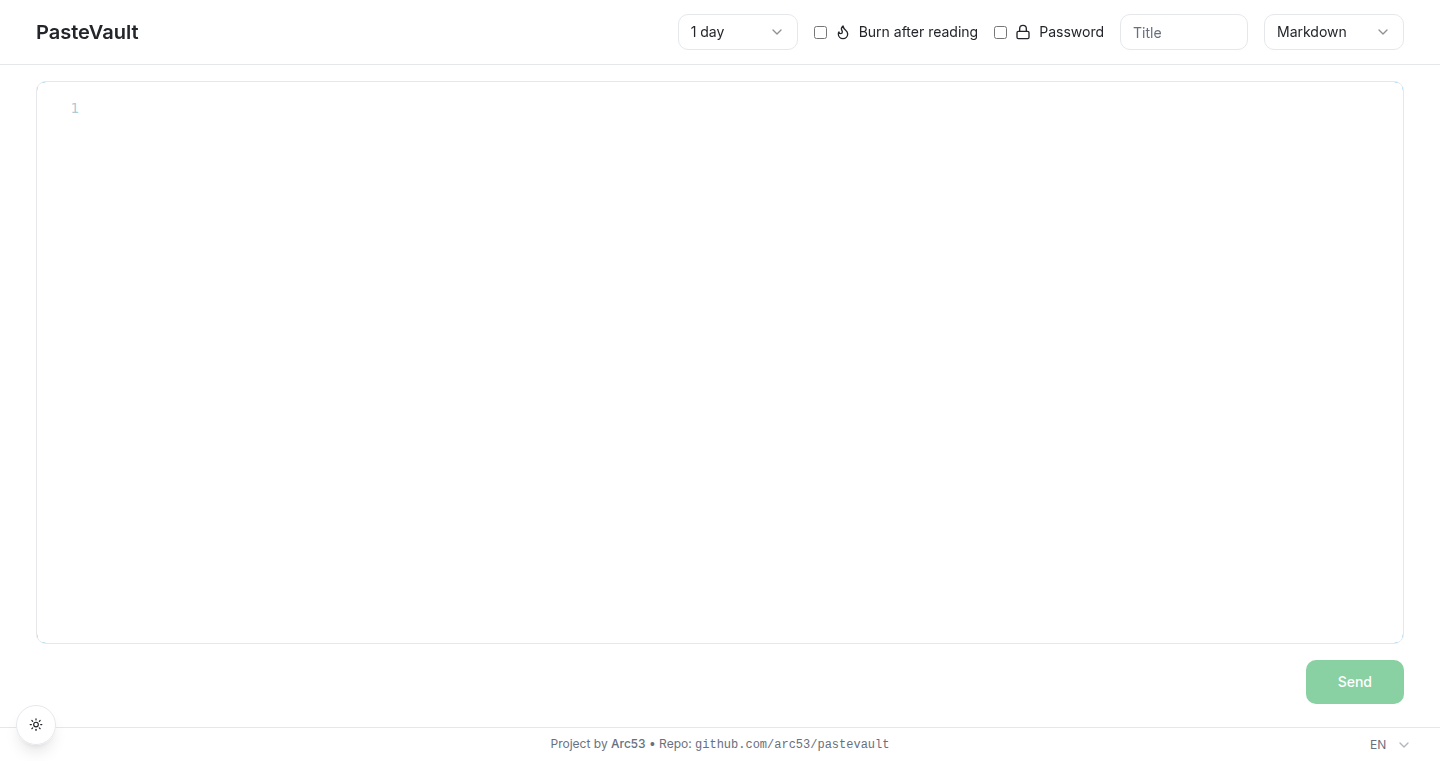

PasteVault-E2EE-VSCode

Author

larry-the-agent

Description

PasteVault is an open-source pastebin with End-to-End Encryption (E2EE) and a familiar VS Code-like editor. It tackles the common need for securely sharing code snippets or sensitive text, offering enhanced privacy and a user-friendly editing experience without compromising on the core functionality of a pastebin.

Popularity

Points 5

Comments 1

What is this product?

PasteVault is a pastebin service built with strong security and developer convenience in mind. The core innovation lies in its End-to-End Encryption (E2EE), meaning only the sender and intended recipient can decrypt and read the pasted content. This is achieved by encrypting the data directly in the user's browser before it's sent to the server, and only decrypting it when the recipient accesses the link. The familiar VS Code-like editor significantly improves the user experience for developers, providing syntax highlighting, autocompletion, and other familiar editing features that make creating and managing pastes more efficient and enjoyable. So, what's in it for you? It means you can share sensitive information like API keys, configuration files, or private code snippets with confidence, knowing they are protected from prying eyes, and do so with the comfort and power of a professional code editor.

How to use it?

Developers can use PasteVault by visiting the website and pasting their content directly into the editor. For advanced use cases, the project is open-source, allowing developers to self-host their own secure pastebin instance, integrate it into their CI/CD pipelines for sharing build logs or secrets, or even build custom tools that leverage its secure sharing capabilities. The encryption is handled client-side, so there are no special client applications needed for basic usage. You can simply paste and share. So, how does this help you? You can quickly and securely share debugging information with a colleague, or manage temporary credentials for your deployment process.

Product Core Function

· End-to-End Encryption: Securely shares data by encrypting it in the browser before transmission, ensuring only intended recipients can read it. This means you can share confidential information without worrying about server-side breaches.

· VS Code-like Editor: Provides a rich and familiar editing experience with syntax highlighting, line numbers, and basic formatting, making it easy for developers to create and review code snippets. This elevates the utility of a simple pastebin to a developer-centric tool.

· Open-Source and Self-Hostable: Allows for community contributions and the ability to deploy your own private, secure pastebin instance, giving you full control over your data. This offers flexibility and enhanced security for sensitive internal projects.

· Simple Sharing Interface: Offers a straightforward way to generate shareable links for pasted content, enabling quick and easy collaboration. This makes sharing information efficient and hassle-free.

Product Usage Case

· Sharing sensitive API keys or database credentials with a team member for a temporary project, ensuring the keys are encrypted and only accessible via a secure link.

· Debugging a complex code issue by pasting error logs or relevant code snippets to a colleague, with the assurance that the content is protected by E2EE.

· Self-hosting PasteVault within a private network to securely share internal documentation or configuration files among development teams.

· Integrating PasteVault's functionality into a custom build script to automatically share build output or warnings securely after a successful compilation.

16

Cross-Platform Guitar Looper

Author

ralph_sc

Description

This project is a native Android guitar looping application built with C (C99). It enables musicians to create seamless, bar-perfect audio loops with a pre-count click, allowing for improvisation and practice. The innovative aspect lies in its cross-platform native implementation, utilizing OpenGL ES for custom GUI rendering and JNI for Android integration. This approach prioritizes performance and a unique user experience.

Popularity

Points 1

Comments 5

What is this product?

This is a native Android application for guitarists that allows them to record and play back short audio segments, called loops. Think of it like a digital tape recorder that can instantly replay what you just played, so you can play along with yourself. The key innovation here is that it's built using C99, a low-level programming language, which offers significant performance benefits and allows for a highly customized user interface rendered directly using OpenGL ES, a graphics technology. It also manages the tricky communication between the C code and the Android operating system through JNI (Java Native Interface). So, why is this useful? It means the app is likely to be very responsive and efficient, providing a smooth and precise looping experience for practice and jamming, without the usual overhead of higher-level programming languages.

How to use it?

Guitarists can use this app by connecting their instrument to their Android device (potentially via an audio interface). The app allows them to set a count-in tempo, record a musical phrase, and then immediately play it back in a continuous loop. They can then play or sing along with their recorded loop. The custom GUI, rendered with OpenGL ES, provides a unique visual feedback for recording, playback, and loop management. It's integrated into the Android ecosystem using JNI, meaning the core performance-critical parts are handled by efficient C code. This is useful for anyone who wants to practice new song parts, experiment with improvisation, or simply jam with themselves in a more engaging way.

Product Core Function