Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-08-13

SagaSu777 2025-08-14

Explore the hottest developer projects on Show HN for 2025-08-13. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Hacker News showcases a vibrant ecosystem of projects leveraging AI and developer tools. The surge in AI-powered solutions, from content generation to sales assistance, highlights the potential for automation across industries. The rise of no-code and low-code platforms empowers a wider audience to build and deploy applications. These trends point towards a future where technology becomes more accessible and where developers can focus on creativity and innovation, not just complex technical implementations. Embrace this wave of innovation by experimenting with AI APIs, exploring open-source solutions, and building tools that enhance developer productivity. Focus on user privacy and data security as crucial elements in any modern application. This is a call to action for all developers and aspiring entrepreneurs: build, innovate, and push the boundaries of what's possible, and don't be afraid to create tools that solve real-world problems.

Today's Hottest Product

Name

Yet another memory system for LLMs

Highlight

This project introduces a content-addressed storage system with block-level deduplication, aiming to reduce storage costs for LLM workflows and research. The innovation lies in its efficiency (saving 30-40% on codebases) and integration with popular development tools. Developers can learn about building efficient storage systems, especially how to use deduplication to save space. It shows how to optimize memory usage in LLM applications.

Popular Category

AI/ML

Tools/Utilities

Web Development

Popular Keyword

AI

LLM

No-code

Open Source

API

Browser

Technology Trends

AI-powered tools for various tasks: From generating content to automating sales research and creating thumbnails, AI is being integrated into different areas to increase efficiency and productivity.

No-code/Low-code platforms: Several projects aim to simplify complex tasks through user-friendly interfaces, enabling users with less technical expertise to create and deploy applications.

Focus on developer productivity and tooling: Projects like AI agents, debugging tools, and frameworks are being developed to enhance developer workflows, aiming to streamline development processes.

Privacy-focused applications: There is a growing emphasis on developing tools that prioritize user privacy, offering local processing, secure storage, and control over data.

Project Category Distribution

AI/ML (35%)

Tools/Utilities (30%)

Web Development (15%)

Other (20%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Content-Addressed Persistent Memory for LLMs (CAPM) | 80 | 18 |

| 2 | Vaultrice: Real-time Key-Value Store with localStorage API | 14 | 2 |

| 3 | FakeFind: AI-Powered Review Authenticity Analyzer | 4 | 6 |

| 4 | PortalPass: A Web Browser for Wi-Fi Captive Portal Bypass | 4 | 5 |

| 5 | IntelliSell.ai: AI-Powered Prospect Research and Sales Strategy Generator | 3 | 5 |

| 6 | YC Galaxy: Interactive 3D Map of Y Combinator Companies | 7 | 1 |

| 7 | Inworld Runtime: A Graph-Based C++ Engine for AI Applications | 6 | 2 |

| 8 | U: A Programming Language for Unified Abstraction | 1 | 7 |

| 9 | langdiff: Real-time, Type-Safe JSON Streaming from LLMs | 6 | 1 |

| 10 | BrowserPilot: Command-Line Control for Your Browser | 2 | 5 |

1

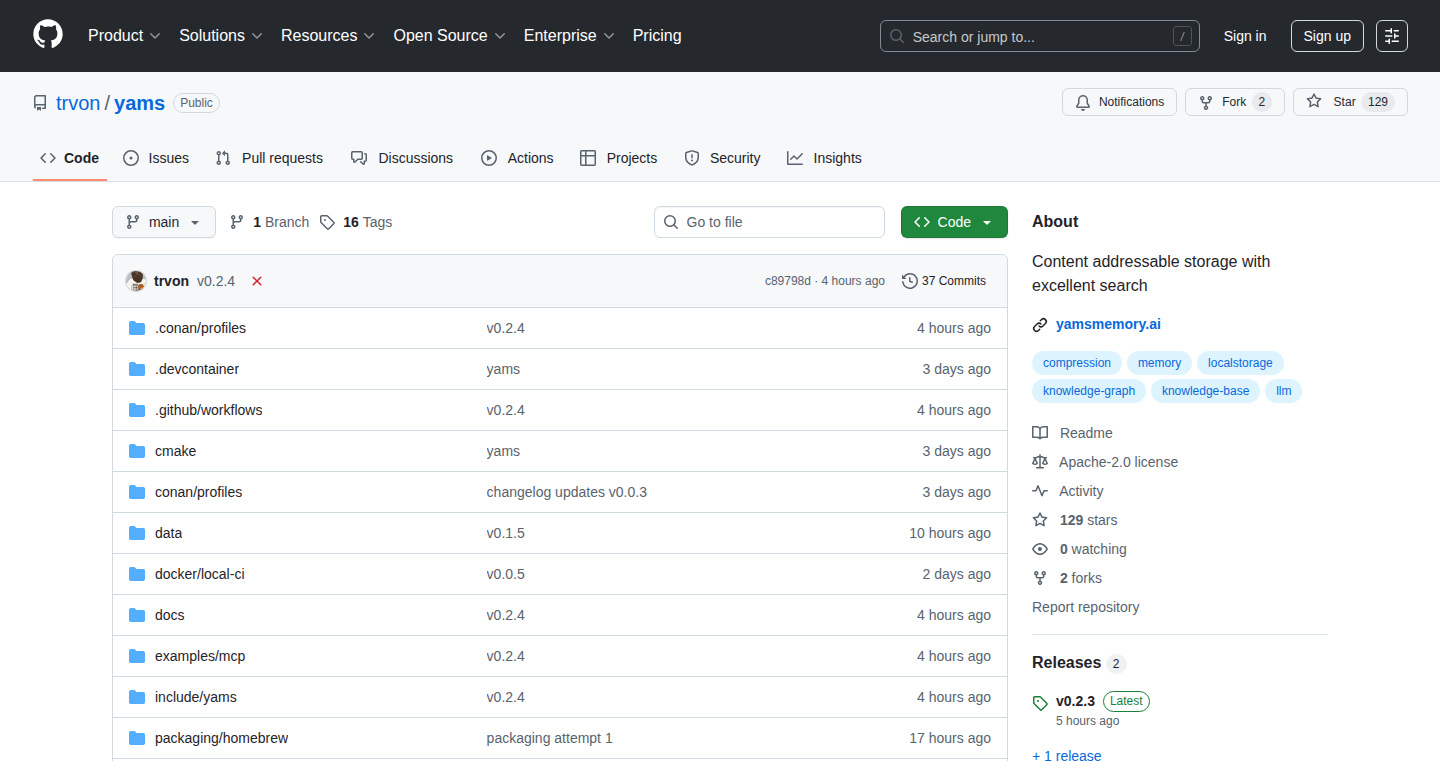

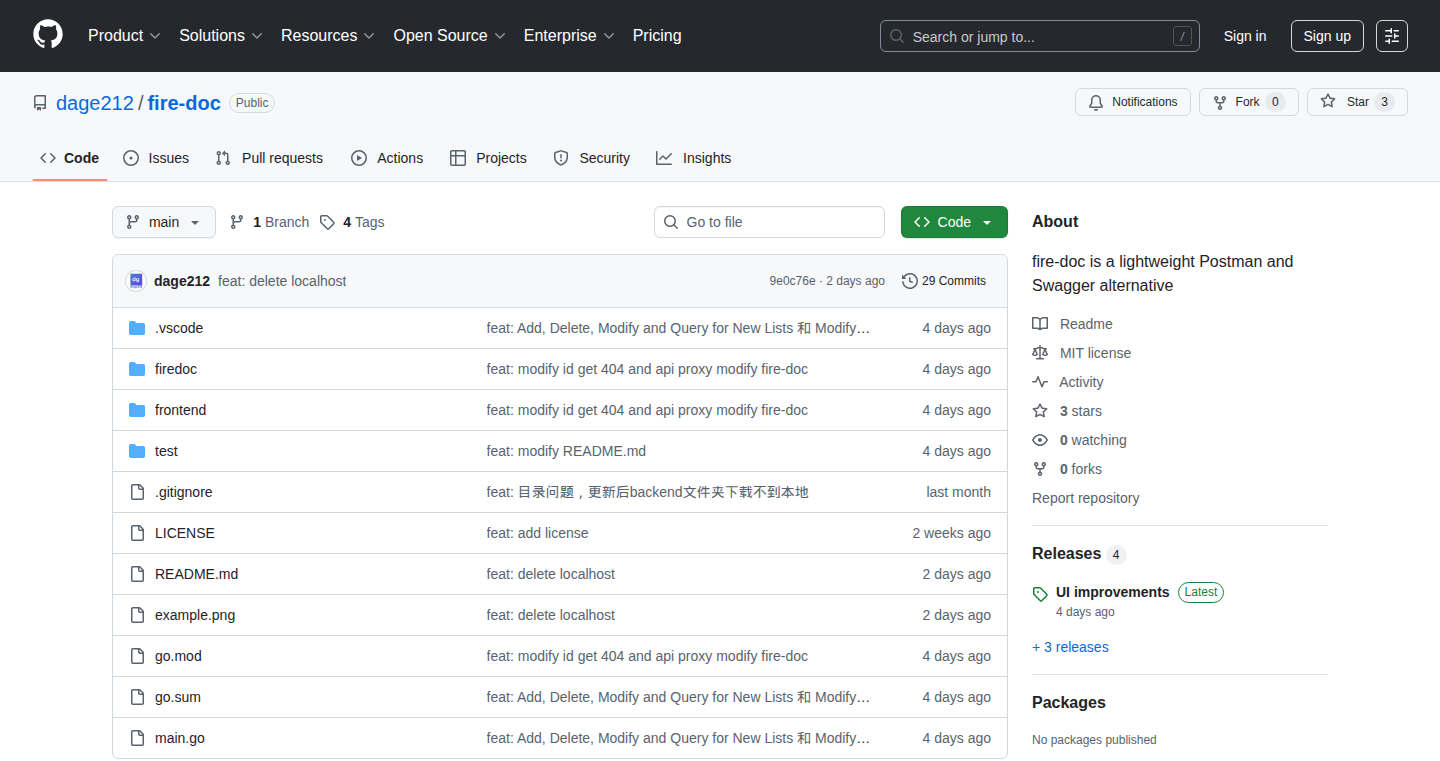

Content-Addressed Persistent Memory for LLMs (CAPM)

Author

blackmanta

Description

CAPM is a content-addressed storage system designed to provide searchable and persistent memory for Large Language Models (LLMs), while significantly reducing storage costs. It achieves this through block-level deduplication, which can save 30-40% on storage space, especially for codebases. The system is built in C++ and is intended for local use, enabling researchers and developers to efficiently manage and retrieve information within their LLM workflows.

Popularity

Points 80

Comments 18

What is this product?

CAPM is like a smart filing cabinet for your LLM's memories. Instead of saving everything in the same way, it looks at the *content* of each piece of information (like a code block or a research paper) and only saves unique pieces. If it finds something similar already saved, it just points to the existing one, avoiding duplication. This technique, called block-level deduplication, is the core innovation. It helps save a lot of storage space, which is especially helpful if you're working with large language models that generate a lot of data. So this is useful because it helps you store your LLM's knowledge more efficiently, meaning you can keep more information without running out of space or spending a fortune on storage.

How to use it?

Developers can use CAPM through a command-line interface (CLI) to integrate it into their development environments, such as code editors and LLM interfaces. For instance, the project is already integrated into popular tools like Zed, Claude Code, and Cursor. You provide the content you want to store and search, and CAPM handles the deduplication and retrieval. Imagine you are working on a coding project and you need to store some code snippets along with their associated prompt, CAPM provides you a convenient way to save your useful notes in code format, so that you can quickly search and re-use the information when needed.

Product Core Function

· Content-Addressed Storage: This means the system finds and stores data based on what the data *is*, rather than just where it's located. This is the fundamental principle of the system, allowing for efficient storage and retrieval. So this is useful for quickly finding the exact information you need without having to remember where you put it.

· Block-Level Deduplication: This is the core technology. It breaks down data into smaller chunks (blocks) and identifies duplicate blocks. Instead of storing identical blocks multiple times, it stores each unique block only once and links to it from different places. This results in significant space savings, especially when dealing with code or similar datasets. So this is useful for saving a lot of storage space, meaning you can keep more information without running out of space or spending a fortune on storage.

· Persistent Memory: The system is designed to store data permanently, so your LLM's memories are preserved between sessions. This allows your LLM to 'remember' things over time. So this is useful for allowing LLMs to 'learn' and improve over time, making them more capable and useful.

· CLI Integration: The CLI tool allows developers to easily incorporate CAPM into their existing workflows, for example code editors. This means developers can seamlessly integrate memory storage and retrieval into their regular development routines. So this is useful for making the memory system easy to use within the existing developer tools.

Product Usage Case

· Code Snippet Storage: A developer is working on a complex software project and wants to save useful code snippets along with their associated prompts, for instance prompt information. CAPM allows them to efficiently store and search these snippets. This means developers can quickly find and reuse snippets, avoiding repetitive coding and saving development time.

· Research Data Management: A researcher is working on a research project that generates large amounts of data. CAPM can be used to efficiently store and retrieve this data, reducing storage costs and making it easier to find specific information. This means researchers can manage their datasets more efficiently, make findings easier and accelerate their research.

· LLM Training Data Caching: When training an LLM, it's common to reuse data. CAPM can be used to cache and deduplicate training data, optimizing the training process. So this is useful to decrease training time and cost.

2

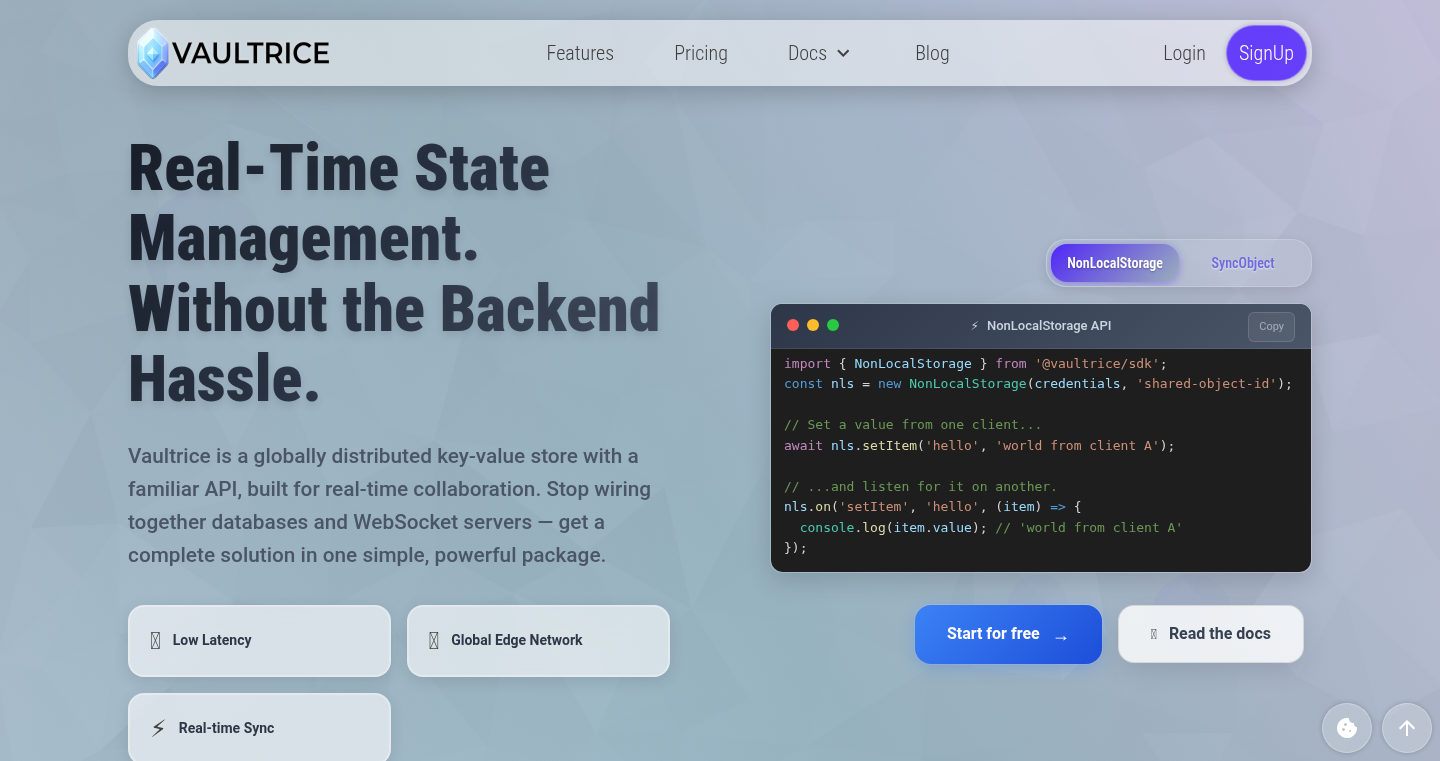

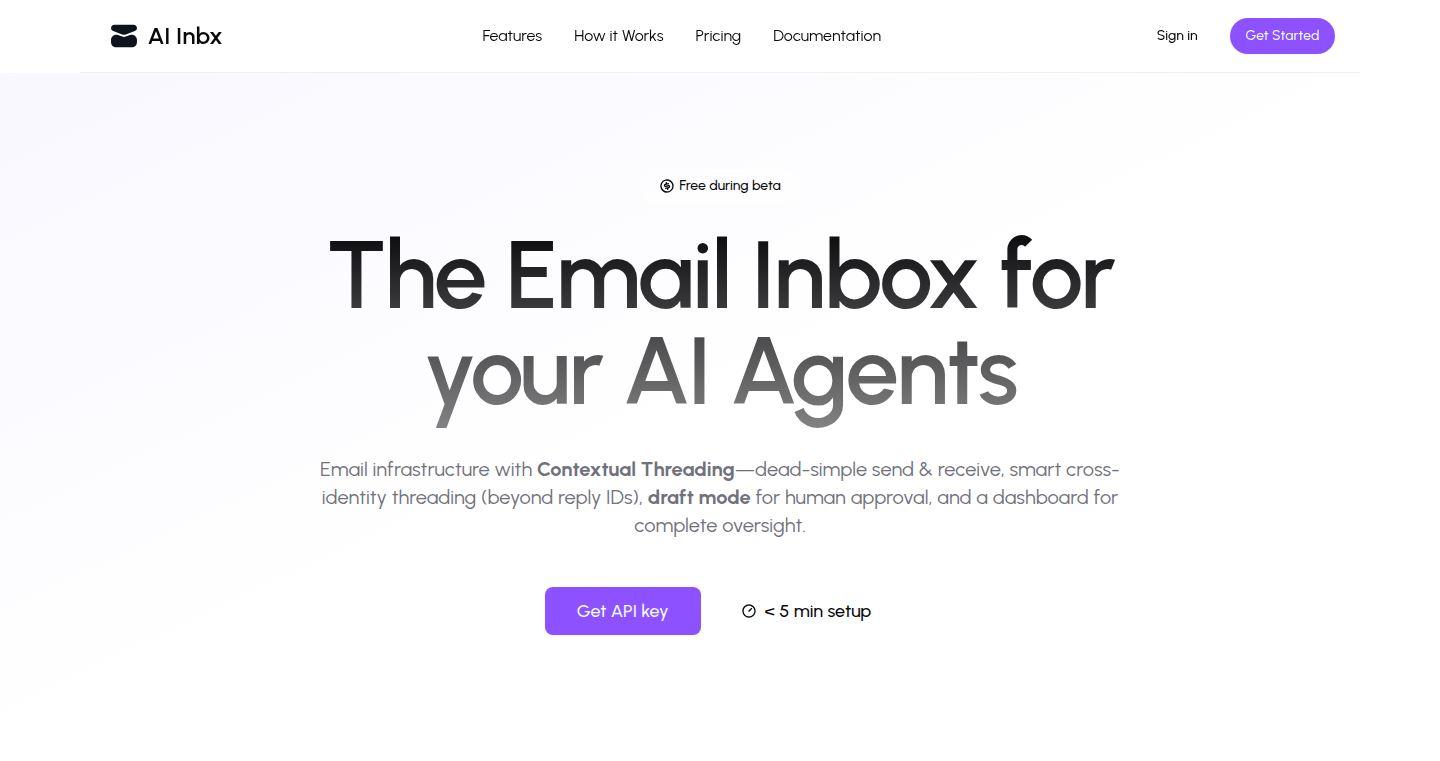

Vaultrice: Real-time Key-Value Store with localStorage API

Author

adrai

Description

Vaultrice is a real-time key-value data store designed to simplify the creation of real-time features like "who's online" lists, collaborative apps, and cross-device state sharing. It leverages Cloudflare's Durable Objects for a consistent backend, offering a JavaScript/TypeScript SDK with a familiar localStorage-like API and reactive object synchronization. It eliminates the complexity of setting up databases, WebSocket servers, and managing connection states, making real-time functionality easy to implement. The product also incorporates a layered security model, from simple API key restrictions to end-to-end encryption, ensuring data security.

Popularity

Points 14

Comments 2

What is this product?

Vaultrice is a real-time data storage service. Think of it as a shared, persistent `localStorage` that works across different websites, devices, and users. It uses a technology called 'Durable Objects' provided by Cloudflare, which ensures that your data is stored reliably. You interact with it using a JavaScript SDK, allowing you to easily save and retrieve data, and automatically synchronize updates in real-time. It also offers a reactive object system where changes to an object automatically sync with other clients. So this removes the need to manually write complicated code to handle real-time updates. Finally, Vaultrice includes security features like API keys and end-to-end encryption to keep your data safe.

How to use it?

Developers can integrate Vaultrice into their web applications by installing the Vaultrice JavaScript/TypeScript SDK. They can then use the SDK's `localStorage`-like API to store and retrieve data. For more advanced features, they can use the reactive `SyncObject` to easily sync data changes across all connected clients. You can use Vaultrice in any project where you need real-time data synchronization, such as creating collaborative applications, building live dashboards, or updating user interfaces instantly. The product is designed to be easy to use, even for developers new to real-time technologies.

Product Core Function

· `localStorage`-like API: Allows developers to store and retrieve data using familiar methods like `setItem`, `getItem`, and `removeItem`. This significantly reduces the learning curve and makes it easy to implement real-time features. So this lets you quickly add real-time functionalities to your website, like updating user information across multiple browsers.

· Real-time Events and Presence: Offers methods like `.on()` and `.join()` to listen for data changes and track who's online. This functionality simplifies the creation of real-time interactions, such as collaborative editing, live chat, or presence indicators. So this allows you to know who's currently viewing a document or participating in a chat.

· Reactive JavaScript Proxy (`SyncObject`): This feature enables developers to create JavaScript objects that automatically synchronize their data with other connected clients. When you change a property in one object, that change is instantly reflected in all other instances. This is great for building highly interactive and collaborative applications. So this means less coding and you can build apps that synchronize in real time with only a few lines of code.

· Layered Security Model: Includes various security options, from simple API key restrictions to server-signed object IDs and client-side end-to-end encryption. This gives developers control over the security level of their applications. So this allows you to choose the level of data protection that best fits your specific needs.

Product Usage Case

· Collaborative Document Editors: Imagine a Google Docs-like experience where multiple users can edit a document simultaneously, and all changes are reflected in real-time. Vaultrice's `SyncObject` makes this easy to achieve. So this allows multiple people to edit documents, view changes, and see who is online.

· Real-time Dashboards: Build dashboards that update in real-time with live data from various sources. Vaultrice can store and synchronize the data, and update user interfaces immediately when the data changes. So this lets you get an instant view of performance, like a live sales tracker or real-time stock prices.

· Live Chat Applications: Quickly build chat applications where messages are sent and received instantly. Vaultrice can handle the storage and synchronization of chat messages in real-time. So this allows you to develop a live chat function within your applications.

3

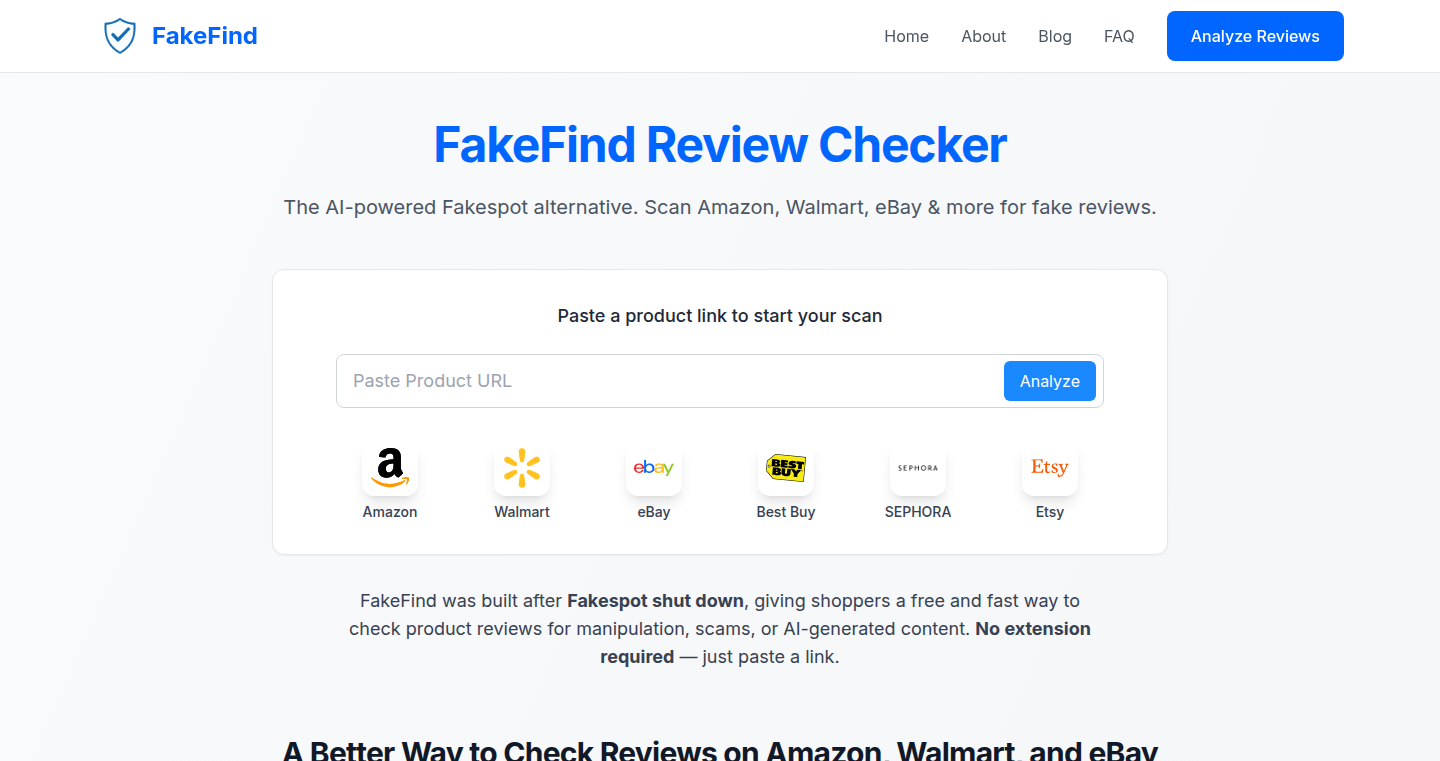

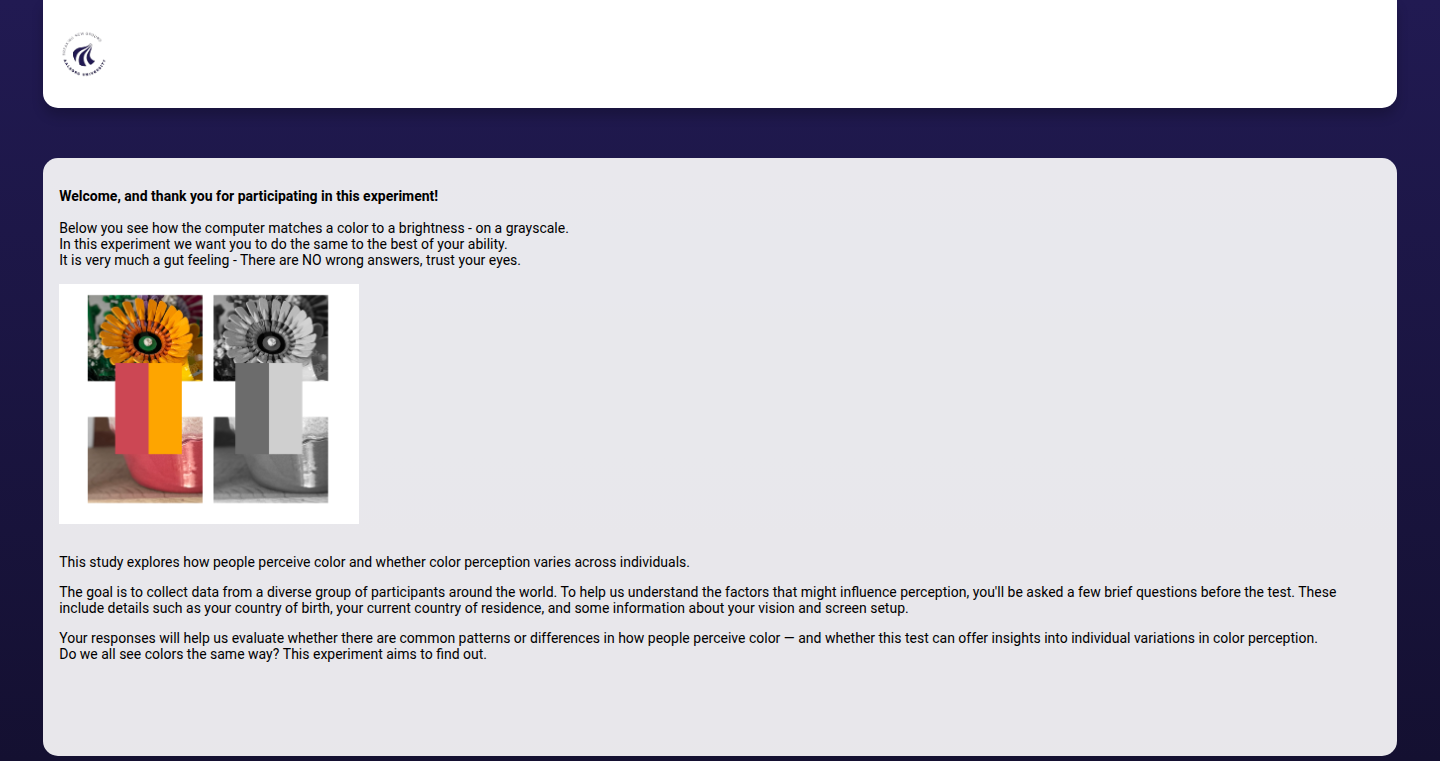

FakeFind: AI-Powered Review Authenticity Analyzer

Author

FakeFind

Description

FakeFind is a web-based tool designed to detect fake product reviews, acting as a free alternative to Fakespot. It leverages AI to analyze reviews on platforms like Amazon, Walmart, eBay, Best Buy, and Etsy, providing a Trust Score (1-10) and a concise review summary to help users make informed purchasing decisions. The tool focuses on identifying suspicious patterns in reviews, offering a streamlined and accessible solution without requiring account creation or browser extensions. This project demonstrates a practical application of AI for enhancing online shopping safety.

Popularity

Points 4

Comments 6

What is this product?

FakeFind uses AI to analyze product reviews for authenticity. It looks for patterns and inconsistencies that often indicate fake or biased reviews. Think of it as a smart detective that sifts through a mountain of reviews to find clues. Its innovation lies in using AI to automate the process, making it faster and more comprehensive than manual analysis. It provides a 'Trust Score' which simplifies the complex analysis into a single, easy-to-understand number. So, instead of spending hours reading reviews and trying to figure out if they are real, you get a quick, AI-powered assessment.

So this gives you a quick way to assess whether the reviews for a product are trustworthy, saving you time and helping you avoid potentially problematic purchases.

How to use it?

Users can simply paste a product link from Amazon, Walmart, eBay, Best Buy, or Etsy into FakeFind. The AI then analyzes the reviews and provides a Trust Score, along with a summary highlighting any potential issues. It's incredibly easy to use: just copy and paste the product URL into the tool, and it does the rest. No need to install anything, create an account, or install a browser extension.

So, this tool integrates seamlessly into your online shopping experience, making it easy to check review authenticity before you buy anything.

Product Core Function

· AI-Powered Review Analysis: FakeFind uses AI algorithms to analyze product reviews for suspicious patterns, such as repetitive language, inconsistencies in reviewer profiles, and signs of paid or biased reviews. This provides a more accurate and efficient way to identify potentially fake reviews compared to manual analysis.

· Trust Score: The tool assigns a Trust Score (1-10) to each product based on the analysis of the reviews. This score is a simplified way to understand the overall reliability of the reviews, making it easy for users to quickly assess the trustworthiness of a product.

· Platform Compatibility: FakeFind supports major e-commerce platforms like Amazon, Walmart, eBay, Best Buy, and Etsy. This cross-platform support allows users to analyze reviews across a wide range of online retailers, improving their shopping safety.

· Concise Review Summary: FakeFind provides a summary of the key findings from its analysis. This includes highlighting any red flags or potential issues with the reviews, giving users a quick overview of the review’s quality.

· User-Friendly Interface: The tool's web-based interface is straightforward, requiring no installation or account creation. Users can easily paste product links and get instant results, making it accessible for anyone who shops online.

Product Usage Case

· Avoiding Scam Products on Amazon: Before purchasing a popular tech gadget on Amazon, a user pastes the product link into FakeFind. The tool flags several suspicious reviews and gives a low Trust Score, indicating potential issues. The user then avoids the product, saving money and time.

· Evaluating Products on Walmart: A shopper is considering buying a new kitchen appliance on Walmart. They use FakeFind to analyze the reviews and find that many reviews have similar wording, raising a red flag. Based on this, the user reconsiders the purchase and avoids a potentially unreliable product.

· Checking eBay Listings: A buyer is interested in a used item on eBay. They use FakeFind to assess the product's reviews before bidding. The tool identifies a pattern of inconsistent feedback, which warns the buyer about potential issues with the seller. The buyer then chooses to opt out of bidding.

· Ensuring Purchases from Best Buy: Before buying a new TV, a user runs FakeFind on the product's reviews on Best Buy. The tool confirms the authenticity of the reviews, allowing the user to make a confident purchasing decision.

· Verifying Reviews on Etsy: A customer is looking to buy a handmade item on Etsy. They utilize FakeFind to analyze the reviews, ensuring that they’re purchasing from a trusted seller. This helps them avoid low-quality products.

4

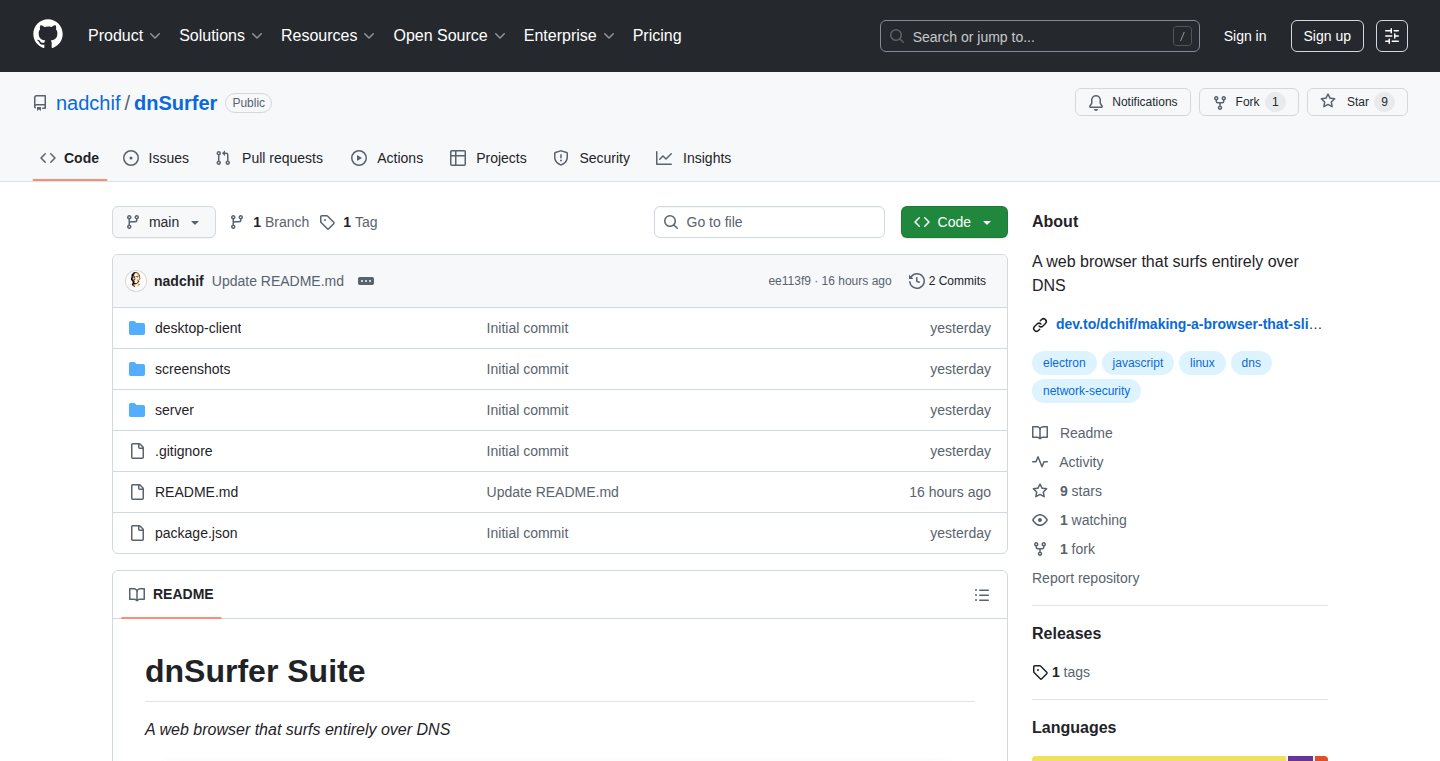

PortalPass: A Web Browser for Wi-Fi Captive Portal Bypass

Author

nadchif

Description

PortalPass is a specialized web browser designed to automatically navigate and bypass Wi-Fi captive portals. It cleverly uses a combination of HTTP requests and pattern matching to identify and interact with these portals, allowing users to connect to Wi-Fi networks without manual interaction. The innovation lies in its automated approach, reducing the need for manual logins and potentially circumventing restrictive network policies. This solves the problem of annoying Wi-Fi login pages, making public Wi-Fi more accessible.

Popularity

Points 4

Comments 5

What is this product?

PortalPass is essentially a smart web browser. It’s designed to automatically detect and bypass those login pages you often encounter when using public Wi-Fi. It does this by sending specific requests and analyzing the responses from the network. Think of it as a little detective for your internet connection, figuring out how to get you online without you having to manually enter a password or click any buttons. The innovation is in its automation – it handles the tedious process of logging in for you.

How to use it?

Developers can use PortalPass to integrate captive portal bypass functionality into their applications or devices. This could involve embedding the browser directly or leveraging its underlying logic to automate Wi-Fi authentication. For example, in a device that connects to public Wi-Fi, PortalPass could handle the initial login, so the user doesn't have to. This integration is done by modifying network configurations and using APIs to automate the portal interaction. So, you can make sure your device automatically connects to the internet without any user interaction.

Product Core Function

· Automated Captive Portal Detection: This function intelligently identifies the presence of a captive portal. It does this by sending HTTP requests to a predefined set of URLs and analyzing the server’s response. This avoids the need for the user to manually determine if a login is required. So, you get a seamless Wi-Fi experience.

· Dynamic Portal Interaction: The browser interacts with the captive portal to automate the login procedure. This includes submitting forms, parsing HTML for relevant fields, and automatically entering credentials. This automation streamlines the entire login process. So, it saves you time and effort when connecting to Wi-Fi.

· HTTP Request Analysis: The core of PortalPass lies in the analysis of HTTP responses. The browser parses the responses received from the Wi-Fi network. This helps it determine the best way to interact with the portal, finding hidden login fields or any other information needed to log in automatically. So, it provides a smooth connection.

· Credential Management: For ease of use, the browser can store and manage Wi-Fi login credentials securely. Users only need to enter their credentials once, and the browser can automatically fill them in when connecting to a new Wi-Fi network. So, you don't have to remember your login details every time.

Product Usage Case

· Embedded Systems: Developers building IoT devices, smart TVs, or other internet-connected devices that frequently connect to public Wi-Fi can integrate PortalPass to streamline the user experience. The device can automatically handle the login process, eliminating the need for users to manually interact with captive portals. So, it will make your devices more user-friendly.

· Custom Wi-Fi Routers: Advanced users and developers who want to create their own Wi-Fi router solutions can use PortalPass to automatically handle login for Wi-Fi. This can be particularly useful in environments with frequent Wi-Fi users. So, it provides a better experience for your users.

· Mobile Application Integration: A mobile application for managing Wi-Fi connections could use PortalPass to automatically log users into captive portal networks. The application would handle the interaction with the login pages, making the connection process seamless. So, your app users enjoy a smoother Wi-Fi connection experience.

5

IntelliSell.ai: AI-Powered Prospect Research and Sales Strategy Generator

Author

troyethaniel

Description

IntelliSell.ai is an AI-driven sales research assistant designed to automate and enhance B2B sales prospecting. It aggregates information from various public sources, uses AI to analyze the data, and generates actionable insights and sales strategies. This project addresses the limitations of traditional CRMs and static sales intelligence tools by providing a dynamic and strategic approach to understanding and engaging with potential customers. It simplifies the tedious process of manual research and personalized outreach by leveraging the power of AI to connect the dots and provide a comprehensive 360° view of each prospect.

Popularity

Points 3

Comments 5

What is this product?

IntelliSell.ai is a tool that acts like a smart research assistant for sales teams. It works by first collecting information about a potential customer from many different online sources, like news articles, social media, and company websites. Then, it uses Artificial Intelligence to analyze this information and understand the company's priorities, recent activities, and challenges. The tool then creates a complete profile of the customer, including insights and suggested sales strategies, such as customized email drafts. So, it's like having a team of researchers and strategists all in one place. This reduces the amount of manual research needed and helps sales teams to engage with potential customers in a much more informed and effective way.

How to use it?

Sales professionals can use IntelliSell.ai by first creating a profile that describes their company and the types of customers they want to reach. Then, they provide the website addresses of the companies they are interested in. IntelliSell.ai will automatically gather information about these companies. Users can then ask specific questions about the companies, like "What are their current priorities?" or "Draft an email about their recent product launch?" The tool also generates account plans and engagement strategies. Finally, it tracks updates and buying signals weekly, providing a summary of the latest developments and insights about each prospect. This information can be used to refine sales efforts and tailor outreach messages.

Product Core Function

· Automated Data Aggregation: Gathers information from multiple public sources like news, social media, and company websites. So, this allows sales reps to avoid manually searching through multiple sources, saving time and effort.

· AI-Powered Insight Generation: Uses AI to analyze the aggregated data and derive insights about a prospect's priorities, challenges, and activities. So, sales teams can gain a deeper understanding of their potential customers.

· Customized Sales Strategy Generation: Generates account plans and engagement strategies tailored to each prospect. So, sales reps can focus on using proven strategies to increase the effectiveness of their outreach.

· Question Answering: Allows users to ask specific questions about prospects and get AI-generated answers. So, this enables sales reps to find relevant information immediately.

· Buying Signal Tracking: Provides a weekly summary of the latest updates, including insights, buying signals, sentiment analysis, and tags. So, sales reps can stay informed about changes within each account and adjust their outreach accordingly.

Product Usage Case

· Scenario: A sales representative wants to understand the current challenges of a potential client. With IntelliSell.ai, the representative provides the client's website and asks, "What are this company's top 3 challenges?" The AI analyzes the available data (news articles, industry reports, etc.) and responds with a list of challenges, which allows the sales rep to tailor their solution to address the specific needs of the client. This improves the chances of a successful sale.

· Scenario: A sales team needs to quickly respond to a new product launch from a competitor. The sales rep enters the competitor's website into IntelliSell.ai and asks, "Draft an email about the latest product launch." The AI generates a draft email, ready to send out to the sales team's contacts. This allows the sales team to respond quickly and address the potential impact of the product launch.

· Scenario: A sales manager wants to track the progress of a prospect. IntelliSell.ai tracks updates and buying signals for each company. This will provide weekly summaries of the latest developments related to the customer, which enables sales reps to react promptly to new opportunities.

· Scenario: A sales team needs to prepare for a sales meeting with a new prospect. They use IntelliSell.ai, providing the prospect's website. The AI generates a comprehensive account profile, including company overview, recent news, and key decision-makers, helping the team to conduct a strategic sales meeting.

6

YC Galaxy: Interactive 3D Map of Y Combinator Companies

Author

ernests

Description

YC Galaxy is a fascinating project that creates a 3D map of Y Combinator companies, visualizing their relationships based on product similarity. It uses web crawlers to gather information, then employs Machine Learning (ML) techniques like embeddings and UMAP to cluster and project these companies onto a 3D space. The interactive interface, built with Three.js and D3, allows users to explore and understand the landscape of YC companies in a visually intuitive way. So, this provides a bird's-eye view of the startup ecosystem and reveals interesting trends and connections.

Popularity

Points 7

Comments 1

What is this product?

YC Galaxy is a web-based interactive 3D map visualizing Y Combinator companies based on their product similarities. It works by:

1. **Crawling:** The system uses a web crawler to gather information from company websites.

2. **Embedding:** Then, ML embeddings are applied to understand product features and represent them as numerical data.

3. **Projection:** The UMAP algorithm reduces the dimensions into 3D coordinates suitable for visualization.

4. **Clustering:** Similar companies are grouped together.

5. **Visualization:** Finally, Three.js and D3 are used to create the interactive 3D map.

This innovative approach offers a novel way to explore the startup landscape. So, the technology provides a visual understanding of how different companies relate to each other based on their products.

How to use it?

Developers can explore the map directly via the provided link (no signup required). The project utilizes web technologies like Three.js and D3 for visualization, making it accessible in any modern web browser. You can interact with the map by panning, zooming, and clicking on companies to view detailed profiles. It showcases a practical application of web scraping, machine learning, and interactive data visualization techniques. So, this project provides insights into how to build similar interactive data visualizations and can inspire developers to explore and visualize complex datasets.

Product Core Function

· Web Crawling: The project crawls company websites to collect product information. This involves automated data extraction, which is critical for gathering the necessary data. So, you can understand how to build a data collection pipeline to extract information from various websites.

· ML Embeddings: It uses machine learning to represent the extracted product information as numerical data points. This converts complex information into a format suitable for processing and analysis. So, this allows you to apply the embedding technique to represent any kind of objects or entities.

· UMAP Projection: The UMAP algorithm is used to reduce the high-dimensional data from the embeddings into a 3D space. This makes it possible to visualize the relationships between companies. So, you can apply this algorithm to other data sets to create 3D maps.

· Clustering: Similar companies are grouped together using a hybrid algorithm. This helps in identifying patterns and relationships. So, you can apply this method to group and categorize the same kind of data points into clusters and better understand the structure within the data.

· Interactive 3D Visualization: The project uses Three.js and D3 to create an interactive 3D map. This allows users to explore the data in an intuitive and engaging way. So, you can take inspiration from these tools to create other interactive visualizations of any kind of data.

Product Usage Case

· Startup Ecosystem Exploration: The core application of the project is exploring the Y Combinator ecosystem. It allows users to discover relationships between companies, identify clusters of similar businesses, and understand the landscape of innovation. So, you can utilize this approach to map various markets or industries.

· Data Visualization in Education: The project can be adapted for educational purposes to teach students about data science, machine learning, and data visualization techniques. The interactive 3D map provides an engaging way to learn. So, you can utilize this visualization method to display various data in a more intuitive manner.

· Market Research and Competitive Analysis: Businesses can use this approach to visualize their competitors and the broader market landscape. By mapping similar companies, they can identify potential partners, understand competitive positioning, and make better strategic decisions. So, you can perform competitive analysis and market research to find opportunities.

7

Inworld Runtime: A Graph-Based C++ Engine for AI Applications

Author

rogilop

Description

Inworld Runtime is a high-performance engine built in C++ designed to simplify the development and deployment of AI-powered applications, especially those involving natural language processing. It tackles the common problem of engineers spending too much time managing AI infrastructure and integrations rather than focusing on building new features. The core innovation lies in its graph-based architecture: AI logic is defined as interconnected nodes (e.g., speech-to-text, language models, text-to-speech), streamlining the data flow and making it easier to manage complex AI workflows. It provides tools to manage AI models, handle traffic, and monitor performance, supporting multiple platforms and allowing for on-device execution. It abstracts the complexity of managing AI models, providing a unified API for multiple providers, and simplifying A/B testing and monitoring.

Popularity

Points 6

Comments 2

What is this product?

Inworld Runtime is a C++-based system that allows developers to build AI applications more efficiently. It uses a graph-based approach, meaning that AI functions are represented as interconnected blocks (nodes). Think of it like building with Lego blocks: you connect different blocks (e.g., speech recognition, language understanding, text generation) to create a complete system. The core innovation is that this graph structure is designed for high performance, particularly when dealing with large amounts of data and complex AI workflows. It includes a web interface (The Portal) for managing and monitoring the AI applications, and offers a unified API to access various AI model providers. So, if you're building something that uses AI, this can speed up your development time and make it easier to manage your AI systems.

How to use it?

Developers can use Inworld Runtime to build AI-powered applications by defining AI logic as a series of connected nodes in a graph. The SDKs (Node.js now, with Python, Unity, Unreal, and native C++ coming soon) will allow developers to build and integrate the graph engine into their specific applications. Developers define the nodes (e.g., STT, LLM, TTS), and the edges define the data flow and any conditions. The web-based Portal UI enables developers to deploy, configure, test, and monitor these applications. In practical terms, developers can select models from providers such as OpenAI or Anthropic using a single interface, implement A/B testing to compare different AI models, and monitor performance metrics to debug issues and optimize results. The core is written in C++, ensuring high performance and the ability to run the AI logic on the device, and SDKs will be open-sourced. So, developers can focus on building the user-facing features rather than dealing with the underlying AI infrastructure.

Product Core Function

· Graph-based Architecture: This is the core of the system. Instead of writing complex code to manage AI processes, developers define them as a series of connected nodes. This simplifies the development process and makes the AI system easier to understand and maintain. For example, if you're building a chatbot, you can connect nodes for understanding user input, generating a response, and speaking the response.

· Extensions: This allows developers to add custom components to the AI system. If a pre-built component doesn't exist, developers can create their own and reuse it in different applications without needing to write custom code. This increases flexibility and customization of applications to fit unique requirements.

· Routers: This feature helps manage traffic and select the best AI models or settings depending on the current load. It can configure what should happen if the application encounters an error. For example, it can automatically switch to a different AI model if the first one is overloaded, or retry failed requests. This functionality makes the application more robust and ready for production.

· The Portal: A web-based control panel is offered so developers can deploy and configure the AI graphs, instantly push out any configuration changes, run A/B tests to compare different AI setups, and monitor performance with logs, traces, and metrics. This simplifies the management and helps with optimizing the performance of an application.

· Unified API: This provides a single, easy-to-use interface for accessing multiple AI providers (OpenAI, Anthropic, Google, etc.). So, developers can switch between different AI models without rewriting the code, and can focus on building the user-facing features rather than getting bogged down with the intricacies of various APIs.

Product Usage Case

· Building Conversational AI Agents: Develop interactive characters for games or virtual assistants for customer service by connecting speech recognition (STT), language models (LLM), and text-to-speech (TTS) nodes. Developers can rapidly prototype and iterate on AI-driven dialogues.

· Creating Interactive Storytelling Applications: Build applications where the user's interaction influences the unfolding story. The graph can use the user's actions to choose how the plot will develop using AI models and enhance the gaming experience.

· Developing AI-Powered Content Creation Tools: This could be used to develop tools that automatically generate text, translate languages, or summarize information. The graph structure can be used to streamline the process of managing multiple AI tasks.

· Creating Virtual Assistants and Chatbots: Developers can quickly build chatbots for customer service or internal applications by connecting various AI components through the runtime. The router features enable managing and selecting the appropriate AI model for specific user requests. The portal allows for easy deployment and monitoring of these conversational interfaces.

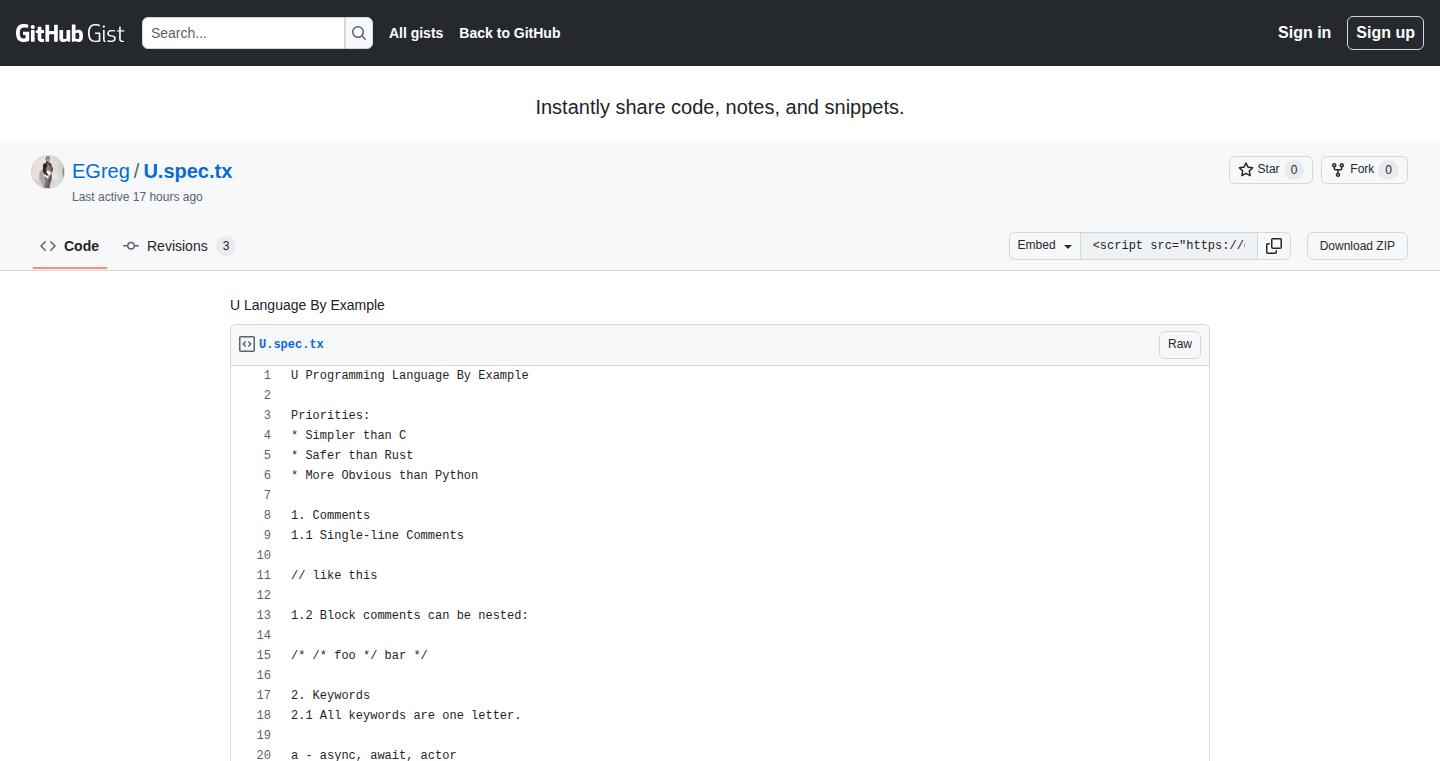

8

U: A Programming Language for Unified Abstraction

Author

EGreg

Description

U is a new programming language that aims to unify the development experience by providing a single language for both backend and frontend development. It tackles the problem of context switching and the complexity of managing different languages and toolchains. The key technical innovation lies in its ability to compile to multiple targets (e.g., JavaScript, native binaries) from a single codebase, simplifying the development process and improving code reuse.

Popularity

Points 1

Comments 7

What is this product?

U is a programming language designed to be used for everything – from building web servers to creating user interfaces. The innovative part is that you only write your code once, and the U language itself figures out how to turn it into something that can run on different platforms, like your web browser or your computer. So instead of needing to learn Javascript for your website's front end and Python for your back end, you could write everything in U. This simplifies development, reduces the need to switch between different programming languages, and makes it easier to share code.

How to use it?

Developers can use U by writing their application code in the U language. The U compiler then takes this code and translates it into the appropriate format for the target platform. For instance, you can write a single U program that compiles to both JavaScript (for the website's front end) and a native binary (for the backend server). This could be integrated by writing U code, compiling to the desired target, and deploying the generated output. So, if you're building a website, you can create your entire application logic in U, including the interface you see and all the behind-the-scenes workings.

Product Core Function

· Cross-Platform Compilation: The core feature allows the U compiler to generate code for various platforms (JavaScript, native binaries, etc.) from a single source code. This simplifies deployment and allows developers to write once and run anywhere. The value here is in the reduced development time and effort, especially when building applications that require both frontend and backend components. It simplifies the process of managing multiple technologies.

· Unified Abstraction: The language unifies development by providing a single language for both frontend and backend. This removes the need to switch between different programming languages and toolchains. Developers only need to learn and master one language. This reduces the cognitive load on developers. It also makes code easier to maintain and understand. So it's useful to anyone who wants to have a simpler and faster development cycle.

· Improved Code Reuse: Code written in U can be easily reused across different parts of an application or even across different projects. This reduces redundancy and increases efficiency, which is useful for projects with a lot of shared logic, such as e-commerce sites or web apps. So it helps to avoid rewriting code when you need to use the same piece of logic in different parts of your app.

Product Usage Case

· Building a full-stack web application where the UI is coded in U and compiled to JavaScript to run in the browser, while the server-side logic is also coded in U and compiled to a native binary running on the server. This means you only have to learn one language and share code easily. So you can save time by reusing the same programming skills.

· Creating a mobile application using U where the core logic and UI are written once and can be compiled to native code for both iOS and Android. This helps in quickly releasing the apps to different platforms. This enables you to build cross-platform apps with less effort.

9

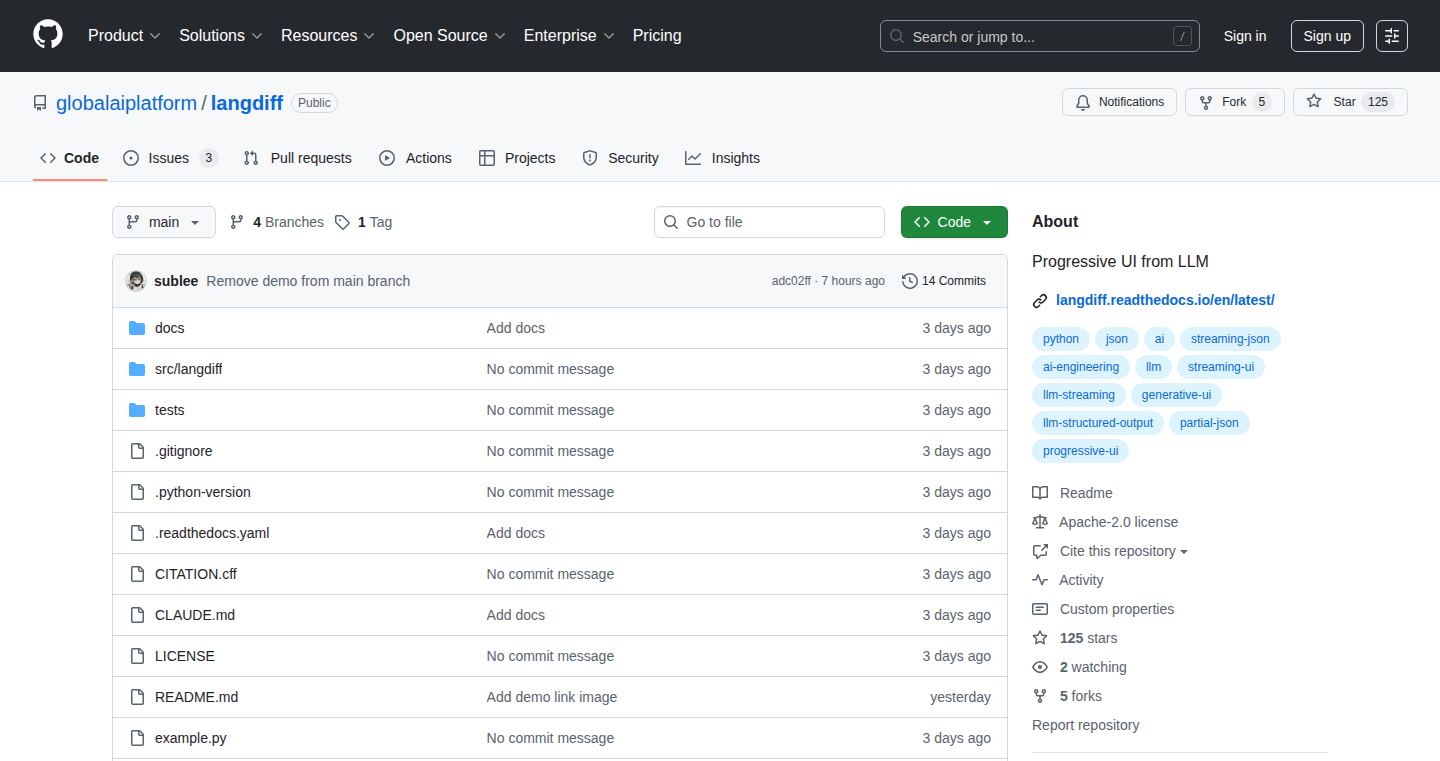

langdiff: Real-time, Type-Safe JSON Streaming from LLMs

Author

maitrouble

Description

langdiff addresses a common problem when working with Large Language Models (LLMs): getting valid, structured JSON data in real-time from a stream of text. It offers a method that uses a schema-based approach coupled with callbacks. You define the structure (schema) of the JSON you expect, then attach functions (callbacks) that are triggered when specific parts of your JSON are recognized. As the LLM generates tokens (pieces of text), langdiff processes them and immediately fires the associated callbacks as structured events. This avoids the issues of incomplete or malformed JSON during streaming, making it reliable for integrating LLM output into applications.

Popularity

Points 6

Comments 1

What is this product?

langdiff is a tool that makes it easier to work with JSON data generated by LLMs, especially when the LLM is providing this data in real-time, piece by piece. The core idea is to define the structure of the JSON (like what fields it should have and what types of data they contain) and then tell langdiff what to do when it finds specific parts of that JSON. This is done through 'callbacks,' which are basically small functions that are activated when certain data is found. The innovation is that langdiff ensures the JSON is always valid, even while it's being created and streamed. So if the LLM sends a JSON field and a piece of text, langdiff will catch the first part of the JSON and then give the function a chance to start.

How to use it?

Developers integrate langdiff by defining a JSON schema that describes the format of the data the LLM will generate. They then associate functions (callbacks) with specific parts of this schema. As the LLM outputs its response in a streaming fashion, the data is fed to langdiff. When a schema element is parsed, the appropriate callback will be invoked immediately, in a type-safe manner. Example: a developer building a chatbot that provides structured information like product details could define a schema for product name, description, and price, and associate each part with a dedicated function to perform operations like updating the UI in real time. It’s integrated by importing langdiff, defining a schema, setting up callbacks that do something with the data, and providing the generated data stream to langdiff.

Product Core Function

· Schema Definition: langdiff allows developers to specify the expected structure (schema) of the JSON data from the LLM. This is crucial for ensuring the correct parsing and processing of the LLM's output. So what: Defines your data structure, prevents issues due to bad JSON formatting.

· Callback Mechanism: Developers can attach functions (callbacks) to schema elements. These callbacks execute when the LLM's output matches parts of the schema. For each output, langdiff processes the tokens and validates them against the schema, and when a token matches any element of the schema, the associated callback function starts. So what: Enable developers to react instantly to specific data in the LLM's output. Example: update UI immediately based on LLM response.

· Streaming JSON Parsing: langdiff is designed to parse JSON data in real-time as it arrives, piece by piece (streaming). This is particularly important when the LLM is delivering a response in chunks, since it makes the whole process more efficient. So what: The parsing happens in real-time without waiting for a full JSON, to improve the user experience and system responsiveness.

Product Usage Case

· Building Chatbots: Developers can use langdiff to build chatbots that provide structured responses like product details, reservation confirmations, or summaries in real-time. When the chatbot generates the JSON, langdiff validates the JSON and sends it to the chatbot for processing, making the process more seamless. So what: Provides structured data in a chatbot conversation without making the user wait until all the data is available.

· Real-time Data Visualization: Imagine receiving data from an LLM and immediately displaying it in a graph or chart. langdiff allows developers to parse the data and update the visualization as the LLM generates it. So what: Create dynamic, instantly updated visualizations based on LLM output.

· Automated Data Processing Pipelines: Develop systems that automatically process data extracted from LLMs, such as automatically extracting information from text to be saved in a database or integrated into other systems. So what: Streamlined data processing to speed up tasks such as information extraction or translation.

10

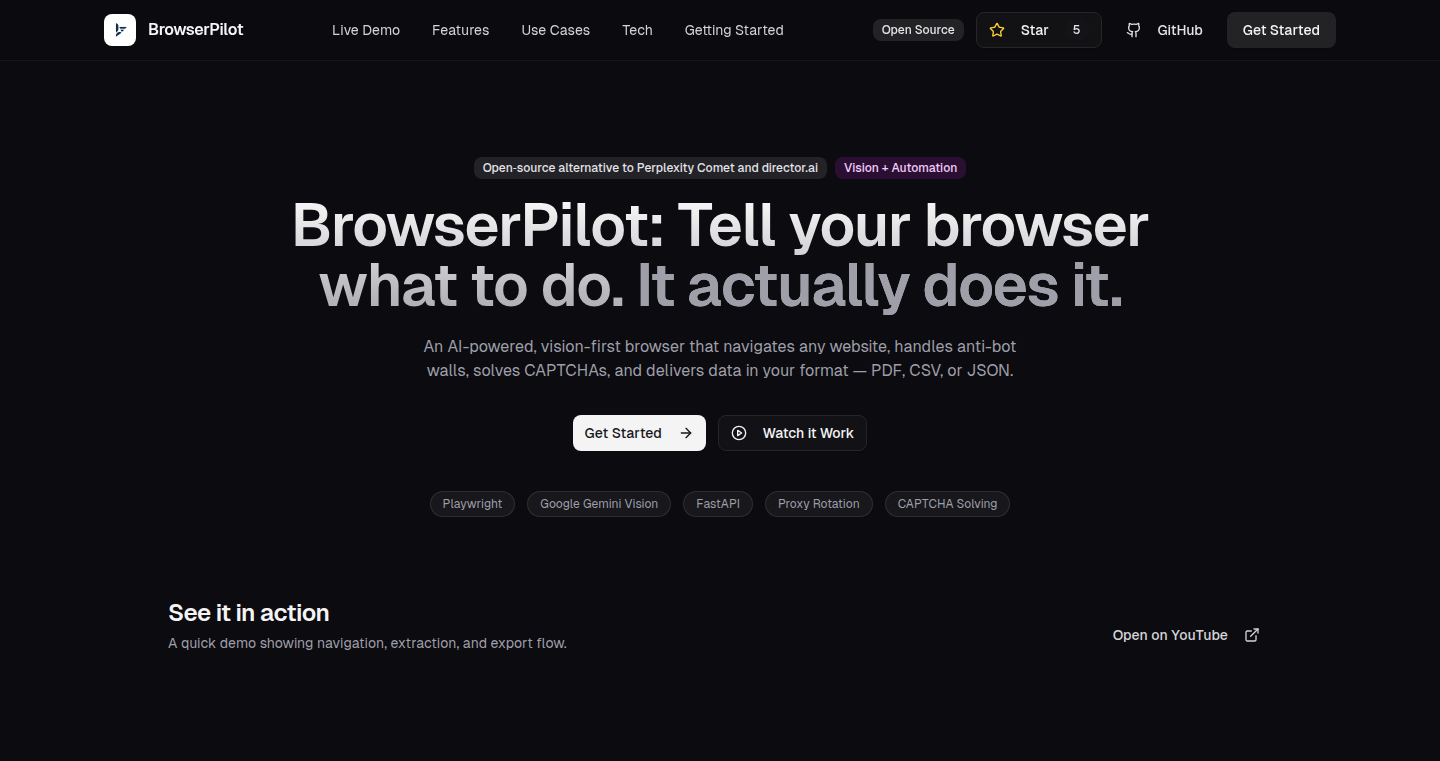

BrowserPilot: Command-Line Control for Your Browser

Author

naymul

Description

BrowserPilot allows you to control your web browser using simple text commands, like a programming interface. It's a bit like having a robot assistant for your browser. The innovative part is its ability to understand and execute natural language instructions, making complex browser actions easy to automate. It tackles the problem of automating web interactions, something that's usually complicated and requires specialized tools.

Popularity

Points 2

Comments 5

What is this product?

BrowserPilot uses a combination of techniques like Natural Language Processing (NLP) to understand your commands and then uses browser automation tools like Selenium under the hood to execute them. The innovation lies in the easy-to-use command interface, enabling almost anyone to automate browser tasks without writing complex code. For example, you can tell it 'go to Google, search for 'Hacker News', and click on the first result'.

How to use it?

Developers can use BrowserPilot by typing simple commands into a terminal. You can integrate it into scripts, automate repetitive tasks like web scraping, data extraction, or automated testing. It's particularly useful for anyone who needs to interact with websites programmatically. Imagine wanting to automate logging into multiple websites or collecting data from various sources without needing to manually interact with each site.

Product Core Function

· Natural Language Command Processing: BrowserPilot understands human language commands. This means you don't need to learn a specific syntax; just tell it what to do. So what? It makes automation super accessible, saving time and reducing the learning curve.

· Browser Automation: It performs actions like clicking links, filling forms, and navigating pages. So what? Allows you to automate complex interactions with websites, from simple tasks like logging in to more complicated processes like data entry.

· Customizable Actions: You can define your own commands and workflows to tailor the browser’s actions to specific needs. So what? Makes it adaptable to various use cases, ensuring the tool fits your requirements and isn't limited by its pre-programmed features.

· Script Integration: It can be integrated into existing scripts and workflows. So what? Simplifies integrating browser automation into existing automation pipelines and existing projects. You can orchestrate it within more complex tools.

Product Usage Case

· Web Scraping: Extracting data from websites, such as product prices or news articles, by automating the process of browsing to a page, finding the content, and saving it. So what? Automatically collecting data from the web for analysis, research, or monitoring.

· Automated Testing: Creating scripts to automatically test the functionality of web applications, by automating user interactions like clicks, form filling, and page navigation. So what? Ensures web applications function correctly and reduces the need for manual testing.

· Workflow Automation: Automating repetitive tasks, such as filling out forms or logging into multiple websites, by executing a series of browser actions based on your instructions. So what? Saves time and reduces manual effort when dealing with recurring browser-based tasks.

· Data Entry Automation: Filling forms on websites, or moving data between multiple web applications by automating all of the form entries. So what? Saves considerable time and prevents human error.

11

Capital Compass: Finding Funding with Tech

Author

nischalb

Description

Capital Compass is a tool built to help startups and founders discover investors with recently raised funds (meaning they have money to invest!), grants, and other resources. It sifts through public financial filings and announcements, normalizes the data, and provides a user-friendly way to identify potential funding opportunities. This project is a testament to the power of using tech to streamline the often-opaque process of finding funding.

Popularity

Points 5

Comments 2

What is this product?

This project works by gathering financial data from public sources, like government filings and announcements. The data is then organized into a database, making it easy to search and filter. The core innovation is automating the process of identifying active investors (those who have recently raised funds) and discovering grant opportunities, instead of manually searching through numerous documents. So, what does this mean for you? It means you can spend less time hunting for funding and more time building your product.

How to use it?

Developers can use Capital Compass to build tools or integrate it with their own applications to provide funding insights. For example, a developer could create a browser extension that automatically highlights investors who have recently raised funds while browsing industry news sites. It can also be used to build tools that help startups compare different grant programs or track funding trends. The data is presented in a structured way, making it easier to integrate into various platforms and applications. This tool can be used for research, analytics, and lead generation in the startup funding space. So, if you're a developer, it opens the door to create specialized funding-related tools for your needs.

Product Core Function

· Identifying Active Investors: This function analyzes recent financial filings to identify investors with fresh capital. It sifts through a ton of information and does the work of finding investors who are likely to be actively looking to invest. So, what's in it for you? It saves you time by surfacing the investors most likely to fund your startup.

· Grant Discovery: The tool indexes and catalogs grant programs from various sources, including federal, state, and local government portals. You can easily search for grants relevant to your startup's needs, dramatically cutting down the time spent searching. So, you could potentially find opportunities you'd otherwise miss.

· Accelerator and Venture Builder Information: Capital Compass provides information on accelerators and venture builders, including program terms, equity requirements, and timelines. This gives you a quick overview of these programs to assist in the decision-making process. So, this helps you evaluate programs that match your needs.

Product Usage Case

· Finding Active Investors: A startup founder uses Capital Compass to identify venture capital firms that have recently raised a new fund. They use this information to tailor their outreach and increase their chances of securing funding. So, it focuses your efforts on the right investors.

· Grant Application Research: A research team uses Capital Compass to identify and compare various government grant programs relevant to their project. This enables them to efficiently prepare their grant applications and save time by not having to look at many websites. So, this helps you find funding opportunities from grants.

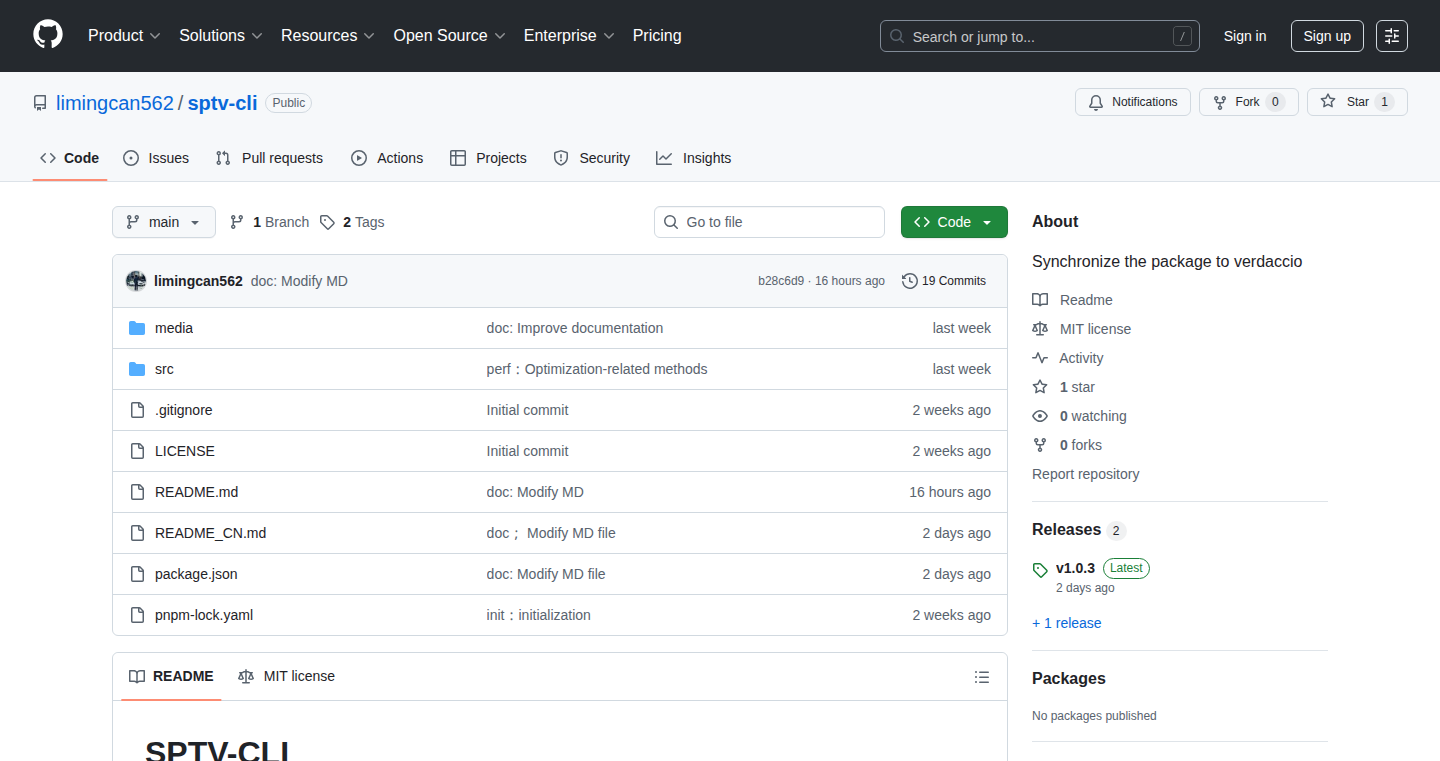

12

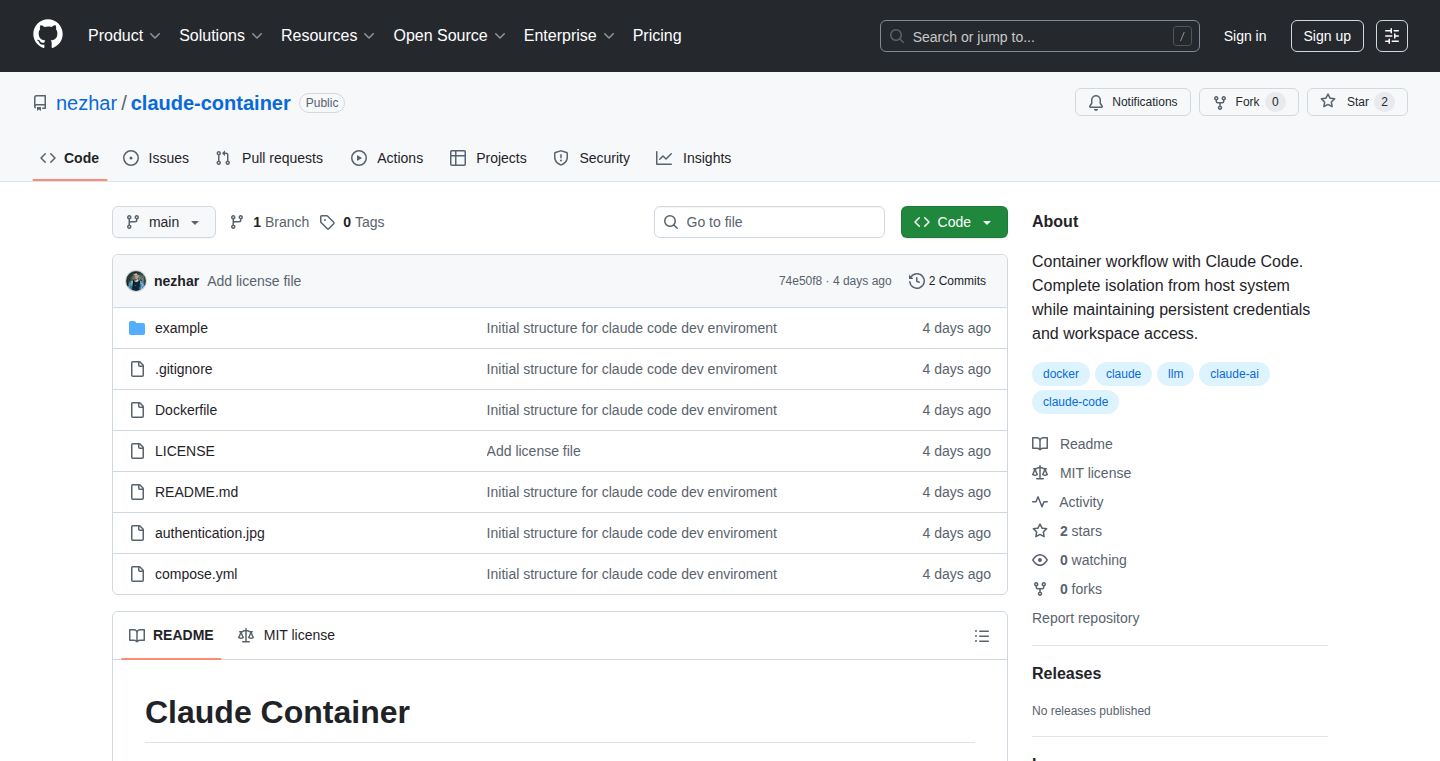

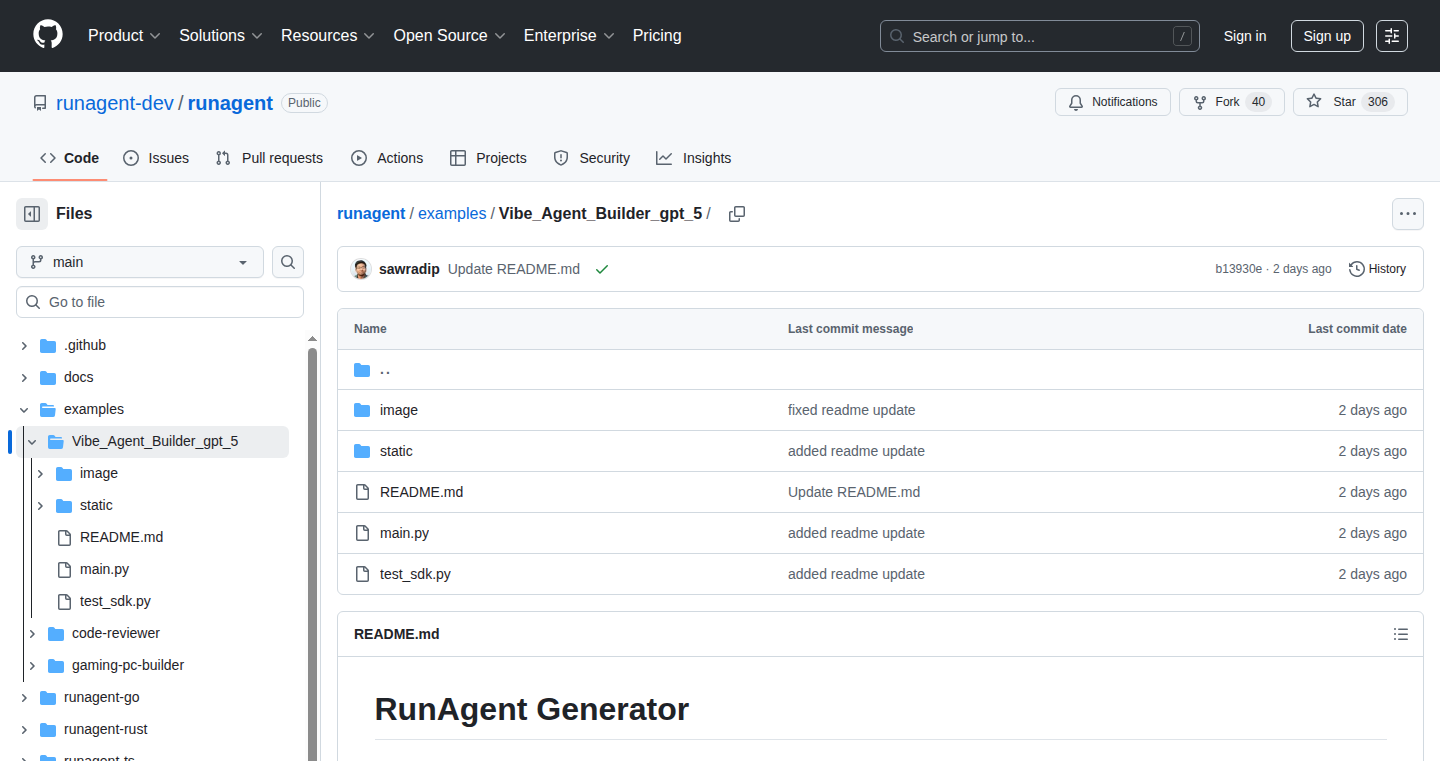

Claude Code DockerBox: Isolated AI Coding Playground

Author

nezhar

Description

This project packages Claude Code, an AI coding assistant, inside a Docker container. It addresses the common problem of wanting to experiment with AI tools without polluting your computer's environment with installations or dependencies. Think of it as a clean, sandboxed area for trying out AI coding features. This offers complete isolation for your system, preserving a clean workspace and making it easy to remove the AI tool after experimentation.

Popularity

Points 2

Comments 5

What is this product?

It's a Docker container pre-configured with Claude Code. Instead of installing AI tools directly on your computer, which can lead to compatibility issues or a cluttered system, you run them inside a self-contained container. This isolation prevents conflicts and keeps your main system clean. The project uses bind mounts to persist your credentials, so you don't have to re-authenticate every time. So this is essentially a ready-to-use, isolated environment to play with AI coding, so you can test it out without worrying about breaking anything on your system.

How to use it?

Developers can use this project by installing Docker and then running the provided command. Once authenticated, you can start using Claude Code within the container, accessing its AI-powered code assistance features. The documentation includes examples for integrating this container with existing projects, meaning you can use AI to help on your current projects without needing to install and configure it first. You can then easily remove the container when finished, leaving no trace on your system.

Product Core Function

· Isolated Execution: Runs Claude Code in a Docker container, keeping it separate from the host operating system. The technical value is protection against dependency conflicts and system clutter. Application scenario: Testing AI coding tools without affecting the main development environment. So this allows you to experiment with AI coding assistance without potentially harming your system.

· Credential Persistence: Uses bind mounts to store credentials securely, so you don't have to re-enter them every time the container is used. The technical value is convenience and efficiency. Application scenario: Seamlessly using the AI coding tool across multiple sessions. So this prevents having to log in every time you want to use the AI tool.

· Easy Removal: Designed for easy removal and clean-up after use. The technical value is a clean system with no residual files or configurations. Application scenario: Quickly removing the AI tool when finished experimenting. So this avoids any potential system clutter.

Product Usage Case

· Development Workflow Testing: A developer wants to evaluate Claude Code for its ability to automate code generation. They can use this Docker container without risking any impact to their existing IDE or installed libraries. So this lets the developer easily try out a new code tool.

· Project Integration: A developer wants to integrate AI assistance with their existing project. They can use this container to avoid installing new dependencies directly. So this allows them to focus on the project without wrestling with the new AI tool's setup.

· Experimentation on a New Machine: A developer is working on a new machine and wants a clean setup to start their development work with the AI assistance without installing dependencies on this new machine. So this gives them a quick start to using AI tools on a new system.

13

OOMProf: eBPF-Powered Memory Profiling for Go Programs at OOM Kill

Author

gnurizen

Description

OOMProf is a tool that helps you understand why your Go program is getting killed by the operating system due to running out of memory (OOM - Out Of Memory). It uses a technology called eBPF to trace memory allocations and deallocations, providing insights into which parts of your code are consuming the most memory at the time of the OOM event. This is a critical issue for developers as it makes debugging memory-related crashes much easier, saving time and preventing production issues. So this tool is super valuable if you develop in Go and want to find the cause of memory problems quickly.

Popularity

Points 5

Comments 1

What is this product?

OOMProf works by integrating with the operating system's kernel using eBPF. eBPF lets the program 'look' into the code when there are memory issues, such as when the system is about to terminate the program for using too much memory. The tool then analyzes the memory usage data it collects to pinpoint the memory-hungry parts of the Go code. The innovation lies in using eBPF during the OOM kill event, providing a real-time view of the memory situation right before the crash. So, instead of guessing, you get the exact memory usage details.

How to use it?

Developers use OOMProf by integrating it into their Go programs. Typically, you’d run the tool alongside your application during development or in production to capture memory usage profiles. When an OOM event occurs, OOMProf generates a memory profile that can be analyzed to identify memory leaks or inefficiencies. This often involves running the program with OOMProf enabled and examining the output reports generated after the program crashes or is killed by the system. So it helps you identify memory problems and optimize your code.

Product Core Function

· eBPF-Based Memory Profiling: This function monitors memory allocations and deallocations in real-time using eBPF. This provides a detailed view of memory usage. It's valuable because it lets you see which parts of your code are consuming the most memory, allowing for precise identification of memory hogs. It can identify memory leaks and other inefficiencies, speeding up debugging.

· OOM Kill Event Integration: OOMProf specifically triggers memory profiling when the program is about to be killed due to OOM. This ensures that the profiling captures the exact state of memory usage just before the crash, leading to more relevant information and faster resolution of memory-related issues. So you get the right information just when you need it, right before a crash.

· Detailed Memory Usage Reports: The tool generates reports that detail memory allocation patterns, including information about memory allocation hotspots. This helps developers understand where the memory is being used. This is useful for identifying the parts of your code that are consuming the most memory, helping you focus on optimizations. So you can analyze how your program uses memory at a granular level.

· Integration with Go Programs: OOMProf is designed for use with Go programs, which makes it easy to integrate into your development and deployment pipeline, helping you streamline debugging. This is useful because it seamlessly fits into your existing Go development workflow, making memory analysis easier.

Product Usage Case

· Debugging Memory Leaks: Imagine your web server, written in Go, is crashing due to excessive memory usage. By integrating OOMProf, you can pinpoint the specific Go functions responsible for the memory leak. You can then analyze the generated profiles to identify problematic code areas where memory is not being freed correctly. So you fix the leak and prevent future crashes.

· Optimizing Resource Usage: In a data processing application, memory optimization is crucial. Using OOMProf, you can analyze which parts of your code consume the most memory during a peak load. This helps identify inefficient data structures or algorithms that may be contributing to high memory consumption, allowing you to rewrite those parts of the code to better utilize resources. So you make your application use less memory and improve performance.

· Preventing Production Outages: Consider a microservices architecture in a containerized environment. If a single service begins to consume excessive memory, it can bring down the entire system. Integrating OOMProf into these services enables early detection of memory issues, preventing these outages. So your services run more reliably and downtime is reduced.

14

Private AI List: Your Guide to Data Sovereignty Tools

Author

tdi

Description

This project, a "Private AI List," is a curated collection of resources focused on data sovereignty and privacy-preserving AI technologies. It's designed to help developers and anyone interested in regaining control over their data. It aims to solve the growing concern of data privacy by providing a central hub for discovering and understanding tools that enable users to keep their data private while still leveraging the power of AI. It’s a community-driven list, showcasing various projects and resources related to private AI, contributing to a more privacy-conscious and secure digital landscape.

Popularity

Points 6

Comments 0

What is this product?

This project is essentially a public, community-editable list of tools, libraries, and projects related to private AI and data sovereignty. It acts as a knowledge base, compiling information about different approaches to keeping your data safe and private while using artificial intelligence. The innovation lies in its focus on a specific niche – private AI – and its collaborative, open-source nature, allowing anyone to contribute and improve the resource. It highlights technologies like homomorphic encryption, federated learning, and differential privacy, explaining how they can be used to build AI systems without exposing sensitive data. So this is useful because it gives you a starting point to understanding cutting edge technologies in AI to protecting your data.

How to use it?

Developers can use this list to discover and learn about specific tools and technologies for building privacy-preserving AI applications. They can find libraries for homomorphic encryption or frameworks for federated learning. The project acts as a starting point for research and experimentation. Developers can explore different projects, understand their use cases, and potentially integrate them into their own projects. For example, if you're building a medical AI application, you could use the list to find resources on secure data sharing or privacy-preserving machine learning techniques. So you could build more secure and privacy-aware applications.

Product Core Function

· Curated Resource Listing: The list provides a curated and categorized collection of tools, libraries, and projects related to private AI and data sovereignty. This saves developers time by aggregating information from various sources, making it easier to find relevant resources. So it saves developers time and research.

· Technology Descriptions: Each entry in the list includes a description of the technology, its purpose, and its potential use cases. This helps developers understand the functionality and applicability of each tool. This feature helps you understand the technical aspects of tools you may want to use.

· Community Contribution: The list is designed to be community-driven, allowing anyone to contribute new resources, update existing entries, and provide feedback. This ensures that the list remains up-to-date and relevant. The community-driven approach keeps it fresh, and allows you to learn from the community.

· Categorization and Tagging: The list is organized by category and tags, making it easier to search and filter resources based on specific needs. So you can find the tools that are most relevant to your specific use case.

· Focus on Data Sovereignty: The project’s core focus on data sovereignty ensures that the listed tools and technologies prioritize user privacy and data control. This can help developers build more ethical and secure applications.

Product Usage Case

· Medical Data Analysis: A developer working on a medical AI project could use the list to find tools for secure data sharing and privacy-preserving machine learning techniques. This allows the project to train AI models on sensitive patient data while maintaining data privacy regulations. So you could build more secure medical applications.

· Financial Services: Financial institutions can use the list to discover tools for building AI models on sensitive financial data without compromising security. Federated learning, for example, could be used to train models across different banks without sharing their actual customer data. So you could develop AI-powered financial services without risking sensitive customer information.

· Personalized Recommendation Systems: Developers can use the list to find tools to build personalized recommendation systems that respect user privacy. Instead of directly accessing user data, they can explore techniques like differential privacy or federated learning. So you can improve user experience while protecting privacy.

· Government and Public Services: Public service developers can use the list to enhance data privacy when building AI-powered public services such as fraud detection and personalized citizen services. So you can deliver better services to citizens in a secure manner.

15

Blue Dwarf: A Text-Based Social Haven for the Antiquated Web

Author

lardbgard

Description

Blue Dwarf is a radical take on social media, stripping away all the fancy visual effects and focusing on the core: text. It's designed to run smoothly on ancient hardware and slow internet connections, like the ThinkPads of yesteryear. The innovation lies in its minimalist approach, eliminating JavaScript, ads, and tracking, leading to incredibly fast loading times and a lightweight experience. It's solving the problem of modern web bloat and accessibility issues for users with older devices or limited bandwidth.

Popularity

Points 4

Comments 2

What is this product?

Blue Dwarf is a social platform built entirely on text, without the typical web technologies that slow things down. It's built to be lean and efficient, avoiding JavaScript, intrusive advertisements, and user tracking. Instead of complex code, it focuses on delivering content directly to the browser, offering a simple and fast user experience, even on very old machines. So what does this mean? This provides a social experience free from distractions and speed issues, creating a better experience on a wide range of hardware.

How to use it?

Developers can interact with Blue Dwarf by simply accessing its text-based interface through their web browsers. Its minimalist design makes it easy to understand and potentially repurpose for similar text-based applications. It offers a model for building accessible, lightweight web applications that focus on content delivery over fancy features. Because there are no complex technologies, it’s easy to integrate into existing projects. So you can explore a new way of building web apps that are much faster and easier to use.

Product Core Function

· Text-based Content Sharing: Users can share thoughts and ideas in a purely textual format. This prioritizes the message over visual clutter, and is easily viewable on any device with a browser. So you can communicate effectively without the burden of images and videos.

· Minimalist Interface: The platform's design is deliberately simple, using only basic HTML and CSS. This means very fast loading times and is easy to navigate, especially on old computers. So you can experience a social media platform that does not require advanced hardware.

· No JavaScript or Tracking: Eliminating JavaScript ensures the platform remains lightweight and doesn’t track user data. This provides a faster, more private experience. So you are protected from data collection and enjoy a more streamlined experience.

· Accessibility-focused Design: The text-only approach and simple HTML make the site highly accessible to users with disabilities or those using older assistive technologies. So everyone can participate regardless of their hardware or disability.

Product Usage Case

· Developing a lightweight blog: A developer could use the Blue Dwarf model to build a personal blog or a small project that requires simplicity and speed, avoiding the complications of modern web frameworks. So you can easily create a blog that loads really fast, which is especially important for SEO.

· Creating a command-line interface (CLI) tool output: A developer could use Blue Dwarf's minimalist style as a reference for building CLI tools that deliver text-based output. This could also lead to building a text-based social app. So, you can quickly make tools that are friendly to older systems or situations where resources are limited.

· Experimenting with web accessibility: Developers can use Blue Dwarf as inspiration to design websites and applications that prioritize accessibility, ensuring they can be used by everyone. So you can develop apps that are accessible to a larger audience.

16

InterviewPrep AI: Automated Mock Interview Generator

Author

fahimulhaq

Description

This project uses the power of artificial intelligence to create realistic mock interviews for software engineers. It tackles the common challenge of preparing for technical interviews by providing a platform to practice answering questions and receive feedback on performance, focusing on code quality and problem-solving skills.

Popularity

Points 4

Comments 2

What is this product?

InterviewPrep AI generates mock interviews for software engineers using AI. It simulates a real interview experience by asking technical questions, evaluating the responses, and providing feedback. The innovation lies in the automated generation of these interviews, making practice more accessible and affordable. It analyzes code, assesses problem-solving approaches, and provides insights, which is a great example of applying AI to a practical need: interview preparation. So this helps engineers to prepare more effectively.

How to use it?

Developers can access InterviewPrep AI through a web interface. They can select a role (e.g., Frontend, Backend) and desired level of difficulty to start an interview session. The system presents coding challenges and behavioral questions, and users can respond either by typing code or describing their thought processes. After submitting answers, AI provides feedback on code quality, efficiency, and clarity. It is integrated as a self-service learning tool. So, developers could use it as a daily practice tool.

Product Core Function

· Automated Question Generation: The system automatically generates a diverse set of technical interview questions based on the chosen role and difficulty level. This saves time and resources compared to traditional interview preparation methods. So, it saves your time for preparation.

· Real-time Code Evaluation: The AI analyzes code snippets written by the user, identifying potential issues like syntax errors, inefficient algorithms, and code readability. This improves the quality of code that developers are writing and enables a better understanding of their strengths and weaknesses. So, you will receive useful feedback on the code you wrote.

· Performance Feedback: The system provides overall feedback on the performance, including problem-solving skills, communication, and technical expertise, offering an objective evaluation of the user's strengths and weaknesses. This allows the developer to identify areas for improvement. So, you will have better understanding on your current coding level.

· Personalized Learning: The AI dynamically adapts to the user's performance. It provides different questions based on performance. This helps to focus the learning and practice efforts in the relevant areas for a personalized experience. So, you will prepare in a more efficient way.

Product Usage Case

· Interview Practice: A junior software engineer preparing for their first job interviews can use InterviewPrep AI to practice coding challenges, which will build confidence. So, it offers great help for fresh engineers.

· Skill Enhancement: An experienced developer can utilize InterviewPrep AI to brush up on specific technical skills or explore areas where they lack expertise. This will increase chances to pass the interview. So, experienced developers can enhance specific skills.

· Company Hiring Preparation: Tech recruiters can use InterviewPrep AI to prepare potential candidates for interviews by focusing on relevant technical questions.

17

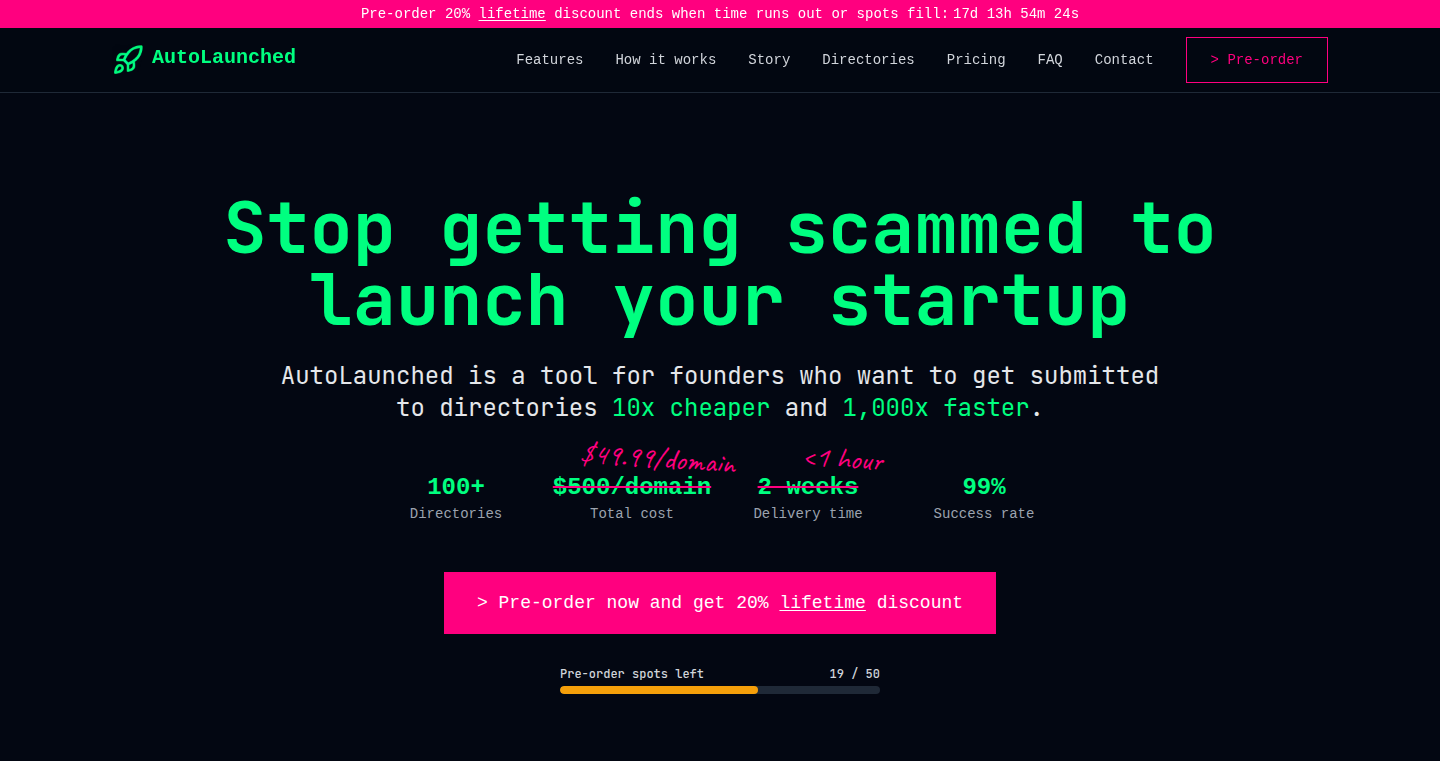

LinkCraft: Automated Backlink & SEO Booster

Author

thevinodpatidar

Description

LinkCraft is a tool designed to help early-stage startups improve their domain rating and search engine optimization (SEO) performance. It automates the process of building backlinks, which are crucial for improving a website's visibility in search results. This project focuses on innovative techniques for identifying and securing valuable backlinks, effectively addressing the common problem of low domain authority for new businesses.

Popularity

Points 2

Comments 3

What is this product?

LinkCraft works by intelligently analyzing the web to find opportunities for building backlinks. It leverages techniques like web scraping and content analysis to identify relevant websites and pages where the startup can potentially acquire a backlink. It then automates the outreach process, making it easier for startups to connect with website owners and bloggers. This is a technical solution to a very practical problem: how to get your website noticed by search engines and potential customers. So what's cool? It automates a really tough process, saving you a ton of time.

How to use it?

Developers can use LinkCraft by inputting their website's URL and providing information about their target audience and industry. The tool then identifies potential backlink opportunities. Developers can integrate LinkCraft into their existing SEO workflows by analyzing the suggested backlinks and incorporating them into their content strategy. You'd use it by essentially feeding it your website and letting it find backlink opportunities. Then, you would vet the suggestions and use the tool to reach out to other sites. So you'd use it to save time and quickly build up your SEO.

Product Core Function

· Automated Backlink Discovery: This feature uses web scraping and content analysis to find relevant websites that might link to your content. Value: Saves time and effort in identifying potential backlink sources. Application: Ideal for finding websites for content promotion and guest posting.

· Outreach Automation: The tool can automate parts of the outreach process, helping you contact website owners and bloggers. Value: Streamlines the process of requesting backlinks. Application: Helps expedite the link building process, boosting efficiency.

· Content Analysis: Analyzes your website content to match it with relevant backlink opportunities. Value: Ensures that the backlinks are relevant and provides better SEO value. Application: This helps in creating targeted content that attracts links.

Product Usage Case

· A new e-commerce startup struggling to rank for its target keywords can use LinkCraft to identify authoritative blogs in their niche and reach out for guest posting opportunities. The tool would help in automatically finding those blogs and assisting with the outreach process. The startup's SEO improves, and their website gains visibility in search results. So this improves visibility in search results.

· A tech blog can use LinkCraft to find websites that cover the same technology or offer similar content, allowing them to build relationships through link exchange. By identifying relevant websites and automating the outreach, the blog can significantly increase its domain authority. Their content gains a wider audience and receives higher ranking in search results. So this helps gain a wider audience.

18

xtop – The eBPF-Powered Time Tracker

Author

tanelpoder

Description

xtop is a 'top' command reimagined, but instead of focusing on CPU usage, it shows you what processes are using your time, specifically wall-clock time, using the modern eBPF technology. This means it measures the actual time a process spends, even when it's waiting for things like network requests. The technical innovation lies in its use of eBPF, allowing it to 'peek' inside the kernel (the core of the operating system) to gather more accurate time information. This avoids the inaccuracies of traditional methods. It solves the problem of understanding where your time is *really* going when your computer feels slow, going beyond just CPU utilization.

Popularity

Points 4

Comments 1

What is this product?