Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-07-29

SagaSu777 2025-07-30

Explore the hottest developer projects on Show HN for 2025-07-29. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's projects showcase a surge in applying AI to automate tasks and create novel user experiences. Developers are leveraging LLMs for code generation, review, and even refactoring, significantly boosting productivity. The trend toward client-side processing emphasizes user privacy and responsiveness. Open-source initiatives are empowering the community with tools for various applications, from security to content creation. For developers and innovators, this means focusing on AI-first development, building tools that automate workflows, and prioritizing client-side processing to enhance user privacy and performance. Explore open-source projects to accelerate your learning and build upon existing foundations. Think about how AI can redefine existing formats or processes to offer unique value. Finally, prioritize building tools that can streamline development process and workflow to increase efficiency.

Today's Hottest Product

Name

Show HN: I built an AI that turns any book into a text adventure game

Highlight

This project ingeniously leverages AI to transform any book into an interactive text adventure game. It demonstrates a creative application of AI by letting users make choices that shape the narrative, offering a fresh way to experience literature. Developers can learn from this project by exploring how to use AI to create personalized, dynamic content and how to integrate AI into existing creative formats, which opens up new possibilities for interactive storytelling.

Popular Category

AI (Artificial Intelligence)

Productivity Tools

Developer Tools

Popular Keyword

AI

LLM

GitHub

Open Source

Technology Trends

AI-Powered Content Generation

AI for Code Review and Automation

Client-Side Processing

Open Source AI Tools and Frameworks

Tools for Workflow Automation

Project Category Distribution

AI-driven applications (35%)

Developer Tools & Utilities (30%)

Productivity & Automation (25%)

Other (Games, Security, etc.) (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | BookQuest AI: Turn Any Book into a Text Adventure | 249 | 99 |

| 2 | Terminal-Bench-RL: Long-Horizon Terminal Agent Training Infrastructure | 115 | 10 |

| 3 | PR Quiz: AI-Powered Code Comprehension Tool | 84 | 29 |

| 4 | ELF Injector: Code Injection for Enhanced Binary Manipulation | 37 | 12 |

| 5 | Xorq: The Compute Catalog for Reusable and Observable AI Pipelines | 35 | 10 |

| 6 | Monchromate: Smart Greyscale Browser Extension | 38 | 5 |

| 7 | ElectionTruth: Interactive Data Visualization and Simulations for Election Analysis | 8 | 4 |

| 8 | StudyTurtle: AI-Powered Explanations for Kids and Parenting Insights | 5 | 3 |

| 9 | YouTubeTldw: Instant YouTube Summaries | 5 | 3 |

| 10 | Maia Chess: Human-like AI for Chess Engagement | 7 | 1 |

1

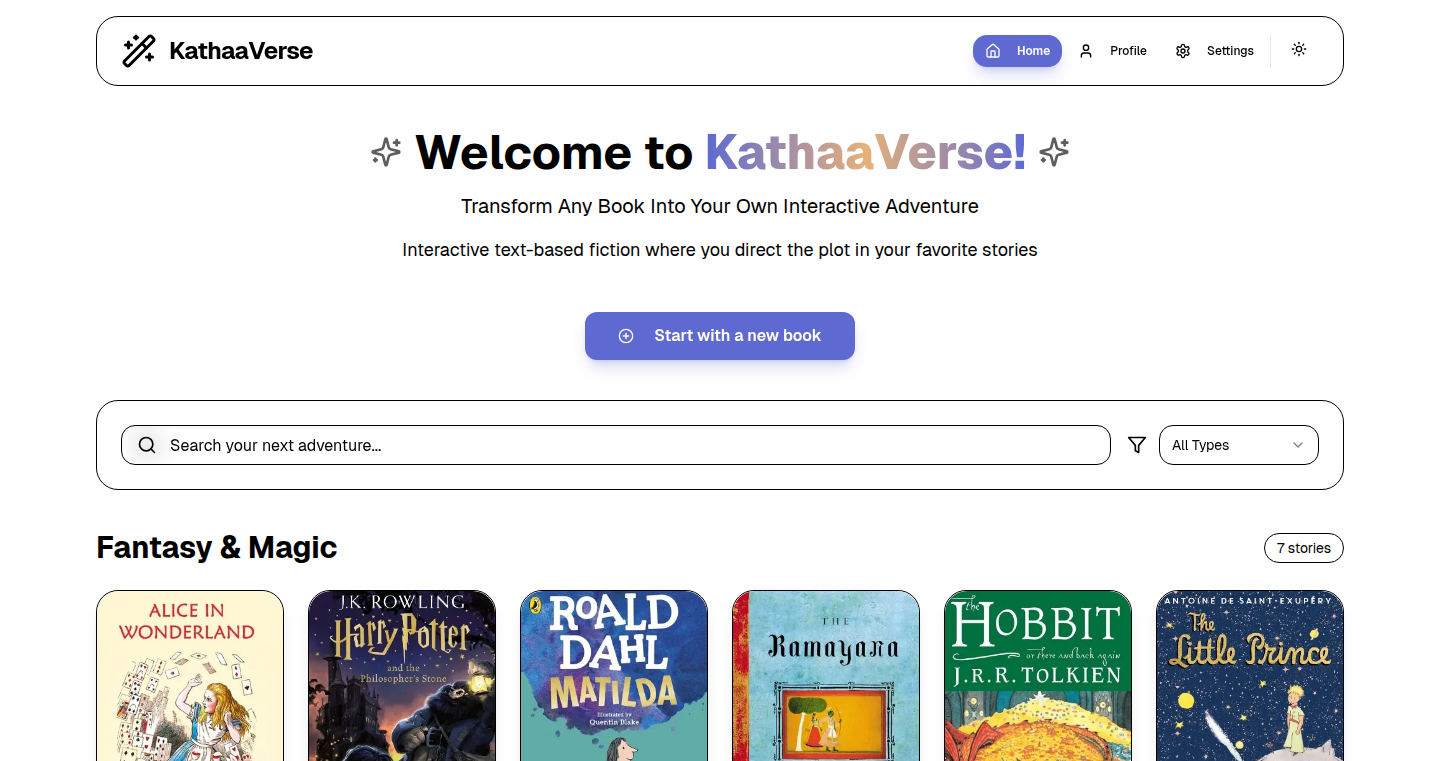

BookQuest AI: Turn Any Book into a Text Adventure

Author

rcrKnight

Description

BookQuest AI is a web application that leverages the power of Artificial Intelligence to transform any book into an interactive text adventure game. It allows you to experience your favorite stories in a new, engaging way by making choices that shape the narrative. The project uses AI to analyze the text, understand the plot, and generate interactive scenarios based on your decisions. A key innovation is the ability to "remix" genres, allowing you to experience classic tales in completely new contexts, such as playing Dune as a noir detective story.

Popularity

Points 249

Comments 99

What is this product?

BookQuest AI is essentially an AI-powered book-to-game converter. It takes a book, processes it with AI algorithms, and creates a playable text adventure. The AI analyzes the book's content – characters, plot, settings – and generates branching narratives based on your input. The innovation lies in how the AI understands and interacts with the book's content, allowing for dynamic storytelling and the ability to reimagine stories in different genres. So this is like a digital playground where you and the AI create new experiences with the same material.

How to use it?

Developers could use BookQuest AI as a fascinating example of AI-driven content generation and interactive storytelling. They can analyze the app's architecture and see how AI is used to process text, generate interactive scenarios, and manage user input. This could be a great learning resource for developers interested in AI, NLP (Natural Language Processing), and game development. Think of it as a practical demonstration of how complex AI models can be applied to create interactive experiences. So you can learn how to build similar applications yourself.

Product Core Function

· Book Conversion: The core function is converting a book into a text adventure game. This involves complex text analysis and generation processes managed by AI. The value is in providing a novel way to engage with books, making reading more interactive and customizable. This can be applied in educational settings to reinforce learning.

· Genre Remixing: The ability to transform a book into a different genre (e.g., making Dune into a noir detective story). The technical achievement lies in the AI's ability to understand the underlying themes and plot structures of a book and adapt them to different narrative styles. This feature provides immense creative possibilities, allowing users to experience books in completely new and unexpected ways. This is useful for game designers and writers to explore creative narrative possibilities.

· Interactive Storytelling: The system allows users to make choices that affect the story's progression. The AI dynamically generates narrative paths based on these choices. This offers an immersive and engaging reading experience. It can be applied in education, gamification, or even personalized entertainment.

· AI-Powered Analysis: The project utilizes AI to analyze the source text, breaking down complex elements such as character interactions, plot points, and settings, allowing the generation of more dynamic and compelling interactions with the reader. The value lies in its use of AI to automate the traditionally manual work of adapting text for interactive formats. So it provides a way to understand text interactions via AI.

Product Usage Case

· Educational Game: Create interactive educational games based on historical texts. The AI can adapt historical events into game scenarios, allowing students to engage with the material in a hands-on and engaging way. This can make learning history more fun and memorable.

· Interactive Fiction Development: Using the BookQuest AI framework to generate interactive stories. Game developers can use the AI to prototype new games, experiment with narrative structures, and quickly generate large amounts of content. This saves time and allows developers to focus on design and gameplay.

· Personalized Storytelling: Individuals can use the AI to customize classic stories to their liking. You could, for instance, change the protagonist, the setting, or the plot of a book to see how it changes the outcome. This is a powerful tool for creative writing exercises and personal enjoyment.

· Adaptive Learning Platforms: Integrated with adaptive learning platforms, this could enhance how students interact with learning materials. By turning books into interactive games, platforms can better engage students and offer personalized learning experiences.

2

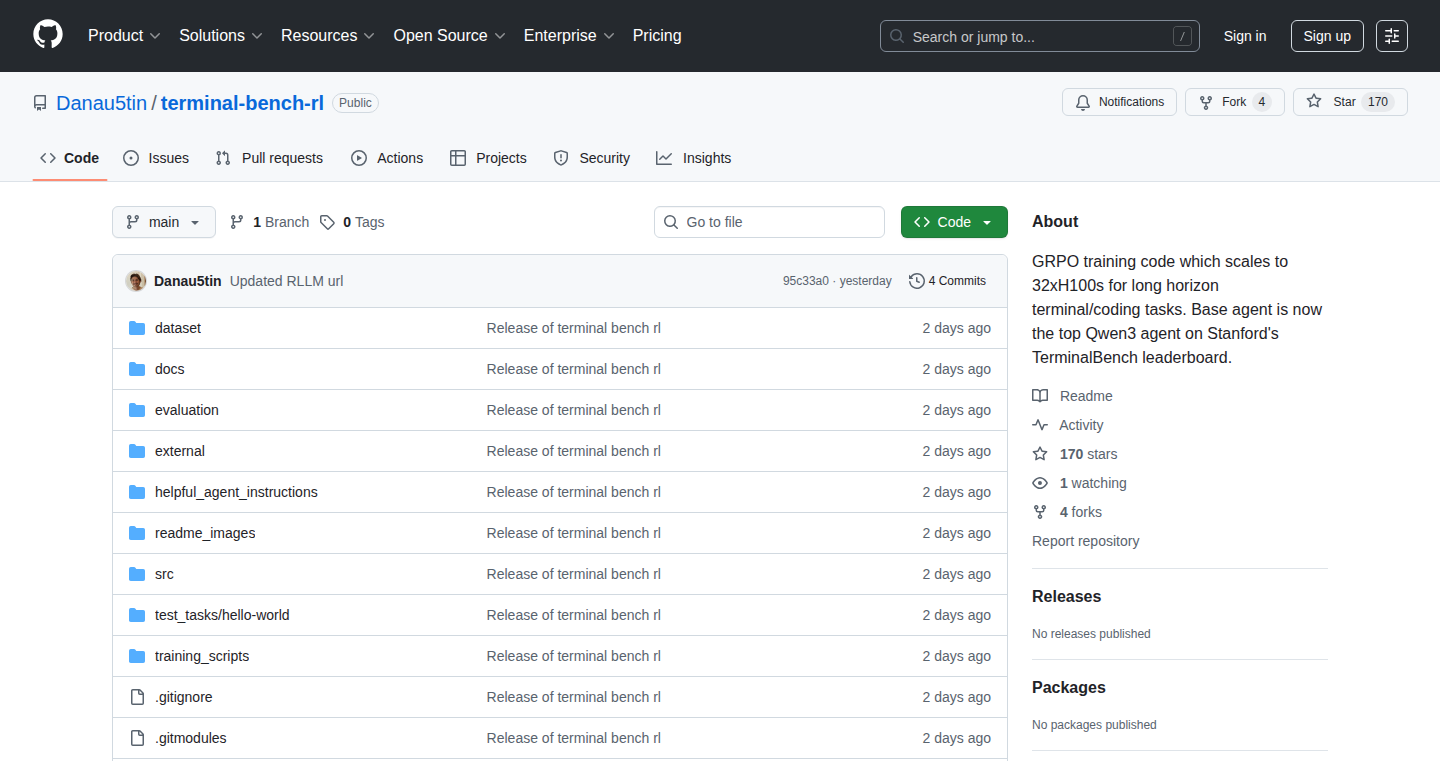

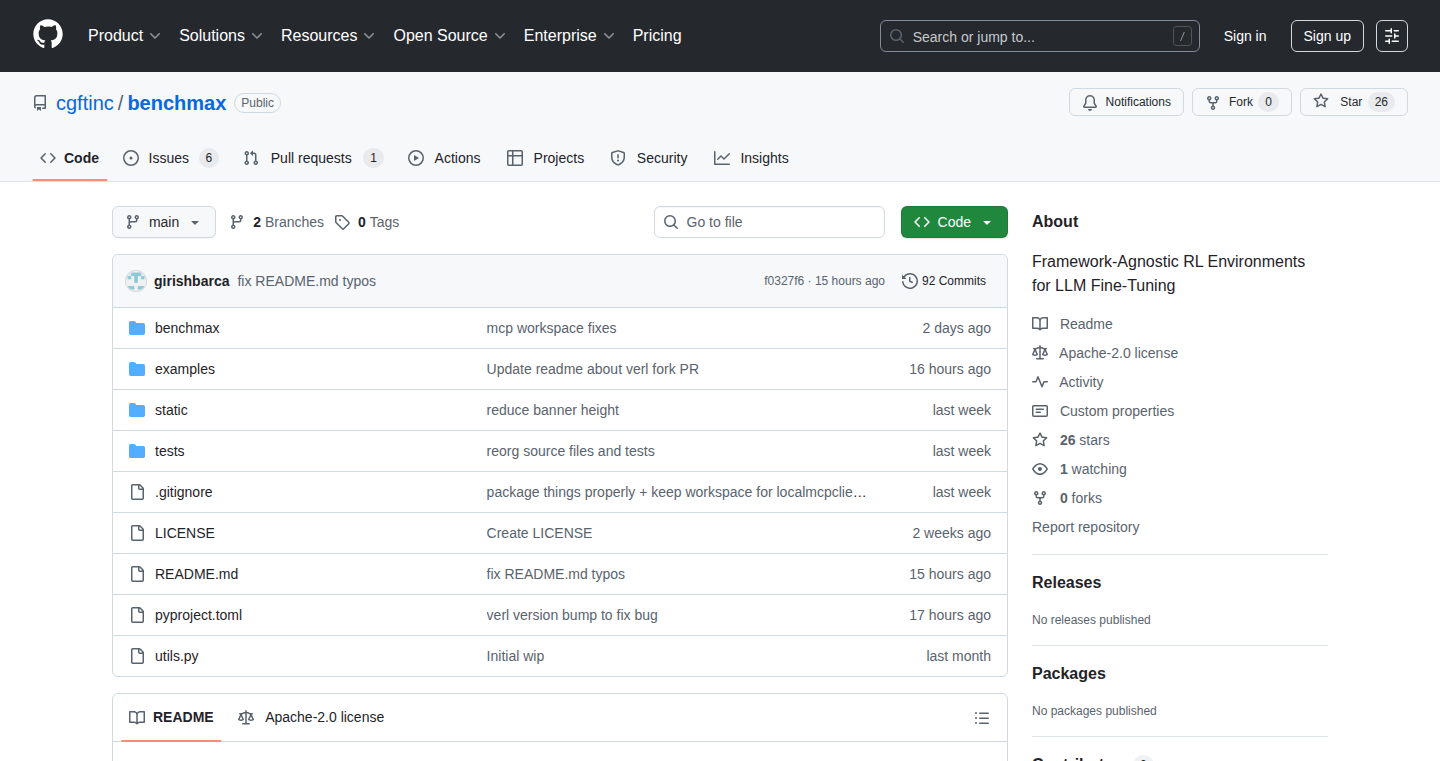

Terminal-Bench-RL: Long-Horizon Terminal Agent Training Infrastructure

Author

Danau5tin

Description

This project builds a system for training AI agents to perform complex tasks in a terminal environment, like using the command line to solve problems. It uses a technique called Reinforcement Learning (RL) to teach the agent. The system is designed to scale from small setups to large, expensive ones, allowing for efficient training. The project's core innovation is the infrastructure built for training these 'long-horizon' agents (agents that have to make multiple decisions over a long period to achieve a goal), which is open-sourced. This project tackles the challenge of teaching AI agents to handle extended, multi-step tasks, something often difficult for current AI systems. So this means, we are teaching the AI to think like a real user and interact with a terminal to achieve a desired outcome.

Popularity

Points 115

Comments 10

What is this product?

This project is a framework for training AI agents in a terminal environment, using Reinforcement Learning (RL). It is similar to teaching a robot how to use a computer. The agent interacts with the terminal, executing commands to accomplish tasks. The core innovation is the infrastructure, including a synthetic data pipeline for creating training data, a multi-agent training setup, and the ability to scale the training across multiple powerful computers. So this allows researchers and developers to experiment with and train complex AI agents that can automate tasks in a terminal environment.

How to use it?

Developers can use this project as a starting point for building and training their own terminal agents. They can customize the agent's behavior, the tasks it performs, and the environment it operates in. The project provides pre-built components such as the agent design inspired by Claude Code, data generation pipelines, and the infrastructure to run the training. The code is open-source on GitHub. So, if you are a developer, you can use this as a base, adapt it, and train AI agents to perform automated tasks, like scripting, software development, or even cybersecurity.

Product Core Function

· Docker-isolated GRPO Training: This allows each training run (rollout) to happen in its own container, preventing conflicts and ensuring that the system can handle lots of tasks at the same time. So this ensures stability and efficiency when training agents with different configurations.

· Multi-agent synthetic data pipeline: This uses a pipeline to create training data, including validation. It uses models like Opus-4 to generate diverse and challenging training tasks, which is key for teaching the agent how to solve real-world problems. So this provides high-quality training data for the agent.

· Hybrid reward signals: The agent is rewarded based on both unit tests that check if the agent's actions are correct and a behavioral LLM judge, which gives feedback on the quality of the results. This helps guide the agent towards the correct solutions. So this makes the AI agent better at problem solving by providing feedback on its performance.

· Scalable infrastructure: The system is designed to scale from small setups to large clusters of computers, making it possible to train agents that perform complex tasks. So this makes the training faster and more efficient.

· Config Presets: Simple configuration presets allow users to adapt the training process for different hardware setups with minimal effort. So this simplifies the setup and allows for faster iteration.

Product Usage Case

· Automated Scripting: A developer could use this to train an agent to automate repetitive scripting tasks, saving time and reducing errors. So this makes your work faster and more efficient.

· Software Development: The project can be used to train agents to assist in software development tasks, like code generation, debugging, and testing. So this can help developers write code, and can also help them find and fix errors in the code.

· Cybersecurity: The technology could be adapted to train agents to perform tasks in cybersecurity, such as threat detection and response, and incident management. So this could help companies detect and respond to cyber threats.

3

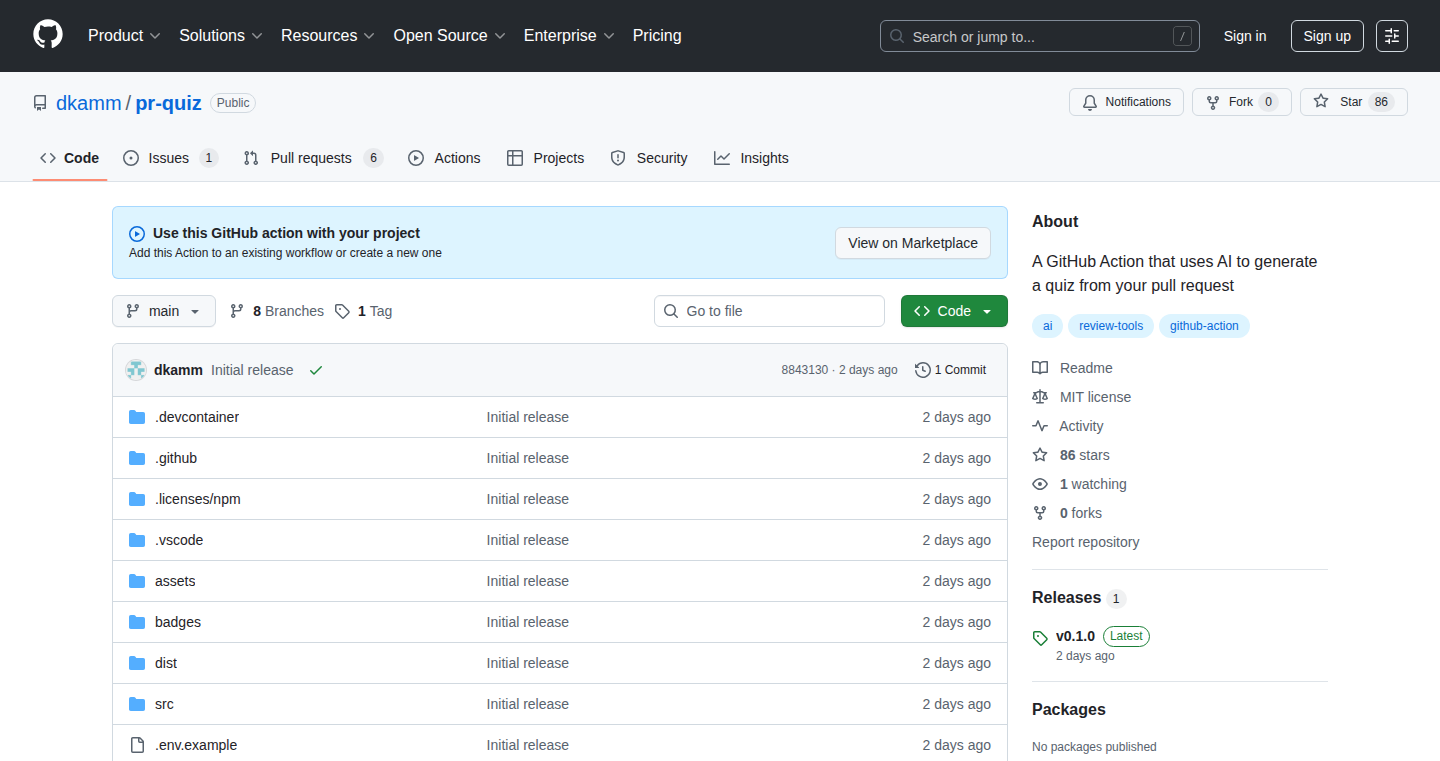

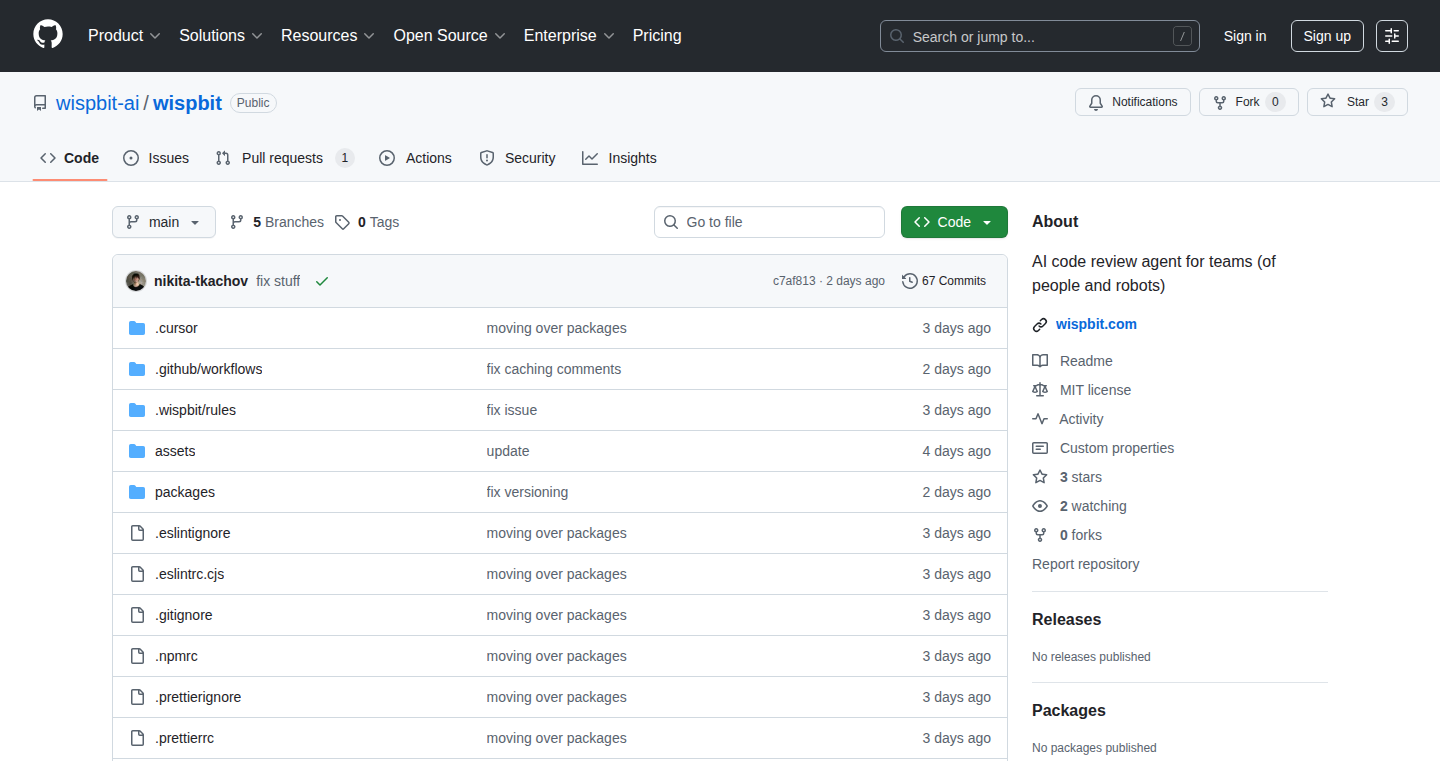

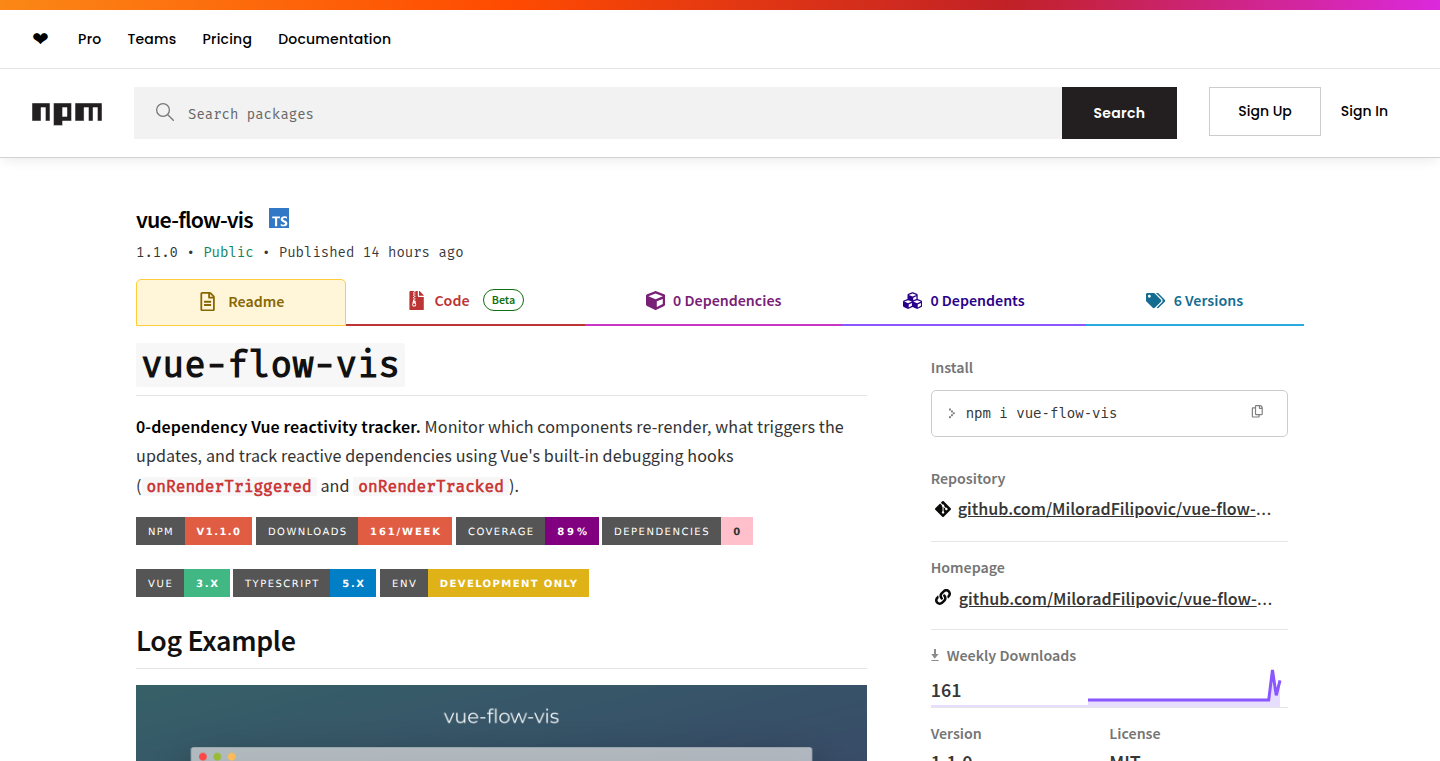

PR Quiz: AI-Powered Code Comprehension Tool

Author

dkamm

Description

PR Quiz is a GitHub Action that uses AI to quiz developers on pull requests before merging. It leverages Large Language Models (LLMs) like OpenAI to generate quizzes based on the code changes in the pull request. This helps developers better understand the code they are about to merge, reducing the risk of errors and improving code quality. The innovation lies in using AI to automatically assess code understanding, making code reviews more efficient and effective.

Popularity

Points 84

Comments 29

What is this product?

PR Quiz is a GitHub Action. It works by analyzing the changes in a pull request and then using AI (specifically, a Large Language Model like OpenAI's) to generate a quiz about those changes. Before you can merge the pull request, you must pass the quiz. This ensures that developers understand the code they are merging, improving code quality and reducing potential bugs. So, this uses AI to improve the code review process. It builds a quiz automatically based on the code changes. So what? It helps developers understand new code and prevents merging code they don't understand, leading to fewer bugs and better code.

How to use it?

Developers install the PR Quiz GitHub Action in their repository. Whenever a pull request is created, the action automatically generates a quiz based on the code changes. The developer must then answer the quiz questions before the pull request can be merged. You can configure settings like which AI model to use, how many times a developer can try the quiz, and the minimum size of the changes to trigger a quiz. This action runs a local webserver to host the quiz and uses ngrok to provide a temporary URL for the quiz. This means your code is only sent to the AI model provider (like OpenAI), not stored anywhere else. So, this makes code review more effective, improves code quality, and integrates seamlessly into the development workflow.

Product Core Function

· Automatic Quiz Generation: The core function is to automatically generate quizzes from code changes in a pull request. This uses AI to analyze the code and formulate questions about it. So what? It saves developers time and effort by automating a previously manual process, allowing them to focus on understanding and reviewing the changes more efficiently.

· AI-Powered Question Generation: Leveraging LLMs to create quiz questions ensures that the questions are relevant and test the developer's understanding of the changes. So what? This creates better tests, meaning more thorough reviews and a better overall understanding of the code.

· Integration with GitHub: The action is designed to seamlessly integrate with GitHub pull requests, blocking merges until the quiz is passed. So what? This forces developers to understand the changes before merging, thereby preventing errors and ensuring quality.

· Configurable Options: Users can configure various settings, such as the AI model, the number of quiz attempts, and the minimum pull request size. So what? It offers flexibility and allows developers to customize the tool to their specific needs and preferences.

Product Usage Case

· Code Review Improvement: A software development team uses PR Quiz to improve their code review process. Before merging a pull request, developers are required to pass a quiz generated by the action. This leads to a better understanding of code changes and a reduction in merge conflicts. So what? This helps a team deliver higher quality software more reliably.

· Onboarding New Developers: When a new developer joins a team, PR Quiz is used to help them understand the existing codebase. Whenever a pull request is made, the new developer is quizzed, which helps them learn the code and quickly understand what's going on. So what? This tool reduces ramp-up time for new developers and makes them productive faster.

· Reducing Bug Introduction: In a project with a lot of contributors, PR Quiz is employed to minimize the introduction of bugs during the merging process. Every pull request undergoes a quiz generated by the action, allowing developers to catch potential problems before they become part of the main codebase. So what? It helps in improving software quality by preventing bugs before they happen.

4

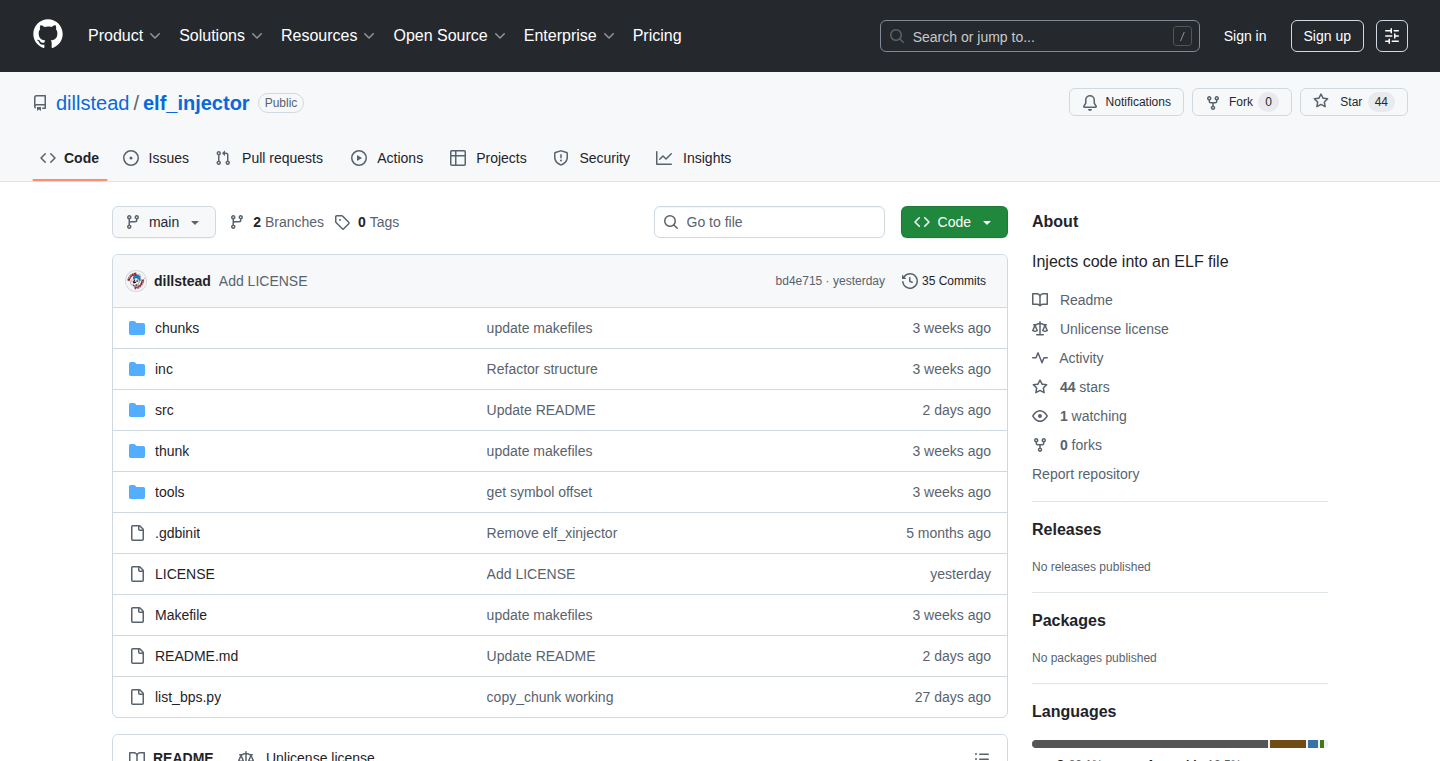

ELF Injector: Code Injection for Enhanced Binary Manipulation

Author

dillstead

Description

This project, the ELF Injector, allows you to insert your own code directly into existing executable files (ELF files). Think of it like adding a secret agent into a program before it even starts. It's like giving a program a pre-flight checkup, or adding extra capabilities without changing the original code. The project focuses on injecting code chunks before the program’s regular start point. The cool part is that it includes examples and a detailed guide, making it easier to understand and experiment. This solves the problem of modifying the behavior of a program without needing its source code.

Popularity

Points 37

Comments 12

What is this product?

This is a tool that lets you inject your own code into an executable program at runtime, before the program's main function is called. It's built using C and assembly language and currently works on 32-bit ARM systems, though it's designed to be adaptable to other types of processors. The innovation lies in its ability to dynamically add functionality or modify existing programs, giving you control without altering the original code directly. So this lets you customize or enhance a program’s behavior without its source code.

How to use it?

Developers can use the ELF Injector to modify or extend the functionality of existing ELF executables. Imagine you want to add security checks or debugging tools to a program you don't have the source code for. You'd inject your code, and it would run first. It's useful for things like adding extra security layers, or patching bugs in software when you don't have access to the original code. You can integrate it by using the injector tool on the executable files you want to modify, and then running the modified file. So, you can extend or modify existing programs without the original code.

Product Core Function

· Code Injection: This is the primary function, allowing developers to inject custom code into the ELF executable. Value: Enables runtime modification of programs. Application: Debugging, security patching, or adding features to closed-source software.

· Relocation Support: The injected code can be relocatable, meaning it can work correctly regardless of where it's loaded in memory. Value: Makes the injected code adaptable and compatible with different versions of the executable. Application: Ensures the injected code works on different systems and executable versions.

· Architecture Portability: Although currently optimized for 32-bit ARM, the tool is designed for easy adaptation to other architectures. Value: Broadens the tool's applicability across various hardware platforms. Application: Allows for cross-platform code injection and modification.

Product Usage Case

· Security Auditing: A security researcher wants to audit a closed-source binary for vulnerabilities. They use the injector to insert code that monitors system calls, allowing them to identify potential security flaws without needing the original source code. So, this helps you find security holes in existing programs.

· Bug Patching: A developer finds a bug in a third-party application they use but cannot directly modify the application's source code. They use the injector to inject a code patch that fixes the bug at runtime. So, you can fix bugs in existing applications.

· Feature Enhancement: A user wants to add a new feature to a program. By injecting code, they can add functionality without modifying the original program's files. This can add customized features into programs that you use every day.

5

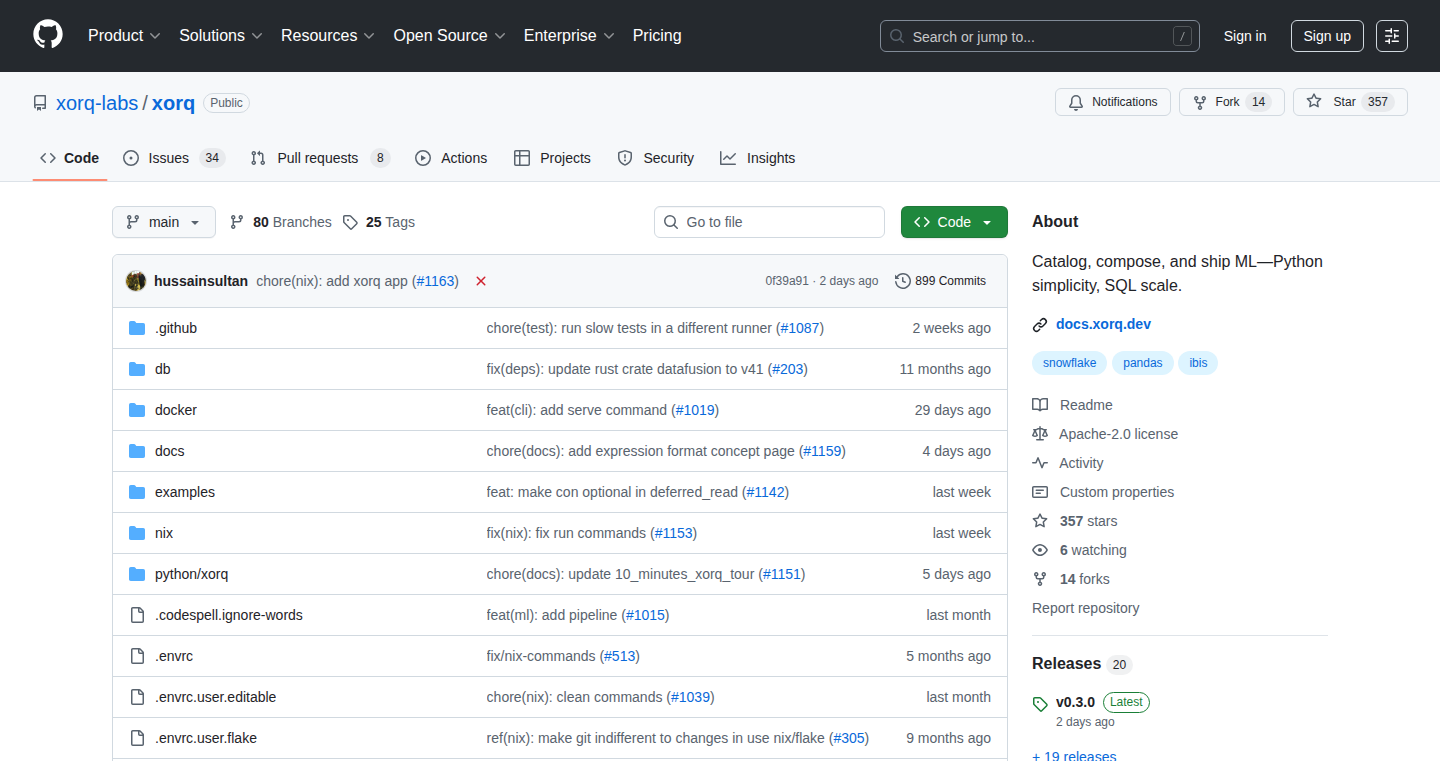

Xorq: The Compute Catalog for Reusable and Observable AI Pipelines

Author

mousematrix

Description

Xorq is a "compute catalog" designed to streamline how data scientists and engineers build, share, and monitor AI pipelines. It addresses the common problem of code and computational work being trapped in isolated environments like notebooks or custom scripts, which often leads to duplicated effort and difficulty in scaling. It leverages technologies like Arrow Flight for fast data transfer, Ibis for cross-engine data transformations (making it easier to switch between tools like DuckDB or Snowflake), and a portable UDF engine to compile pipelines into various formats. This enables users to create reusable components, track the lineage of their data, and deploy their work more efficiently. So it is a tool to manage and share your data processing work like a library for code, making your work more efficient and reproducible.

Popularity

Points 35

Comments 10

What is this product?

Xorq is like a central library for your data processing and AI tasks. It works by providing a standardized way to define and manage the building blocks of your data pipelines (transformations, features, models). Think of it as a digital version of a catalog, where you can browse, reuse, and observe these components. It uses several key technologies:

* **Arrow Flight:** A super-fast way to move data around, making processing quicker.

* **Ibis:** Allows you to write your data transformation code in a platform-agnostic way, so you can use it on different databases or processing engines without rewriting. It converts your code into instructions understood by various data processing systems.

* **Portable UDF Engine:** This enables you to write your own custom functions (UDFs) that can run in different environments.

* **uv:** This tool guarantees the reproducibility of the software environment, ensuring that the same code will run consistently across different machines and over time.

It allows you to build reusable data transformation and AI pipeline components, execute them across different engines (like DuckDB or Snowflake), track where the data comes from and goes, and make it easy to share your work. It's especially good for teams working on AI and data science projects.

How to use it?

Developers use Xorq by defining their data transformations and AI pipelines using a declarative, pandas-style interface, which is then translated into code that can run on different processing engines. They can then register these transformations in the Xorq catalog, making them reusable. Users can then execute their pipelines against different engines. It integrates with other systems through its various components like the Flight endpoints for UDFs. You could use it in a variety of ways, for example:

* **As a Feature Store:** Store and retrieve pre-calculated features used in machine learning models.

* **As a Semantic Layer:** Create a consistent view of your data for different teams and applications.

* **Integration with Model and Application Components:** Connect the data used by Machine Learning models with their applications.

For example, you might write a Python script that uses the Xorq library to define a data transformation. You then 'register' this transformation with Xorq, giving it a name and description. Later, you (or someone else on your team) can find this transformation in the catalog and use it in a new pipeline, potentially running it on a different data processing engine than the original.

Product Core Function

· Declarative transformations with a pandas-style interface: This lets developers express data transformations in a simple, readable way, similar to how they work with pandas DataFrames. This makes the code easier to understand and maintain. The value is it reduces complexity, making data processing tasks more accessible and less prone to errors. It is useful for anyone who works with data transformation, especially data scientists and engineers who use pandas. You can define the transformation steps by writing code, and then easily reuse them.

· Multi-engine execution: Xorq can execute transformations across multiple data processing engines (like DuckDB or Snowflake). The value is that it gives you flexibility to choose the best tool for the job, and can scale your tasks as needed. It is useful for anyone who wants the freedom to switch between data processing systems, especially in scenarios where you're dealing with large datasets or need to optimize performance on different platforms.

· UDFs as portable Flight endpoints: You can write your own custom functions (UDFs) and make them easily accessible to different parts of your data pipelines. The value is that it allows for custom logic and integration with external tools. It is useful for any developer who needs to extend the functionality of their data processing system with custom or specialized calculations, especially for those involved in data science and machine learning.

· Serveable transforms by way of flight_udxf operator: Allows for serving of the transformation through a flight_udxf operator, which provides a standard way to run your transformations as reusable services. The value is it promotes code reusability and enables building scalable data pipelines. It is useful when you want to create reusable data transformations that can be integrated into various applications. This helps avoid code duplication.

· Built-in caching and lineage tracking: Xorq automatically caches results and tracks the origins of your data. The value is that it helps improve performance by avoiding re-computation and provides valuable context for debugging and understanding how data flows through your systems. It is useful for any team or individual involved in data processing and AI, because it reduces computing costs and allows for simplified debugging and data lineage auditing.

· Diff-able YAML artifacts, great for CI/CD: Xorq uses YAML files to define your data transformation, and it provides a standard way to manage the configuration and changes to the code. The value is that it facilitates version control, which is crucial for managing your data processing and machine-learning workflows. It is useful for any team or individual working with CI/CD pipelines, as it makes it easier to track changes and reproduce experiments.

Product Usage Case

· Feature Stores: Xorq is used to build feature stores, which are systems designed to store and serve features for machine learning models. This helps ensure consistency and reusability of features across different models and applications. The value is it improves the efficiency and consistency of machine learning workflows. So, in your machine learning project, you can extract data transformation steps for different machine learning models, and create a feature store to reuse them.

· Semantic Layers: Xorq is used to create semantic layers, which provide a consistent and business-friendly view of the underlying data. This allows different teams to understand and work with the data in a uniform way. The value is it simplifies data access and promotes collaboration across teams. If you want to build a dashboard for business users, you can use semantic layers to provide unified data from a variety of data sources.

· MCP + ML Integration: Xorq helps integrate machine learning models with other components, such as monitoring and alerting systems. This enables teams to monitor the performance of their models and take action when needed. The value is it increases the reliability and maintainability of machine learning systems. If you want to deploy machine learning models on the production server, you can monitor the model performance through this integration.

6

Monchromate: Smart Greyscale Browser Extension

Author

lirena00

Description

Monchromate is a browser extension that intelligently converts webpages to grayscale, helping users reduce eye strain and improve focus. The key innovation lies in its smart features: it allows users to exclude specific websites from the greyscale effect, schedule when the effect is active, and adjust the intensity of the greyscale. It's a practical solution for programmers and anyone who spends a lot of time on the web, allowing them to manage their visual experience and reduce digital fatigue.

Popularity

Points 38

Comments 5

What is this product?

Monchromate works by applying a greyscale filter to web pages. It’s more than just a simple filter; it offers advanced control. You can prevent it from affecting certain websites, set a schedule for when it turns on and off, and even control how strong the greyscale effect is. The extension provides the same experience across different browsers like Chrome and Firefox. So what's cool about it? It's about giving you control over how you see the internet to make it less tiring and more focused.

How to use it?

As a developer, you'd install Monchromate as a browser extension. Once installed, you can customize it to fit your needs. For example, you might exclude your code editor or documentation sites from the greyscale, while keeping it active on distracting sites. You can also schedule it to activate during certain hours. Integration is straightforward: install the extension, configure your preferences, and start browsing with the benefits of reduced eye strain and enhanced focus.

Product Core Function

· Greyscale Conversion: The core function is to apply a greyscale filter to webpages, which can significantly reduce visual stimulation. So what's the value? Less strain on your eyes during long coding sessions.

· Website Exclusion: Allows users to specify websites that should not be converted to greyscale. Value: Prevents the greyscale effect from interfering with your workflow on important sites (like your code editor).

· Scheduler: Enables users to schedule when the greyscale effect is active (e.g., during work hours). Value: Automates the process of greyscale activation and deactivation, so you don't have to manually turn it on and off.

· Intensity Control: Users can adjust the intensity of the greyscale effect. Value: Customization, lets you find the perfect balance between eye relief and being able to see the content clearly.

· Cross-Browser Compatibility: Works consistently across different browsers, including Chrome and Firefox. Value: Flexibility to use the tool with your preferred browser.

Product Usage Case

· A developer uses Monchromate to reduce eye strain while working on a coding project. They exclude their code editor (like VS Code or Sublime Text) to maintain color-coded syntax highlighting, but enable greyscale on other distracting websites to stay focused. So what's the payoff? Increased productivity and less eye fatigue.

· A designer uses the extension to help focus on UI/UX design tasks. They set a schedule to activate greyscale during their work hours. Value: Improved focus during crucial design work.

· A student uses Monchromate while studying online. They set up exclusions for educational sites and enable greyscale to reduce visual distractions during study. So what's the advantage? Improved focus and studying more efficiently.

7

ElectionTruth: Interactive Data Visualization and Simulations for Election Analysis

Author

hannasanarion

Description

ElectionTruth is a web-based project that debunks election fraud claims using interactive data visualizations and simulations. It leverages the Law of Large Numbers to analyze election data, highlighting statistical errors in common arguments. The project is built using handmade HTML, CSS, and JavaScript, with initial analysis done in Python. Key innovations include client-side simulations for real-time data processing and visualizations, and performance optimizations to handle large datasets within a web browser.

Popularity

Points 8

Comments 4

What is this product?

ElectionTruth is a project designed to analyze election data and challenge misinformation. It uses interactive visualizations and simulations, meaning you can play with the data yourself to understand the claims made by others. Technically, it's built on HTML, CSS, and JavaScript, with Python for initial data processing. Visualizations are created using Observable Plot and D3.js. The project also includes client-side simulations, which run directly in your web browser. So it doesn't need a powerful server to do its analysis. It’s focused on showing how statistics are sometimes misused and how to interpret data correctly.

How to use it?

As a developer, you can learn from how ElectionTruth handles large datasets and creates performant visualizations entirely within a web browser. You can study the project’s code to understand techniques for client-side simulation and optimization. You could potentially adapt its visualization techniques to your own projects, such as data dashboards or interactive reports. You can directly visit the project to interact with it and understand its approach. Also, the source code is accessible, so you can copy and adapt it to your own needs.

Product Core Function

· Interactive Data Visualization: The project presents election data through interactive charts and graphs, allowing users to explore the data and see the patterns themselves. This is useful for anyone trying to understand complex data.

· Client-Side Simulations: Simulations run directly in the user's browser. This allows real-time calculations and immediate feedback when users interact with the visualizations. It's like having a powerful calculator inside your website.

· Law of Large Numbers Analysis: The project is built on the understanding of the Law of Large Numbers. This helps in analyzing claims of election fraud by evaluating the probabilities and the impact of large datasets. This helps users to understand how accurate large samples are.

· Performance Optimization for Large Datasets: The project efficiently handles a large amount of election data (around 600,000 ballot records) without slowing down the user's browser. This means that users can interact with the visualizations smoothly, without long loading times. It allows the project to scale and deal with a large amount of data.

· Open-Source and Accessible Code: The project is built with accessible web technologies (HTML, CSS, and JavaScript) and standard libraries. This makes the code easy to understand and adapt. This is great for anyone who wants to learn or modify the project for their own needs.

Product Usage Case

· Data Visualization for News Articles: A journalist could integrate similar interactive visualizations into a news article about an election. By letting readers interact directly with the data, the journalist could illustrate complex statistical concepts and give more context to the discussion. For example, using the visualization, a journalist can debunk claims about suspicious events by comparing the distributions of votes.

· Educational Tool for Statistics: A teacher could use ElectionTruth as a teaching tool for statistics. The interactive simulations can help students understand statistical concepts like the Law of Large Numbers and probability distribution in a practical, visual way. This could make statistics more engaging and easier to understand.

· Development of Interactive Reports: Developers could take inspiration from ElectionTruth to build interactive dashboards for complex data sets. By adapting the techniques for client-side data processing and visualization, they could create user-friendly reports that allow for easy exploration and deeper insights for different organizations.

· Building Tools for Data Analysis: Developers can learn from the project’s architecture and methods for optimizing performance, particularly when working with large datasets. This can be used in different data-intensive applications such as financial data analysis or scientific research.

8

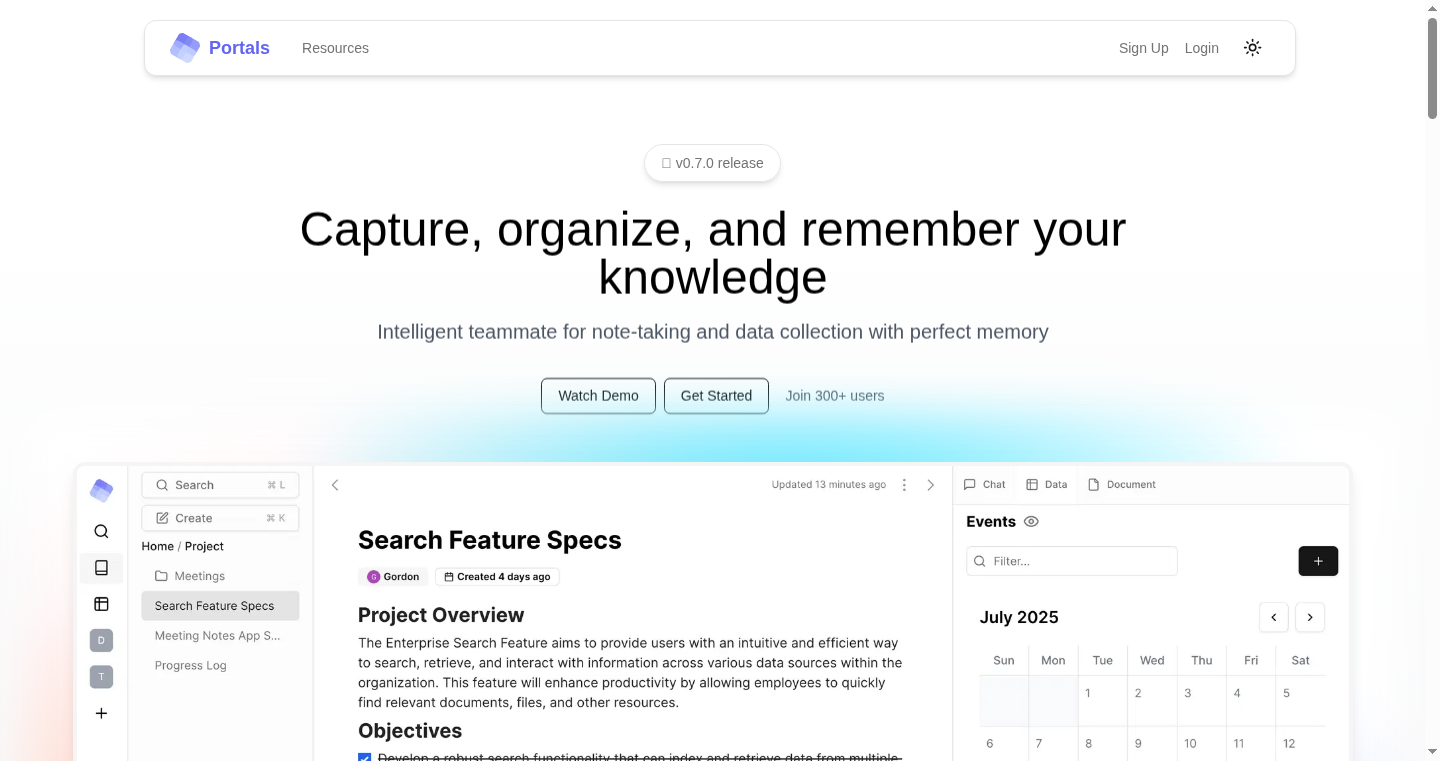

StudyTurtle: AI-Powered Explanations for Kids and Parenting Insights

Author

toisanji

Description

StudyTurtle is an innovative application leveraging AI to provide simplified explanations for children's questions and comprehensive research for parenting challenges. It employs web crawling and natural language processing (NLP) to gather information, rewrite it in a child-friendly format, and offer diverse perspectives on parenting issues. This project stands out by not aiming for a single 'best' answer but presenting various viewpoints, enabling parents and children to explore a wider range of information.

Popularity

Points 5

Comments 3

What is this product?

StudyTurtle utilizes AI to simplify complex topics for children and provide well-researched information for parents. For children, it takes a question, searches the web, and rephrases the answer in a way a child can understand. It also finds related images, videos, and activities. For parents, it answers parenting questions by scouring the internet for diverse information and presenting different perspectives, including research papers, articles, and videos. So this means, it uses AI to be a simplified search engine and research assistant.

How to use it?

Users can access StudyTurtle through a web interface to ask questions. For children, input a question, and the system generates a kid-friendly explanation with supplementary resources. For parents, pose a parenting question to receive a comprehensive research report. The application is designed for easy use, allowing integration into daily interactions with children and providing quick access to parenting solutions. So you can use this application by simply asking a question via a website.

Product Core Function

· Kid-Friendly Explanation Generation: This core feature uses NLP to understand a child's question and then search the web for relevant information. The information is then rewritten in simplified language suitable for children, using accessible vocabulary and tone. The value is that it simplifies complex concepts, making it easier for children to learn and understand various topics. This is useful for parents and educators who need to explain complex topics in a way that children can easily grasp. So this feature can empower kids to learn new things easily.

· Comprehensive Parenting Research: The application crawls the web to find information related to parenting questions, including research papers, articles, and videos. It presents diverse perspectives and avoids offering a single 'best' solution, allowing parents to consider various viewpoints. This feature is valuable because it provides parents with a broad view of solutions, promoting informed decision-making in parenting. This is useful for parents to make informed decisions by researching the web's information in one place. So this helps parents make better decisions.

· Multimedia Resource Integration: For both children and parents, the application integrates related images, videos, and other multimedia resources to enhance understanding and engagement. For children, it provides visual aids and interactive content to complement the text explanations, whereas for parents, it offers related videos. This feature enriches learning experiences, making them more engaging and effective. This is useful because it appeals to different learning styles and makes the learning process more interesting. So this feature makes it more fun to learn.

Product Usage Case

· Explaining Scientific Concepts: A parent can input the question 'Why does the plate break when it falls?' StudyTurtle will provide an answer using accessible language, related images and also find additional experiments. This shows how complex concepts are broken down to be kid friendly. This is useful in an educational environment because it simplifies education. So this could be useful for answering complex science problems.

· Parenting Challenges: A parent can ask, 'How do I get my sons to stop fighting whenever there is a pizza?' StudyTurtle will generate a research report with multiple viewpoints, including research papers and articles, on the topic. It offers parents diverse solutions. This use case is useful because it helps parents to make better decisions and learn parenting strategies. So this could solve tough parenting problems.

9

YouTubeTldw: Instant YouTube Summaries

Author

dudeWithAMood

Description

This project creates concise summaries of YouTube videos using the open-source `tldw` Python library. It addresses the problem of long, ad-supported YouTube videos by providing quick overviews, allowing users to grasp the key points without investing significant time. The project's innovation lies in its simplicity and focus on user experience: it's ad-free, requires no login, and is entirely free to use, making information access faster and more convenient.

Popularity

Points 5

Comments 3

What is this product?

This is a web application that leverages the `tldw` Python library to generate summaries for YouTube videos. The `tldw` library likely uses techniques like Natural Language Processing (NLP) and potentially speech-to-text conversion to analyze the video's transcript or audio and identify the most important information. It then condenses this information into a short, easy-to-understand summary. So, it's like a Cliff's Notes for YouTube. This project stands out by being ad-free and requiring no user registration, focusing on providing a quick and clean way to get the gist of a video.

How to use it?

Developers can integrate the `tldw` library into their own projects to offer similar summary functionalities. For example, you could build a browser extension that provides summaries alongside YouTube videos, or create a chatbot that answers questions about videos using their summaries. To use the web application, you simply paste the YouTube video URL into the provided input field, and the summary will be generated.

Product Core Function

· YouTube Video Summarization: This core function uses the tldw library to extract key information from YouTube videos and present them in a summarized format. Value: Saves users time by providing a quick overview, instead of watching an entire video. Application: Useful for researchers, students, or anyone wanting a quick understanding of the video's content.

· Ad-Free Experience: The service is designed to be ad-free, offering a clean user experience. Value: Improves the user experience by removing distractions and annoying ads. Application: Makes the summarized content more easily accessible and enjoyable to the user.

· No Login Required: The website doesn't require users to create an account or log in. Value: Enhances user convenience and privacy, as users can access summaries without providing personal information. Application: Makes the service immediately accessible to anyone without any barriers.

Product Usage Case

· Education: A teacher wants to quickly review the key points of a lecture or tutorial video for lesson planning. Using the tool, the teacher can get a summary in seconds, saving valuable time that would be spent watching the entire video.

· Research: A researcher needs to scan multiple YouTube videos on a specific topic. The researcher uses the tool to quickly scan the summaries to identify relevant content, without having to watch each video in its entirety. This saves time and improves efficiency.

· News Consumption: A user wants to quickly understand the context of a news video. The user uses the tool to get a summary of the main points, allowing for quick understanding, without the need to watch the full news report.

10

Maia Chess: Human-like AI for Chess Engagement

Author

ashtonanderson

Description

Maia Chess is a unique chess AI project developed at the University of Toronto, designed to play chess in a more human-like manner. This goes beyond simply winning games; it focuses on simulating human cognitive errors and playing styles, offering a novel approach to human-AI collaboration in chess. The core innovation lies in its ability to model individual human behavior and adapt to different skill levels, providing a more engaging and educational chess experience.

Popularity

Points 7

Comments 1

What is this product?

Maia Chess is not just another chess engine; it’s a chess AI designed to mimic human playing styles. It uses advanced machine learning techniques to analyze human chess games and learn common mistakes and thought processes. The system’s architecture involves sophisticated algorithms that model individual player behaviors. So, instead of the AI playing perfectly like other chess engines, Maia makes moves more akin to how a human would play, creating a more realistic and relatable opponent. So what? This makes it ideal for learning and understanding chess from a human perspective.

How to use it?

Developers and chess enthusiasts can access Maia Chess through its open beta website. Users can play against Maia-2 (the latest version of the AI), analyze their games to compare with Maia's human-like evaluations, solve puzzles curated by Maia, drill on openings with personalized feedback, and even play team chess with Maia. Integration could be done through their API (if available in the future) or by using their game analysis data to improve other chess applications. So what? You can use it to build interactive chess tools, enhance chess training programs, or simply enjoy a more human-like chess experience.

Product Core Function

· Play against Maia-2: This allows users to play chess against an AI that plays like a human, making the game more relatable and improving user experience.

· Analyze your games: Users can analyze their games and compare them with Maia's predictions. This helps in understanding human-like decision-making and evaluating their performance with human-like metrics. So what? This aids in understanding how humans approach chess, not just how a perfect AI plays.

· Maia-powered puzzles: Provides tactics puzzles curated through Maia's unique lens. These puzzles are specifically designed to align with how humans learn and think in chess.

· Openings drill: Users can select openings and play through them against Maia, receiving personalized feedback instantly. This offers a unique opportunity to refine and learn opening strategies through interactive human-like feedback. So what? It personalizes chess learning and feedback.

· Hand & Brain: Users can play team chess with Maia as a human-AI team. This creates a new way to experience and learn the game by collaborating with AI. So what? Enhances human-AI collaboration.

· Bot-or-not: A chess Turing Test: A fun way to see if you can spot the bot in a real human-vs-bot game.

· Leaderboards: Users can compete on leaderboards across different modes to assess skill and track progress. So what? Increases engagement and promotes a competitive learning environment.

Product Usage Case

· Chess Training Platforms: Integrate Maia's game analysis and human-like AI to provide more realistic feedback and personalized coaching.

· Educational Games: Developers can incorporate Maia's AI into educational chess games to create an engaging and interactive learning experience.

· Chess Applications: Build new chess applications that incorporate Maia's unique human-like AI, providing players with a fresh perspective and challenges.

· Game Analysis Tools: Integrate the AI for better analysis of human games.

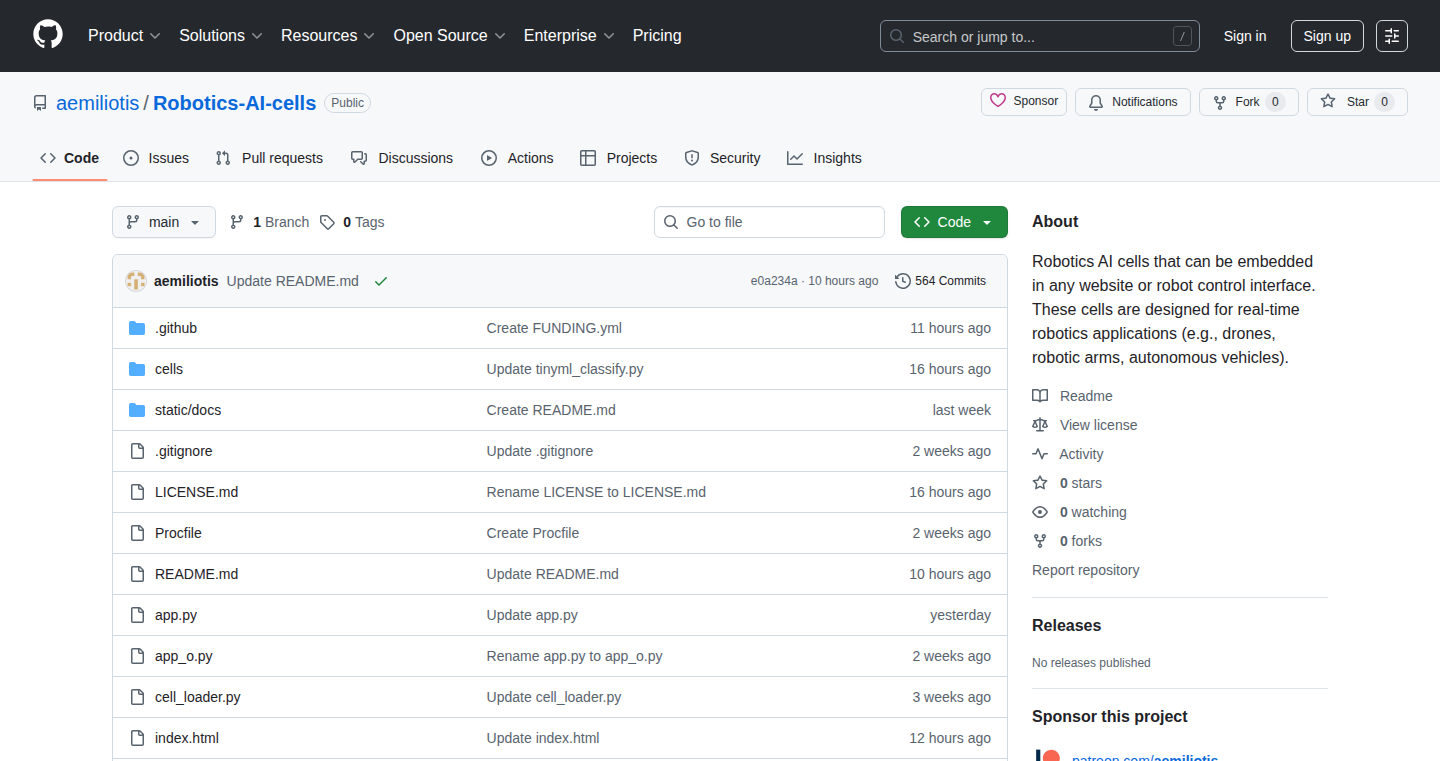

11

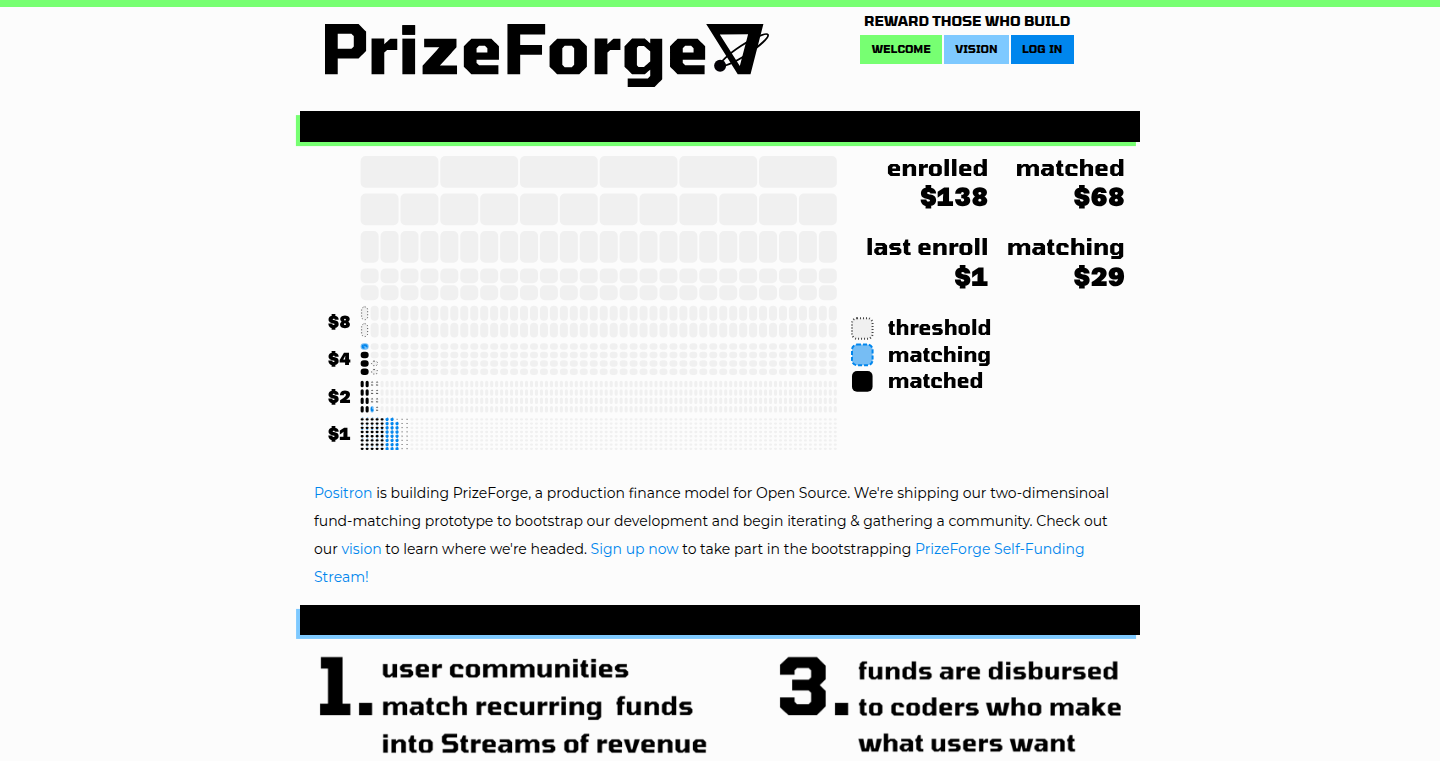

PrizeForge: Elastic Production Finance for Open Source

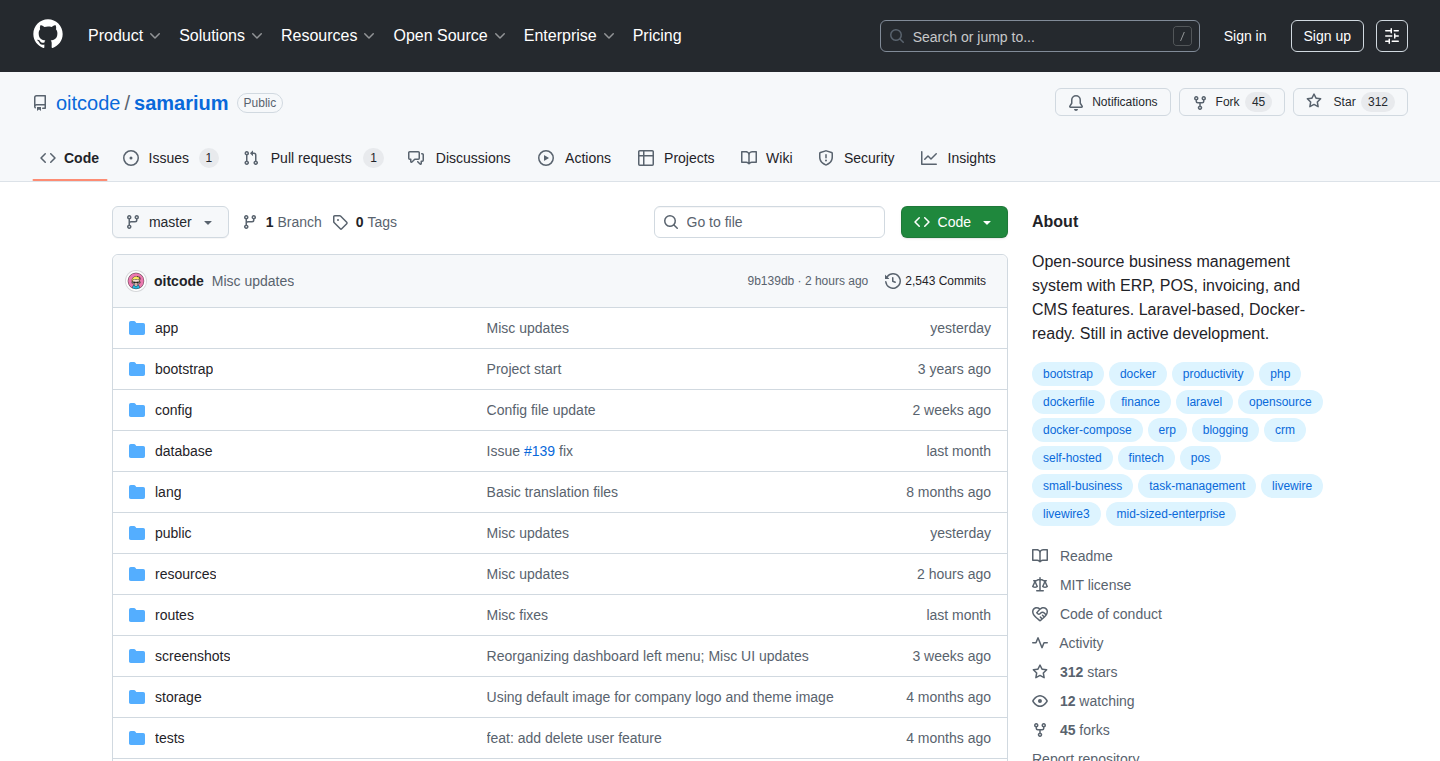

Author

positron26

Description

PrizeForge is a platform designed to connect demand for downstream value creation with upstream enabling technologies, focusing on open-source projects. It combines the accountability of Patreon with the coordinated action of Kickstarter, allowing users to fund projects based on achieved milestones. The core innovation lies in its 'Elastic Fund Raising' feature, enabling flexible and community-driven funding models, along with decision delegation tools optimized for open communities. It utilizes a full-stack Rust application with Leptos for the frontend, Axum on the backend, and integrates with Postgres and NATS.

Popularity

Points 8

Comments 0

What is this product?

PrizeForge is a platform that helps fund open-source projects. It differs from traditional crowdfunding by allowing users to contribute based on project progress and milestones. Think of it as a more flexible and community-driven version of Patreon and Kickstarter combined. It uses advanced tech like Rust and other open-source tools to create this platform.

How to use it?

Developers can use PrizeForge to get funding for their open-source projects. Users can create campaigns with specific goals and milestones, and funders can contribute to the project with a system similar to a pre-pay system. The platform offers features like community decision tools.

So, as a developer, you can create a project, set milestones, and have the community support you in your work. As a contributor, you get to support projects you love, knowing that your money is tied to tangible progress. You can integrate with the platform as a developer.

Product Core Function

· Elastic Fund Raising: This allows projects to receive funds in a flexible way, tied to the achievement of certain milestones. This means contributors only pay when the project reaches specific goals. So this is useful because it assures contributors and keeps developers motivated to deliver.

· Community Decision Tools: These tools are designed to facilitate decision-making within open-source communities. This ensures fair and efficient project direction. This is great because it helps organize the community and ensure the project is developed with their best interests.

· Full-Stack Rust Application: The platform is built using Rust, Leptos (frontend), Axum (backend), Postgres, and NATS. This tech stack provides performance, security, and scalability. So this is useful because it ensures a robust and secure platform for users and developers.

Product Usage Case

· Open-Source Project Funding: Developers of open-source projects can use PrizeForge to get funding for their work, setting milestones and receiving funds based on progress. This is great because it ensures that developers can get funded for open-source projects.

· Community-Driven Development: Communities can use PrizeForge to support their favorite open-source projects, participating in decision-making and monitoring progress. This is useful because it empowers the community and encourages collaboration.

· Supporting Deep Tech: This can also be used to support projects that need funding, like those working on complex technologies. So this is useful because it promotes innovation.

12

Vibe-Coded Fish-Tinder: A CNN-Powered Image Moderation System

Author

hallak

Description

This project is a website where you can draw a fish and watch it swim with others. Behind the scenes, it uses a Convolutional Neural Network (CNN), a type of AI, trained to identify potentially offensive images (penises and swastikas). Anything that doesn't get a high confidence score from the AI goes to a moderation queue. The project showcases a fun and creative use of AI for image moderation. So, it's a simple drawing game with a smart backend.

Popularity

Points 4

Comments 3

What is this product?

This project is a website built using HTML5 for the front-end (what you see and interact with) and Node.js running on Google Cloud Platform (GCP) for the back-end (the server-side logic). The cool part is the integration of a Convolutional Neural Network (CNN). CNNs are like AI eyes, trained to look at images and make educated guesses about what they contain. In this case, the CNN is trained to identify potentially offensive content. This is a cool example of using AI to help moderate user-generated content. So, this uses AI in a creative way to moderate content.

How to use it?

Developers can use the core AI moderation logic in their own applications. The project provides a foundation for integrating a CNN-based image moderation system. You could adapt the code, retrain the CNN with different datasets (e.g., identify other types of inappropriate images), and integrate it into your own web applications, forums, or social media platforms. So, if you're building a website that allows users to upload images, you could use a similar AI model to automatically filter out offensive content.

Product Core Function

· CNN-based Image Recognition: The core function is the CNN that analyzes images. This AI model is trained to recognize specific patterns (like penises and swastikas) and assign a confidence score to each image. This means it can automatically flag suspicious content. So, you can automatically filter out unwanted content.

· Moderation Queue: Images that the CNN is uncertain about (low confidence score) are sent to a moderation queue. This gives human moderators a chance to review the images and make the final call. This reduces the chance of false positives. So, it provides a backup system when the AI isn’t sure.

· Front-end and Back-end Integration: The project seamlessly integrates the front-end (where users draw) with the back-end (where the AI runs and moderation happens). This shows how to build a complete system. So, you can understand the whole pipeline of a web app.

· Vibe-Coding Fish-Tinder: The project’s unique touch is how the drawing feature is integrated with the moderation system, turning the whole thing into a fish-themed game. This is a fun way to make content moderation, showing that you can make boring tasks interesting.

Product Usage Case

· Content Moderation for Online Forums: Imagine building an online forum where users can share images. You can use the AI model to automatically scan all uploaded images and flag those containing offensive content, reducing the workload for human moderators and making the forum safer. So, protect your user base.

· Social Media Image Filtering: A social media platform can use this technique to filter out harmful or inappropriate images before they are posted to user's timelines. This can ensure a safer online environment for everyone. So, your social media becomes more secure.

· E-commerce Product Image Review: An e-commerce website can use this type of AI to automatically review product images uploaded by vendors, ensuring they meet certain criteria. So, ensure your product images are professional and suitable.

· Custom Image Classification Applications: The underlying CNN technology can be adapted for various image classification needs. For instance, it could be modified to detect defects in manufacturing, identify medical images for research, or even classify plant species in ecology studies. So, you can use this in almost any image classification project.

13

Hybrid Groups: Agentic AI for Collaborative Teamwork

Author

cstub

Description

Hybrid Groups introduces a novel approach to team collaboration by integrating agentic AI directly into existing communication platforms like Slack and GitHub. It addresses the challenge of incorporating AI into group workflows, allowing AI agents to participate in conversations, proactively offer assistance, and execute tasks on behalf of users. This fosters a more seamless and efficient collaboration environment, blending human and artificial intelligence capabilities within existing team structures. So, it lets AI be a proactive team member, not just a personal assistant.

Popularity

Points 7

Comments 0

What is this product?

This project allows AI agents to join group chats as virtual team members within platforms like Slack and GitHub. These agents can understand conversations, offer relevant suggestions, and perform actions, such as managing calendars or updating to-do lists. The core innovation is the agent's ability to interact directly within the group context, understanding the shared workspace and proactively contributing to team goals. This leverages concepts like natural language processing (NLP) and task automation to enhance team productivity. So, you get AI that works with your team, not just for you.

How to use it?

Developers can integrate Hybrid Groups by running a Docker container, connecting it to their Slack or GitHub workspace. They can then use predefined demo agents or create custom agents tailored to their specific needs. The project is open-source, providing flexibility for developers to extend and customize the AI agents' capabilities. The quickstart guide provides detailed instructions on setup and usage. So, you can easily add AI team members to your existing workflows.

Product Core Function

· Group Chat Participation: AI agents actively participate in group conversations, allowing them to understand the context of discussions and offer relevant insights or suggestions. This uses NLP to understand human language.

· Proactive Task Management: Agents proactively suggest actions or perform tasks, such as scheduling meetings or updating to-do lists, based on group discussions and user requests. This leverages task automation.

· Integration with Existing Platforms: Seamless integration with popular platforms like Slack and GitHub ensures compatibility with existing team communication and project management workflows. This streamlines how teams get things done.

· Custom Agent Development: Developers can create custom AI agents with specialized skills and knowledge tailored to their team's needs, improving efficiency in specific workflows.

· Data Privacy and Security: Designed to operate without requiring access to users' private resources, ensuring data privacy and security within group contexts.

Product Usage Case

· Meeting Scheduling: A team discussing a meeting can have the AI agent proactively suggest available times based on team members' calendars and automatically send out calendar invites. This solves the time-consuming task of coordinating schedules.

· Task Management: In a project management channel, the AI agent can automatically update the status of tasks based on conversation updates and reminders, saving time and reducing the risk of tasks being missed. This improves project tracking.

· Code Review Assistance: In a GitHub environment, an AI agent could offer suggestions for code improvements or automatically flag potential issues in pull requests, improving code quality and speeding up reviews. This helps write better code.

· Customer Support Automation: An AI agent integrated into a customer support channel can respond to common queries, route tickets to the appropriate team members, or provide automated responses, streamlining customer interactions. This makes customer service more efficient.

· Knowledge Base Integration: The AI agent can answer questions based on information contained within a company knowledge base, making critical information more accessible. This makes knowledge readily available.

14

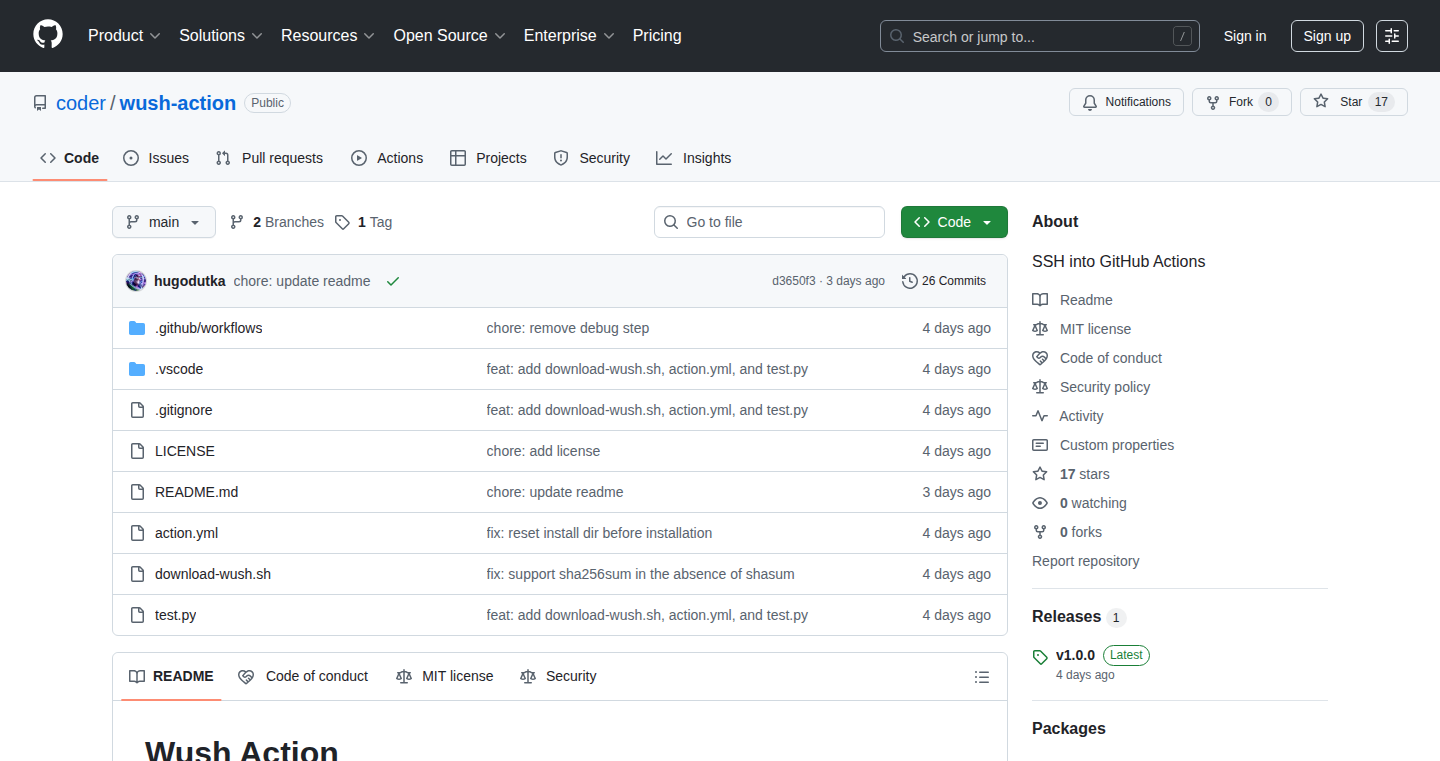

Wush-Action: Secure SSH Tunneling for GitHub Actions via WireGuard

Author

hugodutka

Description

Wush-Action allows developers to securely SSH into their GitHub Actions workflows using WireGuard. It creates a private and encrypted connection between the GitHub Actions runner and the developer's machine, enabling real-time debugging, inspection of workflow state, and direct interaction with the workflow environment. This overcomes the limitations of traditional debugging methods in CI/CD pipelines by providing a secure and interactive shell. The core innovation is leveraging WireGuard for secure tunnel creation and integrating it seamlessly with the GitHub Actions environment, offering a level of interactivity and control typically absent in automated CI/CD processes. This is a huge improvement over debugging complex issues in CI/CD workflows.

Popularity

Points 7

Comments 0

What is this product?

Wush-Action sets up a secure and encrypted connection using WireGuard, a modern VPN protocol, directly into your running GitHub Actions workflow. Think of it as a private, secure tunnel that lets you remotely control and observe the workflow in real-time. The innovation lies in automating this process and integrating it tightly with GitHub Actions. It solves the problem of limited debugging capabilities and lack of direct interaction in CI/CD pipelines.

How to use it?

Developers integrate Wush-Action into their GitHub Actions workflow files (YAML). When the workflow runs, Wush-Action will create a secure tunnel and provide the developer with SSH credentials. The developer can then SSH into the workflow runner from their local machine. This is particularly useful for troubleshooting complex bugs, inspecting the state of the workflow during runtime, and interacting with the environment directly. For example, you could inspect files, run commands, or debug code step-by-step. This offers much more control over the automated process.

Product Core Function

· Secure SSH Tunneling: Wush-Action uses WireGuard to create an end-to-end encrypted connection. This ensures that all communication between the developer and the GitHub Actions runner is private and protected. So what? You can safely debug sensitive information without worrying about interception.

· Automated Setup: The project automates the setup of the WireGuard tunnel, eliminating the need for manual configuration. This makes it easy to integrate the feature into existing workflows. So what? It saves developers time and reduces the chance of errors during configuration.

· Real-time Debugging: Developers can SSH into the workflow runner and debug code in real-time, inspect files, and run commands. This allows for quicker identification and resolution of issues. So what? This drastically reduces debugging time compared to traditional logging and print statements.

· Interactive Environment: The SSH connection provides direct access to the workflow's environment, allowing developers to interact with the environment directly. So what? This gives developers a much more granular understanding of the workflow's state and operation.

Product Usage Case

· Debugging a failing deployment: A developer can use Wush-Action to SSH into the deployment workflow, inspect the deployment scripts, check file permissions, and identify the root cause of the failure directly. So what? Resolving deployment issues becomes a much quicker and less frustrating process.

· Inspecting runtime dependencies: A developer can use Wush-Action to verify the versions of the installed packages or other dependencies within the workflow's environment. So what? This is vital for ensuring that dependencies are correctly installed and that there are no compatibility issues.

· Troubleshooting complex build failures: A developer facing issues with complex build processes can connect to the workflow runner, execute build commands step-by-step, and examine the output to pinpoint the source of the error. So what? Build issues get resolved quickly and efficiently.

15

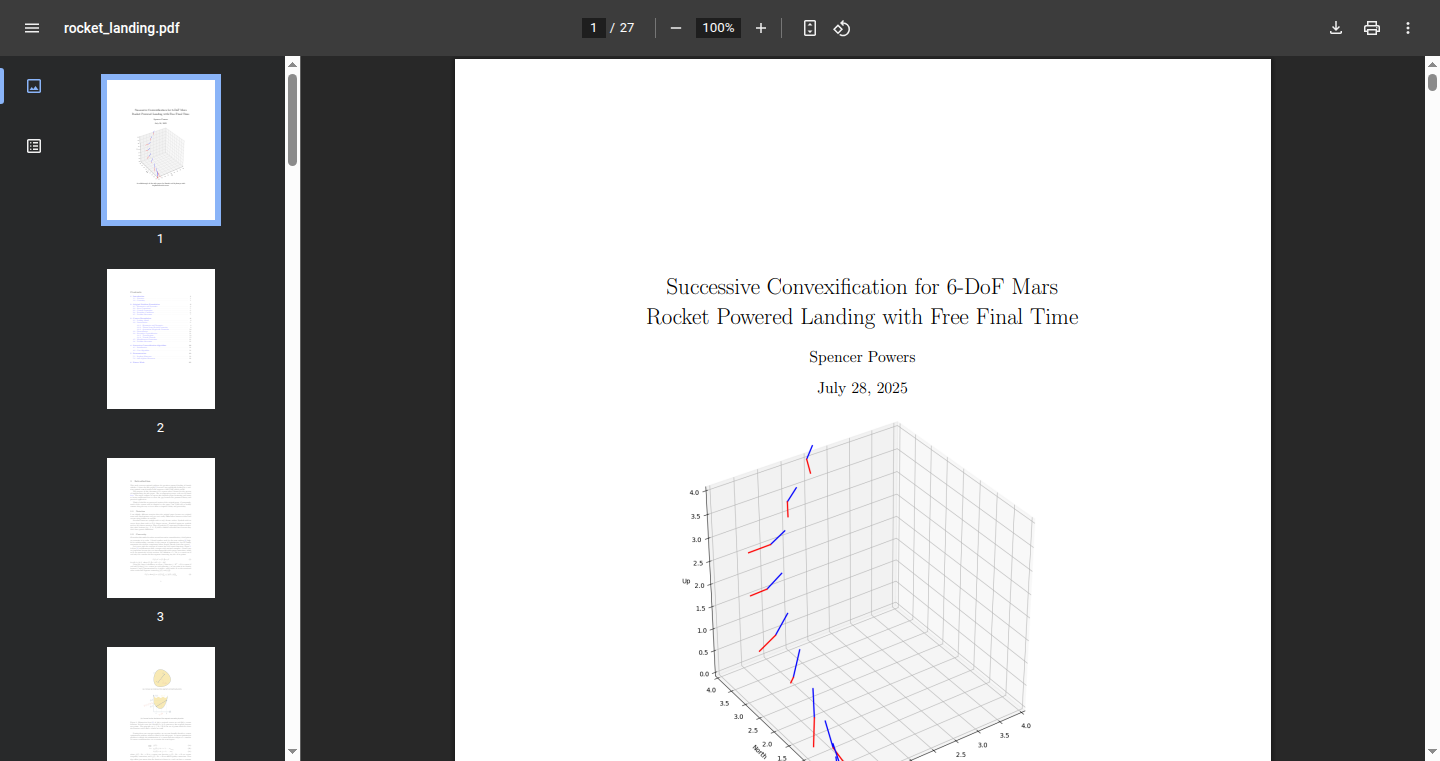

RocketLandingOpt – Demystifying Rocket Landing Trajectory Optimization

Author

scpowers

Description

This project offers a detailed explanation and implementation guide for a rocket landing trajectory optimization algorithm. It's based on a research paper, but the creator has added extra details and clarifications to make it easier for others to understand and apply. It helps solve the complex problem of planning the most efficient and safe path for a rocket to land, considering factors like fuel consumption and time. So this project provides a practical guide for anyone interested in space travel and optimization algorithms.

Popularity

Points 5

Comments 1

What is this product?

This project breaks down a complex optimization problem: how to land a rocket in the most efficient way. It does this by explaining a specific algorithm from a research paper. The creator has rewritten and expanded the original content with extra details, code examples, and insights to help others understand the math and implement it themselves. This kind of project helps bridge the gap between theoretical research and practical application, making complex concepts accessible. So, the key innovation is making a complex topic easier to grasp through clear explanation and practical code.

How to use it?

Developers can use this project as a learning resource and a starting point for their own rocket landing simulations or related projects. They can read the provided explanations to understand the core algorithms and then adapt the accompanying code to their specific needs. The code itself can be integrated into other projects, used as a basis for building more advanced simulations, or even adapted for other optimization problems in different domains. So you can learn the optimization algorithms, apply the theory to a working code, and then customize it to solve real-world engineering problems.

Product Core Function

· Understanding Rocket Landing Trajectory Optimization: The project offers a comprehensive explanation of the optimization problem. This helps developers grasp the underlying physics and constraints involved in planning a rocket's landing path. This is useful for anyone interested in space engineering or robotics, providing a strong foundational understanding before diving into implementations.

· Algorithm Breakdown: The project breaks down a complex algorithm step-by-step, clarifying the math and the logic. This approach enables developers to understand the 'how' and 'why' behind the calculations, enabling them to debug the implementation and adapt the code to their own needs.

· Code Implementation: The project includes accompanying source code. This code acts as a practical example of how the algorithm works. It gives developers a working example to follow, making it easier to understand and test the core concepts.

· Detailed Explanations: The author has added detailed explanations, clarifications, and code examples to the original paper. This ensures the learning curve is lowered and the code can be used across a wide range of rocket landing scenario simulation

Product Usage Case

· Spacecraft trajectory planning: This project can be used to design and test new control systems for rocket landing. Developers can adapt the provided code and apply it to create and test various landing strategies with simulated data before testing a landing, saving resources and preventing accidents.

· Autonomous Robotics: The optimization techniques explained in this project are not limited to rockets. The algorithm can be applied to the path planning of self-driving cars or drones and robots to find the most efficient and safe route. This shows how the core concepts can be applied in other fields, using the same code.

· Simulation and Research: The project acts as a perfect start for researchers to explore different optimization strategies for aerospace or robotics. They can modify the base algorithm for advanced problems and testing.

16

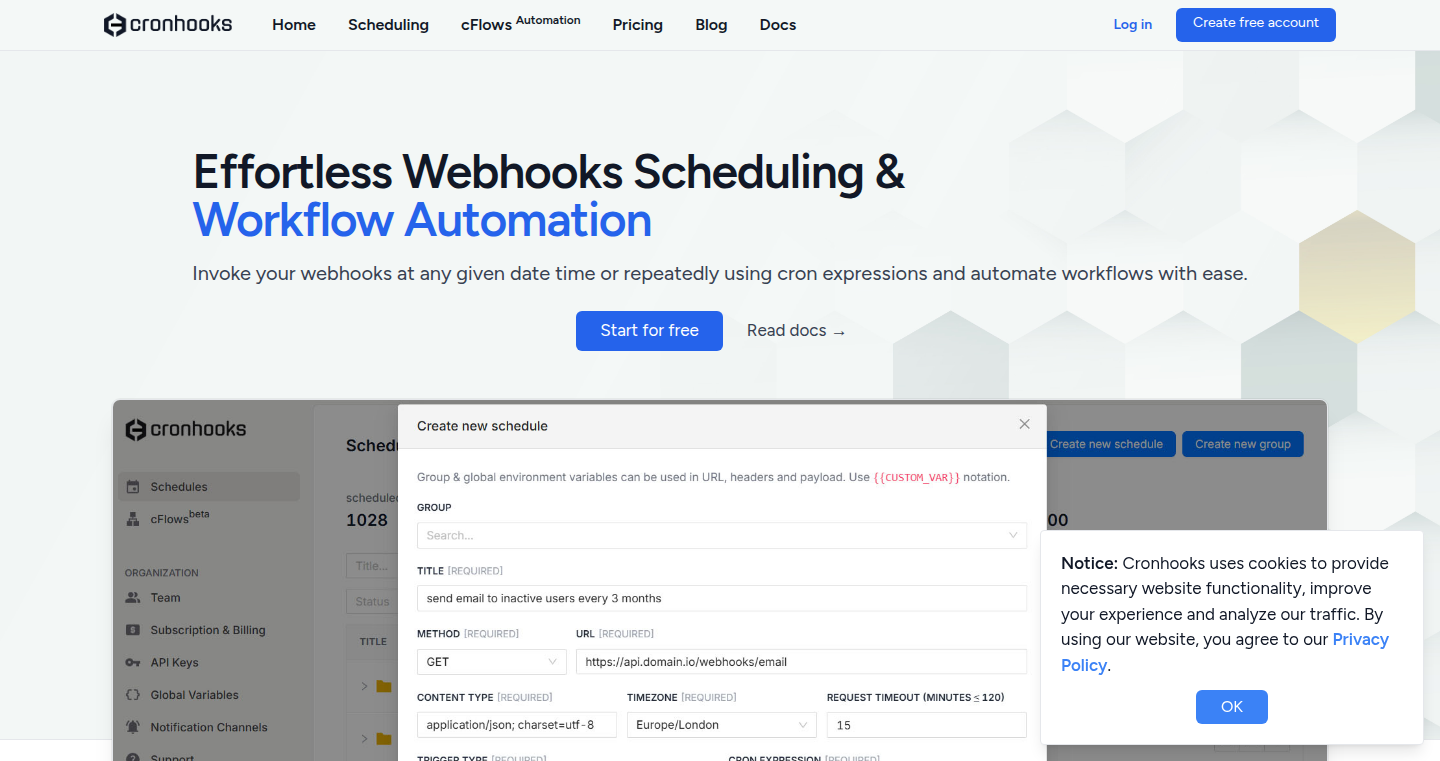

Suggest.dev: Rage-Click Driven Feedback with Session Replay

Author

tsergiu

Description

Suggest.dev is a feedback widget designed to capture user frustration. It intelligently triggers when users exhibit 'rage clicks' (repeated rapid clicking), prompting them to leave feedback. The magic? Each piece of feedback automatically includes a full session replay. This innovative approach allows developers to instantly understand user problems by visualizing the user's entire journey, saving time and guesswork, and dramatically improving the quality of feedback.

Popularity

Points 4

Comments 2

What is this product?

Suggest.dev is a feedback tool that detects when users are frustrated on your website, such as repeated clicking in the same area. When this happens, it prompts them to leave feedback. What's special is that it records their entire user session, including what they clicked, what they typed, and how they interacted with the site. So, it's like having a video recording of what went wrong. This is a major innovation because it gives developers incredibly detailed information to fix problems quickly. It uses advanced techniques to identify rage clicks, which are then paired with session replays using tools to capture and replay user interactions. So this gives you a much clearer picture of what happened and why, without requiring complex debugging or guesswork. This dramatically simplifies the process of understanding and fixing user-facing issues.

How to use it?

Developers can easily integrate Suggest.dev into their website by adding a simple snippet of code. When a user experiences a 'rage click,' they’ll be prompted to provide feedback. This feedback, along with the session replay, is then sent to a dashboard where developers can view and analyze it. Imagine you're a developer building a new e-commerce site. A user tries to add an item to their cart multiple times, but nothing happens. With Suggest.dev, the developer receives not only the user’s feedback but also a replay of the entire session. They can watch exactly what the user did, pinpoint the issue (maybe a broken button or a slow loading script), and quickly fix it. Another great use case is for internal testing within a team. This allows you to get immediate feedback on the problems users face, dramatically decreasing the time needed to identify and fix issues, improving your product’s quality.

Product Core Function

· Rage-Click Detection: The system intelligently monitors user behavior and identifies instances of rapid, repeated clicking in a specific area, indicating potential frustration. This triggers the feedback mechanism. Value: Automatically identifies potentially problematic areas on a site, which saves developers from manually searching for issues. Application: Immediately flags UI glitches or usability issues that might otherwise go unnoticed.

· Session Replay Recording: When a rage click is detected, Suggest.dev captures the user's entire session, including all interactions, clicks, and form entries. Value: Provides developers with a complete visual of the user's experience. Application: Allows developers to instantly understand the context and reproduce issues exactly as the user encountered them.

· Feedback Collection: Users can quickly provide feedback when prompted, without having to navigate to separate feedback forms. Value: Lowers the barrier for user feedback, leading to a higher volume of high-quality reports. Application: Encourages more active and relevant user contributions to improving product quality.

· Centralized Dashboard: The collected feedback and session replays are organized in a centralized dashboard, providing a clear and comprehensive overview of user issues. Value: Simplifies issue management and prioritization for developers. Application: Streamlines the developer's workflow, reducing the effort required to analyze and fix issues.

Product Usage Case

· E-commerce Website Bug Fix: A user repeatedly tries to add an item to their cart but fails. The session replay shows the add-to-cart button isn't working properly. The developer fixes the button. So what? You get a happy user who successfully buys your product.

· Internal Testing for New Features: Developers are using the new version of their website for their own testing and they see an issue with the form. The replay gives the developer enough info to easily debug the issue. So what? You quickly identify usability problems and reduce the time it takes to find bugs.

· Customer Support Improvement: A user contacts customer support with a problem. The session replay shows the steps they took. You use the replays to pinpoint a confusing part of the design. So what? Better customer satisfaction through improved issue resolution.

17

Raq.com: AI-Powered Internal Tool Builder with Self-Correcting Code

Author

hawke

Description

Raq.com is a platform that uses Claude Code, an advanced AI model, to build functional internal tools directly in your web browser. The key innovation lies in its self-correcting capabilities. Unlike many AI coding tools that struggle with real-world API integrations or require constant manual adjustments, Raq.com can generate working solutions from a single prompt. This is achieved through a sophisticated feedback loop, allowing Claude to test, debug, and refine its own code, ensuring a higher success rate and reducing the need for developer intervention. This means you get working tools faster.

Popularity

Points 6

Comments 0

What is this product?

Raq.com is essentially a smart assistant for building internal web applications. You provide a description of the tool you want, and the AI generates the code. The magic happens because it’s not just generating code; it's also testing and fixing its own mistakes. Think of it as a highly skilled programmer that learns from its errors. The platform provides isolated development and production environments (using Docker), a persistent terminal in the browser, and a self-correction loop. The self-correction loop uses various tools like PHPUnit, syntax checkers, and Playwright to test and debug the generated code. So, this AI-powered tool is more than just a code generator; it is a self-improving solution.

How to use it?

Developers can use Raq.com by simply describing the internal tool they need. For example, if you want a tool to fetch and display company information, you would provide a prompt specifying the desired functionality and the APIs to integrate (like Companies House, FinUK, or OpenRouter). Raq.com then takes care of the complex coding, debugging, and integration, and you can deploy the working tool with a single click. This is particularly useful for building admin dashboards, data reporting tools, and other internal applications. So, by just explaining what you need, the platform will handle all the heavy-lifting.

Product Core Function

· AI-Powered Code Generation: Raq.com uses Claude Code to generate code from natural language prompts. This drastically reduces the time and effort required to build applications. The advantage is clear: faster development cycles and reduced reliance on manual coding.

· Self-Correcting Code: A built-in feedback loop allows the AI to test and debug its own code, resulting in more robust and reliable applications. So, your tools work more reliably.

· Isolated Docker Environments: Raq.com provides separate development and production environments using Docker, ensuring a clean and secure workspace for each project. The benefit is increased stability and security of the tools.

· Persistent Terminal in the Browser: A persistent terminal streamed to the browser allows developers to continue their work even when the browser tab is closed. This provides a seamless development experience.

· One-Click Deployment: Deploying the generated application to a live environment is made simple with a single-click deployment feature. This simplifies the overall development and deployment workflow.

· Integrated Testing and Debugging: The platform incorporates tools like PHPUnit, syntax checkers, and Playwright to automatically test and debug the generated code. This improves code quality and reduces errors.

Product Usage Case

· Building Internal Admin Dashboards: Developers can use Raq.com to quickly generate admin dashboards for managing internal data and processes. Instead of spending weeks coding, a functional dashboard can be up and running in a matter of hours. So, save time and effort with a ready-to-use admin panel.

· Automated Data Reporting Tools: The platform can be used to create custom data reporting tools that automatically pull data from various APIs and generate reports. This is especially useful for businesses needing quick insights. This allows you to easily generate and customize your reports.

· API Integration Projects: Raq.com excels in integrating with different APIs. For instance, a user can describe an application that pulls data from a financial API (like FinUK) and displays it in a user-friendly interface. The AI handles the complexities of API interactions, and you get the application faster.

· Prototyping and Testing: It is perfect for quickly prototyping new ideas and testing them in a live environment. You can rapidly build proof-of-concept applications without extensive coding. So, try out your new ideas with minimal effort.

· Internal Tooling for Non-Coders: Raq.com also enables non-coders to build internal tools that streamline their workflow and boost efficiency. It gives non-programmers the capability to create custom software solutions.

18

TanStack DB: The Client-Side Data Dynamo

Author

samwillis

Description

TanStack DB is a clever piece of technology that helps your web and mobile applications handle data much more efficiently. It's like having a mini-database living right inside your app. The magic lies in a technique called Differential Dataflow. Instead of re-doing everything when something changes, it only updates the bits that are actually different. This means lightning-fast updates, even when dealing with mountains of data. It seamlessly integrates with existing tools like REST, GraphQL, and WebSockets, and plays nicely with technologies like ElectricSQL and Firebase for real-time data synchronization. So, if you're tired of slow data updates and want a snappier user experience, this could be your new best friend.

Popularity

Points 4

Comments 2

What is this product?