Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-07-28

SagaSu777 2025-07-29

Explore the hottest developer projects on Show HN for 2025-07-28. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The hacker spirit of building practical solutions using AI is thriving. We're seeing a surge in projects that automate everyday tasks. Take note, developers: the future is about creating intelligent agents that do the work for you. Furthermore, there's a strong emphasis on empowering developers. This trend suggests a huge opportunity to create tools that accelerate development, from code generation to project setup. The focus on local-first applications reflects a growing concern for privacy and control. Embrace this by building tools that work offline or with minimal reliance on external services. Think about how you can apply these technologies to solve real-world problems and create new efficiencies. The key is to build tools that solve real-world problems by leveraging AI and developer tools.

Today's Hottest Product

Name

Piper: AI that makes phone calls for you

Highlight

This project leverages AI to automate phone calls. Instead of manually dialing, you tell Piper what you need, and it handles the entire conversation. Key technologies include optimizing for low latency to make conversations feel natural and custom context engineering to keep the AI agent on track. The innovative aspect is applying AI to handle routine phone calls at scale. Developers can learn from the focus on optimizing for real-time conversation and building robust AI agents.

Popular Category

AI Applications

Developer Tools

Productivity

Popular Keyword

AI

Automation

Open Source

API

Technology Trends

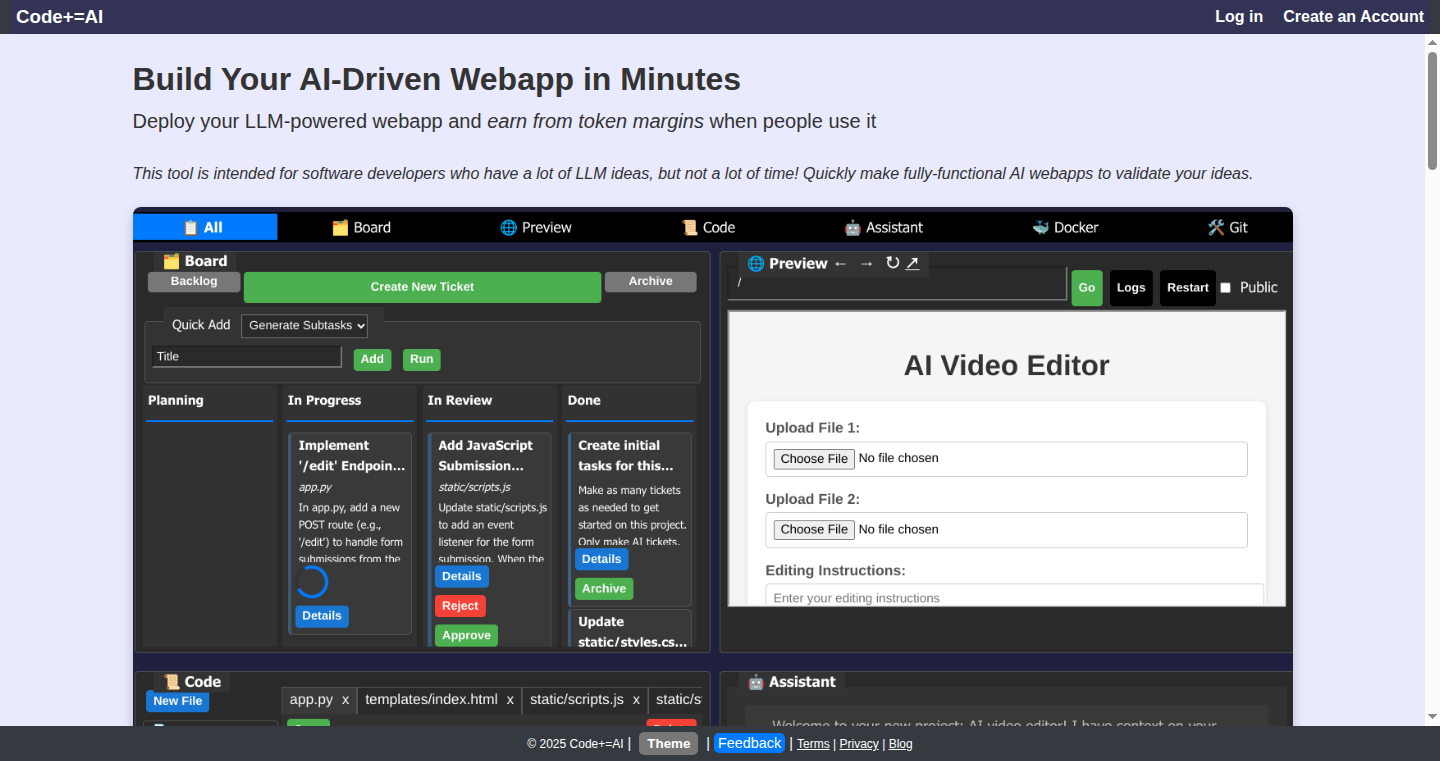

AI-powered automation of routine tasks, such as phone calls and meeting scheduling.

Tools for AI-assisted development, including code review, code generation, and project scaffolding.

Focus on local-first and privacy-focused applications that minimize reliance on the cloud.

Project Category Distribution

AI and Automation (45%)

Developer Tools (30%)

Productivity and Utilities (25%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Use Their ID: AI-Generated Mock IDs for Political Protest | 709 | 205 |

| 2 | Piper: AI-Powered Autonomous Calling Agent | 85 | 76 |

| 3 | Browser-Based Photomosaic Generator | 119 | 39 |

| 4 | JustRef: AI-Powered Sports Refereeing System | 16 | 3 |

| 5 | Allzonefiles.io: Global Domain Data Explorer | 3 | 10 |

| 6 | PendingDelete.Domains: AI-Powered Expired Domain Finder | 5 | 5 |

| 7 | RunAgent: Cross-Language AI Code Reviewer with Seamless Streaming | 6 | 3 |

| 8 | Kiln: The AI Project Forge | 8 | 1 |

| 9 | OpenCodeSpace: YOLO Mode Development Environments | 8 | 0 |

| 10 | Whisper-Optimized: Edge Inference with Custom Kernels | 6 | 2 |

1

Use Their ID: AI-Generated Mock IDs for Political Protest

Author

timje1

Description

This project takes a UK postcode and generates a fake ID for the local Member of Parliament (MP) using AI. It's a playful protest against the UK's Online Safety Act, highlighting the potential for misuse of personal identification. The technical innovation lies in using AI to create realistic, albeit fake, visual representations based on limited input data. This addresses the question: can we quickly generate convincing visual representations based on available information, and what are the implications?

Popularity

Points 709

Comments 205

What is this product?

This project utilizes AI to generate mock IDs of UK MPs based on their constituencies' postcodes. The core innovation lies in the use of AI image generation to create realistic visuals from limited data – the MP's name and constituency. The project cleverly leverages the power of AI to visualize a concept and make a political statement. So this project uses AI to create a visual protest.

How to use it?

Users input a UK postcode, and the system retrieves the MP's information. The AI then generates a mockup ID, showcasing how personal data could be used (or misused) under legislation like the Online Safety Act. Developers can use the underlying AI image generation techniques for similar projects needing rapid visual prototyping or creating visualizations of hypothetical scenarios. The system is very simple, the user provides the postcode and the website displays the AI-generated image.

Product Core Function

· Postcode Input and MP Lookup: Takes a UK postcode as input and retrieves the corresponding MP's details. This has value for any application needing to connect location data with political or geographic information. So this can be used for any application that need to know who the MP is for a certain area.

· AI-Powered ID Generation: The core feature is using AI to create a visual representation (the mock ID). This demonstrates the power of AI in quickly generating visual content from text data, showing its potential in areas from data visualization to creative prototyping. So this can be used for fast visual prototyping.

· User Interface: A simple web interface displaying the generated ID. This provides a practical example of how to display AI-generated content in a user-friendly way. So this can show how to put the content generated by AI to use.

Product Usage Case

· Protest Art: The project itself is a form of digital protest art, leveraging AI to create a striking visual representation of a political statement. It's a creative demonstration of AI's potential to quickly generate visual communication.

· Educational Demonstration: The project can be used to demonstrate the capabilities and potential of AI-based image generation in educational settings. It shows how AI can create things and what its possibilities are.

· Rapid Prototyping: Developers could use the same underlying AI techniques to quickly prototype visual concepts in areas such as UI design or product mockups. So developers can quickly create mockups of their ideas.

2

Piper: AI-Powered Autonomous Calling Agent

Author

michaelphi

Description

Piper is a web application and soon-to-be Chrome extension designed to make phone calls on your behalf using AI. It addresses the asymmetry where businesses use AI to interact with customers via phone calls, but consumers are still manually dialing. The core innovation lies in automating the entire calling process: you provide the task (e.g., book an appointment), and Piper handles the conversation. Key technical challenges addressed include minimizing latency in the voice interaction and maintaining contextual awareness throughout the call, allowing for a seamless and natural user experience.

Popularity

Points 85

Comments 76

What is this product?

Piper is essentially an AI-powered phone assistant. It uses advanced AI to understand your requests and autonomously conduct phone calls to achieve your goals. Technically, it leverages techniques like optimized key-value caching (kv cache) to reduce call latency, ensuring a natural conversational flow. It also implements custom context engineering to keep track of the call's progress, including transfers and hold times. So what? It frees up your time by handling routine phone tasks.

How to use it?

You'll be able to instruct Piper through a web interface, or soon, by clicking any phone number directly via a Chrome extension. You'll provide a task description, and Piper will initiate the call and manage the entire conversation. Imagine you need to book a doctor's appointment: You'd tell Piper, and it would handle the back-and-forth with the clinic. So what? It's like having a personal assistant for your phone calls.

Product Core Function

· Autonomous Call Initiation: The core function of Piper is to initiate phone calls based on user instructions. So what? This allows users to delegate the tedious task of manually dialing and navigating phone menus.

· Natural Language Understanding and Generation: Piper uses AI to understand user requests and generate human-like responses to engage in a conversation with the other party. So what? This provides a seamless and natural experience, eliminating the need for manual interaction.

· Contextual Awareness: Piper maintains situational awareness throughout the call, recognizing when the call is transferred, when the user is on hold, and other call states. So what? This enables it to navigate complex phone systems and handle different scenarios.

· Latency Optimization: Piper minimizes call latency by optimizing technologies like key-value caching (kv cache) to ensure quick response times. So what? This creates a more natural and responsive conversational experience.

Product Usage Case

· Appointment Scheduling: A user needs to schedule a doctor's appointment. They instruct Piper, and Piper calls the clinic, navigating any automated phone systems and confirming the appointment details. So what? It saves the user from having to make the call themselves and wait on hold.

· Order Tracking: A user wants to check the status of an online order. Piper calls the retailer, interacts with the automated system or a customer service representative, and provides the user with the order status. So what? This eliminates the time-consuming task of manually calling and navigating through menus.

· Complaint Resolution: A user needs to dispute a charge on their credit card. Piper calls the bank, explains the issue, and works to resolve the dispute. So what? It allows the user to quickly address billing issues without having to spend time on the phone.

3

Browser-Based Photomosaic Generator

Author

jakemanger

Description

This project is a web-based tool that creates photomosaics directly in your browser. A photomosaic is an image made from many smaller images, arranged to form a larger picture. The innovative aspect is that it all happens within your web browser; no images are uploaded to any server and no registration is needed. This approach utilizes JavaScript and potentially WebAssembly for image processing, offering a fast and privacy-focused solution. So what's the big deal? It means you can create stunning art pieces using your own photos, all without compromising your privacy or waiting for slow server-side processing.

Popularity

Points 119

Comments 39

What is this product?

This tool takes a target image and a set of tile images (your photos). It analyzes each tile image's color and texture and then places them in the mosaic based on how well they match the corresponding sections of the target image. The clever part is that all this computation happens locally within your browser, leveraging the power of your computer's processor. This eliminates the need for uploading your photos to a server, keeping your data secure and speeding up the process. The core innovation lies in its in-browser image processing capabilities, probably involving algorithms like k-means clustering for color matching and efficient image scaling and manipulation using HTML5 Canvas and potentially WebAssembly for speed.

How to use it?

Developers can use this project to understand how to perform image processing and manipulation efficiently within a web browser. They could potentially integrate similar image processing techniques into their own web applications. For example, a developer could use it as a starting point for building a photo editing app, or a tool for creating custom visual effects. The tool would be integrated by understanding the underlying JavaScript code and modifying it to suit their specific requirements. This could involve creating a new user interface for customization, adding new image processing filters, or integrating with a service for obtaining tile images.

Product Core Function

· Image Analysis: The tool analyzes both the target image and the tile images. It likely uses algorithms to calculate color averages and potentially texture information for each image. This is useful because it allows you to 'understand' what each image looks like, leading to intelligent selection.

· Color Matching: It matches the colors of the tile images to the corresponding sections of the target image. This is probably achieved using a color distance algorithm. This is useful for finding the best tile image to use in each mosaic tile.

· Image Resizing and Manipulation: The tool resizes the tile images and arranges them to create the final mosaic. This is achieved using HTML5 Canvas or WebGL. This is useful because it enables creating the actual mosaic arrangement.

· In-Browser Processing: All these operations are performed within the user's browser, without the need for any server-side processing. This is useful for privacy, performance, and ease of use.

Product Usage Case

· Artistic Project: A designer wants to create a personalized gift, so they use the tool to create a photomosaic from family photos. They upload a portrait photo and then select a collection of photos of family events. The tool instantly generates a beautiful mosaic ready for printing. This shows that you can create meaningful personalized gifts that are technically impressive.

· Web Application Feature: A web developer building a photo sharing platform integrates similar image processing techniques to allow users to create photomosaics of their own photos directly on the site. This adds a cool feature that enhances user engagement, showing how it can make any user experience more fun.

· Educational Purpose: Students studying web development can use the tool's source code to understand how image processing is implemented in JavaScript and the browser. This demonstrates the code can serve as a learning tool.

4

JustRef: AI-Powered Sports Refereeing System

Author

justref

Description

JustRef is an AI-powered system that acts as a referee for sports, analyzing video footage to make calls. The innovation lies in its use of computer vision and machine learning to automatically detect events, track players, and identify rule violations. It tackles the problem of human error and subjectivity in refereeing, providing a more objective and data-driven approach. This project explores the application of AI to automate the decision-making process in sports, offering a potentially fairer and more efficient officiating experience.

Popularity

Points 16

Comments 3

What is this product?

JustRef uses artificial intelligence to analyze sports videos, acting like a referee. It uses computer vision, which is like teaching a computer to 'see' and understand video. The AI is trained with machine learning, meaning it learns from watching many videos of sports events. It can detect things like fouls, goals, and player movements, helping make accurate calls. The main innovation is in automating this process – replacing human referees with an AI system. So, it's essentially a smart camera that can see what's happening in a game and make decisions. So what? It could significantly reduce human error in sports.

How to use it?

Developers can use JustRef in a few ways. For example, they could integrate it with existing sports broadcasting systems to provide real-time analysis and replay highlights, powered by AI-generated call suggestions. They can also use the project as a foundation to build similar solutions for other sports or applications. The system could be adapted to different sports by training it on data from those specific games, effectively creating specialized AI referees. So what? Developers can build more innovative sports-related apps and tools.

Product Core Function

· Automated Event Detection: Automatically identifies key events in a sports game (e.g., goals, fouls, penalties). This reduces the need for manual review and speeds up the process. It's valuable for real-time game analysis and generating highlight reels.

· Player Tracking and Movement Analysis: Tracks player positions and movements on the field. This provides valuable data for understanding game dynamics, strategy, and identifying potential rule violations, improving sports analytics.

· Rule Violation Identification: Identifies rule violations based on video analysis, such as offsides, out-of-bounds, or illegal contact. This is core to the referee functionality and allows the system to suggest or make calls, improving accuracy and fairness.

· Data Visualization and Reporting: Presents the analyzed data in a clear and easy-to-understand format. This can include heatmaps of player activity, statistics on fouls, and summaries of key events, which gives better insight into the game for fans and coaches.

Product Usage Case

· Real-time Broadcasting Enhancement: Integrate JustRef into a sports broadcasting system to provide instant replays, highlight key moments, and automatically generate statistical data during live games. So what? Viewers get a better experience and understanding of the game.

· Training and Coaching: Use JustRef to analyze training sessions or games, helping players and coaches identify areas for improvement and refine strategies. So what? Athletes can train more effectively and coaches can gain better insights.

· Sports Analytics Platform: Develop a platform that offers detailed analysis of game events, player performance, and team strategies, using data from JustRef. So what? Fans, analysts, and teams will benefit from a data-driven understanding of the game.

5

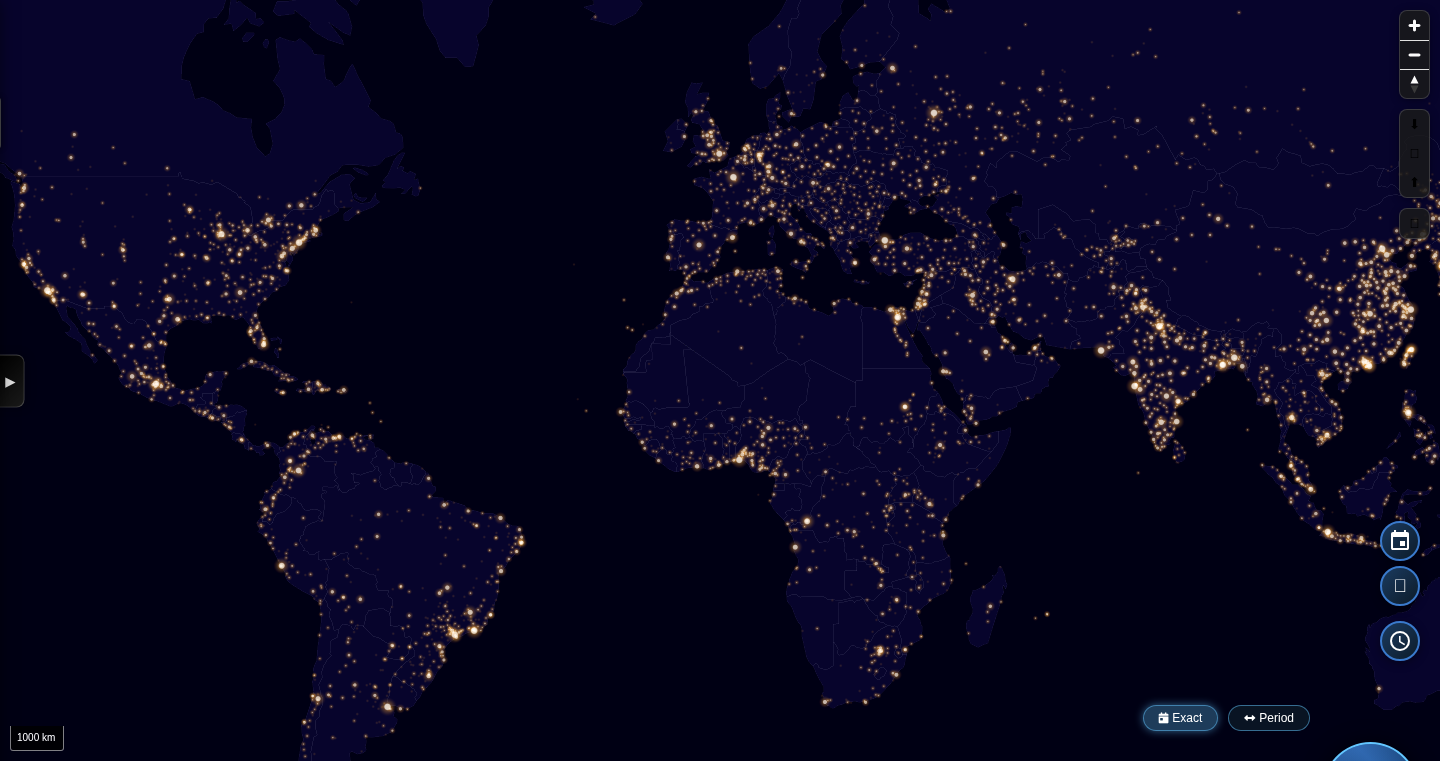

Allzonefiles.io: Global Domain Data Explorer

Author

iryndin

Description

Allzonefiles.io is a service that provides comprehensive lists of registered domain names across the entire internet. It meticulously crawls and compiles data from 1570 domain zones, offering a massive dataset of over 305 million domain names. The service allows users to download these domain lists or access them via an API, with daily updates for most zones. This project tackles the complex problem of collecting and maintaining a global, up-to-date inventory of all active domain names, offering a valuable resource for various technical applications. It demonstrates an efficient method for parsing and processing massive datasets, presenting a significant advancement in domain data management.

Popularity

Points 3

Comments 10

What is this product?

This project is like a massive library catalog for the internet. It gathers all the registered domain names from all over the world – a task that is incredibly challenging because the internet is constantly changing. It's done by collecting information from various domain name servers and organizing it into downloadable lists and an API. The innovation is in its scale, its daily updates, and its accessibility. So what? This offers a central resource for anyone needing to work with domain data, whether it's for security, SEO, or research. So this is a great tool for researching and building domain related applications.

How to use it?

Developers can use Allzonefiles.io in several ways. They can download the entire dataset or specific zone files directly from the website. More advanced users can integrate the provided API into their own applications to access and process the domain data in real-time. For example, they might use it in a script to monitor the registration of new domains. So what? It’s like having a super-powered search engine that is for domain names, allowing you to track trends, identify potential security threats, or build tools to understand the domain landscape. The API allows the use of this data to be dynamic and integrated with many applications.

Product Core Function

· Comprehensive Domain Listing: The core function is providing complete lists of registered domain names. Technical value: This involves complex data collection, parsing, and regular updates. Application: Useful for SEO analysis, brand monitoring, and competitive analysis.

· Daily Zone Updates: The system updates most domain zones daily. Technical value: Ensuring data freshness and accuracy via automated processes. Application: Ideal for detecting new domain registrations, domain squatting, and other real-time trends.

· API Access: Provides an API for programmatic data access. Technical value: Allows integration with other applications and services. Application: Enable developers to build custom tools that interact directly with the domain data, such as security tools, domain name generation tools, and market research platforms.

· Bulk Download: Enables the download of large zone files. Technical value: Efficient access to massive datasets. Application: Useful for offline analysis, large-scale data mining, and building local domain name databases.

Product Usage Case

· Security Monitoring: A security company can use Allzonefiles.io to identify and monitor newly registered domains, looking for potentially malicious websites. So what? It allows quick identification of suspicious domains and prevention of cyberattacks.

· SEO Research: An SEO specialist can use the data to identify expired domains with high authority, aiding in the acquisition of backlinks and boosting search engine rankings. So what? Helps improve website traffic and online visibility.

· Domain Name Generation: A developer could build a tool that uses the data to analyze available domain names based on keyword trends and availability. So what? Gives users a competitive advantage in securing suitable domain names.

· Market Research: Researchers can analyze domain registrations to study the rise of new industries, track market trends, and identify emerging business opportunities. So what? Allows for data-driven decision-making in market entry and expansion strategies.

6

PendingDelete.Domains: AI-Powered Expired Domain Finder

Author

hikerell

Description

This project is a free tool that uses AI to help you discover valuable expired domain names. It analyzes a massive dataset of domains about to expire, sifting through the junk to find those with existing traffic, search engine optimization (SEO) value, or simply appealing names. The core innovation lies in its use of AI to automate the process of identifying valuable domains, saving users hours of manual research. It combines domain history, traffic data, SEO metrics, and AI-driven insights to prioritize potentially valuable expired domains. So, it helps you quickly identify and acquire domains with existing value.

Popularity

Points 5

Comments 5

What is this product?

This tool is essentially a smart domain name scanner. It automatically analyzes a huge list of domain names that are about to expire. The cool part? It doesn't just look at the name; it digs deeper. It uses AI to check their past performance (like how much traffic they got, and if they were popular on search engines). It also looks at SEO data. It is trying to find domains that are still valuable even after they expire. So, this means you can potentially find great domain names for new projects or boost your existing ones.

How to use it?

You can use this tool by simply visiting the website (pendingdelete.domains). The tool is updated daily with a new list of expired domains. You can view this list without needing to log in or pay anything. You can look through the listed domains to spot opportunities that match your needs. This tool is perfect for developers, marketers, or anyone who wants to quickly find domains with some existing online presence and value. You can integrate the found domain names into your projects or use them to improve your SEO.

Product Core Function

· AI-driven Domain Valuation: The core function is to use AI to automatically assess the potential value of an expired domain. It analyzes various metrics like historical traffic, backlinks, and SEO data to predict if a domain still has value. So, you get a quick and intelligent assessment of the domains, saving you from manually analyzing each one.

· Daily Updates of Expired Domain Lists: The tool offers a fresh list of expiring domains every day. This means you always have access to the newest opportunities for potentially valuable domain names. So, you always have the latest data for potential domain acquisitions.

· Combined Data Analysis: The tool combines domain history, traffic metrics, SEO data and other insights to give a comprehensive view of each domain. This allows users to make informed decisions about which domains to pursue. So, you can quickly see a complete picture of a domain's past performance and potential value.

· Free and Accessible: The tool requires no registration or payment. This makes it accessible to anyone who needs to find valuable expired domains. So, it breaks down the barriers to entry and allows everyone to participate in the process.

Product Usage Case

· Startup Projects: A developer wants to create a new tech blog. They can use this tool to find an expired domain with a good name and some existing traffic. They can then re-purpose that domain for the blog, immediately gaining an audience and some SEO benefits. So, the developer can launch a new blog with an established online presence.

· SEO Optimization: A marketing agency wants to boost the SEO for a client's website. They find an expired domain name related to the client’s industry that has a good backlink profile. The agency can then redirect the expired domain to the client's website, boosting the client's search engine ranking. So, the marketing agency significantly improves their client's SEO.

· Domain Flipping: An investor in domain names uses this tool to find expired domains with high potential. They buy these domains and then sell them for a profit. So, the investor can easily identify high-potential domains for the domain flipping business.

· Content Creation: A content creator needs a good domain for his new project, like an online course. They could use this tool to identify an expiring domain name, for example, one that was previously used for a project similar to the planned course. Then, they can acquire and repurpose the domain for their course. So, the content creator can have a relevant domain name with some existing online authority.

7

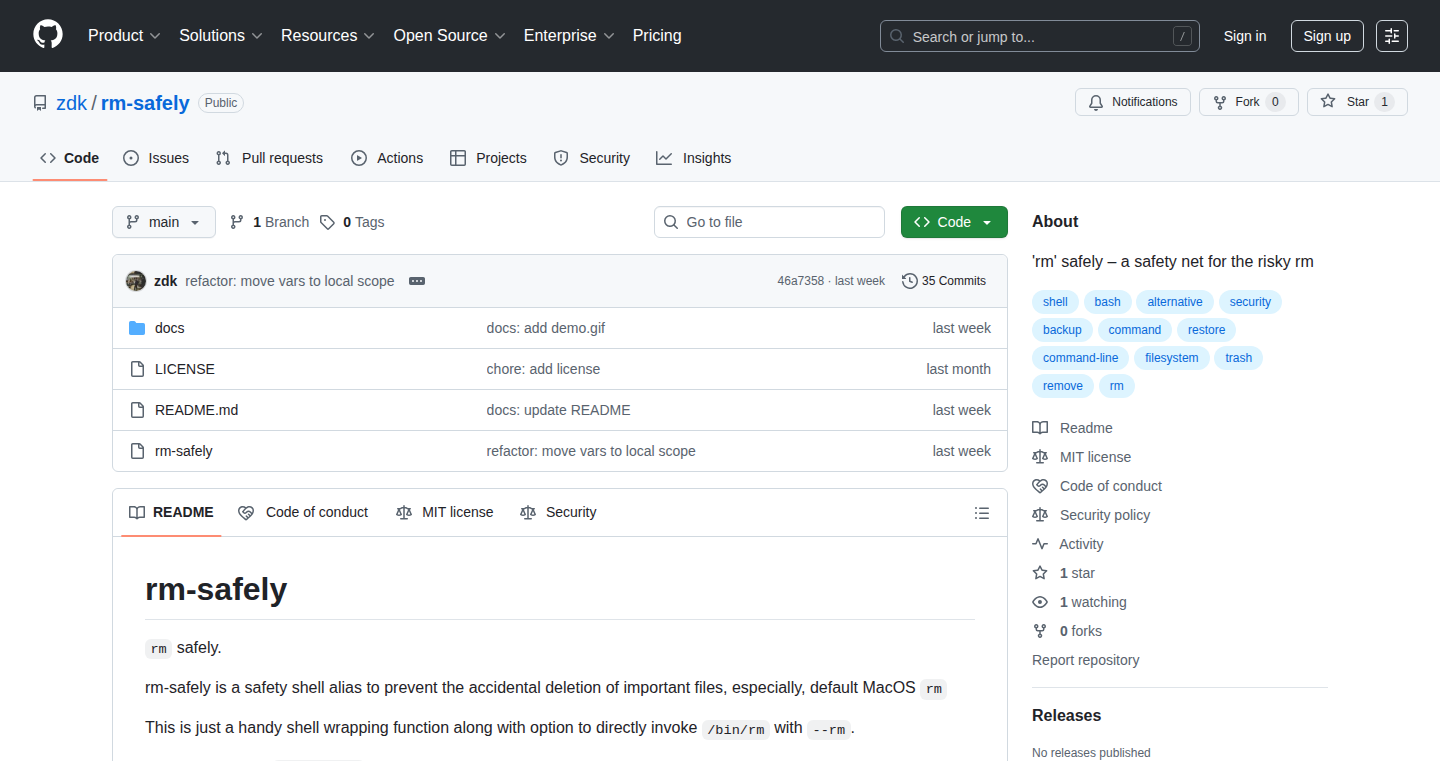

RunAgent: Cross-Language AI Code Reviewer with Seamless Streaming

Author

sawradip

Description

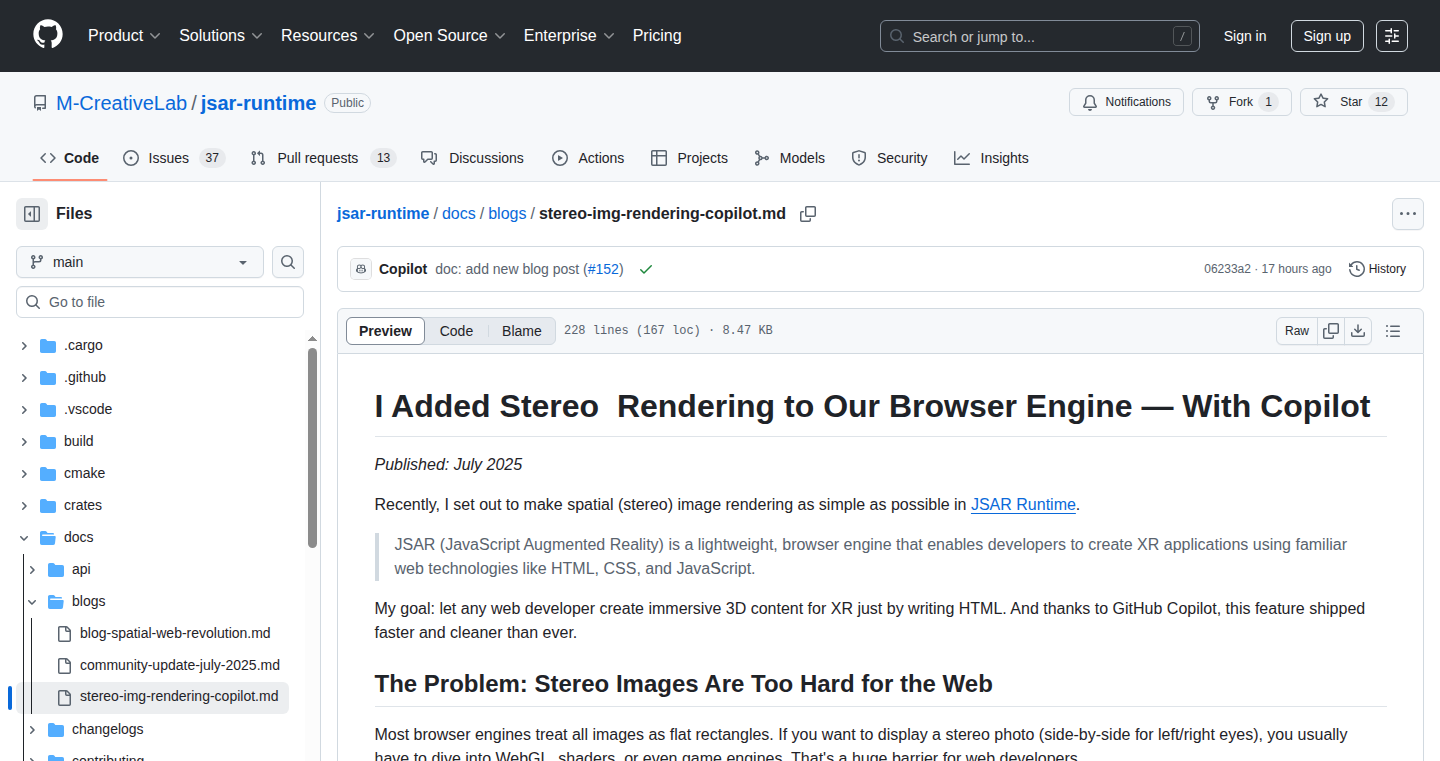

This project showcases an AI-powered code reviewer that cleverly bridges the gap between Rust and Python. It allows developers to call a Python-based AI agent directly from their Rust applications, enabling real-time code reviews with zero hassle. The innovation lies in its ability to achieve seamless, high-performance streaming across these different programming languages without relying on complex technologies like WebSockets or Foreign Function Interface (FFI). This provides a native feeling of calling a Rust library, making it incredibly easy to integrate AI-powered code review into existing Rust projects. It leverages Letta, a Pythonic AI agent framework for agentic memory management, allowing the AI agent to learn and remember coding patterns, providing more intelligent and personalized code reviews. The project focuses on deployment abstraction making cross-language interaction simpler.

Popularity

Points 6

Comments 3

What is this product?

This project builds a code reviewer using an AI agent written in Python and integrated with a Rust application. The core innovation is the RunAgent technology, which allows for native-feeling, real-time streaming between Python and Rust without needing complex bridging mechanisms. This allows the AI agent to stream the code review process, as it happens, directly into the Rust application. It utilizes an AI agent that remembers coding patterns and learns from prior reviews, making code review more efficient and relevant.

How to use it?

Developers can integrate this AI code reviewer into their Rust projects by leveraging the RunAgent framework. By calling the Python AI agent (built using Letta) from their Rust code, they can receive real-time code reviews during development. This involves setting up the agent in Python, then calling it from the Rust application as if it were a native Rust library. Think of it as adding an AI code assistant that can instantly review your code as you type it. This is particularly valuable for codebases that use both Python and Rust, or for anyone looking to integrate AI assistance into their Rust development workflow.

Product Core Function

· Real-time Streaming Code Reviews: The ability to stream code review results in real-time across language boundaries offers instant feedback, accelerating the development process and enabling immediate correction of errors. This is valuable to developers because it provides immediate feedback while coding, saving time and improving code quality.

· Cross-Language Integration: Seamlessly integrating a Python-based AI agent with a Rust application, offering a simplified and efficient way to combine the benefits of different programming languages. This means developers don't need to build a bridge between different language environments and their projects.

· AI-Powered Code Analysis and Learning: The AI agent remembers coding patterns and learns from previous reviews improving its ability to identify potential issues, suggest improvements, and offer personalized guidance. This is very useful, especially for complex projects.

· Simplified Deployment: Project focus on deployment abstraction, simplifies the setup and usage of the AI code reviewer by developers, reducing the time and effort required to integrate the tool into their workflow.

Product Usage Case

· Automated Code Reviews in Rust Projects: Imagine you're working on a Rust project. Instead of waiting for a code review from another person, as you write the code, the AI agent in Python analyzes your code in real time. It suggests best practices, highlights potential bugs, and points out areas for improvement. So you instantly have AI assistant while developing Rust code.

· Cross-Language Development Workflows: A team works with a codebase that involves both Rust for performance-critical components and Python for AI-related tasks. The code reviewer enables smooth integration of AI-driven code analysis in Rust parts, improving the entire workflow.

· Learning and Adapting to Coding Style: The AI agent is trained to understand the specific coding patterns used in a project, allowing for customized code reviews. With each review, the AI learns from the project, ensuring the recommendations become more tailored to the team's coding standards.

8

Kiln: The AI Project Forge

Author

scosman

Description

Kiln is an open-source, local-first toolkit designed as a 'boilerplate' for AI projects, akin to what exists for web apps. It bundles essential components like evaluation systems (including LLM-as-judge), fine-tuning capabilities, synthetic data generation, and model routing, all integrated for seamless AI project development. It runs locally, ensuring data privacy and control, and leverages Git for collaboration. This project addresses the common pain points in AI development by providing a unified, efficient workflow, enabling developers to quickly prototype, experiment, and deploy AI models.

Popularity

Points 8

Comments 1

What is this product?

Kiln is essentially a pre-packaged set of tools designed to speed up the development of AI projects. It's like having a toolbox with all the necessary components already assembled. The core concept is integration – the tools work together, optimizing the workflow. For example, the synthetic data generator knows what kind of data is needed for evaluating the AI model or fine-tuning it, and the evaluation system can automatically test different combinations of AI models and tuning methods. It runs on your computer, meaning your data stays private, and uses Git for collaboration. So this gives you a head start when building AI projects.

How to use it?

Developers use Kiln by cloning the project and using its integrated components. You can integrate it into your own AI projects or use it as a starting point. For instance, you can use the evaluation system to measure the performance of different AI models, the fine-tuning feature to improve the model's performance on specific tasks, and the synthetic data generation to create the data needed for your project. All this can be managed locally through your Git repository, allowing for easy collaboration and version control. So if you're an AI developer, you can immediately start working on your project with the pre-configured tools provided by Kiln.

Product Core Function

· Eval System: This feature allows developers to evaluate the performance of their AI models using various metrics, including LLM-as-judge, eval data generation, and human baselines. This helps developers understand how well their models perform and identify areas for improvement. This is useful because it helps developers measure and improve the quality of their AI models, leading to better outcomes.

· Fine-tuning: Kiln provides an interface to fine-tune AI models through providers like Fireworks, Together, OpenAI, and Unsloth. Fine-tuning enhances an AI model's performance on a specific task or dataset. This helps developers adapt pre-trained AI models to their project's unique requirements. So if you're building a specialized AI model, this is a key component.

· Synthetic Data Generation: Kiln integrates a synthetic data generator that can produce data for both evaluations and fine-tuning. This is extremely useful because it can create diverse data sets customized to AI model development and specific needs. So, if you need to create specific training data, this helps speed up the process.

· Model Routing: Kiln includes a model routing feature that allows developers to use different AI model providers, such as Ollama and OpenRouter. This helps you to easily test and compare different models, and choose the best provider. So if you need to test multiple AI models, this will speed up the model selection process.

· Git-Based Collaboration: Kiln supports Git-based collaboration, allowing developers to manage their projects through their own Git servers. This enables developers to track changes, collaborate effectively, and maintain version control. This is useful because it facilitates easy collaboration and version control, crucial for team projects.

Product Usage Case

· Building a 'natural language to ffmpeg command' demo: Kiln was used to create a demo that translates natural language instructions into ffmpeg commands. This project used the evaluation system, fine-tuning, and synthetic data to develop the AI model. This illustrates how you can use Kiln to turn complex problems into practical solutions, such as converting plain language instructions into executable code. So you can create a specialized tool to automatically convert natural language to executable commands.

· Rapid Prototyping of AI Projects: Kiln can be used to quickly set up and test different AI models for different tasks. For example, in a project to develop a chatbot, you can quickly iterate through various models, train and evaluate them using Kiln's features. You can quickly experiment with different AI models, save time, and accelerate the prototyping phase.

9

OpenCodeSpace: YOLO Mode Development Environments

Author

vadepaysa

Description

OpenCodeSpace is a command-line interface (CLI) tool that allows developers to quickly spin up temporary, self-hosted VS Code environments. It leverages Docker for local execution or Fly.io for remote hosting (with AWS and GCE support planned), pre-configured with tools like Claude Code, OpenAI, and Gemini CLI. The core innovation lies in providing a streamlined, disposable environment for rapid prototyping and experimental development, enabling parallel development workflows and eliminating the need to configure complex development setups. This is especially useful for leveraging AI code generation tools like Claude Code without impacting your local machine.

Popularity

Points 8

Comments 0

What is this product?

OpenCodeSpace is a tool to create disposable, isolated development environments based on VS Code. Think of it like creating a temporary workspace on your computer, but in a self-contained package. It uses Docker to run these environments locally or Fly.io for remote hosting, giving you flexibility. It also pre-installs useful AI tools like Claude Code, so you can test and experiment with them right away. So, what's the innovation? It simplifies the process of setting up and tearing down development environments, making it easy to try out new ideas without cluttering your main workspace. So, this is useful because it saves you time and reduces the risk of messing up your main project.

How to use it?

Developers use OpenCodeSpace through the command line. Simply navigate to a project folder and run `opencodespace .`. The tool then either uses Docker (if you have it installed) to run the environment locally, or deploys it remotely using Fly.io. After this, a browser window opens with a VS Code instance ready to use, pre-configured with necessary tools like AI coding assistants. The whole process is designed to be quick and easy. This is useful because it avoids the tedious setup process required by traditional development environments, letting you focus on coding.

Product Core Function

· One-command environment creation: The core functionality is the ability to create a new, isolated VS Code environment with a single command (`opencodespace .`). This simplifies the process of setting up development environments. So this is useful because it reduces the setup time for new projects or experimental work.

· Local or Remote Execution: Developers can choose to run their environments locally using Docker or remotely on Fly.io, giving them flexibility in terms of resource usage and accessibility. So this is useful because it allows you to use environments with more power than your local machine and use them from anywhere.

· Pre-configured AI tools: The environments come pre-installed with AI coding assistants like Claude Code, OpenAI, and Gemini CLI. This means developers can immediately start using these tools without needing to configure them. So this is useful because it makes it easy to explore AI-assisted coding.

· Disposable Environments: These are meant to be throwaway, meaning developers can quickly spin up temporary sessions for testing, experimenting, and trying out new things without the need to maintain them long-term. So this is useful because it allows for rapid prototyping and reduces the risk of affecting your main codebase.

· YOLO Mode: The tool is designed for 'YOLO mode development', emphasizing the use of temporary and parallel sessions. This allows developers to experiment quickly without considering the impact on their local environment. So this is useful because it provides a sandbox for reckless experimentation.

Product Usage Case

· Quick Prototyping: A developer wants to try a new coding framework. They use `opencodespace .` to instantly create a new environment, write some code, and see how it works without needing to install anything on their main computer. So this is useful because it accelerates the prototyping phase without polluting the primary development environment.

· Parallel Development with AI tools: A developer wants to use Claude Code to help with debugging a project. They can use `opencodespace .` to spin up a new environment where Claude Code is already set up. At the same time, they work on other parts of the project in their main VS Code instance. This is useful because it improves productivity by allowing parallel operation of coding assistant tools.

· Testing Experimental Code: A developer is working on a risky feature. They can use `opencodespace .` to create an isolated environment to implement and test it without the risk of destabilizing the main project. So this is useful because it provides a safety net for experimentation.

10

Whisper-Optimized: Edge Inference with Custom Kernels

Author

coolhanhim

Description

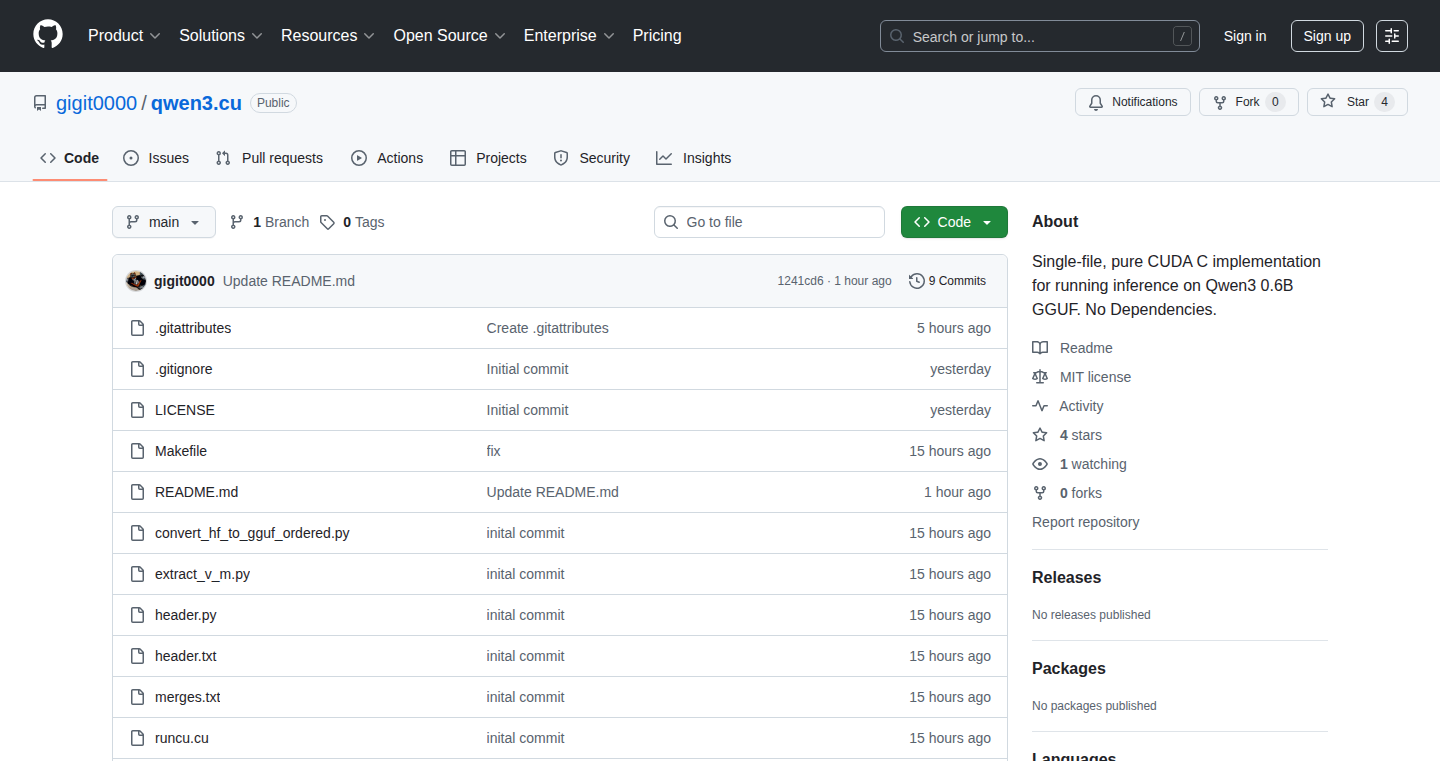

This project focuses on running the Whisper speech recognition model on resource-constrained devices (like your phone or a Raspberry Pi) by using custom kernels. The innovation lies in optimizing the model's core computations (matrix multiplications, convolutions) to use significantly less data, specifically 1.58 bits, achieving edge inference. This drastically reduces the computational load, enabling real-time speech-to-text transcription even on devices with limited processing power and battery life. This is a huge deal for privacy and offline functionality. It solves the problem of needing a powerful server or internet connection to transcribe audio.

Popularity

Points 6

Comments 2

What is this product?

Whisper-Optimized uses a technique called quantization to compress the Whisper model. Instead of representing numbers with the usual high precision, it uses a much smaller set of values (only 1.58 bits in this case). Then, it re-writes the code that runs the model using custom kernels, which are optimized for the hardware it runs on. This means the model can run much faster and use less power. So this means you can now run speech recognition on devices that previously couldn't handle it.

How to use it?

Developers can integrate this optimized Whisper model into their applications. This involves using the project's provided code (or adapting it) to load the quantized model and then running the custom kernels on the target device. Think of it as a plug-and-play solution. A developer would load the model into the application, provide the audio input, and receive the transcribed text as output. This is especially useful for applications requiring offline speech-to-text capabilities, voice control, or real-time transcription on low-power devices. So, for a developer, this simplifies the implementation of real-time transcription into their applications.

Product Core Function

· Model Quantization: Reducing the precision of the model's parameters (from higher-bit floating point to 1.58 bits), significantly decreasing the memory footprint and computational requirements. This makes the model much smaller and faster to run, so you can run it on your phone.

· Custom Kernel Implementation: Replacing the standard matrix operations and convolutional operations within the model with specialized code tailored for specific hardware architectures. This allows the model to take advantage of the unique features of the hardware, leading to substantial performance gains. By optimizing the 'engine' of the model, it can run much more efficiently.

· Edge Inference: Running the speech recognition model directly on a local device (e.g., a smartphone, embedded system). This means no need for an internet connection or cloud servers. This offers improved privacy, lower latency (faster results), and the ability to function offline. This is great because you can use it anywhere without a connection!

· Low-Bit Representation: Utilizing low-bit representations (1.58 bits) for model weights and activations. This is the heart of memory and computation reduction. Low-bit representation cuts memory and power needs, making real-time speech-to-text possible even on very basic hardware. This extends the utility of the technology to almost any device.

Product Usage Case

· Offline Voice Assistants: Create a voice assistant that can operate on a smartphone without an internet connection. Process voice commands and provide responses locally, protecting the user's privacy. So, your voice commands won't have to leave your phone.

· Real-Time Transcription Apps: Develop apps that transcribe lectures, meetings, or interviews in real-time on a tablet or laptop. The application will not need a powerful device or fast connection. Thus, you can instantly take notes or create transcripts without uploading audio to a server.

· Embedded Speech Control: Build voice-controlled interfaces for smart home devices or other embedded systems. The systems can be tiny and draw very little power, and can control your house, for example. Therefore, users can control devices through voice commands without needing a constant internet connection or a powerful computer. This is also great for energy conservation.

11

StoryAtlas: A Spatial-Temporal Story Explorer

Author

ebrizzzz

Description

StoryAtlas is an interactive map that visualizes a vast collection of games, books, and movies, indexed by both their narrative timelines and geographical locations. It allows users to explore stories based on where and when they occur, addressing the challenge of discovering content relevant to specific time periods or places. The innovation lies in its ability to spatially and temporally correlate disparate media, offering a unique and intuitive way to navigate cultural narratives.

Popularity

Points 7

Comments 1

What is this product?

StoryAtlas is a digital map that plots the events of games, books, and movies. Imagine a visual timeline and a world map combined. The map allows you to see stories based on where and when they take place. It uses clever techniques to connect the timeline of a story with its geographical setting. It's like having a librarian who can instantly show you all the stories happening in Paris in the 18th century, or a game set in ancient Rome. The innovation here is not just listing these stories, but providing an interactive and visual way to explore them, which is useful for research or simple discovery.

How to use it?

Developers can use StoryAtlas as a data source to build new applications. You could integrate the map’s API (Application Programming Interface) to create educational tools, or for enhancing content recommendations. Imagine a website where you can view historical novels related to the current city you are in, based on location data. Developers could also adapt the visualization components of StoryAtlas directly into their own projects. This allows for a new kind of search and discovery engine for media.

Product Core Function

· Spatial Visualization: Plotting stories on a world map, allowing users to visualize the geographic setting of different narratives. This is useful because it reveals geographical context to the stories. So what? It enables users to explore media based on location, discover common themes across stories set in the same place, and gain a new understanding of how places shape narratives.

· Temporal Visualization: Representing narratives along a timeline to highlight the chronological sequence of events. This is useful because it creates a visual timeline, which is useful to understand historical contexts. So what? This helps users discover stories that take place during specific historical periods, compare events across different narratives, and understand the evolution of storytelling across time.

· Data Integration: Connecting data from various sources (games, books, movies) to create a unified map view. This is useful for combining different content types and offering a broader range of content. So what? It allows users to explore a rich and diverse set of media, discover hidden connections between seemingly unrelated stories, and find new content.

· Interactive Exploration: Offering tools to filter, search, and navigate the map. This is useful for creating an intuitive interface. So what? It makes it easy for users to find stories of interest based on location, time, or keywords. It also offers a much more engaging way of exploration.

Product Usage Case

· Educational Application: A history teacher could use StoryAtlas to show students the locations of historical events featured in books and movies. This is especially useful in bringing history lessons to life. So what? It provides students with a more interactive and memorable learning experience, enabling them to connect the past with the present.

· Content Recommendation Engine: A streaming service could use the map to recommend movies and TV shows based on the user's location or current events. This helps provide personalized content to users. So what? Users can discover relevant media they might not have found otherwise, leading to greater satisfaction with the streaming platform.

· Game Development Tool: A game developer could use StoryAtlas to research historical settings and narratives for their game. This can provide a deeper understanding of cultural context and historical information. So what? It helps developers create more authentic and engaging game worlds, leading to enhanced user experience and critical acclaim.

12

AI-Powered Scheduling Agent: Automated Meeting Scheduling from Natural Language

Author

Riphyak

Description

This project introduces an AI agent that intelligently schedules meetings directly from natural language conversations in emails (like Gmail) or chat platforms (like Slack). The core innovation lies in its ability to understand the context of meeting requests, identify availability links (Calendly, cal.com, etc.), and compare availabilities to find the best time for everyone. It then automatically drafts email responses and reschedules meetings, saving users time and effort. So this automates the tedious back-and-forth of scheduling, which means less time wasted on logistics and more time focusing on the actual meeting.

Popularity

Points 6

Comments 2

What is this product?

This AI agent leverages Natural Language Processing (NLP) and Machine Learning (ML) to understand the meaning behind meeting requests. It identifies scheduling links, fetches available times from both parties, and finds the optimal time slot. It essentially acts as a smart assistant that manages the entire scheduling process, freeing up your time. So, it's like having a personal assistant that handles all your meeting arrangements automatically.

How to use it?

Developers can use this project by integrating its API into their existing communication tools or creating a standalone application. The integration would involve feeding the AI agent the relevant conversations (emails or chat logs) and providing it with access to scheduling links. Developers can then customize the agent's behavior and integrate it with their preferred calendar systems. So, you could plug this into your existing workflow to automatically manage your meeting schedules.

Product Core Function

· Natural Language Understanding (NLU) for Context Extraction: The agent analyzes meeting requests from emails and chats. This understanding of human language is critical for recognizing requests for meetings, understanding the preferences of the people involved, and determining the context. So, this ensures the AI agent accurately interprets and responds to meeting requests.

· Availability Link Detection and Processing: The agent can identify availability links (like Calendly) in the conversation and uses them to get the available times. So, this ensures the agent can automatically access and use people's calendars to find the best time.

· Availability Comparison and Optimization: It compares the availability of all participants to find the best time that works for everyone. So, this automates the tedious task of manually comparing calendars and figuring out the best meeting time.

· Automated Email Drafting and Sending: The agent drafts and sends email responses, proposing meeting times and rescheduling meetings when necessary. So, this removes the need for manual email back-and-forth, saving time and effort.

· Calendar Integration: Integrates with calendar services (e.g., Google Calendar, Outlook Calendar) to book and update meeting events automatically. So, the scheduling is seamlessly integrated into your existing calendar system.

Product Usage Case

· Sales Team Automation: A sales team uses the agent to automatically schedule demos with potential clients directly from email exchanges. The agent handles the scheduling process, allowing the sales team to focus on building relationships and closing deals. So, this helps salespeople close deals faster because they are spending less time organizing meetings.

· Project Management Optimization: Project managers use the agent to schedule meetings between team members and stakeholders, ensuring everyone is on the same page and informed about project updates. The agent finds the optimal time that works for everyone. So, this helps project managers make better use of their time by not having to manually book meetings.

· Personal Productivity Enhancement: Individuals use the agent to manage their personal and professional schedules, automatically arranging meetings with colleagues, friends, and family. The agent streamlines the scheduling process, freeing up valuable time. So, you can reclaim hours spent scheduling meetings every week.

13

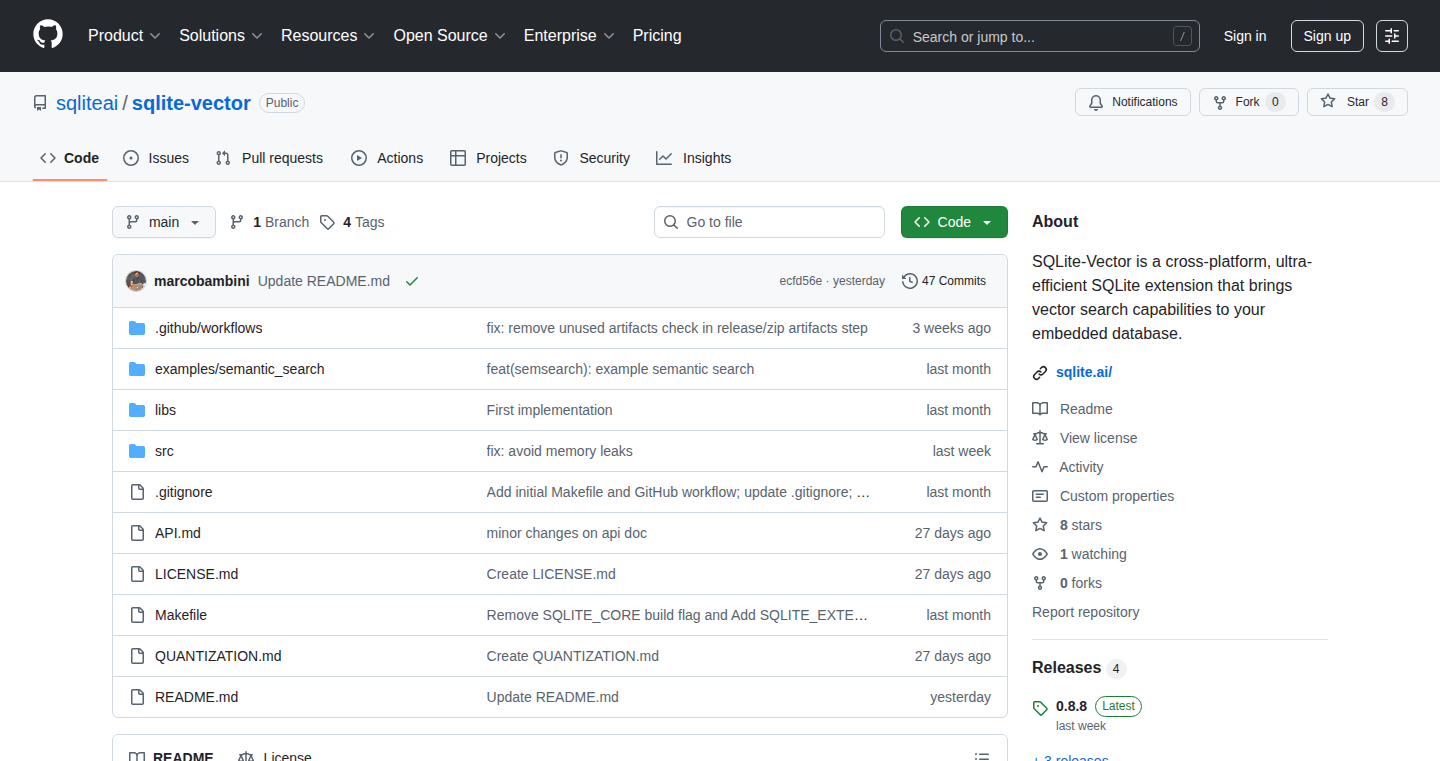

SQLite-vector: Lightweight Vector Search for SQLite

Author

marcobambini

Description

This project introduces a vector search extension for SQLite. It allows you to perform similarity searches on vector data directly within your SQLite database, without needing external index structures or excessive memory usage. The key innovation lies in its efficient implementation that keeps the memory footprint to just 30MB, making it suitable for resource-constrained environments. So, this allows you to do advanced search operations on your data, like finding similar text or images, directly in your database.

Popularity

Points 5

Comments 2

What is this product?

SQLite-vector extends SQLite with the ability to perform vector similarity searches. It achieves this without the need for separate index files, instead leveraging optimized algorithms within SQLite itself. This means you can directly query your data, like finding the most similar pieces of text, using vectors. The project optimizes for low memory usage, allowing for use on smaller machines or within mobile applications. So, this is like giving your SQLite database superpowers to understand and find things that are similar to each other.

How to use it?

Developers integrate this extension by loading it into their SQLite environment. Once loaded, they can then use SQL functions to calculate vector similarities (e.g., cosine similarity) and query for the nearest neighbors of a given vector. You can use this with any existing SQLite setup. Think of scenarios where you need to find similar documents, products, or even recommendations based on feature vectors extracted from your data. So, you can take your existing SQLite database and make it smarter, allowing for richer search and analysis.

Product Core Function

· Vector Similarity Calculation: SQLite-vector provides SQL functions to calculate the similarity between vectors. This includes implementations of cosine similarity, Euclidean distance, and potentially others. This is valuable because it allows you to compare data points based on their vector representations. So, you can find data that's close to a given vector, which is useful for things like content recommendation.

· Nearest Neighbor Search: The extension enables you to efficiently search for the k-nearest neighbors of a given vector within your database. This means you can quickly find the data points most similar to a query vector. This is useful for implementing search features. So, you can quickly find data that's most similar to a given query.

· Lightweight Footprint: The project's design focuses on keeping memory usage low (around 30MB). This makes it suitable for resource-constrained environments. This is valuable because it allows developers to use vector search capabilities on devices with limited resources. So, you can use advanced search capabilities even on your phone or embedded systems.

Product Usage Case

· Content-Based Recommendation: Imagine an e-commerce site using SQLite. You could store product descriptions as vectors and use SQLite-vector to quickly find products similar to the one a user is currently viewing. This can improve customer engagement and increase sales. So, you can build product recommendation features directly in your database, without needing a separate recommendation engine.

· Document Similarity Analysis: You can use SQLite-vector to compare documents based on their content vectors. This is useful for detecting plagiarism, finding similar articles, or building a search engine for a collection of documents. So, you can analyze document similarity and build powerful search features within your SQLite environment.

· Image Similarity Search: While the example may use text, the technology can be adapted to image data. You can extract feature vectors from images and use SQLite-vector to find visually similar images. This would be valuable for image search applications. So, you can search and organize images based on their visual content.

15

RipeKiwis: Guided Self Inquiry Tool

Author

zenape

Description

RipeKiwis is a web-based tool designed to guide users through self-inquiry, a meditation technique aimed at understanding one's true self. It leverages a simple, intuitive interface to facilitate a process of questioning and reflection, inspired by the teachings of Ramana Maharshi. The innovation lies in its accessibility: it demystifies a complex spiritual practice and makes it available via a user-friendly digital platform. So this helps people to easily start and practice the self-inquiry meditation.

Popularity

Points 2

Comments 3

What is this product?

This project offers a digital interface for self-inquiry. The core idea is to provide a structured way to explore the 'self' through guided questions and prompts. It simplifies the process of self-inquiry, making it easier for beginners to engage with the technique. So this means you get a structured way to examine your thoughts and beliefs, potentially leading to a better understanding of yourself.

How to use it?

Users can access RipeKiwis through a web browser. They will be presented with a series of prompts and questions designed to encourage introspection. Users can type their responses, reflect on their thoughts, and follow the prompts as a guide. This tool can be used as a part of a daily meditation practice or as a means of exploring difficult emotions or situations. So this helps you to connect with your inner self in an accessible and structured manner, anytime, anywhere.

Product Core Function

· Guided Questioning: The tool provides a series of carefully crafted questions that prompt users to reflect on their thoughts, feelings, and beliefs. This allows you to delve deeper into your own consciousness.

· Journaling Interface: Users can type their responses to the questions, effectively creating a personal journal or record of their self-inquiry journey. This can be used to track progress and insights.

· User-Friendly Design: The simple interface is designed to be easy to navigate and use, making it accessible to people with various levels of tech skills.

· Web-Based Accessibility: Because the tool is web-based, it can be accessed on any device with a web browser, allowing for practice from anywhere. This provides convenience and consistency in your practice.

Product Usage Case

· Meditation Practice Enhancement: A user struggling with daily meditation can use RipeKiwis as a structured method to focus their minds during meditation sessions. So this can provide a framework for deeper contemplation.

· Emotional Processing: Someone facing a difficult life event can use the tool to reflect on their feelings, potentially helping them to process emotions and gain self-awareness. So this can be used for emotional exploration and self-discovery.

· Mindfulness Training: Individuals interested in practicing mindfulness can use the guided prompts to stay present and observe their thoughts without judgment. So this helps to develop mindfulness and self-regulation.

· Self-Reflection: A person looking for greater self-awareness can use the tool to explore personal values, beliefs, and experiences. So this leads to a better understanding of yourself.

16

AI Equalizer: Shaping AI Personality with Attribute Sliders

Author

FicPeter

Description

AI Equalizer is a conceptual interface allowing users to define and 'lock in' personality traits for their AI assistant, like empathy, rationality, and directness. The key innovation lies in providing fixed attributes for an extended period, moving away from AI chatbots that change too readily based on user input. It explores how shaping an AI's character can build trust and encourage user growth, much like a consistent friend. So, this is about creating AI that helps you grow, rather than simply catering to your every whim.

Popularity

Points 2

Comments 2

What is this product?

AI Equalizer works by offering adjustable sliders, much like an audio equalizer, but instead of sound frequencies, it controls AI personality traits. You set levels for qualities like empathy (e.g., 40%), strictness (e.g., 70%), and others, and these settings remain fixed for a set duration, like a week. This approach allows the AI to develop a consistent 'character,' offering a more stable and trustworthy interaction, unlike AI that adjusts too easily. The core idea is to build an AI that offers a more reliable and less reactive experience. So, you get an AI that becomes a more stable companion.

How to use it?

While the app isn't public yet, the concept involves a user interface where you control sliders to set your AI's personality levels. Think of it as tuning a friend's personality. For example, if you desire an AI assistant that provides thoughtful feedback, you could crank up 'empathy' and 'depth' sliders. Or, if you are looking for direct and to-the-point answers, you would boost the 'directness' parameter. This approach is applicable in various settings, such as developing a personal AI assistant, building an AI tutor, or even for AI-driven customer support systems where consistency is crucial. So, you could have a reliable AI friend, teacher, or assistant.

Product Core Function

· Personality Sliders: Users define the AI's personality by adjusting sliders for attributes like empathy, rationality, and directness. This allows for granular control over the AI's behavior. So, you can tailor an AI to your exact needs.

· Fixed Attribute Duration: The defined personality settings are locked in for a specified period (e.g., 7 days), ensuring consistent behavior and promoting long-term trust. So, you won’t get a different AI every day.

· Character-Building Focus: Unlike AI that constantly adapts, AI Equalizer aims to help users build a more stable and trustworthy relationship with AI. It's like having a friend with stable values. So, you can build trust with your AI companion.

· Conceptual Interface: The project currently focuses on the conceptual framework and visualization, exploring the potential of shaping AI personality. So, it's a vision of how things could be, offering a new perspective on AI interaction.

Product Usage Case

· Personal AI Assistant: Use AI Equalizer to craft a virtual assistant with specific traits, such as a highly empathetic assistant for emotional support or a brutally honest assistant for unfiltered feedback. So, you can customize your AI friend.

· Educational Tools: Develop AI tutors with consistent teaching styles and personalities, which could improve learning outcomes and build rapport with students. So, your AI teacher will be reliable.

· Customer Service: Implement AI-powered customer support systems with fixed personalities, ensuring a predictable and trustworthy experience for users. So, your customer service AI is reliable.

· Mental Health Support: Utilize AI to provide mental health support with consistent, pre-defined levels of empathy and understanding. So, you can get consistent, and reliable mental health support.

17

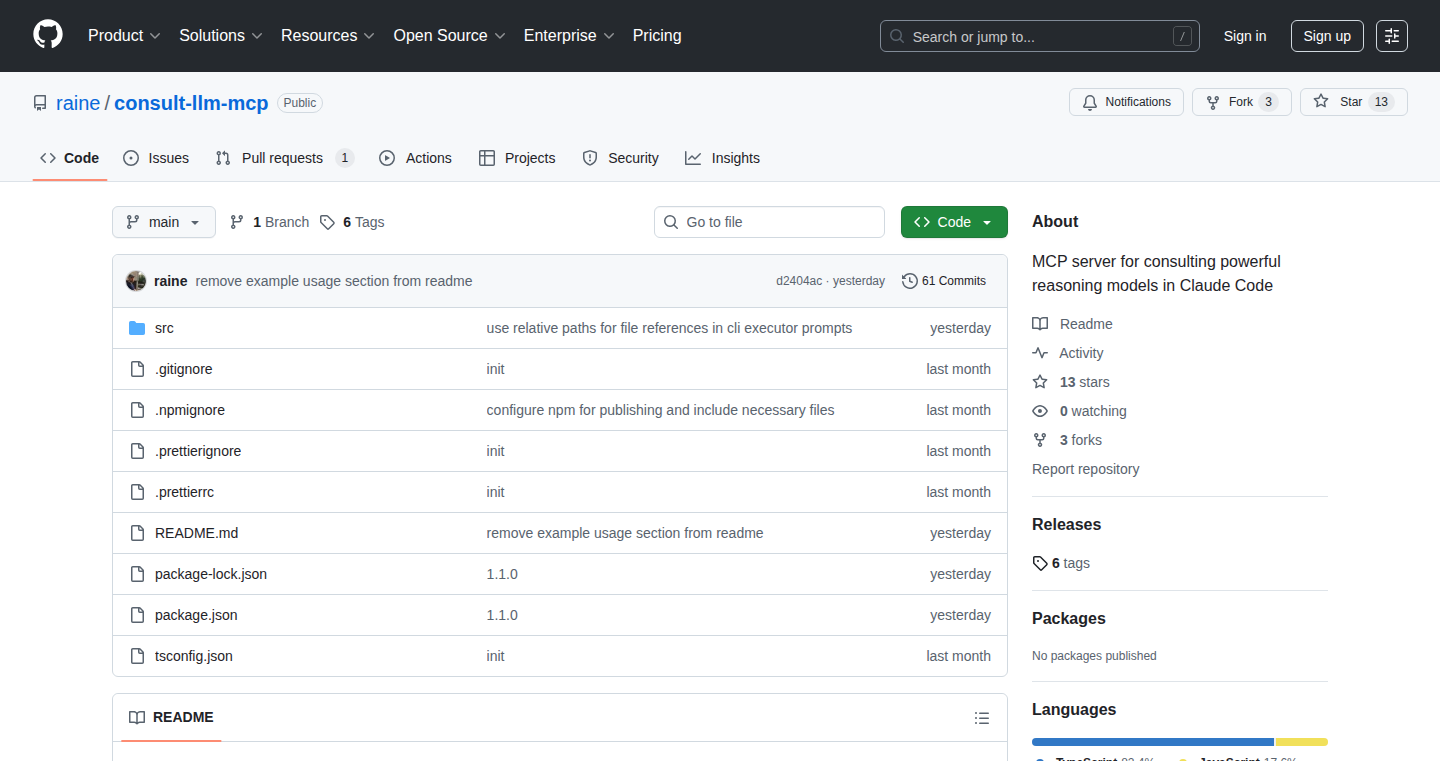

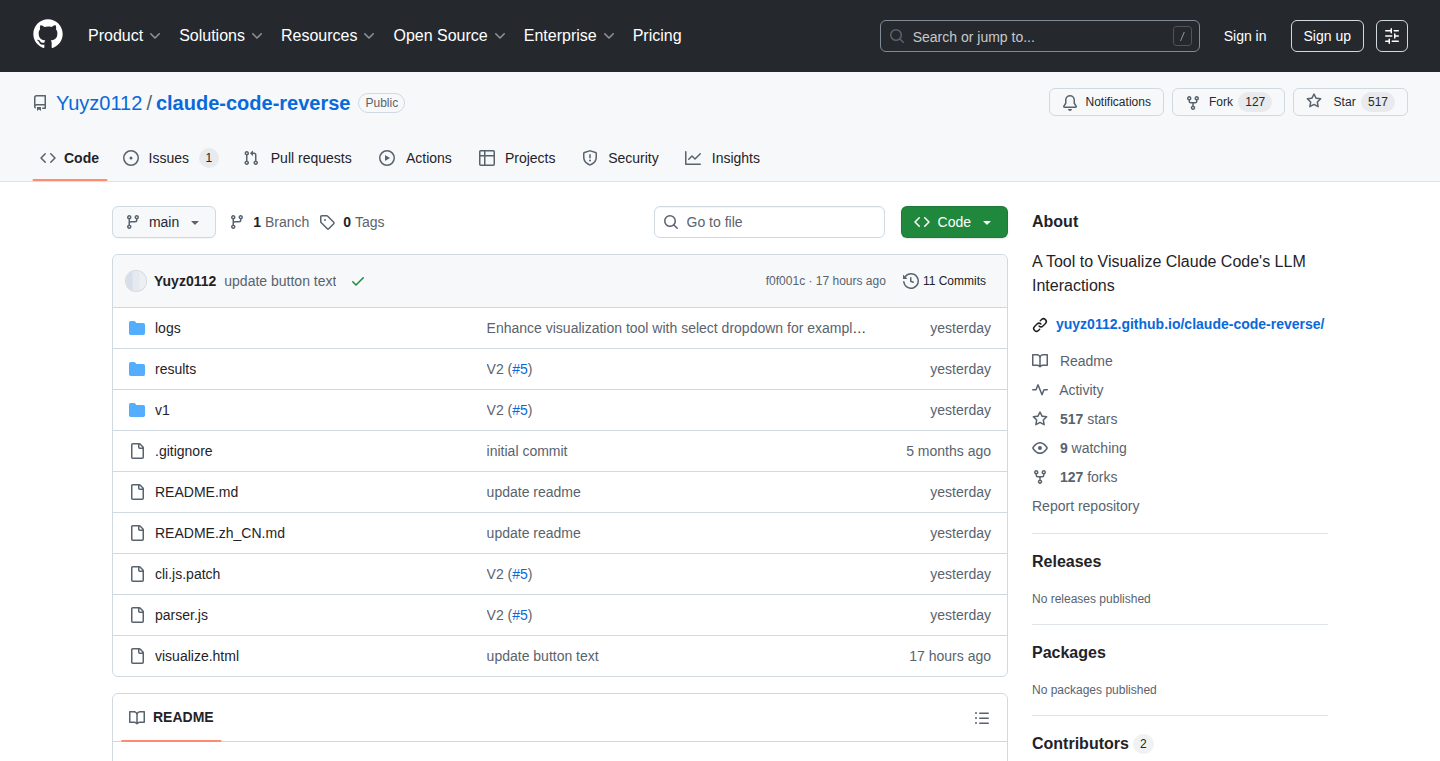

Claude Code Collaborator: Multi-LLM Consultation Server

Author

rane

Description

This project builds a server that allows the Claude Code model to consult with other Large Language Models (LLMs). The core innovation lies in enabling Claude Code to leverage the expertise of various LLMs during code generation and problem-solving. This approach tackles the challenge of relying solely on one LLM, potentially leading to more accurate, comprehensive, and diverse solutions. It essentially creates a 'committee of experts' within the AI realm, enhancing the problem-solving capabilities of Claude Code. So this means you get better code and better answers, by using multiple AI brains at once.

Popularity

Points 3

Comments 1

What is this product?

This project is a server that acts as a bridge, allowing the Claude Code model to communicate and collaborate with other LLMs. The key is in the architecture: the server routes prompts, receives answers from multiple LLMs, and then feeds these responses back to Claude Code, essentially providing it with external expert opinions. It's like giving a student access to a panel of tutors, all providing their insights on the same problem. This way we can get more accurate and better coding answers using different LLMs together.

How to use it?

Developers can use this server by integrating it into their existing Claude Code workflows. They would submit prompts to the server, specifying which other LLMs they want Claude Code to consult with. The server then handles the interaction with these LLMs, aggregating the responses, and passing the results back to Claude Code. This allows developers to leverage the combined knowledge of multiple AI models for tasks like code generation, debugging, or even understanding complex technical concepts. You can easily integrate this into your code, just by sending it the right instructions.

Product Core Function

· LLM Orchestration: The server efficiently manages the interaction between Claude Code and other LLMs. It handles the routing of prompts, collection of responses, and the formatting of feedback to Claude Code. This simplifies the process of using multiple LLMs.

· Value: Allows users to harness the strengths of various LLMs, resulting in improved code quality and debugging capabilities. Application: Useful for developers building complex applications that require accurate and robust code.

· Response Aggregation: The server compiles and presents the responses from multiple LLMs in a way that's helpful for Claude Code. This removes the need for manual interpretation, providing a structured answer.

· Value: Enables Claude Code to make informed decisions based on a wide range of expert opinions. Application: Streamlines the process of getting high-quality solutions from multiple AI models.

· Model Selection and Configuration: Developers can specify which LLMs Claude Code should consult with, tailoring the advice it receives to the specific task. It allows for flexibility and customization.

· Value: Allows you to choose the most relevant AI brains for your project, optimizing performance. Application: Offers a tailored and efficient approach to problem-solving, ensuring the best results.

· Error Handling and Robustness: The server includes mechanisms to handle errors and ensure the continued operation of the consultation process. It anticipates and manages potential issues.

· Value: Guarantees that the system performs well and provides consistent results. Application: Essential for all production applications that rely on AI assistance to solve programming problems.

Product Usage Case

· Code Generation: A developer uses the server to generate complex Python code. The server consults with GPT-3 and Bard to ensure the code adheres to best practices and resolves potential performance issues. The final code is more optimized and less prone to errors.

· Value: Improves the efficiency and quality of code generation, which saves you time and reduces errors.

· Debugging Assistance: A developer is struggling to debug a Java application. By using the server and consulting with multiple LLMs, the developer gets multiple suggestions, which helps them identify and fix the bug much faster.

· Value: Improves troubleshooting speed by getting more perspectives. Application: Helps you quickly solve software problems.

· Technical Documentation Creation: A technical writer utilizes the server to generate documentation for a new API. The server gathers information from various LLMs and provides recommendations to ensure comprehensive and accurate documentation.

· Value: Improves the speed and precision of technical documentation generation. Application: Speeds up documentation creation and improve its accuracy.

18

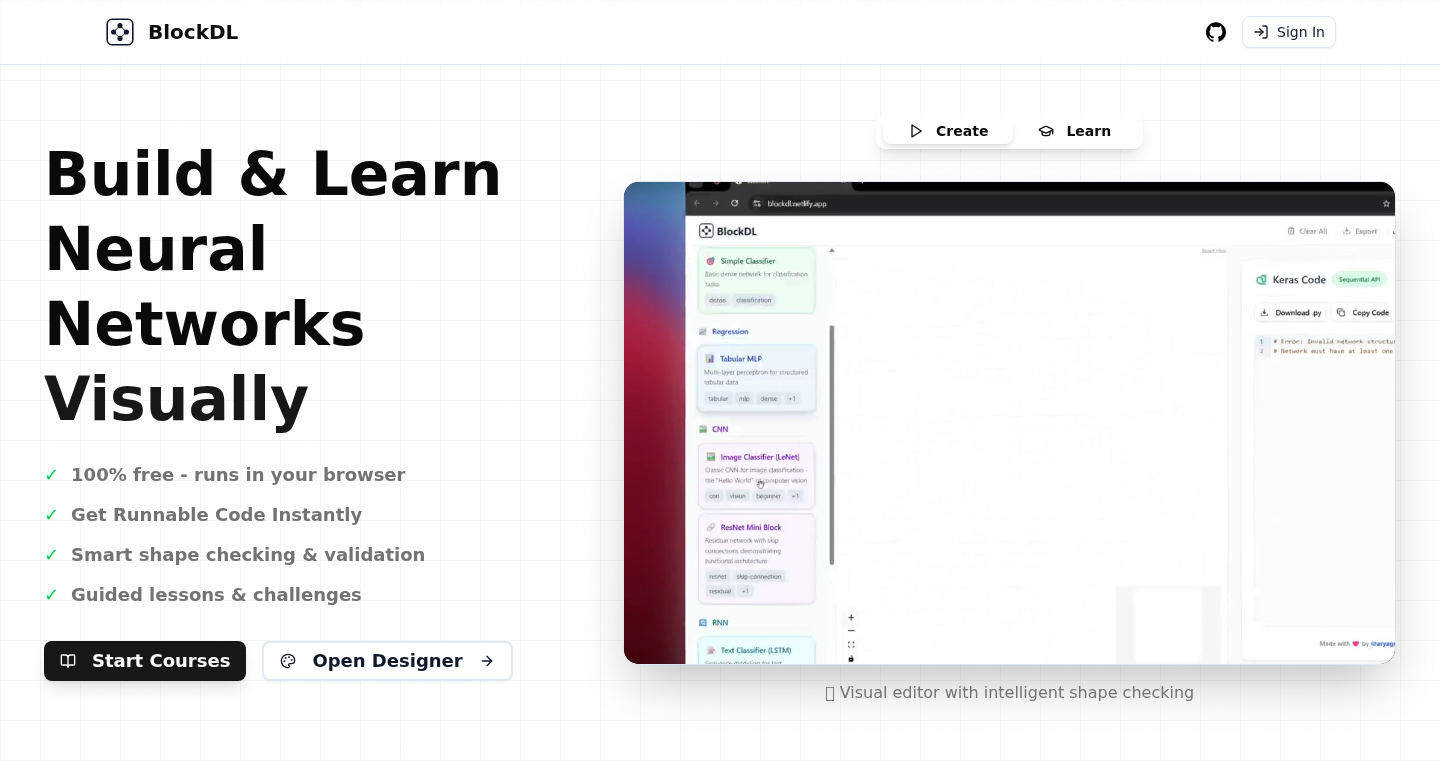

BlockDL: Visual Neural Network Designer

Author

Aryagm

Description

BlockDL is a free and open-source tool that lets you design neural networks visually in your web browser. It addresses the common problem of architects needing to sketch out neural networks before coding them. The innovation lies in its real-time code generation (Python/Keras) and shape validation. As you drag and drop layers, BlockDL instantly updates the code and flags any errors, such as mismatched input and output shapes. It also includes interactive courses to learn about network design. So this is useful because it saves time and reduces errors for anyone building neural networks by visually laying it out first.

Popularity

Points 2

Comments 2

What is this product?

BlockDL is a web-based tool that allows you to visually design neural networks. Instead of writing code from scratch, you can drag and drop different layer types (like convolutional layers, dense layers, and LSTM layers) to create your network architecture. As you build, BlockDL automatically generates the equivalent Python/Keras code and checks for common errors. The benefit is this simplifies the design process and reduces the chance of shape mismatches or connectivity errors. It also provides learning resources with visual and interactive lessons. So this helps users prototype ideas quickly and learn concepts more effectively.

How to use it?

Developers can access BlockDL through their web browser (blockdl.com). After opening the website, the developer can drag and drop different layer types from a panel onto the design canvas. The developer connects the layers to define the network's structure. As they add layers and connections, BlockDL instantly generates Python/Keras code, which can then be copied and pasted into their machine learning project. Developers can also utilize the learning resources to improve their architecture design abilities. So you can use this tool to quickly experiment with different network designs and validate your ideas without writing code.

Product Core Function

· Visual Design Interface: Allows users to drag and drop different neural network layers, making the design process intuitive. This offers the benefit of a more visual and intuitive design process, enabling developers to experiment rapidly with different architectures.

· Real-time Code Generation: Automatically generates Python/Keras code based on the visual design. This means less time spent on manual coding and reduces the chances of coding errors.

· Shape and Connectivity Validation: Checks for errors in the network architecture, such as incompatible layer shapes and broken connections, catching errors early in the design process. This is helpful because it prevents errors and accelerates debugging.

· Supports Common Layer Types: Includes a wide range of common layers like Conv2D, Dense, and LSTM, catering to various neural network types. This broadens the scope of networks you can design.

· Multiple Input/Output Network Support: Enables the design of networks with multiple inputs and outputs. This allows developers to tackle more complex applications.

· Skip Connection Support: Supports the addition of skip connections, improving architecture design flexibility. Provides the user greater flexibility when designing a neural network.

· Interactive Learning Section: Provides beginner-to-intermediate courses focusing on neural network architecture design with interactive and visual lessons. This is useful for learning or improving how you design these networks.

Product Usage Case

· Rapid Prototyping: A machine learning engineer can quickly prototype a new convolutional neural network for image classification by dragging and dropping convolutional layers, pooling layers, and fully connected layers in BlockDL. The tool immediately generates the Keras code, which can be integrated into the training pipeline. The engineer can iterate through different architectures in minutes. This accelerates the entire development cycle.

· Educational Tool: A student learning deep learning can use BlockDL's interactive lessons and visual design interface to understand neural network concepts and experiment with different architectures. The visual nature of the tool makes it easier to understand how each layer works and how they're connected. This allows students to build their understanding through hands-on experience.

· Architecture Exploration: A researcher can use BlockDL to explore and validate innovative network designs. They can quickly build and test various architectures. The real-time error checking allows for quick identification of problems in architectures. This tool increases design iterations.

· Code Generation for Production: A developer building a production machine learning system can design a neural network in BlockDL, then use the generated Keras code to integrate the network into their application. This tool streamlines the coding process and minimizes errors.

19

Steam Achievement Resetter for Linux

Author

t9t

Description

A small, lightweight C application for Linux that allows users to reset their Steam achievements for games. It works by loading the Steam API library and making specific calls to mimic game behavior, effectively allowing players to 're-earn' achievements. This project provides a functional solution for Linux users, inspired by the limitations of existing tools like Steam Achievement Manager (SAM) and showcases a practical application of understanding and interacting with game APIs. So this enables me to replay games with a fresh start and complete the achievement again.

Popularity

Points 2

Comments 2

What is this product?

This tool is essentially a 'hacker's tool' that lets you reset your Steam achievements in Linux games. It works by using the Steam API (Application Programming Interface), which is the set of rules and tools games use to talk to Steam. The tool mimics the game's behavior, telling Steam that you haven't earned the achievements yet. The innovation lies in its simplicity and Linux-specific implementation, offering a workaround for users who couldn't get existing tools to work on Linux. So this gives me a way to enjoy the game again, and provides a solution that is not easily available on Linux.

How to use it?

The user needs to run the compiled C program. The program then interacts with the Steam client to clear the achievements for a selected game. This involves loading specific libraries and making calls to the Steam API. It could be integrated into scripts or workflows to automate achievement resets for testing or other purposes. So this is easy to use, and can be integrated with other tools.

Product Core Function

· Steam API Interaction: The core function is to interact with the Steam API to manipulate achievement data. This lets you, for example, reset all the game achievements. This provides the functionality for resetting achievements, thus allowing you to 'start over' in games.

· Library Loading: The tool loads the libsteam_api.so library, the core library for Steam interaction. This enables the tool to communicate with the Steam client. This provides the ability to interact with Steam. So it makes everything happen and you can reset your achievements.

· Achievement Clearing: The primary function of the application is to clear Steam achievements, allowing the user to 're-earn' them. So it lets you start fresh in a game with all achievements reset, creating the ability to enjoy the game again.

· Linux Compatibility: It's built specifically for Linux, addressing a gap in available tools. So, this tool provides a working method to perform the task, specifically on Linux.

· Simplicity and Size: The tool is designed to be small and lightweight, focusing on the core functionality without unnecessary features. This allows for easy usage and good performance.

Product Usage Case

· Game Replay: A user wants to replay The Witcher 3 and experience all achievements again. The tool allows them to reset achievements, and enjoy playing the game from a fresh start. So this provides a means of a fresh gaming experience.

· Achievement Testing: A game developer needs to test the achievement system of their game by repeatedly triggering and verifying achievements. The tool helps in testing and debugging achievement logic. So it simplifies the test and debug process for developers.

· Linux-Specific Need: A Linux user finds that existing Windows-based achievement managers don't work on their system. They can use this tool as a Linux-compatible alternative. So it fulfills the requirements of Linux users.

· Experimentation: The project can serve as an example for anyone wanting to learn about how games interact with the Steam API or how to create custom tools for interacting with game services. So it offers an learning opportunity to developers.

20

Red Candle: Ruby-Native LLMs with Rust Acceleration

Author