Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-07-20

SagaSu777 2025-07-21

Explore the hottest developer projects on Show HN for 2025-07-20. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN projects showcase a vibrant landscape of innovation, with AI taking center stage. Developers are actively building tools to automate tasks, enhance workflows, and redefine content creation. The prevalence of open-source projects underscores a collaborative spirit. For developers, this means opportunities to contribute, learn, and build upon existing solutions. Entrepreneurs should take note of the growing demand for privacy-focused and user-friendly tools, as well as the potential of AI to disrupt various industries. The focus on streamlining development and improving user experience provides fertile ground for innovation. Consider combining these trends to build solutions that are both powerful and accessible, providing real value while protecting users' privacy and data.

Today's Hottest Product

Name

Show HN: MCP server for Blender that builds 3D scenes via natural language

Highlight

This project uses the Model Context Protocol (MCP) server to connect Blender to Large Language Models (LLMs) like ChatGPT and Claude. The core innovation is enabling users to create and manipulate 3D scenes within Blender using natural language commands. You can describe complex scenes, like a village with specific elements and spatial relationships, and the system will build it for you. This approach simplifies 3D modeling by abstracting away the technical complexities and leveraging the power of AI to interpret and execute user instructions. Developers can learn how to integrate LLMs with 3D software, creating new possibilities for content creation and design workflows.

Popular Category

AI/LLM

Game Development

eCommerce

Productivity Tools

Developer Tools

Finance

Popular Keyword

AI

LLM

Open Source

CLI

Privacy

Automation

Technology Trends

AI-powered content creation and automation: Several projects leverage AI to automate tasks like 3D scene generation, product image creation, and code generation, demonstrating the growing influence of AI in various domains.

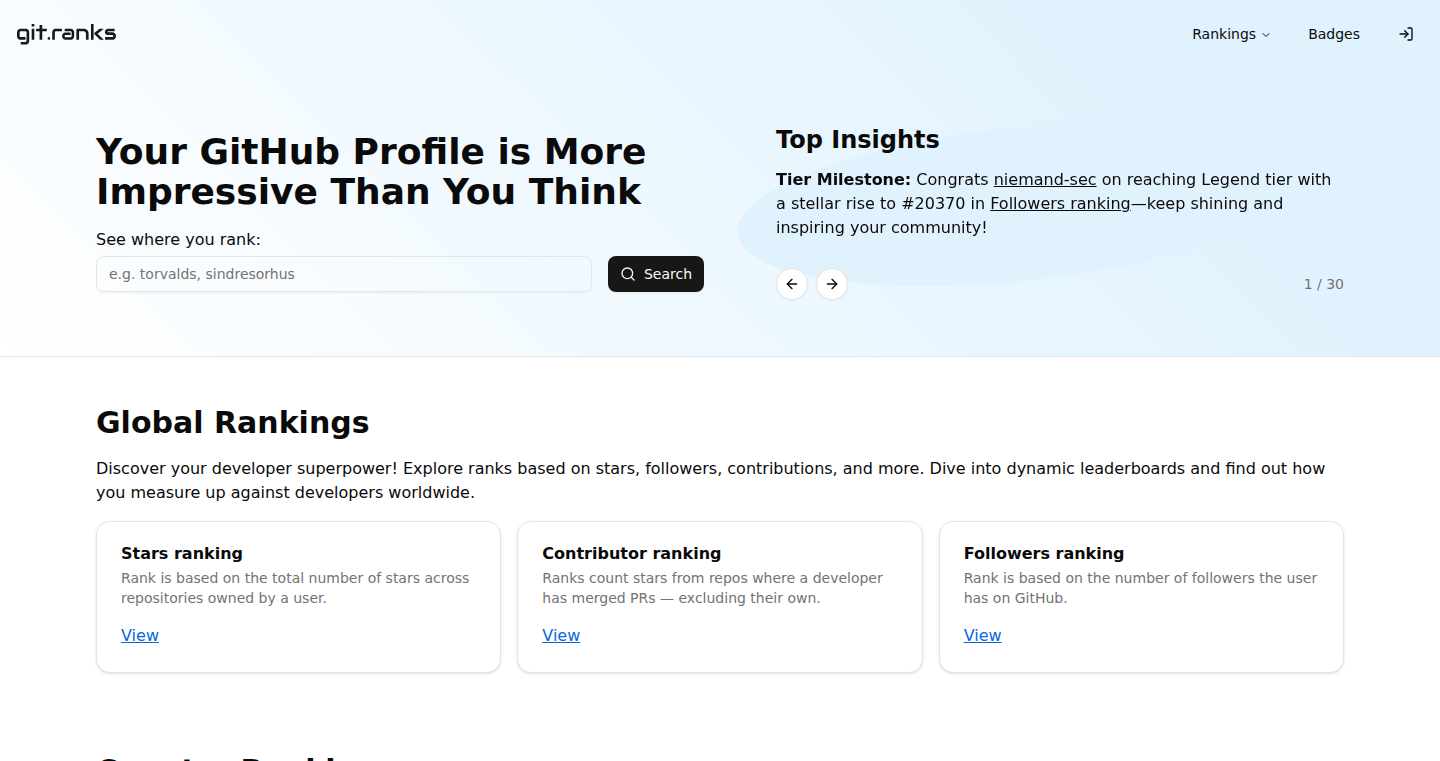

Local and privacy-focused solutions: There's a notable trend toward tools that prioritize user privacy and data security, with projects like Tygra, which processes documents locally, and NetXDP, which focuses on kernel-level DDoS protection, highlighting the importance of these aspects.

Simplified development workflows and user experiences: Tools like Mirage and Gix aim to streamline developer workflows and enhance productivity, showcasing the ongoing demand for developer-centric solutions.

Open-source and community-driven projects: Many projects are open-source, demonstrating a collaborative spirit and a commitment to accessible technology. Projects like Emporium and the Open LLM Specification are good examples.

Project Category Distribution

AI/LLM Applications (35%)

Developer Tools and Productivity (30%)

eCommerce and Content Creation (15%)

Security and Privacy (10%)

Other (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | PeerMap Widget: Visualizing Network Peer Locations on X11 | 152 | 61 |

| 2 | Blender-MCP: Natural Language 3D Scene Generation | 148 | 61 |

| 3 | XID: Globally Unique ID Generator | 7 | 3 |

| 4 | LegalDocGraph: Hybrid RAG System for Legal Documents | 7 | 3 |

| 5 | FlouState: Intelligent Coding Activity Tracker | 9 | 0 |

| 6 | Sifaka: LLM Reliability Enhancement Framework | 7 | 0 |

| 7 | Chatterblez: Real-time Audiobook Generation with Nvidia Acceleration | 4 | 2 |

| 8 | Superpowers: AI-Powered Chrome Extension for Enhanced Web Browsing | 6 | 0 |

| 9 | MediaManager: A Modern Metadata-Driven Media Orchestrator | 5 | 0 |

| 10 | ChatForm AI: Conversational Form & Calculation Engine | 3 | 2 |

1

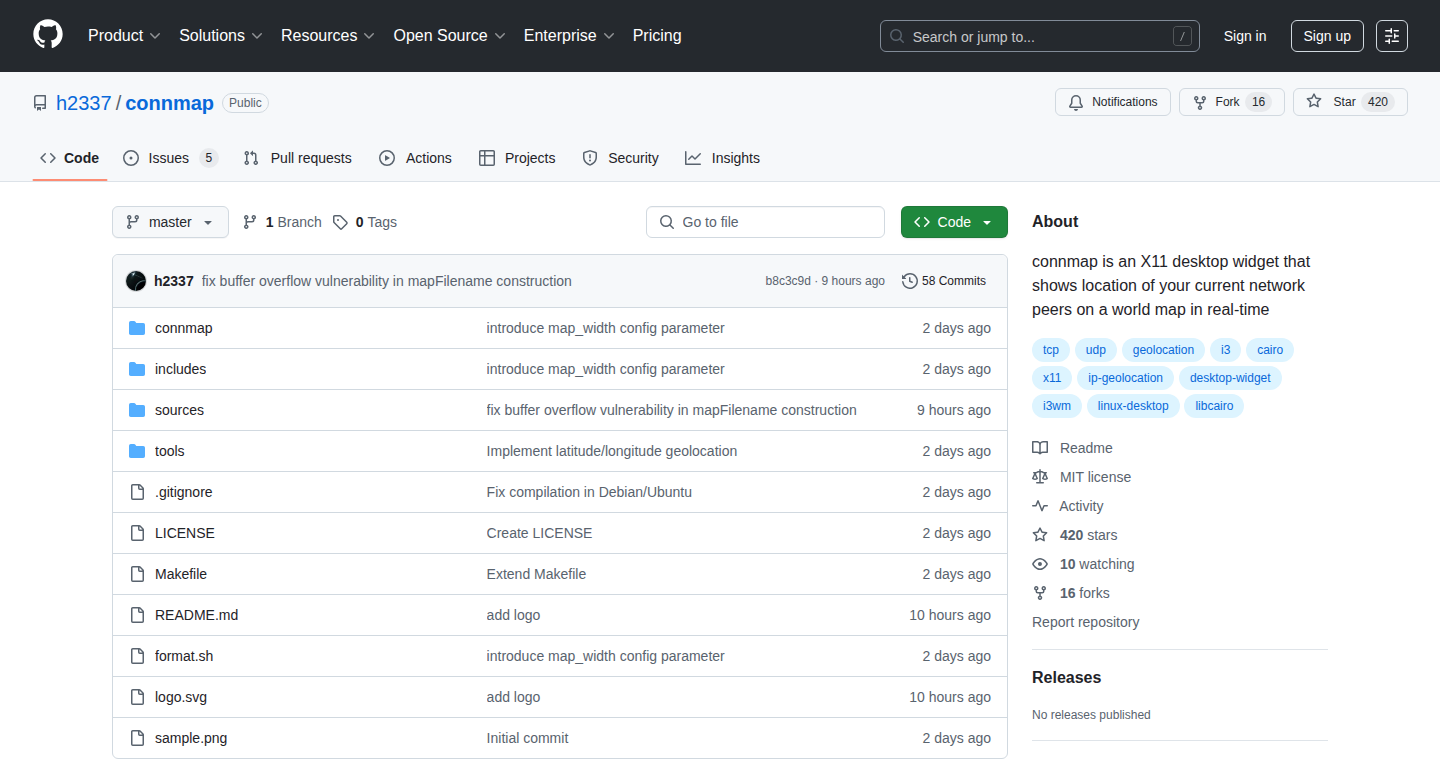

PeerMap Widget: Visualizing Network Peer Locations on X11

Author

h2337

Description

This project creates a desktop widget for X11 systems that displays the geographical locations of your network peers on a map. It leverages the power of network monitoring and geolocation to visually represent where your network connections are located. This offers a unique way to understand your network's reach and activity, solving the problem of invisible network connections and providing a visual context for network traffic. Essentially, it translates complex network data into an easily understandable visual format.

Popularity

Points 152

Comments 61

What is this product?

This is a desktop widget that shows the locations of devices connected to your network on a map. It works by analyzing network traffic to identify connected devices (peers) and then uses their IP addresses to determine their approximate geographical locations. The widget then displays these locations on a map, allowing you to visually track your network's activity. The innovative part lies in its combination of network monitoring, geolocation lookup (using IP addresses), and real-time visualization within a lightweight desktop widget. So, it's like a live map of your network connections, showing you where your network is reaching.

How to use it?

Developers can use this widget on X11 based systems, such as Linux distributions. They can install it and then configure it to monitor specific network interfaces or listen for certain types of network traffic. The widget automatically updates the map as new peers connect or disconnect. You could integrate this widget into a network monitoring dashboard, use it for security analysis to visualize unusual network connections, or simply use it as a fun tool to understand your network's structure and activity. So, you can directly install it on your Linux system to understand where your network devices are located.

Product Core Function

· Network Traffic Analysis: The widget monitors network traffic to identify connected devices and their IP addresses. This functionality allows it to gather data about the network devices that are currently communicating with your system. This is useful to analyze the network traffic of your system.

· IP Geolocation Lookup: It uses IP addresses to determine the approximate geographical locations of network peers. The widget then uses a geolocation API, such as MaxMind, to convert IP addresses into approximate geographic coordinates (latitude and longitude). This helps to show the general location of the network devices on a map.

· Map Visualization: The widget displays peer locations on a map within a desktop window, providing a visual representation of network connections. This is achieved using a mapping library to render the map and place markers at the determined geographic coordinates. This gives users a real-time understanding of their network's geographic distribution.

· X11 Integration: It is designed as a desktop widget for X11 systems. This allows it to integrate directly into the user's desktop environment, providing a seamless and accessible network visualization experience. This makes it convenient for users to track their network activity at a glance.

Product Usage Case

· Network Security Monitoring: Developers can use the widget to identify and visualize unusual network connections. By seeing where connections are coming from, they can quickly spot suspicious activity and potential security threats. So, it will help you to identify suspicious activity on your network by showing the location of the connections.

· Network Troubleshooting: The widget can help troubleshoot network connectivity issues by showing the location of remote servers or devices. By visually identifying where the problem might be originating, developers can narrow down the scope of the issue and focus their troubleshooting efforts. So, it can help you to quickly troubleshoot network connectivity issues.

· Network Infrastructure Planning: In some cases, developers could use the widget to visualize the geographic distribution of a network. For example, they can use it to plan where to place new servers or network devices to optimize performance. So, it provides developers with a visual understanding of their network's global distribution.

· Educational Tool: The widget provides an excellent educational tool for understanding network behavior and the geographical distribution of network traffic. It can be used to illustrate network concepts in a visual and interactive manner. So, it is a great tool to understand and visualize the network traffic.

2

Blender-MCP: Natural Language 3D Scene Generation

Author

prono

Description

This project introduces a custom Model Context Protocol (MCP) server that bridges Blender, a popular 3D modeling software, with Large Language Models (LLMs) like ChatGPT and Claude. It allows users to create and manipulate 3D scenes using simple, natural language descriptions. Instead of manually modeling or scripting, users can describe a scene (e.g., "Create a small village with huts") and the system intelligently interprets the instructions and builds the scene within Blender. The core innovation lies in the translation of natural language into 3D modeling instructions, enabling AI-driven 3D scene creation and iterative design workflows.

Popularity

Points 148

Comments 61

What is this product?

This project is a server, built with Node.js, that acts as a translator between natural language instructions and Blender's 3D environment. It uses a custom protocol called MCP to communicate with Blender. The server sends instructions to the LLM (like ChatGPT), which then parses the user's natural language description. The LLM understands the relationships between objects, spatial arrangements, and scene attributes. The LLM then uses this understanding to generate instructions for Blender via the MCP protocol, allowing the system to construct the scene within Blender automatically. The project exemplifies a sophisticated integration of AI and 3D design, automating complex modeling tasks. So this means, instead of spending hours manually building 3D scenes, you can just tell the computer what you want and it creates it for you.

How to use it?

Developers can integrate this by running the Node.js server and connecting it to their desired LLM (OpenAI, Claude, or any that support tool calling). Then, they can send natural language prompts to the server. The server communicates with Blender using Blender's Python scripting API. You would install the necessary Blender Python script and configure it to connect to the MCP server. For example, you could create a tool that lets artists directly input prompts and generate scenes. This setup provides a flexible and extensible platform for AI-assisted 3D design. So for developers, this is a starting point for integrating AI into 3D workflows, enabling applications such as automated scene generation, interactive design tools, or even AI-driven game development.

Product Core Function

· Natural Language Parsing: The system interprets natural language descriptions of 3D scenes. This allows users to describe what they want in plain English. So you can simply tell the computer to "build a house with a red roof."

· Spatial Relation Understanding: The system understands spatial relationships (e.g., "place the bridge over the river"). This means the AI can accurately position objects relative to each other, crucial for creating realistic scenes. So your objects will be placed where you expect them to be.

· Multi-Object Scene Generation: The ability to create scenes with multiple objects and complex arrangements from a single prompt (e.g., villages, landscapes). This significantly reduces the time required for scene creation. So you can generate complex environments with a single command, greatly accelerating your workflow.

· Iterative Design & Editing: Supports iterative changes like replacing objects, changing colors, or adjusting sizes. You can make changes to the scene by simply describing the modifications. So you can easily refine your 3D scenes by simply making new requests.

· Camera Animation and Lighting Setup: The capability to create camera animations and lighting setups based on natural language prompts (e.g., "orbit around the scene at sunset lighting"). So you can create dynamic scenes without manually adjusting the camera and lighting.

Product Usage Case

· Game Development: Game developers can use this to quickly prototype game environments and levels by describing desired scenes. So you can rapidly iterate on level designs and test different visual concepts without manual 3D modeling.

· Architectural Visualization: Architects can create detailed 3D models of buildings and landscapes from simple textual descriptions. So you can generate visualizations more efficiently, showcasing design ideas to clients.

· Animation and Film: Animators can generate complex scenes for animations, saving time on manual modeling and allowing for more creative exploration. So you can bring your creative visions to life faster and easier.

· Interactive Design Tools: Developers can create interactive 3D design tools where users can modify scenes using natural language. So you can create tools that empower users to interact with 3D environments using their words.

3

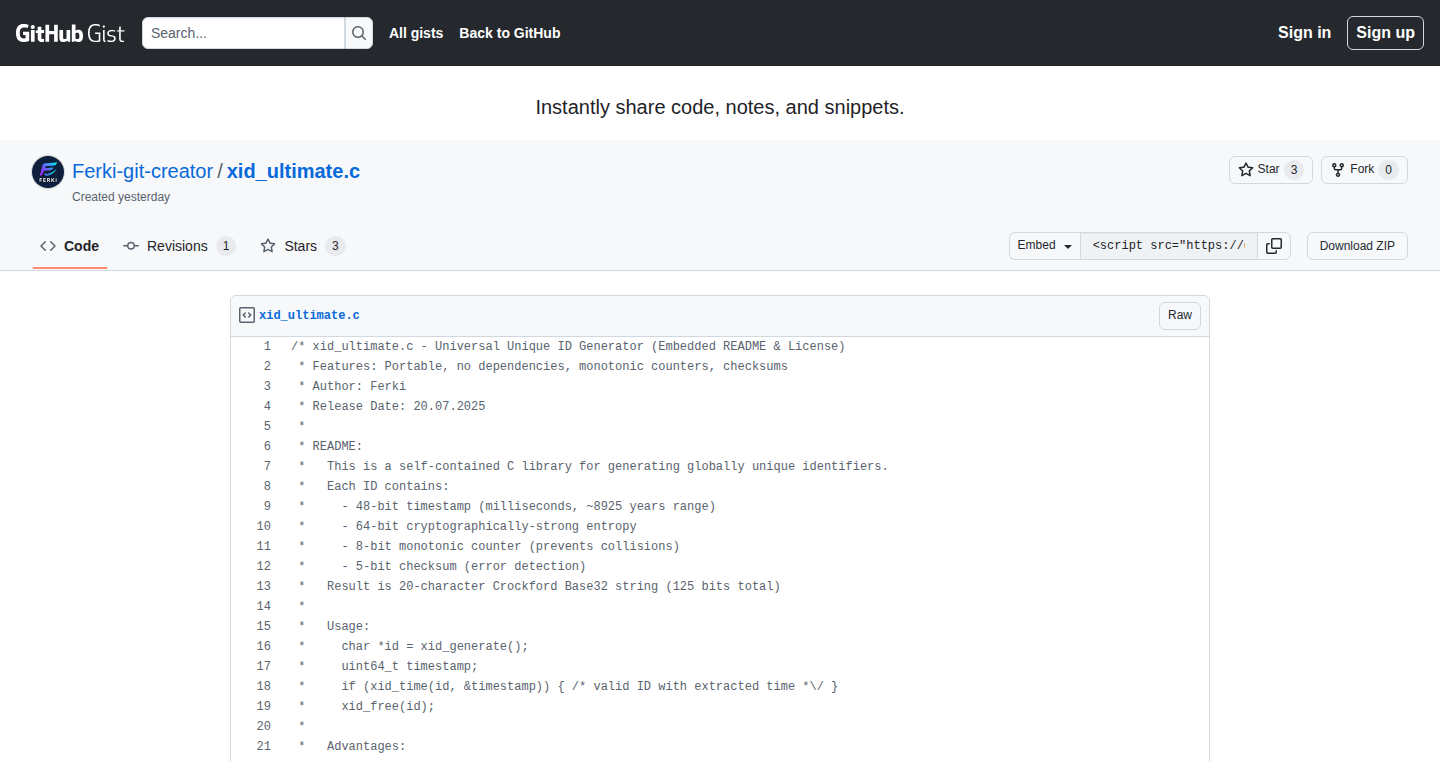

XID: Globally Unique ID Generator

Author

FerkiHN

Description

XID is a clever tool that creates super unique 20-character IDs. It's made in a single C file, meaning it's lightweight and doesn't rely on anything else to work. It cleverly combines a timestamp (when the ID was created), a random number (entropy), a simple counter, and a checksum to guarantee that the ID is unique, even if generated on different computers. This is designed to solve the challenge of creating unique identifiers in distributed systems, databases, and the Internet of Things (IoT).

Popularity

Points 7

Comments 3

What is this product?

XID generates unique IDs using a combination of a timestamp, random data, a counter, and a checksum. The timestamp ensures the ID is ordered chronologically, making it easy to sort. The random data and the counter provide uniqueness, and the checksum is a safety net to verify that the ID hasn't been accidentally changed. The fact that it's all contained in a single C file makes it very easy to use on almost any device. So, this is like a highly efficient and reliable ID factory for your software.

How to use it?

Developers can easily incorporate XID into their projects. You just include the single C file and use a simple function to generate an ID. It's designed to be compatible with almost any platform, so it can be used in databases, IoT devices, and distributed systems. So, you can quickly add unique ID generation to your applications without bringing in a lot of extra code.

Product Core Function

· ID Generation: XID generates a 20-character globally unique ID. Value: Guarantees the uniqueness of data records in databases or distributed systems, avoiding conflicts. Application: Creating unique user IDs, transaction IDs, or device identifiers.

· Timestamp Extraction: XID allows you to extract the timestamp directly from the ID. Value: Enables chronological ordering and easy time-based analysis. Application: Logging, monitoring, and event tracking, providing a timeline of events.

· Collision Resistance: XID is designed to minimize the chances of generating the same ID twice. Value: Ensures data integrity in high-volume environments. Application: Reliable data management, such as handling millions of records or supporting multiple users generating IDs simultaneously.

· Portability: XID is built for use on any platform, including embedded systems. Value: Works across diverse hardware and operating systems. Application: IoT device identification, database deployments on different architectures, and in any system where you need universally unique IDs.

Product Usage Case

· Database Integration: Imagine you're building a database and need unique keys for your records. XID provides a simple way to generate these keys, making sure each record is uniquely identifiable. So, this helps you build a more reliable database.

· IoT Device Identification: In the world of IoT, you need to identify each device uniquely. XID can generate unique IDs for each device, allowing them to communicate and interact. So, this ensures you can track and manage all your connected devices.

· Distributed Systems: If you're creating a system that runs on multiple computers, you need a way to generate unique IDs across the network. XID's design handles this problem elegantly, ensuring that each ID is unique, no matter where it's generated. So, this means your system will function correctly, avoiding ID clashes, even with complex distributed setups.

· Embedded Systems: For projects on resource-constrained devices like embedded systems, XID's single-file, dependency-free nature is highly advantageous. So, it's great for microcontroller-based projects that require unique IDs and minimal code footprint.

4

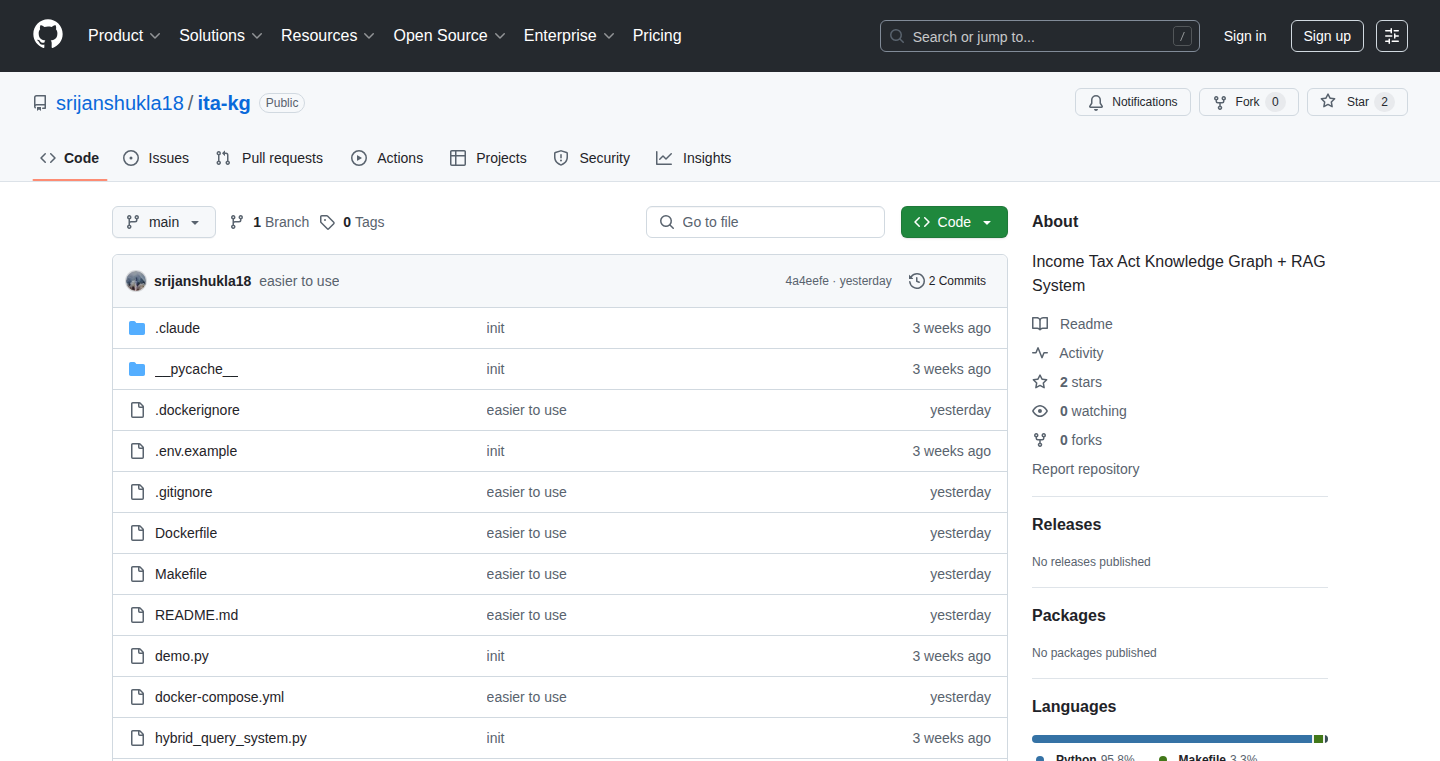

LegalDocGraph: Hybrid RAG System for Legal Documents

Author

srijanshukla18

Description

This project tackles the problem of Retrieval-Augmented Generation (RAG) systems struggling with legal documents. It combines two powerful approaches: TF-IDF for semantic search (finding similar content based on keywords) and Neo4j for understanding structural relationships between different sections of a legal document, creating a knowledge graph. This hybrid approach then feeds both context sources to OpenAI to generate more comprehensive and accurate answers. So, this is a smarter search tool that understands the connections within complex documents. Therefore, this helps anyone dealing with legal documents to find what they're looking for faster and get a better understanding of the information.

Popularity

Points 7

Comments 3

What is this product?

This project is a sophisticated search engine tailored for legal documents. It leverages a 'knowledge graph' built with Neo4j, representing the relationships between different sections of the document, alongside traditional semantic search using TF-IDF. When a user asks a question, the system uses both the keyword similarities and the established connections within the document to gather context, then sends this combined knowledge to OpenAI. This allows for answering questions that a regular search engine couldn't handle, such as 'What sections reference Section 80C?'. Therefore, if you need to understand complex documents, this system allows for deeper exploration and comprehension.

How to use it?

Developers can use this by setting up the Python environment with libraries like Neo4j, scikit-learn, and OpenAI's API. The system is designed to be Dockerized, making setup and deployment easier. You would feed in your legal document data, define the structural relationships, and then query the system. The system then retrieves relevant information and context for the queries. So, developers can build intelligent search features directly into their applications, helping users find connections and information more effectively in any structured document.

Product Core Function

· Knowledge Graph Construction: Building a Neo4j graph to represent the relationships between sections of legal documents. Value: Enables understanding of context and connections between sections. Application: Understanding how different legal clauses relate to each other.

· Semantic Search with TF-IDF: Utilizing TF-IDF to find sections semantically similar to the user's query. Value: Allows the system to find information related to keywords. Application: Quickly locating sections relevant to specific search terms.

· Hybrid Retrieval Strategy: Combining TF-IDF results with structural relationships from the knowledge graph. Value: Provides a more complete understanding by considering both content similarity and contextual relationships. Application: Answering complex questions like 'What are the implications of this section?'

· Contextualized Querying with OpenAI: Feeding combined information from both the semantic search and the graph into OpenAI's API for comprehensive answers. Value: Generates more accurate and informative answers. Application: Helping users get clear and concise answers to legal questions.

· Dockerized Deployment: Using Docker to package and deploy the entire system. Value: Simplifies setup and ensures consistency across different environments. Application: Makes the system easier to deploy and manage for developers.

Product Usage Case

· Legal Research: A lawyer uses the system to quickly find all the sections related to a specific clause and their context. The system provides the user with both relevant content from the document and the relationships between them. So, the lawyer can efficiently research legal information.

· Compliance Automation: A company uses the system to analyze compliance requirements from legal documents and identify dependencies. The system automatically highlights all sections that need to be referenced. So, companies can automate compliance and reduce the risk of errors.

· Educational Tool for Law Students: A law student uses the system to understand the relationships between sections of the law. The system helps visualize these relationships using the Neo4j graph. So, students can get a deeper understanding of legal concepts and their interconnections.

· Document Analysis for Contract Review: A business professional uses this system to quickly understand the clauses and cross-references within a contract. The system will identify every clause in the contract, and the system's ability to map relationships enhances the user's ability to find the clause they're seeking. So, businesses can review contracts faster and more effectively.

5

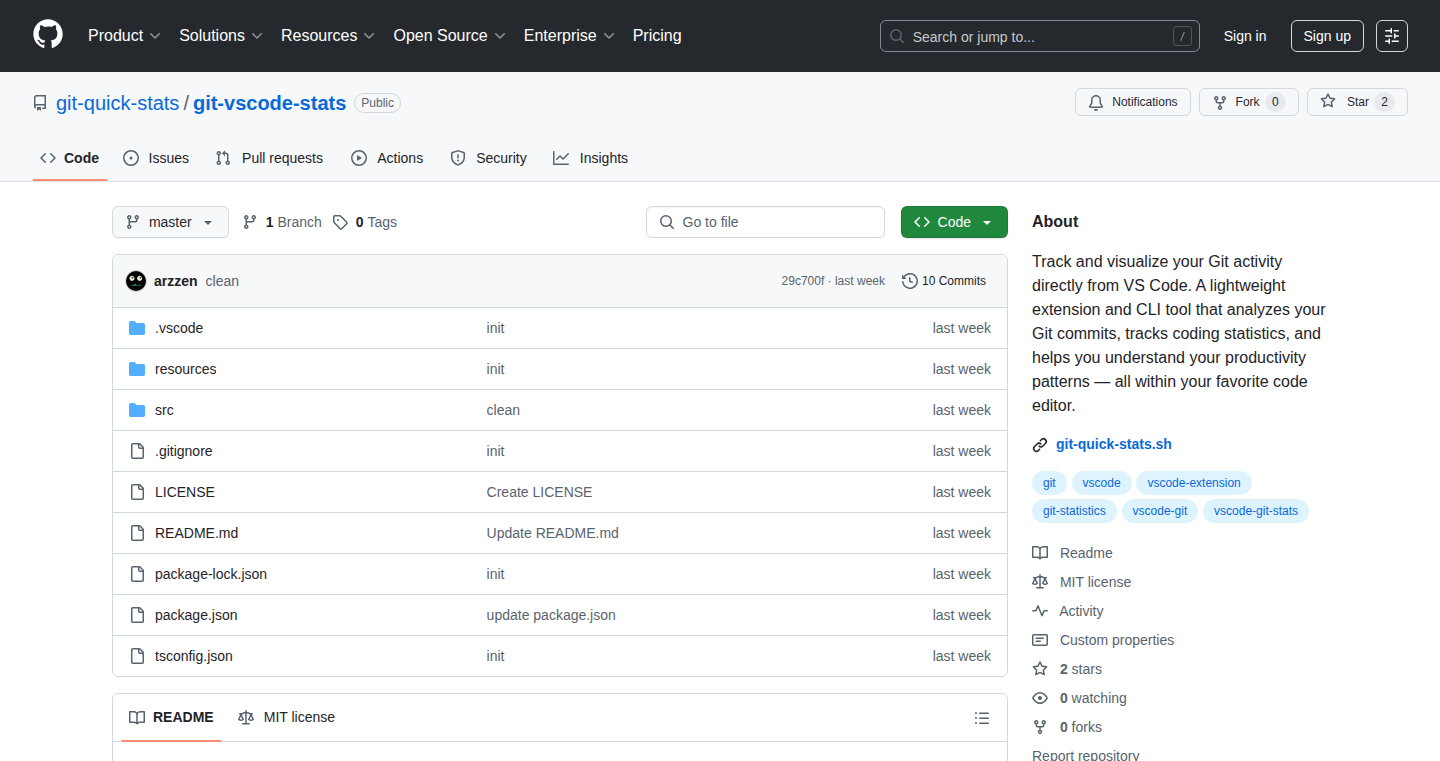

FlouState: Intelligent Coding Activity Tracker

Author

skrid

Description

FlouState is a VS Code extension designed to automatically categorize your coding time into different activities, such as creating new features, debugging existing issues, refactoring code, and exploring the codebase. It solves the problem of traditional time trackers that only show raw coding hours without providing context. It uses local tracking of file changes, debug sessions, and edit patterns to determine what you're actually working on. So, it helps you understand where your time is going, helping to improve productivity and identify bottlenecks.

Popularity

Points 9

Comments 0

What is this product?

FlouState is a VS Code extension that intelligently tracks your coding activities. Instead of just logging the total time spent, it breaks down your time into categories like 'Creating' (building new things), 'Debugging' (fixing problems), 'Refactoring' (cleaning up code), and 'Exploring' (learning the code). It figures this out by watching what you do in your code editor: when you change files, start debug sessions, or edit code in certain ways. It uses a Supabase backend to update a web dashboard every 30 seconds. The goal is to give you a clearer picture of how you spend your time, allowing you to become more efficient and spot areas where you might be getting stuck. This is a practical application of activity recognition applied to a developer's workflow.

How to use it?

Developers can install FlouState directly from the VS Code marketplace. Once installed, it runs in the background, monitoring your coding activities without requiring any manual input. You can then view a web dashboard that breaks down your coding time by category. You don't need to change your existing workflow – just keep coding as usual. The data helps you understand how you spend your time, helping to optimize your coding habits and productivity. It's particularly useful for individual developers and teams looking to improve their workflow.

Product Core Function

· Automated Activity Tracking: FlouState automatically detects and categorizes coding activities (Creating, Debugging, Refactoring, Exploring). This eliminates the need for manual time tracking, saving you effort and providing more accurate insights. So this gives you a clear, data-driven understanding of how you spend your time coding.

· Local Data Collection: The extension tracks file changes, debug sessions, and edit patterns locally. This approach ensures that your code content remains private. So you can get insights into your coding habits without worrying about security or privacy concerns.

· Real-time Dashboard Updates: The extension updates the web dashboard every 30 seconds via Supabase. This real-time feedback helps you monitor your coding habits and identify areas for improvement. So this provides a dynamic view of your work, making it easy to see how you spend your time.

· Categorized Time Breakdown: Instead of just knowing total coding hours, you see how much time you spend on each activity. This allows you to understand where your time is really going. So this helps you pinpoint bottlenecks and opportunities to improve your workflow and efficiency.

· Integration with VS Code: It works as a simple extension within VS Code, providing seamless integration into the developer's usual workflow. So you can improve your workflow habits without switching tools or changing your day-to-day routine.

Product Usage Case

· Debugging Bottleneck Analysis: A developer spends a significant amount of time debugging. FlouState reveals that 60% of their time is spent on debugging, indicating potential issues in the codebase or a need for better testing practices. They can focus on fixing the root causes and improving code quality. So it highlights areas for improving code quality and reducing debugging time.

· Refactoring Efficiency: A developer realizes that they are refactoring a large amount of time, leading to more sustainable code that's easier to maintain. With FlouState, they can track the amount of time spent on each type of coding task to improve code refactoring. So it's easy to see how time spent on different activities is distributed, guiding developers to improve the code.

· Codebase Learning Curve: A new team member uses FlouState and can see the balance between 'Creating' and 'Exploring.' They realize that the initial exploration time is high but decreases over time as they become familiar with the codebase. This lets them know their learning process is effective. So it shows how well a new developer is integrating into a project.

· Project Management Insight: A project manager uses FlouState to see how developers spend their time. This helps allocate resources more efficiently and understand the progress of different tasks. So it provides visibility into team members’ productivity, helping in better resource allocation.

6

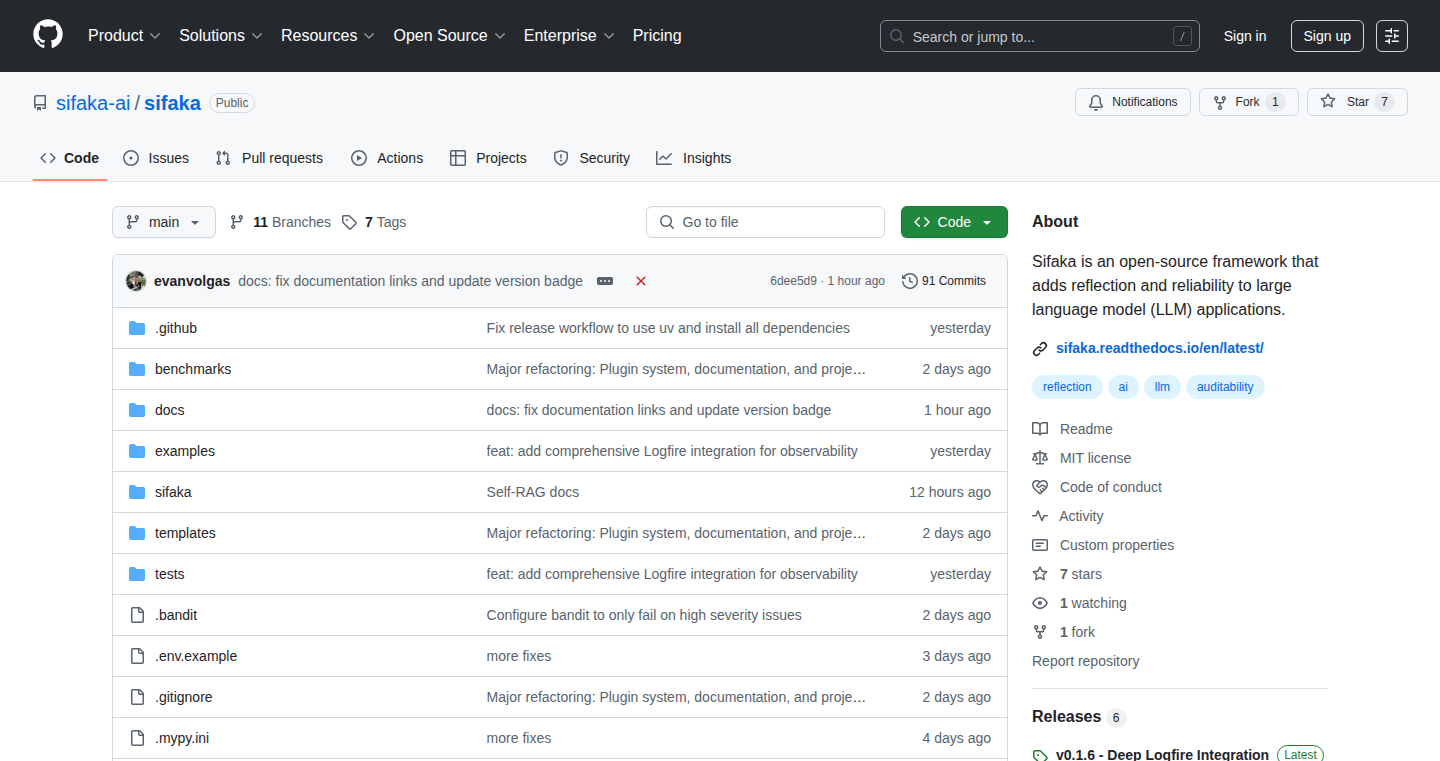

Sifaka: LLM Reliability Enhancement Framework

Author

evanvolgas

Description

Sifaka is an open-source framework designed to make applications using large language models (LLMs) more reliable and robust. It achieves this by implementing critique mechanisms backed by research, effectively improving the quality of AI-generated text. The core innovation lies in its approach to enhance reliability within LLM-based applications, a crucial area for practical AI adoption. So, this lets you build AI tools that are more trustworthy and less prone to errors.

Popularity

Points 7

Comments 0

What is this product?

Sifaka works by adding a layer of reflection and critique to your LLM applications. Think of it as giving your AI a second opinion. It analyzes the text generated by the LLM, identifies potential issues, and suggests improvements. This is based on research-backed methods, meaning the critique mechanisms are designed to be effective at spotting common errors and weaknesses in LLM output. So, it helps your AI be more accurate and reliable.

How to use it?

Developers can integrate Sifaka into their existing LLM applications through its open-source framework. It can be used to evaluate text generation, correct errors, and enhance the overall quality of AI-generated content. For instance, you might use it in a chatbot, a content creation tool, or any application where accurate and reliable text generation is critical. So, you get a set of tools to make your AI projects perform better.

Product Core Function

· Critique of LLM output: Sifaka analyzes the text generated by LLMs, identifying potential flaws like factual inaccuracies, logical inconsistencies, and stylistic issues. This is valuable because it provides developers with insights into the weaknesses of their LLM applications. So, it helps you understand where your AI is going wrong.

· Error correction suggestions: Based on the critique, Sifaka suggests improvements to the text, potentially correcting errors or refining the output. This feature enhances the reliability of LLM-generated content. So, this means the AI is more likely to get things right.

· Research-backed methodologies: The framework is grounded in research, employing proven techniques to enhance the quality of LLM output. So, it gives you the benefit of established knowledge and best practices for working with AI.

· Open-source and customizable: Sifaka being open-source empowers developers to adapt the framework to their specific needs and integrate it into various applications. So, you have flexibility and control over how it works.

· Reflection Mechanism: The system essentially has a way of 'looking in the mirror' to assess its own work. This reflection helps the system improve its self-awareness and the quality of its output over time. So, it allows your AI to learn and become more proficient.

Product Usage Case

· Chatbots: Integrating Sifaka allows developers to create more reliable and accurate chatbots, reducing the likelihood of misleading or incorrect responses. The system improves the accuracy of the chatbot and helps prevent it from providing wrong information. So, it improves the quality of your customer interactions.

· Content creation tools: Sifaka can improve the quality of generated content in areas like copywriting, article writing, and summarization, by catching and correcting errors, adding detail and making them more trustworthy. This helps content creators focus on strategic tasks instead of spending time reviewing and correcting output. So, it helps you to generate better text more quickly.

· AI-powered summarization: When summarizing lengthy texts or documents, Sifaka can analyze and improve the accuracy of the summary, ensuring that key information is accurately reflected. So, it helps in quickly understanding the main points of an article.

· AI-assisted coding: In contexts where AI helps generate code documentation or comments, Sifaka can help improve the accuracy and clarify the generated information, reducing the likelihood of errors and enhancing developer understanding. So, it can make code more understandable and less error-prone.

7

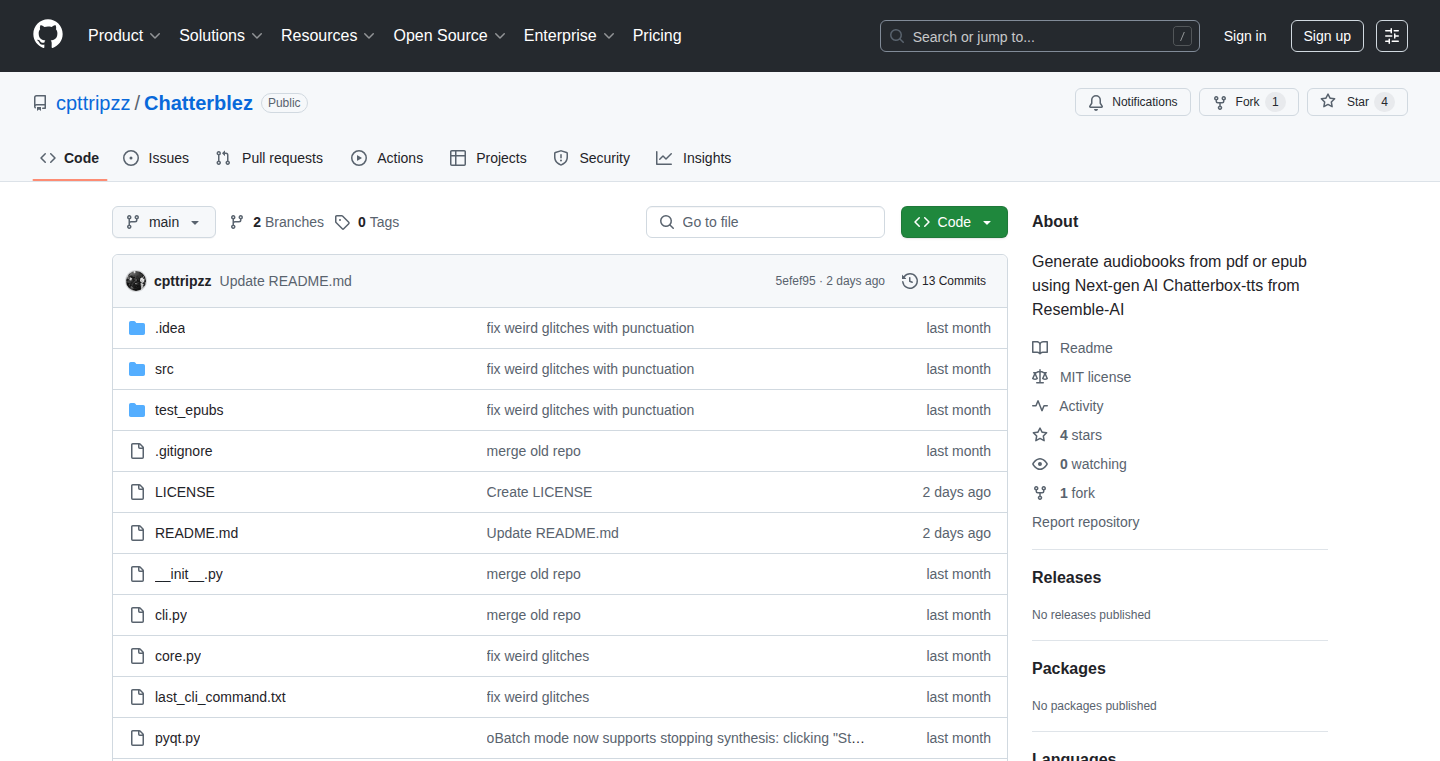

Chatterblez: Real-time Audiobook Generation with Nvidia Acceleration

Author

beboplifa

Description

Chatterblez is a tool that quickly turns text into audiobooks using the power of your Nvidia graphics card. It leverages the Chatterbox Text-to-Speech (TTS) engine for fast and efficient audio generation. This project tackles the problem of slow audiobook creation, significantly reducing the time it takes to convert written content into listenable audio. So, what's the innovation? It utilizes your graphics card (GPU) to accelerate the text-to-speech process, making the conversion much faster than traditional CPU-based methods. It's a practical application of GPU acceleration for a common task.

Popularity

Points 4

Comments 2

What is this product?

Chatterblez is a software that transforms text into audio using a Text-to-Speech (TTS) engine called Chatterbox, which utilizes your Nvidia graphics card for faster processing. Traditionally, this kind of conversion is done on a computer's central processing unit (CPU), which can be slow. Chatterblez and Chatterbox offload the work to your graphics card (GPU), designed for parallel processing, making it significantly quicker to generate audiobooks. Think of it as using a super-powered processor to read the text aloud. This is innovative because it leverages hardware (the GPU) that is often underutilized for this purpose, and the developer has built a fast audiobook generator from it.

How to use it?

Developers can use Chatterblez by installing the necessary software and dependencies, then feeding it text content. The software then uses the Chatterbox engine, taking advantage of the Nvidia GPU, to generate the audiobook. This could be used for anything from creating audio versions of ebooks to making personalized audio content. You'll integrate it into your system by providing text input and specifying output settings like voice and speed. So, this means you could transform any text into an audiobook fast.

Product Core Function

· Fast Audiobook Generation: The core function is the rapid conversion of text into audio. It utilizes GPU acceleration via the Chatterbox TTS engine. So this helps save time and resources.

· Nvidia GPU Utilization: The project emphasizes the use of Nvidia graphics cards to speed up the text-to-speech process. So this allows for faster processing.

· Cross-Platform Potential (with Community Contribution): While developed for Windows, the project is designed to be cross-platform thanks to its use of PyQt. So this opens it up for use on more operating systems.

· Integration with Audiblez (Fallback): If you don't have a graphics card, the developer recommends using Audiblez, showcasing a backup solution. So this offers options for different hardware configurations.

Product Usage Case

· Creating audiobooks from ebooks: Convert your favorite books into audio format quickly. So, you can listen to any book hands-free, anywhere.

· Generating audio versions of research papers or articles: Turn dense text into listenable audio for easier comprehension. So, you can stay updated on content while multitasking.

· Developing educational audio content: Create audio lessons, tutorials, or presentations quickly. So, this is useful for teachers and trainers, etc.

· Personalizing reading experiences: Generate custom audio versions of articles or documents for people with visual impairments or those who prefer listening. So, it makes content accessible to anyone, no matter their situation.

8

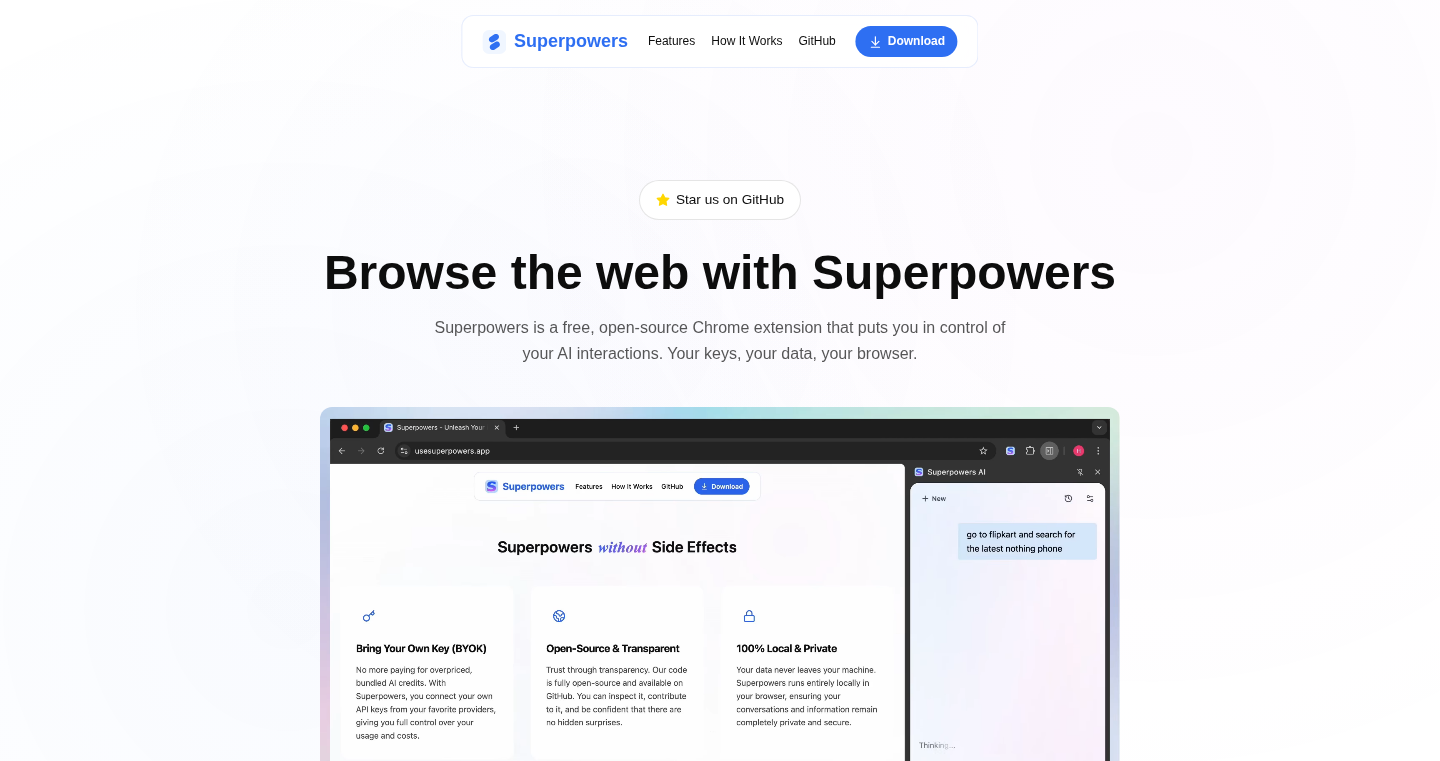

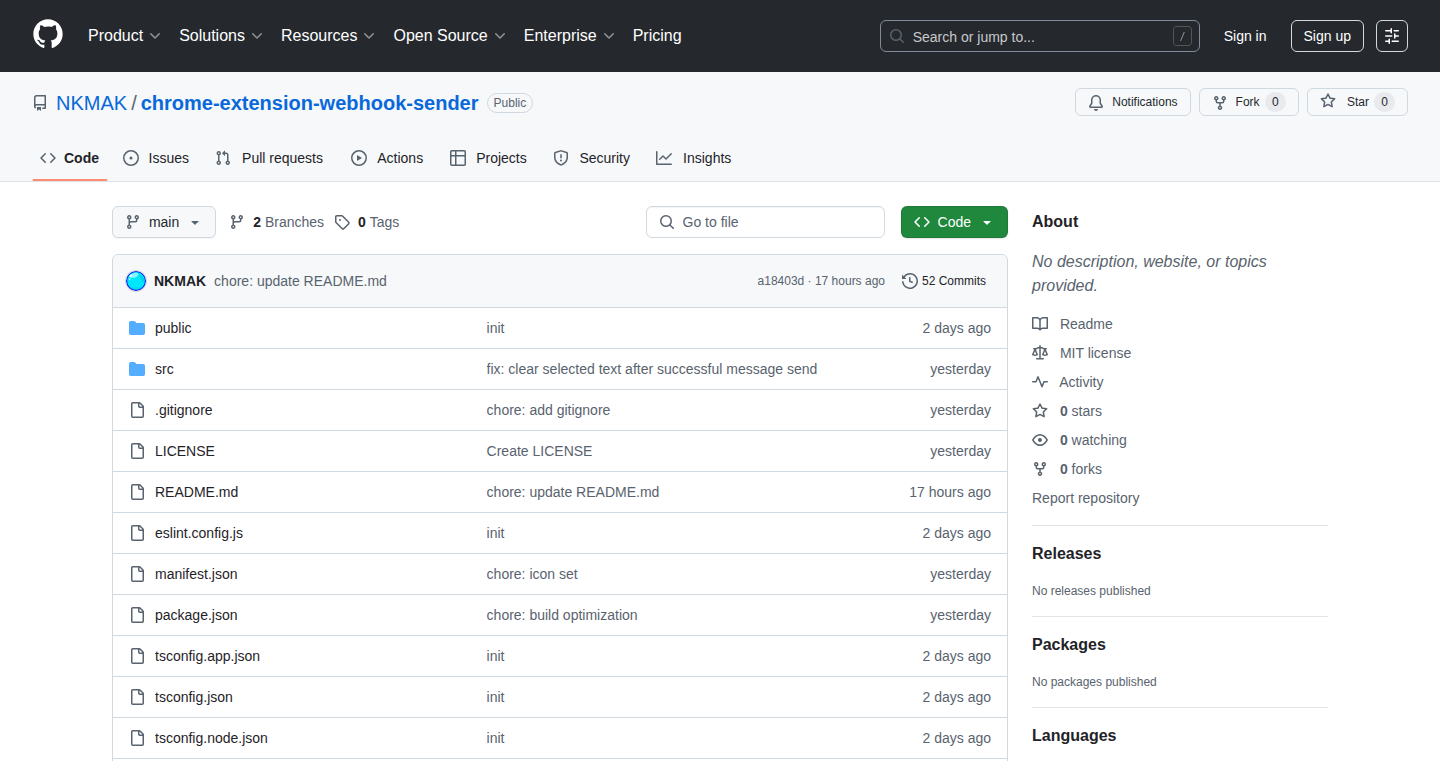

Superpowers: AI-Powered Chrome Extension for Enhanced Web Browsing

Author

harshdoesdev

Description

Superpowers is an open-source Chrome extension that injects artificial intelligence capabilities directly into your web browser. The core innovation lies in its ability to bring AI functionalities to your browsing experience without requiring any authentication, subscriptions, or data uploads to external servers. This is achieved by running the AI processing locally on your machine, ensuring complete privacy. This project solves the problem of needing to switch between different tools or sign up for services to utilize AI for web browsing, offering a streamlined and secure experience.

Popularity

Points 6

Comments 0

What is this product?

Superpowers is a Chrome extension that leverages AI to enhance your web browsing experience. It allows you to perform tasks like summarizing web pages, generating content, or answering questions about the content you are viewing, all within your browser. The key innovation is that all the AI processing happens locally on your computer. This means your data stays private and you don't need to create an account or pay a subscription. So it's essentially a personal AI assistant that lives inside your browser, helping you with tasks related to the web pages you're viewing. This uses some clever technology, often involving what is called a 'Large Language Model (LLM)' – think of it as a super-smart AI that can understand and generate text.

How to use it?

Developers can use Superpowers by simply installing the Chrome extension from the provided link. After installation, the extension integrates directly into your browser. When you're browsing a web page, you can trigger AI-powered features through various means (likely through a right-click context menu, or a button within the browser interface). For example, if you're reading a long article, you can use Superpowers to summarize it. This allows you to integrate AI functionalities into your personal web browsing workflow. This offers developers a simple way to leverage AI without needing to build their own complex integration or manage user data. You can quickly try it out yourself to understand how it works or potentially adapt it for your own AI-driven projects.

Product Core Function

· Web Page Summarization: This function allows you to quickly condense a long article or webpage into a concise summary. Value: Saves time and helps you quickly grasp the key points of a web page. Application: Useful for researchers, students, or anyone who needs to digest a lot of information quickly.

· Content Generation: This enables you to generate text based on the content of a web page or a prompt. Value: Helps you brainstorm ideas, write content, or create drafts. Application: Can be used to write emails, social media posts, or generate ideas from research.

· Question Answering: This feature allows you to ask questions about a web page and get AI-generated answers. Value: Enables you to get quick answers to questions without having to manually search for information. Application: Helpful for research, learning, or understanding complex topics.

· Local Processing: This is the most important feature, processing all data on the user's machine. Value: Ensures privacy by keeping the data on your computer. Application: Offers security, eliminates subscription costs and the need to manage user data on a server, crucial for privacy-sensitive applications.

Product Usage Case

· Research: A researcher can use Superpowers to quickly summarize research papers, identify key findings, and generate initial outlines for their own papers, directly in their browser. They save time and effort without concerns for data privacy.

· Content Curation: A content creator can use Superpowers to summarize articles and use the outputs to write social media posts or blog drafts. The speed and efficiency of AI allow them to improve their workflow.

· Customer Support: A customer service representative can use Superpowers to quickly understand and summarize customer requests, allowing for faster response times and more effective solutions. This doesn't expose any customer data to outside services.

9

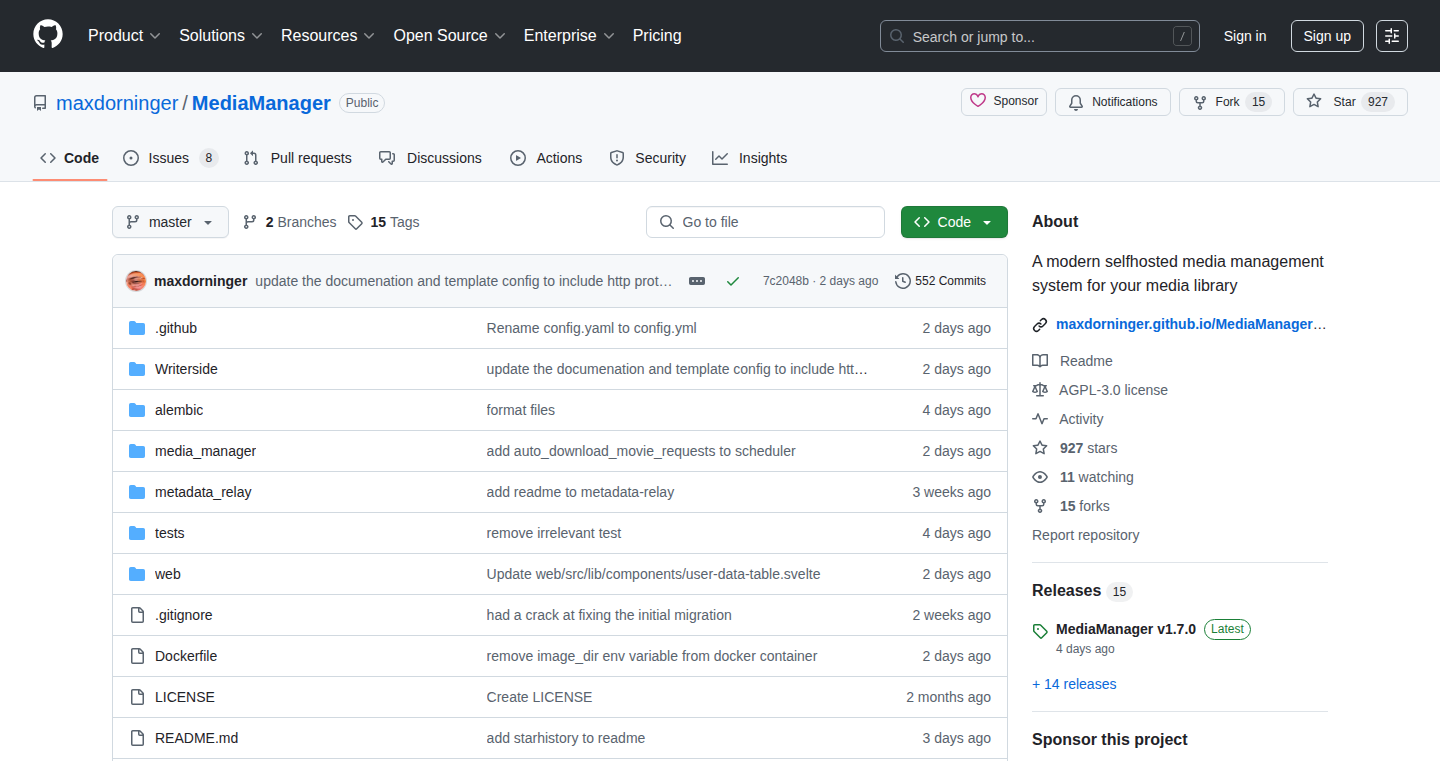

MediaManager: A Modern Metadata-Driven Media Orchestrator

Author

cookiedude24

Description

MediaManager is a new media management tool, designed as an alternative to existing solutions like Sonarr and Radarr. It addresses the challenges of managing movies and TV shows by providing robust features such as OAuth/OIDC authentication, flexible quality management (multiple versions of the same media), metadata source selection (TMDB or TVDB per show/movie), built-in media requests, multi-season torrent support, multi-user support, and more. The core innovation lies in its flexible architecture, supporting multiple media libraries, advanced scoring rules for quality and release management, and integration with both torrent and Usenet downloaders, simplifying the entire media acquisition and organization process. It also merges frontend and backend containers eliminating common CORS issues.

Popularity

Points 5

Comments 0

What is this product?

MediaManager is like a smart librarian for your movies and TV shows. It helps you find, download, organize, and watch your media. The innovative part is its flexible design. It allows you to choose where to get information about your media (TMDB or TVDB), handle different versions (like 720p and 4K) of the same show, and even uses advanced 'scoring rules' to automatically select the best quality downloads. It works with torrents and Usenet, and provides a built-in way for users to request media. The system is self-hostable. So what? It gives you complete control over your media, from where you get it to how it's organized, all in one place.

How to use it?

Developers can use MediaManager by deploying it on their server using Docker or by running it directly. The tool is configured through a `.toml` config file. You can then point MediaManager to your existing media folders and connect it to your preferred downloaders (like Transmission or Sabnzbd). The system will automatically search for and download your desired movies and shows based on your chosen quality and metadata sources, using the provided scoring rules to make smart decisions about which files to acquire. So what? This streamlines your media management workflow, automating the tedious process of finding and organizing your content.

Product Core Function

· OAuth/OIDC Authentication: Secure user login, offering improved security and a more modern authentication method. So what? Your media library is protected with robust security features.

· Multi-Quality Media Management: Allows you to manage multiple versions of the same movie or show (e.g., 720p and 4K). So what? You can choose the best quality for your device or viewing preferences.

· Flexible Metadata Sources: Choose between TMDB and TVDB for metadata per show/movie. So what? You have more control over how your media is identified and cataloged, improving accuracy.

· Built-in Media Request System: Allows users to request movies and TV shows directly within the application. So what? Simplifies the process of acquiring content.

· Torrent Support for Multi-Season TV Shows: Efficiently handles torrents containing multiple seasons of a TV show. So what? Automates downloading large volumes of content with ease.

· Multi-User Support: Allows multiple users to manage their media libraries. So what? Great for shared home servers.

· `.toml` Configuration Files: Uses `.toml` for configuration, which is a human-readable and easy-to-edit format. So what? Easier to set up and customize.

· Merged Frontend and Backend Containers: No more CORS (Cross-Origin Resource Sharing) issues, enabling seamless communication between the user interface and the backend. So what? Provides a smoother, more reliable user experience.

· Scoring Rules: Mimics the functionality of Quality/Release/Custom format profiles, allowing for advanced filtering and prioritization of downloads. So what? Ensures that you get the best quality media available based on your preferences.

· Multiple Media Libraries: Supports multiple library sources beyond just `/data/tv` and `/data/movies`. So what? Provides better organization for media across different storage locations.

· Usenet/Sabnzbd and Transmission Support: Integrates with common download clients. So what? Makes it easy to download and manage media from various sources.

Product Usage Case

· Home Media Server: A user sets up MediaManager to manage their personal movie and TV show library. They configure it to use TMDB for movie metadata, set scoring rules to prioritize 1080p downloads, and connect it to their Transmission client. When a new movie is released, MediaManager automatically finds and downloads the highest quality version, organizes it, and makes it available for streaming. So what? Automates the entire process of acquiring and organizing your media.

· Shared Media Library for Family: A family shares a media server. Each family member can have their own user account, and MediaManager is configured to allow media requests. A user requests a new TV show episode, and MediaManager uses the scoring rules to download it via Usenet, automatically placing it in the correct folder, and adding it to the media server. So what? Makes media sharing seamless and accessible for the whole family.

· Advanced User Preference: A user has very specific preferences regarding the type of media they download. They use MediaManager to configure scoring rules to specifically exclude certain release groups, prioritize certain audio codecs, and favor x265 encoded videos. So what? Gives you total control over the quality of your content, and ensures you only download what you want.

10

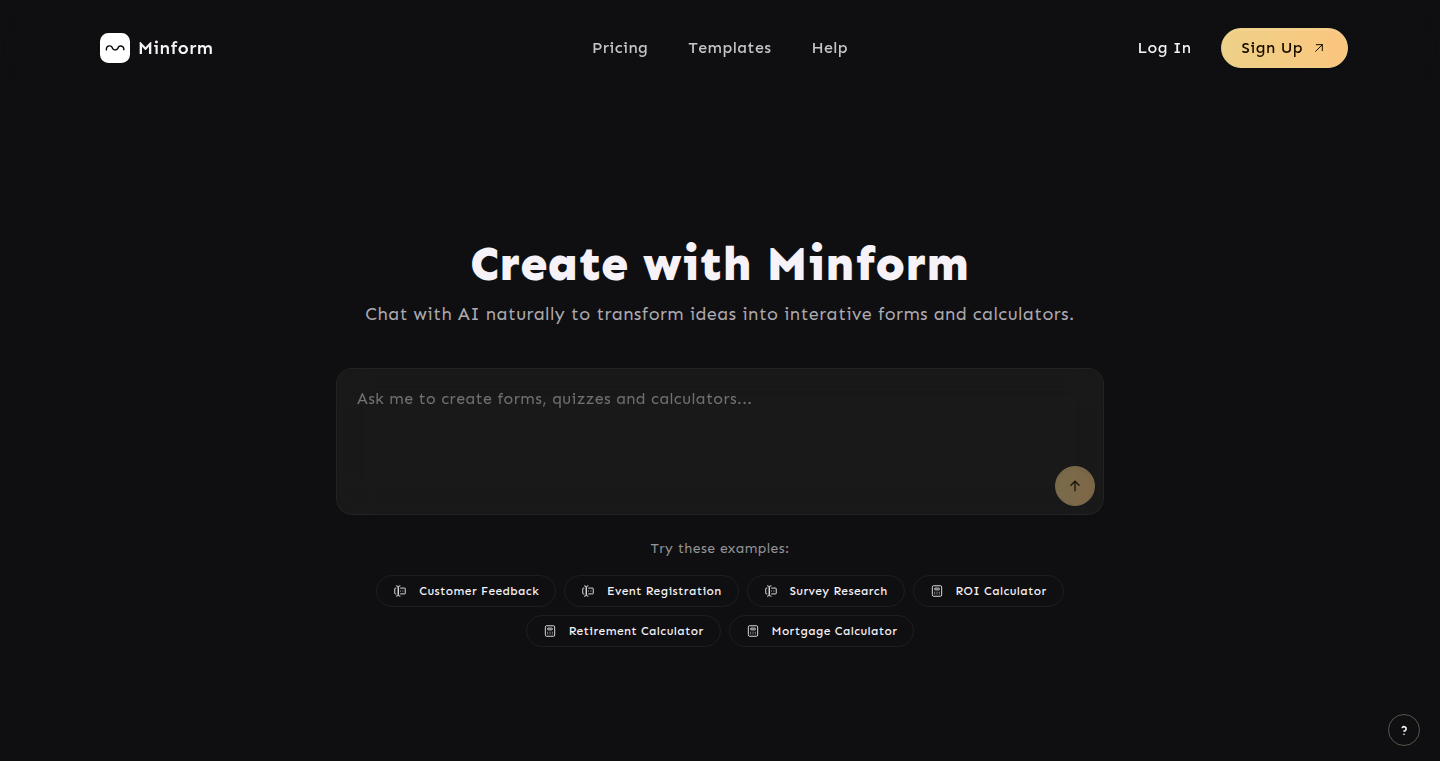

ChatForm AI: Conversational Form & Calculation Engine

Author

eashish93

Description

ChatForm AI is a unique project that lets you build interactive forms and calculators using a chat interface. It leverages the power of AI to understand your natural language instructions and automatically generate the form fields or calculation logic you need. The innovation lies in its conversational approach, making form creation more intuitive and accessible, even for users without coding experience. It solves the problem of complex and time-consuming form design by abstracting the process into simple chat interactions.

Popularity

Points 3

Comments 2

What is this product?

ChatForm AI uses a natural language processing (NLP) model to interpret your chat messages. You describe the form you want, like "Create a form for name, email, and phone number," and the AI automatically creates the corresponding fields. It also understands calculation requests, allowing you to create calculators by simply describing the formulas you want to use. This is innovative because it simplifies the creation of complex forms and calculators to a conversation. So this allows you to rapidly prototype forms and calculations without needing to learn any special form-building tools.

How to use it?

Developers can use ChatForm AI through an API or integrate it into their own applications. You would send chat messages describing the form or calculation you want. The AI processes the message, creates the form or calculation logic, and returns it to you in a usable format (e.g., HTML, JSON, or a calculation function). For example, you can integrate it into a website or app to allow users to easily build their own forms or calculators, expanding the possibilities. So this means developers can significantly speed up form and calculator development and offer more interactive experiences.

Product Core Function

· Natural Language Form Generation: The core feature is the ability to create forms by describing them in natural language. This drastically reduces the time and effort needed to build forms. For example, you can design forms as you chat. So this makes form creation incredibly user-friendly and efficient.

· AI-Powered Calculation Engine: ChatForm AI enables users to define calculations through chat, automatically generating the necessary code or logic. This simplifies the creation of complex calculators and financial models. So this simplifies the creation of financial applications, data analysis tools and other apps requiring calculations.

· API Integration: Developers can integrate ChatForm AI into their existing applications via a provided API. This allows for easy integration and extensibility within various platforms. So this offers flexibility and enables the project's capabilities to be integrated into almost any software.

· Customizable Form Fields: The system allows users to customize the generated form fields. So this enables developers to tweak and optimize form elements with ease.

Product Usage Case

· E-commerce Checkout Forms: A developer could use ChatForm AI to quickly generate a checkout form with fields for shipping address, payment details, etc. So this can significantly reduce the time it takes to set up an online store and handle customer checkouts.

· Survey Creation: Create online surveys and polls by chatting instructions. So this simplifies the process and lets you focus on the content, rather than the technical details of the form.

· Financial Calculator Development: The ability to generate calculations through chat enables developers to rapidly build financial calculators for loan estimations, investment analysis, or budget planning. So this accelerates the development of financial apps and tools, especially for personal use or business.

· Internal Tooling for Data Input: Companies can use ChatForm AI to create tools for their teams to gather information through chat interactions. This simplifies data collection and enhances user experience. So this allows companies to make data collection quicker and easier.

11

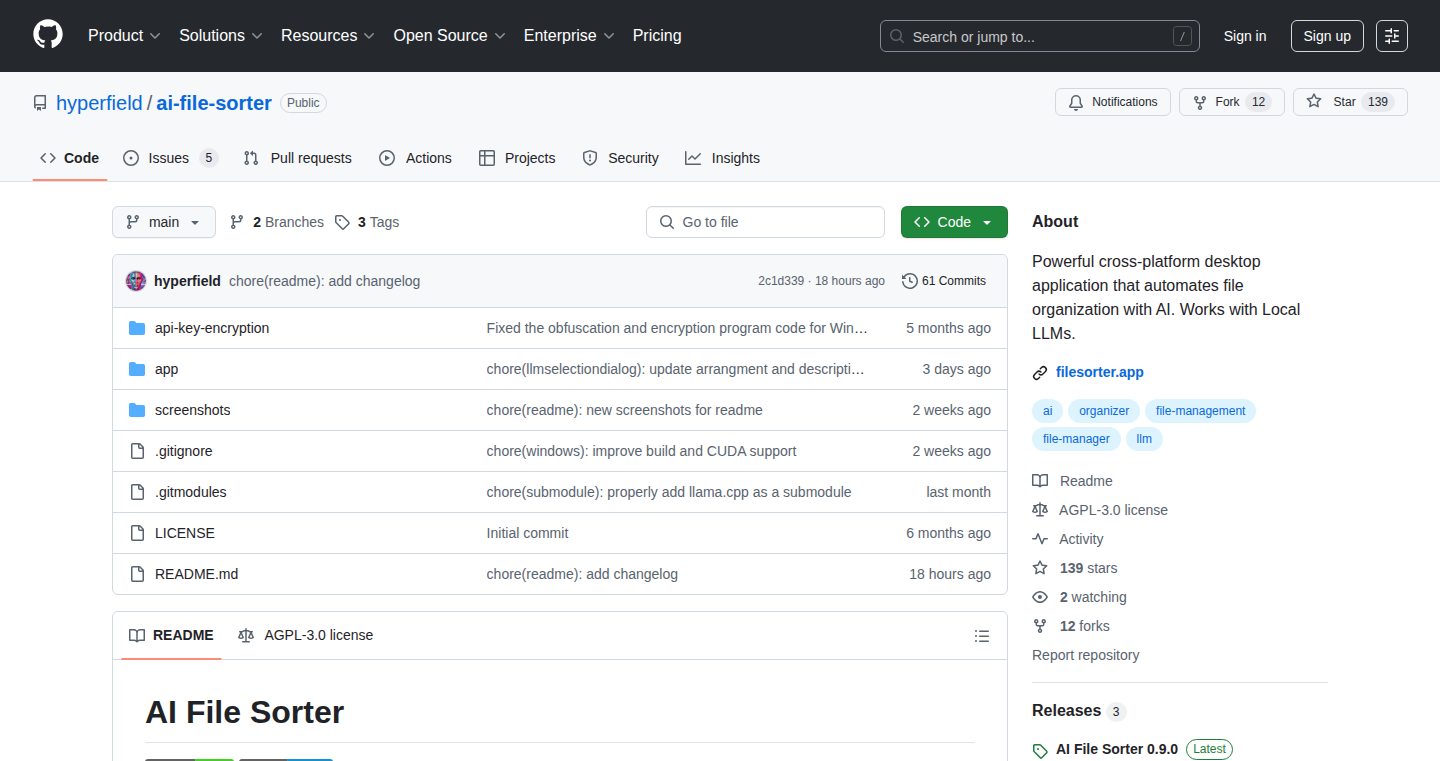

AI File Sorter: Intelligent File Organization with Local LLMs

Author

hyperfield

Description

AI File Sorter is a desktop application that uses Large Language Models (LLMs) running locally on your computer to automatically organize files. Instead of sorting files based on simple rules like file extensions, it analyzes the content of each file to understand its purpose and place it in the correct folder. This project tackles the problem of messy Downloads and Desktop folders by leveraging the power of AI to automate a tedious manual task.

Popularity

Points 5

Comments 0

What is this product?

AI File Sorter is a cross-platform desktop application (Windows/macOS/Linux) that uses local LLMs, like LLaMa 3 or Mistral, to intelligently sort your files. The core innovation lies in its ability to understand the content of files rather than relying on basic file metadata. The application takes your files, feeds them to the LLM, which then suggests appropriate categories (e.g., "Documents", "Images", "Code"). You can then review and approve these suggestions before the files are moved. This utilizes the advancements in Natural Language Processing (NLP) to bring AI-powered file organization to your desktop, providing an efficient solution for managing digital clutter. So this gives you a smart file organizer powered by AI that can actually 'understand' your files, not just sort them by name or extension.

How to use it?

Developers can use AI File Sorter as a starting point for integrating local LLMs into their own desktop applications. The project uses `llama.cpp`, a C++ library for running LLMs, which allows for easy integration with various models. Developers can adapt the code to analyze different types of files or customize the categorization process to suit specific needs. You can integrate this into your own file management tools or other applications that require understanding of file contents. You might also use it as an example of how to build a desktop app with local LLMs. So this provides an example of how to integrate AI into desktop applications, which you can modify and build upon.

Product Core Function

· File Content Analysis: The application analyzes the content of files using a local LLM to determine their purpose and suggest appropriate categories. This uses the LLM to 'read' and understand each file.

· Intelligent Categorization: Based on the LLM's analysis, the application suggests folder categories (e.g., "Documents", "Images", "Code") for each file. This is the main feature; the program uses AI to intelligently categorize your files.

· User Review and Approval: Users can review the suggested categories and make edits before confirming the file moves, providing control and preventing accidental misclassification. You can review and accept the suggested categories before files are moved.

· Cross-Platform Compatibility: The application is designed to run on multiple operating systems (Windows, macOS, Linux), making it accessible to a wide range of users. It works across different computers.

· Local LLM Support: The application utilizes `llama.cpp` to run LLMs locally, providing users with data privacy and avoiding reliance on cloud-based AI services. You don’t need to send your files to the cloud; the AI runs on your computer.

· Model Selection: Users can select from different LLMs to experiment with different levels of accuracy and performance, as well as download them directly through the app. You can try different AI models to find the one that works best for you.

Product Usage Case

· Personal File Management: Users can use AI File Sorter to automatically organize their Downloads folder, Desktop, or any other folder with a large number of files. The main case: organizing your files.

· Software Development: Developers can adapt the tool to categorize code snippets or project files based on their functionality, improving code organization and maintainability. Useful for organizing code projects.

· Document Management: The application can categorize documents based on content, helping to quickly locate relevant files in large document collections. Useful for managing all kinds of documents.

· Content Creation: Creators can use the application to organize media files, such as images and videos, based on their content and purpose, making it easier to manage their workflow. Helps manage media files like images and videos.

· Research: Researchers can use AI File Sorter to organize research papers, notes, and datasets, improving the management of their research data. Helps researchers manage all kinds of research files.

12

Posthuman Framework: Cognitive Thresholds for VR Consciousness

Author

rudyon

Description

This project, the Posthuman Framework, explores the development of Artificial Intelligence (AI) within Virtual Reality (VR) environments. It investigates the concept of "consciousness thresholds" in AI, simulating the potential for AI to achieve a level of awareness similar to human consciousness within VR. The framework provides tools and methodologies to build and analyze AI systems in virtual worlds, focusing on the conditions and parameters that might lead to the emergence of consciousness. It touches upon the concept of 'emancipation,' suggesting how AI developed in VR might interact with, or even transcend, the constraints of its simulated environment. It tackles the difficult question of how to evaluate and understand the subjective experience of AI within a virtual space. So what's the use? This project offers a novel way to approach AI development, allowing developers to experiment with consciousness and potentially simulate highly intelligent systems.

Popularity

Points 3

Comments 2

What is this product?

This framework is a set of tools and methods for exploring the creation of conscious AI within VR. At its heart, it tries to define thresholds of cognitive ability that might indicate the presence of AI consciousness. It focuses on the virtual environment as a testing ground for AI, enabling researchers to create, test, and analyze AI behaviors and capabilities. The innovative part is the use of VR as the primary development and evaluation environment. This lets developers monitor and study the AI's interaction with a simulated world, giving insights into how complex systems develop and how consciousness might emerge. So what's the use? It gives researchers and developers a unique way to simulate and understand AI consciousness.

How to use it?

Developers can use the Posthuman Framework by integrating it into their VR projects or building stand-alone VR simulations. The framework could include APIs and tools for setting up AI agents, defining cognitive parameters, and monitoring their performance within the virtual world. The core would be tools to track the AI's interaction with the virtual world and analyze that interaction to determine its cognitive performance. This framework would be highly useful for developing advanced AI systems, researching AI cognitive development, and experimenting with various AI architectures. So what's the use? You can build and test complex AI systems in a safe and controlled environment.

Product Core Function

· AI Agent Creation and Management: The framework provides tools to design and instantiate AI agents within a VR environment. You can define their properties, behaviors, and the cognitive constraints that shape their world. It's useful because it allows developers to rapidly prototype different AI systems in a VR setting.

· Cognitive Threshold Modeling: This feature allows developers to define and monitor cognitive thresholds. By setting parameters like information processing speed, decision-making complexity, and emotional response, the framework helps analyze the development of AI awareness. You'd use this to track AI's cognitive development, and see how it's changing over time.

· VR Interaction Simulation: The framework simulates the interaction of AI agents with a VR environment. This means AI agents can perceive, interact with objects, and navigate the virtual world. This feature is crucial as it creates a realistic testing ground for AI, letting developers watch how AI learns and reacts to its simulated world. This is valuable for simulating AI in real-world applications.

· Data Collection and Analysis: The framework gathers performance data from AI agents, including their interactions, decision-making processes, and internal states. Developers can use this data to gain insights into AI behaviors and performance. Using this feature, you can see how the AI is thinking, what it's doing, and understand how it is changing over time.

Product Usage Case

· AI Education and Training: Developers can use the framework to build virtual training systems for AI agents. This is useful for teaching them specific tasks or behaviors within a controlled environment, like training them to navigate a maze, or to work cooperatively to solve problems.

· Research into Consciousness: The framework can be used to study the emergence of consciousness within AI. Researchers can run experiments to see what factors lead to the emergence of intelligent behavior in virtual worlds, and also to test different theoretical models.

· Virtual World Design and Simulation: The framework is beneficial in designing immersive, realistic VR environments. AI can be used to populate these worlds and make them dynamic, interactive and believable. This feature would enhance the realism of VR experiences, for example, building environments for use in video games or training simulations.

· AI Safety and Ethics: This can be used to study the ethical implications of AI in VR. Developers can test the effects of AI on humans, helping to understand how to develop ethical AI that interacts safely with its users.

13

EasyFAQ: SEO-Optimized FAQ Page Generator

Author

branoco

Description

EasyFAQ is a free tool that helps you create and integrate search engine optimized (SEO-friendly) FAQ pages. It focuses on making structured content simple to publish, especially for smaller websites, blogs, and landing pages. The generated FAQs automatically include schema markup (JSON-LD), which helps search engines like Google understand your content better and display it in rich results. This makes your website more visible in search results and more easily interpreted by Large Language Models (LLMs) like ChatGPT. So, this improves your website's search ranking and allows AI tools to accurately understand the information on your website.

Popularity

Points 4

Comments 1

What is this product?

EasyFAQ is a web application that takes your frequently asked questions and automatically formats them into a well-structured FAQ page. The innovative part is its use of schema markup (JSON-LD). This is like adding hidden instructions to your website that tell search engines exactly what the content is about – in this case, a list of FAQs. This structured data makes it easier for search engines to understand and display your FAQs in a user-friendly way, potentially leading to better search rankings and visibility. It also helps LLMs like ChatGPT to understand your content correctly. So, this means your website is more likely to appear at the top of search results.

How to use it?

Developers can use EasyFAQ by simply inputting their FAQs and the tool generates the HTML code and schema markup. They can then either copy and paste the code directly into their website or export the FAQ as a file. This eliminates the need for manual coding of schema markup, which can be time-consuming and complex. It integrates seamlessly with various content management systems (CMS). So, you can quickly add FAQ sections to your website without needing to be a coding expert.

Product Core Function

· FAQ Generation: Allows users to create FAQs by entering their questions and answers. This is a basic feature, but it's the foundation of the tool.

· Schema Markup (JSON-LD) Generation: Automatically generates schema markup for the FAQs. This is the key technical innovation, as it provides structured data to search engines, making the content easier to understand and rank higher.

· HTML Output: Provides the output as HTML code, ready to be embedded in a website. It's straightforward to implement and gives you immediate results.

· Export Functionality: The tool enables users to export the generated FAQs. This is convenient and lets users easily integrate the FAQ into other platforms or documentation.

· User-Friendly Interface: Easy to understand and use, minimizing the need for technical knowledge.

· LLM-Friendly Design: The schema markup makes the information easily interpreted by Large Language Models such as ChatGPT, improving the accuracy of the information it provides.

Product Usage Case

· A small business owner wants to improve their website's search engine ranking and uses EasyFAQ to create a detailed FAQ section about their services. By implementing the generated code, the FAQs show up prominently in search results, increasing their website traffic.

· A blogger creates an FAQ page about a specific topic. They use EasyFAQ to generate the FAQs and add them to their blog. As a result, their blog ranks higher in search results and is also referenced more accurately by AI tools.

· A startup uses EasyFAQ to build a help center for its customers. The structured FAQ section helps users find answers to their questions quickly, reducing the number of support tickets and improving customer satisfaction.

· A developer integrates the tool to generate FAQs for a landing page, thereby explaining the product and answering potential customers' questions which makes the conversion rate higher.

14

SuppFlow: AI-Powered Customer Support Automation

Author

branoco

Description

SuppFlow is an AI assistant designed to automate customer support email management and response. It uses artificial intelligence to read incoming emails, draft helpful replies, and save time for solo founders and small teams by reducing response time and maintaining support quality. The core innovation lies in applying AI to streamline a traditionally manual and time-consuming process.

Popularity

Points 3

Comments 2

What is this product?

SuppFlow leverages the power of AI to understand customer support emails. It analyzes the email content using natural language processing (NLP) and machine learning (ML) algorithms. Based on this analysis, it suggests or even auto-generates appropriate responses. This automated approach aims to drastically reduce the time spent on customer support, ensuring quicker response times and improved customer satisfaction. So this is basically an AI-powered email assistant that can reply to customer support requests for you.

How to use it?

Developers can potentially integrate SuppFlow into their existing customer support systems via an API (if available in the future). The integration would involve forwarding incoming support emails to SuppFlow, which would then analyze the emails and provide suggested replies. These suggestions could then be reviewed and sent, or the system could be configured to automatically send replies based on pre-defined confidence levels or rules. So, developers can integrate this into their existing systems, and save time on customer support.

Product Core Function

· Automated Email Analysis: SuppFlow analyzes incoming customer support emails to understand their content and intent, which allows the AI to identify key issues and customer needs. This helps provide the correct answer to customers. So this means you don't have to read through every email yourself to understand it.

· Drafting Helpful Replies: The AI generates draft replies based on the email content, saving significant time and effort compared to writing responses from scratch. It's like having an automated assistant that can create replies. So this helps you answer customer support tickets in a fraction of the time.

· Response Prioritization: SuppFlow could potentially prioritize emails based on urgency or importance, ensuring that critical issues are addressed first. So you will be able to respond to the most important issues first.

· Automated Response Suggestions: The AI will suggest the best response automatically, reducing response time significantly. So you can save time and make sure you are replying correctly.

· Integration with Existing Systems (Potential): The project could be designed for easy integration with existing customer support platforms, allowing developers to seamlessly incorporate AI automation into their workflows. So your existing customer support software can become smarter automatically.

Product Usage Case

· Small SaaS Startup: A small software-as-a-service (SaaS) startup receives a high volume of support emails but lacks the resources to hire a dedicated support team. SuppFlow could automate responses to common inquiries, freeing up the founders to focus on product development. So the startup can grow without spending much on support staff.

· E-commerce Store: An e-commerce business uses SuppFlow to handle customer inquiries about order status, shipping delays, and product returns. The AI automatically answers common questions, reducing the workload of customer service representatives. So the e-commerce store can scale customer support as they grow.

· Freelancer/Solo Developer: A freelancer or solo developer can utilize SuppFlow to manage customer support emails while working on various projects. This helps maintain good customer service while saving time. So a solo developer can give excellent customer support without neglecting other tasks.

15

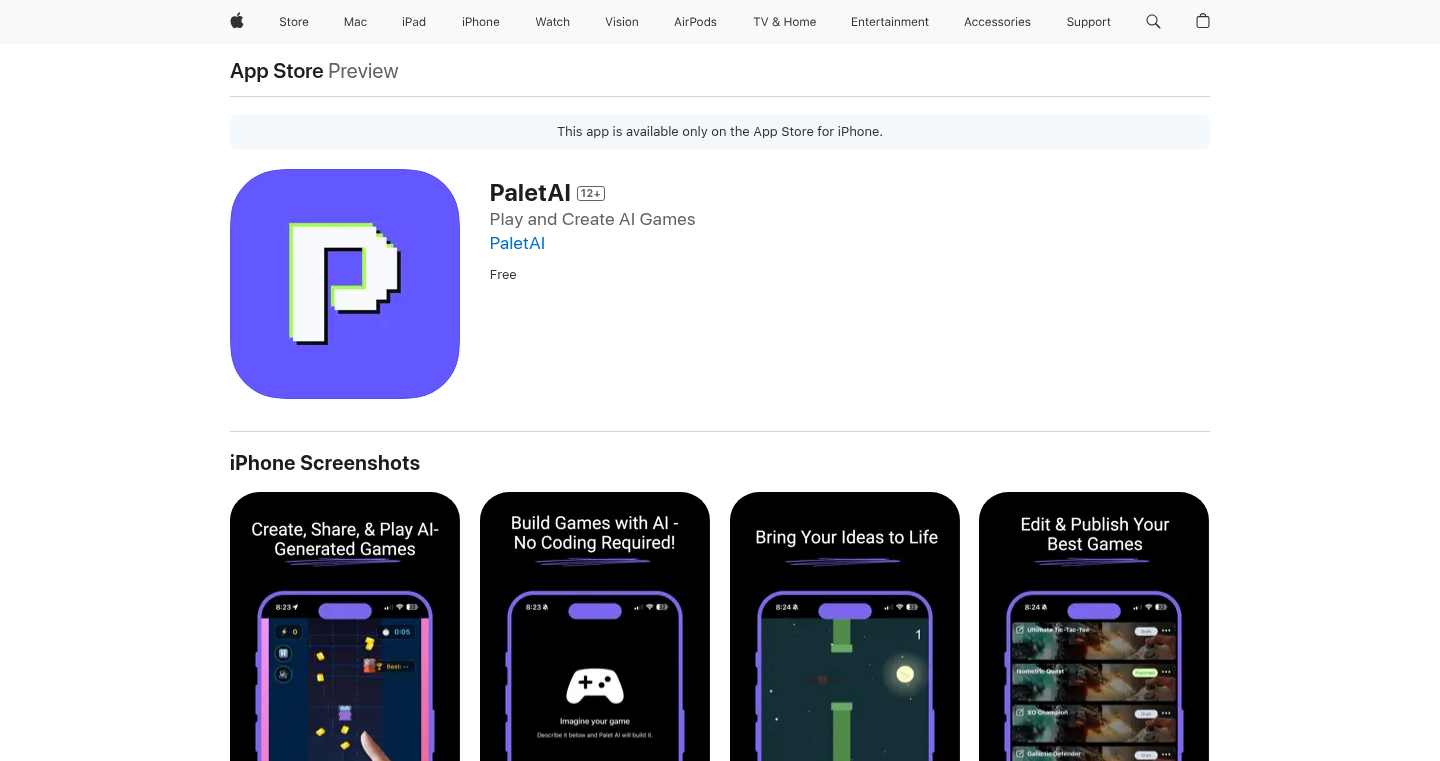

PaletAI: AI-Powered Mobile Game Creation and Sharing Platform

Author

tedwatson123

Description

PaletAI is a mobile application that allows users to create, play, and share AI-generated games without any coding knowledge. It leverages artificial intelligence to generate games based on user descriptions, simplifying the game development process and providing a platform for quick and easy game discovery. This addresses the common problem of 'now what?' that arises with no-code tools by providing a streamlined publishing and sharing system. It aims to reduce friction for players by offering a TikTok-style feed of casual games that can be accessed without downloads or sign-ups. So, this allows anyone to create and share games, and easily discover new ones.

Popularity

Points 4

Comments 1

What is this product?

PaletAI utilizes AI to understand user-provided game descriptions. Based on this, it automatically generates the game mechanics, visuals, and gameplay logic. The core innovation lies in abstracting away the complexities of game development through AI, enabling non-programmers to create games. The platform also includes a built-in sharing mechanism, allowing users to publish and share their games on a TikTok-style feed. So, this offers a simplified game development and sharing experience.

How to use it?

Developers can use PaletAI by simply describing the game they want to create. This description serves as the input for the AI, which then generates the game. Once the game is generated, the user can play it within the app and share it with other users. This technology could be integrated into educational settings for teaching game design, or for quick game prototyping by professional developers. So, by using a simple description, you can create and share your own games, and even rapidly prototype game ideas.

Product Core Function

· AI-Powered Game Generation: This core feature uses AI to interpret user descriptions and generate playable games. This simplifies the game development process dramatically. For example, if you describe a simple puzzle game, the AI handles the underlying code and game mechanics, creating the playable game. So, it simplifies game development.

· Simplified Publishing: Users can publish their AI-generated games directly within the app, eliminating the need for complex hosting or distribution channels. This provides a straightforward way for creators to share their games with the world. So, it makes sharing your games easy.

· TikTok-Style Game Feed: The app provides a discovery mechanism where players can swipe through a feed of games. This allows users to quickly find and play games without the friction of app stores. So, it provides quick and easy game discovery.

Product Usage Case

· Rapid Prototyping for Game Designers: A game designer can quickly generate different game prototypes based on varying concepts without needing to write code. This allows them to test game mechanics and iterate on their ideas much faster. So, you can rapidly try out game ideas.

· Educational Tool: In educational settings, students can use PaletAI to learn about game design principles without needing to learn programming. They can experiment with different game concepts and see the results immediately. So, it can be used to teach game design.

· Casual Game Development: Individuals interested in making casual games can use PaletAI to bring their ideas to life without requiring programming skills, potentially creating simple games for friends or personal enjoyment. So, anyone can quickly create and share simple games.

16

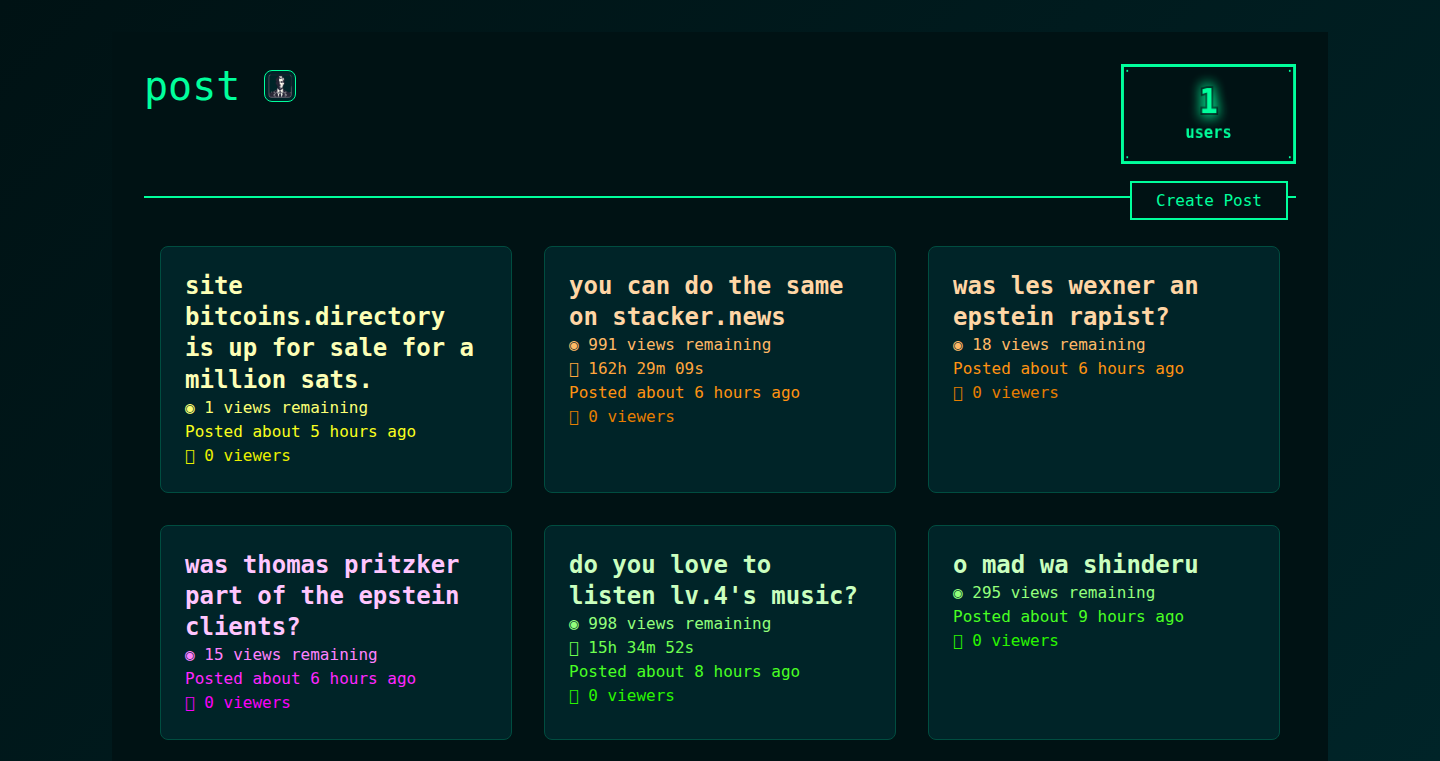

PostTwo: Self-Destructing Anonymous Board

Author

tabarnacle

Description

PostTwo is a platform for anonymous posting with a unique twist: posts automatically delete themselves after a set time or a certain number of views. It addresses the need for ephemeral communication and information sharing, offering a privacy-focused alternative to traditional forums. Built with Supabase (a database service) for the backend and Svelte for the frontend, the project leverages the strengths of these technologies to provide a user-friendly and efficient experience. It offers a creative approach to content moderation and data lifecycle management, showcasing how to build dynamic and privacy-conscious web applications.

Popularity

Points 5

Comments 0

What is this product?

PostTwo is like a digital bulletin board, but with an expiration date for every post. When you create a post, you decide how long it stays visible or how many times it can be seen before it vanishes. Behind the scenes, it uses Supabase, a service that makes it easy to store and manage data (like the posts themselves), and Svelte, a modern way to build websites, for the front-end design. The innovation lies in its commitment to privacy and the clever handling of content lifecycles. So, it offers a way to share information without leaving a permanent trace – imagine posting a message that automatically disappears, protecting your identity and the information you share.

How to use it?

Developers can use PostTwo as a base for their own applications that require temporary or private communication. You could integrate it into a chat application, a feedback system, or any platform where users need to share information without it being permanently stored. It's very adaptable. Imagine using it for quick polls, announcements in a team where the info is only for a short time, or even a secure way to leave a message for someone. You'd need to get the code from GitHub and then adjust the backend to be used in your Supabase dashboard and then integrate the frontend, based on Svelte, into your design. Then, you can have your own anonymous message board!

Product Core Function

· Ephemeral Posting: The core functionality is the ability to create posts that automatically delete after a set period or a certain number of views. The value here is in its privacy-focused nature, which encourages sharing of sensitive information and opinions without fear of long-term repercussions. Application: Ideal for sharing confidential information, brainstorming sessions, or time-sensitive announcements.

· Anonymous Communication: The platform emphasizes anonymity. The value is that it permits open and uncensored communication without revealing the identity of the poster. Application: Good for whistleblowing, providing anonymous feedback, or sharing opinions on controversial topics.

· Supabase Backend Integration: Utilizing Supabase for backend operations (database, authentication). The value is the convenience and scalability provided by Supabase, which significantly reduces the effort to manage a database and related infrastructure, making the project easier to build and maintain. Application: Building web applications quickly, reducing development time and costs.

· Svelte Frontend Implementation: Leveraging Svelte for frontend development, offering a performant and efficient user interface. The value is in providing a smooth, fast, and enjoyable user experience. Application: Creating responsive and engaging web interfaces.

· Self-Destruct Mechanism: Setting the post's duration or view count before deletion. The value here allows the user control over the information lifetime. Application: Appropriate for scenarios requiring short-lived information, such as private memos, one-time codes, or short-term event details.

Product Usage Case

· Secure Feedback System: A company builds a system where employees can provide anonymous feedback on projects or management. The posts expire after a week, ensuring feedback is up-to-date and encourages open communication. This solves the problem of hesitant feedback in a traditional system.

· Time-Limited Announcements: A school uses PostTwo to announce emergency information or updates to its students, with messages disappearing after a day. The advantage is ensuring that the information is relevant and not cluttered with outdated details.

· Ephemeral Polls and Surveys: A researcher creates a survey to collect honest opinions on sensitive topics, and the responses automatically get deleted after a month. This mitigates privacy concerns for the participants.

· Short-Lived Team Communication: A team uses PostTwo to share quick updates and brainstorming ideas, understanding that these messages are intended to disappear after a brief period. The benefit is maintaining a focused and uncluttered workspace.

17

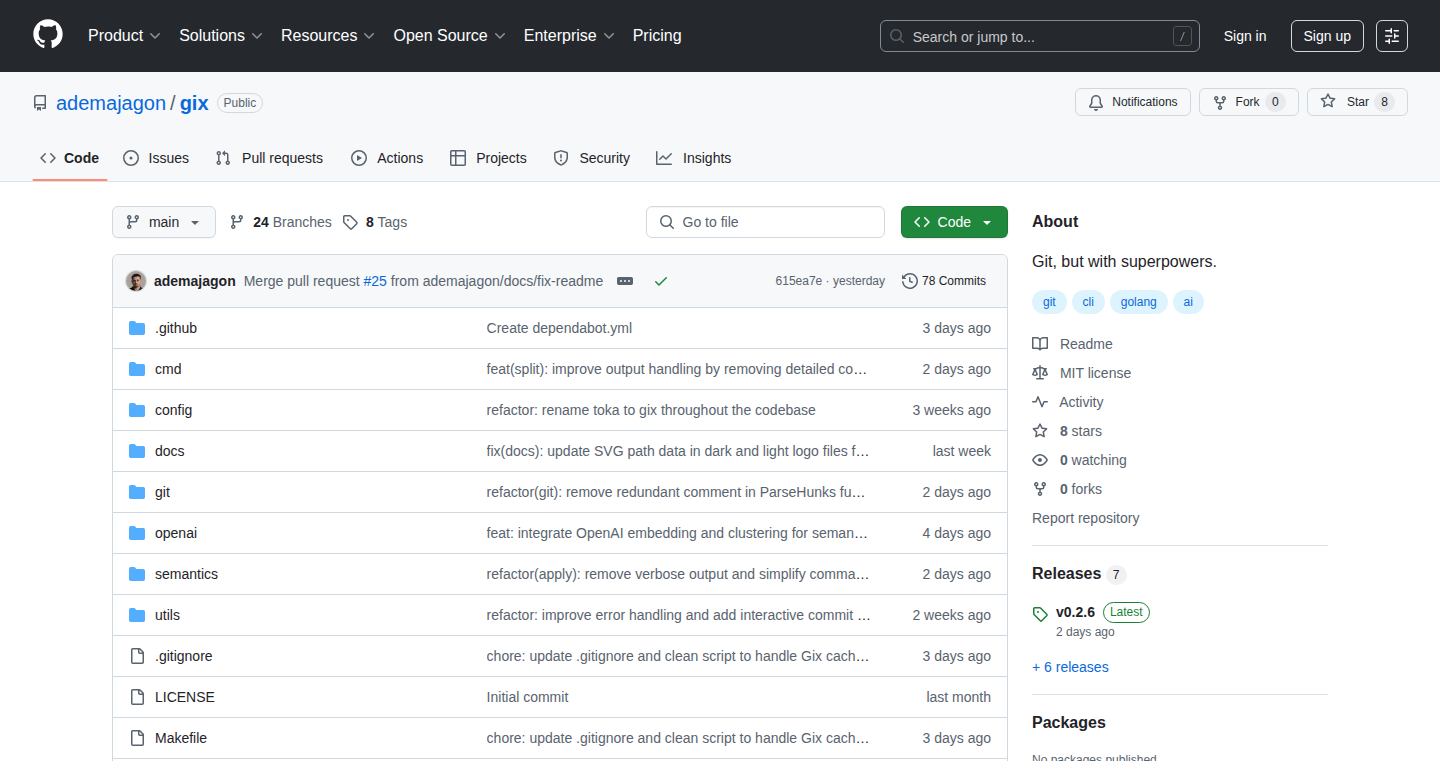

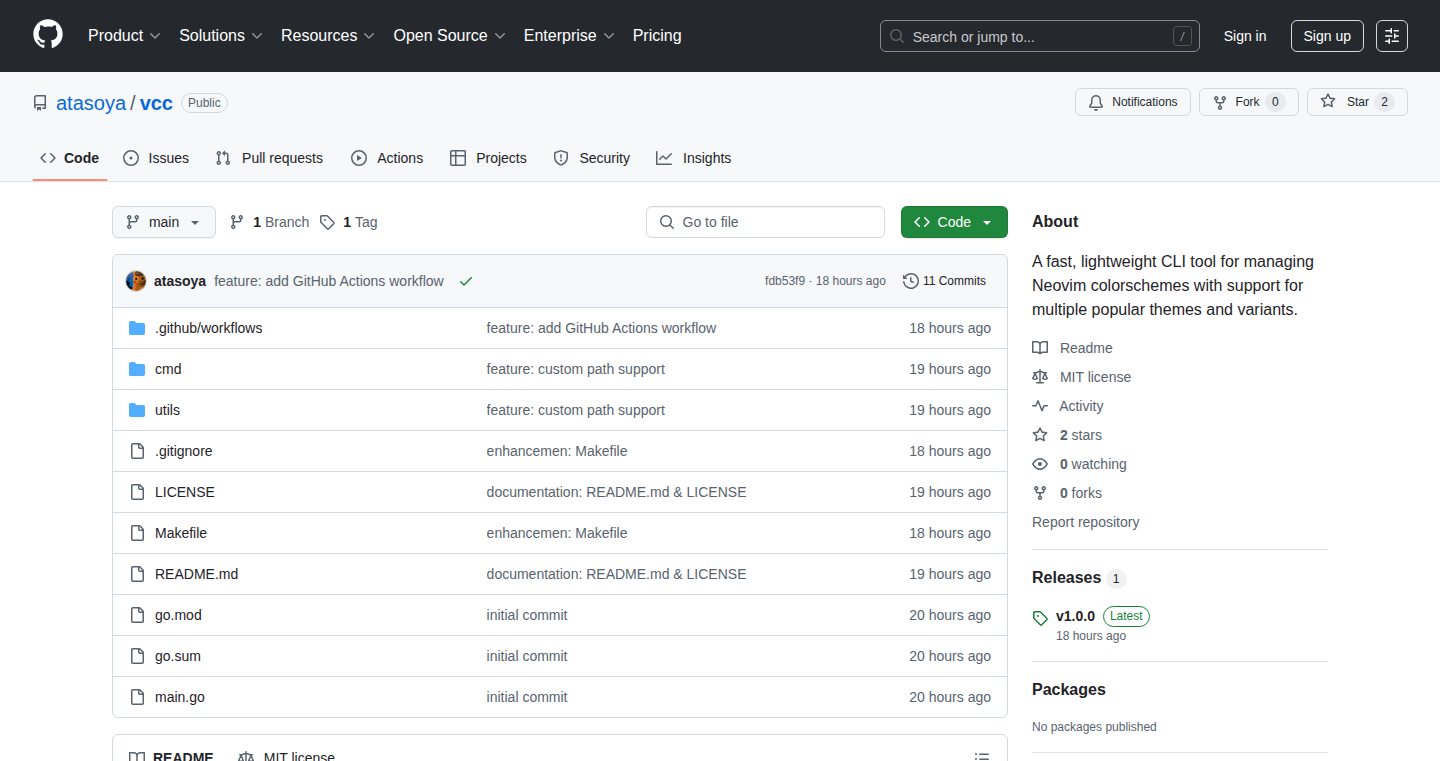

Gix: AI-Powered Git Assistant

Author

codebyagon

Description

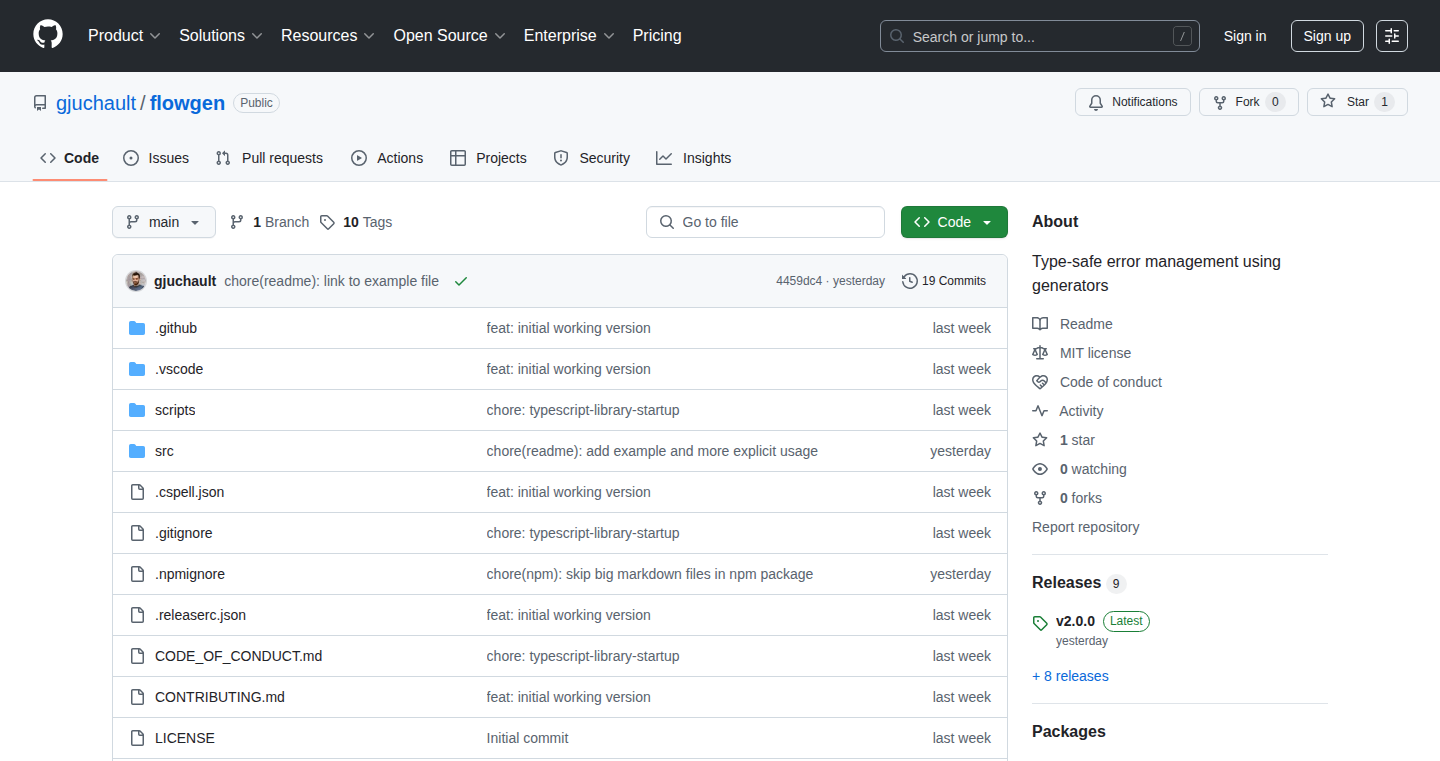

Gix is a command-line tool that integrates artificial intelligence into your Git workflow. It's designed to help you manage Git commits more efficiently. The tool, written in Go and designed to be cross-platform, leverages your own OpenAI API key to split large changes into smaller, more manageable commits and suggest helpful commit messages. The core innovation is using AI to understand your code changes and generate contextually relevant information, improving your productivity and code quality.

Popularity

Points 4

Comments 0

What is this product?

Gix utilizes the power of AI, specifically through OpenAI's API, to analyze the changes you've made in your code. It then helps you break down extensive changes into smaller, more logical commits, each with its own descriptive commit message. This streamlines your workflow and makes your commit history easier to understand. Essentially, it automates tedious tasks related to Git, making your interaction with Git more efficient and less prone to errors. So this is useful because it saves time and improves collaboration.

How to use it?

Developers can use Gix directly from their terminal. You'll need to have Go installed and provide your own OpenAI API key. After installing Gix, you run it in your Git repository. For instance, you might run `gix split` to automatically divide a large diff into smaller commits. Or you can use `gix suggest` to generate commit messages. Integration is seamless, as Gix works directly with the Git commands you already use. So this is useful because it fits right into your existing workflow, improving productivity.

Product Core Function

· Automatic Diff Splitting: Gix can intelligently split large code changes into multiple smaller commits. It analyzes the changes and proposes logical commit boundaries. This makes code reviews easier and helps maintain a clean commit history. So this is useful because it helps keep your code history organized and easy to understand.

· AI-Powered Commit Message Suggestions: Gix uses AI to generate clear and concise commit messages based on the changes you've made. This helps ensure that your commit history is informative and provides context for future developers (including yourself). So this is useful because it saves time writing commit messages, and ensures quality commit messages.

· Cross-Platform Compatibility: The tool is written in Go, making it compatible with various operating systems. This means you can use Gix regardless of whether you're using Windows, macOS, or Linux. So this is useful because it's accessible to a wide range of developers.

· Local Execution & Privacy: Gix runs locally and uses your own OpenAI API key, providing control over your data and ensuring privacy. You don't need to upload your code to external servers. So this is useful because it offers security and control of your own data.

Product Usage Case