Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-07-14

SagaSu777 2025-07-15

Explore the hottest developer projects on Show HN for 2025-07-14. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The Hacker News projects today reveal a strong move toward leveraging AI to solve real-world problems. The prevalence of AI-driven automation and AI-assisted tools across different domains shows how AI is rapidly being integrated into our daily lives. Developers and entrepreneurs should focus on applying AI to automate tedious tasks, extract valuable insights from data, and create more efficient workflows. Also, the rise of local, self-hosted solutions emphasize a growing demand for privacy and control. Consider building solutions that empower users with more control over their data and privacy. The increasing number of tools addressing API creation and backend development indicates opportunities for streamlining and accelerating development processes. Embrace these trends to build innovative, user-centric applications and create a lasting impact.

Today's Hottest Product

Name

legacy-use – add REST APIs to legacy software with computer-use

Highlight

This project leverages AI agents to automate interactions with legacy Windows software, mimicking mouse and keyboard inputs to create an API layer. It tackles the challenge of integrating outdated, API-less software with modern systems. Developers can learn how to apply AI agents for automation, understand the nuances of GUI interaction, and explore methods for extracting data from and exposing it through APIs. This is incredibly useful to unlock the value from legacy systems.

Popular Category

AI/ML

Utilities

API

Productivity

Popular Keyword

AI

Automation

API

Legacy Software

GraphQL

Technology Trends

AI-driven Automation: Using AI agents for automating tasks, particularly in legacy systems, demonstrates a shift towards intelligent automation.

No-Code/Low-Code Solutions: Tools for generating APIs with minimal code, and visual builders for APIs showcase the ongoing trend of simplifying development.

AI-Assisted Productivity: AI is being integrated into various tools to enhance productivity, like ticket management, code generation, and content creation.

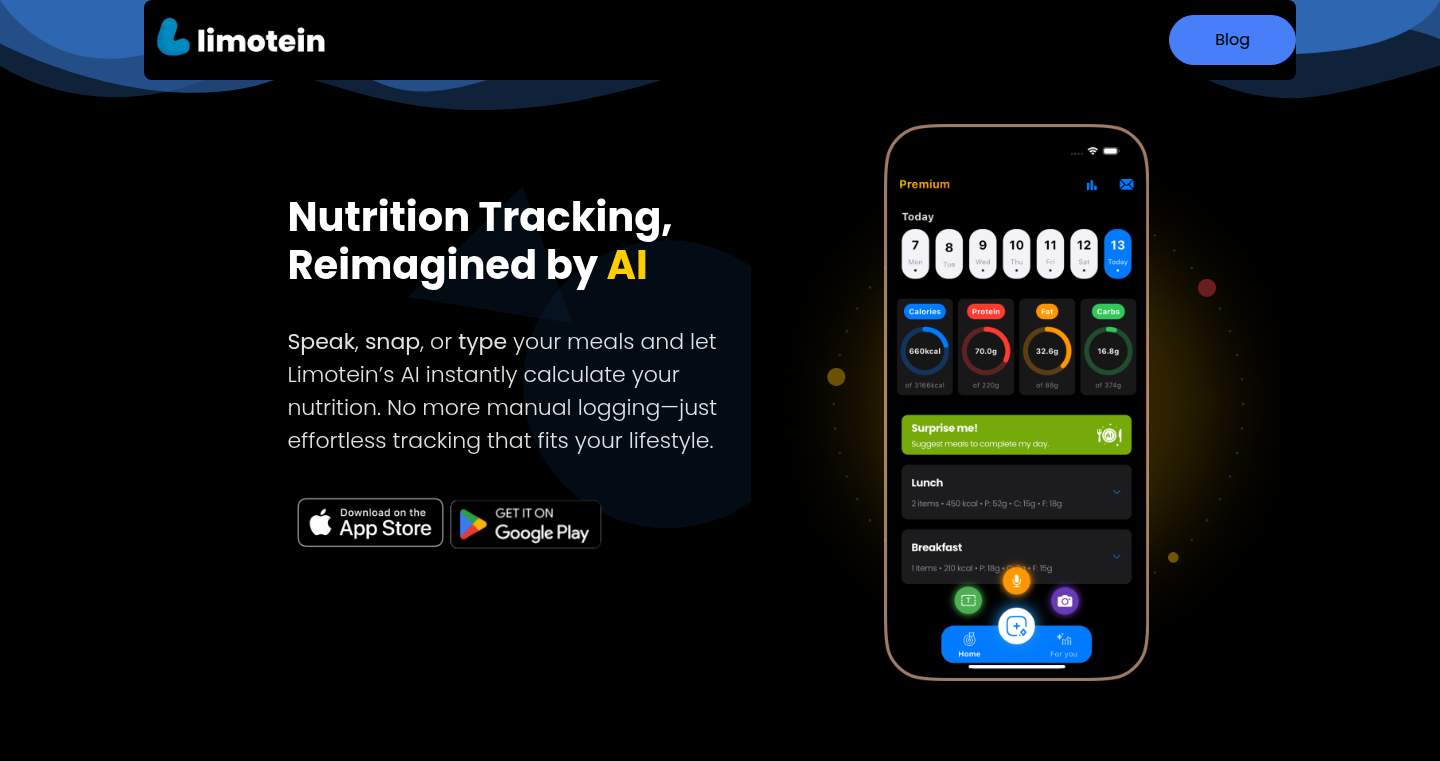

Focus on Data and Insights: Tools for data extraction, analysis, and visualization, and the development of an AI-powered food tracker, highlight the importance of data-driven decision-making.

Edge Computing and Local Processing: Several projects emphasize local processing and self-hosting, improving privacy and security.

Project Category Distribution

AI/ML Applications (25%)

Developer Tools (20%)

Productivity/Utilities (30%)

API/Backend (10%)

Content Creation/Media (15%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Refine: Your Local AI Writing Assistant | 389 | 198 |

| 2 | CallFS - S3-compatible Object Store in a Single Go Binary | 63 | 28 |

| 3 | TechBro Generator: A Satirical Text Generation Tool | 50 | 15 |

| 4 | HTML Maze: A Browser-Based Labyrinth Explorer | 48 | 12 |

| 5 | Portia: Stateful CrewAI Alternative with Authentication and Extensive Tooling | 16 | 6 |

| 6 | legacy-use: Agentic API Layer for Legacy Software | 15 | 1 |

| 7 | Vectra: The AI Board Babysitter for Developers | 8 | 8 |

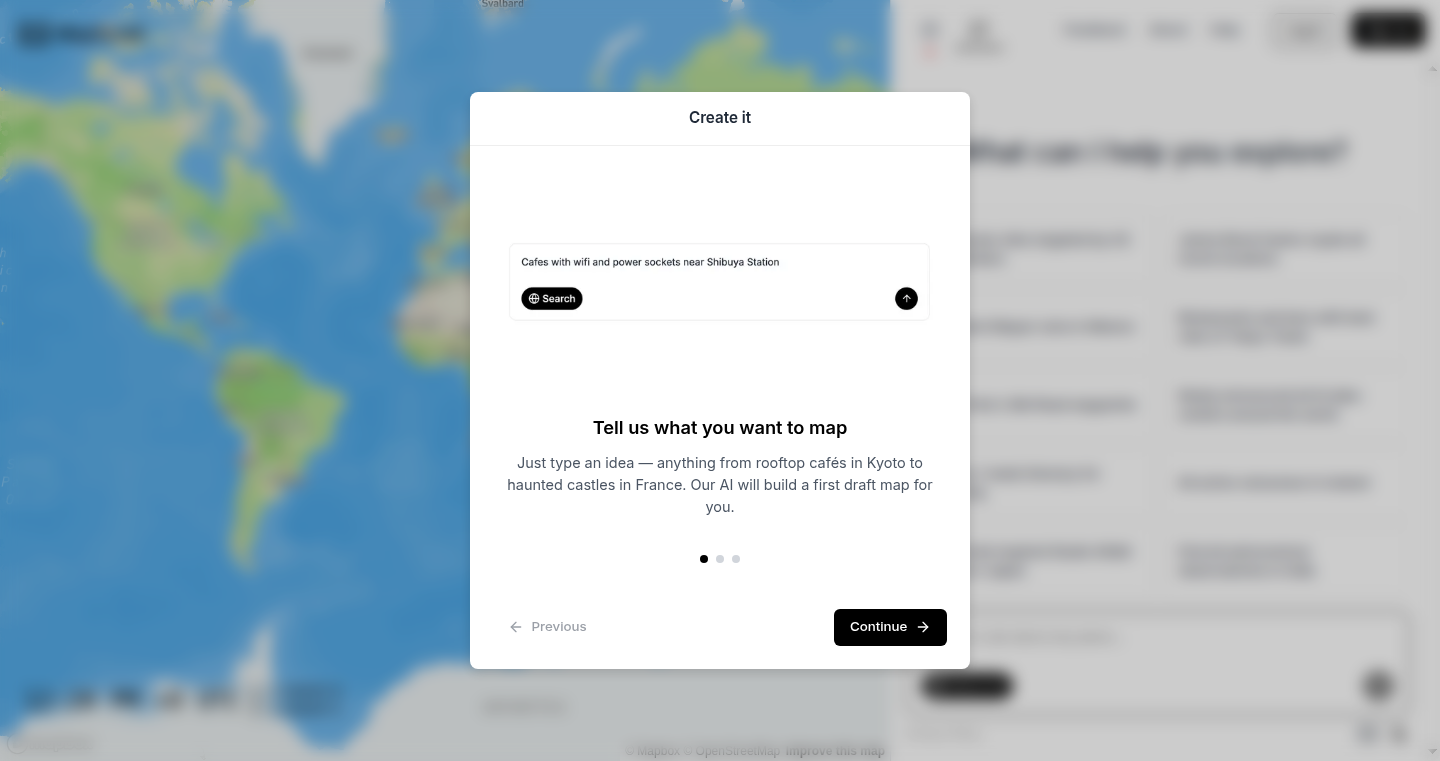

| 8 | MapScroll: AI-Powered Storytelling Maps | 7 | 5 |

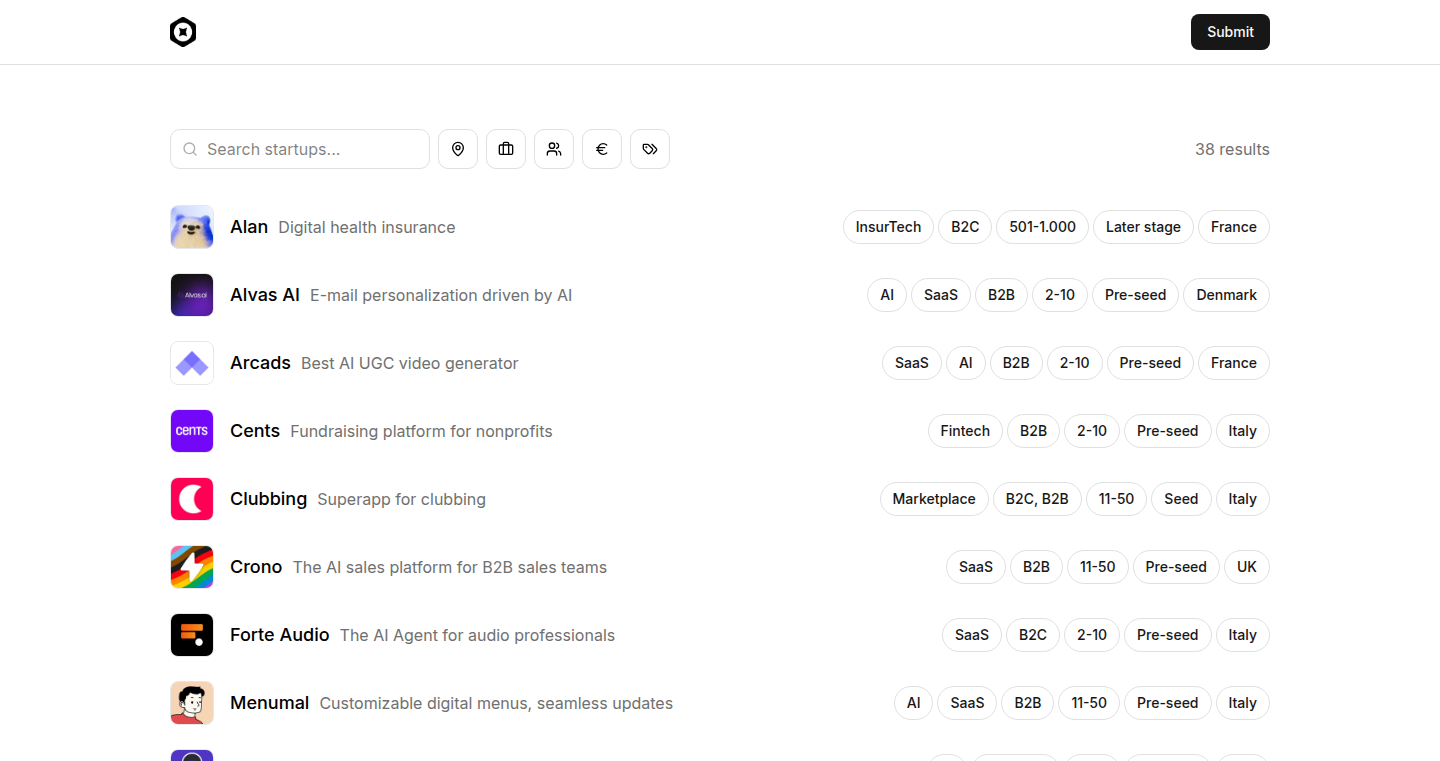

| 9 | StartupList EU: A Public Directory of European Startups | 7 | 4 |

| 10 | Secnote - Self-Destructing Encrypted Notes | 3 | 6 |

1

Refine: Your Local AI Writing Assistant

Author

runjuu

Description

Refine is a locally-running alternative to Grammarly, leveraging the power of open-source language models to provide real-time suggestions for grammar, style, and clarity in your writing. The core innovation lies in its local execution, offering enhanced privacy and control over your data, while still delivering intelligent writing assistance. This project tackles the challenge of providing high-quality writing feedback without relying on cloud-based services, addressing concerns about data security and internet dependency. So this is useful for anyone who wants to improve their writing without sending their text to the cloud.

Popularity

Points 389

Comments 198

What is this product?

Refine uses large language models (LLMs), the same technology behind powerful AI like ChatGPT, but runs these models directly on your computer. This means your text data never leaves your device. The system analyzes your writing in real-time, identifying errors in grammar, suggesting style improvements, and helping you clarify your meaning. The innovative part is that it achieves this with open-source models, giving you more control and transparency. So this offers you an alternative to the popular, but cloud-based, writing tools, ensuring your writing stays private.

How to use it?

Developers can integrate Refine into their existing writing workflows. It can function as a standalone application or be integrated into text editors and IDEs. You can use an API to feed your text to Refine. You can use a specific programming language to call the API function to invoke the tool to refine the grammar and style of your content. So you can easily make it part of your writing or coding environment.

Product Core Function

· Real-time grammar checking: The system instantly identifies and flags grammatical errors. This is valuable for improving the accuracy and professionalism of any written communication.

· Style suggestions: Refine suggests improvements to writing style, such as sentence structure and word choice, making your writing more engaging and effective. This helps you polish your writing.

· Clarity enhancements: The tool identifies areas where your writing might be unclear and offers suggestions for improvement. This is great for making sure your ideas are easily understood by your audience.

· Local execution: Refine runs entirely on your computer, providing enhanced privacy and data security. This is important for users who are concerned about data privacy, or who want a writing tool that works offline.

· Open-source foundation: Being built on open-source models means the system is transparent and can be customized, allowing developers to tailor it to their specific needs. This is important if you want to extend the system in a specific way.

Product Usage Case

· A developer writing technical documentation: Refine can check their technical documentation. This ensures accuracy and consistency in the documentation, which helps other developers.

· A writer crafting a blog post: Refine can offer suggestions for improving the style and clarity of the post, making it more readable and engaging. This helps you get more readership.

· An academic writing a research paper: The tool can help catch grammatical errors and improve the overall flow of the paper, ensuring the quality of the research work. This ensures better papers.

· A developer creating code comments: Refine can assist in writing clear and concise code comments. This helps in the project's documentation.

· Anyone drafting an email or social media post: It's great for refining text for professional or personal use. So your writing is more effective.

2

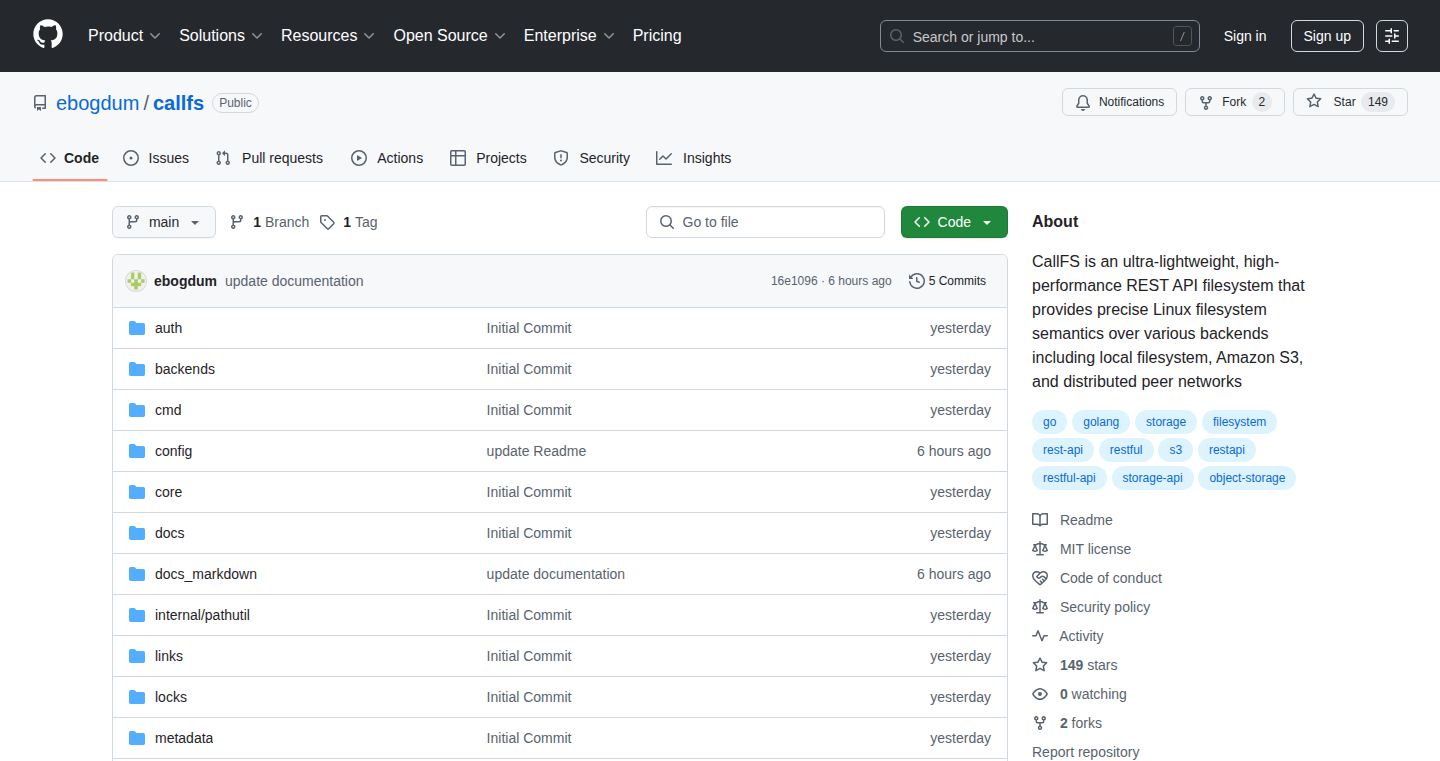

CallFS - S3-compatible Object Store in a Single Go Binary

Author

ebogdum

Description

CallFS is a self-contained file storage service, built in Go, designed to solve the common problem of data disappearing or becoming inaccessible in complex storage setups. It aims to simplify file management by offering an S3-compatible API, allowing seamless integration with existing tools. The core innovation lies in its simplicity: it’s a single, small binary with no external dependencies, storing 'hot' data locally for speed and 'cold' data in S3-compatible buckets. It also includes built-in monitoring, providing insights into what's happening with your data. This project provides a simple, observable, and efficient way to manage and store files, reducing the headache of fragile storage solutions.

Popularity

Points 63

Comments 28

What is this product?

CallFS is essentially a mini-cloud storage service that you can run on your own hardware. It acts like Amazon's S3, meaning you can use all the same tools to upload and download files. The innovation is that it's a single, small program written in Go. It keeps the files you access often (hot data) on your computer's hard drive for quick access, while less frequently used files (cold data) can live in a more cost-effective cloud storage service like Amazon S3. Crucially, CallFS provides built-in monitoring, so you can see exactly what's going on with your files. So this is useful because you can avoid the complexity and fragility of building a storage solution with multiple tools, while still maintaining control and visibility.

How to use it?

Developers can use CallFS by downloading the single executable file and running it on their server or computer. They can then use existing tools like `aws cli` or any S3-compatible client to upload, download, and manage files. You would typically configure CallFS to point to your S3 bucket for cold storage, and the local disk for your hot data cache. CallFS also exports metrics and logs, which you can monitor with tools like Prometheus. So, you use it by replacing your complex storage system with this single, easily manageable tool.

Product Core Function

· S3 API Compatibility: This allows easy integration with existing tools and services that already work with S3, such as backup utilities, content delivery networks (CDNs), and data analysis pipelines. This eliminates the need to change existing workflows. So this is useful because you don't have to rewrite everything you have built to use S3.

· Local Disk Caching (Hot Data): CallFS stores frequently accessed files on the local disk, increasing read and write speeds. This feature significantly improves performance for frequently accessed data, making applications feel faster and more responsive. So this is useful because it speeds up access to your important files.

· S3-Compatible Cold Storage: Files that aren't accessed as often (cold data) are stored in S3-compatible buckets. This utilizes cost-effective cloud storage for large datasets. So this is useful because you can store a lot of files cheaply.

· Prometheus Metrics & JSON Logs: Built-in monitoring through Prometheus and JSON logs provides visibility into storage operations, allowing for easy troubleshooting and performance analysis. Developers can monitor how the storage system is performing. So this is useful because you always know what’s going on with your data and can fix problems easily.

· Single Binary Deployment: CallFS is packaged as a single, ~25 MB static binary with no external dependencies. This makes deployment incredibly simple and portable. So this is useful because you can get up and running with your storage very quickly on any machine.

Product Usage Case

· Web Application Hosting: A web application can use CallFS to store static assets like images, videos, and CSS/JavaScript files. Because of its S3 compatibility, existing deployment pipelines easily work with CallFS. With local caching, these files load quickly for users. So this is useful because your website loads faster and it’s easy to deploy.

· Backup and Archival: CallFS can be used as a local or on-premise backup solution for important files. With its cold storage capability, it also works with low cost long-term archival. This means you get a reliable way to store backups, with easy access. So this is useful because you can back up your data easily and safely.

· Content Delivery Network (CDN) Edge Caching: Deploying CallFS on edge servers allows for caching content closer to the users. This decreases latency and speeds up content delivery. So this is useful because your users get content faster and improve their overall experience.

· Software Development: Developers can use CallFS during development to store build artifacts or other temporary files. This simplifies the development process. So this is useful because it makes development more efficient.

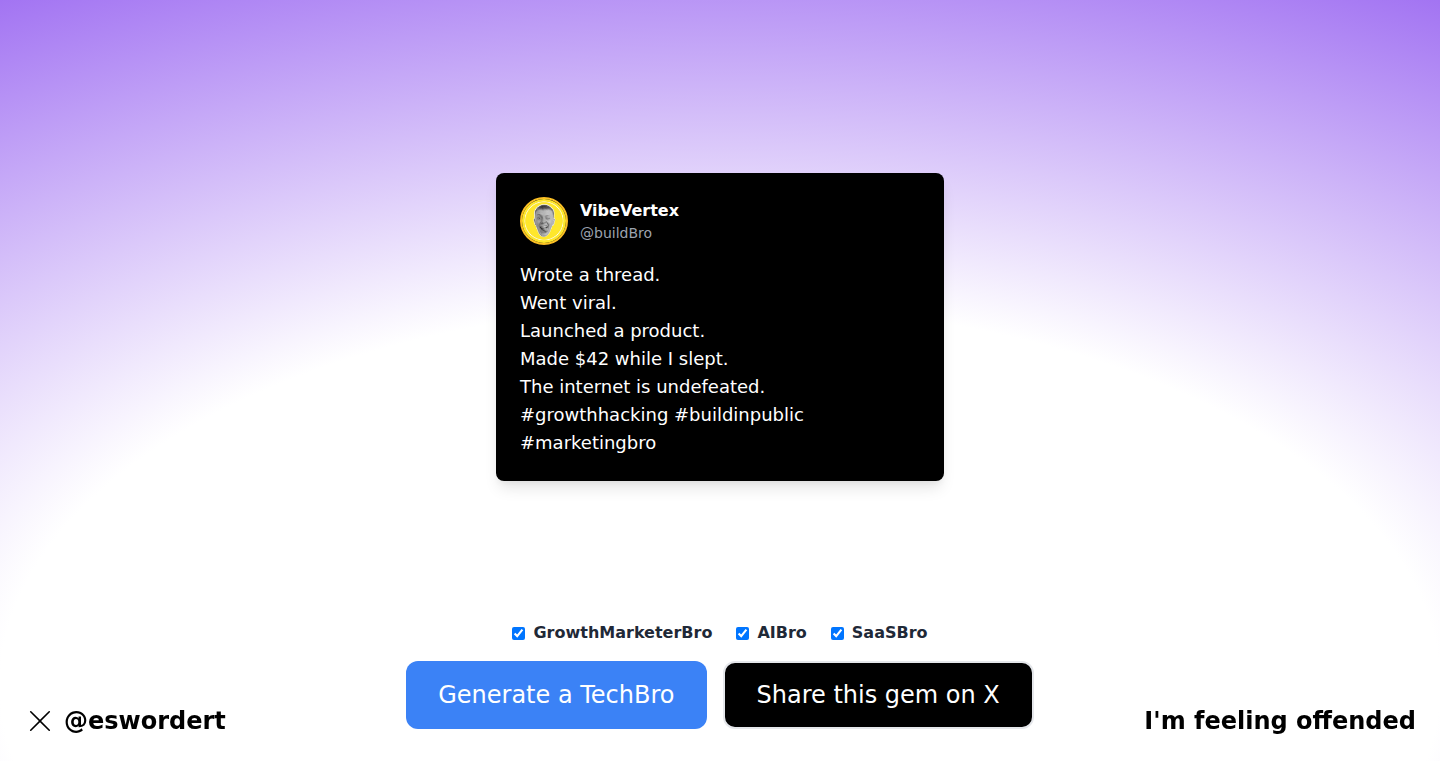

3

TechBro Generator: A Satirical Text Generation Tool

Author

ahmetomer

Description

This project, the TechBro Generator, is a satirical text generation tool. It uses a language model to create humorous and exaggerated social media posts in the style of 'tech bros'. The core innovation lies in its application of natural language processing (NLP) to mimic a specific tone and style. This solves the problem of generating humorous content on demand, providing a fun and engaging way to poke fun at tech culture.

Popularity

Points 50

Comments 15

What is this product?

It's a program that writes satirical posts. The TechBro Generator uses a pre-trained language model, a sophisticated piece of AI, to understand the patterns of language. The model is then fine-tuned with data that resembles the style of typical tech-focused social media posts, allowing it to generate new content that is similar. So the innovation is in the use of AI for creative content generation, specifically for satire.

How to use it?

Developers can use this by interacting with the tool. They can provide initial prompts or keywords, and the generator will create a post that fits the 'tech bro' style. It can be integrated into other applications, such as social media automation tools, content creation platforms, or even as a form of entertainment. For example, a developer might use it to create mock social media content for testing, or to quickly produce a series of humorous posts.

Product Core Function

· Satirical Text Generation: Generates posts in a specific style, enabling humorous content creation. So this gives developers the ability to create funny content rapidly for various applications, and helps generate a specific and recognizable type of content quickly.

· Style Mimicry: The ability to imitate a specific writing style provides a novel tool for content personalization and generation. So this enables developers to target specific audiences with content tailored to their preferences and cultural norms, potentially increasing audience engagement.

· Prompt-based Content Creation: Allowing the user to provide inputs or keywords to generate related text. This allows the user to specify what is being satirized, offering more targeted generation. So this provides developers the ability to customize content generation to their needs.

Product Usage Case

· Social Media Campaign Testing: A developer could use the generator to create multiple satirical posts for testing a social media marketing campaign. This lets you experiment with the messaging and tone before launching your main campaign. So this provides a cost-effective way to test different content approaches.

· Content Creation for Humor: Developers can integrate this generator into a larger content platform that creates comedic content. So developers can now offer humor-focused content for their audience.

· Automated Mocking: A developer might build a bot that comments satirically on tech news, providing humor through the application of machine learning. So, developers can provide entertainment value and satire.

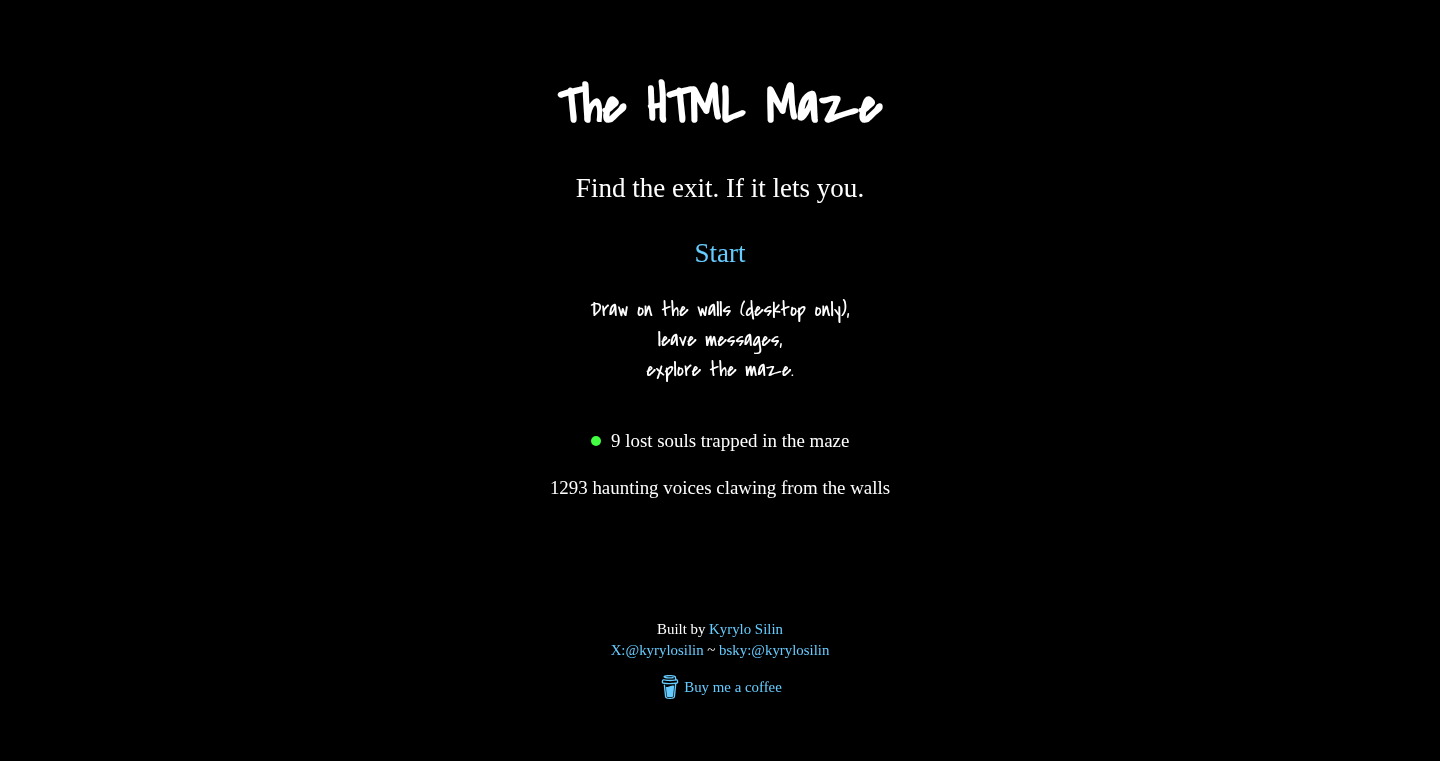

4

HTML Maze: A Browser-Based Labyrinth Explorer

Author

kyrylo

Description

HTML Maze is a fascinating project that crafts an interactive labyrinth entirely using HTML pages. It leverages HTML's ability to link pages (think of it as creating different rooms) to build a complex and visually engaging maze. The innovative aspect lies in its pure HTML implementation, demonstrating the versatility of web technologies for creating interactive experiences beyond typical content display. It's a clever exploration of what you can build with basic web building blocks. The project addresses the technical problem of creating a navigable, interactive environment purely within the constraints of HTML, showcasing a creative use of hypertext and page navigation.

Popularity

Points 48

Comments 12

What is this product?

HTML Maze constructs an immersive experience using only HTML files and hyperlinks. It's essentially a series of interconnected web pages, where each page represents a 'room' in the maze. Navigation between rooms is achieved through HTML links. The innovative part is that it does all this using just the basic elements of the web – HTML tags and hyperlinks. This project demonstrates a creative way to build interactive content using only fundamental web technologies. So what? It shows you can achieve complex interactive designs without needing JavaScript or fancy frameworks; it's a minimalist approach demonstrating the power of the core technologies.

How to use it?

Developers can explore the HTML Maze by navigating the web pages. The core concept is transferable: Developers can adapt this approach to build interactive stories, tutorials, or even simple games by linking HTML pages together to create a navigable experience. It is a great educational tool. So what? Learn to implement this concept to create interactive presentations or enhance user flow on a website.

Product Core Function

· Page Navigation: HTML Maze utilizes hyperlinks to allow users to move between 'rooms' within the maze. This is its primary function. The underlying technology is the `<a>` tag and the `href` attribute. So what? This teaches developers how to build navigation with hyperlinks that are critical in interactive website and applications development.

· Structure and Layout: HTML is used to structure and design each 'room' of the maze. This allows the user to see basic design elements of website. So what? This shows a fundamental understanding of how to use the HTML structure, for example, `div`, `span`, `p` tags, which are essential for creating website layouts and content.

· Visual Representation: The 'rooms' of the maze likely use basic HTML for visual representations. Although there isn’t any complex visuals here, it does demonstrate using only HTML and CSS for basic visual elements. So what? This can be leveraged to create quick prototypes of interactive concepts.

Product Usage Case

· Interactive Storytelling: Imagine building an interactive story where each HTML page represents a scene, and the links offer choices that direct the user to different plot points. So what? It provides an easy way to build interactive stories.

· Tutorials: A step-by-step guide to a complex topic, where each HTML page displays a step and links to the next. So what? This method enables you to construct comprehensive and organized tutorials, each accessible with a click.

· Simple Games: Building simple text-based adventure games with different locations is possible by using only the basics of HTML pages. So what? Allows you to build very basic games or interactive content in a very easy way.

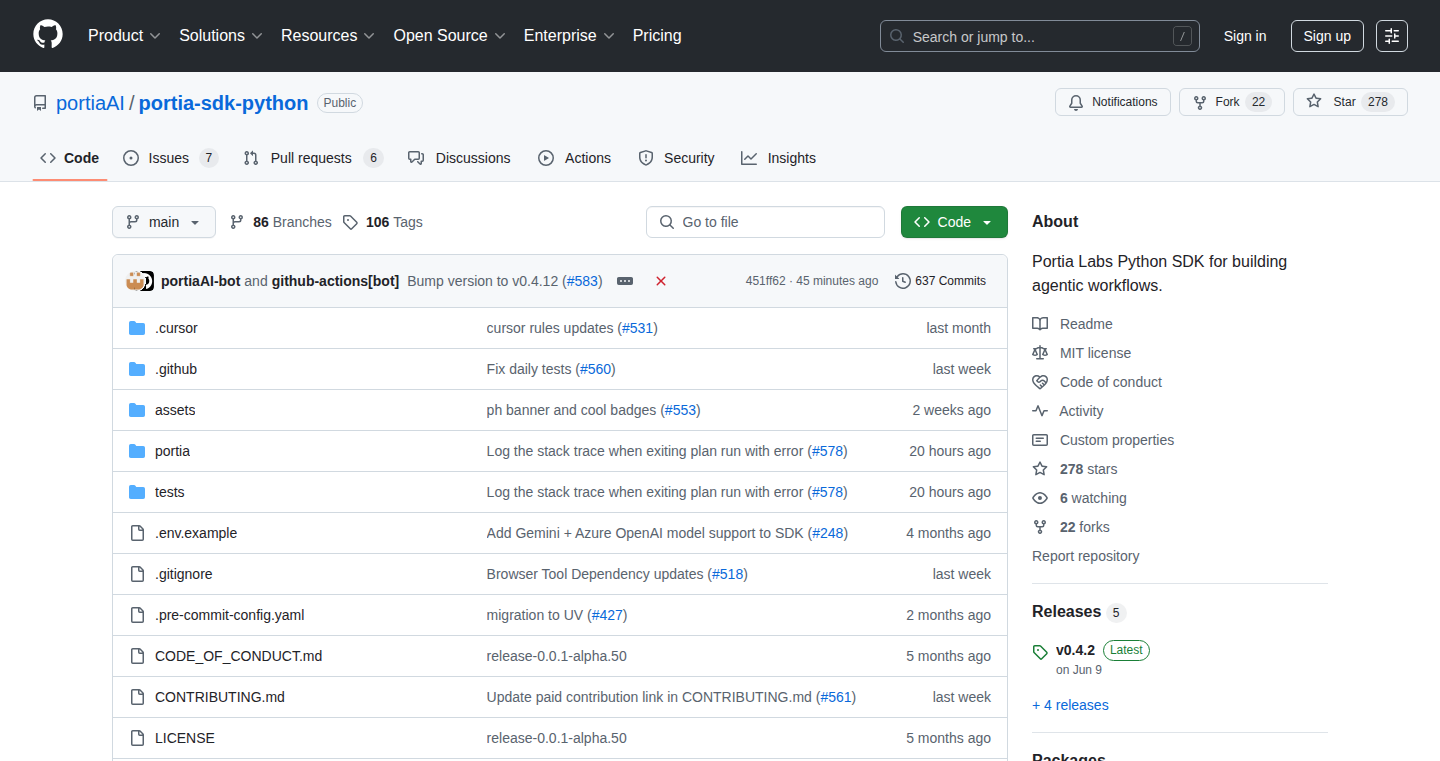

5

Portia: Stateful CrewAI Alternative with Authentication and Extensive Tooling

Author

mounir-portia

Description

Portia offers a new way to build and manage AI agents. It's a replacement for CrewAI, which is a popular tool for orchestrating AI agents. But Portia goes further by adding statefulness (remembering past interactions), authentication (secure access), and supports more than 1000 tools. This solves the problem of needing to consistently re-initialize AI agents and provides secure and comprehensive tools for developers.

So this allows developers to build more advanced and capable AI-powered applications faster.

Popularity

Points 16

Comments 6

What is this product?

Portia lets you create intelligent AI agents that can remember what they've done and what they've learned. Unlike basic AI bots that forget everything after each task, Portia keeps a 'memory' of past conversations and actions. It uses state management (think of it as a detailed diary) to track each agent's history. It also provides secure access with authentication, ensuring only authorized users can use the agents. Portia integrates with a huge library of tools (over 1000), giving your AI agents access to a wide variety of functions and information sources.

This is innovative because it goes beyond simple AI task automation. It allows for building persistent, intelligent agents capable of complex tasks, while providing secure access and extensive tool support.

How to use it?

Developers can use Portia to build custom AI assistants, automate complex workflows, and create intelligent systems. You can define agents with specific roles (e.g., a customer service agent, a data analyst) and provide them with access to tools they need. You can integrate Portia agents with your existing applications via APIs or SDKs, or use them independently. Portia provides an intuitive interface for defining agent behavior, managing tool access, and monitoring agent performance.

So this is useful for developers working on AI projects that require memory, security, and broad tool integration.

Product Core Function

· Stateful Agent Management: Portia's ability to remember past interactions enables agents to learn and adapt over time. This creates more intelligent and helpful AI assistants, suitable for complex tasks and long-running processes. For example, imagine a customer service agent that remembers past interactions with a customer, providing personalized assistance.

· Authentication and Access Control: The built-in authentication feature ensures that only authorized users can interact with and use AI agents. This protects sensitive data and prevents unauthorized use of AI-powered systems. In enterprise settings, this protects valuable data and ensures compliance with privacy regulations.

· Extensive Tool Integration: Portia supports over 1000 tools, including web scraping, data analysis, and various APIs. This allows agents to perform a wide range of tasks, access diverse data sources, and interact with various systems. Useful for automating complex workflows and enabling AI agents to solve a wide range of problems.

· Agent Orchestration and Task Management: Portia helps organize AI agents and their activities. It allows developers to define tasks for agents, manage agent interactions, and monitor agent performance. This is valuable for large-scale AI projects.

· API and SDK Integration: Portia allows developers to seamlessly integrate AI agent capabilities into their existing applications. Providing developers with the flexibility to incorporate intelligent agents without rewriting their core codebase. This facilitates building more intelligent and interactive applications with minimal effort.

Product Usage Case

· Customer Service Automation: Build an AI agent that can remember previous interactions, understand user context, and provide personalized support. This reduces the load on human support staff and improves customer satisfaction. It is able to answer complex inquiries, resolve issues, and guide users through complex processes.

· Data Analysis and Reporting: Create an AI agent that can connect to various data sources, extract relevant information, and generate reports. The agent learns from its past analyses, improving its accuracy and efficiency over time. Useful in analyzing large datasets, generating customized reports, and identifying trends.

· Workflow Automation in Business: Developers use Portia to build workflow systems that automate the repetitive parts of a business. The system might be able to do everything from sending emails to entering data, and even adapting to certain situations. Good for making businesses run more smoothly and with less manual work.

· Personalized Learning Platforms: Developers use Portia to create an AI tutor, that learns how to teach a student. It builds a memory of what they've learned, and provides the personalized instruction. It can assist in creating personalized quizzes, providing instant feedback, and tailoring lessons based on a student's progress and needs.

· Security Monitoring and Incident Response: Portia could be used to manage security alerts. For example, Portia agents could be designed to analyze logs, identify anomalies, and take action based on those anomalies. The 'memory' enables the agent to learn from previous incidents and refine its detection capabilities. This can improve response times and decrease the impact of security threats.

6

legacy-use: Agentic API Layer for Legacy Software

Author

schuon

Description

legacy-use is a fascinating project that bridges the gap between modern AI agents and outdated software, particularly those running on Windows. It creates an 'agentic API layer' that allows AI to control legacy GUI-based applications, mimicking human interaction through mouse and keyboard emulation. This innovative approach addresses the challenge of automating tasks on systems lacking modern APIs. This allows companies to integrate their existing legacy systems into the agentic revolution, extracting data and creating automated workflows. It is built upon Anthropic's Computer Use and has been extended to run on Windows and Linux environments. The core idea here is to allow modern AI agents to interact with older systems that don't have APIs, like those still running on Windows XP.

Popularity

Points 15

Comments 1

What is this product?

legacy-use is a tool that lets AI programs 'talk' to old software. Imagine a robot that can click buttons and type on your computer, but instead of a physical robot, it's an AI. This tool does exactly that, but for software that's old and doesn't have the fancy features of today's programs. It uses AI to control these old programs (think healthcare systems or financial tools). The innovation is in how it makes the AI interact with the software, by pretending to be a person using a mouse and keyboard. This is useful because many businesses still rely on these older, but important, programs. This project takes advantage of Anthropic's Computer Use and extends its’ capabilities to old legacy tools on Windows/Linux/whatever systems. This allows companies to automate old processes, which could save time and money.

How to use it?

Developers can integrate legacy-use into their projects by connecting it to the legacy systems (using methods such as RDP, VNC with VPNs). They can then send instructions (prompts) to the software, and the tool will handle everything like logging, monitoring and data extraction. legacy-use allows the AI agent to extract data and expose it as a REST API. It also provides guardrails to bring in human operators if something goes wrong. You could use it to automate data entry, generate reports, or connect old software to new systems. For example, if you have a very old accounting program, you could use legacy-use to automatically generate compliance reports or extract key financial data. So you can use legacy-use to automate workflows involving legacy software through APIs. This lets companies keep using important, but old, software while still taking advantage of modern technology.

Product Core Function

· GUI Interaction Emulation: The core functionality involves simulating mouse clicks and keyboard inputs to control the legacy software. This is how the AI ‘interacts’ with the software, just like a human user would.

· Connection Infrastructure: This involves setting up the connections to the legacy systems, often using protocols like RDP or VNC. Think of it as setting up the 'doorway' for the AI to access the old software.

· Prompt Execution and Management: The system receives instructions (prompts) from the AI and executes them on the target software. It also handles the logging and monitoring of these actions.

· Data Extraction and API Exposure: legacy-use can extract data from the legacy software and expose it through a REST API. This allows other systems to access and use the data from the legacy software.

· Guardrails and Human Intervention: To ensure reliability, the system includes guardrails to allow human operators to step in if any issues arise. This safety net prevents the automation from going haywire.

Product Usage Case

· Healthcare Automation: A medical provider automated a significant portion of their administrative work using GPT and legacy-use. This demonstrates how AI can streamline tedious tasks in the healthcare industry, freeing up staff for more important duties.

· Financial Compliance Reporting: An accounting firm integrated legacy-use with an older financial application to generate compliance reports automatically. This highlights the potential to modernize and automate processes in the financial sector, saving time and reducing the risk of errors.

7

Vectra: The AI Board Babysitter for Developers

Author

thomask1995

Description

Vectra is an AI-powered tool designed to automate ticket updates in project management systems by analyzing every code commit. It addresses the common pain points of manual ticket updates, outdated information, and lack of visibility into team activities. The core innovation lies in its ability to intelligently interpret code changes and automatically update tickets, create new ones, and provide summaries, thus freeing developers from tedious paperwork. So this can help developers to stay focused on coding, improve project tracking, and enhance team collaboration.

Popularity

Points 8

Comments 8

What is this product?

Vectra uses Artificial Intelligence (AI) to streamline project management. It connects to your code repository (like GitHub) and project management tools (like Linear). When a developer pushes code, Vectra's AI analyzes the commit. Based on the code changes, it automatically updates the relevant tickets, adds comments, and links the commit to the ticket. This saves developers time and ensures that project information is always up-to-date. Vectra understands the context of your code changes and translates them into meaningful updates in your project management system. So this means you spend less time on administrative tasks and more time coding.

How to use it?

Developers can integrate Vectra into their workflow by simply linking their GitHub repository, project management tool, and optionally Slack. Once set up, Vectra works in the background, analyzing every commit and updating tickets automatically. The setup takes only a couple of minutes. It integrates directly with the code repository and project management platform. If you use GitHub and Linear (or other supported platforms), you can set up a connection with a few clicks. Vectra will monitor your code changes and manage the corresponding project tickets. Vectra can notify teams about updates via Slack, keeping everyone informed. So this simplifies the developer's workflow by automating tedious tasks, so that developers can focus on development.

Product Core Function

· Automated Ticket Updates: This core feature analyzes code commits and automatically updates corresponding project management tickets. It saves developers from manually updating tickets, ensuring accuracy and saving time. This is useful because it ensures project information is always up-to-date, thus improving efficiency and reducing the administrative burden on developers.

· Ticket Creation: If a ticket does not exist for a particular task, Vectra can create one based on the code changes. This feature ensures that all tasks are tracked and managed, even if developers forget to create a ticket. It avoids missed tasks and ensures nothing slips through the cracks in the project's management.

· Commit Tagging: The AI links associated commits to the project tickets. This helps to provide a clear history of all code changes linked to a specific task, which improves traceability and makes it easier to understand how a feature has evolved over time. So you can easily see the code changes that support a given ticket, thus improving project transparency and tracking.

· Contextual Comments: Vectra adds helpful comments to tickets that explain what the code changes involve, improving understanding. This allows teams to understand the context and meaning behind code changes. This feature provides clear explanations of the changes, which helps with code reviews, knowledge sharing, and onboarding of new team members.

Product Usage Case

· Automating Release Notes: Vectra automatically updates release notes based on code changes, saving time. For example, when a new feature is added to the code, Vectra automatically generates a description of the feature in the release notes, making sure everyone knows what's new in the release. So this helps teams to maintain accurate and up-to-date release notes without any manual effort.

· Improving Sprint Management: By automatically updating tickets, Vectra ensures that all team members are aware of progress and any potential delays. During a sprint, developers push code and Vectra automatically updates the project tickets. Project managers can easily monitor progress, thus reducing the amount of time spent chasing updates and giving everyone better visibility into project status.

· Onboarding New Developers: New developers can quickly understand the code and project context using the comments and links added by Vectra. When a new team member joins, Vectra helps them understand existing code and project status quickly. They can easily review the code and understand what tasks are linked to particular changes, thereby accelerating the onboarding process.

8

MapScroll: AI-Powered Storytelling Maps

Author

shekharupadhaya

Description

MapScroll is a tool that transforms simple prompts, like "Marco Polo's route" or "WWII sites in France", into interactive, shareable story maps. It leverages AI to automatically geocode locations, gather images and articles, and create a narrative-driven map. This solves the problem of manually creating complex maps with Google My Maps, which is time-consuming and lacks the storytelling aspect. So you can quickly create visually engaging maps for education, travel planning, or exploring historical narratives.

Popularity

Points 7

Comments 5

What is this product?

MapScroll uses AI to understand your search query, find relevant locations (geocoding), and then searches the web for images and articles associated with those locations. It then presents these locations on a map with accompanying images and text snippets, building a story around your initial prompt. The innovation lies in its automation and ability to weave together location data, images, and text into a cohesive narrative. So, you get a map that tells a story, not just a collection of pins.

How to use it?

You simply type in a prompt like "The Silk Road" or "Ancient Roman ruins in Italy." MapScroll then generates a map with markers for relevant locations, accompanied by images and linked sources. You can then refine the map by adding, removing, or adjusting the information. The finished map can be easily shared. So, you can create a visual narrative, ideal for education, travel planning, or personal projects.

Product Core Function

· AI-Powered Prompt Processing: The core of MapScroll is its ability to understand natural language prompts and extract meaningful location information. It uses Natural Language Processing (NLP) techniques to interpret user queries, such as understanding the intent behind a search term like "Marco Polo's route". This enables users to generate story maps easily and without needing precise location data. This is important because it allows anyone to build complex map narratives with simple descriptions. So, it lets you input human language and get back a usable map.

· Automated Geocoding: MapScroll automatically translates textual location descriptions into geographical coordinates. It finds latitude and longitude data for the places mentioned in the user prompt. This feature eliminates the need for manual pin-dropping and ensures that the map accurately reflects the locations. So, it saves time and effort by automating the process of plotting locations.

· Web Data Extraction and Aggregation: The tool automatically gathers images, articles, and other relevant information from the web related to each location. This includes scraping websites for relevant content. This functionality enriches the map with visual and contextual information. So, it offers a comprehensive experience, providing not only the location but also the story behind it.

· Interactive Storytelling Interface: MapScroll provides an interface where users can explore the map, view images, read text snippets, and share the story. It offers a simple way to create and share engaging and visually appealing narratives. So, it allows users to share the maps and the stories they tell easily.

Product Usage Case

· Educational Use: A teacher can use MapScroll to create a map of the voyages of Christopher Columbus, with images and descriptions of the places he visited. The students can explore the map during the lesson, which makes learning more interactive. So, this enables educators to transform static geography lessons into dynamic storytelling experiences.

· Travel Planning: A user can create a map of places to visit during a trip to Paris. The map includes photos, links to articles, and descriptions of attractions. The user can share the map with friends or use it as a personal travel guide. So, it assists travelers in creating customized travel plans and allows them to visually explore potential destinations.

· Historical Research: A historian can use MapScroll to create a map of the key battles during the American Civil War, including images, links to primary sources, and descriptions of events. This lets them visually track the battles and analyze the war's course. So, it empowers researchers to present complex historical data in a clear, engaging, and interactive format.

9

StartupList EU: A Public Directory of European Startups

Author

umbertotancorre

Description

StartupList EU is a publicly accessible directory designed to connect founders, investors, and operators within the European startup ecosystem. It addresses the fragmentation often experienced in Europe, making it easier to discover and learn about early-stage startups. The core innovation lies in providing a centralized, searchable database with detailed startup profiles, including team size, funding, revenue, and more, across various European countries, not just major hubs. This approach facilitates transparency and accessibility within the ecosystem.

Popularity

Points 7

Comments 4

What is this product?

StartupList EU is essentially a European startup search engine. It allows users to find startups based on various criteria such as country, industry, size, and funding. Its technical foundation is likely a database backend (possibly using technologies like PostgreSQL or MySQL) to store the startup information and a web-based frontend (potentially built with frameworks like React or Vue.js) to allow searching and browsing. The innovation is in aggregating and presenting this data in a user-friendly way to solve the discoverability problem inherent in the fragmented European startup landscape. So this lets investors, researchers and even potential employees easily find opportunities, and lets startups gain visibility.

How to use it?

Developers can use StartupList EU as a data source or inspiration. They could integrate its data into their own platforms or build tools for analyzing the European startup ecosystem. For example, a developer could create a Chrome extension that shows startup information when browsing websites. Also, developers looking for examples of directory implementations can learn from the project's structure and user interface. Another way to integrate this would be scraping data to perform further data analysis or to integrate in other systems. So this gives developers valuable data for analysis, and lets them build other tools.

Product Core Function

· Startup Submission: Founders can freely submit their startups to the directory. This builds the directory's dataset. So this lets startups increase their visibility.

· Advanced Search: Users can search by country, industry, name, team size, and business model. This provides easy data filtering. So this lets users find the startups they are interested in quickly.

· Detailed Startup Profiles: Each startup profile includes data like team size, category, funding, revenues, location, and founders. This gives a lot of insight into startups. So this provides complete information to users about startups.

· Cross-EU Coverage: The directory covers startups across the entire European Union, not just major tech hubs. So this expands opportunities and exposure of European startups.

Product Usage Case

· Data Analysis Tool: A developer could use the directory's data to create a tool that visualizes startup trends, funding patterns, and geographic concentrations within Europe. So this helps create data driven tools and insights.

· Investor Due Diligence: An investor could use StartupList EU to quickly identify potential investment opportunities within a specific industry or country. So this helps to streamline the investment process.

· Market Research: A researcher could use the directory to analyze the composition and growth of the European startup ecosystem. So this allows for the ability to extract and analyze key metrics.

10

Secnote - Self-Destructing Encrypted Notes

Author

rahan_r

Description

Secnote is a web application designed for securely sending confidential messages that automatically disappear after being read. The core innovation lies in its end-to-end encryption using XSalsa20-Poly1305, a strong encryption algorithm, ensuring that only the sender and receiver can read the message. It provides a single-use link for accessing the note, eliminating the need for user accounts and minimizing the risk of data breaches. This solves the problem of securely sharing sensitive information like passwords or temporary credentials without leaving a permanent record.

Popularity

Points 3

Comments 6

What is this product?

Secnote is a web-based tool that lets you send messages that are encrypted and self-destruct after being read. It uses a strong encryption method (XSalsa20-Poly1305) to scramble your message, so only the intended recipient can decipher it. Once the message is viewed, it's gone. So, what's innovative? The simplicity and the focus on security. No accounts are needed, and the message disappears automatically. It's about making it super easy and safe to share sensitive data. Therefore, it's useful when you need to share information that you don't want to be stored permanently.

How to use it?

Developers can use Secnote to securely share sensitive information during development, testing, or collaboration. For example, to send API keys, temporary credentials, or debugging information without worrying about them being stored insecurely. You simply create a note, paste your message, and the application generates a unique link. You share this link with the recipient. Once the recipient opens the link and reads the message, it self-destructs. This can be integrated within CI/CD pipelines for tasks requiring secure credential passing, and within chat applications to share sensitive data. It's incredibly simple: create a note, share the link, and forget about it. So, it’s for anyone who wants to share information confidentially and safely.

Product Core Function

· End-to-end encryption with XSalsa20-Poly1305: This ensures that messages are encrypted before they leave the sender's browser and can only be decrypted by the recipient. This protects the data even if the server is compromised. It's useful for sending sensitive data like passwords and API keys.

· Self-destructing messages: Once a message is read, it is automatically deleted, leaving no trace. This is essential for sharing one-time credentials or temporary information. This is for scenarios where you want to avoid storing data permanently.

· Single-use links: Each note is accessible through a unique, one-time-use link. This simplifies the process and enhances security by eliminating the need for user accounts or passwords. This simplifies and secures data sharing.

· No sign-up required: The tool requires no user accounts, enhancing privacy and making it quick and easy to use. This reduces barriers to entry and encourages usage.

Product Usage Case

· Sharing API Keys: A developer needs to securely provide an API key to a teammate. Using Secnote, they can create an encrypted note with the API key and send the link. Once the teammate accesses the key, it's no longer accessible through the link. So, the API key is kept secret.

· Sending Temporary Credentials: An IT administrator needs to provide temporary login credentials for a system. They can generate an encrypted note, send the link, and the credentials disappear after the recipient uses them. This avoids the risk of those credentials being left behind.

· Bug Reporting with Sensitive Data: When reporting a bug, a developer needs to share sensitive information (e.g., database connection strings) with support staff. Using Secnote, the information is encrypted, and after the support team has reviewed the data, it is removed.

11

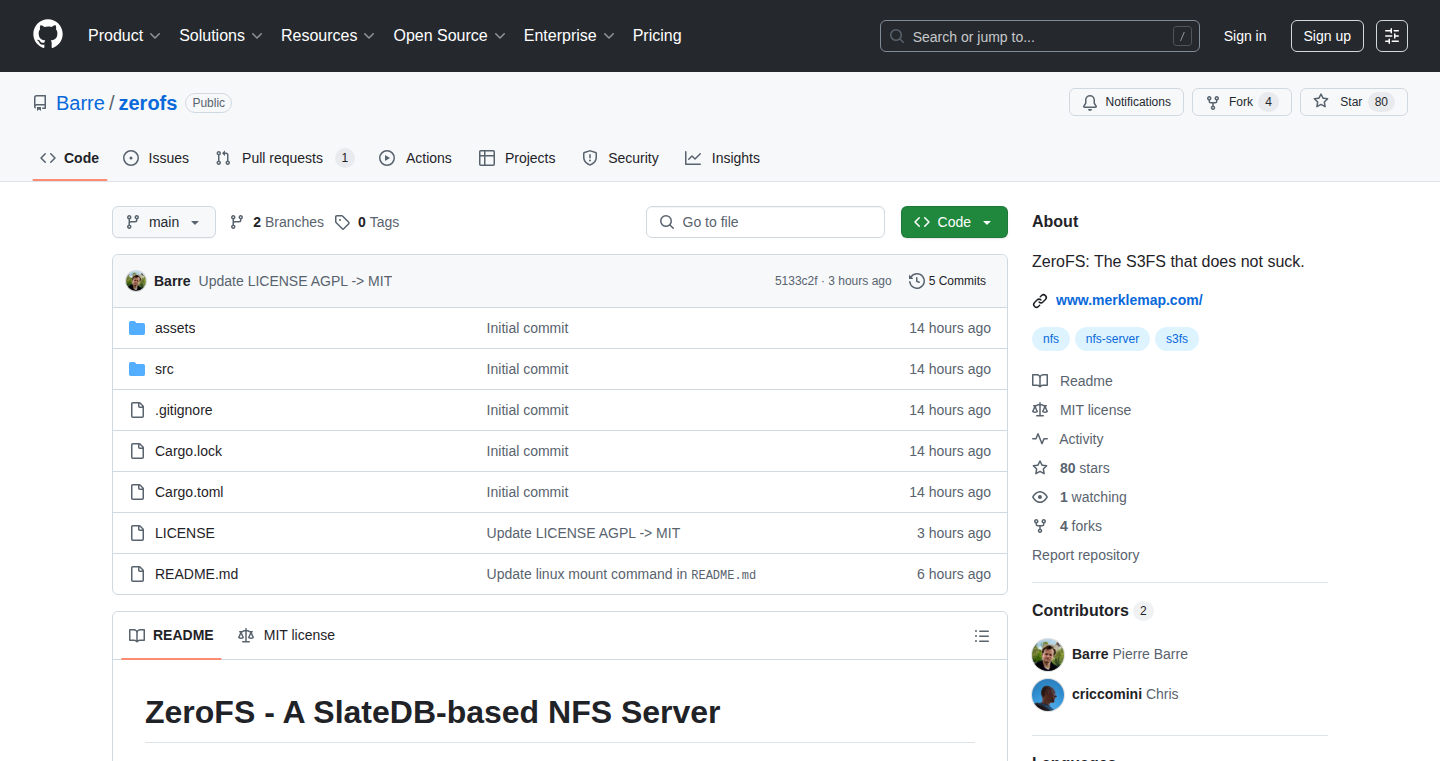

ZeroFS: A Non-Sucking S3 File System

Author

riccomini

Description

ZeroFS is a file system that lets you treat Amazon S3, a cloud storage service, like a local hard drive. The innovative aspect is that ZeroFS is designed to be much faster and more reliable than previous S3 file systems. It avoids common performance bottlenecks by carefully managing how files are accessed and cached, making it a more practical solution for developers who need to work with large datasets in the cloud. So, this is useful if you want faster access to your data stored in the cloud.

Popularity

Points 9

Comments 0

What is this product?

ZeroFS works by cleverly caching data locally and only fetching the necessary parts of a file from S3 when needed. It uses a technique called 'chunking' where files are broken down into smaller pieces, which allows for parallel downloads and improved performance. It also implements smart caching strategies to minimize the number of requests to S3, saving time and money. It's a significant improvement because existing S3 file systems often suffer from slow speeds due to network latency and inefficient data retrieval. This means faster access to your files stored in the cloud – so you get the things you need done quickly.

How to use it?

Developers can 'mount' ZeroFS like any other file system on their Linux or macOS machines. After mounting, you can access your S3 buckets just like regular directories and files. You can read, write, and execute files stored in S3 through ZeroFS. Integration is straightforward: you need to install the ZeroFS software, configure it with your S3 credentials, and then mount your S3 bucket to a local directory. This is beneficial for developers who need to process large datasets or run applications that require fast access to cloud-based storage. So, it means you can use cloud storage in a way that feels as quick and responsive as your local hard drive.

Product Core Function

· Efficient Chunking: ZeroFS breaks down files into smaller chunks for parallel downloading. This increases the speed of file access, especially for large files. The value is faster data retrieval from S3, which is a major win when working with large files or datasets, speeding up your workflow.

· Smart Caching: ZeroFS intelligently caches frequently accessed data locally. This reduces the number of requests to S3 and minimizes latency. This means faster read operations and cost savings. This is great for applications that repeatedly access the same files.

· Background Uploads: ZeroFS handles uploads to S3 in the background. This prevents slow write operations and ensures a responsive user experience. This ensures faster data storage and less waiting, so you can upload files and keep working.

· Metadata Management: ZeroFS caches file metadata locally to speed up directory listings and file information retrieval. This enhances the overall responsiveness of the file system. You get the file data and know where it is, faster, improving overall usability.

Product Usage Case

· Data Science Workflows: A data scientist can use ZeroFS to access a large dataset stored in S3. ZeroFS significantly speeds up data loading and processing, making it easier to run machine learning models or analyze data. So, you can analyze your data more quickly.

· Web Application Hosting: Developers hosting a web application can use ZeroFS to serve static assets (images, JavaScript, etc.) from S3. ZeroFS provides faster access to these assets, improving the application's loading time. You’ll get a faster and better experience for your users.

· Backup and Recovery: ZeroFS can be used to create backups of local data to S3. It allows for efficient and reliable backups with easy access to the backed-up files. So, your important files will be safe in the cloud and easy to retrieve.

12

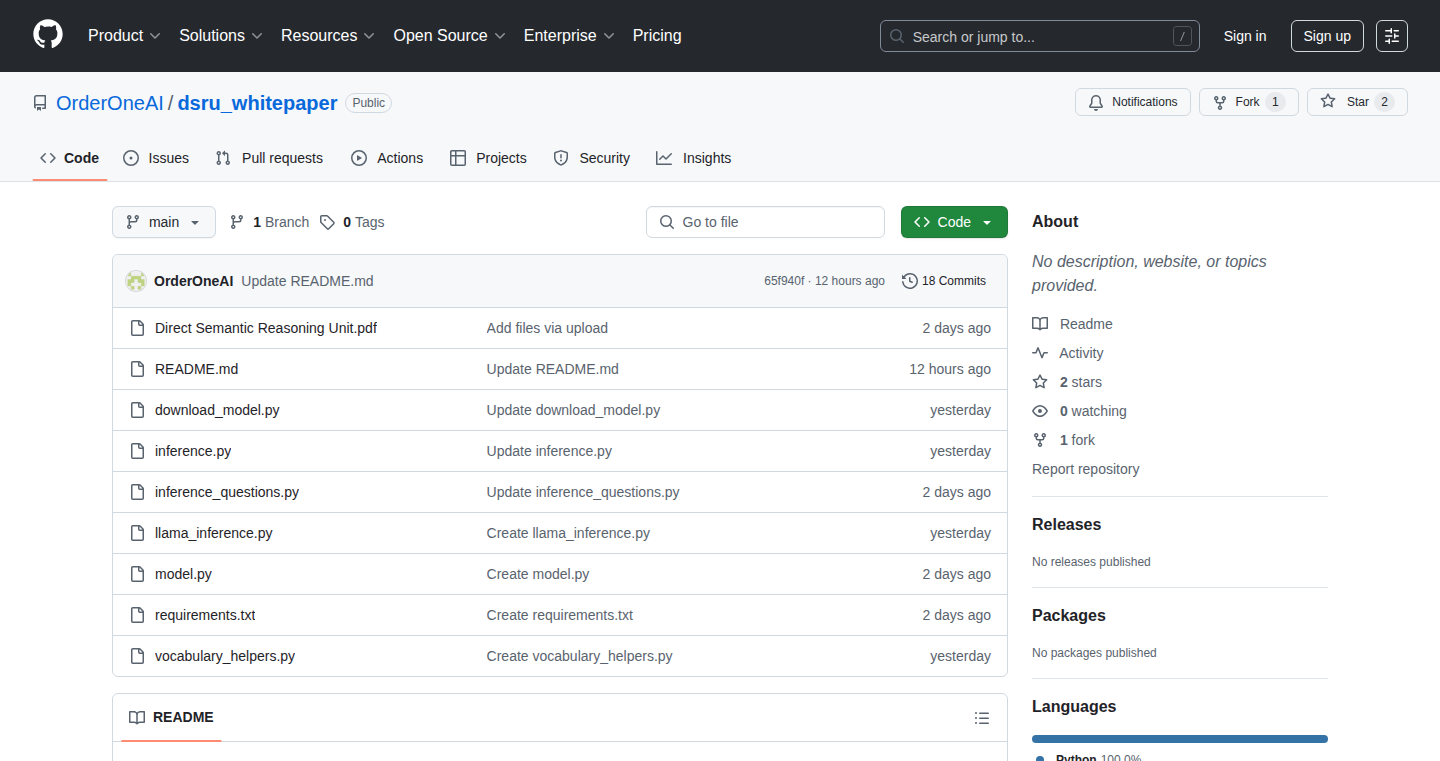

DSRU: Task-Oriented Reasoning in Latent Space

Author

orderone_ai

Description

This project introduces a new type of AI model that can understand and reason about entire tasks incredibly fast. Unlike the popular "Transformer" models (like those used in ChatGPT) which rely on attention mechanisms, tokens, and softmax, this model operates differently, achieving remarkable speed. It processes information in batches, taking only around 13 milliseconds for a batch of 10 examples, and an average of 1.3 milliseconds per example. The model focuses on understanding the core problem, rather than processing everything step-by-step. This offers a unique perspective on how AI can be built for specific tasks.

Popularity

Points 2

Comments 6

What is this product?

This is a new AI model, called DSRU (for its internal reasoning structure), designed to perform reasoning tasks quickly. It skips the common techniques used in large language models like Transformers (which are the foundation for many modern AI tools) and instead focuses on understanding the complete task. This project allows the model to be downloaded, and includes detailed performance results on various tasks such as sentiment analysis, emotion classification, and others. Think of it as a specialized AI brain built for speed and efficiency. So this is useful because it gives you a different way to approach complex AI problems, potentially faster and more efficient than current methods.

How to use it?

Developers can download the model and its associated scripts from the provided repository. They can then integrate it into their projects. The project also includes detailed benchmarks and training datasets, allowing developers to replicate and adapt the results. This means you could take the underlying technology and build your own specific tools for tasks. For example, if you needed to quickly classify customer support tickets by urgency, you could adapt this model. The model is designed to run on moderate hardware (around 10GB of VRAM), making it accessible for many developers. So this is useful for anyone who wants to experiment with new AI architectures, or build specialized tools that need to make quick decisions.

Product Core Function

· Fast Batch Processing: The model can process multiple examples (a batch) at once, making it quick for real-world use. It handles a batch of 10 examples in about 13 milliseconds. This leads to faster analysis and decision-making. So this is useful if you need quick results for a large number of inputs, like analyzing many customer reviews at once.

· Task-Specific Performance: The model has been tested on a range of tasks, from sentiment analysis to identifying sarcasm. This shows its adaptability to different types of problems. So this is useful for any application that requires a quick understanding of text, like filtering spam or categorizing information.

· Open Source and Reproducible Results: The project provides all the data, model, and scripts for developers to test, replicate and build upon. So this is useful for researchers and developers looking to learn and adapt a new AI architecture.

· Efficient Hardware Usage: The model can run on modest hardware (about 10 GB of VRAM), which is significantly less than many comparable models, making it more accessible to a wider audience. So this is useful if you want to build an AI application but don't want to invest in expensive hardware.

· Reasoning in Latent Space: The model reasons in a hidden, or "latent," space. This allows it to quickly understand the problem and arrive at a solution. So this is useful because it demonstrates an alternative to traditional AI models, which can be important for building efficient and specialized AI applications.

Product Usage Case

· Sentiment Analysis Tool: A company wants to automatically analyze customer feedback to understand customer satisfaction. They could use the DSRU model to quickly classify reviews as positive, negative, or neutral, allowing them to instantly gauge customer opinion. So this is useful because you can get quick insights from large amounts of text data.

· Customer Support Ticket Prioritization: A support team needs to identify urgent customer issues. They could use the DSRU model to classify support tickets based on urgency, allowing the team to quickly address the most critical issues. So this is useful for quickly identifying high-priority tasks.

· Content Filtering System: A website wants to automatically filter inappropriate content. They could use the DSRU model to detect toxic or harmful language in user-generated content. So this is useful because you can automate the moderation of content to protect your users and maintain a safe environment.

· Domain Classification for Research: A research team studying different text genres can use the model to categorize documents into relevant domains. So this is useful because it helps researchers to categorize and organize their data more efficiently.

13

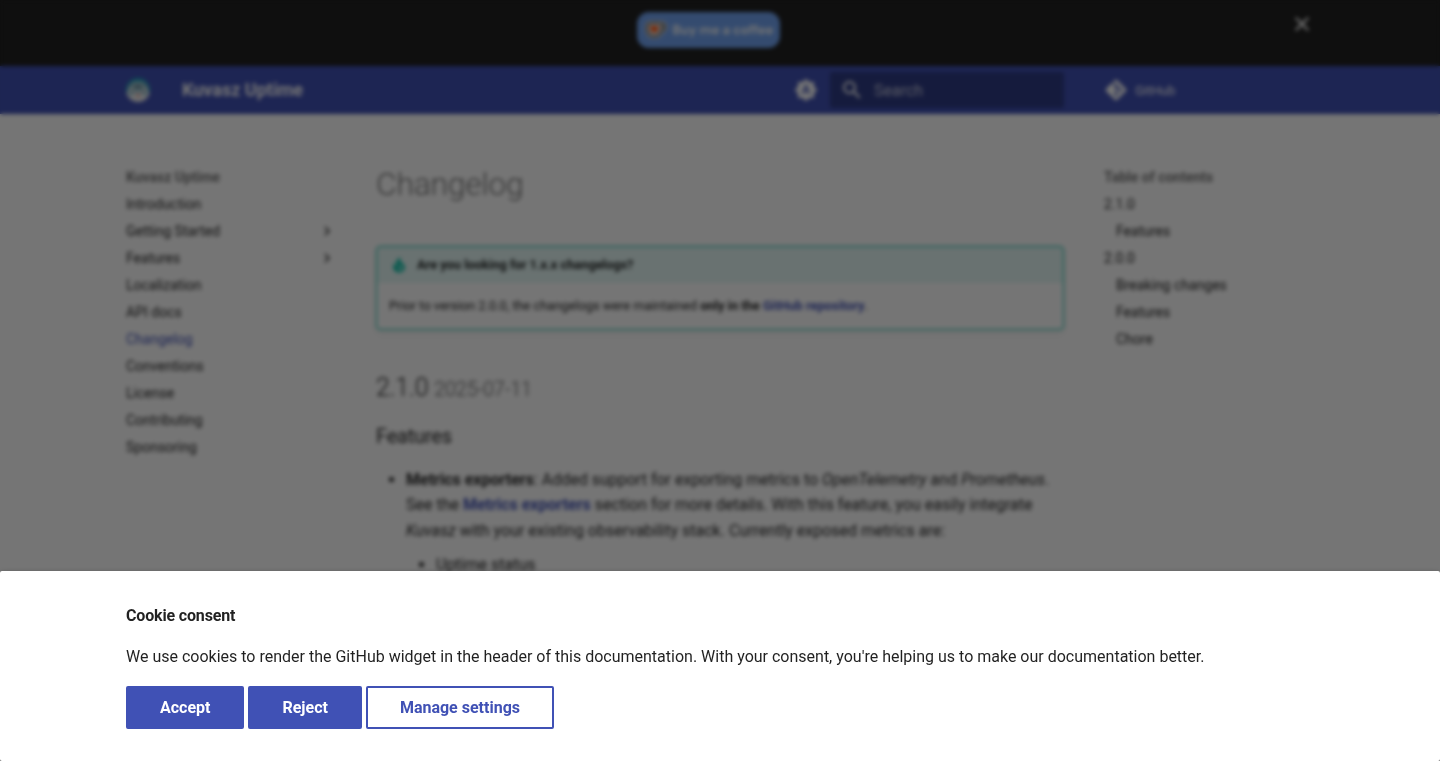

Kuvasz: Cloud-Native Uptime and SSL Monitoring with Prometheus & OpenTelemetry

Author

csirkezuza

Description

Kuvasz is an open-source tool for monitoring the uptime and SSL certificates of websites and services. This project integrates with popular observability tools like Prometheus and OpenTelemetry. This allows developers to easily integrate monitoring data into their existing systems. The key innovation is providing a straightforward, cloud-native solution for website and service monitoring and seamlessly connecting it with a developer's pre-existing monitoring setup. So, you can quickly identify when a website goes down or an SSL certificate is about to expire, without building an entire monitoring system from scratch.

Popularity

Points 6

Comments 0

What is this product?

Kuvasz is like a watchdog for your websites and services. It constantly checks if your websites are online and if the security certificates (SSL) are valid. It's built with modern technology (cloud-native) and integrates directly with Prometheus and OpenTelemetry, which are popular tools for collecting and visualizing data about how your systems are performing. It leverages existing open-source tools to avoid the need for a full-fledged monitoring system. So, you get a reliable way to monitor critical aspects of your online presence.

How to use it?

If you already have a system to monitor your infrastructure (like a dashboard showing how your servers are doing), Kuvasz can easily plug into it. You can configure Kuvasz to check your website uptime, and SSL certificate validity. Then, Kuvasz sends data about these checks to your existing system (using Prometheus and OpenTelemetry). This means you get alerts and see visual representations of your websites' status within your familiar monitoring tools. So, you don't have to learn a new system, and your alerts will integrate seamlessly.

Product Core Function

· Uptime Monitoring: Kuvasz regularly checks if your websites and services are available. It will alert you if a website becomes unavailable. This helps developers identify and fix issues quickly. So, you can minimize downtime and keep users happy.

· SSL Certificate Monitoring: Kuvasz monitors the expiration dates of SSL certificates. It alerts you when a certificate is about to expire. This ensures your websites remain secure and accessible. So, you avoid the embarrassment of broken HTTPS connections and security warnings.

· Prometheus Integration: Kuvasz sends monitoring data to Prometheus. This allows you to collect metrics about uptime, SSL certificate validity, and other important factors. So, you get comprehensive data for detailed analysis and troubleshooting.

· OpenTelemetry Integration: Kuvasz supports OpenTelemetry, enabling integration with a wide range of observability backends. This gives you the flexibility to choose the monitoring and tracing tools that best suit your needs. So, you are not locked into a specific monitoring platform.

· Cloud-Native Design: Built with cloud-native principles, making it easy to deploy and manage in cloud environments. This simplifies the setup and maintenance process. So, you can easily scale your monitoring as your needs grow.

Product Usage Case

· A small business that relies heavily on its website can use Kuvasz to ensure it's always available. By integrating with their existing monitoring system (using Prometheus, for example), they can receive immediate alerts if their website goes down, allowing them to quickly address the issue. So, a business can prevent lost revenue and maintain customer trust.

· A software development team uses Kuvasz to monitor the SSL certificates of its production websites. They set up alerts to notify them well in advance of certificate expirations. This helps them prevent service disruptions caused by expired certificates. So, they can ensure the security and availability of their applications.

· A DevOps engineer uses Kuvasz in a Kubernetes cluster. They deploy Kuvasz as a container and configure it to monitor all the services running in the cluster. They integrate Kuvasz with their existing monitoring dashboards. This provides them with a single pane of glass for monitoring the health and performance of their applications. So, they get a complete view of their infrastructure's performance.

· A developer is looking to build a new monitoring system but does not want to spend too much time on basic uptime and SSL checks. Kuvasz offers a ready solution with seamless integration capabilities through Prometheus and OpenTelemetry. So, the developer can focus on specific functionality.

14

kiln: Git-Native, Age-Encrypted Secrets Manager

url

Author

pacmansyyu

Description

kiln is a command-line tool designed to securely manage your environment variables, which often contain sensitive information like API keys and passwords. The core innovation is its Git-native approach combined with age encryption. Instead of relying on external secret management services or leaving secrets in plain text, kiln encrypts them into files that can be safely committed to version control (like Git). It uses age encryption, a modern and secure method, making sure your secrets travel with your code, work everywhere, and can be version controlled. It also features role-based access control, meaning you control who on your team can decrypt which secrets. This solves the common problem of insecure secret storage and simplifies deployment workflows. So, this means your sensitive data is protected, and your team can collaborate more securely on projects.

Popularity

Points 4

Comments 1

What is this product?

kiln is a tool that uses the age encryption standard to encrypt your environment variables. This allows you to store your secrets in a way that's safe and works seamlessly with Git. It moves away from unsecure methods like storing them in plain text files or relying on third-party secret management services, which can be problematic in various situations. It leverages SSH keys or generates new age keys for encryption and decryption. Role-based access control enables you to grant access only to authorized team members. So, this means your sensitive data is protected and accessible to only those who need it.

How to use it?

Developers can use kiln through a simple command-line interface. You define access control in a config file, encrypt your secrets with a single command, and then commit the encrypted files to Git. When running your applications, kiln can automatically inject the decrypted secrets as environment variables or render them into configuration templates. This makes it easy to integrate into existing development workflows. So, this simplifies your setup and makes your workflow more secure.

Product Core Function

· age Encryption: Kiln encrypts environment variables using age, a modern cryptographic tool. This makes it extremely difficult for unauthorized users to read your secrets, even if they have access to the encrypted files. So, this improves your security posture by ensuring that your secrets are unreadable without the correct key.

· Role-Based Access Control: You can define who on your team has access to specific environment variables. This prevents team members from accessing secrets they don't need, reducing the risk of data breaches. So, this helps manage access and enhance your team's security.

· Git Integration: Encrypted secrets are safely stored in Git, alongside your code. This makes it easy to track changes, collaborate with your team, and roll back to previous versions if needed. So, this facilitates better version control and simplifies the team's workflow.

· Automated Secret Injection: Kiln automatically injects decrypted secrets into your applications as environment variables or can render config templates. This minimizes the risk of errors when manually managing secrets and improves deployment automation. So, this simplifies your setup and makes your workflow more secure.

· Offline Operation: Kiln works completely offline, without external dependencies. This ensures that your secret management doesn't rely on network availability and that your secrets are secure in any context. So, this enhances security and reliability as it doesn’t rely on external services that could be vulnerable.

Product Usage Case

· Development teams can use kiln to manage API keys, database credentials, and other sensitive information needed for their applications. They can store encrypted secrets in the project's Git repository. For example, consider a web application that needs to connect to a database. Instead of hardcoding the database password in the code, you can encrypt it using kiln. This can then be injected as an environment variable during the application's runtime. So, this ensures that your sensitive data is protected, and the deployment process is simplified.

· DevOps engineers can use kiln to secure configuration files and deployment scripts. By using kiln, they can securely store secrets, and they can also automate the configuration and deployment processes. For example, a DevOps engineer can use kiln to encrypt database credentials for a staging environment. The secrets can then be injected into the server's configuration during the deployment. So, this improves automation while protecting secrets during deployment.

· Teams can use kiln to manage environment-specific configurations. Different environments (development, staging, production) may require different secrets. Kiln makes it easy to store these environment-specific secrets separately and ensure that the correct secrets are used in each environment. So, this ensures that the correct secrets are available in the correct environment and reduces security risks.

· If a team is using CI/CD pipelines, they can integrate kiln to securely pass sensitive information, such as API keys or credentials, during the build and deployment process. Kiln ensures that even in these automated workflows, secrets remain protected. So, this streamlines the CI/CD process without sacrificing security.

15

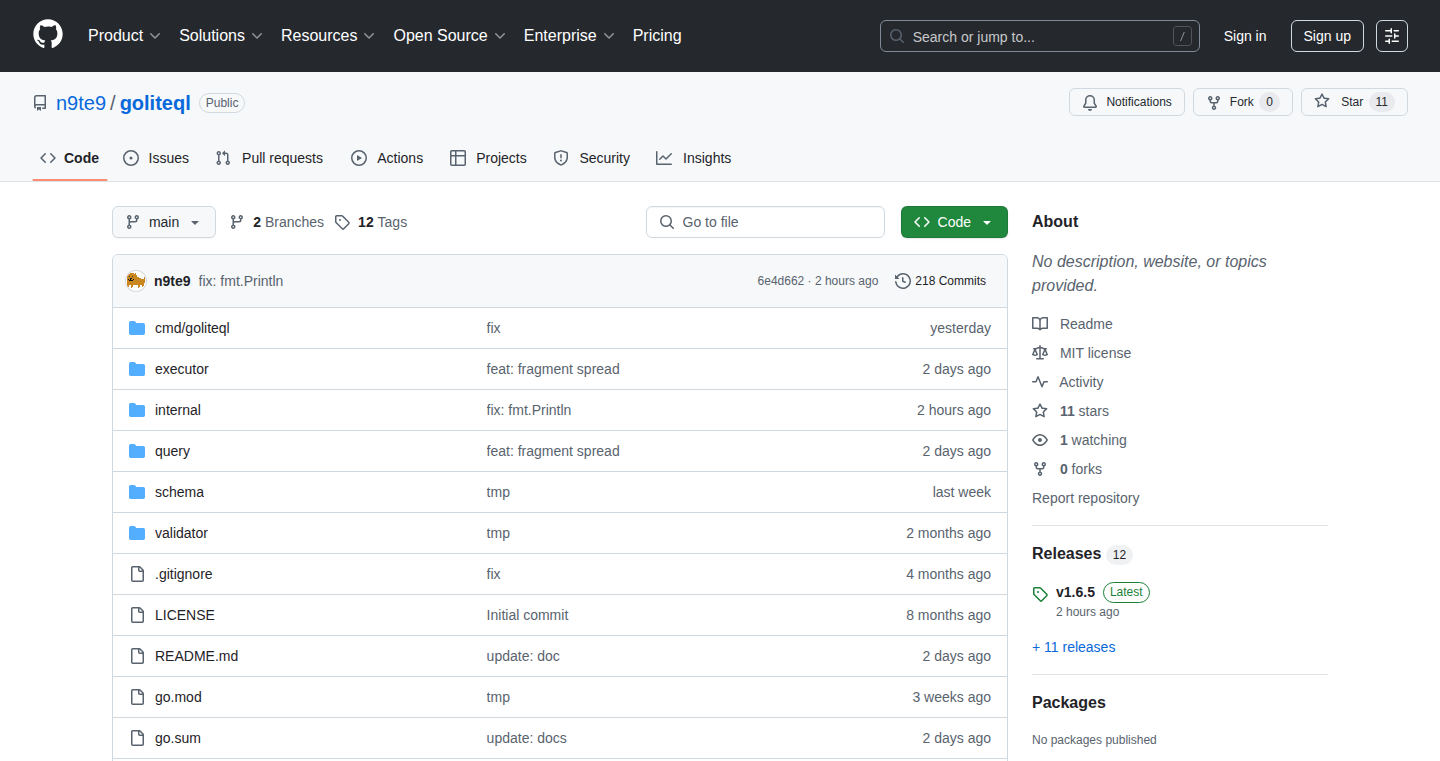

Goliteql: Blazing-Fast GraphQL Engine in Go

Author

n9te9

Description

Goliteql is a high-performance GraphQL engine and code generator built entirely in Go. It tackles the need for speed and efficiency in handling GraphQL queries, offering a lightweight alternative to existing solutions. Its key innovation lies in its approach: parsing, validating, and executing GraphQL operations without relying on reflection, leading to significant performance gains, especially beneficial in resource-constrained environments like WebAssembly (WASM) or microservices. So this means if you're looking for a way to serve GraphQL APIs really, really fast, this is for you.

Popularity

Points 5

Comments 0

What is this product?

Goliteql is like a supercharged translator for GraphQL. Think of GraphQL as a language to ask for data from your server. Goliteql takes these requests, checks if they are valid, and then efficiently gets the data without using slow methods, making it ideal for performance-critical applications. The code generation feature automatically creates Go code from your GraphQL schema, simplifying development. So, it takes your GraphQL queries, interprets them very quickly, and turns them into the data you want, all while making it easy to build and update your APIs. Why is this cool? Because it’s really fast and saves you time.

How to use it?

Developers can integrate Goliteql into their Go projects to create high-performance GraphQL APIs. You'll define your GraphQL schema, and Goliteql will parse this, validate queries, and execute resolvers. You'll use it within microservices to handle API requests or embed it into a WASM environment, such as in a browser, for data fetching and transformation. This is accomplished by importing the library into your Go project, defining your GraphQL schema, and then using Goliteql's functions to execute queries against your resolvers. So you just write your schema, and Goliteql takes care of the rest, making your API super efficient.

Product Core Function

· GraphQL Schema Parsing and Validation: This function takes your GraphQL schema and validates incoming queries, making sure they're following the rules. This is super important because it prevents errors and ensures your data is safe. So, it makes sure your requests are well-formed before running them.

· Fast GraphQL Execution: The core of Goliteql, this function efficiently executes GraphQL queries, retrieving data without performance-draining techniques. So, it's designed for speed. This means your app will load much quicker, providing a smoother experience for users.

· Code Generation from GraphQL Schema: Goliteql generates Go code from your GraphQL schema. This saves developers time by automating repetitive tasks, ensuring consistency between your schema and your backend, and making it easier to maintain the API. So it helps you quickly create the backend code for your API.

· Introspection Support: Goliteql provides introspection features, allowing you to query your GraphQL schema to understand its structure. This is valuable for API documentation, testing, and development tools. So, you can easily explore and understand the structure of your API, making it easier to use.

Product Usage Case

· Microservices Architecture: A company uses Goliteql in their microservices architecture to provide a fast and efficient way to serve data to their front-end applications. Each microservice handles specific data, and Goliteql aggregates and delivers this data as requested. So, it makes microservices talk fast and get data where it needs to go.

· WebAssembly (WASM) Applications: A developer integrates Goliteql into a WASM application that runs in the browser. This allows the application to fetch and process data from a GraphQL API without making slow requests to a central server. So, you can create very responsive applications that work even when the network is not so good.

· Performance-Sensitive Backends: An e-commerce platform uses Goliteql for its product search and catalog API. Due to the optimized execution, users can search and browse products much faster, improving the overall user experience and boosting sales. So, customers can find what they want faster, and your website looks better.

16

Aksara Jawa Transliteration Tool

Author

rahulbstomar

Description

This project is a free web tool that converts text between Latin characters and Aksara Jawa, the traditional Javanese script. It addresses the limited online tools available for this script by providing bidirectional transliteration, Unicode output, and a mobile-friendly interface. This is a great example of using technology to preserve and promote a cultural heritage. It tackles the complex problem of script mapping and regional variations, offering a practical solution for anyone working with the Javanese language and script.

Popularity

Points 2

Comments 2

What is this product?

This tool utilizes transliteration, which is essentially converting characters from one script to another. It's like an advanced form of typing where the computer automatically replaces the letters you type with the corresponding characters in the Javanese script. The innovation lies in providing a comprehensive and accurate mapping between Latin and Aksara Jawa, handling the nuances of the script and allowing for two-way translation. So this is helpful for anyone who wants to learn, use, or preserve the Javanese script.

How to use it?

Developers can integrate this tool into websites, applications, or educational platforms to provide Aksara Jawa support. You could use it to create a Javanese language learning app, a website that displays content in Aksara Jawa, or even build a cultural heritage project. This tool is accessible via a web interface, making it easy to incorporate into various projects. So, you can easily enrich your digital content with Javanese script.

Product Core Function

· Bidirectional Transliteration: The core function allows users to convert text between Latin characters and Aksara Jawa in both directions. This provides a crucial capability for translation and learning. This is useful for translating text.

· Unicode Aksara Jawa Output: The tool outputs text in Unicode format, ensuring compatibility with modern operating systems and applications. This ensures the text is correctly displayed across various devices and platforms, which makes it accessible to everyone.

· Mobile-Friendly Interface: The web interface is designed to be responsive and accessible on mobile devices. This feature ensures that users can access and utilize the tool on the go, which enhances accessibility and convenience.

Product Usage Case

· Creating a Javanese Language Learning App: Developers can integrate the tool into an educational app to allow users to practice reading and writing in Aksara Jawa. This helps in language learning by letting users translate and interact with the script.

· Building a Website with Aksara Jawa Support: A website dedicated to Javanese culture and history can use this tool to display content in Aksara Jawa, reaching a wider audience and preserving cultural heritage. This allows for the creation of authentic cultural experiences online.

· Developing a Cultural Heritage Project: The tool can be used to digitize and translate historical documents written in Aksara Jawa, preserving them for future generations. This enables easy access to historical resources.

17

MCP Explorer: Demystifying Model Context Protocol for AI Agent Orchestration

Author

abhisharma2001

Description

This project introduces the Model Context Protocol (MCP), a method for coordinating and automating AI agents. Think of it as a blueprint for AI agents to work together, similar to how a conductor guides an orchestra. This allows AI agents to perform complex tasks by breaking them down into smaller, manageable steps. The project aims to simplify the understanding and practical implementation of MCP, showcasing its ability to build sophisticated AI systems like a flight booking system.

Popularity

Points 4

Comments 0

What is this product?

This project explains the Model Context Protocol (MCP), which is a way to make different AI agents talk to each other and work together on a complex task. It's like setting up the rules of the game for these agents. The innovation lies in the structured approach to coordinating AI agents, allowing them to collaborate effectively. This project breaks down MCP into understandable concepts, showing how it's used in a real-world example: a flight booking system. So this allows for building more complex and capable AI systems.

How to use it?

Developers can use this project as a guide to understand and implement MCP in their own AI projects. It provides a practical framework and real-world example for building agent-based systems. You could use it to create AI assistants for various tasks, such as managing customer service, automating data analysis, or building more sophisticated search engines. It will help developers to build complex AI-driven solutions. So this helps in designing and developing smarter, more coordinated AI applications.

Product Core Function

· Understanding MCP Architecture: Explains the core components of MCP, which act as the building blocks for AI agent communication and coordination. It helps developers grasp the underlying principles and design choices, paving the way for them to build reliable and scalable AI systems. So this gives you a solid foundation to understand how to build AI systems that work together.

· Agent Coordination and Automation: Focuses on how MCP facilitates the automated execution of tasks across multiple AI agents. It emphasizes the importance of structured communication and task decomposition, which are key for building complex AI solutions. So this will allow you to automate complex tasks more effectively.

· Flight Booking System Example: A practical demonstration of how MCP can be applied to build a real-world system (flight booking). It showcases the potential of MCP to solve real-world problems. So this provides a practical illustration of how to apply MCP in building useful AI applications.

Product Usage Case

· Automated Customer Service: Use MCP to build an AI assistant that handles customer inquiries, routes them to the appropriate agent, and performs tasks like providing information and resolving issues. So this can result in a more efficient and responsive customer service experience.

· Data Analysis and Reporting: Employ MCP to create a system where AI agents collect and process data, generate reports, and provide insights, automating complex data analysis tasks. So this will make data analysis more accessible and efficient.

· Intelligent Search Engines: Utilize MCP to develop a search engine that can understand user intent, break down complex queries, and coordinate AI agents to retrieve and synthesize information from various sources. So this can result in more accurate and useful search results.

18

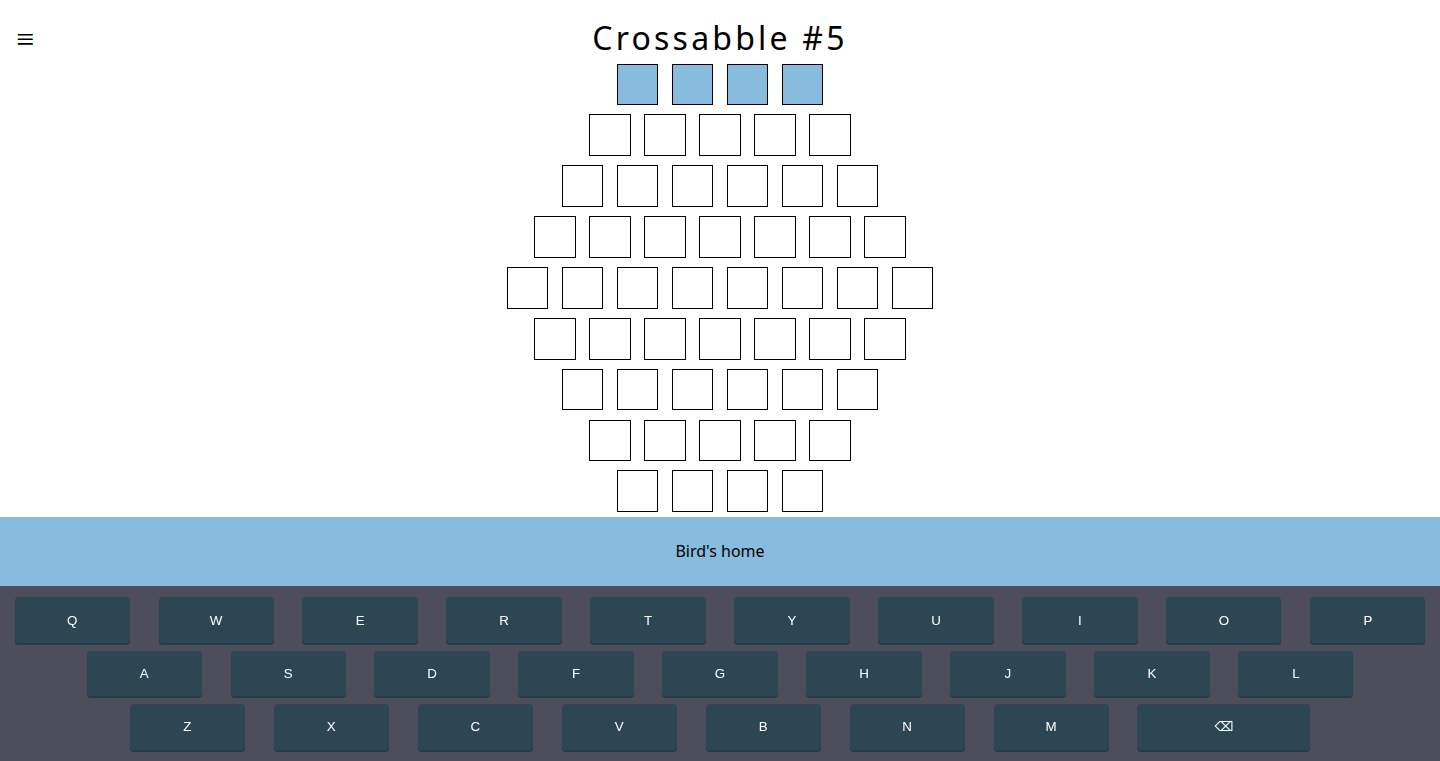

Crossabble: Weekly Word Puzzle Solver & Generator

Author

amenghra

Description

Crossabble is a weekly word puzzle game and puzzle generator. It uses natural language processing and graph theory to create and solve word puzzles. The main innovation lies in its ability to intelligently generate puzzles with hints and constraints, making it a versatile tool for word game enthusiasts and developers. It leverages algorithms to find optimal word arrangements, ensuring puzzle difficulty and playability. This addresses the challenge of automatically creating engaging and solvable word puzzles.

Popularity

Points 2

Comments 2

What is this product?

Crossabble is a word puzzle generator and solver built on a core of natural language processing (NLP) and graph theory. Imagine it as a smart system that understands words and their relationships. It uses NLP to analyze words and their meanings and then uses graph theory to find connections and build puzzles. It allows you to generate puzzles of varying difficulty levels and offers hints and solutions. So, it offers an automated and intelligent way to build word puzzles instead of doing it manually.

How to use it?

Developers can integrate Crossabble's puzzle generation capabilities into their own games or educational apps. You could use it to create a steady stream of new word puzzles for a daily challenge or a puzzle-of-the-week feature. You can also use the solving capabilities to offer hints in your game. The project could be utilized through an API. So, you could easily plug in new puzzles into your game.

Product Core Function

· Puzzle Generation: This function uses NLP to understand the characteristics of a word to find suitable words with various constrains, generating diverse and challenging puzzles. This is useful for creating a constant supply of puzzles, avoiding the need for manual creation and maintaining player engagement. This saves time and effort for developers needing puzzles.

· Hint Generation: The system can generate hints for the generated puzzles. This helps players get unstuck and increases the chance they’ll keep playing, leading to longer user engagement. This is useful to improve the user experience and keep the user playing the game.