Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-07-11

SagaSu777 2025-07-12

Explore the hottest developer projects on Show HN for 2025-07-11. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

今天的Show HN项目展示了AI技术与各种领域的深度融合。开发者们正在积极探索如何利用AI来提高效率、简化流程。 尤其值得关注的是,AI agent的应用不再局限于代码生成,而是深入到项目管理、流程优化等多个方面,为开发者提供了全新的工作方式。 另外,端侧AI应用和低代码/无代码工具的崛起,降低了技术门槛,使得更多非专业人士也能参与到技术创新中。同时,跨平台开发和Web3工具的出现,也为开发者提供了更广阔的舞台。 开发者和创业者们可以重点关注AI技术在垂直领域的应用,结合自己的专业知识,打造出更具创新性和实用性的产品。 此外,关注用户体验,简化操作流程,将是赢得市场的关键。

Today's Hottest Product

Name

Vibe Kanban – Kanban board to manage your AI coding agents

Highlight

Vibe Kanban 使用了AI coding agent,让程序员可以并行处理任务,在agent处理任务的同时,程序员可以专注于规划和复盘。开发者可以学习如何利用AI agent提升工作效率,尤其是在同步任务容易分心的场景下,通过并行化来提高生产力。

Popular Category

AI应用

开发者工具

Popular Keyword

AI

Kanban

开源

Technology Trends

AI Agent在开发流程中的应用

终端用户侧AI应用

低代码/无代码工具开发

跨平台应用开发

Web3 领域的实用工具

Project Category Distribution

AI工具 (35%)

开发者工具 (25%)

实用工具 (20%)

移动应用 (10%)

其他 (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Vibe Kanban: Parallelized AI Coding Agent Management | 158 | 102 |

| 2 | RULER: Universal Reward Function for Reinforcement Learning | 64 | 11 |

| 3 | Heim: Universal FaaS for All Languages | 22 | 5 |

| 4 | Director: Local-First MCP Gateway | 12 | 5 |

| 5 | AI Dognames Generator - Claude Code Powered | 3 | 5 |

| 6 | ByteWise Search: Client-Side, Community-Driven Search Engine | 4 | 2 |

| 7 | claude-code-setup.sh - Repository Issue Resolver with Claude | 6 | 0 |

| 8 | Phono: Terminal Image Viewer (Pure C) | 3 | 3 |

| 9 | AI Movie Finder: Natural Language Movie Discovery | 4 | 1 |

| 10 | NodeLoop: Electronics Design Toolbox | 5 | 0 |

1

Vibe Kanban: Parallelized AI Coding Agent Management

Author

louiskw

Description

Vibe Kanban is a project management tool designed to help developers efficiently manage multiple AI coding agents simultaneously. It addresses the common problem of developers getting distracted while waiting for AI agents to complete tasks. By allowing developers to run agents in the background, Vibe Kanban enables them to focus on planning, reviewing completed tasks, and other productive work, increasing overall productivity and reducing wasted time.

Popularity

Points 158

Comments 102

What is this product?

Vibe Kanban is built around the principles of a Kanban board. It allows you to create and manage tasks for multiple AI coding agents. The core innovation is the ability to run these agents in parallel, so you can have multiple tasks in progress simultaneously. This way, instead of waiting for one agent to finish a task, you can keep multiple agents working, maximizing your time. Think of it as a smart task manager for your AI assistants. So, this is useful because it prevents you from getting bogged down while waiting for AI to do its work, and helps you leverage AI more effectively.

How to use it?

Developers can use Vibe Kanban by integrating it into their existing development workflows. You define tasks for your AI agents, place them on the Kanban board (e.g., 'To Do', 'In Progress', 'Review', 'Done'), and assign agents to each task. As agents complete tasks, you review their output and move them along the board. Integration would involve setting up the connection to your preferred AI coding agents (e.g., OpenAI's Codex, etc.) and defining the tasks you want them to perform. So, you can use it to keep track of all your AI agents' tasks and easily see what they are working on and when they are done.

Product Core Function

· Parallel Task Management: The core feature is the ability to run multiple AI agents concurrently. This allows developers to work on multiple tasks simultaneously, dramatically reducing idle time and improving efficiency. For example, if you are generating a lot of code with AI, the parallel approach allows faster code generation.

· Kanban Board Interface: Provides a visual interface to manage tasks, similar to a traditional Kanban board. Tasks are represented as cards, and users can move them across different stages (e.g., 'To Do', 'In Progress', 'Review'). This offers a clear overview of the development process. Useful for keeping everything organized, allowing for easy tracking of work progress.

· Agent Task Assignment: Allows developers to easily assign tasks to specific AI agents. You can organize AI agents based on their specialty, allowing you to assign specific tasks to the appropriate agents. This helps to better leverage the capabilities of each AI agent.

· Task Review and Iteration: Enables developers to review the output generated by the AI agents. This is a key component of the workflow, where you can ensure quality and correct any errors. This is useful for ensuring that the generated results meet your requirements and for further iterating on the tasks.

Product Usage Case

· Code Generation and Refactoring: Use Vibe Kanban to manage agents that generate code snippets, refactor existing code, or write unit tests. You could set up agents to generate code for various features, and then review the output to ensure it meets your requirements. You can then use it to continuously improve your code.

· Automated Documentation: Use Vibe Kanban to manage agents that automatically generate documentation for your projects. Configure agents to create API documentation or user guides. This streamlines the documentation process and reduces the time spent on repetitive tasks.

· Rapid Prototyping: During the early stages of a project, you can use Vibe Kanban to coordinate multiple AI agents working on different components of a prototype, such as UI design, backend setup, and database schema generation. This accelerates the initial development phase by allowing developers to quickly test out different ideas.

· Content Creation: For developers creating documentation, blog posts, or tutorials, Vibe Kanban can manage AI agents assigned to writing different sections or chapters, summarizing code examples, or generating supplementary material.

2

RULER: Universal Reward Function for Reinforcement Learning

Author

kcorbitt

Description

RULER is a groundbreaking tool that simplifies the application of Reinforcement Learning (RL) to various tasks. Traditionally, implementing RL requires a complex 'reward function' to measure success, often demanding extensive data and expertise. RULER eliminates this hurdle by leveraging Large Language Models (LLMs) to evaluate and rank different outcomes. This innovative approach allows developers to train agents more reliably and effectively, even on tasks where defining a specific reward function is challenging.

Popularity

Points 64

Comments 11

What is this product?

RULER is a 'drop-in' reward function that utilizes LLMs to judge and rank the quality of different actions or outputs generated by an agent. It shows multiple potential solutions (trajectories) to an LLM and asks the LLM to rank them. This method avoids common calibration issues found in other LLM-based evaluation techniques. Combined with the GRPO algorithm, RULER can train agents that consistently outperform other models without requiring hand-crafted reward functions. So, this is a smarter way to teach AI to achieve its goals.

How to use it?

Developers can integrate RULER into their RL training pipelines. They provide the agent's outputs to RULER, which then ranks these outputs using an LLM. This ranking data is then fed to the GRPO algorithm to optimize the agent's behavior. This is particularly useful for complex problems where defining a reward function is difficult or requires significant domain expertise, like improving chatbot responses, optimizing code generation, or enhancing game AI. You can integrate it by simply replacing your current reward function with RULER's evaluation process.

Product Core Function

· LLM-Based Ranking: RULER uses LLMs to evaluate and rank different solutions or actions generated by the agent. The value is, it automates the process of judging the quality of the output without manual intervention. The LLM's judgment offers a flexible and general approach, applicable to many different tasks, eliminating the need for task-specific reward functions. So, it allows you to focus on the goal rather than the evaluation.

· GRPO Integration: It integrates with the GRPO (Group Relative Policy Optimization) algorithm, which is a method for training RL agents based on relative scores. This means it focuses on improving the agent's performance within a group of outputs. The value is, GRPO's focus on relative performance helps it avoid issues related to the scaling and calibration of absolute reward scores. This provides a more robust and reliable training process. So, it ensures the agent learns what matters most - producing better results compared to other outputs.

· Simplified RL Implementation: RULER simplifies the complex process of setting up RL by removing the need for manually designing reward functions. The value is, it makes RL more accessible to a wider audience, lowering the barrier to entry for using this powerful technique in a variety of applications. This leads to faster development cycles and allows developers to explore RL without deep expertise in reward engineering. So, it simplifies the process of making AI smarter.

Product Usage Case

· Chatbot Response Optimization: Imagine a chatbot that can understand and respond to user queries. With RULER, developers can train this chatbot to produce more helpful and relevant answers by feeding its various response options to RULER and using the LLM to rank them. The value is, the LLM can identify the best response based on its general knowledge, even when there are subtle contextual clues. This improves the chatbot's overall performance without having to write a specific reward function for each situation.

· Code Generation Improvement: For developers creating AI to write code, RULER can evaluate the quality of code snippets. After the code is created, the LLM ranks the various code samples. The value is, RULER is able to assess factors like correctness, efficiency, and readability. This enables the AI to learn to write better code over time, improving its coding ability. So, you can create better and faster code automatically.

· Game AI Enhancement: In game development, RULER can be used to train AI agents to perform better. After generating game actions, RULER can rank the effectiveness of these actions by leveraging an LLM. The value is, the LLM may understand game mechanics, strategies, and player preferences, allowing the AI agent to learn to make intelligent game decisions. So, you can create more engaging and challenging game experiences.

3

Heim: Universal FaaS for All Languages

Author

Silesmo

Description

Heim is a lightweight Function-as-a-Service (FaaS) platform designed to run code written in any programming language on any cloud provider. It addresses the limitations of existing FaaS solutions by offering greater flexibility and portability. The core innovation lies in its ability to decouple the language runtime from the underlying infrastructure, enabling developers to deploy functions written in their preferred language without being locked into specific vendor ecosystems. This simplifies cloud deployment and management significantly.

Popularity

Points 22

Comments 5

What is this product?

Heim is essentially a mini-cloud server for your code. Imagine you have a small piece of code that does something useful, like processing images or sending emails. Instead of setting up an entire server to run this, Heim allows you to upload just the code (your function), and it handles the rest – running it, scaling it, and managing resources. The innovation is its universal nature: it doesn't care what language your code is written in (Python, Go, JavaScript, etc.) and it works on any major cloud provider (AWS, Google Cloud, Azure). So this provides maximum flexibility and control.

How to use it?

Developers use Heim by writing their functions and deploying them to the Heim platform. They can then trigger these functions via HTTP requests, scheduled events, or other integrations. For example, you could write a Python function to resize images and then trigger it whenever a new image is uploaded to your cloud storage. You can also integrate Heim with your existing development workflows using its APIs and command-line tools. So this provides easy integration and automation.

Product Core Function

· Universal Language Support: Heim supports functions written in any programming language. This allows developers to use their existing skills and codebases without needing to rewrite everything for a specific platform. So this is extremely valuable because you don't have to learn something new.

· Cloud Agnostic Deployment: Deploy functions across any major cloud provider. This prevents vendor lock-in and enables developers to choose the best cloud for their needs, or even distribute their functions across multiple clouds. So this means better flexibility and cost management.

· Lightweight and Efficient: Heim is designed to be lightweight and resource-efficient, minimizing costs and improving performance. It's designed to be used for small and micro services.

· API-driven Management: Manage functions through APIs and command-line tools, streamlining deployment and management. This makes it easy to integrate Heim into automated build and deployment pipelines.

· Scalability and Autoscaling: Heim automatically scales functions based on demand, ensuring optimal performance and cost-efficiency. Your code will automatically scale without you doing anything, so it is easy to scale.

Product Usage Case

· Image Processing: Use a Python function deployed on Heim to automatically resize and optimize images uploaded to a cloud storage service, allowing for faster website loading times. So this speeds up the development process.

· API Gateway Integration: Create a serverless API gateway using Heim, routing incoming requests to various backend functions written in different languages. This is really useful for modern app development.

· Scheduled Tasks: Schedule a function in Go to run daily, automating routine tasks like database backups or sending reports, without the need for dedicated servers. Automating tasks without worrying about a server is invaluable.

· Webhook Processing: Process incoming webhooks from third-party services (e.g., payment gateways, social media platforms) using functions written in JavaScript. So this means you can easily connect to other systems.

· Microservices Architecture: Build and deploy microservices with different languages and technologies, improving flexibility and allowing each component to scale independently on any cloud provider. Building microservices on demand gives developers full control over their infrastructure

4

Director: Local-First MCP Gateway

Author

bwm

Description

Director is a fully open-source, local-first MCP gateway. It simplifies the process of connecting to MCP servers from tools like Claude, Cursor, or VSCode. The key innovation is providing a user-friendly, secure, and observable way to manage connections, addressing the common pain points of MCP technology, such as complex configuration, lack of monitoring, and security vulnerabilities. It offers a straightforward, local experience with the intention of expanding to cloud-based functionality.

Popularity

Points 12

Comments 5

What is this product?

Director acts as an intermediary, or 'gateway,' for MCP (Message Passing Protocol) communication. MCP is a promising technology, but setting up and managing connections can be difficult. Director solves this by providing a simplified interface for connecting clients (like AI tools or code editors) to MCP servers. It handles the complexities of configuration, allows for easy inspection of data flowing between clients and servers (observability), and aims to improve security. So, this enables developers to more easily use and debug their MCP-based applications, and helps them avoid common pitfalls associated with MCP.

How to use it?

Developers can install Director locally and then configure it to connect to their specific MCP servers. The gateway then acts as a proxy, allowing tools like Claude, Cursor, or VSCode to communicate with the MCP servers. This simplifies the setup process, making it much easier to test and debug applications using MCP. For example, if you're working with an AI model that uses MCP, Director allows you to quickly connect your preferred AI tool to the model and monitor the data being sent back and forth. This streamlines the workflow and makes it easier to find and fix any issues. So, it helps developers by simplifying the process of connecting to and monitoring MCP servers. It makes debugging and testing easier.

Product Core Function

· Simplified Configuration: Director simplifies the complicated setup process required to connect to MCP servers. This saves developers time and reduces the chances of configuration errors. So, it saves developers time and reduces the likelihood of errors when configuring MCP connections.

· Enhanced Observability: Director allows developers to easily inspect and modify the data traffic between clients and servers. This makes it easier to debug and understand how data is being transmitted and processed. So, it allows developers to debug their applications more effectively and gain a better understanding of how data flows through them.

· Improved Security: Director aims to improve the security of MCP connections, addressing vulnerabilities like remote code execution and prompt injection attacks. This protects developers and users from potential threats. So, it makes MCP-based applications more secure.

· Context Window Management: Director assists in managing the amount of information provided to the LLM within the context window to prevent confusion and improve model performance. So, it helps optimize LLM performance and avoid confusing the model with too much data.

Product Usage Case

· AI Model Debugging: A developer working on an AI-powered application can use Director to connect their AI model (using MCP) to their preferred AI tool. They can then monitor the data exchanged between the model and the tool in real time, allowing them to identify and fix any issues in the communication process. This streamlines development and improves the quality of AI applications. So, it speeds up the debugging process and makes AI model development more efficient.

· Secure Cloud Deployment: Director can be used to create a secure and observable connection to an MCP server running in the cloud. Developers can monitor traffic and handle security threats. So, it lets developers use MCP in cloud environments with enhanced security and monitoring.

5

AI Dognames Generator - Claude Code Powered

Author

yeeyang

Description

This project is an AI-powered dog name generator created entirely using Claude, an AI model, within just 24 hours, without any traditional coding. It demonstrates a novel approach to rapid prototyping and automation in software development by leveraging the capabilities of large language models (LLMs) to generate code and build applications. This showcases the potential of AI to accelerate the development process and remove the need for extensive manual coding.

Popularity

Points 3

Comments 5

What is this product?

It's a dog name generator that doesn't involve a human writing code, but is generated by an AI model, Claude. The AI writes the code and builds the application in response to prompts. The innovation here lies in the use of an LLM to handle the entire software development lifecycle – from generating the underlying code to deploying a functional application. It shows how AI can quickly build simple yet useful tools. So this is helpful if you want to quickly get a simple web app up and running without coding.

How to use it?

You can use it by interacting with the generated web interface. Input your preferences for a dog name, and the AI will generate a list of suggestions. Developers can explore the code generated by Claude to learn how the AI approached the problem, and adapt the code for similar projects. This also shows a new approach to rapidly build a prototype: by simply prompt the AI model. So this can be helpful for anyone who wants to play around with AI-generated code and explore new ways of building small web apps.

Product Core Function

· Dog Name Generation: The core function is generating dog names based on user input or AI's creative suggestions. This showcases the AI's ability to understand and respond to user requests by interpreting and generating outputs. So this is helpful if you need a dog name immediately.

· Code Generation: The project's most significant function is the generation of code that implements the dog name generator. This demonstrates the AI's capability to translate natural language prompts into working code, automating the coding process. So this is helpful for exploring AI's code-generating abilities.

· User Interface: The AI generates a simple user interface allowing users to interact with the application and receive name suggestions. This involves the AI creating the necessary HTML, CSS, and JavaScript code. So this is helpful if you want to quickly create a simple web interface.

Product Usage Case

· Rapid Prototyping: A developer wants to quickly test the idea of a dog name generator. Instead of writing code, the developer can use Claude to build a basic prototype. This is helpful for quickly validating an idea. The AI writes the code, and the developer can use the resulting application right away.

· Education and Exploration: A student is studying AI and wants to see how it generates code. They use this project as a reference. This shows how AI can accelerate the coding process, helping developers of any skill level to experiment with AI-generated code and applications.

· Low-Code Development: A small business owner wants to create a tool to suggest names for their products. They can use this project as an example of using AI to accelerate development. This is helpful for creating simple, low-code applications for any business need, since the AI did all the coding.

6

ByteWise Search: Client-Side, Community-Driven Search Engine

Author

FerkiHN

Description

ByteWise Search is a revolutionary search engine that runs entirely in your web browser. It prioritizes privacy, speed, and efficiency by processing all searches locally, eliminating the need for external servers and API calls. The project leverages a community-driven approach where users contribute to a shared database, creating a curated and privacy-respecting search experience. This means your searches are private, fast, and consume zero network traffic after the initial load. It's a search engine built by the community, for the community.

Popularity

Points 4

Comments 2

What is this product?

ByteWise Search is a search engine that works directly within your web browser. Instead of sending your search queries to a server, everything happens on your computer. This means your searches are private because your data never leaves your device. The search results are pulled from a local database, and this database is built and maintained by the community. The core technology uses JavaScript, Service Workers, and IndexedDB to store and retrieve search results locally, providing instant responses and offline functionality. The database grows with contributions from users who add search terms and relevant links. This project is hosted on GitHub Pages, eliminating the need for costly servers or API keys. So, it's a fast, private, and community-driven search engine. So what? It's a search engine that respects your privacy and offers a lightning-fast search experience, especially for niche topics where community knowledge is valuable.

How to use it?

As a developer, you can use ByteWise Search as a starting point for building your own privacy-focused search tools or integrating community-curated knowledge bases into your projects. You can explore the codebase on GitHub, learn from its architecture, and adapt its client-side search functionalities for your own applications. You can contribute to the project by adding new search terms and relevant links to the database. This can be done within the app itself, exporting your contributions as a JSON file, and submitting them via pull requests to the main GitHub repository. Think of it like contributing to a giant, shared knowledge base. So what? By contributing, you help build a better, more comprehensive search experience for everyone. By using it, you get a private, fast, and community-powered search tool for specific knowledge domains.

Product Core Function

· Client-Side Search Processing: All search queries are processed within the user's web browser using JavaScript. This eliminates the need for sending data to external servers, enhancing privacy and reducing network traffic. This means your searches are private. So what? This ensures your search queries are never logged or tracked.

· Local Database Storage: Search results are stored in a local database, leveraging IndexedDB for efficient data management and retrieval. This provides instant search results and enables offline functionality. So what? This allows for incredibly fast search results, even without an internet connection.

· Community-Driven Content Curation: Users can add new query-link pairs, creating a community-maintained knowledge base. This allows for niche knowledge curation, which can be highly valuable for specific topics. So what? This lets you build a search engine tailored to your specific interests or areas of expertise.

· Offline Functionality: Using Service Workers and IndexedDB, ByteWise Search works offline. Search results are readily available even without an internet connection. So what? This means you can access your search results anytime, anywhere.

· Zero-Traffic Search: After the initial database download, all subsequent searches consume zero network traffic. This makes the search incredibly efficient and saves bandwidth. So what? You save on data usage while getting instant results.

Product Usage Case

· Building a Privacy-Focused Search Tool: A developer can adapt the client-side search architecture to build a search tool focused on privacy, ideal for internal company use or for users who value their data. So what? You can offer a privacy-respecting search experience.

· Creating a Community-Curated Knowledge Base: A community focused on a specific topic (e.g., a programming language, a hobby) could use ByteWise Search to build a curated knowledge base of links and resources. So what? You build a resource hub for any specialized topic.

· Integrating a Search Feature into a Web Application: A web application developer could integrate the core ByteWise search mechanism into their application to provide users with a local, fast, and private search feature. So what? You can enhance user experience by providing fast search capabilities within your own application.

· Offline Documentation Search: A developer could use ByteWise Search to build an offline-accessible documentation search for a software project. Users could search documentation without needing an internet connection. So what? You provide users with instant access to documentation, even offline.

· Educational Resource Search: Teachers and educators could create and share community-built databases for educational topics, making learning resources easily searchable and available offline. So what? You provide easily accessible learning materials.

7

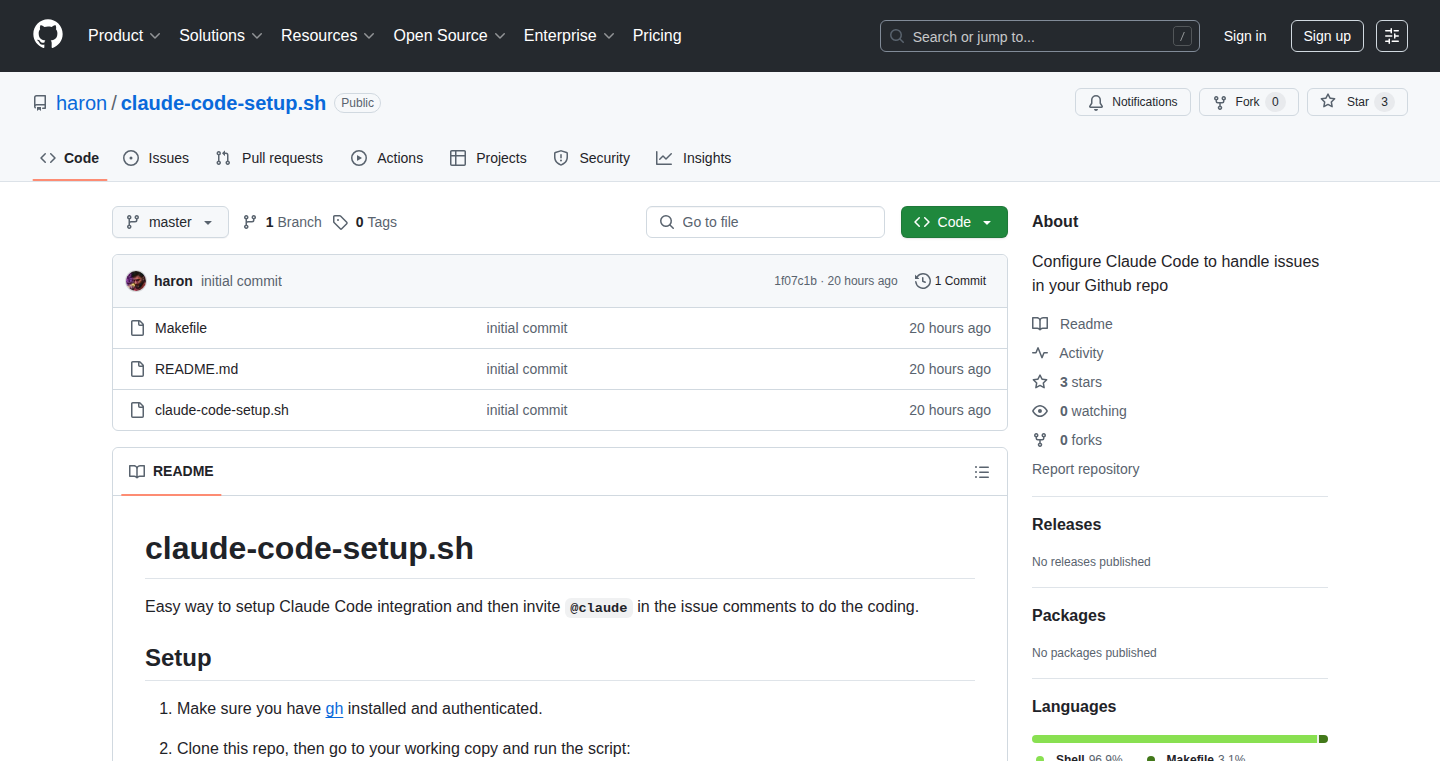

claude-code-setup.sh - Repository Issue Resolver with Claude

Author

haron

Description

This project provides a simple shell script that integrates Claude, a large language model, with your code repository to help you resolve issues. It automatically configures Claude to read your codebase and then allows you to ask questions about it, find bugs, and even get suggestions for fixes. The innovation lies in automating the setup and interaction with Claude to make it a practical tool for developers. This allows developers to more easily leverage the power of AI in understanding and improving their code.

Popularity

Points 6

Comments 0

What is this product?

This project is a shell script, `claude-code-setup.sh`, designed to automate the process of setting up Claude to work with your code repository. It essentially bridges the gap between a powerful AI and your codebase. It handles the complexities of configuring the AI model (Claude) to read and understand your code. This lets you then ask Claude to analyze the code, find potential bugs or even give you suggestions on how to fix problems. It's like having a very smart assistant who can instantly understand your code and help you find and fix issues. So this is useful if you want AI to help you with your code.

How to use it?

Developers can use this script by first cloning the repository and running the script in their own code repository. The script guides the user through setting up the necessary API keys for Claude. After this setup, developers can interact with Claude by asking questions about their code directly via command-line prompts or integrated workflows. This is particularly useful in the development process for understanding code, identifying potential bugs, and receiving code suggestions. So, it can be easily integrated into a developer's workflow.

Product Core Function

· Automated Setup: The script automates the often complicated process of connecting Claude to your code. This simplifies the process of setting up the AI and reduces the time spent on configuration. So this saves your time and simplifies the configuration process.

· Code Analysis: The script is designed to analyze code. Once set up, you can ask Claude questions about your code and receive helpful analysis and insights. So this helps you understand your code better.

· Issue Resolution: This script helps resolve issues by assisting in finding potential bugs, providing suggestions for bug fixes, and guiding the user in resolving identified problems. This allows developers to resolve the coding issues efficiently. So, this allows you to quickly identify and fix the issues in your code.

· Integration with Claude: The script directly integrates with Claude. By leveraging Claude’s capabilities, the script empowers developers to find bugs, explain complex codes, and suggest ways to fix the problems in the code. So, the script makes use of AI's capabilities to find issues in the code.

Product Usage Case

· Bug Finding: Imagine you have a complex piece of code and you suspect a bug. Instead of manually reading through everything, you can use this setup. You can ask Claude to analyze a specific function or area of the code and identify potential problems or bugs. So, it helps you easily find bugs.

· Code Documentation: You can use Claude to generate or augment your code documentation. You can ask questions about specific functions or classes to get clear explanations of what they do, greatly improving the readability and maintainability of the codebase. So, it allows you to generate your code documentation quickly.

· Code Review: When reviewing your code, you can ask Claude to analyze your code and suggest improvements. This can identify potential issues, like areas that could be written more efficiently, or areas that need more testing. So, you can improve the code by reviewing it with Claude.

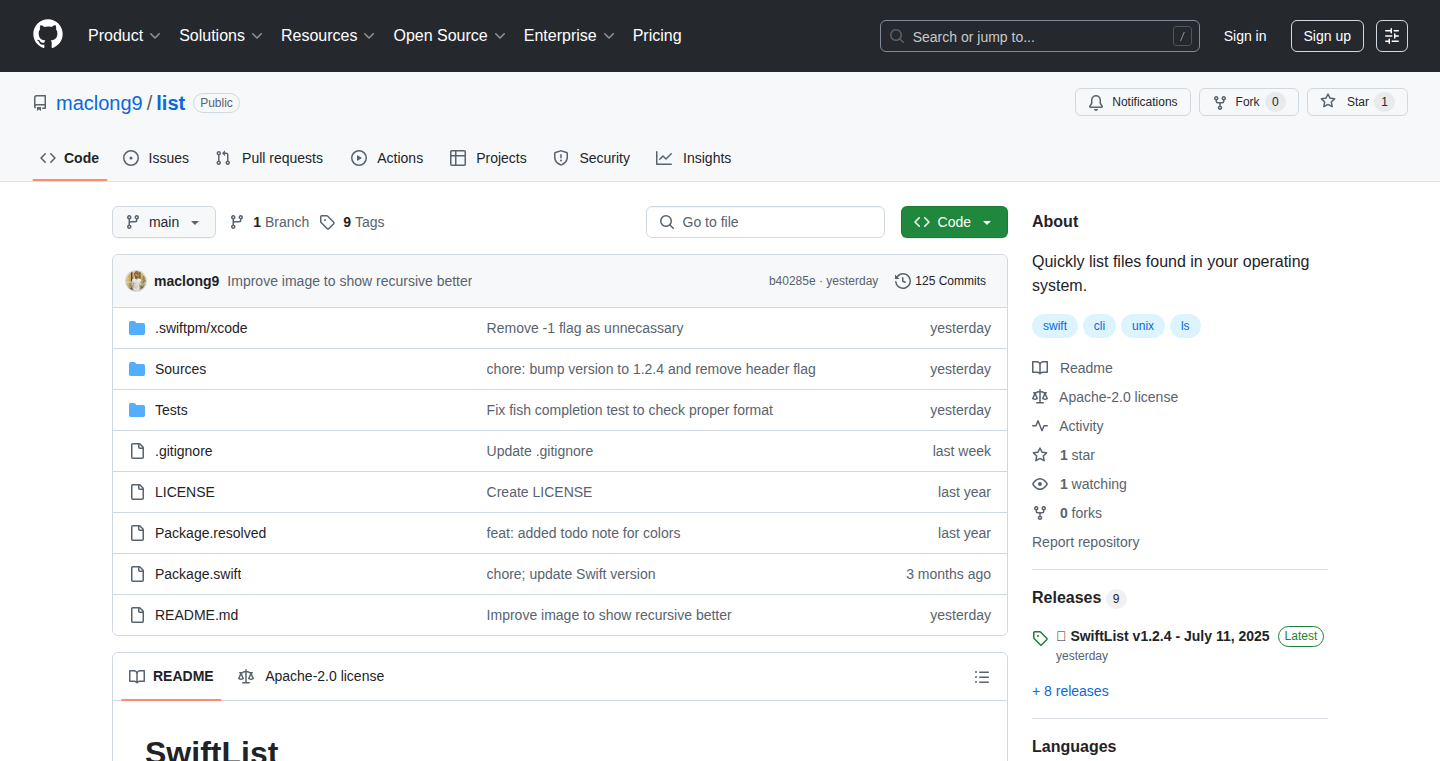

8

Phono: Terminal Image Viewer (Pure C)

Author

FerkiHN

Description

Phono is a lightweight image viewer that runs directly in your terminal (like the command prompt), written entirely in the C programming language. It allows you to view images without needing a graphical user interface (GUI), making it work even on older or resource-constrained devices. The key innovation is its ability to render images using text characters, offering a surprisingly fast and efficient way to visualize images in any terminal environment. So this is useful because you can quickly see images without needing a heavy graphical environment installed.

Popularity

Points 3

Comments 3

What is this product?

Phono works by taking an image file and converting it into a series of text characters that represent the image's pixels. It achieves this using the terminal's built-in capabilities, displaying these characters to create a visual representation of the image. It doesn't require X11 (the traditional graphical system on Linux) or any specific graphics libraries, making it exceptionally portable and easy to install. The benefit here is pure efficiency; it leverages the most basic functionalities of the terminal. So this is helpful because it offers a super simple way to view images directly in a terminal.

How to use it?

Developers can use Phono by simply running the program and pointing it to an image file from their terminal. For example, after compiling Phono, you could type something like `./phono image.png`. This is particularly useful for scripting, remote server administration (where you often interact via the terminal), or quick image previews without launching a separate image viewer. So, it allows you to inspect images very quickly without leaving the command line.

Product Core Function

· Image Rendering in Terminal: This is the core functionality. It takes an image and displays it using text characters within the terminal window. This is valuable because it allows image viewing in environments without graphical interfaces. You might use this when working with a remote server, or when developing on very limited systems.

· Cross-Platform Compatibility: Because it's written in C and relies on standard terminal functionality, Phono works across various operating systems (Linux, macOS, Windows, etc.). This is useful because your image viewing solution works no matter your OS.

· Small Footprint: The program is designed to be small, roughly 300KB. This is great, especially when resources are limited. So this is useful because it won't hog your system's resources, making it suitable for older or resource-constrained devices.

· Pure C Implementation: The use of pure C means it doesn't depend on large libraries or external dependencies. This simplifies installation and enhances portability. So it's great because it's lightweight and won't bring along any complex installation requirements.

Product Usage Case

· Remote Server Monitoring: Imagine you're managing a remote server and need to quickly preview a screenshot or a log file's image. Instead of transferring the image and opening a separate viewer, you can use Phono right in the terminal. So this is helpful because it lets you quickly verify files on a server.

· Embedded System Development: Developers working on embedded systems often have limited resources and might access these systems via a terminal. Phono can be used to view images related to the system, such as a sensor's output or a UI mockup. So this helps debug graphical parts of your embedded system.

· Scripting and Automation: Integrate image viewing into scripts. For example, automatically display a graph after a data analysis script runs. So this saves a lot of time by providing automatic visual feedback.

· Resource-Constrained Environments: On older hardware or virtual machines, Phono provides a fast and efficient way to preview images without demanding significant resources. So this is very useful for older computers that struggle with many graphical applications.

9

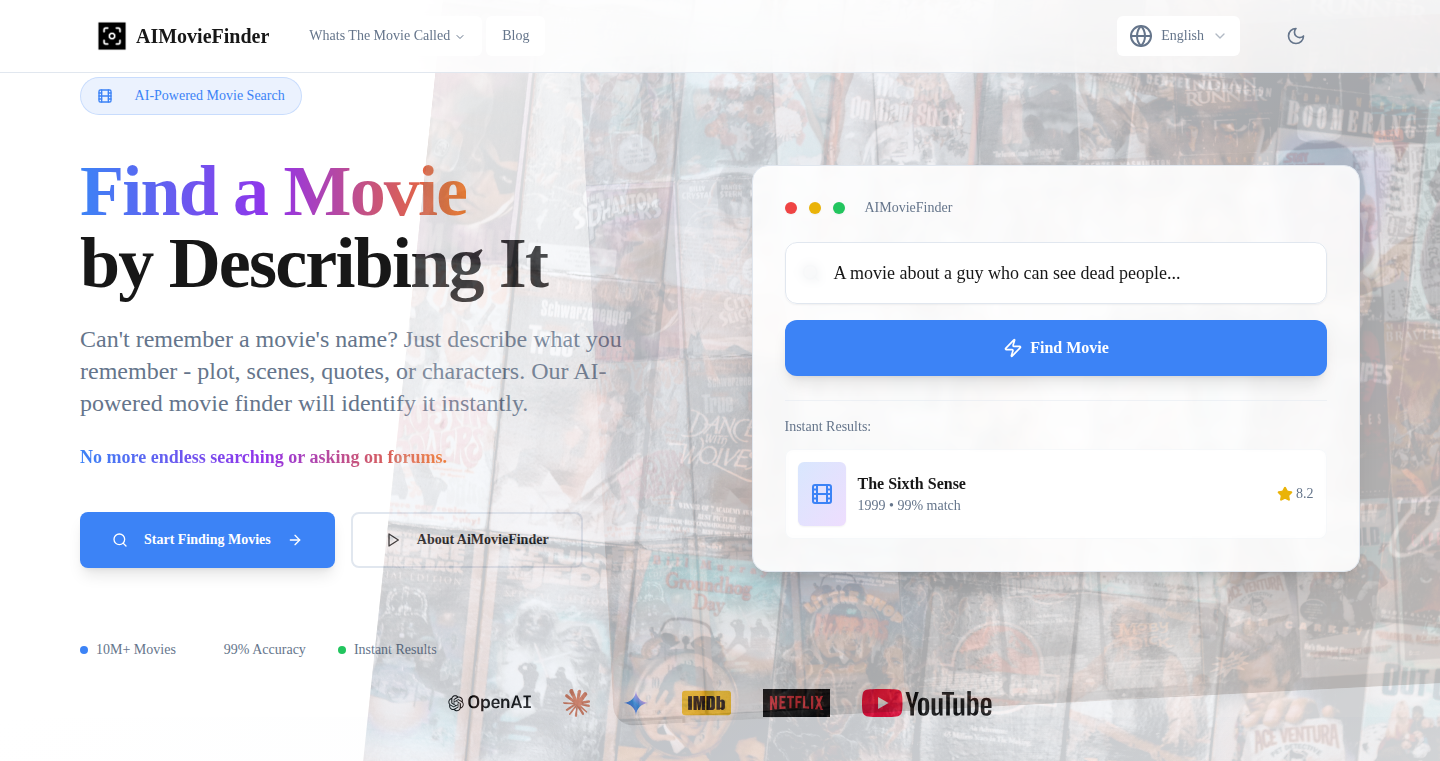

AI Movie Finder: Natural Language Movie Discovery

Author

mosbyllc

Description

AI Movie Finder is a project designed to help you find movies by describing them using natural language. Instead of relying on titles or actors, you can use descriptive phrases like "a movie about a time loop" or "a sci-fi film with robots." It utilizes a sentence transformer model, a sophisticated type of artificial intelligence, to understand the meaning behind your words and match them with movies in a database. The core innovation lies in its ability to handle vague and abstract queries, making movie discovery more intuitive and human-like.

Popularity

Points 4

Comments 1

What is this product?

AI Movie Finder is a search engine for movies that understands natural language. The core technology is a fine-tuned sentence transformer model, which converts your textual descriptions into mathematical representations (vector embeddings). These embeddings capture the semantic meaning of your description. The system then compares your query's embedding to the embeddings of movies in its database. This allows the system to find movies that match your description, even if you don't remember the exact title or actors. So, this project is a valuable tool for movie enthusiasts, allowing them to easily discover new films based on their vague memories and feelings. So, this helps you to find a movie by simply describing it.

How to use it?

To use AI Movie Finder, you simply type a description of the movie you're looking for into the search bar. The project uses the power of natural language processing to understand your words and find the movie. You can use it by visiting their website. For developers, this project offers a valuable demonstration of how to implement natural language processing for search tasks. It provides a real-world example of how to leverage sentence transformers to create intelligent search systems that go beyond simple keyword matching. So, you can integrate this technology into your own applications. It’s also a great learning resource to explore the potential of AI-powered search.

Product Core Function

· Natural Language Query Processing: This feature allows users to search for movies using everyday language, rather than relying on keywords or specific titles. This is achieved through a fine-tuned sentence transformer model that interprets the meaning of the user's input. This provides users with a more intuitive and user-friendly search experience. The value is in making movie discovery easier and more accessible to everyone. So, it allows you to search movies in plain English.

· Vector Embedding Matching: The project converts movie descriptions and user queries into vector embeddings. This allows the system to compare the semantic similarity between the query and the movie descriptions. It enables the system to find relevant movies even when the user's description is vague or incomplete. The value is in its ability to find matches based on meaning rather than just keywords. This is great for situations where you only remember a movie's plot or atmosphere. So, this matches your description to movies based on their meaning.

· Movie Database Integration: The project integrates with a movie database. The value is in a vast collection of movies for the search engine to query, returning search results from a large selection. So, it provides a broad range of movie search results.

· Frontend Interface: The user interface is built using vanilla JavaScript, making the front-end fast, lightweight, and accessible. The value is in its ease of use and speed. So, it provides a quick and responsive user experience.

Product Usage Case

· Movie Recommendation System: Use this project as a foundation to build a more personalized movie recommendation system. By integrating this technology with user preferences and viewing history, you can create a system that suggests movies based on the user's tastes and even their vague recollections of what they've enjoyed in the past. So, you can make a movie recommendation engine.

· Intelligent Search for Media: The project's core technology can be adapted to find other types of media. If you have a large dataset of songs, books, or articles, you can use a similar model to create a search function that allows users to find content by describing the subject matter or the feeling they get from it. So, you can search for more than movies.

· Educational Tool for NLP: The project serves as an excellent learning tool for those interested in natural language processing. The code and underlying principles can be studied to understand how sentence transformers are used in practical applications. So, it's a good resource for learning about NLP.

10

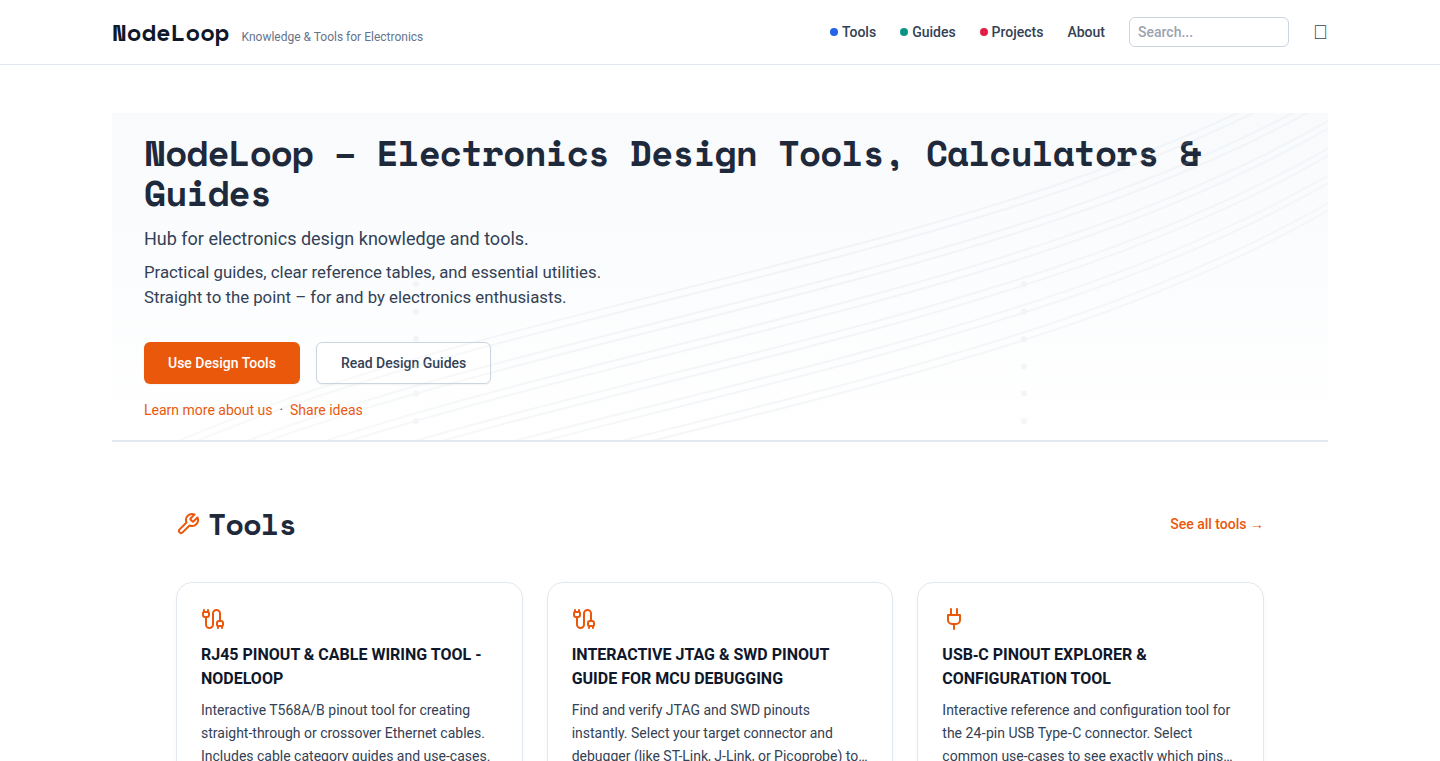

NodeLoop: Electronics Design Toolbox

Author

eezZ

Description

NodeLoop is a free, web-based toolbox specifically designed for hardware engineers. It tackles common challenges in electronics design, offering tools like a cable diagram generator, connector pinout viewers (supporting standards like M.2 and JTAG), and a serial monitor for microcontrollers. The project’s value lies in streamlining complex design tasks and providing readily accessible information. It removes the need to search through numerous documents or create similar tools from scratch, focusing on user convenience and a streamlined workflow.

Popularity

Points 5

Comments 0

What is this product?

NodeLoop is a collection of web-based tools built to help hardware engineers. It's like a digital Swiss Army knife for electronics. The core is based around generating cable diagrams which takes the guesswork out of connecting wires. It also includes a connector pinout viewer – a digital resource that clarifies the purpose of each pin in connectors like M.2 (used in laptops) or JTAG (used for debugging embedded systems). Furthermore, it incorporates a serial monitor for microcontroller communication. This allows developers to easily observe data exchange between a microcontroller and a computer. The innovative aspect is bringing these resources together in a free, easily accessible web interface, built from real user needs.

How to use it?

Developers can access NodeLoop directly through their web browser. For the cable diagram generator, engineers input the specifications of their cable, and the tool outputs a clear, visual representation. The pinout viewer provides a searchable database of connector information. The serial monitor lets you monitor and debug the output of your microcontrollers during the development phase. You'd access it by connecting your microcontroller to your computer, then selecting the correct serial port in NodeLoop. NodeLoop integrates within the existing hardware design process, reducing manual effort and error.

Product Core Function

· Cable Diagram Generator: This tool automatically generates visual representations of cable connections, eliminating the need for manual diagram creation. It saves time and reduces the chances of wiring errors. So what? It simplifies wiring processes, saving design and debugging time.

· Connector Pinout Viewer: This provides comprehensive information about connector pin configurations (like M.2, JTAG). It helps engineers understand the function of each pin, essential for connecting components. So what? It avoids having to search through many datasheets, saving time and preventing incorrect connections.

· Microcontroller Serial Monitor: This allows real-time observation of data transmitted from microcontrollers. It assists with debugging and troubleshooting, crucial during software development. So what? It speeds up the debugging process, helping developers find and fix problems with their code more quickly.

· Web-Based Accessibility: The tools are accessible via a web browser, making them available anywhere. This removes the need for installing any software and makes it easier for engineers to work from any device. So what? Engineers can access these tools from any device with internet access.

Product Usage Case

· Embedded System Development: An engineer designing an embedded system using an M.2 connector can use the pinout viewer to quickly understand the functions of each pin, accelerating the hardware setup. So what? This helps in rapid prototyping and debugging of hardware interfaces.

· Robotics Projects: A robotics engineer can use the cable diagram generator to quickly create wiring schematics for their robot, minimizing errors. So what? This greatly reduces the potential for wiring errors, preventing costly rework.

· Microcontroller Debugging: A developer working on a microcontroller project uses the serial monitor to see what data is being sent and received, allowing them to debug their code. So what? This provides immediate feedback on the microcontroller's behavior, which speeds up the debugging process.

11

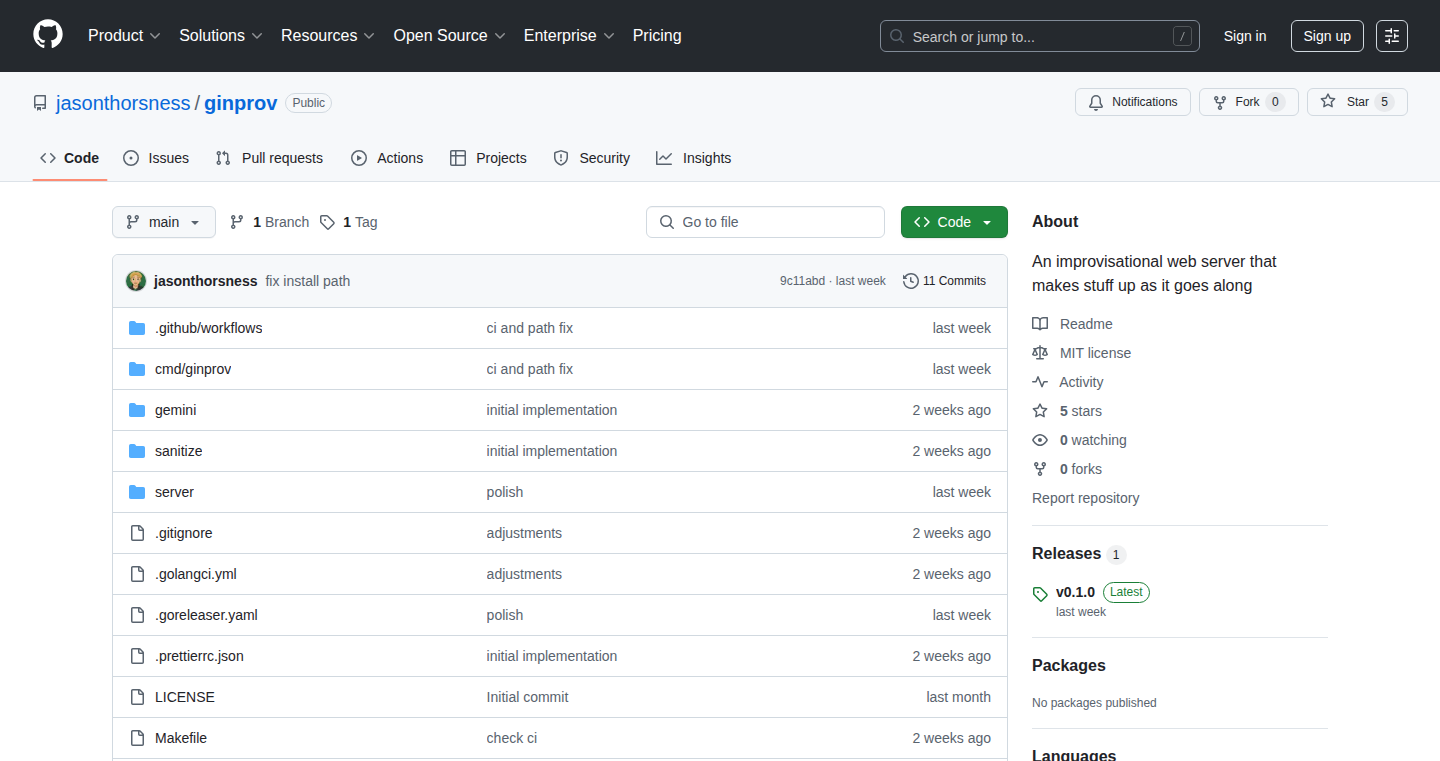

GinProv: On-Demand Web Page and Image Generation Server

Author

jasonthorsness

Description

GinProv is a web server that dynamically generates web pages and images using a Large Language Model (LLM) like Gemini. When a user requests a specific URL, the server uses the LLM to create the content and serve it in real-time. The innovation lies in the immediate content generation triggered by URL requests, allowing for highly customized and responsive web experiences. It tackles the problem of creating dynamic content without pre-generating all possible combinations, offering flexibility and reducing storage needs.

Popularity

Points 4

Comments 1

What is this product?

GinProv is essentially a 'live' web content creator. It uses the power of LLMs to conjure up web pages and images as soon as a user asks for them via a URL. Instead of storing pre-made content, GinProv uses the LLM to understand the request and generate the relevant page on the fly. This is groundbreaking because it removes the need to pre-create every possible version of a page, offering incredible flexibility and responsiveness. For example, if you ask for a page about 'cool-cars' (as in the provided example), GinProv uses the LLM to create that page dynamically. So what does this mean for you? It means you can build web applications with truly dynamic content without needing to manage a massive database or manually creating numerous pages. Imagine a website that generates unique product descriptions based on user input, or an art gallery where each page displays a different AI-generated image based on the URL.

How to use it?

Developers can integrate GinProv by setting up the server and configuring it to use their preferred LLM provider (like Gemini). The key is to structure URLs in a way that triggers the content generation. For instance, a URL like `ginprov.com/topic/details` would trigger the LLM to create a page based on the 'details' related to the 'topic'. GinProv could be self-hosted, meaning you run it on your own computer or server. This offers flexibility and control, especially when using your own Gemini key. To use it, you will need to set up your web server (like Apache or Nginx) to forward requests to GinProv. So what does this mean for you? It lets you create websites with super flexible content without needing to manually create all the pages or storing massive image libraries. You can bring your ideas to life by linking the LLM to the URL.

Product Core Function

· Dynamic Content Generation: The core function is to generate web pages and images on demand based on URL requests. This means a web server will give you a unique response every time you ask a URL.

· LLM Integration: GinProv leverages LLMs like Gemini to create content. This allows for sophisticated and contextually relevant content generation based on the user's request.

· Real-time Rendering: The server generates and renders content in real-time, providing an immediate response to the user's request, enhancing user experience by providing faster and dynamic content.

· Self-Hosting Capability: The ability to self-host the server allows developers to control the infrastructure, reduce costs, and customize the system to their specific needs. This is really useful if you're worried about cost, control, and privacy.

Product Usage Case

· Personalized Product Pages: Developers can create product pages that dynamically generate unique descriptions and images based on user input, like the product name and specifications, leading to unique user experiences and improved conversion rates.

· Interactive Learning Platforms: It can be used to create learning platforms where each lesson is generated dynamically based on the student's progress, providing adaptive and personalized content.

· AI-Generated Art Galleries: Developers can create galleries where each URL represents a new piece of art generated by the LLM, providing an endless stream of unique images.

· Dynamic Documentation: Automatically generate API documentation tailored to the user's query, creating custom documentation for specific functions or endpoints, thus saving time on documentation maintenance.

12

A01AI: Your AI-Powered Information Feed

Author

vincentyyy

Description

A01AI is a demo app designed to give you control over the information you consume. It combats the information overload problem caused by social media algorithms. Instead of passively scrolling through endless feeds, you tell A01AI what topics you want to follow, and it uses AI to fetch relevant updates. This gives you a focused, curated information stream, allowing you to avoid distractions and concentrate on what matters to you. This project is a practical application of AI for personalized content filtering, offering a novel solution to information overload.

Popularity

Points 2

Comments 2

What is this product?

A01AI is an application that leverages Artificial Intelligence to create a custom information feed. Unlike social media platforms that use algorithms to keep you engaged, A01AI lets you define exactly what information you want to see. You specify topics, and the app periodically uses AI to search for and provide you with the latest updates. This approach minimizes distractions and gives you control over your information consumption. So you can stay informed on your terms, and not be at the mercy of attention-grabbing algorithms.

How to use it?

Developers can use A01AI by signing up for beta testing, which grants access to the app. This is a demonstration of how AI can be integrated to create a personalized information stream. Developers can learn by observing the methodology of fetching updates from a variety of sources. The key takeaway is how the app facilitates user control over content discovery by letting you build a more focused stream of information. You could, for instance, integrate a similar system into your own applications to provide users with curated content.

Product Core Function

· Custom Topic Input: You specify the topics of interest, like 'recent crypto big things' or 'latest tech innovations'. This is the core of the personalization feature. Its value lies in letting users define their information needs.

· AI-Powered Update Retrieval: The app uses AI to proactively find information on your specified topics. This saves you time and effort from manually searching for updates, which streamlines the information gathering process. It is great for users because they get a curated stream of data without the distracting noise from social media algorithms.

· Curated Feed: A01AI provides a stream of information from the topics you select. The value is in giving you control over what you see. It allows you to filter out distractions and focus on relevant news and updates, which improves productivity.

Product Usage Case

· Focus on Specific Industry Trends: Imagine you are a fintech developer; you could use A01AI to exclusively follow the latest developments in blockchain technology, regulatory changes, and competitor moves. This gives you a laser-focused view, allowing you to stay ahead of trends.

· Monitor Specific Product or Technology: As a developer, if you are focusing on a specific tool or library, you can configure A01AI to pull the updates, so you can immediately see any new bug fixes, security patches, or any relevant announcements.

· Following Competitive Intelligence: Businesses, in particular, could use a similar technology to follow competitors' activities, track their announcements, product launches, and market strategies.

13

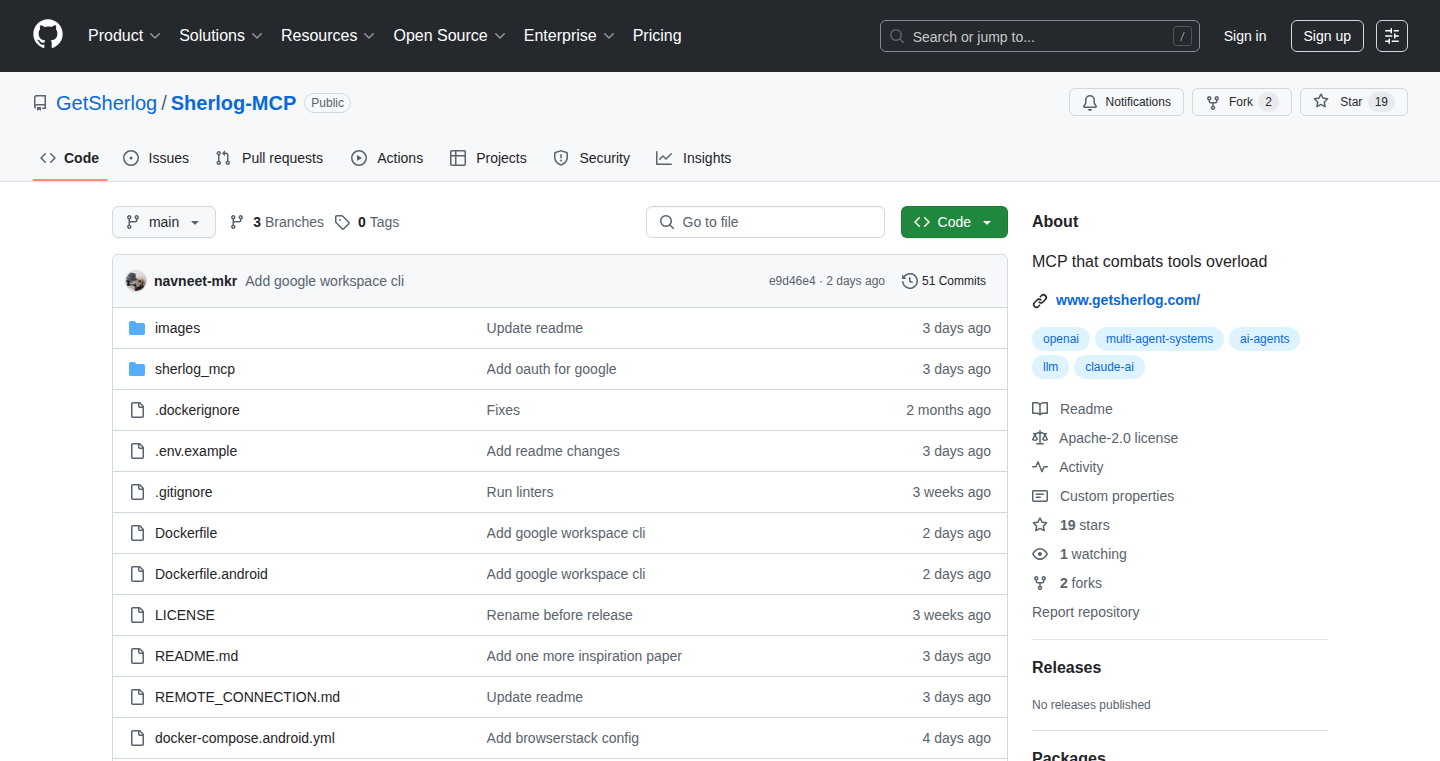

Sherlog MCP: Modern Code Processing Engine

Author

randomaifreak

Description

Sherlog MCP is a tool focused on code analysis and transformation, utilizing a novel approach to code understanding. Instead of relying on traditional parsing methods, it leverages machine learning to interpret code, enabling more flexible and powerful code manipulation capabilities. It tackles the limitations of traditional tools by offering a more intelligent and adaptable code analysis framework. So this helps developers understand and refactor code more effectively, especially in dynamic and evolving codebases.

Popularity

Points 4

Comments 0

What is this product?

Sherlog MCP employs a machine-learning-based approach to understand code structure and semantics. It does this by training models on a large dataset of code, allowing it to identify patterns, relationships, and potential issues within the code. This allows it to perform tasks like automated code refactoring, bug detection, and code generation with greater accuracy and adaptability than traditional methods. The innovation lies in its ability to understand code contextually, leading to a more nuanced and intelligent analysis. So this means it can potentially identify bugs and suggest improvements that are missed by traditional code analysis tools.

How to use it?

Developers can integrate Sherlog MCP into their existing development workflows through a command-line interface or APIs. This allows them to analyze code repositories, identify code smells, and generate suggested refactoring changes. It can be used in CI/CD pipelines to automate code quality checks and improve code maintainability. To use it, a developer might run a command that analyzes their code, and the tool will generate reports, and suggestions that they can review and apply. So you can easily integrate it into your existing workflow.

Product Core Function

· Automated Code Refactoring: This functionality automatically identifies code that can be improved (e.g., dead code, inefficient loops) and suggests refactoring options. This saves time and reduces manual effort in code maintenance. So this means you can automate tedious refactoring tasks.

· Bug Detection: The tool uses machine learning to identify potential bugs and code vulnerabilities, alerting developers to areas that require attention. This improves code reliability and reduces the risk of errors. So this helps catch bugs early.

· Code Generation: It can generate new code snippets or even entire functions based on context and developer input, such as generating boilerplate code for common tasks. This can significantly accelerate development time. So this means quicker code creation.

· Code Similarity Analysis: This allows the tool to identify code clones and similar code blocks across a project, aiding in code cleanup and reducing redundancy. This feature helps maintain consistency in codebase. So this allows developers to easily remove duplicated code.

Product Usage Case

· Automated Code Review in a large Java project: A development team could integrate Sherlog MCP into their continuous integration pipeline. When a developer submits code changes, the tool automatically analyzes the changes, identifies potential issues like inconsistent naming conventions or inefficient algorithms, and generates review comments. This ensures that code quality is consistently maintained throughout the project. So this helps ensure the team's code is consistently reviewed.

· Refactoring a Legacy Codebase: A team is tasked with refactoring a large, outdated Python codebase. Sherlog MCP can be used to automatically identify deprecated functions, unused variables, and potential performance bottlenecks. Then, the tool suggests refactoring options, reducing the manual effort required to modernize the codebase, allowing developers to focus on more complex logic. So this helps modernize older codebases.

· Generating code stubs for API interaction: A developer is building an application that interacts with a REST API. Sherlog MCP could analyze API documentation and automatically generate code stubs and boilerplate code for making API calls, handling responses, and managing error conditions. This speeds up the development process. So this makes integrating APIs faster.

14

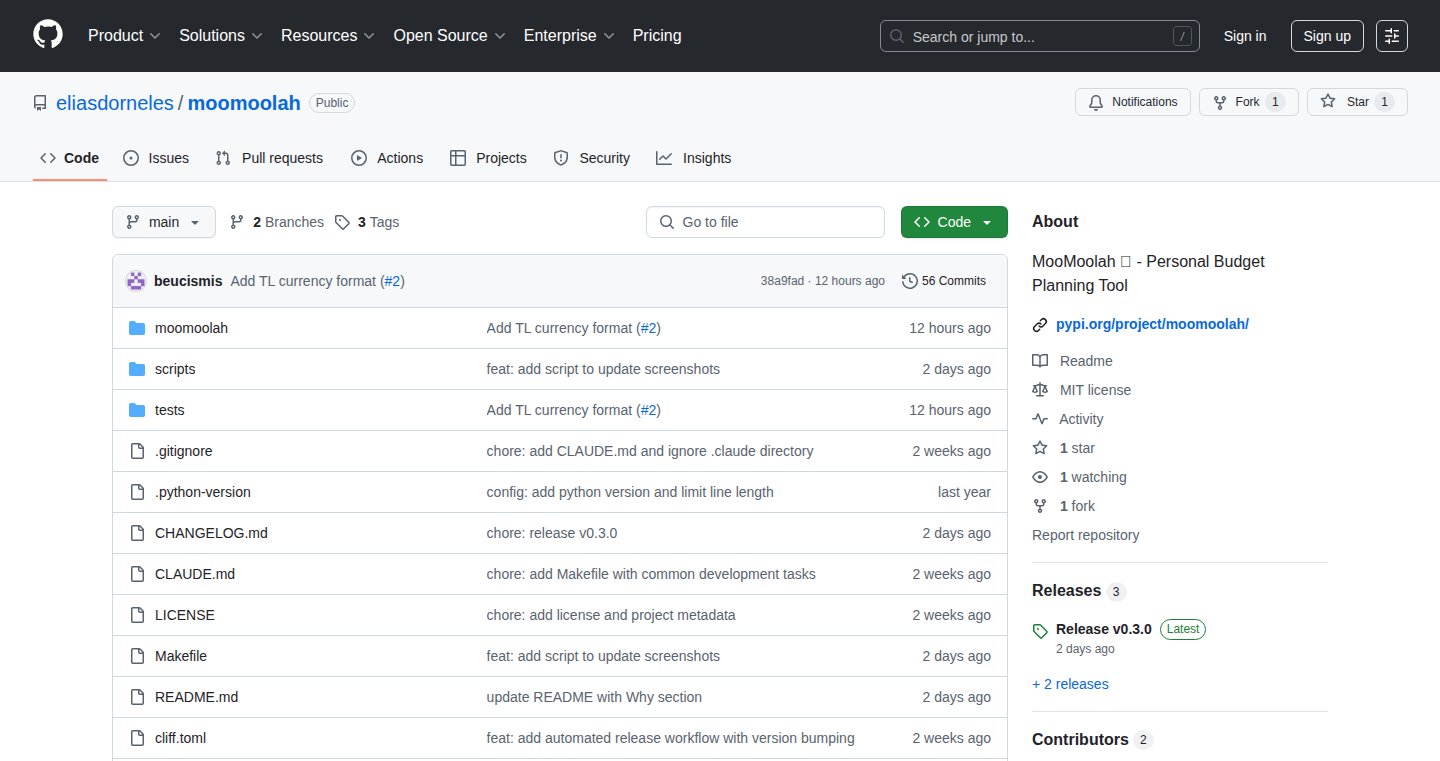

TextualBudget: A Terminal-Based Personal Budget Planner

Author

eliasdorneles

Description

TextualBudget is a personal budget planning application that runs in your terminal (the black screen you use to interact with your computer). It's built using Python and Textual, a framework for building interactive terminal applications. The cool part? Your financial data is stored locally in a simple JSON file. This means your budget stays private and you can access it even without an internet connection. It solves the problem of clunky spreadsheet solutions and the privacy concerns of online budget tools.

Popularity

Points 4

Comments 0

What is this product?

TextualBudget is like having a budget app right inside your terminal. Instead of using complex spreadsheets or web-based tools, you interact with it using text commands. The innovative part is how it uses Textual to create a visually appealing and interactive interface within the terminal. The data is stored as a simple JSON file, which is easy to manage and keeps your financial information secure. So what? It provides a fast, private, and offline-accessible way to manage your money.

How to use it?

Developers can use TextualBudget by installing the Python package and running it in their terminal. They can then add income, expenses, and track their spending. It's designed for anyone who wants a simple and private way to manage their budget. Integration is as simple as installing and running the application. So what? If you're a developer who prioritizes privacy and likes using the command line, this is a great tool to manage your personal finances.

Product Core Function

· Budget Tracking: The core function is tracking income and expenses. This allows users to monitor their cash flow and see where their money is going. This is useful because it provides insights into spending habits, helping to identify areas for potential savings.

· Data Visualization (through the Textual framework): The use of Textual enables visual representation of the budget data within the terminal, like charts or summaries, making it easy to understand financial status at a glance. This is useful because it presents the information in a more digestible format than raw numbers.

· Local Data Storage (JSON): The application stores all the financial data in a JSON file on your computer. This keeps the data private, as opposed to online budgeting tools that require data sharing. This is useful because it gives you complete control over your financial information and ensures privacy.

· Offline Accessibility: Because the data is stored locally, the application works without an internet connection. This is useful because it enables users to access and manage their budget at any time, from anywhere, regardless of internet availability.

· Terminal-Based Interface: The program is entirely operated within the terminal, providing a fast, keyboard-driven interface. This is useful because it allows for quick and efficient budget management for users familiar with terminal interfaces.

Product Usage Case

· Personal Finance Management: A software developer wants a simple way to track their monthly expenses and income without using web-based tools that require data sharing. They use TextualBudget to enter transactions, categorize them, and view summaries within the terminal. They achieve financial transparency and control using local data storage and a simple, private tool. So what? It provides a secure and efficient alternative to online budgeting apps.

· Learning Python and Textual: A developer is looking for a project to learn Python and the Textual framework. They download the TextualBudget code, study its structure, and modify it to suit their needs. This helps them understand the practical applications of Textual, build their own terminal-based applications, and contribute to open-source projects. So what? It provides a hands-on learning experience for terminal UI development.

· Building a Customized Budgeting Tool: A user finds TextualBudget's basic functionality sufficient but wants to customize it further to track specific investments or handle different income streams. They modify the Python code to add these features, extending the application to meet their personalized needs. So what? It provides a starting point for creating a highly customized personal finance tool.

15

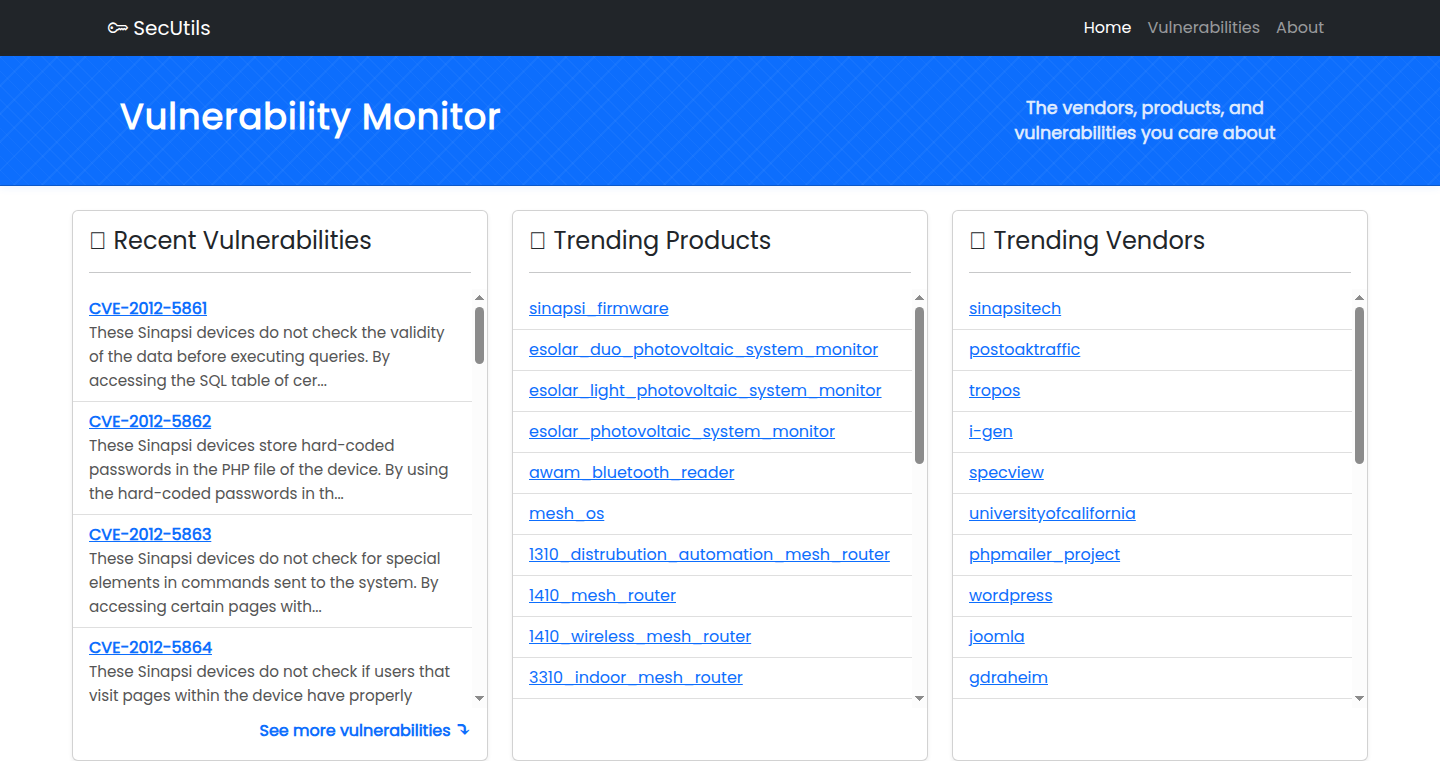

SecUtils: Lightning-Fast CVE Explorer

Author

SecOpsEngineer

Description

SecUtils is a tool for quickly browsing and filtering Common Vulnerabilities and Exposures (CVEs). It's designed to be fast and easy to use, providing a simple interface without the bloat of complex JavaScript frameworks. The project focuses on efficient indexing and filtering capabilities, allowing users to quickly find relevant vulnerability information based on criteria like severity, Common Weakness Enumeration (CWE) and publication date. This addresses the problem of slow and cumbersome interfaces often found in existing CVE viewers, making it easier for security researchers and developers to stay informed about potential security threats.

Popularity

Points 2

Comments 2

What is this product?

SecUtils is a web-based tool that allows users to explore CVE data rapidly. It achieves speed through efficient indexing, meaning the data is organized in a way that allows for very fast searching and filtering. It supports filtering by factors such as CWE (Common Weakness Enumeration, a way of categorizing software weaknesses), CVSS score (a numerical measure of vulnerability severity), and publication date. The project intentionally avoids using heavy JavaScript frameworks to maintain its speed and simplicity. So, this helps you quickly find critical security issues.

How to use it?

Developers and security researchers can use SecUtils to quickly assess vulnerabilities related to specific products, technologies, or software versions. They can filter the data to focus on vulnerabilities that are most relevant to their work. It can be integrated into security workflows by providing a quick way to check for newly published vulnerabilities or to investigate existing ones. For example, a developer could check if a library they use has any new CVEs associated with it. You can access the viewer directly through your web browser.

Product Core Function

· Fast Filtering: The ability to quickly filter CVEs by criteria such as CWE, CVSS score, and publication date. So, this lets you quickly narrow down the scope of vulnerabilities.

· Searchable CVE Views: Provides a detailed view for each CVE, allowing for fast indexing and quick access to specific vulnerability information. So, you can quickly get to the details of a specific vulnerability.

· Minimalist User Interface: Designed with a simple and responsive user interface, without unnecessary features or performance-sapping frameworks. So, the tool is fast and easy to navigate without any fluff.

· Custom Views: The future of the project will likely involve creating custom views. So, you can tailor the information to a specific product.

Product Usage Case

· Security Audits: During a security audit, a security engineer can use SecUtils to quickly identify vulnerabilities in software components or third-party libraries used by the target application. So, you can rapidly assess your security posture.

· Software Development: Developers can use SecUtils during the software development lifecycle to check for new CVEs related to the libraries and dependencies used in their projects. So, developers can proactively address security issues.

· Incident Response: During a security incident, incident responders can use SecUtils to quickly understand the vulnerabilities exploited in an attack. So, you can quickly get the vulnerability details and respond effectively.

· Vulnerability Research: Security researchers can use SecUtils to efficiently explore and analyze large datasets of CVEs, helping them uncover patterns and trends in vulnerability data. So, researchers can quickly perform analysis on CVE data.

16

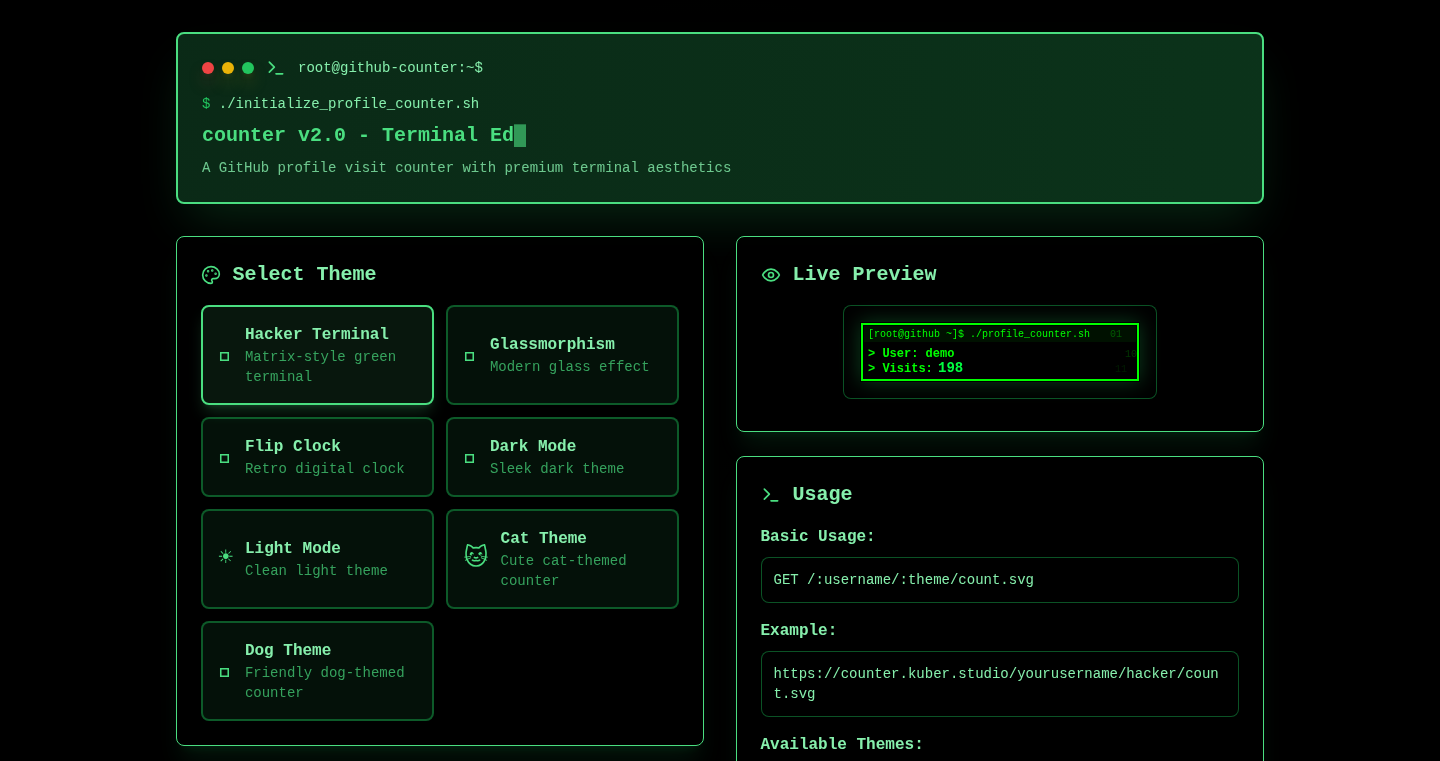

GitHub Profile View Counter - Always Up!

Author

kuberwastaken

Description

This project is a simple, self-hosted view counter for GitHub profiles. The developer created it because their previous counter (with over 100,000 views) went offline. This new version guarantees high availability and can be easily embedded directly in Markdown and HTML, updating with every page refresh. It includes 7 different themes, such as Glassmorphism and a terminal-style theme, with a CATS theme as a fun option. It's free to use and designed to enhance your GitHub profile. This solves the problem of needing a reliable, customizable, and visually appealing way to track profile views.

Popularity

Points 3

Comments 1

What is this product?

This project is a lightweight web counter. It leverages basic web technologies to track how many times your GitHub profile is viewed. The innovation lies in its simplicity, reliability, and customization options. It doesn't rely on complex infrastructure, making it easy to deploy and maintain. The use of a refresh-based system ensures the counter is always up-to-date and functional. So this is important because you will know how many people viewed your profile which can be important for showing how active you are.

How to use it?

Developers can easily embed this counter in their GitHub profile README files or any other webpage using Markdown or HTML. The counter generates a small image with the view count, which can be added using an `<img>` tag. Developers can also select from various themes to match their profile aesthetics. This is incredibly useful for developers who want to showcase the popularity of their profile and projects. For example, imagine you are building a project, the counter will help the other developers see how popular your project is.

Product Core Function

· View Tracking: The core functionality is to track and display the number of times a GitHub profile is viewed. This provides a simple metric for gauging profile popularity and activity. The value is that developers get a quick overview of how popular their profile or project is, which can be important for networking and collaboration.

· Markdown and HTML Embedding: The counter can be easily integrated into GitHub profile README files or any other web page via Markdown or HTML. This makes it simple for developers to add the counter to their existing profile layouts. This enables easy integration without requiring complex coding or setup. This is important because it's easy to show off and the code can be used with various web pages.

· Theme Customization: The project offers various themes, allowing users to customize the appearance of the counter to match their profile aesthetic. It allows you to control the appearance of the counter, making it visually appealing. The value is that it ensures a professional and aesthetically pleasing presentation that complements the user's profile design.

· Self-Hosting: Since it's self-hosted, the counter is completely under the developer's control, eliminating the risk of dependency on external services. Because you host it, you're able to control where the data goes and can assure that the data is always available.

Product Usage Case

· Project READMEs: Developers can add the counter to the README file of their GitHub projects to track how many times the project's page is viewed. This allows the project to be viewed and allows you to see the activity.

· Personal Portfolios: Developers can use the counter on their personal portfolio websites to showcase their profile activity and make it easier for potential employers and collaborators to review their work.

· Community Showcases: Community project maintainers can use the counter to monitor the popularity of the project on GitHub. This provides insights into the level of interest and engagement with the projects.

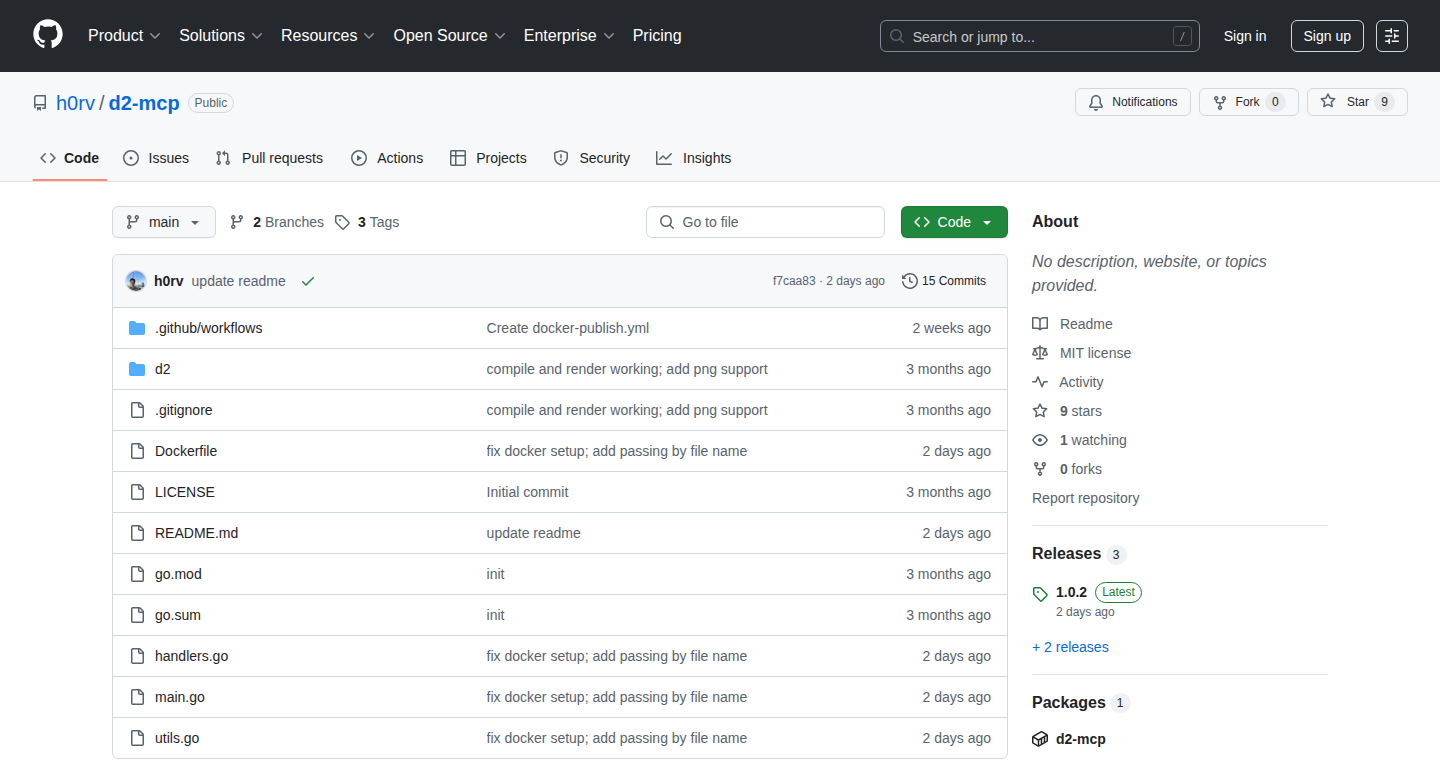

17

d2-mcp: Multimodal Diagram Compiler

Author

h0rv

Description

This project, d2-mcp, is a tool that takes diagrams written in the D2 language and compiles them into formats suitable for use with multimodal Large Language Models (LLMs). The key innovation is bridging the gap between textual descriptions of diagrams and the visual representations needed by these AI models. It solves the problem of incorporating diagrams directly into the input of multimodal LLMs, enabling them to understand and reason about visual information described in a structured way.

Popularity

Points 2

Comments 2

What is this product?

d2-mcp translates diagrams written in the D2 language (a text-based diagramming language) into formats that multimodal LLMs can understand and use. Think of it like a translator for diagrams, converting the textual description of a diagram into a visual representation that the LLM can process. The core technology lies in its ability to parse the D2 code and generate suitable visual inputs, like image files, that are compatible with these advanced AI models. So this is useful because it helps you integrate diagrams directly into your AI workflows, enabling more comprehensive analysis and understanding.

How to use it?

Developers can use d2-mcp by first writing their diagrams in the D2 language, which is known for its simplicity and ease of use. Then, they use d2-mcp to compile these D2 diagrams into a format that their multimodal LLM can accept as input. This could involve generating image files or other formats. Finally, the generated visual representation can be fed to the LLM along with other textual inputs for tasks like generating descriptions, answering questions about the diagram, or even generating code based on the diagram's structure. So this is useful because it enables developers to easily integrate diagrams into LLM-powered applications, enhancing their capabilities and reasoning abilities.

Product Core Function

· D2 Parsing and Compilation: The core function is parsing the D2 diagram description and compiling it into an image or other suitable format. This allows the multimodal LLM to 'see' the diagram. Its value lies in transforming human-readable diagrams into machine-interpretable formats, broadening the scope of tasks an LLM can handle.

· Image Generation: The tool generates image files (e.g., PNG, SVG) from the compiled D2 diagrams. This is the visual representation that the multimodal LLM will 'see'. It allows developers to directly integrate the visual elements of a diagram into an LLM, which is helpful for creating a complete picture of all the information.

· Multimodal LLM Integration: d2-mcp is designed for easy integration with multimodal LLMs. It provides outputs that are compatible with the LLM’s input requirements. This is valuable because it simplifies incorporating diagrams into LLM applications, streamlining the development of visually-informed AI systems.

· Diagram Interpretation: Enables the LLM to interpret and reason about the content of the diagrams. The LLM can now provide insight and interpretation that adds to the overall quality of your outputs. It helps users create better outputs.

Product Usage Case

· Software Architecture Documentation: Use d2-mcp to create diagrams that represent the software architecture. The diagrams are then fed to an LLM. The LLM analyzes these diagrams and generates technical documentation, code snippets, or identifies potential design flaws. This offers automated documentation and improves code quality.

· Process Flow Visualization: Compile process flow diagrams (e.g., business workflows) using d2-mcp and input them into an LLM. The LLM then answers questions about the process, identifies bottlenecks, or suggests improvements. This facilitates streamlined process analysis and optimization.

· Knowledge Base Creation: Use d2-mcp to visualize complex relationships within a knowledge domain. Input the diagrams to an LLM and use the model to auto-generate summaries, insights, or even learning materials. This is very useful for creating useful overviews of complex subjects.

18

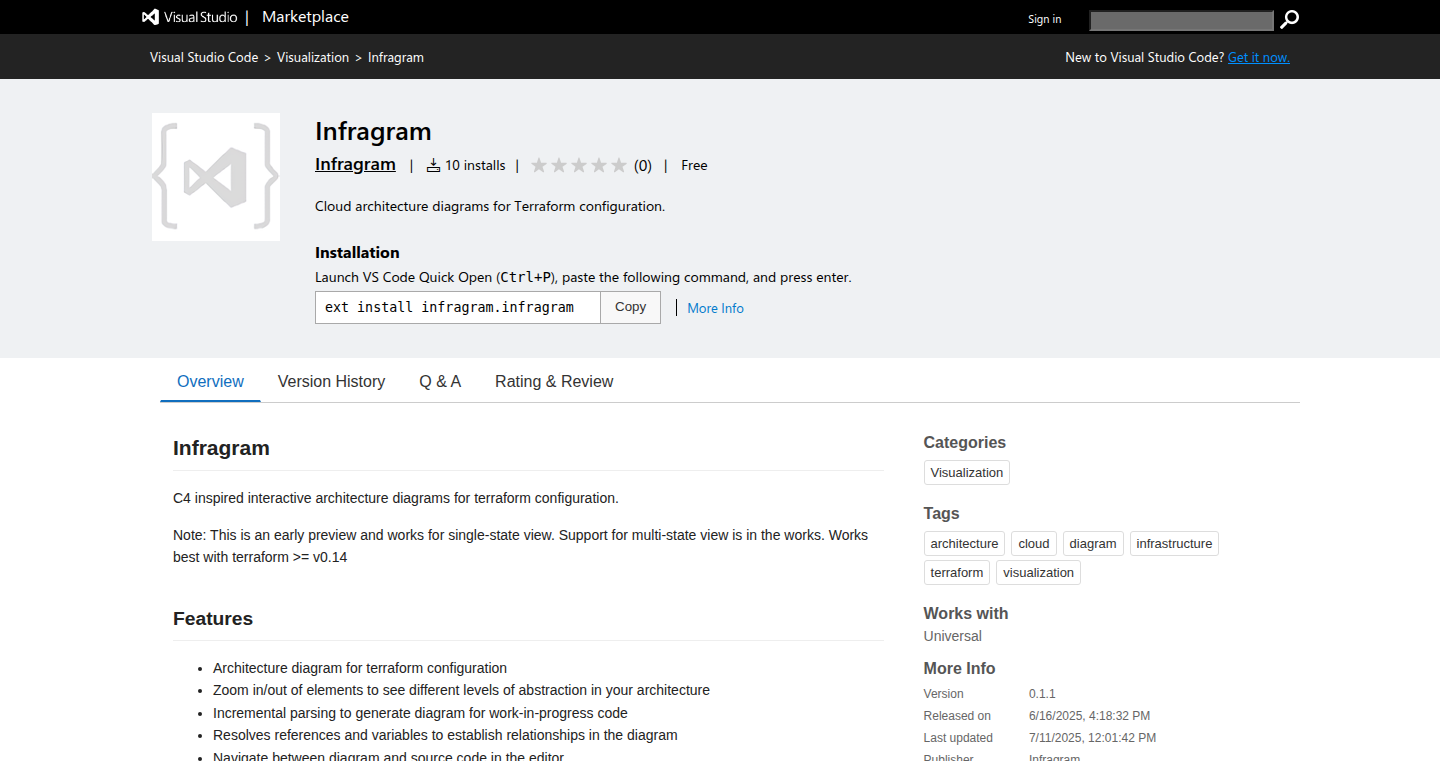

Infragram: Visual Infrastructure Architecting in Your IDE

Author

aqula

Description

Infragram is a Visual Studio Code extension that automatically generates interactive infrastructure diagrams based on the C4 model from your Terraform code. It helps developers visualize their cloud infrastructure, understand its architecture, and collaborate more effectively. The diagrams can be zoomed in and out, and can even link elements in the diagram back to your source code. The extension runs entirely locally, ensuring your code's security. So you can see how your infrastructure components interact without manually drawing diagrams, saving you time and effort.

Popularity

Points 4

Comments 0

What is this product?

Infragram is a VS Code extension that analyzes your infrastructure-as-code (like Terraform) and creates diagrams that visualize your cloud setup. It uses the C4 model, which lets you look at the big picture and then zoom into specifics. The core innovation is the automated generation of these diagrams directly within the developer's coding environment (VS Code), making infrastructure understanding more accessible and dynamic. Think of it as a live map of your cloud resources. So, you can easily understand your cloud infrastructure and how it's set up.

How to use it?

Developers can install the Infragram extension in their VS Code and point it to their Terraform configuration files. The extension will automatically parse the code and generate diagrams. These diagrams can be viewed directly within VS Code and updated automatically as the code changes. You can also navigate from the diagram to your source code, which can speed up debugging. So you can get a visual representation of your infrastructure with minimal setup.

Product Core Function

· Automated Diagram Generation: Automatically creates diagrams from your Terraform code, saving you from manual diagramming. So, you can focus on your infrastructure code instead of drawing diagrams.

· Interactive Visualization: Allows zooming and panning within the diagrams for different levels of detail. So, you can easily explore your infrastructure.

· Code Integration: Links diagram elements back to the original source code, enabling easier navigation and understanding. So, you can quickly jump from a diagram element to the code.

· C4 Model Support: Uses the C4 model to organize and present infrastructure at different levels of abstraction (Context, Container, Component, Code). So, you can understand your infrastructure at different zoom levels.

· Change Plan Visualization: Overlays change plans over the diagram, such as those generated by Terraform plan, to show upcoming modifications. So, you can anticipate the effects of your updates.

· Client-Side Execution: Runs entirely within your IDE and doesn't upload your code, ensuring privacy and security. So, your code remains secure.

Product Usage Case

· Debugging Infrastructure Issues: When a service is down, use Infragram to visually trace dependencies and identify the root cause by navigating from the diagram to the relevant code. So, you can quickly find and fix problems.

· Onboarding New Team Members: Provide new team members with automatically generated diagrams to help them quickly understand the infrastructure. So, you can accelerate team member understanding.

· Planning Infrastructure Changes: Visualize the impact of infrastructure changes before applying them using diagrams generated from Terraform plans. So, you can prevent unexpected consequences.

· Documentation for Cloud Architecture: Automatically generate up-to-date architecture diagrams for documentation, eliminating the need for manual updates. So, you can keep your documentation accurate and current.

· Cost Analysis and Optimization: Integrate with cost estimation tools and visualize costs directly on the infrastructure diagrams. So, you can understand the financial implications of your infrastructure.

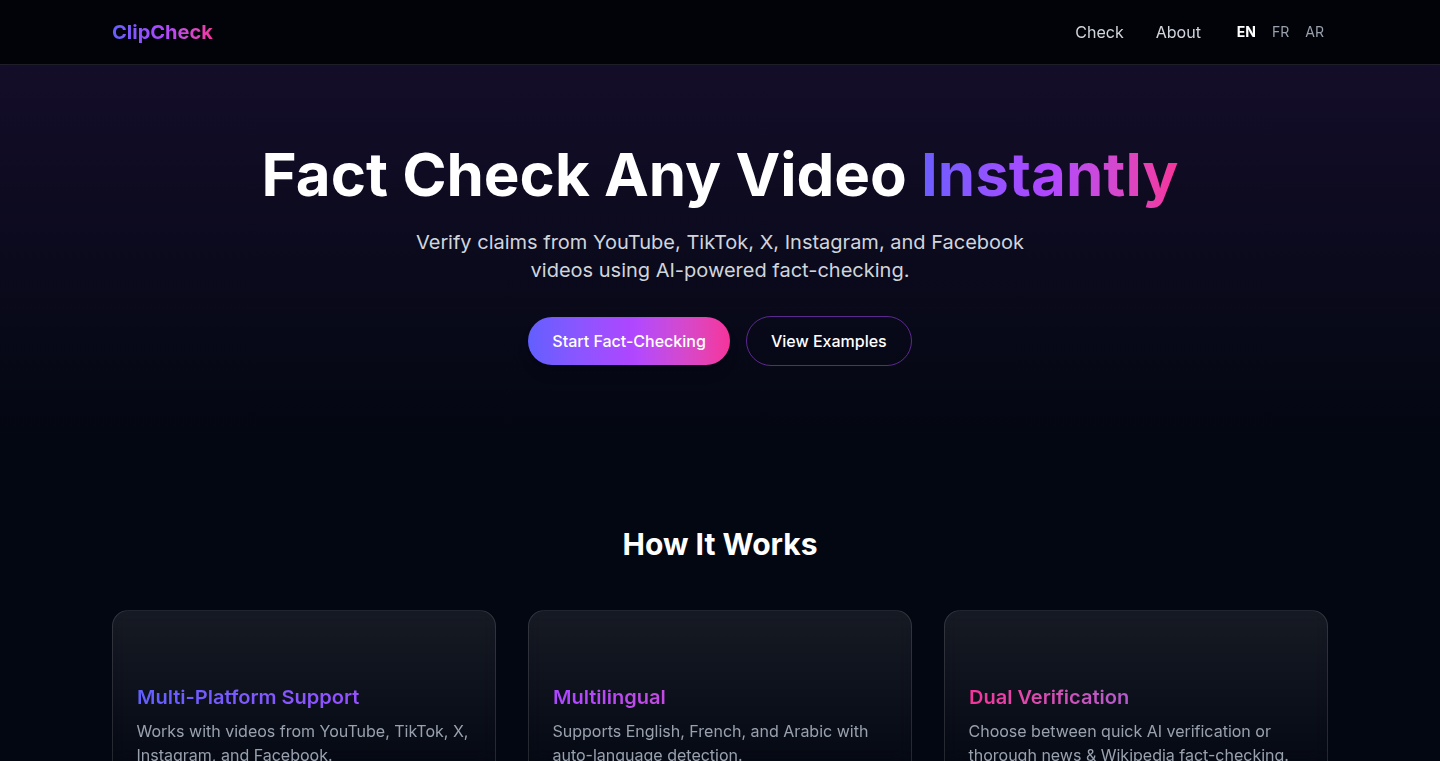

19

ClipCheck: Instant Video Fact-Checking Engine

Author

NovaDrift

Description

ClipCheck is a tool that instantly analyzes any video you give it, and checks the facts presented against a knowledge base. The innovation lies in its ability to quickly process video content using a combination of techniques like speech-to-text, natural language processing (NLP) to understand the claims made, and then cross-reference them with verified information sources. This addresses the growing problem of misinformation in video form, providing a quick way to assess the credibility of video content. So this tool helps you to check the validity of a video, saving you from getting fooled by fake information.

Popularity

Points 1

Comments 3

What is this product?