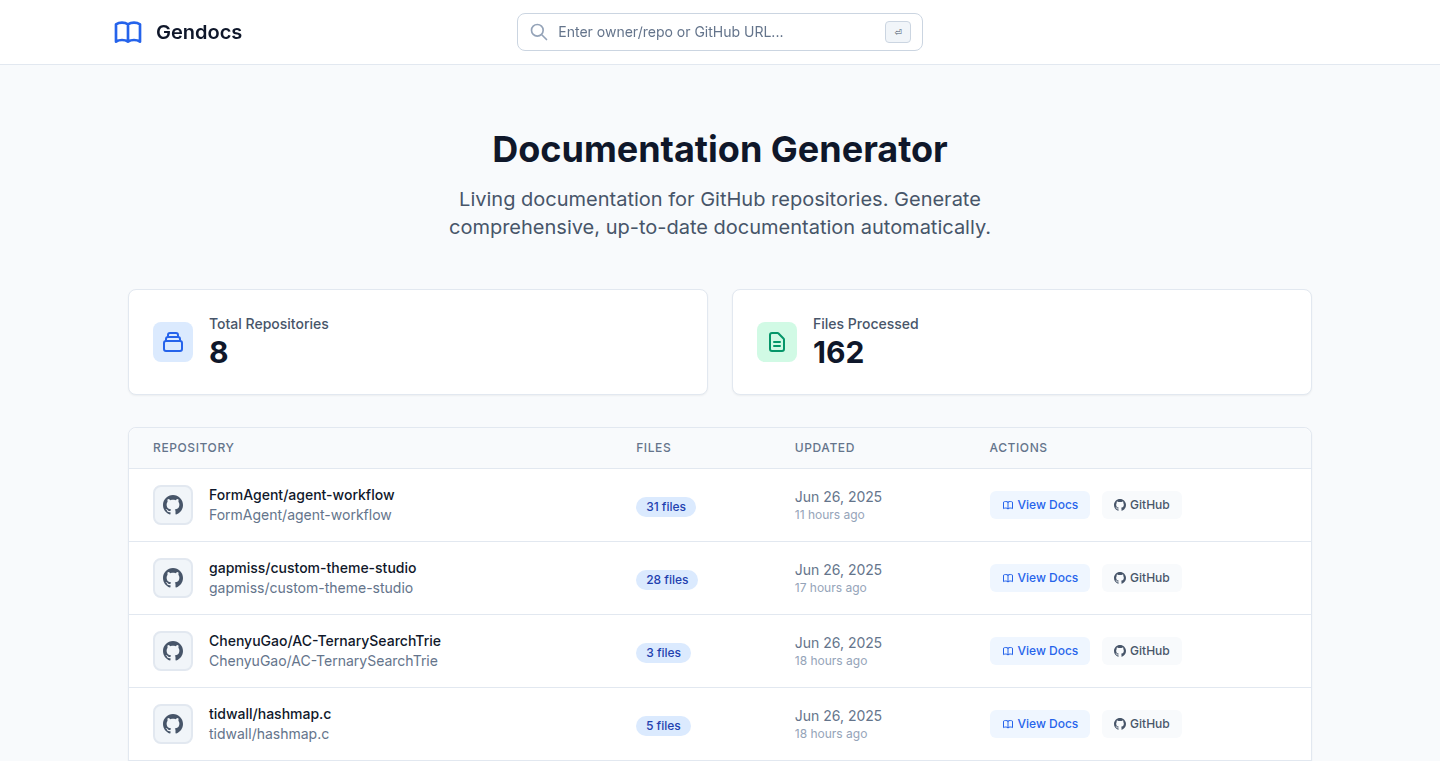

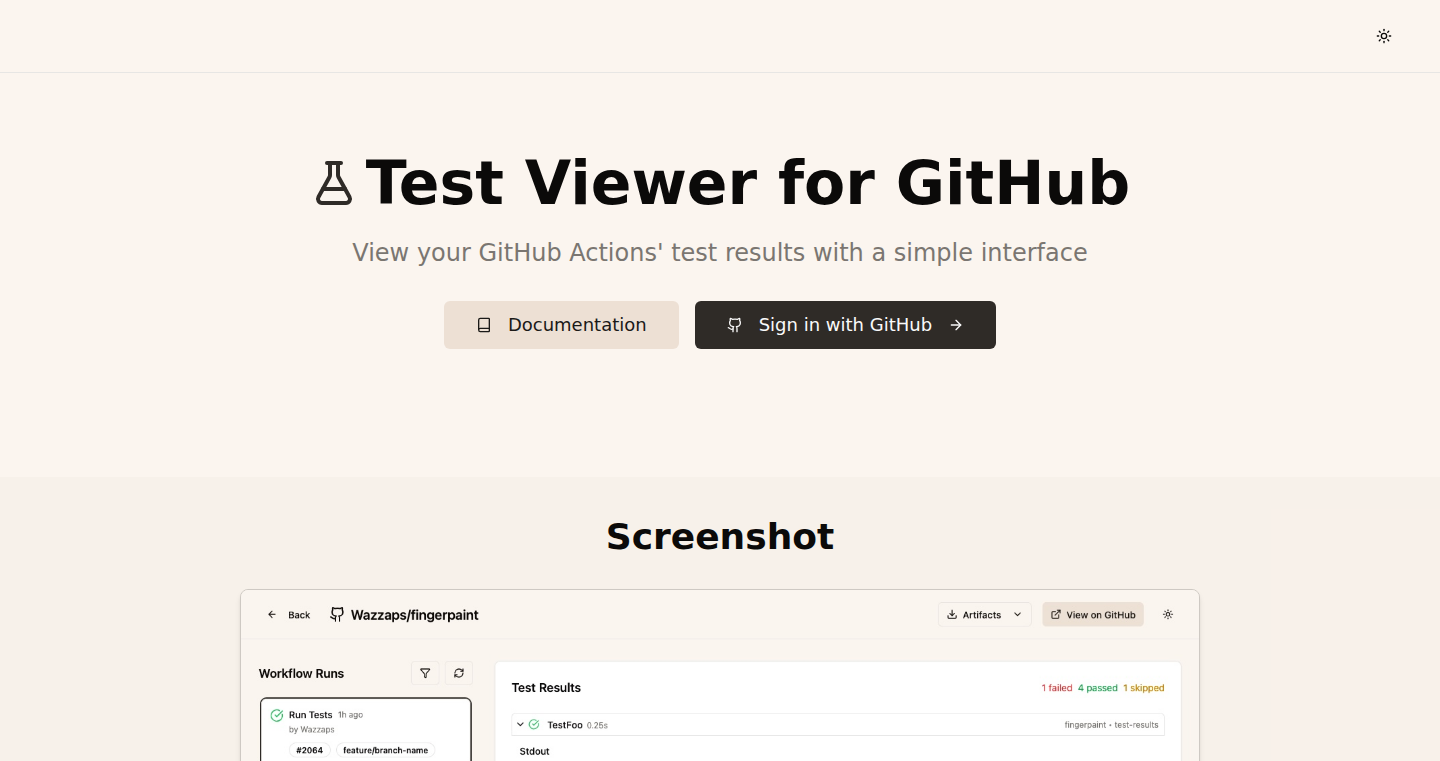

Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-06-26

SagaSu777 2025-06-27

Explore the hottest developer projects on Show HN for 2025-06-26. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

今天的 Show HN 展现了开发者们对 AI 和 Web 技术的深入探索。 AI 仍然是主旋律,尤其是在自动化和内容生成方面。 值得关注的是,开发者开始探索新的交互方式,例如,基于视觉的浏览器自动化,使得自动化流程更加稳定和可靠。对于开发者和创业者来说,可以尝试将 AI 融入到自己的工具中,利用 AI 技术提升效率,或者构建更加智能的交互方式。另外,在本地化和隐私保护方面,开发者们也在积极探索,构建安全可靠的产品。黑客精神鼓励开发者大胆尝试新的技术,解决实际问题,创造更多可能性。

Today's Hottest Product

Name

Magnitude – Open-source AI browser automation framework

Highlight

Magnitude 利用视觉模型进行浏览器自动化,不再依赖容易出错的 DOM 导航,而是通过分析像素坐标来执行精确操作。这意味着它可以更好地处理复杂交互,如拖放、数据可视化等,并提供细粒度的控制,开发者可以使用 act() 和 extract() 函数来精确地控制自动化流程。开发者可以学习如何利用视觉模型进行自动化,这种方法比传统的基于 DOM 的自动化更强大,更不容易出错,能用于创建更稳定、更可靠的自动化脚本。

Popular Category

AI

Web Development

Tools

Popular Keyword

AI

Automation

Browser

Technology Trends

AI 驱动的浏览器自动化:将 AI 应用于浏览器操作,实现更智能、更强大的自动化功能。

本地优先的应用程序:数据存储在本地,强调隐私和离线可用性。

基于 Prompt 的工具:使用自然语言提示来控制工具,简化用户交互。

Project Category Distribution

AI 工具 (35%)

Web 开发工具 (30%)

生产力工具 (15%)

其他 (20%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | DataSetGen: Your AI Data Alchemist | 142 | 29 |

| 2 | Magnitude - AI-Powered Vision-First Browser Automation | 98 | 38 |

| 3 | PRSS Site Creator - Desktop-Powered Static Site Generator | 20 | 17 |

| 4 | Inworld TTS: High-Quality, Low-Latency Multilingual Text-to-Speech | 22 | 13 |

| 5 | Anytype: Your Private, Local-First, Collaborative Knowledge Hub | 17 | 0 |

| 6 | ZigJR: JSON-RPC Library with Compile-Time Reflection | 11 | 2 |

| 7 | CorpTimeViz: Visualizing Corporate Calendars | 11 | 0 |

| 8 | MolSearch: Fast 3D Molecular Search Without a GPU | 9 | 2 |

| 9 | Effect UI: Reactive UI Framework Powered by Effect | 8 | 2 |

| 10 | Kraa.io - Markdown-Powered Knowledge Base | 6 | 3 |

1

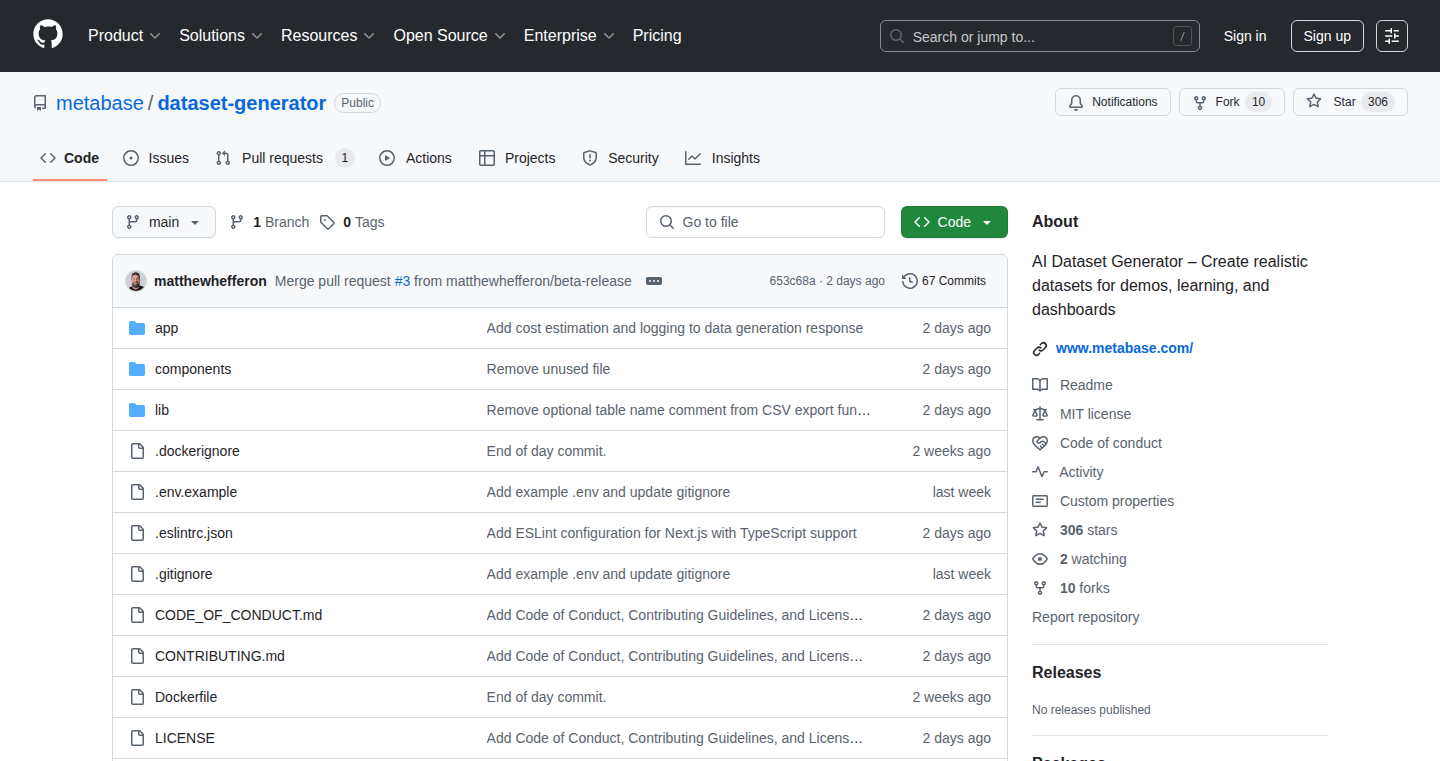

DataSetGen: Your AI Data Alchemist

Author

matthewhefferon

Description

DataSetGen is an AI-powered dataset generator. It tackles the challenge of acquiring high-quality, relevant datasets for AI model training. Instead of manually curating data, it leverages AI to automatically create synthetic data tailored to specific needs, addressing the common problem of data scarcity and bias, ultimately accelerating AI development.

Popularity

Points 142

Comments 29

What is this product?

DataSetGen is a tool that uses Artificial Intelligence to create datasets. Imagine it as a digital chef that cooks up training data for your AI models. The innovation lies in generating artificial data that's customized to your needs, like a dataset about self-driving cars or medical images, based on your provided instructions. This sidesteps the time-consuming and sometimes expensive process of collecting real-world data. So this is useful because it can save you a lot of time and money when creating AI models by removing the need for manually building large datasets.

How to use it?

Developers use DataSetGen by providing specifications – like the type of data (text, images, etc.) and the characteristics they want. Think of it as giving the chef the recipe. The tool then generates a dataset compliant with those instructions. Integration might involve uploading these generated datasets directly into your AI training pipelines. It is valuable because it speeds up the iterative process of model training and experimentation. You can quickly generate different types of datasets to test your models without waiting for manual data collection. For example, you can use it to create synthetic images for object detection or generate text for natural language processing tasks.

Product Core Function

· Synthetic Data Generation: Generates data based on user-defined parameters. For instance, generating a dataset of different types of flowers with specific features. This is valuable because it allows creating datasets that would be difficult or impossible to collect manually, like rare disease images or historical data.

· Data Augmentation: Increases the diversity of existing datasets through AI manipulation. Imagine taking an image of a car and making variations: changing the lighting, angles, or adding slight damage to the car. This is useful because it improves model robustness and generalization by exposing it to a wider range of data variations, avoiding overfitting and increasing the accuracy of the AI model.

· Dataset Customization: Allows users to specify data characteristics, like format, size, and features. Think of setting the ingredients and quantity in our recipe. This is useful because it makes the datasets precisely tailored to specific model training needs, avoiding the use of irrelevant data.

· Data Validation & Quality Control: Includes checks to ensure the synthetic data quality, preventing errors and inconsistencies. Imagine checking if the ingredients are fresh. This is useful because it guarantees data accuracy and reliability, increasing the effectiveness of AI model training.

Product Usage Case

· Self-Driving Car Development: A developer needs to train an AI model to recognize road signs. DataSetGen can generate numerous images of road signs under various weather conditions (rain, snow, fog), angles, and lighting, which allows developers to train more effective self-driving car AI models. This improves the robustness of the AI model against diverse real-world scenarios.

· Medical Image Analysis: A research team trains an AI model to identify tumors in medical scans. DataSetGen can generate synthetic medical images with varying tumor sizes, shapes, and locations. This accelerates medical research by providing a large and diverse dataset for training the AI, which can then improve the ability of AI tools to help doctors make more accurate diagnoses.

· Natural Language Processing (NLP): A company builds a chatbot and needs large amounts of conversational data. DataSetGen generates synthetic conversations based on defined topics and user interactions. This improves the ability of the chatbot to answer questions and assist users, and improves the overall user experience.

· Fraud Detection System: Creating a model to identify fraudulent transactions requires a dataset. DataSetGen can generate synthetic transaction data representing both normal and fraudulent activities, to create an accurate and robust AI model. This allows the system to better identify fraudulent transactions, saving the business money.

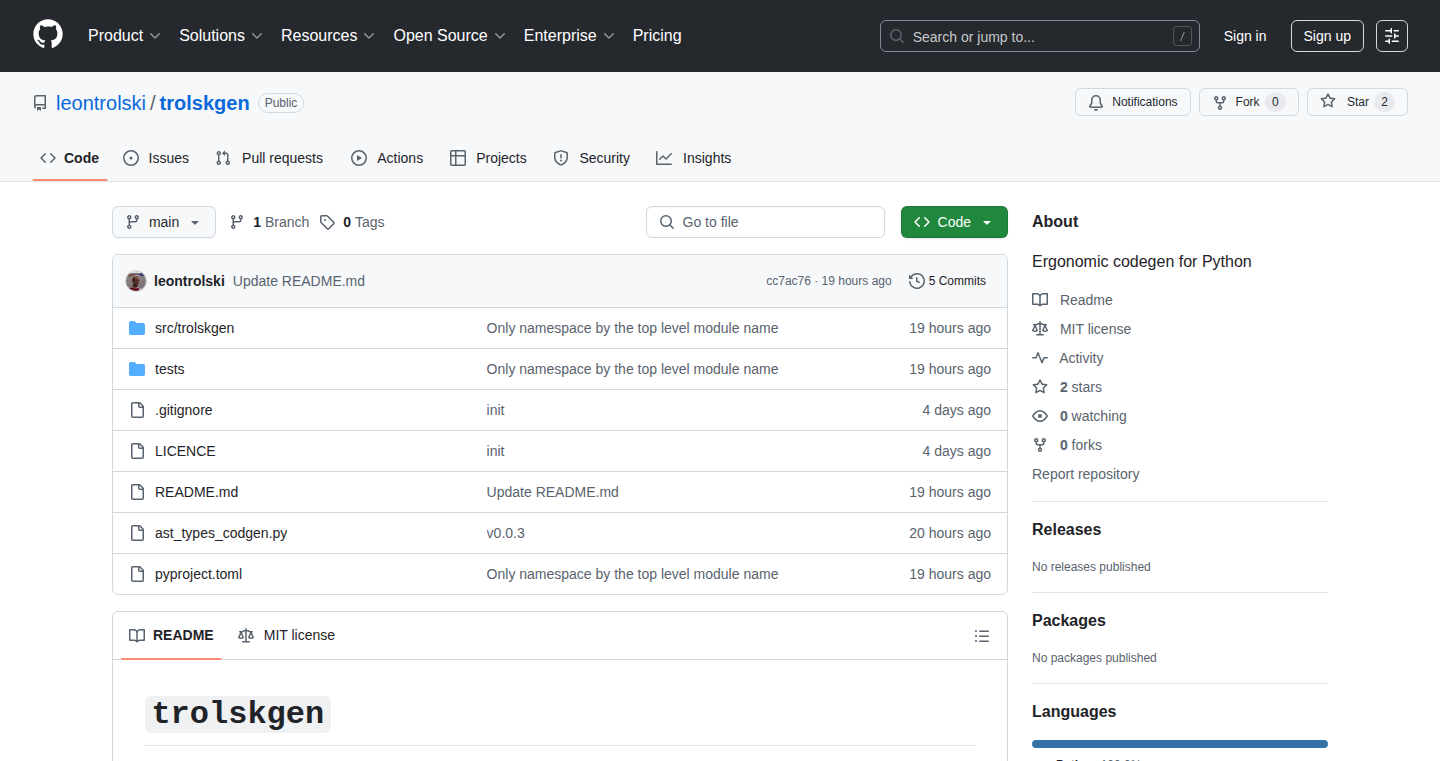

2

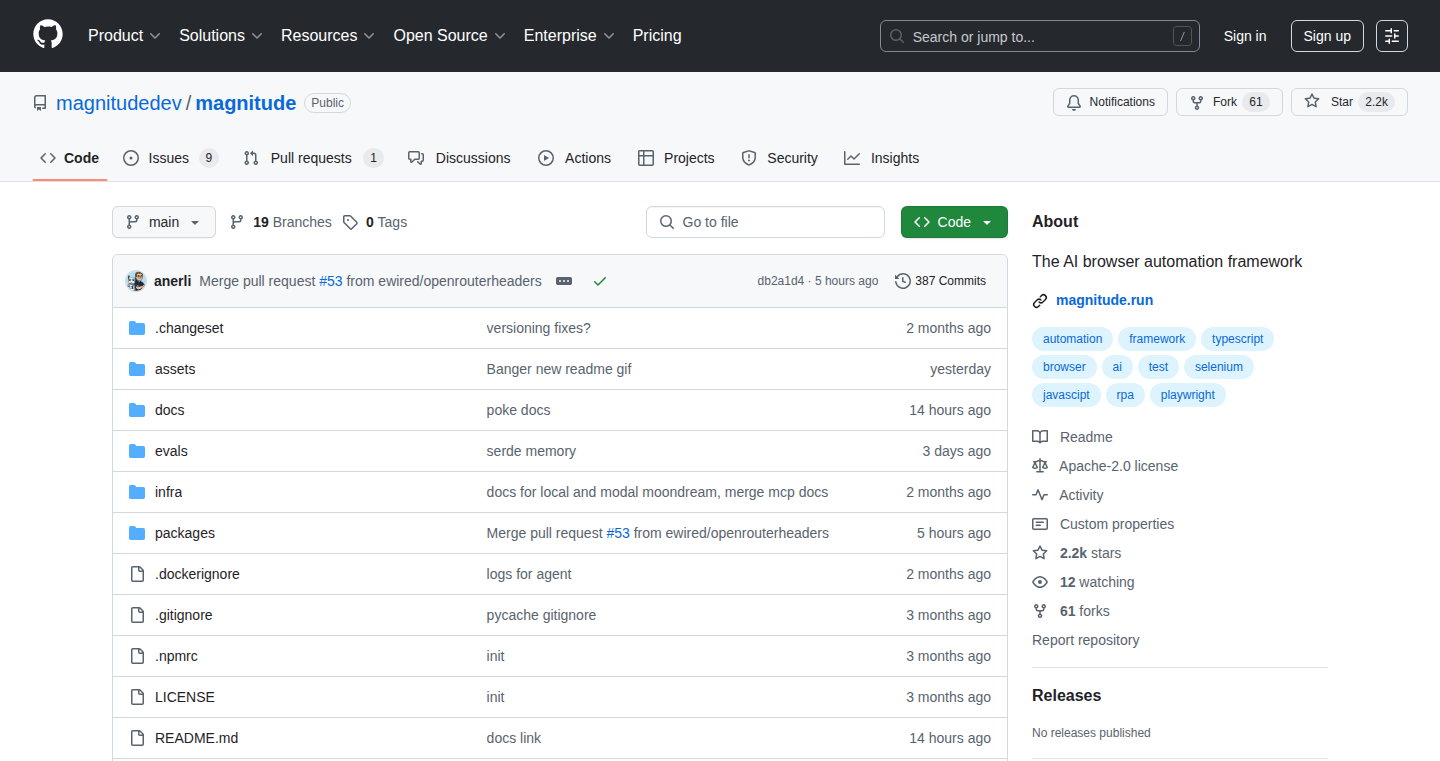

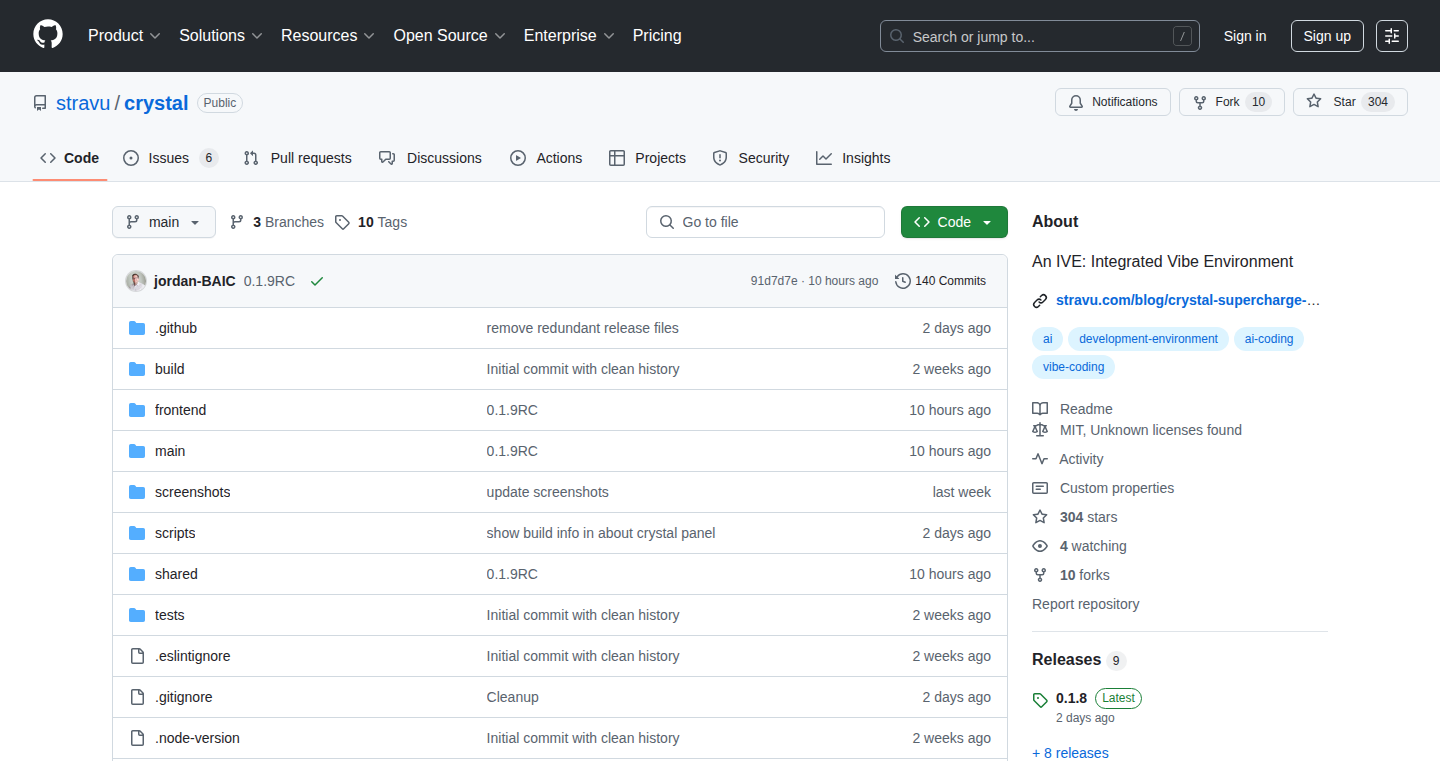

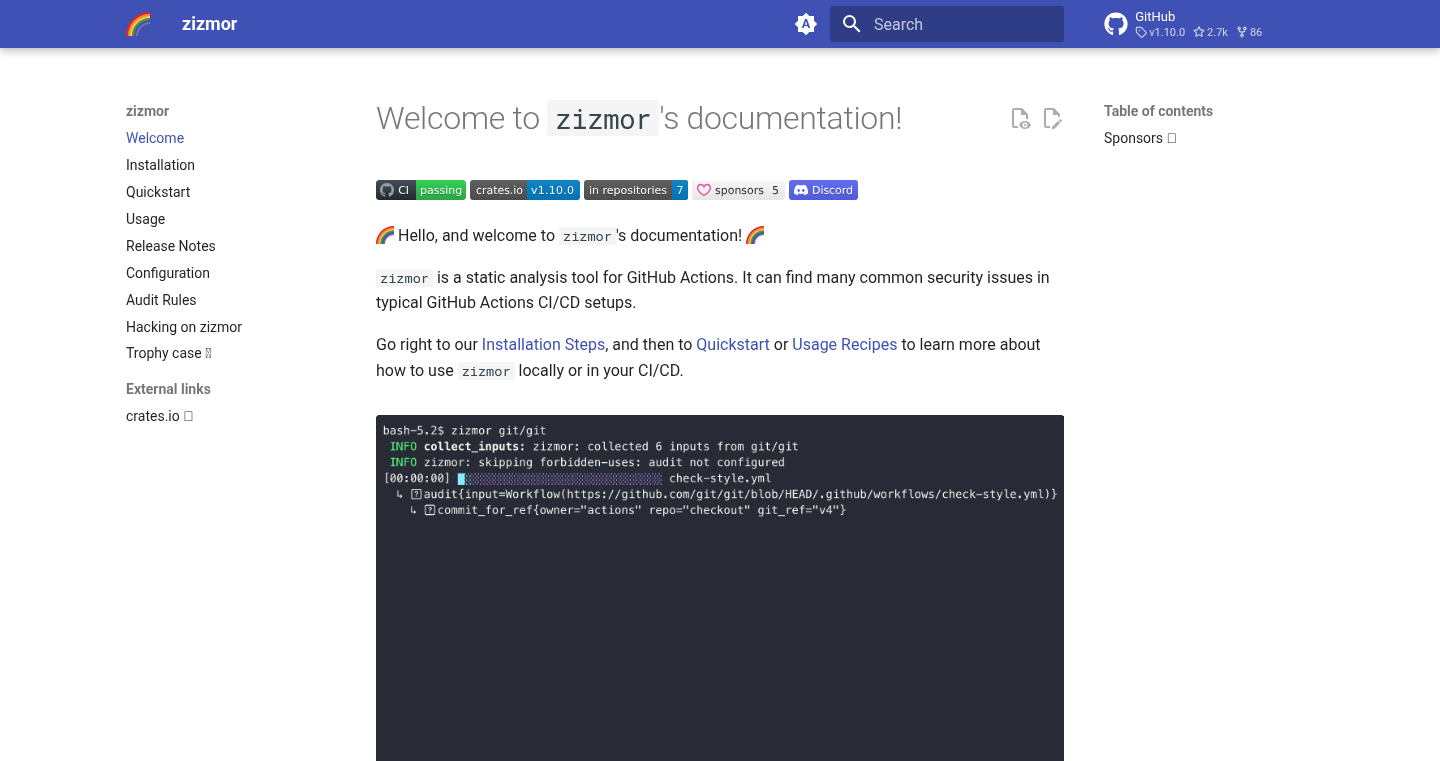

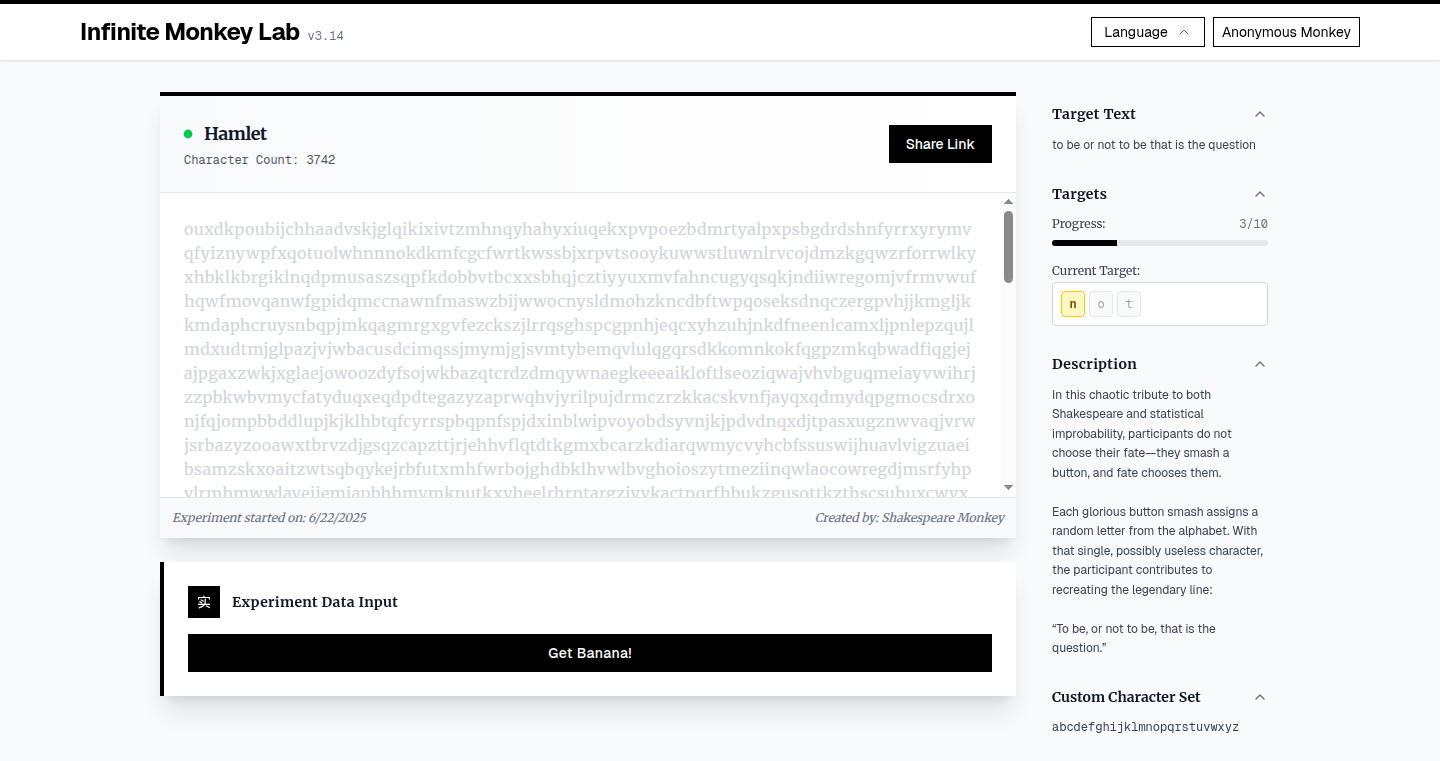

Magnitude - AI-Powered Vision-First Browser Automation

Author

anerli

Description

Magnitude is an open-source framework that uses AI to automate tasks in web browsers. Unlike traditional methods that rely on the browser's internal structure (the DOM), Magnitude takes a 'vision-first' approach, interacting with websites as a human would – by looking at what's on the screen. This allows it to handle complex interactions like drag-and-drop, data visualizations, and legacy applications more reliably. It leverages visually grounded models and provides developers with fine-grained control, offering both high-level task automation and precise actions, along with data extraction capabilities. This makes web automation more robust and adaptable for various scenarios. This means it's better at handling complex websites and interactions.

Popularity

Points 98

Comments 38

What is this product?

Magnitude is a browser automation tool that uses AI to 'see' and interact with web pages, just like a human. It bypasses the usual method of interacting with a website's underlying code (DOM) and instead focuses on visual information, making it more resilient to changes on the website and better at handling complex tasks. It uses smart AI models to understand what's on the screen and perform actions, and lets developers define how the automation works with great detail. This offers powerful automation with reliable results.

How to use it?

Developers can use Magnitude to automate web tasks, integrate different apps without APIs, extract data from websites, test web applications, or build custom browser agents. To get started, you can use a setup script with "npx create-magnitude-app". You can then use Magnitude to give the agent high-level instructions like "Create an issue" or to control low-level actions like "Drag and drop". It also lets you extract data by defining what you want to get, and Magnitude finds the information based on the page content. So you can automate pretty much any web activity, which is great for tasks like testing, data gathering, or automating workflows between different web apps.

Product Core Function

· Vision-First Approach: Magnitude interacts with web pages by 'seeing' them, like a human, instead of using the underlying code. This makes automation more stable and able to handle a wider range of websites.

· AI-Powered Interactions: Uses AI models to understand the visual elements of a webpage and perform actions like clicking, dragging, and typing accurately.

· Fine-Grained Control: Provides developers with detailed control over the agent's actions and the ability to mix it with their own code. This enables customization.

· Data Extraction: Allows you to extract specific data from webpages using a defined structure (schema). The agent can find existing information or generate new insights.

· Compatibility: Designed to work with complex websites, including those with drag-and-drop, data visualizations, legacy apps with nested iframes, and sites heavy on visuals like design tools or photo editing platforms.

Product Usage Case

· Automated Testing: Use Magnitude to automatically test web applications by simulating user interactions and verifying results. This saves time and makes tests more reliable, even if the website changes.

· Data Scraping: Extract structured data from websites for market research, competitor analysis, or data aggregation. It can intelligently gather information from even difficult websites without needing to change your script.

· Cross-Application Integration: Automate workflows between different web applications without needing APIs. You can set up processes that move information between platforms. For example, automatically creating a task in a project management tool based on an email received.

· Web Automation for Legacy Systems: Automate tasks on older web applications with nested iframes that are hard to interact with using traditional methods. Magnitude can reliably automate tasks across these systems by looking at the visible screen.

3

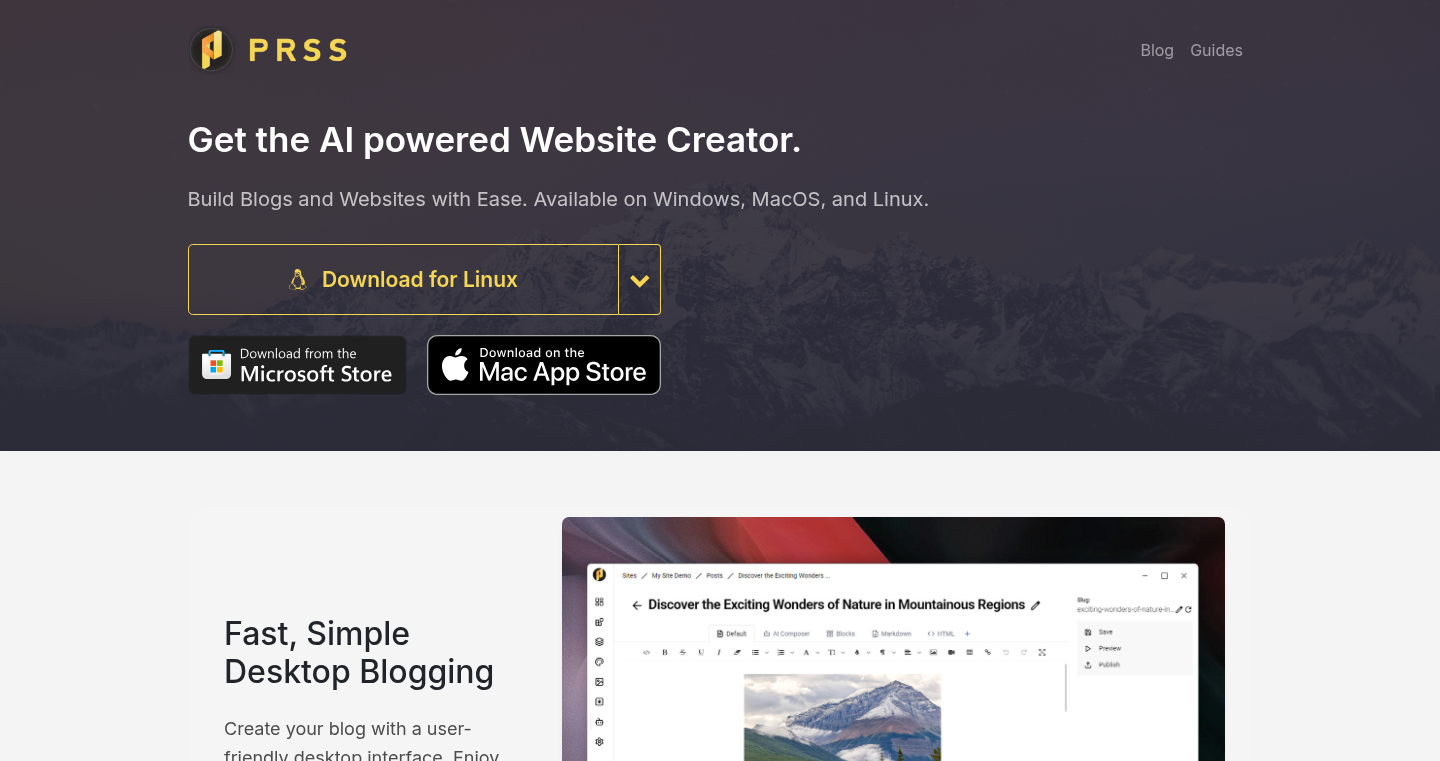

PRSS Site Creator - Desktop-Powered Static Site Generator

url

Author

volted

Description

PRSS Site Creator is a desktop application that helps you build blogs and websites by generating static HTML files. It takes content from your local computer and transforms it into a ready-to-deploy website. The technical innovation lies in its user-friendly desktop interface, which simplifies the complex process of creating static sites by abstracting away the command-line complexities and making it accessible to everyone. It addresses the technical hurdle of needing server-side code for content display. It allows users to generate a website without the need to understand server-side scripting like PHP or complex JavaScript frameworks, making it easy to maintain and deploy on various hosting platforms.

Popularity

Points 20

Comments 17

What is this product?

PRSS Site Creator is a software program that turns the text you write on your computer into a website. Instead of building the website in a complex, behind-the-scenes way, like many modern websites, it creates simple HTML files. The innovative part is that it provides a user-friendly desktop interface. This means you don't have to be a tech expert to generate websites. This is particularly useful because the generated static sites are faster and more secure, as they don't need to constantly run code on a server. So this allows more people to quickly and easily build websites for themselves.

How to use it?

Developers can use PRSS Site Creator by writing content in plain text, Markdown, or other supported formats on their computers. They can then use the application to convert this content into a static website, complete with customizable themes and layout. The resulting website can then be uploaded to a hosting platform. This is particularly useful for developers who want to build blogs, documentation sites, or personal portfolios without the overhead of managing server-side technologies. So, you can quickly and efficiently deploy a static website using this tool.

Product Core Function

· Markdown and Plain Text Conversion: The core functionality is converting content written in Markdown or plain text formats into HTML. This eliminates the need for manual HTML coding and saves time. This is useful for bloggers and writers who want a simple and efficient way to publish their content online.

· Desktop Interface for Website Creation: It provides a user-friendly desktop interface, simplifying the creation process. Instead of using command-line tools, users can manage their website content and configurations with a graphical interface. This is beneficial for non-technical users or anyone who wants to quickly build a site without coding expertise.

· Static Site Generation: The application generates static HTML files, which can be hosted on any web server, offering advantages like speed and security. Unlike websites that rely on dynamic content generation, static sites are faster to load and less vulnerable to security risks. This feature is vital for developers who prioritize performance and security.

· Customizable Themes and Templates: The site creator offers options to apply themes and templates, so users can personalize their sites without manually writing CSS or JavaScript. This simplifies the process of changing the appearance of a website. Therefore, this is great for users to easily customize website’s look and feel.

Product Usage Case

· Personal Blog: A developer can create a personal blog using PRSS Site Creator. They can write their blog posts in Markdown on their desktop, use the application to generate the static website, and then deploy it to a hosting service like Netlify or GitHub Pages. This ensures quick loading speeds and is cost-effective. So, this is a good way to have a personal blog.

· Documentation Site: A software team can use the tool to create a documentation site for their project. They can write documentation in Markdown, and then use the tool to generate the HTML files, which they then host on GitHub Pages or another platform. This makes the documentation easily accessible to users. So this helps you to build helpful documents.

· Portfolio Website: A designer or developer can create a portfolio website using the application. They can showcase their work by writing about their projects and using the program to generate the website, which they then upload to a web hosting service. This provides a fast and secure way to display your portfolio. So this helps to show your work and build your brand.

4

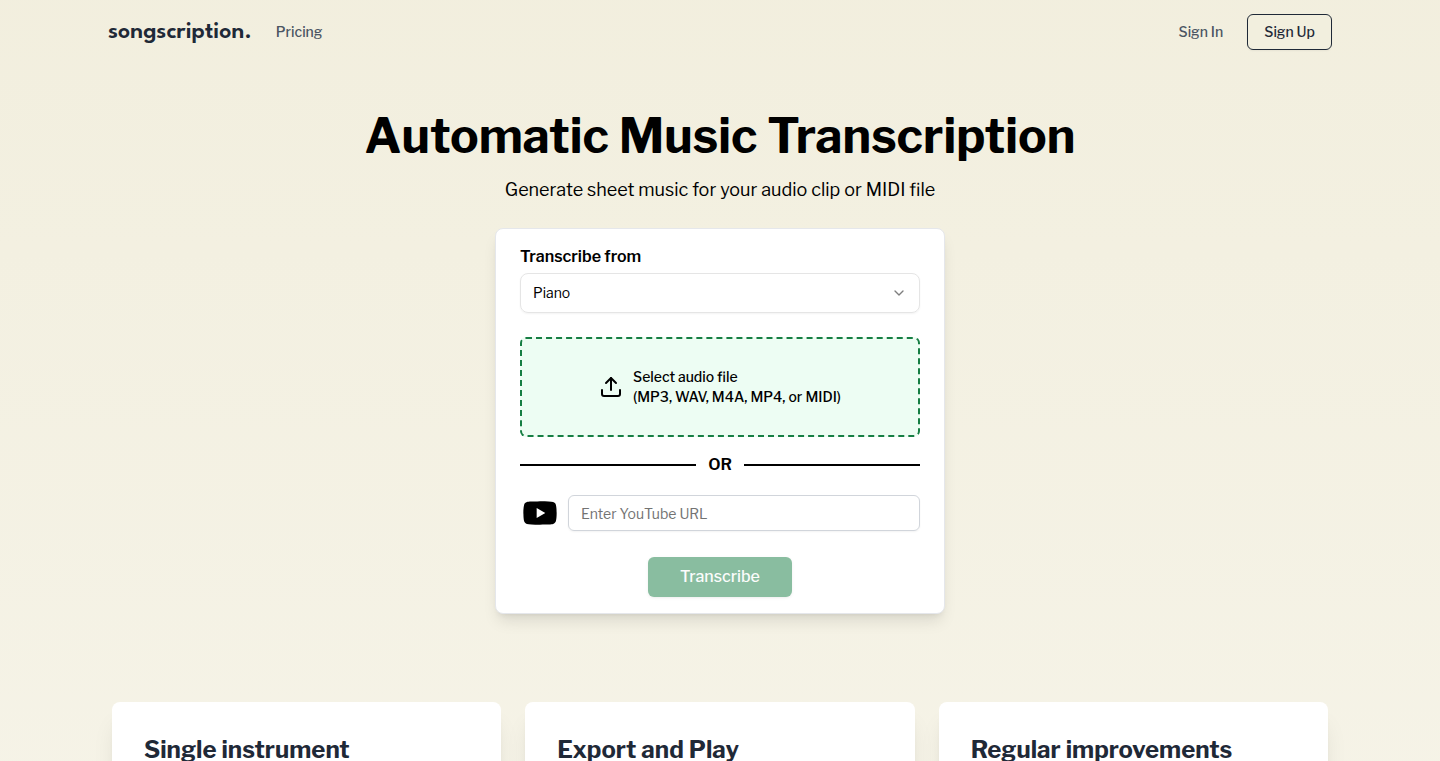

Inworld TTS: High-Quality, Low-Latency Multilingual Text-to-Speech

Author

rogilop

Description

Inworld TTS offers high-quality, affordable, and low-latency text-to-speech (TTS) services. It addresses the common trade-off between quality, speed, and cost in voice APIs. The project leverages large language models (LLaMA) as speech backbones, fine-tuned on text-audio pairs and optimized for real-time performance using Mojo. It supports markup tags for enhanced speech control and provides open-source code for training and benchmarking.

Popularity

Points 22

Comments 13

What is this product?

Inworld TTS is a service that converts text into natural-sounding speech. It's designed to be a better alternative to existing TTS solutions, which often sacrifice quality for speed or affordability. The core innovation lies in using LLaMA (a type of large language model) to create the speech. The project uses a small model (TTS-1) comparable to state-of-the-art quality based on objective metrics such as WER/SIM/DNSMOS, and a larger model (TTS-1-Max) which further improves the quality. They've trained these models on a mixture of text and audio data, and then fine-tuned them on matching text and audio samples. They also incorporate markup tags, letting users add details to control how the speech sounds. To ensure that the speech generation is very fast, they have migrated from a standard solution (vLLM) to a faster solution (Mojo).

How to use it?

Developers can integrate Inworld TTS into their applications via an API (Application Programming Interface). You send the text you want to be spoken, and the API returns the audio. You can also use markup tags to customize the speech (e.g., change the emotional tone). Inworld TTS provides a streaming API for the small model, with the larger model API opening soon. The pricing is set at $5 per 1 million characters processed. So, you send text to their servers, and they send back the audio. This is perfect for adding voice to apps, games, or any project that needs speech output. For example, imagine creating a talking chatbot, or voice-over for videos. Or maybe you are building an educational app or an interactive game.

Product Core Function

· Multilingual Support: The service supports 11 languages, expanding the accessibility of high-quality voice generation. This is valuable for reaching a global audience and building applications that cater to diverse language needs.

· Low Latency: The TTS-1 model offers a p90 latency of ~500ms for the first 2 seconds of audio. This is a significant advantage for real-time applications. This is key for creating responsive, engaging user experiences, especially in areas like virtual assistants, interactive games and real time communication.

· High-Quality Speech Generation: The project focuses on producing high-quality, realistic-sounding speech, comparable to or better than existing solutions. This is critical for creating applications where the audio is a key component of the user experience, like audiobooks, podcasts, or interactive storytelling.

· Markup Tag Support: It allows developers to add markup tags to the text to control how the speech is generated (e.g., add emphasis or change the emotional tone). This provides a higher degree of control over the output, which is essential for creating engaging and expressive voice-overs.

· Open-Source Code for Training and Benchmarking: The developers plan to release their training and benchmarking code on GitHub. This allows developers to understand the techniques they used and modify it for their own needs. This promotes transparency and allows other developers to build on their work, accelerating innovation in the field of speech synthesis.

Product Usage Case

· Game Development: Integrate Inworld TTS to create voice-overs for game characters and narrators. This could be used to dynamically generate dialogue for non-player characters, making gameplay more immersive. This could lead to more engaging gameplay.

· Educational Applications: Develop interactive language learning apps where text is converted to speech, helping users practice pronunciation and comprehension. It provides a more engaging way to learn a new language.

· Virtual Assistants: Build virtual assistants that can speak in multiple languages with high quality and low latency. This can be used to create voice-based interfaces for smart home devices or business applications, offering a more accessible and user-friendly experience.

· Content Creation: Automate the creation of voice-overs for videos, presentations, or audiobooks. This can save time and resources compared to hiring voice actors and improve productivity, especially for content creators.

· Accessibility Tools: Develop applications that convert text into speech for visually impaired users. This makes digital content more accessible to people with disabilities, promoting inclusivity.

5

Anytype: Your Private, Local-First, Collaborative Knowledge Hub

Author

sharipova

Description

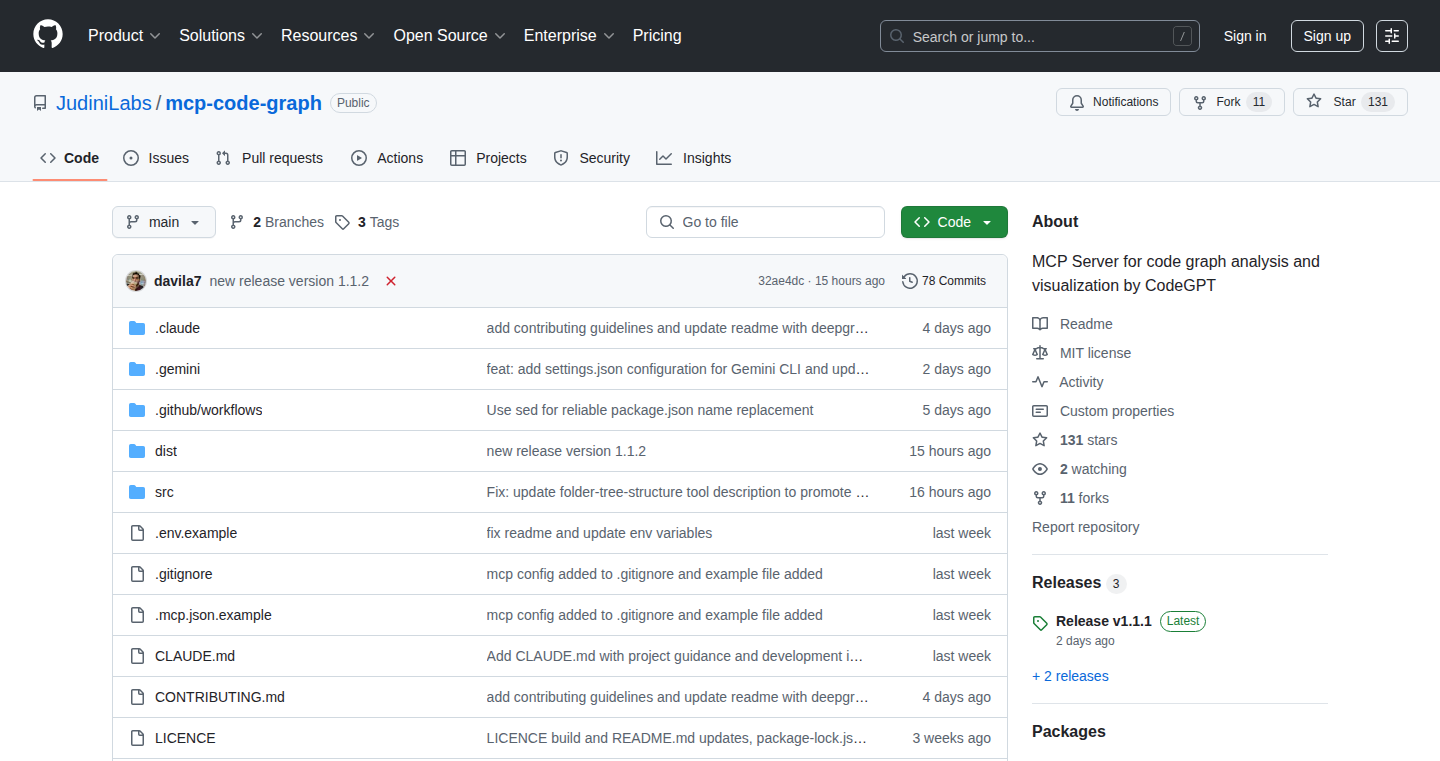

Anytype is a revolutionary knowledge management tool that prioritizes your privacy and control. It allows you to create and manage documents, databases, and files collaboratively, with all your data stored locally and end-to-end encrypted. The new local API and MCP server open up exciting possibilities for developers to integrate Anytype with other tools and build custom workflows, especially with the integration of Large Language Models (LLMs). This is a significant step towards a truly private and independent digital workspace.

Popularity

Points 17

Comments 0

What is this product?

Anytype is a local-first, collaborative knowledge management tool. This means all your data lives on your device, providing enhanced privacy and security because your information isn't stored on a central server. It uses end-to-end encryption to keep your data safe and implements a CRDT (Conflict-free Replicated Data Type)-based synchronization system for seamless collaboration between users and devices. The new local API allows developers to extend Anytype's functionality, and the MCP (Machine Communication Protocol) server facilitates integration with LLMs, enabling AI-powered features within Anytype. This includes features like summarizing documents or generating content.

How to use it?

Developers can integrate Anytype in multiple ways. The local API allows you to build custom workflows and connect Anytype with other applications on your desktop. For instance, you could create a script that automatically imports data from a CSV file into an Anytype database. The MCP server enables you to connect Anytype with LLMs. Imagine automatically summarizing your notes or generating new content based on your existing information. You would need to download Anytype, explore the developer portal (developers.anytype.io), and start experimenting with the local API and the MCP server functionalities. The Raycast extension provided is a great example of how to use the API.

Product Core Function

· Local-first storage: All your data is stored on your device, giving you complete control and privacy. So this means that your information is not stored on any external server and you can rest assured that your data is only accessed by you and the people you choose to share it with.

· End-to-end encryption: Your data is encrypted from your device to the devices you share with, ensuring that only authorized users can access it. So this protects your content from prying eyes, keeping your information secure even if your device is compromised or someone intercepts your data in transit.

· CRDT-based sync: This technology allows for seamless, real-time collaboration, even with offline capabilities. So this lets multiple people work on the same documents or databases simultaneously, and the system automatically handles conflicts, ensuring everyone's changes are reflected.

· Local API: This API allows developers to build custom integrations and extend the functionality of Anytype. So this enables the creation of powerful workflows and allows you to connect Anytype with other tools and services you use, customizing it to your specific needs.

· MCP server: This server facilitates the integration of Large Language Models (LLMs) such as ChatGPT, allowing for AI-powered features within Anytype. So this opens the door to AI-driven features like automated summarization, content generation, and smart organization of your knowledge base, improving efficiency and productivity.

· Collaborative features: Anytype supports real-time collaboration on documents, notes, tasks, and tables. So this means you can work with others on the same projects, share ideas, and track progress in real-time, boosting team productivity and ensuring everyone stays on the same page.

Product Usage Case

· Integrating with existing note-taking workflows: A developer could create a script using the local API to automatically import notes from other apps into Anytype. So, this helps you consolidate all your information in one place, streamlining your note-taking process and making it easier to find what you need.

· Building custom AI assistants: Developers can leverage the MCP server to build AI-powered features, such as a summarization tool that condenses lengthy documents within Anytype. So, you can quickly grasp the key points of any document, saving time and effort.

· Developing project management dashboards: Using Anytype's database capabilities and the API, a developer could create a custom dashboard to track tasks, deadlines, and progress for different projects. So, you can get a clear overview of your projects, track progress, and manage your tasks efficiently.

· Creating a personalized knowledge base: A user can link notes, documents, and tasks, structuring information in a way that suits their needs, then share parts of it selectively. So, you can organize your personal and professional knowledge in an easily accessible and interconnected way, improving information retention and productivity.

6

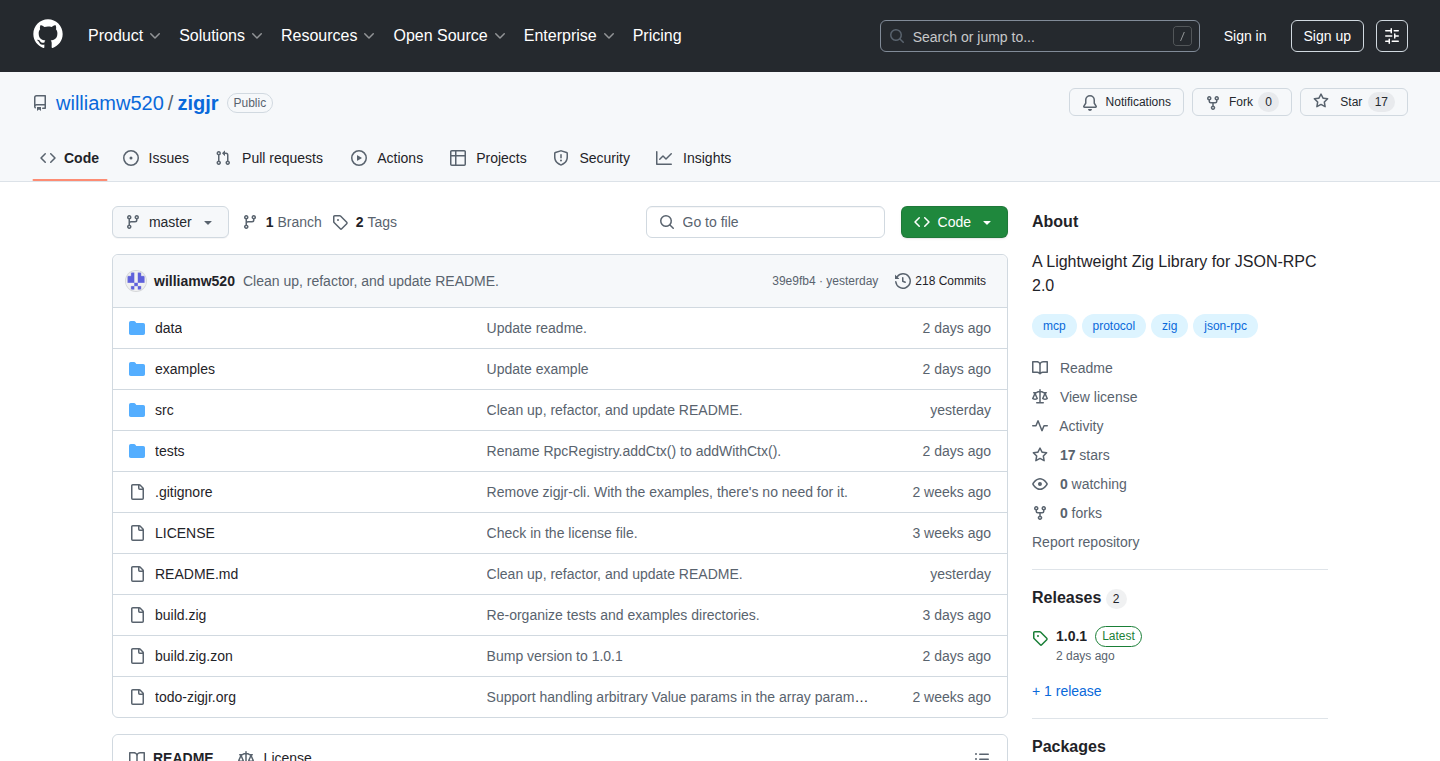

ZigJR: JSON-RPC Library with Compile-Time Reflection

Author

ww520

Description

ZigJR is a JSON-RPC library specifically built for the Zig programming language. The core innovation lies in its use of Zig's 'comptime' feature (compile-time reflection) to achieve dynamic dispatching – the ability to call different functions based on a specific request – without sacrificing the benefits of static typing (where the type of a variable is known at compile time). This tackles the challenge of creating a flexible system where you can call functions with various input parameters and return types, all while ensuring that the code remains safe and efficient. So, instead of resorting to dynamic typing or runtime tricks, ZigJR leverages the compiler to understand function details and package them into a uniform format, making it possible to call functions dynamically in a statically-typed and type-safe way. This allows developers to build robust, flexible, and efficient systems that can handle various function calls with ease.

Popularity

Points 11

Comments 2

What is this product?

ZigJR is a JSON-RPC library, meaning it allows different software components to communicate with each other using JSON messages over a network. What makes it special is its internal mechanism: it utilizes 'comptime' reflection in Zig. In simple terms, during the compilation process, ZigJR looks at the functions you define. It figures out what parameters they take, what type of data they return, and wraps them in a standardized way. This allows you to call these functions dynamically (based on the request), while keeping the code type-safe and efficient. The innovation is the use of compile-time features to enable flexible function calls without sacrificing the advantages of a statically-typed language. So, imagine you want to remotely call a function 'add' and a function 'hello'. With ZigJR, you can set up a system where the request (e.g., asking to call 'add') is received and the correct function ('add') is then executed. The library handles the intricacies of packaging function details and ensuring everything works properly, offering a flexible, safe, and efficient way to handle dynamic function calls.

How to use it?

Developers integrate ZigJR into their Zig projects by including it as a dependency. They then define functions they want to expose via JSON-RPC. These functions can accept different types of inputs and produce various outputs. ZigJR handles the serialization (converting data into JSON) and deserialization (converting JSON back into data) of the data passed between the client and the server, and facilitates the dynamic calling of functions based on received JSON-RPC requests. The integration usually involves setting up routing rules for the functions, so when a specific request is received, ZigJR knows which function to call. For example, you would set up a mapping: When a JSON request comes in asking to run the function named 'add', the system knows to actually execute your 'add' function. This makes it easy to build applications that communicate with each other over a network using the JSON-RPC protocol. So, you can use this in distributed systems, microservices architectures, or any application where you need to have remote procedure calls.

Product Core Function

· JSON-RPC Handling: The library supports the JSON-RPC protocol, enabling communication with other applications over a network. So, it enables remote procedure calls easily.

· Compile-Time Reflection: ZigJR uses the Zig's comptime feature to reflect and determine function parameters and return types during compilation. So, it allows functions to be invoked dynamically and type-safely.

· Dynamic Dispatching: This feature lets the library call different functions based on the incoming requests, all while maintaining strong typing. So, you can build applications that react to different inputs and scenarios.

· Serialization/Deserialization: It converts data to and from JSON format, necessary for network communication. So, it provides the basis for data exchange between applications.

· Error Handling: ZigJR implements robust error handling mechanisms, ensuring the reliability of function calls. So, you can handle potential issues gracefully, ensuring that the system continues to run smoothly.

Product Usage Case

· Microservices Architecture: Imagine a system composed of several small services (microservices), each running different parts of an application. Using ZigJR, each microservice can expose its functionality via JSON-RPC. One service can call functions in another service over the network. For example, an e-commerce application might have one microservice managing user accounts and another managing product catalogs. The service managing product catalogs could use ZigJR to invoke functions of user account service to check user's permissions before serving product information. So, the developer can build a distributed, resilient system.

· API Gateway: You can build an API gateway to handle different API requests. ZigJR can manage the routing of the requests to appropriate back-end services, as well as handle the transformation of data, authentication and authorization. So, you can create a centralized access point for your APIs and add new functionality without impacting the consumers.

· Building RPC-based Applications: Use ZigJR in any scenario where you want different parts of your application (or other applications) to communicate. For example, build a system where a front-end application can trigger back-end functions over a network to do operations. ZigJR facilitates communication between the different applications. So, you can create flexible and interoperable applications.

7

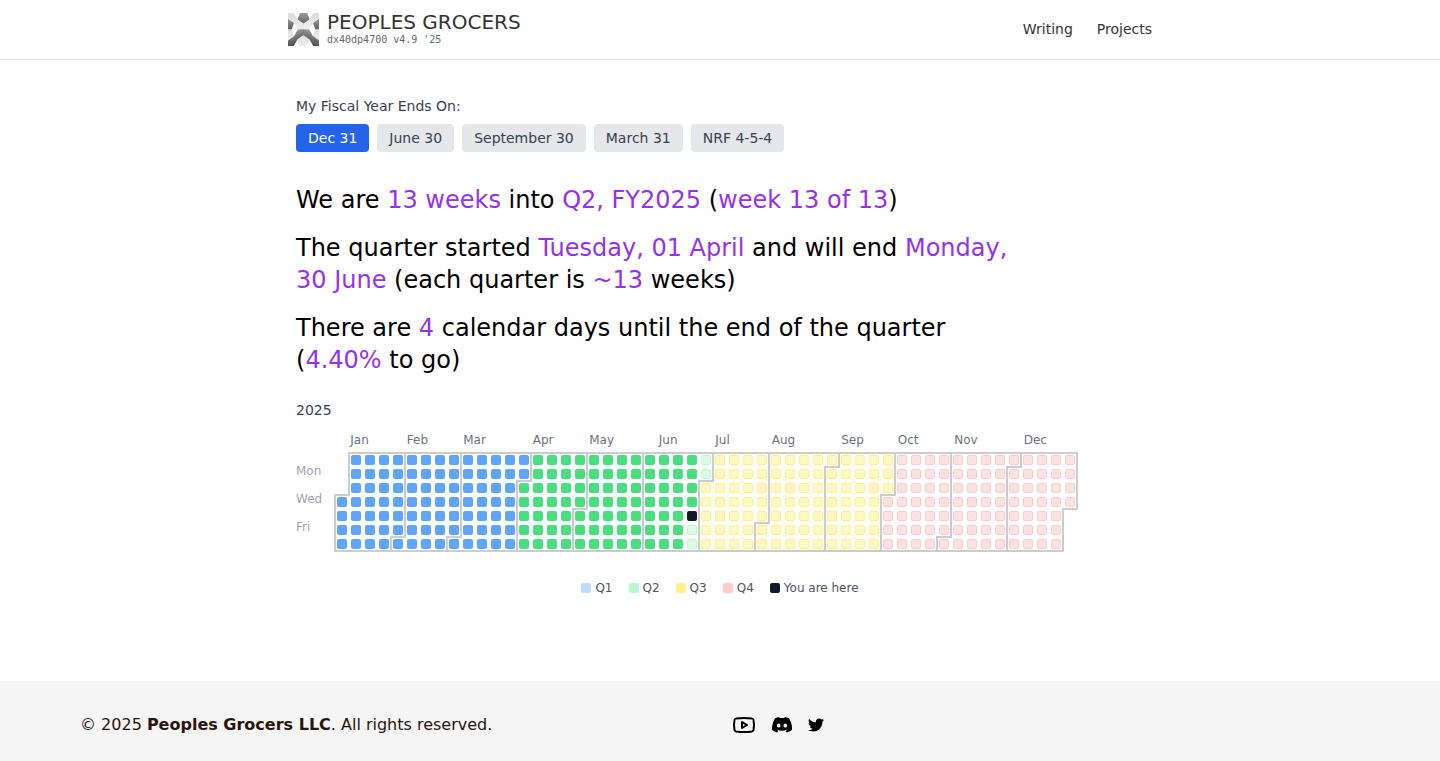

CorpTimeViz: Visualizing Corporate Calendars

Author

marxism

Description

CorpTimeViz is a web application that visualizes different corporate calendar types, including the National Retail Federation's 4-5-4 calendar. This helps users understand and compare various financial reporting periods, which can be particularly useful for analysts, investors, and anyone dealing with financial data. The project demonstrates a practical application of data visualization to solve the complexity of time-based corporate structures.

Popularity

Points 11

Comments 0

What is this product?

CorpTimeViz is a web-based tool that graphically represents different corporate calendar structures. It takes complex calendar systems, like the 4-5-4 calendar used in retail, and presents them in an easy-to-understand visual format. It focuses on the core issue of how companies structure their fiscal year, making it simpler to grasp financial reporting periods. This is achieved by converting the numerical calendar logic into visual timelines and comparative displays.

How to use it?

Developers can potentially use CorpTimeViz as a module within their own financial analysis tools. Imagine integrating it into a reporting dashboard to show the impact of different fiscal year structures on financial performance. It can also be used as a learning tool to teach others about complex financial calendars. The project could inspire further development by providing a practical base for visualizing other financial data tied to calendar periods.

Product Core Function

· Visualization of 4-5-4 Calendar: This core function displays the 4-5-4 calendar in a clear visual format. It converts the numerical structure into a visual representation that makes it easy to compare and contrast reporting periods. For developers, this is a good example of data visualization principles applied to solve a specific problem, like simplifying a complex concept to boost understanding.

· Visualization of other calendar types: The project likely supports other calendar types. Developers can use this to integrate different financial calendar comparisons into a tool, for example comparing the fiscal year across different companies. So, it provides a solid foundation for building calendar-aware data applications.

· Potential search integration for company symbols: The project hints at searching for company symbols to link to specific calendar structures. This would allow users to quickly see the financial calendar information for specific companies. This is useful to filter data and make it easily navigable for users, and also an opportunity for developers to build a scalable data aggregation service.

Product Usage Case

· Financial Analysis Dashboard: An analyst could integrate CorpTimeViz into a dashboard to display a company's fiscal year alongside its financial performance metrics. So that the impact of different calendar periods on revenue can be seen.

· Investor Education Platform: An investment website could use the visualization to educate users on the fiscal year cycles of various companies. This allows investors to understand how different financial reporting periods may affect reported earnings or other financial data.

· Comparative Analysis Tool: Corporate finance professionals can leverage the visualization to compare the financial calendar structures of multiple companies. This helps them understand reporting timelines and make informed financial decisions more quickly.

8

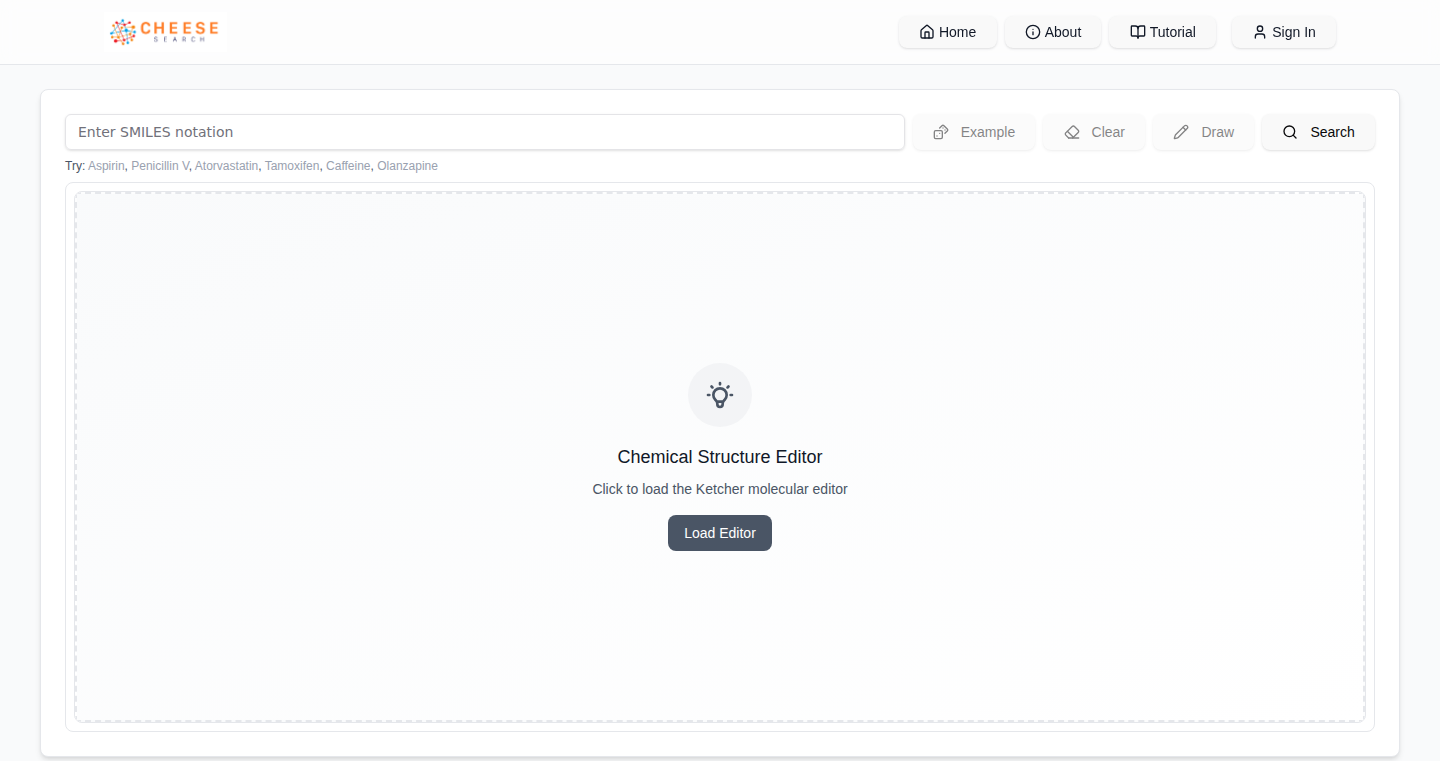

MolSearch: Fast 3D Molecular Search Without a GPU

Author

mireklzicar

Description

MolSearch is a tool that lets you find molecules similar in shape or electrical properties to a molecule you provide, from a database of billions of molecules. The cool part? It does all this quickly, in seconds, and it doesn't even need a powerful graphics card (GPU) during the search. It stores everything on your computer's hard drive and uses less than 10GB of RAM. The project was built from the ground up, avoiding reliance on existing database tools, and offers a cost-effective way to analyze vast chemical datasets.

Popularity

Points 9

Comments 2

What is this product?

MolSearch is a molecular search engine that uses advanced algorithms to compare molecules based on their 3D shape and electrostatic similarity. You give it a SMILES string (a text representation of a molecule) and it returns a list of the most similar molecules. The innovation lies in its ability to perform these complex searches quickly, using just your computer's regular processing power (CPU) and memory, and storing the entire index on your hard drive. The underlying technology includes custom-built indexing methods and similarity calculations. It avoids the need for expensive GPUs or massive in-memory databases. So this is useful if you want to quickly find similar molecules without needing expensive hardware or cloud services.

How to use it?

Developers can use MolSearch through a user-friendly web interface or potentially integrate it into their own chemical software workflows via its data output formats (CSV/SDF). To use it, you input a SMILES string representing a molecule, and MolSearch returns similar molecules along with various properties. You might integrate this into drug discovery pipelines, materials science projects, or any application involving molecule analysis. It would be especially useful if you need a fast and cost-effective solution to quickly search through huge chemical databases. For example, you can use it to predict ADMET properties, or export the result to other software for further analysis.

Product Core Function

· Fast 3D Shape Similarity Search: Allows you to quickly find molecules that have a similar 3D shape to a query molecule. This helps in identifying molecules that might interact with the same biological targets or have similar physical properties. So this allows you to quickly identify molecules which have similar shapes which is important for drug discovery and material science.

· Electrostatic Similarity Search: Finds molecules with similar electrical charge distributions, which is crucial for understanding how molecules interact with each other and the environment. This helps in predicting things like drug-target interactions or how a molecule behaves in a particular solvent. So this function is important for understanding how molecules interact and behave.

· Massive Database Indexing: Handles a database of billions of molecules, allowing for comprehensive searches across a huge chemical space. This is a significant achievement, enabling researchers to find relevant molecules that might otherwise be missed. So this feature allows you to search in large database and find results that would otherwise be hard to come by.

· GPU-less Operation: Performs searches without needing a powerful graphics card (GPU), making it more accessible and cost-effective for researchers. This removes a significant barrier to entry for many users. So this means you don't need to buy expensive hardware and still get the results.

· Cost-Effective Index Building: Constructs the index using affordable hardware, like a single Nvidia T4 GPU, for indexing massive datasets. This makes it possible to build and maintain the index without breaking the bank. So this is a low cost way to build and use a massive database.

Product Usage Case

· Drug Discovery: Researchers can use MolSearch to identify molecules similar to known drugs, potentially leading to the discovery of new drug candidates. You could provide a SMILES string for a drug and find other molecules with a similar shape and electrostatic properties, thereby identifying potential new drug molecules. So, this helps speed up drug discovery.

· Material Science: Scientists can search for molecules with specific properties to design new materials. For example, you could identify molecules that are similar to a known polymer, enabling the design of new plastics. So this helps with finding and designing new materials.

· ADMET Prediction: MolSearch can be used to predict the ADMET (absorption, distribution, metabolism, excretion, and toxicity) properties of molecules. This allows researchers to filter out molecules that are likely to fail in clinical trials early on. So this reduces the time and cost to test molecules in the lab.

· Virtual Screening: Pharmaceutic scientists can use MolSearch in virtual screening campaigns to identify potential drug candidates from large databases of chemical compounds. So this helps to improve drug discovery effectiveness and efficiency.

9

Effect UI: Reactive UI Framework Powered by Effect

Author

m9t

Description

Effect UI is a proof-of-concept (PoC) UI framework built entirely with the Effect library. It leverages Effect's functional programming paradigm to create a reactive UI experience. The core innovation lies in its use of SubscriptionRefs for fine-grained reactivity, similar to SolidJS, minimizing unnecessary re-renders. Components are essentially Effects, enabling dependency injection of themes, clients, and stores using Contexts. This approach aims to build UI components with a functional, predictable and efficient way, which leads to a more maintainable UI.

Popularity

Points 8

Comments 2

What is this product?

Effect UI is a UI framework that takes a different approach to building user interfaces. Instead of relying on traditional methods like React's re-renders, it uses the Effect library to achieve fine-grained reactivity, meaning changes are applied to specific parts of the UI efficiently. It's like having a UI that can update itself intelligently. Effect UI also uses a technique called dependency injection, allowing you to easily manage settings like themes and data sources. So, what's the innovation? It's about building a UI in a way that is both functional and efficient, making it easier to maintain and understand. So this is useful for developers who want to build UI components with better efficiency and maintainability. Think of it as building lego blocks, where each block represents an effect and each block interacts in the right way.

How to use it?

Developers can use Effect UI to build reactive web applications. The framework offers a novel way to handle UI updates, making the app more performant by minimizing unnecessary re-renders. You could integrate Effect UI into your existing TypeScript projects by importing its components and defining your UI using Effect-based principles. You can then create components that react to data changes and user interactions. The advantage is you can build reactive UI without some performance problems of the existing react UI Frameworks. So it can be used in all kinds of web app projects that requires responsiveness and performance.

Product Core Function

· Fine-grained Reactivity with SubscriptionRefs: Effect UI utilizes SubscriptionRefs to precisely track and update UI elements that have changed, minimizing unnecessary re-renders. Application Scenario: This is incredibly useful for building highly interactive and dynamic user interfaces, where performance is critical. So this benefits you by making your UI faster, smoother, and more responsive, especially for complex applications.

· Component as Effects: The framework treats UI components as Effects, providing a clean and functional approach. Application Scenario: This approach facilitates dependency injection of various resources like themes, clients, and data stores, enhancing the organization and maintainability of the code. So this benefits you by writing components easier to maintain and reuse across your applications.

· Dependency Injection via Contexts: Effect UI enables dependency injection through Contexts, facilitating a clean and modular architecture. Application Scenario: This approach is used for injecting configurations, services, and other dependencies into components, promoting a modular and testable codebase. So this benefits you by improving modularity and makes your components easier to test.

· Effect-Based Functional Programming: The core design is built on Effect, encouraging functional programming principles. Application Scenario: This enhances the predictability of the code and makes the UI easier to reason about. So this benefits you by making your code easier to debug, understand, and collaborate on.

Product Usage Case

· Building Interactive Dashboards: Suppose you're developing a real-time dashboard that needs to update frequently based on data changes. With Effect UI, you can easily create components that react immediately to incoming data, minimizing performance overhead. For you, this means your dashboard remains responsive even with a large number of updates.

· Developing Complex Web Applications: If you're working on a single-page application (SPA) with many interactive elements, Effect UI’s fine-grained reactivity can significantly improve performance by updating only what’s necessary. For you, this means a faster and more responsive web app, enhancing user experience.

· Theming and Customization: Imagine creating a website where users can customize the theme (colors, fonts, etc.). Effect UI's dependency injection capabilities would let you easily inject theme settings into your components. For you, this streamlines the customization process, making it easier to implement and maintain.

· Creating Reusable UI Components: You are building a component library to share across multiple projects. Effect UI's functional nature encourages creating components that are easy to understand and reuse. For you, this translates into more maintainable and standardized code, reducing development time.

10

Kraa.io - Markdown-Powered Knowledge Base

Author

levmiseri

Description

Kraa.io is a Markdown writing application designed for building and managing a knowledge base. It offers a simple and efficient way to create, organize, and share information using the universally compatible Markdown format. The innovation lies in its focus on streamlined writing and knowledge management, making it easy for anyone to document and retrieve information. It tackles the problem of disorganized notes and complex documentation tools by providing a lightweight, text-based solution.

Popularity

Points 6

Comments 3

What is this product?

Kraa.io is a web-based application where you can write and organize your notes using Markdown. Markdown is a simple way to format text using plain text symbols (like using * for italic or ** for bold). The project's innovation is the focus on making it easy to write and organize information. Think of it as a specialized notebook that understands Markdown, making your notes look good and easy to find. The underlying technology involves a Markdown parser and a content management system (CMS) tailored for quick writing and organization. So this is useful for everyone who needs to keep their notes in order and share with others.

How to use it?

Developers can use Kraa.io to document their projects, write tutorials, or create internal wikis for their teams. Simply write in Markdown, organize your notes, and Kraa.io handles the formatting and organization. You can share the content by linking to it. For example, you can embed your project's documentation within your codebase or share it with clients. It integrates seamlessly with other tools that support Markdown like any text editor. So this is useful for creating documentation in any kind of projects.

Product Core Function

· Markdown Editing: Allows users to write and format text easily using Markdown syntax. This is valuable because Markdown is a simple and universal format, ensuring your notes can be read anywhere, so you won't need to deal with specific file type incompatibility.

· Knowledge Base Organization: Provides a structure for organizing notes, enabling easy navigation and retrieval of information. This is useful because it helps to create a central hub for documentation, making your knowledge accessible, and searchable.

· Content Sharing: Allows users to share their notes easily with others. This is valuable because it facilitates collaboration and knowledge sharing within teams, or with external users.

· Real-time Preview: Offers a live preview of the formatted Markdown, allowing users to see how their notes will look as they write. This is useful because it helps with visual editing and makes it easier to create well-formatted documentation.

· Note Linking: Allows users to link between different notes, creating a web of interconnected information. This is valuable because it helps users connect related information, and discover useful information faster and more efficiently

Product Usage Case

· Software Documentation: A developer can use Kraa.io to create detailed documentation for their software projects. This allows them to organize technical specifications, tutorials, and API references in an easy-to-read format. The benefits are clear documentation improves user experience and makes it easier for developers to maintain and update their projects.

· Personal Knowledge Management: A student can use Kraa.io to keep track of notes from their classes, create study guides, and organize research. This provides an organized system to help understand the complex materials.

· Team Wikis: A team can use Kraa.io to set up an internal wiki for sharing knowledge, documenting processes, and collaborating on projects. This promotes information sharing across the organization.

· Project Management: A project manager can use Kraa.io to document meeting notes, create project plans, and track progress. This creates a central repository of information and keep everything in the same place.

· Content Creation: A content creator can use Kraa.io to write articles, blog posts, and create content for different platforms. With this approach, you can keep the formatting without having to worry about the specifics of each platform's requirements

11

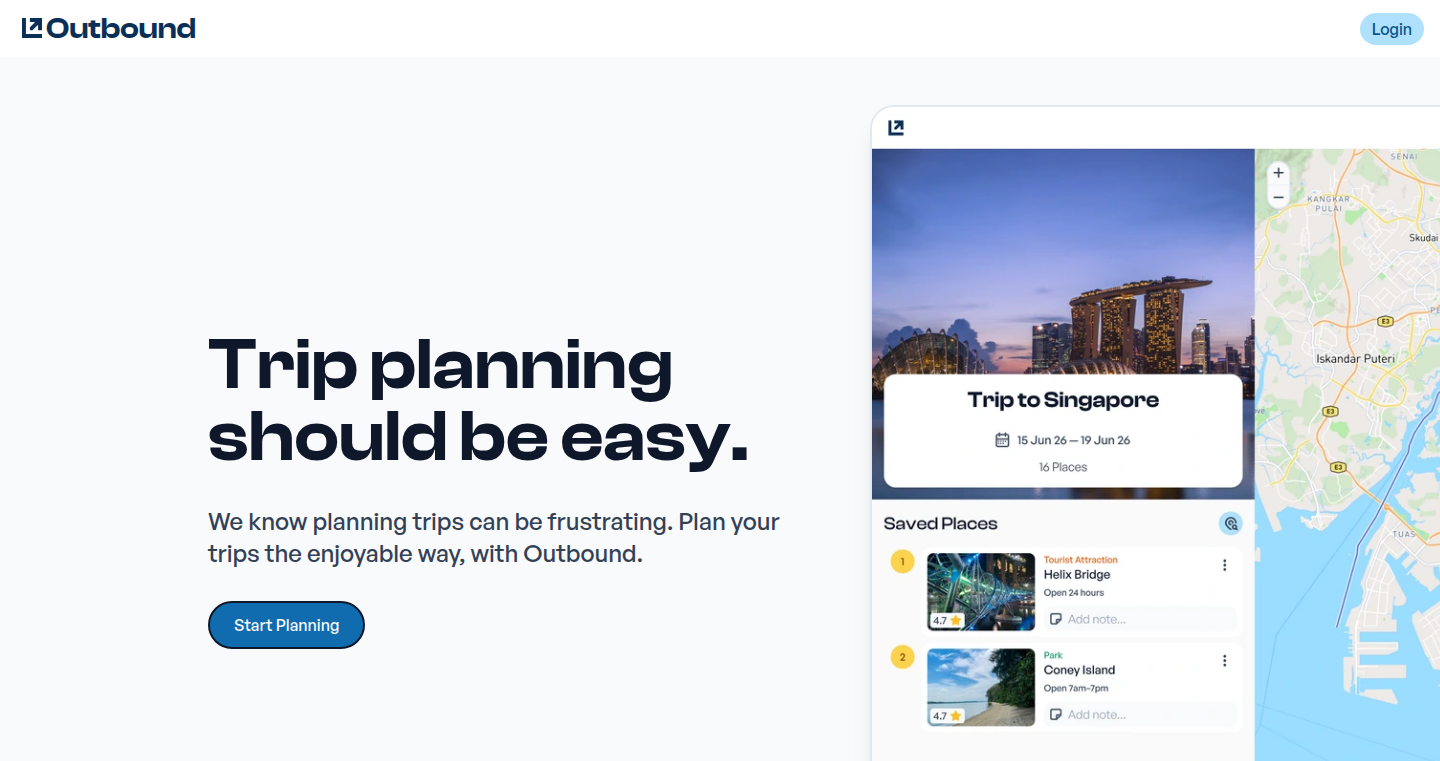

Outbound: Swipe to Plan – Your Brainrot-Era Trip Planner

Author

Su-

Description

Outbound is a trip planning tool that reimagines the process with a Tinder-like swiping interface. It allows users to quickly add attractions to their itinerary by simply swiping through them. The core innovation lies in its intuitive, drag-and-drop itinerary planner and automatic travel time calculations. It solves the common problem of tedious trip planning by streamlining the process and making it fun, especially for day trips.

Popularity

Points 6

Comments 3

What is this product?

Outbound is a web application that lets you plan trips using a swipe-to-add interface, much like how you would browse through profiles on Tinder. The core technology leverages a database of attractions and integrates with mapping services to provide travel time estimates between locations. The innovation is in making trip planning quick and easy. So, what this means is that instead of spending hours planning, you can swipe through different places and easily organize them into a daily itinerary.

How to use it?

Developers can access the source code and contribute to the project on GitHub. They can use the project as a starting point to learn about front-end development (likely using a framework like React), integrating with mapping APIs (like Google Maps), and building user interfaces that feel intuitive. You can integrate this into your own travel apps or create a custom planner tailored to specific interests. For example, you can adapt the swipe-to-add functionality to build a tool for choosing restaurants or activities. So, you can learn a lot about building fun, user-friendly interfaces and get a head start on creating similar applications.

Product Core Function

· Swipe-to-add attractions: Allows users to quickly add places to their itinerary by swiping, simplifying the process of selecting potential destinations. It's helpful because it makes browsing and choosing places much more enjoyable, saving you time.

· Drag-and-drop itinerary planner: Provides a visual interface for arranging places in the itinerary, enabling easy modification and organization. It provides a very direct way to control your trip and adjust your schedule on the go. So, you can customize your trip to fit your needs quickly.

· Automatic travel and arrival time estimates: Calculates travel times between places, eliminating the need to manually check travel durations. This automates the tedious part of travel, making planning way faster. So, you can get realistic estimates of how long it takes to get from one place to another without leaving the app.

· Share trips with friends: Allows users to collaborate on trip planning by sharing their itineraries with others. This makes it easy to plan trips together. So, you can easily coordinate with friends and family and create amazing experiences.

· Notes for each place: Allows the user to store useful notes, details, and reminders regarding each place in the itinerary. This is helpful because it helps you create more detail for your trip. So, you can organize your thoughts and make your trip even better.

Product Usage Case

· Integrating a Tinder-style UI for location selection in a travel app. This is an example of using the swipe interface to improve the user experience. So, you can make a travel planning app more intuitive.

· Using the drag-and-drop functionality to build an itinerary planner. This enhances a lot of planning apps with a simple way to arrange the user's day. So, you can create an easy-to-use itinerary planner.

· Implementing automatic travel time calculations in a mapping service integration to provide travel directions. This is especially helpful when planning day trips to reduce time spent on route planning. So, you can incorporate real-time travel information.

· Using share functionalities to make it easier to collaborate with others. This helps with creating group trips and reducing the work involved with planning it all. So, you can help with friend group trips.

· Using the software as inspiration to build a more focused itinerary creator. This allows developers to think about the needs of a small group and solve a specific pain point. So, you can create a better solution.

12

PixelCraft: Rust-Powered Image-to-Pixel-Art Generator

Author

gametorch

Description

PixelCraft is a tool that transforms images into pixel art using the power of Rust and WebAssembly (WASM). It leverages the K-Means clustering algorithm for color quantization, which means it intelligently reduces the number of colors in an image while preserving its visual essence. This project tackles the problem of automatically generating pixel art, a task usually done manually, by providing a fast and efficient method for converting images into a retro pixelated style. So this is useful because it automates a tedious artistic process.

Popularity

Points 9

Comments 0

What is this product?

PixelCraft works by taking an image, analyzing its colors, and then using the K-Means algorithm to group similar colors together. The algorithm then chooses a representative color for each group, effectively reducing the color palette. This process is done using Rust, a language known for its speed and memory efficiency. The resulting color palette and the pixelated image data is then exported, often via WASM, making the project usable in web browsers or other environments where speed is critical. So this is useful because it offers a performant solution for image manipulation with cross-platform compatibility.

How to use it?

Developers can use PixelCraft in several ways. They can integrate the Rust crate directly into their projects, for example in game development, for efficiently processing images. Or, they could use it as a backend service, providing a pixel art generation API. The WASM compilation allows for easy integration in web-based image editors. So this is useful for image processing tools and game developers who need pixel art generation capability.

Product Core Function

· K-Means Color Quantization: This is the core algorithm that intelligently reduces the number of colors in an image. This is valuable because it allows for more efficient image representation, especially useful for low-resolution displays or retro art styles. You can use this to create pixel art and reduce the image file size.

· Rust Implementation: The use of Rust provides significant performance advantages, making the image processing extremely fast, even for large images. This is useful if you need to process a lot of images quickly or work in a resource-constrained environment.

· WASM Compilation: Compiling to WebAssembly allows the pixel art generation to run in web browsers or other environments that support WASM. This is useful because it gives a wider reach and allows image transformation client side in your browser.

· Image Input/Output: The project handles different image formats (e.g., PNG, JPEG), and outputs pixelated image data, providing a ready-to-use result. So this is useful because it simplifies the process of converting images into pixel art.

Product Usage Case

· Game Asset Creation: A game developer can use PixelCraft to automatically generate pixel art sprites from existing character images. This solves the time-consuming process of manual pixel art creation, allowing for rapid prototyping and asset generation. This is useful for game developers to convert their existing images to pixel-art formats

· Web-Based Image Editor Integration: A web developer can incorporate PixelCraft into an online image editor. Users can upload images, apply the pixelation effect, and download the result directly in their browser. So this is useful for websites or online applications requiring pixel-art processing capabilities.

· Educational Tool: PixelCraft can be used as an educational tool to demonstrate the principles of color quantization and image processing to understand how to effectively transform images. So this is useful for students, or those learning the basics of digital image manipulation.

13

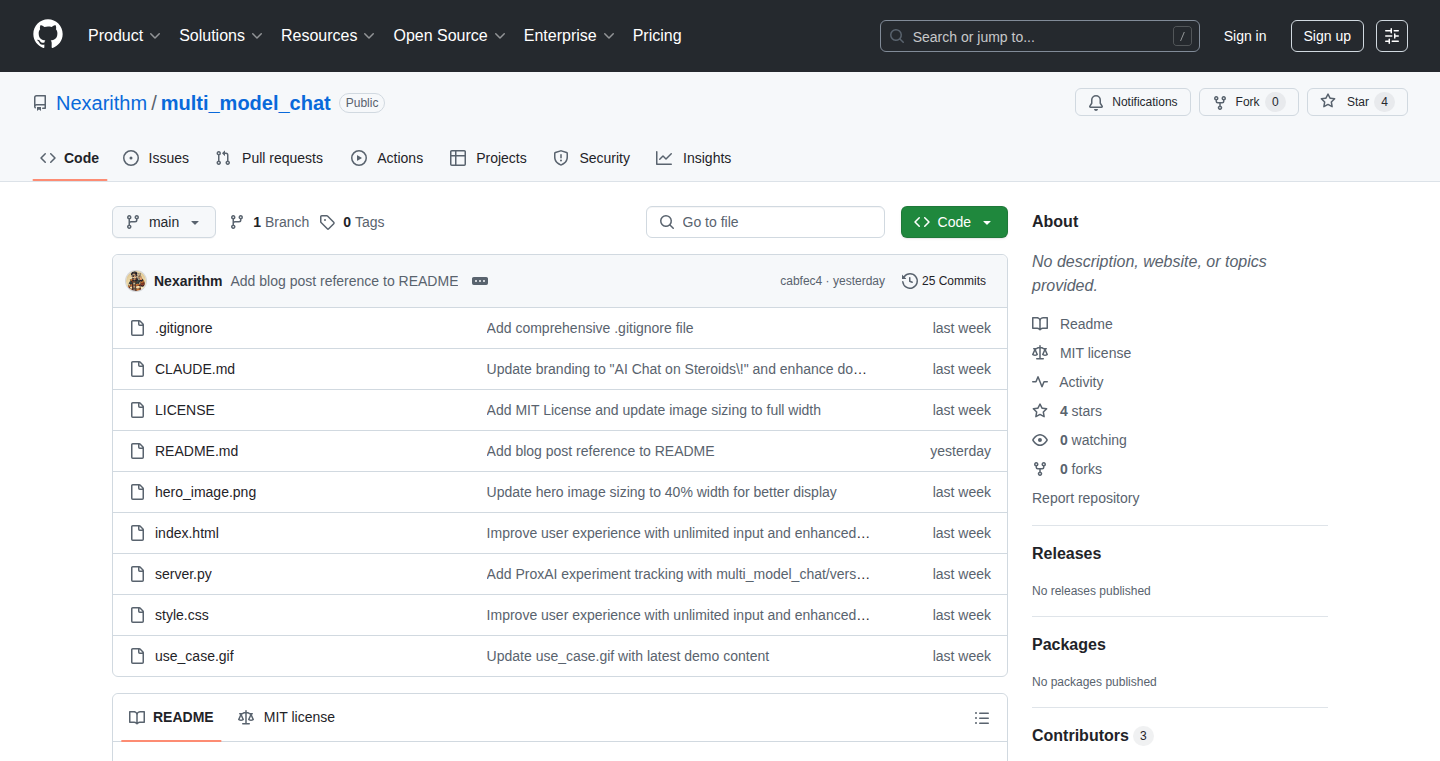

ParallelAI: Unleashing the Power of Multiple AI Models

Author

nexarithm

Description

ParallelAI is an open-source application that simultaneously queries over 10 different AI models (like Gemini, Claude, and others) for a single prompt, and then uses a 'combiner' AI model to summarize the responses. This addresses the challenge of getting diverse perspectives and the best possible answer by leveraging the strengths of multiple AI tools at once. It offers a simple interface for users to quickly compare and contrast responses from various AI models, enhancing the quality of answers to complex queries. Think of it as having a panel of experts working on your problem, each offering their insights.

Popularity

Points 5

Comments 4

What is this product?

ParallelAI works by sending your question to multiple AI models in parallel – think of it like asking ten different experts the same question at the same time. Each AI model processes the question and generates its own response. Then, a special 'combiner' AI model takes all the responses and creates a summary, giving you the most comprehensive and helpful answer. So, if you want more complete answers to complex questions, this provides a way to harness the power of different AI tools to get a richer set of information.

How to use it?

Developers can download the open-source code from the GitHub repository. After setting up the necessary API keys for different AI models, users can input their queries and receive combined responses. This allows developers to experiment with different AI models without needing to manually switch between them. It is extremely useful for AI-driven applications, allowing developers to easily compare answers and enhance user experience in applications where the quality of AI-generated answers is critical. You can integrate this into your projects to build an AI-powered research assistant, a smart chatbot, or even an automated content generation system. This allows for a more robust and versatile approach to AI integration, saving time and effort.

Product Core Function

· Simultaneous Querying: This feature sends a user's input to multiple AI models concurrently. This means you get multiple perspectives and answers in a short time. This is super valuable when you need to quickly compare different AI's capabilities.

· Response Summarization: The system combines responses from different models to create a comprehensive summary using another AI. This avoids the need to manually sift through various responses and provides a consolidated answer, which saves time and delivers more valuable insights. This is useful when you want an 'expert level' answer, as it reduces the chance of missing important information.

· Open-Source and Customizable: As an open-source project, it allows developers to tailor the application to their specific needs, integrate it into existing projects, and even modify the AI models used. This offers maximum flexibility, enabling creative experiments in AI applications, and promoting learning and adaptation of different AI models.

Product Usage Case

· Research and Information Gathering: Researchers can use ParallelAI to quickly gather diverse perspectives on a research topic. Asking the same question to multiple AI models allows them to compare and contrast different insights. This helps in identifying new ideas and ensuring comprehensive coverage of the subject. It streamlines the research process and provides more in-depth analysis.

· Content Creation and Editing: Content creators can use the application to generate various drafts or improve existing articles. By querying different AI models, you can get different writing styles, tones, and suggestions. This enables faster content production and helps in improving the quality and originality of the content.

· Debugging and Troubleshooting: Developers can use ParallelAI to troubleshoot coding problems by querying several AI models for solutions. The application allows them to get different suggestions, compare approaches, and select the most effective solution more efficiently, saving time and increasing productivity. This offers the chance to discover unexpected solutions and accelerate their workflow.

14

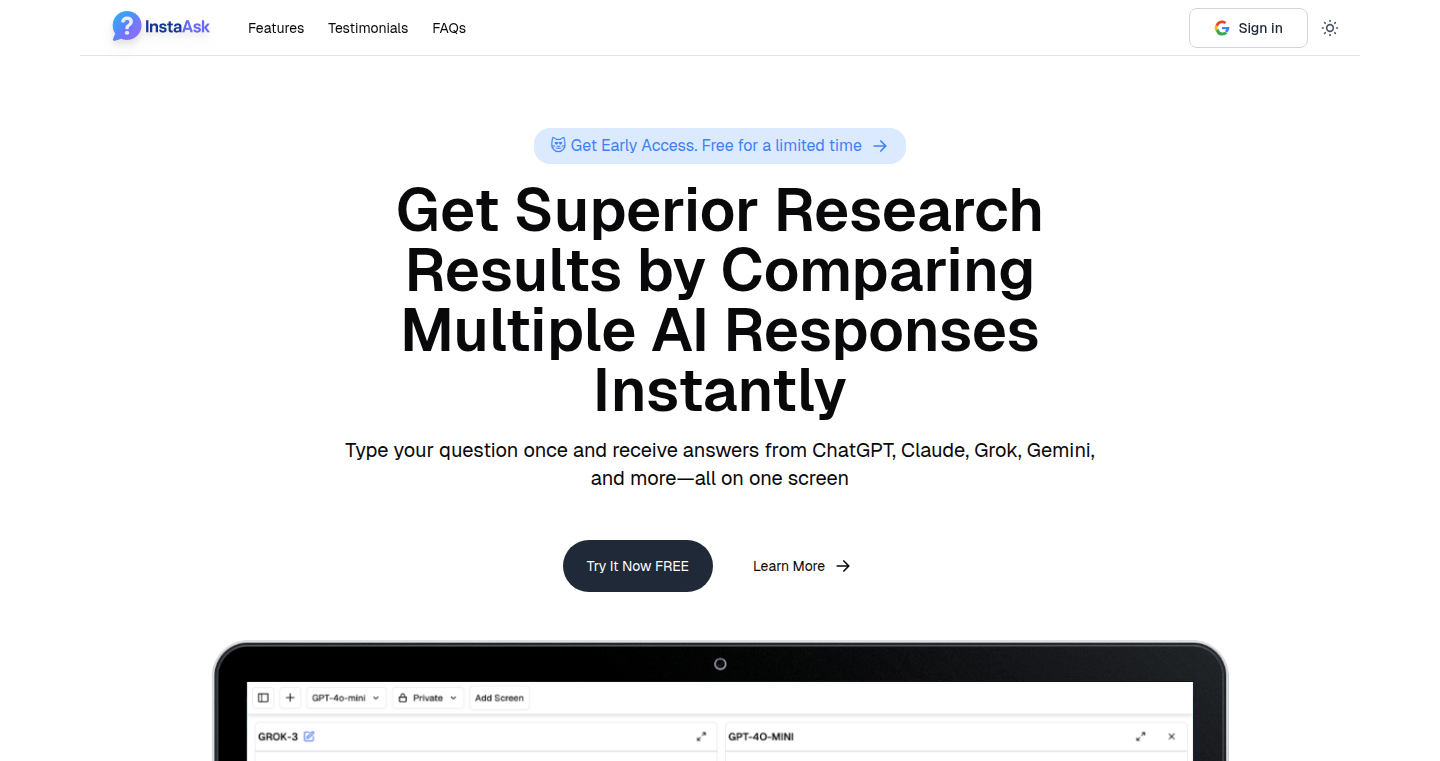

Multi-AI Chat Aggregator

Author

oksteven

Description

This project is a chat interface that lets you talk to multiple AI models (like ChatGPT, Claude, Grok, Gemini, and Llama) at the same time. It's designed to help you quickly compare answers from different AI and find the best response for your research or tasks. The technical innovation lies in providing a unified interface and consolidating responses from different AI backends, allowing for simultaneous querying and comparison.

Popularity

Points 5

Comments 4

What is this product?

This project combines the power of various AI models by allowing you to interact with them through a single interface. When you ask a question, it sends the question to all connected AI services. Then, it displays all the answers side-by-side. This helps you quickly see the different responses and compare them. The innovation is in the simultaneous use of multiple AI models, providing a faster and more complete overview of the information available. So what's in it for me? You can get better answers by comparing the output of different AI models, which helps you make decisions or get more reliable information.

How to use it?

Developers can use this project by integrating it into their research tools, automation systems, or data analysis pipelines. You can either use the provided interface or integrate the API endpoints that the project provides into your own application to query these AI models. For example, you could build a tool to summarize documents, where the summary is generated by several AI models in parallel, and the user can then compare and select the best summary. So what's in it for me? You can save time by streamlining your research or data analysis tasks using parallel AI processing.

Product Core Function

· Simultaneous Querying: Sends your prompts to multiple AI models at the same time. Technical value: This allows for rapid comparison of responses, saving time and improving efficiency. Application scenario: Useful for research or tasks where accuracy and breadth of information are critical.

· Unified Interface: Provides a single interface for interacting with all AI models. Technical value: Simplifies the user experience by hiding the complexity of working with different AI platforms. Application scenario: Helps in various tasks like summarization, question answering, and content generation.

· Response Aggregation: Collects and displays answers from multiple AI models in a single view. Technical value: This lets the user compare the responses directly. Application scenario: Ideal for understanding nuances, identifying biases, or checking the accuracy of the information.

· Model Selection (Potential): Offers functionality to choose and integrate any available AI model (depending on available APIs and developer effort). Technical value: Allows for easily changing the models used and ensures up-to-date access to different AI technologies. Application scenario: Helps to keep up with rapidly developing AI technologies.

Product Usage Case

· Research Assistant: A researcher can use this to quickly compare the responses of different AI models when researching a topic. This helps to get a broader perspective and identify the most relevant information. So what's in it for me? Better information for your studies.

· Content Creation Tool: A content creator can use this to generate different drafts of an article or script, compare them, and select the best one. This can save time and improve the quality of the generated content. So what's in it for me? Increased content creation efficiency.

· Decision-Making Aid: In complex decision-making processes, users can input a question to several AI models to get varied perspectives on a given issue. They can then compare the models' responses and make a better decision. So what's in it for me? Make more informed decisions.

15

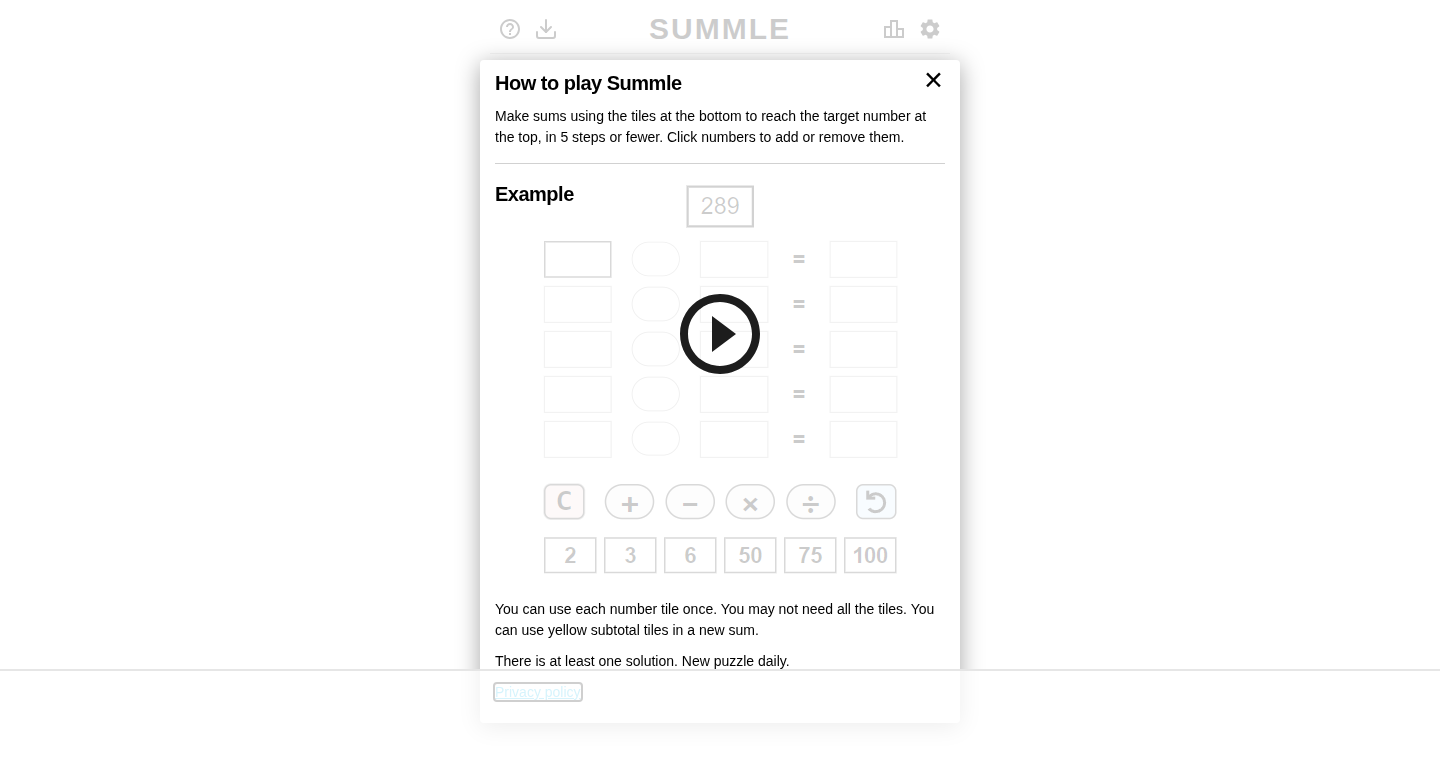

Summle: A Numerical Deductive Game

Author

kirchhoff

Description

Summle is a browser-based math game, a playful take on number puzzles. It challenges players to deduce a hidden number based on a series of addition clues. The technical innovation lies in its simple yet clever algorithm that generates these clues, ensuring a solvable but challenging experience. It cleverly combines simple arithmetic with logical deduction, demonstrating how complex gameplay can be built from straightforward mathematical principles. It solves the problem of creating a fun and educational game with a focus on number sense.

Popularity

Points 5

Comments 3

What is this product?

Summle is a web-based game where you have to guess a secret number. The game gives you a series of addition problems as hints. The clever part is how the game creates these hints. It uses a special method to make sure the clues are just right – not too easy, not too hard – so you can always find the secret number if you use your brain. So, it teaches you to think logically and use numbers in a fun way.

How to use it?

Developers can use Summle's core logic to build similar educational games or puzzles. The game's clue generation algorithm could be integrated into other projects requiring a similar level of puzzle design. You could embed it in your website or integrate it into other educational tools to provide users with math-based challenges.

Product Core Function

· Hint Generation Algorithm: This is the heart of Summle. It takes a target number and generates addition problems to guide the player. Its value lies in its ability to create tailored puzzles that are challenging but always solvable. For developers, this is valuable as it can be adapted to create puzzles of varying difficulty levels, suitable for different age groups and skill levels. This is useful for creating educational games.

· User Interface (UI): The UI of Summle is built with simple web technologies (HTML, CSS, JavaScript). This functionality allows users to interact with the game through a web browser. Its value lies in allowing any user with internet access to play the game. For developers, it demonstrates how user-friendly and accessible interfaces can be built using basic web technologies.

Product Usage Case

· Educational Game Development: Developers can leverage the hint generation algorithm to create other math-based games or educational tools. It is possible to modify the algorithm and game to teach other mathematical concepts, or other areas needing similar types of reasoning and deduction.

· Web-based Puzzle Design: Web developers can use this as an example of how to create and launch a simple, yet effective, web-based game. This showcases the power of front-end technologies and the effectiveness of lightweight development for a simple game.

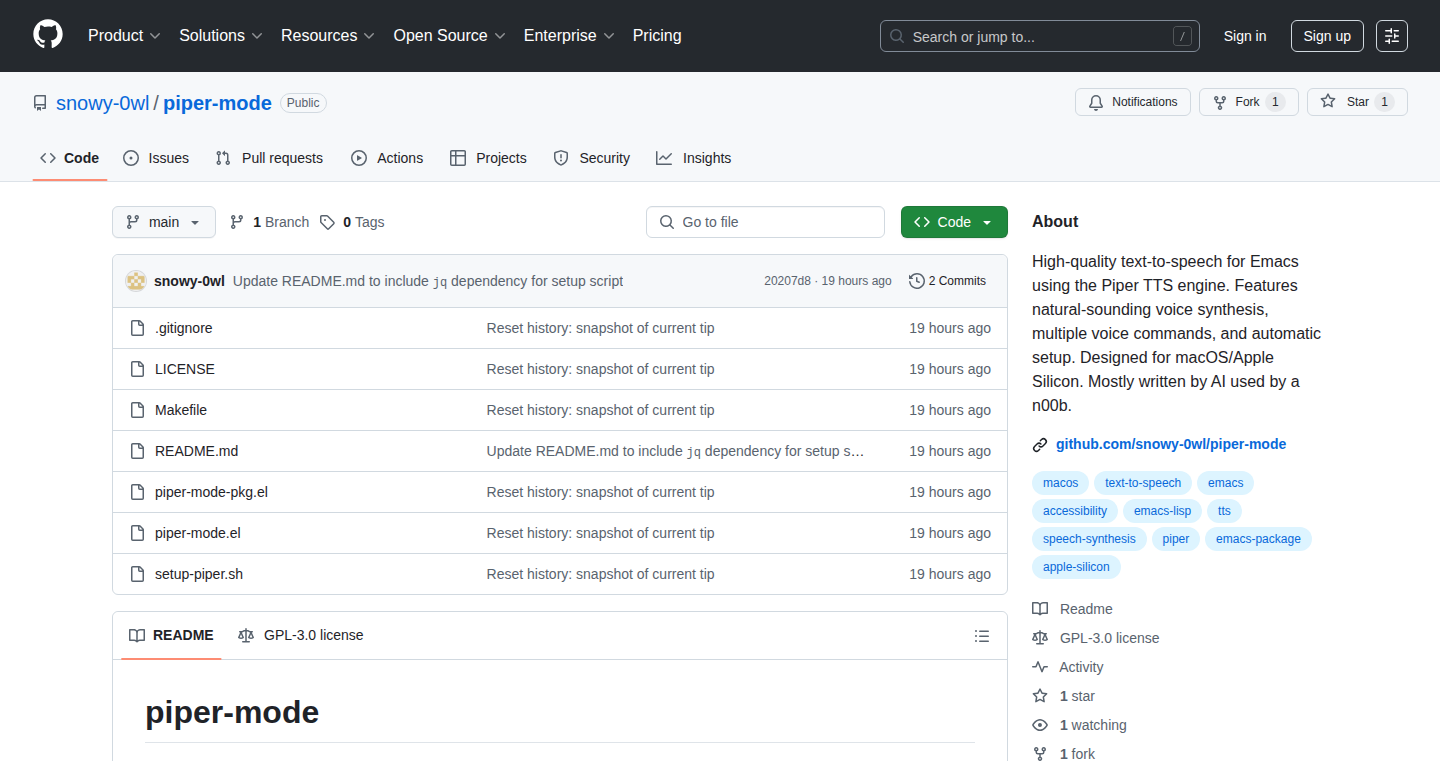

16

Piper-mode: Emacs' Voice with Piper's Power

Author

snowy_owl

Description

Piper-mode integrates the Piper text-to-speech (TTS) engine into the Emacs text editor. It allows Emacs users to have their text read aloud using high-quality, offline voices. The core innovation is leveraging Piper, an open-source and privacy-focused TTS engine, directly within the Emacs environment, providing users with spoken feedback and enhancing accessibility and productivity for programmers and writers. So this offers hands-free review of your code or text.

Popularity

Points 6

Comments 1

What is this product?

Piper-mode is a plugin that adds text-to-speech functionality to Emacs, utilizing the Piper TTS engine. Piper is known for its ability to generate natural-sounding speech offline. This project creatively combines these two elements, letting you listen to text within Emacs. You can have code read aloud, making debugging easier, or listen to documentation while coding. Its innovation lies in seamlessly integrating a powerful, open-source TTS engine into a widely-used text editor environment.

How to use it?

Developers can install Piper-mode within their Emacs configuration. Once installed, they can trigger text-to-speech using customizable commands. For instance, you could configure it to read the current line of code, the entire buffer, or selected regions. Integration is done by simple command in Emacs. So this is useful for a wide range of tasks, such as code review, documentation consumption, or simply making text more accessible.

Product Core Function

· Text-to-Speech for Code: Reads code aloud, allowing developers to catch errors and review their work hands-free. This is valuable for code quality and review.

· Text-to-Speech for Documentation: Reads documentation or comments. Useful for understanding lengthy text quickly while keeping focus on other tasks.

· Customizable Voice and Speed: Allows users to select different voices and adjust the speech rate to suit their preferences and workflow. This enhances personalization and utility.

· Offline Functionality: Works entirely offline, ensuring privacy and accessibility, which is useful for working in environments without network connectivity.

Product Usage Case

· Code Review: A developer can configure Piper-mode to read out the current line of code after every code modification. This can help to quickly identify errors. So you can find mistakes faster.

· Documentation Consumption: A writer uses Piper-mode to read aloud long articles or documentation while simultaneously writing or editing. This speeds up and helps with understanding large documents.

· Accessibility Aid: For users with visual impairments, Piper-mode can read out the entire buffer or selected parts, thus making Emacs accessible. So anyone can benefit from text-to-speech.

17

Biohack: Longevity-Focused Food Scanner

Author

Fbue

Description

Biohack is a food scanner that analyzes food products and assigns them a 'longevity score' based on their potential impact on aging factors. It's like a personalized health advisor in your pocket, using data science to help you make smarter food choices for a longer, healthier life. The innovative part is how it integrates various data points – from inflammation triggers to omega ratios and toxin levels – into a single, easy-to-understand score. It's tackling the complex challenge of translating nutrition science into actionable insights for everyday consumers.

Popularity

Points 6

Comments 1

What is this product?

Biohack uses a combination of image recognition and nutritional databases to analyze food products. The user scans a food item, and the app retrieves detailed information about its ingredients. It then uses algorithms to calculate a 'longevity score' based on factors known to influence aging, such as the presence of inflammatory ingredients or the balance of omega-3 and omega-6 fatty acids. This is innovative because it moves beyond simple calorie counting or macronutrient analysis to provide a more holistic view of a food's impact on health. So what? It tells you if the food you're eating is likely to contribute to longevity or accelerate aging.

How to use it?

Developers and health enthusiasts can potentially integrate Biohack's scoring system into their own health and wellness apps or devices. This could involve using its API (if available) to access the longevity scores for various foods or even integrating the scanner directly into their products. Imagine a smart refrigerator that automatically tracks the health scores of the items inside, or a fitness app that provides personalized food recommendations based on Biohack's analysis. So what? It allows developers to add a layer of health-focused intelligence to their existing projects.

Product Core Function

· Image Recognition: This feature allows the app to identify food products through image scanning. Value: Makes it easy to get nutritional information quickly, no manual input needed. Application: Helps users quickly analyze products on the go.

· Nutritional Database Integration: Accessing and analyzing information from large databases about ingredients and nutrition facts. Value: Provides detailed information about the food products analyzed. Application: Forms the basis for the longevity score calculation.

· Longevity Score Calculation: Algorithms that calculate a single score based on multiple factors influencing aging. Value: Simplifies complex nutritional information into an easily understandable metric. Application: Helps users make informed food choices.

· Aging Factor Analysis: Analyzing food items against factors known to impact aging, like inflammation and toxins. Value: Offers a deeper understanding of a food's potential health impact. Application: Helps users understand the 'why' behind the longevity score.

· Data-Driven Recommendations: Providing personalized food recommendations based on the longevity score. Value: Guides users toward healthier food choices that can improve longevity. Application: Encourages users to choose products with higher longevity scores.

Product Usage Case

· Integration with Health Tracking Apps: Developers could integrate Biohack's API to display the longevity score of food items alongside other health metrics like activity levels, sleep quality, and weight. So what? Provides a holistic health view to the user.

· Smart Kitchen Integration: A smart fridge could use Biohack to scan the contents and alert the user of foods with low longevity scores. So what? Proactively assists with healthier grocery shopping and food selection.

· Personalized Nutrition Plans: Nutritionists and dietitians could use Biohack's data to create customized meal plans based on longevity factors. So what? It allows for more data-driven and personalized health recommendations.

· Research and Development: Scientists and researchers can use the food scan to study the relationship between food consumption and various health outcomes. So what? Facilitates deeper explorations into the impact of nutrition on aging.

18

TypeQuicker - Personalized Typing Practice with Intelligent Weakness Detection

Author

absoluteunit1

Description

TypeQuicker is a web-based typing tutor that analyzes your typing habits to identify your weaknesses and then generates customized practice sessions. The core innovation lies in its ability to dynamically adapt the practice content based on your performance, focusing on the letters, words, and patterns you struggle with the most. It's like a personalized typing gym, ensuring you spend your time improving where it matters most, not just mindlessly repeating exercises. This project tackles the inefficiency of generic typing tutors by providing targeted practice, which can significantly accelerate the improvement of typing speed and accuracy.

Popularity

Points 6

Comments 1

What is this product?

TypeQuicker is a smart typing tutor that goes beyond basic exercises. It uses sophisticated algorithms to analyze your typing style, pinpointing the specific keys, letter combinations, or words you make mistakes on. Then, it creates custom typing drills designed to help you overcome those weaknesses. The clever part is that it continuously adapts. As you improve, the program adjusts the exercises to keep you challenged and help you progress even further. So what? This means you get faster and more accurate typing skills efficiently.

How to use it?

To use TypeQuicker, you typically visit the website and start typing. As you type, the program tracks your speed, accuracy, and mistakes. You can then review your performance and see which areas need improvement. Based on this analysis, TypeQuicker provides personalized practice sessions. Developers can integrate this by embedding the typing test components into their websites or applications to test users' typing skills, or can use it for their own needs in order to improve their own typing. Therefore, developers can improve their efficiency.

Product Core Function

· Weakness Detection: The core functionality is analyzing user input to identify specific areas of typing difficulty, such as frequently mistyped characters, letter combinations, or words. This allows the program to pinpoint where the user needs the most practice. This is valuable because it avoids wasting time on areas where the user is already proficient.