Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-06-25

SagaSu777 2025-06-26

Explore the hottest developer projects on Show HN for 2025-06-25. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

今天的Show HN项目展示了开发者们对 AI 技术的积极拥抱,并将其应用于解决各种实际问题。 从利用AI 自动化测试、生成代码,到创建个性化的生产力工具和Chrome扩展,这些项目都体现了技术与用户需求的紧密结合。 对于开发者和创业者而言,抓住这一趋势意味着要思考如何利用 AI 来简化工作流程、提升用户体验,以及解决用户痛点。 不要害怕从简单的项目开始,例如“Scream to Unlock”的成功,证明了创新有时在于对人性的深刻理解,而非复杂的技术实现。 重点关注用户体验和实际需求,同时积极探索 AI 技术的应用,将是未来技术创新成功的关键。

Today's Hottest Product

Name

Show HN: Scream to Unlock – Blocks social media until you scream “I'm a loser”

Highlight

这个项目使用了一种非常规的方式来解决社交媒体成瘾问题:用户必须大声喊出“I'm a loser”才能解锁社交媒体。 这利用了行为心理学,通过设置令人不适的触发条件来打破习惯。 开发者可以学习到的是,技术创新不仅仅是关于更复杂的功能,而是关于如何通过简单但有效的方式改变用户行为。这个项目的成功关键在于它对用户心理的洞察,而非复杂的技术堆栈。

Popular Category

AI 工具

生产力工具

开发者工具

Popular Keyword

AI

MCP

CLI

Chrome Extension

Technology Trends

AI 辅助开发:利用AI提高开发效率,例如生成代码、调试、编写文档。

基于AI的自动化:利用AI自动化重复性任务,例如测试、数据分析、市场营销等。

CLI 工具的创新:开发者持续构建强大且个性化的命令行工具,提高工作效率。

Chrome Extension 的创新应用: 利用Chrome Extension增强用户体验,例如内容过滤、生产力工具等。

隐私优先的本地化应用:在本地进行数据处理,保护用户隐私,提高数据安全性。

Project Category Distribution

AI 工具 (35%)

生产力工具 (20%)

开发者工具 (20%)

其他 (25%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Scream to Unlock: The Embarrassment-Based Social Media Blocker | 223 | 122 |

| 2 | Elelem: CLI for LLM Tool-Calling in C | 39 | 3 |

| 3 | MCP Generator: Natural Language API Interface | 16 | 13 |

| 4 | Autohive: AI Agent Builder for Everyday Teams | 28 | 0 |

| 5 | AskMedically: AI-Powered Medical Research Assistant | 11 | 14 |

| 6 | PLJS: JavaScript for Postgres | 9 | 8 |

| 7 | Perch-Eye: On-Device Face Comparison SDK | 2 | 14 |

| 8 | CodeChange-Aware AI Testing Agent | 6 | 9 |

| 9 | ZigMCP: A JSON-RPC 2.0 Powered MCP Server | 13 | 2 |

| 10 | SuperDesign.Dev: IDE-Integrated Design Agent | 10 | 4 |

1

Scream to Unlock: The Embarrassment-Based Social Media Blocker

Author

madinmo

Description

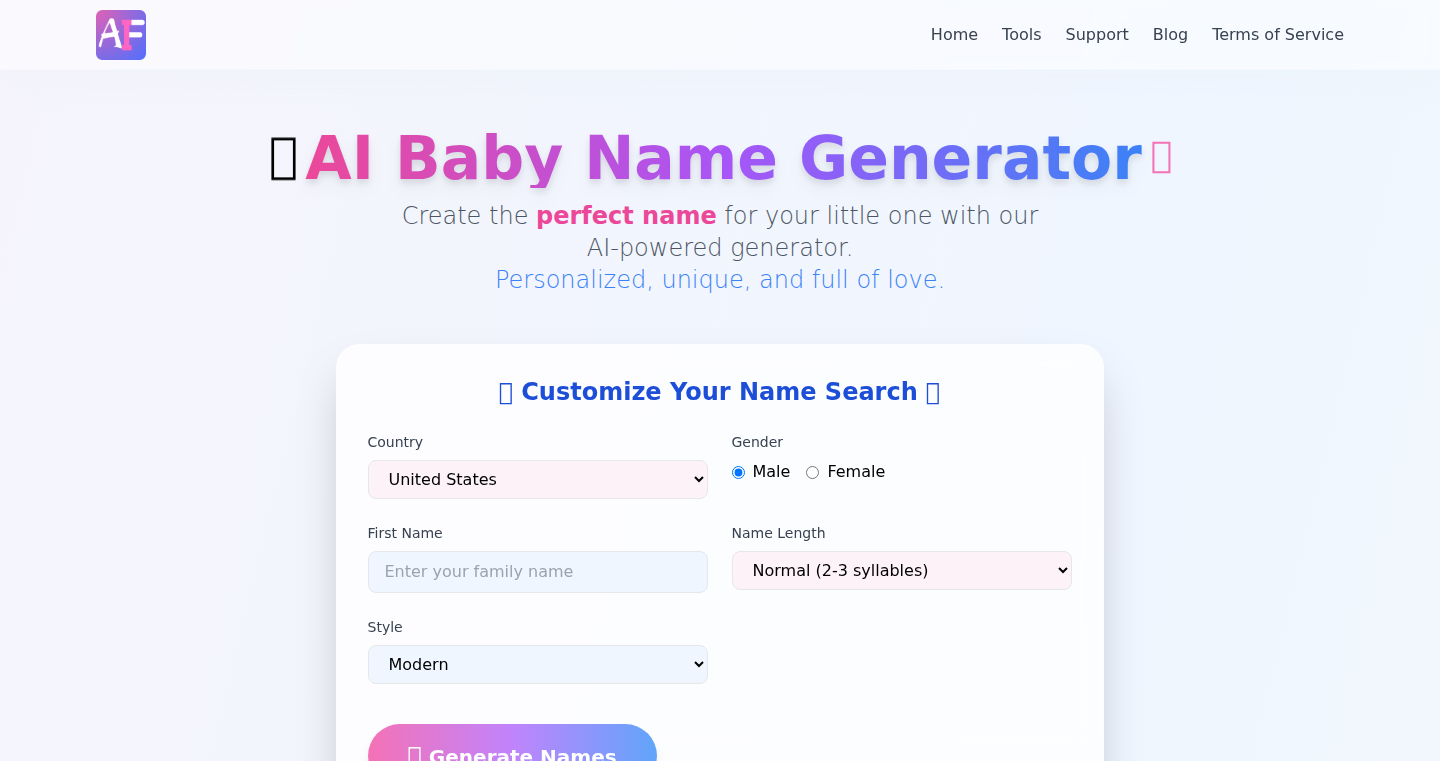

Scream to Unlock is a Chrome extension that blocks access to specified websites until the user yells a phrase into their microphone. It leverages Web Audio API for real-time audio analysis, providing a unique and arguably humorous approach to breaking social media addiction. Instead of passive blocking, it introduces an active, potentially embarrassing, challenge, making users think twice before accessing distracting sites. So it helps you to focus.

Popularity

Points 223

Comments 122

What is this product?

This is a browser extension that uses your microphone to determine if you've screamed a specific phrase loud enough to unlock access to certain websites. It uses the Web Audio API, a set of tools built directly into your web browser, to analyze the sound coming from your microphone. The extension analyzes the audio locally, without sending your audio to a server, and releases access to the blocked websites if the screamed phrase is detected and meets a certain decibel threshold. This provides a privacy-focused and creative way to address the challenge of distraction. So it provides a new way to break bad habits.

How to use it?

Install the extension in your Chrome browser, configure the websites you want to block, and set the phrase you need to scream to unlock access. When you attempt to visit a blocked website, the extension will prompt you to scream your embarrassing phrase. The louder you scream, the faster the website unlocks, or the longer it unlocks. For developers, this project showcases the power of in-browser audio processing and offers a starting point for other audio-based applications. So it is useful for anyone struggling with distractions.

Product Core Function

· Website Blocking: The extension allows users to specify a list of websites they want to block, making it simple to manage distractions.

· Audio Analysis: Utilizing the Web Audio API, the extension analyzes the sound input from the microphone in real time. This is the core technical component, providing the means to detect the scream.

· Phrase Detection: The extension is designed to recognize a specific phrase yelled by the user, which enables the activation of the unlocking mechanism when the correct phrase is detected. So it prevents unwanted distractions.

· Volume Threshold: A key parameter is the sound level threshold. The extension needs to register the scream at a sufficient volume. This is important for ensuring that unlocking occurs only if the user is actually trying to break the block. So it helps you stay focused.

· Unlocking Mechanism: Once the phrase is detected and the volume threshold is met, the extension automatically unlocks access to the specified websites, removing the block and allowing users to browse the content. So it is the core purpose of the extension.

Product Usage Case

· Preventing Social Media Addiction: This is the primary use case. Users can block distracting social media sites and use "Scream to Unlock" to force themselves to think twice before accessing them. So it directly helps you reduce time wasted.

· Focus Enhancement for Work/Study: The extension can be used to block distracting websites during work or study sessions, encouraging focused work habits. So it maximizes productivity.

· Educational Tool: The project provides a practical example of using the Web Audio API, which can be a valuable learning resource for developers interested in audio processing. So it teaches you how to apply a key web technology.

· Customizable Productivity Tool: Developers can modify the project to integrate different unlocking criteria, such as requiring a specific melody, or even integrating with other APIs. So it gives you the freedom to customize the experience.

2

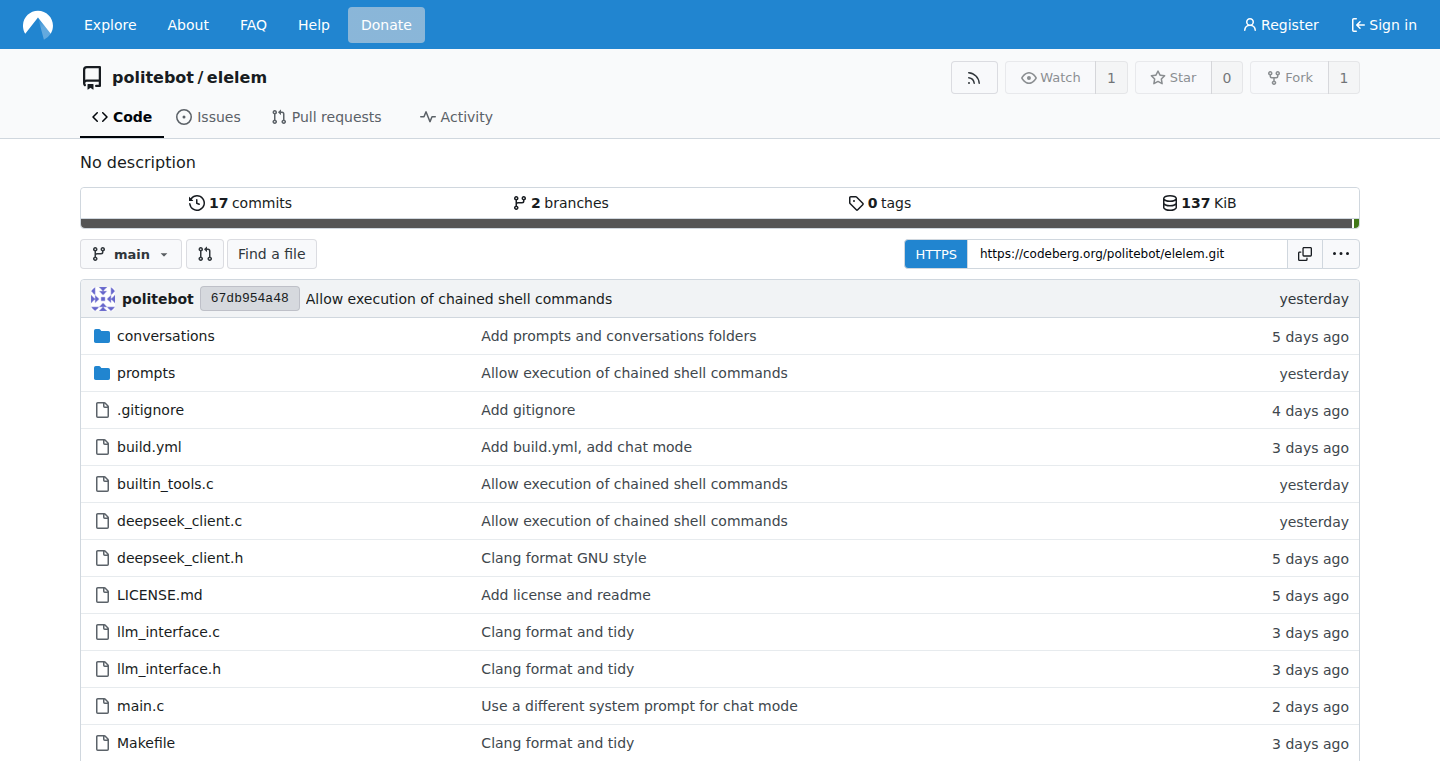

Elelem: CLI for LLM Tool-Calling in C

Author

atjamielittle

Description

Elelem is a command-line interface (CLI) tool that lets you use large language models (LLMs) like Ollama and DeepSeek to perform tool-calling. This means you can give it a task, and it will figure out what tools to use (e.g., a calculator, a search engine) to solve it. The cool thing is it's written in C, which makes it super fast and efficient. It solves the problem of needing a simple and quick way to leverage LLMs for tasks that involve using other tools, without all the complex setup.

Popularity

Points 39

Comments 3

What is this product?

Elelem is essentially a smart assistant you can run from your computer's command line. It understands natural language and can 'call' other tools to help solve your problem. It leverages the power of LLMs, but instead of just answering questions, it can actively use tools to complete tasks. The innovative part is its C implementation, which makes it highly optimized, meaning it runs faster and uses fewer resources. So what? It makes interacting with LLMs for practical tasks incredibly quick and easy.

How to use it?

Developers can use Elelem by simply typing a command in their terminal, like 'elelem "What's the weather in London?"'. Elelem then uses the LLM to figure out it needs a weather API, calls that API, and gives you the answer. You can integrate it into scripts or other programs. For example, you could build an automation tool that uses Elelem to analyze data, trigger actions, or manage your workflow. So what? It allows developers to quickly build automated workflows and integrations with LLMs directly from the command line.

Product Core Function

· Tool-calling: The core function is its ability to decide which tools to use based on your instructions, and then use them. For example, if you ask it to calculate something, it will use a calculator tool. This avoids the need for developers to manually manage the interaction between the LLM and external tools. So what? It makes automation simpler and reduces the amount of code needed to integrate LLMs into applications.

· CLI Interface: It provides a command-line interface, which means you can interact with it directly from your terminal. This makes it easy to use in scripts, automation pipelines, and other development workflows. So what? It provides a very accessible way for developers to experiment with and implement LLM-based tools.

· C Implementation: The fact that it’s written in C is significant. C is a low-level language known for its efficiency. This means Elelem is fast and uses less memory. This is great for resource-constrained environments or performance-critical applications. So what? It allows developers to run sophisticated LLM applications with less overhead, on a wider range of devices.

· Integration with Ollama and DeepSeek: It specifically supports popular LLMs like Ollama and DeepSeek. This means it offers easy access to powerful LLMs through a standard interface. So what? This gives developers a head start, letting them use leading-edge AI capabilities with minimal configuration.

Product Usage Case

· Automated Data Analysis: A developer could use Elelem to automatically pull data from various sources, use the LLM to analyze it, and then generate reports. For instance, you could use it to scrape web pages, perform sentiment analysis, and identify key trends. This would drastically speed up data exploration and report generation. So what? It eliminates tedious manual data analysis work.

· Workflow Automation: Integrate Elelem into your development workflow to automate tasks such as code generation, testing, or deployment. For instance, you can ask Elelem to 'Write a shell script to deploy my application' which can then interact with your CI/CD pipeline. So what? It simplifies repetitive tasks, allowing developers to focus on more creative coding.

· Smart Command-line Tools: A developer could wrap Elelem around other command-line tools to give them AI-powered capabilities. Imagine enhancing existing tools with LLM features by seamlessly integrating them with Elelem for tasks like data transformation or code completion. So what? You can add AI smarts to your existing toolbox and make your tools more powerful.

· Building Custom Assistants: You could use Elelem as the core of a custom assistant that helps with software development. For example, you could ask it to 'Find bugs in this code' or 'Suggest improvements for this function'. So what? It allows you to build unique, tailored tools to fit specific needs.

3

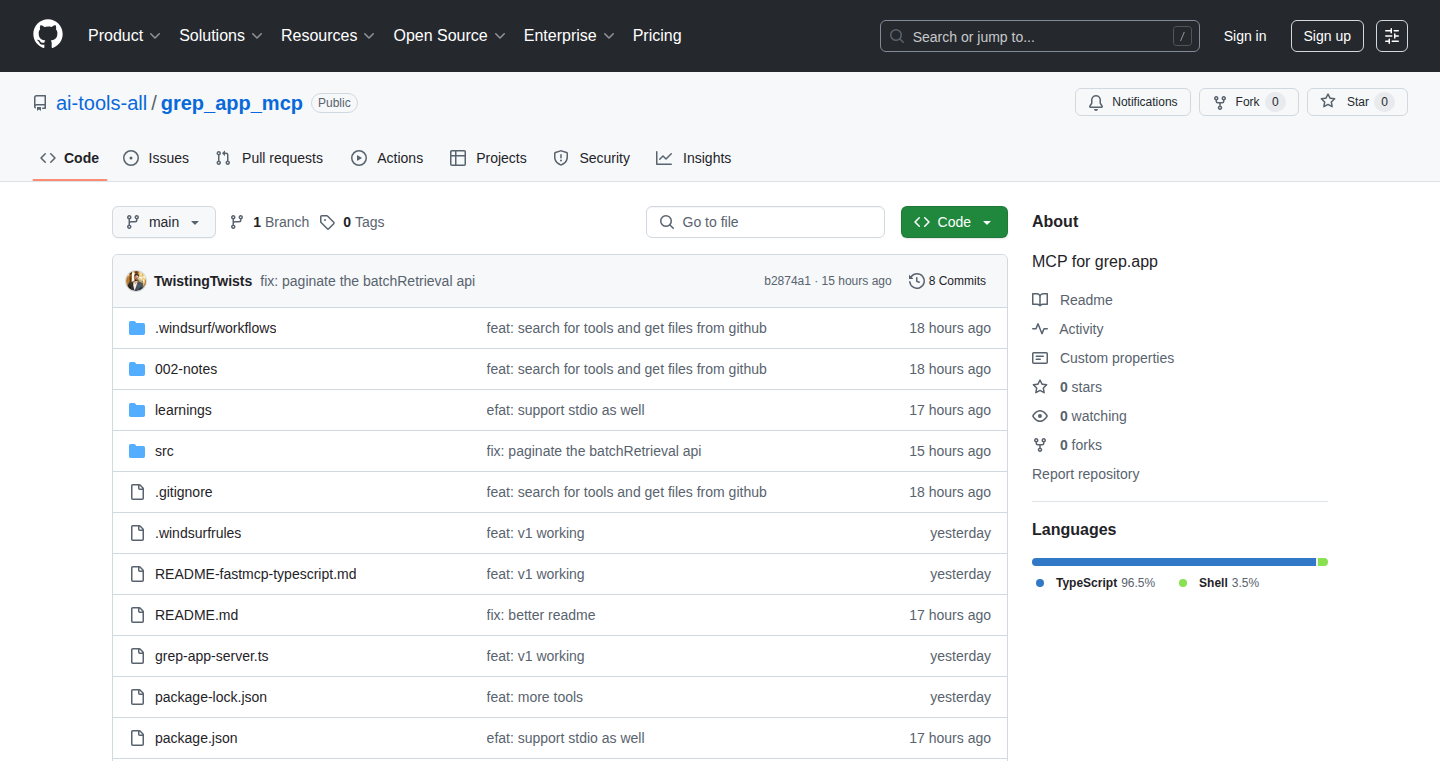

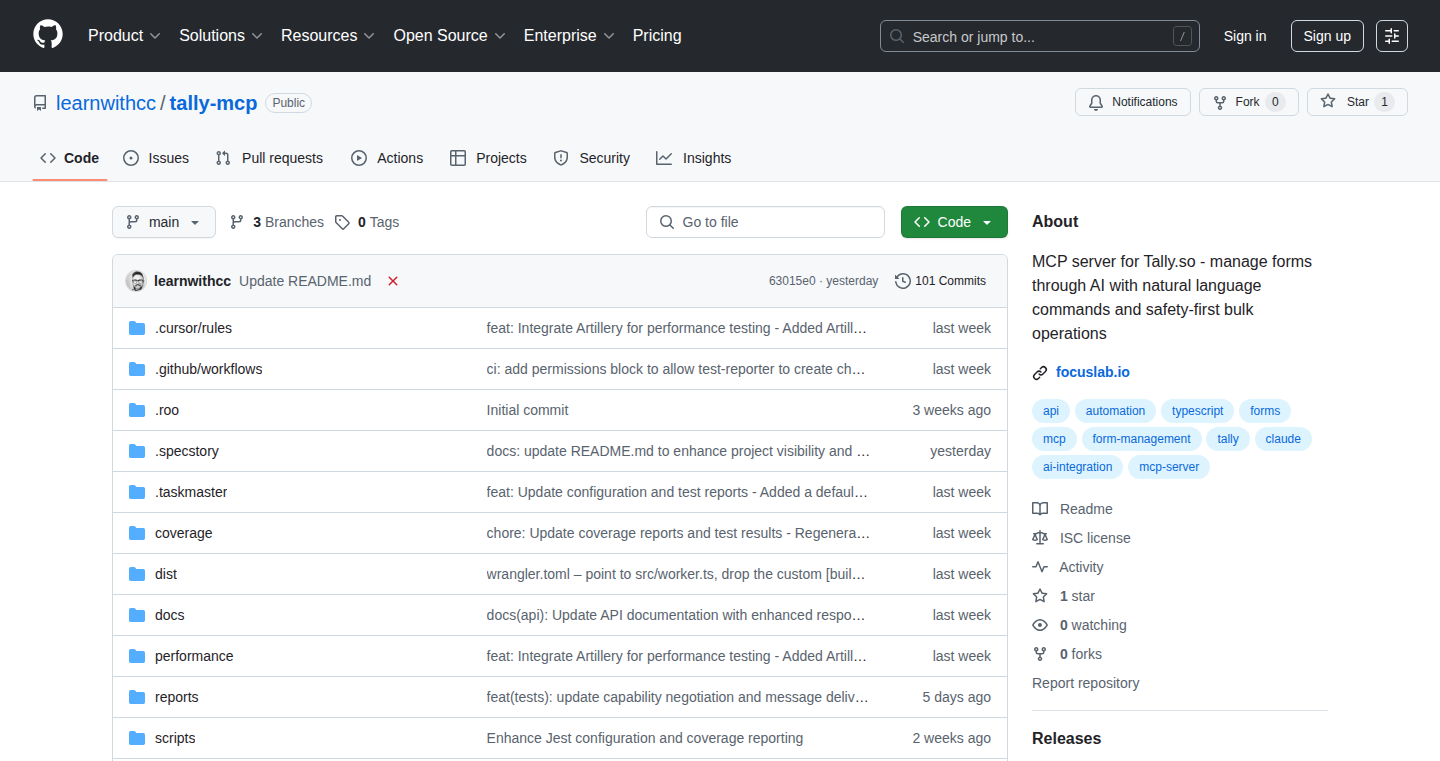

MCP Generator: Natural Language API Interface

Author

sagivo

Description

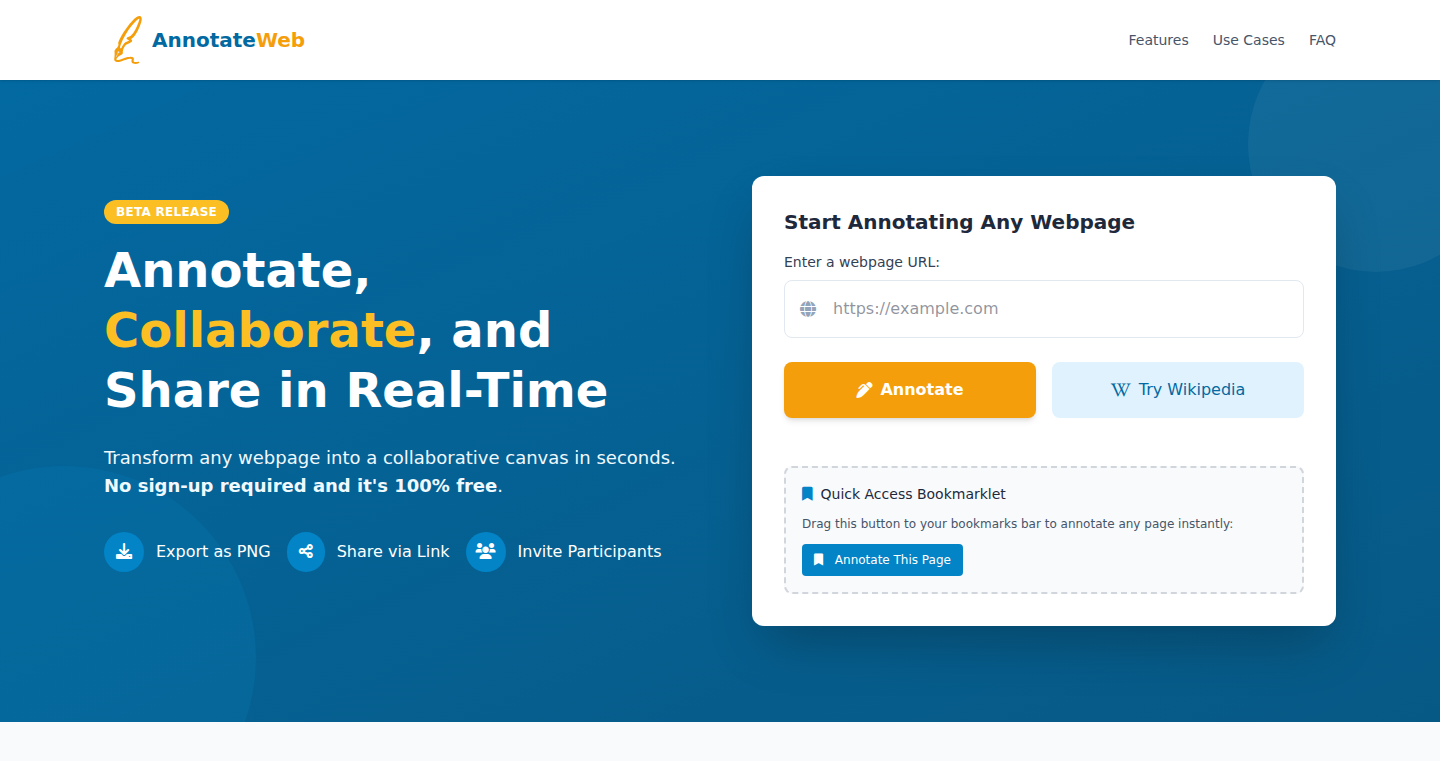

This project simplifies the process of connecting AI tools (like large language models, or LLMs) to existing APIs. It takes your API's specification (often in a format called OpenAPI) and automatically generates a server that understands and translates natural language into API calls. This eliminates the need for developers to manually write complex code to connect their APIs with AI tools, saving time and effort. So it saves you from the headache of building the bridge between your API and AI.

Popularity

Points 16

Comments 13

What is this product?

It's an automatic code generator that creates a Model Context Protocol (MCP) server. Think of MCP as a translator. You give it your API's blueprint, and it creates a server that can understand natural language queries and convert them into API calls. The magic happens by leveraging the OpenAPI specification of your API and generating the necessary code to interpret natural language requests, handle authentication, and manage the connection. This is innovative because it automates a process that typically requires extensive manual coding, making it easier for developers to integrate their APIs with AI tools. So it is like having an AI-powered assistant for your API.

How to use it?

Developers provide the MCP Generator with their API's OpenAPI specification. The generator then deploys a ready-to-use MCP server in the cloud or lets you download it for local use. To use it, you simply point your AI tool (e.g., an LLM) to the generated server's URL. Then, the AI can start interacting with your API through natural language. This is as simple as providing the API specification, and the tool handles the rest. So it lets you easily link your AI tools with your API with a URL.

Product Core Function

· Automated MCP Server Generation: It automatically generates a fully functional MCP server from an API specification (OpenAPI). This eliminates the need for manual coding, which simplifies the integration of AI with APIs. This is important because it accelerates the development process, reducing time to market for AI-powered features.

· Cloud Deployment and Local Usage Options: The generated MCP server can be deployed to the cloud or downloaded for local use. This flexibility allows developers to choose the best deployment option based on their needs and constraints. This means developers can use the server without needing to manage infrastructure.

· Automatic API Synchronization: As the API evolves, the MCP server can be regenerated to stay in sync. This eliminates the need for developers to manually update the integration code, improving maintainability. So it prevents issues with API versioning.

· Natural Language to API Conversion: It translates natural language requests into structured API calls. This simplifies API interaction for developers and allows them to use AI tools to control their APIs. Thus making APIs accessible to a wider range of users, even those without coding experience.

· OpenAPI Specification Based: The tool uses the OpenAPI specification. This allows it to automatically understand and interact with most APIs without any manual work. This greatly improves the ease of use and applicability of the tool.

Product Usage Case

· Building Developer Tools: Developers can create tools that allow LLMs to perform actions against their internal or external APIs. This can automate tasks, create new workflows, or enhance existing ones. So it enables the building of AI-powered tools without extensive manual integration.

· Querying Internal Metrics: Users can query internal metrics or services using natural language questions. This allows for quick access to data and insights without needing to write custom queries. This is really important to retrieve key data quickly.

· Surfacing Documentation through Conversational AI: Structured documentation and content can be surfaced through conversational AI interfaces. This makes documentation more accessible and user-friendly. Thus helping in making documentations conversational.

· Speaking with Your API Service: Instead of writing functions, you can interact with your API service using natural language. This simplifies the interaction and reduces the need for coding. So it transforms the way developers interact with APIs, making it more intuitive and efficient.

4

Autohive: AI Agent Builder for Everyday Teams

Author

davetenhave

Description

Autohive is a platform that allows teams without extensive coding experience to build and deploy their own AI agents. It simplifies the creation process, handling the complex technical aspects like prompt engineering, model selection, and workflow management behind the scenes. The innovation lies in its user-friendly interface and pre-built integrations, enabling non-technical users to leverage AI to automate tasks and boost productivity. So this helps you automate tasks without needing to become a coding expert.

Popularity

Points 28

Comments 0

What is this product?

Autohive is like a LEGO set for AI. It provides a visual interface where you can drag and drop pre-built AI components – like the ability to summarize text, answer questions, or generate new content – and connect them together to create custom AI agents. It handles the tricky parts, such as figuring out the best AI models to use and how to communicate with them, so you can focus on what you want your AI agent to *do*. This allows even non-technical people to build intelligent systems without writing any code. So this lets you build intelligent tools easily.

How to use it?

Developers can use Autohive as a rapid prototyping tool for AI agents, or to quickly create AI-powered features for their own applications. You could integrate it via API to trigger agent workflows from within your existing system. For example, a developer could use Autohive to build an agent that automatically analyzes customer support tickets, summarizes the issue, and suggests a solution. So this lets developers quickly prototype AI solutions.

Product Core Function

· Workflow Builder: Autohive offers a visual interface that lets you design and assemble AI workflows. You can drag and drop pre-built components and connect them to create complex processes. This removes the need for writing code to orchestrate multiple AI tasks.

· Pre-built Integrations: The platform provides integrations with popular services and tools, enabling your AI agents to interact with real-world data sources and systems. This streamlines the development process by providing easy access to different tools.

· Agent Deployment: Autohive simplifies the process of deploying your AI agents, making it simple for teams to put their AI-powered solutions into practice. You can host the agents or integrate them into existing environments. So you can make use of the AI agent you built.

· Model Selection and Management: Autohive takes care of selecting and managing the right AI models for the job. The system automatically handles model updates and performance monitoring. So this makes your AI work effortlessly in the background.

Product Usage Case

· Customer Support Automation: A team uses Autohive to build an AI agent that automatically analyzes customer support tickets, identifies the main issue, and provides suggestions for the support agent. This reduces the time spent handling routine issues, allowing agents to focus on more complex inquiries.

· Content Generation: A marketing team uses Autohive to create an agent that generates social media posts, based on provided keywords and descriptions. This saves time and effort in content creation.

· Data Analysis: A business analyst builds an agent to extract key insights from large datasets, such as sales reports or market research, without requiring any coding knowledge. So it is easier to generate reports and analyze data.

· Lead Qualification: A sales team integrates Autohive with their CRM to build an AI agent that automatically qualifies leads based on pre-defined criteria, routing qualified leads to the appropriate sales representatives.

· Internal Task Automation: A project manager uses Autohive to automate repetitive tasks like project updates, meeting summaries, and task assignments based on pre-defined triggers. This makes it easy for a project manager to focus on the real problem.

5

AskMedically: AI-Powered Medical Research Assistant

Author

arunbhatia

Description

AskMedically is an AI-powered tool designed to answer health and medical questions using information from reputable medical sources. It leverages Artificial Intelligence to sift through research papers and provide clear, concise answers supported by citations. This solves the problem of information overload and misinformation in the health space, offering users access to evidence-based knowledge. So this helps you quickly find reliable health information.

Popularity

Points 11

Comments 14

What is this product?

AskMedically works by using AI to understand your health-related questions. It then searches through a vast database of medical research papers from trusted sources like PubMed and Cochrane. The AI summarizes the relevant information, providing you with a clear answer, along with citations to the original research. This innovation lies in its ability to process complex scientific literature and present it in an easy-to-understand format. So this provides credible and understandable medical information.

How to use it?

You can use AskMedically by simply typing your health questions into the search bar. For example, you can ask, "Does intermittent fasting improve insulin sensitivity?" or "What are the benefits of creatine for brain health?". The tool will then generate an answer with supporting research citations. It's designed to be user-friendly on both computers and mobile devices. This lets you get answers to your questions, without having to search through complex medical papers.

Product Core Function

· Answer Generation: The core function is to provide answers to medical questions, summarized from research papers. The value lies in the quick access to summarized medical knowledge, eliminating the need to read numerous papers. This is useful if you want a quick overview of a medical topic.

· Citation & Source Verification: Each answer is accompanied by citations to the original research papers. This is valuable for ensuring the information is accurate and verifiable. You can ensure the information is reliable.

· AI-Powered Search & Summarization: The AI analyzes research papers to extract the most relevant information and summarize it for the user. The benefit is getting precise answers without spending hours researching. This is helpful to have relevant information presented to you.

Product Usage Case

· For Patients: A patient can use AskMedically to understand their condition or the effectiveness of a treatment option discussed by a doctor. This offers the patient a reliable source of information to complement doctor's advice.

· For Students: Medical students can use AskMedically to quickly research medical topics and understand complex concepts. This assists in a faster research, helping to cut down on the research time.

· For Healthcare Professionals: Healthcare professionals can use it to find the latest research on specific medical topics to stay up-to-date. This provides healthcare professionals to make more informed decisions.

6

PLJS: JavaScript for Postgres

Author

jerrysievert

Description

PLJS is a new tool that lets you run JavaScript code directly inside your PostgreSQL database. It cleverly combines a fast JavaScript engine called QuickJS with PostgreSQL. The main innovation is its speed and efficiency, especially in converting data between the database and JavaScript. It aims to be a lighter and faster alternative to existing solutions like PLV8, making it easier and more efficient to run JavaScript code for tasks like data processing and complex logic within your database. So this means you can make your database do more, faster.

Popularity

Points 9

Comments 8

What is this product?

PLJS is a JavaScript extension for PostgreSQL. It integrates QuickJS, a lightweight and quick JavaScript engine, with PostgreSQL. The magic happens in how it handles data conversion between JavaScript and the database, aiming for speed and efficiency. This allows developers to execute JavaScript code directly within their PostgreSQL database, opening up possibilities for tasks like complex data manipulation, business logic execution, and creating custom functions. Think of it as giving your database superpowers by letting it speak JavaScript. So this lets you get more done, faster, inside your database.

How to use it?

Developers can use PLJS by installing it as an extension in their PostgreSQL database. Once installed, they can write JavaScript functions and execute them within the database, just like they would write SQL functions. You would use this like you would use SQL functions, by calling them from SQL queries or other database operations. For instance, you might use it to transform data, validate input, or create custom aggregations. So it lets you extend your SQL with JavaScript, to solve problems that SQL alone can't handle easily.

Product Core Function

· Fast Type Conversion: PLJS efficiently converts data between PostgreSQL's data types and JavaScript, reducing overhead and improving performance. This means less waiting around when your JavaScript code interacts with the database. So this speeds up your data processing.

· QuickJS Integration: It uses QuickJS, a very fast JavaScript engine, ensuring that your JavaScript code runs quickly inside the database. This leads to faster execution times for your database functions. So this lets your code run faster.

· Lightweight Footprint: PLJS is designed to be lightweight, meaning it doesn't add a lot of extra baggage to your database. This helps keep your database running smoothly, even with JavaScript extensions. So it doesn't slow down your database.

· JavaScript Inside Postgres: Developers can execute JavaScript code directly inside the PostgreSQL database, allowing for flexible and powerful data manipulation and business logic implementation within the database. This moves the computation closer to your data. So this lets you avoid moving data back and forth, speeding up your applications.

Product Usage Case

· Data Transformation: A developer needs to clean and transform data before analyzing it. They could write a JavaScript function with PLJS inside the database to quickly process data, avoiding the need to move it to an external application. So this saves time and bandwidth.

· Custom Validation: Implementing complex validation rules for incoming data. A developer could create a JavaScript function with PLJS to check data integrity, right where the data enters the database. So this ensures data quality.

· Advanced Aggregation: Performing custom calculations and aggregations on data. A developer might use PLJS to create advanced calculations that are difficult to do with SQL alone. So this enables more complex data analysis.

7

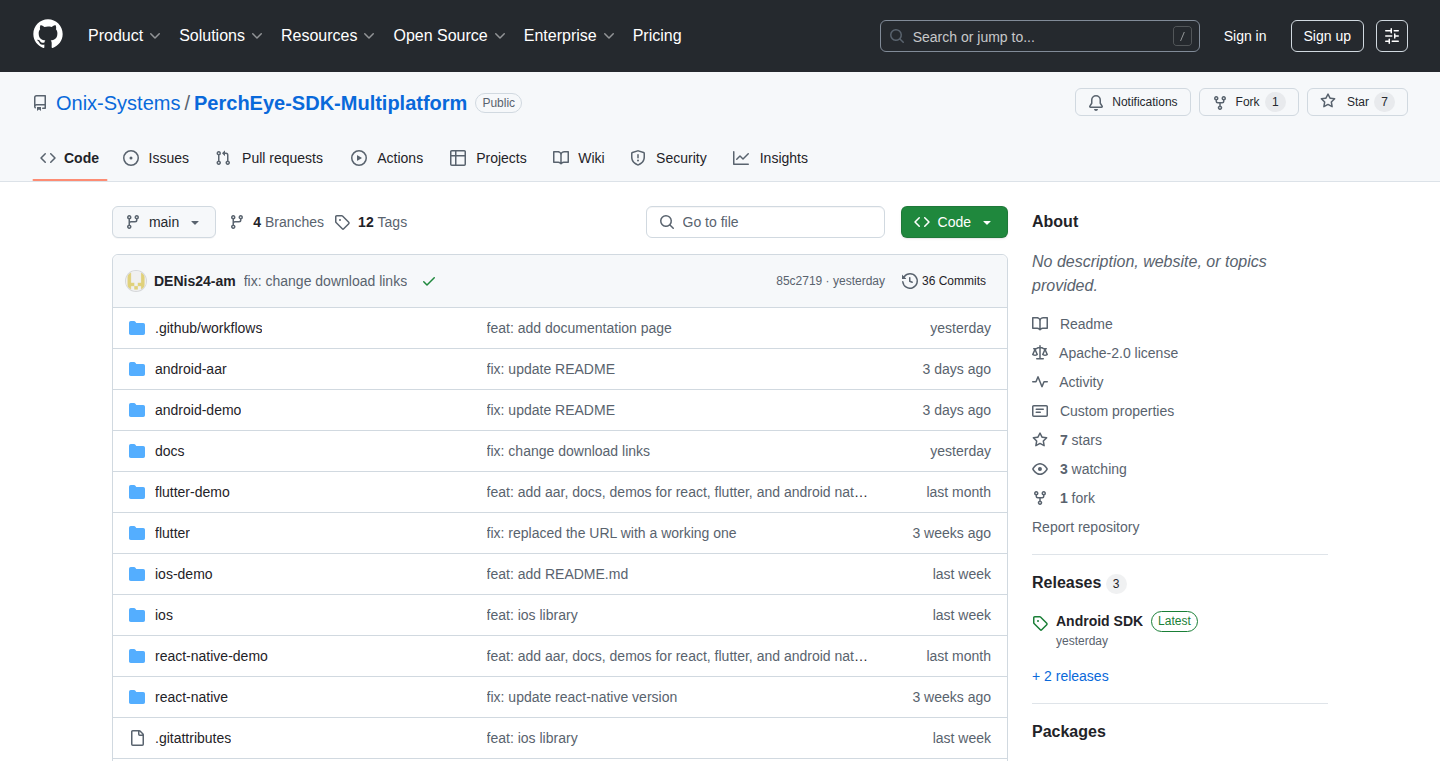

Perch-Eye: On-Device Face Comparison SDK

Author

vladimir_adt

Description

Perch-Eye is a lightweight, open-source SDK that allows developers to easily add face comparison features to their Android and iOS apps. The core innovation is its ability to perform this face comparison directly on the user's device, without needing to send any data to the cloud. This solves the privacy concerns associated with cloud-based face recognition and offers a faster, more reliable experience, especially in areas with limited internet connectivity. So this means your app can identify faces securely and quickly.

Popularity

Points 2

Comments 14

What is this product?

Perch-Eye uses advanced image processing algorithms to analyze faces captured by a device's camera. The SDK extracts unique facial features and compares them to a database of known faces. The key technology is its optimized approach to run these algorithms on the mobile device itself. This avoids the need for a network connection to process the images on a server, resulting in improved privacy and reduced latency. It provides native support for both Android and iOS platforms. So you can build apps that recognize faces without sending any information to a cloud service.

How to use it?

Developers integrate Perch-Eye into their apps through a straightforward SDK, allowing them to capture images of faces, compare them with existing data, and receive a match/no-match result. The SDK is designed to be flexible, supporting custom face detectors, which means developers can tailor the facial recognition process to meet specific needs. Imagine using it for secure app login, personalizing content, or automatically recognizing people in photos. So developers can add facial recognition features to their apps quickly and easily, ensuring user privacy and speed.

Product Core Function

· On-Device Face Comparison: This function performs face matching entirely on the user's device. This ensures user privacy by eliminating the need for cloud-based processing, keeping all sensitive data on the user's phone. This is great for apps needing secure and private identity verification. So it helps you protect user data and build trust.

· Cross-Platform Support: The SDK is designed to run on both Android and iOS. This means developers can use the same code base for face comparison functionality across both platforms. This functionality saves time and resources by avoiding platform-specific development. So you can reach more users with one implementation.

· Custom Detector Support: Perch-Eye allows developers to use their own face detection models. This flexibility allows developers to customize the face recognition process, potentially improving accuracy or supporting specific facial feature detection. So it helps in creating custom solutions tailored to specific app requirements.

· Offline Functionality: Since it processes data locally, the SDK works even without an internet connection. This is crucial for applications in areas with unreliable network access or that need to prioritize data privacy. So it makes your application reliable and privacy-focused even in challenging network environments.

Product Usage Case

· Secure Mobile App Login: Integrate Perch-Eye to enable secure login by comparing the user's face with a pre-registered face. This enhances security and makes logging in easier compared to traditional passwords. So you can easily secure your app with face recognition.

· Personalized User Experience: Use face comparison to personalize app content or settings based on the recognized user. For example, dynamically adjust the user interface to fit a specific user. So you can build more engaging and user-friendly apps.

· Photo Organization: Perch-Eye can be integrated into photo management applications to automatically tag or group photos based on the people present. This reduces manual tagging and facilitates photo search. So it helps automate photo organization and make it easier to find photos of specific people.

· Access Control: Implement facial recognition to provide access control to physical locations or digital resources. For instance, allowing access to a locked door or protected content based on recognized faces. So you can improve security in any environment that requires access control.

8

CodeChange-Aware AI Testing Agent

Author

ElasticBottle

Description

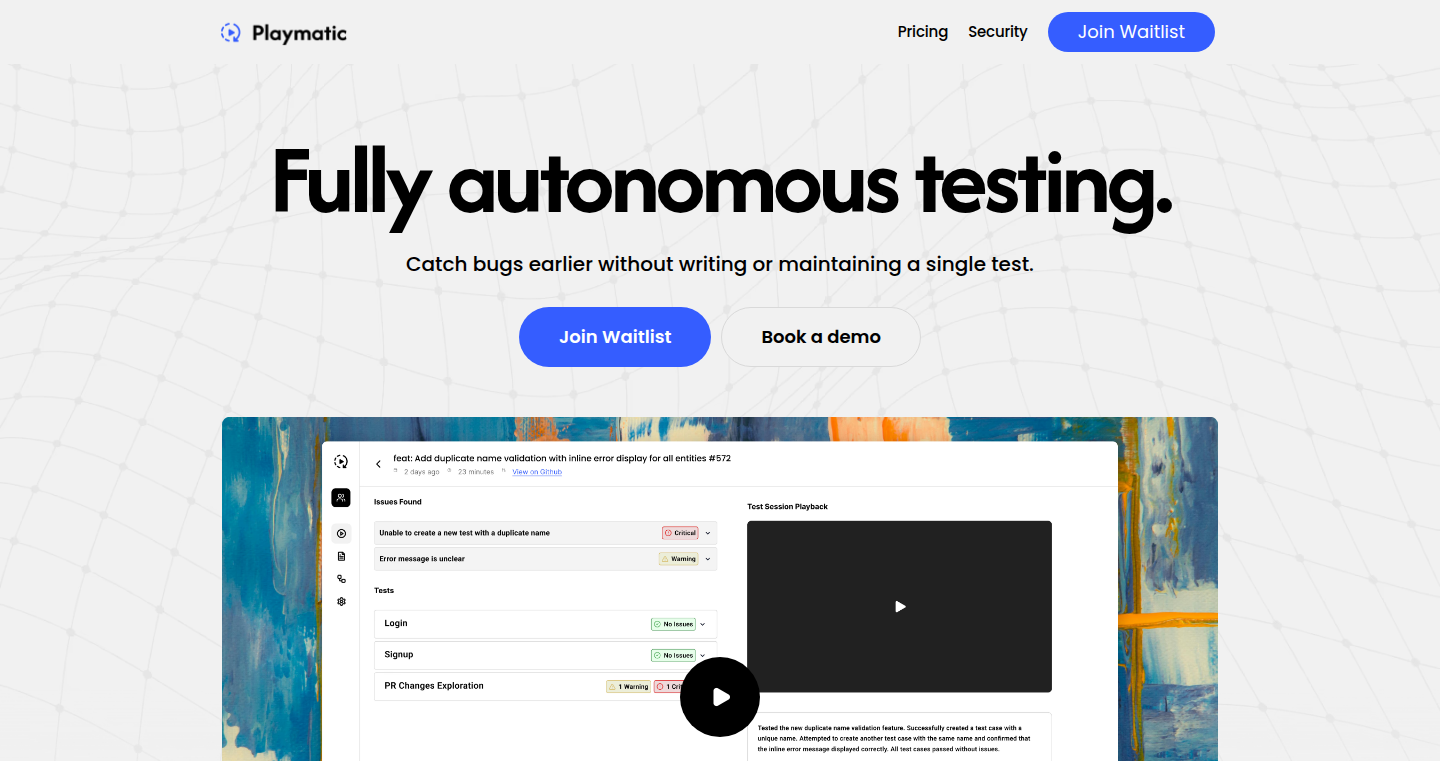

This project introduces an AI-powered testing agent designed to automate end-to-end (E2E) testing, addressing the tedious maintenance burden often associated with it. The core innovation lies in its ability to analyze code changes, visit preview environments, and simulate user interactions to validate functionality. It supports tests described in plain English and integrates seamlessly with GitHub Actions, allowing for continuous testing and proactive detection of regressions. So this automates repetitive tasks, freeing up developers to focus on building, rather than testing.

Popularity

Points 6

Comments 9

What is this product?

This is an AI-driven testing tool that streamlines E2E testing. Instead of manually writing and maintaining test scripts, developers can push a code change (like a Pull Request), and the agent automatically assesses the change. It then accesses the relevant testing environment and acts as a user, verifying that things work as intended. This tool can even interpret test instructions written in natural language. So the project is designed to take the pain out of repetitive testing, making it easier to catch bugs early in development.

How to use it?

Developers integrate this agent into their workflow by pushing code changes to their repository. When a pull request is submitted, the agent analyzes the code and runs the automated tests, including visiting preview environments. It can also be integrated as a GitHub Action, allowing tests to run automatically on a schedule or on specific events (e.g., code commits). So it fits seamlessly into a developer's existing workflow and reduces manual testing efforts.

Product Core Function

· Automated Code Change Analysis: The agent analyzes code diffs in a PR to understand what has changed, which parts of the system are affected, and what needs to be tested. This is valuable because it can intelligently focus the testing efforts on the relevant parts of the application, reducing test execution time and resource usage. So it saves developers time and testing resources.

· AI-Powered Test Execution: The AI simulates user interactions (e.g., clicking buttons, filling forms) to test the functionality of the application as a real user would. This allows the system to automatically validate the features being changed in the code with high fidelity. So it ensures that the application works properly from the user’s perspective.

· Natural Language Test Description Support: Developers can describe tests using plain English. The AI translates these instructions into executable test steps. This removes the need to learn complex test scripting languages and makes the testing process more accessible. So it makes testing much more accessible to developers and reduces the barrier to entry for automation.

· GitHub Action Integration: The agent can be integrated as a GitHub Action, allowing tests to run automatically on code commits, pull requests, or on a schedule. So the system provides continuous testing, which allows detecting problems earlier and easier.

· Preview Environment Testing: The agent automatically accesses and tests the preview environment when a change is made, allowing developers to test code changes before merging them. So developers can validate the code changes and verify that everything is working before releasing.

Product Usage Case

· Continuous Integration and Deployment (CI/CD): Developers can integrate the agent into their CI/CD pipelines. When a code change is pushed, the agent automatically runs tests, providing immediate feedback on the health of the application. If issues are detected, it will stop the process immediately. So it enables rapid and reliable software releases by automating tests at every stage of the development process.

· Regression Testing: After making code changes, the agent can be used to ensure existing functionality continues to work as expected. So it quickly validates that new changes haven’t broken existing features.

· UI/UX Validation: The agent can be used to test the user interface and user experience (UI/UX) of the application. It can simulate user interactions to ensure that the UI elements are working correctly and the user experience is smooth. So it improves the quality of the user experience by testing the UI and UX.

· Complex Workflow Testing: For applications with complex workflows, the agent can be used to test the entire workflow. It can simulate different user actions and test all aspects of the workflow. So it enables efficient testing of complex workflows and avoids the need for manual testing.

· Rapid Prototyping: When prototyping a new feature, the agent can test functionality before significant code is written. So it speeds up development cycles.

9

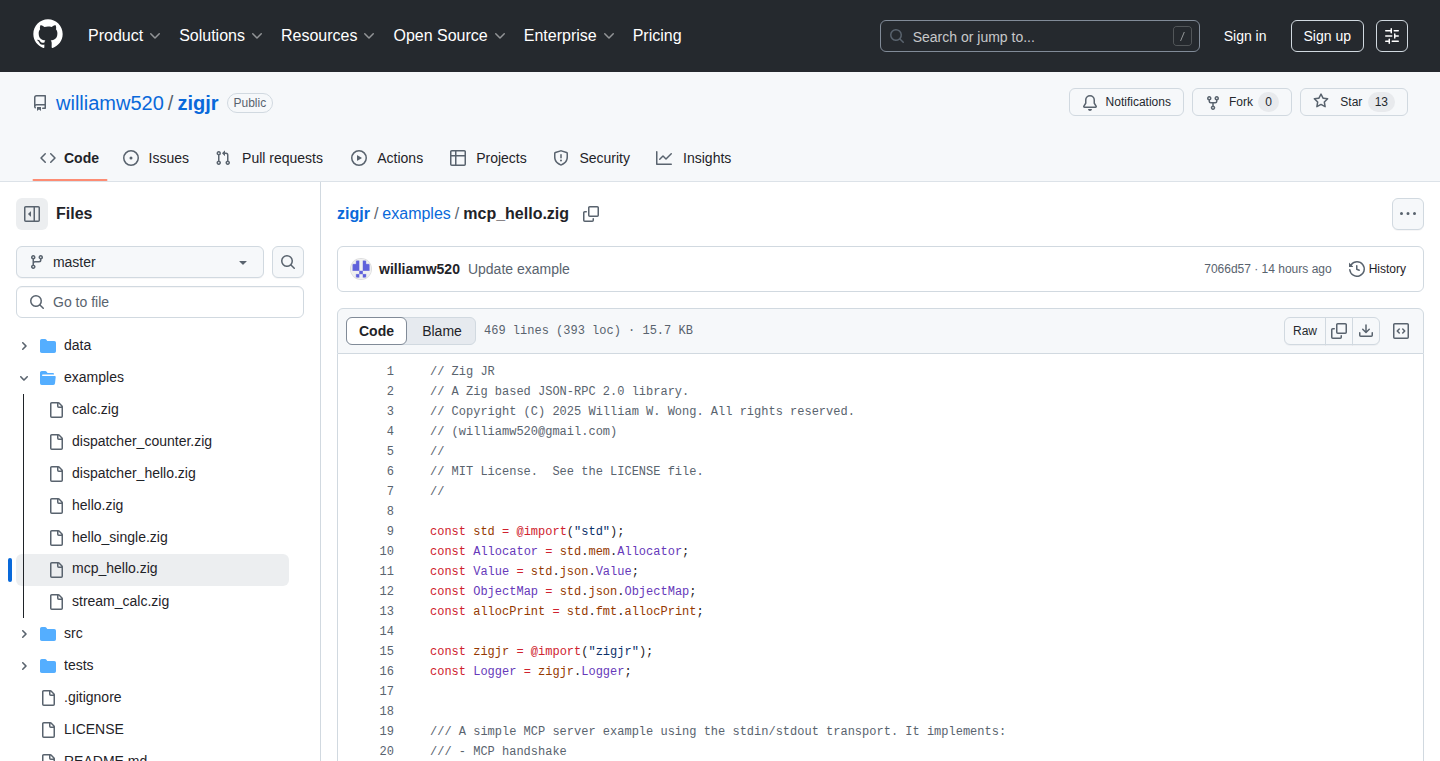

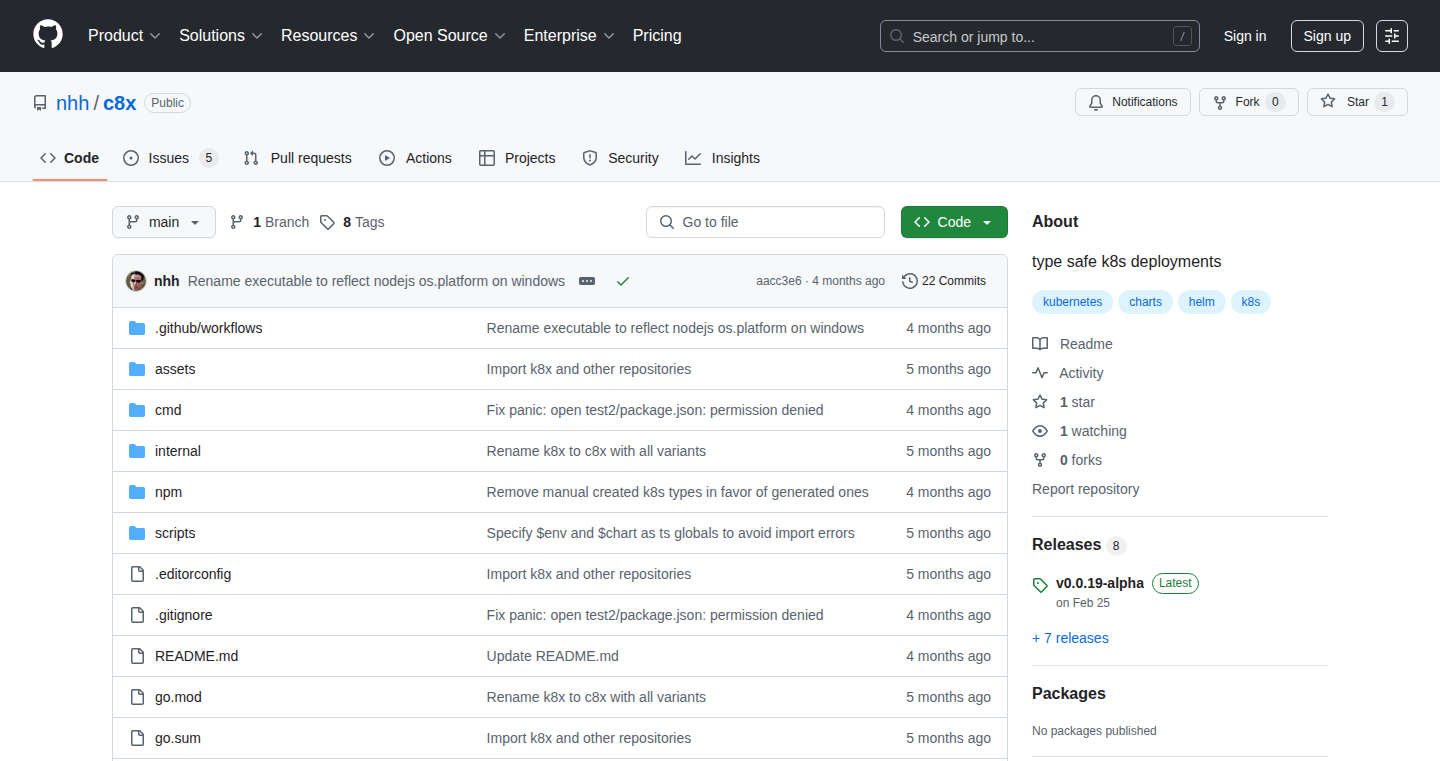

ZigMCP: A JSON-RPC 2.0 Powered MCP Server

Author

ww520

Description

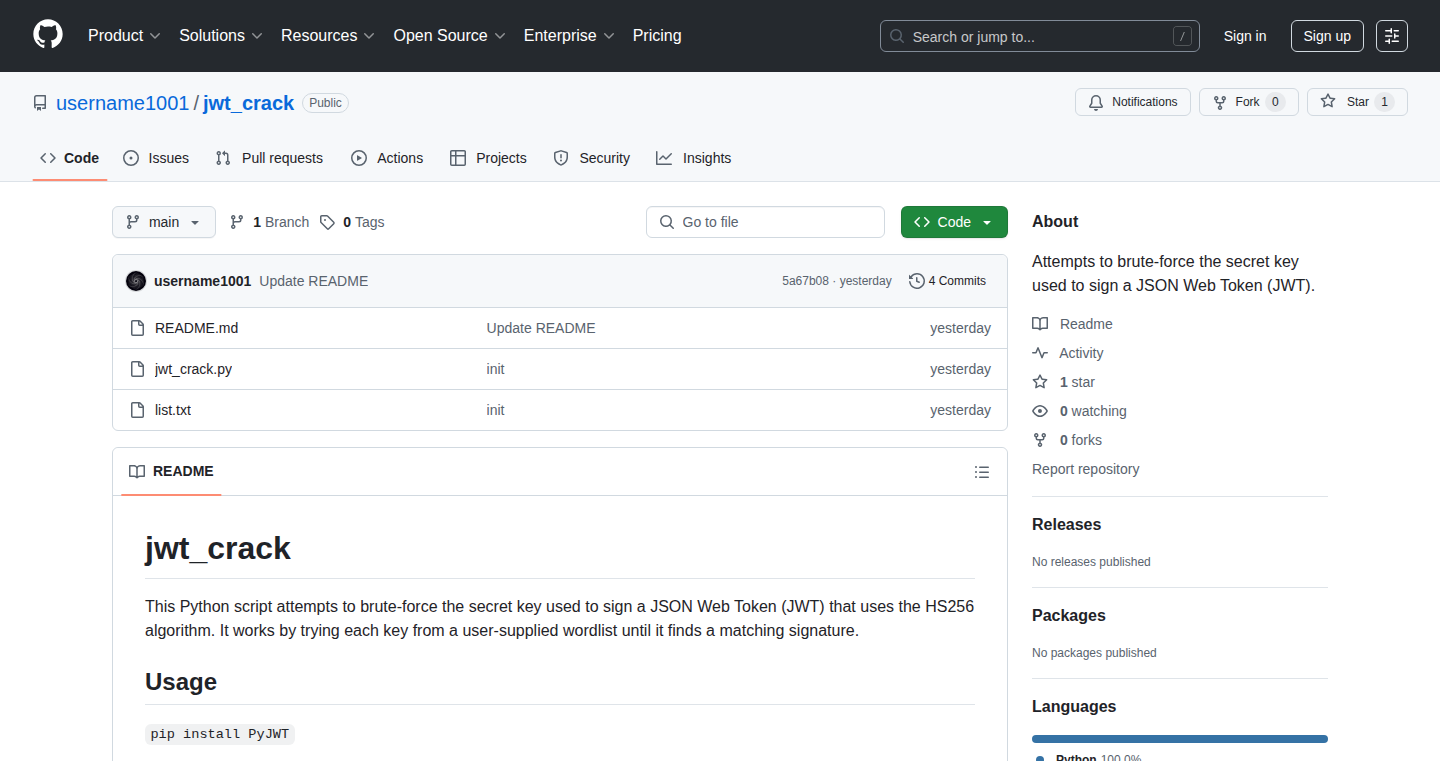

This project is a from-scratch implementation of a MCP server written in the Zig programming language. It leverages a custom JSON-RPC 2.0 library developed by the author to handle communication. The core innovation lies in building a server directly from the MCP JSON schema, enabling the use of Large Language Models (LLMs) to interact with the server. This approach demonstrates the power of Zig for low-level, high-performance tasks and its ability to interface with modern AI technologies. So what this mean for me? It means a developer can now easily create a server that can communicate with AI systems.

Popularity

Points 13

Comments 2

What is this product?

This is a server built in Zig, a language known for its performance and safety. It uses the JSON-RPC 2.0 protocol for communication, which is like a universal translator for different software systems. The cool part is that it directly implements the MCP protocol from scratch, allowing LLMs to talk to it. This showcases Zig's capability to handle complex tasks and its suitability for AI integration. So what this mean for me? It allows me to build robust, high-performance servers and seamlessly integrate with modern AI tools and applications.

How to use it?

Developers can use this project as a foundation or reference for building their own MCP servers or other networked applications in Zig. The JSON-RPC library can also be used independently for any project needing inter-process communication. Integration involves incorporating the Zig code and potentially adapting it to specific needs. The project provides example usage scenarios for both the server and the JSON-RPC library. So what this mean for me? It provides building blocks for creating custom server applications and JSON-RPC-based communication for my projects.

Product Core Function

· MCP Server Implementation: This is the core function, allowing the server to receive and process commands defined in the MCP JSON schema. Value: Enables the creation of custom communication protocols. Application: Useful in building custom servers, command-line applications, or AI interaction.

· JSON-RPC 2.0 Library: Provides the communication framework. This function allows different components of the system to talk to each other using a standard format (JSON). Value: Ensures interoperability and communication reliability. Application: Perfect for building APIs or integrating systems using JSON-based message exchange.

· Zig Language Implementation: The whole project is built in Zig, a systems programming language. This implies performance and low-level control. Value: Provides a foundation for a robust and high-performance infrastructure. Application: Suitable when needing servers with minimal resource overhead, such as high-load systems.

Product Usage Case

· Building a custom server for LLM interaction: The project's design allows an LLM to send commands to the MCP server. Application: Integrating an LLM into a custom server architecture, for instance, for automating services or adding voice control to applications.

· Creating inter-process communication in applications: Leveraging the JSON-RPC library to enable communication between different modules or components of an application, even if they are written in different languages. Application: Building a modular system with independent components, that also eases development and debugging.

· Developing high-performance network applications: The project demonstrates how to build network applications with the Zig language, especially in resource-constrained or high-traffic scenarios. Application: Ideal for creating efficient servers or microservices that require speed and low resource usage, such as in gaming or IoT.

10

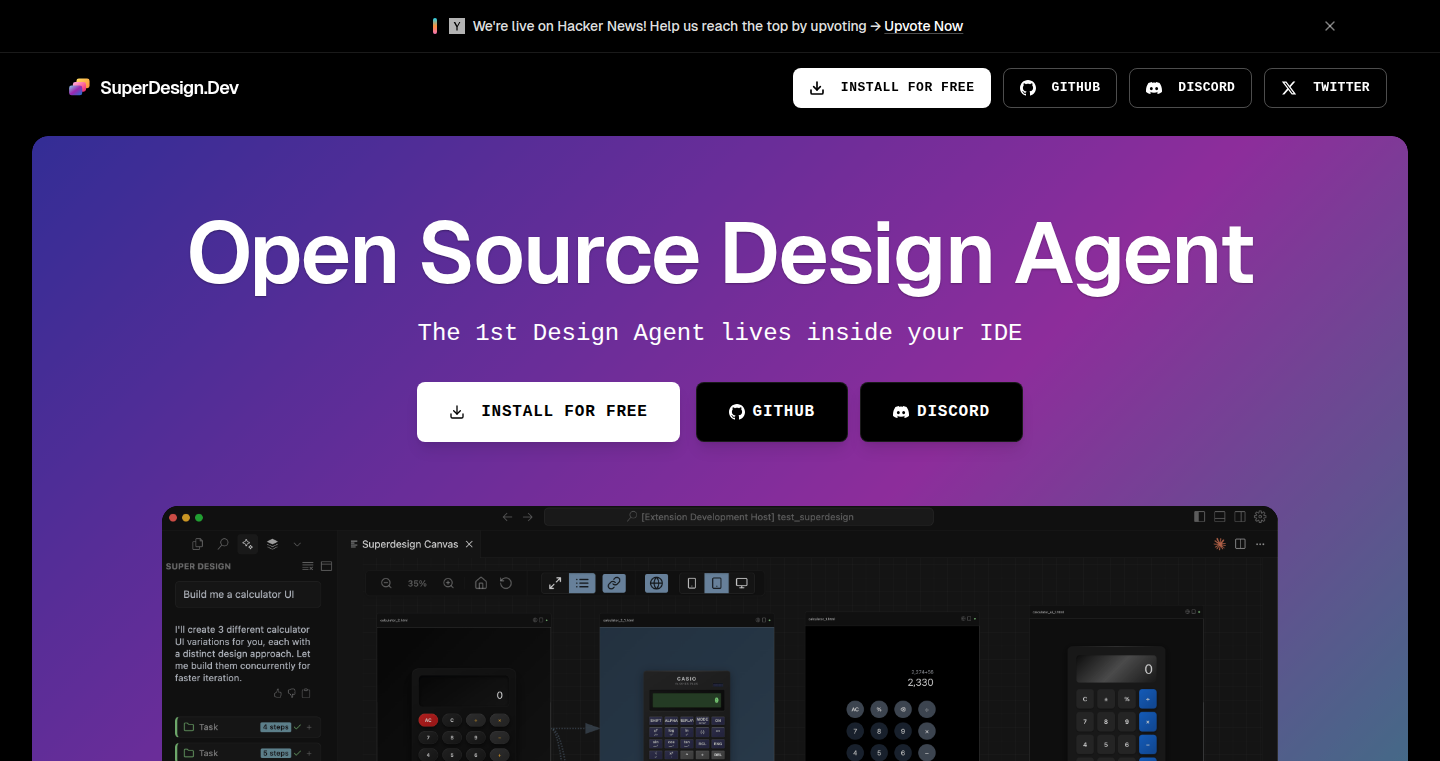

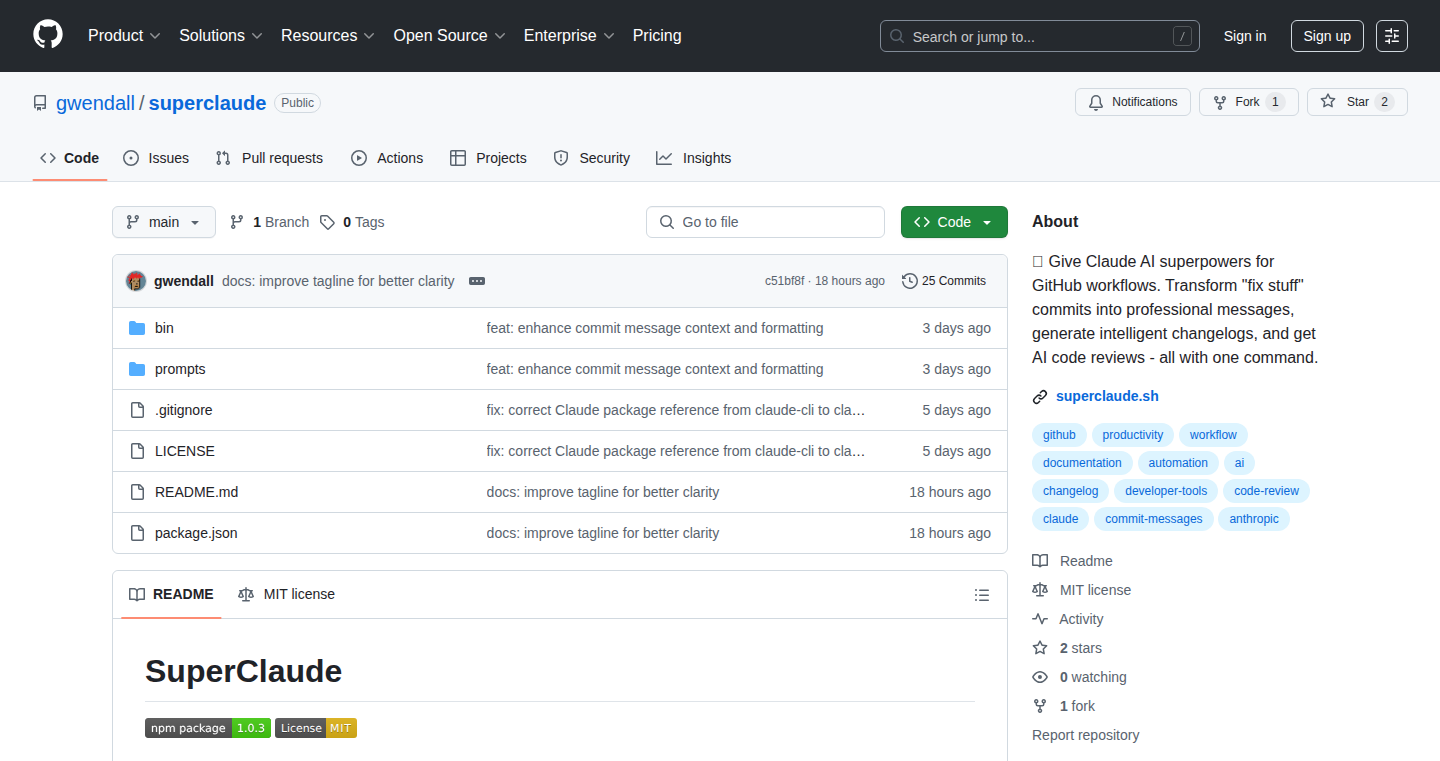

SuperDesign.Dev: IDE-Integrated Design Agent

Author

jzdesign1993

Description

SuperDesign.Dev is an open-source design tool that lives inside your code editor (like VS Code). It uses AI agents to help you design user interfaces (UIs) directly within your development environment. This is a major shift because it allows you to generate, modify, and iterate on designs without switching between different tools. The current version uses the Claude Code SDK to generate static HTML pages, but future versions aim to support full-stack web applications and improve the design flow.

Popularity

Points 10

Comments 4

What is this product?

SuperDesign.Dev is like having a design assistant built into your code editor. It uses AI agents, specifically the Claude Code SDK, to generate design mockups, UI components, and wireframes. Think of it as an AI that helps you create the visuals for your website or app, right where you write the code. The key innovation is the direct integration within the IDE (Integrated Development Environment), streamlining the design process. So this allows developers to design directly in their code editor, reducing the need to switch between design tools and code editors. This makes the design process much faster and more efficient.

How to use it?

To use SuperDesign.Dev, you would install it as an extension within your chosen code editor (e.g., Cursor, Windsurf, or VS Code). Once installed, you can interact with the AI agent to generate designs. You might provide a prompt like "Create a landing page for a productivity app," and the agent would generate a basic HTML page with the design. You can then further refine the design by making changes to the generated code or by providing more specific instructions. This lets you prototype and experiment with designs quickly. For developers, you can use it to quickly generate UI components, experiment with different design ideas, and iterate on designs in real-time without leaving your coding environment.

Product Core Function

· Design Generation: Allows you to generate multiple design options (mockups, UI components, wireframes) based on prompts, leveraging the power of AI. This saves time by automatically generating initial designs, and gives developers a starting point for design experimentation.

· Design Iteration: Enables you to "fork" and modify existing designs directly within the IDE. This allows developers to quickly prototype and adjust designs based on feedback or evolving requirements, leading to a more iterative design process.

· IDE Integration: Deeply integrates into your IDE workflow. This eliminates the need to switch between different applications for designing and coding, optimizing the development process. Developers stay focused on their code while also shaping the visual presentation of their projects.

· Static HTML Generation: Currently generates static HTML pages for rapid prototyping. This offers a quick and easy way to visualize design ideas and allows developers to quickly see the visual representation of their designs, providing an efficient way for the design process.

Product Usage Case

· Rapid Prototyping: Developers can use SuperDesign.Dev to quickly create UI prototypes for their web applications without needing to learn complex design tools. For example, a developer can use a prompt like "Create a simple signup form" and get a working design instantly, significantly speeding up the prototyping phase. So, it enables quicker design iteration and faster project development.

· UI Component Creation: It assists in the creation of UI components like buttons, forms, and navigation bars. This improves the efficiency of design by helping generate the initial building blocks. For example, developers can generate different versions of a button and see which works best for their design.

· Design Exploration: Developers can use the tool to explore different design options without spending a lot of time. For instance, a developer can ask the AI agent to suggest different color palettes for a website, helping them quickly choose the best design for their project.

· IDE-Centric Design Workflow: SuperDesign.Dev enables developers to maintain a consistent workflow within their IDE. This reduces context switching, increasing their productivity by minimizing distractions. For example, designers and developers collaborating on a project can see the design changes immediately without using external software, leading to faster collaboration.

11

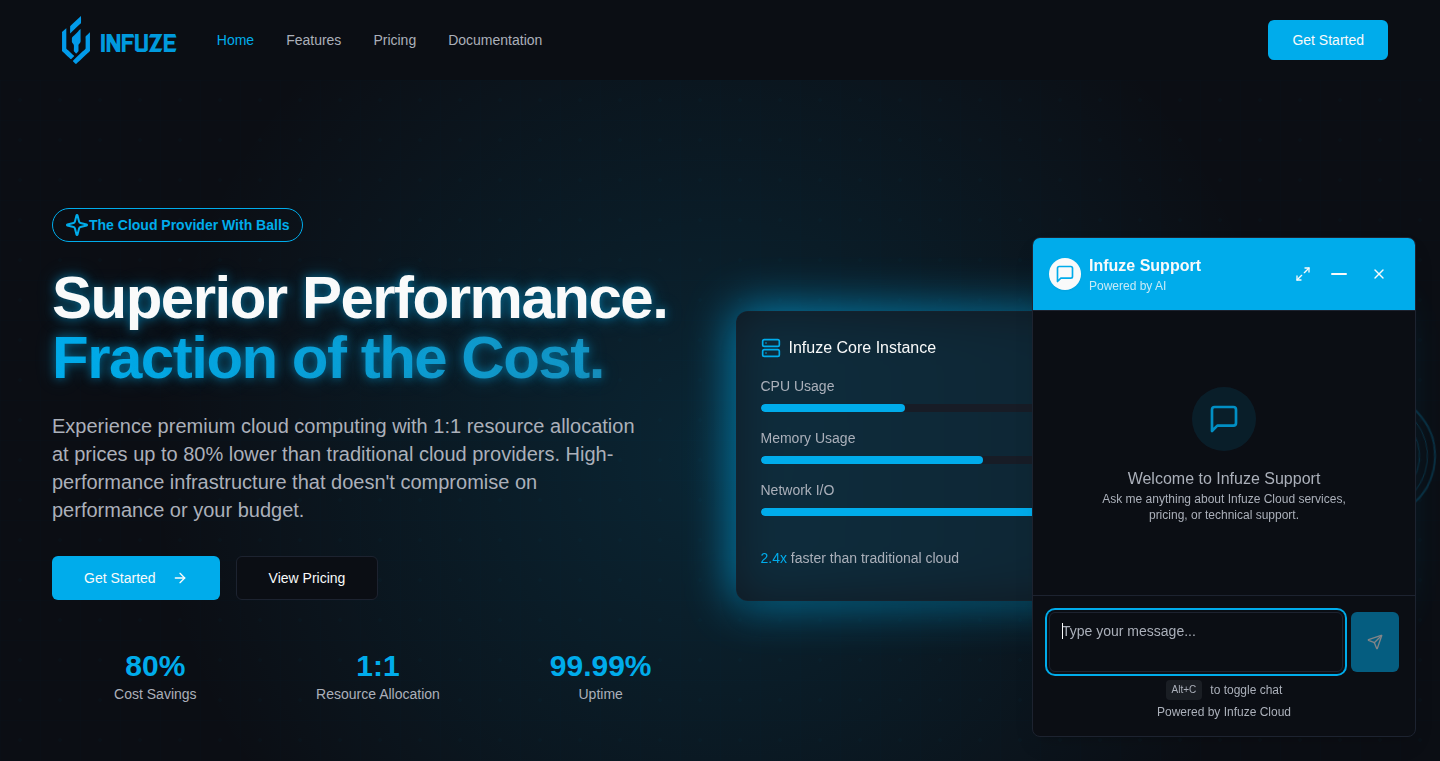

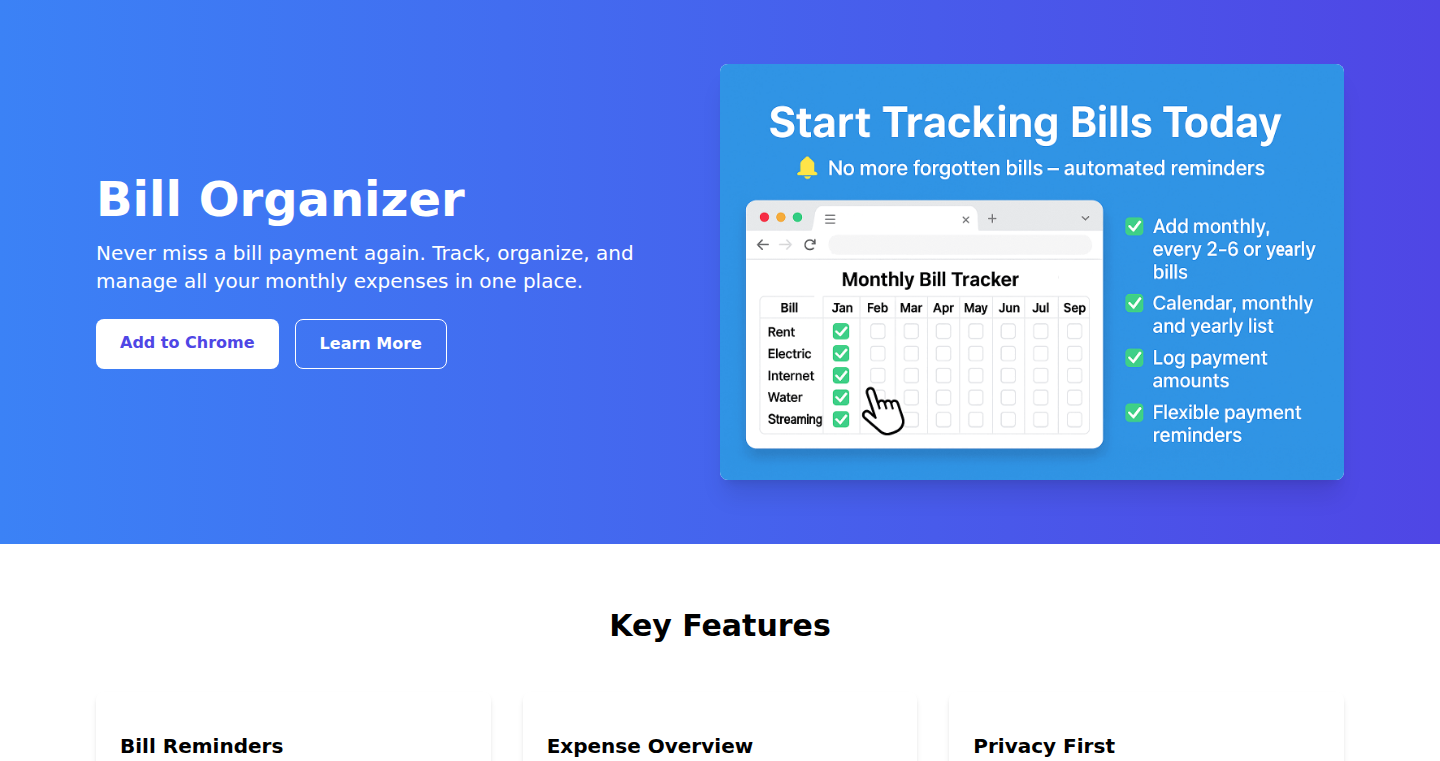

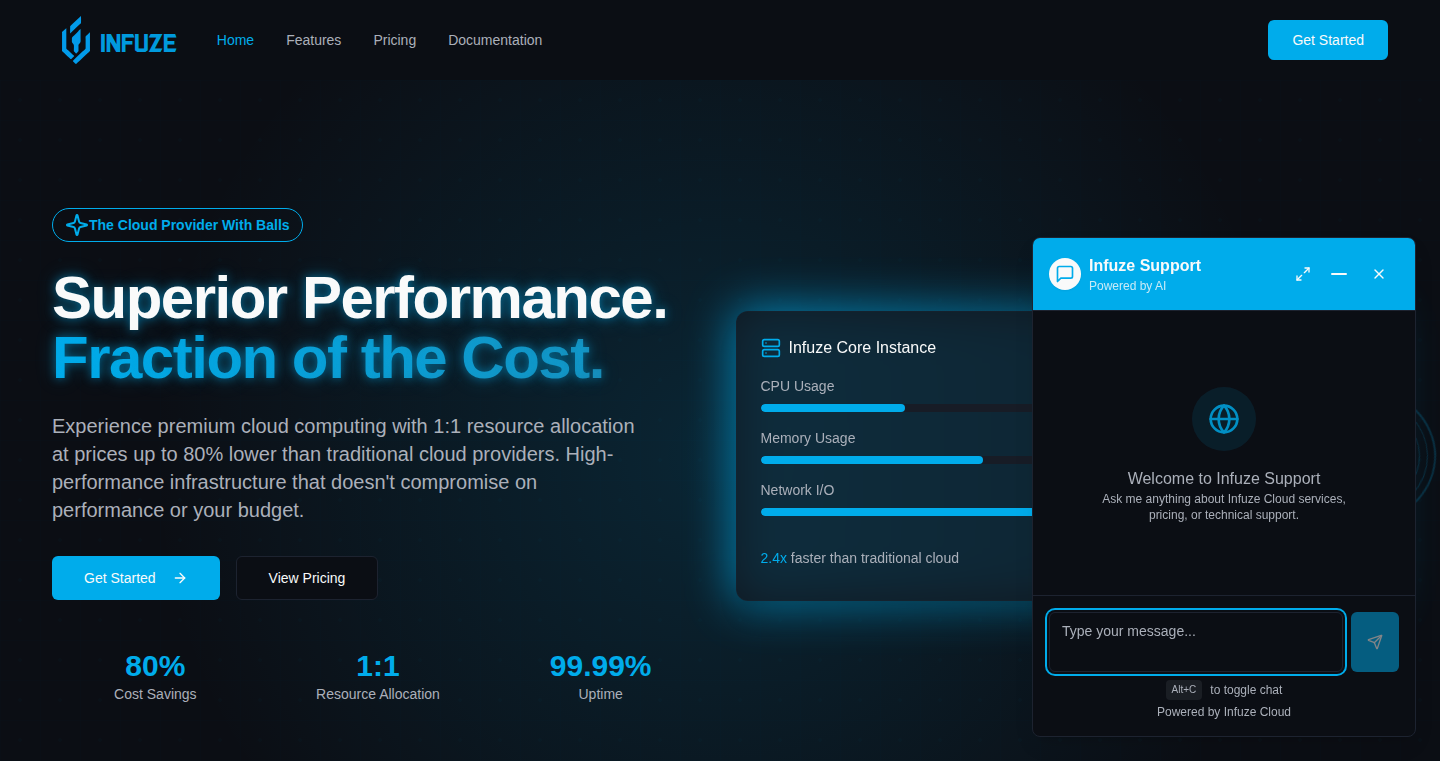

Infuze Cloud: Raw Performance, Custom Built Cloud Infrastructure

Author

ccheshirecat

Description

Infuze Cloud is a new cloud service built from scratch, offering raw, dedicated performance where 1 virtual CPU equals 1 physical thread, without overcommitment. It leverages its own hardware and IP space, providing transparent pricing based on actual usage. This project aims to disrupt the existing cloud market by offering a more cost-effective and performant alternative for developers seeking full control and optimal resource utilization. The core innovation lies in the custom-built infrastructure, offering direct access to resources and avoiding the overhead and costs associated with existing cloud providers.

Popularity

Points 5

Comments 5

What is this product?

Infuze Cloud is a cloud computing service. It's different because it's built from the ground up with custom components, meaning it doesn't rely on third-party services (except hardware and IP space). It gives you raw, dedicated computing power – what you pay for is exactly what you get, without any sneaky resource sharing. It’s built on open-source tech like Proxmox and ZFS, and runs on the developer’s own hardware and IP space. The pricing is designed to be more transparent and closer to the actual cost of the infrastructure. So this provides a cloud service with no hidden costs and maximum performance.

How to use it?

Developers can use Infuze Cloud to run virtual machines (VMs) with root access via SSH, just like a regular server. You can deploy your applications, host websites, run databases, or experiment with new technologies. The service provides public IPv4 addresses and a /64 subnet for each VM, offering direct network access. You can also use it to benchmark your applications to see how they perform on bare-metal hardware. This allows developers to have more control over their environment and better understand the cost/performance trade-offs.

Product Core Function

· Dedicated CPU Allocation: Every virtual CPU (vCPU) is directly mapped to a physical thread on the server. This ensures that your applications have all the computing power they need without sharing with other users. The value is a guaranteed level of performance, making it suitable for performance-sensitive applications. So this means your app will run faster.

· Usage-Based Pricing: You only pay for the resources you use, with hourly billing and discounts for larger commitments. This provides a transparent and predictable cost structure. The value here is cost optimization. So this saves you money.

· Custom Infrastructure: The entire stack, from virtualization (Proxmox) to networking, is built and managed by the Infuze team. This offers greater control over the underlying infrastructure and allows for optimization and cost savings that are not possible with third-party services. This gives you the benefit of better performance and pricing. So this offers better performance and pricing.

· Root Access via SSH: Developers have full root access to their VMs via SSH, enabling them to fully customize their environment and install any software they need. This empowers developers with complete control over their server. So you can set up your server exactly how you want it.

· BGP-Routed IP Space: The cloud service operates on its own BGP-routed IP space, providing direct network connectivity. This results in better network performance and control over the network routing. So this means you can get more reliable and faster connections.

Product Usage Case

· Web Application Hosting: Developers can host their web applications on Infuze Cloud VMs, leveraging the dedicated CPU resources and transparent pricing to optimize costs. For example, a developer can run a high-traffic website that requires consistent CPU and RAM. So you can run your website more efficiently.

· Game Server Hosting: Game developers can utilize Infuze Cloud to host game servers, benefiting from the dedicated performance to minimize lag and improve the gaming experience. A multiplayer game might have significant performance needs. So you can create a better gaming experience.

· Development and Testing Environments: Developers can create isolated environments for testing and development. The full root access enables the installation of required tools and frameworks. A team can use this to build and test their code. So you can make your development and testing more efficient.

· Batch Processing and Data Analysis: Researchers or data scientists can use the VMs to run compute-intensive tasks like data analysis and scientific simulations. This is useful for large data processing needs. So this allows you to tackle large data sets.

· Building a Personal Cloud: With root access, you can install tools and services to manage your own data. For example, a user could use Infuze Cloud to host their own file storage or cloud services. So you can create your own personalized cloud services.

12

Prepin.ai: AI-Powered Phone Interviewer

Author

OlehSavchuk

Description

Prepin.ai offers an AI-driven phone interview service. It connects you with an AI that conducts a brief screening interview shortly after you enter your phone number. The core innovation lies in its use of artificial intelligence to automate the initial stages of the hiring process, providing instant feedback and generating basic reports. This tackles the time-consuming task of preliminary candidate screening, allowing recruiters to focus on more in-depth evaluations. So this is for me because it automates the initial screening of job applicants, saving time and resources.

Popularity

Points 7

Comments 3

What is this product?

Prepin.ai is a service that uses AI to conduct short phone interviews. It employs natural language processing (NLP) and potentially machine learning (ML) to ask standard screening questions and analyze candidate responses. The innovative part is its automated approach, enabling almost instant interviews and generating reports. This significantly reduces the manual effort required for initial candidate evaluations. So this offers a quick and automated first-stage assessment tool for job candidates.

How to use it?

Recruiters and hiring managers can use Prepin.ai to quickly screen applicants. A candidate enters their phone number, and within seconds, they receive a call from the AI interviewer. Recruiters then receive a simple report of the interview. To integrate, the product could offer APIs for ATS integration, or custom question sets tailored for different roles. So I can use this to quickly screen applicants and get a brief overview of their qualifications.

Product Core Function

· Automated Phone Interview: The AI initiates a phone call and asks pre-programmed screening questions. This functionality automates the initial contact and assessment of a candidate. So this allows me to quickly and efficiently screen candidates.

· Report Generation: The system generates a basic report summarizing the interview, providing a preliminary evaluation of the candidate's responses. This feature delivers an overview of the candidate's performance. So this gives me a quick overview of each candidate.

· Natural Language Processing (NLP): NLP is used to understand and respond to candidates’ answers during the interview. This enables a more interactive and human-like interview experience. So this allows for a more natural and effective interview process.

· Scalable Screening: By automating the interview process, the system can handle a large volume of applicants simultaneously. This enhances the efficiency of the recruitment process. So this ensures that I can screen multiple candidates concurrently without extra time.

· Validation of Demand: The current MVP focuses on validating market demand before investing heavily into advanced features. This provides insights and data to better understand user needs and preferences. So this means the tool is evolving and will continue to improve based on user feedback.

Product Usage Case

· Startup Hiring: A small startup receives many applications and needs a way to quickly screen candidates. They use Prepin.ai to automate the initial screening, freeing up the hiring manager’s time for more in-depth interviews. So this is valuable because it streamlines their hiring process and saves the company time and money.

· Large Company Recruitment: A large company needs to handle hundreds of applications. Prepin.ai is integrated into their ATS (Applicant Tracking System) to automatically screen initial applicants. This integration provides faster evaluation of candidates. So this enables an efficient screening process and reduces the workload of the recruitment team.

· Remote Interviewing: A company wants to assess candidates remotely. Prepin.ai offers phone interviews, reducing the need for scheduling and coordinating video calls in the initial phase of recruitment. So this simplifies and speeds up the remote hiring process, improving efficiency and enabling faster decision making.

13

LuxWeather: Pixel Art Weather with ASP.NET and HTMX

Author

thisislux

Description

LuxWeather is a unique weather website that delivers weather information in a visually appealing pixel art style, built using ASP.NET and HTMX. The core innovation lies in its use of HTMX to create a dynamic, interactive user experience without the complexities of traditional JavaScript frameworks. This project showcases a lightweight and efficient approach to web development, focusing on simplicity and rapid development, addressing the challenge of building interactive web applications with less code.

Popularity

Points 9

Comments 0

What is this product?

LuxWeather is a website that shows weather information, but instead of boring charts and graphs, it uses cool pixel art. The website uses ASP.NET, a framework for building websites, and HTMX, a clever tool that lets developers create interactive features without writing a lot of complex JavaScript. It’s a simplified way to build websites, focusing on quick development and less code. So this leverages the power of server-side rendering with a modern approach, delivering rich user experiences with minimal client-side code.

How to use it?

Developers can use LuxWeather's approach as a model for building their own interactive web applications. They can learn how to integrate HTMX into their ASP.NET projects to create dynamic features like real-time updates, without needing to master complex JavaScript frameworks. This is especially useful for developers who want to build simpler, faster websites. Integrating HTMX typically involves adding it to your HTML and then making requests to the server using HTML attributes. So this is an easy way for developers to make their websites more engaging and responsive with less effort.

Product Core Function

· Pixel Art Weather Display: The website renders weather data as charming pixel art, which offers a unique visual experience. The technical value is the use of image generation techniques and the seamless integration of pixel art with weather data. Application Scenario: This can be applied in various web-based dashboards and data visualization tools needing a user-friendly display.

· Dynamic Updates with HTMX: The website uses HTMX for dynamic updates, meaning the page updates without needing to reload. This improves the user experience by making the website feel faster and more responsive. Technical Value: It simplifies the development process by minimizing the need for writing JavaScript and managing client-side state. Application Scenario: Useful for interactive dashboards and real-time data visualizations that need to be updated frequently without disrupting the user’s view.

· Ad-Free Experience: The website is ad-free, focusing on providing a clean and user-friendly experience. Technical Value: This prioritizes user experience and demonstrates a commitment to simplicity and direct value. Application Scenario: Suitable for any web service that puts the user experience first, like simple information sites or personal projects.

· ASP.NET Backend: The backend is built using ASP.NET. Technical Value: Offers a robust server-side platform for serving the application and handling data. Application Scenario: Provides a solid foundation for developers experienced with the Microsoft ecosystem.

Product Usage Case

· Building a Simple Dashboard: You could use HTMX and a similar backend to build a dashboard that updates without requiring a page reload. This would be perfect for displaying real-time data, like stock prices or sales figures. The key is using HTMX's ability to make small, efficient updates to the page. So this is great for a dashboard that shows information that changes quickly.

· Creating Interactive Forms: Use HTMX to handle form submissions and updates without reloading the entire page. This makes the forms feel more responsive and less clunky. Application: A user filling out a survey: when they complete one section, the next appears instantly. So this provides a better experience for filling out forms.

· Developing Interactive Charts and Graphs: Use HTMX to update charts and graphs dynamically when data changes. This way, the user will get real-time updates without the page flashing. The technical side is the ability to update the charts using only a small amount of data. So this could be used to make a data visualization tool more dynamic.

14

TrafficEscape: Real-time Traffic Prediction Tool

Author

BigBalli

Description

TrafficEscape leverages real-time traffic data and historical patterns to predict the optimal departure time, helping users avoid traffic congestion. It uses machine learning to analyze traffic flow and provide personalized recommendations. The project addresses the common problem of wasted time in traffic by providing actionable insights.

Popularity

Points 2

Comments 6

What is this product?

TrafficEscape is a tool that analyzes real-time and historical traffic data using machine learning models. It identifies patterns and predicts future traffic conditions based on current and past events. The innovation lies in its ability to generate departure time recommendations tailored to an individual's route, significantly reducing commute time. So, it's like having a smart traffic assistant that proactively helps you avoid jams.

How to use it?

Developers can integrate TrafficEscape's API into their applications or services. This could involve using the API to provide traffic predictions within a navigation app, a ride-sharing service, or even a calendar application. The integration requires setting up an API key and providing the necessary route information. So, developers can add a 'smart commute' feature to their apps to make them more useful.

Product Core Function

· Real-time Traffic Analysis: Analyzes current traffic conditions from various sources (e.g., GPS data, sensors) to provide up-to-the-minute traffic information. This enables the prediction model to stay relevant. So, you always get the latest traffic picture.

· Historical Data Analysis: Examines historical traffic patterns to identify recurring traffic bottlenecks and predict traffic changes. This improves the accuracy of predictions, especially during peak hours. So, you know when to expect congestion.

· Machine Learning Prediction: Employs machine learning algorithms to forecast traffic flow based on real-time data and historical patterns. This enables TrafficEscape to anticipate traffic changes. So, it knows what the roads will look like in advance.

· Personalized Route Recommendations: Suggests the best departure time and/or alternative routes based on the user's route and predicted traffic conditions. This provides actionable guidance. So, you can get personalized suggestions to improve your trip.

Product Usage Case

· Navigation App Integration: Integrate TrafficEscape into a navigation app to provide users with optimized routes and departure time recommendations based on current and predicted traffic. This would improve the user's overall experience. So, users can plan their trips more effectively.

· Ride-Sharing Service Enhancement: Use TrafficEscape to optimize the routes and dispatch times for ride-sharing drivers, thereby reducing travel time and improving efficiency. This will lead to better resource utilization. So, this improves the profitability for drivers.

· Calendar Application Integration: Incorporate TrafficEscape into a calendar application to suggest optimal departure times based on scheduled events and traffic predictions. This will help users better manage their time. So, it avoids delays when attending events.

15

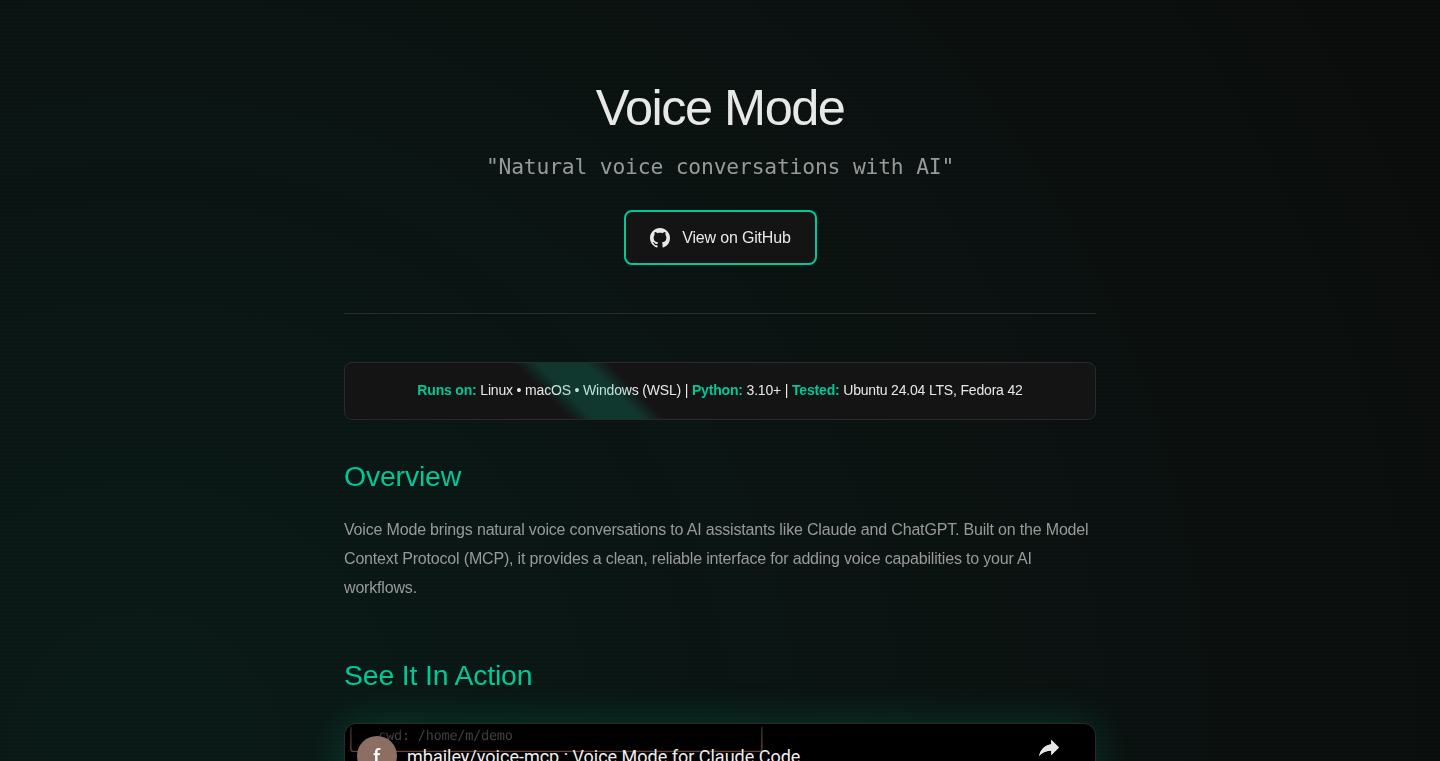

Voice-Mode MCP: Conversational Coding Bridge

Author

mike-bailey

Description

Voice-Mode MCP is a Free and Open Source Software (FOSS) server designed to facilitate two-way voice conversations with language models like Claude Code and Gemini. It allows developers to interact with these models using voice commands, enabling a more natural and efficient coding experience. The core innovation lies in bridging the gap between spoken language and code execution, essentially turning the language model into a conversational coding assistant. So this helps me use voice commands to control code.

Popularity

Points 7

Comments 0

What is this product?

Voice-Mode MCP acts as a conversational interface. It takes voice input, processes it, and sends the instructions to a language model. The language model then generates or modifies code based on the voice commands. The server then takes the result and provides feedback back to the user through voice. This leverages the power of Large Language Models (LLMs) to create a more interactive and less typing-intensive coding workflow. So this helps me use voice commands to control code.

How to use it?

Developers can install and configure Voice-Mode MCP by modifying a settings.json file, enabling interaction with models like Claude Code and Gemini through the command line (CLI). The developer needs to define the command and arguments to interact with the LLM. It supports the 'uvx' command which allows the user to interact with the LLM via voice. You can use it in your terminal. So this allows me to talk to a language model and get my coding done!

Product Core Function

· Voice Command Interpretation: Voice-Mode MCP captures spoken instructions and converts them into a format suitable for the language model. This simplifies the coding process by replacing typing with talking. So this lets me command my code with my voice.

· Language Model Integration: The system seamlessly integrates with various language models (Claude Code, Gemini), leveraging their capabilities to generate and modify code. This opens up new possibilities for automated coding tasks and faster development cycles. So this means I can use smart AI to do my coding for me.

· Two-Way Communication: It provides a bi-directional communication channel, allowing the developer to give commands and receive feedback vocally. This conversational approach significantly enhances the user experience. So I can talk to my code and it talks back!

· Open Source and Customization: Being FOSS, the server is open for contributions from the community, and allows developers to extend and tailor the system to meet their specific needs and preferences. So I can make this software do exactly what I need.

Product Usage Case

· Automated Code Generation: Use voice commands to instruct the LLM to create specific code snippets or entire functions, saving time and reducing the need for extensive manual typing. For instance, a developer could say 'Write a function to sort an array' and the system would generate the code. So I can just tell the software what I want and it writes the code for me.

· Code Modification and Debugging: Developers can use voice to modify existing code, e.g., 'Change the variable name to x' or debug errors using conversational prompts. This streamlines the process of fixing problems. So I can fix bugs just by talking to my code.

· Rapid Prototyping: The tool allows rapid prototyping by letting the user quickly generate and test various code ideas through voice interactions, accelerating the exploratory phase of software development. So I can test out new ideas and make changes really fast.

16

iCloudDriveFix: A Windows Sync Savior

Author

instagib

Description

This project documents a fix for a common problem: iCloud Drive failing to sync on Windows. The author, frustrated by persistent sync issues, outlined a detailed troubleshooting guide, bypassing common online solutions that didn't work. This project is a direct response to a user's practical need, providing a reliable solution and saving time, demonstrating a hands-on approach to resolving a technical problem. It showcases the spirit of the hacker community by solving a real-world issue, making the iCloud Drive experience smoother for Windows users.

Popularity

Points 5

Comments 1

What is this product?

This project is a comprehensive guide to resolve the frustrating issue of iCloud Drive not syncing on Windows. It’s not about a specific software, but rather a series of steps and checks that identify and fix the underlying problems. It focuses on diagnosing and correcting sync errors by looking at the specifics of what is failing. This is particularly helpful because the fixes depend on your own situation. So this guide provides a method to identify the root cause and then gives you the steps to fix it.

How to use it?

The guide is used by following the troubleshooting steps laid out by the author. This often involves checking specific settings, processes running in the background, and potentially modifying system files or reinstalling software. The steps are meant for users who are facing sync issues and want a methodical approach to finding the problem and then fixing it. You can simply follow the instructions step by step and apply it to your own computer. This means you can potentially recover your iCloud Drive sync when standard solutions fail. You can also use it to understand the common pitfalls of iCloud Drive on Windows.

Product Core Function

· Troubleshooting Guide: Provides a step-by-step process to diagnose why iCloud Drive is not syncing, covering a range of possible issues from simple setting misconfigurations to more complex system interactions. This is useful for quickly identifying the problematic setting or program. So this is useful for saving hours of searching and testing.

· Problem Isolation: This guide guides you through isolating the exact cause of the sync failure. By methodically checking the elements involved, you can narrow down the cause. This saves a lot of time compared to general troubleshooting. So this helps pinpointing the exact cause of the issue.

· Solution Implementation: Offers practical methods to resolve common sync issues, providing actionable steps to fix the problems. The solutions range from simple configuration adjustments to more advanced steps like file repair or reinstalling specific components. This means you could find your files syncing again after hours of frustration.

Product Usage Case

· Sync Problems with Photos: If your iCloud Photos aren't updating on your Windows computer, this guide can help. It can help you check to see if your settings are correct and ensure that the sync is running in the background as it should. Then if the settings appear correct, this guide can help you dig deeper. So this might make you happy, since you can use your photos on your computer again.

· Document Sync Failures: If your documents on iCloud Drive are not syncing to your computer, then this guide can help. This guide can walk you through the process of identifying what is causing this issue and guide you on how to fix it. So this allows you to keep using your documents everywhere.

17

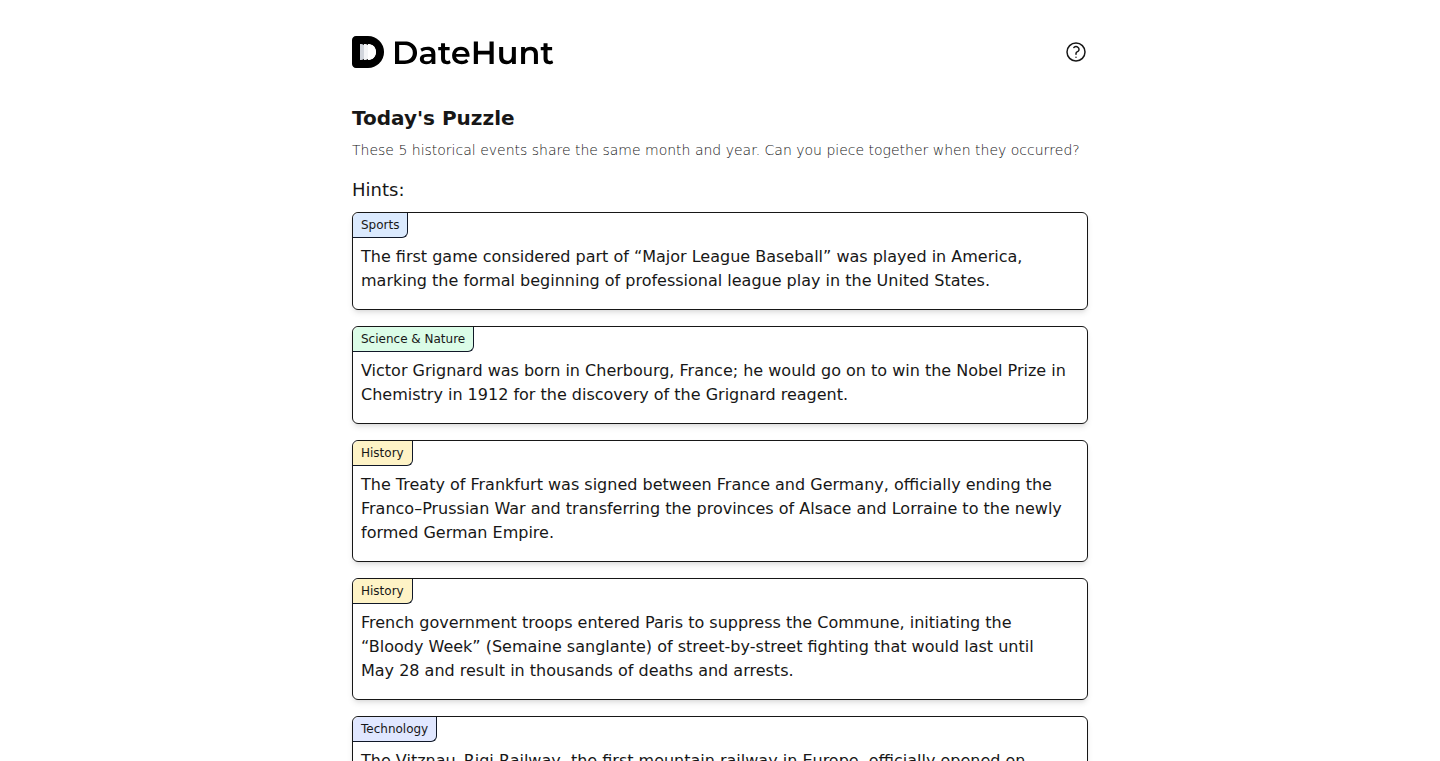

ChronosGuess: A Daily Historical Event Date Guessing Game

Author

cjo_dev

Description

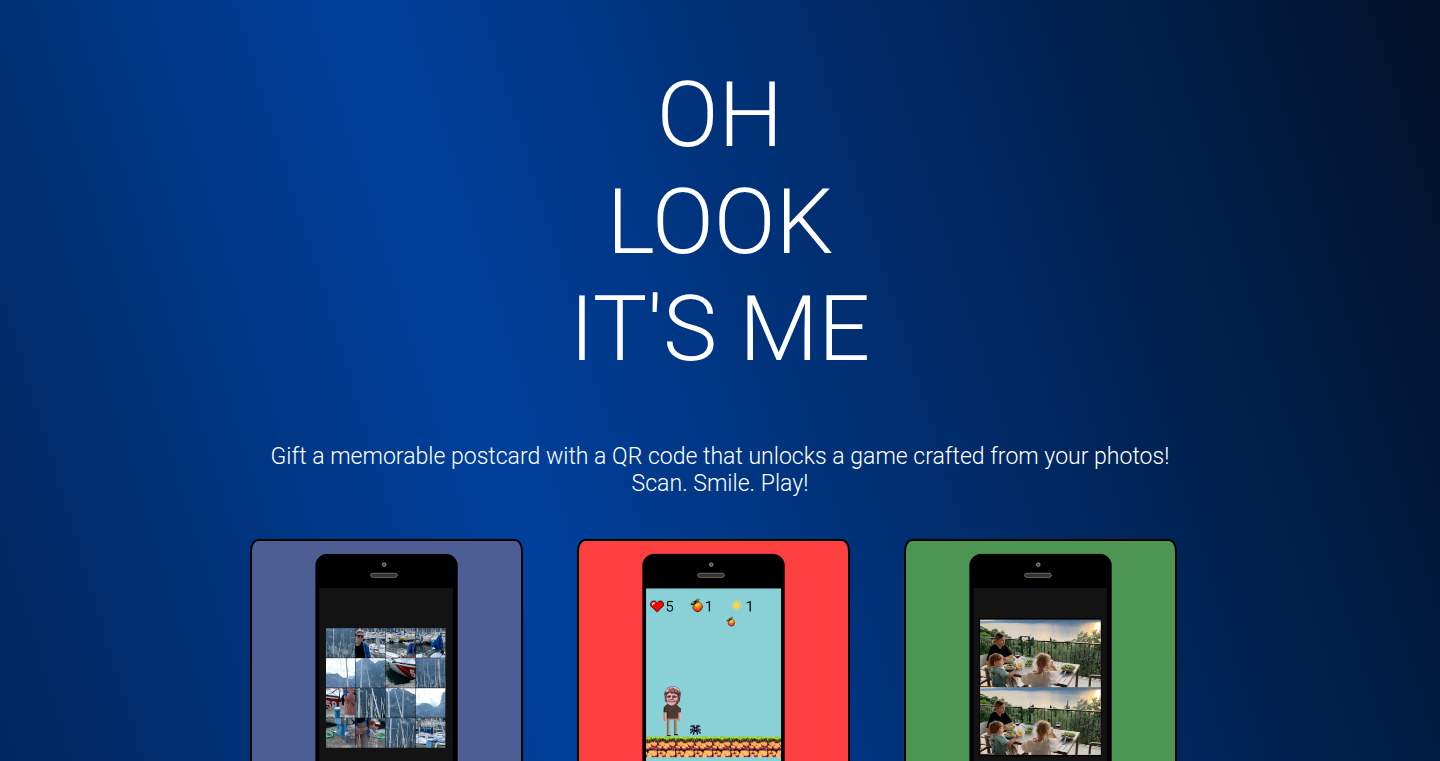

ChronosGuess is a daily puzzle game where you try to guess the date of a significant historical event. The core innovation lies in its curated event database and the interactive guessing mechanism that provides hints based on the user's input, allowing for a fun and educational experience. It addresses the challenge of making history engaging and accessible.

Popularity

Points 3

Comments 3

What is this product?

ChronosGuess is a game built around historical dates. Think of it like a word puzzle, but instead of guessing words, you're guessing dates of important historical events. The game gives you clues – for example, it might tell you the year is before or after your guess. The core innovation is its curated database of historical events and the interactive feedback system. The game uses a smart algorithm to narrow down your guesses, making it both challenging and educational. So what does that mean? It means it's a fun way to learn about history. The clever hint system is also a key part of this, which helps you move toward the right answer, even if you don’t know the exact date right away.

How to use it?

Developers can potentially leverage the event database and guessing mechanism to create similar educational games or integrate historical context into their applications. They could incorporate the date-guessing feature into interactive storytelling platforms, educational apps, or even gamified quizzes. You can imagine this integrated into a learning app: Users learn a fact, then guess the date. Developers would access the game's core functionalities, potentially through an API or by adapting the provided code. So, it's a tool for anyone wanting to spice up their projects with historical facts and interactive elements.

Product Core Function

· Daily Puzzle Generation: This feature generates a new historical event each day, providing fresh content and encouraging daily engagement. Its technical value lies in the algorithm that selects and presents the event, making sure it's interesting and fair. The application is in educational platforms to create consistent learning experiences.

· Interactive Guessing Mechanism: The game provides hints based on the user's input, like telling the user whether the correct date is before or after their guess, and maybe a range. This uses a smart comparison system to guide users. This is valuable because it makes the game enjoyable, but also educational, enabling users to refine their understanding of historical timelines. It could be applied in educational games to encourage exploration of time.

· Curated Event Database: The core of the game is the historical event database. It's meticulously curated to include significant events. It's useful as a resource for creating projects that involve historical facts and data. It enables developers to easily integrate historical data into their projects.

Product Usage Case

· Educational App Integration: Imagine an educational app teaching about the Roman Empire. Using ChronosGuess, developers can add a mini-game where users guess the dates of key events like the founding of Rome or the death of Caesar. This creates an interactive learning experience, testing knowledge in a fun way.

· Interactive Storytelling Platform: For a storytelling platform that has historical facts, use ChronosGuess to provide mini-games about dates during key moments, allowing the user to interact with the story. This enhances the narrative by adding an element of puzzle-solving.

18

IQMeals - AI-Powered Nutritional Assistant

Author

scalipsum

Description

IQMeals is a mobile application (iOS and Android) leveraging AI to assist users in making healthier food choices. It analyzes your dietary preferences and restrictions, provides personalized meal recommendations, and tracks your nutritional intake. The innovation lies in its use of AI algorithms to interpret user data and generate tailored dietary plans, offering a practical solution to managing healthy eating habits.

Popularity

Points 5

Comments 0

What is this product?

IQMeals is like a smart nutritionist in your pocket. It uses artificial intelligence to understand your eating habits, dietary needs (like allergies or specific diets), and personal preferences. Based on this information, it suggests meals that are good for you, helps you track what you eat, and provides insights into your nutritional intake. The innovative aspect is the use of AI to personalize these recommendations and make healthy eating easier.

How to use it?

Developers can integrate IQMeals API (if available in future iterations) into their own health and wellness apps to offer intelligent meal recommendations or nutritional tracking features. This could include features like personalized recipe suggestions or dietary analysis tools within existing apps. You could use it to build a more comprehensive health tracking platform. So this allows you to enhance the functionality of your existing apps with smart dietary features.

Product Core Function

· Personalized Meal Recommendations: The app suggests meals based on your individual dietary needs and preferences, using AI to analyze your data. So this can greatly simplifies the process of deciding what to eat, especially if you have specific dietary restrictions or goals.

· Nutritional Tracking: It allows users to log their meals and track their nutritional intake, helping them monitor their progress and make informed choices. So this gives you a clear picture of what you're eating and its impact on your health.

· Dietary Preference Customization: Users can specify their dietary requirements, like allergies or preferences (vegan, vegetarian, etc.), to receive customized recommendations. So it caters to your specific needs, making it easier to follow your chosen diet.

Product Usage Case

· Integration with Fitness Apps: A fitness app developer could integrate IQMeals' API to provide users with customized meal plans that complement their workout routines. So this provides a holistic health solution, helping users achieve their fitness goals through both exercise and diet.

· Wellness Platform Enhancement: A wellness platform could use IQMeals to offer its users personalized nutritional guidance, along with other wellness services. So this improves the user experience and provides a more comprehensive health and wellness offering.

· Recipe Website/App Augmentation: A recipe website or app could integrate IQMeals to give users recipe recommendations that align with their dietary needs and preferences. So this allows users to find recipes that are perfectly tailored to them.

19

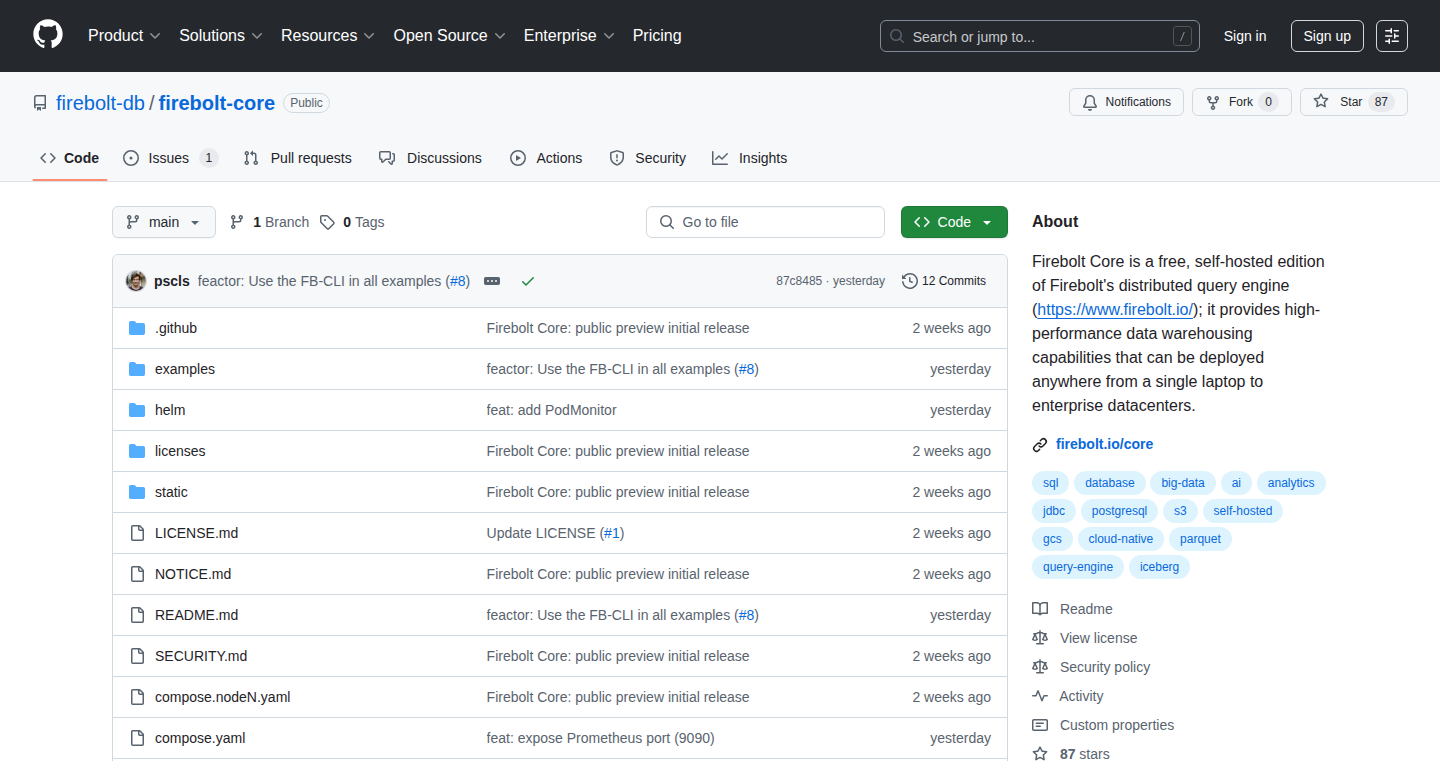

Firebolt Core: Self-Hosted, High-Performance Analytical SQL Engine

Author

lorenzhs

Description

Firebolt Core is a self-hosted, open-source analytical database engine designed for speed and scalability. It addresses the lack of modern, self-hosted query engines by providing a Docker image that can be deployed on your own infrastructure. This allows you to perform fast, concurrent analytics on your data, making it ideal for user-facing dashboards and data-heavy applications. The engine's performance is validated by achieving the top spot on the ClickBench benchmark, demonstrating its ability to handle complex queries with low latency. This gives you control over your data and avoids vendor lock-in, a common problem with SaaS solutions. This innovative approach offers a free, unrestricted option for commercial use, empowering developers to build powerful analytical solutions on their own terms.

Popularity

Points 5

Comments 0

What is this product?

Firebolt Core is a database engine that lets you analyze large amounts of data quickly. Think of it as a super-powered tool for asking questions of your data and getting answers fast. It achieves this through advanced query optimization and distributed processing techniques. The key innovation is its ability to run complex queries with very low delay and manage huge data sets. It is packaged as a Docker image, meaning you can easily install and run it on your own computers or servers without complex setup, while still giving you enterprise-level performance. This open-source approach lets you see and modify the inner workings, fostering flexibility and control over your data management.

How to use it?

Developers can use Firebolt Core by pulling the Docker image and deploying it on their infrastructure. The project provides Helm charts and Docker Compose files to simplify the deployment process. You can connect to it using SQL clients or integrate it with data visualization tools. This allows developers to run analytical queries on their data, build dashboards, or power data-intensive applications. For example, you can use it to analyze website traffic, track sales performance, or provide real-time insights to users. Its ease of deployment and integration means you can quickly get it working with your existing systems, allowing you to focus on the business logic rather than database administration.

Product Core Function

· High-Performance Query Processing: Firebolt Core optimizes queries to provide low-latency results, meaning you get answers to your data questions almost instantly. So this lets you build responsive and engaging user interfaces that quickly visualize data.

· Scale-Out Architecture: The engine is designed to scale horizontally, meaning it can handle increasing amounts of data and user load by adding more computing resources. So this ensures your application can handle growth without performance degradation.

· Self-Hosted Deployment: Offered as a Docker image and easily deployable through Docker Compose and Helm, Firebolt Core provides a self-hosted experience, providing flexibility and avoids vendor lock-in. So this allows you to retain full control over your data and infrastructure.

· ETL/ELT Capabilities: Firebolt Core supports data transformation and loading (ETL/ELT), making it a versatile solution for data warehousing and analytics. So this allows you to consolidate data from different sources and prepare it for analysis within the same system.

· Open Source and Free for Commercial Use: Firebolt Core is provided with a permissive license, removing restrictions for commercial use. So this reduces the cost of ownership and promotes community involvement and collaboration.

Product Usage Case