Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-06-23

SagaSu777 2025-06-24

Explore the hottest developer projects on Show HN for 2025-06-23. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

今天Show HN的项目展现了黑客们对AI的拥抱和创造力。可以看到,AI 不再仅仅是研究,而是被用来解决实际问题、提高效率。 开发者们正在使用AI来自动化重复性工作,比如转录视频,生成设计,管理数据库,甚至是帮助求职。同时,开源精神依然闪耀,许多项目选择开源,这不仅加速了技术传播,也降低了创新门槛。 创业者们可以关注AI与各种工具的结合,寻找可以被AI加速的流程。 技术创新者可以从这些项目中学习如何将AI集成到现有工作流中,实现降本增效,并探索新的商业模式。 记住,黑客精神在于动手实践,用技术解决实际问题,不要害怕失败,大胆尝试,你也可以创造出改变世界的工具。

Today's Hottest Product

Name

RUNRL JOB

Highlight

这个项目让你一键运行强化学习微调 (RFT) 工作负载。它简化了RLHF实验,降低了研究人员、学生和独立黑客的门槛。你可以用它微调你的大语言模型,定制自己的奖励函数,让AI更听话。它解决了一键启动RLHF实验的技术难题,你无需配置复杂的Docker环境,只需点击几下就能启动实验,还提供实时的训练指标,并且可以帮你节省内存。开发者可以学习到如何整合HPC-AI基础设施进行大规模的AI实验。

Popular Category

AI工具

开发工具

开源库

Popular Keyword

AI

开源

API

自动化

Technology Trends

使用AI简化任务和加速工作流 (例如,自动化任务、转录视频、数据库交互、AI代码生成)

利用AI技术提升开发效率 (如 AI 代码助手、LLM 辅助调试工具、生成UI设计)

将AI应用于实际问题 (例如,AI 辅助求职、AI 驱动的网页内容分析、AI 生成图片、AI 辅助交易)

Project Category Distribution

AI应用 (35%)

开发工具 (30%)

实用工具 (25%)

其他 (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Comparator: Open-Source Job Offer Analysis Tool | 48 | 28 |

| 2 | RUNRL JOB: One-Click Reinforcement Learning Fine-Tuning on HPC-AI | 20 | 6 |

| 3 | Artist Network Explorer | 6 | 7 |

| 4 | GitHub DeepDive: Your AI-Powered GitHub Research Assistant | 12 | 0 |

| 5 | Windowfied: Bring Windows' `dir` Command to macOS | 4 | 7 |

| 6 | Cargofetch: Rust Project Dependency Retriever | 8 | 3 |

| 7 | Blockdiff: Instant VM Disk Snapshotting with Block-Level Diffs | 10 | 1 |

| 8 | Reddit Recap Quiz: Programming Digest | 8 | 1 |

| 9 | InterviewReady: AI-Powered Resume Optimizer | 8 | 0 |

| 10 | WhisperTranscribe: YouTube Video Transcription & Cleaning CLI | 4 | 2 |

1

Comparator: Open-Source Job Offer Analysis Tool

Author

MediumD

Description

Comparator is a free and open-source application designed to help job seekers easily compare multiple job offers. It's built with the goal of simplifying the often complex task of evaluating compensation packages, considering not only salary but also benefits, stock options, and other perks. The technical innovation lies in its structured approach to parsing and presenting offer details, allowing for a clear side-by-side comparison. It tackles the problem of information overload by providing a centralized platform to evaluate offers, promoting informed decision-making.

Popularity

Points 48

Comments 28

What is this product?

Comparator is a web application that lets you input details from different job offers, like salary, bonuses, stock options, health insurance, and other perks. The application then organizes this information in a clear, comparable format. The technical innovation is the structured data entry and comparative display. It doesn't just list numbers; it converts them into a format that allows for quick and easy comparisons. So you'll be able to easily spot the best overall offer. It's also open-source, meaning anyone can see how it works and even contribute to improve it. This promotes transparency and community contribution.

How to use it?

Developers can use Comparator by entering the details of their job offers into the application. It's a web-based tool, so there's no complex installation. Once the data is input, the application generates a comparison table, making it easy to identify the most advantageous offer. Developers who need a tool to better understand their job offers, or any technical workers negotiating a new job can utilize it to compare their compensation packages in a side-by-side view. This can be integrated into a workflow when negotiating salary or benefits by providing quantifiable data to back up decisions.

Product Core Function

· Data Input: The core function is allowing the user to input job offer details. This value resides in providing a structured way to enter information, breaking down complex compensation packages into understandable parts. This is helpful when looking at total compensation, not just salary. For instance, you can input yearly salary, bonuses, stock options, healthcare plan details, and other benefits.

· Comparative Display: The application compares the details across all entered offers in a side-by-side format. This value is in the clarity and conciseness of the display. Instead of scrolling through multiple documents and spreadsheets, the user gets a clear view of all offers. For example, comparing the equivalent value of stock options or the impact of different healthcare plans.

· Open-Source Nature: Being open-source means that the code is available for anyone to view, modify, and contribute to. This value promotes transparency, allows community contributions to improve the tool's capabilities, and lets the user be sure of the safety of the data being entered.

Product Usage Case

· Salary Negotiation: A software engineer receives two job offers and can input the details into Comparator. Instead of focusing on the highest initial salary, they can see the long-term value of stock options and benefits. This gives them more leverage during salary negotiations with each company. So it helps the engineers to make a better choice by providing a clear overview of each job offer.

· Benefit Comparison: A designer receives three job offers, all offering different health insurance plans. By inputting the details into Comparator, they can see the differences in premiums, deductibles, and coverage. This helps them choose the best plan based on their personal healthcare needs and make a more informed decision. This allows for a comparison that goes beyond salary to include other perks.

· Financial Planning: A developer uses Comparator to project the value of their stock options over time. They can input the stock price and vesting schedule to get a better understanding of their future financial position with each company. So the application provides the developers with insight into the long-term value of offers beyond the immediate salary.

2

RUNRL JOB: One-Click Reinforcement Learning Fine-Tuning on HPC-AI

Author

cheerGPU

Description

RUNRL JOB is a service that lets you run Reinforcement Learning Fine-Tuning (RFT) jobs, like GRPO or PPO, with just one click on HPC-AI.com. It simplifies the process of training models with rewards by pre-configuring pipelines, optimizing memory usage, and providing monitoring tools. The main innovation is making complex Reinforcement Learning (RL) techniques, typically requiring significant setup, accessible to everyone, from researchers to hobbyists, without the need for Dockerfiles or dealing with dependencies.

Popularity

Points 20

Comments 6

What is this product?

RUNRL JOB simplifies Reinforcement Learning Fine-Tuning (RFT) by providing a pre-configured pipeline. This means it handles the complicated setup required for training models using techniques like GRPO and PPO, which involve giving the model rewards based on its performance. It includes pre-built configurations, memory optimizations to save resources, logging to track progress, and reward modules. The core innovation is making these complex RL techniques easy to use. For instance, it offers memory-efficient GRPO, using less memory than alternatives, making it possible to experiment with complex models even on limited hardware. This also allows users to easily swap in their own models and monitor progress through TensorBoard, a tool for visualizing machine learning training.

How to use it?

Developers can use RUNRL JOB by visiting HPC-AI.com, clicking to launch GPU instances (choosing H100 or H200 GPUs), selecting the RUNRL JOB template, and starting the job. The system handles all the complexities of the RL training pipeline, enabling developers to focus on their model and training goals. They can then monitor the progress using JupyterLab or TensorBoard.

So, this simplifies the whole process, allowing you to quickly experiment with RL without having to worry about the technical setup.

Product Core Function

· Pre-wired RFT Pipeline: It sets up the entire Reinforcement Learning Fine-Tuning (RFT) process for you, including the model, reward system, and logging, eliminating the need to configure these components manually. This dramatically reduces setup time and effort.

· Memory Optimization: The system is optimized for memory usage, especially with GRPO. It uses advanced techniques to reduce the amount of computer memory needed to train models, which can save costs and allow you to work with larger models or datasets without needing extremely powerful hardware. This is particularly valuable for those without access to the largest compute resources.

· Model Support: RUNRL JOB is compatible with popular models like Qwen-3B and Qwen-1.5 out of the box, making it easy to get started. You can also use your own models by simply plugging them into the system. This flexibility lets you leverage existing models or test your own ideas quickly.

· Real-Time Monitoring: Provides live metrics via TensorBoard, allowing users to track their training progress and performance in real-time. This is crucial for understanding how the model is learning and making necessary adjustments, improving efficiency.

Product Usage Case

· Research and Experimentation: Researchers can quickly test different Reinforcement Learning algorithms (e.g., GRPO, PPO) on custom models. For example, a researcher exploring new reward mechanisms can rapidly iterate and evaluate their ideas without spending weeks setting up the training environment. So, it accelerates research and reduces the time to test new ideas.

· Model Tuning: Developers looking to fine-tune large language models can use RUNRL JOB to improve model performance. For example, a company wants to improve its chatbot's conversational skills. They could use RUNRL JOB to train the chatbot with reinforcement learning, where the reward is based on how engaging and helpful the chatbot is. So, it helps refine and improve existing models.

· Educational Purposes: Students and newcomers to Reinforcement Learning can use the service to learn and experiment with RL techniques without the steep learning curve of setting up the environment from scratch. For example, a student learning about RL can easily train a model on a small dataset to understand how the training process works. So, it provides an accessible entry point to explore RL techniques.

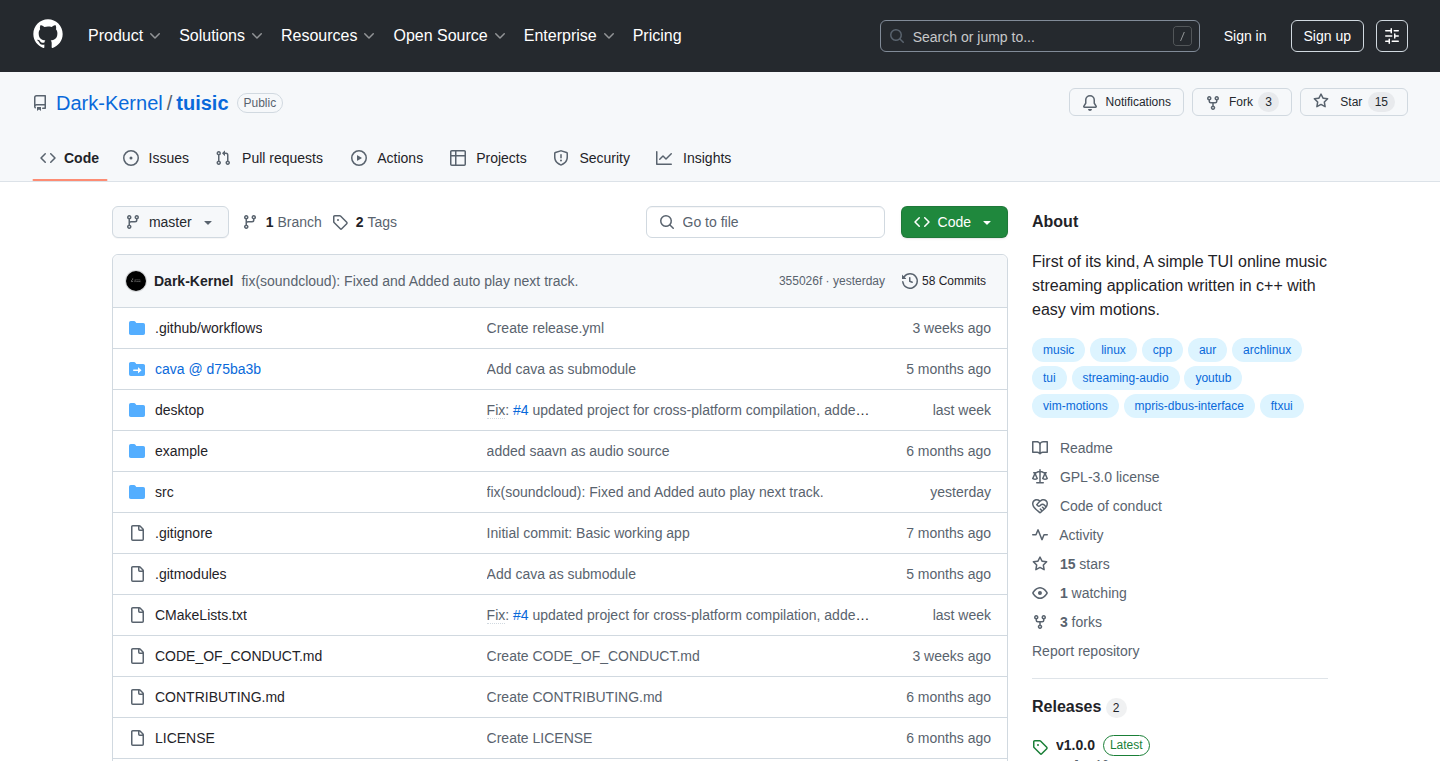

3

Artist Network Explorer

Author

fruitbarrel

Description

This project is a web-based tool that lets you discover new music artists by starting with a favorite artist and exploring similar artists in a network graph. It uses the Apple Music API to retrieve artist data, allowing you to click on artists and expand the network. Hovering over artists reveals previews of their top songs. This is a neat way to explore music in a visual and interactive way.

Popularity

Points 6

Comments 7

What is this product?

This is a music discovery tool that visually maps similar artists. Imagine a network where each artist is a node, and connections represent musical similarity. Clicking on an artist expands the network, revealing more similar artists. The core innovation lies in this visual representation and the use of API data to create an interactive experience. It solves the problem of finding new music beyond just searching by name or genre. So what does this mean for you? You get a new and visual approach to explore music, easily finding music that aligns with your taste.

How to use it?

To use Artist Network Explorer, simply go to the website and search for an artist. The tool will then generate a network of similar artists. You can click on the artist nodes to expand the network further and discover new connections. Hover over the nodes to listen to song previews. This tool can be integrated in any web application which requires music recommendation and discovery feature. For example, a music streaming service could use this to suggest artists for users based on their listening history. It is easily integratable because it is fully web-based, no sign up required.

Product Core Function

· Visual Artist Network: This allows users to interactively explore artist relationships, offering a more engaging and intuitive discovery experience than a simple list. So this means it gives users a new and more intuitive way to find music

· Apple Music API Integration: Utilizing the Apple Music API to fetch artist data and song previews provides a source of real-time information. This ensures the recommendations are relevant. This allows the app to be always up to date.

· Interactive Node Expansion: Clicking on artist nodes expands the network, revealing more similar artists. This allows for deeper dives into the musical landscape. So users can endlessly explore music.

· Song Preview on Hover: The ability to preview top songs upon hovering over an artist node allows quick assessment of artist similarity. This helps users quickly decide whether to dig deeper. So users can rapidly decide if they want to discover more music.

Product Usage Case

· Music Streaming Service: Integrate the Artist Network Explorer into a music streaming service to provide users with visually-driven recommendations based on their listening history. This directly enhances user engagement and retention. So you get better user experience, and more users.

· Music Blog or Website: Embed the explorer on a music blog or website as an interactive way to discuss and introduce new artists to readers. This creates a more engaging and informative content format. So this will attract more visitors to your website.

· Personal Music Discovery: Use the tool as a personal exploration tool to find new music and build a diverse music library. This gives you a personal way to explore music.

4

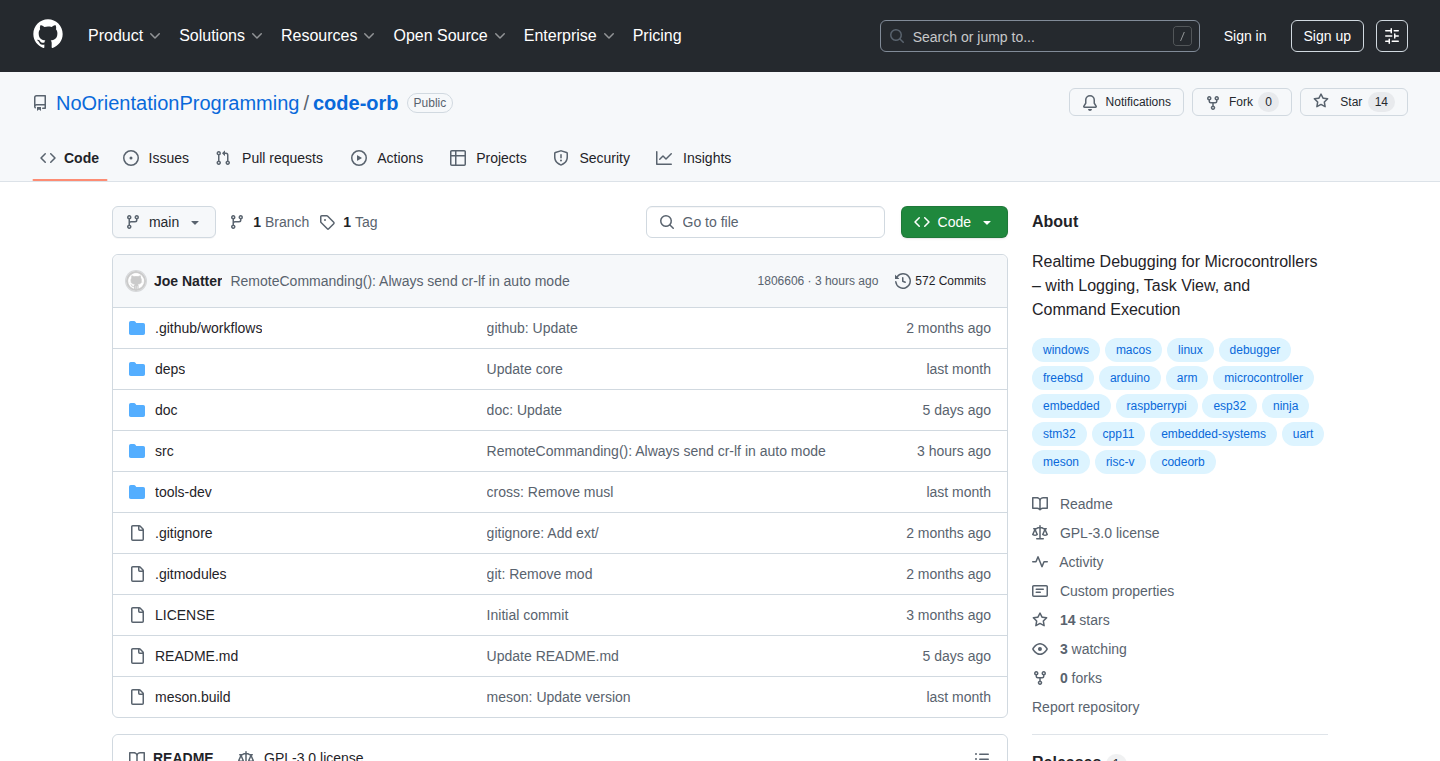

GitHub DeepDive: Your AI-Powered GitHub Research Assistant

Author

gustavoes

Description

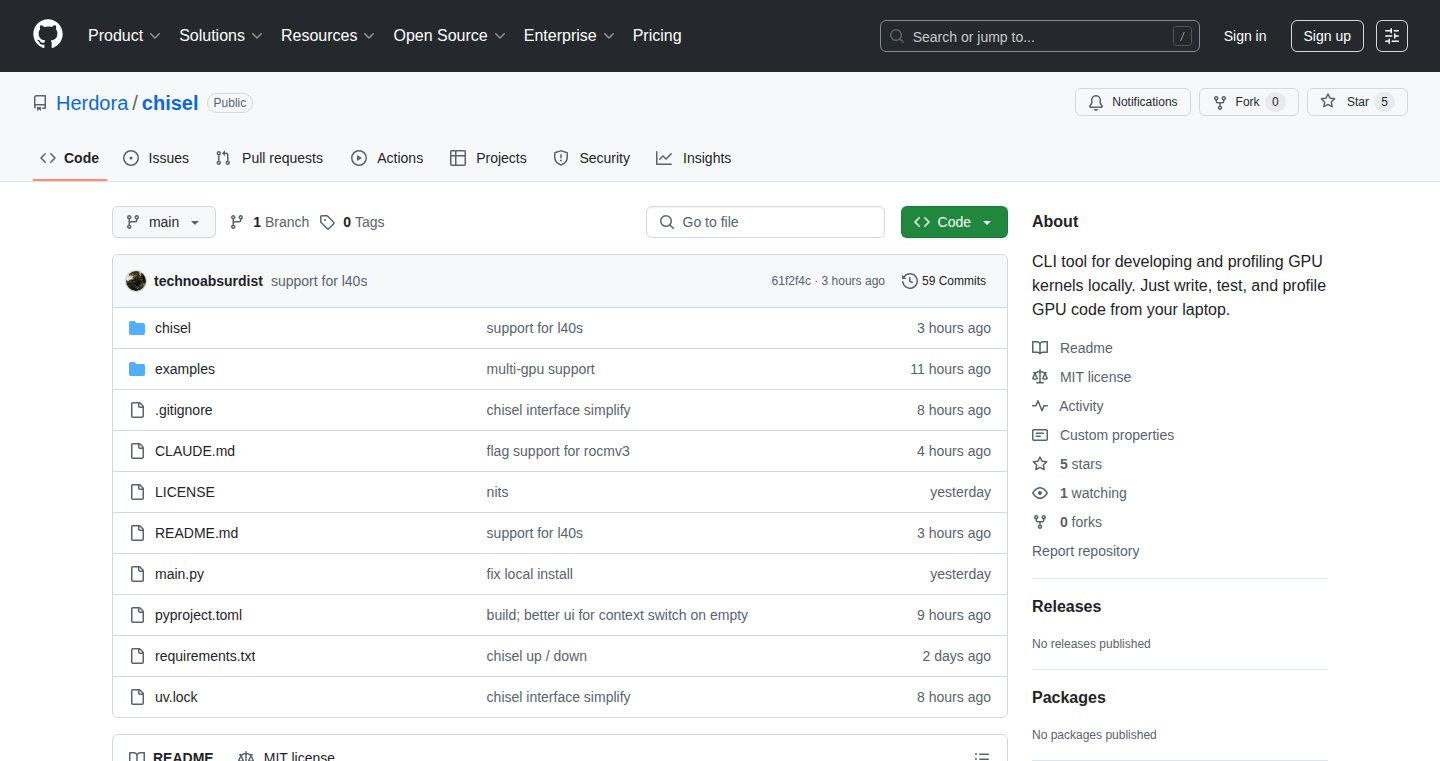

GitHub DeepDive is a tool that helps you deeply analyze any GitHub repository. It uses AI to summarize code, identify key functions, and understand the overall project architecture. This innovation saves developers significant time by quickly providing insights into complex codebases, accelerating the learning process and facilitating code reuse. So, it helps you understand massive codebases quickly.

Popularity

Points 12

Comments 0

What is this product?

GitHub DeepDive is like having an AI expert that reads and understands GitHub repositories for you. It uses powerful techniques like Natural Language Processing (NLP) and code analysis to break down the project's structure. It highlights critical pieces of code, explains their purpose, and helps you get a clear picture of what the project does. The innovative part is how it combines AI with code analysis to go beyond basic summaries and deliver deep insights. So, it helps you understand code, even if you don't fully understand the language.

How to use it?

Developers can use GitHub DeepDive by simply providing the URL of a GitHub repository. The tool then automatically analyzes the code and presents a detailed overview. You can also ask specific questions about the code, and the AI will provide relevant answers. It's integrated via a web interface, making it easy to analyze any public GitHub repository. So, you can easily explore any GitHub project that interests you.

Product Core Function

· Code Summarization: GitHub DeepDive automatically generates summaries of code files and functions. Technical Value: It drastically reduces the time needed to understand what a piece of code does. Application: Quickly grasp the purpose of a function or a file, even in a large project. So, you can quickly figure out what a code snippet does.

· Architecture Overview: The tool analyzes the project's structure and provides a high-level overview. Technical Value: Helps developers understand how different parts of the project fit together. Application: Quickly understand the project's design and how components interact. So, you don't have to read through tons of files to understand the overall project structure.

· Key Function Identification: GitHub DeepDive identifies the most important functions and code snippets within a repository. Technical Value: It focuses your attention on the most relevant code. Application: Quickly identify the core logic of a project and understand its key features. So, you can find the important stuff faster.

· Code Question Answering: The tool allows developers to ask specific questions about the code. Technical Value: Provides direct answers to questions about the code. Application: Get immediate answers to questions, such as how a particular feature is implemented. So, you can get your coding questions answered immediately.

· Dependency Analysis: Identifies and explains the project's dependencies. Technical Value: Understand the project's external components and how they're used. Application: Understand what libraries and tools are used in the project. So, you can easily understand what the code relies on.

Product Usage Case

· Understanding a New Open-Source Project: A developer wants to contribute to an open-source project but finds the codebase overwhelming. GitHub DeepDive can provide an overview of the project, identify the key functions, and explain the architecture, allowing the developer to quickly understand the project and start contributing. So, you can immediately understand a new project.

· Code Review Enhancement: During a code review, a developer can use GitHub DeepDive to quickly understand unfamiliar code. By asking questions about specific functions or code blocks, the reviewer can efficiently identify potential issues or areas for improvement. So, you can speed up your code review process.

· Learning from Examples: A developer is learning a new programming language or framework and wants to see how a specific feature is implemented. By using GitHub DeepDive on relevant repositories, the developer can quickly grasp the implementation details. So, you can learn how other developers have used new things.

· Code Reuse and Adaptation: A developer is working on a new project and needs a specific functionality. By using GitHub DeepDive to analyze existing projects, the developer can identify and adapt code snippets that meet their needs. So, you can save time by reusing existing code.

· Debugging and Troubleshooting: A developer faces a bug in their project. GitHub DeepDive can help by summarizing the code related to the bug and highlighting key function calls. So, you can quickly pinpoint the location of the problem in the code.

5

Windowfied: Bring Windows' `dir` Command to macOS

Author

mnky9800n

Description

This project, Windowfied, is a playful attempt to bring the `dir` command, the directory listing tool from Windows, to macOS. It's built using Homebrew, a popular package manager for macOS. The core idea is to mimic the functionality and, perhaps, the aesthetic of the Windows `dir` command within a macOS environment. It tackles the technical challenge of cross-platform command-line adaptation, translating a Windows-centric tool to a Unix-based system. So it allows you to use a familiar command if you're coming from a Windows background, making your transition to macOS a bit smoother.

Popularity

Points 4

Comments 7

What is this product?

Windowfied essentially clones the functionality of the `dir` command, which is used to list files and directories in a Windows command prompt. It's built on macOS using Homebrew, and its key technical aspect is likely the parsing and re-implementation of `dir`'s numerous flags and formatting options within a Unix-like environment. This involves mapping the Windows-specific command's behavior to macOS's underlying file system and command-line tools. So it's like having a piece of Windows functionality running on your Mac.

How to use it?

Developers install Windowfied using Homebrew with the command `brew install mnky9800n/tools/windowfied`. After installation, they can simply use the `windowfied` command in their terminal, much like they would use `dir` on Windows. This is especially useful if they are used to `dir` in windows and want the same experience on their mac. They can also use this as a learning tool to understand how cross-platform command line tool implementation is carried out.

Product Core Function

· Directory Listing: Windowfied lists files and directories, similar to the `ls` command but with a style closer to the Windows `dir` command. This helps developers who are familiar with the `dir` command easily navigate the macOS file system.

· File Attribute Display: It probably presents file attributes like size, modification date, and file type in a format similar to Windows `dir`. This aids in quickly identifying important file details in a familiar layout.

· Command-line Arguments and Options: Windowfied likely supports various command-line arguments and options that modify the output, mimicking the flags available in the Windows `dir` command. This offers developers customization options for how they want to view file listings.

Product Usage Case

· Cross-platform Development: A developer working on a project that involves both Windows and macOS systems can use Windowfied to maintain a consistent command-line experience. So the developer doesn't have to context switch between two different types of directory listing formats.

· Transitioning from Windows: Developers switching from a Windows background to macOS can use Windowfied to ease the transition by providing a familiar file listing command. So it enables faster adoption and smoother workflow on macOS.

· Scripting and Automation: In scripts or automation workflows, Windowfied can be used to generate file listings in a predictable format if a Windows-like format is needed. So it helps to ensure that scripts work correctly across both platforms, if the need arises.

6

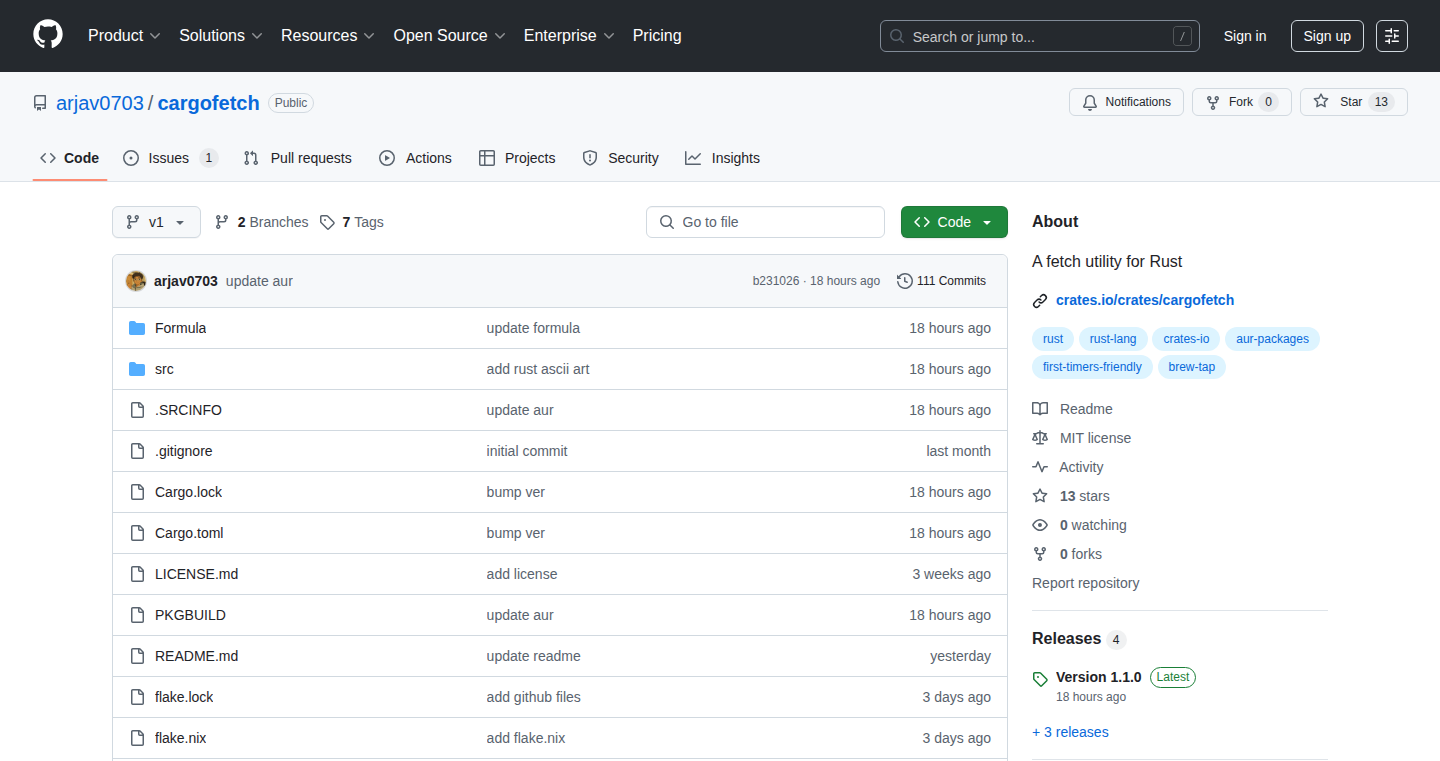

Cargofetch: Rust Project Dependency Retriever

Author

Manan-Coder

Description

Cargofetch is a utility designed to efficiently fetch and manage dependencies for Rust projects. The core innovation lies in its optimized approach to retrieving and caching dependencies, drastically speeding up build times and improving developer productivity. It tackles the common problem of slow dependency resolution in Rust projects, which often involves downloading and compiling numerous crates from the internet. This project offers a faster, more streamlined solution by leveraging intelligent caching mechanisms.

Popularity

Points 8

Comments 3

What is this product?

Cargofetch is a command-line tool that helps Rust developers get the necessary packages (dependencies) for their projects. The core idea is to download and store these dependencies in a smart way. It's like a super-efficient delivery service for your project's building blocks. It uses a caching system to avoid redownloading the same dependencies every time you build your project, which speeds up the whole process significantly. This involves understanding how Rust's package manager, Cargo, works and optimizing the download and caching process to minimize waiting time.

How to use it?

Developers can use Cargofetch by integrating it into their Rust build process. For example, you can use it by running it before you start building your project with `cargo build`. This tool fetches the dependencies and caches them, allowing Cargo to build the project much faster. This is particularly useful for projects with many dependencies or for developers who switch between projects frequently. The integration is usually straightforward, involving setting up some environment variables or configuring build scripts.

Product Core Function

· Dependency Fetching: The primary function is to download the necessary dependencies from the internet, such as crates.io. This is crucial because it's the foundation upon which your Rust project is built. So this is why you use it, to get everything in order to build your project. Faster downloads means faster builds.

· Caching Mechanism: Cargofetch implements a smart caching system. Once a dependency is downloaded, it's stored locally so that it doesn't have to be downloaded again. This dramatically reduces the build time, especially for projects with many dependencies. Imagine it as a library: you don't have to reprint the book every time you need it; just borrow it. So this will save you tons of time.

· Build Process Integration: It integrates seamlessly with the standard Cargo build process, making it easy for developers to adopt. By using Cargofetch, developers can maintain their current workflow. This is really important to make things easy to use. So this tool is very friendly for developers to start using it without significant adjustment.

Product Usage Case

· Large Project Builds: When working on large Rust projects with numerous dependencies, developers often encounter long build times. Cargofetch dramatically reduces these build times by caching downloaded dependencies, allowing for faster iteration cycles. So this will save you a lot of time when developing the big project.

· Continuous Integration/Continuous Deployment (CI/CD) pipelines: In CI/CD environments, where projects are built and tested automatically, faster build times are essential. Cargofetch helps to streamline the CI/CD process by reducing the time it takes to build the project. So this will make the CI/CD pipeline faster which is super important for the project's fast update.

· Local Development Speed: By caching dependencies, Cargofetch makes local development much more efficient. Developers can quickly test and iterate on their code without being slowed down by the overhead of repeatedly downloading dependencies. So this will make it easier to test the code when you are working on your local machine.

7

Blockdiff: Instant VM Disk Snapshotting with Block-Level Diffs

Author

silasalberti

Description

Blockdiff is a custom file format and tool designed for incredibly fast VM (Virtual Machine) disk snapshotting. It addresses the problem of slow snapshot times, a major bottleneck for developers and researchers who need to quickly create copies or roll back VMs. The innovation lies in its block-level diffing, a technique that identifies and stores only the differences between disk blocks, leading to a massive speed improvement compared to traditional snapshotting methods. This provides rapid VM startup, rollback, and fork capabilities. So this means you can experiment and iterate much faster with your code.

Popularity

Points 10

Comments 1

What is this product?

Blockdiff works by creating a new file format optimized for quickly determining the differences between two versions of a disk image (like a VM disk). Instead of copying the entire disk, it focuses on identifying and storing only the modified 'blocks' of data. Think of it like this: if you have a book and you only change a few sentences, Blockdiff notes down *what* changes and *where*, instead of copying the whole book. This is achieved through a custom file format and related tools designed for block-level comparison, allowing for 200x faster snapshot creation compared to EC2. So, if you're tired of waiting ages for snapshots, this is your solution!

How to use it?

Developers can integrate Blockdiff into their VM management systems. This allows for dramatically faster snapshot creation, leading to rapid VM cloning, rollback, and suspension. This will greatly enhance development workflows, for example for creating testing or development environments. Integration would involve using the blockdiff tool to create and apply diffs between disk images, or even integrating it into a system like Docker or Kubernetes for more efficient image management and faster deployment. So, if you want to build or manage infrastructure with speed, this is how you do it.

Product Core Function

· Block-level Diffing: This is the heart of Blockdiff. It identifies the differences at the level of individual blocks of data on a disk. This is a key technique to dramatically reduce the time taken for snapshotting. So, by storing only the *changes*, it massively speeds up the process. So this helps me create and manage virtual machines much faster.

· Custom File Format for Optimized Storage: Blockdiff uses a custom file format designed for efficient storage and retrieval of block-level differences. This format is specifically crafted to minimize storage space and maximize the speed of applying the changes. So, you get faster operations and smaller storage footprint.

· Rapid Snapshot Creation: With Blockdiff, creating snapshots becomes a near-instant process, taking seconds instead of minutes. This lets users create as many snapshots as needed to safeguard their data or experiment with their code. So, I can quickly save the state of my VM, and revert to it anytime.

· VM Forking and Rollback: The ability to quickly create snapshots enables instant VM forking (creating copies) and rollback to previous states. This provides flexibility in development and testing. So, I can easily experiment with different configurations or test code changes without risking data loss.

· VM Suspension and Resumption: The fast snapshotting capabilities of Blockdiff can also improve the speed of VM suspension and resumption, allowing for better resource management and quicker return to work. So, I can quickly save the current state of a running VM and return to where I left off.

Product Usage Case

· Development Workflow Speedup: A developer working on a complex application creates a snapshot of their development environment. Then, they experiment with a new code change. If something breaks, they instantly roll back to the snapshot. This provides a rapid development cycle by quickly reverting to the previous, working state. So, my development cycles can be drastically shorter because I can experiment more freely.

· Continuous Integration and Testing: In a CI/CD pipeline, fast VM snapshotting enables rapid creation of testing environments. For example, each test run can use a fresh VM instance with a specific software configuration, which is a powerful way to ensure consistent and repeatable testing. So, I can be sure my tests run in the same environment every time.

· Research and Experimentation: A researcher experimenting with machine learning models needs to create copies of VMs to explore new model parameters or datasets. Fast snapshotting makes it easy to create multiple environments to run different experiments in parallel. So, I can easily spin up multiple environments to quickly test a wide range of parameters.

8

Reddit Recap Quiz: Programming Digest

Author

gametorch

Description

This project is a fun quiz generator that summarizes the top posts from the r/programming subreddit of the previous week. It uses natural language processing (NLP) to understand and extract key information from the Reddit posts, then generates multiple-choice quiz questions. This showcases how we can automate content summarization and gamify learning, offering a quick and engaging way to stay updated on current programming trends. It tackles the problem of information overload by providing a condensed and interactive learning experience.

Popularity

Points 8

Comments 1

What is this product?

It's a quiz that recaps the popular topics on the r/programming subreddit. The project leverages NLP to automatically analyze the text of the posts. Think of it like a smart reader that understands the key ideas and creates questions about them. The core innovation lies in automating the process of understanding and summarizing technical content, and then turning that into a quiz format. So you get a fun, fast way to catch up on what's hot in programming without reading dozens of articles.

How to use it?

Developers would access the quiz through a web interface. The integration is as simple as clicking the link to start a new quiz. Think of it as your weekly dose of programming news, but with a game attached. This can be used as a quick learning tool or a way to test your existing knowledge. So this helps developers to easily check their understanding of the latest developments in programming.

Product Core Function

· Automatic Content Summarization: It intelligently extracts the most important information from the r/programming posts. The value is that it saves you time by filtering out the noise and focusing on the core ideas. This can be used by developers to quickly get a handle on the important trends and topics of the week.

· Quiz Generation: The project transforms the summarized content into multiple-choice questions. The value is that it makes learning engaging and helps developers to retain the information better. This can be used for self-assessment or as a fun way to revise programming concepts.

· Weekly Update: The quiz is automatically generated every week based on the top posts. The value is that it provides a continuous stream of relevant and up-to-date programming news in an easily digestible format. This is very useful to stay up-to-date with what's happening in the programming community.

· Natural Language Processing (NLP): The project uses NLP techniques for text understanding. The value is that it allows the automation of summarizing and quiz generation. This can be used in other applications for automated content generation and knowledge extraction.

Product Usage Case

· Learning about new libraries and frameworks. Developers can use the quiz to test their understanding of new tools. So you can use it to learn about a new library that's gaining traction and assess your understanding of it.

· Keeping up with industry trends. Developers can use the quiz to stay updated on the latest programming trends and technologies. So you can use it as a source to know what's trending in the programming community to stay relevant.

· Self-assessment and revision. Developers can use the quiz to assess their understanding of programming concepts and revise them. So you can use it to assess your current programming knowledge and identify areas for improvement.

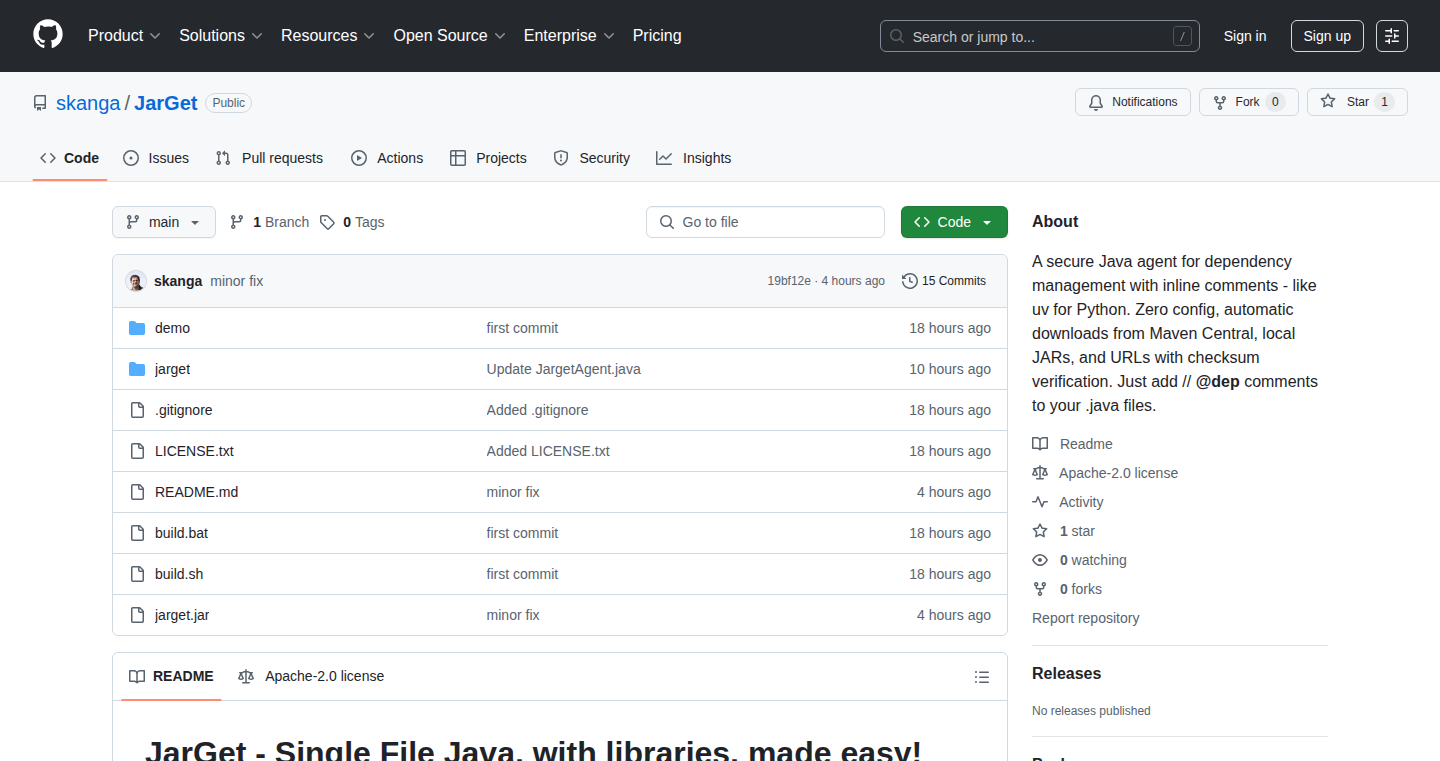

9

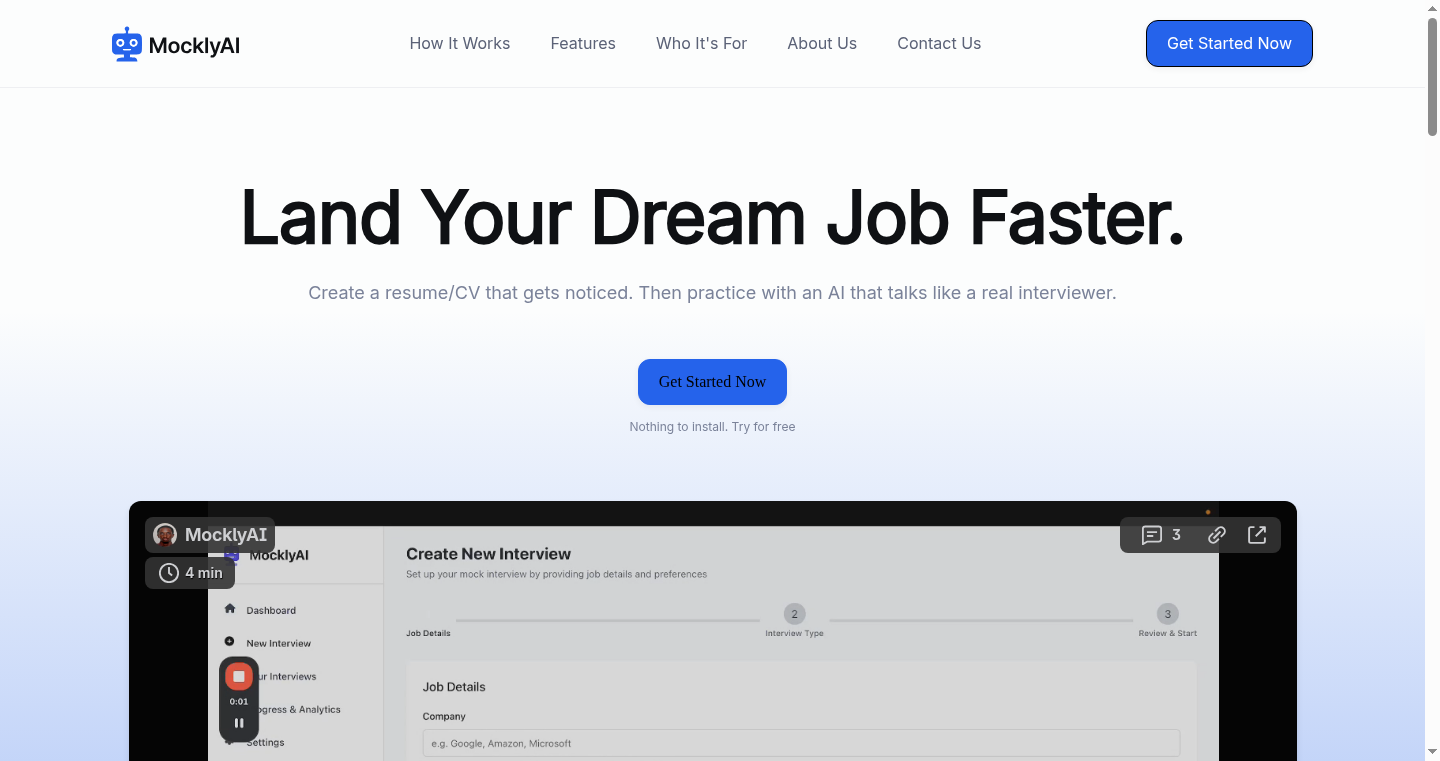

InterviewReady: AI-Powered Resume Optimizer

Author

kaly_codes

Description

InterviewReady is a web-based tool designed to help job seekers create resumes that are more likely to get them interviews. It uses Artificial Intelligence to analyze a resume against a given job description, highlighting key skills and tailoring the content to match the employer's needs. It focuses on solving the problem of resume screening, which often involves automated systems that filter out unqualified candidates based on keyword matching. The core innovation lies in its ability to provide feedback and suggest improvements to help candidates beat these automated systems and stand out to human recruiters.

Popularity

Points 8

Comments 0

What is this product?

InterviewReady analyzes your resume using AI. It works by taking your existing resume and a job description, then comparing them. The AI identifies the keywords and skills the employer values most, and then highlights areas in your resume where you can improve. It suggests changes to better match the job description. Think of it as a smart editor for your resume, ensuring it's tailored to each specific job application. The AI utilizes Natural Language Processing (NLP) to understand the meaning of the words and phrases in both your resume and the job description, going beyond simple keyword matching to grasp the underlying requirements and qualifications. So it helps you tailor your resume so you are more likely to get an interview.

How to use it?

To use InterviewReady, you'll typically upload your resume and paste the job description into the tool. The AI engine then analyzes the two documents and generates a report. This report highlights missing keywords, provides suggestions for improvement, and shows you how well your resume matches the job description. You can then use these insights to edit your resume, making it more relevant. The tool could be integrated into existing job search websites or used as a standalone tool by job seekers. You can also download your optimized resume. So you can quickly see where you need to improve your resume.

Product Core Function

· **Resume Analysis:** Analyzes a resume against a job description. This enables candidates to identify how well their resume matches the specific requirements of a job posting. The value is time saving and better chance to match the job description. So this helps you quickly see if your resume is a good fit.

· **Keyword Highlighting:** Identifies and highlights the key skills and keywords the employer is looking for in a job description. This allows users to see what skills are most important for each job, and to tailor their resume to those specifics. So this ensures your resume speaks the right language.

· **Content Suggestion:** Provides suggestions for improving resume content. Suggests how to rephrase your achievements or experiences to more closely match the language used in the job description. This makes your resume better tailored for specific job postings. So this helps you write a better resume that will actually get read.

· **Match Percentage Calculation:** Calculates a 'match percentage' to show the overall similarity between your resume and the job description. This gives users a clear, quantifiable measure of how well their resume aligns with the job requirements. So this gives you a number to show how good your resume is.

· **Automated Formatting Assistance:** Offers formatting suggestions for your resume to help it pass through applicant tracking systems (ATS). This is important because many companies use these systems to filter out unqualified applicants. So this ensures your resume can get past automated filters.

Product Usage Case

· **Job Application:** A user is applying for a software engineer position and uses InterviewReady to analyze their resume against the job description. The tool identifies missing keywords related to specific programming languages and frameworks. The user then updates their resume with those keywords and relevant experience, improving their chances of getting an interview. So this helps you apply to jobs more effectively.

· **Skill Gap Analysis:** A job seeker wants to identify the skills they need to improve their resume. Using InterviewReady, they can analyze their resume and compare it to various job descriptions. The tool highlights the skills they lack, helping them focus their learning and experience-building efforts. So this helps you prepare for your next job.

· **Career Transition:** A professional changing careers can use InterviewReady to tailor their resume to a new industry. By analyzing resumes against the job descriptions, they can highlight transferable skills and modify their resume to highlight the relevant expertise. So this helps you make a career change.

· **Resume Optimization for Specific Companies:** A candidate has a dream company and wants to tailor their resume. InterviewReady can be used with various job postings from the target company to provide a comprehensive view of their ideal candidate profile, helping the user optimize their resume to match the company's expectations. So this helps you get noticed by your dream company.

· **Freelance Application Improvement:** A freelancer is applying for several gigs, using InterviewReady allows to highlight the essential skills for each project quickly. So this helps you land more freelance projects.

10

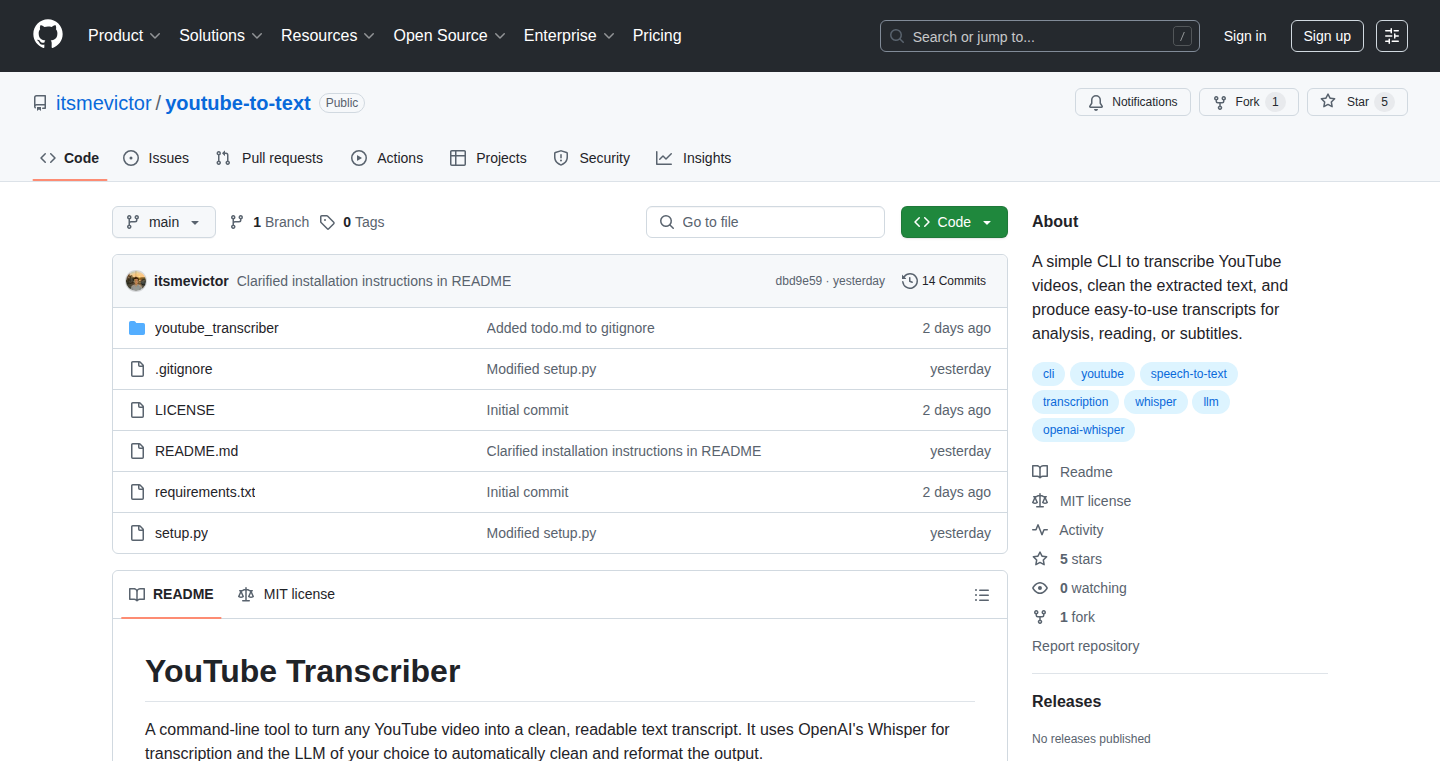

WhisperTranscribe: YouTube Video Transcription & Cleaning CLI

Author

itsmevictor

Description

WhisperTranscribe is a command-line tool that effortlessly converts YouTube videos into clean, readable text using the power of OpenAI's Whisper for transcription and a Large Language Model (LLM) of your choice for intelligent cleanup. It tackles the problem of extracting useful information from video content by automating the transcription process and enhancing readability, which is often a tedious and time-consuming task. It automatically downloads audio, supports various output formats, and tailors the cleaning process for presentations, conversations, or lectures. So this tool makes it much easier and faster to get the key takeaways from long videos.

Popularity

Points 4

Comments 2

What is this product?

This project is a command-line tool that simplifies the process of turning YouTube videos into text. It uses two main technologies. First, it uses Whisper, a powerful speech-to-text engine developed by OpenAI, to accurately transcribe the audio from the video. This converts the spoken words into raw text. Second, it leverages the capabilities of Large Language Models (LLMs) – like the ones that power advanced chatbots – to clean up the transcribed text. The LLM removes unnecessary filler words (like "um" and "ah"), corrects grammatical errors, and improves overall readability. This results in a polished transcript that's much easier to understand and use. So, it's a simple way to quickly get a clean text version of a YouTube video.

How to use it?

Developers use WhisperTranscribe through the command line interface (CLI). After installing the tool, they simply provide the YouTube video URL and the desired output format (like TXT, SRT, or VTT). The tool then automatically downloads the audio, transcribes it using Whisper, and cleans it up using the chosen LLM. Developers can integrate this tool into their workflows for various tasks, such as creating subtitles, generating summaries, or indexing video content. For example, a developer could easily process a batch of tutorial videos to create searchable documentation or transcripts for their website. So, developers can easily get text transcript from YouTube with just a few command.

Product Core Function

· Automatic YouTube Audio Download: The tool automatically downloads the audio from the YouTube video. This removes the need for manual audio extraction, streamlining the process. This is helpful for users who want to quickly get started without the extra step of downloading audio separately.

· Whisper-Powered Transcription: Utilizes OpenAI's Whisper to accurately convert spoken words into text. This technology is known for its high accuracy, even with different accents and background noise. This feature ensures that the initial transcription is as accurate as possible, providing a solid foundation for further processing.

· LLM-Driven Text Cleaning: Employs a Large Language Model (LLM) to clean up the transcript by removing filler words, correcting grammar, and improving readability. This significantly improves the quality of the output, making it easier to read and understand. This provides a more polished and professional-looking transcript, which can be used for various purposes.

· Multiple Output Formats: Supports various output formats, including TXT, SRT, and VTT. This flexibility enables users to easily integrate the transcripts into different applications, such as subtitle files for videos (SRT, VTT), or plain text for reading or further processing (TXT).

· Customizable Cleaning: Offers options to tailor the transcript cleaning process for different types of content, such as presentations, conversations, or lectures. This ensures that the cleaned transcript is optimized for its intended use. It helps in generating transcripts that are perfect for a variety of purposes, from taking notes during a lecture to quickly summarizing a presentation.

Product Usage Case

· Content Creation: A video creator can use WhisperTranscribe to generate transcripts for their YouTube videos, which can be used for creating subtitles, improving SEO, and reaching a wider audience. So, a content creator could make their video more accessible and improve its visibility on search engines.

· Research and Note-Taking: Researchers and students can use this tool to transcribe lectures, presentations, or interviews. Then, they can quickly search through the text to find specific information and take notes more efficiently. So, a researcher can easily organize and access critical information from audio sources.

· Accessibility: Those with hearing impairments can use the tool to create text versions of videos, making the content accessible. So, people with hearing disabilities can get the information easily.

· Content Summarization: Developers can use WhisperTranscribe in combination with other tools to automatically summarize the content of videos. They can use this to quickly get the key takeaways from lengthy videos without having to watch them in their entirety. So, it helps users to quickly get the essence of a video content.

· Educational Purposes: Teachers and educators can use the tool to create transcripts of educational videos, facilitating student comprehension and retention. They can also integrate the transcripts in study materials. So, teachers can create learning resources more efficiently for their students.

11

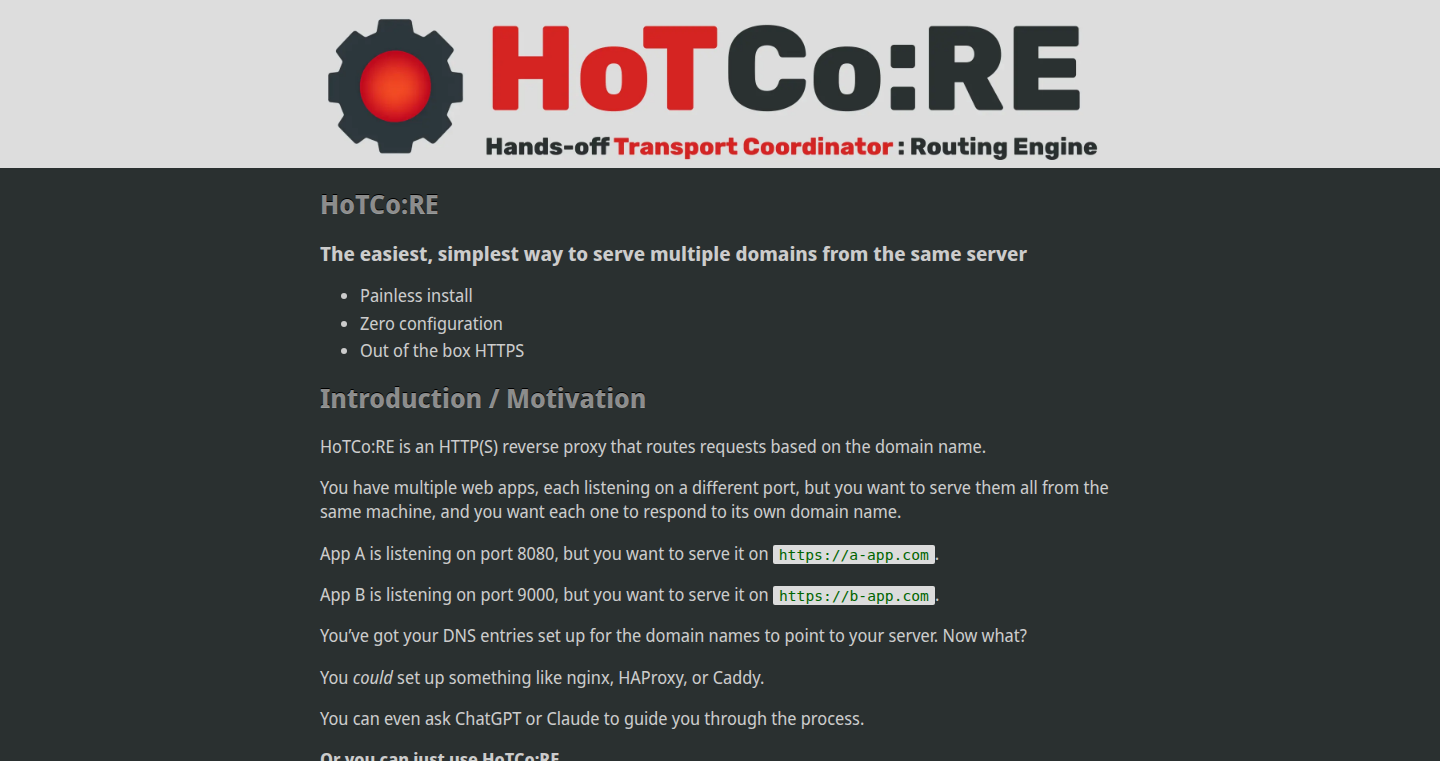

Hotcore - Command-Driven Reverse Proxy

Author

hsn915

Description

Hotcore is a reverse proxy that's configured entirely through commands, rather than traditional configuration files. This means instead of writing complex text files to tell the proxy how to route traffic, you just use simple commands. It simplifies the setup and management of web servers by making configuration changes quick and straightforward, improving developer productivity and reducing the likelihood of configuration errors. The core innovation lies in its command-line interface (CLI) driven approach, which allows for dynamic configuration adjustments and real-time monitoring of web traffic. This avoids the need to restart the proxy after every change, a common pain point with many reverse proxy solutions.

Popularity

Points 6

Comments 0

What is this product?

Hotcore is a reverse proxy that gets all its instructions from commands you type in your terminal. Think of it like a traffic controller for your website, but instead of setting it up with complicated text files, you give it instructions directly using simple commands. The innovation here is that you can change how the proxy works on the fly without restarting it. It handles the complex tasks of routing web traffic but makes the configuration process much easier and faster.

How to use it?

Developers use Hotcore by entering commands in their terminal to define how web traffic should be directed. For example, a command might tell Hotcore to send all requests to `example.com` to a server running on `localhost:8080`. The project provides a CLI tool which is the main way developers will interact with the reverse proxy. This is done by typing in commands. It is intended for production use as well, meaning you can use it on your live website to manage traffic and ensure it is running smoothly.

Product Core Function

· Dynamic Configuration: Configure your reverse proxy using commands, allowing for quick changes without restarting. So, if you need to change where your website traffic goes, you just type a command and it's done immediately. This is great if you frequently make changes to your website's infrastructure.

· Real-time Traffic Monitoring: Monitor web traffic and the performance of your backend servers directly through the command line. This feature provides immediate feedback on how your system is performing. This helps you identify and fix issues immediately. So, you can see if your website is getting slow or if some parts are down and can take action right away.

· Simplified Setup: Configure web server routing with simple commands, avoiding the complexity of config files. This means that instead of spending time learning and writing a complex configuration file, you use simple commands that are easy to understand. So, setting up and managing your web server becomes simpler and faster.

· On-the-fly Updates: Make changes without restarting the reverse proxy, ensuring continuous availability. This allows for continuous delivery, letting you update your website or application without any downtime. So, when you change your website, your visitors won't notice any interruption.

Product Usage Case

· Load Balancing: Direct web traffic to multiple backend servers to balance the workload and prevent any one server from being overloaded. In simple terms, this is like having multiple helpers working on the same project, making sure the work is distributed evenly and everyone is working efficiently. So, your website remains fast and responsive even during peak traffic.

· A/B Testing: Route different percentages of traffic to different versions of your website for A/B testing. This allows you to test new features or design changes on a small group of users before rolling them out to everyone. So, you can see which version performs best without impacting all your users.

· Security Hardening: Use Hotcore to add an extra layer of security by hiding your backend servers and implementing access controls. This can protect your web server from attacks and unauthorized access. So, the website and the data inside are secure.

· Service Discovery: When you have a dynamic environment with services coming and going, use it to automatically discover and route traffic to new services as they become available. In a fast-changing environment where services are constantly updated, this allows you to ensure that traffic is always directed to the correct location. So, your website stays up-to-date with the latest version of your app, and users always get the latest features.

12

SupOS: The Industrial Data Integration Hub

Author

M3rcyzzz

Description

SupOS is a platform designed to streamline the process of gathering and managing data from various industrial sources. Its core innovation lies in its modular design and focus on efficient data pipelines, making it easier for engineers to connect, transform, and analyze data from different industrial systems. This solves the complex problem of dealing with data silos and heterogeneous data formats commonly found in industrial environments, enabling better decision-making based on real-time information.

Popularity

Points 5

Comments 1

What is this product?

SupOS is like a central nervous system for industrial data. It uses a modular architecture, meaning it's built from independent components that can be easily plugged in or out. This architecture facilitates the integration of data from diverse sources such as sensors, machines, and databases. The platform employs data pipelines to move data through a series of steps, including collection, transformation, and storage. It's designed to handle the different formats and protocols that industrial systems use, making data accessible and usable for various applications. So, what's the benefit? It eliminates the headache of manually integrating and cleaning up industrial data, ultimately offering a unified view of operations, which is crucial for efficiency and data-driven decision making.

How to use it?

Developers can use SupOS by deploying it within their industrial infrastructure, or as a cloud service. It provides tools to define data sources, build data pipelines, and configure data transformations. Data can then be easily extracted, transformed and loaded (ETL). After setting it up, engineers can access transformed and structured data through various APIs and tools. It provides a unified interface for monitoring data flows, troubleshooting issues, and managing the entire data integration process. This could be used in manufacturing for predictive maintenance, supply chain optimization, or even in smart agriculture for precision farming.

Product Core Function

· Data Source Connectors: These components are designed to interact with specific industrial systems, such as Programmable Logic Controllers (PLCs), Supervisory Control and Data Acquisition (SCADA) systems, and various sensors. Value: Simplifies connecting to disparate data sources. Application: Collecting real-time data from factory floor machinery for performance monitoring.

· Data Transformation Engine: The engine allows developers to clean, format, and convert incoming data. Value: Ensures that data is usable and compatible across different systems. Application: Converting raw sensor readings (e.g., temperature in Celsius) into a standard format (e.g., Fahrenheit) for consistent reporting.

· Data Pipeline Orchestration: This feature allows developers to define and manage the flow of data from source to destination. Value: Automates the entire data ingestion process, ensuring data is consistently processed and delivered. Application: Creating a pipeline that automatically pulls data from a PLC, transforms it, and stores it in a data warehouse for analysis.

· Data Storage and Management: This supports data persistence, handling the storage of processed data in different formats. Value: Makes data available for reporting, analytics, and historical analysis. Application: Storing historical machine performance data to identify trends and improve operational efficiency.

· API and Data Access: Provides access to data through APIs. Value: Simplifies integration with external applications and analytical tools. Application: Integrating real-time production data with a dashboard for operators to monitor key performance indicators (KPIs).

Product Usage Case

· Predictive Maintenance in Manufacturing: Use SupOS to collect sensor data from machines on a factory floor. The platform would then process this data, identify patterns that indicate potential failures, and alert maintenance staff before a machine breaks down. Value: Reduces downtime and maintenance costs.

· Smart Agriculture for Precision Farming: Integrate data from various sources, such as soil sensors, weather stations, and irrigation systems. Transform and unify this data to create optimal growing conditions. Value: Optimize resource usage (water, fertilizer), improve crop yields, and reduce environmental impact.

· Supply Chain Optimization: Collect data from suppliers, warehouses, and delivery systems. Create data pipelines to track goods in real-time. By analyzing the data, the platform offers insights into bottlenecks, inefficiencies, and opportunities for improvement. Value: Improves supply chain efficiency and ensures timely delivery of goods.

13

RateScape: A Free and Open API for FX and Crypto Data

Author

robBrownCC

Description

RateScape provides a free and open Application Programming Interface (API) to access real-time foreign exchange (FX) and cryptocurrency rates. It distinguishes itself by offering a completely free service, addressing the common need for developers to access financial data without incurring costs. The technical innovation lies in its efficient data aggregation and distribution mechanism, ensuring low latency and high availability of the rate information. It solves the problem of expensive or limited access to financial market data, making it accessible to everyone.

Popularity

Points 3

Comments 2

What is this product?

RateScape is like a digital library that gives you the latest prices for different currencies and cryptocurrencies. It gathers this information from various sources on the internet and provides it to you in a simple format. The innovation is that it's free and easy to use, unlike some other services that charge a lot of money. It addresses the technical problem of needing to collect and distribute data quickly and reliably to developers, so they can build their applications using the latest financial rates.

How to use it?

Developers can use RateScape by sending a simple request to its API, just like asking a question. The API will respond with the requested exchange rates in a structured format (like JSON), which can easily be integrated into their applications, websites, or trading bots. For example, you could get the current value of Bitcoin in USD, or the exchange rate between EUR and GBP. The integration involves making an HTTP request and parsing the response, a common task in modern software development.

Product Core Function

· Real-time FX Rates: Provides the latest exchange rates for various currency pairs. The value is that this functionality allows developers to build applications that need to display currency conversions, like e-commerce platforms, travel booking websites, or financial calculators. This solves the technical problem of needing to constantly update currency values.

· Cryptocurrency Rates: Offers price data for a wide range of cryptocurrencies. The value is that it provides easy access to cryptocurrency prices, enabling the development of trading platforms, portfolio trackers, and price alert systems. This meets the growing demand for real-time crypto information.

· Free and Open Access: The API is completely free to use and does not require registration. The value is that it removes the financial barrier to entry for developers, democratizing access to financial data and allowing anyone to experiment with or build upon it without constraints. This is a core value in open source communities.

· Easy Integration: The API is designed to be simple and easy to integrate into any application. The value is that it reduces development time and effort, enabling developers to focus on building their core features instead of spending time on complex data collection and processing pipelines. This makes the data accessible to a wider audience.

Product Usage Case

· E-commerce Website: An online store selling products internationally can use RateScape to dynamically convert prices into different currencies, providing a localized experience for users from around the world. This helps in improving the user experience and boosting sales.

· Travel Booking Platform: A travel website can use RateScape to display real-time currency exchange rates when calculating and displaying travel costs in different currencies, enhancing user accessibility and transparency.

· Personal Finance App: A personal finance application can use RateScape to allow users to track their investments in different currencies and cryptocurrencies, thus providing up-to-date portfolio valuation and analysis.

· Trading Bots: Developers can leverage RateScape to feed real-time financial data into their trading algorithms, assisting in automated trading decisions and portfolio management.

14

WFGY: Semantic Reasoning Engine Experiment

Author

WFGY

Description

This project is a solo developer's experiment to evaluate how well ten different AI models can understand and reason about the same PDF document. The core innovation lies in the 'semantic reasoning engine' which attempts to extract meaning and relationships from the document. It tests the AI models' ability to handle abstract logic, understand concepts, and make consistent inferences. The project provides raw data, visualizations, and the entire experiment process, offering a transparent look at how different AI models perform. So this project is testing how good different AI systems are at understanding and making inferences from a single document.

Popularity

Points 3

Comments 2

What is this product?

This is a deep dive into the inner workings of AI models. The project uses a custom-built 'semantic reasoning engine' (WFGY) to analyze a PDF document. This engine is the heart of the experiment, designed to break down the document and find its meaning. The developer then fed this processed information into ten different AI models, testing their ability to answer questions, draw conclusions, and handle complex ideas. This project provides a transparent way to compare how different AI models tackle the same problem. So it’s about testing how smart these AI models are.

How to use it?

While not a readily usable tool, this project provides valuable insights for developers working with AI and natural language processing. Developers can use the project's methodology as a blueprint for comparing different AI models on their own data sets. They can also learn from the developer's approach to building a semantic reasoning engine, using it as a starting point to improve their own systems. Specifically, you might use the experiment's data to better understand the strengths and weaknesses of various AI platforms. You could also adapt the methods used in this project to assess the performance of your own AI models. Finally, it provides a peek into the development process, showing how one person can build and test complex AI systems. So it provides a model for how to test and understand AI capabilities.

Product Core Function

· Semantic Reasoning Engine: This is the core of the project, designed to extract meaning from a PDF. The value lies in its ability to prepare data for the AI models, making complex information understandable. This means you can feed it a document, and it will try to understand what the document is all about, and the relations between different ideas in the document. This is useful for developers who need to analyze documents for various AI applications, such as content summarization and question answering.So this is about enabling a deeper understanding of the content.

· Multi-Model Comparison: The project compares the performance of ten different AI models. This allows developers to see how different models perform with the same dataset and questions. It allows developers to understand the strengths and weaknesses of various AI platforms. This is great for anyone trying to figure out which AI platform will perform best on your specific tasks, allowing for more informed technology choices. So this is for people wanting to compare different AI systems.

· Raw Data and Transparency: The project provides all the data and the entire experiment process. The value is that it lets developers see exactly what the AI models are 'thinking' and how they arrived at their answers. This makes it easier to understand why certain models perform well and others do not. This open approach fosters trust and accelerates learning in the AI community. So you can check how the AI models make decisions and how reliable they are.

· Abstract Logic and Conceptual Shifts Testing: The project tests the AI models' ability to handle abstract logic and conceptual shifts. The value lies in assessing how well the models can cope with complex and nuanced information. This is crucial for any AI application that requires reasoning beyond simple facts. This helps developers understand the limits of AI models and gives insights into how to train them to be better at critical thinking. So this helps understand the limit of the AI and develop better AI systems.

Product Usage Case

· Research and Development: Researchers can use the project’s methodology to evaluate new AI models or to benchmark existing ones. By replicating the experiment on new models, researchers can gain valuable insights into their performance. For example, you are building a chatbot that should understand very complex questions. This helps researchers understand if the new AI system is better than old ones and how good these AI models are.

· AI Model Selection: Developers can use the project's results to help choose the best AI model for their specific needs. For example, if a developer needs an AI model that excels in complex reasoning, they can refer to the project's results to identify models that performed well in that area. This makes it easier and more efficient to get the right tool for the job and reduce development time. So you can figure out which AI system is right for your project.

· Education and Learning: Students and anyone interested in AI can use the project as a practical example of how to test and evaluate AI models. It provides a real-world case study that makes learning about AI more engaging and accessible. This allows people to understand AI concepts more easily by looking at actual results, promoting a deeper understanding. So you can learn how AI works and how it is tested.

15

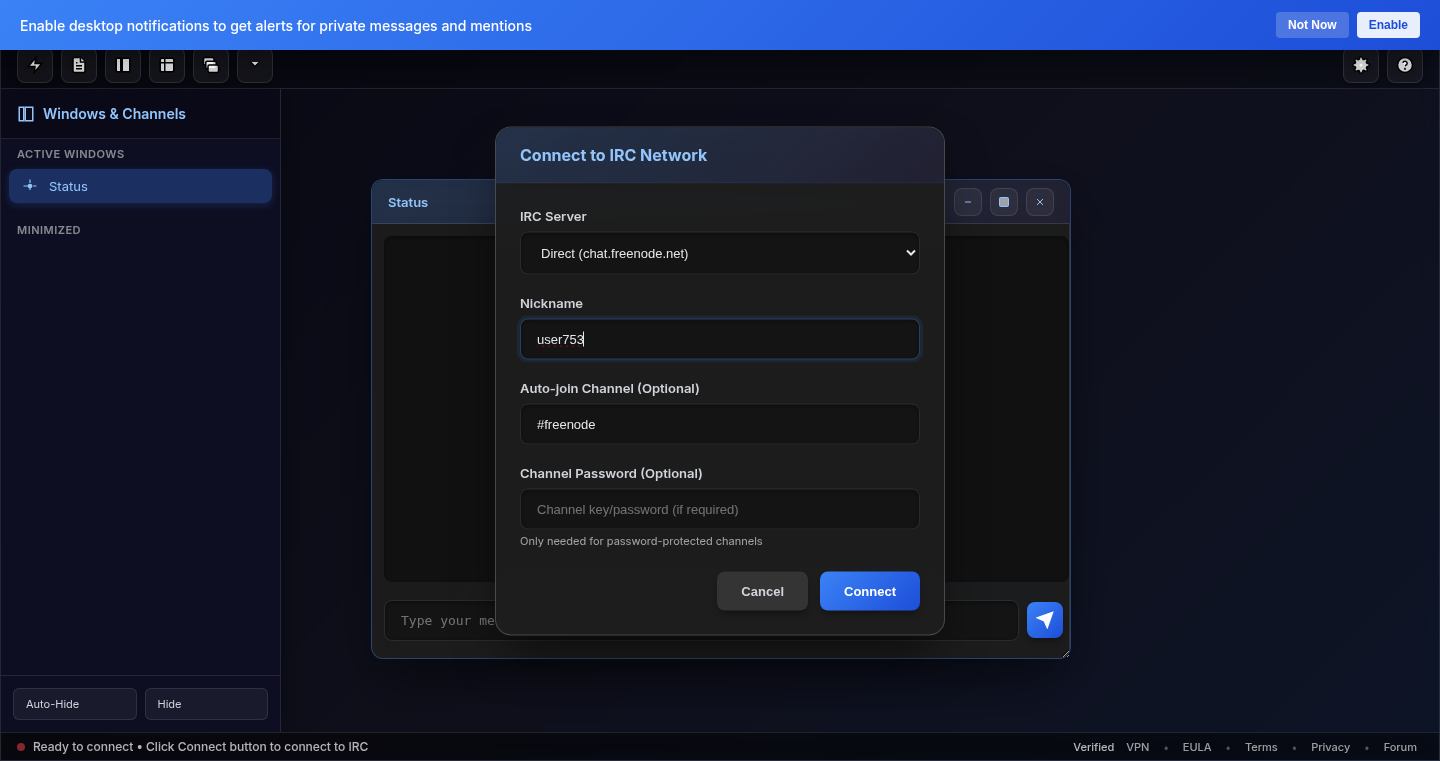

IRC.com: The Scriptable IRC Client – Your IRC, Your Way

url

Author

rasengan

Description

IRC.com is a web-based IRC client that lets you control everything with JavaScript. Instead of being locked into a pre-defined interface, you can customize how it looks, automate tasks, and add your own commands. This means you're not just a user, you're a creator, able to mold the IRC experience to fit your needs. The innovation lies in making the client's core functionality, like handling the IRC protocol, accessible and modifiable via JavaScript. This solves the problem of limited customization in traditional IRC clients and unlocks a world of possibilities for advanced users and developers.

Popularity

Points 3

Comments 2

What is this product?

IRC.com is an IRC (Internet Relay Chat) client, but with a twist: It's fully scriptable using JavaScript. Imagine a normal IRC client, but instead of being a static program, it's like a website built with code that you can change. The core functionality is there: it connects to IRC servers, handles messages, and manages channels. But because it's built with JavaScript, you can change how things work. You can automate commands, change the user interface, or add new features. So, you can take control of your IRC experience. This is done using a 'protocol shim' which translates between standard IRC commands and the JavaScript engine, and a 'window manager' to handle the UI elements.

How to use it?

Developers can use IRC.com by opening it in a web browser and then writing JavaScript code to customize the client. For example, you could write a script to automatically join specific channels when you connect, create custom commands to perform actions in the channels, or even build a completely different user interface. Integration is simple: just write JavaScript and the client executes it. There's no need to download and install anything, just load the web page and start scripting. So, you get more power than ever to personalize the way you chat.

Product Core Function

· Protocol Handling: The client handles all the messy details of communicating with IRC servers (connecting, sending messages, etc.). The value? You don't have to worry about the underlying IRC protocol; you can just focus on building your custom features. The application? Automating tasks like setting channel modes, or automatically responding to specific keywords.

· Scriptable UI: The user interface is designed to be modified with JavaScript. You can change the colors, add buttons, rearrange elements, or create a completely new layout. So, you can create an IRC interface that perfectly matches your aesthetic preferences. The application? Designing an IRC client tailored for specific use cases, or adapting the UI for accessibility.

· Command Automation: You can write scripts to automate repetitive IRC commands, like joining channels, setting channel modes, or greeting new users. So, you can make your IRC experience more efficient and less tedious. The application? Managing a large channel by automating moderation tasks or developing bots for specific tasks.

· Custom Command Creation: You can define your own commands that the client will recognize and execute. This allows you to extend the functionality of IRC. So, you can add entirely new features and behaviors to the client. The application? Building custom bots to interact with IRC, developing tools to manage channels, or providing interactive services.

· Network Agnostic: Initially supporting IRC.com server but with future plans to integrate with other IRC networks. The value? This increases compatibility, allowing the client to work with any IRC server. The application? Connecting and interacting across various IRC networks and communities, allowing you to follow different channels and interests.

Product Usage Case

· Custom Bot Development: A developer creates a bot to moderate a channel, automatically removing spam and welcoming new users. The developer uses JavaScript to define the bot's behavior and integrate it with the IRC client. So, you can build your own smart assistants for your chats.

· Personalized UI for Streamers: A streamer builds a custom user interface for their IRC channel, displaying recent donations and subscriber information. This enhances the user experience for viewers and helps with community interaction. So, this can improve audience engagement and community building.

· Automated Channel Management: A channel administrator creates scripts to automate channel moderation, such as automatically banning spammers or setting channel modes at specific times. This keeps the channel clean and organized. So, you can easily manage large channels and maintain order.

· Integrating with External APIs: A developer writes a script that pulls information from an external API (like weather updates or stock prices) and displays it in the IRC channel. So, you can integrate real-time data into your IRC experience.

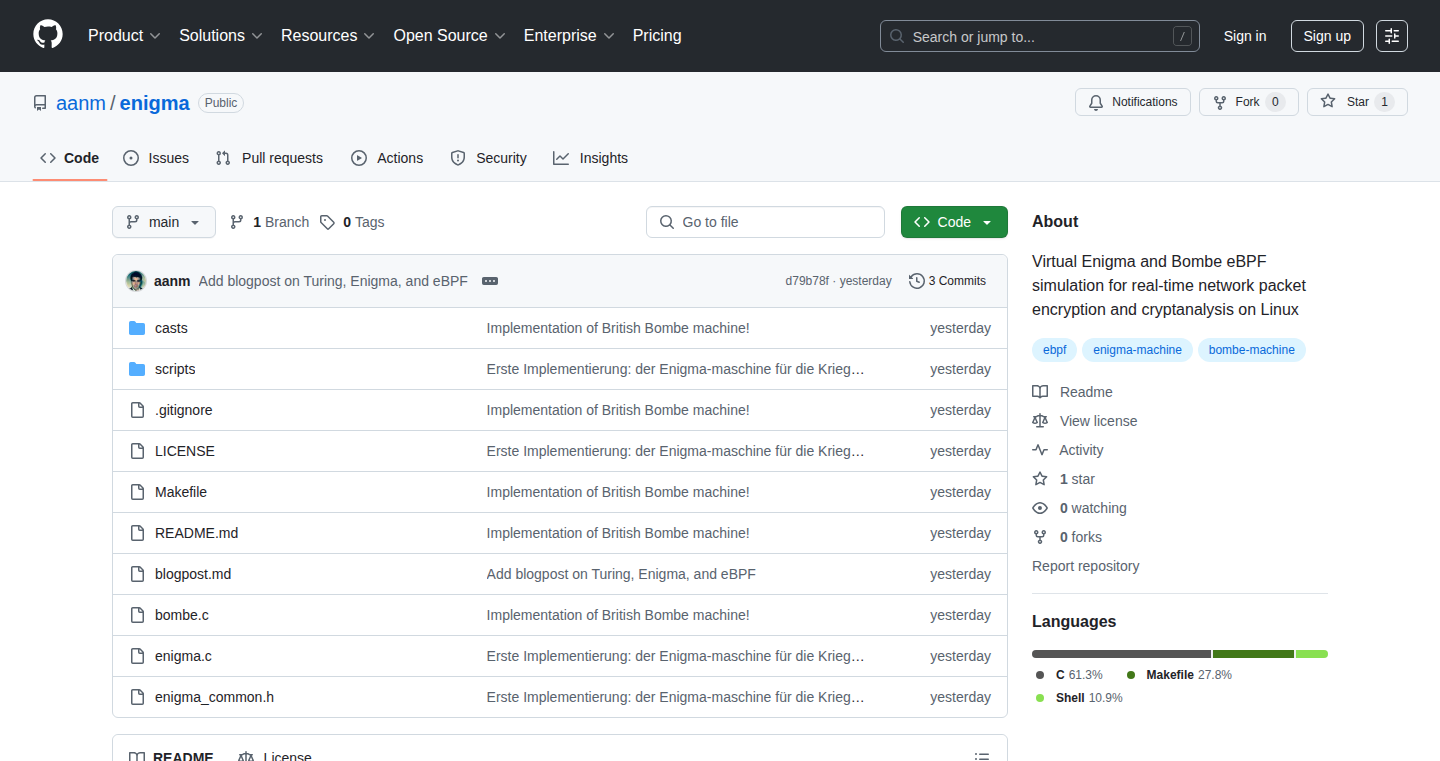

16

eBPF-Enigma: Real-time Network Encryption Inspired by Alan Turing

Author

aanm__

Description

This project recreates the Enigma encryption machine and its decryption counterpart, the Bombe, within eBPF (extended Berkeley Packet Filter). eBPF is a powerful technology that allows developers to run code inside the Linux kernel. This implementation uses eBPF to process network packets through virtual Enigma rotors and reflectors, mimicking the original WWII-era machines. The core innovation lies in applying Turing's encryption principles within the modern kernel, enabling real-time encryption and decryption directly on network traffic. This showcases how historical cryptographic methods can be re-imagined using modern systems programming, offering new possibilities for network security and control.

Popularity

Points 5

Comments 0

What is this product?

This project brings the Enigma machine, a famous encryption device, to life inside the Linux kernel using a technology called eBPF. Think of it like building a miniature Enigma machine that sits inside your computer's network card. When network data passes through, it gets encrypted just like in the real Enigma, and then decrypted on the other side. This shows us how a cool old encryption method can be used with today's technology, offering new ways to secure network communications. So this is about taking a piece of history and making it useful in a modern tech setup.

How to use it?

Developers can use this by creating virtual network interfaces. Any data sent through these interfaces will be encrypted by the eBPF-Enigma. To decrypt, another system with the eBPF-Enigma can be set up on the receiving end, allowing real-time secure communication. It's integrated by loading the eBPF program into the kernel and configuring the virtual network interfaces. Think of it as adding a secure, historical twist to your network, making it harder for outsiders to see what you're sending. So, this project lets developers play with network security in a fun and insightful way.

Product Core Function

· Real-time Encryption/Decryption: This is the core function. The eBPF code intercepts network packets and encrypts them using Enigma's rotor configuration. On the receiving end, the packets are decrypted in real-time. So this allows secure communication that happens as data is being sent and received.

· Configurable Rotors and Reflectors: The project allows users to customize the Enigma's settings, including the type and order of rotors and the reflector used. This configuration is crucial for the encryption process and provides flexibility. So this offers control over the level of security and customization options to tailor the encryption to different needs.

· Virtual Network Interface Integration: The encrypted packets are sent over virtual network interfaces. This means any application that uses these interfaces automatically benefits from the Enigma encryption. So this makes it easy to integrate the encryption into existing applications without much modification.

Product Usage Case

· Secure Communication for IoT Devices: Imagine you are using many small computers, such as those that control sensors in your home. They often send information back to a central hub. Using eBPF-Enigma, you can encrypt the traffic of these devices. So your sensitive sensor data will be secured.

· Protecting Sensitive Data in Cloud Environments: When sending data between virtual machines in the cloud, privacy is key. The eBPF-Enigma could be used to encrypt network traffic between virtual machines, ensuring that data is protected from potential eavesdropping. So this helps protect the flow of your business's critical information.

· Educational Tool for Cybersecurity: This project is a great teaching resource. Students and enthusiasts can learn about encryption, network security, and eBPF by experimenting with the system. So they can get hands-on experience with the technology to understand how security works.

· Research in Modern Cryptography: Researchers can use the implementation to study the security implications and explore new applications of historic encryption techniques in a modern network setting. So, they are able to evaluate and adapt historical tools to meet contemporary demands for data protection.

17

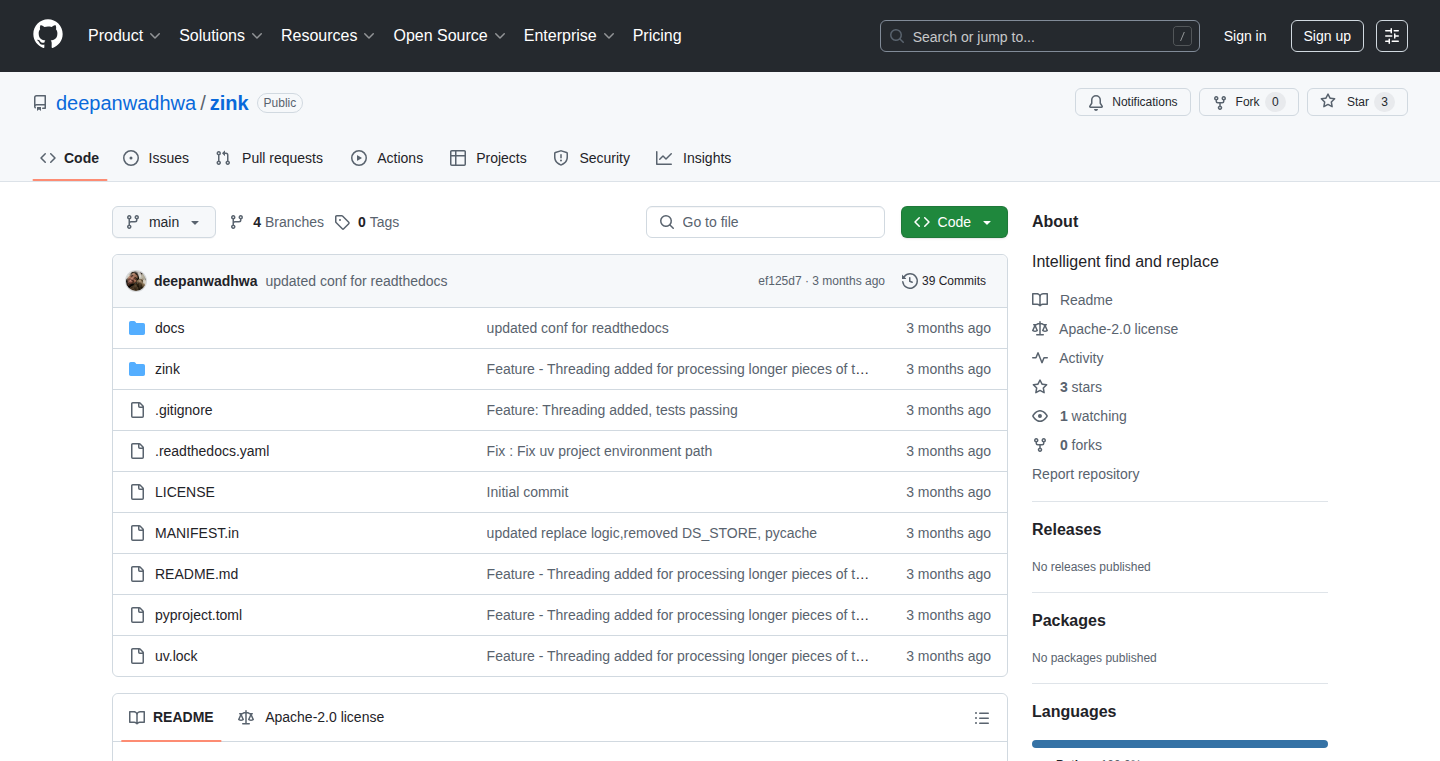

Zink: Self-Hosted Anonymization Pipeline

Author

dwa3592

Description

Zink is a project designed to help you anonymize your data. It tackles the problem of needing a simple, self-hostable way to scrub sensitive information from text, images, and other data formats. The innovation lies in providing a straightforward pipeline, allowing users to control their data anonymization process without relying on third-party services. So, this is about keeping your data private and giving you control over it.

Popularity

Points 4

Comments 1

What is this product?

Zink works by providing a system where you can run your data through a series of processing steps. It starts with accepting your data (text, images, etc.), then runs it through a series of anonymization steps. This might include things like removing personal information (PII) such as names and addresses, blurring faces in images, or even redacting entire sections of text. Finally, it outputs the anonymized data. The innovative part is that this entire process happens on your own servers, giving you complete control over your data. So, this gives you a secure way to protect sensitive information.

How to use it?

Developers can use Zink by setting it up on their own servers (self-hosting). They would send their data to Zink, which processes it according to pre-configured settings (or custom scripts they write), and then receive the anonymized output. This is useful for projects where you need to share data but protect privacy. Imagine scenarios like sharing medical data for research, or creating datasets for machine learning training while protecting individuals' identities. So, you can integrate Zink into your data processing workflows for privacy.

Product Core Function

· Data Ingestion: Zink accepts various data formats as input. This functionality is valuable because it supports different input types, making the anonymization process versatile. For example, in healthcare, this supports a variety of formats of patient data. It allows for anonymization across different platforms.

· Anonymization Steps: The core functionality involves applying a set of anonymization techniques like removing names, dates, and location data. This is essential for complying with privacy regulations (like GDPR or HIPAA). Developers can protect sensitive information when creating public datasets.

· Customizable Pipelines: Users can define their own anonymization pipelines, tailoring the process to their specific needs. This flexibility is crucial for projects with unique data requirements. For example, different industries and use cases may call for different levels of anonymity.

· Self-Hosting: The fact that Zink is self-hostable means that all processing is done on your own infrastructure. This feature is essential for maintaining full control over your data and minimizing the risk of data breaches. Developers who are serious about protecting their users’ privacy will find this an important capability.

Product Usage Case

· Medical Research: A research team can use Zink to anonymize patient data before sharing it with collaborators, ensuring patient privacy while still allowing for data analysis. This solves the problem of needing to de-identify patient records for research purposes.

· Journalism: Journalists can redact sensitive information from documents and images, protecting their sources and the subjects of their stories before publishing. This addresses the need to maintain privacy and security when reporting on sensitive topics.

· Machine Learning: Data scientists can use Zink to create anonymized datasets for training machine learning models, ensuring privacy while allowing for model development. This solves the issue of protecting individual data during model training.

18

TNX API: Natural Language Database Interaction

Author

Marten42

Description